| Computer Modeling in Engineering & Sciences |  |

DOI: 10.32604/cmes.2022.016985

REVIEW

Human Stress Recognition from Facial Thermal-Based Signature: A Literature Survey

1School of Computer Sciences, Universiti Sains Malaysia, Penang, 11800, Malaysia

2School of Distance Education, Universiti Sains Malaysia, Penang, 11800, Malaysia

*Corresponding Author: Ahmad Sufril Azlan Mohamed. Email: sufril@usm.my

Received: 17 April 2021; Accepted: 20 August 2021

Abstract: Stress is a normal reaction of the human organism which triggered in situations that require a certain level of activation. This reaction has both positive and negative effects on everyone's life. Therefore, stress management is of vital importance in maintaining the psychological balance of a person. Thermal-based imaging technique is becoming popular among researchers due to its non-contact conductive nature. Moreover, thermal-based imaging has shown promising results in detecting stress in a non-contact and non-invasive manner. Compared to other non-contact stress detection methods such as pupil dilation, keystroke behavior, social media interaction and voice modulation, thermal-based imaging provides better features with clear boundaries and requires no heavy methodology. This paper presented a brief review of previous work on thermal imaging related stress detection in humans. This paper also presented the stages of stress detection based on thermal face signatures such as dataset type, thermal image face detection, feature descriptors and classification performance comparisons are presented. This paper can help future researchers to understand stress detection based on thermal imaging by presenting the popular methods previous researchers use for stress detection based on thermal images.

Keywords: Stress state; stress recognition; skin temperature; thermal signature; thermal imaging

The word “stress” is described in many contexts [1]. An inclusive definition of stress refers to the biological response to a physiological or psychological stimulus [2]. Emotional and physical stressors can be detrimental to the human body. The effects of stress on human wellbeing and symptoms have been extensively researched in recent times [3–9]. Kim [10] found that people in their 30 s experience the highest stress level due to mask-wearing. The authors reveal that the early stress detection techniques depend on psychological questionnaires [11] and consultations [12].

In recent years, researchers have been exploring the non-invasions method to detect stress. Skin temperature is one of the established stress markers based on physiological signals. The amount of heat dissipated by the body has the capacity as a tool to measure the temperature of the human skin. Body temperature is affected by blood flow, metabolic activities, subcutaneous tissue structure, sympathetic nervous (SNS) activities, and muscle contractions [13–15]. The healthy people's body temperature was recorded between 35.5°C and 37.7°C under normal conditions. The human body can regulate body temperature to keep it stable [16]. A noticeable rise in core body temperature may indicate an illness such as fever or hypothermia and a change in the human affective state [17]. Hypothalamus is a part of the brain located at the brain base responsible for regulating body temperature. Sometimes it may fail to function well under abnormal conditions [18,19]. However, neglecting treatment of the symptom of continuously high body temperature may lead to harmful consequences; injures body organs. Another factor that affects human temperature is muscle contractions which generate heat through muscles movement [20]. The internal body heat is transferred from the internal issue to the human skin via the blood supply in the vascular system. The control of blood flow in the skin by the processes of vasoconstriction and vasodilation processes is part of the thermoregulatory mechanism, i.e., the thermal homeostasis of internal body temperature to external factors such as cold and heat [18,21]. The skin surface is an essential body part in regulating core body temperature: the body heat is transferred to the skin via internal vessels and the skin loses the heat in several ways: evaporation, thermal radiation, conduction, and exhalation through a respiratory process [22–25].

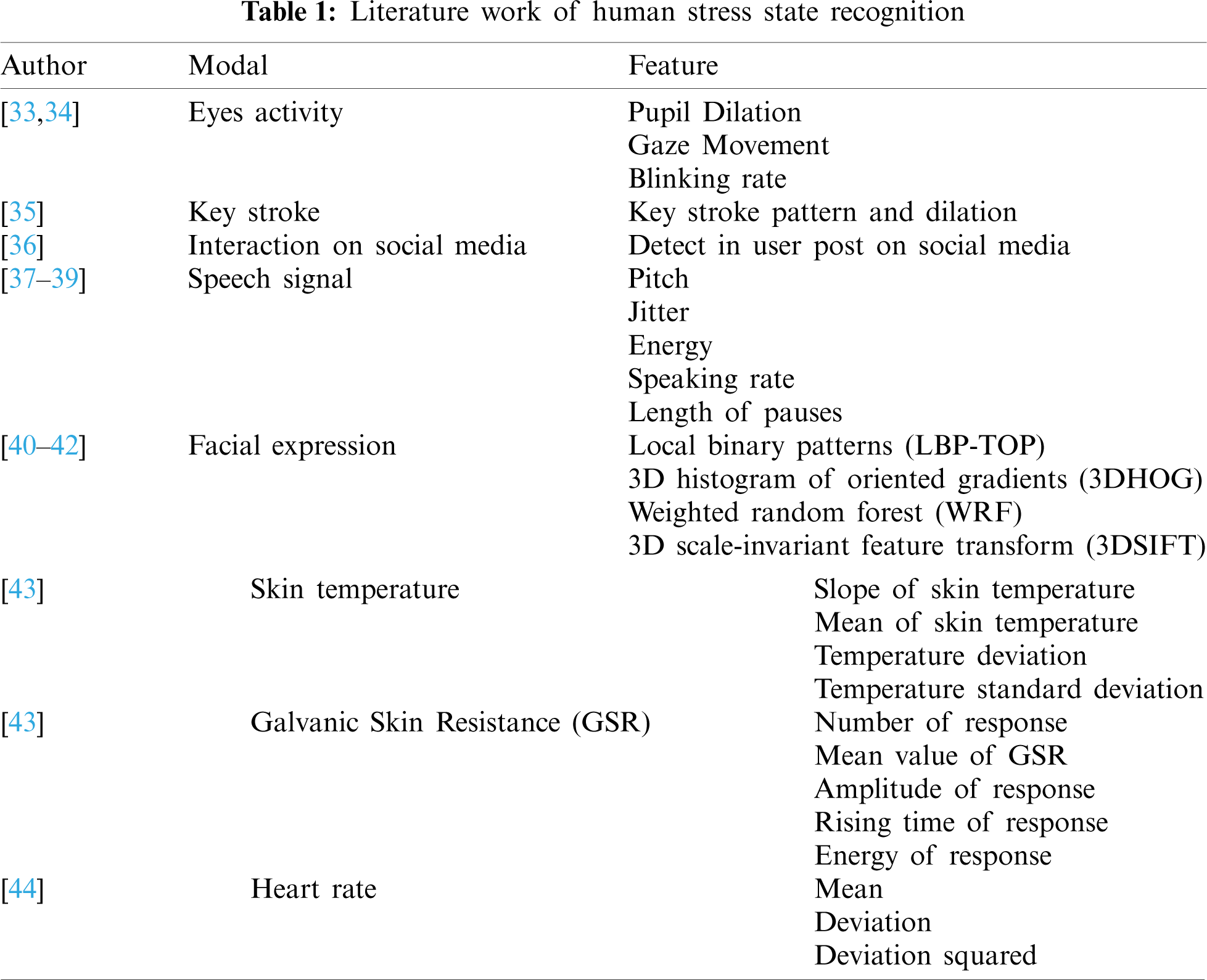

Pavlidis firstly discovered the alarming thermal signature and announced an increment of blood demand in the periorbital region [26–30]. Consequently, it contributes to effective feature extraction, thus provides extensive research on the physiological signals of the human body such as breathing [28], sweating [30], blood flow velocity [31,32], and heart rate [29]. Table 1 summarized the commonly used model in literature that detects human stress.

Many studies have explored the possibility of stress detection with facial skin temperature [45–47]. Researchers also investigated other modalities such as pupil dilation, breathing pattern, behavior pattern, keystroke pattern, and social media activity. In [25,41,42], the authors proposed a novel method to detect stress based on facial expressions. The results demonstrated that the proposed method has similar accuracy performance to other state-of-the-art methods. However, this method is proposed based on RGB images. Similar method can be proposed for thermal images as a new potential research direction. Compared to other modalities, thermal-based stress detection provides a reliable accuracy rate concerning user privacy. To investigate the facial skin temperature for human stress recognition, Al Qudah et al. [48] explored the recent use of thermal imaging in distinguishing human affective states and the problems that have surfaced. The authors also suggested a framework for solving the issues discussed and the mentioned challenges. Therefore, this paper discussing previous literature regarding facial detection in the thermal image and stress recognition based on the facial thermal signature. More importantly, this paper will reveal the future research potential and challenges faced by the previous work and the solution proposed to overcome these limitations.

The following is the structure of this paper: Section 2 will discuss the stages in thermal-based stress state detection such as dataset type, face detection method, facial temperature as stress signature, and stress classification performance comparison. Section 3 will detail the modalities listed above. Section 6 will propose future work to extend the knowledge of stress detection based on thermal imaging.

2 Thermal Based Stress State Recognition

Thermal imaging is one of the popular topics among researchers to detect stress in a non-invasion manner. Thermal camera technology was initially unfeasible due to its low resolution, high cost, and heavyweight, combined with the ability to regulate the surroundings for a steady ambient temperature [49]. Thermal system inventions further paved the way for new varieties of accessible and adaptable thermal sensors that are lightweight, low-cost, and have high resolutions, such as handheld thermal sensors. As a result, sophisticated thermal sensors encourage researchers to investigate thermal imaging in laboratories and real-world settings in many applications, including human stress recognition [50]. Moreover, the COVID-19 pandemic enhanced the ability of thermal sensors. It can detect human face temperatures in a non-invasive and contactless manner. ANS is responsible for coordinating human physiological signals such as heart rate, respiration rate, blood perfusion, and body temperature during human stress state from a psychophysiological standpoint. Thermal imaging can measure the temporal temperatures of the face [51]. The usage of thermal imaging is a realistic solution to achieve stress detection in a contactless manner.

Several studies have also investigated thermal imaging to explore other psychological signals that correlates with human stress states like respiration rate, pulse rate, and skin temperature. It has the potential to transcend the limitations of contact-based and intrusive physiological sensors [52]. When thermal imaging to visual (RGB) imaging, studies have shown that thermal images have many benefits over RGB images. The variation of human skin colour, facial structure, texture, ethical contexts, cultural distinctions, and eyes could affect the accuracy of the human emotion includes stress state applied visual-based methods. Visual-based systems are also sensitive to illumination change. In unregulated settings, visual-based imagination techniques have unreliable recognition precision [52]. Thermal imaging, on the other hand, is light-resistant and can be used in low-light situations. The connection between the human stress state and the variety of skin temperature is confirmed with the thermo-muscular and hemodynamic-metabolic components [16,23]. Researchers have focused on thermal imaging that encourages them to gauge the transient temperature esteems from the selected facial region to detect the stress state. In the literature, a temperature difference between the left and right sides of the face, and temperature change in the periorbital and nasal facial regions, has been linked to human stress.

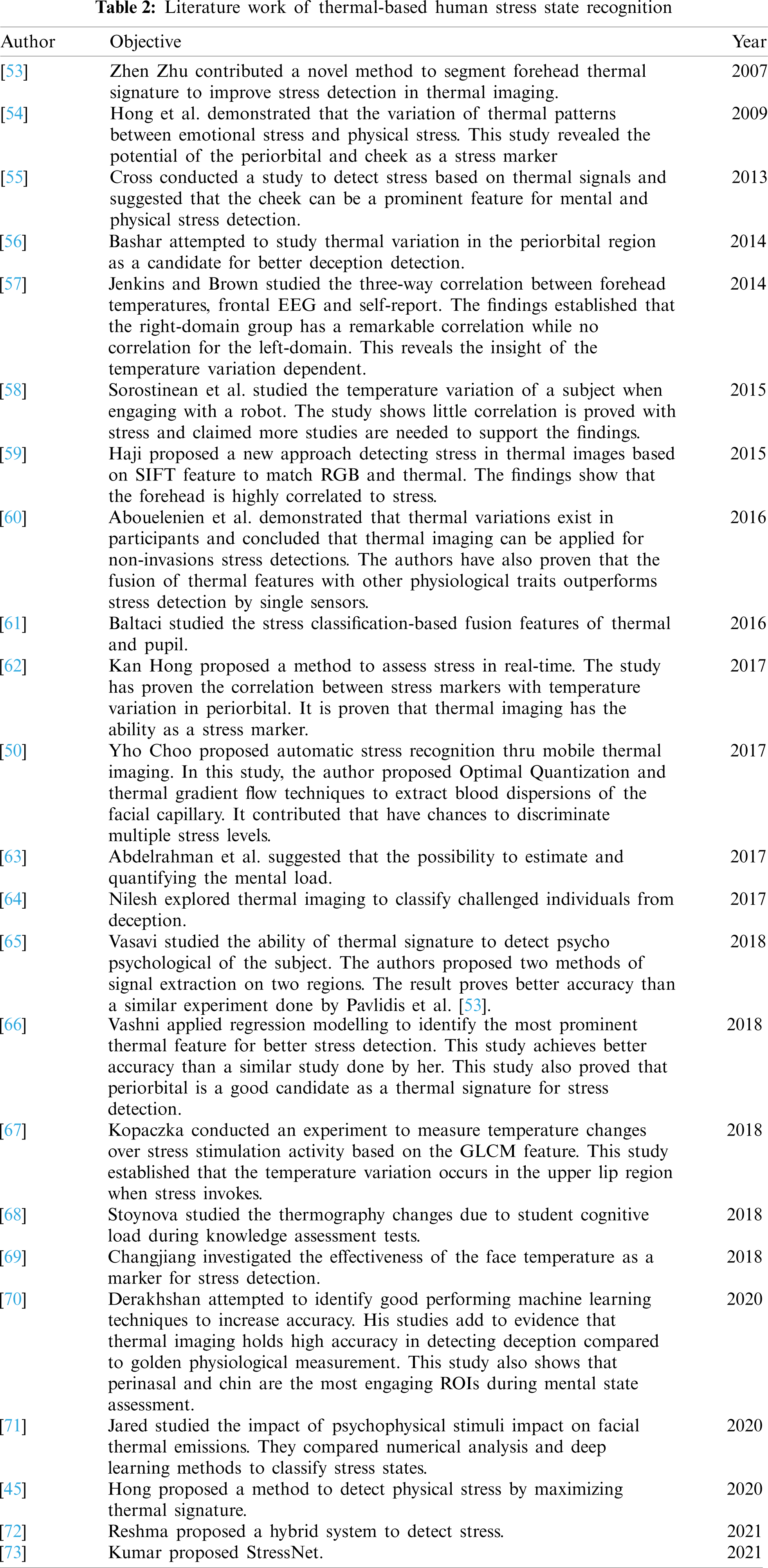

However, the alteration in blood flow in the periorbital area allows measuring both instantaneous and prolonged stress conditions [51]. This method is a procedural step where begins with a thermal dataset or thermal signature data collection activity. Thermal imaging is typically used in laboratory experiments to collect data. Stress stimulation is applied to cause participants to become stressed, and their facial thermal signatures are measured. Subsequently, the preprocessing and feature extraction approach is adopted to extract facial features and classify them with a classification procedure. However, the accuracy of the performance for each method is varied. The accuracy of the approach also depends on the number of chosen criteria, the feature descriptor and the classification method. Table 2 summarized the previous work related to human stress detection based on facial skin temperature based on the timeline.

In previous literature, there are two types of data identified; static facial image and moving face. Initially, studies begin with static facial image detection and recognize a few regions of interest on the facial. In the study conducted by [45], participants have to restrict their head movement. In the real world, it is an impractical approach to instruct the subject to be static. This limitation emphasizes the importance to track the facial in motion. Several studies focused on automating facial detection in thermal imaging where the participant can move freely. The majority of studies conducted self-experiment to collect their dataset. A laboratory experiment was conducted in [62,68,69,74] to collect thermal images and other physiological signals.

2.2 Methods for Face Detection in Thermal-Based Image

Face detection is the first step in stress detection based on the facial thermal image. Thermal images are commonly used in many circumstances where ordinary perception is limited, hindered, or inadequate. For example, during night surveillance and fugitive searches. The facial detection algorithm in the thermal image was inefficient in the beginning and was also not sophisticated for visual RGB images. Many studies reported this as a limitation that affects the experiment methodology and findings. Several stress detection methods prohibit head movement during the data collection phase. This is because thermal imaging has limitations for many head movements. As in [62], the authors willingly crop regions of interest (ROIs) manually due to this limitation. The common practice in face detection in the scope of stress detection based on the thermal image is knowledge-based techniques feature invariant facial approaches, template matching method, appearance-based method, colour information, and fusion with visible spectrum imaging. In previous literature, the methodology involved in facial detection in thermal imaging is classified as appearance-based [75,76], feature extraction [77–79], fusion with RGB image [80–85] and multimodal analysis.

Zheng [86] proposed the Projection Project Analysis (PPA) algorithm for face detection algorithm. Studies related to facial thermal images adapted this algorithm to detect face regions. Zheng [75] continued the experiment that adapted PPA to detect faces that have eyeglasses. One of the main limitations of thermal imaging is facial occlusion, which may occur from glass opacity in individuals who are eye-glass wearers. Therefore, occlusion will prevent thermal sensors from reading the heat pattern produced in that region. Several models have been introduced to handle such limitations; different facial ROI is being explored to tackle this challenge. Basu et al. [87] proposed a thermal-based occluded images model by applied Kotani Thermal Facial Expression Database (KTFE) as input images and the Viola-Jones algorithm for facial detection. The study applied a median filter to remove noise and Contrast Limited Adaptive Histogram Equalization (CLAHE) for image enhancement. The study selected six facial patches: forehead, eyes, right and left cheeks, nose, and mouth. Hu's [88] seven-moment invariant methods were chosen for feature extraction and the classification process. The study selected multi-scale SVM to classify four basic emotions and the average accuracy was 87.5%.

A number of studies [45,89,90] applied a state-of-the-art face detection algorithm, the Haar-based Viola-Jones face detection algorithm [91]. Reese et al. [90] conclusively proven that learning-based methods; Viola-Jones and Gabor have a high tendency to detect a face, and the Viola-Jones algorithm can be used as mainstream for face detection in thermal images. Basbrain et al. [92] suggested strategies for improving the Viola-Jones algorithm's face detection performance in thermal images. The findings suggest that the Viola-Jones method with LBP performs significantly better in thermal images vs. Haar-like features. The study also found that using the Otsu technique in the pre-processing stage improves the detection rate. In a recent study, Tran et al. [93] proposed to integrate cross-examples into a proposed scheme, which effectively improved the face detection accuracy. Kowalski et al. [94] evaluated three common face detection algorithms, the Viola-Jones, YOLO, and CNN. The deep learning network outperforms the Viola-Jones algorithm. Faster R-CNN has better performance with a near-perfect detection rate and a low false detection rate. Researchers presented the bioheat model, a special identification method, in [95], by creating a thinned vascular network, similar to work done in [96]. Cho et al. [97] integrated Modified Hausdorff Distance features to improve the precision performance implemented by [95].

Kopazka and his colleagues [98] have proven that a state-of-the-art machine learning algorithm designated for visual images performs better than dedicated algorithms in detecting facial in thermal imaging in terms of accuracy and false-positive rate. Five algorithms were selected; Haar Cascade Viola-Jones, Haar Cascade classifier with local binary patterns (LBPs), Histograms of Oriental Gradients (HOG), the Deformable Parts Model (DPM), and Pixel Intensity Comparisons Organized in Decision Trees (PICO) to compare with two algorithms; Eye Corner Detection (ED) [99], Projection Profile Analysis (PPA) [100] dedicated for facial detection in thermal imaging. These comparative studies exposed the algorithms have a sensitivity towards a change in pose and facial expression as it impacts the accuracy rate. VJ-LBP and HOG produce good similar detection rates. DPM performs best in the detection and false-positive rates at the cost of having the longest computation time. The authors recommend the PICO method performs fast and produce better results. Kopaczka et al. [100,101] proposed one of the earliest works on thermal facial landmark detection based on active appearance models [102]. The authors applied PCA to landmark data and trained the AAM model based on dense HOG and SIFT features. Chu et al. [103] tested a theory by applying an image transfer model by transferring a thermal image to visible and used one of the landmark detectors designated for visual images. The result indicated that the dedicated facial landmark detector need for thermal imaging. This leads the authors to propose a thermal facial landmark detection based on deep multi-task learning. Kopaczka et al. [101] suggested the face tracker method by using thermal videos and images based on AAM. The study focused on strong landmarks to detect and track ROI within head pose and rotation. Furthermore, the study proposed several enhancement algorithms such as sharp masking, USM with bilateral filtering and USM with a Gaussian kernel. The study used several descriptors with AAM like scale-invariant feature transform (SIFT) and Histogram Oriented Gradient (HOG) for fitting the algorithm. The study also used Project-out inverse compositional, alternating inverse compositional (IC) and Simultaneous Inverse compositional.

Sonkusare et al. [104] proposed a novel deep-learning assisted facial landmark to detect method for the thermal image. This is to extract thermal signals from the facial regions. The authors applied the sudden auditory stimulus of a loud stimulus to invoke the physiological responses. The authors aimed to characterize the spatial changes in temperatures of different facial regions i.e., nose-tip, right and left cheeks and forehead). The GSR and HR is selected as bench marker. In this work, the authors compared two methods; (1) a task-constrained deep convolutional network (TCDCN) and (2) an OpenPose detector. For method (1), the authors implemented TCDCN which trained on RGB images and then fine-tune by further learning on thermal images. Method (2) OpenPose detector is presented by [105] that employs a robust multi-view bootstrapping architecture. Then this two methods are combined to improve the landmark localization accuracy.

Several studies [90] demonstrated that face detection in the thermal image is possible without being aided by a visual image. Researchers explored the artificial intelligence method. Mohd et al. [59] suggested a BoCNN architecture framework to overcome one’ limitation, detect occluded facial in a thermal image. A variety of CNN models proven to perform well in thermal imaging [106–110]. Hong [45] proposed a multi-subject correlation [100] method to detect ROIs; forehead, nose, and mouth in a thermal image.

There are many stresses stimulus widely used in literature; Stroop test, Trier Social Stress Test (TSST) [55,62,74], arithmetical questionnaire [61,68,69,71], mock crime scenario setup [70] and physical activities [55]. In [45], the participants were required to run on a treadmill to induce physical stress as this study aimed to differentiate physical stress and baseline status. This study achieves an accuracy rate of 90%.

2.4 Feature Extraction (Descriptors)

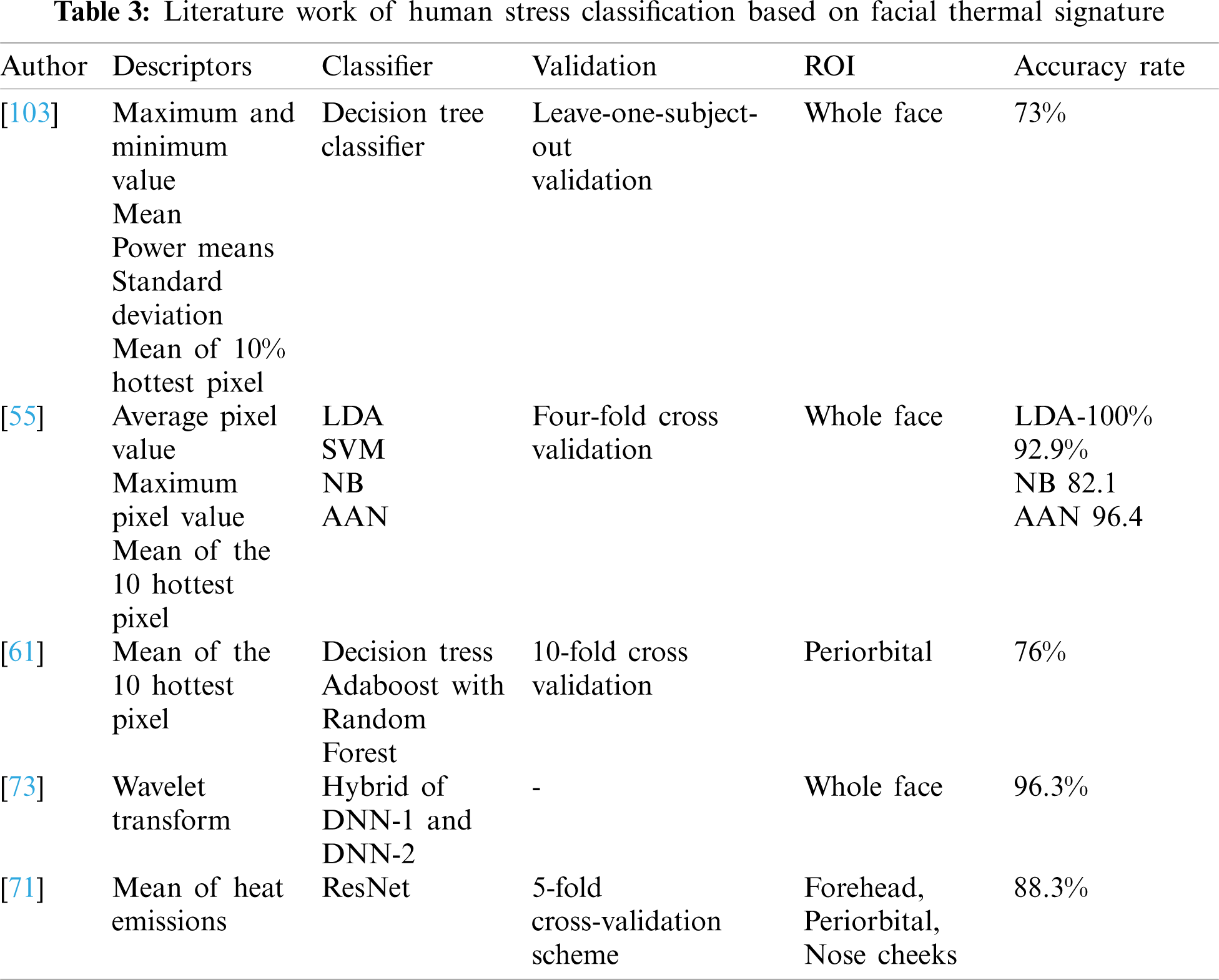

Thermal images, which have a distinct texture, appearance, and form than RGB images, play a crucial role to identify human stress. Various forms of thermal descriptors have been used in previous research. The effectiveness of facial features from the entire face or facial parts (ROI) is required in stress state identification. Numerous types of feature extraction are shown in Tab. 3 of this survey. Based on Tab. 3, the majority of studies have used statistical features. For example, He et al. [69] used maximum temperature, and mean. Derakhshan et al. [70] extracted the six temporal features including mean, minimum, maximum, standard deviation, means of the absolute values of the first and seconds’ derivatives of the pre-processed signals. Vasavi et al. [65] calculated heart rate based on the mean value of the frame over time. The authors [64] extracted the mean of the top 10% thermally hot pixels, minimum, maximum, and standard deviation. The authors [71] calculated the mean value of emissivity and performed normalization by using min-max normalization. In [61] the authors used the mean of the top 10% of the pixels. Cross et al. [55] extracted average pixel value, maximum pixel value and the mean of the top 10% of the pixels. Berlovskaya et al. [111] also extract the average value of the pixel and standard deviations.

2.5 Facial Thermal Signature Correlation with Stress State

The researchers conducted several studies to establish the correlation between thermal features with stress state of a human [74]. suggested that the individual stress state recognized by the facial temperature is measurable with thermal imaging. Kan Hong and his colleagues [62] found a Pearson correlation value less than 1 between facial thermal signatures and known stress indicator such as heart rate (HR) and cortisol level. In this study, the authors proposed new physiological signal extraction; the Eulerian magnification algorithm, to amplify the physiological signals. This study also shows the significant correlation between perinasal ROI and ground truth. A 96% accuracy is achieved by the proposed algorithm compared to ground truth features.

The authors [64] applied the thermal imaging to classify the challenged participants among the threatened participants. The findings show that features extracted from forehead and nose regions yield better accuracy of stress detection. The stress classification based on individual features such as forehead and the nose yields 80% accuracy. When the data from the forehead and nose are combined, the accuracy improves even further. This research reveals insight temperature variations in the facial region that can be used to identify different human emotional states.

The authors [71] studied the impact of psychophysical stimuli on facial thermal emissions. In this study, the authors attempt to distinguish the facial pattern produced by physical activity and mental stress. The findings show that thermal variation caused by psychological stimuli has more changes in pixel intensity compared to the caused by physical stimuli. Reference [68] investigated the impact of student cognitive load on the facial thermographic during knowledge assessment test. The output of the study provides strong evidence to support the correlations between student cognitive load and thermal changes. The authors agreed that thermal signature changes on facial as criteria for stress detection.

Vasavi et al. [66] attempted to identify the most engaging feature for better stress detection by applying regressing modelling. The findings support that the periorbital region is the most engaging thermal feature for stress detection. Authors [112] investigated the correlation between stress and topography of facial temperature changes over time. With Bonferroni-corrected pairwise comparisons, the correlation between induced stress state and the temperature changes in forehead, cheeks and perioral is acknowledged. He et al. [69] justify the usefulness of using facial temperature to evaluate mental stress. The authors also attempted to evaluate the effectiveness of employing face temperature as a mental stress biomarker in comparison to other established biomarkers; HRV, TLI, and PDM. The results established that the face temperature can provide an accountable indicator for human stress recognition in a non-contact approach. Derakhshan et al. [70] confirmed that perinasal and chin areas mostly correlated to stress state in studies. Hong et al. [54] found that number of hot pixels increases in the periorbital region when participants have induced physical stress. When participant experiences emotional stress, prefrontal region has its pixel increases temperatures. This exhibits characteristics of the thermal distribution.

2.6 Stress Classification Based on Facial Skin Temperature Model

Researchers investigated the stress classification based on the extracted thermal signature of the face. Table 2 provides the summary.

In [60], the authors experimented to detect human acute stress by integrating physiological features and thermal features. The authors evaluate the performance of the chosen feature individually and in combination. The performance of thermal features as individual features achieves the highest accuracy of 73% as similar to the fusion of four physiological features, state-of-the-art biomarkers of stress detection. These findings provide evidence to support that the thermal features hold high accountability to measure stress remotely. The authors have suggested a combination of the thermal and respiration rate features can improve the accuracy of stress detection. This combination contributes 26% to improve accuracy. The decision tree classifier is employed for stress classification and validated by the leave-one-subject-out-validation.

Cross et al. [55] demonstrated that the system yields the highest accuracy in classifying mental stress vs. physical stress. The features used are frequency analysis of the respiratory and cardiovascular pulse. The authors extracted statistical descriptors, such as pixel value, maximum value, and the mean of the 10% hottest pixel. The authors also compared four classifiers; AAN, Naiye Bayes classifier, linear discriminant analysis, and SVM. The accuracy rate of classifications is 96.4% (AAN), 100% (LDA), 92.9% (SVM), and 82.1% (NB). Each classifier is validated with four-fold cross-validation. Among the four classifiers, LDA was found to perform better that provides the high accuracy classification in a short time of computation. The authors claimed that isolated face regions have the potential to improve classification accuracy.

Baltaci et al. [61] proposed a method to separate the stress state of a computer user. The authors investigated the classification accuracy for each feature and fusion. The results show that individually thermal features perform better with an accuracy of 76%, while individual pupil features achieved 73% accuracy. The combined features of thermal and pupil achieved 83% accuracy. Two classifiers were compared which are Adaboost with Random Forest outperform Decision trees. 10-fold cross-validation is applied. The stress simulation used in this study revealed limitation emotional that influences the result. The mean of the hottest pixel of the periorbital region is used as descriptors in this study.

Derakhshan et al. [70] conducted an experiment to discriminate deception and truth by comparing four machine learning techniques which SVM, KNN, LDA, and decision tree (DT). This experiment aimed to improve the accuracy of thermal imaging and also to identify the ROIs that can show significant results. From the perspective of physiology, deceptive anxiety leads to spontaneous physiological signs including perspiration, increased heart rate, blood flow changes and so on [70]. According to Cannon, this physiological reaction to acute stress is called the “fight or flight” response [113]. The raw measurement obtained from thermal data is maximum and minimum values. The six temporal features are extracted from these raw measurements: mean, minimum, maximum, standard deviation, and means of absolute values of the first and second derivatives of the signals. In this study, the authors carried two activities to trigger deceptive, mock crime, and best friend scenarios. For mock crime scenarios, the classification accuracies of thermal data, GSR, and PPG are 83.8%, 67.7% and 64.5%, respectively. Thermal data performs better. While in best friend scenarios, accuracies for thermal data, GSR, and PPG are 62.9%, 66.6%, and 79.6%. Physiological signals show its discrimination property is stronger than thermal data. After the feature reduction technique is applied, the accuracy of the thermal data jumps from 41% to 90%. The DT performs better than other models. LDA classifier achieves 905 accuracies after feature reduction. The authors also compared the four thermal reduction methods: t-test, relative entropy, ROC, and MWW. The result shows that ROC and MWW produce high accuracies and the t-test shows improvement in other classifiers. The authors used the leave one out validation method to get classifier accuracy. This study established that thermal features outperform gold standard measurement and the accuracy can be improved with the feature reduction method. The authors also found that perinasal and chin contributed to high accuracy classification with help of the feature reduction method.

The authors [65] presented a framework to measure thermal signatures to detect cardiovascular and stress. In this study, the authors extracted thermal signatures such as card pulse, stress responses (heart rate and heart rate variability), breath rate, and sudomotor responses. They categorized the stress state based on rules. For stress responses, the carried two methods, the first method uses FFT and the second method applied wavelet and FFT to calculate heart rate. The first method achieves 91% accuracy while the second method achieves 90.3%. The proposed method performs better than the similar method proposed in [114].

Hong [45] proposed a contact-free model to detect the physical stress of the human body by maximizing thermal signature in the facial region. After extract the ROIs by using the multi-object correlation method, the stress signal was extracted, converted into an independent component by the blind source separation (ICA) method and then amplified by Euclidean Magnification (EM) algorithm. They applied the deep learning algorithm model to classify the baseline and physical stress and achieve 90% accuracy. Before [45], the authors also conducted studies to detect stress by analyzing facial temperature. They achieve 96% accuracy between the proposed EM algorithm and ground truth that consists of the established stress markers. The authors magnified the stress signal after preprocessed with the FFT algorithm.

In the recent years, very few studies explored the deep learning technique to produce better high accuracy stress classification. Reshma [72] presented a hybrid deep learning network for stress detection in the thermal image. Z-normalization based on the mean and standard deviation is applied for better training. In this work, the authors combine two deep learning neural networks. Raw image provided as input to first network DNN-1, and generate frequency features based on wavelet transform technique. This frequency feature is given as input to the second network, DNN-2. This hybrid system performance is compared with the machine learning technique. The comparison shows the proposed system produces high accuracies, 96.2%. This system is also compared with two transfer learning networks. The hybrid system outperforms these transfer learning techniques, Alexnet and Vgg-16. Alexnet accuracy is 92%, Vgg-166 accuracy is 94.5 and proposed system accuracy is 96.2%.

Kumar et al. [73] presented a novel deep learning-based methodology that explored the new feature ISTI to detect stress in the thermal videos. This study also proposed emission representation modules that can be used to model variations in emitted radiation due to the motion of blood and head movements. The authors explored the neural network based on facial skin temperature and established evidence to introduce a new feature that has similar performance to the state-of-the-art features. Biomarkers of Stress State (BOSS) and Cold Pressor test used to invoke stress. Mean Squared Error (MSE) and Pearson's correlation coefficient (R) were used to evaluate ISTI prediction. The findings show that ISTI extracted by the proposed model have a high prediction rate. Average precision (AP) as a validation metric is applied for stress detection. Predicted ISTI signal is better in detecting stress state than HR (12% higher AP) and HRV (4% higher AP). Also, higher AP with the ground truth ISTI signal confirms that ISTI is the most performing index of stress state in the experiment. Panasiuk et al. [71] compared the numeral analysis and deep learning method for stress state detection. Both techniques depend on heat emissions as a feature. The proposed deep learning methods achieved a high classification accuracy of 88.21%. The numerical analysis produces an accuracy of 76.40% and 78.10% for psychological and physical tests.

Bara et al. [115] presented a preliminary approached based on deep learning towards multi modal stress detection. The authors evaluated a different set of deep learning method. The multi-modal used in this works are thermal video; RGB Closeup Video; RGB Wideangle Video; Audio; the QA and monologues; Physiological signals: (1) heart rate, (2) body temperature, (3) skin conductance, (4) breathing rate; and text: transcripts extracted from the QA. In this work, the proposed architecture is based on Convolutional-Autoencoders and Recurrent Neural Networks. The Gated Recurrent Unit (GRU) is used for implementation. The subject-based leave-one-out cross-validation is used for validation. Results demonstrated that the deep-learning methods can generate rich state representations related to stress, regardless of relatively limited amount of data.

Gupta [116] proposed a stress detection method based on a deep learning technique. The authors employed the deep learning model that consists of LSTM layer and a fully connected layer. The output from fully connected layer is channeled into a softmax function for stress prediction of a person. The authors used the 5-fold cross-validation to train the model. The results show the average accuracy of classification by this model is 87%. Proposed model performs better than similar accuracy produced in [117].

Human stress detection is crucial in different disciplines. This paper discusses the approach used in stress detection by using thermal imaging on facial skin temperature. The reason for selecting the mentioned modality is to focus on contactless, physiological signals, and imaging-based modalities. This paper has discussed the stages involved in stress detection such as face detection in the thermal image, ROI localization, feature extraction, and stress classification. Detecting an image in a thermal image is more complicated than a visual image. Sufficient research has been done on detecting images in visual images more than thermal images. A solid state-of-the-art face detection algorithm has been established compared to the thermal image, the simple algorithm has been tailored to fit the gap. This becomes a major limitation in this stress detection based on thermal. To overcome this limitation, the researchers have contributed in comparing performance state-of-the-art face detection algorithm based on a visual image in a thermal image. The outcome shows that the researchers can adapt a visual-based face detection algorithm for the thermal image. The researchers also attempted to adopt deep learning techniques to detect the face. These contributions ease the current limitation of stress detection based on thermal imaging.

This paper also highlights the thermal patterns from facial regions as an indicator to detect stress. Thermal-based modalities have focused on the binary relationship between facial temperature and human stress state. Variation of thermal distribution patterns can be used to distinguish different stress types such as emotional stress and physical stress. The paper reveals that many studies have been done to prove the correlation between temperature changes in the face and the stress state of a person. The stress classification accuracy performance of the thermal features has been compared to the physiological biomarker such as heart rate, GSR, and HRV. These physiological signals are considered as a state-of-the-art biomarker for stress detection through the traditional method. The findings established that the thermal imaging technique is a good candidate for detecting stress in a non-invasions manner. The study has the potential to be an ideal experimental evaluation if a person wears a facial mask or relevant protection mode to fight against the COVID-19 pandemic. Because protective gear covers a large portion of the face, the periorbital region only has the potential to be used for feature extraction. More studies are needed to investigate the ROI localization and feature extraction if a person wears a face shield where the shield may hide the actual temperatures on the face.

However, the measurement of facial skin temperature by using low-cost equipment thermal imaging to induce human stress state is not explored in previous literature. Major literature studies have investigated the frontal face for stress detection and correlate it to the stress state. There are insufficient studies to establish the relationship between side face and front face. Very few studies are investigated the side face especially the neck side and ear to study the stress impact. More studies are needed to cover this area. This investigation may lead to a discovery of ROI and an established 3D thermal pattern model to study the stress impact in more detail.

Based on the discussion, several gaps are identified and this section is to propose the suggestion to fill those gaps. The proposed future work for human stress recognition is based on the thermal signature. Future studies should investigate the relationship between lateral faces and frontal faces. Also, the proposed work should correlate with the stress level induced by an individual. Future work should also consider novel methodologies to extract facial temperature when a person wears a protective face shield. This study can be useful to detect stress among the frontlines. A methodology that computes stress level and correlates it to the temperature changes in a face is needed. This methodology would be useful for detecting stress levels in an individual and can respond accordingly to the perceived stress level. The stress detection based on facial expression in thermal images also is a potential research direction

This paper aimed to review the studies on stress detection based on thermal imaging. Many studies that established the thermal feature can be useful for detecting stress remotely. The performance of thermal features has been compared to the state-of-the-art physiological stress marker. However, this methodology has its own limitations. Thus, more studies need to be conducted to overcome the limitations. Based on the quantitative result comparison, it can be concluded that the thermal features have full potential to detect stress. While face detection in thermal imaging limits the thermal imaging in stress detection, the face temperatures changes provide stress information. Further research is needed to determine the temperature changes on lateral sides of the face and also to understand the relationship with the frontal face when a person induced stress.

Funding Statement: This research was pursued under the Research University Grant by Universiti Sains Malaysia [1001/PKOMP/8014001].

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. Selye, H. (1975). Confusion and controversy in the stress field. Journal of Human Stress, 1(2), 37–44. DOI 10.1080/0097840X.1975.9940406. [Google Scholar] [CrossRef]

2. Lederbogen, F., Baranyai, R., Gilles, M., Menart-Houtermans, B., Tschoepe, D. et al. (2004). Effect of mental and physical stress on platelet activation markers in depressed patients and healthy subjects: A pilot study. Psychiatry Research, 127(1–2), 55–64. DOI 10.1016/j.psychres.2004.03.008. [Google Scholar] [CrossRef]

3. Otto, M. (2014). Physical stress and bacterial colonization. FEMS Microbiology Reviews, 38(6), 1250–1270. DOI 10.1111/1574-6976.12088. [Google Scholar] [CrossRef]

4. Tripathi, R. K., Salve, B. A., Petare, A. U., Raut, A. A., Rege, N. N. (2016). Effect of withania somnifera on physical and cardiovascular performance induced by physical stress in healthy human volunteers. International Journal of Basic & Clinical Pharmacology, 5, 2510–2516. DOI 10.18203/2319-2003.ijbcp20164114. [Google Scholar] [CrossRef]

5. Pardeshi, A. M., Kirtikar, S. N. (2016). Comparison of anthropometric parameters and blood pressure changes in response to physical stress test in normotensive subjects with or without family history of hypertension. Indian Journal of Physiology and Pharmacology, 60(2), 208–212. PMID: 29809380. [Google Scholar]

6. Øktedalen, O. (1988). The infuence of prolonged physical stress on gastric juice components in healthy man. Scand J. Gastroenterol, 23(9), 1132–1136. DOI 10.3109/00365528809090180. [Google Scholar] [CrossRef]

7. Wallen, N. H., Held, C., Rehnqvist, N., Hjemdahl, P. (1997). Effects of mental and physical stress on platelet function in patients with stable angina pectoris and healthy controls. European Heart Journal, 18(5), 807–815. DOI 10.1093/oxfordjournals.eurheartj.a015346. [Google Scholar] [CrossRef]

8. Trapp, M., Trapp, E. M., Egger, J. W., Domej, W., Schillaci, G. et al. (2014). Impact of mental and physical stress on blood pressure and pulse pressure under normobaric versus hypoxic conditions. PLoS One, 9(5), e89005. DOI 10.1371/journal.pone.0089005. [Google Scholar] [CrossRef]

9. Irfan, M., Raja, G. K., Murtaza, S., Mansoor, R., Qayyum, M. et al. (2012). Physical stress may result in growth suppression and pubertal delay in working boys. Journal of Medical Hypotheses and Ideas, 6(1), 35–39. DOI 10.1016/j.jmhi.2012.03.006. [Google Scholar] [CrossRef]

10. Kim, H. S. (2021). A study on the skin stress recognition and beauty care status due to wearing masks. Journal of the Korean Applied Science and Technology, 38(2), 465–475. DOI 10.12925/jkocs.2021.38.2.465. [Google Scholar] [CrossRef]

11. Cohen, S., Kamarck, T., Mermelstein, R. (1983). A global measure of perceived stress. Journal of Health and Social Behavior, 24, 385–396. DOI 10.2307/2136404. [Google Scholar] [CrossRef]

12. Dupéré, V., Dion, E., Harkness, K., McCabe, J., Thouin, É. et al. (2017). Adaptation and validation of the life events and difficulties schedule for use with high school dropouts. Journal of Research on Adolescence, 27(3), 683–689. DOI 10.1111/jora.12296. [Google Scholar] [CrossRef]

13. Gillan, W., Naquin, M., Zannis, M., Bowers, A., Brewer, J. et al. (2013). Correlations among stress, physical activity and nutrition: School employee health behavior. ICHPER-SD Journal of Research, 8(1), 55–60. ISSN: ISSN-1930-4595. [Google Scholar]

14. Mizuno, M., Siddique, K., Baum, M., Smith, S. A. (2013). Prenatal programming of hypertension induces sympathetic overactivity in response to physical stress. Hypertension, 61(1), 180–186. DOI 10.1161/HYPERTENSIONAHA.112.199356. [Google Scholar] [CrossRef]

15. Taylor, A. H., Dorn, L. (2006). Stress, fatigue, health, and risk of road traffic accidents among professional drivers: The contribution of physical inactivity. Annual Review of Public Health, 27, 371–391. DOI 10.1146/annurev.publhealth.27.021405.102117. [Google Scholar] [CrossRef]

16. Jones, B. F. (1998). A reappraisal of the use of infrared thermal image analysis in medicine. IEEE Transactions on Medical Imaging, 17(6), 1019–1027. DOI 10.1109/42.746635. [Google Scholar] [CrossRef]

17. Jones, B. F., Plassmann, P. (2002). Digital infrared thermal imaging of human skin. IEEE Engineering in Medicine and Biology Magazine, 21(6), 41–48. DOI 10.1109/MEMB.2002.1175137. [Google Scholar] [CrossRef]

18. Khan, M. M. (2008). Cluster-analytic classification of facial expressions using infrared measurements of facial thermal features (Doctoral Dissertation). University of Huddersfield. [Google Scholar]

19. Fujimasa, I., Chinzei, T., Saito, I. (2000). Converting far infrared image information to other physiological data. IEEE Engineering in Medicine and Biology Magazine, 19(3), 71–76. DOI 10.1109/51.844383. [Google Scholar] [CrossRef]

20. Bale, M. (1998). High-resolution infrared technology for soft-tissue injury detection. IEEE Engineering in Medicine and Biology Magazine, 17(4), 56–59. DOI 10.1109/51.687964. [Google Scholar] [CrossRef]

21. Hessler, C., Abouelenien, M., Burzo, M. (2018). A survey on extracting physiological measurements from thermal images. Proceedings of the 11th PErvasive Technologies Related to Assistive Environments Conference, pp. 229–236. Corfu, Greece. [Google Scholar]

22. Zhao, W., Zhao, Z., Li, C. (2018). Discriminative-CCA promoted by EEG signals for physiological-based emotion recognition. 2018 First Asian Conference on Affective Computing and Intelligent Interaction (ACII Asia), pp. 1–6. Beijing, China. [Google Scholar]

23. Barclay, C. J., Launikonis, B. S. (2021). Components of activation heat in skeletal muscle. Journal of Muscle Research and Cell Motility, 42(1), 1–16. DOI 10.1109/ACIIAsia.2018.8470373. [Google Scholar] [CrossRef]

24. Youssef, A., Verachtert, A., de Bruyne, G., Aerts, J. M. (2019). Reverse engineering of thermoregulatory cold-induced vasoconstriction/Vasodilation during localized cooling. Applied Sciences, 9(16), 3372. DOI 10.3390/app9163372. [Google Scholar] [CrossRef]

25. Khan, M. M., Ward, R. D., Ingleby, M. (2009). Classifying pretended and evoked facial expressions of positive and negative affective states using infrared measurement of skin temperature. ACM Transactions on Applied Perception, 6(1), 1–22. DOI 10.1145/1462055.1462061. [Google Scholar] [CrossRef]

26. Pavidis, I., Eberhardt, N. L., Levine, J. A. (2002). Human behavior: Seeing through the face of deception [Brief communication]. Nature, 425, 35. DOI 10.1038/415035a. [Google Scholar] [CrossRef]

27. Pavlidis, I. (2003). Continuous physiological monitoring. Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE Cat. No. 03CH37439), vol. 2, pp. 1084–1087. Cancun, Mexico. [Google Scholar]

28. Pavlidis, I., Levine, J., Baukol, P. (2001). Thermal image analysis for anxiety detection. Proceedings 2001 International Conference on Image Processing (Cat. No. 01CH37205), vol. 2, pp. 315–318. Thessaloniki, Greece. [Google Scholar]

29. Pavlidis, I., Dowdall, J., Sun, N., Puri, C., Fei, J. et al. (2007). Interacting with human physiology. Computer Vision and Image Understanding, 108(1–2), 150–170. DOI 10.1016/j.cviu.2006.11.018. [Google Scholar] [CrossRef]

30. Pavlidis, I., Tsiamyrtzis, P., Shastri, D., Wesley, A., Zhou, Y. et al. (2012). Fast by nature-how stress patterns define human experience and performance in dexterous tasks. Scientific Reports, 2(1), 1–9. DOI 10.1038/srep00305. [Google Scholar] [CrossRef]

31. Ebisch, S. J., Aureli, T., Bafunno, D., Cardone, D., Romani, G. L. et al. (2012). Mother and child in synchrony: Thermal facial imprints of autonomic contagion. Biological Psychology, 89(1), 123–129. DOI 10.1016/j.biopsycho.2011.09.018. [Google Scholar] [CrossRef]

32. Ioannou, S., Ebisch, S., Aureli, T., Bafunno, D., Ioannides, H. A. et al. (2013). The autonomic signature of guilt in children: A thermal infrared imaging study. PLoS One, 8(11), e79440. DOI 10.1371/journal.pone.0079440. [Google Scholar] [CrossRef]

33. Hirt, C., Eckard, M., Kunz, A. (2020). Stress generation and non-intrusive measurement in virtual environments using eye tracking. Journal of Ambient Intelligence and Humanized Computing, 11(12), 5977–5989. DOI 10.1007/s12652-020-01845-y. [Google Scholar] [CrossRef]

34. Yamanaka, K., Kawakami, M. (2009). Convenient evaluation of mental stress with pupil diameter. International Journal of Occupational Safety and Ergonomics, 15(4), 447–450. DOI 10.1080/10803548.2009.11076824. [Google Scholar] [CrossRef]

35. Gunawardhane, S. D., de Silva, P. M., Kulathunga, D. S., Arunatileka, S. M. (2013). Non invasive human stress detection using key stroke dynamics and pattern variations. 2013 International Conference on Advances in ICT for Emerging Regions (ICTer), pp. 240–247. Colombo, Sri Lanka. [Google Scholar]

36. Lin, H., Jia, J., Guo, Q., Xue, Y., Li, Q. et al. (2014). User-level psychological stress detection from social media using deep neural network. Proceedings of the 22nd ACM International Conference on Multimedia, pp. 507–516. Florida, Orlando, USA. [Google Scholar]

37. Hansen, J. H., Patil, S. (2007). Speech under stress: Analysis, modeling and recognition. Speaker classification I. pp. 108–137. Berlin, Heidelberg: Springer. [Google Scholar]

38. Han, H., Byun, K., Kang, H. G. (2018). A deep learning-based stress detection algorithm with speech signal. Proceedings of the 2018 Workshop on Audio-Visual Scene Understanding for Immersive Multimedia, pp. 11–15. Seoul, Korea. [Google Scholar]

39. Hansen, J. H., Womack, B. D. (1996). Feature analysis and neural network-based classification of speech under stress. IEEE Transactions on Speech and Audio Processing, 4(4), 307–313. DOI 10.1109/89.506935. [Google Scholar] [CrossRef]

40. Giannakakis, G., Pediaditis, M., Manousos, D., Kazantzaki, E., Chiarugie, F. et al. (2017). Stress and anxiety detection using facial cues from videos. Biomedical Signal Processing and Control, 31, 89–101. DOI 10.1016/j.bspc.2016.06.020. [Google Scholar] [CrossRef]

41. Zhang, J., Mei, X., Liu, H., Yuan, S., Qian, T. (2019). Detecting negative emotional stress based on facial expression in real time. 2019 IEEE 4th International Conference on Signal and Image Processing (ICSIP), pp. 430–434. Wuxi, China. [Google Scholar]

42. Gao, H., Yüce, A., Thiran, J. P. (2014). Detecting emotional stress from facial expressions for driving safety. 2014 IEEE International Conference on Image Processing (ICIP), pp. 5961–5965. Paris, France. [Google Scholar]

43. Zhai, J., Barreto, A. (2006). Stress detection in computer users based on digital signal processing of noninvasive physiological variables. 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, pp. 1355–1358. New York, NY, USA. [Google Scholar]

44. Shi, Y., Nguyen, M. H., Blitz, P., French, B., Fisk, S. et al. (2010). Personalized stress detection from physiological measurements. International Symposium on Quality of Life Technology, pp. 28–29. Las Vegas, NV, USA. [Google Scholar]

45. Hong, K. (2020). Non-contact physical stress measurement using thermal imaging and blind source separation. Optical Review, 27(1), 116–125. DOI 10.1007/s10043-019-00573-9. [Google Scholar] [CrossRef]

46. Adachi, H., Oiwa, K., Nozawa, A. (2019). Drowsiness level modeling based on facial skin temperature distribution using a convolutional neural network. IEEJ Transactions on Electrical and Electronic Engineering, 14(6), 870–876. DOI 10.1002/tee.22876. [Google Scholar] [CrossRef]

47. Oiwa, K., Nozawa, A. (2019). Feature extraction of blood pressure from facial skin temperature distribution using deep learning. IEEJ Transactions on Electronics, Information and Systems, 139(7), 759–765. DOI 10.1541/ieejeiss.139.759. [Google Scholar] [CrossRef]

48. Al Qudah, M. M., Mohamed, A. S., Lutfi, S. L. (2021). Affective state recognition using thermal-based imaging: A survey. Computer Systems Science & Engineering, 37(1), 47–62. DOI 10.32604/csse.2021.015222. [Google Scholar] [CrossRef]

49. Cho, Y., Bianchi-Berthouze, N. (2019). Physiological and affective computing through thermal imaging: A survey. Physiological and Affective Computing through Thermal Imaging: A Survey. arXiv preprint arXiv: 1908.10307. [Google Scholar]

50. Cho, Y. (2017). Automated mental stress recognition through mobile thermal imaging. 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), IEEE, pp. 596–600. San Antonio, TX, USA. [Google Scholar]

51. Elanthendral, V. S., Rekha, R. K., Rameshkumar, M. (2014). Thermal imaging for facial expression–Fatigue detection. International Journal for Research in Applied Science & Engineering Technology, 2(XII), 14. [Google Scholar]

52. Chu, C. H., Peng, S. M. (2015). Implementation of face recognition for screen unlockingon mobile device. Proceedings of the 23rd ACM International Conference on Multimedia, pp. 1027–1030. Brisbane, Australia. [Google Scholar]

53. Zhu, Z., Tsiamyrtzis, P., Pavlidis, I. (2007). Forehead thermal signature extraction in lie detection. 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, IEEE, pp. 243–246. Lyon, France. [Google Scholar]

54. Hong, K., Yuen, P., Chen, T., Tsitiridis, A., Kam, F. et al. (2009). Detection and classification of stress using thermal imaging technique. Optics and photonics for counterterrorism and crime fighting V, vol. 7486. pp. 74860I. International Society for Optics and Photonics, Berlin, Germany. [Google Scholar]

55. Cross, C. B., Skipper, J. A., Petkie, D. T. (2013). Thermal imaging to detect physiological indicators of stress in humans. Thermosense: Thermal infrared applications XXXV, vol. 8705. pp. 87050I. International Society for Optics and Photonics, Baltimore, Maryland, United States. [Google Scholar]

56. Rajoub, B. A., Zwiggelaar, R. (2014). Thermal facial analysis for deception detection. IEEE Transactions on Information Forensics and Security, 9(6), 1015–1023. DOI 10.1109/TIFS.10206. [Google Scholar] [CrossRef]

57. Jenkins, S. D., Brown, R. D. H. (2014). A correlational analysis of human cognitive activity using infrared thermography of the supraorbital region, frontal EEG and self-report of core affective state. Comunicación Presentada en la 12a Conferencia Internacional de Termografía de Infrarrojo Cuantitativa, Burdeos, Francia. [Google Scholar]

58. Sorostinean, M., Ferland, F., Tapus, A. (2015). Reliable stress measurement using face temperature variation with a thermal camera in human-robot interaction. IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), pp. 14–19. Seoul, Korea (South). [Google Scholar]

59. Mohd, M. N. H., Kashima, M., Sato, K., Watanabe, M. (2015). Mental stress recognition based on non-invasive and non-contact measurement from stereo thermal and visible sensors. International Journal of Affective Engineering, 14(1), 9–17. DOI 10.5057/ijae.14.9. [Google Scholar] [CrossRef]

60. Abouelenien, M., Burzo, M., Mihalcea, R. (2016). Human acute stress detection via integration of physiological signals and thermal imaging. Proceedings of the 9th ACM International Conference on Pervasive Technologies Related to Assistive Environments, pp. 1–8. Island, Corfu, Greece. [Google Scholar]

61. Baltaci, S., Gokcay, D. (2016). Stress detection in human–computer interaction: Fusion of pupil dilation and facial temperature features. International Journal of Human–Computer Interaction, 32(12), 956–966. DOI 10.1080/10447318.2016.1220069. [Google Scholar] [CrossRef]

62. Hong, K., Liu, G. (2017). Facial thermal image analysis for stress detection. International Journal of Engineering Research and Technology, 6(10), 94–98. [Google Scholar]

63. Abdelrahman, Y., Velloso, E., Dingler, T., Schmidt, A., Vetere, F. (2017). Cognitive heat: Exploring the usage of thermal imaging to unobtrusively estimate cognitive load. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 1(3), 1–20. DOI 10.1145/3130898. [Google Scholar] [CrossRef]

64. Powar, N. U., Schneider, T. R., Skipper, J. A., Petkie, D. T., Asari,V. K. et al. (2017). Thermal facial signatures for state assessment during deception. Electronic Imaging, 2017(13), 95–104. DOI 10.2352/ISSN.2470-1173.2017.13.IPAS-207. [Google Scholar] [CrossRef]

65. Vasavi, S., Neeharica, P., Poojitha, M., Harika, T. (2018). Framework for stress detection using thermal signature. International Journal of Virtual and Augmented Reality, 2(2), 1–25. DOI 10.4018/IJVAR. [Google Scholar] [CrossRef]

66. Vasavi, S., Neeharica, P., Wadhwa, B. (2018). Regression modelling for stress detection in humans by assessing most prominent thermal signature. IEEE 9th Annual Information Technology, Electronics and Mobile Communication Conference, pp. 755–762. Vancouver, BC, Canada. [Google Scholar]

67. Kopaczka, M., Jantos, T., Merhof, D. (2018). Towards analysis of mental stress using thermal infrared tomography. Bildverarbeitung für die Medizin, pp. 157–162. Informatik aktuell. DOI 10.1007/978-3-662-56537-7_47. [Google Scholar] [CrossRef]

68. Stoynova, A. (2018). Infrared thermography monitoring of the face skin temperature as indicator of the cognitive state of a person. 14th Quantitative InfraRed Thermography Conference. pp. 30–35. [Google Scholar]

69. He, C., Mahfouf, M., Torres-Salomao, L. A. (2018). Facial temperature markers for mental stress assessment in human-machine interface (HMI) control system. ICINCO, 2(2), 31–38. DOI 10.5220/0006820700210028. [Google Scholar] [CrossRef]

70. Derakhshan, A., Mikaeili, M., Gedeon, T., Nasrabadi, A. M. (2020). Identifying the optimal features in multimodal deception detection. Multimodal Technologies and Interaction, 4(2), 25. DOI 10.3390/mti4020025. [Google Scholar] [CrossRef]

71. Panasiuk, J., Prusaczyk, P., Grudzień, A., Kowalski, M. (2020). Study on facial thermal reactions for psycho-physical stimuli. Metrology and Measurement Systems, 27(3), 399–415. DOI 10.24425/mms.2020.134591. [Google Scholar] [CrossRef]

72. Reshma, R. (2021). Emotional and physical stress detection and classification using thermal imaging technique. Annals of the Romanian Society for Cell Biology, 25, 8364–8374. ISSN:1583-6258. [Google Scholar]

73. Kumar, S., Iftekhar, A. S. M., Goebel, M., Bullock, T., MacLean, M. H. et al. (2021). Stressnet: Detecting stress in thermal videos. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 999–1009. Waikoloa, HI, USA. [Google Scholar]

74. Engert, V., Merla, A., Grant, J. A., Cardone, D., Tusche, A. et al. (2014). Exploring the use of thermal infrared imaging in human stress research. PLoS One, 9(3), e90782. DOI 10.1371/journal.pone.0090782. [Google Scholar] [CrossRef]

75. Zheng, Y. (2012). Face detection and eyeglasses detection for thermal face recognition. Image processing: Machine vision applications V, vol. 8300. pp. 83000C. International Society for Optics and Photonics. [Google Scholar]

76. Cutler, R. G. (1996). Face recognition using infrared images and eigenfaces, University of Maryland at College Park, College Park, MD, USA. [Google Scholar]

77. Chen, X., Flynn, P. J., Bowyer, K. W. (2003). PCA-Based face recognition in infrared imagery: Baseline and comparative studies. Proceedings of the IEEE International SOI Conference(Cat. No. 03CH37443), pp. 127–134. Nice, France. [Google Scholar]

78. Srivastava, A., Liu, X. (2003). Statistical hypothesis pruning for identifying faces from infrared images. Image and Vision Computing, 21(7), 651–661. DOI 10.1016/S0262-8856(03)00061-1. [Google Scholar] [CrossRef]

79. Buddharaju, P., Pavlidis, I. T., Tsiamyrtzis, P. (2006). Pose-invariant physiological face recognition in the thermal infrared spectrum. Conference on Computer Vision and Pattern Recognition Workshop, pp. 53–53. New York, NY, USA. [Google Scholar]

80. Heo, J., Kong, S. G., Abidi, B. R., Abidi, M. A. (2004). Fusion of visual and thermal signatures with eyeglass removal for robust face recognition. Conference on Computer Vision and Pattern Recognition Workshop, pp. 122–122. Washington, DC, USA. [Google Scholar]

81. Gyaourova, A., Bebis, G., Pavlidis, I. (2004). Fusion of infrared and visible images for face recognition. European Conference on Computer Vision, pp. 456–468. Springer, Berlin, Heidelberg. [Google Scholar]

82. Socolinsky, D. A., Selinger, A. (2004). Thermal face recognition in an operational scenario. Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2, pp. II. Washington DC, USA. [Google Scholar]

83. Wang, J. G., Sung, E., Venkateswarlu, R. (2004). Registration of infrared and visible-spectrum imagery for face recognition. Proceedings of the Sixth IEEE International Conference on Automatic Face and Gesture Recognition, Proceedings, pp. 638–644. Seoul, Korea (South). [Google Scholar]

84. Chen, X., Flynn, P. J., Bowyer, K. W. (2005). IR and visible light face recognition. Computer Vision and Image Understanding, 99(3), 332–358. DOI 10.1016/j.cviu.2005.03.001. [Google Scholar] [CrossRef]

85. Kong, S. G., Heo, J., Abidi, B. R., Paik, J., Abidi, M. A. (2005). Recent advances in visual and infrared face recognition—A review. Computer Vision and Image Understanding, 97(1), 103–135. DOI 10.1016/j.cviu.2004.04.001. [Google Scholar] [CrossRef]

86. Zheng, Y. (2010). A novel thermal face recognition approach using face pattern words. Biometric technology for human identification VII, vol. 7667. pp. 766703. International Society for Optics and Photonics. [Google Scholar]

87. Basu, A., Routray, A., Shit, S., Deb, A. K. (2015). Human emotion recognition from facial thermal image based on fused statistical feature and multi-class SVM. 2015 Annual IEEE India Conference, pp. 1–5. New Delhi, India. [Google Scholar]

88. Hu, M. K. (1962). Visual pattern recognition by moment invariants. IRE Transactions on Information Theory, 8(2), 179–187. [Google Scholar]

89. Mostafa, E., Hammoud, R., Ali, A., Farag, A. (2013). Face recognition in low resolution thermal images. Computer Vision and Image Understanding, 117(12), 1689–1694. DOI 10.1016/j.cviu.2013.07.010. [Google Scholar] [CrossRef]

90. Reese, K., Zheng, Y., Elmaghraby, A. (2012). A comparison of face detection algorithms in visible and thermal spectrums. International Conference on Advances in Computer Science and Application, Amsterdam, Netherlands. [Google Scholar]

91. Viola, P., Jones, M. J. (2004). Robust real-time face detection. International Journal of Computer Vision, 57(2), 137–154. DOI 10.1023/B:VISI.0000013087.49260.fb. [Google Scholar] [CrossRef]

92. Basbrain, A. M., Gan, J. Q., Clark, A. (2017). Accuracy enhancement of the viola-jones algorithm for thermal face detection. International Conference on Intelligent Computing, pp. 71–82. Springer, Cham. [Google Scholar]

93. Tran, H., Dong, C., Naghedolfeizi, M., Zeng, X. (2021). Using cross-examples in viola-jones algorithm for thermal face detection. Proceedings of the 2021 ACM Southeast Conference, pp. 219–223. Virtual Event, USA. [Google Scholar]

94. Kowalski, M. Ł., Grudzień, A., Ciurapiński, W. (2021). Detection of human faces in thermal infrared images. Metrology and Measurement Systems, 28(2), 307–321. [Google Scholar]

95. Buddharaju, P., Pavlidis, I. T., Tsiamyrtzis, P., Bazakos, M. (2007). Physiology-based face recognition in the thermal infrared spectrum. IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(4), 613–626. DOI 10.1109/TPAMI.2007.1007. [Google Scholar] [CrossRef]

96. Prokoski, F. J., Riedel, R. B. (1996). Infrared identification of faces and body parts. Biometrics. pp. 191–212. Boston, MA: Springer. [Google Scholar]

97. Cho, S. Y., Wang, L., Ong, W. J. (2009). Thermal imprint feature analysis for face recognition. 2009 IEEE International Symposium on Industrial Electronics, pp. 1875–1880. Seoul, Korea (South). [Google Scholar]

98. Kopaczka, M., Nestler, J., Merhof, D. (2017). Face detection in thermal infrared images: A comparison of algorithm-and machine-learning-based approaches. International Conference on Advanced Concepts for Intelligent Vision Systems, pp. 518–529. Springer, Cham. [Google Scholar]

99. Friedrich, G., Yeshurun, Y. (2002). Seeing people in the dark: Face recognition in infrared images. International Workshop on Biologically Motivated Computer Vision, pp. 348–359. Springer, Berlin, Heidelberg. [Google Scholar]

100. Kopaczka, M., Schock, J., Nestler, J., Kielholz, K., Merhof, D. (2018). A combined modular system for face detection, head pose estimation, face tracking and emotion recognition in thermal infrared images. IEEE International Conference on Imaging Systems and Techniques, pp. 1–6. [Google Scholar]

101. Kopaczka, M., Acar, K., Merhof, D. (2016). Robust facial landmark detection and face tracking in thermal infrared images using active appearance models. In VISIGRAPP (4: VISAPP), pp. 150–158. [Google Scholar]

102. Antonakos, E., Alabort-i-Medina, J., Tzimiropoulos, G., Zafeiriou, S. P. (2015). Feature-based lucas–kanade and active appearance models. IEEE Transactions on Image Processing, 24(9), 2617–2632. DOI 10.1109/TIP.2015.2431445. [Google Scholar] [CrossRef]

103. Chu, W. T., Liu, Y. H. (2019). Thermal facial landmark detection by deep multi-task learning. IEEE 21st International Workshop on Multimedia Signal Processing, pp. 1–6. Kuala Lumpur, Malaysia. [Google Scholar]

104. Sonkusare, S., Ahmedt-Aristizabal, D., Aburn, M. J., Nguyen, V. T., Pang, T. et al. (2019). Detecting changes in facial temperature induced by a sudden auditory stimulus based on deep learning-assisted face tracking. Scientific Reports, 9(1), 1–11. DOI 10.1038/s41598-019-41172-7. [Google Scholar] [CrossRef]

105. Cao, Z., Hidalgo, G., Simon, T., Wei, S. E., Sheikh, Y. (2019). Openpose: Realtime multi-person 2D pose estimation using part affinity fields. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(1), 172–186. DOI 10.1109/TPAMI.34. [Google Scholar] [CrossRef]

106. Kumar, S., Singh, S. K. (2020). Occluded thermal face recognition using bag of CNN ($ Bo $ CNN). IEEE Signal Processing Letters, 27, 975–979. DOI 10.1109/LSP.97. [Google Scholar] [CrossRef]

107. Wu, Z., Peng, M., Chen, T. (2016). Thermal face recognition using convolutional neural network. International Conference on Optoelectronics and Image Processing, pp. 6–9. Warsaw, Poland. [Google Scholar]

108. Simonyan, K., Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv: 1409.1556. [Google Scholar]

109. He, K., Zhang, X., Ren, S., Sun, J. (2016). Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778. Las Vegas, NV, USA. [Google Scholar]

110. Muller, M., Baier, G., Rummel, C., Schindler, K., Stephani, U. et al. (2008). A multivariate approach to correlation analysis based on random matrix theory. Seizure prediction in epilepsy: From basic mechanisms to clinical applications. pp. 209–226, Weinheim, Germany, Wiley. [Google Scholar]

111. Berlovskaya, E. E., Isaychev, S. A., Chernorizov, A. M., Ozheredov, I. A., Adamovich, T. V. et al. (2020). Diagnosing human psychoemotional states by combining psychological and psychophysiological methods with measurements of infrared and THz radiation from face areas. Psychology in Russia: State of the Art, 13(2), 64–83. DOI 10.11621/pir.2020.0205. [Google Scholar] [CrossRef]

112. Kandus, J. T. (2018). Using functional infrared thermal imaging to measure stress responses. Humboldt State University, California, USA. [Google Scholar]

113. Jacobs, G. D. (2001). The physiology of mind–body interactions: The stress response and the relaxation response. The Journal of Alternative & Complementary Medicine, 7(1), 83–92. DOI 10.1089/107555301753393841. [Google Scholar] [CrossRef]

114. Garbey, M., Sun, N., Merla, A., Pavlidis, I. (2007). Contact-free measurement of cardiac pulse based on the analysis of thermal imagery. IEEE Transactions on Biomedical Engineering, 54(8), 1418–1426. DOI 10.1109/TBME.2007.891930. [Google Scholar] [CrossRef]

115. Bara, C. P., Papakostas, M., Mihalcea, R. (2020). A deep learning approach towards multimodal stress detection. AffCon@AAAI, CEUR Workshop Proceedings, pp. 67–81. New York, USA. [Google Scholar]

116. Gupta, S. (2019). Stress recognition from image features using deep learning. Australian National University. [Google Scholar]

117. Irani, R., Nasrollahi, K., Dhall, A., Moeslund, T. B., Gedeon, T. (2016). Thermal super-pixels for bimodal stress recognition. Sixth International Conference on Image Processing Theory, Tools and Applications, pp. 1–6. Oulu, Finland. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |