| Computer Modeling in Engineering & Sciences |  |

DOI: 10.32604/cmes.2022.018424

ARTICLE

BMRMIA: A Platform for Radiologists to Systematically Learn Automated Medical Image Analysis by Three Dimensional Medical Decision Support System

1School of Software, Shandong University, Jinan, 250101, China

2School of Information Science and Engineering, Shandong University, Qingdao, 266237, China

3School of Computer Science and Technology, Shandong Jianzhu University, Jinan, 250012, China

4Department of Nephrology, Qilu Hospital of Shandong University, Jinan, 250012, China

5State Key Laboratory of ASIC & Systems, The School of Microelectronics, Fudan University, Shanghai, 200433, China

6Shenzhen Institute of Information Technology, Shenzhen, 518172, China

*Corresponding Author: Geng Yang. Email: yangg@sziit.edu.cn

Received: 28 July 2021; Accepted: 27 October 2021

Abstract: Contribution: This paper designs a learning and training platform that can systematically help radiologists learn automated medical image analysis technology. The platform can help radiologists master deep learning theories and medical applications such as the three-dimensional medical decision support system, and strengthen the teaching practice of deep learning related courses in hospitals, so as to help doctors better understand deep learning knowledge and improve the efficiency of auxiliary diagnosis. Background: In recent years, deep learning has been widely used in academia, industry, and medicine. An increasing number of companies are starting to recruit a large number of professionals in the field of deep learning. Increasing numbers of colleges and universities also offer courses related to deep learning to help radiologists learn automated medical image analysis techniques. For now, however, there is no practical training platform that can help radiologists learn automated medical image analysis systematically. Application Design: The platform proposes the basic learning, model combat, business application (BMR) concept, including the learning guidance system and the assessment training system, which constitutes a closed-loop learning guidance mode of “learning-assessment-training-learning”. Findings: The survey results show that most of radiologists met their learning expectations by using this platform. The platform can help radiologists master deep learning techniques quickly, comprehensively and firmly.

Keywords: BMR; deep learning; three dimensional medical decision support system; deep learning engineer standard

In recent years, deep learning has developed rapidly in academia and industry, especially in the fields of speech recognition, image recognition and natural language processing [1] because deep learning can achieve precision that is unmatched by traditional methods. Since 2012, deep learning has broken through the bottleneck of traditional image recognition technology and won the championship of the ImageNet Large-scale Visual Recognition Challenge (ILSCRC) competition [2]. Deep learning can be applied to an increasing number of fields. Deep learning will continue to break through the bottleneck of traditional technology and will be applied to many fields, such as genetic technology [3], personalized medicine [4–6], self-media [7], public safety [8,9], art [10], and finance [11].

Deep learning has greatly promoted the development of machine learning and attracted the attention of researchers and high-tech companies in relevant fields worldwide. Deep learning extends from not only graduate courses to undergraduate courses [12] but also university laboratories and top IT companies to public life. More companies will be able to solve practical problems by deep learning technology. With the development of open source learning tools and the maturity of the technology, an increasing number of companies and individuals will enjoy the benefits of deep learning technology. Currently, many companies with Internet enterprises as the main body have begun to recruit a large number of deep learning professionals and an increasing number of hospitals and universities have also opened courses related to deep learning [13]. However, new explorations and achievements in the field of deep learning are increasing daily. The threshold for entry into deep learning is constantly increasing, and the application fields involved are increasing. The needs of academia and the community have prompted hospitals and universities to offer courses in deep learning and to export professionals in deep learning. However, currently, in the study of information science and computer science radiologists in hospitals and universities, there is not a practical learning and training platform specifically designed to help radiologists systematically learn automated medical image analysis technology. As far as we know, there are currently few relevant platforms for training experts to train and test deep learning. There is NiftyNet [14], a deep learning platform for medical imaging, which implements an open-source platform based on TensorFlow APIs for deep learning in the field of medical imaging. This platform only provides three deep learning applications, including segmentation, regression, image generation, and representation learning, as specific examples to illustrate the key features of the platform. And our platform can first provide basic knowledge of each network, so that radiologists who have never been exposed to deep learning before can learn. In addition, our platform provides a complete set of systems from classification to segmentation to three-dimensional reconstruction based on radiology images, allowing doctors to more systematically understand the knowledge of deep learning and use it to assist in diagnosis.

Therefore, this paper proposes the basic learning, model combat, radiological training application (BMR) concept, to design a learning and training platform for deep learning. The platform includes the learning guidance system and the assessment training system. The combination of the two systems constitutes a closed-loop learning guidance mode of “learning-assessment-training-learning.” Radiologists can study deep learning frameworks [15,16], which can support their work and improve their efficiency in diagnosing patients. In addition, the platform also includes a medical decision support system to allow radiologists to more specifically learn the application of deep learning. Through this system, radiologists can systematically grasp how deep learning is applied to the medical field. The platform aims to provide radiologists with learning guidance, online learning assessment, targeted reporting, and personalized training services for deep learning technology, helping learners to quickly, comprehensively and firmly master deep learning techniques and to help hospitals and universities narrow the gap between the output of deep learning talents and the standards of corporate talent demand.

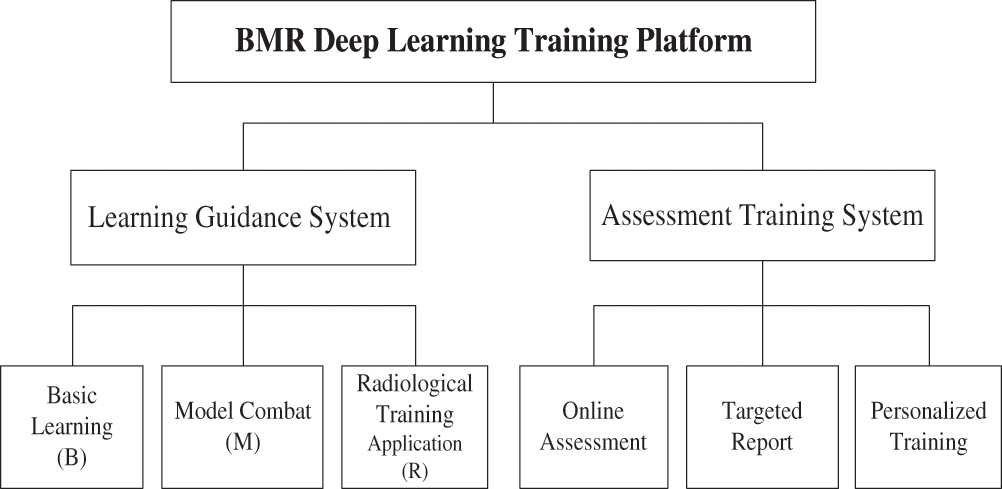

The BMR Medical Image Analysis Platform (BMRMIA) is a deep learning evaluation and training platform based on the concept of BMR, which aims to provide radiologists with learning guidance, online assessment, targeted reporting and personalized training services oriented toward deep learning technology. The BMRMIA learning and training platform has two systems: the learning guidance system and the assessment training system, as shown in Fig. 1.

Figure 1: Framework of the BMRMIA learning and training platform

2.1 BMR Learning Guidance System

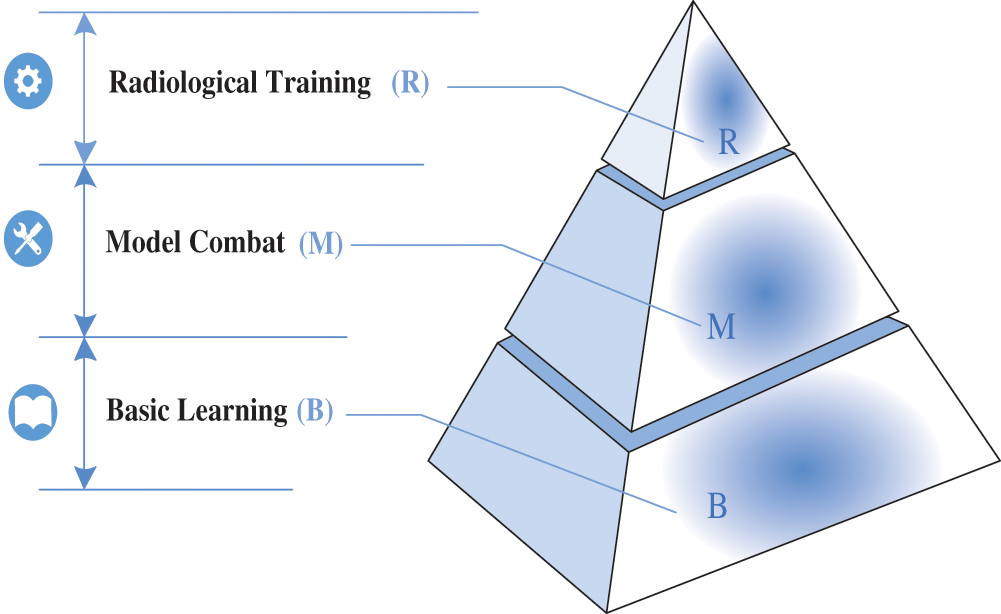

Based on the outcome-based engineering education (OBE) model [17–21], this paper proposes the concept of BMR pyramid-like learning guidance, which includes three kinds of abilities: basic learning (B), model combat (M) and radiological training application (R). Among them, the basic learning (B) part refers to providing some basic deep learning network knowledge, radiologists can freely choose various basic networks, including network framework, basic composition, etc. Model combat (M) means that radiologists can use some deep learning frameworks to run the network once and save the pre-trained model. Radiology training application (R) refers to radiology images. Experts can select one or more networks to classify, segment, and 3D reconstruction of radiology images. The three abilities are built from the bottom up in a pyramid, each based on the following ability, as shown in Fig. 2. This hierarchical and complementary pyramid-like concept, which is dominated by learning guidance and ability enhancement, can help radiologists firmly understand the basic knowledge of deep learning and the ability of model development and project application.

Figure 2: The concept of BMR pyramid learning guidance includes three kinds of abilities: basic learning (B), model combat (M) and radiological training application (R)

2.1.1 The Basic Learning Layer (b)

The bottom layer of the pyramid-like concept is the basic learning layer. The bottom layer of the pyramid-like concept is the basic learning layer. At this layer, the radiologists are quizzed with some questions measuring their understanding of the fundamental concepts of deep learning, question such as:

• I can master the function of each layer of CNN

• I can master 5 regularization and 7 optimization strategies in model training

Based on the student's response, the content of the basic learning layer is developed. This layer includes the following specific contents: (i) Mathematical foundations of deep learning. In this section, the probability basis, common distribution, mathematical statistics and parameter estimation required by deep learning are explained in detail. (ii) Theoretical basis of deep learning. This section focuses on the introduction of deep learning theory, regularization, optimization strategies and so on. It also uses acceptable and attractive teaching tools (such as JGOMAS) to motivate radiologists to enhance their interest in learning [22]. (iii) Basis of the neural network model. This section explains classic neural network models including neuron models, deep neural networks (DNN) [23], convolutional neural networks (CNN) [24], recurrent neural network (RNN) [25], AlexNet [2], VGGNet [26], GoogLeNet [27], and ResNet [28]. (iv) Deep learning network resources. This includes many excellent resources, such as the homepage of Professor Hinton, the free neural network course taught by Professor Hinton on the Coursera website, and the online tutorials offered by Professor Andrew Ng of Stanford University. (v) A guide list of papers on deep learning. This includes classic papers on deep learning published by conferences such as the International Conference on Machine Learning (ICML), the Conference and Workshop on Neural Information Processing Systems (NIPS), the IEEE International Conference on Computer Vision (ICCV), the International Conference on Acoustics, Speech and Signal Processing (ICASSP), and the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). (vi) Getting started with python [29,30]. This section can help radiologists quickly master the use of Python 2 and Python 3. The Python language design is simple and elegant, with high development efficiency and rich resources. Its open source and massive technical community support have great advantages. The evaluation indicators of the online assessment module are shown in Fig. 3.

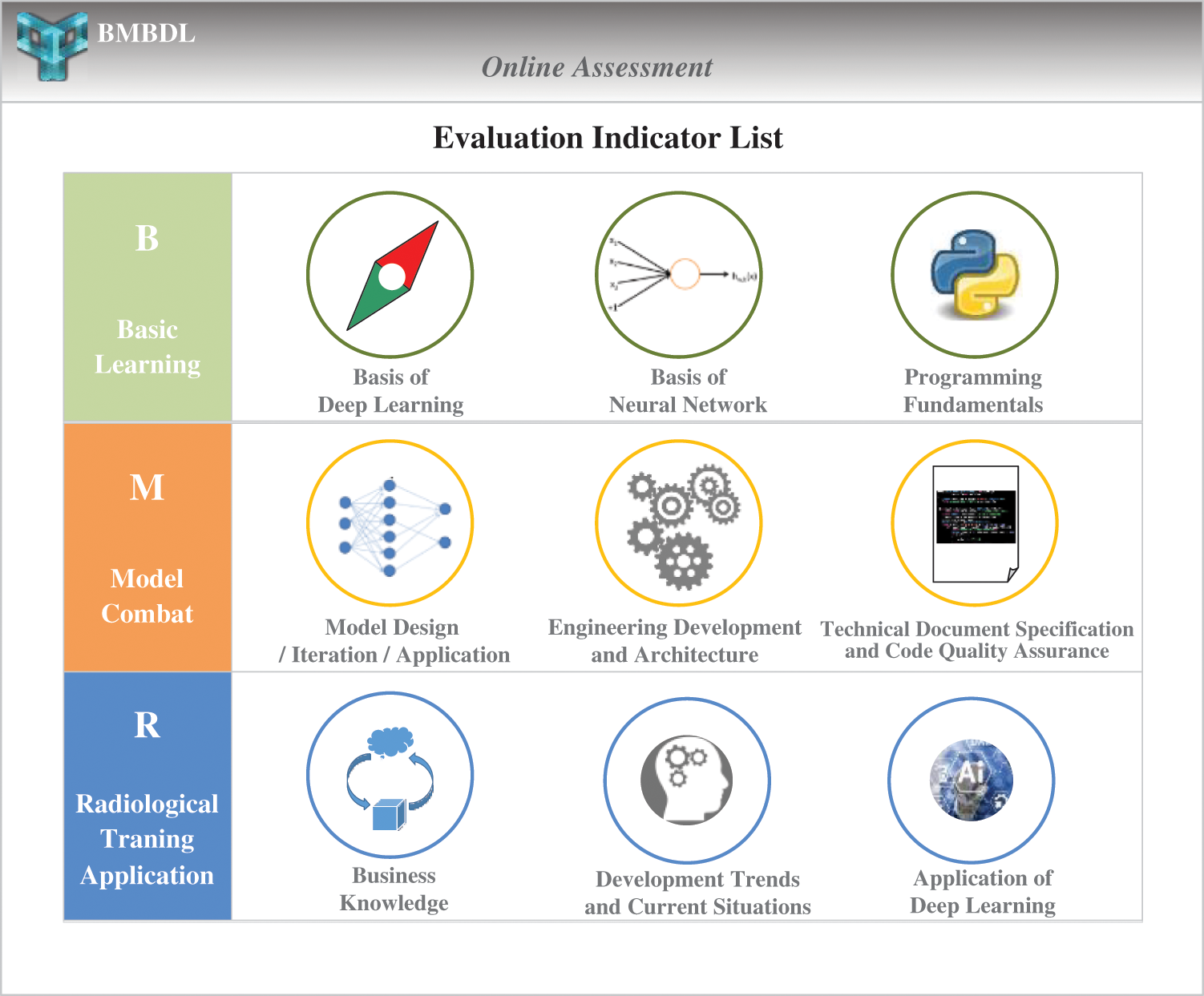

Figure 3: The evaluation indicators of the online assessment module. The BMRMIA learning and training platform provides three large assessment dimensions and nine types of skills assessment projects

2.1.2 The Model Combat Layer (m)

The middle layer of the pyramid-like concept is the model combat layer, which covers the entire cycle of deep learning development, including the following parts: (i) The establishment of three major mainstream deep learning open source frameworks (TensorFlow, Caffe and MXNet). The deep learning framework mainly provides the implementation of the neural network model, which is used for model training. (ii) Deep network model development. This part includes one-click sample model development and custom model development. (iii) Deep network model training. This part includes i) locally written training code, ii) package training task code, iii) upload code and data package, iv) create training task, v) view training task. (iv) Deep network model service. This part includes i) model export. The platform supports one-click deployment and on-demand deployment. ii) The start server. The trained model is used to create model services through the console to implement model deployment and provide services. iii) Create a client. The platform supports users writing client code.

2.1.3 The Radiological Training Application Layer (R)

The top layer of the pyramid-like concept is the radiological training application layer, which focuses on the application of deep learning technology, including specific contents as follows: (i) Disease application services. This section guide radiologists to complete application training in the following fields: i) Disease classification based on radiological images, ii) lesion segmentation based on radiological images, iii) disease progression prediction based on radiological images. (ii) Image application services. This part guides radiologists to complete application training in the following fields: i) Radiographic image enhancement, ii) radiographic image denoising, iii) radiographic image 3D reconstruction.

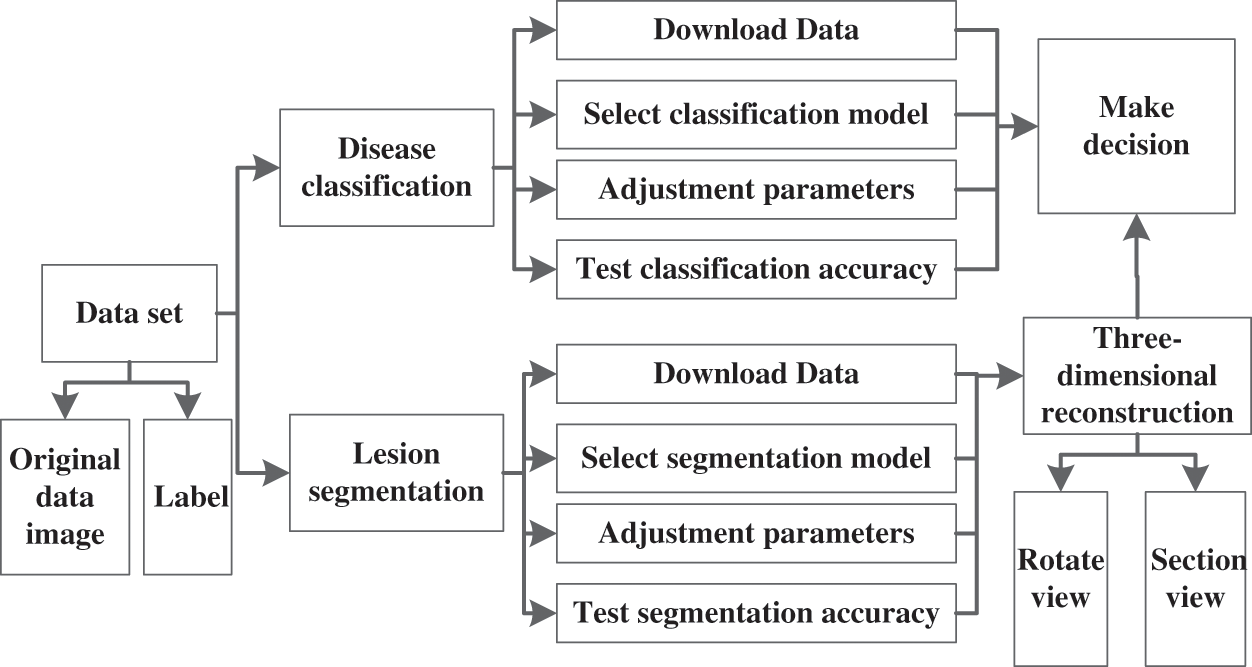

In order to enable radiologists to master the application of deep learning more systematically, this paper designs a 3D medical decision support system using deep learning techniques. In this system, a data set of medical images can be provided, which is given in DICOM format, where radiologists can learn how to read this medical image. Because ultrasound imaging has high resolution to soft tissue structure, and real-time imaging, no pain, no ionizing radiation, the data set in this 3D reconstruction platform is mainly ultrasound image [31,32]. In addition, this data set gives the label, which radiologists can use to classify the disease in the medical image and segment the lesion. When the student completes these two steps, a three-dimensional reconstruction can be performed based on the segmented image. This three-dimensional medical decision support system provides a GAN network for three-dimensional reconstruction to efficiently generate 3D structures given some slices of the original image. After obtaining the results of the three-dimensional reconstruction, the lesions can be clearly seen to assist the doctor in making diagnostic decisions and treatments.

Through this three-dimensional medical decision support system, radiologists can systematically master the use of deep learning to deal with medical problems, so as to more deeply understand the theory and application of deep learning. The block diagram of the 3D medical decision support system is shown in Fig. 4.

Figure 4: The block diagram of the 3D medical decision support system

2.2 Assessment Training System of the Platform

The assessment training system is another important part of the BMRMIA learning and training platform. The system consists of three modules: online assessment, targeted report and personalized training. The system not only helps radiologists accurately assess the mastery of each link in the learning guidance system but also customizes the personalized learning and training plan for radiologists.

2.2.1 Module of Online Assessment

This module combines the current evaluation criteria for deep learning engineers of well-known Internet enterprises and has led to the development of a series of quantitative evaluation indicators for radiologists to learn automated medical image analysis technology. The BMRMIA learning and training platform provides three large assessment dimensions and nine types of skills assessment projects (as shown in Fig. 3). This module can accurately track the radiologists’ advantages and disadvantages in basic learning, model combat, and radiological training application. The evaluation indicators of the platform are divided as follows.

First Assessment Dimension—Basic Learning: This contains the following evaluation indicators: (i) Basis of deep learning. This indicator examines the extent to which radiologists understand the basic concepts, principles and relevant mathematics of deep learning. The evaluation form of this indicator is exam problems and quiz problems, problems such as:

• What is the formula for the partial derivative of the composite function?

• What is the mathematical principle of the Dropout regularization strategy?

• Please write the mathematical derivation process of the gradient descent method.

In this regard, radiologists are required to submit answers to this exam or quiz. (ii) The basis of the neural network. This indicator examines radiologists’ mastery of the basic concepts, principles, technical characteristics and usage scenarios of important neural network models (such as DNN, CNN, RNN). The evaluation form of this indicator is exam problems, and problems such as:

• How to understand the local perception and weight sharing of CNN?

• What layers and their functions are included in LeNet-5?

In this regard, radiologists need to submit an answer. (iii) Programming fundamentals. This indicator examines the extent to which radiologists master the fundamentals of program skills, such as, but not limited to, Python and C++. The evaluation form of this indicator is project assignments, such as please use python to implement CNN network to recognize handwritten images. In the end, radiologists are required to submit project artifacts.

Second Assessment Dimension—Model Combat: This contains the following evaluation indicators: (i) Model design/iteration/application. This indicator examines the extent to which radiologists master the iterative development process of deep learning models and algorithms. (ii) Engineering development and architecture. This indicator examines the extent to which radiologists master the specific technical aspects involved in the entire life cycle of deep learning application development. (iii) Technical document specification and code quality assurance. This indicator examines radiologists’ ability to write standard technical documents and their awareness of code quality assurance. The second dimension is evaluated in the form of laboratory assignments, tasks included:

• Write a technical document of object detection method based on deep learning

• Submit the implementation code of the above document

In response, radiologists are required to submit project reports and artifacts.

Third Assessment Dimension—Radiologists Application: It contains the following evaluation indicators: (i) Radiologists knowledge. This indicator examines the radiologists’ ability to understand the transformation between radiologist's requirements and deep learning technologies. (ii) Development trends and current situations. This indicator examines radiologists’ ability to think about the current situation of deep learning technology and the development of blank application fields. (iii) Application of deep learning. This indicator examines radiologists’ ability to solve specific application problems with the most critical deep learning elements/technologies and their ability to delve into the three major application areas f deep learning. The third dimension is evaluated in the form of quiz problems and exam problems. For example, please give the best deep learning technology solution for the medical image segmentation of brain tumors. In this regard, radiologists are required to submit answers for an exam or quiz.

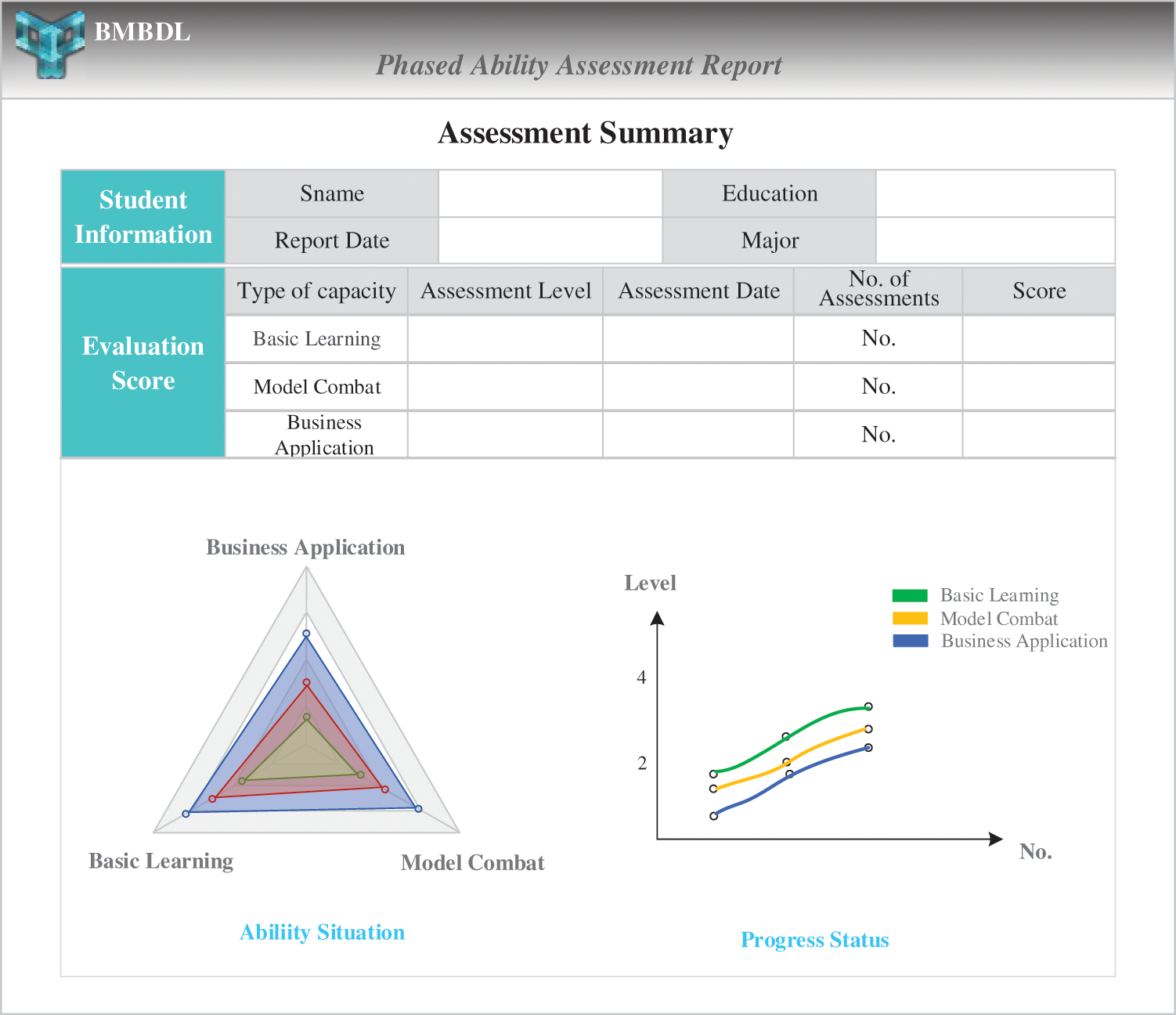

2.2.2 Module of Targeted Report

For the online assessment results, this module can generate a targeted assessment report every time. After the student's segmentation learning and continuous evaluation, this module can also generate a phased ability assessment report. As shown in Fig. 5, radiologists can clearly see their current ability assessment results and progress stage status in the report. It is convenient for radiologists to quantitatively measure their mastery of deep learning technology and clarify the learning objectives for the next stage.

Figure 5: The phased ability assessment report

The evaluation grade has 4 levels, and the evaluation score is 100 points. In the total score, the first dimension accounts for 50 points, and the second and third dimensions each account for 25 points. For the answers to exams and quizzes submitted by the radiologists, the teacher scores the answers based on the standard answers. For the project documents and reports submitted by radiologists, several professors of the local hospital will give subjective marks according to the specific quality of the products submitted. The teacher then takes the average score of these scores as the final score of the submitted products.

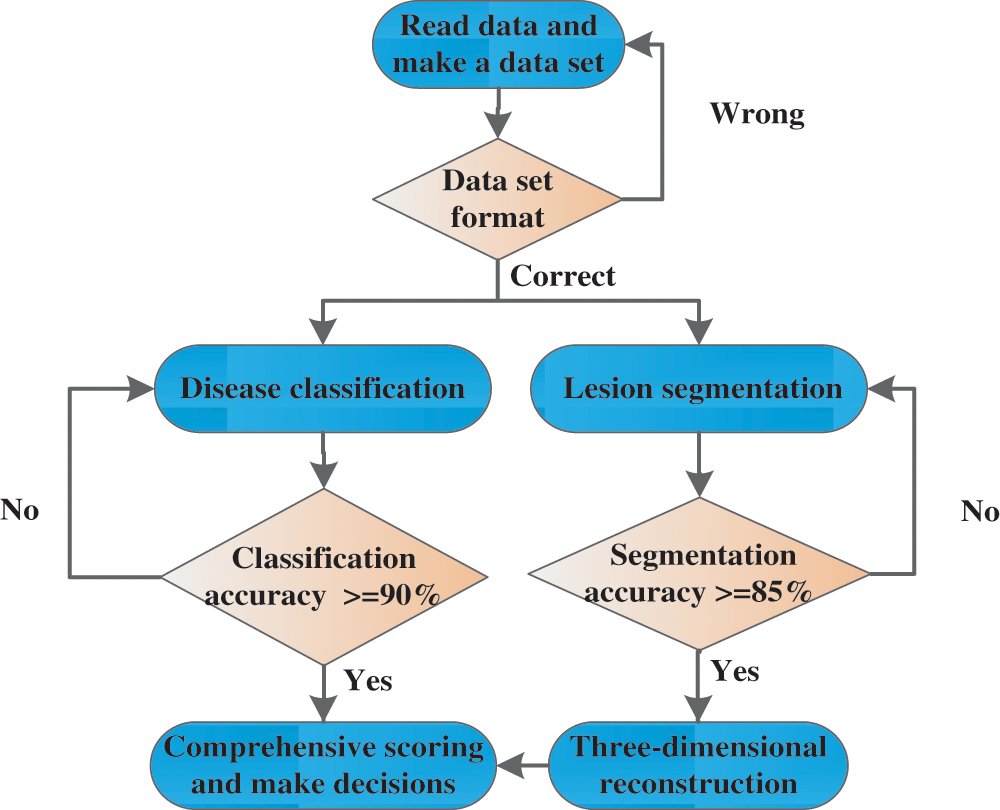

2.2.3 Three-Dimensional Medical Decision Support System Testing

After passing the online evaluation module and the target reporting module, the platform provides a complete 3D medical decision support system to systematically test the depth of learning. In this three-dimensional decision system, radiologists first choose to read the data module and make their own data sets based on the medical data provided by the platform. The next module, the classification of the disease and the segmentation of the lesion, will only occur if the data set is correctly prepared. In this module, radiologists can train and test the model, and adjust the parameters. When the classification accuracy and the segmentation accuracy reach a certain standard, it is assumed that the classification accuracy is set to 90% at the lowest and the segmentation accuracy is set to 85%. This module passes the test. Go to the module below, 3D reconstruction module. In this module, the segmentation results of the previous modules are reconstructed in three dimensions, the reconstruction results are displayed and uploaded to the background, and the final results are scored by a professional doctor. The overall classification accuracy and the doctor's evaluation of the triple reconstruction result were finally used as the final score of this test system. The flow chart of the entire test system is shown in Fig. 6 below:

Figure 6: 3D medical decision support system test flow chart

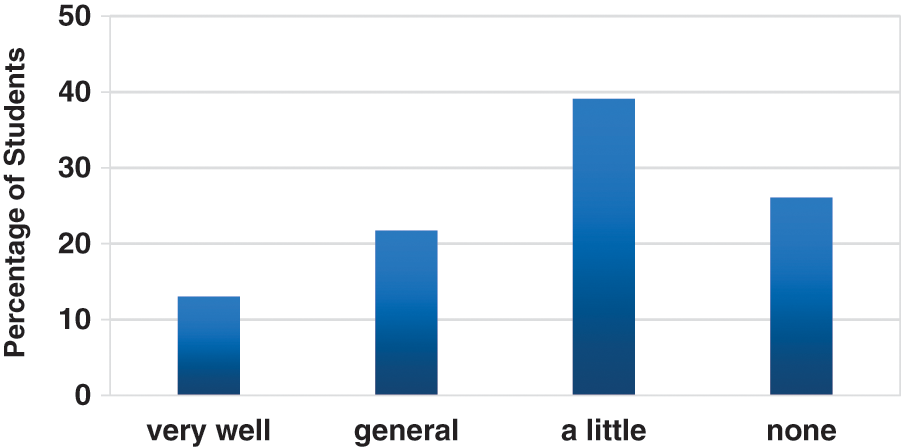

Prior to the establishment of the BMRMIA learning and training platform, 23 radiologists from the school of information science and engineering of Shandong University, including 13 undergraduate radiologists and 10 graduate radiologists, were invited to participate in the Cognitive Questionnaire for Deep Learning Technology. The radiologists’ understandings of deep learning are presented in Fig. 7. Results show that more than 60% of the radiologists do not know the basics knowledge of deep learning technology, and only less than 14% of the radiologists are well aware of the basics of deep learning technology.

Figure 7: Responses of the radiologists—understanding

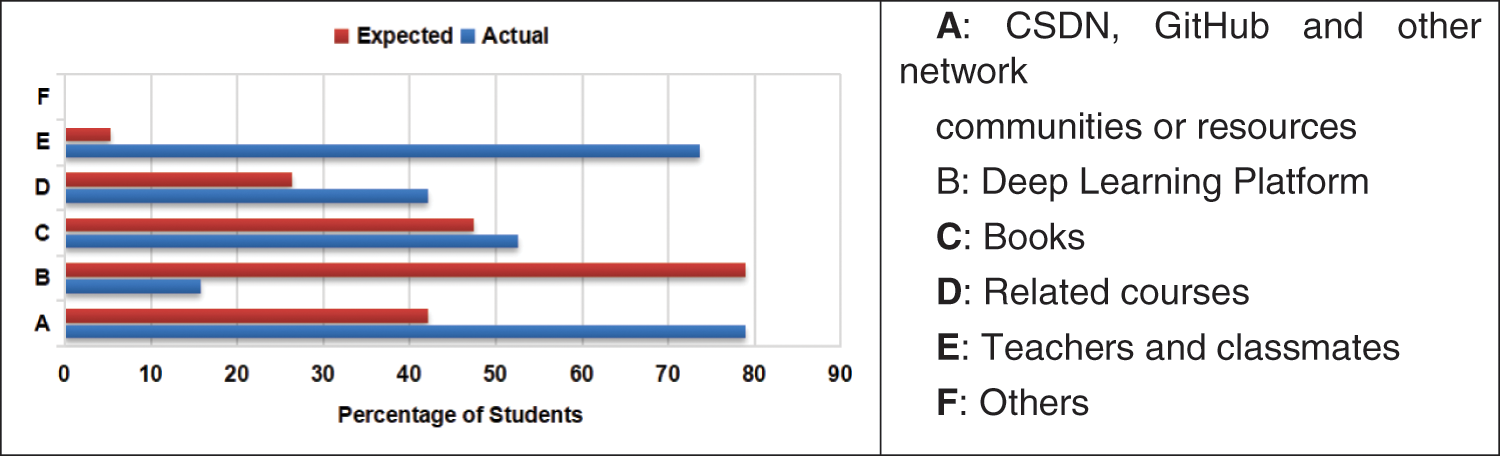

89% of these radiologists are radiologists who have a certain understanding of deep learning. They are asked to continue answering the current ways of understanding deep learning and the desired ideal learning channels. The results are listed in Fig. 8, more than 70% of the radiologists know through A and E, and only 3 of them through the ordinary deep learning platform. However, in the desired ideal learning channel, the expectation rate of the systematic learning platform is 79%, far exceeding the three learning channels of A, C, and D, which means the necessity of establishing a deep learning platform. At the same time, the questionnaire also requires radiologists to supplement the learning guidance content that the platform should have. The answer is mainly focused on the basic theoretical part of deep learning and how to actually apply it. Therefore, the BMRMIA learning and training platform focuses on building a basic learning layer in the learning guidance system and a practical medical application for radiologists through a three-dimensional medical decision support system.

Figure 8: Responses of the radiologists—actual and expected learning ways

Subsequently, the 23 radiologists were asked to conduct a two-month BMRMIA learning and training platform experience. Online assessment modules are used to get their targeted assessment reports, which show that their levels have improved after two months. The learner outcomes of the platform can also be measured by a survey. In order to measure the basic skills learned by radiologists and their satisfaction with learning expectations after using this platform, questions included:

• I have mastered several important neural network models

• I can use Python to implement image classifiers based on deep learning

• I can systematically master the application of deep learning through a three-dimensional medical decision support system.

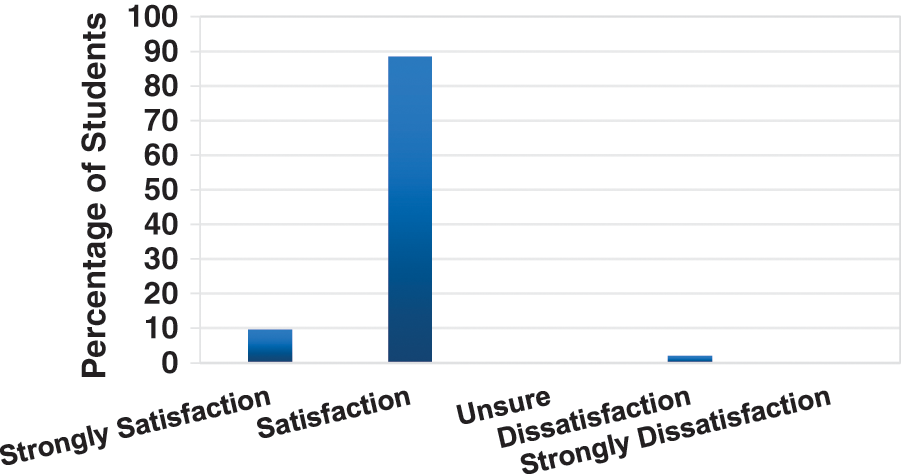

The statistical results of the survey questionnaire responses are provided in Fig. 9.

As shown in the figure, in order to assess the degree of satisfaction of radiologists with the platform, we have divided five levels: Strongly satisfaction, Satisfaction, Unsure, Dissatisfaction and Strongly dissatisfaction. Among them, 10% of experts expressed strong satisfaction and 88% of experts expressed satisfaction with the learning guidance services of the platform. Another 2% of experts were not satisfied. After consultation, the main opinions of dissatisfied experts are that some methods are more difficult for doctors. They hope that the platform can provide some more basic and easy-to-use algorithms to practice. These include responses from 21 radiologists over the two-month period.

Figure 9: Cumulative learner outcomes—satisfaction with learning expectations

It can be seen from the experimental data that the BMRMIA learning and training platform significantly improved the learning in the two groups of graduate radiologists and undergraduates in the field of deep learning and initially achieved the desired goal. After learning and training on the platform, these radiologists improved the skills that they lacked, and they highly praised the rationality and practicability of the platform. The BMRMIA learning and training platform includes the learning guidance system and the assessment training system, which creates a good deep learning experience for radiologists, helping radiologists to master the theoretical knowledge and practical skills of deep learning in a hierarchical and systematic way, narrowing the gap between the output of deep learning talents in hospitals and universities and the talent demand standards of enterprises. And through a specific three-dimensional medical decision system to more specifically learn the application of deep learning. However, the number of test participants on this platform was small, and the experiments may not be able to achieve accurate predictions of platform usage. In addition, many companies do not have uniform standards for recruiting deep learning engineers, so there is no comprehensive measure of the effect of the platform in narrowing the gap between university personnel training and corporate demand standards. Future work includes the ability to extend the platform to different hospitals and universities to test the effectiveness of the platform. It is also possible to further investigate the demand standards of more enterprises for deep learning talent, supplement the learning guidance content and online evaluation indicators of the platform, and provide better services for universities to cultivate deep learning talents in hospitals and universities.

Funding Statement: This work is supported in part by the Major Fundamental Research of Natural Science Foundation of Shandong Province under Grant ZR2019ZD05, and Joint Fund for Smart Computing of Shandong Natural Science Foundation under Grant ZR2020LZH013, and the Scientific Research Platform and Projects of Department of Education of Guangdong Province under Grant 2019GKQNCX121 and the Intelligent Perception and Computing Innovation Platform of the Shenzhen Institute of Information Technology under Grant PT2019E001.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. Deng, L., Yu, D. (2014). Deep learning: Methods and applications. Foundations & Trends in Signal Processing, 7(3), 197–387. DOI 10.1561/9781601988157. [Google Scholar] [CrossRef]

2. Krizhevsky, A., Sutskever, I., Hinton, G. E. (2017). Imagenet classification with deep convolutional neural networks. Communications of the ACM, 60(6), 84–90. DOI 10.1145/3065386. [Google Scholar] [CrossRef]

3. Gurovich, Y., Hanani, Y., Bar, O., Fleischer, N., Gelbman, D. et al. (2018). DeepGestalt-Identifying rare genetic syndromes using deep learning. arXiv:1801.07637. [Google Scholar]

4. Dekhil, O., Hajjdiab, H., Ayinde, B. O., Shalaby, A., Switala, A. et al. (2018). Using resting state functional MRI to build a personalized autism diagnosis system. IEEE 15th International Symposium on Biomedical Imaging. Washington DC, USA. DOI 10.1109/ISBI.2018.8363829. [Google Scholar] [CrossRef]

5. Su, S., Hu, Z., Lin, Q., Hau, W. K., Gao, Z. et al. (2017). An artificial neural network method for lumen and media-adventitia border detection in IVUS. Computerized Medical Imaging and Graphics, 57, 29–39. DOI 10.1016/j.compmedimag.2016.11.003. [Google Scholar] [CrossRef]

6. Gao, Z., Wu, S., Liu, Z., Luo, J., Zhang, H. et al. (2019). Learning the implicit strain reconstruction in ultrasound elastography using privileged information. Medical Image Analysis, 58, 101534. DOI 10.1016/j.media.2019.101534. [Google Scholar] [CrossRef]

7. Nguyen, D. T., Joty, S., Imran, M., Sajjad, H., Mitra, P. (2016). Applications of online deep learning for crisis response using social media information. arXiv:1610.01030. [Google Scholar]

8. Wang, B., Yin, P., Bertozzi, A. L., Brantingham, P. J., Osher, S. J. et al. (2017). Deep learning for real-time crime forecasting and its ternarization. Chinese Annals of Mathematics, Series B, 40(6), 949–966. DOI 10.1007/s11401-019-0168-y. [Google Scholar] [CrossRef]

9. Shams, S., Goswami, S., Lee, K., Yang, S., Park, S. J. (2018). Towards distributed cyberinfrastructure for smart cities using big data and deep learning technologies. IEEE 38th International Conference on Distributed Computing Systems, pp. 1276–1283. Vienna, Austria, IEEE. [Google Scholar]

10. Lecoutre, A., Negrevergne, B., Yger, F. (2017). Recognizing art style automatically in painting with deep learning. Asian Conference on Machine Learning, pp. 327–342. Yonsei University, Seoul, Korea. [Google Scholar]

11. Hasan, A., Kalıpsız, O., Akyokuş, S. (2017). Predicting financial market in big data: Deep learning. International Conference on Computer Science and Engineering, pp. 510–515. Antalya, Turkey, IEEE. [Google Scholar]

12. Georgiopoulos, M., Demara, R. F., Gonzalez, A. J., Wu, A. S., Mollaghasemi, M. et al. (2009). A sustainable model for integrating current topics in machine learning research into the undergraduate curriculum. IEEE Transactions on Education, 52(4), 503–512. DOI 10.1109/TE.2008.930511. [Google Scholar] [CrossRef]

13. Lavesson, N. (2010). Learning machine learning: A case study. IEEE Transactions on Education, 53(4), 672–676. DOI 10.1109/TE.2009.2038992. [Google Scholar] [CrossRef]

14. Gibson, E., Li, W., Sudre, C., Fidon, L., Shakir, D. I. et al. (2018). NiftyNet: A deep-learning platform for medical imaging. Computer Methods and Programs in Biomedicine, 158, 113–122. DOI 10.1016/j.cmpb.2018.01.025. [Google Scholar] [CrossRef]

15. Tang, Z., Zhao, G., Ouyang, T. (2021). Two-phase deep learning model for short-term wind direction forecasting. Renewable Energy, 173, 1005–1016. DOI 10.1016/j.renene.2021.04.041. [Google Scholar] [CrossRef]

16. Wong, K. K. L., Fortino, G., Abbott, D. (2020). Deep learning-based cardiovascular image diagnosis: A promising challenge. Future Generation Computer Systems, 110, 802–811. DOI 10.1016/j.future.2019.09.047. [Google Scholar] [CrossRef]

17. Spady, W. G. (1994). Outcome-based education: Critical issues and answers. American Association of School Administrators, Arlington, VA. https://eric.ed.gov/?id=ED380910. [Google Scholar]

18. Mohamad, I. A. A. S. (2009). Implementation of OBE in engineering education. International Conference on Engineering Education, pp. 164–166. Kuala Lumpur, Malaysia. DOI 10.1109/ICEED.2009.5490591. [Google Scholar] [CrossRef]

19. Dai, H., Wei, W., Wang, H., Wong, T. (2017). Impact of outcome-based education on software engineering teaching: A case study. IEEE International Conference on Teaching, pp. 261–264. Hong Kong, China. [Google Scholar]

20. Prasad, M. R., Reddy, D. K. (2016). Computer based teaching methodology for outcome-based engineering education. IEEE International Conference on Advanced Computing IEEE, pp. 809–814. Bhimavaram, India. [Google Scholar]

21. Midraj, S. (2018). Outcome-based education (OBE). American Cancer Society, John Wiley & Sons. [Google Scholar]

22. Barella, A., Valero, S., Carrascosa, C. (2009). JGOMAS: New approach to AI teaching. IEEE Transactions on Education, 52(2), 228–235. DOI 10.1109/TE.2008.925764. [Google Scholar] [CrossRef]

23. Hinton, G. E., Salakhutdinov, R. R. (2006). Reducing the dimensionality of data with neural networks. Science, 313(5786), 504–507. DOI 10.1126/science.1127647. [Google Scholar] [CrossRef]

24. LeCun, Y., Bottou, L., Bengio, Y., Haffner, P. (1998). Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11), 2278–2324. DOI 10.1109/5.726791. [Google Scholar] [CrossRef]

25. Lipton, Z. C. (2015). A critical review of recurrent neural networks for sequence learning. Computer Science. arXiv:1506.00019. [Google Scholar]

26. Simonyan, K., Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556. [Google Scholar]

27. Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S. et al. (2014). Going deeper with convolutions. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–9. Boston, MA, USA. [Google Scholar]

28. He, K., Zhang, X., Ren, S., Sun, J. (2013). Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778. Las Vegas, NV, USA. [Google Scholar]

29. Monk, S. (2013). Programming the raspberry Pi: Getting started with python. New York, USA: Mcgraw-Hill. [Google Scholar]

30. Unpingco, J. (2016). Getting started with scientific python. Python for Probability, Statistics, and Machine Learning, pp. 1–33. Springer, Cham. [Google Scholar]

31. Gao, Z., Xiong, H., Liu, X., Zhang, H., Ghista, D. et al. (2017). Robust estimation of carotid artery wall motion using the elasticity-based state-space approach. Medical Image Analysis, 37, 1–21. DOI 10.1016/j.media.2017.01.004. [Google Scholar] [CrossRef]

32. Hoffman, E. A., Sonka, M., Zhang, X., Siebes, M., Chada, R. R. et al. (1994). Automated detection of wall and plaque borders in intravascular ultrasound images. Proceedings of SPIE-The International Society for Optical Engineering, 2168, 13–22. DOI 10.1117/12.174406. [Google Scholar] [CrossRef]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |