DOI:10.32604/cmc.2020.012165

| Computers, Materials & Continua DOI:10.32604/cmc.2020.012165 |  |

| Article |

Image Recognition of Citrus Diseases Based on Deep Learning

1Central South University of Forestry and Technology, Changsha, 410004, China

2School of Information Technology and Management, Hunan University of Finance and Economics, Changsha, 410205, China

3Department of Mathematics and Computer Science, Northeastern State University, Tahlequah, OK, 74464, USA

*Corresponding Author: Xuyu Xiang. Email: xyuxiang@163.com

Received: 17 June 2020; Accepted: 28 July 2020

Abstract: In recent years, with the development of machine learning and deep learning, it is possible to identify and even control crop diseases by using electronic devices instead of manual observation. In this paper, an image recognition method of citrus diseases based on deep learning is proposed. We built a citrus image dataset including six common citrus diseases. The deep learning network is used to train and learn these images, which can effectively identify and classify crop diseases. In the experiment, we use MobileNetV2 model as the primary network and compare it with other network models in the aspect of speed, model size, accuracy. Results show that our method reduces the prediction time consumption and model size while keeping a good classification accuracy. Finally, we discuss the significance of using MobileNetV2 to identify and classify agricultural diseases in mobile terminal, and put forward relevant suggestions.

Keywords: Deep learning; image classification; citrus diseases; agriculture science and technology

There are many types of agricultural disasters with great impact and frequent disasters, which not only cause losses to crop production, but also threaten food safety [1]. Crop disease is one of the main types of “disasters” with variety and wide range of influence, etc. Disease problems inevitably affect crops throughout their growing cycle. Moreover, ecological environment has synergistic effect, and the spread of diseases through insects may even change into a certain scale and serious infection problem under the influence of the synergism, resulting in widespread crop loss. Therefore, the automatic and accurate identification of crop diseases is the key to crop prevention.

At present, some scholars have researched disease image recognition based on deep learning [2]. Replacing agricultural disease monitoring technicians with artificial intelligence [3] can greatly improve the efficiency of disease prevention and control, solve the problem of insufficient forecasting personnel in China’s agricultural grassroots, and further realize the intellectualization of agricultural production. Therefore, promoting the intellectualization of disease prediction and prevention of agricultural output [4] can solve the problems of delay, low efficiency, and poor objectivity of artificial monitoring and prediction identification, and reduce the workload of personnel. The rapid and accurate diagnosis of crop diseases plays an essential role in ensuring crop yield and food safety, farmers can use mobile devices to detect crop diseases and treat them in time by photographing diseased crops [5]. Citrus disease is one of the most important commercial crops all over the word, it brings great economic losses to farmers. There are some diseases that are the most threatening, such as Anthracnose [6], Huanglongbing (HLB) Canker [7], Scabis [8], Black spot [9] and Sand paper rust. This paper takes selects several common citrus diseases as experimental objects.

HLB is the most influential and harmful citrus disease in the world. It is commonly known as the citrus cancer bringing great trouble to farmers. The long-distance transmission of HLB is scion grafting and disease seedlings. HLB can make citrus fruit bitter, early fall. In later stages, the whole citrus will wither and die.

Anthracnose is the most common citrus disease with a wide range and long duration. It is mainly harmful to leaves, branches, flowers, fruits and stalks. Severe cases of Anthracnose often lead to defoliation, shoot dieback, the fall of flowers and fruits in abundance, and fruit decay.

Canker is the significant challenge in citrus cultivation, which is harmful to citrus leaves, shoot tips and fruits. It can also cause small trees to lose their leaves and their shoots to die. Severe canker will lead to fall of fruits, and mild canker will cause fruit rot, reduction of storage resistance and perishability, which significantly reduce the value of fruit commodities and increase the cost of disease and insect control.

Black spot is a common disease in most citrus cultivation areas. It is harmful to fruits, and its symptoms are mostly found in nearly mature fruits. Black spot mainly infects fruitlets without obvious symptoms in the immature fruit stage. Black spot begins to appear from the fruit swelling stage to the mature stage, easily leading to fruit decay.

Sand paper rust is a fungal disease caused by Diaporthe citri. It mainly damages spires, tender tips and immature fruits of citrus and produces black and brown colloid small dots on the surface.

Scabis is one of the main fungal diseases of citrus, which is usually caused by Elsinoe fawcettii. It damages not only the young fruit of a new shoot, but also the calyx and the petal.

Deep learning is a subclass of machine learning, it has significantly improved the recognition rate in many traditional recognition tasks [10]. In recent years, deep learning is extensively used not only in image processing, image recognition and classification [11–16], but also in other fields such as agriculture. Compared with previous artificial neural network methods, deep learning can be more accurate in recognition and better solve image classification and visualization problems, hence it is now the most promising technology in the modern agricultural field [17]. Deep learning is similar to shallow neural network structure, but it contains many neural networks with hidden layer structure. Typical deep learning networks include convolutional network (CNN), restricted boltzmann machine (RBM), deep confidence network, deep boltzmann machine (DBM) [18], DBN, RNN, GAN and CapsNet, etc. Compared with the shallow neural network, deep learning is stronger in learning ability, higher in recognition accuracy and lower in external environmental conditions requirements, which can be applied to the actual life and agricultural production [19], such as the detection of plant diseases [20]. The current methods of crop disease investigation have been unable to meet the current agricultural production needs, deep learning can replace manual work and adopt electronic equipment to identify the disease even prevent and control the crop diseases. At present, some scholars have studied disease image recognition by using the technology of deep learning.

In view of the identification of some of the above diseases, in the farming process, the disease identification mainly relies on primary technical person. Through field observation, they compare the observation records with recorded disease specimens or disease specimens to determine the type of diseases. However, the long-term use of this artificial investigation method costs a lot of human resources and material resources, and cannot effectively guarantee the accuracy and effectiveness, it is unable to meet the current needs for disease detection and prevention. Therefore, more and more researchers focus on deep learning for the crop disease identification. While performance has improved, more computing power is needed as efficiency increases. Deep learning is limited in mobile deployment with limited energy consumption, computing resources and storage space.

The efficiency is mainly determined by two aspects, the storage and the prediction speed. Only when the efficiency of CNN is solved, can CNN get rid of the shackles of the laboratory and be more extensively in mobile devices. To improve the efficiency, the general method is to compress the trained model, to solve the memory and speed problem with fewer network parameters. Besides, the lightweight model design adopts another method, which design a more efficient network computing mode (mainly for the convolution mode) that can reduce the parameters without losing performance.

MobileNet is a lightweight model proposed for mobile devices and other embedded devices that effectively alleviates the problems mentioned above. It mainly utilizes Depthwise separable convolution to simplify the network structure.

3.1 Network Structure Comparison

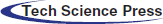

MobileNetV1 and MobileNetV2 both use Depthwise (DW) convolution and Pointwise (PW) convolution to extract features. The combination of these two operations is also called depth-separable convolution. In theory, this method can exponentially reduce the time and space complexity of the convolution layer. According to the following formula, since the number of the convolution kernel is less than the number of output channels, the computational complexity of standard convolution is approximately K2 times of the combination of DW and PW convolution.

Figure 1: The difference between MobileNetV1 and MobileNetV2

In Fig. 1, there are some differences between MobileNetV1 and MobileNetV2. MobileNetV2 adds a new PW convolution before the DW convolution because the DW convolution cannot change the number of channels by itself due to its computational properties, it can only output the number of channels given by the previous layer. Thus, if the number of channels in the previous layer is very small, DW can only extract the features in the low-dimensional space very grievously, so the effect is not good enough. Now, to solve this problem, MobileNetV2 has been equipped with a PW before each DW, which is specifically used to raise the dimension, and defining the number of lift maintenance as t = 6. In this way, no matter whether the number of input channels Cin is more or less after the first PW raises the dimension, DW is always working hard in a relatively higher dimension (t·Cin). MobileNetV2 removes the activation function of the second PW. We call this linear bottleneck [21], because although activating function can increase the nonlinearity of high dimensional space with effect, it will destroy the features of low dimensional space, so it is not as good as the linear effect. According to the above theory, the main function of the second PW is dimension reduction, so ReLU6 should not be used after dimension reduction.

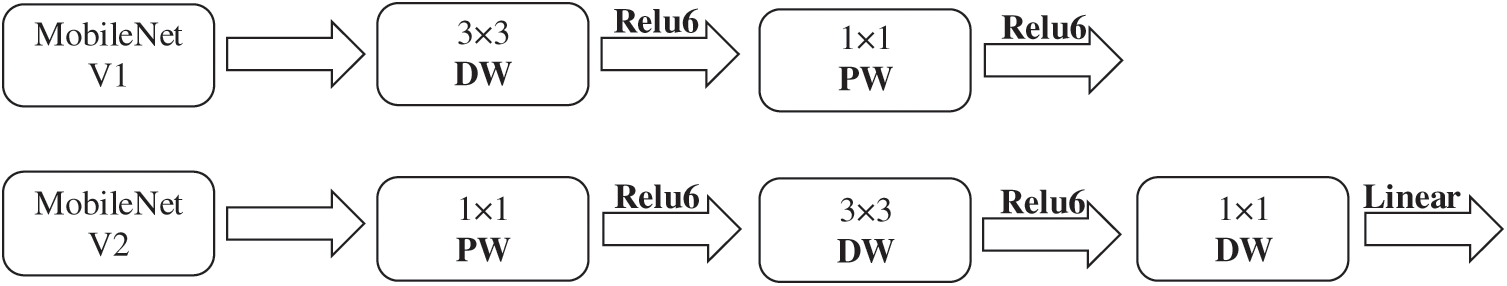

Figure 2: The network structure of MobileNetV1 and MobileNetV2. (a) MobileNetV2 (b) MobileNetV1

In Fig. 2, MobileNet V1 doesn’t have the residual connection and contains ReLU in the last part, while MobileNet V2 has the residual connection and removes ReLU in the last part.

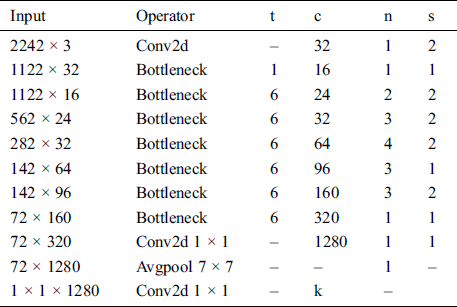

In Tab. 1 [21], t is the multiplication coefficient of the input channel, c is the number of output channels, n is the repetitions of modules, and s is the stride of the first repetition of the module (all subsequent repetitions are stride 1).

Table 1: Overall structure diagram of MobileNetV2

The network architecture of MobileNetV2 has a lot in common with many of the CNN we’ve seen before. For example, it firstly uses the ordinary convolution for basic feature extraction, and then uses the novel residual module for level-one processing, feature map size is getting smaller and smaller, but the number of channels is increasing. Moreover, the extension factors for internal use of each residual module was 6 (we conducted experiments in the range of 5–10 and finally chose 6) [21].

3.2 Citrus Disease Detection Based on MobileNetV2

In this paper, to establish an efficient citrus disease detection method, we adopt MobileNetV2 to implement our method, it is a lightweight network. The used dataset is enhanced through five ways in advance. Experiment with enhanced data sets on tweaked MobileNetV2. The intention is to reduce the training and testing time of the network, as well as the size of the network model while maintaining classification progress. In this way, we can use our method on the mobile terminal, which is convenient for users to use anytime and anywhere.

4 Experimental Results and Analysis

The experiment adopted Intel(R) Core (TM) i7-8750h CPU@2.20ghz, 16.00gb RAM and Nvidia GeForce GTX 1070. The framework adopted in this paper is Keras, which is an advanced neural network API. The learning rate = 0.001, batch size = 8 and epochs = 50.

4.2 The Establishment of Datasets

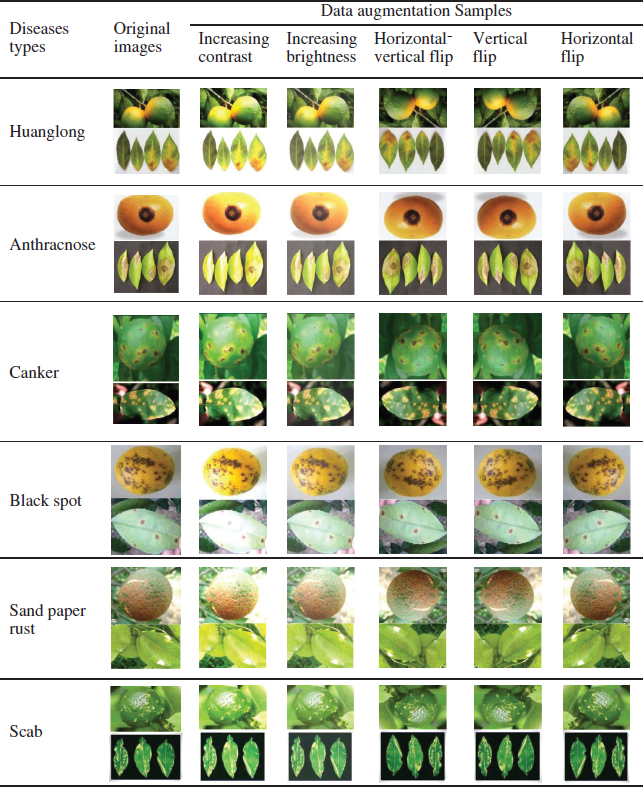

In this experiment, we used the six typical citrus cases mentioned in the previous article. We collected these pictures through Internet search and field photography. The dataset includes six categories: Huanglongbing, Anthracnose, Canker, Black spot, Sand paper rust, and Scabis. We divided the dataset into the training set, testing set, and validation set in a ratio of 6:2:2. Because our data set is small, the training results may be poor, such as the phenomenon of overfitting. To solve this problem, we enhanced the dataset to improve accuracy and efficiency [22]. We changed the brightness, contrast, horizontal flip, vertical flip, and horizontal vertical flip of the data set, resulting in a five-fold increase in the size of the training set and the testing set [23]. The original and enhanced image of dataset are shown in Tab. 2.

Table 2: Data samples of the original image and data augmentation

4.3 Analysis the Classification Accuracy of Different Models

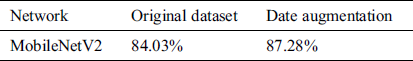

We used the trained model in MobileNetV2 to test our validation set, and the classification accuracy comparison of the original data and data augmentation is shown in Tab. 3. Experimental results show that the overall accuracy after data augmentation has been improved by about 3%.

Table 3: Classification accuracy of the original image and data augmentation

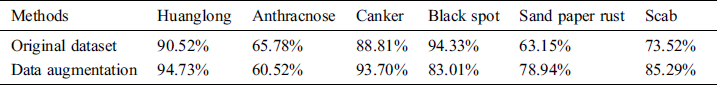

At the same time, we test the accuracy of each class in dataset with MobileNetV2, which are shown in Tab. 4.

Table 4: Accuracy comparison the of each category before and data augmentation

As can be seen from Tab. 3, after data augmentation, the accuracy of 4 diseases was improved, while the accuracy of 2 diseases was decreased. This phenomenon may be related to the small number of raw datasets in these two categories and the complexity of the image background.

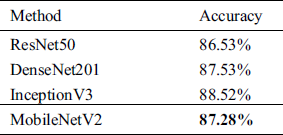

We also use the dataset after data enhancement to train in different networks and compare the accuracy of various networks, and the results are shown in Tab. 5.

Table 5: The classification accuracy of the different network

Although MobileNetV2 is not as complex as other networks, it still performs well in our dataset in terms of classification, even slightly better than ResNet50(+0.75%).

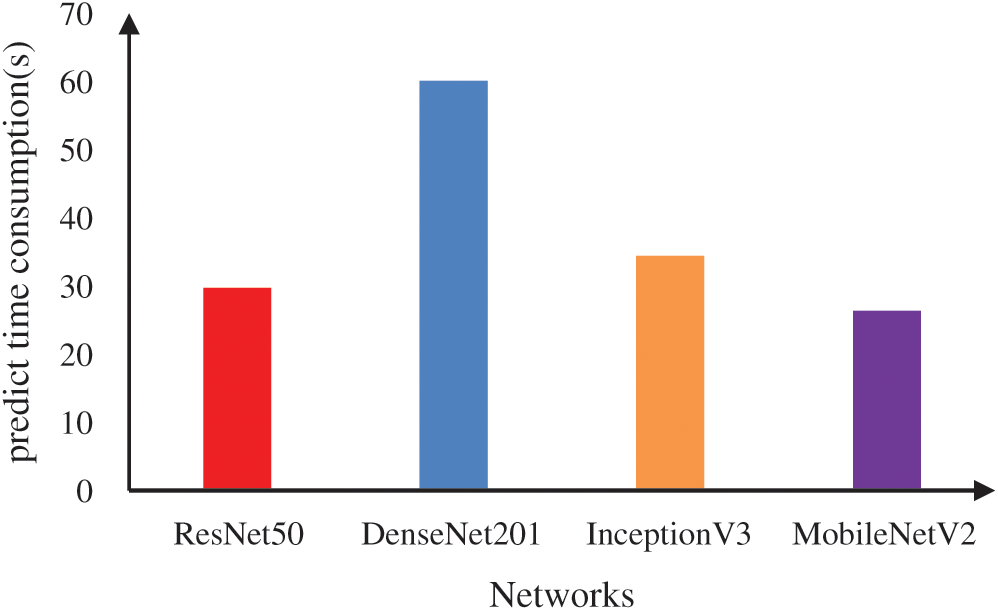

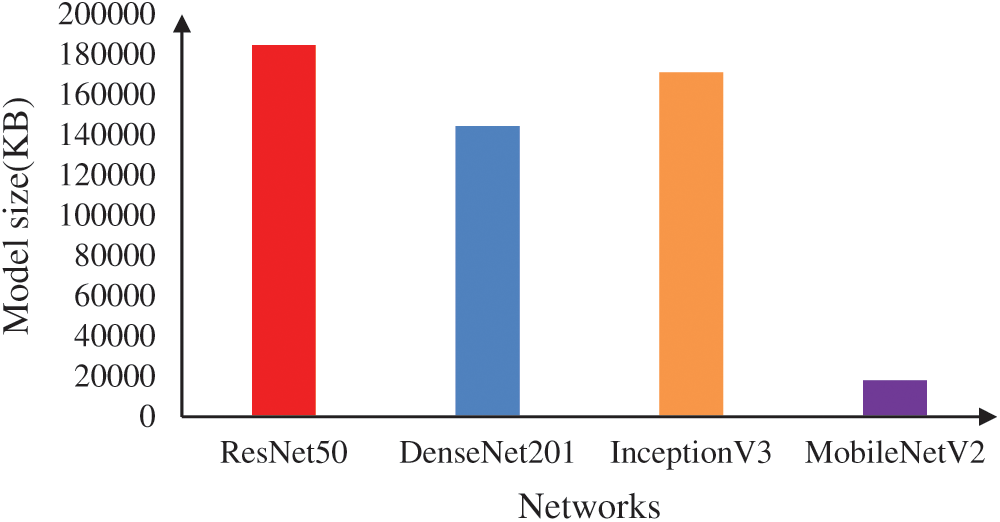

4.4 Predict Time Consumption and Model Size of Different Network

We put images of the validation set into several network models that we trained, recording the predicted time and model size used to test each model.

From Figs. 3 and 4, it can be seen that MobileNetV2 has little difference with other networks in the test accuracy. At the same time, Mobilenetv2 has a great advantage in the time used to verify the accuracy of the model compared with other network models, so it is more applicable on the mobile end. In this citrus disease classification, Mobilenetv2 keeps better accuracy and faster detection speed. It’s worth noting that the dataset used in this experiment is not large enough, if the dataset increases gradually, the speed advantage of MobileNetV2 will become more obvious.

Figure 3: The compares of predict time consumption

Figure 4: The compares of the model size of different model

This method mainly uses MobileNetV2 to realize citrus disease detection with high efficiency. Therefore, the lightweight network model can play a meaningful role when the verification speed and model storage are limited. For example, as a mobile terminal, phones are often used by people. We can apply the trained network model to the App to automatically identify the disease by taking photos, and give the disease introduction and countermeasures.

After identification, the purchase link of the required pesticides can be attached to provide disease treatment experience for experts and fruit farmers. This approach may also be applied to mobile intelligent sensing equipment for diseases and pests and automatic identification system, it can help fruit farmers quickly determine disease types and take measures to reduce economic losses, protect the healthy growth and quality of fruit [24].

1) A rich database is the important basis for the classification and identification of crop diseases and insect pests, and a large dataset can increase the recognition accuracy after model training. Only by accumulating enough data can we give full play to the power of deep learning tools and technologies. In the future, we will continue to collect the number and types of expanded databases to improve the generalization ability of the model.

2) The collected citrus disease data set can be used for image segmentation to remove complex picture backgrounds to obtain more accurate disease pictures for training models and improve the identification accuracy.

3) The optimization of the convolution network algorithm is a direct way to achieve identification accuracy. In the case of further obtaining massive datasets, the optimization of the convolution operation can improve the identification accuracy.

In this paper, we trained MobileNetV2 to classify and identify six common citrus diseases. By comparing with other network models from the model accuracy, model size and model validation speed, we can see that MobileNetV2, performs well in the classification and identification of citrus diseases. As a lightweight network, MobileNetV2 has similar accuracy with other network models, and has fast validation.

Acknowledgement: The author would like to thank the support of Central South University of Forestry & Technology and the support of National Natural Science Fund of China.

Funding Statement: This work was supported in part by the National Natural Science Foundation of China under Grant 61772561, author J. Q, http://www.nsfc.gov.cn/; in part by the Key Research and Development Plan of Hunan Province under Grant 2018NK2012, author J. Q, http://kjt.hunan.gov.cn/; in part by the Key Research and Development Plan of Hunan Province under Grant 2019SK2022, author Y. T, http://kjt.hunan.gov.cn/; in part by the Science Research Projects of Hunan Provincial Education Department under Grant 18A174, author X. X, http://kxjsc.gov.hnedu.cn/; in part by the Science Research Projects of Hunan Provincial Education Department under Grant 19B584, author Y. T, http://kxjsc.gov.hnedu.cn/; in part by the Degree & Postgraduate Education Reform Project of Hunan Province under Grant 2019JGYB154, author J. Q, http://xwb.gov.hnedu.cn/; in part by the Postgraduate Excellent teaching team Project of Hunan Province under Grant [2019]370-133, author J. Q, http://xwb.gov.hnedu.cn/, in part by the Postgraduate Education and Teaching Reform Project of Central South University of Forestry & Technology under Grant 2019JG013, author X. X, http://jwc.csuft.edu.cn/ in part by the Natural Science Foundation of Hunan Province (No. 2020JJ4140), author Y. T, http://kjt.hunan.gov.cn/; and in part by the Natural Science Foundation of Hunan Province (No. 2020JJ4141), author X. X, http://kjt.hunan.gov.cn/.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1S. P. Jia, H. J. Gao and X. Hang. (2019), “Advances in image recognition technology of crop diseases and insect pests based on deep learning. ,” Transactions of the Chinese Society of Agricultural Machinery, vol. 1000, no. (1298), pp, 314–316, .

2Q. S. Zhou and X. Z. Tang. (2017), “Application of image recognition processing technology in agricultural engineering. ,” Modern Electronics Technique, vol. 40, no. (4), pp, 107–110, . [Google Scholar]

3S. P. Lv, D. H. Li and R. H. Xian. (2019), “The application of deep learning in agriculture in China computer engineering and applications. ,” Computer Engineering and Applications, vol. 55, no. (20), pp, 24–32, . [Google Scholar]

4L. L. Pan, J. H. Qin, H. Chen, X. Y. Xiang, C. Li. (2019). et al., “Image augmentation-based food recognition with convolutional neural networks. ,” Computer, Materials & Continua, vol. 59, no. (19), pp, 297–313, . [Google Scholar]

5J. H. Li, X. Hao, M. L. Niu, J. W. Wang, P. A. Li. (2019). et al., “Identification of crop diseases based on convolution neural network. ,” China Agricultural Information, vol. 31, no. (3), pp, 39–47, . [Google Scholar]

6L. L. Deng, K. F. Zeng, Y. H. Zhou and Y. Huang. (2015), “Effects of postharvest oligochitosan treatment on anthracnose disease in citrus fruit. ,” European Food Research and Technology, vol. 240, no. (4), pp, 795–804, . [Google Scholar]

7H. G. Jia, Y. Z. Zhang, O. Vladimir, J. Xu, F. F. White. (2017). et al., “Genome editing of the disease susceptibility gene CsLOB1 in citrus confers resistance to citrus canker. ,” Plant Biotechnology Journal, vol. 15, no. (7), pp, 817–823, . [Google Scholar]

8M. S. Wang, Y. Q. Meng, X. Hou, Z. R. Zhu and Y. H. Li. (2017), “Two fungal diseases of citrus worthy of attention. ,” Plant Quarantine, vol. 30, no. (1), pp, 65–68, . [Google Scholar]

9N. T. Tran, A. K. Miles, R. G. Dietzgen, M. M. Dewdney, K. Zhang. (2017). et al., “Sexual reproduction in the citrus black spot pathogen. ,” Phyllosticta Citricarpa, Phytopathology, vol. 107, no. (6), pp, 732–739, . [Google Scholar]

10A. Krizhevsky, I. Sutskever and G. Hinton. (2012), “ImageNet classification with deep convolutional neural networks. ,” Proc. of the Advances in Neural Information Processing Systems, vol. 60, no. (6), pp, 84–90, . [Google Scholar]

11Y. J. Luo, J. H. Qin, X. Y. Xiang, Y. Tan, Q. Liu. (2020). et al., “Coverless real-time image information hiding based on image block matching and dense convolutional network. ,” Journal of Real Time Image Processing, vol. 17, no. (1), pp, 125–135, . [Google Scholar]

12J. H. Qin, H. Li, X. Y. Xiang, Y. Tan, W. Y. Pan. (2019). et al., “An encrypted image retrieval method based on harris corner optimization and LSH in cloud computing. ,” IEEE Access, vol. 7, no. (1), pp, 24626–24633, .

13J. Wang, J. H. Qin, X. Y. Xiang, Y. Tan and N. Pan. (2019), “Captcha recognition based on deep convolutional neural network. ,” Mathematical Biosciences and Engineering, vol. 16, no. (5), pp, 5851–5861, .

14Y. Tan, J. H. Qin, X. Y. Xiang, W. T. Ma, W. Y. Pan. (2019). et al., “A robust watermarking scheme in YCbCr color space based on channel coding. ,” IEEE Access, vol. 7, no. (1), pp, 25026–25036, .

15J. H. Qin, W. Y. Pan, X. Y. Xiang, Y. Tan and G. M. Hou. (2020), “A biological image classification method based on improved CNN. ,” Ecological Informatics, vol. 58, pp, 1–8, .

16Q. Liu, X. Y. Xiang, J. H. Qin, Y. Tan, J. S. Tan. (2020). et al., “Coverless steganography based on image retrieval of DenseNet features and DWT sequence mapping. ,” Knowledge Based Systems, vol. 192, pp, 105375–105389, . [Google Scholar]

17A. Kamilaris, B. Prenafeta and X. Francesc. (2018), “Deep learning in agriculture: A survey. ,” Computers and Electronics in Agriculture, vol. 147, no. (12), pp, 70–90, . [Google Scholar]

18Y. X. Wang, Y. Zhang and C. Y. Yang. (2019), “Advances in crop disease image recognition based on deep learning. ,” Acta Agriculturae Zhejiangensis, vol. 31, no. (4), pp, 669–676, . [Google Scholar]

19K. P. Ferentinos. (2018), “Deep learning models for plant disease detection and diagnosis. ,” Computers and Electronics in Agriculture, vol. 145, pp, 311–318, . [Google Scholar]

20S. P. Mohanty, D. P. Hughes and M. Salathé. (2016), “Using deep learning for image based plant disease detection. ,” Frontiers in Plant Science, vol. 7, no. (1419), pp, 1–10, . [Google Scholar]

21M. Sandler, A. Howard, M. L. Zhu, A. Zhmoginov and L. C. Chen. (2018), “MobileNetV2: Inverted residuals and linear bottlenecks. ,” in Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, pp, 4510–4520, . [Google Scholar]

22L. Perez and J. Wang. (2017), “The effectiveness of data augmentation in image classification using deep learning. ,” Convolutional Neural Networks Vis. Recognit, vol. 11, pp. 1–8, . [Google Scholar]

23W. Y. Pan, J. H. Qin, X. Y. Xiang, Y. Wu, Y. Tan. (2019). et al., “A smart mobile diagnosis system for citrus diseases based on densely connected convolutional networks. ,” IEEE Access, vol. 7, no. (1), pp, 87534–87542, . [Google Scholar]

24T. J. Chen, J. Zeng, C. J. Xie and R. J. Wang. (2019), “Intelligent pest identification system based on deep learning. ,” China Plant Protection, vol. 39, no. (4), pp, 26–34, . [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |