DOI:10.32604/cmc.2021.013878

| Computers, Materials & Continua DOI:10.32604/cmc.2021.013878 |  |

| Article |

Smart Object Detection and Home Appliances Control System in Smart Cities

1Department of Computer Science, University of Swabi, KP, Pakistan

2Department of Accounting and Information Systems, College of Business and Economics, Qatar University, Doha, Qatar

*Corresponding Author: Habib Ullah Khan. Email: habib.khan@qu.edu.qa

Received: 25 August 2020; Accepted: 04 November 2020

Abstract: During the last decade the emergence of Internet of Things (IoT) based applications inspired the world by providing state of the art solutions to many common problems. From traffic management systems to urban cities planning and development, IoT based home monitoring systems, and many other smart applications. Regardless of these facilities, most of these IoT based solutions are data driven and results in small accuracy values for smaller datasets. In order to address this problem, this paper presents deep learning based hybrid approach for the development of an IoT-based intelligent home security and appliance control system in the smart cities. This hybrid model consists of; convolution neural network and binary long short term model for the object detection to ensure safety of the homes while IoT based hardware components like; Raspberry Pi, Amazon Web services cloud, and GSM modems for remotely accessing and controlling of the home appliances. An android application is developed and deployed on Amazon Web Services (AWS) cloud for the remote monitoring of home appliances. A GSM device and Message queuing telemetry transport (MQTT) are integrated for communicating with the connected IoT devices to ensure the online and offline communication. For object detection purposes a camera is connected to Raspberry Pi using the proposed hybrid neural network model. The applicability of the proposed model is tested by calculating results for the object at varying distance from the camera and for different intensity levels of the light. Besides many applications the proposed model promises for providing optimum results for the small amount of data and results in high recognition rates of 95.34% compared to the conventional recognition model (k nearest neighbours) recognition rate of 76%.

Keywords: Hybrid deep learning model; IoT; smart cities; home appliances control system; and Amazon web services

In this modern technological age smart IoT based system plays a key role in our daily life. Unlimited applications are developed ranging from smart healthcare systems to smart transportations system, smart banking systems to smart business management systems, eHealth to mHealth, and many other smart system using IoT based communication devices. Sensors are the generic components of these devices that plays a key role in the machine perception and pattern recognition [1]. In the last few years the IoT based smart systems gaining a significant attention from the IT industry. The engineers and research community trying to develop a complex IoT system that can fulfil the future requirements of the smart world. These IoT based systems are designed to facilitate the user in multiple areas and provide services in healthcare, home automations, transportation, security, banking sector, and so on. Significant research work has been reported in the home automation field and is extended to the other relevant fields. Typically a home automation system interconnects several electrical devices and remotely monitor these devices for different purposes [2]. Bluetooth device, Wi-Fi, GSM/GPRS, etc., are considered as the communication channel for the remote connectivity of these devices.

Cloud computing servicing are extensively used due to high security and allows computing in the cloud. Amazon Elastic Compute Cloud (Amazon EC2) is type of web service that ensures both security and computing in the cloud [3]. The proposed research work uses Amazon Web Services (EC2) due to its high popularity and stable commercial cloud [4]. Typically it is designed for the software developers to easily make and manage web-based cloud computing. We have developed a mobile based web application intelligent home security appliances control system (IHSACS), and deployed the same on ASW cloud. The web application is hosted on Node.js; developed by Ryan Dhal in 2009 [5]. It is an open source, cross platform support environment built on Google Chrome JavaScript Engine for the development of network and server-side applications [6]. Node.js applications can be developed in the JavaScript language. Integrating the most relevant libraries from different JavaScript modules the Node.js make it simple for the developer to develop web-based applications. The proposed IHSACS web application helps the user to define virtual room, can monitor and control different appliance through internet. Installed on a smart phone (can be installed on both iOS and Android operating systems) one can remotely control the home appliances from the outside. A status button (Red/Green) is mounted in front of each device to control the status (ON/OFF) of each device.

Multiple communication protocols such as message queuing telemetry protocol (MQTT), and constrained application protocol (CoAP) are introduced for data transmission purposes [7]. MQTT is a light weight messaging protocol generally used for IoT connectivity. MQTT contains different implementations such as; paho-mqtt, hive-mqtt, and mosquito etc. MQTT is a connectivity protocol generally used for machine-to-machine communication or for Internet of Things [8]. It is based on publish and subscribe messages between devices. It is designed to be lightweight messaging transport protocol that results in consuming low battery power in embedded devices. The MQTT broker is responsible for connecting multiple devices such as; actuators, sensors, cameras, etc. For the proposed IHCAS system, we have used MQTT-Paho library, and the broker is configured and installed on AWS cloud using python. We have proposed the concept of distributed broker to overcome the load on central broker. In case of unavailability of the internet (Wi-Fi/mobile data) a static database is developed using SQLite Open Helper in Android, and a GSM modem is integrated with Arduino UNO for offline communication to control the state (ON/OFF) of the devices (home appliances). When the internet connects the data automatically saved to the online database.

In the fields of digital image processing and computer vision object detection is the process of identifying an objects (person, chair, vehicle, etc.) in videos or in images [9,10]. Many research domain exists under the umbrella of object detection such as; face detection and recognition, theft detection, gender classification, and many others. Different techniques are suggested by the researchers for object detection like; Khan et al. [11] suggested sonar sensors for object detection and avoidance. Mtshali et al. [12] developed smart home system for physically disabled persons.

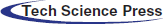

After the shallow architectures (Support vector machine, k nearest neighbours, hidden Markova model, Naïve Bayes, and so on) the deep neural networks (DNN) gained significant attention of the research community for the research work due to its automatic capabilities of extracting astute features from the images and do classification and recognition based on the calculated features. Fig. 1 represents a generic DNN model. It consists of input layers, hidden layer and output layer. DNN has proven to be more effective in solving the complex problems [13]. Due to high applicability and promising results DNN are applied in many fields like; text recognition [14], malware threat hunting [15], speech recognition [16]. The learning phase is classified into, supervised learning, semi-supervised learning, and unsupervised learning. Based on the literature several types of implementations can be found for the object detection processing such as; back propagation neural network (BPNN), recurrent neural network (RNN), feed forward neural network (FNN), binary long short term memory (BLSTM), and many others.

Figure 1: Generalized DNN model

The proposed research work uses a hybrid model based on convolution neural network (CNN) and BLSTM for the object detection. The images are classified and recognised using CNN while the temporal information is recognized based on the BLSTM. The proposed model uses Raspberry pi with a camera connected to IoT based sensing device. A person is detected in the live stream using the hybrid technique running on the Raspbian OS and MQTT message is published to the cloud server when the detection phase completes. Notification messages are transmitted to the user via an email and SMS with a command message Arduino board (ESP8266) to turn ON the LED that act as an actuating device. A GSM module is also integrated to the Arduino board to overcome the issues (no Wi-Fi signals or unavailability of mobile data). SMS package is activated to the SIM card to send offline messages. The applicability of the proposed model is tested for object detection purposes at different environmental effects such as; light intensity level, and varying distance from camera. Both online and offline (MySQL and SQLite) databases developed for storing IoT devices data and information. Main contributions of the proposed work are;

• A hybrid model of deep neural network is used for the identification and recognition of object.

• Both online and offline databases (MySQL and SQLite) are used for the records storage purposes.

• MQTT and CoAP are used for the online communication purposes while GSM modem is integrated for offline communication (to send and receive offline messages).

• A user can control home devices remotely (far away from home using GSM modem).

The rest of the paper is organized as follows. Section 2 gives details for the related work developed so far in the proposed field. Section 3 gives details for the proposed methodology followed for the development of the IHSACS system. This section of the paper explains briefly discuss about the virtual model of the proposed system, the entity relation diagram followed, and the object detection mechanism developed for the proposed IHSACS system. The design strategy followed and the performance of the system is evaluated in the Section 4. The results and discussions are detailed in Section 5 followed by the conclusion in Section 6.

In object detection and recognition field, sensors plays a significant role. It a computer technology term most relevant to the image processing and computer vision fields. In fact, object detection techniques are used in multiple smart industrial fields such as; healthcare, farms, home, factories, and many others. Among these diverse fields our focus of interest is to develop a smart home appliances control system based on the advanced deep neural network technique. Deep neural network have revolutionized the research field by solving many complex problems within a limited time and with high accuracies. Diverse applications are developed using DNN models such as; Yang et al. [17] developed a social robot for the smart homes using convolution neural network for the detection of embarrassing situation, while Erol et al. [18] developed a robot in smart home for object detection. Zhang et al. proposed the seven layer DNN model for cerebral micro-bleed (CMB) voxels detection in the brain. Rajan et al. [19] suggested a conceptual design for the smart cities using IoT based DNN model. Their model provide a conceptual view for the smart building, smart parking and street lights. Kumar et al. [20] proposed BLSTM model for the control of residual microgrid.

The networked inter-connection of daily life physical devices, automobiles, actuator control devices, home appliances, sensor devices, and other components integrated via omnipresent intelligence, programs, and other significant connectivity mechanism that enables these devices to share useful information with each other [21,22]. For the communication purposes in between these devices MQTT is used. MQTT is a connection oriented protocol from machine to machine. Internet of things is targeting both enterprise and consumer’s electronics market for rapid prototype application development in the smart homes, smart cities, and many others. For the development of these smart applications there are numerous low-cost with efficient power backup sensors available in the market for the developers. For the communication purposes in between these devices the computational job is performed at the server side while the sensing and actuating work is performed on the client side. To minimize the risk MQTT protocol is used for asynchronous communication.

During the last few years the demand for the smart applications increased significantly. Many smart applications are developed ranging from healthcare to transportations system, business to banking system, navigation systems to tracking system, and so on. In real time system, the speed and accuracy is one of the major concern. To overcome this issue many advanced machine learning techniques are designed for the detection of real time object in the video stream. These algorithms not only helps in classifying and recognizing the real time objects but also draw a rectangular box around the desired object. In computer vision object detection is comparatively difficult task than image classification [23]. Mehmood et al. [24] proposed single shot detector for real time object detection.

The proposed research work uses a hybrid model of deep neural networks for the detection of the object. The applicability of the system is tested on a standard database named; common object in context (COCO). It is a large scale object detection, capturing and segmentation dataset [25]. The proposed hybrid model requires a small dataset for the training purposes and gives prominent results in a limited amount of time. Also a GSM modem is integrated in the proposed IoT model for the un-interrupted communication in between the devices in case of no internet services. From the literature cited it is concluded that there is significant work reported on the home security systems but all these systems uses online communication systems and fails in the case of no Wi-Fi or data networks. To address this problem a smart home security system is develop using advanced deep neural network model for the object identification and recognition purposes. The proposed model is capable of both online and offline communication. Also it helps in controlling the devices remotely (far away from home).

3 Overview of the Proposed Methodology

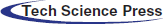

Overall mechanism for the proposed intelligent home appliances control system is shown in Fig. 2. It consists of three main layers; the application services layers, the data storage layer, and the object detection and control layers. The application services layer is responsible for providing the smart home management and control services to the user. A user can create virtual rooms, IoT devices in their smart home via an android based mobile application. Middle layer is the data storage layer where web server is hosted on AWS cloud. MQTT broker is configured in Ubuntu instance that is created on AWS cloud. The bottom layer contains the actuating and IoT sensing devices that help in collecting the contextual information collection and environmental control. For live video stream capturing and monitoring purposes Raspberry Pi camera is used as a sensing IoT device.

Figure 2: Proposed IHSACS model

Advanced hybrid neural network architecture is followed for the object detection in Raspberry Pi and MQTT is published to the central broker when person is detected. Users are notified via SMS and email and command message is sent to an actuating device i.e., LED attached with Arduino board. To overcome the issues of no Wi-Fi signals or mobile data a GSM device is integrated to Arduino board for sending and receiving messages to control the IoT devices and an offline database is created using SQLiteOpenHelper database for the offline data storage and controlling. The data is sent back to the online server when the mobile access the Wi-Fi or the data signals.

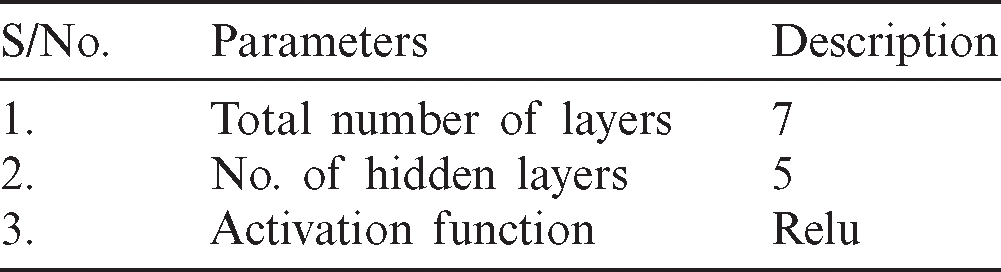

Different parameters selected for the training purposes of the hybrid model are depicted in Tab. 1.

Table 1: Parameters of the proposed hybrid model

The proposed system is developed in android studio. The virtual design of the proposed model is shown in Fig. 3 where a user can add new rooms or devices in the room. A single room, a kitchen, and bathroom is available in the selection menu, while new rooms can be added in the model along with new devices. Some devices such as washing machine, electric water pump these are mostly outside the room so these devices are not available in the rooms list but are available in the home menu. The end-user can turn on and turn off the devices based on the GSM modem and control circuit.

Figure 3: Virtual sketch of IHSACS model

The virtual design in Fig. 3 is divided in three sections; the storage section, the user interface, and the data model (control section). All these sections are discussed in details below.

• Storage section—The proposed IHSACS model uses both online and offline (MySQL and SQLite) databases for the storage purposes. MQTT and CoAP are used for the online communication purposes while GSM modem is integrated with the hardware for the offline communication to ensure the smooth process of the application in case of no Wi-Fi or no data packages. In such cases this application works by using simple messaging services (SMS). The data is stored in the SQLite database (using SQLiteOpenHelper services). This is light weight and provides offline storage facilities for the user using Android operating system.

• User interfac—The user interface of the proposed model is developed in Android Studio for the Android phone users. Activities uses in this model are login, Contact (to select the contact for sending and receiving messages and controlling the electric devices remotely), AddNewRoom, AddHomeDevices, etc., as shown in Fig. 3. This section of the model provides a facility to the user to control the virtual devices (switch it ON/OFF) like actual devices. Also a user can check the total expenses for all the devices based on the number of units consumed by each device. A user can also schedule a device for defined interval of time. A user-friendly model is developed to facilitate even the illiterate persons.

• Data model (control section)—The data model in other words getters and setters class provides an object oriented facilities to save data and retrieve data from the database. Also it helps to send data from the activity classes to the database. This class not only helps in minimizing the code but it also helps in providing a smooth communication facilities in between the activities and the database.

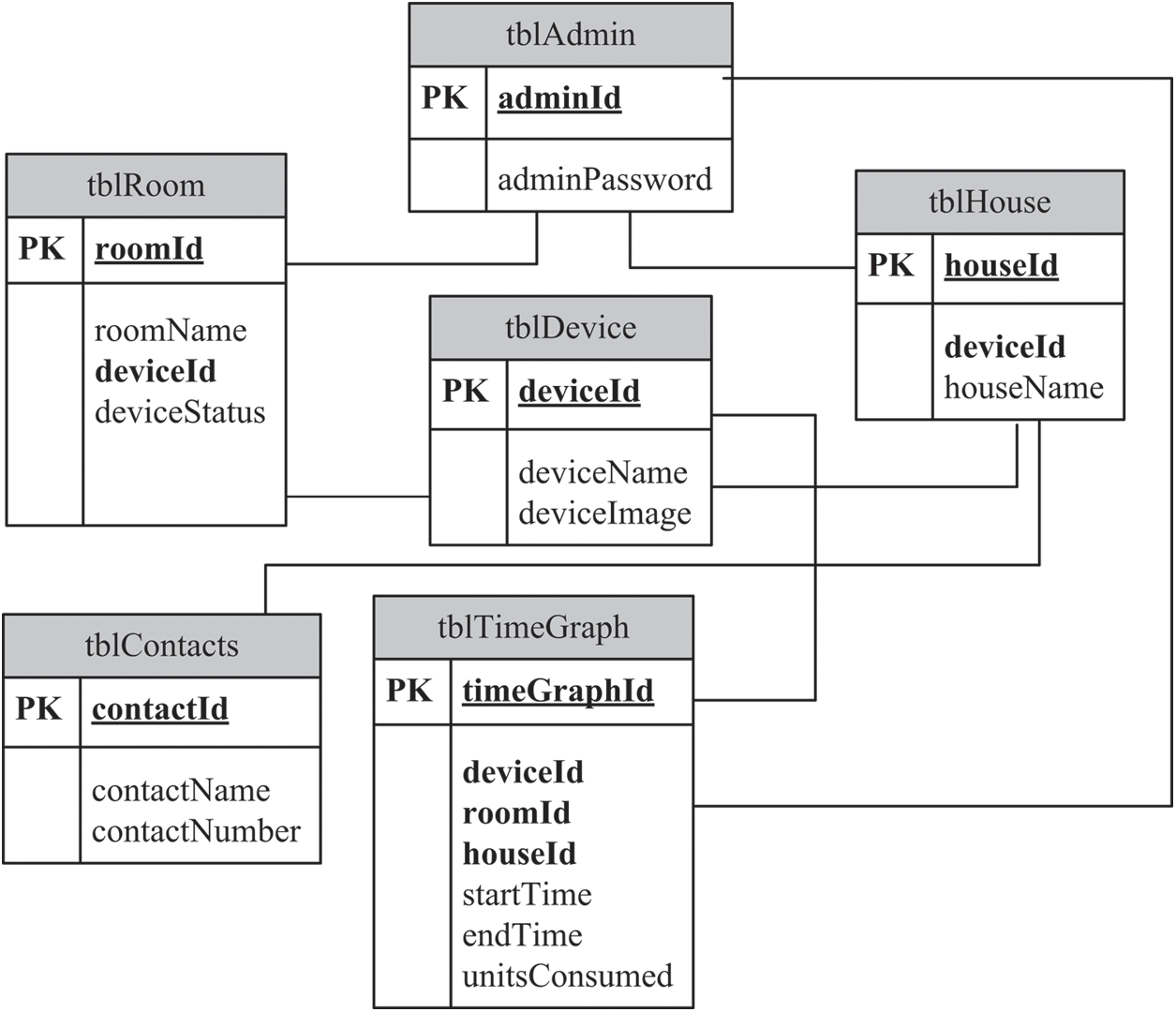

The entity relation diagram (ERD) is depicted in Fig. 4. It consists of the major tables used in the IHSACS model. This ERD diagram is developed in both SQLite and MySQL servers.

Figure 4: ERD diagram of the proposed IHSACS model

3.3 Object Detection Mechanism

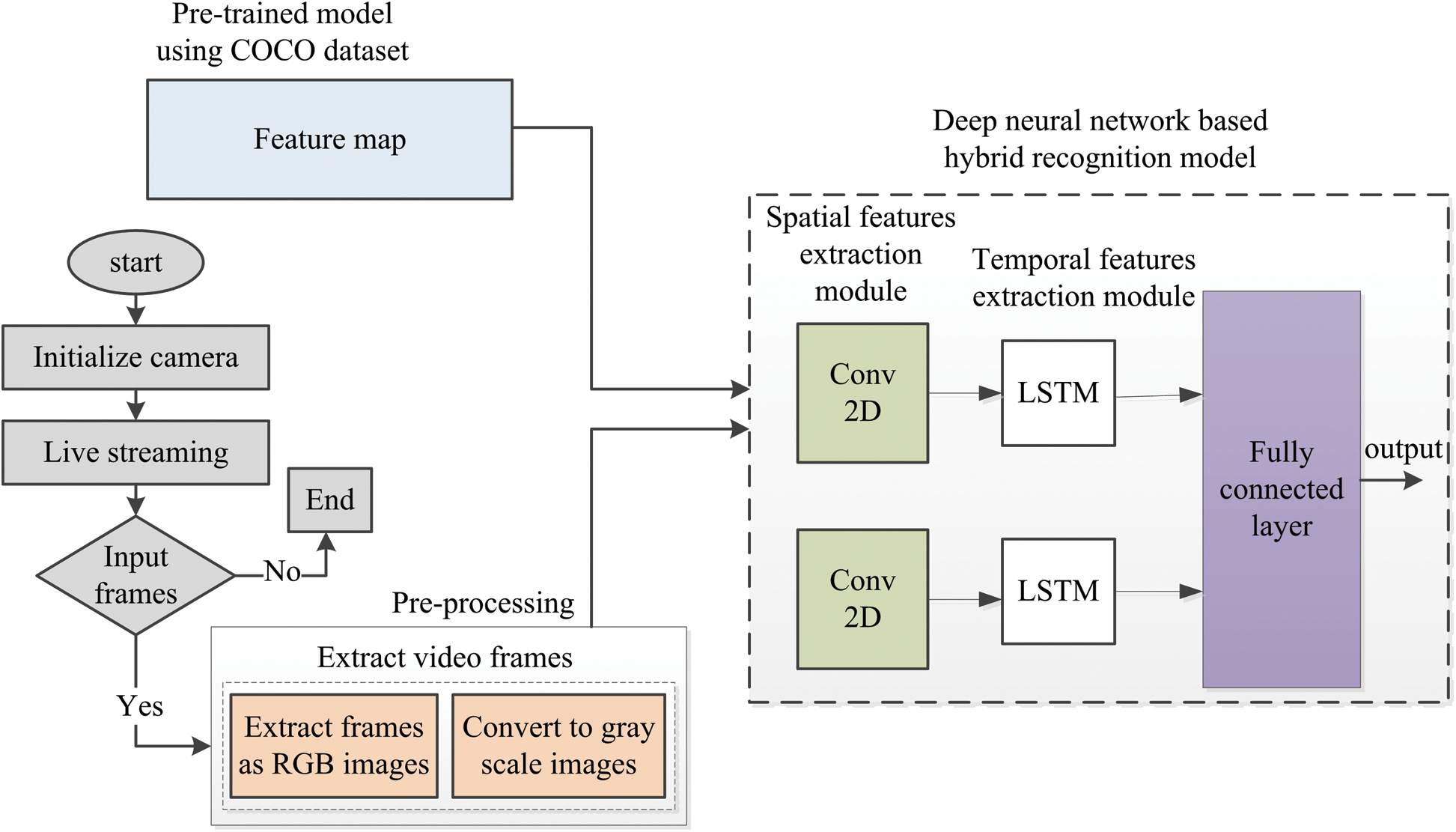

The details process diagram of the object detection and recognition mechanism is depicted in Fig. 5. A pre-trained model of COCO dataset is used for the classification of objects in live video streaming. Convolution neural network (CNN) is prominent is prominent in classifying the images. In other words it is good for extracting spatial features while long short term memory (LSTM) is good in extracting temporal information. CNN and LSTM gained significant attention in many research fields such as healthcare [26], phishing detection [27–29], intrusion detection [30–35], and many others. In the proposed research work the CNN is used to recognize the object based on the Raspberry Pi camera connected to the hardware while LSTM is used to keep records the timely information of the object. A hybrid model of both these techniques is used to provide optimum recognition results. The object detection process is initiated whenever a user comes in front of the Raspberry Pi camera a sensing IoT device. Whenever a person is detected a massage is initiated to MQTT broker which is configured on cloud. The broker transmits message to the corresponding device. LED is subscribed to the message against topic. It is acting as an actuating IoT device. It performs action accordingly and sends acknowledgment in response to the broker. The acknowledgment is passed to the web application and notification is sent to the user.

Figure 5: Process diagram of object detection mechanism

The simulation results and performance of the proposed system are discussed below.

4 System Design and Performance Analysis

The proposed IHSACS system consists of two implementations modules; the hardware module for home/room device controlling and communication purposes and the mobile application module (user interface). Both of these modules are discussed in details below.

4.1 Hardware/ Circuitry Module

The software model is divided into two phases; (1) Arduino-based circuit design and (2) Mobile application.

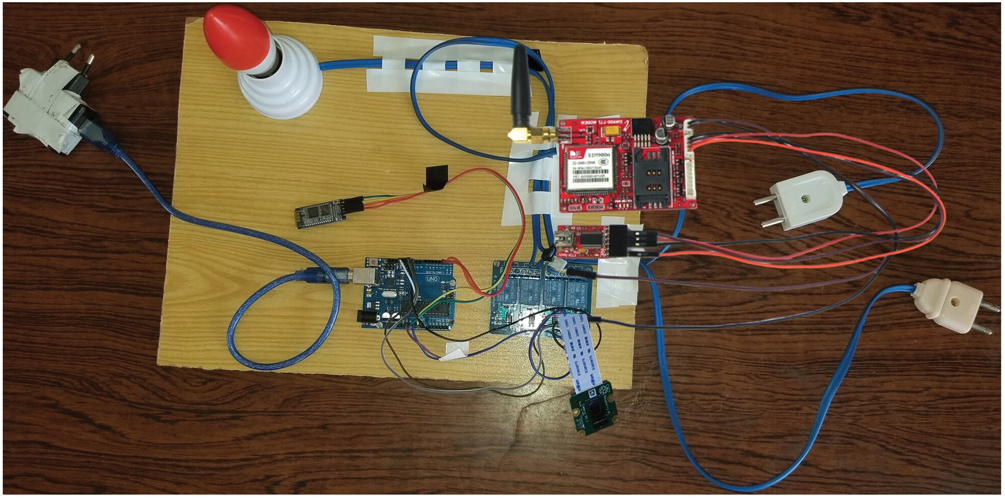

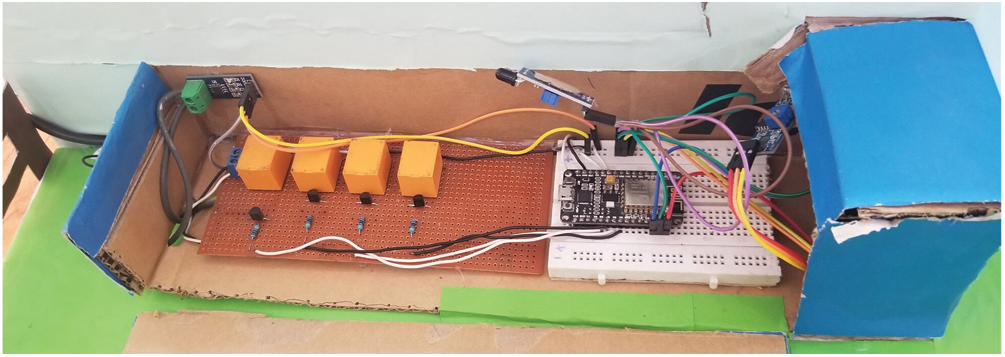

Fig. 6 depicts the circuitry module of the proposed intelligent home security and appliances control system. This system uses a hybrid mechanism based on deep learning algorithms for the detection and recognition of objects in the IHSCAS system. The clients can communicate with the web server using web sockets. Using web server i-e AWS cloud MQTT broker is configured. IoT devices ESP8266 Wi-Fi development board with LED attached is used as an actuating device while Raspberry Pi with camera is used as a sensing device for object detection purposes. Hybrid deep learning mechanism is operated inside the Raspberry Pi board to analyse live stream for object detection and recognition purposes such as; for the detection of a person. Whenever a person is detected the MQTT broker transmits message to the broker executing over the cloud. Notification messages are transmitted using email services (if internet is available) other these messages are transmitted using GSM modem (it no internet facility available) connected to the Arduino board.

Figure 6: Hardware design of the proposed IHSACS system

ESP8266 Wi-Fi module is a small system-on-chip (SoC) integrated with TCP/IP protocol stack that allows micro controllers to access Wi-Fi over the Internet. It is mainly designed and used for the execution of embedded applications. This SoC circuit is pre-programmed with an AT commands and is highly cost-effective development board. This SoC module has a powerful enough on-board processing and storage capability that allow it to be integrated with the sensors and other application specific devices through its GPIOs with minimal development upfront and minimal loading during runtime.

The circuit in Fig. 6 can operate only one device. It was also tested for multiple devices by using relays as shown in Fig. 7. Based on the relays two bulbs, a fan and an electric motor was controlled using the circuitry diagram depicted in Fig. 7. More than four devices can also be controlled just by adding multiple relays based on the requirement of a home/room(s).

Figure 7: Controlling multiple electric devices

In-line equations/expressions are embedded into the paragraphs of the text. For example  . In-line equations or expressions should not be numbered and should use the same/similar font and size as the main text.

. In-line equations or expressions should not be numbered and should use the same/similar font and size as the main text.

An android mobile application (app) is developed for the users to remotely control the devices and provide an interface to them for communication with the devices. A static database also developed for storing the status of the electric devices to address the issue of unavailability of internet or data packages. This static database automatically (without user interaction) uploads the data to the server when there is internet facility available. A user-friendly interface is developed for the proposed IHSACS system and even a lay person can use it. A help menu is also added at the start of the app and in selection menu to guide the user how to use the application. A login activity is created so that no other person can un-authentically access it. Some of the major features of the mobile application are shown below.

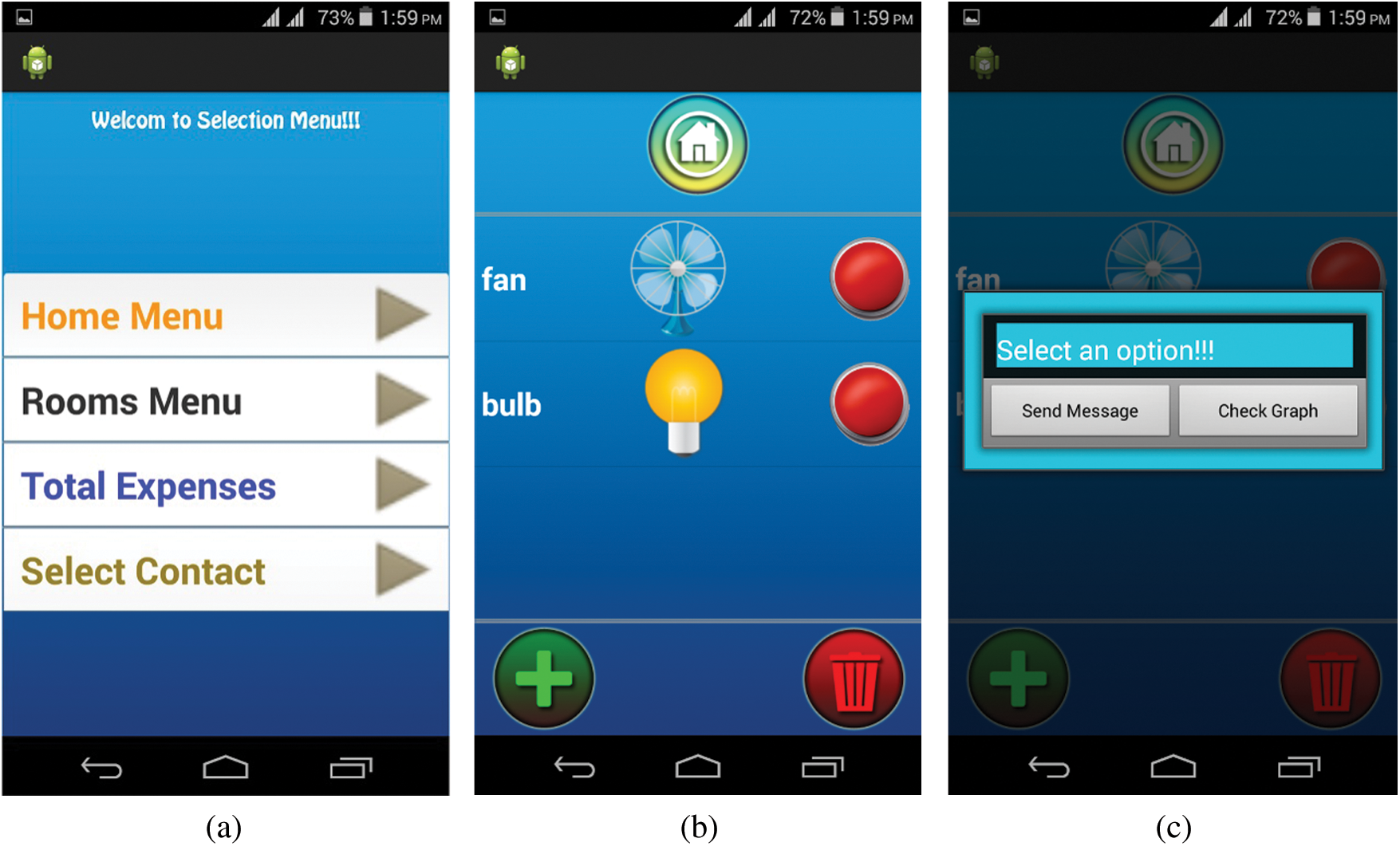

The selection menu shown in Fig. 8a provides multiple option for the user such as; to select home menu (consists of washing machine, water pump, and other devices that cannot be added or placed in the room), or to select room menu to add multiple rooms and devices to the room. Also a user can check the total expenses of his/her home based on the total unit consumed. Also a user can select any contact from the contact list to communicate with the circuit board for the controlling of different devices. Fig. 8b shows the different electric devices selected for a room. In case of Switch ON/OFF a message is transmitted to the circuit board to change the status of that particular device as shown in Fig. 8c. Also a user can check the total consumption for that particular device by selecting the option “check graph.”

Figure 8: The selection and switch ON/OFF menu of the IHSACS mobile app

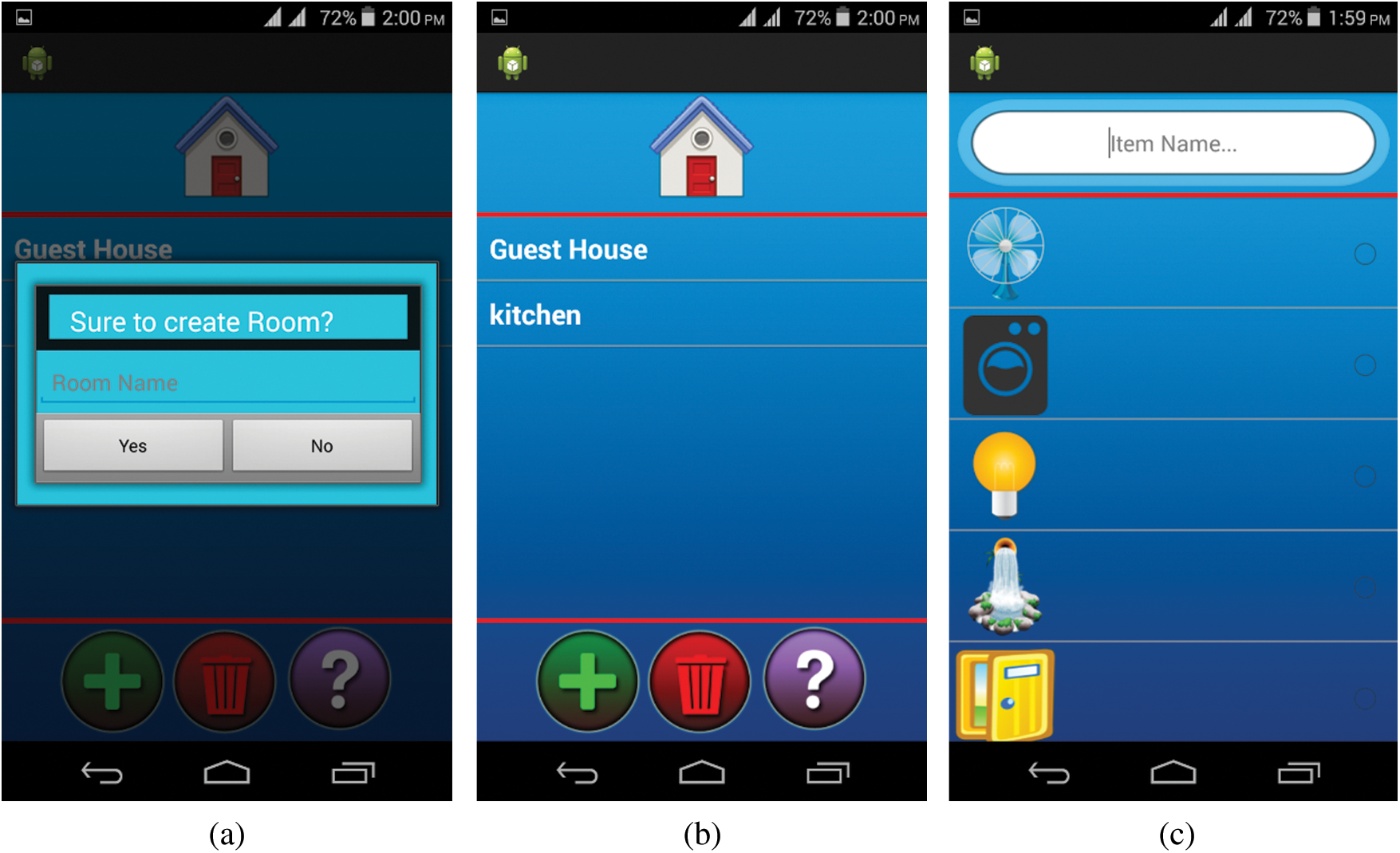

The room creation and item selection phases are shown in Fig. 9. One can search an item in the list or can select an item using checklist given in front of each device.

Figure 9: The room creation and item selection features

The proposed deep learning based hybrid model is tested for the object detection using real world environmental conditions such as; light intensity level, varying training and test sets, video frame size, distance of the object from the camera, time consumption, and accuracy calculated based on these different parameters. All these environmental conditions are discussed in detail below.

Light intensity level is a key factor that directly affects the recognition rates of an object detection system such as; if light level is dark then the object cannot be easily detected and it ultimately results in small recognition rates. While if the light level is bright, then the object can be easily detected and highly recognized. In the metric SI system it is calculated in lux as depicted in Eq. (1).

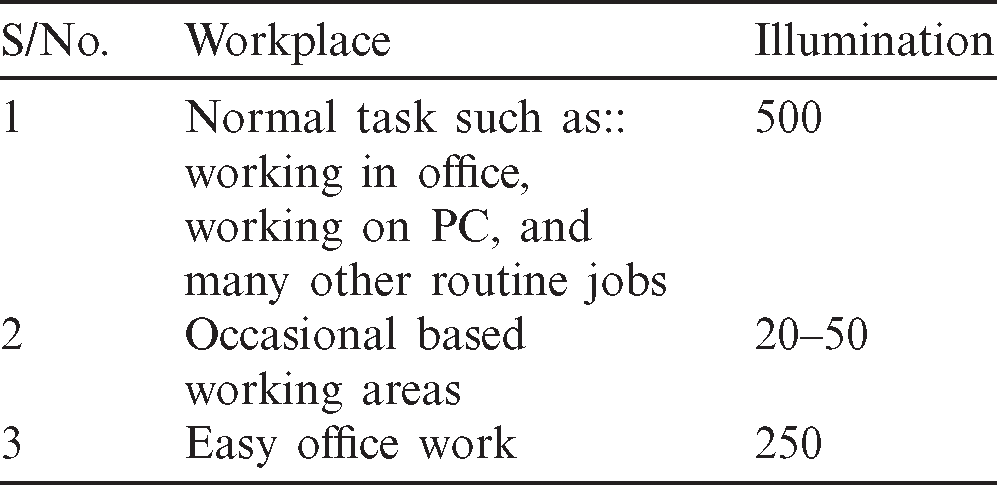

Typically, the quantity and quality of light are the two major factors that directly affects the illumination rates as shown in Tab. 2.

Table 2: Workplace based recommended lighting level for simulation

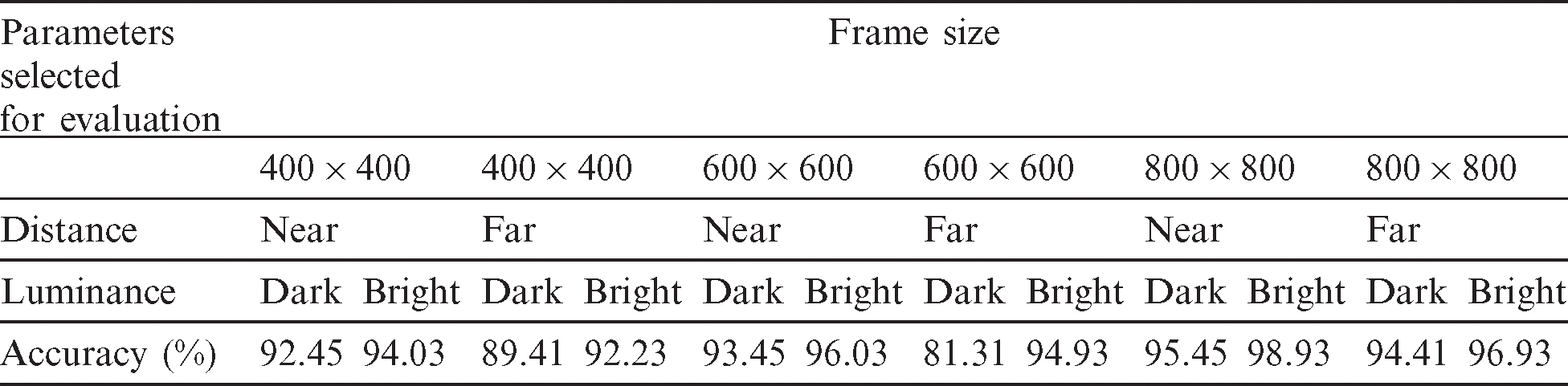

Based on the recommended intensity levels as depicted in Tab. 2 the experimental results of the proposed technique are calculated as depicted in Tab. 3. These results are based upon different lighting levels i–e dark and bright.

Table 3: Light level for normal official work

4.2.2 Varying Training and Test Sets

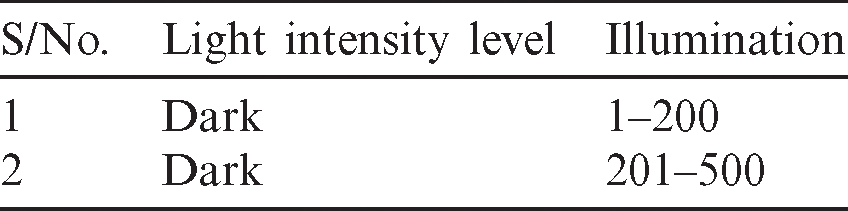

The second parameter is the varying training and test sets of the data for training the proposed model. After analysing the performance of the selected hybrid model for the object detection it was concluded that as long as the training set increases the accuracy of the system based on the recognition rates exponentially increases as shown in Eq. (2).

The results of the proposed object detection algorithms is shown in Fig. 10.

Figure 10: Recognition abilities of the hybrid model based on varying training sets

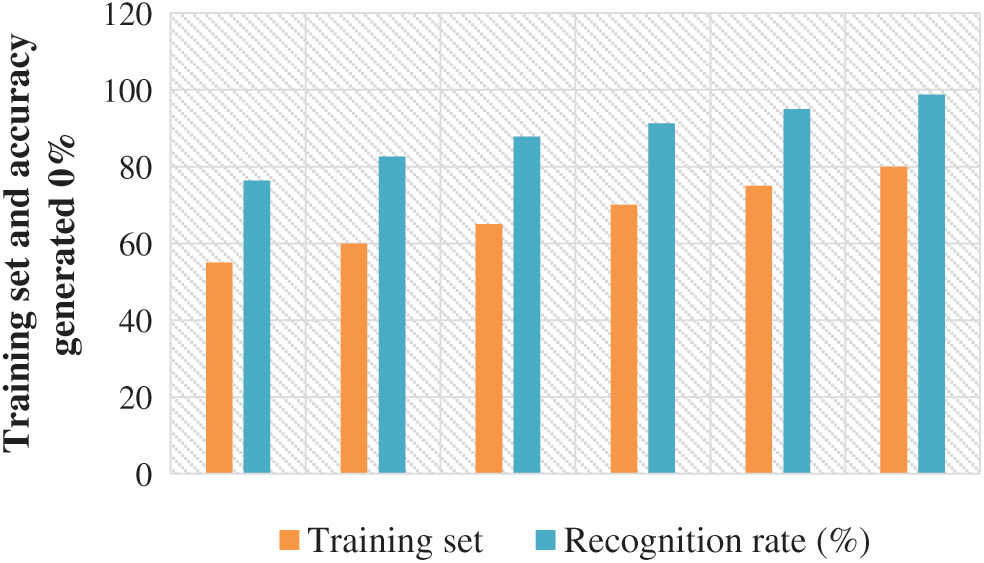

Another parameter to check the validity of the proposed hybrid algorithm for the object detection algorithms was frame rate. The size of frame matters especially in recognition task because higher the frame size higher the recognition rates calculated. If the size of the frame decreases the quality of the video get blurs and it becomes difficult for the object detection algorithm to provide optimum results. The experimental results are carried out on three different frame sizes  ,

,  , and

, and  pixels as shown in Fig. 11.

pixels as shown in Fig. 11.

Figure 11: Frame size vs. recognition rate

From Fig. 11 it is concluded that as long as the frame size increases the recognition rate increases because the high frame size results in high quality of the video which ultimately results for the accurate detection of the selected object.

4.2.4 Distance of Object from Camera

Distance plays a vital role in the detection of the object. In other words the accuracy of the object detection is highly dependent upon the distance of the object from the camera. If the object in near to the camera, then it can be easily detected and results in high accuracy rates as well. But if the object is far away from the camera then it is difficult to recognize the object accurately. In object detection algorithms if an object lies at a distance of 3 feet then it is considered as a far away from the camera and if the object lies within the three feet range then it is considered as nearer to the camera. To calculate the distance of the object from the camera firstly, a picture is captured with the camera from any distance. Then using the concepts of the triangle similarity [36–40]. Focal length of the camera can be perceived using the following formula shown in Eq. (3).

where P is width of the object in pixels, D is distance of the object from the camera, and W is object width. The distance of the object can be derived from Eq. (3) as shown below in Eq. (4).

Using Eq. (4), the distance of the object from the camera is calculated and it was decided that object is nearer to camera if it is within 3 feet range otherwise far away.

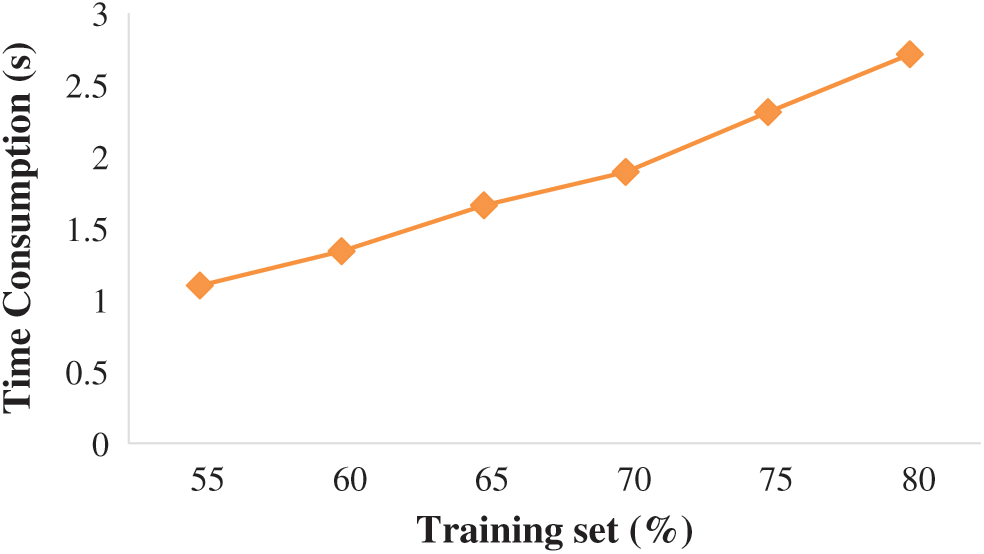

The time consumption of the proposed object detection algorithm is validated after generating results for varying training and tests as depicted in Fig. 12.

Figure 12: Time-consumption vs. training set

From Fig. 12 it is concluded that as long as the training set increases the time consumption increases accordingly.

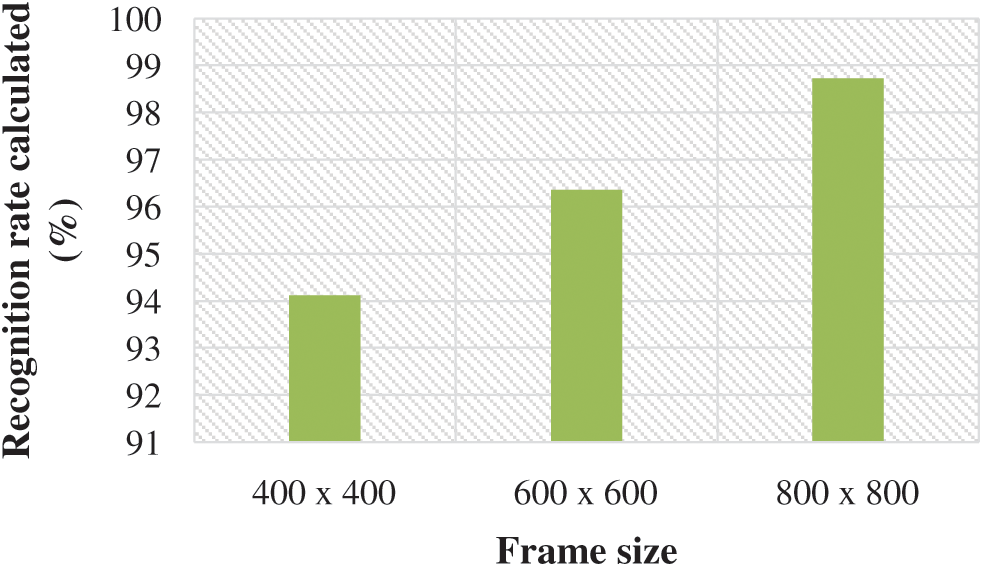

The sixth most important parameter is the accuracy. The higher the accuracy rates reflects the applicability of a certain algorithm in the proposed field and vice versa. Based on the accuracy rates generated for varying training and test sets, for varying intensity levels, frame sizes it was concluded that the proposed object detection algorithm provides prominent results.

After testing the camera from varying directions, varying distances (near and far), with different frame sizes, with varying training and test sets a generic output is shown in Fig. 13 for  frame size with distance near to camera. This generic output is generated based on the hybrid deep learning based object detection algorithms. There are three objects detected in the picture; a person with accuracy measures of 95.34%, a cup with 42.59%, and a chair with 42.04%. The accuracy of the chair is low it is hidden and far from the camera. These outputs are generated based on Raspberry Pi camera (an embedded device) integrated in the circuitry.

frame size with distance near to camera. This generic output is generated based on the hybrid deep learning based object detection algorithms. There are three objects detected in the picture; a person with accuracy measures of 95.34%, a cup with 42.59%, and a chair with 42.04%. The accuracy of the chair is low it is hidden and far from the camera. These outputs are generated based on Raspberry Pi camera (an embedded device) integrated in the circuitry.

Figure 13: Object detection using embedding device (Raspberry Pi)

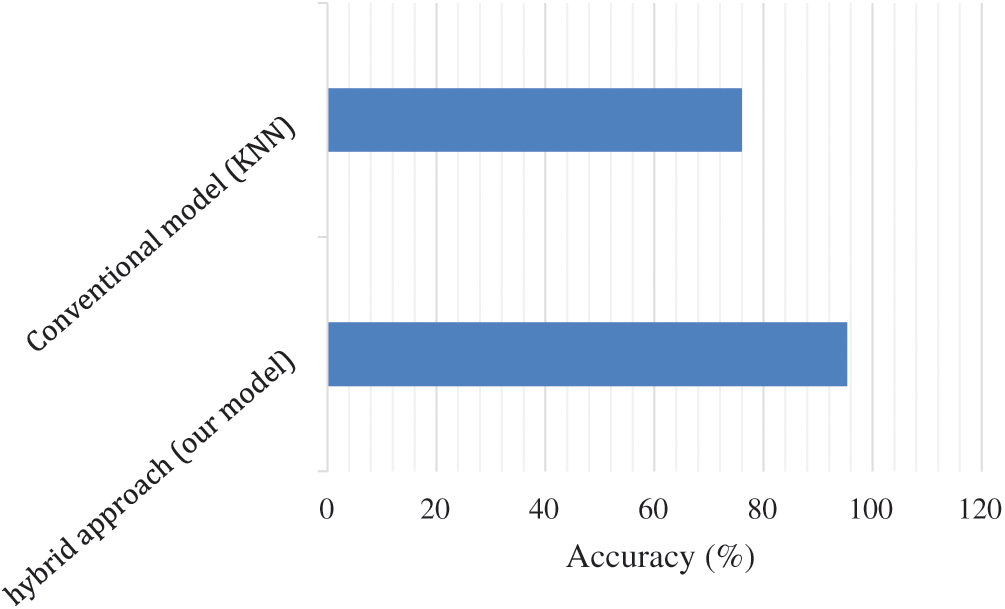

The performance results of the proposed hybrid approach are compared with the conventional K nearest neighbors (KNN) classifier for the object detection purposes. After comparison it was concluded that the proposed method presented in our research work out-performs by providing a recognition rate of 95.34% compared to the conventional KNN model that provides recognition rate of 76% as depicted in Fig. 14.

Figure 14: Performance analysis of proposed model with KNN classifier

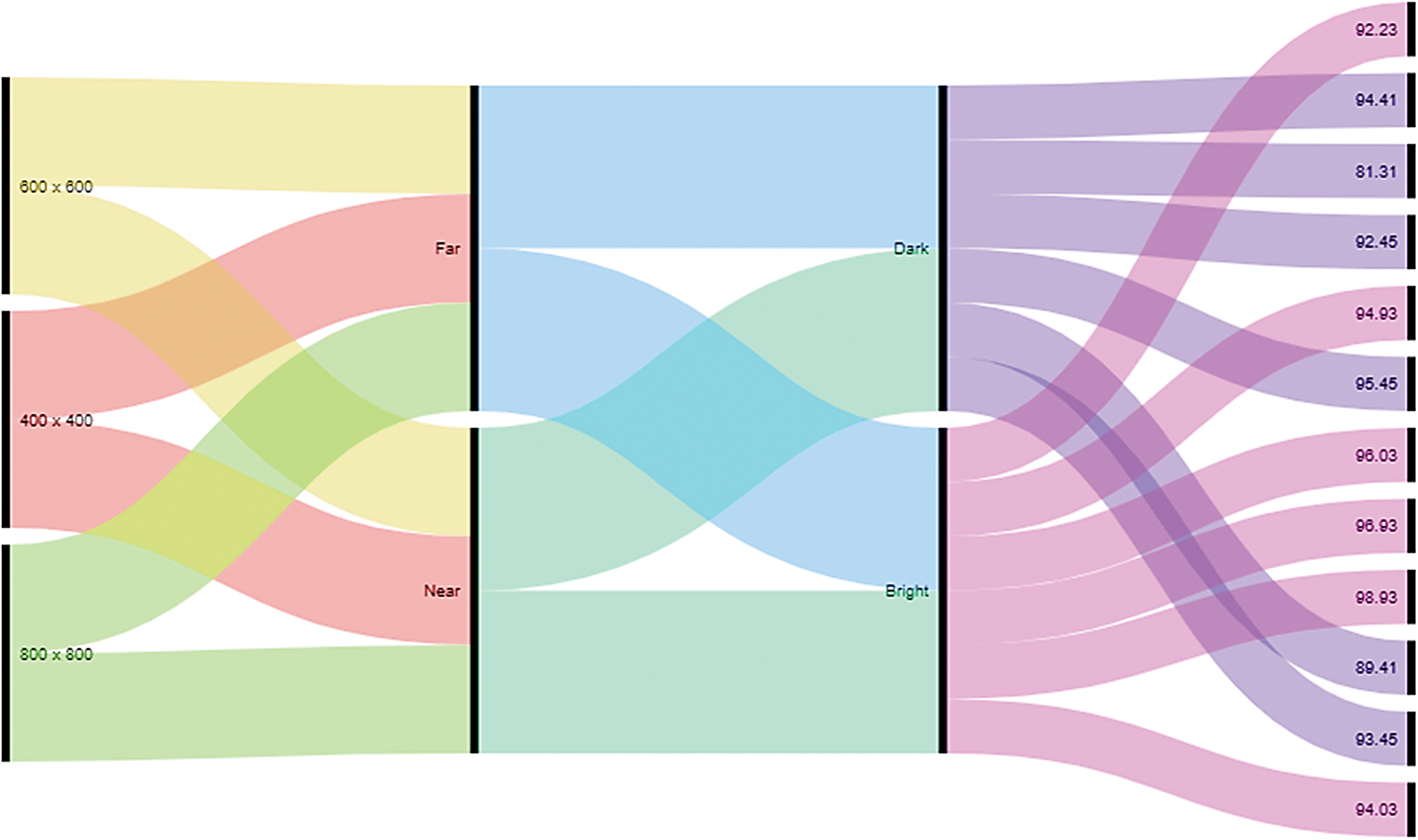

Tab. 4 represents the performance measures of the proposed object detection algorithms based on varying experimental parameters. From these results shown in Tab. 4 it was concluded that as long as the frame size increases, the intensity level (luminance) is bright, and the object is nearer to camera then the resultant is high that ultimately reflects the object’s high recognition abilities of the proposed hybrid object detection algorithm. The highest recognition rates are calculated for an 800  800 frame size. If the intensity level decreases accuracy also drops because it is not easier for the algorithm to detect the object significantly. Also the accuracy decreases if the object lies at a distance of three feet or more than three feet (far), and the recognition rate also decreases if the frame size decreases.

800 frame size. If the intensity level decreases accuracy also drops because it is not easier for the algorithm to detect the object significantly. Also the accuracy decreases if the object lies at a distance of three feet or more than three feet (far), and the recognition rate also decreases if the frame size decreases.

Table 4: Accuracy measures for varying frame size based on luminance and distance parameters

The simulation results based on varying parameters are shown in Fig. 15 below. From Fig. 15 it is concluded that the object detection algorithm based on deep neural network provides optimum results if the object is nearer to the camera and the intensity level is high and the luminance is bright.

Figure 15: Object detection algorithm accuracy based on distance, frame size, and luminance

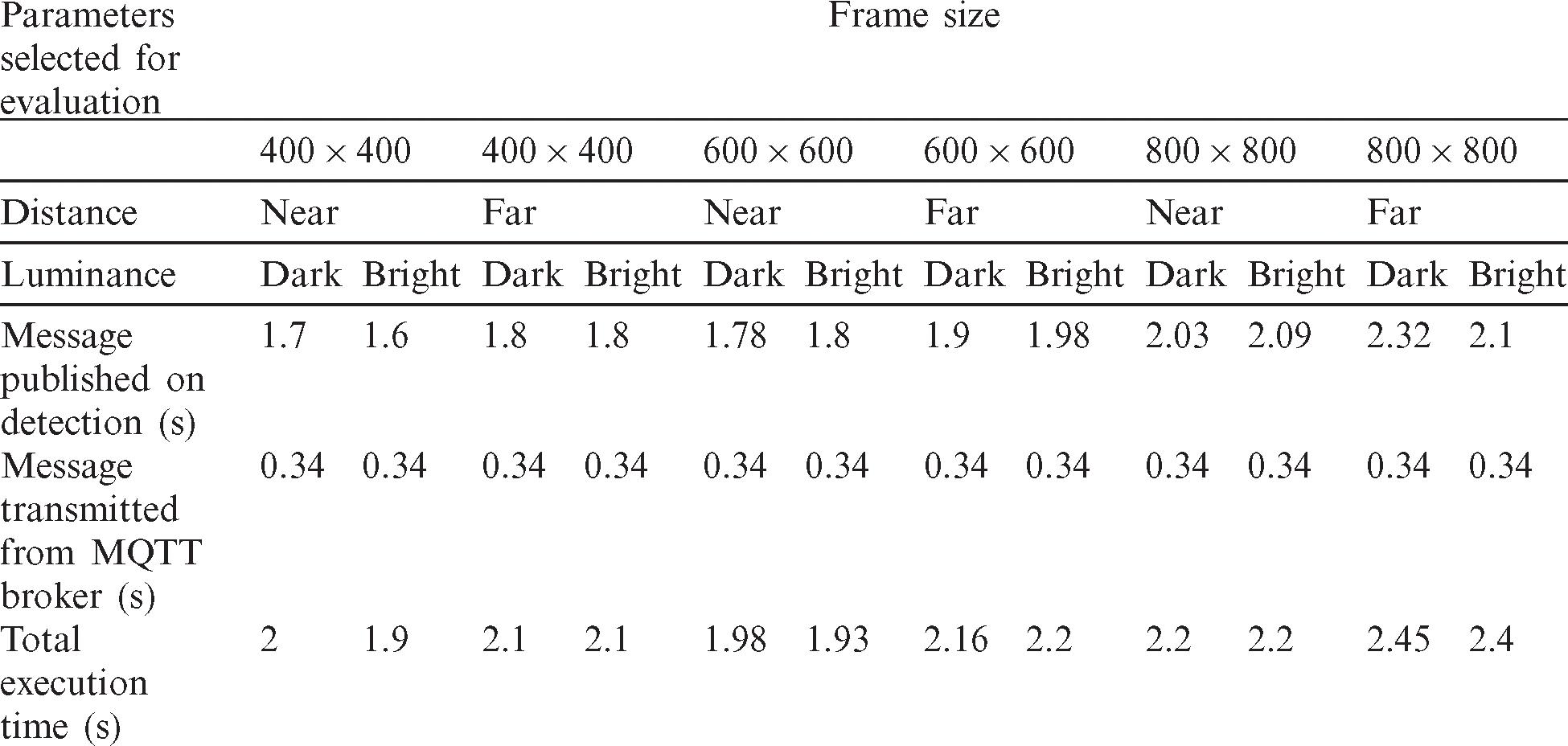

The proposed algorithm is also evaluated in terms of execution time, communication delays. The corresponding results are presented in Tab. 5. Android studio and Node.js has a powerful mechanism to measure the execution time. Message published on detection is the time taken by the object detection algorithm processing on Raspberry Pi device to detect the desired object in frame. Message publish from MQTT Broker is the communication delay when publish message was received by MQTT broker. The total execution time is the time duration from connection establishment to device activation. Message publish on detection is from time of connection established to person detected in camera. Results were collected with varying light level, size of frame, and distance of object from camera. Tab. 5 the execution and communication delays for both bright and dark light levels. Total execution time is greater if the light level is dark. While the execution time is smaller if the light level is bright.

Table 5: Total execution time of MQTT broker based on luminance

This paper presents a smart home appliances control system and intelligent object detection mechanism in the smart home. It uses of deep neural network based hybrid model for the detection and recognition of objects in the smart homes. This hybrid model consists of convolution neural network for spatial feature extraction and recognition purposes, while long short term memory for temporal feature extraction and classification purposes. This model is evaluated for varying environmental conditions. This system presents a cloud based-layered architecture for smart appliances control using hybrid deep neural network for object detection embedded in IoT devices. The applicability of the hybrid model is tested for different parameters such as; distance from the camera (near or far), the time consumption (processing delay), frame rate, light/intensity level, and accuracy generated based on the recognition of the objects. An overall accuracy rate of 95.34% is achieved after testing for varying parameters and directions. It was concluded that the recognition rate decreases as the object moves far away from the camera or the intensity level decreases from 200 lux or the frame rates increases from the camera. This frame rate not only blurs the object but it also causes delay in generating the output for the system.

Experimental prototype is implemented in the Android studio for Android phone users. Proposed system provides a user-friendly interface for the users (even a lay-person) to create virtual rooms, select devices, to check the total consumption of the devices based on the start and end timings and power. It was also concluded that object detection is solely dependent on camera and algorithm selected. Furthermore, it was also concluded that the proposed deep learning based hybrid model is no-data hunger algorithm and provides optimum results for a limited amount of data after testing for varying training and test sets.

In future, we want to select different recognition algorithms and generate the comparative results for the proposed model. Also in the near future we want to develop an iPhone based mobile application for the iPhone mobile users.

Acknowledgement: This research work is supported by Department of Accounting and Information Systems, College of Business and Economics, Qatar University, Doha, Qatar and Department of Computer Science, University of Swabi, KP, Pakistan.

Funding Statement: This research was funded by Qatar University Internal Grant under Grant No. IRCC-2020-009. The findings achieved herein are solely the responsibility of the authors.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. J. Park, K. Jang and S. B. Yang. (2018). “Deep neural networks for activity recognition with multi-sensor data in a smart home,” in 2018 IEEE 4th World Forum on Internet of Things, Singapore, pp. 155–160.

2. B. Li and J. Yu. (2011). “Research and application on the smart home based on component technologies and Internet of Things,” Procedia Engineering, vol. 15, pp. 2087–2092.

3. K. R. Jackson, L. Ramakrishnan, K. Muriki, S. Canon, S. Cholia et al. (2010). , “Performance analysis of high performance computing applications on the amazon web services cloud,” in 2010 IEEE Second Int. Conf. on Cloud Computing Technology and Science, Indianapolis, IN, pp. 159–168.

4. G. Juve, E. Deelman, K. Vahi, G. Mehta, B. Berriman et al. (2009). , “Scientific workflow applications on Amazon EC2,” in 2009 5th IEEE Int. Conf. on e-Science Workshops, Oxford, pp. 59–66.

5. R. Dahl. (2009). “Node. js,” . [Online]. Available http://s3.amazonaws.com/four.livejournal/20091117/jsconf.pdf.

6. D. Guinard and V. Trifa. (2016). Building the Web of Things. Shelter Island: Manning.

7. K. Fysarakis, I. Askoxylakis, O. Soultatos, I. Papaefstathiou, C. Manifavas et al. (2016). , “Which iot protocol? Comparing standardized approaches over a common M2M application,” in 2016 IEEE Global Communications Conf., Washington, DC, pp. 1–7.

8. U. Hunkeler, H. L. Truong and A. Stanford-Clark. (2008). “MQTT-S—A publish/subscribe protocol for wireless sensor networks,” in 2008 3rd Int. Conf. on Communication Systems Software and Middleware and Workshops, Bangalore, pp. 791–798.

9. S. Khan, H. Ali, Z. Ullah and M. F. Bulbul. (2018). “An intelligent monitoring system of vehicles on highway traffic,” in 2018 12th Int. Conf. on Open Source Systems and Technologies, Lahore, Pakistan, pp.

10. P. Druzhkov, V. Erukhimov, N. Y. Zolotykh, E. Kozinov, V. Kustikova et al. (2011). , “New object detection features in the OpenCV library,” Pattern Recognition and Image Analysis, vol. 21, pp. 384. [Google Scholar]

11. S. Khan, K. Ahmad, M. Murad and I. Khan. (2013). “Waypoint navigation system implementation via a mobile robot using global positioning system (GPS) and global system for mobile (GSM) modems,” International Journal of Computational Engineering Research, vol. 3, no. 7, pp. [Google Scholar]

12. P. Mtshali and F. Khubisa. (2019). “A smart home appliance control system for physically disabled people,” in 2019 Conf. on Information Communications Technology and Society, pp. 1–5. [Google Scholar]

13. S. Kumari, M. Karuppiah, A. K. Das, X. Li, F. Wu et al. (2018). , “Design of a secure anonymity-preserving authentication scheme for session initiation protocol using elliptic curve cryptography,” Journal of Ambient Intelligence and Humanized Computing, vol. 9, no. 3, pp. 643–653. [Google Scholar]

14. S. Khan, H. Ali, Z. Ullah, N. Minallah, S. Maqsood et al. (2018). , “KNN and ANN-based recognition of handwritten Pashto letters using zoning features,” International Journal of Advanced Computer Science and Applications, vol. 9, no. 10, pp. 570–577. [Google Scholar]

15. H. HaddadPajouh, A. Dehghantanha, R. Khayami and K.-K. R. Choo. (2018). “A deep recurrent neural network based approach for Internet of Things malware threat hunting,” Future Generation Computer Systems, vol. 85, pp. 88–96. [Google Scholar]

16. F. Ge and Y. Yan. (2017). “Deep neural network based wake-up-word speech recognition with two-stage detection,” in 2017 IEEE Int. Conf. on Acoustics, Speech and Signal Processing, pp. 2761–2765. [Google Scholar]

17. G. Yang, J. Yang, W. Sheng, F. E. F. Junior and S. Li. (2018). “Convolutional neural network-based embarrassing situation detection under camera for social robot in smart homes,” Sensors, vol. 18, pp. 1530. [Google Scholar]

18. B. A. Erol, A. Majumdar, J. Lwowski, P. Benavidez, P. Rad et al. (2018). , “Improved deep neural network object tracking system for applications in home robotics,” In W. Pedrycz, S. M. Chen, (Eds.Computational Intelligence for Pattern Recognition, Cham: Springer, vol. 777, pp. 369–395. [Google Scholar]

19. P. Rajan Jeyaraj and E. R. S. Nadar. (2019). “Smart-monitor: Patient monitoring system for IoT-based healthcare system using deep learning,” IETE Journal of Research, no. 1, pp. 1–8. [Google Scholar]

20. G. Kumar, M. Sindhu and S. S. Kumar. (2019). “Deep neural network based hierarchical control of residential Microgrid using LSTM,” in TENCON 2019-2019 IEEE Region 10 Conf. (TENCONKochi, India, pp. 2129–2134. [Google Scholar]

21. F. Xia, L. T. Yang, L. Wang and A. Vinel. (2012). “Internet of Things,” International Journal of Communication Systems, vol. 25, pp. 1101. [Google Scholar]

22. X. Li, R. Lu, X. Liang, X. Shen, J. Chen et al. (2011). , “Smart community: An Internet of Things application,” IEEE Communications Magazine, vol. 49, no. 11, pp. 68–75. [Google Scholar]

23. C. Szegedy, S. Reed, D. Erhan, D. Anguelov and S. Ioffe. (2014). “Scalable, high-quality object detection,. arXiv preprint arXiv:1412.1441. [Google Scholar]

24. F. Mehmood, I. Ullah, S. Ahmad and D. Kim. (2019). “Object detection mechanism based on deep learning algorithm using embedded IoT devices for smart home appliances control in CoT,” Journal of Ambient Intelligence and Humanized Computing, pp. 1–17. [Google Scholar]

25. T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona et al. (2014). , “Microsoft coco: Common objects in context,” in D. Fleet, T. Pajdla, B. Schiele, T. Tuytelaars (Eds.European Conf. on Computer Vision, Cham: Springer, vol. 8693, pp. 740–755. [Google Scholar]

26. H. Chen, S. Khan, B. Kou, S. Nazir, W. Liu et al. (2020). , “A smart machine learning model for the detection of brain hemorrhage diagnosis based Internet of Things in smart cities,” Complexity, vol. 2020, 3047869. [Google Scholar]

27. S. Wang, S. Khan, C. Xu, S. Nazir and A. Hafeez. (2020). “Deep learning-based efficient model development for phishing detection using random forest and BLSTM classifiers,” Complexity, vol. 2020, 8694796. [Google Scholar]

28. H. U. Khan and A. Alhusseini. (2015). “Optimized web design in the Saudi culture,” in IEEE Science and Information Conf., London, UK, pp. 906–915. [Google Scholar]

29. H. U. Khan and M. A. Awan. (2017). “Possible factors affecting internet addiction: A case study of higher education students of qatar,” International Journal of Business Information Systems, vol. 26, no. 2, pp. 261–276. [Google Scholar]

30. Y. He, S. Nazir, B. Nie, S. Khan and J. Zhang. (2020). “Developing an efficient deep learning-based trusted model for pervasive computing using an LSTM-based classification model,” Complexity, vol. 2020, 4579495. [Google Scholar]

31. Z. Gu, S. Nazir, C. Hong and S. Khan. (2020). “Convolution neural network-based higher accurate intrusion identification system for the network security and communication,” Security and Communication Networks, vol. 2020, 8830903. [Google Scholar]

32. G. M. Bashir and H. U. Khan. (2016). “Factors affecting learning capacity of information technology concepts in a classroom environment of adult learner,” in 15th Int. Conf. on Information Technology Based Higher Education and Training (IEEE Conf.Istanbul, Turkey. [Google Scholar]

33. O. A. Bankole, M. Lalitha, H. U. Khan and A. Jinugu. (2017). “Information technology in the maritime industry past, present and future: Focus on Lng Carriers,” in 7th IEEE International Advance Computing Conf., Hyderabad, India, pp. 7. [Google Scholar]

34. H. U. Khan and S. Uwemi. 2018a. , “Possible impact of E-commerce strategies on the utilization of E-commerce in Nigeria’,” International Journal of Business Innovation and Research, vol. 15, no. 2, pp. 231–246. [Google Scholar]

35. H. U. Khan and A. C. Ejike. (2017). “An assessment of the impact of mobile banking on traditional banking in Nigeria,” International Journal of Business Excellence, vol. 11, no. 4, pp. 463. [Google Scholar]

36. R. G. Smuts, M. Lalitha and H. U. Khan. (2017). “Change management guidelines that address barriers to technology adoption in an HEI context,” in 7th IEEE Int. Advance Computing Conf., Hyderabad, India, pp. 7. [Google Scholar]

37. H. U. Khan. (2016). “Possible effect of video lecture capture technology on the cognitive empowerment of higher education students: A case study of gulf-based university,” International Journal of Innovation and Learning, vol. 20, no. 1, pp. 68–84. [Google Scholar]

38. V. F. Brock and H. U. Khan. (2017). “Are enterprises ready for big data analytics? A survey based approach,” International Journal of Business Information Systems, vol. 25, no. 2, pp. 256–277. [Google Scholar]

39. H. U. Khan and K. Alshare. (2019). “Violators versus non-violators of information security measures in organizations—A study of distinguishing factors,” Journal of Organizational Computing and Electronic Commerce, vol. 29, no. 1, pp. 4–23. [Google Scholar]

40. A. Rosebrock. (2015). “Find distance from camera to object/marker using Python and OpenCV,” Pyimagesearch. [Online]. Available: https://www.pyimagesearch.com/2015/01/19/find-distance-camera-objectmarker-using-python-opencv/. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |