DOI:10.32604/cmc.2021.016804

| Computers, Materials & Continua DOI:10.32604/cmc.2021.016804 |  |

| Article |

Accurate and Computational Efficient Joint Multiple Kronecker Pursuit for Tensor Data Recovery

1Guangdong Key Laboratory of Intelligent Information Processing, College of Electronics and Information Engineering, Shenzhen University, Shenzhen, 518061, China

2Department of Housing and Public Works, Queensland, Australia

3Customer Service Centre, Guangdong Power Grid Corporation, Guangzhou, China

*Corresponding Author: Peng Zhang. Email: pzhang66@126.com

Received: 12 January 2021; Accepted: 17 February 2021

Abstract: This paper addresses the problem of tensor completion from limited samplings. Generally speaking, in order to achieve good recovery result, many tensor completion methods employ alternative optimization or minimization with SVD operations, leading to a high computational complexity. In this paper, we aim to propose algorithms with high recovery accuracy and moderate computational complexity. It is shown that the data to be recovered contains structure of Kronecker Tensor decomposition under multiple patterns, and therefore the tensor completion problem becomes a Kronecker rank optimization one, which can be further relaxed into tensor Frobenius-norm minimization with a constraint of a maximum number of rank-1 basis or tensors. Then the idea of orthogonal matching pursuit is employed to avoid the burdensome SVD operations. Based on these, two methods, namely iterative rank-1 tensor pursuit and joint rank-1 tensor pursuit are proposed. Their economic variants are also included to further reduce the computational and storage complexity, making them effective for large-scale data tensor recovery. To verify the proposed algorithms, both synthesis data and real world data, including SAR data and video data completion, are used. Comparing to the single pattern case, when multiple patterns are used, more stable performance can be achieved with higher complexity by the proposed methods. Furthermore, both results from synthesis and real world data shows the advantage of the proposed methods in term of recovery accuracy and/or computational complexity over the state-of-the-art methods. To conclude, the proposed tensor completion methods are suitable for large scale data completion with high recovery accuracy and moderate computational complexity.

Keywords: Tensor completion; tensor Kronecker decomposition; Kronecker rank-1 decomposition

The problem of data recovery from limited number of observed entries, referred to as matrix completion or tensor recovery problem, had attracted significant attention in the pass decade and had been applied in various fields such as recommendation system [1,2], Bioinformatics data [3], computer vision [4,5] and image data [6,7].

In practice, the matrix data we observed is often of low rank structure. Therefore, the matrix completion problem can be solved by finding a low rank matrix to approximate the data matrix to be recovered. However, the process of rank minimization is NP-hard [8]. In order to relax the non-convex NP-hard problem to a traceable and convex one, [9,10] proposed to minimize the matrix nuclear norm instead of matrix rank. Based on this concept, many algorithms, such as the singular values thresholding (SVT) [11] and singular value projection (SVP) [12] approaches. Unfortunately, a great number of singular value decomposition (SVD) operations or iterations are required for these methods, leading to high computational and storage complexity.

In real world systems, the observed data is sometimes of high dimensional structure. The recovery of these tensor data, which can be regarded as a generalization of that of matrix completion to the high dimensional case, is becoming more and more important. It is assumed that the observed data tensor contains a low rank structure and thus the missing entries can be recovered by the minimizing the tensor rank. In fact, several tensor decomposition methods and definitions of tensor rank are employed for the completion problem. Reference [13] proposed to minimize the number of rank-1 tensors from CANDECOMP/PARAFAC (CP) decomposition to recover the original tensor data. However, the decomposition is very costly, and it might fail to give reasonable results in some cases because of the ill-posedness property [14]. Other than the CP decomposition, the Tucker decomposition that yields a core tensor and corresponding subspace matrices of each dimension, are employed for tensor completion [15,16]. However, these methods require the knowledge of tensor rank, and might be not applicable in some applications.

Recently, some new types of tensor decomposition methods are proposed. By operating the 3-dimensional (3-D) tensors as 2-D matrices using the tensor product, the tensor singular value decomposition (t-SVD) is proposed [17]. Then the corresponding tensor rank and tensor nuclear norm [18] are defined and used for tensor data recovery. This method can transform the decomposition of one 3-D tensor to slice-wise matrix ones. However, for tensor with number of dimension higher than 3, it will fold all the dimensions higher than 3 into the third one, resulting in a lost of higher order information. Other researchers, proposed to employ the Kronecker tensor decomposition (KTD) based on the Kronecker structure of general R-D tensor to solve the tensor completion problem [19]. This approach employed the idea that folding an R-D tensor into a high-order tensor and then unfolding it into a matrix by general unfolding [20]. By reducing the tensor computation to matrix computation, a similar matrix rank can be defined, and good recovery results can be obtained. However, the computational complexity of the KTD based methods are still unsatisfying because of the SVD operation of large matrices.

Furthermore, in order to improve the efficiency of data recovery, some orthogonal rank-1 matrix and tensor pursuit approaches had been proposed [21,22]. These methods transfer the minimization of the matrix rank or tensor rank to an iterative rank-1 pursuit problem, and achieve a moderate data recovery result with low computational complexity. In this work, we will propose two novel tensor completion methods based on the minimization of tensor Kronecker rank as well as technique of matching pursuit, and apply the tensor data recovery methods to several real world data completion applications.

The remainder of the paper is organized as follows. Section 2 presents the notations and definitions. In Section 3, the tensor recovery algorithms are developed in details. Experiments are provided in Section 4 and conclusions are drawn in Section 5.

The notations used in this paper is first defined. Scalars, vectors, matrices and tensors are denoted by italic, bold lower-case, bold upper-case and bold calligraphic symbols, respectively. The Frobenius-norm of a tensor

Definition 2.1. The 2-way folding of an R-D tensor

We can now go on to define the Kronecker tensor product [19] of two tensors

Definition 2.2. The

Definition 2.3. The KTD of a tensor

where

Following this definition, the tensor rank of

The task is to recover the R-D tensor

where

where

where F is the maximum number of Kronecker rank. We can solve this problem by a greedy matching pursuit type method.

3.1 Iterative Multiple Kronecker Tensor Pursuit

In this Iterative multiple Kronecker Tensor Pursuit (IKTP) method, we update one Kronecker rank-1 basis tensor

where

Supposing that after the (k −1)-

where

After solving the new Kronecker rank-1 basis tensor

By reshaping the tensor

Then we can go on to the

Table 1: Iterative Kronecker tensor pursuit

3.2 Joint Multiple Kronecker Tensor Pursuit

The proposed IKTP approach updates one Kronecker rank-1 basis tensor according to one of the G patterns alternatively. When the number of ranks to be recovered is large, a lot of iteration is required, making the proposed approach highly computational inefficient. To overcome this problem, we propose a Joint multiple Kronecker Tensor Pursuit (JKTP) method that updates all the G Kronecker rank-1 basis tensors in one iteration under the given patterns.

According to (4), suppose that after the (k −1)-

Under the given patterns

where

After solving the new Kronecker rank-1 basis tensors

Then the optimal solution

where

Table 2: Joint Kronecker tensor pursuit

In each iteration, the proposed IKTP algorithm will track all pursued bases and save them in the memory, leading to a high requirement of storage space. Furthermore, when the number of iteration is large, the LS solution (8) will compute the inverse of a large matrix, making it be rather computationally unattractive. To overcome these problems, an economic updating scheme

which update

And the updated recovered tensor becomes

Similarly, the economic updating technique can be used to update

And the recovered tensor can be obtained by

In this section, we conduct experiments based on both visual data and SAR imaging data completion. The proposed IKTP and JKTP methods as well as they economic realization, IKTP-econ and JKTP-econ, are evaluated, and the state-of-the-art algorithms, including Tucker [15], KTD [19], R1TP [21] and R1MP [22] are employed for comparison. For all algorithms, the stop criterion is met if the two conditions are reached:

First, the problem of image recovery by JKTP, JKTP-econ, IKTP, IKTP-econ, Tucker [15], KTD [19], and R1TP [22] methods from randomly sampled entries is tackled. Six images of dimensions

Figure 1: Images for testing: group 1 contain some patterns and those in group 2 are not

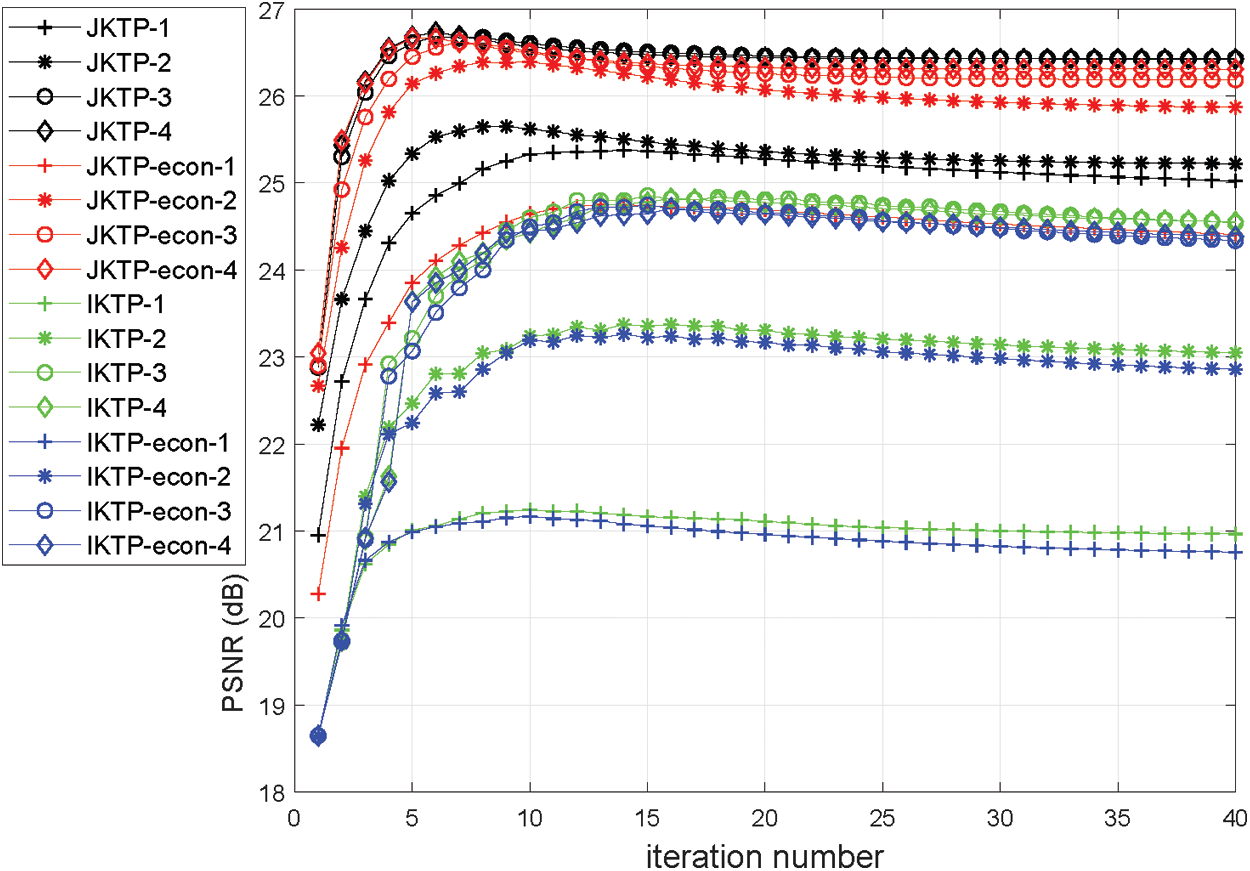

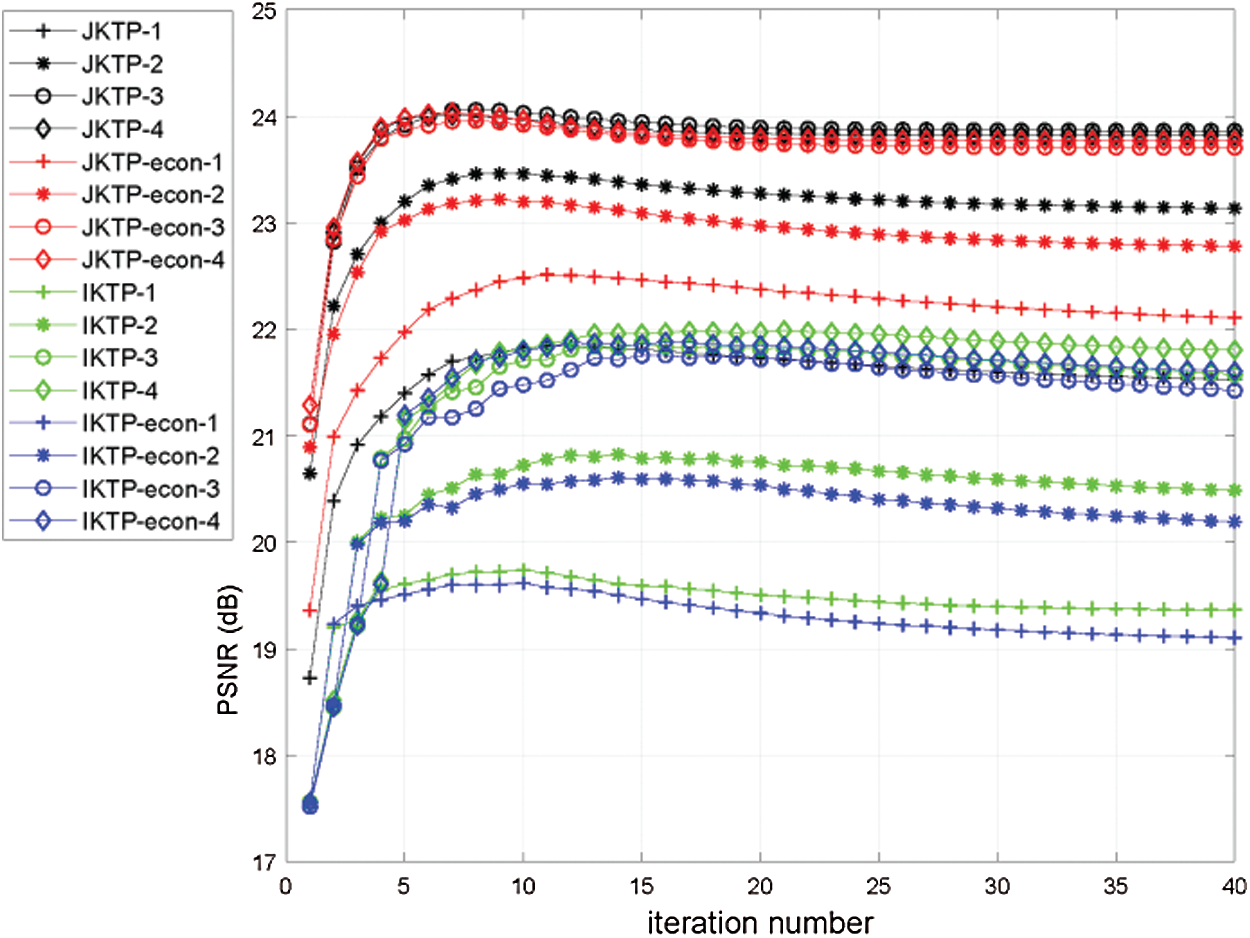

In the first test, the convergence performances of the proposed algorithms are verified. The images of dimensions

Figure 2: PSNR vs. numbers of iterations with 20% observed entries for group 1 images

Figure 3: PSNR vs. numbers of iterations with 20% observed entries for group 2 images

In the second test, the completion performances of the proposed approaches under different number of patterns and the state-of-the-art methods for image recovery under 10% observation rate are tested. The four patterns are the same as those in the first test. The average PSNR and SSIM results as well as the computational time in seconds of different methods on the first image, namely, the ‘Group 1: Beans’ under the resolution of

Table 3: Image recovery performance of different algorithms

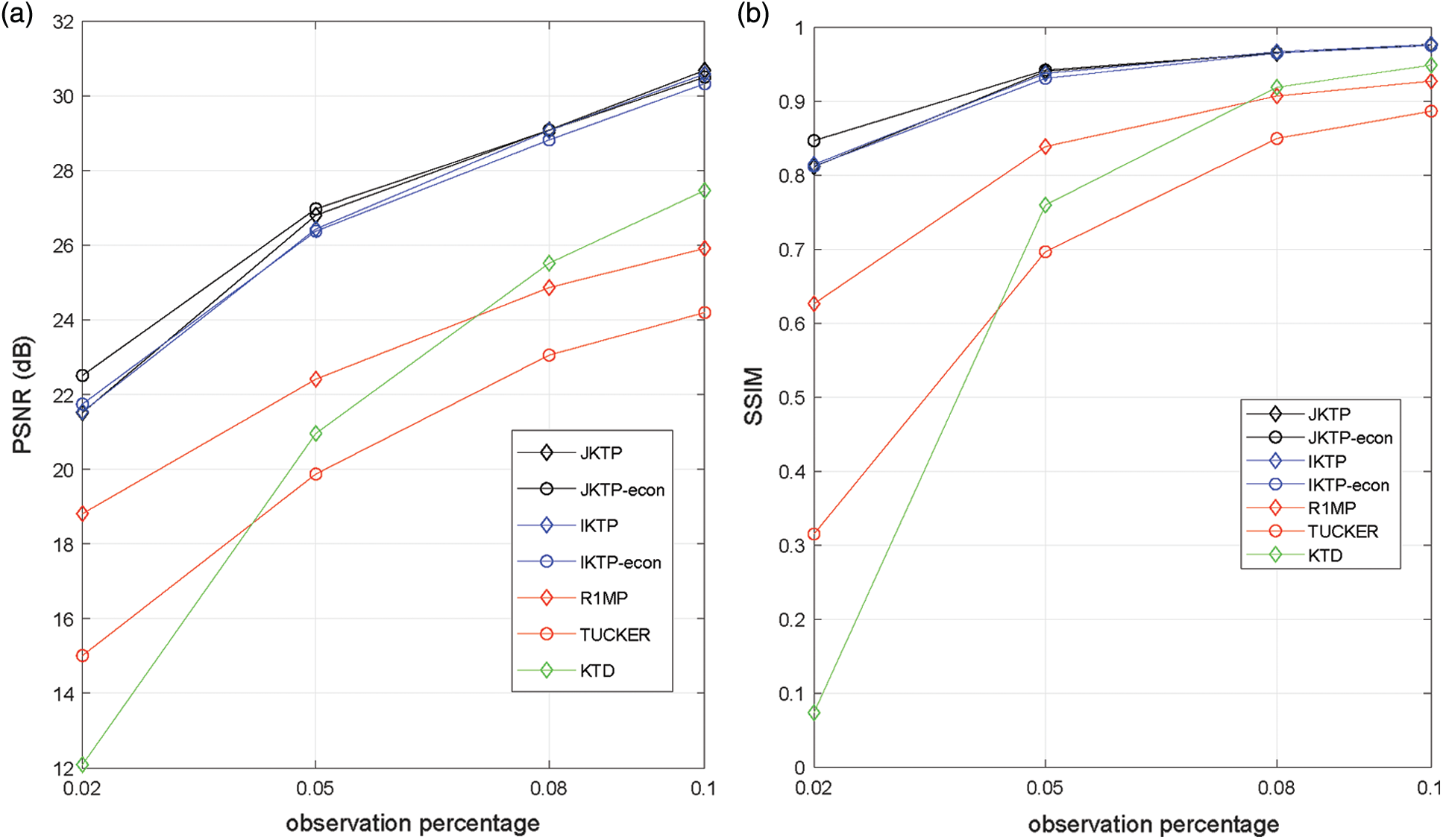

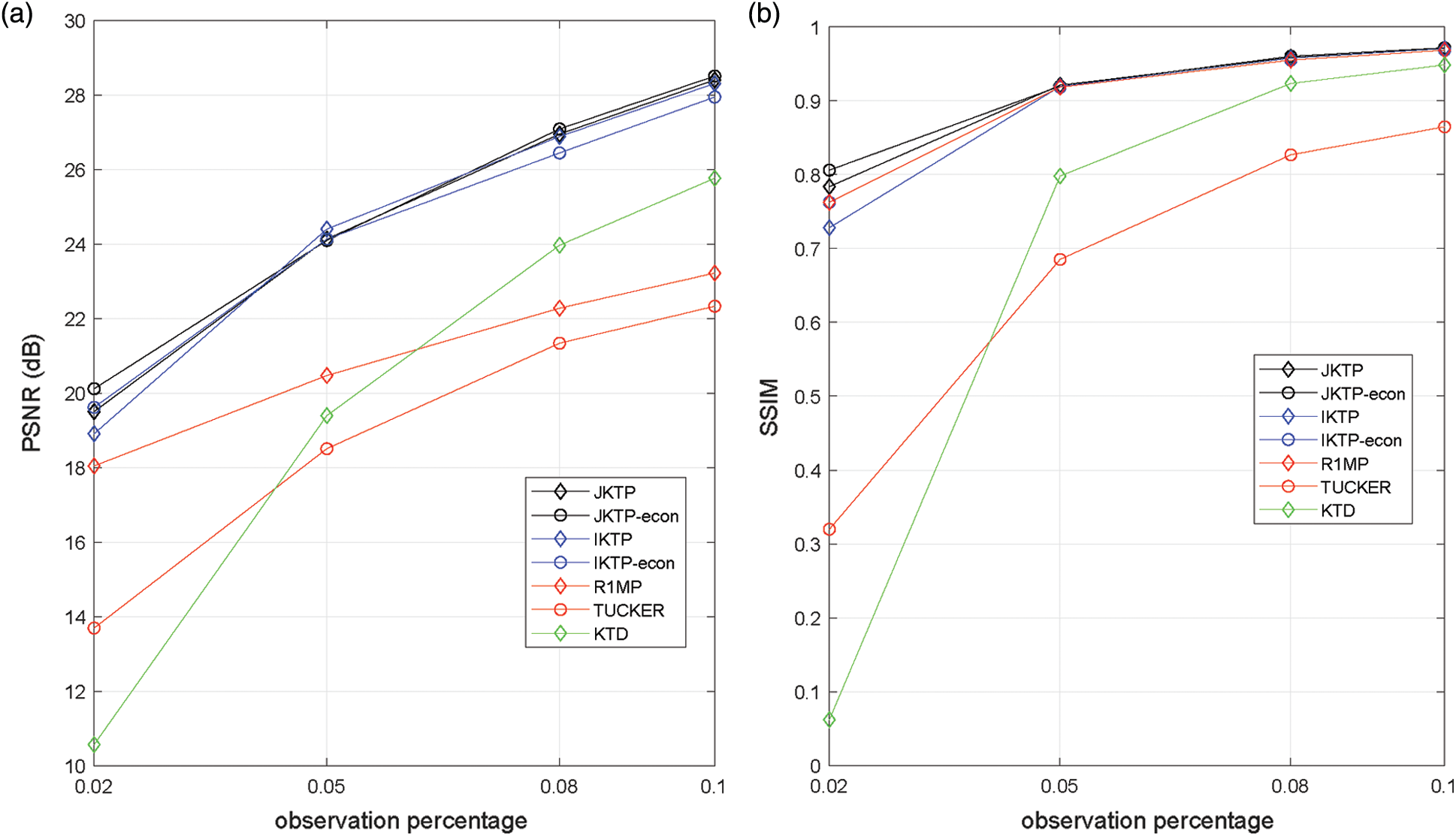

In the third test, the performance under different percentage of observation percentages, ranging from 2% to 10 %, is evaluated. The images with resolution

Figure 4: Average PSNR and SSIM vs. observation percentage of group 1 images (a) PSNR (b) SSIM

Figure 5: Average PSNR and SSIM vs. observation percentage of group 2 images (a) PSNR (b) SSIM

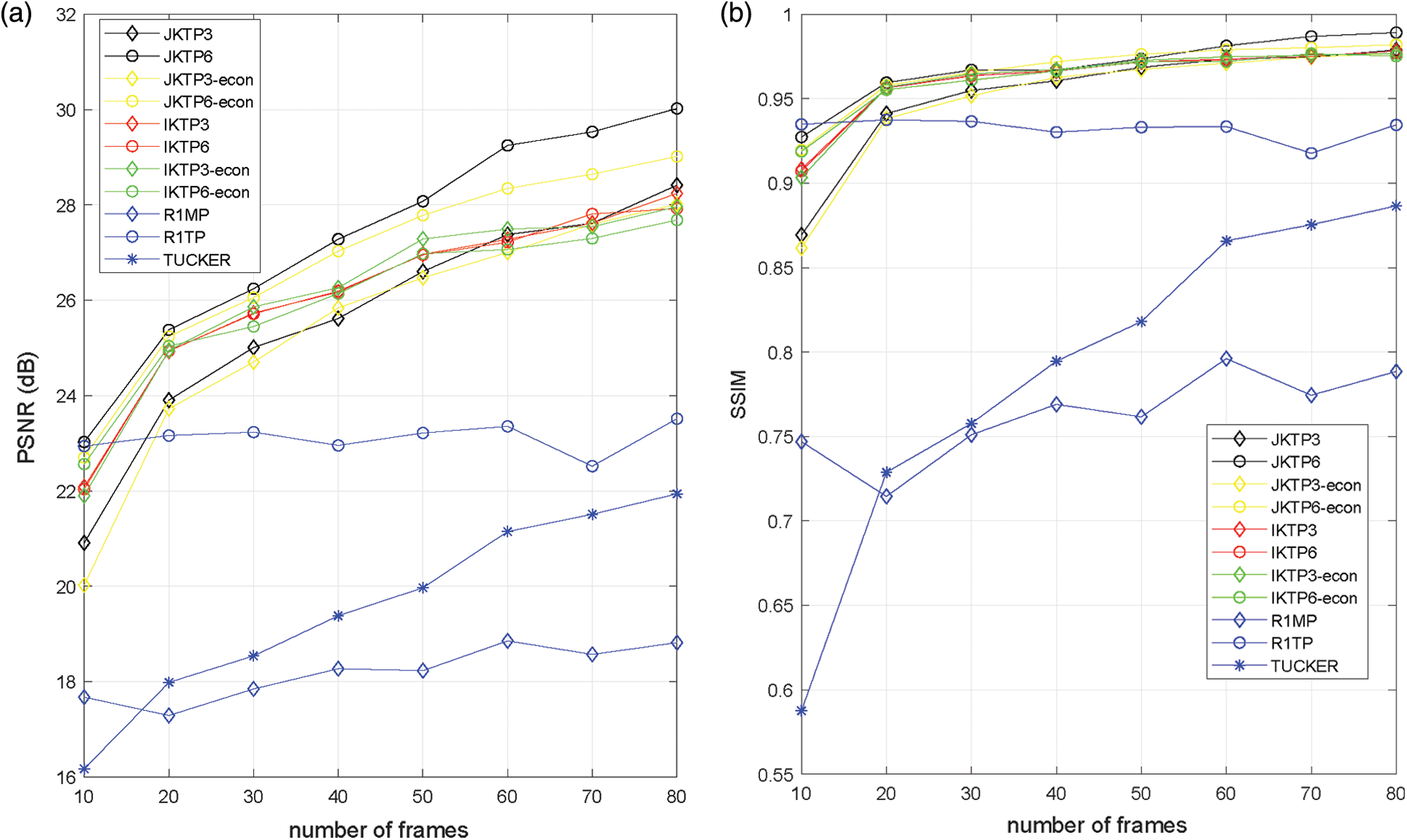

In this part, we test the algorithms JKTP, JKTP-econ, IKTP, IKTP-econ, Tucker [15], R1MP [19] and R1TP [21] under ‘Gun Shooting’ video [25,26] completion from randomly sampled entries, and the first and last frames of the video are shown in Fig. 6. Note that the KTD approach is not include for comparison because of its high computational complexity. The video is of dimensions

Figure 6: video data for testing

And the situations of different number of frames ranging from 10 to 80 are tested. The observation rate is set to 0.03, and for all the methods, a maximum number of 50 iterations is used. The patterns for the proposed methods are

Figure 7: PSNR and SSIM results vs. number of frames (a) PSNR (b) SSIM

Figure 8: Computational complexity vs. number of frames

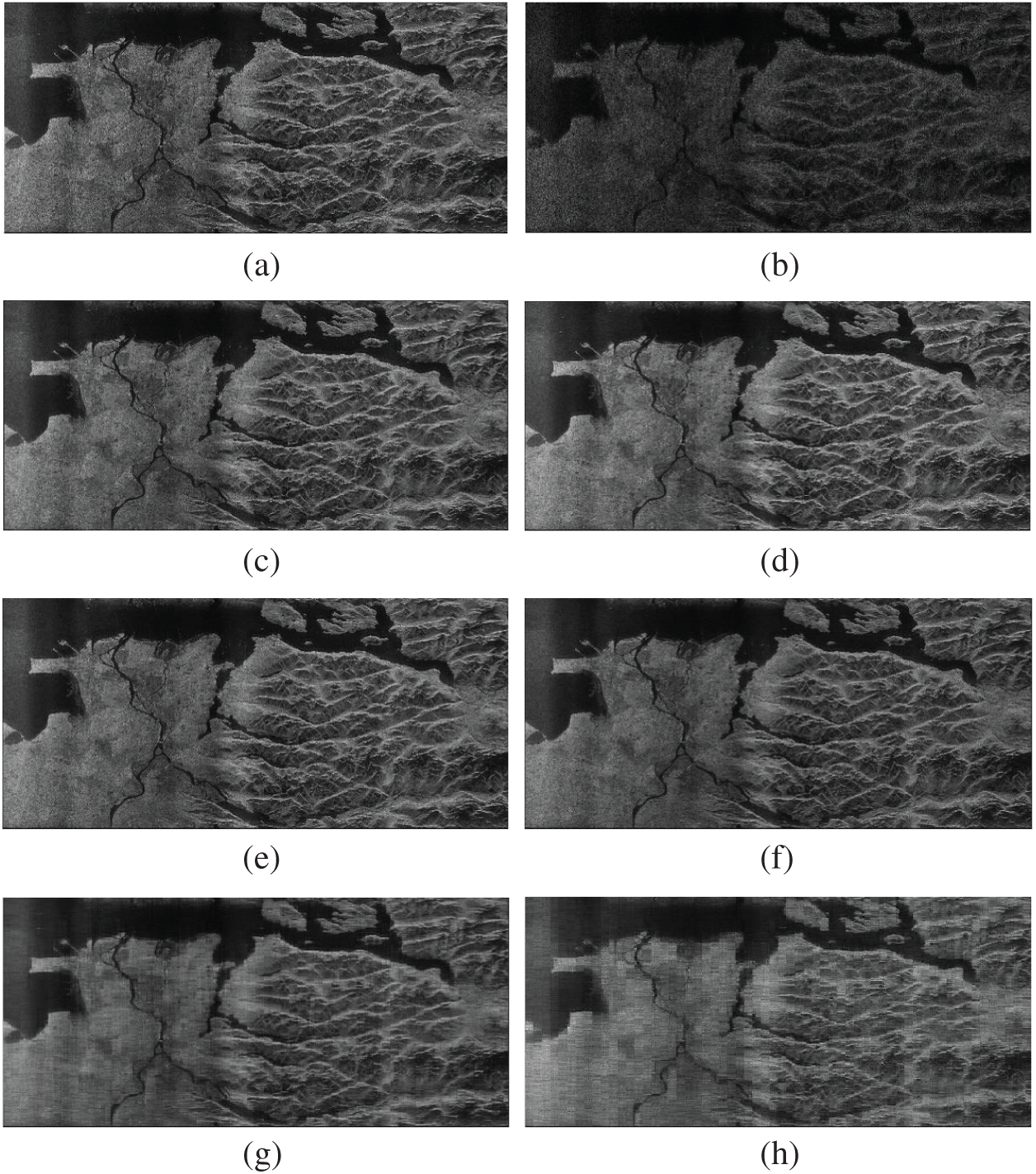

4.3 High Resolution SAR Imaging

In the end, we evaluate the algorithms with under-sampled high resolution SAR imaging data [27,28]. We employ the model shown in Fig. 9 to conduct our experiments as follows. First, scan the test scene to generate the data

Figure 9: Model of SAR image recovery

Figure 10: The original, sampled and recovered SAR images (a) Original (b) Sampled (c) JKTP (d) JKTP-econ (e) IKTP (f) IKTP-econ (g) R1MP (h) Tucker

Considering that the size

Table 4: Completion results with different step-sizes of completion under 10% to 30% observed entries

In this paper, the novel tensor completion algorithms combining the ideas of tensor Kronecker Decomposition and rank-1 tensor pursuit, named IKTP and JKTP, are proposed and applied to color image, video and SAR image inpainting. A novel weight update algorithm, to reduce the time and storage complexity, is also derived. Experimental results show that the proposed methods outperform the state-of-the-art algorithms in terms of PSNR, SSIM and MSE. For the tensor based algorithms, the results also show the advantage of the proposed methods in terms of computational complexity.

Funding Statement: The work described in this paper was supported in part by the Foundation of Shenzhen under Grant JCYJ20190808122005605, and in part by National Science Fund for Distinguished Young Scholars under grant 61925108, and in part by the National Natural Science Foundation of China (NSFC) under Grant U1713217 and U1913203.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. L. Gongshen, M. Kui, D. Jiachen, J. P. Nees, G. Hongyi et al., “An entity-association-based matrix factorization recommendation algorithm,” Computers, Materials & Continua, vol. 58, no. 1, pp. 101–120, 2019. [Google Scholar]

2. H. Bai, X. Li, L. He, L. Jin, C. Wang et al., “Recommendation algorithm based on probabilistic matrix factorization with adaboost,” Computers, Materials & Continua, vol. 65, no. 2, pp. 1591–1603, 2020. [Google Scholar]

3. N. Li and B. Li, “Tensor completion for on-board compression of hyper-spectral images,” in 17th IEEE Int. Conf. on Image Processing, Hong Kong, China, pp. 517–520, 2010. [Google Scholar]

4. J. Bengua, H. Phien, H. Tuan and M. Do, “Efficient tensor completion for color image and video recovery: Low-Rank tensor train,” IEEE Transaction on Image Processing, vol. 26, no. 5, pp. 2466–2479, 2017. [Google Scholar]

5. S. Gandy, B. Recht and I. Yamada, “Tensor completion and low-n-rank tensor recovery via convex optimization,” Inverse Problem, vol. 27, no. 2, pp. 25–43, 2011. [Google Scholar]

6. H. Yang, J. Yin and Y. Yang, “Robust image hashing scheme based on low-rank decomposition and path integral LBP,” IEEE Access, vol. 7, pp. 51656–51664, 2019. [Google Scholar]

7. R. Cheng and D. Lin, “Based on compressed sensing of orthogonal matching pursuit algorithm image recovery,” Journal of Internet of Things, vol. 2, no. 1, pp. 37–45, 2020. [Google Scholar]

8. L. Vandenberghe and S. Boyd, “Semidefinite programming,” SIAM Review, vol. 38, no. 1, pp. 49–95, 1996. [Google Scholar]

9. M. Fazel, H. Hindi and S. P. Boyd, “A rank minimization heuristic with application to minimum order system approximation,” in Proc. of the American Control Conf., Arlington, VA, USA, pp. 4734–4739, 2001. [Google Scholar]

10. M. Fazel, “Matrix rank minimization with applications,” Stanford University: Department of Electrical Engineering, Ph.D. thesis, 2002. [Google Scholar]

11. J. F. Cai, E. J. Candes and Z. Shen, “A singular value thresholding algorithm for matrix completion,” SIAM Journal on Optimization, vol. 20, no. 4, pp. 1956–1983, 2010. [Google Scholar]

12. P. Jain, R. Meka and I. S. Dhillon, “Guaranteed rank minimization via singular value projection,” Advanced in Neural Information Processing, vol. 20, no. 4, pp. 1956–1982, 2008. [Google Scholar]

13. T. G. Kolda and B. W. Bakder, “Tensor decompositions and applications,” SIAM Review, vol. 51, no. 3, pp. 455–500, 2009. [Google Scholar]

14. V. D. Silva and L. H. Lim, “Tensor rank and the ill-posedness of the best low-rank approximation problem,” SIAM Journal on Matrix Analysis Applications, vol. 30, no. 32, pp. 1084–1127, 2008. [Google Scholar]

15. W. Sun and H. C. So, “Accurate and computationally efficient tensor-based subspace approach for multi-dimensional harmonic retrieval,” IEEE Transactions on Signal Processing, vol. 60, no. 10, pp. 5077–5088, 2012. [Google Scholar]

16. T. Thieu, H. Yang, T. Vu and S. Kim, “Recovering incompelte data using tucker model for tensor with low-n-rank,” International Journal of Bioscience, Biochemistry and Bioinformatics, vol. 12, no. 3, pp. 34–40, 2016. [Google Scholar]

17. M. Kilmer and C. Martin, “Factorization strategies for third-order tensors,” Linear Algebra and Its Application, vol. 465, no. 3, pp. 641–658, 2011. [Google Scholar]

18. Z. Zhang and S. Aeron, “Exact tensor completion using t-SVD,” IEEE Transaction on Signal Processing, vol. 65, no. 6, pp. 1511–1526, 2017. [Google Scholar]

19. A. H. Phan, A. Cichocki, P. Tichavsky, D. P. Mandic and K. Matsuoka, “On revealing replicating structures in multiway data: A novel tensor decomposition approach,” in Proc. of Latent Variable Analysis and Signal Separationinternational Conf., Tel Aviv, Israel, pp. 297–305, 2012. [Google Scholar]

20. M. Wang, K. D. Duc, J. Fischer, G. Luta and A. Brockmeier, “Operator norm inequalities between tensor unfoldings on the partition lattice,” Linear Algebra and Its Application, vol. 520, no. 1, pp. 44–66, 2017. [Google Scholar]

21. W. Sun, L. Huang, H. C. So and J. J. Wan, “Orthogonal tubal rank-1 tensor pursuit for tensor completion,” Signal Processing, vol. 157, no. 2, pp. 213–224, 2019. [Google Scholar]

22. Z. Wang, M. J. Lai, L. Lu, W. Fan, H. Davulcu et al., “Orthogonal rank one matrix pursuit for low rank matrix completion,” SIAM Journal on Scientifific Computing, vol. 37, no. 1, pp. A488–A514, 2014. [Google Scholar]

23. M. Jaggi and M. Sulovsk, “A simple algorithm for nuclear norm regularized problems,” in Int. Conf. on Machine Learning, Haifa, Isreal, 2010. [Google Scholar]

24. M. Dudik, Z. Harchaoui and J. Malick, “Lifted coordinate descent for learning with trace-norm regularization,” in AISTATS, La Palma, Canary Islands, Spain, 2012. [Google Scholar]

25. “Pistol shot recorded at 73,000 frames per second,” Published by Discovery, 2015. [Online]. Available: https://youtu.be/7y9apnbI6GA. [Google Scholar]

26. W. Wang, V. Aggarwal and S. Aeron, “Efficient low rank tensor ring completion,” in IEEE Int. Conf. on Computer Vision, Venice, Italy, pp. 5698–5706, 2017. [Google Scholar]

27. D. Yang, G. S. Liao, S. Q. Zhu, X. Yang and X. P. Zhang, “SAR imaging with undersampled data via matrix completion,” IEEE Geoscience and Remote Sensing Letters, vol. 11, pp. 9, 2014. [Google Scholar]

28. S. Zhang, G. Dong and G. Kuang, “Matrix completion for downward-looking 3-D SAR imaging with a random sparse linear array,” IEEE Transactions on Geoscience and Remote Sensing, vol. 56, no. 4, pp. 1994–2006, 2018. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |