DOI:10.32604/cmc.2022.023172

| Computers, Materials & Continua DOI:10.32604/cmc.2022.023172 |  |

| Article |

A BPR-CNN Based Hand Motion Classifier Using Electric Field Sensors

1Department of Computer Science, Georgia State University, Atlanta, 30302, USA

2Nat'l Program of Excellence in Software Centre, Chosun University, Gwangju, 61452, Korea

3Department of Computer Inf. & Communication Eng., Chonnam Nat'l Univ., Gwangju, 61186, Korea

*Corresponding Author: Youngchul Kim. Email: yckim@jnu.ac.kr

Received: 30 August 2021; Accepted: 18 November 2021

Abstract: In this paper, we propose a BPR-CNN (Biometric Pattern Recognition-Convolution Neural Network) classifier for hand motion classification as well as a dynamic threshold algorithm for motion signal detection and extraction by EF (Electric Field) sensors. Currently, an EF sensor or EPS (Electric Potential Sensor) system is attracting attention as a next-generation motion sensing technology due to low computation and price, high sensitivity and recognition speed compared to other sensor systems. However, it remains as a challenging problem to accurately detect and locate the authentic motion signal frame automatically in real-time when sensing body-motions such as hand motion, due to the variance of the electric-charge state by heterogeneous surroundings and operational conditions. This hinders the further utilization of the EF sensing; thus, it is critical to design the robust and credible methodology for detecting and extracting signals derived from the motion movement in order to make use and apply the EF sensor technology to electric consumer products such as mobile devices. In this study, we propose a motion detection algorithm using a dynamic offset-threshold method to overcome uncertainty in the initial electrostatic charge state of the sensor affected by a user and the surrounding environment of the subject. This method is designed to detect hand motions and extract its genuine motion signal frame successfully with high accuracy. After setting motion frames, we normalize the signals and then apply them to our proposed BPR-CNN motion classifier to recognize their motion types. Conducted experiment and analysis show that our proposed dynamic threshold method combined with a BPR-CNN classifier can detect the hand motions and extract the actual frames effectively with 97.1% accuracy, 99.25% detection rate, 98.4% motion frame matching rate and 97.7% detection & extraction success rate.

Keywords: BPR-CNN; dynamic offset-threshold method; electric potential sensor; electric field sensor; multiple convolution neural network; motion classification

The EF (Electric Field) sensor extracts information by sensing the variations of the electric charge near the surface of the sensor and converts the signal into voltage-level output. Today, EF sensors are mainly categorized by two modes, contact mode and non-contact mode. The contact mode has widely been applied in the area of healthcare and medical applications by sensing bioelectric signals such as electrocardiogram, electromyogram and electroencephalogram [1–5]. Meanwhile, non-contact type measures the electric potential signal on the surface of EF sensors induced by the disturbance of the surrounding electric field which is caused by movement of dielectric substances such as human bodies or hands due to coupling effect [6]. A few of non-contact EF sensor systems have been applied in commercial products, while several studies in academic institutions have been reported in application areas of proximity sensing, placement identification, etc [7–10]. As EF proximity sensing systems are gaining attention, recent studies have been published regarding the area of hand or body motion detection and recognition [11–21]. Our past studies [11–14] were focused on non-contact EF sensing, extracting and processing the signals through EF sensors. Moreover, by utilizing deep learning algorithms such as LSTM and CNN, multiple hand gesture classification mechanisms were proposed after a series of signal processing steps. Wimmer et al. [22] introduced the ‘Thracker’ device that utilized capacitive sensing, which encouraged the possibility of interaction between human and computer systems through non-contact capacitive sensing. Singh et al. [15] suggested a gesture recognition system called Inviz for paralysis patients that implemented textile-built capacitive sensors, measuring the capacitance interaction between the patient's body and the sensor. Aezinia et al. [16] designed a three-dimensional finger tracking system through a capacitive sensor, which was functional within 10 cm range from the sensor.

In this paper, we present a real-time hand motion detection and classification system adopting Biometric Pattern Recognition-Convolution Neural Network (BPR-CNN) classifiers combined with a dynamic threshold method for automatic motion detection and motion-frame extraction (Fig. 1) in EF signals. Our proposed system is fully automated with real-time motion detections, extracting the true frame and classifying motion types occurring at the range of up to 30 cm near the EF sensors. Accuracies of detecting hand motions and extracting signal frames were quantitatively rated through our suggested metrics. Furthermore, we suggest the simulation results of our CNN architectures; Multiple CNN (MCNN) and BPR-CNN with other classification algorithms and empirically evaluate the hand gesture classification performance.

Figure 1: A proposed hand motion extraction and classification process

This paper is organized as follows. Section 2 describes our suggested methods of computing the dynamic threshold for motion detection and frame extraction by analyzing the intrinsic features of the EF signals. We also explain the two CNN-based motion classifiers that were designed and thus applied into our system. In Section 3, we present the results of four hand gesture classifications through multiple experiments. In conclusion, we conclude our study and suggest the future works.

In this section, we explain our dynamic threshold method for motion detection and frame extraction in the EF sensor signals. After setting the optimal signal frame, we conduct normalization followed by transforming the dimensions of normalized data in order to be trained into our proposed CNN model. We implement the MCNN and BPR-CNN to effectively train the features of the transformed signals thus classifying the inputs into corresponding gestures. Note that we handle the signals that have been already processed through Low-Pass-Filter (LPF) and Simple Moving Average (SMA). Since natural frequency from the human hand or arm is known to have 5∼10 Hz [23], which is a domain of extremely low frequency, thus we use the 10 Hz as a cut-off frequency in the LPF. The readers can refer to our previous studies [11–14] for more information regarding the implementation of LPF to filter out unnecessary noises and conduct the Moving Average to smoothen the filtered gesture signal from the sensor.

2.1 Dynamic Offset and Threshold

One of the challenging problems in dynamic thresholding in order to detect the signal and to locate the “genuine” signal frame is to compute an offset voltage for each Electric Potential Sensor (EPS) and adjust the threshold values periodically before detecting the target hand motion. As most hand motions and gestures are being done within a short period of time, we set the update cycle time unit to be a second. We implemented two EPS (Sensor A, B, sensor type PS25401) [14,23], and each sensor started with unidentical initial offset due to the various electric charging and discharging states near the sensors according to diverse environmental conditions in the moment of time.

Through our empirical past simulations, the initial voltage

While a sensor is operational, which would constantly generate S, two thresholds

Likewise, due to heterogeneous property of S, dynamic

Figure 2: (a) distribution of

Due to these features, dynamic

2.2 Motion Detection and Extraction

Each individual hand motion generates unidentical signal phases depending on various conditions such as hand movement speed, distance and direction. In order to detect and extract the corresponding motion frames, we considered not only the duration, but also the time frame that could be divided into the stages of motion. The detection is composed of 4 steps where the left and right term enclosed with braces each indicate the two contrasting cases (case 1, 2). The detection steps are indicated as follows;

Note that when

However, this would trigger to lose the intrinsic features as this frame merely includes the partial actual motion signals as there may be more features on

In Fig. 3, a signal of hand gesture moved from left to right (LR) is shown. As we make another hand gesture, it detects the motion and locates the following frame continuously. Fig. 3 contains two motion signals; each signal obtained from two different sensors (A-red and B-blue), where the dotted lines intersected with the signals show the starting point of the frame (magenta dot) and ending point of the frame (black dot). Each red and blue dot indicates the intersection point where

Figure 3: Motion frame extraction visualization

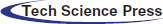

2.3 Normalization of an Extracted Motion Frame

When hand motions are identified by the sensor, the time period of the extracted frame is diverse even if the motions were the same types, due to the speed or distance range of the motion. Likewise, the amplitude of the motion signal also tends to change on every new motion since the subject's potential electrostatic state varies through numerous conditions such as textile of the cloth, location, or nearby machines, etc [4,9,11,12]. Thus, it is imperative to conduct normalization in order to be properly trained in deep learning models as normalizing input data is known to be a productive measure to enhance the performance. In our signal, the time (X-axis) and voltage (Y-axis) are the two axes that are to be normalized. For Y-axis, standardization was applied and let

Figure 4: Visualization of original clockwise hand motion frame signal and normalized frame signal

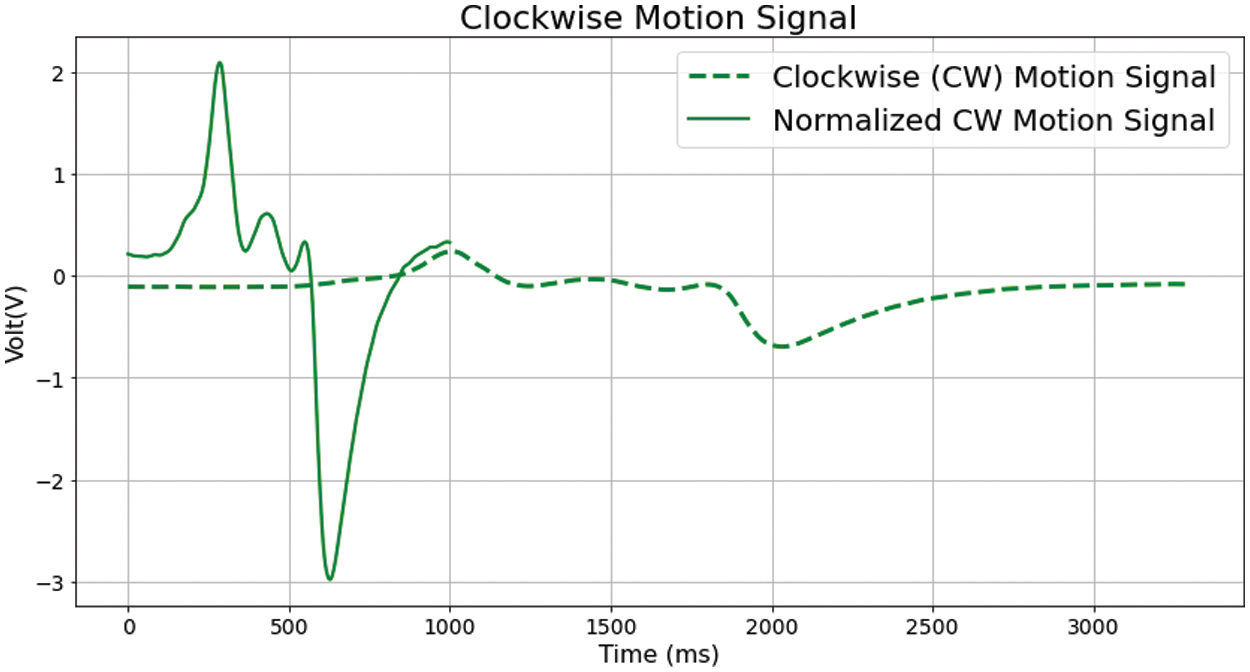

2.4 Signal Dimension Transformation

After applying the normalized motion frame, the signal frame must be transformed from 1-dimension voltage signal to 2-dimension image in order to train the CNN model. Based on

Figure 5: Dimensional transformation of a motion frame: (a) the transformation schematization reshaped into (30 × 30 × 1) grayscale image, (b) the transformed images for 4 hand gestures

2.5 Motion Classification Through CNN Models

In this section, we define the structure of our two CNN-based classifiers; MCNN and BPR-CNN that were implemented to effectively categorize the types of input hand motion signal images.

2.5.1 Multiple CNN Classifiers with Voting Logics

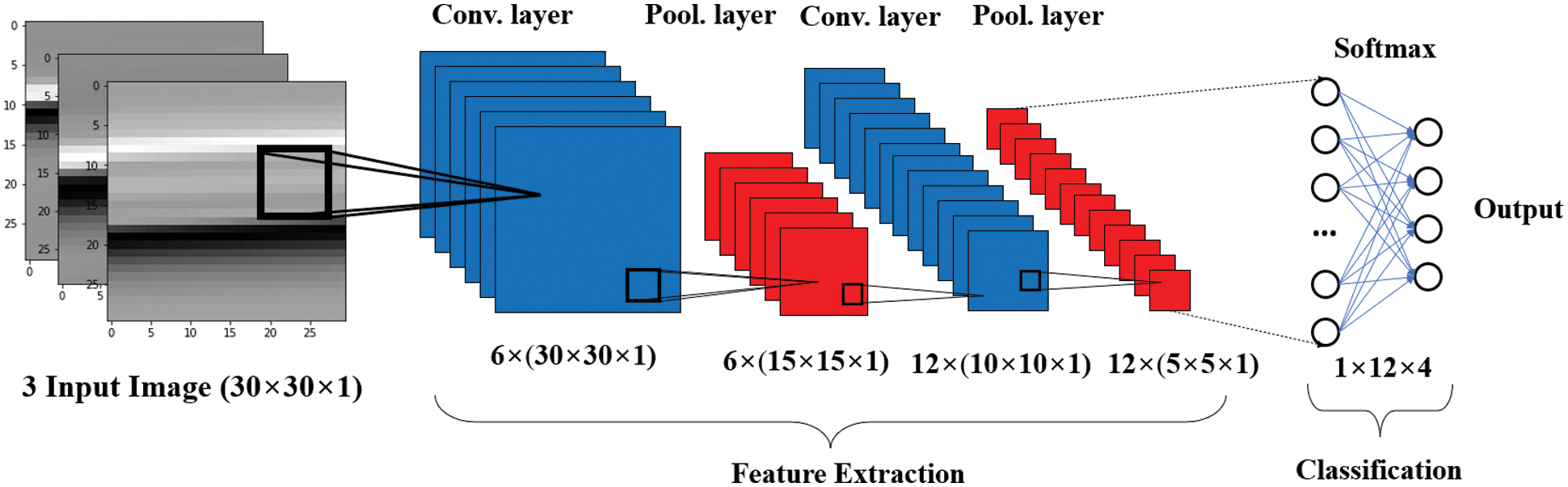

The first classifier is composed of five pre-trained CNN models, which are operated in parallel with unidentical filter size in their convolution layer. Fig. 6 shows a five-layer CNN structure that was applied in our MCNN.

Figure 6: An example of implemented CNN structure

This model was trained through the extracted feature patterns from convolution layers learning local features to global features. After the fully-connected layer and softmax function, it outputs the class probability

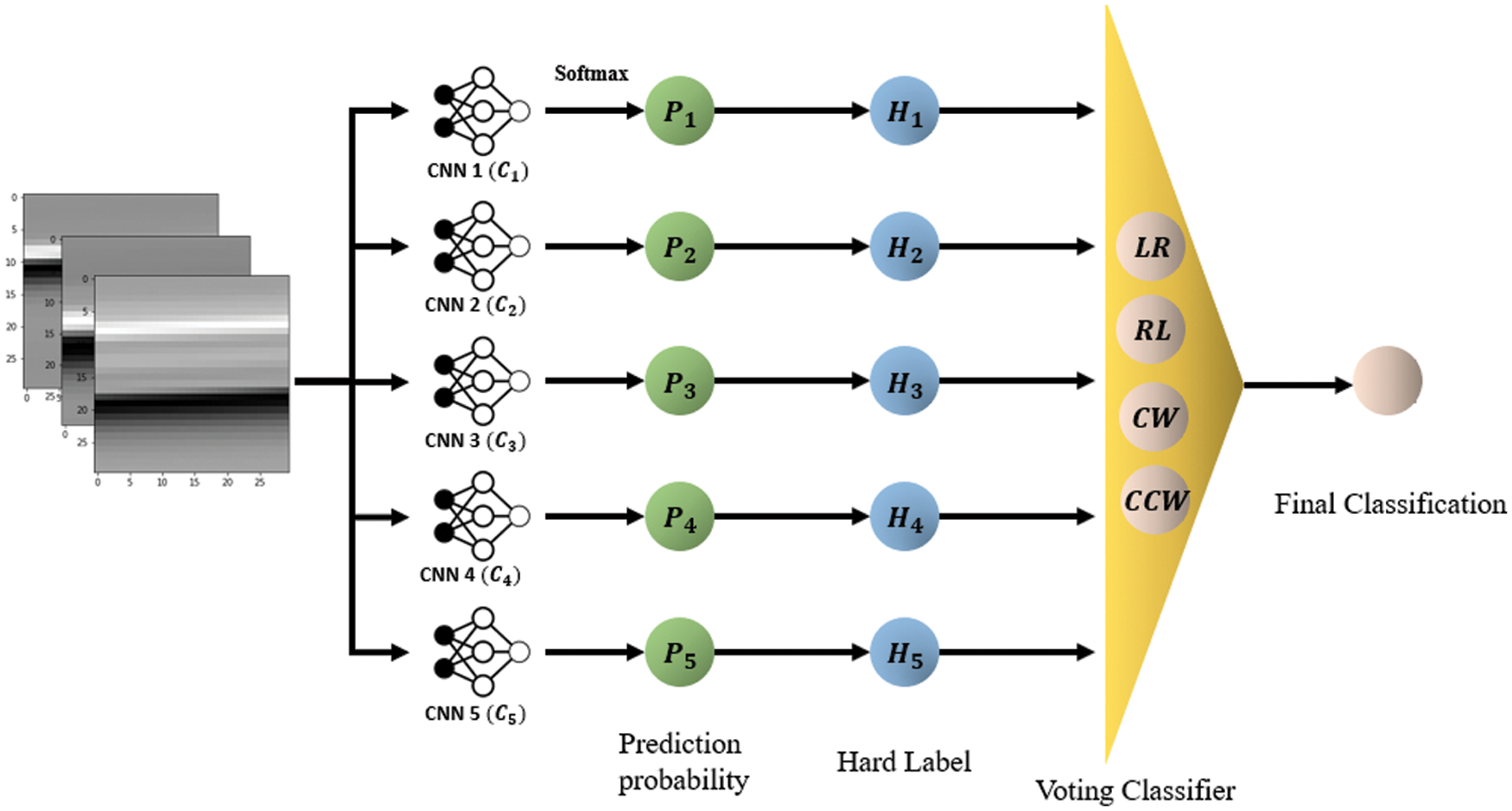

The outputs from the five CNNs are considered in order to make the final classification prediction through majority voting as shown in Fig. 7. Each input data consists of three 30 × 30 × 1 images, which represents three channels for sensor A, B and A-B signals. These three motion signal images are extracted in real-time and inserted into pre-trained CNN classifiers in Fig. 6.

Figure 7: Parallel multiple CNN with majority voting classifier

Our ultimate goal is to successfully classify the four types of hand motions (Tab. 1) with high accuracy. Recall that the five CNN contains different kernel sizes, (refer to Fig. 6 for detailed kernel sizes in each layer) and MCNN classifier conducts majority voting (Fig. 7) between soft labels. Let

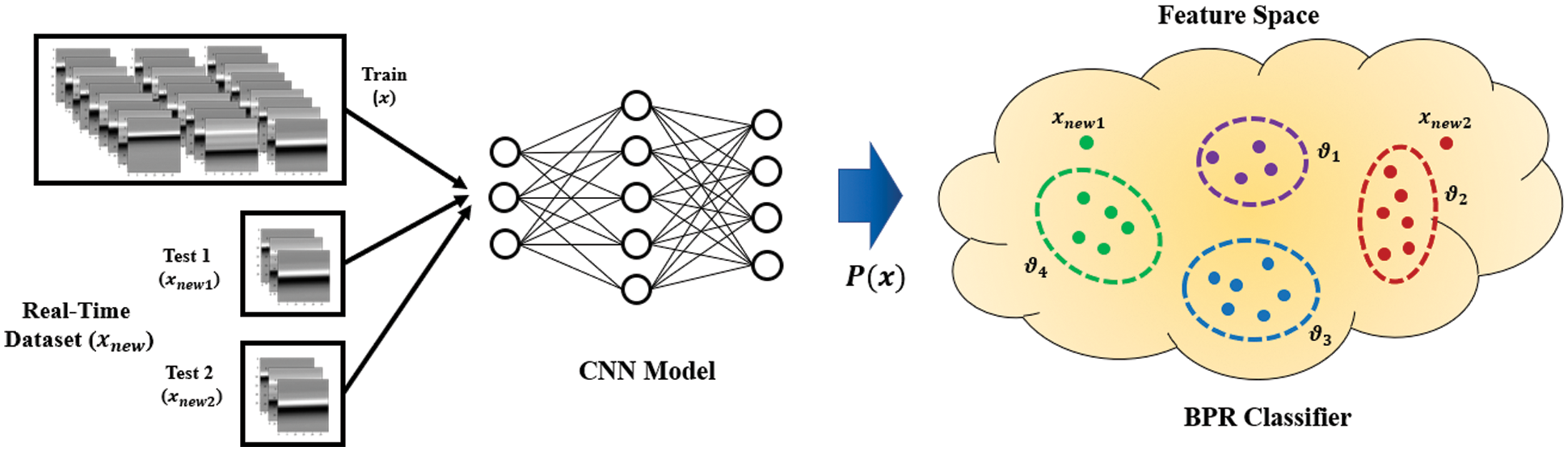

Biomimetic Pattern Recognition (BPR) [16,23–25] utilizes high-level topology features from biomimetic signals to discover certain patterns, which focuses on the concept of cognizing feature topology. Combining BPR with CNN (BPR-CNN) triggers higher performance as features are extracted from the CNN model, and BPR computes the topological manifold properties in given Euclidean parameter space based on Complex Geometry Coverage (CGC) [26] as shown in Fig. 8. Manipulating the prediction probability

Figure 8: Visualization of the BPR-CNN mechanism

However,

Our case specifically requires the robust features in high-level feature space where the trend of each hand motion signal image could be found. Implementing the BPR-CNN, we could derive better input signal classification performance compared to conventional CNN, and we validate this through experiments in Section 3. In BPR classifier, pre-trained CNN model

Through the empirical experiments, we evaluate the performance of our designed methodology. Utilizing the EPS sensor [14,28], four hand motion types indicated in Tab. 1 were extracted from each of six subjects, 100 gestures for each motion, collecting a total of 2400 motion samples. Among the dataset, we randomly split the 2160 samples for training and 240 for test data. The extracted raw signal was processed through consecutive signal processing methods starting from the LPF and SMA, followed by automatically detecting hand motions and setting signal frame by dynamic threshold, and normalizing the signal. Next, we transform the signal into an image and a pre-trained classifier determines its label. Note that

Following Tab. 2 shows the result of our three designated metrics, which validates that the proposed method of our study works with high accuracy of around 98% on average.

Following Tab. 3 displays the experiment results of the four classifier algorithms. Their performances were evaluated with classification accuracy based on identical test data of four specific hand motions in Tab. 1. We denote the average of four motion accuracy as Classification Correction Rate (CCR), which is computed in Tab. 3. From each motion in Tab. 1, three distinct output signals are produced; sensor A value, sensor B value and subtracted value (A-B). Performance of the two CNN classifiers (MCNN and BPR-CNN) were also compared with other algorithms such as HMM and SVM. Our experiment results show that the suggested motion detection and frame extraction based on the two threshold works with high CDR and MFMR, and also the classification accuracy of BPR-CNN classifier outperformed other competitive models.

In this paper, we proposed the dynamic thresholding and framing algorithms in order to set the accurate motion EF signal frame in real-time, and evaluated its performance using the following metrics; 99.4% in CDR, 98.4% in MFMR, 97.8% in DESR. Moreover, we implemented the MCNN and BPR-CNN motion classifiers and compared the accuracy with other algorithms. Based on the extracted features of the 3 channel (sensor A, B, A-B) input signal images, BPR-CNN had shown the highest performance of 97.1% in CCR. Utilizing EF sensing is regarded as a prospective research domain and accommodates practical usage in industry due to diverse advantages such as low computation & price, high sensitivity & recognition speed. Our future work is to adopt the introduced methods to mobile devices and apply the algorithms to control the interface through non-contact hand motions. Training and classifying the diverse and detailed gestures in order to gain algorithmic robustness and versatility is a part of our future work. Combining our studies into interface technologies such as Human Computer Interaction (HCI) or Natural User Interface (NUI), we expect the further utilizations of controlling the various applications through user-friendly interfaces based on EF sensing.

Funding Statement: This work was supported by the NRF of Korea grant funded by the Korea government (MIST) (No. 2019 R1F1A1062829).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. R. J. Prance, A. Debray, T. D. Clark, H. Prance, M. Nock et al., “An ultra-low-noise electrical potential probe for human body scanning,” Measurement Science and Technology, vol. 11, no. 3, pp. 291–297, 2000. [Google Scholar]

2. E. Rendon-Morales, R. J. Prance, H. Prance and R. Aviles-Espinosa, “Non- invasive electrocardiogram detection of in vivo zebrafish embryos using electric potential sensors,” Applied Physics Letters, vol. 107, no. 19, pp. 3701, 2015. [Google Scholar]

3. R. K. Rajavolu and K. Sakumar, “Fetal ECG extraction on light of adaptive filters and wavelet transform approval and application in fetal heart rate variability analysis of low power IOT platform,” International Journal of Pure and Applied Mathematics, vol. 118, no. 14, pp. 307–313, 2018. [Google Scholar]

4. T. G. Zimmerman, J. R. Smith, J. A. Paradiso, D. Allport and N. Gershenfeld, “Applying electric field sensing to human-computer interfaces,” International Journal of Smart Home, vol. 11, no. 4, pp. 1–10, 2017. [Google Scholar]

5. H. Prance, “Sensor developments for electrophysiological monitoring in healthcare,” Applied Biomedical Engineering, pp. 265–286, 2011. [Online]. Available: https://www.intechopen.com. [Google Scholar]

6. M. Nath, S. Maity, S. Avlani, S. Weigand and S. Sen, “Inter-body coupling in electro-quasistatic human body communication: Theory and analysis of security and interference properties,” Scientific Reports, vol. 11, no. 1, pp. 1–15, 2021. [Google Scholar]

7. X. Tang and S. Mandal, “Indoor occupancy awareness and localization using passive electric field sensing,” IEEE Transactions on Instrumentation and Measurement, vol. 68, no. 11, pp. 4535–4549, 2019. [Google Scholar]

8. B. Fu, F. Kirchbuchner, J. V. Wilmsdorff, T. Grosse-Puppendahl, A. Braun et al., “Performing indoor localization with electric potential sensing,” Journal of Ambient Intelligence and Humanized Computing, vol. 10, no. 2, pp. 731–746, 2018. [Google Scholar]

9. D. R. Roggen, A. P. Yazan, F. Javier, O. Marales, R. J. Prance et al., “Electric field phase sensing for wearable orientation and localization applications,” in Proc. of 15th Int. Semantic Web Conf. 2016, Heidelberg, Germany, pp. 52–53, 2016. [Google Scholar]

10. S. Beardsmore-Rust, P. Watson, P. B. Stiffell, R. J. Prance, C. J. Harland et al., “Detecting electric field disturbances for passive through wall movement and proximity sensing,” in Proc. of Smart Biomedical and Physiological Sensor Technology, Orlando, USA, vol. 7313, 2009. [Google Scholar]

11. Y. R. Lee, “EPS gesture signal pattern recognition using multi-convolutional neural networks,” M.S. thesis, Chonnam National University, Gwangju, Korea, 2017. [Google Scholar]

12. S. I. Jeong and Y. C. Kim, “Hand gesture signal extraction and application to light controller using passive electric sensor,” in Proc. 5th Int. Conf. on Next Generation Computing 2019, ChiangMai, Thailand, pp. 130–132, 2019. [Google Scholar]

13. S. I. Jung, V. Kamoliddin and Y. C. Kim, “Automatic hand motion signal extraction algorithm for electric field sensor using dynamic offset and thresholding method,” in Proc. Korean Institute Smart Media 2021, Busan, Korea, pp. 169–172, 2021. [Google Scholar]

14. H. M. Lee, S. I. Jung and Y. C. Kim. “Real-time hand motion frame extraction using electric potential sensors,” in Proc. 9th Int. Conf. on Smart Media and Applications 2020, Guam, USA, pp. 1–5, 2020. [Google Scholar]

15. G. Singh, A. Nelson, R. Rubocci, C. Patel and N. Banerejee, “Inviz: low-power personalized gesture recognition using wearable textile capacitive sensor arrays,” in Proc. 2015 IEEE Int. Conf. on Pervasive Computing and Communications, St. Louis, MO, USA, pp. 198–206, 2015. [Google Scholar]

16. F. Aezinia, Y. Wang and B. Bahreyni, “Three dimensional touchless tracking of objects using integrated capacitive sensors,” IEEE Transactions on Consumer Electronics, vol. 58, no. 3, pp. 886–890, 2012. [Google Scholar]

17. D. N. Tai, I. S. Na and S. H. Kim, “HSFE network and fusion model based dynamic hand gesture recognition,” KSII Transactions on Internet and Information Systems, vol. 14, no. 9, pp. 3924–3940, 2020. [Google Scholar]

18. I. S. Na, C. Tran, D. Nguyen and S. Dinh, “Facial UV map completion for pose-invariant face recognition: A novel adversarial approach based on coupled attention residual UNets,” Human-centric Computing and Information Sciences, vol. 10, no. 45, pp. 1–17, 2020. [Google Scholar]

19. P. N. Huu, Q. T. Minh and H. L. The,“An ANN-based gesture recognition algorithm for smart-home applications,” KSII Transactions on Internet and Information Systems, vol. 14, no. 5, pp. 1967–1983, 2020. [Google Scholar]

20. S. Y. Lee, J. K. Lee and H. J. Cho, “A hand gesture recognition method using inertial sensor for rapid operation on embedded device, “KSII Transactions on Internet and Information Systems, vol. 14, no. 2, pp. 757–770, 2020. [Google Scholar]

21. H. M. Lee, I. S. Na and Y. C. Kim, “A multiple CNN hand motion classifier with a dynamic threshold method for motion extraction using EF sensors,” in Proc. of 16th Asia Pacific Int. Conf. on Information Science and Technology 2021, Busan, Korea, pp. 34–36, 2021. [Google Scholar]

22. R. Wimmer, P. Holleis, M. Kranz and A. Schmidt, “Thracker-using capacitive sensing for gesture recognition,” in proc. of 26th IEEE Int. Conf. on Distributed Computing Systems Workshops, Lisboa, Portugal, pp. 64–64, 2006. [Google Scholar]

23. “Plessy semiconductors, datasheet for PS25014,” “PS25014A, PS25014B Application Boards for EPIC sensor PS25401,” [Online]. Available: https://www.mouser.com/datasheet/2/613/PS25201-EPIC-sensor-electrophysiology-high-gain-Da-1387263.pdf. [Google Scholar]

24. L. Zhou, Q. Li, G. Huo and Y. Zhou, “Image classification using biomimetic pattern recognition with convolutional neural networks features,” Computational Intelligence and Neuroscience, vol. 2017, pp. 1–12, 2017. [Google Scholar]

25. S. Wang and X. Chen, “Biomimetic (topological) pattern recognition-a new model of pattern recognition theory and its application,” in Proc. Int. Joint Conf. on Neural Networks, Portland, OR, USA, vol. 3, pp. 2258–2262, 2003. [Google Scholar]

26. S. Wang and X. Zhao, “Biomimetic pattern recognition theory and its applications,” Chinese Journal of Electronics, vol 13, no. 3, pp. 373–377, 2004. [Google Scholar]

27. L. Aristidis, N. Vlassis and J. Verbeek, “The global k-means clustering algorithm,” Pattern Recognition, vol. 36, no. 2, pp. 451–461, 2003. [Google Scholar]

28. D. Mariama and J. Wessberg. “Human 8-to 10-hz pulsatile motor output during active exploration of textured surfaces reflects the textures’ frictional properties,” Journal of Neurophysiology, vol. 122, no. 3, pp. 922–932, 2019. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |