DOI:10.32604/cmc.2022.024431

| Computers, Materials & Continua DOI:10.32604/cmc.2022.024431 |  |

| Article |

Deep Reinforcement Learning Enabled Smart City Recycling Waste Object Classification

1Department of Natural and Applied Sciences, College of Community-Aflaj, Prince Sattam bin Abdulaziz University, Saudi Arabia

2Department of Information Systems-Girls Section, King Khalid University, Mahayil, 62529, Saudi Arabia

3Department of Computer Science, King Khalid University, Muhayel Aseer, Saudi Arabia

4Faculty of Computer and IT, Sana'a University, Sana'a, Yemen

5Department of Information Systems, King Khalid University, Muhayel Aseer, 62529, Saudi Arabia

6Department of Computer and Self Development, Preparatory Year Deanship, Prince Sattam bin Abdulaziz University, AlKharj, Saudi Arabia

7Department of Information Systems, College of Computer and Information Sciences, Princess Nourah Bint Abdulrahman University, Saudi Arabia

8Department of Mathematics, Faculty of Science, Cairo University, Giza, 12613, Egypt

*Corresponding Author: Manar Ahmed Hamza. Email: ma.hamza@psau.edu.sa

Received: 17 October 2021; Accepted: 29 November 2021

Abstract: The Smart City concept revolves around gathering real time data from citizen, personal vehicle, public transports, building, and other urban infrastructures like power grid and waste disposal system. The understandings obtained from the data can assist municipal authorities handle assets and services effectually. At the same time, the massive increase in environmental pollution and degradation leads to ecological imbalance is a hot research topic. Besides, the progressive development of smart cities over the globe requires the design of intelligent waste management systems to properly categorize the waste depending upon the nature of biodegradability. Few of the commonly available wastes are paper, paper boxes, food, glass, etc. In order to classify the waste objects, computer vision based solutions are cost effective to separate out the waste from the huge dump of garbage and trash. Due to the recent developments of deep learning (DL) and deep reinforcement learning (DRL), waste object classification becomes possible by the identification and detection of wastes. In this aspect, this paper designs an intelligence DRL based recycling waste object detection and classification (IDRL-RWODC) model for smart cities. The goal of the IDRL-RWODC technique is to detect and classify waste objects using the DL and DRL techniques. The IDRL-RWODC technique encompasses a two-stage process namely Mask Regional Convolutional Neural Network (Mask RCNN) based object detection and DRL based object classification. In addition, DenseNet model is applied as a baseline model for the Mask RCNN model, and a deep Q-learning network (DQLN) is employed as a classifier.Moreover, a dragonfly algorithm (DFA) based hyperparameter optimizer is derived for improving the efficiency of the DenseNet model. In order to ensure the enhanced waste classification performance of the IDRL-RWODC technique, a series of simulations take place on benchmark dataset and the experimental results pointed out the better performance over the recent techniques with maximal accuracy of 0.993.

Keywords: Smart cities; deep reinforcement learning; computer vision; image classification; object detection; waste management

In smart cities, the design of intelligent waste management is a new borderline for local authorities aiming to minimize municipal solid waste and improve community recycling rate. Since cities spent high cost for managing waste in public places, smart city waste management programs result in enhanced performance. The rapid explosion in urbanization, global population rate, and industrialization have gained more consideration, pertain to environment degradation. With the global population growing at an alarming rate, there has been dreadful degradation of the environments, result in terrific conditions. According to the reports (2019), India yearly generates over 62 million tons (MT) of solid waste [1]. The concern has been increased toward the necessity of segregation of waste on the basis of non-biodegradable and biodegradable behaviors. Generally, in the Indian context, waste consists of plastic, paper, metal, rubber, textiles, glass, sanitary products, organics, infectious materials (clinical and hospital), electrical and electronics, hazardous substances (chemical, paint, and spray) are widely categorized as non-biodegradable (NBD) and biodegradable (BD) wastes with their corresponding shares of 48% & 52% [2]. Furthermore, as per the current Indian government report, the most common thing which has been disposed of in the garbage's are food, glass, paper and paper boxes [3]. This thing constitutes over 99.5% of overall garbage gathered, that defines clearly that peoples dispose of wet and dry waste together.

Effective waste segregation will support the appropriate recycling and disposal of this waste according to its biodegradability. Therefore, modern times dictate the development of a smart waste segregation scheme for alluding to the above-mentioned cause of ecological ruins. The segregation of wastes is subsequently, seeking consideration from several academicians and researchers around the world. Recycling systems could produce more effective results by keeping up with industrial development. In this system, the decomposition of waste is yet based on human factors [4]. But, the developments of deep learning architecture and artificial intelligence technology might lead to enhancing system productivity than human factors in the following days. Specifically, human brain control system could be successfully and quickly transmitted to the machine with AI model. In these developments, it can be unavoidable that recycling systems depending on DL frameworks could be employed in the waste classification [5].

The conventional waste classification is based largely on manual selections, however, the disadvantages are inefficacy. Even though the present waste classification driven by Machine Learning (ML) methods could operate effectively, but still the classification performance need to be enhanced. By analyzing the present waste classification method depends on deep learning method, 2 potential reason leads to the complexity of enhancing the classification performance [6]. Initially, because of the distinct frameworks of DL methods, they act in a different way on many datasets. Next, there is only a low number of waste image dataset accessible for training model, absence of large scale databases such as ImageNet7. As well, it consists of various kinds of garbage, the absence of accurate and clear classification would result in lower classification performance. As plastic bags and plastic bottles have distinct characteristics and shapes, even though they are made up of plastic, the disposal ways are distinct.

Deep Reinforcement Learning (DRL) is a subfield of ML which amalgamates Reinforcement Learning (RL) and Deep Learning (DL). A variety of algorithms exist in literature to deal with the underlying concept of iteratively learning [7], illustrating and improving data in order to foresee better outcomes and apply them to provide improved decisions. DL is largely are trained by the scientific community employing Graphical Processing Units (GPU) to accelerate their research and application, bringing them to the point where they exceed the performance of most traditional ML algorithms in video analytics. DRL techniques are extended to 3-dimensional for unveiling spatio-temporal features from the video feed which could distinguish the objects from one another

This paper designs an intelligence DRL based recycling waste object detection and classification (IDRL-RWODC) model. The IDRL-RWODC technique encompasses a two-stage process namely Mask Regional Convolutional Neural Network (Mask RCNN) based object detection and DRL based object classification. Moreover, DenseNet model is used as a baseline model for the Mask RCNN model and a deep Q-learning network (DQLN) is employed as a classifier. For ensuring the improved waste classification outcomes of the IDRL-RWODC technique, an extensive experimental analysis takes place to investigate the efficacy in terms of different measures.

Ziouzios et al. [8] proposed a cloud based classification approach for automatic machines in recycling factories with ML algorithm. They trained an effective MobileNet method, capable of classifying 5 distinct kinds of waste. The inference could be implemented in real time on a cloud server. Different methods were used and described for improving the classification performances like hyperparameter tuning and data augmentation. Adedeji et al. [9] proposed a smart waste material classification algorithm, i.e., established with the fifty layers residual net pretrain (ResNet-50) CNN method, i.e., ML algorithm and serves as the extractor, and SVM i.e., employed for classifying the waste to distinct types or groups like metal, glass, plastic, paper and so on.

Chu et al. [10] propose an MHS DL approach for manually sorting the waste disposed of by individual perosn in the urban public area. Such systems deploy a higher resolution camera for capturing unwanted images and sensor nodes for detecting another beneficial feature data. The MHS employs CNN based method for extracting image features and MLP model for consolidating image features and other features data for classifying waste is recyclable. Gan et al. [11] propose an approach of MSW recycling and classification depends on DL technique and employs CNN for building classification algorithm and garbage intelligence simultaneous interpreting, that enhances the speed and accuracy of the garbage image detection. Narayan [12] proposed DeepWaste, an easy to use mobile app, which uses greatly improved DL model for providing users instantaneous waste classification to recycling, compost, and trash. Research using various CNN frameworks for detecting and classifying waste materials. Huang et al. [13] proposed a novel combinational classification algorithm depending on 3 pretrained CNN methods (NASNetLarge, VGG19, and DenseNet169) to process the ImageNet database and attain higher classification performance. In this presented method, the TL method depends on every pretrained method is made as candidate classifiers, and the optimum output of 3 candidate classifications are elected as the concluding classifier results.

Zhang et al. [14] aim are to enhance the performance of waste sorting via DL model for providing a chance to smart waste classifications depending on mobile phone or computer vision terminal. A self-monitoring model is included in the residual network module that is capable of integrating the appropriate features of each channel graph, compresses the spatial dimensional feature, and has global receptive fields. In Toğaçar et al. [15], the dataset applied to the classification of waste has been recreated using the AE network. Then, the feature set is extracted by 2 datasets using CNN frameworks and the feature set is integrated. The RR model implemented on the integrated feature set decreased the amount of features and also exposed the effective features. The SVM model has been employed as a classifier in each experiment.

Wang et al. [16] explore the applications of DL in the area of environmental protections, the CNN VGG16 models are applied for solving the problems of classification and identification of domestic garbage. Such solutions are initially employed the Open CV library for locating and selecting the recognized object and pre-processed the image to 224 × 224 pixel RGB images recognized as the VGG16 network. Next, data augmentation, a VGG16 CNN depending on the TensorFlow frameworks are constructed, with the RELU activation function and including BN layer for accelerating the model's convergence speed, when safeguarding detection performance. In Hasan et al. [17], automated waste classification systems are presented with DL approach for classifying the wastes like paper, metal, non-recyclable and plastic waste. The classification was executed by this CV model with the aid of AlexNet CNN framework in realtime thus the waste could be dropped to the proper chamber since it is thrown to dustbin.

Kumar et al. [18] investigate a new method for waste segregation to its efficient disposal and recycling by using a DL approach. The YOLOv3 model was used in the Darknet NN architecture for training self-made datasets. The network was trained for six objects classes (such as glass, cardboard, paper, metal, organic and plastic waste). Sousa et al. [19] proposed a hierarchical DL model for waste classification and detection in food trays. The proposed 2 phase method maintains the benefits of current object detectors (as Faster RCNN) and permits the classification process to be assisted in high resolution bounding box. As well, they annotate, collect and provide to the research novel datasets, called Labeled Waste in the Wild.

In this study, a new IDRL-RWODC technique has been presented for recycling waste object detection and classification. The IDRL-RWODC technique derives a Mask RCNN with DenseNet model for the detection and masking of waste objects in the scene. In addition, the DRL based DQLN technique is employed to classify the detected objects into distinct class labels. The detailed working of these processes is given in the succeeding sections.

Given a waste object image dataset

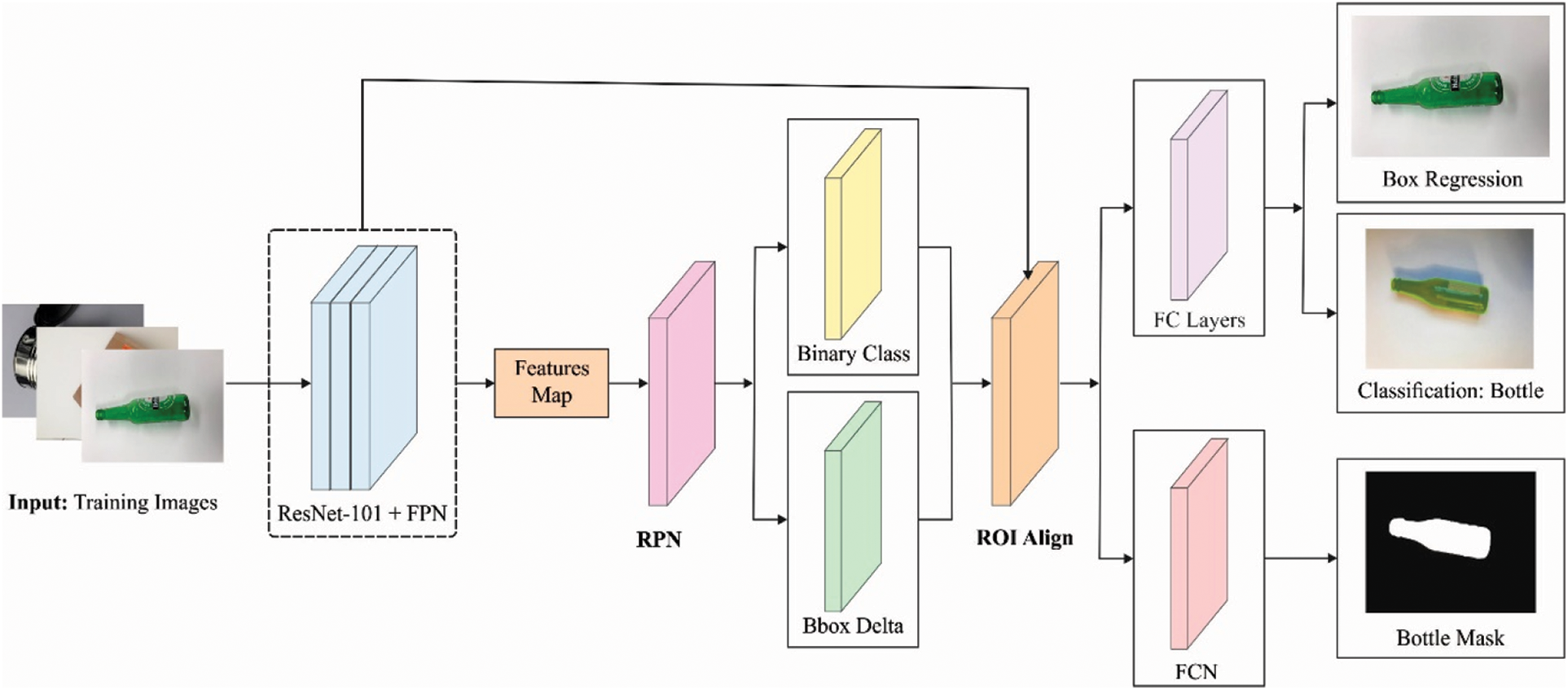

3.2 Mask RCNN Based Object Detection Model

At the initial stage, the Mask RCNN model is used for the detection of waste objects in the image. Mask RCNN is an easy, flexible, and usual structure for object recognition, exposure, and sample segmentation that capably detects objects in an image, but creating a great quality segmentation mask for all instances. The RPN, the 2nd piece of Mask R-CNN, and share complete images convolution features with recognition networks, therefore supporting approximately cost-free region proposal. The RPN is implemented to Mask R-CNN rather than elective search and so RPN shares the convolutional feature of complete map with recognition networks. It is forecast combined boundaries place as well as to object score at all places, and it can be also fully convolutional network (FCN).

For Mask R-CNN achieves 3 tasks: target recognition, detection, and segmentation. At the input, the images are passed to the FPN. Then, a 5 group of feature maps of distinct sizes were created and candidate frame region has been created by RPN [21]. Then the candidate area has been both the feature map, the model attained the recognition, classifier, and mask of target. In order to additional enhance the calculating speed of technique, it is adjusted to real time necessities of quick drive anti-collision warning scheme. During this case, FPN framework, and RPN parameter settings were enhanced. An enhanced technique presented during this case is to appreciate the recognition, detection, and segmentation of target simultaneously. Fig. 1 illustrates the structure of Mask RCNN for waste object detection.

Figure 1: Structure of mask RCNN for waste object detection

In the multi-task loss, function is implemented during trained Mask

where

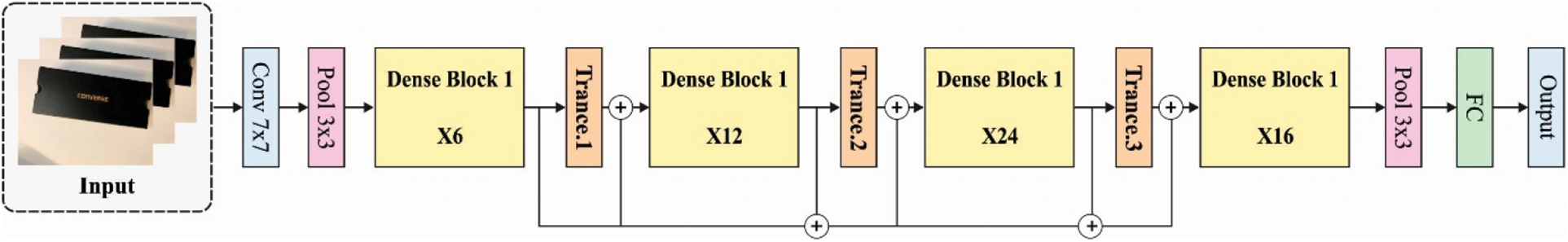

In this study, DenseNet method is employed as the baseline model for the Mask RCNN. DenseNet [22] is a DL framework where each layer is directly connected, thus attaining efficient data flow amongst other. Every layer is connected to each subsequent layer of the network, and they are represented as DenseNets. Assume an input image

where

Figure 2: Structure of DenseNet

All the convolutional layers corresponding to the BN-ReLU-Conv sequences. Afterward the convolution is implemented on the image, ReLU is employed to the output feature map. This function presents nonlinearity in CNN models. The ReLU function can be expressed as

Average pooling splits the input to the pooling area and calculates the average value of all the areas. GAP compute the average of all the feature maps, and the resultant vector is taken to the softmax layer. In this case, DenseNet-169 model is utilized, which depends upon the basic DenseNet framework and the DenseNet has L (L + 1)/2 direct connections.

In order to boost the object detection outcomes of the DenseNet model, a hyperparameter optimization using dragonfly algorithm (DFA). The DFA is established by Mirjalili in 2016 [23]. It is a metaheuristic technique simulated by the static as well as dynamic performances of dragonflies in nature. There are 2 important phases of optimization: exploration as well as exploitation. These 2 stages are modeled by dragonflies, also dynamic/static searching to food or avoided the enemy.

There are 2 analysis where SI appears in dragonflies: fooding and migration. The feeding has been demonstrated as a statically swarm in optimized; migration is displayed as dynamically swarm. Based on the swarms are 3 particular performances: separation, alignment, and cohesion. At this point, the model of separation implies which separate in swarm avoid statically collision with neighbor (Eq. (7)). Alignment mentions the speed at that the agents were corresponding with adjacent individuals (Eq. (8)). Lastly, the model of cohesion illustrates the tendency of individuals nearby centre of herd (Eq. (9)). Two other performances are added to these 3 fundamental performances in DA: moving near food and avoid the enemy. The reason to add these performances to this technique is that important drive of all swarms is for surviving. So, if every individual is moving near food source (Eq. (10)), it can avoid the enemy in similar time period (Eq. (11)).

In the above cases, X implies the instantaneous place of individuals, but

where the values of s, a, and c in Eq. (12) signify the separate, alignment, and cohesion coefficients correspondingly, and f, e, w, and t values demonstrate the food factor, enemy factor, inertia coefficients, and iteration number correspondingly. These coefficients and stated factors allow for performing exploratory as well as exploitative performances in the optimization. During this dynamic swarm, dragonfly incline for alignment its flight. During the static motion, the alignment has been extremely lower, but the fits for attacking the enemy were extremely superior. So, the coefficient of alignment has been maximum and the cohesion coefficient was minimum during the exploration procedure; during the exploitation model, the coefficient of alignment was minimum and co-efficient of cohesion was maximum.

3.3 DRL Based Waste Classification Model

Once the waste objects are detected in the image, the next step involves the waste object classification process by the use of DQLN technique. The DRL incorporates three components namely state, action, and reward. The DRL agent intends in learning the map function from the state to action space. Then, the DRL agent receives an award. The goal of the DRL agent is the maximization of total rewards. The classifier strategy of DQLN model is a function that receives the instance and returns the probability of every label.

The aim of classification agent is to appropriately identify the instances in the trained data more feasible. Since the classification agents obtain a positive reward if it correctly identifies instances, it attains their aim by maximizing the cumulative reward

In reinforcement learning, there is function which computes the quality of state-action combination named as Q function:

Based on the Bellman formula, the Q function is written as:

The classification agents are maximizing the cumulative reward by resolving the optimum

In the low-dimension finite state space, Q function is recording by table. But, in the high dimensional continuous state space, Q functions could not be solved still deep

where y implies the target evaluation of Q function, the written of y is:

where

At this point, optimum

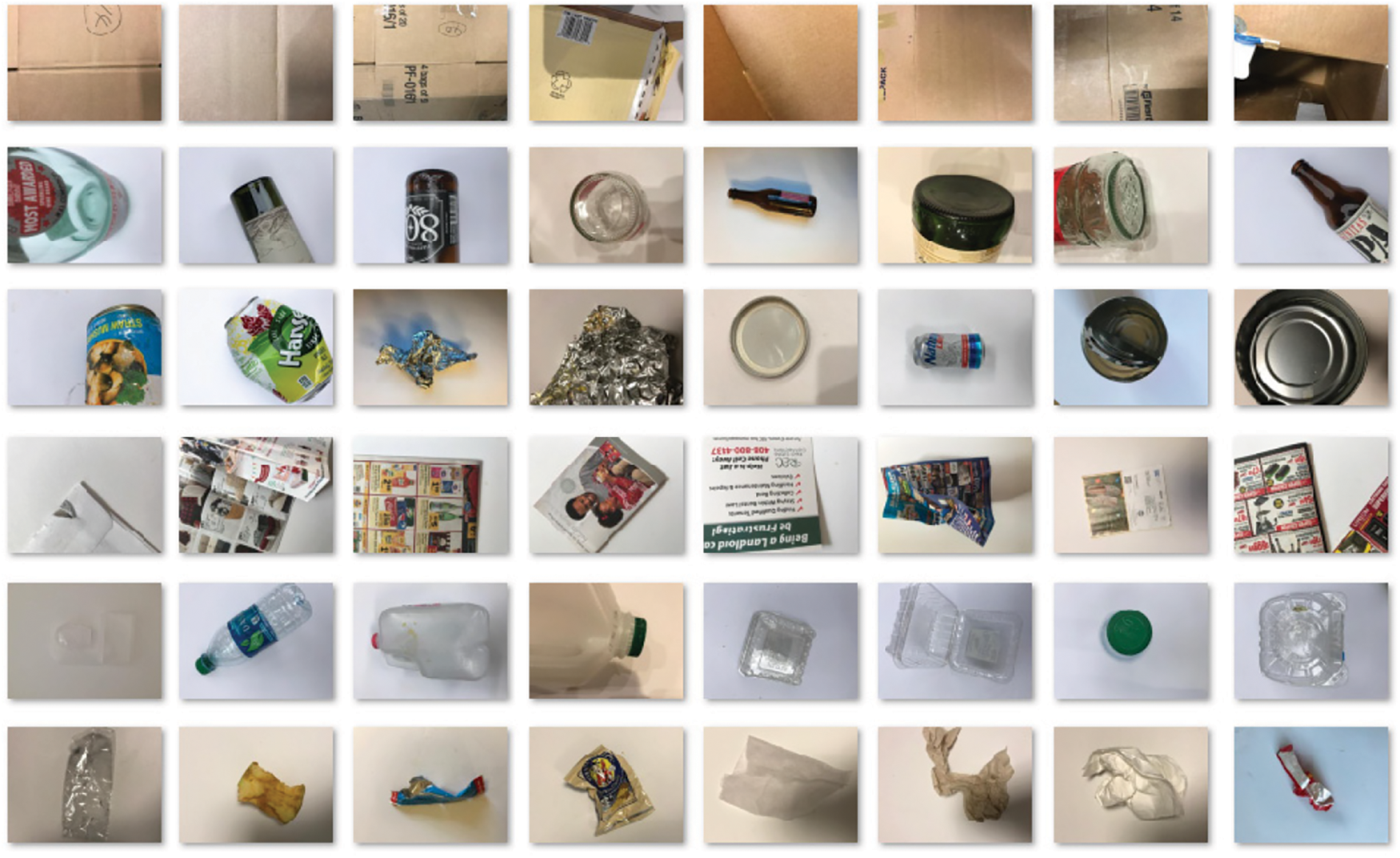

The proposed model is simulated using Python 3.6.5 tool on a benchmark Garbage [26] classification dataset from Kaggle repository. The dataset includes six waste classes. It has 403 images under cardboard, 137 images under Thrash, 501 images under Glass, 482 images under Plastic, 504 images under Paper, and 410 images under Metal. Fig. 3 showcases some of the sample test images from the Kaggle datasets.

Figure 3: Sample images

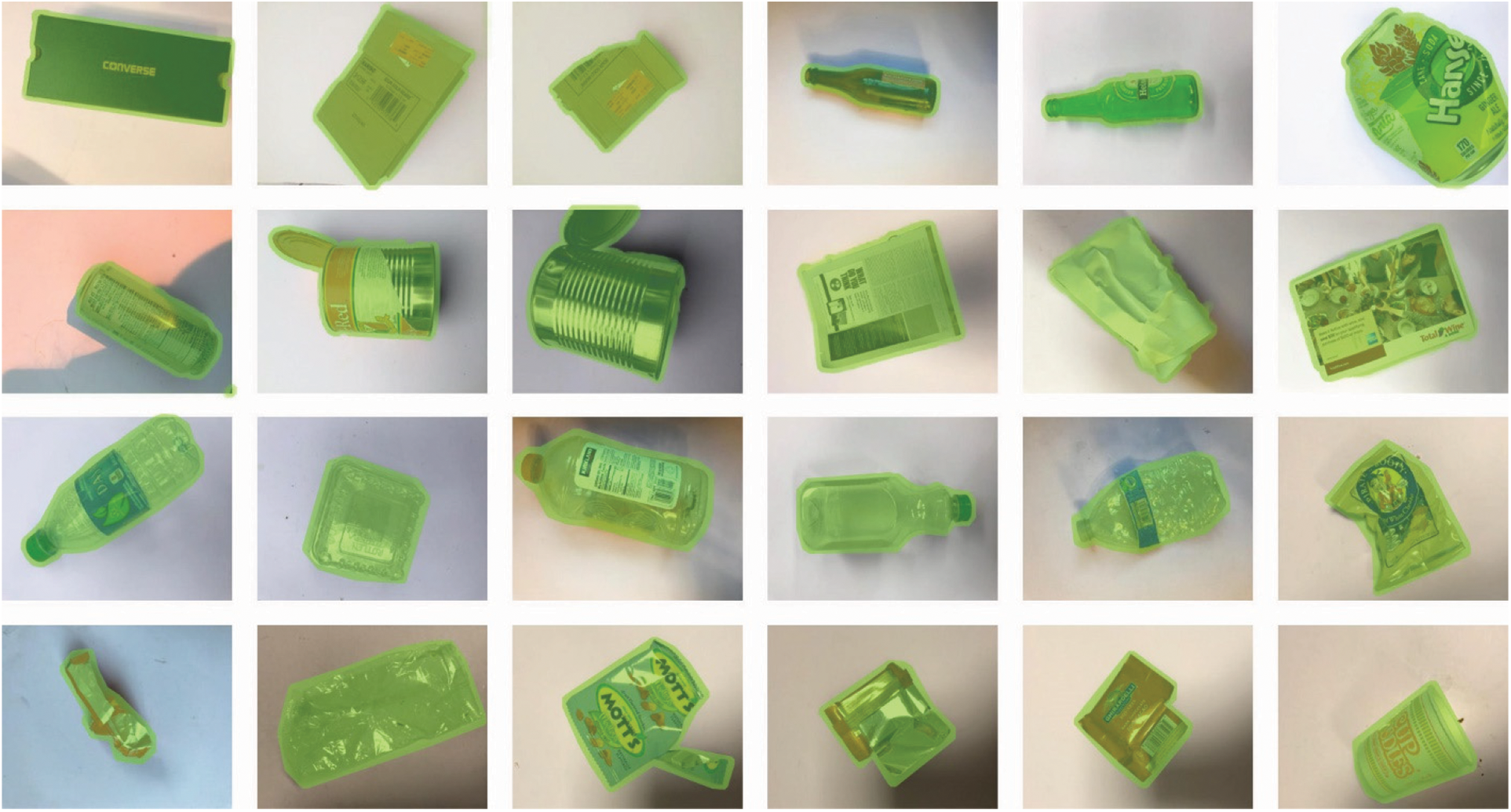

Fig. 4 demonstrates the sample object detection results of the Mask RCNN model. The figures displayed that the Mask RCNN model has effectually detected and masked the objects.

Figure 4: Sample object detection results

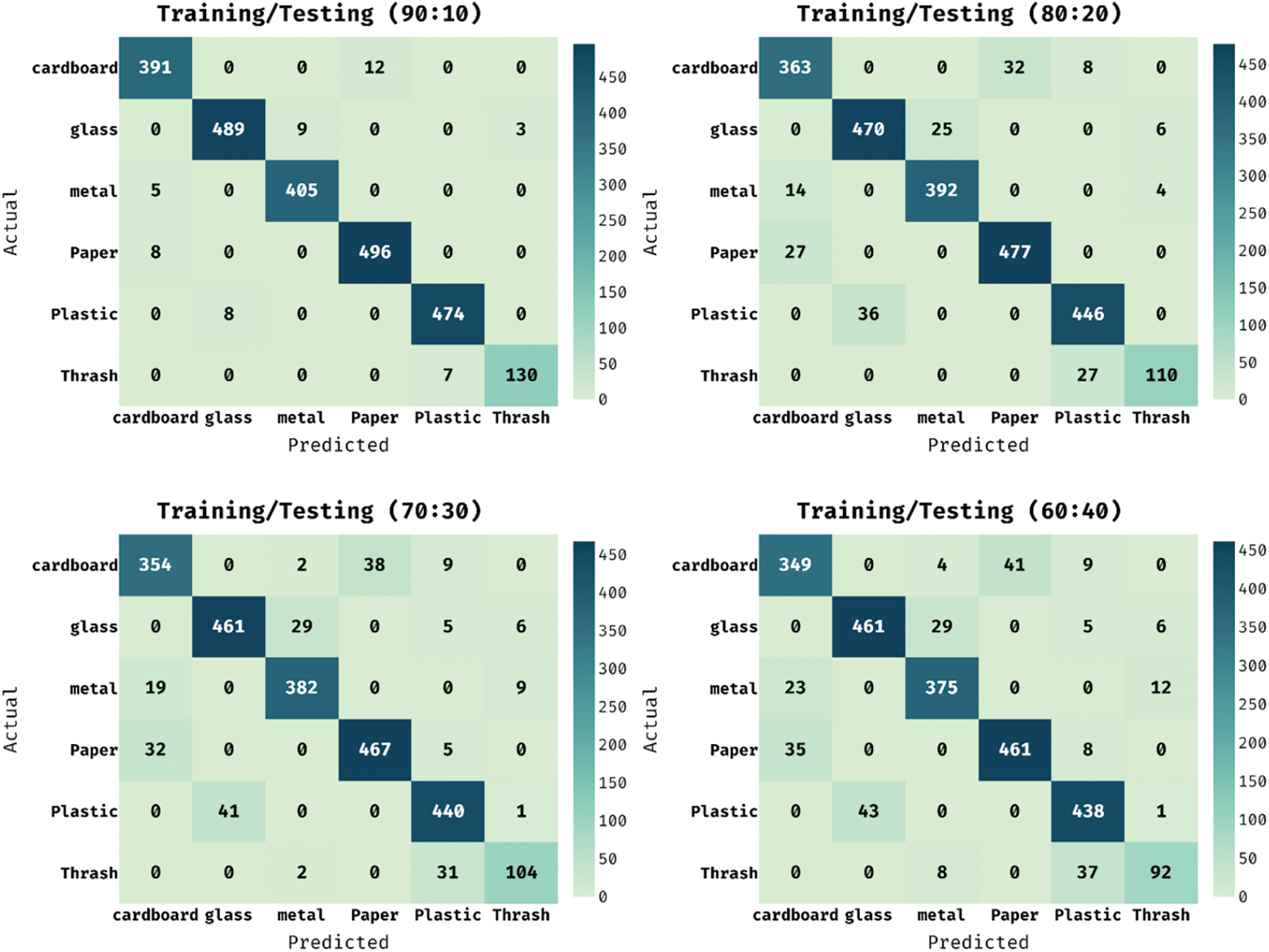

aFig. 5 offers the confusion matrices of the IDRL-RWODC technique under varying sizes of testing and training information. On the applied training/testing (90:10) dataset, the IDRL-RWODC technique has identified 391 images into cardboard, 489 images into glass, 405 images into metal, 496 images into Paper, 474 images into Plastic, and 130 images into Thrash. Also, on the applied training/testing (80:20) dataset, the IDRL-RWODC approach has identified 363 images into cardboard, 470 images into glass, 392 images into metal, 477 images into Paper, 446 images into Plastic, and 110 images into Thrash. Likewise, on the applied training/testing (70:30) dataset, the IDRL-RWODC method has identified 354 images into cardboard, 461 images into glass, 382 images into metal, 467 images into Paper, 440 images into Plastic, and 104 images into Thrash. Similarly, on the applied training/testing (60:40) dataset, the IDRL-RWODC algorithm has identified 349 images into cardboard, 461 images into glass, 375 images into metal, 461 images into Paper, 438 images into Plastic, and 92 images into Thrash.

Figure 5: Confusion matrix of IDRL-RWODC model

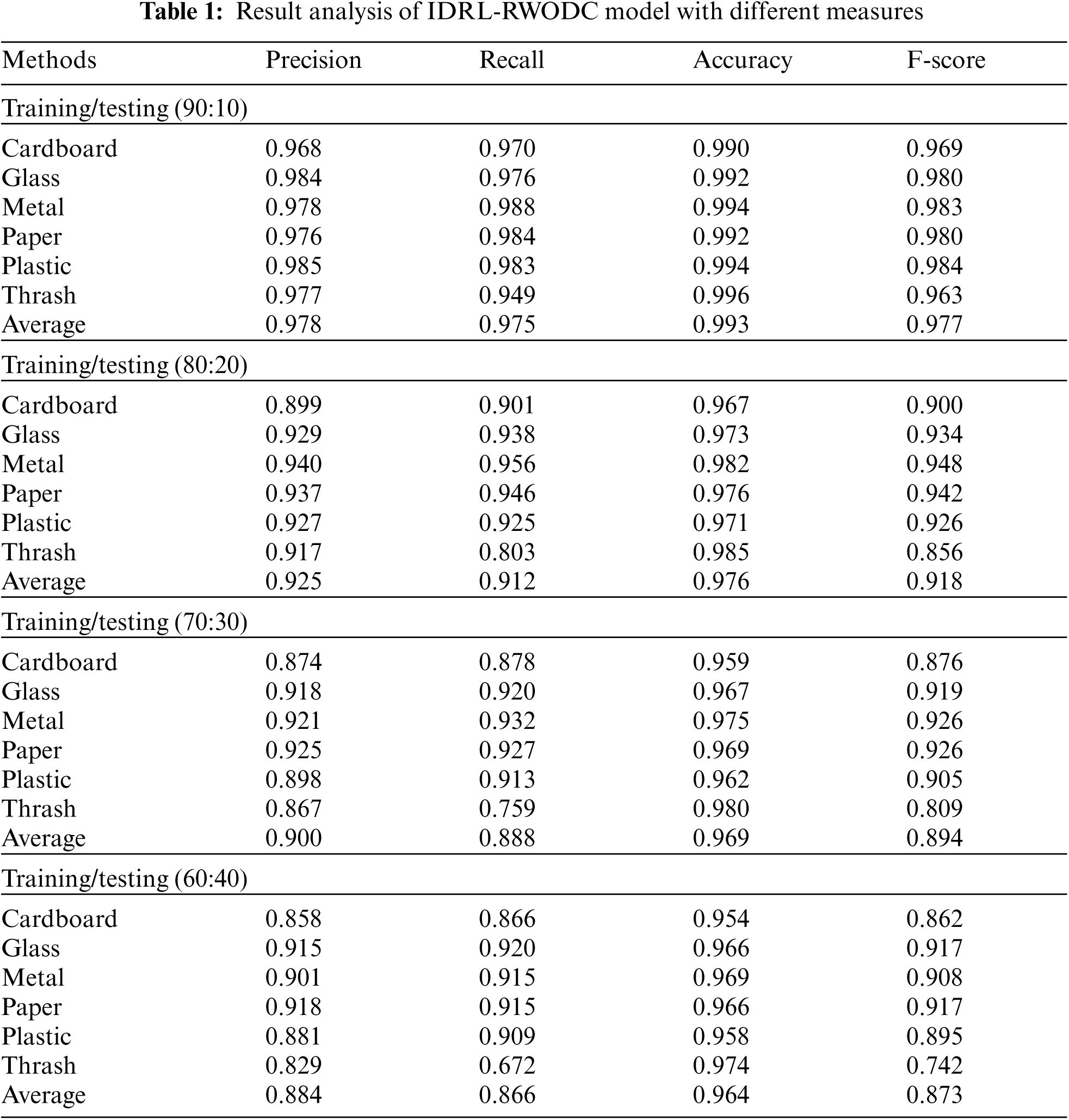

Tab. 1 offers the waste object classification results of the IDRL-RWODC technique on the varying training/testing dataset. The table values showcased that the IDRL-RWODC technique has classified all the objects efficiently under each size of testing or training datasets. With training/testing (90:10), the IDRL-RWODC technique has attained an average recall of 0.975, precision of 0.978, F-score of 0.977, and accuracy of 0.993. Additionally, with training/testing (80:20), the IDRL-RWODC approach has gained an average accuracy of 0.976, precision of 0.925, recall of 0.912, and F-score of 0.918. Moreover, with training/testing (70:30), the IDRL-RWODC manner has reached an average recall of 0.888, precision of 0.900, F-score of 0.894, and accuracy of 0.969. Furthermore, with training/testing (60:40), the IDRL-RWODC methodology has gained an average recall of 0.866, precision of 0.884, F-score of 0.873 and accuracy of 0.964.

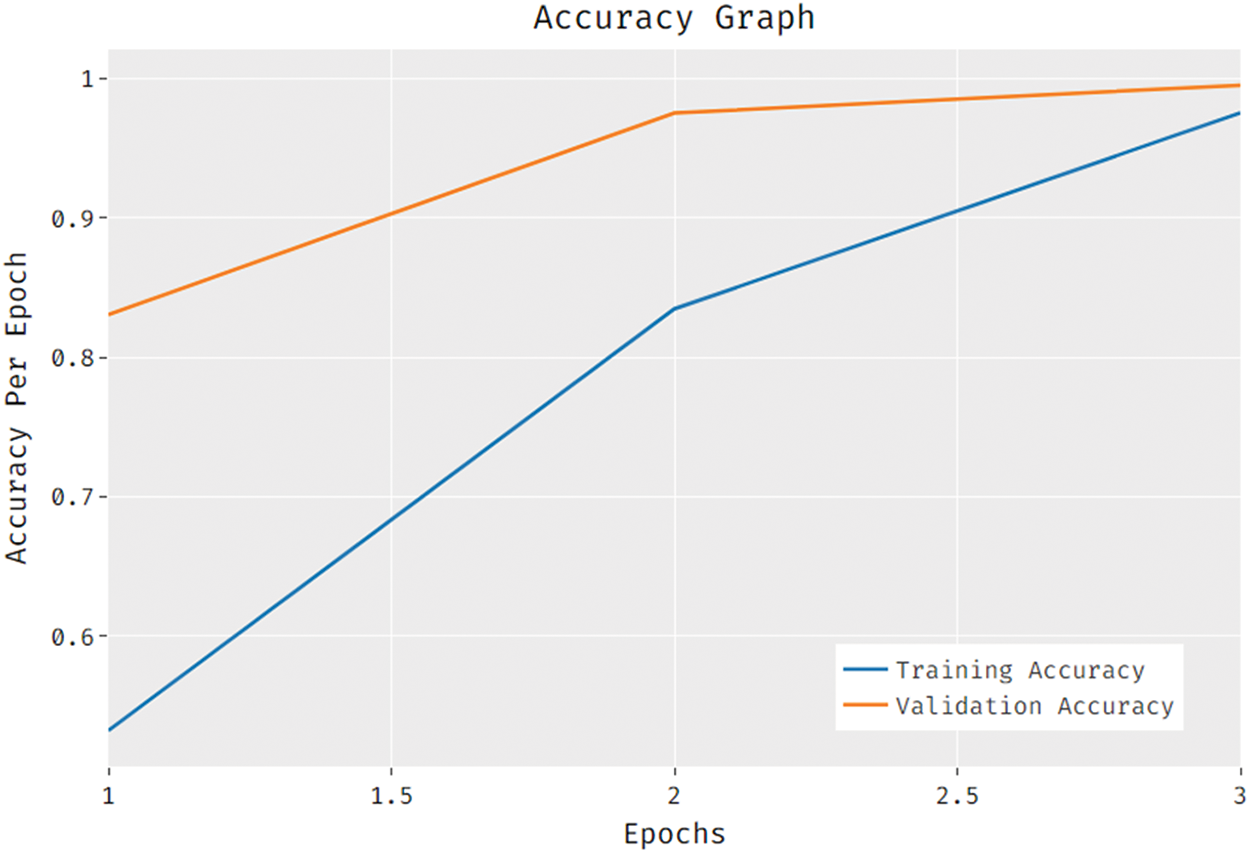

Fig. 6 showcases the accuracy analyses of the IDRL-RWODC method on the applied dataset. The figure demonstrated that the IDRL-RWODC approach has resulted in maximum accuracy on the training and validation dataset. The results demonstrated that the validation accuracy is found to be higher than the training accuracy.

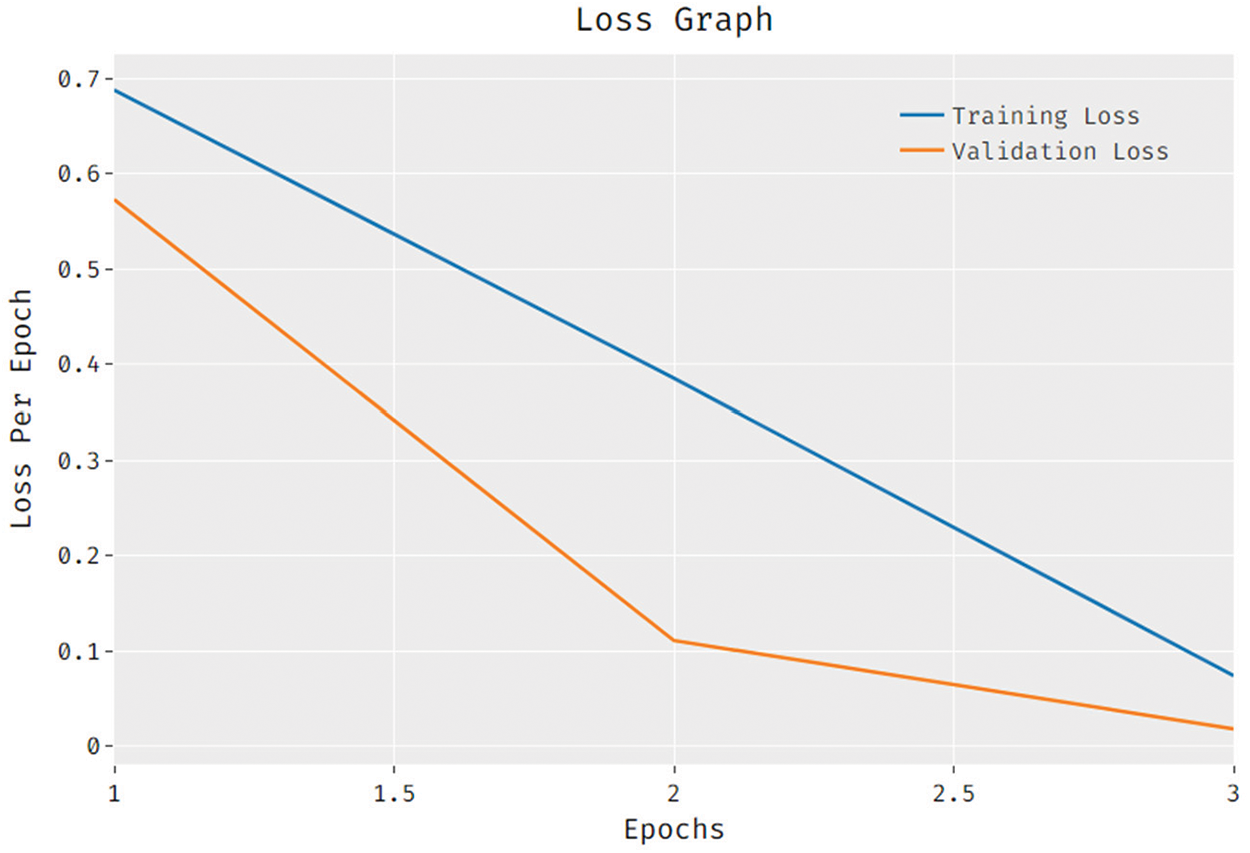

Fig. 7 illustrates the loss analysis of the IDRL-RWODC approach on the applied dataset. The figure showcased that the IDRL-RWODC manner has resulted in a minimum loss on the training and validation dataset. The results outperformed that the validation loss is initiate to be lesser over the training loss.

Figure 6: Accuracy analysis of IDRL-RWODC model

Figure 7: Loss analysis of IDRL-RWODC model

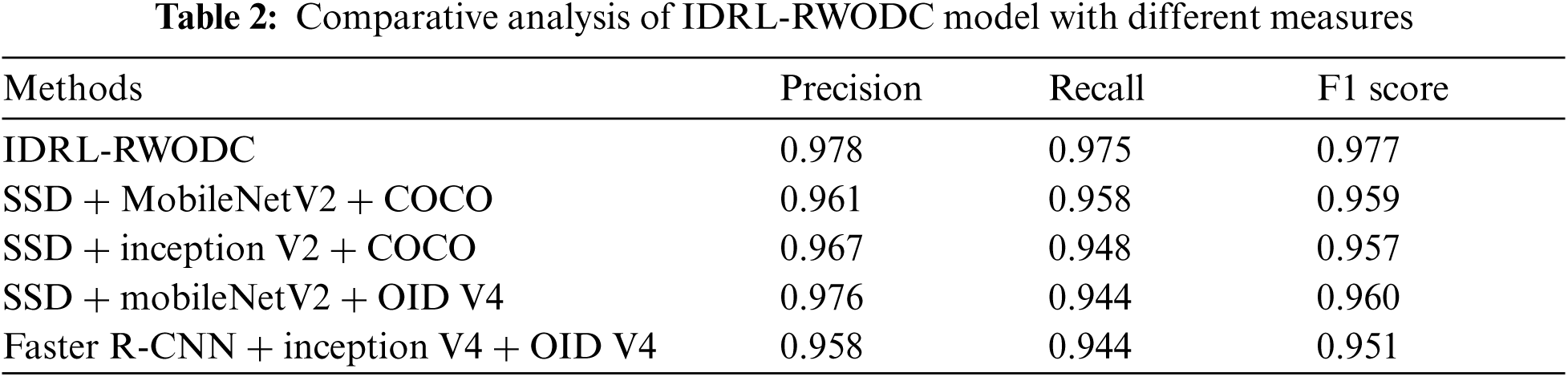

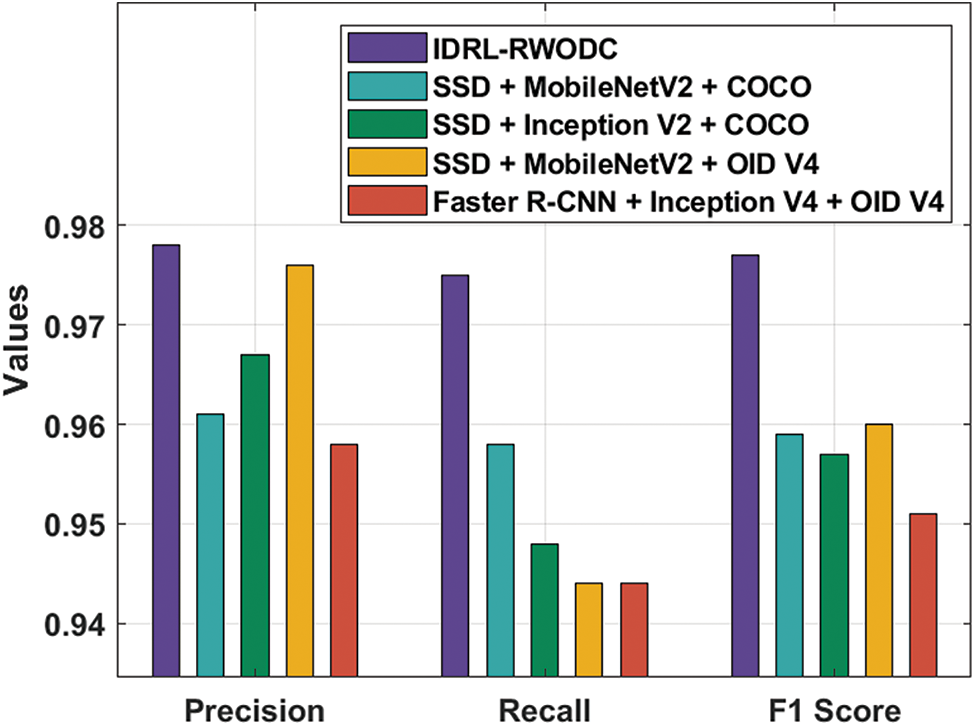

Tab. 2 and Fig. 8 demonstrate the comparative results analysis of the IDRL-RWODC manner with existing approaches. The figure depicted that the Faster R-CNN + Inception V4 + OID V4 has accomplished a comparative lower precision of 0.958, recall of 0.94, and F1 score of 0.951. Followed by, the SSD + MobileNetV2 + COCO technique has obtained certainly increased result with the precision of 0.961, recall of 0.958, and F1 score of 0.959. Moreover, the SSD + Inception V2 + COCO technique has gained moderate results with the precision of 0.967, recall of 0.948, and F1 score of 0.957. Furthermore, the SSD + MobileNetV2 + OID V4 technique has resulted in a competitive efficiency with precision of 0.976, recall of 0.944, and F1 score of 0.960. However, the IDRL-RWODC method has accomplished effective results with the recall of 0.975, precision of 0.978, and F1 score of 0.977.

Figure 8: Comparative analysis of IDRL-RWODC model with existing techniques

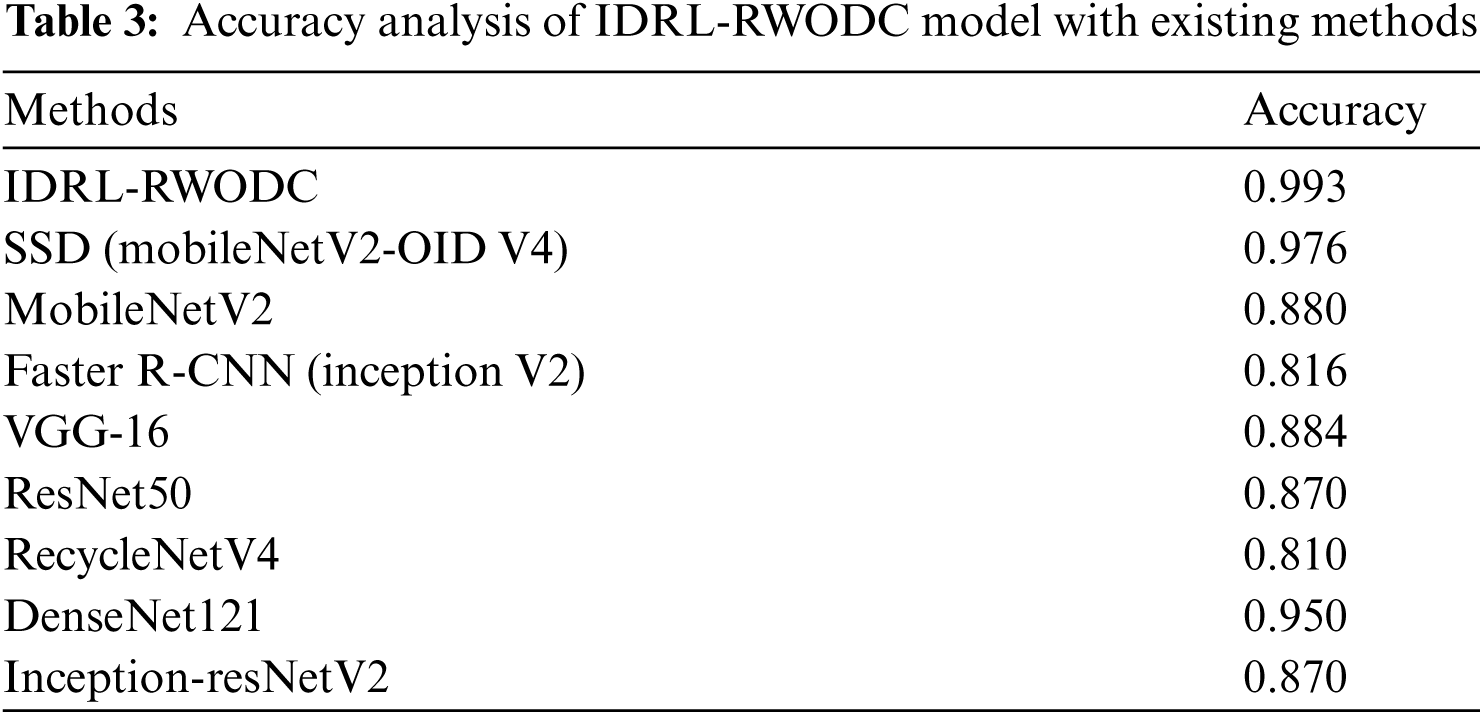

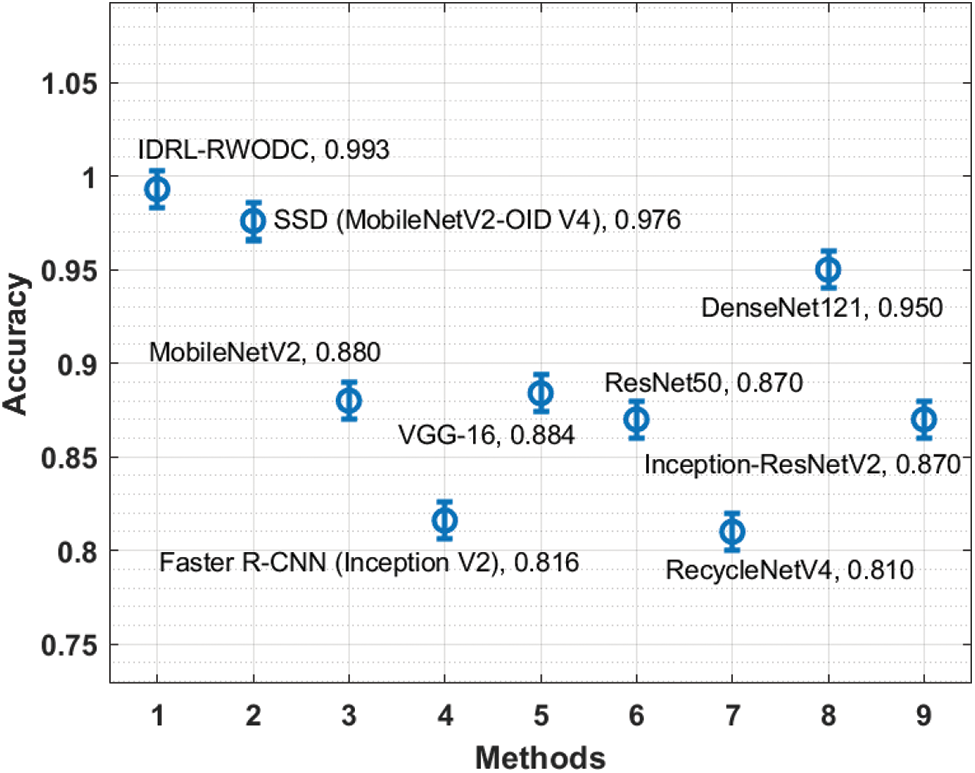

Tab. 3 and Fig. 9 depict the accuracy analyses of the IDEL-RWODC technique with existing manners. The figure outperformed that the RecycleNetV4 and Faster R-CNN (Inception V2) algorithms have attained a lesser accuracy of 0.810 and 0.816 respectively. In line with, the ResNet50 and Inception-ResNetV2 approaches have achieved somewhat improved and same accuracy of 0.870.

Simultaneously, the MobileNetV2 and VGG-16 techniques have accomplished nearly nearer accuracy values of 0.880 and 0.884 correspondingly. Along with that, the DenseNet121 and SSD (MobileNetV2-OID V4) methods depicted that reached superior accuracy of 0.950, and 0.976 respectively. Finally, the IDRL-RWODC algorithm has resulted in a maximum performance with a maximal accuracy of 0.993.

Figure 9: Accuracy analysis of IDRL-RWODC model with existing techniques

In this study, a new IDRL-RWODC technique has been presented for recycling waste object detection and classification for smart cities. The IDRL-RWODC technique derives a Mask RCNN with DenseNet model for the detection and masking of waste objects in the scene. In order to boost the object detection outcomes of the DenseNet model, a hyperparameter optimization using DFA. In addition, the DRL based DQLN technique is employed to classify the detected objects into distinct class labels. The IDRL-RWODC technique has the ability to recognize objects of varying scales and orientations. For ensuring the improved waste classification outcomes of the IDRL-RWODC technique, an extensive experimental analysis takes place to investigate the efficacy in terms of different measures. The experimental results pointed out the better performances of the IDRL-RWODC algorithm over the current techniques. In future, the IDRL-RWODC technique can be realized as a mobile application for smartphones to aid the waste object classification process in real time.

Funding Statement: The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University for funding this work under Grant Number (RGP 1/282/42). This research was funded by the Deanship of Scientific Research at Princess Nourah bint Abdulrahman University through the Fast-Track Research Funding Program.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. K. D. Sharma and S. Jain, “Overview of municipal solid waste generation, composition, and management in India,” Journal of Environmental Engineering, vol. 145, no. 3, pp. 04018143, 2019. [Google Scholar]

2. Y. Wang and X. Zhang, “Autonomous garbage detection for intelligent urban management,” in Proc. of the MATEC Web Conf., vol. 232, China, pp. 01056, 2018. [Google Scholar]

3. R. S. S. Devi, V. R. Vijaykumar and M. Muthumeena, “Waste segregation using deep learning algorithm,” International Journal of Innovative Technology and Exploring Engineering, vol. 8, pp. 401–403, 2018. [Google Scholar]

4. N. J. G. J. Bandara and J. P. A. Hettiaratchi, “Environmental impacts with waste disposal practices in a suburban municipality in Sri Lanka,” International Journal of Environment and Waste Management, vol. 6, no. 1/2, pp. 107, 2010. [Google Scholar]

5. A. T. García, O. R. Aragón, O. L. Gandara, F. S. García and L. E. G. Jiménez, “Intelligent waste separator,” Computación y Sistemas, vol. 19, no. 3, pp. 487–500, 2015. [Google Scholar]

6. J. Zheng, M. Xu, M. Cai, Z. Wang and M. Yang, “Modeling group behavior to study innovation diffusion based on cognition and network: An analysis for garbage classification system in shanghai, China,” International Journal of Environmental Research and Public Health, vol. 16, no. 18, pp. 3349, 2019. [Google Scholar]

7. Y. Chu, C. Huang, X. Xie, B. Tan, S. Kamal et al., “Multilayer hybrid deep-learning method for waste classification and recycling,” Computational Intelligence and Neuroscience, vol. 2018, pp. 1–9, 2018. [Google Scholar]

8. D. Ziouzios, D. Tsiktsiris, N. Baras and M. Dasygenis, “A distributed architecture for smart recycling using machine learning,” Future Internet, vol. 12, no. 9, pp. 141, 2020. [Google Scholar]

9. O. Adedeji and Z. Wang, “Intelligent waste classification system using deep learning convolutional neural network,” Procedia Manufacturing, vol. 35, pp. 607–612, 2019. [Google Scholar]

10. Y. Chu, C. Huang, X. Xie, B. Tan, S. Kamal et al., “Multilayer hybrid deep-learning method for waste classification and recycling,” Computational Intelligence and Neuroscience, vol. 2018, pp. 1–9, 2018. [Google Scholar]

11. B. Gan and C. Zhang, “Research on the algorithm of urban waste classification and recycling based on deep learning technology,” in 2020 Int. Conf. on Computer Vision, Image and Deep Learning (CVIDL), Chongqing, China, pp. 232–236, 2020. [Google Scholar]

12. Y. Narayan, “DeepWaste: Applying deep learning to waste classification for a sustainable planet,” arXiv preprint arXiv:2101.05960, 2021. [Google Scholar]

13. G. Huang, J. He, Z. Xu and G. Huang, “A combination model based on transfer learning for waste classification,” Concurrency and Computation: Practice and Experience, vol. 32, no. 19, pp. 1–12, 2020. [Google Scholar]

14. Q. Zhang, X. Zhang, X. Mu, Z. Wang, R. Tian et al., “Recyclable waste image recognition based on deep learning,” Resources, Conservation and Recycling, vol. 171, pp. 105636, 2021. [Google Scholar]

15. M. Toğaçar, B. Ergen and Z. Cömert, “Waste classification using AutoEncoder network with integrated feature selection method in convolutional neural network models,” Measurement, vol. 153, pp. 107459, 2020. [Google Scholar]

16. H. Wang, “Garbage recognition and classification system based on convolutional neural network vgg16,” in 2020 3rd Int. Conf. on Advanced Electronic Materials, Computers and Software Engineering (AEMCSE), Shenzhen, China, pp. 252–255, 2020. [Google Scholar]

17. A. R. A. Rajak, S. Hasan and B. Mahmood, “Automatic waste detection by deep learning and disposal system design,” Journal of Environmental Engineering and Science, vol. 15, no. 1, pp. 38–44, 2020. [Google Scholar]

18. S. Kumar, D. Yadav, H. Gupta, O. P. Verma, I. A. Ansari et al., “A novel yolov3 algorithm-based deep learning approach for waste segregation: Towards smart waste management,” Electronics, vol. 10, no. 1, pp. 14, 2020. [Google Scholar]

19. J. Sousa, A. Rebelo and J. S. Cardoso, “Automation of waste sorting with deep learning,” in 2019 XV Workshop de Visão Computacional (WVC), São Bernardo do Campo, Brazil, pp. 43–48, 2019. [Google Scholar]

20. L. Sun and Y. Gong, “Active learning for image classification: A deep reinforcement learning approach,” in 2019 2nd China Symposium on Cognitive Computing and Hybrid Intelligence (CCHI), Xi'an, China, pp. 71–76, 2019. [Google Scholar]

21. C. Xu, G. Wang, S. Yan, J. Yu, B. Zhang et al., “Fast vehicle and pedestrian detection using improved mask r-cnn,” Mathematical Problems in Engineering, vol. 2020, pp. 1–15, 2020. [Google Scholar]

22. Y. Zhou, Z. Li, H. Zhu, C. Chen, M. Gao et al., “Holistic brain tumor screening and classification based on densenet and recurrent neural network,” in Int. MICCAI Brainlesion Workshop, pp. 208–217, 2018. [Google Scholar]

23. S. Mirjalili, “Dragonfly algorithm: A new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems,” Neural Computing and Applications, vol. 27, no. 4, pp. 1053–1073, 2016. [Google Scholar]

24. Çİ Acı and H. Gülcan, “A modified dragonfly optimization algorithm for single-and multiobjective problems using brownian motion,” Computational Intelligence and Neuroscience, vol. 2019, pp. 1–17, Jun. 2019. [Google Scholar]

25. E. Lin, Q. Chen and X. Qi, “Deep reinforcement learning for imbalanced classification,” Applied Intelligence, vol. 50, no. 8, pp. 2488–2502, 2020. [Google Scholar]

26. Dataset: https://www.kaggle.com/asdasdasasdas/garbage-classification, 2021. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |