DOI:10.32604/cmc.2022.026309

| Computers, Materials & Continua DOI:10.32604/cmc.2022.026309 |  |

| Article |

A New Intelligent Approach for Deaf/Dumb People based on Deep Learning

1Computer Science Department, Faculty of Computers and Information, Kafrelsheikh University, Kafrelsheikh, Egypt

2Industrial Engineering Department, College of Engineering, King Saud University, Riyadh 11421, Saudi Arabia

3Industrial Engineering Department, College of Engineering, University of Houston, Houston, TX 77204-4008, USA

4Department of Computer Science, Faculty of Computers and Artificial Intelligence, University of Sadat City, Sadat City, Egypt

*Corresponding Author: Haitham Elwahsh. Email: haitham.elwahsh@gmail.com

Received: 22 December 2021; Accepted: 23 February 2022

Abstract: People who are deaf or have difficulty speaking use sign language, which consists of hand gestures with particular motions that symbolize the “language” they are communicating. A gesture in a sign language is a particular movement of the hands with a specific shape from the fingers and whole hand. In this paper, we present an Intelligent for Deaf/Dumb People approach in real time based on Deep Learning using Gloves (IDLG). The approach IDLG offers scientific contributions based deep-learning, a multi-mode command techniques, real-time, and effective use, and high accuracy rates. For this purpose, smart gloves working in real time were designed. The data obtained from the gloves was processed using deep-learning-based approaches and classified multi-mode commands that allow dumb people to speak with regular people via their smart phone. Internally, the glove has five flex sensors and an accelerometer using to achieve Low-Cost Control System. The flex sensor generates a proportional change in resistance for each individual move. The processing of these hand gestures is in Atmega32A Microcontroller which is an advance version of the microcontroller and the lab view software. IDLG compares the input signal to memory-stored specified voltage values. The performance of the IDLG approach was verified on a dataset created using different hand gestures from 20 different people. In the test using the IDLG approach on 10,000 data points, process time performance of milliseconds was achieved with 97% accuracy.

Keywords: Deep learning; flex sensor; labview; Atmega32A; low-cost; control system; accelerometer; intelligent glove

Speech is the most basic means of interacting with others and communicating with them. As a result, a person who suffers from a lack of hearing ability or loss of hearing does not have good communication with people and society, which naturally leads to isolation. Those with hearing disabilities, especially those born deaf, do not speak, and thus cannot express or communicate with others, where some people assume when dealing with deaf and dumb people that their ability to understand is limited. Deaf persons have a hard time talking with others who don’t comprehend sign language in general. Even those who do talk aloud are often self-conscious of their “deaf voice,” which can make them hesitant. There is no initiative that is used to announce physically challenged people’s ideas. Furthermore, gesture-based materials or circuits are expensive and unavailable on the market. The existing means of communication is sign language, which cannot be understood by all persons. Following that, a project is created to assist and communicate with deaf and hard of hearing persons using sign language. In general, deaf individuals communicate using sign language, but they have trouble communicating with others who do not comprehend sign language. As a result, there is a communication barrier between these two communities. We want to make it easier for people to speak with each other because there are so few masters of sign language, which makes it difficult to converse in public. As an example, a person may request assistance or an emergency through sign language, but no one will understand him. We’re aiming to make it easier for deaf people to speak with others by providing a mobile application that connects to the glove via Bluetooth, allowing them to converse wirelessly and easily. The smart gloves consist of a regular glove with accelerometer and flex sensors running the length of each finger and thumb. The sensors generate a stream of data that changes in response to the degree of bend. The sensor’s output is analogue values, which are transformed to digital and processed by a microcontroller before being broadcast through wireless communication, where it is received in the receiver part and processed using a mobile application.

Flex sensors are sensors that alter in resistance depending on how much the sensor is bent. They convert bend change to electrical resistance; the greater the bend, the higher the resistance value. They usually come in the shape of a thin strip that ranges in length from “1 to 5” and has a resistance of 10 to 50 kilo ohms. They’re frequently found in gloves to detect finger movement. Analog resistors are what flex sensors are. They function as analogue voltage dividers that can be adjusted. Carbon resistive elements are contained within a thin flexible substrate within the flex sensor. Less resistance equals more carbon. Deaf-dumb people rely on sign language as their primary and only means of communication. As a formal language, sign language uses a system of hand gestures to communicate. The goal of this project is to break down this communication barrier. The major goal of the proposed project is to create a low-cost system that uses Smart Gloves to provide speech and text to voiceless people. It means that communication will not be a barrier between two groups when smart gloves are used. Disabled people can also benefit from these gloves because they allow them to grow in their particular environments at a low cost. The use of such equipment by disabled people contributes to the growth of the nation. The paper we carried out includes the design of two smart gloves for Deaf/Dumb People and classification methods for investigation results to define hand gestures. The hardware and software components of the IDLG architecture are discussed in detail in the relevant subsections.

The contributions of this paper are summarized as follows.

• We designed a soft and hardware such as flexible pair of smart gloves that people of all ages and genders can wear easily.

• With these smart gloves, signals received from the flex sensors and accelerometer sensor integrated on the gloves is received through the Atmega32A Microcontroller and accelerometer to the android to detect hand movements correctly.

• The user interface we built facilitates the process of displaying, perceiving, and transforming these received signals into digital values. These data are translated into a smooth (text and sound) with the least cost to make them relevant and able to recognize hand movements.

• Intelligent approach IDLG in real time based on deep learning using Gloves for Deaf/Dumb People.

• IDLG approach includes multi-mode command techniques in real-time, and effective use, and high accuracy rates.

The structure of our paper organized as followed: Section 2 presents some previous studies in approach of gesture recognition methods and intelligent glove approach. Section 3 explains our system with its technologies. In Section 4 provides the explanation and interpretation of system results. Finally, a conclusion and future work are given in Section 5.

Few attempts have been made in the past to identify motions produced with hands utilizing the concept of gestures, but with limits in recognition rate and time, such as:

1. The use of a CMOS camera.

2. A glove that is based on leaf switches.

3. A glove with a flex sensor (Kanika Rastogi, May 2016) [1].

4. A glove made of The Internet of Things (IoT) and deep learning.

The image data from a CMOS camera is transmitted via the UART serial connection [2]. Data received from a peripheral device (in this case, a CMOS camera) is serial-to-parallel converted by the UART, and data received from the CPU is parallel-to-serial converted by the UART (Microcontroller in this case).

Three steps were used to detect hand motions using a CMOS camera:

1. Capturing the gesture’s image

2. Detection of the image’s edges

3. Detection of the image’s peak

Disadvantages include high costs, delay, and the fact that each image takes up 50 KB of memory [1]. Using Leaf switches based glove, these are similar to regular switches, but they are built so that when pressure is applied to the switch, the two ends make contact and the switch closes. These leaf switches are attached to the glove’s fingers in such a way that when the finger is bent, the switch’s two terminals come into contact. Disadvantage: After a period of time, the switch will close instead of being open when the finger is straight, resulting in erroneous gesture transmission [1]. A copper plate is put on the palm as ground in this prototype of the Copper Plate Based Glove. In the rest state, the copper strips indicate logic 1 voltage level. When copper strips come into contact with the ground plate, however, the voltage connected with them is drained, and they show a logic 0 voltage level. Advantage: The use of copper plate makes the glove hefty, making it unsuitable for long-term wear [1]. Flex denotes “bend” or “curve” in the context of the Flex sensor-based glove. Sensor is A transducer that transfers physical energy into electrical energy. Flex Sensor is a resistive sensor that changes resistance when the bend or curvature of the sensor changes into analogue voltage. This is a haptic-based strategy in which flex sensors are used to capture physical values for processing [1]. Mohammed et al. (2021) [3] developed a model that employs a glove equipped with sensors that can decode the American Sign Language (ASL) alphabet. On a single chip, the glove has flex sensors, touch sensors, and a 6 DOF accelerometer/gyroscope. All of these sensors are attached to the hand and collect data on each finger’s location as well as the hand’s orientation in order to distinguish the letters. Sensor data is transmitted to a processing unit (an Arduino microcontroller), which is then translated and presented. The accelerometer/gyroscope is positioned on the upper side of the hand and is used to detect movement and orientation. The contact or force sensors determine which fingers are in contact and how they are positioned with relation to one another. A Bluetooth module is used to enable wireless communication between the controller and a smartphone [4]. The Bluetooth module was chosen for its ease of use and wide interoperability with today’s products. This project makes use of a serial Bluetooth module device. The similarity between measurements for various letters might be quite strong in some circumstances. Shukor et al. (2015) [5] created a model that stated, “To the best of the authors’ knowledge, this is the first application of the tilt sensor in sign language detection.” They used it to translate Malaysian Sign Language. The Arduino microcontroller is utilized, and it interprets the data from the sensors (tilt and accelerometer) before sending it to the Bluetooth module, which then sends the interpreted sign/gesture to the Bluetooth-enabled mobile phone. They claimed that making a data glove with flex sensors could be rather pricey due to the high cost of flex sensors. Furthermore, the flex sensor’s reading is not very steady and is susceptible to noise. All of the exams have a high level of accuracy. At least seven of the ten trials for each indicator were successful because tilt sensors are used, the alphabet/number has a higher accuracy [6].

These are similar to regular switches, but they are built so that when pressure is applied to the switch, the two ends make contact and the switch closes. These leaf switches are attached to the glove’s fingers in such a way that when the finger is bent, the switch’s two terminals come into contact. Disadvantage: After a period of time, the switch will close instead of being open when the finger is straight, resulting in erroneous gesture transmission [1].

2.3 A Glove with a Flex Sensor

The word “flex” means “to bend” or “to curve” “A transducer” that transfers physical energy into electrical energy is referred to as a sensor. Flex Sensor is a resistive sensor that changes resistance when the bend or curvature of the sensor changes into analogue voltage. This is a haptic-based approach in which flex sensors are used to capture physical values for processing [1].

2.4 A Glove Made of the Internet of Things (IoT) Based on Deep Learning

Labazanova et al. [7] collected right-hand samples from six participants and used a wearable tactile glove to allow the user to intuitively and safely handle a drone herd in virtual reality situations. ANOVA was used to assess signal samples, and the results were 86.1 percent successful. Muezzinoglu et al. [8] used Gsr and IMU sensors to collect data from eight people—two women and six men—with 4–10 years of driving experience who used the glove built for the study. The authors used the data to create a stress classifier model that uses an SVM to distinguish between stressful and non-stressful driving circumstances. Song et al. [9] developed a smart glove that could measure the player’s kinetic and kinematic characteristics during right-hitting and backhand handling in badminton by sensing finger pressures and bending angles. The authors used an Arduino board, 21 pressure sensors, and 11 flexibility sensors to create the smart glove, and then visualised the parameters measured by these sensors to assess the various grip movements. 10,000 datapoints were obtained using the measured joint angles and pressure data, and a method for displaying these data on a computer was proposed. Benatti et al. [10] used IMU sensors to create a SEMG-based hand gesture detection system. Benatti employed the SVM classification method [11] in seven motion recognition systems with data from four participants in a previous study and had a 90 percent success rate. Benatti also evaluated [12] a wearable electromyographic (EMG) motion recognition application based on a hyper-dimensional computational paradigm operating on a parallel ultra-low-power (PULP) platform, as well as the system’s capacity to learn online. Triboelectric-based finger flexure sensors, palm shift sensors, and piezoelectric mechanical stimulators were used by Zhu et al. [13] to create a tactile force-fed smart glove. Tactile mechanical stimulation with piezoelectric chips was used to realize human–machine interactions via self-generated triboelectric signals under multiple degrees of freedom on the human hand by detecting multi-directional bending and shifting states. Using the SVM machine learning technique combined with smart schooling, the authors were able to achieve object recognition with 96 percent accuracy in this study. Berezhnoy et al. [14] proposed using a wearable glove system with an Arduino nano microcontroller, an IMU, and flex sensors to construct a hand gesture recognition-based control interface and offer UAV control. The authors Berezhnoy et al. gathered right-hand samples from six persons and used fuzzy C-means (FCM) clustering to do motion capture and evaluation, as well as fuzzy membership functions to classify the data.

Reference [15] Suggested a sensory glove that employed machine learning techniques to measure the angles of the hand’s joints and detect 15 motions with an average accuracy of 89.4%. Chen et al. [16] demonstrated a wearable hand rehabilitation device that can perform 16 different finger movements with 93.32 percent accuracy. Pan et al. [17] demonstrated a wireless smart glove that can recognize ten American Sign Language motions, with a classification accuracy of 99.7% in testing. Maitre et al. [18] created a data glove prototype that can recognize items in eight basic daily tasks with 95 percent accuracy.

Lee et al. [19] proposed a real-time gesture recognition system that classifies 11 motions using a data glove, and the recognition result was 100 percent accurate. The use of convolutional neural networks on 6 grasp classifications using a piezoresistive data glove was proposed by Ayodele et al. [20], and the average classification accuracy of the convolutional neural networks (CNN) algorithm was 88.27 percent in the object-seen and 75.73 percent in the object-unseen scenarios, respectively. Chauhan et al. [21] reported grab prediction algorithms for 5 activities that can be employed for naturalistic, synergistic control of exoskeleton gloves, with an average accuracy of about 75%. Convolution Neural Networks was used to translate a video clip of ASL signals into text. As a result, an accuracy of over 90% was achieved in [22].

On the Ninapro DB5 platform, [23] suggested an automatic recognition system to identify hand movements using surface electromyogram signals with an average accuracy of 93.53 percent (17 gestures). A prototype of a data glove was proposed in [24], which performs well object identification throughout 13 basic daily activities with an accuracy of around 93%. Huang et al. [25] employed a prefabricated data glove to monitor the bending angle of the finger joint in real time and subsequently achieved 98.3 percent accuracy in the recognition of 9 Chinese Sign Language (CSL) words.

In [26], a novel deep learning framework based on graph convolutional neural networks (GCNs-Net) was presented, and on the High Gamma dataset, GCNs-Net attained the highest averaged accuracy of 96.24 percent at the topic level (4 movements) [27]. Developed an attention-based BiLSTM-GCN to successfully categorise four-class electroencephalogram motor imagery tasks, with 98.81 percent prediction accuracy based on individual training.

Despite the fact that many researchers have achieved significant progress in the field of hand movement detection, there is still a need to increase the accuracy of multiple hand movement classification [28].

Recognizing multiple hand movements is difficult since the accuracy often declines as the number of hand movements increases [29]. However, to the best of our knowledge, only a few acts in present studies can be identified with high precision using data gloves alone. The average accuracy in the aforementioned study employing data gloves was greater than 90%, however the number of hand movements was limited to 20. A new classification system should be built in order to recognize accurate and more hand movements from raw data gloves signals.

As a result, in this study, we proposed using deep learning to identify a larger dataset containing more movements and data glove sensor measurements. Our approach IDLG identifies the movement state by obtaining the sensor of a hand movement over time. Second, a neural network-based classification model is presented to recognize movements for the movement state sensor.

3 Intelligent Approach in Real Time Based on Deep Learning Using Gloves (IDLG)

We created a flexible, soft pair of smart gloves that can be worn by people of all ages and genders in this paper. Signals from the embedded flex sensors on the gloves are received by the STM32 microcontroller and sent it to correctly detect hand movements using these smart gloves. The user interface we built facilitates the process of displaying, perceiving, and transforming these received signals into digital values. These data are turned into digital dataset that classification using deep learning methods to make them meaningful and able to distinguish hand movements.

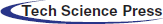

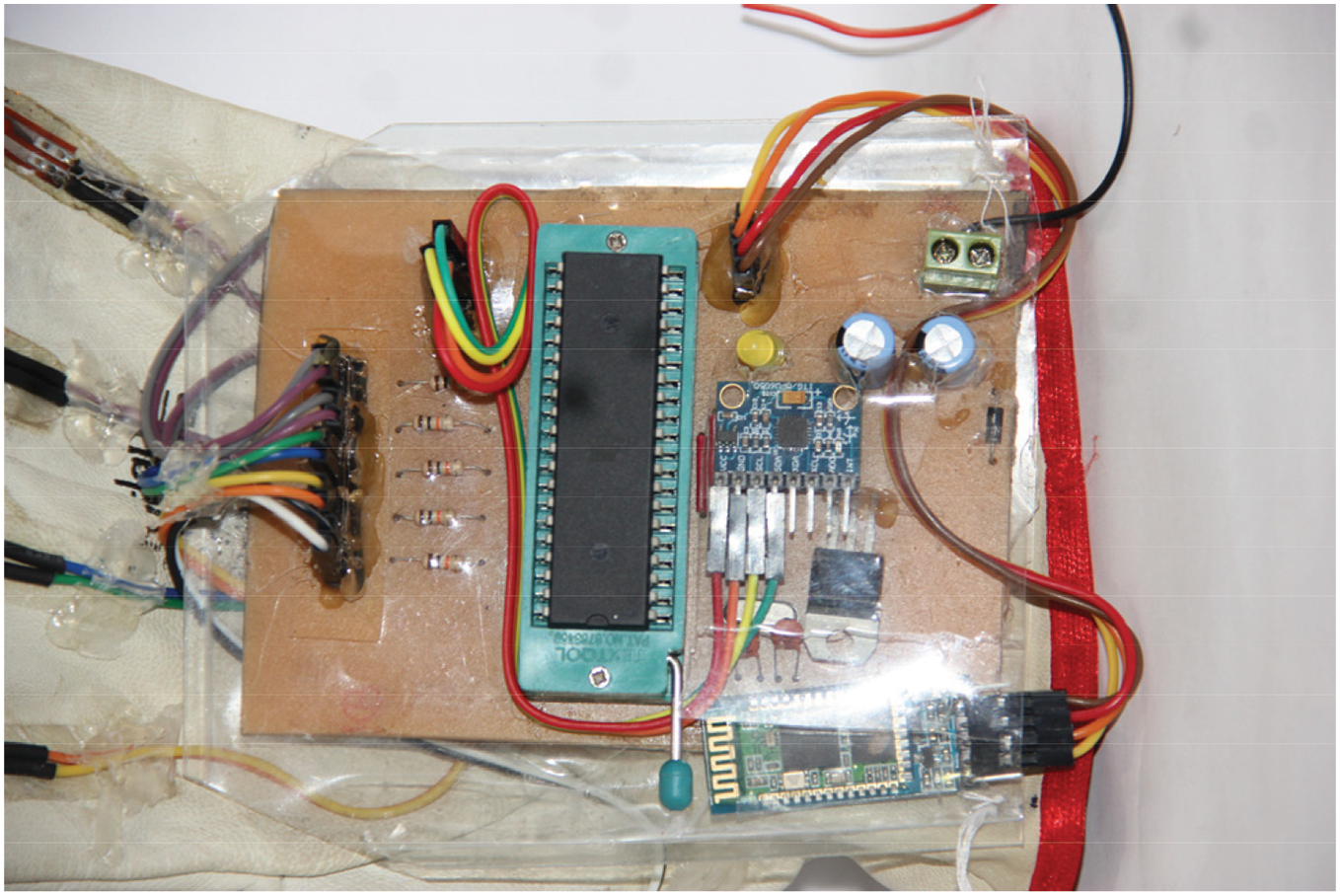

As a result of this classification, a real-time approach is proposed for issuing the text and converting it to the appropriate sound based on task scheduling commands. Fig. 1 depicts the proposed architecture for estimating hand movement using a smart pair of gloves. The development methods and guidelines for creating and building the IDLG are explained in this section. The circuit design and construction, as well as the development of software and algorithms and the usage of deep learning, are the two primary components of the IDLG development.

Figure 1: The intelligent gloves consisting of the sensor and the recognition approach

3.1 Flex Sensors as Fingers Gesture Recognition

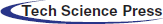

A flex sensor in Fig. 2 is a gadget with a wide range of applications. The resistance of the sensor increases dramatically when it is flexed. By connecting the flex sensor to the comparator circuit and using a voltage divider circuit consisting of the flex sensor and a carefully chosen fixed resistor, we can determine when the sensor is flexed and when it is not. The flex sensor is the best sensor for measuring and capturing finger movement. When a sensor in gloves is bent, it provides a resistance output that is proportional to the radius of the bend; the smaller the radius, the higher the resistance value. The bending resistance range for a flex sensor is roughly 30 to 125 Kohms. Because most letters can be recognized based on finger flexes, the flex sensors are the most important sensors. (Electronics).

Figure 2: Flex sensor in IDLG

The sensing operation of a contact sensor is performed by a transducer. Potentiometers, strain gauges, and other sensors are commonly utilized. One of the most frequent sensors in robotics is the contact or touch sensor. These are used to detect changes in position, velocity, acceleration, force, or torque at the manipulator joints and end-effector. Bumper and tactile are the two major types. If the bumper type detects that they are touching something, they will receive a Yes or No response. They are unable to provide information on the intensity of the contact or what they are touching. Tactile sensors are more complicated, because they provide information on how hard the sensor is pressed, as well as the direction and velocity of relative movement. Strain gauges or pressure sensitive resistances are used to measure the touch pressure. Carbon fibers, conductive rubber, piezoelectric crystals, and piezo-diodes are examples of pressure sensitive resistor variations. These resistances can work in one of two ways: either the material conducts better under pressure, or the pressure increases some area of electrical contact with the substance, allowing more current to flow. A voltage divider is formed by connecting pressure sensitive resistors in series with fixed resistances across a DC voltage supply.

If the variable resistance becomes too small, the fixed resistor limits the current across the circuit. The sensor’s output is the voltage across the pressure variable resistor, which is proportional to the pressure on the resistor. Except for the piezo-diode, which has a linear output over a wide range of pressures, the relationship is frequently non-linear. To connect these sensors to a computer, an analogue to digital converter is required [30].

3.2 Accelerometer Used in IDLG as a Hand Motion Recognition

When linked to a glove, the flex sensors and accelerometer are motion-based sensors that can detect hand motions. To precisely detect motion, the sensors on the finger and wrist section of the glove can be secured. The coordinates and angles made during each signaling are given out by these sensors. The accelerometer is used to capture changes in the wrist’s coordinates on all three axes. The results are taken from the flex sensor readings and the accelerometer value of x and y is the value of hand slope in implementing hand movement in formatting gesture. The reading of that value to clarify the difference in values on each gesture.

A MEMS accelerometer and a MEMS gyro are combined in the Intenseness MPU-6050 sensor in Fig. 3. It is extremely precise, as each channel has 16-bit analogue to digital conversion technology. As a result, it simultaneously captures the x, y, and z channels. The I2C bus is used to connect the sensor to the Adriano. The MPU-6050 is reasonably priced, especially considering that it incorporates both an accelerometer and a gyro. (ARDUINO Playground) [31].

Figure 3: Accelerometer sensor should give out the correct coordinates for its position

3.3 ARDUINO UNO as Gesture Recognition

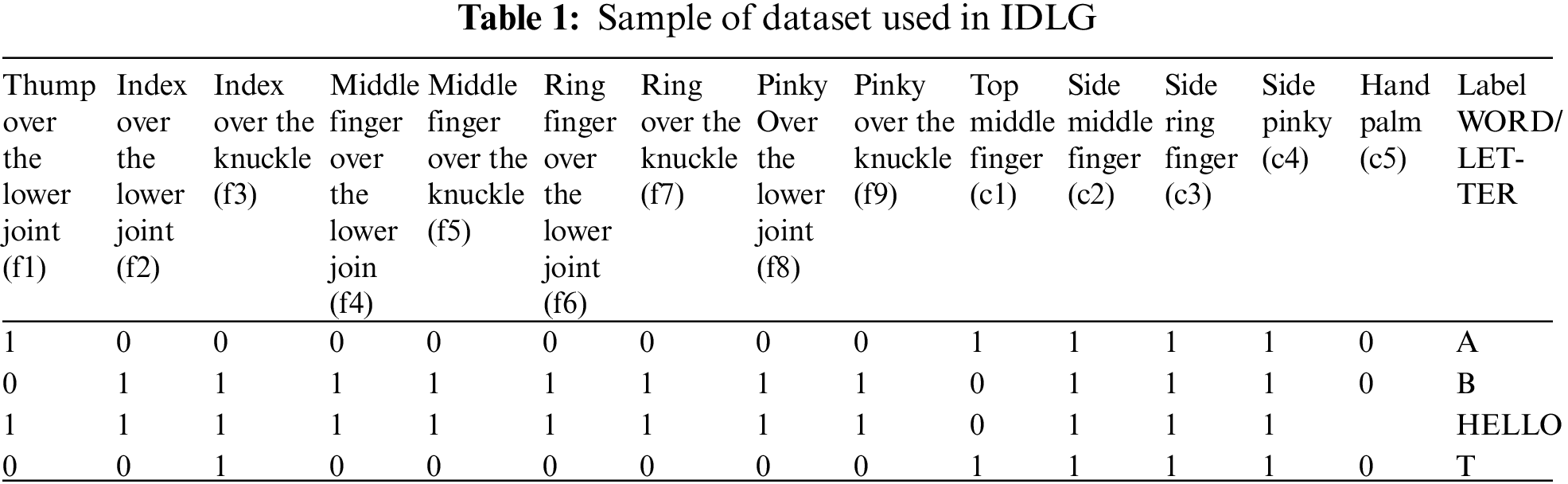

The IDLG is based on a series of microcontroller board designs primarily produced by Smart sensor, which use various 8-bit Atmel AVR microcontrollers or 32-bit Atmel ARM processors. These systems provide digital and analogue I/O pins that can be connected to a variety of expansion boards (“shields”) and other circuits. For loading applications from personal computers, the boards provide serial communications interfaces, including USB on some variants. For loading applications from personal computers, the boards provide serial communications interfaces, including USB on some variants. The Arduino platform contains an integrated development environment (IDE) based on the Processing project that supports C and C++ programming languages for programming the microcontrollers. (ARDUINO). We compare the number of movements and classification in Tab. 1, we conclude that the proposed algorithm of the IDLG approach performs better in recognizing the hand movements and maintains classification.

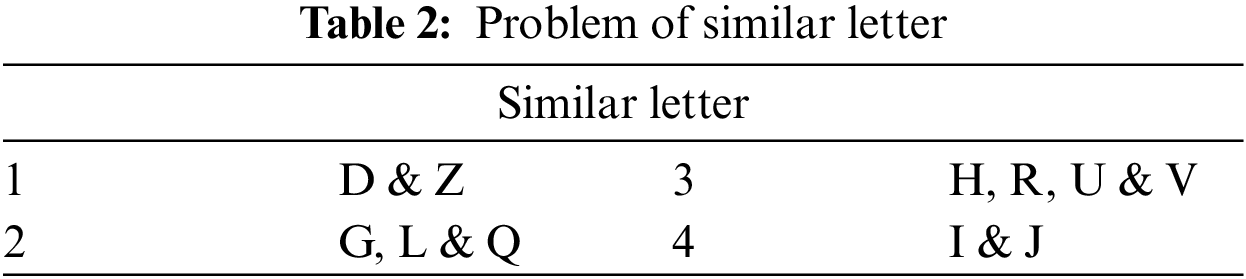

We used variable resisters to act as flex sensors, we have 9 flex sensors (variable resisters) in the simulation connected with comparators, and the output of the comparators is entering the Arduino at pins 0 and 2–9 at the digital pins; we also have 4 contact sensors, which are represented by 4 normal switches, and the values of the sensors are processed inside the Arduino as shown in Tab. 1. It was found in all previous works when taking the flexible spaces for each letter (see Appendix A) there is a problem of similar letters that have the same values of the areas of the flexible sensors, and several attempts were made to solve the problem as in Tab. 2, but also did not achieve the required.

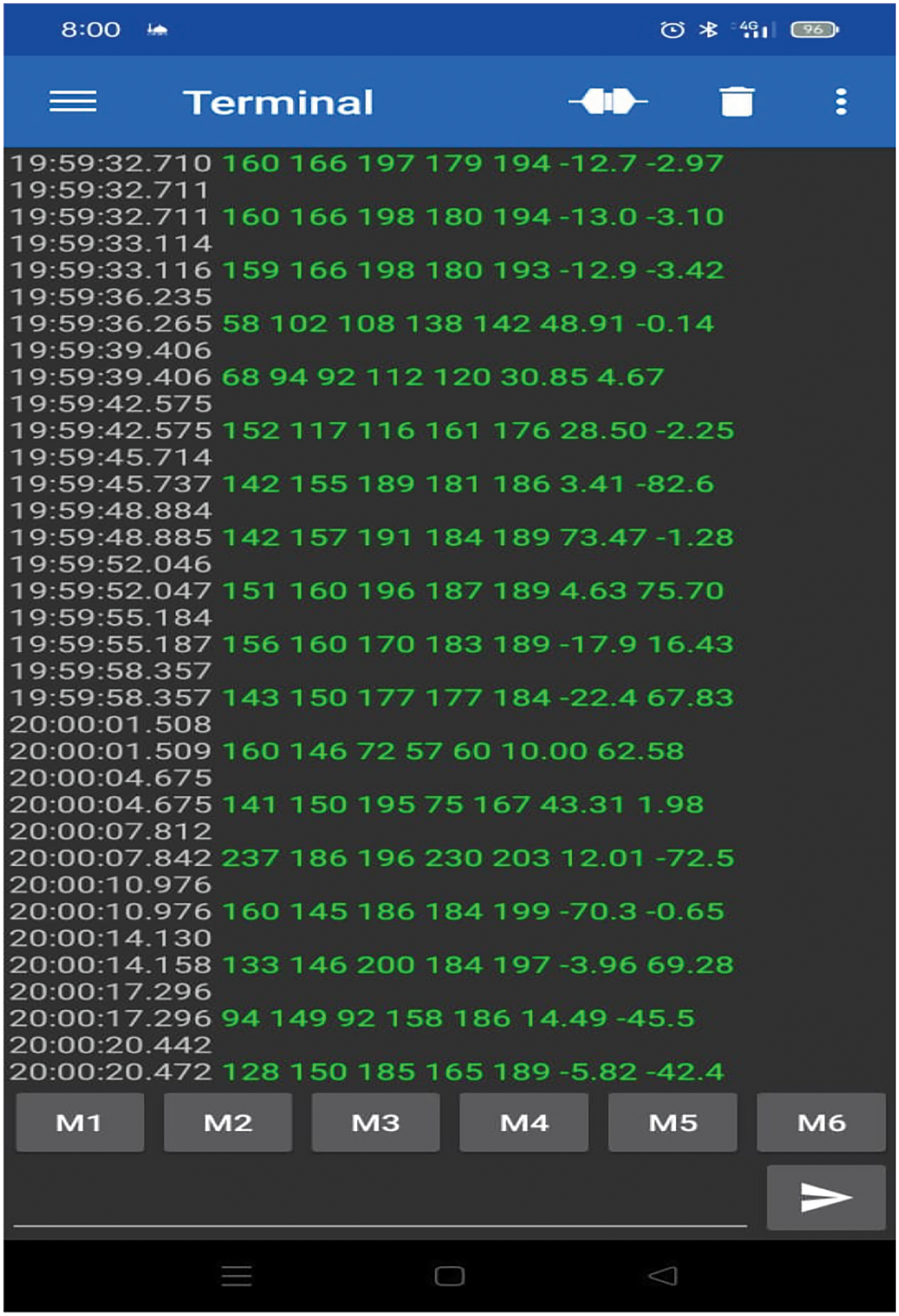

Multiple people motions were captured to create a training corpus for testing the entire solution. With the help of a serial cable, the glove is connected to the smart application a baud rate of 9500 bits per second. The data is collected from the glove into our model via the serial port on the arduino board in the form of ‘.txt’ files as shown in Fig. 4. This serial interface is also utilised for debugging the embedded system on the glove and loading classifier settings into the EEPROM of the Atmega2560 on the Arduino.

Figure 4: Sample of data Outputs of the IDLG

3.4 Deep Learning for Data in IDLG Approach

The flexibility sensors in addition to acceleration sensor signals corresponding to hand gestures in real-time. During the beginning and end of the motion, data from the first and last 2% of the signals received from the human operator were removed to avoid distortion. In the first stage: the signals received in the gloves were preprocessed and converted into digital data then create a dataset of digital values. The secand stage: we use IDLG approach based on deep learning.

The data obtained by flex sensors and accelerometer is used to train an artificial deep network in this paper. As data changes in response to gestures, data from flex and accelerometer sensors matches particular gestures. By learning this data from diverse cloud data and simulating a predictive model for gesture prediction for the future try.

As part of layered feed forward Artificial deep Networks, the IDLG technique is employed. In light of the gradient descent learning rule [32], back-propagation comprises numerous layers and uses supervised learning. The approach is provided examples of the sources of inputs and outputs we want to include in the approach, and the inaccuracy that results is then determined. This strategy is likely to reduce this error till the Artificial Deep Network receives the training data [33]. To generate an activation function, the total of the inputs xi is multiplied by the appropriate weights see Eq. (1).

Sigmoidal function is used as the active function for this model see Eq. (2).

Since the weights influence the error refinement between genuine and expected results, we must change the weights to reduce the error. For each neuron’s output, we can depict the error function as follows in Eq. (3).

The error value is then determined in relation to the inputs, outputs, and weights.

Distinct classification approaches were used to build deep-learning for the dataset acquired. To cleanse these data of duplication, a normalisation method was applied, and subsequently the dataset to be used was obtained. The classification process.

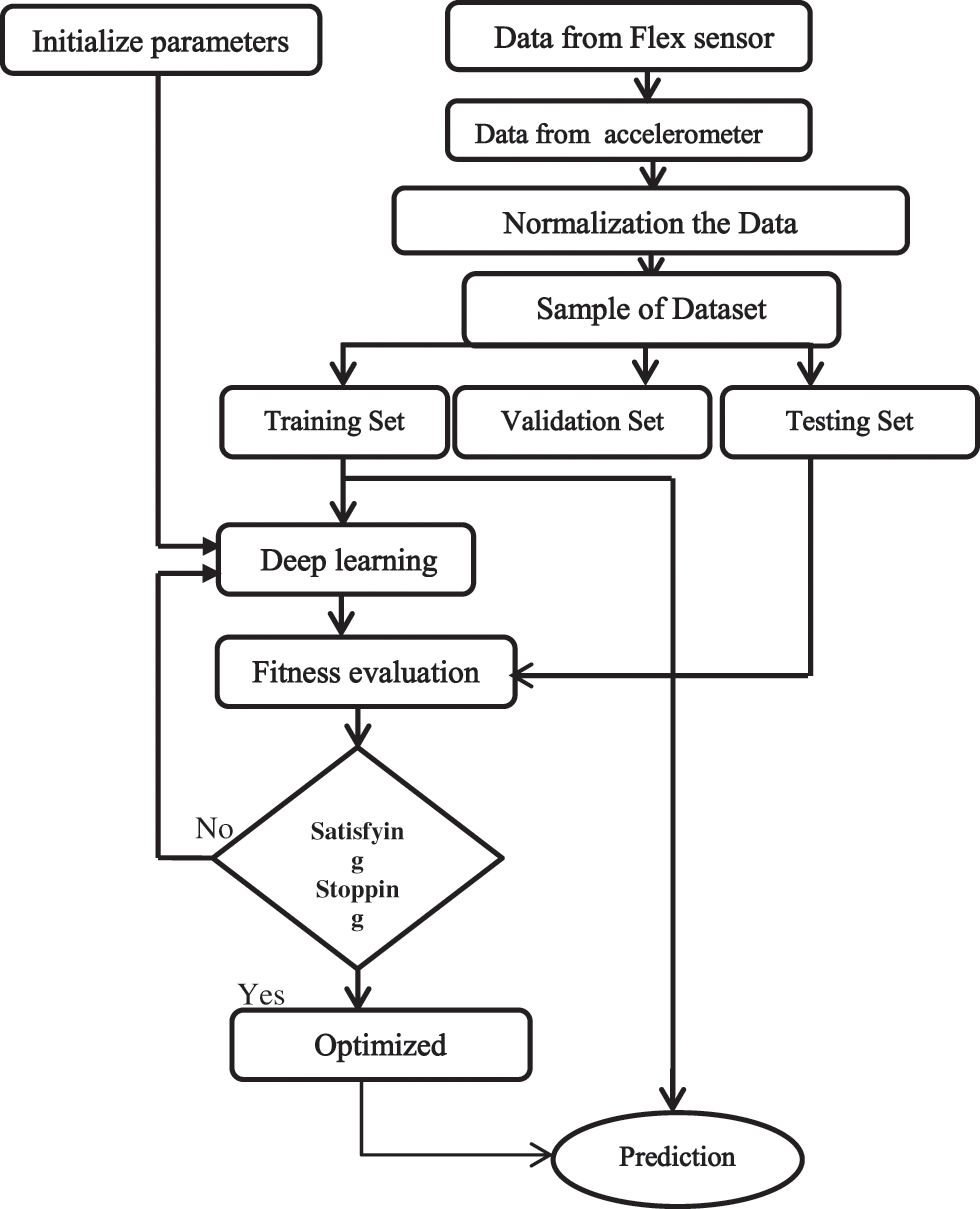

We applied classification model on the dataset which is based on deep Neural Networks as shown in Fig. 5. Datasets: The classifier data were randomly divided into a test set (20% of the original dataset) and a training set (80% of the original dataset) (the remaining 80 percent). The validation set was created by dividing the training set into two parts. We employed a holdout validation approach, which used 20% of the training samples for model selection and hyper-parameter tuning and the remaining 80% for training the model, as is usual practise in the literature. Pre-processing: Using a cubic spline interpolation with not-a-knot end constraints, all data were re-sampled to a fixed number of samples. Using the normalisation function.

Figure 5: Flowchart for intelligent Glove IDLG

IDLG approach is designed as shown in Fig. 5, joint- measurement data of Glove are used as the input of the neural network, outputs of each repeating neural cell are concatenated into a dense layer for further prediction. In the IDLG approach, fully connected layers are added after two Layers.

In this network, two hidden Layers, from the sensor, concatenate them together, and map them to two Layers, combination of Layers increases the amount of data, which can then improve the accuracy and classification.

4 Implementation of the Intelligent Glove IDLG Approach

Experimental Results IDLG approach training and testing are completed under the deep learning of python with Intel i7, 2.20 GHz CPU, 16 GB Ram.

The first stage was to gather information on similar initiatives in the field and the components that are employed in these projects. After that, we looked at each component individually to see how it operates and what values it provides. The next step was to learn about ASL (American Sign Language) and how it differs from the English alphabet.

4.1 Create Dataset Used in IDLG Approach

Flex sensors are utilised to determine the extent of finger bending in this paper, while contact detectors are employed to detect finger interactions. Accelerometers are used to track dynamic movements and the orientation of the hand for various gestures. The signal conditioning unit refines the signals collected from the sensors in order to make them compatible with the processing unit.

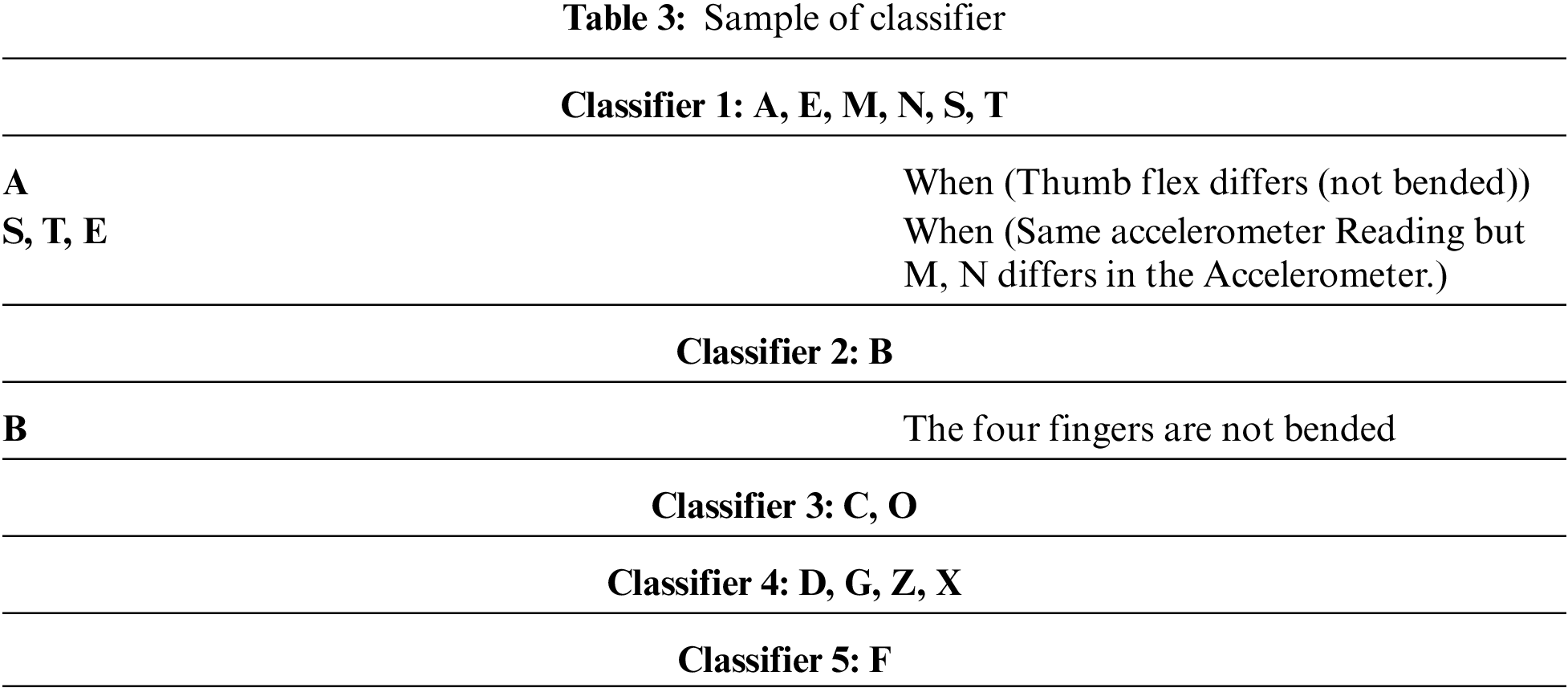

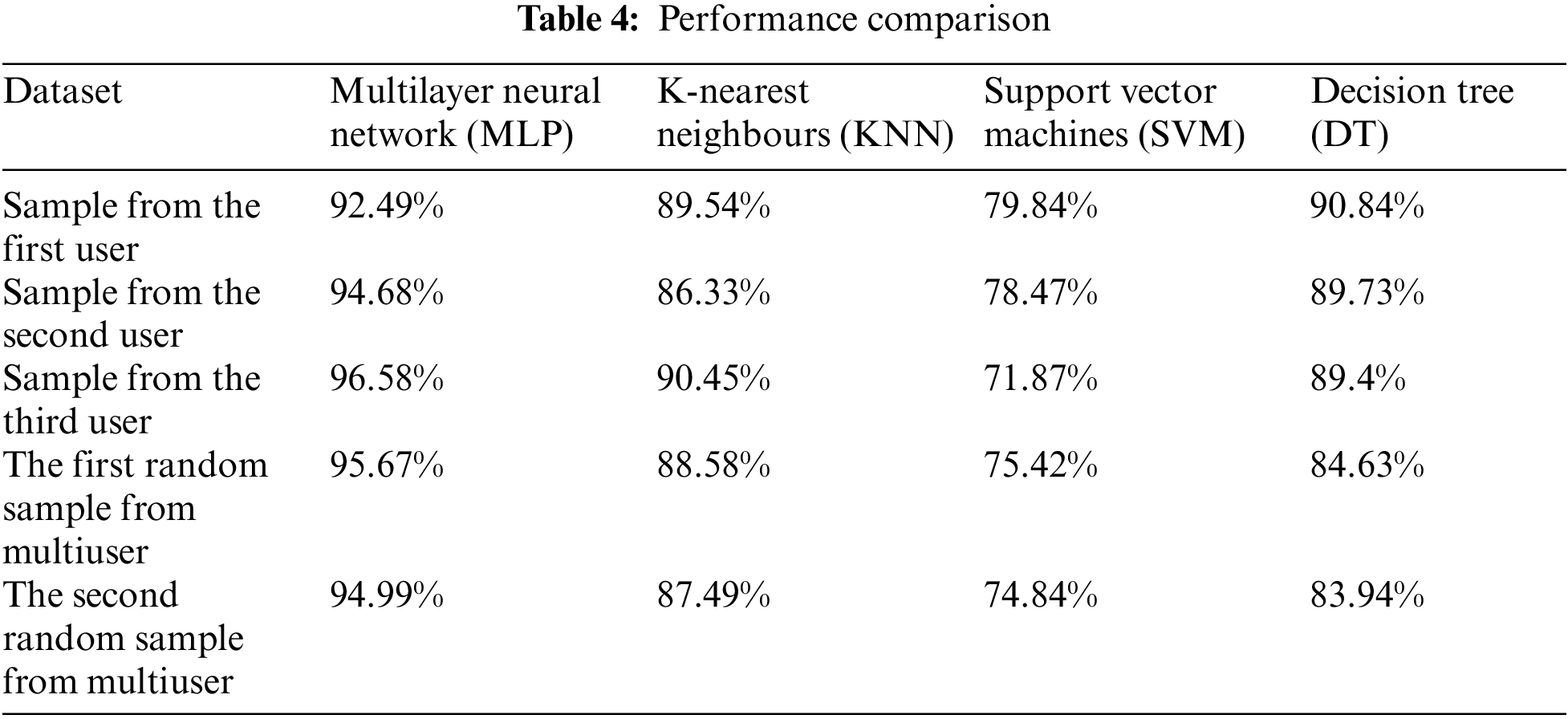

In this paper, we have features from flex sensors and accelerometers in our dataset. There are also 27 classes, with 26 of them being ASL alphabets and one being a self-made gesture signifying space. Users contributed to the training corpus. Sample of Classifier as shown in Tab. 3, each with twenty-seven classes, are collected for each user (C). The data samples (S) are collected from N users in order to calculate the total number of samples.

4.2 Multiuser for IDLG Approach

Tab. 4 displays the findings for the instance where testing is conducted with user-specific data. When testing the first user, only the first user’s own training data is used. In this scenario, the Multilayer Neural Network easily outperforms the K-nearest neighbours (KNN) classifier, which also achieves excellent accuracy. The Support vector machines (SVM) classifier performs poorly because there is insufficient data to estimate all of the parameters involved, but the Decision tree (DT) classifier-based classifier performs well only for small data corpora.

We collected data from many users to train the models in the second arrangement.

The IDLG will potentially reduce the communication gap between Dumb and ordinary people. The device should be economic for the common people to access it easily. So comparing with currently available marketed Sensing Gloves, such as Sign language recognition (SLR) systems based on sensory gloves. The IDLG approach achieves Low-Cost performance compared to other gloves in the market.

The challenges created by the rapid increase in data and the complexity of problems related to people of determination, who are deaf and dumb, require more and more computing power. In such a situation, it is important to ensure that the proposal approach is improved and used efficiently. The presented work touches on this issue through this paper of the use of variables from flex and accelerometer sensors. The use of the number of several neural configurations based on deep learning network was presented together with the data required to reproduce the experiments. A new approach with very promising accuracy, which gives 97% of network accuracy for training using parameters. Moreover, 14 variables were sufficient to reach a network accuracy of 97%. The results of this work introduce new types of parameters that can be used by sensors during neural training and create a solid foundation for continued search.

Acknowledgement: The authors extend their appreciation to King Saud University for funding this work through Researchers Supporting Project Number (RSP-2021/164), King Saud University, Riyadh, Saudi Arabia.

Funding Statement: This work is funded by King Saud University from Riyadh, Saudi Arabia. Project Number (RSP-2021/164), King Saud University, Riyadh, Saudi Arabia. https://ksu.edu.sa/.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. K. A. Bhaskaran, A. G. Nair, K. D. Ram, K. Ananthanarayanan and H. N. Vardhan, “Smart gloves for hand gesture recognition: Sign language to speech conversion system,” in Int. Conf. on Robotics and Automation for Humanitarian Applications (RAHA), Kerala, India, pp. 1–6, 2016. [Google Scholar]

2. N. Bang-iam, Y. Udnan and P. Masawat, “Design and fabrication of artificial neural network-digital image-based colorimeter for protein assay in natural rubber latex and medical latex gloves,” Microchemical Journal, vol. 106, pp. 270–275, 2013. [Google Scholar]

3. A. Q. Baktash, S. L. Mohammed and H. F. Jameel, “Multi-sign language glove based hand talking system,” in IOP Conference Series: Materials Science and Engineering, Baghdad, Iraq, vol. 1105, no. 1, pp. 012078, 2021. [Google Scholar]

4. M. Yan and H. Shi, “Smart living using bluetooth-based android smartphone,” International Journal of Wireless & Mobile Networks,” vol. 5, no. 65, 2013. [Google Scholar]

5. A. Z. Shukor, M. F. Miskon, M. H. Jamaluddin, F. bin Ali, M. F. Asyraf et al., “A new data glove approach for Malaysian sign language detection,” Procedia Computer Science, vol. 76, pp. 60–67, 2015. [Google Scholar]

6. E. Abana, K. H. Bulauitan, R. K. Vicente, M. Rafael and J. B. Flores, “Electronic glove: A teaching AID for the hearing impaired,” International Journal of Electrical and Computer Engineering, vol. 8, no. 4, pp. 2290–2298, 2018. [Google Scholar]

7. Z. Wu, L. Labazanova, P. Zhou and D. Navarro-Alarcon, “A novel approach to model the kinematics of human fingers based on an elliptic multi-joint configuration,” arXiv preprint arXiv:2107.1469, 2021. [Google Scholar]

8. T. Muezzinoglu and M. Karakose, “An intelligent human–unmanned aerial vehicle interaction approach in real time based on machine learning using wearable gloves,” Sensors, vol. 21, no.1766, 2021. [Google Scholar]

9. X. Song, Y. Peng, B. Hu and W. Liu, “Characterization of the fine hand movement in badminton by a smart glove,” Instrumentation Science & Technology, vol. 48, no. 4, pp. 443–458, 2020. [Google Scholar]

10. S. Benatti, B. Milosevic, M. Tomasini, E. Farella, P. Schoenle et al., “Multiple biopotentials acquisition system for wearable applications,” in Proc. INSTICC, Lisbon, Portugal, pp. 260–268, 2015. [Google Scholar]

11. M. Rossi, S. Benatti, E. Farella and L. Benini, “Hybrid EMG classifier based on HMM and SVM for hand gesture recognition in prosthetics,” in IEEE Int. Conf. on Industrial Technology (ICIT), Seville, Spain, pp. 1700–1705, 2015. [Google Scholar]

12. M. Zanghieri, S. Benatti, A. Burrello, V. Kartsch, F. Conti et al., “Robust real-time embedded EMG recognition framework using temporal convolutional networks on a multicore IoT processor,” IEEE Transactions on Biomedical Circuits and Systems, vol. 14, pp. 244–256, 2019. [Google Scholar]

13. M. Zhu, Z. Sun, Z. Zhang, Q. Shi, T. He et al., “Haptic-feedback smart glove as a creative human-machine interface (HMI) for virtual/augmented reality applications,” Science Advances, vol. 6, pp. 8693, 2020. [Google Scholar]

14. V. Berezhnoy, D. Popov, I. Afanasyev and N. Mavridis, “The hand-gesture-based control interface with wearable glove system,” in ICINCO, Porto, Portugal, vol. 2, pp. 458–465, 2018. [Google Scholar]

15. J. Nassour, H. G. Amirabadi, S. Weheabby, A. A. Ali, H. Lang et al., “A robust data-driven soft sensory glove for human hand motions identification and replication,” IEEE Sensors Journal, vol. 20, no. 21, pp. 12972–12979, 2020. [Google Scholar]

16. X. Chen, L. Gong, L. Wei, S. C. Yeh, L. Da Xu et al., “A wearable hand rehabilitation system with soft gloves,” IEEE Transactions on Industrial Informatics, vol. 17, no. 2, pp. 943–952, 2020. [Google Scholar]

17. J. Pan, Y. Luo, Y. Li, C. K. Tham, C. H. Heng et al., “A wireless multi-channel capacitive sensor system for efficient glove-based gesture recognition with AI at the edge,” IEEE Transactions on Circuits and Systems, vol. 67, no. 9, pp. 1624–1628, 2020. [Google Scholar]

18. J. Maitre, C. Rendu, K. Bouchard, B. Bouchard and S. Gaboury, “Basic daily activity recognition with a data glove,” Procedia Computer Science, vol. 151, pp. 108–115, 2019. [Google Scholar]

19. M. Lee and J. Bae, “Deep learning based real-time recognition of dynamic finger gestures using a data glove,” IEEE Access, vol. 8, pp. 219923–219933, 2020. [Google Scholar]

20. E. Ayodele, T. Bao, S. A. R. Zaidi, A. M. Hayajneh, J. Scott et al., “Grasp classification with weft knit data glove using a convolutional neural network,” IEEE Sensors Journal, vol. 21, no. 9, pp. 10824–10833, 2021. [Google Scholar]

21. R. Chauhan, B. Sebastian and P. Ben-Tzvi, “Grasp prediction toward naturalistic exoskeleton glove control,” IEEE Transactions on Human-Machine Systems, vol. 50, no. 1, pp. 22–31, 2019. [Google Scholar]

22. A. K. B. Bhokse and A. R. Karwankar, “Hand gesture recognition using neural network,” International Journal of Innovative Science, Engineering and Technology, vol. 2, 2015. [Google Scholar]

23. B. Fatimah, P. Singh, A. Singhal and R. B. Pachori, “Hand movement recognition from sEMG signals using Fourier decomposition method,” Biocybernetics and Biomedical Engineering, vol. 41, no. 2, pp. 690–703, 2021. [Google Scholar]

24. J. Maitre, C. Rendu, K. Bouchard, B. Bouchard and S. Gaboury, “Object recognition in performed basic daily activities with a handcrafted data glove prototype,” Pattern Recognition Letters, vol. 147, pp. 181–188, 2021. [Google Scholar]

25. X. A. Huang, Q. Wang, S. Zang, J. Wan, G. Yang et al., “Tracing the motion of finger joints for gesture recognition via sewing RGO-coated fibers onto a textile glove,” IEEE Sensors Journal, vol. 19, no. 20, pp. 9504–9511, 2019. [Google Scholar]

26. X. Lun, S. Jia, Y. Hou, Y. Shi, Y. Li et al., “GCNs-net: A graph convolutional neural network approach for decoding time-resolved eeg motor imagery signals,” Signal Processing, vol. 14, no. 8, 2020. [Google Scholar]

27. Y. Hou, S. Jia, S. Zhang, X. Lun, Y. Shi et al., “Deep feature mining via attention-based BiLSTM-GCN for human motor imagery recognition,” Signal Processing, vol. 14, no. 8, 2021. [Google Scholar]

28. Y. Hou, L. Zhou, S. Jia and X. Lun, “A novel approach of decoding EEG four-class motor imagery tasks via scout ESI and CNN,” Journal of Neural Engineering, vol. 17, no. 1, 2020. [Google Scholar]

29. X. Tang, Y. Liu, C. Lv and D. Sun, “Hand motion classification using a multi-channel surface electromyography sensor,” Sensors, vol. 12, no. 2, pp. 1130–1147, 2012. [Google Scholar]

30. N. M. Mohan, A. R. Shet, S. Kedarnath and V. J. Kumar, “Digital converter for differential capacitive sensors,” IEEE Transactions on Instrumentation and Measurement, vol. 57, pp. 2576–2581, 2008. [Google Scholar]

31. A. H. Kelechi, M. H. Alsharif, C. Agbaetuo, O. Ubadike, A. Aligbe et al., “Design of a low-cost air quality monitoring system using arduino and thing speak,” Computers, Materials & Continua, vol. 70, pp. 151–169, 2022. [Google Scholar]

32. J. Li, J. Cheng, J. Shi and F. Huang, “Brief introduction of back propagation (BP) neural network algorithm and its improvement,” Advances in Computer Science and Information Engineering, vol. 169, pp. 553–558, 2012. [Google Scholar]

33. X. H. Yu and S. X. Cheng, “Training algorithms for back propagation neural networks with optimal descent factor,” Electronics Letters, vol. 26, pp. 1698–1700, 1990. [Google Scholar]

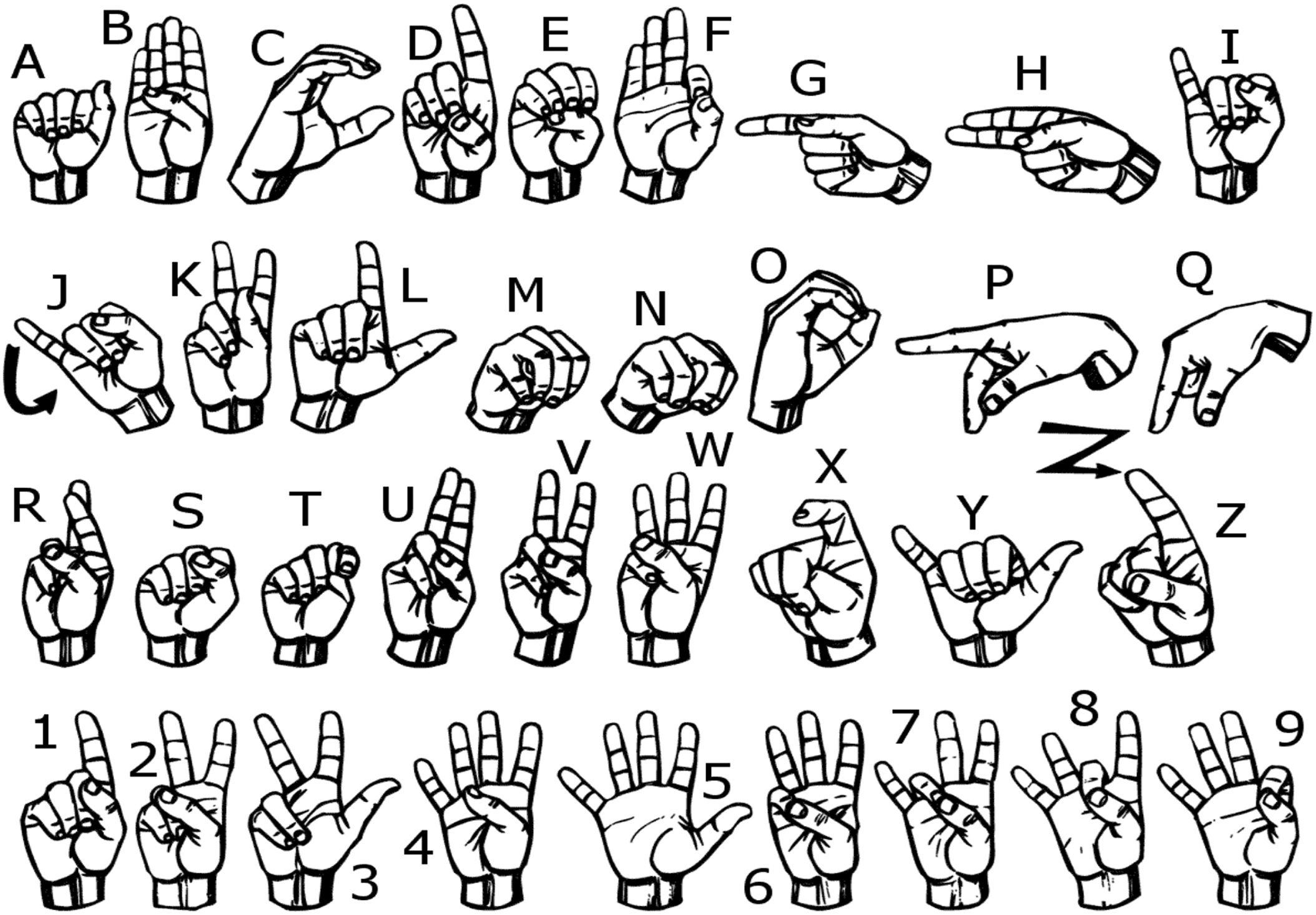

Appendix A: The representation for all ASL alphabets is shown in the diagram as shown in Fig. 6.

Figure 6: English alphabets ASL representation

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |