DOI:10.32604/cmc.2022.027064

| Computers, Materials & Continua DOI:10.32604/cmc.2022.027064 |  |

| Article |

Vertex Cover Optimization Using a Novel Graph Decomposition Approach

1Department of Mathematics, University of Gujrat, Gujrat, 50700, Pakistan

2Department of Physics, University of Gujrat, Gujrat, 50700, Pakistan

*Corresponding Author: Abdul Majid. Email: abdulmajid40@uog.edu.pk

Received: 10 January 2022; Accepted: 30 March 2022

Abstract: The minimum vertex cover problem (MVCP) is a well-known combinatorial optimization problem of graph theory. The MVCP is an NP (nondeterministic polynomial) complete problem and it has an exponential growing complexity with respect to the size of a graph. No algorithm exits till date that can exactly solve the problem in a deterministic polynomial time scale. However, several algorithms are proposed that solve the problem approximately in a short polynomial time scale. Such algorithms are useful for large size graphs, for which exact solution of MVCP is impossible with current computational resources. The MVCP has a wide range of applications in the fields like bioinformatics, biochemistry, circuit design, electrical engineering, data aggregation, networking, internet traffic monitoring, pattern recognition, marketing and franchising etc. This work aims to solve the MVCP approximately by a novel graph decomposition approach. The decomposition of the graph yields a subgraph that contains edges shared by triangular edge structures. A subgraph is covered to yield a subgraph that forms one or more Hamiltonian cycles or paths. In order to reduce complexity of the algorithm a new strategy is also proposed. The reduction strategy can be used for any algorithm solving MVCP. Based on the graph decomposition and the reduction strategy, two algorithms are formulated to approximately solve the MVCP. These algorithms are tested using well known standard benchmark graphs. The key feature of the results is a good approximate error ratio and improvement in optimum vertex cover values for few graphs.

Keywords: Combinatorial optimization; graph theory; minimum vertex cover problem; maximum independent set; maximum degree greedy approach; approximation algorithms; benchmark instances

The Minimum Vertex Cover Problem (MVCP) is a subset of NP complete problems. Solution of NP class of problems is one of the seven outstanding millennium problems stated by the Clay Mathematics institute. The solution of these problems can be verified in polynomial time scale, but time complexity for solving these problems grow exponentially with size of the problems [1]. The MVCP involves finding a set U such that

The MVCP has a wide range of applications; for example, cyber security, setting up or dismantling of a network, circuit design, biochemistry, bioinformatics, electrical engineering, data aggregation, immunization strategies in network, network security, internet traffic monitoring, wireless network design, network source location problem, marketing and franchising, pattern recognition and cellular phone networking [3–9].

Due to its wide range of applications, the MVCP has received special attention in the scientific community. Several approximate algorithms for solving the problem have been proposed, e.g., the depth first search algorithm, the maximum degree greedy algorithm, the edge weighting algorithm, the deterministic distributed algorithm, the genetic algorithm, the edge deletion algorithm, the support ratio algorithm, the list left algorithm, the list right algorithm and iterated local search algorithm etc [10]. Since all these algorithms provide approximate results with certain accuracy, there is a certain space to improve accuracy and to reduce complexity by introducing faster and more accurate algorithms. The scientific community around the globe has proposed approximate solutions of the problem with polynomial complexity. Some of the efforts by scientific community are described in following paragraph.

Jiaki Gu et al. proposed an algorithm that uses a general three stage strategy to solve the minimum vertex cover problem. Their method includes graph reduction, finding minimum vertex cover of bipartite graph components and finally finding the vertex cover of actual graph [11]. Changsheng Quan and coworkers proposed an edge waiting algorithm to solve MVCP. They claim that their algorithm has a fast-searching performance for solving large-scale real-world problem [12]. Shaowei Cai et al. in their work proposed a heuristic algorithm that make use of a preprocessing algorithm, construction algorithms and search algorithms to solve the MVCP. They claim that their algorithm is fast and accurate as compared to other existing heuristic algorithms [13]. Chuan Luo et al. proposed an algorithm that uses a highly parametric framework and incorporates many effective local search techniques to solve the MVCP. According to their claim their algorithm performs better for medium size graph and is competitive for large sized graphs [14].

Jinkun Chen and coworkers proposed an approximate algorithm based on rough sets. They use a Boolean function with conjunction and disjunction logics [15]. Cai. S et al. in their work use an edge weighting local search technique for finding an approximate MVC [16]. Khan, I and coworkers proposed an algorithm that works by removal of nodes to find a maximum independent set yielding an approximate MVC [17]. Arstrand, M et al. formulated a deterministic distributed algorithm to solve the MVCP. The authors solved the problem for two approximate solutions of the MVC during

The literature survey indicates variety of results as far as complexity and accuracy of algorithms are concerned. Onak and Rubinfeld developed a randomized algorithm for maintaining an approximate maximum cardinality matching with a time complexity of

In particular, the present work is more suitable for two dimensional graphs with triangular grid structures. The two-dimensional triangular grid graphs are very common in telecommunications, in molecular biology, in configurational statistics of polymers and in various other fields [28–30]. We are proposing here a new way to find edges shared by such triangular grid structures and use these subgraphs to simplify the MVC for such graphs.

A graph can be represented as a matrix

Here

The set

From here onward let

Any vertex

A graph can be divided into a number of subgraphs such that;

One may construct these subgraphs

A solution for graph decomposition is proposed that is based on Lemma and Theorem proved below.

Lemma: An edge that has both of its vertices covered must belong to a Hamiltonian cycle or a path with odd number of edges.

Proof: A graph can be decomposed into a number of subgraphs that may be a Hamiltonian cycle or a path with odd or even number of edges. For even number of edges a Hamiltonian cycle or path does not require both vertices of any edge to be covered. For a Hamiltonian cycle with odd number of sides only one of the edges must have both vertices covered. For paths with odd number of sides both vertices of one of those edge may or may not require to be covered. Hence, if both vertices of an edge are covered that edge may either be an edge of a Hamiltonian cycle or a path with odd number of edges.

Theorem: The exact minimum vertex cover of a graph

Proof: For a given set of vertex cover there may exist at most two types of edges depending on the vertices being covered i.e., an edge with both of its vertices covered and an edge with a single vertex covered. The edge with both vertices covered essentially belongs to a subgraph of the form of a path or a Hamiltonian cycle with odd number of edges as proved in the Lemma. In case of two different set of vertex covers i.e., an optimum vertex cover and an approximate one, there can be at most five cases, which are listed below;

Case 1: An edge

Case 2: An edge

Case 3: An edge

Case 4: An edge

Case 5: An edge

For both of the sets the number of cases like case 3, 4 and 5 are the same i.e., such cases do not cause any difference on the cardinality of both sets. In cases 1, 2 and 3 at least one of the sets have its edges covered by both vertices. Since case 3 is the same for both the sets, the only two cases that can cause difference on the cardinality of both sets are case 1 and 2. For the approximate set the number of cases like case 2 is greater than or equal to the number of cases like case 1. This leads to the fact that a large number of cases like case 2 can increase cardinality of an approximate set compared to the optimal set. All edges that belong to subgraphs that are either paths or Hamiltonian cycles with odd number of edges are candidates of having both vertices in the vertex cover. By simply separating all such edges the optimization problem simplifies.

This leads to a conclusion that minimum vertex cover optimization depends only on optimization of subgraphs formed by Hamiltonian cycles or paths with odd number of sides.

Based on the Theorem a new approach is proposed to find

Our aim here is to find edges, which are common in more than one triangular Hamiltonian cycle. We refer such edges as shared edges. To find such shared edges in a large graph we are proposing a new approach. The new approach is based on decomposition of a graph

An element of matrix

An element-by-element multiplication (just corresponding element multiplication of two matrices) of matrix

For example,

Removal of any subset of vertices from a graph requires removal of the corresponding row or column from the edge matrix

Proposition:

Proof: Since

where

Let

Let

where

All elements in

Reduced matrix

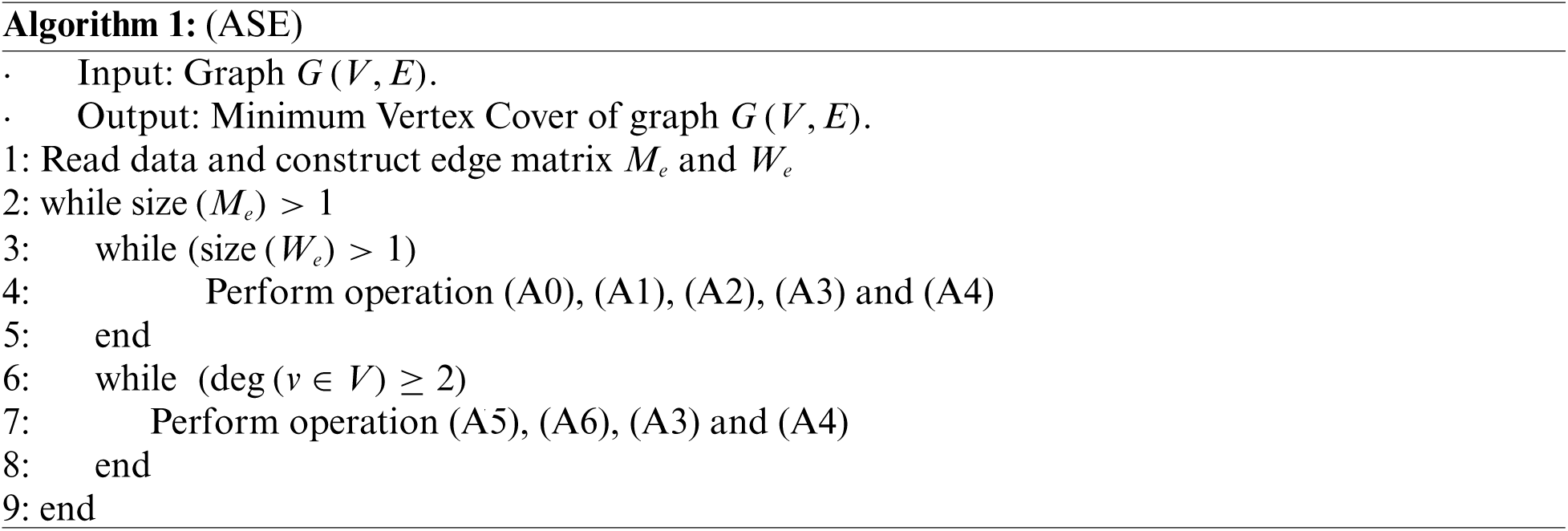

Algorithms

The decomposition of a graph G into

The proposed algorithms are described in the following section. Algorithm 1 and Algorithm 2 are abbreviated as ASE and ASER such that ‘A’ stands for Algorithm, ‘SE’ stands for ‘Shared Edges’ and ‘R’ stands for ‘Reduction Strategy’.

(A0) Evaluate

(A1) Find a vertex with highest degree from

(A2) Evaluate matrix F for the vertex found in step A1. Using Eq. (4) evaluate reduced matrix

(A3) Remove all leaves from the graph

(A4) Remove all isolated vertices from the graph

(A5) Find

(A6) Find the vertex u that has the maximum degree in present graph, cover the selected vertex u and remove from the graph.

Complexity

Total complexity of the algorithm is

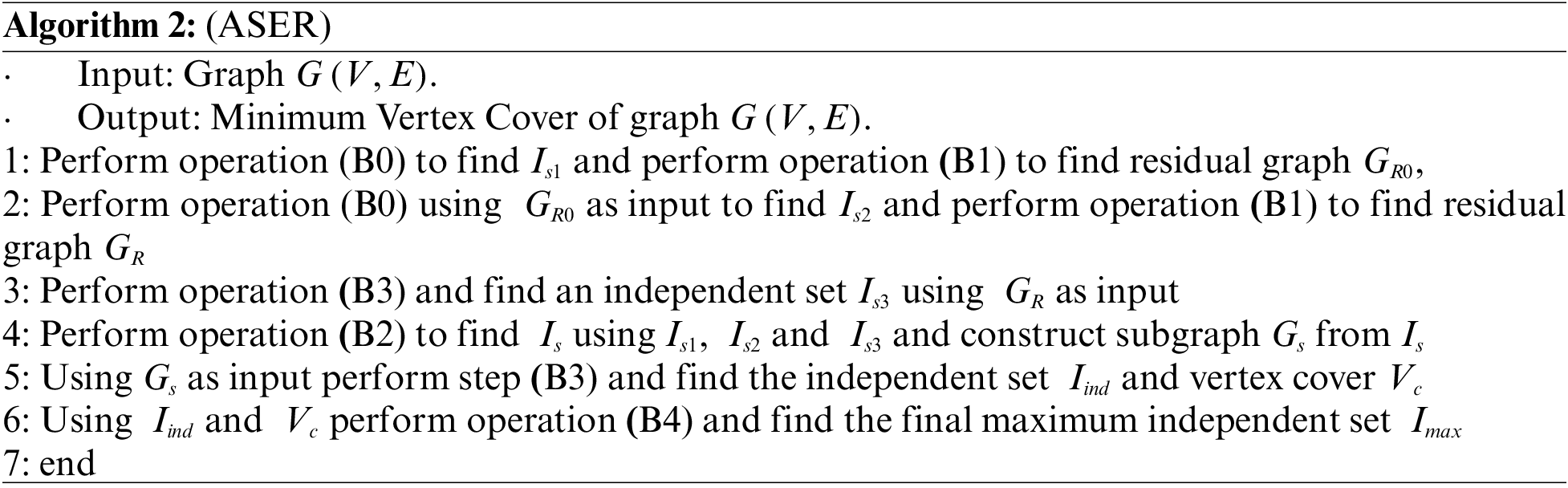

To reduce complexity of ASE a reduction strategy is proposed. The reduction strategy consists of splitting graph into two subgraphs and finding independent set of 1st subgraph and taking union of that independent set with 2nd subgraph and finding independent set of the union set. However, this graph splitting is not random. For splitting one can list the set of edges

Under normal circumstances the list of edges E may not provide reasonably large

(B0) Find an independent set

(B1) Construct residual subgraph

(B2) Construct a single set using three sets as;

(B3) Find maximum independent set of given subgraphs using algorithm 1

(B4) Find a subset

The low-cost algorithm simply selects and adds a vertex to an independent set followed by searching vertices that can be moved to the independent set. This algorithm has a complexity of

In this section both the algorithms are tested and analyzed for their accuracy and complexity for benchmark graphs taken from [31,32] and [33]. The simulations are performed on a computer with 1.61 GHz processor and 8.00 GB RAM using sequential programming.

The results from the 72 benchmark graphs referred above are organized in the form of three tables. Tabs. 1 and 2 contain optimum vertex cover and accuracy in the form of error ratios for the algorithms of respective graphs. Tab. 3 contains a comparison of results of the algorithm ASE with three well know algorithms. The error ratio is defined here as the value of minimum vertex cover for a given graph obtained from each algorithm divided by the optimum vertex cover

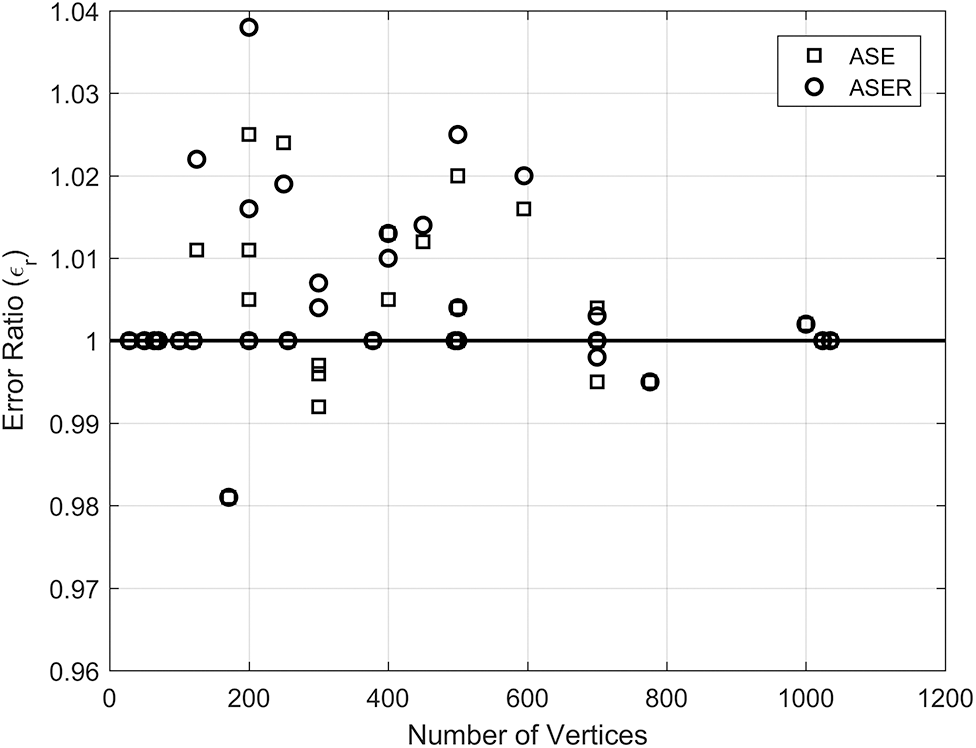

Fig. 1 shows the error ratio

Figure 1: Accuracy of the suggested algorithms, solid line represents reported optimum values

As can be seen from the Tabs. 1 and 2 that out of 72 instances ASE and ASER both give an error ratio

In ASE, since only edges

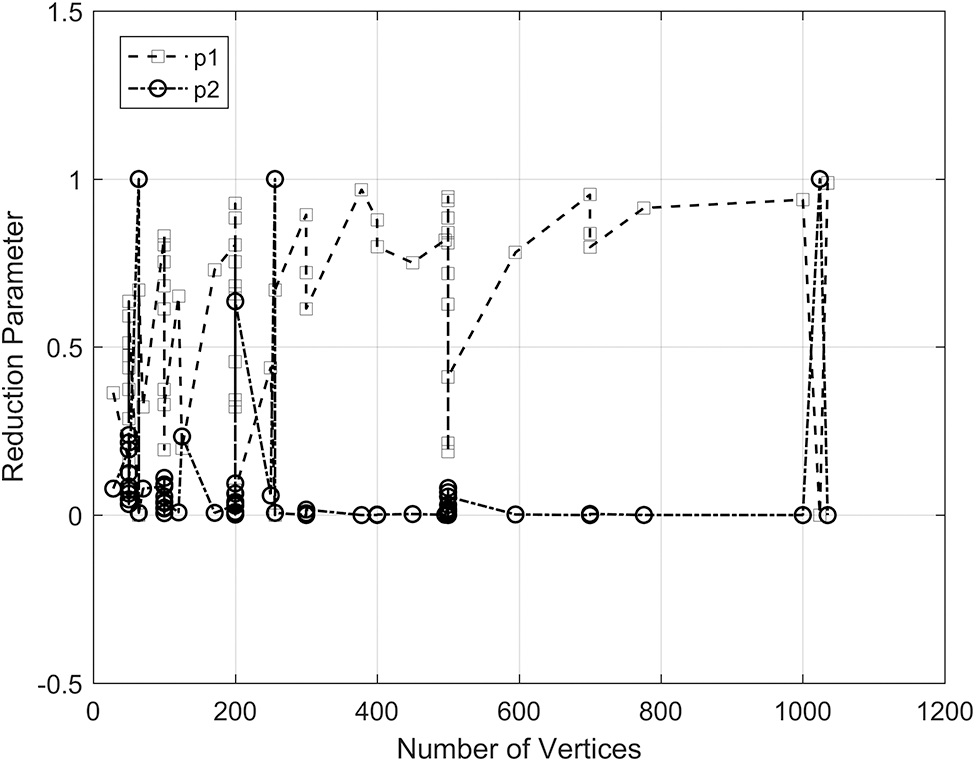

Supplementary data describes the complexity of the proposed algorithms and contains two parametric numbers or reduction parameters

The comparison between ASE and ASER is given in Fig. 2, which shows that if either

Figure 2: Reduction parameters p1 and p2 plotted against number of vertices

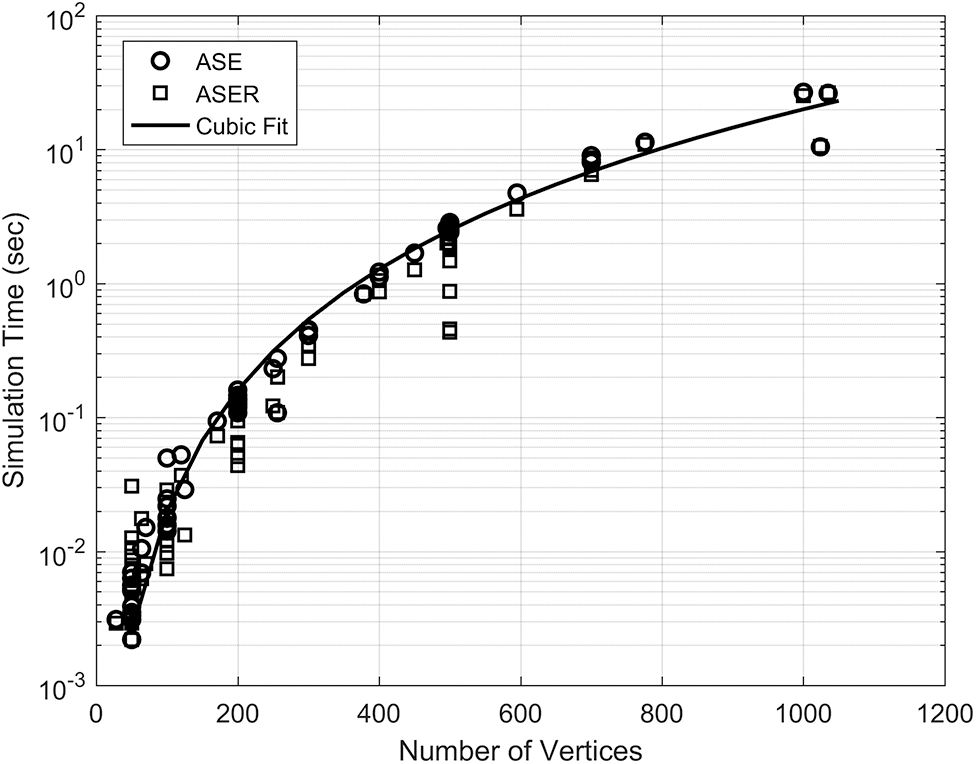

The simulation time for the proposed algorithms is plotted against the number of vertices in Fig. 3. A cubic fit function of the form

Figure 3: Simulation time vs. the number of vertices for the proposed algorithms

In Tabs. 1 and 2 the performance of the algorithm ASE is evaluated using 72 benchmark graphs. On 48 instances our algorithms yield optimal values. In Tab. 3 we have compared the results for 20 benchmark graphs for which ASE does not yield optimal values with three well known algorithms MDG (maximum degree greedy) [34], MVSA (modified vertex support algorithm) [35] and MtM (Min-to-Min) [36]. The average ratio error for these 20 benchmark graphs presented in the Tab. 3 for ASE, MDG, MVSA and MtM are, 1.005, 1.014, 1.015 and 1.012, respectively. This shows that the algorithm ASE clearly outperforms the three algorithms in comparison.

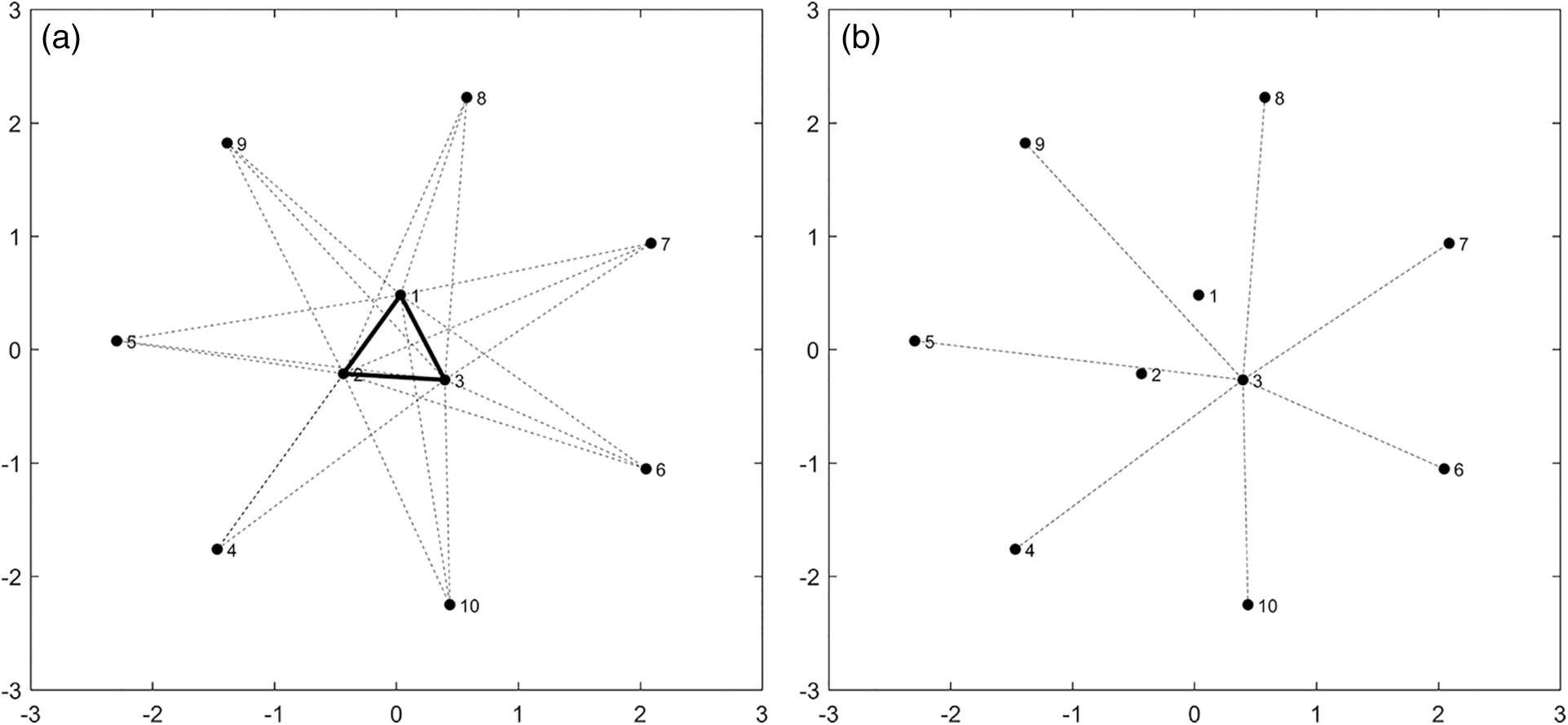

For step-by-step analysis of the algorithm a simple real-life example is selected. Crypto or digital currency market currently has a market capital of billions of US dollars. There are hundreds of crypto currencies with billions of USD daily volume. Market data analysis of these currencies is becoming harder and harder with growth in data. However, almost all of these currencies are paired with each other for trading. Therefore, any market fluctuation is coupled within these crypto currencies. In principle, one can represent these currencies and their trading pairs in the form of a graph and can find minimum vertex cover of the graph to select only few currencies for crypto market data analysis. We have selected ten crypto currencies and their trading pairs in order to simplify the problem. These crypto currencies and their trading pair are presented in the form of a graph is shown in Fig. 4a.

Figure 4: (a) Crypto currencies and their trading pair (b) Subgraph with no shared edge

The graph is decomposed in subgraphs with bold dark lines showing shared edges and dashed lines as the rest of the graph. The shared edge graph is simply a triangle in this case and each vertex has a degree 2 in the subgraph. A single vertex (in this case vertex 1) is covered, i.e.,

The proposed approach simplifies a graph to isolated paths or polygons (Hamiltonian cycles) by moving shared edges followed by shared vertices to the vertex cover. This process forms three subgraphs i.e., a subgraph containing shared edges, a subgraph with shared vertices and finally a simplified subgraph. The final simplified subgraph is either a single Hamilton cycle or path or a number of isolated Hamiltonian cycles or paths. The vertex cover of the simplified subgraph can be exactly found in a short time using maximum degree greedy approach. The accuracy of finding the first two subgraphs depends on the sequence of covering the vertices. The proposed strategies are capable to search for the sequence of selection of vertices to an approximate extent only. However, using this approach the problem can be broken successfully into three smaller problems, which are relatively easy to handle. The worst-case error ratio

The proposed approach may work even better if all the edges shared by subgraphs of the form

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. S. Cook, “The complexity of theorem proving procedure,” Proceedings of the Third Annual ACM Symposium on Theory of Computing, pp. 151–158, 1971. [Google Scholar]

2. Z. Ullah, M. Fayaz and S. H. Lee, “An efficient technique for optimality measurement of approximation algorithms,” International Journal of Modern Education & Computer Science, vol. 11, pp. 11, 2019. [Google Scholar]

3. L. Wang, S. Hu, M. Li and J. Zhou, “An exact algorithm for minimum vertex cover problem,” Mathematics, vol. 7, no. 7, pp. 603, 2019. [Google Scholar]

4. S. R. Balachandar and K. Kannan, “A Meta-heuristic algorithm for vertex covering problem based on gravity,” International Journal of Mathematical and Statistical Sciences, vol. 1, no. 3, pp. 130–136, 2009. [Google Scholar]

5. J. Chen and I. A. Kanj, “Constrained minimum vertex cover in bipartite graphs: Complexity and parameterized algorithms,” Journal of Computer and System Sciences, vol. 67, no. 4, pp. 833–847, 2003. [Google Scholar]

6. M. Fayaz, S. Arshad, A. S. Shah and A. Shah, “Approximate methods for minimum vertex cover fail to provide optimal results on small graph instances: A review,” International Journal of Control and Automation, vol. 11, no. 2, pp. 135–150, 2018. [Google Scholar]

7. Z. Jin-Hua and Z. Hai-Jun, “Statistical physics of hard combinatorial optimization: Vertex cover problem,” Chinese Physics B, vol. 23, no. 7, pp. 078901, 2014. [Google Scholar]

8. M. Javad-Kalbasi, K. Dabiri, S. Valaee and A. Sheikholeslami, “Digitally annealed solution for the vertex cover problem with application in cyber security,” in ICASSP 2019–2019 IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSPpp. 2642–2646, 2019. [Google Scholar]

9. D. Zhao, S. Yang, X. Han, S. Zhang and Z. Wang, “Dismantling and vertex cover of network through message passing,” IEEE Transactions on Circuits and Systems II: Express Briefs, vol. 67, no. 11, pp. 2732–2736, 2020. [Google Scholar]

10. J. Chen, K. Lei and C. Xiaochuan, “An approximation algorithm for the minimum vertex cover problem,” Procedia Engineering, vol. 137, pp. 180–185, 2016. [Google Scholar]

11. J. Gu and P. Guo, “PEAVC: An improved minimum vertex cover solver for massive sparse graphs,” Engineering Applications of Artificial Intelligence, vol. 104, pp. 104344, 2021. [Google Scholar]

12. C. Quan and P. Guo, “A local search method based on edge age strategy for minimum vertex cover problem in massive graphs,” Expert Systems with Applications, vol. 182, pp. 115185, 2021. [Google Scholar]

13. S. Cai, J. Lin and C. Luo, “Finding a small vertex cover in massive sparse graphs: Construct, local search, and preprocess,” Journal of Artificial Intelligence Research, vol. 59, pp. 463–494, 2017. [Google Scholar]

14. C. Luo, H. H. Hoos, S. Cai, Q. Lin, H. Zhang et al., “Local search with efficient automatic configuration for minimum vertex over,” in IJCAI, Macau, pp. 1297–1304, 2019. [Google Scholar]

15. J. Chen, Y. Lin, J. Li, G. Lin, Z. Ma et al., “A rough set method for the minimum vertex cover problem of graphs,” Applied Soft Computing, vol. 42, pp. 360–367, 2016. [Google Scholar]

16. S. Cai, K. Su and Q. Chen, “EWLS: A new local search for minimum vertex cover,” in Proc. of the AAAI Conf. on Artificial Intelligence, Atlanta, Georgia, USA, vol. 24, no. 1, 2010. [Google Scholar]

17. I. Khan and N. Riaz, “A new and fast approximation algorithm for vertex cover using a maximum independent set (VCUMI),” Operations Research and Decisions, vol. 25, no. 4, pp. 5–18, 2015. [Google Scholar]

18. M. Åstrand, P. Floréen, V. Polishchuk, J. Rybicki, J. Suomela et al., “A local 2-approximation algorithm for the vertex cover problem,” in Int. Symp. on Distributed Computing, Springer, Berlin, Heidelberg, pp. 191–205, 2009. [Google Scholar]

19. R. Bar-Yehuda and S. Even, “A Linear-time approximation algorithm for the weighted vertex cover problem,” Journal of Algorithms, vol. 2, no. 2, pp. 198–203, 1981. [Google Scholar]

20. H. Bhasin and M. Amini, “The applicability of genetic algorithm to vertex cover,” International Journal of Computer Applications, vol. 123, no. 17, pp. 29–34, 2015. [Google Scholar]

21. S. Balaji, V. Swaminathan and K. Kannan, “An effective algorithm for minimum weighted vertex cover problem,” Int. J. Comput. Math. Sci, vol. 4, pp. 34–38, 2010. [Google Scholar]

22. O. Kettani, F. Ramdani and B. Tadili, “A heuristic approach for the vertex cover problem,” International Journal of Computer Applications, vol. 82, no. 4, pp. 9–11, 2013. [Google Scholar]

23. X. Xu and J. Ma, “An efficient simulated annealing algorithm for the minimum vertex cover problem,” Neurocomputing, vol. 69, no. 7–9, pp. 913–916, 2006. [Google Scholar]

24. R. Li, S. Hu, Y. Wang and M. Yin, “A local search algorithm with tabu strategy and perturbation mechanism for generalized vertex cover problem,” Neural Computing and Applications, vol. 28, no. 7, pp. 1775–1785, 2017. [Google Scholar]

25. E. Halperin, “Improved approximation algorithms for the vertex cover problem in graphs and hypergraphs,” SIAM Journal on Computing, vol. 31, no. 5, pp. 1608–1623, 2002. [Google Scholar]

26. S. Cai, K. Su, C. Luo and A. Sattar, “NuMVC: An efficient local search algorithm for minimum vertex cover,” Journal of Artificial Intelligence Research, vol. 46, pp. 687–716, 2013. [Google Scholar]

27. K. Onak and R. Rubinfeld, “Maintaining a large matching and a small vertex cover,” in Proc. of the Forty-Second ACM Symp. on Theory of Computing, New York, USA, pp. 457–464, 2010. [Google Scholar]

28. V. S. Gordon, Y. L. Orlovich and F. Werner, “Hamiltonian properties of triangular grid graphs,” Discrete Mathematics, vol. 308, no. 24, pp. 6166–6188, 2008. [Google Scholar]

29. Y. Orlovich, G. Valery and F. Werner, “Cyclic properties of triangular grid graphs,” IFAC Proceedings, vol. 39, no. 3, pp. 149–154, 2006. [Google Scholar]

30. R. Jothi and J. Mary, “Cyclic structure of triangular grid graphs using SSP,” International Journal of Pure and Applied Mathematics, vol. 109, no. 9, pp. 46–53, 2016. [Google Scholar]

31. CLIQUE Benchmark Instances. Available online: https://github.com. [Google Scholar]

32. Benchmark graphs. Available online: http://networkrepository.com/dimacs.php. [Google Scholar]

33. Benchmark graphs. Available online: Index of/pub/challenge/graph/benchmarks/clique–DIMAC. [Google Scholar]

34. S. Gajurel and R. Bielefeld, “A simple NOVCA: Near optimal vertex cover algorithm,” Procedia Computer Science, vol. 9, pp. 747–753, 2012. [Google Scholar]

35. I. Khan and K. Hasham, “Modified vertex support algorithm: A new approach for approximation of minimum vertex cover,” Research Journal of Computer and Information Technology Sciences ISSN 2320, 6527, vol. 1, no. 6, pp. 1–6, 2013. [Google Scholar]

36. J. Haider and M. Fayaz, “A smart approximation algorithm for minimum vertex cover roblem based on Min-to-min (MtM) strategy,” (IJACSA) International Journal of Advanced Computer Science and Applications, vol. 11, no. 12, pp. 250–259, 2020. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |