DOI:10.32604/cmc.2022.027652

| Computers, Materials & Continua DOI:10.32604/cmc.2022.027652 |  |

| Article |

Design of Teaching System of Industrial Robots Using Mixed Reality Technology

1Zhejiang Dongfang Polytechnic, WenZhou, 325000, China

2Compugen Ltd., Toronto, M2 J4A6, Canada

*Corresponding Author: Zhou Li. Email: 2679392892@qq.com

Received: 23 January 2022; Accepted: 19 April 2022

Abstract: Traditional teaching and learning about industrial robots uses abstract instructions, which are difficult for students to understand. Meanwhile, there are safety issues associated with the use of practical training equipment. To address these problems, this paper developed an instructional system based on mixed-reality (MR) technology for teaching about industrial robots. The Siasun T6A-series robots were taken as a case study, and the Microsoft MR device HoloLens-2 was used as the instructional platform. First, the parameters of the robots were analyzed based on their structural drawings. Then, the robot modules were decomposed, and 1:1 three-dimensional (3D) digital reproductions were created in Maya. Next, a library of digital models of the robot components was established, and a 3D spatial operation interface for the virtual instructional system was created in Unity. Subsequently, a C# code framework was established to satisfy the requirements of interactive functions and data transmission, and the data were saved in JSON format. In this way, a key technique that facilitates the understanding of spatial structures and a variety of human-machine interactions were realized. Finally, an instructional system based on HoloLens-2 was established for understanding the structures and principles of robots. The results showed that the instructional system developed in this study provides realistic 3D visualizations and a natural, efficient approach for human-machine interactions. This system could effectively improve the efficiency of knowledge transfer and the student’s motivation to learn.

Keywords: Mixed reality; space; interaction; instructional system

In recent years, virtual reality (VR) and augmented reality (AR) become increasingly mature, and they are applied to various digital teaching and learning applications. Recently, with the launch of the Microsoft HoloLens mixed-reality (MR) device [1], MR technology has emerged and begun to be adopted for practical applications. In 2019, Microsoft launched HoloLens-2, which integrates hand-tracking functions and a built-in eye-tracking function, showing further improvement in the hardware of MR technology. Currently, MR technology is often used in scientific research such as aerospace and shipbuilding [2]. However, there are few mature teaching and learning applications about industrial robots. Traditional teaching and learning about industrial robots is usually achieved through the lessons given by teachers to students with textbooks, pictures, and video instructional materials [3]. The teaching content is abstract, making it difficult for the students to understand the internal structures and principles of a specific robot and to master the skills needed for disassembly/assembly and maintenance of these robots. Besides, due to the complicated internal structures of industrial robots, it is challenging to extract the key knowledge points for further instruction. Moreover, traditional practical training on industrial robots may arise safety issues, such as injury by falling equipment and electric shock. In addition, traditional practical training involves severe equipment deterioration. For example, repeated disassembly/reassembly shortens the life of robots and affects their normal use, thus increasing the training cost. Furthermore, the training resources are insufficient to cover the needs of all students, because each component can be only used by a single person or one team at a time.

Based on integrated MR technology, the teaching and learning modes present instructional content through both dynamic and static approaches. They provide students with a comprehensive, stereoscopic, and multi-angle understanding of the mechanical structures, as well as stereoscopic presentations of complex, abstract, difficult-to-understand, and spatiotemporally restricted knowledge points. Because there are no restrictions on the operating space in MR, operations across a large spatial range and a long distance of classroom models are possible. With the latest MR technology, key knowledge points can be seamlessly integrated into real operating scenarios, facilitating the practical training process. Based on the naked-eye vision synchronized with MR vision, classroom instruction can be live-streamed and recorded for playback.

Compared to the traditional VR technology that only supports a single-person operating mode, MR supports multi-person interaction and thus allows team-based training and inter-team collaboration. Meanwhile, MR enables a broad range of interaction and interactive feedback approaches, which provides multi-sensory and immersive observations and gives full play to the students’ subjective initiative and autonomy during the learning process. Besides, MR embeds knowledge into interactions to improve the students’ cognitive competence and ability to understand spatial structures. In MR, real-virtual integrated visual scenarios can be created to enable the students to use bodily motions and virtual tools to perform practical skills. In this way, the students’ thinking and practical skills are improved through active exploration and interactive communication [4].

MR technology is a further development of VR, and it combines the advantages of VR and AR. In MR, the real-time physical information about the real world is obtained through cameras, sensors, and positioners. Meanwhile, through the position-tracking software and spatial-mapping technology, the computer-generated virtual scenarios are then superimposed onto the real-world physical information to present an integrated real-virtual visualization to the user [5]. The application of MR technology to instructional design is an important development trend in integrating Internet technology into education, which will enrich and supplement the mainstream instruction forms.

In this study, to meet the needs of contemporary digital teaching and learning, an MR-based instructional system for teaching and learning about industrial robots (taking the Siasun T6A-series industrial robot as a case study) was designed and realized based on the latest MR technology. The Autodesk Maya application, three-dimensional (3D) animation technology and the Unity engine were combined with a C# code framework to realize interactive functions and data transmission. Besides, the development of this system used a teaching and learning platform based on the HoloLens-2.

2 Overall Design of MR Instructional System

Based on the characteristics of the MR technology, the overall design of the instructional system for teaching and learning about industrial robots consists of the following parts.

2.1 Establishing a Real-Virtual Integrated 3D Teaching and Learning Space

The real-virtual integrated teaching and learning space is based on the visualization ability of MR technology. MR recognizes and obtains spatial information from a real environment through sensors, and it integrates 3D virtual objects into the environment in real-time. In this way, the MR-based instructional system transforms the abstract textual information of instructional materials into visualizations in a 3D learning space. Meanwhile, the system simulates real-time interactions in a real-world learning space and provides abundant digital resources [6].

2.2 Reconstructing Complex Spatial Structures

The reconstruction of spatial structures reproduces the complex spatial structures of industrial robots accurately through 3D modeling. Also, the knowledge points of the course are integrated into the virtual reproductions. That is, the spatial structure reconstruction integrates abstract knowledge points into specific models and provides massive digital learning resources, including text, graphs, videos, and 3D models. In this way, the information is presented in a more contextualized and straightforward manner, thus reducing the cognitive load on the students. In addition, interaction cues are designed [7]. Virtual guidance, such as dialog boxes and voice prompts, is provided in various interactions to guide the students to learn through the instructional system. Based on this, the students can master the knowledge and learn skills more straightforwardly, and a more effective environment is provided for autonomous learning.

2.3 Providing Diversified Interaction Approaches

The system provides diversified interaction approaches, including auditory, haptic, and visual sensory experiences. The students can walk freely in the space, and they can change their angle of observation. Meanwhile, the students can manipulate virtual objects with the assistance of virtual tools (such as magnifiers and scales) [8]. They can interact with the virtual objects through body motions, e.g., control virtual objects through different hand gestures [9]. Besides, the students can gain practical skills by using various virtual tools. In addition, the system also supports multi-person interactions, team-based training, and inter-team collaboration, helping the students to improve their teamwork and practical skills.

2.4 Building a Module for Managing the Entire Teaching and Learning Process

The teaching and learning process focuses on students’ active learning under the teachers’ guidance. The teacher assigns learning tasks according to the key and difficult points of the learning modules. Then, the teacher evaluates the students’ current level of thinking according to their use of the equipment and records their interests. Besides, the teacher provides supplementary explanations according to the students’ learning progress and asks questions during the use of the equipment. In this way, the student’s knowledge acquisition is ensured [10]. Naked-eye and MR visions can be synchronized in the entire process, and the instructional content can be live-streamed or recorded for playback. The teacher can also analyze the students’ understanding of the intended knowledge and their ability to put it to practice based on their feedback and test results. The instructional content can then be accordingly fine-tuned on time to enable a more targeted teaching and learning process [11].

3 Detailed Design of MR Instructional System

In this study, the instructional system was developed based on industrial robot technology, and the Siasun T6A-series industrial robots were selected as the study object. The system was designed based on the Microsoft HoloLens-2 head-mounted MR device, and it involves the Unity engine, the Visual Studio 2017 integrated development environment, the Autodesk Maya software platform, and Adobe multimedia design software. Besides, a C# code framework was established to satisfy the requirements for interactive functions and data transmission, and the system data were stored in JSON format. The implementation of the major functions of the MR-based instructional system includes the design of instructional content and functions, the establishment of a library of 3D models, user-interface creation, and the design of interactive functions.

3.1 Instructional Content and Functions

The instructional content and functional modules of the industrial robot were designed based on the key/difficult knowledge points. They are described as follows.

3.1.1 Understanding and Mastering the Structures of the Components in the Siasun Industrial Robots

The 3D structures of the T6A-series industrial robots were visualized based on the analysis of their characteristics and structures. The visualizations help students to understand the detailed internal structures and principles of the components.

3.1.2 Simulating the Assembly and Disassembly Processes

The instructional system developed in this study enables the students to perform simulation tasks in a realistic interactive environment and assembly and disassembly of the robot modules with virtual manipulation tools. In this way, the student’s understanding of the component is strengthened, and they can better master the relevant knowledge of the industrial robot. The system provides videos, text, graphics, and 3D animations [12] to guide the students to explore the underlying principles of the industrial robot, thereby improving their practical skills and task-specific research capabilities. Fig. 1 shows the functional design of the instructional system.

Figure 1: The framework of the system function

The functions of the instructional system are mainly provided by two modules: the structure cognition module, and the contextual interaction module. The structure cognition module supports the following functions: the display of 3D models, extraction of components, display of information about the robots, and assembly and disassembly of various components. The models of the industrial robots can be placed anywhere in the real environment according to a user’s instructions. The user can manipulate the module, including making observations through movement, rotation, assembly, disassembly, and the extraction of individual components. The system dynamically displays descriptions and detailed explanations (including text, voice, and audio explanations) of the user-selected components. The contextual interaction module supports the following functions: virtual contextual dialogs, guidance prompts for manipulations, and photographic recording. These text-mediated and voice-mediated interactive functions enable students to learn independently and strengthen their understanding and memory of the instructional content.

3.2 Establishing a Library of Robot Component Models

The detailed component information was mainly obtained from the accurate data about the Siasun T6A-series industrial robots. The entire system of the industrial robots was modeled based on the collected and sorted graphical, textual, and video data [13]. Three-dimensional modeling was performed by using the Maya software. This modeling ensured the accuracy of model dimensions, simplified the number of planes, and optimized the wire-frame structure. The resulting models were texture-mapped according to the defined UV coordinates, and the high- and low-poly models were stored separately.

3.2.1 High-precision Models of Real Robots

High-precision models enable the students to perceive the volume and mass of real objects in the physical environment and understand the details of their complex internal structures by comparing the real objects with the corresponding virtual models.

3.2.2 Low-precision Models of Real Robots

The use of low-precision models reduces system cost and improves the response speed. The small models whose internal structures are not visible during the display of models were replaced with low-precision models. The use of low-precision models can improve system performance without affecting the user experience.

Finally, the models were optimized and integrated, and the 3D models, texture maps, animations, and other virtual information were sorted and saved as FBX files. The model resources and 3D animations, including materials and light modules, were configured in the Unity engine.

3.3 Design of Interactive Functions

The underlying control system and the relevant instructional applications created using the system development software were uploaded to HoloLens-2. The device runs these applications and captures the 3D structural information from the physical world, the position and posture information of the student, and the student’s instructions (gazes, hand gestures, and speech) for interactions through the built-in sensors [14]. Then, it projects a created virtual-real integrated scenario onto its holographic lens in real-time.

Specific functional modules are developed for the designed structural cognition module and situational interaction module. The interactive system based on HoloLens-2 was developed using the Unity 2019.3.12 development engine, and the Visual Studio 2017 development platform in a Windows 10 64-bit computer environment was taken as the interaction prototype development tool. To develop MR-based applications on the HoloLens-2 platform, the relevant installation packages were provided by Microsoft. First, because cameras were used to track the position of the user’s head and render the graphics in real-time in Unity, the configurations of the main cameras in Unity were modified [15] so that they were compatible with HoloLens-2. After parameter matching and basic configuration, programs were written in C# language to handle the spatial scan data and control human-machine interactions. Fig. 2 illustrates the analysis of the key techniques for spatial cognition and human-machine interactions involved in system development.

Figure 2: The key technologies involved in interactive function design

Appropriately placing holograms requires the correct recognition of the spatial information of the real world. The instructional system developed in this study uses the spatial mapping application programming interface (API) [16] in Unity to achieve adequate cognition of the real environment, the placement of virtual objects, and the occlusion relationships between real and virtual objects. First, the SpatialPerception function of Unity was activated to initialize an object of SurfaceObserver for the space onto which spatial data mapping was performed [17]. Meanwhile, for each SurfaceObserver object, the size and shape of the space were specified for data collection [18]. The system transformed the spatial grid data obtained from scanning into planes, which were later used as the basic space to create specific virtual objects. This is critical to identifying the occlusion relationships between real and virtual objects [19]. To enhance the user’s experience, textual and voice prompts were built into the system to prompt the user to define the range to be scanned and the current position of the user. Additionally, the scanned region was visualized, and visual feedback was provided to the user by mapping different spatial mapping grid textures [20]. The appearance of a visible wire-frame in the view indicates the completion of the scanning process. Fig. 3 illustrates the Interaction process of spatial cognitive teaching mode.

Figure 3: Interaction process of spatial cognitive teaching mode

3.3.2 Human-Machine Interaction Technology

The system provides three interaction approaches, which were designed based on the interactive functions of HoloLens-2, including gaze, hand gesture recognition, and voice control [21]. When worn on the head of a user, HoloLens-2 emits a line of light, which radiates in the direction of the user’s gaze and may touch the target objects in the field of view. When the user looks at an interactable object, its profile lights up and flashes. The profile restores the normal state when the gaze is moved away from the object. The API provided by HoloLens-2 enables the design of many hand gestures, including single click, double click, long press, and drag [22]. Meanwhile, GestureRecognizer allows defining the hand gestures to trigger different events. Voice recognition is realized by using GrammarRecognize to designate the target words to be recognized, and OnPhraseRecognized is exploited to trigger the corresponding events [23]. For example, the system is configured to allow voice control of the display of disassembling various modules. When the user confirms the target object to be disassembled through the radiation light and gives the voice instruction of “chaijie” (disassemble), the system invokes the instruction code for module disassembly and presents the animations of the disassembly process. When an important event or object exceeds the space visible to the user during user-hologram interactions, prompts such as arrows, light tracers, virtual guides, pointers, and spatial audio will direct the user to view the important content. Fig. 4 illustrates the Interaction process of situational task teaching mode.

Figure 4: Interaction process of situational task teaching mode

During the development of the interactive functions, the system can be connected to HoloLens-2 by activating Window-XRHoloGraphic Emulation [24]. In this way, HoloLens-2 can access the visualizations in Unity for real-time preview. The final applications were loaded to HoloLens-2 via a universal serial bus (USB) or remote control [25], and they were tested on the device. HoloLens-2 projects the environment visible to the user and the user interactions onto a computer screen, which allows teachers and students to view the interactions synchronously in the same learning space, thus facilitating bi-directional communication and collaboration. Besides, all classroom interactions were automatically saved to the computer so they can be reviewed later. Fig. 5 illustrates the interface of the instructional system developed in this study.

Figure 5: The interface of the teaching system

We carried out detailed tests: scenario test and abnormal test.

(1) Scenario test. Test the system according to the normal program flow sequence to see whether various interactive functions are perfect. Fig. 6 illustrates the test flow. The test results show that the current system module conforms to the business logic, and there is no bug in each interactive function.

(2) Abnormal test. According to the business process, under the wrong operation, check whether there are exceptions in the system. The test results show that the current system has no exceptions under the wrong operation.

Figure 6: The test flow

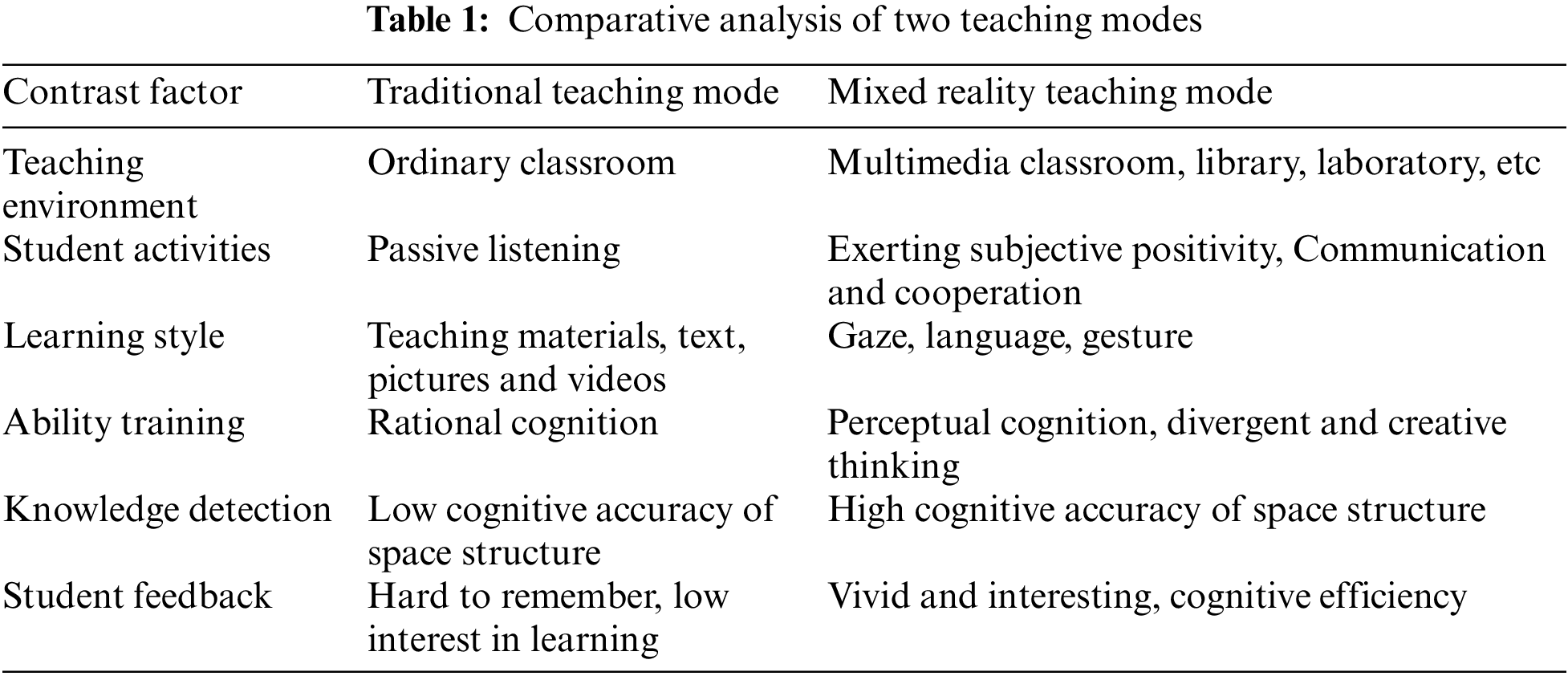

In traditional teaching and learning about industrial robots, it is difficult for students to understand the complex internal structures of robots. To address this issue, an MR-based instructional system was developed in this study for teaching and learning about industrial robots. Taking the Siasun T6A-series industrial robots as a case study, a HoloLens-2-based instructional system was established through Maya for the students to understand the structures and principles of the robots. Besides, 3D digital reproductions and Unity were adopted to develop the interactive functions in the instructional system. Tab. 1 shows the comparative analysis results of traditional teaching mode and mixed reality teaching mode.

This instructional system transforms information from traditional two-dimensional screens and books into realistic stereoscopic visualizations, which helps to strengthen the students’ understanding of the internal structures of industrial robots and facilitates the integration of theoretical and practical learning. The system provides a rich and diversified range of human-machine interactions that make the learning process more pleasurable. This improves the enthusiasm for learning and the self-study ability of students. With ongoing improvements in technology and in-depth research, MR technology will have broader and deeper future applications in the education field.

Acknowledgement: We would like to thank TopEdit (https://topeditsci.com) for providing linguistic assistance during the preparation of this manuscript.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. W. Fang, F. H. Zhang, V. S. Sheng and Y. W. Ding, “A method for improving CNN-based image recognition using DCGAN,” Computers, Materials & Continua, vol. 57, no. 1, pp. 167–178, 2018. [Google Scholar]

2. W. Fang, F. H. Zhang, V. S. Sheng and Y. W. Ding, “SCENT: A new precipitation nowcasting method based on sparse correspondence and deep neural network,” Neurocomputing, vol. 448, pp. 10–20, 2021. [Google Scholar]

3. M. Alkhatib, “An effective and secure quality assurance system for a computer science program,” Computer Systems Science and Engineering, vol. 41, no. 3, pp. 975–995, 2022. [Google Scholar]

4. Y. Wang, “Hybrid efficient convolution operators for visual tracking,” Journal on Artificial Intelligence, vol. 3, no. 2, pp. 63–72, 2021. [Google Scholar]

5. M. A. Nauman and M. Shoaib, “Identification of anomalous behavioral patterns in crowd scenes,” Computers, Materials & Continua, vol. 71, no. 1, pp. 925–939, 2022. [Google Scholar]

6. Y. Dai and Z. Luo, “Review of unsupervised person re-identification,” Journal of New Media, vol. 3, no. 4, pp. 129–136, 2021. [Google Scholar]

7. S. Mahmood, “Review of internet of things in different sectors: Recent advance, technologies, and challenges,” Journal of Internet of Things, vol. 3, no. 1, pp. 19–26, 2021. [Google Scholar]

8. S. W. Han and D. Y. Suh, “A 360-degree panoramic image inpainting network using a cube map,” Computers, Materials & Continua, vol. 66, no. 1, pp. 213–228, 2021. [Google Scholar]

9. R. Wazirali, “Aligning education with vision 2030 using augmented reality,” Computer Systems Science and Engineering, vol. 36, no. 2, pp. 339–351, 2021. [Google Scholar]

10. M. Younas, A. Shukri and M. Arshad, “Cloud-based knowledge management framework for decision making in higher education institutions,” Intelligent Automation & Soft Computing, vol. 31, no. 1, pp. 83–99, 2022. [Google Scholar]

11. S. Xia, “Application of maya in film 3D animation design,” in 2011 3rd Int. Conf. on Computer Research and Development, Shanghai, China, pp. 357–360, 2011. [Google Scholar]

12. G. Vigueras and J. M. Orduña, “On the use of GPU for accelerating communication-aware mapping techniques,” The Computer Journal, vol. 59, no. 6, pp. 836–847, 2016. [Google Scholar]

13. A. Devon, H. Tim, A. Freeha, S. Kim, W. Derrick et al., “Education in the digital age: Learning experience in virtual and mixed realities,” Journal of Educational Computing Research, vol. 59, no. 5, pp. 795–816, 2021. [Google Scholar]

14. E. Nea, “The animated document: Animation’s dual indexicality in mixed realities,” Animation, vol. 15, no. 3, pp. 260–275, 2020. [Google Scholar]

15. P. Cegys and J. Weijdom, “Mixing realities: Reflections on presence and embodiment in intermedial performance design of blue hour VR,” Theatre and Performance Design, vol. 6, no. 2, pp. 81–101, 2020. [Google Scholar]

16. S. W. Volkow and A. C. Howland, “The case for mixed reality to improve performance,” Performance Improvement, vol. 57, no. 4, pp. 29–37, 2018. [Google Scholar]

17. M. Parveau and M. Adda, “3iVClass: A new classification method for virtual, augmented and mixed realities,” Procedia Computer Science, vol. 141, no. 1, pp. 263–270, 2018. [Google Scholar]

18. G. Guilherme, M. Pedro, C. Hugo, M. Miguel and B. Maximino, “Systematic review on realism research methodologies on immersive virtual, augmented and mixed realities,” IEEE Access, vol. 9, no. 1, pp. 89150–89161, 2021. [Google Scholar]

19. C. Sara, C. Fabrizio, C. Nadia, C. Simone, P. P. Domenico et al., “Hybrid simulation and planning platform for cryosurgery with microsoft hololens,” Sensors, vol. 21, no. 13, pp. 4450–4450, 2021. [Google Scholar]

20. S. Keskin and T. Kocak, “GPU-based gigabit LDPC decoder,” IEEE Communications Letters, vol. 21, no. 8, pp. 1703–1706, 2017. [Google Scholar]

21. W. Lee and S. Hong, “28 GHz RF front-end structure using CG LNA as a switch,” IEEE Microwave and Wireless Components Letters, vol. 30, no. 1, pp. 94–97, 2020. [Google Scholar]

22. S. G. Maria, C. Alessandro, L. Alfredo and O. Francesco, “Augmented reality in industry 4.0 and future innovation programs,” Technologies, vol. 9, no. 2, pp. 33–33, 2021. [Google Scholar]

23. C. Valentina, G. Benedetta, G. Angelo and M. Danilo, “Enhancing qualities of consciousness during online learning via multisensory interactions,” Behavioral Sciences, vol. 11, no. 5, pp. 57–57, 2021. [Google Scholar]

24. H. D. Jing, X. Zhang, X. B. Liu, X. Y. Sun and X. B. Ma, “Research on emergency escape system of underground mine based on mixed reality technology,” Arabian Journal of Geosciences, vol. 14, no. 8, pp. 1–9, 2021. [Google Scholar]

25. Y. Z. Dan, Z. J. Shen, Y. Y. Zhu and L. Huang, “Using mixed reality (MR) to improve on-site design experience in community planning,” Applied Sciences, vol. 11, no. 7, pp. 3071–3071, 2021. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |