DOI:10.32604/cmc.2022.029640

| Computers, Materials & Continua DOI:10.32604/cmc.2022.029640 |  |

| Article |

Research on the Design Method of Equipment in Service Assessment Subjects

Military Exercise and Training Center, Army Academy of Armored Forces, Beijing, 100072, China

*Corresponding Author: Yuhang Zhou. Email: zhouyuhang0907@foxmail.com

Received: 01 March 2022; Accepted: 01 April 2022

Abstract: Combined with equipment activities such as combat readiness, training, exercises and management, it is proposed that the design of equipment in-service assessment subjects should follow the principles of combination, stage and operability. Focusing on the design of equipment in-service assessment subjects, a design method for in-service assessment subjects based on the combination of trial and training mode is proposed. Based on the actual use of high-equipment use management and training and the established indicator system, the army’s bottom-level equipment activity subjects and bottom-level assessments are combined. The indicators are mapped and analyzed. Through multiple rounds of iterations, the mapping relationship between in-service assessment indicators and military equipment activity subjects is established. Finally, the equipment activity subjects whose data is collected (reflecting the underlying assessment indicators) are generated in-service assessment subjects. The orthogonal test method is used to optimize the samples in the assessment subjects to form an in-service assessment implementation plan. Taking a certain type of armored infantry fighting vehicle as an example, the sample optimization design of the initially generated in-service assessment subjects is analyzed. It provides methods and ideas to carry out in-service assessment work.

Keywords: Equipment; in-service assessment; subject design; mapping

The equipment in-service assessment subject is the basic unit and important basis for the organization and implementation of in-service assessment. It is an in-service assessment activity mainly carried out in combination with military combat readiness, training, exercise and equipment management. Assessment indicators, military equipment activities, assessment data sources and other related core contents shall establish corresponding relationships with assessment subjects. Therefore, the design of equipment in-service assessment subjects is the key link to convert in-service assessment indicators into in-service assessment contents. By solving the relationship between test and training, a scientific and feasible assessment subject is formed [1–4]. This paper mainly studies the combined in-service assessment, which does not need to set up assessment subjects separately, but forms reasonable and feasible assessment subjects combined with military equipment activity subjects. In the current research results, there is no in-depth research on what the equipment in-service assessment subjects based on the combination of trial and training are, what the contents are and how to combine them. It is urgent to clarify the internal relationship between in-service assessment and military training activities, and form the internal logic and method of in-service assessment subject design.

Subject design research is mainly reflected in the optimal design research of experimental samples. Optimal design of experimental samples refers to the planning and design of experimental factors, factor levels and combinations of experimental factors, and the specific and usual arrangements for experimental subjects. At present, a large number of design methods about factor level combinations have been studied, and methods such as orthogonal experimental design, uniform experimental design, sequential design, and Bayesian design are applied to the field of equipment test identification. Cao Yuhua focuses on the basic principles and scope of application of comprehensive test method, single factor test method, orthogonal experimental design method and uniform experimental design method, as well as specific applications in the field of equipment testing [5]. Wu Xiaoyue studied the selection and level of equipment test variables in equipment statistical experimental design, focusing on the specific application of four methods: orthogonal experimental design, uniform experimental design, statistical verification experimental design, and sequential experimental design. The selection of equipment in-service assessment variables and its level design provide a reference [6]. Chang Xianqi analyzed the statistical design methods in weapon equipment experiments, focusing on the statistical problems encountered in the experimental design, such as parameter estimation, hypothesis testing, and regression analysis [7]. He Yingping analyzes and studies the statistical methods of experimental design in the field of polymer material testing, focusing on statistical inference, variance analysis, orthogonal experimental design and regression analysis. Problem handling has reference significance [8].

2 Principles and Ideas for the Design of In-service Assessment Subjects

Combined in-service assessment subject design is an orderly test process carried out in combination with military equipment activities. The purpose is to obtain collectable data that meet the evaluation requirements with less test sample size on the basis of not affecting the normal equipment activity process of the military. Therefore, the design of combined in-service assessment subjects should follow the following principles:

1. Binding. Combined in-service assessment generally does not set assessment subjects separately, but is organized and implemented in combination with military combat readiness, training, exercise, equipment management and other activities. Therefore, the design of assessment subjects should be based on the thinking of “integration of trial and training”, closely combined with military equipment activities, and form scientific and reasonable assessment subjects through the mutual mapping between assessment indicators and military equipment activity subjects.

2. Phased. The equipment activities of the army include not only continuous activities (such as daily combat readiness, equipment management, etc.), but also phased activities (such as training, exercises, etc.). Therefore, the design of assessment subjects follows the phased principle and forms phased assessment subjects in combination with military equipment activities, so as to obtain relevant data on the basis of assessment subjects and complete in-service assessment tasks.

3. Operability. The design of in-service assessment subjects should consider factors such as the actual operation of the army and the demand for data collection. It should not only collect comprehensive evaluation data, but also affect the process of army operation. Therefore, the formed in-service assessment subjects need to be operable, so as to facilitate the implementation of in-service assessment on the basis of not affecting the military equipment action and ensure the smooth completion of in-service assessment subjects.

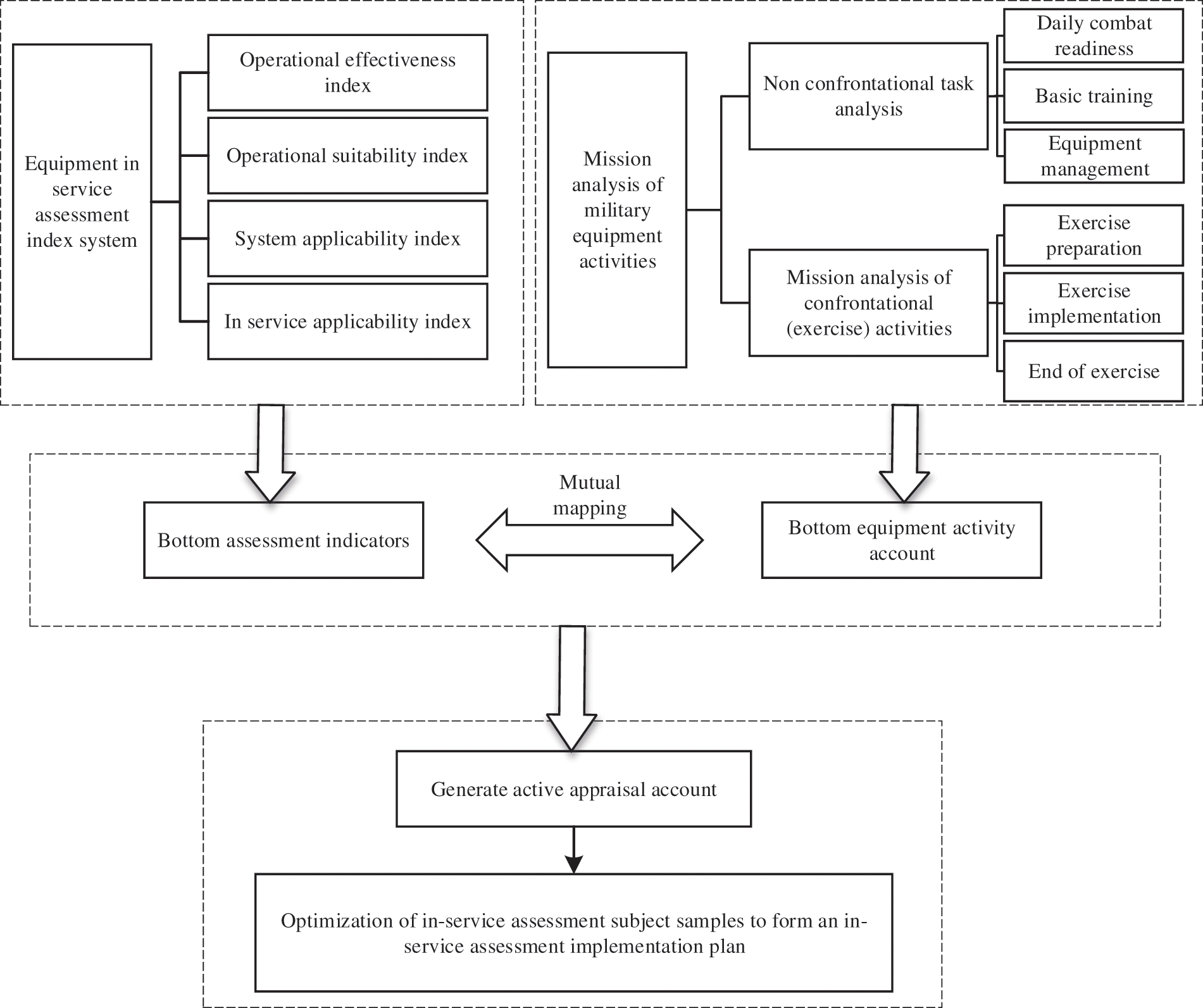

The general idea of the design of equipment in service assessment subjects is: through the analysis of the activities and tasks of a certain type of equipment, including the analysis of non confrontational activities and tasks (such as daily combat readiness, basic training, equipment management, etc.) and confrontational (exercise) activities and tasks, the lower level equipment activity subjects of the army are obtained; Combined with the constructed index system of operational effectiveness, operational applicability, system applicability and in-service applicability, the indicators are gradually decomposed from the key problems of in-service assessment to the bottom assessment indicators [9–12]; Map the lower level equipment activity subjects and lower level assessment indicators, preliminarily generate the equipment activity subjects that can collect data (reflecting the lower level assessment indicators) into in-service assessment subjects, and finally optimize the samples in the assessment subjects by using the orthogonal experimental design method to form a highly operational in-service assessment implementation scheme. The general idea of in-service assessment subject design is shown in Fig. 1.

Figure 1: Design ideas for in-service assessment subjects

3 Design Method of In-Service Assessment Subjects Based on the Combination of Trial and Training Mode

After equipping the troops, it is necessary to carry out a series of activities such as daily combat readiness, training, confrontation exercises and management, so as to ensure that the equipment performance is in good condition, improve the training level of man-machine integration and comprehensive support ability, so as to achieve the purpose of improving combat effectiveness. Military equipment activity is the carrier of combined in-service assessment, which provides real and reliable original data for in-service assessment. In-service assessment uses these data to obtain useful information of in-service equipment. Therefore, the in-service assessment based on the combination of trial and training mode is carried out in combination with military equipment activities.

3.1 Establish the Mapping Relationship Between In-service Assessment Indicators and Military Equipment Activity Subjects

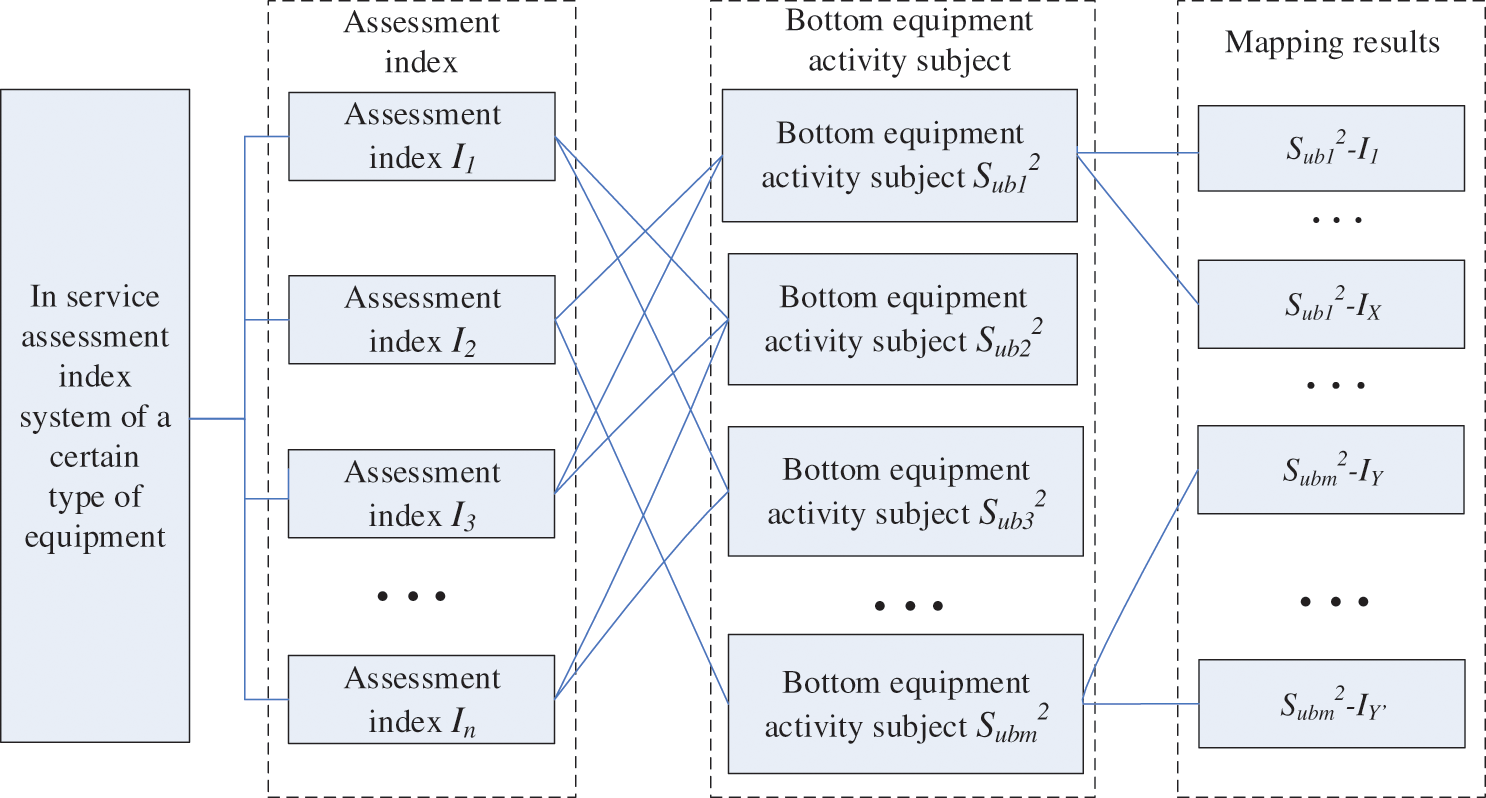

After analyzing the military equipment activity tasks and decomposing the multi-level military equipment activity subjects, it is necessary to map the in-service assessment indicators with the underlying military equipment activity subjects, determine the data collection source and form the in-service assessment subjects. There are many mapping relationships between the assessment indicators and the equipment activity subjects of the underlying forces, such as one to many, many to one or one to one [13]. As shown in Fig. 2, the constructed assessment indicators are mapped one by one with the underlying equipment activity subjects. After the mapping is completed, analyze whether the mapped indicators are clearly understood and easy to collect, and further decompose the indicators according to the content of equipment activity subjects until a data set convenient for collection is formed. Finally, equipment activity subjects that can map to assessment indicators and collect data can be used as in-service assessment subjects [14–16].

Figure 2: The process of mapping between in-service assessment indicators and underlying equipment activities

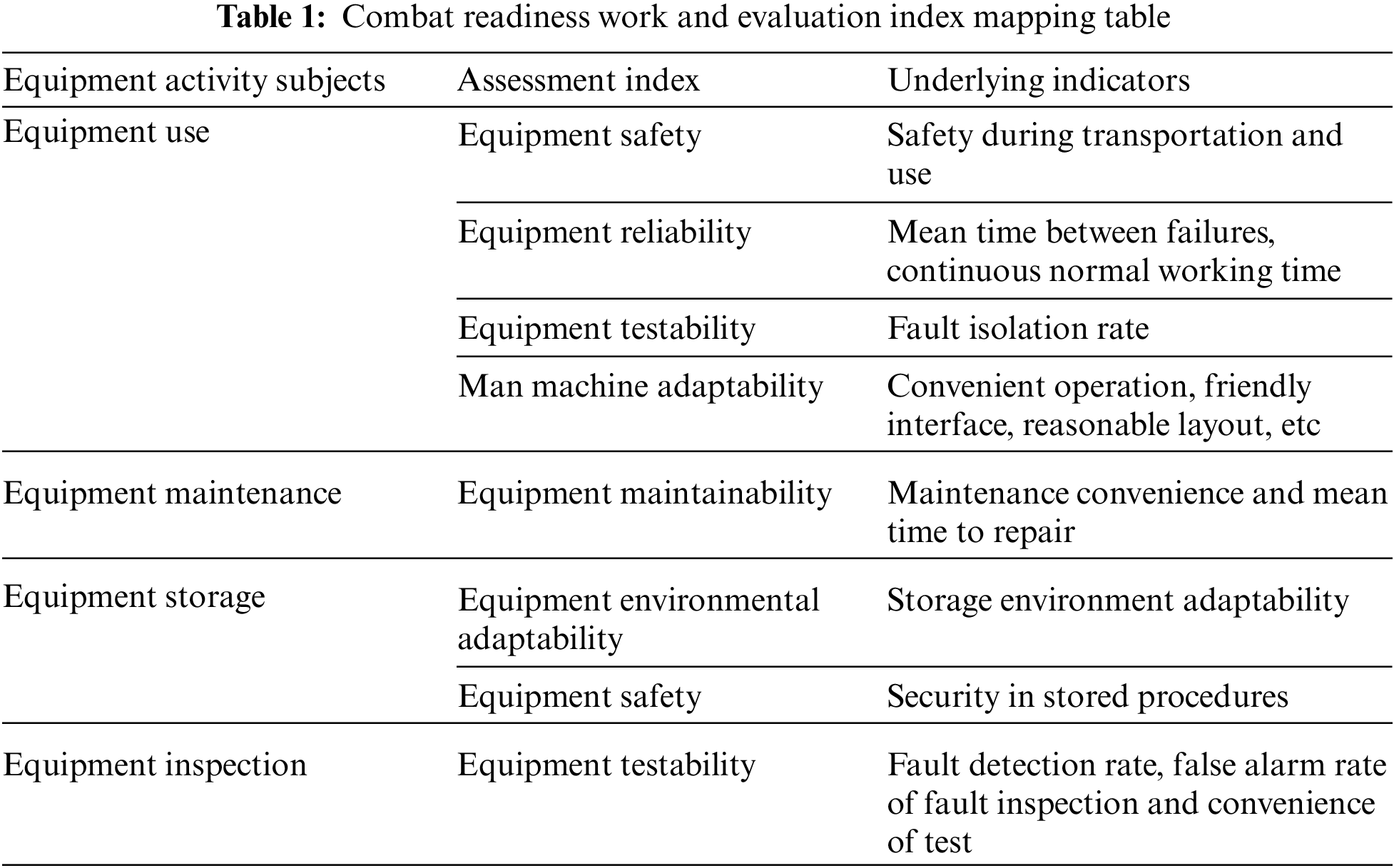

For example, the mapping relationship between combat readiness and assessment indicators. Combat readiness work is mainly divided into four secondary equipment activity subjects: equipment use, equipment maintenance, equipment storage and equipment inspection. According to the characteristics of equipment combat readiness work, it mainly corresponds to the indicators for assessing operational applicability in service, including environmental applicability, equipment safety, equipment testability, equipment maintainability, equipment reliability, man-machine adaptability, etc. The specific mapping relationship is shown in Tab. 1.

3.2 Generation of In-service Assessment Subjects

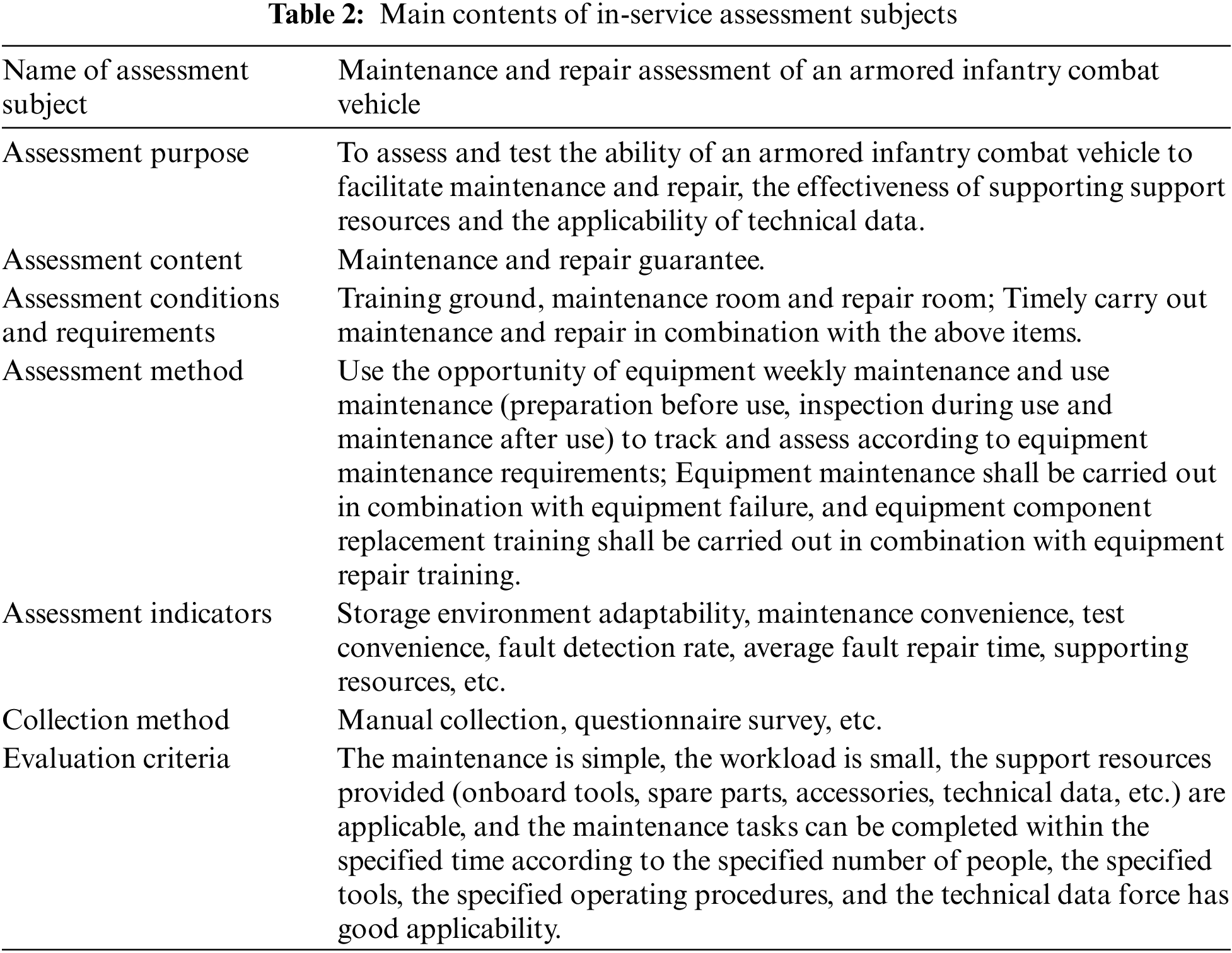

After the military equipment activities are decomposed into specific equipment activity subjects, the mapping relationship between the in-service assessment indicators and the underlying equipment activity subjects is determined by mutual mapping method. The designers of the in-service assessment subjects convert the equipment activity subjects into in-service assessment subjects, which is also the basis for the military to implement the in-service assessment tasks. The main contents of in-service assessment subjects include: assessment subject name, purpose, content, assessment conditions and requirements, assessment methods, assessment indicators, data collection methods, evaluation standards, etc. For example: a certain type of armored infantry fighting vehicle generates in-service assessment subjects in the maintenance and repair support as shown in Tab. 2 below.

4 Sample Optimization Design Based on Orthogonal Design

After the assessment subjects are initially generated, the relevant factors (assessment factors) that affect the collection results of the assessment indicators in the in-service assessment activities can be analyzed based on the corresponding assessment indicators in the underlying assessment subjects, so as to lay the foundation for the optimization of assessment samples. After determining the relevant assessment factors of the assessment indicators corresponding to the assessment subjects, it is necessary to further study the combination of factor levels (the value of assessment factors), so that the assessment indicators correspond to one or more factor level combinations. Through the design and research of factor level combinations, the assessment subject samples are optimized to reduce the assessment times and scale as much as possible Shorten the assessment cycle and form more specific, targeted and operable assessment subjects.

At present, a large number of design methods of factor horizontal combination have been studied at home and abroad, such as orthogonal experimental design, uniform experimental design, block experimental design, Latin square experimental design and so on [17–21]. The most commonly used methods are orthogonal experimental design and uniform experimental design. When the number of assessment factors is small, the orthogonal experimental design method is preferred; When there are many levels of assessment factors, the uniform test design method is preferred. The equipment in service assessment is organized and implemented in combination with the army’s daily combat readiness, training, exercise and equipment management. The assessment factor level number is less, and the orthogonal experimental design method can be used preferentially [22–25]. The general steps of equipment in-service assessment sample optimization method based on orthogonal design are:

(1) Determine assessment factors and levels

The factors affecting the value of assessment indicators mainly come from two aspects: first, uncontrollable factors, which come from the changes of external environment of equipment action, including terrain environment, weather environment, threat environment, etc; The second refers to controllable factors, which come from the limitations of assessment methods and conditions, such as gun firing angle, firing state, target distance and target motion state. In the equipment in service assessment, in order to control the assessment scale, the assessment factor is usually taken as level 2

(2) Select the appropriate orthogonal table

Orthogonal table is generally represented by

① Check the number of assessment factor levels.If the number of assessment factor levels is all 2 or all 3, choose

② Check whether the number of columns in the orthogonal table can accommodate all appraisal factors and the interaction between appraisal factors. Usually, an appraisal factor is used as a column; The number of columns occupied by the interaction between the assessment factors is related to the number of levels. If the number of levels is

③ The equipment in service assessment is organized and implemented in combination with the army’s daily combat readiness, training, exercise and equipment management. The assessment scale needs to be controlled, and the smaller orthogonal table meeting the requirements is usually selected. The number of rows of the selected orthogonal table (the number of assessments) “n” is:

For example, A certain assessment has 4 factors and 3 levels. Without considering the interaction between assessment factors, the number of assessments is

(3) Orthogonal table header design

The header design is to reasonably arrange the evaluation factors and interactions on the appropriate columns of the orthogonal table. There are two main assessment aspects: first, the interaction between assessment factors needs to be considered. First, the assessment factors with interaction are arranged, and then the assessment factors without interaction are arranged on the remaining columns; Second, without considering the interaction between the assessment factors, the assessment factors can be arbitrarily arranged on the columns of the orthogonal table.

(4) Clarify the assessment scheme

Organize and implement appraisal according to the appraisal samples composed of the columns of appraisal factors. The number in the column (without interaction) of each assessment factor in the orthogonal table is replaced with the horizontal value of the assessment factor, so as to form the implementation scheme of orthogonal assessment.

5 Example of Optimal Design of In-service Assessment Samples

Taking an armored infantry combat vehicle as an example, this paper analyzes the sample optimization design of in-service assessment subjects.

(1) Initially formed in-service assessment subjects

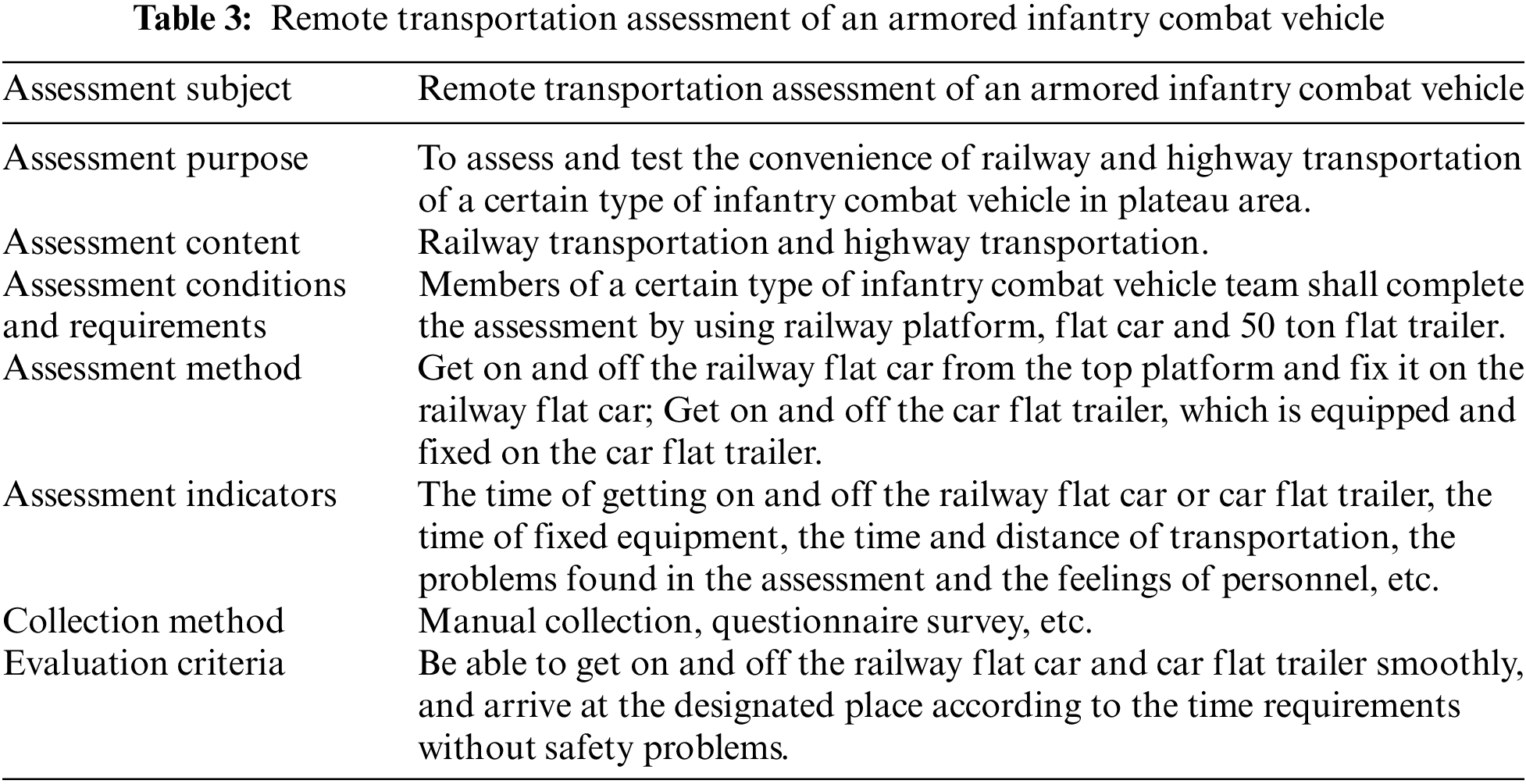

For example, an armored infantry combat vehicle generates an in-service assessment subject (long-range transportation assessment) from the equipment action subject (long-range tactical mobility) during the exercise. The specific content is shown in Tab. 3.

(2) Determine assessment factors

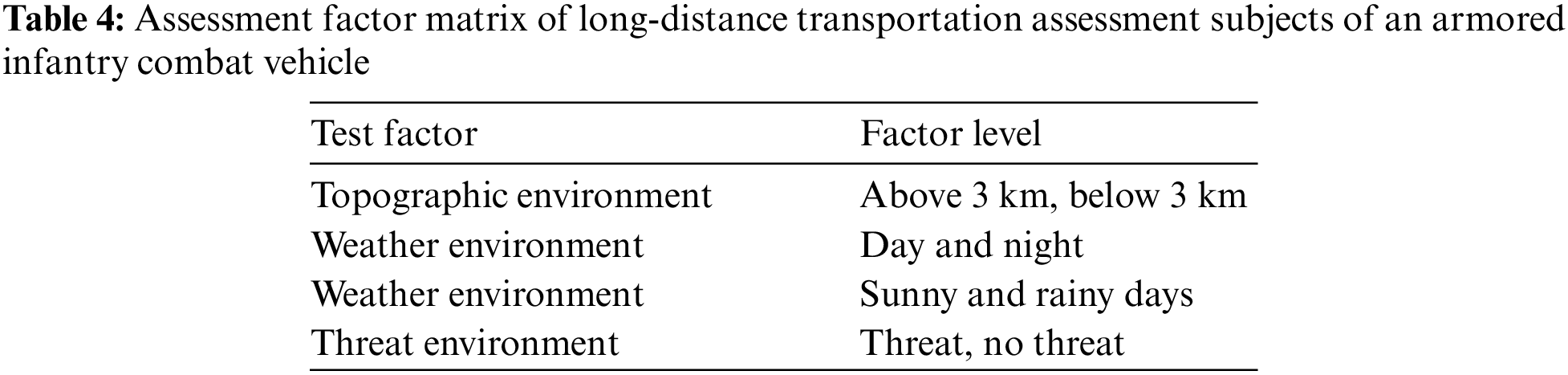

There are many factors affecting the value of assessment indicators in the remote transportation assessment subject of an armored infantry combat vehicle, which mainly comes from the change of external environment, including terrain environment, day environment, weather environment and threat environment. As mentioned above, the value of the time to get off the railway slab is different during the day and night, so the impact of the day and night time environment should be considered. The assessment factor matrix is shown in Tab. 4.

(3) Optimal design of assessment samples

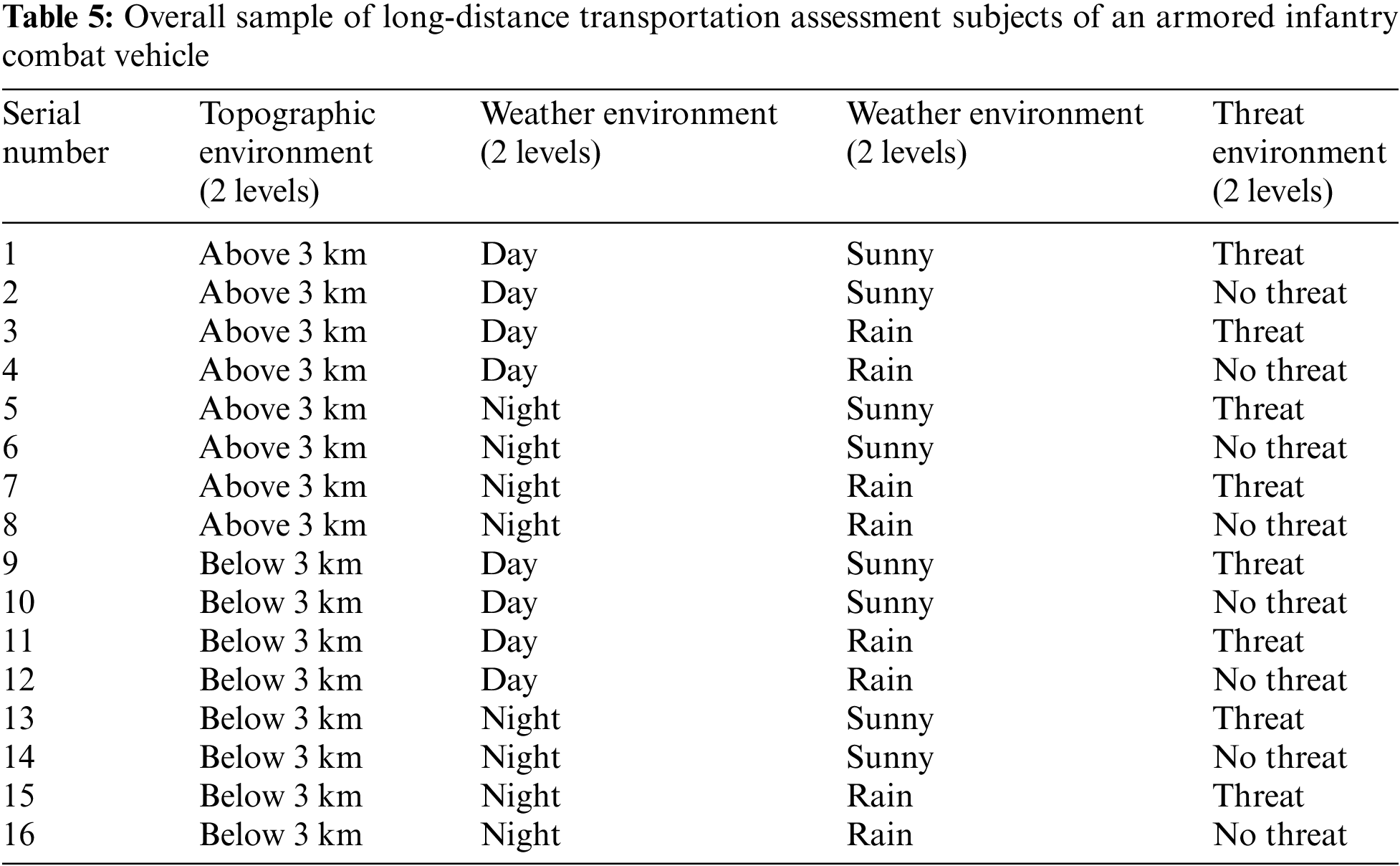

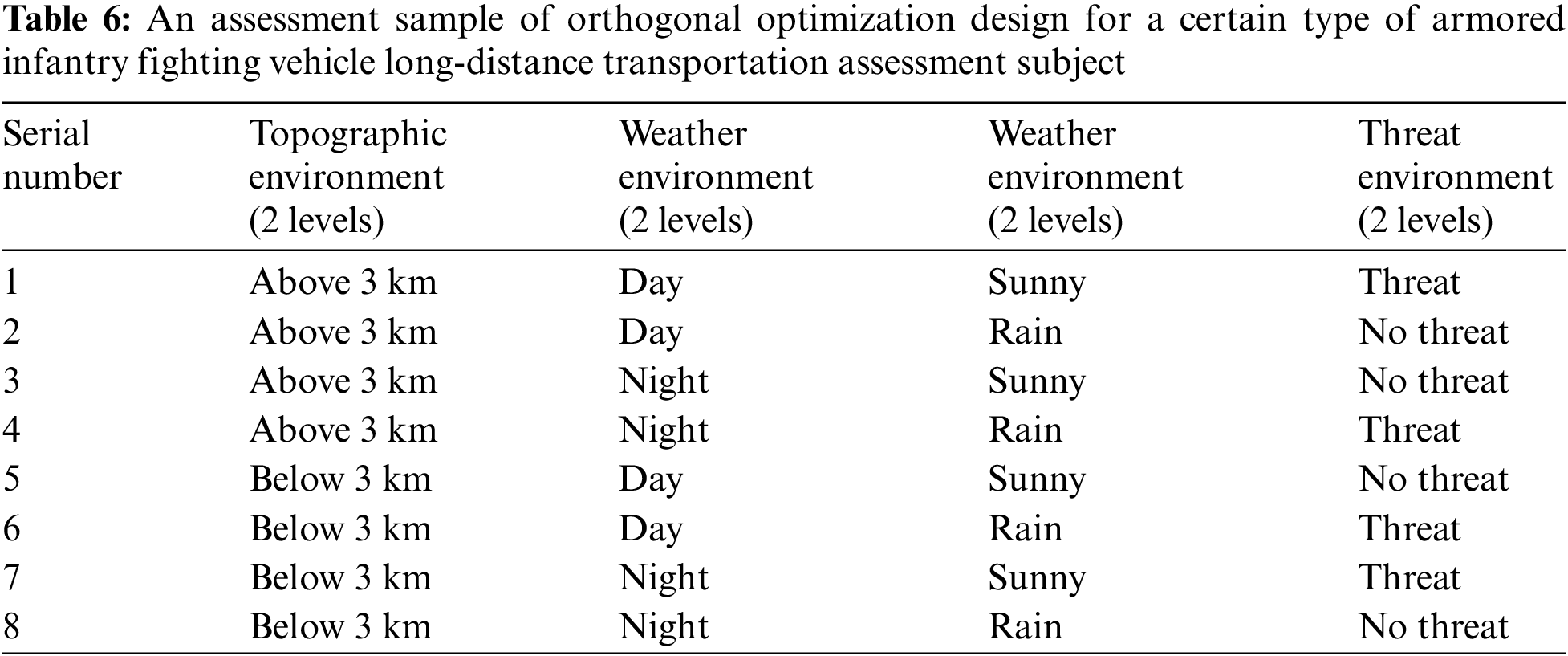

Taking a certain type of armored infantry fighting vehicle remote transportation assessment subject as an example, the sample optimization design is carried out, and the assessment sample point is selected. If all assessments are carried out, at least

The orthogonal experimental design method is used to optimize the design of the sample. After the

Firstly, the principle and idea of in-service assessment subject design are put forward, and the relationship between assessment index and equipment activity subject is logically clarified; Secondly, the task of typical armored equipment activities in the army is decomposed to form multi-level equipment activity subjects; Then, a mapping relationship is established with the assessment indicators. According to the mapping relationship between the assessment indicators and the underlying equipment activity subjects, the equipment activity subjects that can collect data are preliminarily generated into in-service assessment subjects; Finally, the orthogonal test method is used to optimize the samples in the assessment subjects to form the implementation scheme of in-service assessment, which provides a reference for the research on the design of equipment in-service assessment subjects.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. Q. J. Meng, Y. K. Cao and H. J. Zhang, “The connotation and working methods of equipment in-service assessment,” Journal of Armored Forces Engineering Academy, vol. 31, no. 5, pp. 18–22, 2017. [Google Scholar]

2. R. S. Ju, X. Xu and S. Wang, “A new military conceptual modeling method,” Systems Engineering and Electronic Technology, vol. 13, no. 3, pp. 1751–1756, 2017. [Google Scholar]

3. O. Said and A. Tolba, “A reliable and scalable internet of military things architecture,” Computers, Materials & Continua, vol. 67, no. 3, pp. 3887–3906, 2021. [Google Scholar]

4. H. Y. Pang, J. Zhang and H. L. Guo, “Research on application requirements of army equipment combat test simulation,” 2019 China System Simulation and Virtual Reality Technology High Level Forum, vol. 16, no. 2, pp. 89–91, 2019. [Google Scholar]

5. Y. H. Cao and L. Z. Zhang, “Equipment system test and simulation,” in Equipment System Test Design, Beijing, China: National Defense Industry Press, pp. 18–58, 2016. [Google Scholar]

6. X. Y. Wu and Q. Liu, “Equipment test and evaluation,” in Equipment Statistical Experiment Design, Beijing, China: National Defense Industry Press, pp. 80–125, 2008. [Google Scholar]

7. X. Q. Chang, “Experimentation of conventional weapons and equipment,” in Test Population, Beijing, China: National Defense Industry Press, pp. 60–98, 2007. [Google Scholar]

8. Y. P. He, “Experimental design and analysis,” in Basic Statistical Concepts, Beijing, China: Chemical Industry Press, pp. 40–66, 2013. [Google Scholar]

9. M. Zhu and Q. W. Yang, “Construction and evaluation of in-service assessment index system of main combat equipment,” Command Control and Simulation, vol. 21, no. 2, pp. 55–59, 2020. [Google Scholar]

10. L. Wang, “Research on the construction method of weapon equipment combat test appraisal mission index,” Journal of the Academy of Equipment, vol. 26, no. 6, pp. 109–113, 2015. [Google Scholar]

11. Y. X. Xue and D. Zhou, “The establishment method of the appraisal index system for the combat test of weapons and equipment,” Journal of the Academy of Equipment, vol. 27, no. 4, pp. 102–107, 2016. [Google Scholar]

12. X. M. Luo, R. He and Y. L. Zhu, “Research on the basic theory of equipment combat test design and evaluation,” Journal of the Academy of Armament Forces Engineering, vol. 28, no. 6, pp. 1–6, 2014. [Google Scholar]

13. J. L. Wang and Q. S. Guo, “Research on the design method of weapons and equipment combat test items,” Journal of the Academy of Equipment, vol. 129, no. 1, pp. 129–133, 2016. [Google Scholar]

14. J. Mir, A. Mahmood and S. Khatoon, “Multi-level knowledge engineering approach for mapping implicit aspects to explicit aspects,” Computers, Materials & Continua, vol. 70, no. 2, pp. 3491–3509, 2022. [Google Scholar]

15. S. Kim, N. Moon, M. Hong, G. Park and Y. Choi, “Design of authoring tool for static and dynamic projection mapping,” Computers, Materials & Continua, vol. 66, no. 1, pp. 1–16, 2021. [Google Scholar]

16. Q. Liu, X. Xiang, J. Qin, Y. Tan, J. Tan et al., “Coverless steganography based on image retrieval of densenet features and DWT sequence mapping,” Knowledge-Based Systems, vol. 192, no. 2, pp. 105375–105389, 2020. [Google Scholar]

17. Y. X. Xu and W. Zeng, “Research on missile storage reliability based on bayes theory,” Journal of Naval Aeronautical Engineering Institute, vol. 21, no. 6, pp. 672–674, 2016. [Google Scholar]

18. X. M. Yan, Y. Wen and C. L. Qiu, “Bayes estimation of range and dispersion group test,” Journal of Ballistics, vol. 18, no. 2, pp. 80–83, 2006. [Google Scholar]

19. S. F. Zhang, “The method of determining the optimal test in the assessment of the concentration of missile drop points,” Applied Probability and Statistics, vol. 18, no. 4, pp. 377–384, 2002. [Google Scholar]

20. Z. Y. Qian, Y. H. Cao and R. Y. Yan, “Research on equipment in-service assessment and evaluation based on data mining,” Ordnance Equipment Engineering Journal, vol. 41, no. 8, pp. 158–162, 2020. [Google Scholar]

21. X. P. Zhang, J. H. Zhang and H. G. Xie, “Optimal design of Bayes test appraisal of missile drop point dispersion,” Journal of Astronautics, vol. 23, no. 4, pp. 92–95, 2002. [Google Scholar]

22. C. Cheng and D. Lin, “Based on compressed sensing of orthogonal matching pursuit algorithm image recovery,” Journal of Internet of Things, vol. 2, no. 1, pp. 37–45, 2020. [Google Scholar]

23. X. Wu and Ge Mu, “Design principles of weapons and equipment combat experiments,” Journal of Ordnance Equipment Engineering, vol. 40, no. 1, pp. 24–27, 2019. [Google Scholar]

24. J. Z. Qin and X. H. Liao, “Analysis of equipment combat test design process,” Journal of Armored Forces Engineering College, vol. 32, no. 4, pp. 12–15, 2018. [Google Scholar]

25. Z. Q. Lin and G. Liu, “Application of orthogonal experimental design to improve the forming accuracy of U-shaped parts,” Journal of Mechanical Engineering, vol. 38, no. 3, pp. 83–88, 2002. [Google Scholar]

26. Q. N. Naveed, A. M. Aseere, A. Muhammad, S. Islam, M. Rafik et al., “Evaluating and ranking mobile learning factors using a multi-criterion decision-making (MCDM) approach,” Intelligent Automation & Soft Computing, vol. 29, no. 1, pp. 111–129, 2021. [Google Scholar]

27. C. Wang, H. Fu, H. Hsu, V. T. Nguyen, V. T. Nguyen et al., “A model for selecting a biomass furnace supplier based on qualitative and quantitative factors,” Computers, Materials & Continua, vol. 69, no. 2, pp. 2339–2353, 2021. [Google Scholar]

28. A. B. Abdulkareem, N. S. Sani, S. Sahran, Z. Abdi, A. Adam et al., “Predicting COVID-19 based on environmental factors with machine learning,” Intelligent Automation & Soft Computing, vol. 28, no. 2, pp. 305–320, 2021. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |