| Computers, Materials & Continua DOI:10.32604/cmc.2022.028618 |  |

| Article |

Body Worn Sensors for Health Gaming and e-Learning in Virtual Reality

1Department of Computer Science, Air University, Islamabad, 44000, Pakistan

2Department of Computer Science and Software Engineering, Al Ain University, Al Ain, 15551, UAE

3Department of Computer Science, College of Computer, Qassim University, Buraydah, 51452, Saudi Arabia

4Department of Computer Engineering, Tech University of Korea, 237 Sangidaehak-ro, Siheung-si, Gyeonggi-do, 15073, Korea

*Corresponding Author: Jeongmin Park. Email: jmpark@tukorea.ac.kr

Received: 14 February 2022; Accepted: 05 May 2022

Abstract: Virtual reality is an emerging field in the whole world. The problem faced by people today is that they are more indulged in indoor technology rather than outdoor activities. Hence, the proposed system introduces a fitness solution connecting virtual reality with a gaming interface so that an individual can play first-person games. The system proposed in this paper is an efficient and cost-effective solution that can entertain people along with playing outdoor games such as badminton and cricket while sitting in the room. To track the human movement, sensors Micro Processor Unit (MPU6050) are used that are connected with Bluetooth modules and Arduino responsible for sending the sensor data to the game. Further, the sensor data is sent to a machine learning model, which detects the game played by the user. The detected game will be operated on human gestures. A publicly available dataset named IM-Sporting Behaviors is initially used, which utilizes triaxial accelerometers attached to the subject’s wrist, knee, and below neck regions to capture important aspects of human motion. The main objective is that the person is enjoying while playing the game and simultaneously is engaged in some kind of sporting activity. The proposed system uses artificial neural networks classifier giving an accuracy of 88.9%. The proposed system should apply to many systems such as construction, education, offices and the educational sector. Extensive experimentation proved the validity of the proposed system.

Keywords: Artificial neural networks; bluetooth connection; inertial sensors; machine learning; virtual reality exergaming

In recent years, international statistics show that there is a higher range of obesity among people at an alarming rate. The obesity rates are as high as 38%. 78% of these 38% can be considered overweight [1]. Some arguments show that more screen time is the main reason for obesity by two means. First, is lack of physical activity and the other is poor eating habits [2]. There is a study on young American children that 37% of them had very less physical activity, 65% of them had more screen time, and 26% had both [3]. So, the problem here arises that children today have the least interest in outdoor activities. Rather, they focus more on indoor technology such as mobile phones and television. The Americans spend more time on screens mostly 4 to 5 h as studies say. Ultimately, people do not exercise or engage in physical activities that it is recommended by health care specialists [4]. In short, more screen time plays a role in an inactive lifestyle [5].

Virtual reality came into being in 1957 by Morton Heilig and made the first virtual reality system. The device was named Sensorama. But, researcher Jaron Lanier proposed the term ‘virtual reality’ later in 1987 [6]. The prices of virtual reality headsets have turned high for usage by normal people. For example, Oculus Go has the highest price that is 545 USD. The lowest price of the Oculus headset is almost 249 USD [7]. So, the problem arises that normal people cannot buy such expensive headsets. Moreover, systems giving the same experience like Xbox360, PlayStation and Wii Nintendo are high and have technical issues as well. To make an impact, a new system is proposed, which is comparatively less costly and more effective. The proposed system is applicable for healthcare and fitness and will have an impact on children who are suffering from obesity and other problems and promote fitness in them.

• The model proposed relates health with gaming such that a person can do health exercises or play outdoor games in his room.

• The system is cost-effective and user-friendly as it designs a custom virtual reality experience with inertial sensors.

• It uses an accurate artificial neural networks classifier to predict the values.

The focus of our research is that how a person who does not have an opportunity to have regular exercise can have the same experience by even not stepping out of his room. The acceleration will be measured by our 6DOF (degrees of freedom) sensor and the data will be sent by a Bluetooth module to our computer which will be tested a machine learning model using a decision tree classifier and ultimately, the game will be predicated and the respective interface will be loaded in the game designed in Unity. The user will be viewing it on the screen attached to a virtual reality headset and simultaneously, the movements and activity recognition can be seen on the PC. The reversible watermark embedding does not affect the robust watermark, which improves the robustness of the watermark [8]. Unfortunately, like digital images and videos, 3-D meshes can be easily modified, duplicated and redistributed by unauthorized users. Digital watermarking came up while trying to solve this problem [9].

The system we are proposing can have many other applications. There are plenty of head mounted display-virtual reality (HMD-VR) health games that are helping the patients to recover and extensively used for rehabilitation [10]. Moreover, virtual reality (VR) games have extensive applications in healthcare. It has vast applications in medical assessments, clinical care, preventive health and wellness etc [11]. Virtual reality gaming can also have vast applications in education. Applying games in education helps to increase students’ engagement and motivation and applying first person VR games will help in keeping students fit and healthy along with fun [12]. Research into applying virtual reality gaming systems to provide rehabilitation therapy have seen revival. Virtual reality games have applications in the fields of rehabilitation of many diseases like post-stroke, parkinson’s disease etc [13].

Our contribution in the required field are:

(1) Our proposed system are developing a cost effective solution to a very major problem today that is obesity and health problems in children.

(2) In the field of virtual reality, similar systems are present, but they are very costly and normal people cannot afford it. Therefore, the proposed system develops a product by which people can remain fit by staying indoors and playing games for fun.

(3) We are developing a system with our own hardware components, so it will be a reliable solution.

(4) We are integrating a simple cheap VR device with our own system, to make it effective and not so expensive.

(5) We are achieving higher accuracy than other state of art methods.

The following is how the article is organized: Section 2 discusses some of the work that is linked to our research paper. The system’s methodology and process are shown in Section 3. Section 4 presents the findings using graphical tools, and Section 5 involved discussion and Section 6 discusses the conclusion and future study.

Motivation towards the proposed system is to provide a cost-effective solution to a major problem prevailing in the world. Children are becoming lazier and inactive. Studies have shown that overweight children and adolescents generally grow up to be overweight adults [14]. Therefore, obesity in childhood is an important risk-factor for obesity and subsequent chronic diseases in later life [15]. In contrast an active lifestyle in childhood should lead to health benefits in adulthood and is influenced by factors that were acquired as habits in early life [16]. This is why the proposed system will have a huge role in solving this issue. The related work can be divided into two subsections including some recently developed systems including virtual reality exergaming with cameras and with sensors.

2.1 Virtual Reality Exergaming with Sensors

In recent years, many applications have been produced using virtual reality gaming and sensors. Tsekleves et al. [17] suggested a system that combines a less expensive, customizable, and off-the-shelf motion capture system (using bigger controllers for the game) with personalized video games adapted to the needs of Parkinson’s Disease (PD) rehabilitation. To achieve the promise of live biofeedback and game difficulty adjustment by the therapist, the data which is recorded is sent to the teacher’s VR platform online. Fitzgerald et al. [18] outlined the creation of a virtual reality computer game for directing an athlete through a sequence of prescribed rehabilitation activities. Athletes have been prescribed workout programs by adequately trained professionals to avoid or treat musculoskeletal type ailments while also attempting to increase physical performance. By examining and engaging with realistic models in virtual environments utilising serious games, Mondragón Bernal et al. tells in his research develops and assesses a method for power substation operating training. Building information modelling (BIM) from a 115 kV substation was utilised to create a scenario with high technical detail suited for professional training in the virtual reality (VR) simulator [19]. Immersive 3D virtual environments and serious games, or video games intended for educational reasons, have become increasingly popular, and serious games have just recently been evaluated for healthcare teaching. Ma et al. explore some examples of what has been developed in terms of teaching models and evaluative methodologies in this study, then discuss educational theories explaining why virtual simulations and serious games are important teaching tools, and finally suggest how to evaluate their value in an educational context [20]. To simulate, analyse, and optimise the assembly process, virtual reality technologies are becoming increasingly popular. Some authors in their paper describes the creation of a haptic virtual reality platform for planning, performing, and evaluating virtual assembly. The system allows virtual components to be handled and interacted with in real time. The system includes several software packages, such as OpenHaptics, PhysX, and OpenGL/GLUT libraries, to investigate the benefits and drawbacks of merging haptics with physically based modelling [21].

2.2 Virtual Reality Exergaming with Camera

Various methodologies have been adopted by researchers for using camera-oriented virtual reality systems. Gerling, et al. [22] proposed a method that used Kinect v2 depth camera to analyze wheelchair movement and made two virtual reality games in Unity to make disabled people feel at ease. By the research, their results were very positive as immersive VR experience for disabled people proved to be a great experience for them. Xu et al. [23] Stomp Joy, a task-specific virtual reality game based on a depth camera, was designed for post stroke rehabilitation of the lower limbs. Sangmin et al. [24] used Unity3D to create VR games for the A-Visor and A-Camera. They demonstrated new self-made head-mounted VR controllers made from corrugated cardboard, Arduino, and sensors, all of these are easily available to self-made fans. Virtual reality (VR) is a component of the digital manufacturing process. It can be employed at different stages of the production process. Another study expands the use of virtual reality in manufacturing by incorporating concepts and studies from training simulations into the evaluation of assembly training efficacy and training transfer [25]. In this research, Abidi et al. evaluate and compare a virtual assembly training system for the first Saudi Arabian car prototype fender assembly. Traditional Engineering, Computer Aided Design Environment, and Immersive Virtual Reality were the three teaching settings compared in this study. The diverse training settings were allocated to fifteen students from King Saud University at random [26]. Virtual reality makes industrial design, planning, and prototyping more efficient and cost-effective. As a result, the study reported in this paper by Ahmari et al. focuses on the development of a fully functioning virtual manufacturing assembly simulation system that addresses the problems associated with virtual reality settings. The suggested system creates an interactive workbench using a virtual environment for analyzing assembly decisions and teaching assembly procedures [27].

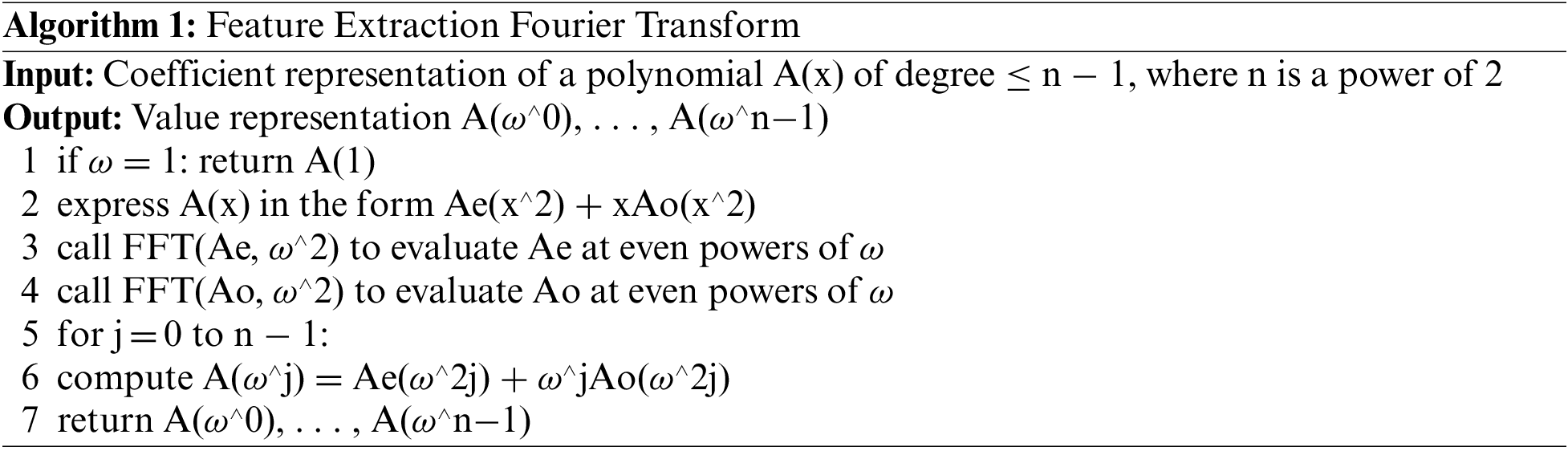

This section describes the proposed framework for active monitoring of the daily life of humans. Fig. 1 shows the general overview of the proposed system architecture. According to the figure, firstly, the dataset used is preprocessed and after that, its features are extracted. Then, the model is classified by artificial neural networks where the gesture performed by human is recognized. At the end, the predicted gesture is depicted in the virtual reality game and the user can play the game as a first person in his virtual reality world.

Figure 1: System architecture of the proposed system

3.1 Sensor Data Pre-Processing

MPU6050 is the 6 degrees of freedom (DOF) sensor that contains 3 axes accelerometer and 3 axes gyroscope. After integrating MPU6050 with Arduino, it produced 3 values that are three axes accelerometer values and 3 axes gyroscope. But this data is in raw format and is not calibrated. To make it accurate, we use a noise reduction model for generating accurate angles. Pre-processing is conducted after framing to increase data quality. As a result, signal enhancement is employed, which entailing data filtering to identify undesired features and to rid of data irregularities such as irrelevancy, inconsistencies, and repetition. The signal is then put through a 3rd order median filter for significant noise artifact cancellation [28]. The median filter works in a way to provide median of the signals in the required size. The median filter, on the other hand, is a non-linear filter that is used to remove speckle noise from a signal. It outperforms the low-pass filter because it can reduce noise while preserving the original signal [29]. Median filter can be applied by an equation shown below:

where X is ordered set of values in dataset and n is the total value count in dataset Fig. 2 shows the results of a comparison between filtered and unfiltered signals. The dotted line depicts the filtered wave, and the solid line shows the unfiltered data.

Figure 2: Comparison of filtered and unfiltered wave from motion sensor (a) wx (b) wy (c) wz

Fourier analysis is a technique for describing a function, which is the total of periodic components. After that, the signal is recovered from them. When the signal and its fourier transform (FT) are replaced with discretized fourier transforms (DFT), a discrete Fourier transform is made. The Fourier transform function is as follows [30]:

where X(w) is a function of frequency and

Figure 3: Fourier transform results for x-axis accelerometer

3.2.2 Feature Extraction by Random Forest

Feature extraction was done by seeing the unimportant features and dropping them. Feature extraction with a random forest algorithm was used. As the proposed system had a dataset with three accelerometers and their x, y and z components, while the ranking is done based on a random forest algorithm. Random forest feature selection falls under the area of embedded techniques. Filter and wrapper methods are combined in embedded methods. Algorithms with built-in feature selection methods are used to implement them. The ranking of the features is shown in Fig. 4.

Figure 4: Feature importance plot

By this feature extraction method, important features are extracted and ultimately used to improve the accuracy of the model.

3.3 Classification via Artificial Neural Networks

A key deep learning algorithm is Artificial Neural Networks (ANNs). Artificial neurons are a collection of connected units or nodes in an ANNs that loosely mimic the neurons in a biological brain. Each link, like synapses in the human brain, can transmit a signal to other neurons. An artificial neuron receives a signal, analyses it, and then transmits it to the neurons to which it is attached. The output of each neuron is determined by a non-linear function of its inputs, and the “signal” at a connection is a real number. Connections are referred to as edges. As learning develops, the weight of neurons and edges is often modified. The weight increases or decreases the signal strength at a connection. Neurons may have a threshold above which they can only transmit a signal if the total signal surpasses it. Neurons are commonly arranged in layers as shown in Fig. 5. On their inputs, separate layers may apply different transformations. Signals move from the first (input) layer to the last (output) layer, perhaps after traversing the layers several times [31].

Figure 5: Artificial neural networks visual view

The most used activation function in ANN is rectified linear unit. It can be calculated as:

The function is zero if the value is less than zero and if it is the other case, the function value is the number. Moreover, the function used is the softmax function. The equation for softmax function is:

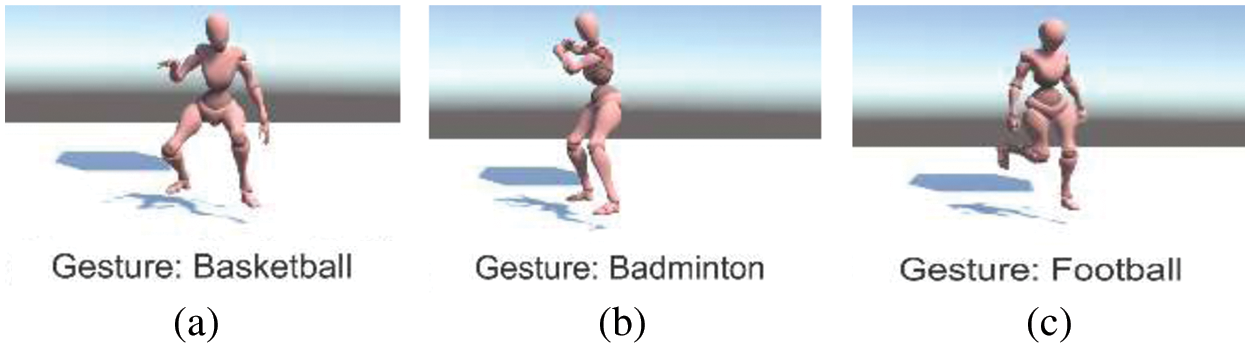

Graphical User Interface is developed and then viewed through a virtual reality headset. The model is implemented through Arduino sketch and then the results go to the game where the gesture is recognized and viewed in a virtual reality game. The interface used is formed in Unity3D software with C# scripts. The person can do the gesture of playing badminton for example and data will be sensed by the inertial sensors and ultimately, the machine learning model will recognize it. After recognizing, the same gesture will be predicted in the game formed in a 3D environment and the person can play the first-person game by wearing a sensor jacket. The visual representation is shown in Fig. 6.

Figure 6: Gaming interface for showing detected gestures (a) Basketball (b) Badminton (c) Football

The model is to be connected to a graphical user interface made with Unity3d software. A 3D virtual reality game will be made in Unity3d and the model will be connected to Arduino joined with MPU6050. The game will be visible to the person by a screen attached in the virtual reality headset and it will be shown on the PC. Hence, it will be deployed on a virtual reality headset with sensors attached to the body of the person. This section gives a brief description of the dataset used in the proposed system.

The dataset used was IMSporting behaviors by Intelligent Media Centre, Air university. The description of the dataset and the hardware used to design the dataset is provided in Tab. 1.

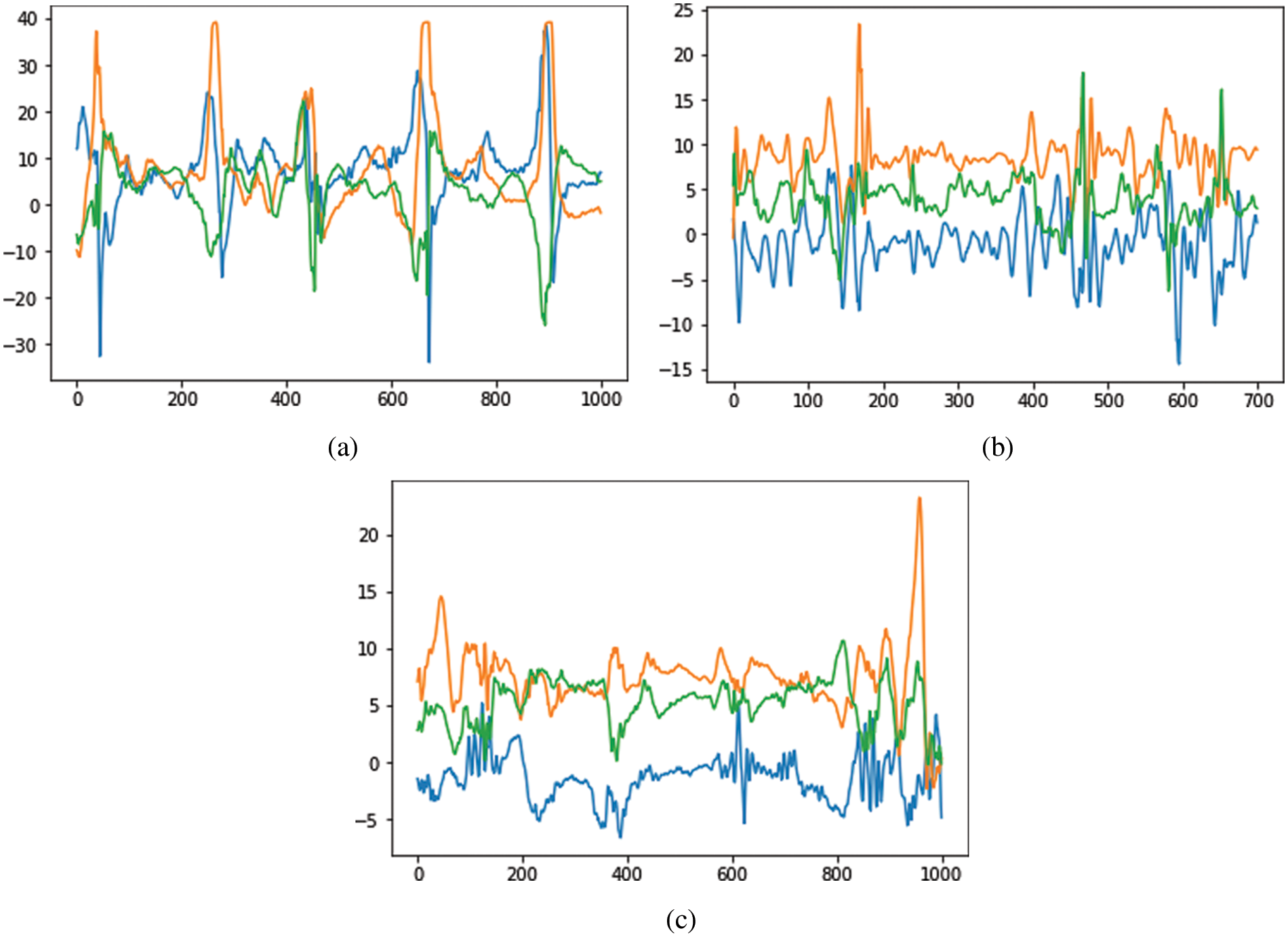

Fig. 7 below shows the plots of raw data of three accelerometers in x, y and z coordinates for badminton, table tennis and basketball behavior.

Figure 7: Raw data plots of three accelerometers showing (a) Badminton (b) Table tennis (c) Basketball

4.2 Experimental Settings and Results

The processing and experimentation were done in python 3.9 Jupiter notebook. The classifier used for the training and testing is the decision tree model. We used Grid Search CV to tune the hyper-parameters space. Hardware used was Arduino with a Bluetooth module attached which will send the sensor data. Moreover, MPU6050 was used from which we got accelerometer data values.

4.2.1 Experimental Results Over IMSporting Behavior Dataset

Data was trained by the artificial neural network classifier. The label variable was the target variable for prediction. It detected which game the user is playing. Either it is badminton, cricket, table tennis, skipping or cycling. The accuracy by using the IM sporting behaviors dataset was 88.9%. Tab. 2 shows a confusion matrix for showing recognition accuracies over classes of IMSporting behaviors dataset.

4.2.2 Precision, Recall and F1-Measure

The precision is the ratio of correct positive predictions to the total positives while the recall is the true positive rate and it is the ratio of correct positive to the total predicted positives [33]. The F1-score is the mean of precision and recall. The precision, recall and F1-score for classes of the dataset are given in Tab. 3. Hence, an accurate system is developed which is able to recognize each game with high precision.

4.2.3 Comparison with Well-Known State-of-the Art Methods

Tab. 4 represents a comparison of classifier results with other state of art methods. There are many systems built similar to the proposed method. A comparison of the systems developed on the same dataset we are using is shown below. The results were compared with an already developed system and it was compared with the proposed method. Authors have used the decision tree algorithm along with GMM based feature extraction and got the accuracy of 83% [34]. Another system used random forest algorithm and achieved the accuracy of 83.42% [35]. One of the other proposed systems used classification using LSVM with multi-fused feature extraction and achieved the accuracy of 80% [36]. Lastly, a system used artificial neural networks and achieved the accuracy of 85.66% [37]. The system proposed in this paper has achieved the accuracy of 88%.

The proposed system has much significance in the fields of virtual reality and exergaming. Now a days, inertial sensors are playing a vital role in applications indoors and outdoors [38]. In case of using inertial sensors, the sensors are attached to the body of the player [39]. In the proposed system, these inertial sensors are used to detect the motion of the user and then the machine learning model detects the activity. The depicted game is then played in a virtual reality environment. This system has vast applications in fitness and gaming. With the use of physical activity recognition applications, people who are suffering from different diseases can be handled and treated in a timely and better manner [40]. Activity recognition done by inertial sensors is a very cost-effective solution which can have vast applications [41].

The proposed system is a highly effective system for users because the users can have virtual gyms and grounds in their homes which can help them play outdoor games in their rooms. Virtual reality is a very vast field and it gives reality like experience in an imaginary world [42]. Users will be standing in their room or any place, will wear virtual reality headset with the hardware connected and a screen attached in his VR where the game will be played, and he will perform any gesture. Ultimately, our system will detect the gesture and the person will start playing it in his virtual reality game. It will be an amazing experience for fun loving people mainly children because they will be able to stay fit even in their screen time.

In this research paper, we have formed a virtual reality gaming interface and connected it with daily physical outdoor games so that a person can play these games within his room. The proposed system has many benefits such as it can affect the motivation of the children and reduce obesity, laziness and anxiety. The sensors are attached to the body of the user. Data of sensors is sent to the PC by a Bluetooth module. Sensor data will be tested by the machine learning model and it will predict which activity the user has performed. The same activity will be performed by the avatar in unity and model accuracy is 88.9%. In the future, we will extend this system by adding special levels for disabled people so that they can also enjoy the gaming experience, and this will ultimately bring a good impact on their physical and mental health. In conclusion, the proposed system is designed in a way to become a cost-effective and fascinating solution to many health issues in children as well as elders.

Funding Statement: This research was supported by a Grant (2021R1F1A1063634) of the Basic Science Research Program through the National Research Foundation (NRF) funded by the Ministry of Education, Republic of Korea.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. H. Taylor, “Healthy and unhealthy behavior and lifestyle trends: No significant change in 2011 in proportion of adults who are obese, smoke or wear seatbelts,” Harris Interactive, vol. 1, pp. 364–375, 2011. [Google Scholar]

2. L. D. Rosen, A. F. Lim, J. Felt, L. M. Carrier and N. A. Cheever, “Media and technology use predicts ill-being among children, preteens and teenagers independent of the negative health impacts of exercise and eating habits,” Computers in Human Behavior, vol. 35, pp. 364–375, 2014. [Google Scholar]

3. S. E. Anderson and C. D. Must, “Active play and screen time in US children aged 4 to 11 years in relation to sociodemographic and weight status characteristics: A nationally representative cross-sectional analysis,” BMC Public Health, vol. 8, pp. 366–378, 2008. [Google Scholar]

4. S. A. Bowman, “Television-viewing characteristics of adults: Correlations to eating practices and overweight and health status,” Preventing Chronic Disease, vol. 3, pp. 1–11, 2006. [Google Scholar]

5. R. Yao, T. Heath, A. Davies, T. Forsyth and N. Mitchell, “Oculus vr best practices guide”, Oculus VR, vol. 4, pp. 27–35, 2014. [Google Scholar]

6. B. Poetker, “The very real history of virtual reality (+A look ahead),” 2019. [Online]. Available: https://www.g2.com/articles/history-of-virtual-reality. [Google Scholar]

7. O. Ouyed and M. A. Said, “Group-of-features relevance in multinomial kernel logistic regression and application to human interaction recognition,” Expert Systems with Applications, vol. 148, pp. 1–22, 2020. [Google Scholar]

8. X. R. Zhang, W. F. Zhang, W. Sun, X. M. Sun and S. K. Jha, “A robust 3-D medical watermarking based on wavelet transform for data protection,” Computer Systems Science & Engineering, vol. 41, no. 3, pp. 1043–1056, 2022. [Google Scholar]

9. X. R. Zhang, X. Sun, X. M. Sun, W. Sun and S. K. Jha, “Robust reversible audio watermarking scheme for telemedicine and privacy protection,” Computers, Materials & Continua, vol. 71, no. 2, pp. 3035–3050, 2022. [Google Scholar]

10. G. Tao, B. Garrett, T. Taverner, E. Cordingley and C. Sun, “Immersive virtual reality health games: A narrative review of game design,” Journal of NeuroEngineering and Rehabilitation, vol. 18, no. 1, pp. 1–21, 2021. Available: 10.1186/s12984–020–00801–3. [Google Scholar]

11. L. Jones, “VR and… better healthcare,” Engineering & Technology, vol. 11, no. 3, pp. 36–39, 2016. [Google Scholar]

12. P. Zikas, S. Kateros and P. Margarita, “Mixed reality serious games and gamification for smart education,” European Conf. on Games Based Learning, Paisley, United Kingdom, pp. 805–812, 2016. [Google Scholar]

13. M. Yates, A. Kelemen and C. Sik Lanyi, “Virtual reality gaming in the rehabilitation of the upper extremities post-stroke,” Brain Injury, vol. 30, no. 7, pp. 855–863, 2016. [Google Scholar]

14. J. J. Reilly, E. Methven, Z.C. McDowell, “Health consequences of obesity,” Archives of Disease in Childhood, vol. 88, no. 9, pp. 748–752, 2003. [Google Scholar]

15. M. K. Gebremariam, T. H. Totland and L. F. Andersen, “Stability and change in screen-based sedentary behaviours and associated factors among Norwegian children in the transition between childhood and adolescence,” BMC Public Health, vol. 12, pp. 1–9, article 104, 2012. [Google Scholar]

16. S. J. H. Biddle, N. Pearson, G. M. Ross and R. Braithwaite, “Tracking of sedentary behaviours of young people: A systematic review,” Preventive Medicine, vol. 51, no. 5, pp. 345–351, 2010. [Google Scholar]

17. I. Paraskevopoulos and E. Tsekleves, “Use of gaming sensors and customised exergames for Parkinson’s disease rehabilitation: A proposed virtual reality framework,” in Proc of 5th Int. Conf. on Games and Virtual Worlds for Serious Applications (VS-GAMES), Poole, United Kingdom, vol. 4, pp. 1–5, 2013. [Google Scholar]

18. D. Fitzgerald, J. Foody, D. Kelly, T. Ward, C. Markham et al., “Development of a wearable motion capture suit and virtual reality biofeedback system for the instruction and analysis of sports rehabilitation exercises,” in Proc of 29th Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society, Guadalajara, Mexico, pp. 4870–4874, 2007. [Google Scholar]

19. I. Mondragón Bernal, S. Valdivia, R. Muñoz, J. Aragón, R. García et al., “An immersive virtual reality training game for power substations evaluated in terms of usability and engagement,” Applied Sciences, vol. 12, no. 2, pp. 711, 2022. [Google Scholar]

20. M. Ma, L. Jain and P. Anderson, “Healthcare training enhancement through virtual reality and serious games,” Virtual, Augmented Reality and Serious Games for Healthcare, vol. 68, pp. 9–27, 2014. [Google Scholar]

21. M. Abidi, A. Ahmad, S. Darmoul and A. Al-Ahmari, “Haptics assisted virtual assembly,” IFAC-PapersOnLine, vol. 48, no. 3, pp. 100–105, 2015. [Google Scholar]

22. K. Gerling, P. Dickinson, K. Hicks, L. Mason, A. L. Simeone et al., “Virtual reality games for people using wheelchairs,” in Proc of CHI Conf. on Human Factors in Computing Systems, New York, USA, pp. 1–11, 2020. [Google Scholar]

23. Y. Xu, M. Tong, W. Ming, Y. Lin, W. Mai et al., “A depth camera–based, task-specific virtual reality rehabilitation game for patients with stroke,” Pilot Usability Study JMIR Serious Games, vol. 9, no. 1, pp. e20916, 2021. [Google Scholar]

24. P. Sangmin, A. Hojun, J. Junhyeong, K. Hyeonkyu, H. Sangsun et al., “A-Visor and a-camera: Arduino-based cardboard head-mounted controllers for vr games,” in Proc of IEEE Conf. on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, USA, pp. 434–435, 2021. [Google Scholar]

25. M. Abidi, A. Al-Ahmari, A. Ahmad, W. Ameen and H. Alkhalefah, “Assessment of virtual reality-based manufacturing assembly training system,” The International Journal of Advanced Manufacturing Technology, vol. 105, no. 9, pp. 3743–3759, 2019. [Google Scholar]

26. M. Abidi, A. Tamimi, A. Ahmari and E. Nasr, “Assessment and comparison of immersive virtual assembly training system,” International Journal of Rapid Manufacturing, vol. 3, no. 4, pp. 266, 2013. [Google Scholar]

27. A. Al-Ahmari, M. Abidi, A. Ahmad and S. Darmoul, “Development of a virtual manufacturing assembly simulation system,” Advances in Mechanical Engineering, vol. 8, no. 3, pp. 1–17, 2016. [Google Scholar]

28. I. Akhter, A. Jalal and K. Kim, “Adaptive pose estimation for gait event detection using context-aware model and hierarchical optimization,” Journal of Electrical Engineering & Technology, vol. 9, pp. 1–9, 2021. [Google Scholar]

29. A. Jalal, Y. Kim, S. Kamal, A. Farooq and D. Kim, “Human daily activity recognition with joints plus body features representation using kinect sensor,” in Proc of Int. Conf. on Informatics, Electronics & Vision (ICIEV), Fukuoka, Japan, pp. 1–6, 2015. [Google Scholar]

30. E. Salah, K. Amine, K. Redouane and K. Fares, “A Fourier transform based audio watermarking algorithm.”, Applied Acoustics, vol. 172, pp. 107652, 2021. [Google Scholar]

31. M. Gochoo, I. Akhter, A. Jalal and K. Kim, “Stochastic remote sensing event classification over adaptive posture estimation via multifused data and deep belief network,” Remote Sensing, vol. 13, no. 5, pp. 1–29, 2021. [Google Scholar]

32. A. Jalal, M. A. K. Quaid and A. S. Hasan, “Wearable sensor-based human behavior understanding and recognition in daily life for smart environments,” in Proc of Int. Conf. on Frontiers of Information Technology (FIT), Islamabad, Pakistan, pp. 105–110, 2018. [Google Scholar]

33. Y. Ghadi, I. Akhter, M. Alarfaj, A. Jalal and K. Kim, “Syntactic model-based human body 3D reconstruction and event classification via association based features mining and deep learning,” PeerJ Computer Science, vol. 7, pp. e764, 2021. [Google Scholar]

34. A. Jalal, M. Batool and S. B. ud din Tahir, “Markerless sensors for physical health monitoring system using ecg and gmm feature extraction,” in Proc of. Conf. on Applied Sciences and Technologies (IBCAST), Islamabad, Pakistan, pp. 340–345, 2021. [Google Scholar]

35. S. B. ud din Tahir, A. Jalal and M. Batool, “Wearable sensors for activity analysis using smo-based random forest over smart home and sports datasets,” in Proc of 3rd Int. Conf. on Advancements in Computational Sciences (ICACS), Lahore, Pakistan, pp. 1–6, 2020. [Google Scholar]

36. W. Manahil, A. Jalal, M. Alarfaj, Y. Ghadi, S. Tamara et al., “An LSTM-based approach for understanding human interactions using hybrid feature descriptors over depth sensors,” IEEE Access, vol. 9, pp. 167434–167446, 2022. [Google Scholar]

37. S. ud din Tahir, A. Jalal and K. Kim, “Daily life Log recognition based on automatic features for health care physical exercise via IMU sensors,” in Int. Bhurban Conf. on Applied Sciences and Technologies (IBCAST), Islamabad, Pakistan, pp. 494–499, 2021. [Google Scholar]

38. S. B. ud din Tahir, A. Jalal and K. Kim, “Wearable inertial sensors for daily activity analysis based on adam optimization and the maximum entropy markov model,” Entropy, vol. 22, no. 5, pp. 1–19, 2020. [Google Scholar]

39. A. Jalal, Y. -H. Kim, Y. -J. Kim, S. Kamal and D. Kim, “Robust human activity recognition from depth video using spatiotemporal multi-fused features,” Pattern Recognition, vol. 61, pp. 295–308, 2017. [Google Scholar]

40. A. Jalal, M. Batool and K. Kim, “Stochastic recognition of physical activity and healthcare using tri-axial inertial wearable sensors,” Applied Sciences, vol. 10, no. 20, pp. 7122, 2020. [Google Scholar]

41. A. Jalal, M. A. K. Quaid, S. B. Tahir and K. Kim, “A study of accelerometer and gyroscope measurements in physical life-log activities detection systems,” Sensors, vol. 20, no. 22, pp. 6670, 2020. [Google Scholar]

42. A. Ahmed, A. Jalal and K. Kim, “Region and decision tree-based segmentations for multi-objects detection and classification in outdoor scenes, “ in Proc of Int. Conf. on Frontiers of Information Technology (FIT), Islamabad, Pakistan, pp. 209–214, 2019. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |