| Computers, Materials & Continua DOI:10.32604/cmc.2022.030033 |  |

| Article |

Unconstrained Hand Dorsal Veins Image Database and Recognition System

1Faculty of Science and Information Technology, Al-Zaytoonah University of Jordan, Amman, 11733, Jordan

2Faculty of Architecture and Design, Al-Zaytoonah University of Jordan, Amman, 11733, Jordan

*Corresponding Author: Mustafa M. Al Rifaee. Email: m.rifaee@zuj.edu.jo

Received: 17 March 2022; Accepted: 18 May 2022

Abstract: Hand veins can be used effectively in biometric recognition since they are internal organs that, in contrast to fingerprints, are robust under external environment effects such as dirt and paper cuts. Moreover, they form a complex rich shape that is unique, even in identical twins, and allows a high degree of freedom. However, most currently employed hand-based biometric systems rely on hand-touch devices to capture images with the desired quality. Since the start of the COVID-19 pandemic, most hand-based biometric systems have become undesirable due to their possible impact on the spread of the pandemic. Consequently, new contactless hand-based biometric recognition systems and databases are desired to keep up with the rising hygiene awareness. One contribution of this research is the creation of a database for hand dorsal veins images obtained contact-free with a variation in capturing distance and rotation angle. This database consists of 1548 images collected from 86 participants whose ages ranged from 19 to 84 years. For the other research contribution, a novel geometrical feature extraction method has been developed based on the Curvelet Transform. This method is useful for extracting robust rotation invariance features from vein images. The database attributes and the veins recognition results are analyzed to demonstrate their efficacy.

Keywords: Biometric recognition; contactless hand biometrics; veins recognition; Curvelet transform; image segmentation; feature extraction

Hand veins’ recognition gained increased attention from biometric recognition researchers over the last two decades due to the unique features of the veins vascular network, high degree of freedom, time invariance of shape, and difficulty to forge [1].

A biometric-based recognition system consists of four main stages: image enhancement, segmentation, feature extraction, and matching [2]. In the enhancement stage, the image quality is processed to reduce the noise effect, especially in images that have been captured in an unconstrained environment. The segmentation step defines the Region of Interest (ROI) in the image, such as with extracting the iris ring from the whole eye image [3] or the veins region in a medical image [4,5]. Furthermore, some systems such as face recognition may require multiple regions of interest [6]. Once the ROI is defined, the discriminating features can be extracted for the matching process in the feature extraction step. Finally, these features are used in the matching step to identify the person.

This paper will discuss the related previous work in Section 2. Then, the proposed methods for image segmentation and feature extraction will be presented in Section 3. The data acquisition of hand dorsal veins images for the system’s database will be explained in detail in that section. Implementation results will be discussed in Section 4. Finally, the conclusion will be presented in Section 5.

Multiple research papers were done on dorsal hand veins and each of them conducted many tests over a large number of images. Several dorsal veins databases are available for research purposes. The Wilches dorsal hand veins database, presented by Wilches-Bernal et al. [7], was collected in two different sessions; the first session collected 1104 images from 138 people, and the second collected 678 images from 113 people. Raghavendra et al. [8] created a dataset with 100 dorsal veins images collected from 50 individuals, with a Near Infrared (NIR) spectrum of 940 nanometers (nm) for lighting the hand dorsal veins area along with an ROI segmentation sensor.

The SAUS database [9], which contains 919 images acquired from 155 people, was captured using a low coast veins finder with a 940 nm wavelength NIR light source. The creators of the VERA palm veins database [10,11] used a prototype capturing device developed by Haute Ecole Spécialisée de Suisse Occidentale. This database contains 2200 images collected from 110 people whose ages ranged from 18 to 60 years. The image collection was implemented in two different sessions where 10 images were captured from each hand of each person.

Although these are relatively new veins databases, their data are only valid for testing recognition methods designed to process the adequate quality images captured in constrained environments. Alternatively, this research presents the new Unconstrained Hand Dorsal Veins Database (UHDVD), which simulates an unconstrained biometric identification framework useful for contactless and on-the-move conditions.

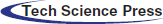

Most currently deployed palm veins recognition systems use a segmentation algorithm that depends on key points between fingers to define the ROI, as seen in Fig. 1. These key points are used to build a coordinate system that defines a circular or rectangular veins area. The researchers in [12–14] followed the same process to locate the veins area, key points between fingers, and a geometrical shape perpendicular to the tangent line that crosses the key points.

Figure 1: Dorsal veins ROI key points

Lee [14] presented a rotation-invariant segmentation method to locate the veins area in rotated palm images. This method defines a potential palm center point followed by a gradient expanded circle in all directions. The intersection between the circle and the valley between fingers defines the key points, and the detected key points are finally aligned according to a predefined orientation to achieve a rotation-invariant image. Their method was successful in segmenting palm images with 45°, 180°, 230°, and 350° rotation angles. An automatic size-invariant segmentation method was presented by Yahya et al. [15] to efficiently segment two-dimensional images without codifying them.

Castro-Ortega et al. [16] utilized the geometrical moments' method to find the center points of the dorsal images. The centroid point was used to define a bounding box with dimensions equal to image size divided by 2. Authors in [17] proposed a multi-level segmentation algorithm based on image binarization followed by contour detection to locate the key points between fingers. Although these proposals were able to segment the veins area from clear hand dorsal images, they were less successful when applied to images captured under unconstrained conditions.

Wu et al. [18] developed a geometry-based feature extraction technique by designing a directional filter bank followed by minimum directional code (MDC) to extract linear-based veins features. Moreover, to reduce the noise caused by non-veins areas, they utilized the directional filtering magnitude method to detect and remove the non-veins pixels.

In [19], Otsu’s technique was used to identify the hand area while employing a region property method to calculate bounding box parameters that enclose the veins' pattern. Other researchers presented texture-based feature extraction methods to extract rich information from degraded veins images where the vein pattern is partially occluded or scattered. Rahul et al. [20] utilized the Local Directional Texture Pattern method to extract directional and contrast information from contactless veins images captured in an unconstrained environment. Another method, based on structural-based features, uses a double-oriented code descriptor from the veins image to extract the discrete dominant orientation features that can be used in the matching process [21]. Our proposed method for image segmentation and feature extraction will be described in the next section.

This section presents the proposed method for contact-free dorsal hand vein recognition and the creation of the required database.

3.1 Dorsal Hand Veins Image Acquisition

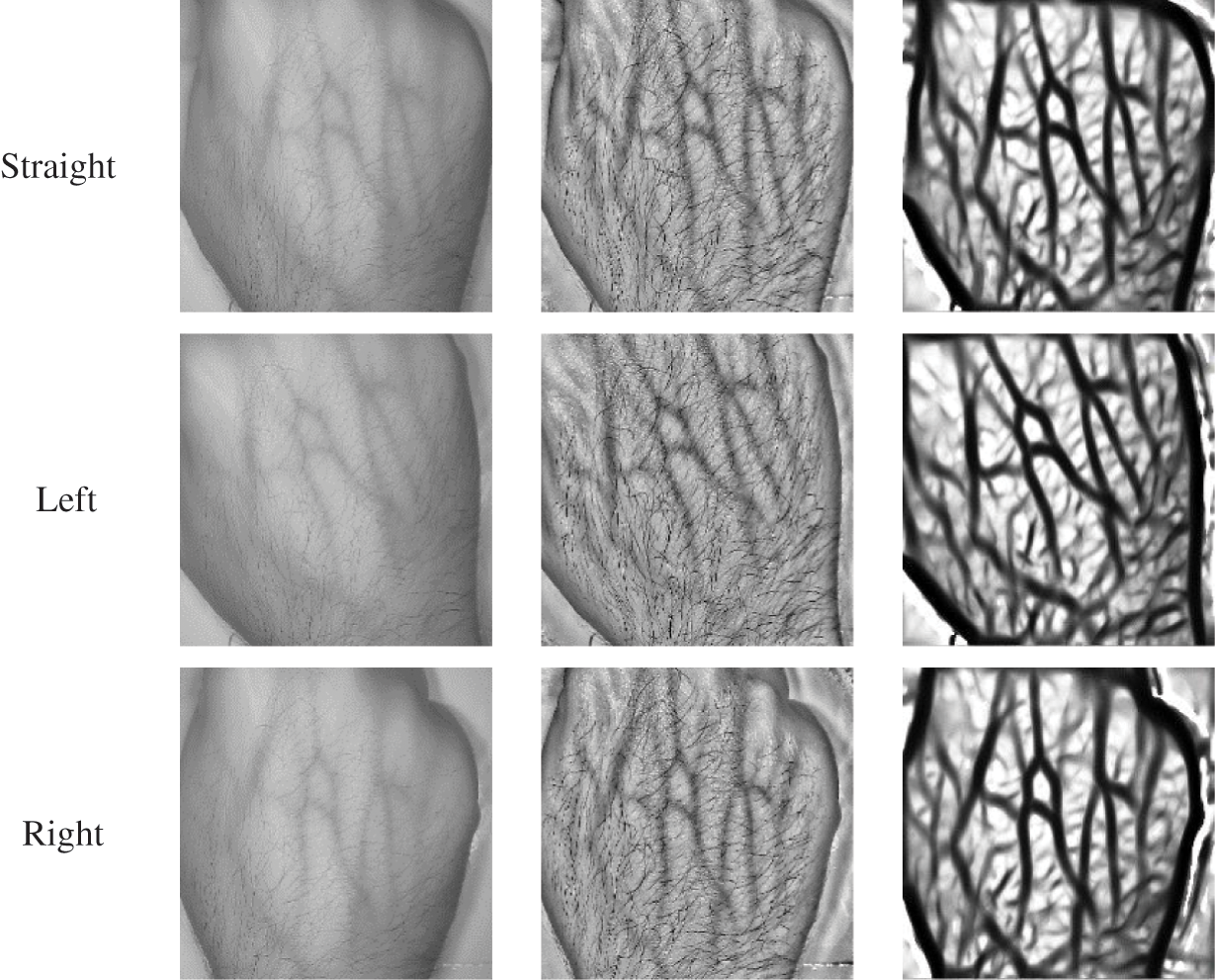

Unlike the currently available databases, UHDVD images were captured in an unconstrained environment with heterogeneous characteristics that come from the NIR light source. The settings are used with a comfortable 650 nm wavelength where the light of 900 nm wavelength or above is hazardous to the human eyes. Moreover, UHDVD was obtained through a multi-image session for each person with different hand orientations. Finally, due to the contactless capturing feature, there was a variation in the distance between the camera lens and the captured hands. The acquisition device used for image capturing is the BIOBASE BKVD-1202L Veins Finder [22], shown in Fig. 2, with a NIR light source customized with the 650 nm wavelength. The Veins Finder uses a 5-megapixel camera with adjustable light intensity to produce images with a 480 × 600 resolution. See Tab. 1 for a detailed list of the device parameters. The Veins Finder is equipped with a Liquid Crystal Display (LCD) for image viewing.

Figure 2: The BIOBASE BKVD-1202L veins finder

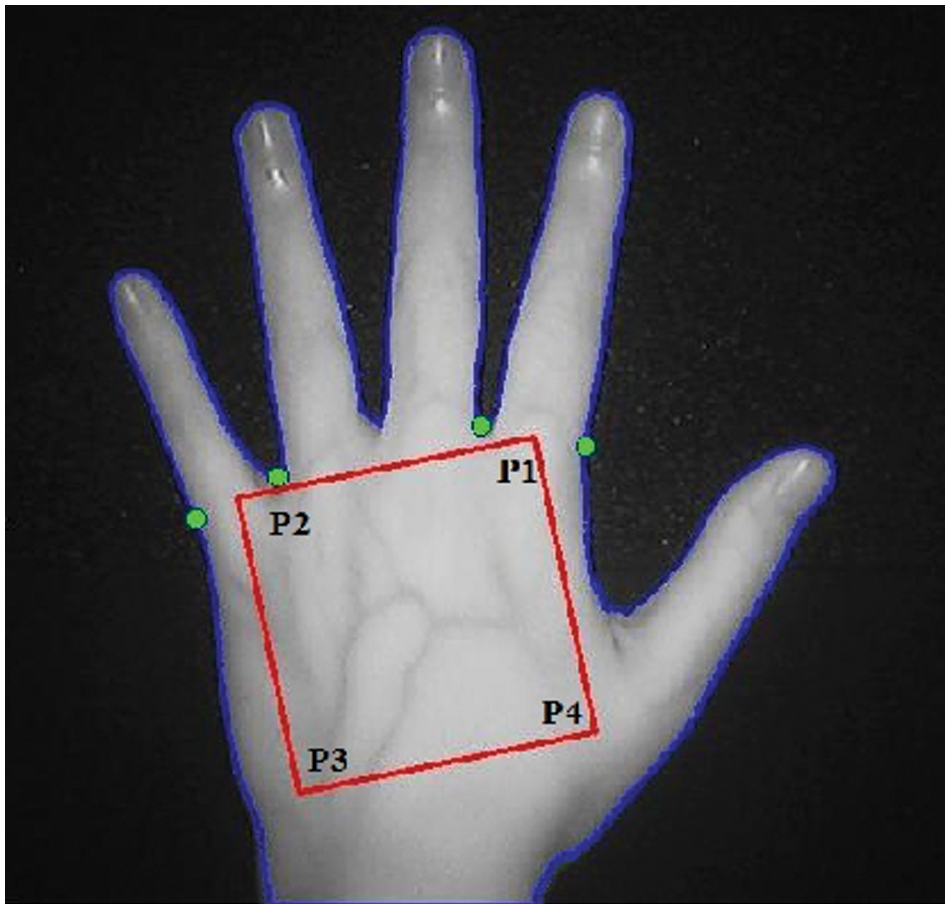

The UHDVD database contains 1548 images obtained from 86 people, divided into two sessions for the left and right hands. Each session captured nine images representing three orientations (straight, left, and right) with three different light filter parameters for each orientation. Fig. 3 shows a sample left-hand session where the first image in each row is the basic NIR image and the second and third images were captured using the light filters supplied by the Veins Finder device. The participants were asked to place their hands away from the device surface at any distance between 5 and 20 centimeters and to rotate their hands with free left and right angles as seen in Fig. 4. This process produced images with different ROI sizes and rotation angles that simulate an unconstrained environment suitable for recognizing an individual. These imaging conditions can be applied to different frameworks at various locations such as airports, secure areas, and banks, and during pandemic conditions.

Figure 3: Sample images of a left-hand session

Figure 4: Image acquisition

The ability to detect the correct ROI and eliminate noise is essential to image recognition. Once the noise is eliminated and the veins image is correctly segmented, the segmented enhanced veins image could be used in the feature extraction process. Experiments were applied to the UHDVD database, with varying parameters of rotation, capturing distance, and noise filtering.

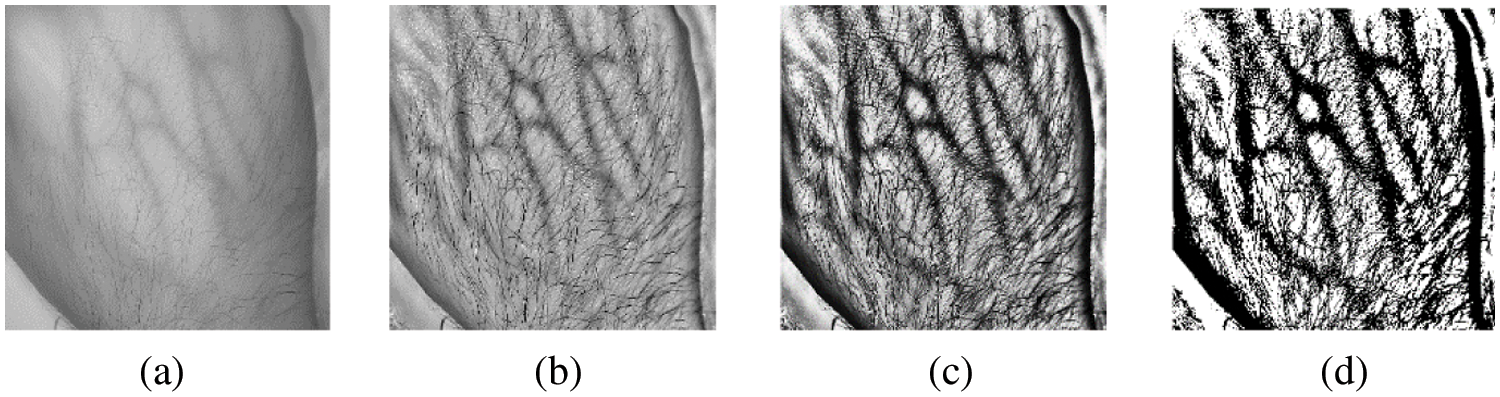

For image enhancement, three filters were applied, namely histogram equalization, erosion, and dilation. The image enhancement method first converts the image from the Red Green Blue format into the grayscale format to reduce the processing complexity as seen in Fig. 5a. Then, as shown in Fig. 5b, the light-enhanced version of the original image is processed by applying the device modes provided by the Veins Finder device. Then, the processed image is passed to a histogram equalization function [17] to increase the contrast of the veins’ vascular network and further reduce the noise by stretching the intensity range when the image global contrast has a narrow range of values as seen in Fig. 5c. Finally, the histogram image is binarized through a threshold value of 0.6 as seen in Fig. 5d. Although many noise reduction methods are available [23], our experiments have shown that the histogram equalization of images filtered by the Veins Finder machine was effective in reducing the noise while aiding the segmentation process. Nonetheless, further noise reduction is applied after the image is binarized. The noise caused by the hand hair as well as the gabs in the veins structure created by the capturing device must be processed. For hair elimination, an erosion method [24] is applied to the binary image as in Eq. (1) where X is the image eroded by the structuring element B and

Figure 5: A sample image going through the enhancement process

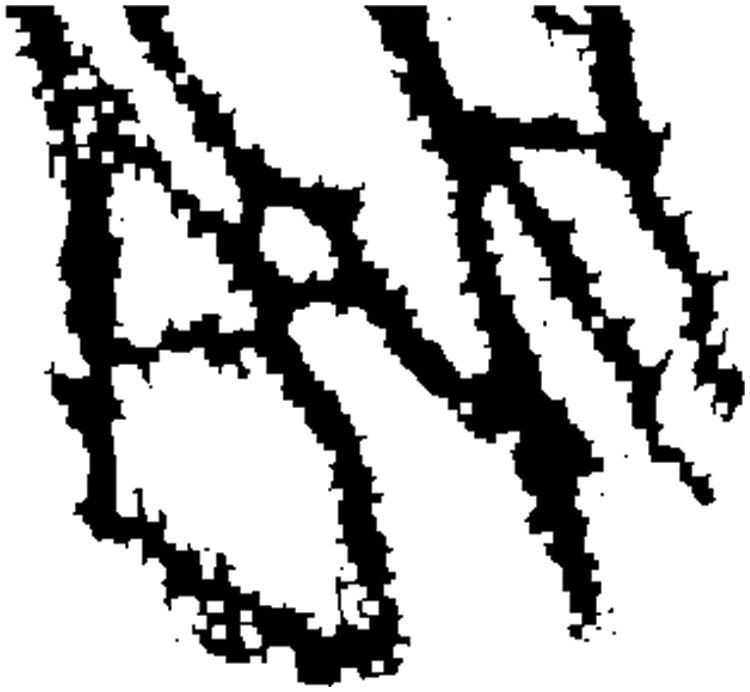

In the erosion process, the contents of an image are shrunk by the structuring element according to a given parameter. Then, the narrow image elements, especially hair, are eliminated and the veins become more visible. However, erosion causes an expansion of the scattered gaps between the veins, which negatively affects the extracted features and may result in recognition failure. To overcome this problem, a dilation method is applied to the eroded image as in Eq. (2) where X is the image dilated by structuring element B. This dilation process will fill the gaps in the vein curves using rectangular structuring elements. After the dilation process, 20 pixels around the image are removed to eliminate the edges, and the resulting image, as seen in Fig. 6, is passed to the feature extraction phase.

Figure 6: Sample segmented image ready for feature extraction

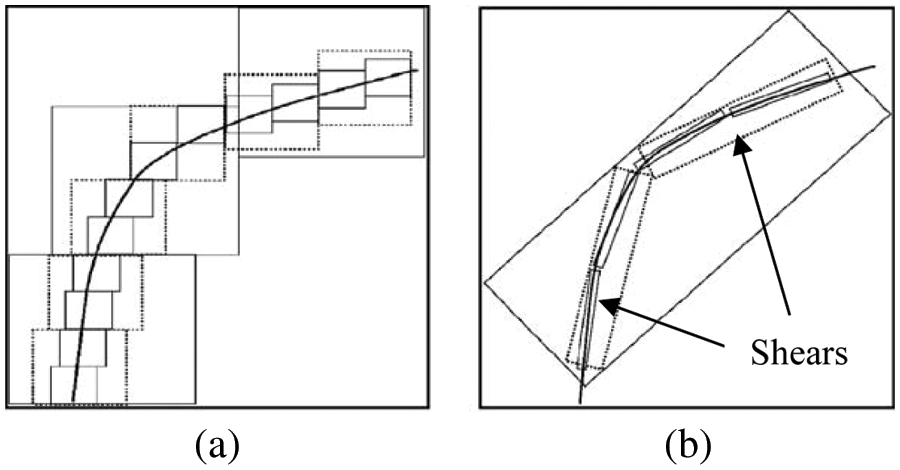

One of the main contributions of this research is the extraction of geometrical features of the hand vascular network. Fourier [25] and Wavelet-like [26] transforms require a large number of terms to represent curves with discontinuities. Nonetheless, textural-based transforms to extract features from images like a veins network are sufficient and the curve discontinuities are often represented with multidimensional geometrical feature extraction algorithms. Therefore, this paper utilizes the Curvelet multidimensional transform [27] and employs the two-dimensional Fast Fourier Transform (2D FFT) with shearing. Fig. 7 shows how the curves are processed with both Wavelet (Fig. 7a) and Curvelet (Fig. 7b) transforms. As seen in the figure, the oriented curve singularity is better represented using the Curvelet transform than with the Wavelet transform.

Figure 7: Two curve processing methods

3.4 Curvelet Algorithm Implementation

The Curvelet transform [27] performs features representation in four steps:

1. Apply frequency extraction using the 2D FFT to obtain the sheared objects

2. Divide the frequency plane into shears (sheared objects).

3. Multiply each sheared object

4. Apply Inverse 2D FFT to each shear

The number of scales in the Curvelet decomposition is proportional to image size, as given by Eq. (4) where ⌈ ⌉ is the ceiling operation and the image size is N × N. For example, an image with 512 × 512 size will be divided into six scales. If the image is not square-shaped, its smaller dimension may be chosen for the value of N.

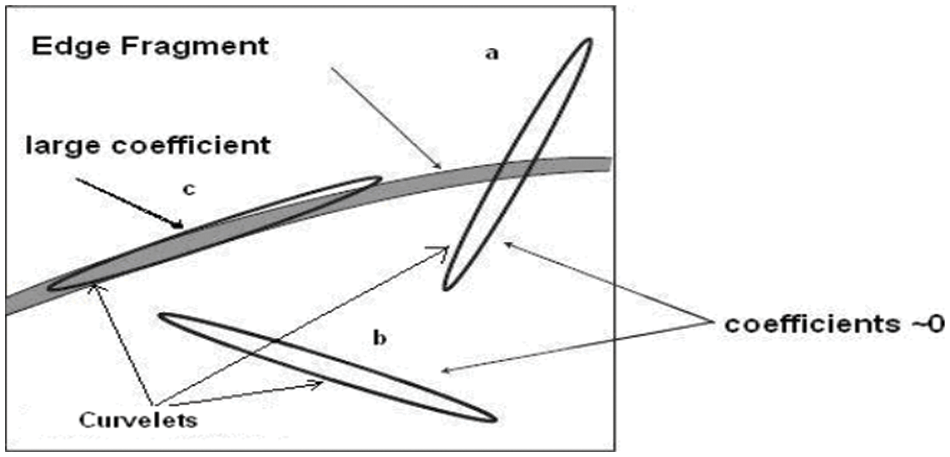

In our experiments, the veins image size was normalized to 256 × 256 and it was distributed over five scales to reduce the number of extracted Curvelet confidence. The Curvelet coefficients with the highest value represent the curve discontinuity that fits inside the shear, as seen in Fig. 8. The maximum value (1024) is extracted from each scale, thus forming 5120 features to be used in the matching process.

Figure 8: Curvelet coefficients

Many techniques are used for template matching in recognition systems, depending on the nature of the extracted features whereas Euclidean Distance (ED) is commonly used for distance calculation [28]. ED applies a summation operation to calculate the dissimilarity between two given templates, a and b, as in Eq. (5).

In an unconstrained environment, the extraction of the same features from individuals under variant imaging conditions is difficult, and the resulting distance is usually high and unpredictable. Therefore, a modified version of ED with a similarity margin of m and feature size n was implemented as in Eq. (6) under the conditions of Eq. (7). Here, a Sim value of one indicates no match and zero indicates a complete match. In this paper, the values m = 5 and n = 5120 were used in Eq. (6), and the value of distance L0 remains in the range between 0 and 1.

To overcome the rotation problem, the Curvelet features from each scale were sorted in ascending order. Experimentally, a threshold of 0.28 gave the best results. If the distance L0 of comparing two vein templates was less than the threshold, the two templates belong to the same person; otherwise, there is no match.

4 Experimental Results and Analysis

The proposed recognition method was tested with the proposed UHDVD database in addition to the NCUT V1 database [29]. The NCUT database was chosen because it is well cited in the literature, and it is the best available database that meets the requirements of the proposed method. The experiments were applied to 2040 images captured for 102 people.

Tab. 2 shows the recognition rate of some of the best-known methods in the literature. Wang et al. [30] used an image binarization method for segmentation followed by the Scale-Invariant Feature Transform (SIFT) to extract features from the veins template. The weakness of their method is in the selection process of the veins region used in segmentation. On the other hand, the Label Switched Path (LSP) segmentation methods used in [29,31,32] improve the recognition accuracy. Our proposed method shows less recognition accuracy than some other methods when tested over NCUT because the proposed method was designed to benefit from the extra features of the UHDVD database, which simulates an unconstrained capturing environment. However, the UHDVD database is new and provides no grounds for comparison with the previous works that did not use it. When tested with the 1548 images of the UHDVD database, the proposed method achieved a False Rejection Rate of only 1.83 and a False Acceptance Rate of zero with the 0.28 dissimilarity threshold. These very low values of false results demonstrate the high accuracy of the proposed system when used with the UHDVD database.

In this paper, we introduced the new UHDVD dorsal hand veins database where the image acquisition framework was created and the database was made available for download [34]. Moreover, the main differences between UHDVD and other databases were highlighted. Then, we proposed a novel segmentation method using image binarization and based on morphological erosion and dilation processes to search for local minima pixels from enhanced veins images to detect the ROI. Finally, a multi-resolution technique of curvature feature extraction was applied using the Curvelet transform to extract the discriminating features from the veins patterns. The proposed method was tested, with the segmentation and feature extraction processes, on the NCUT and UHDVD databases. Test results demonstrated the efficacy of the proposed method as it achieved a high recognition rate with a low False Rejection Rate and zero False Acceptance Rate. Future work can consider models that adopt multi-feature learning models with enhanced local attention such as [35,36] to enhance the efficacy of the recognition system.

Acknowledgement: The authors would like to express their sincere gratitude to the volunteers who participated in the imaging sessions of the UHDVD database.

Funding Statement: This research was funded by Al-Zaytoonah University of Jordan Grant Number (2020-2019/12/11).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. H. Wasmi, M. Al-Rifaee, A. Thunibat and B. Al-Mahadeen, “Comparison between proposed convolutional neural network and KNN for finger vein and palm print,” in Proc. of the 2021 Int. Conf. on Information Technology (ICIT 2021), Amman, Jordan, pp. 946–951, 2021. [Google Scholar]

2. D. R. Ibrahim, A. A. Tamimi and A. M. Abdalla, “Performance analysis of biometric recognition modalities,” in Proc. of the 8th Int. Conf. on Information Technology (ICIT 2017), Amman, Jordan, pp. 980–984, 2017. [Google Scholar]

3. M. Al-Rifaee, “Unconstrained iris recognition,” PhD Thesis, De Montfort University, Leicester, UK, 2014. [Google Scholar]

4. O. AlZoubi, M. A. Awad and A. M. Abdalla, “Automatic segmentation and detection system for varicocele in supine position,” IEEE Access, vol. 9, pp. 125393–125402, 2021. [Google Scholar]

5. A. Abdalla, M. A. Awad, O. AlZoubi and L. Al-Samrraie, “Automatic segmentation and detection system for varicocele using ultrasound images,” Computers, Materials and Continua, vol. 72, no. 1, pp. 797–814, 2022. [Google Scholar]

6. A. A. Tamimi, O. N. A. AL-Allaf and M. A. Alia, “Real-time group face-detection for an intelligent class-attendance system,” International Journal of Information Technology and Computer Science, vol. 7, no. 6, pp. 66–73, 2015. [Google Scholar]

7. F. Wilches-Bernal, B. Núñez-Álvares and P. Vizcaya, “A database of dorsal hand vein images,” 2020. [Online]. Available: https://arxiv.org/abs/2012.05383. [Google Scholar]

8. R. Raghavendra, J. Surbiryala and C. Busch, “Hand dorsal vein recognition: Sensor, algorithms and evaluation,” in Proc. of the 2015 IEEE Int. Conf. on Imaging Systems and Techniques (IST 2015), Macau, China, pp. 1–6, 2015. [Google Scholar]

9. M. Z. Yildiz and Ö.F. Boyraz, “Development of a low-cost microcomputer based vein imaging system,” Infrared Physics and Technology, vol. 98, pp. 27–35, 2019. [Google Scholar]

10. P. Tome and S. Marcel, “VERA palmvein dataset,” 2015. [Online]. Available: https://zenodo.org/record/4575454#.YiUu2-hBxhE. [Google Scholar]

11. P. Tome and S. Marcel, “On the vulnerability of palm vein recognition to spoofing attacks,” in Proc. of the 2015 Int. Conf. on Biometrics (ICB 2015), Phuket, Thailand, pp. 319–325, 2015. [Google Scholar]

12. Y. P. Lee, “Palm vein recognition based on a modified (2D)2 LDA,” Signal Image and Video Processing, vol. 9, no. 1, pp. 229–242, 2015. [Google Scholar]

13. W. Kang and Q. Wu, “Contactless palm vein recognition using a mutual foreground-based local binary pattern,” IEEE Transactions on Information Forensics and Security, vol. 9, no. 11, pp. 1974–1985, 2014. [Google Scholar]

14. J. C. Lee, “A novel biometric system based on palm vein image,” Pattern Recognition Letters, vol. 33, no. 12, pp. 1520–1528, 2012. [Google Scholar]

15. R. I. Yahya, S. M. Shamsuddin, S. I. Yahya, S. Hasan and B. Alsalibi, “Automatic 2D image segmentation using tissue-like p system,” International Journal of Advances in Soft Computing and its Applications, vol. 10, no. 1, pp. 36–54, 2018. [Google Scholar]

16. R. Castro-Ortega, C. Toxqui-Quitl, G. Cristóbal, J. V. Marcos, A. Padilla-Vivanco et al., “Analysis of the hand vein pattern for people recognition,” in Proc. of SPIE, San Diego, CA, USA, 9599, 2015. [Google Scholar]

17. J. R. G. Neves and P. L. Correia, “Hand veins recognition system,” in Proc. of the 9th Int. Conf. on Computer Vision Theory and Applications (VISAPP 2014), Lisbon, Portugal, vol. 1, pp. 122–129, 2014. [Google Scholar]

18. K. S. Wu, J. C. Lee, T. M. Lo, K. C. Chang and C. P. Chang, “A secure palm vein recognition system,” Journal of Systems and Software, vol. 86, no. 11, pp. 2870–2876, 2013. [Google Scholar]

19. A. Singh, H. Goyal and A. K. Gautam, “Human identification based on hand dorsal vein pattern using BRISK & SURF algorithm,” International Journal of Engineering and Advanced Technology, vol. 9, no. 4, pp. 2168–2175, 2020. [Google Scholar]

20. R. C. Rahul, M. Cherian and C. M. Manu Mohan, “A novel MF-LDTP approach for contactless palm vein recognition,” in Proc. of the 2015 Int. Conf. on Computing and Network Communications (CoCoNet 2015), Trivandrum, Kerala, India, pp. 793–798, 2016. [Google Scholar]

21. L. Fei, Y. Xu, W. Tang and D. Zhang, “Double-orientation code and nonlinear matching scheme for palmprint recognition,” Pattern Recognition, vol. 49, no. C, pp. 89–101, 2016. [Google Scholar]

22. BIOBASE Meihua, 2022. [Online]. Available: http://www.meihuatrade.com. [Google Scholar]

23. A. M. Abdalla, M. S. Osman, H. AlShawabkah, O. Rumman and M. Mherat, “A review of nonlinear image-denoising techniques,” in Proc. of the 2018 Second World Conf. on Smart Trends in Systems, Security and Sustainability (World S4), London, UK, pp. 96–100, 2018. [Google Scholar]

24. E. R. Urbach and M. H. F. Wilkinson, “Efficient 2-D grayscale morphological transformations with arbitrary flat structuring elements,” IEEE Transactions on Image Processing, vol. 17, no. 1, pp. 1–8, 2008. [Google Scholar]

25. T. Tyagi and P. Sumathi, “Comprehensive performance evaluation of computationally efficient discrete Fourier transforms for frequency estimation,” IEEE Transactions on Instrumentation and Measurement, vol. 69, no. 5, pp. 2155–2163, 2020. [Google Scholar]

26. K. K. Singh and A. Singh, “Diagnosis of COVID-19 from chest X-ray images using wavelets-based depthwise convolution network,” Big Data Mining and Analytics, vol. 4, no. 2, pp. 84–93, 2021. [Google Scholar]

27. E. Candès, L. Demanet, D. Donoho and L. Ying, “Fast discrete curvelet transforms,” Multiscale Modeling and Simulation, vol. 5, no. 3, pp. 861–899, 2006. [Google Scholar]

28. T. E. Schouten and E. L. Van Den Broek, “Fast exact Euclidean distance (FEEDA new class of adaptable distance transforms,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 36, no. 11, pp. 2159–2172, 2014. [Google Scholar]

29. D. Huang, Y. Tang, Y. Wang, L. Chen and Y. Wang, “Hand-dorsa vein recognition by matching local features of multisource keypoints,” IEEE Transactions on Cybernetics, vol. 45, no. 9, pp. 1823–1837, 2015. [Google Scholar]

30. Y. Wang, Y. Fan, W. Liao, K. Li, L. K. Shark et al., “Hand vein recognition based on multiple keypoints sets,” in Proc. of the 2012 5th IAPR Int.Conf. on Biometrics (ICB 2012), New Delhi, India, pp. 367–371, 2012. [Google Scholar]

31. X. Zhu and D. Huang, “Hand dorsal vein recognition based on hierarchically structured texture and geometry features,” in Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 7701, Springer, Berlin, Heidelberg, Redondo Beach, CA, USA, pp. 157–164, 2012. [Google Scholar]

32. S. Zhao, Y. D. Wang and Y. H. Wang, “Biometric identification based on low-quality hand vein pattern images,” in Proc. of the 7th Int. Conf. on Machine Learning and Cybernetics (ICMLC), Kunming, China, 2, pp. 1172–1177, 2008. [Google Scholar]

33. Y. Wang, K. Li, J. Cui, L. K. Shark and M. Varley, “Study of hand-dorsa vein recognition,” in Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 6215, Springer, Berlin, Heidelberg, Seoul, Korea, pp. 490–498, 2010. [Google Scholar]

34. M. M. Al Rifaee, M. M. Abdallah and M. I. Salah, “UHDVD hand veins dataset,” 2022. [Online]. Available: https://www.i-csrs.org/database/Veins_Database.rar. [Google Scholar]

35. W. Sun, G. Dai, X. Zhang, X. He and X. Chen, “TBE-Net: A three-branch embedding network with part-aware ability and feature complementary learning for vehicle re-identification,” IEEE Transactions on Intelligent Transportation Systems, pp. 1–13, 2021. https://doi.org/10.1109/TITS.2021.3130403. [Google Scholar]

36. W. Sun, X. Chen, X. Zhang, G. Dai, P. Chang et al., “A multi-feature learning model with enhanced local attention for vehicle re-identification,” Computers, Materials and Continua, vol. 69, no. 3, pp. 3549–3561, 2021. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |