DOI:10.32604/csse.2021.014154

| Computer Systems Science & Engineering DOI:10.32604/csse.2021.014154 |  |

| Article |

Motion-Blurred Image Restoration Based on Joint Invertibility of PSFs

1Qingdao Branch, Navy Aeronautical and Astronautical University, Qingdao, 266041, China

2Dazzle Color Flower Core Speech Training School, Qingdao, 266041, China

*Corresponding Author: Yuye Zhang. Email: amigo_yezi@sohu.com

Received: 02 September 2020; Accepted: 10 November 2020

Abstract: To implement restoration in a single motion blurred image, the PSF (Point Spread Function) is difficult to estimate and the image deconvolution is ill-posed as a result that a good recovery effect cannot be obtained. Considering that several different PSFs can get joint invertibility to make restoration well-posed, we proposed a motion-blurred image restoration method based on joint invertibility of PSFs by means of computational photography. Firstly, we designed a set of observation device which composed by multiple cameras with the same parameters to shoot the moving target in the same field of view continuously to obtain the target images with the same background. The target images have the same brightness, but different exposure time and different motion blur length. It is easy to estimate the blur PSFs of the target images make use of the sequence frames obtained by one camera. According to the motion blur superposition feature of the target and its background, the complete blurred target images can be extracted from the observed images respectively. Finally, for the same target images with different PSFs, the iterative restoration is solved by joint solution of multiple images in spatial domain. The experiments showed that the moving target observation device designed by this method had lower requirements on hardware conditions, and the observed images are more convenient to use joint-PSF solution for image restoration, and the restoration results maintained details well and had lower signal noise ratio (SNR).

Keywords: Motion-blurred image restoration; PSF invertibility; ill-posed problem; computational photography

In recent years, researchers have introduced computational photography technology into the field of motion blur restoration, which has opened up a new way of thinking. Image restoration based on computational photography technology is to design a new way of image acquisition to obtain information that is more conducive to image restoration. In 2004, Ben-Ezra et al. [1] first proposed using high-speed video sensors to assist motion blur PSF estimation, and developed a class of methods based on visual measurement technology, which has high requirements on the configuration and combination of imaging equipment. In 2006, Raskar et al. [2] proposed a CE (Coded Exposure) method with a fast open-close shutter within the camera exposure time to obtain a reversible motion blur PSF, which means that PSF does not have zero point in frequency domain, thus transforming the ill-posed problem of image restoration into a well-posed one. The idea of obtaining reversible PSF by CE method [3] has attracted extensive attention of researchers and has been further developed. However, the CE method requires complex shutter control settings, the premise of local image restoration is uniform background, manual PSF estimation and target segmentation are also required. In 2009, Agrawal et al. [4] used common cameras to record the same target through continuously shooting and changing the exposure time, and obtained multiple motion blur target images with different PSFs, thus introducing the concept of zero filling to construct a reversible PSF for multi-image joint restoration. This method is simple for imaging equipment and overcomes the complex hardware design problem in the previous method. However, this method needs to continuously change the exposure time during the target movement. For high-speed moving targets, the morphological changes of target images in adjacent frames will be relatively large, thus affecting the joint restoration effect.

Based on the idea of constructing a reversible PSF by combining multiple PSFs, this paper proposes to use multiple image acquisition devices with the same device parameters to obtain target images with different motion blur lengths under the same background. In addition, aiming at the problem that it is difficult to extract the target from the complex background and accurately separate the target from the blur superposition of the background, this paper proposes to extract the complete target image from the image according to the motion blur superposition feature of the target background, and then solve the iterative restoration in spatial domain simultaneously.

2 Analysis of Ill-Posed Problem of Motion Blur Restoration and Joint Reversibility of Multi-PSFs

2.1 The Ill-Posed Problem of Motion Blur Restoration

Since the image blurring process is actually the shift and superposition process of the image points in the exposure time, the image blurring process can be described by the convolution mathematical model:

where,  represents the blur image,

represents the blur image,  represents the clear image,

represents the clear image,  represents the blur point spread function,

represents the blur point spread function,  represents the convolution operation, and

represents the convolution operation, and  represents the noise.

represents the noise.

The model is transformed to the frequency domain as

In which  ,

,  ,

,  and

and  are respectively the Fourier transform of blur image, clear image, point spread function and noise. Calculated in the frequency domain, the estimation formula of clear image can be expressed as:

are respectively the Fourier transform of blur image, clear image, point spread function and noise. Calculated in the frequency domain, the estimation formula of clear image can be expressed as:

However, the motion blur PSF has zero-points in frequency domain, so the noise or interference in the image is easy to be over-amplified in the restoration process, thus affecting the restoration result, which is the ill-posed problem of image restoration. From the perspective of calculation, it is caused by the irreversibility of PSF. In a physical sense, it is actually caused by the loss of high-frequency information in the imaging process. To transform an ill-posed problem into a well-posed one, we need to transform the irreversible PSF into a reversible one. Agrawal et al. [4] proposed the use of variable exposure cameras to obtain multiple images with different PSFs of the same moving target, and the combination multiple PSFs has reversibility.

2.2 Joint Reversibility of Irreversible PSF

Suppose that the same target is shot at varying exposure time to obtain  images with different lengths of motion blur [4,5]. Image restoration is carried out by frequency domain filtering formula (3) respectively. Here, each deconvolution filter is represented by

images with different lengths of motion blur [4,5]. Image restoration is carried out by frequency domain filtering formula (3) respectively. Here, each deconvolution filter is represented by  and

and  .

.

The optimal deconvolution filter  can be obtained by minimizing the noise intensity, and because

can be obtained by minimizing the noise intensity, and because

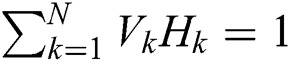

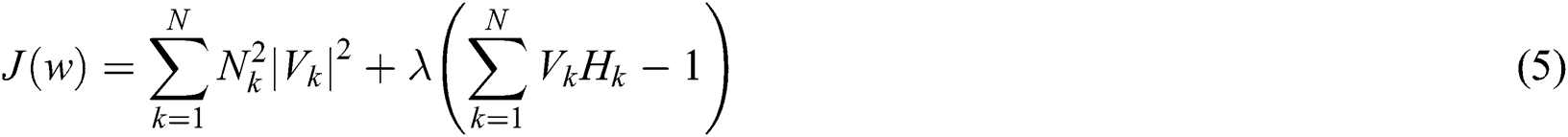

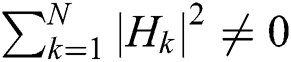

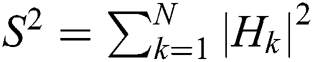

Therefore, the Lagrange cost function can be constructed as follows:

Minimize the  and take the derivative of

and take the derivative of  , we can get:

, we can get:

Joint estimation of clear images from multiple images can be got as follows:

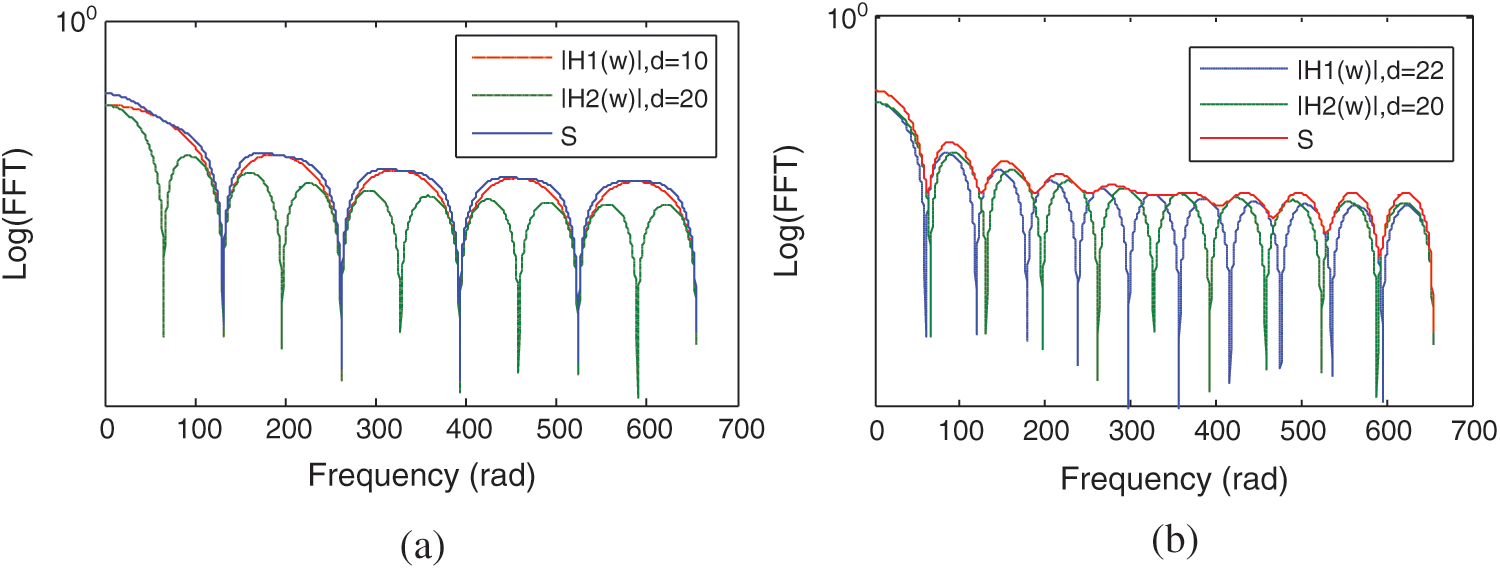

It can be found from the above equation that, as long as  , you can change the pathologies of the recovery problem. As shown in Fig. 1, the spectrum mode curve

, you can change the pathologies of the recovery problem. As shown in Fig. 1, the spectrum mode curve  of a single PSF and the sum of the spectrum mode squares

of a single PSF and the sum of the spectrum mode squares  of all PSFs are shown in each curve, here

of all PSFs are shown in each curve, here  . Comparing Figs. 1(a) and 1(b), it can be seen that each PSF has a zero or minimum value in the frequency domain. However, in Fig. 1(b), the two PSFs do not have a common zero at a certain frequency, so there is no zero in

. Comparing Figs. 1(a) and 1(b), it can be seen that each PSF has a zero or minimum value in the frequency domain. However, in Fig. 1(b), the two PSFs do not have a common zero at a certain frequency, so there is no zero in  , that is, the joint reversibility of multiple PSFs can be guaranteed. As shown in Fig. 1(a), there is a multiple relationship between the two PSFs’ motion blur lengths, that is, the relationship between exposure time is multiple. So there are some common minimum points in the frequency domain, and correspondingly, there are also some minimum points in

, that is, the joint reversibility of multiple PSFs can be guaranteed. As shown in Fig. 1(a), there is a multiple relationship between the two PSFs’ motion blur lengths, that is, the relationship between exposure time is multiple. So there are some common minimum points in the frequency domain, and correspondingly, there are also some minimum points in  . Therefore, when acquiring images with different exposure times, it is required that the exposure times should be close to each other rather than integer multiples of each other.

. Therefore, when acquiring images with different exposure times, it is required that the exposure times should be close to each other rather than integer multiples of each other.

In addition, for motion blur PSF, the longer the motion blur length is, the more zeros or minimum points exist, which means the more information has lost. To ensure the joint reversibility of PSF, multiple images with different exposure times need to be acquired. It can be interpreted as that multiple target blur images with different exposure times can be complementation to each other in the restoration process. Theoretically, the higher the value of k is, the better. However, in consideration of the computational complexity and the cost of image acquisition, the value of k is usually between 2 and 4.

Figure 1: The curves of two PSFs’ frequency spectrum module and  (

( denotes motion blur length). (a) Case of multiple blur lengths, (b) Case of no multiple blur lengths

denotes motion blur length). (a) Case of multiple blur lengths, (b) Case of no multiple blur lengths

3 Setting Up of Imaging Devices with Different Shutters

In order to obtain the same target images with different motion blur lengths, the variable exposure continuous image acquisition proposed by Agrawal et al. needs to constantly change the exposure time in the process of continuously shooting the moving target. However, during the shooting interval, the continuous movement of the target will inevitably change the shooting angle, resulting in the difference of the target image morphology. In this way, when multiple different PSF observation images are combined for restoration operation, the error of the restoration result will be large.

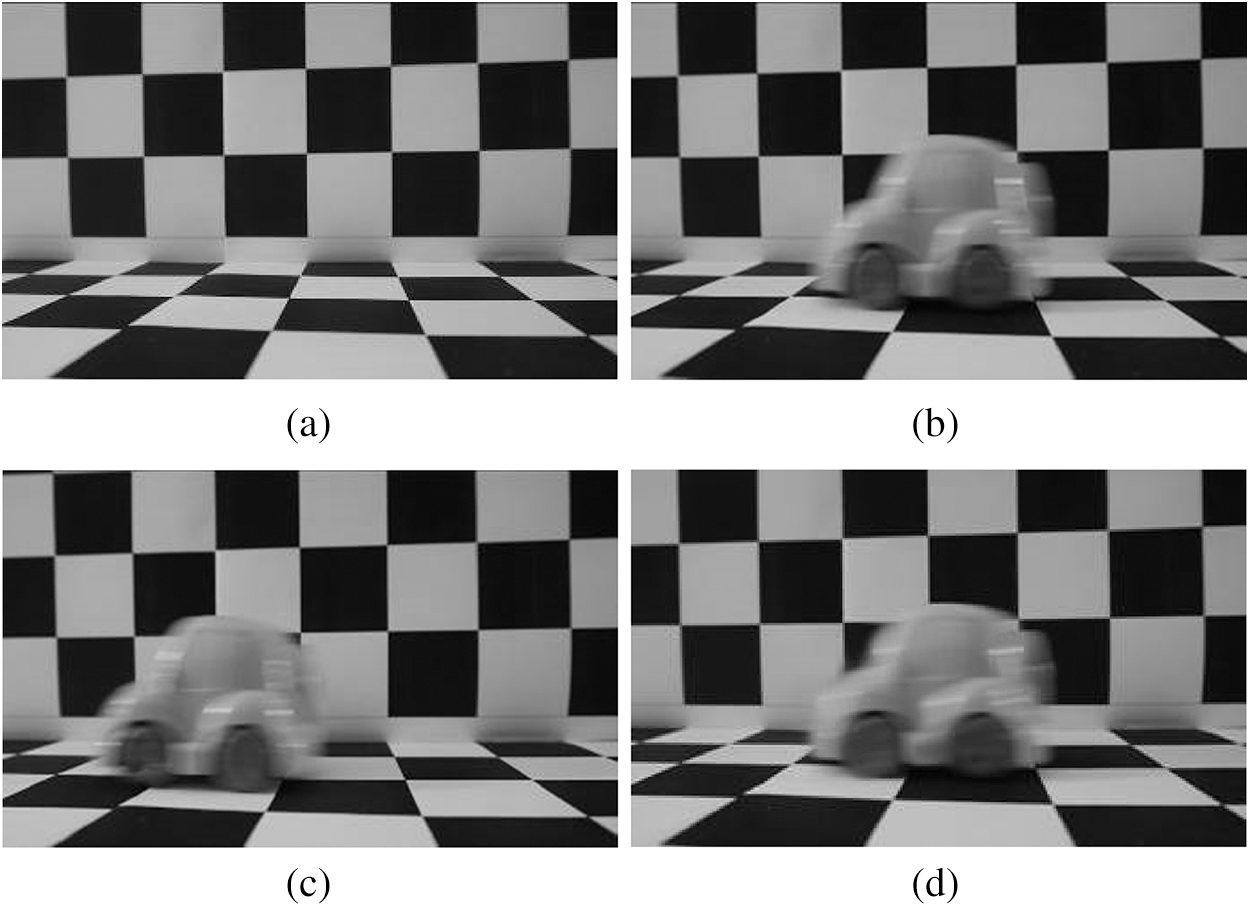

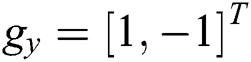

In this paper, multiple cameras are used to capture moving targets in the same field of view. These cameras are of the same model, and several cameras are fixed close together. In the long shooting distance, the difference of images of the same target position taken by different cameras is extremely small, which can be controlled within 1 pixel, thus can be ignored. Set the same frame frequency for each camera to make the shutters open at the same time, and set different exposure time, so that when the moving target passes through the specified field of view, different cameras can get moving target images with different motion blur degrees in the same static background. Fig. 2(a) shows the background image, Figs. 2(b) and 2(c) show two images in use of variable continuous shooting exposure. It can be seen that the differences between the target images are obvious. However, use of the device of this paper, the observation of the image target starting position are the same, so there is not much difference between the shape of two target images, as shown in Figs. 2(b) and 2(d).

Figure 2: Observed images by different methods. (a) Background, (b) Observation 1, (c) Observation 2, (d) Observation 3

4 Estimation of Motion Blur PSF and Segmentation of Target Image

4.1 Motion Blur PSF Estimation

The motion blur lengths of the images obtained at different exposure times are also different, so it is necessary to estimate the motion blur PSF of each image. The accuracy of traditional PSF estimation in only a single blur image is relatively low [6–9]. While the way in this paper, the motion blur PSF of the target image continuously acquired by the same camera is consistent, and the acquisition time can be directly obtained by the camera system time setting. Then according to the location distance of the two target images in the background, it is easy to calculate the target image motion speed  (unit: pixel/SEC). The product of

(unit: pixel/SEC). The product of  and the exposure time is blur length

and the exposure time is blur length  (unit: pixels) of the target image motion, so as to get the motion blur PSF.

(unit: pixels) of the target image motion, so as to get the motion blur PSF.

4.2 Segmentation of Motion Blur Target Image

For the restoration of moving target in static background, the first point is the segmentation and extraction of the complete target image. For this target extraction problem, the CE method assumes that the background is uniform in gray scale, which makes it difficult to deal with complex background conditions.

Agrawal et al. [4] used the local difference of the target image in continuous frames to estimate the PSF, and used static image cutout method to extract the target area. In the process of target segmentation, primary deblurring method is used to improve the segmentation accuracy, but since there is no consideration to remove the integrated background information, there is still a large error in segmentation result and will inevitably affect the restoration effect. Our method is segmenting the target image according to the motion blur superposition feature.

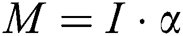

Let the observation image be  (as shown in Fig. 2(b)), where the background image is represented as

(as shown in Fig. 2(b)), where the background image is represented as  (as shown in Fig. 2(a)). By performing exclusive or operation between

(as shown in Fig. 2(a)). By performing exclusive or operation between  and

and  , the mask image

, the mask image  of the moving target in the

of the moving target in the  can be obtained (as shown in Fig. 3(a)),

can be obtained (as shown in Fig. 3(a)),  , where

, where  represents exclusive or operation. The “target image”

represents exclusive or operation. The “target image”  (as shown in Fig. 3(b)) on the black background is obtained by the operation of the mask on the observation image.

(as shown in Fig. 3(b)) on the black background is obtained by the operation of the mask on the observation image.

Figure 3: Images of mask operation. (a) Mask  , (b) Mask operation result

, (b) Mask operation result

is not merely the motion blur image of the target. Due to the relative motion of the target background during the exposure time, background pixels are mixed and overlaid at the boundary of its motion direction. In order to extract the pure motion blur target image

is not merely the motion blur image of the target. Due to the relative motion of the target background during the exposure time, background pixels are mixed and overlaid at the boundary of its motion direction. In order to extract the pure motion blur target image  . At present, most studies [10–14] refer to the “image matting” idea to describe the blur situation:

. At present, most studies [10–14] refer to the “image matting” idea to describe the blur situation:

where,  represents the clear target image,

represents the clear target image,  represents the background image obtained by the

represents the background image obtained by the  mask operation on the background

mask operation on the background  , and

, and  represents the percentage of the target image pixels at any position in the image

represents the percentage of the target image pixels at any position in the image  . The generation process of

. The generation process of  is the imaging process in which the target pixel is continuously diffused and the background pixel is occluded to the exposure or exposed to the occlusion during the exposure. Therefore, in the case that noise

is the imaging process in which the target pixel is continuously diffused and the background pixel is occluded to the exposure or exposed to the occlusion during the exposure. Therefore, in the case that noise  is ignored, combining with the image degradation model Eq. (1), Eq. (8) can be expressed as:

is ignored, combining with the image degradation model Eq. (1), Eq. (8) can be expressed as:

Since the target moves  pixels relative to the background during the exposure time, there are

pixels relative to the background during the exposure time, there are  pixels wide overlapping areas of the target background pixels on both sides of the target image and at the junction with the background in the direction of movement. The exposure time is very short, so it can be considered that the target moves uniformly in a straight line. Then any line of image

pixels wide overlapping areas of the target background pixels on both sides of the target image and at the junction with the background in the direction of movement. The exposure time is very short, so it can be considered that the target moves uniformly in a straight line. Then any line of image  in the motion direction, the percentage

in the motion direction, the percentage  of the time the background pixel is blocked by the target in the exposure time is:

of the time the background pixel is blocked by the target in the exposure time is:

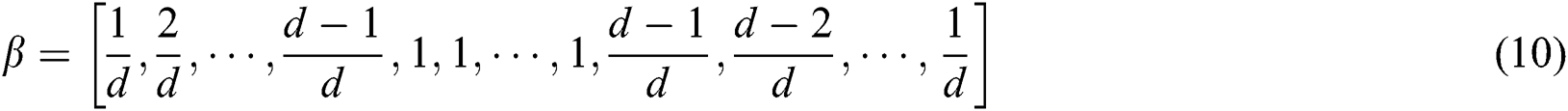

where, the number of “1” equals to the actual target image width of the line minus  pixels.

pixels.

Therefore, Eq. (10) is substituted into Eq. (9), and according to the method in the above section, the target image  of

of  images has been obtained, thus motion blur target image

images has been obtained, thus motion blur target image  can be extracted from each observation image.

can be extracted from each observation image.

5 Joint Solution of Image Restoration with Multiple PSFs

The extracted moving target blur images  can be reconstructed by spatial domain method. For example, a single image restoration in spatial domain can be expressed as formula (1) which is the product of circulant matrix and vector to express the convolution:

can be reconstructed by spatial domain method. For example, a single image restoration in spatial domain can be expressed as formula (1) which is the product of circulant matrix and vector to express the convolution:

Here,  ,

,  ,

,  are translated into lexicographical arrangement vectors, that is, each row of a two-dimensional matrix is transposed one by one into a single column vector, starting with the first row.

are translated into lexicographical arrangement vectors, that is, each row of a two-dimensional matrix is transposed one by one into a single column vector, starting with the first row.  is the circulant matrix of

is the circulant matrix of  .

.  obtained by the above method is a local image in the observation image.

obtained by the above method is a local image in the observation image.  is whole moving target image which is not truncated, so it is a completely convoluted image. If the length of

is whole moving target image which is not truncated, so it is a completely convoluted image. If the length of  is n1 and the length of

is n1 and the length of  is n2, then the length of

is n2, then the length of  is n1 + n2–1.

is n1 + n2–1.

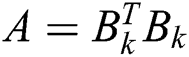

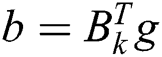

Eq. (11) can be transformed into a linear equation

where  &

&  , using conjugate gradient algorithm to solve in spatial domain.

, using conjugate gradient algorithm to solve in spatial domain.

For the joint solution of  images, then

images, then

Accordingly, Eq. (12) is transformed to solve the following equation.

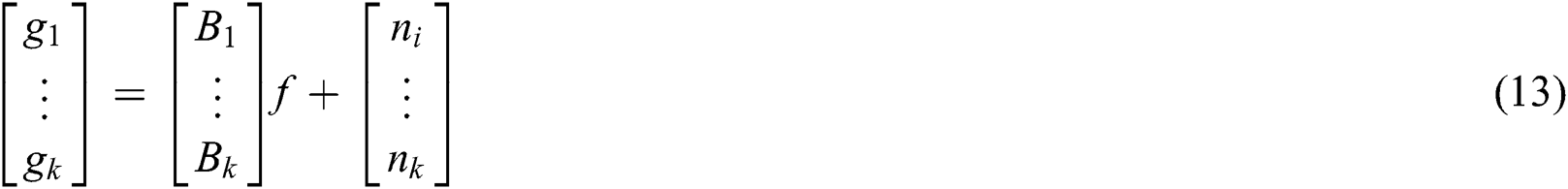

The sizes of images  are different, so in order to carry out joint restoration, need to align the image with zero padding. Although the joint solution of multiple PSF images can reduce the ill-condition of restoration, some errors are inevitably introduced in the process of multi-image processing. In order to remove false ripples, it is necessary to use the prior knowledge of natural images, such as Gaussian prior, to normalize the restoration. The coefficient matrix

are different, so in order to carry out joint restoration, need to align the image with zero padding. Although the joint solution of multiple PSF images can reduce the ill-condition of restoration, some errors are inevitably introduced in the process of multi-image processing. In order to remove false ripples, it is necessary to use the prior knowledge of natural images, such as Gaussian prior, to normalize the restoration. The coefficient matrix  in Eq. (12) is modified as

in Eq. (12) is modified as

where,  ,

,  are the differential filtering convolution matrixes of

are the differential filtering convolution matrixes of  ,

,  , and

, and  is the weight parameter. Eq. (14) is converted as

is the weight parameter. Eq. (14) is converted as

Use the conjugate gradient algorithm to solve the problem in spatial domain.

This method has low requirements for hardware equipment. It does not need the expensive binocular stereoscopic cameras or coding cameras which are used in CE method, nor the industrial-grade camera Flea2, which is used in Agrawal method to continuously change the exposure time. Instead, this method only needs several common digital cameras with continuous shooting function which are prevailing in the market. The more cameras there are, the better the restoration effect will be. Therefore, in order to verify the effect, only two D7000 cameras are selected for the experiment in this paper. The cameras are fixed side by side and set to the continuous shooting mode with the frame rate set to 6 fps (the maximum frame rate of the camera). The two cameras are set with different exposure times, and the two are not in a multiple relationship, The exposure time is required to be between the maximum value and minimum value in the shooting interval, so as to obtain photos with good exposure effect.

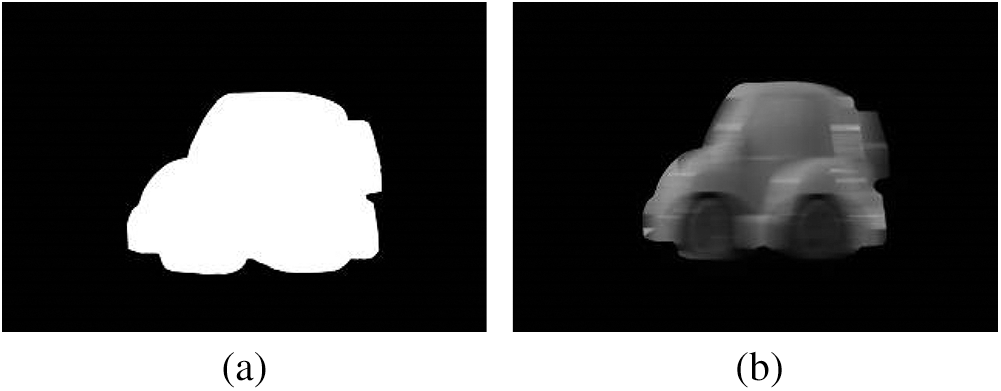

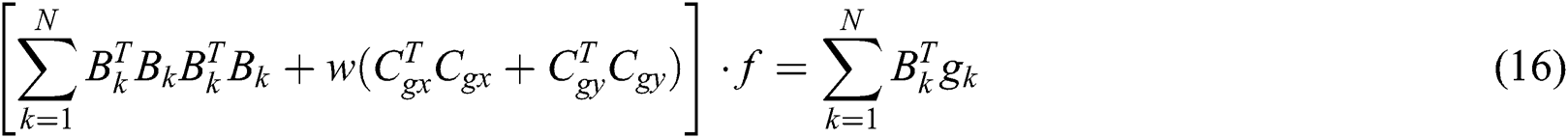

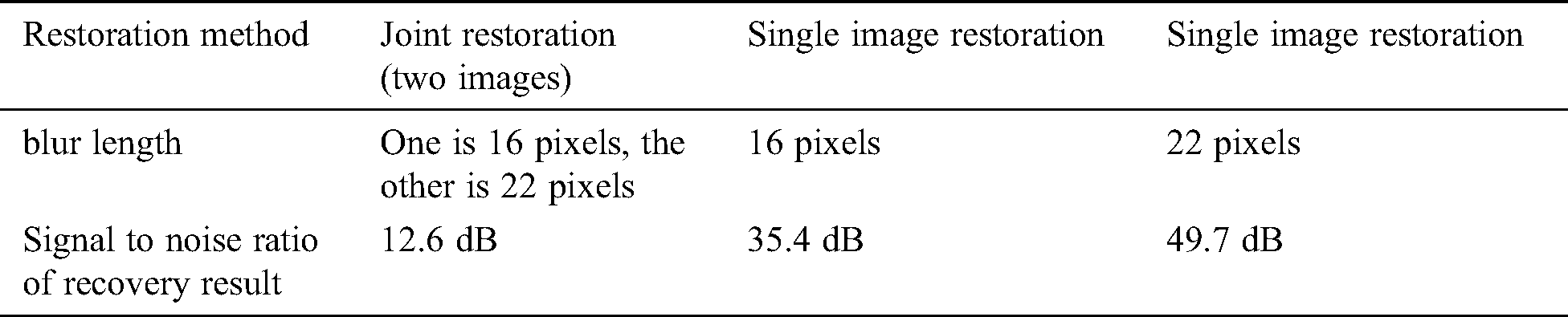

Experiment 1: Comparison of the results of joint restoration and single image restoration

The camera exposure times are respectively 40 ms and 50 ms, targeting for a toy car, each camera in the field obtained three images, six in total. Among them, two images obtained in a same time are as shown in Figs. 2(b) and 2(d). According to the steps of this method: (1) Carry on the PSF estimation, two motion blur lengths are 16 and 22 pixels respectively; (2) Extract the moving blur target in Figs. 2(b) and 2(d); (3) Conduct joint airspace restoration for the extracting images, and the local effect of the restoration results is shown in Fig. 4(a). Spatial iterative restoration was carried out for two images respectively, and the local effect of the restoration results is shown as Figs. 4(b) and 4(c). The SNRs of the three images was 12.6 dB, 35.4 dB and 49.7 dB respectively. It can also be found from the observation of the image that the edge details of the image obtained by joint image restoration are clear, while the restoration results of a single image still have some false ripples and noise points even after normalized restoration due to the ill-posed problem of restoration.

Figure 4: Joint restoration and single image restoration results, (a) Joint restoration result (12.6 dB), (b) Single image restoration result (35.4 dB), (c) Single image restoration result (49.7 dB)

It can be seen from the restoration result as Fig. 4 that the restoration effect of multi images joint restoration method is better than that of single image restoration. First of all, from the comparison in Tab. 1, it can be seen that under the premise of known motion blur length, for single image restoration, the shorter the motion blur length is, the better the restoration effect is; on the contrary, the effect is poor; while the joint restoration of multiple images of the same scene and the same target with different motion blur lengths has a much better effect. In addition, when the parameters of motion blur is unknown, the proposed method in the article can estimate the motion blur parameters more conveniently, However, it is difficult to estimate a single image.

Table 1: Comparison of multi image joint restoration and single image restoration

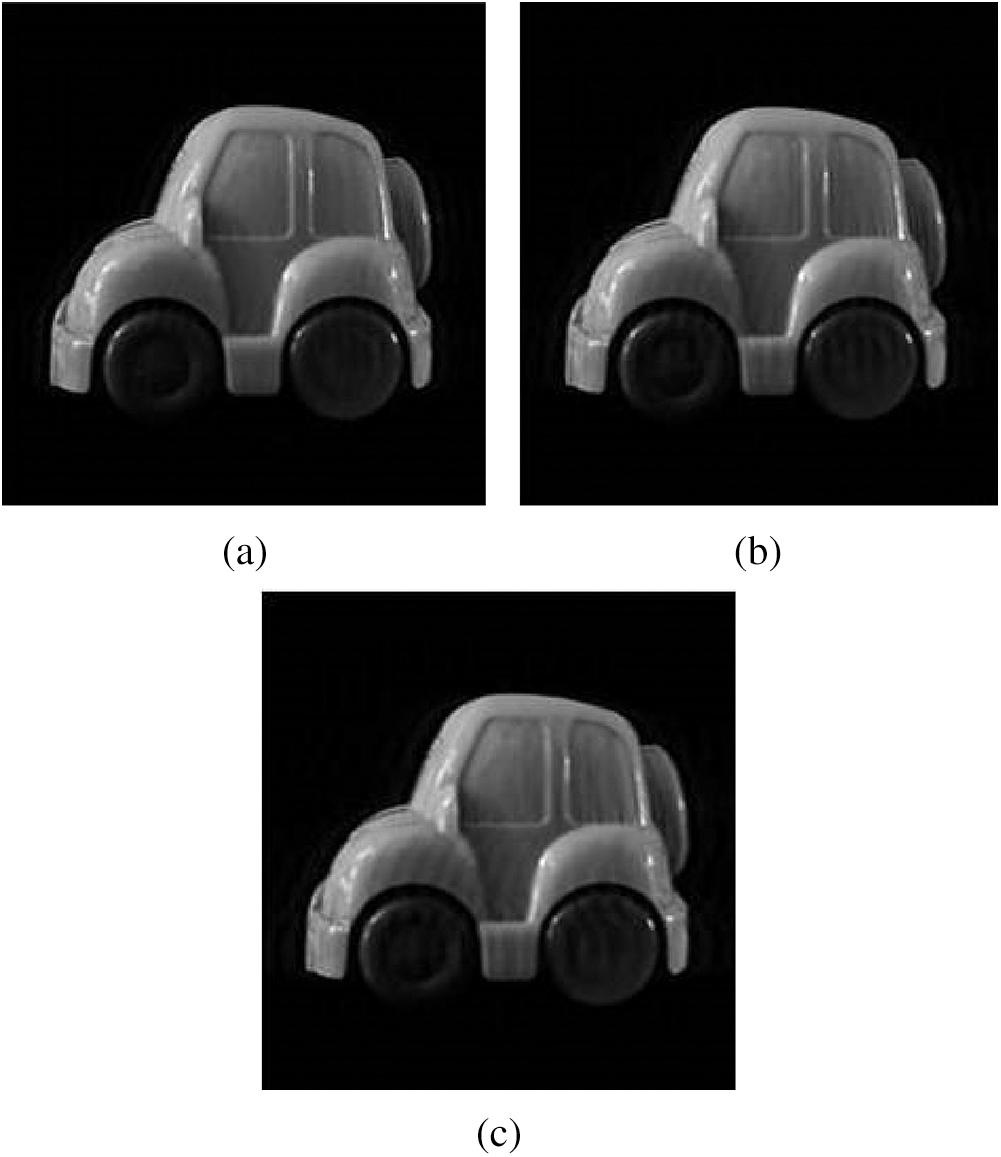

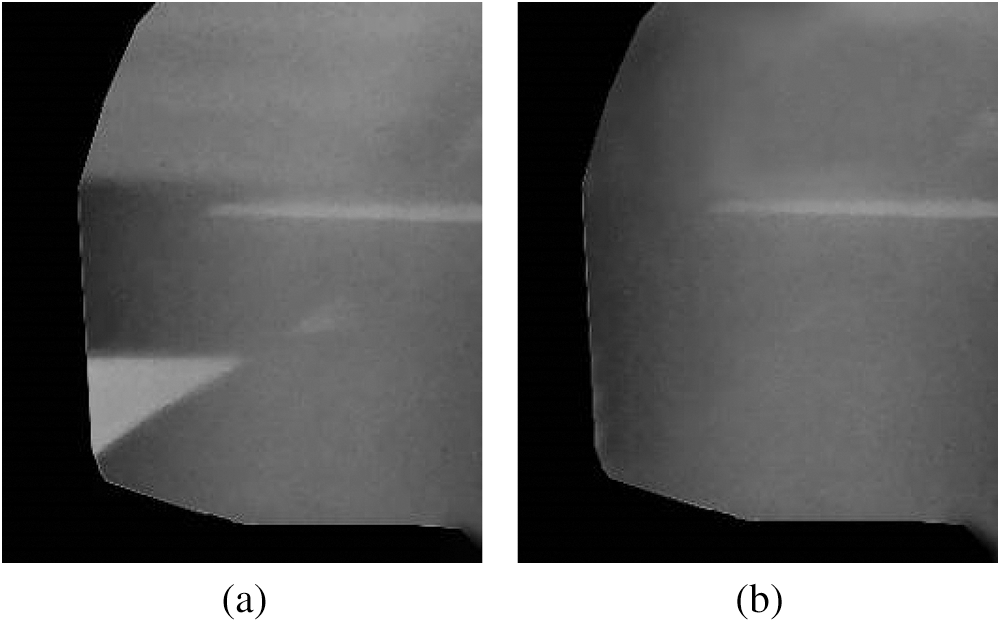

Experiment 2: Comparison of local effect between threshold segmentation and matting method in this paper

Comparing the local images of the target shown in Figs. 5(a) and 5(b) which extracted by the threshold segmentation method and our “image matting” method, it can be found that due to the rough background separation by the threshold segmentation method, the background information (black and white lattice) is doped in the target image, which will can affect the restoration result. Therefore, for this kind of local image restoration, it is necessary to use motion blur feature to separate and extract the target accurately.

Figure 5: The local image comparison of two segmentation methods. (a) Threshold segmentation, (b) Our method

Compared with the complicated imaging equipment configuration and photographic calculation of the CE method, the imaging equipment configuration of this method is simple, and common digital camera with continuous shooting function can achieve the target acquisition. In this method, multiple cameras are used to open the shutters for the same field of view at the same time, which also avoids the problem of target image morphological difference caused by Agrawal et al’s [4] acquisition of continuous image with variable exposure. At the same time, it is easy to carry out accurate PSF estimation to the images obtained by this method, and use the motion blur feature to extract the target, so as to improve the accuracy and restoration effect of the motion blurred target restoration, which is of high practical value.

Acknowledgement: I would like to express my gratitude to all my colleagues for their valuable instructions and suggestions on the manuscript.

Funding Statement: The author Yuye Zhang received the funding of Natural Science Foundation of Shandong Province (ZR2013F0025), www.sdnsf.gov.cn.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. M. Ben-Ezra and S. K. Nayar. (2004). “Motion-based motion deblurring,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 26, no. 6, pp. 689–698.

2. R. Raskar, A. Agrawal and J. Tumblin. (2006). “Coded exposure photography: Motion deblurring using fluttered shutter,” in Proc. ACM SIGGRAPH 2006, Boston, MA, USA, pp. 795–804.

3. S. K. Xu, J. Zhang, D. Tu and G. H. Li. (2011). “A constant acceleration motion blur image deblurring based on hybrid coded exposure,” Journal of National University of Defense Technology, vol. 33, no. 6, pp. 78–83.

4. A. Agrawal, Y. Xu and R. Raskar. (2009). “Invertible motion blur in video,” in Proc. ACM SIGGRAPH 2009, New Orleans, LA, USA, pp. 1–8.

5. A. Rav-acha and S. Peleg. (2005). “Two motion-blurred images are better than one,” Pattern Recognition Letters, vol. 26, no. 3, pp. 311–317.

6. B. R. Kapuriya, D. Pradhan and R. Sharma. (2019). “Detection and restoration of multi-directional motion blurred objects,” Signal Image and Video Processing, vol. 13, no. 5, pp. 1001–1010.

7. H. Y. Hong, L. C. Li, X. H. Zhang, L. X. Yan and T. X. Zhang. (2013). “Versatile restoration and experimental verification for multi-wave band image of object detection,” Infrared and Laser Engineering, vol. 42, no. 1, pp. 251–255.

8. Y. Y. Zhang, X. D. Zhou and C. H. X. Wang. (2009). “Space-variant blurred image restoration based on pixel motion-blur character segmentation,” Optics and Precision Engineering, vol. 17, no. 5, pp. 1119–1125.

9. S. K. Xu. (2011). “Research on motion blur image deblurring technology based on computational photography,” Ph.D. dissertation, National University of Defense Technology, ChangSha.

10. C. Xu, Y. C. Liu, W. Y. Qiang and H. Z. Liu. (2008). “Image velocity measurement based on motion blurring effect,” Infrared and Laser Engineering, vol. 37, no. 4, pp. 625–650. [Google Scholar]

11. D. Zhao, H. W. Zhao and F. H. Yu. (2015). “Moving object image segmentation by dynamic multi-objective optimization,” Optics and Precision Engineering, vol. 23, no. 7, pp. 2109–2114.

12. X. Chen, Y. G. Wang and S. L. Peng. (2010). “Restoration of degraded image from partially-known mixed blur,” Journal of Computer-Aided Design & Computer Graphics, vol. 22, no. 2, pp. 272–276.

13. M. Zhu, H. Yang, B. G. He and J. F. Lu. (2013). “Image motion blurring restoration of joint gradient prediction and guided filter,” Chinese Optics, vol. 6, no. 6, pp. 850–855.

14. X. J. Ye, G. M. Cui, J. F. Zhao and L. Y. Zhu. (2020). “Motion blurred image restoration based on complementary sequence pair using fluttering shutter imaging,” Acta Photonica Sinica, vol. 49, no. 8, 810001. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |