DOI:10.32604/csse.2021.015261

| Computer Systems Science & Engineering DOI:10.32604/csse.2021.015261 |  |

| Article |

Exploring Students Engagement Towards the Learning Management System (LMS) Using Learning Analytics

1Department of Information Systems, Faculty of Computer Science & Information Technology, University Malaya, Kuala Lumpur, 50603, Malaysia

2Department of Information systems, College of Computer and Information Sciences, Shaqra University, Saudi Arabia

3School of Computer Science and Engineering, SCE, Taylor’s University, Subang Jaya, 47500, Malaysia

*Corresponding Author: Suraya Hamid. Email: suraya_hamid@um.edu.my

Received: 22 November 2020; Accepted: 17 December 2020

Abstract: Learning analytics is a rapidly evolving research discipline that uses the insights generated from data analysis to support learners as well as optimize both the learning process and environment. This paper studied students’ engagement level of the Learning Management System (LMS) via a learning analytics tool, student’s approach in managing their studies and possible learning analytic methods to analyze student data. Moreover, extensive systematic literature review (SLR) was employed for the selection, sorting and exclusion of articles from diverse renowned sources. The findings show that most of the engagement in LMS are driven by educators. Additionally, we have discussed the factors in LMS, causes of low engagement and ways of increasing engagement factors via the Learning Analytics approach. Nevertheless, apart from recognizing the Learning Analytics approach as being a successful method and technique for analyzing the LMS data, this research further highlighted the possibility of merging the learning analytics technique with the LMS engagement in every institution as being a direction for future research.

Keywords: Learning analytics; student engagement; learning management system; systematic literature review

In recent years, Learning Analytics has emerged as a promising area of research for tracing the digital footprints of learners as well as extracting useful knowledge of a student’s learning progress. Since the availability of the increased amount of data alongside the fact that potential benefits of learning analytics can be far-reaching to all stakeholders in the education field, such as students, teachers, leaders, and policymakers, many higher learning institutions have started using the new analytics models and the data stored in its Learning Management System (LMS) as a way of reaching their respective short- and long-term goals and objectives. However, the data produced from the LMS cannot only be transformed effectively into meaningful information but can be used to reflect on the learners’ interaction within a system. Moreover, this can improve the understanding of the user’s behaviour and characteristics that contributes to low LMS usage. The data mining and analysis applied in higher education is still in the early stages [1]. LMS has been introduced as a technology to provide a better option for institutions to endorse more student participation and engagement [2]. The LMS trace and log data or virtual learning environments (VLEs) that had evolved from traditional learning methods are now regarded as crucial learning analytics data sources [3]. Although a study conducted by Nguyen et al. [4], revealed that most of the LMS interactions occurred between students, instructors and the institution’s resources. Moreover, Jovanovic et al. [5] discovered a low LMS interaction and engagement level among these learners.

Similarly, Zanjani et al. [6] and Emelyanova et al. [7] stated the use of LMS as a means of providing a flexible platform as well as a secure learning environment for students having different lifestyles. It is a tool that can help students and lecturers, improve learning and management processes. The LMS however, made it somewhat difficult for the educator to gauge the student’s behaviour and their study patterns as compared to the traditional face-to-face learning [8]. Despite the above shortcoming, the user’s participation in LMS can still be used to gauge the student’s real learning behaviour since it may offer a different and more personal learning experience than those of conventional classroom learning. The examination on log information of student will radiate instinct into figuring out how to guarantee better decisions and be responsible to different partners. Assessment from the learning information will improve the learning condition to be more organized, as it diverges from customary to a web-based learning background. Consequently, learning investigation can recognize the relationship between log information and understudy conduct [9]. Learning Analytics foresee understudy presentation, dropout rate, last stamps and grades, maintenance of the understudies in LMS, as well as coming up with an early cautioning framework for the understudies, all through their examination in college [10]. Also, the execution of Learning Analytics to the college understudy helps with the college organization, assists the employees to eyewitness the development of the students and perceive how they advance in their study. Understudy commitment in LMS is an area that is yet under the disclosure of its status. In this investigation, the scientist utilizes commitment in LMS to foresee the presentation of the understudy. The issue of commitment concerns imparting and associating with others, that is, those that are still new impacts on the understudy’s conduct. In any case, the commitment and cooperation in LMS possibly occur if there is a push factor including the speakers or the teacher’s investment. By utilizing the learning examination, we can break down the status of the understudy’s commitment in the LMS stage. For this reason, this paper utilises the Systematic Literature Review of the Learning Analytics as a way of gauging the student’s level of engagement in the Learning Management System. Similar work has been conducted on the learning dashboard [11] the benefits and challenges of learning analytics [12] as well as the practice of learning analytics and educational data mining [13]. This systematic literature review focused on student engagement factors with the application of learning analytics, which are the main objectives of this paper. The significance of this paper is to grab the understanding of student engagement factors classification using the learning analytics technique. Whereas the existing systematic literature review focused on the benefits and the challenges faced for learning success from the use of Learning Analytics.

Further, this paper elaborates on the factors that influence students’ engagement and their interaction levels in the LMS. The following section explains the methods applied in conducting this systematic literature review. The results and discussion of findings are the last sections of this systematic literature review. Finally, we summarize and highlight the study limitations and craft recommendations for future work.

Since Systematic Literature Review (SLR) utilises in-depth and absolute methodologies as well as guidelines in the evaluation of related literature of a research topic [14], this research, therefore, implemented the SLR methodology as suggested by Kitchenham [14] and Kitchenham et al. [15] in the investigation of factors that influences students’ engagement level in the Learning Management System. Composing of several isolated activities, the SLR is sorted into input, process and output phases. While the input process is a systematic review planning that was identified from the research question, the processing stage, on the other hand, is a phase that ensures the validity and reliability of the systematic review process as relates adhering to each of the prescribed step, which is stated in the evaluation, inclusion and exclusion criteria, as well as the quality assessment stages before concluding with a review report.

Identifying the necessities - This study needs to identify the necessity of a comprehensive literature review on student’s engagement factors in the Learning Management System from the perspective of learning analytics, 3 research questions were thus generated for the literature review process.

Research questions - The research questions used in addressing the needs of this study are outlined as follows:

• How do students’ manage LMS engagement levels?

• What are the contributing factors to students’ engagement level in LMS as shown by the learning analytics?

• What are the learning analytics methods used for analysing the student’s engagement level?

In the second SLR stage of conducting the review, this article will not only examine the few primary scientific databases that were obtained from the selected keywords but will also highlight the inclusion and exclusion criteria that were used in this research.

2.3 Literature Search Procedure and Criteria Selection

The keywords that were used in the search engine are shortlisted as follows:

• Student engagement AND learning management

• Learning Analytic AND learning management system

• Student Engagement model in higher education

• Student Engagement AND learning analytics

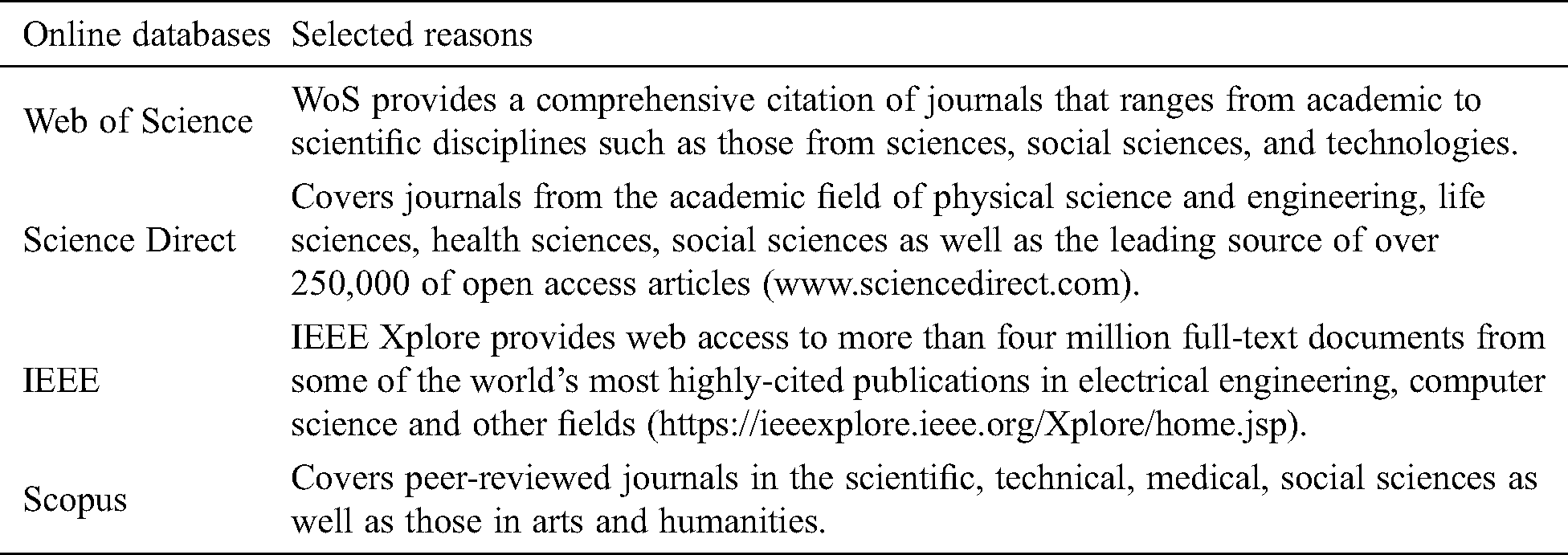

The online databases that were used in the Systematic Literature Review such as Web of Science, Science Direct, IEEE and Scopus database as described in Tab. 1, were selected based on their various article sources as well as their credibility levels.

Table 1: Selected online databases

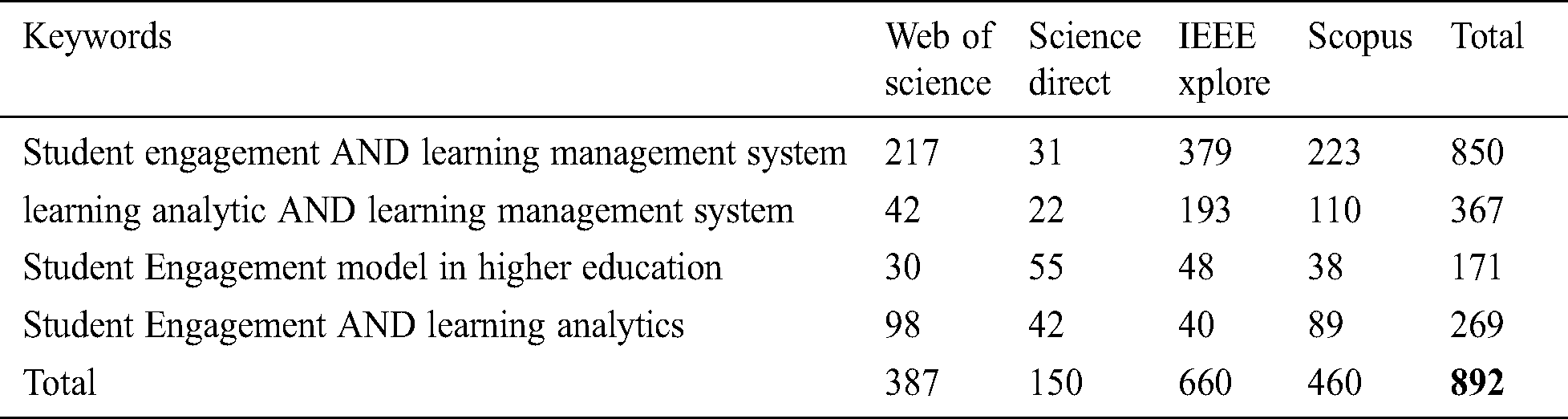

The inclusion criteria used for determining the relevant literature (journal papers, conference papers) are listed as follows: Studies that are related to the Learning Management System Engagement model, Studies that had examined the LMS log data through the use of Learning Analytics and Studies or journals that were published between 2005 to 2019. The exclusion criteria used are listed as follows: The omission of unrelated material, not pertinent to the research question and research field, only abstract paper and editorial paper. Selection of Primary Sources Tab. 2 shows the number of publications that appeared from using the keywords of the mentioned databases in Tab. 1, where those with the ‘Student Engagement AND Learning Management System’ keywords were found to have garnered a total of 850 articles, the ‘Learning Analytics AND Learning Management System’ with a total of 367 outcomes, while the ‘Student Engagement Model in Learning Management System’ and the ‘Student Engagement AND Learning Analytics’ obtained around 171 and 269 articles respectively.

Table 2: Number of publications that appeared from the search results

Fig. 1 below shows the process of the systematic literature review from the four databases that are used in the structured keyword search.

Figure 1: The publication selection and collection flow

From these four databases, a total of 892 papers that matched the earlier mentioned keywords of the previous section were found to have dwindled to 127 (100 journals and 27 conference papers) after undergoing the filtering of the inclusion and exclusion phases. Since the quality assessment step is important for ensuring the selection of higher impact journals, 58 relevant papers (45 journals and 13 conference papers) were therefore shortlisted to produce a better systematic literature review output. The selection on the quality attribute is thus defined as such:

• Adequate findings and results that have been displayed and discussed.

• The online learning platform was discussed and implemented in the research.

• Involvement of the learning analytic approach.

This section discusses the results obtained from the SLR process, as presented in sub-sections below as per the research question. The details of discussion from the findings are described in a means of answering each research question.

3.1 [RQ1]: How do Students’ Manage LMS Engagement Levels?

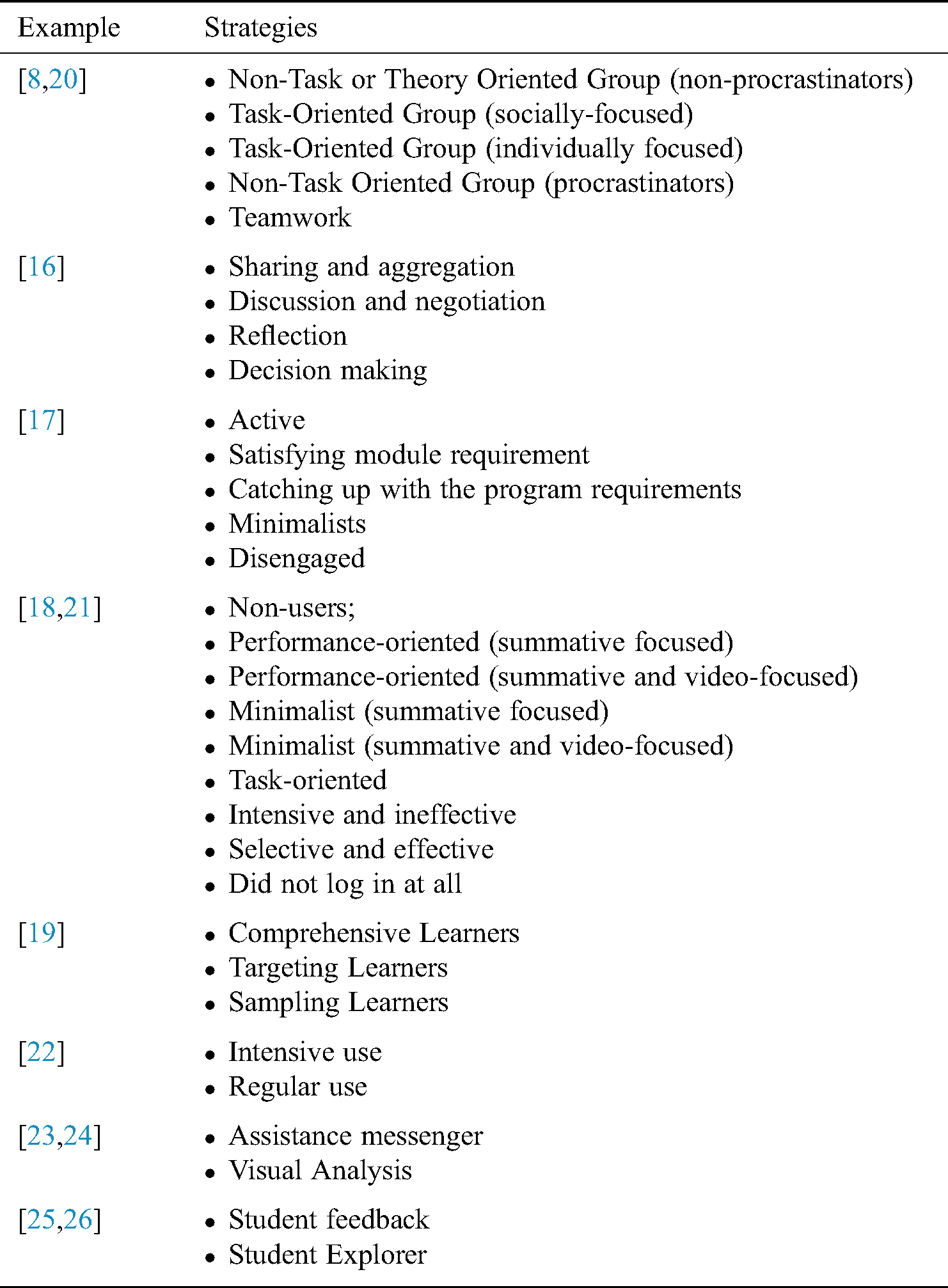

The student’s involvement level on the LMS was gauged from the diverse engagement, interaction and participation levels of the LMS as shown in Tab. 3. As depicted from the table, these LMS interactions had not only involved the students and their peers but also their instructors and the system. While the student to student interaction in the LMS platform might have occurred as a result of the students’ communication and participation in a forum, the student-lecturer interaction level, on the other hand, was based on the students’ motivation for communicating with their lecturers. For this reason, the student-lecturer interaction is seen as a two-way communication when both the student and lecturer strive to connect through messages or via online forums and discussions. As for the student-system interaction, this occurs when the student starts to log into the LMS and performs a download of a required file. Under this circumstance, the interaction between the system and resource is also regarded as the student-system interaction since it had involved the downloading of files and viewing of systems upon the login of the respective student’s account. According to Wang et al. [16] the learning design of the LMS can be divided into resource sharing and aggregation strategies that are depicted by not only the course forums but also the post and blog contents discussions as well as negotiations like those of Twitter and Facebook hence, facilitating a more thorough decision-making process. In another study, Mirriahi et al. [17] identified four clusters of students’ learning profiles from the behaviour logs of their video annotation tool. The categorization of the user profiles, namely the intensive users, selective users, limited users and non-users was based on the usage intensity of the various learning tools that are available within a learning management system. Pardo et al. [18] too had stated that information procured from learning logs and digital traces of learning analytics do not only provide the student with timely personalised feedback at scale but also enhances the student’s satisfaction; where the student’s inclination towards a task-oriented strategy rather than any other identified strategy is found to have been resulted from the formative feedback provided. Due to the high and regular usage among the strategies exhibited by most of the users, an intensive user is thus defined as one who spends more time in sourcing and assessing the additional required materials, while the regular users are seen as those spending the same amount of time on lesser significant materials. While a comprehensive learner is defined as possessing a high self-regulated learning profile that adheres to the course structure and completion of the course material via the online platform, the targeting learner on the other hand only aims at passing the minimum course requirement with a lower course engagement level. As for the sampling learner, this type of learner is defined as having a low self-regulated learning profile with a typical behaviour of not completing the required course [19].

Table 3: Engagement strategies in LMS

3.2 [RQ2]: What are the Contributing Factors to the Student’s LMS Usage; As Shown by the Learning Analytics?

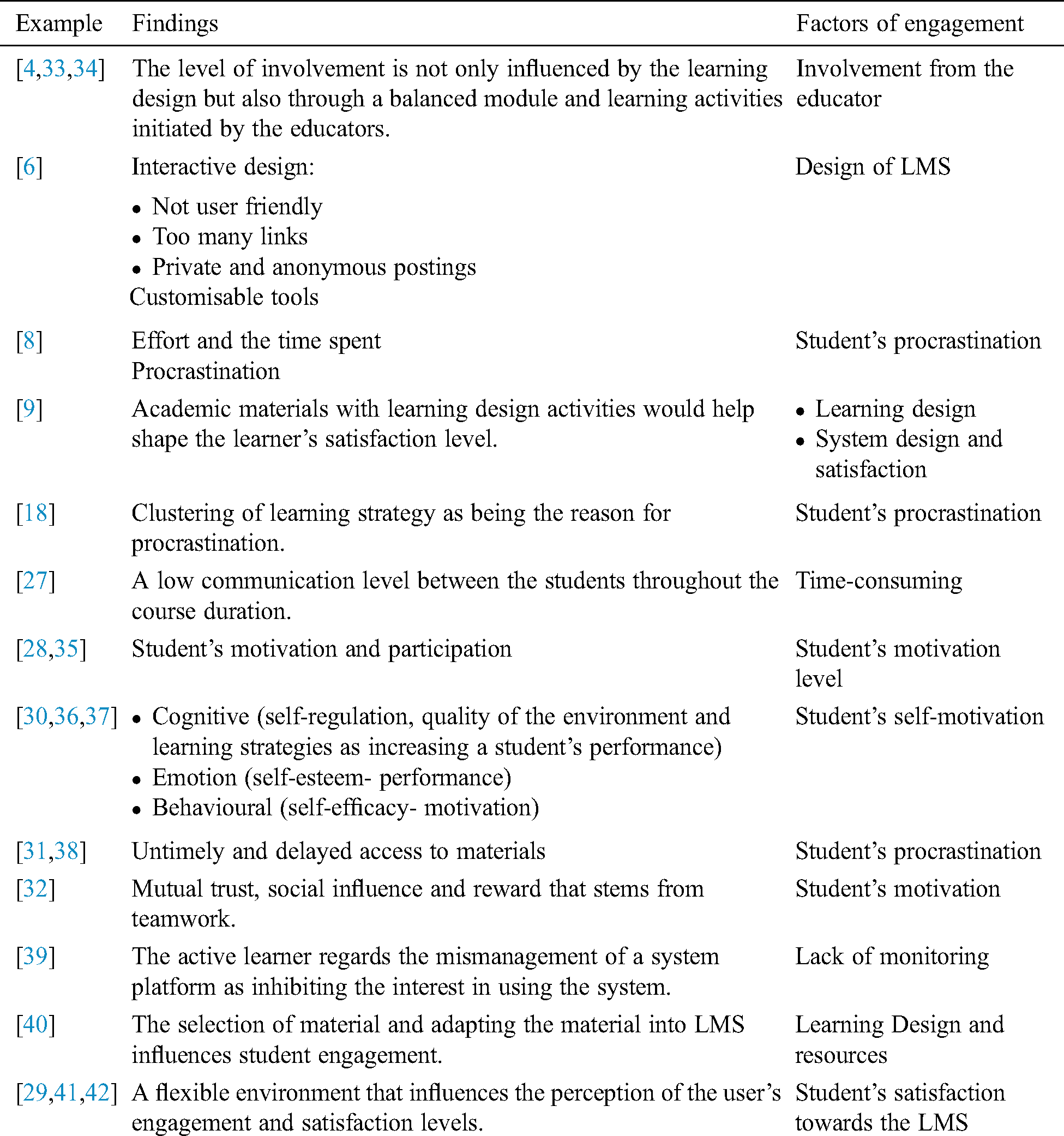

The factors that influence a student’s engagement level in the LMS can thus be divided into several themes as those shown in Tab. 4. As depicted in the table, these engagement factors are found to have been influenced by the motivation and rewards from using the LMS, the student’s satisfaction level on the system as well as the ease of usage. Most of the users agreed that an interactive and a user-friendly LMS interface would entice them on completing their assigned tasks, while a dull and unattractive design indicate otherwise, but also diminish their interest to engage in forum discussions [18]. Other than the above, the other reasons that are cited for the low LMS engagement are higher workload, which is because of its cumbersome system [27]. Since a low self-motivation is observed among the students, the motivating factor for using the LMS is seen as leaning towards the learning interface and the LMS design [6,28,29]; where those consisting of obsolete learning materials are hindering the student’s involvement in the LMS [28]. For this reason, a higher LMS engagement is not only perceived as being influenced by the system’s design and its overall performance but is also stemmed from the reward system for using the LMS and the contributions provided by the lecturers [29]. As such, the LMS should be improved with a more engaging system that offers rewards for a minimum level of system usage since this can be used to trigger a student’s motivation level [30] and consequently a higher LMS participation level [28]. Next, the commitment in LMS is low because the understudy does not effectively take part in the LMS given the dawdling conduct. Hesitation is the demonstration of postponement and the delaying of the culmination of an assignment [18]. The understudy’s exertion and time spent that should be yielded by the understudy in LMS are the motivation behind why the commitment in LMS is low because the understudy does not wish to invest more energy in LMS [8]. Hesitation is likewise the learning procedure utilized by the understudy; it causes the understudy to interface however the commitment they produce are not dynamic [18]. The activity of less than ideal and straightforward deferral of the entrance to the material in LMS is likewise a purpose behind the student dawdling [31]. The inspirations are the understudy’s craving and enthusiasm for taking an interest in the action. The understudy inspiration relies upon three dependent measurements, intellectual is the self-guideline, nature of the learning methodologies, the feeling is the confidence, which alludes to the understudy’s exhibition and conduct is simply the understudy’s viability, and this is the place the inspiration originates from [30]. The inspiration is impacted by the cooperation in the LMS [28]. Student’s inspiration additionally impacts the social condition, the shared trust from the learning framework and the prizes they get when participating in the framework [32]. Nguyen et al. [4] talked about the instructor association in the web-based learning structure and their revelations demonstrate that VLE responsibility isn’t simply considerably affected by the learning design, yet is particularly impacted by how educators inside and across over modules, balance their learning plan practices on seven days by-week premise. The appointment of steady re-evaluation into a module is found to have provoked extended understudy duty with that module, as assessed by joint efforts with the VLE. The evaluation in learning the executive’s framework makes greater commitment if the teacher structures the learning exercises well. A summary of the findings and the engagement factors of the LMS are thus shown in Tab. 4.

Table 4: Factors for influencing the student’s LMS engagement level that was shown by the learning analytics

3.3 [RQ3]: What Are the Learning Analytics Methods Used for Analysing the Student’s Engagement Level?

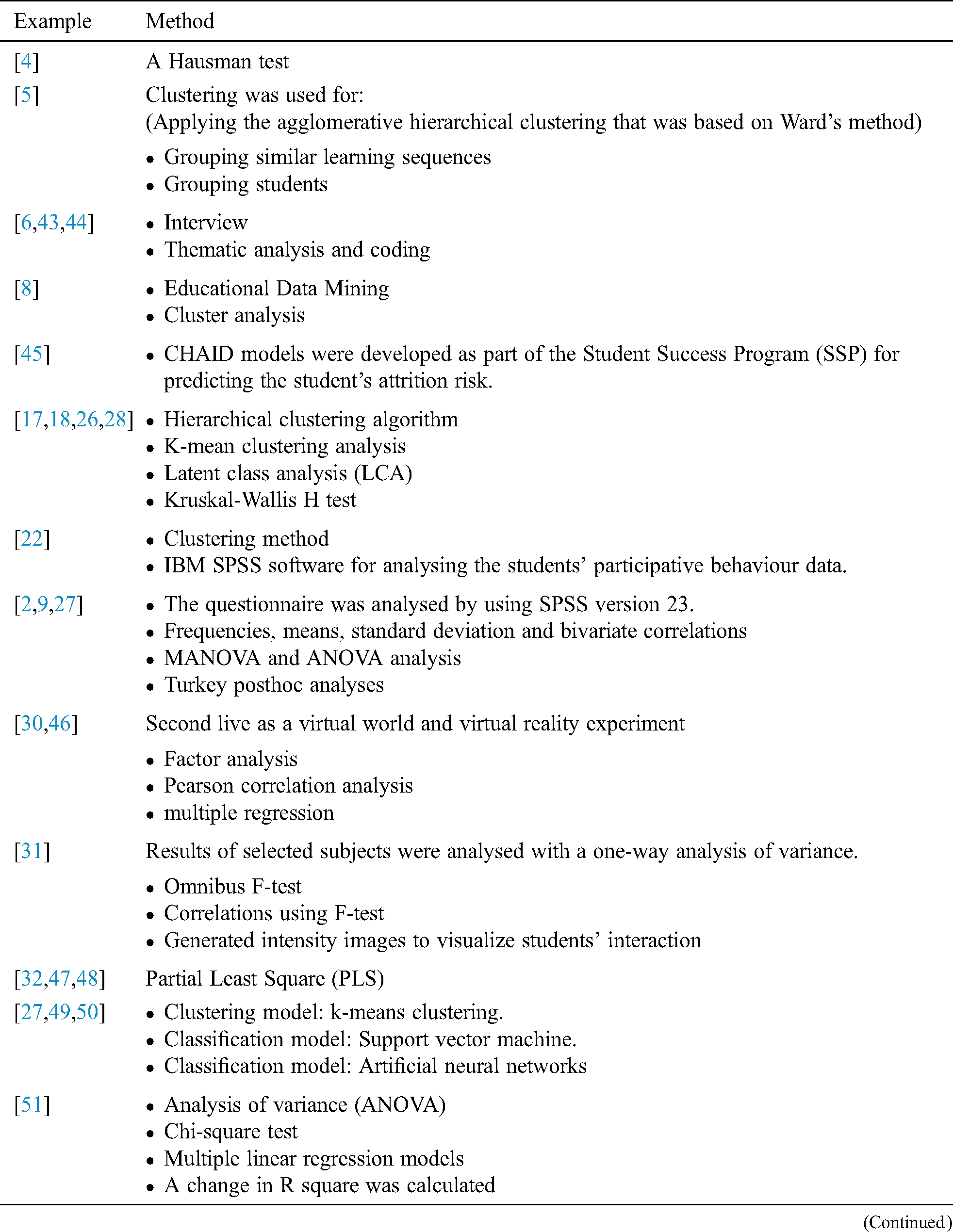

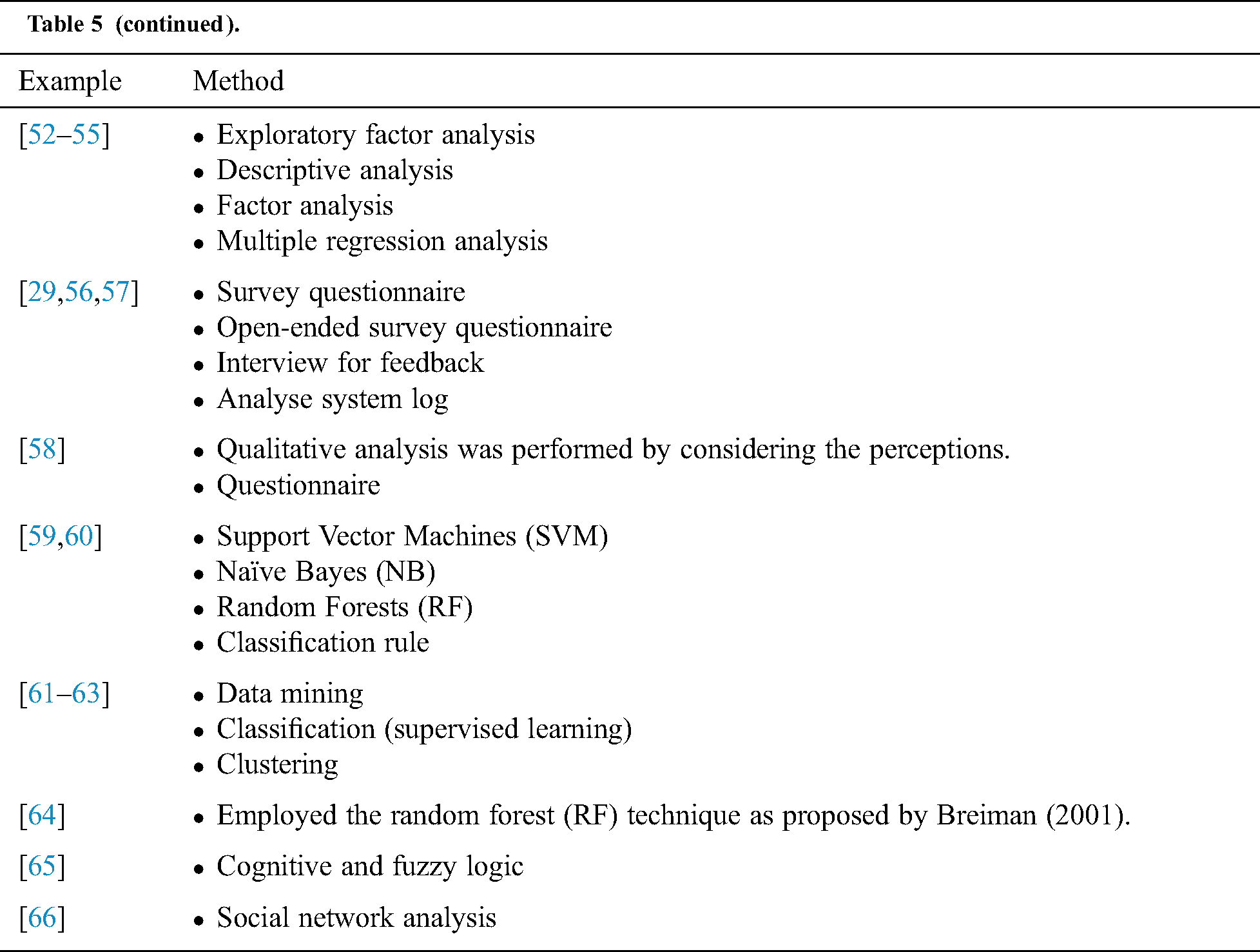

The various learning analytic methods that were applied in the LMS trace and log data can be classified as qualitative and quantitative, where the former would involve the use of surveys, while the latter would focus on the analysis, validation and clarification of the obtained data. The learning analytic methods that are utilised by the researchers are depicted in Tab. 5. Apart from using the qualitative methods to determine if the LMS elements design is affecting the user’s engagement [6], the testing of past theories under a different context [16] and the gathering of student’s feedback [29] are other prediction methods that are used for predicting the student’s behaviour use of the LMS. A summary of the learning analytics methods that are practised by researchers, based on the review is shown in the table below.

Table 5: Types of learning analytic methods

The above tab. 5 shows the type of data that can be obtained from the log data of an online platform, like those of the LMS or through the use of a qualitative method. It implies that Learning Analytics is not being bound by specific methods. Since the results varied according to the research outcome, these methods are found to have consisted of the data mining technique; where the classification and clustering methods [8,17,22,27,28,67] are used to cluster the data and to form relationships between respective variables, while those of the Anova and Manova techniques [2,27,51,68] are used together with multiple regressions to establish a correlation between the subjects of study. Apart from the descriptive analysis that can be used to validate the results of the research [52], some researchers had also utilised the partial least square method in their data analysis and the testing of a developed model [32,47].

4 Challenge and Drawback of LMS

The major challenge of learning analytic research is the data. A more emphasized result requires more data. The data is very big, to the extent that specific methods and tools must be used to analyse them based on the Researcher’s need. Even though LMS brings some form of advancement into the education world, yet it has its disadvantages, where it may not record the student’s emotional or real situations faced during online learning. The complex function in LMS may lead the student and educator into confusion, as regarding how to use the function; thus, more systematic and simple functions can make the learning process better, thence producing a more realistic outcome. In terms of student usage in LMS, issues such as procrastination might occur, as the student may wait until the last minute to submit the given task.

Although many achievements were obtained from studying the student’s LMS engagement level through the use of Learning Analytics, the systematic review of this study is still restricted by some of the future research direction.

With most of the students in this era being digitally literate, classroom engagement has now reached an all-time low level, with lecturers competing against countless diversions from phones, tablets and laptops. For this reason, countless resources should therefore be introduced to ensure enhancing the education material and making learning more fun and effective; where future researchers can look into the possibility of creating apps that support classroom ‘gamification,’ competitive scenarios as well as the distribution of points and rewards system.

Since privacy and security have become an increasing concern among the students, steps should therefore be taken to enhance the security system of the LMS. For this reason, the customization of system administration paths or more integrated learning environments for better security control of the LMS can thence be considered as part of the future research for this study.

Despite the successful use of machine learning algorithms in other domain applications, this method is however not used in predicting the student’s behaviour on the LMS usage. Since the machine learning algorithm has the potential of detecting the learners’ study pattern and behaviour through the use of system automation, we, therefore, suggest that future researchers shift their attention to the application of machine learning in the LMS for a better assessment of the student’s engagement level.

The comprehensive SLR is performed on the engagement factors in the Learning Management System (LMS) to discover the factors for influencing students’ LMS engagement level and consequently the intensity of the student’s engagement level, but also the student’s engagement strategies. To support the learning environment in LMS to become more functioning, few approaches can be used like more active strategies in the educator approach towards the student. Also, the program requirement must be met, and the educator needs to be focused on student performance as well as a unique task. This is important to increase student engagement as the student’s motivation toward the LMS is the biggest thing to handle. The LMS design, awards and satisfaction from the student is a critical factor of the engagement. There are a lot of techniques that can be used as learning analytics tools. This method can help the institution to find what the students’ needs are, wherein the institution can map student requirement and institution objective. Since learning analytics and learning management system are regarded as an active research area with quite a number of publications, the engagement factors that are extracted from the use of SLR thus suggests the further need of generating a higher engagement level of the LMS. Apart from offering an overview of the existing LMS research and the engagement level of an LMS environment, this research also offers supplementary information on the learning analytic methods hence, prompting the possibility of a better learning analytics research.

Funding Statement: This research was supported by the University of Malaya, Bantuan Khas Penyelidikan under the research grant of BKS083-2017 and Fundamental Research Grant Scheme (FRGS) under Grant number FP112-2018A from the Ministry of Education Malaysia, Higher Education.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. H. Aldowah, H. Al-Samarraie and W. M. Fauzy. (2019). “Educational data mining and learning analytics for 21st century higher education: A review and synthesis,” Telematics and Informatics, vol. 37, pp. 13–49. [Google Scholar]

2. N. R. Aljohani, A. Daud, R. A. Abbasi, J. S. Alowibdi, M. Basheri et al. (2019). , “An integrated framework for course adapted student learning analytics dashboard,” Computers in Human Behavior, vol. 92, pp. 679–690. [Google Scholar]

3. I. H. JO, D. Kim and M. Yoon. (2015). “Constructing proxy variable to measure adult learners’ time management strategies in LMS,” Educational Technology and Society, vol. 18, no. 3, pp. 214–225. [Google Scholar]

4. Q. Nguyen, B. Rienties, L. Toetenel, R. Ferguson and D. Whitelock. (2017). “Examining the designs of computer-based assessment and its impact on student engagement, satisfaction, and pass rates,” Computers in Human Behavior, vol. 76, pp. 703–714. [Google Scholar]

5. J. Jovanović, D. Gašević, S. Dawson, A. Pardo and N. Mirriahi. (2017). “Learning analytics to unveil learning strategies in a flipped classroom,” Internet and Higher Education, vol. 33, pp. 74–85. [Google Scholar]

6. N. Zanjani, S. L. Edwards, S. Nykvist and S. Geva. (2017). “The important elements of LMS design that affect user engagement with e-learning tools within LMSs in the higher education sector,” Australasian Journal of Educational Technology, vol. 33, no. 1, pp. 19–31. [Google Scholar]

7. N. Emelyanova and E. Voronina. (2014). “Introducing a learning management system at a russian university: Students’ and teachers’ perceptions,” International Review of Research in Open and Distance Learning, vol. 15, no. 1, pp. 272–289. [Google Scholar]

8. R. Cerezo, M. Sánchez-Santillán, M. P. Paule-Ruiz and J. C. Núñez. (2016). “Students’ LMS interaction patterns and their relationship with achievement: A case study in higher education,” Computers and Education, vol. 96, pp. 42–54. [Google Scholar]

9. B. Rienties and L. Toetenel. (2016). “The impact of learning design on student behaviour, satisfaction and performance: A cross-institutional comparison across 151 modules,” Computers in Human Behavior, vol. 60, pp. 333–341. [Google Scholar]

10. K. Casey and D. Azcona. (2017). “Utilizing student activity patterns to predict performance,” International Journal of Educational Technology in Higher Education, vol. 14, no. 1, pp. 12–15. [Google Scholar]

11. B. A. Schwendimann, M. J. Rodriguez-Triana, A. Vozniuk, L. P. Prieto, M. S. Boroujeni et al. (2017). , “Perceiving learning at a glance: A systematic literature review of learning dashboard research,” IEEE Transactions on Learning Technologies, vol. 10, no. 1, pp. 30–41. [Google Scholar]

12. S. G. Nunn, J. T. Avella, M. Kebritchi and T. Kanai. (2016). “Learning analytics methods, benefits, and challenges in higher education: A systematic literature review,” Online Learning, vol. 20, no. 2, pp. 13–29. [Google Scholar]

13. Z. Papamitsiou and A. A. Economides. (2014). “Learning analytics and educational data mining in practice: A systemic literature review of empirical evidence,” Educational Technology and Society, vol. 17, no. 4, pp. 49–64. [Google Scholar]

14. B. Kitchenham. (2004). “Procedures for Performing Systematic Reviews,” Keele, UK, Keele University, vol. 33, pp. 12–15. [Google Scholar]

15. B. Kitchenham, O. Pearl Brereton, D. Budgen, M. Turner, J. Bailey et al. (2009). , “Systematic literature reviews in software engineering - A systematic literature review,” Information and Software Technology, vol. 51, no. 1, pp. 7–15. [Google Scholar]

16. Z. Wang, T. Anderson, L. Chen and E. Barbera. (2017). “Interaction pattern analysis in cMOOCs based on the connectivist interaction and engagement framework,” British Journal of Educational Technology, vol. 48, no. 2, pp. 683–699. [Google Scholar]

17. N. Mirriahi, J. Jovanovic, S. Dawson, D. Gašević and A. Pardo. (2018). “Identifying engagement patterns with video annotation activities: A case study in professional development,” Australasian Journal of Educational Technology, vol. 34, no. 1, pp. 57–72. [Google Scholar]

18. A. Pardo, D. Gasevic, J. Jovanovic, S. Dawson and N. Mirriahi. (2019). “Exploring student interactions with preparation activities in a flipped classroom experience,” IEEE Transactions on Learning Technologies, vol. 12, no. 3, pp. 333–346. [Google Scholar]

19. J. Maldonado-Mahauad, M. Pérez-Sanagustín, R. F. Kizilcec, N. Morales and J. Munoz-Gama. (2018). “Mining theory-based patterns from Big data: Identifying self-regulated learning strategies in Massive Open Online Courses,” Computers in Human Behavior, vol. 80, pp. 179–196. [Google Scholar]

20. Á. Fidalgo-Blanco, M. L. Sein-Echaluce, F. J. García-Peñalvo and M.Á. Conde. (2015). “Using learning analytics to improve teamwork assessment,” Computers in Human Behavior, vol. 47, pp. 149–156. [Google Scholar]

21. A. F. Wise and Y. Cui. (2018). “Unpacking the relationship between discussion forum participation and learning in MOOCs,” in Proc. of the 8th Int. Conf. on Learning Analytics and Knowledge - LAK ’18, pp. 330–339. [Google Scholar]

22. Y. S. Su, T. J. Ding and C. F. Lai. (2017). “Analysis of students engagement and learning performance in a social community supported computer programming course,” Eurasia Journal of Mathematics, Science and Technology Education, vol. 13, no. 9, pp. 6189–6201. [Google Scholar]

23. H. He, Q. Zheng, D. Di and B. Dong. (2019). “How learner support services affect student engagement in online learning environments,” IEEE Access, vol. 7, pp. 49961–49973. [Google Scholar]

24. K. Mouri, H. Ogata and N. Uosaki. (2017). “Learning analytics in a seamless learning environment,” in ACM Int. Conf. Proc. Series, pp. 348–357. [Google Scholar]

25. P. D. Barua, X. Zhou, R. Gururajan and K. C. Chan. (2019). “Determination of factors influencing student engagement using a learning management system in a tertiary setting,” in Proc. - 2018 IEEE/WIC/ACM Int. Conf. on Web Intelligence, WI 2018, Santiago, Chile: IEEE, pp. 604–609. [Google Scholar]

26. S. Aguilar, S. Lonn and S. D. Teasley. (2014). “Perceptions and use of an early warning system during a higher education transition program,” in ACM International Conf. Proc. Series, pp. 113–117. [Google Scholar]

27. T. Soffer, T. Kahan and E. Livne. (2017). “E-assessment of online academic courses via students’ activities and perceptions,” Studies in Educational Evaluation, vol. 54, pp. 83–93. [Google Scholar]

28. L. Y. Li and C. C. Tsai. (2017). “Accessing online learning material: Quantitative behavior patterns and their effects on motivation and learning performance,” Computers and Education, vol. 114, pp. 286–297. [Google Scholar]

29. Y. Chen, Y. Wang, Kinshuk and N. S. Chen. (2014). “Is FLIP enough? or should we use the FLIPPED model instead?,” Computers and Education, vol. 79, pp. 16–27. [Google Scholar]

30. N. Pellas. (2014). “The influence of computer self-efficacy, metacognitive self-regulation and self-esteem on student engagement in online learning programs: Evidence from the virtual world of Second Life,” Computers in Human Behavior, vol. 35, pp. 157–170. [Google Scholar]

31. A. AlJarrah, M. K. Thomas and M. Shehab. (2018). “Investigating temporal access in a flipped classroom: procrastination persists,” International Journal of Educational Technology in Higher Education, vol. 15, no. 1, pp. 363. [Google Scholar]

32. X. Zhang, Y. Meng, P. Ordóñez de Pablos and Y. Sun. (2019). “Learning analytics in collaborative learning supported by Slack: From the perspective of engagement,” Computers in Human Behavior, vol. 92, pp. 625–633. [Google Scholar]

33. J. Ballard and P. I. Butler. (2016). “Learner enhanced technology: Can activity analytics support understanding engagement a measurable process?,” Journal of Applied Research in Higher Education, vol. 8, no. 1, pp. 18–43. [Google Scholar]

34. Q. Nguyen, M. Huptych and B. Rienties. (2018). “Linking students’ timing of engagement to learning design and academic performance,” in LAK’18: Int. Conf. on Learning Analytics and Knowledge, Sydney, NSW, Australia. ACM, New York, NY, USA, pp. 10. [Google Scholar]

35. C. Schumacher and D. Ifenthaler. (2018). “Features students really expect from learning analytics,” Computers in Human Behavior, vol. 78, pp. 397–407. [Google Scholar]

36. D. Gašević, A. Zouaq and R. Janzen. (2013). “Choose Your Classmates, Your GPA Is at Stake!’: The Association of Cross-Class Social Ties and Academic Performance,” American Behavioral Scientist, vol. 57, no. 10, pp. 1460–1479. [Google Scholar]

37. J. Broadbent. (2016). “Academic success is about self-efficacy rather than frequency of use of the learning management system,” Australasian Journal of Educational Technology, vol. 32, no. 4, pp. 38–49. [Google Scholar]

38. L. Agnihotri, A. Essa and R. Baker. (2017). “Impact of student choice of content adoption delay on course outcomes,” in ACM Int. Conf. Proc. Series, pp. 16–20. [Google Scholar]

39. S. H. Zhong, Y. Li, Y. Liu and Z. Wang. (2017). “A computational investigation of learning behaviors in MOOCs,” Computer Applications in Engineering Education, vol. 9999, pp. 1–13. [Google Scholar]

40. N. Kelly, M. Montenegro, C. Gonzalez, P. Clasing, A. Sandoval et al. (2017). , “Combining event- and variable-centred approaches to institution-facing learning analytics at the unit of study level,” International Journal of Information and Learning Technology, vol. 34, no. 1, pp. 63–78. [Google Scholar]

41. M. Firat. (2016). “Determining the effects of LMS learning behaviors on academic achievement in a learning analytic perspective,” Journal of Information Technology Education: Research, vol. 15, no. 2016, pp. 075–087. [Google Scholar]

42. K. B. Ooi, J. J. Hew and V. H. Lee. (2018). “Could the mobile and social perspectives of mobile social learning platforms motivate learners to learn continuously?,” Computers and Education, vol. 120, pp. 127–145. [Google Scholar]

43. J. Aguilar and P. Valdiviezo-Díaz. (2017). “Learning analytic in a smart classroom to improve the eEducation,” in 2017 4th Int. Conf. on eDemocracy and eGovernment, ICEDEG 2017, Quito, Ecuador: IEEE, pp. 32–39. [Google Scholar]

44. B. Chen, Y. H. Chang, F. Ouyang and W. Zhou. (2018). “Fostering student engagement in online discussion through social learning analytics,” Internet and Higher Education, vol. 37, pp. 21–30. [Google Scholar]

45. E. Seidel and S. Kutieleh. (2017). “Using predictive analytics to target and improve first year student attrition,” Australian Journal of Education, vol. 61, no. 2, pp. 200–218. [Google Scholar]

46. D. Bhattacharjee, A. Paul, J. H. Kim and P. Karthigaikumar. (2018). “An immersive learning model using evolutionary learning,” Computers and Electrical Engineering, vol. 65, pp. 236–249. [Google Scholar]

47. F. H. Wang. (2017). “An exploration of online behaviour engagement and achievement in flipped classroom supported by learning management system,” Computers and Education, vol. 114, pp. 79–91. [Google Scholar]

48. R. S. Baragash and H. Al-Samarraie. (2018). “Blended learning: Investigating the influence of engagement in multiple learning delivery modes on students’ performance,” Telematics and Informatics, vol. 35, no. 7, pp. 2082–2098. [Google Scholar]

49. K. Castro-Wunsch, A. Ahadi and A. Petersen. (2017). “Evaluating neural networks as a method for identifying students in need of assistance,” in Proc. of the Conf. on Integrating Technology into Computer Science Education, ITiCSE, New York, NY, pp. 111–116. [Google Scholar]

50. J. Gardner, C. Brooks, J. Gardner and C. Brooks. (2018). “Coenrollment networks and their relationship to grades in undergraduate education,” in Proc. of the 8th Int. Conf. on Learning Analytics & Knowledge - LAK ’18, vol. 18, pp. 295–304. [Google Scholar]

51. D. Gašević, S. Dawson, T. Rogers and D. Gasevic. (2016). “Learning analytics should not promote one size fits all: The effects of instructional conditions in predicting academic success,” Internet and Higher Education, vol. 28, pp. 68–84. [Google Scholar]

52. S. Singh, R. Misra and S. Srivastava. (2017). “An empirical investigation of student’s motivation towards learning quantitative courses,” International Journal of Management Education, vol. 15, no. 2, pp. 47–59. [Google Scholar]

53. R. Abzug. (2015). “Predicting success in the undergraduate hybrid business ethics class: Conscientiousness directly measured,” Journal of Applied Research in Higher Education, vol. 7, no. 2, pp. 400–411. [Google Scholar]

54. R. A. Ellis, F. Han and A. Pardo. (2017). “Improving learning analytics - Combining observational and self-report data on student learning,” Educational Technology and Society, vol. 20, no. 3, pp. 158–169. [Google Scholar]

55. A. S. M. Niessen, R. R. Meijer and J. N. Tendeiro. (2016). “Predicting performance in higher education using proximal predictors,” PLoS One, vol. 11, no. 4, pp. e0153663. [Google Scholar]

56. M. Charalampidi and M. Hammond. (2016). “How do we know what is happening online?: A mixed methods approach to analysing online activity,” Interactive Technology and Smart Education, vol. 13, no. 4, pp. 274–288. [Google Scholar]

57. A. Shibani, S. Knight and S. Buckingham Shum. (2020). “Educator perspectives on learning analytics in classroom practice,” Internet and Higher Education, vol. 46, pp. 100730. [Google Scholar]

58. M. A. Conde, R. Colomo-Palacios, F. J. García-Peñalvo and X. Larrucea. (2018). “Teamwork assessment in the educational web of data: A learning analytics approach towards ISO 10018,” Telematics and Informatics, vol. 35, no. 3, pp. 551–563. [Google Scholar]

59. M. Hlosta, Z. Zdrahal and J. Zendulka. (2017). “Ouroboros: Early identification of at-risk students without models based on legacy data,” in ACM International Conf. Proc. Series, pp. 6–15. [Google Scholar]

60. Z. Papamitsiou and A. A. Economides. (2017). “Exhibiting achievement behavior during computer-based testing: What temporal trace data and personality traits tell us?,” Computers in Human Behavior, vol. 75, pp. 423–438. [Google Scholar]

61. M. C. S. Manzanares, R. M. Sánchez, C. I. García Osorio and J. F. Díez-Pastor. (2017). “How do B-learning and learning patterns influence learning outcomes?,” Frontiers in Psychology, vol. 8, pp. 1–13. [Google Scholar]

62. R. Asif, A. Merceron, S. A. Ali and N. G. Haider. (2017). “Analyzing undergraduate students’ performance using educational data mining,” Computers and Education, vol. 113, pp. 177–194. [Google Scholar]

63. C. Angeli, S. K. Howard, J. Ma, J. Yang and P. A. Kirschner. (2017). “Data mining in educational technology classroom research: Can it make a contribution?,” Computers and Education, vol. 113, pp. 226–242. [Google Scholar]

64. D. Kim, Y. Park, M. Yoon and I. H. Jo. (2016). “Toward evidence-based learning analytics: Using proxy variables to improve asynchronous online discussion environments,” Internet and Higher Education, vol. 30, pp. 30–43. [Google Scholar]

65. Q. I. Liu, R. Wu, E. Chen, G. Xu and Y. U. Su. (2018). “Fuzzy cognitive diagnosis for modelling examinee,” ACM Transactions on Intelligent Systems and Technology, vol. 9, no. 4, pp. 1–26. [Google Scholar]

66. S. K. Jan. (2018). “Identifying online communities of inquiry in higher education using social network analysis,” Research in Learning Technology, vol. 26, pp. 12–15. [Google Scholar]

67. Y. Y. Song and Y. Lu. (2015). “Decision tree methods: Applications for classification and prediction,” Shanghai Archives of Psychiatry, vol. 27, no. 2, pp. 130–135. [Google Scholar]

68. R. Tirado-Morueta, P. Maraver-López and Á. Hernando-Gómez. (2017). “Patterns of participation and social connections in online discussion forums,” Small Group Research, vol. 48, no. 6, pp. 639–664. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |