DOI:10.32604/csse.2022.021398

| Computer Systems Science & Engineering DOI:10.32604/csse.2022.021398 |  |

| Article |

An Effective and Secure Quality Assurance System for a Computer Science Program

Computer Science Department, College of Computer and Information Sciences, Imam Mohammad Ibn Saud Islamic University (IMSIU), Riyadh, Saudi Arabia

*Corresponding Author: Mohammad AlKhatib. Email: mohkhatib83@gmail.com

Received: 01 July 2021; Accepted: 05 August 2021

Abstract: Improving the quality assurance (QA) processes and acquiring accreditation are top priorities for academic programs. The learning outcomes (LOs) assessment and continuous quality improvement represent core components of the quality assurance system (QAS). Current assessment methods suffer deficiencies related to accuracy and reliability, and they lack well-organized processes for continuous improvement planning. Moreover, the absence of automation, and integration in QA processes forms a major obstacle towards developing efficient quality system. There is a pressing need to adopt security protocols that provide required security services to safeguard the valuable information processed by QAS as well. This research proposes an effective methodology for LOs assessment and continuous improvement processes. The proposed approach ensures more accurate and reliable LOs assessment results and provides systematic way for utilizing those results in the continuous quality improvement. This systematic and well-specified QA processes were then utilized to model and implement automated and secure QAS that efficiently performs quality-related processes. The proposed system adopts two security protocols that provide confidentiality, integrity, and authentication for quality data and reports. The security protocols avoid the source repudiation, which is important in the quality reporting system. This is achieved through implementing powerful cryptographic algorithms. The QAS enables efficient data collection and processing required for analysis and interpretation. It also prepares for the development of datasets that can be used in future artificial intelligence (AI) researches to support decision making and improve the quality of academic programs. The proposed approach is implemented in a successful real case study for a computer science program. The current study serves scientific programs struggling to achieve academic accreditation, and gives rise to fully automating and integrating the QA processes and adopting modern AI and security technologies to develop effective QAS.

Keywords: Quality assurance; information security; cryptographic algorithms; education programs

The recent decades witnessed increasing involvement of quality assurance systems (QAS) in different fields of businesses. The reason for this is due to the great benefits that can be accomplished through implementing effective quality assurance (QA) processes. Those benefits include improved performance and outcomes, effective usage of resources, increased revenues, … etc [1]. The organizations of higher education realized the importance of adopting efficient QA processes in its education system for both programs and institutions. Almost all top ranked academic institutions follow well-organized QA procedures that guarantee the continuous improvement of the program quality and students performance. This contributes in improving the quality of the academic programs’ outcomes and increasing the alumni’s employment rates. Such institutions become a favorite destination for many students and governments overall the world [2].

In academic programs, the core functions of QA processes focus on assessing the students performance towards achieving the predefined learning outcomes (LOs) and providing effective improvement plans at both course and program levels. The LOs are defined as the knowledge and skills the students will earn upon the completion of certain academic program. Thus, achieving the intended LOs is a very important indicator that can be used to evaluate students performance, and hence the academic program quality. In addition, the assessment results of LOs represents the most affecting factor be considered when establishing improvement plans for both programs and institutions [2–4]. Therefore, performing the LOs assessment processes in accurate, and transparent manner, as well as adopting systematic way to use the assessment results in the development of continuous improvement plans are the main concerns for the QAS and play crucial role in judging the program’s quality level. Unfortunately, the majority of previously presented researches LOs assessment approaches relies on the use of KPIs and estimation results to assess students’ performance. For example, the student achievement for certain outcome is determined by estimation on a scale from 1 to 5 according to his progress in particular task(s). Such estimation may not be accurate enough to reflect actual students’ performance; many factors may interfere the results, including the personal characteristics of the assessor. Another deficiency of current assessment methodologies is that they use sample of students and assessment methods to assess the LOs achievement for an academic program. The sample may not be representative if not taken appropriately. Other programs use alumni surveys as an indirect assessment for program learning outcomes (PLOs), which is not appropriate because alumni students might be affected by many outside factors such as the work environment, while assessment should focus on knowledge and skills earned by students at the level of graduation. Again, this may affect the accuracy of the assessment results and improvement plans [5].

The absence of well-defined and effective approach for LOs assessment and continuous improvement processes represents a major obstacle towards automating those processes and developing intelligent and fully-automated and secure QAS in the field of education. The lack of automation affects the data collection, normalization, and visualization processes. This prevents the utilization of the state of the art technologies in the fields of information security (IS) and artificial intelligence (AI) to improve the efficiency of the QAS and hence the quality of the academic environment.

Specialized organizations such as the accreditation board for engineering & technology (ABET) provides guidelines about QA processes to be followed by academic institutions in the fields of computer science and engineering to satisfy quality requirements and achieve the accreditation certificates [6]. Although, such processes became relatively known for ABET, they still look vague and not adequately investigated for emerging accreditation organizations, such as the National center for Academic Accreditation and Assessment (NCAAA), which earned increasing interest in the middle east, in particular the Kingdom of Saudi Arabia, recently. Given that relatively few research articles have investigated the QA procedures for NCAAA accreditation and CS programs in particular, it became a necessity to provide a guide for the huge amount of academic programs seeking to be accredited by NCAAA and ABET.

This article has two main contributions that seek to close above-mentioned gaps and develop efficient QAS for academic programs. First, it introduces an effective and systematic LOs assessment methodology that ensures the accuracy and representativeness of the assessment results and shows, in great details, how to utilize those results in the continuous quality improvement at the different levels of the academic program. The proposed methodology is implemented in a real case study to verify its effectiveness. The research’s activities are performed within the context of ABET and NCAAA accreditation processes for the Bachelor program in Computer Science (CS) at Imam Mohammad Ibn Saud Islamic University (IMSIU). Such case study aims to assist the program’s QA staff in developing hands on skills related to the accreditation procedures through a successful experience. It is worth mentioning here that the academic program studied in this research has received full academic accreditation from both ABET and NCAAA.

The second contribution of this research is the development of well-designed, automated, and secure QAS that enables to perform relevant quality processes in efficient and reliable manner. Several state-of-the-art cryptographic algorithms have been utilized to develop effective security protocols that provide required security services to protect valuable information processed by the quality system.

Another important value of this research is that it represents a starting point towards the intelligent automation and integration of all QA processes in the field of education and allows to utilize modern technologies to improve the decision making and the quality of academic programs. Remember that utilizing AI and IS technologies requires efficient data (in digital format) collection and processing in advance.

The CS program, in which the study was applied, has official courses and program specifications, which include LOs at course and program levels respectively. All courses, including their course learning outcomes (CLOs), in the program curriculum are mapped to some PLOs. The achievement level of particular PLO can be measured through the average achievement levels of all CLOs aligned with that PLO in certain courses used in the assessment process. Each course is assigned to a course coordinator who is responsible for managing the course delivery process and coordinating the relevant tasks between instructors of the same course. Every instructor prepares a course report (CR) illustrating CLOs achievement and the course evaluation survey (CES) results for his own section(s), while the coordinator prepares the comprehensive course report (CCR) showing overall CLOs assessment results at the course level, improvement plans, and the survey analysis results. The quality assurance and development committee (QADC) manages almost all QA and accreditation tasks in the CS department.

Section 2 of this article presents the literature review, the proposed LOs assessment methodology and QAS are introduced in Sections 3 and 4 respectively, Section 5 presents the results and discussion, and finally the conclusion is presented in Section 6.

Researchers have conducted many studies to recognize and standardize the QA procedures and accreditation processes for academic programs in different fields of study [6–8]. In [9] authors tried to develop standards and QA procedures for bachelor programs in the emerging fields of computer security. The common goal of those researches is to improve the quality of the academic programs via following certain QA processes and complying with standards specified by accreditation organizations such as ABET. Some researchers studied the adequacy of proposed LOs by ABET in the field of engineering and found that LOs should be upgraded to include more skills that are required from engineers when practicing their profession in real work environments [10]. Authors of [11] discussed the different approaches to design the curriculum courses in the field of electrical engineering in a way that enables students to better achieve the LOs proposed by ABET. Other researchers developed a generic model for preparing the self-study report and identifying weaknesses of the academic programs according to ABET quality standards [12]. Some researchers shared successful experiences in achieving ABET accreditation by presenting the QA practices and continuous improvement plans followed by their programs during the accreditation processes [13,14]. Essa et al. [15] have proposed a web based application to organize and facilitate the data collection for LOs assessment and the process of writing required quality reports for ABET accreditation. In [15], the main obstacles against achieving ABET accreditation were presented and discussed. Those obstacles include the lack of training and commitment, the workload of faculty members, and the shortage of staff. A collaborative project between AlBaha school of computing and Uppsala University in Sweden to develop and implement QAS to satisfy ABET requirements were presented in [16]. Harmanani [17] highlighted the importance of adopting LOs based teaching approach in the academic programs. Researchers in [18] presented a framework showing the main QA processes and required activities for achieving ABET accreditation for an information system program. Taleb et al. [19] proposed a holistic framework for both ABET and NCAAA accreditations. The proposed framework tried to unify the main reports and procedures required by the two well-known accreditation bodies. Authors in [20] suggested an assessment methodology for ABET accreditation. The proposed methodology used KPIs and rubrics to assess the LOs attainment level. It relies on a level of estimation to categorize students according to the LOs achievement level. The alumni survey was used as indirect assessment.

Researchers in [21] proposed a model to measure students’ performance in the final exam. Researchers used the Rasch measurement model for this purpose. The model aimed to improve the teaching and assessment methods at the course level. Another research attempt, presented in [22], employed the Rasch model to evaluate the validity and quality of the final exam questions, and make sure they are appropriate to measure students performance towards achieving the course learning outcomes (CLO). In [23] authors investigated the potential positive impact for using the digital curriculum on the quality of learning. They also provided suggested several ways for implementing the digital curriculum. In [24], researchers highlighted many research gaps related to LOs assessment and QA processes.

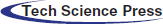

The proposed methodology is depicted in Fig. 1. The starting point of the proposed methodology is the assessment preprocessing step. It receives inputs from several sources, including program specification (PS), courses specifications (CSs), QADC members, and faculty. During the preprocessing stage, the plans for evaluating the attainment of CLOs and PLOs are developed. In particular, the mapping matrix between PLOs and certain courses that well be used in the assessment processes are established. All courses mapped to each PLO, from the third level to the eighth level of study, are used in PLOs assessment. The rationale behind this selection is that courses at the third and fourth levels of study represent early checkpoints that enable to assess students’ performance and take timely corrective actions to improve it and hence avoid propagating any possible deficiencies to next levels of study. The assessment methods used to measure the students’ achievement for each LO and the teaching strategies adopted to deliver LOs are specified at this stage. This stage is critical and needs to be performed carefully to ensure accurate and reliable results that reflect actual LOs achievement. Therefore, the inputs must be verified and taken from official PSs and CSs.

The outputs of the preprocessing stage include the PLOs-CLOs mapping scheme, in which all LOs at the program level are mapped with associated CLOs within courses that are used for assessment purposes. Courses are mapped to the PLOs at Introductory, Practiced, or Advanced levels. The PLOs-CLOs mapping scheme is the cornerstone for evaluating the students’ performance. The proposed approach ensures more accurate assessment of PLOs, since it relies on real students grades as taken from quizzes, labs, exams, projects, and other direct assessment methods. Moreover, it eliminates the need for using specific KPIs and rubrics for each PLO, and thus reduces the complexity of the assessment processes. The majority of previous assessment approaches use estimation results and only selected courses at advanced level. This may compromise the accuracy and not provide realist view about the students’ performance.

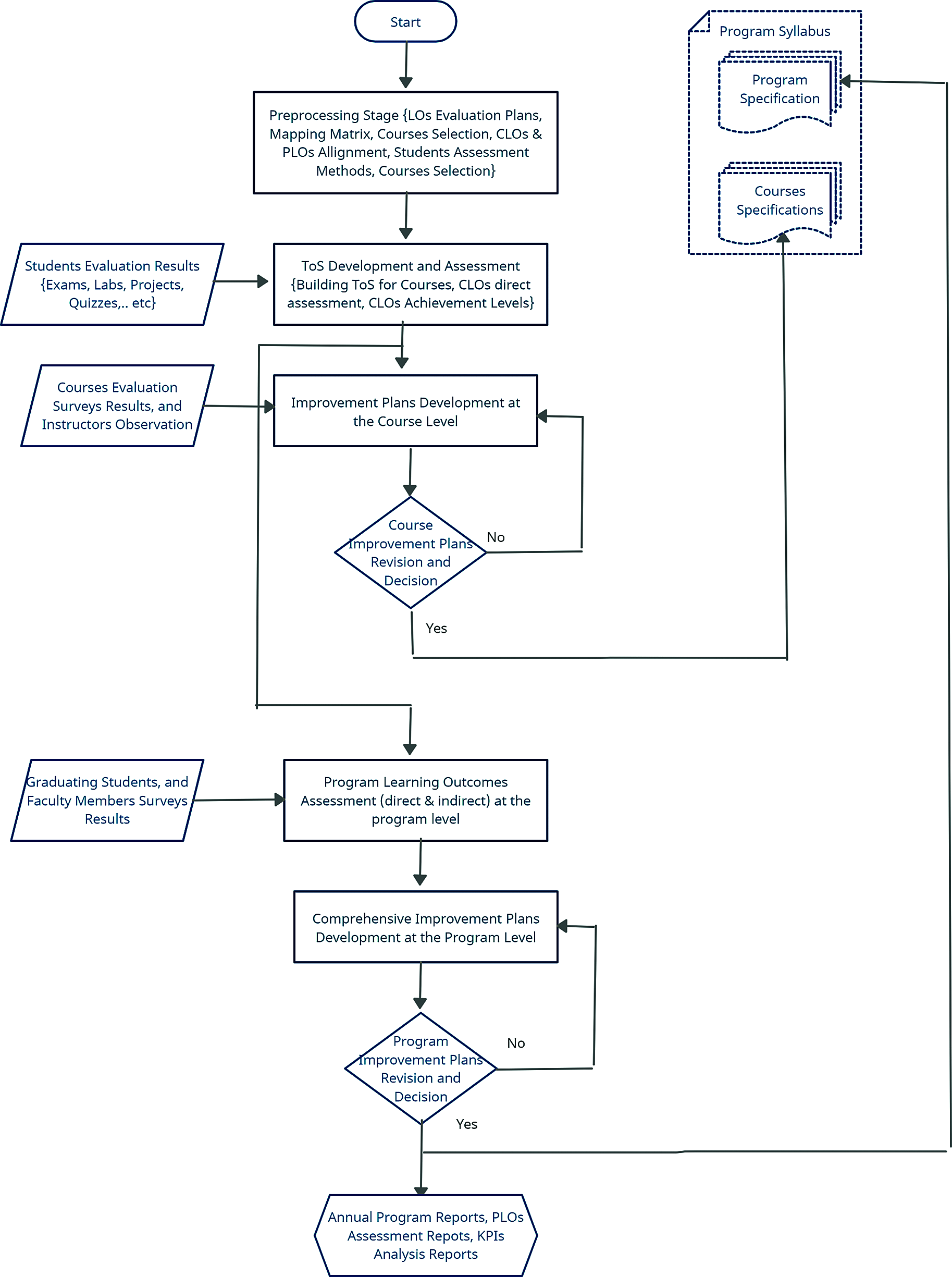

Tab. 1 presents sample of the PLOs-CLOs mapping scheme for three PLOs, one under each learning domain. The sample shows three (out of eleven) PLOs and their associated courses. For each course, CLOs mapped with certain PLO are presented in the last column. The complete mapping matrix includes all PLOs and their corresponding courses. This matrix is input to the second stage.

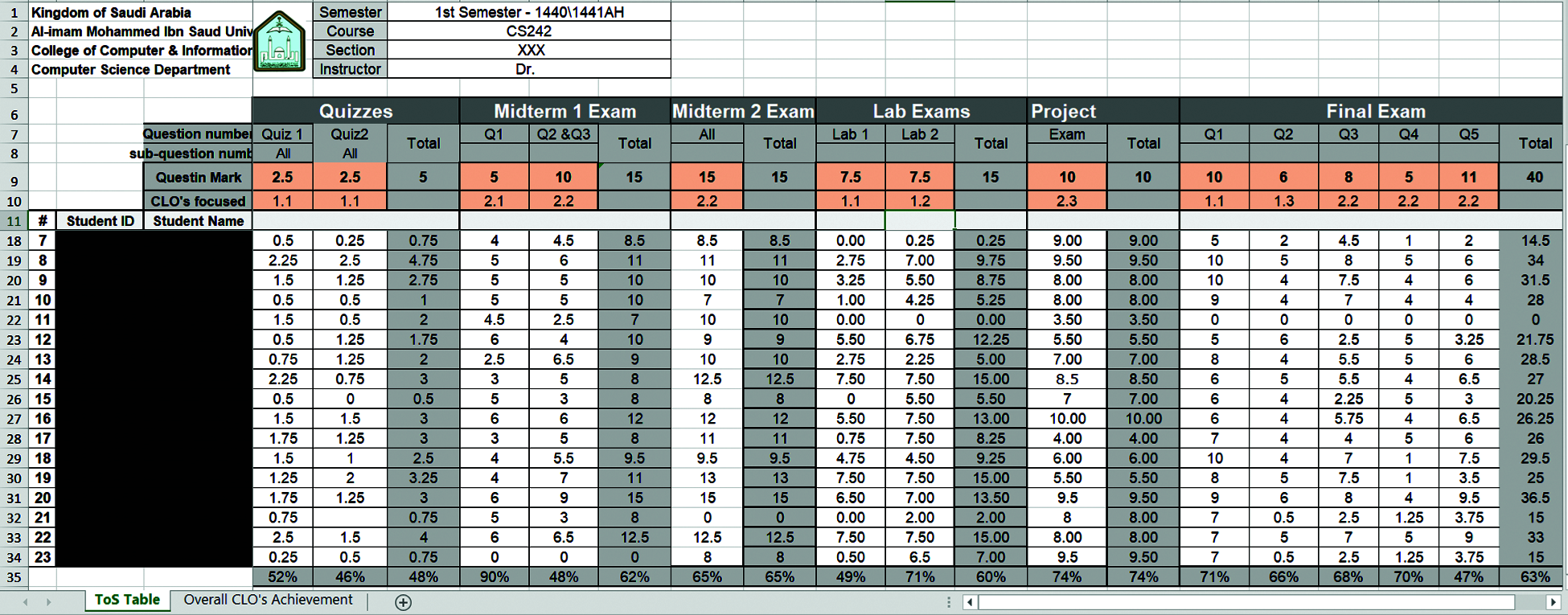

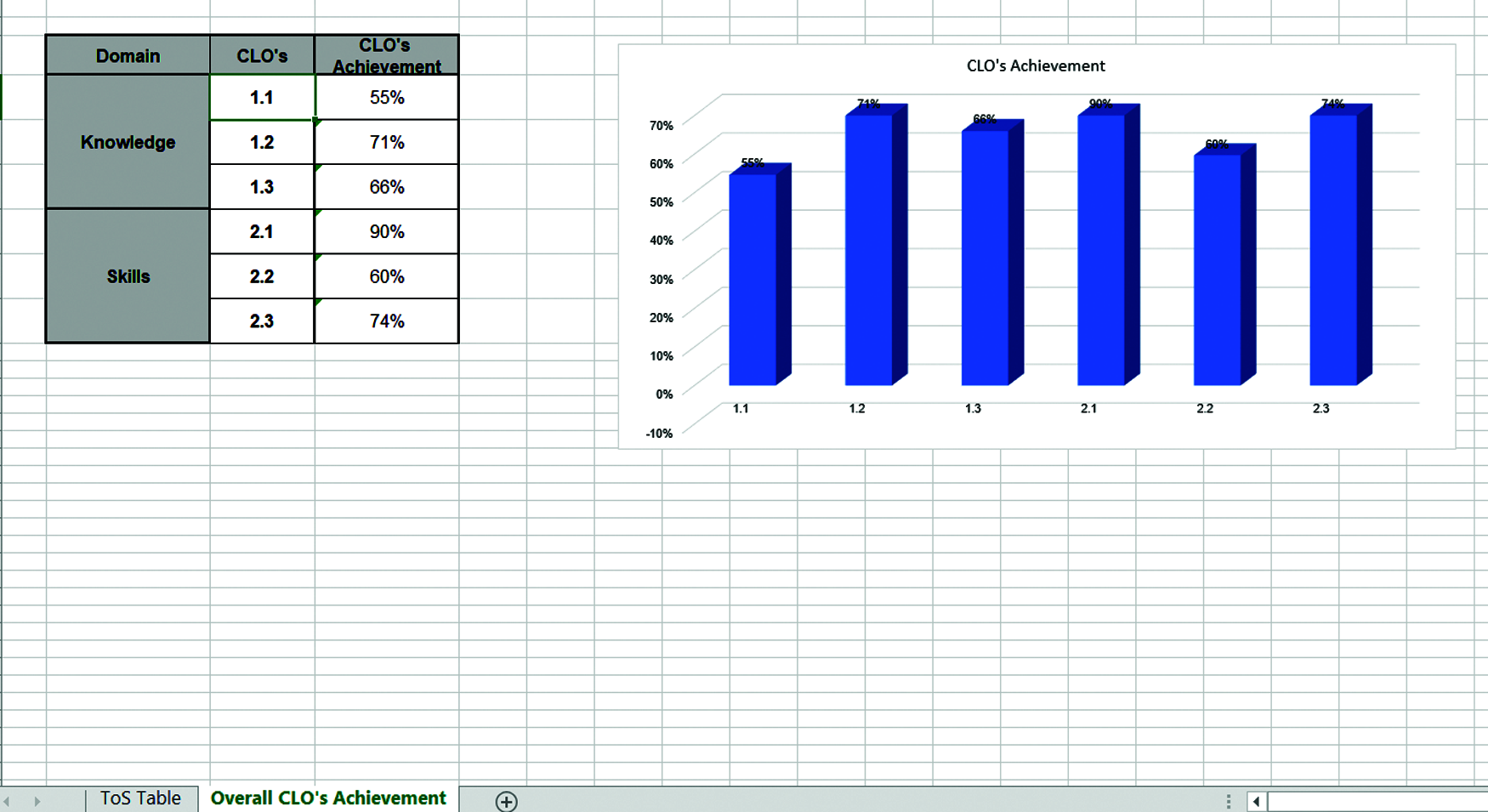

In the second stage, a table of specification (ToS) is developed for each course. The ToS represents useful tool for evaluating the achievement level of LOs at both course and program levels. The ToS is depicted in Figs. 2 and 3. The ToS consists of two parts; the first contains details about assessment methods, their associated CLOs, students’ grades and information, and the total marks assigned to each assessment, where the second part, computes automatically the average achievement for CLOs based on data entered in the first part. It shows useful charts to summarize the CLOs achievement results.

The QADC develops an adjustable version of ToS and sends it to coordinators. At the beginning of each academic semester, the course coordinator, in collaboration with course instructors, develops customized ToS file for the course and shares it with all instructors teaching the same course, so each instructor can use it separately for his section. Thus, the ToS is customized for each course and filled separately for each section under certain course. As can be seen from Fig. 2, the instructor needs only to enter students grades in each assessment method then ToS calculates the average student achievement for the CLO per each assessment. For example, the CLO#1.1 is assessed four times during the academic semester in the first and second quizzes, the first lab exam, and eventually in the first question of the final exam. Other CLOs are assessed one time such as 2.3 in the course project. Each CLO must be assessed at least once. After entering students grades by instructors in all course assessments, the ToS automatically calculates the average achievement level for each CLO as illustrated in Fig. 3. The coordinator uses an extended version of ToS to compute the overall achievement level of CLOs at the course level; for all sections at both male and female sides under a particular course.

In the third stage, the improvement plans are developed at the course level. This stage is important to ensure that the continuous quality improvement is maintained for every course taught by the program. The process of developing the improvement plans takes inputs from previous stage, represented by the levels of attainment for CLOs, and the indirect assessment results obtained by CES. The results of the section-wise CES and ToS assist the instructor to determine strengths, and weaknesses for his own section(s), and hence contribute in the development of effective improvement plans considering every course instructor’s experience. The suggested improvement plan by each instructor is written in the CR, and then submitted to the coordinator to prepare the final CCR. The coordinator aggregates direct and indirect assessment results for all sections and compile them to prepare the final results and report them in the CCR. Then, the coordinator develops a mutually agreed on course improvement plan.

Figure 1: LOs assessment methodology

Figure 2: First part of ToS showing actual students grades and CLO achievement per each assessment

Figure 3: The second part of ToS presenting the overall achievement levels for all CLOs

In the third stage, the improvement plans are developed at the course level. This stage is important to ensure that the continuous quality improvement is maintained for every course taught by the program. The process of developing the improvement plans takes inputs from previous stage, represented by the levels of attainment for CLOs, and the indirect assessment results obtained by CES. The results of the section-wise CES and ToS assist the instructor to determine strengths, and weaknesses for his own section(s), and hence contribute in the development of effective improvement plans considering every course instructor’s experience. The suggested improvement plan by each instructor is written in the CR, and then submitted to the coordinator to prepare the final CCR. The coordinator aggregates direct and indirect assessment results for all sections and compile them to prepare the final results and report them in the CCR. Then, the coordinator develops a mutually agreed on course improvement plan. The preparation of the course improvement plan takes three parameters, which are the overall CLOs achievement results, the CES results, and the course instructors’ opinion based on their experience related to the course delivery activities.

It should be highlighted that ToS uses actual students’ scores obtained by the different assessment methods in order to ensure more accurate and realistic results for the students’ performance towards achieving the CLOs. Another strength point for using the proposed methodology is that ToS and assessment methods are unified for each course and applied to all sections and students. No sampling technique is considered in this approach. This contributes in improving the accuracy and reliability of the assessment results. The ToS represents an initial step towards fully automating the assessment and QA processes, which has been done through the proposed system. Once the course improvement plan is approved by the concerned committees in the department, necessary actions are taken at the course and program levels to implement the plan in the next academic semester. In the proposed automatic QAS, secure protocols are adopted to provide the security services for the QA processes included in this methodology.

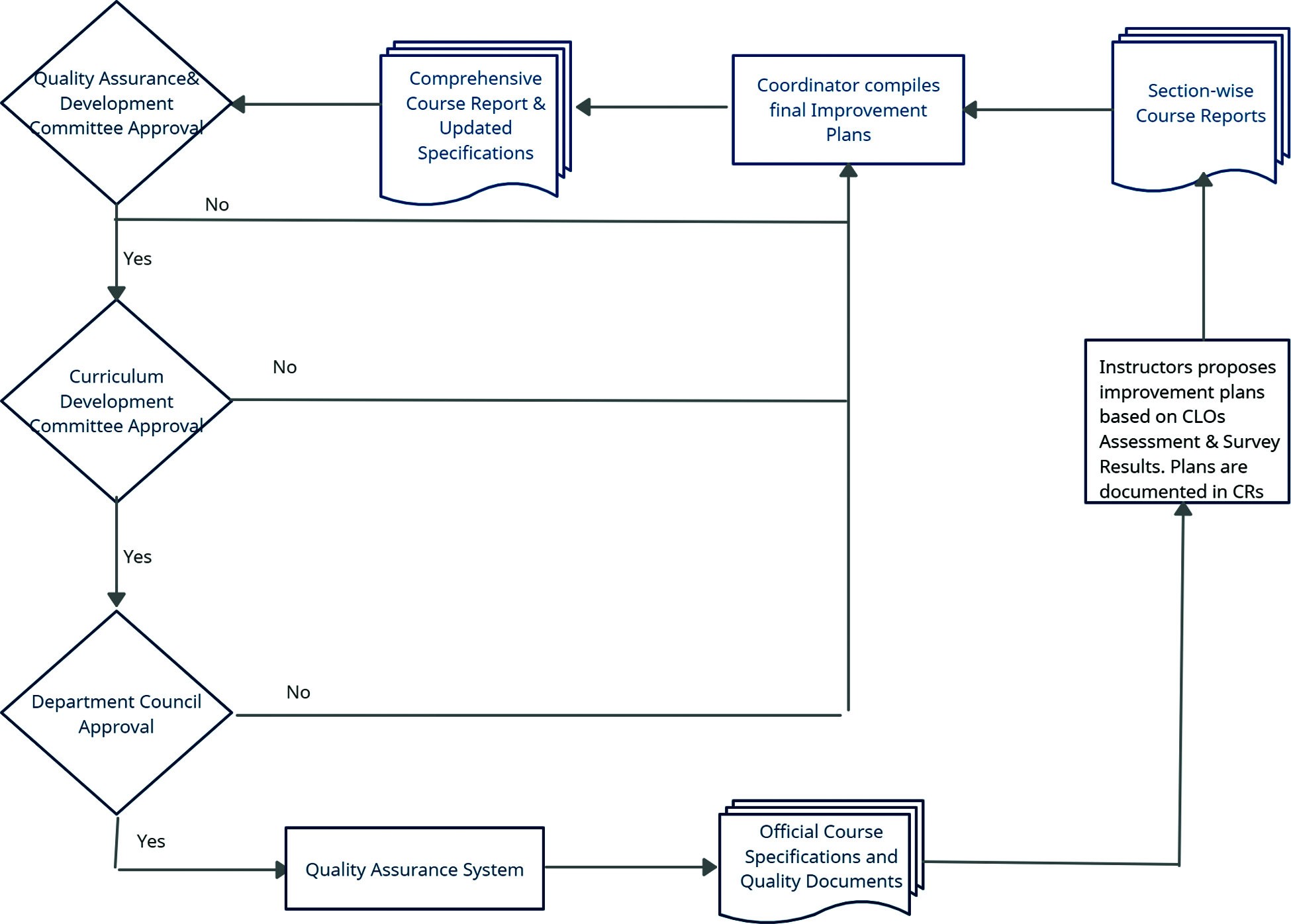

In the fourth stage, the proposed CLOs-based improvement plans are reviewed and then approved by concerned committees in the department. In particular, the proposed plans are first reviewed by the curriculum approval committee to make sure that proposed changes to the course are scientifically valid and applicable within the program context. In the second review process, the QADC double check the improvement plans to make sure they satisfy the quality standards for ABET and NCAAA accreditations. Then, plans are submitted to the department council for final approval. Once the plan of a particular course is approved by the department, it will be incorporated to the program curriculum and changes, if necessary, will be made on the specifications. Again, the developed system in this article allows automatic and secure implementation for those QA processes.

The fifth stage in proposed methodology pursues the LOs assessment processes at the program level. It uses the results of CLOs assessment, using direct methods, as inputs to the PLOs assessment process, in which the overall average achievement level is calculated for each PLO. The assessment processes in the fifth stage of the methodology specify the achievement levels for male and female students separately and the combined achievement level for each PLO. This allows to analyze and understand the students’ performance accurately and realize any possible variation between students’ achievement of PLOs based on their gender. This technique is appropriate for academic institutions, which have two separate environments for male and female students.

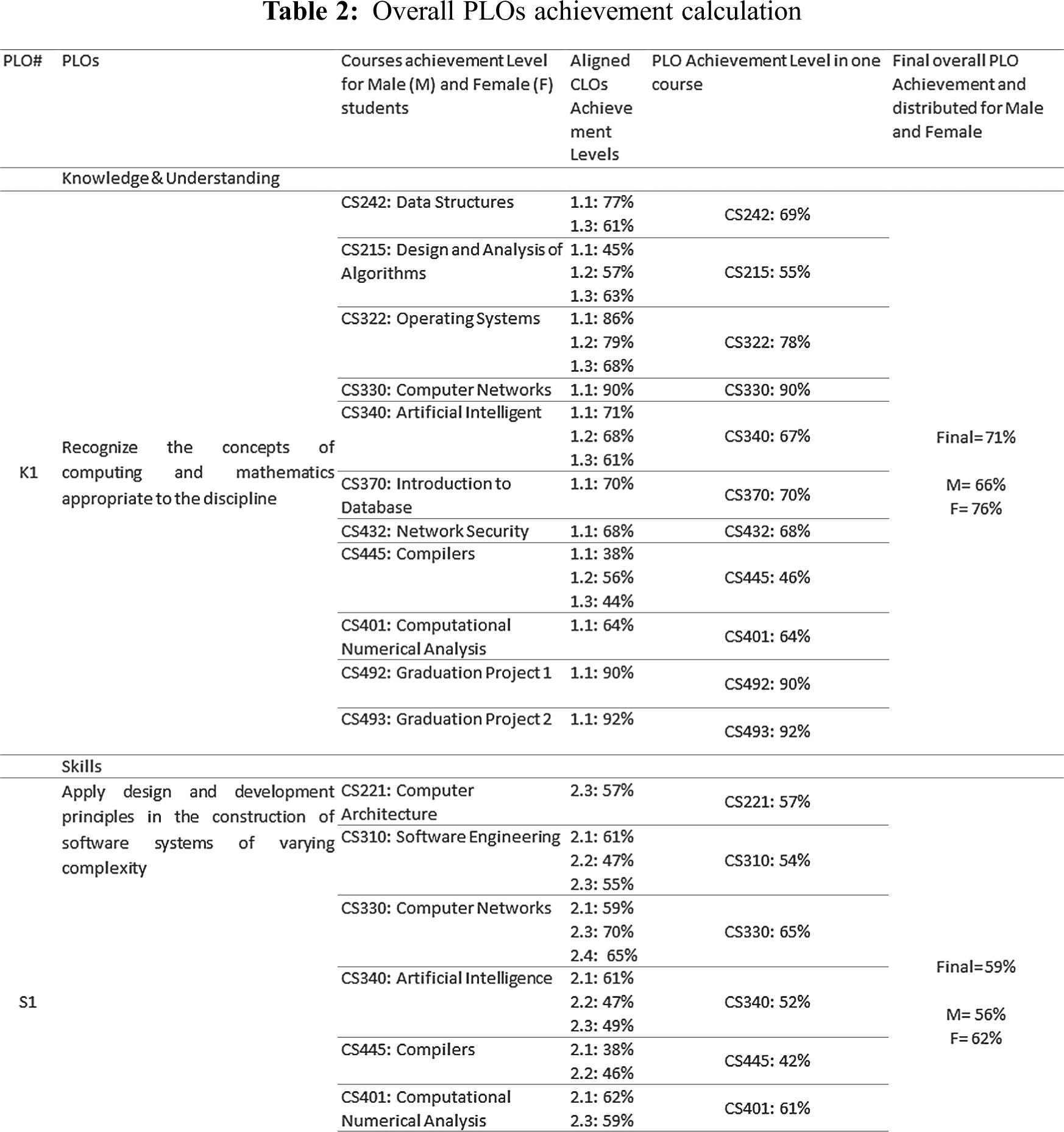

Tab. 2 presents an example for the overall PLOs achievement calculation. Three PLOs (K1, S1, and V1) and their associated CLOs and courses are included in the table. Calculations show that K1 and V1 have very good results, where S1 scored low achievement level. It should be mentioned here that, using the proposed methodology, PLOs assessment is done at the end of every academic semester. This allows to develop effective corrective actions and implement them in timely manner.

The proposed methodology allows to discover any weakness related to the students’ performance towards achieving the PLO, and trace that weakness to find its root. For example, the students’ performance in PLO# S1 is relatively low (59%), which represents a weakness point. The students’ performance can be analyzed to find out the courses and their CLOs that led to this weakness. As a result, the courses CS221, CS310, CS340, and CS445 represent the sources for the weak students’ performance in PLO#S1 as depicted in Tab. 2. Thus, the CRs of those courses should be reviewed carefully by concerned committees within the department, and the improvement plans suggested by instructors should be considered to address the weakness and improve students’ performance.

The fifth stage receives inputs from secondary sources, which are the graduating students and faculty members’ surveys. This is an indirect assessment of PLOs attainment, which gives deeper understanding of students’ performance from the different perspectives of stakeholders. Unlike other assessment approaches, the proposed methodology uses the graduating students survey instead of alumni surveys.

The outputs of the fifth stage represent inputs to the sixth stage, in which comprehensive improvement plans are developed at the program level in collaboration with relevant committees, such as QADC and curriculum development A considerable part of the planning effort focuses on uplifting students’ performance towards achieving the PLOs.

The seventh stage is the final stage, in which the proposed plans are reviewed by the department council. If approved they will be documented in the APR and incorporated to the program and courses specifications to feed the first stage of the next round of implementing the proposed methodology. The next section presents the design and implementation of the proposed secure and automatic QAS.

4 Quality Assurance System Modeling

In this section, we present the design and implementation of the proposed QAS. The security protocols used to provide CIA and non-repudiation services are illustrated as well. Although some steps of the system design and implementation may sound straightforward, they are essential to enable effective data collection and processing and hence achieve desired improvements. They also open the door for further utilization of technical advances in the field of AI and IS for the sake of improving the quality of educational programs.

A conventional QAS should grant privileges and allow each type of users (e.g., instructors, coordinators, Quality members, program development team, and manager) to perform certain tasks according to their roles. Each Instructor views, enters, retrieves, and updates data related to his own section(s), while the coordinator can be granted access to all sections under one particular course, so he can extract the CCR. Quality members and program managers, on the other hand, are granted wider privileges in order to perform the quality tasks assigned to them. An important aspect of the proposed system is that it enables collecting almost all data related to the program and students, such as evaluation results, surveys, statistics, corrective actions, CLOs and PLOs results, … etc. This allows to perform the assessment processes of LOs and other QA tasks automatically and accurately as discussed before. Moreover, collecting such huge and significant amount of data provides great opportunity for future research and analysis using advanced AI and data mining techniques.

In addition to above mentioned functionalities, the system maintains an outstanding level of security through extensive use of cryptographic algorithms. It provides the main security services, which are the confidentiality, Integrity, authentication, and non-repudiation.

4.2 System Development Methodology

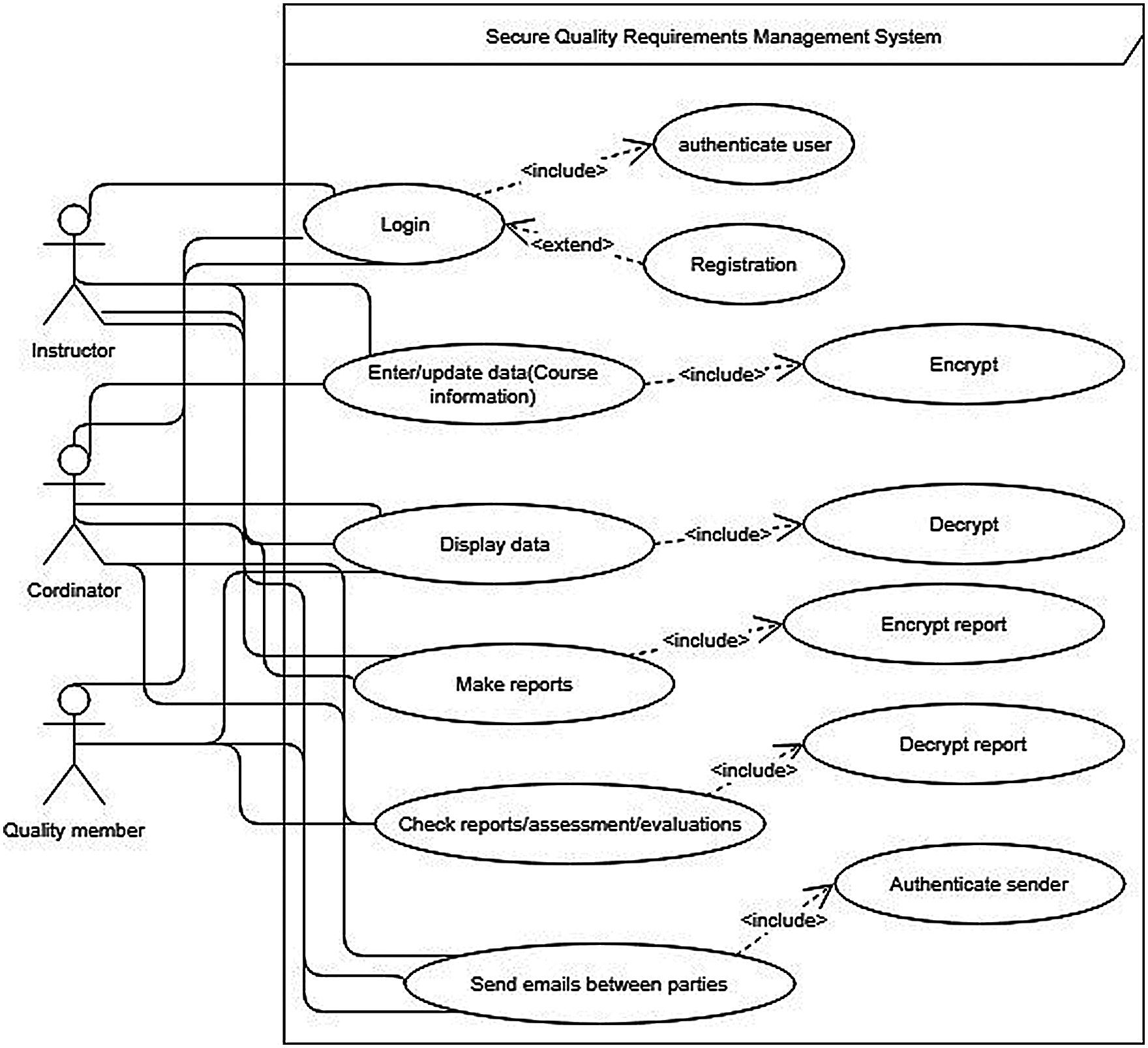

To satisfy the huge and continuously changing requirements for the project of developing the QAS, we decided to use the scrum methodology. It follows effective iterative strategy during the development process, that allows to continuously test the system’s functionality, fix any deficiencies, and accommodate emerging requirements. After collecting the main requirements, the system development process considered first the most important priorities represented by the LOs assessment, and quality reports. Then, the use case diagram was developed as shown in Fig. 4. It assists to provide robust view about the requirements and key functions.

Figure 4: Use case diagram of proposed QAS

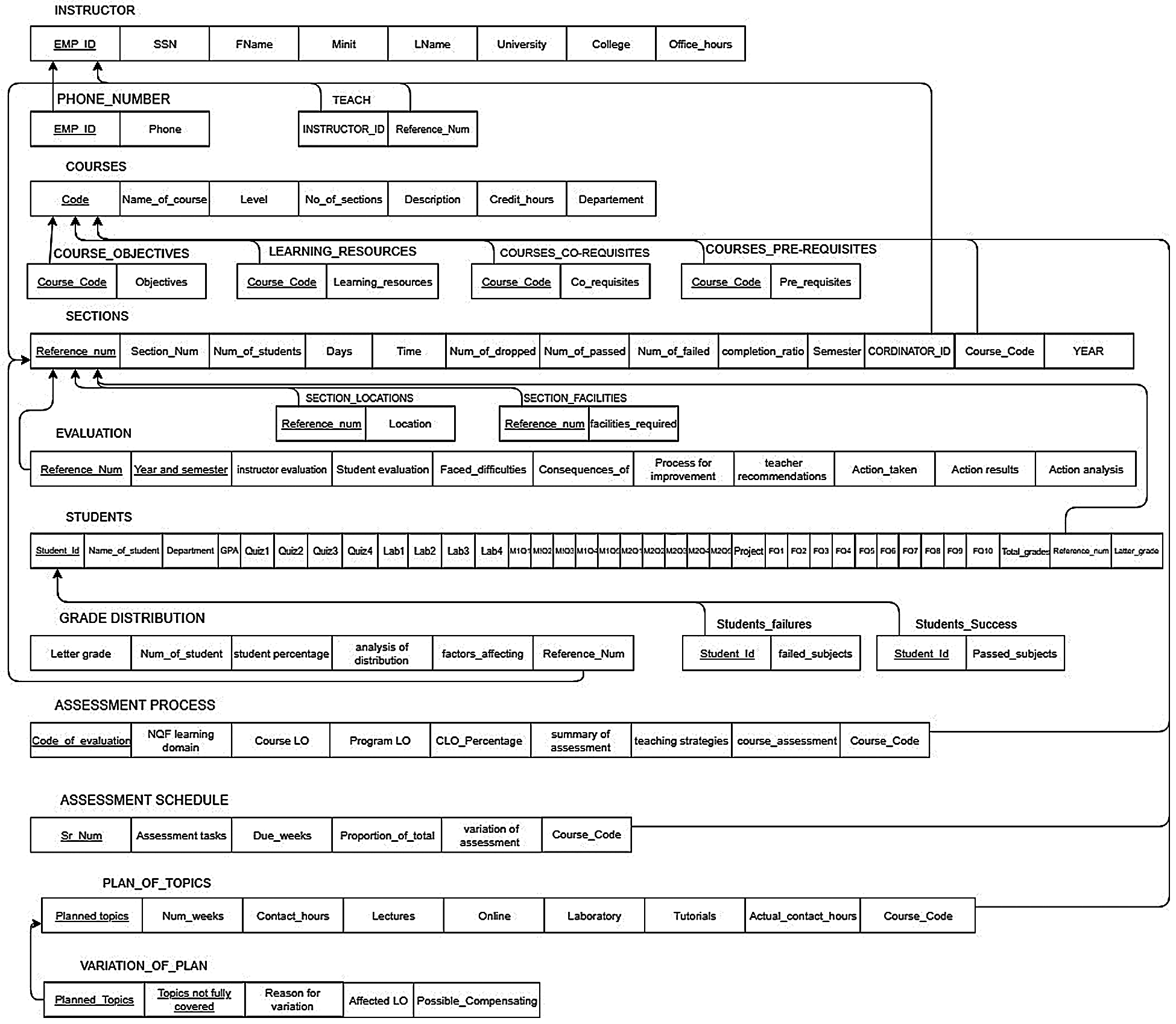

Prior to the implementation stage, the ER and DB diagrams were developed taking into account the major quality requirements. The main entities of proposed system and its Data Base (DB) design are shown in Fig. 5. The importance of such design is that it was built based on real case studies. Thus, it reflects the actual required functionalities to efficiently manage QA processes. Each entity has a set of well-defined attributes that allows optimal data collection and processing. For example, the student table in Fig. 5 has a number of main and composite attributes (fields in the DB table) encompassing all required data for assessment processes. The composite attributes ensure that the system can handle students’ results in all assessment methods. Moreover, each assessment is split down into a number of questions that reflect the students’ grades scheme presented in the ToS. This aims to enable the system to assess LOs accurately at both course and program levels. Collecting such a huge and valuable amount of data is important to feed further intelligent automation that might be integrated with the system in future.

Figure 5: The data base diagram of proposed QAS

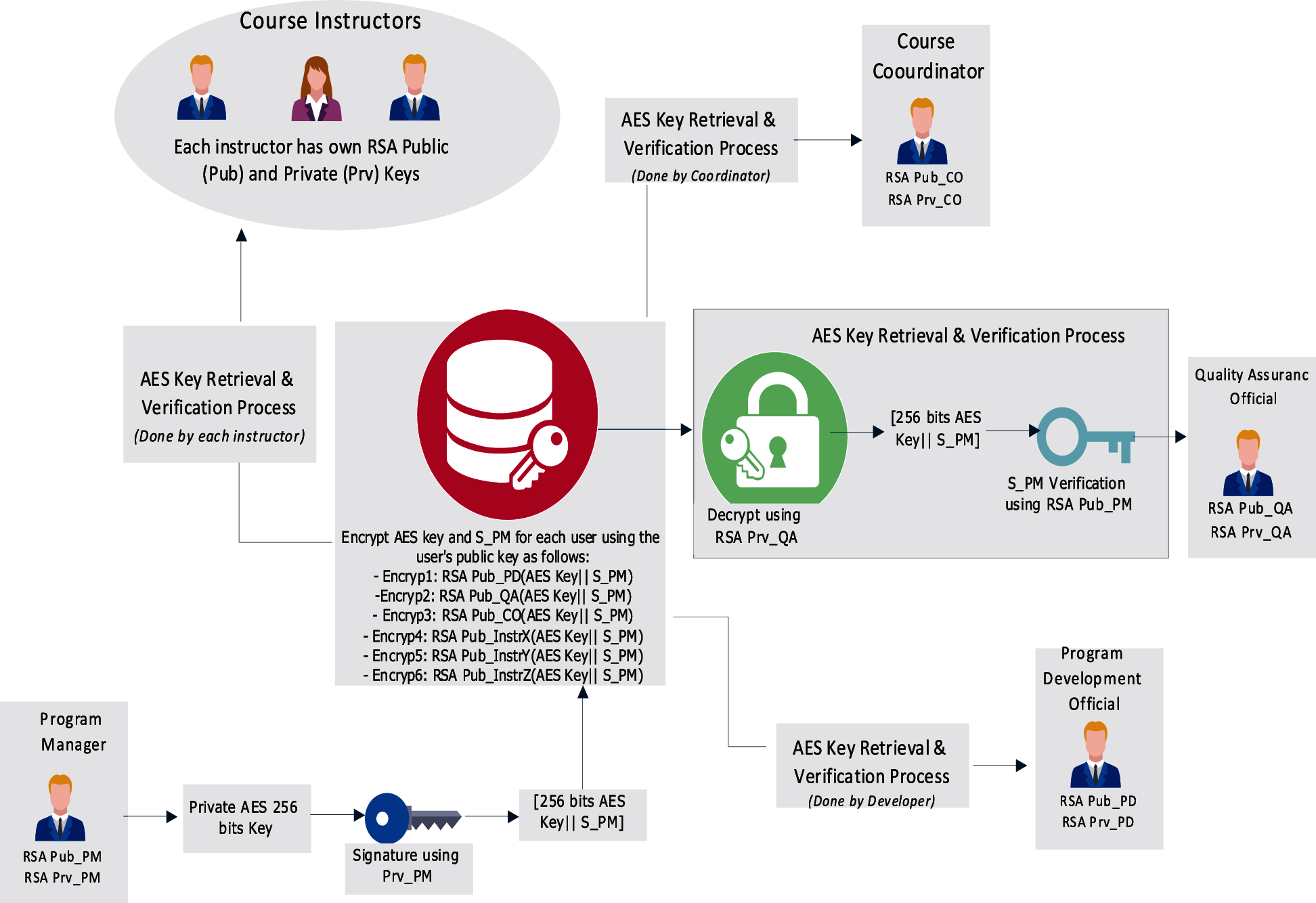

Data processed by QAS and related procedures are very import and valuable. Stakeholders should be confident with regards to QA data and reports. Therefore, high levels of security should be considered when developing a QAS. The proposed QAS uses cryptographic algorithms and well-defined protocols to provide and maintain the CIA security services, and avoid the source repudiation problem. This improves the reliability of the quality reports handled by the system and ensures the transparency of QA processes and procedures. Two main security protocols were implemented; the secret keys sharing protocol and the data processing and reporting protocol, which are presented in Figs. 6 and 7 respectively.

4.4.1 The Key Sharing Protocol

Ensuring the confidentiality and authentication is the main goal of the secret-key sharing protocol. It enables the program manager (PM) or department head to generate a private 256-bit key for AES encryption for each user and share the keys with intended parties in secure and efficient manner.

The secret-key sharing protocol can be described in the following steps:

1. Each user should generate (using a true random number generator (TRNG)) his own RSA public (Pub) and private (Prv) keys with 1024-bits length at least. The main users are course instructors, coordinators (CO), Quality Assurance (QA) officials, program development (PD) officials, and the program manager (PM) or department head. The public key might be declared via the user’s official webpage, where the private key must be kept secure and saved from unauthorized access.

2. The PM generates the private 256-bits AES key, and signs it with his own RSA private (Prv_PM) key to generate the signature S_PM. Note that the signature guarantees that the key is really issued by PM and not altered in unauthorized way. This step can be repeated for each user if the manager needs to provide different keys for every group of users. For example, instructors in different courses might be given different keys so each instructor can encrypt and decrypt data and reports relevant to his/her course(s).

3. The manager encrypts the AES private key and the signature (S_PM) using each user’s public RSA key. This encryption is repeated for each user in the system. For example, the key and signature of the QA official are encrypted using the Pub_QA.

4. Encrypted keys can be then shared with relevant parties.

5. Each party decrypt the encrypted message (AES Key || S_PM) to retrieve the key.

6. Eventually, each user verifies the signature using the manager’s public key (Pub_PM). If the verification’s result is true, this means that key is reliable and issued by the PM, otherwise, the user should contact the PM to re-send the key and the signature.

In summary, the above protocol ensures that no one can access the data and quality reports else that authorized users who received the reliable key, which is ensured to be issued by the department head or PM. In the next section, the data and quality reports security (DQRS) protocol is presented and discussed.

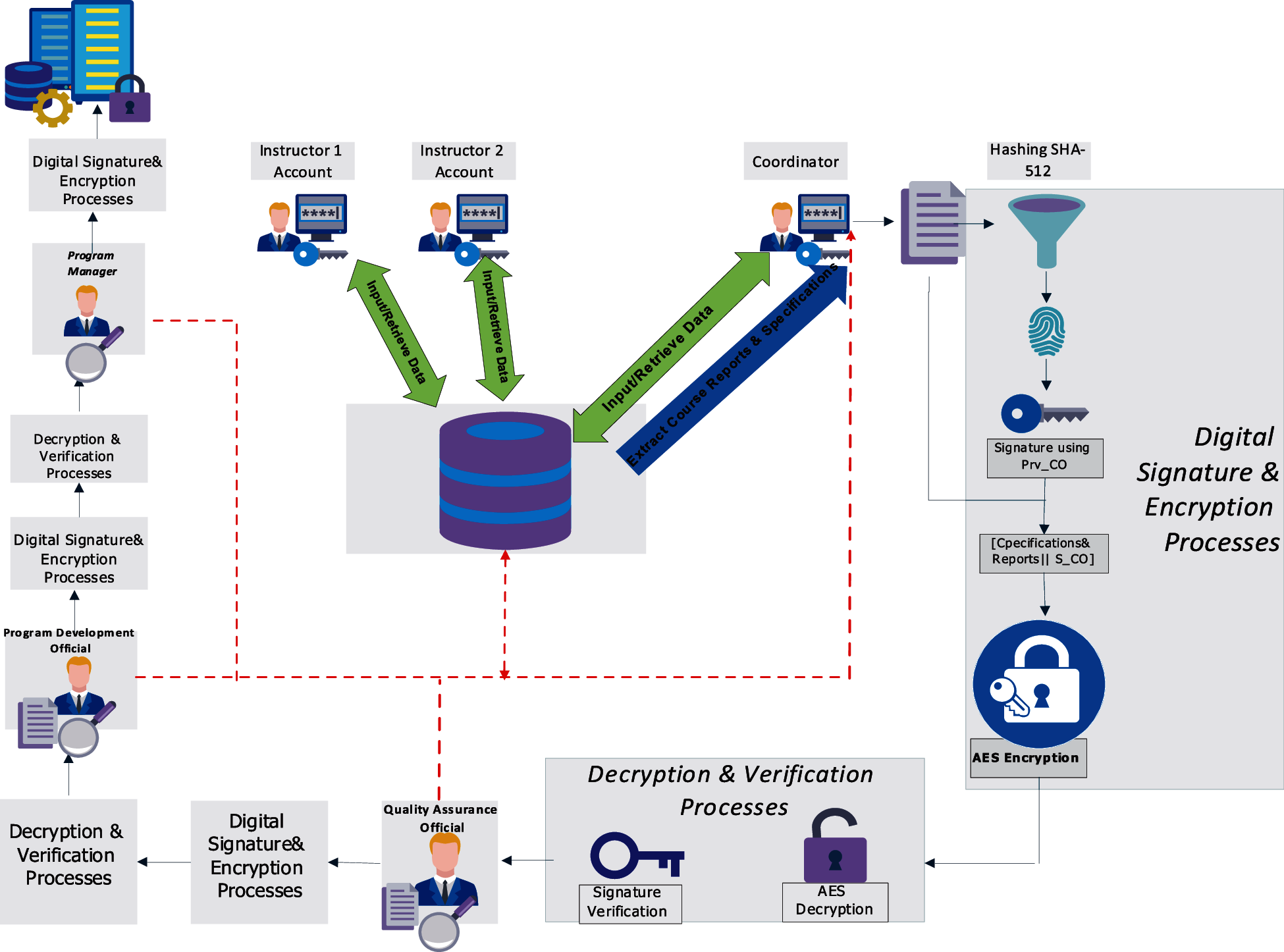

4.4.2 The Quality Data and Reports Security Protocol

The QDRS protocol is aimed at providing the CIA and non-repudiation security services. It enables different parties (instructors, coordinator, QA members, PD members, and PM) to process data, exchange and store the quality reports and related documents (including CSs and CRs) in secure and reliable way. In other words, the protocol securely performs the continuous quality improvement processes presented in this study. The QDRS protocol can be described in the following steps:

1. Each Instructor inputs and retrieves data related to his/her own section(s) in the quality database. Data includes students grades, survey results, course statistics. Corrective actions, analysis, discussions, plans, and other parts of the CR are entered by the instructor for his section(s) as well.

2. The Coordinator then processes data for all sections under his course and extracts the CCR. The processing includes, for example, calculation of overall CLOs achievement levels, the course survey results and other required processes for the CCR. The CCR should be mutually agreed on by all instructors in one particular course.

3. The coordinator uses the highly secured SHA-512 to get a course digest or fingerprint that represents the CCR, updated CSs, and other course documents.

4. The coordinator signs the digest using his own RSA private key (Prv-CO), which was issued in the previous protocol. The result is the signature S_CO.

5. The coordinator encrypts the course documents and the signature S_CO using the AES private key, which was securely communicated using the key sharing protocol. Then, he sends encrypted documents [course documents || S_CO] to the QA official.

6. The QA official decrypts the documents, using the private shared (AES), and verifies the signature to make sure that the reports and documents are sent by the coordinator and not altered by unauthorized party. This also prevents the source (CO) from denying his responsibility about those documents.

7. After reviewing the documents and adding necessary reports, the QA official performs the steps 3–5 (using the Prv-QA in step 4). Then, encrypted documents are sent to the PD official.

8. The PD official performs steps 6–7, and review the documents. Before sending the documents to the manager he performs steps 3–5 (using the Prv-PD in step 4).

9. Again the manager performs the steps 6–7, review the documents, and then performs steps 3–5 (using the Prv-PM in step 4).

10. Eventually, documents are stored in securely on the college servers. Note that after approval (digitally signed by the PM) from the manager, the course documents; including updated specifications if any, are considered approved so relevant parties can start implementing the improvement actions stated in the CRs.

The next section briefly describes the software implementation environment for the proposed QAS.

Figure 6: The key sharing protocol of proposed QAS

Figure 7: The quality data and reports security protocol of proposed QAS

4.5 Software Implementation Environment

The proposed QAS was implemented using Java programming language. We used MySQL to develop the database. Graphical user interfaces (GUI) were also used to enable the interaction between the user and the system. Several cryptographic libraries were used to implement the aforementioned security protocols. The next section presents and discusses the results obtained via applying proposed QAS.

This section discusses the results of implementing the proposed QA approach. It introduces the gained benefits and the continuous quality improvement process. Assessment results and improvement plans can be categorized into course and program levels. The following sections illustrate both levels.

5.1 LOs Assessment Results and Improvement Plans at Course Level

A sample of CLOs assessment for one course, which is data structures (CS242), was presented in Fig. 2. The course has six LOs; three under knowledge and three under skills domains. The results show that students scored good results in the CLOs 1.2, 1.3, 2.1, and 2.3. The lowest achievement result is 55% and scored by CLO 1.1, while the highest achievement is 90% and reported for the CLO#2.1. The students’ performance related to CLOs 1.1 and 2.2 needs careful analysis and investigation to find weaknesses and allow further improvement. As per NCAAA standards, if the LO’s achievement goes below 65%, there should be improvement plans to enhance students performance and allow better achievement for LOs.

Examples for improvement plans that were developed as a response to CLOs assessment results are the development of unified class and lab tutorials of the course and re-organizing the course assessment methods to include more lab assignments and exams. This contributes significantly in improving the students’ performance especially in CLOs under skills domain.

5.2 LOs Assessment Results and Improvement Plans at Program Level

The majority of current assessment methodologies depends on developing KPIs and rubrics for each PLO and then assessing students’ performance by giving him a number from 1 to 5 for each KPI. They also consider only sample of students in selected sections. Such approach does not give accurate and comprehensive view on the real students’ performance towards achieving the LOs.

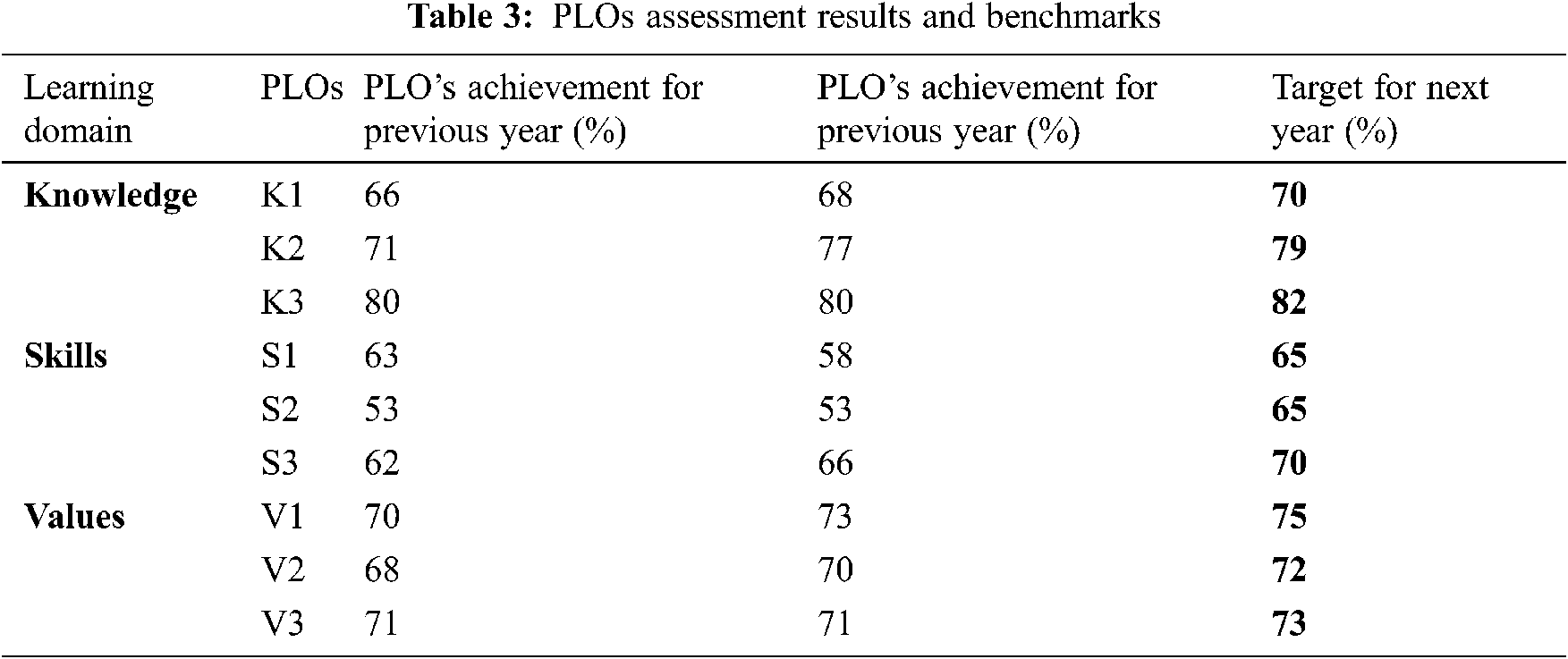

In our proposed assessment approach, the PLOs achievement levels are calculated after obtaining CLOs achievement levels for all courses and their corresponding sections. Tab. 3 shows the PLOs achievement results per one academic year. Based on those results, a number of corrective actions, that will be involved in the future improvement plans of the program, are developed.

It can be observed from the table that students performance towards achieving PLOs# S1 and S2 is below the minimum acceptable level, which is 65%. This indicates that uplifting students’ skills relevant to those LOs should be considered as a priority when developing the program improvement plans. The table shows a comparison with the previous year’s results and the new target for next year.

In order to develop effective improvement plans the course reports, including CLOs and indirect student assessment results should be reviewed and taken into account, especially in courses that have low achievement of S1 and S2. Some examples of the improvement plans, that were developed based on the analysis of PLOs assessment results, include adopting research seminars, improving the program’s curriculum, introducing more lab oriented courses, and re-organizing the contact hours.

5.3 Indirect Assessment Results

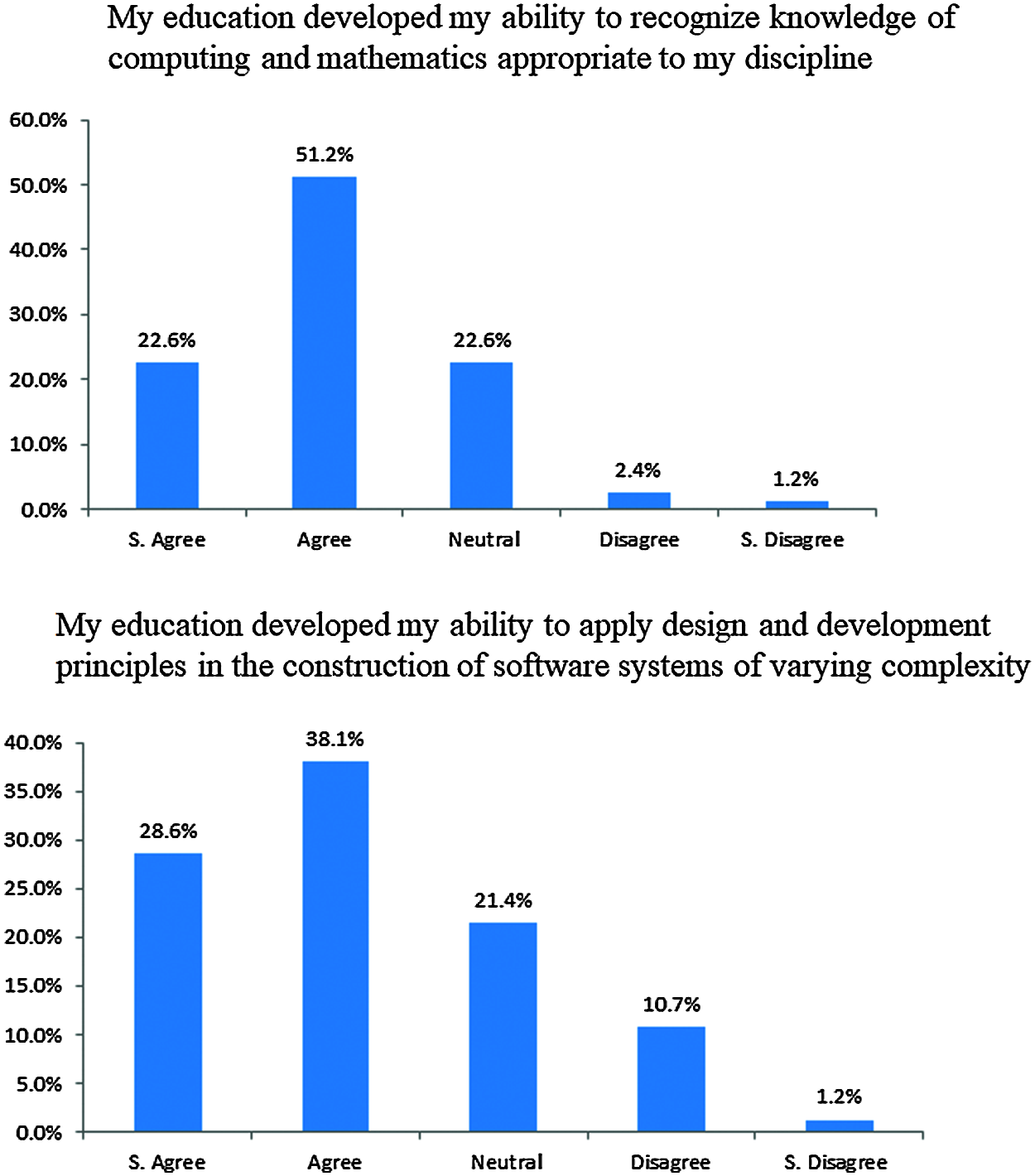

The indirect assessment methods are used to assess students’ achievement of LOs and other relevant issues at both course and program levels. At the course level and for each section the CES indirect assessment is used to assist instructors to develop effective improvement plans for the course. The graduating students survey represents the second indirect assessment in the proposed approach. Unlike the practice used in previous studies, which relay on the alumni survey, this methodology uses a survey that targets students at the level of graduation. It aims to develop more accurate and realistic view on the students’ performance. This is because students at the graduation level are not affected by other outside factors yet, including the learning and experience earned from the work environment and many other sources. Also, students at this level are mature enough to provide realistic perception of the extent to which the PLOs are attained. Therefore, the use of graduating students survey as an indirect assessment for students’ outcomes is more appropriate than alumni survey. Fig. 8 shows the survey results for the PLOs# K1 and S1. It can be seen that 73.8% of students agreed that the education they received developed their ability to recognize knowledge of computing and mathematics while 3.6% are not. This result is consistent with the direct assessment results of the PLO#K1, which equals 68%. Both results indicate that students have barely achieved satisfactory level. For the PLO#S1, the indirect assessment result is 66.7% compared to 63% for the direct PLOs assessment using ToS. Again, both assessment results are in harmony, which provides further evidence of the accuracy of the proposed assessment methodology.

Figure 8: PLO: K1 and S1 indirect assessment results at the program level

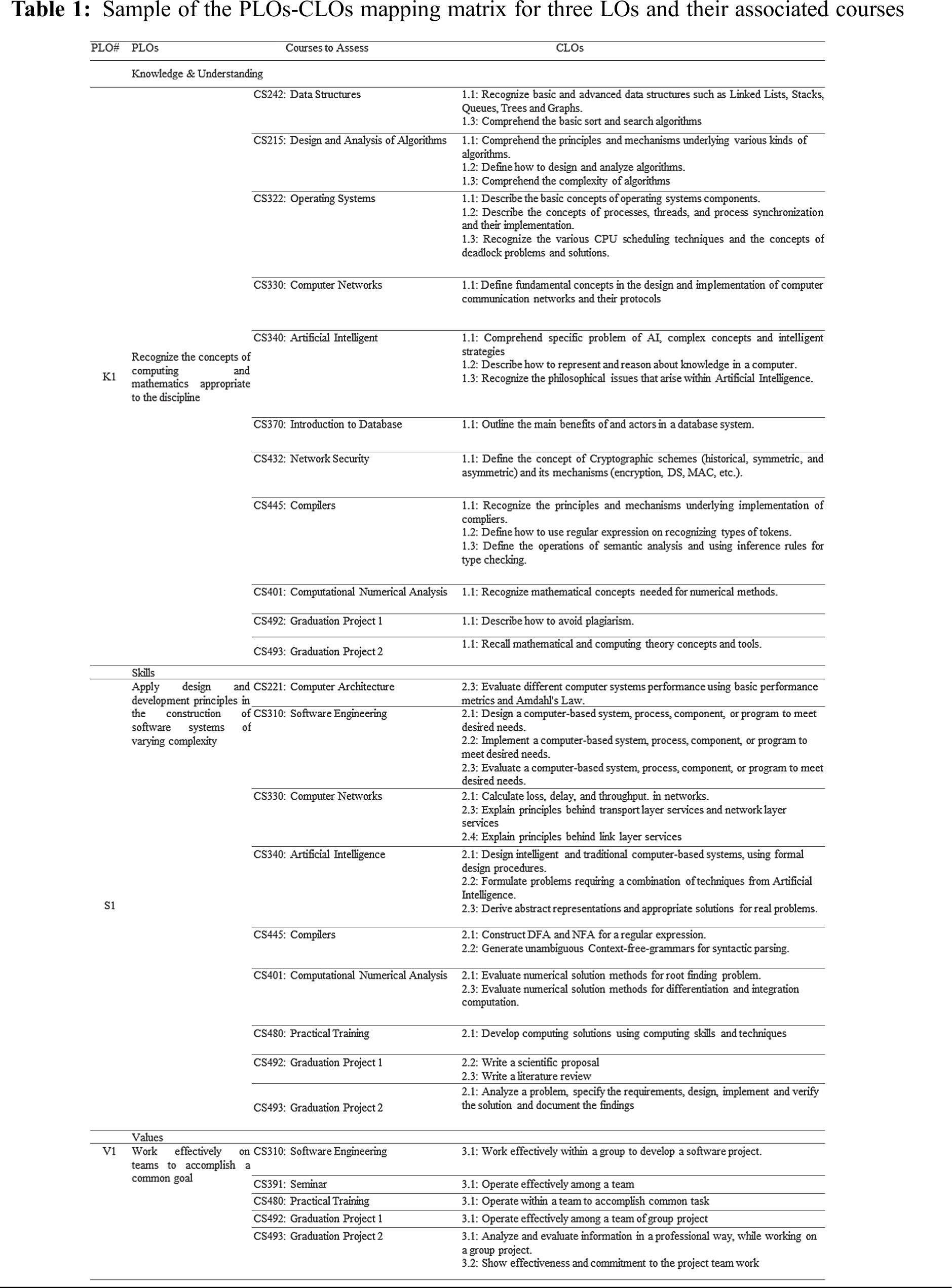

5.4 Continuous Improvement Processes

The proposed approach includes systematic processes for continuous quality improvement at both courses and program levels. The flowchart of the continuous improvement process is presented in Fig. 9 and can be performed securely and automatically using the proposed QAS in section 4. As an example of previously developed improvement plans, establishing a committee at the program level to update the curriculum according to recent advances in the field of CS. Also, the department has decided to adopt more lab-oriented and research seminar courses. Another example, adopting new courses that focus on the computer ethics in the program curriculum and increasing the penalties for fraud cases in the graduation projects (GPs). The latest improvement recommendation aims to improve the attainment of the PLO#V2, which is related to the realization of ethical responsibilities.

Figure 9: Continuous quality improvement at the course level

Academic institutions seek to get accreditation and improve the quality of their academic programs. This requires adopting an effective QAS that ensures the accuracy and reliability of LOs assessment processes, and enables the development of efficient quality improvement plans. To address deficiencies in current QASs, a systematic LOs assessment and continuous quality improvement approach was developed. The proposed approach guarantees the accuracy of the assessment results and provides well-defined processes and procedures to utilize them in the continuous improvement processes. Such systematic approach is indispensable as a first step for fully automating and integrating the QA processes. In addition, the current study represents a guide for academic programs that aspire to obtain accreditation.

This research also introduced an automated and secure QAS that reduces efforts and performs relevant QA tasks efficiently and safely. The proposed system uses two security protocols and utilizes state of the art cryptographic algorithms to provide confidentiality, integrity, and authentication services. The source repudiation problem is also eliminated through the use of RSA digital signature algorithm. Furthermore, the system enables to efficiently collect and process valuable data that can be arranged later in datasets and used for advanced researches investigating the utilization of AI technologies to support decision making and improve the quality of academic programs.

Acknowledgement: Author thanks the Computer Science department in the College of Computer and Information Sciences at Imam Mohammad Ibn Saud Islamic University for providing the data required for conducting this research study.

Funding Statement: Author extends his appreciation to the Deanship of Scientific Research at Imam Mohammad Ibn Saud Islamic University for funding and supporting this work through Graduate Student Research Support Program.

Conflicts of Interest: The author declare that he has no conflicts of interest to report regarding the present study.

1. K. Manghani, “Quality assurance: Importance of systems and standard operating procedures,” Perspectives in Clinical Research, vol. 2, no. 1, pp. 34–37, 2011. [Google Scholar]

2. D. Dill and M. Soo, “Academic quality, league tables, and public policy: A cross-national analysis of university ranking systems,” Journal of Higher Education, vol. 49, no. 4, pp. 495–533, 2005. [Google Scholar]

3. C. Pringle and M. Michel, “Assessment practices in aacsb, accredited business schools,” Journal of Education for Business, vol. 82, no. 4, pp. 202–211, 2007. [Google Scholar]

4. M. Dawood, K. A. Buragga, A. R. Khan and N. Zaman, “Rubric based assessment plan implementation for computer science program: A practical approach,” in Proc. IEEE Int. Conf. on Teaching, Assessment and Learning for Engineering, Kuta, Indonesia, pp. 551–555, 2013. [Google Scholar]

5. B. K. Jesiek and L. H. Jamieson, “The expansive (dis)integration of electrical engineering education,” IEEE Access, vol. 5, pp. 4561–4573, 2017. [Google Scholar]

6. S. Al-Yahya and M. Abdel-halim, “A successful experience of ABET accreditation of an electrical engineering program,” IEEE Transactions on Education, vol. 56, no. 2, pp. 165–173, 2013. [Google Scholar]

7. T. S. El-bawab, “Telecommunication engineering education: Making the case for a new multidisciplinary undergraduate field of study,” IEEE Communications Magazine, vol. 53, no. 11, pp. 35–39, 2015. [Google Scholar]

8. M. Garduno-Aparicio, J. ıguez-Resendiz, G. Macias-Bobadilla and S. Thenozhi, “A multidisciplinary industrial robot approach for teaching mechatronics-related courses,” IEEE Transactions on Education, vol. 61, no. 1, pp. 55–62, 2018. [Google Scholar]

9. R. K. Raj and A. Parrish, “Toward standards in undergraduate cybersecurity education in 2018,” Computer, vol. 51, no. 2, pp. 72–75, 2018. [Google Scholar]

10. E. E. Koehn, “Engineering perceptions of ABET accreditation criteria,” Journal of Professional Issues in Engineering Education and Practice, vol. 123, no. 2, pp. 66–70, 1997. [Google Scholar]

11. R. M. Felder and R. Brent, “Designing and teaching courses to satisfy the ABET engineering criteria,” Journal of Engineering Education, vol. 92, no. 1, pp. 7–25, 2003. [Google Scholar]

12. C. Cook, P. Mathur and M. Visconti, “Assessment of CAC self-study report,” in Proc. 34th Annual Frontiers in Education, Savannah, Georgia, pp. 12–17, 2004. [Google Scholar]

13. F. D. McKenzie, R. R. Mielke and J. F. Leathrum, “A successful EAC-ABET accredited undergraduate program in modeling and simulation engineering,” in Proc. Winter Simulation Conf., California, USA, pp. 3538–3547, 2015. [Google Scholar]

14. A. A. Rabaa’i, A. R. Rababaah and S. A. Al-Maati, “Comprehensive guidelines for ABET accreditation of a computer science program: The case of the american university of Kuwait,” International Journal of Teaching and Case Studies, vol. 8, no. 2–3, pp. 151–191, 2017. [Google Scholar]

15. E. Essa, A. Dittrich, S. Dascalu and H. F. C. Jr, “ACAT: A web-based software tool to facilitate course assessment for ABET accreditation,” in Proc. 7th Int. Conf. on Information Technology: New Generations, Nevada, USA, pp. 88–93, 2010. [Google Scholar]

16. A. Pears, A. Berglund, A. Nylen, N. Saleh and M. Shenify, “AccAB—processes and roles for accreditation of computing degrees at al Baha University in Saudi Arabia,” in Proc. Int. Conf. on Interactive Collaborative Learning, Dubi, UAE, pp. 969–970, 2014. [Google Scholar]

17. H. M. Harmanani, “An outcome-based assessment process for accrediting computing programs,” European Journal of Engineering Education, vol. 42, no. 6, pp. 844–859, 2017. [Google Scholar]

18. W. Rashideh, O. A. Alshathry, S. Atawneh, H. A. Bazar and M. S. AbualRub, “A successful framework for the ABET accreditation of an information system program,” Intelligent Automation & Soft Computing, vol. 26, no. 6, pp. 1285–1307, 2020. [Google Scholar]

19. A. Taleb, A. Namoun and M. Benaida, “A holistic quality assurance framework to acquire national and international educational accreditation: The case of Saudi Arabia,” Journal of Engineering and Applied Sciences, vol. 14, no. 18, pp. 6685–6698, 2019. [Google Scholar]

20. A. Shafi, S. Saeed, Y. A. Bamarouf, S. Z. Iqbal, N. Min-Allah et al., “Student outcomes assessment methodology for abet accreditation: A case study of computer science and computer information systems programs,” IEEE Access, vol. 7, pp. 13653–13667, 2019. [Google Scholar]

21. A. Talib, F. Alomary and H. Alwadi, “Assessment of student performance for course examination using rasch measurement model: A case study of information technology fundamentals course,” Education Research International, vol. 2018, no. 3, pp. 1–8, 2018. [Google Scholar]

22. A. Talib, F. Alomary and H. Alwadi, “Utilization of Rasch measurement model in evaluating reliability, validity and quality of examination questions: A case study of information technology fundamentals course,” IPASJ International Journal of Information Technology (IIJIT), vol. 6, no. 5, pp. 22–28, 2018. [Google Scholar]

23. M. Alamri, N. Z. Jhanjh and M. Humayun, “Digital curriculum importance for new era education,” in Employing Recent Technologies for Improved Digital Governance, IGI Global. Pennsylvania, USA: IGI Global, pp. 1–18, 2019. [Google Scholar]

24. H. E. Calderon, R. V ' Asquez, D. Aponte and M. D. Valle, “Successful accreditation of the electrical engineering program offered in two campuses at Caribbean University,” in Proc. IEEE Frontiers in Education Conf., Pennsylvania, USA, pp. 1–6, 2016. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |