DOI:10.32604/csse.2022.021927

| Computer Systems Science & Engineering DOI:10.32604/csse.2022.021927 |  |

| Article |

Developing Engagement in the Learning Management System Supported by Learning Analytics

1Department of Information System, Faculty of Computer Science and Information Technology, Universiti Malaya, Kuala Lumpur, Malaysia

2Faculty of Computing and Informatics, Universiti Malaysia Sabah, Sabah, Malaysia

3Department of Computing, School of Electrical Engineering and Computer Science (SEECS), National University of Sciences and Technology (NUST), Islamabad, Pakistan

*Corresponding Author: Muzaffar Hamzah. Email: muzaffar@ums.edu.my

Received: 19 July 2021; Accepted: 20 August 2021

Abstract: Learning analytics is an emerging technique of analysing student participation and engagement. The recent COVID-19 pandemic has significantly increased the role of learning management systems (LMSs). LMSs previously only complemented face-to-face teaching, something which has not been possible between 2019 to 2020. To date, the existing body of literature on LMSs has not analysed learning in the context of the pandemic, where an LMS serves as the only interface between students and instructors. Consequently, productive results will remain elusive if the key factors that contribute towards engaging students in learning are not first identified. Therefore, this study aimed to perform an extensive literature review with which to design and develop a student engagement model for holistic involvement in an LMS. The required data was collected from an LMS that is currently utilised by a local Malaysian university. The model was validated by a panel of experts as well as discussions with students. It is our hope that the result of this study will help other institutions of higher learning determine factors of low engagement in their respective LMSs.

Keywords: Engagement analysis; learning analytics; learning management system; student engagement

The current COVID-19 pandemic has seen many schools, colleges, and universities adopt online learning. The world over, the education system first remained closed for a few months before eventually transitioning to online learning. To the best of our knowledge, the World Health Organization (WHO) does not advocate face-to-face education at the time of writing. Therefore, the important role that Learning Management Systems (LMSs) play in education has increased by leaps and bounds. LMSs were traditionally used to complement face-to-face learning whereby instructors would upload lecture-related content, conduct quizzes, and facilitate assignment submissions only. However, the pandemic had driven the adoption of LMSs for online learning and teaching with instructors conducting online classes and trying other effective methods to engage with their students. As such, the implementation of technology in the field of learning has evolved traditional learning perspectives. A platform for online learning, LMSs create a virtual world that connects educators to learners thereby changing learning methods. Learning analytics is the measurement, collection, and analysis of learner-related data in order to understand and optimise learning. The main source of learner-related data analytics is trace data; a log that is derived from the LMS or virtual learning environment (VLE). As the traditional method of learning has evolved into a virtual learning system, the trace data that it produces can be derived from the electronic learning platform. Although the diversification of traditional learning to online learning poses a challenge, analysing learning data can bring the learning environment forward. This is because learning analytics can not only identify the correlation between log data and student behaviour [1] but predict student performance, drop-out rates, final marks and grades, as well as student retention in an LMS. It also serves as an early warning system for university students throughout their studies [2]. Student engagement in LMSs is an emerging research area that warrants further exploration. Therefore, this study used student engagement in the LMS to predict student performance. One of the factors that affects student engagement is the lack of communication and interaction with others which, in turn, affects student behaviour. Engagement between lecturers and students is an important criterion in developing a healthy relationship not only in a physical classroom but in the virtual learning environment as well. This is because student responses during an activity can be indicative of their eagerness towards virtual learning as well as increase their participation in the classroom [3]. However, many factors contribute towards a lack of engagement in LMSs. Firat et al. [4] discuss the effects of LMSs on engagement, motivation, and drop-out rates. Apart from learning achievements, the effect of support provided in LMSs should be investigated as well. The effects of the social web, interaction, and academic achievement all add value to the design and development of an effective engagement system. The existing student engagement model [5] uses conceptual engagement profiles as an engagement indicator. According to [6], the online behaviour engagement model on problem-solving does not focus on developing a holistic model for student engagement. Therefore, there exists gaps in the research that require further exploration, especially in the context of online learning during the COVID-19 pandemic. Based on a cluster analysis, the existing system showed that the low engagement cluster had more members in the online activity [7] than the institution anticipated [8]. An analysis of interactions between students, lecturers, systems, and content will provide a detectable learning pattern [9]. However, interactive activities that are the most effective do not yield many results [10]. A less than optimum usage of learning modules in an LMS results in low engagement in association and correspondence between students and lecturers. Therefore, this study developed a comprehensive model to increase student engagement for a superior learning experience. The objectives of this research were to determine engagement factors that could be derived from a new paradigm of participation during this COVID-19. The objectives of this research were as follows:

RO1: To determine the factors that lead to low engagement in LMSs.

RO2: To develop an engagement model for LMSs using learning analytics.

RO3: To validate the proposed engagement model.

Learning analytics is the measurement, collection, analysis, and reporting of data about learners and their contexts in order to understand and enhance the experience. Institutions of higher learning continuously gather student-related data. Furthermore, the availability of data analytics, online systems, and learning ecosystems has resulted in large quantities of accessible data. The gathering of student-related data has provided valuable insight into the learning activities of students [11]. Learning analytics uses data mining techniques to process information and data sets with technology to deliver insights with which to develop the learning proficiency of students [12]. Furthermore, log data has been extensively used to predict scores and student performance [2,13–15]. Learner engagement has also been used as an indicator of student performance. Learning analytics serves a useful tool for predicting dropout rates as well as an early warning system for students to avoid disciplinary actions for low scores [16,17]. Although academic failure is endemic in every institution, early warning systems can predict student performance and prompt universities to take action early to prevent students from failing by encouraging them to increase their performance. Multiple studies have examined student satisfaction towards LMSs [3,13,18]. The literature review indicated that students have a satisfactory acceptance of LMSs and VLEs. Furthermore, students were also willing to use LMS platforms to aid their university studies. Therefore, student feedback could be utilised to develop better online learning environments. Gašević et al. [19] found that learning analytics helped educators develop empathetic learning, improved the lecturing process, predicted learning results, supported intercessions, and aided decision-making. A study by Seidel et al. [20] on student attrition found that communication strategies helped improve student retention and attrition. Furthermore, Holmes [21] found that the introduction of weekly online tests increased student engagement in the LMS. The application of learning analytics in Malaysia is still in its infancy. West et al. [22] states that the adoption of learning analytics applications in Malaysia will take time to produce results in comparison to Australia or other countries. A study by Taib et al. [23] that aimed to understand pedagogy design approaches in the learning environment concluded that learning analytics in Malaysia needed more exploration which can be done once the main issues of learning are first understood.

AlJarrah et al. [24] provide comprehensive data on user interaction on Moodle LMS course pages. The collected data was used to extrapolate student access outlines, and subsequently, associate it with student performance. The study found that inept students simply postponed their studies as they simply gave up or their efforts had come to an end. The authors then investigated the effect of altered training methodologies on the learning behaviour, log information, and psychological burden of undergraduates. You et al. [25] demonstrated that undergraduates who procrastinated ultimately had less than ideal long-term maintenance or accomplishments. Additionally, submitting a task on time made a huge difference in making progress in the course. The study affirmed the significance of time spent on the board. Therefore, assessing student engagement on an LMS can enable the characterisation of a student’s work as well as the management and ability of a student to interact with lecturers and other students. Three types of interactions occur in an LMS; student-to-student, student-to-lecturer, and student-to-resource [26,27]. Pellas [28] found that self-regulation, environment quality, and learning strategies increase student performance, student emotion (self-esteem-performance), and student behaviour (self-efficacy-motivation) leading to self-motivation among students. Li et al. [29] found that student satisfaction level correlated with the quality of teaching and the learning materials. Therefore, student satisfaction is as important as the quality of the learning material. AlJarrah et al. [24] found that delayed access to materials caused procrastination among students. Nguyen et al. [30] study found that the level of involvement was not only influenced by the learning design but also by a balanced module and learning activities initiated by the educator. LMSs provide undergraduates from all walks of life an opportunity to concentrate free of impediments in a safe environment [31,32]. They can facilitate organising and managing course contents [32,33], submitting assignments, providing feedback to students setting up groups, organising grades [31], and assessing students [32].

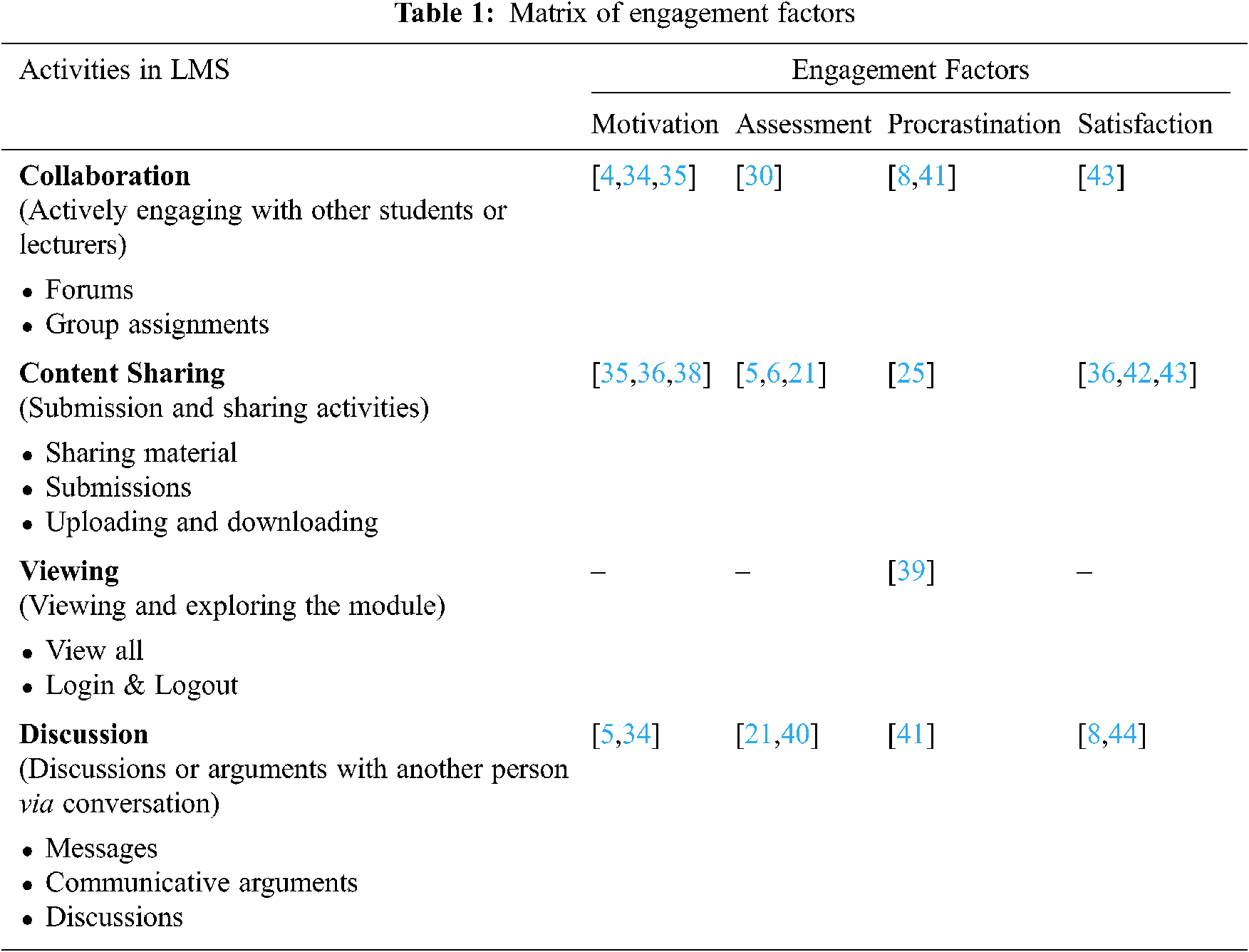

The methods of this study included a combination of synthesising the literature review as well as data analysis. This section discusses the technique that was used to analyse the log data. The results of which; the matrix of engagement; can be seen in Tab. 1. Engagement factors have also been determined alongside the activities in an LMS.

The data for this study was collected from a public university in Malaysia through the Centre of Information Technology. The dataset was referred to as the ‘University LMS’. The log data of one faculty member’s account was also collected. The analysis was performed on a computer programming course. The log data was collected from two consecutive sessions and analysed before comparing them. The total size of the log data was about 14,856 rows from the first session and 38,725 rows from the second session.

In order to achieve the first research objective; to determine the factors that lead to low engagement in LMSs; an extensive literature review was conducted to determine the factors of student engagement in LMSs.

1) Data Analysis Technique – To accomplish the second research objective—an engagement model for LMSs using learning analytics—an initial engagement model was developed by combining the matrix of the engagement factors and the data analysis. The data was analysed using SAS® Enterprise Miner™ 14.1 (SAS® EM™ 14.1). An experimental research was conducted to determine the significance of university LMS activity before data exploration was used to analyse the log data. A decision model was then used to determine the output of the engaged activity. The log data that was used contained information on learner activity; such as engagement duration, participation in discussions and forums, assignment submissions, and educator response times in the university’s LMS.

2) Research Validation – In order to achieve the third research objective; to validate the proposed engagement model; three processes were carried out: 1. data validation, 2. expert validation, and 4. a focus group. The data was partitioned into training and validation processes in SAS® EM™ 14.1. An initial student engagement model was developed by analysing the engagement activities. The model was then validated by a panel of experts that included lecturers and members of the faculty’s administration followed by a focus group consisting of selected student to gauge the students’ perspective. This validation process assisted the development of the final student engagement model.

The results of the research objectives are discussed in this section. The outcome of the extensive literature review was the engagement matrix. Two main techniques of data analysis; data exploration and decision tree; were conducted using SAS® EM™ 14.1. As previously mentioned in the earlier section, the data was cleaned and processed prior to data analysis.

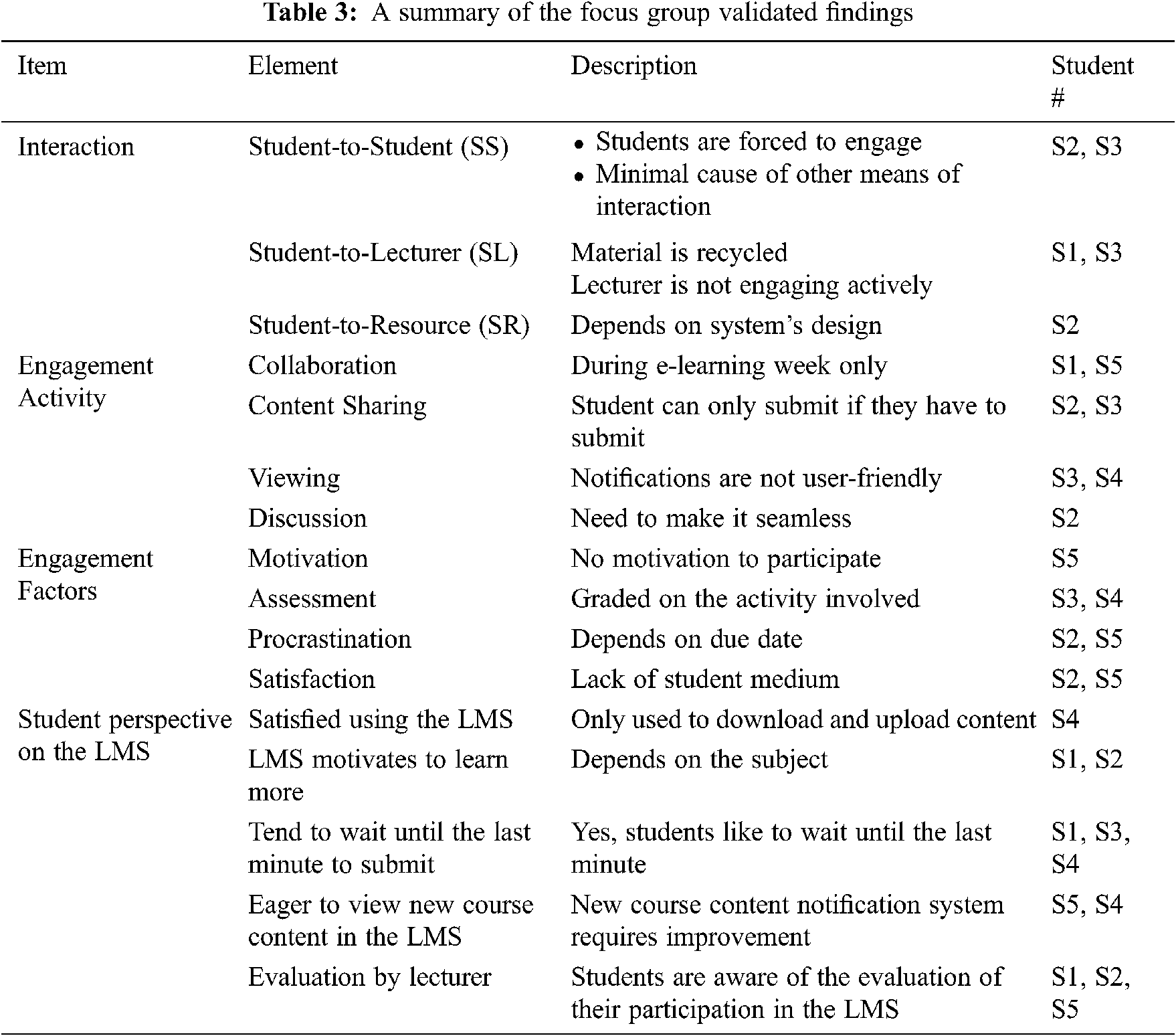

In order to develop an engagement model for LMSs using learning analytics, an initial engagement model was first developed by compiling the findings of our literature review. In summary, we found that existing engagement models were not holistic as they did not illicit engagement from every type of user. We also concluded that activities that led to higher engagement required a combination of content sharing, viewing, and discussion.

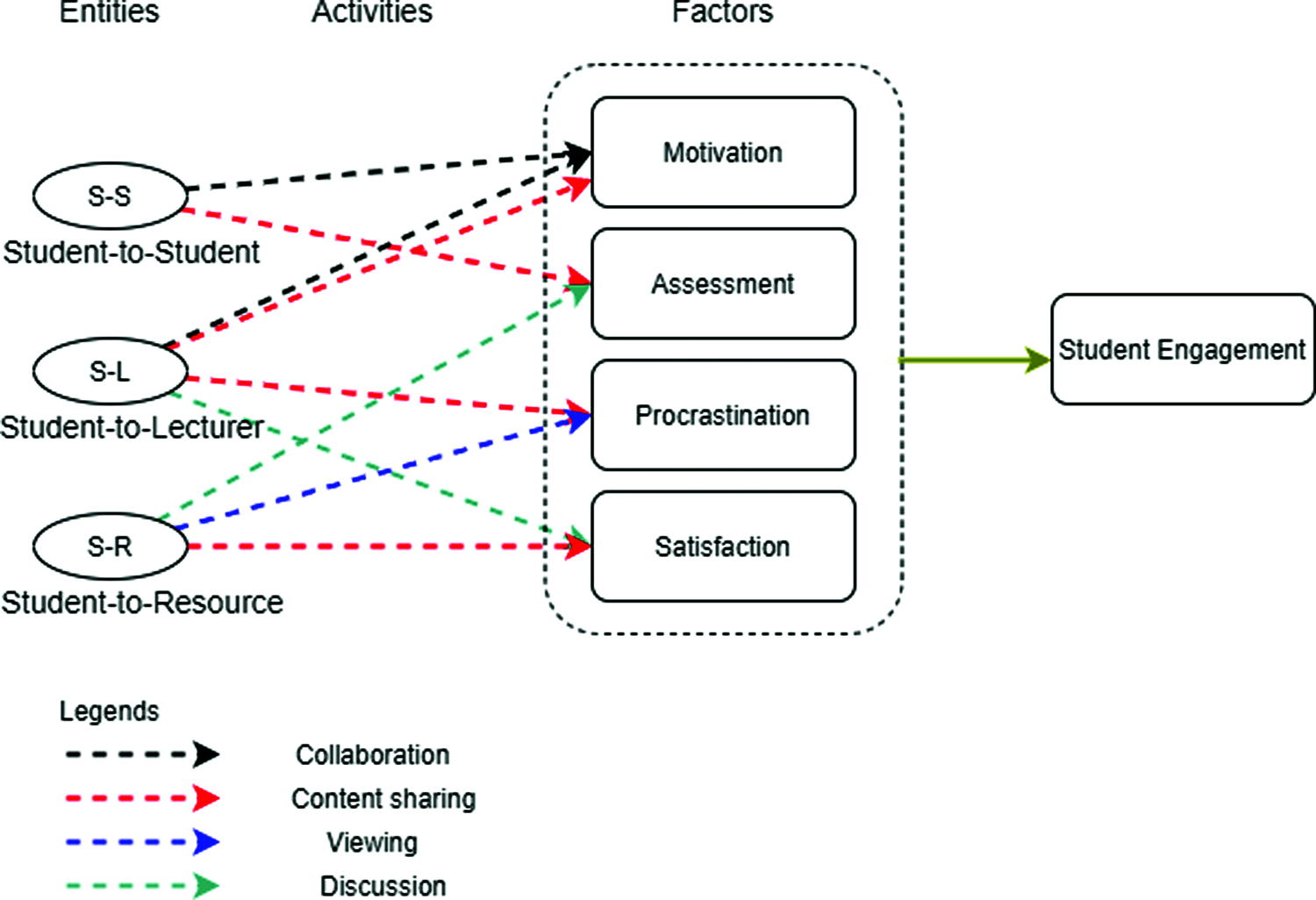

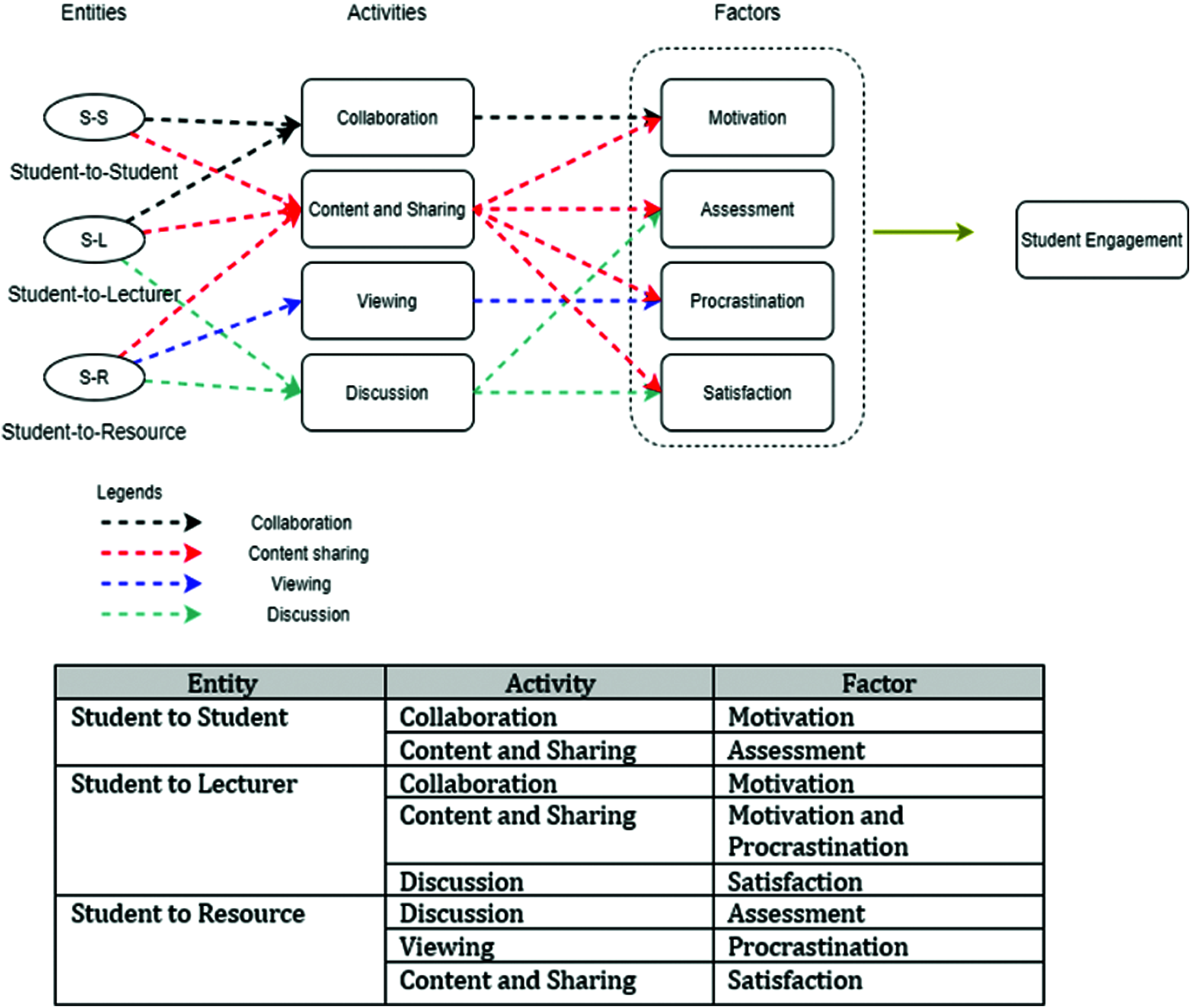

Figure 1: Initial student engagement model

Fig. 1 shows the initial engagement model for LMSs. As seen, different types of interaction between three entities are required. 1. student-to-student (SS) interaction, 2. student-to-lecturer (SL) interaction, and 3. student-to-resource (SR) interaction. The types of activities are represented by colour-coded arrows; black for collaboration [4,34,35], red for content sharing [6,21,36–38], blue for viewing [39], and green for discussion [21,40,41]. Furthermore, an entity needed to be involved in an activity in order to lead to engagement factors. The initial engagement model focused on four engagement factors: 1. motivation [4,5,34,37,38], 2. procrastination [8,41], 3. satisfaction [36,42,43], and 4. assessment [5,6,21]. Every entity had respective activities that produced specific engagement factors. SS interaction required two activities; 1. collaboration (activity) lead to motivation (engagement factor) while content sharing (activity) lead to assessment (engagement factor). SL interaction required three engagement factors; motivation, procrastination, and satisfaction, with respective activities. Lastly, SR interaction required discussion (activity) to lead to assessment (engagement factor), viewing (activity) to lead to procrastination (engagement factor), and content sharing (activity) to lead to satisfaction (engagement factor). Therefore, all these engagement factors suggest methods for better student engagement.

The engagement matrix below explained research objective 1; to determine the factors that lead to low engagement in LMSs. As previously mentioned, an extensive literature review was conducted to determine factors of student engagement in LMSs. The activities that were classified, and which led to engagements were collaboration, content sharing, viewing, and discussion. Furthermore, the four engagement factors derived from the literature review were motivation, assessment, procrastination, and satisfaction.

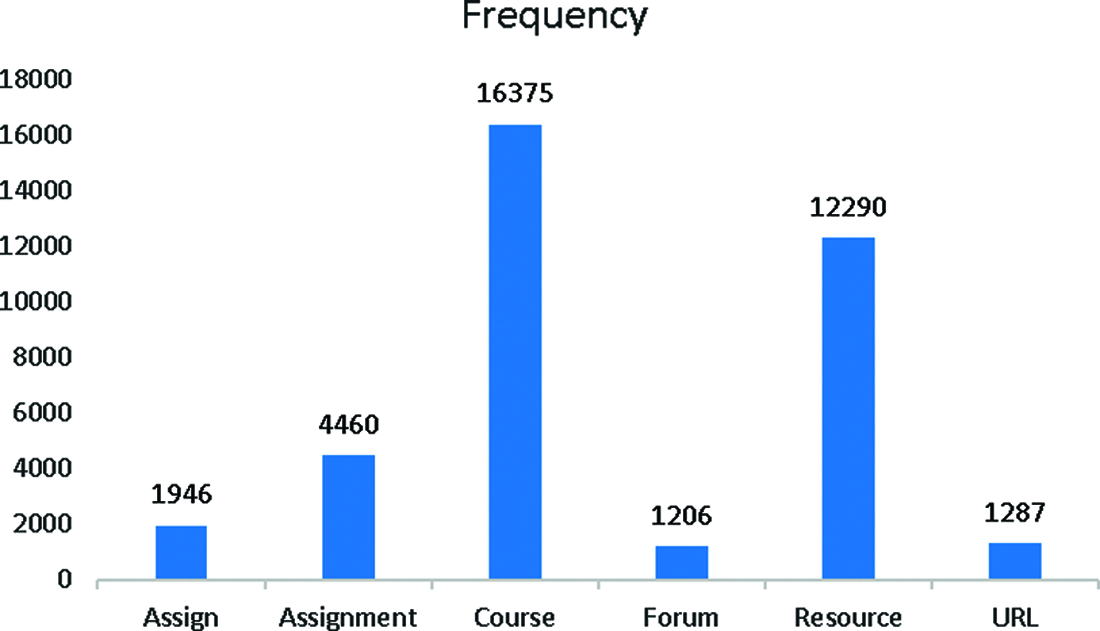

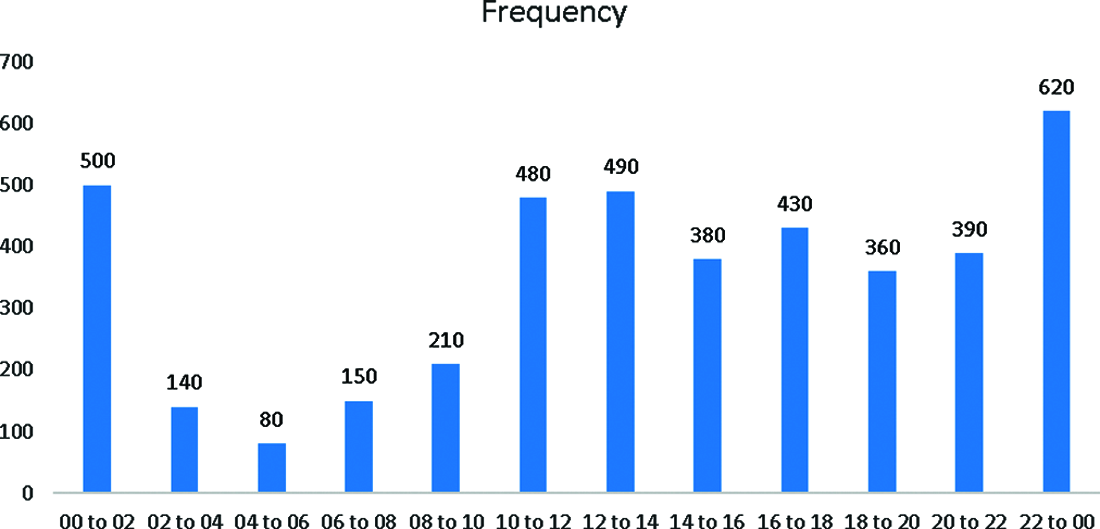

Data exploration refers to an exploratory analysis of the data, the results of which serve as an indicator of the data’s behaviour. Using the ‘Explore’ function in SAS® EM™ 14.1, the ‘Plot’ function was used to explore the available data and produce a chart with which to analyse the log data. Fig. 2 depicts the how often the options available in the assignment module were used while Fig. 3 illustrates the number of times that the assignment module was used throughout a day. In this way, we were able to analyse the amount of time that a student spent in the assignment module. As seen in the histogram, the number of students who logged in to the assignment module increased at night. The frequency of log ins was at its highest between 10.23 pm to 11.59 pm and 00.00 am to 01.36 am. This enabled us to conclude that students tend to procrastinate, choosing to wait until the eleventh-hour of a submission period to tender their assignments.

Figure 2: The usage frequency of the assignment module during the 14/15 academic session

Figure 3: The usage distribution throughout the day in the assignment module

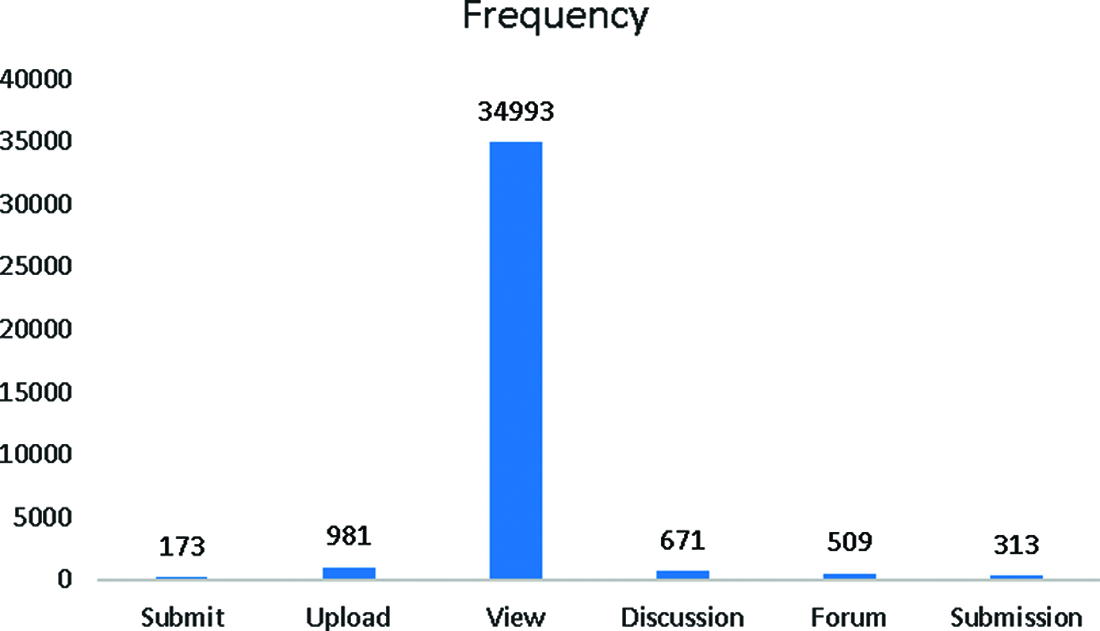

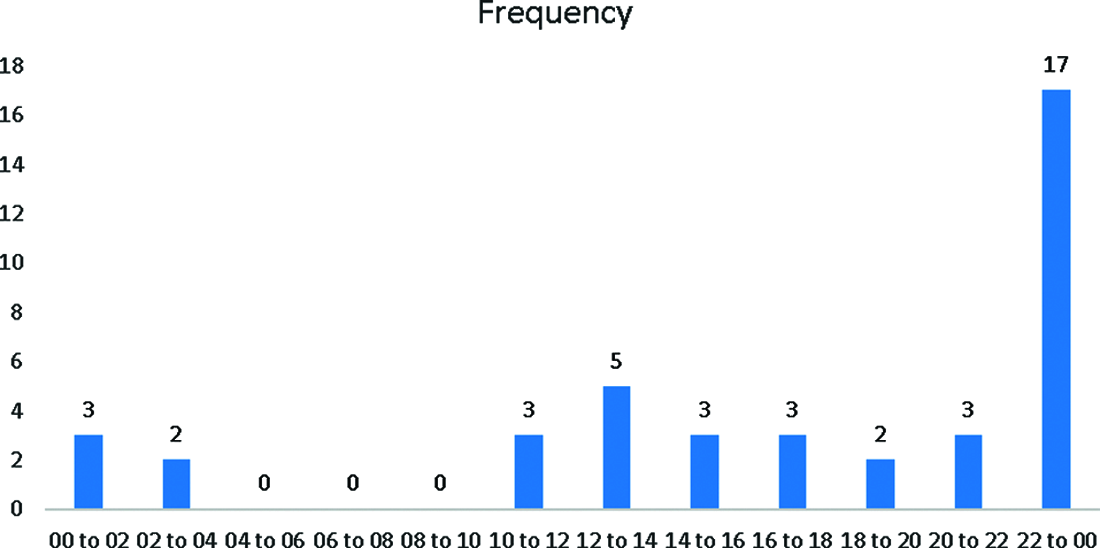

A further analysis of the ‘upload’ option and its timestamp was conducted. Figs. 4 and 5 present the how often the sub-options available in the upload option were used and the number of times that the upload option was used throughout a day, respectively. As seen in Fig. 5, most of the assignments were uploaded after 9.35 pm and increased in frequency between 00.02 to 2.24 am. This finding supports the findings of the assignment module, i.e., that the frequency of assignment uploads was at its highest at and after midnight (Malaysian time).

Figure 4: The usage frequency of the upload sub-options during the 13/14 academic session

Figure 5: The usage distribution throughout the day in the upload module

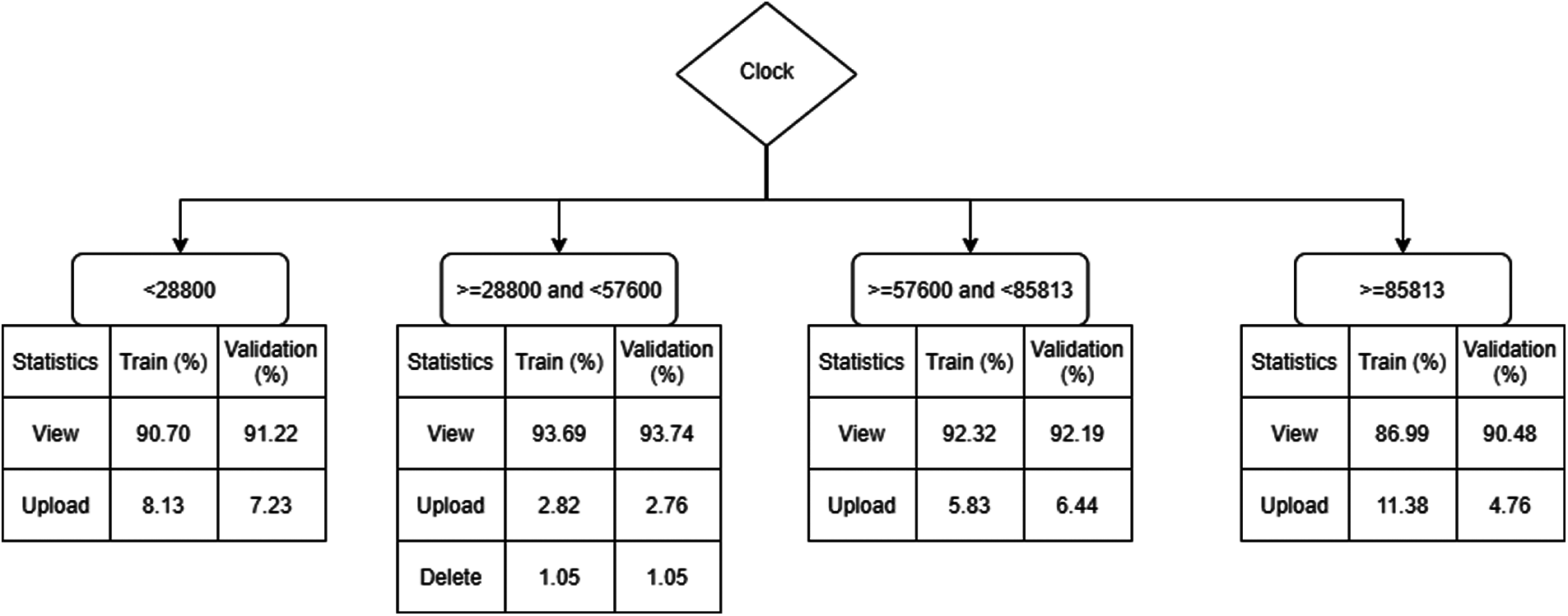

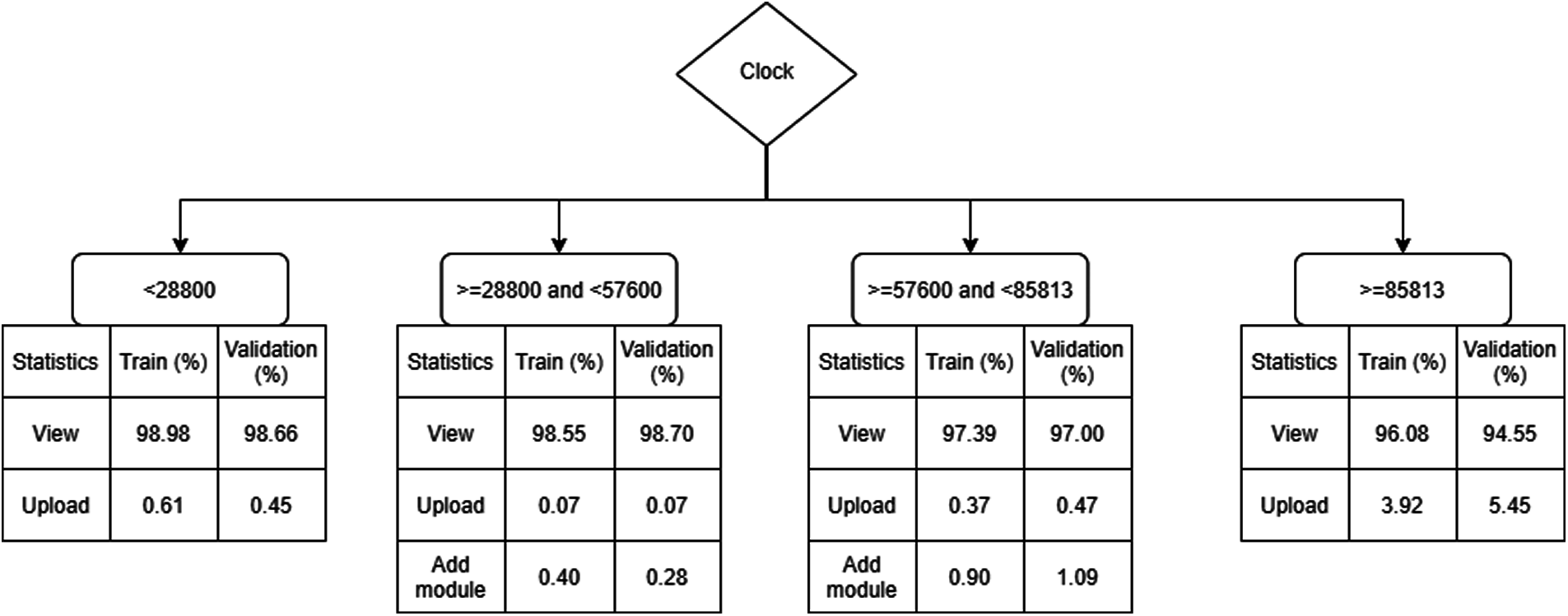

Decision tree methodology is a powerful, predictive, and explanatory modelling tool. Not only is it very easy to understand and visualise but building the tree is quite interactive. Decision trees have been used for predictive purposes [16,20] and can also be used for data visualisation and classification [45]. Furthermore, it can also be used to provide as well as support findings and identify the root cause of a subject or field of study in question. The decision tree model of the assignment module below provides an overview of its usage frequency. Fig. 6 depicts the decision tree of the usage frequency of the Programming 1 subject in the assignment module during the 14/15 academic session. Usage was expressed in the form of percentages as well as the total and time of actions. As seen, the percentage of uploads increased at <28800 or before 8 am. This was followed by ≥85813 or after 11.30 pm. This indicated that students tended to upload their assignments after 11.30 pm and after midnight. Therefore, the engagement factor that could be derived from this decision tree was that procrastination occurred in the LMS of the Programming 1 subject. Fig. 7 depicts the decision tree of the usage frequency of the Programming 1 subject in the assignment module during the 13/14 academic session. Usage was expressed in the form of percentages as well as the total and time of actions. As seen, the percentage of uploads increased at ≥85813 or after 11.30 pm. This indicated that students tended to submit their assignments after 11.30 pm. Therefore, the engagement factor that could be derived from this decision tree was that procrastination occurred in the LMS of the Programming 1 subject.

Figure 6: The usage frequency of the Programming 1 during the 14/15 academic session

Figure 7: The usage frequency of the Programming 1 during the 13/14 academic session

Determining the engagement factors was the first objective of this research. The model was developed by combining the initial model, the engagement factors, and the data analysis. These were the engagement factors involved in the LMS: LMS design, student-related procrastination, student-related motivation, student-related satisfaction, and educator-related involvement. LMS design plays an important role as students may find that the LMS is an interactive place for their study [36]. It also leads to student-related satisfaction whereby the learning system, which is designed by the educator or lecturer, determines the student engagement factor. Learning materials that support student involvement increases student-related satisfaction [1]. The LMS environment also affects student-related satisfaction as a flexible environment influences student engagement. Engagement within the LMS was low due to student-related procrastination. Procrastination is the act of delaying or postponing the completion of a task [25]. The amount of effort and time that students need to sacrificed to use the LMS is also a reason behind low engagement as students do not wish to spend more time in it [41]. When procrastination is the type of learning strategy employed, while it may require students to interact with the LMS, the type of engagement produced is inactive [46]. These untimely actions and simple delays in accessing LMS material is the reason behind student-related procrastination [24]. Motivation is a student’s desire and interest in participating in an activity. Student-related motivation depends on three reliant cognitive dimensions: 1. self-regulation i.e., the quality of the learning strategies, 2. emotion i.e., self-esteem; and 3. self-efficacy i.e., student performance and behaviour which requires motivation [34]. Student-related motivation influences participation in an LMS [39] which, in turn, influences the social environment to develop mutual trust with the learning system and results in system engagement [35]. The involvement of an educator in online learning design shows that commitment to an LMS seven-days-a-week does not only substantially impact learning configuration but is also influenced by the educator’s insight and wisdom over modular balance in their learning plans and exercises [30]. Integrating an LMS into education shows that incorporating a constant e-assessment into a module increased the commitment of undergraduates to that module [21]. Therefore, LMS-based assessments boost engagement if the educator designs effective learning activities.

Figure 8: Student engagement model in an LMS

Fig. 8 depicts the student engagement model which was the product of the initial engagement model and the results of the data analysis. The entities of the interactions were derived from existing works. Student-to-student (SS) interaction occurs when there is communication between the students in the LMS [26,27]. Student-to-lecturer (SL) interaction occurs when students engage with the lecturer or vice versa. Student-to-resource (SR) interaction occurs when students interact directly with the system and its resources. Activities that transpire in the LMS were divided into four categories: 1. collaboration i.e. actively engaging with other students or lecturers, forum, or group assignments, 2. content sharing i.e. sharing knowledge through assignments and discussions (sharing material, submissions, uploading, and downloading), 3. viewing i.e., viewing or looking around the module by ‘viewing all’, logging in, and logging out, and 4. discussions i.e., discussing or arguing with others through conversations; such as ‘messages and communicative’ arguments. An analysis of the data provided the factors that were merged with the engagement matrix. These engagements were: motivation i.e., a student’s desire and interest to participate in an activity [5,34], assessment i.e., learning evaluation by the lecturer(s) or the system [21,40] procrastination i.e., the act of delaying or postponing something [8,41], and satisfaction i.e., what the student wants from the LMS [36,42,43].

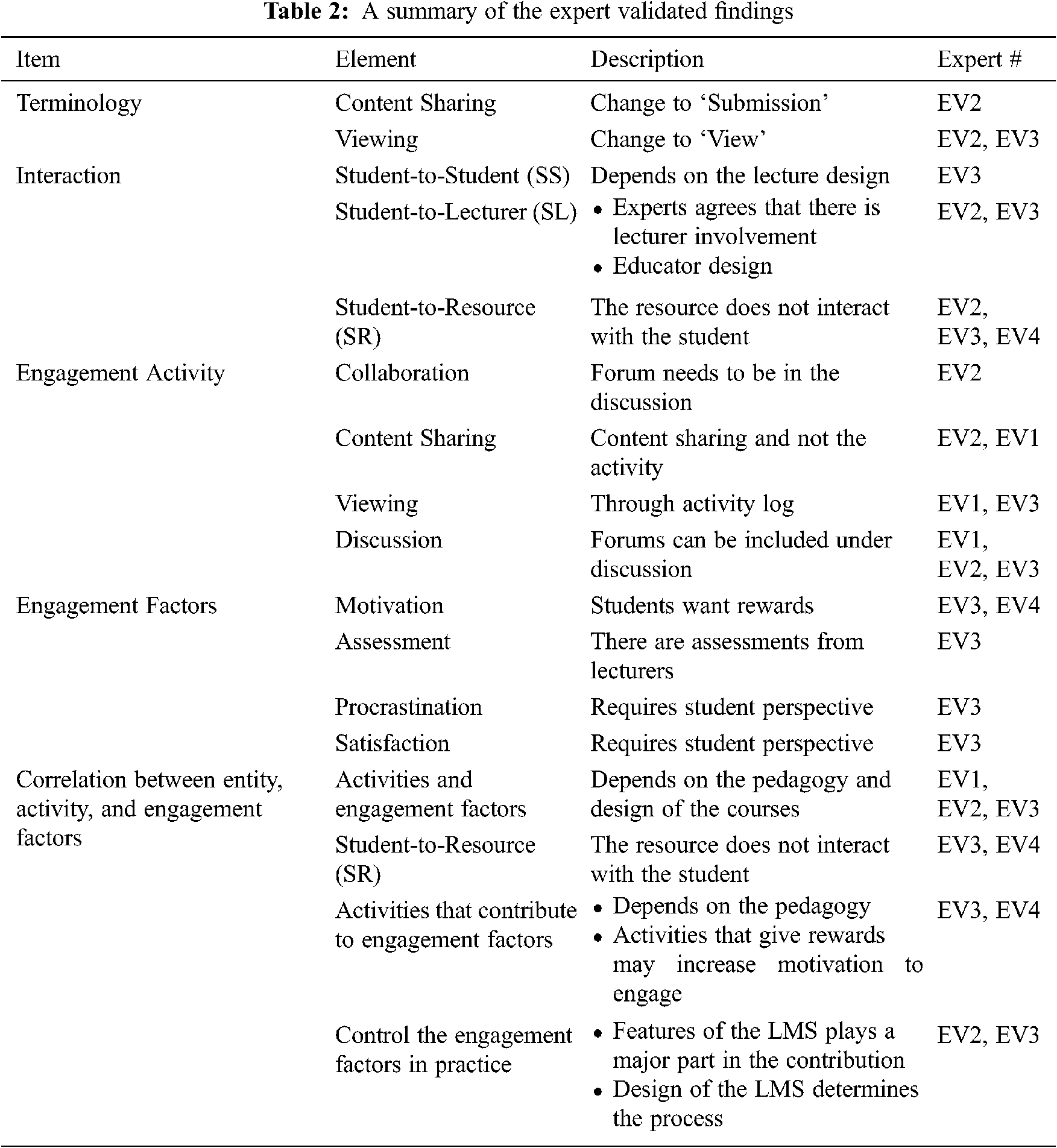

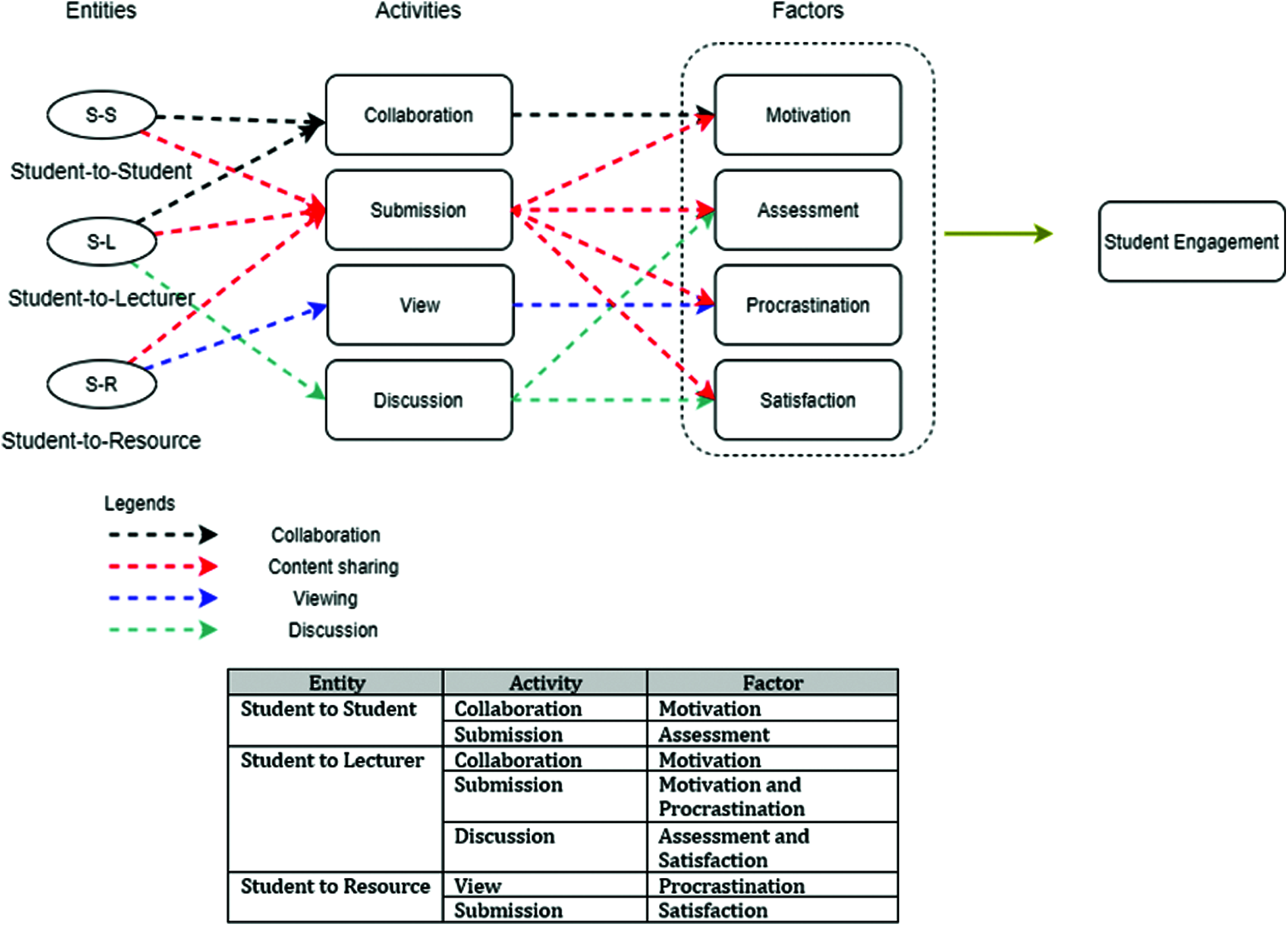

This section discusses the validation process of this research. Three stages of validation were executed: 1. data validation where the result of the trained data sets was compared with that of the validated data sets, 2. expert validation where a panel of experts was interviewed or answered a questionnaire, and 3. focus group to validate the model from a student perspective. The ecosystem of the model was validated via an interview with the panel of experts which included lecturers and members of the faculty’s administration. A focus group was conducted using selected students to validate the model from the student perspective. After the validation process, the model was modified to fit the results of the validation. Several items were improvised from the previously developed student engagement model. The results of the data analysis were validated by comparing the results of the final version of the model before subsequent validation by the student engagement model. Tabs. 2 and 3 summarise the findings of the expert panel and the student focus group, respectively.

Figure 9: The validated student engagement model

Fig. 9 shows the validated student engagement model for the LMS. Several improvements have been made to the model based on the validated research results. The description of what constitutes an ‘activity’ was updated to ensure that the activity data derived from the LMS was legitimate. Although ‘entity’ was validated, SR interactions are one-way as the resources of an LMS does not interact with the students. The four engagement factors were validated as a few factors required a student perspective which was derived via the focus group session. The factors of motivation, procrastination, and satisfaction were also validated from the student perspective as it involves students. With the objective of developing a student engagement model that boosts student engagement in the LMS, the LMS design of the lecturer was found to be the most important factor affecting student engagement. Fig. 9 shows the final output of the validation process.

5 Conclusion and Future Research

In conclusion, using learning analytics to develop a student engagement model is important in order to support effective teaching and learning among institutions of higher learning. Our goal was to develop an effective model that increased the quality of learning in higher education institutions. Malaysia has a good prospect of implement learning analytics in all learning platforms as it can improve student engagement and interaction. Learning analytics typically leverages the use of highly interactive learning environments; such as tutorials, games, and simulations; to produce a rich stream of interesting data visualisation patterns with which to determine behavioural trends among the youth. Furthermore, institutions of higher learning only realised the importance of learning management systems (LMSs) when they became more widely used during the COVID-19 pandemic. As such, online learning has quickly become a hot topic. Based on the result of the analysis, institutions need to foster student-related motivation, student-related satisfaction, procrastination, and assessment as they lead to student engagement. Pedagogy planning needs to be more thorough in order to increase student engagement in online classrooms as there are many distractions when students study from home. Furthermore, students require more guidance to achieve online learning objectives during these trying times. Universities and educational institutions can utilise the findings of this research to ensure the successful implementation of learning analytics. Furthermore, using learning analytics to analyse student engagement and interaction data will reveal the communication strength of an LMS. Stakeholders; such as lecturers, management, and administration, can use the student engagement model as a guideline to increase the use of LMSs for the teaching and learning among Malaysian institutions of higher learning. The proposed model can also help universities develop LMS sustainability by encouraging more student engagement as it is very helpful in measuring student engagement. Nevertheless, this study is not without its limitations. As the data set used in this analysis was collected from one public university in Malaysia, the findings of this study cannot be generalised for other countries. Furthermore, this research only utilised three data analysis techniques to determine engagement factors. Future studies may consider investigating other techniques that can be useful in analysing the data. Thus, this requires further exploration. Additionally, future investigations should consider using log data from when students first enter the university until they complete their studies in order to accurately gauge their growth in learning. Lastly, the findings of this study could be combined with the LMS data of another Malaysian university to determine the behaviour patterns of Malaysian students.

Funding Statement: This research was supported by the Universiti Malaya, Bantuan Khas Penyelidikan under the research grant of BKS083-2017.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. B. Rienties and L. Toetenel, “The impact of learning design on student behaviour, satisfaction and performance: A cross-institutional comparison across 151 modules,” Computers in Human Behavior, vol. 60, no. 2, pp. 333–341, 2016. [Google Scholar]

2. K. Casey and D. Azcona, “Utilizing student activity patterns to predict performance,” International Journal of Educational Technology in Higher Education, vol. 14, no. 1, pp. 23, 2017. [Google Scholar]

3. J. Son, J. D. Kim, H. S. Na and D. K. Baik, “A social learning management system supporting feedback for incorrect answers based on social network services,” Journal of Educational Technology & Society, vol. 19, no. 2, pp. 245–257, 2016. [Google Scholar]

4. M. Firat, “Determining the effects of LMS learning behaviors on academic achievement in a learning analytic perspective,” Journal of Information Technology Education: Research, vol. 15, no. 2016, pp. 75–87, 2016. [Google Scholar]

5. J. Ballard and P. I. Butler, “Learner enhanced technology: Can activity analytics support understanding engagement a measurable process?,” Journal of Applied Research in Higher Education, vol. 8, no. 1, pp. 18–43, 2016. [Google Scholar]

6. F. H. Wang, “An exploration of online behaviour engagement and achievement in flipped classroom supported by learning management system,” Computers & Education, vol. 114, no. 1, pp. 79–91, 2017. [Google Scholar]

7. V. Kovanovi´c, D. Gaˇsevi´c, S. oksimovi´c, M. Hatala and O. Adesope, “Analytics of communities of inquiry: Effects of learning technology use on cognitive presence in asynchronous online discussions,” Internet and Higher Education, vol. 27, no. 3, pp. 74–89, 2015. [Google Scholar]

8. Y. Park, J. H. Yu and I. H. Jo, “Clustering blended learning courses by online behavior data: A case study in a Korean higher education institute,” Internet and Higher Education, vol. 29, no. 1, pp. 1–11, 2016. [Google Scholar]

9. M. C. S. Manzanares, R. M. Sánchez, C. I. García Osorio and J. F. Díez-Pastor, “How do B-learning and learning patterns influence learning outcomes?,” Frontiers in Psychology, vol. 8, pp. 542, 2017. [Google Scholar]

10. V. A. Nguyen, “The impact of online learning activities on student learning outcome in blended learning course,” Journal of Information & Knowledge Management, vol. 16, no. 04, pp. 1750040, 2017. [Google Scholar]

11. W. Greller and H. Drachsler, “Translating learning into numbers: A generic framework for learning analytics,” Journal of Educational Technology & Society, vol. 15, no. 3, pp. 42–57, 2012. [Google Scholar]

12. R. A. Ellis, F. Han and A. Pardo, “Improving learning analytics - Combining observational and self-report data on student learning,” Educational Technology and Society, vol. 20, no. 3, pp. 158–169, 2017. [Google Scholar]

13. Y. J. Joo, N. Kim and N. H. Kim, “Factors predicting online university students’ use of a mobile learning management system (m-LMS),” Educational Technology Research and Development, vol. 64, no. 4, pp. 611–630, 2016. [Google Scholar]

14. R. Asif, A. Merceron, S. A. Ali and N. G. Haider, “Analyzing undergraduate students’ performance using educational data mining,” Computers & Education, vol. 113, no. 1, pp. 177–194, 2017. [Google Scholar]

15. R. Abzug, “Predicting success in the undergraduate hybrid business ethics class: Conscientiousness directly measured,” Journal of Applied Research in Higher Education, vol. 7, no. 2, pp. 400–411, 2015. [Google Scholar]

16. W. Xing and D. Du, “Dropout prediction in MOOCs: Using deep learning for personalized intervention,” Journal of Educational Computing Research, vol. 57, no. 3, pp. 547–570, 2019. [Google Scholar]

17. E. B. Costa, B. Fonseca, M. A. Santana, F. F. de Ara´ujo and J. Rego, “Evaluating the effectiveness of educational data mining techniques for early prediction of students’ academic failure in introductory programming courses,” Computers in Human Behavior, vol. 73, pp. 247–256, 2017. [Google Scholar]

18. T. Bayrak and B. Akcam, “Understanding student perceptions of a web-based blended learning environment,” Journal of Applied Research in Higher Education, vol. 9, no. 4, pp. 577–597, 2017. [Google Scholar]

19. D. Gašević, S. Dawson, T. Rogers and D. Gasevic, “Learning analytics should not promote one size fits all: The effects of instructional conditions in predicting academic success,” Internet and Higher Education, vol. 28, no. 1, pp. 68–84, 2016. [Google Scholar]

20. E. Seidel and S. Kutieleh, “Using predictive analytics to target and improve first year student attrition,” Australian Journal of Education, vol. 61, no. 2, pp. 200–218, 2017. [Google Scholar]

21. N. Holmes, “Engaging with assessment: Increasing student engagement through continuous assessment,” Active Learning in Higher Education, vol. 19, no. 1, pp. 23–34, 2018. [Google Scholar]

22. D. West, Z. Tasir, A. Luzeckyj, K. S. Na, D. Toohey et al., “Learning analytics experience among academics in Australia and Malaysia: A comparison,” Australasian Journal of Educational Technology, vol. 34, no. 3, pp.122–139, 2018. [Google Scholar]

23. T. M. Taib, K. M. Chuah and N. Abd Aziz, “Understanding pedagogical approaches of unimas moocs in encouraging globalized learning community,” Int. Journal of Business and Society, vol. 18, no. S4, pp. 838–844, 2017. [Google Scholar]

24. A. AlJarrah, M. K. Thomas and M. Shehab, “Investigating temporal access in a flipped classroom: procrastination persists,” Int. Journal of Educational Technology in Higher Education, vol. 15, no. 1, pp. 363, 2018. [Google Scholar]

25. J. W. You, “Examining the effect of academic procrastination on achievement using lms data in e-learning,” Journal of Educational Technology & Society, vol. 18, no. 3, pp. 64–74, 2015. [Google Scholar]

26. S. Joksimovi´c, D. Gaˇsevi´c, T. M. Loughin, V. Kovanovi´c and M. Hatala, “Learning at distance: Effects of interaction traces on academic achievement,” Computers & Education, vol. 87, no. 2, pp. 204–217, 2015. [Google Scholar]

27. C. S. Cardak and K. Selvi, “Increasing teacher candidates’ ways of interaction and levels of learning through action research in a blended course,” Computers in Human Behavior, vol. 61, no. 2, pp. 488–506, 2016. [Google Scholar]

28. N. Pellas, “The influence of computer self-efficacy, metacognitive self-regulation and self-esteem on student engagement in online learning programs: Evidence from the virtual world of Second Life,” Computers in Human Behavior, vol. 35, no. 1, pp. 157–170, 2014. [Google Scholar]

29. N. Li, V. Marsh and B. Rienties, “Modelling and managing learner satisfaction: Use of learner feedback to enhance blended and online learning experience,” Decision Sciences Journal of Innovative Education, vol. 14, no. 2, pp. 216–242, 2016. [Google Scholar]

30. Q. Nguyen, B. Rienties, L. Toetenel, R. Ferguson and D. Whitelock, “Examining the designs of computer-based assessment and its impact on student engagement, satisfaction, and pass rates,” Computers in Human Behavior, vol. 76, no. February, pp. 703–714, 2017. [Google Scholar]

31. A. Chawdhry, K. Paullet and D. Benjamin, “Assessing blackboard: Improving online instructional delivery,” Information Systems Education Journal, vol. 9, no. 4, pp. 20, 2011. [Google Scholar]

32. A. Heirdsfield, S. Walker, M. Tambyah and D. Beutel, “Blackboard as an online learning environment: What do teacher education students and staff think?,” Australian Journal of Teacher Education, vol. 36, no. 7, pp. 1, 2011. [Google Scholar]

33. J. Squillante, L. Wise and T. Hartey, “Analyzing blackboard: Using a learning management system from the student perspective,” Mathematics and Computer Science Capstones, vol. 20, pp. 1–50, 2014. [Google Scholar]

34. B. Chen, Y. H. Chang, F. Ouyang and W. Zhou, “Fostering student engagement in online discussion through social learning analytics,” Internet and Higher Education, vol. 37, no. 1, pp. 21–30, 2018. [Google Scholar]

35. X. Zhang, Y. Meng, P. Ordóñez de Pablos and Y. Sun, “Learning analytics in collaborative learning supported by Slack: From the perspective of engagement,” Computers in Human Behavior, vol. 92, Pergamon, pp. 625–633, 2019. [Google Scholar]

36. N. Zanjani, S. L. Edwards, S. Nykvist and S. Geva, “The important elements of LMS design that affect user engagement with e-learning tools within LMSs in the higher education sector,” Australasian Journal of Educational Technology, vol. 33, no. 1, pp. 19–31, 2017. [Google Scholar]

37. J. Jovanović, D. Gašević, S. Dawson, A. Pardo and N. Mirriahi, “Learning analytics to unveil learning strategies in a flipped classroom,” Internet and Higher Education, vol. 33, no. 1, pp. 74–85, 2017. [Google Scholar]

38. N. Mirriahi, J. Jovanovic, S. Dawson, D. Gašević and A. Pardo, “Identifying engagement patterns with video annotation activities: A case study in professional development,” Australasian Journal of Educational Technology, vol. 34, no. 1, pp. 57–72, 2018. [Google Scholar]

39. L. Y. Li and C. C. Tsai, “Accessing online learning material: Quantitative behavior patterns and their effects on motivation and learning performance,” Computers & Education, vol. 114, no. 3, pp. 286–297, 2017. [Google Scholar]

40. Á. Fidalgo-Blanco, M. L. Sein-Echaluce, F. J. García-Peñalvo and M.Á. Conde, “Using learning analytics to improve teamwork assessment,” Computers in Human Behavior, vol. 47, no. 2, pp. 149–156, 2015. [Google Scholar]

41. R. Cerezo, M. Sánchez-Santillán, M. P. Paule-Ruiz and J. C. Núñez, “Students’ LMS interaction patterns and their relationship with achievement: A case study in higher education,” Computers & Education, vol. 96, no. 4, pp. 42–54, 2016. [Google Scholar]

42. C. Schumacher and D. Ifenthaler, “Features students really expect from learning analytics,” Computers in Human Behavior, vol. 78, no. 1, pp. 397–407, 2018. [Google Scholar]

43. J. Maldonado-Mahauad, M. Pérez-Sanagustín, R. F. Kizilcec, N. Morales and J. Munoz-Gama, “Mining theory-based patterns from Big data: Identifying self-regulated learning strategies in Massive Open Online Courses,” Computers in Human Behavior, vol. 80, no. 3, pp. 179–196, 2018. [Google Scholar]

44. M. Charalampidi and M. Hammond, “How do we know what is happening online?: A mixed methods approach to analysing online activity,” Interactive Technology and Smart Education, vol. 13, no. 4, pp. 274–288, 2016. [Google Scholar]

45. Y. Y. Song and Y. Lu, “Decision tree methods: Applications for classification and prediction,” Shanghai Archives of Psychiatry, vol. 27, no. 2, pp. 130–135, 2015. [Google Scholar]

46. A. Pardo, D. Gasevic, J. Jovanovic, S. Dawson and N. Mirriahi, “Exploring student interactions with preparation activities in a flipped classroom experience,” IEEE Transactions on Learning Technologies, vol. 12, no. 3, pp. 333–346, 2019. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |