DOI:10.32604/csse.2022.023256

| Computer Systems Science & Engineering DOI:10.32604/csse.2022.023256 |  |

| Article |

Performance Analysis of Machine Learning Algorithms for Classifying Hand Motion-Based EEG Brain Signals

1Department of Computer Science, College of Applied Studies, King Saud University, Riyadh, 11495, Saudi Arabia

2Department of Computer Science and Engineering, Manipal University Jaipur, Jaipur, 303007, India

3Department of Computer Science, CHRIST (Deemed to be University), Bangalore, 560029, India

4Department of Computer Science and Engineering, CHRIST (Deemed to be University), Bangalore, 560074, India

5Information Systems Department, Imam Mohammad Ibn Saud Islamic University (IMSIU), Riyadh, 11432, Saudi Arabia

*Corresponding Author: Abdul Khader Jilani Saudagar. Email: aksaudagar@imamu.edu.sa

Received: 01 September 2021; Accepted: 12 October 2021

Abstract: Brain-computer interfaces (BCIs) records brain activity using electroencephalogram (EEG) headsets in the form of EEG signals; these signals can be recorded, processed and classified into different hand movements, which can be used to control other IoT devices. Classification of hand movements will be one step closer to applying these algorithms in real-life situations using EEG headsets. This paper uses different feature extraction techniques and sophisticated machine learning algorithms to classify hand movements from EEG brain signals to control prosthetic hands for amputated persons. To achieve good classification accuracy, denoising and feature extraction of EEG signals is a significant step. We saw a considerable increase in all the machine learning models when the moving average filter was applied to the raw EEG data. Feature extraction techniques like a fast fourier transform (FFT) and continuous wave transform (CWT) were used in this study; three types of features were extracted, i.e., FFT Features, CWT Coefficients and CWT scalogram images. We trained and compared different machine learning (ML) models like logistic regression, random forest, k-nearest neighbors (KNN), light gradient boosting machine (GBM) and XG boost on FFT and CWT features and deep learning (DL) models like VGG-16, DenseNet201 and ResNet50 trained on CWT scalogram images. XG Boost with FFT features gave the maximum accuracy of 88%.

Keywords: Machine learning; brain signal; hand motion recognition; brain-computer interface; convolutional neural networks

Amyotrophic lateral sclerosis (ALS) is a growing disease related to the nervous system that attacks nerve cells in the brain and disturbs muscle movement control. It is one of the rapidly spreading diseases as the symptoms of this disease get worse over time. Currently, medical science has no efficient treatment for this disease. Thus, it is highly desirable to detect it at an early stage. BCI offers patients with ALS and other neurological disorders to control prosthetic hands, wheelchairs, etc. [1]. Brain-controlled wheelchairs can improve the quality of life of an individual suffering from ALS. BCI has numerous other applications like controlling mouse cursors using imagined hand movements [2]. It used only one channel EEG signal to control a mouse pointer; this study uses eye blinks to switch between cursor movements like linear displacement. Spinning uses attention level to modulate the cursor’s speed. It can also be used to classify inner speech; Kumar and Scheme [3] proposed a deep spatio-temporal learning architecture with 1D convolutional neural networks (CNNs) and long short-term memory (LSTM) for the classification of imagined speech. There are two types of techniques to measure brain signals, invasive and non-invasive procedures. First, electrodes are placed within or on the surface of the cortex and in the second, electrodes are placed on the scalp of the head. EEG is a non-invasive technique to measure brain signals using EEG headsets; these headsets have electrodes placed on the scalp of the head. The most challenging part is to extract brain commands from the brain signals as these signals have a low signal-to-noise ratio (SNR). The feature extraction method removes the features from raw brain signals and uses machine learning algorithms to classify them.

BCIs measure brain activity using different techniques, analyze it, extract essential features and convert those features into commands that can control output devices like prosthetic hands, wheelchairs, IoT devices, etc. [4]. After reading brain signals, it will be processed and features will be extracted using different feature extraction methods like FFT and CWT. Using these features, brain commands will be extracted out using sophisticated ML models [5]. Once the brain commands are received, they will be directed towards the IoT devices that need to be controlled, in our case, its prosthetic hands and this is how the patient will be able to use BCI. The most challenging part of this project will be to extract brain commands from EEG Signals as EEG Signals have low SNR. Therefore, two types of noise are coming into the picture: external and internal noise (user-induced noise) [6]. These signals can be removed using signal processing and feature extraction techniques [7].

This study used a publicly available EEG dataset with events like hand motions and compared different ML models like logistic regression, random forest, KNN, light GBM and XG boost on FFT and CWT feature extraction methods. In addition, some deep learning (DL) approaches like VGG-16, DenseNet201 and ResNet50 are also used here. This study used different metrics like precision, recall, F1-score, support and accuracy to compare these ML and DL models. To progress this research, it is decided to use publicly available data from Kaggle, which have events like hand movements. This dataset was used in mind that the methods and machine learning algorithms we will be using can later be used for the wheelchair control dataset. The dataset used in this study was already epoched and pre-processed; we applied moving average filter as a processing technique and feature extraction methods like FFT and CWT. The main aim of this paper is to create brain-controlled interfaces for patients who have ALS. With the help of EEG headsets, patients will control IoT devices, wheelchairs, etc.

Alam et al. [8], in 2021, used the power spectral density (PSD) feature extraction technique on Graz BCI competition IV dataset 2b and a significant increase in classification performance was observed. The classification was done between two classes of motor imagery left-hand and right-hand movement. Linear discriminant analysis (LDA) classifier gave 0.61 Cohen’s Kappa accuracy [8].

İşcan and Nikulin in 2018 used SSVEP-based BCI parallelly during the conversations as some subjects’ perturbations resulted even in better performance. For example, the decision tree gave excellent results (>95%) when compared with K-NN and naïve Bayes algorithms [9].

Al-Fahoum et al. [10], in 2014, compared different feature extraction methods like FFT, time-frequency distributions (TFD), wavelet transform (WT), eigenvector methods (EM) and auto-regressive method (ARM) based on performance for a specific EEG task [10].

Narayan et al. [11] in 2021 applied different machine learning algorithms like support vector machine (SVM), LDA and multi-layer perceptron (MLP) classifier on EEG dataset acquired from 20 subjects; the data was pre-processed and followed by feature extraction and classification, it was found that SVM gave the best classification accuracy of 98.8% [11].

Lazarou et al. [12] in 2018, proposed an EEG-based BCI system for oneself with motor impairment for communication and rehabilitation like TTD system, Graz BCI system, web browsers, game applications, cursor movement system, virtual environments, speller systems like P300 and control of external applications [12].

Chaurasiya et al. [13], in 2015, applied the SVM classification technique to obtain an accurate and quick solution for the detection of target characters linked with the P300 speller system for BCI. This system needs the least pre-processing and gives a considerable transfer rate, fitting online analysis [13].

Zhang et al. [14], in 2020, used a deep attention-based LSTM network to classify hand movements using EEG and deployed LSTM to identify left/right-hand movement [14]. In addition, LaRocco et al. [15] in 2020 detected drowsiness with EEG headsets.

Bilucaglia et al. [16] analyzed previously recorded EEG activity while healthy participants were provided with emotional stimulation and high and low stimuli (auditory and visual). His target is to classify signal that was to initiate pre-stimulus brain activity. This paper compared three classifiers, namely, KNN, SVM and LDA using temporal and spectral features. Bilucaglia et al. [16] conclude that temporal dynamic features give better performance in terms of accuracy. Additionally, SVM with temporal features achieved 63.8% classification accuracy.

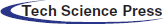

This study uses different feature extraction methods and machine learning models to predict the probability of fist motion on EEG records. The dataset consists of already epoched EEG data for 19 electrodes which were then processed using moving average for noise removal; different feature extraction methods are applied like FFT [17] and CWT [18]. Two types of data were generated from the CWT feature extraction method, i.e., CWT coefficients as features and CWT signal spectrum image as a feature. On these features, different machine learning models were trained and compared. The overall workflow for EEG data analysis is shown in Fig. 1.

Figure 1: EEG data analysis process

3.1 Dataset Description and Visualization

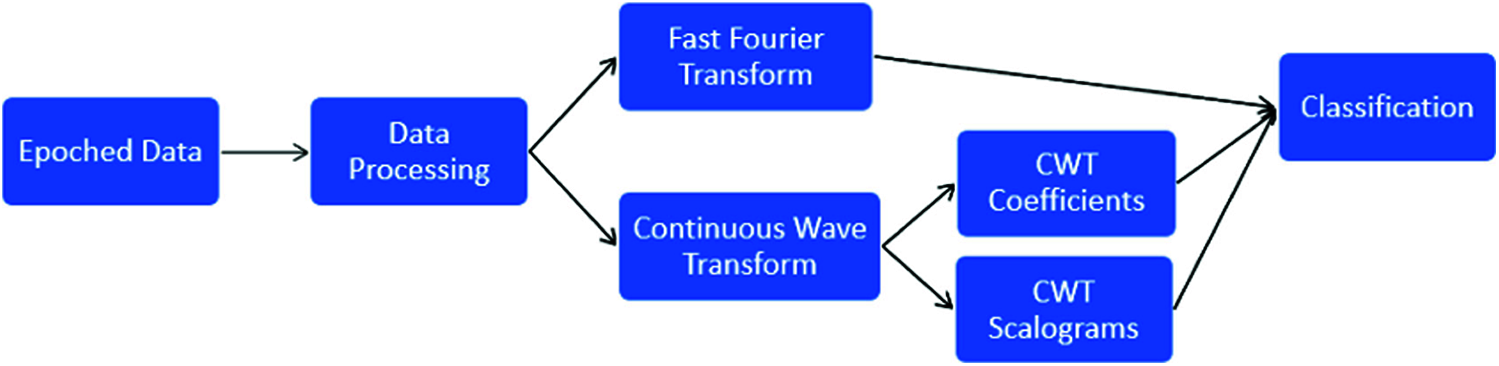

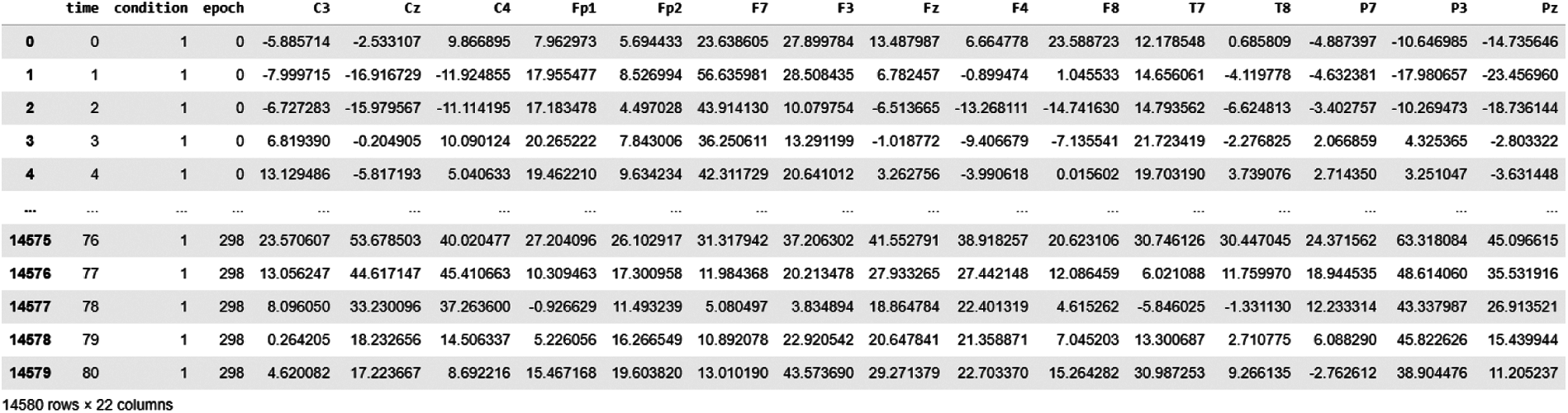

The dataset consists of already epoched EEG data for 19 electrodes i.e., ‘C3’, ‘Cz’, ‘C4’, ‘Fp1’, ‘Fp2’, ‘F7’, ‘F3’, ‘Fz’, ‘F4’, ‘F8’, ‘T7’, ‘T8’, ‘P7’, ‘P3’, ‘Pz’, ‘P4’, ‘P8’, ‘O1’, ‘O2’. There are 180 epochs with three conditions i.e., resting state (1) and hand movements (2 and 3). This headset follows 10–20 system of electrode placement method which basically describes the location of scalp electrodes as shown in the Fig. 2.

Figure 2: International 10–20 system for electrode placement [19]

EEG data used in this research have opted from BCI EEG data analysis (NEUROML2020 class competition) [20].

Dataset snapshot of BCI EEG epoched signals is shown in Fig. 3; these recordings contain three events: event-1: resting-state, event-2 and event-3: hand motions. We generally get a continuous EEG signal from these headsets, which are further pre-processed and split into epochs. Dataset opted in this study is already epoched, because of which column named epoch is given (having epoch number) with the corresponding column named condition (1, 2 or 3). Each epoch is of size 81 which is shown in time column (0, 1, 2, 3….80, 0, 1, 2, 3….80 etc.).

Figure 3: Dataset snapshot of BCI EEG data analysis

This data was already pre-processed, though it was not mentioned in the EEG data description. EEG raw data pre-processing includes removal of DC component and it is usually done before epoching. Specifically, we removed the DC component by calculating the mean and subtracting it from the EEG readings (data-mean), which gave us a negligible mean, implying that the data was already pre-processed.

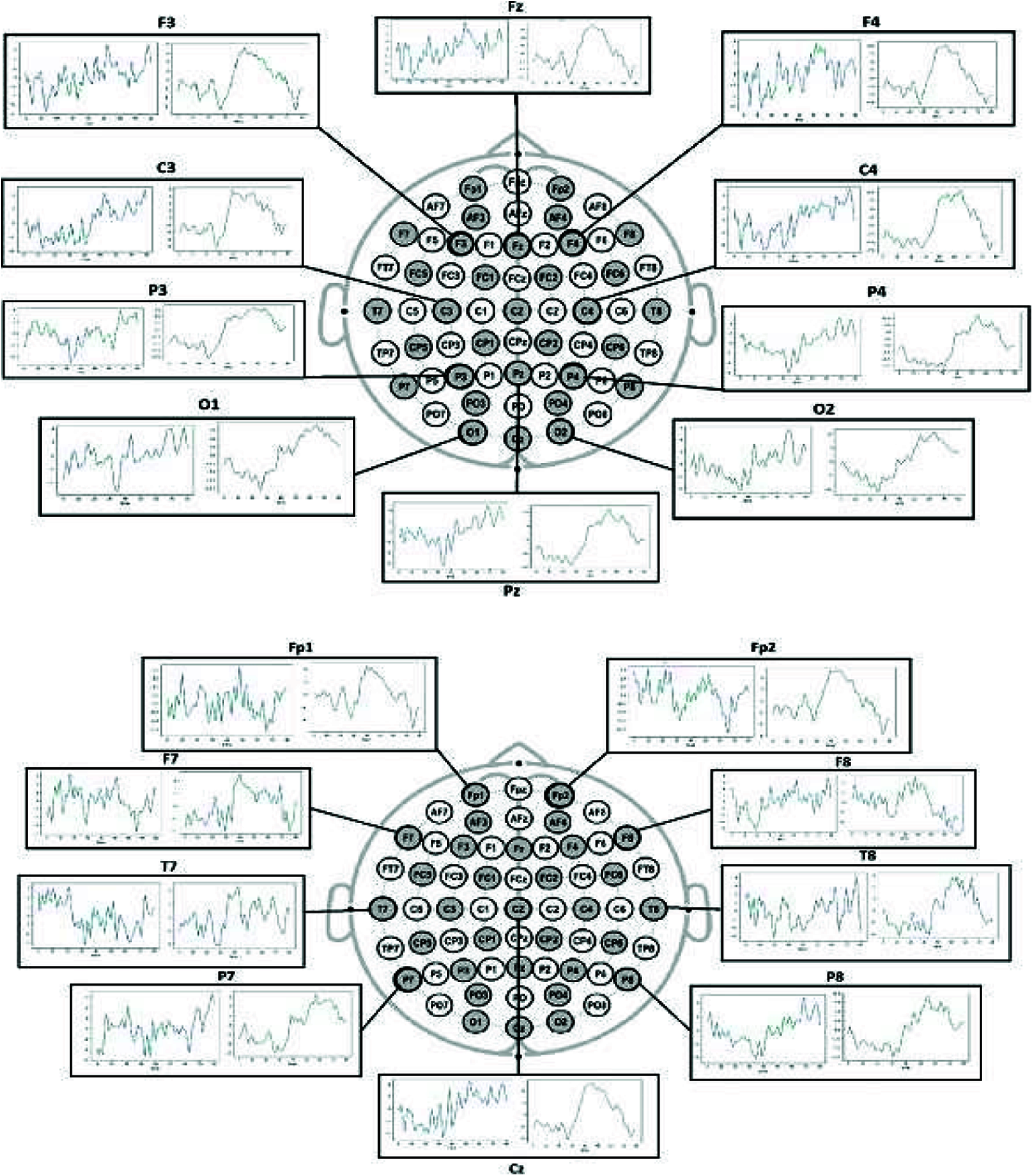

Here, BCI EEG data was visualized for each electrode to differentiate between the conditions. The mean of all epochs for condition one was plotted for all the 19 electrodes during the epoch period of 80 s, similarly grouped for second and third conditions, representing hand motions as shown in Fig. 4. These visualizations distinct the two conditions, i.e., hand movement and no hand movement (steady-state).

Figure 4: Left visualization is for condition 1 (steady-state) and right visualization is for condition-2 and 3 (hand motions)

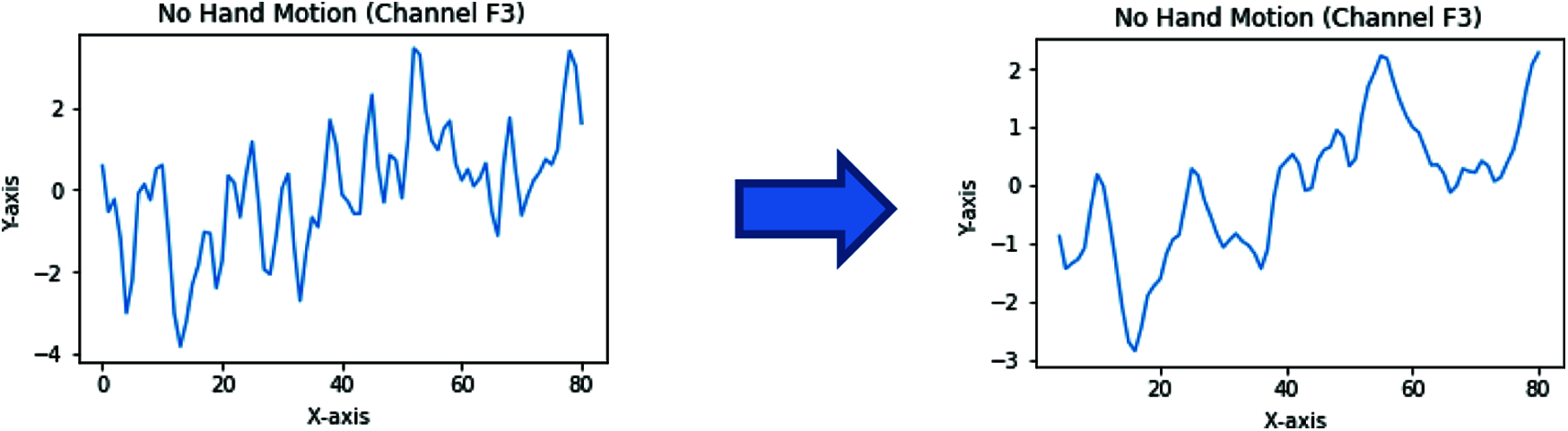

The moving average is one of the most common approaches used to capture significant trends in time series data. In addition, a finite impulse response (FIR) filter is used for a set of time-series data points by comparing different subsets of time-series data sets.

EEG brain signals can also be seen as a time series; therefore, moving average is used to remove the EEG signals’ artifacts and noise, as shown in Fig. 5.

Figure 5: Moving average filter applied to an EEG signal

Frequency domain features were extracted using FFT, a widespread feature extraction method [17] for raw EEG signals.

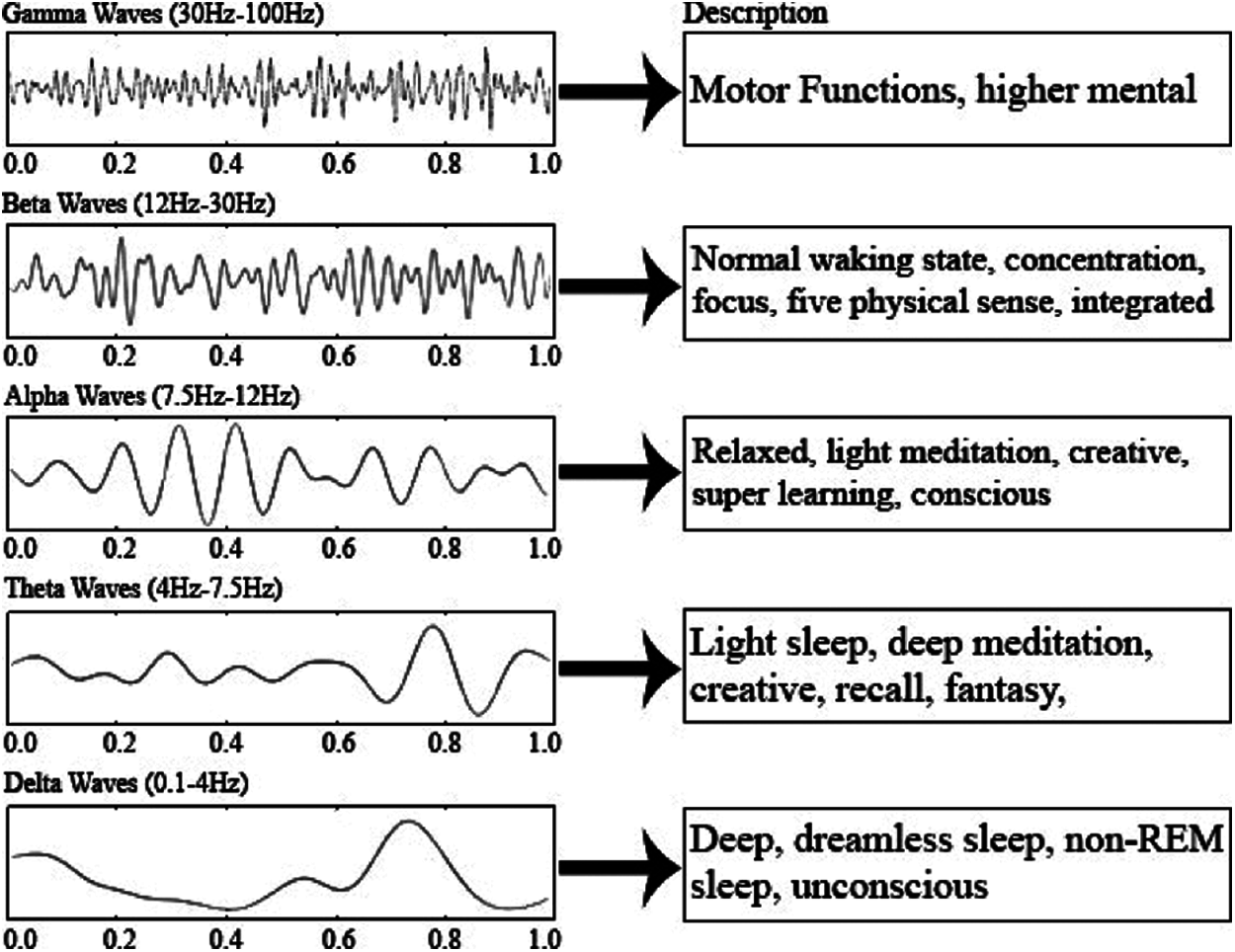

The considered dataset consists of EEG signals in the time domain and any time-dependent signal can be subdivided into a collection of sinusoids that can represent a single frequency. FFT converts the signal from the time domain to the frequency domain. Therefore, we can extract all the frequencies (sin waves) from which the signal is composed (ex: after performing the FFT, the raw EEG signal gave the frequencies: 2 Hz, 2.3 Hz, 13 Hz and 20 Hz). There are several types of brain waves, as shown in Fig. 6.

Figure 6: Different brain signals and their range [21]

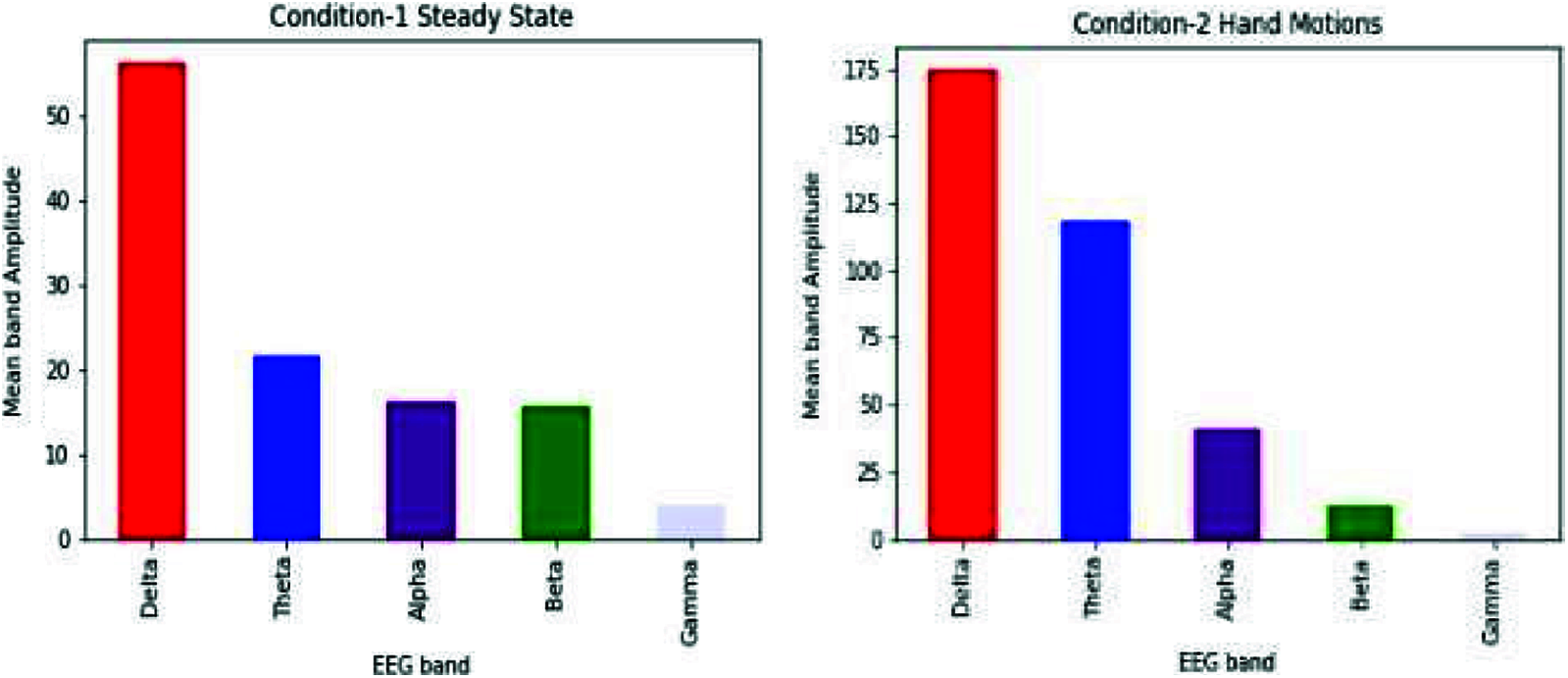

After applying FFT on raw EEG, we can use frequencies to say which waves dominate a specific event. We compared the bands for both the conditions (i.e., steady-state and hand motions).

As shown in Fig. 7, results show an increase in theta and alpha bands when the subject moved a hand. This concludes that the person goes from unconsciousness to consciousness when he does some action, i.e., hand motions.

Figure 7: Comparison of different bands of both the conditions (steady-state and hand movement) for the Cz electrode

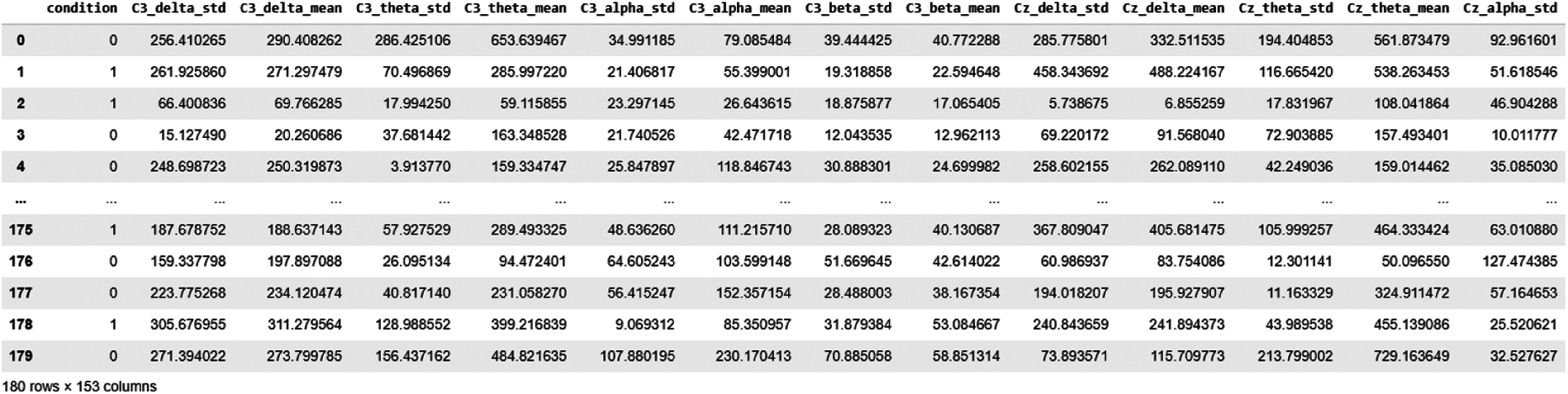

Using the FFT feature extraction method, 180 epochs data were obtained; we calculated delta, theta, alpha and beta EEG band values for each channel. We took the standard deviation for each channel as an expected value. This makes a total of 19x4x2 = 152 Features, as shown in Fig. 8.

Figure 8: Data snapshot of FFT features

3.4 CWT Coefficient Feature Extraction and Scalogram Images

CWT feature extraction method applies inner products to estimate the pattern match between morlet wavelet (ψ) and EEG signal. CWT analyzes the EEG signal to stretched and shifted versions of compressed morlet wavelets. For a scale parameter, a > 0 and position, b, the CWT given by Eq. (2):

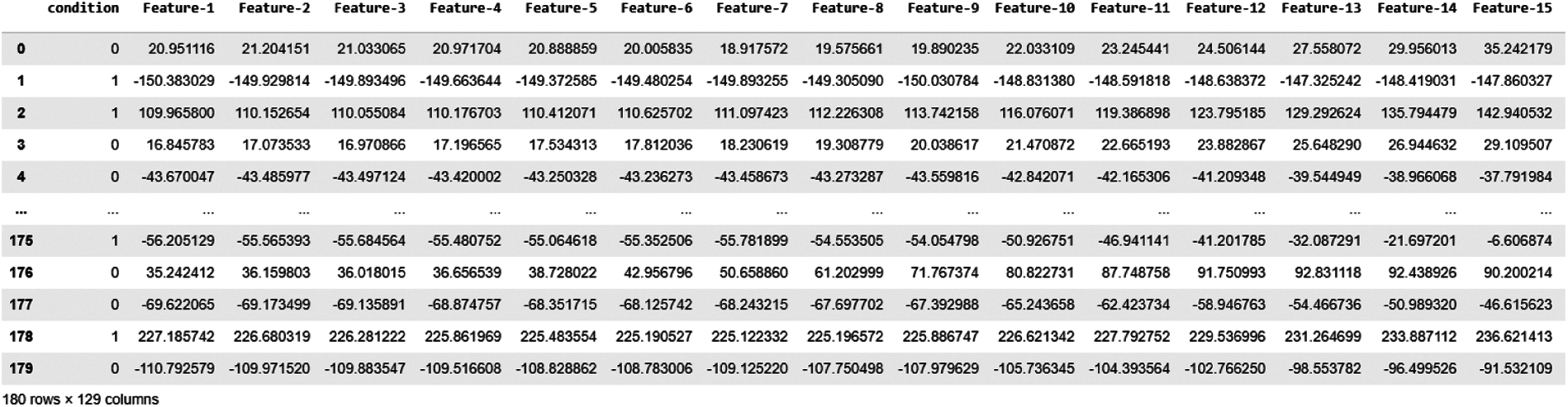

In this study, we trained our machine learning model in this CWT Coefficients. As a result, we obtained 128 Features from CWT coefficients for each epoch, as shown in Fig. 9.

Figure 9: Data snapshot of CWT features

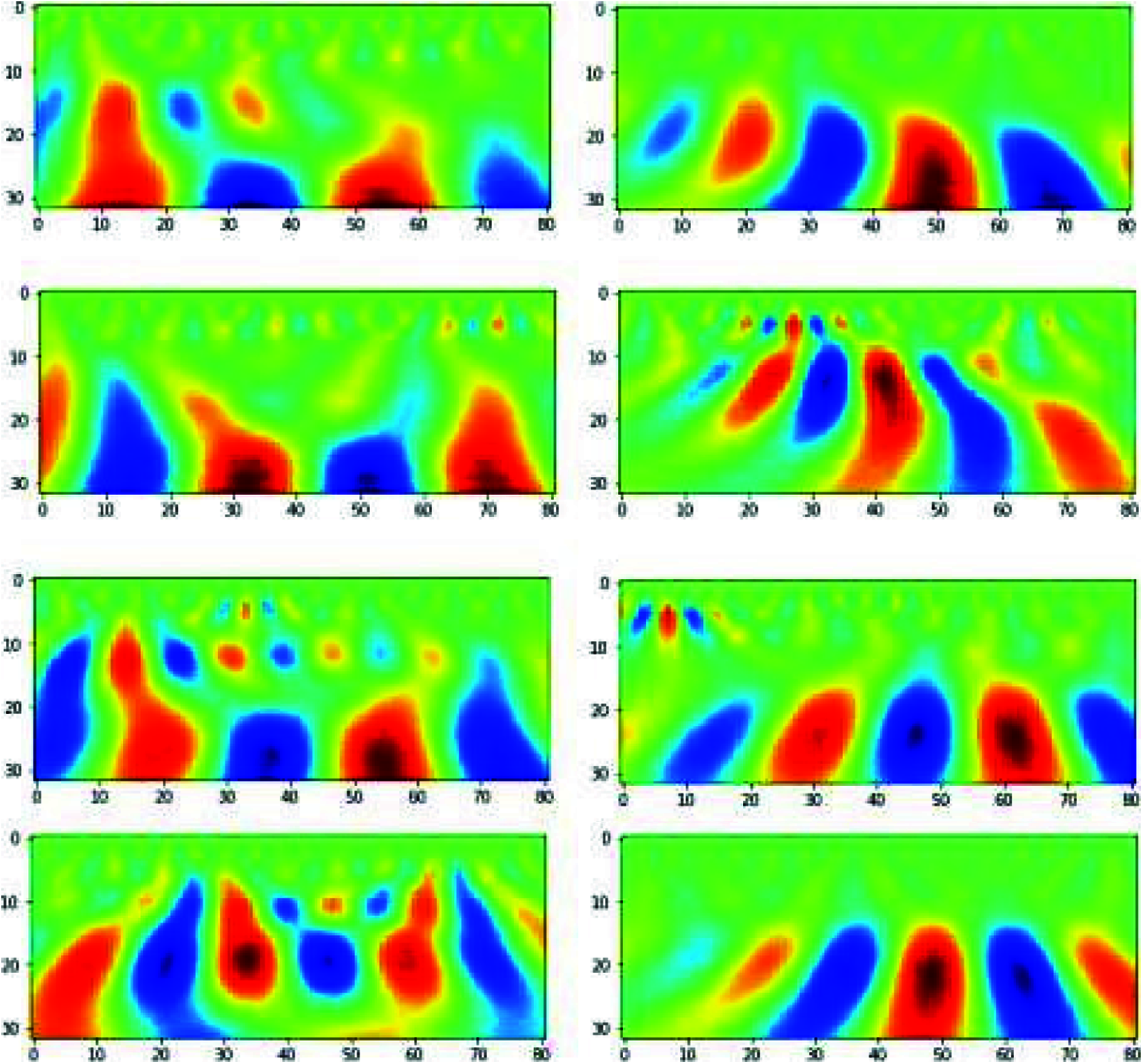

To apply CNN [22], we used scalogram images obtained from CWT coefficients, as shown in Fig. 10. These images are of dimension 32x81, where we used 32 CWT features and 81-time points of an epoch. We extracted images for each channel of each epoch which generated a total of 19x180 = 3420 images.

Figure 10: Scalogram on the left side is for steady-state condition and scalogram on the right side is for hand movement conditions

This study applied 5 different classification models on FFT features and CWT features, this includes logistic regression [23], random forest [24], KNN [25], light GBM [26] and XG boost [27].

Light GBM trained on FFT features and CWT features have the following parameters: objective = binary, tree learner = data, number of leaves = 99, learning rate = 0.1, bagging fraction = 0.8, bagging freq = 1, feature fraction = 0.8, boosting type = gbdt and metric = binary logloss.

The random forest model, trained on FFT Features, has criterion=entropy, min samples leaf = 5, min samples split = 2 and several estimators = 700 and for CWT features, it is criterion=gini, min samples leaf = 5, min samples split = 2 and number of estimators = 400.

XG Boost, which is trained on FFT features, has an objective of binary logistic with the number of estimators = 10 and the model trained on CWT features has the same objective but with the number of estimators = 20.

KNN model have the following parameters neighbour = 2, leaf_size = 30, metric=‘minkowski’, p = 2, weights=‘uniform’ for FFT features and CWT features.

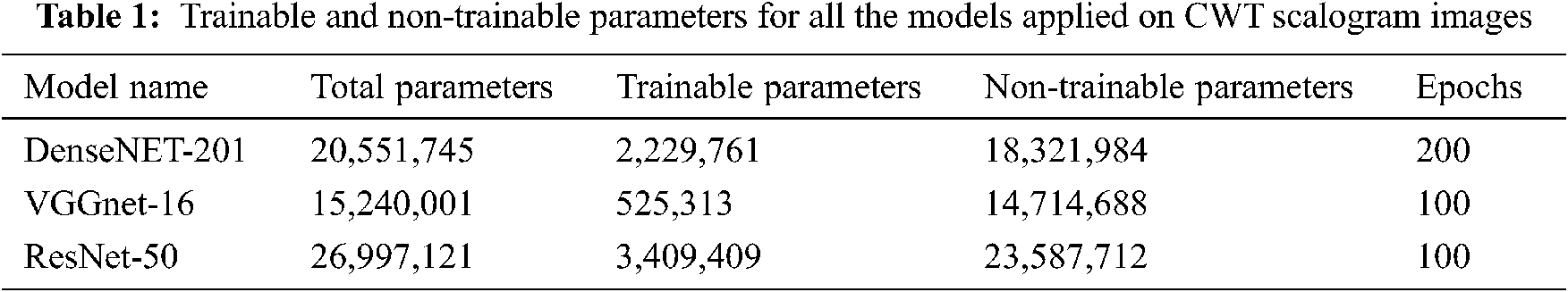

For classifying CWT scalogram images, pre-trained models like VGGnet-16, DenseNET201 and ResNet-50 were used with weights of the ImageNet. The model inputs a 32x81x3 input, where 32 are CWT features extracted and 81-time points. This image was provided to pre-trained models and subsequently passed through dense layers of 512 nodes for VGGnet and Resnet-50 and two 512 nodes layers in case of DenseNet201, Dropout of 0.5 was applied to avoid overfitting of data. Tab. 1 illustrates parameters for considered models with architecture mentioned in Section 2.5.

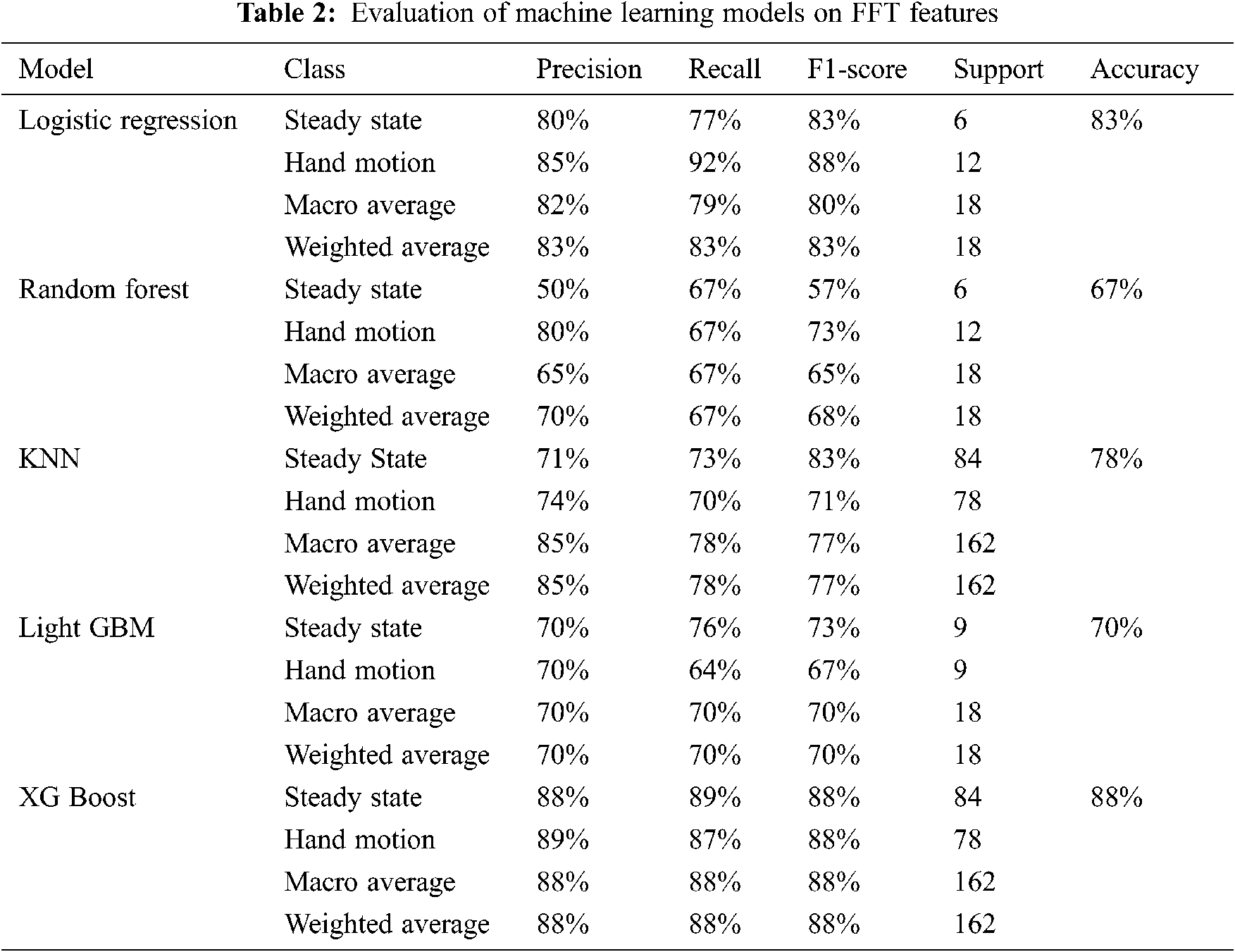

This study’s overall objective is to develop a robust and accurate workflow to predict hand motion and rest state. Our study presented the processing of EEG signals using the moving average method, two feature extraction techniques, i.e., FFT feature extraction and CWT feature extraction. Different machine learning models like the random forest, logistic regression, KNN, Light GBM and XG Boost for FFT features and CWT coefficients and VGG-16, DenseNet201 and ResNet-50 for CWT scalogram images. Tab. 2 shows metrics obtained for these ML models o FFT features.

Tab. 3 shows the accuracy obtained for different machine learning models on CWT.

Tab. 4 shows accuracy obtained for different deep learning models, i.e., VGG-16, DenseNet201 and ResNet50 for CWT scalogram images.

In this study, different feature extraction methods and ML models have been used to predict the probability of fist motion on EEG records. Various feature extraction methods are applied, like FFT and CWT, on the dataset. On these features, different machine learning models were trained and compared. XG Boost and logistic regression models performed well in FFT features and achieved 88% and 83% accuracy, while XG Boost and KNN performed equally for CWT features with 74.85% and 73.34%. For CWT scalogram images, ResNet50 performance is better than the VGG-16 as it gave an accuracy of 85%. This study shows that XG Boost trained on FFT Feature Extraction with Moving Average Filter as the signal processing technique gave the highest accuracy for the dataset of about 88%. To create BCI for ALS patients, we need a large EEG dataset. This dataset can also be created on our own using EEG headsets. It can be further extended towards brain-controlled wheelchairs for patients who have ALS or other BCI applications. Once brain commands have been detected, it will direct it towards the IoT devices such as prosthetic hands and this is how the patient will be able to use BCI.

Acknowledgement: The authors extend their appreciation to the Deanship of Scientific Research at King Saud University for funding this research.

Funding Statement: This work was supported by the Deanship of Scientific Research, King Saud University, Saudi Arabia.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. T. J. La Vaque, “The history of EEG hans berger: Psychophysiologist. a historical vignette,” Journal of Neurotherapy, vol. 3, no. 2, pp. 1–9, 1999. [Google Scholar]

2. A. J. Molina-Cantero, J. A. Castro-García, F. Gómez-Bravo, R. López-Ahumada, R. Jiménez-Naharro et al., “Controlling a mouse pointer with a single-channel EEG sensor,” Sensors, vol. 21, no. 16, pp. 1–31, 2021. [Google Scholar]

3. P. Kumar and E. Scheme, “A deep spatio-temporal model for EEG-based imagined speech recognition,” in 2021 IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSPToronto, Canada pp. 995–999, 2021. [Google Scholar]

4. Electroencephalography-Wikipedia. Retrieved 20 July 2021, from https://en.wikipedia.org/wiki/Electroencephalography. [Google Scholar]

5. A. Lécuyer, F. Lotte, R. B. Reilly, R. Leeb, M. Hirose et al., “Brain-computer interfaces, virtual reality and videogames,” Computer, vol. 41, no. 10, pp. 66–72, 2008. [Google Scholar]

6. G. Repovš, “Dealing with noise in EEG recording and data analysis spoprijemanje s šumom pri zajemanju in analizi EEG signala,” Informatica Medica Slovenica, vol. 15, no. 1, pp. 18–25, 2010. [Google Scholar]

7. F. H. Helgesen, D. Nedregård and R. M. Hjørungdal, “Man/machine interaction through EEG,” Bachelor’s Thesis, 2015. [Google Scholar]

8. M. N. Alam, M. I. Ibrahimy and S. M. A. Motakabber, “Feature extraction of EEG signal by power spectral density for motor imagery based BCI,” in 2021 8th Int. Conf. on Computer and Communication Engineering (ICCCEKuala Lumpur, Malaysia, pp. 234–237, 2021. [Google Scholar]

9. Z. İşcan and V. V. Nikulin, “Steady state visual evoked potential (SSVEP) based brain-computer interface (BCI) performance under different perturbations,” PloS One, vol. 13, no. 1, pp. e0191673, 2018. [Google Scholar]

10. A. S. Al-Fahoum and A. A. Al-Fraihat, “Methods of EEG signal features extraction using linear analysis in frequency and time-frequency domains,” International Scholarly Research Notices, vol. 2014, pp. 1–7, 2014. [Google Scholar]

11. Y. Narayan, “Motor-imagery EEG signals classification using SVM, MLP and LDA classifiers,” Turkish Journal of Computer and Mathematics Education (TURCOMAT), vol. 12, no. 2, pp. 3339–3344, 2021. [Google Scholar]

12. I. Lazarou, S. Nikolopoulos, P. C. Petrantonakis, I. Kompatsiaris and M. Tsolaki, “EEG-Based brain–computer interfaces for communication and rehabilitation of people with motor impairment: A novel approach of the 21st century,” Frontiers in Human Neuroscience, vol. 12, no. 14, pp. 1–18, 2018. [Google Scholar]

13. R. K. Chaurasiya, N. D. Londhe and S. Ghosh, “An efficient p300 speller system for brain-computer interface,” in Proc. 2015 Int. Conf. on Signal Processing, Computing and Control (ISPCCIEEE, Waknaghat, India, pp. 57–62, 2015. [Google Scholar]

14. G. Zhang, V. Davoodnia, A. Sepas-Moghaddam, Y. Zhang and A. Etemad, “Classification of hand movements from EEG using a deep attention-based LSTM network,” IEEE Sensors Journal, vol. 20, no. 6, pp. 3113–3122, 2019. [Google Scholar]

15. J. LaRocco, M. D. Le and D. G. Paeng, “A systemic review of available low-cost EEG headsets used for drowsiness detection,” Frontiers in Neuroinformatics, vol. 14, no. 553352, pp. 1–14, 2020. [Google Scholar]

16. M. Bilucaglia, G. M. Duma, G. Mento, L. Semenzato and P. E. Tressoldi, “Applying machine learning EEG signal classification to emotion–related brain anticipatory activity,” F1000 Research, vol. 9, no. 173, pp. 1–21, 2020. [Google Scholar]

17. C. V. Loan, Computational frameworks for the fast Fourier transform, University of Auckland, NZ: Society for Industrial and Applied Mathematics, SIAM Publications, 1992. [Online]. Available: https://epubs.siam.org/doi/book/10.1137/1.9781611970999. [Google Scholar]

18. J. Sadowsky, “Investigation of signal characteristics using the continuous wavelet transform,” Johns Hopkins APL Technical Digest, vol. 17, no. 3, pp. 258–269, 1996. [Google Scholar]

19. M. Ali, A. H. Mosa, F. Al Machot and K. Kyamakya, “EEG-Based emotion recognition approach for e healthcare applications,” in Proc. 2016 Eighth Int. Conf. on Ubiquitous and Future Networks (ICUFNVienna, Austria, pp. 946–950, 2016. [Google Scholar]

20. BCI EEG Data Analysis, “NEUROML2020 class competition,” 2020. [Online]. Available: https://www.kaggle.com/c/neuroml2020eeg/data. [Google Scholar]

21. W. Srimaharaj, S. Chaising, P. Temdee, P. Sittiprapaporn and R. Chaisricharoen, “Identification of LTheanine acid effectiveness in oolong Tea on human brain memorization and meditation,” ECTI Transactions on Computer and Information Technology (ECTI-CIT), vol. 13, no. 2, pp. 129–136, 2019. [Google Scholar]

22. I. Goodfellow, Y. Bengio and A. Courville, Deep learning. Cambridge, MA, USA: MIT Press, 2016. [Online]. Available: https://mitpress.mit.edu/books/deep-learning. [Google Scholar]

23. J. Tolles and W. J. Meurer, “Logistic regression: Relating patient characteristics to outcomes,” Jama, vol. 316, no. 5, pp. 533–534, 2016. [Google Scholar]

24. T. K. Ho, “Random decision forests,” in Proc. 3rd Int. Conf. on Document Analysis and Recognition, Montreal, Canada, vol. 1, pp. 278–282, 1995. [Google Scholar]

25. N. S. Altman, “An introduction to kernel and nearest-neighbor nonparametric regression,” the American Statistician, vol. 46, no. 3, pp. 175–185, 1992. [Google Scholar]

26. G. Ke, Q. Meng, T. Finley, T. Wang, W. Chen et al., “Lightgbm: A highly efficient gradient boosting decision tree,” Advances in Neural Information Processing Systems, vol. 30, pp. 3146–3154, 2017. [Google Scholar]

27. T. Chen and C. Guestrin, “Xgboost: A scalable tree boosting system,” in Proc. 22nd ACM Sigkdd Int. Conf. on Knowledge Discovery and Data Mining, Montreal, Canada, pp. 785–794, 2016. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |