DOI:10.32604/csse.2022.018520

| Computer Systems Science & Engineering DOI:10.32604/csse.2022.018520 |  |

| Article |

Early Diagnosis of Alzheimer’s Disease Based on Convolutional Neural Networks*

1College of Technological Innovation, Zayed University, Abu Dhabi Campus, FF2-0-056, UAE

2Computer Science Department, Community College, King Saud University, Riyadh, 11437, Saudi Arabia

3Nathan Campus, Griffith University, Brisbane, Australia

*Corresponding Author: Atif Mehmood. Email: atifed4151@yahoo.com

Received: 11 March 2021; Accepted: 29 September 2021

Abstract: Alzheimer’s disease (AD) is a neurodegenerative disorder, causing the most common dementia in the elderly peoples. The AD patients are rapidly increasing in each year and AD is sixth leading cause of death in USA. Magnetic resonance imaging (MRI) is the leading modality used for the diagnosis of AD. Deep learning based approaches have produced impressive results in this domain. The early diagnosis of AD depends on the efficient use of classification approach. To address this issue, this study proposes a system using two convolutional neural networks (CNN) based approaches for an early diagnosis of AD automatically. In the proposed system, we use segmented MRI scans. Input data samples of three classes include 110 normal control (NC), 110 mild cognitive impairment (MCI) and 105 AD subjects are used in this paper. The data is acquired from the ADNI database and gray matter (GM) images are obtained after the segmentation of MRI subjects which are used for the classification in the proposed models. The proposed approaches segregate among NC, MCI, and AD. While testing both methods applied on the segmented data samples, the highest performance results of the classification in terms of accuracy on NC vs. AD are 95.33% and 89.87%, respectively. The proposed methods distinguish between NC vs. MCI and MCI vs. AD patients with a classification accuracy of 90.74% and 86.69%. The experimental outcomes prove that both CNN-based frameworks produced state-of-the-art accurate results for testing.

Keywords: Alzheimer’s disease; neural networks; intelligent systems; gray matter

Alzheimer’s disease (AD) is a neurodegenerative illness that gradually abolishes memory and leads to difficulties in performing tasks of daily routine life such as walking, speaking, and writing [1]. Furthermore, AD patients also have a serious problem identifying the persons, including family members [2]. In other words, AD is considered as the most common type of dementia. At an early stage of AD, some biomarkers may become abnormal and cognitive decline occurs [3]. During the AD period, the number of symptoms changes over a period. Based on these symptoms, the nerve cells are frequently damaged. The mild cognitive impairment (MCI) is the intermediate stage of AD where most people do daily routine task independently but in some cases, they require assistance to manage daily life work [4]. In the United States of America, AD is the fifth leading cause of death. According to the center of disease control and prevention (CDC) statistics, there were 122019 people died in 2018 due to AD. Another study shows that the death rate has decreased caused by heart disease, stroke, and prostate cancer, but it has increased by 145% due to AD. Global AD care cost is more than 600 billion US dollars [5].

Recently, many studies show that the MCI stage lies between normal control (NC) and AD [6]. If more symptoms are observed in that stage then it is called a late mild cognitive impairment (LMCI) or progressive MCI, if symptoms remain stable then it is called stable mild cognitive impairment (sMCI) [7]. The doctors and practitioners are more cautious about a situation when symptoms are increased and converted from one stage to another [8]. However, it is very difficult for researchers to determine the transformation of the accurate symptom from one stage to another. Different types of medical image modalities play a key role in helping out the researchers to find out the actual situation of transformation symptoms. In these modalities, Positron Emission Tomography (PET) [9], Magnetic Resonance Imaging (MRI) and computed tomography (CT) provides the standard format subjects and scans for the experimental processes [10]. The MRI is one of the most used imaging modalities in different disease diagnoses and a very safe and powerful tool. It helps to detect the disease early due to its high sensitivity. MRI has different sequences which have a unique special capacity for different pathologies. MRI images are more prominent to be used as compare to other modalities used for AD classification [11]. However, in classification, the numbers of features are extracted from MRI images which are used for the AD prediction or MCI diagnosis. These features include gray and white matter intensities, cortical thickness, and cerebral spinal fluid (CSF) used for the prediction of disease stage [12].

In AD classification problems, traditional machine learning-based approaches also produce better results in terms of classification. In machine learning, the support vector machine is famous that is commonly used in several studies [13]. However, machine learning-based approaches have some limitations, such as requiring highly trained labor to process the data samples [14]. Furthermore, different modalities, especially MRI and PET images produced different regions belonging to AD [15]. They are motivated by the success of deep learning approaches in computer vision and image classification. In recent research studies, deep learning, especially convolutional neural networks (CNN) achieves competitive results in pattern recognition and classification [16]. Furthermore, there is still room for further exploration of these techniques, especially for AD classification in terms of binary and multiclass classification [17].

In this study, we proposed two CNN based models to diagnose the NC, MCI, and AD. In our experimental processes, all datasets are segmented with the same procedure. We used three binary classes for classification of AD stages. The research work presented here has the contribution by several aspects.

• We proposed a new computer-aided diagnosis system for the detection of AD an early stage.

• This study based on gray matter images which are extracted from the MRI after applying the segmentation on MRI images.

In the medical domain, machine learning played a key role in the data analysis of different diseases. The authors in [18] utilized the support vector machine (SVM) based approach to classify AD vs. NC scans. Reference [19] developed a model with the combination of SVM and downsized kernel principal component (DKPC) for AD classification. Reference [20] introduced a machine learning-based method and attained the 86.10% to 92.10% classification performance on dementia and AD classes. However, recently deep learning-based approaches produced promising results regarding object detection, classification, and segmentation. In deep learning, especially CNN-based techniques applied successfully in the biomedical domain.

Ieracitani et al. [21] introduced a customized CNN-based model for diagnosing dementia stages and attained the 89.8% average accuracy on binary classification. These studies show that CNN based model produced the more improved results as per the machine learning model. In [22], the researchers combined the sparse auto encoder (AE) and 3D-CNN to classify NC, MCI, and AD. They used the ADNI dataset and attained the classification results in 95.39%, 92.11%, 86.64%, and 89.47% respectively, on four binary classes. The authors in [23] introduced a multi-CNN approach for AD diagnosis. They extracted the 3D patches from the whole MRI subjects and used the 3D-CNN to learn automatically generic features. During the experimental processes, they achieved 87.15% performance in terms of AD classification.

Reference [24] developed a CNN-based approach for the early diagnosis of AD stages. In researchers [25] modified the VGG-16 and fine-tuned on classification data. The authors in [26] developed a domain transfer learning-based model for MCI conversion prediction. They used both target and auxiliary domain data samples with different modalities. After experimental processes, they achieved the 79.40% in terms of prediction accuracy through domain transfer learning. Reference [27] developed a classification model that predicts the MCI stages. These models are based on the two CNN architectures. Reference [28] developed a robust deep learning approach by using MRI and PET modalities. They utilized a dropout approach to improving the performance in terms of classification. They also used the multi-task learning strategy for the deep learning framework and checked the variation by using the dropout and without the model’s dropout performance. Experimental results showed 5.9% improvements due to the dropout technique. The authors in [29] introduced the two models that are based on CNNs. They analyzed the performance of volumetric and multi-view CNNs on the classification task. Furthermore, it also introduced multi-resolution filtering, which directly impacts on the classification results.

3.1 Data Actuation and Pre-Processing Operations

In this study, we obtained the image data from the Alzheimer’s Disease Neuroimaging Initiative (ADNI), which is publicly available (http://adni.loni.usc.edu/data-samples/access-data/). The ADNI project started in 2004 under Dr. Michael W. Weiner’s supervision and funded as a public-private partnership. It is a part of a global research study that fully supports the investigation and development of treatments to reduce the risk of AD and slow down AD progression [30]. Over 1000 scientific publications currently used the ADNI data samples for the experimental processes and produced the valuables results that helped the doctors treat the AD at an early stage. The ADNI study primary objective in four phases is to add more participants after investigation and evaluation, which helps further AD diagnosis at an early stage. Normally, the ADNI added those participants who have age in the range of 55 to 90. In this study, a total of 325 T1 weighted MRI subjects were used for the experimental process. These subjects belong to three classes such as 110 NC subjects, 110 MCI, and 105 subjects belong to AD. The participants include males and females and age range from 65 to 90.

This study used a complete pre-processing pipeline that gives us the best quality images for experimental processes. Our proposed model based on gray matter (GM) images which extracted after applying the segmentation on the MRI subjects as shown in Fig. 1. We used the SPM12 (https://www.fil.ion.ucl.ac.uk/spm/software/spm12/) tool for pre-processing and applied the segmentation on MRI subjects and get the three major parts, such white matter (WM), GM, and cerebral spinal fluid (CSF). Furthermore, the ICBM space template was used for all data samples for the regularization purpose, and Montreal Neurological Institute (MNI) space was used for spatial normalization. Finally, the Gaussian filter was applied for the smoothing of all dataset.

Figure 1: Data pre-processing results on whole brain MRI

3.2 Convolutional Neural Networks

Recently, neural network-based approaches have been broadly utilized in computer vision, video classification, medical image analysis, and the best results. It is a type of artificial neural network inspired by the hierarchical model of the visual cortex [31]. CNNs is a multilayered structure that includes convolutional layers, pooling layers, activation functions and fully connected layers. According to the requirement of classification problem several intermediate layers extract the low-level features after that are used to build high-level features [32]. It also a hierarchical structure that can increase the depth and breadth of the network. However, during the training of the neural networks shown the feedback through the loss function. The CNN’s key block is the convolutional layer, which has more importance in extracting the input data feature. During training, it uses learnable filters that have to extract the small part of the images [33].

Activation function also a crucial component in deep learning models, which determine the output of the CNNs based models in term of accuracy and efficiency. Each neuron is connected to the next layers in a neural network, and each neuron has weight. The activation function is a mathematical gate between input feeding the current neuron and its output going to the next layer. This study used the rectified linear unit (ReLU) because it can replace the negative values to zero, which directly impacts the model training performance. Another important parameter of the CNN is the pooling layer [34]. By using different non-linear functions, it provides the reduced feature maps. It also decreases the number of parameters that help prevent the overfitting issue during training the model. Finally, fully connected layers are connected to all the neurons in the previous layer. In last, with softmax layer produced the classification results applied on the testing data samples [35].

In this research work, we proposed two CNN based models for the classification of AD stages. The proposed CNN-1 consisted of six convolutions and six max-pooling layers. We applied the kernel size 3 × 3 in five convolution layers on each image, but in the second layer, we used 5 × 5 kernel size. In the first convolutional layer, we used 64 feature maps applied on the input images. At this stage, the same feature map used, but kernel size changed 5 × 5 and extracted low-level features. Pooling operation is also applied on that stage after activation function, which can change the non-negative values to zero. The stride size of the max-pooling is 2 × 2. Finally, two fully connected layers with 256 and 128 hidden units are applied in the proposed model. The details of the CNN-1 model shown in Fig. 2.

Figure 2: The flow chart of the proposed CNN-1 model for AD classification (C = feature maps, Conv = convolution, MP = Max-pooling, FC = Fully Connected)

In the second proposed model, we also used the CNN based model with different parameters to classify NC, MCI, and AD. Here, we used five convolutions with three max-pooling layers. In CNN-2 same kernel size was applied during the experimental process. First convolution layer 64 feature maps applied, but in the next layers, we used 32, 32, 16, and 8 feature maps in the last layer. The max-pooling layers’ size is the same 2 × 2 and ReLU activation function is applied. Finally, for classification of three classes, we used three fully connected layers with 256 and two 128 hidden units with the final softmax layers. The details of CNN-2 are seen in Fig. 3. We determined that after using the convolutional neural network layers, and finally, CNN-1 produced promising results in terms of classification.

Figure 3: The flow chart of the proposed CNN-2 model for AD classification (C = feature maps, Conv = convolution, MP = Max-pooling, FC = Fully Connected)

Classification of AD from MRI images is an important task that can support doctors to diagnose AD at an early stage. The success of a diagnostic system depends on the useful feature extraction form the input data. Furthermore, MRI segmentation also plays a key role in the diagnosis of AD. In this proposed system, we used the GM images that help the early diagnosis of AD. However, CNNs capable of learning the different features according to the proposed models. Another key factor in CNN, kernel size and feature maps, is used in the convolution layer to capture the useful features during the training process. Different CNN-based models have different behavior according to the successive classification rate. We check the performance of proposed CNN-based models on three binary classifications, which contain NC vs. AD, NC vs. MCI and MCI vs. AD. We evaluate these two models by splitting the data of 77% for training and 23% for testing. We used the Keras library to implement the proposed model on Z800 work satiation Intel Xeon (R) @ 2.40 GHz*2 with 128 GB SSD. Last, we check the performance of two proposed approaches with different measures such as sensitivity, specificity, and accuracy. In these measures, some terms are used True Negative (TN), True Positive (TP), False Negative (FN), and False Positive (FP). The details are below in equations.

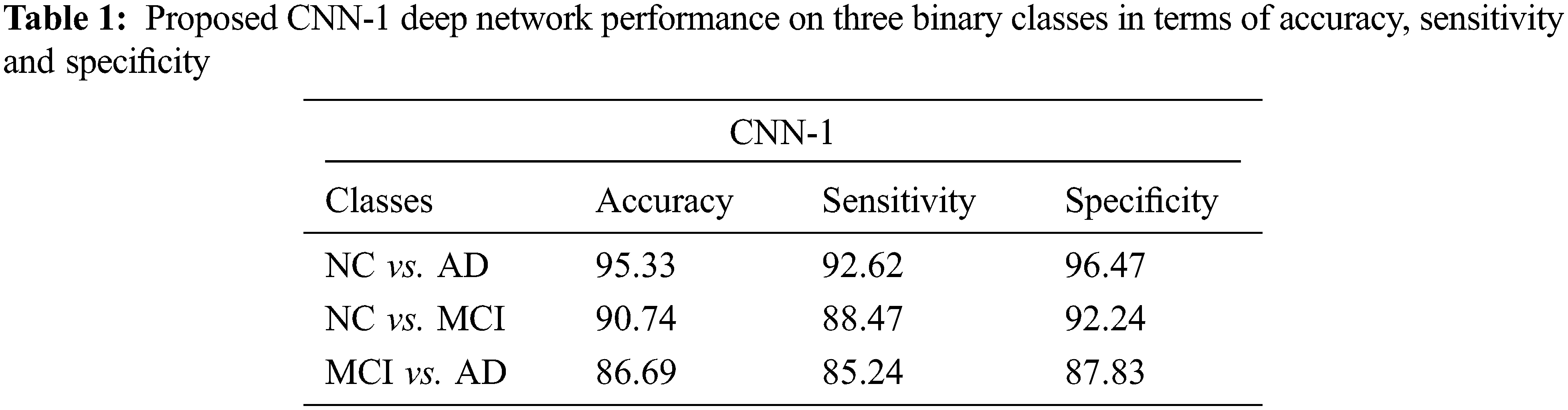

4.1 Performance Evaluation of CNN-1

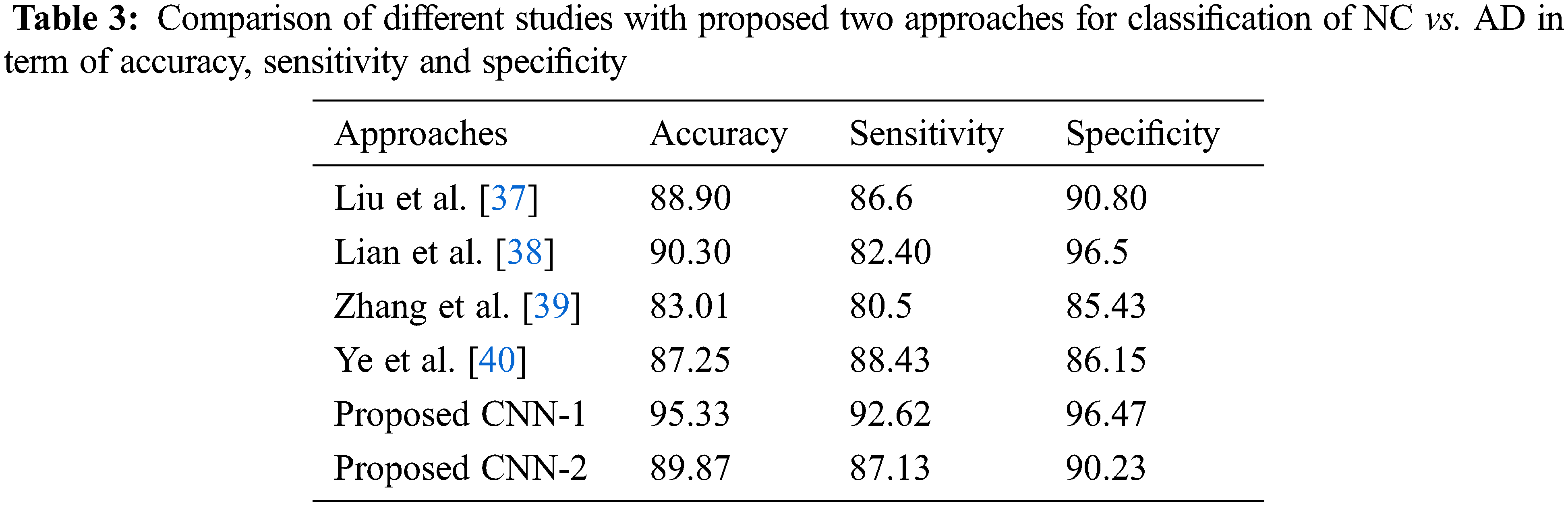

We test our proposed model on three binary classifications scenarios such as NC vs. AD, NC vs. MCI, and MCI vs. AD as shown in Tab. 1. In this section, we showed the performance obtained by CNN-1. Our model attained an excellent performance in accuracy 95.33% on NC vs. AD (sensitivity 92.62% and specificity 96.47%). Furthermore, 90.74% accuracy is achieved on NC vs. MCI classification (sensitivity 88.47% and specificity 92.24%). Finally, our proposed model also produced promising results on MCI vs. AD classification and obtained 86.69% performance in terms of accuracy (sensitivity 85.24% and specificity 87.83%).

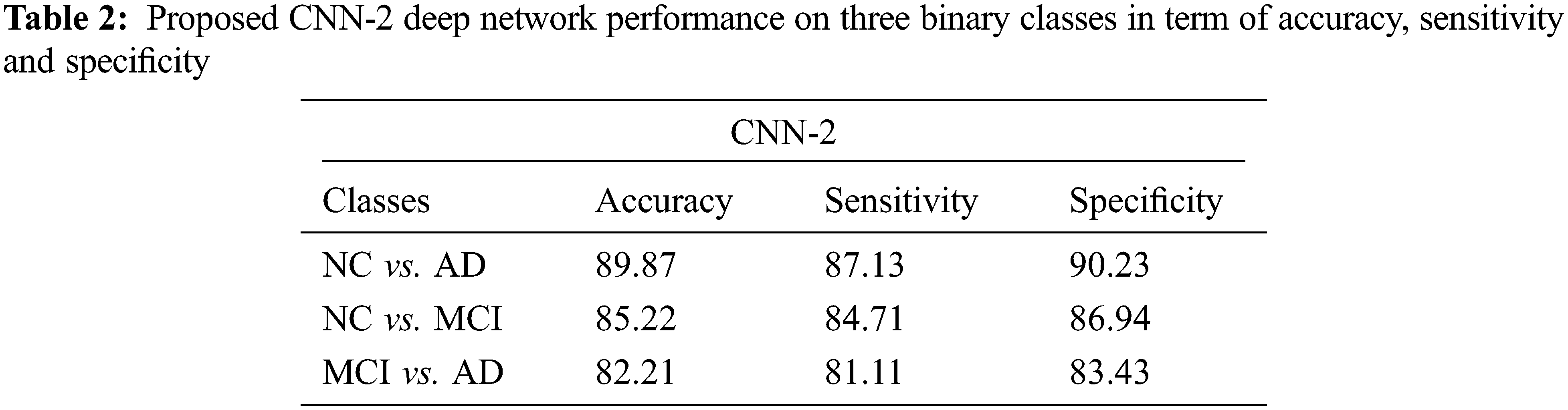

4.2 Performance Evaluation on CNN-2

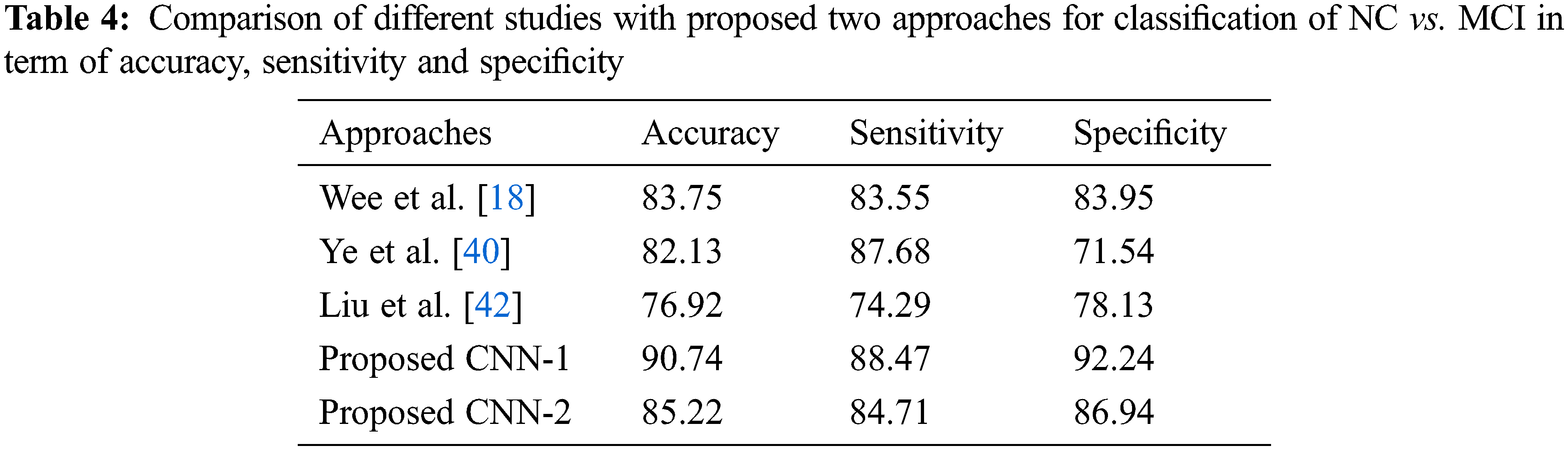

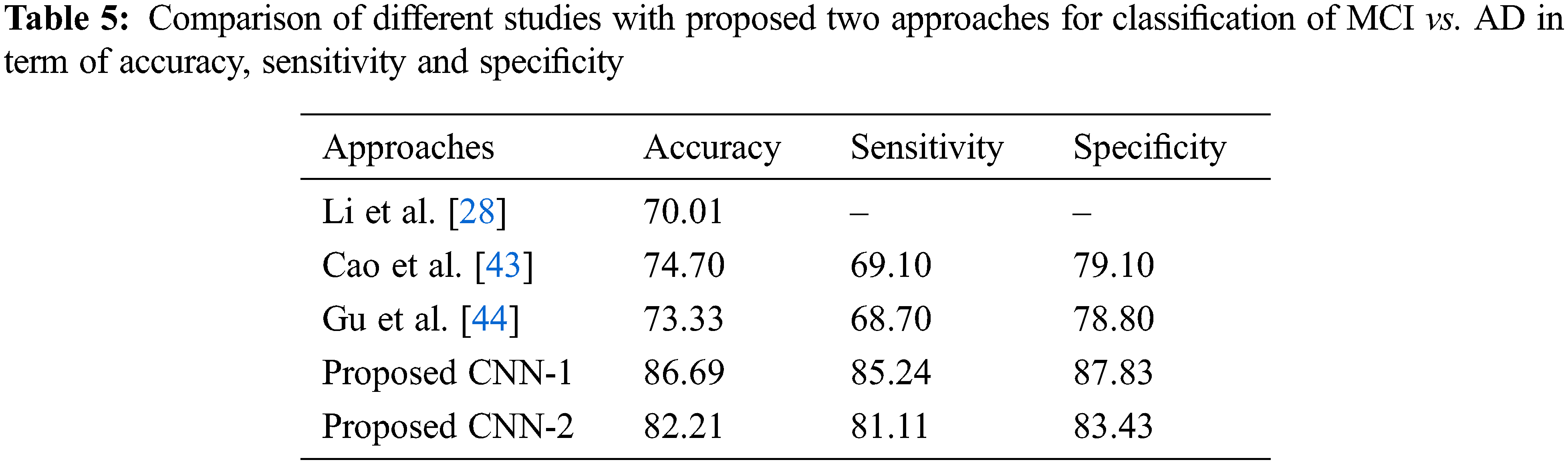

Tab. 2 shows the performance of CNN-2 on three binary classes NC vs. AD, NC vs. MCI and MCI vs. AD. In this approach, we used different parameters and variations between the number of convolution layers and kernel size as compared to CNN-1. Our CNN-2 proposed model achieved accuracy on NC vs. AD is 89.87% (sensitivity 87.13% and specificity 90.23%). In the second binary class NC vs. MCI, we attained 85.22% accuracy in classification (sensitivity 84.71% and specificity 86.94%). Finally, 82.21% accuracy achieved on the MCI vs. AD classification (sensitivity 81.11% and specificity 83.43%).

Recently, in many studies, researchers used machine learning and deep learning-based techniques to diagnose AD at an early stage. However, several computer-aided systems developed by the researchers directly help the diagnosis of AD. These systems are mostly based on deep learning [36]. These approaches are more helpful nowadays, especially in computer vision, image classification, and artificial intelligence systems. In deep learning, especially CNN-based techniques can manipulate the input data samples where manual experts for the feature extraction is not required. We proposed a system based on two models CNN-1 and CNN-2. The two models results are tested by three performance parameters such as accuracy, sensitivity and specificity. In AD diagnosis, the neuroimaging modalities play a key role in the early detection. In these modalities, one of the more prominent is the MRI. Behind this modality has many factors in terms of the availability of large amounts of MRI subjects and making annotated data samples after pre-processing is easy.

In AD detection, MCI is an intermediate stage between normal control and AD patients. The early detection of MCI is more useful to overcome the dementia risk factor. Especially in aging research fields, several CAD systems were developed by the researchers. In this research work, we classified the NC, MCI and AD patients with two approaches based on CNN. In both, we used the GM images extracted after the segmentation of MRI subjects. After completing the experimental process, the proposed models average performance on NC vs. AD is 95.33% and 89.87%, which showed the efficiency of CNN-1 and CNN-2 models. Furthermore, we vestige the developed models on the remaining two binary classes and checked the performance.

As for the classification, Tab. 3 shows the comparison with different studies investigating the early diagnosis of AD patients. However, our proposed approaches produced better performance as compared to popular deep networks in term of the accuracy of NC vs. AD 95.33% score, which is higher than the multi deep model of convolutional neural network (88.90%) [37]. The results have clear improvement [38,39]. Also, the comparison with multi-task feature selection (87.25%) has also shown that the proposed algorithm has improved the accuracy [40]. Furthermore, Tabs. 4 and 5 shows the comparison between NC vs. MCI and MCI vs. AD. Here, we can see that, the proposed models produced promising results in terms of accuracy, sensitivity, and specificity. Both models achieved better results, but CNN-1 produced the best results for classification on all binary classes. It is because, the training of deep neural networks based model on segmented data samples has overfitting issues which directly cause to reduced the CNNs models’ performance in terms of classification and detection of AD.

We observed the different CNN-based models for NC, MCI and AD classification, which used some data without skull stripping. Secondly, the intermediate stage prediction like MCI on early stage is more helpful for doctors to diagnosed on early stage. Our proposed methods also produce better results for MCI classification [41−44]. Because, MCI conversion is also linked with the number of risk factors involved in early conversion of MCI to AD on a short time. After segmentation, the MRI subjects gray matter images shown the difference between normal control and AD patients, which is more suitable for researchers to make models perfect.

We introduced the early diagnosis of AD based on two CNN based models. We proposed different number of parameters and convolutional layers to extract the useful features from the input samples in both the proposed approaches. We used the MRI images that are acquired from the ADNI database. Finally, the GM images were obtained after applying the segmentation on MRI subjects and put into the CNNs models for the experimental testing. The distinction between NC vs. MCI helps out the experts to detect the early stage of MCI. We evaluate both proposed models on 325 ADNI subjects for three binary classes. The experimental results show that the CNNs based framework has demonstrated the best performance in terms of classification accuracy. Especially our proposed method outperforms for classification of NC vs. AD and attained an accuracy of 95.33%. Finally, our proposed method based on CNNS show convincing results for building a model for automated systems used for the early detection of AD.

*Data used in preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (http://adni.loni.usc.edu/). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found at: http://adni.loni.usc.edu/wpcontent/uploads/howtoapply/ADNIAcknowledgementList.pdf.

Acknowledgement: The authors would like to thanks the editors and reviewers for their review and recommendations.

Funding Statement: This work was supported by the Researchers Supporting Project (No. RSP-2021/395), King Saud University, Riyadh, Saudi Arabia.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. K. Li, X. Luo, Q. Zeng, P. Huang, Z. Shen et al., “Gray matter structural covariance networks changes along the Alzheimer’s disease continuum,” NeuroImage Clinical, vol. 23, no. 8, pp. 1–10, 2019. [Google Scholar]

2. M. N. Shahzad, M. Suleman, M. A. Ahmed, A. Riaz and K. Fatima, “Identifying the symptom severity in obsessive-compulsive disorder for classification and prediction: An artificial neural network approach,” Behavioral Neurology, vol. 22, no. 3, pp. 1–12, 2020. [Google Scholar]

3. P. J. Gallaway, H. Miyake, M. S. Buchowski, M. Shimada, Y. Yoshitake et al., “Physical activity: A viable way to reduce the risks of mild cognitive impairment, Alzheimer’s disease, and vascular dementia in older adults,” Brain Sciences, vol. 7, no. 2, pp. 1–21, 2017. [Google Scholar]

4. H. T. Gorji and N. Kaabouch, “A deep learning approach for diagnosis of mild cognitive impairment based on mri images,” Brain Sciences, vol. 9, no. 9, pp. 1–13, 2019. [Google Scholar]

5. C. H. Lim and A. S. Mathuru, “Modeling Alzheimer’s and other age related human diseases in embryonic systems,” Journal of Development Biology, vol. 6, no. 1, pp. 1–27, 2017. [Google Scholar]

6. F. Ramzan, M. U. G. Khan, A. Rehmat, S. Iqbal, T. Saba et al., “A deep learning approach for automated diagnosis and multi-class classification of Alzheimer’s disease stages using resting-state fMRI and residual neural networks,” Journal of Medical Systems, vol. 44, no. 2, pp. 1710–1721, 2019. [Google Scholar]

7. C. Feng, P. Yang, T. Wang, F. Zhou, H. Hu et al., “Deep learning framework for Alzheimer’s disease diagnosis via 3D-cNN and FSBi-lSTM,” IEEE Access, vol. 7, pp. 63605–63618, 2019. [Google Scholar]

8. P. Forouzannezhad, A. Abbaspour, C. Li, M. Cabrerizo and M. Adjouadi, “A deep neural network approach for early diagnosis of mild cognitive impairment using multiple features,” in IEEE 17th Int. Conf. on Machine Learning and Applications (ICMLA), Orlando, USA, pp. 1–6, 2019. [Google Scholar]

9. F. Segovia, J. Ramirez, D. C. Barnes, D. S. Gonzalez, M. R. Gomez et al., “Multivariate analysis of dual-point amyloid PET intended to assist the diagnosis of Alzheimer’s disease,” Neurocomputing, vol. 417, pp. 1–9, 2020. [Google Scholar]

10. P. Bharati and A. Pramanik, “Deep learning techniques—R-CNN to mask R-CNN: A survey,” in Computational Intelligence in Pattern Recognition, Singapore, Springer, pp. 657–668, 2020. [Google Scholar]

11. A. Chaddad, C. Desrosiers and T. Niazi, “Deep radiomic analysis of MRI related to Alzheimer’s disease,” IEEE Access, vol. 6, pp. 58213–58221, 2018. [Google Scholar]

12. S. J. Teipel, C. D. Metzger, F. Brosseron, K. Buerger, K. Brueggen et al., “Multicenter resting state functional connectivity in prodromal and dementia stages of Alzheimer’s disease,” Journal of Alzheimer’s Disease, vol. 64, pp. 801–813, 2018. [Google Scholar]

13. B. Khagi, G. R. Kwon and R. Lama, “Comparative analysis of Alzheimer’s diseas classification by CDR level using CNN, feature selection, and machine-learning techniques,” International Journal of Imaging System Technology, vol. 17, pp. 1–14, 2019. [Google Scholar]

14. H. Suk, S. W. Lee and D. Shen, “Deep ensemble learning of sparse regression models for brain disease diagnosis,” Medical Image Analysis, vol. 37, pp. 101–113, 2017. [Google Scholar]

15. M. Liu, D. Cheng, K. Wang and Y. Wang, “Multi-modality cascaded convolutional neural networks for Alzheimer’s disease diagnosis,” Neuroinformatics, vol. 16, pp. 295–308, 2018. [Google Scholar]

16. A. Mehmood, M. Maqsood, M. Bashir and Y. Shuyuan, “A deep siamese convolution neural network for multi-class classification of Alzheimer disease,” Brain Sciences, vol. 10, no. 4, pp. 1–18, 2020. [Google Scholar]

17. A. Mehmood, S. Yang, Z. Feng, M. Wang, A. S. Ahmed et al., “A transfer learning approach for early diagnosis of Alzheimer’s disease on MRI images,” Neuroscience, vol. 460, no. 13, pp. 978–991, 2021. [Google Scholar]

18. C. Y. Wee, P. T. Yap and D. Shen, “Predictioon of Alzheimer’s disease and mild cognitive impairment using cortical morphological patterns,” Human Brain Mapping, vol. 34, no. 9, pp. 3411–3425, 2013. [Google Scholar]

19. S. Neffati, K. Ben, I. Jaffel and B. O. Taouali, “An improved machine learning technique based on downsized KPCA for Alzheimer’s disease classification,” International Journal of Imaging System Technology, vol. 29, no. 5, pp. 121–131, 2019. [Google Scholar]

20. J. P. Kim, J. Kim, Y. H. Park, S. B. Park, J. S. Lee et al., “Machine learning based hierarchical classification of frontotemporal dementia and Alzheimer’s disease,” Neuroimage Clinical, vol. 23, no. 8, pp. 1–17, 2019. [Google Scholar]

21. C. Ieracitani, N. Mammone, A. Bramanti, A. Hussain and F. C. Morabito, “A convolutional neural network approach for classification of dementia stages based on 2D-spectral representation of EEG recordings,” Neurocomputing, vol. 323, pp. 96–107, 2019. [Google Scholar]

22. H. T. Gorji and J. Haddadnia, “A novel method for early diagnosis of Alzheimer’s disease based on pseudo zernike moment from structural MRI,” Neuroscience, vol. 305, no. 17, pp. 361–371, 2015. [Google Scholar]

23. A. A. AlZubi, A. Alarifi and M. Al-Maitha, “Deep brain simulation wearable IoT sensor device based Parkinson brain disorder detection using heuristic tubu optimized sequence modular neural network,” Measurement, vol. 161, no. 6, pp. 1135–1146, 2020. [Google Scholar]

24. R. R. Janghel and Y. K. Rathore, “Deep convolution neural network based system for early diagnosis of Alzheimer’s disease,” Irbm Journal, vol. 1, no. 3, pp. 1–10, 2020. [Google Scholar]

25. R. Jain, N. Jain, A. Aggarwal and D. J. Hemanth, “Convolutional neural network based Alzheimer’s disease classification from magnetic resonance brain images,” Cognitive Systems Research, vol. 57, no. 8, pp. 147–159, 2019. [Google Scholar]

26. B. Cheng, M. Liu, D. Zhang, B. C. Munsell and D. Shen, “Domain transfer learning for MCI conversion prediction,” IEEE Transactions on Biomedical Engineering, vol. 62, no. 7, pp. 1805–1817, 2015. [Google Scholar]

27. C. Wu, S. Guo, Y. Hong, B. Xiao, Y. Wu et al., “Discrimination and conversion prediction of mild cognitive impairment using convolutional neural networks,” Quantitative Imaging and Medical in Surgery, vol. 8, no. 4, pp. 992–1003, 2018. [Google Scholar]

28. F. Li, L. Tran, K. H. Thung, S. Ji, D. Shen et al., “A robust deep model for improved classification of AD/MCI patients,” IEEE Journal of Biomed Health Informatics, vol. 19, no. 8, pp. 1610–1616, 2015. [Google Scholar]

29. Q. R. Charles, H. Su, M. Niessner, A. Dai, M. Yan et al., “Volumetric and multi-view CNNs for object classification on 3D data,” in IEEE Int. Conf. on Computer Vision and Pattern Recognition (CVPR), Las Vegas, USA, pp. 102–114, 2017. [Google Scholar]

30. C. R. Jack, M. A. Bernstein, N. C. Fox, P. Thompson, G. Alexander et al., “The Alzheimer’s disease neurocomputing initiative (ADNIMri methods,” Journal of Magnetic Resonance Imaging, vol. 27, no. 3, pp. 685–691, 2008. [Google Scholar]

31. A. Abugabah, A. A. AlZubi, M. Al-Maitha and A. Alarifi, “Brain epilepsy seizure detection using bio-inspired krill herd and artificial alga optimized neural network approaches,” Journal of Ambient Intelligence and Humanized Computing, vol. 12, no. 1, pp. 1–12, 2020. [Google Scholar]

32. H. Chen, D. Ni, J. Qin, S. Li, X. Yang et al., “Standard plane localization in fetal ultrasound via domain transferred deep neural networks,” IEEE Journal of Biomedical Health Informatics, vol. 19, no. 5, pp. 1627–1636, 2015. [Google Scholar]

33. J. Young, M. Modat, M. J. Cardoso, A. Mendelson, D. Cash et al., “Accurate multimodal probabilistic prediction of conversion to Alzheimer’s disease in patients with mild cognitive impairment,” Neuroimage Clinical, vol. 2, pp. 735–745, 2013. [Google Scholar]

34. S. H. Wang, P. Phillips, Y. Sui, B. Liu, M. Yang et al., “Classification of Alzheimer’s disease based on eight-layer convolutional neural network with leaky rectified linear unit and max pooling,” Journal of Medical Systems, vol. 42, no. 7, pp. 1816–1827, 2018. [Google Scholar]

35. S. Luo, X. Li and J. Li, “Automatic Alzheimer’s disease recognition from mri data using deep learning method,” Journal of Applied Mathematics and Physics, vol. 5, no. 9, pp. 1892–1898, 2017. [Google Scholar]

36. R. Zemouri, N. Zerhouni and D. Racoceanu, “Deep learning in the biomedical applications: Recent and future status,” Applied Sciences, vol. 9, no. 8, pp. 1–16, 2019. [Google Scholar]

37. M. Liu, F. Liu, H. Yan, K. Wang, Y. Ma et al., “A multi-model deep convolutional neural network for automatic hippocampus segmentation and classification in Alzheimer’s disease,” Neuroimage, vol. 208, no. 7, pp. 653–662, 2020. [Google Scholar]

38. C. Lian, M. Liu, J. Zhang and D. Shen, “Hierarchical fully convolutional network for joint atrophy localization and Alzheimer’s disease diagnosis using structural mri,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, no. 4, pp. 880–893, 2020. [Google Scholar]

39. J. Zhang, Y. Gao, G. Yin, B. C. Munsell and D. Shen, “Detecting anatomical landmarks for fast Alzheimer’s disease diagnosis,” IEEE Transactions on Medical Imaging, vol. 35, no. 12, pp. 2524–2533, 2016. [Google Scholar]

40. T. Ye, C. Zu, B. Jie, D. Shen and D. Zhang, “Discriminative multi-task feature selection for multi-modality classification of Alzheimer’s disease,” Brain Imaging Behavior, vol. 10, pp. 739–749, 2016. [Google Scholar]

41. H. Suk, C. Y. Wee, S. W. Lee and D. Shen, “State-space model with deep learning for functional dynamics estimation in resting-state fMRI,” Neuroimage, vol. 129, no. 10, pp. 292–307, 2016. [Google Scholar]

42. S. Liu, L. Sidong, W. Cai, S. Pujol, R. Kikinis et al., “Early diagnosis of Alzheimer’s disease with deep learning,” in IEEE 11th Int. Symp. on Biomedical Imaging (ISBI), Beijing, China, pp. 1015–1018, 2014. [Google Scholar]

43. A. Abugabah, N. Nizam and A. A. AlZubi, “Decentralized telemedicine framework for a smart healthcare ecosystem,” IEEE Access, vol. 8, pp. 166575–166588, 2020. [Google Scholar]

44. Q. Gu, Z. Li and J. Han, “Joint feature selection and subspace learning,” in Proc. of the 22nd Int. Joint Conf. on Artificial Intelligence, Barcelona, Spain, pp. 1294–1299, 2011. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |