DOI:10.32604/csse.2023.026358

| Computer Systems Science & Engineering DOI:10.32604/csse.2023.026358 |  |

| Article |

Early Skin Disease Identification Using Deep Neural Network

1Chitkara University Institute of Engineering and Technology, Chitkara University, Rajpura, Punjab, India

2School of Computer Science, University of Petroleum and Energy Studies, Dehradun, 248007, Uttarakhand, India

3Department of Computer Science, College of Computers and Information Technology, Taif University, Taif, Saudi Arabia

4Department of Systemics, University of Petroleum & Energy Studies, Dehradun, India

5ABES Institute of Technology, Ghaziabad, India

*Corresponding Author: Rajeev Tiwari. Email: errajeev.tiwari@gmail.com

Received: 23 December 2021; Accepted: 30 March 2022

Abstract: Skin lesions detection and classification is a prominent issue and difficult even for extremely skilled dermatologists and pathologists. Skin disease is the most common disorder triggered by fungus, viruses, bacteria, allergies, etc. Skin diseases are most dangerous and may be the cause of serious damage. Therefore, it requires to diagnose it at an earlier stage, but the diagnosis therapy itself is complex and needs advanced laser and photonic therapy. This advance therapy involves financial burden and some other ill effects. Therefore, it must use artificial intelligence techniques to detect and diagnose it accurately at an earlier stage. Several techniques have been proposed to detect skin disease at an earlier stage but fail to get accuracy. Therefore, the primary goal of this paper is to classify, detect and provide accurate information about skin diseases. This paper deals with the same issue by proposing a high-performance Convolution neural network (CNN) to classify and detect skin disease at an earlier stage. The complete methodology is explained in different folds: firstly, the skin diseases images are pre-processed with processing techniques, and secondly, the important feature of the skin images are extracted. Thirdly, the pre-processed images are analyzed at different stages using a Deep Convolution Neural Network (DCNN). The approach proposed in this paper is simple, fast, and shows accurate results up to 98% and used to detect six different disease types.

Keywords: Convolution neural network (CNN); skin disease; deep learning (DL); image processing; artificial intelligence (AI)

Skin is the most sensitive part of the human body and enhances its appearance. Skin disorder is one of the extremely widespread and impact on the appearance of the skin. The main reason of the skin diseases are fungus, allergy, bacteria, and viruses, etc. This may be the reason for the transformation of skin texture and color. Later stage, this may be the reason for chronic, infection, and disease. In 2013, the population of India affected by skin disease was reported to be approximately 15.1 crores, with a prevalence rate of 10%. According to estimates, 18.8 crore people will be affected by skin disease by 2015. The skin diseases are broadly classified in three types as given below:

a) Internal and External: Skin disorders that grow within the body are known as internal involving hormones and body glands, such as acne. Skin disorders grow on the skin and manifest themselves on the surface are known as external one involving air pollution or sun exposure, such as rashes.

b) Chronic and acute: Skin diseases may be chronic, like psoriasis and atopic eczema, or uncommon, like sweet syndrome and fuji disease.

c) Primary and Secondary: The skin disease may be either primary or secondary. Spots, pimples, plaque, discoloration, nodule, tumor, vesicle, pustule, cyst, and bulla are examples of primary skin disease, while crust, decay, excoriation, scale, ulcer, fissure, induration, atrophy, maceration, umbilication, and phyma are examples of secondary skin disease.

Some of these diseases may take lots of time to show symptoms and are difficult to detect. The lack of medical knowledge may be the reason for severe disease. In most of the cases, it is difficult to diagnose it with bare eyes. Therefore, he/she recommend costlier laboratory and laser tests to diagnose it properly. Some of these tests may be the reason for some other harmful effects on human skin. To eliminate such expenses and ill effects of tests on the human body, an automated system is proposed in this paper to detect and classify skin disease.

Therefore, it is mandatory to earlier detect, reduce impact and stop spreading otherwise it may be the reason for health problems. Most ordinary people are unaware of skin disease. In some cases, it has been observed that the skin disease shows symptoms a month later in the final stage. This may be the reason for the lack of knowledge about skin disorders. In some situations, it has been observed that even the skin specialist is not able to diagnose it and that leads to costlier laboratory tests. This is the main reason to develop such a system that helps to earlier detect skin disease based on the skin texture. Images are one of the best sources for such systems.

In most cases, early detection and care are sufficient to fully cure the illness but early identification of the type of ailment is critical. Tests conducted with the naked eye have several disadvantages, including precision inconsistencies, the human observer’s caution, and so on. Computer-assisted techniques can study and track the subsurface structures of pores and skin disease more effectively. This approach allows for a deeper understanding of rare disease, their appearance, and characteristics. Furthermore, dermatologists are in short supply, especially in rural areas, and consultations are expensive. Furthermore, the traditional approach can result in infections and pains. Disease should be identified automatically from photographs in dermatology to provide precise, early, and objective diagnoses.

This advances to a skin disease diagnosis approach centered around computer vision. The diagnosis of these diseases may also be aided by automated classification of disease, which eliminates the hurdles of costly treatment. Furthermore, automatic disease classification diagnosis is more cost-effective than conventional diagnosis. Picture partitioning is the method of splitting a digital image into several regions or artifacts and processing them separately depending on the need and function. It easily streamlines the analysis and classification. Pixels around the similar section have the same attributes, although clusters have different characteristics.

Various deep learning models have been proposed to address various issues different field such as agriculture [1–7], weed growth [8–11], soil [12,13], object detection [14] and in various robotic based system [15]. Here, this paper tackle skin disease issue with deep learning model and image processing.

In this paper, the skin images are used to identify and classify skin disease. The main contributions of the paper are:

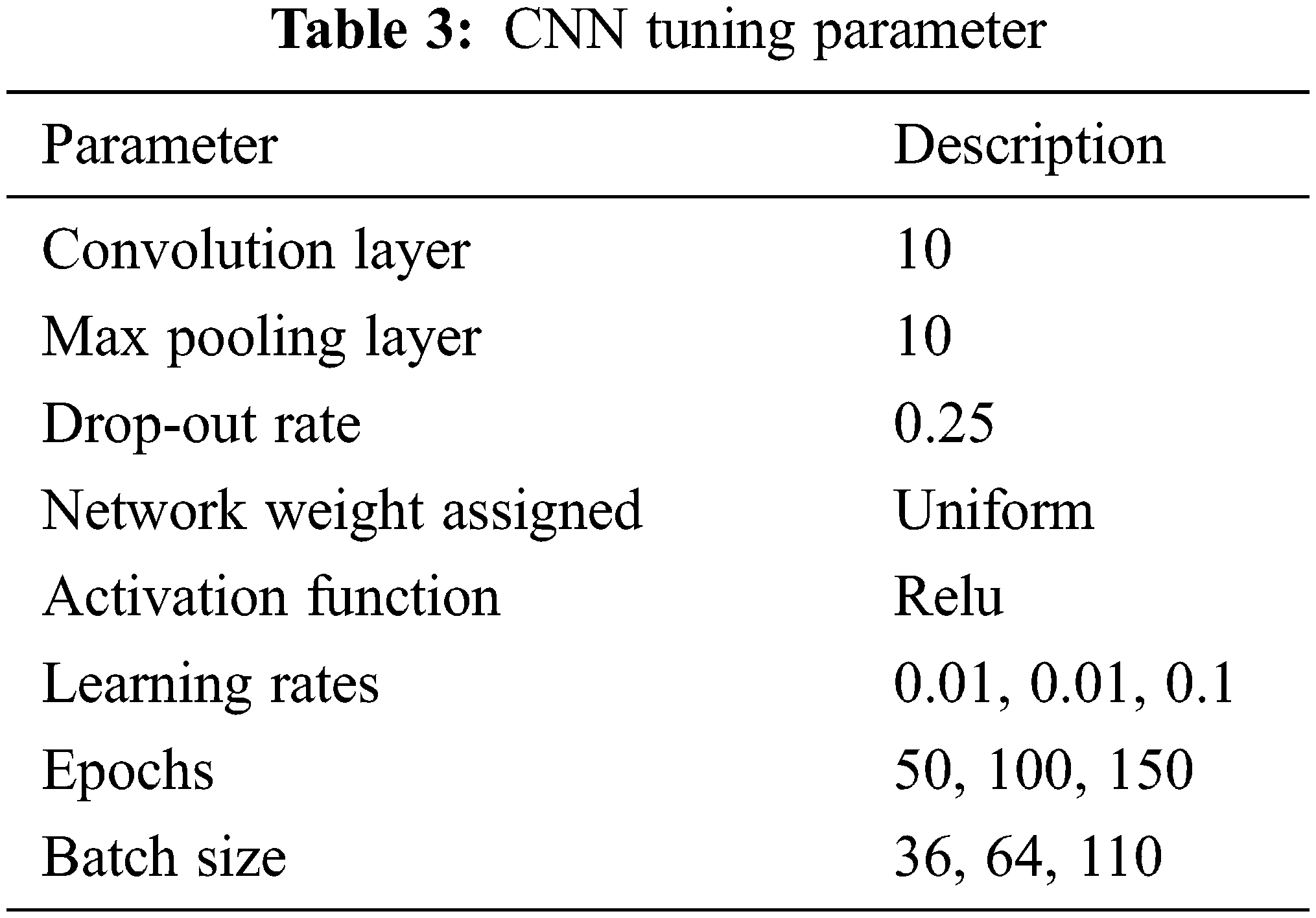

• In proposed techniques, the CNN is tuned with different values of hyper-parameters to get better results.

• Here, a vegetation/semantic segmentation to resolve the issue of normal segmentation techniques.

• Vegetation Segmentation is used to reduce the noise in the image and focuses on the disease part.

• The target skin disease is processed with proposed method to enhance the accuracy.

The complete paper is explained in different folds: Section 2 is concerned about the state-of-the-art review. Section 3 explains about material method and process. Section 4 describes the proposed model and mathematical transformation. The result and discussion are explained in Section 5. Section 6 describes the conclusion and future work.

Lots of artificial intelligence (AI)-based techniques and methods are proposed by researchers to identify and classify skin diseases which are discussed in this section. The skin disease recognition and classification technique are given below:

A system is proposed in [16] that consists of two stages to detect skin disease using color images: firstly, to detect the infected skin by use of color image and apply k-mean clustering with color gradient techniques to identify it. Secondly, Artificial Neural Network (ANN) is used to classify diseases.

The image features are extracted after prepossessing the image and the same features are used to predict the type of disease [17]. The accurateness of the technique depends on the number of extracted features. These features are inputted to feed-forward ANN for training and testing. Total nine different diseases are classified here with an accuracy of 90%.

In this paper, the lesion part and background part are separated using different segmentation methods and after segmentation, the lesion part is processed with image processing techniques [18]. After experiments, the author concludes that the Multilevel thresholding has a better accuracy rate.

A specialized algorithm is applied to a given image dataset to detect diseases of dark skins [19]. The support vector machine is used in [20,21] to detect skin disease. Computer vision and machine learning techniques are combined to detect skin disease with a 95% accuracy rate. The underlying deep learning technique with the unique visual feature is applied in [22,23] to diagnose skin diseases.

In [24–27] the ANN is applied to the image dataset to diagnose skin disease. The segmentation based on two feature sets is employed with the Sobel operator in [28] to diagnose three different skin diseases. The deep neural network is used in [29,30] to classify four different skin diseases. In this GoogleNet Inception and V3 packets are used to classify the image and attain an accuracy of 86%.

A rule-based expert system is developed in [31] that uses forward chaining with a depth-first search algorithm. Image processing and ANN are used in [32] to detect skin disease. In this, some histopathological attributes are also involved with image processing for accurate results. A data mining technique is involved with a skin detection system to enhance its accuracy with the choice of attribute [33].

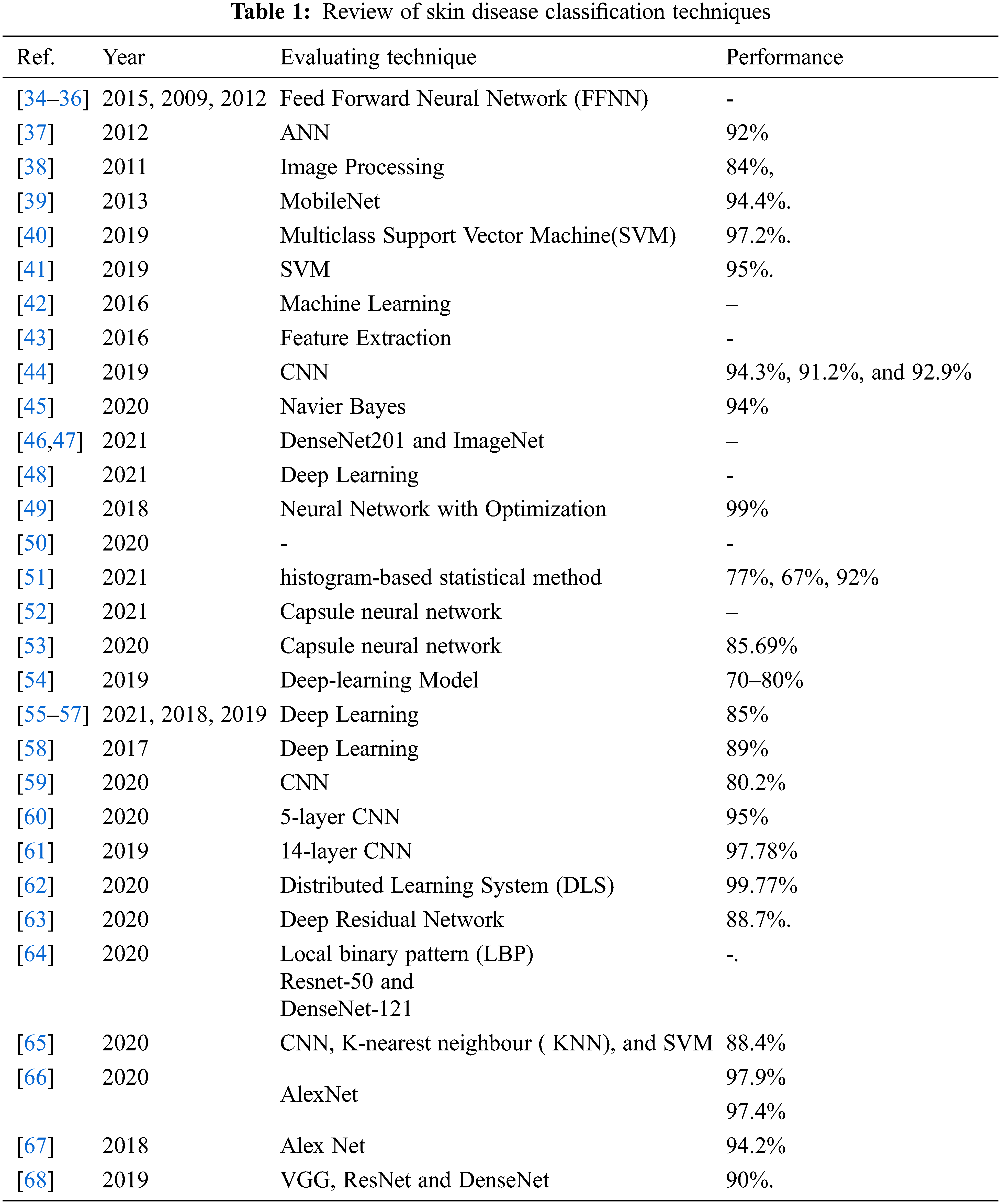

A review of different Skin Disease classification technique with accuracy is described in “Tab. 1”.

The next section of the paper is concerned with the dataset and proposed methodology.

In this section, for validating the dataset of skin images with state-of-art methods and models are presented.

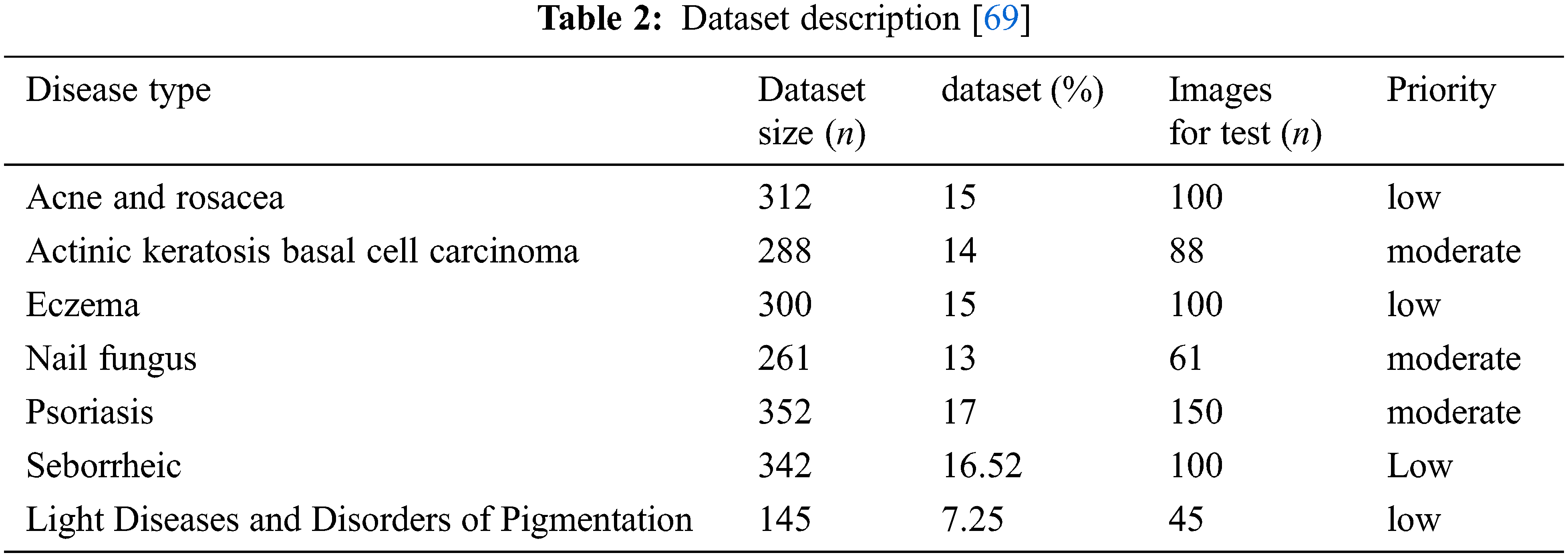

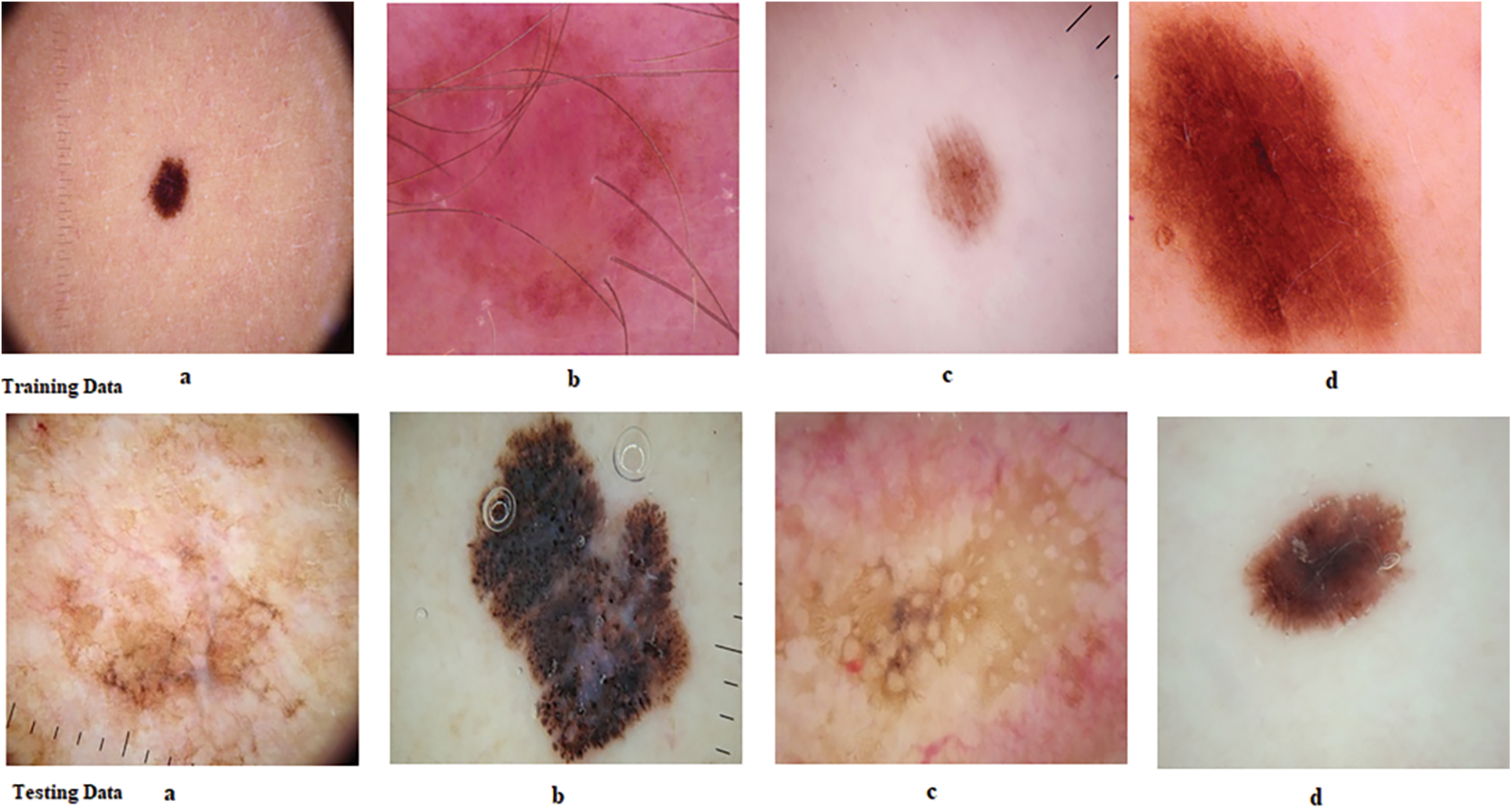

A dataset consists of around 2000 skin disease pictures collected from a standardized dataset Dermnet [69] containing 1800 training data pictures and 200 data test pictures, as shown in Tab. 2 and Fig. 1. The dataset comprises distinct classes of disease. Different classes are infected skin, and one stable class is given in the list:

Figure 1: Sample skin diseases

Fig. 1. shows the reference images of skin diseases from dataset. The experiment is performed with 2000 sample images. Almost 1600 images are used to train the model and 600 images are used to test the model. The experiment is performed with different training and testing ratios but one 80:20 results are shown in this paper.

The next section is dealt with proposed methodology, mathematical model and detection algorithm:

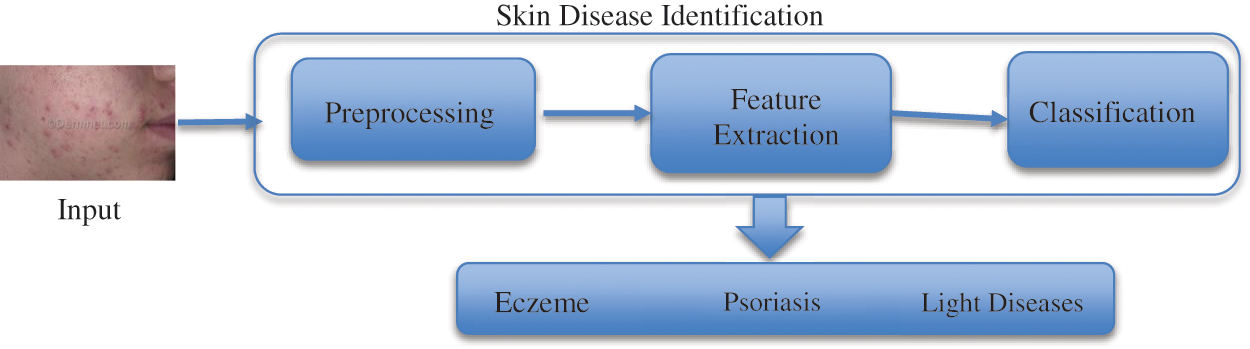

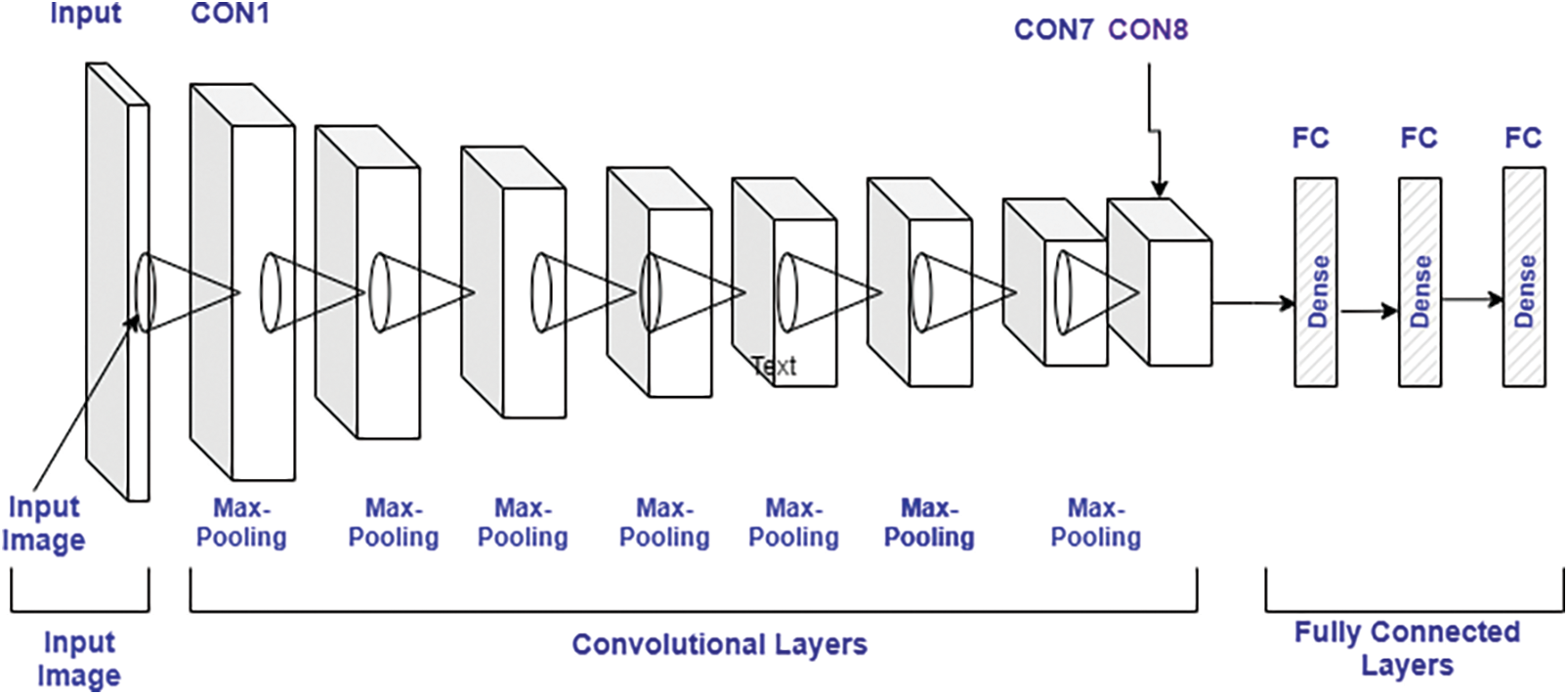

The disease detection methodology starts with the first phase of data pre-proceeding and labeling. Secondly, pre-processed data is classified using a Convolution neural network. “Fig. 2: Laid down the complete process of disease classification and detection. The CNN is tuned on hyperparameters which are based on the training of proposed network and numbers of hidden layer. Here in this paper, the hyperparameter are chosen based on the network which used to classify the images. The complete Convolution neural network (CNN) layout is shown in Fig. 3 and description is given in Tab. 3.

Figure 2: Proposed methodology

Figure 3: CNN model architecture

4.1 Image Pre-Processing and Labelling

This is an initial phase, where the raw image dataset is pre-processed to remove noise before inputting it to the Convolution neural network classifier. It must for a model to analyze the structure of the network and dataset to generate better outcomes. Therefore, the dataset is preprocessed initially to collect appropriate features of images which can be used by the model to accurately diagnose or predict the actual outcomes. Here in pre-processing, firstly, the size of each image is normalized as per requirement which is 256*256 pixels. The image size is defined during network design and act as input node. The same is used to train the model to achieve consistent results. The python libraries are utilized to perform the same task with maximum accuracy. Secondly, all images are converted into grey images.

The pre-processing stage is considered as a phase that extracts image features to train the model. These training features are the reason for accurate prediction. After pre-processing, the data labeled with the correct acronym. After this data is segregated into different classes which can be used for testing.

4.2 Mathematical Representation of Classification

Here, the Convolution neural network (CNN) is utilized for classification, one of the most prominent technologies used at present. Here, the model is accomplished with the feature extracted in the previous phase. In the CNN, the image dataset is processed in different layers and each layer has the following sub-layers:

a) Convolutional Layer

The main operation in the convolutional layer is convolution in which the input image is mapped with a filter of m*m and generates outcome feature maps. The outcome of the convolutional layer is expressed by Eq. (1)

where,

An: Outcome feature maps,

Ln : Input maps,

Mkn : Kernel of convolution,

Cn : Bias term.The degree of final feature map is expressed by,

where,

N: output height/length

X : input height/length

M: filter size,

Y: padding,T: Stride.

Here, padding can be used to store the output. The padding is expressed by Eq. (3):

where, M: filter size.

• ReLU Layer: This also plays an important in CNN and is also known as the Activation layer. This layer is next to the convolution layer and the output of the same will be input to the ReLU. This layer creates linearity in the convolutional process. So, each convolutional layer is associated with a ReLU layer. The important task of this layer is to update all negative activation to zero and thresholding which is given by f(p) = max(0, p). This layer helps the system to learn quickly and remove gradient problems. ReLU activation function is well designed for multiclass classification.

• Max-Pooling Layer: This layer generates the reduce sized output after maximizing the elements of each block. This layer also controls the overfitting problem without the learning process.

• Dropout Layer: This layer is used to drop out the input elements having a probability less than a certain value and this process is a part of the training phase.

• Batch Normalization Layer: This layer plays an important role in between the convolutional and ReLU layer. This layer is used to enhance the training speed and reduce sensitivity. This layer performs different operations (subtractor, division, shifting, and scaling). The activation layer to normalize its value. Firstly, the activation is subtracted with mean, and divided by the standard deviation which is followed by fluctuating by α and then scaled by Θ. The batch normalized outcome, Bk is expressed by the Eqs. (4)–(7),

where

where,

ε : constant

UD: Mini-batch mean

σD2 : Mini-batch variance given by,

b) Fully Connected Layer

Here, the neurons of the next layer are linked with neurons of the previous layer and produced a vector, and the vector dimensions represent the number of classes.

c) Output Layer

This layer is a combination of softmax and classification. In this layer, firstly, the softmax is used to distribute the probability and the classification is carried out by the network. The softmax is defined by Eq. (8)

where, 0≤ P(vr|A θ)

written as follows, where

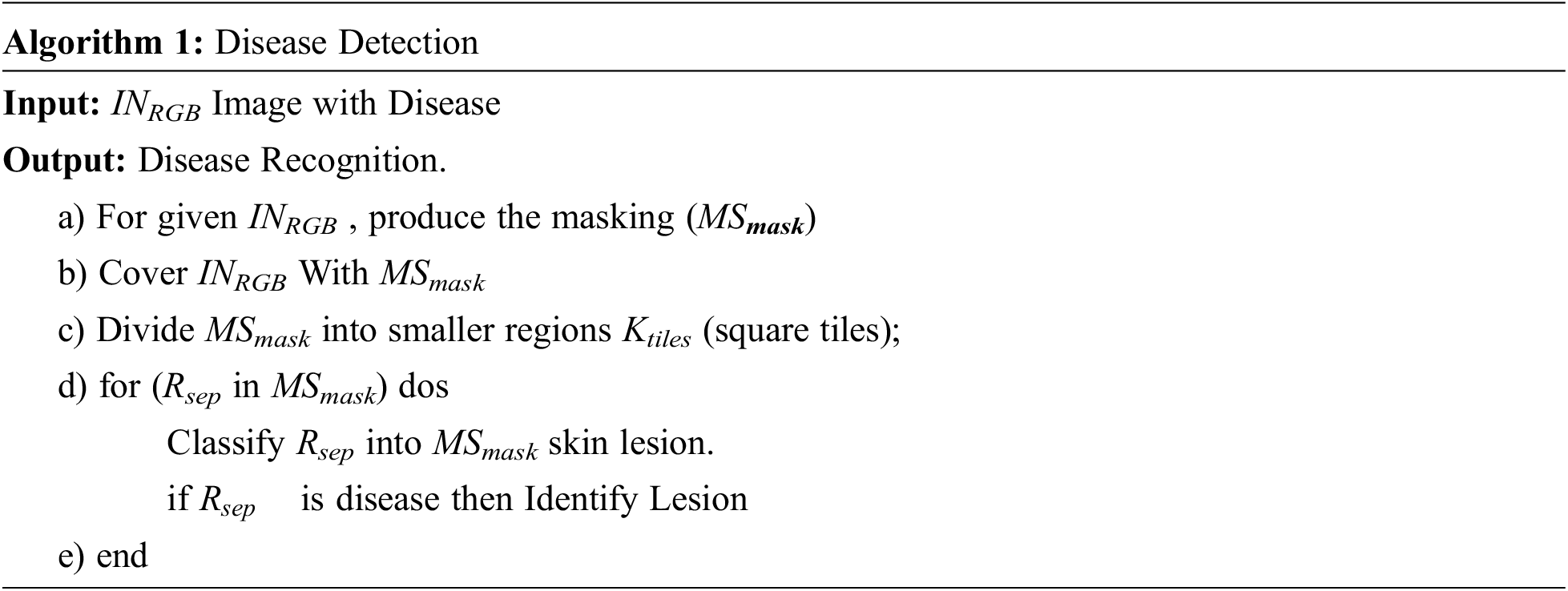

4.3 Skin Lesion Detection Algorithm

The algorithm initiates with images INRGB. After that INRGB is segmented into MSmask. The MSmask is further partitioned into several regions Rsep. Afterward, it chooses the Region of Interest (RoI) and the same is used to identify skin disease. The proposed algorithm is given in below:

The next section discusses the result analysis and their interpretation.

5 Result Analysis and Discussion

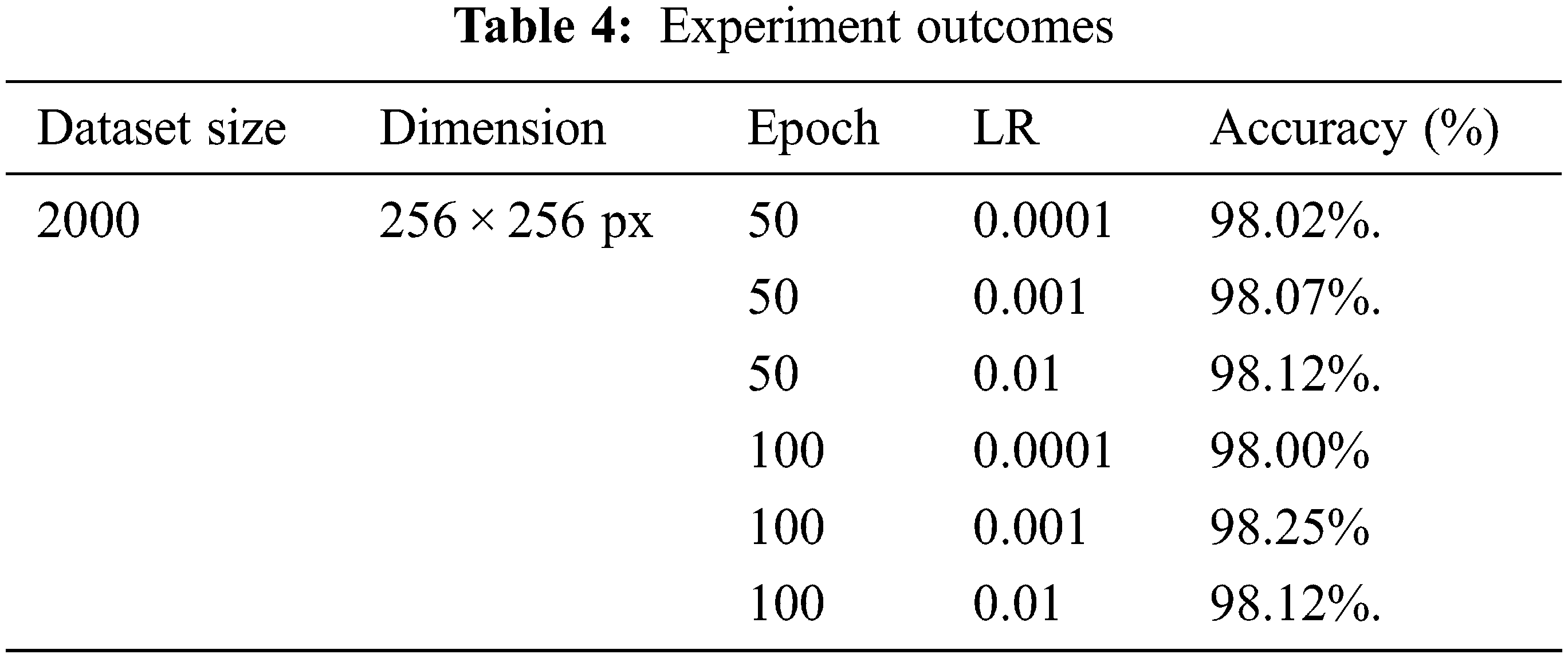

The research is carried out with the help of Co-Lab-based Graphics Processing Unit (GPU) and python-based karas libraries are used to implement the complete approach. Here in this research, experiments are performed with varying the batch size, epoch, and learning rate. The experiment is performed with two epoch sizes i.e., 50 and 100, and three learning rates i.e., 0.1,.001, and .0001 learning rates. The model performance is measured on different performance indicators such as accuracy, precision, recall and F1-Score. The results are laid down below:

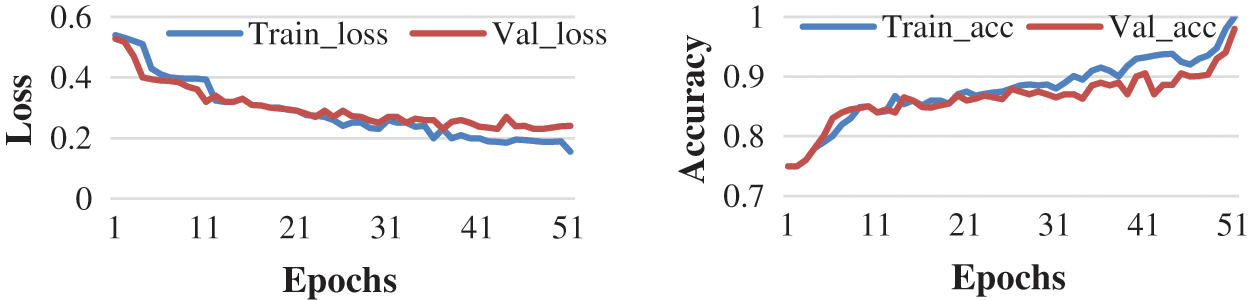

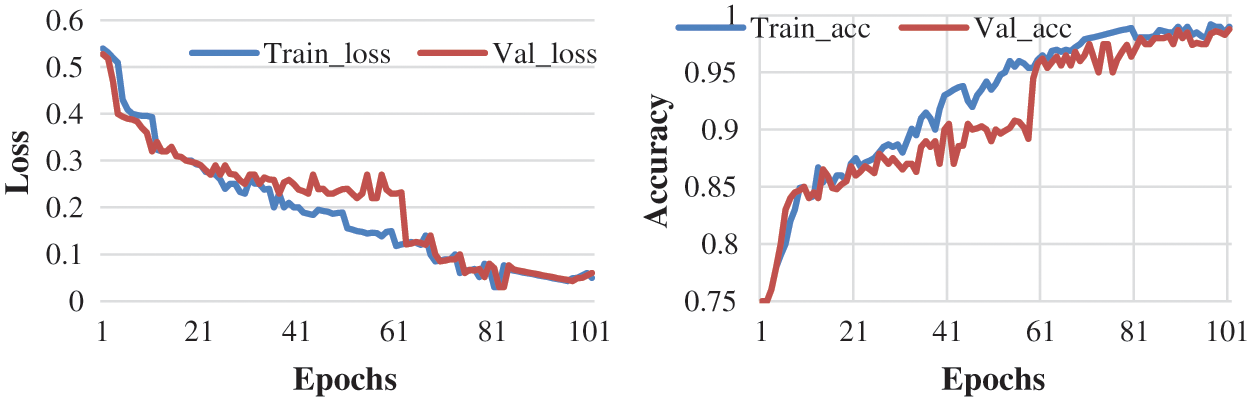

The objective here is to observe the effect of Epochs on device efficiency. An epoch is a complete introduction to a learning machine of the data set. In this research, the experiment is conducted with 50 and 100 epochs size. “Fig. 4: shows the test with 50 epochs” and “Fig. 5: Shows 100 epoch sizes with a learning rate of 0.0001”. Both show an accuracy rate that is 98.02% and 98%.

Figure 4: Accuracy and loss learning rate 0.0001

Figure 5: Accuracy and loss with learning rate 0.0001

It is possible to assume that more times will provide a higher percentage of correctness centered on the investigation procedure. But the number of epochs is getting longer, the longer the training step involves.

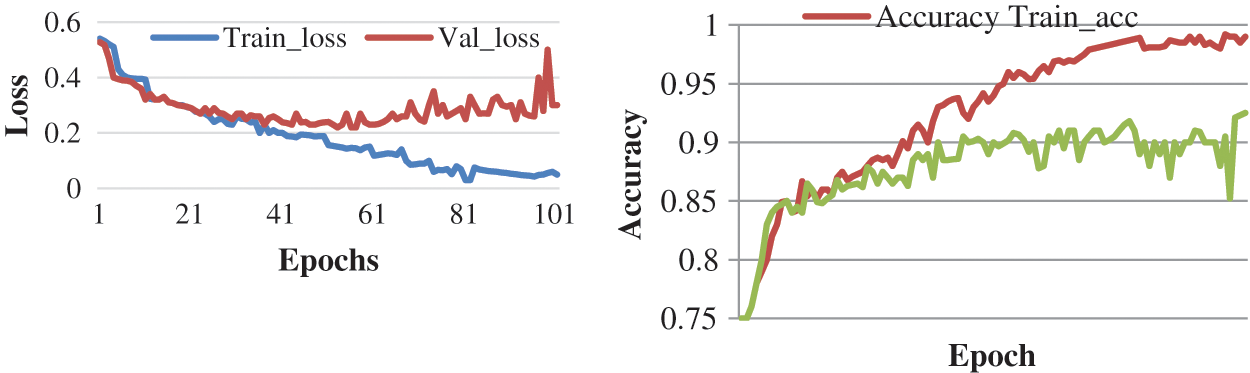

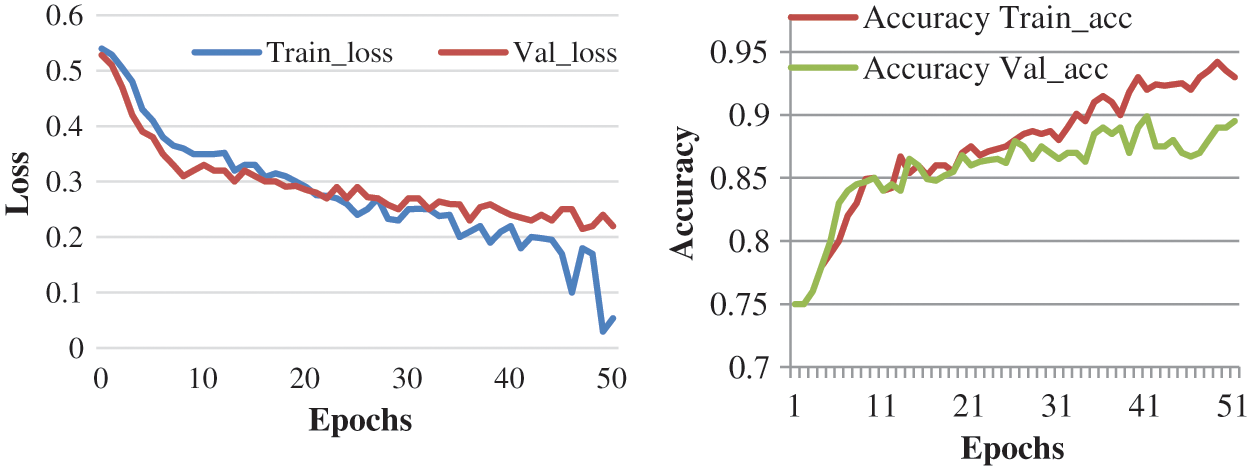

Here, the experiment is conducted with 50 and 100 epochs size. “Fig. 6: shows the test with 50 epochs “and “Fig. 7: shows 100 epoch sizes with a learning rate of 0.001”. Both show an accuracy rate that is 98.07.% and 98.25%.

Figure 6: Accuracy and loss learning rate 0.001

Figure 7: Accuracy and loss learning rate 0.001

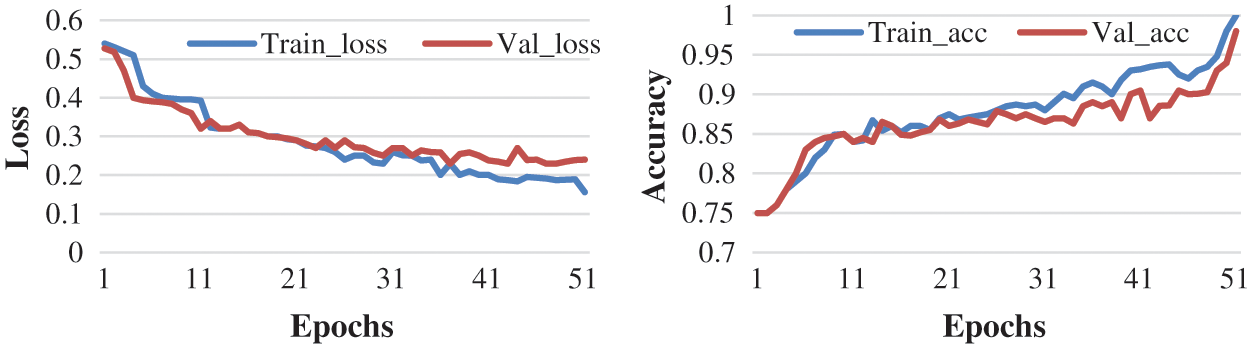

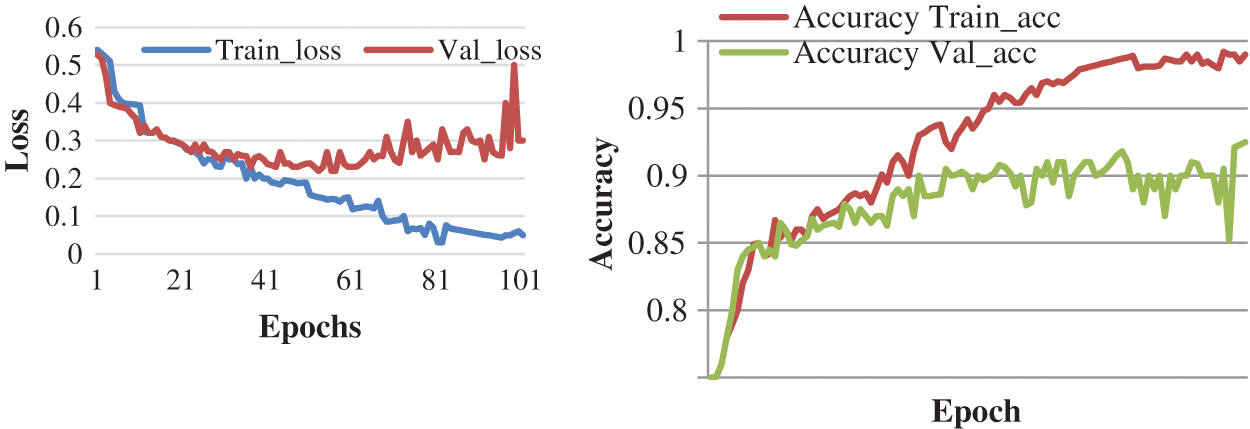

Here, the experiment is conducted with 50 and 100 epochs size. “Fig. 8: shows the test with 50 epochs” and “Fig. 9: shows 100 epoch sizes with a learning rate of 0.01”. Both show an accuracy rate that is 98.12.% and 98.12%.

Figure 8: Accuracy and loss learning rate 0.01

Figure 9: Accuracy and loss learning rate 0.01

Based on the assessment procedure performed, a more precise measurement of the data with a higher learning rate can be evaluated. “Tab. 4”: the result analysis based on the experiment”.

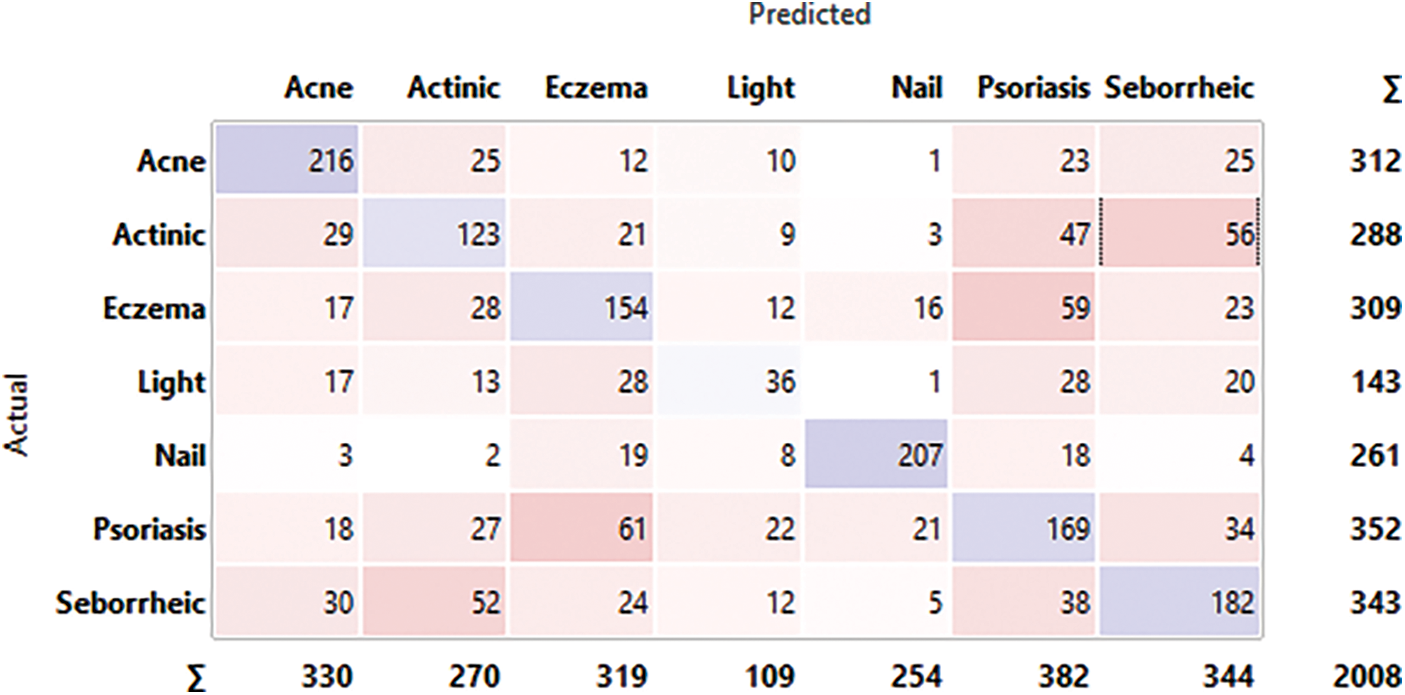

Confusion matrix and linear distribution for proposed techniques is shown in “Fig. 10”:

Figure 10: Confusion matrix

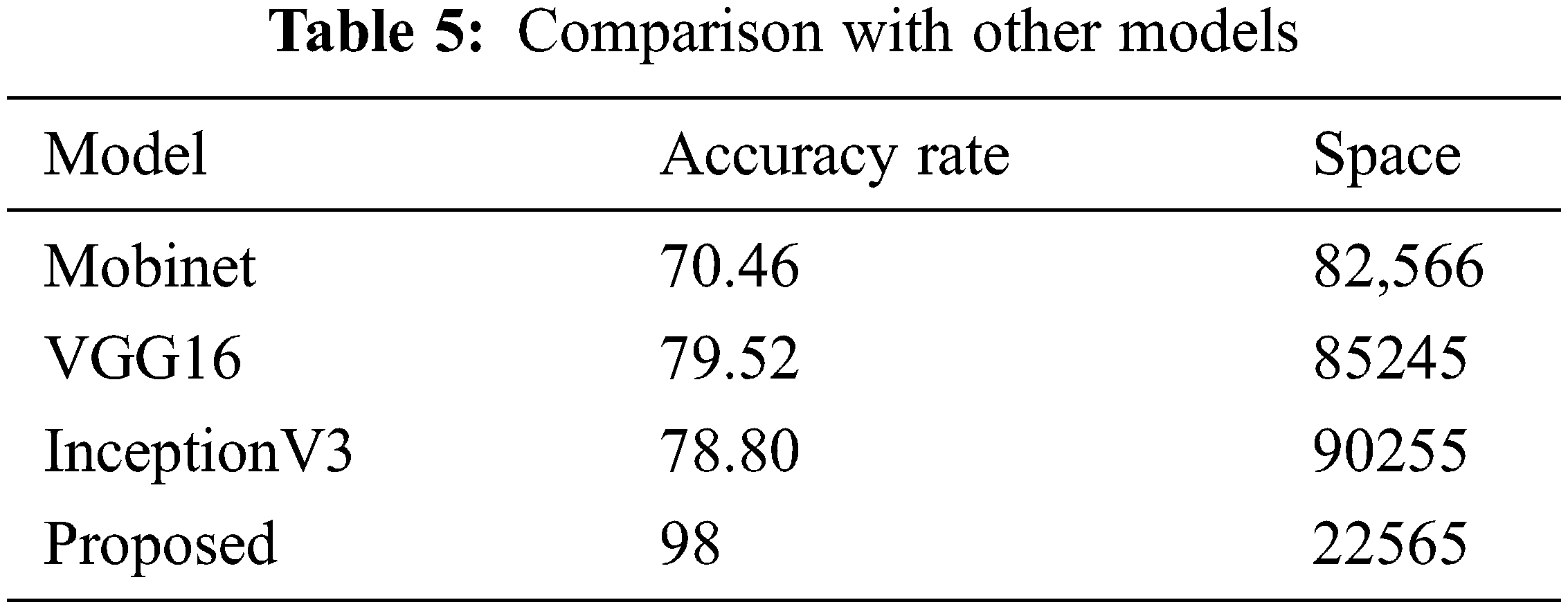

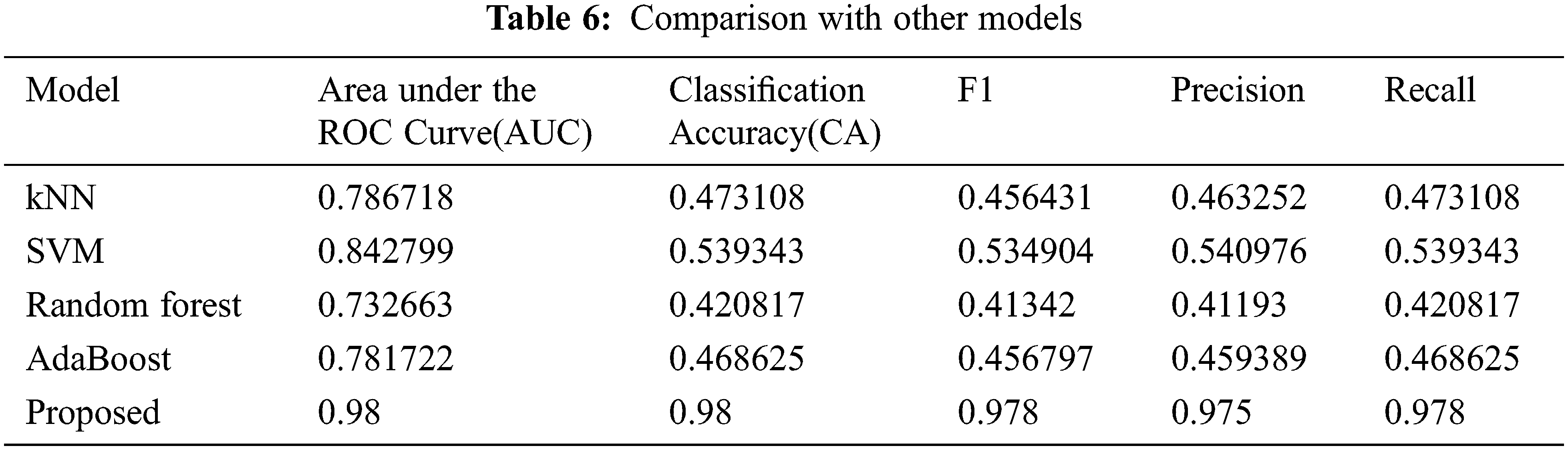

The anticipated model is compared with other models and results are elaborated in Tabs. 5 and 6. The Tab. 4 and Fig. 11 describes the comparison of proposed model with other deep learning model. This shows that the proposed model has better accuracy then the other models. The space complexity of proposed approach is lowest among other models.

Figure 11: Proposed model comparison with state-of-the-art models

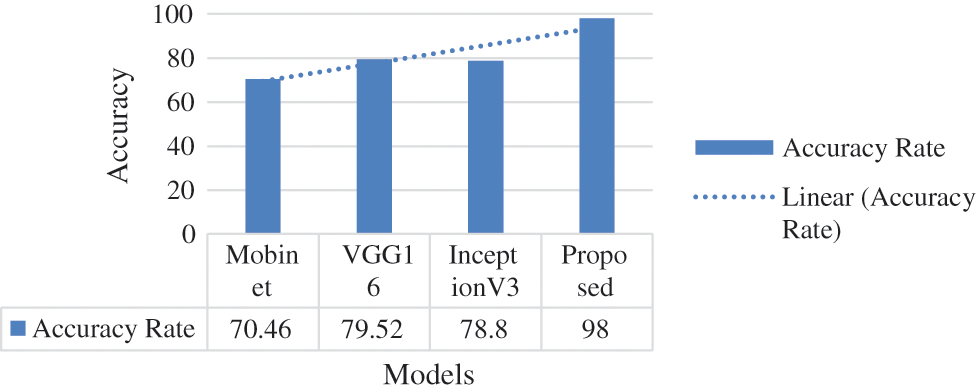

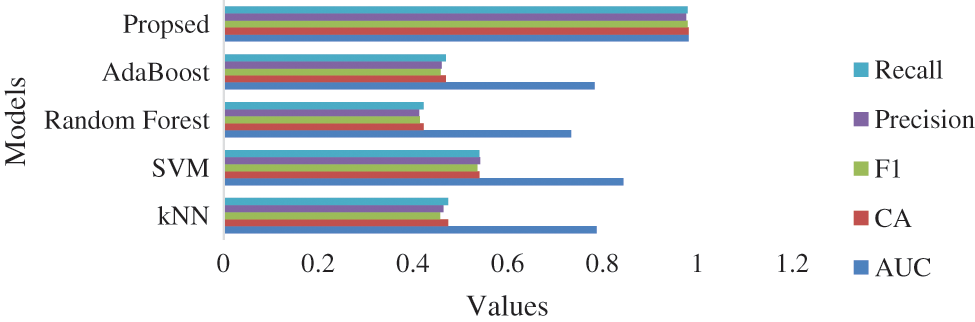

Tab. 6 and “Fig. 12” explains that the proposed model performs better than other models in terms of accuracy, F1-Score, Precision and Recall.

Figure 12: Proposed model comparison with state-of-the-art models

The conclusion and future work are described in Section 6.

In the past few years, skin disease is the reason for lots of casualties. The severity of skin disease is not possible to detect with naked eyes, therefore, requires a complex, costlier, and long process. Automated skin disease recognition acts a crucial role in diagnosis and reduces the death ratio. Therefore, AI-based techniques were developed to diagnose skin disease with maximum accuracy. This can help to reduce the spreading and expansion of skin disease. Still, skin doctors are facing issues with the accuracy of available techniques. The disease detection process should be mature enough to accurately identify or detect it. Here, in this paper, a skin disease detection system is proposed to accurately diagnose skin diseases. Image processing with a neural network helps to automatically screen and diagnose skin diseases at the preliminary stage. The approach used here is explained in different folds: firstly, the skin diseases images are pre-processed, and secondly, an important feature of the image is extracted. Thirdly, the pre-processed images are analyzed at different stages of the CNN model. The approach proposed in this paper is simple, fast, and shows accurate results up to 98% with different disease types. In the future, the plan is to develop a mobile application that takes input as skin images and provides complete details of disease based on the analysis.

Funding Statement: This work was supported by Taif university Researchers Supporting Project Number (TURSP-2020/114), Taif University, Taif, Saudi Arabia.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present research.

1. T. R. Gadekallu, D. S. Rajput, M. Reddy, K. Lakshmanna, S. Bhattacharya et al.,“ “A novel PCA–whale optimization-based deep neural network model for classification of tomato plant diseases using GPU,” Journal of Real-Time Image Processing, vol. 18, no. 4, pp. 1383–1396, 2021. [Google Scholar]

2. P. Kaur and V. Gautam, “Plant biotic disease identification and classification based on leaf image: A review,” in Proc. of 3rd Int. Conf. on Computing Informatics and Network (ICCIN), pp. 597–610, Springer Singapore, 2021. [Google Scholar]

3. P. Kaur and V. Gautam, “Research patterns and trends in classification of biotic and abiotic stress in plant leaf, Materials Today: Proceedings, vol. 45, pp. 4377–4382, 2021. [Google Scholar]

4. N. K. Trivedi, V. Gautam, A. Anand, H. M. Aljahdali, S. G. Villar et al., “Early detection and classification of tomato leaf disease using high-performance deep neural network,” Sensors, vol. 2021, no. 23, pp. 7987, 2021. [Google Scholar]

5. P. Kaur and V. Gautam, “Plant biotic disease identification and classification based on leaf image: A review,” in Proc. of 3rd Int. Conf. on Computing Informatics and Networks, Delhi, India, pp. 597–610, 2021. [Google Scholar]

6. P. Kaur, S. Harnal, R. Tiwari, S. Upadhyay, S. Bhatia et. al., “Recognition of leaf disease using hybrid convolutional neural network by applying feature reduction,” Sensors, vol. 22, no. 2, pp. 1–16, 2022. [Google Scholar]

7. V. Gautam and J. Rani, “Smart solution for leaf stress detection and classification a research pattern,” Materials Today: Proceedings, vol. 2022, pp. 1–14, 2022(Accepted). [Google Scholar]

8. A. M. Mishra and V. Gautam, “Weed species identification in different crops using precision weed management: A review,” in Proc. of CEUR Workshop, Kurukshetra, India, pp. 180–194. 2021. [Google Scholar]

9. A. M. Mishra and V. Gautam, “Monocots and dicot weeds growth phases using deep convolutional neural network,” Solid State Technology, vol. 63, no. 5, pp. 1–9, 2021. [Google Scholar]

10. A. M. Mishra, Y. Shahare and V. Gautam, “Analysis of weed growth in rabi crop agriculture using deep convolutional neural networks,” Journal of Physics: Conference Series, vol. 2070, no. 1, pp. 012101, 2021. [Google Scholar]

11. A. M. Mishra, S. Harnal, K. Mohiuddin, V. Gautam, O. A. Nasr et al., “A deep learning-based novel approach for weed growth estimation,” Intelligent Automation and Soft Computing, vol. 31, no. 2, pp. 1157–1172, 2022. [Google Scholar]

12. Y. Shahare and V. Gautam, “Soil nutrient assessment and crop estimation with machine learning method: A survey,” in Proc. Cyber Intelligence and Information Retrieval, Kolkata, West Bengal, India, pp. 253–266, 2022. [Google Scholar]

13. Y. Shahare and V. Gautam, “Improving and prediction of efficient soil fertility by classification and regression techniques,” Solid State Technology, vol. 63, no. 5, pp. 9571–9580, 2020. [Google Scholar]

14. T. R. Gadekallu, M. Alazab, R. Kaluri, P. K. R. Maddikunta, S. Bhattacharya et al., “Hand gesture classification using a novel CNN-crow search algorithm,” Complex & Intelligent Systems, vol. 7, no. 4, pp. 1855–1868, 2021. [Google Scholar]

15. P. N. Srinivasu, A. K. Bhoi, R. H. Jhaveri, G. T. Reddy and M. Bilal, “Probabilistic deep q network for real-time path planning in censorious robotic procedures using force sensors,” Journal of Real-Time Image Processing, vol. 18, no. 5, pp. 1773–1785, 2021. [Google Scholar]

16. M. S. Arifin, M. G. Kibria, A. Firoze, M. A. Amini and H. Yan, “Dermatological disease diagnosis using color-skin images,” in Proc. of International Conf. on Machine Learning and Cybernetics, Xi’an, China, vol. 5, pp. 1675–1680, 2012. [Google Scholar]

17. R. Yasir, M. A. Rahman and N. Ahmed, “Dermatological disease detection using image processing and artificial neural network,” in Proc. of Int. Conf. on Electrical and Computer Engineering, Dhaka, Bangladesh, pp. 687–690, 2014. [Google Scholar]

18. A. Santy and R. Joseph, “Segmentation methods for computer aided melanoma detection,” in Proc. of Global Conf. on Communication Technologies, Thuckalay, India, pp. 490–493, 2015. [Google Scholar]

19. V. Zeljkovic, C. Druzgalski, S. Bojic-Minic, C. Tameze and P. Mayorga, “Supplemental melanoma diagnosis for darker skin complexion gradients,” in Proc. of Pan American Health Care Exchanges, Santiago, Chile, pp. 1–8, 2015. [Google Scholar]

20. R. Suganya, “An automated computer aided diagnosis of skin disease detection and classification for dermoscopy images,” in Proc. of Int. Conf. on Recent Trends in Information Technology, Chennai, India, pp. 1–5, 2016. [Google Scholar]

21. M. N. Alam, T. T. K. Munia, K. Tavakolian, F. Vasefi, N. MacKinnon et al., “Automatic detection and severity measurement of eczema using image processing,” in Proc. of Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society (EMBC) IEEE, Florida, USA, pp. 1365–1368, 2016. [Google Scholar]

22. D. D. Miller and E. W. Brown, “Artificial intelligence in medical practice: The question to the answer,” The American Journal of Medicine, vol. 131, no. 2, pp. 129–133, 2018. [Google Scholar]

23. S. Jha and E. J. Topol, “Adapting to artificial intelligence: Radiologists and pathologists as information specialists,” Journal of the American Medical Association (JAMA), vol. 316, no. 22, pp. 2353–2354, 2016. [Google Scholar]

24. L. C. D. Guzman, R. P. Maglaque, V. M. B. Torres, S. P. A. Zapido and M. I. I. O. Cordel, “Design and evaluation of a multi-model, multi-level artificial neural network for eczema skin disease detection,” in Proc. of 3rd Int. Conf. on Artificial Intelligence, Modelling and Simulation (AIMS), Sabah, Malasia, pp. 42–47, 2015. [Google Scholar]

25. R. M. Aragon, W. R. Tibayan, A. Alvisor, P. G. Palisoc, G. Terte et al., “Detection of circulatory diseases through fingernails using artificial neural network,” Journal of Telecommunication, Electronic and Computer Engineering (JTEC), vol. 10, no. 1–4, pp. 181–188, 2018. 16, 2018. [Google Scholar]

26. J. Velasco, J. Rojas, J. P. M. Ramos, H. M. Muaña and K. L. Salazar, “Health evaluation device using tongue analysis based on sequential image analysis,” International Journal of Advanced Trends in Computer Science and Engineering, vol. 8, no. 3, pp. 451–457. 2019. [Google Scholar]

27. P. S. Ramesh, “Analysis of various methods for diagnosing Alzheimer disease and their performances,” International Journal of Advanced Trends in Computer Science and Engineering, vol. 8, no. 3, pp. 755–757, 2019. [Google Scholar]

28. A. R. Bayot, L. Ann, M. S. Niño, A. D. Santiago, M. Tomelden et al., “Malignancy detection of candidate for basal cell carcinoma using image processing and artificial neural network,” De La Salle University (DLSU) Engineering e-Journal, vol. 1, no. 1, pp. 70–79, 2007. [Google Scholar]

29. N. S. A. ALEnezi, “A model for classification of skin disease using image processing techniques and neural network,” in Proc. of Int. Learning & Technology Conference, Jeddah, Saudi Arabia, pp. 106–112, 2017. [Google Scholar]

30. H. lquran, I. A. Qasmieh, A. M. Alqudah, S. Alhammouri and E. Alawneh, “The melanoma skin cancer detection and classification using support vector machine,” in Proc. of IEEE Jordan Conf. on Applied Electrical Engineering and Computing Technologies (AEECT), Aqaba, Jordan, pp. 1–5, 2017. [Google Scholar]

31. X. Zhang, S. Wang, J. Liu and C. Tao, “Computer-aided diagnosis of four common cutaneous diseases using deep learning algorithm,” in Proc. of IEEE Int. Conf. on Bioinformatics and Biomedicine (BIBM), Kansas City, MO, USA, pp. 1304–1306, 2017. [Google Scholar]

32. M. Z. Asghar, M. J. Asghar, S. M. Saqib, B. Ahmad, S. Ahmad et al., “Diagnosis of skin diseases using online expert system,” International Journal of Computer Science and Information Security, vol. 9, no. 6, pp. 323–325, 2011. [Google Scholar]

33. R. Yasir, M. A. Rahmana and N. Ahmed, “Dermatological disease detection using image processing and artificial neural network,” International Journal for Research in Applied Science & Engineering Technology (IJRASET), vol. 8, no. 9, pp. 1074–1079, 2014. [Google Scholar]

34. A. Naser, S. Samy and A. N. Akkila, “A proposed expert system for diagnosis of skin diseases,” International Journal of Applied Research, vol. 4, no. 12, pp. 168–1693, 2015. [Google Scholar]

35. L. G. Kabari and F. S. Bakpo, “Diagnosing skin diseases using an artificial neural network,” in Proc. of Int. Conf. on Adaptive Science & Technology, Accra, Ghana, pp. 187–191, 2009. [Google Scholar]

36. A. M. Shamsul, M. G. Kibria, A. Firoze, M. A. Amini and H. Yan, “Dermatological disease diagnosis using colour skin images,” in Proc. of Int. Conf. on Machine Learning and Cybernetics, Xi’an, China, vol. 5, pp. 1675–1680, 2012. [Google Scholar]

37. A. Rahman, Shuzlina, A. K. Norhan, M. Yusoff, A. Mohamed and S. Mutalib,"Dermatology diagnosis with feature selection methods and artificial neural network,” in Proc. of IEEE EMBS Int. Conf. on Biomedical Engineering and Sciences, Langkawi, Malaysia, pp. 371–376, 2012. [Google Scholar]

38. T. Florence, E. Mwebaze and F. Kiwanuka, “An image-based diagnosis of virus and bacterial skin infections,” in Proc. of Int. Conf. on Complications in Interventional Radiology, Vienna, Austria, pp. 1–7, 2011. [Google Scholar]

39. D. A. Okuboyejo, O. O. Olugbara and S. A. Odunaike, “Automating skin disease diagnosis using image classification,” in Proc. of World Congress on Engineering and Computer Science, San Francisco, USA, vol. 2, pp. 850–854, 2013. [Google Scholar]

40. J. Velasco, P. Cherry, W. A. Jean, A. Jonathan, S. C. John et al., “A Smartphone-based skin disease classification using mobilenet,” International Journal of Advanced Trends in Computer Science and Engineering, vol. 8, no. 10, pp. 2–8, 2019. [Google Scholar]

41. A. N. S. ALKolifi, “A method of skin disease detection using image processing and machine learning,” Procedia Computer Science, vol. 163, pp. 85–92, 2019. [Google Scholar]

42. K. V. Bannihatti, S. S. Kumar and V. Saboo, “D ermatological Disease Detection Using Image Processing and Machine Learning,” in Proc. of Third Int. Conf. on Artificial Intelligence and Pattern Recognition (AIPR), Lodz, Poland, pp. 88–93, 2016. [Google Scholar]

43. S. Kolkur and D. R. Kalbande, “Survey of texture-based feature extraction for skin disease detection,” in Proc. of Int. Conf. ICT Business, Indore, India, pp. 1–6, 2016. [Google Scholar]

44. S. Akyeramfo-Sam, A. A. Philip, D. Yeboah, N. C. Nartey and I. K. Nti, “A Web-based skin disease diagnosis using convolution neural networks,” International Journal Information Technology and Computer Science, vol. 11, no. 11, pp. 54–60, 2019. [Google Scholar]

45. V. R. Balaji and S. T. Suganthi, “Skin disease detection and segmentation using dynamic graph cut algorithm and classification through naive Bayes classifier,” Measurement Journal, vol. 163, no. 15, pp. 107922, 2020. [Google Scholar]

46. S. S. Mohammed and J. M. Al-Tuwaijari, “Skin disease classification system based on machine learning technique: A survey,” IOP Conference Series: Materials Science and Engineering, vol. 1076, no. 1, pp. 012045, 2021. [Google Scholar]

47. J. Steppan and S. Hanke, “Analysis of skin lesion images with deep learning,” arXiv preprint arXiv:2101.03814, pp. 1–8, 2021. [Google Scholar]

48. E. Goceri, “Deep learning-based classification of facial dermatological disorders,” Computers in Biology and Medicine, vol. 128, pp. 104–118, 2021. [Google Scholar]

49. M. Monisha, A. Suresh and M. R. Rashmi, “Artificial intelligence-based skin classification using gmm,” Journal of Medical Systems, vol. 43, no. 1, pp. 1–8, 2018. [Google Scholar]

50. F. Liu, J. Yan, W. Wang, J. Liu and J. Li et al., “Scalable skin disease multi-classification recognition system,” Computers, Materials & Continua, vol. 62, no. 2, pp. 801–816, 2020. [Google Scholar]

51. E. Goceri, “Automated skin cancer detection: Where we are and the way to the future,” in Proc. of Int. Conf. on Telecommunications and Signal Processing (TSP), Europe, Middle East and Africa, pp. 48–51, 2021. [Google Scholar]

52. R. Javed, M. S. M. Rahim, T. Saba, S. M. Fati, A. Rehman et al., “Statistical histogram decision based contrast categorization of skin disease datasets dermoscopic images,” Computers, Materials & Continua., vol. 67, no. 2, pp. 2337–2352, 2021. [Google Scholar]

53. T. Vijayakumar, “Comparative study of capsule neural network in various applications,” Journal of Artificial Intelligence, vol. 1, no. 1, pp. 19–27, 2021. [Google Scholar]

54. E. Göçeri, “Convolution neural network-based desktop applications to classify dermatological diseases,” in Proc. of Int. Conf. on Image Processing, Applications and Systems (IPAS), Genova, Italy, IEEE, pp. 138–143, 2020. [Google Scholar]

55. E. Goceri, “Skin disease diagnosis from photographs using deep learning,” in Proc. of ECCOMAS Thematic Conf. on Computational Vision and Medical Image Processing, Porto, Portugal, pp. 239–246, 2019. [Google Scholar]

56. P. Delisle, B. A. Robitaille, C. Desrosiersand and H. Lombert, “Realistic image normalization for multi-domain segmentation,” Medical Image Analysis, vol. 74, pp. 102191, 2021. [Google Scholar]

57. E. Goceri, “Fully automated and adaptive intensity normalization using statistical features for brain MR images,” Celal Bayar University Journal of Science, vol. 14, no. 1, pp. 125–134, 2018. [Google Scholar]

58. Y. H. Chang, K. Chin, G. Thibault, J. Eng, E. Burlingame et al., “RESTORE: Robust intensity normalization method for multiplexed imaging,” Communications Biology, vol. 3, no. 1, pp. 1–9, 2019. [Google Scholar]

59. E. Goceri, “Intensity normalization in brain mr images using spatially varying distribution matching,” in Proc. of Int. Conf. on Computer Graphics, Visualization, Computer Vision and Image Processing (CGVCVIP 2017), Roorkee, India, pp. 300–304, 2017. [Google Scholar]

60. S. Subha, D. J. W. Wise, S. Srinivasan, M. Preethamand and B. Soundarlingam, “Detection and differentiation of skin cancer from rashes,” in Proc. of 2020 Int. Conf. on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, pp. 389–393, 2020. [Google Scholar]

61. T. Alkarakatly, S. Eidhah, M. Al-Sarawani, A. Al-Sobhiand and M. Bilal, “Skin lesions identification using deep convolutional neural network,” in Proc. 2019 Int. Conf. on Advances in the Emerging Computing Technologies (AECT), Al Madinah Al Munawwarah, Saudi Arabi, pp. 1–5, 2020. [Google Scholar]

62. A. Mohamed, W. A. Mohamedand and A. H. Zekry, “Deep learning can improve early skin cancer detection,” International Journal of Electronics and Telecommunications, vol. 65, no. 3, pp. 507–512, 2019. [Google Scholar]

63. M. A. Kadampur and S. Al Riyaee, “Skin cancer detection: Applying a deep learning-based model driven architecture in the cloud for classifying dermal cell images,” Informatics in Medicine Unlocked, vol. 18, pp. 100282–100288, 2020. [Google Scholar]

64. B. Vinay, P. J. Shah, V. Shekarand and H. Vanamala, “Detection of melanoma using deep learning techniques,” in Proc. 2020 Int. Conf. on Computation, Automation and Knowledge Management (ICCAKM), noida,India, pp. 391–394, 2020. [Google Scholar]

65. F. Xiao and Q. Wu, “Visual saliency based global–local feature representation for skin cancer classification,” IET Image Processing, vol. 14, no. 10, pp. 2140–2148, 2020. [Google Scholar]

66. J. Daghrir, L. Tlig, M. Bouchouichaand and M. Sayadi, “Melanoma skin cancer detection using deep learning and classical machine learning techniques: A hybrid approach,” in Proc.2020 5th Int. Conf. on Advanced Technologies for Signal and Image Processing (ATSIP), Sfax, Tunisia, pp. 1–5, 2020. [Google Scholar]

67. R. Ashraf, S. Afzal, A. U. Rehman, S. Gul and J. Baber et al., “Region-of-interest based transfer learning assisted framework for skin cancer detection,” IEEE Access, vol. 8, pp. 147858–147871, 2020. [Google Scholar]

68. U. -O. Dorj, K. -K. Lee, J. -Y. Choiand and M. Lee, “The skin cancer classification using deep convolutional neural network,” Multimedia Tools and Applications, vol. 77, no. 8, pp. 9909–9924, 2018. [Google Scholar]

69. M. M. I. Rahi, F. T. Khan, M. T. Mahtab, A. A. Ullah, M. G. R. Alam et al., “Detection of skin cancer using deep neural networks,” in Proc. 2019 IEEE Asia-Pacific Conf. on Computer Science and Data Engineering (CSDE), Melbourne, Australia, pp. 1–7, 2019. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |