DOI:10.32604/iasc.2020.012159

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2020.012159 |  |

| Article |

Multi-Focus Image Region Fusion and Registration Algorithm with Multi-Scale Wavelet

1Guangdong Polytechnic of Science and Technology, Guangzhou, 510663, China

2Hunan Agricultural University, Changsha, 410125, China

3Shenzhen Intelligent Sichuang Technology Co., Ltd., Shenzhen, 518015, China

*Corresponding Author: Hai Liu. Email: liuhaigpst@gmail.com

Received: 17 June 2020; Accepted: 29 July 2020

Abstract: Aiming at the problems of poor brightness control effect and low registration accuracy in traditional multi focus image registration, a wavelet multi-scale multi focus image region fusion registration method is proposed. The multi-scale Retinex algorithm is used to enhance the image, the wavelet decomposition similarity analysis is used for image interpolation, and the EMD method is used to decompose the multi focus image. Finally, the image reconstruction is completed and the multi focus image registration is realized. In order to verify the multi focus image fusion registration effect of different methods, a comparative experiment was designed. Experimental results show that the proposed method can control the image brightness in a reasonable range, the root mean square error of image region fusion registration algorithm is less than 5, and the image registration accuracy is high. This method can achieve multi focus image region fusion registration.

Keywords: Multi-scale; multi-focus image; fusion registration; wavelet decomposition

After the focus of an optical imaging system is determined, only the space points within the depth of field can be clearly imaged. In practical application, when imaging the object in a scene, as the distances between the objects and the imaging lens are different, the obtained image is not clear for all objects. In order to obtain a clear full scene image, it is necessary to focus on the different objects in the scene, obtain the images of each object, and fuse them together, which refers to multi-focus image fusion technology. Image fusion technology is an important branch of information fusion, which combines the high and new technologies such as sensor, image processing, signal processing, computer, and artificial intelligence [1]. The technology is to synthesize the image or image sequence of a specific scene obtained with two or more sensors at the same time or different time, so as to generate new interpretation information about the scene [2]. Image fusion effectively synthesizes the complementary advantages of each sensor and maximizes the complete description of target or scene information, which helps to locate, identify and explain physical phenomena and events [3]. The main advantages of image fusion are: improving the stability and reliability of the system, improving the spatial resolution, and reducing the performance requirements for a single sensor [4]. In the medical field, the technology can be used to fuse medical images with multi-mode to improve the accuracy of diagnosis and treatment. In military field, it can be used for situation estimation, tactical reconnaissance, and identification. In the field of remote sensing, it can be used to interpret and classify aerial and satellite images [5,6]. Registration is an important part in image fusion. The current image fusion registration algorithm cannot control the brightness of the image in a reasonable range, and the accuracy of the registration is low [7].

Jia proposed an image registration method with high precision [8]. Image edge line is extracted by using SUSAN algorithm. According to the geometric center of gravity, the image edges are divided into the closed edge line and non-closed edge line. The edge features of the image are extracted by calculating the extreme point of the image edge. OLB descriptor is used to describe the image edge feature. Digital image registration is achieved according to Hamming distance. However, the algorithm cannot control the brightness of the image in a reasonable range. Li et al. [9] proposed a multi-band image fusion registration method. The optimal fusion performance is obtained by finding the optimal registration parameters. The definition index based on the area of interest of human eye is used as an evaluation function to improve the registration process. The joint optimization is achieved by particle swarm optimization algorithm to realize image registration. However, the accuracy of image registration is low. Hou et al. proposed an SURF-based image registration fusion algorithm. The distance measure function of rough matching in SURF algorithm is improved to enhance the registration speed of SURF algorithm. Image registration is achieved by using RANSAC algorithm. Wavelet transform fusion of the registered image is carried out. However, the algorithm cannot control the brightness of the image reasonably [10]. Image fusion registration algorithm based on FFST and the contrast of and direction characteristics. In this algorithm, the sparsity constraint is applied in the optimization function of the basic nonnegative matrix decomposition. High frequency subband coefficients are selected by contrast of joint direction characteristics. Image registration is obtained by using fast finite shear wave inverse transform. However, the algorithm cannot complete the image registration accurately [11].

To address the problems of the current methods, a multi-focus image region fusion and registration algorithm with multi-scale wavelet is proposed in this paper. The paper is organized as follows.

1. The multi-scale Retinex algorithm is used to enhance the image and improve the recognition rate of the image.

2. Image interpolation is obtained with wavelet transform and similarity technique. EMD method is used for image decomposition. The multi-focus image is reconstructed with different weighting coefficients to achieve image registration.

3. Experimental results and analysis. The overall effect of the proposed algorithm is verified in two aspects: The image brightness and root-mean-square error of image interpolation.

4. Conclusions. The research works are summarized, and the future development direction is presented.

2 Image Enhancement with Multi-Scale Retinex Algorithm

The purpose of image enhancement is to improve the image quality by image processing. In order to improve the recognition rate of the image and the accuracy of image registration, the current image enhancement algorithm is improved [12]. The input color image  is decomposed into 3 grayscale images

is decomposed into 3 grayscale images  ,

,  , and

, and  . Each image is processed with the Gaussian function as an environment function. The environment function

. Each image is processed with the Gaussian function as an environment function. The environment function  is expressed as

is expressed as

where  is the standard deviation of Gaussian function. The normalization condition is given by

is the standard deviation of Gaussian function. The normalization condition is given by

The multi-scale Retinex algorithm can compress the dynamic range of the image and ensure the color consistency of the image [9]. The multi-scale Retinex algorithm is described as

where the subscript  represents the

represents the  th spectrum band,

th spectrum band,  is the number of the spectrum bands,

is the number of the spectrum bands,  represents grayscale image,

represents grayscale image,  represents color image,

represents color image,  is the weight coefficient related to

is the weight coefficient related to  ,

,  is the number of the environment functions, different standard deviation

is the number of the environment functions, different standard deviation  is selected by the environment function

is selected by the environment function  to control the scale of the range of the environment function.

to control the scale of the range of the environment function.  is the output of the multi-scale Retinex algorithm of the

is the output of the multi-scale Retinex algorithm of the  th channel of the color image,

th channel of the color image,  is the output of the multi-scale Retinex algorithm of the

is the output of the multi-scale Retinex algorithm of the  th channel. The standard deviations

th channel. The standard deviations  under different scales are obtained by using Eqs. (1) and (2). The offset method is used to modify the pixel of the output image.

under different scales are obtained by using Eqs. (1) and (2). The offset method is used to modify the pixel of the output image.

where  is the gain coefficient,

is the gain coefficient,  is the offset,

is the offset,  is the image enhancement control coefficient. The modified image gray value is mapped to the gray range of the display by using Eq. (5) to obtain the enhanced image.

is the image enhancement control coefficient. The modified image gray value is mapped to the gray range of the display by using Eq. (5) to obtain the enhanced image.

where  is the output image,

is the output image,  and

and  are the maximum gray value and the minimum gray value of the modified image.

are the maximum gray value and the minimum gray value of the modified image.

On the basis of the Gaussian function as the environment function, the multi-scale Retinex algorithm is used for image enhancement. The offset method is used to modify the pixels of the output image, and the modified result is mapped to the grayscale of the display to obtain the enhanced image.

3 Image Region Fusion Registration

3.1 Image Interpolation after Enhancement

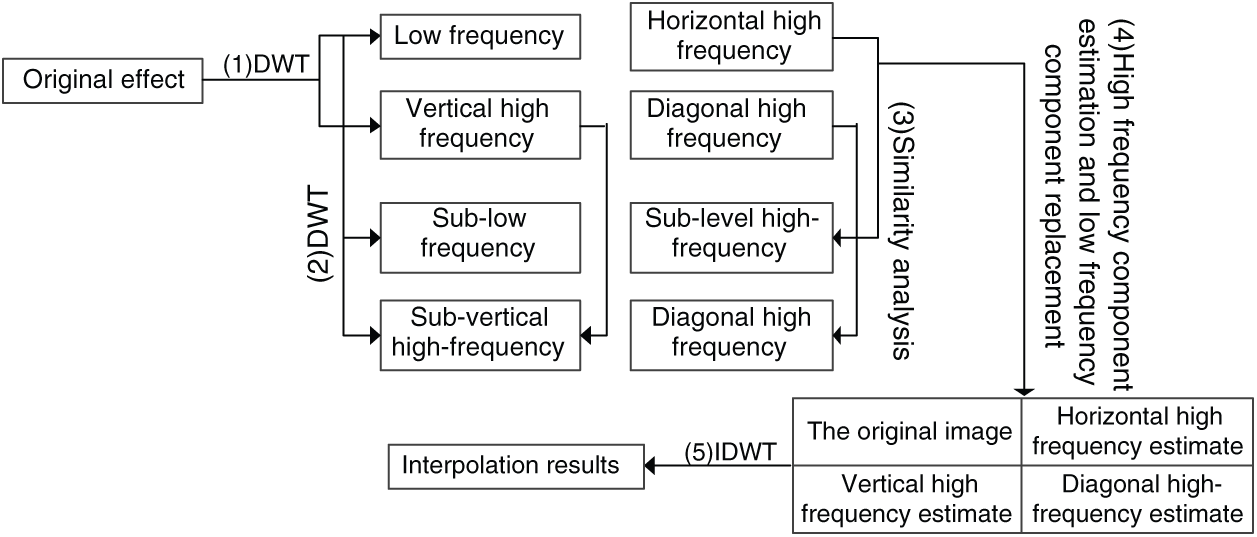

Image interpolation is for preprocessing of image registration and fusion [13–15]. Wavelet decomposition of the original image  is carried out. The components of the low frequency approximate component and the components of the horizontal direction, the vertical direction, and the diagonal direction are denoted as

is carried out. The components of the low frequency approximate component and the components of the horizontal direction, the vertical direction, and the diagonal direction are denoted as  . Further Wavelet decomposition of

. Further Wavelet decomposition of  is implemented to obtain

is implemented to obtain  ,

,  ,

,  ,

,  ,

,  , and

, and  . The similarity analysis is carried out and the similarity parameters are obtained. The obtained similarity parameters are obtained to estimate the high frequency components of the interpolated results. The original image is used as the low-frequency component of the interpolation result, and the four components are replaced by the inverse wavelet transform, and the final interpolation results are obtained.

. The similarity analysis is carried out and the similarity parameters are obtained. The obtained similarity parameters are obtained to estimate the high frequency components of the interpolated results. The original image is used as the low-frequency component of the interpolation result, and the four components are replaced by the inverse wavelet transform, and the final interpolation results are obtained.

In Fig. 1,  represents discrete wavelet transform. For the Step (3), the similarity analysis between the two adjacent layers of the same direction after wavelet decomposition is as follows.

represents discrete wavelet transform. For the Step (3), the similarity analysis between the two adjacent layers of the same direction after wavelet decomposition is as follows.

Figure 1: Image interpolation based on wavelet transform and similarity

The size of the pixel decomposition result of  is the same as

is the same as  . Similarity between

. Similarity between  and each row corresponding to

and each row corresponding to  is calculated by using

is calculated by using

where  and

and  are the

are the  th elements of

th elements of  and

and  . Eq. (7) is solved for

. Eq. (7) is solved for  corresponding to the minimum value of the objective function to obtain the similarity coefficient between

corresponding to the minimum value of the objective function to obtain the similarity coefficient between  and

and  .

.

denotes the interpolation result of the horizontal component of the

denotes the interpolation result of the horizontal component of the  th row, which is given by

th row, which is given by

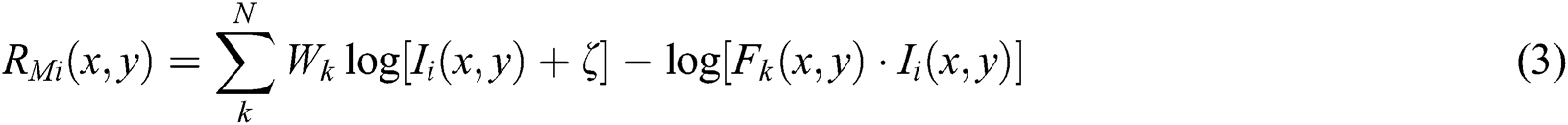

3.2 EMD-Based Image Registration

Image decomposition is implemented with EMD. Different weighting coefficients are used to reconstruct multi-focus image according to the complexity of each layer image to achieve the region fusion registration of multi-focus image.

By using EMD, the multi-focus image  is decomposed into

is decomposed into  layers

layers

and the remnant

and the remnant  . Image is enlarged with the combined interpolation on each layer and the enlarged image is expressed as

. Image is enlarged with the combined interpolation on each layer and the enlarged image is expressed as

where  ,

,  is the IMF component of the

is the IMF component of the  th layer of the enlarged image,

th layer of the enlarged image,  is the remnant of the enlarged image,

is the remnant of the enlarged image,  ,

,  ,

,  , and

, and  are the enlarged results obtained with bilinear interpolation and bi-cubic interpolation,

are the enlarged results obtained with bilinear interpolation and bi-cubic interpolation,  is the weighting coefficient. The enlarged image is reconstructed by using

is the weighting coefficient. The enlarged image is reconstructed by using

where  and

and  are the mode functions. Weighting coefficient determines the weight of bi-cubic interpolation. For images with high complexity,

are the mode functions. Weighting coefficient determines the weight of bi-cubic interpolation. For images with high complexity,  should be set to a larger value. For a flat-variant image, a smaller value should be taken for

should be set to a larger value. For a flat-variant image, a smaller value should be taken for  . As the difference between images is large, if a weighted coefficient is used for the whole image, it is difficult to achieve the desired effect. According to the complexity of each layer, a combination of different weighting coefficients

. As the difference between images is large, if a weighted coefficient is used for the whole image, it is difficult to achieve the desired effect. According to the complexity of each layer, a combination of different weighting coefficients  is used respectively. Finally, the enlarged image is reconstructed by the EMD layer after the combined interpolation. The size of the variance reflects the complexity of the image. The weighting coefficients of each layer can be determined by the weight of the variance of this layer accounting for the sum of the variance of all layers, which is given by

is used respectively. Finally, the enlarged image is reconstructed by the EMD layer after the combined interpolation. The size of the variance reflects the complexity of the image. The weighting coefficients of each layer can be determined by the weight of the variance of this layer accounting for the sum of the variance of all layers, which is given by

where  is the number of the layers after EMD decomposition, the

is the number of the layers after EMD decomposition, the  th layer is the remnant, usually a monotonous flat, which can be bi-linearly interpolated. The combined interpolation of other layers is according to the weighting coefficients,

th layer is the remnant, usually a monotonous flat, which can be bi-linearly interpolated. The combined interpolation of other layers is according to the weighting coefficients,  is the variance of the

is the variance of the  th layer,

th layer,  is the variance of the

is the variance of the  th layer.

th layer.

Assume wavelet decomposition coefficient of the images to be registered  and

and  are

are  and

and  . The image registration is achieved by using

. The image registration is achieved by using

4 Experimental Results and Analysis

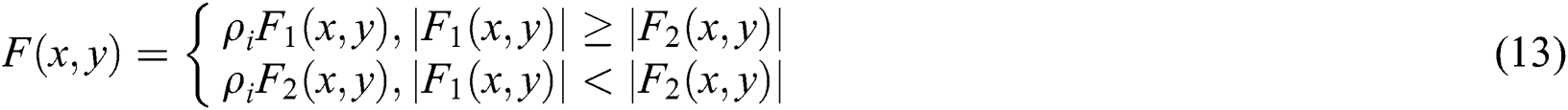

Experiments are carried out to verify the effectiveness of the proposed algorithm. The experiment platform is Simulink. Wavelet multi-scale and multi-focus image region fusion and registration algorithm, multi-band image fusion and registration method, and SURF-based image registration and fusion algorithm are applied for color image enhancement. The gray histograms of image enhancement by three image fusion and registration methods are obtained. The results are shown in Fig. 2.

Figure 2: Gray histograms of three image fusion and registration methods. (a) Multi-band image fusion and registration method. (b) SURF-based image registration and fusion algorithm. (c) Image region fusion registration algorithm based on wavelet multi-scale

From Figs. 2a and 2b, it can be seen that, gray distribution of multi-band image fusion and registration method and SURF-based image registration and fusion algorithm is in a narrower interval. The brightness of the back and middle is higher and concentrated at a far distance from the origin of the coordinate axis. The result of image enhancement is poor. From Fig. 2c, after image enhancement, the dynamic range of the gray value of the image is obviously increased, and the brightness of the image is also enhanced. The brightness of the image can be controlled within a reasonable range, and the obtained image enhancement effect is better.

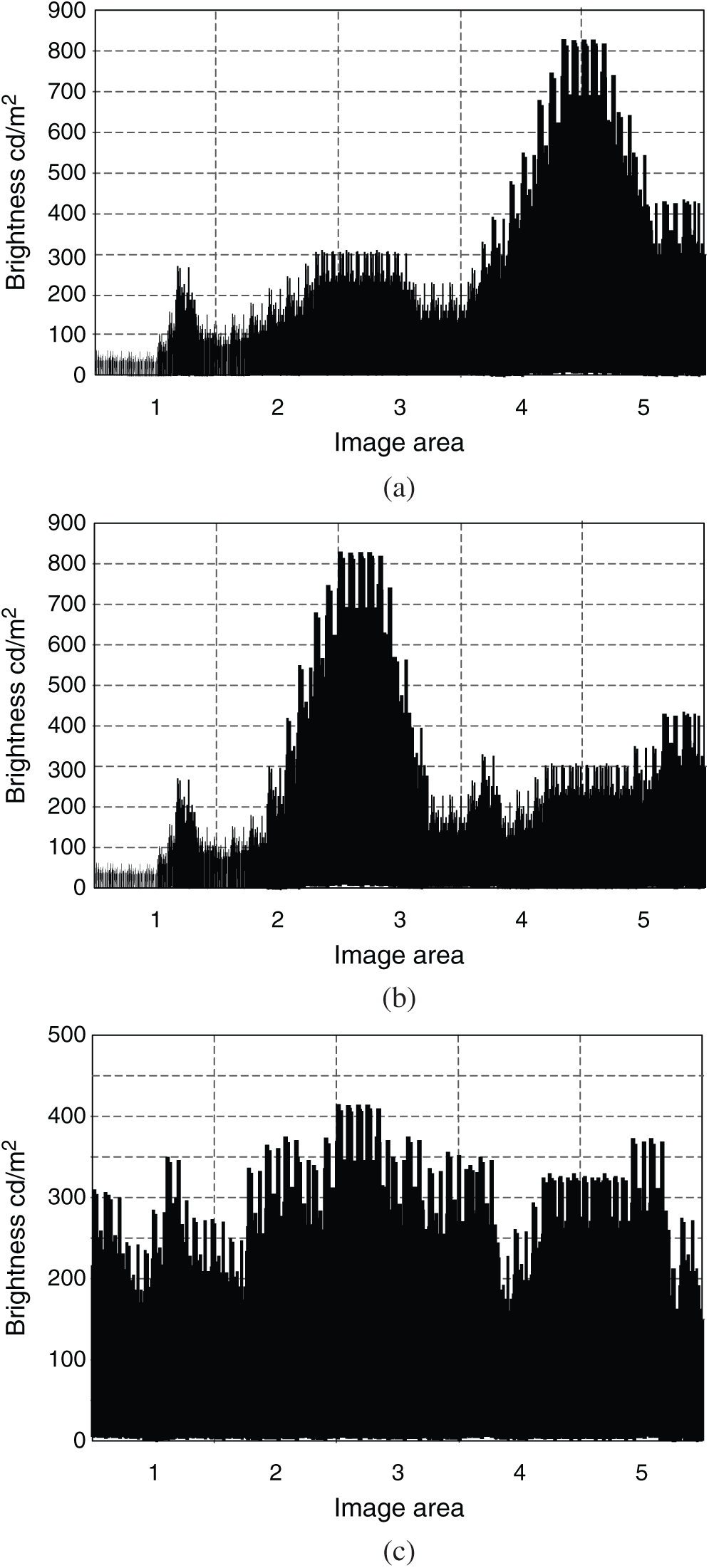

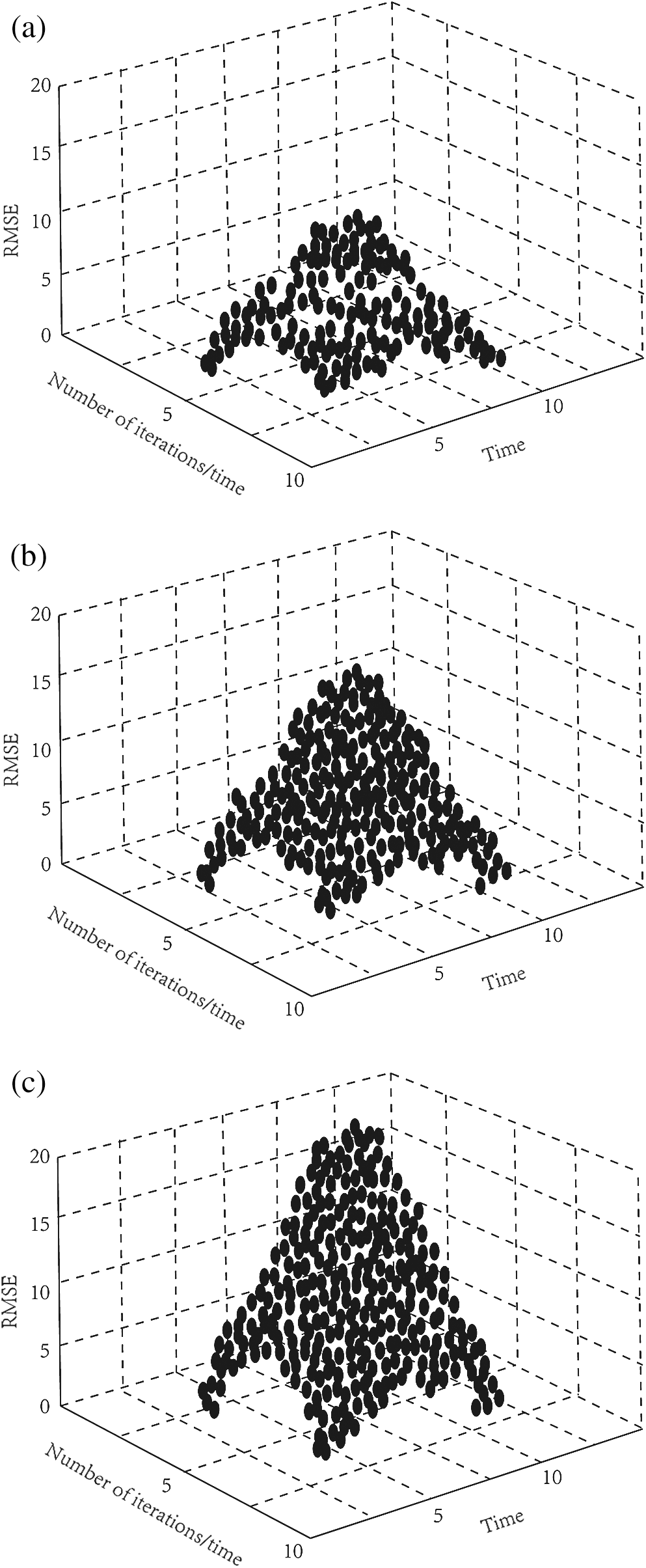

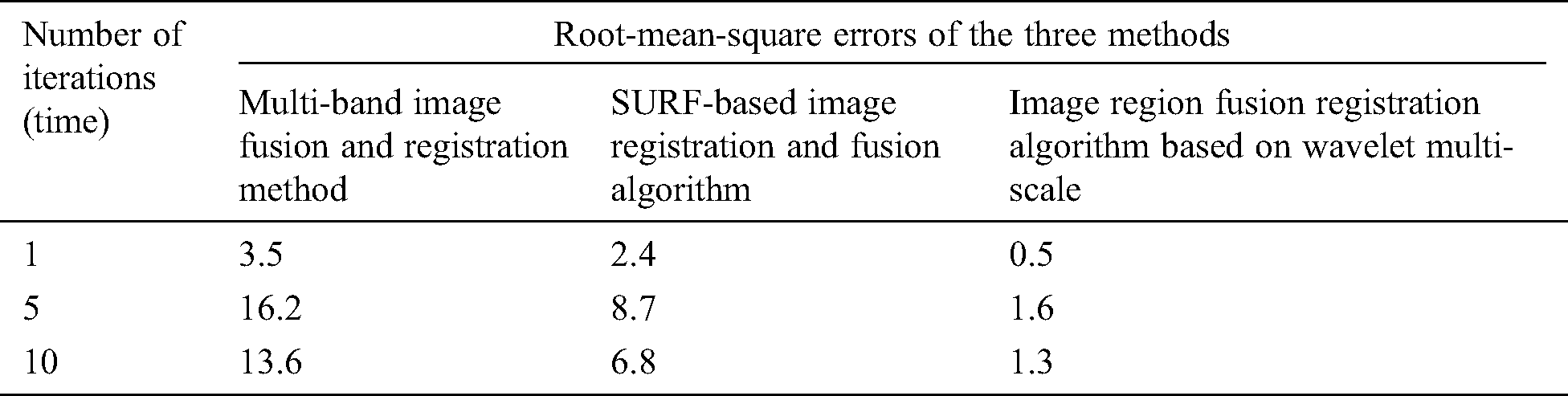

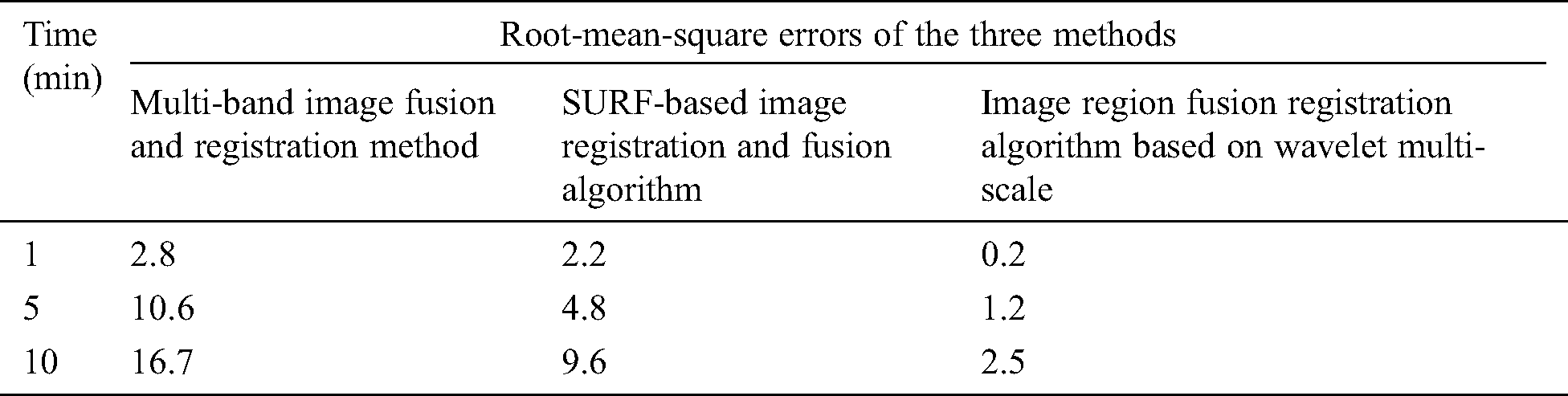

Root-mean-square errors of the three methods are compared. The smaller root-mean-square error represents the higher accuracy of the image registration and the better result of the image fusion. The results are shown in Fig. 3 and Tabs. 1 and 2.

Figure 3: Root-mean-square errors of the three methods. (a) Multi-band image fusion and registration method. (b) SURF-based image registration and fusion algorithm. (c) Image region fusion registration algorithm based on wavelet multi-scale

Table 1: Root mean square error of three methods under different iteration times

Table 2: Root mean square error of three methods at different time

Analysis Tabs. 1, 2 and Fig. 3 show, it can be seen that, the root-mean-square error of multi-band image fusion and registration method is up to 15, the root-mean-square error of SURF-based image registration and fusion algorithm is about 10, and the root-mean-square error of image region fusion registration algorithm based on wavelet multi-scale is below 5. Comparison results show that the root-mean-square error of the proposed method is lowest and the accuracy is high.

Image fusion involves many fields, such as information fusion, sensor, and image processing, which is a new research direction. Image registration is the basis of image fusion. The current image registration method cannot control the brightness of the image in a reasonable range for image enhancement, and the accuracy of the registration is low. In this paper, a multi-focus image region fusion and registration algorithm with multi-scale wavelet is proposed. The first step is image enhancement. The second step is image interpolation. The third step is image registration.

Because of the variety of image sensors and the different application environment, there are still many problems for further research.

1. Accurate registration of source image is very important for image fusion. The accuracy of registration has a direct impact on the quality of the final fusion image. Most of the current image fusion algorithms are carried out on the premise of assuming that the source image has been strictly registered. Therefore, the effect of the fusion algorithm cannot be fully reflected. At present, the research on image registration has developed, but it is far from the application requirements, especially for the problem of image registration under the non-ideal condition of the heterogenous sensor and the real time registration of the video sequence.

2. In image fusion based on multi-scale analysis, different analysis tools will result in different fusion results. The selection of appropriate analytical tool according to fusion task has never been conclusive.

3. Image fusion technology involves many fields of image processing. It is necessary to strengthen the connection between the fusion algorithm and the new theories in various fields, such as compressive sensing and super-resolution technology. How to combine the new mathematical methods, such as fuzzy clustering and neural network technology with multi-scale analysis tools, combined with the characteristics of human vision, to develop a more complete and reasonable fusion strategy, and achieve intelligent and adaptive fusion, is a direction worth researching in depth.

Funding Statement: Characteristic Innovation Projects of Ordinary Universities in Guangdong Province Project Number: 2019GKTSCX029.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. K. Ma, H. Li, H. Yong, Z. Wang, D. Meng et al. (2019). , “Robust multi-exposure image fusion: A structural patch decomposition approach,” IEEE Transactions on Image Processing, vol. 26, no. 5, pp. 2519–2532. [Google Scholar]

2. K. K. Brock, S. Mutic, T. R. Mcnutt, H. Li and M. L. Kessler. (2017). “Use of image registration and fusion algorithms and techniques in radiotherapy: Report of the AAPM radiation therapy committee task group no. 132,” Medical Physics, vol. 44, no. 7, pp. e43–e76. [Google Scholar]

3. F. Werner, C. Jung, M. Hofmann, R. Werner, J. Salamon. (2016). et al., “Geometry planning and image registration in magnetic particle imaging using bimodal fiducial markers,” Medical Physics, vol. 43, no. 6, pp. 2884–2893. [Google Scholar]

4. M. S. Robertson, X. Liu, W. Plishker, G. F. Zaki, P. K. Vyas et al. (2016). , “Software-based PET-MR image co-registration: Combined PET-MRI for the rest of us,” Pediatric Radiology, vol. 46, no. 11, pp. 1–10. [Google Scholar]

5. D. Guo, J. Yan and X. Qu. (2015). “High quality multi-focus image fusion using self-similarity and depth information,” Optics Communications, vol. 338, no. 338, pp. 138–144. [Google Scholar]

6. R. V. D. Plas, J. Yang, J. Spraggins and R. M. Caprioli. (2015). “Image fusion of mass spectrometry and microscopy: A multimodality paradigm for molecular tissue mapping,” Nature Methods, vol. 12, no. 4, pp. 366. [Google Scholar]

7. M. Ghahremani and H. Ghassemian. (2019). “Remote-sensing image fusion based on curvelets and ICA,” International Journal of Remote Sensing, vol. 36, no. 16, pp. 4131–4143. [Google Scholar]

8. Y. M. Jia. (2018). “Research of network digital image edge and high precision matching method,” Computer Simulation, vol. 34, no. 8, pp. 251–254. [Google Scholar]

9. Y. J. Li, J. J. Zhang, B. K. Chang, Y. Qian and L. Liu. (2016). “Remote multiband infrared image fusion system and registration method,” Infrared and Laser Engineering, vol. 45, no. 5, pp. 276–282. [Google Scholar]

10. X. Hou, X. H. Zhou, Q. H. Tang and A. X. Wang. (2016). “Registration and fusion of multi-beam echo sounder and side scan sonar image based on the revised SURF method,” Marine Science Bulletin, vol. 35, no. 1, pp. 38–45. [Google Scholar]

11. Q. J. Chen, B. Z. Wei, Y. Z. Chai and Y. B. Zhang. (2016). “Fusion algorithm based on FFST and directional characteristics contrast,” Laser & Infrared, vol. 46, no. 7, pp. 890–895. [Google Scholar]

12. C. H. Son and X. P. Zhang. (2016). “Layer-based approach for image pair fusion,” IEEE Transactions on Image Processing, vol. 25, no. 6, pp. 2866–2881. [Google Scholar]

13. Y. Li, F. Li, B. Bai and Q. Shen. (2019). “Image fusion via nonlocal sparse K-SVD dictionary learning,” Applied Optics, vol. 55, no. 7, pp. pp.–1814. [Google Scholar]

14. A. O. Karali, S. Cakir and T. Aytaç. (2015). “Multiscale contrast direction adaptive image fusion technique for MWIR-LWIR image pairs and LWIR multi-focus infrared images,” Applied Optics, vol. 54, no. 13, pp. 4172–4179.

15. P. Ke, C. Jung and Y. Fang. (2019). “Perceptual multi-exposure image fusion with overall image quality index and local saturation,” Multimedia Systems, vol. 23, no. 2, pp. 239–250. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |