DOI:10.32604/iasc.2022.019538

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2022.019538 |  |

| Article |

Dynamic Feature Subset Selection for Occluded Face Recognition

Department of Computer Science, Faculty of Computing and Information Technology, King Abdulaziz University, Jeddah, 21589, KSA

*Corresponding Author: Najlaa Hindi Alsaedi. Email: Nalsaedi0024@stu.kau.edu.sa

Received: 16 April 2021; Accepted: 08 June 2021

Abstract: Accurate recognition of person identity is a critical task in civil society for various application and different needs. There are different well-established biometric modalities that can be used for recognition purposes such as face, voice, fingerprint, iris, etc. Recently, face images have been widely used for person recognition, since the human face is the most natural and user-friendly recognition method. However, in real-life applications, some factors may degrade the recognition performance, such as partial face occlusion, poses, illumination conditions, facial expressions, etc. In this paper, we propose two dynamic feature subset selection (DFSS) methods to achieve better recognition for occluded faces. The proposed DFSS methods are based on resilient algorithms attempting to minimize the negative influence of confusing and low-quality features extracted from occluded areas by either exclusion or weight reduction. Principal Component Analysis and Gabor filtering based approaches are initially used for face feature extraction, then the proposed DFSS methods are dynamically enforced. This is leading to more effective feature representation and an improved recognition performance. To validate their effectiveness, multiple experiments are conducted and the performance of different methods is compared. The experimental work is carried out using AR database and Extended Yale Face Database B. The obtained results of face identification and verification show that both proposed DFSS methods outperform the standard (static) use of the whole number of features and the equal feature weights.

Keywords: Biometrics; dynamic feature subset selection; feature subset selection; identification; occluded face recognition; quality-based recognition; support vector machine; verification

Biometrics has long been known as a robust approach for person recognition, using various physiological/behavioral traits such as face, voice, fingerprint, iris, gait, etc. [1]. The majority of existing real-world biometric systems are based on unimodal biometric recognition, which makes use of a single biometric modality and needs to be accurately enrolled in database for training the algorithm, then needs to be sufficiently acceptable and usable in recaptured probe or test samples for achieving successful recognition. Thus, such biometric systems may still suffer from several limitations, especially with unexpected or uncontrolled test or query data used to probe the biometric system, such as occlusions, variations, noise, and low quality [2,3].

Face recognition is deemed as the most intuitively natural, user-friendly, and non-intrusive biometric measure to recognize a person, which has been employed in daily life for several effective purposes, such as identification, re-identification, authentication, and retrieval. Recently, face recognition techniques have achieved high performance on some public databases [4,5]. However, in most real-life applications, face recognition accuracy may degrade by inevitable causes, such as occlusion, facial expressions, poses, and illumination [4,6]. Face occlusion is a common challenging problem studied extensively in recent years [4,7–12]. Nowadays, the situation of the global coronavirus (COVID-19) pandemic obligates billions of people worldwide to wear face masks legally or even compulsory. Hence, the wide spread of commonly masked faces everywhere has become a serious concern to be considered and a real challenge to be confronted by face biometric systems for person identification or verification (authentication). For instance, several security-related face recognition issues have been escalated in many regions after dozens of crimes committed by criminals taking advantage of COVID-19 face-covering rules.

Fig. 1 demonstrates a surveillance footage publicized by FBI and Naperville police of a bank robbery in January 2021, showing two suspects unsuspiciously wearing required COVID-19 masks. Human faces can be partially covered by natural occlusions such as a beard, hair, and other human parts like hands, or alternatively by intentional occlusions with other objects such as masks, sunglasses, and scarfs [13], as shown in Fig. 2. When a face is occluded or obscured, standard feature extraction and selection methods may ignore likely negative effects caused by occlusions and do not address occluded areas with any special treatment or adaptive processing, they may rather not be able to perceive such occlusions or detect them as abnormal cases or anomaly aspects. This in turn, may severely degrade the overall system performance. Several previous research studies investigate the use of local feature extraction methods to solve the problem of the partially occluded face image recognition [7,14,15].

Figure 1: Two suspects with occluded faces by legal masks [ABC 7 Chicago]

Figure 2: Examples of normal face image and partially occluded face image

A method for partially occluded face recognition was proposed by Jianxin et al. [7] based on feature and sparse representation of block-oriented gradient histogram and local binary mode. Their proposed algorithm was designed to segment the face image and extract Histogram of Oriented Gradient (HOG) and Local Binary Pattern (LBP) features from each block, to obtain the HOG-LBP joint features of each block of the image. Then apply classification and identification by sparse representation reconstruction residual. This algorithm achieved a better recognition rate and more robustness when compared with other traditional algorithms.

In Li et al. [12], two different approaches for masked faces recognition was proposed. The first approach was attention-based, where attention module is adopted to focus on the area around eyes, which shows superior performance on masked face recognition over the other attention modules based on their comparison. The second approach was a cropping-based, as they explored the optimal cropping for each casein masked face recognition, where their experimental results of this approach show improvement in recognition performance. Also, Mi et al. [15] partially occluded face images were analyzed to be recognized by dividing images into several blocks, then an indicator based on a linear regression technique is used to determine whether a block is occluded. After that, unoccluded blocks are used in a sparse representation-based classifier (SRC) to determine unknown faces. While the proposed method in Sharma et al. [14] for partially occluded face recognition was implemented by dividing each image into sub-images and detect occluded regions using eigenfaces, then Gabor filters are used to extract features from unoccluded sub-images, where matching is performed only for those unoccluded sub-images. Wu et al. [11] proposed a partial occlusion facial attitude estimation algorithm based on HOG in the direction of the pyramid. Their proposed method divides a detected face horizontally into two sub-regions, to predict the existence of any occlusions in these two sub-regions individually. Finally, pyramid HOG features are extracted from non-occluded sub-regions and used with a Support Vector Machine (SVM) classifier. Song et al. [16] developed an occluded face recognition approach based on Pairwise Differential Siamese Network (PDSN). A mask dictionary firstly established using the differences between the top convoluted features of occluded and non-occluded face pairs, which indicated the correspondence between occluded facial areas and damaged feature elements. The experimental results on synthesized and realistic occluded face datasets demonstrate their proposed approach with a high performance with respect to the state-of-the-art results. However, their requirements of paired pictures are difficult to be satisfied in real-life applications [12].

It can be concluded from most earlier studies that, the recognition accuracy can be enhanced, when the occlusion is detected and eliminated before classification [11,14–17]. Hence, in this research, unlike most existing occluded face recognition approaches, we propose two dynamic feature quality-based subset selection methods that either exclude or minimize the undesirable effects of face occlusions. As such, this research explores feature subset selection techniques capable to dynamically select an optimal subset with the most effective features and dynamically enforce feature vector resizing or features reweighting. This may lead to efficient representation of an input face image sample, effective minimization of face feature dimensionality, and improved face recognition performance.

The main contributions of this research are aimed to present:

• A new proposed dynamic feature subset selection method, with dynamic feature vector size, based on feature quality analysis and assessment of probe samples.

• A new proposed dynamic feature subset selection method, with dynamic weighting for a feature subset, based on feature quality analysis and assessment of probe samples.

• An investigation of the effects and performance of using the proposed dynamic feature subset selection methods in a verity of occluded face biometric recognition tasks.

• Performance comparisons between the proposed dynamic feature subset selection approaches and their standard counterparts using the standard feature vector size and equal feature weights.

The rest of this paper is organized as follows. Section 2 shows the quality analysis and assessment method, followed by describing two proposed dynamic feature subset selection approaches. The research methodology including a brief background about the used databases, feature extraction methods, preprocessing of face images, and the classifier used in our experiments is described in Section 3. Section 4 demonstrates the experiments and results. The research is eventually concluded in Section 5.

2 Proposed Occluded Face Recognition Method

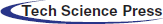

In this research, we propose two Dynamic Feature Subset Selection (DFSS) methods, based on the appearance quality of the probe image, to improve recognition performance and robustness against various possible forms of face occlusions. Fig. 3 summarizes and illustrates an overview of the proposed DFSS approach for enhanced occluded face identification and verification. Other than standard processes, the training is followed by an additional process, where the dynamicity takes place on training data to reconstruct the templates corresponding to the dynamic size or weight, as decided by the quality-based analysis and assessment in the test phase. In consequence, the first step for testing is to analyze and assess the probe image quality to determine whether it contains any occlusion, then the proposed DFSS is appropriately applied.

Figure 3: Overview of the proposed DFSS approach for face recognition

The first DFSS method is based on dynamic size for the biometric feature vector, changing the selected number of features based on the probe image quality, whilst the second DFSS method is based on dynamic weighting for features affected by occlusion in the probe image in terms of quality. Note that both DFSS methods initially handle the training data in the same manner as standard face biometric feature extraction methods, unless occlusions are detected. Nevertheless, the dynamic feature selection process is entirely based on the quality assessment of the probe (test) image and is applied first to the probe data images then accordingly reconstructing face templates of the training data images before matching.

2.1 Quality Analysis and Assessment

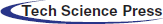

A biometric sample is deemed of good quality if “it is suitable for automated matching” [3], where face quality can be affected by occlusion, illumination, pose, aging, and expression [3,18]. In this research, face occlusions are the main quality attribute to be considered in the proposed DFSS approach. Therefore, we classify face images based on occlusion into four categories, which are normal face image (no occlusion), face with sunglasses occlusion (eyes area is occluded by sunglasses/object), face with scarf occlusion (lower face area including mouth and maybe nose is occluded by scarf/mask/object), and face with vertical side occlusion (one side/half of the face is vertically occluded by hair/object). As such, a normal face image is considered as a high-quality image. Therefore, the whole extracted feature set is standardly used in this case, whereas an image of a face with sunglasses, scarf, or side occlusion is considered as a low-quality image, where the DFSS resizing or reweighting will be applied and takes place in such cases.

To detect the existence of occlusion and assess a probe image quality, we use a Support Vector Machine (SVM) classifier as it is often used and provides prompt and accurate results for occlusion existence classification [12,19,20]. The SVM classifier was trained to classify images into one of the four aforementioned categories (i.e., normal face image, image with sunglasses occlusion, image with scarf occlusion, and image with side occlusion). When a face image is classified as an image with sunglasses, scarf, or side occlusion, the occlusion area is determined using watershed algorithm, which is an automatic and unsupervised algorithm for region segmentation. It based on the representation of a monochrome image as a 3D topographic relief, where the intensity of a pixel in the image represents the elevation of the corresponding position. The topography is flooded with water, where watersheds are lines dividing different regions [21]. For simplicity, the monochrome image is represented by the function

The expected output of the watershed algorithm is a partition of the image composed of regions (sets of connected pixels belonging to the same local minimum) and watershed pixels (the borders between the regions). Fig. 4 shows an example of each of the four categories of occlusion detected using the watershed algorithm to segment occlusions in data samples of occluded face images.

Figure 4: Examples of low-quality occluded face images, in each pair, left is the original image and right is the detected and segmented occlusion

2.2 Dynamic Features Subset Selection (DFSS)

2.2.1 Dynamic Size for Feature Vectors

The first proposed feature subset selection method is based on adopting a dynamic feature vector size after the standard feature selection phase, where face features are initially extracted in this research study using either Principal Component Analysis (PCA) or Gabor filters. This method is achieved by minimizing the number of features, when a given probe/test sample for recognition is assessed as a low-quality face image, implicating some occluded areas and confusing features. Thus, only effective significant features—other than occluded ones—are used to compose a reduced-size feature vector for matching and recognition. Such that, in case of low-quality probe face image, the result of the quality assessment phase indicates a detected occlusion as either sunglasses, scarf, or side category. Consequently, the extracted feature vector using Gabor filters is minimized by PCA and limited to the most high-quality relevant unoccluded features, whereas the extracted feature set using PCA is further minimized to the most high-quality relevant features by selecting the top-k principal components.

2.2.2 Dynamic Weighting for Selected Features

The second proposed feature selection method is based on using the whole extracted feature vector, and applying dynamic weighting for detected low-quality features. This method adopts a fixed-size feature vector for both high quality, and low-quality images, but a minimized range of features based on the quality of features.

After segmentation, the features extracted from occlusion pixels are set a minimized range smaller than the remaining face pixels, by multiplying it by a weight value within (0–1) after normalization. Whereas face features inferred from unoccluded face pixels are used after normalization without any change in its weight values. On the other hand, when a probe image is assessed as a high-quality image indicating no occlusions, a standard feature vector is used as it is after normalization. This DFSS method can be achieved using the Algorithm 2.

In this research, two different databases are used for conducting the experimental work, the first is the AR database [22] and the second is the Extended Yale Face Database B [23,24]. The usage of these different face image databases enables evaluation and comparison of variation in recognition performance of the proposed DFSS techniques from different aspects and on different real or synthesized occlusions.

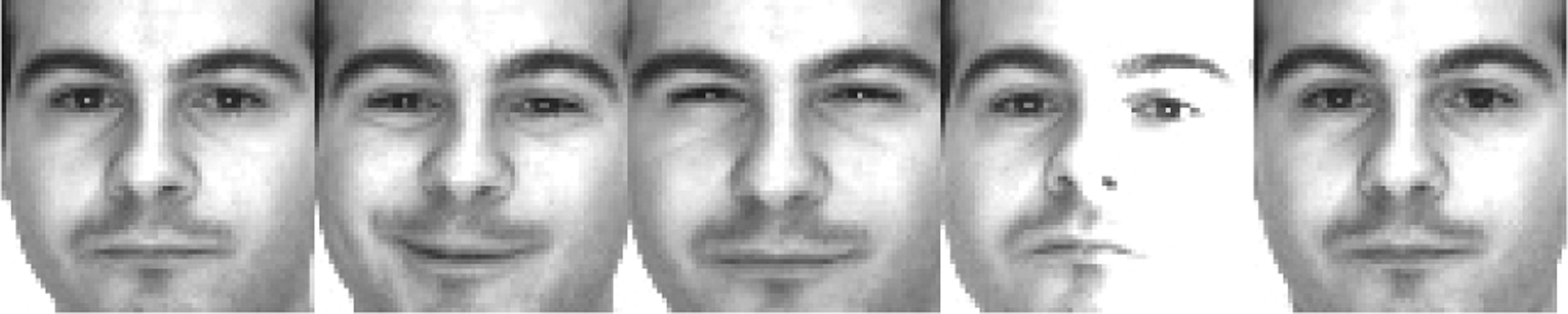

AR database is one of very few databases containing a number of face images with real occlusion for each person in the database. Therefore, it has been widely used as the standard database to evaluate recognition performance on occluded faces for several algorithms [12,25,26]. It consists of 126 distinct persons, 70 males, and 56 females. There are 26 different images with variation in illumination conditions, expressions, and occlusions. Fig. 5 demonstrates a number of AR database face samples used for training.

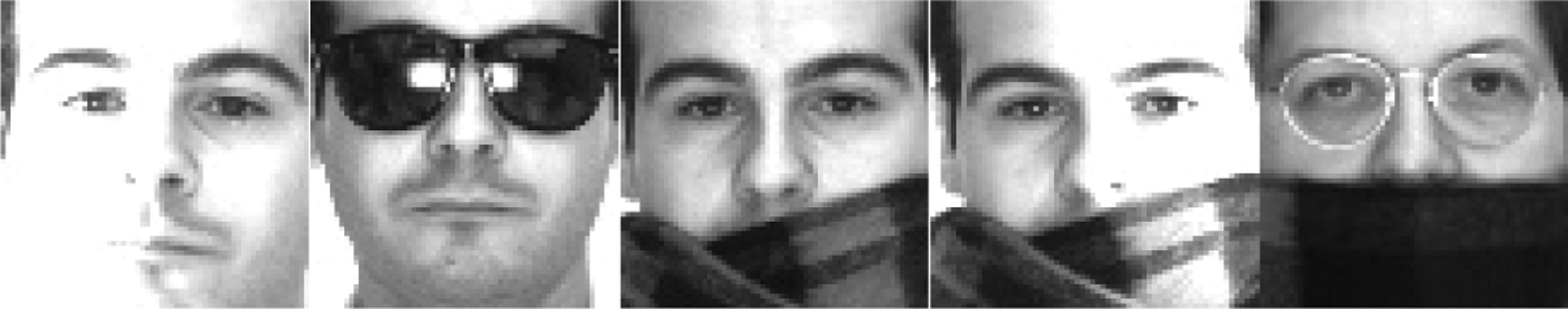

Extended Yale B database has been commonly used to evaluate the recognition performance of different algorithms under synthesized occlusions [12,25,26]. It consists of 16128 images of 28 distinct persons with nine different poses, and 64 various illumination conditions. Fig. 6 presents a few samples of Extended Yale Database B used for training.

Figure 5: Face data samples of AR database used for training

Figure 6: Face data samples of Extended Yale database B used for training

Figs. 7 and 8 show some face probe samples used for testing from AR and Extended Yale Database B databases, respectively. In our experiments, a synthesized occlusion is added to a number of test images, emulating different possible real face occlusions like masks or scarfs, as shown in Fig. 8, to allow extended test and performance evaluation of proposed methods in a diverse of occluded face identification and verification.

For training our model on each database, a maximum of 14 different unoccluded face images per person in databases with different illumination conditions, facial expressions, and different poses were used. All experiments were conducted using probe images that are different from the ones used for training, and they mostly have different real/synthetic face occlusions in addition to various expressions and illumination conditions.

Figure 7: Face data samples of AR database images used in testing with variant illumination conditions, real occlusions, and expressions

Figure 8: Face data samples of Extended Yale database B images used in testing with variant illumination conditions and synthesized occlusion

As a preprocessing to prepare and normalize face data images with different properties and from different databases, we implemented face detection for each image sample using Haar Cascade Classifier, since we need to only focus on a cropped face image as the area of interest. As such, the Haar cascade classifier is used as a cascading of weak classifiers to determine whether the preprocessed image contains a face, then to detect the position and size of the face in the image if there is one. The Haar cascade classifier is based on learning and using various group of Haar features, like the ones shown in Fig. 9, to detect the target object, which is the human face in this context. Each feature produces a numerical value calculated by subtracting the number of pixels under the light rectangle from the number of pixels under the dark rectangle [27]. At some threshold defined based on the training samples, the Haar features can classify face existence in the processed image as positive or negative [28]. The Haar cascade classifier output indicates the position and size of a detected face. After the face is detected, we crop out the other non-face or background parts of the image and keep a square image area containing only the face, and then this cropped face image is resized to 64 × 64 pixels. Figs. 10 and 11 show a face image sample before and after preprocessing.

Figure 9: Example of Haar features

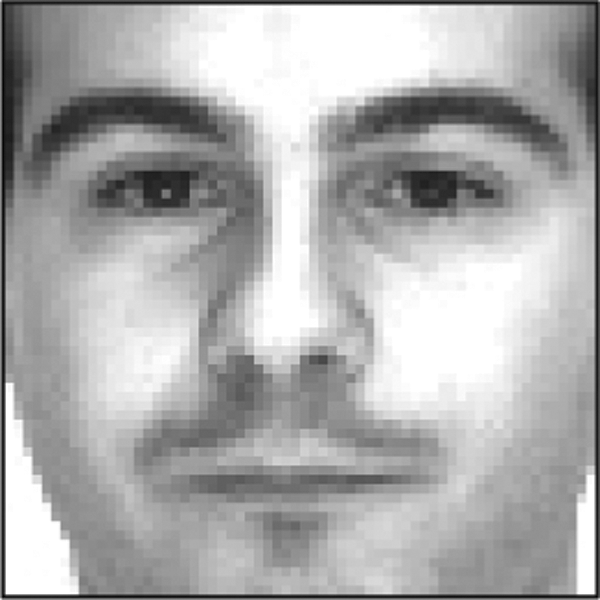

Figure 10: Face data sample before preprocessing

Figure 11: Face data sample after preprocessing

In this research study, a number of experiments of occluded face recognition are conducted for performance evaluation and comparison purposes using Gabor-based and PCA-based feature extraction methods, for either composing standard biometric feature vector (as a baseline for performance comparison) or deriving improved biometric feature vectors via the proposed DFSS approach.

Gabor filters have been extensively used for feature extraction, due to their capabilities for analyzing the visual appearance of an image, and extracting discriminative feature vectors [29]. It has been used and confirmed to be useful in several biometric applications, such as face detection or recognition, iris recognition, and fingerprint recognition [30]. Gabor filters have achieved high accuracy in face recognition in many research studies, as reviewed in Zeng et al. [25] even when faces were partially occluded. A 2D Gabor filter can be mathematically represented in the frequency domain as follows:

where

where u, and v are the variable pair of the filter frequency, f refers to the central frequency of the filter, θ refers to the rotation angle of the Gaussian major axis, and the plane wave, γ refers to the sharpness along the major axis, and η refers to the sharpness along the minor axis (perpendicular to the wave) [14,29].

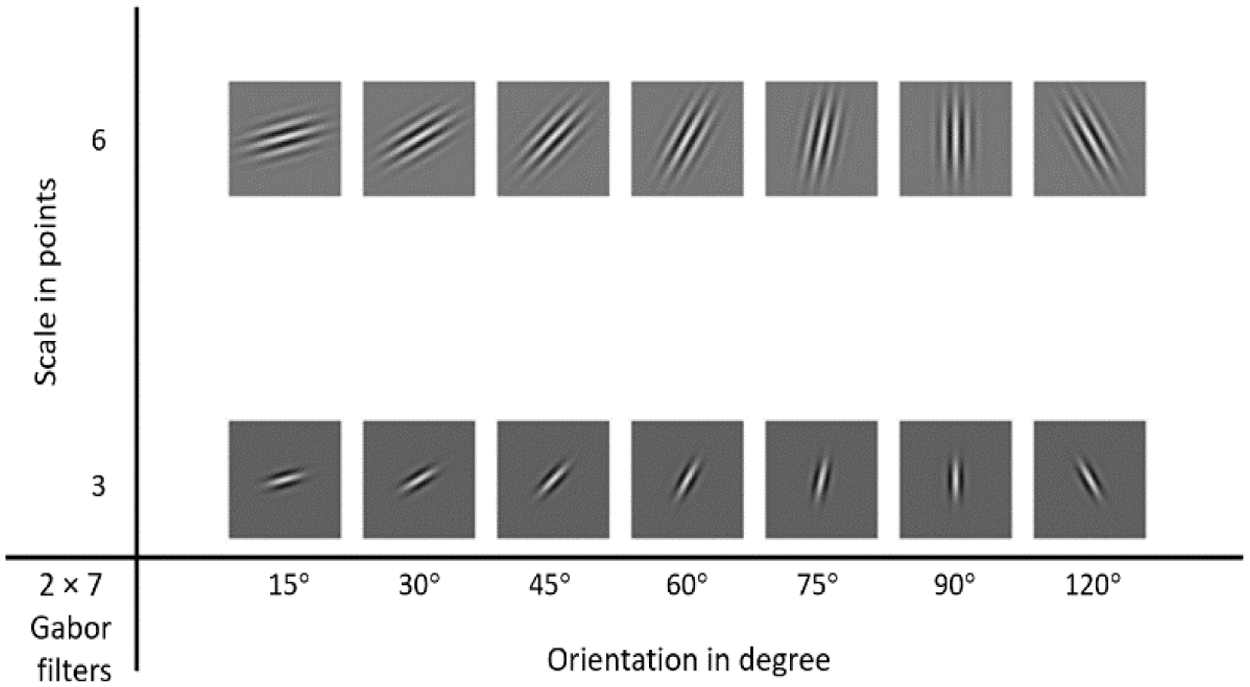

In this research exploration, the Gabor based feature extraction technique designed for our experiments, uses 14 different Gabor filters, with two different scales (3, and 6), and seven different rotation angles of θ (15°, 30°, 45°, 60°, 75°, 90°, and 120°) were used, as demonstrated in the spatial domain in Fig. 12. Furthermore, Fig. 13 shows the resulting 14 face images from applying those 14 Gabor filters on a sample face image.

Since the large number of the extracted Gabor features by all 14 filters from a normalized image of size 64 × 64 pixels, an effective dimensionality reduction method, proposed in Jaha [30], was initially applied on the whole extracted Gabor features. This method is designed to address the resulting 14 filtered images (concatenated in their same positions as shown in Fig. 13). Then, in the single large image comprising all 14 concatenated images, for each i row-group consists of d rows and each j group of columns consist of d columns, the dimensionality is reduced by removing the dth row from each i row-group and the dth column from each j column-group. The used level of dimensionality reduction in our experiments was set to the dimension of (d = 2).

Figure 12: The 14 created and used Gabor filters for face feature extraction

Figure 13: A sample of face image after filtering with the 14 Gabor filters

PCA is a features extraction and reduction method that transforms the original data into a new set of data that can be represented in a lower dimension [31]. In the transformed data, the first several data elements contain the most relevant information that may reveal the most significant characteristics of the data that were obscured or hidden before the transformation [31]. This is done by creating a set S containing M feature vectors of size N, where M is the number of images, and N = image height × image width. Then the mean image m is obtained using the following formula:

where

The resultant differences of all input images are arranged as a matrix X = [x1, x2, …, xn] of dimension M × N. Then a covariance matrix is computed as:

After that, the eigenvectors, and eigenvalues are obtained using the following formula:

Here,

where j = 0, 1, …, N, and uj refers to the eigenvectors of the covariance matrix

In our experiments, PCA based approach was used as a second feature extraction technique, adopting the use of 500 principal components in the standard feature set; noting that none of occluded face images is involved in PCA analysis for training purposes. Moreover, PCA is used for feature reduction in the first DFSS method (described by Algorithm 1 of dynamic size feature vector) to minimize the size of the feature vector of a low-quality image. The first 15 of the total of 500 derived eigenfaces from our experimental training data are shown in Fig. 14.

Figure 14: The first 15 eigenfaces derived from experimental training data

Towards achieving an extended analysis to determine the validity, and viability of the proposed dynamic feature selection algorithms, we train and use an SVM based classifier for experimenting the proposed occluded face recognition approach. Here we take the advantage of SVM as a powerful supervised machine learning technique suited to our experimental work, as it is based on statistical learning theory and can be effectively trained to classify face biometric data by determining an optimal set of support vectors, which are members of the labeled face training data samples and nominated to form a discriminative SVM classifier.

The main objective of an SVM is to find optimal hyperplanes to separate data with a maximum margin [34]. SVM can separate linear and non-linear data by applying different kernel functions such as linear function, Radial Basis Function (RBF), polynomial function, etc. The separation can be tuned by the regularization parameter C, which refers to the softness of the margin. A smaller value of C refers to a softer margin, where this enables the existence of some classification errors in order to maximize the separation margin. Whereas the large value of C makes the SVM fits the training data better, with regard to the decision function’s margin maximization [35].

In this research, the experiments were conducted using a soft margin SVM with linear kernel. In each experiment, a corresponding SVM classifier is trained and tested using three different selections of PCA/Gabor features: The first selection consisted of the whole extracted features representing a standard feature vector was used as a baseline for performance comparison (referred to as ‘Standard features’); the second selection comprising an unoccluded feature subset derived using the first DFSS method of dynamic feature vector size (referred to as ‘Dynamic size’); and the third selection implicating the whole extracted features with reduced weight for occluded features was performed by using the second DFSS method of dynamic weighting for a feature subset (referred to as ‘Dynamic weight’). With a view to investigating and comparing variation in recognition performance, the same experiments were separately accomplished on each of the AR and Extended Yale B databases.

4.1 Feature Significance Analysis

We evaluated and compared the significance (importance) of the features in the standard feature set and in the dynamically selected feature subsets by comparing the SVM feature weights. Feature selection using the SVM weight vector has been effectively utilized extensively in previous research studies for feature significance exploration [36–39]. An SVM classifier computes a weight vector for the training data, such that for each feature a corresponding weight value is inferred in the interval [0,1]. This weight value represents the importance of the feature to the classifier [36]. If the weight is 0, its corresponding feature is considered as redundant, which can be removed, whereas if the weight is greater than 0, the corresponding feature is considered as non-redundant, where the greater the feature weight, the more important the feature in the classification [40], as shown in the linear SVM equation:

where x is the feature value, and w is the corresponding weight. Hence, the features with higher absolute weight value have more contribution in the classification result. Figs. 15 and 16 show the feature weights for standard features against dynamic size/weight features. The shown weights confirm that using the DFSS methods not only improves the classification performance, but also shows the increased efficacy of the dynamically selected features and emphasizes their higher importance for a successful classification.

Figure 15: Feature weight (importance) of the first 30 features of Gabor-based ‘Standard features’ against proposed (a) ‘Dynamic size’ and (b) ‘Dynamic weight’ features

Figure 16: Feature weight (importance) of the first 30 features of PCA-based ‘Standard features’ against proposed (a) ‘Dynamic size’ and (b) ‘Dynamic weight’ features

To prove the effectiveness of using the proposed dynamic feature subset selection methods, the experiments were conducted and the results were compared for face identification and verification using different feature extraction and selection methods. These experiments were similarly achieved per database using the three aforementioned types of feature selections ‘Standard features’, ‘Dynamic size’, and ‘Dynamic weight’, which were all initially extracted using either PCA or Gabor filtering based approaches.

In all experiments, the same training images of size 64 × 64 pixels for each person were used, where all training images were of high-quality (i.e., no face occlusions), whereas testing was mostly performed using about 80% of low-quality (occluded) face images and a few only about 20% of high-quality (unoccluded) face images.

4.2.1 Face Identification Performance

The performance of each identification method is evaluated by different measurements including accuracy, precision, recall, and F1 score, which are calculated by the following equations:

By comparing the results of the different experiments with all possible combinations (each is composed of a feature extraction approach, a feature selection method, and database), it can be observed that both DFSS methods enhanced the identification performance on both databases by all means (accuracy, precision, recall, and F1-score) over the baseline methods that use ‘Standard features’ of Gabor or PCA, as shown in Tab. 1. With respect to the accuracy, for example, a significant performance improvement is achieved by dynamic size/weight selection for Gabor-based features ranging from about 3% to 7% and for PCA-based features ranging from around 6% to 13%.

The reported identification performance in Tab. 1 shows that the ‘Dynamic size’ method achieves better performance than the ‘Dynamic weight’ method when used with Gabor-based features, whereas the ‘Dynamic weight’ method attains the higher performance when used with PCA-based features.

• Improved Gabor-based Face Identification

For experiments that use Gabor filters features, the first proposed DFSS method of ‘Dynamic size’ enhanced the performance of the full-set of ‘Standard features’ extracted using Gabor filters on AR database by 4% in terms of accuracy and recall, besides 3% in terms of precision and F1 score.

The second proposed DFSS method of ‘Dynamic weight’ enhanced the accuracy on AR data over the standard Gabor methods that use the whole feature set, by 3% in terms of accuracy and recall, in addition to 1% in terms of precision and 2% for F1-score, as illustrated in Tab. 1 and Fig. 17.

Figure 17: Face identification performance of two proposed DFSS methods vs. standard method using Gabor features on AR database (a), and Extended Yale B database (b)

The same experiments were carried out and tested using a subset of test images including a great majority of different synthesized face occlusion forms added to probe samples of the Extended Yale B database, where both proposed DFSS methods attained similar higher results in terms of accuracy and recall, in which they outperformed their standard counterparts by 7%, as can be observed in Tab. 1 and Fig. 17.

• Improved PCA-based Face Identification

For experiments that use PCA-based features, the ‘Dynamic size’ method achieved better recognition results than ‘Standard features’ in terms of accuracy, precision, recall, and F1 score for both AR and extended Yale B databases. It achieved 88.6% for accuracy and recall along with 86.6% for precision and 87.6% for F1 score, when tested on realistic occlusion forms of AR data, as can be observed in Fig. 18. Whereas it also achieved improved results by 5.8% for accuracy as well as recall, and 5.7% for F1 score, whereas the precision was improved by 5.6%, upon the received test results when testing the subset of synthesized occluded faces on Extended Yale B database, as shown in Fig. 18.

The ‘Dynamic weight’ method with PCA-based features achieved the best overall attained results in accuracy, precision, recall, and F1 score results, where the achieved high accuracy reaches up to 95.7% on AR images. As such, its performance improvement outperformed the ‘Standard features’ of PCA by 12.8% in terms of accuracy, 10.8% for precision, 12.8% for recall, and 11.8% F1 score on AR database. Likewise, it offered the same improvement as ‘Dynamic size’ on Extended Yale B data, which exceeded the standard features by almost 6% in accuracy.

Figure 18: Face identification performance of two proposed DFSS methods vs. standard method using PCA features on AR database (a), and Extended Yale B database (b)

4.2.2 Face Verification Performance

In this exploration, we thoroughly evaluated and compared the verification performance for both ‘Standard features’, and dynamically selected features using Receiver Operator Characteristic (ROC) analysis, as illustrated in Figs. 19 and 20. The SVM was used for computing the likelihood as the conditional probability of each sample x [41] as follows:

Moreover, Equal Error Rate (EER) and Area Under the Curve (AUC) were deduced from ROC analysis for verification performance comparisons from different aspects, as reported in Tab. 2.

Both proposed methods improve the verification performance over the methods using standard features of both PCA-based and Gabor-based approaches, by increasing the true positive rate and decreasing the false positive rate, as illustrated in Fig. 19 for Gabor-based features, in addition to Fig. 20 for PCA-based features. Also, the reported results of AUC and EER confirm the superiority of the dynamically selected features in verification performance, as this can be observed as increased AUC values and decreased EER values for both dynamic selection algorithms, i.e., ‘Dynamic size’ and ‘Dynamic weight’.

Figure 19: Face verification ROC performance of Gabor-based standard features vs. proposed dynamic size/weight on AR database (a), and Extended Yale B database (b)

In both identification and verification performance, the first proposed method performs better when used with Gabor-based features, whereas the second proposed method shows a better improvement when used with PCA-based features.

Figure 20: Face verification ROC performance of PCA-based standard features vs. proposed dynamic size/weight on AR database (a), and Extended Yale B database (b)

Developing more reliable face biometric systems for occluded face identification/verification have been increasingly becoming an urgent need for nowadays global face-mask occlusion accompanying COVID-19 epidemic. The capability of dynamically detecting occlusions and adapting extracted features can offer more advantages over traditional standard approaches and more invariant face recognition immune to change/shortage caused by face occlusions.

In this work, we proposed two dynamic feature subset selection (DFSS) methods to improve face recognition performance on occluded face images. The first DFSS method proposes a dynamic size for feature vector, by excluding the low quality features in occluded face images. The second DFSS method maintains the size of extracted standard feature vectors, but with different weight ranges for selected features, based on the quality of features in terms of occlusion, to minimize the negative effects of low-quality or occluded features and maximize the positive effects of high-quality or unoccluded features that contribute most in the face recognition task.

The attained performance of face identification and verification using both proposed DFSS methods enforcing dynamic feature size/weight indicates remarkable improvement over standard features. The overall results of the first proposed DFSS method of ‘Dynamic size’ was the superior in performance, offering the highest improvement, when used with Gabor-based features, whereas the second proposed DFSS method of ‘Dynamic weight’ shows the better performance improvement when used with the PCA-based features in both identification and verification results.

Acknowledgement: The authors would like to thank King Abdulaziz University Scientific Endowment for funding the research reported in this paper.

Funding Statement: This research was supported and funded by KAU Scientific Endowment, King Abdulaziz University, Jeddah, Saudi Arabia.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. M. Deriche, “Trends and challenges in mono and multi biometrics,” in 2008 First Workshops on Image Processing Theory, Tools and Applications, Sousse, pp. 1–9, 2008. [Google Scholar]

2. K. Juneja and C. Rana, “An extensive study on traditional-to-recent transformation on face recognition system,” Wireless Personal Communications, vol. 118, pp. 1–54, 2021. [Google Scholar]

3. S. Bharadwaj, M. Vatsa and R. Singh, “Biometric quality: A review of fingerprint, iris, and face,” EURASIP Journal on Image and Video Processing, vol. 2014, no. 1, pp. 1–28, 2014. [Google Scholar]

4. W. Wan and J. Chen, “Occlusion robust face recognition based on mask learning,” in 2017 IEEE Int. Conf. on Image Processing, Beijing, China, pp. 3795–3799, 2017. [Google Scholar]

5. M. Zou, M. You and T. Akashi, “Reconstruction of partially occluded facial image for classification,” IEEJ Transactions on Electrical and Electronic Engineering, vol. 16, no. 4, pp. 600–608, 2021. [Google Scholar]

6. C. Shirley, N. R. Mohan and B. Chitra, “Gravitational search-based optimal deep neural network for occluded face recognition system in videos,” Multidimensional Systems and Signal Processing, vol. 32, no. 1, pp. 189–215, 2021. [Google Scholar]

7. Z. Jianxin and W. Junyong, “Local occluded face recognition based on HOG-LBP and sparse representation,” in IEEE Int. Conf. on Artificial Intelligence and Computer Applications, Dalian, China, 2020. [Google Scholar]

8. J. Madarkar and P. Sharma, “Occluded face recognition using noncoherent dictionary,” Journal of Intelligent & Fuzzy Systems, vol. 38, no. 5, pp. 1–13, 2020. [Google Scholar]

9. J. Jang, H. Yoon and J. Kim, “Improvement of identity recognition with occlusion detection-based feature selection,” Electronics, vol. 10, no. 2, pp. 167, 2021. [Google Scholar]

10. M. Koc, “A novel partition selection method for modular face recognition approaches on occlusion problem,” Machine Vision and Applications, vol. 32, no. 1, pp. 1–11, 2021. [Google Scholar]

11. J. Wu, Z. Shang, K. Wang, J. Zhai, Y. Wang et al., “Partially occluded head posture estimation for 2D images using pyramid HoG features,” in 2019 IEEE Int. Conf. on Multimedia & Expo Workshops, Shanghai, China, pp. 507–512, 2019. [Google Scholar]

12. Y. Li, K. Guo, Y. Lu and L. Liu, “Cropping and attention based approach for masked face recognition,” Applied Intelligence, vol. 51, pp. 1–14, 2021. [Google Scholar]

13. K. Bommidi and S. Sundaramurthy, “A compressed string matching algorithm for face recognition with partial occlusion,” Multimedia Systems, vol. 27, no. 2, pp. 191–203, 2021. [Google Scholar]

14. M. Sharma, S. Prakash and P. Gupta, “An efficient partial occluded face recognition system,” Neurocomputing, vol. 116, no. 12, pp. 231–241, 2013. [Google Scholar]

15. J. X. Mi, D. Lei and J. Gui, “A novel method for recognizing face with partial occlusion via sparse representation,” Optik, vol. 124, no. 24, pp. 6786–6789, 2013. [Google Scholar]

16. L. Song, D. Gong, Z. Li, C. Liu and W. Liu, “Occlusion robust face recognition based on mask learning with pairwise differential Siamese network,” in Proc. of the IEEE/CVF Int. Conf. on Computer Vision, Seoul, Korea, pp. 773–782, 2019. [Google Scholar]

17. S. Park, H. Lee, J. H. Yoo, G. Kim and S. Kim, “Partially occluded facial image retrieval based on a similarity measurement,” Mathematical Problems in Engineering, vol. 2015, no. 1, pp. 1–11, 2015. [Google Scholar]

18. M. Gomez-Barrero, P. Drozdowski, C. Rathgeb, J. Patino, M. Todisco et al., “Biometrics in the era of COVID-19: Challenges and opportunities,” arXiv preprint arXiv: 2102.09258, 2021. [Google Scholar]

19. R. Min, A. Hadid and J. L. Dugelay, “Efficient detection of occlusion prior to robust face recognition,” Scientific World Journal, vol. 2014, no. 3, pp. 1–10, 2014. [Google Scholar]

20. G. N. Priya and R. W. Banu, “Occlusion invariant face recognition using mean based weight matrix and support vector machine,” Sadhana, vol. 39, no. 2, pp. 303–315, 2014. [Google Scholar]

21. A. S. Kornilov and I. V. Safonov, “An overview of watershed algorithm implementations in open source libraries,” Journal of Imaging, vol. 4, no. 10, pp. 123, 2018. [Google Scholar]

22. A. M. Martinez, “The AR face database,” CVC Technical Report 24, 1998. [Google Scholar]

23. A. S. Georghiades, P. N. Belhumeur and D. J. Kriegman, “From few to many: Illumination cone models for face recognition under variable lighting and pose,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 23, no. 6, pp. 643–660, 2001. [Google Scholar]

24. K. C. Lee, J. Ho and D. J. Kriegman, “Acquiring linear subspaces for face recognition under variable lighting,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 27, no. 5, pp. 684–698, 2005. [Google Scholar]

25. D. Zeng, R. Veldhuis and L. Spreeuwers, “A survey of face recognition techniques under occlusion,” arXiv preprint arXiv: 2006.11366, 2020. [Google Scholar]

26. W. Zheng, C. Gou and F. Y. Wang, “A novel approach inspired by optic nerve characteristics for few-shot occluded face recognition,” Neurocomputing, vol. 376, no. 4, pp. 25–41, 2020. [Google Scholar]

27. A. Priadana and M. Habibi, “Face detection using Haar cascades to filter selfie face image on Instagram,” in 2019 Int. Conf. of Artificial Intelligence and Information Technology, Yogyakarta, Indonesia, pp. 6–9, 2019. [Google Scholar]

28. A. Obukhov, “Haar classifiers for object detection with Cuda,” in GPU Computing Gems Emerald Edition. Burlington, USA: Elsevier, pp. 517–544, 2011. [Google Scholar]

29. E. S. Jaha, “Augmenting Gabor-based face recognition with global soft biometrics,” in 2019 7th Int. Sym. on Digital Forensics and Security, Kerala, India, pp. 1–5, 2019. [Google Scholar]

30. E. S. Jaha, “Efficient Gabor-based recognition for handwritten Arabic-Indic digits,” International Journal of Advanced Computer Science and Applications, vol. 10, no. 1,pp. 112–120, 2019. [Google Scholar]

31. R. Lionnie and M. Alaydrus, “Biometric identification system based on principal component analysis,” in 2016 12th Int. Conf. on Mathematics, Statistics, and Their Applications (ICMSABanda Aceh, Indonesia, pp. 59–63, 2016. [Google Scholar]

32. H. M. Ebied, “Feature extraction using PCA and Kernel-PCA for face recognition,” in 2012 8th Int. Conf. on Informatics and Systems (INFOSGiza, Egypt, pp. 72–77, 2012. [Google Scholar]

33. H. M. Maw, K. Z. Lin and M. T. Mon, “Evaluation of face recognition techniques for facial expression analysis,” in Int. Conf. on Intelligent Computing, Communication & Convergence (ICCC-2015Odisha, India, 2015. [Google Scholar]

34. Z. Rustam and S. Kharis, “Comparison of support vector machine recursive feature elimination and kernel function as feature selection using support vector machine for lung cancer classification,” Journal of Physics: Conference Series, vol. 1442, no. 1, pp. 12027, 2020. [Google Scholar]

35. M. Mohammadi, T. A. Rashid, S. H. T. Karim, A. H. M. Aldalwie, Q. T. Tho et al., “A comprehensive survey and taxonomy of the SVM-based intrusion detection systems,” Journal of Network and Computer Applications, vol. 178, no. 4, pp. 102983, 2021. [Google Scholar]

36. E. E. Bron, M. Smits, W. J. Niessen and S. Klein, “Feature selection based on the SVM weight vector for classification of dementia,” IEEE Journal of Biomedical and Health Informatics, vol. 19, no. 5, pp. 1617–1626, 2015. [Google Scholar]

37. A. Rakotomamonjy, “Variable selection using SVM-based criteria,” Journal of Machine Learning Research, vol. 3, pp. 1357–1370, 2003. [Google Scholar]

38. S. Hao, J. Hu, S. Liu, T. Song, J. Guo et al., “Improved SVM method for internet traffic classification based on feature weight learning,” in 2015 Int. Conf. on Control, Automation and Information Sciences, Changshu, China, pp. 102–106, 2015. [Google Scholar]

39. D. Mladenić, J. Brank, M. Grobelnik and N. Milic-Frayling, “Feature selection using linear classifier weights: Interaction with classification models,” in Proc. of the 27th Annual Int. ACM SIGIR Conf. on Research and Development in Information Retrieval, Xi’an, China, pp. 234–241, 2004. [Google Scholar]

40. X. Wang and Q. He, “Enhancing generalization capability of SVM classifiers with feature weight adjustment,” in Int. Conf. on Knowledge-Based and Intelligent Information and Engineering Systems, Wellington, New Zealand, pp. 1037–1043, 2004. [Google Scholar]

41. M. Sokolova, N. Japkowicz and S. Szpakowicz, “Beyond accuracy, F-score and ROC: A family of discriminant measures for performance evaluation,” in Australasian Joint Conf. on Artificial Intelligence, Cairns, Australia, pp. 1015–1021, 2006. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |