DOI:10.32604/iasc.2022.020365

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2022.020365 |  |

| Article |

Application of XR-Based Virtuality-Reality Coexisting Course

1Shenzhen Polytechnic, Shenzhen, 518000, China

2The University of Chicago, Chicago, 60637, United States

*Corresponding Author: Chun Xu. Email: zxuchun@szpt.edu.cn

Received: 20 May 2021; Accepted: 21 June 2021

Abstract: Significant advances in new emerging technologies such as the 5th generation mobile networks (5G), Expand the reality (XR), and Artificial Intelligence (AI) enable extensive three-dimensional (3D) experience and interaction. The vivid 3D virtual dynamic displays and immersive experiences will become new normal in near future. The XR-based virtuality-reality co-existing classroom goes beyond the limitations of Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR). Such technology also enables integration of the digital and physical worlds and further creates a smart classroom featuring co-existed virtuality and reality. In this paper, we show an application of the XR enabling human-environment interaction. Through the theory explanation, practice platform and combination of virtual and reality, using XR we construct a new type of class. We develop the teaching methods using digital enabling, building information model library, and offline XR immersive teaching, and design a mixed teaching method which is virtual and real, and is available in online and offline formats. In our developed system the working scene as it is seen in practice is shown to the students. We consider the steel structure construction technology course in civil engineering and architecture as an example, to help the learners develop their theoretical understanding and practical skills. We also discuss the application and development of the class based on virtual and reality. This work shows the feasibility and significance of learning methods and the reconstruction and transformation of learning spaces empowered by new technologies in the digital era.

Keywords: XR (extended reality); virtuality-reality co-existing; curriculum design

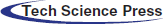

Cone of Learning (see Fig. 1) is proposed by the National Training Laboratories, Maine, USA [1]. This model indicates that after two weeks the learners remember 70% of the knowledge (i.e., average learning retention rate) grasped through practice. It is also shown that if the learners teach what they learned to the others immediately, the learners can get up to 90% of the learning material. This corroborates the importance of exercise.

Nevertheless, in some discipline, exercising the taught materials are not often easily attainable. For instance, in subjects such as traditional medical education which is mainly focused on animal specimens, cadavers, and teaching AIDS, the main issues are insufficient resources and a high risk of patients’ safety [2]. Another example is the aircraft design and operation, where without physical observations, it is difficult for the students to correctly gain mastery on the subject only based on conventional multimedia means, such as pictures, animations, and videos [3]. Similarly, civil engineering concepts cannot be displayed on site due to the long construction cycle and extent of the hidden works (e.g., pile foundation works, slope supporting works), especially as some of such operations are high-risk. Hence, in many cases, the students are unable to gain experience on complex and high-risk experiments which are closely combined with actual projects (e.g., steel structure installation) [4].

To address the above issue, it is an immediate need to seek technological solutions enabling the simulation of the actual scenes and construct virtuality-reality-coexisting classrooms. Such a classroom significantly improves the learners’ experience and meets the surging demand for diversified learning scenarios. In 2019, China Digital Library and Filchuang Technology Co., Ltd. cooperated to develop the “XR Global Digital Content Center” in Shanghai to integrate the virtual and real worlds through extended reality (XR). Besides, there are more possibilities to apply such technologies in education and create learning experiences for the learners which are otherwise hard to realize.

In this paper we introduce a mixed teaching method which is virtual and real, and available both online and offline. This is based on improving the teaching method with digital enabling, teaching carrier of building information model library, building information model library and offline XR immersive teaching. The steel structure construction technology course of civil engineering and architecture is used as an example to show how using XR enables restoring the real working scenario and helps learners better understand the concepts and apply their knowledge in practice.

Figure 1: Cone of Learning (National Training Laboratories)

2 Development of Virtual Reality Technology

In 1956, Heileg M. developed a motorcycle simulator, Sensorama, with a three-dimensional display and stereo effect and the ability to generate vibrating sensation. His 1962 patent “Sensorama Simulator” includes some basic ideas of VR technology [5]. In 1973, Krueger’s term “Artificial Reality” was proposed, which was an early VR term [6]. Since then, the research of virtual reality technology has been significantly developed. VR is a technology that provides an immersive experience in an interactive three-dimensional environment generated by a computer. This is often based on the integration of computer graphics and various reality and control interface devices.

According to Virtual Reality Technology [3] by Grigore Burdea and Philippe Coiffet, there are three characteristics associated with virtual reality: Immersion, Interactivity, and Imagination. These characteristics are also referred to as the “3I” characteristics of VR. The VR presents users with a virtual situation and allows them to interact with the objects in the virtual situation to develop an immersive experience. Nevertheless, the immersive features of the VR technology separate the users from their actual surroundings, which hence limits their mobility [7].

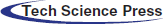

In 1997, Ronald Azuma, a professor at the University of North Carolina, defined Augmented Reality (AG) in a paper titled “A Survey of Augmented Reality” [2]. Based on his definition AR is a kind of real-time position and angle according to the real world and combined with the corresponding virtual images and the 3D object technology. Superposing the virtual objects and information in the real space (e.g., objects, images, video, voice map), further enables the AR system to have the following three characteristics: virtuality-reality co-existing, real-time interaction, and three-dimensional registered. As an extension to the AR, mixed reality (MR), was then proposed by the University of Toronto professor, Mann [8], which refers to the natural world’s digitalization. In other words, MR results in a new environment that is created through integration with the virtual world. In the MR, the natural world’s objects may coexist and interact with the virtual objects. In 1994, Paul Milgram and Fumio Kishino proposed the real-virtual continuum model [9] (see Fig. 2), which takes the realistic and virtual environments as the two ends of a continuous system. In this model, the element closest to the practical environment is called Augmented Reality. The aspect most relative to the virtual environment is Augmented Virtuality.

The “Extended reality” or “cross reality”, also known as “XR” or “ER”, refers to a real-virtual environment for human-machine interaction generated by computers and wearable devices. The XR can be regarded as an umbrella term, covering VR, AR, MR, and other new immersive technologies that possibly occurred due to technological progress, and it could be V(R), A(R), or M(R), and even any point on the “virtuality-reality continuum” model. Therefore, XR covers all hardware, software, method, and immersive technology experience that can integrate the physical and virtual world [10].

Figure 2: Reality-virtuality continuum

In the XR, the physical objects are integrated with the simulated situations and generate the experience in both reality and virtuality. According to [11], XR has the following four characteristics: context-awareness, feeling substitution, intuitive interaction, and reality editing. Ideally, in the XR, the virtual objects in the computer can interact and communicate with the operators and also the real objects.

In 1991, Wyckoff and Mann developed a head-mounted display device that was able to present XR visual pictures. In their devise high-dynamic-range image processing techniques, VR, and AR technologies were combined to improve the sensory abilities [12]. Nevertheless, besides the expansion and augmentation of the realistic environment, the XR technology enables users to maintain the surrounding scenes’ perception. This adds virtual digital information to the real world and introduces real-world objects to the virtual environment.

The human-environment interacting XR technology constructs a virtuality-reality coexisting new classroom by combining the theoretical explanation and practice platforms. This approach enables safety, vivid reproduction of scenes, lower cost compared to the actual scenes, and repeatable operation and training. Through the integration of teaching, learning, and practice, enables the implementation of Piaget’s concept. Such technology also enables “moving the laboratory to the classroom” and constructivism learning theory which is based on the following “learning is an experience of a real-world situation” [13].

3 Technical Framework of XR-Based Virtuality-Reality Co-Existing Classroom

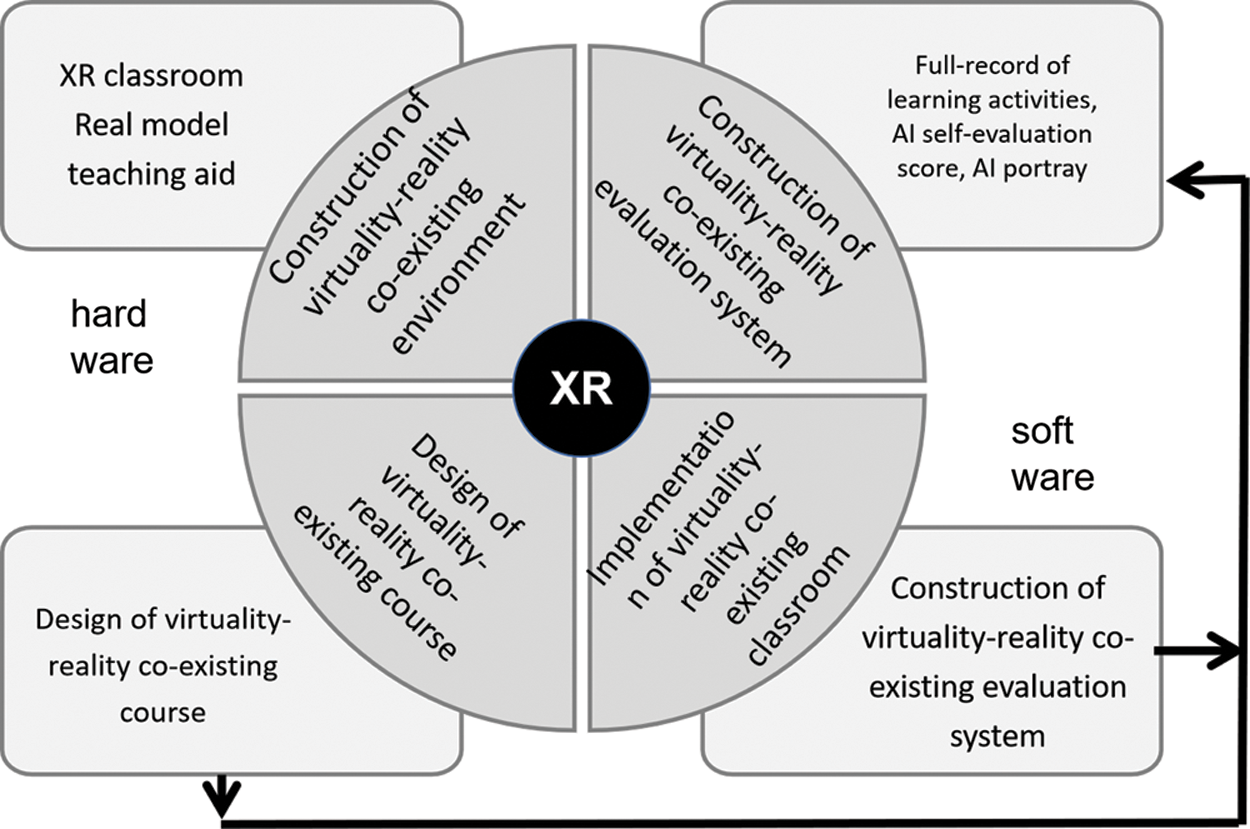

Development of the virtuality-reality co-existing classroom (Fig. 3) includes the construction of hardware and software conditions. The objective of hardware design is to implement the virtuality-reality co-existing environment. For software development, the objective is to construct the teaching design of the virtuality-reality co-existing. This includes the design of a virtuality-reality co-existing course, implementation of a virtuality-reality co-existing classroom, and construction of a virtuality-reality co-existing evaluation system.

Figure 3: The technical framework of the XR-based virtuality-reality co-existing classroom

3.1 Virtuality-Reality Coexist Environment

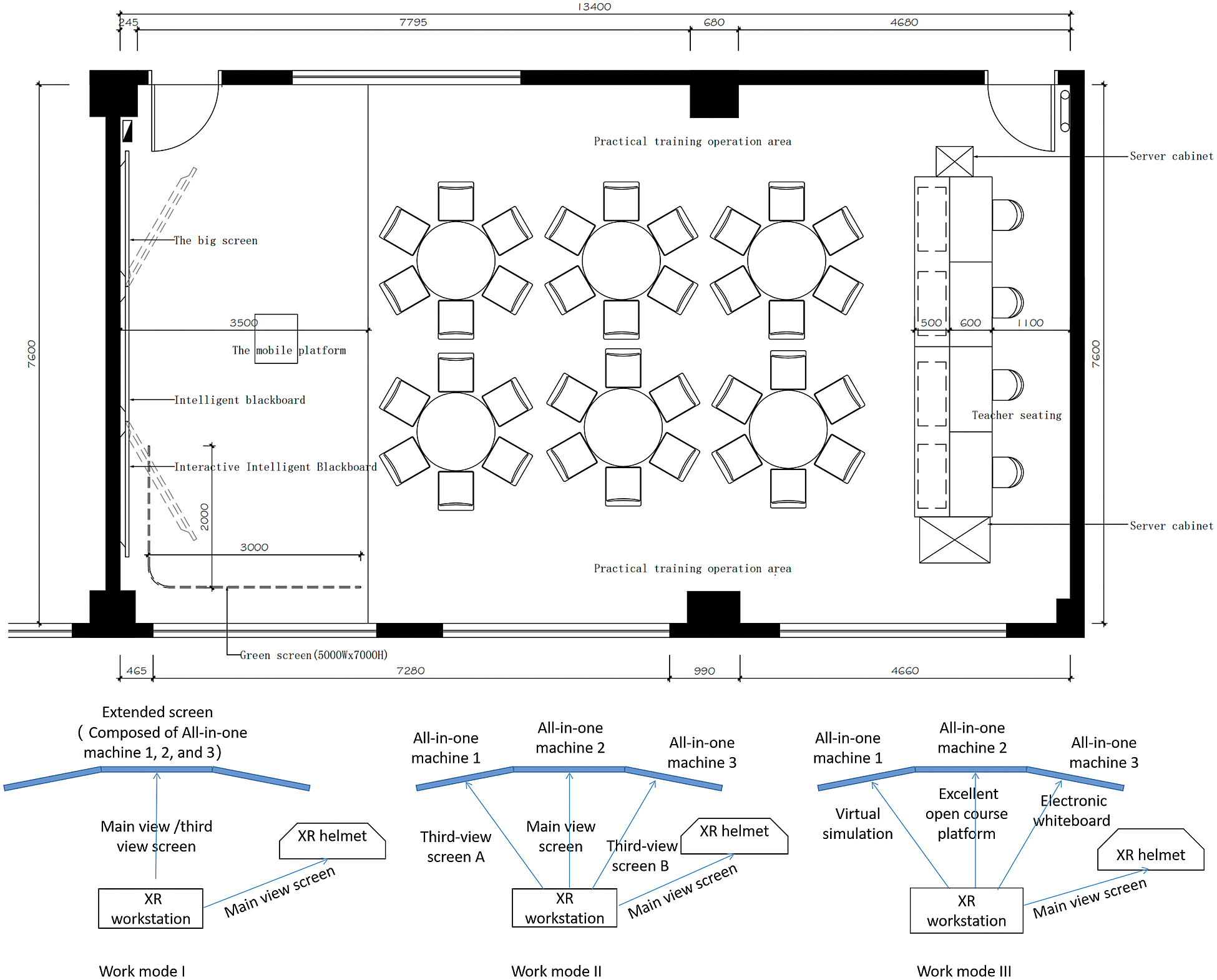

This component covers the in-depth integration of virtuality and reality, to create unlimited interactions. Combining teaching resources, communication technologies, and mobile terminals enables multi-scene ubiquitous learning possible [14]. This component also implements the dynamic real-time transmission and verification and goes beyond the boundary of virtuality-reality interaction by adding the sensing system. It further generates the virtuality and integrates it with reality through the digital twin (i.e., generation or collection of digital data representing a physical object). This creates unlimited interaction and provides a highly immersive learning scenario [15]. The arrangement of the XR classroom is shown in Fig. 4.

Figure 4: The arrangement of the XR classroom and its working principles

3.2 Teaching Design of the Virtuality-Reality Co-Existence

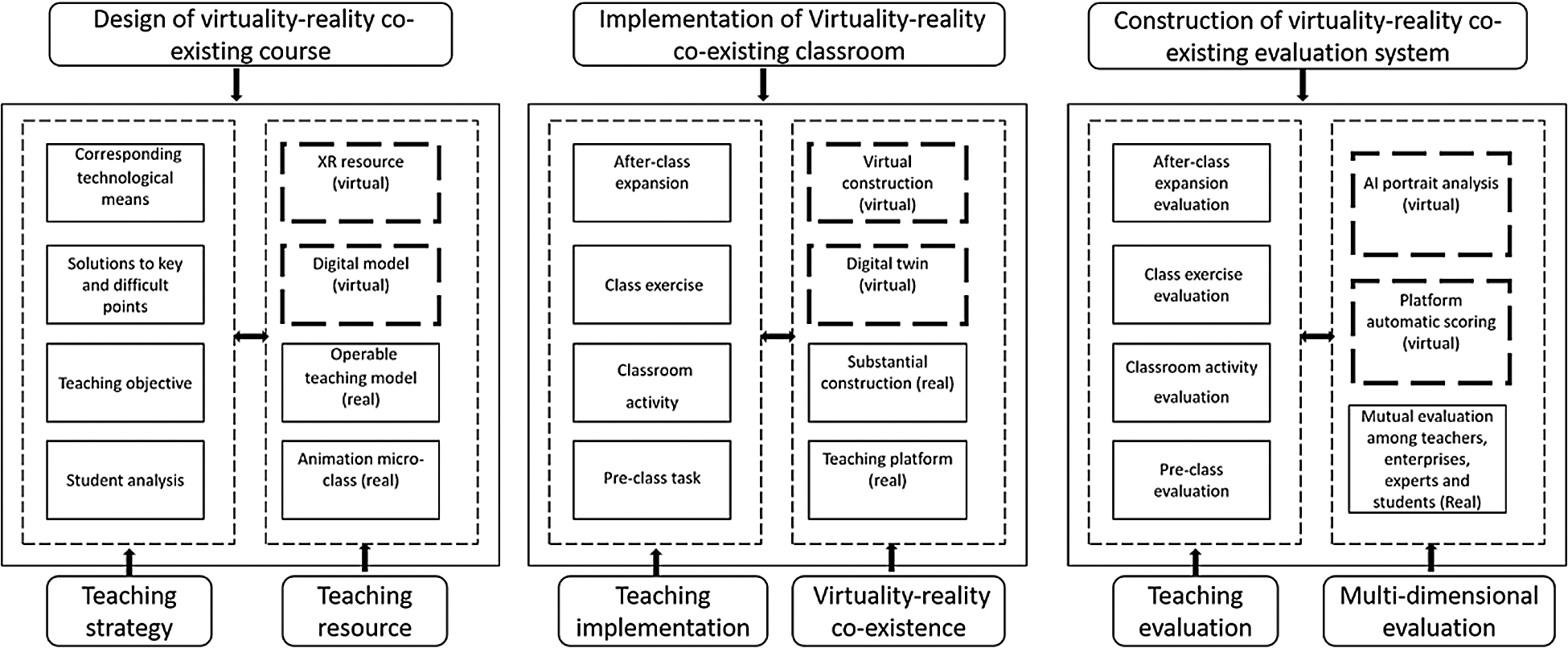

As it is seen in (Fig. 5) the course development includes the following tasks:

i. Design of virtuality-reality co-existing course: The teaching design stage mainly comprised of the following two tasks: teaching strategy design and construction of the teaching resources. Teaching strategy design is based on talent training program and learning situation analysis to determine teaching objectives and difficult points in teaching. Teaching strategy design then optimizes the teaching process and application of the available technologies and methods. Teaching resource construction includes the allocation of physical resources e.g., animated micro class and teaching aid model, digital models, and XR resource construction.

ii. Implementation of Virtuality-reality co-existing classroom: In teaching implementation, XR is integrated into the entire teaching process before, during, and after the class. The virtual-real and 3D XR classroom teaching field highlights the emotional experience and social interaction values in real-time interaction. This incentivizes both the teachers’ and students’ subjective initiative to the maximum degree. Furthermore, the teachers and students are immersed in vivid classroom activities to significantly improve the learning experience [16].

iii. Development of the virtuality-reality co-existing evaluation system: An individualized electronic learning portfolio is generated for each learner based on the entire record of classroom activities in the teaching evaluation stage. The teaching is then dynamically adjusted according to the overall and individual portrait analysis to enable students’ learning according to their aptitude.

Figure 5: The course development tasks

4 Practical Application of XR Technology in Higher Vocational Education

We consider a course in civil engineering, ‘the steel structure construction technology’ to illustrate the practical application of VR technology in higher vocational education. Due to the complexity of the process, it is almost impossible to have steel structure installations in a lab or a classroom for the students to acquire experience with the actual structures. Therefore, it is challenging for the student to gain mastery of the topic and construction sites’ real situations. Due to the safety risks in construction and the severe shortage of on-site training staff, it is often difficult to arrange on-site practice for the students. Therefore, developing a virtual training system based on VR technology is an ideal solution and an affordable alternative to address the above issues.

This course is composed of three modules: Module 1: Steel Structure Recognition, Module 2: Steel Structure BIM Modeling, and Module 3 Steel Structure Fabrication and Installation. In the XR classroom, the hardware is already available and therefore we focus on developing the software.

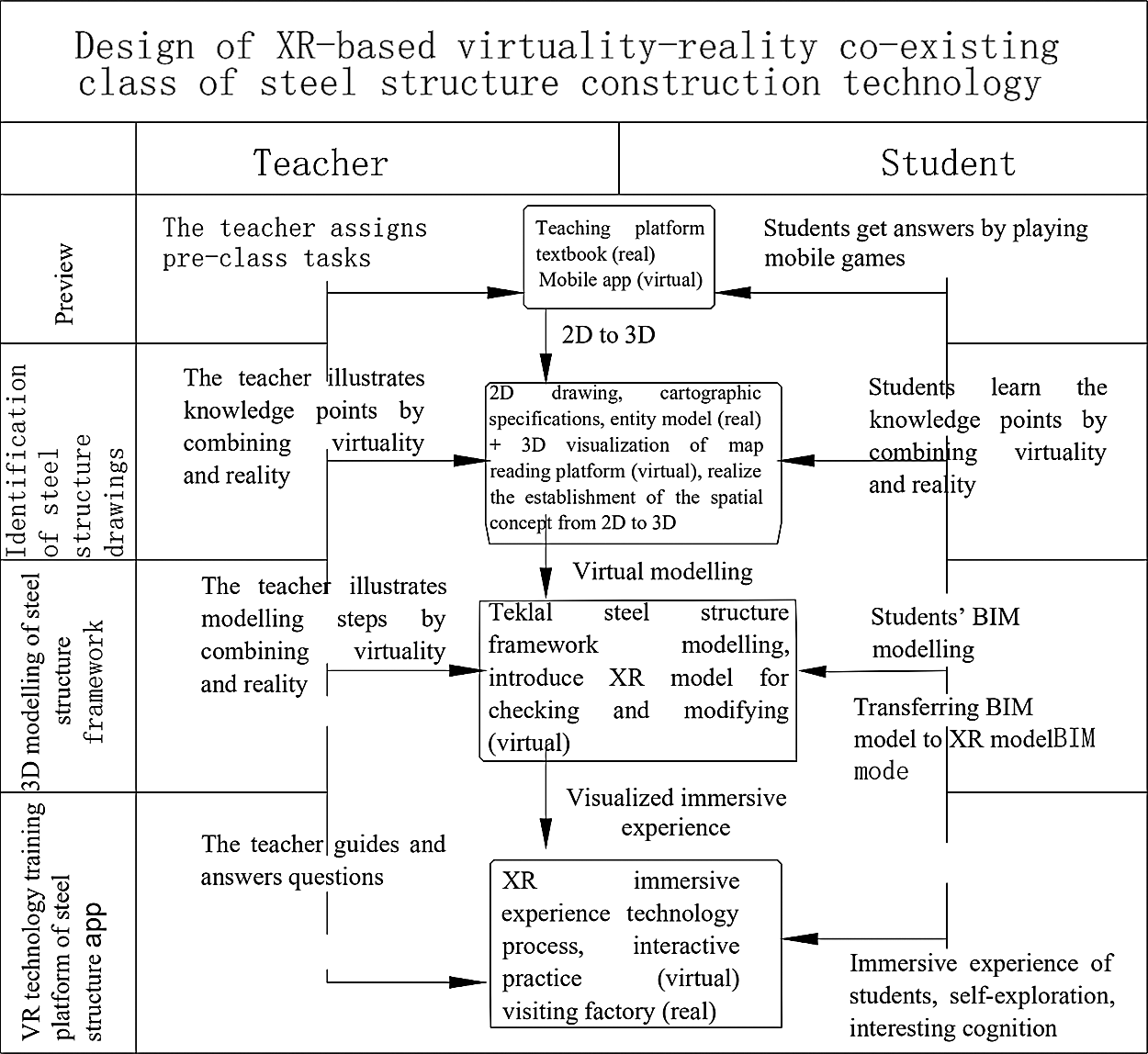

From the students’ perspective, it is essential to work with real engineering cases and perform careful analysis. The challenges include designing a customized part of the teaching aid model that can be synchronized in the classroom which cannot be done on-site. The XR resources are then developed and solve the difficult points of the course with the help of XR technology (see, Fig. 6). In the following, we elaborate on the design steps.

Figure 6: The course design flowchart

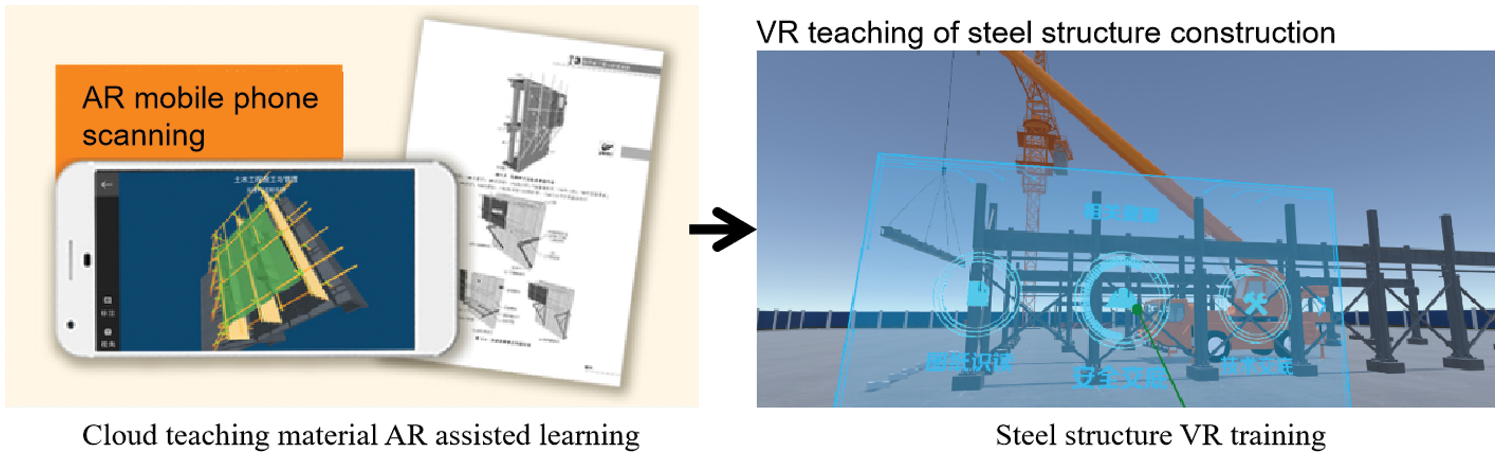

(1) Development of cloud teaching materials using AR technology: This is to assist reading, simulate the real scenes, and addressing the difficulties in theory and practice, two-dimensional drawing, and three-dimensional real scenes do not correspond well.

(2) Addressing the teaching difficulties in module 1, steel structure drawing recognition: The main objectives here are facilitating the training of spatial thinking, and development of the “Steel Structure 3D Simulation Recognition Training Platform”. We use the detailed drawing of common steel structure joints as the teaching material for two-dimensional design. The three-dimensional model is then generated and displayed. The system is equipped with professional voice-over node explanations and simulated expert on-site teaching. We also provide 3D models that are easily splittable and can be assembled step-by-step to support one-click quick assembly and restoration of the overall components.

Dynamic display of the section process of the joint sections, intelligent matching of joint details corresponds to the section position are also supported. The same interface shows a two-dimensional diagram of the current section, which can be zoomed in and out and also moved. The joint member dimensions can be displayed in three-dimensional space, with a full sense of stereo feeling, and can accurately correspond to the matching position so clearly. The joint members can be also displayed in all directions and all joints can be zoomed and moved by 360 degrees, which is convenient for learning. After students complete the exercises in the training platform, it will automatically come out the score and give a portrait analysis.

(3) Aiming at the error checking of the model, and addressing the teaching difficulty in steel structure BIM modeling in module 2, "one-click VR conversion" software is developed. The 3D model created by the students can be converted by the software and can be imported into the VR system to check and then be modified. Through all these steps, virtual construction is performed.

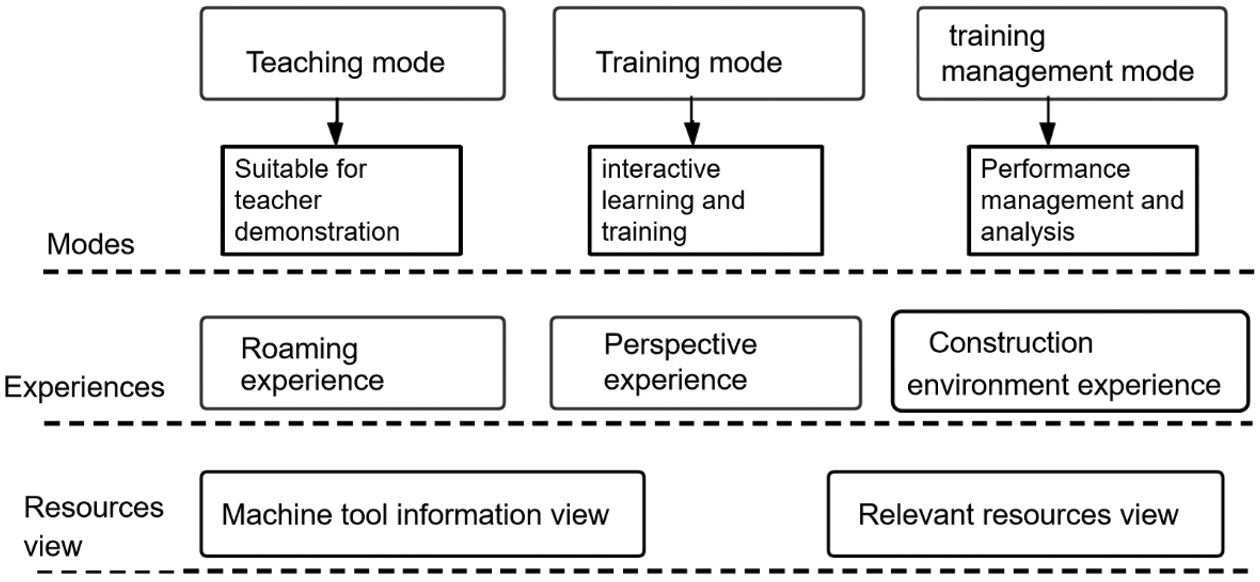

(4) Aiming at the lack of intuitive sense of the construction process and installation process, and addressing the difficulty in steel structure production and installation in module 3, the “steel structure VR training platform” is developed. This platform consists of three modes including the teaching mode, training mode, and training management mode [17]. Three kinds of experience simulation are also considered including roaming experience, perspective experience, and construction environment experience. We also consider two kinds of resource viewing including the construction equipment viewing and related resources viewing (see, Fig. 7).

Figure 7: Functional architecture diagram of the steel structure VR training platforms

i. Three operational modes:

Teaching mode: In this mode, the steel structure hoisting project construction can be played, and paused. This enables special observation and learning which is suitable for teaching demonstrations by the teachers.

Practical training mode: This is for interactive learning and training in the VR environment. This model combines with the knowledge points to be mastered to set practical training questions. After answering the questions the student can progress to the next process. The number and the content of the questions can be edited.

The training teaching management mode: This mode is accessible through a unified management interface. The main functions include managing the VR equipment terminals, students’ accounts, training course data resource package, and student VR training course task completion status and assessment scores. This mode also analyzes and feedback knowledge blind spots.

ii. Three experiences:

Roaming experience: The user can perform roaming and walking functions in the scene. There are two ways, one is to walk by themselves, and the other is to walk through the handle ray.

Perspective experience: The user can choose a high perspective and perform an interactive experience below the corresponding height.

Construction environment experience: This is designed to enable adjustments on lighting brightness and experience the construction environment at different times.

iii. The two resources:

Machine tool information view: The user can check the XR model of the machine tool used in the construction process.

Relevant resources view: The user can view drawings and drawings, safety and technical instructions, plan review, project summary, and other relevant materials.

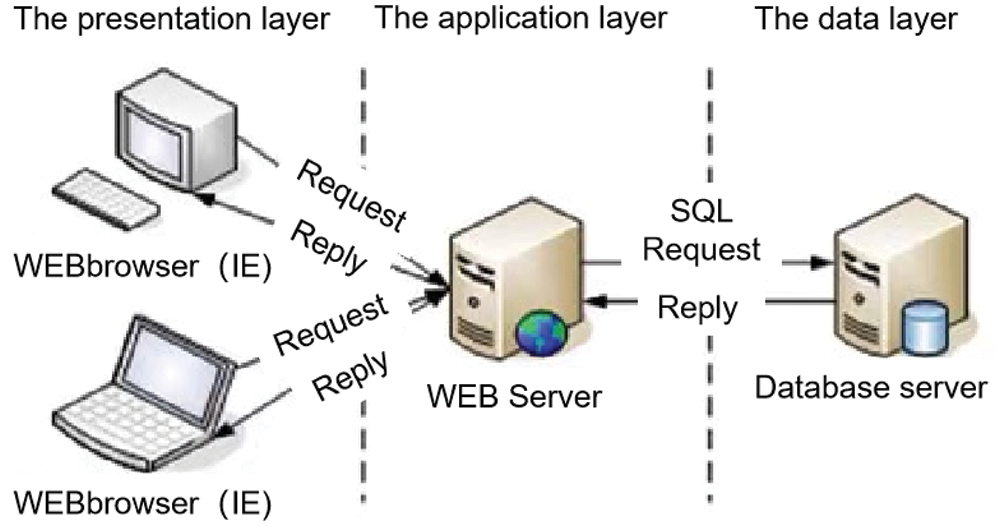

Using B/S technology architecture (browser/server mode), the core part of the system function implementation is on the server. Only one browser is installed on the client, e.g., Netscape Navigator, or Internet Explorer, and the server is installed with SQL Server, Oracle, MYSQL, and other database tools. The browser interacts with the database through the Web Server (Fig. 8).

Figure 8: The B/S system architecture

4.3 Teaching Application Examples

The steel structure frame is the considered classroom teaching task, and the steel structure roof truss is the after-school expansion task. The teaching modules include picture recognition, three-dimensional modeling, steel structure production, and installation. Both cases are based on the actual projects. The advantage is that the teaching can be organized in combination with the actual work process and this motivates the students to learn. The main disadvantage is that there are fewer nodes covered which is not conducive to extensive learning of the knowledge points. In this case, different types of nodes are added to the project. To make the selected knowledge points more similar to the actual project, the large samples of the nodes are taken from the national standard atlas.

Module 1 is the learning stage of the steel structure drawing. To read two-dimensional drawings faster, develop web-based steel structure drawing training software, and set up simulation training on the MOOC platform. Which realizes a two-dimensional plane. For the change of thinking in three-dimensional space (Fig. 9), we further set up a question bank and complete the test through the MOOC platform.

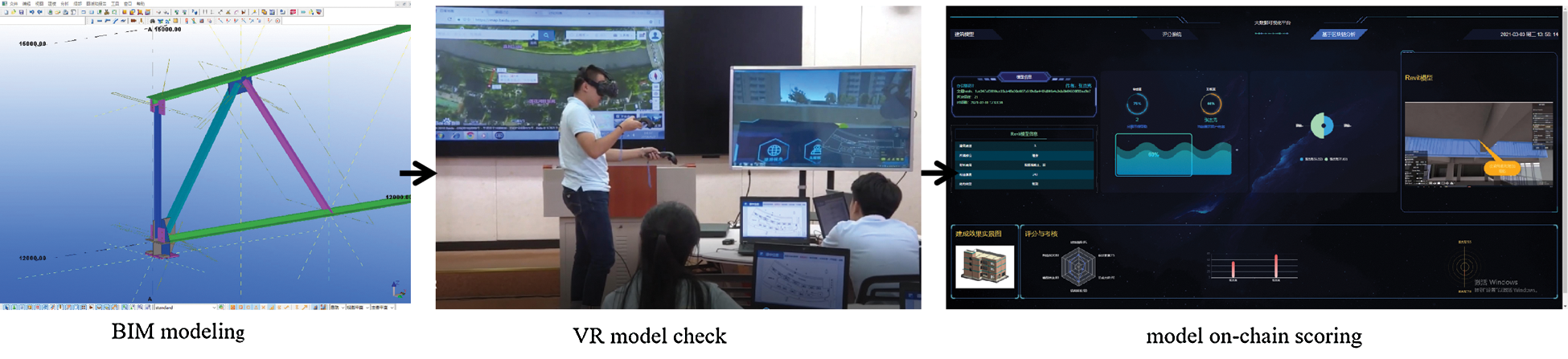

Figure 9: Classroom teaching process in Project 1

Module 2 is the steel structure BIM modeling. In this project, the student performs BIM modeling according to the drawings. The built model is then transformed into a VR model through the designed software. The model is then checked in the virtual world and, if required, returned to the student for modification. The modified model is then uploaded to the blockchain. and through the blockchain, it is scored by the teacher (Fig. 10). In summary, the classroom teaching and training process for Module 2 includes digital model modeling, VR model checking, and model submission to the blockchain.

Figure 10: Classroom teaching process in Project 2

Module 3 is on the production and installation of steel structures. In this project, the steel structure VR training platform, BIM data, videos, and materials can be viewed by the students in real-time in the VR environment. The students can watch realistic construction scenes with digital model through simulation and advanced light effect rendering and rich functional interaction, as well as virtual reproduction construction process (see, Fig. 11).

Figure 11: Classroom teaching process in Project 3

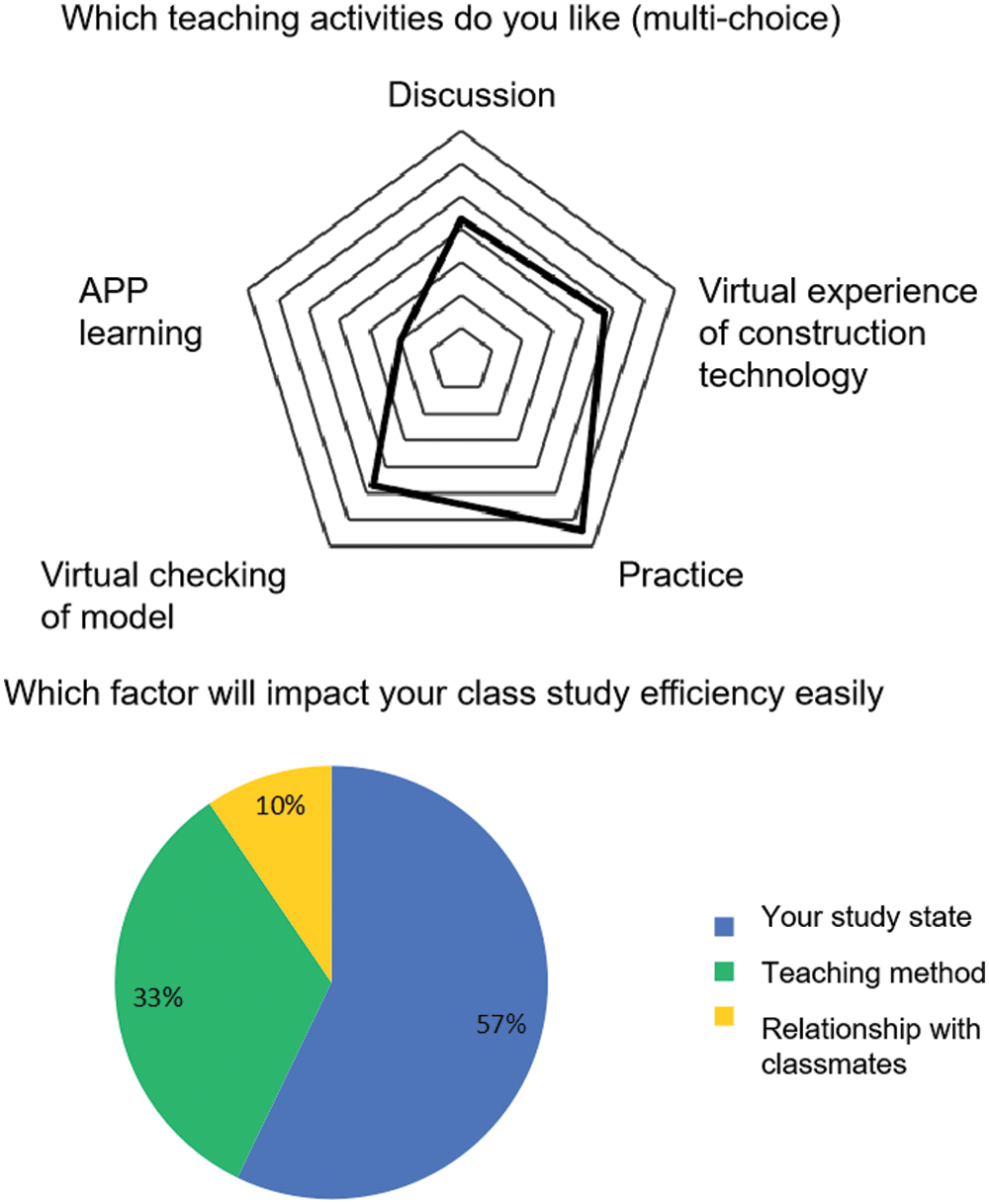

After completing the steel structure construction technology course, the students are asked to fill in an online questionnaire. The seventy-two filled-in questionnaires indicate that the students liked the practical training the most (Fig. 12), followed by the virtual model inspection and virtual process experience. It is also seen that the students believe that their learning efficiency is greatly affected by the learning method and teacher’s training approach (Fig. 12). Analysis of the student's answers and the completion of the extended homework indicate that they were able to skillfully read the construction drawings and complete the construction of the deepening model of the steel structure through learning. Nevertheless, their accuracy and speed of completion need to be improved. Overall, the collective results of the survey suggest that the classroom with virtual reality is suitable for this course.

Figure 12: Students’ feedback on the teaching of a virtuality-reality co-existing class of steel structure construction technology

5.1 Case Improvement and Generalization

1. In this case, the knowledge of steel structure drawing, modeling, production, and installation has been taught through three modules. However, due to the limited time of the class, the mechanical analysis of the connection joint is not described. Therefore, simulation experiment of steel structure joints should be developed, the graphics of experiment outcomes should be visualized, and the analysis of steel structure joint should be conducted visually and in reality to explain the obscure mechanical principles through vivid graphics.

2. VR needs to be equipped with special glasses and handles. Therefore, virtual simulation training courses on the online MOOC platform are still not suitable. It is expected that technological developments soon enable the versatile development of such a system.

3. This case is based on teaching mode of XR, which is a combination of virtual and real environments which enables exposing the students to both theory and practice and enable them understand diagrams, models, manufacturing processes and installation methods. This can be extended to the study of reinforced concrete structure, masonry structure, wood structure, and decoration engineering in civil engineering. This can be also extended to other engineering topics.

In this paper, we outlined the system framework of the virtual and real classroom-based on XR technology. We discussed the steps including developing the XR-based environment, curriculum design, classroom implementation, and evaluation system. The digital multi-scene and multi-resource characteristics of the virtual and real curriculum satisfy the demand for digital transformation and diversified learning and provides unlimited possibilities for future classrooms. The main conclusions are as the following.

1. Technology promotes changes in the classroom teaching from the perspectives of application scenarios, and learner’s demands. In the XR-based virtuality-reality coexisting classroom teaching is the driving force for innovation and reform of classroom-based education.

2. The complicated virtual environment created in a virtuality-reality coexisting classroom facilitates implementing digital twin and digital twin learning spaces. Data provenance and scientific evaluation can also stimulate and extend the evaluation dimensions which can realize a personalized teaching environment.

3. Labs with fixed seats and partition walls cannot adapt to the demands of XR-based virtuality-reality coexisting classrooms. Therefore, smart classrooms with XR equipment are required where seats can are mobilized, and space is divided for physical assembling and virtual construction.

4. The operating mechanism, framework, functions, teaching contents, and teaching design of XR-based virtuality-reality coexisting classroom need further research and development. Such research activities can provide operable and exercisable research results for the teaching reform in the digital age which affects the methods of study, study process, and evaluation.

Development of 5G and front-projected holographic display [18] and superposition of XR, Artificial intelligence (AI), holographic technology, and virtual reality collectively enable new learning space [19]. In such learning spaces using virtual reality enables automation and intelligence.

Acknowledgement: The authors would like to express their gratitude to EditSprings (https://www.editsprings.com/) for the expert linguistic services provided.

Funding Statement: This work was supported by the Teaching Reform Fund of the Ministry of Education Science and Technology Development Center (2018B01003) and the Education and Science Programming Project of Shenzhen City (dwzz19013).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. A. Abayomi-Alli, S. Misra, L. Fernández-Sanz, O. Abayomi-Alli and A. Edun, “Genetic algorithm and tabu search memory with course sandwiching (gats_cs) for university examination timetabling,” Intelligent Automation and Soft Computing, vol. 26, no. 3, pp. 385–396, 2020. [Google Scholar]

2. R. T. Azuma, “A survey of augmented reality,” Presence: Teleoperators & Virtual Environments, vol. 6, no. 4, pp. 355–385, 1997. [Google Scholar]

3. G. C. Burdea and P. Coiffet, “VR programming,” in Virtual Reality Technology, 2nd edition, vol. 1. New Jersey: Wiley-IEEE Press, pp. 210–215, 2003. [Google Scholar]

4. S. Cai, P. Wang, Y. Yang and S. Liu, “Overview of the educational application of augmented reality (ar) technology,” Distance Education Magazine, vol. 34, no. 5, pp. 27–40, 2016. [Google Scholar]

5. M. Heilig, “Sensorama simulator (EE. UU. Patente No 3,050,870),” US Patent and Trademark Office, 1962. [Online]. Available: https://patents.google.com/patent/US3050870A/en. [Google Scholar]

6. M. W. Krueger and S. Wilson, “VIDEOPLACE: A report from the artificial reality laboratory,” Leonardo, vol. 18, no. 3, pp. 145–151, 1985. [Google Scholar]

7. J. Radianti, T. A. Majchrzak, J. Fromm and I. Wohlgenannt, “A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda,” Computers & Education, vol. 147, pp. 103778, 2020. [Google Scholar]

8. S. Mann, J. C. Havens, J. Iorio, Y. Yuan and T. Furness, “All reality: Values, taxonomy, and continuum, for virtual, augmented, extended/mixed (xmediated (x, yand multimediated reality/intelligence,” in Augmented World Expo Conf., Santa Clara, California, USA, 2018. [Google Scholar]

9. P. Milgram and F. Kishino, “A taxonomy of mixed reality visual displays,” IEICE TRANSACTIONS on Information and Systems, vol. 77, no. 12, pp. 1321–1329, 1994. [Google Scholar]

10. J. Ratcliffe, F. Soave, N. Bryan-Kinns, L. Tokarchuk and I. Farkhatdinov, “Extended reality (XR) remote research: A survey of drawbacks and opportunities,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, pp. 1–13, 2021. [Google Scholar]

11. S. Mann, T. Furness, Y. Yuan, J. Iorio and Z. Wang, “All reality: Virtual, augmented, mixed (xmediated (x,yand multimediated reality,” arXiv preprint, arXiv:1804.083862018. [Google Scholar]

12. S. Mann, “Using the device as a reality mediator,” in Intelligent Image Processing, 1st edition, New Jersey: Wiley-IEEE Press, pp. 99–105, 2002. [Google Scholar]

13. Z. Fadeeva, Y. Mochizuki, K. Brundiers, A. Wiek and C. L. Redman, “Real-world learning opportunities in sustainability: From classroom into the real world,” International Journal of Sustainability in Higher Education, vol. 11, no. 4, pp. 308–324, 2010. [Google Scholar]

14. Q. Wang, F. Zhu, Y. Leng, Y. Ren and J. Xia, “Ensuring readability of electronic records based on virtualization technology in cloud storage,” Journal on Internet of Things, vol. 1, no. 1, p. 33, 2019. [Google Scholar]

15. M. Grieves, “Digital twin: Manufacturing excellence through virtual factory replication,” White Paper, vol. 1, pp. 1–7, 2014. [Google Scholar]

16. E. Hu-Au and J. J. Lee, “Virtual reality in education: A tool for learning in the experience age,” International Journal of Innovation in Education, vol. 4, no. 4, pp. 215–226, 2017. [Google Scholar]

17. Q. Zang, “Using the learning pyramid theory to improve high school mathematics teaching,” Mathematics Teaching, vol. 2, no. 1, pp. 8–9, 2011. [Google Scholar]

18. H. Lin, S. Xie and Y. Luo, “Construction of a teaching support system based on 5G communication technology,” in Advances in Computer, Communication and Computational Sciences. New York: Springer, pp. 179–186, 2021. [Google Scholar]

19. J. A. Fisher, “Designing lived space: Community engagement practices in rooted AR,” in Augmented and Mixed Reality for Communities, 1st edition, vol. 5. Florida: CRC Press, 2021. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |