DOI:10.32604/iasc.2022.022241

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2022.022241 |  |

| Article |

Abnormality Identification in Video Surveillance System using DCT

1Centre for Cyber Physical Systems, School of Computer Science and Engineering, Vellore Institute of Technology (VIT), Chennai, 600127, India

2Department of Computer Science and Engineering, SRM Institute of Science and Technology, Vadapalani Campus, Chennai, 600026, India

3Department of Computer Science and Engineering, SRM Institute of Science and Technology, Kattankulathur, Chennai, 603203, India

4School of Computer Science and Engineering, Vellore Institute of Technology (VIT), Chennai, 600127, India

5Department of Information Technology, M. Kumarasamy College of Engineering, Karur, 639113, India

6Department of Computer Science and Engineering, KCG College of Technology, Chennai, 600097, India

7Department of Computer Science and Engineering, Saveetha School of Engineering, SIMATS, Chennai, 602105, India

*Corresponding Author: A. Balasundaram. Email: balasundaram.a@vit.ac.in

Received: 01 August 2021; Accepted: 02 September 2021

Abstract: In the present world, video surveillance methods play a vital role in observing the activities that take place across secured and unsecured environment. The main aim with which a surveillance system is deployed is to spot abnormalities in specific areas like airport, military, forests and other remote areas, etc. A new block-based strategy is represented in this paper. This strategy is used to identify unusual circumstances by examining the pixel-wise frame movement instead of the standard object-based approaches. The density and also the speed of the movement is extorted by utilizing optical flow. The proposed strategy recognizes the unusual movement and differences by using discrete cosine transform coefficient. Our goal is to attain a trouble-free block-based Discrete Cosine Transform (DCT) strategy that promotes real-time abnormality detection. The proposed approach has been evaluated against an airport dataset and the outcome of unusual happenings occurred in is evaluated and reported.

Keywords: Abnormality detection; anomaly detection; DCT strategy; video surveillance

In most of the existing video surveillance systems, objects that are in motion alone are identified and tracked. The actions that lead to the movement of object are not tracked. However it is equally important to track the person’s movement as well for detecting any abnormal activities. Also most of the surveillance systems just record the actions and classifying the abnormal events is generally performed by human intervention where security personnel identify the abnormal events manually. This manual intervention has to be removed and the surveillance system should be intelligent enough to recognize abnormal events on its own and report to the concerned authorities automatically. In the first phase, the proposed system recognizes the regions that have been subject to changes. In the second phase, the system computes the relevant data pertaining the changes. The data include computing the speed of motion, acceleration and route of movement and accordingly a representation of the current state of the object is provided. In the last stage, the video is examined by comparing the state constraints with the pre-standardized constraints. This provides the details of the unusual activities [1,2]. With the help of an already fixed criterion the outline, movement and also additional data pertaining to the objects have been extorted from image series. The intermediary state representation and replication is performed using Hidden Markov Model (HMM). This state representation retrieved from the image series is compared with a standard action model [3,4] and if the resultant values are not equivalent, the action is deemed to be anomalous. Conversely, due to the occurrence of unusual actions, the organized learning process gets disrupted. An extensive range of unusual actions cannot be easily stated because of its intricacy in occurrence and movements as described in [5,6].

In [7,8], based on certain rules the input video files are separated into certain sections and from every sub section of video the attributes are also extorted. This sub section of video is presented by creating vectors. The grouping technique and even the resemblance measures are applied to those vectors and once it is processed, the sub-video actions might be deemed as irregular only if the sub video had very less resemblance. In real time, it is very complex to recognize the unusual actions. To verify irregular performance like burglary, fight and chasing Jian-hao and Li [9] projected a technique which identifies the actions based upon the turmoil of speed and also the path of movement. However, the three unusual actions cannot be differentiated by this technique. Cheng et al. [10] projected a method which could identify the cyclic activities and also distinguishes the cyclic motion of a flexible moving object such as an instance of finding the running behavior of the human. Moreover, to recognize the human running behavior, a descriptor resulted from cyclic action depiction is utilized. To gratify the real-time presentation sequentially in the surveillance method, a technique is projected in this paper which identifies the unusual running action in surveillance tape in accordance with spatio-temporal constraints. Firstly, the objects presented in the foreground are extorted from video segments associated with Gaussian Mixture representation and also frame subtraction calculation [11,12] is performed. The input images are converted into binary images. The nonlinear structures are entailed in extorted foreground object detection algorithm [13–15].

Although various strategies and object handling methods are utilized in real life to promote tracking in crowded area, more difficulties emerge while tracking the scenes in crowd area rather than the small sequences. For instance, It is highly difficult to recognize a targeted object in crowded area due to the size of the targeted object and other scenarios such as occlusion, relative movement of other objects etc. To overcome these difficulties, various outcomes are projected where the researchers have reinstated those by tracking each unit of the targeted object. Some researchers have projected the algorithm by removing the foreground. The plan for recognizing and observing the temporal strategy for a crowded area is represented. Initially, various attributes recover the substances of every lead frameworks involved in the operation. Once every object is identified, the Gaussian Mixture algorithm (GMM) is used. In this segment, we describe the recognition of unusual performance in wider aspect, for instance, the unpredicted actions of a person. The researchers try to expand several methods that are usually utilized for video surveillance. If there are any unexpected transformation in scenes like lighting or change in weather and difficulties such as identifying the action are addressed using Gaussian Mixture Model (GMM). Individual events are identified in this series based on identifying the action of every person. Then “vision.BlobAnalysis” object is used for analyzing the individual objects. The blob analysis is instrumental in identifying individual objects. Before performing blob analysis, segmentation of the objects from the background is performed using GMM and then morphological operations are applied for removing noise and extracting the boxes containing the connected components.

3 Problem Statement and Motivation

Even though several related path techniques flourish in various applications, they undergo certain primary drawbacks. At the initial stage, execution of pipeline process might be upshot in a delicate design which might result in error percolating across the following phases. Secondly, the system follows numerous objects and needs multifaceted algorithms which are very computation intensive. As a result, in crowded regions, multi-object tracking is not competent always. In this assignment, the feature and color data of surveillance tapes are deprived. Thirdly, inflexible objects like vehicles, trains where in such form of objects trailing are competent, and moreover, it not suitable for form less actions likes waves on the water, trees shaking due to the wind, etc. To overcome the above discussed disadvantages, some of the authors have currently projected some learning techniques which relates to features apart from action trails. For such kind of cases, object trailing is not required whereas pixel-point attributes are taken into consideration. The primary objective of this paper is to monitor and analyze the actions of the object as normal or abnormal events effectively.

With the intention of providing protection to people, it is essential to examine the performances of people and also specify whether their actions are usual or unusual one. To denote similar notations various keywords are utilized in several research works (abnormal, exceptional, different, exciting, doubtful, and irregular). The abnormality can be defined as a measurement where its probability becomes less than a threshold values in a normal condition. A typical performance refers to the action that has not been monitored earlier; unusual actions are considered to be abnormal. Human activity becomes abnormal when they do indifferent activities like mugging, robbery and other criminal activities. To differentiate the abnormal and normal activities (running), there are two definitions are given here. Definition-1 says about the normal activity behavior of the human and Definition-2 says about the abnormal activity behavior of the human.

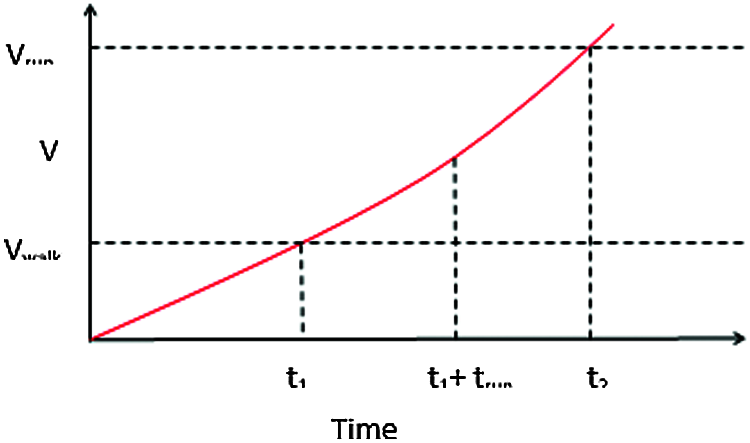

Definition-1: The normal state of the human is slowly and gradually accelerated from a starting level into higher level in terms of speed and time in the normal action. After a long interval of time the moving speed of the objects is greater than the normal speed of the objects normal action state is defined as normal activity behavior and it is represented in Fig. 1 and in Eq. (1).

Figure 1: Normal human movement

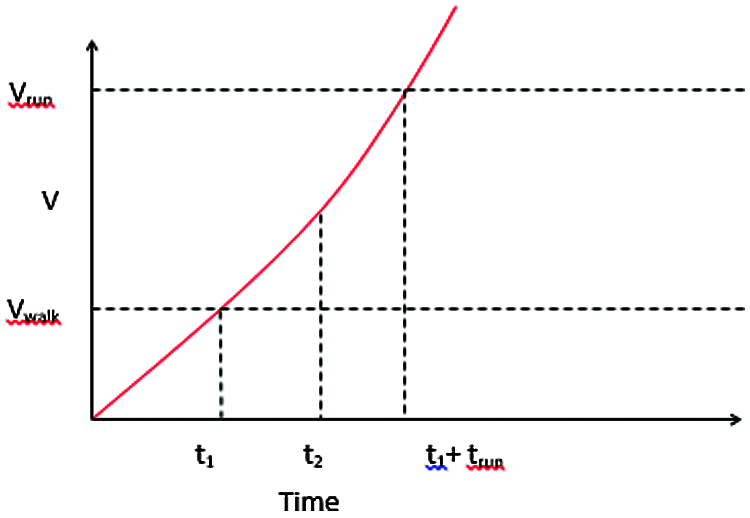

Definition-2: The speed of the human objects changed from the normal state into speedy state with high and sudden acceleration represents the abnormal activity. The speed of the speedy state is higher than the normal state within a short interval of time is defined as abnormal activity behavior, which is depicted in Fig. 2 and in Eq. (2).

Normal state represents the walking state of the human and speedy state represents the running state of the human. Running state can be driving a cycle, car, van and etc. V0 is the initial velocity, V_normal is the velocity of walking state, V_speedy is the velocity of the running state and t says the time taken for walking and running state of the human. As the slope becomes steeper, it gives a chance for potential anomaly.

Figure 2: Abnormal human movement

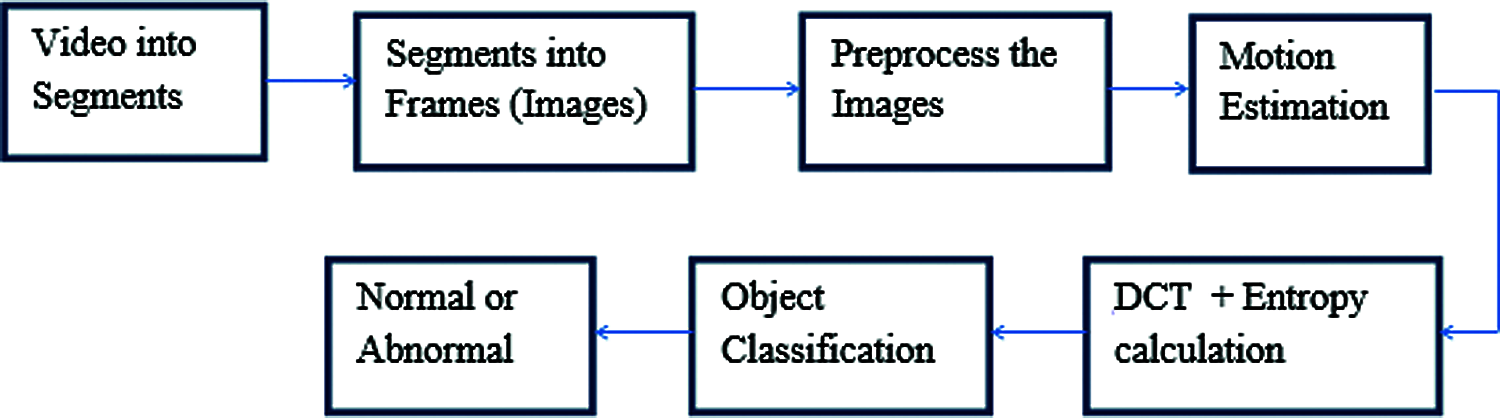

A universal model as shown in Fig. 3 is designed in accordance to the speed characteristics that are studied from action at pixel-point level. It will direct to the picture which articulates the action in the sequence. Later the data content of the picture is examined within the “incidence" area by means of calculating the entailed DCT (Discrete Coefficient Transform) coefficients which gets transmitted to the restricted action area. We must realize the performance of the objects is effectively examined in every structure. The perceptive of action entails the examination and identification of action patterns and also its depiction at huge intensity. To deem either happenings are usual or unusual related to its performance characteristics, it also evaluates every segment to the median standard rates across point in time to categorize the actions in order to know that they are usual and unusual. The need for an adoption of DCT concept is clearly illustrated in the real-time feature, while these could be proficiently executed.

In accordance to action characteristics our unusual happenings are recognized and extorted by action evaluation method. Within a picture series, the action inference aims by identifying the areas parallel to moving objects like car, also human beings. The characteristics are related to pixel-based optical flow, which is said to be one of the most normal methods for capturing the action [16]. Computer vision specifies that the calculation of optical flow is not precise, especially when applied for noisy records. To overcome this drawback, optical flow approaches utilize Lucas-Kanade algorithm [17] in every structure. When the optical flow is related to action evaluation it utilizes the features of flow vectors of moving objects. Therefore the dislocation of every pixel from image to image in every vector is presented evidently [18]. The dislocation rate of every pixel is the outcome of optical flow at both perpendicular and also horizontal routes. To acquire action magnitude vector, all the dislocation are joined. In order to en route these motion vectors, the pixel rates are replaced for calculating the action. Also every structure is partitioned into segments. During abnormal activities, the moving pattern and the energy of the objects are changed. Hence the motion vector of the image and objects is changed in comparison with the normal activity.

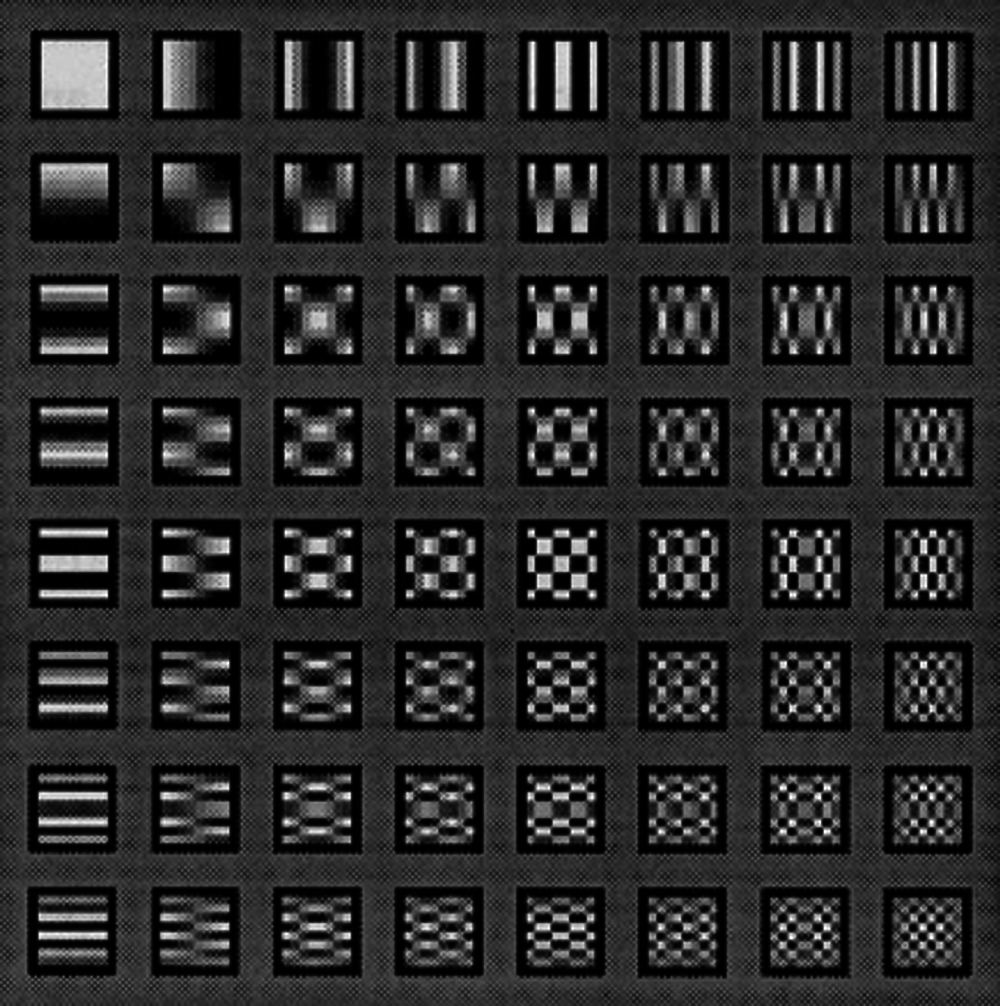

Discrete Cosine Transform (DCT) is used to separate the image into parts which are of different significance based on the visual quality. DCT is analogous to Fourier transform in the fact that the image is transferred to frequency domain instead of time domain. It is much easier to depict DCT as a set of basic functions which can be of a known input array. Fig. 3 shows a 8 × 8 representation of the same. Though DCT is almost similar to Fourier transform, it performs the approximation with lesser coefficients. The one dimensional representation of DCT with n data items is given by Eq. (3):

The two dimensional representation of DCT with an N x M sized image is given by Eq. (4):

Figure 3: 8 × 8 DCT basis function

DCT is pertained in every segment, as it supplies a compressed presentation of the signal’s force. Lastly, the DCT coefficients are calculated in order to compute the data structure [19]. The entropy is termed in [20] is shown in Eq. (5).

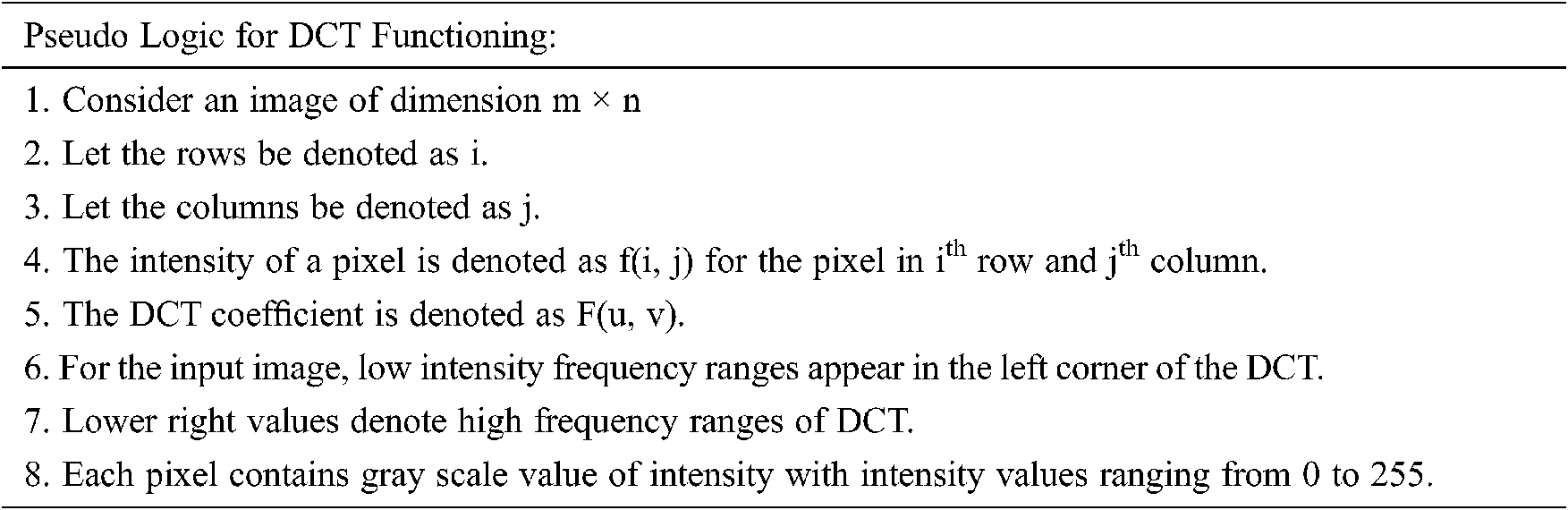

N is the picture volume and p specifies the possibility of action power value at certain pixel position. In the histogram, the amount of bins illustrates the nature of picture, whereas a grey scale picture is formed when the size of the action is structured per pixel also 256 bins are utilized which might match up to the amount of gray heights. To identify either the action is usual or unusual; the entropy rate is contrasted with threshold rate which has been noticed earlier in video tapes. Entropy denotes the measure of uncertainty associated with a random variable. The acquired threshold is related to median rate of the entropies which is considered during the initial 500 frames of video. Apparently, we might imagine that the unusual action does not happen in the first set of 500 frames of video series. This procedure is processed regularly by video series as the unusual actions are sieved with the help of the median rate. The basic functioning of DCT is shown below:

Median filtering is restricted for some time in order to organize the entire intricacy of the computations. The unusual happening is specified when the present frame rate of the entropy is slightly higher than the threshold rate specified within the block. A different strategy [9] is utilized which identifies the static rate that depicts how efficiently the optical flow vector is structured within the framework. A metric is utilized in this algorithm which is nothing but the scalar result of the normalized rate and those features are: difference in route, difference in action level also the route of histogram. The acquired metric is contrasted with the threshold in order to place a regular value manually. In Fig. 4 the system model of a processed block procedure during the active sequence is depicted.

Figure 4: DCT based abnormal activity detection in surveillance video

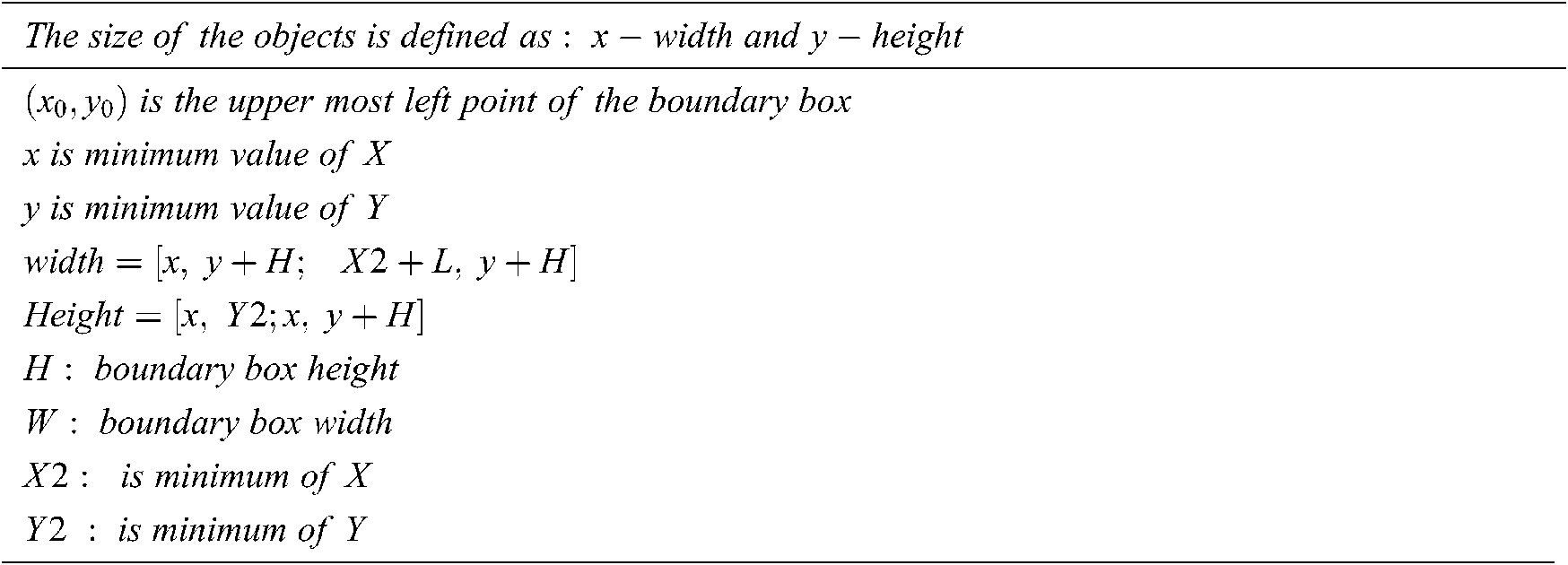

Each individual objects are obtained after background subtraction in the earlier research works. Then the objects are compared using other objects are features of the objects in the ground truth images. But in this paper, to increase the accuracy, the DCT block based object comparison without any disturbance the entropy values of the objects are compared. Since the entropy of the object represents various important information about the images and it is used here. In order to identify and show externally the abnormal objects in the image a boundary box is drawn on the image around the objects. Generally the power within RGB color space distinguishes every pixel. Finally, from the monitored rate of every pixel, the possibility is computed. For every connected element, the bounding boxes are founded, subsequent to individuals’ action within the object “vision.BlobAnalysis”. The areas of pixels that are connected in a picture are the recognitions of the blob analysis. The restriction of rectangle is created by following the action of individual; the location of every person is discovered throughout the action by utilizing the bounding box outcome (BBOX) and it can be obtained using the following pseudocode.

In this research work, pedestrian surveillance videos are utilized so that various unusual circumstances are replicated with the help of many volunteers. The unusual circumstances comprise of various volunteers suddenly dancing, running and pushing in a crowded place. Overall there are six kinds of unusual or abnormal circumstances which take place in 12000 frames of video series. The usual screening quality for a video surveillance is 720 × 576 by 29 frames per second, which is the spatial motion of a novel video frame. The frame rate is maintained uniformly across the experiment to ensure that there is no delay in processing frames. The experiment was conducted across outdoor environment which included different environment conditions such as rain, dim light, shade etc. and the performance of the system was evaluated.

A unique strategy which is projected is contrasted and compared with this approach. The proposed approach and the resulting output is comprehensively evaluated with contemporary works. Each frame is sub-divided into four quadrants or segments as part of the current research work. The number of segments per frame is customizable. The entropy of DCT coefficients is computed for each and every segment and inclusive of the first 500 frames’ median rate is computed. In relation to this research and analysis, the threshold of median entropy is set thrice higher than the median rate to categorize the abnormal happenings. If there are any anomaly and unusual happenings present, an unusual indicator is raised and alert is generated. Fig. 5 represents the collection of frames that is extracted from the segmented video. The duration of the segmented video is one minute and is customizable.

Figure 5: Frame sequence of a segmented video

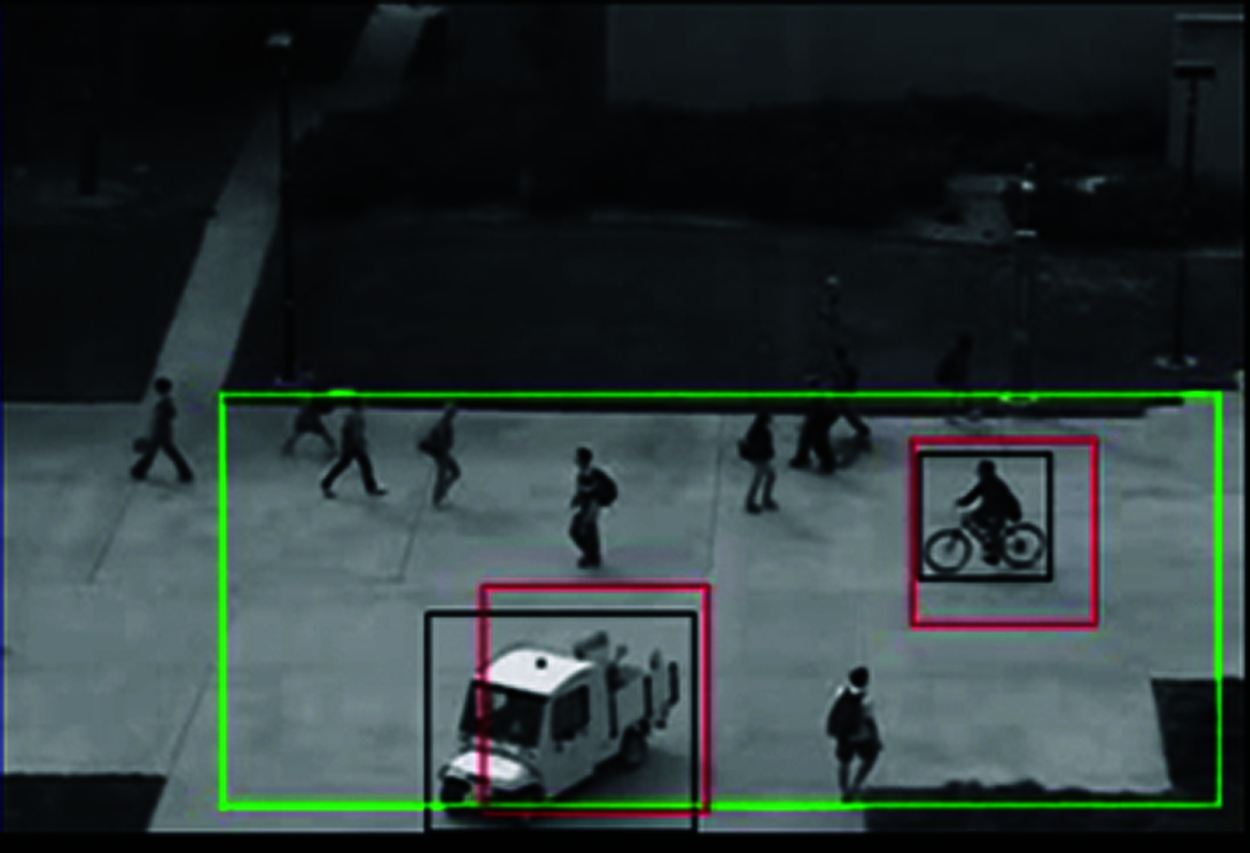

The number of frames extracted from a segmented video is 95. Initially, it is assumed that all the images as normal images and the process is initiated. Across each frame, following object detection, the calculation of entropy from the DCT values is carried out and this is compared with the computed threshold levels already recorded and stored in a database from ground truth images. The abnormal activities identified from the sequence of input frames are shown in Figs. 6–8. The abnormal activities are human riding a cycle, driving a van, driving a van and cycle, and cycle and scatter are shown in Figs. 6–8 respectively. The variation of the entropy and matching with the ground truth objects helps to classify the objects are normal or abnormal.

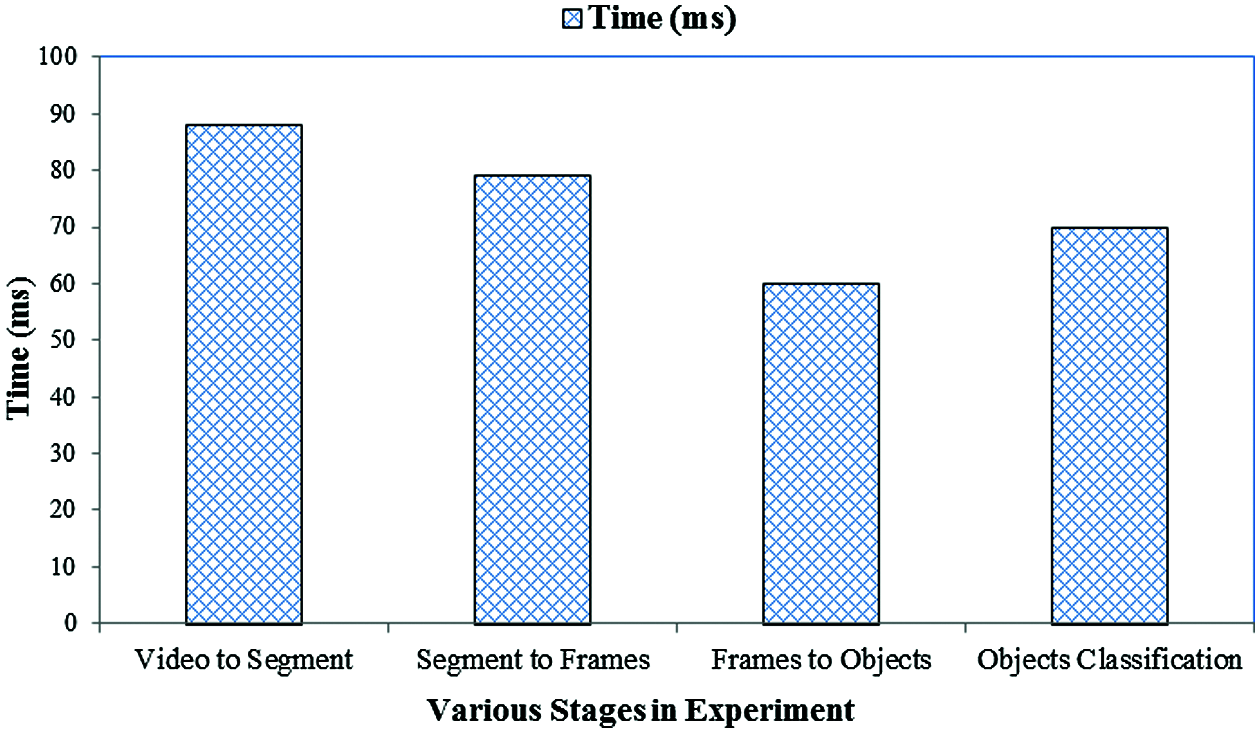

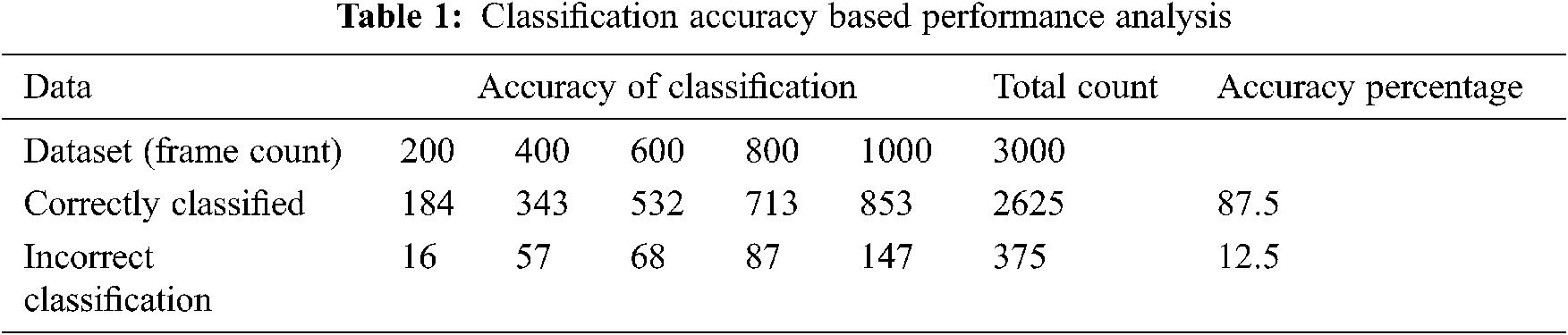

Also, the proposed approach performance is evaluated in terms of time complexity for computing and classification accuracy. The focus of the work is to enhance the accuracy of classification and not on the speedup. No major latency issues were observed in anomaly detection. Since the video frames are scanned immediately one after the other, any occlusion observed in a frame gets cleared in the next frame. In order to accomplish this, time taken for conversion at various stages of the exercise is found in the work and the obtained results is shown in Fig. 9. Also, the average time consumed for the entire system model to compute unusual actions is 74.35 ms which is inclusive all intermediate stages. The result is considered in terms of accuracy of computation and the obtained results are given in Tab. 1. Classification accuracy is a good indicator of whether the anomalies are classified without false alarms. Hence it is considered as a performance measure in this work. From the results it can be seen that accuracy of classification is 87.5%.

Figure 6: Human riding bicycle in pedestrian lane detected as anomaly and highlighted in red

Figure 7: A bicycle and truck driven in pedestrian lane detected as anomaly and highlighted in red

Figure 8: Bicycle riding and skating in pedestrian lane detected as anomaly and highlighted in red

Figure 9: Time Complexity analysis across various phases of conducting the work

In this research work, an action-structured-related algorithm is developed, in order to identify unusual happenings in surveillance tape observed in public place. We had played a major focus was towards establishing attributes which are very useful to classify actions also by utilizing a threshold which is mechanically modernized in order to recognize unusual happenings. Discrete Cosine Transform (DCT) was used to analyze the anomalous pattern. The altered action size of DCT entropy determined is a consistent measure in order to categorize that the present action in the video tape is usual or unusual one. Since the projected technique is block-based, we can accurately specify the component of the frame in which the unusual happenings occurs. An additional benefit in utilization of block is that there is chance for parallel routing in real-time execution, as every block could be processed without any dependency. The airport surveillance video output is obtained by identifying five abnormal happenings during the aversion of fake alarms. The classification accuracy was 87.5%. From the obtained results, it can be observed that the proposed approach is a better approach for anomaly detection and recognition.

This structure need not depend upon the kind of view since it is common. As part of the future work, for identifying abnormalities, more exploration on DCT-related attributes is predicted. Moreover this approach can be tested with varying scenarios and the performance van be observed. Also, this approach can be scaled across a larger volume of data and can be applied across different domain to detect various types of anomalies. Since the time complexity is more and accuracy is merely less it should be improved by computing the error models in the frames and the objects. Future work will be to take this approach and experiment and verify the results with more benchmark dataset and custom dataset and to integrate this and model along with a sophisticated framework that can be deployed for smart surveillance.

Acknowledgement: The authors wish to express their thanks to one and all who supported them during this work.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. M. A. Geng-yu and L. I. N. Xue-yin, “Unexpected human behavior recognition in image sequences using multiple features,” in Proc. of the Twentieth Int. Conf. on Pattern Recognition, Istanbul, Turkey, pp. 368–371, 2010. [Google Scholar]

2. K. Sudo, T. Osawa, K. Wakabayashi and H. Koike, “Detecting the degree of anomaly in security video,” in Proc. of Int. Association of Pattern Recognition, Tokyo, Japan, pp. 3–7, 2007. [Google Scholar]

3. D. Matern, A. P. Condurache and A. Mertins, “Linear prediction based mixture models for event detection in video sequences. Berlin, Heidelberg, Germany: Springer, 2011. [Online]. Available: https://link.springer.com/chapter/10.1007/978-3-642-21257-4_4. [Google Scholar]

4. P. L. M. Bouttefroy, A. Beghdadi, A. Bouzerdoum and S. L. Phung, “Markov random fields for abnormal behavior detection on highways,” in Proc. of the Second European Workshop on Visual Information Processing, Paris, France, pp. 149–154, 2010. [Google Scholar]

5. M. Li and W. Zhao, “Visiting power laws in cyber-physical networking systems,” Mathematical Problems in Engineering, vol. 2012, no. 1, pp. 1–13, 2012. [Google Scholar]

6. M. Li, C. Cattani and S. Y. Chen, “Viewing sea level by a one-dimensional random function with long memory,” Mathematical Problems in Engineering, vol. 2011, no. 1, pp. 1–13, 2011. [Google Scholar]

7. D. Zhang, D. Gatica-Perez, S. Bengio and I. McCowan, “Semi-supervised adapted HMMs for unusual event detection,” in Proc. of the IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, San Diego, CA, USA, pp. 611–618, 2005. [Google Scholar]

8. V. Reddy, C. Sanderson and B. C. Lovell, “Improved anomaly detection in crowded scenes via cell-based analysis of foreground speed, size and texture,” in Proc. of the IEEE Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, pp. 55–61, 2011. [Google Scholar]

9. D. U. Jian-hao and X. U. Li, “Abnormal behavior detection based on regional optical flow,” Journal of Zhejiang University, vol. 45, no. 7, pp. 1161–1166, 2011. [Google Scholar]

10. F. Cheng, W. J. Christmas and J. Kittler, “Detection and description of human running behaviour in sports video multimedia database,” in Proc. of the Eleventh Int. Conf. on Image Analysis and Processing, Palermo, Italy, pp. 366–371, 2001. [Google Scholar]

11. L. Xin, L. Hui, Q. Zhen-ping and G. Xu-tao, “Adaptive background modeling based on mixture Gaussian model,” Journal of Image and Graphics, vol. 13, no. 4, pp. 729–734, 2008. [Google Scholar]

12. J. Chen, Q. Lin and Q. Hu, “Application of novel clonal algorithm in multi-objective optimization,” International Journal of Information Technology and Decision Making, vol. 9, no. 2, pp. 239–266, 2010. [Google Scholar]

13. Z. Liao, S. Hu, D. Sun and W. Chen, “Enclosed laplacian operator of nonlinear anisotropic diffusion to preserve singularities and delete isolated points in image smoothing,” Mathematical Problems in Engineering, vol. 2011, no. 1, pp. 1–15, 2011. [Google Scholar]

14. S. Hu, Z. Liao, D. Sun and W. Chen, “A numerical method for preserving curve edges in nonlinear anisotropic smoothing,” Mathematical Problems in Engineering, vol. 2011, no. 1, pp. 1–14, 2011. [Google Scholar]

15. Z. Liao, S. Hu and W. Chen, “Determining neighborhoods of image pixels automatically for adaptive image denoising using nonlinear time series analysis,” Mathematical Problems in Engineering, vol. 2010, no. 1, pp. 1–14, 2010. [Google Scholar]

16. A. A. Efros, A. C. Berg, G. Mori and J. Malik, “Recognizing action at a distance,” in Proc. of Int. Conf. of Computer Vision, Nice, France, pp. 13–16, 2003. [Google Scholar]

17. B. D. Lucas and T. Kanade, “An iterative image registration technique with an application to stereo vision,” in Proc. of Int. Joint Conf. on Artificial Intelligence, Vancouver BC, Canada, pp. 674–679, 1981. [Google Scholar]

18. A. Wali and A. M. Alimi, “Event detection from video surveillance data based on optical flow histogram and high-level feature extraction,” in IEEE Int. Workshop on Database and Expert Systems Application. Linz, Austria, 221–225, 2009. [Google Scholar]

19. R. C. Gonzalez, R. E. Woods and S. L. Eddins, Digital Image Processing using MATLAB, 2nd ed., Gatesmark Publishing, Knoxville, TN, United States of America, 2009. [Google Scholar]

20. M. Li, C. Cattani and S. Y. Chen, “Viewing sea level by a one-dimensional random function with long memory,” Mathematical Problems in Engineering, vol. 2011, no. 1, pp. 1–13, 2011. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |