DOI:10.32604/iasc.2022.023953

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2022.023953 |  |

| Article |

Moving Object Detection and Tracking Algorithm Using Hybrid Decomposition Parallel Processing

1Department of Computer Science and Engineering, Ramco Institute of Technology, Rajapalayam, 626117, India

2Department of Computer Science and Engineering, R.M.K. College of Engineering and Technology, Chennai, 601206, India

3Department of Computer Science and Engineering, Veltech Rangarajan Dr Sagunthala R&D Institute of Science and Technology, Chennai, 600062, India

4School of Computer Science and Engineering, Vellore Institute of Technology, Vellore, 632014, Tamilnadu, India

*Corresponding Author: M. Gomathy Nayagam. Email: m.g.nayagam@gmail.com

Received: 28 September 2021; Accepted: 17 December 2021

Abstract: Moving object detection, classification and tracking are more crucial and challenging task in most of the computer vision and machine vision applications such as robot navigation, human behavior analysis, traffic flow analysis and etc. However, most of object detection and tracking algorithms are not suitable for real time processing and causes slower processing speed due to the processing and analyzing of high resolution video from high-end multiple cameras. It requires more computation and storage. To address the aforementioned problem, this paper proposes a way of parallel processing of temporal frame differencing algorithm for object detection and contour tracking using the mixture of functional and domain decomposition parallel processing techniques. It has two main contributions. First, steps of frame differencing are parallelized using functional decomposition technique. Second, the processing of frames in each steps of frame differencing is again parallelized using domain decomposition technique. Finally, the performance is evaluated in Aneka Private Cloud platform and which yields to detect and track the object very swiftly and accurately.

Keywords: Temporal frame differencing based object detection; functional and domain decomposition parallel processing techniques contour tracking and cloud computing

In automated video surveillance system [1], the task of detection and tracking of moving object is a important part and it can be done be different approaches. But it does not have common approaches for all applications. Background subtraction is one of the most popular and robust method for moving object detection [2,3]. But there is a challenge in background subtraction due to dynamic background, illumination changes and etc. [4]. The temporal difference method is used in [5] where they proposed reliable foreground segmentation algorithm which combines temporal image analysis with reference background image. It is suitable to adapt for background change in illumination. In [6], they extract moving object based on temporal differencing mechanism and they used Normalized Cross Correlation (NCC) technique to remove Ghost and shadow effect during temporal frame differencing mechanism. Smart cameras that perform real time video analysis are recent trends in surveillance system [7].

Due to the amount of data that needs to be processed for providing high accuracy in real time video surveillance systems, most of the algorithms are having difficult and time consuming characteristics in nature. If we perform video analysis in serial processor, it requires more computation time and uses significant amount of energy which bounds the application of such algorithm in live video analysis.

GPU are popular platform for parallel processing of objection and tracking [8] using software such as openCL and CUDA. Due to the physical constraints and high energy consumption of GPU, there is a need to modify the algorithm for the particular embedded GPU chipset. Otherwise, it results in suboptimal performance in such GPU chipsets.

Another way is the implementation of parallel algorithm on embedded systems which is based on digital signal processor (DSP) and field programmable gate arrays (FPGA) [9]. Since, this system have low number of computing units, this solution is suitable only for low video resolution and low frame rate video processing.

Cloud computing is one of the platform for implementing parallelization of moving object detection and tracking algorithm without any modification for optimization and also does not require any specialized hardware. So, implementing parallelization of moving object detection and tracking algorithm in cloud platform is more efficient and cost effective [10–12].

In this paper, parallel version of temporal frame difference based moving object detection with contour tracking is presented. In order to achieve functional decomposition, there are three master threads are created in this proposed work. First one is responsible for motion detection using temporal frame differencing, second one is responsible for noise removal in foreground image and third one is responsible for blob detection and track the individual objects. The moving object detection thread is again divided into child thread for inherent parallelization of pixel by pixel subtraction operation. The work will outperforms to detect multiple objects at same time with high speed with good accuracy.

Remaining of the paper is organized as follows: Section 2 surveys the relevant work done using cloud computing and other technologies. Section 3 describes the proposed work. Section 4 describes results and performance evaluation. Section 5 discusses conclusion and future work.

The proposed cloud computing based rapid object detection and tracking system is elucidated in this section.

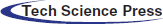

The main aim of this article is to present cloud computing based rapid object detection and tracking system. Most of the object detection and tracking algorithm requires more computational and storage for processing video. The cloud computing will provide environment which has more computational and storage requirements to process the video for detecting and tracking object in it with very rapidly. The following Fig. 1 shows the architecture of cloud based moving object detection and tracking system in video. The proposed system assumes the camera is fixed in the particular surveillance area with fairly wide angle of view.

Figure 1: Architecture of cloud based moving object detection and tracking system

The frequently captured video file is transferred to cloud environment through GUI and cloud manager where video are sliced into frames and each frames are considered as a work unit of cloud environment. Each of the work unit is distributed to executor by using cloud scheduler. The system components of proposed system architecture includes: video capture, motion detection, Image Filtering, Blob detection, contour detection, updatation and tracking.

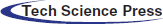

In order to parallelize these components, the proposed system architecture uses functional decomposition based parallelism. Hence, each functional component of temporal object detection and tracking algorithms are executed parallelly by the worker node. Again, these functional decomposed components are internally parallelized using inherent sequential parallelizing mechanism which is executed by the individual workers nodes. The Fig. 2 shows the functional decomposition based parallelizing mechanism to execute the functional components of object detection and tracking algorithm parallely.

Figure 2: Functional decomposition based parallel execution for temporal frame differencing

The input can be taken in two ways using camera or giving path for stored video file which are captured from camera sensors. Live video streams need to be processed. Once the video captured which are converted in to frames and processed. Frames in videos are sequence of images which is a representation of two dimensional images as a finite set of digital values called pixel [13–15] in digital computer. Digital images are represented as 2D matrices which contains finite set of elements px, y which is the color information for the corresponding pixel in the image at the given location x, y.

2.3 Motion Detection Using Temporal Frame Differencing

In this work, detection of moving object using temporal frames differencing [16] which is the simplest background subtraction techniques. Also, it is a non-recursive method; hence there is no need to maintain the history of frames in buffer. So, memory requirement for this algorithm is low compared to other existing algorithms. In this method, current frame’s pixel values are subtracted with previous frames pixel value. Based on the thresholding value, moving region of the image is extracted and identifies the both foreground and background [17]. The resultant of absolute pixel value is zero indicates, there is no moving object or non-zero value of this pixel value indicates there was a moving object in frames.

According to the method describe above, the difference between the frame at t and frame at t−1 is determined as follows [18,19]:

For adopting the changes in background illumination [19,20], the reference image is updated at each iteration as follows:

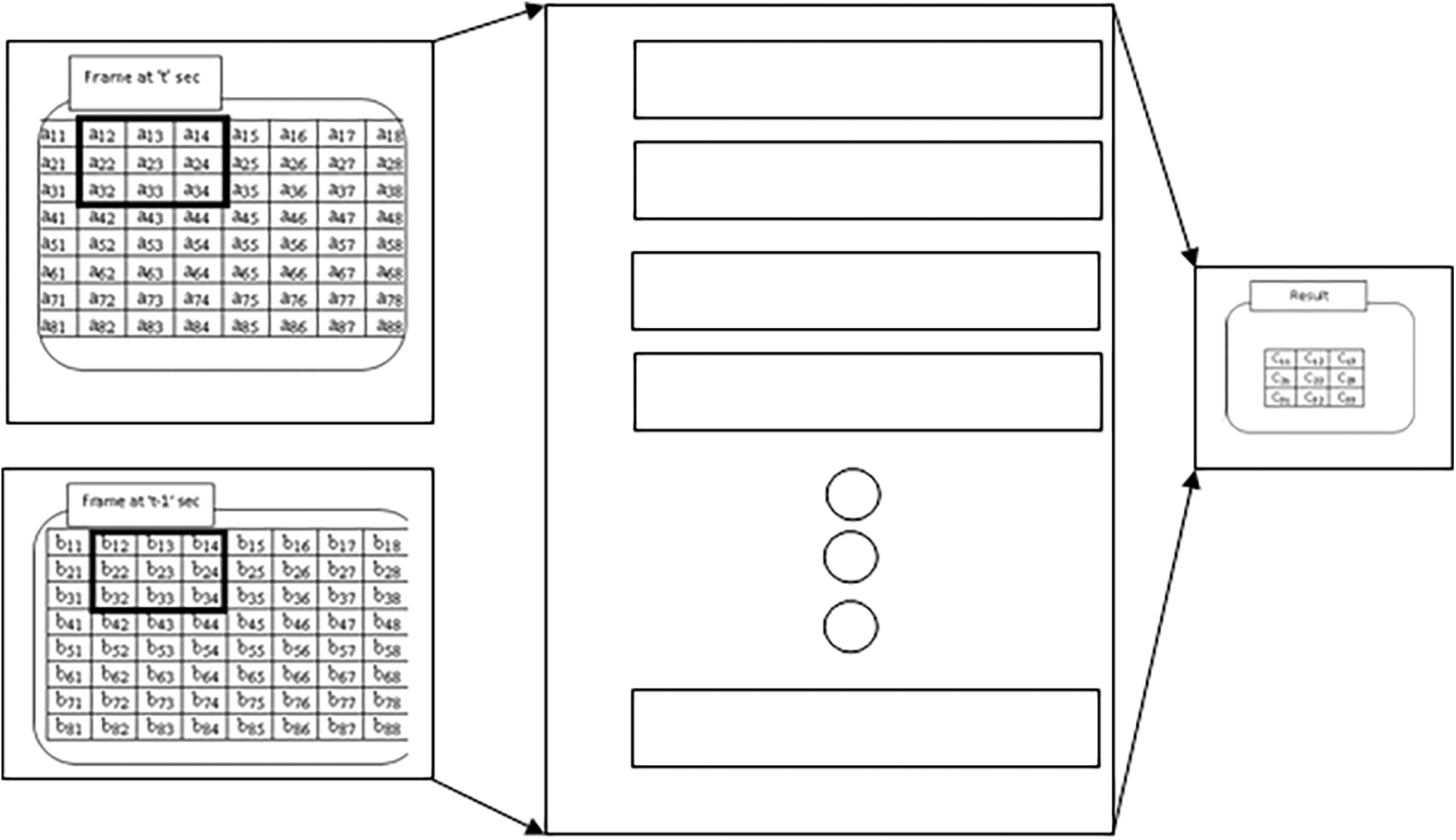

The pixel value of frames/image is represented as a matrix and matrix subtraction operations carried out for motion detection. The size of image is divided into smaller kernel like N × N matrix and each kernel of adjacent frame pixels are created as a thread which are executed in different cloud executor using inherently sequential decomposition mechanism. The Fig. 3 shows the inherently sequential based functional domain decomposition for temporal frame differencing.

Figure 3: Inherently sequential based functional domain decomposition for temporal frame differencing

Some of the background frames have spurious movement of object. It leads to mistaken in detect and track the object. So, this noise causing elements have to be filtered. For this purpose, this work used two basic morphological operations viz erosion and dilation. The basic effect of dilation mathematical morphological operation is to gradually increase the image boundary of region of foreground pixels whereas erosion mathematical morphological operation gradually shrinks the background [20]. Hence, it removes the noises.

The erosion of binary image f by a structuring element s is denoted by fθs which produces g=fθs. It reduces the size of regions of interest.

If

The dilation of an image f by a structuring element s is denoted by f

If

The number of iteration for each erode operation is depends on how much noise presents in the video frame. So, here we applied dilation operation applied on the output of the erosion operation. These operations remove noise, isolation of individual elements and distinct element in an image and finding intensity holes in an image. Both operations take two pieces of data as input. One is the image which is to be dilated and another one is set of coordinate points known as structuring elements/kernel with the size of N × N. Each N × N size of frames is processed by different worker nodes of cloud environment using functional domain decomposition approach.

It is a mathematical step to detect specific region based on some property in digital image. Blob made up of pixels having specific property in common and pixels which are combined together if they share common neighborhood pixels that have values greater than the threshold are the property of interest. After absolute differencing and noise removal applied in each video frames, the output of the pixel value is greater than binary threshold which is eligible to form a blob. All the adjacent neighbor of each such pixels are tested for eligibility and, if they satisfy, all those pixels are combined together to form blobs. In order to blob created by spurious movement, which are considerably in small, the size of detecting blob is limited by choosing blob threshold. By default, in this work the threshold value for blob detection is 15 and also applied smooth Gaussian filter with small kernel size (i.e., 3 × 3). Functionally decomposed kernels are processed by different worker node as individual thread. The output of this module is given as the feedback to detection system for learning.

2.6 Contour Detection and Tracking

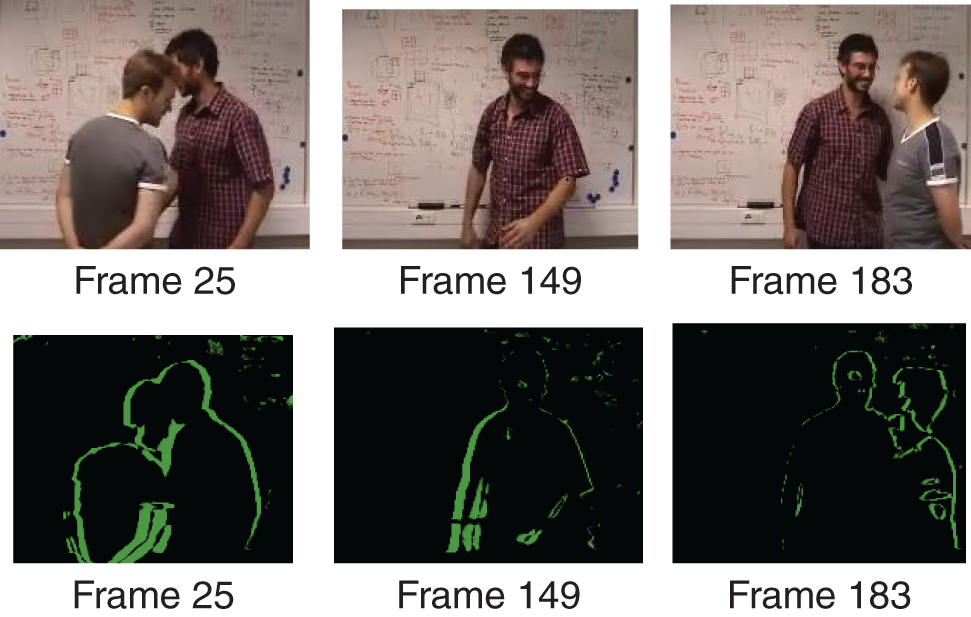

Contour is the boundary of object pixel above the threshold value. It is edge based features which are insensitive to illumination change. Contour analysis output uses a linked list which consists of detected blobs. All the promising contours are found and reconstructed a full hierarchy of nested contour by using RETR_TREE Mode. The end points which are necessary for drawing the contour are extracted by using CHAIN_APPROX_SIMPLE method. In this work, once contour is identified, each contour is tracked separately with individual thread of different worker nodes in cloud environment. Fig. 4 shows the sample video frames and corresponding detect contour in the frame.

Figure 4: Sample capture video frame and its corresponding contour detected frames

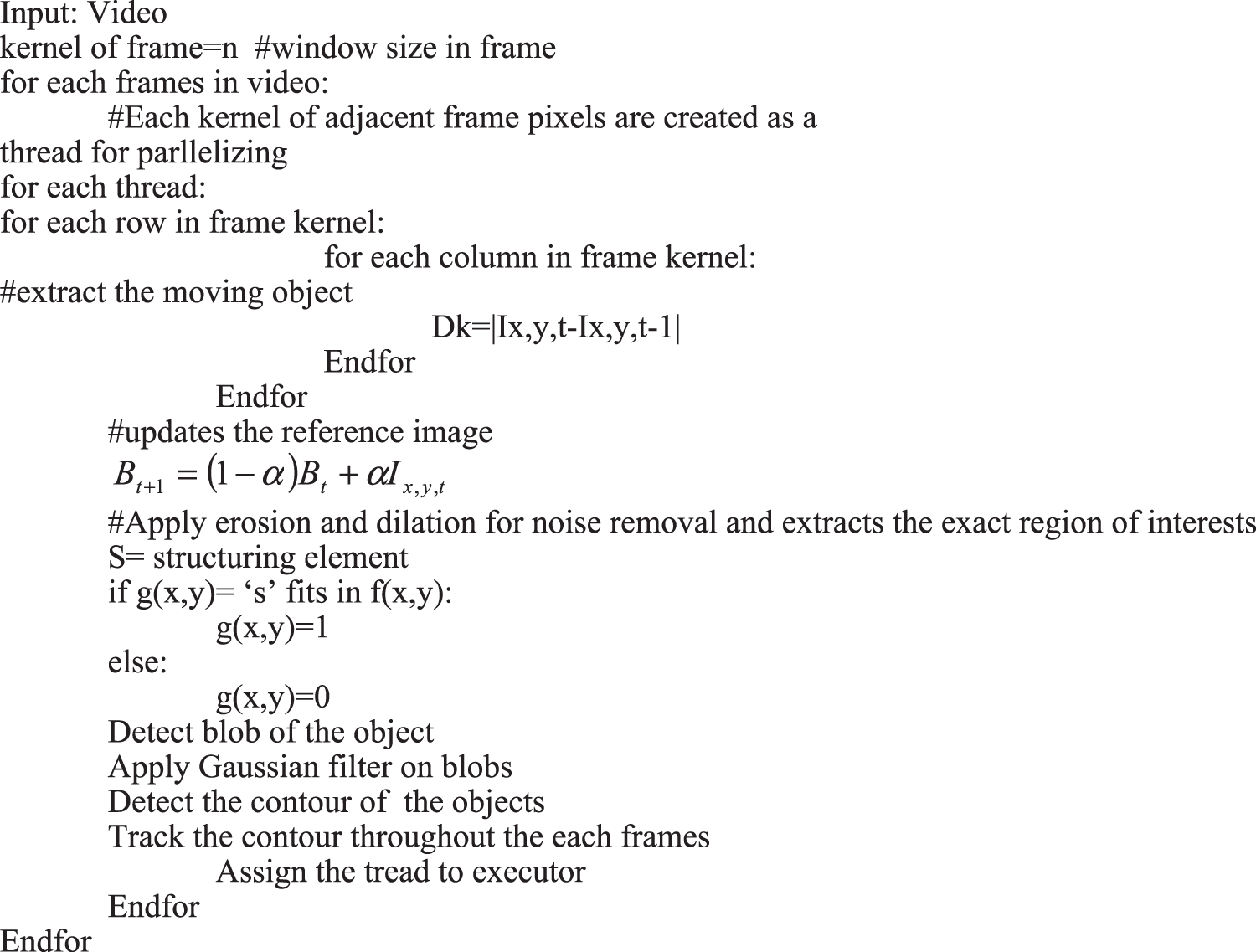

The proposed algorithm is shown in the Fig. 5.

Figure 5: Pseudo code of proposed algorithm

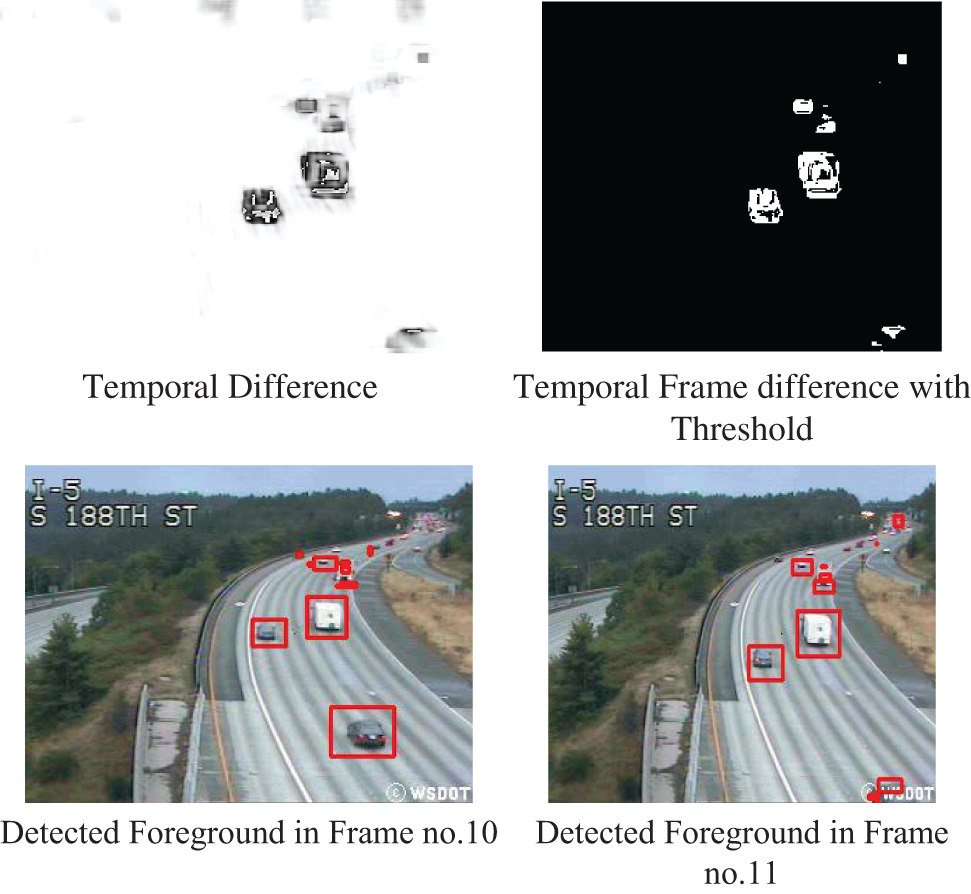

The following Fig. 6 shows the temporal difference between contiguous frames and extracted foreground object using threshold, detected foreground objects.

Figure 6: Temporal frame difference, with threshold output and detected foreground in contiguous frame

We have run some tests in order to compare the performance of different execution. We set the different tests by varying the following parameters:

• Number of Video Frames

• Frame rate

• Video file size

• Video Resolution

• Kernel Dimension for processing

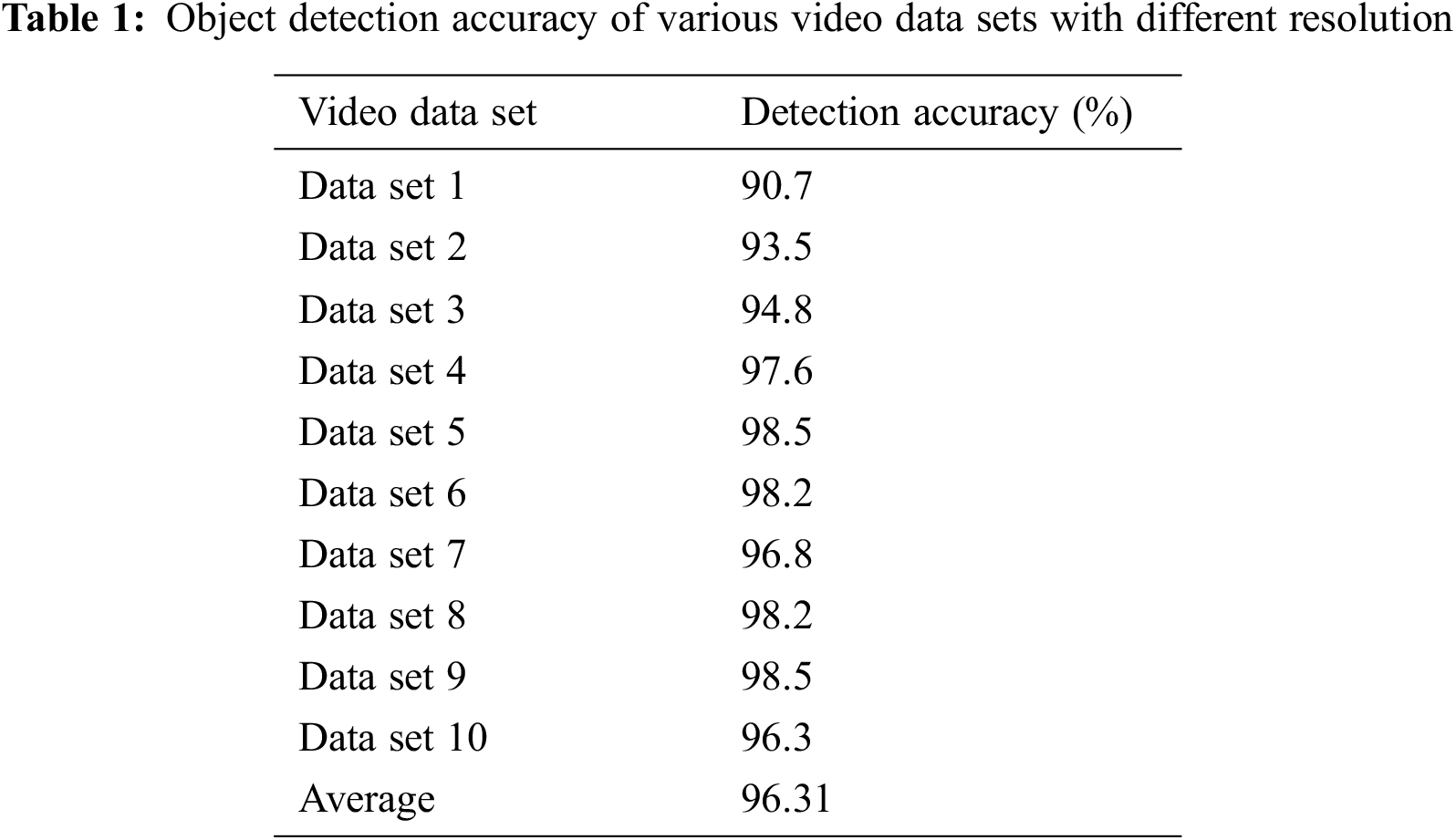

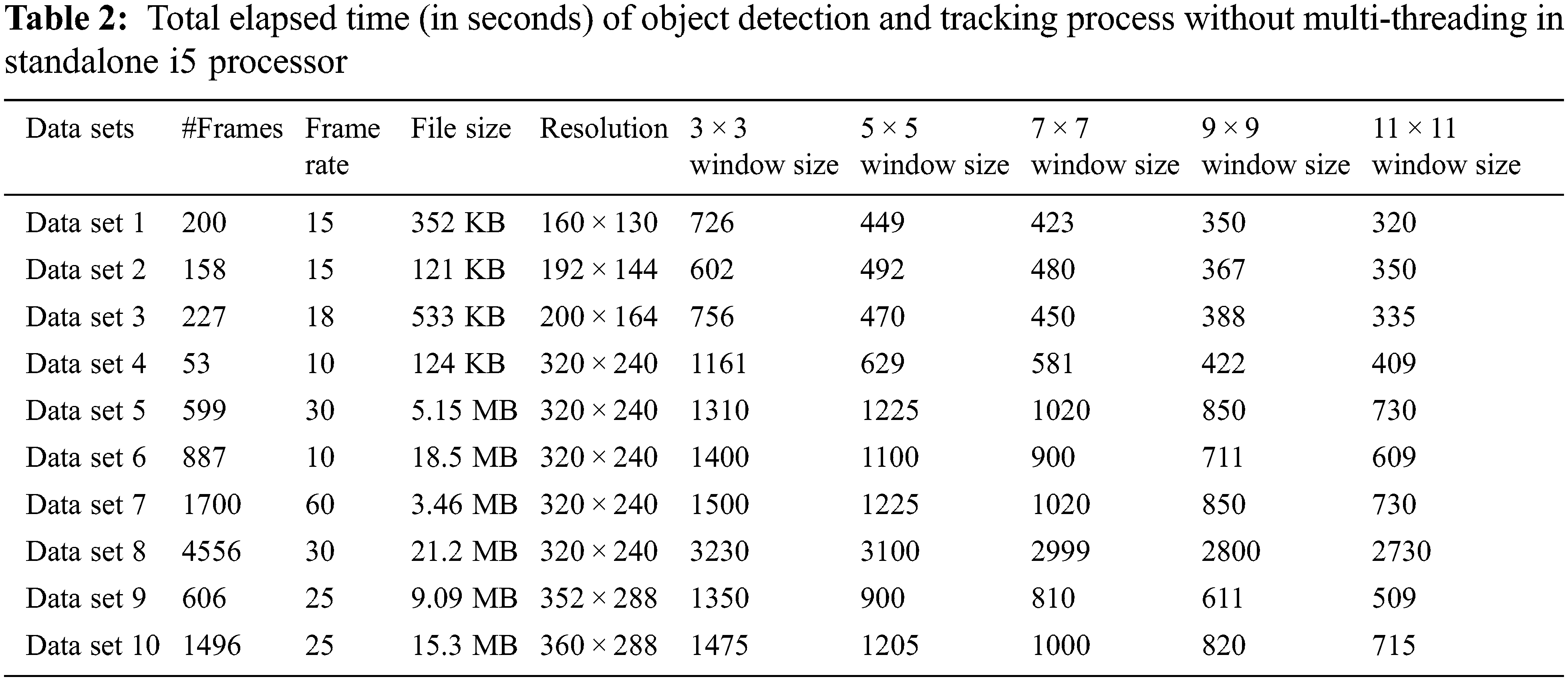

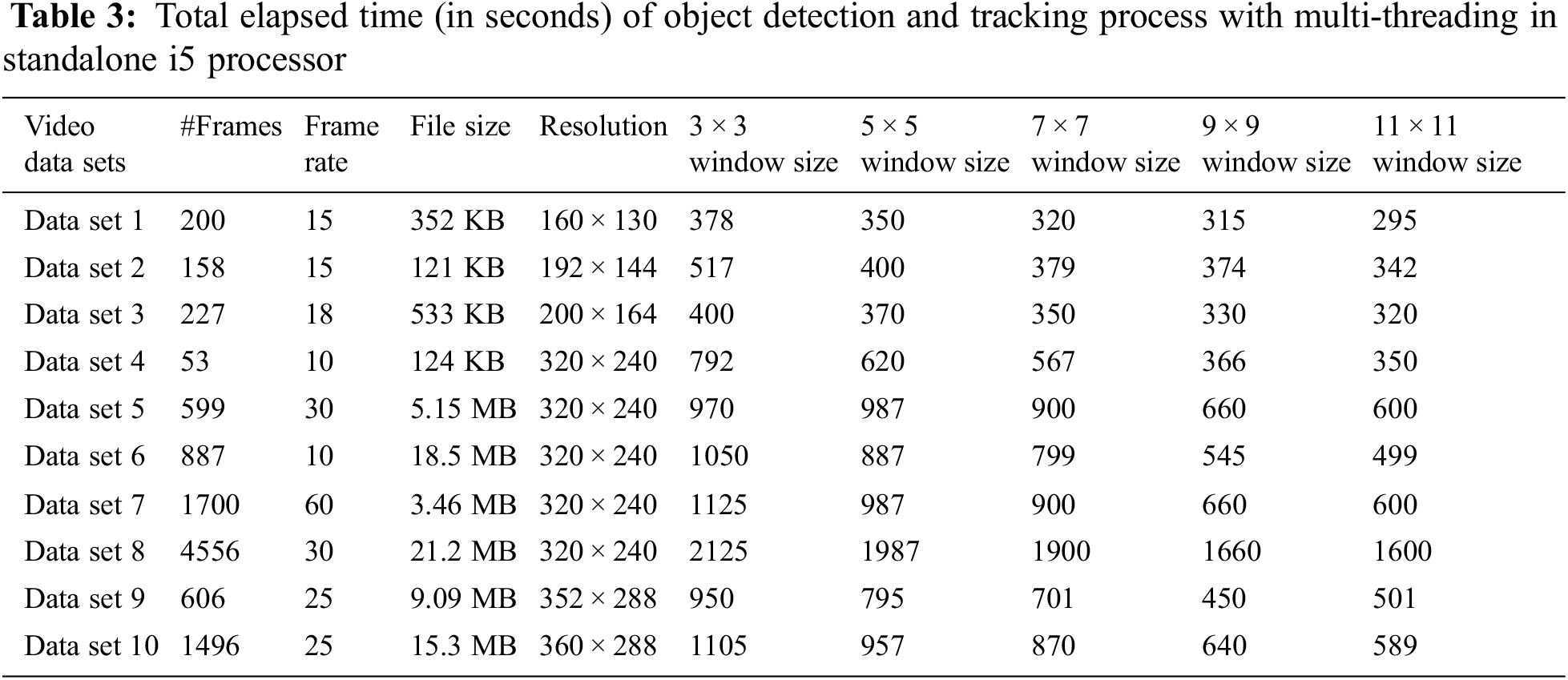

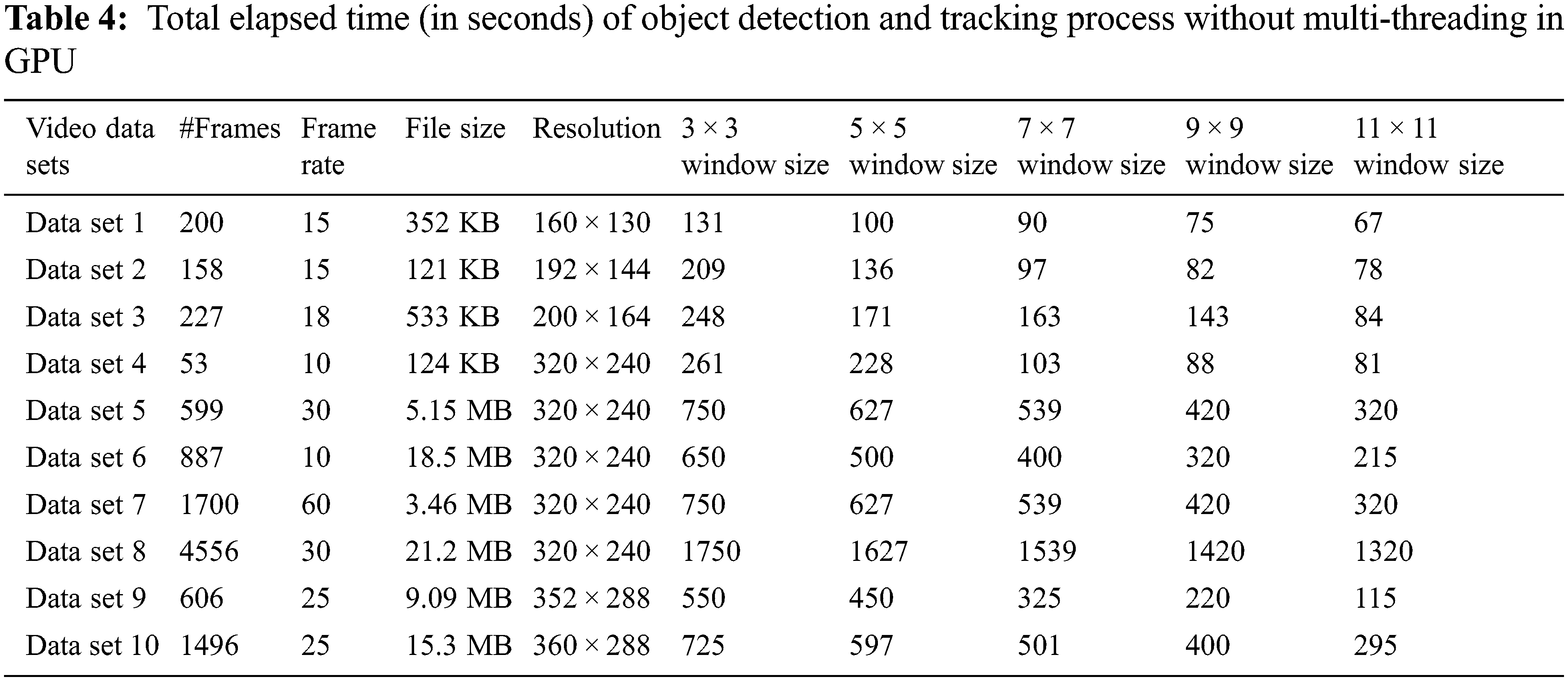

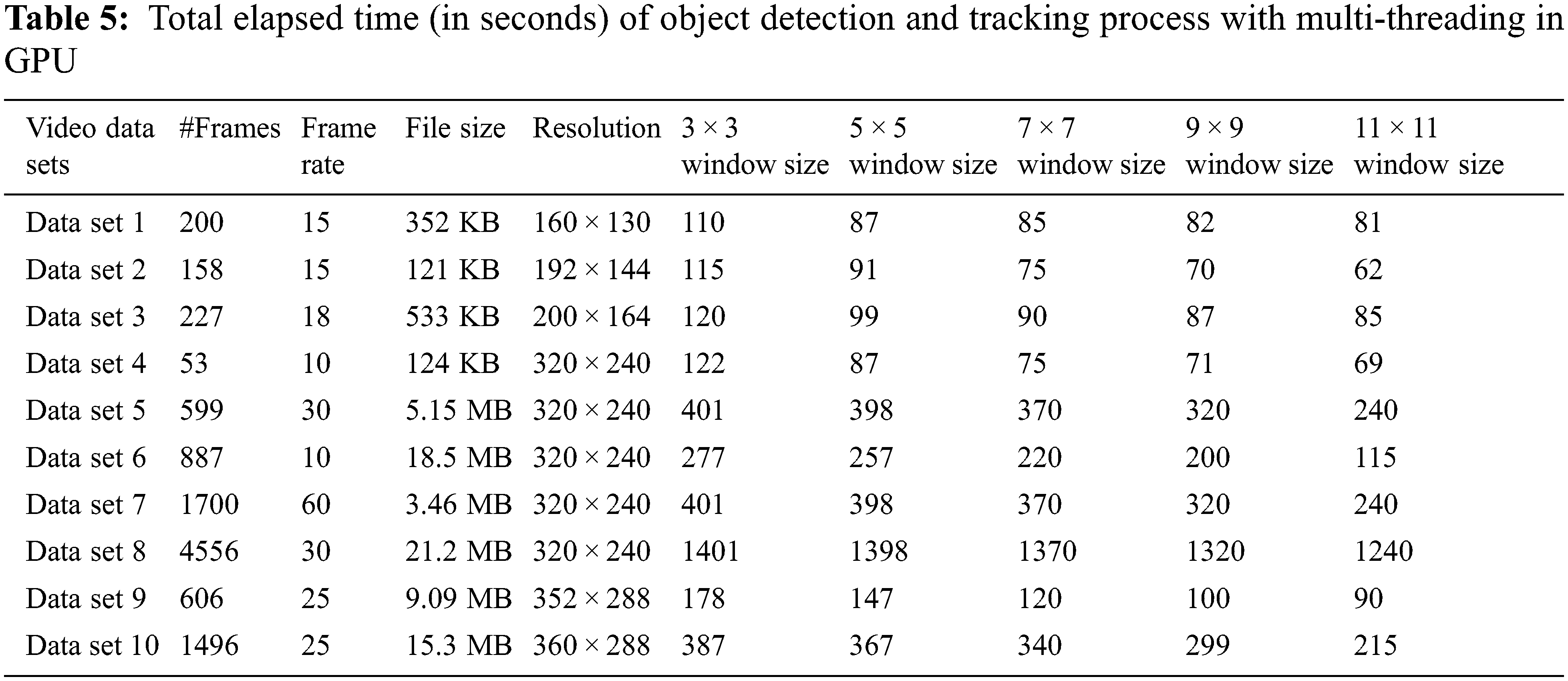

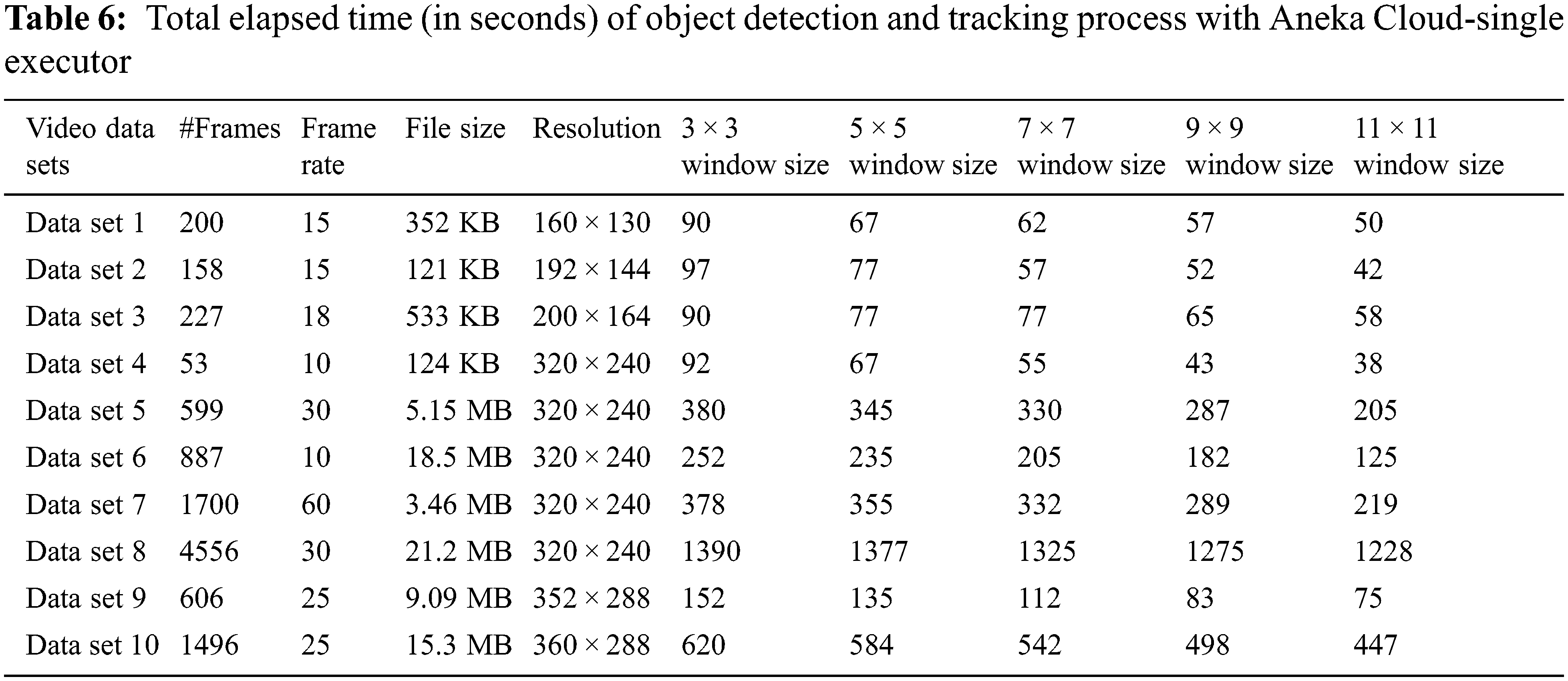

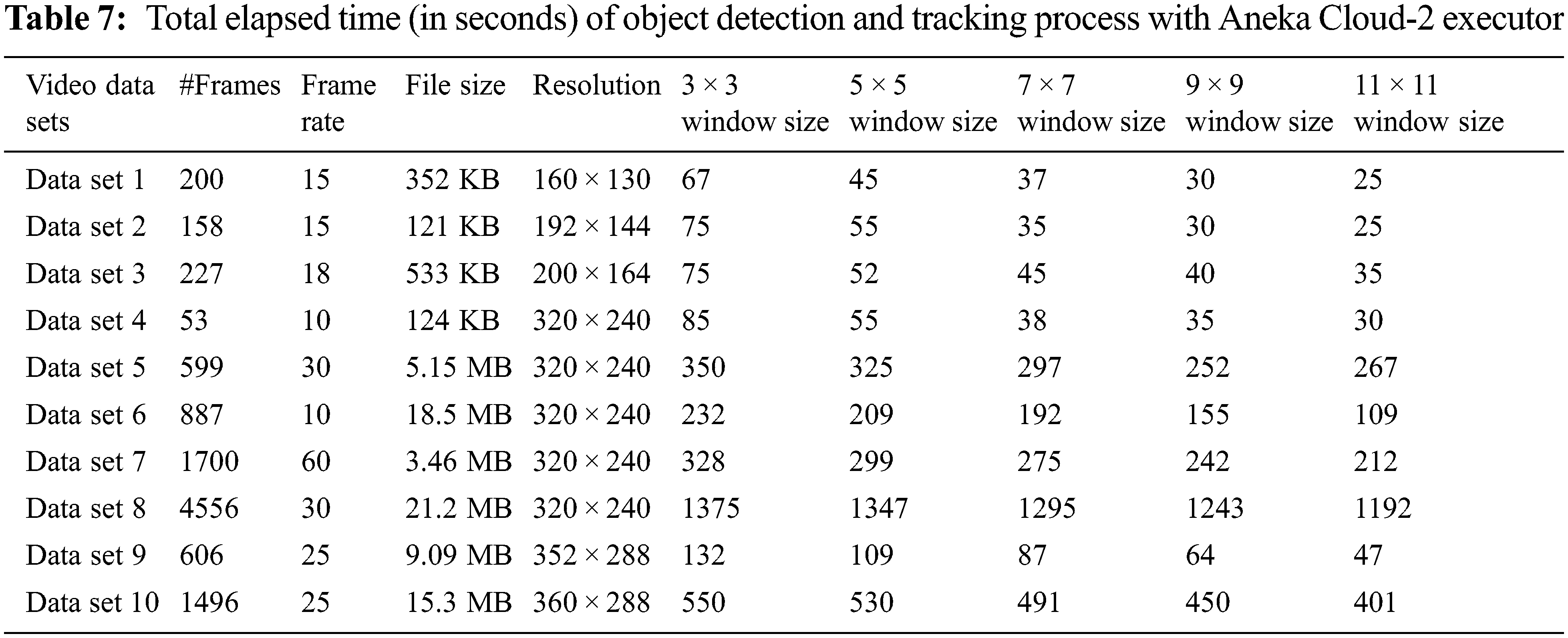

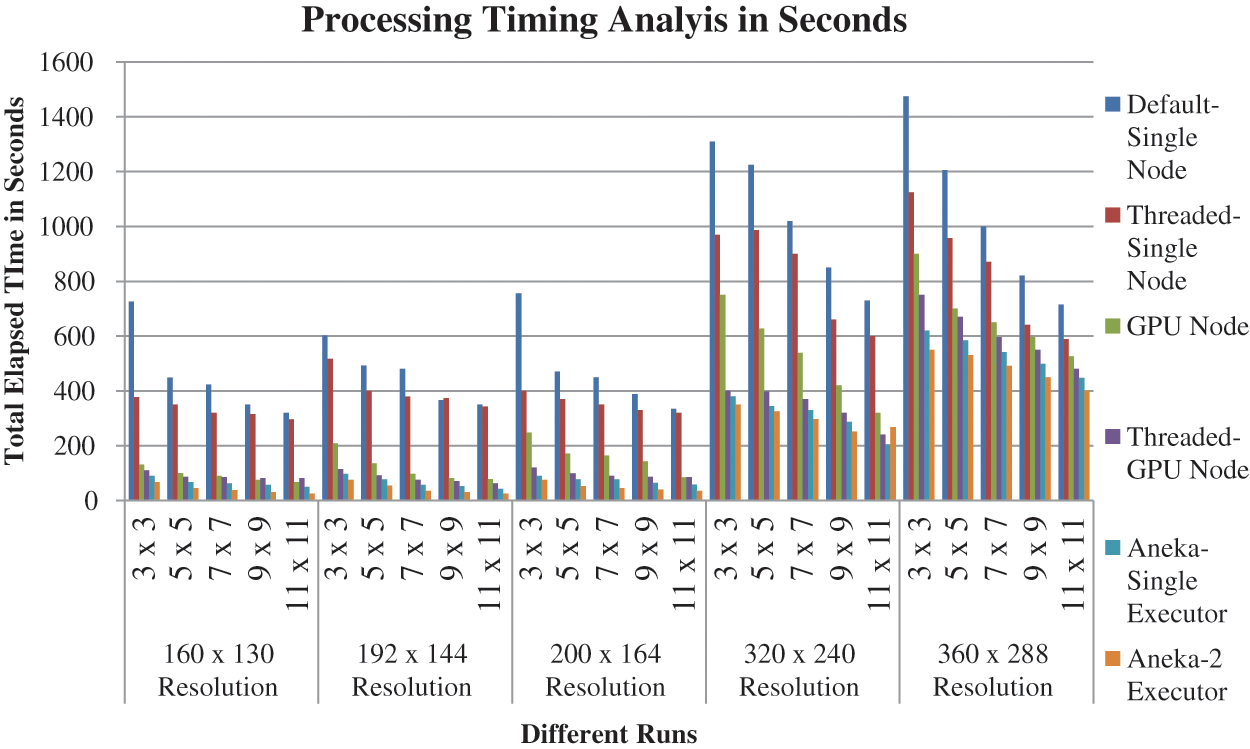

The performance of the proposed approach is evaluated in local standalone machine with Intel® Core™ i5-3380M CPU@ 2.90 Ghz with 4 GB RAM, GPU Node( NIVIDIA GeForce210 with 4 GB Ram) and Aneka Cloud composed of 3 nodes–1 manager and 2 executor where each arehaving the configuration of Intel Core i5 processor systems with 8 GB RAM. The entiremachines in the test were running in Windows 7 professional SP1 and .Net Framework 2.0 with EMGUCV. With the intention of checking the proposed approach, it is tested over several videos which are chosen from the CAVIAR and VISIOR data set. The accuracy of object detection is shown in Tab. 1 and also Tabs. 2–7 shows the total elapsed time of object detection and tracking in various video with varying kernel dimension for processing without multi-threading in standalone i5 processor, with multi-threading in standalone i5 processor, without multi-threaded GPU node, with multi-threaded GPU node, Aneka Cloud with single executor and Aneka Cloud with 2 Executor.

The data represented in the Tab. 1 show that object detection accuracy of various video data sets with different resolution which is calculated by the following formula.

where Tp, Tn are the true positive and true negative rates.

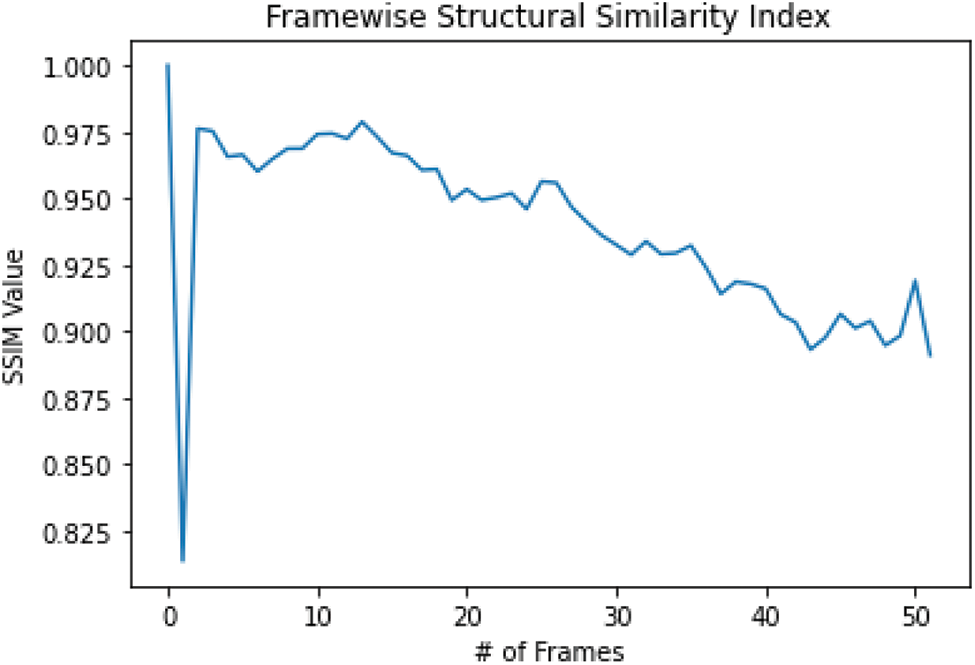

MSE is one of the measurements for identifying similarity between frames. If we are using MSE is only the measurement of identifying similarity between frames, it runs into problem. Hence in this work, we used Structural similarity index developed in [21].

SSIM attempts to model the perceived change in the structural information of the image. It compares two window rather than compare entire image in MSE. SSIM value can vary between −1 to 1 where 1 indicates perfect similarity. The Fig. 7 shows the SSIM analysis between each frame. Here, SSIM is varied from 0.825 to 1. This results shown that, there is a change of luminance and/or contrast. But it will not the affect detection accuracy of foreground using temporal frame differencing algorithm.

Figure 7: Frame wise SSIM analysis

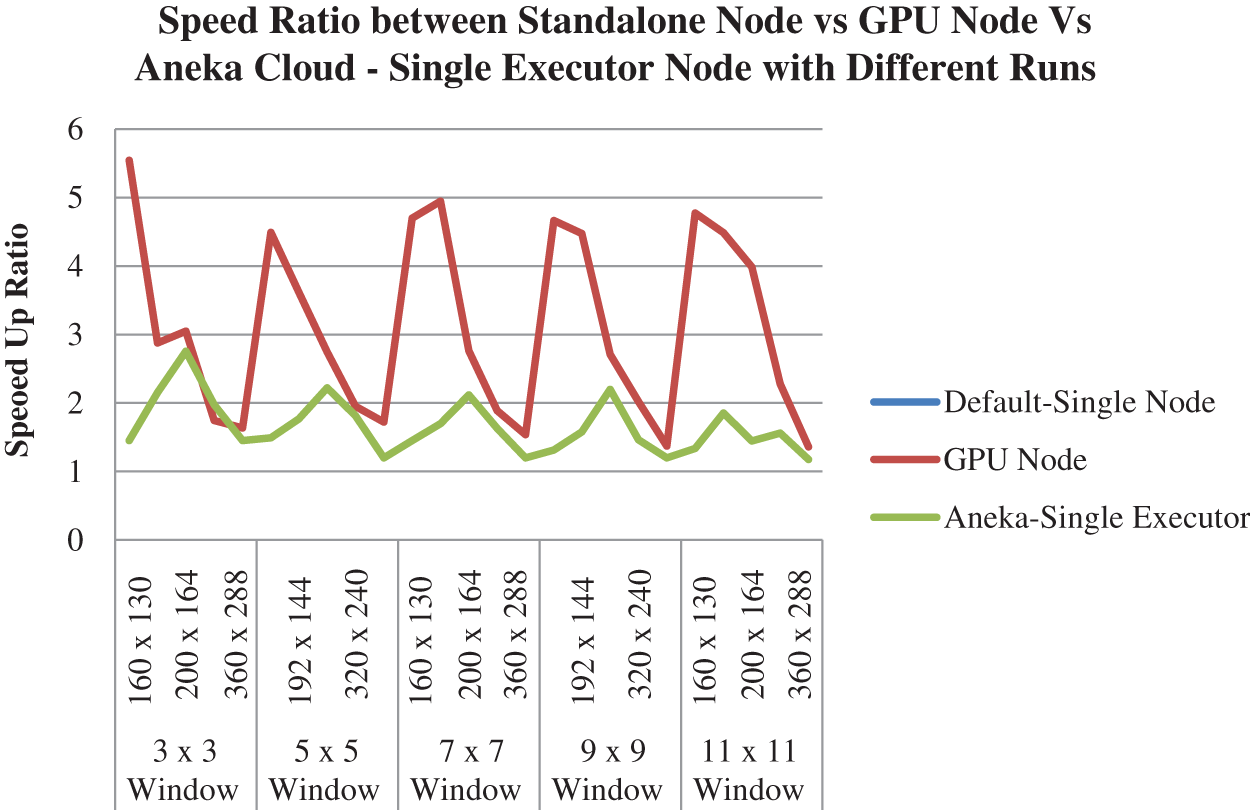

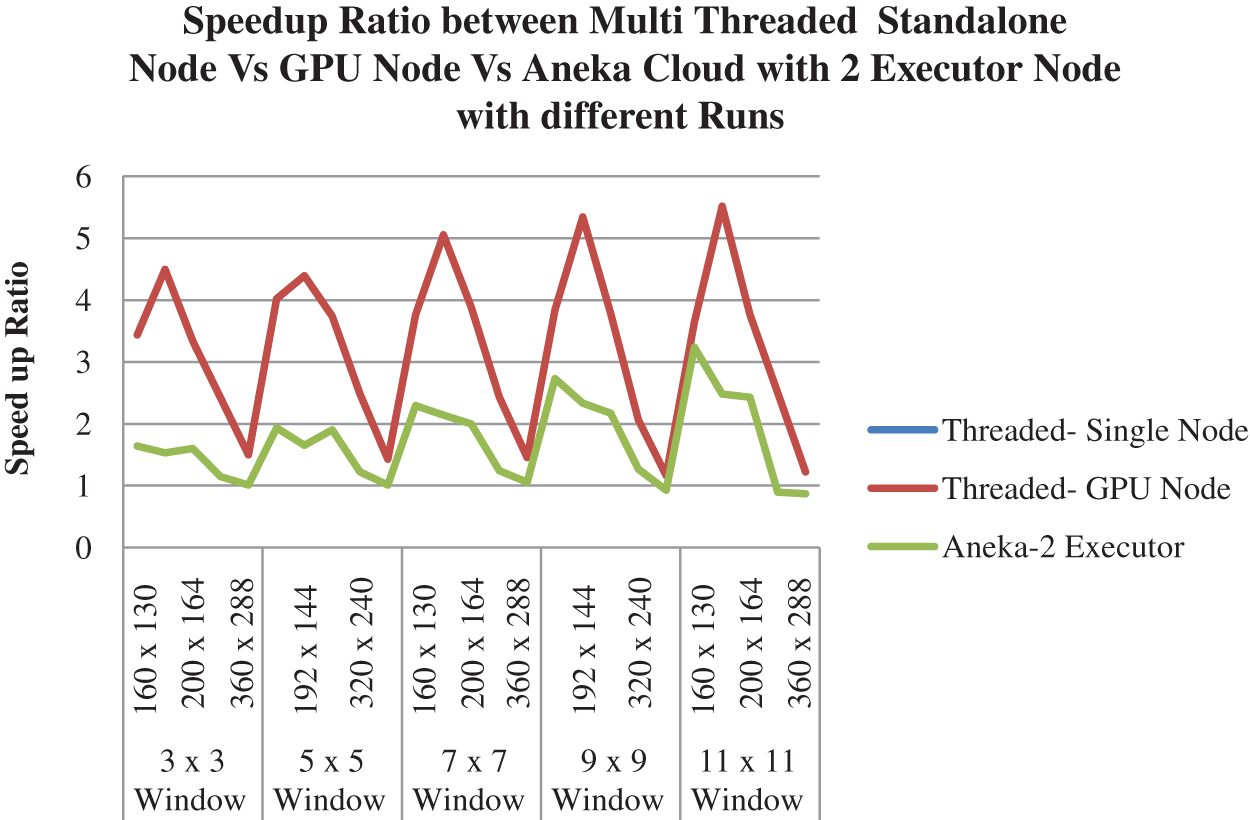

The efficiency of the proposed approach is tested in terms of total elapsed time of object detection and tracking process and speedup ratio between Standalone system vs. GPU node vs. Aneka Cloud platform with and without multi-threading by varying kernel size of video frames are given in Tab. 2.

Fig. 8 shows histogram of processing time for object detection and tracking. It shown that object detection and tracking in private cloud environment (i.e., Aneka with 2 executor node) will outperform other parallel processing technique with hybrid decomposition technique.

Figure 8: Processing time histogram of object detection and tracking

Figs. 9 and 10 shows the results of speedup ratio between standalone node vs. GPU node vs. Aneka Cloud single and 2 Executors with and without multi-threading. From the results, it is observed that Aneka cloud platform always gives better performance even though which is having communication overhead between nodes for detecting and tracking the objects in video without compromising detection accuracy and also without investing any specialized hardware platform.

Figure 9: Speedup ratio between standalone node vs. GPU node vs. Aneka Cloud with single executor without multi-threading

Figure 10: Speedup ratio between standalone node vs. GPU node vs. Aneka Cloud with 2 executor with multi-threading

This work proposed a cloud computing based rapid object detection and tracking algorithm for video solution applied with temporal frame differencing and contour matching techniques using functional decomposition technique. In this work, each functional component of temporal object detection and tracking algorithms are executed parallelly by the worker node. Again, these functional decomposed components are internally parallelized using inherent sequential parallelizing mechanism which is executed by the individual workers nodes of Aneka cloud environment. The performance of proposed approach is compared and evaluated with standalone machine, GPU node and Aneka Cloud environment. The performance of cloud computing based object detection and tracking outperforms well compare to other platform without investing any specialized hardware architecture. In future, this work is plan to extend by extracting the various features (like LBP, HOG) of detected blobs and improve the accuracy of detection and tracking of blobs and improve the computation as a service for very swift object detection and tracking algorithm through the design of application level task scheduler for cloud environment. This work more suitable for automation field. We plan to extend this work in real time both single and multiple camera whereas currently we used to test on only stored videos. Also, design the storage cloud architecture for video processing and surveillance application.

Acknowledgement: I am extremely thankful to Management, Principal, Head of the department of Computer Science and Engineering, Ramco Institute of Technology, Rajaplayam for providing me laboratory facility in RIT-AI Research Centre to carry out this work in successful way.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. A. Czyzewski, G. Szwoch, P. Dalka, P. Szczuko, A. Ciarkowski et al., “Multi-stage video analysis framework. Video surveillance,” in Intech Open Book Series, Intech Open Ltd., United Kindgdom, vol. 9, pp. 147–172, 2011. [Google Scholar]

2. H. S. Parekh, D. G. Thakore and U. K. Jaliya, “A survey on object detection and tracking methods,” International Journal of Innovative Research in Computer and Communication Engineering, vol. 2, no. 2, pp. 2970–2979, 2014. [Google Scholar]

3. M. Gomathynayagam and K. Ramar, “A survey on real time object detection and tracking algorithms,” International Journal of Applied Engineering Research, vol. 10, no. 5, pp. 8290–8297, 2015. [Google Scholar]

4. M. Álvarez and M. Lopez, “Road detection based on illuminant invariance,” IEEE Transactions on Intelligent Transportation Systems, vol. 12, no. 2, pp. 184–193, 2011. [Google Scholar]

5. P. Spangnolo, T. D. Orazio, M. Leo and A. Distante, “Moving object segmentation by background subtraction and temporal analysis,” Image and Vision Computing, vol. 24, no. 5, pp. 411–423, 2006. [Google Scholar]

6. W. Zhang, P. Xu, L. Duan, W. Gong, X. Liu et al., “Towards a high speed video cloud based on batch processing integrated with fast processing,” in Proc. Int. Conf. of Identification, Information and Knowledge in the Internet of Things (IIKI), Beijing, China, pp. 28–33, 2014. [Google Scholar]

7. D. Melpignano, L. Benini, E. Flamand, B. Jego, T. Lepley et al., “Platform 2012, a many-core computing accelerator for embedded socs: Performance evaluation of visual analytics applications,” in Proc. 49th Annual Design Automation Conf., San Francisco, California, pp. 1137, 2012. [Google Scholar]

8. S. Solehah, S. Nizamyaakob, Z. Kadim and H. HockWoon,“Extraction of moving object using frame differencing, ghost and shadow removal,” in Proc. 5th IEEE Int. Conf. on Intelligent Systems, Modeling and Simulation, Malaysia, pp. 229–234, 2014. [Google Scholar]

9. J. Parsola, D. Gangodkar and A. Mittal, “Efficient storage and processing of video data for moving object detection using hadoop/map reduce,” in Proc. Int. Conf. on Signal, Networks, Computing and Systems, Springer Lecture Notes in Electrical Engineering, India, vol. 395, pp. 137–147, 2017. [Google Scholar]

10. S. K. Maharana, P. Ganeshprabhakar and A. Bhati, “A study of computing for retinal image processing through matlab,” International Journal of Cloud Applications and Computing, vol. 2, no. 2, pp. 59–69, 2012. [Google Scholar]

11. M. Gomathynayagam and K. Ramar, “Reliable object recognition system for cloud video data based on ldp feature,” Elsevier Journal of Computer Communications, vol. 149, pp. 343–349, 2020. [Google Scholar]

12. K. A. Joshi and D. G. Thakore, “A survey on moving object detection and tracking in video surveillance system,” International Journal of Soft Computing and Engineering (IJSCE), ISSN, vol. 2, no. 3, pp. 2231–2307, 2012. [Google Scholar]

13. M. Zhu, S. Shuifa, S. Han and H. Shen, “Comparison of moving object detection algorithms,” World Automation Congress (WAC), Mexico, vol. 1, pp. 35–38, 2012. [Google Scholar]

14. R. Radke, S. Andra, O. Al-Kofahi and B. Roysam, “Image change detection algorithms: A systematic survey,” IEEE Trans. Image Processing, vol. 14, pp. 294–307, 2005. [Google Scholar]

15. S. Shih-Wei, F. Yu-Chiang, F. Huang and H. Mark Liao, “Moving foreground object detection via robust sift trajectories,” Journal of Visual Communication Image, vol. 24, pp. 232–243, 2012. [Google Scholar]

16. L. Dawei, X. Lihong and D. Goodman, “Illumination-robust foreground detection in a video surveillance system,” IEEE Trans. on Circuits and System for Video Technology, vol. 23, no. 10, pp. 1637–1650, 2013. [Google Scholar]

17. M. Gomathynayagam and K. Ramar, “A design of hybrid workflow model for real time object detection using temporal frame differencing algorithm: A cloud computing approach,” Asian Journal of Research in Social Science and Humanities, vol. 6, no. 10, pp. 2099–2113, 2016. [Google Scholar]

18. C. Vecchiola, K. Nadiminti and R. Buyya, “Image filtering on. Net-based desktop grid,” in. Proc. 6th Int. Conf. on Grid and Cooperative Computing (GCC 2007, IEEE CS Press, Los Alamitos, CA, USA), Urumchi, Xinjiang, China, pp. 16–18, 2007. [Google Scholar]

19. R. Cucchiara, C. Grana, A. Prati and R. Vezzani, “Probabilistic posture classification for human-behavior analysis,” IEEE Transactions on Systems, vol. 35, no. 1, pp. 42–54, 2005. [Google Scholar]

20. J. Heikkila and O. Silven, “A real-time system for monitoring of cyclists and pedestrians,” in Proc. Second IEEE Workshop on Visual Surveillance, USA, pp. 74–81, 1999. [Google Scholar]

21. Z. Wang, A. C. Bovik, H. R. Sheikh and E. P. Simoncelli, “Image quality assessment: From error visibility to structural similarity,” IEEE Transaction on Image Processing, vol. 13, no. 4, pp. 1–14, 2004. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |