DOI:10.32604/iasc.2022.023605

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2022.023605 |  |

| Article |

Dark and Bright Channel Priors for Haze Removal in Day and Night Images

SRM Institute of Science and Technology, Chennai, 603203, India

*Corresponding Author: A. Ruhan Bevi. Email: ruhanb@srmist.edu.in

Received: 14 September 2021; Accepted: 10 November 2021

Abstract: Removal of noise from images is very important as a clear, denoised image is essential for any application. In this article, a modified haze removal algorithm is developed by applying combined dark channel prior and multi-scale retinex theory. The combined dark channel prior (DCP) and bright channel prior (BCP) together with the multi-scale retinex (MSR) algorithm is used to dynamically optimize the transmission map and thereby improve visibility. The proposed algorithm performs effective denoising of images considering the properties of retinex theory. The proposed method removes haze on an image scene through estimation of the atmospheric light and manipulating the transmittance parameter. The coarse transmittance is refined using edge-preserving median filtering. Experimental results depict that the proposed technique enhances the image quality degraded by foggy weather during day time or night. The results show that the proposed algorithm enhances the image quality in terms of PSNR and SSIM to a considerate level.

Keywords: Dehazing; dark channel prior; bright channel prior; multi-scale retinex; atmospheric light estimate; transmission estimate

During the past decade, significant research work has been progressed on the enhancement of the quality of hazy, foggy images. Haze occurs due to the scattering of light through the microscopic water droplets. For outdoor environments, the visibility and contrast of images captured in foggy weather pose a great challenge for image enhancement. The photo quality is mainly affected by the existence of small aerosols in the atmosphere which scatter the original light reflected by an object in the scene before being absorbed by the sensor. This leads to a poor contrast quality and the depth details begin to lose. Image dehazing is desirable in feature extraction applications such as digital video surveillance, aerial survey and driverless car, thereby enhancing the scene visibility and appropriate correction in the color shift.

Noise present in the image tends to degrade the per pixel image value, hence degrading robustness and efficiency. The number of pixels in a frame can be enhanced by the removal of noise in the video. The proposed work deals with the removal of haze from atmospheric scattering and gains high visibility. This reduces the trade-off between contrast and saturation of images, preserving the quality of the recovered image. There are various techniques based on Dark Channel Prior (DCP) discussed which find solutions simply by using the single input hazy image. Even though these techniques are very effective and have been widely used on daylight hazy scenes, they perform poorly with restrictions on night-time hazy scenes. Since several light-emitting objects cause a non-uniform brightness, haze removal from images captured in the night becomes intricate. So only a limited number of researches have been carried out on the night-time haze elimination issues.

Dehazing algorithms such as dark channel prior are discussed in [1–4]. Berman et al. [5] proposed an algorithm for dehazing using haze-lines to estimate atmospheric light. Fattal [6] suggested colour-lines method for haze removal. Meng et al. [7] proposed L1 norm-based regularization technique for dehazing. Retinex properties such as illumination and reflectance components of images are deployed to compute parameters such as air-light estimate and rough depth of the scene is analyzed in various retinex methods [8–10]. Unlike dehazing of day images in case of haze removal of night time [11–14] images, low illuminated images are processed with both bright and dark channel prior thereby modifying transmission estimation with a local air-light component that generates a maximum reflectance. Estimations are made on the ambient light on local patterns with small size tracts where the non-uniform illumination originates from several unnatural sources. On combining multi-scale retinex and dark channel prior [15], a recovered quality output image is obtained but with a poor form of transmission map. Learning based dehazing is discussed in [16] for an end-to-end process of haze removal. Li et al. [17] proposed a new haze removal method on images captured at night time in order to eliminate the glow of light from light sources. Though authors in [18] claimed that their technique performs well for the global enhancement but it lacks local enhancement at the edges and boundaries of the image.

A modified DCP [19] overcomes the artifacts generated in the single image dehazing and made a comparison of the DCP in real-time. Ancuti et al. [20] discussed how to overcome the problem in selecting patch-size for fusion based dehazing with more samples of inverted images using a Laplacian decomposition. Chung et al. [21] proposed an image restoration method using dark channel prior and white patch retinex to obtain fast and effective haze removal of an image. Liu et al. [22] proposed an algorithm namely coarse detection and artificial neural network (ANN) realization for recognition of remote sensing images. The scene radiance structure [23] can be recovered from hazy scenes using multiple images and by applying spatial information. Koschmiede [24] discussed the scattering model of hazy images. A minimum volume ellipsoidal approximation [25] method yields inaccuracy on pixels that represents bright elements in the scene.

In this work, by merging both DCP and BCP algorithms with the MSR method a novel haze removal technique is introduced which enhances the contrast of images captured during the day and night time under a foggy situation. This is achieved by computing the ambient light value and then estimating the transmission map using median filters and finally processed by multi-scale retinex method in HSV space to obtain better enhancement.

2 Proposed Day and Night Image Dehazing Algorithm

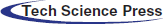

Light plays an essential role in haze removal and the choice of illumination and reflectance is a challenging problem. The new value dark channel prior (DCP) is obtained by first estimating the 0.1% of the dark pixels in the dark channel, which is present mostly in the opaque and hazy portion of the scene. The top 0.1% of the bright pixels form bright channel prior (BCP), in the bright channel which is present in the most glowing portion of the image. A total average of dark and bright channels pixel elements is added with the retinex component to generate an atmospheric light estimate and transmission estimate as shown in Fig. 1.

Figure 1: Flow chart for proposed haze removal technique in both day and night images

Haze removal is performed through following steps: estimate atmospheric light, estimate transmission map, radiance computation, multi-scale retinex (MSR) computation and haze-free image reconstruction.

Our work uses the Koschmiede's optical atmospheric attenuation model to represent the hazy image as

where I represents the observed image, A is atmospheric illumination, R is haze free image and τ is the transmittance parameter.

The purpose of image enhancement is for restoring the radiance (R) of the image (I) by eliminating haze. The product R(x)•τ(x) in the above equation is the scene irradiance and the subsequent term is the air-light. The air-light represents the amount of direct light transmitted from the surface of the object that is being picturized which gets scattered without reaching the camera due to floating aerosols in the air as in Fig. 2. The transmission map is evaluated using the equation which is given by

where β is the scattering parameter and d(x) is the measure of distance of separation between camera and the object.

Figure 2: Atmospheric scattering model

2.1 Dark Channel and Bright Channel Prior Estimation

Restoring the image without haze from (1) requires transmittance (τ) and atmospheric light(A). In order to evaluate transmittance, we used the DCP technique which states that in haze-free non-sky outdoor images, among any of three red-green-blue (RGB) color channels, there exists at least one channel that might have some pixels with the lowest intensity [1] with values close to zero i.e.,

Hence the dark channel of a given image is defined as

where Idarkis the dark channel of I(x), ICis color channel of I(x), and Ω (x) is a small portion that is surrounding at the pixel x. Similarly in an image, at least one colour channel has few pixels which are bright enough with intensity values that are very closer to unity. In this work, the bright channel, Rbright is computed by the equation as follows:

So the bright channel of a given image is defined as

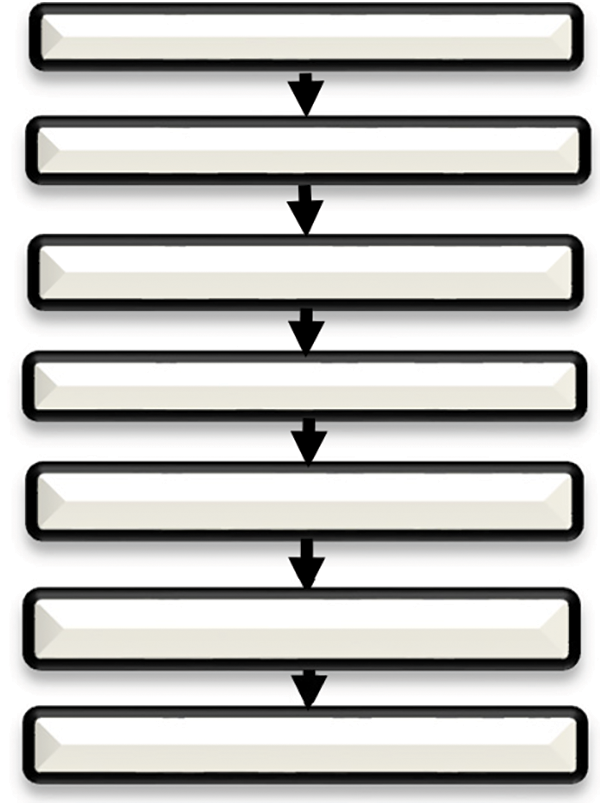

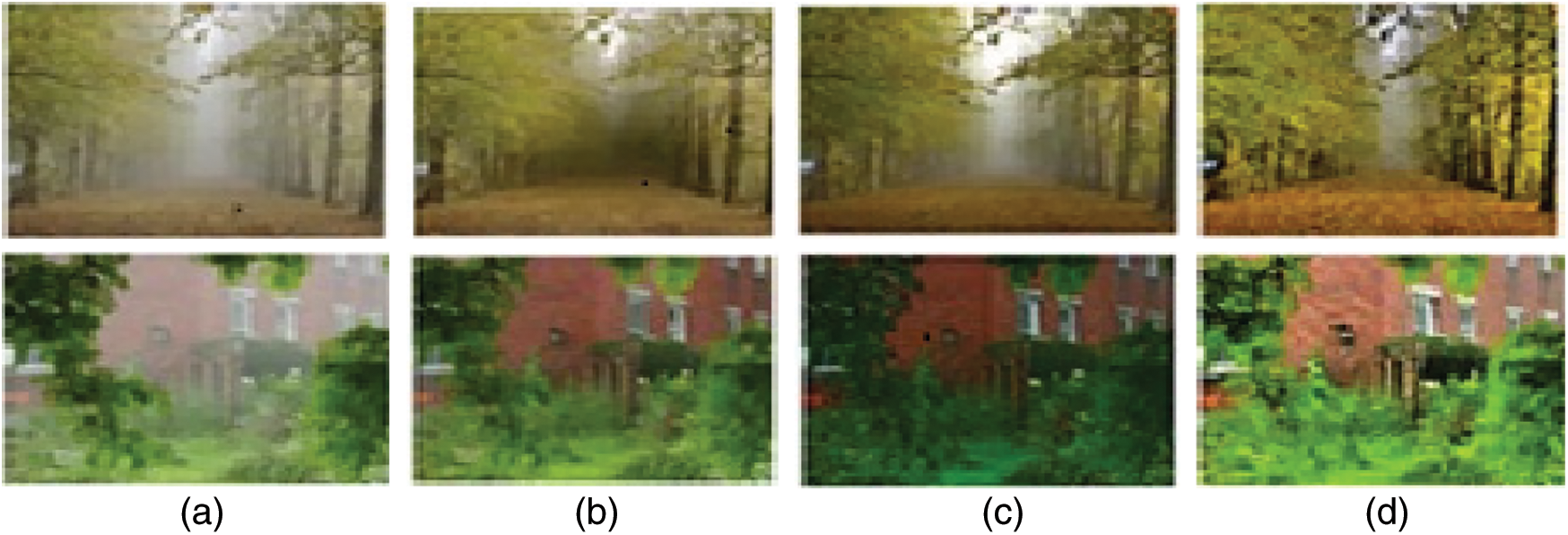

The experimental results of dark channel prior(DCP) and bright channel prior (BCP) for different input hazy images are shown in Fig. 3.The existence of high levels of shadow, colourful and dark objects in the hazy image causes variation of the dark channel as well as bright channel values.

Figure 3: DCP and BCP of hazy daytime images (a) DCP of forest (b) BCP of forest (c) DCP of building (d) BCP of building (e) DCP of swan and (f) BCP of swan

2.2 Atmospheric Light Estimation

The atmospheric light A is obtained from the severely blurred region whereas the points with the brightest intensity are usually found on white objects that appear in the real image. This condition is refined for the computation of atmospheric light. The proposed algorithm includes steps as follows:

i) Select the top 0.1% of the bright points from dark channel Idark which are found in the opaque part of the image (non-sky regions).

ii) Select the top 0.1% of the bright pixels from the bright channel Ibright from the bright portion of the image.

iii) Calculate new estimate A′ as an average of Idark and Ibright.

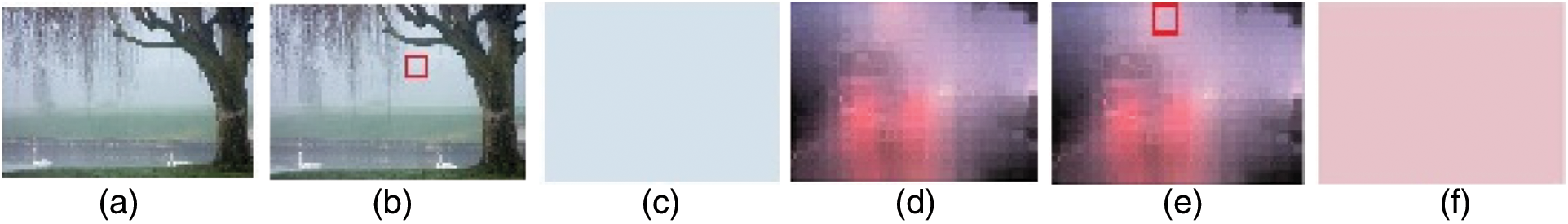

Hence A′ is the new atmospheric light estimated from the image after DCP and BCP are added with the input image. The estimation of atmospheric light is shown in Fig. 4.

Figure 4: Atmospheric light estimation (a) Daytime input image (b) position of atmospheric light (c) Atmospheric light (d) Night input image (e) position of atmospheric light and (f) Atmospheric light

The bright channel estimates the depth or veil of the atmosphere of the input image and hence it upgrades the atmospheric light estimation. Moreover, the average intensity of pixels of channels Idark and Ibright in the input image is chosen as atmospheric light A′. During the nighttime, atmospheric light due to various light sources or moon light is not so bright and constant as that of daytime. In such a scenario to prefer bright points as atmospheric light might produce noise. Hence to eliminate such problems we use the average value of Idark and Ibright channels for estimation.

The derived atmospheric light estimation A′ is utilized to evaluate the transmission map as persistent in the local patch Ω(x). This is done by manipulating both sides of Eq. (1) with transmittance minimum transformation function in the RGB channels which is

The value of RC(x) reduces to zero inside the haze-free local patch and A’Cis invariably positive. The new value A’C handle both sky and other regions in the image. The transmittance of sky portion or those region with uniform intensity tends to zero. In order to avoid this, a constant ω is introduced. Finally the transmission is computed as

where 0 ≤ ω ≤ 1and patch size Ω(x) = 20 × 20. The refined transmission is computed using median filter.

After evaluating A and τ(x) from I(x), haze free image R(x) can be recovered from the optical scattering image model of equation R(x) using τ(x). Since the parameter R(x) • τ(x) scene irradiance is approximately equal to ‘0’ for ground truth, τ(x) is confined to a minimum value t0 and assigned as an appropriate value of 0.1. Hence, restored radiance R(x) is evaluated as

Hence this method using new estimation of atmospheric light A′ performs well on images captured under both day and night time.

Finally, the radiance generated is processed by the MSR algorithm. Retinex image restoration basics achieve dynamic compression and colour brightness and reduce artifacts and halo effects. The retinex algorithm is used to fragment image I into two separate components such as the reflectance part J, and the illuminance part S. This decomposition provides the gain of elimination of halos, artifacts, enriching image edges and colour shifts correction. So, each pixel (x, y) on the image I can be represented as in [8]

MSR algorithms blend the supremacy of the preserving edges, edge enrichment and hybrid of brightness and color restoration. The luminance module of the haze image is processed in HSI color space and then performs linear reconstructions. Commonly, the MSR principle is used to process the brightness component and to obtain the reflectance component. It is represented as weighted single scale retinex [10] for different scales of Gaussian surround function

where Jn is the reflectance, M represents the count of distinct scales (assume M = 3), wkis the weight of scale, the term (In* Γk) is the probability distribution of illumination S(x, y) and * is convolution operator. Then, the kth gaussian surround function Γk, with scale factor ci. is

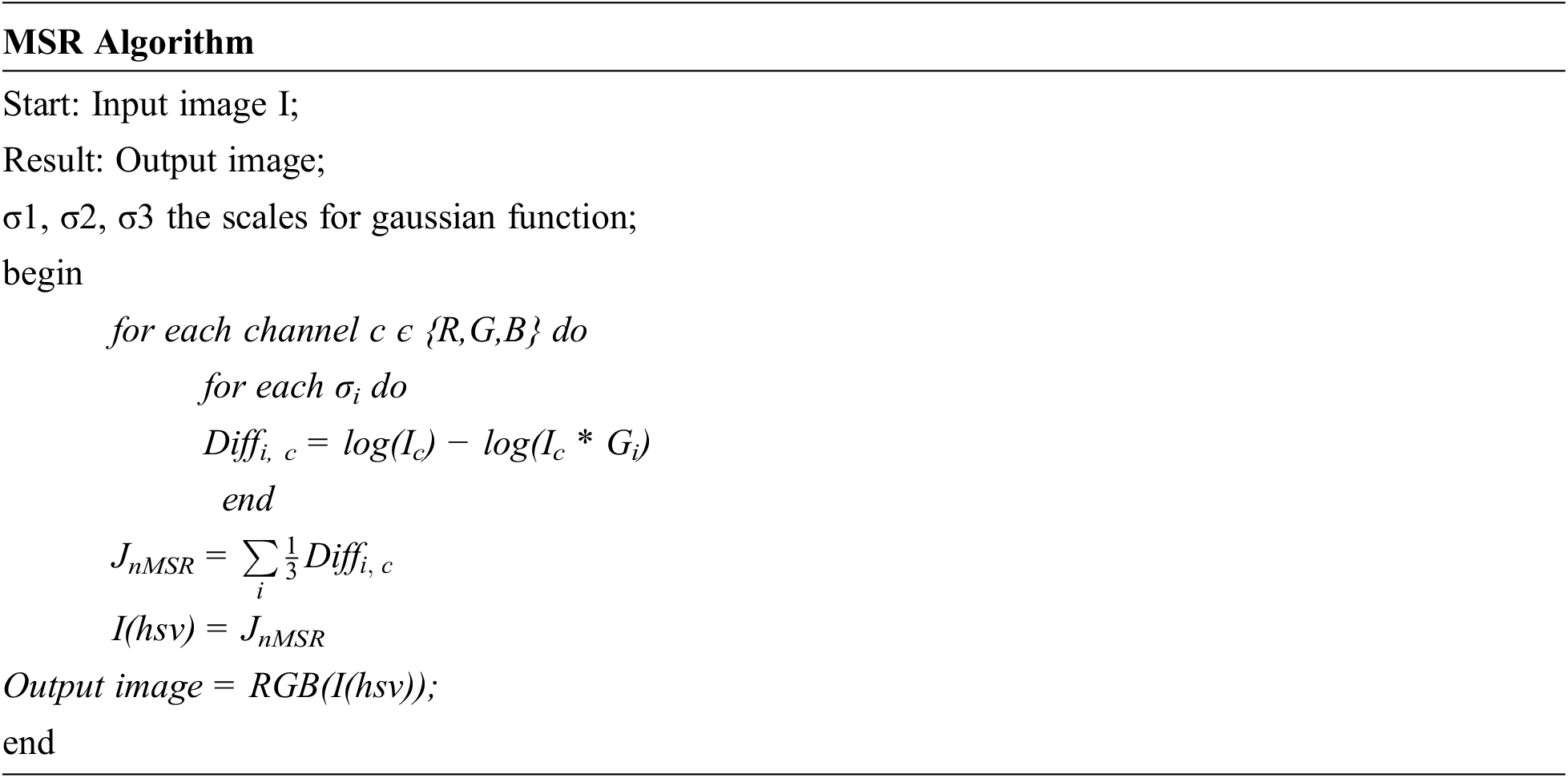

where Ci represents scale factor, it should be selected in some special range. The pseudo code for multi-scale retinex is furnished below:

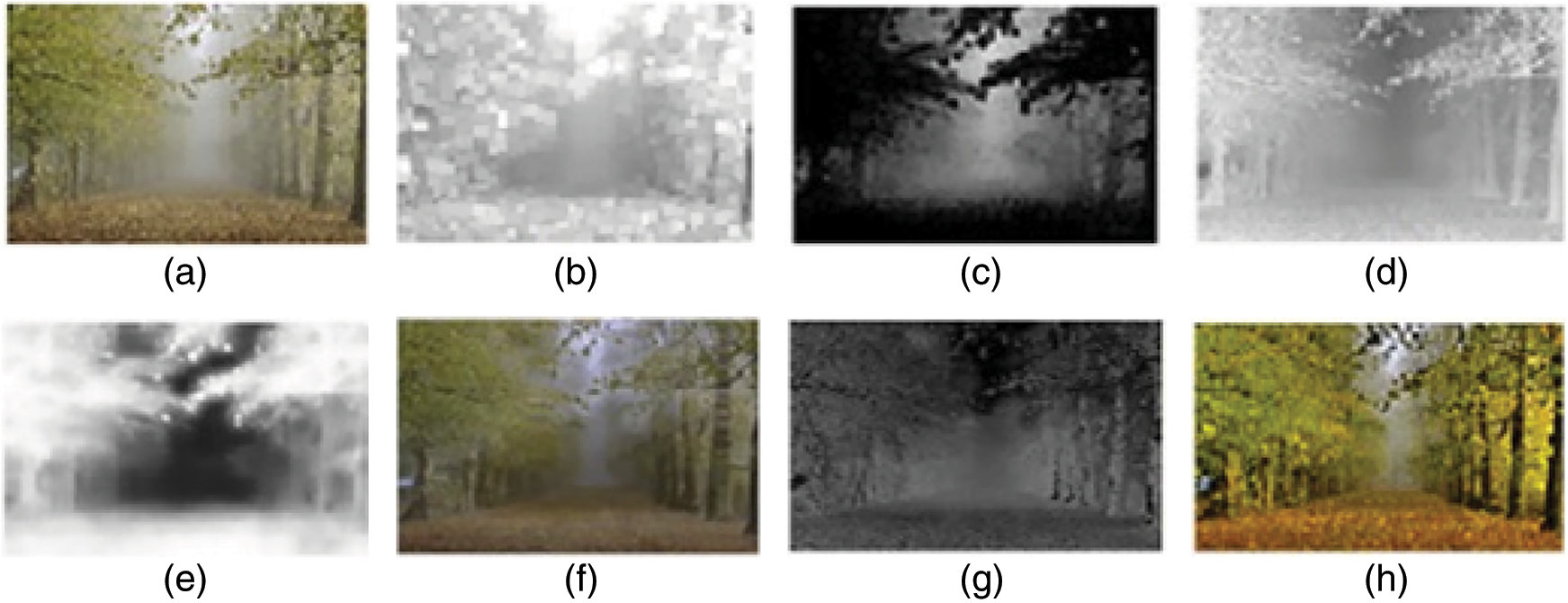

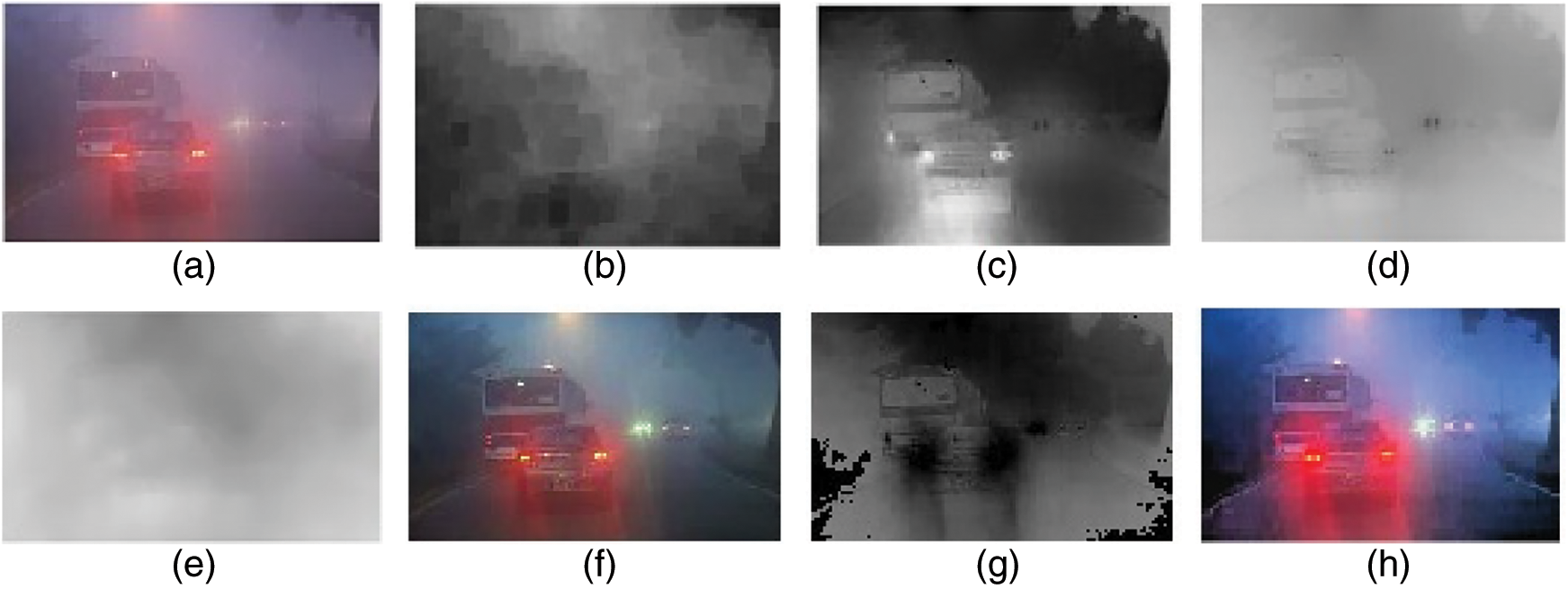

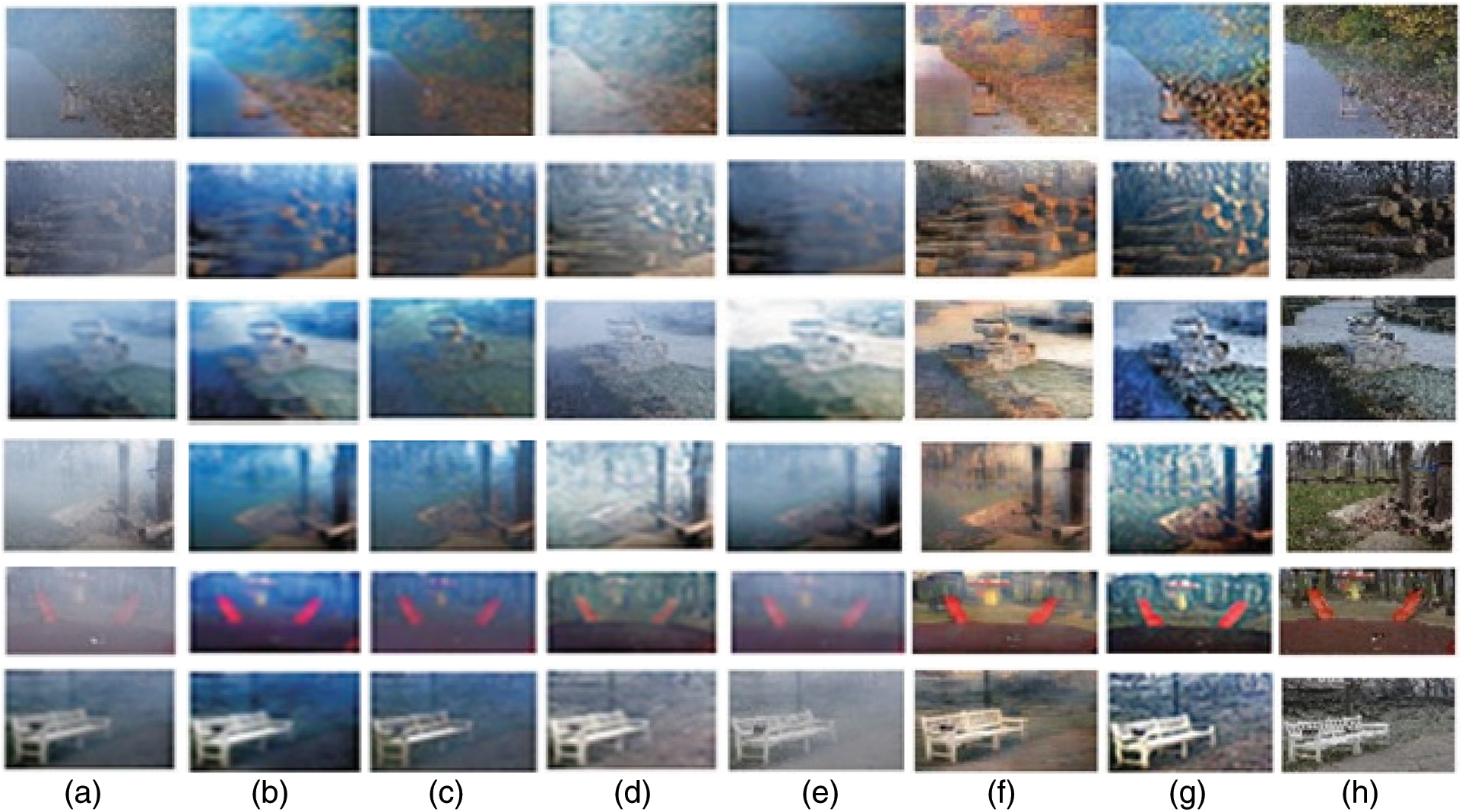

The Figs. 5 and 6 represent the day and nighttime image analysis of the proposed work with hazy input image producing the dehazed output image with bright and dark channel prior, transmission estimation followed by the refined transmission. From this radiance is computed and processed by MSR algorithm which brings out the retinex component and finally dehazed output is generated.

Figure 5: Day time image analysis (a) Hazy input image (b) Bright channel prior (c) Dark channel prior (d) transmission estimation (e) refined transmission (f) Radiance (g) Retinex (h) Dehazed output image

Figure 6: Night time image analysis (a) Hazy input image (b) Dark channel prior (c) Bright channel prior (d) transmission estimation (e) refined transmission (f) Radiance (g) Retinex (h) Dehazed output image

The human visual system can perceive brightness rather than colour. Expecting good visual effects on performing luminance correction directly over RGB space often leads to distortion of the image color due to difficulty in enhancing all channels in a correct ratio. But in HSV space the hue, saturation and intensity components are independent. So manipulation of the intensity values does not have any direct impact on the color of the images. Finally, the retinex Jn is combined with the input image using the HSV space and manipulated to generate a haze-free enhanced output image in the RGB space.

The results obtained by the proposed work are analyzed qualitatively with standard datasets for day and night images and are compared with the existing works. Also, quantitative measurements using metrics PSNR and SSIM are performed using O-Haze dataset an outdoor hazy dataset for day images. Then our results were compared with that of the existing works.

3.1 Haze Removal Analysis of Daytime Images

In this work, the proposed method is implemented in Matlab R2020b for simulation using the O-HAZE dataset. O-HAZE is a pragmatic dataset that comprises of 45 ground truth and their respective hazy images, captured under a real haze environment.

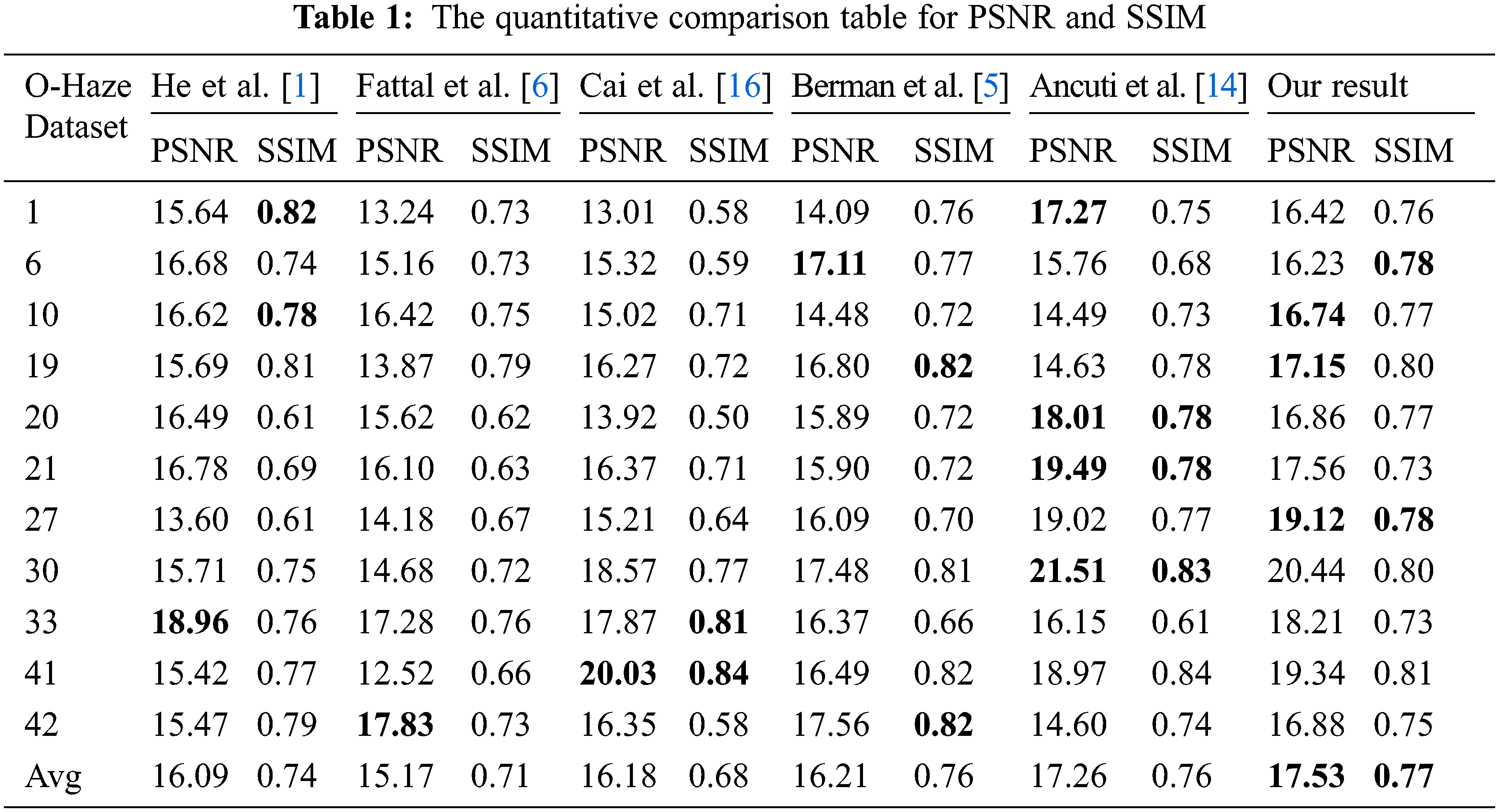

The results of the proposed work are analyzed qualitatively using standard daytime datasets and are compared with the other existing algorithms He et al. [1] and Li et al. [4] as shown in Fig. 7. We used randomly selected haze images from the O-HAZE dataset, for the quantitative analysis measuring the metrics PSNR and SSIM. The Fig. 8 shows the comparison of output images of the proposed method with existing methods.

Figure 7: Comparison of output images for daytime haze images. (a) Input Images (b) He et al. [1] (c) Li et al. [4] and (d) Proposed method

Figure 8: Results of the proposed O-Haze daytime dataset samples (a) Hazy input image (b) He et al. [1] (c) Fattal et al. [6] (d) Cai et al. [16] (e) Berman et al. [5] (f) Ancuti et al. [14] (g) Our result and (h) Ground truth

Peak Signal-To-Noise Ratio (PSNR) is expressed in terms of mean square error and is used to measure the quality of reconstruction of the hazy image. Another parameter that is frequently used to measure the quality of the image is Structural Similarity Index (SSIM). The quantitative comparison is provided in Tab. 1 for PSNR and SSIM values. We also used O-Haze dataset images of size 800x600 same as done in [13] and we used the same result values in [13] for comparison with our result.

A few samples of the O-Haze dataset are considered for this work and are quantitatively analyzed. The dehazed output image is compared with the haze-free ground truth for PSNR and SSIM evaluation. The highest value for each image is highlighted in bold letters. In the evaluation of SSIM for the image OH_06, our algorithm has 19% and 9% superior over Cai et al. [16] and Ancuti et al. [14] respectively. The PSNR evaluation for the image OH_10 of the proposed work is 14% higher than Berman et al. [5] and 11% higher than Cai et al. [16]. The average value of the metrics of our result is higher than other existing methods discussed above. Hence it is inferred that the proposed work is performing better with the daytime haze removal algorithms on comparing with the existing works. A good dehazing performance generates all fine details of an output image that has a closer appearance as that of ground truth with a higher SSIM value. Similarly, a higher PSNR is a measure of perfection on haze removal and restoration of high contrast image such that the dehazed output image is with enriched visibility.

3.2 Dehazing Evaluation of Night Images

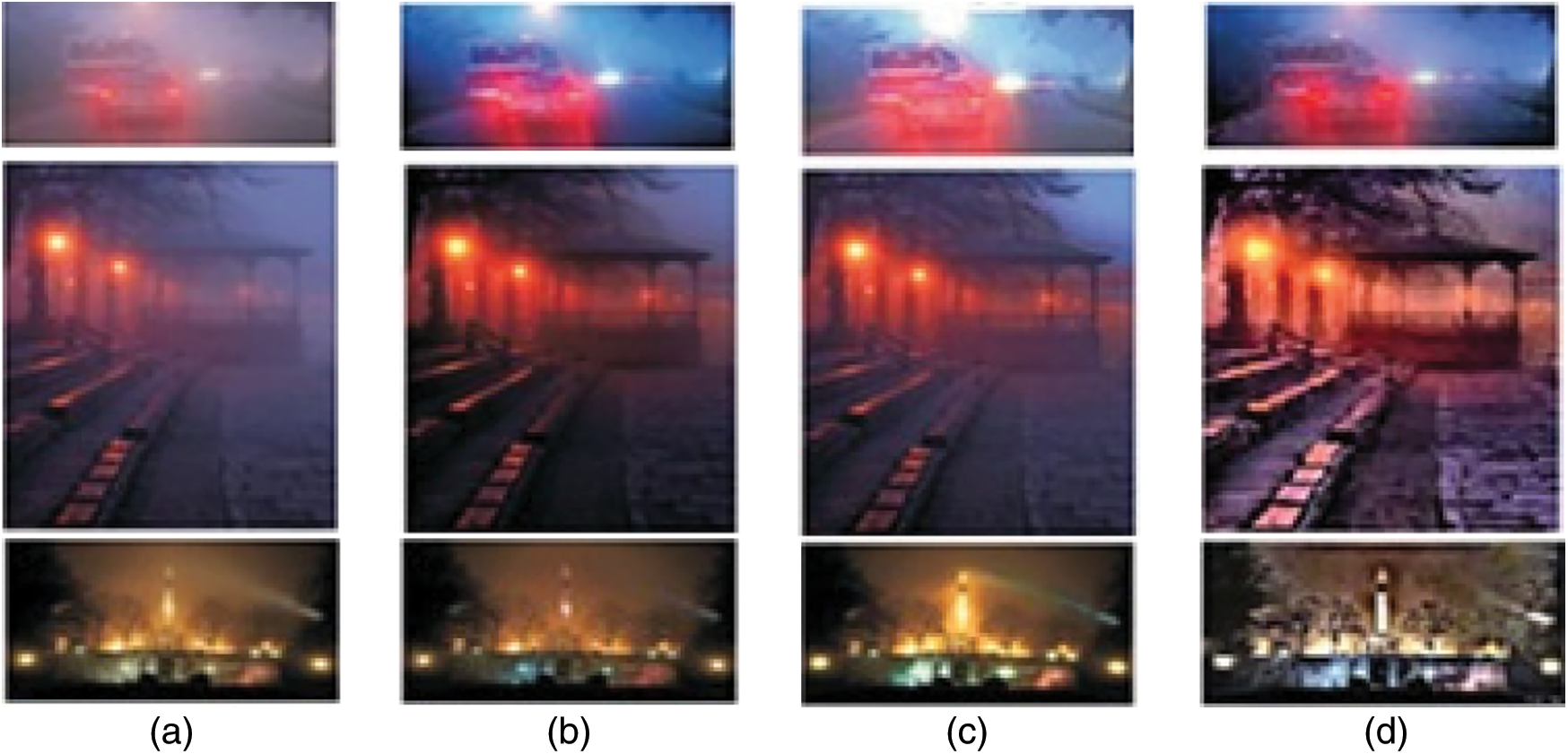

Also, haze removal is performed over nighttime images with the proposed algorithm. The output results are compared with the existing methods and presented below.

The Fig. 9 demonstrates the result for the night hazy image. We have examined our work on the datasets used in [13] which consists of a variety of quality images of hazy night scenes. We compared our results with the existing day and night time haze removal techniques. The excess glow of light objects due to the saturation and colour distortion artifacts are reduced. It shows our method has a good performance compared to other works.

Figure 9: Comparing night time results. (a) input image (b) He [1] (c) Li [4] and (d) our results

To conclude that we have developed a novel technique to enhance both day and night images influenced by haze. The experimental results show that the proposed algorithm effectively removes haze from images and enhances brightness and visibility. During the night time, since the illumination originates from several artificial sources which are different in colour and non-uniform intensity may lead to artifacts. The proposed algorithm combines DCP and BCP along with MSR for radiance optimization and reduces excess smoothing and artifacts that are present in existing methods. We evaluated the effectiveness of the proposed method quantitatively using the metrics against existing techniques. The average value of the metrics of our result is higher than the existing algorithms discussed above and hence we infer that our work is performing better. In the future work, we will focus to eliminate artifacts with enhanced contrast and also reducing the computation time.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. K. He, J. Sun and X. Tang, “Single image haze removal using dark channel prior,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 33, no. 12, pp. 2341–2353, 2011. [Google Scholar]

2. J. B. Wang, N. He, L. L. Zhang and K. Lu, “Single image dehazing with a physical model and dark channel prior,” Neurocomputing, vol. 149, pp. 718–728, 2015. [Google Scholar]

3. K. He, J. Sun, and X. Tang,“Single image haze removal using dark channel prior, ” in Proc. IEEE Computer Vision and Patern Recognition, Florida, USA, pp. 1956–1963, 2009. [Google Scholar]

4. B. Li, S. Wang, J. Zheng and L. Zheng, “Single image haze removal using content-adaptive dark channel and post enhancement,” IET Computer Vision, vol. 8, no. 2, pp. 131–140, 2014. [Google Scholar]

5. D. Berman, T. Treibitz and S. Avidan, “Single image dehazing using haze-lines,” IEEE Transactions Pattern Analysis and Machine Intelligence, vol. 42, no. 3, pp. 720–734, 2020. [Google Scholar]

6. R. Fattal, “Dehazing using color-lines,” in ACM Transactions on Graphics, vol. 34, no. 1, pp. 1–14, 2014. [Google Scholar]

7. G. Meng, Y. Wang, J. Duan, S. Xiang and C. Pan, “Efficient image dehazing with boundary constraint and contextual regularization,” in Proc. of IEEE Int. Conf. Computer Vision.(ICCV 2013), Sydney, Australia, pp. 617–624, 2013. [Google Scholar]

8. D. J. Jobson, Z. U. Rahman and G. A. Woodell, “A multiscale retinex for bridging the gap between color images and the human observation of scenes,” IEEE Transactions on Image Processing, vol. 6, no. 7, pp. 965–976, 1997. [Google Scholar]

9. J. Wang, K. Lu, J. Xue, N. He and L. Shao, “Single image dehazing based on the physical model and MSRCR algorithm,” in IEEE Transactions on Circuits and Systems for Video Technology, vol. 28, no. 9, pp. 2190–2199, 2018. [Google Scholar]

10. B. Xie, F. Guo and Z. Cai, “Improved single image dehazing using dark channel prior and multi-scale retinex,” in Proc. Int. Conf. on Intelligent System Design and Engineering Application Proceedings, China, pp. 848–851, 2010. [Google Scholar]

11. J. Zhang, Y. Cao, S. Fang, Y. Kang and C. W. Chen, “Fast haze removal for nighttime image using maximum reflectance prior,” in Proc. IEEE Conf. on Computer Vision Pattern Recognition (CVPR), Hawaii, USA, pp. 7418–7426, 2017. [Google Scholar]

12. S. C. Pei and T. Y. Lee, “Nighttime haze removal using color transfer pre-processing and dark channel prior,” in Proc. 19th IEEE Int. Conf. on Image Processing, Orlando, Florida, pp. 957–960, 2012. [Google Scholar]

13. C. Ancuti, C. O. Ancuti, C. De Vleeschouwer and A. C. Bovik, “Day and night-time dehazing by local airlight estimation,” IEEE Transactions on Image Processing, vol. 29, pp. 6264–6275, 2020. [Google Scholar]

14. C. Ancuti, C. O. Ancuti, C. De Vleeschouwer and A. C. Bovik, “Nighttime Dehazing by Fusion,” in Proc. IEEE Int. Conf. on Image Processing. (ICIP), pp. 2256–2260, 2016. [Google Scholar]

15. Z. Shi, M. Zhu, B. Guo, M. Zhao and C. Zhang, “Nighttime low illumination image enhancement with single image using bright/dark channel prior,” EURASIP Journal on Image and Video Processing, vol. 1, no. 13, pp. 1–15, 2018. [Google Scholar]

16. B. Cai, X. Xu, K. Jia, C. Qing and D. Tao, “Dehazenet: An end-to-end system for single image haze removal,” IEEE Transactions on Image Processing, vol. 25, no. 11, pp. 5187–5198, 2016. [Google Scholar]

17. Y. Li, R. T. Tan and M. S. Brown, “Nighttime haze removal with glow and multiple light colors,” in Proc. IEEE Int. Conf. on Computer Vision. (ICCV), Santiago, Chile, pp. 226–234, 2015. [Google Scholar]

18. H. M. Qassim, N. M. Basheer and M. N. Farhan, “Brightness preserving enhancement for dental digital x-ray images based on entropy and histogram analysis,” Journal of Applied Science and Engineering, vol. 22, no. 1, pp. 187–194, 2019. [Google Scholar]

19. Y. Wang, H. Wang, C. Yin and M. Dai, “Biologically inspired image enhancement based on retinex,” Neurocomputing, vol. 177, pp. 373–384, 2016. [Google Scholar]

20. C. O. Ancuti and C. Ancuti, “Single image dehazing by multi-scale fusion,” IEEE Transactions on Image Processing, vol. 22, no. 8, pp. 3271–3282, 2013. [Google Scholar]

21. Y. L. Chung, H. Y. Chung and Y. S. Chen, “A study of single image haze removal using a novel white-patch retinex-based improved dark channel prior algorithm,” in Journal Intelligent Automation and Soft Computing, vol. 26, no. 2, pp. 367–383, 2020. [Google Scholar]

22. C. Liu, X. Wu, B. Mo and Y. Zhang, “Multi-phase Oil tank recognition for high resolution remote sensing images,” in Journal Intelligent Automation and Soft Computing, vol. 24, no. 3, pp. 671–678, 2018. [Google Scholar]

23. T. Pal, “Visibility enhancement of fog degraded image sequences on SAMEER TU dataset using dark channel strategy,” in Proc. 9th Int. Conf. on Computing, Communication and Networking Technologies, Banglore,India, pp. 1–6, 2018. [Google Scholar]

24. H. Koschmieder, “Theorie der horizontalen sichtweite, in beitrage zur physik der freien atmosphare,” Munich, Germany: Keim Nemnich, pp. 33–53, 1924. [Google Scholar]

25. R. Tan, “Visibility in bad weather from a single image,” in Proc. IEEE Conf. on Computer Vision Pattern Recognition (ICVPR), Anchorage, Alaska, USA, pp. 1–8, 2008. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |