DOI:10.32604/iasc.2022.024840

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2022.024840 |  |

| Article |

Object Detection Learning for Intelligent Self Automated Vehicles

1Department of Computer Science, Air University, Islamabad, 44000, Pakistan

2Department of Computer Science, College of Computer, Qassim University, Buraydah, 51452, Saudi Arabia

3Department of Computer Science and Software Engineering, Al Ain University, Al Ain, 15551, UAE

4Department of Humanities and Social Science, Al Ain University, Al Ain, 15551, UAE

*Corresponding Author: Suliman A. Alsuhibany. Email: salsuhibany@qu.edu.sa

Received: 01 November 2021; Accepted: 24 January 2022

Abstract: Robotics is a part of today's communication that makes human life simpler in the day-to-day aspect. Therefore, we are supporting this cause by making a smart city project that is based on Artificial Intelligence, image processing, and some touch of hardware such as robotics. In particular, we advocate a self automation device (i.e., autonomous car) that performs actions and takes choices on its very own intelligence with the assist of sensors. Sensors are key additives for developing and upgrading all forms of self-sustaining cars considering they could offer the information required to understand the encircling surroundings and consequently resource the decision-making process. In our device, we used Ultrasonic and Camera Sensor to make the device self-sustaining and assist it to discover and apprehend the objects. As we recognize that the destiny is shifting closer to the Autonomous vehicles that are the destiny clever vehicles predicted to be driver-less, efficient, and crash-fending off best city vehicles of the destiny. Thus, the proposed device could be capable of stumbling on the item/object for easy and green running to keep away from crash and collisions. It could additionally be capable of calculating the gap of the item/object from the autonomous car and making the corresponding decisions. Furthermore, the device can be tracked-able by sending the location continuously to the mobile device through IOT using GPS and Wi-Fi Module. Interestingly. Additionally, the device is also controlled with the voice using Bluetooth.

Keywords: Autonomous device; crash avoiding; sensors; tracked-able; voice control

The recent era having digitized technologies includes data communicated in terms of signals [1], images [2], and other sources [3]. To secure them, we have to adopt cryptography [4], network security that ensures our data is safe to communicate [5]. On other hand, we can utilize the data by applying some algorithms [6] and some procedures [7]. In the real world, thousands of people die in a day and lose their lives or become physically disabled with reckless driving or somehow bad road condition accidents. In the last few years, the number of traffic accidents has increased to double or triple with the increase of the world population. “World report on road traffic injury prevention” World Health Organization [8], In this report, Around two decades ago, road accident was at 9 leading cause and now it has raised to no.3 leading cause in worldwide. Although the developed countries have overcome this cause of death rate up to 30% by improving road safety infrastructure and adopting smart technologies. But still, this cause is being worst in low and middle-income countries with the increase in population. According to the WHO report, the total predicted road traffic fatalities of 156 countries in 1990 was 542, in 2000 was 723 and in 2010 was 957 and now in 2020, it has increased more up to 1204. The change that has been reported in the figure is about 67% from 2000-200. These have forced us to adopt advanced digital technologies [9,10] of the current era by replacing the traditional transporting system with an autonomous vehicle as most of the traffic accidents are caused by human mistakes. According to statistics, in the next 10 years, the number of lives claimed by traffic accidents each year likely to be double [11]. Advance researches have been seen in the field of autonomous vehicles in the last few years both within the academic circle and across the industry. An autonomous car and smart system [12] can be considered as an object which can perceive its environment and existing objects in front of it with the help of a variety of sensors, decide which route to take to its destination, and drive itself.

Object detection is the fundamental requirement of autonomous cars which is possible if it is capable to perceive environmental data and make an intelligent decision on it [13]. The critical function of detecting the item/object is to extract spatial records via sensor perception. The explored surrounding of the autonomous car relies upon the sphere of view of the sensor used for detection [14]. The items/objects are of types: (i) fine items/objects that stand above the floor with fine top and (ii) poor items/objects that stand underneath the floor with poor top. This paper focuses on positive objects detection and local object avoidance [15].

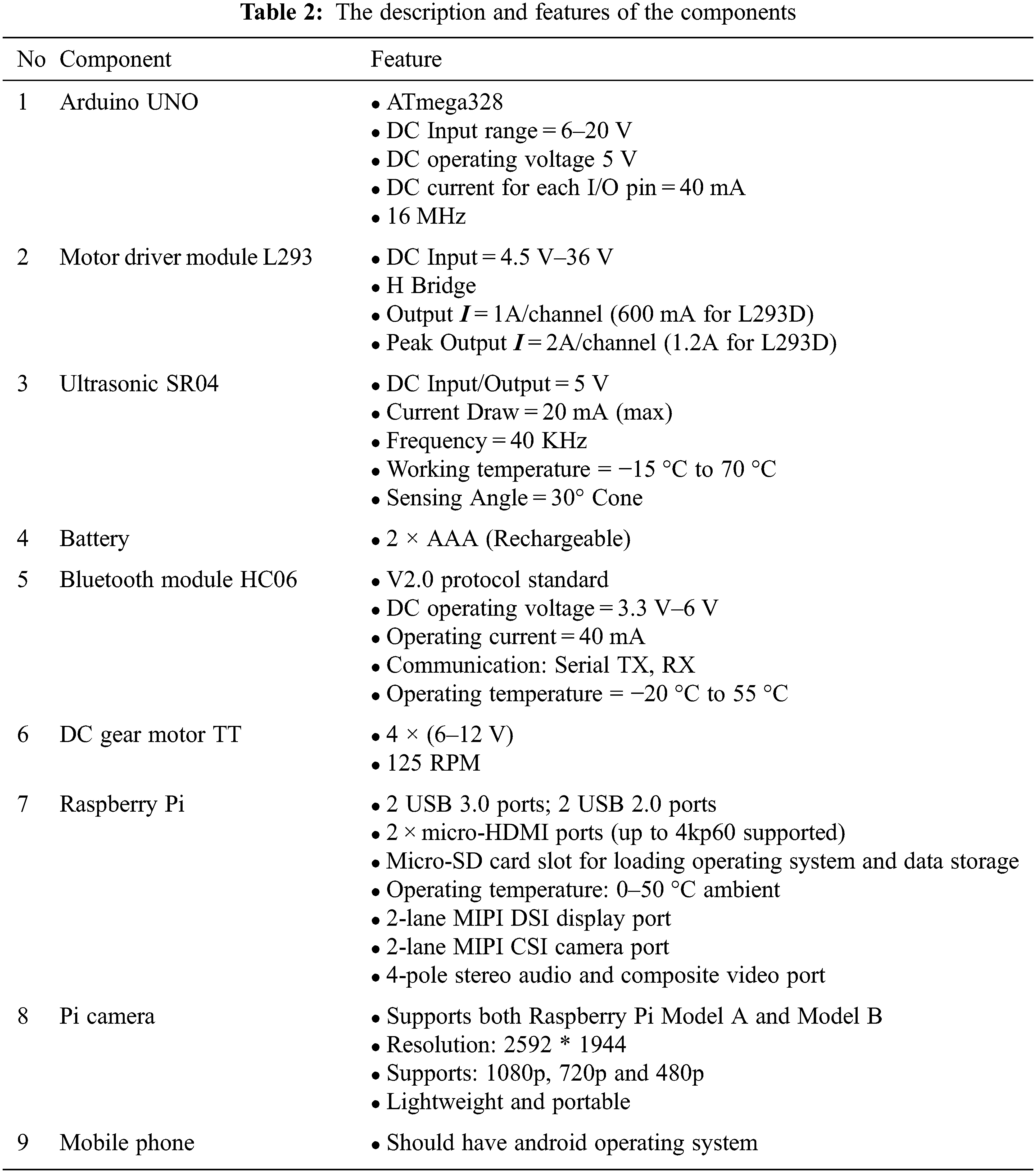

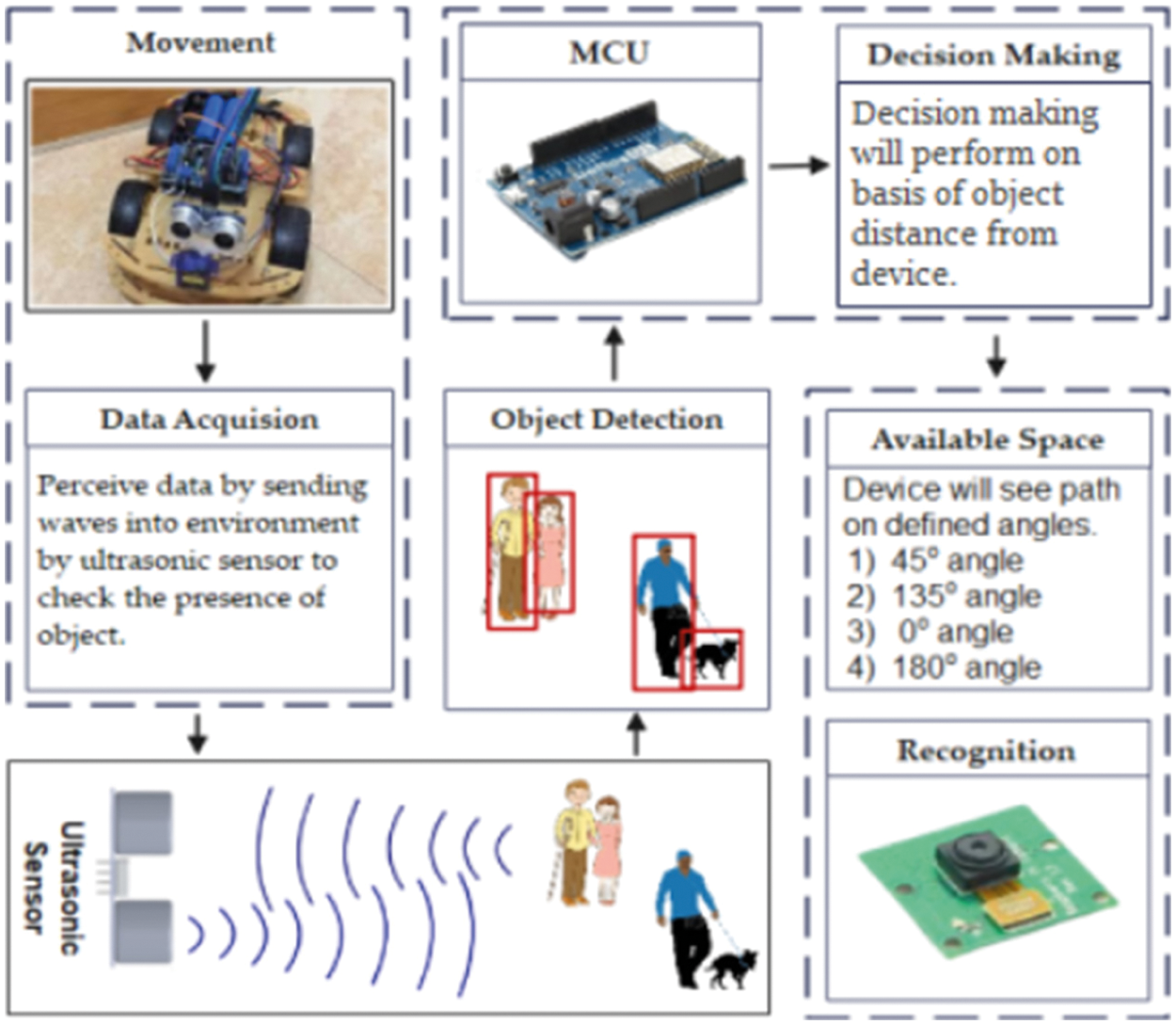

An autonomous car is a smart city project that uses different categories of sensors. Moreover, this device is based on artificial intelligence, image processing and some touch of hardware (robotics). For image recognition and detection, a purpose camera is used and this camera has an image type of data set. Movement of the autonomous system can also be done through voice by delivering defined/specified commands such as right, left, forward and backward. The autonomous system can detect or recognize specific types of objects that coming in its way. For hardware purpose, there is a lot of categories of sensors with unique features and qualities but for this project, we have chosen ultrasonic sensor SR04, pi-camera, DC gear motors of 6–12 V, Micro-controller ATmega328, wires for connection and LEDs to get better result for object recognition. The detail of every component has been defined in Tab. 2. For software purposes, we can use C or Python programming language using the TensorFlow library. To make a relation between software and hardware; we use Arduino and Raspberry Pie.

The main contributions of the proposed system are as follows:

• We have used Arduino Micro-controller ATmega328 (less in price than other controllers) to act as the brain of this project because it is responsible for providing the signal to the device to decide the presence of hurdle. For recognizing the objects, a pi-camera with processing unit ‘raspberry pi’ has been used.

• Detecting objects at a distance ranging between 2 to 400 cm from the device/autonomous car with the use of an ultrasonic sensor (SR04). As it offers excellent non-contacting detection range lies between 2 to 400 cm or 1 to 13 feet with high accuracy and stable reading.

• Overcoming the problem of previous related projects of rational behavior/abnormal behavior in case of all side objects/hurdles like moving in a circle in a closed room and effective performance in computational complexities, less accuracy rate and are less secure.

• Making our system a track-able device via GPS to obtain the current location and voice control feature.

• Night vision ability for object detection via a cross-platform to object recognizable streaming.

This article is structured as follows: Section 2 describes related work. Section 3 gives a detailed overview of the proposed model. In Section 4, the proposed model performance is experimentally assessed. Lastly, we sum up the paper and future directions are outlined in Section 5.

Extensive research has been done in the field of autonomous car systems. Recently many autonomous cars are developed to detect objects [16] but many of them have large computational complexities. On the other hand, these devices achieved less accuracy rate and are less secure. The device performs abnormal behavior in case of all side objects/hurdles like moving in a circle in a closed room.

Previously autonomous cars projects build for object detection purposes [17], but the problem is the behavior is not rational and the accuracy is not much efficient because it maximizes the computational time. That's why the device is stuck in the loop sometimes and does not take the required decision on time that can affect the working. So, what are we doing is to make the computational time as little as we can by following the rational behavior so the accuracy and decision on-time performance increases. Furthermore, our device can control through voice and can recognize the objects. Dubbelman et al. [18] proposed a statistical method to find the distance using stereo vision techniques. The strength of this paper is that it's a practical approach that is used in many robotics and real-time car manufacturing companies because stereo vision can find distance and path allocation in the day as well as at night. We have selected this paper for our research because in the future it is very helpful to relate to our research project when we do object detection based on the camera and also helps in our night vision feature. In [19], the authors described three different levels to reach autonomous vehicles. The strength of this paper is that the grid-based approach is used for object detection. Smooth and control object avoidance trajectories are generated which help to reach an accurate result. We chose this paper for our research because it is very helpful related to our object detection functionality, but this approach is very costly. In [20], the authors designed the system composed of two sensors (infrared and ultrasonic), an Arduino micro-controller and a DC gear motor. Ultrasonic and infrared sensors are used to detect items/objects in the path of the robot by sending signals to a micro-controller. The micro-controller redirects the robot to move in another direction. The main strength of this paper is that it is a less costly approach through which we can achieve the maximum result. In [21], the authors proposed different levels of automated driving functions according to the SAE standard and derive the requirements on sensor technologies. Next, advanced technologies for object detection and identification, as well as systems under development, are presented, discussed and evaluated. suitability for automotive application. It uses the very expensive LADAR approach. In [22] the authors proposed a driver-less vehicle equipped with an integrated GPS module capable of guiding the vehicle from one point to another without human needs. Arduino the micro-controller redirects the robot to move in an alternate direction. The main strength of this paper is that it is low cost, and has high ranging capabilities approach through which we can achieve the maximum result. In [23], a system is designed for manufacturing the autonomous car for object detection. Complete working of the components; problems of connectivity, limitations, and everything that we need to develop the device are in this. In [24], the authors disrobed a method that helps to learn three main types of object recognition algorithms techniques that are based on machine vision. In [25], the proposed system is for object detection using an ultrasonic sensor, So as an ultrasonic sensor that plays a vital role in object detection is attached to a DC motor that moves continuously in 360 degrees. The problem may arise here that if an object comes in front of the device at that moment when ultrasonic rays of ultrasonic sensor are not emitting/spreading in the same direction of object/object and maybe in other direction (i.e., Left, Back, or Right direction) then collision may occur between them. In [26], the device moves back when it detects the object in front of its way and then observe left and right to choose the way to make the decision and move onward but while moving backward upon detecting the object there may be an object behind the device which can cause collision between them. Moreover in the dark situation/scenario, the recognition of the object can't be performed due to poor light conditions and will generate poor results.

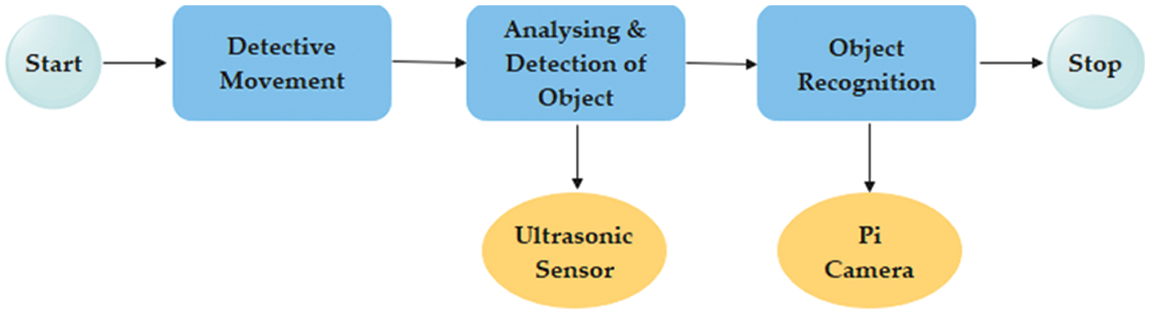

The methodology of this project lies underperforming fundamental requirements of the autonomous car which are data acquisition, object availability, data pre-processing, object detection, object recognition, voice control, and decision. The first device perceives environmental data to ensure the availability of objects with the help of an ultrasonic sensor. Environment observation (such as the condition of the environment), noise reduction and saving our computational power in data pre-processing which is necessary for the detection of an object in the environment. Then, the acquired raw data perceived by the sensor to detect the object by transmitting rays into the environment delivers to the micro-control unit (MCU) which calculates/estimates the distance of an object from a device on presence/availability. The information delivered to the Micro-controller utilizes for further processing and making a decision. To object recognition, information will undergo through recognition algorithm and techniques, and to control the device by voice different specified keyword will be delivered to micro-controller in signal form through the android operating system. Finally, the device makes a decision based upon processed/filtered data done Fig. 1 shows the design methodology of the autonomous car.

Figure 1: Design system methodology of the autonomous car for object detection

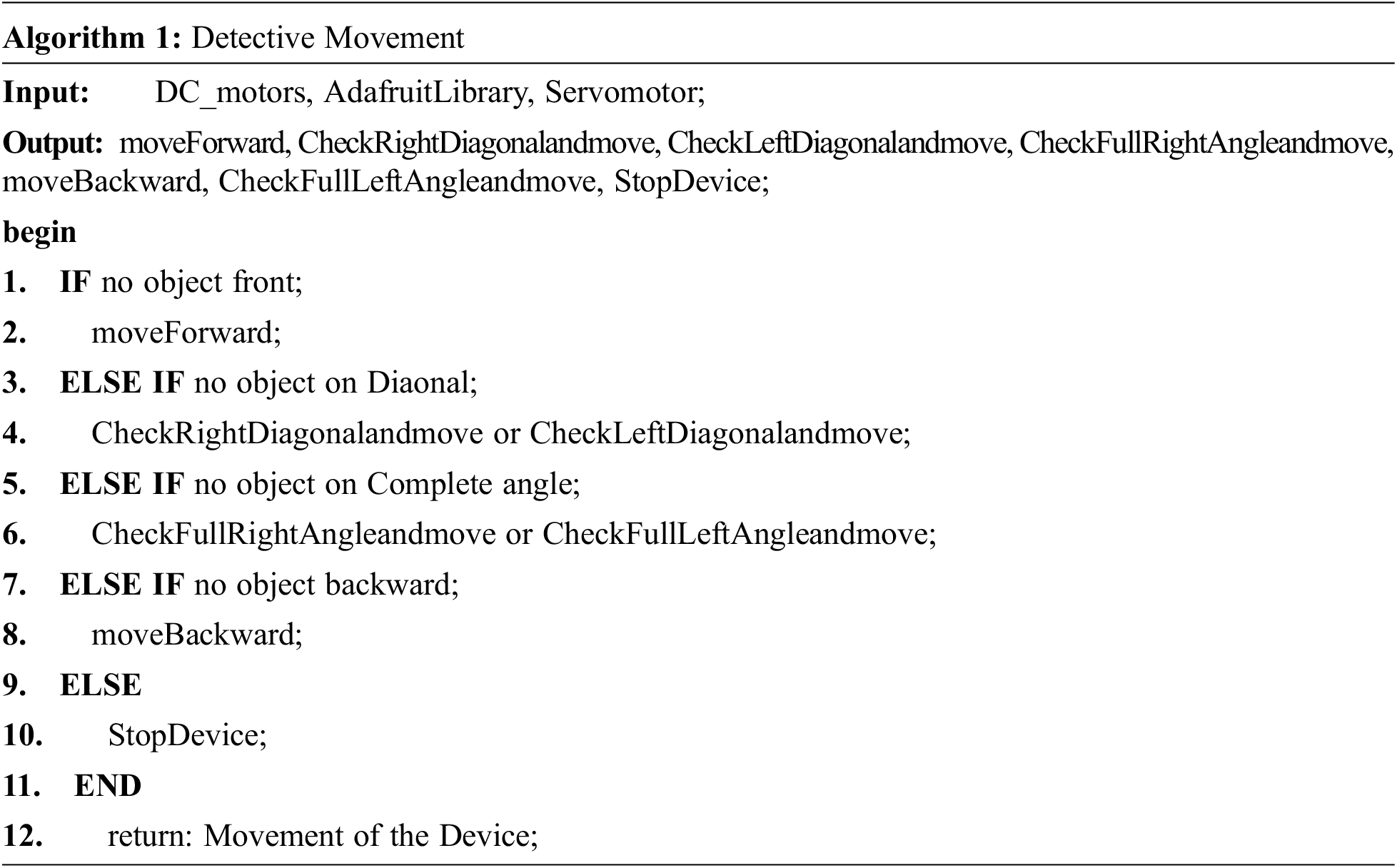

The movement of the device depends upon the clearance of the route. The movement of the device depends upon the clearance of the route. The ultra-sonic sensor first obtains the data from the environment in front of the autonomous car ranging from 2 to 400 cm to check the presence of an object of different types if it does not find the object then it starts to move in the forward direction. Device move in five (5) different directions i.e., movement on 90o (forward movement), movement on 45o (right diagonal movement), movement on 135o (left diagonal movement), movement on 0o (full right movement), movement on 180o (full left movement). Algorithm 1 shows the procedure of movement of the device. We can see the flow of device movement in Fig. 2. The motion of the device is defined in Eq. (1);

where

Figure 2: The flow of device movement for analyzing & detecting the objects

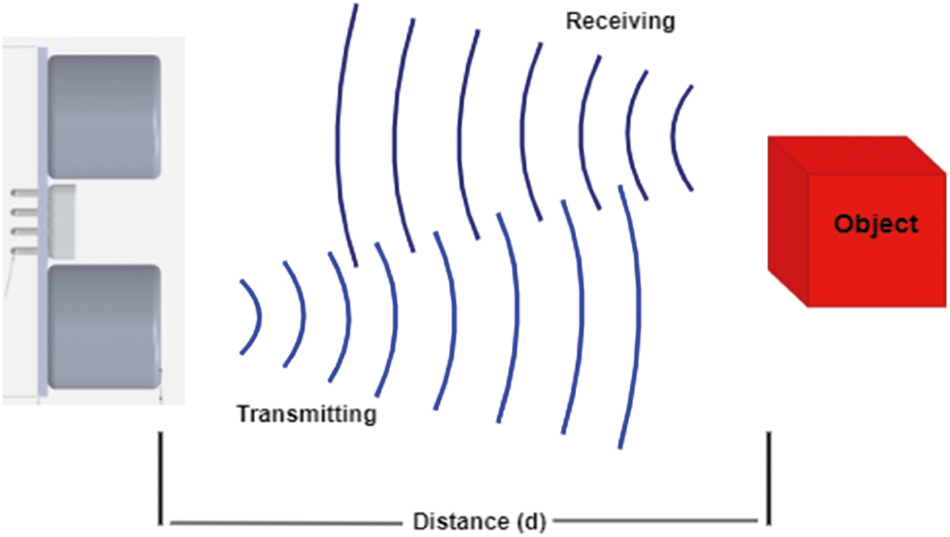

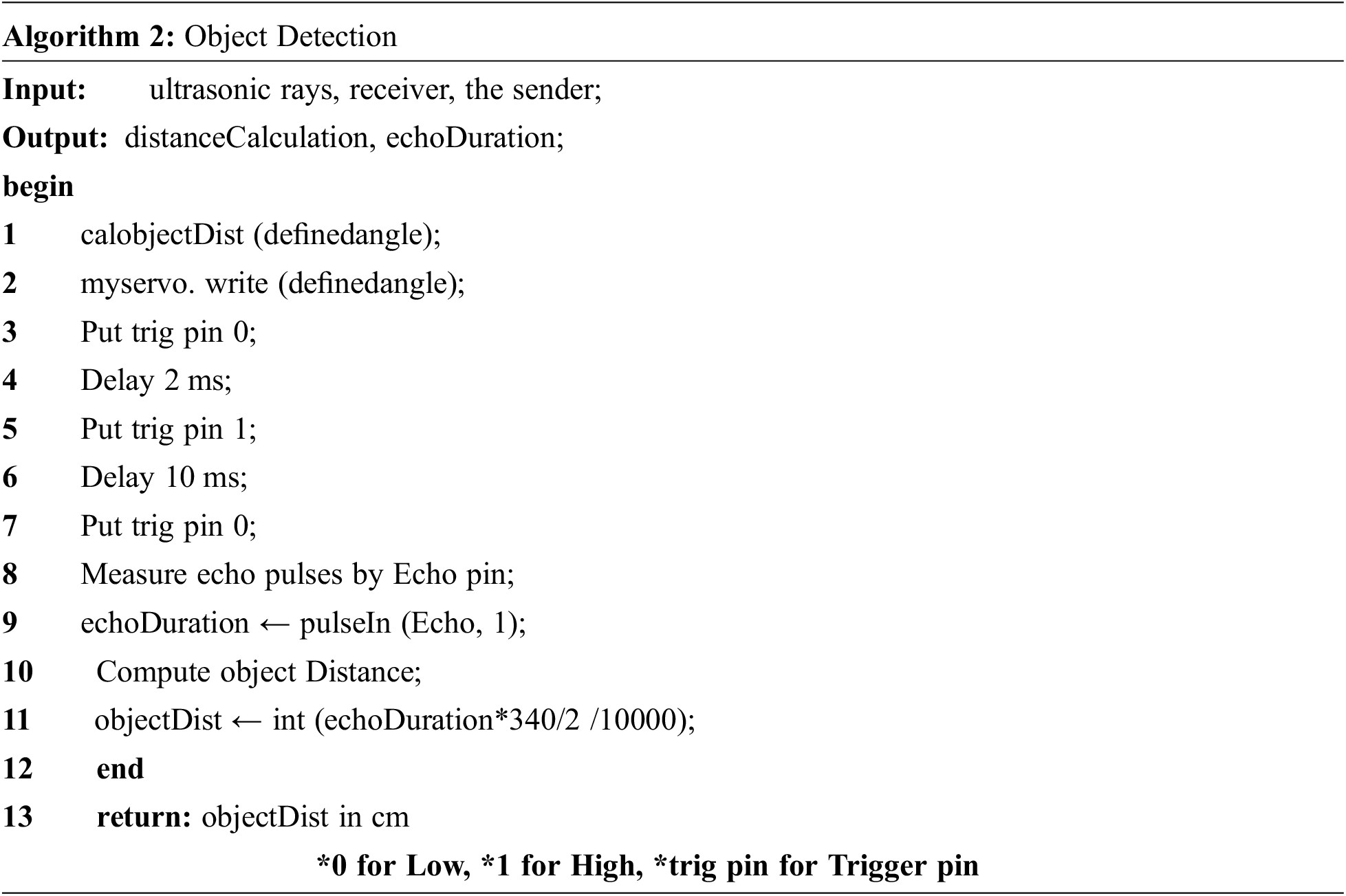

The object detection functionality of the device is being performed with a sensor by emitting ultrasonic rays into the environment with a deviation angle maximum of about 30° which reflects the sensor after hitting to object placed at front of the device in a ranging distance of 2–400 cm. To calculate the distance of the object from the device in case of detection is defined in Eqs. (2) and (3);

where d is a distance measurement, and c is the speed of the sound wave and Tf is the time interval. The complete cycle of sound waves produced by the sensor for transmitting and receiving after colliding/reflecting from any object to detect the presence of an object is done with the speed of sound c in air and the half of c denote the half path of the cycle of sound waves from getting out till collision with an object. The presence of objects is detected by the ultrasonic sensor can be viewed in Fig. 3.

Figure 3: Object detection using the ultrasonic sensor at distance d

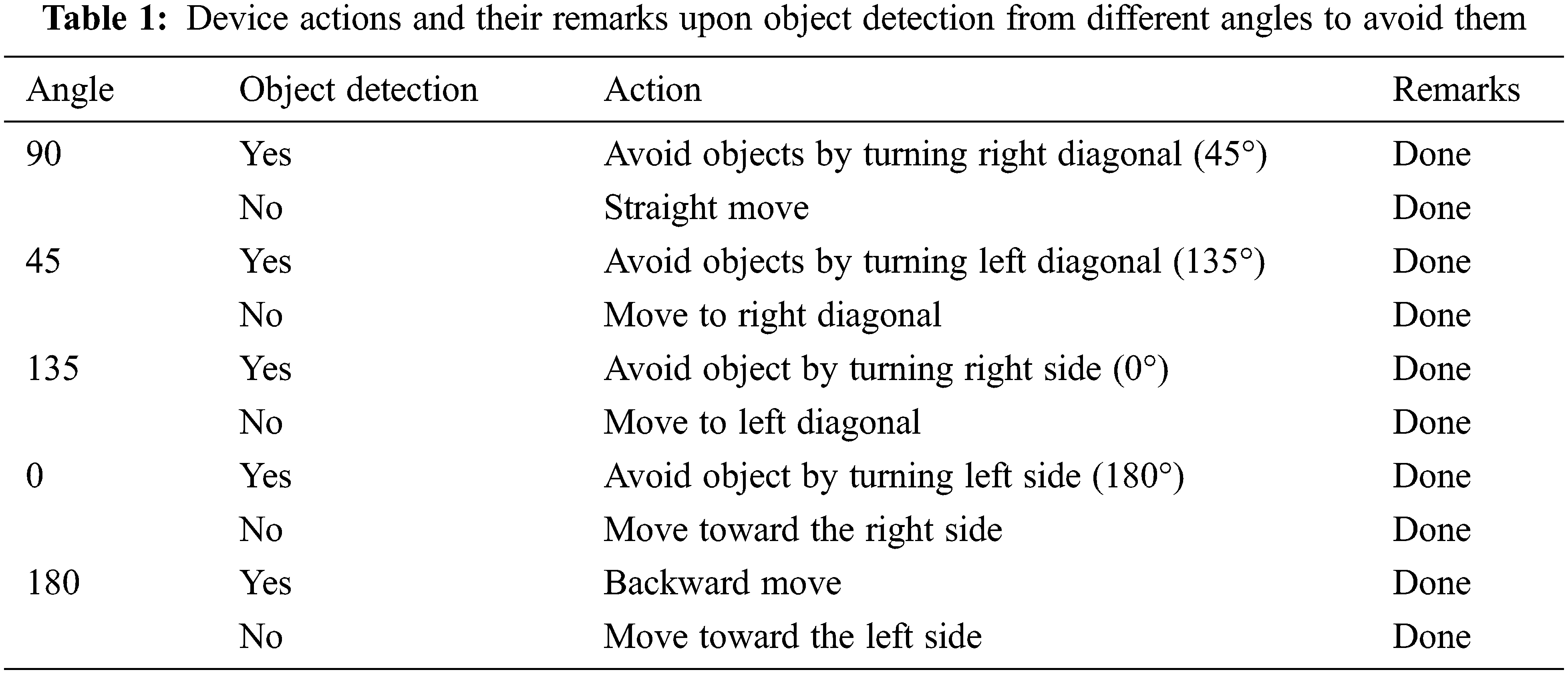

There is two different modes of the device: (i) autonomous mode (ii) voice control mode. In autonomous mode, the device decides by self when it detects the object while in voice control mode, the device decides on providing specified voice command through the android operating system using google assistant as well as detecting objects by avoiding them. Tab. 1 shows all specified angles of directions followed by the autonomous car when it detects any object and performs its corresponding actions.

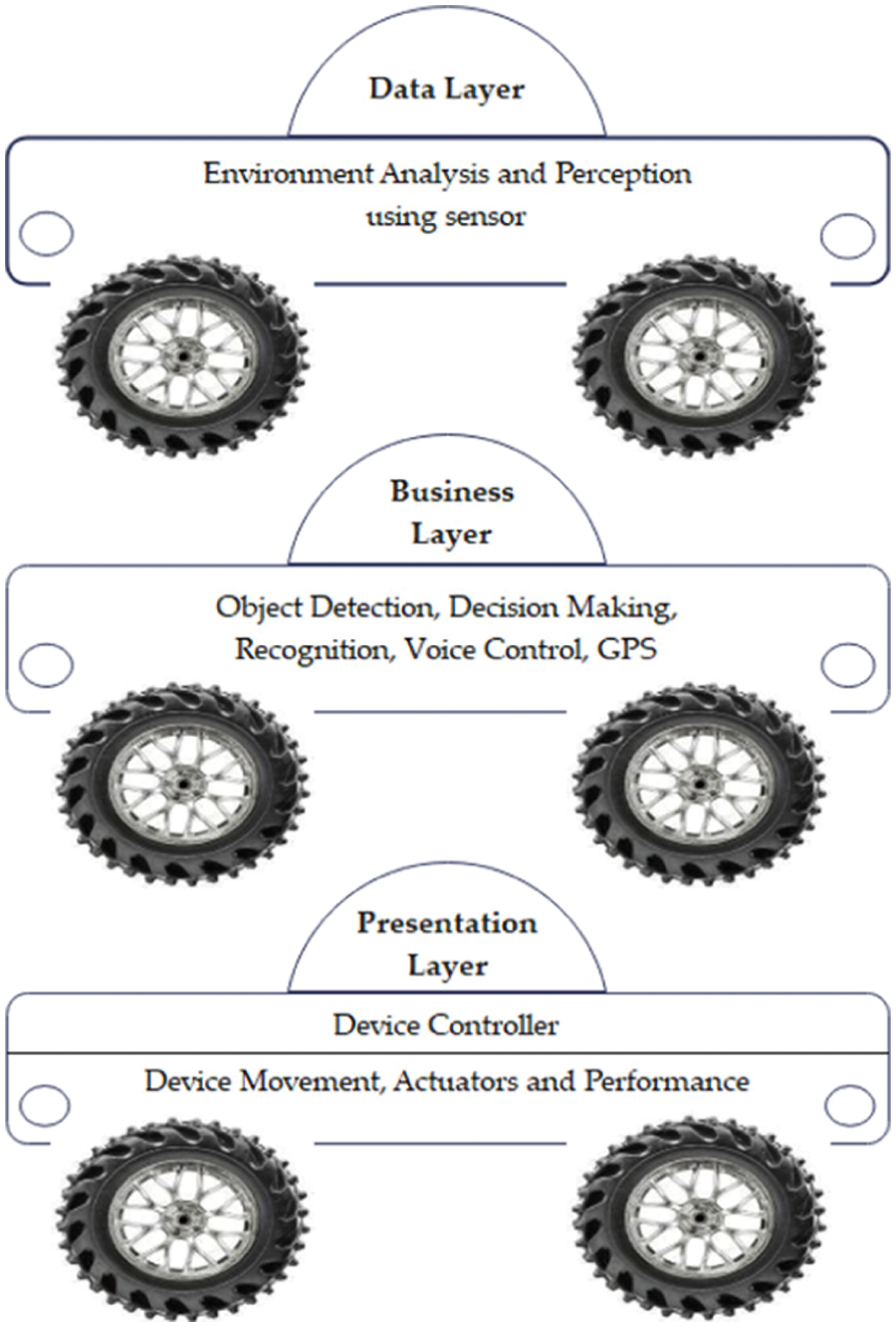

Autonomous car for object detection is based on three-tier architecture named ‘data layer’, ‘business layer’ and ‘presentation layer’. Each layer has its importance and plays an important role. All the layers of the architecture have been described briefly.

This is the very first layer of architecture which is composed of environment modeling and localization. They rely on a camera, GPS, Bluetooth, ultrasonic, infrared sensors.

Next, the business layer consists of Arduino and raspberry pi which takes decisions, detect and recognize objects voice control and GPS base decision based on the information delivered by the data layer.

Finally, the presentation layer has a controller which is dedicated to following the decisions that have been taken by the business layer by commanding the vehicle's actuators. Data that comes from analyzing and perceiving the environment effectively is mandatory for an autonomous car. Because it gives information to extract the locations of static/dynamic objects as well as the type of the object that the device will face. On the given previous information device can take different decisions on different scenarios that are defined by the business layer in the form of logic. In the last, the device can move and perform based on the logical decision that is made by the previous layer. The 3-Tier architecture of the device is shown in Fig. 4.

Figure 4: 3-Tier architecture design of autonomous car

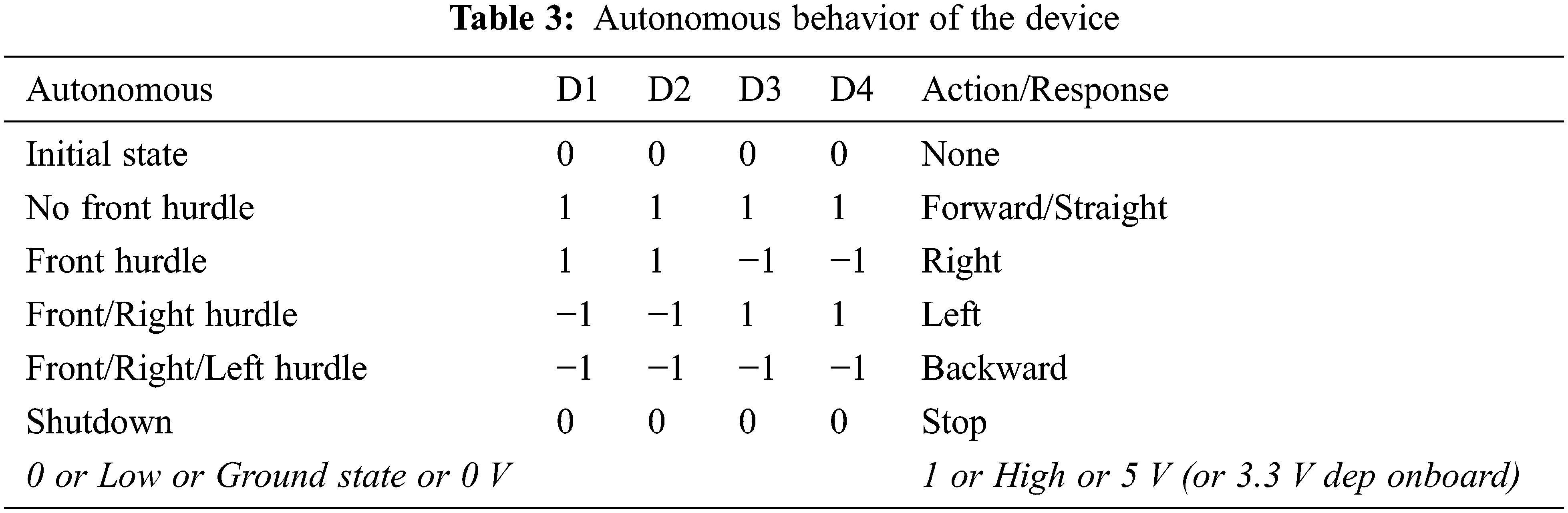

An autonomous car for object detection is a self-decision-making device that moves and decides its intelligence. The device takes action with the help of data provided by the sensor to the micro-controller to avoid hurdles/objects. The device turns its direction automatically when the minimum distance will be reached between the device and the object. When an object comes in the way of the device then it not only avoids it but also recognizes it by applying a recognition algorithm. The camera is the component that performs the recognition task. While; autonomous behavior of the device is operated as below mention scenario in Tab. 3. The role of components used in this project to make the autonomous car and their collaboration with each other is briefly described below in points;

• The first is the Micro-controller ATmega328 component which is the main brain of the device and it is responsible for communication between software and Hardware.

• Motor Driver Shield is the current empower module, which gets the low current signal from the controller and converts it to a high current signal which enables the motor to move.

• Ultrasonic Sensors component which has two main purposes firstly to detect the object and measure and collect the distance (data) between them from the environment secondly to perform control mechanism through some specific actions.

• Bluetooth Module HC06 is used for short-range wireless data communication between two micro-controllers.

• The Camera component attempts to give the data and recognize the type of objects.

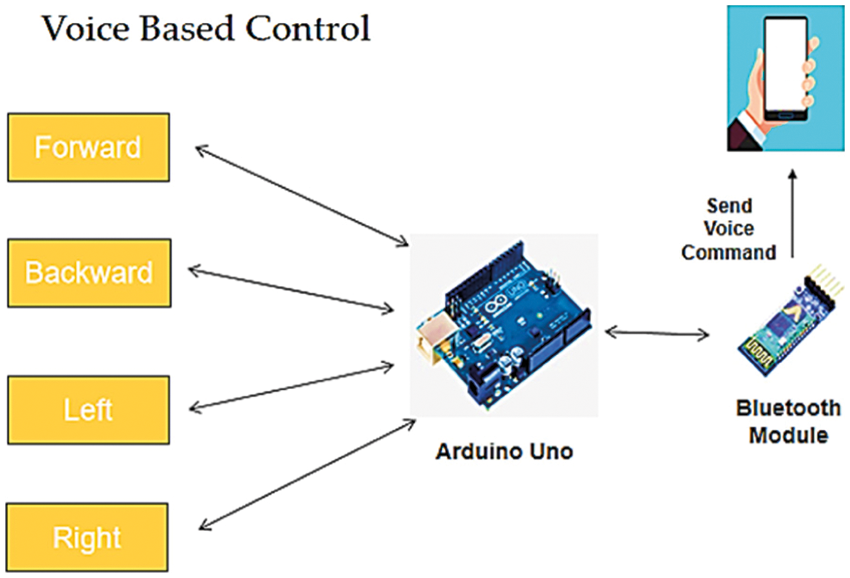

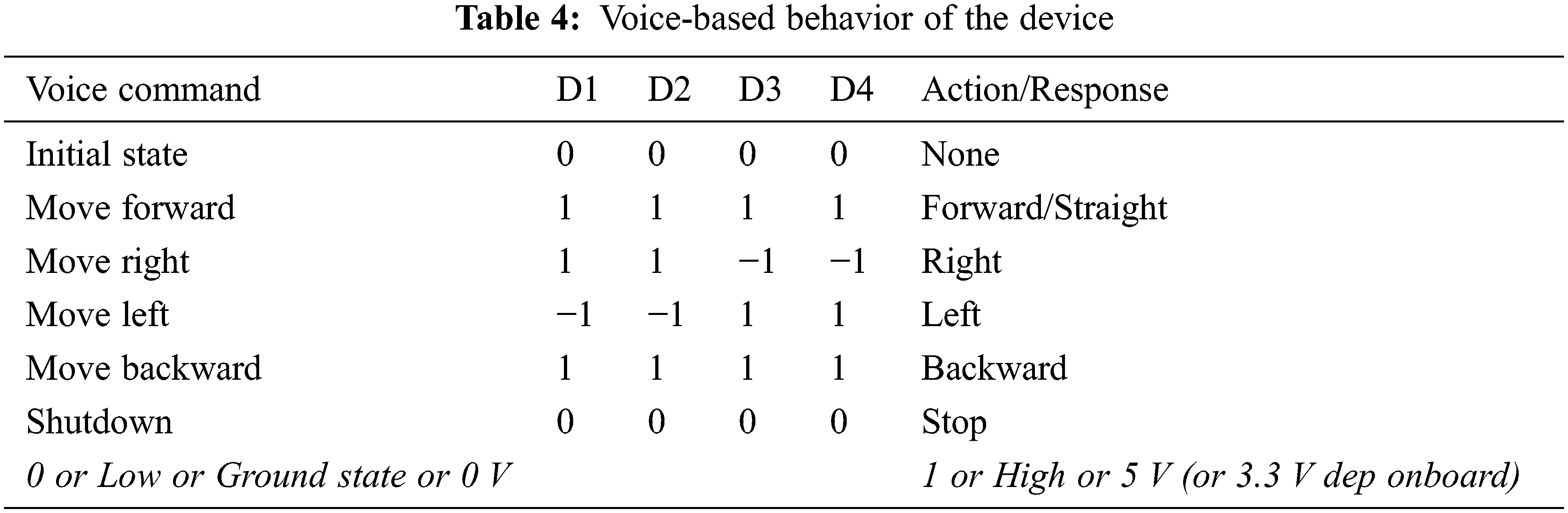

The autonomous car is also controlled by an Android mobile phone with the help of a Bluetooth module. Here only need to provide voice commands to android phones to control the device actions like forwarding, backward, left, and right directions. So, the android phone is used as a transmitting device to transfer signals to the autonomous car and a Bluetooth module placed in an autonomous car serve as a receiver. The Android phone will forward commands to the autonomous car via built-in Bluetooth module so that it can move in the specified direction, such as forward, backward, turn left, turn right and stop. The concept of voice base control of the device has been shown in Fig. 5 and the operating scenario for the voice-based behavior of the device can be seen in Tab. 4.

Figure 5: Voice-based control of the device

The ultrasonic sensor attached by the servo motor detects the presence of an object in front of it and also measures its distance from a device which helps the device to take action before a collision with this. When detection of an object is done then it is recognized by the camera highlighting it making a rectangle around the object with the help of recognition techniques.

4 Experimental Results and Analysis

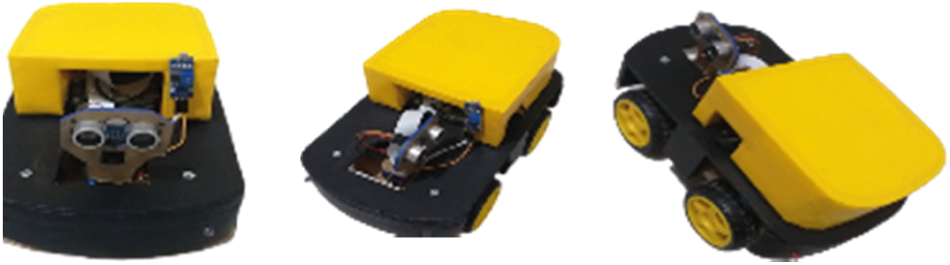

The proposed system design of an autonomous car for object detection can be attached in Fig. 6.

Figure 6: Design of autonomous car for object detection

A few examples of images of autonomous cars for object detection are shown in Fig. 7.

Figure 7: Few examples of autonomous cars for object detection

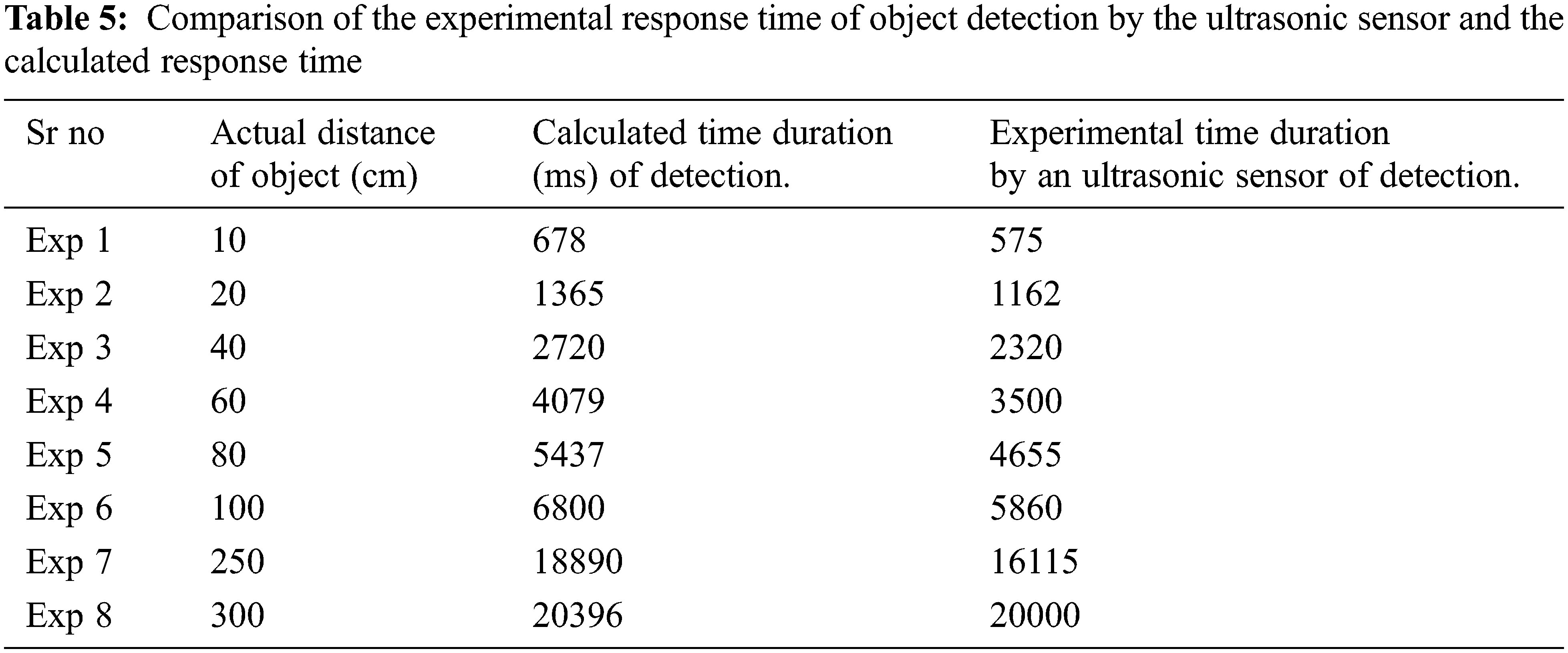

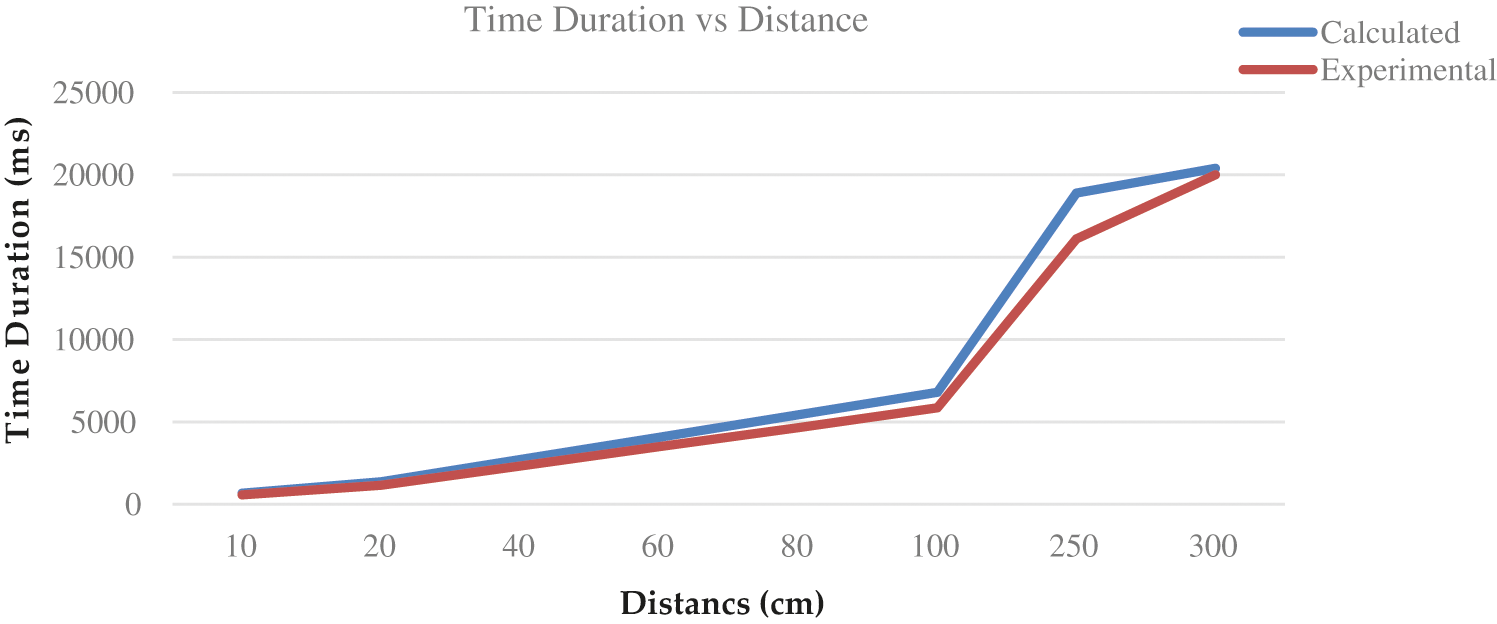

4.1.1 Experiment 1: Analysis of Ultrasonic Performance

In this experiment, we have estimated the response time of object detection using an ultrasonic sensor by placing objects at a different position from the autonomous car and compared the result with the calculated time duration to observe the accuracy of the experimental result of the device. The computed result can be seen in Tab. 5. From the result, it can be observed that the time duration for object/object detection gradually increases as the increase in object distance.

The ‘Time Duration vs. Distance’ graph shows better visualization and comparison between the calculated and experimental performance of object detection by ultrasonic sensor. Object Detection comparison can be seen in Fig. 8.

Figure 8: Comparison between the experimental and calculated response time of ultrasonic detection

4.1.2 Experiment 2: Object Detection and Avoiding

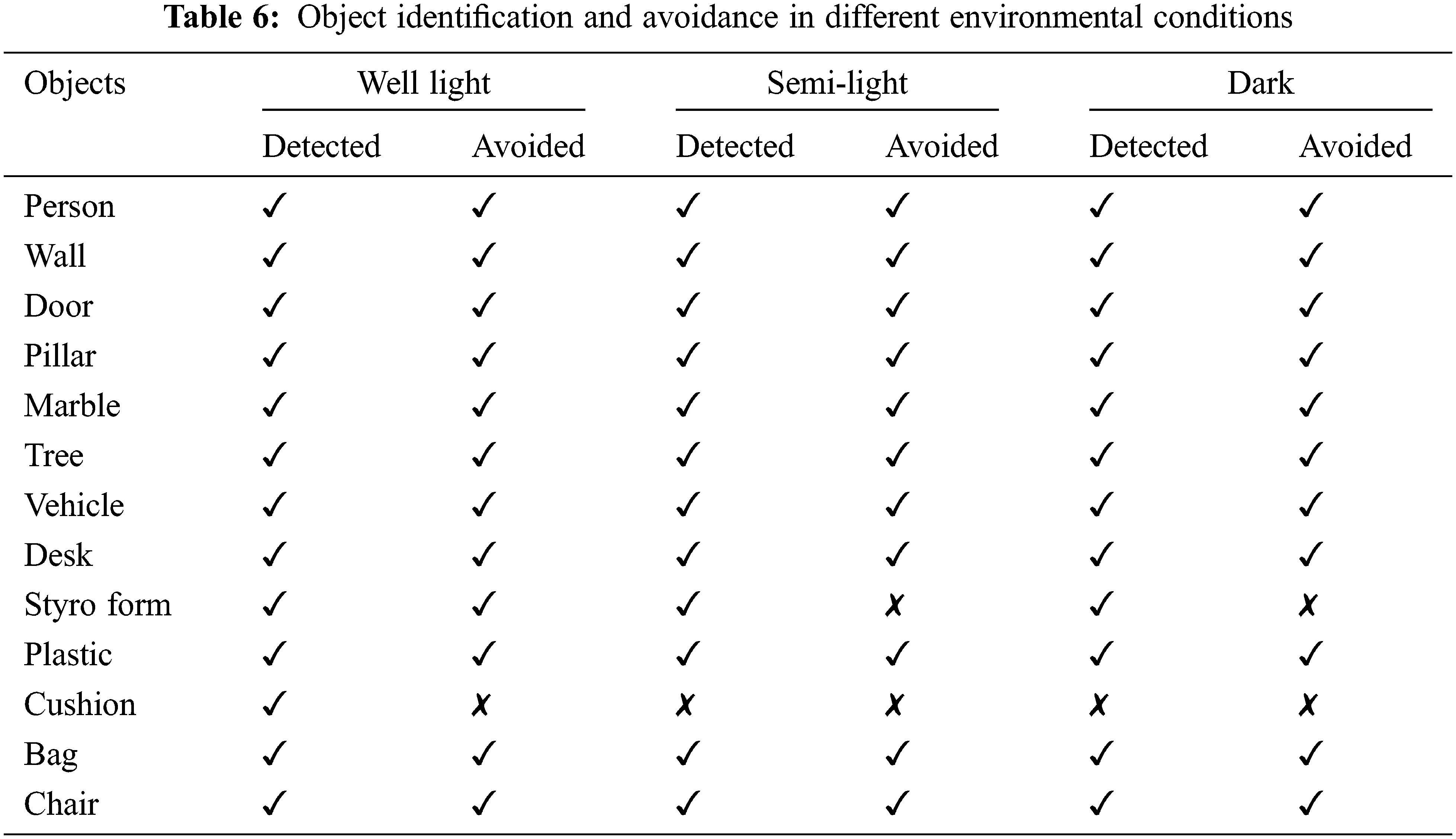

Here we analyze the performance of our device by identifying the objects and then avoiding them in a different environment. Tab. 6 shows the complete behavior of the device taking a different category of an object in 3 different environments like ‘Well Light’, ‘Semi-Light’ and in Dark situation.

4.1.3 Experiment 3: Device Performance Accuracy

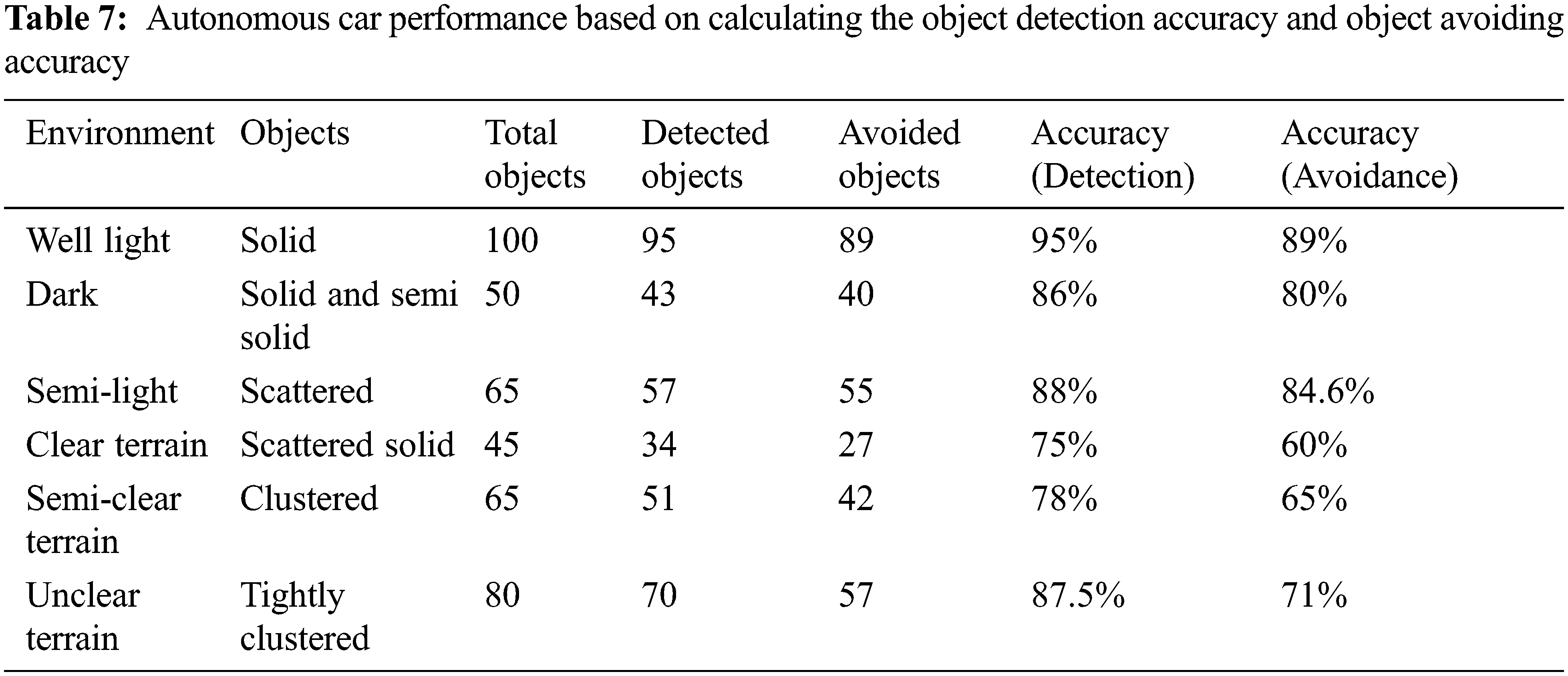

In experiment 3, we compute the accuracy of our device by calculating the accuracy of object detection and its avoidance by the device. We have done this experiment by performing several successful tests. The performance of the autonomous car in detecting and avoiding objects has been estimated by simple accuracy equations. The equation for accuracy of detecting the object is defined in Eq. (4) and the equation for accuracy of avoiding the object is defined in Eq. (5);

The performance of the autonomous car is tested under different environmental factors such as ‘well light’, ‘semi-light’ and ‘dark’ conditions and similarly under ‘clear terrain’, ‘semi-clear terrain’ and ‘unclear terrain’ conditions. The test cases can be seen in Tab. 7.

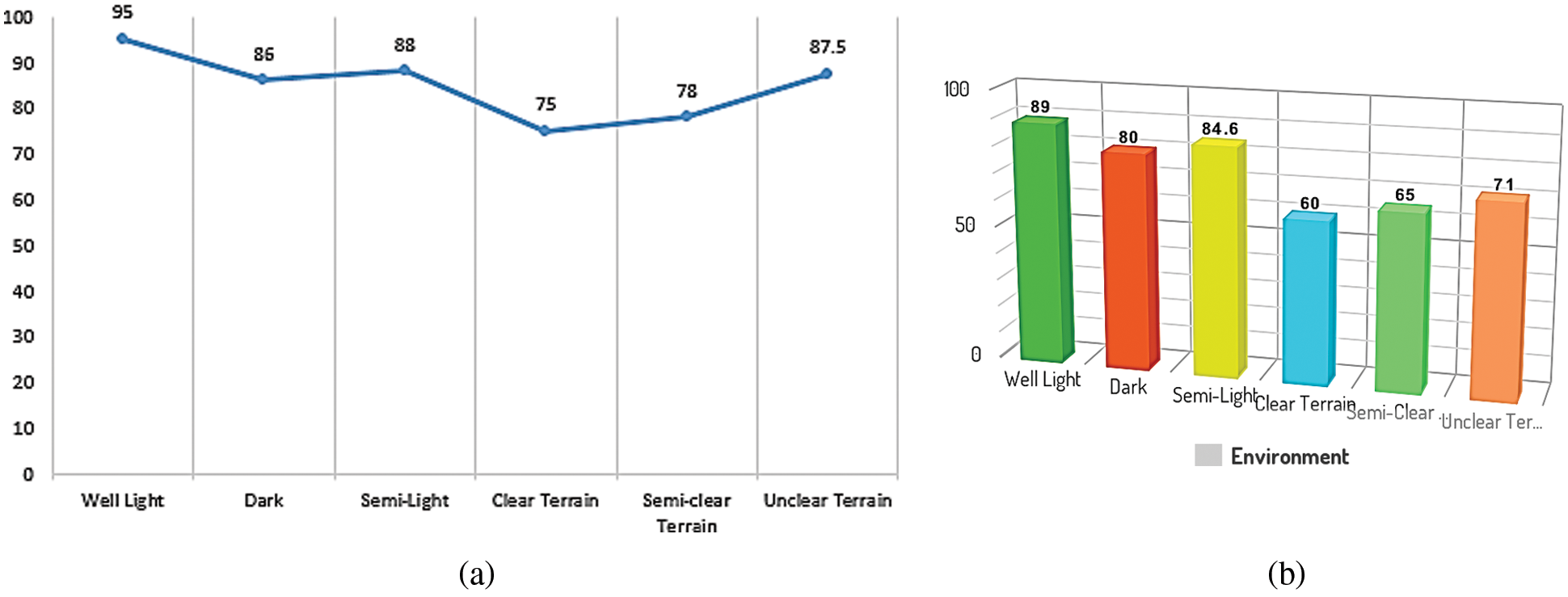

Accuracy graph on the base of object detection and object avoiding in different environments show the better visualization of device performance. The object detection accuracy graph is represented in Fig. 9a and the object avoiding accuracy graph is shown in Fig. 9b.

Figure 9: (a) Object detection accuracy graph on the base of object detection in a different environment. (b) Object avoiding accuracy graph in a different environment

To Conclude, we have designed and implemented a device that detects and recognizes the objects for smooth and efficient working to avoid accidents and collisions. This device consists of the following components: are Arduino UNO micro-controller ATmega328, Servo-motor, Motor Driver shield, Raspberry pi, Bluetooth module, Ultrasonic sensor, DC motors and pi camera. These components help us to achieve autonomous device making possible. In and around many countries [27] in the region, such as India, Thailand, Indonesia and Vietnam, the transport systems and driving experience are being affected by the lack of a regulatory environment, lack of advanced technology, infrastructure, compliance with traffic laws and safe driving practices. The test and evaluation results of the proposed device on various objects with different environments showed good performance in terms of detection accuracy and can evade the objects very well. Experimental and results of evaluating the proposed device on different objects with different environments showed a good performance in terms of detection accuracy and can avoid the objects very well. The proposed device is also able to recognize objects in front of the device and We all achieve this in a very cheap manner.

For future work, we will improve this system for an autonomous vehicle or delivery system due to statistics of the next ten years; the number of lives claimed by traffic accidents each year likely to be double.

Acknowledgement: We are thankful to Dr. Shaharyar Kamal for his consistent assessment in experimental evaluations.

Funding Statement: The researchers would like to thank the Deanship of Scientific Research, Qassim University for funding the publication of this project.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. S. Tahir, A. Jalal and K. Kim, “Wearable inertial sensors for daily activity analysis based on Adam optimization and the maximum entropy markov model,” Entropy, vol. 22, no. 5, pp. 1–19, 2020. [Google Scholar]

2. S. Tahir, A. Jalal and M. Batool, “Wearable sensors for activity analysis using smo-based random forest over smart home and sports datasets,” in Proc. Conf. on Advancements in Computational Sciences (ICACS), Lahore, Pakistan, pp. 1–6, 2020. [Google Scholar]

3. A. Jalal, I. Akhtar and K. Kim, “Human posture estimation and sustainable events classification via pseudo-2D stick model and K-ary tree hashing,” Sustainability, vol. 12, no. 23, pp. 9814, 2020. [Google Scholar]

4. M. A. Rehman, H. Raza and I. Akhter, “Security enhancement of hill cipher by using non-square matrix approach,” in Proc. Conf. on Knowledge and Innovation in Engineering, Science and Technology, Berlin, Germany, pp. 1–7, 2018. [Google Scholar]

5. A. Jalal, M. Batool and S. B. Tahir, “Markerless sensors for physical health monitoring system using ECG and GMM feature extraction.” in Proc. Int. Bhurban Conf. on Applied Sciences and Technologies (IBCAST), Islamabad, Pakistan, pp. 340–345, 2021. [Google Scholar]

6. A. Jalal, N. Khalid and K. Kim, “Automatic recognition of human interaction via hybrid descriptors and maximum entropy markov model using depth sensors,” Entropy, vol. 22, no. 8, pp. 1–33, 2020. [Google Scholar]

7. M. Gochoo, S. Tahir, A. Jalal and K. Kim, “Monitoring real-time personal locomotion behaviors over smart indoor-outdoor environments via body-worn sensors,” IEEE Access, vol. 9, pp. 70556–70570, 2021. [Google Scholar]

8. M. Peden, R. Scurfield, D. Sleet, C. Mathers, E. Jarawan et al., “World report on road traffic injury prevention,” World Health Organization, vol. 274, pp. 1–217, 2004. [Google Scholar]

9. N. Khalid, M. Gochoo, A. Jalal and K. Kim, “Modeling two-person segmentation and locomotion for stereoscopic action identification: A sustainable video surveillance system,” Sustainability, vol. 13, no. 2, pp. 970, 2021. [Google Scholar]

10. I. Akhter, A. Jalal and K. Kim, “Adaptive pose estimation for gait event detection using context-aware model and hierarchical optimization,” Journal of Electrical Engineering & Technology, vol. 16, pp. 1–9, 2021. [Google Scholar]

11. M. Gochoo, I. Akhter, A. Jalal and K. Kim, “Stochastic remote sensing event classification over adaptive posture estimation via multifused data and deep belief network,” Remote Sensing, vol. 13, no. 5, pp. 1–29, 2021. [Google Scholar]

12. I. Akhter, A. Jalal and K. Kim, “Pose estimation and detection for event recognition using sense-aware features and adaboost classifier.” in Proc. Conf. on Applied Sciences and Technologies (IBCAST), Islamabad, Pakistan, pp. 500–505, 2021. [Google Scholar]

13. E. Godwin, A. Y. Idris and A. O. Julian, “Obstacle avoidance and navigation robotic vehicle using proximity and ultrasonic sensor arduino controller,” Int. Journal of Innovative Information Systems & Technology Research, vol. 9, pp. 62–69, 2021. [Google Scholar]

14. S. H. Ahmed, “Autonomous self-driven vehicle,” B.S. thesis, Dept. CSE, Daffodil Int, Dhaka, Bangladesh, 2018. [Google Scholar]

15. A. K. Gamidi, S. R. Ch, A. Yalla and R. A. Issac, “Obstacle avoiding robot using infrared and ultrasonic sensor.” Int. Journal of Emerging Technologies in Engineering Research (IJETER), vol. 6, no. 10, pp. 60–63, 2018. [Google Scholar]

16. M. V. Rajasekhar and A. K. Jaswal, “Autonomous vehicles: The future of automobiles,” in Proc. IEEE Int. Transportation Electrification Conf. (ITEC), Dearborn, Michigan, USA, pp. 1–6, 2015. [Google Scholar]

17. S. Sachdev, J. Macwan, C. Patel and N. Doshi, “Voice-controlled autonomous vehicle using IoT,” Procedia Computer Science, vol. 160, pp. 712–717, 2019. [Google Scholar]

18. G. Dubbelman, W. Mark, J. C. Heuvel and F. Groen, “Obstacle detection during day and night conditions using stereo vision,” in Proc. IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, San Diego, CA, United States, pp. 109–116, 2007. [Google Scholar]

19. H. Laghmara, M. Boudali, T. Laurain, J. Ledy, R. Orjuela et al., “Obstacle avoidance, path planning and control for autonomous vehicles,” in Proc. Conf. on Intelligent Vehicles Symp., Paris, France, pp. 529–534, 2019. [Google Scholar]

20. K. S. Alli, M. Onibonoje, A. Oluwole, M. Ogunlade, A. Mmonyi et al., “Development of an arduino-based obstacle avoidance robotic system for an unmanned vehicle,” ARPN Journal of Engineering and Applied Sciences, vol. 13, no. 3, pp. 1–7, 2018. [Google Scholar]

21. M. Hirz and B. Walzel, “Sensor and object recognition technologies for self-driving cars,” Computer-Aided Design and Applications, vol. 15, no. 4, pp. 501–508, 2018. [Google Scholar]

22. S. Campbell, N. O’ Mahony, L. Krpalcova, D. Riordan, J. Walsh et al., “Sensor technology in autonomous vehicles: A review,” in Proc. Irish Signals and Systems Conf. (ISSC), Belfast, United Kingdom, pp. 1–4, 2018. [Google Scholar]

23. D. Ghorpade, A. D. Thakare and S. Doiphode, “Obstacle detection and avoidance algorithm for autonomous mobile robot using 2D LiDAR,” in Proc. Int. Conf. on Computing, Communication, Control and Automation (ICCUBEA), Pune, India, pp. 1–6, 2017. [Google Scholar]

24. D. K. Prasad, “Survey of the problem of object detection in real images,” International Journal of Image Processing, vol. 6, no. 6, pp. 441, 2012. [Google Scholar]

25. G. Arun, M. Arulselvan, P. Elangkumaran, S. Keerthivarman and J. Vijaya, “Object detection using ultrasonic sensor,” International Journal of Innovative Technology and Exploring Engineering, vol. 8, pp. 207–209, 2020. [Google Scholar]

26. R. Rangesh, R. Vipin, R. Rajesh and R. Yokesh, “Arduino based obstacle avoidance robot for live video transmission and surveillance,” International Research Journal of Engineering and Technology (IRJET), vol. 7, pp. 1701–1704, 2020. [Google Scholar]

27. M. Daily, S. Medasani, R. Behringer and M. Trivedi, “Self-driving cars,” Computer, vol. 50, pp. 18–23, 2017. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |