DOI:10.32604/iasc.2023.029078

| Intelligent Automation & Soft Computing DOI:10.32604/iasc.2023.029078 |  |

| Article |

A Deep Trash Classification Model on Raspberry Pi 4

1Ho Chi Minh City University of Foreign Languages and Information Technology, Ho Chi Minh City, 700000, Vietnam

2International University, Ho Chi Minh City, Vietnam-Vietnam National University, Ho Chi Minh City, 70000, Vietnam

3Faculty of Information Technology, Haiphong University, Haiphong, 180000, Vietnam

4Department of Computer Science and Information Technology, University of Lahore, Lahore, Pakistan

*Corresponding Author: Kha Tu Huynh. Email: hktu@hcmiu.edu.vn

Received: 24 February 2022; Accepted: 03 May 2022

Abstract: Environmental pollution has had substantial impacts on human life, and trash is one of the main sources of such pollution in most countries. Trash classification from a collection of trash images can limit the overloading of garbage disposal systems and efficiently promote recycling activities; thus, development of such a classification system is topical and urgent. This paper proposed an effective trash classification system that relies on a classification module embedded in a hard-ware setup to classify trash in real time. An image dataset is first augmented to enhance the images before classifying them as either inorganic or organic trash. The deep learning–based ResNet-50 model, an improved version of the ResNet model, is used to classify trash from the dataset of trash images. The experimental results, which are tested both on the dataset and in real time, show that ResNet-50 had an average accuracy of 96%, higher than that of related models. Moreover, integrating the classification module into a Raspberry Pi computer, which controlled the trash bin slide so that garbage fell into the appropriate bin for inorganic or organic waste, created a complete trash classification system. This proves the efficiency and high applicability of the proposed system.

Keywords: Trash classification; ResNet; raspberry pi; internet of things (IoT); deep learning

We live in a modern, dynamic period marked by the rapid development of industry, technology, and services. The amount of trash we produce is increasing due to rising demand for products, which further causes environmental pollution and contributes to climate change. Countries such as the US, Canada, Japan, and China have well-developed trash-sorting systems in which different types of trash-e.g., wrapping paper, plastic shells, plastic bottles, rubber, and leather-are placed in separate bins, representing their comprehensive and synchronous trash classification processes. But in most other countries, trash classification has not been given adequate attention. It is necessary to familiarize people in these countries with trash classification to facilitate the garbage disposal process and limit the extent to which trash harms the environment.

More than 3.5 million tons of waste are generated daily around the world. Environmental scientists consider this a large amount and predict that it will rise in the near future. By the end of the 21st century, the amount of waste generated every day could reach 11 million tons [1], which may overload garbage disposal processes if the garbage is not appropriately classified as misclassification is one of the main causes of environmental pollution. Thus, there is an urgent need for garbage classification solutions that can be deployed as practical actions or applied and maintained scientific products. For this, scientists and researchers should call upon image processing, computer vision, and deep learning tools to build models using neural networks and image processing theories to analyze and classify trash as organic or inorganic.

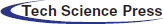

The classification problem has always received a lot of research interest [2,3]. According to statistics from IEEE Xplore, nearly 100 scientific publications related to garbage classification from images have been published in the last five years, and this number has increased rapidly in the last two years in particular (see Fig. 1). Among the proposed solutions, the majority use neutral networks. This paper will summarize the related studies, analyze outstanding models, and propose an improved model with convincing and highly applicable experimental results.

Figure 1: The numbers of publications related to trash classification at website of IEEE Xplore in recent five years (Statistics with the keyword “Waste classification”, “Garbage classification” and “Trash classification”)

The remainder of the paper is organized as follows. Section 2 reviews the related research and defines problem statements. Section 3 proposes an automatic trash classification system, and Section 4 describes our experiments and discussion. Finally, Section 5 presents our conclusion and introduces directions for future research.

Garbage classification is a topic of concern for researchers and industry alike. A deep learning model is suggested in [4] to classify 5940 images as exhibiting either organic, inorganic, or medical waste. The proposed system, DNN-TC, improved upon the ResNet model and achieved 94% accuracy in classifying the Trashnet dataset [5]. The authors of [6] suggested using location- and time-based traffic intensity to im-prove image classification They developed the ThanosNet model to classify five types of garbage in images-paper, tetra pak, landfill, plastic, and cans—which proved effective in classifying the ISBNet dataset. ScrapNet [7], MobileNet [8], SqueezeNet [9], PublicGarbageNet [10], and WasNet [11], similar to ThanosNet, are also deep learning models for classifying garbage with high accuracy. Another study combined the AlphaTrash machine with the Inception-V1 convolutional neural network (CNN) model to create a garbage classification system with an accuracy of 94% and a classification time of 4.2 s for each image [12]. You only look once (YOLO) is an-other CNN model for object detection, recognition, and classification. It is created by combining convolutional layers, which extracted image features, and connected layers, which predicted the probability and coordinates of the object. YOLO’s outstanding performance in object recognition is improved over many versions, and the model is now widely used in garbage classification. Specifically, the effectiveness of YOLO3 for trash classification has been reported in publications such as [13–15], and YOLO5 appears in works such as [16,17].

The development of the internet and the need to connect people and objects in real time result in a trend of connected networks that allow computers and humans to interface and enable objects such as cars, turbines, machines, and transportation systems to exchange information. This connected network is called the Internet of Things (IoT) and may be illustrated by a smart oven that can communicate with a washing machine. Many effective systems have been developed based on the IoT, such as [18] and [19] for COVID-19 disease diagnosis and detection and [20] for modeling the mosquito release ecosystem in a heterogeneous atmosphere.

The combination of artificial intelligence (AI)—specifically machine learning/deep learning—and the IoT is an attractive solution for building a garbage classification system [21–23]. However, such systems are missing in the extant literature and many studies have failed to consider specific system needs and ensure wide applicability of the systems. Therefore, building specific, professional systems that integrate CNN-based models and the IoT is one feasible state-of-the-art solution.

A trash classification system should follow these specific requirements:

– A trash image from a dataset or collected in real time should be classified as inorganic or organic.

– For real-time trash sorting, a piece of trash thrown in the bin should be automatically moved to the appropriate location (i.e., the inorganic or organic portion of the bin).

– The solution should be efficient and highly applicable, especially to Vietnam, where diverse sources of waste and environmental pollution have reached a high warning level and where trash sorting is one of the key projects receiving government investment.

Our work addresses these requirements by:

– Researching and proposing a model to classify the trash images with high accuracy.

– Embedding the model in high-performance hardware to effectively classify and sort trash thrown into a recycling bin.

– Evaluating the trash classification and comparing its performance to related models.

3 The Automatic Trash Classification System

Our system is designed to carry out the following functions:

– Capture an object and send it to the system.

– Classify the captured image as organic or inorganic.

– Move the object to the corresponding trash bin.

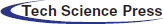

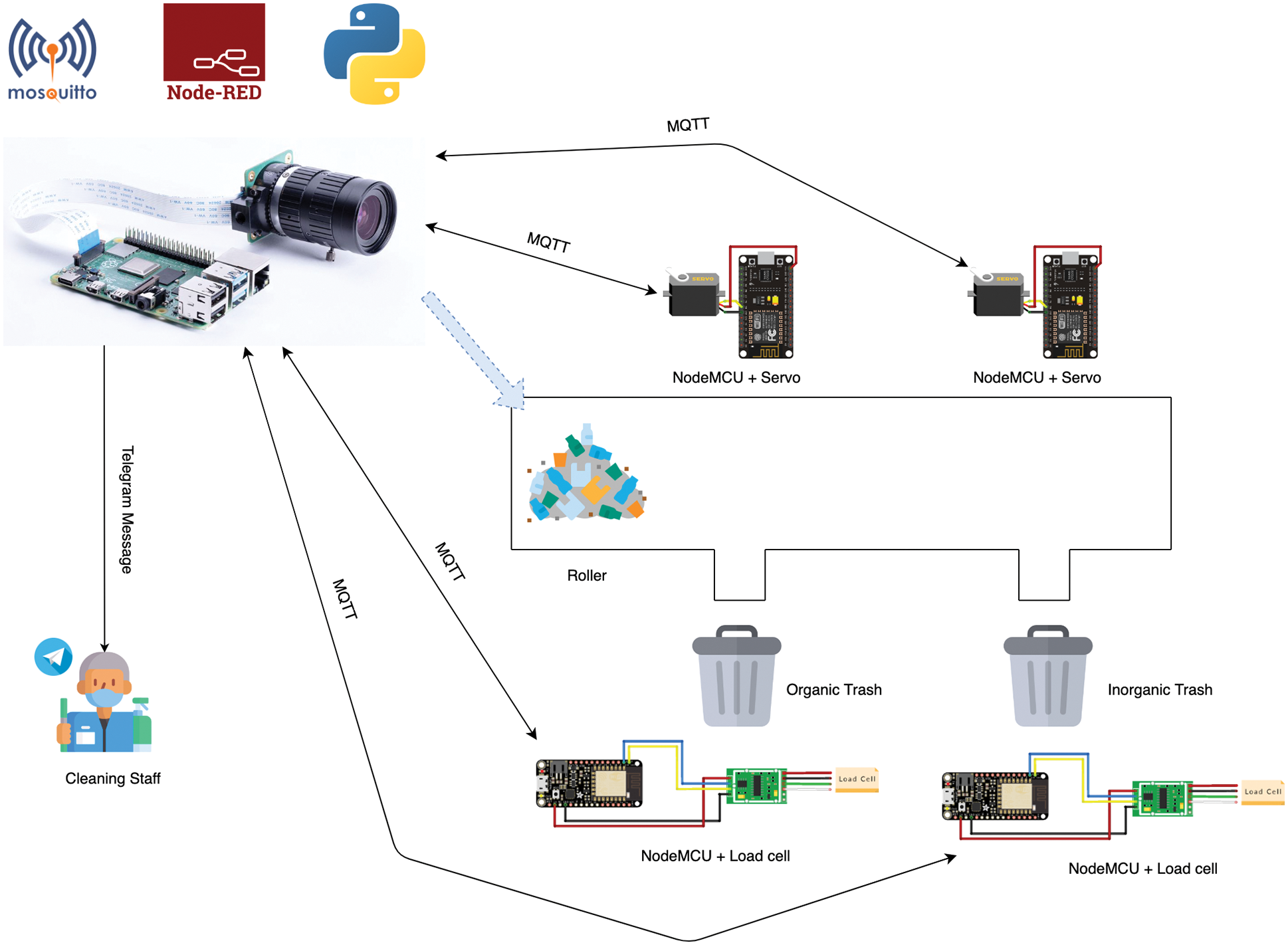

To realize these functions, the system needed to be composed of two main components:

1. Trash classification model

2. Camera module and servo motor

In this paper, the ResNet-50 model is used for organic/inorganic waste classification and the 8 GB Raspberry Pi 4 model B controls the camera, which captures images, and the servo motor, which in turn moves the garbage to the corresponding bin after receiving the results from the trash classifier engine.

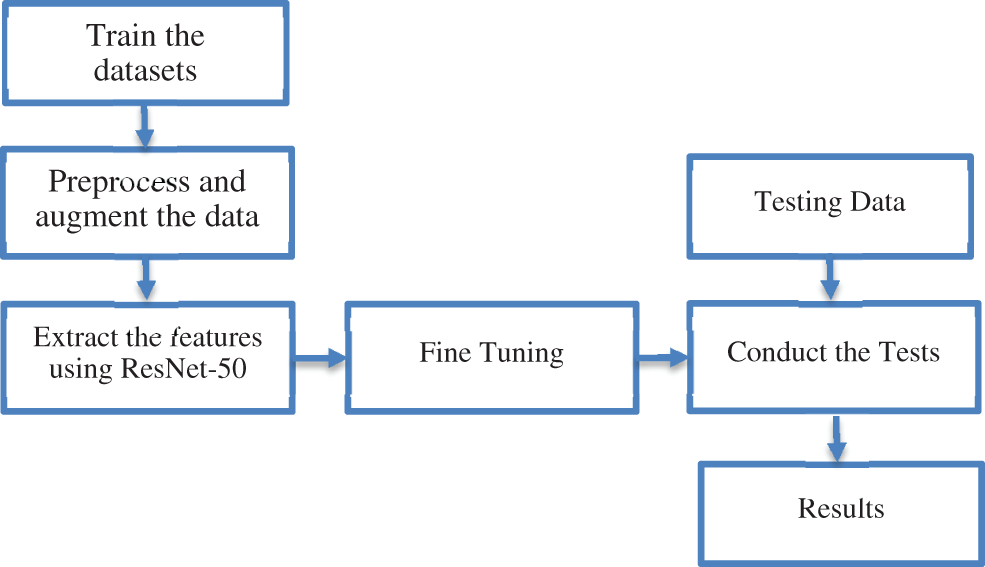

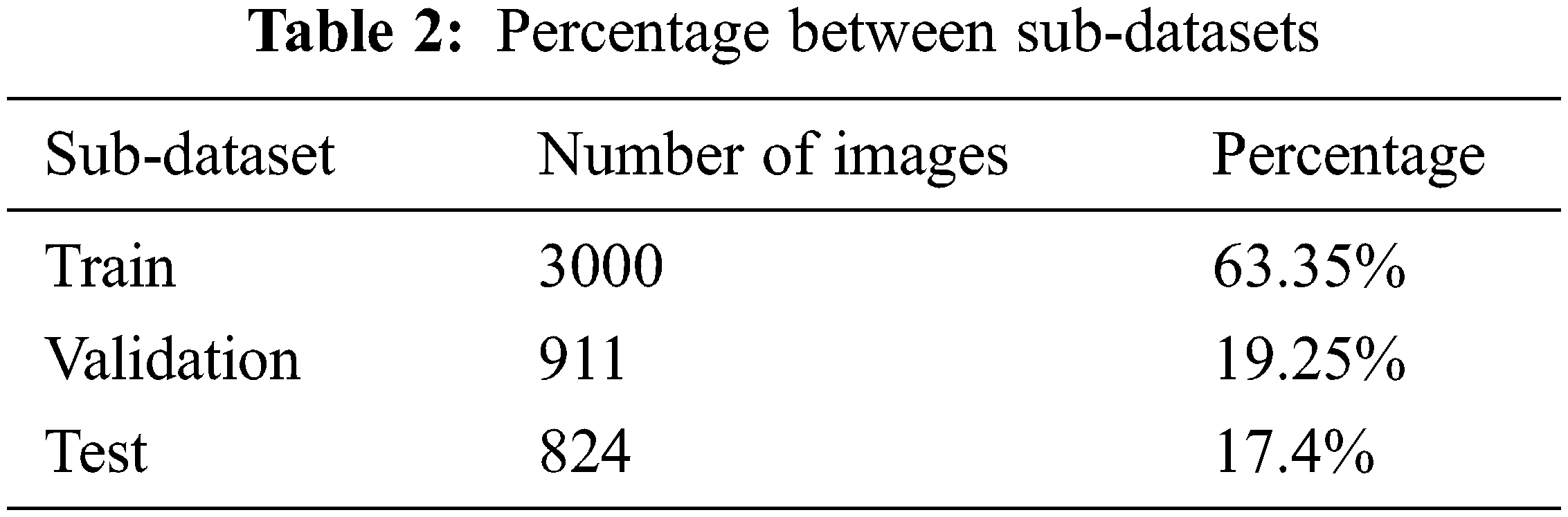

The structure of the proposed system is presented in Fig. 2.

Figure 2: Structure of the proposed system

The trash classifier is a key component of the system that determines the efficiency of the application. We adopted the ResNet-50 model, a deep transfer learning model–based CNN to build the classification module. Fig. 3 presents the procedure for building the trash classification system using ResNet-50. The deep learning model starts with a training process, applies ResNet50’s pre-trained weights, optimizes the model parameters, and conducts the tests. Characteristics of ResNet-50, datasets, and the experimental environment are detailed in Subsections 3.1.1, 3.1.2, and 3.1.3, respectively.

Figure 3: Steps to build the trash classification system using ResNet-50

ResNet (residual network) [24] was introduced in 2015, winning various competitions, including ILSVRC2015 with a top-five error rate of only 3.57% and ILSVRC and COCO2015 with ImageNet Detection, ImageNet localization, Coco detection, and Coco segmentation. Many versions of ResNet architecture are available with different numbers of layers, such as ResNet-18, ResNet-34, ResNet-50, in which the numeral denotes how many layers are there. ResNet is a deep CNN designed with hundreds to thousands of convolutional layers that automatically learn from low- to high-level features to improve classification or recognition accuracy.

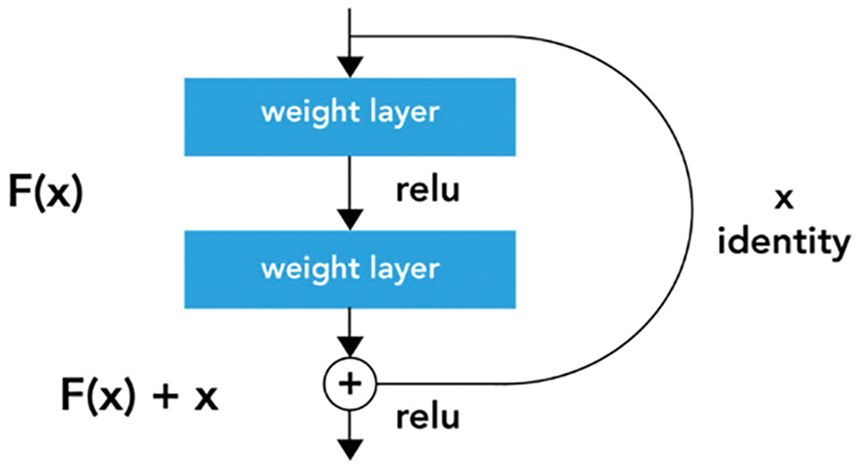

ResNet utilizes deep residual pre-trained architecture to solve vanishing gradient problems [25]. A connection is employed to pass through one or more layers as an efficient method for vanishing gradient problems. Such a connection is called a residual block, as shown in Fig. 4. The residual block performs the following: feed forward x (input) to Conv-MaxPooling-Conv layers to obtain F(x), then add x to H(x) so that H(x) = F(x) + x, with the desired mapping represented as H(x). The learning process is improved if features from the previous layers are added.

Figure 4: Residual block

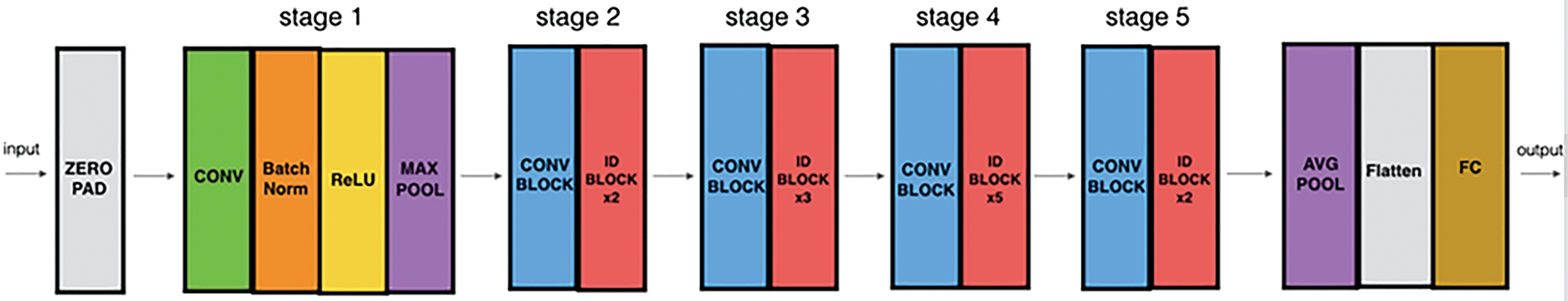

A diagram which shows the detail implementation of ResNet, is illustrated in Fig. 5 [24].

Figure 5: ResNet-50 architecture

Fig. 5 shows a diagram of the detailed implementation of ResNet [24]. The ResNet-50 architecture includes the following:

• Zero-padding. The zero-padding layer accepts Input with (3,3).

• Five stages.

– Stage 1 consists of Convolution (Conv1) with 64 filters, shape (7,7), stride (2,2), BatchNorm, a ReLU function, and MaxPooling (3,3).

– Stages 2, 3,4, and 5 comprise a convolutional block (CONV Block) followed by identity blocks (ID Block). They have different convolutional filter sizes and numbers of ID Blocks. The filter size of the CONV Block at Stage 2 is 64 × 64 × 256, and the size doubles at each subsequent stage, and the number of ID Blocks at each stage are shown in Fig. 5.

• 2D average pooling layer. The average pooling layer has the size (2, 2).

• Flatten layer.

• Fully connected layer. A softmax activation is applied in the fully connected layer to normalize the input vector of the real numbers to a probability distribution before labeling the image.

In the system, we collect data consisting of waste (e.g., from daily life, around the house, and on the road) and garbage at the market (e.g., vegetables, cabbage, plastic bottles, and plants). Our dataset includes 4735 images of different sizes, labeled as organic or inorganic by two experts. To assess consensus between the two labelers, we apply Cohen’s kappa coefficient [26], as in Eq. (1):

where Pr(a) is the consensus value observed between the evaluated variables and Pr(e) is the assumed probability of the consensus. The resulting Cohen’s kappa coefficient of the data labeling process is 0.96, which indicates a very high consensus in the data labeling process.

Next, the dataset is classified into three sub-datasets for training, testing, and validation.

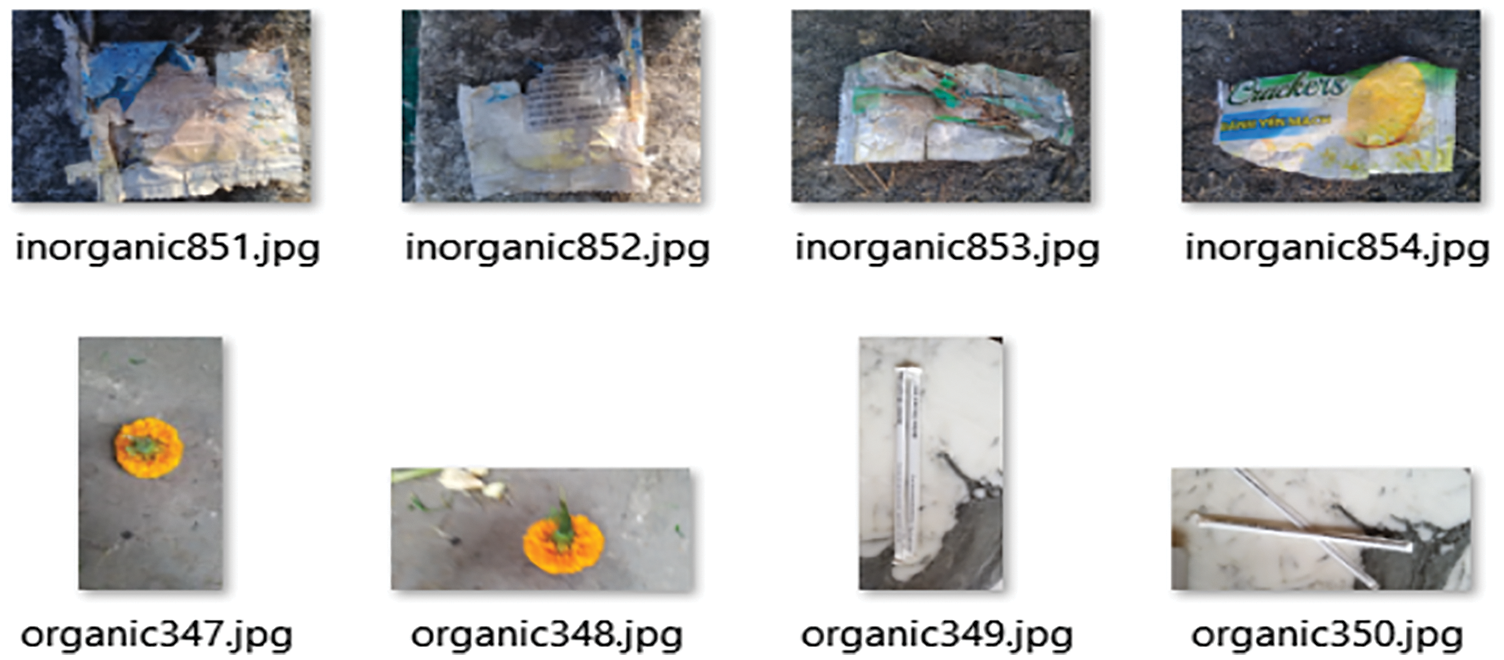

The images are resized to 224 × 224 pixels and saved with the corresponding label in the classified folders. Fig. 6 shows some of the 224 × 224 images from two trash folders.

Figure 6: Some images from the dataset

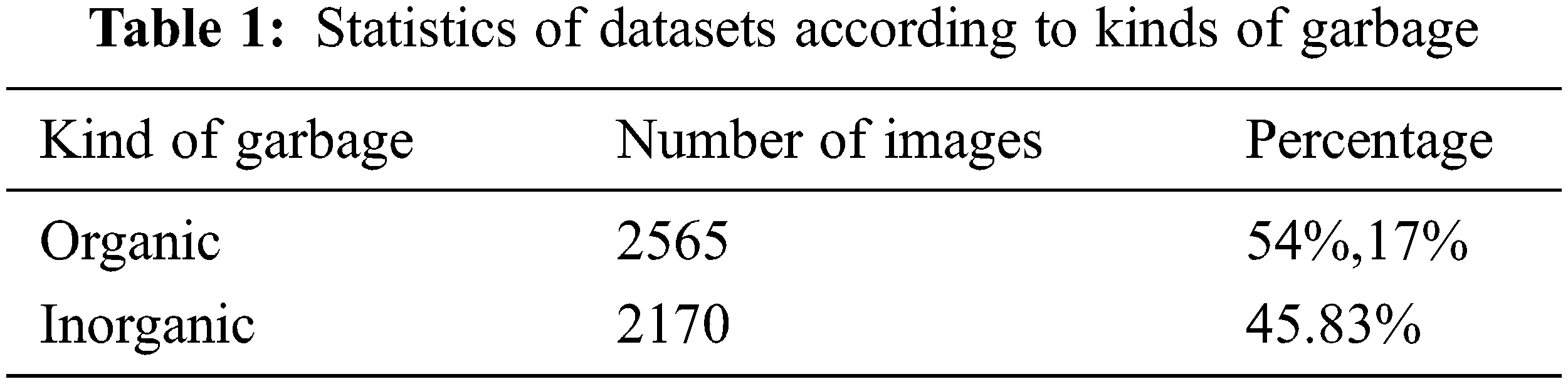

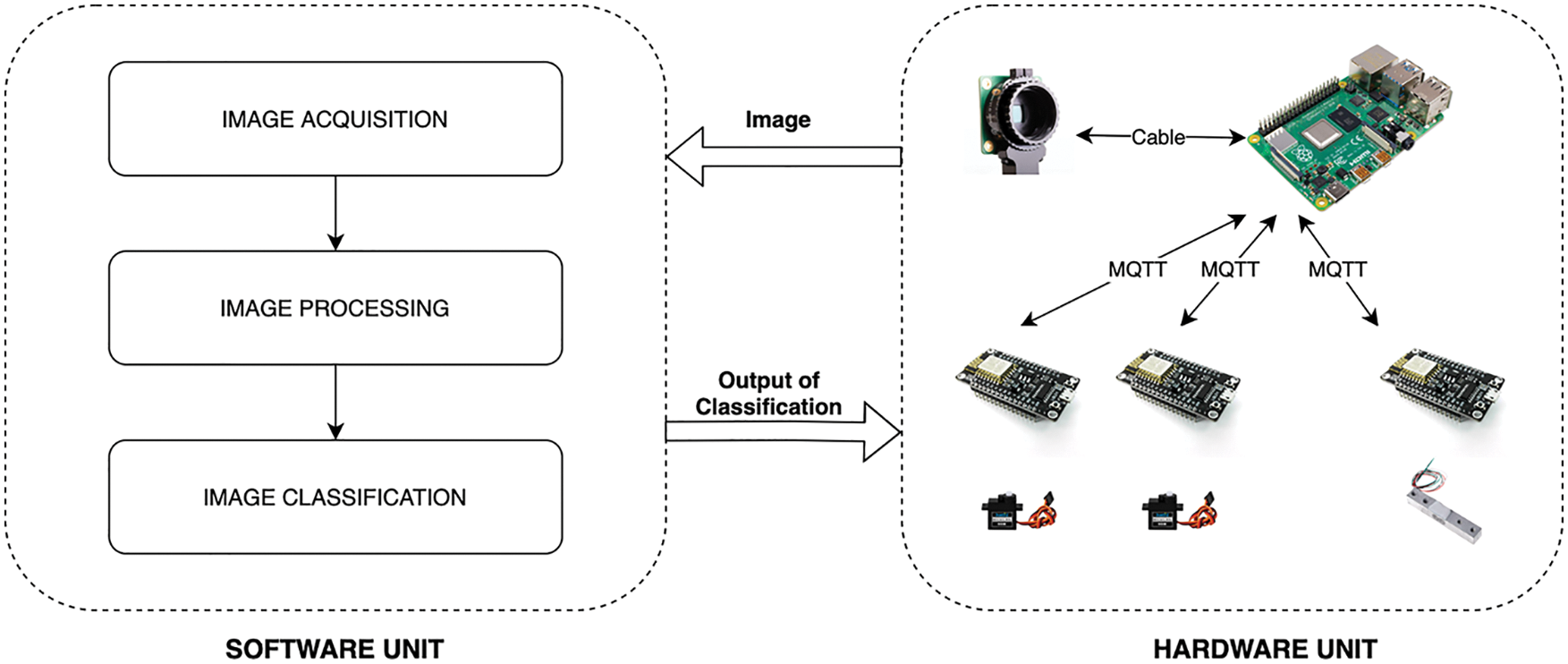

The statistics of datasets according to inorganic vs. organic and the percentage between sub-datasets are listed in Tabs. 1 and 2, respectively.

The system uses the Raspberry Pi model B (8 G version) board with the 64-bit Raspberry Pi OS installed. The Raspberry Pi board is integrated with the Raspberry Pi High Quality Camera module and installed with Node-RED service and Eclipse Mosquitto (MQTT broker). The sensors are controlled by a NodeMCU microcontroller that communicates with the Raspberry Pi via the MQTT protocol.

When the user throws the trash into the recycle bin, the system takes a photo and then transfers it to the software module for processing and classification. Based on the classifier’s result, Raspberry Pi controls the servo motor to adjust the slide so the trash falls into the correct position for the organic or inorganic compartment.

In addition, the weight is periodically measured by sensors. When the waste weight reaches the limit defined on Node-RED, the system sends a message via Telegram to the sanitation staff. The operation mechanism of the hardware module is presented in Fig. 7.

Figure 7: Operation mechanism of the hardware module

Raspberry Pi is chosen for our system because of its advantages, such as the following:

– SoC and high configuration

• Broadcom BCM2711–Quad-Core 64 bits, Cortex-A72(ARM-V8)

• RAM DDR 4–8 GB

• 40 pins GPIO, which is easily integrated with other devices (e.g., servo, sensors, led, and buzzer)

– Affordable (∼$100 for the highest configuration)

– Small size with integrated wireless connections (Bluetooth and wifi), only one power supply wire required

– Numerous connection ports and many modules available with Raspberry Pi Org (e.g., adapter, cooling fan, and camera)

– Linux Distros operating system (based on Debian), which is highly compatible with Python, allowing for easy integration with machine learning and deep learning models.

The system consists of two parts, as shown in Fig. 8 and described below:

– The hardware unit includes a Raspberry Pi Camera with an image acquisition function, Raspberry Pi board, servo motor, and trash bin. This unit receives the results from the software unit and puts each piece of garbage in the correct location.

– The software unit, developed in Python 3 language, is responsible for receiving, processing, and classifying trash images.

Figure 8: Complete system of the hardware module

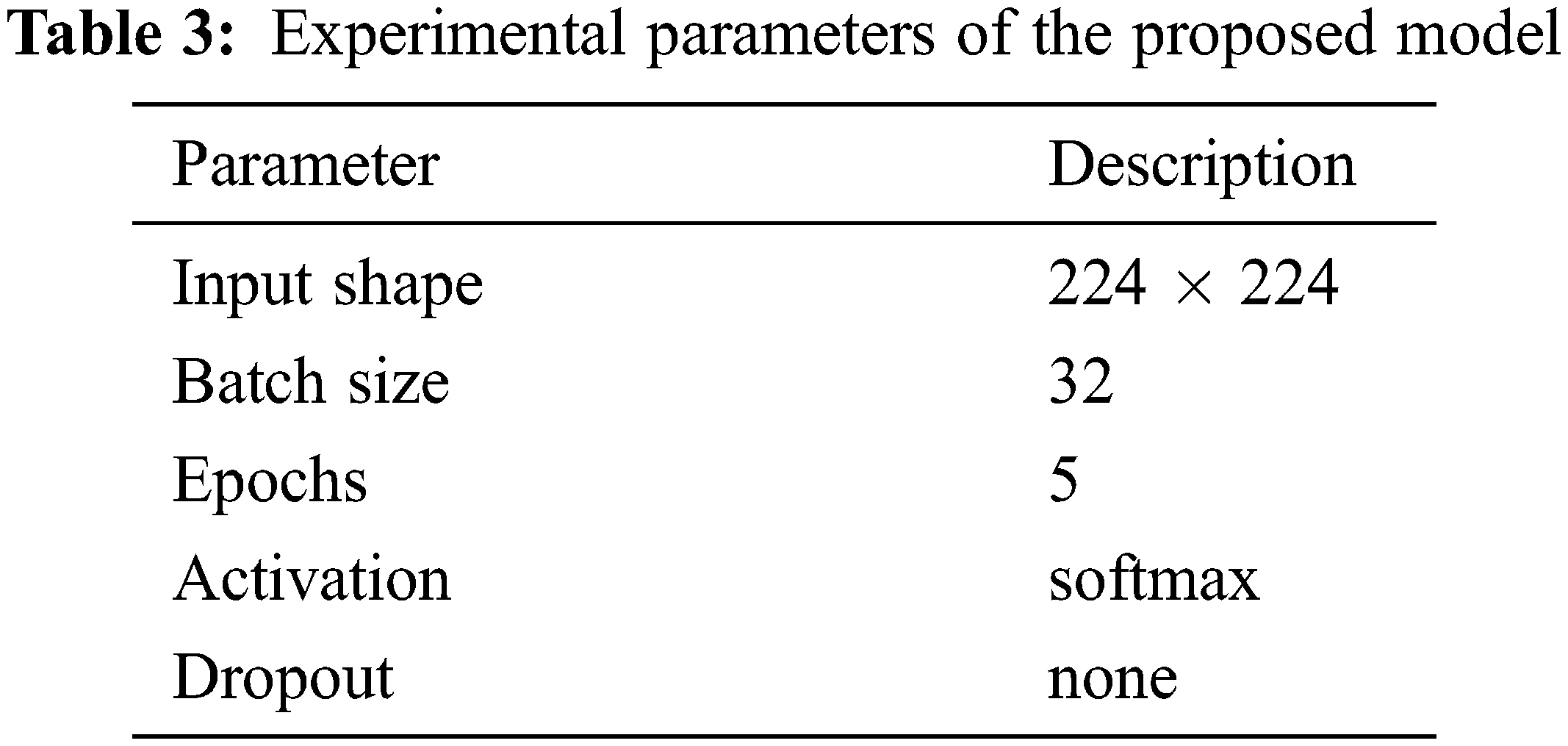

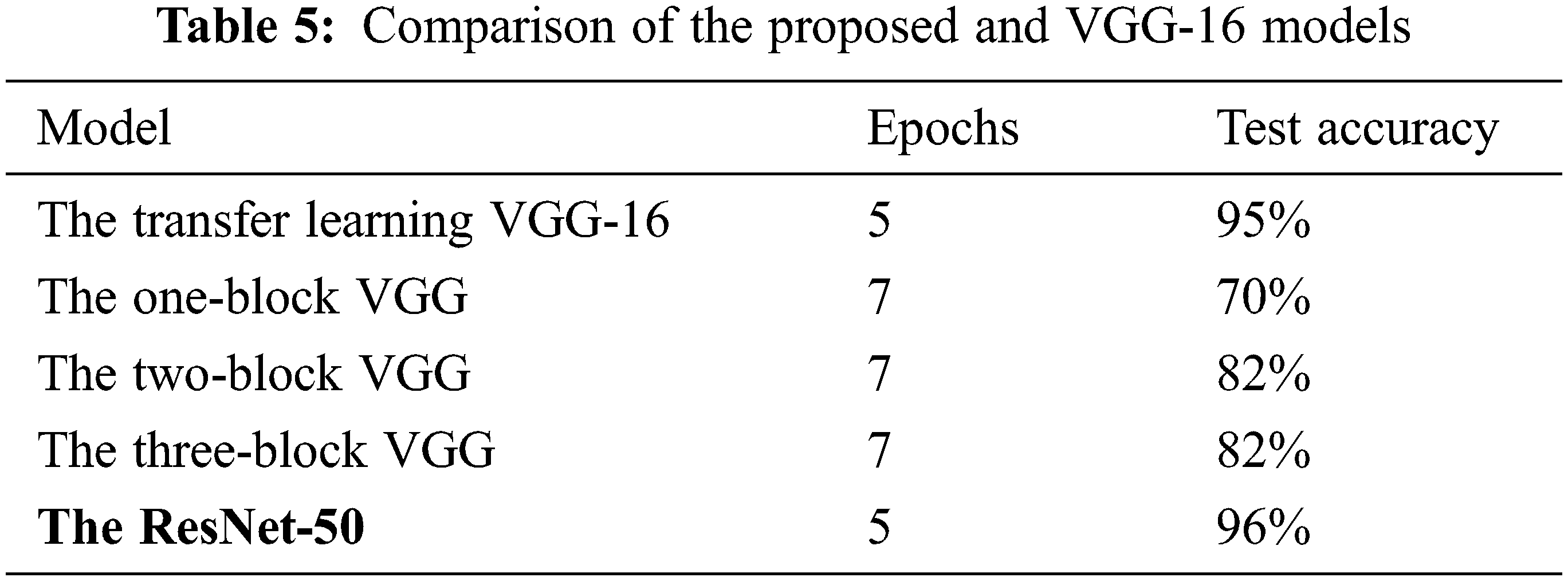

The model is executed in the Lenovo Y520 computer with a Core i7 CPU (2.80 GHz), an NVIDIA GeForce GTX 1050 4 GB GPU, and 16 GB of RAM. Pre-training and subsequent fine-tuning are performed by replacing the final fully connected layers. The ResNet-50 model is optimized by the stochastic gradient descent method with a learning rate of 0.0001. Tab. 3 shows the experimental parameters of the proposed ResNet-50 model.

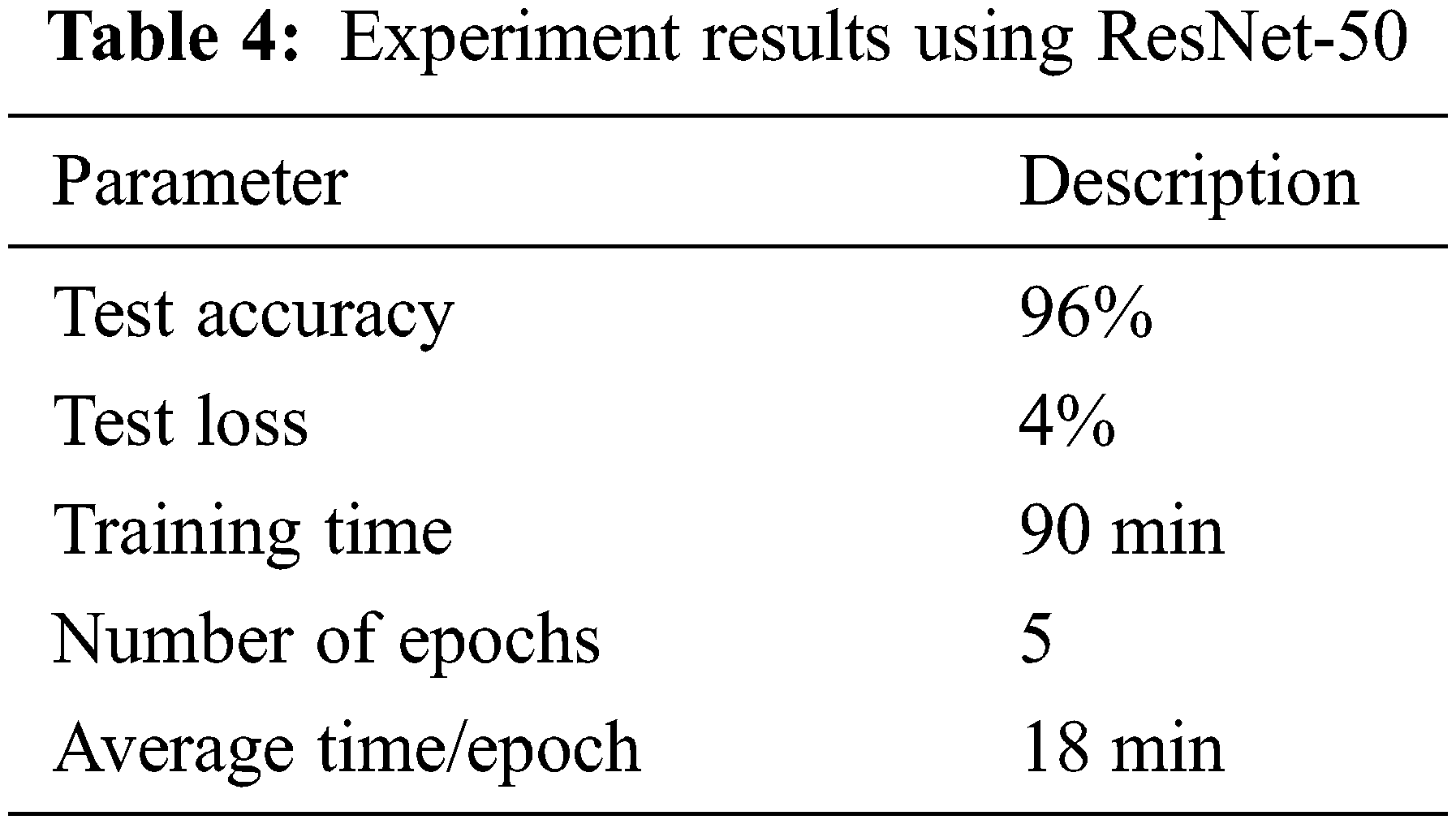

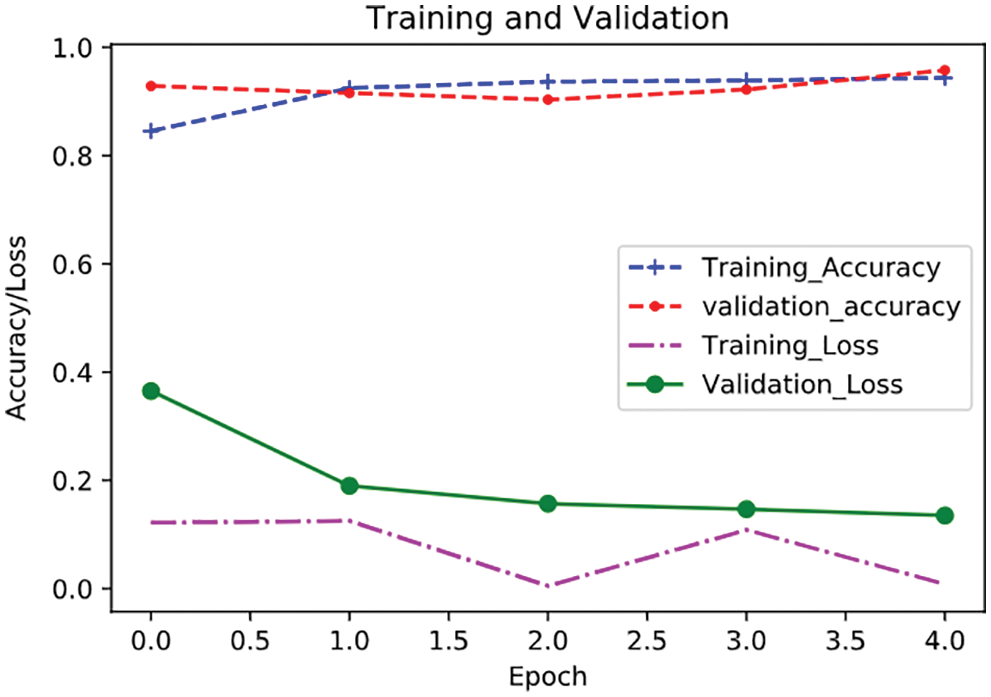

We conduct experiments with a classifier based on ResNet-50 and compare our model with start-of-the-art models, such as VGG networks. Experimental results using ResNet-50 are shown in Tab. 4, and the training and validation using ResNet-50 are shown in Fig. 9.

Figure 9: Training and validation using ResNet-50

The training model took 90 min with five epochs, and the average training time per epoch is 18 min. The results are very good, with 96% accuracy.

We use VGG-16 [27] and its variants for evaluation comparisons. The VGG-16 is deep-trained on a very large dataset called ImageNet database [28] and always gives high accuracies regardless of dataset size. Models participating in the experiment include the following:

– The one-block VGG model has a single CNN layer with 32 filters followed by a max pooling layer.

– Additional models extend the one-block VGG model, adding one block into the previous and doubling the number of filters. In other words, the two-block VGG model is created by extending the first block and adding a second block of 64 filters. The same rule is applied for the three-block VGG model, which consists of an extension of the second block and a third block of 128 filters.

– The transfer learning VGG-16 includes all layers of the pre-trained VGG-16, which are first transferred to our model. The last fully connected layers are then adjusted to classify the garbage, and the weights at the initial CNN filter are updated to become optimized through training data. The Multiple Layer Perceptron (MLP) classifiers are trained based on the features obtained from the fine-tuned CNN-based learning. Then, the output is the final classification.

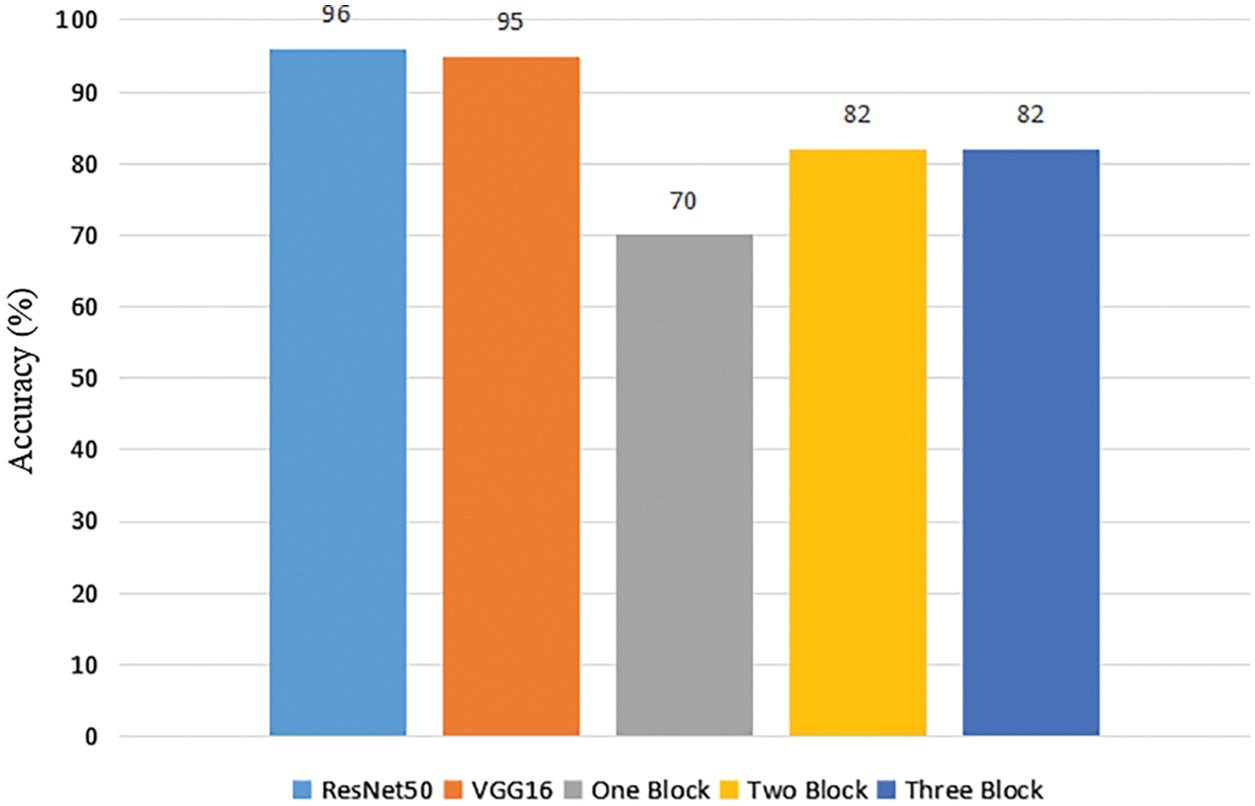

Comparison results with other models are shown in Tab. 5 and Fig. 10.

Figure 10: Accuracy of the proposed model and VGG-16 models

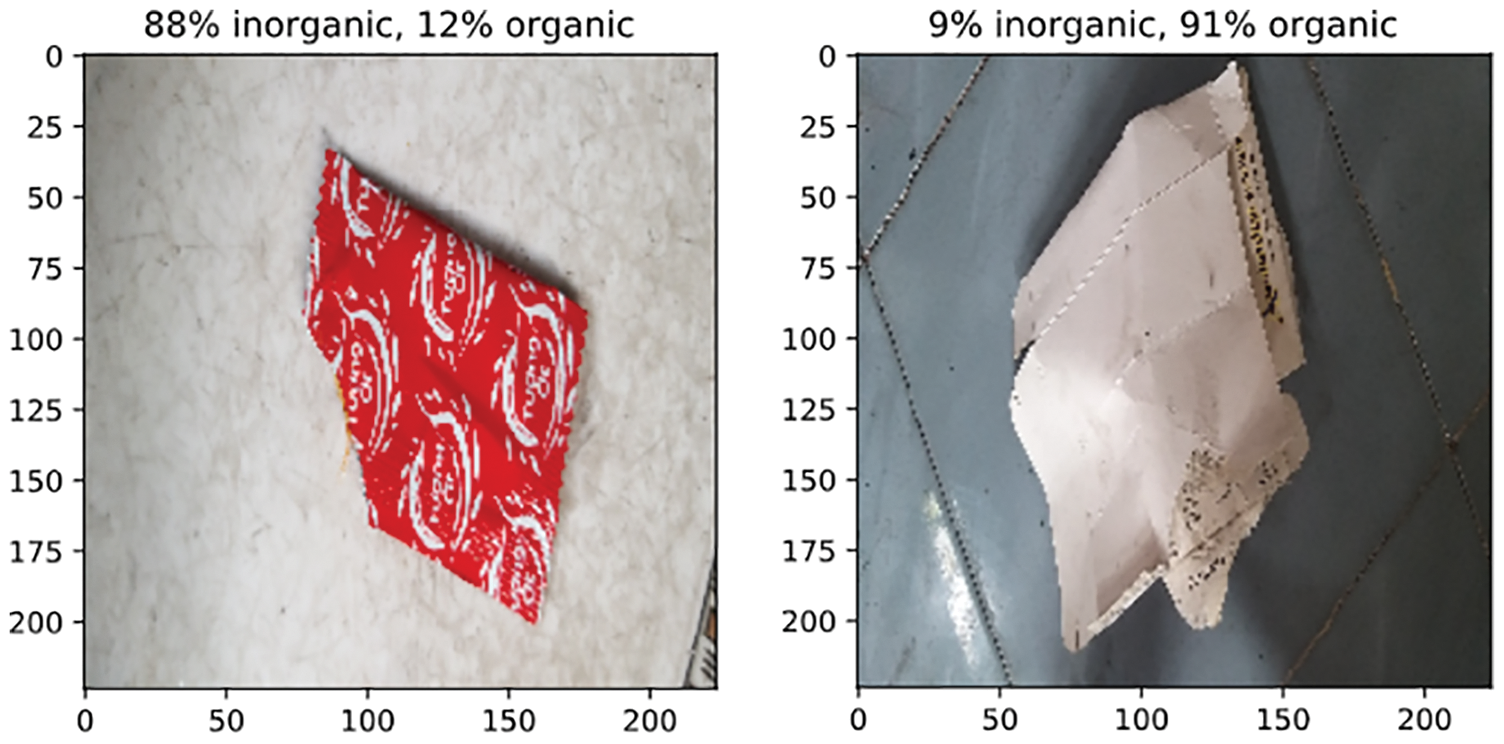

Based on the experimental results of models on the same dataset, the ResNet-50 model has the highest accuracy of 96%. Some real-time images on successful trash classification are shown in Fig. 11.

Figure 11: Simulation results from images in the dataset

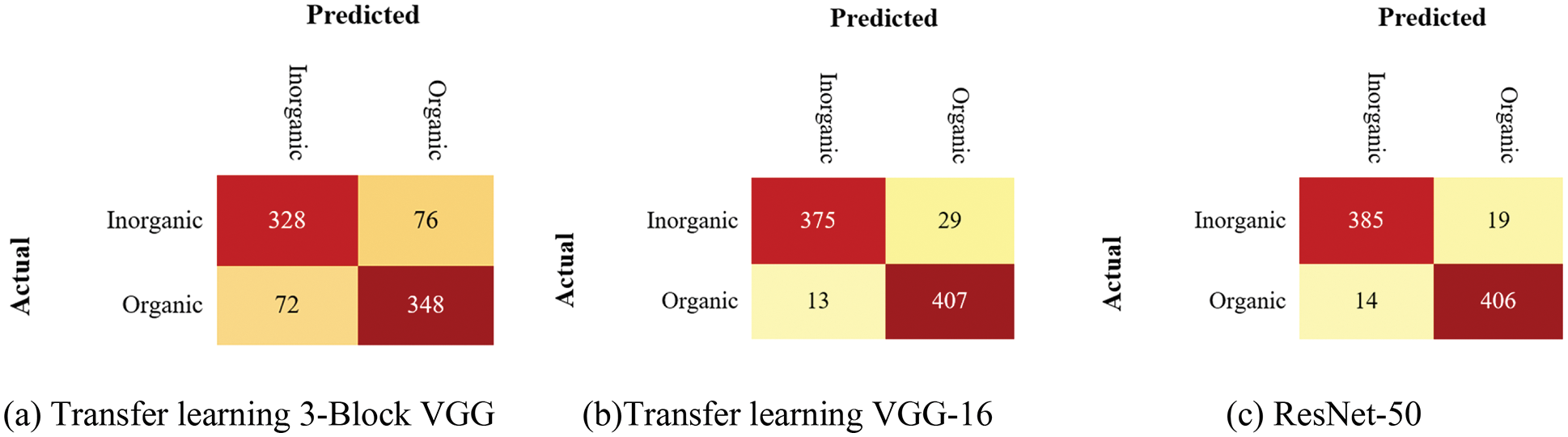

We further analyze three models with high accuracies—three-block VGG, VGG-16, and ResNet-50. Fig. 12 shows that the transfer learning of VGG-16 and ResNet-50 exhibit higher accuracies. Especially when compared with VGG-16, ResNet-50 significantly improves the correct recognition for inorganic images.

Figure 12: Confusion matrices of the three best experimental models

Although our paper has some limitations, including a small dataset, binary classification system, and limited experiments and comparisons, the obtained results demonstrate the positive contributions of our system:

– The dataset, which we collect ourselves, is comprised of unique data from Vietnam and is highly applicable to reality.

– Although few training data are used, they are enough for the system to achieve high accuracy because of the use of ResNet, a state-of-the-art, pre-trained deep learning model. With this model, although the dataset is small, it is still able to classify images with high accuracy and can therefore be applied to real-life trash classification.

In addition, the security edge should be considered when IoT applications are deployed. Smart devices continuously interact with each other using various wireless communication protocols. While we producing an adaptive IoT execution, communications also take a risk of exposing IoT safety vulnerabilities and unlocking passageways to malevolent actors, generating unintended data escapes. An IoT security master plan reduces susceptibilities through stratagems such as device identity administration, enciphering, entry mastery, and coverless information hiding techniques [29,30]

This paper proposed an automatic classification system that combined AI and the IoT. Using the ResNet-50 model, we successfully built a high-accuracy classifier using a transfer learning approach. This classification module was then integrated into the IoT components to create a complete and intelligent system capable of automatic garbage classification. Our future works will focus on building a multi-class classification and upgrading the functionality of the IoT components so that they can, for example, automatically detect the fullness of the trash bin and determine the weight of different types of garbage.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. K. V. Lohuizen, “The world produces more than 3.5 million tons of garbage a day - and that figure is growing,” Washington Post, 2017. [Online]. Available: https://www.washingtonpost.com/graphics/2017/world/global-waste/. [Accessed: 13-Feb-2022]. [Google Scholar]

2. K. K. Nagwanshi, in “Learning classifier system,” in Modern Optimization Methods for Science, Engineering and Technology, pp. 1–l, IOP Publishing, United Kingdom, 2019. [Google Scholar]

3. H. M. Khan, J. N. Boodoo, W. Dullull, S. Nathire, X. Gao et al., “Multi-class classification of breast cancer abnormalities using deep convolutional neural network (CNN),” PLoS ONE, vol. 16, no. 8, pp. e0256500, 2021. https://doi.org/10.1371/journal.pone.0256500. [Google Scholar]

4. A. H. Vo, H. S. Le, M. T. Vo and T. Le, “A novel framework for trash classification using deep transfer learning,” IEEE Access, vol. 7, pp. 178631–178639, 2019. [Google Scholar]

5. G. Thung and M. Yang, “Classification of trash for recyclability status,” in CS229 Project Report, Leland Stanford Junior University, San Francisco, CA, USA, pp. 1–6, 2016. https://cs229.stanford.edu/proj2016/report/ThungYang-ClassificationOfTrashForRecyclabilityStatus-report.pdf [Google Scholar]

6. A. Sun and H. Xiao, “ThanosNet: A novel trash classification method using metadata,” in Proc. of the IEEE Int. Conf. on Big Data (Big Data 2020), Atlanta, GA, USA, 2020, pp. 1394–1401. [Google Scholar]

7. A. Masand, S. Chauhan, M. Jangid, R. Kumar and S. Roy, “ScrapNet: An efficient approach to trash classification,” IEEE Access, vol. 9, pp. 130947–130958, 2021. [Google Scholar]

8. S. L. Rabano, M. K. Cabatuan, E. Sybingco, E. P. Dadios and E. J. Calilung, “Common garbage classification using mobilenet,” in Proc. of the 2018 IEEE 10th Int. Conf. on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM), Baguio City, Philippines, pp. 1–4, 2018. [Google Scholar]

9. W. Zhi, L. Gao and Z. Zhu, “Garbage classification and recognition based on SqueezeNet,” in Proc. of the 2020 3rd World Conf. on Mechanical Engineering and Intelligent Manufacturing (WCMEIM 2020), Shanghai, China, pp. 122–125, 2020. [Google Scholar]

10. M. Zeng, X. Lu, W. Xu, T. Zhou and Y. Liu, “PublicGarbageNet : A deep learning framework for public garbage classification,” in Proc. of the Chinese Control Conf. (CCC 2020), Shenyang, China, 2020, pp. 7200–7205. [Google Scholar]

11. Z. Yang and D. Li, “WasNet: A neural network-based garbage collection management system,” IEEE Access, vol. 8, pp. 103984–103993, 2020. [Google Scholar]

12. P. Tiyajamorn, P. Lorprasertkul, R. Assabumrungrat, W. Poomarin and R. Chancharoen, “Automatic trash classification using convolutional neural network machine learning,” in Proc. of the IEEE 2019 9th Int. Conf. on Cybernetics and Intelligent Systems and Robotics, Automation and Mechatronics (CIS and RAM 2019), Bangkok, Thailand, pp. 71–76, 2019. [Google Scholar]

13. S. Kumar, D. Yadav, H. Gupta, O. P. Verma, I. A. Ansari et al., “A novel YOLOv3 algorithm-based deep learning approach for waste segregation: Towards smart waste management,” Electron, vol. 10, no. 1, pp. 14, 2020. [Google Scholar]

14. J. Xiong, W. Cui, W. Zhang and X. Zhang, “YOLOv3-darknet with adaptive clustering anchor box for intelligent dry and wet garbage identification and classification,” in Proc. of the 11th Int. Conf. on Intelligent Human-Machine Systems and Cybernetics (IHMSC 2019), Nanjing, China, vol. 2, pp. 80–84, 2019. [Google Scholar]

15. G. Niu, J. Li, S. Guo, M. O. Pun, L. Hou et al., “SuperDock: A deep learning-based automated floating trash monitoring system,” in Proc. of the IEEE Int. Conf. on Robotics and Biomimetics (ROBIO 2019), Yunnan, China, pp. 1035–1040, 2019. [Google Scholar]

16. Z. Wu, D. Zhang, Y. Shao, X. Zhang, Y. Feng et al., “Using YOLOv5 for garbage classification,” in Proc. of the 4th Int. Conf. on Pattern Recognition and Artificial Intelligence (PRAI 2021), Chengdu, China, pp. 35–38, 2021. [Google Scholar]

17. G. Yang, J. Jin, Q. Lei, Y. Wang, J. Sun et al., “Garbage classification system with YOLOV5 based on image recognition,” in Proc. of the 6th IEEE 6th Int. Conf. on Signal and Image Processing (ICSIP), Nanjing, China, pp. 11–18, 2021. [Google Scholar]

18. D. N. Le, V. S. Parvathy, D. Gupta, A. Khanna, J. J. P. C. Rodrigues et al., “IoT enabled depthwise separable convolution neural network with deep support vector machine for COVID-19 diagnosis and classification,” International Journal of Machine Learning and Cybernetics, vol. 12, no. 11, pp. 3235–3248, 2021. [Google Scholar]

19. K. H. Abdulkareem, M. A. Mohammed, A. Salim, M. Arif, O. Geman et al., “Realizing an effective COVID-19 diagnosis system based on machine learning and iot in smart hospital environment,” IEEE Internet Things Journal, vol. 8, no. 21, pp. 15919–15928, 2021. [Google Scholar]

20. Z. Sabir, K. Nisar, M. A. Z. Raja, M. R. Haque, M. Umar et al., “IoT technology enabled heuristic model with morlet wavelet neural network for numerical treatment of heterogeneous mosquito release ecosystem,” IEEE Access, vol. 9, pp. 132897–132913, 2021. [Google Scholar]

21. M. G. Mallikarjuna, S. Yadav, A. Shanmugam, V. Hima and N. Suresh, “Waste classification and segregation: Machine learning and IoT approach,” in Proc. of the 2nd Int. Conf. on Intelligent Engineering and Management (ICIEM 2021), London, United Kingdom, pp. 233–238, 2021. [Google Scholar]

22. D. Zhaojie, Z. Chenjie, W. Jiajie, Q. Yifan and C. Gang, “Garbage classification system based on AI and IoT,” in Proc. of the 15th Int. Conf. on Computer Science and Education (ICCSE 2020), Delft, Netherlands, pp. 349–352, 2020. [Google Scholar]

23. H. Sohail, S. Ullah, A. Khan, O. B. Samin and M. Omar, “Intelligent trash bin (ITB) with trash collection efficiency optimization using iot sensing,” in Proc. of the 8th Int. Conf. Information Communication Technology (ICICT 2019), Sindh, Pakistan, pp. 48–53, 2019. [Google Scholar]

24. K. He, X. Zhang, S. Ren and J. Sun, “Deep residual learning for image recognition,” in Proc. of the IEEE Computer Society Conf. on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, pp. 770–778, 2016. [Google Scholar]

25. H. H. Tan and K. H. Lim, “Vanishing gradient mitigation with deep learning neural network optimization,” in Proc. of the 7th Int. Conf. on Smart Computing and Communications (ICSCC 2019), Sarawak, Malaysia, pp. 1–4, 2019. [Google Scholar]

26. M. L. McHugh, “Interrater reliability: The kappa statistic,” Biochemia Medica, vol. 22, no. 3, pp. 276–282, 2012. [Google Scholar]

27. K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” in Proc. of the 3rd Int. Conf. on Learning Representations (ICLR 2015), San Diego, CA, USA, pp. 1–14, 2015. [Google Scholar]

28. J. Deng, W. Dong, R. Socher, L. -J. Li, K. Li et al., “ImageNet: A large-scale hierarchical image database,” in Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR 2009), Miami, FL, USA, pp. 248–255, 2009. [Google Scholar]

29. X. R. Zhang, W. F. Zhang, W. Sun, X. M. Sun and S. K. Jha, “A robust 3-D medical watermarking based on wavelet transform for data protection,” Computer Systems Science & Engineering, vol. 41, no. 3, pp. 1043–1056, 2022. [Google Scholar]

30. X. R. Zhang, X. Sun, X. M. Sun, W. Sun and S. K. Jha, “Robust reversible audio watermarking scheme for telemedicine and privacy protection,”Computers, Materials & Continua, vol. 71, no. 2, pp. 3035–3050, 2022. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |