Open Access

Open Access

REVIEW

Deep Learning Applied to Computational Mechanics: A Comprehensive Review, State of the Art, and the Classics

1 Aerospace Engineering, University of Illinois at Urbana-Champaign, Champaign, IL 61801, USA

2 Institute of Technical Mechanics, Johannes Kepler University, Linz, A-4040, Austria

* Corresponding Author: Loc Vu-Quoc. Email:

Computer Modeling in Engineering & Sciences 2023, 137(2), 1069-1343. https://doi.org/10.32604/cmes.2023.028130

Received 01 December 2022; Accepted 01 March 2023; Issue published 26 June 2023

Abstract

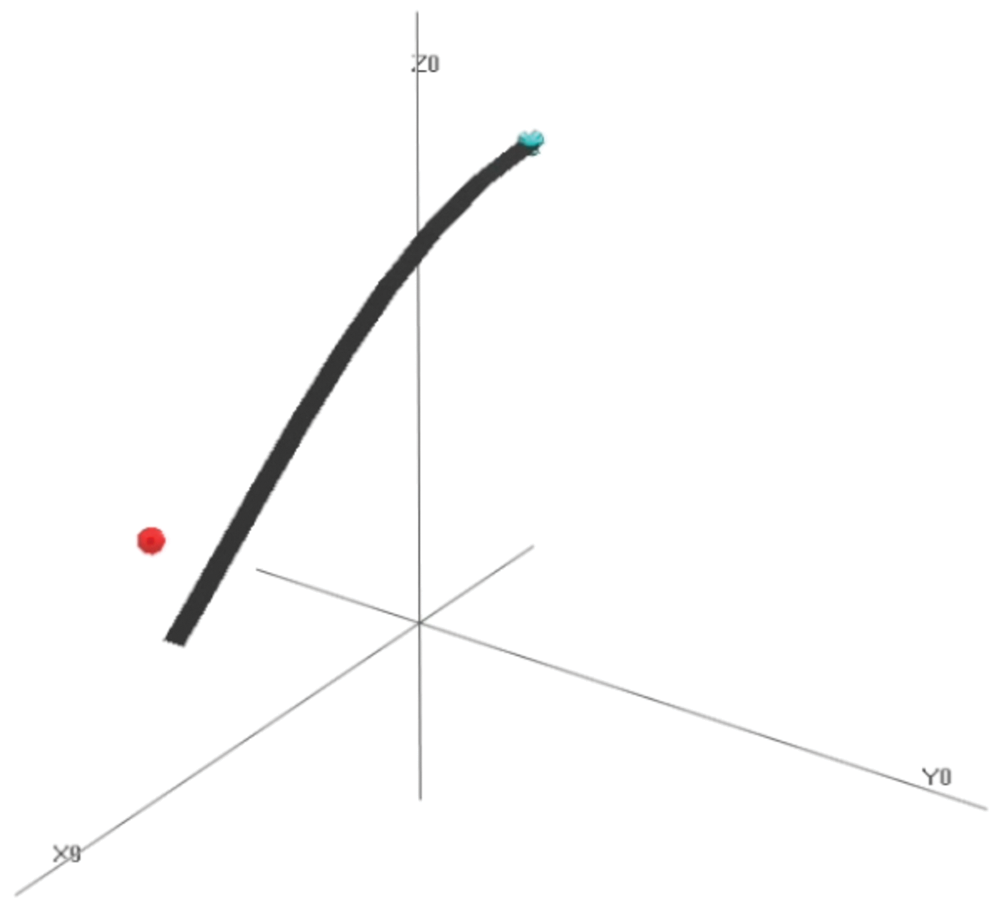

Three recent breakthroughs due to AI in arts and science serve as motivation: An award winning digital image, protein folding, fast matrix multiplication. Many recent developments in artificial neural networks, particularly deep learning (DL), applied and relevant to computational mechanics (solid, fluids, finite-element technology) are reviewed in detail. Both hybrid and pure machine learning (ML) methods are discussed. Hybrid methods combine traditional PDE discretizations with ML methods either (1) to help model complex nonlinear constitutive relations, (2) to nonlinearly reduce the model order for efficient simulation (turbulence), or (3) to accelerate the simulation by predicting certain components in the traditional integration methods. Here, methods (1) and (2) relied on Long-Short-Term Memory (LSTM) architecture, with method (3) relying on convolutional neural networks.. Pure ML methods to solve (nonlinear) PDEs are represented by Physics-Informed Neural network (PINN) methods, which could be combined with attention mechanism to address discontinuous solutions. Both LSTM and attention architectures, together with modern and generalized classic optimizers to include stochasticity for DL networks, are extensively reviewed. Kernel machines, including Gaussian processes, are provided to sufficient depth for more advanced works such as shallow networks with infinite width. Not only addressing experts, readers are assumed familiar with computational mechanics, but not with DL, whose concepts and applications are built up from the basics, aiming at bringing first-time learners quickly to the forefront of research. History and limitations of AI are recounted and discussed, with particular attention at pointing out misstatements or misconceptions of the classics, even in well-known references. Positioning and pointing control of a large-deformable beam is given as an example.Keywords

TABLE OF CONTENTS

1 Opening remarks and organization

2 Deep Learning, resurgence of Artificial Intelligence

2.1 Handwritten equation to LaTeX code, image recognition

2.2 Artificial intelligence, machine learning, deep learning

2.3 Motivation, applications to mechanics

2.3.1 Enhanced numerical quadrature for finite elements

2.3.2 Solid mechanics, multiscale modeling

2.3.3 Fluid mechanics, reduced-order model for turbulence

3 Computational mechanics, neuroscience, deep learning

4 Statics, feedforward networks

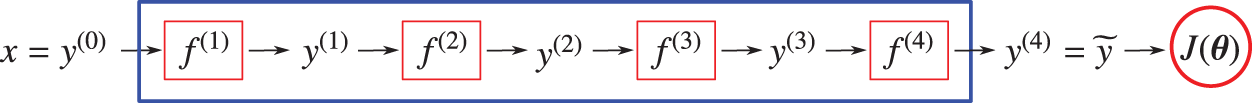

4.3 Big picture, composition of concepts

4.3.1 Graphical representation, block diagrams

4.4 Network layer, detailed construct

4.4.1 Linear combination of inputs and biases

4.4.3 Graphical representation, block diagrams

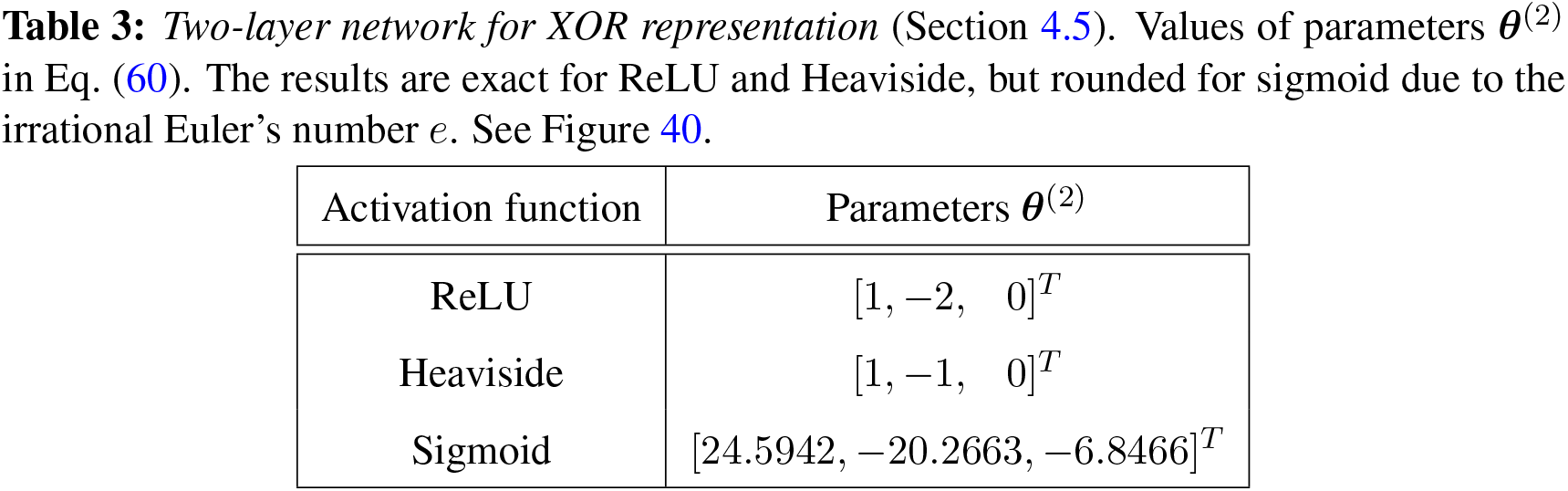

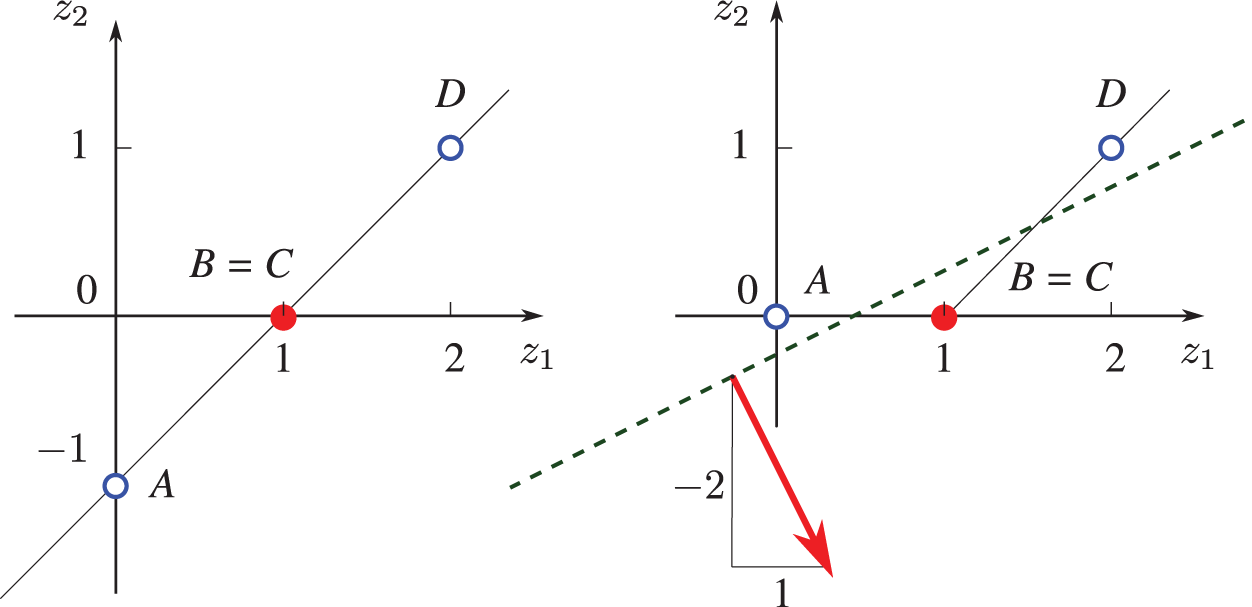

4.5 Representing XOR function with two-layer network

4.6 What is “deep” in “deep networks” ? Size, architecture

5.1 Cost (loss, error) function

5.1.2 Maximum likelihood (probability cost)

5.1.3 Classification loss function

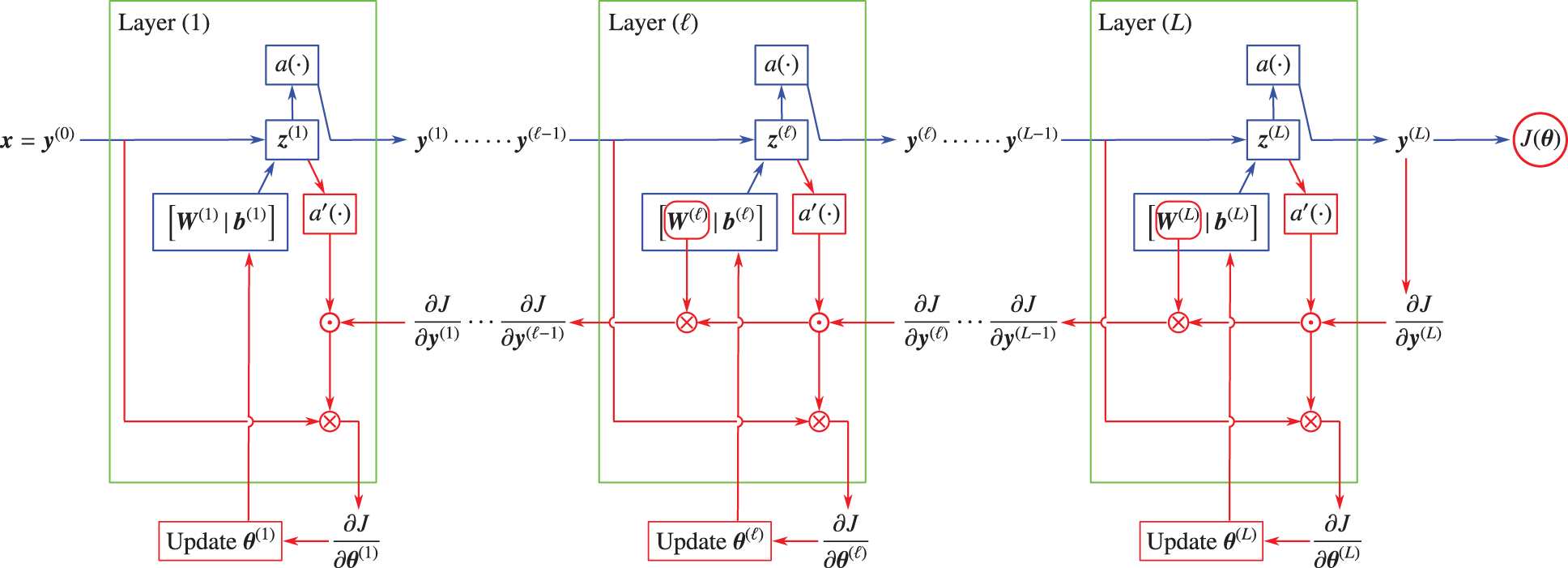

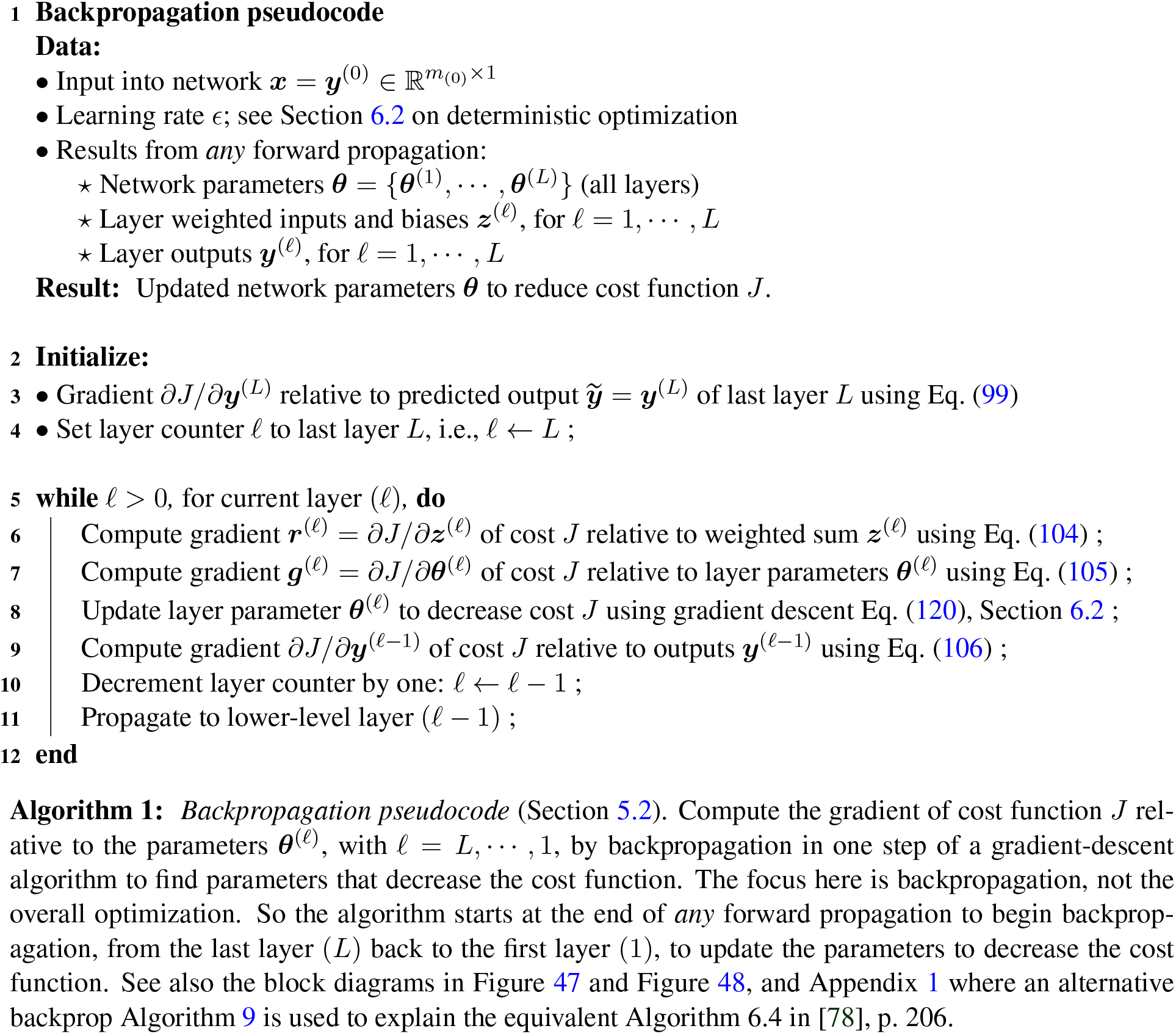

5.2 Gradient of cost function by backpropagation

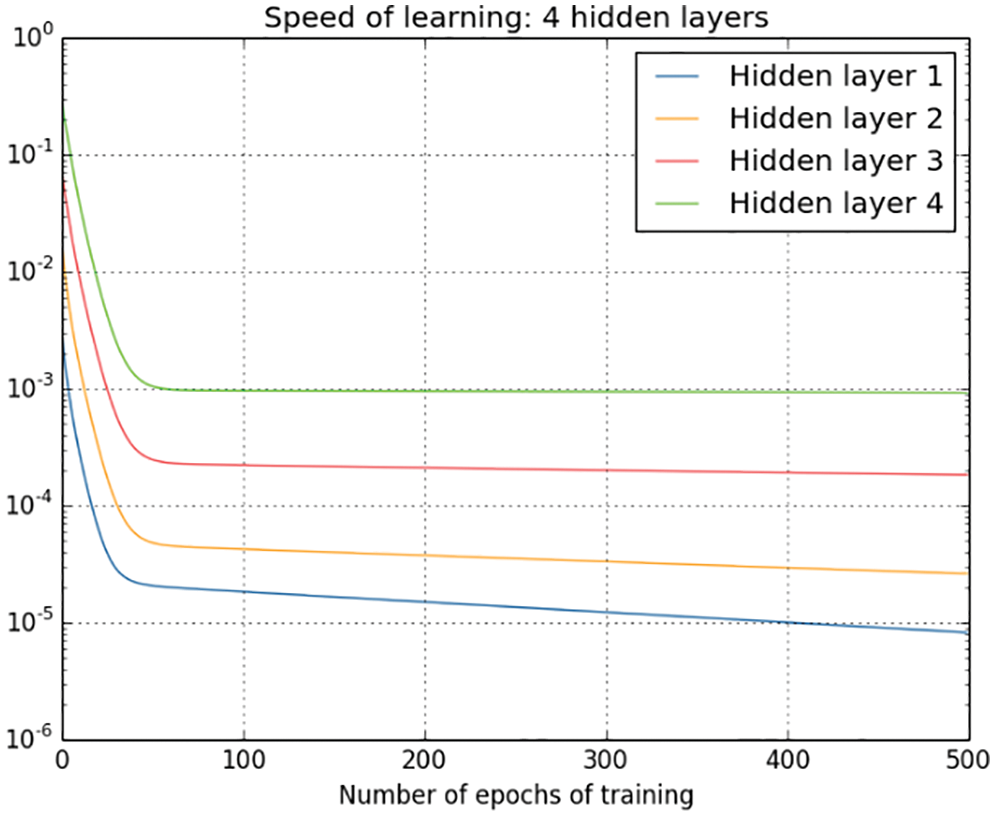

5.3 Vanishing and exploding gradients

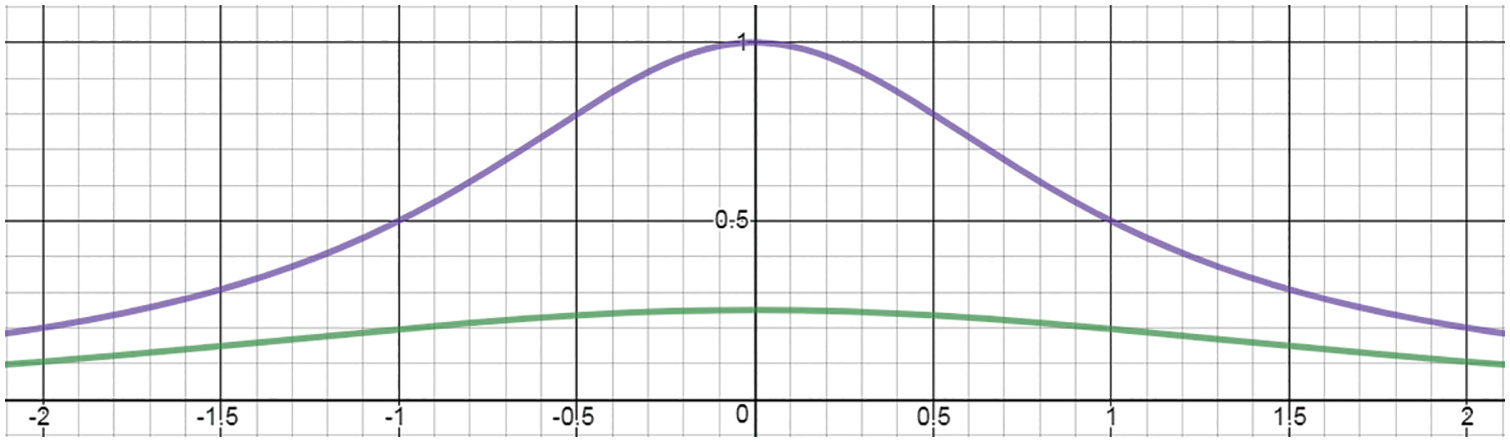

5.3.1 Logistic sigmoid and hyperbolic tangent

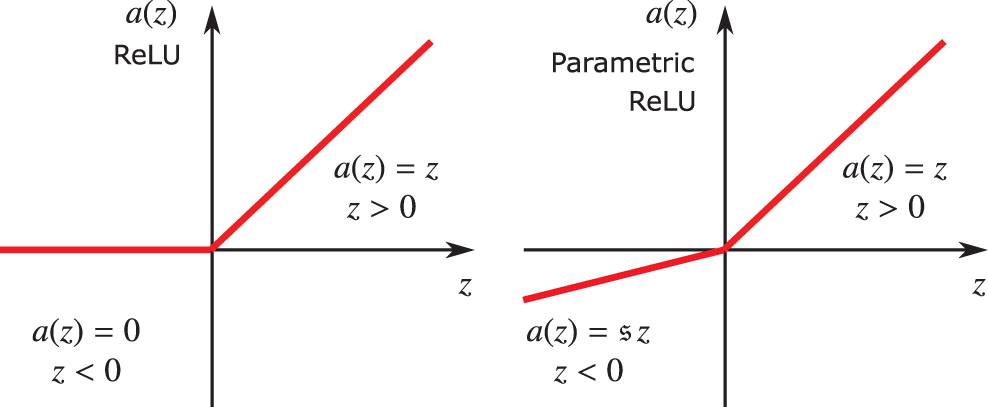

5.3.2 Rectified linear function (ReLU)

5.3.3 Parametric rectified linear unit (PReLU)

6 Network training, optimization methods

6.1 Training set, validation set, test set, stopping criteria

6.2 Deterministic optimization, full batch

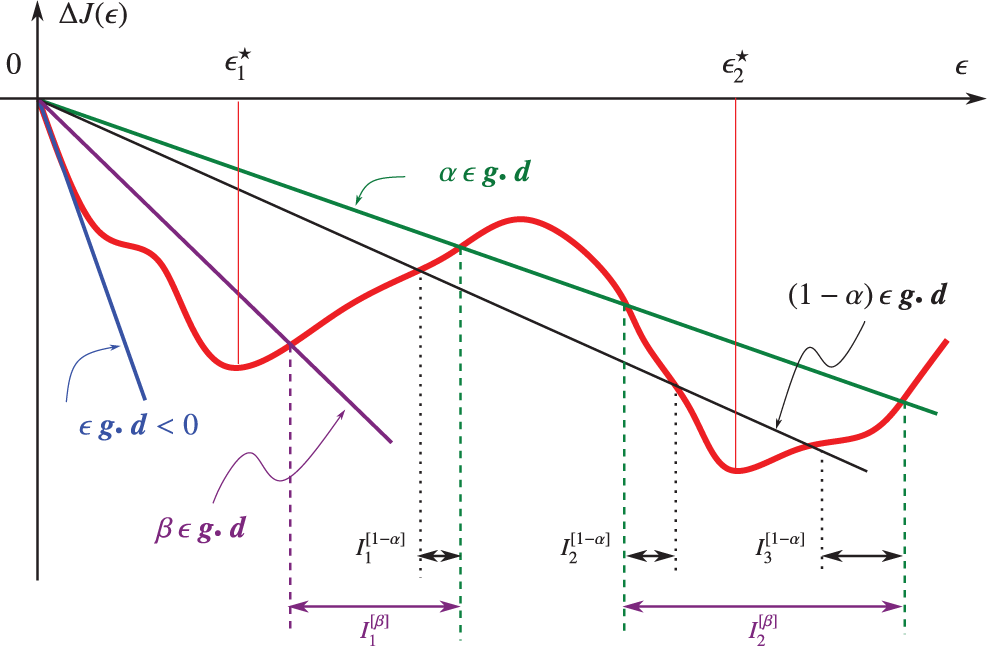

6.2.2 Inexact line-search, Goldstein’s rule

6.2.3 Inexact line-search, Armijo’s rule

6.2.4 Inexact line-search, Wolfe’s rule

6.3 Stochastic gradient-descent (1st-order) methods

6.3.1 Standard SGD, minibatch, fixed learning-rate schedule

6.3.2 Momentum and fast (accelerated) gradient

6.3.3 Initial-step-length tuning

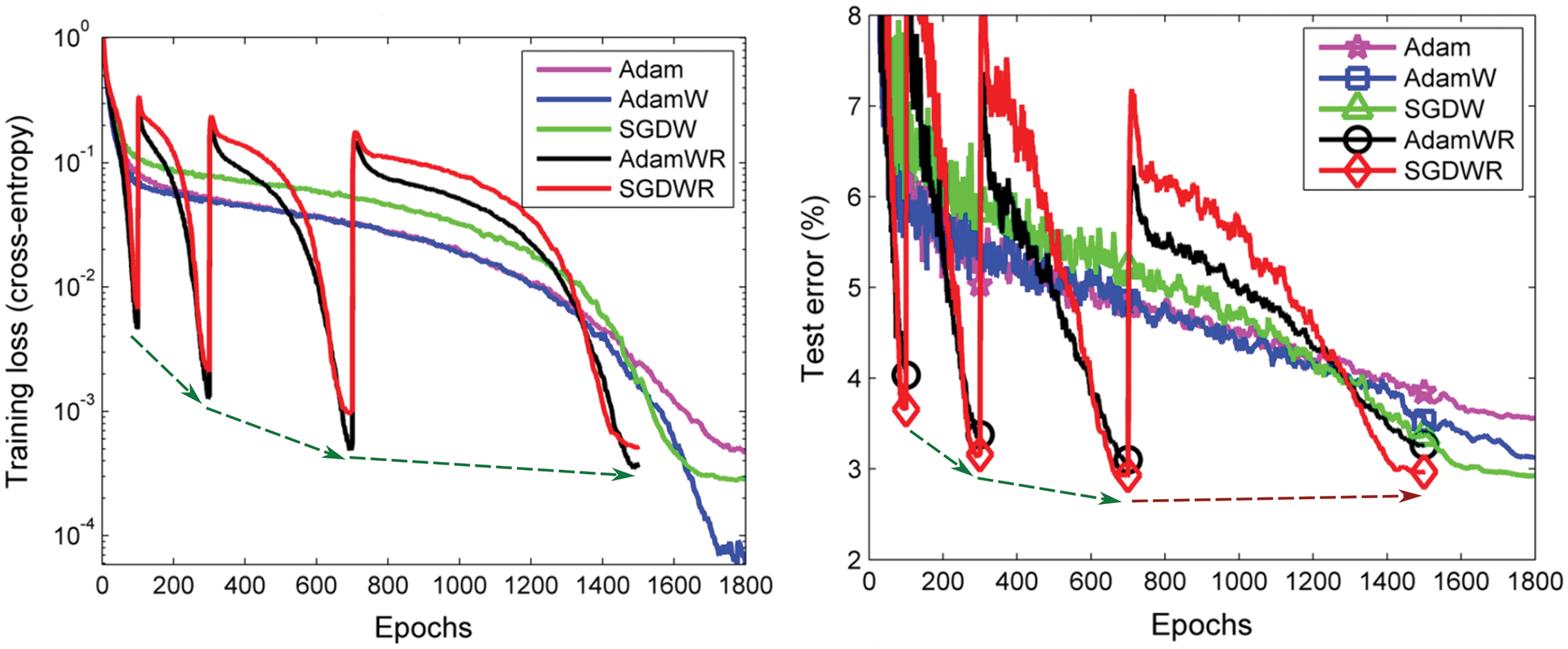

6.3.4 Step-length decay, annealing and cyclic annealing

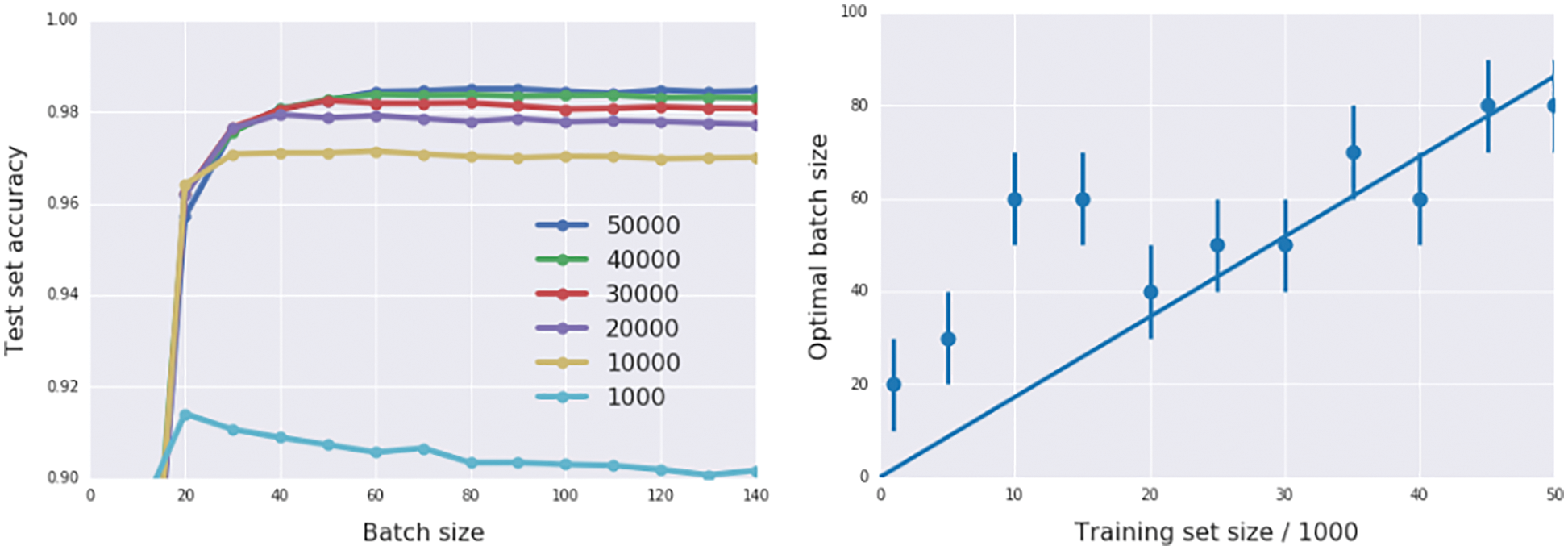

6.3.5 Minibatch-size increase, fixed step length, equivalent annealing

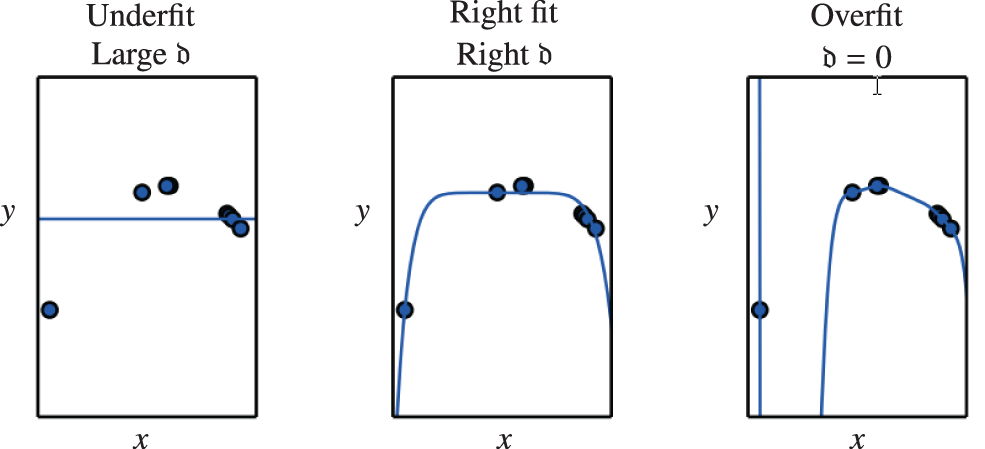

6.3.6 Weight decay, avoiding overfit

6.3.7 Combining all add-on tricks

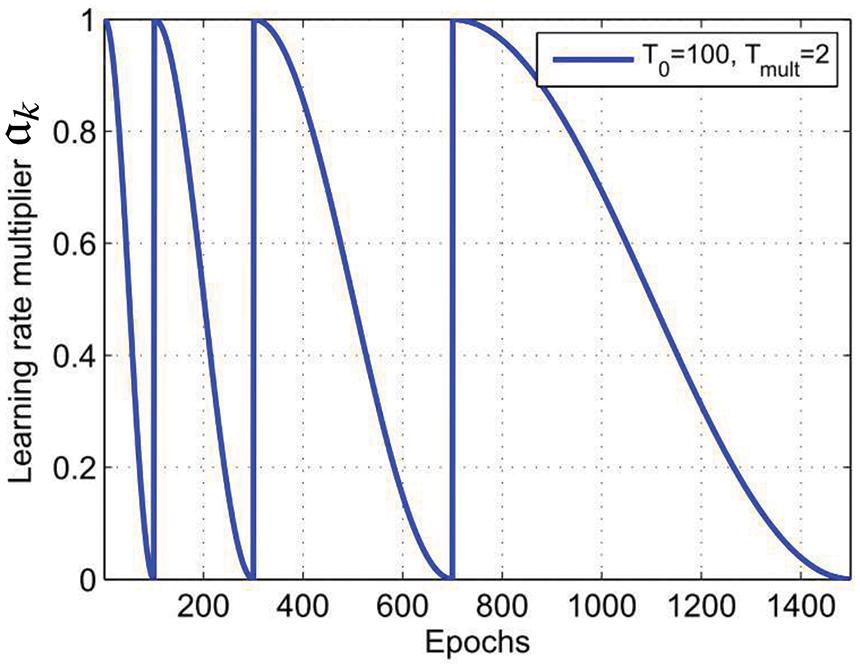

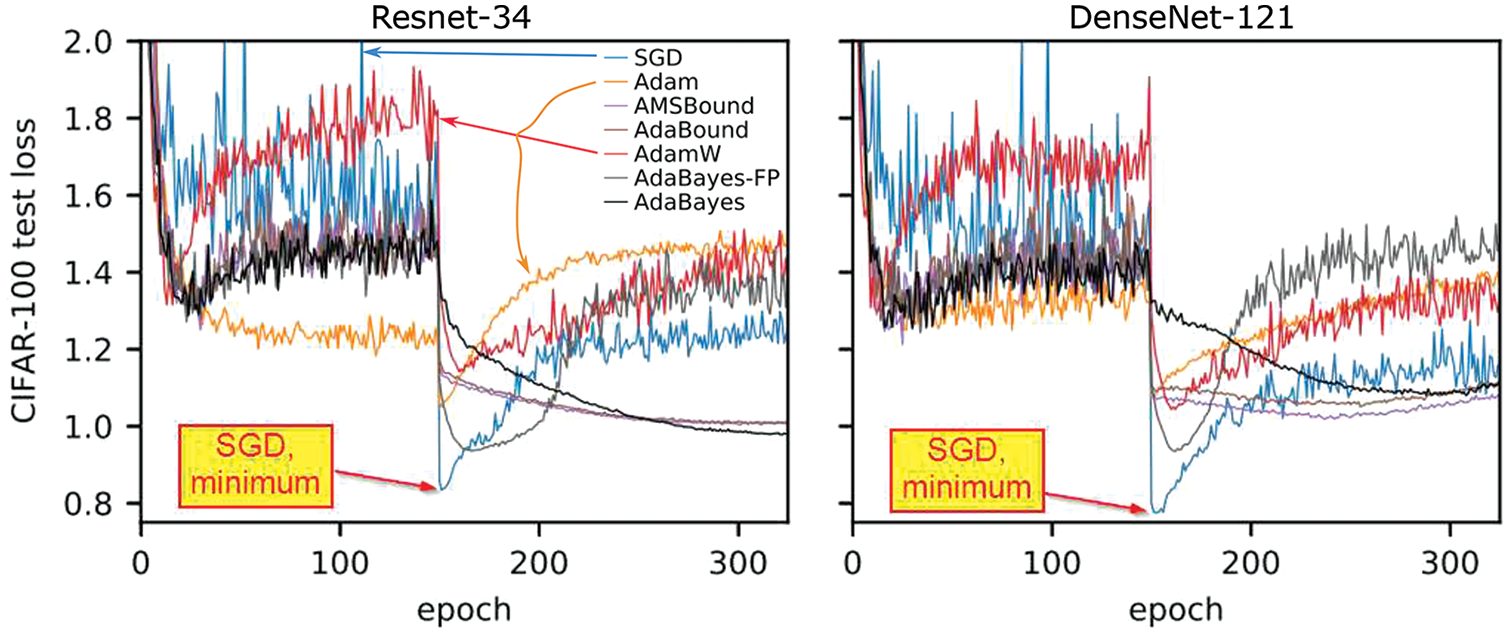

6.5 Adaptive methods: Adam, variants, criticism

6.5.1 Unified adaptive learning-rate pseudocode

6.5.2 AdaGrad: Adaptive Gradient

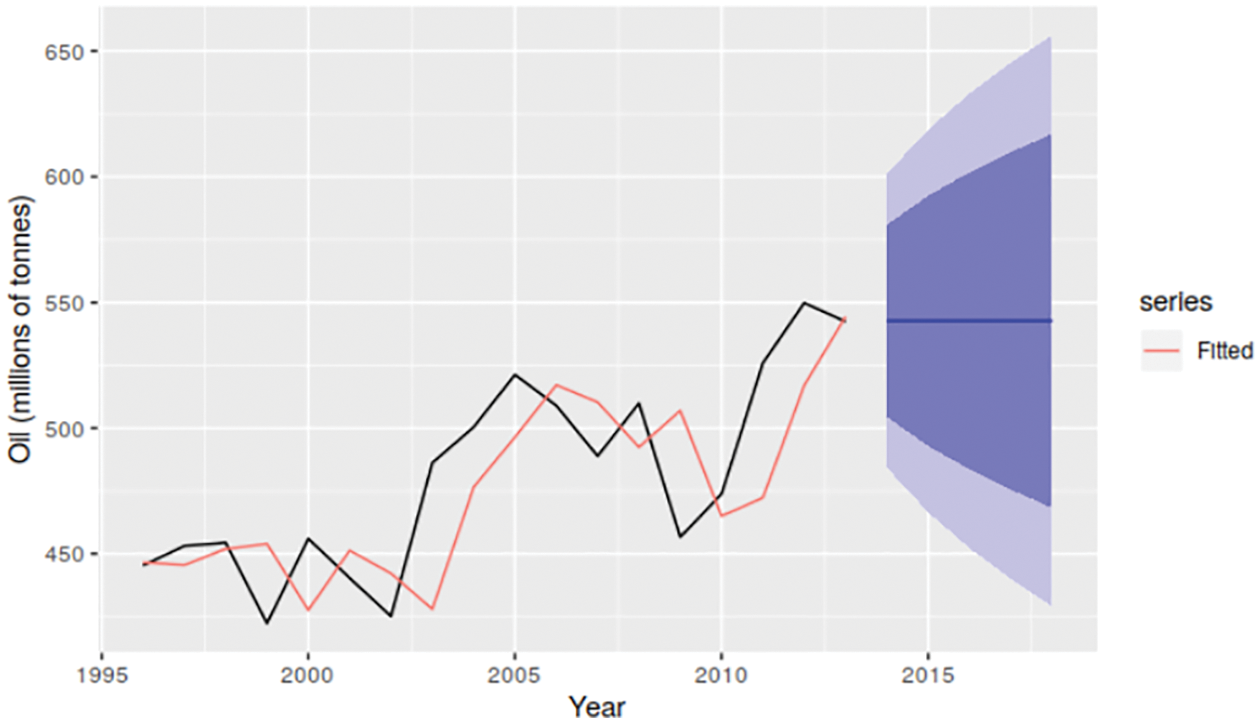

6.5.3 Forecasting time series, exponential smoothing

6.5.4 RMSProp: Root Mean Square Propagation

6.5.5 AdaDelta: Adaptive Delta (parameter increment)

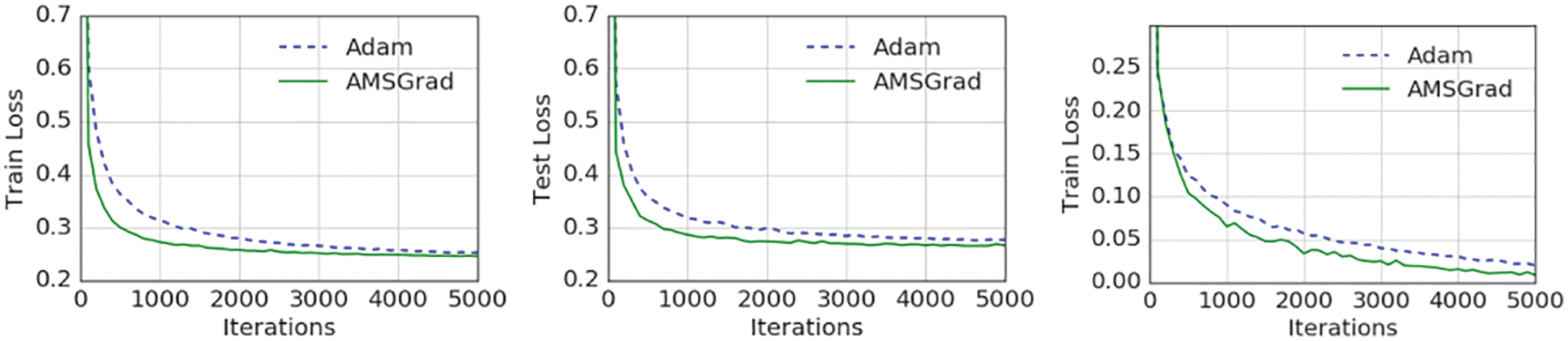

6.5.7 AMSGrad: Adaptive Moment Smoothed Gradient

6.5.8 AdamX and Nostalgic Adam

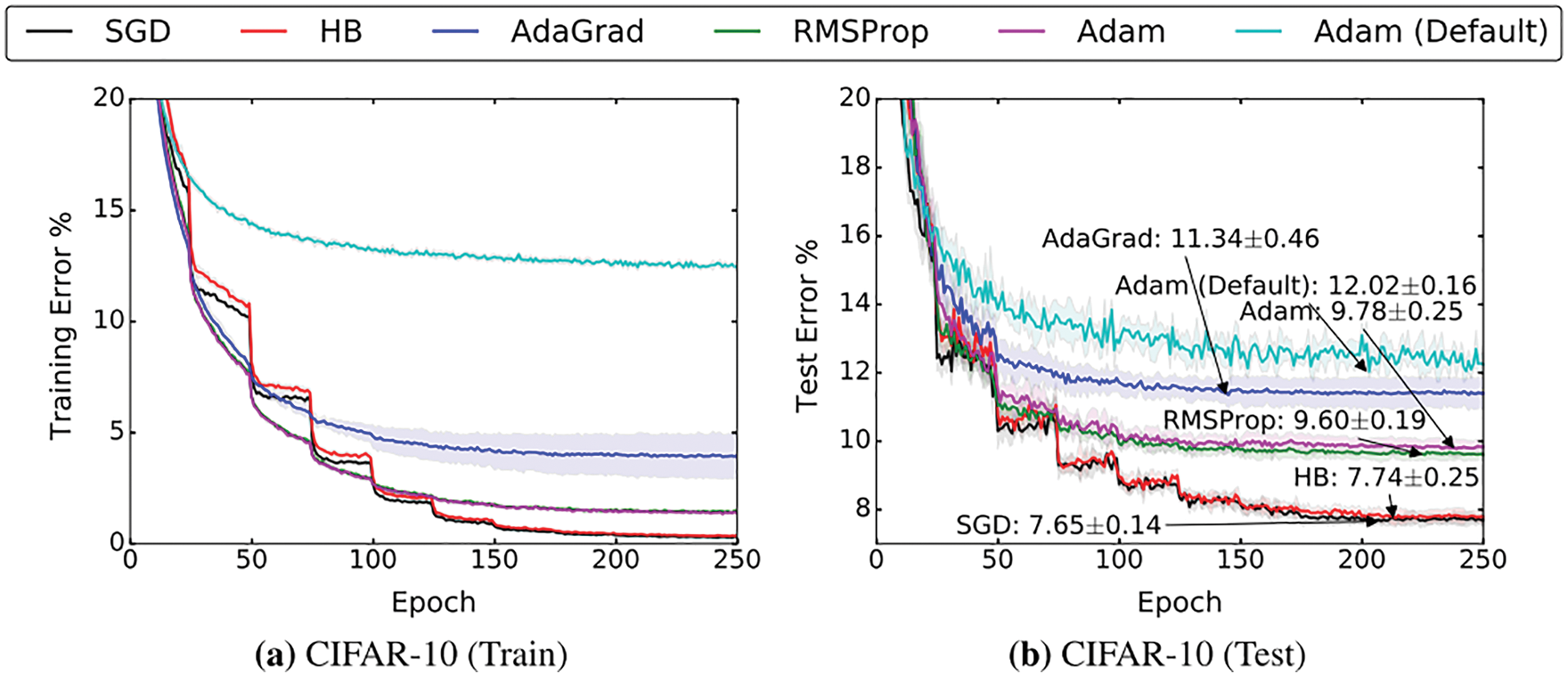

6.5.9 Criticism of adaptive methods, resurgence of SGD

6.5.10 AdamW: Adaptive moment with weight decay

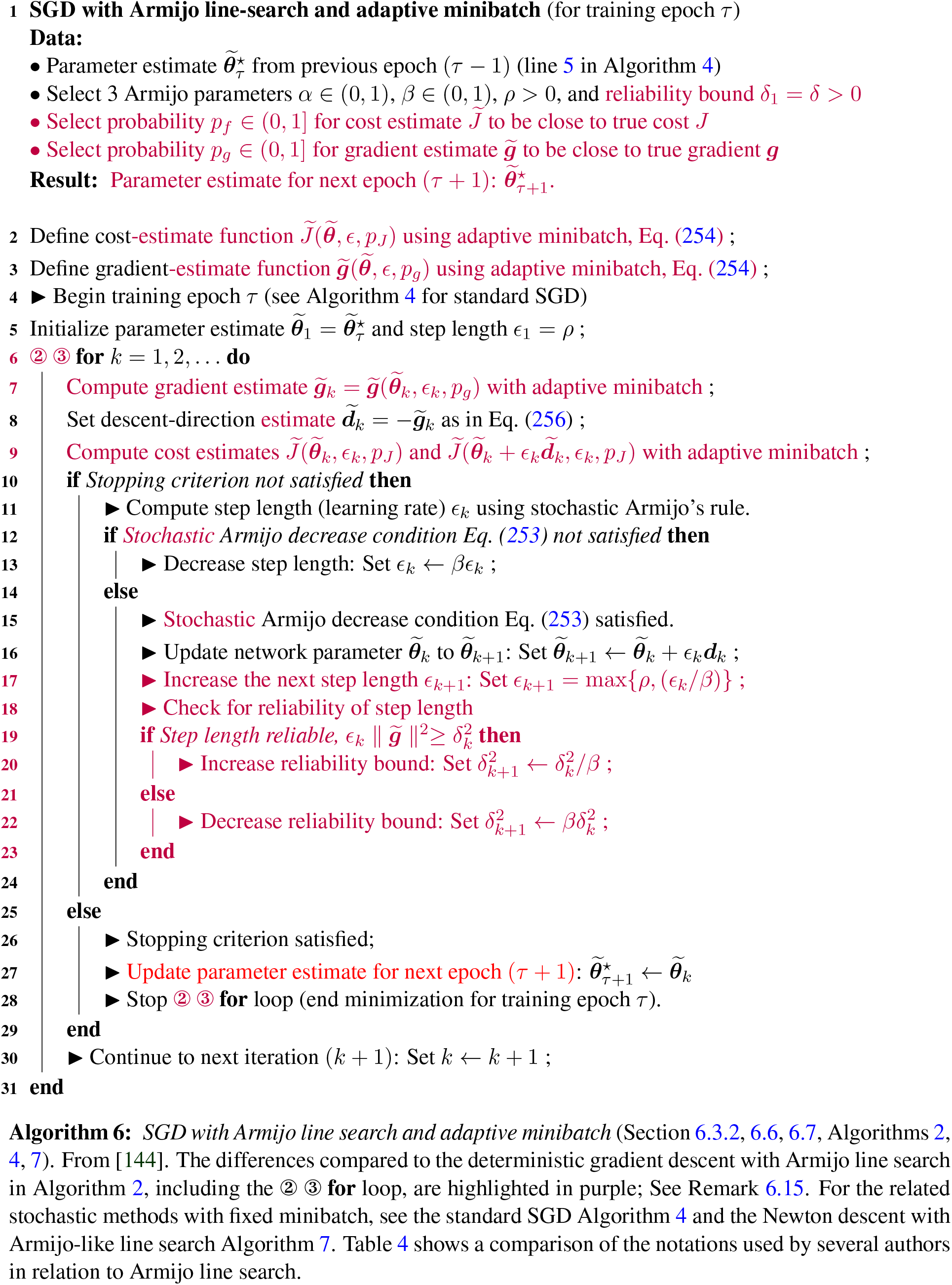

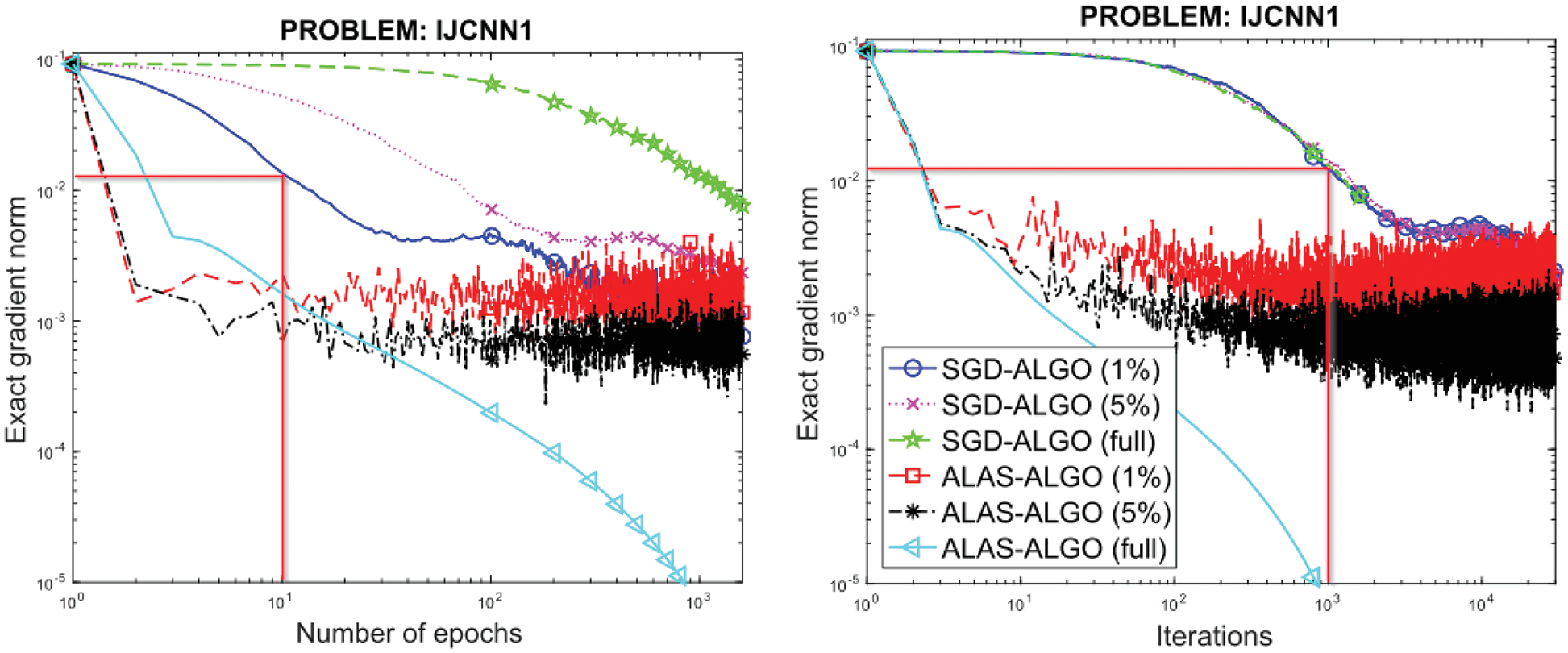

6.6 SGD with Armijo line search and adaptive minibatch

6.7 Stochastic Newton method with 2nd-order line search

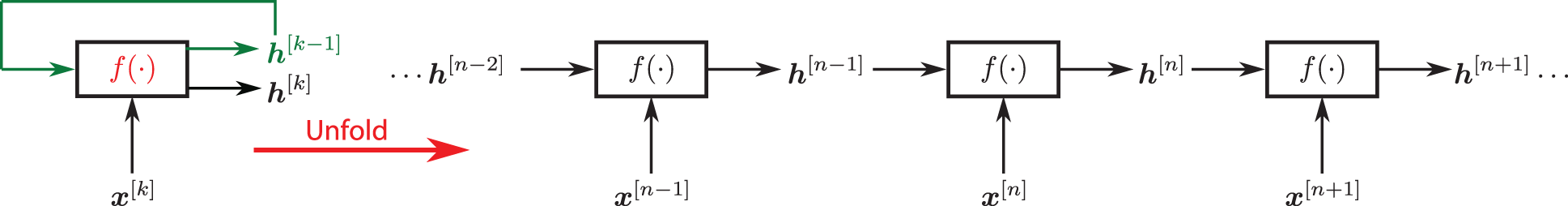

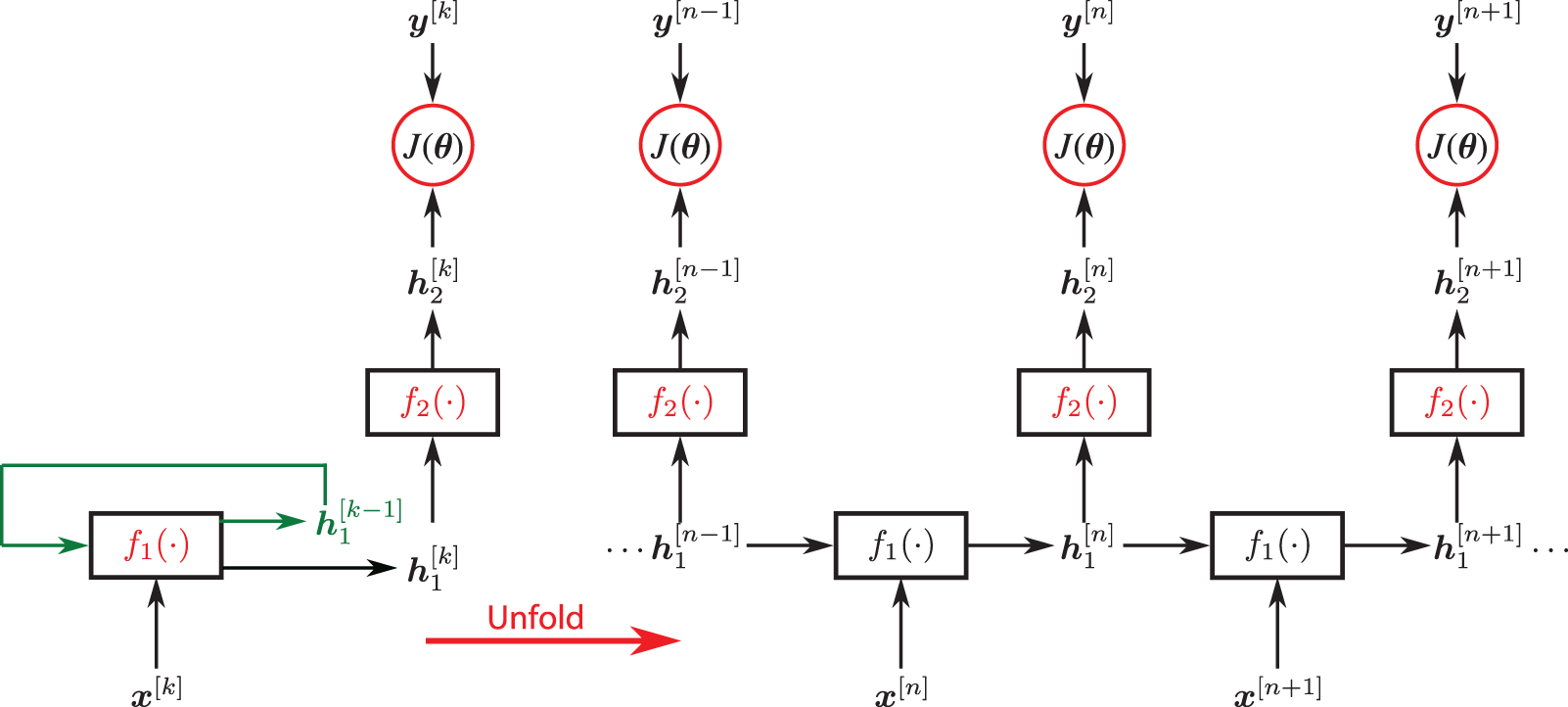

7 Dynamics, sequential data, sequence modeling

7.1 Recurrent Neural Networks (RNNs)

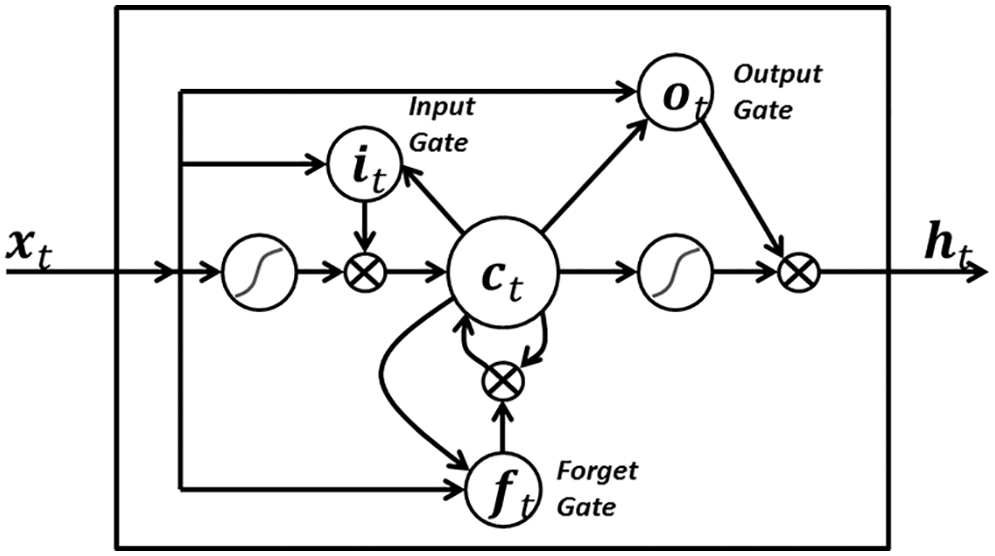

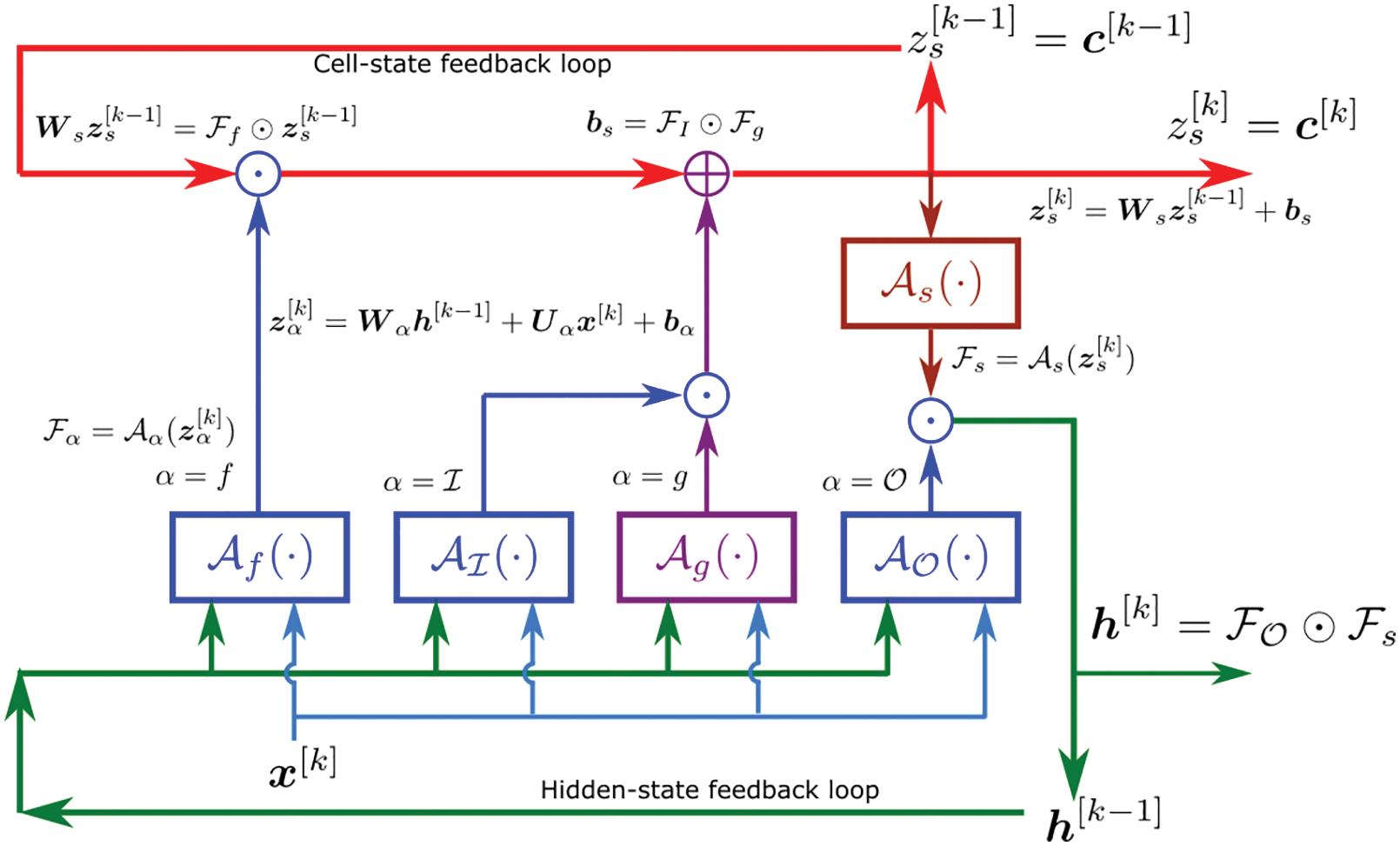

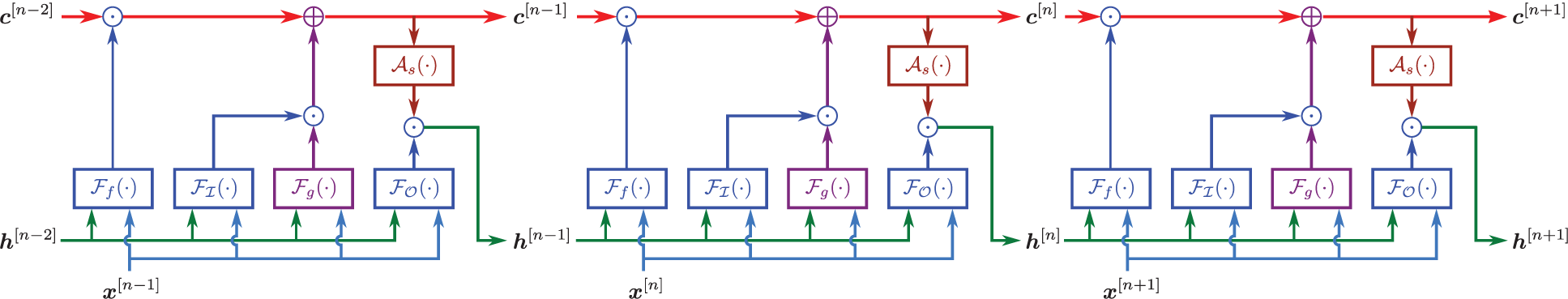

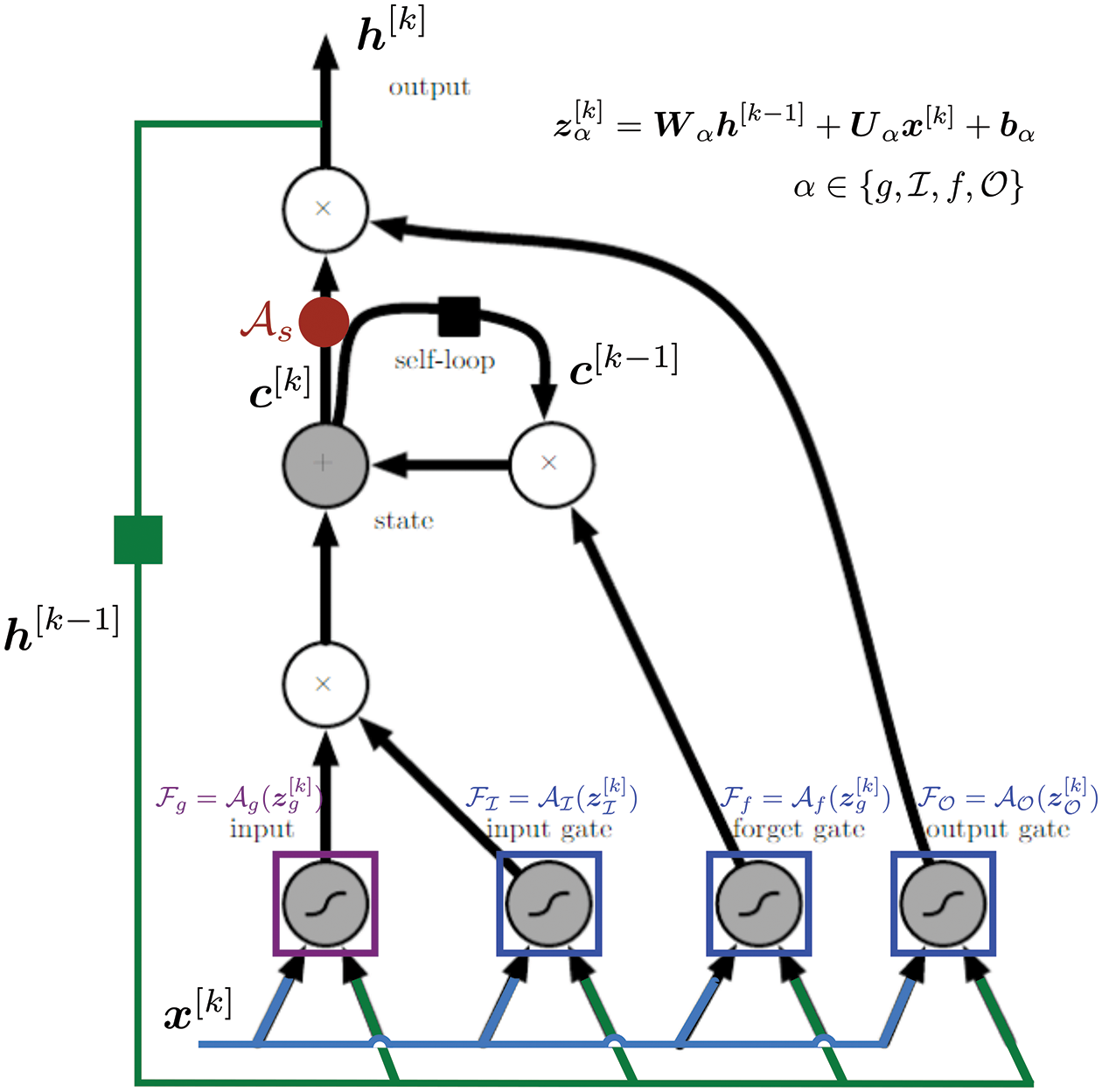

7.2 Long Short-Term Memory (LSTM) unit

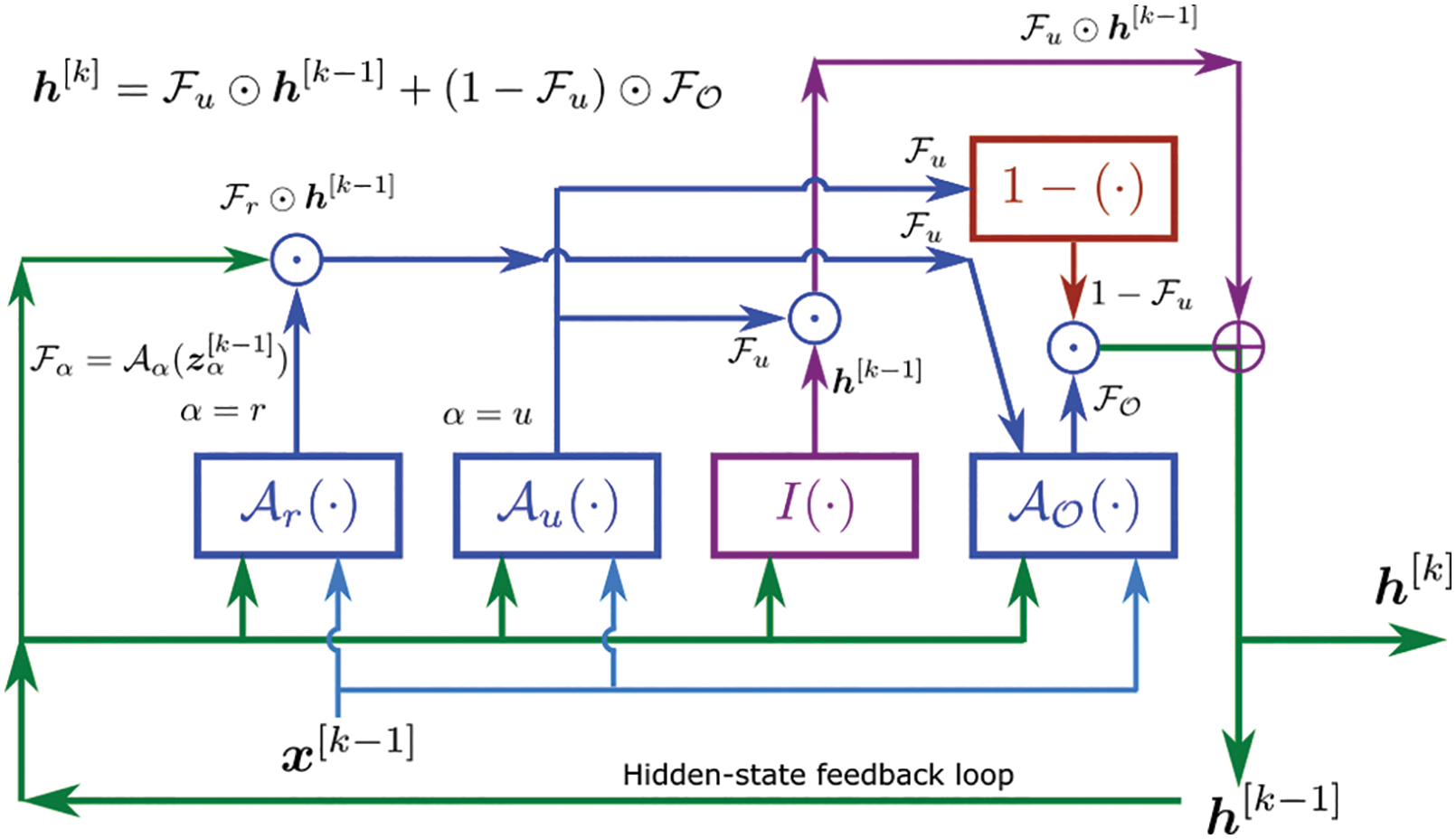

7.3 Gated Recurrent Unit (GRU)

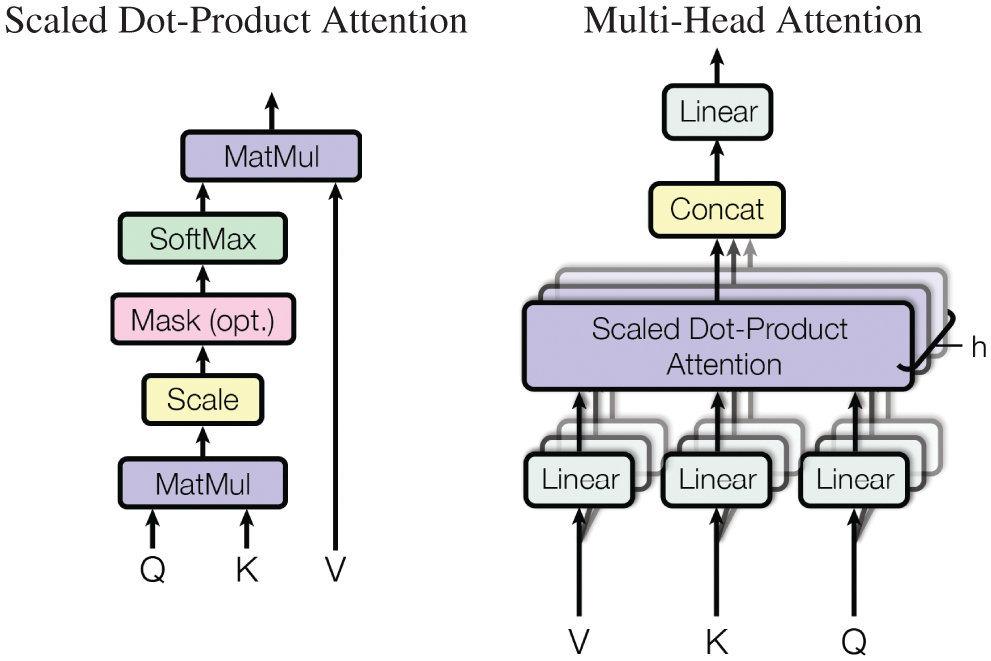

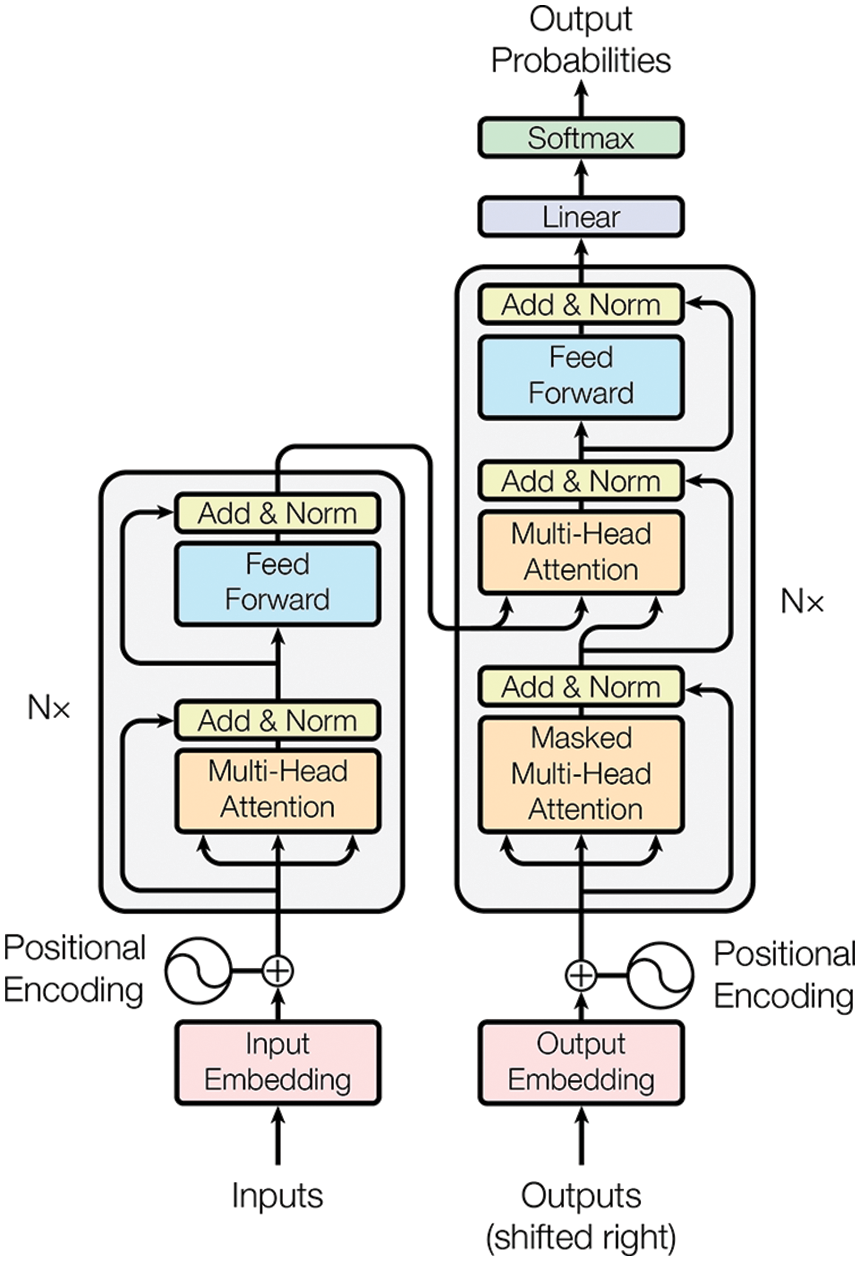

7.4 Sequence modeling, attention mechanisms, Transformer

7.4.1 Sequence modeling, encoder-decoder

7.4.3 Transformer architecture

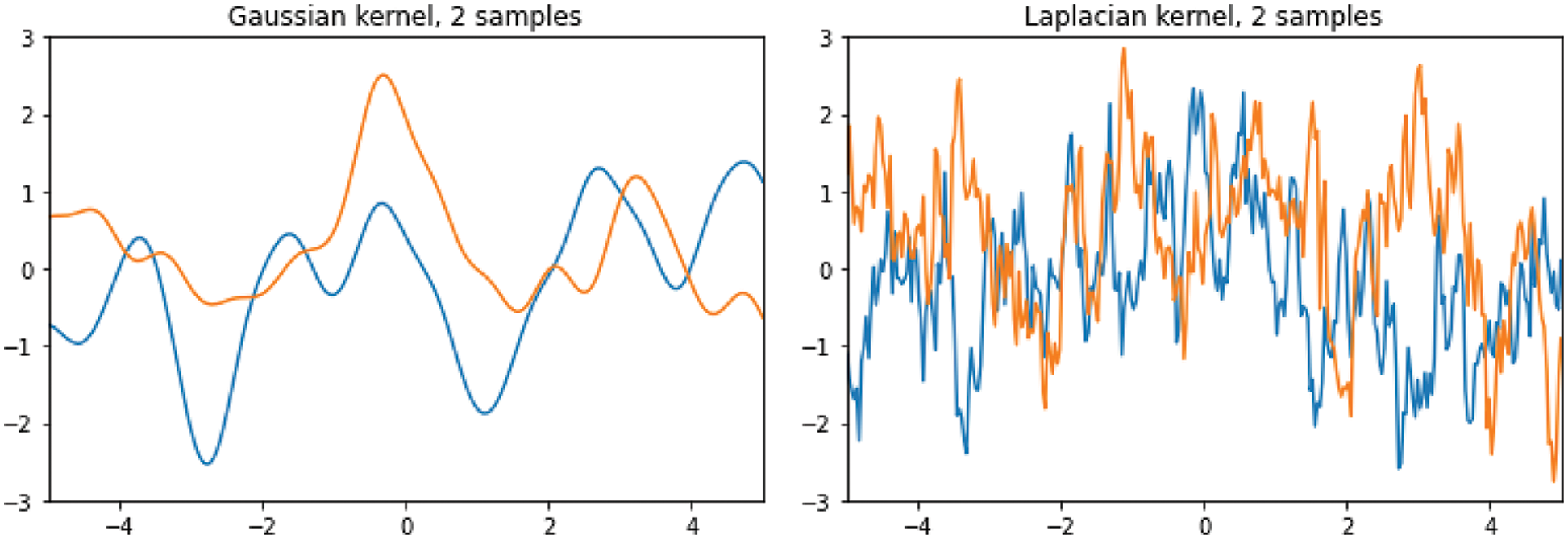

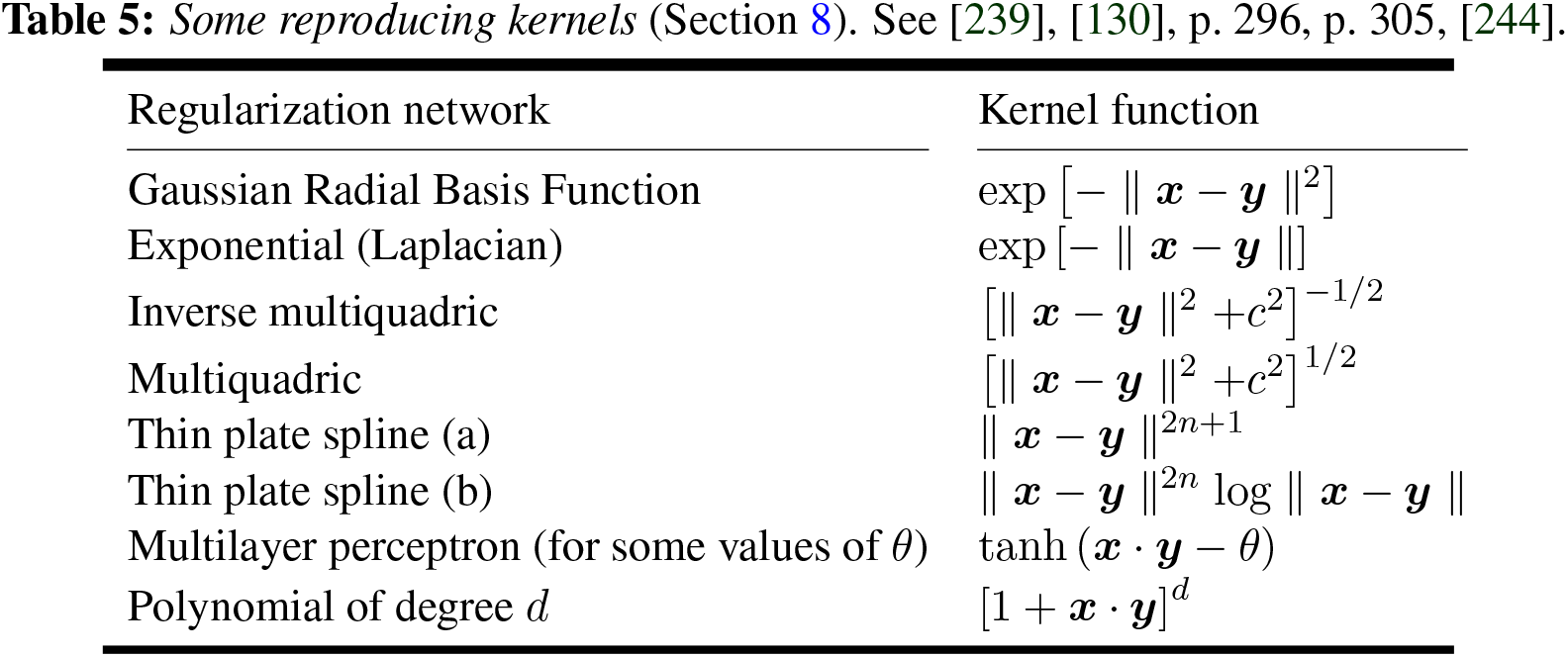

8 Kernel machines (methods, learning)

8.1 Reproducing kernel: General theory

8.2 Exponential functions as reproducing kernels

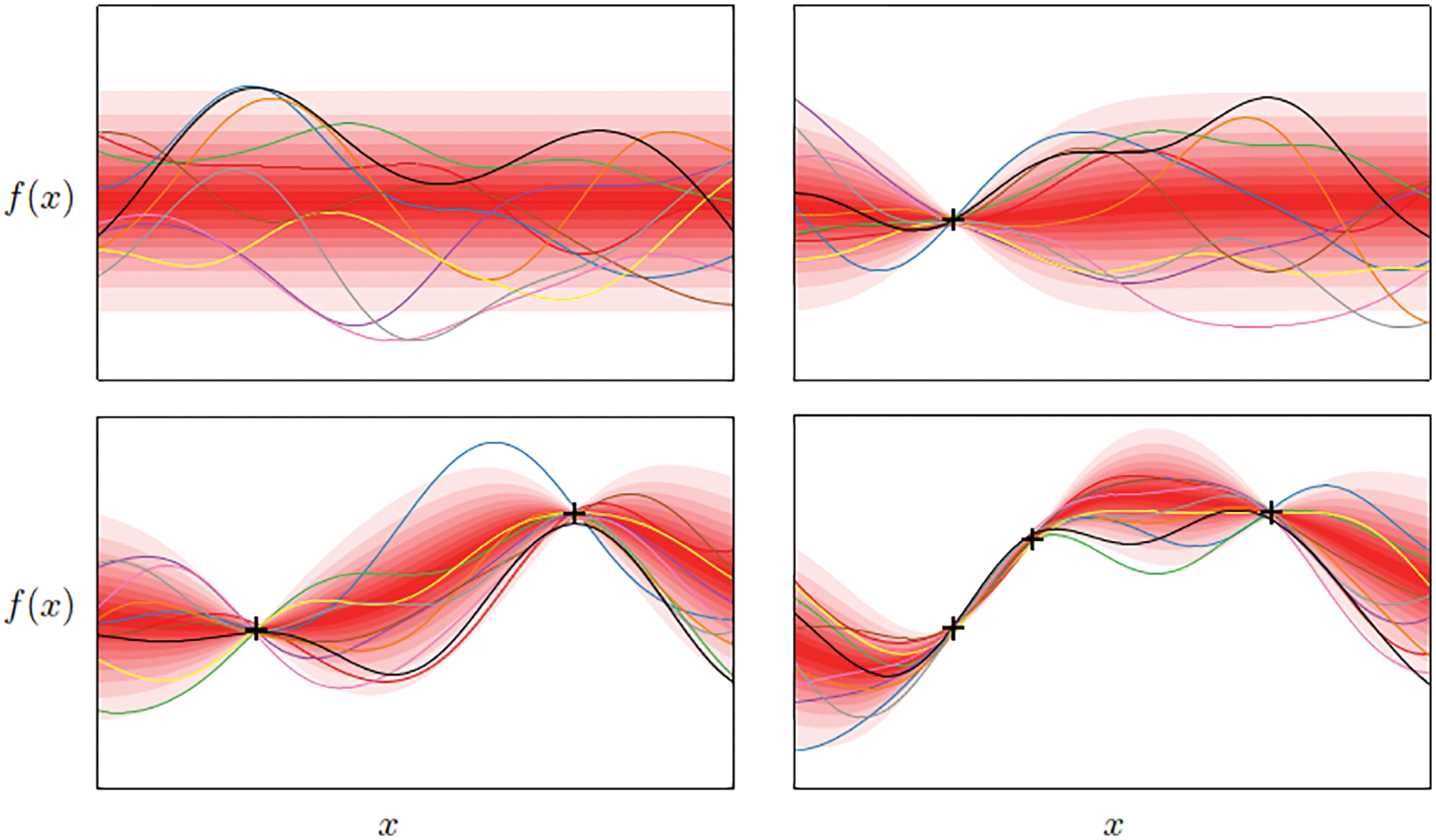

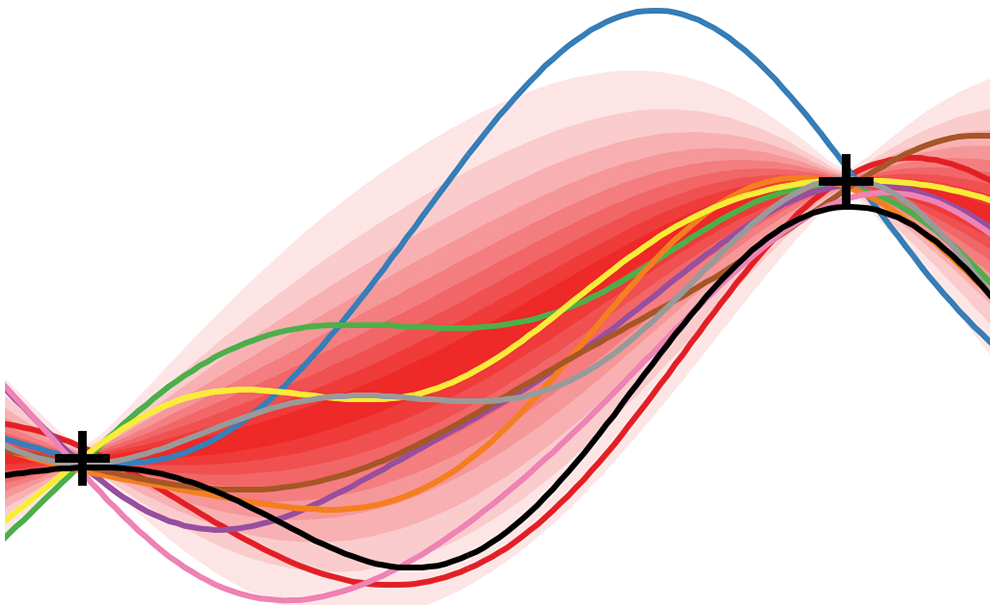

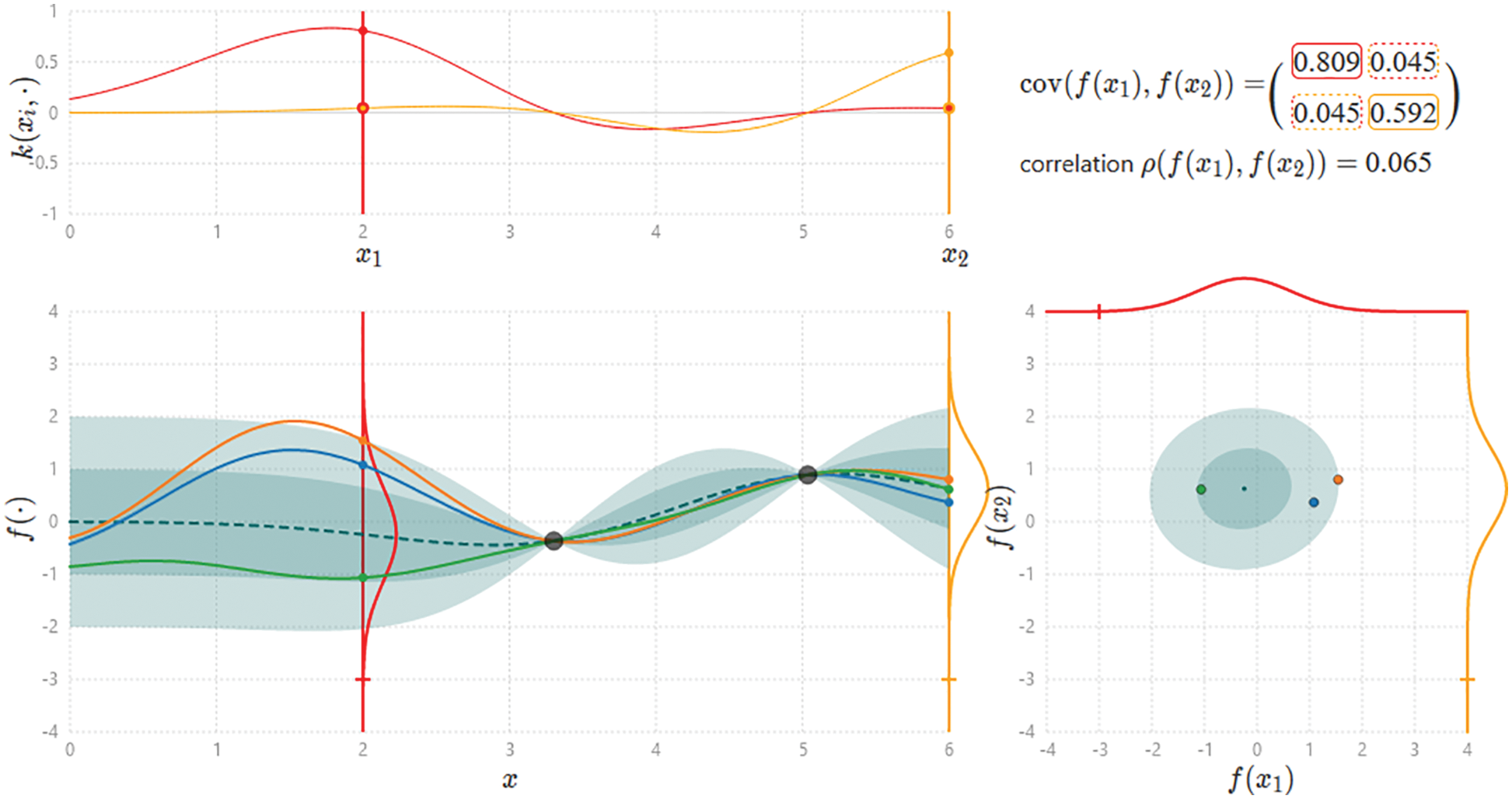

8.3.1 Gaussian-process priors and sampling

8.3.2 Gaussian-process posteriors and sampling

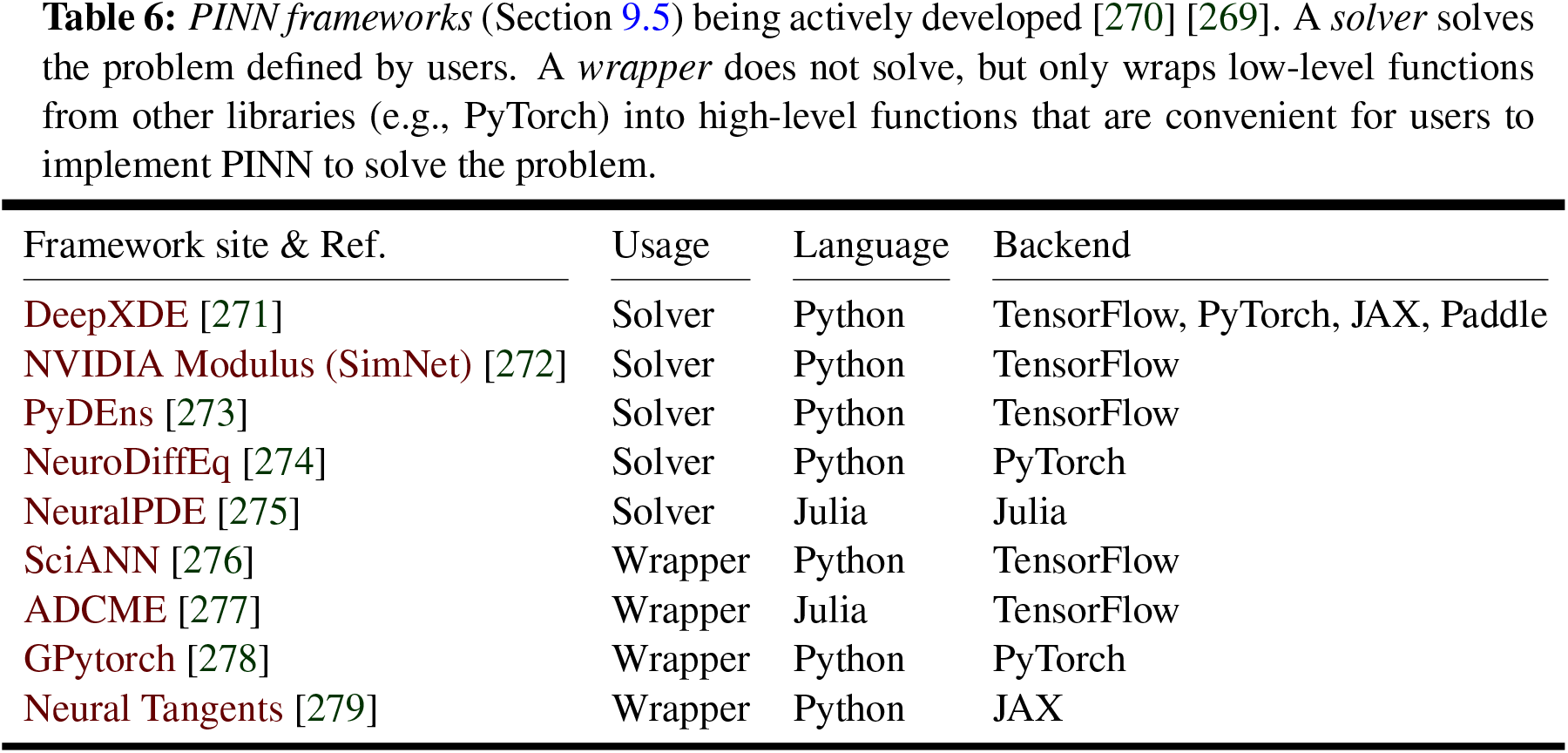

9 Deep-learning libraries, frameworks, platforms

9.4 Leveraging DL-frameworks for scientific computing

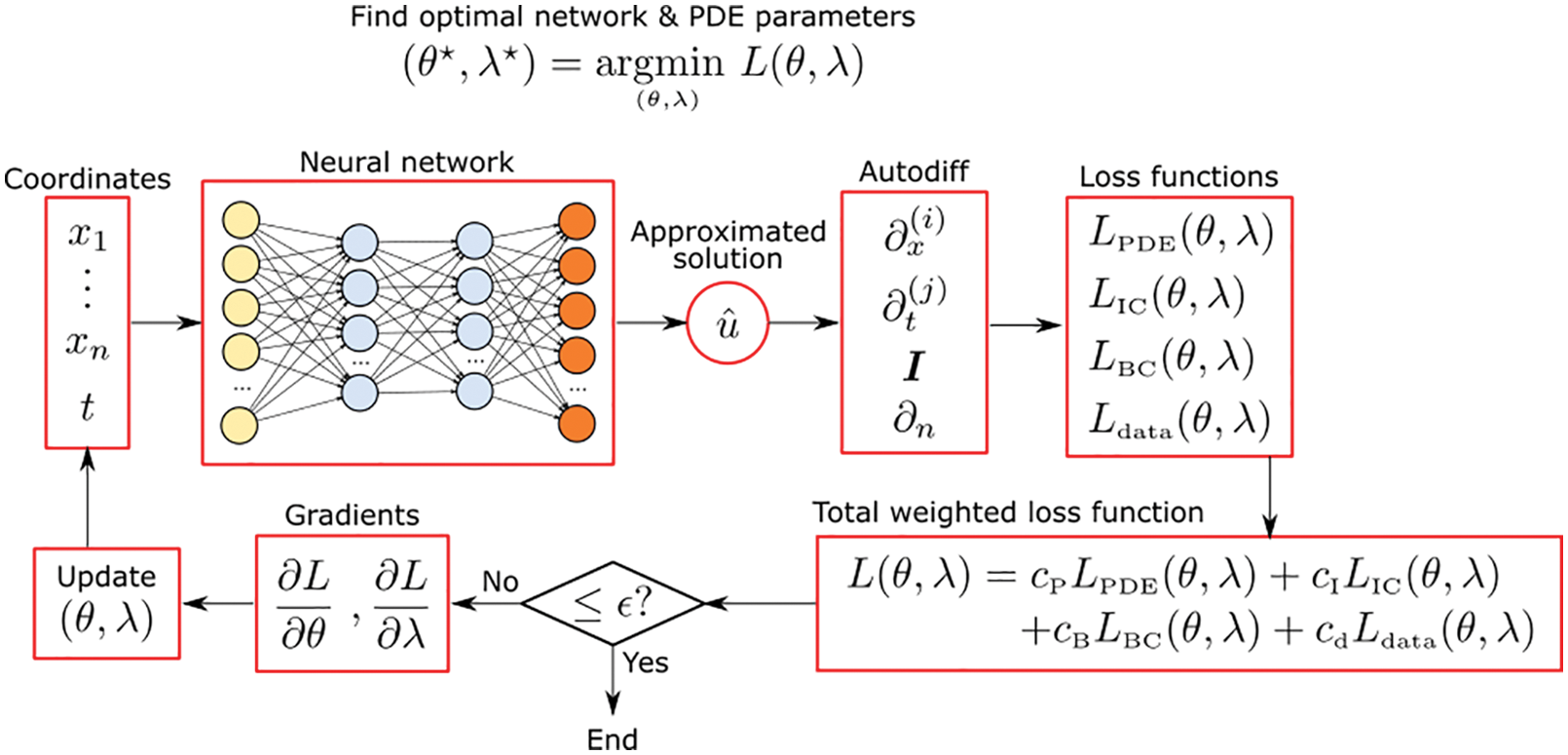

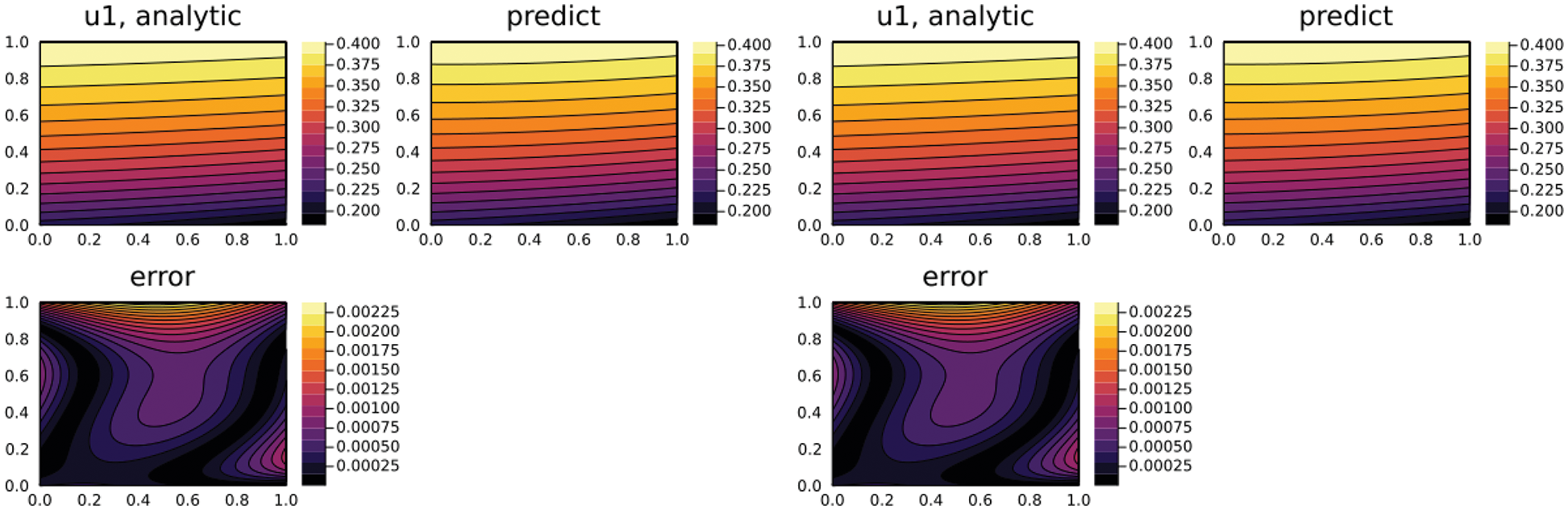

9.5 Physics-Informed Neural Network (PINN) frameworks

10 Application 1: Enhanced numerical quadrature for finite elements

10.1 Two methods of quadrature, 1-D example

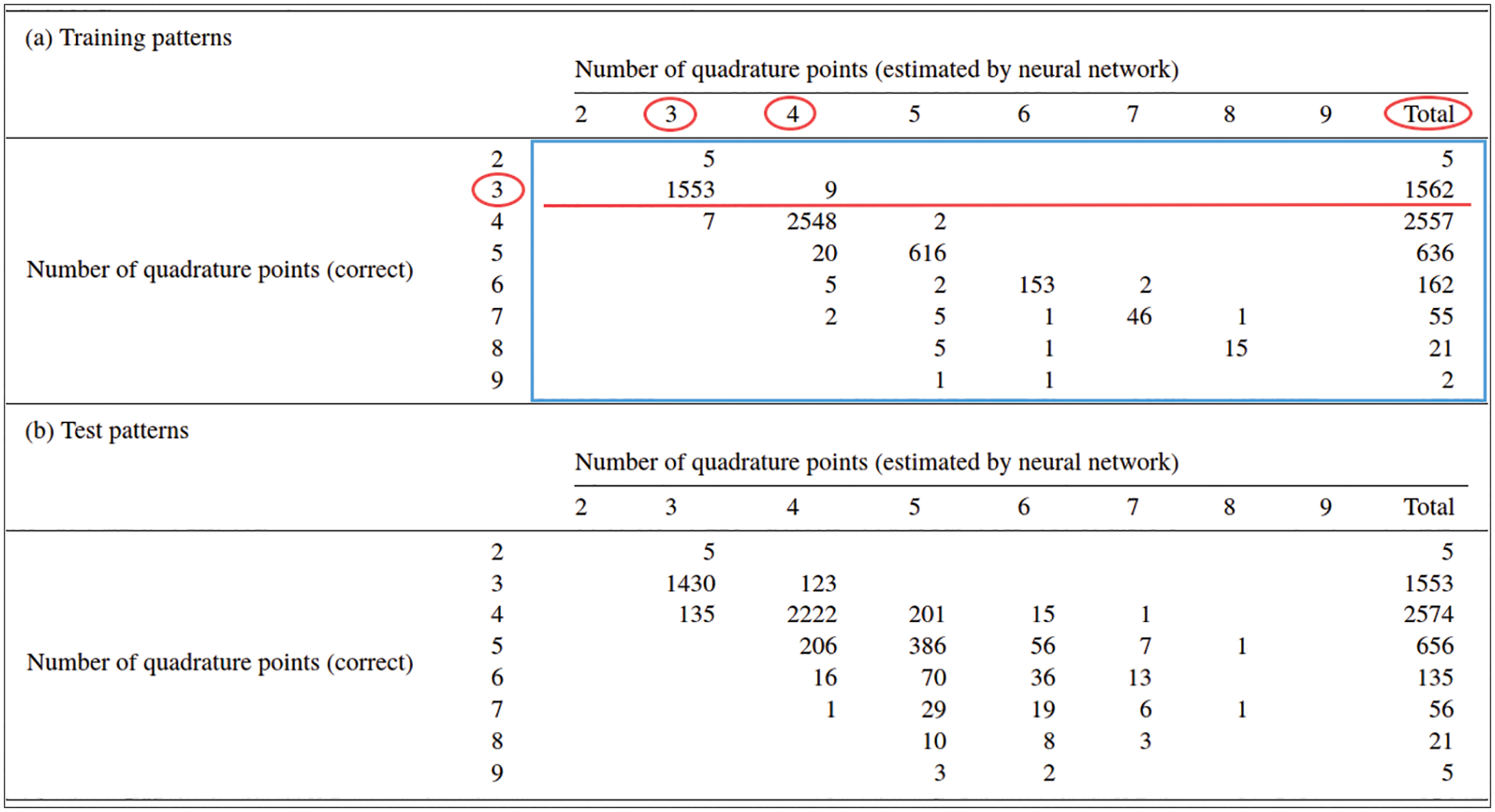

10.2 Application 1.1: Method 1, Optimal number of integration points

10.2.1 Method 1, feasibility study

10.2.2 Method 1, training phase

10.2.3 Method 1, application phase

10.3 Application 1.2: Method 2, optimal quadrature weights

10.3.1 Method 2, feasibility study

10.3.2 Method 2, training phase

10.3.3 Method 2, application phase

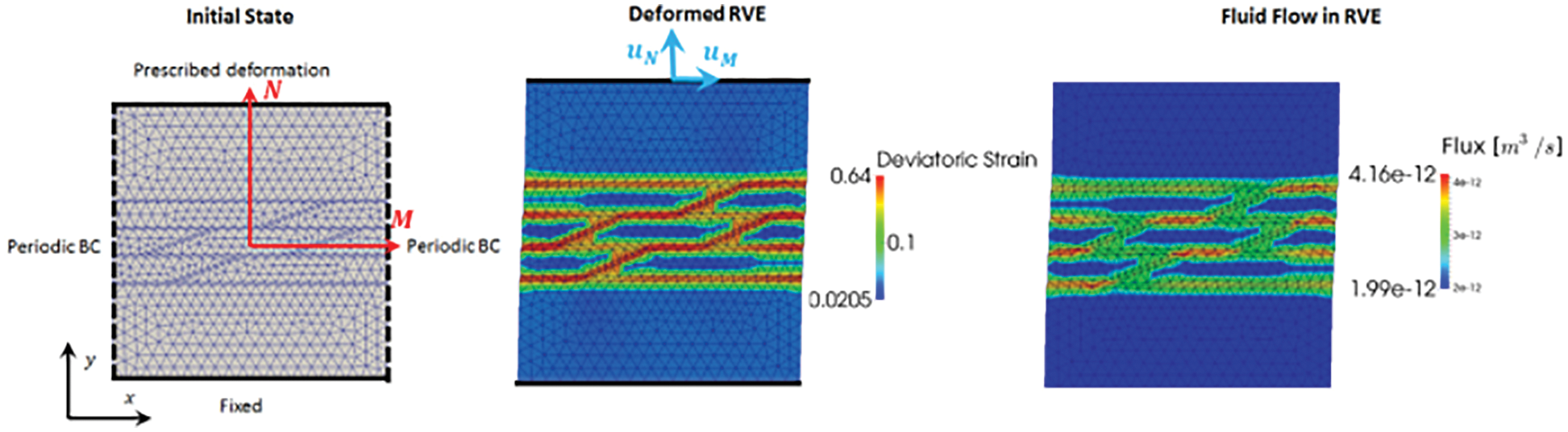

11 Application 2: Solid mechanics, multi-scale, multi-physics

11.2 Data-driven constitutive modeling, deep learning

11.3 Multiscale multiphysics problem: Porous media

11.3.1 Recurrent neural networks for scale bridging

11.3.2 Microstructure and principal direction data

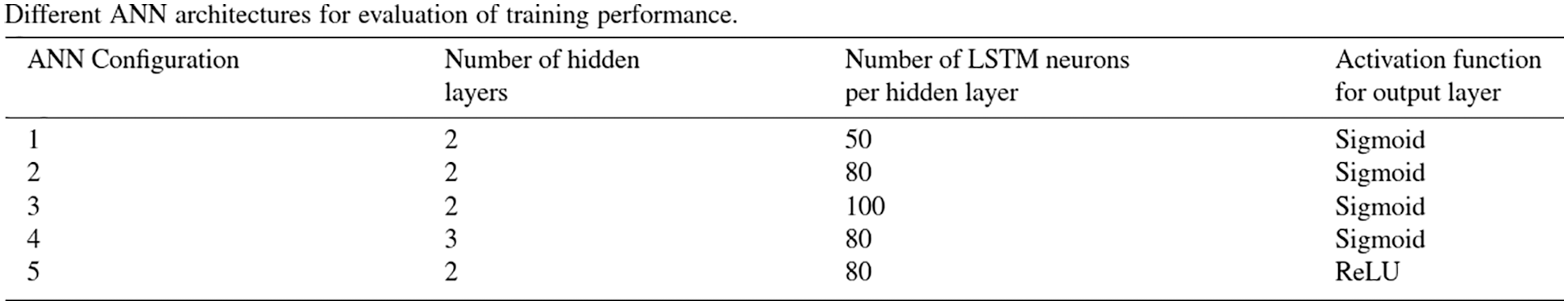

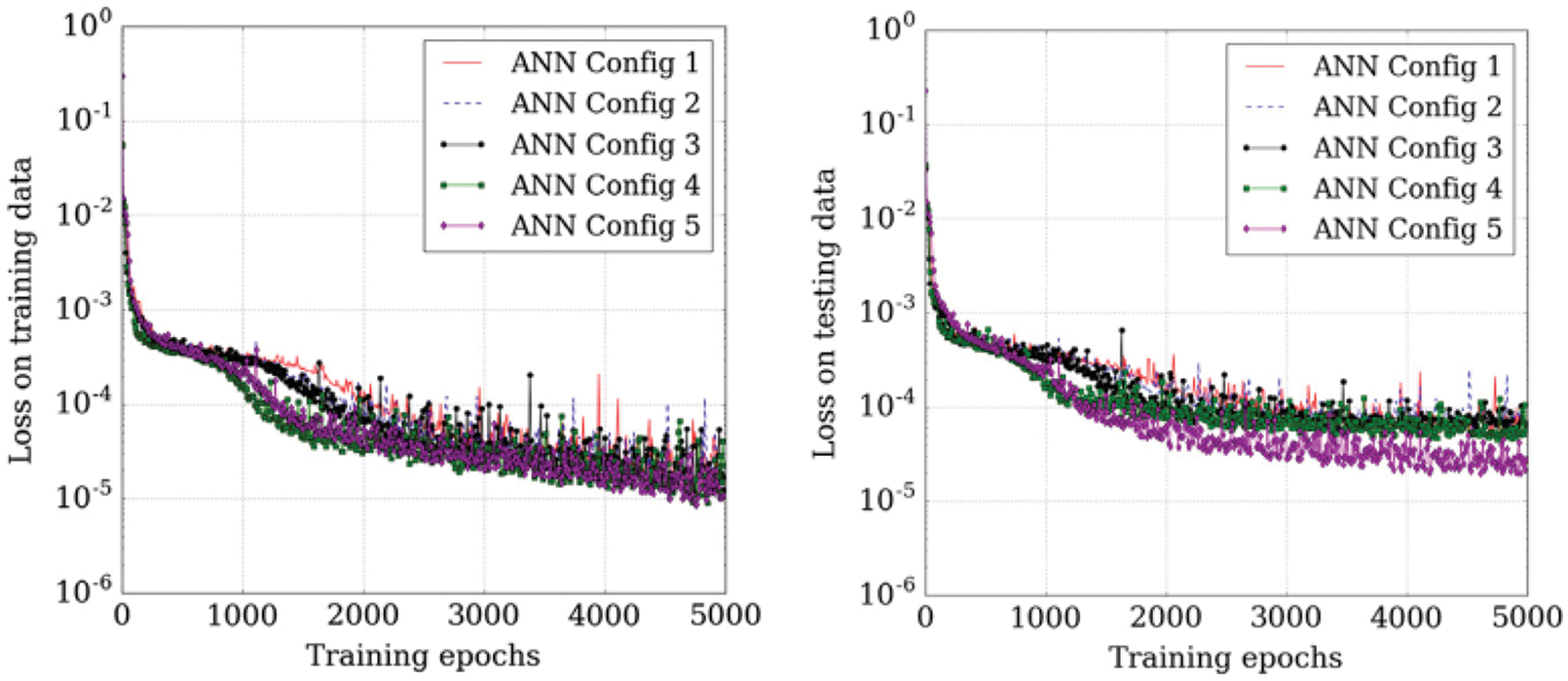

11.3.3 Optimal RNN-LSTM architecture

11.3.4 Dual-porosity dual-permeability governing equations

11.3.5 Embedded strong discontinuities, traction-separation law

12 Application 3: Fluids, turbulence, reduced-order models

12.1 Proper orthogonal decomposition (POD)

12.2 POD with LSTM-Reduced-Order-Model

12.2.1 Goal for using neural network

12.2.2 Data generation, training and testing procedure

12.3 Memory effects of POD coefficients on LSTM models

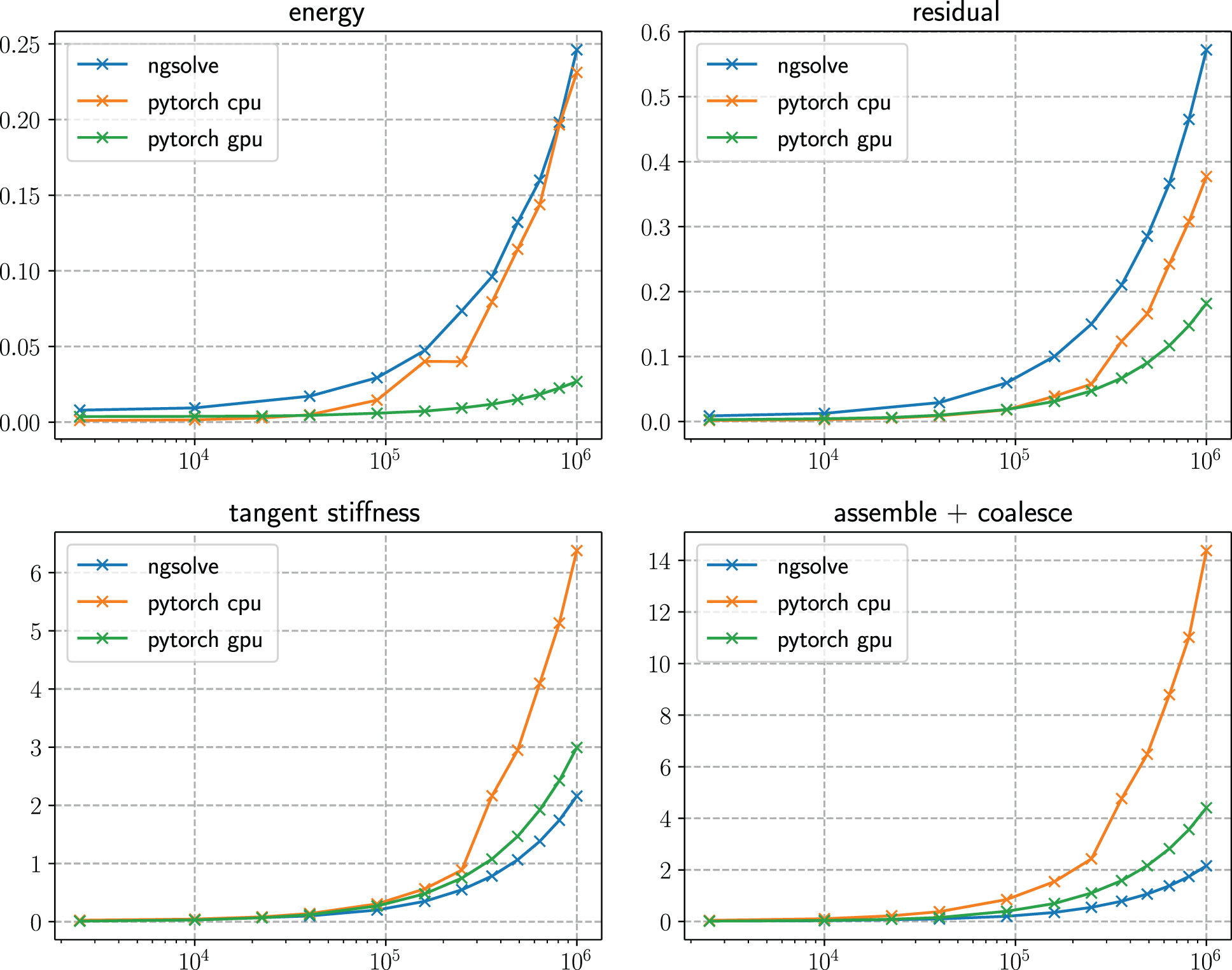

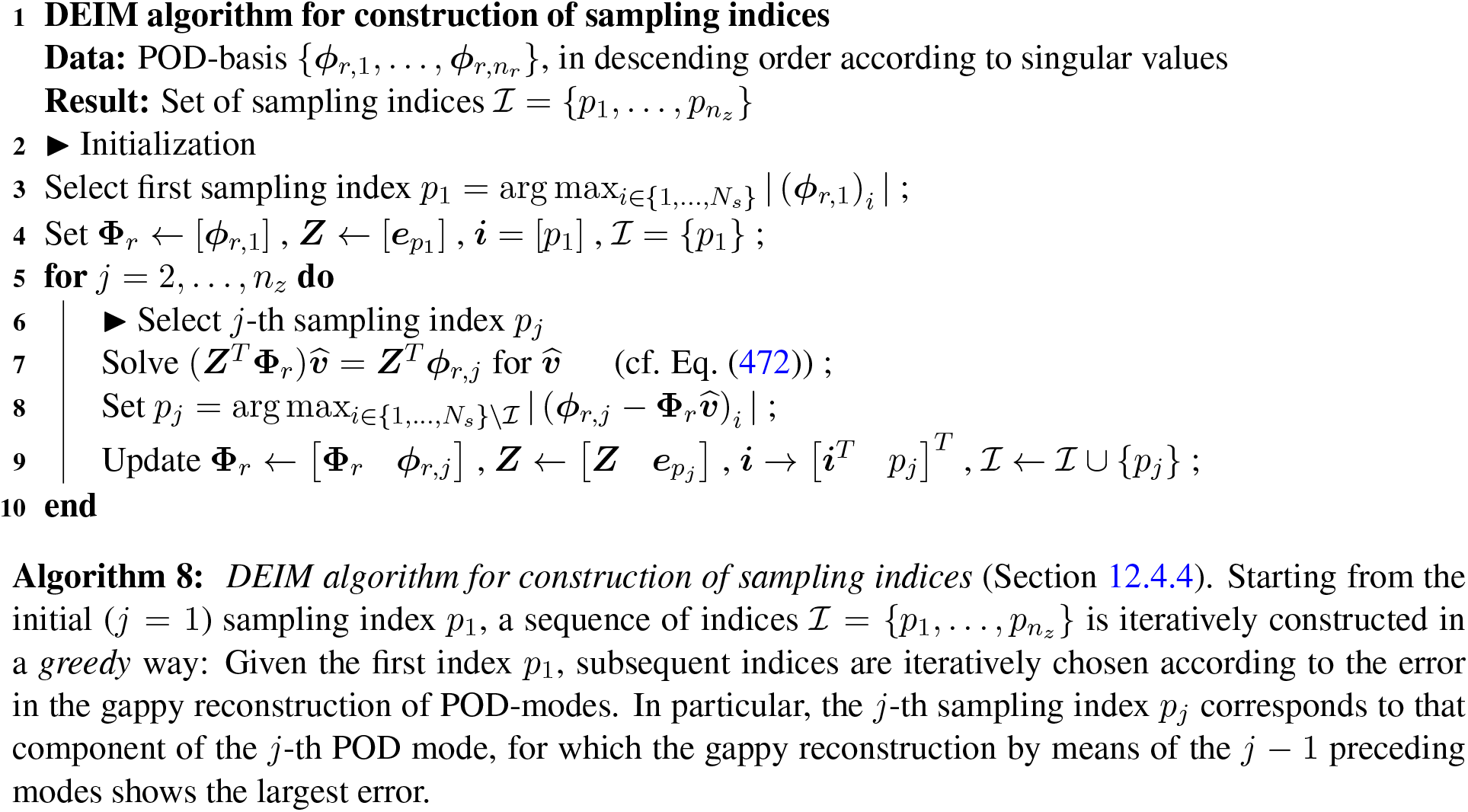

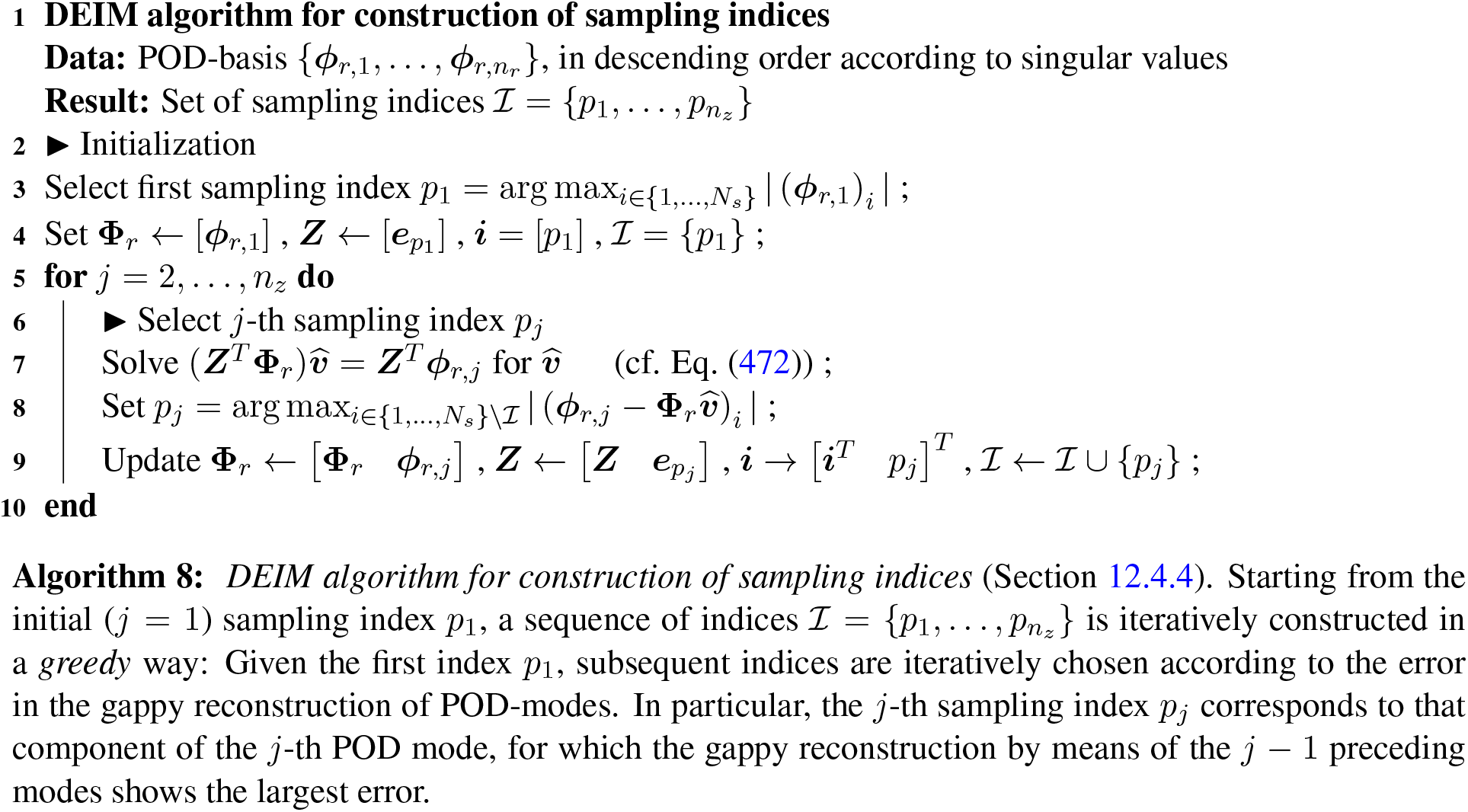

12.4 Reduced order models and hyper-reduction

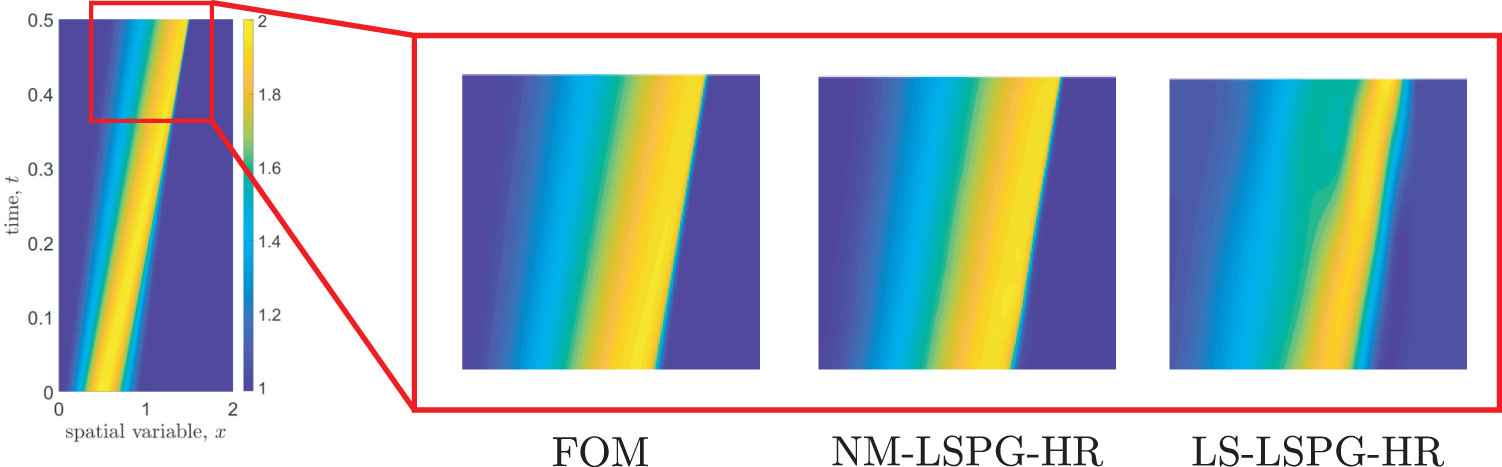

12.4.1 Motivating example: 1D Burger’s equation

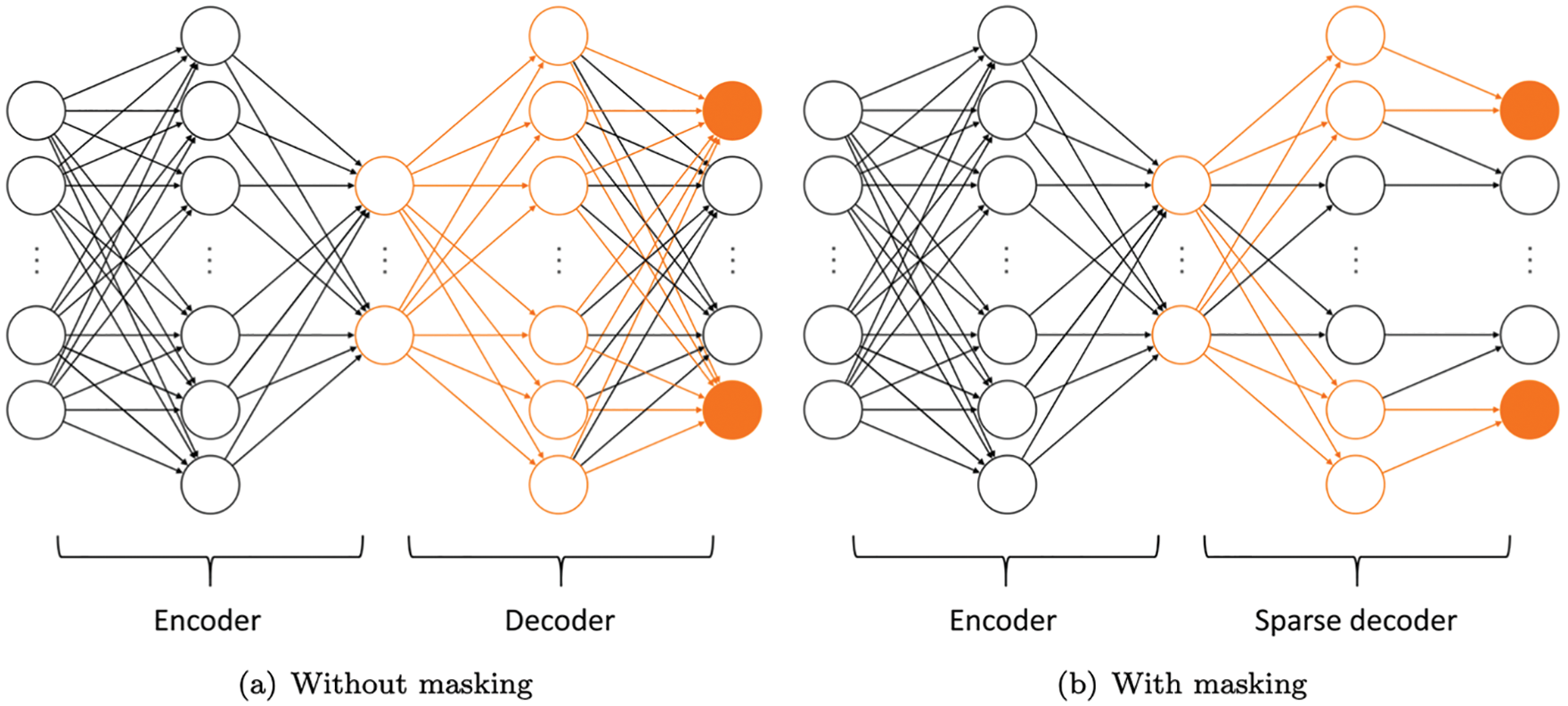

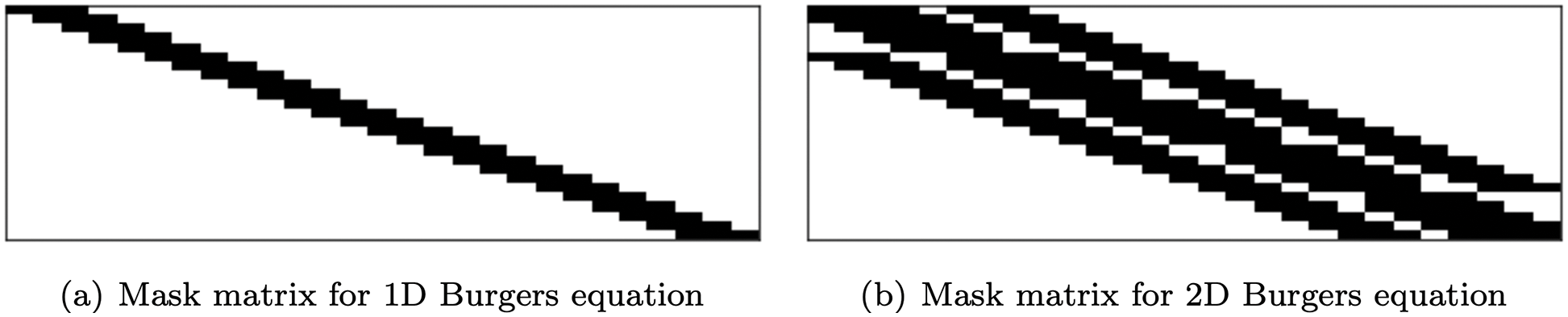

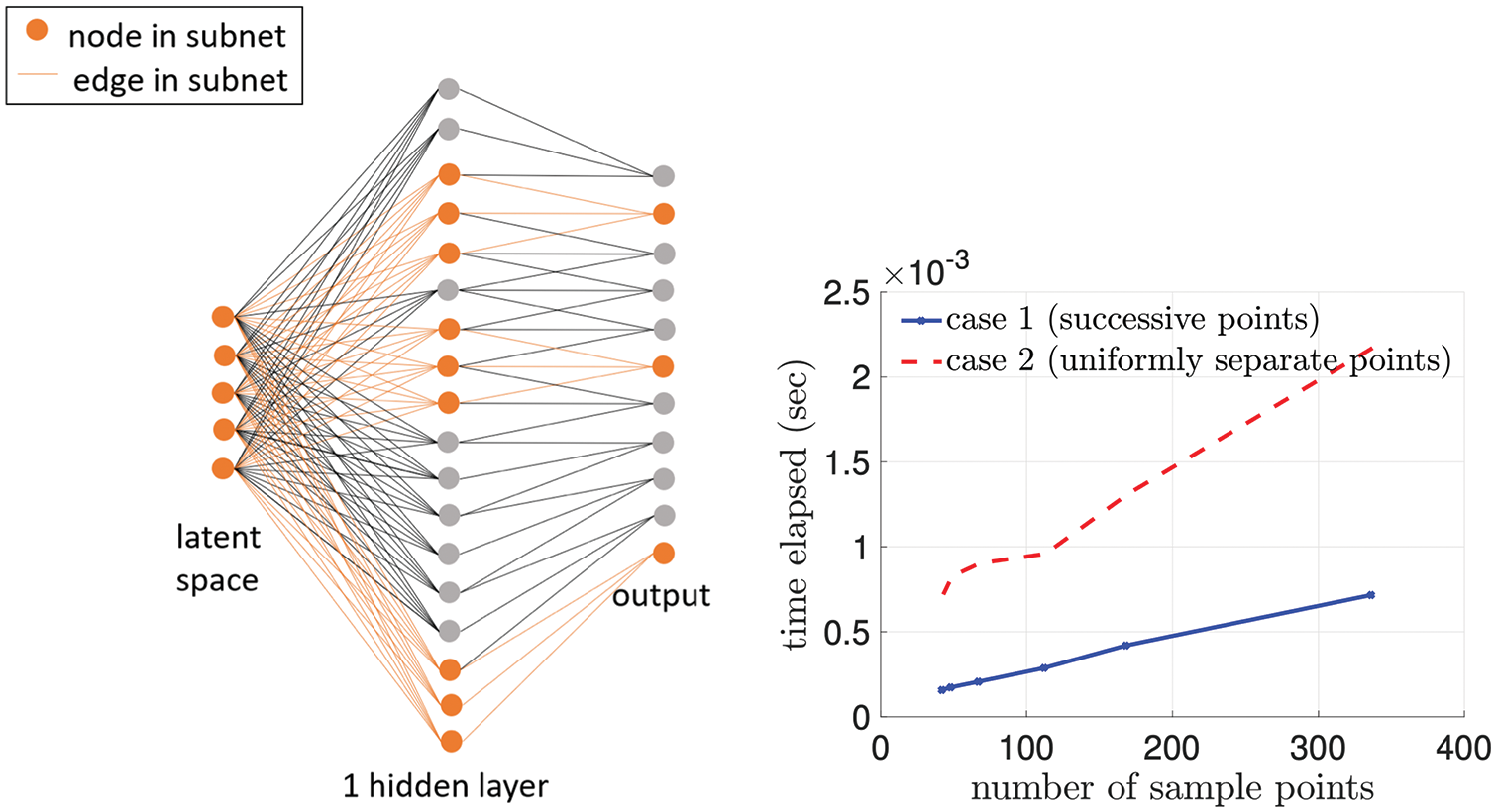

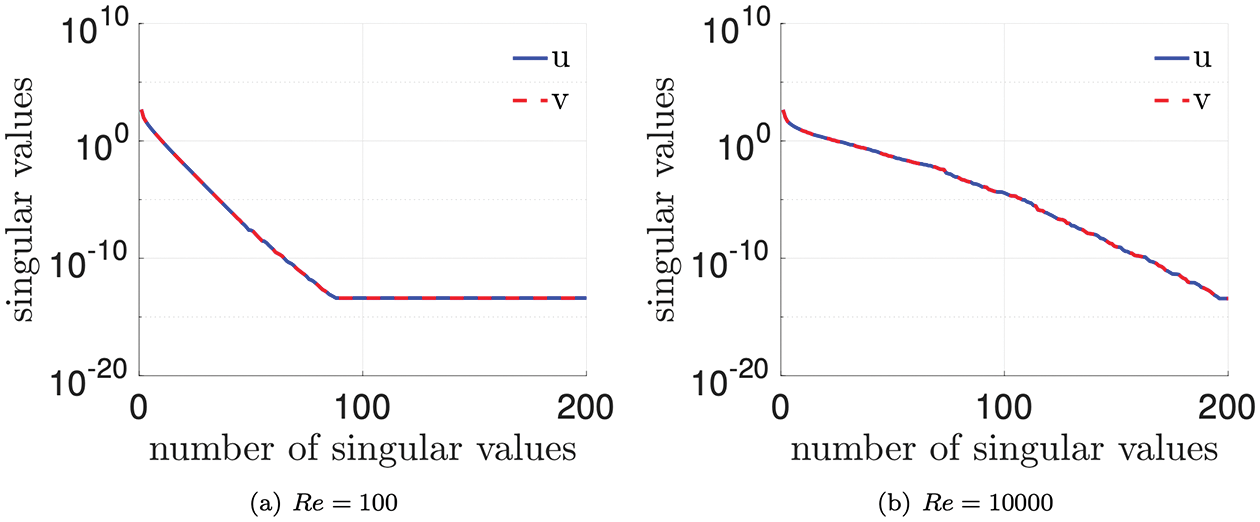

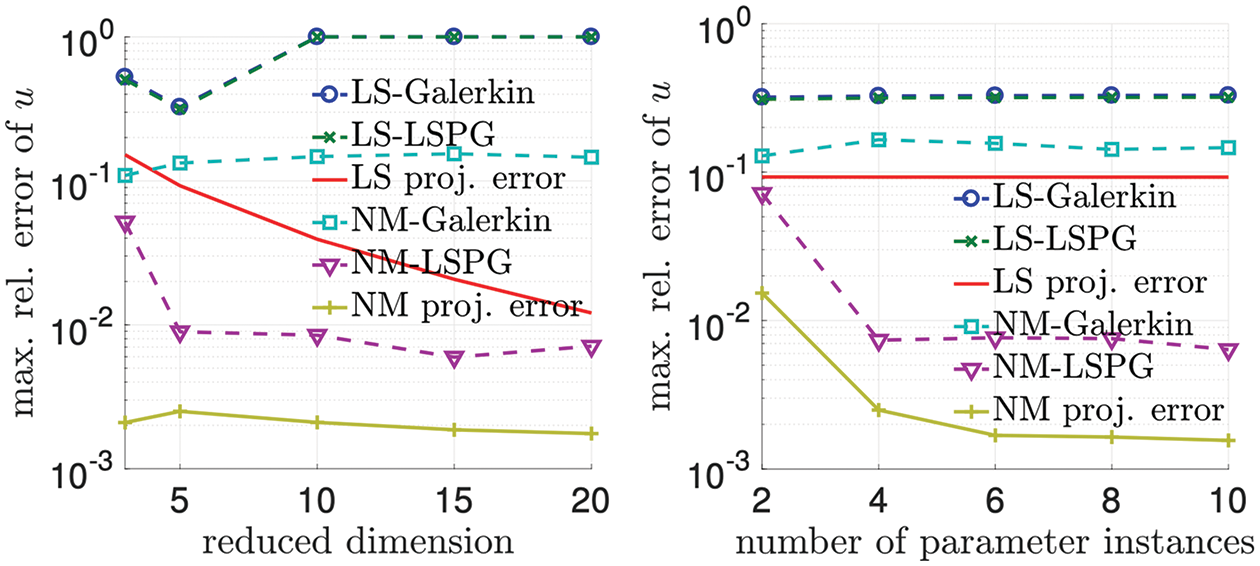

12.4.2 Nonlinear manifold-based (hyper-)reduction

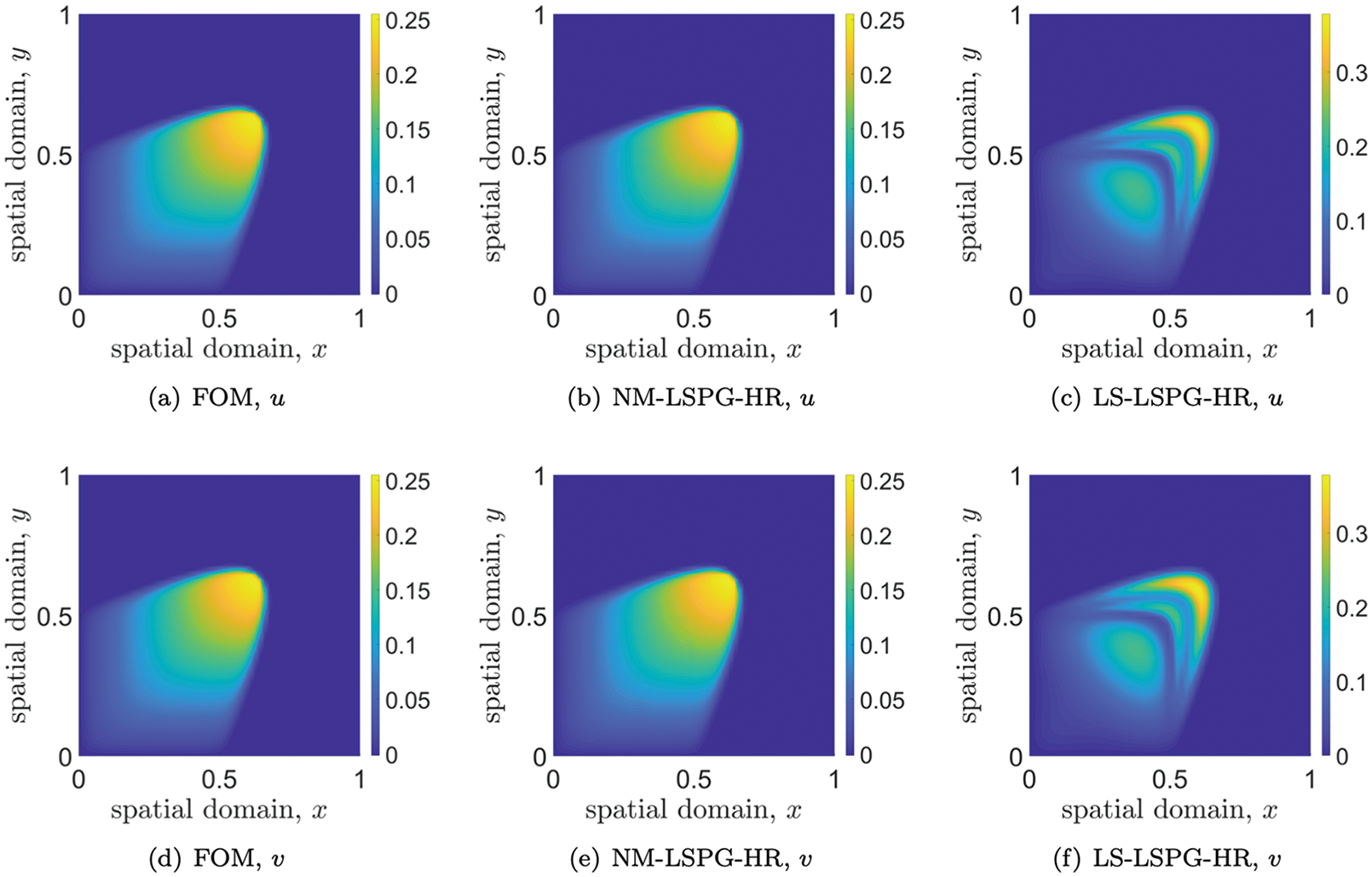

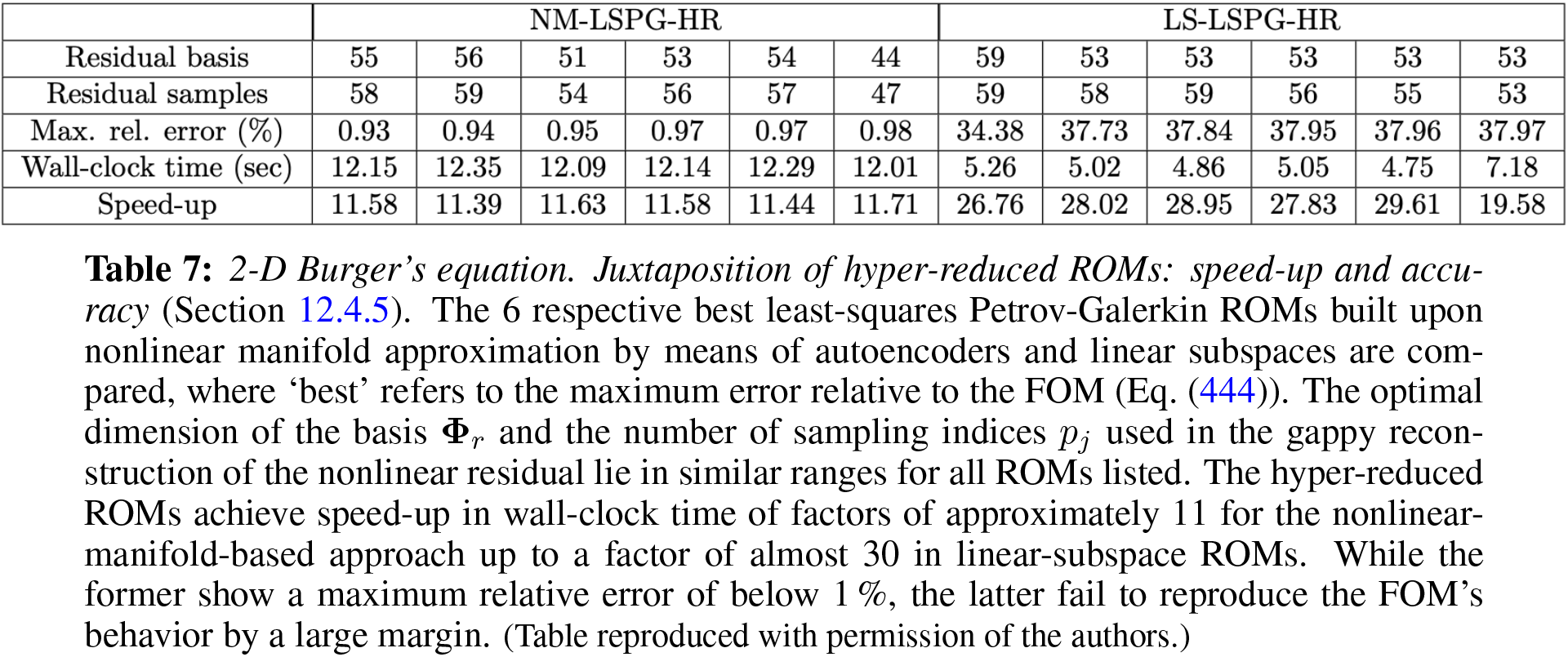

12.4.5 Numerical example: 2D Burger’s equation

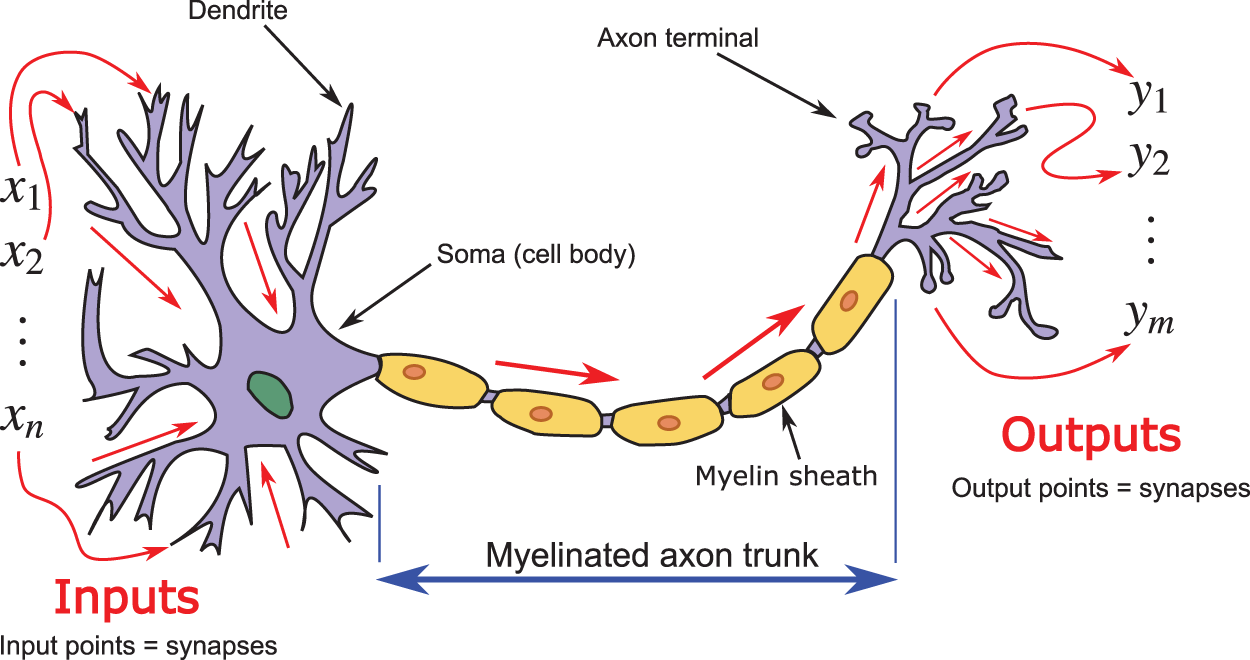

13.1 Early inspiration from biological neurons

13.2 Spatial / temporal combination of inputs, weights, biases

13.2.1 Static, comparing modern to classic literature

13.2.2 Dynamic, time dependence, Volterra series

13.3.2 Rectified linear unit (ReLU)

13.4 Back-propagation, automatic differentiation

13.4.2 Automatic differentiation

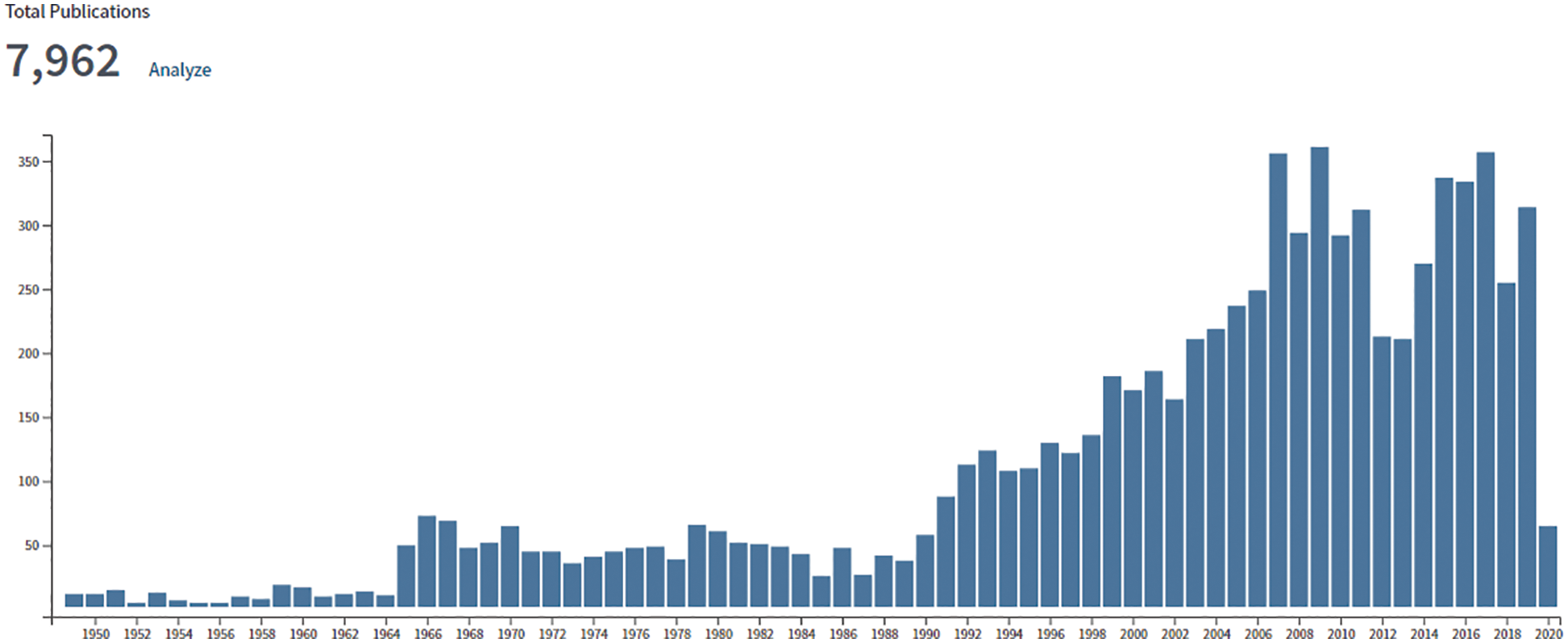

13.5 Resurgence of AI and current state

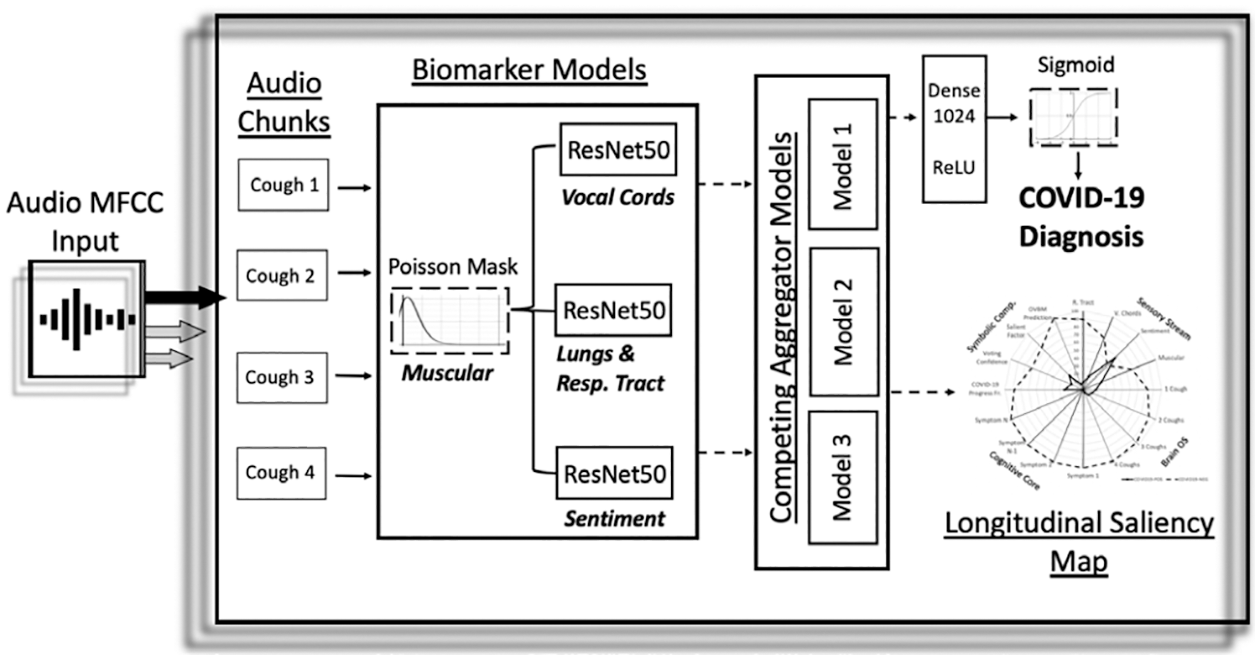

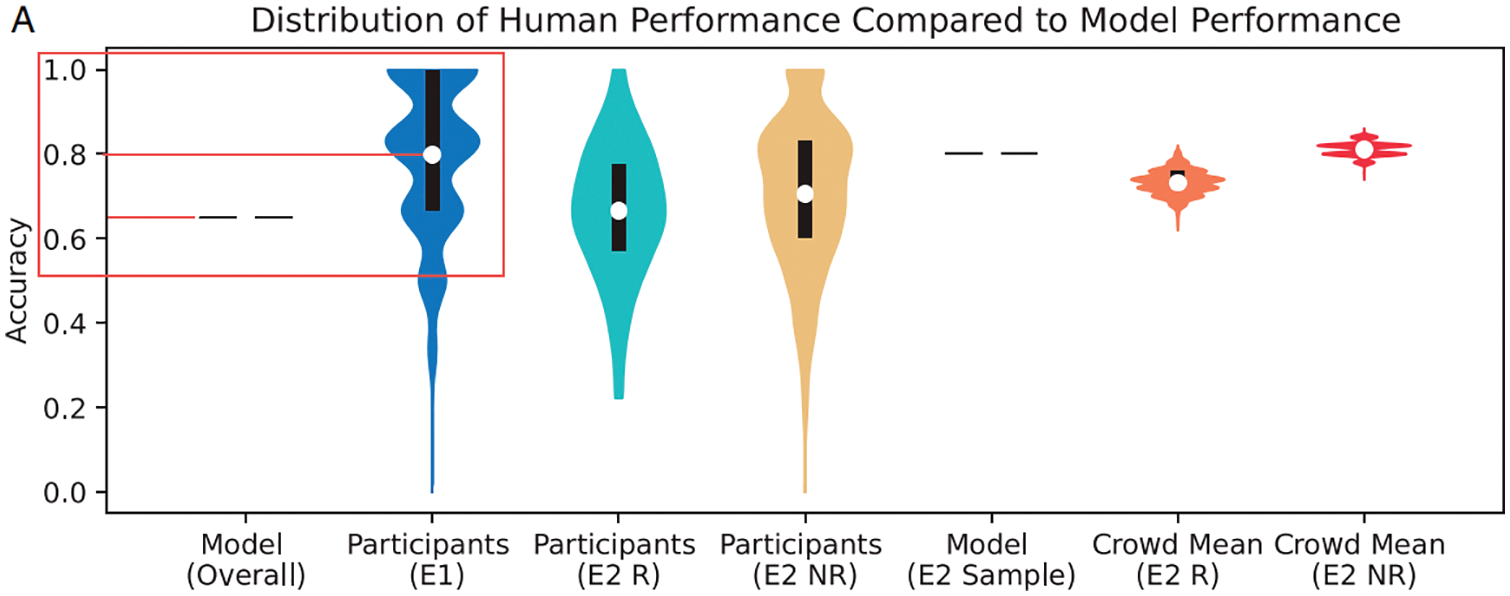

13.5.1 COVID-19 machine-learning diagnostics and prognostics

13.5.2 Additional applications of deep learning

14 Closure: Limitations and danger of AI

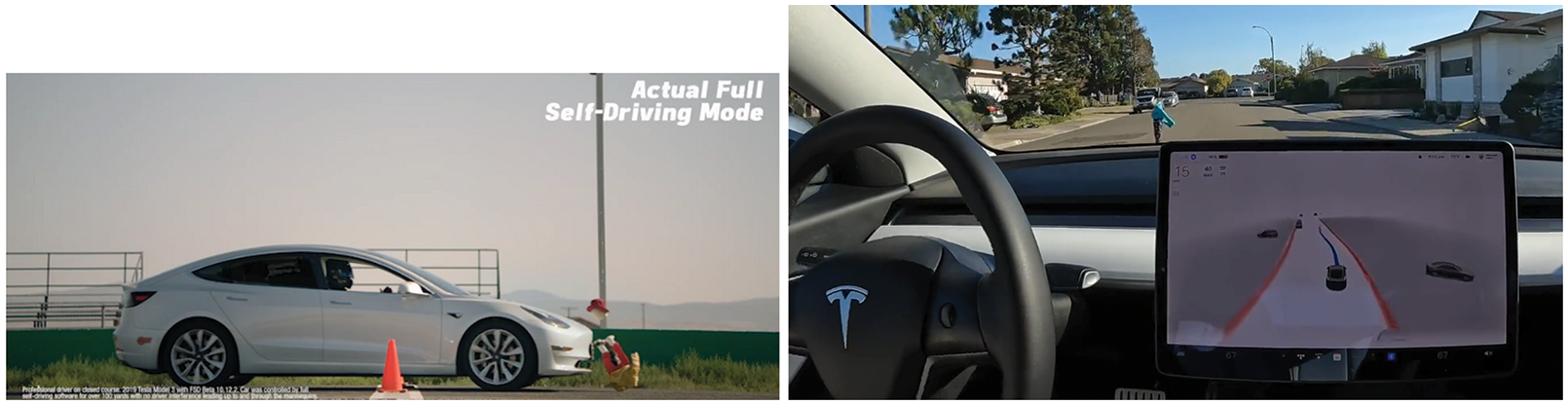

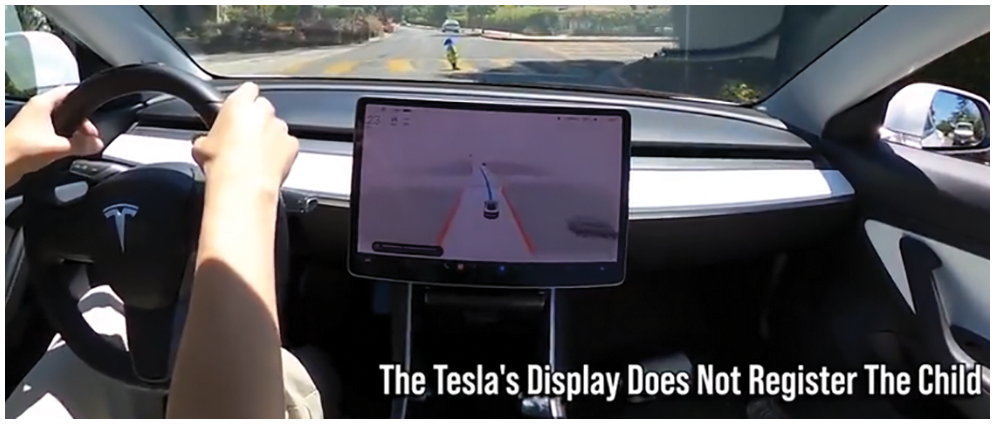

14.1 Driverless cars, crewless ships, “not any time soon”

14.2 Lack of understanding on why deep learning worked

14.4 Threat to democracy and privacy

14.4.2 Facial recognition nightmare

14.5 AI cannot tackle controversial human problems

14.6 So what’s new? Learning to think like babies

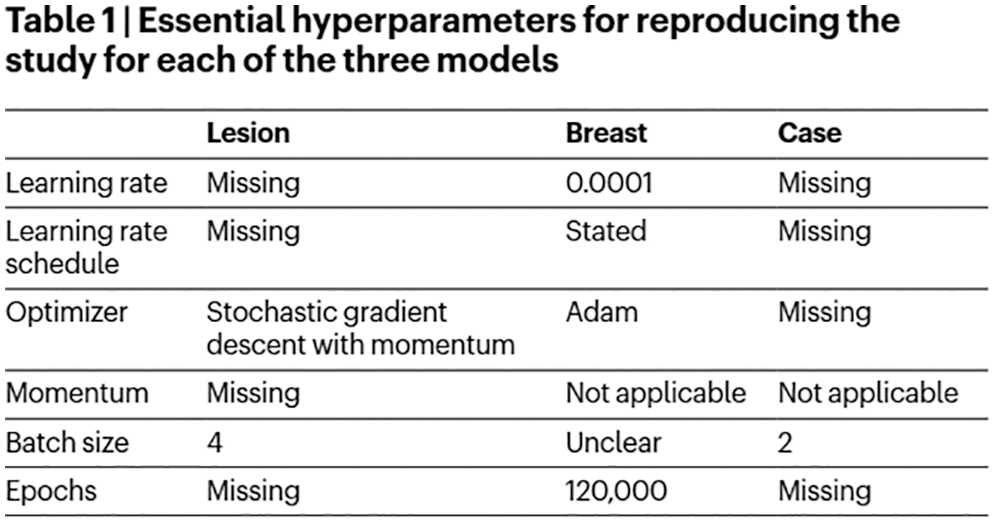

14.7 Lack of transparency and irreproducibility of results

1 Backprop pseudocodes, notation comparison

3 Conditional Gaussian distribution

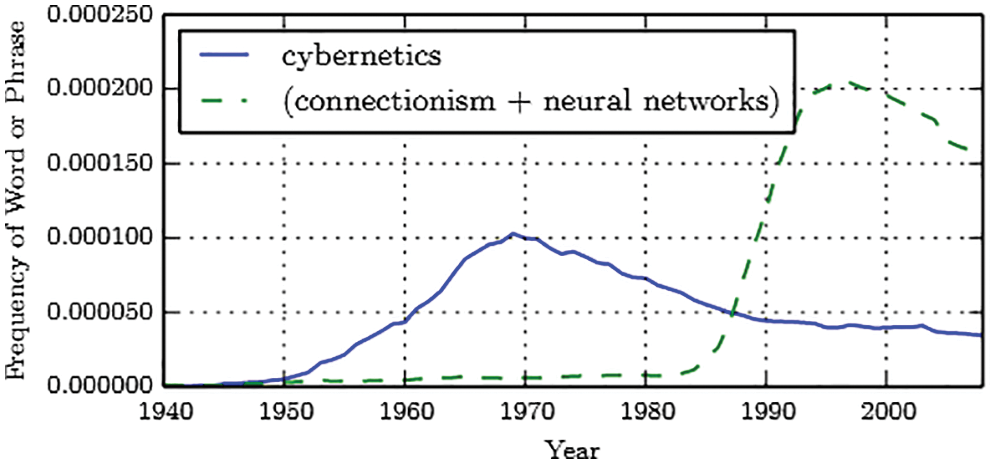

4 The ups and downs of AI, cybernetics

1 Opening remarks and organization

Breakthroughs due to AI in arts and science. On 2022.08.29, Figure 1, an image generated by the AI software Midjourney, became one of the first of its kind to win first place in an art contest.1 The image author signed his entry to the contest as “Jason M. Allen via Midjourney,” indicating that the submitted digital art was not created by him in the traditional way, but under his text commands to an AI software. Artists not using AI software—such as Midjourney, DALL.E 2, Stable Diffusion—were not happy [4].

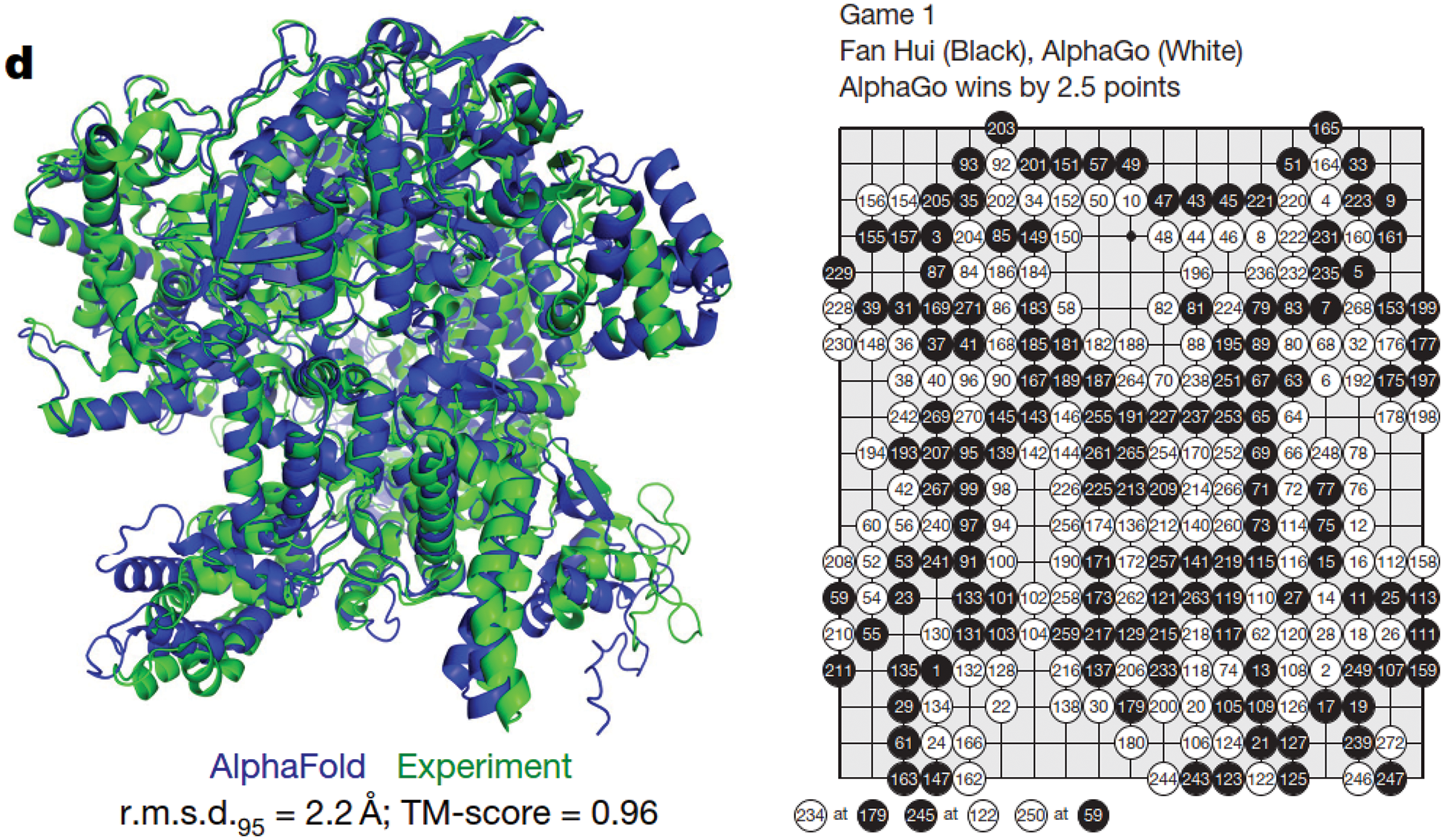

In 2021, an AI software achieved a feat that human researchers were not able to do in the last 50 years in predicting protein structures quickly and in a large scale. This feat was named the scientific breakthough of the year; Figure 2, left. In 2016, another AI software beat the world grandmaster in the Go game, which is described as the most complex game that human ever created; Figure 2, right.

On 2022.10.05, DeepMind published a paper on breaking a 50-year record of fast matrix multiplication by reducing the number of multiplications in multiplying two

Since the preprint of this paper was posted on the arXiv in Dec 2022 [9], there have been considerable excitements and concerns about ChatGPT—a large language-model chatbot that can interact with humans in a conversational way—which would be incorporated into Microsoft Bing to make web “search interesting again, after years of stagnation and stasis” [10], whose author wrote “I’m going to do something I thought I’d never do: I’m switching my desktop computer’s default search engine to Bing. And Google, my default source of information for my entire adult life, is going to have to fight to get me back.” Google would release its own answer to ChatGPT called “Bard” [11]. The race is on.

Figure 1: AI-generated image won contest in the category of Digital Arts, Emerging Artists, on 2022.08.29 (Section 1). “Théâtre D’opéra Spatial” (Space Opera Theater) by “Jason M. Allen via Midjourney”, which is “an artificial intelligence program that turns lines of text into hyper-realistic graphics” [4]. Colorado State Fair, 2022 Fine Arts First, Second & Third. (Permission of Jason M. Allen, CEO, Incarnate Games)

Audience. This review paper is written by mechanics practitioners to mechanics practitioners, who may or may not be familiar with neural networks and deep learning. We thus assume that the readers are familiar with continuum mechanics and numerical methods such as the finite element method. Thus, unlike typical computer-science papers on deep learning, notation and convention of tensor analysis familiar to practitioners of mechanics are used here whenever possible.3

For readers not familiar with deep learning, unlike many other review papers, this review paper is not just a summary of papers in the literature for people who already have some familiarity with this topic,4 particularly papers on deep-learning neural networks, but contains also a tutorial on this topic aiming at bringing first-time learners (including students) quickly up-to-date with modern issues and applications of deep learning, especially to computational mechanics.5 As a result, this review paper is a convenient “one-stop shopping” that provides the necessary fundamental information, with clarification of potentially confusing points, for first-time learners to quickly acquire a general understanding of the field that would facilitate deeper study and application to computational mechanics.

Figure 2: Breakthroughs in AI (Section 2). Left: The journal Science 2021 Breakthough of the Year. Protein folded 3-D shape produced by the AI software AlphaFold compared to experiment with high accuracy [5]. The AlphaFold Protein Structure Database contains more than 200 million protein structure predictions, a holy grail sought after in the last 50 years. Right: The AI solfware AlphaGo, a runner-up in the journal Science 2016 Breakthough of the Year, beat the European Go champion Fan Hui five games to zero in 2015 [6], and then went on to defeat the world Go grandmaster Lee Sedol in 2016 [7]. (Permission by Nature.)

Deep-learning software libraries. Just as there is a large number of available software in different subfields of computational mechanics, there are many excellent deep-learning libraries ready for use in applications; see Section 9, in which some examples of the use of these libraries in engineering applications are provided with the associated computer code. Similar to learning finite-element formulations versus learning how to run finite-element codes, our focus here is to discuss various algorithmic aspects of deep-learning and their applications in computational mechanics, rather than how to use deep-learning libraries in applications. We agree with the view that “a solid understanding of the core principles of neural networks and deep learning” would provide “insights that will still be relevant years from now” [21], and that would not be obtained from just learning to run some hot libraries.

Readers already familiar with neural networks may find the presentation refreshing,6 and even find new information on neural networks, depending how they used deep learning, or when they stopped working in this area due to the waning wave of connectionism and the new wave of deep learning.7 If not, readers can skip these sections to go directly to the sections on applications of deep learning to computational mechanics.

Applications of deep learning in computational mechanics. We select some recent papers on application of deep learning to computational mechanics to review in details in a way that readers would also understand the computational mechanics contents well enough without having to read through the original papers:

• Fully-connected feedforward neural networks were employed to make element-matrix integration more efficient, while retaining the accuracy of the traditional Gauss-Legendre quadrature [38];8

• Recurrent neural network (RNN) with Long Short-Term Memory (LSTM) units9 was applied to multiple-scale, multi-physics problems in solid mechanics [25];

• RNNs with LSTM units were employed to obtain reduced-order model for turbulence in fluids based on the proper orthogonal decomposition (POD), a classic linear project method also known as principal components analysis (PCA) [26]. More recent nonlinear-manifold model-order reduction methods, incorporating encoder / decoder and hyper-reduction of dimentionality using gappy (incomplete) data, were introduced, e.g., [47] [48].

Organization of contents. Our review of each of the above papers is divided into two parts. The first part is to summarize the main results and to identify the concepts of deep learning used in the paper, expected to be new for first-time learners, for subsequent elaboration. The second part is to explain in details how these deep-learning concepts were used to produce the results.

The results of deep-learning numerical integration [38] are presented in Section 2.3.1, where the deep-learning concepts employed are identified and listed, whereas the details of the formulation in [38] are discussed in Section 10. Similarly, the results and additional deep-learning concepts used in a multi-scale, multi-physics problem of geomechanics [25] are presented in Section 2.3.2, whereas the details of this formulation are discussed in Section 11. Finally, the results and additional deep-learning concepts used in turbulent fluid simulation with proper orthogonal decomposition [26] are presented in Section 2.3.3, whereas the details of this formulation, together with the nonlinear-manifold model-order reduction [47] [48], are discussed in Section 12.

All of the deep-learning concepts identified from the above selected papers for in-depth are subsequently explained in detail in Sections 3 to 7, and then more in Section 13 on “Historical perspective”.

The parallelism between computational mechanics, neuroscience, and deep learning is summarized in Section 3, which would put computational-mechanics first-time learners at ease, before delving into the details of deep-learning concepts.

Both time-independent (static) and time-dependent (dynamic) problems are discussed. The architecture of (static, time-independent) feedforward multilayer neural networks in Section 4 is expounded in detail, with first-time learners in mind, without assuming prior knowledge, and where experts may find a refreshing presentation and even new information.

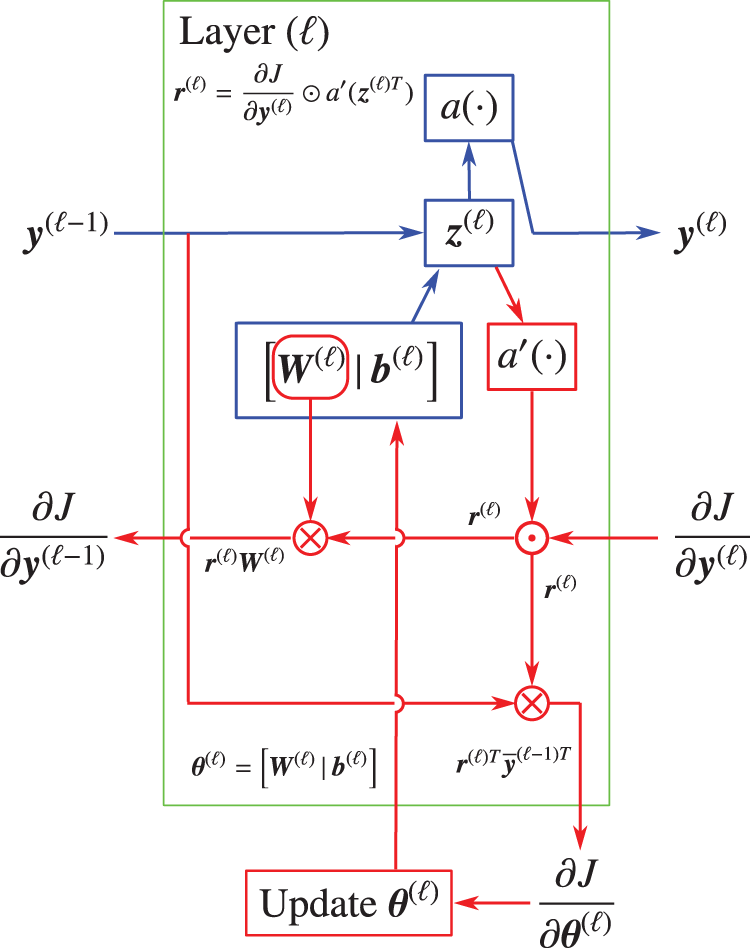

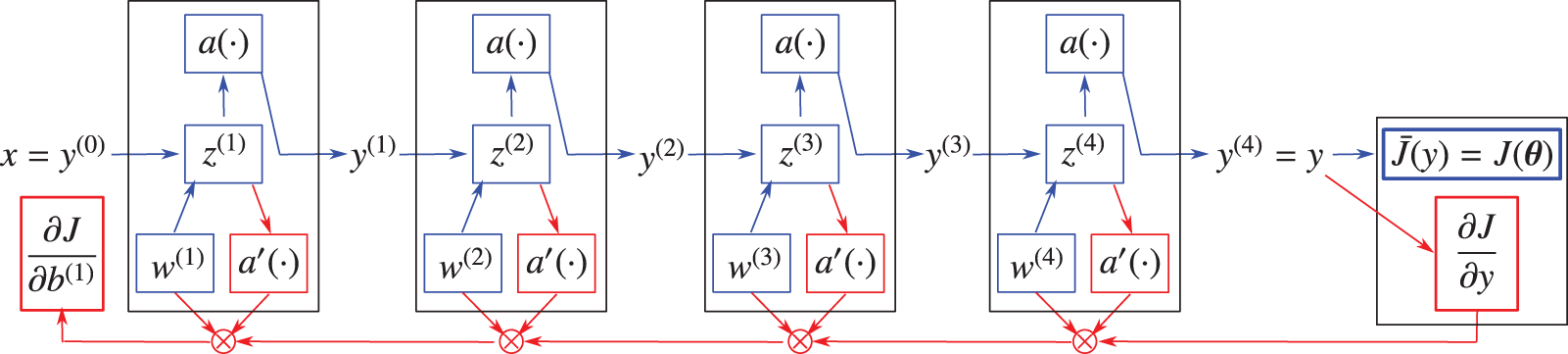

Backpropagation, explained in Section 5, is an important method to compute the gradient of the cost function relative to the network parameters for use as a descent direction to decrease the cost function for network training.

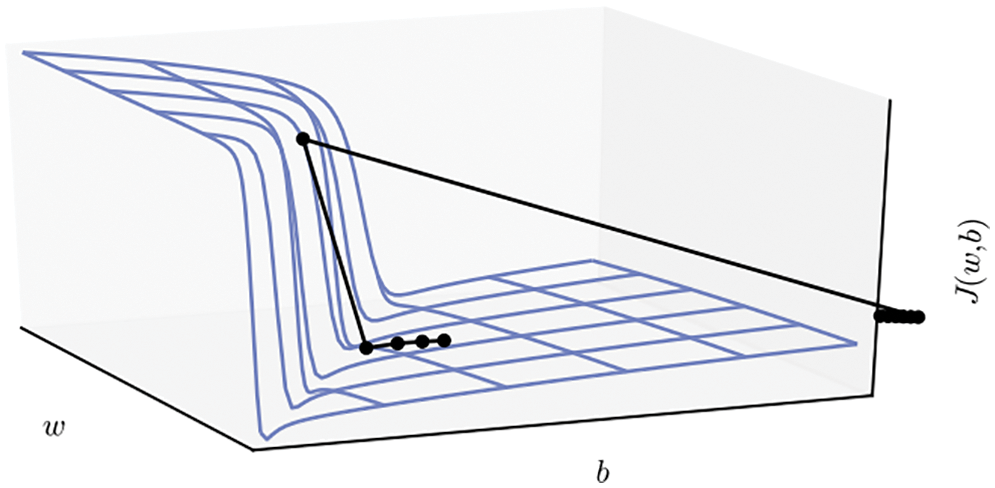

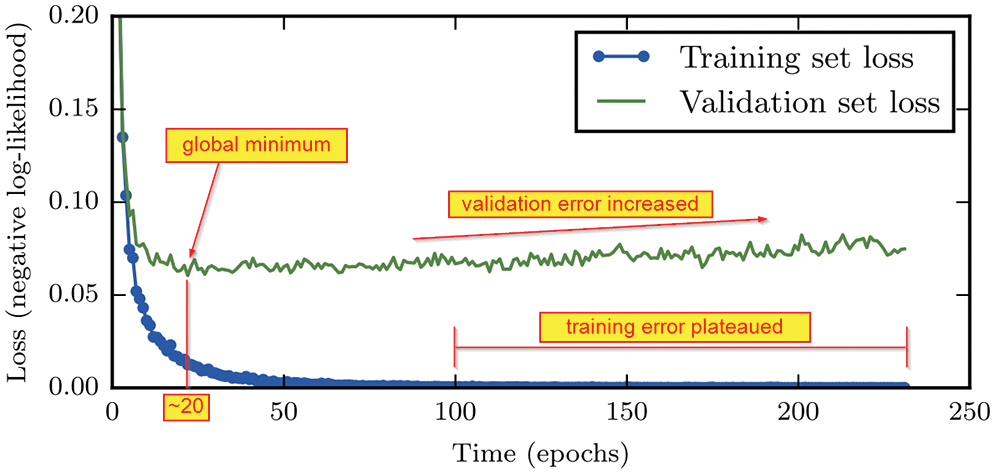

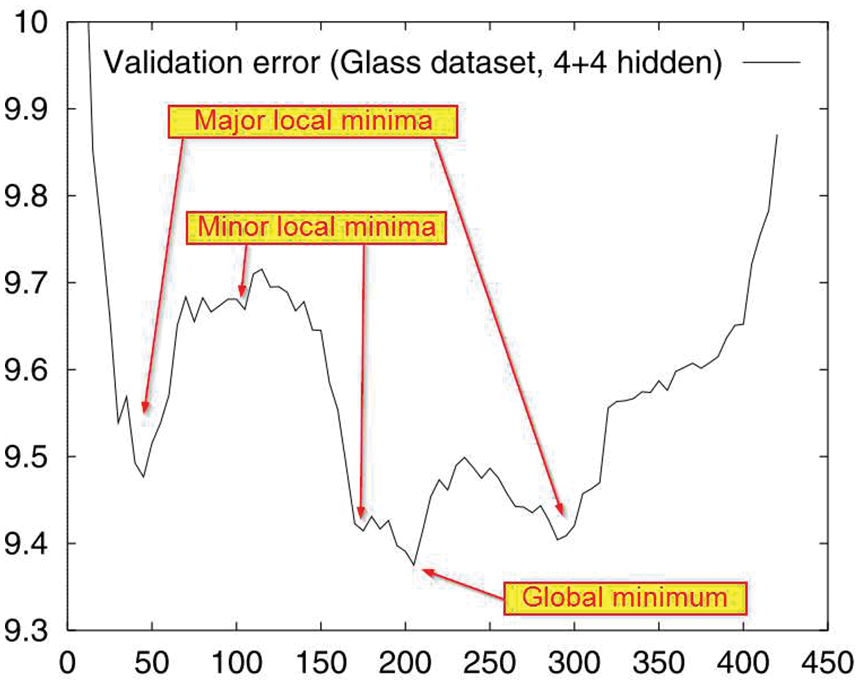

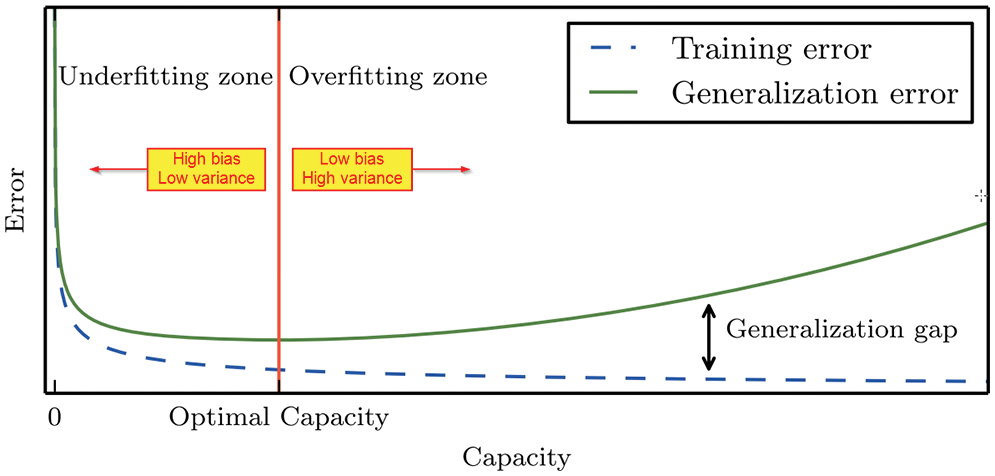

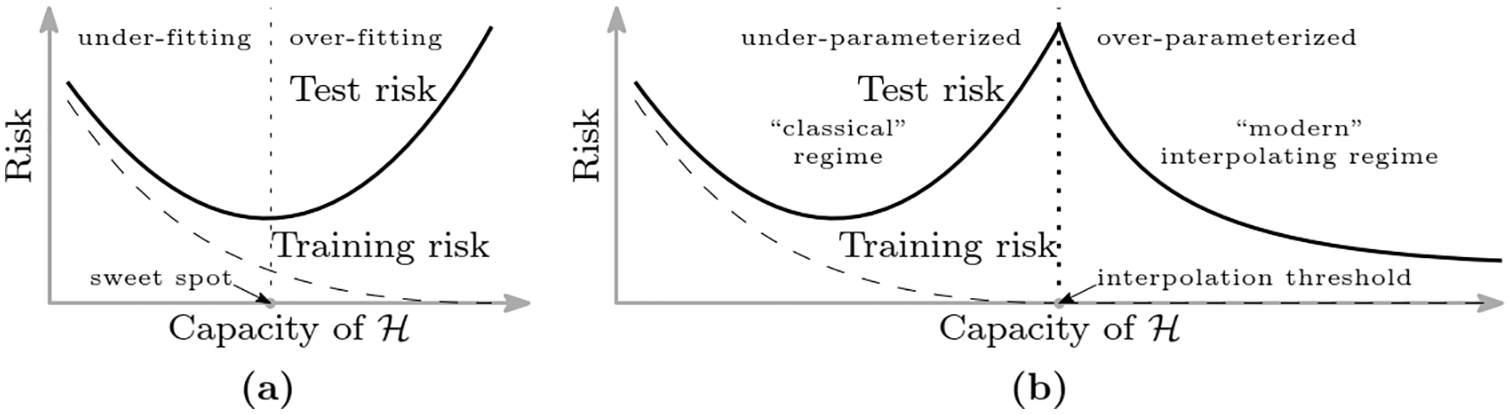

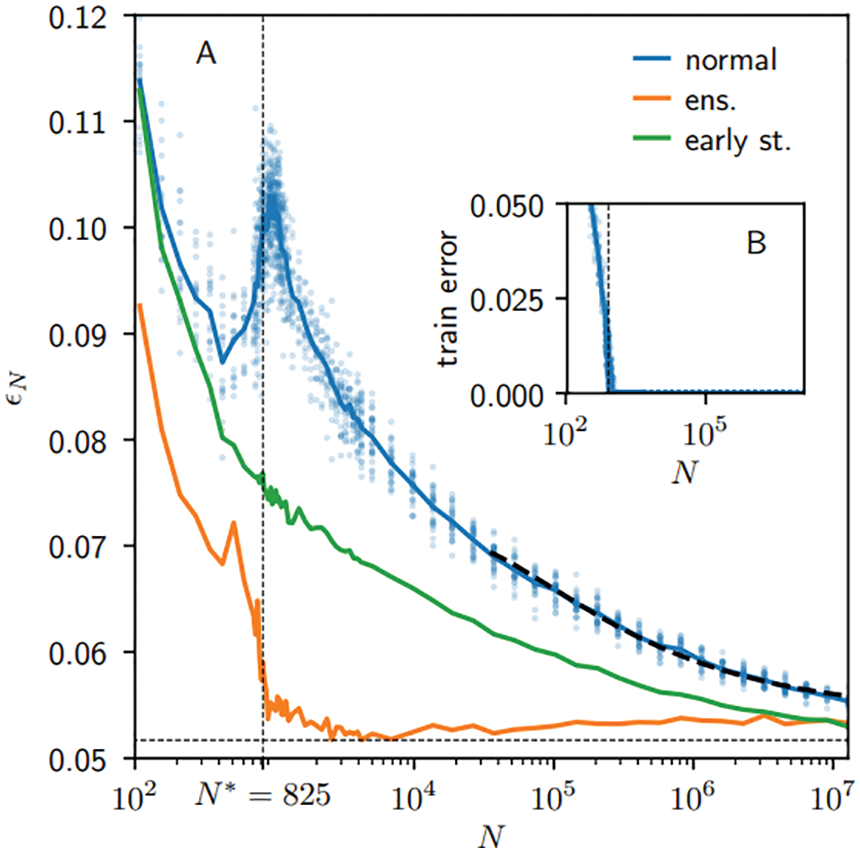

For training networks—i.e., finding optimal parameters that yield low training error and lowest validation error—both classic deterministic optimization methods (using full batch) and stochastic optimization methods (using minibatches) are reviewed in detail, and at times even derived, in Section 6, which would be useful for both first-time learners and experts alike.

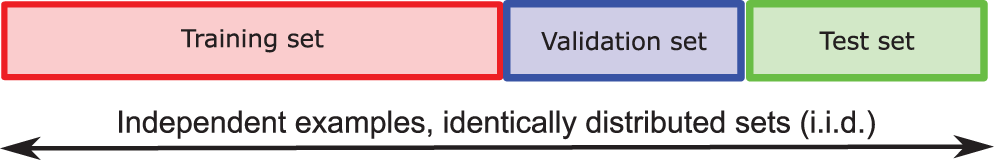

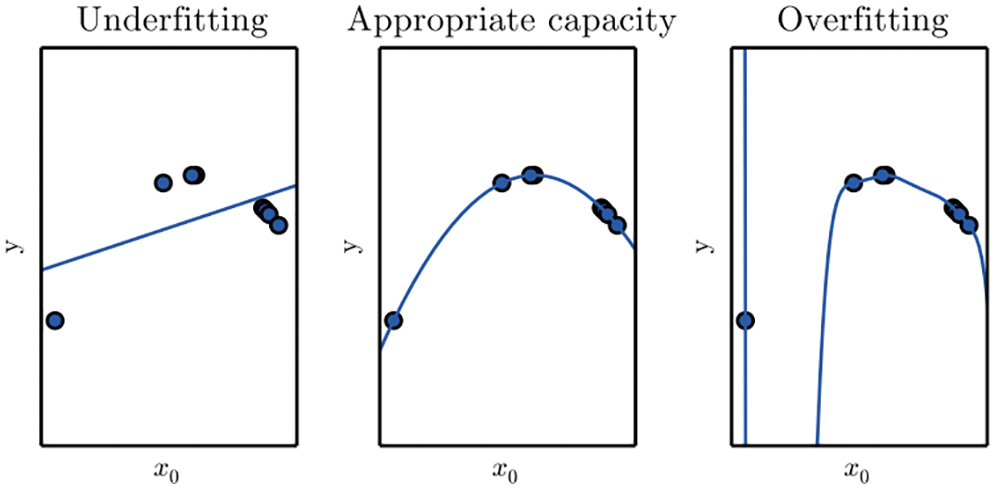

The examples used in training a network form the training set, which is complemented by the validation set (to determine when to stop the optimization iterations) and the test set (to see whether the resulting network could work on examples never seen before); see Section 6.1.

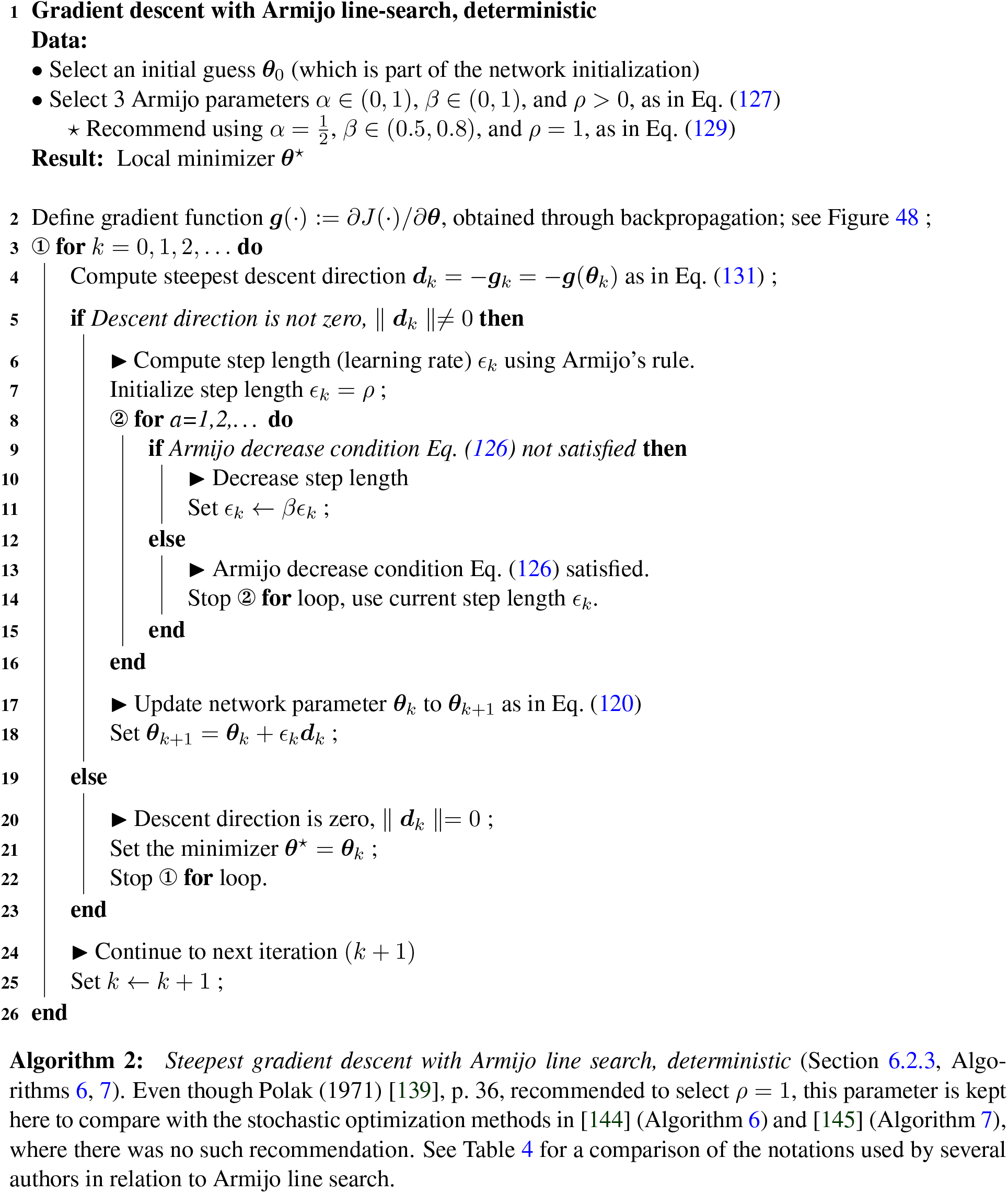

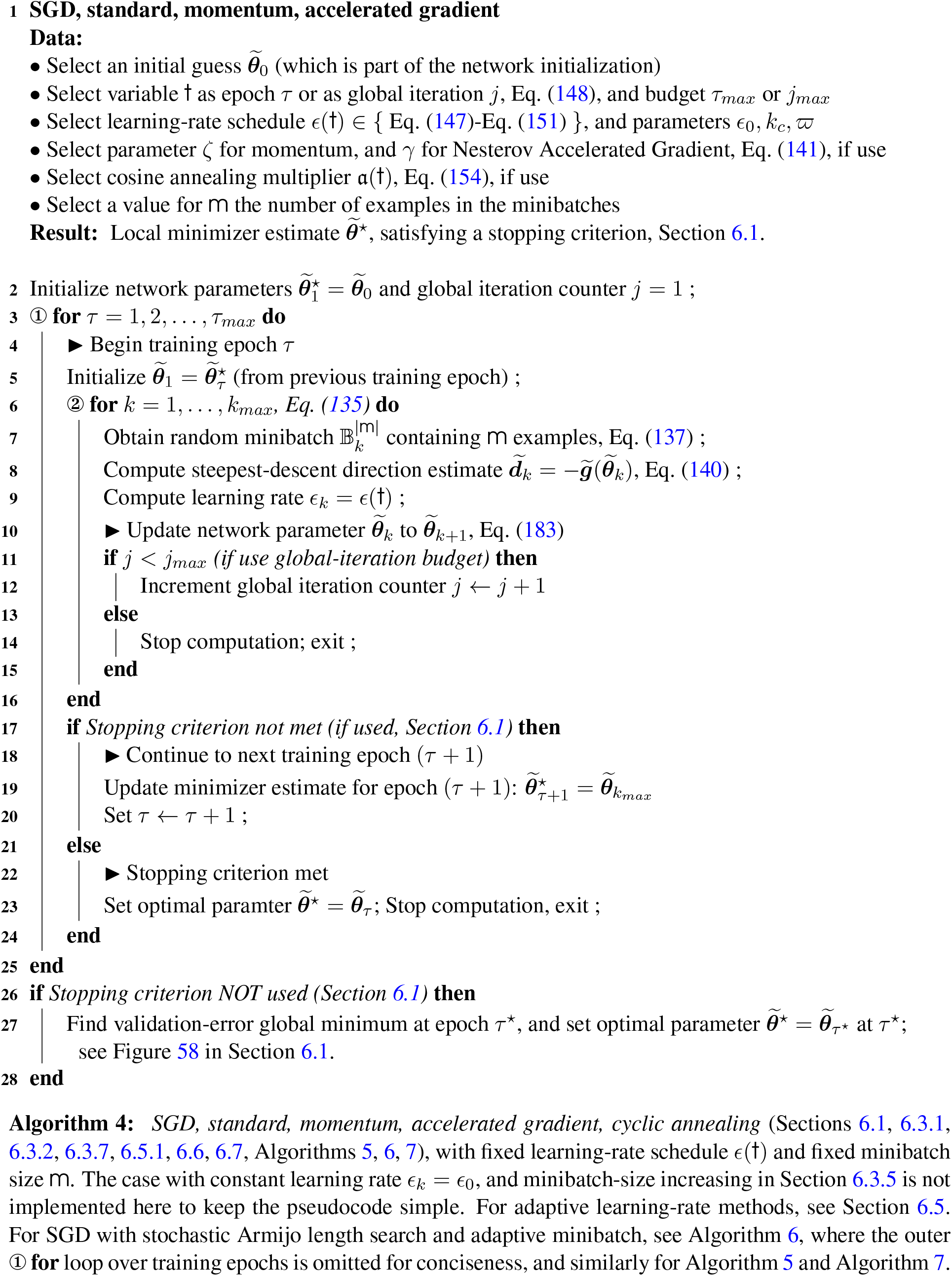

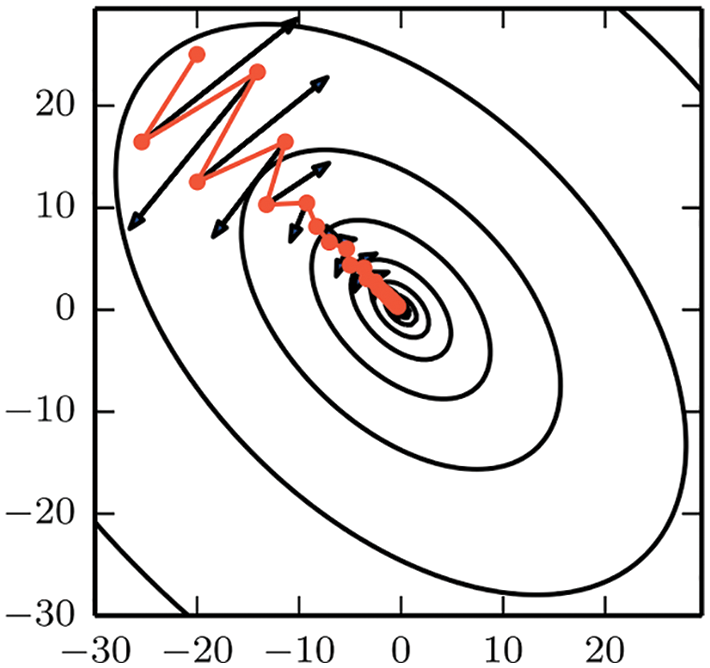

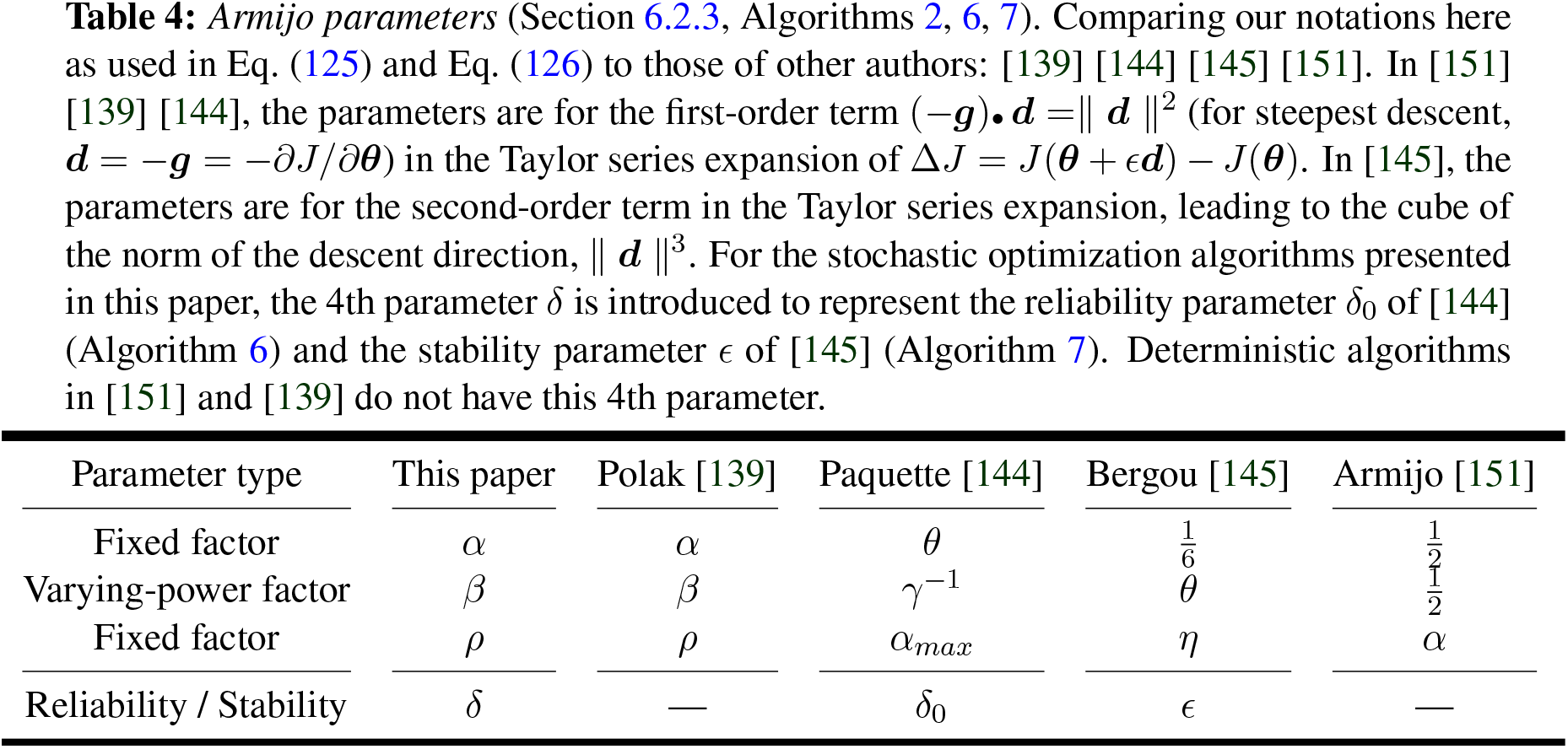

Deterministic gradient descent with classical line search methods, such as Armijo’s rule (Section 6.2), were generalized to add stochasticity. Detailed pseudocodes for these methods are provided. The classic stochastic gradient descent (SGD) by Robbins & Monro (1951) [49] (Section 6.3, Section 6.3.1), with add-on tricks such as momentum Polyak (1964) [3] and fast (accelerated) gradient by Nesterov (1983 [50], 2018 [51]) (Section 6.3.2), step-length decay (Section 6.3.4), cyclic annealing (Section 6.3.4), minibatch-size increase (Section 6.3.5), weight decay (Section 6.3.6) are presented, often with detailed derivations.

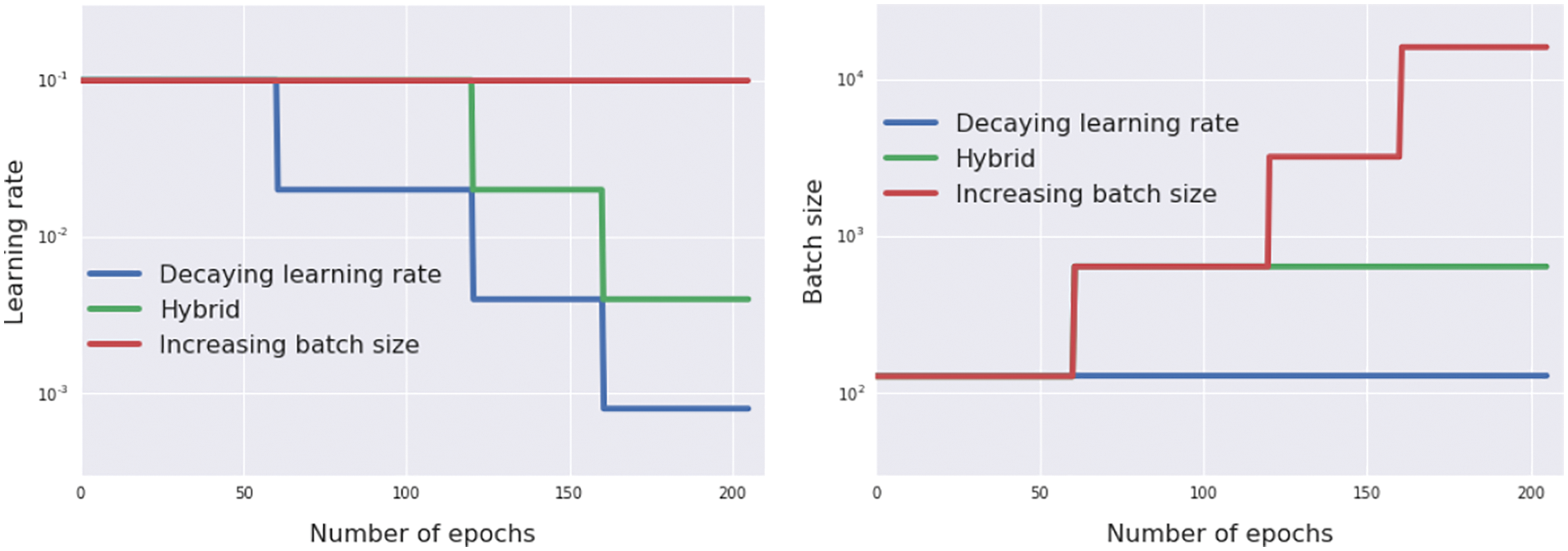

Step-length decay is shown to be equivalent to simulated annealing using stochastic differential equation equivalent to the discrete parameter update. A consequence is to increase the minibatch size, instead of decaying the step length (Section 6.3.5). In particular, we obtain a new result for minibatch-size increase.

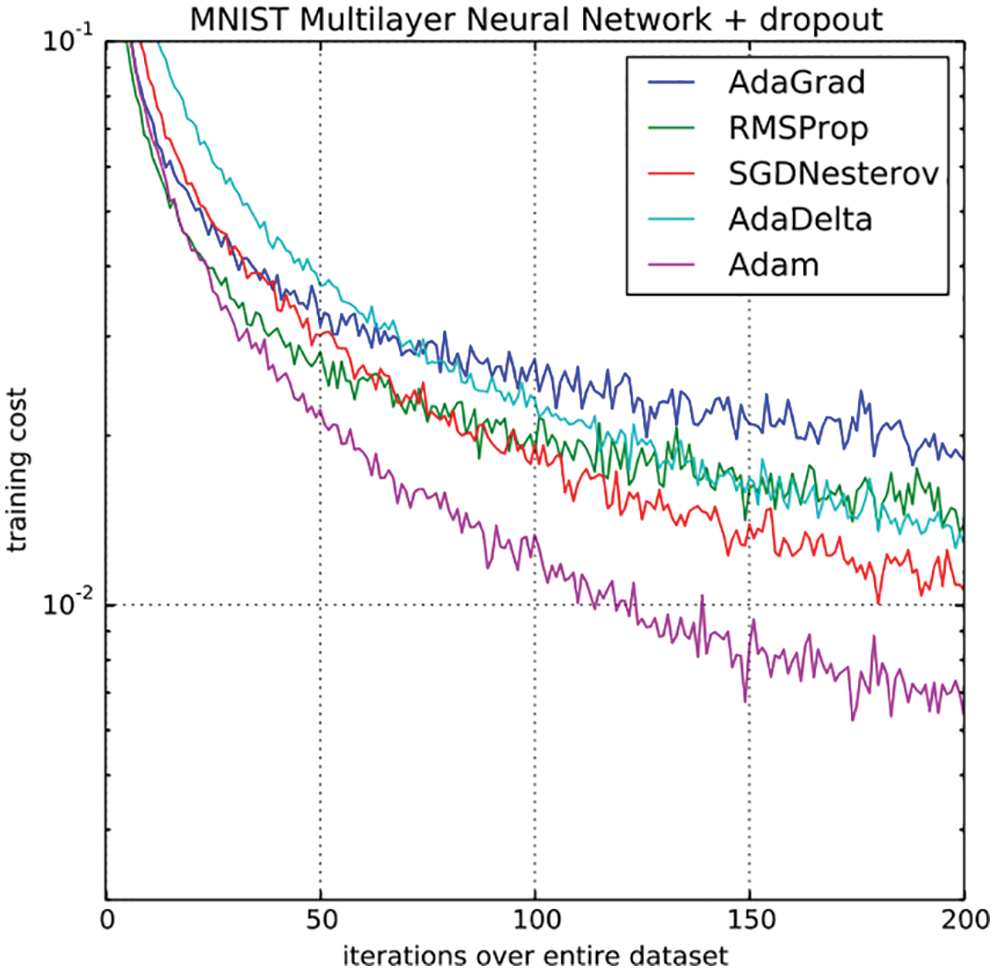

In Section 6.5, highly popular adaptive step-length (learning-rate) methods are discussed in a unified manner in Section 6.5.1, followed by the first paper on AdaGrad [52] (Section 6.5.2).

Overlooked in (or unknown to) other review papers and even well-known books on deep learning, exponential smoothing of time series originating from the field of forecasting dated since the 1950s, the key technique of adaptive methods, is carefully explained in Section 6.5.3.

The first adaptive methods that employed exponential smoothing were RMSProp [53] (Section 6.5.4) and AdaDelta [54] (Section 6.5.5), both introduced at about the same time, followed by the “immensely successful” Adam (Section 6.5.6) and its variants (Sections 6.5.7 and 6.5.8).

Particular attention is then given to a recent criticism of adaptive methods in [55], revealing their marginal value for generalization, compared to the good old SGD with effective initial step-length tuning and step-length decay (Section 6.5.9). The results were confirmed in three recent independent papers, among which is the recent AdamW adaptive method in [56] (Section 6.5.10).

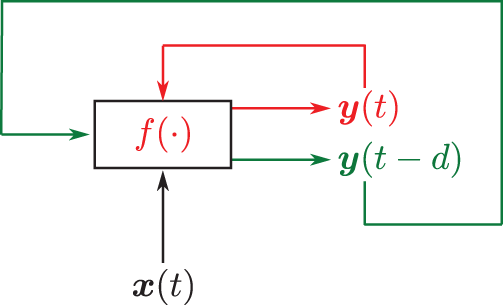

Dynamics, sequential data, and sequence modeling are the subjects of Section 7. Discrete time-dependent problems, as a sequence of data, can be modeled with recurrent neural networks discussed in Section 7.1, using the 1997 classic architecture such as Long Short-Term Memory (LSTM) in Section 7.2, but also the recent 2017-18 architectures such as transformer introduced in [31] (Section 7.4.3), based on the concept of attention [57]. Continuous recurrent neural networks originally developed in neuroscience to model the brain and the connection to their discrete counterparts in deep learning are also discussed in detail, [19] and Section 13.2.2 on “Dynamic, time dependence, Volterra series”.

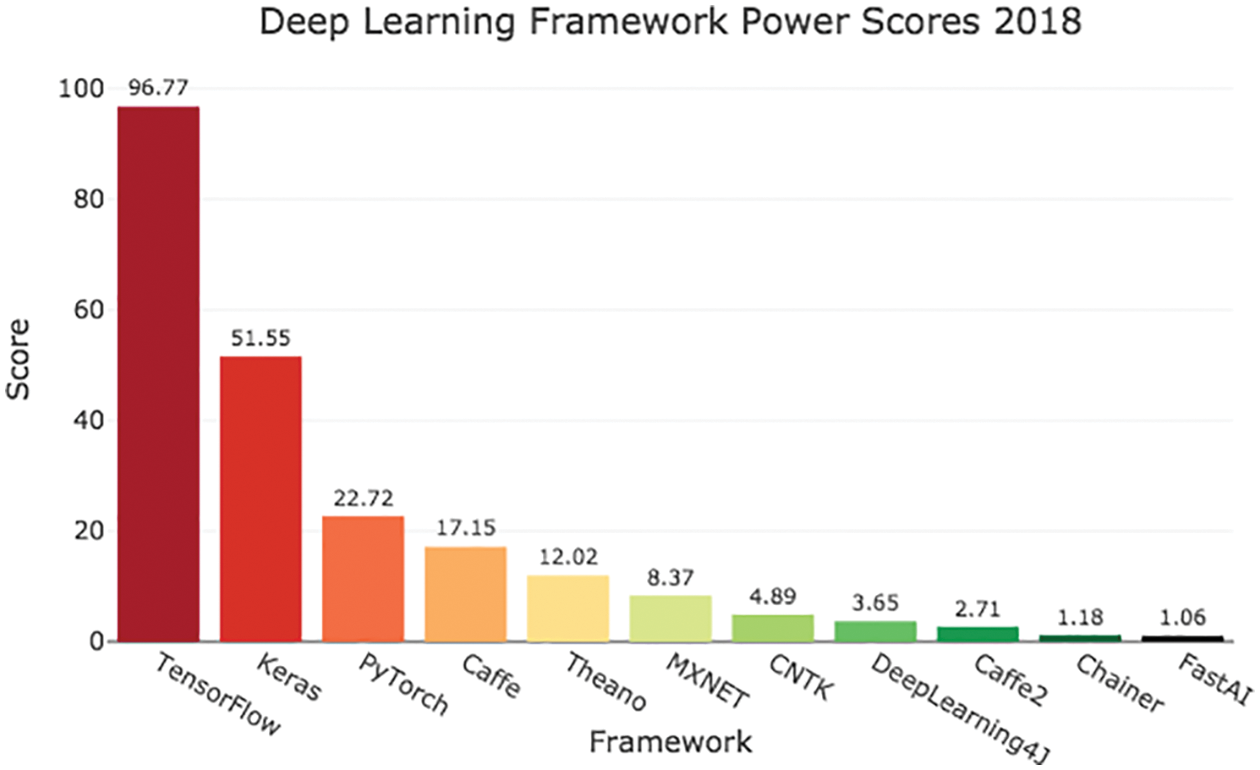

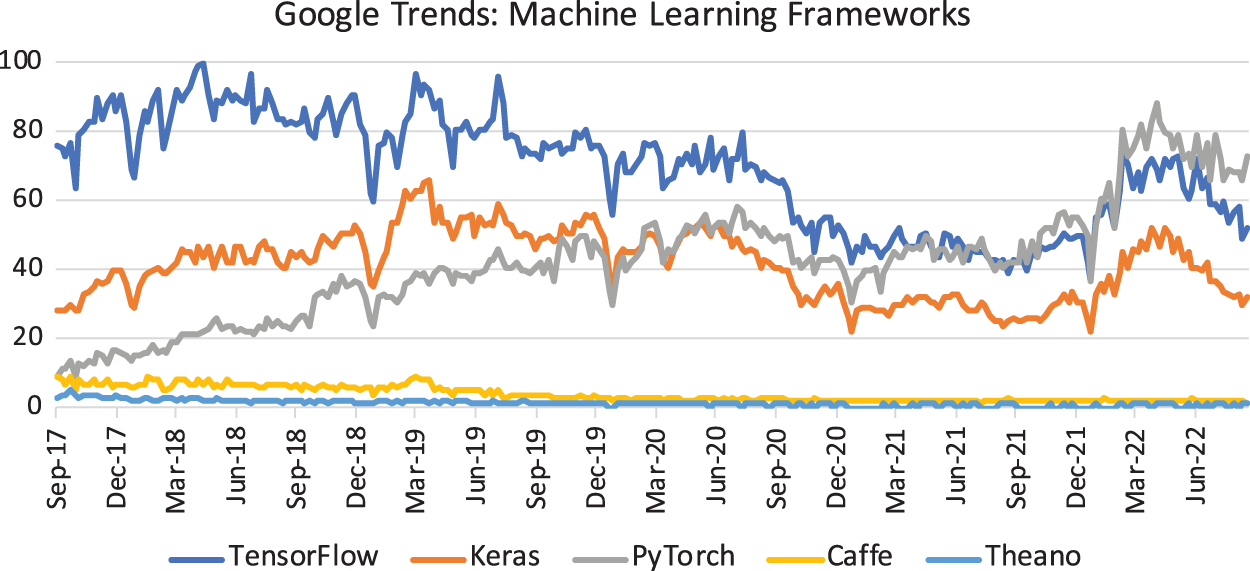

The features of several popular, open-source deep-learning frameworks and libraries—such as TensorFlow, Keras, PyTorch, etc.—are summarized in Section 9.

As mentioned above, detailed formulations of deep learning applied to computational mechanics in [38] [25] [26] [47] [48] are reviewed in Sections 10, 11, 12.

History of AI, limitations, danger, and the classics. Finally, a broader historical perspective of deep learning, machine learning, and artificial intelligence is discussed in Section 13, ending with comments on the geopolitics, limitations, and (identified-and-proven, not just speculated) danger of artificial intelligence in Section 14.

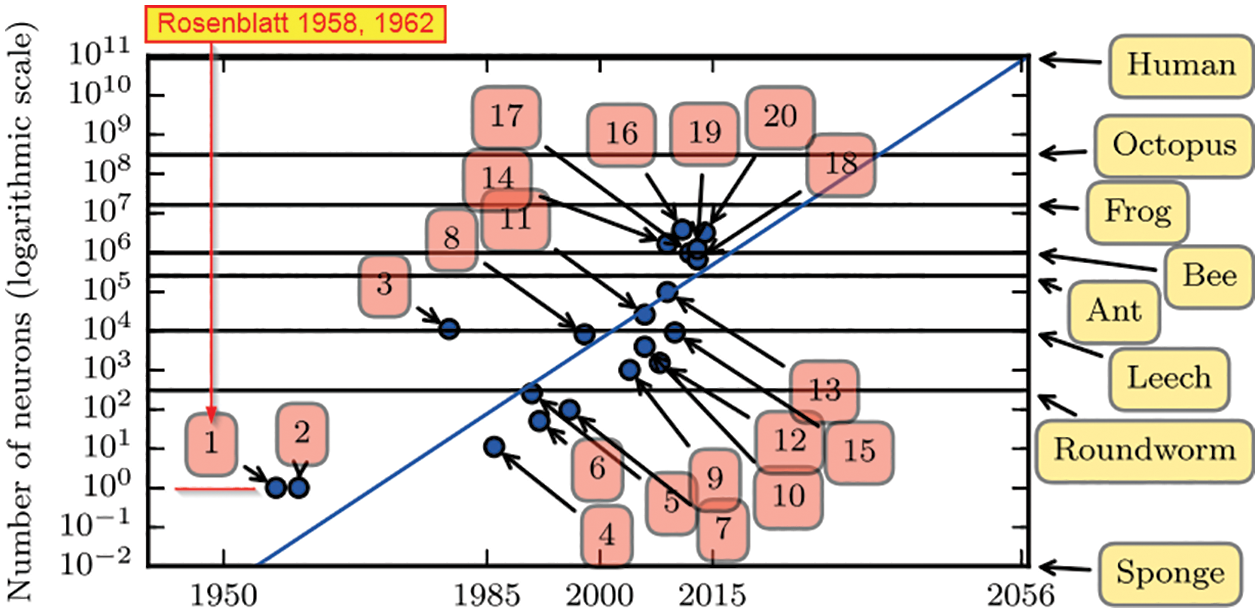

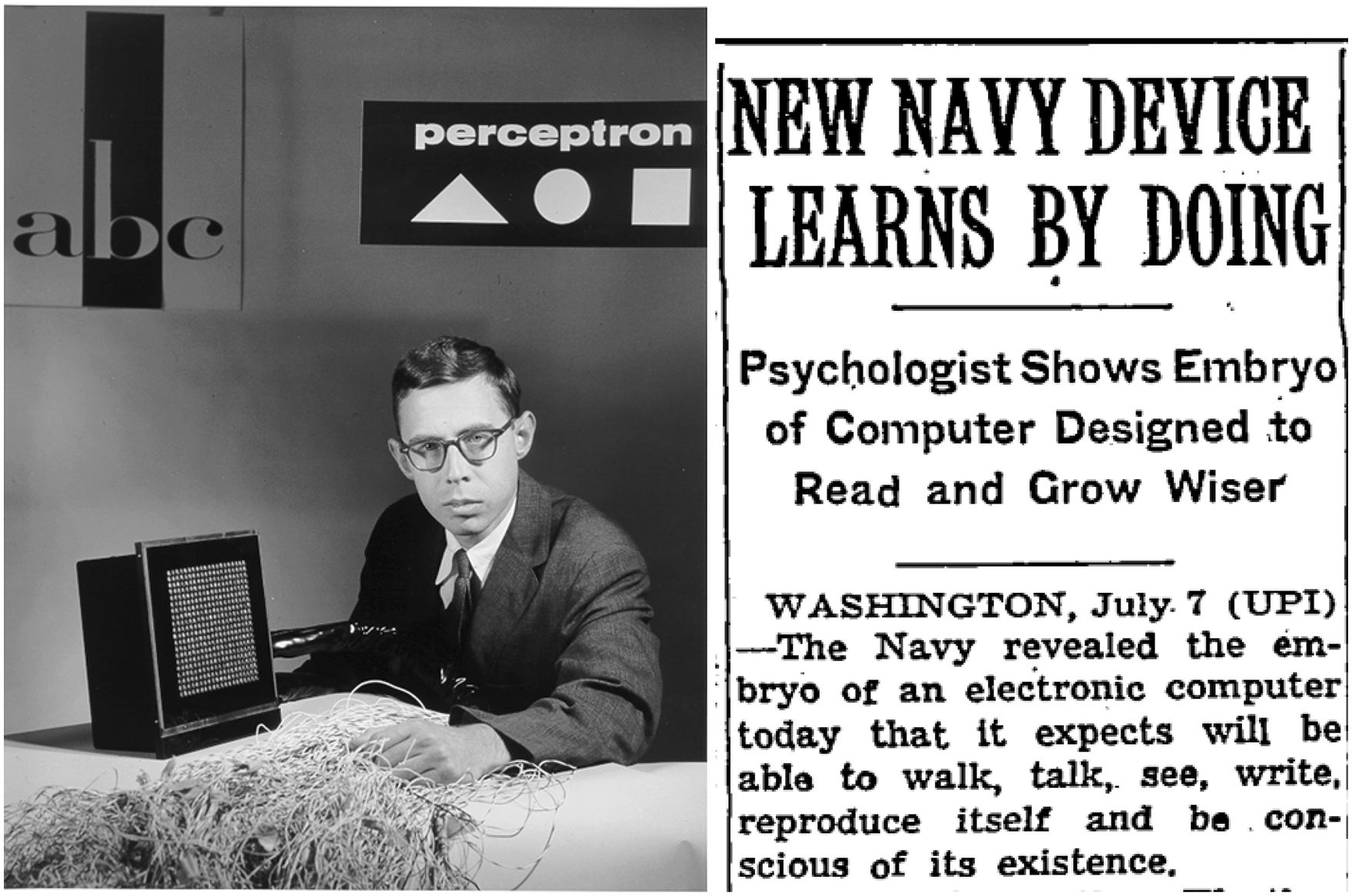

A rare feature is in a detailed review of some important classics to connect to the relevant concepts in modern literature, sometimes revealing misunderstanding in recent works, likely due to a lack of verification of the assertions made with the corresponding classics. For example, the first artificial neural network, conceived by Rosenblatt (1957) [1], (1962) [2], had 1000 neurons, but was reported as having a single neuron (Figure 42). Going beyond probabilistic analysis, Rosenblatt even built the Mark I computer to implement his 1000-neuron network (Figure 133, Sections 13.2 and 13.2.1). Another example is the “heavy ball” method, for which everyone referred to Polyak (1964) [3], but who more precisely called the “small heavy sphere” method (Remark 6.6). Others were quick to dismiss classical deterministic line-search methods that have been generalized to add stochasticity for network training (Remark 6.4). Unintended misrepresentation of the classics would mislead first-time learners, and unfortunately even seasoned researchers who used second-hand information from others, without checking the original classics themselves.

The use of Volterra series to model the nonlinear behavior of neuron in term of input and output firing rates, leading to continuous recurrent neural networks is examined in detail. The linear term of the Volterra series is a convolution integral that provides a theoretical foundation for the use of linear combination of inputs to a neuron, with weights and biases [19]; see Section 13.2.2.

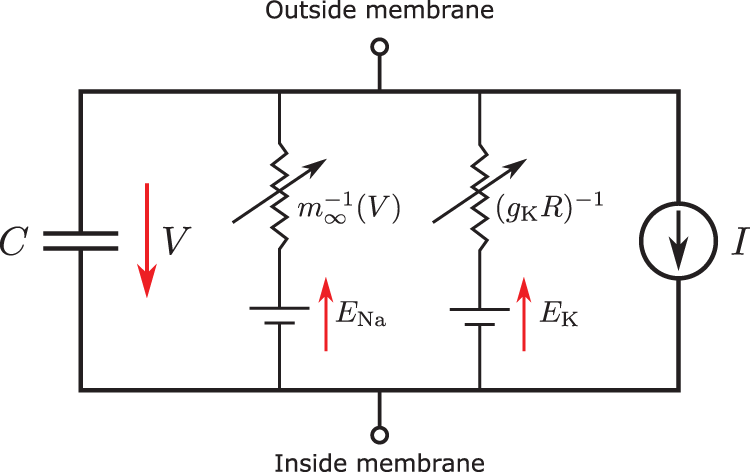

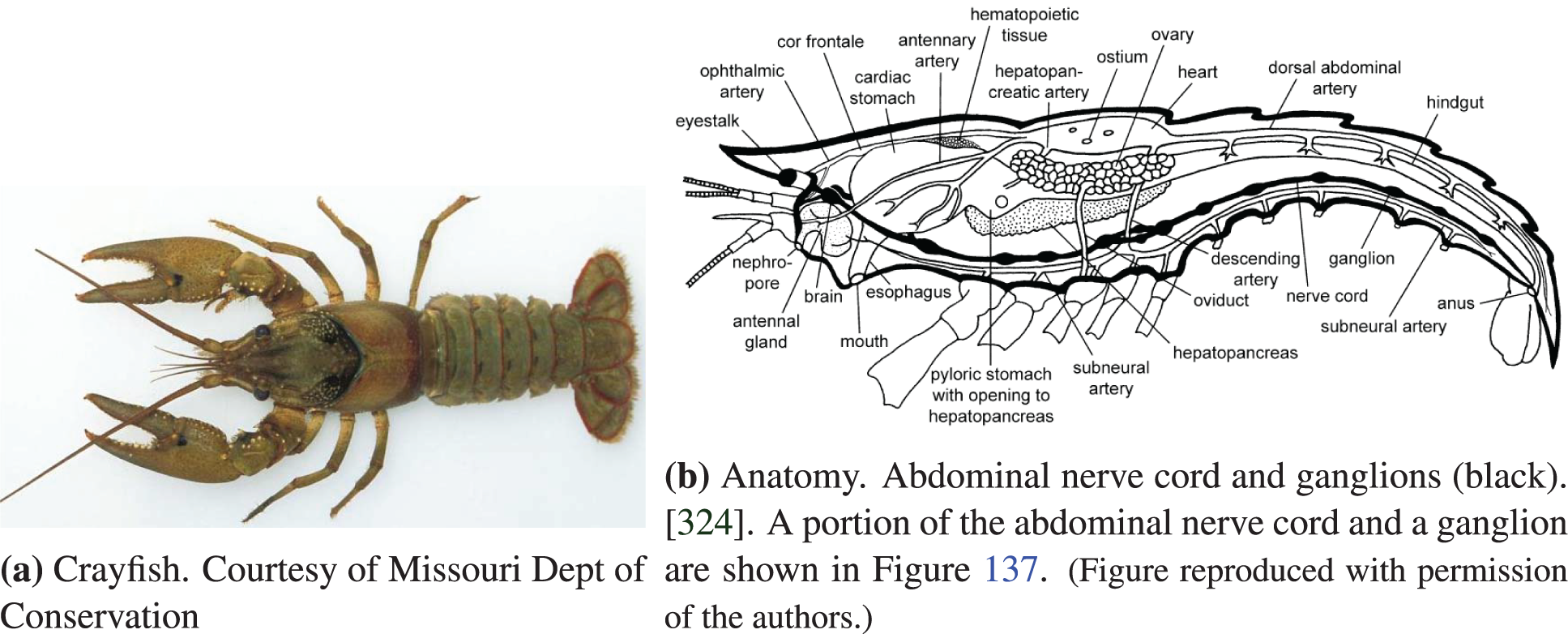

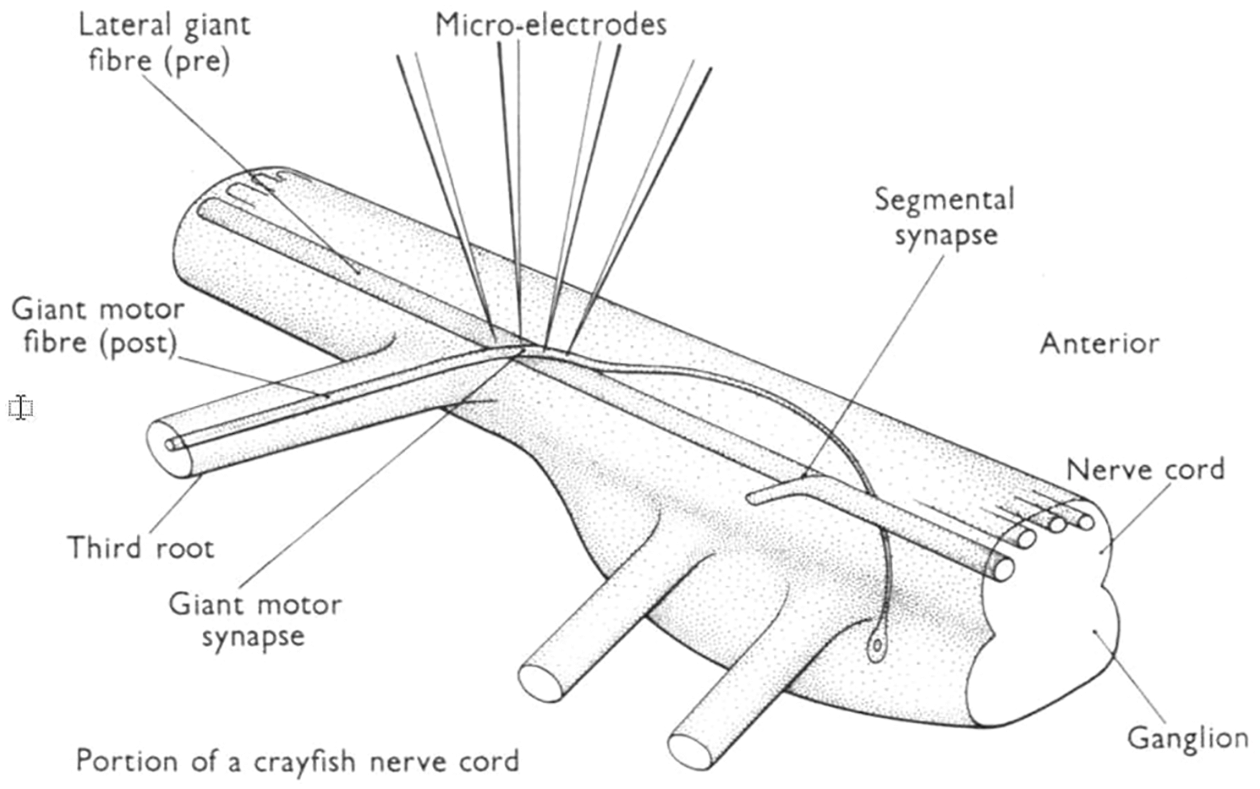

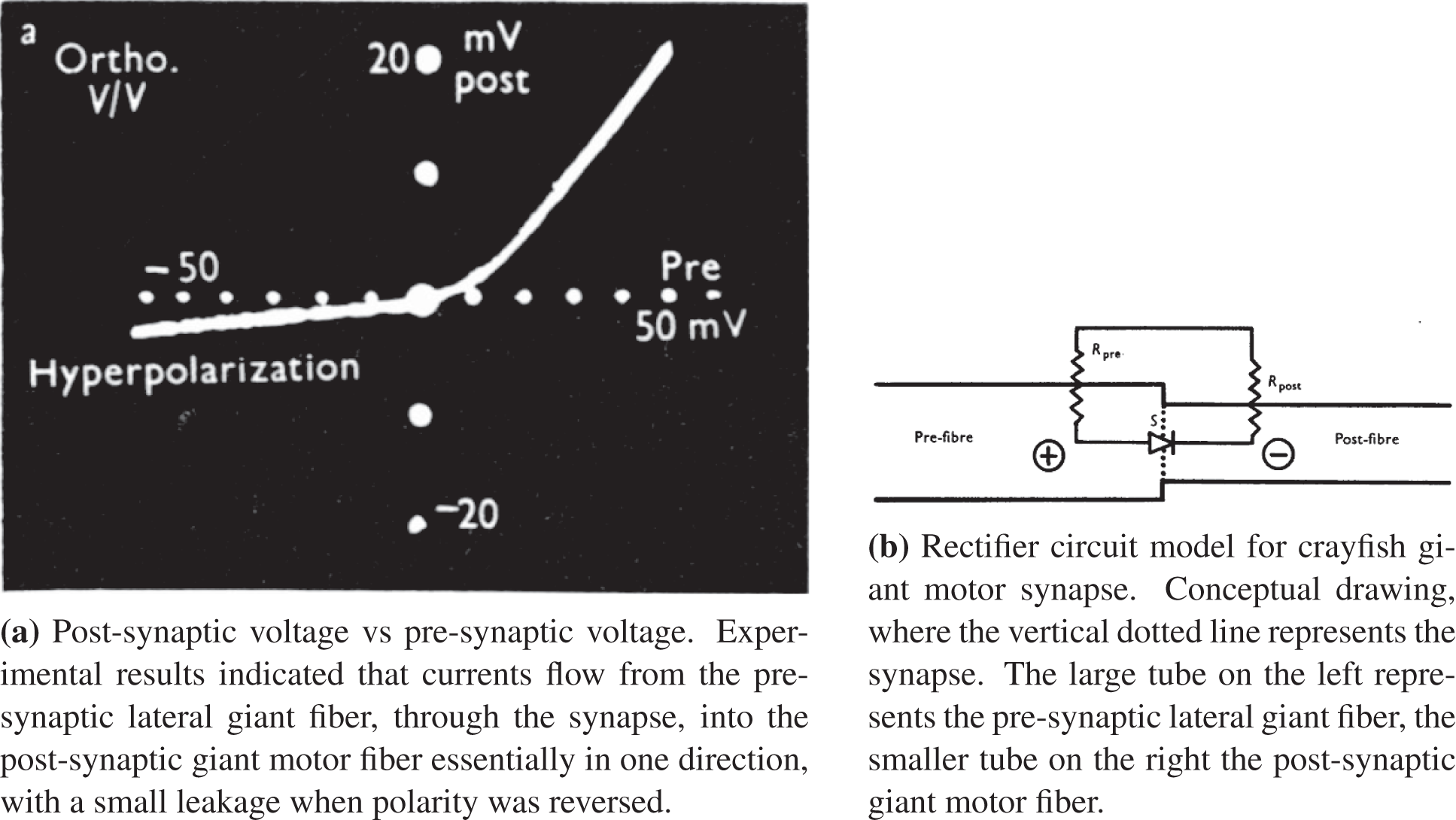

The experiments in the 1950s by Furshpan et al. [58] [59] that revealed the rectified linear behavior in neuronal axon, modeled as a circuit with a diode, together with the use of the rectified linear activation function in neural networks in neuroscience years before being adopted for use in deep learning network, are reviewed in Section 13.3.2.

Reference hypertext links and Internet archive. For the convenience of the readers, whenever we refer to an online article, we provide both the link to original website, and if possible, also the link to its archived version in the Internet Archive. For example, we included in the bibliography entry of Ref. [60] the links to both the Original website and the Internet archive.10

2 Deep Learning, resurgence of Artificial Intelligence

In Dec 2021, the journal Science named, as its “2021 Breakthrough of the Year,” the development of the AI software AlphaFold and its amazing feat of predicting a large number of protein structures [64]. “For nearly 50 years, scientists have struggled to solve one of nature’s most perplexing challenges—predicting the complex 3D shape a string of amino acids will twist and fold into as it becomes a fully functional protein. This year, scientists have shown that artificial intelligence (AI)-driven software can achieve this long-standing goal and predict accurate protein structures by the thousands and at a fraction of the time and cost involved with previous methods” [64].

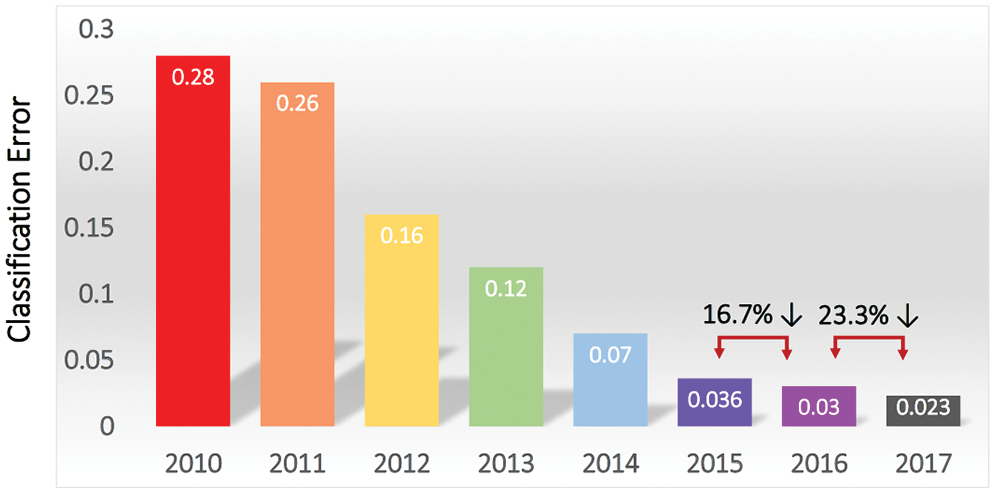

Figure 3: ImageNet competitions (Section 2). Top (smallest) classification error rate versus competition year. A sharp decrease in error rate in 2012 sparked a resurgence in AI interest and research [13]. By 2015, the top classification error rate surpassed human classification error rate of 5.1% with Parametric Rectified Linear Unit [61]; see Section 5.3.3 and also [62]. Figure from [63]. (Figure reproduced with permission of the authors.)

The 3-D shape of a protein, obtained by folding a linear chain of amino acid, determines how this protein would interact with other molecules, and thus establishes its biological functions [64]. There are some 200 million proteins, the building blocks of life, in all living creatures, and 400,000 in the human body [64]. The AlphaFold Protein Structure Database already contained “over 200 million protein structure predictions.”11 For comparison, there were only about 190 thousand protein structures obtained through experiments as of 2022.07.28 [65]. “Some of AlphaFold’s predictions were on par with very good experimental models [Figure 2, left], and potentially precise enough to detail atomic features useful for drug design, such as the active site of an enzyme” [66]. The influence of this software and its developers “would be epochal.”

On the 2019 new-year day, The Guardian [67] reported the most recent breakthrough in AI, published less than a month before on 2018 Dec 07 in the journal Science in [68] on the development of the software AlphaZero, based on deep reinforcement learning (a combination of deep learning and reinforcement learning), that can teach itself through self-play, and then “convincingly defeated a world champion program in the games of chess, shogi (Japanese chess), as well as Go”; see Figure 2, right.

Go is the most complex game that mankind ever created, with more combinations of possible moves than chess, and thus the number of atoms in the observable universe.12 It is “the most challenging of classic games for artificial intelligence [AI] owing to its enormous search space and the difficulty of evaluating board positions and moves” [6].

This breakthrough is the crowning achievement in a string of astounding successes of deep learning (and reinforcenent learning) in taking on this difficult challenge for AI.13 The success of this recent breakthrough prompted an AI expert to declare close the multidecade long, arduous chapter of AI research to conquer immensely-complex games such as chess, shogi, and Go, and to suggest AI researchers to consider a new generation of games to provide the next set of challenges [73].

In its long history, AI research went through several cycles of ups and downs, in and out of fashion, as described in [74], ‘Why artificial intelligence is enjoying a renaissance’ (see also Section 13 on historical perspective):

“THE TERM “artificial intelligence” has been associated with hubris and disappointment since its earliest days. It was coined in a research proposal from 1956, which imagined that significant progress could be made in getting machines to “solve kinds of problems now reserved for humans if a carefully selected group of scientists work on it together for a summer”. That proved to be rather optimistic, to say the least, and despite occasional bursts of progress and enthusiasm in the decades that followed, AI research became notorious for promising much more than it could deliver. Researchers mostly ended up avoiding the term altogether, preferring to talk instead about “expert systems” or “neural networks”. But in the past couple of years there has been a dramatic turnaround. Suddenly AI systems are achieving impressive results in a range of tasks, and people are once again using the term without embarrassment.”

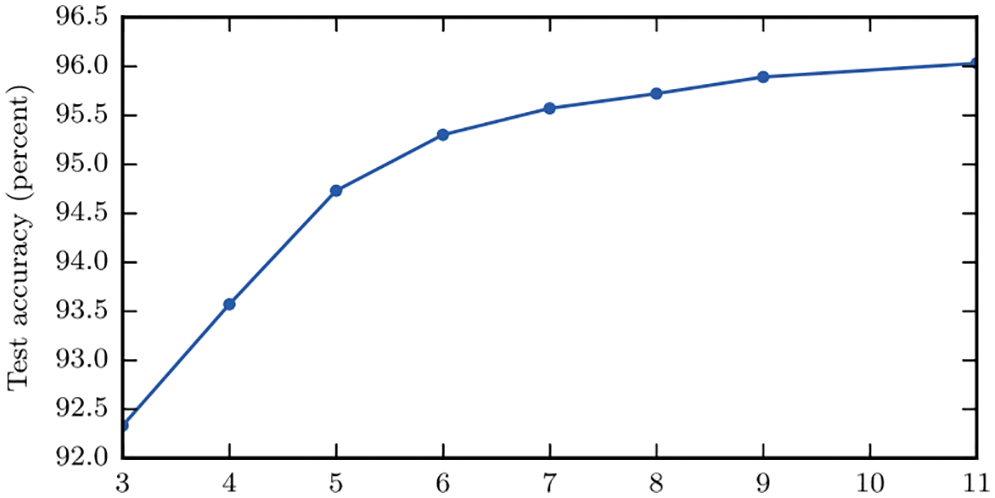

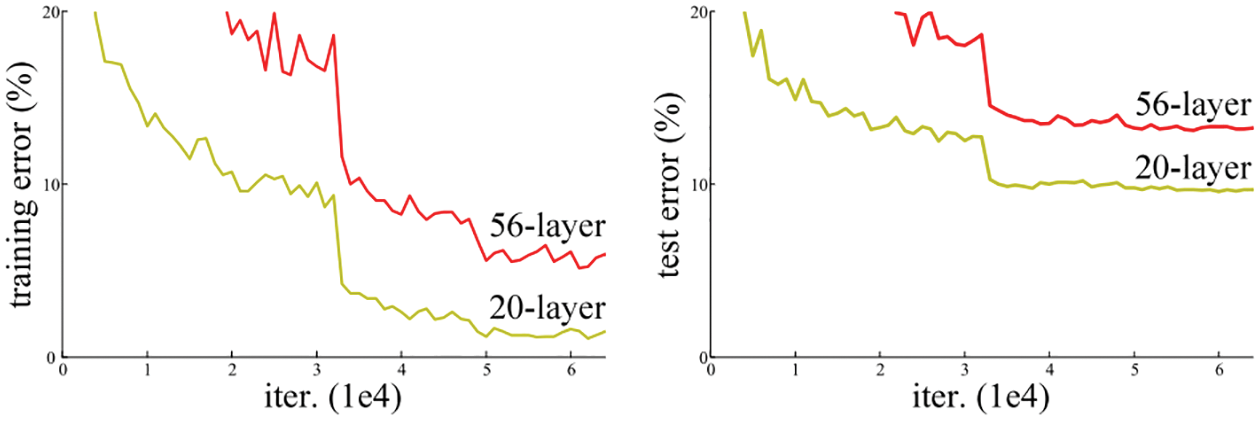

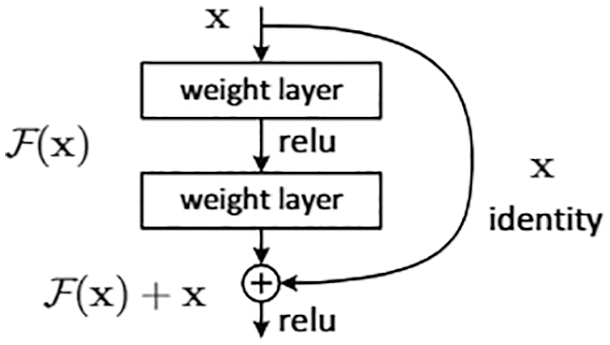

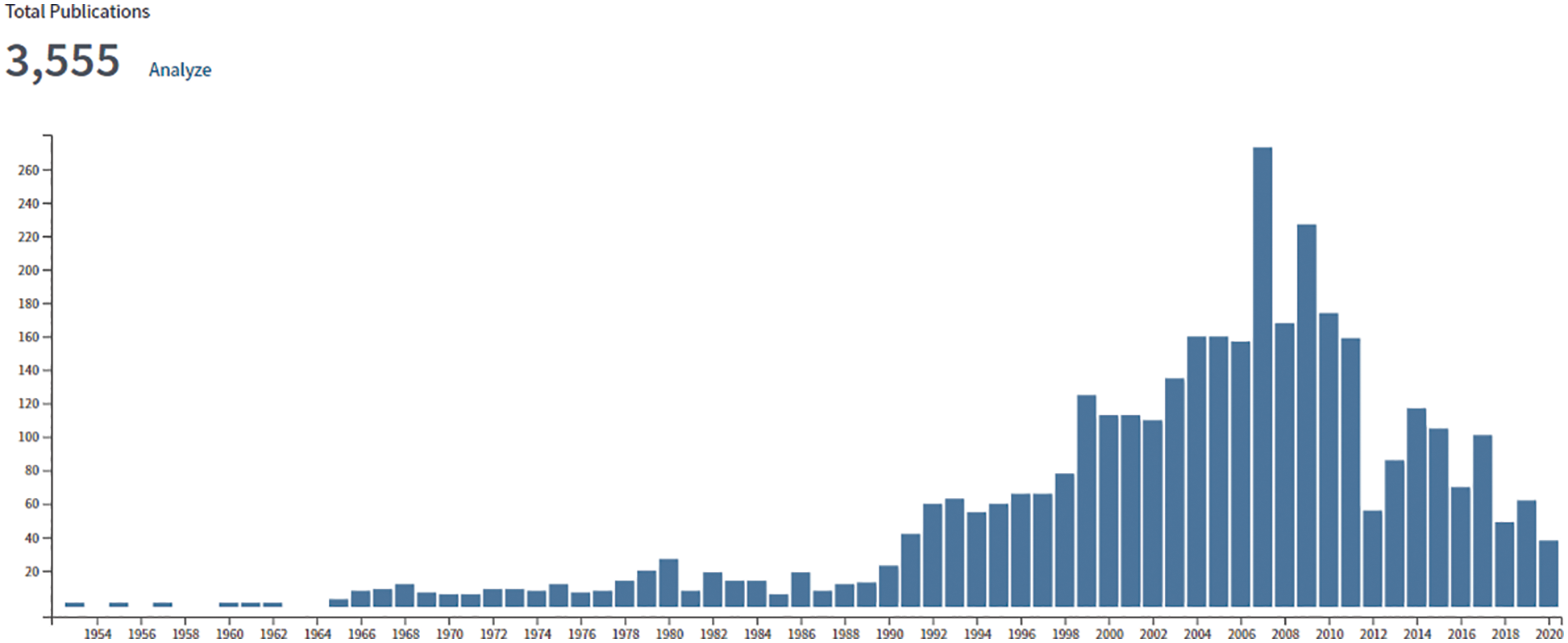

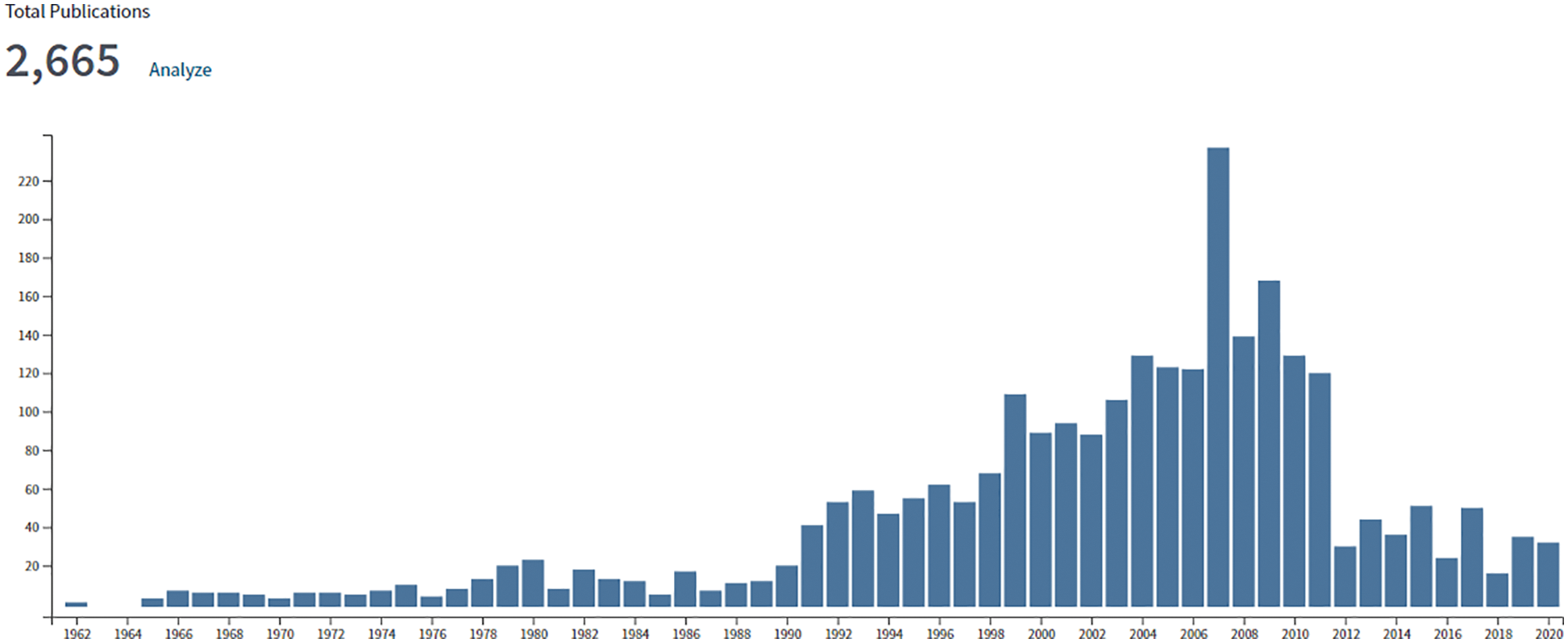

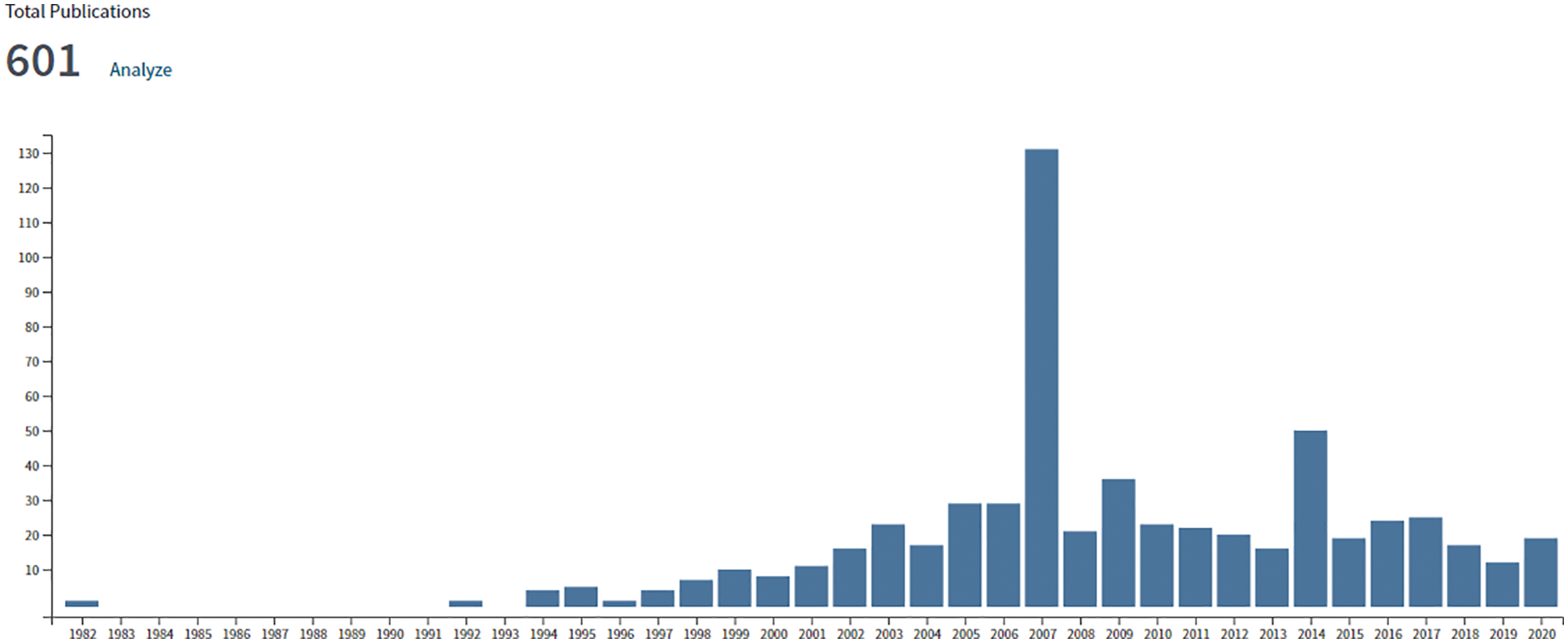

The recent resurgence of enthusiasm for AI research and applications dated only since 2012 with a spectacular success of almost halving the error rate in image classification in the ImageNet competition,14 Going from 26% down to 16%; Figure 3 [63]. In 2015, deep-learning error rate of 3.6% was smaller than human-level error rate of 5.1%,15 and then decreased by more than half to 2.3% by 2017.

The 2012 success16 of a deep-learning application, which brought renewed interest in AI research out of its recurrent doldrums known as “AI winters”,17 is due to the following reasons:

• Availability of much larger datasets for training deep neural networks (find optimized parameters). It is possible to say that without ImageNet, there would be no spectacular success in 2012, and thus no resurgence of AI. Once the importance of having large datasets to develop versatile, working deep networks was realized, many more large datasets have been developed. See, e.g., [60].

• Emergence of more powerful computers than in the 1990s, e.g., the graphical processing unit (or GPU), “which packs thousands of relatively simple processing cores on a single chip” for use to process and display complex imagery, and to provide fast actions in today’s video games” [77].

• Advanced software infrastructure (libraries) that facilitates faster development of deep-learning applications, e.g., TensorFlow, PyTorch, Keras, MXNet, etc. [78], p. 25. See Section 9 on some reviews and rankings of deep-learning libraries.

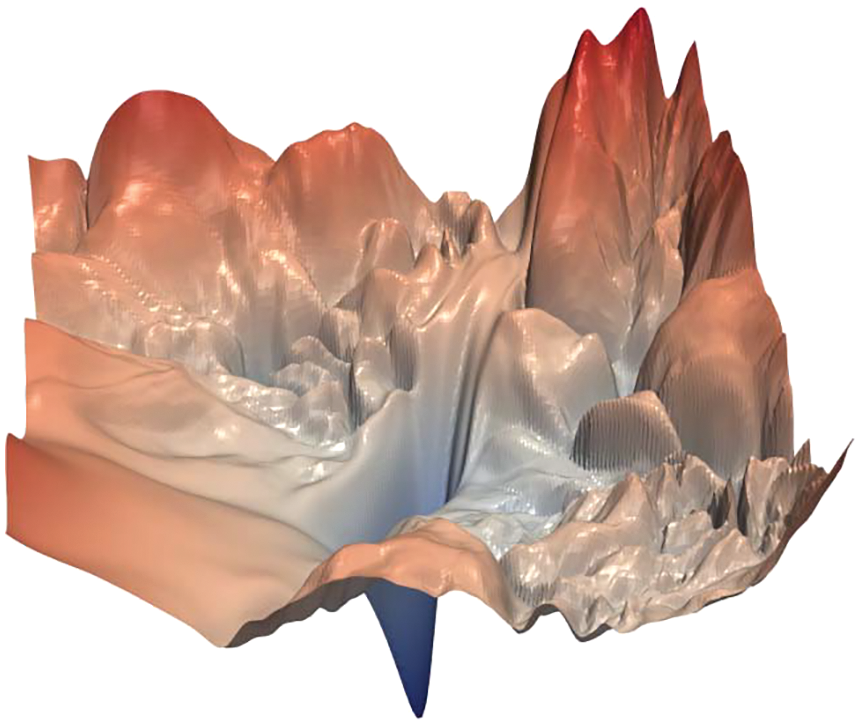

• Larger neural networks and better training techniques (i.e., optimizing network parameters) that were not available in the 1980s. Today’s much larger networks, which can solve once intractatable / difficult problems, are “one of the most important trends in the history of deep learning”, but are still much smaller than the nervous system of a frog [78], p. 21; see also Section 4.6. A 2006 breakthrough, ushering in the dawn of a new wave of AI research and interest, has allowed for efficient training of deeper neural networks [78], p. 18.18 The training of large-scale deep neural networks, which frequently involve highly nonlinear and non-convex optimization problems with many local minima, owes its success to the use of stochastic-gradient descent method first introduced in the 1950s [80].

• Successful applications to difficult, complex problems that help people in their every-day lives, e.g., image recognition, speech translation, etc.

Section 13 provices a historical perspective on the development of AI, with additional details on current and future applications.

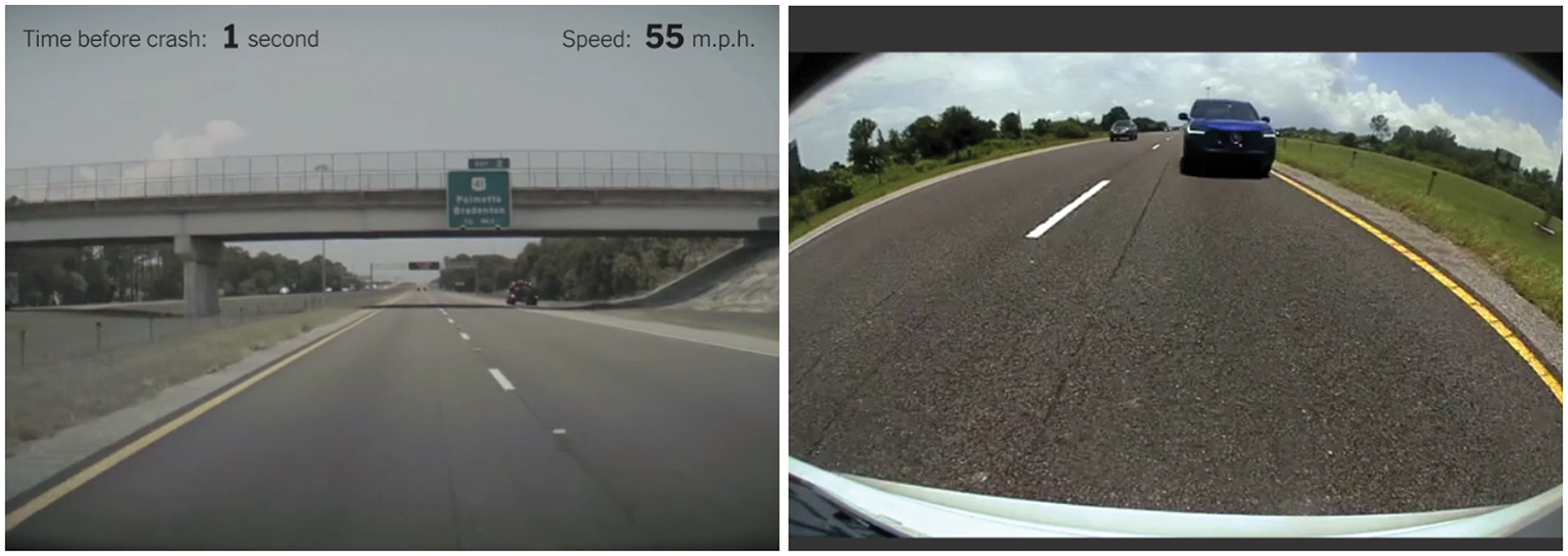

It was, however, disappointing that despite the above-mentioned exciting outcomes of AI, during the Covid-19 pandemic beginning in 2020,23 none of the hundreds of AI systems developed for Covid-19 diagnosis were usable for clinical applications; see Section 13.5.1. As of June 2022, the Tesla electric vehicle autopilot system is under increased scrutiny by the National Highway Traffic Safety Administration as there were “16 crashes into emergency vehicles and trucks with warning signs, causing 15 injuries and one death.”24 In addition, there are many limitations and danger in the current state-of-the-art of AI; see Section 14.

2.1 Handwritten equation to LaTeX code, image recognition

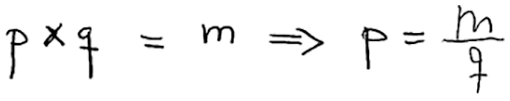

An image-recognition software useful for computational mechanicists is Mathpix Snip,25 which recognizes hand-written math equations, and transforms them into LaTex codes. For example, Mathpix Snip transforms the hand-written equation below by an 11-year old pupil:

Figure 4: Handwritten equation 1 (Section 2.1)

into this LaTeX code “p \times q = m \Rightarrow p = \frac { m } { q }” to yield the equation image:

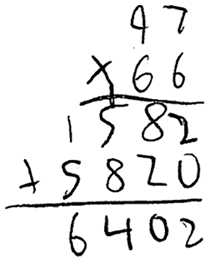

Another example is the hand-written multiplication work below by the same pupil:

Figure 5: Handwritten equation 2 (Section 2.1). Hand-written multiplication work of an eleven-year old pupil.

that Mathpix Snip transformed into the equation image below:26

2.2 Artificial intelligence, machine learning, deep learning

We want to immediately clarify the meaning of the terminologies “Artificial Intelligence” (AI), “Machine Learning” (ML), and “Deep Learning” (DL), since their casual use could be confusing for first-time learners.

For example, it was stated in a review of primarily two computer-science topics called “Neural Networks” (NNs) and “Support Vector Machines” (SVMs) and a physics topic that [85]:27

“The respective underlying fields of basic research—quantum information versus machine learning (ML) and artificial intelligence (AI)—have their own specific questions and challenges, which have hitherto been investigated largely independently.”

Questions would immediately arise in the mind of first-time learners: Are ML and AI two different fields, or the same fields with different names? If one field is a subset of the other, then would it be more general to just refer to the larger set? On the other hand, would it be more specific to just refer to the subset?

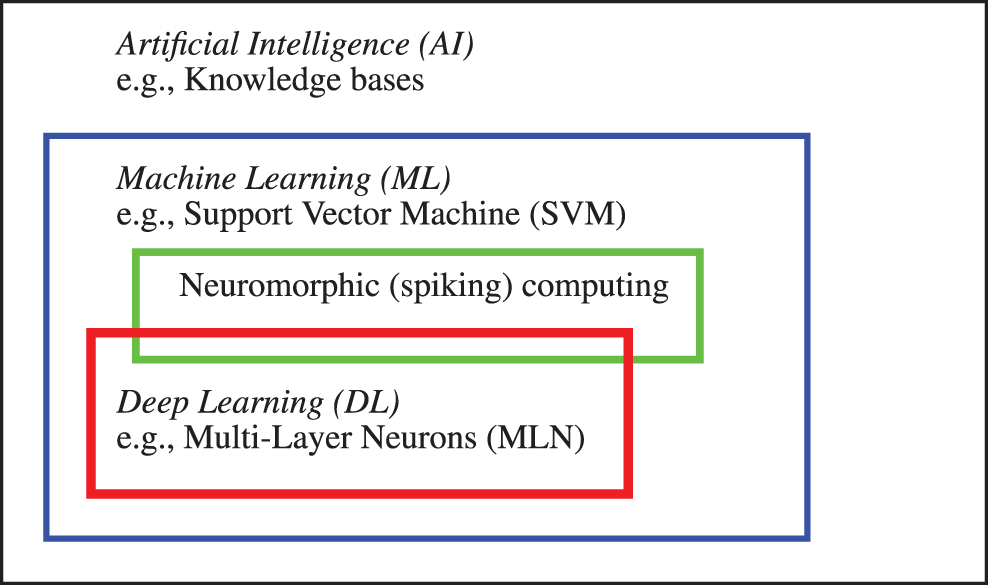

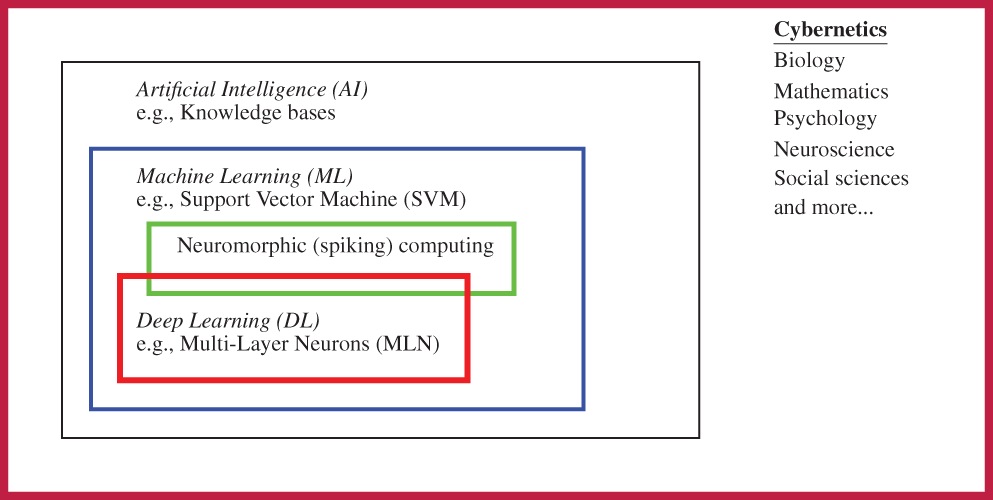

In fact, Deep Learning is a subset of methods inside a larger set of methods known as Machine Learning, which in itself is a subset of methods generally known as Artificial Intelligence. In other words, Deep Learning is Machine Learning, which is Artificial Intelligence; [78], p. 9.28 On the other hand, Artificial Intelligence is not necessarily Machine Learning, which in itself is not necessarily Deep Learning.

The review in [85] was restricted to Neural Networks (which could be deep or shallow)29 and Support Vector Machine (which is Machine Learning, but not Deep Learning); see Figure 6. Deep Learning can be thought of as multiple levels of composition, going from simpler (less abstract) concepts (or representations) to more complex (abstract) concepts (or representations).30

Based on the above relationship between AI, ML, and DL, it would be much clearer if the phrase “machine learning (ML) and artificial intelligence (AI)” in both the title of [85] and the original sentence quoted above is replaced by the phrase “machine learning (ML)” to be more specific, since the authors mainly reviewed Multi-Layer Neural (MLN) networks (deep learning, and thus machine learning) and Support Vector Machine (machine learning).31 MultiLayer Neural (MLN) network is also known as MultiLayer Perceptron (MLP).32 both MLN networks and SVMs are considered as artificial intelligence, which in itself is too broad and thus not specific enough.

Figure 6: Artificial intelligence and subfields (Section 2.2). Three classes of methods—Artificial Intelligence (AI), Machine Learning (ML), and Deep Learning (DL)—and their relationship, with an example of method in each class. A knowledge-base method is an AI method, but is neither a ML method, nor a DL method. Support Vector Machine and spiking computing are ML methods, and thus AI methods, but not a DL method. Multi-Layer Neural (MLN) network is a DL method, and is thus both an ML method and an AI method. See also Figure 158 in Appendix 4 on Cybernetics, which encompassed all of the above three classes.

Another reason for simplifying the title in [85] is that the authors did not consider using any other AI methods, except for two specific ML methods, even though they discussed AI in the general historical context.

The engine of neuromorphic computing, also known as spiking computing, is a hardware network built into the IBM TrueNorth chip, which contains “1 million programmable spiking neurons and 256 million configurable synapses”,33 and consumes “extremely low power” [87]. Despite the apparent difference with the software approach of deep computing, neuromorphic chip could implement deep-learning networks, and thus the difference was not fundamental [88]. There is thus an overlap between neuromorphic computing and deep learning, as shown in Figure 6, instead of two disconnected subfields of machine learning as reported in [20].34

2.3 Motivation, applications to mechanics

As motivation, we present in this section the results in three recent papers in computational mechanics, mentioned in the Opening Remarks in Section 1, and identify some deep-learning fundamental concepts (in italics) employed in these papers, together with the corresponding sections in the present paper where these concepts are explained in detail. First-time learners of deep learning likely find these fundamental concepts described by obscure technical jargon, whose meaning will be explained in details in the identified subsequent sections. Experts of deep learning would understand how deep learning is applied to computational mechanics.

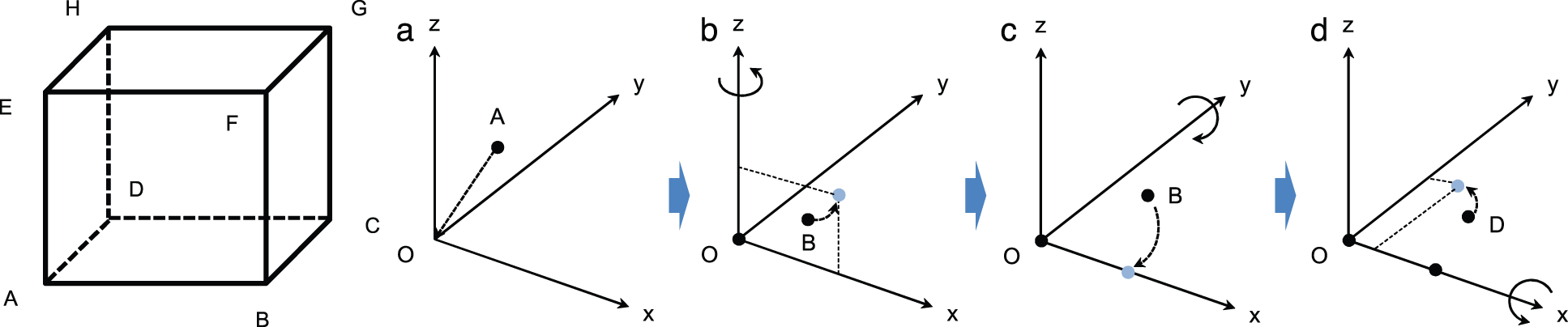

Figure 7: Feedforward neural network (Section 2.3.1). A feedforward neural network in [38], rotated clockwise by 90 degrees to compare to its equivalent in Figure 23 and Figure 35 further below. All terminologies and fundamental concepts will be explained in detail in subsequent sections as listed. See Section 4.1.1 for a top-down explanation and Section 4.1.2 for bottom-up explanation. This figure of a network could be confusing to first-time learners, as already indicated in Footnote 5. (Figure reproduced with permission of the authors.)

2.3.1 Enhanced numerical quadrature for finite elements

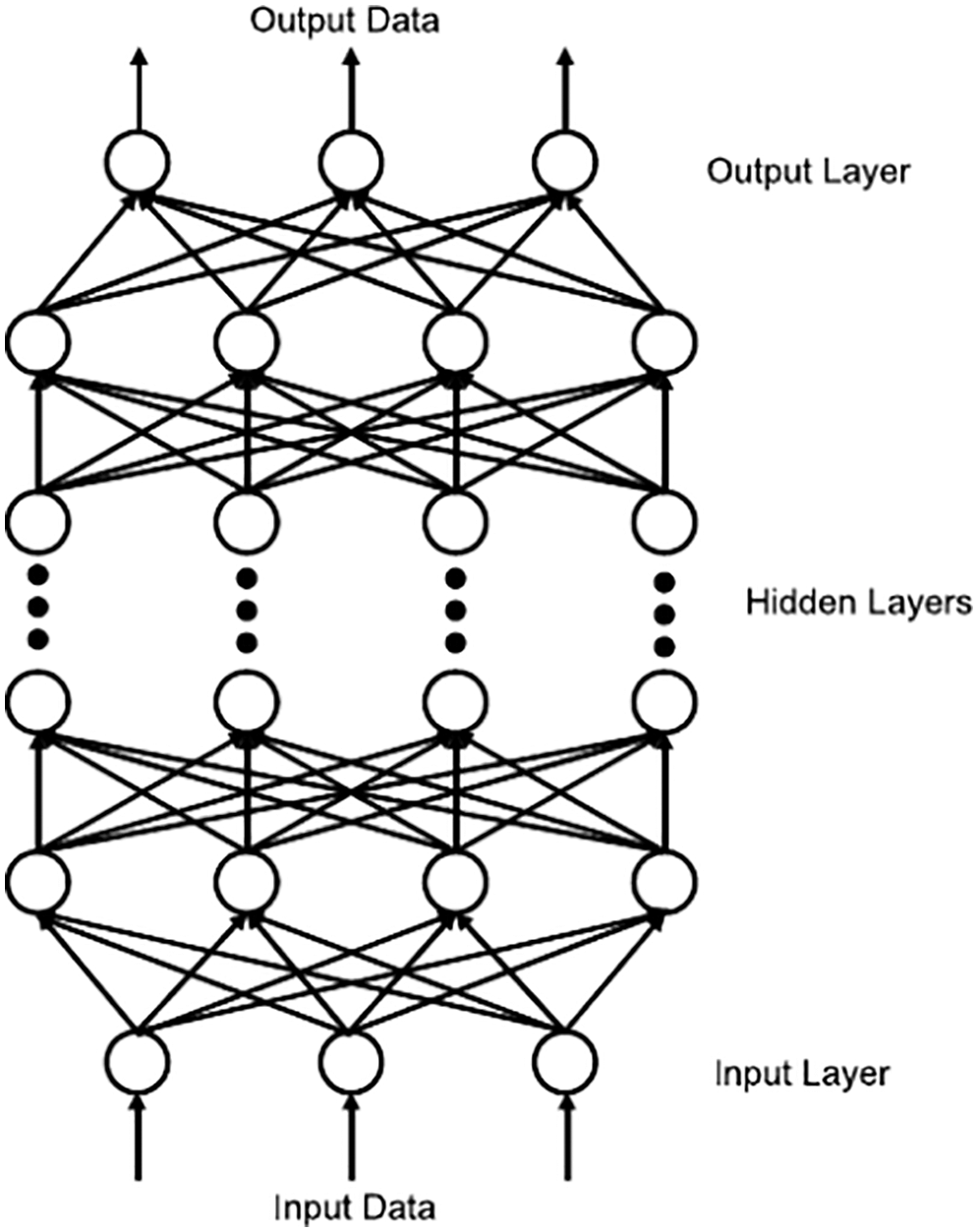

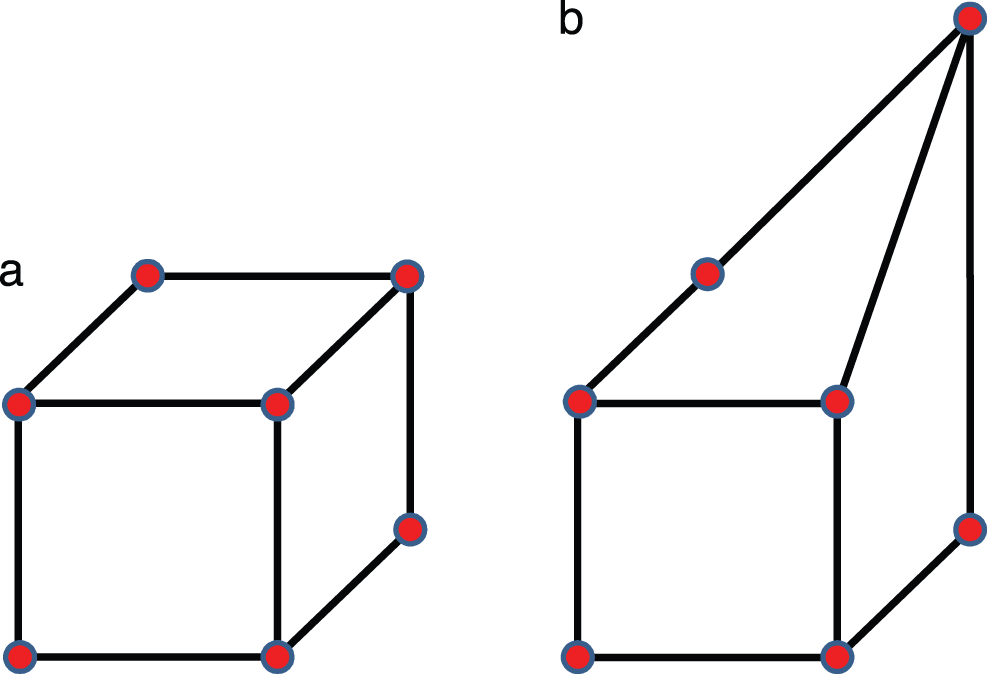

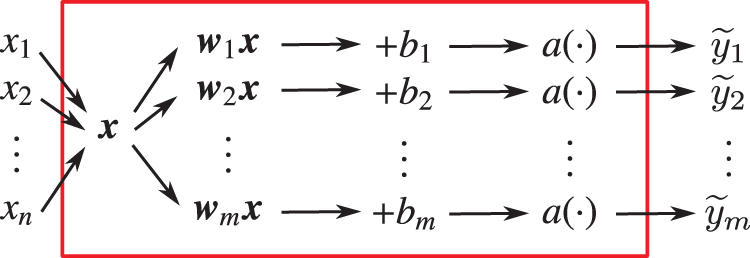

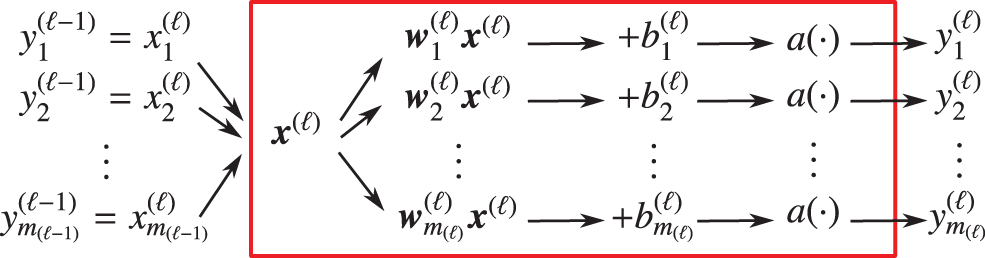

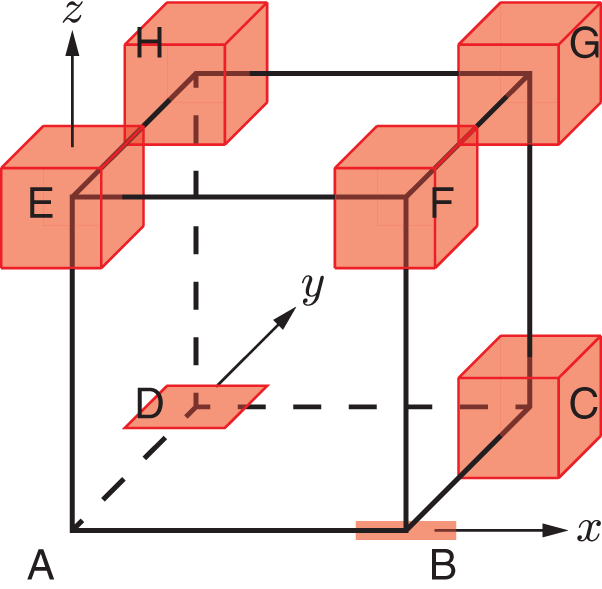

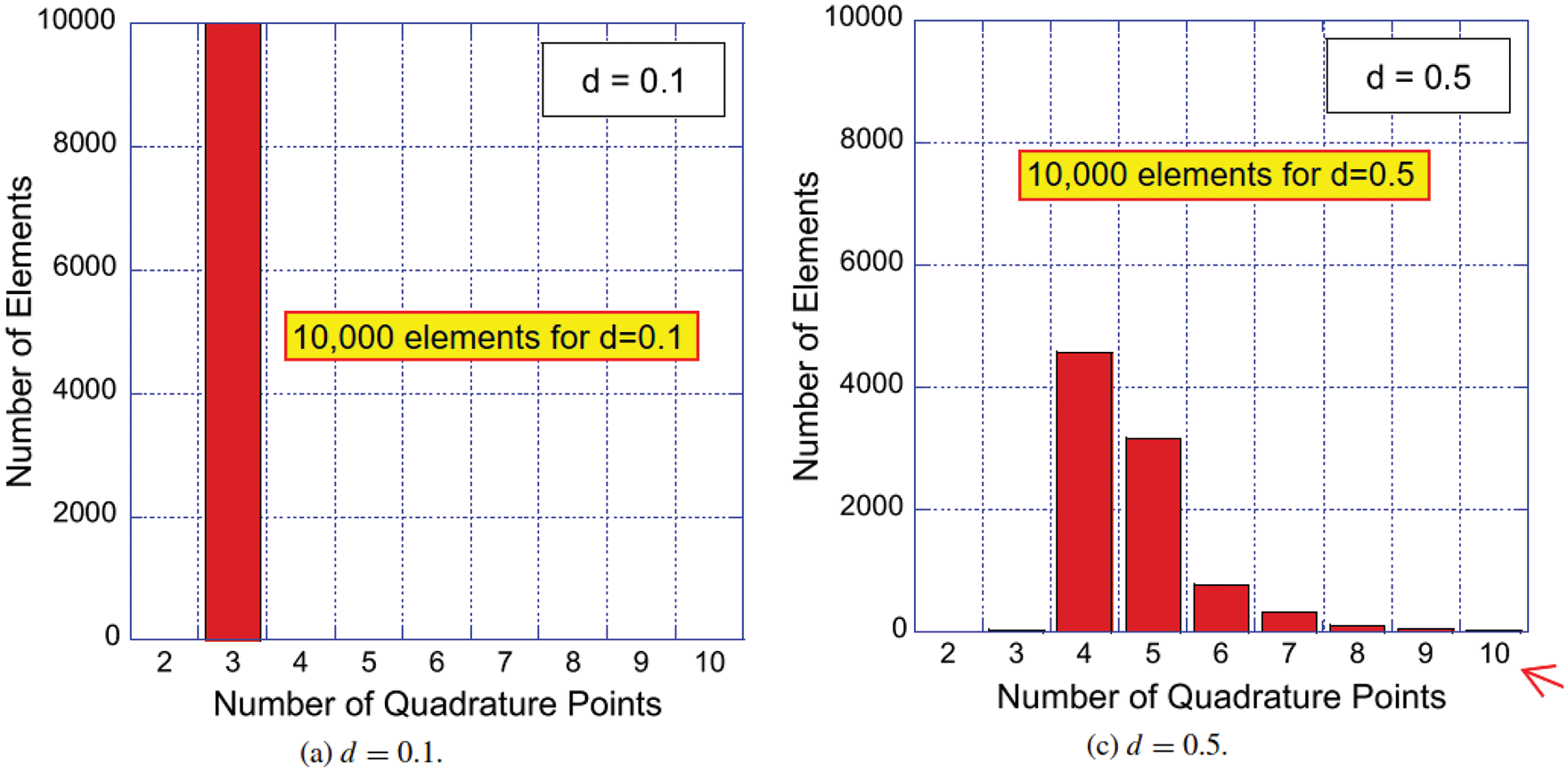

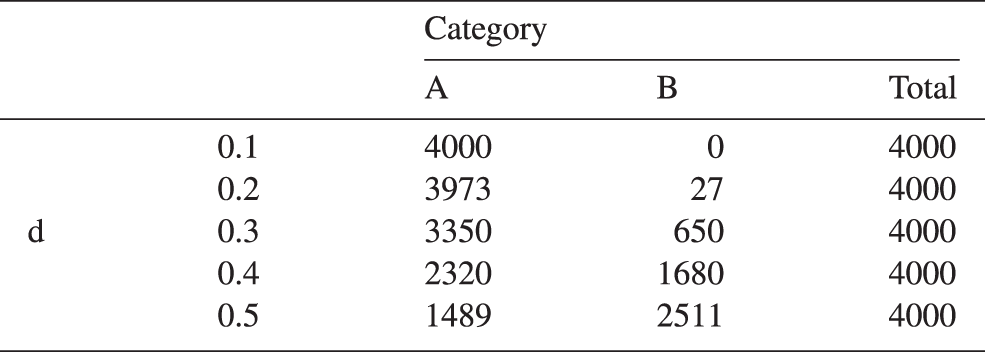

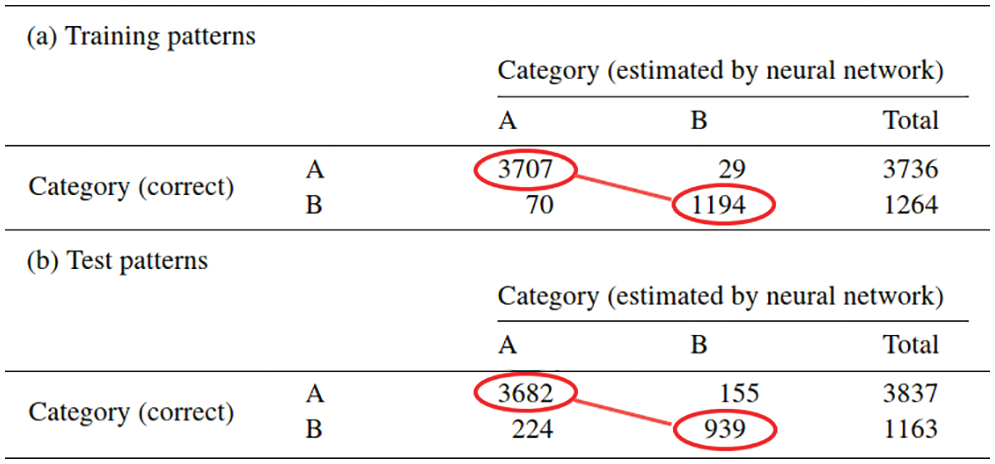

To integrate efficiently and accurately the element matrices in a general finite element mesh of 3-D hexahedral elements (including distorted elements), the power of Deep Learning was harnessed in two applications of feedforward MultiLayer Neural networks (MLN,35 Figures 7-8, Section 4) [38]:

(1) Application 1.1: For each element (particularly distorted elements), find the number of integration points that provides accurate integration within a given error tolerance. Section 10.2 contains the details.

(2) Application 1.2: Uniformly use

To train37 the networks—i.e., to optimize the network parameters (weights and biases, Figure 8) to minimize some loss (cost, error) function (Sections 5.1, 6)—up to 20000 randomly distorted hexahedrals were generated by displacing nodes from a regularly shaped element [38], see Figure 9. For each distorted shape, the following are determined: (1) the minimum number of integration points required to reach a prescribed accuracy, and (2) corrections to the quadrature weights by trying one million randomly generated sets of correction factors, among which the best one was retained.

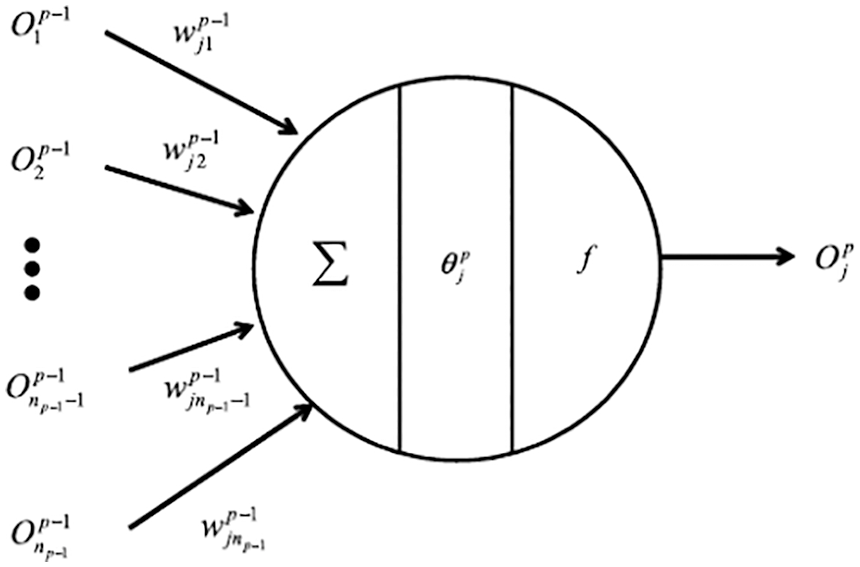

Figure 8: Artificial neuron (Section 2.3.1). A neuron with its multiple inputs

Figure 9: Cube and distorted cube elements (Section 2.3.1). Regular and distorted linear hexahedral elements [38]. (Figure reproduced with permission of the authors.)

While Application 1.1 used one fully-connected (Section 4.6.1) feedforward neural network (Section 4), Application 1.2 relied on two neural networks: The first neural network was a classifier that took the element shape (18 normalized nodal coordinates) as input and estimated whether or not the numerical integration (quadrature) could be improved by adjusting the quadrature weights for the given element (one output), i.e., the network classifier only produced two outcomes, yes or no. If an error reduction was possible, a second neural network performed regression to predict the corrected quadrature weights (eight outputs for

To train the classifier network, 10,000 element shapes were selected from the prepared dataset of 20,000 hexahedrals, which were divided into a training set and a validation set (Section 6.1) of 5000 elements each.38

To train the second regression network, 10,000 element shapes were selected for which quadrature could be improved by adjusting the quadrature weights [38].

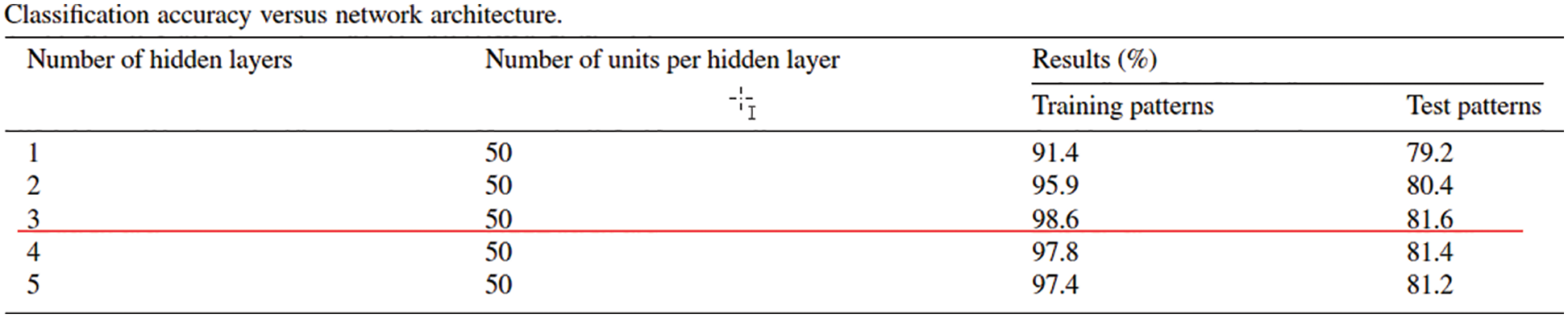

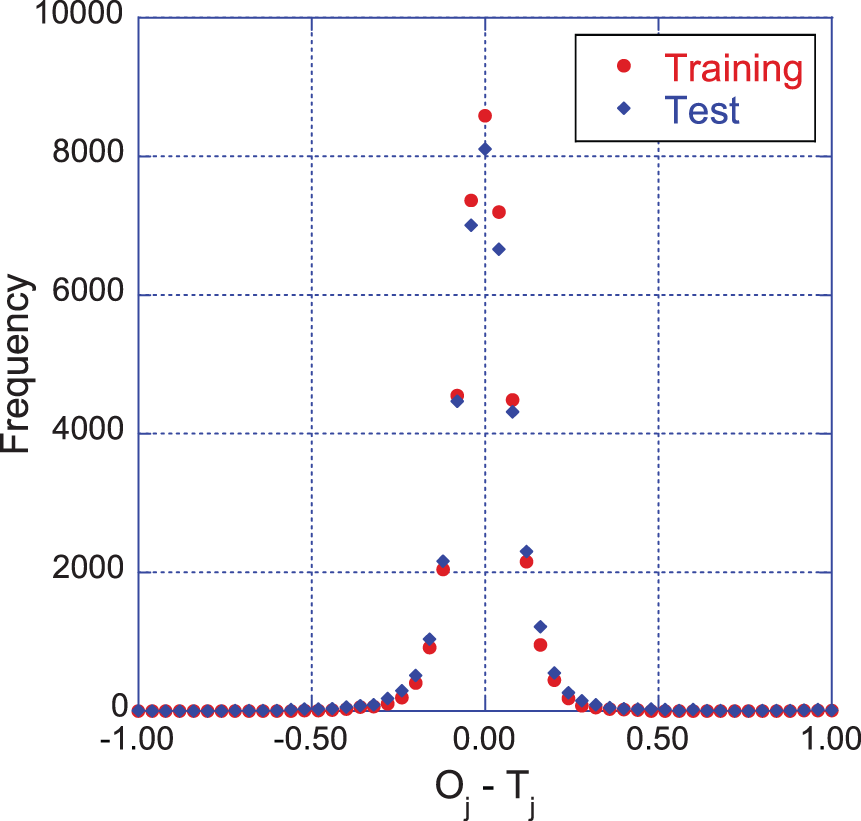

Again, the training set and the test set comprised 5000 elements each. The parameters of the neural networks (weights, biases, Figure 8, Section 4.4) were optimized (trained) using a gradient descent method (Section 6) that minimizes a loss function (Section 5.1), whose gradients with respect to the parameters are computed using backpropagation (Section 5).

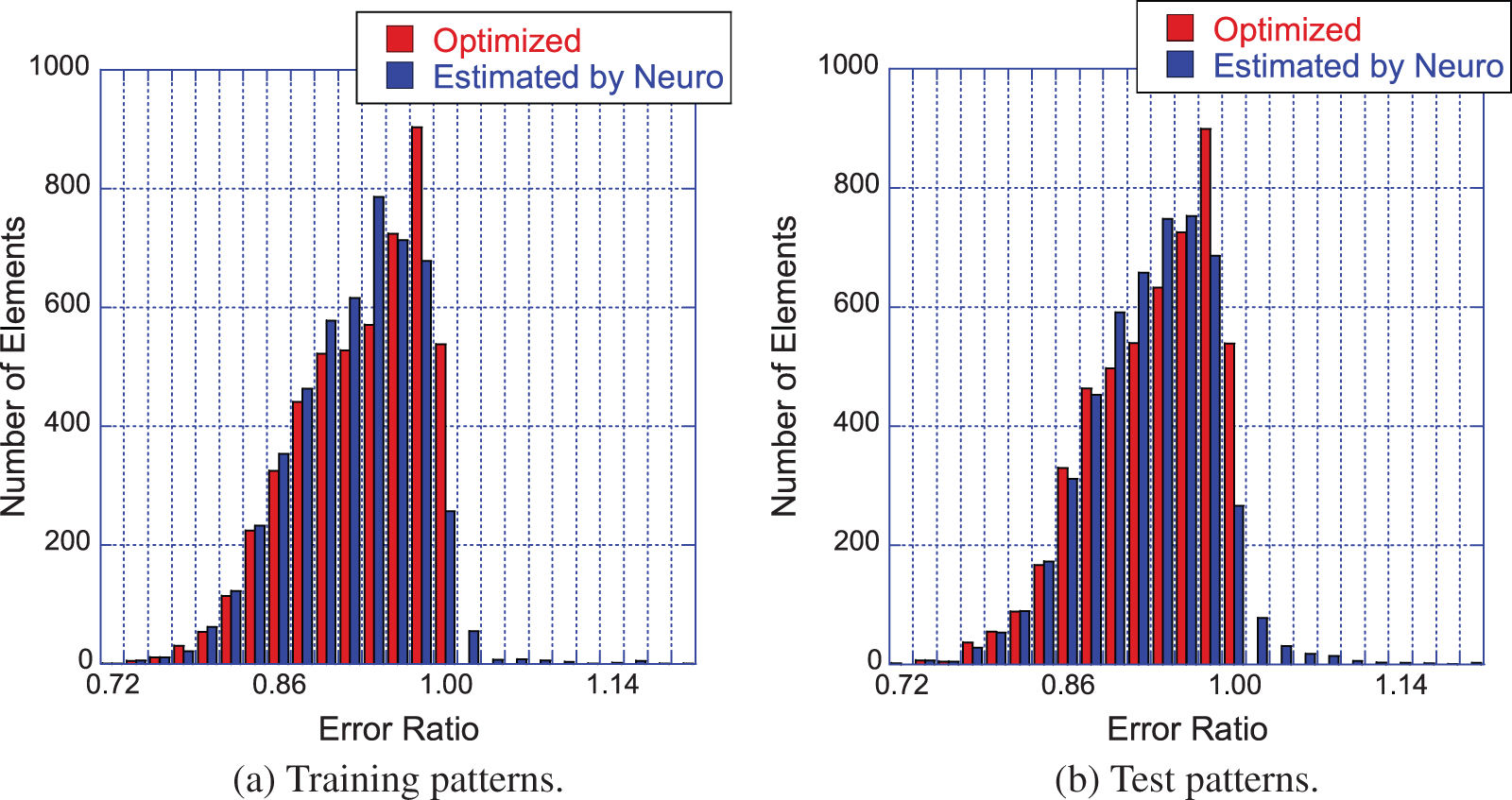

Figure 10: Effectiveness of quadrature weight prediction (Section 2.3.1). Subfigure (a): Distribution of error-reduction ratio

The best results were obtained from a classifier with four hidden layers (Figure 7, Section 4.3, Remark 4.2) with 30 neurons (Figure 8, Figure 36, Section 4.4.3) each and a regression network that had a depth of five hidden layers, where each layer was 50 neurons wide, Figure 7. The results were obtained using the logistic sigmoid function (Figure 30) as activation function (Section 4.4.2) due to existing software, even though the rectified linear function (Figure 24) were more efficient, but yielded comparable accuracy on a few test cases.39

To quantify the effectiveness of the approach in [38], an error-reduction ratio was introduced, i.e., the quotient of the quadrature error with quadrature weights predicted by the neural network and the error obtained with the standard quadrature weights of Gauss-Legendre quadrature with

For most element shapes of both the training set (a) and the test set (b), each of which comprised 5000 elements, the blue bars in Figure 10 indicate an error ratio below one, i.e., the quadrature weight correction effectively improved the accuracy of numerical quadrature.

Readers familiar with Deep Learning and neural networks can go directly to Section 10, where the details of the formulations in [38] are presented. Other sections are also of interest such as classic and state-of-the-art optimization methods in Section 6, attention and transformer unit in Section 7, historical perspective in Section 13, limitations and danger of AI in Section 14.

Readers not familiar with Deep Learning and neural networks will find below a list of the concepts that will be explained in subsequent sections. To facilitate the reading, we also provide the section number (and the link to jump to) for each concept.

Deep-learning concepts to explain and explore:

(1) Feedforward neural network (Figure 7): Figure 23 and Figure 35, Section 4

(2) Neuron (Figure 8): Figure 36 in Section 4.4.4 (artificial neuron), and Figure 131 in Section 13.1 (biological neuron)

(3) Inputs, output, hidden layers, Section 4.3

(4) Network depth and width: Section 4.3

(5) Parameters, weights, biases 4.4.1

(6) Activation functions: Section 4.4.2

(7) What is “deep” in “deep networks” ? Size, architecture, Section 4.6.1, Section 4.6.2

(8) Backpropagation, computation of gradient: Section 5

(9) Loss (cost, error) function, Section 5.1

(10) Training, optimization, stochastic gradient descent: Section 6

(11) Training error, validation error, test (or generalization) error: Section 6.1

This list is continued further below in Section 2.3.2. Details of the formulation in [38] are discussed in Section 10.

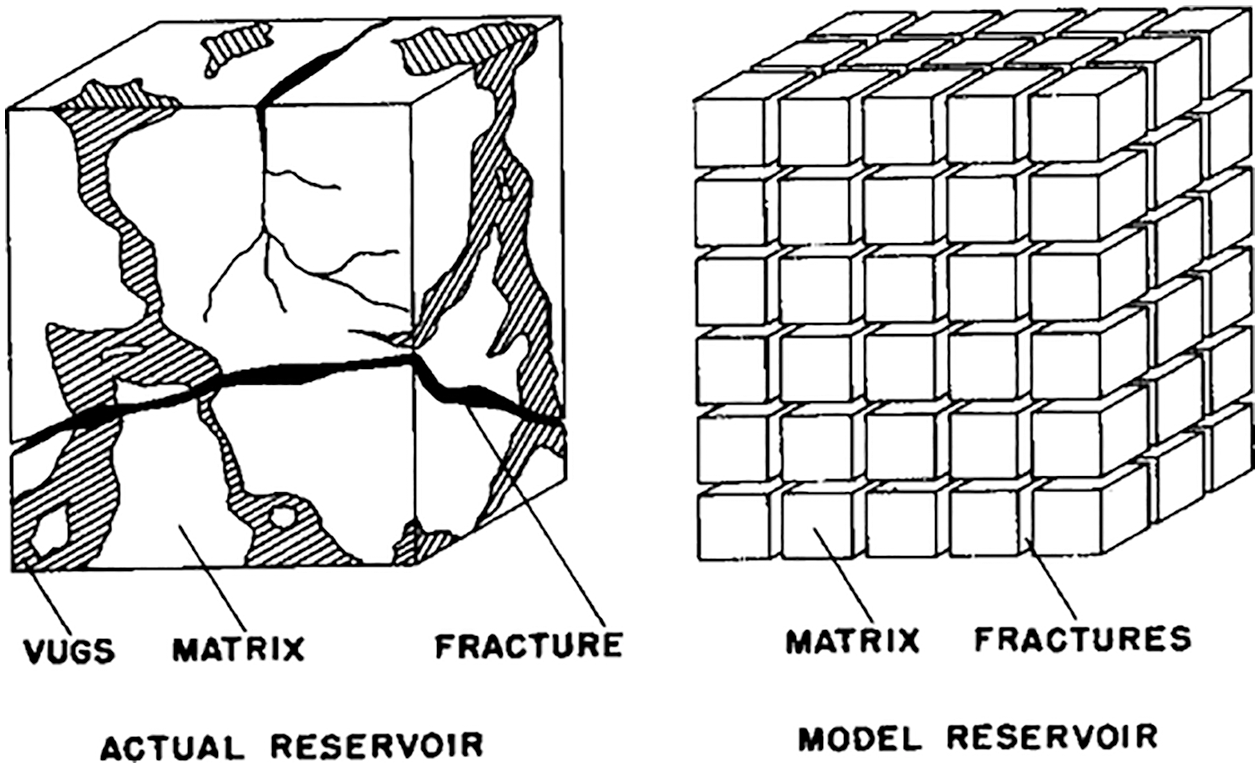

Figure 11: Dual-porosity single-permeability medium (Section 2.3.2). Left: Actual reservoir. Dual (or double) porosity indicates the presence of two types of porosity in naturally-fractured reservoirs (e.g., of oil): (1) Primary porosity in the matrix (e.g., voids in sands) with low permeability, within which fluid does not flow, (2) Secondary porosity due to fractures and vugs (cavities in rocks) with high (anisotropic) permeability, within which fluid flows. Fluid exchange is permitted between the matrix and the fractures, but not between the matrix blocks (sugar cubes), of which the permeability is much smaller than in the fractures. Right: Model reservoir, idealization. The primary porosity is an array of cubes of homogeneous, isotropic material. The secondary porosity is an “orthogonal system of continuous, uniform fractures”, oriented along the principal axes of anisotropic permeability [89]. (Figure reproduced with permission of the publisher SPE.)

2.3.2 Solid mechanics, multiscale modeling

One way that deep learning can be used in solid mechanics is to model complex, nonlinear constitutive behavior of materials. In single physics, balance of linear momentum and strain-displacement relation are considered as definitions or “universal principles”, leaving the constitutive law, or stress-strain relation, to a large number of models that have limitations, no matter how advanced [91]. Deep learning can help model complex constitutive behaviors in ways that traditional phenomenological models could not; see Figure 105.

Deep recurrent neural networks (RNNs) (Section 7.1) was used as a scale-bridging method to efficiently simulate multiscale problems in hydromechanics, specifically plasticity in porous media with dual porosity and dual permeability [25].40

The dual-porosity single-permeability (DPSP) model was first introduced for use in oil-reservoir simulation [89], Figure 11, where the fracture system was the main flow path for the fluid (e.g., two phase oil-water mixture, one-phase oil-solvent mixture). Fluid exchange is permitted between the rock matrix and the fracture system, but not between the matrix blocks. In the DPSP model, the fracture system and the rock matrix, each has its own porosity, with values not differing from each other by a large factor. On the contrary, the permeability of the fracture system is much larger than that in the rock matrix, and thus the system is considered as having only a single permeability. When the permeability of the fracture system and that of the rock matrix do not differ by a large factor, then both permeabilities are included in the more general dual-porosity dual-permeability (DPDP) model [94].

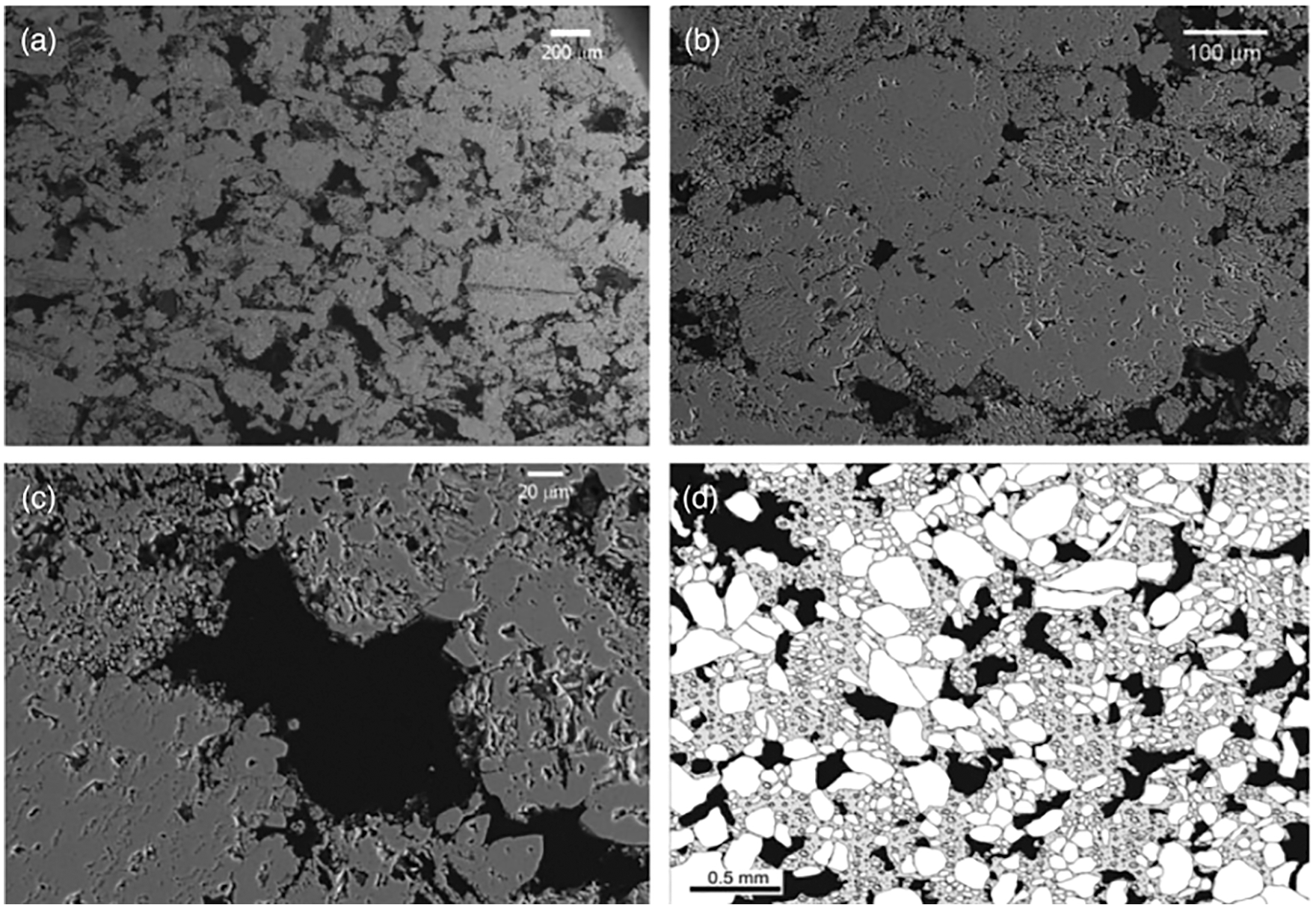

Figure 12: Pore structure of Majella limestone, dual porosity (Section 2.3.2), a carbonate rock with high total porisity at 30%. Backscattered SEM images of Majella limestone: (a)-(c) sequence of zoomed-ins; (d) zoomed-out. (a) The larger macropores (dark areas) have dimensions comparable to the grains (allochems), having an average diameter of 54

Since 60% of the world’s oil reserve and 40% of the world’s gas reserve are held in carbonate rocks, there has been a clear interest in developing an understanding of the mechanical behavior of carbonate rocks such as limestones, having from lowest porosity (Solenhofen at 3%) to high porosity (e.g., Majella at 30%). Chalk (Lixhe) is a carbonate rock with highest porosity at 42.8%. Carbonate rock reservoirs are also considered to store carbon dioxide and nuclear waste [95] [93].

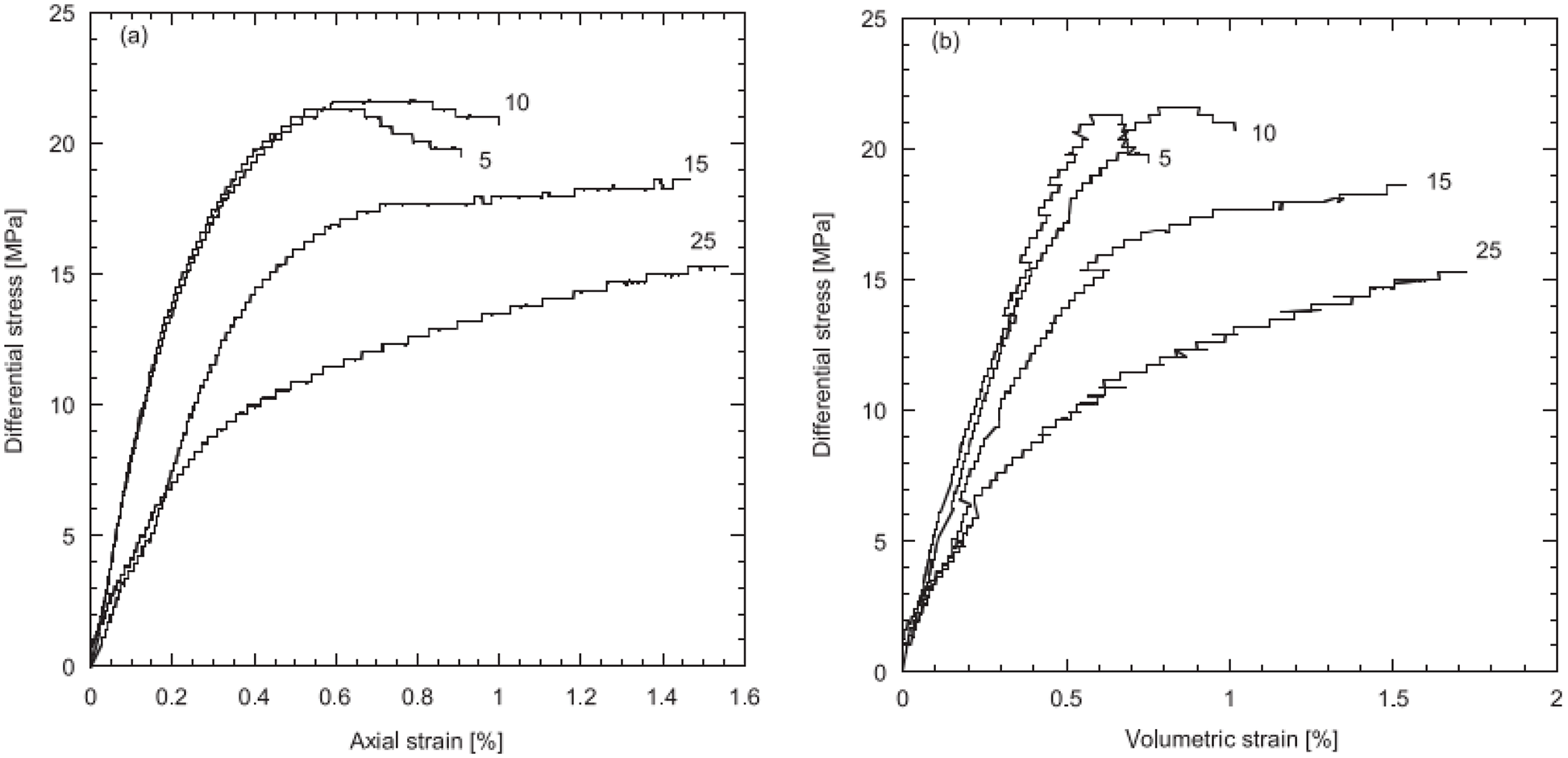

In oil-reservoir simulations in which the primary interest is the flow of oil, water, and solvent, the porosity (and pore size) within each domain (rock matrix or fracture system) is treated as constant and homogeneous [94] [96].41 On the other hand, under mechanical stress, the pore size would change, cracks and other defects would close, leading to a change in the porosity in carbonate rocks. Indeed, “at small stresses, experimental mechanical deformation of carbonate rock is usually characterized by a non-linear stress-strain relationship, interpreted to be related to the closure of cracks, pores, and other defects. The non-linear stress-strain relationship can be related to the amount of cracks and various type of pores” [95], p. 202. Once the pores and cracks are closed, the stress-strain relation becomes linear, at different stress stages, depending on the initial porosity and the geometry of the pore space [95].

Figure 13: Majella limestone, nonlinear stress-strain relations (Section 2.3.2). Differential stress (i.e., the difference between the largest principal stress and the smallest one) vs axial strain (left) and vs volumetric strain (right) [90]. See Remark 11.7, Section 11.3.4, and Remark 11.10, Section 11.3.5. (Figure reproduced with permission of the authors.)

Moreover, pores have different sizes, and can be classified into different pore sub-systems. For the Majella limestone in Figure 12 with total porosity at 30%, its pore space can be partitioned into two subsystems (and thus dual porosity), the macropores with macroporosity at 11.4% and the micropores with microporosity at 19.6%. Thus the meaning of dual-porosity as used in [25] is different from that in oil-reservoir simulation. Also characteristic of porous rocks such as the Majella limestone is the non-linear stress-strain relation observed in experiments, Figure 13, due the changing size, and collapse, of the pores.

Likewise, the meaning of “dual permeability” is different in [25] in the sense that “one does not seek to obtain a single effective permeability for the entire pore space”. Even though it was not explicitly spelled out,42 it appears that each of the two pore sub-systems would have its own permeability, and that fluid is allowed to exchange between the two pore sub-systems, similar to the fluid exchange between the rock matrix and the fracture system in the DPSP and DPDP models in oil-reservoir simulation [94].

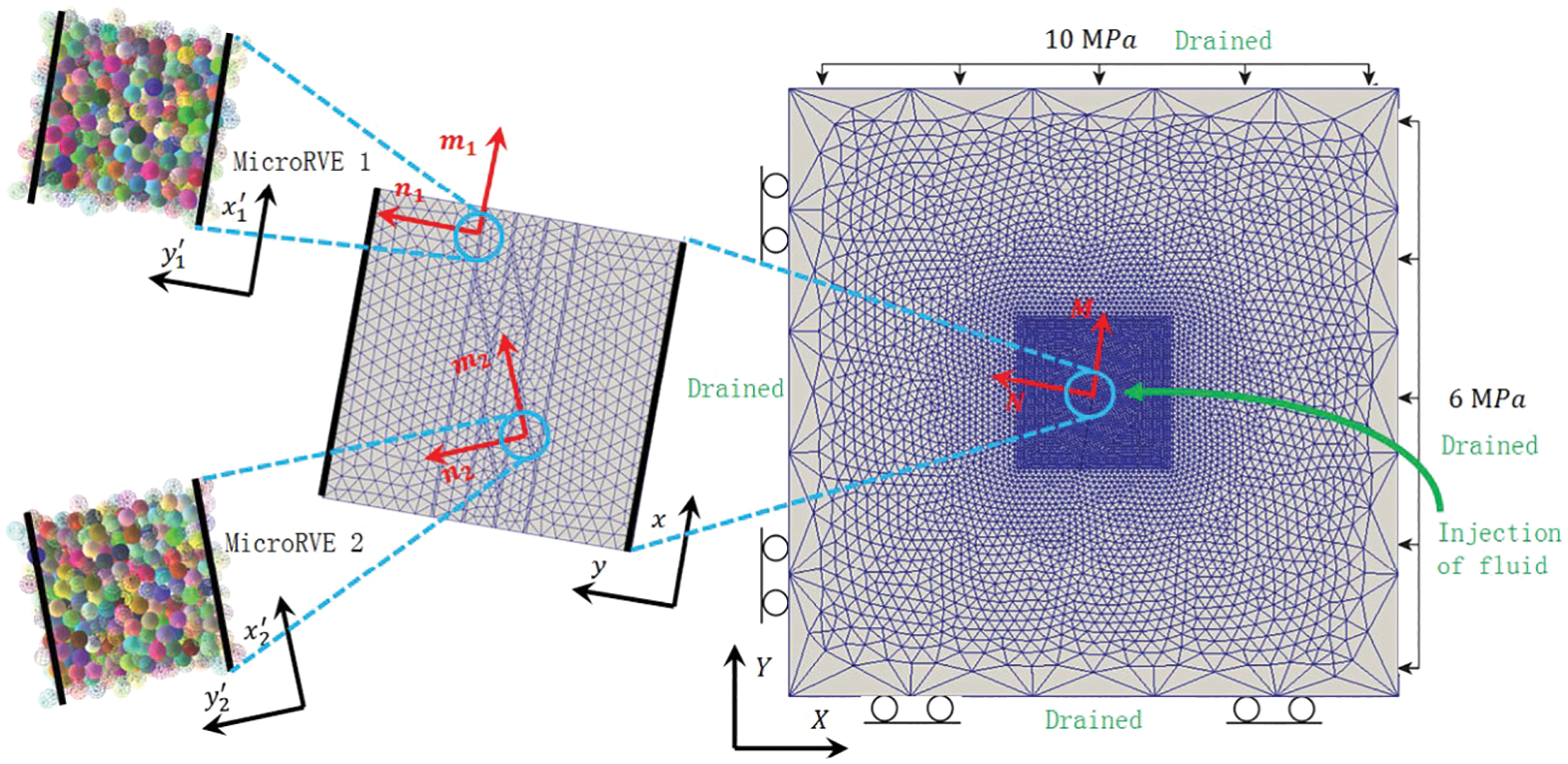

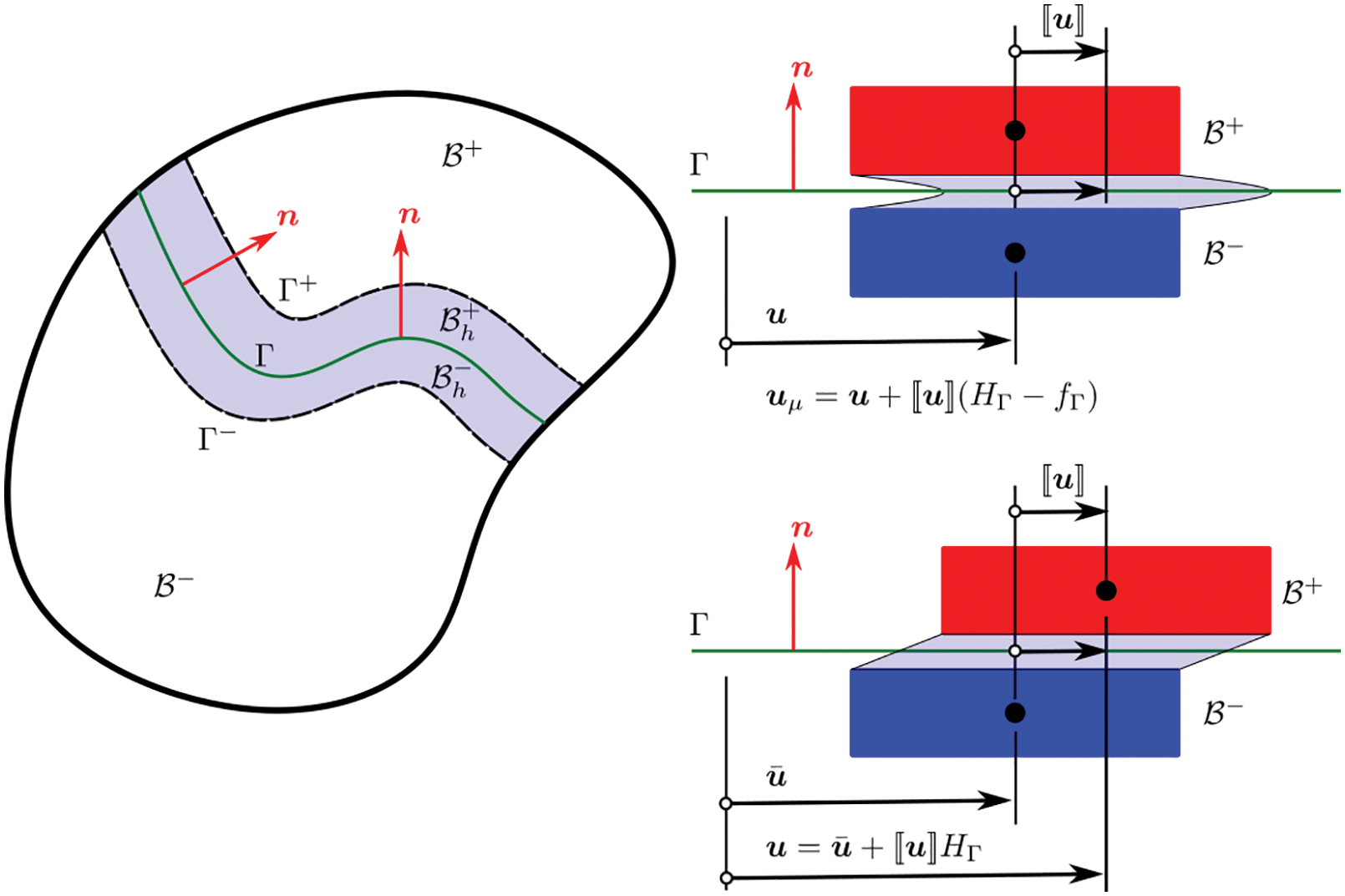

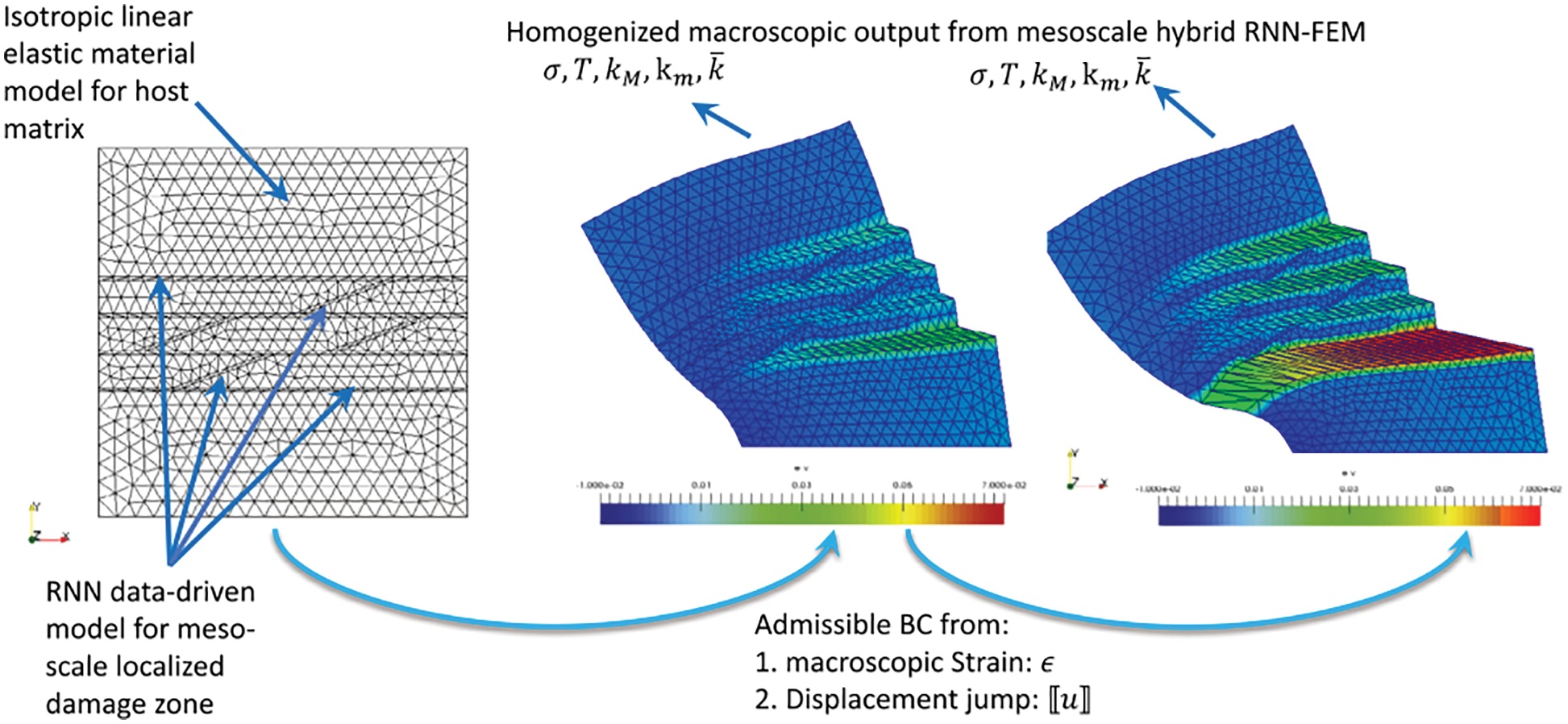

In the problem investigated in [25], the presence of localized discontinuities demands three scales—microscale (

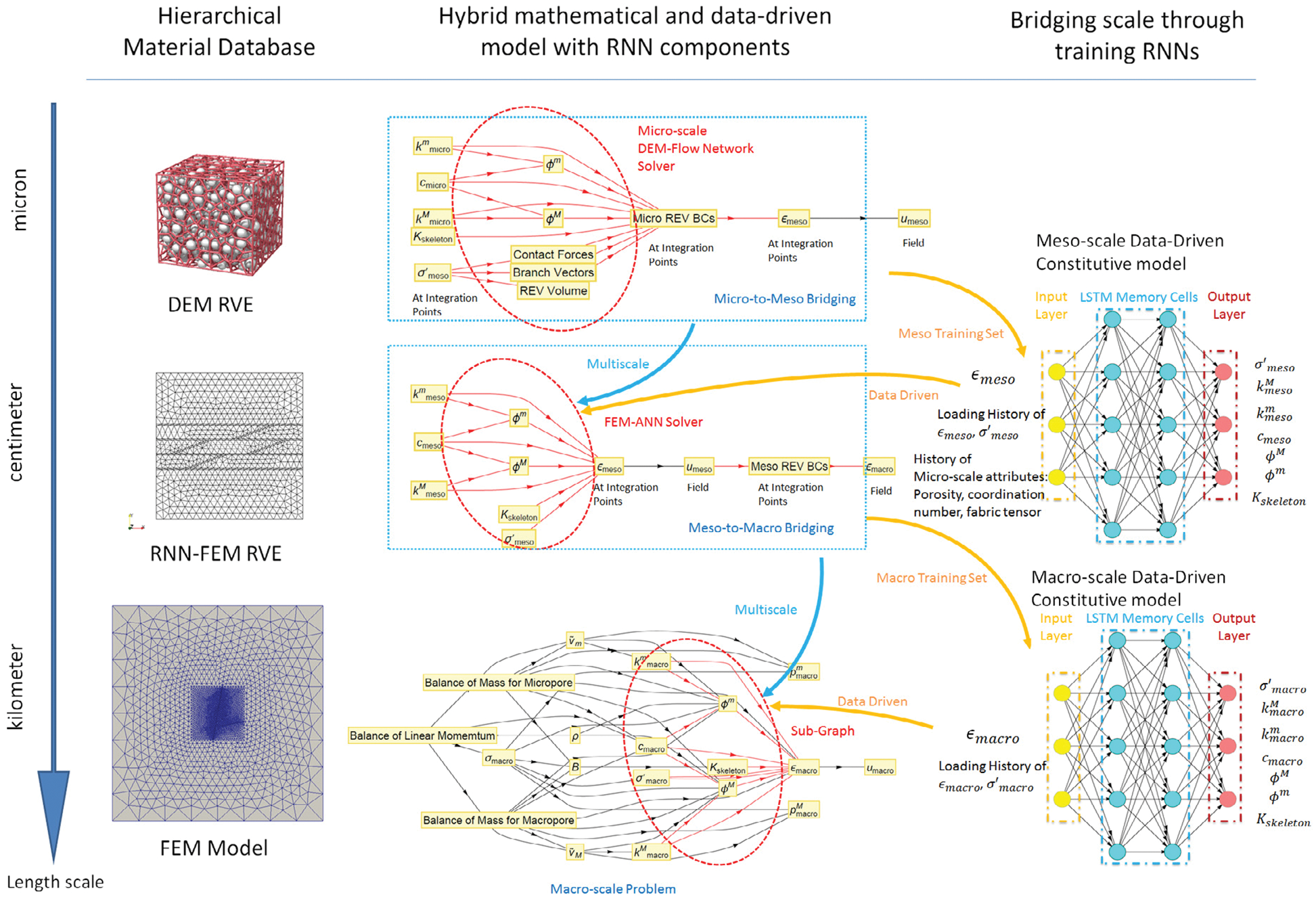

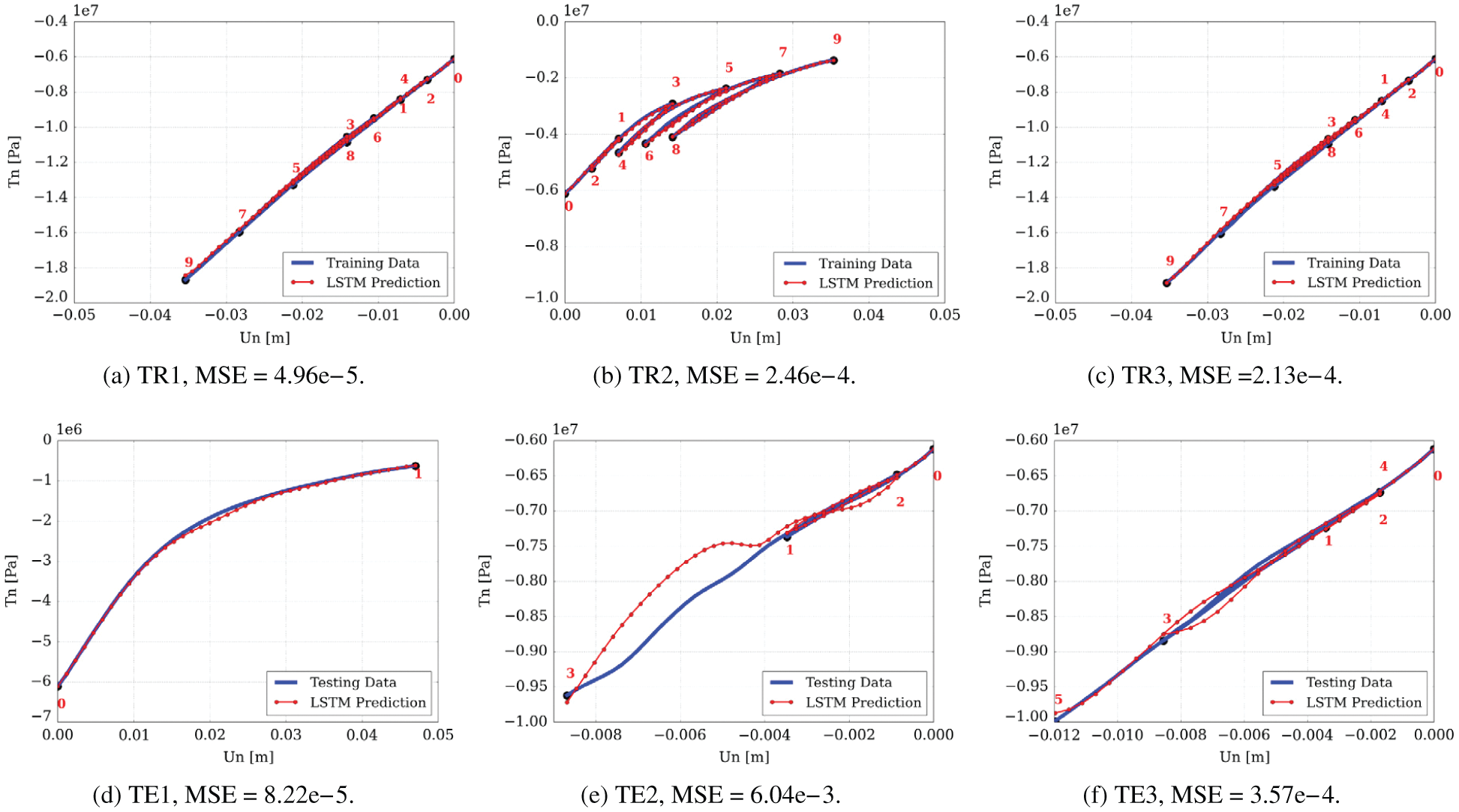

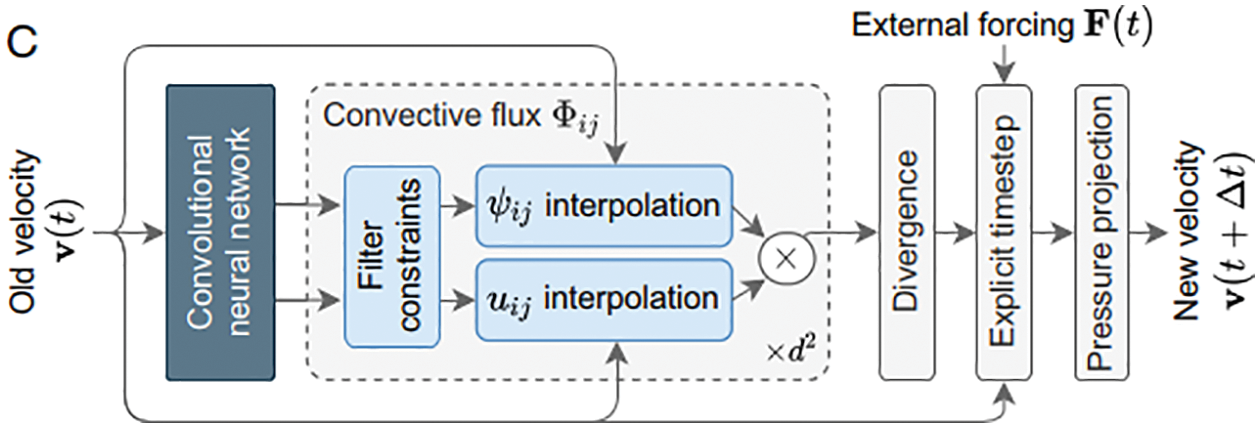

Instead of coupling multiple simulation models online, two (adjacent) scales were linked by a neural network that was trained offline using data generated by simulations on the smaller scale [25]. The trained network subsequently served as a surrogate model in online simulations on the larger scale. With three scales being considered, two recurrent neural networks (RNNs) with Long Short-Term Memory (LSTM) units were employed consecutively:

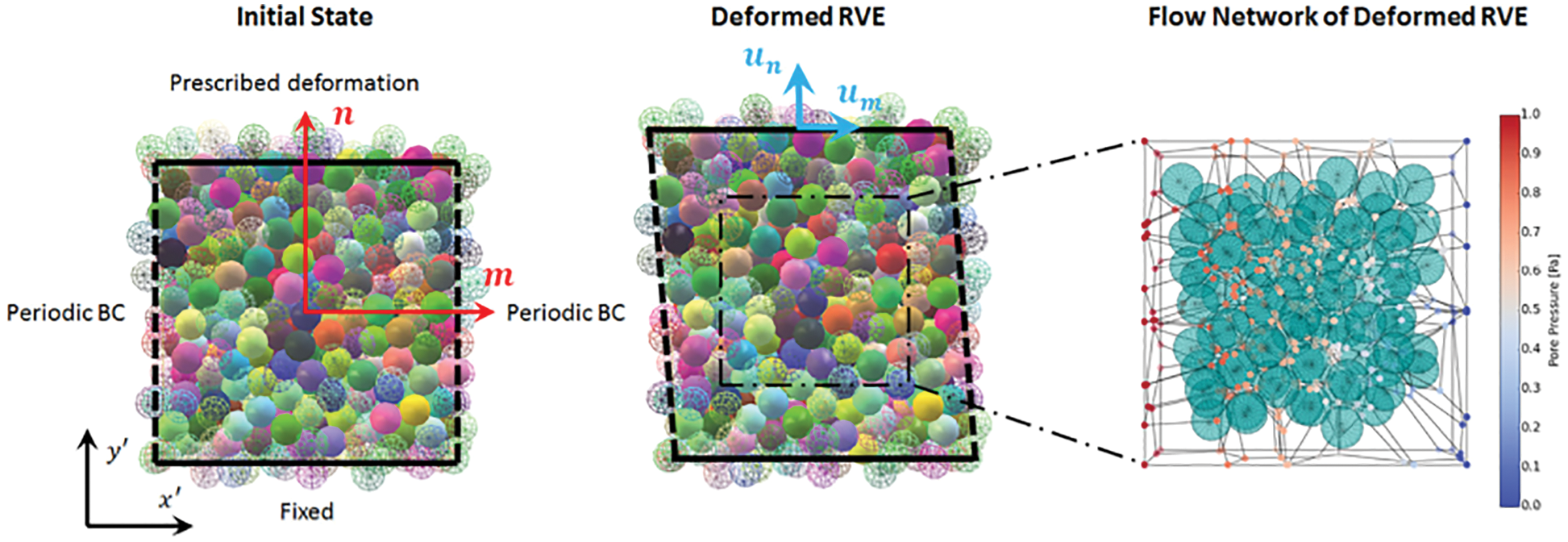

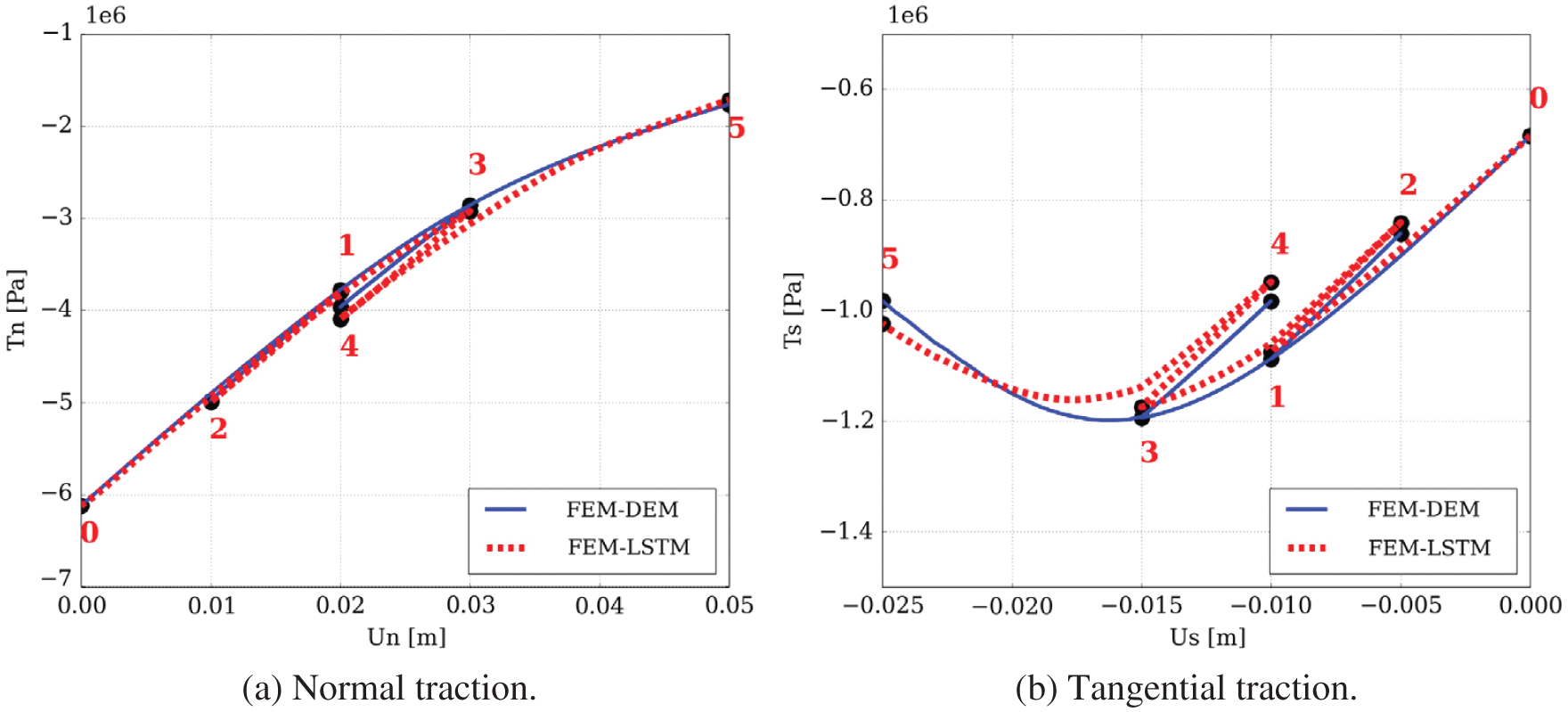

(1) Mesoscale RNN with LSTM units: On the microscopic scale, a representative volume element (RVE) was an assembly of discrete-element particles, subjected to large variety of representative loading paths to generate training data for the supervised learning of the mesoscale RNN with LSTM units, a neural network that was referred to as “Mesoscale data-driven constitutive model” [25] (Figure 14). Homogenizing the results of DEM-flow model provided constitutive equations for the traction-separation law and the evolution of anisotropic permeabilities in damaged regions.

(2) Macroscale RNN with LSTM units: The mesoscale RVE (middle row in Figure 14), in turn, was a finite-element model of a porous material with embedded strong discontinuities equivalent to the fracture system in oil-reservoir simulation in Figure 11. The host matrix of the RVE was represented by an isotropic linearly elastic solid. In localized fracture zones within, the traction-separation law and the hydraulic response were provided by the mesoscale RNN with LSTM units developed above. Training data for the macroscale RNN with LSTM units—a network referred to as “Macroscale data-driven constitutive model” [25]—is generated by computing the (homogenized) response of the mesoscale RVE to various loadings. In macroscopic simulations, the mesoscale RNN with LSTM units provided the constitutive response at a sealing fault that represented a strong discontinuity.

Figure 14: Hierarchy of a multi-scale multi-physics poromechanics problem for fluid-infiltrating media [25] (Sections 2.3.2, 11.1, 11.3.1, 11.3.3, 11.3.5). Microscale (

Figure 15: LSTM variant with “peephole” connections, block diagram (Sections 2.3.2, 7.2).43 Unlike the original LSTM unit (see Section 7.2), both the input gate and the forget gate in an LSTM unit with peephole connections receive the cell state as input. The above figure from Wikipedia, version 22:56, 4 October 2015, is identical to Figure 10 in [25], whose authors erroneously used this figure without mentioning the source, but where the original LSTM unit without “peepholes” was actually used, and with the detailed block diagram in Figure 81, Section 7.2. See also Figure 82 and Figure 117 for the original LSTM unit applied to fluid mechanics. (CC-BY-SA 4.0)

Path-dependence is a common characteristic feature of the constitutive models that are often realized as neural networks; see, e.g., [23]. For this reason, it was decided to employ RNN with LSTM units, which could mimick internal variables and corresponding evolution equations that were intrinsic to path-dependent material behavior [25]. These authors chose to use a neural network that had a depth of two hidden layers with 80 LSTM units per layer, and that had proved to be a good compromise of performance and training efforts. After each hidden layer, a dropout layer with a dropout rate 0.2 were introduced to reduce overfitting on noisy data, but yielded minor effects, as reported in [25]. The output layer was a fully-connected layer with a logistic sigmoid as activation function.

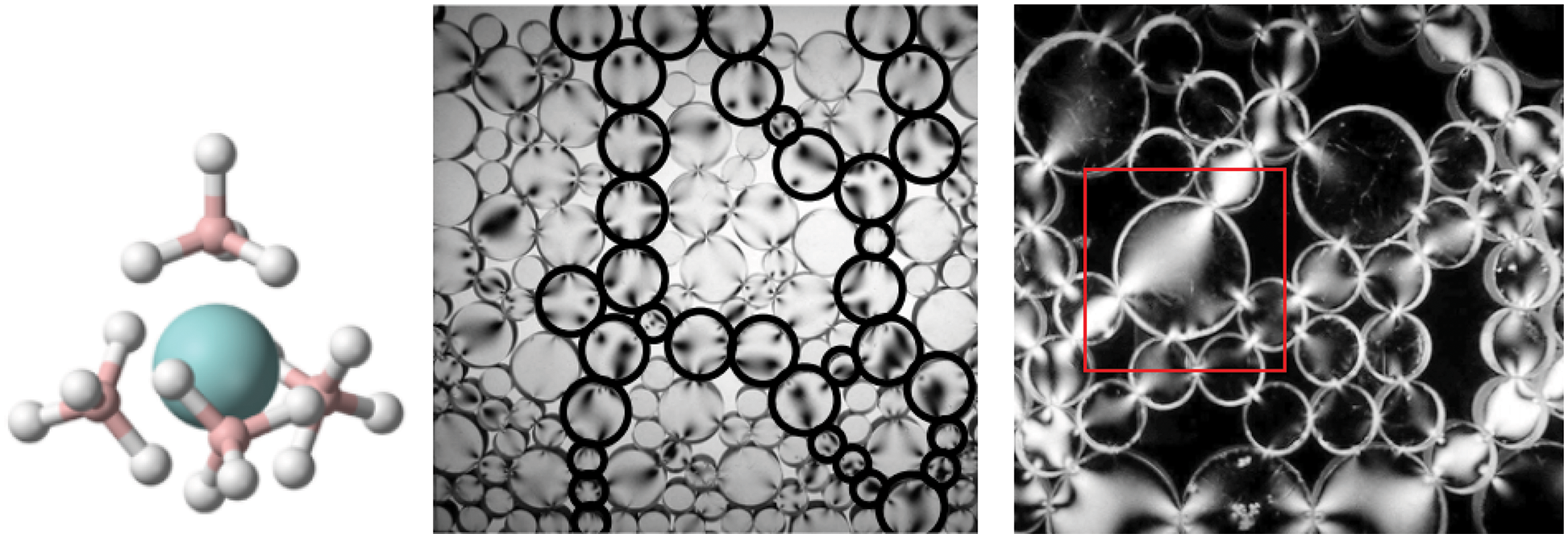

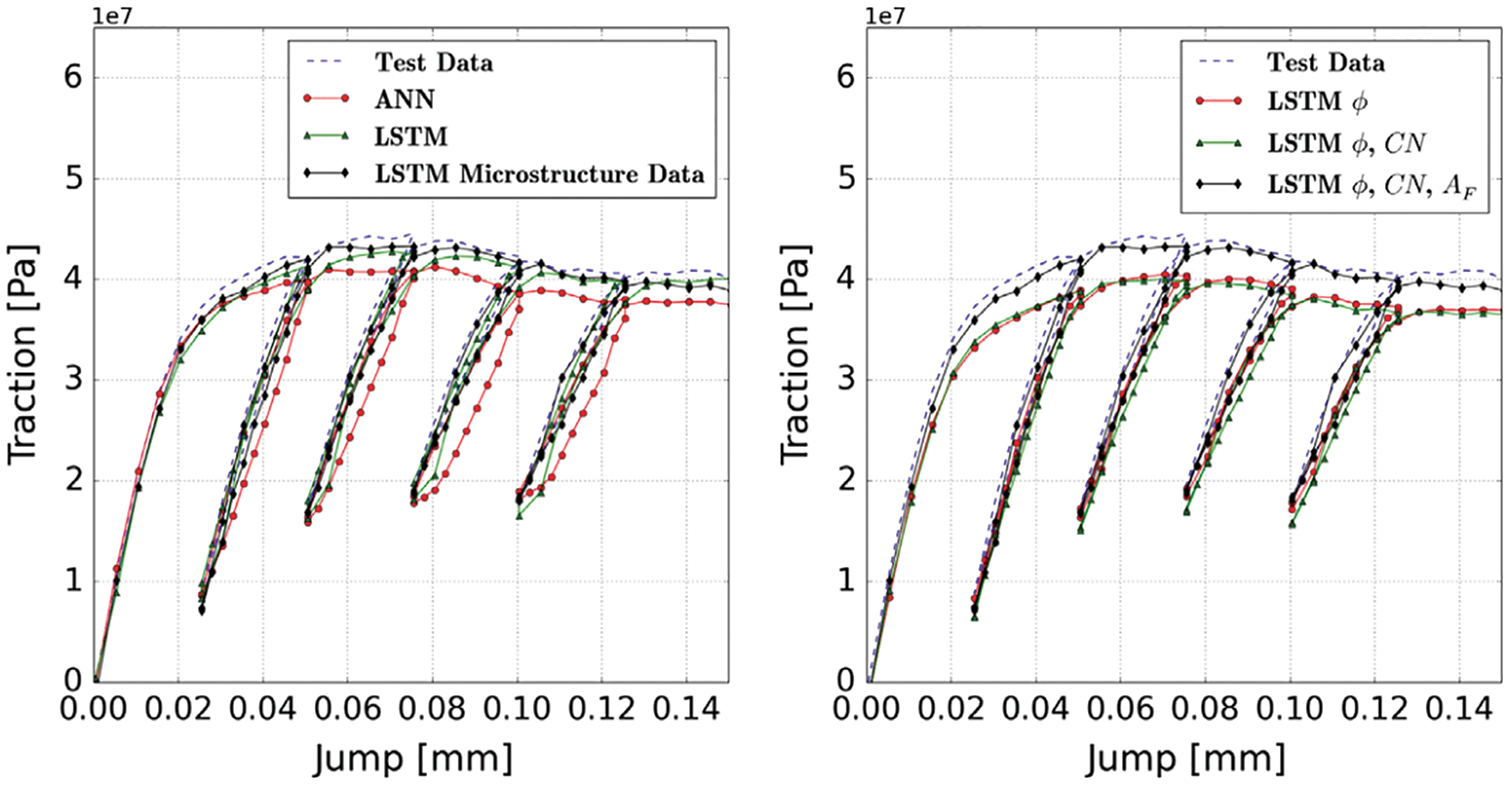

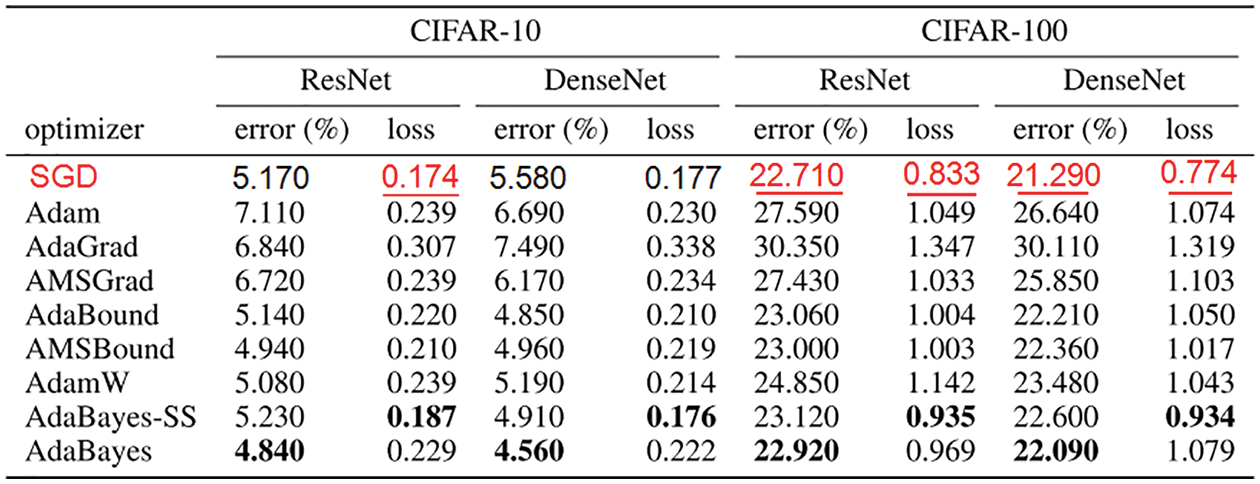

An important observation is that including micro-structural data—the porosity

Figure 16: Coordination number CN (Section 2.3.2, 11.3.2). (a) Chemistry. Number of bonds to the central atom. Uranium borohydride U(BH4)4 has CN = 12 hydrogen bonds to uranium. (b, c) Photoelastic discs showing number of contact points (coordination number) on a particle. (b) Random packing and force chains, different force directions along principal chains and in secondary particles. (c) Arches around large pores, precarious stability around pores. The coordination number for the large disc (particle) in red square is 5, but only 4 of those had nonzero contact forces based on the bright areas showing stress action. Figures (b, c) also provide a visualization of the “flow” of the contact normals, and thus the fabric tensor [98]. See also Figure 17. (Figure reproduced with permission of the author.)

Figure 17 illustrates the importance of incorporating microstructure data, particularly the fabric tensor, in network training to improve prediction accuracy.

Deep-learning concepts to explain and explore: (continued from above in Section 2.3.1)

(12) Recurrent neural network (RNN), Section 7.1

(13) Long Short-Term Memory (LSTM), Section 7.2

(14) Attention and Transformer, Section 7.4.3

(15) Dropout layer and dropout rate,45 which had minor effects in the particular work repoorted in [25], and thus will not be covered here. See [78], p. 251, Section 7.12.

Details of the formulation in [25] are discussed in Section 11.

2.3.3 Fluid mechanics, reduced-order model for turbulence

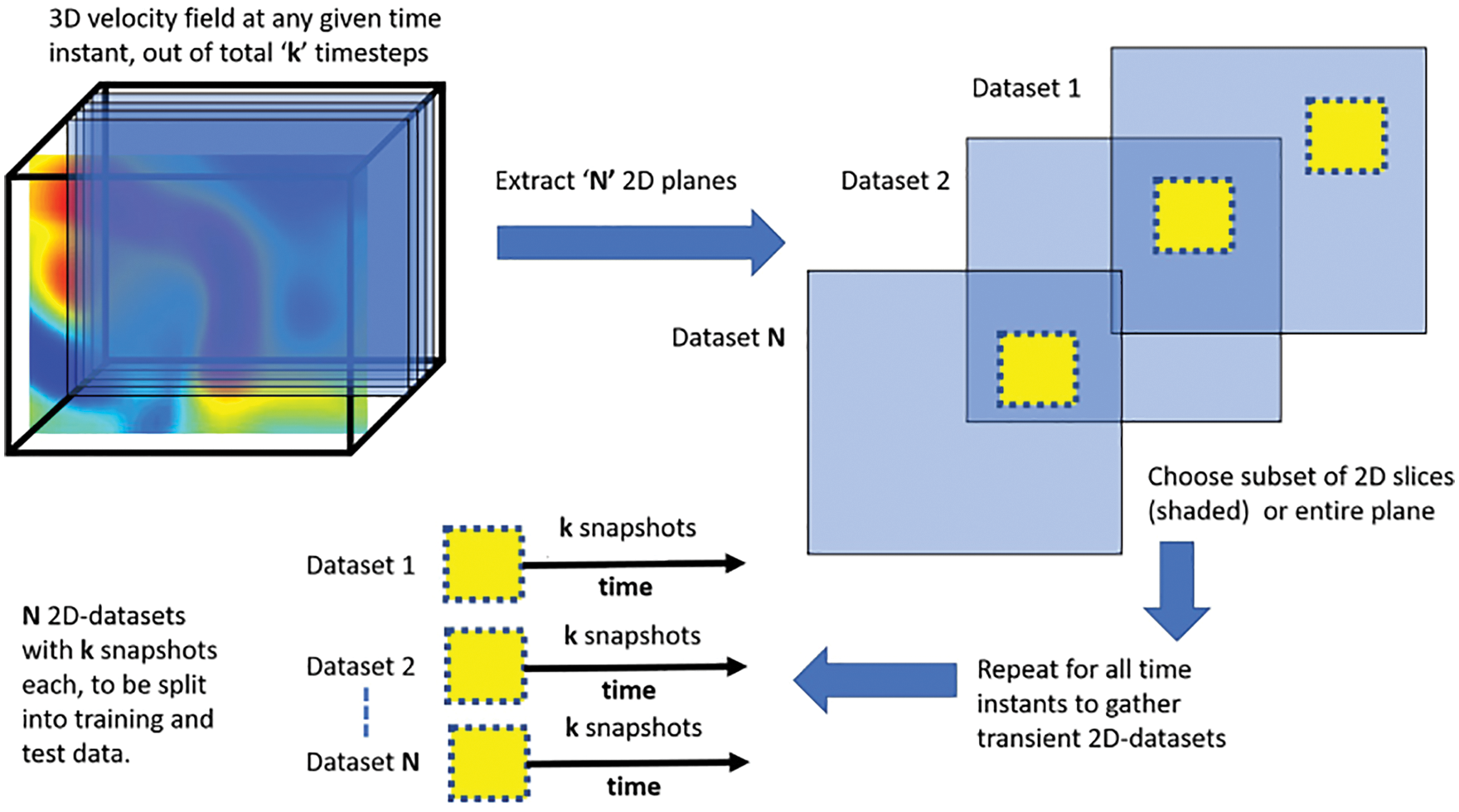

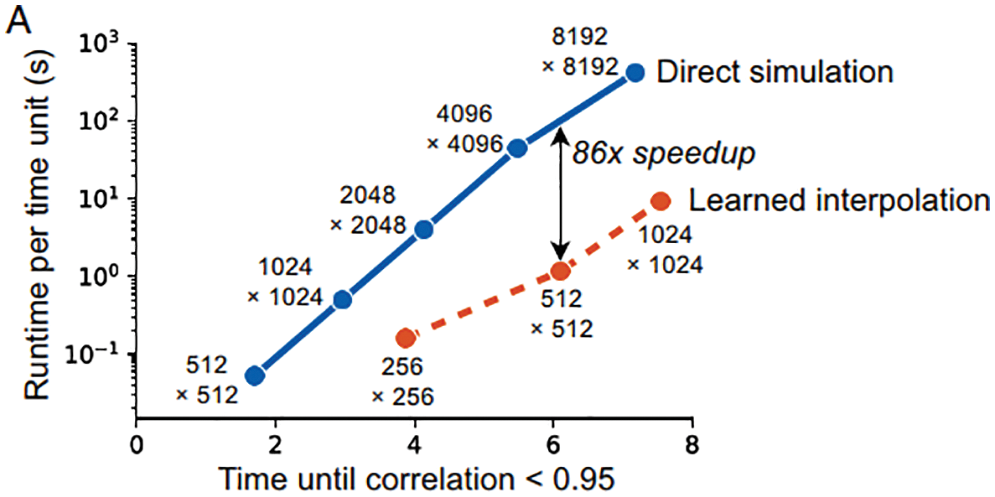

The accurate simulation of turbulence in fluid flows ranks among the most demanding tasks in computational mechanics. Owing to both the spatial and the temporal resolution, transient analysis of turbulence by means of high-fidelity methods such as Large Eddy Simulation (LES) or direct numerical simulation (DNS) involves millions of unknowns even for simple domains.

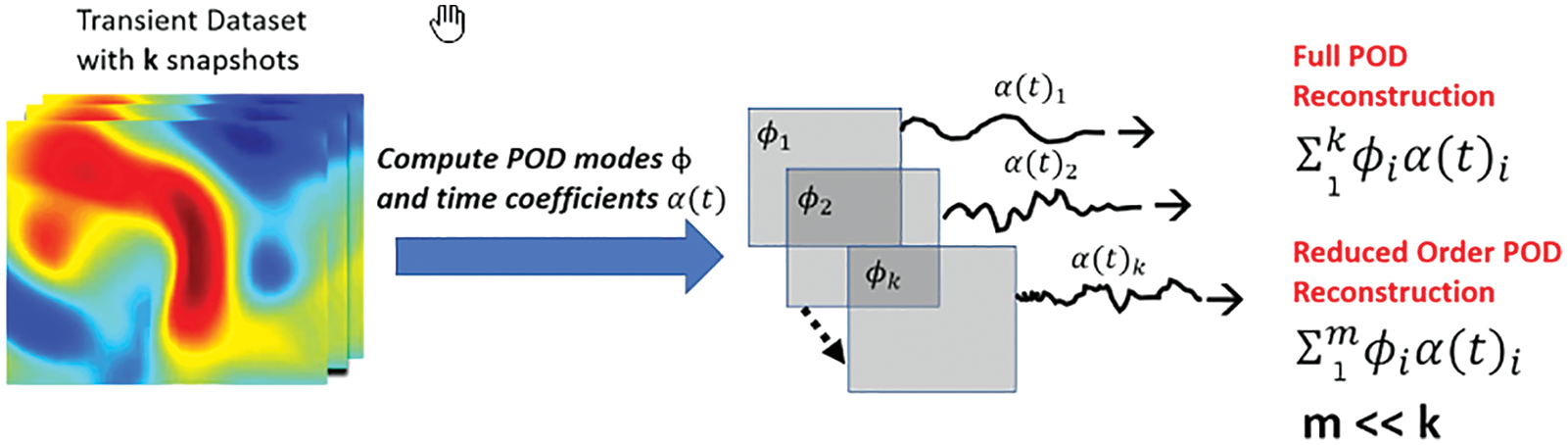

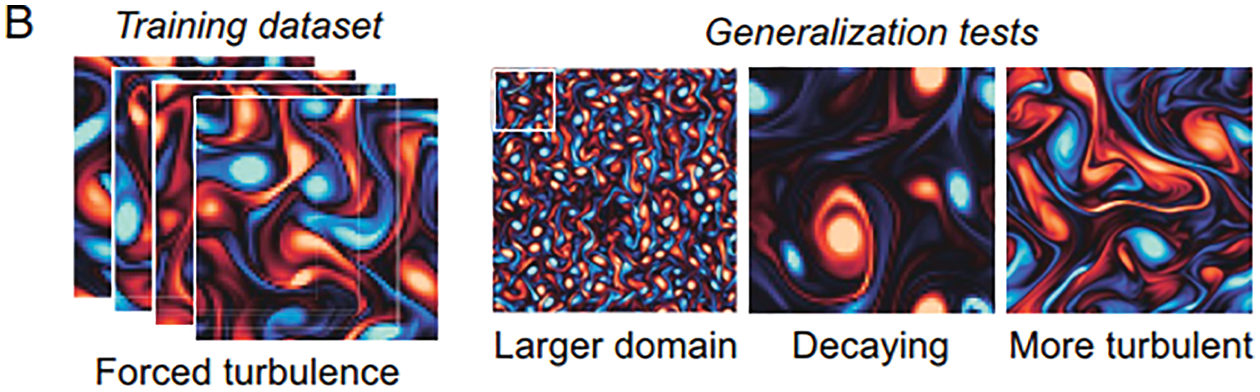

To simulate complex geometries over larger time periods, reduced-order models (ROMs) that can capture the key features of turbulent flows within a low-dimensional approximation space need to be resorted to. Proper Orthogonal Decomposition (POD) is a common data-driven approach to construct an orthogonal basis

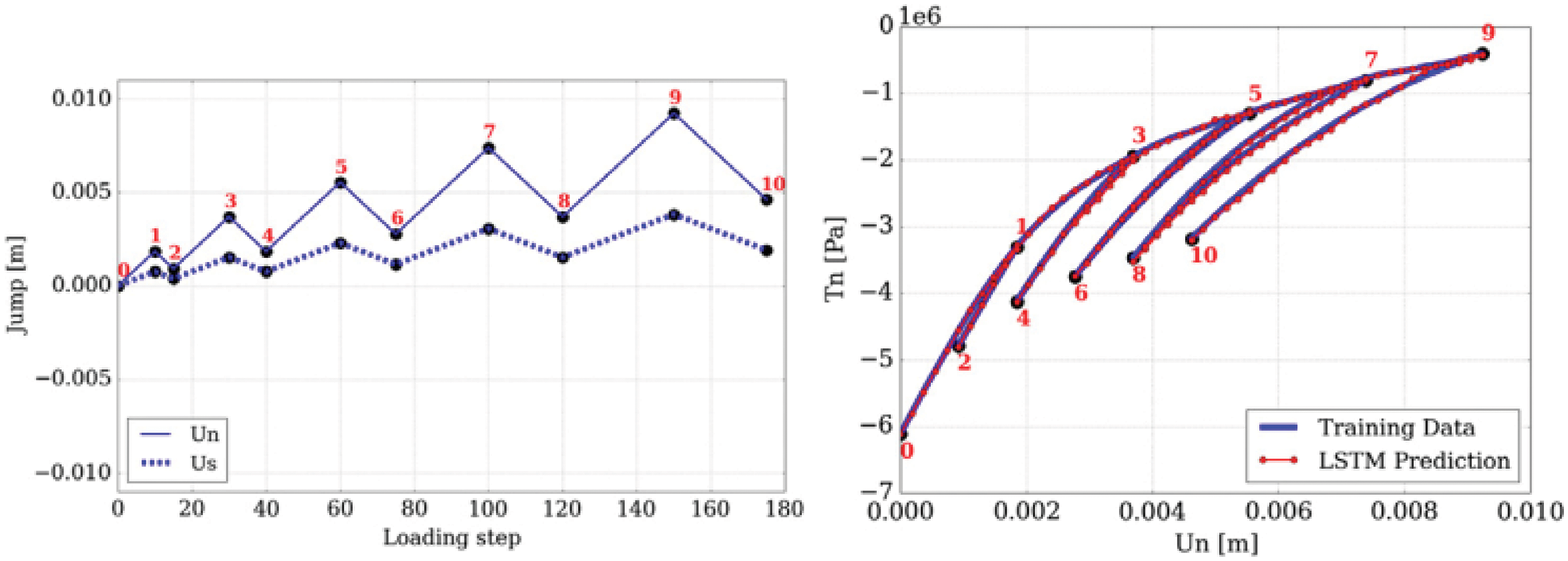

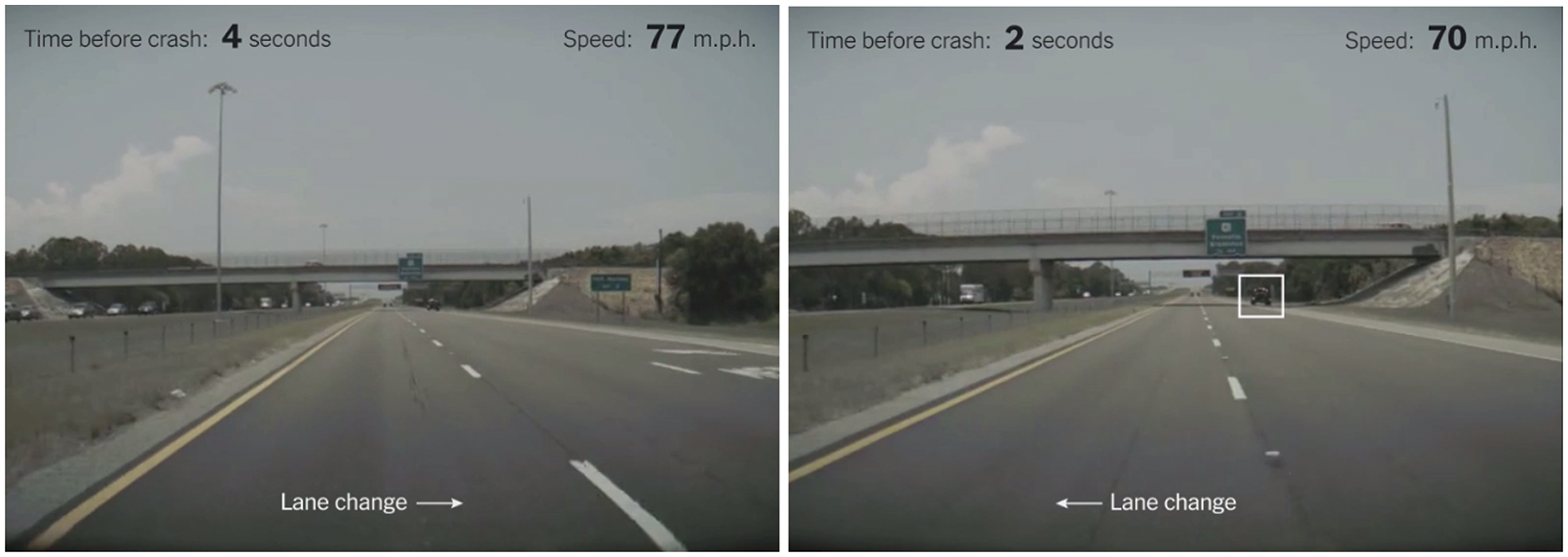

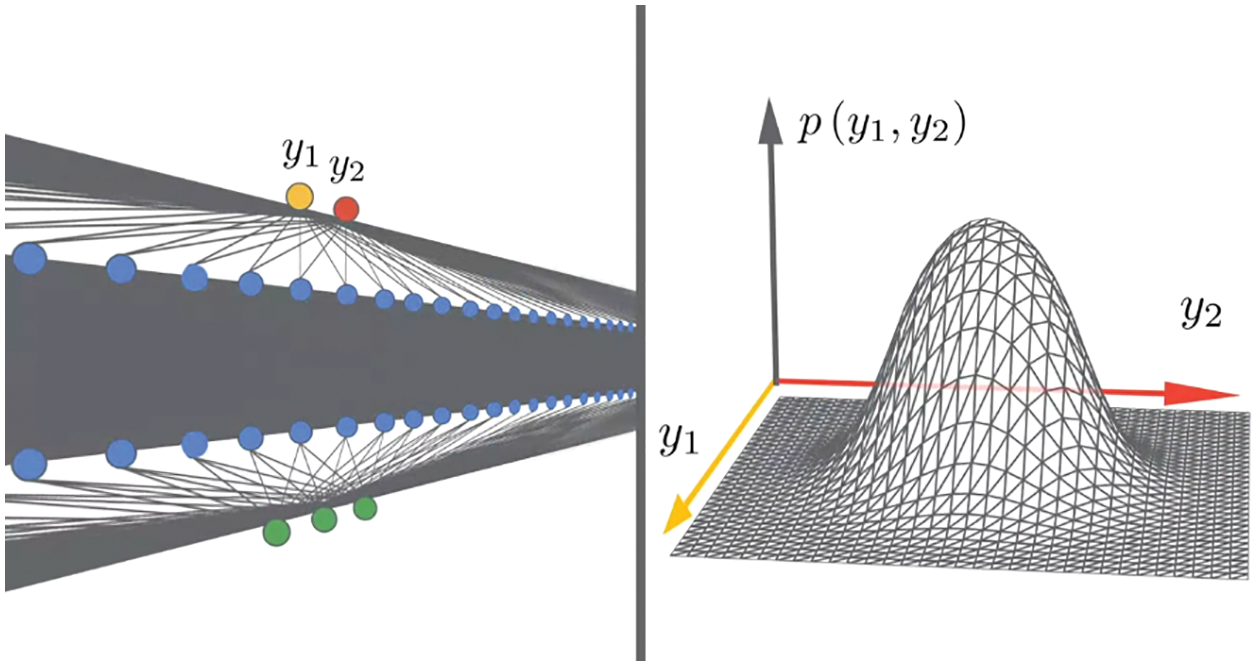

Figure 17: Network with LSTM and microstructure data (porosity

where

where

In a Galerkin-Project (GP) approach to reduced-order model, a small subset of dominant modes form a basis onto which high-dimensional differential equations are projected to obtain a set of lower-dimensional differential equations for cost-efficient computational analysis.

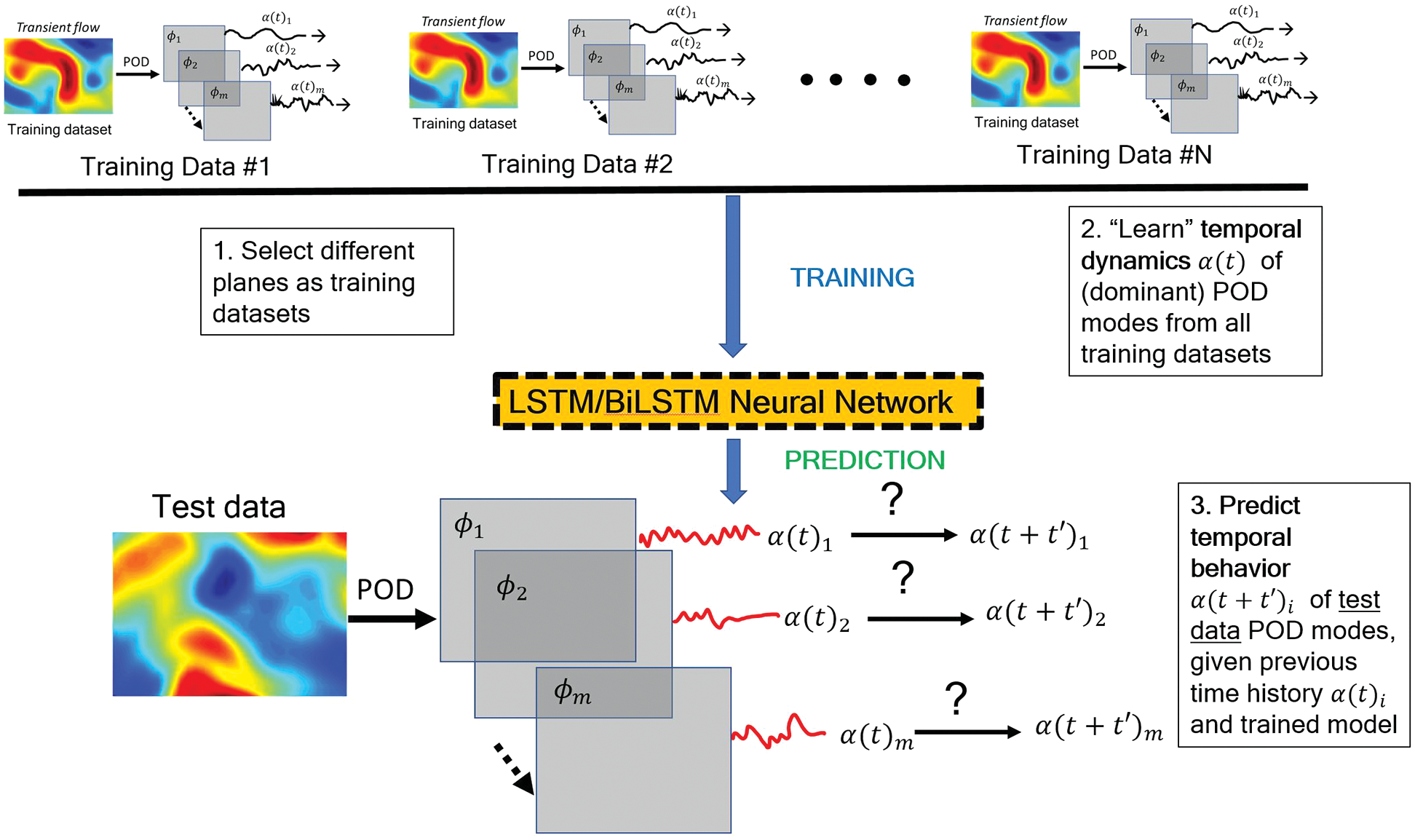

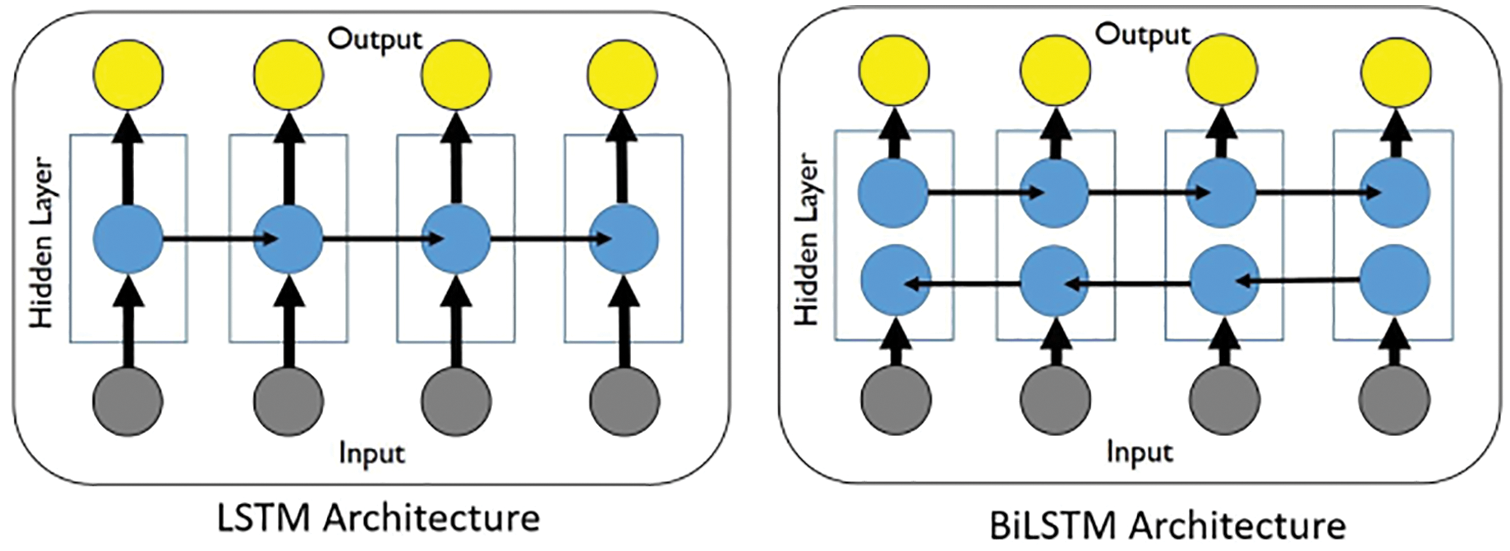

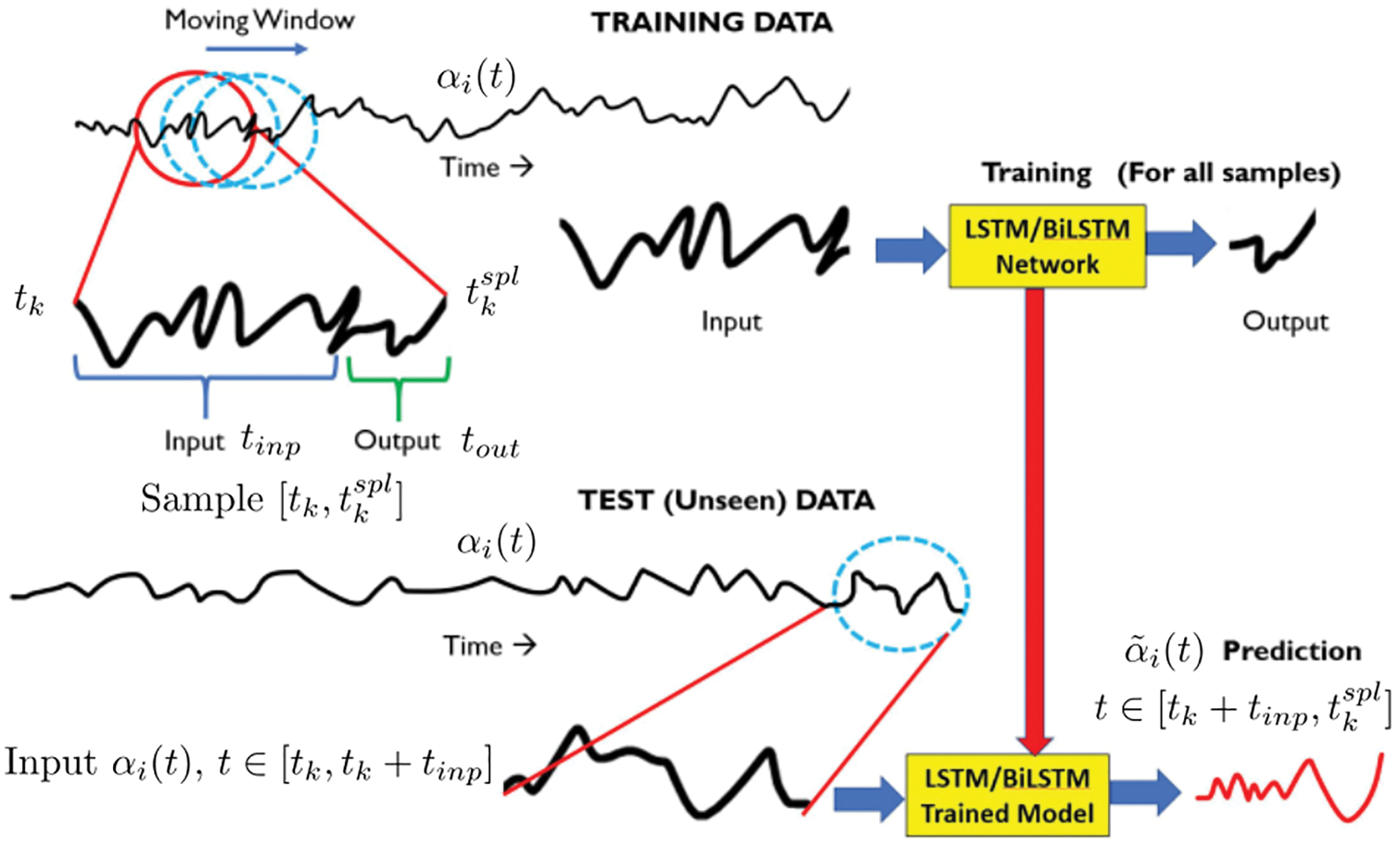

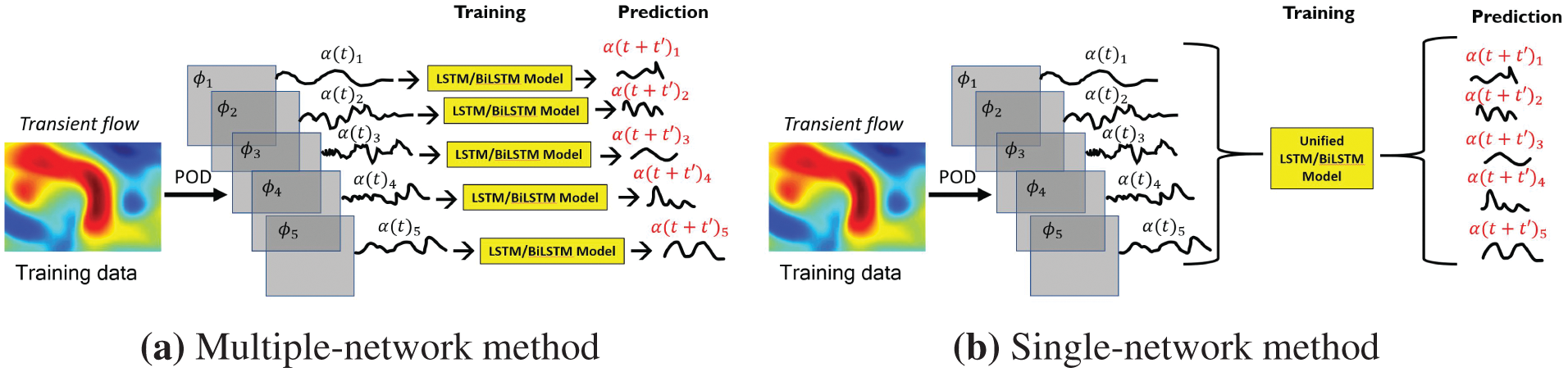

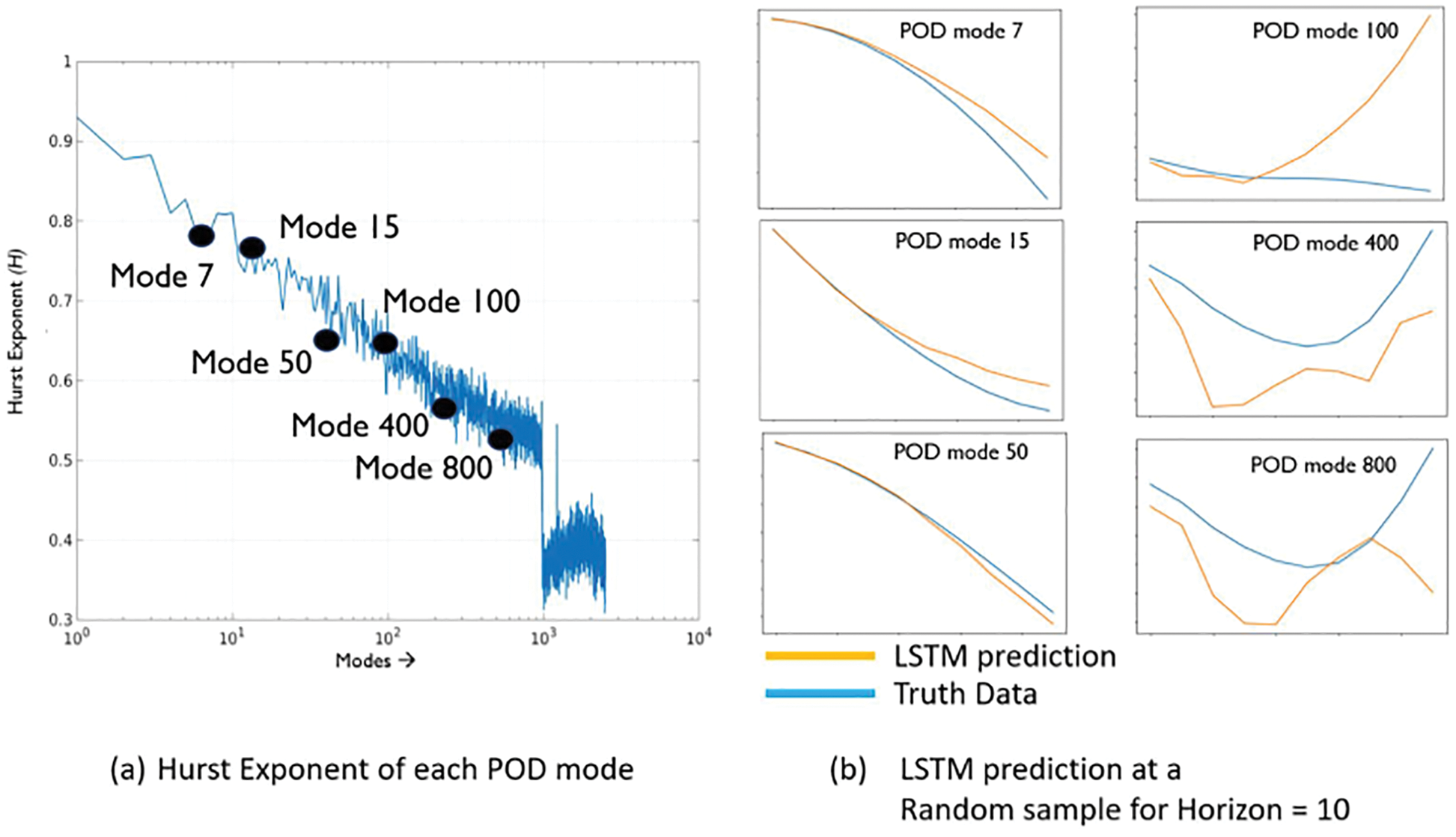

Instead of using GP, RNNs (Recurrent Neural Networks) were used in [26] to predict the evolution of fluid flows, specifically the coefficients of the dominant POD modes, rather than solving differential equations. For this purpose, their LSTM-ROM (Long Short-Term Memory - Reduced Order Model) approach combined concepts of ROM based on POD with deep-learning neural networks using either the original LSTM units, Figure 117 (left) [24], or the bidirectional LSTM (BiLSTM), Figure 117 (right) [104], the internal states of which were well-suited for the modeling of dynamical systems.

Figure 18: Reduced-order POD basis (Sections 2.3.3, 12.1). For each dataset (also Figure 116), which contained

To obtain training/testing data, which were crucial to train/test neural networks, the data from transient 3-D Direct Navier-Stokes (DNS) simulations of two physical problems, as provided by the Johns Hopkins turbulence database [105] were used [26]: (1) The Forced Isotropic Turbulence (ISO) and (2) The Magnetohydrodynamic Turbulence (MHD).

To generate training data for LSTM/BiLSTM networks, the 3-D turbulent fluid flow domain of each physical problem was decomposed into five equidistant 2-D planes (slices), with one additional equidistant 2-D plane served to generate testing data (Section 12, Figure 116, Remark 12.1). For the same subregion in each of those 2-D planes, POD was applied on the

(1) Multiple-network method: Use a RNN for each coefficient of the dominant POD modes

(2) Single-network method: Use a single RNN for all coefficients of the dominant POD modes

For both methods, variants with the original LSTM units or the BiLSTM units were implemented. Each of the employed RNN had a single hidden layer.

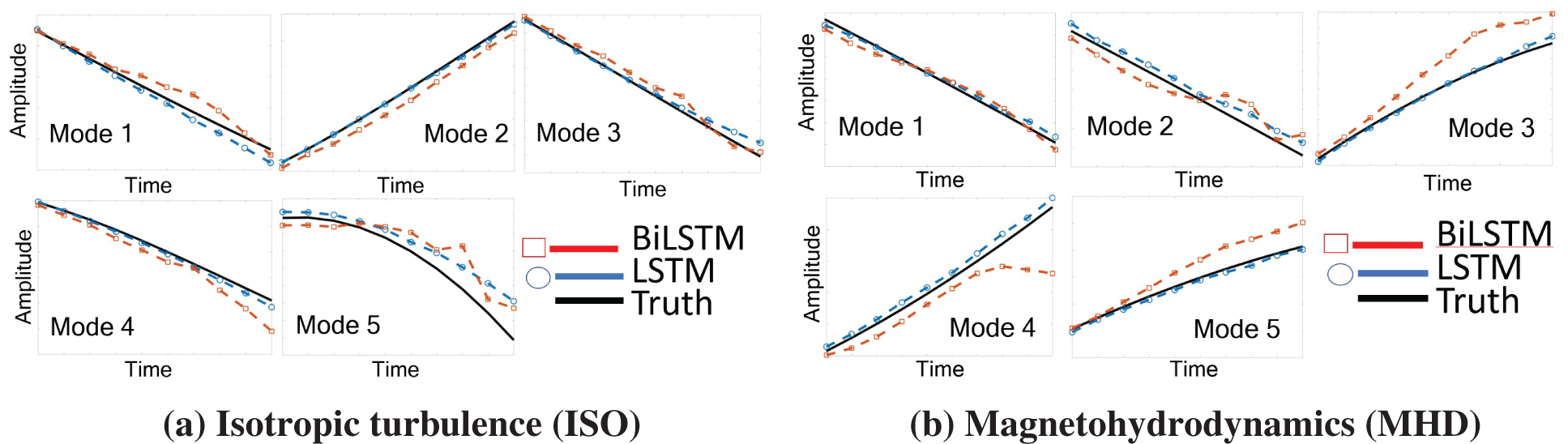

Demonstrative results for the prediction capabilities of both the original LSTM and the BiLSTM networks are illustrated in Figure 20. Contrary to the authors’ expectation, networks with the original LSTM units performed better than those using BiLSTM units in both physical problems of isotropic turbulence (ISO) (Figure 20a) and magnetohydrodyanmics (MHD) (Figure 20b) [26].

Details of the formulation in [26] are discussed in Section 12.

Figure 19: Deep-learning LSTM/BiLSTM Reduced Order Model (Sections 2.3.3, 12.2). See Figure 18 for POD reduced-order basis. The time-dependent coefficients

3 Computational mechanics, neuroscience, deep learning

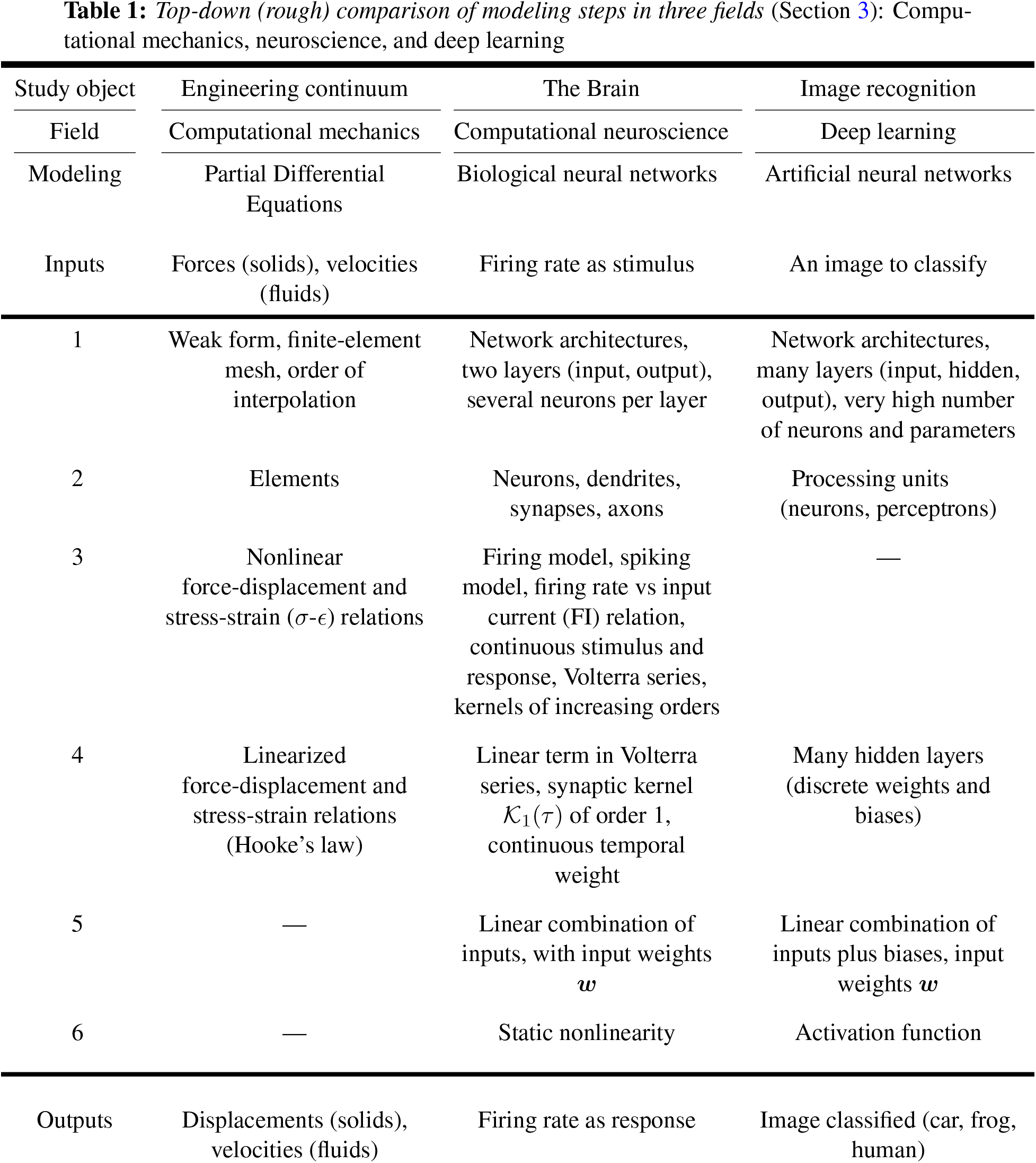

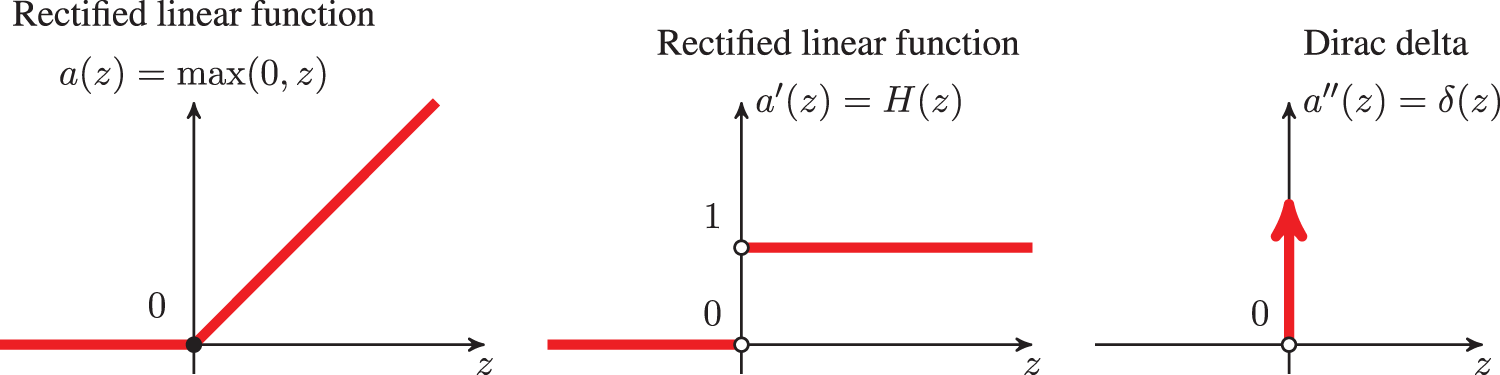

Table 1 below presents a rough comparison that shows the parallelism in the modeling steps in three fields: Computational mechanics, neuroscience, and deep learning, which heavily indebted to neuroscience until it reached more mature state, and then took on its own development.

We assume that readers are familiar with the concepts listed in the second column on “Computational mechanics”, and briefly explain some key concepts in the third column on “Neuroscience” to connect to the fourth column “Deep learning”, which is explained in detail in subsequent sections.

See Section 13.2 for more details on the theoretical foundation based on Volterra series for the spatial and temporal combinations of inputs, weights, and biases, widely used in artificial neural networks or multilayer perceptrons.

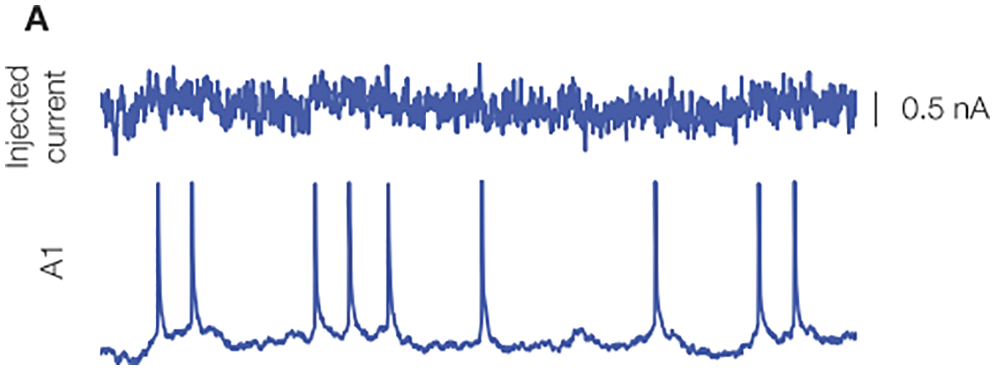

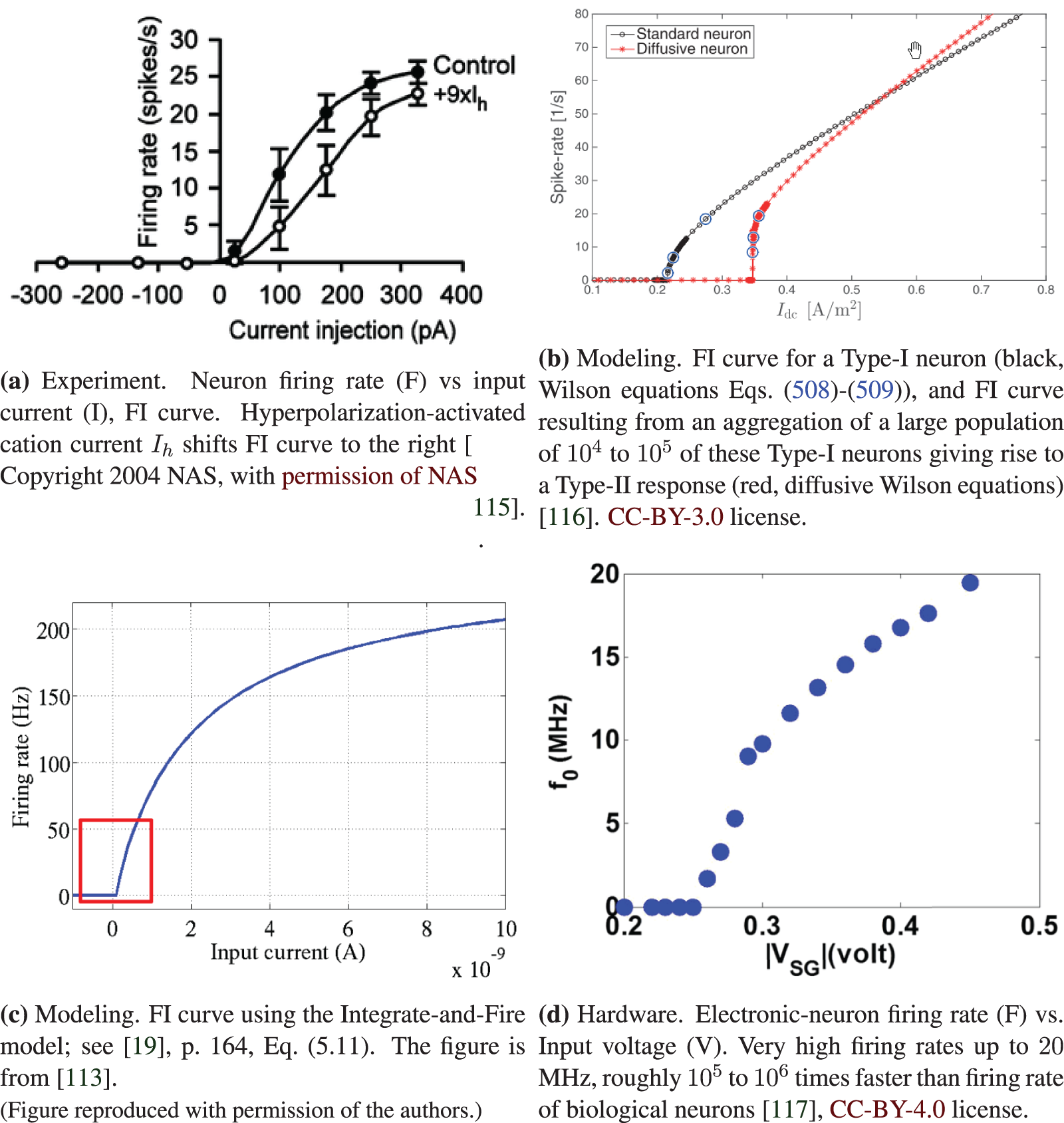

Neuron spiking response such as shown in Figure 21 can be modelled accurately using a model such as “Integrate-and-Fire”. The firing-rate response

where

where

Figure 20: Prediction results and errors for LSTM/BiLSTM networks (Sections 2.3.3, 12.3). Coefficients αi(t), for i = 1, . . . , 5, of dominant POD modes. LSTM had smaller errors compared to BiLSTM for both physical problems (ISO and MHD).

Figure 21: Biological neuron response to stimulus, experimental result (Section 3). An oscillating current was injected into the neuron (top), and neuron spiking response was recorded (below) [106], with permission from the authors and Frontiers Media SA. A spike, also called an action potential, is an electrical potential pulse across the cell membrane that lasts about 100 milivolts over 1 miliseconds. “Neurons represent and transmit information by firing sequences of spikes in various temporal patterns” [19], pp. 3-4.

It will be seen in Section 13.2.2 on “Dynamics, time dependence, Volterra series” that the convolution integral in Eq. (5) corresponds to the linear part of the Volterra series of nonlinear response of a biological neuron in terms of the stimulus, Eq. (497), which in turn provides the theoretical foundation for taking the linear combination of inputs, weights, and biases for an artificial neuron in a multilayer neural networks, as represented by Eq. (26).

The Integrate-and-Fire model for biological neuron provides a motivation for the use of the rectified linear units (ReLU) as activation function in multilayer neural networks (or perceptrons); see Figure 28.

Eq. (5) is also related to the exponential smoothing technique used in forecasting and applied to stochastic optimization methods to train multilayer neural networks; see Section 6.5.3 on “Forecasting time series, exponential smoothing”.

4 Statics, feedforward networks

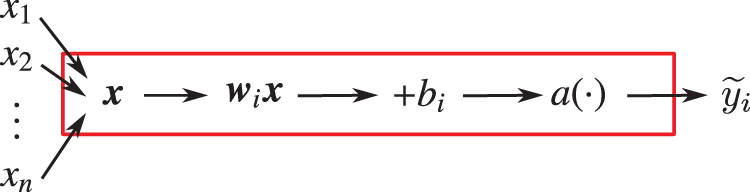

We examine in detail the forward propagation in feedforward networks, in which the function mappings flow48 only one forward direction, from input to output.

There are two ways to present the concept of deep-learning neural networks: The top-down approach versus the bottom-up approach.

The top-down approach starts by giving up-front the mathematical big picture of what a neural network is, with the big-picture (high level) graphical representation, then gradually goes down to the detailed specifics of a processing unit (often referred to as an artificial neuron) and its low-level graphical representation. A definite advantage of this top-down approach is that readers new to the field immediately have the big picture in mind, before going down to the nitty-gritty details, and thus tend not to get lost. An excellent reference for the top-down approach is [78], and there are not many such references.

Specifically, for a multilayer feedforward network, by top-down, we mean starting from a general description in Eq. (18) and going down to the detailed construct of a neuron through a weighted sum with bias in Eq. (26) and then a nonlinear activation function in Eq. (35).

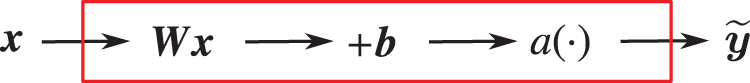

In terms of block diagrams, we begin our top-down descent from the big picture of the overall multilayer neural network with

The bottom-up approach typically starts with a biological neuron (see Figure 131 in Section 13.1 below), then introduces an artificial neuron that looks similar to the biological neuron (compare Figure 8 to Figure 131), with multiple inputs and a single output, which becomes an input to each of a multitude of other artificial neurons; see, e.g., [23] [21] [38] [20].49 Even though Figure 7, which preceded Figure 8 in [38], showed a network, but the information content is not the same as Figure 23.

Unfamiliar readers when looking at the graphical representation of an artificial neural network (see, e.g., Figure 7) could be misled in thinking in terms of electrical (or fluid-flow) networks, in which Kirchhoff’s law applies at the junction where the output is split into different directions to go toward other artificial neurons. The big picture is not clear at the outset, and could be confusing to readers new to the field, who would take some time to understand; see also Footnote 5. By contrast, Figure 23 clearly shows a multilevel function composition, assuming that first-time learners are familiar with this basic mathematical concept.

In mechanics and physics, tensors are intrinsic geometrical objects, which can be represented by infinitely many matrices of components, depending on the coordinate systems.50 vectors are tensors of order 1. For this reason, we do not use neither the name “vector” for a column matrix, nor the name “tensor” for an array with more than two indices.51 All arrays are matrices.

The matrix notation used here can follow either (1) the Matlab / Octave code syntax, or (2) the more compact component convention for tensors in mechanics.

Using Matlab / Octave code syntax, the inputs to a network (to be defined soon) are gathered in an

where the commas are separators for matrix elements in a row, and the semicolons are separators for rows. For matrix transpose, we stick to the standard notation using the superscript “

Using the component convention for tensors in mechanics,53 The coefficients of a

are arranged according to the following convention for the free indices

(1) In case both indices are subscripts, then the left subscript (index

(2) In case one index is a superscript, and the other index is a subscript, then the superscript (upper index

With this convention (lower index designates column index, while upper index designates row index), the coefficients of array

Instead of automatically associating any matrix variable such as

Consider the Jacobian matrix

where

where implicitly

are the spaces of column matrices, Then using the chain rule

where the summation convention on the repeated indices

Consider the scalar function

with

Now consider this particular scalar function below:57

Then the gradients of

4.3 Big picture, composition of concepts

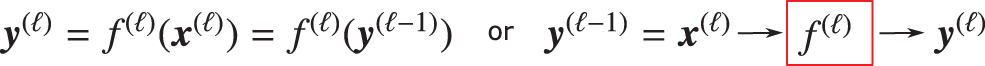

A fully-connected feedforward network is a chain of successive applications of functions

or breaking Eq. (18) down, step by step, from inputs to outputs:59

Remark 4.1. The notation

The quantities associated with layer

are the predicted outputs from the previous layer

are the inputs to the subsequent layer

Remark 4.2. The output for layer

and can be used interchangeably. In the current Section 4 on “Static, feedforward networks”, the notation

The above chain in Eq. (18)—see also Eq. (23) and Figure 23—is referred to as “multiple levels of composition” that characterizes modern deep learning, which no longer attempts to mimic the working of the brain from the neuroscientific perspective.60 Besides, a complete understanding of how the brain functions is still far remote.61

4.3.1 Graphical representation, block diagrams

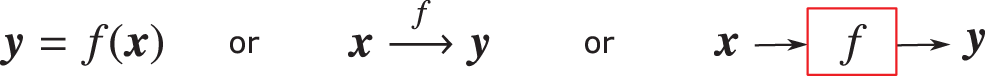

A function can be graphically represented as in Figure 22.

Figure 22: Function mapping, graphical representation (Section 4.3.1):

The multiple levels of compositions in Eq. (18) can then be represented by

revealing the structure of the feedforward network as a multilevel composition of functions (or chain-based network) in which the output

Figure 23: Feedforward network (Sections 4.3.1, 4.4.4): Multilevel composition in feedforward network with

Remark 4.3. Layer definitions, action layers, state layers. In Eq. (23) and in Figure 23, an action layer is defined by the action, i.e., the function

4.4 Network layer, detailed construct

4.4.1 Linear combination of inputs and biases

First, an affine transformation on the inputs (see Eq. (26)) is carried out, in which the coefficients of the inputs are called the weights, and the constants are called the biases. The output

The column matrix

is a linear combination of the inputs in

where the

and the

Both the weights and the biases are collectively known as the network parameters, defined in the following matrices for layer

For simplicity and convenience, the set of all parameters in the network is denoted by

Note that the set

Similar to the definition of the parameter matrix

with

The total number of parameters of a fully-connected feedforward network is then

But why using a linear (additive) combination (or superposition) of inputs with weights, plus biases, as expressed in Eq. (26) ? See Section 13.2.

An activation function

Without the activation function, the neural network is simply a linear regression, and cannot learn and perform complex tasks, such as image classification, language translation, guiding a driver-less car, etc. See Figure 32 for the block diagram of a one-layer network.

An example is a linear one-layer network, without activation function, being unable to represent the seemingly simple XOR (exclusive-or) function, which brought down the first wave of AI (cybernetics), and that is described in Section 4.5.

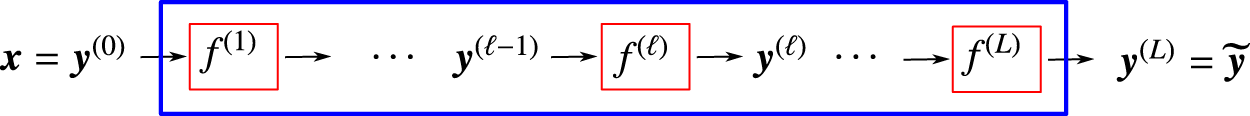

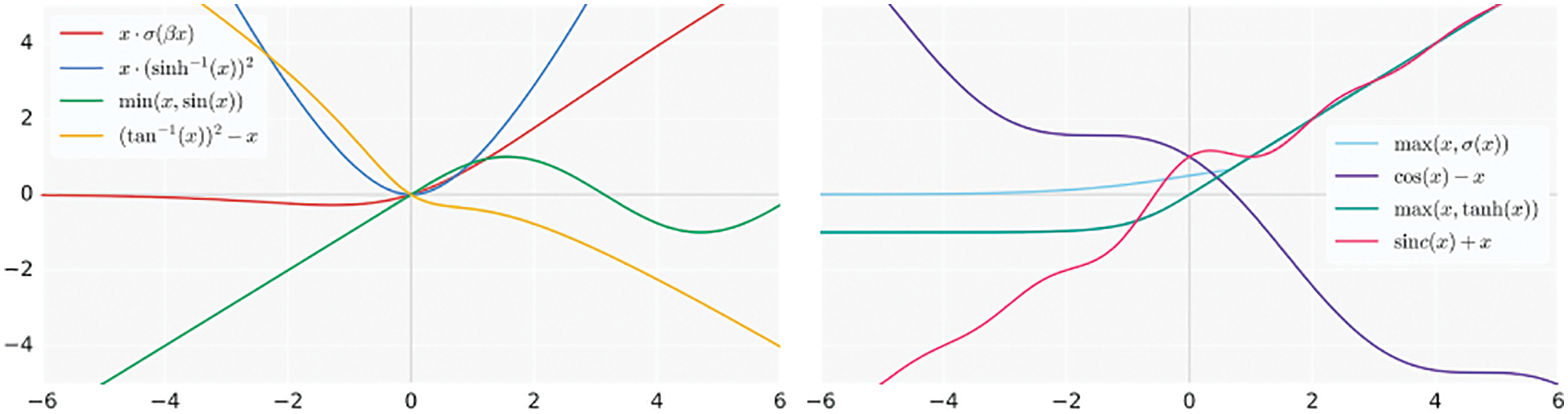

Rectified linear units (ReLU). Nowadays, for the choice of activation function

and depicted in Figure 24, for which the processing unit is called the rectified linear unit (ReLU),68 which was demonstrated to be superior to other activation functions in many problems.69 Therefore, in this section, we discuss in detail the rectified linear function, with careful explanation and motivation. It is important to note that ReLU is superior for large network size, and may have about the same, or less, accuracy than the older logistic sigmoid function for “very small” networks, while requiring less computational efforts.70

Figure 24: Activation function (Section 4.4.2): Rectified linear function and its derivatives. See also Section 5.3.3 and Figure 54 for Parametric ReLU that helped surpass human level performance in ImageNet competition for the first time in 2015, Figure 3 [61]. See also Figure 26 for a halfwave rectifier.

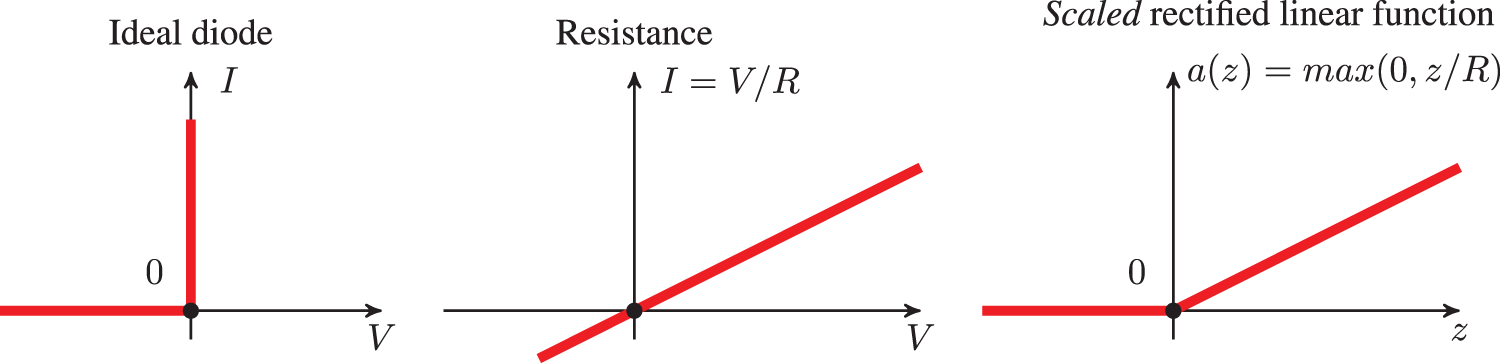

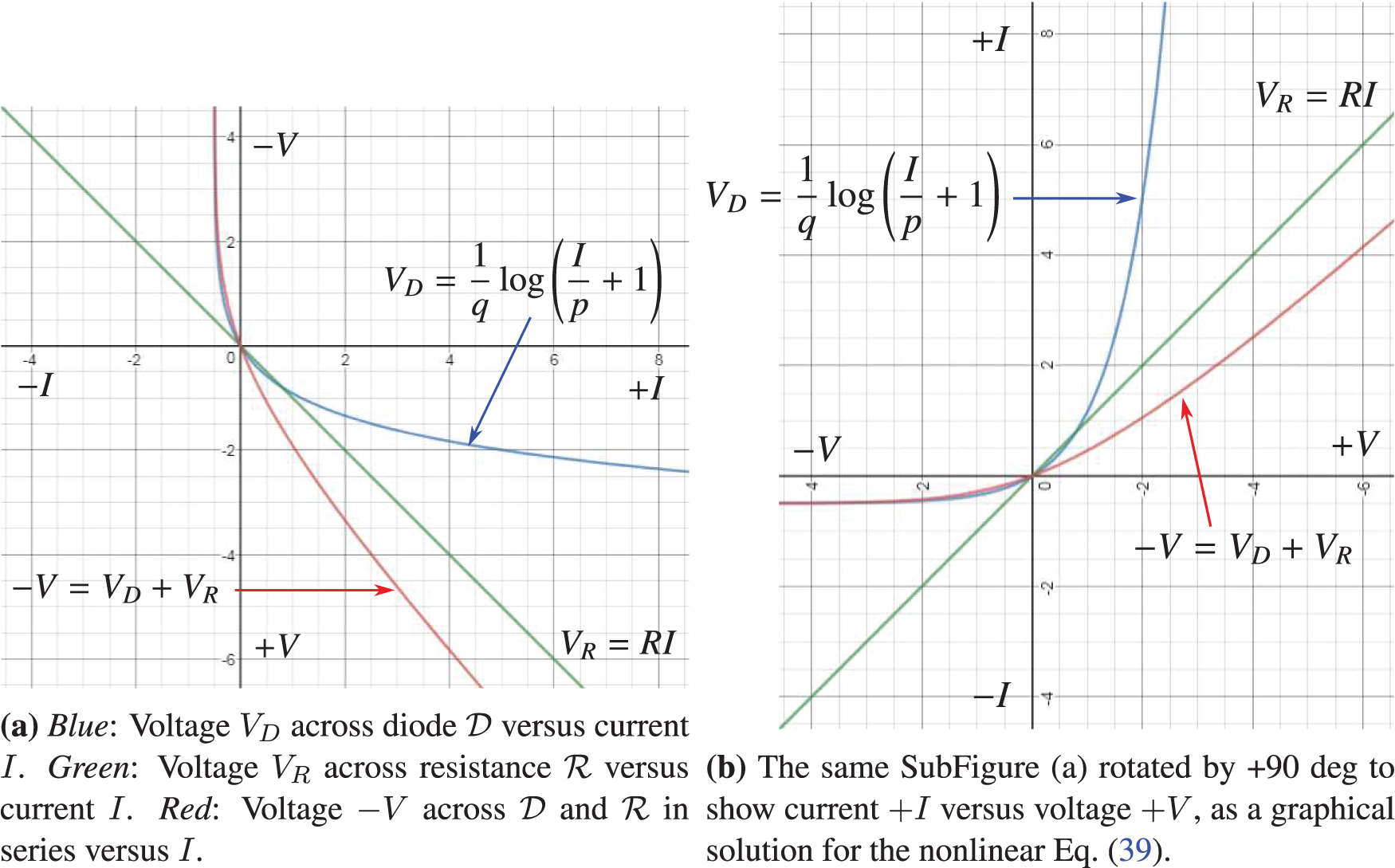

To transform an alternative current into a direct current, the first step is to rectify the alternative current by eliminating its negative parts, and thus The meaning of the adjective “rectified” in rectified linear unit (ReLU). Figure 25 shows the current-voltage relation for an ideal diode, for a resistance, which is in series with the diode, and for the resulting ReLU function that rectifies an alternative current as input into the halfwave rectifier circuit in Figure 26, resulting in a halfwave current as output.

Figure 25: Current I versus voltage V (Section 4.4.2): Ideal diode, resistance, scaled rectified linear function as activation (transfer) function for the ideal diode and resistance in series. (Figure plotted with R = 2.) See also Figure 26 for a halfwave rectifier.

Mathematically, a periodic function remains periodic after passing through a (nonlinear) rectifier (active function):

where

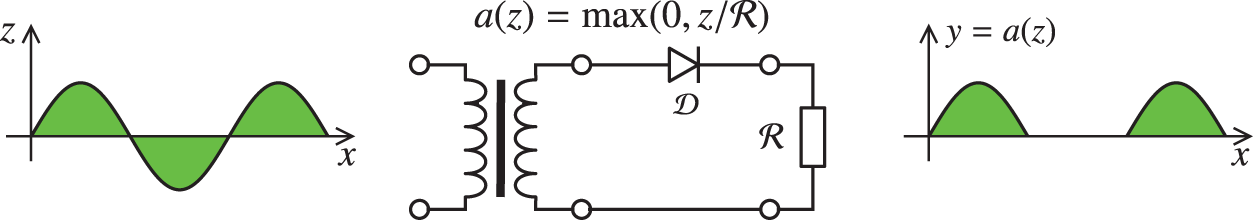

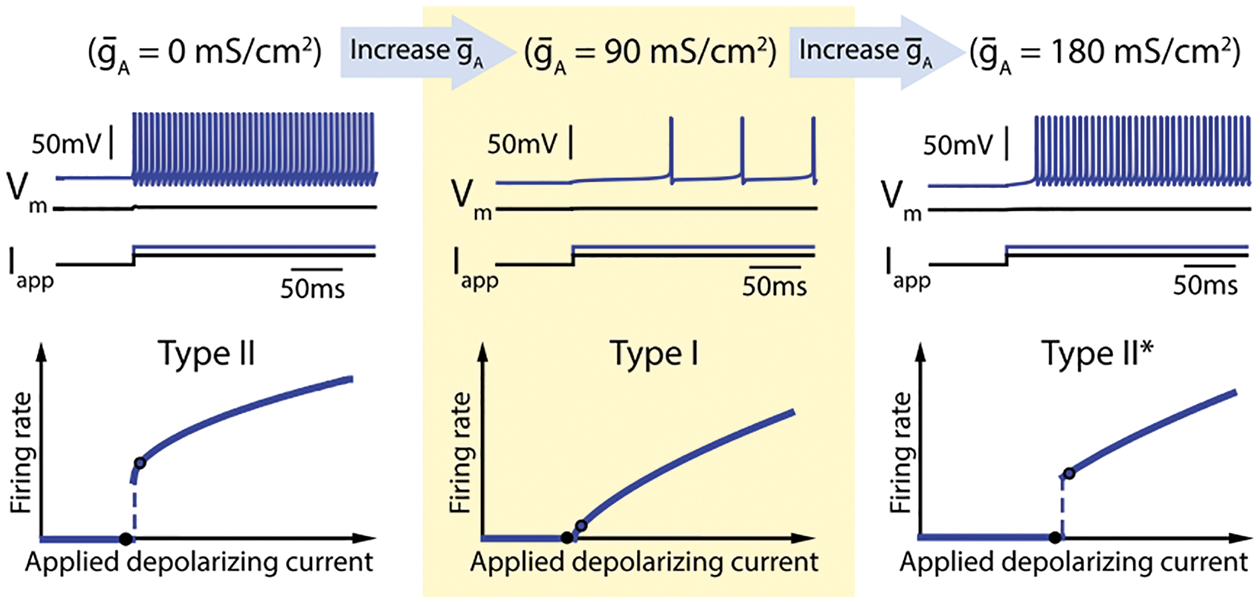

Biological neurons encode and transmit information over long distance by generating (firing) electrical pulses called action potentials or spikes with a wide range of frequencies [19], p. 1; see Figure 27. “To reliably encode a wide range of signals, neurons need to achieve a broad range of firing frequencies and to move smoothly between low and high firing rates” [114]. From the neuroscientific standpoint, the rectified linear function could be motivated as an idealization of the “Type I” relation between the firing rate (F) of a biological neuron and the input current (I), called the FI curve. Figure 27 describes three types of FI curves, with Type I in the middle subfigure, where there is a continuous increase in the firing rate with increase in input current.

Figure 26: Halfwave rectifier circuit (Section 4.4.2), with a primary alternative current

The Shockley equation for a current

With the voltage across the resistance being

which is plotted in Figure 29. The rectified linear function could be seen from Figure 29 as a very rough approximation of the current-voltage relation in a halfwave rectifier circuit in Figure 26, in which a diode and a resistance are in series. In the Shockley model, the diode is leaky in the sense that there is a small amount of current flow when the polarity is reversed, unlike the case of an ideal diode or ReLU (Figure 24), and is better modeled by the Leaky ReLU activation function, in which there is a small positive (instead of just flat zero) slope for negative

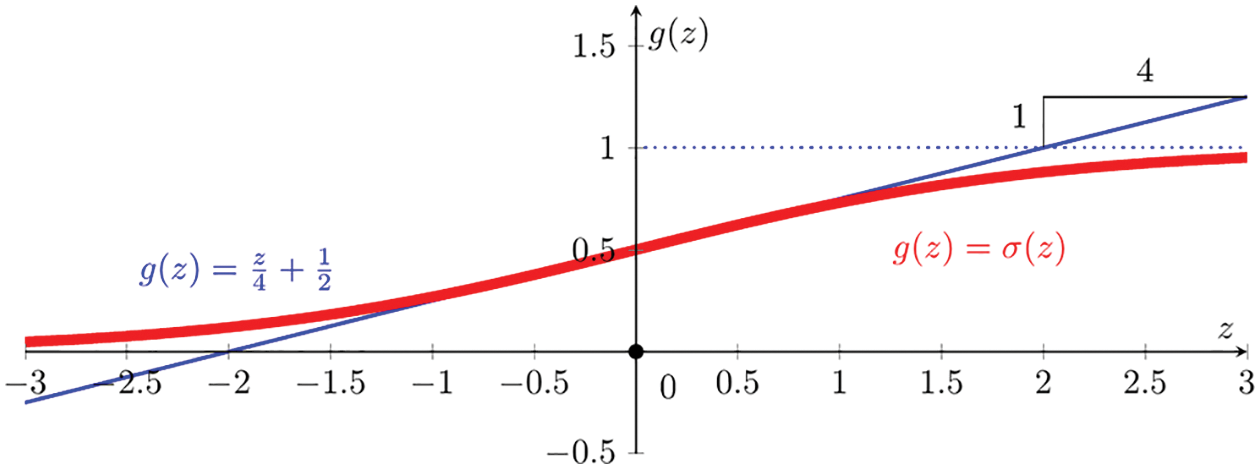

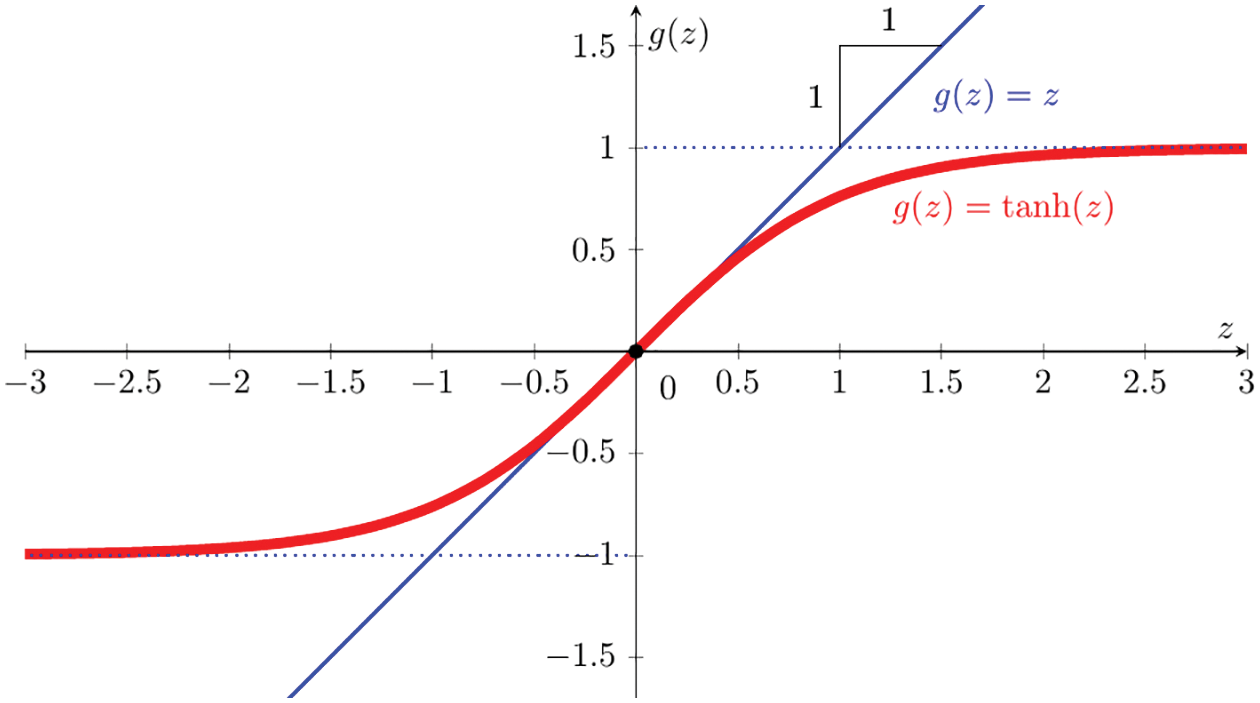

Prior to the introduction of ReLU, which had been long widely used in neuroscience as activation function prior to 2011,71 the state-of-the-art for deep-learning activation function was the hyperbolic tangent (Figure 31), which performed better than the widely used, and much older, sigmoid function72 (Figure 30); see [113], in which it was reported that

Figure 27: FI curves (Sections 4.4.2, 13.2.2). Firing rate frequency (F) versus applied depolarizing current (I), thus FI curves. Three types of FI curves. The time histories of voltage

“While logistic sigmoid neurons are more biologically plausible than hyperbolic tangent neurons, the latter work better for training multilayer neural networks. Rectifying neurons are an even better model of biological neurons and yield equal or better performance than hyperbolic tangent networks in spite of the hard non-linearity and non-differentiability at zero.”

The hard non-linearity of ReLU is localized at zero, but otherwise ReLU is a very simple function—identity map for positive argument, zero for negative argument—making it highly efficient for computation.

Also, due to errors in numerical computation, it is rare to hit exactly zero, where there is a hard non-linearity in ReLU:

“In the case of

Figure 28: FI or FV curves (Sections 3, 4.4.2, 13.2.2). Neuron firing rate (F) versus input current (I) (FI curves, a,b,c) or voltage (V). The Integrate-and-Fire model in SubFigure (c) can be used to replace the sigmoid function to fit the experimental data points in SubFigure (a). The ReLU function in Figure 24 can be used to approximate the region of the FI curve just beyond the current or voltage thresholds, as indicated in the red rectangle in SubFigure (c). Despite the advanced mathematics employed to produce the Type-II FI curve of a large number of Type-I neurons, as shown in SubFigure (b), it is not clear whether a similar result would be obtained if the single neuron displays a behavior as in Figure 27, with transition from Type II to Type I to Type II* in a single neuron. See Section 13.2.2 on “Dynamic, time dependence, Volterra series” for more discussion on Wilson’s equations Eqs. (508)-(509) [118]. On the other hand for deep-learning networks, the above results are more than sufficient to motivate the use of ReLU, which has deep roots in neuroscience.

Figure 29: Halfwave rectifier (Sections 4.4.2, 5.3.2). Current I versus voltage V [red line in SubFigure (b)] in the halfwave rectifier circuit of Figure 26, for which the ReLU function in Figure 24 is a gross approximation. SubFigure (a) was plotted with p = 0.5, q = −1.2, R = 1. See also Figure 138 for the synaptic response of crayfish similar to the red line in SubFigure (b).

Thus, in addition to the ability to train deep networks, another advantage of using ReLU is the high efficiency in computing both the layer outputs and the gradients for use in optimizing the parameters (weights and biases) to lower cost or loss, i.e., training; see Section 6 on Training, and in particular Section 6.3 on Stochastic Gradient Descent.

The activation function ReLU approximates closer to how biological neurons work than other activation functions (e.g., logistic sigmoid, tanh, etc.), as it was established through experiments some sixty years ago, and have been used in neuroscience long (at least ten years) before being adopted in deep learning in 2011. Its use in deep learning is a clear influence from neuroscience; see Section 13.3 on the history of activation functions, and Section 13.3.2 on the history of the rectified linear function.

Deep-learning networks using ReLU mimic biological neural networks in the brain through a trade-off between two competing properties [113]:

(1) Sparsity. Only 1% to 4% of brain neurons are active at any one point in time. Sparsity saves brain energy. In deep networks, “rectifying non-linearity gives rise to real zeros of activations and thus truly sparse representations.” Sparsity provides representation robustness in that the non-zero features73 would have small changes for small changes of the data.

(2) Distributivity. Each feature of the data is represented distributively by many inputs, and each input is involved in distributively representing many features. Distributed representation is a key concept dated since the revival of connectionism with [119] [120] and others; see Section 13.2.1.

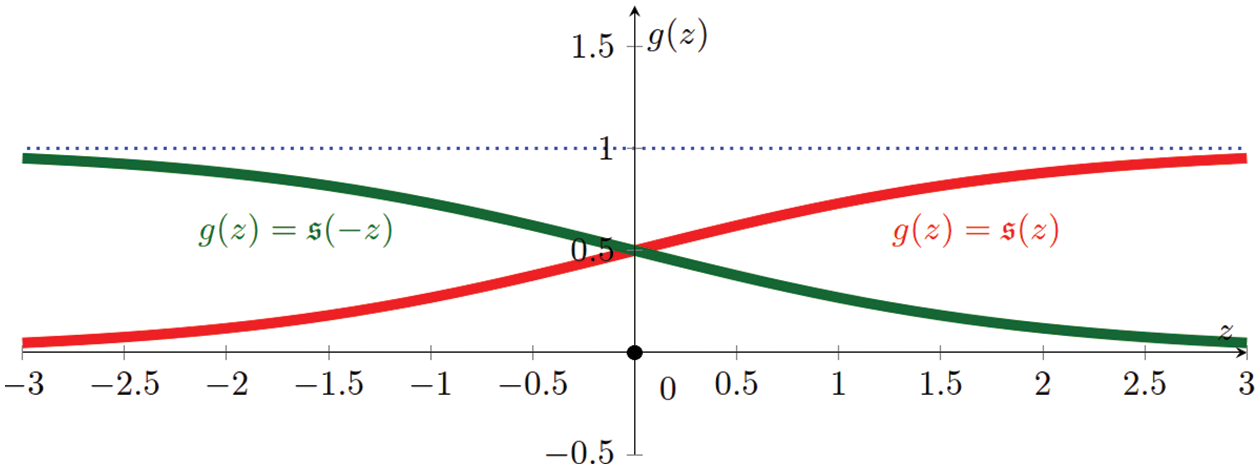

Figure 30: Logistic sigmoid function (Sections 4.4.2, 5.1.3, 5.3.1, 13.3.3):

Figure 31: Hyperbolic tangent function (Section 4.4.2):

4.4.3 Graphical representation, block diagrams

The block diagram for a one-layer network is given in Figure 32, with more details in terms of the number of inputs and of outputs given in Figure 33.

Figure 32: One-layer network (Section 4.4.3) representing the relation between the predicted output

For a multilayer neural network with

Figure 33: One-layer network (Section 4.4.3) in Figure 32: Lower level details, with

Figure 34: Input-to-output mapping (Sections 4.4.3, 4.4.4): Layer

Figure 35: Low-level details of layer

And finally, we now complete our top-down descent from the big picture of the overall multilayer neural network with

Figure 36: Artificial neuron (Sections 2.3.1, 4.4.4, 13.1), row

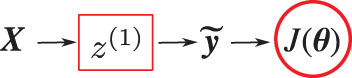

4.5 Representing XOR function with two-layer network

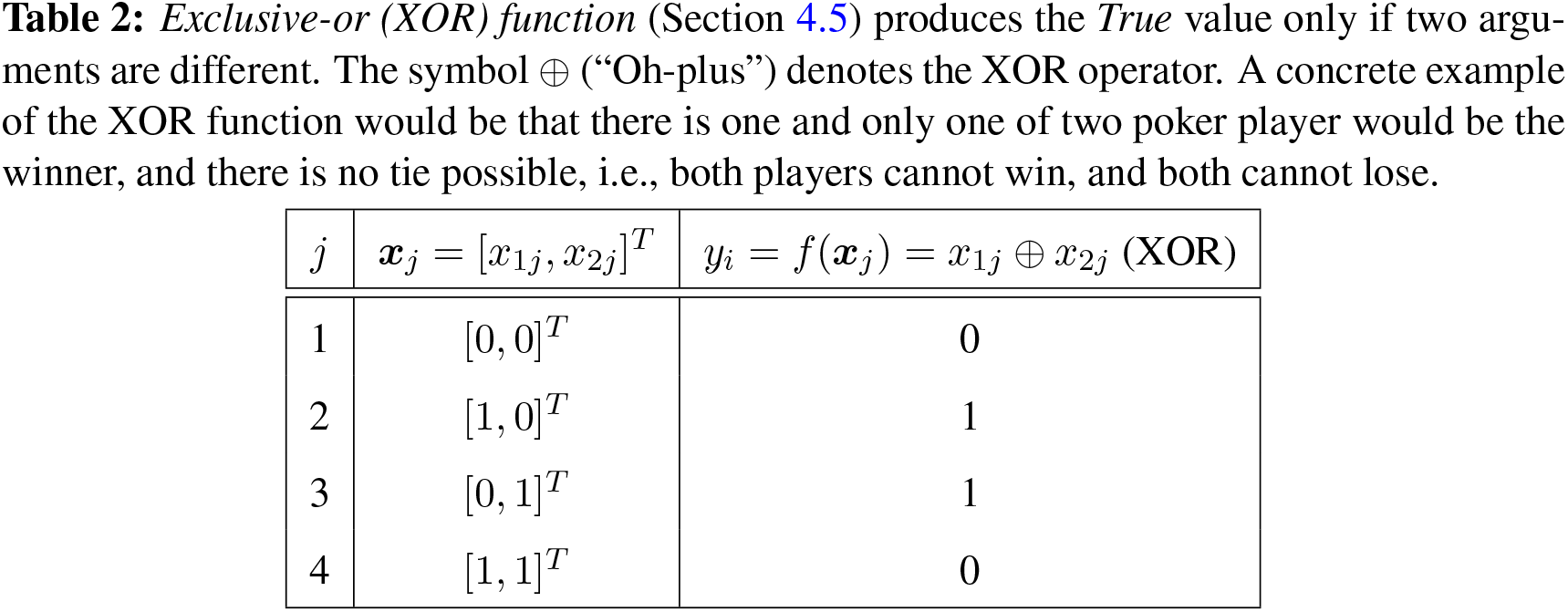

The XOR (exclusive-or) function played an important role in bringing down the first wave of AI, known as the cybernetics wave ([78], p. 14) since it was shown in [121] that Rosenblatt’s perceptron (1958 [119], 1962 [120] [2]) could not represent the XOR function, defined in Table 2:

The dataset or design matrix74

An approximation (or prediction) for the XOR function

We begin with a one-layer network to show that it cannot represent the XOR function,75 then move on to a two-layer network, which can.

Consider the following one-layer network,76 in which the output

with the following matrices

since it is written in [78], p. 14:

“Model based on the

First-time learners, who have not seen the definition of Rosenblatt’s (1958) perceptron [119], could confuse Eq. (43) as the perceptron—which was not a linear model, but more importantly the Rosenblatt perceptron was a network with many neurons77—because Eq. (43) is only a linear unit (a single neuron), and does not have an (nonlinear) activation function. A neuron in the Rosenblatt perceptron is Eq. (489) in Section 13.2, with the Heaviside (nonlinear step) function as activation function; see Figure 132.

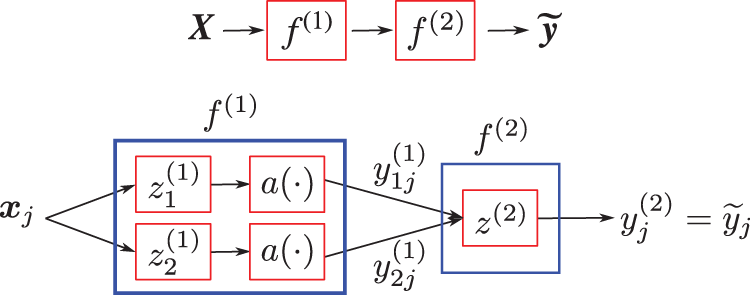

Figure 37: Representing XOR function (Sections 4.5, 13.2). This one-layer network (which is not the Rosenblatt perceptron in Figure 132) cannot perform this task. For each input matrix

The MSE cost function in Eq. (42) becomes

Setting the gradient of the cost function in Eq. (46) to zero and solving the resulting equations, we obtain the weights and the bias:

from which the predicted output

and thus this one-layer network cannot represent the XOR function. Eqs. (48) are called the “normal” equations.78

The four points in Table 2 are not linearly separable, i.e., there is no straight line that separates these four points such that the value of the XOR function is zero for two points on one side of the line, and one for the two points on the other side of the line. One layer could not represent the XOR function, as shown above. Rosenblatt (1958) [119] wrote:

“It has, in fact, been widely conceded by psychologists that there is little point in trying to ‘disprove’ any of the major learning theories in use today, since by extension, or a change in parameters, they have all proved capable of adapting to any specific empirical data. In considering this approach, one is reminded of a remark attributed to Kistiakowsky, that ‘given seven parameters, I could fit an elephant.’ ”

So we now add a second layer, and thus more parameters in the hope to be able to represent the XOR function, as shown in Figure 38.79

Figure 38: Representing XOR function (Sections 4.5). This two-layer network can perform this task. The four points in the design matrix