Open Access

Open Access

ARTICLE

A Time-Varying Parameter Estimation Method for Physiological Models Based on Physical Information Neural Networks

1

College of Information and Electrical Engineering, China Agricultural University, Beijing, 100083, China

2

Key Laboratory of Agricultural Information Acquisition Technology (Beijing), Ministry of Agriculture, Beijing, 100083, China

3

Key Laboratory of Modern Precision Agriculture System Integration Research, Ministry of Education, Beijing, 100083, China

* Corresponding Author: Lan Huang. Email:

Computer Modeling in Engineering & Sciences 2023, 137(3), 2243-2265. https://doi.org/10.32604/cmes.2023.028101

Received 29 November 2022; Accepted 23 March 2023; Issue published 03 August 2023

Abstract

In the establishment of differential equations, the determination of time-varying parameters is a difficult problem, especially for equations related to life activities. Thus, we propose a new framework named BioE-PINN based on a physical information neural network that successfully obtains the time-varying parameters of differential equations. In the proposed framework, the learnable factors and scale parameters are used to implement adaptive activation functions, and hard constraints and loss function weights are skillfully added to the neural network output to speed up the training convergence and improve the accuracy of physical information neural networks. In this paper, taking the electrophysiological differential equation as an example, the characteristic parameters of ion channel and pump kinetics are determined using BioE-PINN. The results demonstrate that the numerical solution of the differential equation is calculated by the parameters predicted by BioE-PINN, the Root Mean Square Error (RMSE) is between 0.01 and 0.3, and the Pearson coefficient is above 0.87, which verifies the effectiveness and accuracy of BioE-PINN. Moreover, real measured membrane potential data in animals and plants are employed to determine the parameters of the electrophysiological equations, with RMSE 0.02-0.2 and Pearson coefficient above 0.85. In conclusion, this framework can be applied not only for differential equation parameter determination of physiological processes but also the prediction of time-varying parameters of equations in other fields.Keywords

Differential equations describing dynamical processes are widely utilized in physics [1], engineering [2], medicine [3], biology [4], physiology, and other fields. In the practical application of differential equations, it may also involve the solution of the inverse problem, that is, when the boundary conditions and some parameters in the equation are unknown, accurate model parameters can be obtained through some measured data. Physiological processes are often described by differential equations [5]. For example, the reactions of enzymes and transporters are described by metabolic equations to reveal the mechanism leading to diabetes [6]; the process of drug concentration changes in body compartments is studied by uncertainty differential equations [7]; and the kinetic characteristics between membrane voltage and ion channels are described by electrophysiological equations, namely the Hodgkin-Huxley (HH) equation [8]. Such equations can not only be employed to describe the electrical activity of neurons, but also guide the construction of the artificial neural network [9]. The most critical and basic step in establishing the equation is the determination of time-varying parameters, such as the switch rate parameters and conductivity of ion channels. In general, all these parameters are determined by experimental measurements, usually obtained by voltage clamp or patch clamp experiments, which work with complicated steps, high difficulty, and high experimental skills [10]. Obviously, these facts motivate us to search for faster ways to estimate the time-varying parameters of electrophysiological equations, except that this presents the same challenges of time-varying parameter prediction as other differential equations [11].

In recent years, Physics-Informed Neural Networks (PINN) have achieved good performance in solving complex differential equations [12–17], becoming an alternative simple method for tackling many problems in computational science and engineering. In particular, PINN can efficiently address the forward and inverse problems of nonlinear ordinary differential equations without the need for grids in finite difference methods. Since conventional neural network models require labeled or large amounts of data, it is difficult to solve the inverse problem using this method. In addition, PINN is different from algorithms such as BP neural network [18] and semi-supervised learning [19,20] which are considered “black boxes”. PINN can integrate all given information (such as electrophysiological equations, experimental data, and initial conditions) into the loss function of the neural network, thereby recasting the original problem into an equivalent optimization problem.

How to predict time-varying parameters in differential equations is a problem we need to handle at present. However, traditional parametric prediction methods are usually based on linear and non-time-varying premises, which is not easy to achieve for the time-varying parameter determination of general nonlinear systems. Although PINN allows us to obtain time-varying parameters, conventional PINN is slow to converge in dealing with inverse problems of complex differential equations. In addition, the values of membrane potential and switch rate in HH are not in the order of magnitude, requiring a long training time. In summary, existing PINN cannot predict the time-varying parameters of HH accurately. For this purpose, this paper proposes a novel time-varying parameter estimation framework for electrophysiological models based on physical information neural networks, named BioE-PINN. This framework offers a total loss function based on the Hodgkin-Huxley equation, observed data errors, and hard constraints on initial conditions. The training time can be reduced, and the prediction accuracy can be improved of the model after modifying the ion channel switch rate equation. Furthermore, the stimulation currents that evoke membrane potentials can be predicted.

The contributions of this paper are summarized as follows:

1. We propose a new PINN-based framework for time-varying parameter estimation in electrophysiological differential equations that combines physical prior knowledge of bioelectrodynamics with advanced deep learning. The loss function of a neural network is carefully designed to not only perfectly predict observations (i.e., data-driven losses), but also take into account the underlying physics (i.e., physics-based losses).

2. We employ hard constraints to accommodate differences between orders of magnitude and adaptive activation functions to accelerate the convergence of BioE-PINN.

3. We verify the effectiveness of the model with simulation experiments and real experimental data, using membrane potential to predict the conductivity and switch rate parameters of ion channels and pumps of electrophysiological equations.

The rest of this paper is organized as follows. Section 2 reviews the related work of PINN in solving the inverse problem. Section 3 discusses in depth the details of our proposed method. Section 4 presents the experiments and results. Section 5 shows the conclusion and outlook.

Parameter inference from observational data is a core problem in many fields and quite an important research direction in process modeling. Traditional parameter prediction methods are usually based on linearity and time-invariance, but the parameter determination of general nonlinear systems is not easy to achieve. The neural network has the advantages of strong approximation ability, a high degree of nonlinearity, strong self-learning, and self-adaptation, and has been widely exploited in the parameter determination of nonlinear systems.

In recent years, with the further development of deep learning and computing resources, a deep learning algorithm framework called Physics-informed Neural Network has been proposed [21]. It mainly employs automatic differentiation techniques to solve forward and inverse problems of ordinary differential equations. PINN can be trained with a small amount of data and has better generalization performance while maintaining accuracy [21]. With the emergence of PINN, a lot of follow-up research work is triggered. Some of the research focuses on improving the accuracy and efficiency of PINN, and then adaptive activation functions can be applied to improve the performance of PINN in solving differential equations. This method introduces a scaling factor and a learnable parameter into the activation function of the neural network, and the factor and the parameters of the neural network are updated synchronously during training [22]. Residual points in PINN are usually randomly distributed among grid points on the domain, which may result in a large amount of data required to train the model. To address this problem, gPINNs embed the gradient information of the physical equations into the loss of the neural network to achieve better accuracy while using fewer data [23].

In PINN, multiple loss terms appear corresponding to the residuals, initial values, and boundary conditions of the equation, and it is critical to balance these different loss terms. By reviewing the latest literature, we found that the imbalance of loss functions in PINNs can be addressed by employing meta-learning techniques. And it can be applied to discontinuous function approximation problems with different frequencies, advection equations with different initial conditions and discontinuous solutions [24].

In addition, researchers have developed a large number of software libraries designed for PINNs, most of which is based on Python, such as DeepXDE [25], SimNet [26], NeuroDiffEq [27], SciANN [28], etc. The development of the software library facilitates the popularization and application of PINN. We will introduce the application of PINN in parameter prediction below.

The A-PINN framework uses a multi-output neural network to simultaneously represent the main variables and equation integrals in nonlinear integrodifferential equations, solving integral discdiscretization and truncation errors. Since A-PINN does not set fixed grids and nodes, unknown parameters can be discovered without interpolation [29]. Inferring compressibility and stiffness parameters from a material’s ultrasonic data encounters an ill-posed inverse problem. Shukla et al. implement the in-plane and out-of-plane elastic wave equations by using two neural networks in PINN and employing adaptive activation functions to accelerate their convergence [30]. Another advantage of PINN is to accelerate industrial production processes. For example, FlowDNN is 14,000 times faster than traditional methods in computational fluid dynamics simulation. It directly predicts flow fields by giving fluid constraints and initial shapes, and utilizes graphics processing units to speed up the training process of neural networks [31]. XPINNs solve the ill-posed inverse problems in designing dedicated high-speed aircraft, and the model is able to predict parameters such as air density, pressure, and so on, based on observations. The main advantage of XPINNs is the decomposition of the spatiotemporal domain, which introduces a generalization trade-off. It decomposes complex solutions of differential equations into simple parts, thereby reducing the complexity required for network learning in various subdomains and improving generalization. Compared to traditional methods, this decomposition results in less training data available in each subdomain and less time required for training [32].

PINN also has applications in the parameter determination of electrophysiological equations. For example, to capture multi-channel data related to cardiac electrical activity from high-dimensional body sensor data, a modified P-DL framework based on PINN is proposed. The framework adds a balancing metric to optimize the model training process. P-DL assists medical scientists in monitoring and detecting abnormal cardiac conditions, handling the inverse ECG modeling problem, that is, predicting spatiotemporal electrodynamics in the heart from electrical potential [33]. To aid in the treatment and diagnosis of cardiac arrhythmias, Herrero Martin et al. proposed a tool called EP-PINNs, which applies PINN to cardiac action potential simulations. The tool can predict precise action potential and duration parameters using sparse action potential data [34]. EP-PINNs are based on the Aliev-Panfilov electrophysiological model, which has the advantage of modeling action potentials without considering ion channels. However, ion channels play an important role in life activities. For example, calcium ion channels have an effect on human blood pressure, and doctors use calcium ion channel blockers as the first choice for antihypertensive drugs [35]. Therefore, predicting the kinetic parameters of ion channels and pumps is a problem that needs to be solved.

The above studies show that PINN has been continuously improved since its original proposal and has been applied to differential equation solving and predicting constant parameters. The above also provides a feasible indication for applying PINN to the time-varying parameter estimation method of physiological models in this study.

In this section, we propose a physics-based neural network to estimate parameters in electrophysiological equations. Compared with traditional PINN, our proposed BioE-PINN contains hard constraints and an adaptive activation function method, which can improve the accuracy of PINN.

3.1 Physiological Equations of Cell Membrane Potential

The core mathematical framework for current biophysical-based neural modeling was developed by Hodgkin and Huxley. It is one of the most successful mathematical models of complex biological processes that have been proposed so far [36]. The cell membrane potential equations proposed in this paper are improved on the basis of the Hodgkin-Huxley (HH) equation, which can be applied to plant electrical signal modeling:

where V represents the membrane potential.

where

When using PINN to predict the switch rate, it is first necessary to construct a feedforward neural network to approximate the electrical signal or rate. A feedforward neural network consists of an input layer, multiple hidden layers, and an output layer. Information is passed layer by layer in one direction. The value of any neuron in the network is calculated by the sum of the products of the weights and outputs of the previous layer, and then activated by a specific activation function. The relationship between two adjacent layers is usually described by the following equation:

where

This paper aims to solve the inverse problem of membrane potential

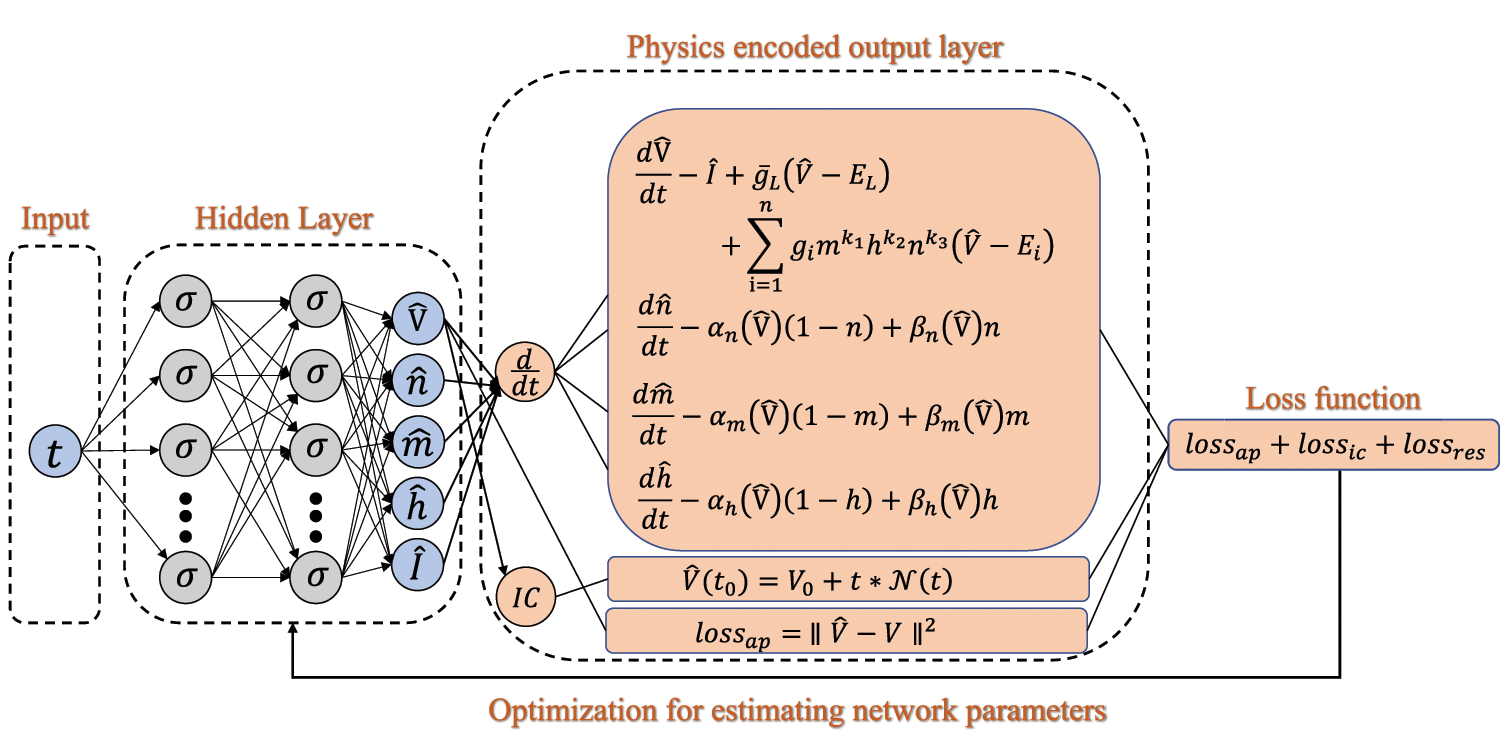

Fig. 1 demonstrates the proposed BioE-PINN. The solution to the inverse problem is parameterized by a trained DNN that satisfies not only the laws of physics but also data-driven constraints on membrane potential. Specifically, we estimate the time map of membrane potential as:

Figure 1: Schematic of the BioE-PINN for switch rates prediction

where

where the weights of data-driven loss and physics-based loss are rescaled by

The data-driven loss function is designed to solve the inverse problem. In order to infer the parameters in the differential equation based on the membrane potential, the predicted value of the neural network needs to be close to the real membrane potential. We define the data-driven loss:

where

Physiologically based constraints will be implemented by minimizing the magnitudes of

where

To calculate the loss function during DNN training, it is necessary to obtain the derivatives of

When conventional PINN solves time-dependent ordinary differential equations or inversion of parameters, the constraints of initial conditions are mainly in the form of soft constraints, which will affect the calculation accuracy to a certain extent [38]. To this end, we propose hard constraints so that the output of the network automatically satisfies the initial conditions. Specifically, we define the residuals of the initial conditions as:

Hard constraints based on initial conditions will be achieved by minimizing the size of

3.3 Adaptive Activation Functions

Regarding the activation functions in neurons, there are currently Sigmoid, Logistic, tanh, sin, cos, and so on, as well as ReLU, and various variants based on ReLU, ELU, Leakly ReLU, PReLU, and so forth. The purpose of introducing the activation function is to make the neural network have the characteristics of expressing nonlinearity. The activation function plays an important role in the training process of the neural network, because the calculation of the gradient value of the loss function depends on the optimization of the network parameters, and the optimization learning of the network parameters depends on the derivability of the activation function.

The size of a neural network is related to its ability to express the complexity of a problem. Complex problems require a deep network, which is difficult to train. In most cases, choosing an appropriate architecture based on the experience of the rest of the scholars requires a large number of experimental approaches. This paper considers tuning the network structure for optimal performance. To this end, a learnable parameter

The learnable parameter

The learnable parameter

The learning rate has a dramatic impact when searching for a global minimum. Larger learning rates may skip the global minimum, while smaller learning rates increase computational cost. In the training process of PINN, a small learning rate is generally used, which makes the convergence slow. In order to speed up the convergence, a scaling factor

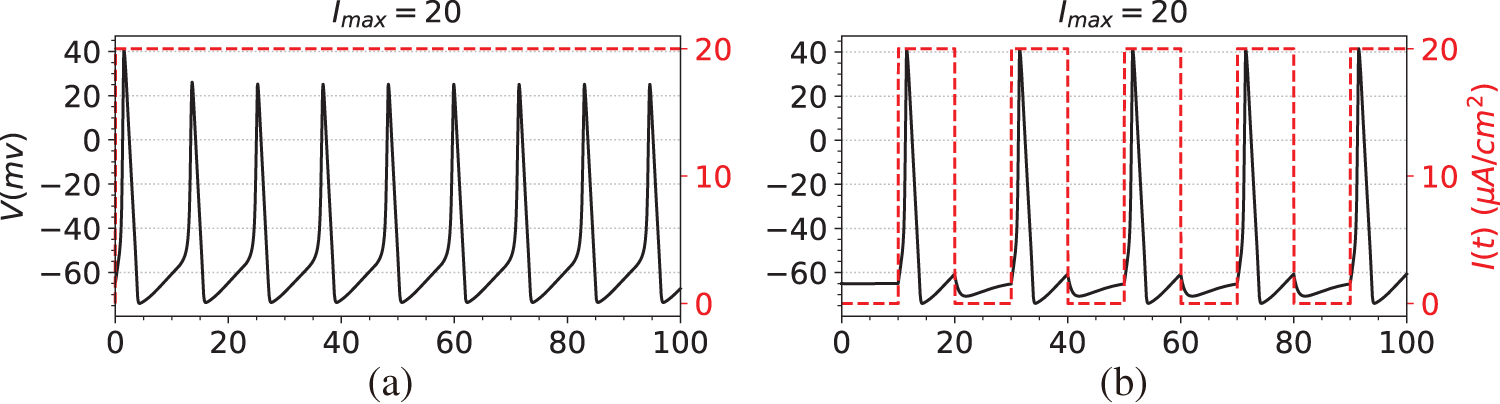

After establishing the PINN network for estimating the time-varying parameters of the electrophysiological equation, combined with the improved adaptive activation function algorithm in this paper, the prediction of the switching rate parameters in the electrophysiological equation can be realized. The algorithm is summarized as follows:

The main factors affecting the time complexity of the BioE-PINN algorithm are the number of iterations

In order to evaluate the error of predicted and observed values, the following indicators are exploited: Spearman correlation coefficient (SPCC) and Root Mean Square Error (RMSE). In addition, Relative Errors are employed to evaluate the effect of parameter prediction.

SPCC is used to measure the correlation between two variables, especially shape similarity. RMSE reflects the degree of difference between the predicted value and the real value, and a smaller RMSE means that the accuracy of the predicted value is higher.

In this section, we first use conventional PINN and BioE-PINN to predict the parameters of HH. Specifically, we obtain the observation data through simulation, determine the HH parameters according to the observation data and compare them with the real parameters. Subsequently, we conduct experiments on the inference of electrophysiological equation parameters in animals as well as plants, and solve the HH equation according to the parameters determined by BioE-PINN. To demonstrate the effectiveness of the proposed method, various comparisons are performed. In this study, we implement BioE-PINN with the PyTorch [41] framework. The weight of DNN is initialized by Glorot [42] and optimized by Adam [43]. It is found through experiments that the optimal value of the learning rate is 0.0005, and the activation function is ReLU.

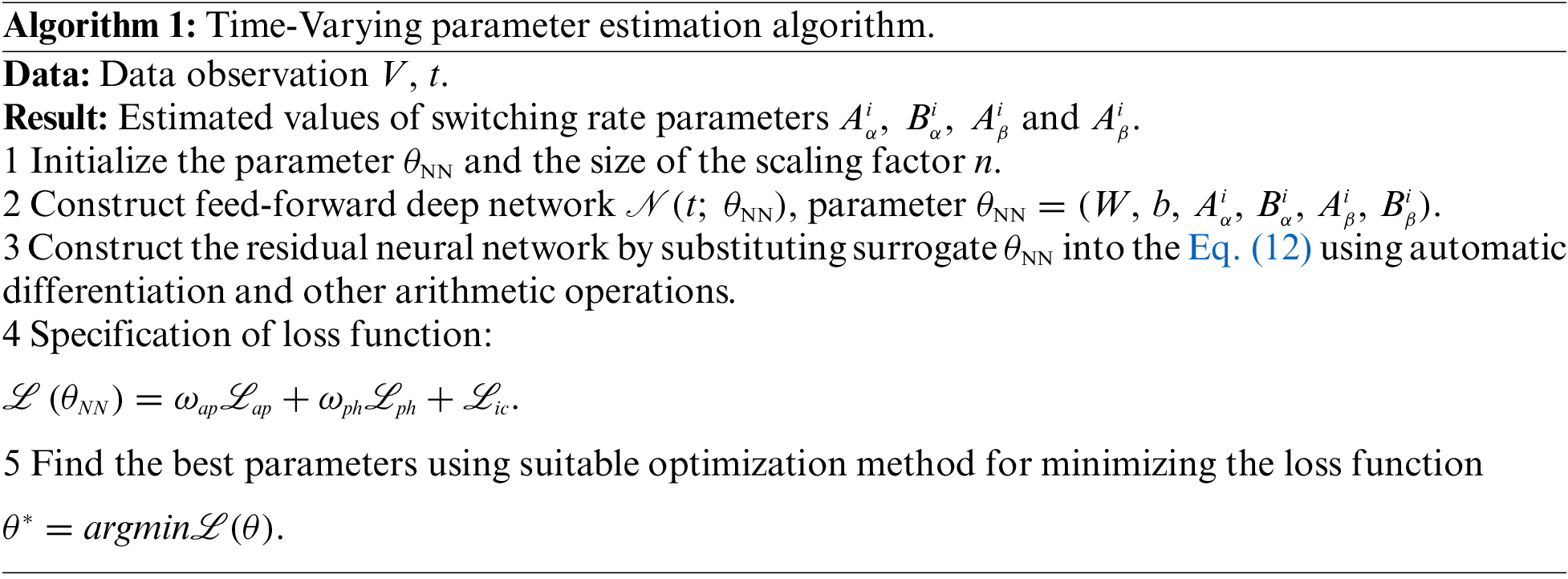

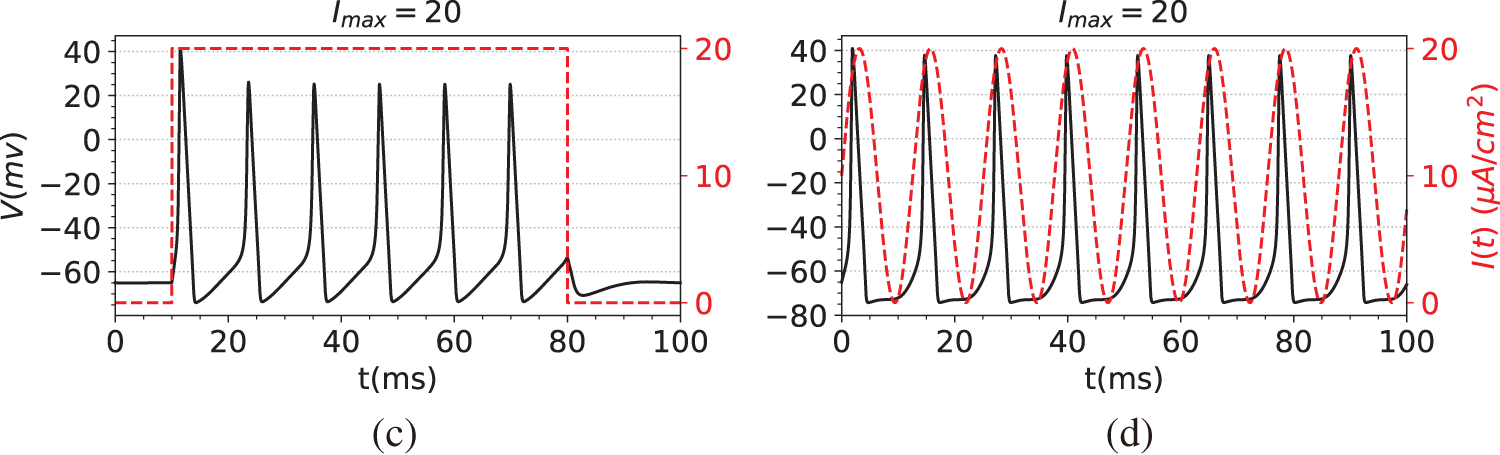

The first step in the simulation experiment is to generate observation data, namely the membrane potential V. Specifically, we first compute the numerical solutions of Eqs. (29)–(32) using the solver SciPy [44], obtaining data for both the membrane potential V and the gating variables

Figure 2: The dataset used in the simulation experiments. The black line is the membrane potential. The red line is the stimulating current. (a) Membrane potential induced by constant current stimulation. (b) Membrane potential induced by short pulse current stimulation. (c) Membrane potential induced by long-pulse current stimulation. (d) Membrane potential induced by sine function current stimulation

(a) A constant current, where

(b) A step function with a long pulse, such as

(c) A multi-pulse pulsing step function, such as

(d) A sine function, such as

where

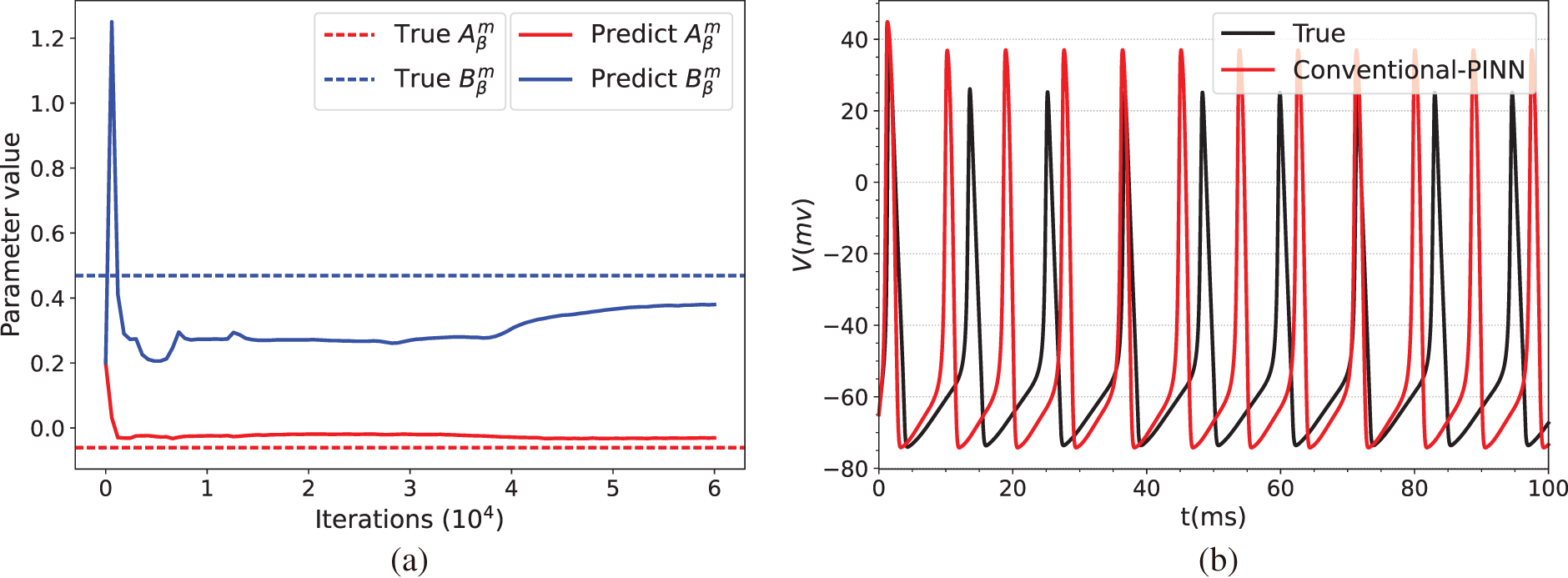

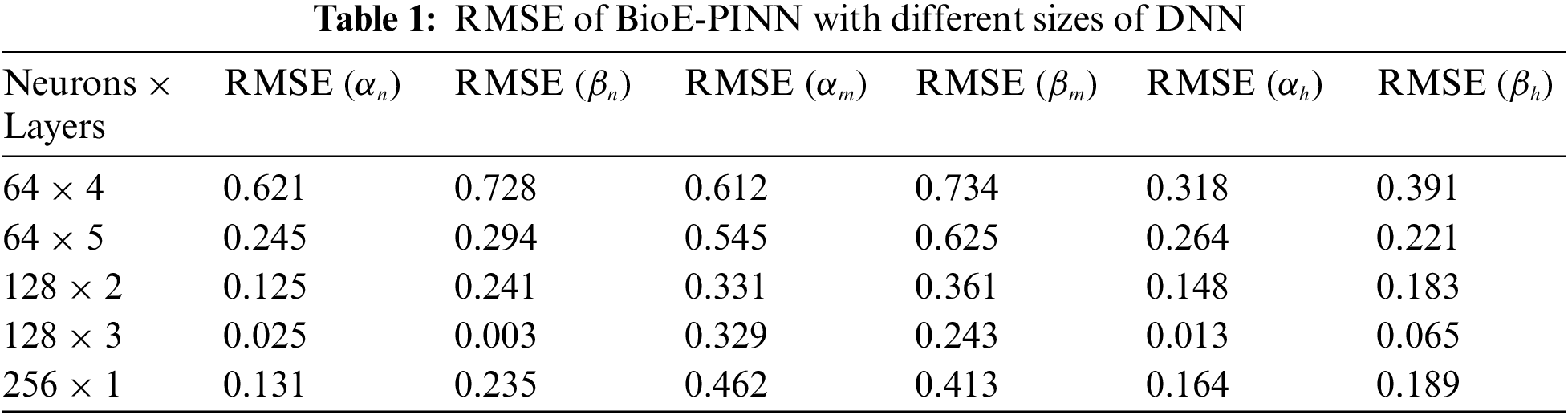

Fig. 3 illustrates the prediction results of the parameters of the HH equation under constant current stimulation given by the conventional PINN (top row) and BioE-PINN (middle row) models. The first column presents the predicted results of

Figure 3: Prediction results under constant current stimulation. (a) and (c) show the parameter prediction results of Conventional-PINN and BioE-PINN, respectively. (b) and (d) present the results of solving the HH equation according to the predicted parameters. (e) shows the loss function change curve. (f) presents the change curve of the learnable parameter

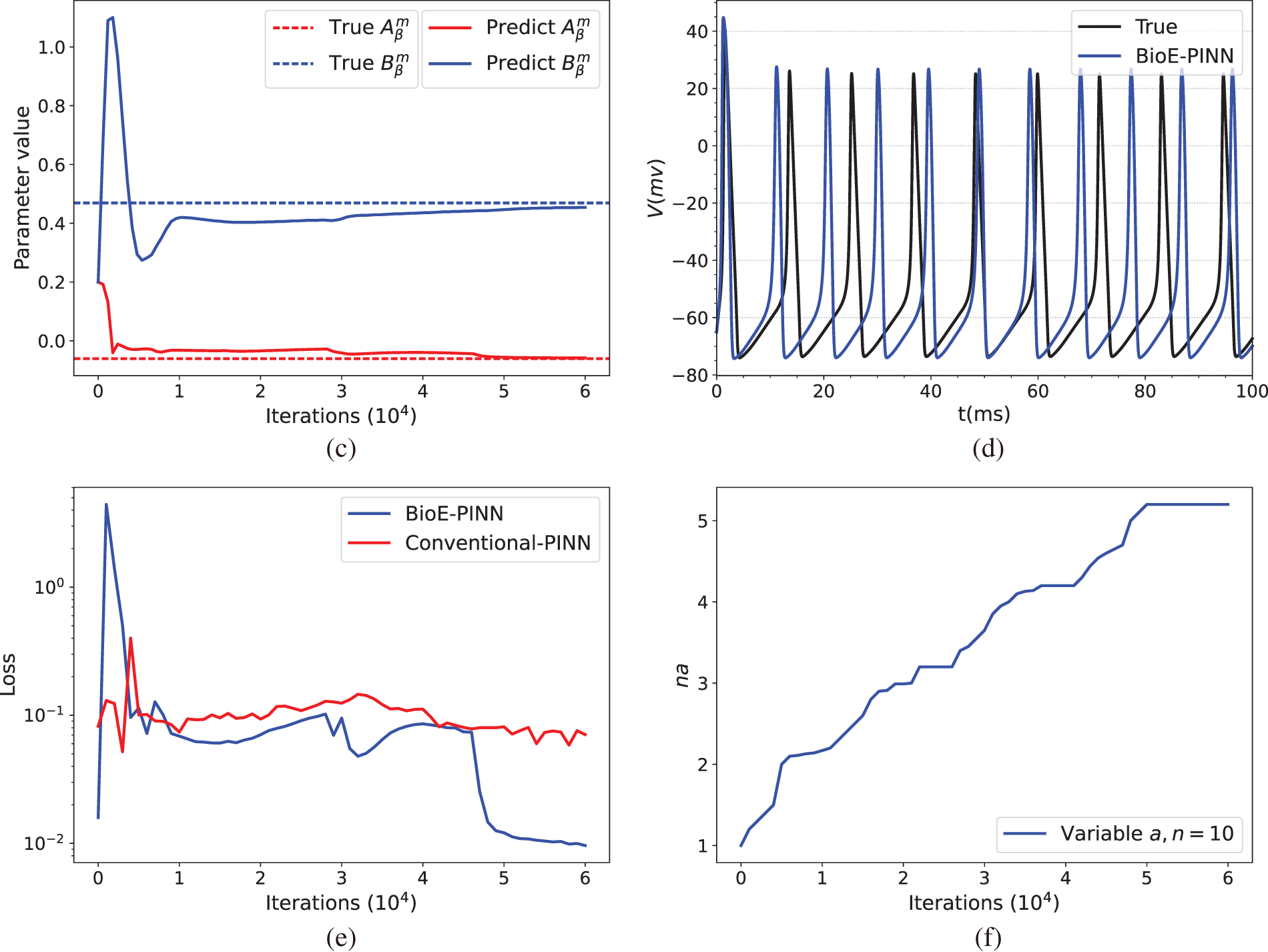

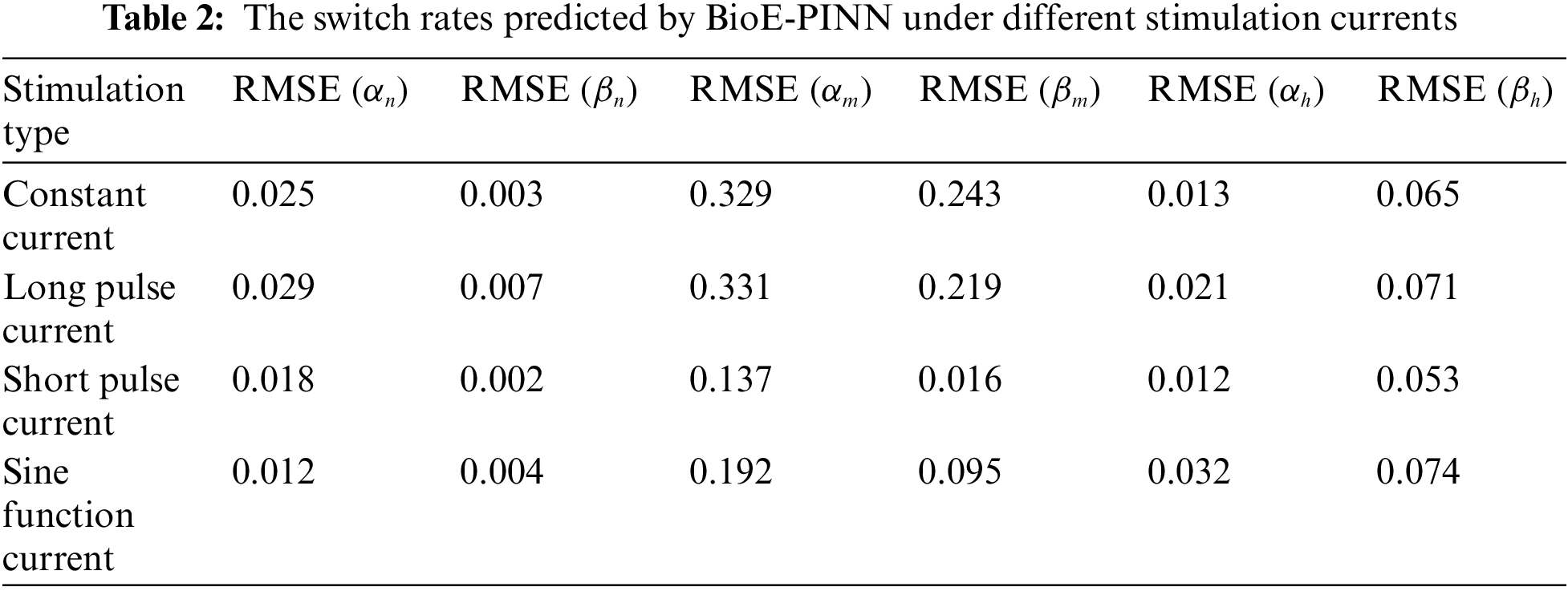

We briefly discuss the sensitivity of BioE-PINN to the number of neurons and layers of DNN models. The performance is found to be sensitive to the size of the DNN (neurons

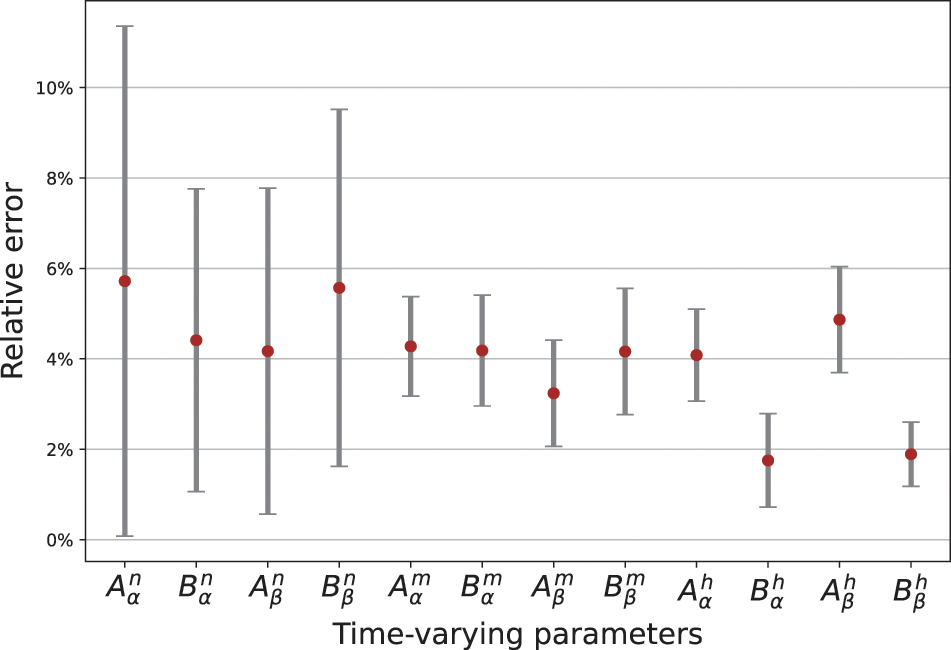

The optimal structure of BioE-PINN is selected to predict the switch rate under different stimulation currents, and the results are shown in Table 2. It can be found that the predictive results of sinusoidal stimulation and short pulse stimulation are better than constant current stimulation and long pulse stimulation. Fig. 4 shows the relative error of the parameter prediction experiments. The black line in the figure reflects the fluctuation range of the error, and the red point is the average value. We can see that the average relative error of the 12 parameters is below 6%, reflecting the high stability of the model.

Figure 4: Relative error of parameters predicted by BioE-PINN. 10 experiments are performed, and the red dots are the average error values of the 10 experiments

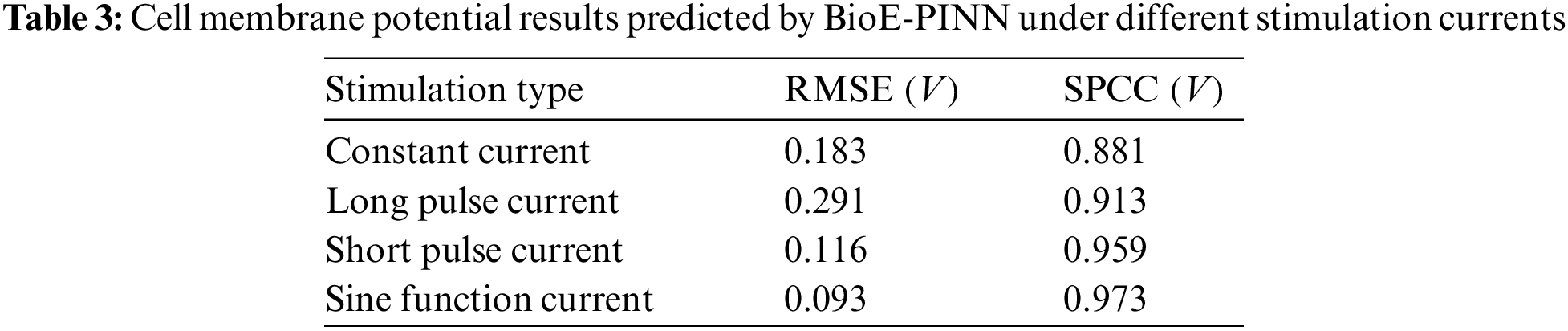

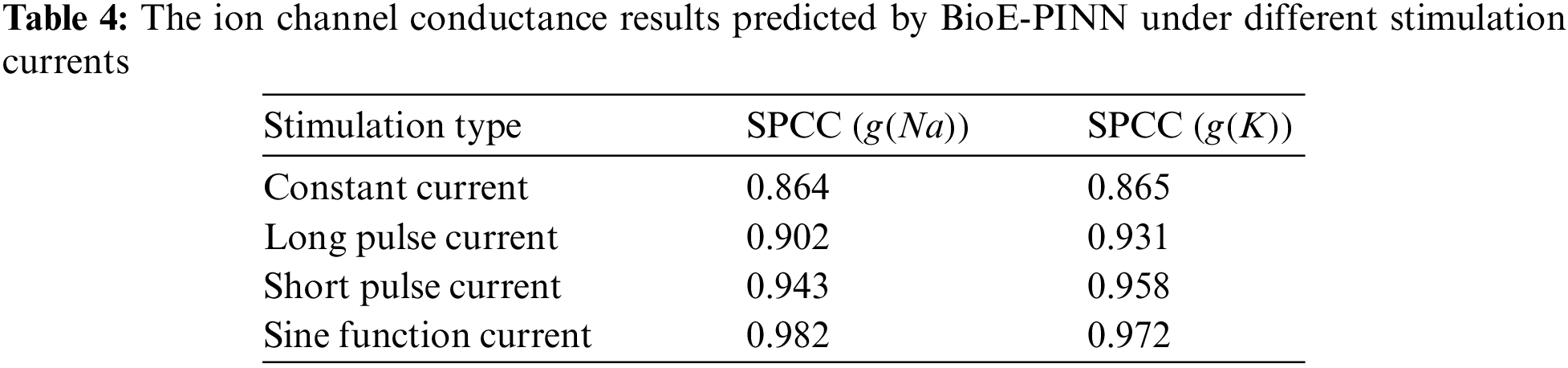

Tables 3 and 4 show the predicted results of membrane potential and channel conductance. In detail, we solve the HH equation according to the parameters determined by BioE-PINN, and we can obtain the change curve of the membrane potential and the conductance of the ion channel, and calculate the respective RMSE and SPCC. Compared with RMSE, SPCC can reflect the changing trend of the curve. The larger the value of SPCC, the more similar the predicted value is to the real value, which can better reflect the physiological change process.

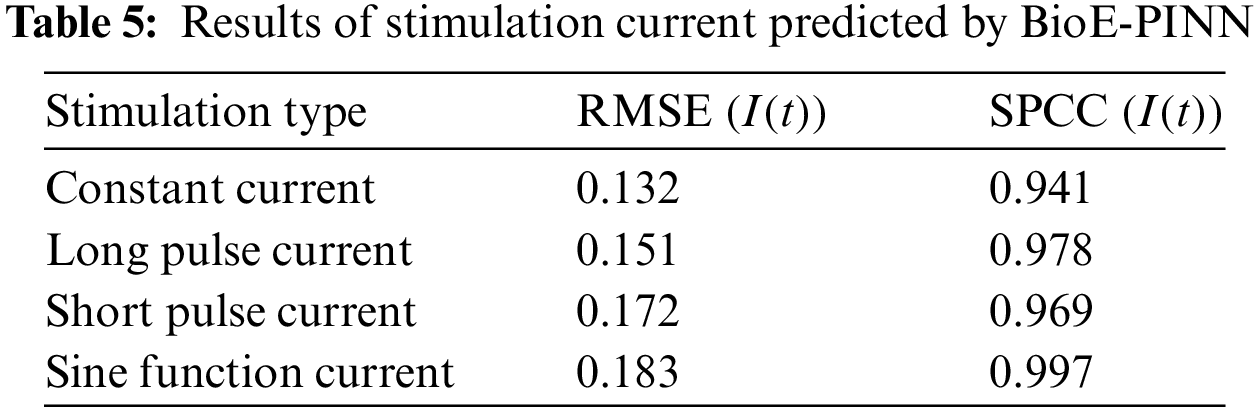

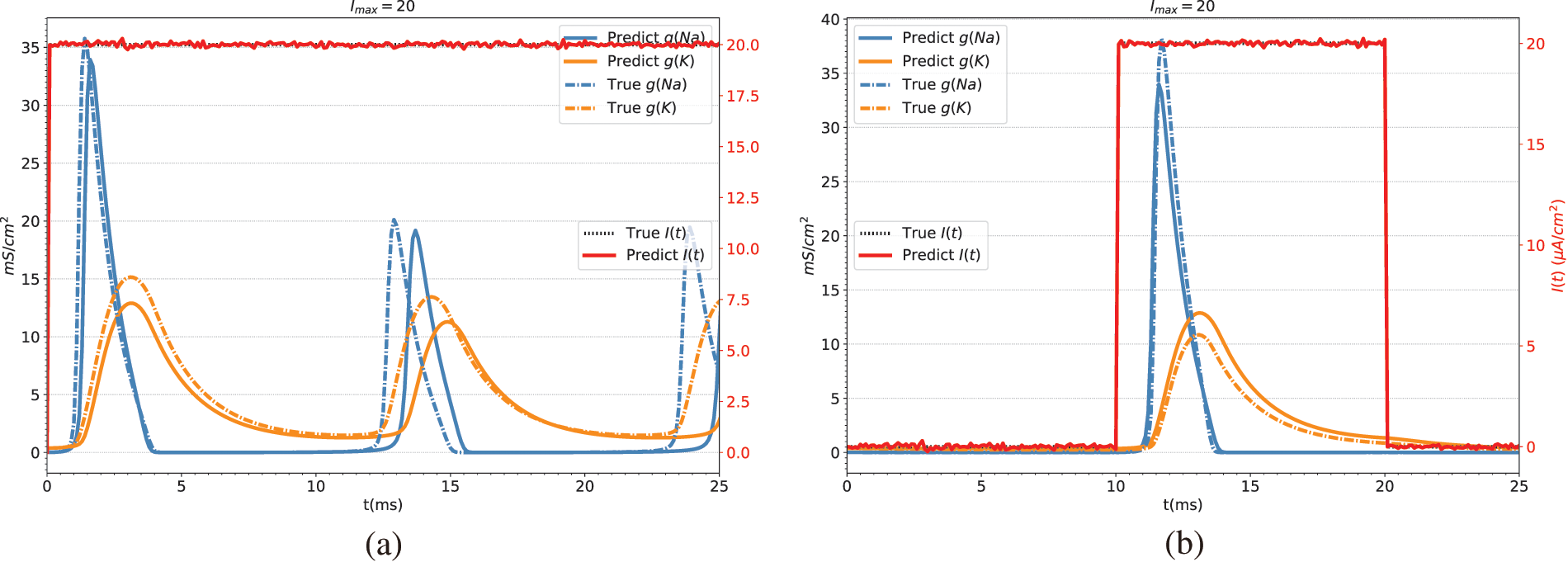

Table 5 shows the predicted results of the stimulation current. In this study, the prediction of the stimulation current is achieved through the fully connected layer. Fig. 5 shows the conductance and stimulation current predicted by BioE-PINN. It can be seen from the figure that the predicted change curve is basically consistent with the real value.

Figure 5: Conductance and stimulation current predicted by BioE-PINN. The red lines are the predicted stimulation currents. The blue and orange lines are the predicted sodium ion conductance and potassium ion conductance. The dotted line is the real value. (a) Predicted results of constant current stimulation. (b) Prediction results of short pulse current stimulation

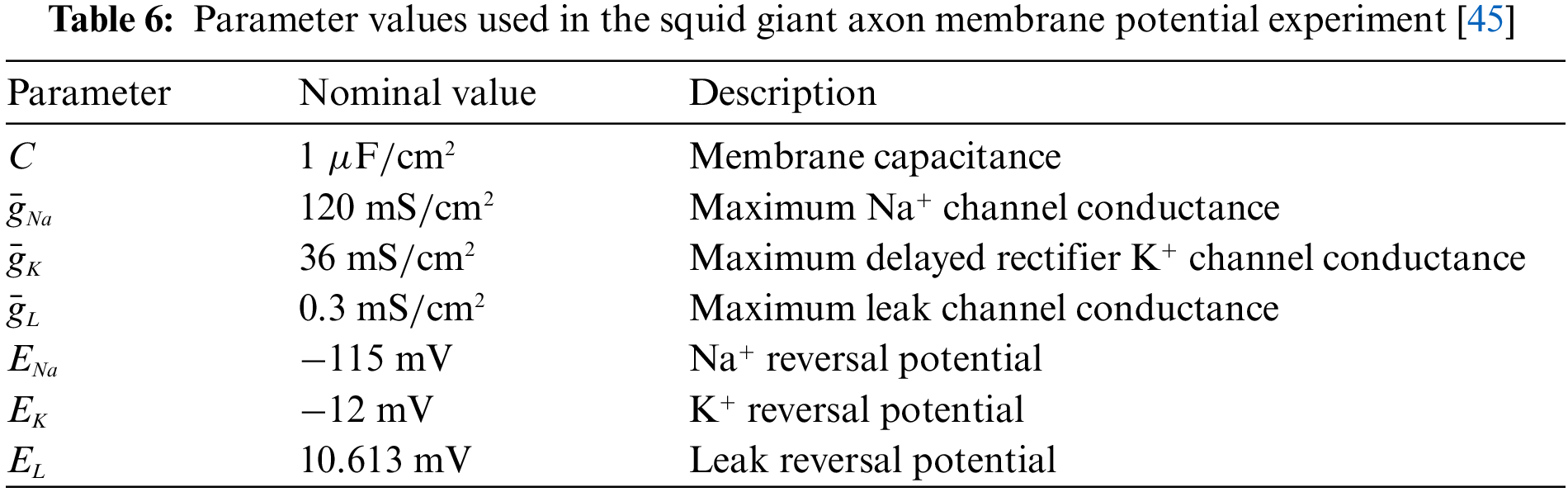

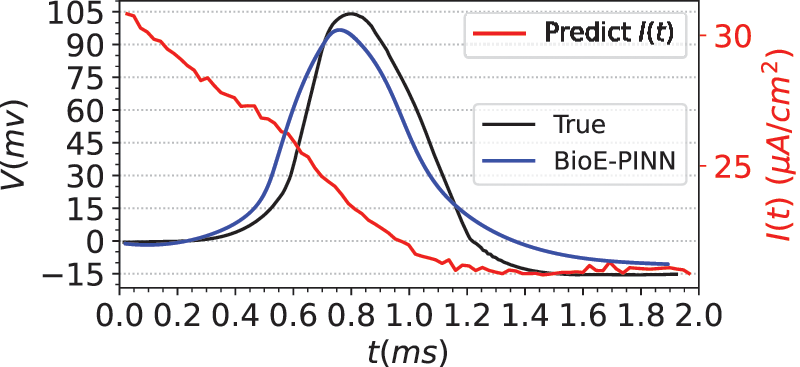

4.2 Squid Giant Axon Membrane Potential Experiment

The squid giant axon is part of the squid’s jet propulsion control system. In 1952, after experiments on giant axons, Hodgkin and Huxley made assumptions about the dynamic characteristics of ion channels and uncovered the ion current mechanism of action potentials for the first time. Therefore, the BioE-PINN is also validated with the membrane potential data of the Squid giant axon and applied to predict the time-varying parameters and stimulation currents of the HH equation.

Specifically, we first use the Eqs. (29)–(32) to construct the loss function (Eq. (10)). Then generate a time series t as the input of BioE-PINN, the length of t is 200, and the range is [0, 2]. In addition, the membrane potential recorded in the experiment is used to calculate data-driven loss (Eq. (11)), and the parameter settings in the equation are shown in Table 6.

Eq. (34) are the ion channel dynamics characteristics predicted by BioE-PINN. The predicted parameters are used to compute the numerical solution of Eq. (29), and the results are shown in Fig. 6. Fig. 6 presents the measured membrane potential (black line), predicted membrane potential (blue line), and predicted stimulation current (red line). It can be seen from the figure that our method can predict action potentials efficiently, and the Pearson coefficient and RMSE of the predicted value and the real value are 0.89 and 0.18, respectively. Based on the experimental results, we found that there is a change in the stimulation current, which decreases rapidly during the depolarization phase of the electrical signal.

Figure 6: Prediction results of squid giant axon membrane potential. The real data is from Fig. 15C in the literature [45]. The blue line is the predicted electrical signal. The red line is the predicted stimulation current

Fig. 7 illustrates the predicted results of the ion channel conductance. The left Fig. 7a is the conductance change curve predicted by BioE-PINN. The Fig. 7b is the experimentally recorded change curve of the conductance of the sodium ion channel from the literature [45]. By comparing the two, it can be found that the change trend of sodium conductance we predicted is close to that of the experiment, and it rises rapidly in the depolarization stage.

Figure 7: Giant squid axon conductance predicted by BioE-PINN. (a) Result of model prediction. The blue and orange lines in the figure are the predicted potassium ion conductance and sodium ion conductance, namely

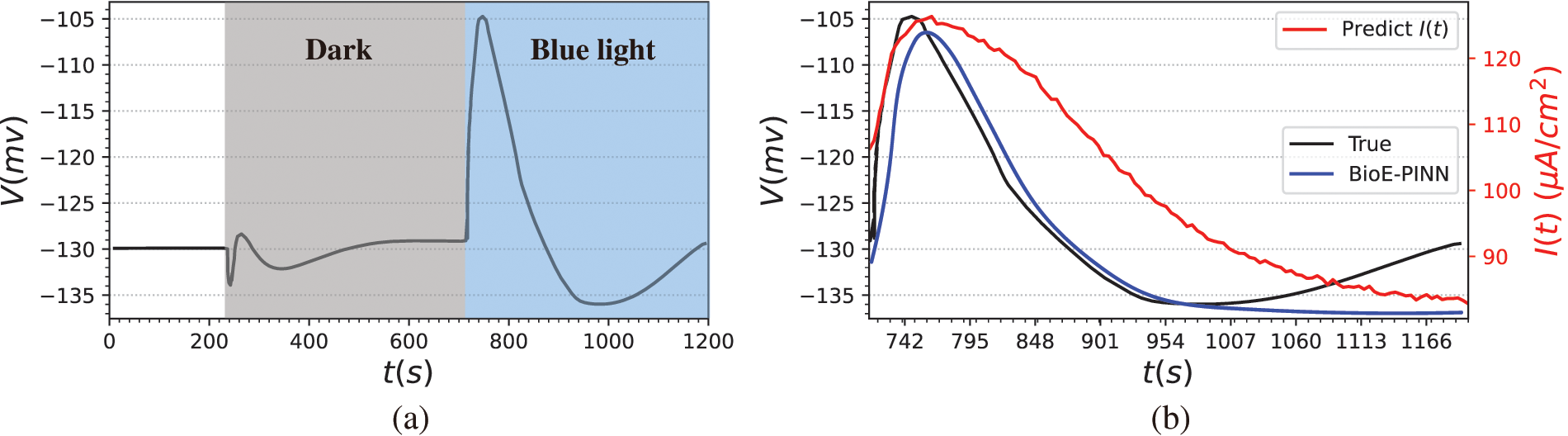

4.3 Plant Cell Membrane Potential Experiment

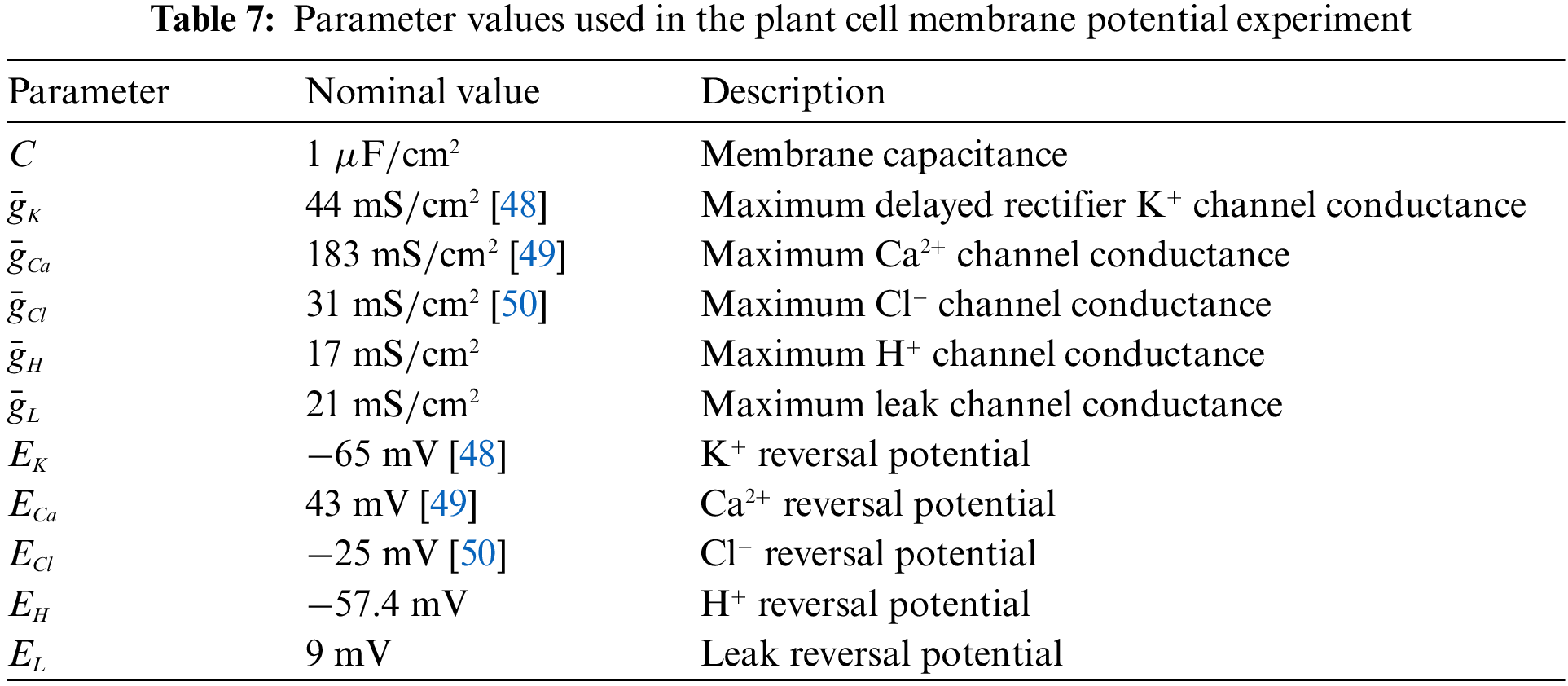

The PINN-based framework can be used to solve the HH equation and determine the parameters in the equation. This allows us to try this method for model solving of plant electrical signals. Plant electrical signaling is a rather complex process, and the ion channels involved in its formation have not been fully discovered. Therefore, it is of great significance to help biologists to discover the dynamic characteristics of plant ion channels using computational methods. At present, scientists have found potassium, calcium, chloride, and hydrogen ions in the cell membranes of higher plants. Based on previous studies [46], this paper advances the HH equation to describe the membrane potential of Arabidopsis mesophyll cells, as Eq. (35), and the parameters of the equation are set in Table 7. To be more specific, we empirically determined the membrane potential model of Arabidopsis mesophyll cells through trial and error.

The Arabidopsis mesophyll cell membrane potential data in Fig. 3b in the literature [47] are exploited to conduct experiments, shown in the first column of Fig. 8, which record the cellular electrical activity of mesophyll cells stimulated by blue light. In detail, we first determine the stimulation current I(t) and time-varying parameters in the mesophyll cell membrane potential model using BioE-PINN, and then solve Eq. (35) using the predicted parameters.

Figure 8: The mesophyll cell membrane potential of Arabidopsis induced by blue light. (a) Raw data from the literature. 713–1200 s stimulated by blue light. (b) Prediction results of BioE-PINN. The black lines are the real electrical signal values, the blue lines are the predicted electrical signals, and the red lines are the predicted stimulus currents

The second column of Fig. 8 shows the measured membrane potential (black line), predicted membrane potential (blue line), and predicted stimulation current (red line). It can be found from the figure that in the depolarization stage, the predicted electrical signal changes slowly and fails to reach the same extreme value as the measured data quickly. From the evaluation indicators, the Pearson coefficient and RMSE of the predicted value and the real value are 0.86 and 0.2, respectively. We believe that the predicted parameters can better reflect the changes of plant membrane potential. The stimulating current reflects the change process of all charged substances in the cells after the plant is stimulated, and the current generated by these substances further induces changes in the membrane potential of the mesophyll cell. According to the experimental phenomenon, it is speculated that the light intensity of this biological experiment does not reach the threshold for generating action potentials, or it is a subthreshold local potential, since we do not predict the continuous AP signal based on the experimental results.

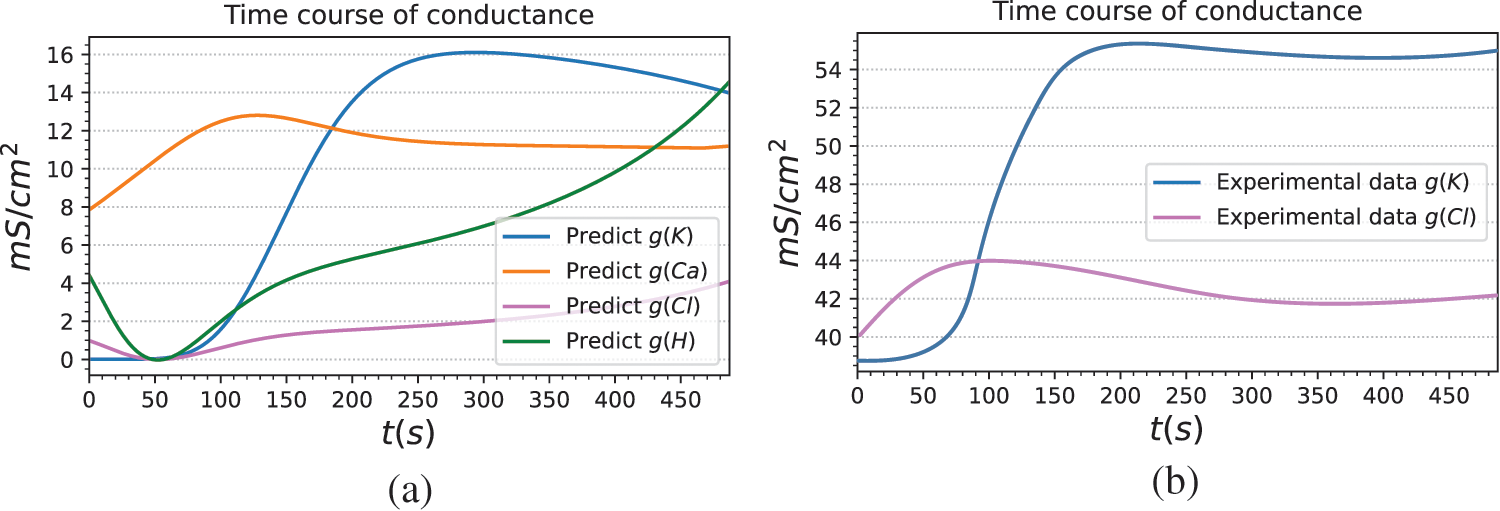

The current mechanism of ions involved in the formation of electrical signals in plants needs to be further studied. There are many mechanistic models that cause plant electrical activity, but each parameter of a model often comes from different plants [51,52]. For the description of plant electrical phenomena, its generalization needs to be improved. Our method models plant electrical signals based on reasonable assumptions, and the prediction results show that the model can well predict the light-induced membrane potential. To further demonstrate the interpretability and reliability of the model, the predicted conductivities of ion channels are analyzed, namely

To further verify the reliability of the model, we derived the conductance change curves of potassium and chloride channels from patch-clamp data extracted from the literature. Specifically, the whole-cell voltage clamp data in [53] and [54] are employed to obtain the current values under different clamping voltages.

where

When the membrane potential changes, it can be obtained from Eq. (37):

We bring Eqs. (39) into (36) to obtain Eq. (40), and then use the conductance data at each clamp potential to calculate their respective

where

The above equation is applied to find the switch rate of the activation factor

Figure 9: Ion channel and proton pump conductance predicted by BioE-PINN. (a) The ion channel and proton pump conductance curves of Arabidopsis mesophyll cells predicted by BioE-PINN. (b) Whole-cell voltage-clamp data from literature [53] and literature [54] are used, and the conductance curves of potassium and chloride channels are manually derived according to Eq. (35)

where

In this paper, we developed a framework for identifying the time-varying parameters of physiological equations based on improved PINN, which integrates the electrophysiological equations into the loss function of the neural network and adds hard constraints to make the predicted output of the neural network conform to physical laws. The difference between our framework and traditional PINN is that we add an adaptive activation function to improve the accuracy of the model and slow down the convergence. Finally, simulation experiments prove that BioE-PINN can predict the dynamic characteristics of ion channels and pumps by using membrane potential, such as gating variables, conductivity and other parameters. At the same time, we verified the effectiveness and accuracy of the model with real electrical signal data of animals and plants. In future work, it is possible to consider more complex physiological equation modeling. In addition, how to promote BioE-PINN to different fields to obtain application value is also worth studying.

Funding Statement: This work was supported by the National Natural Science Foundation of China under 62271488 and 61571443.

Conflicts of Interest: The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

1. Akinfe, K. T., Loyinmi, A. C. (2022). The implementation of an improved differential transform scheme on the schrodinger equation governing wave-particle duality in quantum physics and optics. Results in Physics, 40(1), 105806. https://doi.org/10.1016/j.rinp.2022.105806 [Google Scholar] [CrossRef]

2. Fareed, A. F., Semary, M. S., Hassan, H. N. (2022). Two semi-analytical approaches to approximate the solution of stochastic ordinary differential equations with two enormous engineering applications. Alexandria Engineering Journal, 61(12), 11935–11945. https://doi.org/10.1016/j.aej.2022.05.054 [Google Scholar] [CrossRef]

3. Amigo, J. M., Small, M. (2017). Mathematical methods in medicine: Neuroscience, cardiology and pathology. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 375(2096), 20170016. https://doi.org/10.1098/rsta.2017.0016 [Google Scholar] [PubMed] [CrossRef]

4. Lagergren, J. H., Nardini, J. T., Michael Lavigne, G., Rutter, E. M., Flores, K. B. (2020). Learning partial differential equations for biological transport models from noisy spatio-temporal data. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences, 476(2234), 20190800. https://doi.org/10.1098/rspa.2019.0800 [Google Scholar] [PubMed] [CrossRef]

5. Higgins, J. P. (2002). Nonlinear systems in medicine. The Yale Journal of Biology and Medicine, 75(5–6), 247–260. [Google Scholar] [PubMed]

6. Metwally, A. S., El-Sheikh, S. M. A., Galal, A. A. A. (2022). The impact of diabetes mellitus on the pharmacokinetics of rifampicin among tuberculosis patients: A systematic review and meta-analysis study. Diabetes & Metabolic Syndrome: Clinical Research & Reviews, 16(2), 102410. https://doi.org/10.1016/j.dsx.2022.102410 [Google Scholar] [PubMed] [CrossRef]

7. Cheng, V., Abdul-Aziz, M. H., Burrows, F., Buscher, H., Cho, Y. J. et al. (2022). Population pharmacokinetics and dosing simulations of ceftriaxone in critically ill patients receiving extracorporeal membrane oxygenation (an ASAP ECMO study). Clinical Pharmacokinetics, 61(6), 847–856. https://doi.org/10.1007/s40262-021-01106-x [Google Scholar] [PubMed] [CrossRef]

8. Hodgkin, A. L., Huxley, A. F. (1952). The dual effect of membrane potential on sodium conductance in the giant axon of Loligo. The Journal of Physiology, 116(4), 497–506. https://doi.org/10.1113/jphysiol.1952.sp004719 [Google Scholar] [PubMed] [CrossRef]

9. Wang, J., Li, T. F., Sun, C., Yan, R. Q., Chen, X. F. (2022). Improved spiking neural network for intershaft bearing fault diagnosis. Journal of Manufacturing Systems, 65(1), 208–219. https://doi.org/10.1016/j.jmsy.2022.09.003 [Google Scholar] [CrossRef]

10. Lok, L. C., Mirams, G. R. (2021). Neural network differential equations for ion channel modelling. Frontiers in Physiology, 12(1), 708944. https://doi.org/10.3389/fphys.2021.708944 [Google Scholar] [PubMed] [CrossRef]

11. Türkyilmazoğlu, M. (2022). An efficient computational method for differential equations of fractional type. Computer Modeling in Engineering & Sciences, 133(1), 47–65. https://doi.org/10.32604/cmes.2022.020781 [Google Scholar] [CrossRef]

12. Blechschmidt, J., Ernst, O. G. (2021). Three ways to solve partial differential equations with neural networks-a review. GAMM-Mitteilungen, 44(2), e202100006. https://doi.org/10.1002/gamm.202100006 [Google Scholar] [CrossRef]

13. Karniadakis, G. E., Kevrekidis, I. G., Lu, L., Paris, P., Sifan, W. et al. (2021). Physics-informed machine learning. Nature Reviews Physics, 3(6), 422–440. https://doi.org/10.1038/s42254-021-00314-5 [Google Scholar] [CrossRef]

14. Roy, A. M., Bose, R., Sundararaghavan, V., Arróyave, R. (2023). Deep learning-accelerated computational framework based on physics informed neural network for the solution of linear elasticity. Neural Networks, 162(1), 472–489. https://doi.org/10.1016/j.neunet.2023.03.014 [Google Scholar] [PubMed] [CrossRef]

15. Pang, H., Wu, L., Liu, J., Liu, X., Liu, K. (2023). Physics-informed neural network approach for heat generation rate estimation of lithium-ion battery under various driving conditions. Journal of Energy Chemistry, 78(1), 1–12. https://doi.org/10.1016/j.jechem.2022.11.036 [Google Scholar] [CrossRef]

16. Herrero, M. C., Oved, A., Bharath, A. A., Varela, M. (2022). EP-PINNs: Cardiac electrophysiology characterisation using physics-informed neural networks. Frontiers in Cardiovascular Medicine, 8(1), 768419. https://doi.org/10.3389/fcvm.2021.768419 [Google Scholar] [PubMed] [CrossRef]

17. Psaros, A. F., Kawaguchi, K., Karniadakis, G. E. (2022). Meta-learning PINN loss functions. Journal of Computational Physics, 458(1), 111121. https://doi.org/10.1016/j.jcp.2022.111121 [Google Scholar] [CrossRef]

18. Qin, X., Liu, Z., Liu, Y., Liu, S., Yang, B. et al. (2022). User ocean personality model construction method using a BP neural network. Electronics, 11(19), 3022. https://doi.org/10.3390/electronics11193022 [Google Scholar] [CrossRef]

19. Lu, S., Guo, J., Liu, S., Yang, B., Liu, M. et al. (2022). An improved algorithm of drift compensation for olfactory sensors. Applied Sciences, 12(19), 9529. https://doi.org/10.3390/app12199529 [Google Scholar] [CrossRef]

20. Dang, W., Guo, J., Liu, M., Liu, S., Yang, B. et al. (2022). A semi-supervised extreme learning machine algorithm based on the new weighted kernel for machine smell. Applied Sciences, 12(18), 9213. https://doi.org/10.3390/app12189213 [Google Scholar] [CrossRef]

21. Raissi, M., Perdikaris, P., Karniadakis, G. E. (2019). Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational Physics, 378, 686–707. https://doi.org/10.1016/j.jcp.2018.10.045 [Google Scholar] [CrossRef]

22. Jagtap, A. D., Kawaguchi, K., Karniadakis, G. E. (2020). Adaptive activation functions accelerate convergence in deep and physics-informed neural networks. Journal of Computational Physics, 404(4), 109136. https://doi.org/10.1016/j.jcp.2019.109136 [Google Scholar] [CrossRef]

23. Yu, J., Lu, L., Meng, X., Karniadakis, G. E. (2022). Gradient-enhanced physics-informed neural networks for forward and inverse PDE problems. Computer Methods in Applied Mechanics and Engineering, 393(6), 114823. https://doi.org/10.1016/j.cma.2022.114823 [Google Scholar] [CrossRef]

24. Wu, C., Zhu, M., Tan, Q., Kartha, Y., Lu, L. (2023). A comprehensive study of non-adaptive and residual-based adaptive sampling for physics-informed neural networks. Computer Methods in Applied Mechanics and Engineering, 403(1), 115671. https://doi.org/10.1016/j.cma.2022.115671 [Google Scholar] [CrossRef]

25. Lu, L., Meng, X., Mao, Z., Karniadakis, G. E. (2021). DeepXDE: A deep learning library for solving differential equations. SIAM Review, 63(1), 208–228. https://doi.org/10.1137/19M1274067 [Google Scholar] [CrossRef]

26. Hennigh, O., Narasimhan, S., Nabian, M. A., Subramaniam, A., Tangsali, K. et al. (2021). NVIDIA SIMNET: An AI-accelerated multi-physics simulation framework. International Conference on Computational Science, Poland, Springer. [Google Scholar]

27. Chen, F., Sondak, D., Protopapas, P., Mattheakis, M., Liu, S. et al. (2020). NeuroDiffEq: A python package for solving differential equations with neural networks. Journal of Open Source Software, 5(46), 1931. https://doi.org/10.21105/joss.01931 [Google Scholar] [CrossRef]

28. Haghighat, E., Juanes, R. (2021). SciANN: A Keras/Tensorflow wrapper for scientific computations and physics-informed deep learning using artificial neural networks. Computer Methods in Applied Mechanics and Engineering, 373(7553), 113552. https://doi.org/10.1016/j.cma.2020.113552 [Google Scholar] [CrossRef]

29. Yuan, L., Ni, Y. Q., Deng, X. Y., Hao, S. (2022). A-PINN: Auxiliary physics informed neural networks for forward and inverse problems of nonlinear integro-differential equations. Journal of Computational Physics, 462, 111260. https://doi.org/10.1016/j.jcp.2022.111260 [Google Scholar] [CrossRef]

30. Shukla, K., Jagtap, A. D., Blackshire, J. L., Sparkman, D., Karniadakis, G. E. (2021). A physics-informed neural network for quantifying the microstructural properties of polycrystalline nickel using ultrasound data: A promising approach for solving inverse problems. IEEE Signal Processing Magazine, 39(1), 68–77. https://doi.org/10.1109/MSP.2021.3118904 [Google Scholar] [CrossRef]

31. Chen, D., Gao, X., Xu, C., Wang, S., Chen, S. et al. (2022). FlowDNN: A physics-informed deep neural network for fast and accurate flow prediction. Frontiers of Information Technology & Electronic Engineering, 23(2), 207–219. https://doi.org/10.1631/FITEE.2000435 [Google Scholar] [CrossRef]

32. Jagtap, A. D., Mao, Z., Adams, N., Karniadakis, G. E. (2022). Physics-informed neural networks for inverse problems in supersonic flows. Journal of Computational Physics, 466(1), 111402. https://doi.org/10.1016/j.jcp.2022.111402 [Google Scholar] [CrossRef]

33. Xie, J., Yao, B. (2022). Physics-constrained deep learning for robust inverse ECG modeling. IEEE Transactions on Automation Science and Engineering, 20(1), 151–166. https://doi.org/10.1109/TASE.2022.3144347 [Google Scholar] [CrossRef]

34. Herrero Martin, C., Oved, A., Chowdhury, R. A., Ullmann, E., Peters, N. S. et al. (2022). EP-PINNs: Cardiac electrophysiology characterisation using physics-informed neural networks. Frontiers in Cardiovascular Medicine, 2179, 768419. https://doi.org/10.3389/fcvm.2021.768419 [Google Scholar] [PubMed] [CrossRef]

35. Jariwala, P., Jadhav, K. (2022). The safety and efficacy of efonidipine, an L- and T-type dual calcium channel blocker, on heart rate and blood pressure in indian patients with mild-to-moderate essential hypertension. European Heart Journal, 43(1), 143–849. https://doi.org/10.1093/eurheartj/ehab849.143 [Google Scholar] [CrossRef]

36. Liu, C., Liu, X., Liu, S. (2014). Bifurcation analysis of a morris–lecar neuron model. Biological Cybernetics, 108(1), 75–84. https://doi.org/10.1007/s00422-013-0580-4 [Google Scholar] [PubMed] [CrossRef]

37. Lei, C. L., Mirams, G. R. (2021). Neural network differential equations for ion channel modelling. Frontiers in Physiology, 12, 708944. https://doi.org/10.3389/fphys.2021.708944 [Google Scholar] [PubMed] [CrossRef]

38. Ji, W., Qiu, W., Shi, Z., Pan, S., Deng, S. (2021). Stiff-PINN: Physics-informed neural network for stiff chemical kinetics. The Journal of Physical Chemistry A, 125(36), 8098–8106. https://doi.org/10.1021/acs.jpca.1c05102 [Google Scholar] [PubMed] [CrossRef]

39. Bienstock, D., Muñoz, G., Pokutta, S. (2018). Principled deep neural network training through linear programming. arXiv preprint arXiv: 1810.03218. [Google Scholar]

40. Zhang, M., Hibi, K., Inoue, J. (2023). GPU-accelerated artificial neural network potential for molecular dynamics simulation. Computer Physics Communications, 108655. https://doi.org/10.1016/j.cpc.2022.108655 [Google Scholar] [CrossRef]

41. Paszke, A., Gross, S., Chintala, S., Chanan, G., Yang, E. et al. (2017). Automatic differentiation in pytorch. NIPS 2017 Autodiff Workshop: The Future of Gradient-Based Machine Learning Software and Techniques, Long Beach, CA, USA. [Google Scholar]

42. Glorot, X., Bengio, Y. (2010). Understanding the difficulty of training deep feedforward neural networks. Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics. JMLR Workshop and Conference Proceedings, pp. 249–256. Chia Laguna Resort, Sardinia, Italy. [Google Scholar]

43. Kingma, D. P., Ba, J. (2014). ADAM: A method for stochastic optimization. arXiv preprint arXiv: 1412.6980. [Google Scholar]

44. Virtanen, P., Gommers, R., Oliphant, T. E., Haberland, M., Reddy, T. et al. (2020). SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nature Methods, 17(3), 261–272. https://doi.org/10.1038/s41592-019-0686-2 [Google Scholar] [PubMed] [CrossRef]

45. Hodgkin, A. L., Huxley, A. F. (1952). A quantitative description of membrane current and its application to conduction and excitation in nerve. The Journal of Physiology, 117(4), 500–544. https://doi.org/10.1113/jphysiol.1952.sp004764 [Google Scholar] [PubMed] [CrossRef]

46. Wang, Z., Huang, L., Yan, X., Wang, C., Xu, Z. et al. (2007). A theory model for description of the electrical signals in plant part I. International Conference on Computer and Computing Technologies in Agriculture, Wuyishan, China, Springer. [Google Scholar]

47. Marten, I., Deeken, R., Hedrich, R., Roelfsema, M. (2010). Light-induced modification of plant plasma membrane ion transport. Plant Biology, 12, 64–79. https://doi.org/10.1111/j.1438-8677.2010.00384.x [Google Scholar] [PubMed] [CrossRef]

48. Spalding, E. P., Slayman, C. L., Goldsmith, M. H. M., Gradmann, D., Bertl, A. (1992). Ion channels in Arabidopsis plasma membrane: Transport characteristics and involvement in light-induced voltage changes. Plant Physiology, 99(1), 96–102. [Google Scholar] [PubMed]

49. de Angeli, A., Moran, O., Wege, S., Filleur, S., Ephritikhine, G. et al. (2009). ATP binding to the c terminus of the Arabidopsis thaliana nitrate/proton antiporter, atclca, regulates nitrate transport into plant vacuoles. Journal of Biological Chemistry, 284(39), 26526–26532. [Google Scholar] [PubMed]

50. Lew, R. R. (1991). Substrate regulation of single potassium and chloride ion channels in Arabidopsis plasma membrane. Plant Physiology, 95(2), 642–647. [Google Scholar] [PubMed]

51. Vodeneev, V., Orlova, A., Morozova, E., Orlova, L., Akinchits, E. et al. (2012). The mechanism of propagation of variation potentials in wheat leaves. Journal of Plant Physiology, 169(10), 949–954. [Google Scholar] [PubMed]

52. Sukhova, E., Akinchits, E., Sukhov, V. (2017). Mathematical models of electrical activity in plants. The Journal of Membrane Biology, 250(5), 407–423. [Google Scholar] [PubMed]

53. Reintanz, B., Szyroki, A., Ivashikina, N., Ache, P., Godde, M. et al. (2002). AtKC1, a silent Arabidopsis potassium channel α-subunit modulates root hair k+ influx. Proceedings of the National Academy of Sciences, 99(6), 4079–4084. [Google Scholar]

54. Qi, Z., Kishigami, A., Nakagawa, Y., Iida, H., Sokabe, M. (2004). A mechanosensitive anion channel in Arabidopsis thaliana mesophyll cells. Plant and Cell Physiology, 45(11), 1704–1708. [Google Scholar] [PubMed]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF

Downloads

Downloads

Citation Tools

Citation Tools