Open Access

Open Access

REVIEW

Unveiling Effective Heuristic Strategies: A Review of Cross-Domain Heuristic Search Challenge Algorithms

1 Faculty of Information Science and Technology, Universiti Kebangsaan Malaysia, Bangi, 43600, Selangor, Malaysia

2 Center for Artificial Intelligence Technology, Universiti Kebangsaan Malaysia, Bangi, 43600, Selangor, Malaysia

3 Faculty of Medicine, Universiti Kebangsaan Malaysia Medical Centre, Cheras, 56000, Kuala Lumpur, Malaysia

4 School of Engineering and Computing, MILA University, Nilai, 71800, Negeri Sembilan, Malaysia

5 School of Computer Science, University of Nottingham-Malaysia Campus, Semenyih, 43500, Selangor, Malaysia

* Corresponding Author: Mohamad Khairulamirin Md Razali. Email:

(This article belongs to the Special Issue: Algorithms, Models, and Applications of Fuzzy Optimization and Decision Making)

Computer Modeling in Engineering & Sciences 2025, 142(2), 1233-1288. https://doi.org/10.32604/cmes.2025.060481

Received 02 November 2024; Accepted 27 December 2024; Issue published 27 January 2025

Abstract

The Cross-domain Heuristic Search Challenge (CHeSC) is a competition focused on creating efficient search algorithms adaptable to diverse problem domains. Selection hyper-heuristics are a class of algorithms that dynamically choose heuristics during the search process. Numerous selection hyper-heuristics have different implementation strategies. However, comparisons between them are lacking in the literature, and previous works have not highlighted the beneficial and detrimental implementation methods of different components. The question is how to effectively employ them to produce an efficient search heuristic. Furthermore, the algorithms that competed in the inaugural CHeSC have not been collectively reviewed. This work conducts a review analysis of the top twenty competitors from this competition to identify effective and ineffective strategies influencing algorithmic performance. A summary of the main characteristics and classification of the algorithms is presented. The analysis underlines efficient and inefficient methods in eight key components, including search points, search phases, heuristic selection, move acceptance, feedback, Tabu mechanism, restart mechanism, and low-level heuristic parameter control. This review analyzes the components referencing the competition’s final leaderboard and discusses future research directions for these components. The effective approaches, identified as having the highest quality index, are mixed search point, iterated search phases, relay hybridization selection, threshold acceptance, mixed learning, Tabu heuristics, stochastic restart, and dynamic parameters. Findings are also compared with recent trends in hyper-heuristics. This work enhances the understanding of selection hyper-heuristics, offering valuable insights for researchers and practitioners aiming to develop effective search algorithms for diverse problem domains.Keywords

Hyper-heuristics are one of the three forms of heuristics as described by Pillay et al. [1]. The other two types are low-level heuristics (often just referred to as heuristics) and metaheuristics. Low-level heuristics make direct changes to the solution, whereas metaheuristics are higher-level methods that guide the search using one or more low-level heuristics [2]. Hyper-heuristics combine multiple low-level heuristics into a framework that explores the heuristic space [3].

Hyper-heuristics can be classified into four categories: selection constructive, selection perturbative, generation constructive or generation perturbative [4]. Selection hyper-heuristics select an existing low-level heuristic (either constructive or perturbative), whereas generation hyper-heuristics evolve a new low-level heuristic at each point of the optimization. Constructive low-level heuristics build a complete solution incrementally, whereas perturbative low-level heuristics are used to iteratively improve an already complete solution, which might be infeasible.

By exploring the heuristic search space, hyper-heuristics achieve a higher level of generality [5]. A method with greater generality can address numerous problem instances, as opposed to tailor-made methodologies that perform well on certain instances but poorly on others [6]. To evaluate the generality of hyper-heuristics, the HyFlex framework was developed [7]. HyFlex provides multiple problem instances with hidden instance-specific low-level heuristics. Users of the framework simply need to design the high-level strategy that decides which low-level heuristic to call at a given decision point. The framework has been used in various research studies and competitions, including the Cross-domain Heuristic Search Challenge (CHeSC) [8]. The inaugural CHeSC drew researchers worldwide to create highly general hyper-heuristics. The final competition includes 20 selection hyper-heuristics of various algorithmic designs. These algorithms have been extensively studied post-competition and remain significant benchmarks in hyper-heuristic research.

One of the main issues in selection hyper-heuristics is the lack of concrete study on which hyper-heuristic components are most crucial to search performance. Hyper-heuristics encompass numerous components, such as feedback, low-level heuristics, solutions (or search points), objectives, move acceptance, and parameter settings [3]. Many hyper-heuristic algorithms have been introduced, each with different features and strategies. Burke et al. [9] reviewed various hyper-heuristic algorithms, categorizing them into selection and generation types. Drake et al. [3] also identified various selection hyper-heuristics with different implementations in components such as feedback mechanisms, move acceptance, and parameter setting. Sánchez et al. [2] examined various hyper-heuristics implemented to solve combinatorial optimization problems. However, existing reviews only conducted comparisons between the algorithms limited to the nature of the heuristic space [9], abstract level [3], or problems addressed [2]. Furthermore, each component of selection hyper-heuristics has different implementation methods [3]. For example, there are three classes of move acceptance: stochastic, non-stochastic basic, and non-stochastic threshold [10]. Comparing performance across different implementations can help in identifying the most effective methods.

Nevertheless, there is a notable absence of a comprehensive analysis on selection hyper-heuristic components. Numerous studies have focused on heuristic selection and move acceptance [10–14], neglecting other crucial components such as feedback mechanisms [15,16], diversification strategies such as Tabu mechanism [16] and search restart [17], as well as parameter settings [16,18]. These overlooked components significantly influence algorithmic performance, with feedback mechanisms enabling adaptive strategies [3,9], diversification techniques promoting exploration of the search space [19–21], and appropriate parameter selection enhancing overall performance [3]. Identifying the best implementation methods for all components can lead to the development of a more effective algorithm.

To address these gaps in the literature, a comprehensive analysis of existing algorithms is needed. The CHeSC algorithms present an ideal case study for addressing these gaps. They remain relevant as they continue to serve as benchmarks in recent studies such as [22–25]. Their use of a standardized framework allows for direct comparisons by ensuring consistent experimental conditions. Additionally, the availability of their design documentation and source codes facilitates in-depth analysis, which is not feasible for more recent HyFlex algorithms. Despite their significance, no comprehensive analysis of the competing algorithms has been conducted. Drake et al. [3] provided a summary and a limited classification of only ten out of the 20 competing algorithms. Furthermore, most algorithms were only inspected by their creators, potentially resulting in a biased analysis. Only the winning algorithm was examined using a complexity analysis to identify features that do not necessarily contribute to algorithmic performance, conducted by Adriaensen et al. [17]. While their analysis method is undoubtedly effective, we contend that it may be too complex to apply to the other 19 algorithms. Moreover, given their diverse algorithmic designs, there is a need to compare the performance of different implementation methods among these algorithms, which remains unexplored.

In this work, a brief summary of ten competing algorithms that were not reviewed in Drake et al. [3] is provided using the information gained from their source codes, design documentation, and extended abstracts. The design documentation offers explanations from the algorithm designers on how their algorithm operates. Then, this study employs a review analysis to investigate eight key components of selection hyper-heuristics among the top twenty competitors from CHeSC: search points, search phases, heuristic selection, move acceptance, feedback, Tabu mechanism, restart mechanism, and low-level heuristic parameters. The review analysis primarily focuses on these components, as they constitute the key components of a selection hyper-heuristic algorithm [26,27]. Within hyper-heuristics, the role of the heuristic selection mechanism is to choose specific low-level heuristics to be employed [28]. The resulting solution then undergoes the move acceptance process to decide whether it should be accepted or rejected. Besides, certain algorithms can learn from feedback to adapt their strategies [3,9]. Tabu and restart mechanisms can promote diversification when the search gets stuck at local optima. Tabu mechanism encourages exploration and avoids short-term cycling by ignoring previously visited solutions [21]. Restart mechanism can move the search to a different region in the search space to escape local optima [19,20]. Hyper-heuristic algorithms may have several parameters that necessitate control within heuristic selection, move acceptance, or low-level heuristics [3]. The selection of appropriate parameter values is essential for achieving increased performance.

This work offers a simpler analysis of all competing algorithms from CHeSC. The main contribution of this study is the identification of effective implementation methods for key components of selection hyper-heuristics by finding the similarities among the top-ranking algorithms. Ineffective strategies are recognized by detecting the similarities among the bottom-ranking algorithms. To achieve this, participating algorithms are categorized based on their similarities in implementation methods for each algorithmic component. The performance of different methods is compared using the average ranking of algorithms employing the same strategy, derived from the final leaderboard of the competition. The algorithms are not subjected to additional execution or re-ranking in this study. Instead, the study relies on the results and rankings provided by the competition organizers.

This study is intended for designers of selection hyper-heuristic algorithms. Although our review focuses on CHeSC algorithms, we ensure the relevance of our findings by relating them to trends observed in recent algorithms. This approach enables our analysis to reflect progress in the field while offering valuable insights for the development of future algorithms. The effective implementation methods identified in this study can serve as focal points for future research aimed at enhancing search performance. By concentrating on these methods, researchers can refine algorithms using proven strategies. The findings can also aid future algorithm designers in creating effective algorithms for solving various optimization problems. The research questions (RQ) of the study are as follows:

RQ1: What are the main characteristics of the proposed algorithms for CHeSC competition?

RQ2: What are the good and bad practices for key components of selection hyper-heuristics, including search points, search phases, heuristic selection, move acceptance, feedback, Tabu mechanism, restart mechanism, and low-level heuristic parameters, observed in the CHeSC algorithms?

RQ3: How do the results obtained from the analysis of the CHeSC algorithms align with the trends identified in recently introduced hyper-heuristics?

RQ4: What are the recommendations for future studies related to selection hyper-heuristics?

The rest of the paper is structured as follows: Section 2 describes related works on core components of selection hyper-heuristic and CHeSC. The methodology for the analysis is described in Section 3. The following sections are structured to answer each research question. The HyFlex framework and the main characteristics of the participating algorithms are summarized in Section 4 (RQ1). Section 5 discusses the classification and implementation of key components of selection hyper-heuristics for the CHeSC algorithms (RQ2). A comparison between the findings from the review with the trends observed in recent algorithms is included in Section 6 (RQ3). Section 7 highlights future research recommendations related to selection hyper-heuristics based on the review findings (RQ4). Finally, Section 8 summarizes the study, discusses limitations and provides suggestions for future work.

The core components of selection hyper-heuristic frameworks are heuristic selection, move acceptance, and a set of low-level heuristics [11]. Within this framework, the selection mechanism determines which low-level heuristic to apply to a working solution, whereas move acceptance establishes whether the resulting solution will replace the existing one. Hyper-heuristics have been explored in a wide array of optimization problems. Related works have been reviewed in various contexts, as summarized in Table 1.

The primary components of selection hyper-heuristics, namely heuristic selection and move acceptance, have been subject to extensive analysis in the literature. Ozcan et al. [11] conducted tests using different combinations from seven heuristic selection methods and five move acceptance methods in solving benchmark functions. Results revealed that the heuristic selection methods exhibit minimal performance differences. In contrast, move acceptance methods significantly affect algorithm performance, with the Improving and Equal strategy proving to be the most effective. Kiraz et al. [13] investigated 35 combinations of five heuristic selection methods and seven move acceptance methods to solve problems in a dynamic environment. Their findings indicated that heuristic selection methods with learning capabilities excel in dynamic settings. Conversely, move acceptance methods with parameters, such as Simulated Annealing (SA), yield poor results, whereas accepting all moves is the least effective. Misir et al. [33] evaluated the generality of different heuristic selection and move acceptance configurations in solving problems with different low-level heuristics sets. Their analysis underscores that the performance of heuristic selection is also influenced by other algorithmic components and runtime. Move acceptance was found to impact performance, but it is essential to ensure compatibility with the chosen heuristic selection method. The set of low-level heuristics employed also affects performance, as they possess varying improvement characteristics that can be leveraged best by different selection strategies.

Zamli et al. [12] assessed the performance of four heuristic selection and move acceptance methods, including the newly developed Fuzzy Inference Selection (FIS), in solving the t-way test generation problem. The results showed that FIS outperforms the Exponential Monte Carlo with counter (EMCQ) and Choice Function (CF), albeit it exhibits slower execution times. Castro et al. [14] focused on the Multi-objective Particle Swarm Optimization Hyper-heuristic, examining four heuristic selection methods, including CF, multi-armed bandit, roulette-based and random approaches. Roulette-based selection emerged as the most effective across 30 instances of multi-objective benchmark problems. Random selection lags behind the other methods, emphasizing the advantages of non-arbitrary selection strategies. Jackson et al. [10] established a taxonomy for move acceptance methods and conducted an empirical study comparing well-known methods. SA was found to be the most effective approach in the context of cross-domain search. Furthermore, they confirmed the contribution of move acceptance methods to algorithmic performance.

In addition to heuristic selection and move acceptance, other components of selection hyper-heuristics impact algorithmic performance, as evidenced in the literature. Several studies explored the role of learning mechanisms in heuristic algorithms. Yates et al. [15] investigated the effect of learning effective heuristic sequences from a set of training instances on the performance of solving cross-domain instances. They showed that offline learning enhances the performance of a sequence-based hyper-heuristic and outperforms the online learning approach. Alanazi et al. [34] compared four approaches for controlling selection probabilities in solving a well-known runtime analysis function. They concluded that learning schemes do not consistently improve performance. Further analysis has shown that the effectiveness of learning schemes is most prominent when there are significant performance variations among the low-level heuristics. This aligns with the findings of Misir et al. [33] that underscore the impact of the set of available low-level heuristics.

Misir et al. [16] investigated whether a learning strategy combined with a Tabu low-level heuristic mechanism can improve algorithmic performance. Experiments on the home care scheduling problem, a variant of VRP, demonstrated that the proposed strategy led to improved outcomes. An additional analysis was conducted to inspect the impact of Tabu duration. The analysis revealed that an optimal range of values exists, and deviating from this range can significantly hinder performance. These findings underscore the importance of carefully tuning algorithmic parameter settings to achieve performance enhancements. This principle is further substantiated by research conducted by Lissovoi et al. [18]. In their study, the impact of using static vs. adaptive values for the learning period (τ) parameter in a random gradient hyper-heuristic algorithm was explored. The comparative analyses on standard unimodal benchmark functions indicated that adaptive parameter values have the potential to enhance algorithmic performance. Furthermore, the research revealed that the optimal parameter value can vary across different problem instances.

Misir et al. [16]’s evaluation has also highlighted the influence of the Tabu mechanism, particularly involving low-level heuristics, on algorithmic performance. The Tabu list, also referred to as a prohibition list, was identified as one of the diversification strategies by Sarhani et al. [35], along with search restart and randomization. Other diversification strategies have also been identified as influential to algorithmic performance. Adriaensen et al. [17] conducted an Accidental Complexity Analysis on the winner of the CHeSC 2011 competition, AdapHH. The analysis delved into the performance contributions of each sub-mechanism within the algorithm. The results of the analysis indicated that the restart mechanism incorporated within the algorithm’s threshold acceptance criterion significantly contributed to its overall performance. However, the Tabu mechanism within the algorithm did not make a substantial contribution to performance. This underlines the importance of understanding how different algorithmic components can impact each other’s performance. Table 2 summarizes the algorithmic components examined in previous studies, emphasizing the novelty of our work in offering a comprehensive review.

The CHeSC 2011 algorithms continue to serve as benchmarks in numerous recent publications, as highlighted in [3]. The selection hyper-heuristics included in the CHeSC 2011 competition have been discussed, analyzed and utilized as benchmarks in other research publications. Burke et al. [9] provided an overview of hyper-heuristics covering the early approaches, classifications, learning components, and research trends in the field. Four algorithms that competed in CHeSC 2011 were included in the survey. Drake et al. [3] focused on extended classification, benchmark frameworks, cross-domain search, and problem domains in selection hyper-heuristics. HyFlex framework and CHeSC 2011 were extensively discussed, and ten of the competing algorithms were described. However, both reviews only provided summaries and lacked an in-depth analysis and comparison. In contrast, Van Onsem et al. [32] produced a report of the CHeSC algorithms, highlighting core concepts such as iterated local search, reinforcement learning, and Tabu search. They concluded that an algorithm’s performance relies on combining various techniques, and no single technique used independently leads to large performance gains.

Adriaensen et al. [36] conducted a comparative study to assess the generality of two CHeSC 2011 algorithms (AdapHH and EPH) in solving three additional problem domains: 0–1 knapsack problem, quadratic assignment problem and max-cut problem. Their experiments showed that AdapHH, the winner of CHeSC 2011, exhibits superior generality and consistency even when considering the extended set of problem domains. The authors also pointed out that AdapHH’s shortcoming is in its complexity, which they examined in detail through the complexity analysis in Adriaensen et al. [17]. The analysis identifies complex algorithmic components that can be eliminated with minimal performance loss to reduce the overall complexity. A less complex variant of the algorithm, Lean-GIHH, is proposed from the analytical results. Drake et al. [37] investigated the crossover control mechanism by substituting the one in AdapHH with another from the literature and using AdapHH’s in a Modified Choice Function-All Moves hyper-heuristic. They concluded that the control strategy has no impact on the algorithm’s performance. Notably, AdapHH has received most of the attention in terms of analysis and comparison, leaving other algorithms overlooked.

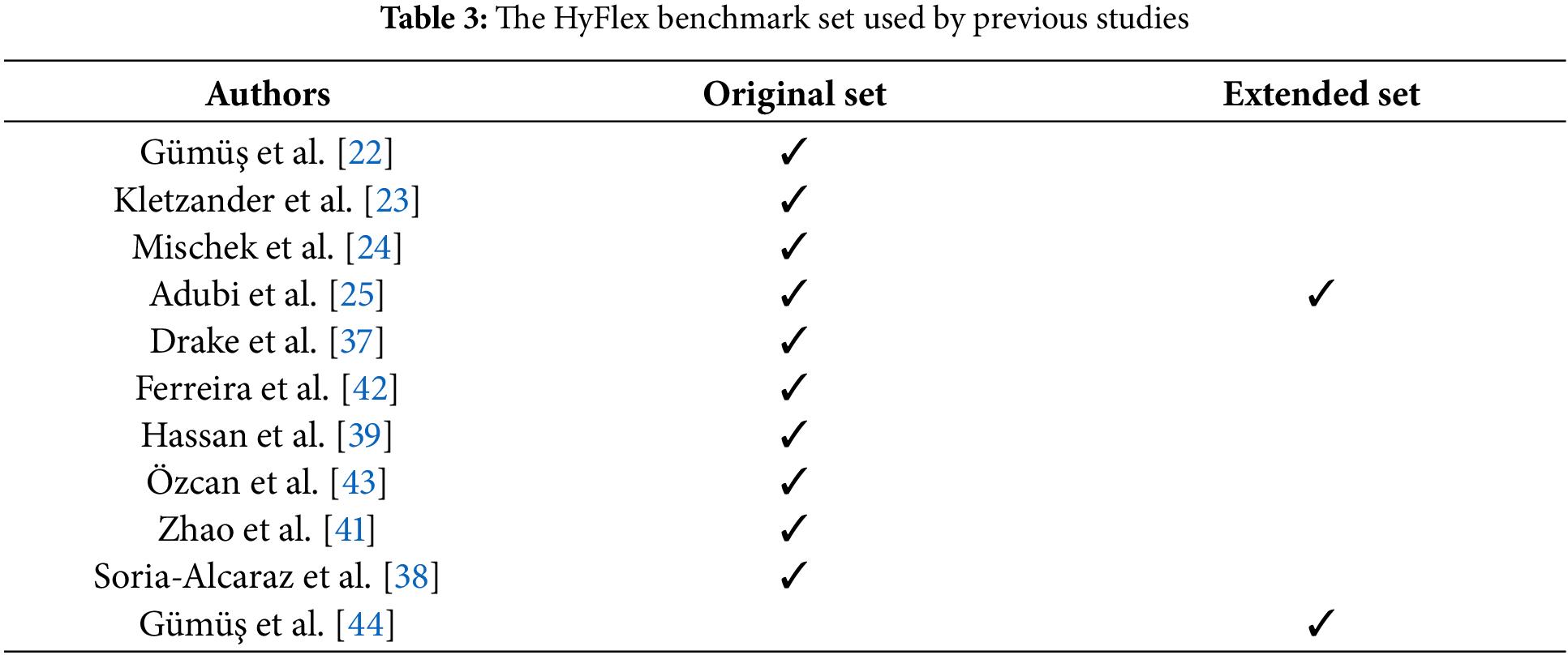

The algorithms from CHeSC 2011 have also served as benchmarks in other studies. Numerous works utilize the HyFlex framework to compare their proposed algorithms against CHeSC 2011 algorithms, either with the original problem domains or the extended ones. Table 3 lists the benchmark set used in studies that evaluated algorithms using the HyFlex framework. For a comprehensive summary of works utilizing the HyFlex framework before 2020, refer to Drake et al. [3]. Soria-Alcaraz et al. [38] compared their iterated local search hyper-heuristic to the CHeSC 2011 contestants exclusively on vehicle routing problems. Their algorithm achieved a third-placed ranking among the competitors. Hassan et al. [39] integrated a dynamic heuristic set selection (DHSS) into the Fair-Share Iterated Local Search (FS-ILS) hyper-heuristic [40], which surpassed the performance of all CHeSC 2011 algorithms. The comparison, using the original HyFlex problem domains, showed that DHSS improved FS-ILS. Zhao et al. [41] introduced a hyper-heuristic algorithm that incorporates multi-armed bandit, relay hybridization and a genetic algorithm. The algorithm produced highly competitive results against the top five CHeSC 2011 algorithms in most problem instances and generalized well across the original domains.

In [25], an evolutionary algorithm-based iterated local search (EA-ILS) hyper-heuristic was presented, featuring a novel mutation operator. The study compared the algorithm’s performance in solving all 30 instances of the extended HyFlex problems against seven hyper-heuristics, which included two from CHeSC 2011. The algorithm demonstrated superior generalization compared to other algorithms. Additionally, when applied to address the original HyFlex set, EA-ILS outperformed all algorithms from the competition. Mischek et al. [24] proposed a reinforcement learning approach incorporating different state space representations and an intelligent reset mechanism. The algorithm would have come in second place, beating other reinforcement learning-based algorithms in the competition. Kletzander et al. [23]' large state reinforcement learning-based algorithm managed to outperform all 20 competitors from CHeSC 2011. Gümüş et al. [22] proposed using F-Race as a parameter tuning method for a steady-state memetic algorithm (SSMA) to investigate its efficacy in cross-domain search. They compared the performance of SSMA without tuning [43] with SSMA tuned using the F-Race or Taguchi method in the CHeSC 2011 competition setup. The tuned SSMA ranked higher (fourth place) compared to the untuned variant (22nd of 25 algorithms).

This study conducts a review analysis of the top 20 algorithms that competed in CHeSC 2011, aiming to examine their algorithmic designs. These algorithms are chosen as they continue to be utilized as benchmarks in recent studies such as [22–25], highlighting their relevance. Furthermore, the algorithms utilize the same framework, i.e., the HyFlex framework, streamlining the analysis process. The source codes and supporting documentation for the algorithms are also readily available, enabling us to easily identify the implementation methods for the algorithmic components examined.

More recent selection hyper-heuristics utilizing the HyFlex framework as highlighted in Section 2. However, we excluded these algorithms from our review analysis due to the unavailability of their source codes and to limit the scope of our study. Nevertheless, we provide a brief comparison of the analysis findings from the CHeSC 2011 algorithms to the trends observed in recent algorithms in Section 6. The inclusion and exclusion criteria for the review analysis in this study are summarized in Table 4.

This study examines eight key components of selection hyper-heuristics: search points, search phases heuristic selection, move acceptance, feedback, Tabu mechanism, restart mechanism, and low-level heuristic parameter control. The algorithmic attributes for each component are categorized based on existing classification where available. Otherwise, the attributes are classified based on their similarities. The implementation methods of each algorithm from CHeSC 2011 are identified from the source codes and supporting documentation. We adapt the quality index as in [45,46] to evaluate the performance of the different implementation methods. Quality index

Figure 1: Components of selection hyper-heuristics discussed in this study

The quality index analysis relies on the rankings within the leaderboard, which are determined by the performance metric used to rank the algorithms. In the HyFlex framework, algorithms are ranked using a scoring system inspired by Formula 1 (F1) racing, based on the median values among 31 runs. Recent research has introduced two performance metrics: average rank (

Additionally, statistical tests are conducted to validate the findings. Due to the unavailability of individual algorithmic run results, the tests rely on the normalized median objective function values of 30 HyFlex problem instances, following the approach by Di Gaspero et al. [48]. The normalization is performed using using the formula

4 Main Characteristics of Cross-Domain Heuristic Search Challenge (CHeSC) 2011 Algorithms

CHeSC 2011 competition uses the HyFlex framework to evaluate the competing algorithms based on their ability to find high-quality solutions within given time limits. The HyFlex framework is a Java program that provides a set of problem instances from different domains with varying complexity [7]. There are two distinct sets of problem instances within the framework. The original set consists of 30 instances, encompassing problems from six distinct domains, each comprising five instances. These instances pertain to problems within the field of combinatorial optimization. During the competition, participants are provided with only four problem domains, these being the Boolean satisfiability (SAT) [55], one-dimensional bin packing (BP) [56], personnel scheduling (PS) [57], and permutation flowshop (PFS) [58]. The two hidden domains are the travelling salesman problem (TSP) [59] and the vehicle routing problem (VRP) [60]. The HyFlex instances set was later expanded with three additional domains by Adriaensen et al. [36]: 0–1 Knapsack (KP) [61], Quadratic Assignment (QAP) [62], and Max-Cut Problem (MaxCut) [63]. Each algorithm underwent 31 executions, each with a time limit of 10 min. The competing algorithms were ranked based on the median solution quality achieved in these runs, and the F1 scoring system was employed to assign a score. The scores were then aggregated and used to determine the final rankings on the leaderboard across various problem domains. Readers are referred to the original works by Burke et al. [8] and Ochoa et al. [7] for more information. Table 5 shows the final leaderboard of the CHeSC 2011 competition.

Two notable features of HyFlex that will be discussed throughout this paper are the classes of low-level heuristics and the heuristic parameters. Low-level heuristics are classified into different types: mutational, ruin-recreate, local search, and crossover. Additionally, the behaviour of the low-level heuristics can be controlled using two parameters, namely depth of search (DOS) and intensity of mutation (IM). DOS only applies to local search heuristics, whereas IM is for mutational and ruin-recreate heuristics, both of which specify how much the solution can change. Both parameters have a default value of 0.2 and can increase up to 1.0.

Several algorithms from the competition have been reviewed in Drake et al. [3], including AdapHH [45], VNS-TW [64], ML, PHunter [65], EPH [66], HAHA [67], NAHH [68], ISEA [69], GenHive [70], and AVEG-Nep [48]. Readers are referred to their work for the explanation of those algorithms. The remaining algorithms are examined using their source codes, design documentation, and extended abstracts available at https://github.com/seage/hyflex/tree/master/hyflex-hyperheuristics (accessed on 25 December 2024). The following provides a description of the main characteristics of each algorithm, presented in the order of their rankings in the competition.

KSATS-HH: Achieving a ninth position in the competition, the algorithm demonstrated good performance in solving SAT and VRP problems, while it struggled with PFS and TSP. The algorithm is a hybrid approach that combines reinforcement learning, Tabu search, and simulated annealing. Heuristic selection follows a tournament selection of size two, where heuristics are chosen based on their ranks. Reinforcement learning adjusts the heuristic ranks, increases if heuristic improves the working solution and decreases if it fails. A Tabu mechanism is used, where heuristics that fail to improve the solution are added to the Tabu list with a tenure of seven rounds. Simulated annealing is employed for move acceptance, considering the fitness change relative to the best solution. The cooling schedule resets when the maximum iterations are exceeded, with this value influenced by heuristics’ execution time.

HAEA: Producing the second-best results in solving VRP, contributing to an overall tenth position for the Hybrid Adaptive Evolutionary Algorithm Hyper Heuristic (HAEA). Low-level heuristics are divided into two subsets: one includes a mix of local search and other types of heuristics, whereas the other comprises the remaining local search heuristics. Heuristics from both subsets are applied iteratively, ensuring that a local search heuristic is the last to be applied. Heuristics are selected based on their probabilities, which increase or decrease depending on whether they improve the working solution, employing the roulette wheel strategy. The move acceptance strategy only accepts improving solutions. If a non-improving solution is found, the heuristic receives a penalty (decrease in selection probability), IM and DOS values are adjusted, and the soft replacement policy is applied to assess if a search restart is needed. The soft replacement policy applies local search heuristics using default parameter values and triggers a search restart if the range of DOS has been tested. Upon a search restart, the current solution is changed using random heuristics from the two subsets, and the subsets are updated through random permutation. Additionally, heuristic parameters are adjusted when no improvement is seen, and resets when a maximum value is reached. The value of IM is inversely related to the DOS value, that is, if IM = 0.4, then DOS = 0.6.

ACO-HH: The algorithm performed third best for BP instances and finished eleventh in the competition. It adopted an Ant Colony Optimization (ACO) approach tailored for selection hyper-heuristics [71]. The algorithm employs ants that traverse a path, applying a low-level heuristic at each step. All ants start from the same solution, which is the best solution from the previous iteration. For each iteration, 30 ants search independently by applying low-level heuristics five times. This approach means that the algorithm maintains a population of solutions. Heuristics are selected according to both the pheromone and heuristic information of ACO. The pheromone factor considers the average improvement obtained by a complete path solution, whereas the heuristic information factor considers the average improvement obtained for the component. Heuristics are selection based on their probabilities, following the roulette wheel approach. The algorithm also maintains a different selection probability for the heuristic parameter values. In each application, the heuristic information and its parameter value are updated to reward or penalize the heuristic.

DynILS: This algorithm performed better on BP and TSP than on other problems. DynILS is a dynamic iterated local search that selects perturbative heuristics and their IM value. At each iteration, one perturbative (mutational or ruin-recreate) and one local search heuristic are applied to the incumbent solution, indicating a single-point and iterated search phases approach. Heuristics are selected using the roulette wheel strategy, where the selection probabilities of these heuristics are adjusted based on whether improvement is achieved or not. Heuristic performance is measure by their normalized fitness delta relative to their possible values. The incumbent solution is only replaced when an improvement is found. Additionally, the heuristic parameter values are rewarded or penalized according to their performance. No Tabu or restart mechanisms are employed within the algorithm.

SA-ILS: SA-ILS obtained most of its total score from the personnel scheduling problem, ranking fourth. The algorithm employs two strategies: Iterated Local Search Hyper-heuristic (ILSHH) and Simulated Annealing Hyper-heuristic (SAHH), chosen based on the average execution time of local search heuristics for each problem instance. Before initiating the search process, each local search heuristic once in a random order, and the results guide the choice of strategy and heuristic parameters. For instances with extended execution times, ILSHH is employed, whereas SAHH is utilized for instances with shorter execution times. ILSHH applies one random perturbative heuristic followed by all local search heuristics in a random sequence. The acceptance strategy allows a non-improving solution to be accepted after seven consecutive iterations without improvement. For SAHH, a random local search heuristic is used until no improvements are observed for seven consecutive iterations. The final solution is accepted only if it improves; otherwise, a random perturbative heuristic is applied. Besides, the low-level heuristic parameters differ based on the algorithm: ILSHH uses IM and DOS values of 0.4, whereas SAHH uses IM of 0.8 and DOS of 0.6. These parameters remain constant throughout search process.

XCJ: XCJ (eXplore-Climb-Jump) is a hill climbing-based selection hyper-heuristic that performed best on BP compared to other problem domains. The low-level heuristics are classified into explore heuristics (crossover and ruin-recreate) and exploit heuristics (local search and mutational). The algorithm applies each explore heuristic to the current best solution, generating multiple solutions. Subsequently, each solution undergoes a sequential application of all exploit heuristics, starting from local search to mutational heuristics, until ten consecutive non-improving iterations. After each application of exploit heuristic, the resulting solution is compared to the best solution. An improving solution is always accepted, whereas a worsening solution is accepted only if the fitness delta is less than 0.2. The fitness delta is calculated as one minus the fitness before divided by the fitness after application. The algorithm does not include Tabu mechanism, restart mechanism, or heuristic parameter control.

GISS: Generic Iterative Simulated-Annealing Search (GISS) applies a random heuristic and utilizing simulated annealing move acceptance at each iteration. Heuristics are selected without considering their performance. The move acceptance allows non-worsening solutions, where the simulated annealing temperature is influenced by the heuristic’s execution time and fitness delta. The algorithm incorporates a restart mechanism to reinitialize move acceptance parameters, heuristic parameters, and the working solution. The search restart is triggered when the number of non-accepted iterations exceeds a threshold, calculated as the number of heuristics for the problem instance multiplied by two. This threshold doubles if the current solution is within 1.5 times of the best solution. Upon restart, the temperature resets to its initial value. Heuristic parameter values may be modified, with IM and DOS increased by 1.1 times with a probability of 0.2, and the working solution is updated by applying randomly selected ruin-recreate then mutation heuristics. The algorithm achieves moderate performance for PS and VRP.

SelfSearch: This population-based algorithm adapts its strategy, either explorative or exploitative, depending on the number of expected generations (or iterations) remaining before reaching the time limit. Before initiating the search, each heuristic is applied once to calculate the expected number of generations, based on their execution times and population size. Afterwards, the expected generations are updated after each generation. The two strategies differ in their heuristic selection, search restart methods, and heuristic parameter values to either promote exploration or exploitation. Heuristic selection follows the roulette wheel strategy, which depends on feedback from factors including fitness delta, execution time, usage frequency, frequency of repetitions, and frequency of non-improvements. Besides, the Adaptive Pursuit approach dictates that the explorative strategy prioritizes heuristics with lower frequency of repetition and higher improvement rate, whereas the exploitative strategy only prioritizes higher improvement rate. The selected heuristic is applied to all individuals in the population of solutions. Move acceptance is incorporated into the population update, which follows elitist survival selection while avoiding duplicate solutions. A restart mechanism is triggered when the number of consecutive non-improvement iterations exceeds a threshold, determined by the expected number of generations. Different restart actions are applied depending on the remaining number of generations. During the first half of the search, the DOS value is increased, a ruin-recreate heuristic is applied when DOS reached 1.0 and the current heuristic is applied to all solutions in the population. In the final half of the search, the IM value is increased, a ruin-recreate heuristic is applied when IM reached 1.0, and the heuristic with the highest improvement rate is applied to all solutions in the population. Additionally, different heuristic parameter values are used based on the strategy employed. For the explorative strategy, high IM and low DOS values are used, with IM increasing proportional to the number of local optima reached after a restart. For the exploitative strategy, low IM and high DOS values are used, with DOS increasing proportional to the number of local optima reached after a restart. Tabu mechanism is not incorporated in this algorithm. SelfSearch performed fairly in PS and TSP.

MCHH-S: MCHH-S, a single objective variant of the Markov chain Hyper-heuristic [72], performed well only on SAT. The algorithm utilizes a Markov chain for heuristic selection, considering heuristics’ quality scores derived from fitness delta and execution time. Each iteration involves applying a heuristic to one solution from a population of solutions, updating the quality score and deciding to accept or reject the resulting solution. The quality score is calculated as fitness delta multiplied by one minus the time taken by the heuristic over the maximum time. Improving moves are always accepted, whereas non-improving moves have a gradually increasing probability of acceptance, proportional to the number of consecutive non-accepted iterations, reaching a 100% acceptance chance after five non-accepted iterations. Upon acceptance, a different heuristic (of any type) and solution is selected for the next iteration. Otherwise, a local search heuristic is applied to the current solution in the next cycle. This algorithm does not involve Tabu mechanism, restart mechanism, or heuristic parameter control.

Ant-Q: Ant-Q is a hybridization between an Ant system and Q-learning. In this algorithm, two ants apply heuristics repeatedly, for a total of

5 Effective and Ineffective Strategies among CHeSC 2011 Algorithms

We classify the search point, search phases, heuristic selection methods, move acceptance, feedback, Tabu mechanism, restart mechanism, and low-level heuristic parameters for the CHeSC 2011 competing algorithms as presented in Table 6. Then, the performance of each implementation method is discussed.

Drake et al. [3] classified search points of selection hyper-heuristics into single, population, or mixed. The single-point search involves a continuous process of heuristic selection and move acceptance until termination, applied to a single solution [9]. Population-based (or multi-point) search applies the same process to multiple solutions, whereas mixed-point search combines single and population-based searches sequentially [3].

We illustrate the frequency and average quality index of the search point (see Fig. 2). It shows that the number of algorithms based on single and multi-point searches is equal, with nine each. Mixed-point search is only implemented by two algorithms which produce the highest average quality index. The average quality index for single-point search is slightly higher than for multi-point search algorithms, with 11 and 8.56, respectively. Among the top five performers, Table 6 indicates the distribution among the three approaches is equal with two single-point, two multi-point, and one mixed-point algorithm. Although mixed-point search gives a higher average quality index, its performance gains over the other approaches could not be confirmed as only two algorithms employ this strategy. On the other hand, it can be observed that a single-point search is better than a multi-point search.

Figure 2: Algorithm count and average quality index for search point

Statistical tests revealed a non-normal distribution in the sample for the mixed-point category, necessitating the use of non-parametric tests. The Friedman test identified a significant difference among the groups with a p-value of 0.0022. Subsequent post-hoc Wilcoxon signed-rank tests confirmed significant differences across all pairings, validating the performance variations among the implementation strategies.

Fig. 3 presents the boxplot of normalized median values across 30 HyFlex problem instances, averaged among each group’s algorithms. Lower values indicate better final solutions, whereas a smaller range signifies greater reliability as there is a reduced performance variability across different problem instances. The mixed-point approach demonstrates the best performance but exhibits higher variability.

Figure 3: Boxplot of normalized median values for search point

Fig. 4 displays the average quality index using rankings in individual problem domains in the HyFlex framework. Algorithms employing mixed-point search achieved the highest average quality index in five out of six domains, except for BP, where single-point search is the preferred strategy. Multi-point search was outperformed by single-point search in all domains. Upon further inspection, we found that BP instances are sensitive, where every heuristic application tends to yield an improvement. This observation aligns with findings by Kheiri et al. [73], who emphasized that exploring new search regions significantly enhances performance in BP instances. In time-constrained settings, the single-point approach is advantageous over multi- or mixed-point approaches, as it enables more frequent heuristic applications to a single solution. In contrast, the other approaches must apply heuristics to multiple solutions, selecting the best one for the next iteration while discarding the rest, which may reduce efficiency.

Figure 4: Average quality index in individual problem domains for search points

The single-point search algorithms can further be classified into three different strategies. The first strategy is applying single or multiple heuristics to a single solution. A single heuristic is selected using roulette wheel selection (AdapHH), tournament selection (KSATS-HH), and random selection (GISS). The application of multiple heuristics can be perturbative followed by local search heuristics (HAEA, DynILS, SA-ILS, ML) or relay hybridization (AdapHH). HAEA and DynILS apply only one local search heuristic, whereas SA-ILS and ML apply all local search heuristics after the perturbative one. Relay hybridization in AdapHH chooses a heuristic from a list of efficient heuristics at each step. Adriaensen et al. [17] demonstrated that the combination of single selection and relay hybridization outperformed the variants utilizing only one component. Also, relay hybridization contributes most to the performance.

AVEG-Nep conducts parallel independent searches from three different solutions, which is considered a single-point search supported by Drake et al. [3]. A heuristic type is chosen by reinforcement learning, then one random heuristic is selected from the chosen type to be applied to the solutions. Another strategy is to use multiple algorithm schemata. NAHH runs multiple algorithmic schemata in a race until the best one emerges. Each schema is parametric and contains different heuristic parameter values, acceptance probability, mutation probability, and restart probability. Six algorithmic schemata were included: randomized iterative improvement, probabilistic iterative improvement, variable neighbourhood descent, iterated local search, simulated annealing, and iterated greedy.

Most multi-point search algorithms utilize a population of solutions (VNS-TW, PHunter, EPH, HAHA, ACO-HH, GenHive, XCJ, SelfSearch, MCHH-S, and Ant-Q) in three different ways. The first approach is to maintain a population of solutions where only a single solution is chosen as the working solution for the iteration (VNS-TW, GenHive). VNS-TW selects a solution using tournament selection, which then undergoes shaking and local search stages. The new solution replaces the original solution if an improvement is found. Otherwise, a worse solution from the population is selected for replacement. GenHive conducts searches on a population of solutions, where at each iteration, only the best solution is improved with heuristic sequences. Newly produced solutions always replace the old solution in the population.

The second approach employs search from multiple solutions. In PHunter, EPH, SelfSearch, and MCHH-S, heuristics are applied to different solutions in the population. The population is updated according to the move acceptance strategy. PHunter applies heuristics to different solutions and updates the population upon improvement. EPH applies different heuristic sequences to different solutions. Each resulting solution is compared to the population and placed into the population when it has improved or has a unique fitness value. MCHH-S applies a heuristic to a single solution until the solution is accepted. Once accepted, a different heuristic is applied to a different solution. Conversely, in SelfSearch, all solutions in the population are improved using a heuristic and the population is updated using elitist survival selection. Since SelfSearch ranked poorly in the competition, elitism in population update is undesirable.

The third approach uses multiple solutions derived from a single solution for either local search (HAHA, XCJ) or evaluation (ACO-HH, Ant-Q) on each of these solutions. HAHA follows a mixed-point search, where multiple solutions are generated by applying perturbative heuristics to the solution from the single-point search phase. Each solution is then applied with a local search heuristic. In XCJ, each perturbative heuristic is applied to the current best solution to produce multiple solutions. Then, local search heuristics are used to improve the solutions. ACO-HH and Ant-Q produce multiple solutions for evaluation. Both algorithms follow the strategies from ACO, modified for selection hyper-heuristics [71]. ACO-HH applies heuristics chosen by the Ant system to the previous iteration’s best solution at each iteration. In contrast, Ant-Q only uses the Ant system and Q-learning to determine the first heuristic, with subsequent heuristics chosen randomly. Each heuristic is applied to the best solution among the ones kept by agents.

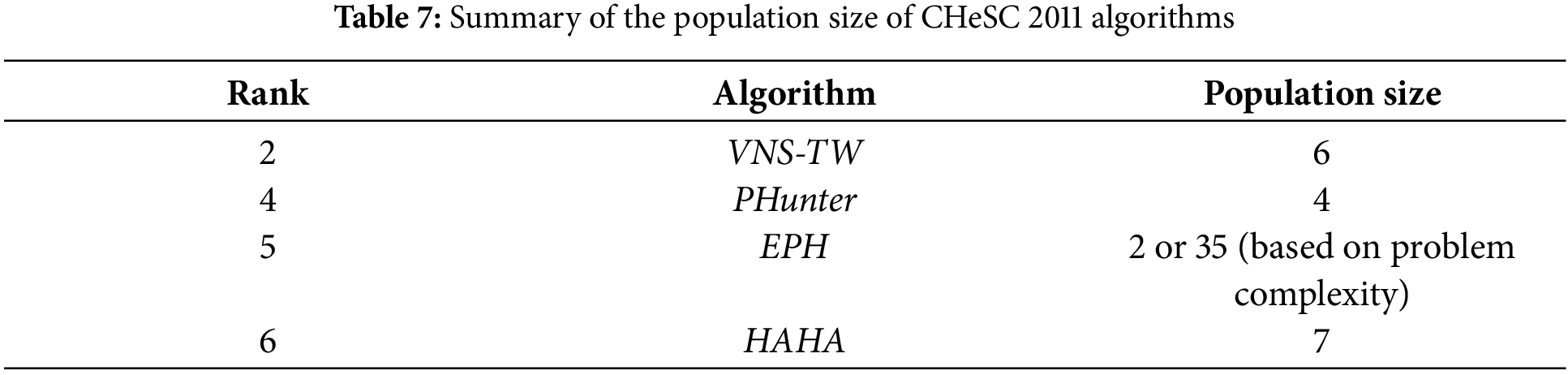

Agushaka et al. [74] noted the importance of finding the right balance between population size and algorithmic iterations to guarantee optimality. Moreover, Malan et al. [75] found that an algorithm with the same number of function evaluations but different population sizes leads to a significant performance difference. Table 7 summarizes the population sizes of the multi-point search algorithms. It shows that most algorithms limit the number of solutions stored to seven. Only ACO-HH has a large population, but we argue that the performance is maintained since the heuristic reward is updated after each application. EPH only use a large population for problem instances with short heuristic execution times, which we think is the best strategy to achieve greater generality. Two algorithms determine the population size based on the number of heuristics (XCJ and Ant-Q).

A population of heuristic sequences are included in certain algorithms from the competition. A heuristic sequence consists of multiple heuristics to be applied successively to the domain solution. Two algorithms that maintain a population of heuristic sequences, EPH and GenHive, are compared in Table 8. Since EPH outperformed GenHive, several conclusions can be noted. Firstly, an algorithm with predefined sequences of perturbative, followed by local search, heuristics outperforms an algorithm without any rules. A dynamic heuristic sequence length and population size may better suit different problem instances than a fixed one. Next, evolving heuristic parameters alongside the heuristic sequences (co-evolution) presents performance gains. Crossover among heuristic sequences may not lead to a better final solution, and elitism may degrade algorithmic performance compared to evolving every heuristic sequence in the population. A population updated using tournament selection could outperform a population that always accepts offspring. Deploying heuristic sequences to multiple solutions enhances search performance compared to applying the sequences to the same solution. ISEA maintains a population of action sequences which are a series of actions to be applied to a heuristic sequence. The action sequences include adding, removing, moving, swapping, or changing heuristics. The action sequences evolved through crossover and mutation, with an elitist population update strategy. Contrary to the standard evolutionary process, multiple offspring (up to 200) are produced at each generation using the same domain solution.

Population-based algorithms have demonstrated their superiority in achieving global optima [76]. Nevertheless, single-point search strategies can achieve comparable performance by adapting population-based features, such as utilizing multiple starting points [77] or hybridizing with single-point-based algorithms [78]. Within CHeSC 2011 algorithms, VNS-TW utilizes a population of solutions that is reduced to a single solution after 50% of the time limit or when the search stagnates. The search in HAHA is divided into serial and parallel search phases. Parallel search applies perturbative heuristics to produce multiple solutions. Then, one non-Tabu solution is chosen for serial search where local search heuristics are applied to the solution until none of them can improve any further.

The HyFlex framework provides four types of low-level heuristics for each problem instance: mutational, ruin-recreate, local search, and crossover heuristics [7]. Mutational, ruin-recreate, and crossover heuristics are often grouped into perturbative heuristics, including in VNS-TW and ML. Algorithm designers can employ an iterated search strategy where heuristics are applied iteratively between perturbative and local search. Categorizing low-level heuristics or operators by their search capabilities enables control between exploration and exploitation [79]. Algorithms proposed for CHeSC 2011 competition follow one of three strategies for the sequence of heuristic applications: iterated, optional iterated or non-iterated (see categorization in Table 6). Iterated search alternates between phases of applying perturbative and local search heuristics, where some algorithms may only enforce the strategy optionally. Other algorithms may allow any type of heuristics to be applied at any point of the search (non-iterated).

We analyze the search phases by tallying the number of algorithms for each strategy and calculating their average quality index (see Fig. 5). It shows that most algorithms employ the iterated search strategy (10 algorithms), followed by non-iterated search (eight algorithms). The iterated strategy increases algorithm performance, as evidenced by the higher average quality index and six of the top seven algorithms following this strategy (see Table 6). Algorithms with optional iterated search scored marginally lower than ones employing the iterated search strategy, but only two algorithms implemented this strategy. Repeated measure ANOVA confirmed a significant difference among the groups (p = 4.7969E−16). Post-hoc Tukey’s tests showed significant differences across all pairings, with p-values lower than 0.0001. Additionally, the boxplot in Fig. 6 displays normalized median values for different search phases, revealing that the iterated approach has the smallest interquartile range, reflecting higher reliability.

Figure 5: Algorithm count and average quality index for search phases

Figure 6: Boxplot of normalized median values for search phases

The average quality index for iterated search phases in individual domains is consistently high compared to the non-iterated strategy (see Fig. 7), with large differences observed in PS, PFS, TSP, and VRP instances. Notably, both TSP and VRP problems are routing and optimization problems. They often share characteristics of having multiple optima and benefit from similar exploratory strategies for effective solution refinement. PS instances are also characterized as having multiple optimum points [80]. This suggests that iterated search is effective for multi-modal problems. Meanwhile, the optional iterated approach performed well in five domains, except for SAT.

Figure 7: Average quality index in individual problem domains for search phases

The usage of perturbative and local search heuristics can be described as static or dynamic. Most algorithms impose that perturbative heuristics must be followed by the local search heuristics at each iteration. For the iterated search strategy, VNS-TW and ML continue the search until reaching a local optimum using local search heuristics after applying one perturbative heuristic. DynILS and ILSHH of SA-ILS follow one perturbative heuristic with one and all local search heuristics, respectively. PHunter uses perturbative heuristics to escape local optima before improving the solution using local search heuristics with a high DOS. EPH applies every local search heuristic either by a single application or Variable Neighbourhood Descent after the perturbative heuristics. XCJ produce multiple solutions using perturbative heuristics before applying all local search heuristics in sequence. Each step in NAHH involves applying ruin-recreate heuristics followed by local search heuristics. A mutational heuristic is then applied to the resulting solution with a probability.

Meanwhile, HAHA and SAHH of SA-ILS employ the phases in reverse to diversify when no improvement is obtained from the local search heuristics. In the optional iterated search strategy, HAEA and ISEA require starting and ending each iteration’s heuristic sequence with a local search heuristic, but there are no restrictions for the positions in between. Only SelfSearch switches between the search phases dynamically based on the expected number of iterations remaining. For each phase, different heuristic selection rules and parameter values are utilized. Since the algorithm ranked poorly, this feature appears degrading performance.

5.3 Heuristic Selection Methods

Five strategies for selecting heuristics are identified: random, roulette wheel, tournament selection, relay hybridization and sequential (refer to Table 6). Random selection is utilized by SA-ILS, GISS, and VNS-TW in the local search phase and NAHH for the selected heuristic type. Roulette wheel selection based on heuristic performance is the most used strategy, employed by 11 competitors (AdapHH, ML, PHunter, HAHA, HAEA, ACO-HH, DynILS, AVEG-Nep, SelfSearch, MCHH-S, Ant-Q). ACO-HH and Ant-Q obey the principle of Ant systems where selection probabilities are also influenced by pheromone evaporation. AVEG-Nep uses a roulette wheel to select only the heuristic type, and a random heuristic of the type is applied to the solution.

Tournament selection is only used in KSATS-HH using a tournament of size two. AdapHH implements relay hybridization to select heuristic pairings. Relay hybridization involves multiple heuristics working together in a sequence, where the output of one serves as the input for the next heuristic [81]. In AdapHH, the first heuristic is selected by the roulette wheel, and the second is chosen from known good heuristics for the first. XCJ applies all local search heuristics in sequence. On the other hand, EPH, ISEA, and GenHive incorporate heuristic sequences modified using genetic operators or action sequences, negating the need for heuristic selection.

Fig. 8 illustrates the frequency distribution for heuristic selection methods and their average quality index. It suggests that relay hybridization is the most effective heuristic selection strategy, whereas sequential is the least effective. Since these strategies only exist in one algorithm, a deeper inspection is necessary. Kheiri et al. [82] demonstrated the effectiveness of relay hybridization in the HyFlex framework. Zhao et al. [41] also highlighted its capability to form effective heuristics by pairing existing ones. Lepagnot et al. [83] obtained good results using relay hybridization to combine three different metaheuristics, although it may be less effective for simpler problems. The quality index averages indicate a marginally better value for the roulette wheel strategy (10.0) compared to random selection (9.8). We observed high variability between the methods implemented by the random selection algorithms. The use of random selection in VNS-TW and NAHH does not negatively impact search performance as it is used on a small heuristic subset compared to SA-ILS and GISS. Roulette wheel selection was implemented by more algorithms in the top five positions.

Figure 8: Algorithm count and average quality index of heuristic selection methods

Non-parametric statistical tests indicated significant differences among the groups (p = 4.0908E−10). Pairwise comparisons revealed that the top performer, relay hybridization, has significant difference to all other strategies. Interestingly, the sequential approach, which had the lowest average quality index, did not show significant differences with other strategies, whereas other strategies exhibited differences among themselves. The no-selection approach (ranked second) showed no significant difference from tournament selection (ranked third) (p = 0.5999) but was statistically different to both roulette wheel (ranked fourth) (p-value = 3.7243E−05) and random approach (ranked fifth) (p-value = 0.0030). Tournament selection was significantly different from the random approach (p = 0.0350) but not from roulette wheel (p = 0.0571). Roulette wheel selection had no significant difference from the random approach (p = 0.7036).

Fig. 9 illustrates the distribution of normalized median values for six heuristic selection approaches. Relay hybridization demonstrates the best performance but includes multiple high-value outliers. The roulette wheel approach has a narrower interquartile range and a smaller overall range between minimum and maximum values compared to other approaches.

Figure 9: Boxplot of normalized median values for heuristic selection methods

Relay hybridization achieved the highest quality index in four domains: SAT, BP, PFS, and TSP (refer to Fig. 10). The best performers in PS are algorithms that do not employ heuristic selection, relying instead on evolutionary processes to improve solutions. Tournament selection is marginally the best approach for VRP and performs well for BP and TSP. Between the roulette wheel and random selection, the roulette wheel is better in BP, PFS, TSP, and VRP, while random selection is better for SAT and PS. These observations are supported by multiple researchers, noting that search feedback is non-essential for solving SAT [23] and PS instances [73]. Sequential heuristic selection is the worst strategy for the four domains but offers better performance for SAT and BP.

Figure 10: Average quality index in individual problem domains for heuristic selection methods

Move acceptance involves determining whether to accept or reject the outcome of a heuristic application [3]. Move acceptance methods can be broken down into stochastic and non-stochastic methods [10]. Stochastic methods accept a solution with a given probability. In contrast, non-stochastic methods make deterministic decisions about candidate solutions. Within the non-stochastic methods, it can be further divided into basic or threshold methods. Non-stochastic basic utilizes the objective function value of previous solutions, whereas non-stochastic threshold relies on a predetermined value as the criterion for the acceptance threshold. Contrarily, several algorithms deviate from the conventional hyper-heuristic structure by omitting a move acceptance strategy. The categorization of heuristic selection techniques for the participating algorithms can be found in Table 6.

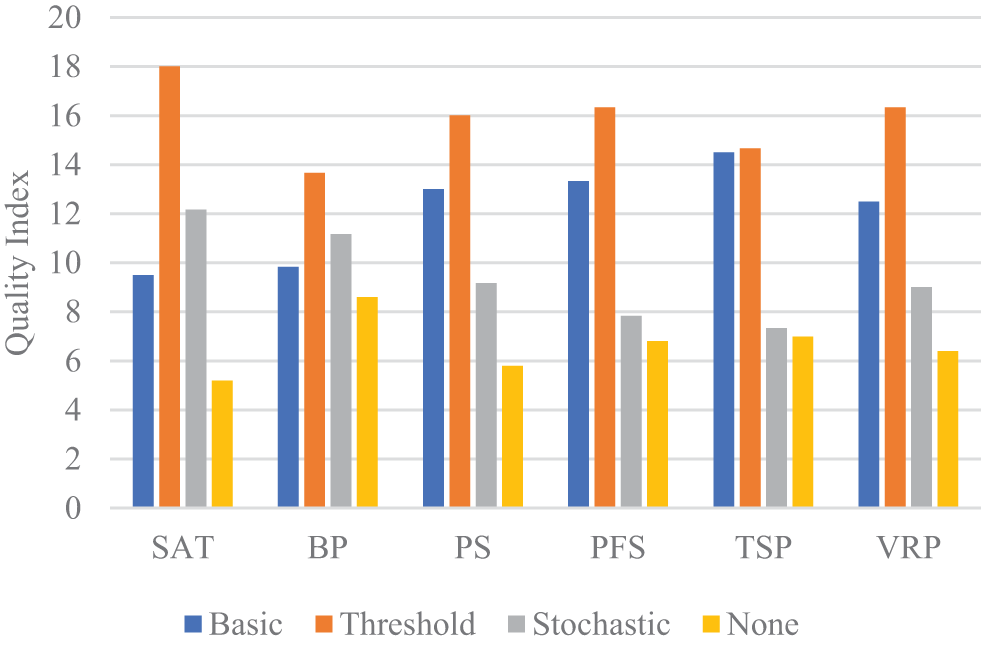

Fig. 11 presents the distribution of algorithm count and the average quality index for each move acceptance method. It shows that most algorithms implemented non-stochastic basic and stochastic move acceptance methods (six algorithms each). Non-stochastic threshold move acceptance was the least frequently employed technique (three algorithms), yet it yielded the highest average quality index. Conversely, algorithms lacking a move acceptance have the lowest quality index. Friedman test demonstrated a significant difference among the groups (p = 4.8205E−12). Post-hoc comparisons showed significant differences in nearly all pairings, except between basic and stochastic move acceptance (p = 0.1414). Furthermore, the boxplot of normalized median values for move acceptance shows lower variability for the basic and threshold approaches (refer to Fig. 12).

Figure 11: Algorithm count and average quality index of move acceptance

Figure 12: Boxplot of normalized median values for move acceptance

Fig. 13 compares the average quality index across problem domains. Threshold move acceptance obtained the best quality index in all domains, whereas not employing move acceptance led to the worst quality index. Non-stochastic move acceptance methods performed significantly better in PS, PFS, TSP, and VRP, indicating a preference for systematic mechanisms. Stochastic move acceptance outperformed non-stochastic basic move acceptance on SAT and BP, suggesting that some degree of randomness is beneficial in these domains. BP instances exhibit sensitivity in objective function changes, leading to all moves being accepted regardless of improvement magnitudes. For SAT instances, escaping local optima is important for better performance. Acceptance criteria that prioritize diversification, such as those integrated with restart mechanisms, are more effective [36,82]. The results suggest that incorporating a move acceptance can enhance search performance, particularly the non-stochastic threshold method.

Figure 13: Average quality index in individual problem domains for move acceptance

The two approaches of non-stochastic basic methods are to accept only improving (PHunter, EPH, HAEA, DynILS, and SA-ILS) or accept improving and equal solutions (VNS-TW). Most algorithms accept only improving candidate solutions, including. Notably, EPH follows a unique approach by replacing a solution in its population with only candidate solutions that differ from other solutions within the population.

The non-stochastic threshold method is implemented by the winning algorithm, AdapHH, which compares the candidate solution against the previous best solutions kept in a list. Meanwhile, ML accepts a non-improving solution only if 120 consecutive iterations without improvement have been reached. HAHA rejects worsening solutions unless five consecutive worsening iterations have occurred and a second of execution time has passed since the last acceptance of a candidate solution.

A well-known stochastic move acceptance method is SA [84], which has been used in three algorithms (NAHH, KSATS-HH, and GISS). In NAHH, the move acceptance strategy is different for each algorithmic schemata, with one of them involving SA. NAHH also employs schemata that directly apply probabilities to accept a worsening candidate solution, which is also utilized in ISEA, XCJ, and MCHH-S.

Several algorithms, including ACO-HH, GenHive, AVEG-Nep, SelfSearch, and Ant-Q, do not incorporate a move acceptance strategy. In these algorithms, the solutions are updated every time heuristics are applied. Notably, Di Gaspero et al. [48] highlighted the trust in the reinforcement learning process within AVEG-Nep, leading to the acceptance of every candidate solution, including the worsening ones.

Burke et al. [4] classified hyper-heuristics based on the source of feedback during learning. An algorithm is considered a learning one when it uses feedback from the search process. Learning algorithms process feedback during the search process, influencing the subsequent decisions made at the hyper-heuristic level. Algorithms that do not learn from feedback are considered non-learning ones. Learning can be further divided into online and offline learning. In online learning, the feedback is taken while the algorithm is in the process of solving the problem, whereas offline learning involves collecting knowledge from a set of training instances that are expected to be able to generalize for solving the problem. Drake et al. [3] then extended this taxonomy by adding another category, mixed learning, which combines both offline and online learning approaches.

We classify the algorithms that competed in CHeSC 2011 based on their nature of feedback in Table 6. Fig. 14 shows that most algorithms employ the online learning approach (12 algorithms), followed by the offline learning approach (four algorithms). Notably, the three highest-ranked algorithms implement online learning. However, the bottom three algorithms are also implementing online learning, which causes the average quality index for online learning to be lower than for the offline approach. Upon further investigation, we found no major differences between the online learning strategies utilized by the top three and the bottom three algorithms. In both cases, the performance of heuristics is measured in terms of the solution fitness or fitness improvement to help make decisions in heuristic selection. This could indicate that other features of the algorithm contribute to the performance of the better algorithms. Yates et al. [15] have also observed that offline learning outperforms online learning. Mixed learning has the highest quality index, albeit only one algorithm followed the approach. Three algorithms did not have any element of learning, where they are placed among the worst ten at rank 12, 15, and 17. The significance of learning mechanisms is evident as numerous prior studies have emphasized the notable improvements linked to the inclusion of learning mechanisms [12,13,16]. Statistical tests validated significant differences, with the Friedman test yielding a p-value of 1.8115E−10. Post-hoc Wilcoxon signed-rank tests further validated significant differences across all pairings. In terms of variability, the mixed learning approach exhibits higher variability, as indicated by a larger range of normalized median values in Fig. 15.

Figure 14: Algorithm count and average quality index for nature of feedback

Figure 15: Boxplot of normalized median values for feedback

Regarding the adaptability to different environments, learning approaches are less critical for SAT instances, where algorithm performance relies more on escaping local optima [23]. The slow execution of low-level heuristics in PS and VRP instances limited the benefits of feedback mechanisms, making adaptive or greedy approaches more suitable [73]. Conversely, for PFS instances, feedback mechanisms are crucial in enhancing heuristic effectiveness and guiding the search toward high-quality solutions [23,82]. Overall, learning approaches are well-suited for problems with fast low-level heuristics and large solution space.

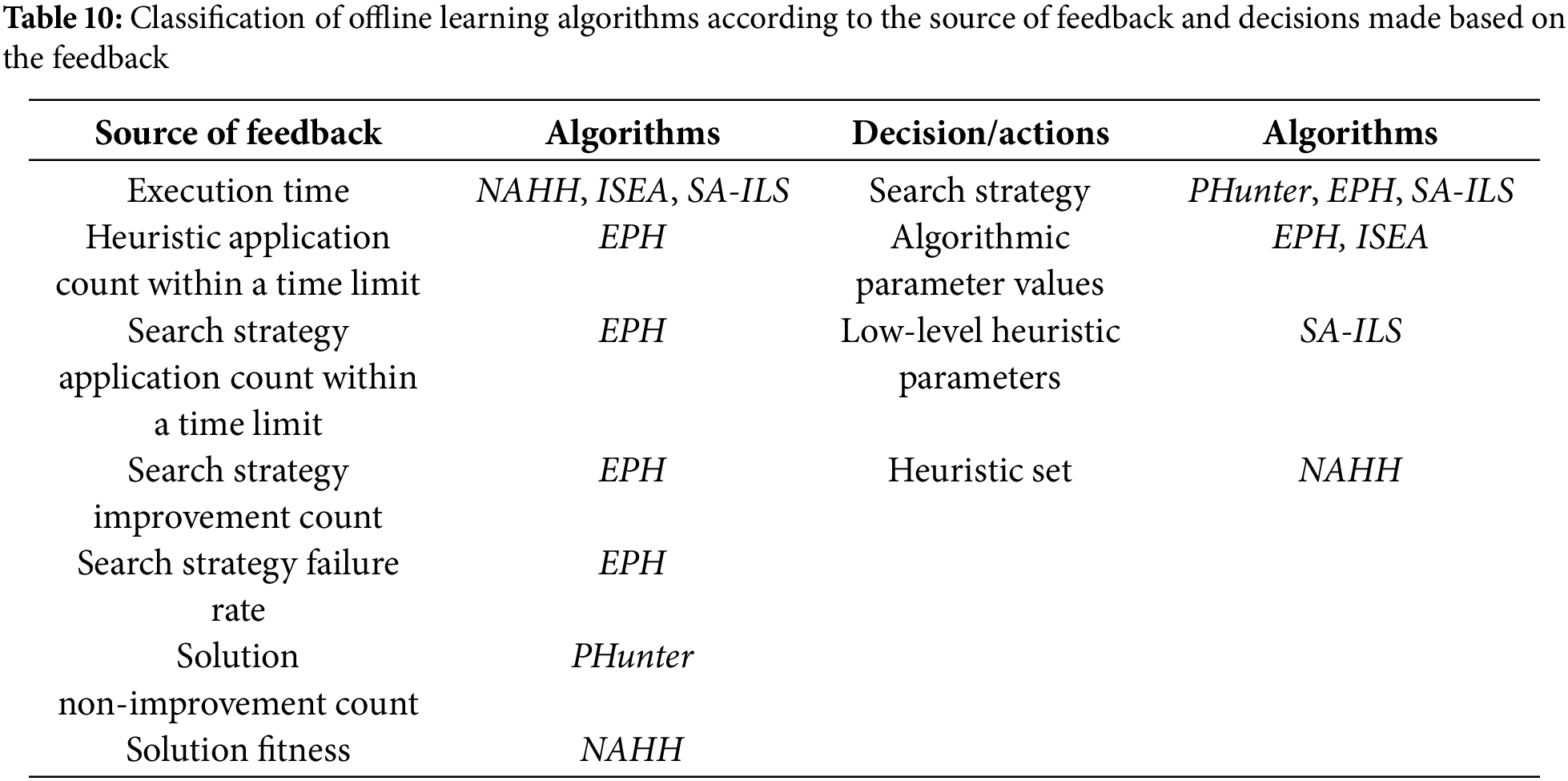

Next, we analyze both online and offline learning in terms of the source of feedback (what is measured) and the decisions (or actions) made based on the feedback in Tables 9 and 10. Algorithms with mixed learning are included in both analyses since mixed learning is a combination of online and offline learning. The results show that algorithms with online learning most frequently measure the improvement count (whether the low-level heuristic applied has improved the solution or not), followed by the value of the improvement, with seven and six algorithms, respectively. The execution time of the algorithm (time spent or time left before the time limit) is considered in four algorithms, which influences the heuristic selection and search strategy. VNS-TW checks whether the same solution is produced to disable the low-level heuristic applied. SelfSearch measures low-level heuristics performance in multiple metrics, including the number of the same solution it has produced, the number of non-improvement applications and the total number of applications for the heuristic.

The feedback from the search process is most often used to make decisions in heuristic selection, implemented by 11 algorithms. Two other decisions are within the nature of heuristic selection, which are heuristic order and heuristic type. VNS-TW and HAHA use feedback information to order a set of low-level heuristics to be applied sequentially. Meanwhile, AVEG-Nep determines the type of low-level heuristic (between the four provided in the HyFlex framework) to be applied before randomly choosing one from the selected type. AdapHH alters the low-level heuristic set available for one phase of the search based on the heuristic performance in the previous phase. Finally, SelfSearch controls the search strategy, whether to be more explorative or more exploitative, based on the time left before the time limit is exceeded.

In the context of CHeSC 2011, we observed offline learning as the process of assessing the difficulty of the problem instance. This is achieved by applying the low-level heuristics provided for the selected problem instance before entering the main search process (either loop or other process). Afterwards, feedback from the learning process is used to make some decisions. Among the 20 competitors, offline learning is observed in five algorithms (PHunter, EPH, NAHH, ISEA, and SA-ILS). Most of these are placed in the top half of the competition leaderboard, with SA-ILS being the exception at rank 14.

Our analysis of the source of feedback for offline learning algorithms has shown that most algorithms measure the execution time of the low-level heuristics. Compared to online learning, there is less emphasis on the solution fitness after the heuristic application. Besides, execution time is measured related to the execution time of low-level heuristics instead of the time spent or time left before the time limit. EPH also gauges the execution time of low-level heuristics, but it does so by counting how many times a heuristic is applied within a specified time limit. Moreover, the algorithm tests a local search strategy, namely Variable Neighbourhood Descent (VND), by observing the number of applications within a time limit. The improvement and failure rate of the executions are then measured to influence the decision on which local search strategy to implement in the main phase of the algorithm. PHunter also tests different search strategies in a rehearsal run, which records the number of non-improvement solutions found throughout. Finally, besides execution time, NAHH also considers the solution quality obtained after heuristic application to determine the quality of the particular low-level heuristic.

The feedback from offline learning is most frequently used to determine the search strategy to employ (three algorithms), followed by setting the algorithmic parameter values such as population size (two algorithms). EPH will avoid VND for instances with a low number of applications within the offline learning phase. A single heuristic application strategy will be used in such cases. SA-ILS have two different strategies for local search (SA or iterated local search), determined by the average heuristic execution time. Meanwhile, EPH uses a smaller population when the low-level heuristic has a long execution time and a bigger population when the execution time is shorter. In ISEA, chromosome length and population size are among the parameters adjusted based on the low-level heuristic execution time. Another type of parameter is the one for the low-level heuristics, namely IM and DOS. SA-ILS uses high IM and DOS values for instances with computationally inexpensive low-level heuristics, whereas lower values are used for harder (longer time to solve) instances. NAHH uses the knowledge gathered from the offline learning phase to remove low-level heuristics that are dominated by other ones from being used during the search process.

Only one algorithm (PHunter) uses mixed learning, and it placed fourth in the competition. Their online learning component is similar to other algorithms, where the low-level heuristic performance is measured in terms of solution improvement. Weights are assigned to the heuristics, which will guide heuristic selection. Meanwhile, the offline learning part resembles EPH and SA-ILS, where they test out different local search strategies to be implemented by the main search process. Since the algorithm is placed in the top five of the competition, we argue that mixed learning brings a positive impact on the algorithmic performance. Furthermore, we contend that offline learning is important as different instances have different low-level heuristics with varying execution times, which will affect the search process. This is reasonable as it has been found that parameter adaptation in cross-domain search can increase performance [10], and offline learning is capable of adjusting algorithmic parameters according to the instance difficulty.

The main mechanism of Tabu search [85] is storing information related to the search process [86]. It features a memory mechanism called Tabu list, which stores either solutions or move operators already encountered during the search to avoid cycling to them. Information from the Tabu list can be used to promote diversification by exploring new unvisited areas of the solution space [87]. Talbi [86] gives three representations for the Tabu list, which are visited solutions, moves attributes, and solution attributes. The basic approach in implementing the Tabu mechanism is by storing solutions visited throughout the search process. However, this is computationally expensive and impractical for problems with a large solution space. A simpler approach is to store moves or solution attributes instead, with the former being the most popular of all approaches.

Among the CHeSC 2011 algorithms, we identified two approaches in implementing the Tabu mechanism, which are storing solutions and low-level heuristics. In most cases, the Tabu mechanism with solutions stores domain solutions to ensure it is not revisited, whereas the Tabu mechanism with heuristics prevents ineffective heuristics from being utilized. Besides, several algorithms implement both approaches since they are not mutually exclusive. None of the algorithms implemented a Tabu mechanism based on solution attributes. This omission could be attributed to the fact that the HyFlex framework conceals domain knowledge.