Open Access

Open Access

ARTICLE

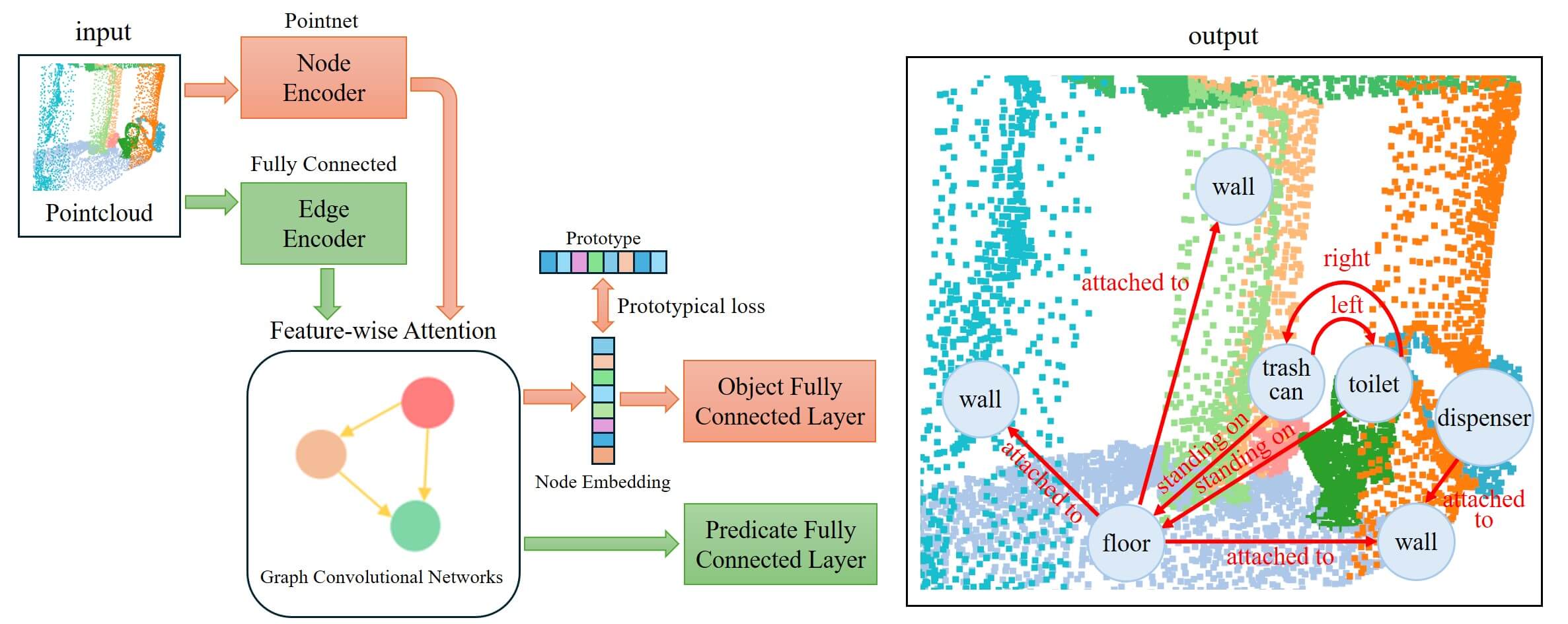

Fusion Prototypical Network for 3D Scene Graph Prediction

Department of Computer Science and Engineering, Gyeongsang National University, Jinju-si, 52828, Republic of Korea

* Corresponding Author: Suwon Lee. Email:

Computer Modeling in Engineering & Sciences 2025, 143(3), 2991-3003. https://doi.org/10.32604/cmes.2025.064789

Received 24 February 2025; Accepted 21 May 2025; Issue published 30 June 2025

Abstract

Scene graph prediction has emerged as a critical task in computer vision, focusing on transforming complex visual scenes into structured representations by identifying objects, their attributes, and the relationships among them. Extending this to 3D semantic scene graph (3DSSG) prediction introduces an additional layer of complexity because it requires the processing of point-cloud data to accurately capture the spatial and volumetric characteristics of a scene. A significant challenge in 3DSSG is the long-tailed distribution of object and relationship labels, causing certain classes to be severely underrepresented and suboptimal performance in these rare categories. To address this, we proposed a fusion prototypical network (FPN), which combines the strengths of conventional neural networks for 3DSSG with a Prototypical Network. The former are known for their ability to handle complex scene graph predictions while the latter excels in few-shot learning scenarios. By leveraging this fusion, our approach enhances the overall prediction accuracy and substantially improves the handling of underrepresented labels. Through extensive experiments using the 3DSSG dataset, we demonstrated that the FPN achieves state-of-the-art performance in 3D scene graph prediction as a single model and effectively mitigates the impact of the long-tailed distribution, providing a more balanced and comprehensive understanding of complex 3D environments.Graphic Abstract

Keywords

Cite This Article

Copyright © 2025 The Author(s). Published by Tech Science Press.

Copyright © 2025 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools