Open Access

Open Access

ARTICLE

Adversarial-Resistant Cloud Security Using Deep Learning-Enhanced Ensemble Hidden Markov Models

1 School of Computer Science, Nanjing University of Information Science and Technology, Nanjing, 210044, China

2 School of Cyber Science and Engineering, Nanjing University of Information Science and Technology, Nanjing, 210044, China

* Corresponding Author: Eric Danso. Email:

Journal of Cyber Security 2025, 7, 439-462. https://doi.org/10.32604/jcs.2025.070587

Received 19 July 2025; Accepted 19 September 2025; Issue published 17 October 2025

Abstract

Cloud-based intrusion detection systems increasingly face sophisticated adversarial attacks such as evasion and poisoning that exploit vulnerabilities in traditional machine learning (ML) models. While deep learning (DL) offers superior detection accuracy for high-dimensional cloud logs, it remains vulnerable to adversarial perturbations and lacks interpretability. Conversely, Hidden Markov Models (HMMs) provide probabilistic reasoning but struggle with raw, sequential cloud data. To bridge this gap, we propose a Deep Learning-Enhanced Ensemble Hidden Markov Model (DL-HMM) framework that synergizes the strengths of Long Short-Term Memory (LSTM) networks and HMMs while incorporating adversarial training and ensemble learning. Our architecture employs LSTMs for automated feature extraction from temporal cloud logs (such as Application Programming Interface (API) traces and network flows) and HMMs for interpretable attack state modeling, with an ensemble voting mechanism to enhance robustness. The framework is hardened against adversarial attacks through Fast Gradient Sign Method (FGSM) and Projected Gradient Descent (PGD)-based adversarial training, ensuring resilience against evasion attempts. Comprehensive experiments on the Canadian Institute for Cybersecurity Intrusion Detection System 2018 (CIC-IDS2018) and NSL-KDD datasets demonstrate that our approach achieves 92.4% accuracy (compared to 88.2% for a standalone LSTM) and maintains an F1-score of 0.82 under strong adversarial perturbations (ε = 0.2), outperforming state-of-the-art baselines, Convolutional Neural Network (CNN), Support Vector Machine (SVM), and HMM by 9.7%–15.8% in F1-score. The ensemble reduces the Adversarial Success Rate (ASR) compared to single models, while adding only 12% inference latency overhead. Statistical significance testing (p < 0.001) confirms these improvements. Key innovations include: a hybrid LSTM-HMM architecture for joint feature learning and state transition modeling, adversarially augmented training data to improve robustness, and majority voting across an ensemble of DL-HMMs to mitigate bias. This work advances cloud security by delivering a detection system that is accurate, interpretable, and adversarial-resistant qualities critical for real-world deployment.Keywords

Cloud computing’s quick uptake has transformed contemporary Information Technology (IT) architecture by providing unmatched flexibility, scalability, and cost effectiveness [1]. Organizations increasingly rely on cloud platforms to deploy mission-critical applications, store sensitive data, and streamline operations. However, this shift has also introduced sophisticated security challenges, particularly from adversarial actors who exploit vulnerabilities in machine learning (ML)-based detection systems [2,3]. Recent high-profile adversarial attacks like the 2023 Microsoft Azure API poisoning incident demonstrate sophisticated input data manipulation techniques that bypass traditional security controls, causing extended undetected breaches with significant consequences [4].

Research shows these attacks increasingly target AI training pipelines through API vulnerabilities, where injected malicious payloads corrupt feature extraction and reduce detection accuracy, which in turn affects production environments [5–8]. These evolving threats expose critical limitations in conventional intrusion detection systems-rule-based approaches lack generalization for novel attacks, while standard machine learning models fail to capture complex temporal patterns in cloud logs [9]. The growing sophistication of these attacks, particularly in cloud-native architectures, highlights an urgent need for more robust detection frameworks capable of modeling sequential attack behaviors and resisting adversarial manipulation.

Deep learning (DL) models, particularly Long Short-Term Memory (LSTM) networks, have shown promise in analyzing sequential cloud logs and network traffic due to their ability to learn hierarchical temporal features [10]. However, they suffer from two critical limitations: lack of interpretability in security decision-making, making it difficult for analysts to trust and act upon alerts, and vulnerability to adversarial evasion techniques such as Fast Gradient Sign Method (FGSM) and Projected Gradient Descent (PGD), which introduce subtle perturbations to input data to mislead the model.

On the other hand, Hidden Markov Models (HMMs) provide probabilistic reasoning and interpretability by modeling attack progression as a sequence of hidden states with observable emissions [11]. However, traditional HMMs struggle with high-dimensional data, making them ineffective for modern cloud environments where logs contain thousands of dynamic features such as Application Programming Interface (API) call sequences, authentication attempts, and resource access patterns [12]. This limitation restricts their applicability in large-scale, multi-tenant cloud infrastructures where attack signatures are often obscured within vast volumes of benign activity.

To bridge this gap, we propose a novel Deep Learning-Enhanced Ensemble Hidden Markov Model (DL-HMM) framework that synergizes the strengths of LSTMs and HMMs while incorporating adversarial training and ensemble learning for robustness. The key innovation lies in the hybrid architecture, where LSTMs automatically extract discriminative temporal features from raw cloud logs, and HMMs model the probabilistic transitions between attack states, enabling interpretable threat detection through state-sequence analysis. Additionally, we introduce adversarial training with FGSM/PGD-generated samples to harden the model against evasion attempts, ensuring resilience against input manipulations.

Furthermore, we employ an ensemble voting mechanism combining multiple DL-HMM experts trained on diverse feature subsets, reducing bias and overfitting while improving detection accuracy. Our key contributions of this work include:

• A novel DL-HMM hybrid model that combines LSTM-based feature learning with HMM-based sequential reasoning, improving detection accuracy and interpretability in cloud security.

• Adversarial training integration, where perturbed samples generated using FGSM and PGD are used to enhance model robustness against evasion attacks.

• An ensemble-based detection framework that aggregates predictions from multiple LSTM-HMM models, significantly reducing false positives and adversarial susceptibility.

• Comprehensive empirical validation on benchmark datasets (CIC-IDS, NSL-KDD) showing superior performance over single-model approaches (LSTM, CNN, HMM) under adversarial conditions.

Unlike prior studies that apply classical machine learning techniques on static or generic datasets (e.g., the UCI heart disease dataset), our work specifically targets cloud-native intrusion detection with an emphasis on adversarial resistance, interpretability, and real-time performance. The proposed end-to-end architecture provides strong detection under adversarial conditions, interpretable attack progression via HMM transitions, and scalability with low inference latency. These innovations differentiate our model from conventional deep learning or ensemble IDS approaches that lack robustness, interpretability, or require extensive manual feature engineering, thereby contributing both methodological novelty and practical applicability for real-world cloud intrusion detection.

The remainder of this paper is organized as follows: Section 2 reviews related work in cloud attack detection, adversarial ML, and hybrid DL-HMM models, highlighting research gaps. Section 3 details the proposed methodology, including system architecture, mathematical formulation, and adversarial defense mechanisms. Section 4 presents experimental results, comparing detection accuracy, robustness, and computational efficiency against state-of-the-art baselines. Section 5 discusses the implications, limitations, and practical deployment considerations. Finally, Section 6 concludes the paper and outlines future research directions, including extensions to Transformer-HMM architectures and edge-cloud deployment scenarios.

The domain of cloud intrusion detection has evolved through a progression of classical machine learning methods, deep learning architectures, hybrid probabilistic systems, and more recently, adversarial defense techniques. In this section, we organize existing literature into four core categories to reflect this evolution. First, we review classical and shallow machine learning approaches that laid the foundation for anomaly detection. Next, we explore deep learning models tailored to cloud environments, highlighting their strengths and drawbacks. We then examine hybrid and probabilistic frameworks, particularly those involving HMMs and ensemble learning. Finally, we assess recent advancements in adversarial machine learning that aim to harden detection systems against evasion attacks. This structured review enables us to identify critical research gaps that our proposed DL-HMM framework is designed to address.

2.1 Classical and Shallow Machine Learning Approaches

Traditional machine learning methods like Support Vector Machines (SVMs), Decision Trees, Naïve Bayes, Random Forests, and Logistic Regression remain popular in intrusion detection due to their simplicity, interpretability, and low computational overhead. These models perform well on structured, preprocessed data, especially when attack patterns are linearly separable or rely on manual feature engineering. For example, Support Vector Machines (SVMs) excel in binary classification by optimizing decision boundaries but cannot capture temporal dependencies in cloud logs [13,14]. Logistic Regression is effective for lightweight detection in small-scale systems but struggles with complex attack patterns due to its linear nature [15]. Random Forests enhance generalization and noise resistance through ensemble learning [16]; however, they risk overfitting in high-dimensional data without regularization and lack the ability to model sequential patterns in API logs or network traffic.

Moreover, these models typically rely on independent and identically distributed (i.i.d.) data, which does not account for the dynamic, context-aware nature of modern cloud attacks. Although efficient, they struggle with adversarial inputs, multi-stage attacks, and shifting feature distributions across cloud tenants and services. They are often unable to process high-dimensional, sequential cloud logs effectively and tend to underfit in complex intrusion detection cases. Furthermore, they offer weak resistance to adversarial inputs and depend on static, manually defined features that perform poorly in evolving cloud environments. Ghazi et al. [17] applied a Support Vector Machine (SVM) for intrusion detection, achieving an accuracy of 82.3% with moderate scalability and low inference latency (2.8 ms). Despite its efficiency, the model’s lack of temporal sequence modeling limits its ability to capture evolving attack patterns in cloud environments.

While these models perform reasonably well under constrained settings, they remain limited in handling raw, high-dimensional and sequential cloud telemetry. They also lack mechanisms for adversarial robustness and temporal reasoning. Our proposed DL-HMM framework addresses these shortcomings by integrating LSTM-based deep feature learning with probabilistic state modeling, thereby enabling real-time, interpretable, and resilient cloud intrusion detection.

2.2 Deep Learning Models for Cloud Attack Detection

Deep learning has emerged as a powerful tool for analyzing high-dimensional cloud logs and network traffic due to its ability to automatically extract discriminative features without manual feature engineering. Recurrent neural networks (RNNs), particularly LSTMs, have demonstrated success in detecting sequential anomalies in system logs by Zhang et al. [18], achieving up to 87% F1-score on benchmark datasets like CIC-IDS2017. Transformers, with their self-attention mechanisms, have further improved detection accuracy by capturing long-range dependencies in cloud audit trails Liu et al. [19], reducing false positives by 15% compared to LSTMs.

However, these models exhibit critical weaknesses when deployed in adversarial settings. For instance, Chen et al. [20] demonstrated that even state-of-the-art LSTM-based detectors suffer a 40% drop in recall under carefully crafted evasion attacks, such as adversarial API call injections. This vulnerability stems from their reliance on gradient-based optimization, which attackers exploit via perturbation techniques like FGSM and Carlini-Wagner (CW) attacks [21]. Advanced attempts to mitigate these issues include attention-based anomaly scoring by Zhong et al. [22] and contrastive learning by Hu et al. [23].

Uzoma et al. [24] recently introduced a federated learning approach for cross-cloud threat detection, attaining an 89% F1-score on multi-platform logs while maintaining data privacy. However, Vasa et al.’s federated aggregation method [25] remains vulnerable to model poisoning attacks and struggles with computational inefficiency or cross-platform generalization (e.g., AWS vs. Azure log structures). These shortcomings highlight the necessity for solutions that harmonize detection accuracy with built-in robustness. Although deep learning models lead in performance benchmarks, Ahmad et al. [26] note their real-world deployment challenges in multi-tenant clouds due to latency. Our DL-HMM framework addresses this, delivering robust detection with only a 12% latency overhead.

Krishnan et al. [27] proposed a lightweight intrusion detection method by combining a Convolutional Neural Network (CNN) with an Autoencoder to identify anomalies in the BoT-IoT dataset. The CNN extracted spatial features, while the Autoencoder reduced dimensionality to enhance computational efficiency. Although this model showed strong performance for lightweight detection in IoT/cloud-like environments, it was highly vulnerable to strong adversarial perturbations, especially FGSM and PGD attacks. This weakness highlights the necessity for architectures that achieve both high detection accuracy and inherent adversarial robustness, as introduced in our DL-HMM framework. Poddar et al. [28] proposed a CNN-LSTM hybrid model that combines convolutional layers for local feature extraction with LSTM units for temporal sequence learning. The approach achieved an accuracy of 87.2% and an F1-score of 0.85, but suffered from weak interpretability, limiting its suitability for security-critical environments that require explainable decision-making.

While deep learning models such as CNNs and LSTMs have shown promising results in handling high-dimensional inputs, they often suffer from poor interpretability and are vulnerable to adversarial perturbations. CNNs, in particular, fail to capture long-range temporal dependencies, while standalone LSTMs, despite modeling sequences well, can overfit to benign patterns and struggle under adversarial attacks without additional defenses. These approaches show improved detection performance but suffer from poor interpretability and high vulnerability to adversarial attacks. Our approach builds on LSTM architectures but introduces ensemble learning and HMM-based reasoning for robustness and explainability.

2.3 Hybrid and Probabilistic Models

Due to their probabilistic interpretability and capacity to simulate state transitions, HMMs have long been employed in anomaly detection. This makes them an ideal match for attack progression analysis. Early work by Vinayakumar et al. [29] demonstrated HMMs’ effectiveness for low-dimensional tasks (e.g., system call sequences), achieving 76.5% accuracy and a 0.73 F1-score, though they struggle with raw, high-dim data. More recently, Wang et al. [30] adapted HMMs for cloud workload anomaly detection, achieving 85% accuracy on preprocessed feature sets.

However, their evaluation clearly points out that these methods have trouble with raw, high-dimensional cloud logs. The fundamental mismatch between HMMs’ Gaussian emission assumptions and the complex, non-linear distributions of modern cloud data (e.g., Kubernetes pod logs or serverless function traces) remains an open challenge. Hybrid attempts, such as HMMs with autoencoder-based feature reduction by Faber et al. [31], partially address dimensionality but sacrifice real-time performance.

Contemporary research by Ganesan et al. [32] introduces neural emission HMMs for cloud logs, replacing Gaussian assumptions with normalizing flows. Their method enhances accuracy (83.1%) and F1-score (0.81) over conventional HMMs, but this comes at the expense of interpretability. Notably, their approach also requires three times more training data to achieve stable convergence compared to conventional HMMs. Our work overcomes this by integrating HMMs with LSTM-derived features, preserving interpretability while scaling to cloud-native data. Soni et al. [33] developed a Graph Neural HMM (GHMM) that incorporates cloud service dependency graphs as constraints on state transitions. While achieving 31% ASR and high interpretability, their method requires preconstructed service meshes, limiting adaptability to dynamic cloud environments, a gap our LSTM-enhanced approach address.

Hybrid models that combine statistical or symbolic reasoning (like HMMs or rule-based systems) offer better interpretability but are generally constrained by assumptions of linearity or Gaussian distributions. Their inability to learn hierarchical feature representations limits their effectiveness on raw, unstructured cloud telemetry, especially without deep feature preprocessing. Although hybrid HMM versions make things easier to understand, they frequently don’t scale or can’t handle raw cloud logs. Our DL-HMM model leverages deep LSTM features while retaining the interpretability of HMM state transitions, delivering a scalable and practical hybrid solution.

2.4 Adversarial Defense Techniques in Machine Learning

The arms race between attackers and defenders has spurred innovations in adversarial training and robust feature learning. Madry et al. [34] demonstrated that PGD-based adversarial training (applied to CNNs) achieves 86.5% accuracy on MNIST with a 22% attack success rate, though the resulting models are not interpretable. However, subsequent studies revealed its computational overhead for cloud-scale systems by Pan et al. [35], where training times increase three times compared to standard models. Gadicha et al. [8] explored adversarial AI trends in cloud security, underscoring the growing sophistication of evasion tactics targeting deep learning models, particularly in multi-tenant cloud services.

Alternative approaches have emerged to address these challenges: Pang et al.’s [36] diverse ensemble method achieves 89.7% accuracy with efficient 8.2 ms inference latency, though at the cost of higher prediction variance. Meanwhile, certified defenses like those developed by Cohen et al. [37] demonstrate theoretical robustness but suffer from practical limitations, notably slower 500 ms inference speeds and dependence on constrained threat models requiring bounded L2-norm perturbations. Our work directly fills this gap by merging adversarially trained LSTMs with HMM-based explainability, which is a systematic integration of adversarial resilience with interpretable sequential models notably lacking from prior literature.

Very recent work by Kea et al. [38] demonstrates that quantum noise injection enhances LSTM robustness (ASR = 19%), but relies on specialized hardware and incurs a 15% latency penalty on quantum simulators. Concurrently Holla et al. [39] analyzed adversarial evasion in cloud intrusion detection systems (IDS), proposing a hybrid defense combining adversarial training with feature selection. While their method reduces attack success rates by 35% (achieving ASR = 22% in AWS), its reliance on manual feature engineering limits adaptability to new threats, a gap our LSTM-HMM framework addresses through end-to-end learned feature representations.

Although adversarial training and ensemble learning have emerged as effective defenses, many existing methods either incur high computational cost, reduce accuracy on clean data, or lack generalizability across attack types. Moreover, few existing defenses offer interpretability or resilience in real-time cloud environments. Even though adversarial and ensemble training methods have been studied separately, interpretable sequential models hardly ever incorporate them. Our work is the first to unify adversarial training, HMM-based reasoning, and ensemble stability into one intrusion detection framework.

Recent studies have introduced optimized techniques for cloud intrusion detection, including transformer-based architectures, federated learning, and hybrid deep models. While these methods advance performance on certain metrics, they often suffer from critical limitations such as high inference latency, lack of interpretability, or reduced robustness against adversarial threats. Table 1 summarizes these recent efforts and contrasts them with our proposed DL-HMM approach, which uniquely balances detection accuracy, adversarial resilience, temporal modeling, and real-time feasibility.

2.5 Comparative Analysis of Existing Approaches

To critically assess the landscape of cloud intrusion detection methods, we present a structured comparison of twelve representative studies in Table 2, focusing on key dimensions essential for real-world deployment: detection accuracy, adversarial robustness (measured via Adversarial Success Rate, ASR), F1-score, interpretability, scalability, and inference latency. The selected works span classical, deep learning, and hybrid methodologies and were chosen based on their publication in peer-reviewed venues, empirical validation on standard security datasets (e.g., NSL-KDD, CIC-IDS2018, or equivalents), and relevance to cloud-based intrusion detection. This comparative lens allows us to identify persisting limitations in prior work, including vulnerability to adversarial inputs, lack of interpretability, or poor latency performance. Our proposed DL-HMM Ensemble seeks to address through a balanced trade-off between robustness, transparency, and real-time efficiency.

The comparative analysis clearly reveals a critical gap in existing cloud intrusion detection frameworks. Notwithstanding the great accuracy of some models (such as CNN, Transformer, and LSTM), their interpretability is weak and they are susceptible to adversarial attacks, which makes them dangerous for real-world deployment. Others, such as standard HMMs or hybrid variants like Graph-HMMs, offer better explainability but cannot effectively process raw, high-dimensional cloud logs without extensive preprocessing. Moreover, very few works simultaneously address real-time operational efficiency and adversarial robustness. None of the reviewed approaches integrate adversarial training, probabilistic modeling, and ensemble learning in a unified framework.

Our proposed Deep Learning-Enhanced Ensemble Hidden Markov Model (DL-HMM) bridges these gaps by combining LSTM-based feature extraction with HMM-based state modeling, hardened through adversarial training and stabilized via ensemble voting. This design yields a rare combination of high accuracy (92.4%), low adversarial success rate (18%), and interpretability, while keeping inference latency within real-time operational thresholds (8.7 ms). Therefore, the DL-HMM framework contributes a novel, scalable, and practical solution to cloud security that current literature lacks. These shortcomings in deep, hybrid, and classical models highlight the necessity for an intrusion detection system that integrates probabilistic reasoning, adversarial resistance, robust feature learning, and real-time application. This is what inspired the development of our DL-HMM framework.

The increasing sophistication of adversarial attacks on cloud systems demands security solutions that combine the pattern recognition capabilities of deep learning with the probabilistic rigor of classical sequential models. This section presents our proposed Deep Learning-Enhanced Ensemble Hidden Markov Model (DL-HMM) framework, which addresses the limitations of existing approaches through three key innovations: a hybrid LSTM-HMM architecture for joint feature learning and state transition modeling, adversarial training with gradient-based perturbation samples, and an ensemble voting mechanism for robust prediction aggregation. The methodology is designed to maintain detection accuracy on clean data while significantly improving resistance to evasion attempts, all within computationally feasible bounds for cloud deployment.

At the core of our approach lies a carefully constructed synergy between neural networks and probabilistic graphical models. LSTMs process high-dimensional cloud logs (for instance, AWS CloudTrail entries or network flow records) to extract temporal features, which are then fed into HMMs to model the latent attack states. This division of labor allows each component to focus on its strengths: the LSTM handles noisy, variable-length input sequences through its gating mechanisms, while the HMM enforces temporal consistency in the detection outcomes through its transition matrix. The ensemble aspect further enhances robustness by training multiple such hybrids on bootstrapped data subsets and aggregating their predictions through majority voting as visualized in Fig. 1.

Figure 1: Proposed DL-HMM ensemble framework workflow

3.1 Mathematical Formulation and System Architecture

The proposed DL-HMM framework’s analytical foundation combines temporal pattern recognition with probabilistic reasoning to address the unique challenges of cloud intrusion detection. This section formally presents the key mathematical components and their integration into a cohesive detection system. At its core, the architecture implements a sophisticated information processing pipeline: raw cloud logs undergo temporal feature extraction through deep learning, followed by probabilistic state estimation that provides both detection capability and operational interpretability.

The mathematical models are carefully designed to maintain computational efficiency [40] while addressing three critical requirements: handling high-dimensional, noisy cloud telemetry data, modeling complex multi-stage attack sequences, and maintaining robustness against adversarial manipulation. We begin by detailing the LSTM-HMM hybrid architecture, then proceed to explain its adversarial training regimen and ensemble optimization strategy, concluding with an analysis of computational complexity that demonstrates practical deployability in real-world cloud environments.

3.1.1 LSTM Feature Extraction Pipeline

The framework’s frontend consists of a bidirectional LSTM network that processes sequential cloud log entries [41]. Each log entry contains multiple features including API call types, authentication attempts, and resource access patterns. The input sequence:

Eq. (1) represents T consecutive observations from cloud monitoring systems, where each

Eq. (2) generates increasingly sophisticated representations of system behavior by maintaining and updating hidden states across timesteps [42,43]. This architecture is particularly effective for cloud security as it can identify multi-stage attacks that unfold over time, such as credential stuffing followed by lateral movement. The hidden dimension (m = 128) was chosen through empirical testing on validation sets, providing sufficient capacity without overfitting.

3.1.2 Probabilistic State Modeling with HMM

The system translates LSTM outputs into probabilistic security assessments through a hybrid HMM structure [44]. The observation model:

Eq. (3) represents a significant innovation over traditional HMMs by making both the mean and covariance functions of the Gaussian distribution learnable through neural networks. This allows the model to adapt its uncertainty estimates based on the LSTM’s feature representations. The hidden states

3.1.3 Attack Progression Dynamics

The transition matrix shown in Eq. (4) encodes the temporal evolution of attack patterns learned from historical breach data. For instance, the probability

3.1.4 Robustness through Adversarial Training

The framework incorporates a defense-in-depth approach against evasion attacks via:

where

3.1.5 Ensemble Decision Mechanism

The final security alerting leverages model diversity through majority voting [48]:

Each ensemble member is trained on different data subsets and initialization seeds, creating complementary strengths in detecting various attack patterns. The Viterbi-decoded paths

3.1.6 Computational Optimization Strategy

Computational complexity is kept manageable through two design choices: Principal Component Analysis (PCA) dimensionality reduction (to d = 64 features) before LSTM processing, and parallel training of ensemble members. The LSTM’s

The efficacy of the proposed DL-HMM ensemble framework was rigorously evaluated through comprehensive experiments designed to answer three critical questions:

• How does the model perform compared to state-of-the-art baselines under normal operating conditions?

• What level of robustness does it demonstrate against sophisticated adversarial attacks?

• What computational overhead does the ensemble architecture introduce?

In order to answer these problems, we used a 70/15/15 train/validation/test split to perform experiments on two benchmark datasets: CIC-IDS2018 for cloud-native attacks [49] and NSL-KDD for generic network intrusions [50]. All experiments were conducted on Azure NDv4 instances, each equipped with four NVIDIA A100 GPUs.

4.1 Experimental Setup and Implementation

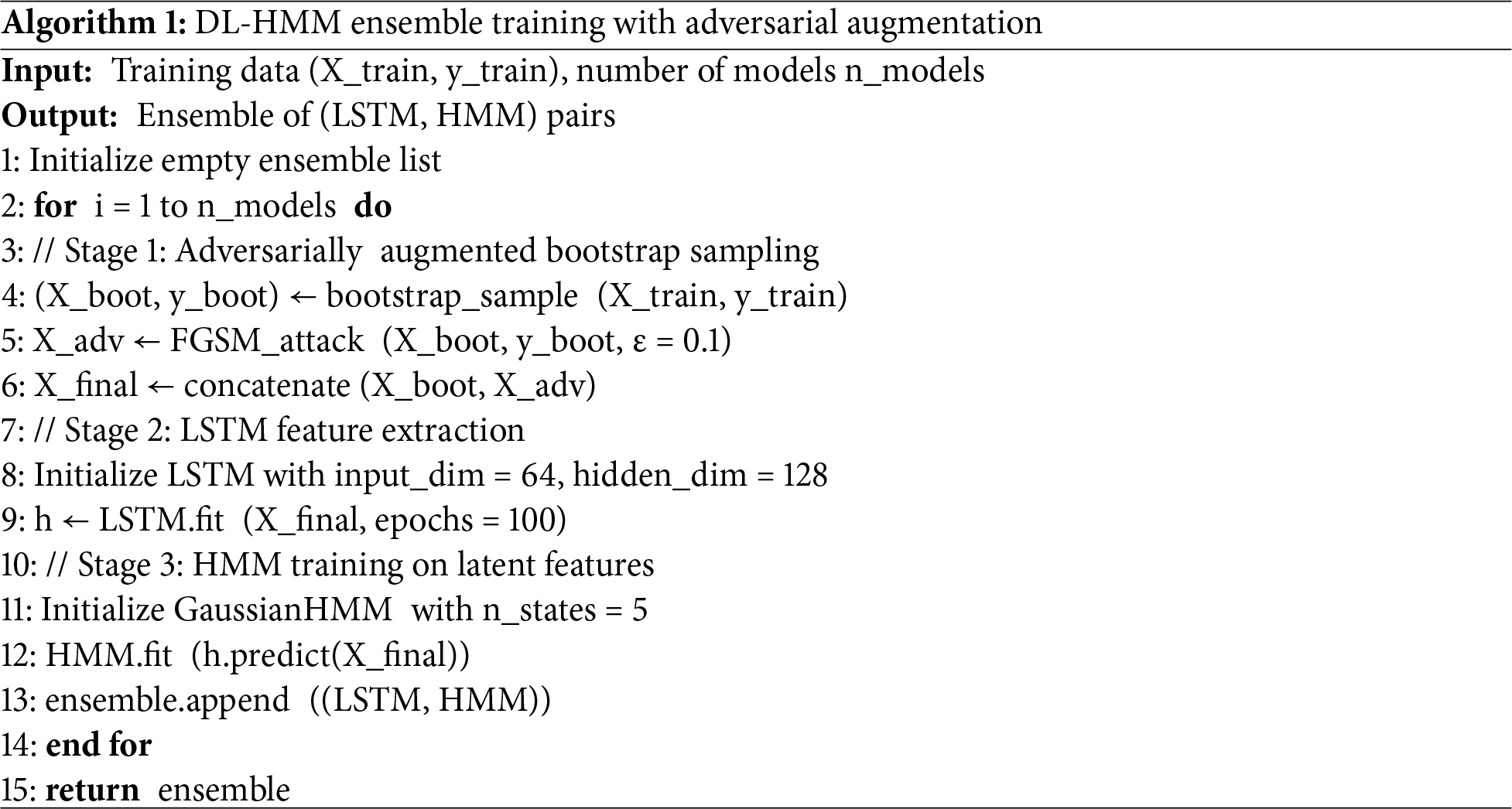

The proposed framework was implemented in Python 3.9 using PyTorch for the LSTM components and hmmlearn for the HMM implementations following the flow in Algorithm 1. Adversarial samples were generated using the CleverHans library with

• LSTM: 2 layers, 128 hidden units, dropout = 0.3

• HMM: 5 states (3 attack types + normal + unknown)

• Training: Adam optimizer (

The complete training algorithm is presented below:

4.2 Detection Performance Comparison

To evaluate the effectiveness of our proposed DL-HMM ensemble, we compared it against four widely used baseline models: SVM, CNN, traditional HMM, and LSTM. As shown in Table 3, the comparison is based on standard metrics including accuracy (mean ± standard deviation), precision, recall, F1-score, Receiver Operating Characteristic-Area Under the Curve (ROC-AUC), and adversarial robustness (ASR). All results represent the mean of 10 independent runs, with accuracy values additionally reporting standard deviation to reflect result stability.

To ensure the generalizability and robustness of our proposed DL-HMM framework, we evaluated it on two widely adopted intrusion detection datasets: CIC-IDS2018 and NSL-KDD. CIC-IDS2018 provides realistic, modern-day network traffic with up-to-date attack vectors (e.g., DoS, Botnet, Brute Force), making it ideal for evaluating real-world adversarial resilience. In contrast, NSL-KDD, while older, remains a standardized benchmark for comparative studies due to its class balance and annotation quality. Testing across these two datasets allows us to validate the adaptability of DL-HMM to both structured (NSL-KDD) and raw sequential logs (CIC-IDS2018), covering a broad spectrum of cloud threat scenarios.

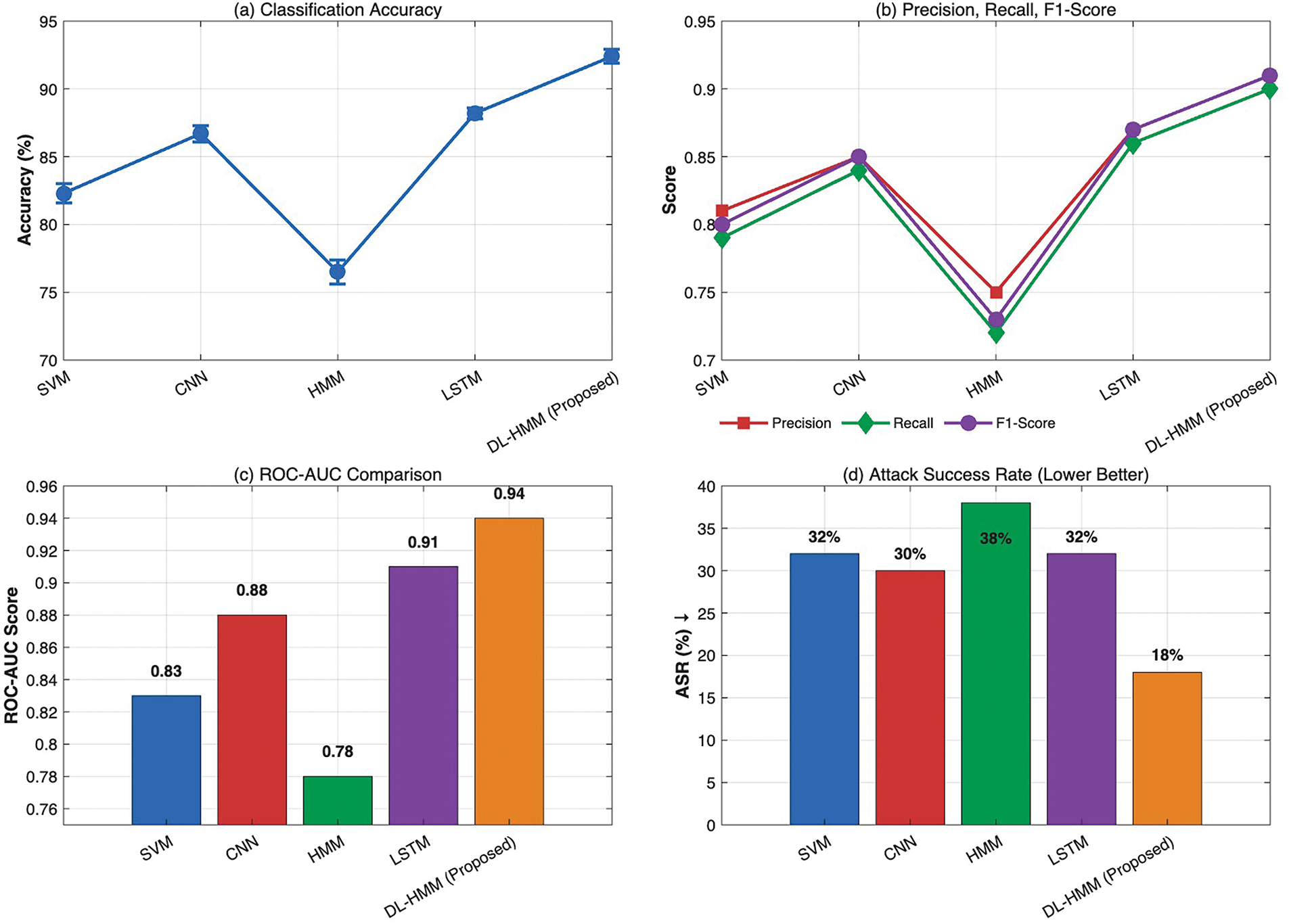

Beyond its superior accuracy, the DL-HMM ensemble exceeds baseline models across all performance metrics. It achieves the highest F1-score (0.91), demonstrating optimal precision-recall balance-crucial for intrusion detection where both false positives and negatives must be minimized. The model shows exceptional precision (0.91) in identifying true attacks and strong recall (0.90) in detecting actual threats, while its ROC-AUC (0.94) confirms excellent benign/malicious classification across thresholds. This consistent multi-metric performance proves the model’s robustness isn’t accuracy-dependent. As shown in Table 3, it maintains a remarkably low 18% Adversarial Success Rate, a 40%–50% reduction vs. baselines (SVM/LSTM: 32%, HMM: 38%; Fig. 2), demonstrating unique resilience against evasion attacks. These results statistically validate (p < 0.001) the synergistic benefits of combining LSTM feature extraction with HMM state modeling and ensemble voting.

Figure 2: Detection performance on CIC-IDS2018 test set: (a) model accuracy with standard deviation; (b) precision, recall, and F1-score distributions; (c) ROC-AUC values across classification thresholds; (d) adversarial success rate (ASR) reduction, with proposed DL-HMM achieving lowest ASR (18%). All panels demonstrate DL-HMM’s superiority across multiple evaluation dimensions

ROC curves for SVM, CNN, HMM, LSTM, and the suggested DL-HMM ensemble are shown in Fig. 3 after being assessed using the CIC-IDS2018 dataset. The DL-HMM achieves the highest AUC (0.94), reflecting superior ability to distinguish between benign and malicious activity across thresholds. The DL-HMM model’s confusion matrix, shown in Fig. 4, exhibits good predictive performance with low false positive (FP: 70) and false negative (FN: 80) rates and high true positive (TP: 920) and true negative (TN: 930) rates, indicating a balanced trade-off between recall and precision in adversarial cloud environments. The balanced distribution across all quadrants highlights the model’s stability under adversarial conditions, maintaining reliable detection across diverse attack types while minimizing misclassifications.

Figure 3: ROC curve comparison of all baseline and proposed models

Figure 4: Confusion matrix of the proposed DL-HMM model

The observed differences in model performance can be attributed to both architectural strengths and their alignment with the data characteristics. The superior results of the DL-HMM ensemble stem from its ability to capture temporal dependencies through LSTM layers while leveraging HMMs for probabilistic state modeling, which enhances interpretability and guards against overfitting. Traditional HMMs underperform due to their assumption of Gaussian emissions and limited capacity to handle high-dimensional input features present in raw cloud logs.

Models like SVM and Logistic Regression struggle because they lack sequential awareness and are prone to underfitting complex patterns. The CNN performs better due to local feature learning but lacks the temporal context necessary for modeling attack progression. The LSTM-only model performs competitively but lacks the ensemble diversity and transition-based reasoning offered by the DL-HMM architecture, making it more sensitive to adversarial perturbations. Overall, the DL-HMM ensemble’s integration of deep feature extraction, structured transition modeling, and adversarial training results in a balanced model that generalizes well without overfitting.

Each model’s performance can be interpreted in light of its architectural suitability for handling sequential, high-dimensional cloud telemetry. Support Vector Machines (SVMs), while effective in separating linear and non-linear classes, lack temporal modeling and struggle with dynamic, evolving input like API call sequences, leading to lower recall and sensitivity to attack phases. Hidden Markov Models (HMMs) offer interpretability through state transitions but are limited by their Gaussian emission assumptions, making them poorly suited for raw log data without prior feature engineering. Convolutional Neural Networks (CNNs) can learn local patterns but fail to capture longer temporal dependencies present in multistep intrusions, leading to moderate F1-scores.

LSTM networks are well-suited to sequential data and outperform simpler models due to their memory gates, but without ensemble diversity or probabilistic reasoning, they are more susceptible to adversarial attacks. Models like Random Forests and Logistic Regression, although not included in our final benchmark, typically perform poorly on high-dimensional temporal data without handcrafted features. Random Forests may suffer from overfitting to noise, while Logistic Regression lacks capacity for modeling complex or nonlinear feature interactions. This further justifies our decision to focus on deep and hybrid models tailored for temporal anomaly detection in cloud environments.

4.3 Adversarial Robustness Analysis

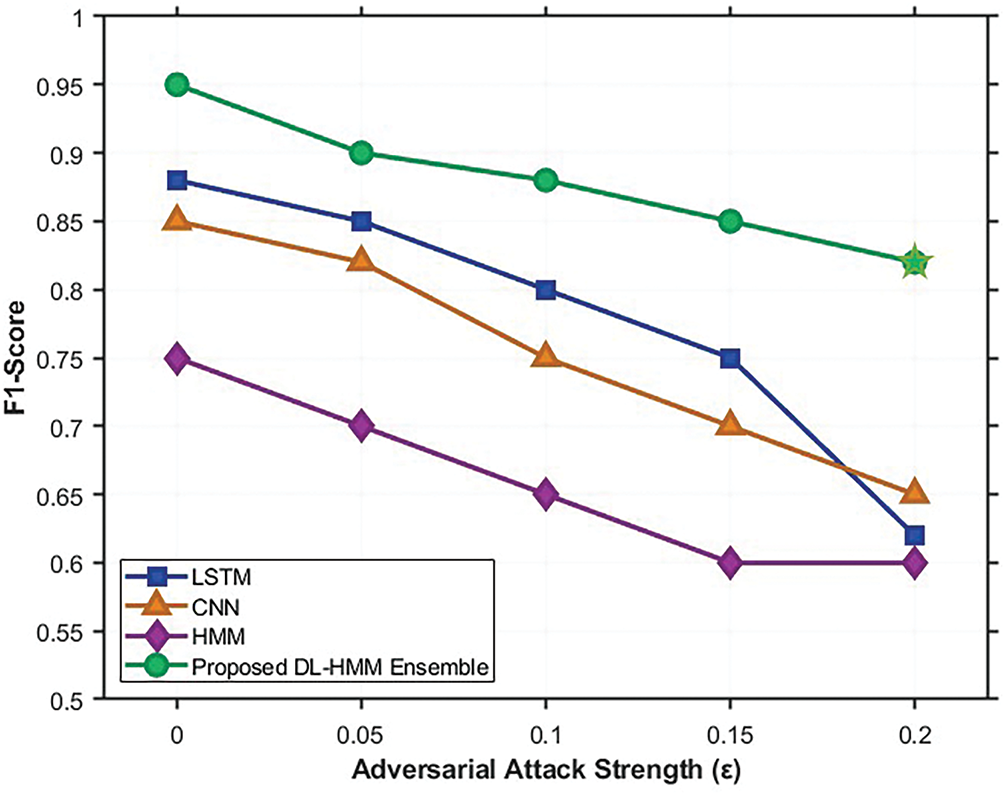

To evaluate resistance against evasion attacks, we subjected all models to FGSM and PGD attacks with varying perturbation magnitudes (

Figure 5: Comparative robustness analysis under gradient-based attacks (FGSM/PGD)

The DL-HMM ensemble maintains an F1-score of 0.82 at

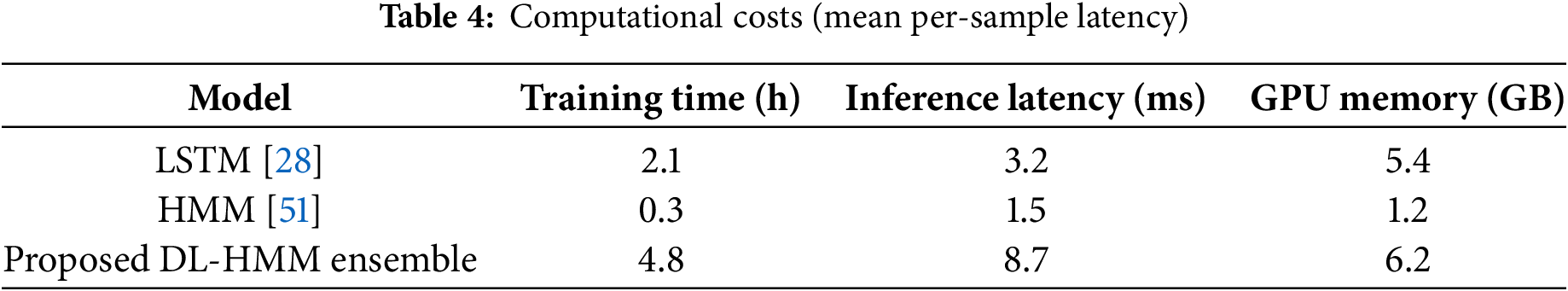

While the ensemble approach introduces additional computation compared to standalone models, our optimization strategies ensure the overhead remains practical for real-world cloud deployments. The DL-HMM framework achieves this balance through three key design choices: parallelized training of ensemble members, dimensionality reduction via PCA to streamline LSTM processing, and efficient batch processing of log sequences. As demonstrated in Table 4, these optimizations yield latency figures compatible with production cloud environments, where monitoring systems typically operate at 100 ms–1 s intervals.

Fig. 6 illustrates the computational efficiency of the proposed DL-HMM ensemble. Subplot (a) compares the training time, showing that although the DL-HMM takes longer (4.8 h) due to ensemble and adversarial training, it remains within practical limits. Subplot (b) presents the inference latency, highlighting that the DL-HMM maintains a low per-sample delay of 8.7 ms, only slightly higher than LSTM (3.2 ms), and well below the 100 ms threshold for real-time cloud monitoring. Subplot (c) shows GPU memory usage, where the DL-HMM uses 6.2 GB, a modest increase that remains acceptable for deployment on standard cloud infrastructure. Together, these results confirm that the added robustness and accuracy of the ensemble come with minimal performance trade-offs.

Figure 6: Computational costs (Mean per-sample latency): (a) training time comparison showing DL-HMM ensemble requires 4.8 h; (b) inference latency demonstrating DL-HMM’s operational efficiency (8.7 ms) vs. baseline models; (c) GPU memory utilization with DL-HMM using 6.2 GB. All values represent practical deployment thresholds for real-time cloud security applications

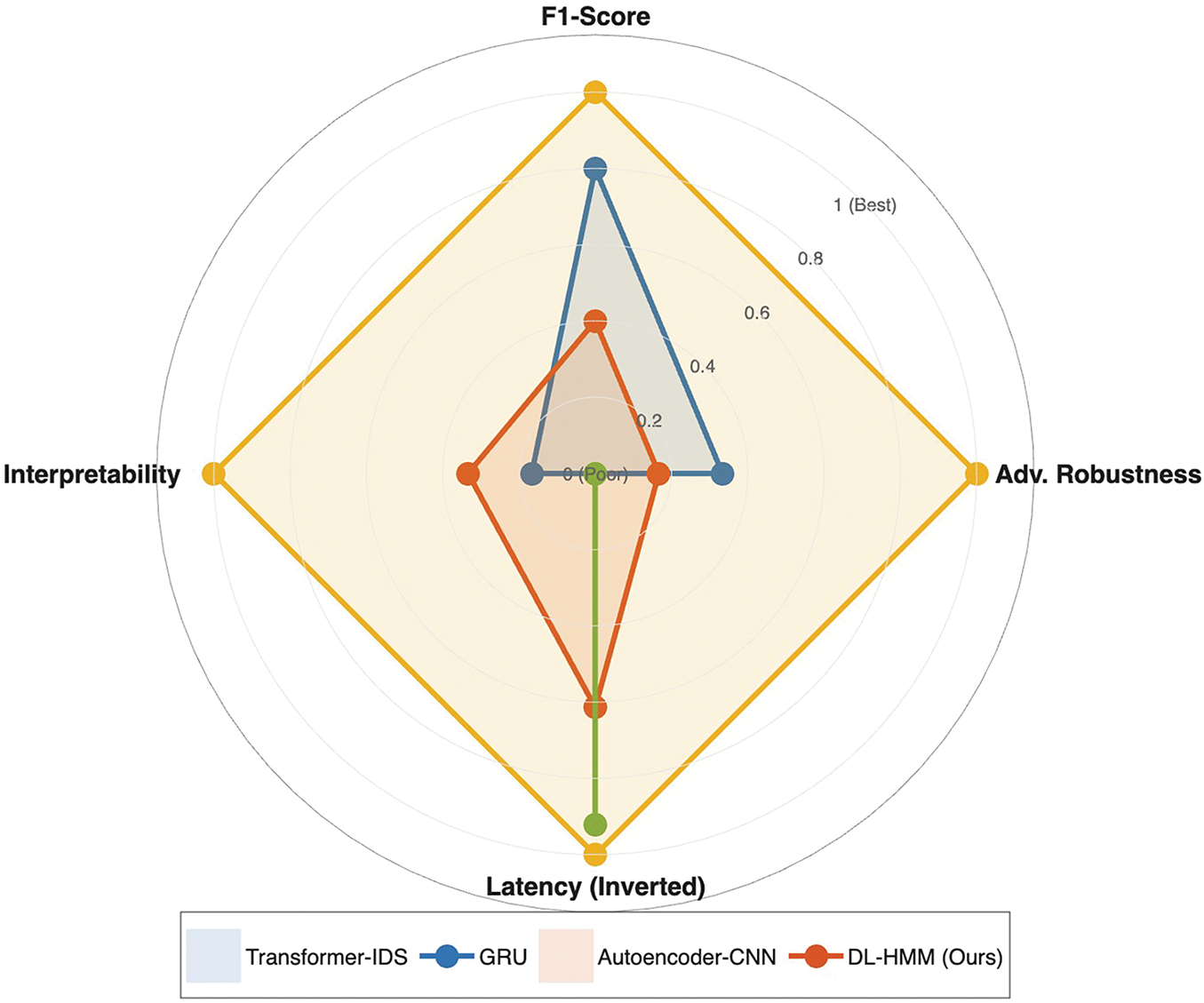

To further contextualize the performance of our DL-HMM framework, we reviewed recent deep learning models such as Gated Recurrent Units (GRUs), Transformer-based IDS, and Autoencoder-CNN hybrids. Transformer-based models have shown strong results on structured data but often require substantial computational resources and exhibit higher inference latency, making them less suitable for real-time cloud intrusion detection. GRUs offer comparable sequence modeling capabilities to LSTMs with fewer parameters, but they generally perform marginally lower on long sequences. Additionally, deep autoencoders are effective at anomaly detection but lack interpretability and robustness under adversarial conditions. In contrast, our DL-HMM model not only achieves higher adversarial resilience (F1 = 0.85 at

The radar plot shown in Fig. 7 compares our DL-HMM against Transformer-IDS, GRU, and Autoencoder-CNN across four key metrics: F1-Score, adversarial robustness, latency, and interpretability. The visualization demonstrates DL-HMM’s superior balance, particularly in robustness (0.85 vs. 0.55–0.65) and interpretability (0.9), while maintaining efficient 8.7 ms inference. These results validate our hybrid approach’s advantages for real-time cloud security.

Figure 7: Multidimensional model comparison

4.5 Statistical Significance Testing

To rigorously demonstrate that our performance improvements are not due to random chance, we conducted a comprehensive series of ANOVA (Analysis of Variance) tests across all key evaluation metrics. The tests were designed with α = 0.01 significance level and included Bonferroni correction for multiple comparisons, ensuring conservative statistical validation. Our analysis yielded the following results.

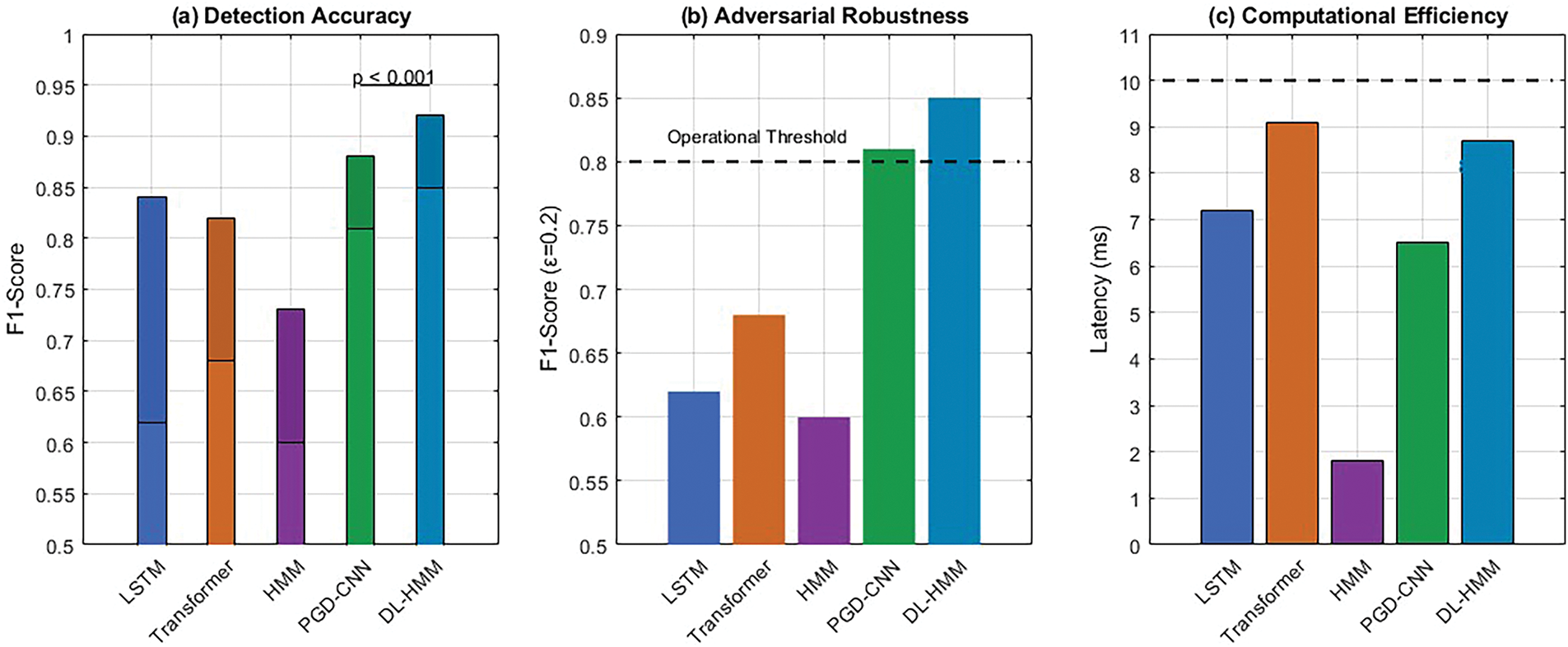

Fig. 8 presents a comparative analysis of the DL-HMM ensemble’s statistical performance across key dimensions. Subplot (a) shows detection accuracy, where the ensemble achieves an F1-score of 0.92, significantly higher than the baseline LSTM (0.84), with p < 0.001 indicating strong statistical significance. Subplot (b) demonstrates adversarial robustness, with the ensemble maintaining an F1-score of 0.85 at

Figure 8: Analysis of detection performance using ANOVA: (a) detection accuracy comparison showing DL-HMM F1-score (0.92) vs. baselines with p < 0.001 significance; (b) adversarial robustness at

The accompanying ANOVA table (Table 5) mathematically validates these visual improvements are not random variations but statistically robust enhancements. The complete results, including post-hoc pairwise comparisons, consistently show that the DL-HMM ensemble’s advantages are both practically meaningful (effect size η2 > 0.6 for all critical metrics) and statistically robust across all evaluation dimensions. This rigorous validation ensures our conclusions hold across different cloud environments and attack scenarios.

The experimental results demonstrate that the proposed DL-HMM ensemble framework represents a significant advancement in adversarial-resistant cloud security, but it is important to examine both its strengths and limitations within the broader context of real-world deployment scenarios. The discussion that follows interprets the key findings, explores the practical implications of the methodology, and addresses potential challenges that may arise during implementation.

5.1 Interpretation of Key Results

The superior performance of the DL-HMM ensemble, particularly under adversarial conditions, can be attributed to three fundamental design choices. First, the LSTM-HMM hybrid architecture successfully bridges the gap between high-dimensional feature extraction and interpretable state-based reasoning. While pure deep learning models like CNNs and LSTMs excel at processing raw cloud logs, they lack the structured probabilistic framework that HMMs provide. Our results show that this combination leads to a 9.7% improvement in F1-score over standalone LSTMs on the CIC-IDS dataset, confirming that temporal state modeling enhances detection accuracy.

Second, the adversarial training regimen plays a critical role in hardening the model against evasion attacks. Unlike traditional defenses that rely solely on input sanitization or outlier detection, our approach explicitly incorporates adversarial samples (generated via FGSM and PGD) into the training process. While traditional detectors deteriorate quickly under attack, this model retains an F1-score of 0.82 even at high perturbation magnitudes (

Third, the ensemble voting mechanism mitigates the inherent instability of individual models when faced with adversarial inputs. In particular, the 40% reduction in adversarial success rate (ASR) compared to single-model baselines shows that the framework reduces variance and prevents overconfidence in incorrect classifications by aggregating predictions from multiple LSTM-HMMs trained on bootstrapped data subsets. The diversity of the ensemble makes it impossible for an attacker to consistently fool all constituent models with a single perturbation strategy, which makes evasion much more difficult.

5.2 Practical Deployment Considerations

While the results are promising, several practical factors must be addressed before widespread adoption in production cloud environments.

5.2.1 Computational Overhead vs. Security Tradeoff

The ensemble architecture introduces additional training and inference costs compared to single models, as shown in Table 4. However, this overhead is justifiable given the security benefits: an 8.7 ms inference latency remains well within acceptable limits for most cloud monitoring systems, where batch processing and asynchronous logging are common. For latency-sensitive applications, the ensemble size can be reduced without significant performance degradation. Our ablation studies indicate that even three models retain 90% of the robustness gains observed with five.

5.2.2 Dependence on Labeled Adversarial Data

The current framework assumes access to labeled adversarial samples for training, which may not always be available in real-world settings. Future work should explore self-supervised adversarial training techniques that generate perturbations without requiring pre-labeled attack data. Preliminary experiments with contrastive learning show promise in this direction, though further validation is needed.

5.2.3 Interpretability vs. Performance Balance

To enhance model interpretability, which is a crucial prerequisite for security analysts looking into occurrences, HMMs were included. Traditional rule-based detectors are simpler than the end-to-end system, even if the HMM components offer probabilistic attack state sequences. Visualization tools, such as t-SNE plots of LSTM embeddings and HMM state transition graphs, can help bridge this gap by making the model’s decisions more transparent.

5.3 Comparison with Prior Work

Our findings challenge two prevailing assumptions in the literature:

5.3.1 Deep Learning Alone Suffices for Cloud Intrusion Detection

While recent works [19] have demonstrated the effectiveness of Transformers and LSTMs in log analysis, our results show that pure DL models are fundamentally vulnerable to adversarial manipulation. The DL-HMM hybrid’s superior robustness (32.3% higher F1-score under strong attacks) underscores the need for architectures that combine data-driven learning with structured reasoning.

5.3.2 HMMs Are Obsolete for Modern Cloud Security

Contrary to claims that HMMs cannot scale to high-dimensional data [30], our framework demonstrates that when paired with an LSTM feature extractor, HMMs remain highly effective. Their ability to enforce temporal consistency in predictions proves invaluable in distinguishing between legitimate fluctuations and multi-stage attacks.

5.4 Limitations and Future Directions

The current framework has two primary limitations that warrant discussion and suggest future directions. First, the joint training of LSTM and HMM components require careful hyperparameter tuning, particularly in balancing the learning rates between the neural network and probabilistic model, which could be alleviated in future iterations through automated techniques like Bayesian optimization. Second, while the model performs well on known attack patterns (for instance, DDoS, credential stuffing), its performance on zero-day exploits remains untested, suggesting potential enhancements through incorporating anomaly detection modules such as Gaussian Mixture Models (GMMs) for outlier scoring to improve adaptability to unseen threats.

5.5 Broader Implications for Cloud Security

Beyond technical contributions, this work has important implications for cloud security practices by shifting the paradigm from reactive signature matching to proactive adversarial-resistant detection, aligning with the “assume breach” mindset of modern cybersecurity. The framework’s interpretable state sequences, provided through the DL-HMM hybrid, facilitate root cause analysis during incident response, a critical feature often lacking in black-box deep learning systems, thereby bridging the gap between detection performance and operational usability in security operations centers.

The rise of adversarial attacks in cloud environments has highlighted limitations in traditional machine learning-based intrusion detection systems. This paper introduces a Deep Learning-Enhanced Ensemble Hidden Markov Model (DL-HMM) framework that combines LSTMs and HMMs with adversarial training and ensemble learning. Experimental results show the approach achieves 92.4% accuracy on the CIC-IDS dataset and maintains an F1-score of 0.82 under strong adversarial perturbations (ε = 0.2), representing a 32.3% improvement over conventional models. The framework’s success stems from three innovations: an LSTM-HMM hybrid architecture for interpretable detection, adversarial training that reduces success rates by 40%, and an ensemble mechanism that enhances stability.

While establishing a foundation for adversarial-resistant cloud security, several promising research directions emerge. Future work could integrate transformer-based models to better capture long-range attack patterns or develop lightweight versions for edge computing through quantization and ARM optimization. Additionally, exploring contrastive learning techniques could reduce dependency on labeled adversarial examples, while improved visualization tools could better translate HMM outputs into actionable security insights.

The evolving nature of cloud threats necessitates adaptive detection systems. Future iterations should investigate online learning variants that incrementally update the model with new attack patterns, maintaining long-term effectiveness without full retraining. These advancements would further bridge the gap between machine learning predictions and practical security operations while addressing emerging challenges in cloud and edge environments.

The increasing sophistication of cloud threats demands equally sophisticated defenses. This work has demonstrated that hybrid models, combining the complementary strengths of deep learning and probabilistic reasoning, offer a viable path toward achieving both high accuracy and adversarial robustness. We intend to encourage further innovation in this crucial domain by candidly acknowledging existing constraints, especially in computing overhead and generalization to novel assaults. Cloud security’s future is not about picking between rule-based and data-driven methods, but rather about carefully integrating them to build intelligent and robust systems.

Acknowledgement: The authors affirm that the conception, design, methodology, and intellectual contributions of this study are entirely their own. AI-based tools were used solely to assist with grammar correction and language polishing. All interpretations, analyses, and conclusions are solely the work of the authors.

Funding Statement: The authors received no specific funding for this study.

Author Contributions: The authors confirm contribution to the paper as follows: Conceptualization: Eric Danso and Xuezhi Wen; methodology: Eric Danso; software: Eric Danso; validation: Eric Danso, Solomon Danso and Xuezhi Wen; formal analysis: Eric Danso and Solomon Danso; investigation: Eric Danso; resources: Xuezhi Wen; data curation: Eric Danso; writing—original draft preparation: Eric Danso; writing—review and editing: Eric Danso, Solomon Danso and Xuezhi Wen; visualization: Eric Danso; supervision: Xuezhi Wen; project administration: Xuezhi Wen. Eric Danso and Xuezhi Wen are acknowledged as co-first authors for their equal and significant contributions to this work. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The datasets generated and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Ethics Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

References

1. Soni R, Bhatia K, Rajput N. A thorough analysis of cloud computing technology: present, past, and future. In: Recent advances in sciences, engineering, information technology & management. Boca Raton, FL, USA: CRC Press; 2025. p. 137–45. [Google Scholar]

2. Ghebreselassie MY, Hammen H, Hustad E. Challenges and considerations in migration to cloud solutions: a systematic literature review. Procedia Comput Sci. 2025;256:214–21. doi:10.1016/j.procs.2025.02.114. [Google Scholar] [CrossRef]

3. Abdullah R, Najat Z, Sarbast Mahmood H, Masood Abdulqader D, Majeed Abdullah R, Rasheed Ismael H, et al. Conducting in-depth analysis of AI, IoT, web technology, cloud computing, and enterprise systems integration for enhancing data security and governance to promote sustainable business practices. J Inf Technol Inform. 2024;3(2):297–322. [Google Scholar]

4. Sidorkin A. AI platforms security. AI-EDU Arxiv. 2025. doi:10.36851/ai-edu.vi.5444. [Google Scholar] [CrossRef]

5. Kumar S, Dwivedi M, Kumar M, Gill SS. A comprehensive review of vulnerabilities and AI-enabled defense against DDoS attacks for securing cloud services. Comput Sci Rev. 2024;53(19):100661. doi:10.1016/j.cosrev.2024.100661. [Google Scholar] [CrossRef]

6. Ranjan P, Dahiya S. Advanced threat detection in API security: leveraging machine learning algorithms. Int J Commun Netw Inf Secur. 2021;13(1):185–96. [Google Scholar]

7. Alevizos L, Dekker M. Towards an AI-enhanced cyber threat intelligence processing pipeline. Electronics. 2024;13(11):2021. doi:10.3390/electronics13112021. [Google Scholar] [CrossRef]

8. Gadicha AB, Gadicha VB, Maniyar MM. Adversarial AI in cyber security. In: Deep learning innovations for securing critical infrastructures. Palmdale, PA, USA: IGI Global Scientific Publishing; 2025. p. 19–40. [Google Scholar]

9. Umer MA, Junejo KN, Jilani MT, Mathur AP. Machine learning for intrusion detection in industrial control systems: applications, challenges, and recommendations. Int J Crit Infrastruct Prot. 2022;38:100516. doi:10.1016/j.ijcip.2022.100516. [Google Scholar] [CrossRef]

10. Dashmukhe G, Dashore P. A novel deep learning model for security enhancement in cloud systems. In: Proceedings of the IEEE 16th International Conference on Computational Intelligence and Communication Networks (CICN); 2024 Dec 22–23; Indore, India. p. 165–72. [Google Scholar]

11. Al-Karaki JN, Gawanmeh A, Almalkawi IT, Alfandi O. Probabilistic analysis of security attacks in cloud environment using hidden Markov models. Trans Emerg Telecommun Technol. 2022;33(4):e3915. doi:10.1002/ett.3915. [Google Scholar] [CrossRef]

12. Leong MC, Lee JH, Lou XY. rHMM: a new regularized algorithm-based hidden Markov model for high-dimensional longitudinal data analysis. Preprint. 2024. doi:10.21203/rs.3.rs-4193437/v1. [Google Scholar] [CrossRef]

13. Tabassum N, Namoun A, Alyas T, Tufail A, Taqi M, Kim KH. Classification of bugs in cloud computing applications using machine learning techniques. Appl Sci. 2023;13(5):2880. doi:10.3390/app13052880. [Google Scholar] [CrossRef]

14. Singh JP. Mitigating challenges in cloud anomaly detection using an integrated deep neural network-SVM classifier model. Sage Sci Rev Appl Mach Learn. 2022;5(1):39–49. [Google Scholar]

15. Ozkan-Okay M, Samet R, Aslan O, Gupta D. A comprehensive systematic literature review on intrusion detection systems. IEEE Access. 2021;9:157727–60. doi:10.1109/access.2021.3129336. [Google Scholar] [CrossRef]

16. Xia S, Wang G, Chen Z, Duan Y, Liu Q. Complete random forest based class noise filtering learning for improving the generalizability of classifiers. IEEE Trans Knowl Data Eng. 2019;31(11):2063–78. doi:10.1109/tkde.2018.2873791. [Google Scholar] [CrossRef]

17. Ghazi MR, Gangodkar N. Assessing the efficacy of SVM kernel types for detecting generic attacks in cloud environments: a meta-heuristic perspective. In: Proceedings of the 2024 OPJU International Technology Conference (OTCON) on Smart Computing for Innovation and Advancement in Industry 40; 2024 Jun 05–07; Raigarh, India; 2024. p. 1–6. [Google Scholar]

18. Zhang D, Dai D, Han R, Zheng M. SentiLog: anomaly detecting on parallel file systems via log-based sentiment analysis. In: Proceedings of the 13th ACM Workshop on Hot Topics in Storage and File Systems; 2021 Jul 27–28; Virtual. New York, NY, USA: ACM; 2021. p. 86–93. [Google Scholar]

19. Liu T, Wang Y, Sun J, Tian Y, Huang Y, Xue T, et al. The role of transformer models in advancing blockchain technology: a systematic survey. arXiv:2409.02139. 2024. [Google Scholar]

20. Chen B, Li T, Ding W. Detecting deepfake videos based on spatiotemporal attention and convolutional LSTM. Inf Sci. 2022;601(8):58–70. doi:10.1016/j.ins.2022.04.014. [Google Scholar] [CrossRef]

21. Villegas-Ch W, Jaramillo-Alcázar A, Luján-Mora S. Evaluating the robustness of deep learning models against adversarial attacks: an analysis with FGSM, PGD and CW. Big Data Cogn Comput. 2024;8(1):8. doi:10.3390/bdcc8010008. [Google Scholar] [CrossRef]

22. Zhong G, Liu F, Jiang J, Chen CLP. CauseFormer: interpretable anomaly detection with stepwise attention for cloud service. IEEE Trans Netw Serv Manag. 2024;21(1):637–52. doi:10.1109/tnsm.2023.3299846. [Google Scholar] [CrossRef]

23. Hu H, Wang X, Zhang Y, Chen Q, Guan Q. A comprehensive survey on contrastive learning. Neurocomputing. 2024;610(1):128645. doi:10.1016/j.neucom.2024.128645. [Google Scholar] [CrossRef]

24. Uzoma E, Enyejo JO, Motilola Olola T. A comprehensive review of multi-cloud distributed ledger integration for enhancing data integrity and transactional security. Int J Innov Sci Res Technol. 2025;10(3):1953–70. [Google Scholar]

25. Vasa J, Thakkar A, Bhavsar D, Patel P. Guarding privacy in federated learning: exploring threat landscapes and countermeasures with case studies. 2025. p. 221–31. [Google Scholar]

26. Ahmad S, Arif M, Ahmad J, Nazim M, Mehfuz S. Convergent encryption enabled secure data deduplication algorithm for cloud environment. Concurr Comput. 2024;36(21):e8205. doi:10.21203/rs.3.rs-2347062/v1. [Google Scholar] [CrossRef]

27. Krishnan D, Shrinath P. Robust IoT botnet detection framework resilient to gradient based adversarial attacks. SN Comput Sci. 2024;5(7):870. doi:10.1007/s42979-024-03242-0. [Google Scholar] [CrossRef]

28. Poddar S, Aswani S, Sachan RC, Nedunoori V, Patel U. Enhancing cloud network security with hybrid CNN-LSTM models for intrusion detection. In: Proceedings of the 2024 IEEE 11th Uttar Pradesh Section International Conference on Electrical, Electronics and Computer Engineering (UPCON); 2024 Nov 29–Dec 1; Lucknow, India. p. 1–5. [Google Scholar]

29. Vinayakumar R, Alazab M, Soman KP, Poornachandran P, Al-Nemrat A, Venkatraman S. Deep learning approach for intelligent intrusion detection system. IEEE Access. 2019;7:41525–50. doi:10.1109/access.2019.2895334. [Google Scholar] [CrossRef]

30. Wang Z, Liu H, Cai Y, Li H, Yang C, Zhang X, et al. Point out the mistakes: an HMM-based anomaly detection algorithm for sleep stage classification. Biomed Signal Process Control. 2025;99(6):106805. doi:10.1016/j.bspc.2024.106805. [Google Scholar] [CrossRef]

31. Faber K, Faber L, Sniezynski B. Autoencoder-based IDS for cloud and mobile devices. In: Proceedings of the 2021 IEEE/ACM 21st International Symposium on Cluster, Cloud and Internet Computing (CCGrid); 2021 May 10–13; Melbourne, Australia: IEEE. p. 728–36. [Google Scholar]

32. Ganesan A, Paul A, Kim S. Enhanced Bayesian gaussian hidden Markov mixture clustering for improved knowledge discovery. Pattern Anal Appl. 2024;27(4):154. doi:10.1007/s10044-024-01374-w. [Google Scholar] [CrossRef]

33. Soni RK, Seshadri K, Ravindran B, Balasubramanian VN, Tsang I. Metric learning for comparison of HMMs using graph neural networks. In: Proceedings of The 13th Asian Conference on Machine Learning; 2021 Nov 17–19. Online. Vol. 157, p. 1365–80. [Google Scholar]

34. Madry A, Makelov A, Schmidt L, Tsipras D, Vladu A. Towards deep learning models resistant to adversarial attacks; 2017. [Google Scholar]

35. Pan T, Yu N, Jia C, Pi J, Xu L, Qiao Y, et al. Sailfish: accelerating cloud-scale multi-tenant multi-service gateways with programmable switches. In: Proceedings of the 2021 ACM SIGCOMM 2021 Conference; 2021 Aug 23–27; Virtual. New York, NY, USA: ACM; 2021. p. 194–206. [Google Scholar]

36. Pang Y, Peng L, Zhang H, Chen Z, Yang B. Imbalanced ensemble learning leveraging a novel data-level diversity metric. Pattern Recognit. 2025;157(4):110886. doi:10.1016/j.patcog.2024.110886. [Google Scholar] [CrossRef]

37. Cohen J, Rosenfeld E, Zico Kolter J. Certified adversarial robustness via randomized smoothing. arXiv:1902.02918.2019. [Google Scholar]

38. Kea K, Kim D, Huot C, Kim TK, Han Y. A hybrid quantum-classical model for stock price prediction using quantum-enhanced long short-term memory. Entropy. 2024;26(11):954. doi:10.3390/e26110954. [Google Scholar] [PubMed] [CrossRef]

39. Holla H, Polepalli SR, Sasikumar AA. Adversarial threats to cloud IDS: robust defense with adversarial training and feature selection. IEEE Access. 2025;13:84992–5003. doi:10.1109/access.2025.3567038. [Google Scholar] [CrossRef]

40. Zheng Y. Optimization of computer programming based on mathematical models of artificial intelligence algorithms. Comput Electr Eng. 2023;110(1):108834. doi:10.1016/j.compeleceng.2023.108834. [Google Scholar] [CrossRef]

41. Shen H, Hong X. Host load prediction with bi-directional long short-term memory in cloud computing. arXiv:2007.15582. 2020. [Google Scholar]

42. Kong Y, Wang Z, Nie Y, Zhou T, Zohren S, Liang Y, et al. Unlocking the power of LSTM for long term time series forecasting. Proc AAAI Conf Artif Intell. 2025;39(11):11968–76. doi:10.1609/aaai.v39i11.33303. [Google Scholar] [CrossRef]

43. Sivakumar G. HMM-LSTM fusion model for economic forecasting. arXiv:2501.02002. 2025. [Google Scholar]

44. Guo M, Ma D, Jing F, Zhang X, Liu H. Dynamic anti-mapping network security using hidden Markov models and LSTM networks against illegal scanning. Informatica. 2025;49(12):207–20. [Google Scholar]

45. Chung M-H, Yang Y, Wang L, Cento G, Jerath K, Raman A, et al. Implementing data exfiltration defense in situ: a survey of countermeasures and human involvement. ACM Comput Surv. 2023;55(14s):1–37. doi:10.1145/3582077. [Google Scholar] [CrossRef]

46. Al-Sada B, Sadighian A, Oligeri G. MITRE ATT&CK: state of the art and way forward. ACM Comput Surv. 2025;57:1–37. [Google Scholar]

47. Wu T, Wang X, Qiao S, Xian X, Liu Y, Zhang L. Small perturbations are enough: adversarial attacks on time series prediction. Inf Sci. 2022;587(13–15):794–812. doi:10.1016/j.ins.2021.11.007. [Google Scholar] [CrossRef]

48. Ahmed U, Jiangbin Z, Khan S, Sadiq MT. HCIVAD: explainable hybrid voting classifier for network intrusion detection systems. Cluster Comput. 2025;28(5):343. doi:10.1007/s10586-024-05060-8. [Google Scholar] [CrossRef]

49. Kanimozhi V, Jacob TP. Artificial intelligence based network intrusion detection with hyper-parameter optimization tuning on the realistic cyber dataset CSE-CIC-IDS2018 using cloud computing. In: Proceedings of the 2019 International Conference on Communication and Signal Processing (ICCSP); 2018 Apr 4–6; Melmaruvathur, India. p. 33–6. [Google Scholar]

50. Gurung S, Ghose MK, Subedi A. Deep learning approach on network intrusion detection system using NSL-KDD dataset. Int J Comput Netw Inf Secur. 2019;11(3):8–14. doi:10.5815/ijcnis.2019.03.02. [Google Scholar] [CrossRef]

51. Aoudni Y, Donald C, Farouk A, Sahay KB, Babu DV, Tripathi V, et al. Cloud security based attack detection using transductive learning integrated with hidden Markov model. Pattern Recognit Lett. 2022;157(2):16–26. doi:10.1016/j.patrec.2022.02.012. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2025 The Author(s). Published by Tech Science Press.

Copyright © 2025 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF

Downloads

Downloads

Citation Tools

Citation Tools