Open Access

Open Access

ARTICLE

Adaptive Consistent Management to Prevent System Collapse on Shared Object Manipulation in Mixed Reality

1 Department of Game Software, Hoseo University, Asan-si, Korea

2 Department of Artificial Intelligence and Data Science, Korea Military Academy, Seoul, Korea

* Corresponding Author: Hyun Kwon. Email:

Computers, Materials & Continua 2023, 75(1), 2025-2042. https://doi.org/10.32604/cmc.2023.036051

Received 15 September 2022; Accepted 08 December 2022; Issue published 06 February 2023

Abstract

A concurrency control mechanism for collaborative work is a key element in a mixed reality environment. However, conventional locking mechanisms restrict potential tasks or the support of non-owners, thus increasing the working time because of waiting to avoid conflicts. Herein, we propose an adaptive concurrency control approach that can reduce conflicts and work time. We classify shared object manipulation in mixed reality into detailed goals and tasks. Then, we model the relationships among goal, task, and ownership. As the collaborative work progresses, the proposed system adapts the different concurrency control mechanisms of shared object manipulation according to the modeling of goal–task–ownership. With the proposed concurrency control scheme, users can hold shared objects and move and rotate together in a mixed reality environment similar to real industrial sites. Additionally, this system provides MS Hololens and Myo sensors to recognize inputs from a user and provides results in a mixed reality environment. The proposed method is applied to install an air conditioner as a case study. Experimental results and user studies show that, compared with the conventional approach, the proposed method reduced the number of conflicts, waiting time, and total working time.Keywords

Mixed reality (MR) is an environment that can be flexibly changed according to the purpose of a service that provides both virtual reality (VR) and augmented reality (AR) environments. Because of the rapid development of device technology, including head-mounted displays (HMDs) and electromyography (EMG) sensors, MR environments have been widely used in various applications such as games, entertainment, and industrial fields [1,2]. Through a recognition device interlocked with an HMD, a user can perform various tasks such as selection, release, 3D position change, rotation, size change, color change, and simulation of objects in an MR environment. To address challenges because of COVID-19 restrictions, network-based collaborative work has become essential. Collaborative work in MR can enable users to perform collaborative tasks in the same space or can augment the appearances of remotely distant users in the same space and augment shared objects to provide collaborative work that transcends time and space [3]. Thus, various studies have been conducted to provide collaborative work in MR environments [3–9].

However, if users repeat the same work while manipulating shared objects, a conflict phenomenon in which the result of the shared object is suddenly changed may occur. If this phenomenon occurs continuously, the consistency of participants in the collaborative work will become problematic, and the work would have to be repeated. Consequently, the work time would be prolonged, and the satisfaction of the participants would diminish [10–12]. To address this situation, a concurrency control approach, which provides ownership to the first user performing the work on the shared object and prevents other users’ access to the shared object until the work of the relevant owner is completed, is used [13–16]. The relevant method has the advantage that the conflict of shared objects can be prevented. To address this issue, task-based concurrency control methods have been proposed, wherein the work on shared objects is subdivided so that only the owner can perform work, such as the movement of the object, while other users can perform other tasks such as painting together with the owner [17,18]. However, the foregoing methods have the disadvantage that they cannot be applied flexibly when work on the same shared object change depending on the purpose because the ownership of tasks for shared objects is fixed.

This study proposes an adaptive concurrency control approach for shared objects in MR environments in which the ownership of subdivided tasks is significantly changed while users perform work on shared objects in an MR environment so that the control over the shared objects can be changed to achieve the relevant goal. The proposed method consists of goal–task–ownership for the manipulation of shared objects. As the collaborative work progresses, the proposed system adapts the different concurrency control mechanisms of shared object manipulation according to the modeling of goal–task–ownership. For example, a concurrency control mechanism is not necessary when two users are moving a shared object in an MR environment. However, the owner may want to restrict specific tasks of the non-owner to focus on assembling small parts of the shared object after the moving state. Finally, the non-owner may support the owner’s task such as changing the visual appearance and testing the shared object. With the proposed system, detailed concurrency control mechanisms of the shared object can be adapted when the state of the goal is changed.

The proposed system was applied to the collaborative installation of an air conditioner in an MR environment. As the interactions of users in MR are similar to the real world, a sensor-based hand and upper body motion recognition method is applied to the part of actions to achieve specific motions. Hence, we used MS Hololens [19] and Myo sensors [20,21].

The main contributions of this study are as follows:

• The proposed system provides an adaptive concurrency control mechanism that enables users to significantly change the ownership rules for tasks while they are performing collaborative work to achieve certain goals.

• The proposed system provides a sensor-based hand and upper body motion recognition method to recognize inputs and provide appropriate interactions because an MR environment has the potential to provide natural behaviors of users in the real world.

2.1 Interactions in MR Environment

MR environments visualize virtual objects, show them to users through HMDs, and provide interactions with the user. In addition, MR environments provide a solution of VR or AR environments to users according to the purpose of the provided application [1,2]. MR environments are employed in diverse fields such as education, office environments, industrial fields, and medical simulation education [3]. One of the most important considerations in MR environments is that interactions with 3D virtual objects expressed in MR should be simplified and convenient for users. Specifically, if the interactions that enable users to perform specific tasks in MR are easily and conveniently provided, users can be immersed in the MR environment while feeling high levels of satisfaction and amusement, which can eventually lead to improvement in work productivity [4–7]. Interaction methods for objects in the existing MR have been appropriately studied depending on the type of devices users use.

To manipulate objects in an MR environment, the user can use various interfaces and interactions. The first method enables users to manipulate objects through finger touches using the sensor on the HMD. Although this method is relatively simple except for manipulating the 2D UI visible in the user’s field of vision, 3D objects, which are important in MR environments, cannot easily be manipulated using this method [18]. The second method manipulates 3D objects with a dedicated interface such as a controller provided by the HMD. This method provides high accuracy and is the most widely used [19–22]. However, this method is primarily specialized only in VR equipment units. The equipment units that constitute an MR environment cause fatigue as the user holds the controller with both hands to manipulate objects. Moreover, they cause a feeling of disjunction because the shape of the hand movement using this method is different from that using a real object. The third method recognizes the motions of the hand and upper body so that the user grabs and manipulates the actual object. Unlike in other interaction methods, in this method, users may not learn the method separately because they grab and manipulate virtual objects in the same motions as those performed by the users to grab and manipulate actual objects [23].

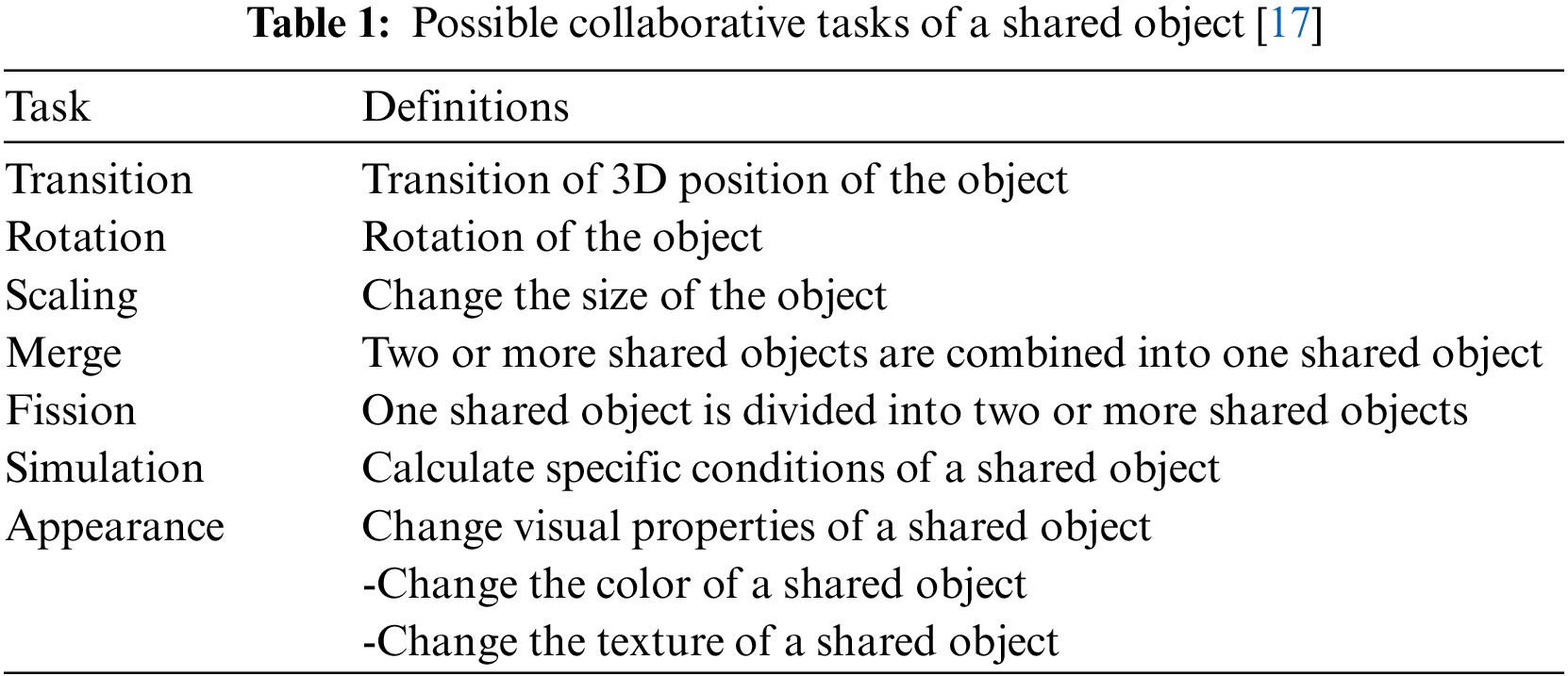

In an MR environment, users can interact with 3D objects using the interaction methods. Interactions with objects can be concretely classified into several tasks depending on the movement of the 3D position of the object, rotation, size transformation of the object, changes in the appearance of the object, simulations of objects, and combining and disassembling the objects [17,18]. Table 1 shows the descriptions of the interaction methods responsible for the manipulation of objects in an MR environment.

2.2 Concurrency Control on Shared Object

When two or more users perform collaborative work in an MR environment, 3D objects must be synchronized. That is, if a user executes one of the tasks mentioned in Table 1 with a 3D object, the execution process and results should be visualized in real-time by the other participants in the collaborative work. Moreover, the results should be applied to all the users concurrently. An object that can be synchronized as such is defined as a shared object.

In an MR environment, when interactions with a shared object are conducted simultaneously by multiple users, conflicts may occur. As such, the result of the task on the object can be changed before the interaction of a user is completed depending on the interactions of other users. Consequently, the consistency of the tasks that had been worked on is affected. Concurrency control approaches intended to address these challenges are classified into three types. The first type is an optimistic method that allows users interactions with a shared object. However, if any conflict occurs among the users, this method recognizes only the work of the first user [12]. Although users without ownership can access the shared object through this method, their work is canceled. The second method is a pessimistic concurrency control approach. In this method, users must first acquire ownership to perform a specific interaction with a shared object. In the system, only users with ownership can interact with shared objects [13–15]. This method has the advantage that the management of conflicts is simple. However, users who do not have ownership cannot easily participate in other work that views the synchronization of shared objects. The third is a prediction-based method, which analyzes the behaviors of participants in MR and allocates ownership in advance. In systems such as PaRADAE, this method was proposed to give authority to the first user who can own the shared object, considering the location, movement direction, and speed of the users participating in the collaborative work [16]. Although the proposed method has the advantage of providing ownership in advance by predicting the user’s behavior, it has the disadvantage that the work of users with authority operates in a pessimistic manner. Otto et al. proposed shared object manipulation methods for lifting and moving heavy objects in a VR environment [11]. When two users are lifting and moving a heavy object, their lifting forces are accumulated. Although shared object manipulation does not require conventional concurrency control mechanisms, this example may also require some special concurrency control mechanism when the directions of inputs are different.

As collaborative work environments have become more complex and diversified, methods to divide ownerships into small pieces and provide the divided ownerships according to individual interactions rather than providing the ownership of shared objects per se to users have been proposed. Lee et al. proposed a method to allow other users to access and perform other interactions such as painting the relevant object when the owner of the object is holding the object [17]. These methods can eliminate the parts where the work of multiple users is restricted while reducing the parts where conflicts occur.

Moreover, in an MR environment, collaborative work in an extremely complex industrial field is complex. For example, in an MR environment, multiple users can perform collaborative work to build an office [3], install facilities, such as air conditioners, together, or perform simulations [24]. Therefore, even in the case of interactions with the same object, the authority of ownership or the concurrency control approach should be adjusted depending on the situation.

3.1 Goal–Task–Ownership Relationship Model

Fig. 1 shows Norman’s action model, which comprises users working on seven interactions organized into seven stages [25]. First, the user sets goals to use the system and plans actions for the goals. Actions are recorded in the order in which they will be performed in the system’s interface. Moreover, they are executed when the user converts the record into actual actions. After the user conducts the actual actions, the goal of the user is achieved through the process of the system interpreting the user’s actions and updating the state.

Figure 1: Norman’s seven stages of action model

Although users have one goal when performing collaborative work in an MR environment, diverse concurrency control approaches to the interaction methods of users may occur. For example, when two users conduct collaborative work to move an air conditioner, the ownership of the movement of the shared object can be processed so that two or more users can operate it. Thereafter, while fixing other parts through additional screws to the air conditioner in a situation where one user is holding the air conditioner after it has moved, only the user who is holding the object has the authority to move the object. Moreover, the authority to assemble the object can be provided to the other users.

To provide users with collaborative work on shared objects in an MR environment, the characteristics of the user action model are applied in this study to identify users’ intentions to achieve a specific goal. Further, a gorilla troops optimizer (GTO) model is defined accordingly so that the ownership of users’ actions can be divided, or the ownership can be owned jointly. First, concerning the definition of modeling terms, a goal that a user wants to achieve and detailed goals that must be achieved to achieve the foregoing goal in an application program exist. Moreover, the aforementioned goals are classified into goals and sub-goals, respectively. Actions that appear in the process of users’ recognition of what must be performed to achieve detailed goals are defined as tasks. In addition, the part that sets the ownership of the tasks is defined as “Ownership.”

Fig. 2 shows a schematization of the process of installing the air conditioning equipment. The process of installing the air conditioner consists of first the movement of the user who carries the air conditioner to the designated location followed by the process of installing the air conditioner and testing the operation of the air conditioner. In this process, the relationships among the user’s “Goals,” “Sub-goals,” “Tasks,” and “Ownership” can be modeled.

Figure 2: Goal–task–ownership modeling

Fig. 3 explains the tasks that correspond to the sub-goals in the three stages of movement, installation, and test of the air conditioner and changes in the appropriate ownership. Fig. 3A is the process of moving the air conditioner first; all users who hold the air conditioner can participate in the movement of the air conditioner. In this case, no restriction is set on movement according to ownership. However, an algorithm for the movement of the air conditioner similar to the real world is required according to the users’ movement inputs. Thereafter, when users have completed the movement of the air conditioner, the process of assembling the air conditioner is necessary. As shown in Fig. 3B, in this process, the person in charge of the micro-movements of the air conditioner assembles attached devices to the air conditioner. In this case, only the ownership of the user in charge of the micro-movements of the air conditioner is validated, and the process of assembling attached devices is performed when the owner of the air conditioner permits. Fig. 3C shows the process of testing the air conditioner after assembly is complete in which adjustments, such as movement, are performed while the air conditioner is tested. In this case, relevant parts can be performed according to the permission of the user with ownership.

Figure 3: Adaptation of ownership and tasks according to the sub-goals (A) moving to location sub-goal, (B) assembling parts, and (C) testing

3.2 Adaptive Concurrency Control Scheme in MR

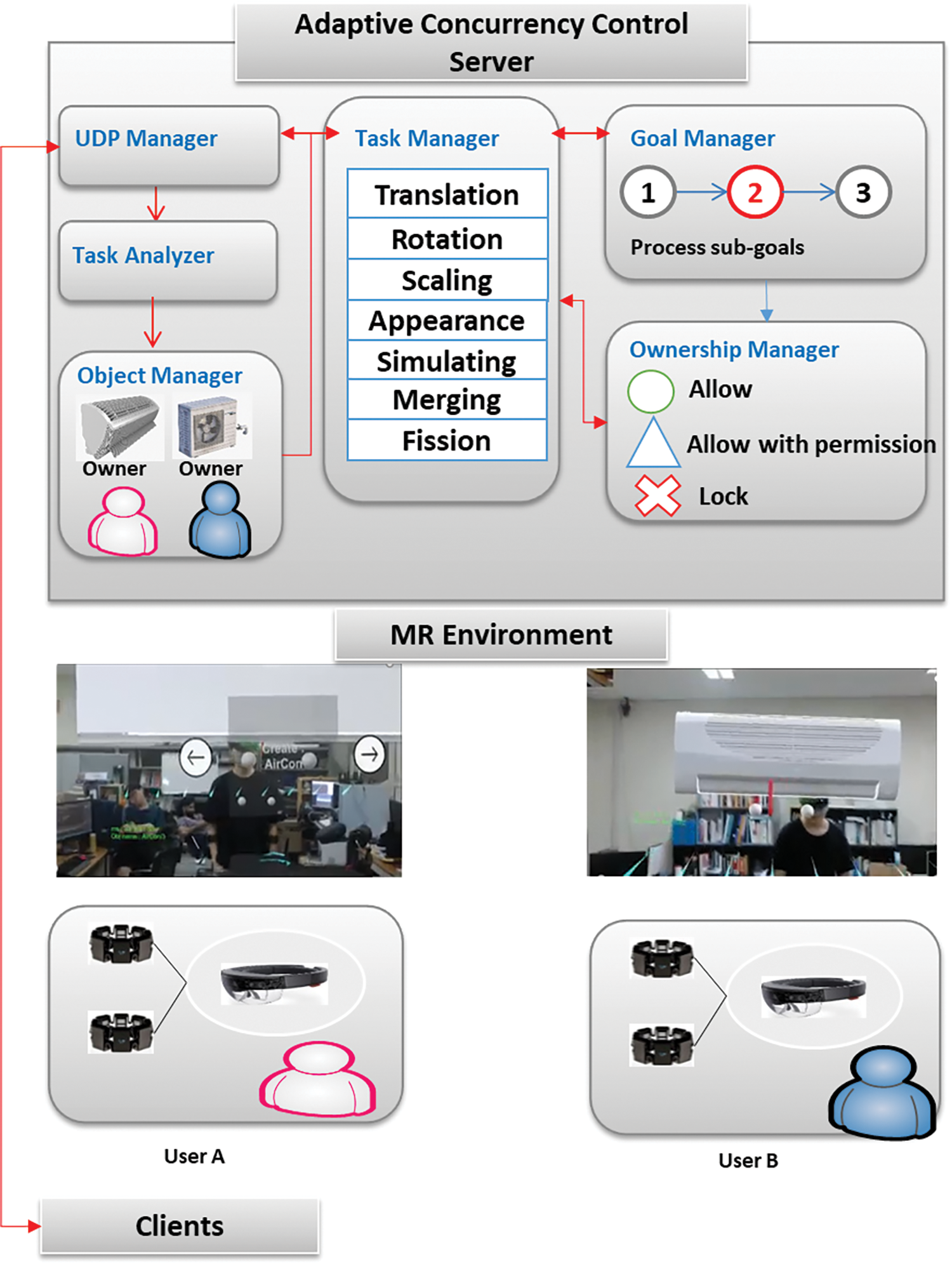

In this study, the collaborative work performed in an MR environment is classified into Goal, Sub-Goal, and Task, and a collaborative work platform that can adaptively transform ownership management appropriate for relevant tasks according to the progress of the tasks and sub-goals is proposed. The proposed platform consists of a client that visualizes MS Hololens and a server in charge of collaborative work, as shown in Fig. 4. When users perform collaborative work in an MR environment, the platform follows a thin client structure in which the user’s motion and movement information is transmitted to the server through the UDP protocol. Subsequently, after all calculations are conducted, the results are transmitted to the client.

Figure 4: Proposed system scheme

The server analyzes the UDP information transmitted from the client through a task analyzer to analyze the information on the tasks performed by users. Thereafter, based on the analyzed information on users’ tasks, the currently shared objects are selected using an object manager that manages the shared objects, for which work is currently performed; the owner of the shared objects is identified, and the input of the task by the user is performed by a task manager. At this time, the goals of participants in the current collaborative work and the information on the progress of the sub-goals to achieve the goals are identified using the goal manager. Further, the concurrency of the collaborative work is controlled according to the ownership policy set for the tasks in the sub-goals currently in progress through the ownership manager, and the relevant results are informed to the user. Thereafter, even if the tasks of users are the same, the users are allowed to accomplish the following functions: perform the tasks even if they are not owners according to the ownership policy set to fit the “Sub-goals,” in progress, access the tasks through the owner’s permission, or not to access (lock).

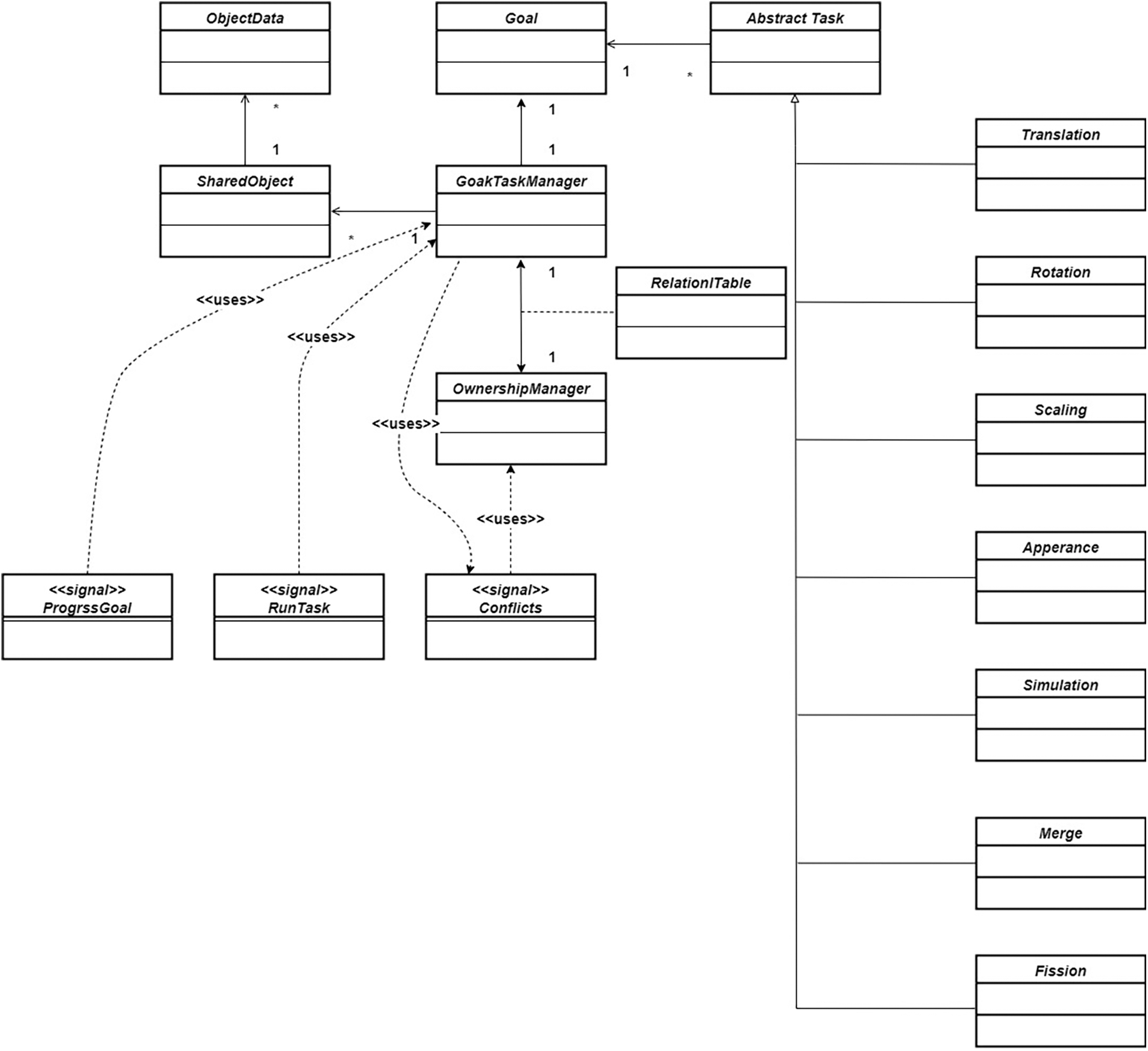

Fig. 5 illustrates a class diagram of the proposed scheme. GoalTaskManager analyzes a requested task for a shared object from a user and determines the current state of the goal. Then, the ownership of the requested task is managed by OwnershipManager. OwnershipManager charges the permission and rejection of the requested task of SharedObject through RelationTable. If the requested task is set to “Lock” whose owner does not allow any tasks, OwnershipManager rejects the task. If the task is set to “Allow,” OwnershipManager allows the task, and the other requested information is delivered to SharedObject. The proposed system handles the delivered request on multiple shared objects using a SharedObject class. If the requested task is set to “Allow with Permission,” the task is performed when the owner of the shared object allows a permission request.

Figure 5: Class diagram

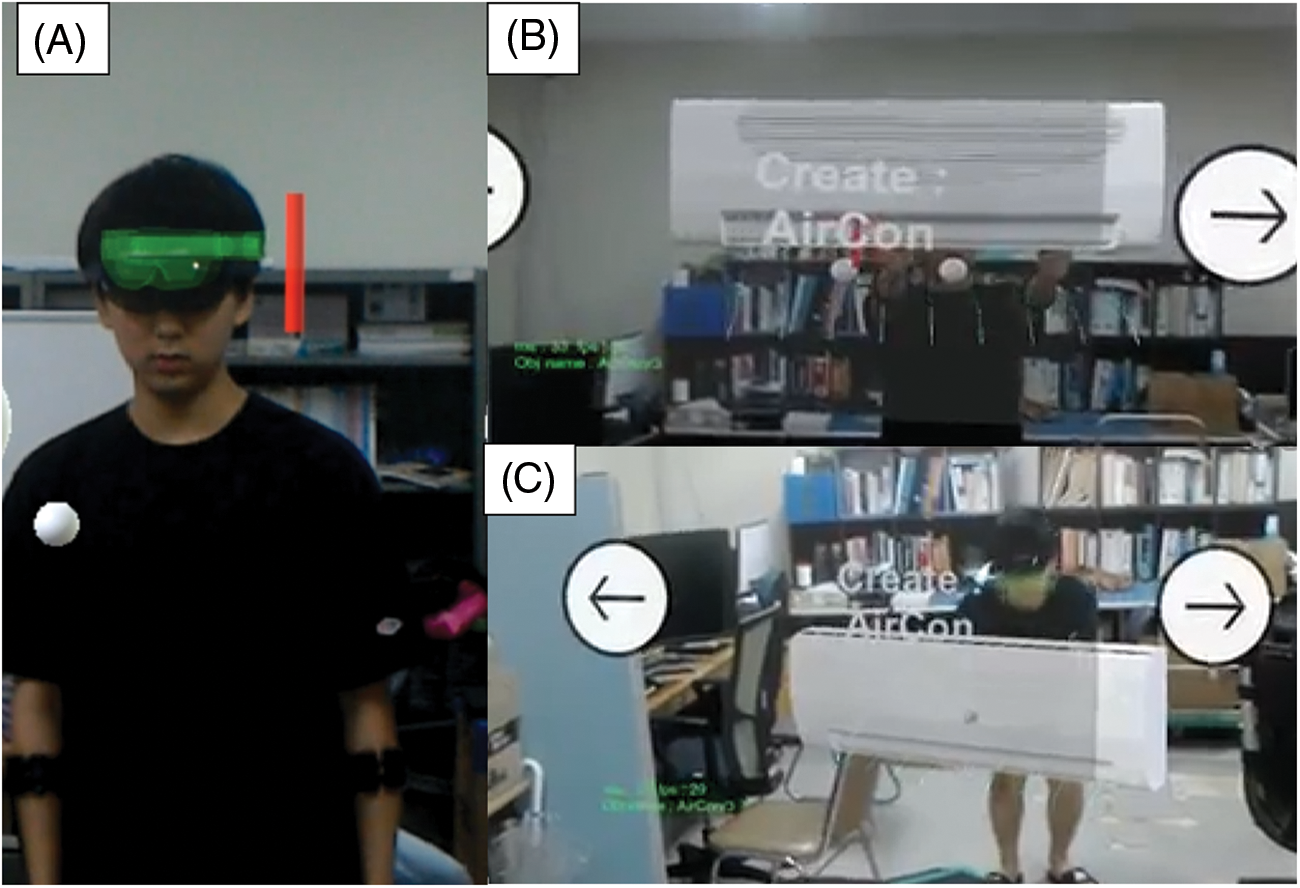

For the control of the concurrency of the shared object proposed in this study, users use a direct object manipulation method involving hand usage through upper body motion recognition. Thus, the proposed system is structured to facilitate interaction with users after they have worn the MS Hololens and Myo devices, which are EMG sensors, on both arms. In the case of MS Hololens, when voice input is received from the user or when the user moves in an MR environment, the 3D position is tracked, and information on the rotation value of the user’s head wearing MS Hololens is extracted. Moreover, a user can perform various tasks on 3D shared objects while moving in an MR environment and use Myo devices made by combining EMG and IMU sensors to recognize the foregoing. When the user is attached to the Myo sensors, the user’s movements from the head to both arms can be manipulated in response to the movement of individual joints, as with real arms. Moreover, shared objects can be selected and manipulated through the recognized hand. Fig. 6 shows the user holding the 3D air conditioner, a shared object, and the user moving while wearing the MS Hololens and Myo sensors.

Figure 6: Client in MR. (A) User initializes with MS Hololens and two Myos. The proposed system tracks upper body information and converts it to 3D position, (B) before placing an air conditioner, and (C) after placing an air conditioner

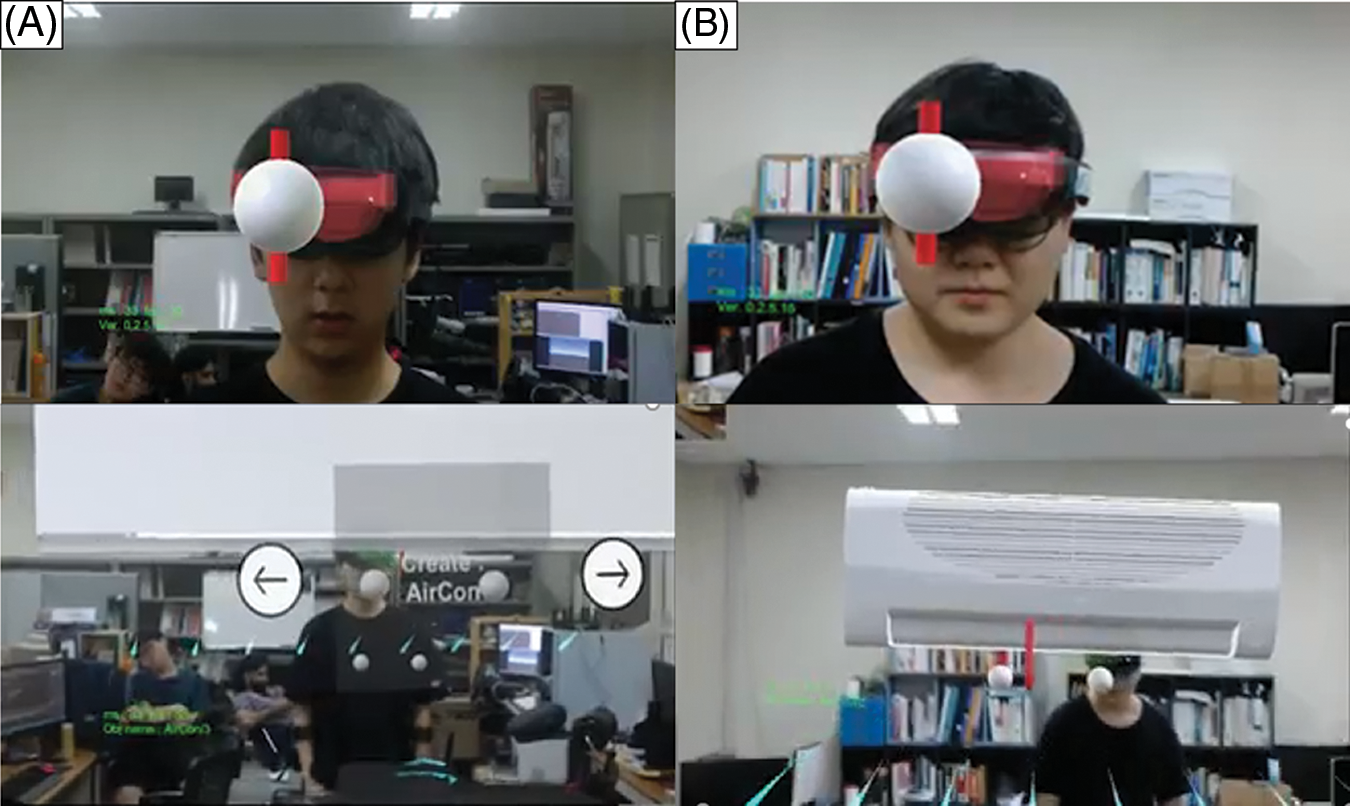

To manipulate other tasks, the speech recognition function of MS Hololens can be used, or the menu using ray casting can be clicked to visualize the current progress in the goal of the object and the information on other available tasks. Moreover, the relevant tasks can be selected through voice or ray casting to perform other tasks. Fig. 7 shows the process of test simulation conducted after two users installed the air conditioner in an MR environment.

Figure 7: Collaborative testing of air conditioner in different views of participants in real-time. (A) Viewpoint of user A and (B) viewpoint of user B

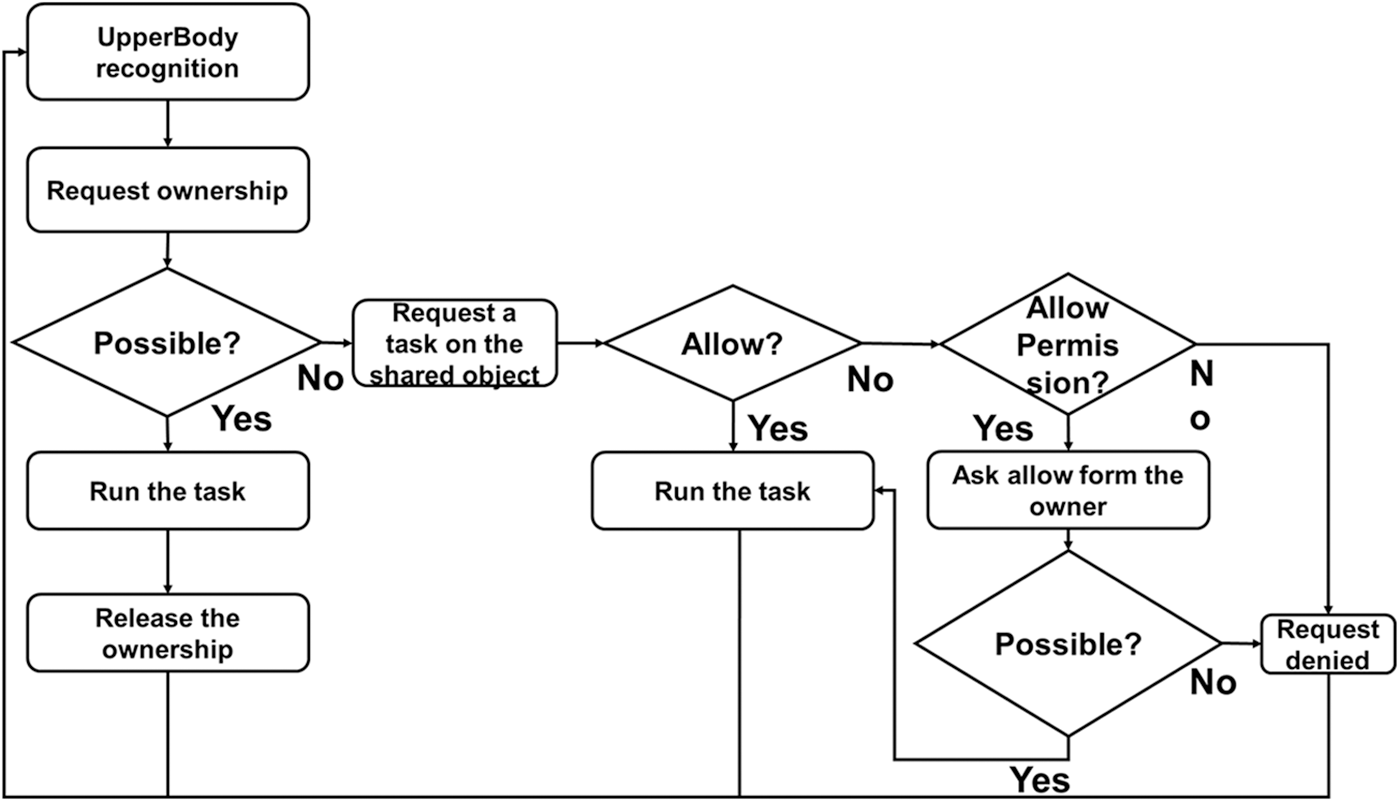

When two or more users perform collaborative work, the ownership of the object is judged. If they are not the owner, three types of concurrency controls are performed on the task according to the state of the goal of the current collaborative work, as shown in Fig. 8.

Figure 8: Overall process adaptive concurrency control

First, the work to grab, move, and rotate a shared object can be performed. However, when two or more users move or rotate while holding a shared object, to prevent the conflict phenomenon in which the position or rotation information is changed according to the movement of one user, a concurrency control algorithm for the movement and rotation of the object is provided, as shown in the following equation. When two users grab an object, if the owner is assumed to be PA and the non-owner is assumed to be PB in the order in which the users hold the object, the middle point PC is obtained using Eq. (1). Thereafter, when the users move, the position of PC is changed by calculating the displacement of the user’s movement using Eq. (2). To control the concurrency of the rotation of the shared object, rotation can be provided through approximate direction changes according to the positions of the hands of both users as conflicts of rotation values occur when rotation is performed with the rotation value of one person’s hand. In this study, it is assumed that the direction of owner A is the initiative at this time. Therefore, the direction is the unit vector for the rotation of C, the middle point of the shared object, and is calculated to have the directivity of the normal value of the vector obtained by subtracting B from A, as shown in Eq. (3). Through the final algorithm shown in Eq. (4), users who manipulate the shared object can rotate or move it by moving their hands while holding the shared object.

Second, with the consent of the owner, tasks, such as simulations of shared objects, can be performed. In this study, to obtain the owner’s consent, an approach was set so that consent would be considered to have been obtained in cases where the owner permitted through the speech recognition of MS Hololens or clicked ray casting in the relevant menu. Third, the owner’s work was blocked.

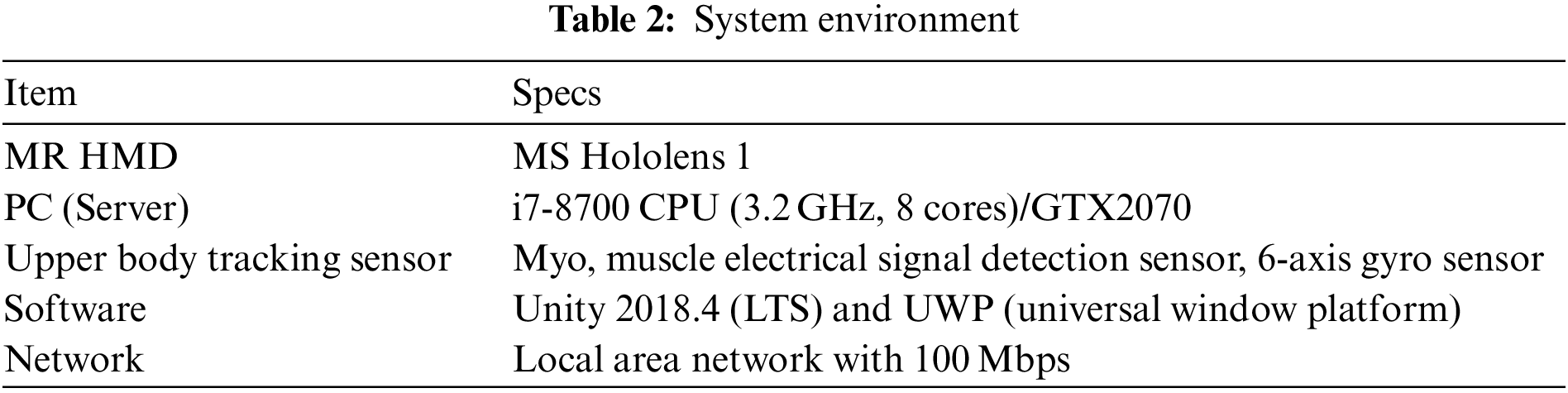

In this study, collaboration contents for air conditioning systems were developed. Table 2 describes information on the system used in the experimental environment. To evaluate the performance of the system proposed, users who have experience with HMDs were selected to eliminate the possibility that their experiences of the device per se may hinder their experiences of the experimented contents. Therefore, in this study, 30 users aged between 20 and 37 years who had experience with HMDs were selected. The participants consisted of 20 users in their 20 s and 10 users in their 30 s, of which 16 were males and 14 were females. The selected users were provided with basic education on the proposed system and education on the air conditioning system process for 20 min. Subsequently, the users were randomly divided into three groups, User1, User2, and User3, of 10 users each. Further, two users were randomly matched in each group and instructed to perform the air conditioning training process.

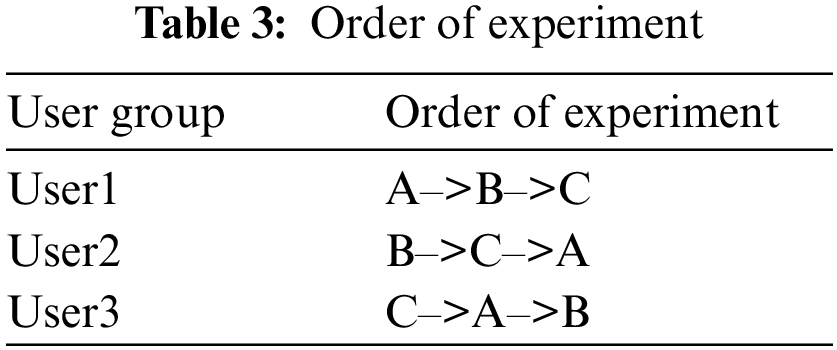

This study experimented on three concurrency control approaches including the proposed method. The first, Method A, blocks the access tasks of non-owner until the owners release their ownerships. The second, Method B, is a task-based concurrency control approach in which the ownership of shared objects exists and permission from the owner is obtained every time a task is used to perform work. The third, Method C, applied the GTO-based adaptive concurrency control approach proposed in this study. Users in the individual groups experimented in the order described in Table 3 to avoid the learning effect.

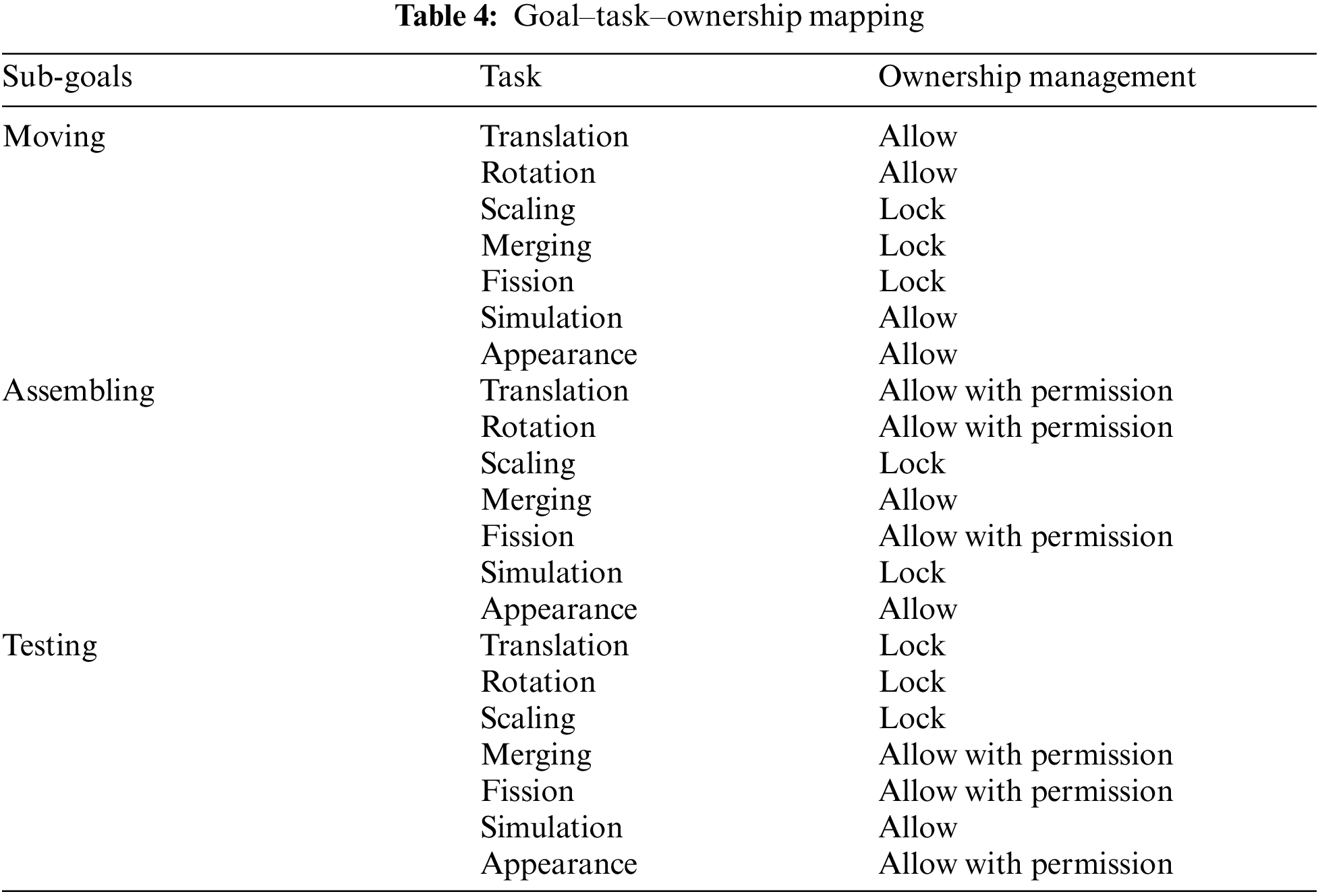

In Method C, the relationship to a GTO can be defined. Thus, based on the literature [25] related to air conditioning system construction and questionnaire surveys with experts, the stages of air conditioning systems were classified into three; tasks used in the situations were defined, and concurrency control approaches for non-owners suitable for the foregoing tasks were mapped. The foregoing is defined in Table 4. The air conditioning system is configured into three stages. First, in the moving goal stage, two users move the air conditioner to the location where it should be installed. In this case, users can perform collaborative work to move the air conditioner per se or rotate it. Thus, users can closely learn the suitable posture to hold, move, and rotate the air conditioner in an actual MR environment. Herein, even users without ownership can participate. Notably, where mistakes are made, such as missing the air conditioners or unintentionally ramming them against a real wall space during the collaborative work, tasks are performed on the simulations and visualizations of the damage to the air conditioner.

The second stage constitutes assembling the accessories of the air conditioning system. In this case, the user with the ownership primarily fixes the air conditioner, and other users assemble accessories such as filters and hoses for the air conditioner. Users without ownership can join accessories without the permission of the owner. However, if they wish to disassemble the accessories because of a wrong connection or adjust the location or direction of the air conditioner, they should obtain permission from the owner to perform the collaborative work.

The third involves starting the air conditioner and checking it upon completion. At this stage, users without ownership can access the simulation to turn on and view the wind of the air conditioner without the owner’s permission. However, disassembling and assembling tasks can be performed only with the owner’s permission.

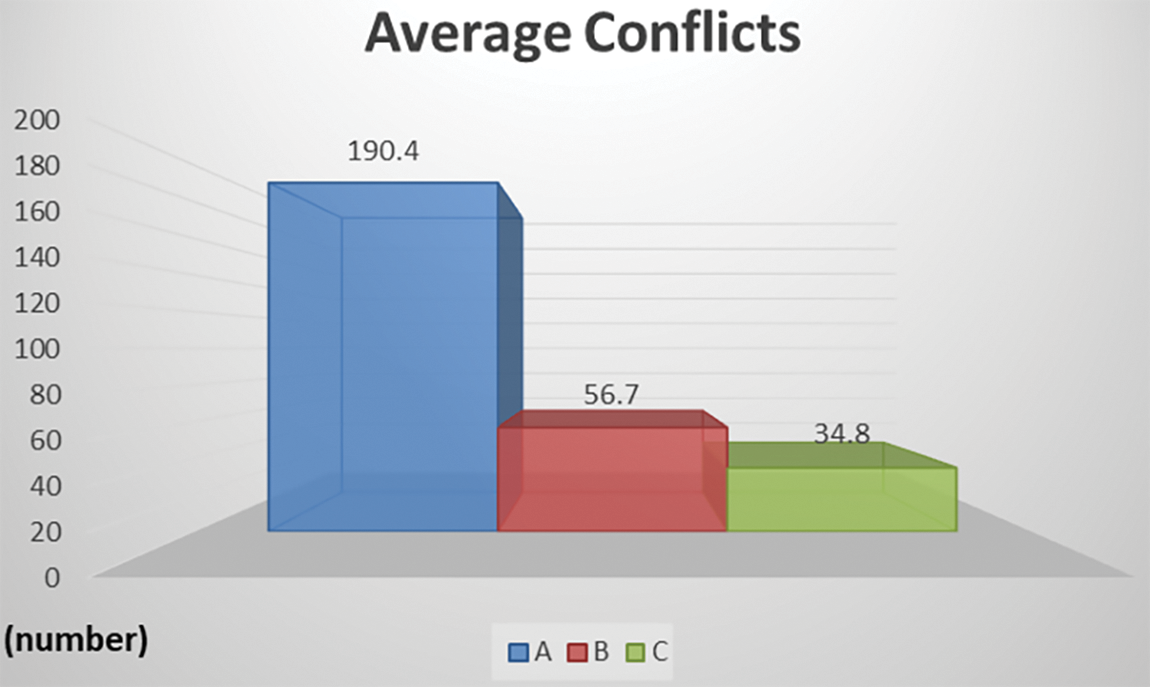

Users who participated in the experiment completed the processes for air conditioner movement, installation, and testing. However, in the process of jointly moving or rotating the air conditioner, the methods proposed in this study were commonly applied to all experimental methods. In the experimental process, the number of conflicts between users’ collaborative work was measured. The experimental results of the foregoing show that conflicts occur when Method A is used, as shown in Fig. 9. Moreover, methods B and C showed relatively smaller numbers of conflicts than that of Method A. As Method B used a task-based concurrency control method, the results of average conflicts showed smaller occurrences of conflicts. Although the three concurrency control mechanisms blocked the conflicts, the blocked participants were surprised. As methods B and C divided ownership into smaller tasks, they exhibited small numbers of conflicts. Compared with those of conventional methods, the proposed method C exhibited the smallest number of conflicts because the participants recognized the changes in the ownership strategy as the proposed system notified changes in goals. Moreover, they communicated with each other during the experiments.

Figure 9: Results of average conflicts during collaborative work in MR

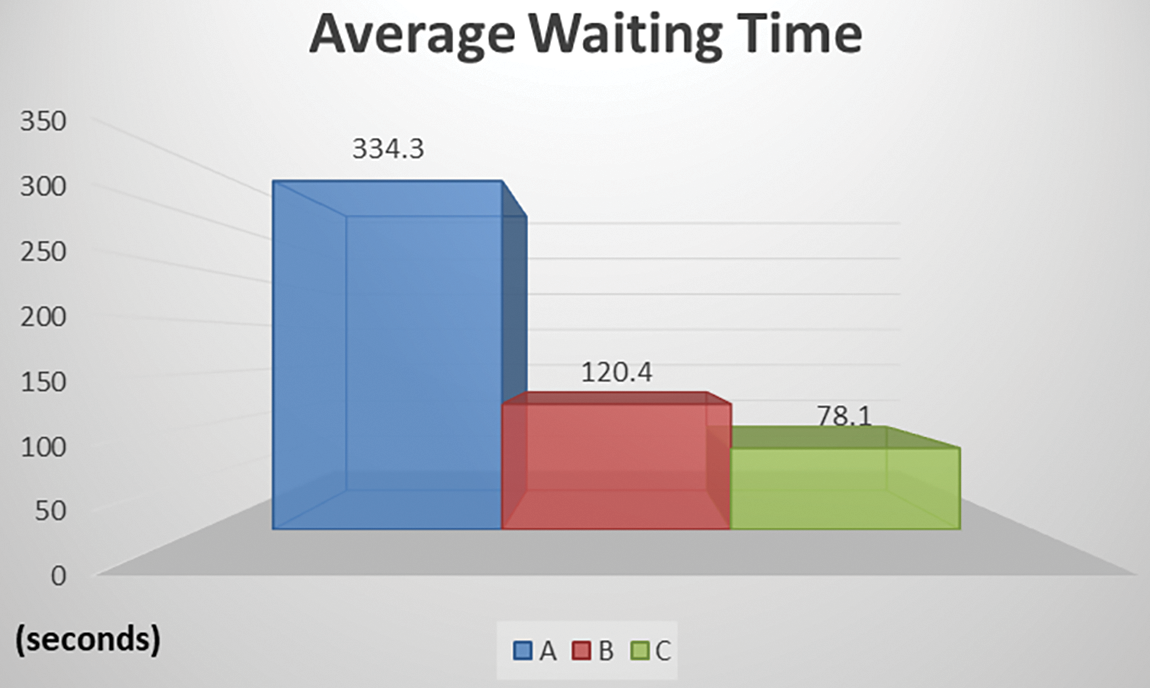

Fig. 10 shows the results of the average waiting time, which is calculated when a non-owner is waiting for the owner’s task. The waiting time was affected by the number of conflicts because non-owners could not perform any tasks on the shared objects and therefore waited until they could access the shared object. The proposed scheme, Method C, exhibited the shortest waiting time.

Figure 10: Results of working time during collaborative work in MR

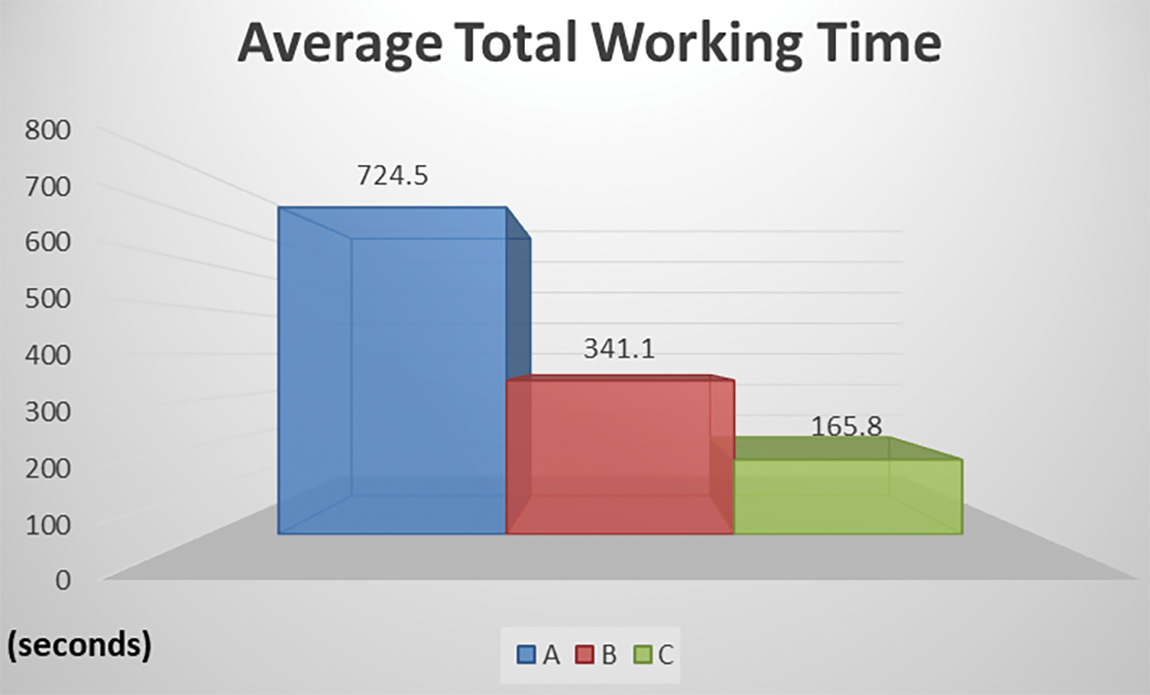

The average completion times of the participants are shown in Fig. 11. The results showed that users spent the longest time in Method A at an average of 724.5 s. This was because the consistency of the information on the work was interrupted because of conflict. Moreover, considerable time was required to recover consistency. Second, in Method B, 341.1 s were required. In this case, some time was spent because users without ownership requested the owner for ownership every time they perform a task. Moreover, the owner had to accept every request. Third, the proposed Method, Method C, exhibited the fastest task completion with an average of 165.8 s.

Figure 11: Results of working time during collaborative work in MR

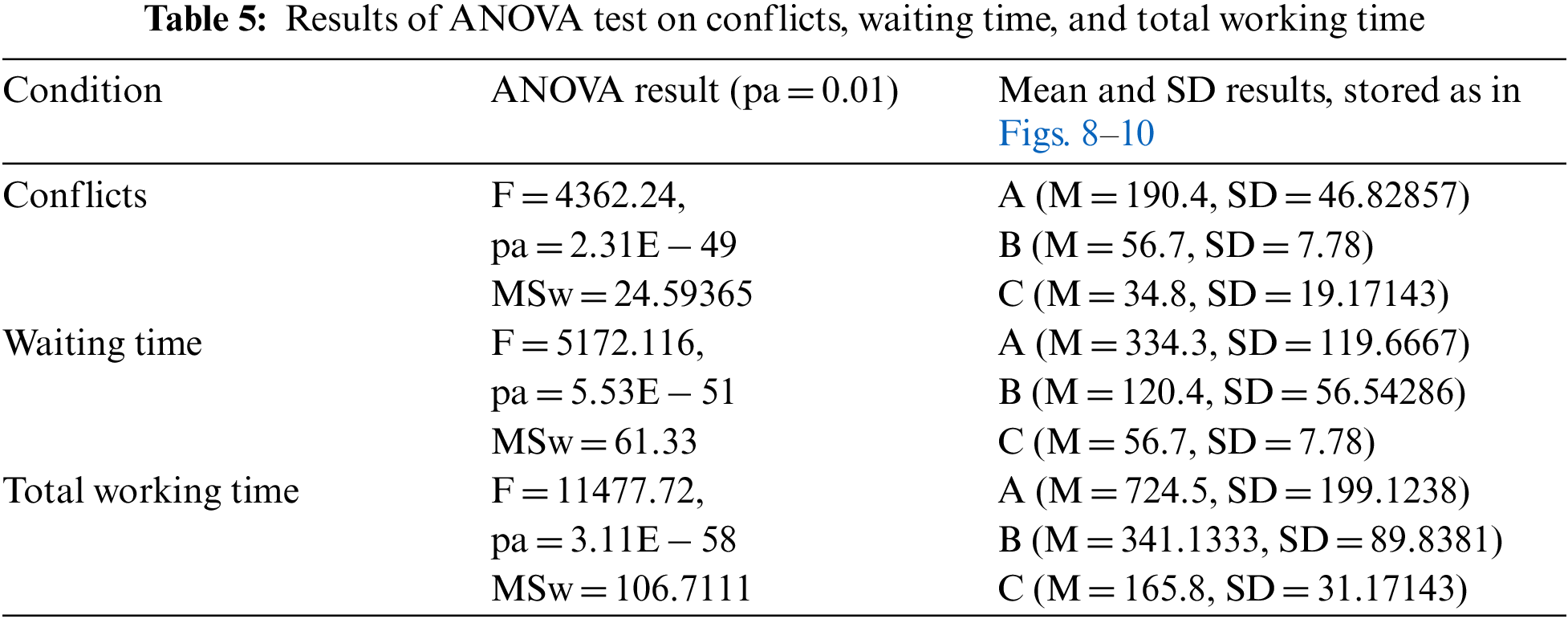

We conducted an analysis of the variance (ANOVA) test on the performance evaluations for statistical analysis, as described in Table 5. Conflict is recorded when a non-owner requests a specific task on a shared object while the owner is performing a task. According to the results of the ANOVA test, the number of conflicts among the concurrency control mechanisms is statistically significant (2.31E-49 < 0.01). As the waiting time is affected by the number of conflicts, the proposed method exhibited the lowest waiting time. Moreover, the results of the ANOVA test show that the waiting time is statistically significant (5.53E-51 < 0.01). The waiting time and number of conflicts influenced the total working time for completing shared object manipulation in the proposed experiments. The results of the total working times among the concurrency control mechanisms exhibited significant differences (3.11E-58 < 0.01), as described in Table 5. Thus, the proposed concurrency control method may exhibit few conflicts and may reduce the waiting and working times on shared object manipulation in MR environments.

After the experiment, the participants were interviewed to qualitatively evaluate the proposed system. When a question concerning the most uncomfortable concurrency control approach was asked, participants indicated that Method A was the most inconvenient. There were inconveniences when the consistency of collaborative work was interrupted because of other users’ work. Although the precautions for this experimental method were earlier explained, some participants thought it was an error of the system. Additionally, some users indicated inconveniences in Method B. Users with ownership complained of the inconvenience of having to grant permission to each task requested by a non-owner during collaborative work, whereas those without ownership felt inconveniences as they had to request ownership every time they performed a task. Moreover, they had to watch work when their work was not permitted. Users generally evaluated Method C, the proposed method, as being satisfactory and efficient in that the concurrency control approach to tasks was changed according to sub-goals. However, some users were unfamiliar with both the air conditioner and air conditioning system. Consequently, they wrongly manipulated the system without recognizing changes in the concurrency control approach to tasks because of changes in the sub-goal and proposed that the guidance UI for relevant parts should be improved.

This study proposed an adaptive concurrency control mechanism with modeling of goal–task–ownership to enable users to perform collaborative tasks in an MR environment. Hence, the proposed system classified the collaborative tasks in an MR environment into detailed goals and tasks. The classified goals and tasks are mapped to appropriate ownership strategies. By modeling goal–task–ownership, the proposed system adapted the ownership strategy of a shared object according to the changes in the detailed goals.

To provide the collaborative tasks of shared objects in an MR environment, MS Hololens and Myo devices were proposed. Users could perform collaborative tasks on the shared object using both hands and upper body motions. The proposed concurrency control method was implemented and applied to install an air conditioner system, which could be one of the most promising applications of our approach. To verify the proposed method, two users were asked to perform virtual air conditioner movement, installation, and test processes in an MR environment as collaborative work. The experiment results showed that, compared with conventional concurrency control mechanisms, the proposed method could minimize numerous conflicts. Interestingly, the number of conflicts affected both the waiting and total working times. According to user studies, the participants adopted the proposed adaptation of concurrency controls of shared objects and voice communication for requesting permission for shared objects in an MR environment.

In future research, we will study appropriate UI for collaborative work in MR environments. We expect to apply a deep learning-based motion recognition method, that is, a system that will naturally enable collaborative work even when no devices are attached to the body.

Acknowledgement: The authors thank HyungJun Cho and DongHyun Kim (Hoseo University) for their hard work in supporting the experiments of the proposed system.

Funding Statement: These results were supported by “Regional Innovation Strategy (RIS)” through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (MOE) (2021RIS-004).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. M. Spicher, B. D. Hall and M. Nebeling, “What is mixed reality,” in Proc. CHI Conf., Glasgow, Scotland, UK, pp. 1–15, 2019. [Google Scholar]

2. M. Funk, M. Kritzler and F. Michahelles, “Hololens is more than air tap: Natural and intuitive interaction with holograms,” in Proc. IoT 17 Proc., Linz, Austria, pp. 1–2, 2017. [Google Scholar]

3. J. Lee and S. J. Park, “Extended reality-based simultaneous multi presence for remote cooperative work,” Journal of Computer and Information, vol. 26, no. 5, pp. 23–30, 2021. [Google Scholar]

4. E. Maye Culpa, J. I. Mendoza, J. G. Ramirez, A. L. Yap and E. Fabian et al., “A cloud-linked ambient air quality monitoring apparatus for gaseous pollutants in urban areas,” Journal of Internet Services and Information Security (JISIS), vol. 11, no. 1, pp. 64–79, 2021. [Google Scholar]

5. B. Aziz, “A note on the problem of semantic interpretation agreement in steganographic communications,” Journal of Internet Services and Information Security (JISIS), vol. 11, no. 3, pp. 47–57, 2021. [Google Scholar]

6. A. Borchert and M. Heisel, “Conflict identification and resolution for trust-related requirements elicitation a goal modeling approach,” Journal of Wireless Mobile Networks, Ubiquitous Computing, and Dependable Applications (JoWUA), vol. 12, no. 1, pp. 111–131, 2021. [Google Scholar]

7. M. Komisarek, M. Pawlicki, R. Kozik and M. Choraś, “Machine learning based approach to anomaly and cyberattack detection in streamed network traffic data,” Journal of Wireless Mobile Networks, Ubiquitous Computing, and Dependable Applications (JoWUA), vol. 12, no. 1, pp. 3–19, 2021. [Google Scholar]

8. D. Pöhn and W. Hommel, “Universal identity and access management framework,” Journal of Wireless Mobile Networks, Ubiquitous Computing, and Dependable Applications (JoWUA), vol. 12, no. 1, pp. 64–84, 2021. [Google Scholar]

9. C. Joslin, D. T. Giacomo and N. Magnenat-Thalmann, “Collaborative virtual environments: From birth to standardization,” IEEE Communication Magazine, vol. 42, no. 4, pp. 28–33, 2004. [Google Scholar]

10. D. Roberts, R. Wolff, O. Otto and A. Steed, “Constructing a gazebo: Supporting team work in a tightly coupled distributed task in virtual reality,” Presence: Teleoperators & Virtual Environments, vol. 12, no. 6, pp. 644–657, 2003. [Google Scholar]

11. O. Otto, D. Roberts and R. Wolff, “A review on effective closely-coupled collaboration using immersive CVE’s,” in Proc. 2006 ACM Int. Conf. on Virtual Reality Continuum and its Applications (VRCIA’06), Hong Kong, China, pp. 145–154, 2006. [Google Scholar]

12. Q. Abbas, H. Shafiq, I. Ahmad and S. Tharanidharan, “Concurrency control in distributed database system,” in Proc. 2016 Int. Conf. on Computer Communication and Informatics (ICCCI), Coimbatore, India, pp. 1–4, 2016. [Google Scholar]

13. D. J. Roberts and P. M. Sharkey, “Maximizing concurrency and scalability in a consistent, causal, distributed virtual reality system, whilst minimizing the effect of network delays,” in Proc. 6th Workshop on Enabling Technologies, Infrastructures for Collaborative Enterprise (WETICE’97), Cambridge, MA, USA, pp. 161–166, 1997. [Google Scholar]

14. U. J. Sung, J. H. Yang and K. Y. Wohn, “Concurrency control in CIAO,” in Proc. 1999 IEEE Virtual Reality Conf. (VR’99), Houston, TX, USA, pp. 22–28, 1999. [Google Scholar]

15. A. Steed and M. F. Oliveira, “Ownership,” in Networked Graphic: Building Networked Games and Virtual Environments, 1st ed., vol. 1, San Francisco, CA, USA: Elsevier, pp. 266–271, 2009. [Google Scholar]

16. J. Yang, “Scalable prediction based concurrency control for distributed virtual environments,” in Proc. IEEE Virtual Reality 2000 Conf., New Brunswick, NJ, USA, pp. 151, 2000. [Google Scholar]

17. J. Lee, M. Lim, H. S. Kim and J. I. Kim, “Supporting fine-grained concurrent tasks and personal workspaces for a hybrid concurrency control mechanism in a networked virtual environment,” Presence Teleoperators Virtual Environment, vol. 21, no. 4, pp. 452–469, 2012. [Google Scholar]

18. J. Lee, M. Lim, S. J. Park, H. S. Kim and H. Ko et al., “Approximate resolution of asynchronous conflicts among sequential collaborations in dynamic virtual environments,” Computer Animation & Virtual Worlds, vol. 27, no. 2, pp. 163–180, 2016. [Google Scholar]

19. K. C. Mathi, “Augment HoloLens’ body recognition and tracking capabilities using Kinect,” Ph.D. Dissertation, Wright State University, USA, 2016. [Google Scholar]

20. T. Mulling and M. Sathiyanarayanan, “Characteristics of hand gesture navigation: A case study using a wearable device (MYO),” in Proc. British HCI’15, Lincoln, Lincolnshire, UK, pp. 283–284, 2015. [Google Scholar]

21. S. J. Park, “A study on sensor-based upper full-body motion tracking on hololens,” Journal of the Korea Society of Computer and Information, vol. 26, no. 4, pp. 39–46, 2021. [Google Scholar]

22. P. P. Desai, P. N. Desai, K. D. Ajmera and K. A. Mehta, “Review paper on oculus rift-a virtual reality headset,” International. Journal of Engineering Trends and Technology, vol. 13, no. 4, pp. 175–179, 2014. [Google Scholar]

23. L. Hui, J. Yuan, D. Thalmann and N. M. Thalmann, “AR in hand: Egocentric palm pose tracking and gesture recognition for augmented reality applications,” in Proc. ACM MM’ 15, Brisbane, Australia, pp. 743–744, 2015. [Google Scholar]

24. R. Lathia and J. Mistry, “Process of designing efficient, emission free HVAC systems with its components for 1000 seats auditorium,” Pacific Science Review A: Natural Science and Engineering, vol. 18, no. 2, pp. 109–122, 2016. [Google Scholar]

25. D. A. Norman, “Psychology of everyday action,” in The Design of Everyday Things, New York: Basic Book, 1988. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools