Open Access

Open Access

ARTICLE

Deep Transfer Learning-Enabled Activity Identification and Fall Detection for Disabled People

1 Department of Computer Science, College of Science & Art at Mahayil, King Khalid University, Saudi Arabia

2 Faculty of Arts and Science, Najran University, Sharourah, Saudi Arabia

3 Department of Computer Sciences, College of Computer and Information Sciences, Princess Nourah bint Abdulrahman University, P.O. Box 84428, Riyadh, 11671, Saudi Arabia

4 Department of Industrial Engineering, College of Engineering at Alqunfudah, Umm Al-Qura University, Saudi Arabia

5 Department of Information Systems, College of Computer and Information Sciences, Princess Nourah bint Abdulrahman University, P.O. Box 84428, Riyadh, 11671, Saudi Arabia

6 Department of Computer Science, College of Applied Sciences, King Khalid University, Muhayil, 63772, Saudi Arabia

7 Department of Digital Media, Faculty of Computers and Information Technology, Future University in Egypt, New Cairo, 11835, Egypt

8 Department of Computer and Self Development, Preparatory Year Deanship, Prince Sattam bin Abdulaziz University, AlKharj, Saudi Arabia

* Corresponding Author: Manar Ahmed Hamza. Email:

Computers, Materials & Continua 2023, 75(2), 3239-3255. https://doi.org/10.32604/cmc.2023.034037

Received 05 July 2022; Accepted 13 October 2022; Issue published 31 March 2023

Abstract

The human motion data collected using wearables like smartwatches can be used for activity recognition and emergency event detection. This is especially applicable in the case of elderly or disabled people who live self-reliantly in their homes. These sensors produce a huge volume of physical activity data that necessitates real-time recognition, especially during emergencies. Falling is one of the most important problems confronted by older people and people with movement disabilities. Numerous previous techniques were introduced and a few used webcam to monitor the activity of elderly or disabled people. But, the costs incurred upon installation and operation are high, whereas the technology is relevant only for indoor environments. Currently, commercial wearables use a wireless emergency transmitter that produces a number of false alarms and restricts a user’s movements. Against this background, the current study develops an Improved Whale Optimization with Deep Learning-Enabled Fall Detection for Disabled People (IWODL-FDDP) model. The presented IWODL-FDDP model aims to identify the fall events to assist disabled people. The presented IWODL-FDDP model applies an image filtering approach to pre-process the image. Besides, the EfficientNet-B0 model is utilized to generate valuable feature vector sets. Next, the Bidirectional Long Short Term Memory (BiLSTM) model is used for the recognition and classification of fall events. Finally, the IWO method is leveraged to fine-tune the hyperparameters related to the BiLSTM method, which shows the novelty of the work. The experimental analysis outcomes established the superior performance of the proposed IWODL-FDDP method with a maximum accuracy of 97.02%.Keywords

The World Health Organization (WHO) reported 684,000 disastrous falls. In most fall events, the victims were individuals aged above 60 years. This high proportion of fall events among the elderly makes road traffic injury the most common cause of death [1]. Across the globe, the fall events are regarded as a public healthcare concern for the elderly people. Around 80 percent of the people, who experience disastrous falls, live in low- and middle-income nations [2]. Undoubtedly, falls-induced injury among elderly individuals has far-reaching impacts on their health and societal and healthcare institutions. In general, these emergencies occur without warning, and it is critical to detect the occurrence of emergencies at the earliest. Consequently, the Fall Detection (FD) techniques have been developed, and these techniques are a life-saver in medical warning systems. In case of a fall-like medical emergency in which the individual cannot reach the assistance button, the automated FD feature of the medical alert system might provide a self-possession that provides appropriate care to the victim [3].

The autonomous life of a disabled individual gets dramatically altered after a fall [4]. Based on the health status of elderly individuals, nearly 10% of the fallen persons get injured severely. During a few fatal fall events, the affected individual may also die due to the absence of intermediate support [5]. So, a consistent FD technique is required to avoid the serious consequences of a fall. The wrist-worn recognition system is one of the conventional techniques used for fall detection, and it measures the acceleration force of the wearer [6]. Wearable wrist devices are increasingly used nowadays across the globe. These wrist-based wearables have gained much attention in computation presentation and use Artificial Intelligence (AI) techniques [7]. Generally, the older adults find it easy to use these devices, though they have a few concerns about privacy and understanding of the functions of the devices at certain times. The mobile FD systems can be easily assessed with extreme sophistication since live information can be retrieved about the falls of older adults [8,9].

The advanced AI techniques empower the FD systems for fall detection and its prediction with the help of the established datasets and data trends. The sensors collect the data under several fall parameters, using various data collection mechanisms [8]. Consequently, the Machine Learning (ML) approaches are exploited for categorization or the identification of falls based on the requirement of the application [10]. The Deep Learning (DL) approaches are highly helpful for FD and other detection activities, especially in sensor fusion and visual detectors [11]. The Deep Reinforcement Learning (DRL) model is one of the domains in FD investigation techniques, and it depends on psychological and neuroscientific models of human beings. Further, it also provides suggestions based on the altering environments and enhances user performance [12,13]. The deep reinforcement data integrates deep and reinforcement learning (RL) data to expand the recognition activities with robustness and accuracy based on the changing environments [14].

Kerdjidj et al. [15] suggested an effective automatic fall detection system that can also be used to detect various Activities of Daily Living (ADL). The proposed system relied upon wearable Shimmer gadgets to transmit certain inertial signals to the computer via a wireless connection. The main objectives of the proposed method were to reduce the size of the data transferred and the power utilized. A Compressive Sensing (CS) technique was applied to achieve these objectives. Casilari et al. [16] proposed and validated a Convolutional Deep Neural Network (DNN) for the identification of falls based on dimensions gathered by a transportable tri-axial accelerometer. In this study, an extensive public data set was used compared to other works. This dataset contained traces of the data collected from different groups of volunteers who mimicked the falls and ADLs.

Ramón et al. [17] developed a wearable FD System (FDS) with a body-area network. The FDS approach had four nodes with Bluetooth wireless interfaces and inertial sensors. The signals produced by the nodes were transmitted to a smartphone that concurrently served as another sensing point. In comparison with several FDSs suggested by single-sensor studies, a multi-sensory prototype was used in this study to investigate the effect of the positions and the number of sensors upon the efficiency of making FD decisions. de Quadros et al. [18] developed and evaluated a wrist-worn fall detection solution. Various sensors (i.e., magnetometer, accelerometer and gyroscope), signals (i.e., displacement, acceleration, and velocity) and direction elements (such as non-vertical and vertical) were compiled in this study, and a complete set of threshold-related and ML techniques was used to define the optimal fall detection techniques. In literature [19], a weighted multi-stream Convolutional Neural Network (CNN) model was suggested in which rich multi-modal data was collected using cameras and exploited to achieve the outcomes. This technique automatically identified all the fall events and raised help requests to their respective caregivers. The contributions of this study were three-fold, whereas the novel structure had a total of four individual CNN streams with each stream working for each modality.

The current study develops an Improved Whale Optimization with Deep Learning-Enabled Fall Detection for Disabled People (IWODL-FDDP) model. The aim of the presented IWODL-FDDP method is to identify the fall events to assist disabled people. At first, the IWODL-FDDP method applies an image filtering approach to pre-process the image. Besides, the EfficientNet-B0 model is utilized to generate valuable feature vector sets. Followed by the IWO with Bidirectional Long Short-Term Memory (BiLSTM) model is leveraged for recognition and the classification of the fall events. Finally, the IWO technique is employed to fine-tune the hyperparameters related to the BiLSTM method. The experimental results confirmed the better performance of the proposed IWODL-FDDP model over recent approaches.

In this study, a novel IWODL-FDDP method is developed for the identification of falls to assist the disabled people. The presented IWODL-FDDP model follows three major processes: the fall classification method, the feature extraction process and the pre-processing approach. Initially, the IWODL-FDDP model applies the image filtering approach to pre-process the image. Besides, the EfficientNet-B0 model is utilized for the generation of a valuable feature vector set. Following by, the IWO with BiLSTM model is leveraged for recognition and the classification of fall events. Fig. 1 depicts the block diagram of the IWODL-FDDP system.

Figure 1: Block diagram of the IWODL-FDDP algorithm

In recent times, the CNN approach has been widely applied in both object detection as well as image processing applications. The robustness of CNN approaches lies in the shared weight [20]. The weight-sharing process reduces the number of learning-free parameters, extensively. This, in turn, reduces the stored requirements for the functioning of the networks. Further, it permits the most powerful and the most extensively-used network to be trained. The CNN model includes four layers such as the convolution layer, the Fully-Connected (FC) layer, the normalized layer and the pooling layer. In each layer, the

In Eq. (1), a discrete convolutional operator is denoted by ‘

Finally, the max-pooling and the convolution layers i.e., the high-level reasoning layers in the Neural Network (NN), are developed with the help of the FC layer. In this scenario, the weightage is shared again. The CNN model is generally trained in an end-to-end fashion under supervision. There is a substantial reduction in the number of weight parameters whereas the translational invariant of the learned features contributes to the end-to-end training process of the CNN model.

In this study, the EfficientNet-B0 model is utilized to generate a useful set of the feature vectors. The EfficientNet DNNs are applied in the creation of infrastructures that identify the images. Over a million images are utilized from the ImageNet database to train the EfficientNet-b0 framework [21]. The EfficientNet family makes use of a compound scaling system with set ratios for every three dimensions in a network to increase the speed as well as the precision. The outcomes demonstrate that a balance among the parameters such as network resolution, depth and width tend to enhance the efficacy of the output. A total of 18 convolutional layers are used in which the depth is 18. All the layers have a kernel sized at 3 × 3 or 5 × 5. The size of the input images is 224 × 224 × 3 pixels whereas ‘3’ signifies the RGB colour channels. The resolution of the subsequent layers gets reduced to minimize the size of the feature vectors. However, its width gets improved to enhance the accuracy. The second convolutional layer has a width of 16 filters whereas the subsequent convolutional layers have a width of 24 filters. The final layer i.e., the FC layer has a maximum number of filters i.e., 1,280. In general, the kernels of different sizes are used such as 3 × 3, 5 × 5 and 7 × 7. The superior kernels make the model, a superior one and enhance its performance. Further, the large kernels help in case of high resolution patterns, whereas the small kernels support the extraction of low-resolution patterns. During training and testing processes, the current study dataset had a total of 18 layers with the EfficientNet-b0 baseline method. The EfficientNet family has distinct configurations like b1, b2 … & b7 along with a base EfficientNet-b0. The EfficientNet-b0 network is identified as the least network and is utilized in this study.

2.2 Fall Detection and Classification

Next, the BiLSTM model is employed for recognition and the classification of falls. The Long Short Term Memory (LSTM) model stores the cells and their states to overcome the long-term dependency issues found in the Recurrent Neural Network (RNN) model [22]. Long-term dependency issue is a gradient explosion or gradient dispersion problem that is created by several multiplications of the matrices, once the RNNs compute the connection of the distant nodes. The subsequent equations depict that the LSTM network gets upgraded in a one-time step.

Here,

The Bidirectional LSTM (Bi-LSTM) network enhances its LSTM predecessors through the addition of the backward HL,

Here, h e implies the HL of the present cell,

Figure 2: Framework of the BiLSTM technique

2.3 IWO Based Hyperparameter Optimization

Finally, the IWO method is employed to fine-tune the hyperparameters related to the BiLSTM algorithm. The Whale Optimization Algorithm (WOA) is a metaheuristic algorithm [23], inspired by the hunting pattern of the humpback whales. The whale-to-prey attack pattern can be named as a bubble-net feed method. It occurs when a bubble is formed in a circle nearby the prey. It has two phases such as encircling and attacking the prey. The hunting activity of the whales is peculiar and follows two techniques. The first one is the upward spiral in which the whales dive 12

Let X, Y be the vector coefficients, k represents the existing iteration,

The humpback whale net attack follows two strategies. The initial solution is a diminishing and spiral-updating technique. Every technique is utilized to attack the prey. When using this strategy, the possibility of capturing the prey stands at 50%. In shrinking-encircling strategy, the value of ‘x’ gets reduced from [0, 2] that is directly propositional to X as shown in Eq. (14). X refers to an

If

In this searching stage, the whale (i.e., searching agent) globally searches for a better solution (prey) and changes its location according to other whales. In order to force the searching agent to move farther from the positions of the other whales globally, X must be higher than 1 or lower than −l. A random search is formulated using the following expression.

The IWO algorithm is derived from a Quasi-Oppositional-based Learning (QOBL) with WOA. Tizhoosh developed the OBL [24] method in which it was stated that an opposite number is possible nearby the solution than a random number. When the OBL method is implemented in conventional evolutionary algorithms, it increases the coverage of the solution space which in turn results in rapid convergence and high accuracy. Some of the applications of the OBL method in soft computing fields include Oppositional Differential Evolution (ODE), Oppositional Particle Swarm Optimization (OPSO) and Oppositional Biogeography-Based Optimization (OBBO). In this study, the researcher integrated the QOBL method and the elementary WOA technique to have additional possibilities in terms of finding the solutions nearby the global optima. At first, the idea of Quasi-Opposition Based Optimization is presented. Then, it is applied to accelerate the convergence of the WOA algorithm. The concept of QOBL is utilized in both generation jumping as well as the initial population. The OBL method is defined as follows.

Opposite number: Consider

Opposite point: Given that

Quasi-opposite number: Assume x as a real number in the range of

where c is demonstrated below:

Quasi-opposite point: Here, x represents a real number that lies in the range of

where

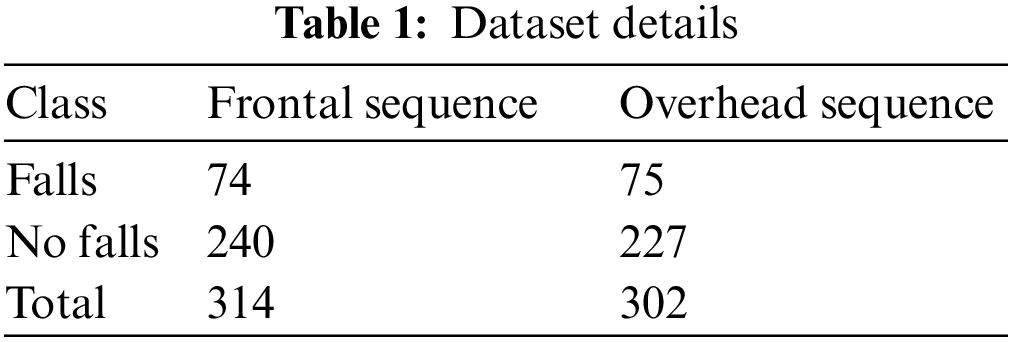

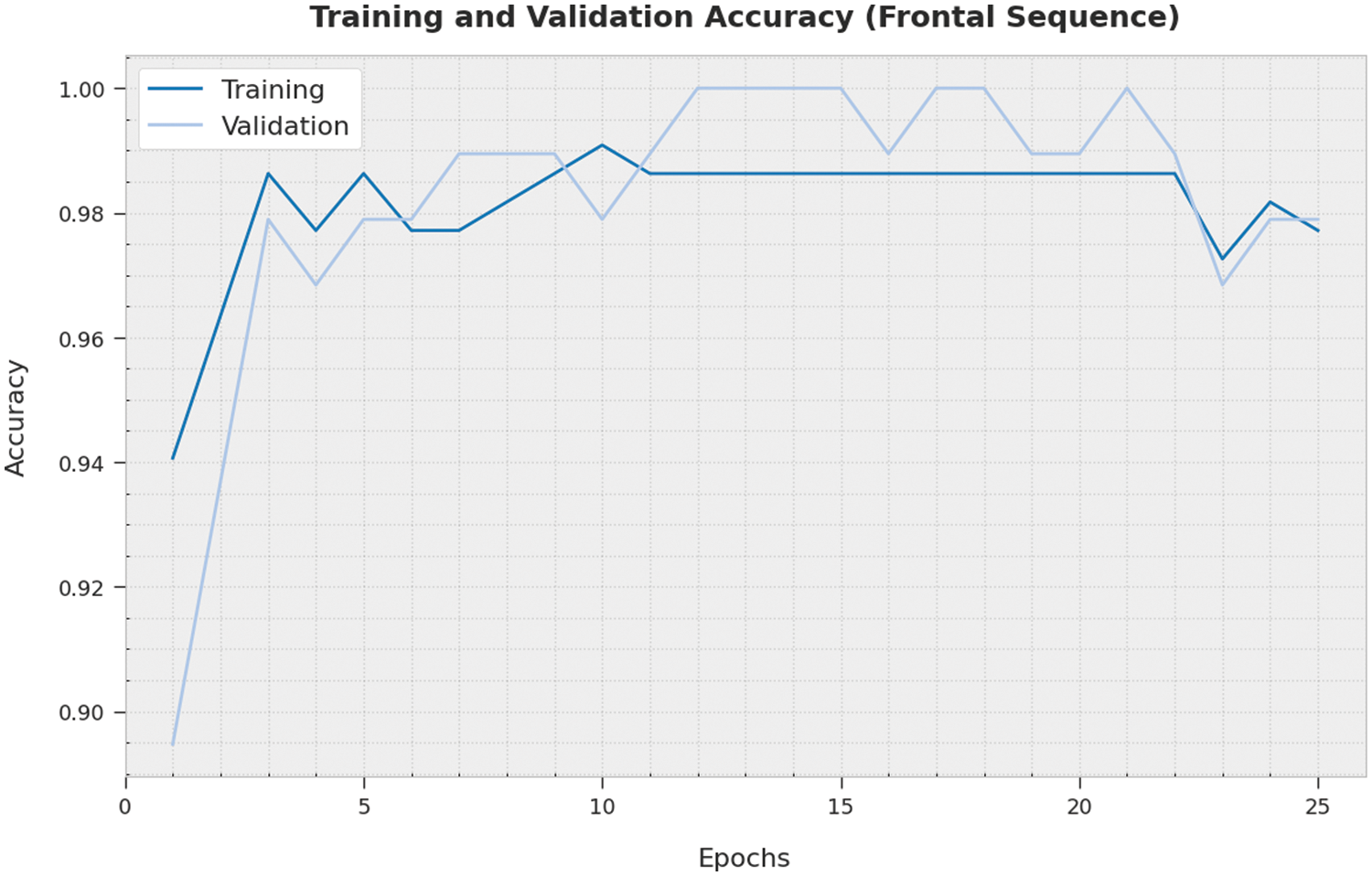

The proposed model was simulated using Python 3.6.5 tool and was validated using multiple cameras’ fall dataset with frontal sequence [25] and UR Fall Detection (URFD) dataset with an overhead sequence (available at http://fenix.univ.rzeszow.pl/~mkepski/ds/uf.html). Table 1 shows the details about two datasets.

Fig. 3 shows the confusion matrices generated by the proposed IWODL-FDDP model on frontal sequences. On run-1, the proposed IWODL-FDDP model identified 71 samples as fall events and 237 samples as No fall events. In addition, on run-3, the IWODL-FDDP algorithm classified 74 samples under fall events and 238 samples under No fall events. Moreover, on run-5, the proposed IWODL-FDDP technique categorized 73 samples under fall events and 238 samples under No fall events.

Figure 3: Confusion matrices of the IWODL-FDDP approach under frontal sequence dataset (a) Run-1, (b) Run-2, (c) Run-3, (d) Run-4, and (e) Run-5

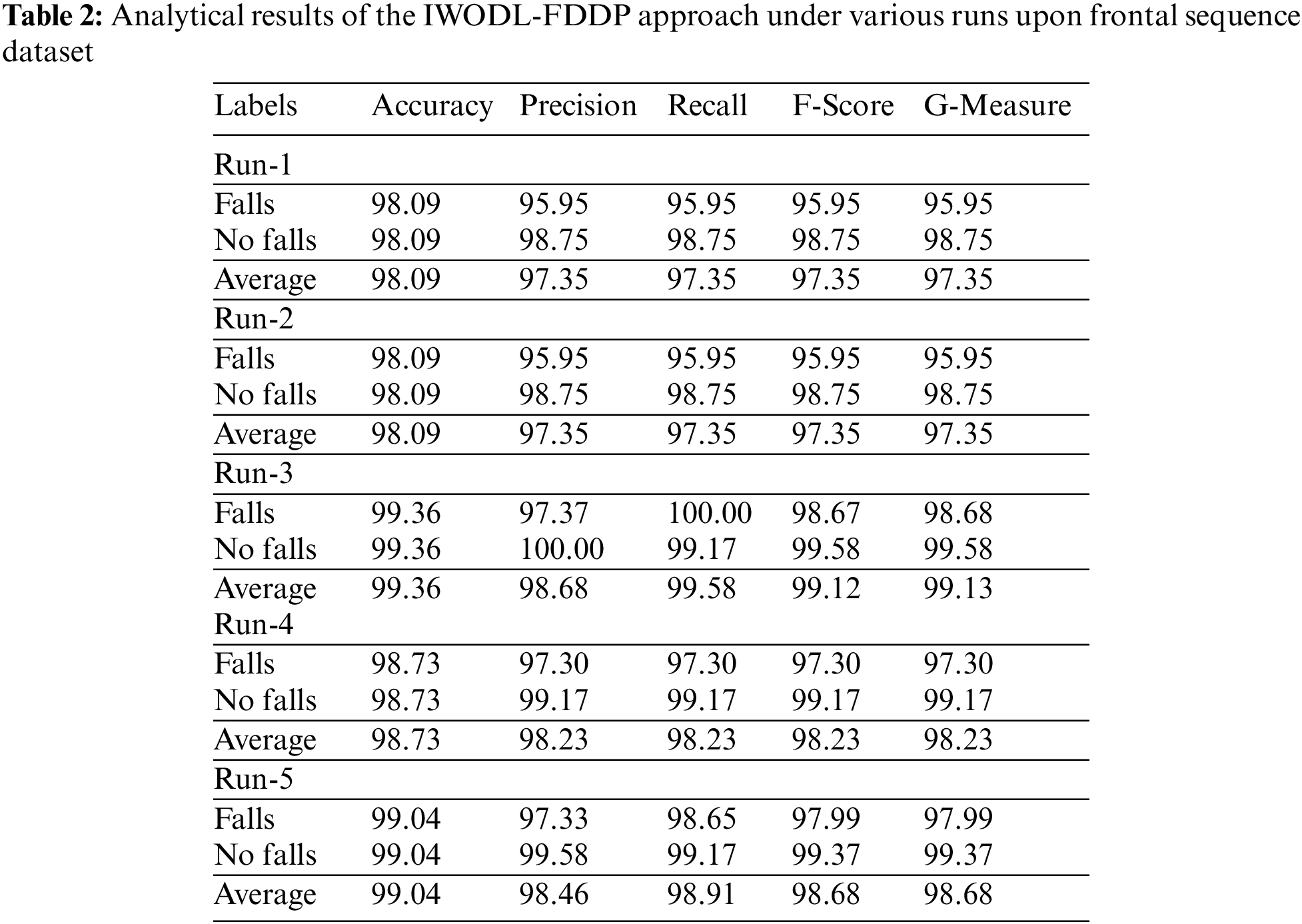

The fall detection outcomes of the proposed IWODL-FDDP model under distinct runs of execution using frontal sequence data are given in Table 2 and Fig. 4. The experimental values imply that the proposed IWODL-FDDP model accomplished the maximum performance under each run. For instance, on run-1, the proposed IWODL-FDDP model offered an average

Figure 4: Analytical results of the IWODL-FDDP approach on frontal sequence dataset

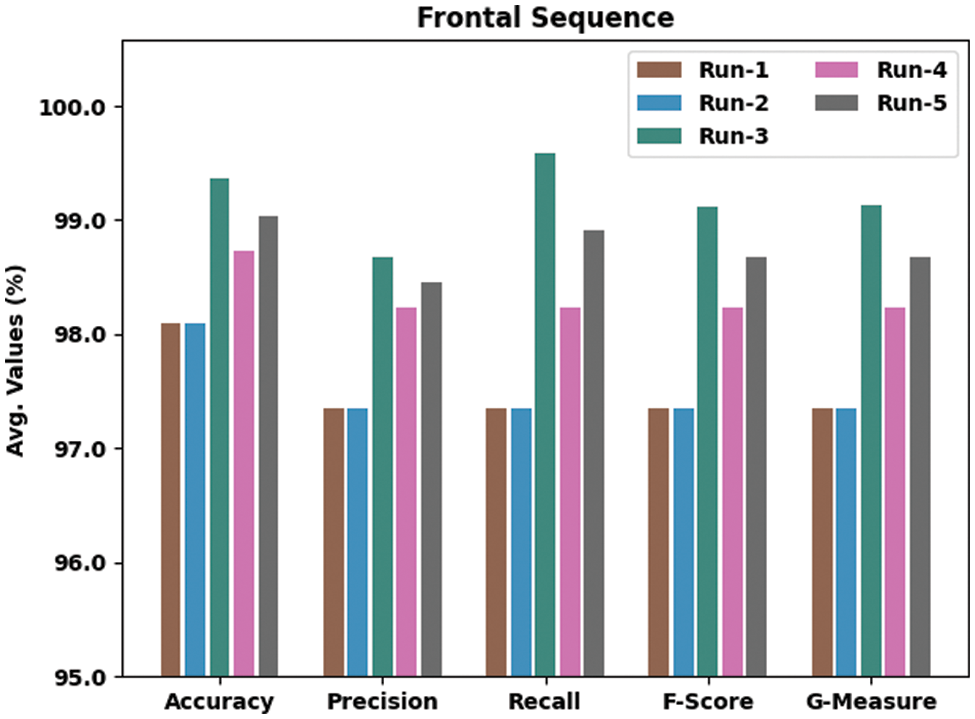

Both Training Accuracy (TA) and Validation Accuracy (VA) values, gained by the proposed IWODL-FDDP technique on frontal sequence dataset, are illustrated in Fig. 5. The experimental outcomes infer that the proposed IWODL-FDDP method reached the maximal TA and VA values whereas the VA values were higher than the TA values.

Figure 5: TA and VA analyses results of the IWODL-FDDP approach on frontal sequence dataset

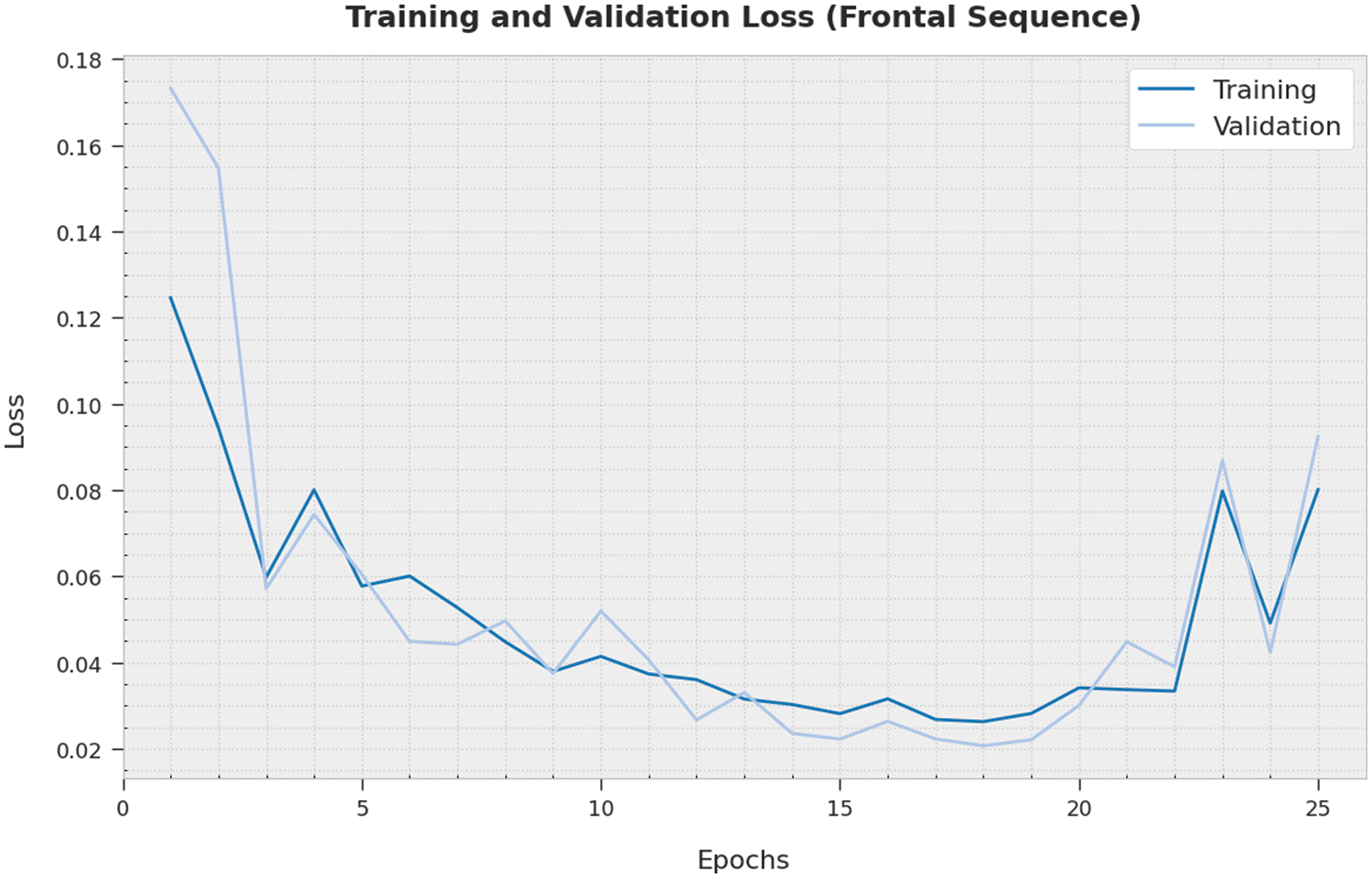

Both Training Loss (TL) and Validation Loss (VL) values, acquired by the proposed IWODL-FDDP technique on frontal sequence dataset, are portrayed in Fig. 6. The experimental outcomes imply that the proposed IWODL-FDDP methodology accomplished the least TL and VL values whereas the VL values were lower than the TL values.

Figure 6: TL and VL analyses results of the IWODL-FDDP approach on frontal sequence dataset

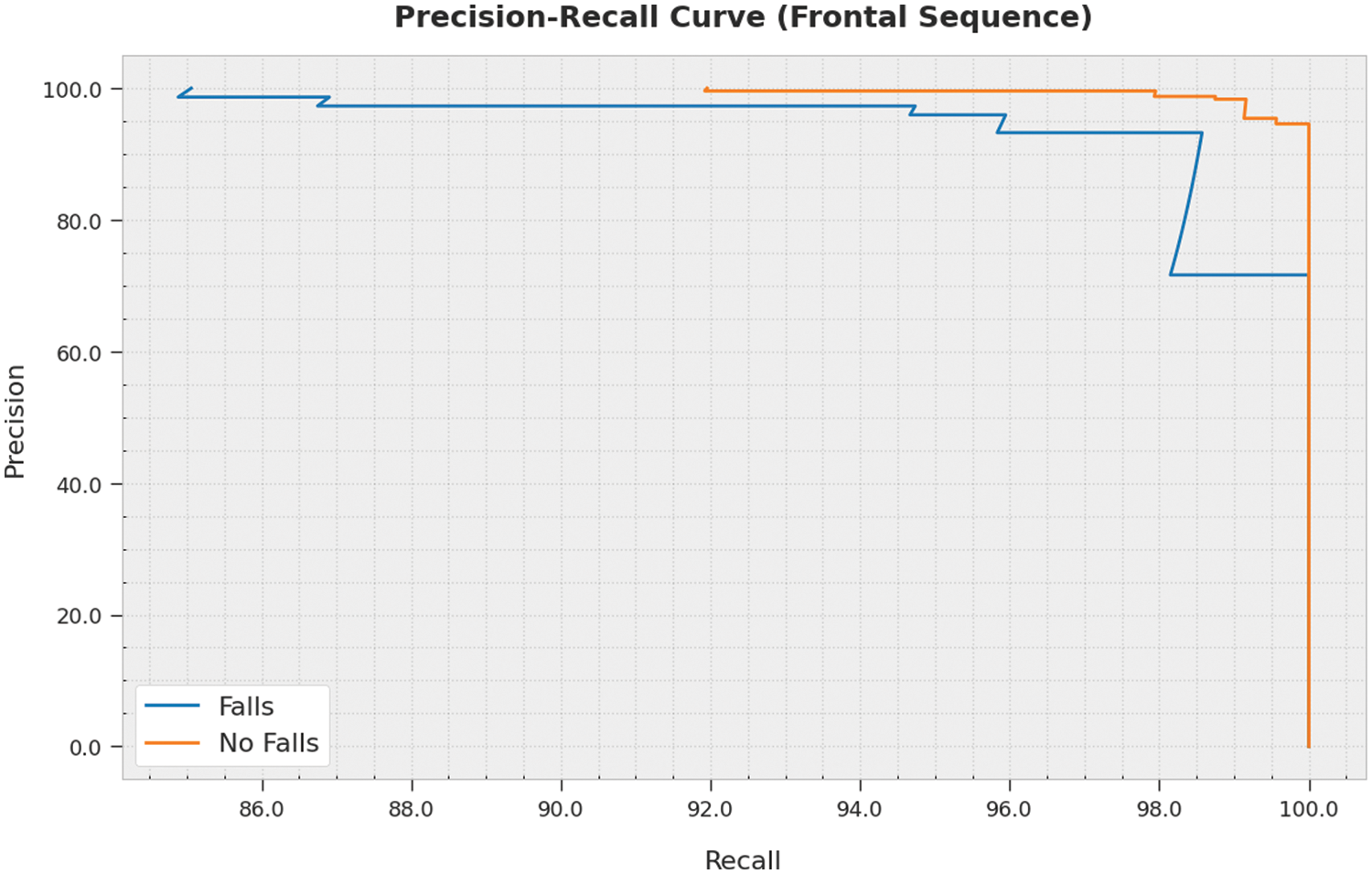

A clear precision-recall analysis was conducted upon the IWODL-FDDP algorithm using frontal sequence dataset and the results are shown in Fig. 7. The figure represents the superior performance of the proposed IWODL-FDDP technique with enhanced precision-recall values under all the classes.

Figure 7: Precision-recall analysis results of the IWODL-FDDP approach on frontal sequence dataset

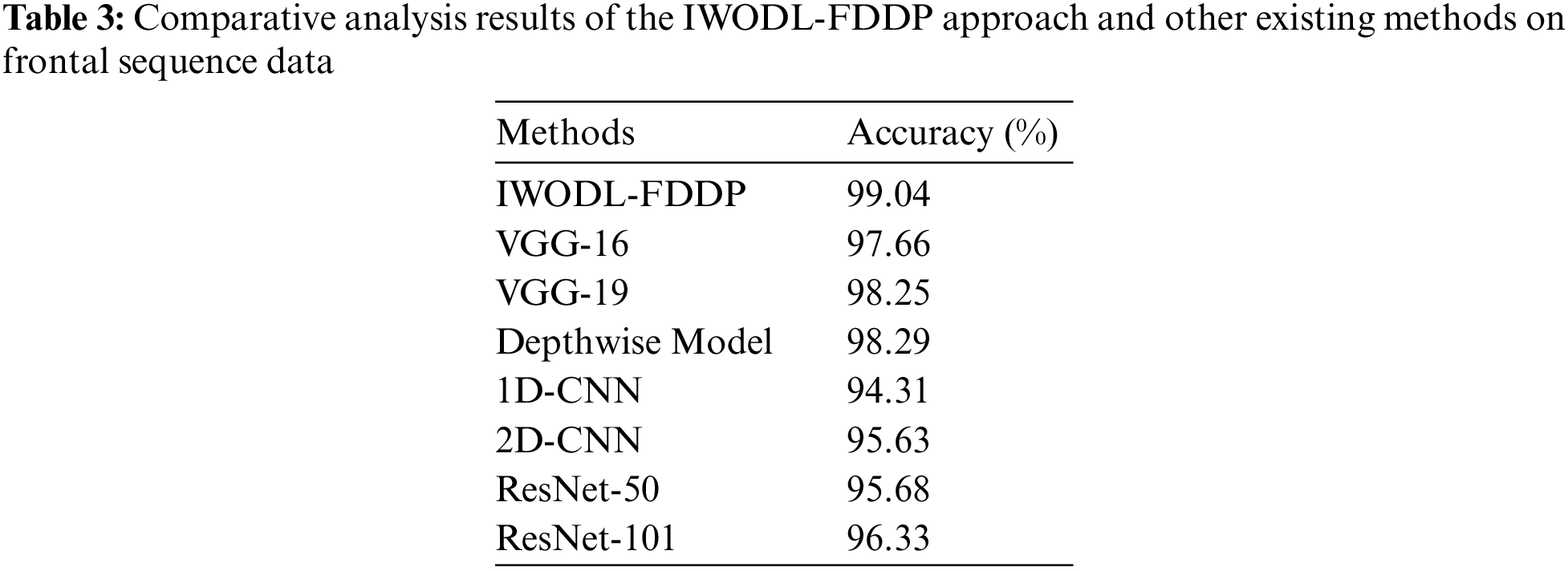

A comparative examination was conducted between the proposed IWODL-FDDP model and other existing models on frontal sequence data and the results are shown in Table 3 and Fig. 8. The results imply that the proposed IWODL-FDDP model accomplished excellent performance with an

Figure 8: Comparative analysis results of the IWODL-FDDP approach on frontal sequence data

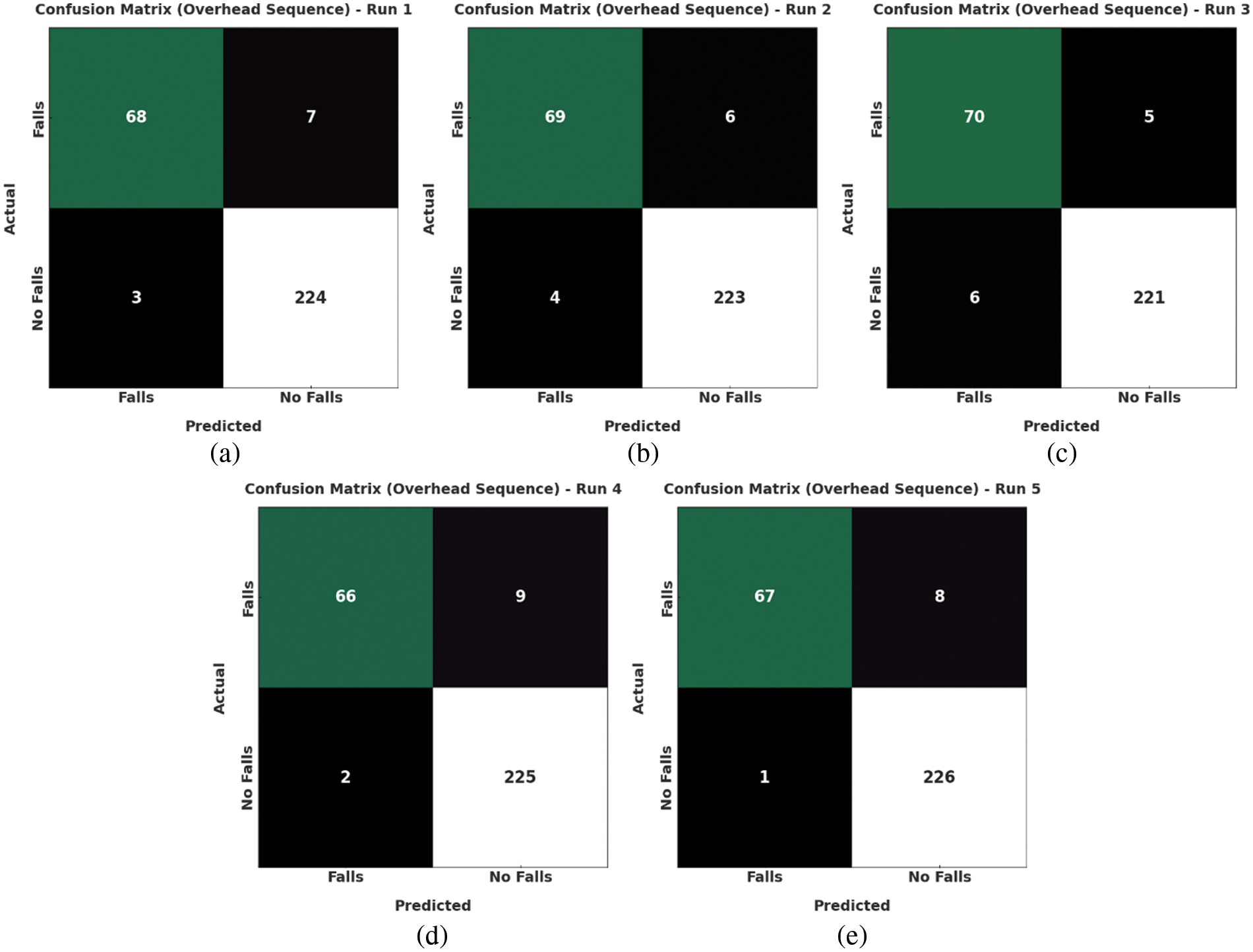

Fig. 9 portrays the confusion matrices generated by the IWODL-FDDP method on overhead sequences. On run-1, the proposed IWODL-FDDP method identified 71 samples as fall events and 237 samples as No fall events. Moreover, on run-3, the IWODL-FDDP technique classified 74 samples under fall events and 238 samples under No fall events. Additionally, on run-5, the proposed IWODL-FDDP methodology identified 73 samples as fall events and 238 samples as No fall events.

Figure 9: Confusion matrices of the IWODL-FDDP approach under overhead sequence dataset (a) Run-1, (b) Run-2, (c) Run-3, (d) Run-4, and (e) Run-5

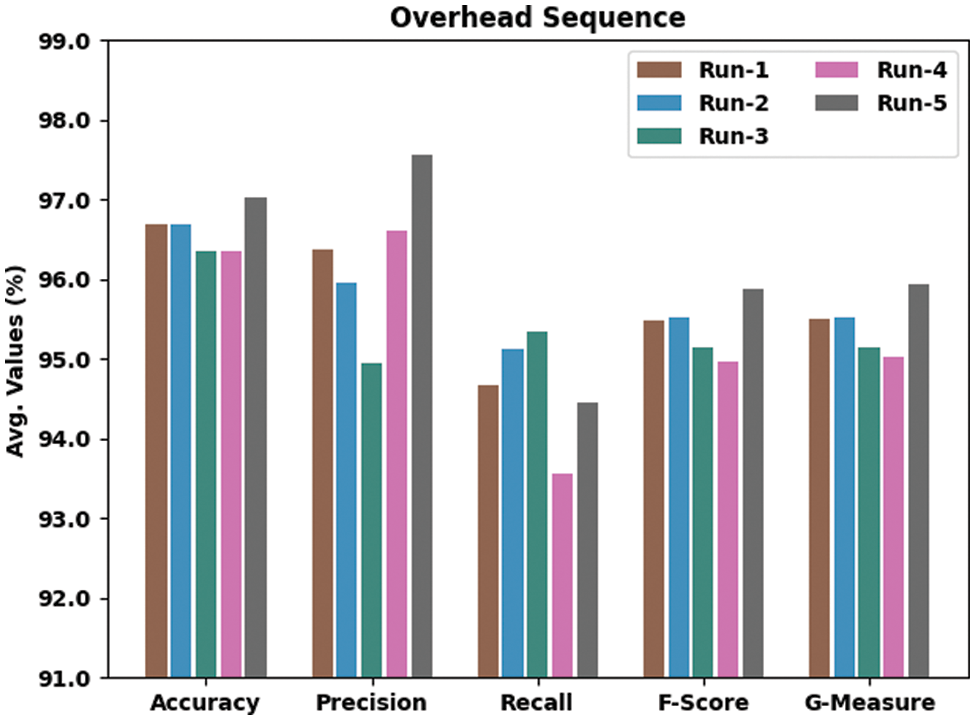

The fall detection outcomes of the proposed IWODL-FDDP technique under distinct runs of the execution on overhead sequence data are shown in Table 4 and Fig. 10. The experimental values infer that the proposed IWODL-FDDP approach established the maximum performance under each run. For example, on run-1, the proposed IWODL-FDDP methodology rendered an average

Figure 10: Analytical results of the IWODL-FDDP approach on overhead sequence dataset

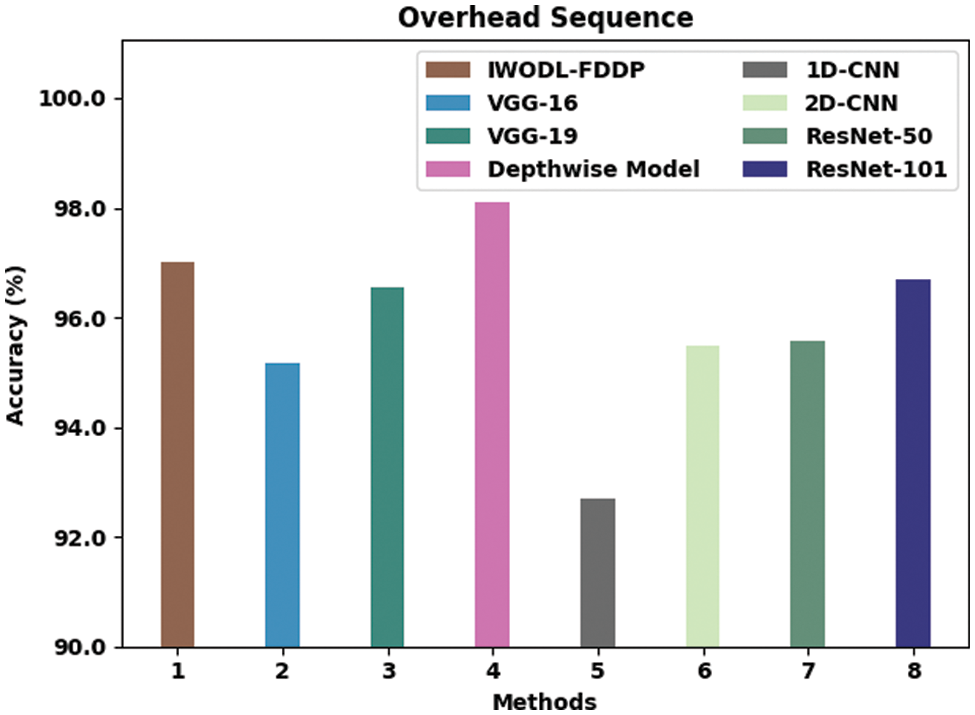

A comparative analysis was conducted between the proposed IWODL-FDDP method and other existing models on overhead sequence data and the results are shown in Table 5 and Fig. 11. The results infer that the proposed IWODL-FDDP methodology accomplished enhanced performance with an

Figure 11: Comparative analysis results of the IWODL-FDDP approach on overhead sequence data

In this study, a new IWODL-FDDP algorithm has been developed for the identification and classification of the fall events to assist the disabled people. The presented IWODL-FDDP model follows three major processes such as the pre-processing approach, the feature extraction process and the fall classification approach. Initially, the proposed IWODL-FDDP model applies image filtering approach to pre-process the image. Besides, the EfficientNet-B0 model is utilized for the generation of a useful feature vector set. Followed by, the BiLSTM model is leveraged for recognition and the classification of the fall events. Finally, the IWO method is employed to fine-tune the hyperparameters related to the BiLSTM method. The experimental analysis outcomes confirmed the superiority of the proposed IWODL-FDDP method over recent approaches. In the future, the proposed method can be integrated into smartphone applications to detect the falls in a real-time environment.

Funding Statement: The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University for funding this work through Large Groups Project under grant number (158/43). Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2022R77), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. The authors would like to thank the Deanship of Scientific Research at Umm Al-Qura University for supporting this work by Grant Code: (22UQU4310373DSR52).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. F. Lezzar, D. Benmerzoug and I. Kitouni, “Camera-based fall detection system for the elderly with occlusion recognition,” Applied Medical Informatics, vol. 42, no. 3, pp. 169–179, 2020. [Google Scholar]

2. F. Hussain, F. Hussain, M. E. ul. Haq and M. A. Azam, “Activity-aware fall detection and recognition based on wearable sensors,” IEEE Sensors Journal, vol. 19, no. 12, pp. 4528–4536, 2019. [Google Scholar]

3. P. Vallabh and R. Malekian, “Fall detection monitoring systems: A comprehensive review,” Journal of Ambient Intelligence and Humanized Computing, vol. 9, no. 6, pp. 1809–1833, 2018. [Google Scholar]

4. L. M. Villaseñor, H. Ponce, J. Brieva, E. M. Albor, J. N. Martínez et al., “UP-Fall detection dataset: A multimodal approach,” Sensors, vol. 19, no. 9, pp. 1988, 2019. [Google Scholar]

5. Y. Nizam, M. Mohd and M. Jamil, “Development of a user-adaptable human fall detection based on fall risk levels using depth sensor,” Sensors, vol. 18, no. 7, pp. 2260, 2018. [Google Scholar] [PubMed]

6. S. A. Qureshi, S. E. Raza, L. Hussain, A. A. Malibari, M. K. Nour et al., “Intelligent ultra-light deep learning model for multi-class brain tumor detection,” Applied Sciences, vol. 12, no. 8, pp. 1–22, 2022. [Google Scholar]

7. A. S. Almasoud, S. B. Haj Hassine, F. N. Al-Wesabi, M. K. Nour, A. M. Hilal et al., “Automated multi-document biomedical text summarization using deep learning model,” Computers, Materials & Continua, vol. 71, no. 3, pp. 5799–5815, 2022. [Google Scholar]

8. T. Xu, Y. Zhou and J. Zhu, “New advances and challenges of fall detection systems: A survey,” Applied Sciences, vol. 8, no. 3, pp. 418, 2018. [Google Scholar]

9. A. Mustafa Hilal, I. Issaoui, M. Obayya, F. N. Al-Wesabi, N. Nemri et al., “Modeling of explainable artificial intelligence for biomedical mental disorder diagnosis,” Computers, Materials & Continua, vol. 71, no. 2, pp. 3853–3867, 2022. [Google Scholar]

10. W. Chen, Z. Jiang, H. Guo and X. Ni, “Fall detection based on key points of human-skeleton using openpose,” Symmetry, vol. 12, no. 5, pp. 744, 2020. [Google Scholar]

11. M. Al Duhayyim, H. M. Alshahrani, F. N. Al-Wesabi, M. A. Al-Hagery, A. M. Hilal et al., “Intelligent machine learning based EEG signal classification model,” Computers, Materials & Continua, vol. 71, no. 1, pp. 1821–1835, 2022. [Google Scholar]

12. I. Pang, Y. Okubo, D. Sturnieks, S. R. Lord and M. A. Brodie, “Detection of near falls using wearable devices: A systematic review,” Journal of Geriatric Physical Therapy, vol. 42, no. 1, pp. 48–56, 2019. [Google Scholar] [PubMed]

13. M. Baig, S. Afifi, H. G. Hosseini and F. Mirza, “A systematic review of wearable sensors and IoT-based monitoring applications for older adults—A focus on ageing population and independent living,” Journal of Medical Systems, vol. 43, no. 8, pp. 233: 1–13, 2019. [Google Scholar]

14. S. A. Shah and F. Fioranelli, “RF sensing technologies for assisted daily living in healthcare: A comprehensive review,” IEEE Aerospace and Electronic Systems Magazine, vol. 34, no. 11, pp. 26–44, 2019. [Google Scholar]

15. O. Kerdjidj, N. Ramzan, K. Ghanem, A. Amira and F. Chouireb, “Fall detection and human activity classification using wearable sensors and compressed sensing,” Journal of Ambient Intelligence and Humanized Computing, vol. 11, no. 1, pp. 349–361, 2020. [Google Scholar]

16. E. Casilari, R. L. Rivera and F. G. Lagos, “A study on the application of convolutional neural networks to fall detection evaluated with multiple public datasets,” Sensors, vol. 20, no. 5, pp. 1466, 2020. [Google Scholar] [PubMed]

17. J. S. Ramón, E. Casilari and J. C. García, “Analysis of a smartphone-based architecture with multiple mobility sensors for fall detection with supervised learning,” Sensors, vol. 18, no. 4, pp. 1155, 2018. [Google Scholar]

18. T. de Quadros, A. E. Lazzaretti and F. K. Schneider, “A movement decomposition and machine learning-based fall detection system using wrist wearable device,” IEEE Sensors Journal, vol. 18, no. 12, pp. 5082–5089, 2018. [Google Scholar]

19. C. Khraief, F. Benzarti and H. Amiri, “Elderly fall detection based on multi-stream deep convolutional networks,” Multimedia Tools and Applications, vol. 79, no. 27–28, pp. 19537–19560, 2020. [Google Scholar]

20. C. Dong, C. C. Loy, K. He and X. Tang, “Image super-resolution using deep convolutional networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 38, no. 2, pp. 295–307, 2016. [Google Scholar] [PubMed]

21. E. Luz, P. Silva, R. Silva, L. Silva, J. Guimarães et al., “Towards an effective and efficient deep learning model for COVID-19 patterns detection in X-ray images,” Research on Biomedical Engineering, vol. 38, no. 1, pp. 149–162, 2021. [Google Scholar]

22. M. Gao, G. Shi and S. Li, “Online prediction of ship behavior with automatic identification system sensor data using bidirectional long short-term memory recurrent neural network,” Sensors, vol. 18, no. 12, pp. 4211, 2018. [Google Scholar] [PubMed]

23. J. Nasiri and F. Khiyabani, “A whale optimization algorithm (WOA) approach for clustering,” Cogent Mathematics & Statistics, vol. 5, no. 1, pp. 1483565, 2018. [Google Scholar]

24. S. Y. Park and J. J. Lee, “Stochastic opposition-based learning using a beta distribution in differential evolution,” IEEE Transactions on Cybernetics, vol. 46, no. 10, pp. 2184–2194, 2016. [Google Scholar] [PubMed]

25. E. Auvinet, C. Rougier, J. Meunier, A. S. Arnaud and J. Rousseau, “Multiple cameras fall dataset,” DIRO-université de montréal, Montreal, QC, Canada, tech. Rep. 1350,” 2010. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools