Open Access

Open Access

ARTICLE

Parameter-Tuned Deep Learning-Enabled Activity Recognition for Disabled People

Department of Computer Science, College of Sciences and Humanities-Aflaj, Prince Sattam Bin Abdulaziz University, Al-Aflaj, 16273, Saudi Arabia

* Corresponding Author: Mesfer Al Duhayyim. Email:

Computers, Materials & Continua 2023, 75(3), 6287-6303. https://doi.org/10.32604/cmc.2023.033045

Received 06 June 2022; Accepted 07 July 2022; Issue published 29 April 2023

Abstract

Elderly or disabled people can be supported by a human activity recognition (HAR) system that monitors their activity intervenes and patterns in case of changes in their behaviors or critical events have occurred. An automated HAR could assist these persons to have a more independent life. Providing appropriate and accurate data regarding the activity is the most crucial computation task in the activity recognition system. With the fast development of neural networks, computing, and machine learning algorithms, HAR system based on wearable sensors has gained popularity in several areas, such as medical services, smart homes, improving human communication with computers, security systems, healthcare for the elderly, mechanization in industry, robot monitoring system, monitoring athlete training, and rehabilitation systems. In this view, this study develops an improved pelican optimization with deep transfer learning enabled HAR (IPODTL-HAR) system for disabled persons. The major goal of the IPODTL-HAR method was recognizing the human activities for disabled person and improve the quality of living. The presented IPODTL-HAR model follows data pre-processing for improvising the quality of the data. Besides, EfficientNet model is applied to derive a useful set of feature vectors and the hyperparameters are adjusted by the use of Nadam optimizer. Finally, the IPO with deep belief network (DBN) model is utilized for the recognition and classification of human activities. The utilization of Nadam optimizer and IPO algorithm helps in effectually tuning the hyperparameters related to the EfficientNet and DBN models respectively. The experimental validation of the IPODTL-HAR method is tested using benchmark dataset. Extensive comparison study highlighted the betterment of the IPODTL-HAR model over recent state of art HAR approaches interms of different measures.Keywords

Recently, Human Activity Recognition (HAR) is one such effective method to assist disabled persons. Owing to the availability of accelerometers and sensors, low energy utilization and minimum cost, and developments in artificial intelligence (AI), computer vision (CV), Internet of Things (IoT), and machine learning (ML), several applications were framed with the help of human centered design monitoring for recognizing, detecting, and categorizing human behaviors, and research scholars have offered numerous techniques related to this topic [1]. HAR becomes a necessary tool in monitoring the dynamism of person, and it could be established with the help of ML techniques. HAR refers to a technique which automatically analyse and recognizes human activities on the basis of data obtained from several wearable devices and smartphone sensors, like location, gyroscope sensors, time, accelerometer sensors, and other environmental sensors [2]. Whenever compiled with other technologies, like IoT, it is utilized in an extensive range of application zones like sports, industry, and healthcare. World Health Organization (WHO) survey stated that nearly 650 million working age individuals across the globe were disabled [3]. Also, there are over six million people in Indonesia, as per a research carried out by Survei Sosial Ekonomi Nasional (Susenas) in 2012. There are presently inadequate amenities to meet the people needs with disability [4]. One is the need for a companion for monitoring its actions. Fig. 1 depicts the role of AI in disabled people.

Figure 1: Role of AI in disabled people

In recent times, latest smartphones fortified with various embedded sensors, like gyroscopes and accelerometers, were used as an alternate platform for HAR [5]. The smartphone-related HAR mechanism refers to an ML method which can be positioned on the subject’s smartphone and endlessly detects his or her actions while the smartphone can be attached to body parts of an individual [6]. This algorithm considers the benefits of the current smartphone computing sources for developing a real-time mechanism. In general, advancing these systems can be carried in 4 basic steps they are feature extraction, data collection, classification, and windowing. Feature extraction can be regarded as the most critical step, as it decides the model’s overall performance [7]. This step is established either utilizing conventional deep learning (DL) or ML techniques. In classical ML techniques, the field experts extract handcrafted or heuristic features manually in frequency as well as time domains. There exist several time domain features like standard deviation, mean, max, min, correlation, and so on. Additionally, there comes several frequency domain features namely time between peaks, energy, entropy, and much more. But handcrafted features contain certain restrictions in both fields. Firstly, they were dependent on human experience and field knowledge [8]. This knowledge can be helpful in some issues with particular settings; however, it could not be generalized for the same issue having distinct settings [9]. Moreover, the human experience was just utilized for extracting shallow features, namely statistical data, however, fails to distinguish among activities with approximately similar patterns (like standing and sitting actions in HAR). There were numerous studies which employed the conventional ML techniques to build smartphone-related HAR [10]. To overcome this limitation, DL techniques can be utilized. In DL techniques, the features are automatically learned by utilizing more than one hidden layer rather than extracted manually by the field specialist.

Yadav et al. [11] develop effective activity and fall detection systems (ARFDNet). At this point, the raw RGB video is passed to impose estimation system for extracting skeleton features. The skeleton coordinate is later preprocessed and inputted in a sliding window manner to develop especially convolution neural network (CNN) and gated recurrent unit (GRU), for learning the Spatio-temporal dynamics existing in the information. In [12], the authors proposed Wi-Sense—a human activity recognition (HAR) scheme which utilizes a CNN to identify human activity of the human on the basis of the environment-independent fingerprint derived from the Wi-Fi channel state information (CSI). Firstly, Wi-Sense captures the CSI through a typical Wi-Fi network interface card. Wi-Sense employs the CSI ratio model for reducing the noise and the effect of the phase offset. Additionally, it employs the principal component analysis (PCA) for removing data redundancy. Basly et al. [13] developed a deep temporal residual system for HAR that aim is to improve spatiotemporal features for enhancing performance of system. Eventually, we adopted a deep residual convolutional neural network (RCN) to preserve discriminatory visual features relayed to appearance and long short-term memory neural network\(LSTM-NN) for capturing the long-term temporal evolution of action.

In [14], the different textural characteristics such as point feature, grey level co-occurrence matrix, and local binary pattern accelerated powerful feature are retrieved from video activity that is a proposed work and classifiers such as k-nearest neighbor (KNN), probabilistic neural network (PNN), support vector machine (SVM), as well as the presented classifiers are utilized for classifying the activity. In [15], a futuristic architecture has been experimented and proposed to construct a precision-centric HAR model by examining the information attained from Personalized Positions Detection Scheme (PPDS) and Environment Monitoring Scheme (EMS) using ML techniques namely AdaBoost, SVM, and PNN. Furthermore, the presented technique employs the Dempster-Shafer Theory (DST)-based complete sensor data fusion thus improving the performance of global HAR.

This study develops an improved pelican optimization with deep transfer learning enabled HAR (IPODTL-HAR) system for disabled persons. The major goal of the IPODTL-HAR method is to recognize the human activities for disabled person and improve the quality of living. The presented IPODTL-HAR model follows data pre-processing to improve the quality of the data. Besides, EfficientNet model can be applied to derive a useful set of feature vectors and the hyperparameters are adjusted by the use of Nadam optimizer. Finally, the IPO with deep belief network (DBN) technique is utilized for the recognition and classification of human activities. The utilization of Nadam optimizer and IPO algorithm helps in effectually tuning the hyperparameters related to the EfficientNet and DBN models respectively. The experimental validation of the IPODTL-HAR method can be tested using benchmark dataset.

The rest of the paper is given as follows. Section 2 introduces the proposed model and Section 3 discusses the performance validation. Finally, Section 4 concludes the study.

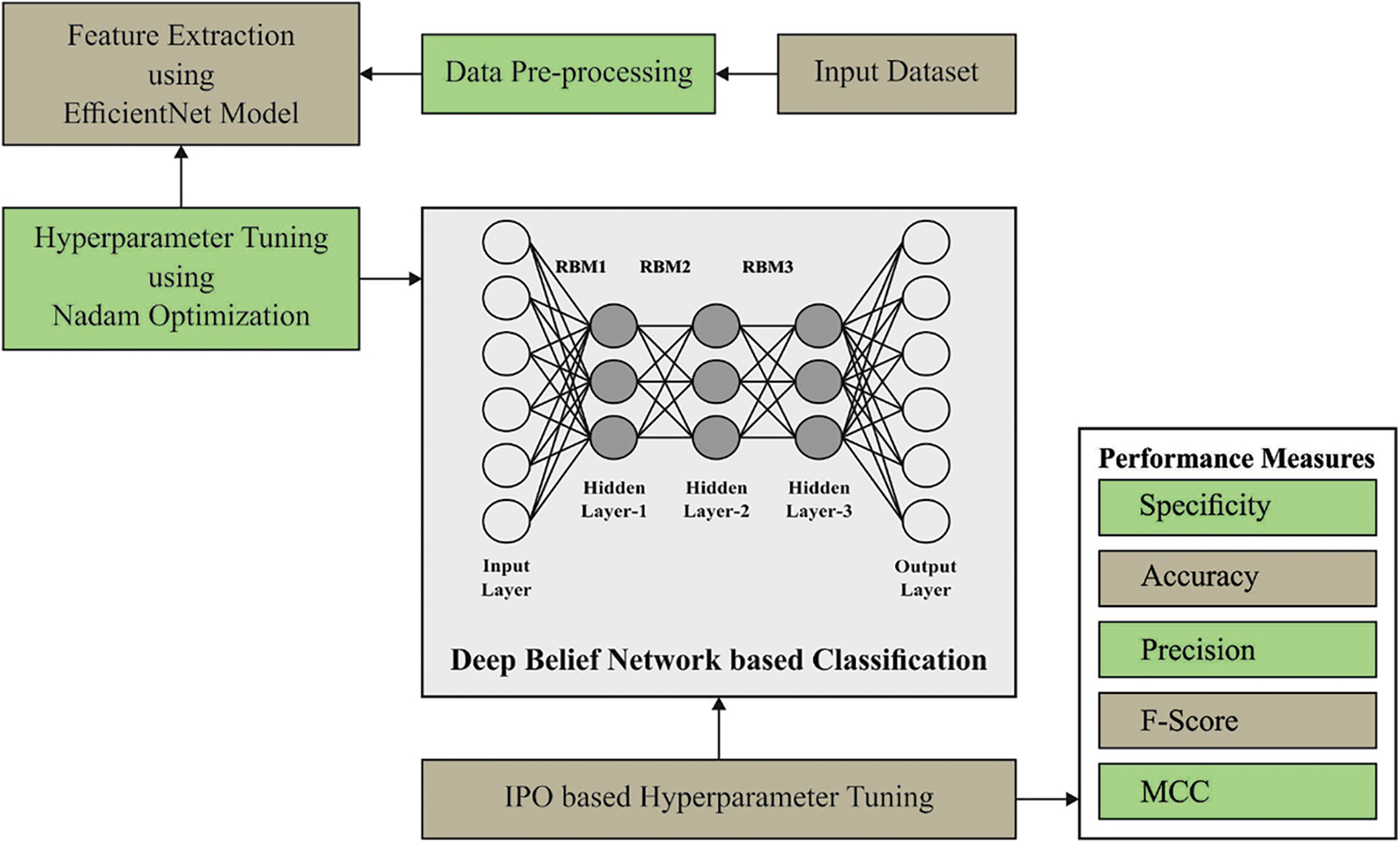

In this study, a new IPODTL-HAR model was introduced to recognize the human activities of disabled persons and improve the quality of living. The presented IPODTL-HAR model follows data pre-processing to improvise the data quality. Besides, EfficientNet method can be applied to derive a useful set of feature vectors and the hyperparameters are adjusted by the use of Nadam optimizer. Finally, the IPO with DBN model is utilized for the recognition and classification of human activities. Fig. 2 demonstrates the block diagram of IPODTL-HAR approach.

Figure 2: Block diagram of IPODTL-HAR approach

2.1 Feature Extraction: EfficientNet Model

In this study, the EfficientNet model is utilized to produce a collection of feature vectors. The concept of transfer learning is faster than training a model from scratch, overcomes limitations of the data as in the healthcare fields, increases performance, achieves less training time, and is computationally inexpensive [16]. In this work, the pre-trained convolutional neural network (CNN) model finetuned is the EfficientNet. In 2019 Google proposed a multi-dimensional mixed model scaling mechanism (EfficientNet sequence network) that has received considerable interest in the academic field. For exploring a model scaling mechanism that consider accuracy and speed, the EfficientNet series network that instantaneously scale the three dimension of depth, width, and resolution of the network is initially presented. The input is a three-channel color images with a height H and a width W. After the layer-wise convolution, the network can able to learn respective attributes to increase network efficiency by varying the number of channels, increase the network from the perception of input images and improves the network learning efficacy by proportionally reducing or enlarging the size of input images to improve the network. Also, it aims at increasing or decreasing the amount of network layers such that the network could improve network efficiency and learn more explicit feature information. In the EfficientNet, the composite parameter is utilized for concurrently performing the scaling of the abovementioned three dimensions, thus enhancing the total network performance.

For hyperparameter tuning of the EfficientNet model, the Nadam optimizer is utilized. The Nadam optimizer try to incorporate Nesterov accelerated adaptive moment estimation to the Adam [17]. The great advantage of this presented technique is that they assist to implement highly accurate phase in the gradient direction by updating mechanism with the momentum phase before the gradient computation and this can be demonstrated in the following equation:

where

2.2 Activity Classification: DBN Model

For the recognition and classification of human activities, the DBN technique is utilized. A DBN refers to a neural network mechanism comprising of a multiple restricted Boltzmann machine (RBM) [18], the essential RBM unit is an energy-based mechanism.

The energy of RBM is defined using state

where,

The marginal likelihood distribution of

If

From the above equation, the activation function is denoted by the term

DBN feature extraction is a layer-wise learning mechanism of multiple RBMs, involving reverse reconstruction and forward learning. DBN maps complicated signals to output and has better feature extraction capability.

DBN resolves the optimization issue of deep neural networks through layer-wise training mechanism and provides the network a good initial weight by layer-wise training, which means that the network could reach the optimum solution if it is finetuned.

2.3 Hyperparameter Tuning: IPO Algorithm

To tune the hyperparameters related to the DBN technique, the IPO algorithm is utilized. The PO algorithm is a new metaheuristic optimization technique motivated by the behavior of pelican hunting [19]. Fast convergence speed, simple calculation, and adjustment parameters are the benefits of the proposed algorithm. They are found in the warm water and primarily live in swamps, lakes, rivers, and coasts. Generally, pelicans live in flocks; they were good at swimming and flying. They have excellent observation skills, sharp eyesight in flight, and they mainly feed on fish. When pelican finds school of fish, they arrange itself in a

(1) Initialization: Assume that there exist

In Eq. (9),

In Eq. (10),

(2) Moving towards prey: Here, the pelican finds the prey position and rushes toward the prey from higher altitude. The random distribution of prey in the searching space surges the exploration capability, and the updating of pelicans’ position at every iteration can be determined as follows

In Eq. (11), the current iteration number is represented as

(3) Winging on the water surface: Afterward, the pelican reaches the surface of water, they spread its wings toward the water surface for moving the fish upward, collecting the prey in its throat pouch. The mathematical formulation of pelican during hunting is given in the following

In Eq. (12), existing amount of iterations is represented by

After enhancing the PO approach, the optimization efficacy is additionally enhanced. The specific improvement strategy is shown in the following.

(1) Initialization strategy: The Tent chaotic map can be utilized for replacing the randomly generated model to initialize the pelican afterward the Tent chaotic mapping is developed, and the Eq. (10) is rewritten by using:

From the expression,

In this phase, the pelican position can be initialized by the Tent chaotic map that assists in improving the global search performance.

(2) Moving towards prey: In the phase, the dynamic weight factor

The IPO approach extracts a fitness function for gaining enhanced classifier outcomes. It determines a positive integer for denoting superior outcome of the candidate solutions. In this paper, the reduction of the classifier error rate was assumed as the fitness function, as shown in Eq. (16).

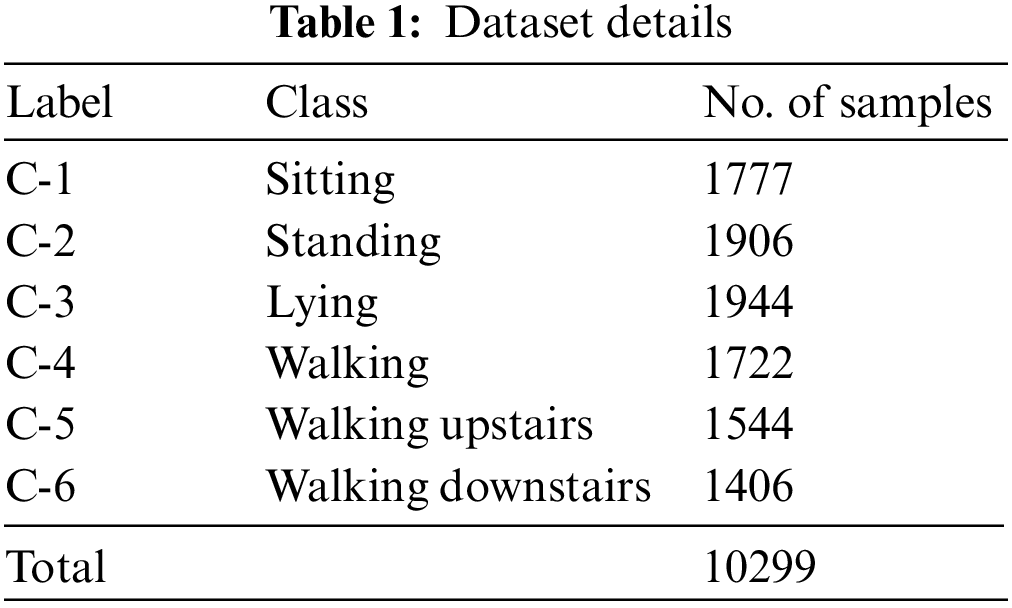

This section inspects the activity recognition performance of the IPODTL-HAR model using a dataset comprising 10299 samples. The dataset includes samples under six class labels, as given in Table 1. The IPODTL-HAR method is simulated by utilizing Python 3.6.5 tool.

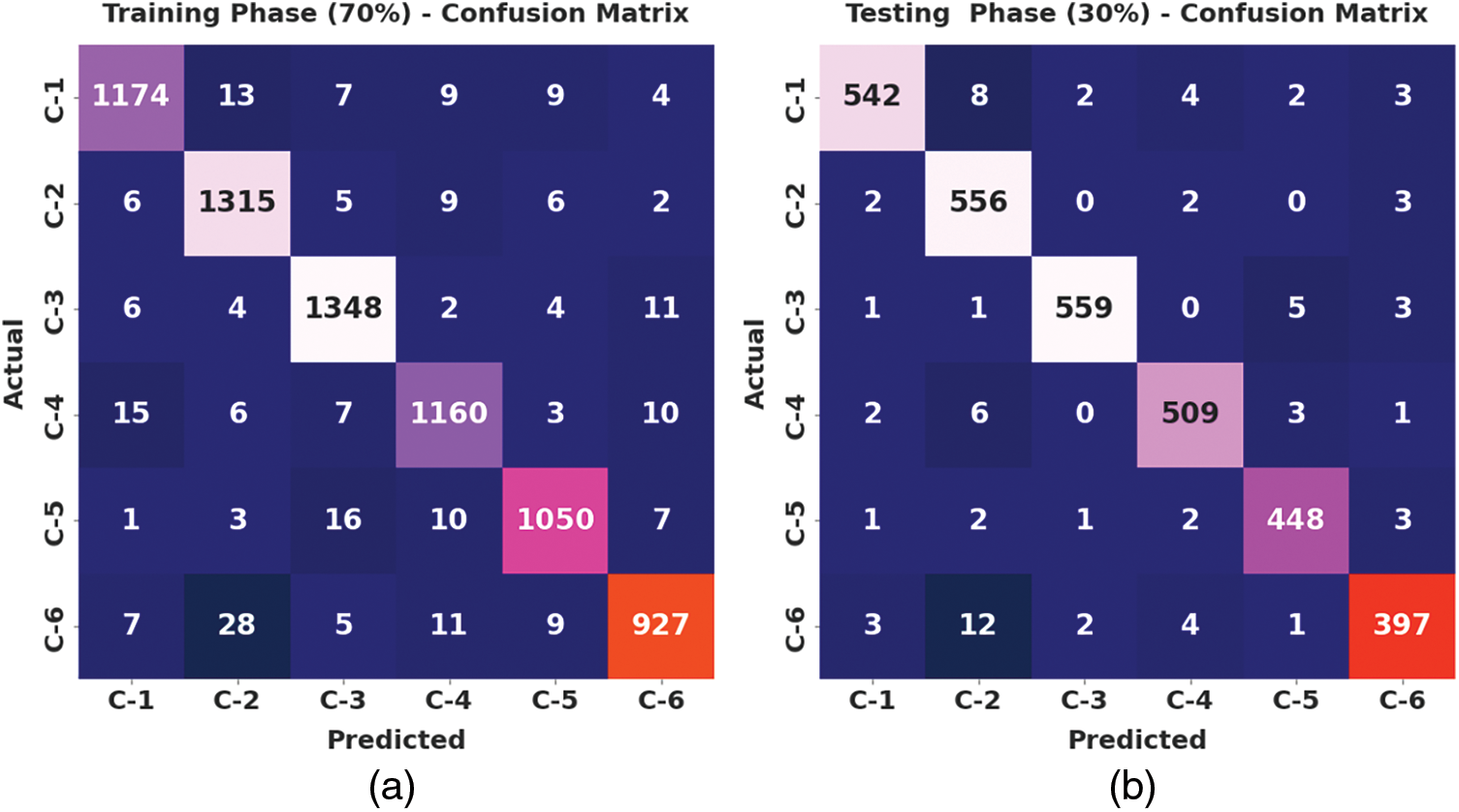

Fig. 3 demonstrates the confusion matrix created by the IPODTL-HAR model on 70% of TR data and 30% of TS data. On 70% of TR data, the IPODTL-HAR model has recognized 1174 samples under C-1 class, 1315 samples under C-2 class, 1348 samples under C-3 class, 1160 samples under C-4 class, 1050 samples under C-5 class, and 927 samples under C-6 class. Also, on 30% of TS data, the IPODTL-HAR method has recognized 542 samples under C-1 class, 556 samples under C-2 class, 559 samples under C-3 class, 509 samples under C-4 class, 448 samples under C-5 class, and 397 samples under C-6 class.

Figure 3: Confusion matrices of IPODTL-HAR approach (a) 70% of TR data and (b) 30% of TS data

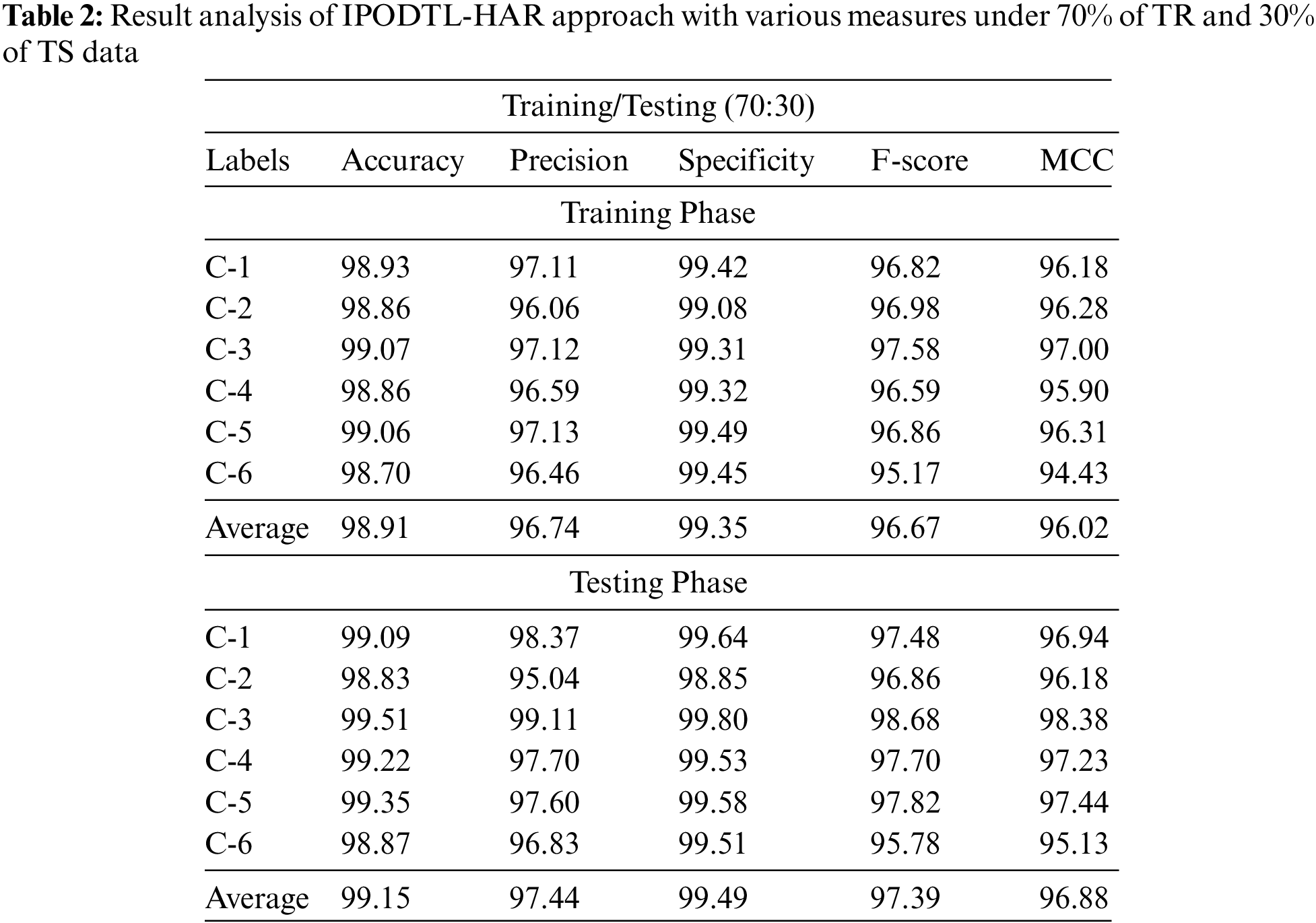

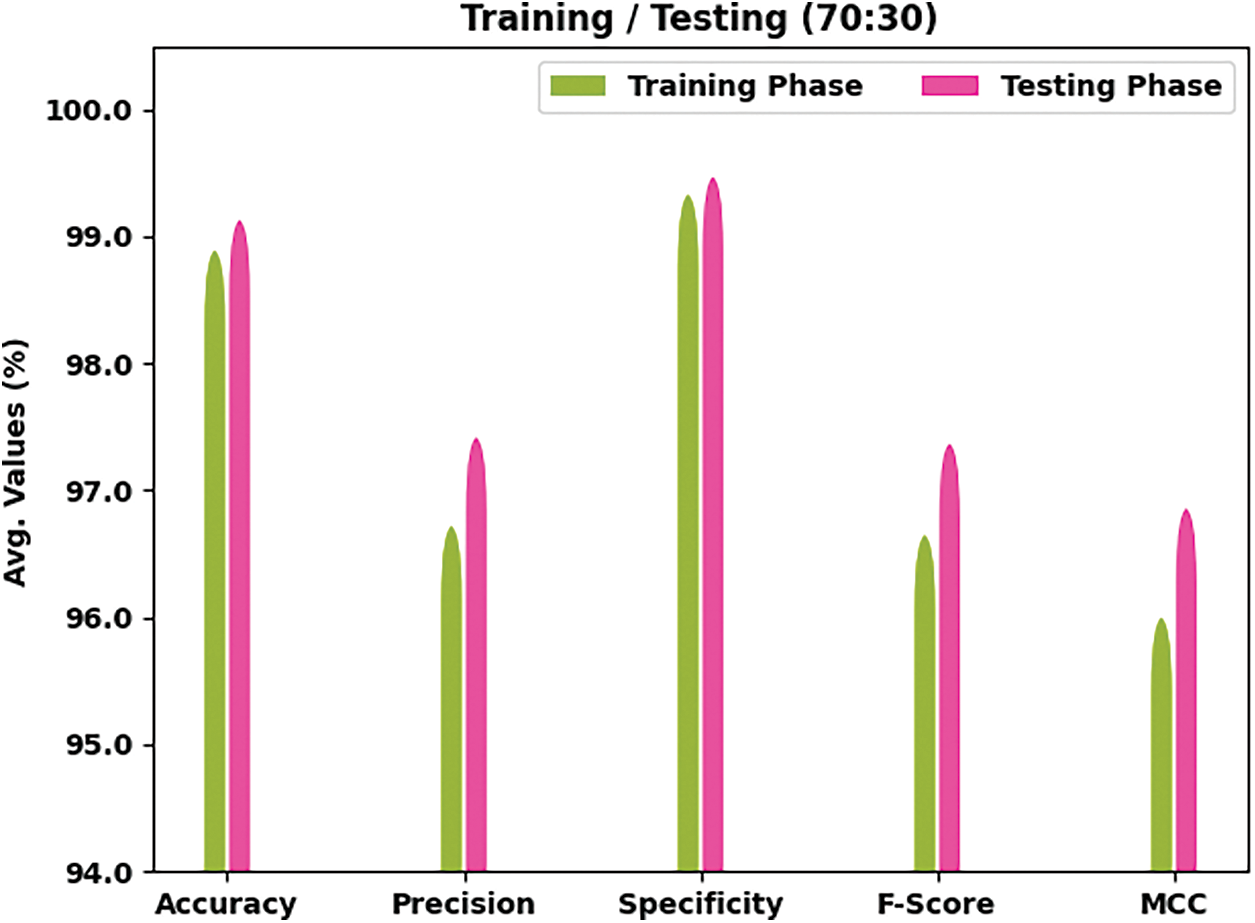

Table 2 and Fig. 4 report the overall HAR outcomes of the IPODTL-HAR model on the applied data. The results inferred that the IPODTL-HAR method has shown enhanced results under all cases. For example, on 70% of TR data, the IPODTL-HAR model has resulted to average

Figure 4: Result analysis of IPODTL-HAR approach under 70% of TR and 30% of TS data

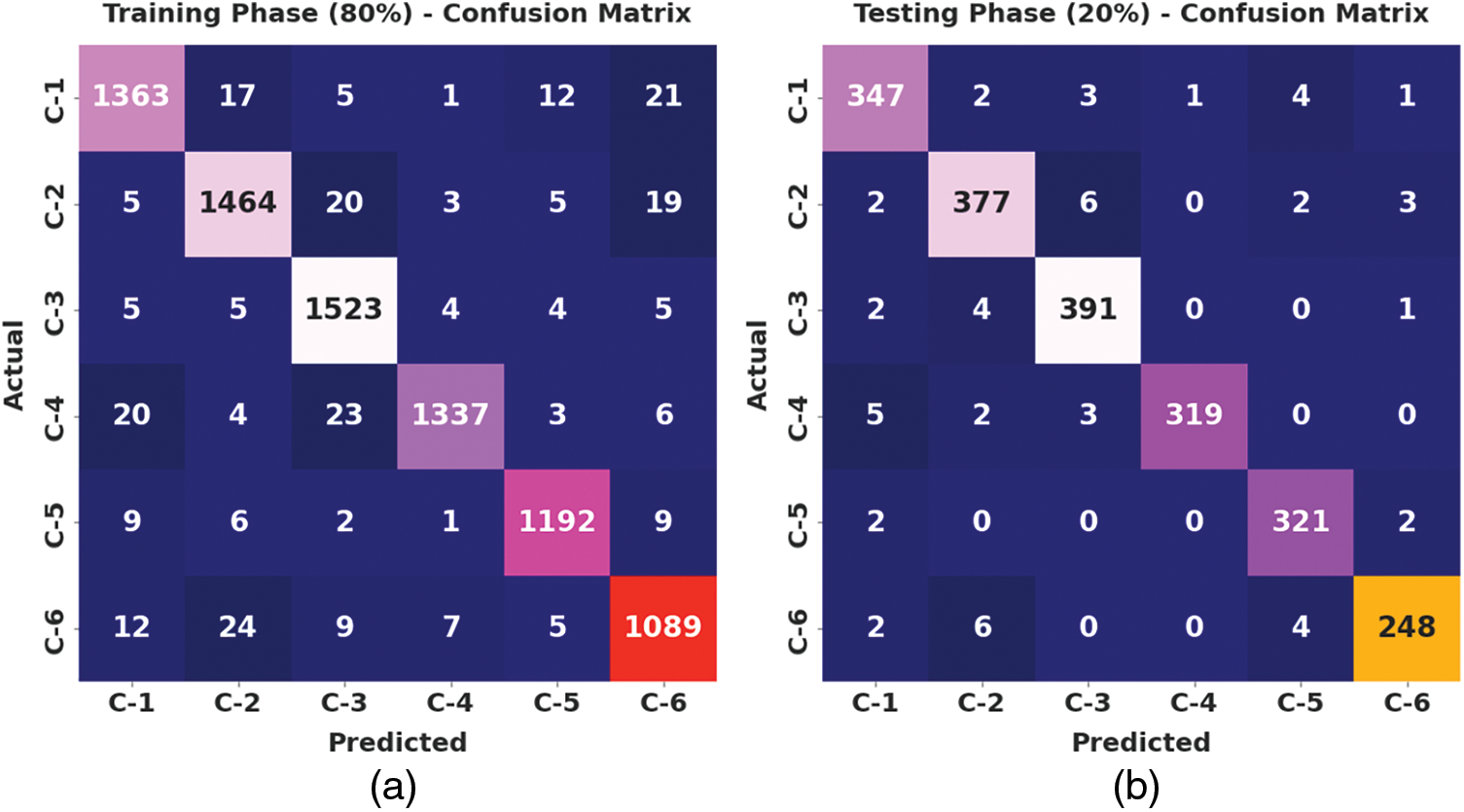

Fig. 5 illustrates the confusion matrix created by the IPODTL-HAR method on 80% of TR data and 20% of TS data. On 80% of TR data, the IPODTL-HAR approach has recognized 1363 samples under C-1 class, 1464 samples under C-2 class, 1523 samples under C-3 class, 1337 samples under C-4 class, 1192 samples under C-5 class, and 1089 samples under C-6 class. Additionally, on 20% of TS data, the IPODTL-HAR method has recognized 347 samples under C-1 class, 377 samples under C-2 class, 391 samples under C-3 class, 319 samples under C-4 class, 321 samples under C-5 class, and 248 samples under C-6 class.

Figure 5: Confusion matrices of IPODTL-HAR approach (a) 80% of TR data and (b) 20% of TS data

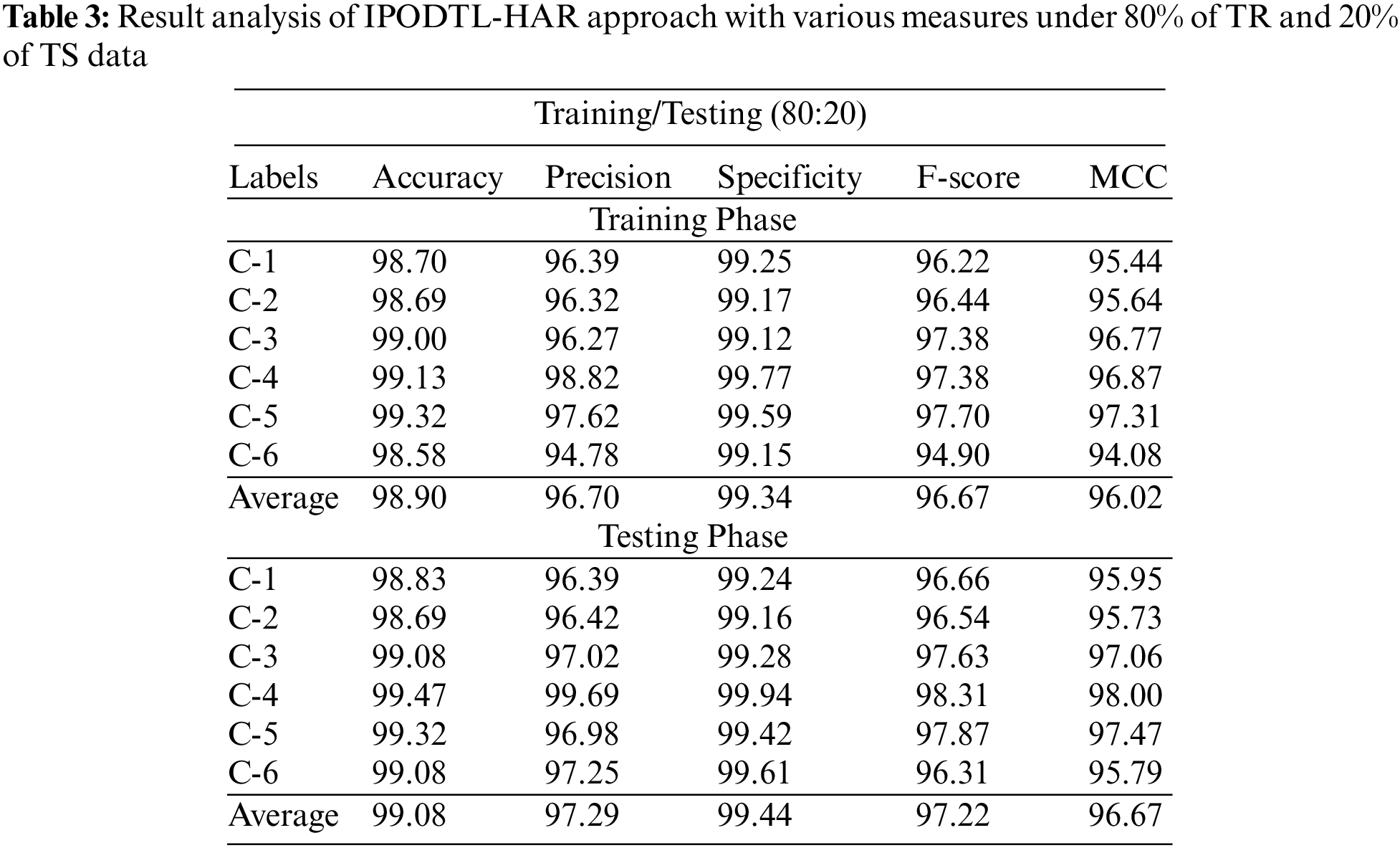

Table 3 and Fig. 6 signify the overall HAR outcomes of the IPODTL-HAR method on the applied data. The results indicated the IPODTL-HAR method has displayed improvised results under all cases. For instance, on 80% of TR data, the IPODTL-HAR algorithm has resulted to average

Figure 6: Result analysis of IPODTL-HAR approach under 80% of TR and 20% of TS data

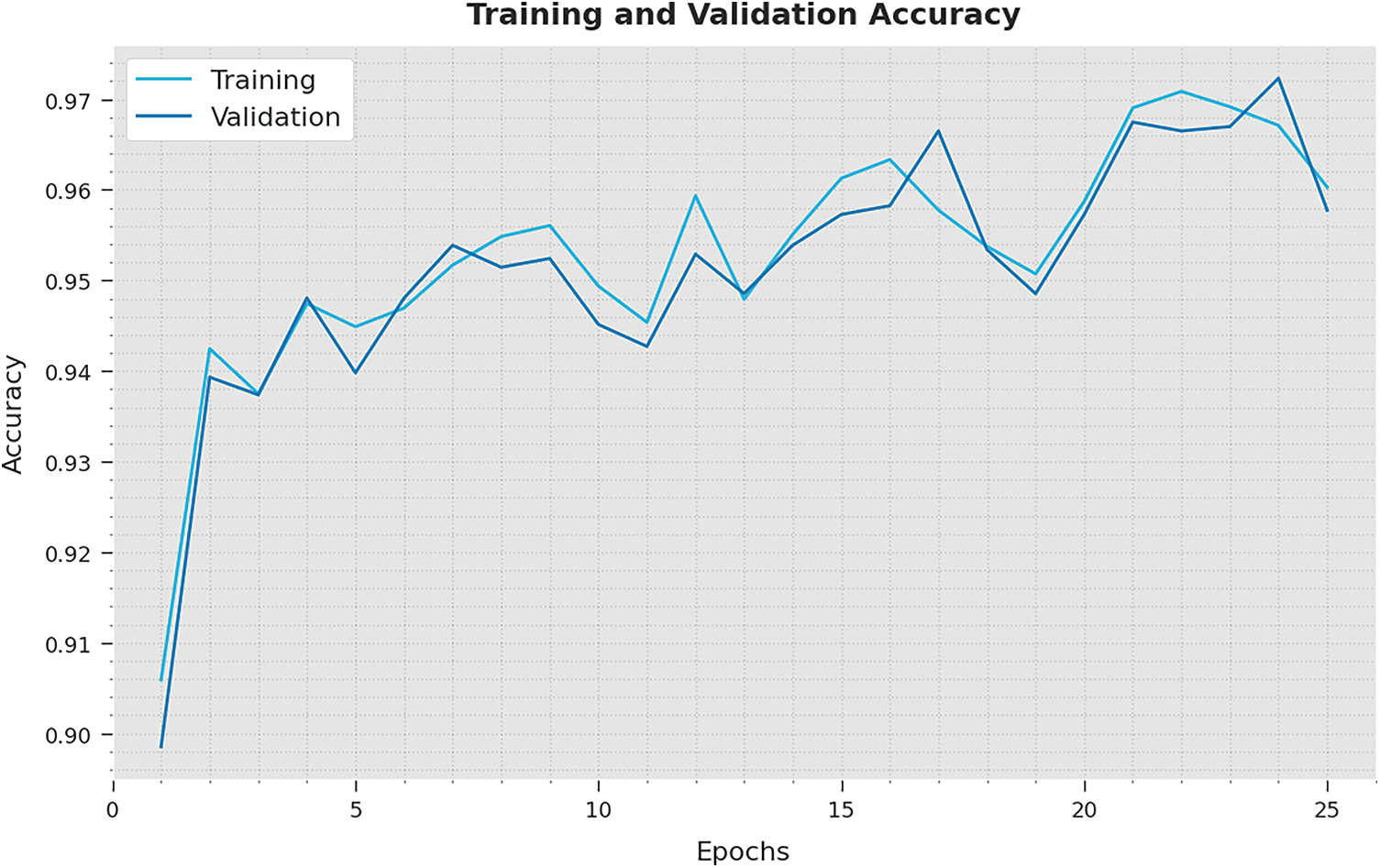

The training accuracy (TA) and validation accuracy (VA) gained by the IPODTL-HAR algorithm on test dataset is illustrated in Fig. 7. The experimental outcome denoted the IPODTL-HAR approach has reached maximal values of TA and VA. To be specific the VA is superior than TA.

Figure 7: TA and VA analysis of IPODTL-HAR methodology

The training loss (TL) and validation loss (VL) reached by the IPODTL-HAR method on test dataset are established in Fig. 8. The experimental outcome implied that the IPODTL-HAR technique has accomplished least values of TL and VL. Particularly, the VL is lesser than TL.

Figure 8: TL and VL analysis of IPODTL-HAR methodology

A clear precision-recall analysis of the IPODTL-HAR approach on test dataset is illustrated in Fig. 9. The figure represented the IPODTL-HAR technique has resulted to enhanced values of precision-recall values under all classes.

Figure 9: Precision-recall curve analysis of IPODTL-HAR methodology

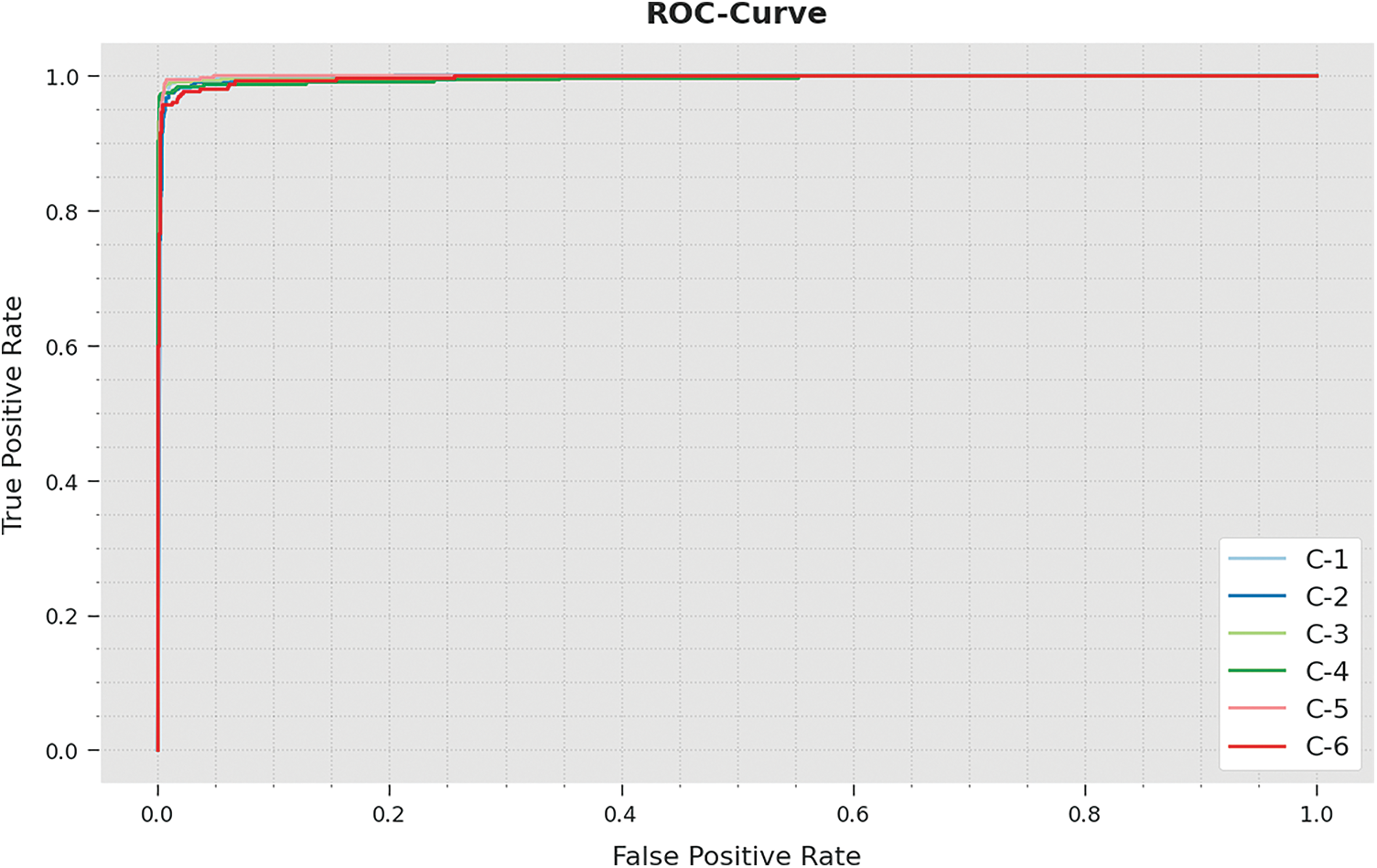

A brief ROC inspection of the IPODTL-HAR method on test dataset is demonstrated in Fig. 10. The results indicated the IPODTL-HAR approach has displayed its ability in categorizing distinct classes on the test dataset.

Figure 10: ROC curve analysis of IPODTL-HAR methodology

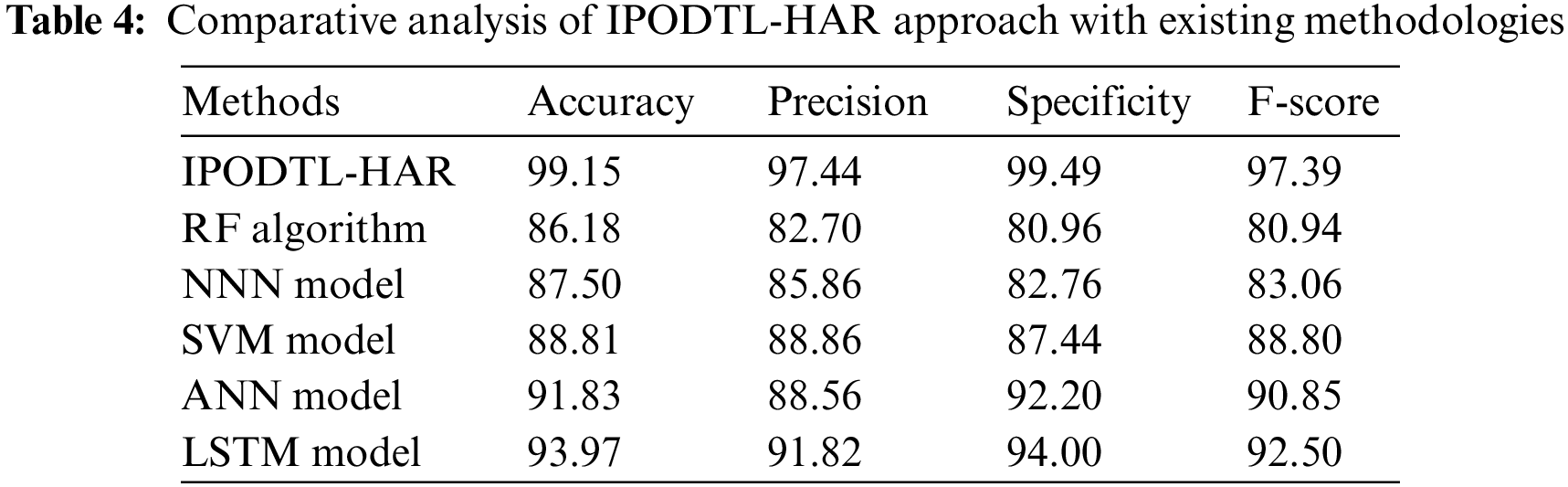

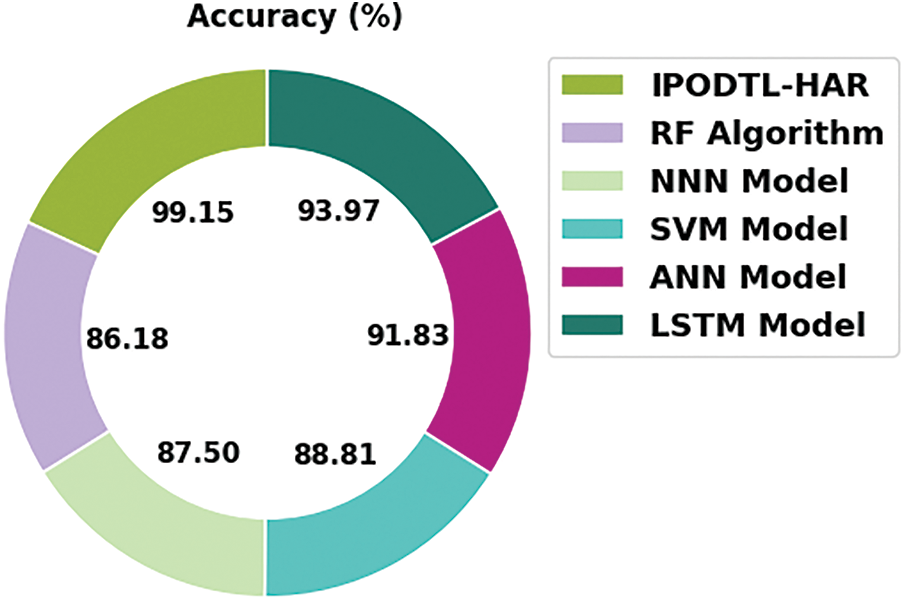

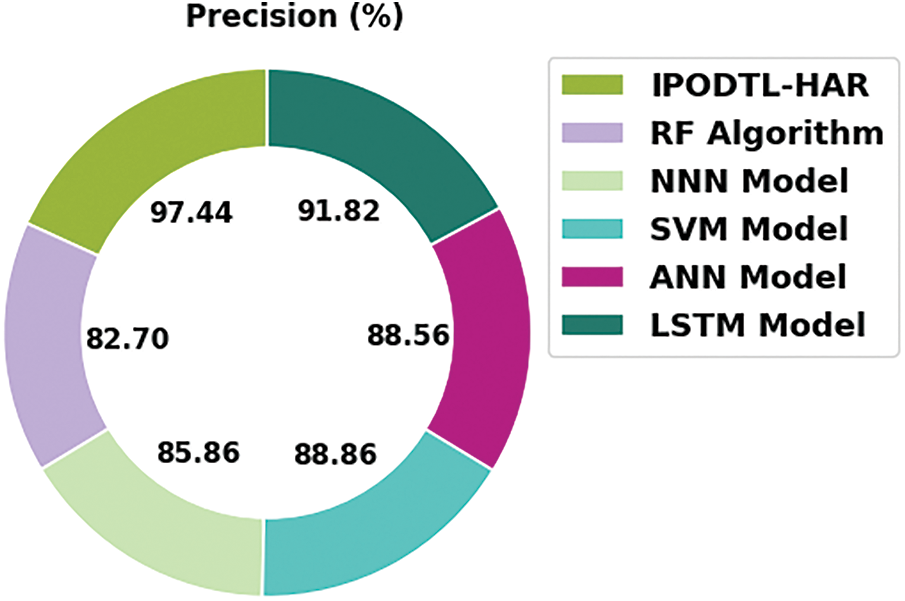

To exhibit the better performance of the IPODTL-HAR model, a comparison study with recent models is made in Table 4 [20,21]. Fig. 11 provides a comprehensive

Figure 11:

Fig. 12 presents a brief

Figure 12:

Fig. 13 provides a detailed

Figure 13:

Fig. 14 offers a comprehensive

Figure 14:

From the detailed results and discussion, it is assured that the IPODTL-HAR model has demonstrated superior performance over other models.

In this study, a new IPODTL-HAR model was introduced to recognize the human activities for disabled persons and improve the quality of living. The presented IPODTL-HAR model follows data pre-processing for enhancing the data quality. Besides, EfficientNet method was applied to derive a useful set of feature vectors and the hyperparameters are adjusted by the use of Nadam optimizer. Finally, the IPO with deep belief network (DBN) model is utilized for the recognition and classification of human activities. The utilization of Nadam optimizer and IPO algorithm helps in effectually tuning the hyperparameters related to the EfficientNet and DBN models respectively. The experimental validation of the IPODTL-HAR technique is tested using benchmark dataset. Extensive comparison study highlighted the betterment of the IPODTL-HAR method over recent state of art HAR approaches interms of different measures. In future, the proposed model can be employed in real time environment.

Funding Statement: The author received no specific funding for this study.

Conflicts of Interest: The author declares that there are no conflicts of interest to report regarding the present study.

References

1. A. B. Jayo, X. Cantero, A. Almeida, L. Fasano, T. Montanaro et al., “Location based indoor and outdoor lightweight activity recognition system,” Electronics, vol. 11, no. 3, pp. 360, 2022. [Google Scholar]

2. M. O. Raza, N. Pathan, A. Umar and R. Bux, “Activity recognition and creation of web service for activity recognition using mobile sensor data using azure machine learning studio,” Review of Computer Engineering Research, vol. 8, no. 1, pp. 1–7, 2021. [Google Scholar]

3. H. Raeis, M. Kazemi and S. Shirmohammadi, “Human activity recognition with device-free sensors for well-being assessment in smart homes,” IEEE Instrumentation & Measurement Magazine, vol. 24, no. 6, pp. 46–57, 2021. [Google Scholar]

4. S. K. Yadav, K. Tiwari, H. M. Pandey and S. A. Akbar, “A review of multimodal human activity recognition with special emphasis on classification, applications, challenges and future directions,” Knowledge-Based Systems, vol. 223, no. 8, pp. 106970, 2021. [Google Scholar]

5. M. Z. Uddin and A. Soylu, “Human activity recognition using wearable sensors, discriminant analysis, and long short-term memory-based neural structured learning,” Scientific Reports, vol. 11, no. 1, pp. 16455, 2021. [Google Scholar] [PubMed]

6. A. Vijayvargiya, V. Gupta, R. Kumar, N. Dey and J. M. R. S. Tavares, “A hybrid WD-EEMD sEMG feature extraction technique for lower limb activity recognition,” IEEE Sensors Journal, vol. 21, no. 18, pp. 20431–20439, 2021. [Google Scholar]

7. S. Mekruksavanich and A. Jitpattanakul, “Deep convolutional neural network with RNNs for complex activity recognition using wrist-worn wearable sensor data,” Electronics, vol. 10, no. 14, pp. 1685, 2021. [Google Scholar]

8. I. Bustoni, I. Hidayatulloh, A. Ningtyas, A. Purwaningsih and S. Azhari, “Classification methods performance on human activity recognition,” Journal of Physics: Conference Series, vol. 1456, no. 1, pp. 12027, 2020. [Google Scholar]

9. D. Sehrawat and N. S. Gill, “IoT based human activity recognition system using smart sensors,” Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 516–522, 2020. [Google Scholar]

10. A. S. A. Sukor, A. Zakaria, N. A. Rahim, L. M. Kamarudin, R. Setchi et al., “A hybrid approach of knowledge-driven and data-driven reasoning for activity recognition in smart homes,” Journal of Intelligent & Fuzzy Systems, vol. 36, no. 5, pp. 4177–4188, 2019. [Google Scholar]

11. S. K. Yadav, A. Luthra, K. Tiwari, H. M. Pandey and S. A. Akbar, “ARFDNet: An efficient activity recognition & fall detection system using latent feature pooling,” Knowledge-Based Systems, vol. 239, no. 7, pp. 107948, 2022. [Google Scholar]

12. M. Muaaz, A. Chelli, M. W. Gerdes and M. Pätzold, “Wi-Sense: A passive human activity recognition system using Wi-Fi and convolutional neural network and its integration in health information systems,” Annals of Telecommunications, vol. 77, no. 3–4, pp. 163–175, 2022. [Google Scholar]

13. H. Basly, W. Ouarda, F. E. Sayadi, B. Ouni and A. M. Alimi, “DTR-HAR: Deep temporal residual representation for human activity recognition,” The Visual Computer, vol. 38, no. 3, pp. 993–1013, 2022. [Google Scholar]

14. K. P. S. Kumar and R. Bhavani, “Human activity recognition in egocentric video using PNN, SVM, kNN and SVM+kNN classifiers,” Cluster Computing, vol. 22, no. S5, pp. 10577–10586, 2019. [Google Scholar]

15. V. Venkatesh, P. Raj, K. Kannan and P. Balakrishnan, “Precision centric framework for activity recognition using Dempster Shaffer theory and information fusion algorithm in smart environment,” Journal of Intelligent & Fuzzy Systems, vol. 36, no. 3, pp. 2117–2124, 2019. [Google Scholar]

16. J. Wang, Q. Liu, H. Xie, Z. Yang and H. Zhou, “Boosted EfficientNet: detection of lymph node metastases in breast cancer using convolutional neural networks,” Cancers, vol. 13, no. 4, pp. 661, 2021. [Google Scholar] [PubMed]

17. S. H. Haji and A. M. Abdulazeez, “Comparison of optimization techniques based on gradient descent algorithm: A review,” PalArch’s Journal of Archaeology of Egypt/Egyptology, vol. 18, no. 4, pp. 2715–2743, 2021. [Google Scholar]

18. X. Z. Wang, T. Zhang and R. Wang, “Noniterative deep learning: incorporating restricted Boltzmann machine into multilayer random weight neural networks,” IEEE Transactions on Systems, Man, and Cybernetics, vol. 49, no. 7, pp. 1299–1308, 2019. [Google Scholar]

19. W. Tuerxun, C. Xu, M. Haderbieke, L. Guo and Z. Cheng, “A wind turbine fault classification model using broad learning system optimized by improved pelican optimization algorithm,” Machines, vol. 10, no. 5, pp. 407, 2022. [Google Scholar]

20. A. Hayat, M. Dias, B. Bhuyan and R. Tomar, “Human activity recognition for elderly people using machine and deep learning approaches,” Information, vol. 13, no. 6, pp. 275, 2022. [Google Scholar]

21. T. H. Tan, J. Y. Wu, S. H. Liu and M. Gochoo, “Human activity recognition using an ensemble learning algorithm with smartphone sensor data,” Electronics, vol. 11, no. 3, pp. 322, 2022. [Google Scholar]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools