Open Access

Open Access

REVIEW

Evolution and Prospects of Foundation Models: From Large Language Models to Large Multimodal Models

1 College of Mathematics and Computer Science, Zhejiang A&F University, Hangzhou, 311300, China

2 Computer Science Department, Community College, King Saud University, Riyadh, 11437, Saudi Arabia

3 Mathematics and Computer Science Department, Faculty of Science, Menofia University, Shebin El Kom, Menoufia Governorate, 32511, Egypt

4 School of Computer Science, Shenyang Aerospace University, Shenyang, 110136, China

5 Graduate School of Science and Engineering, Hosei University, Tokyo, 184-8584, Japan

* Corresponding Authors: Keping Yu. Email: ; Hailin Feng. Email:

Computers, Materials & Continua 2024, 80(2), 1753-1808. https://doi.org/10.32604/cmc.2024.052618

Received 09 April 2024; Accepted 09 July 2024; Issue published 15 August 2024

Abstract

Since the 1950s, when the Turing Test was introduced, there has been notable progress in machine language intelligence. Language modeling, crucial for AI development, has evolved from statistical to neural models over the last two decades. Recently, transformer-based Pre-trained Language Models (PLM) have excelled in Natural Language Processing (NLP) tasks by leveraging large-scale training corpora. Increasing the scale of these models enhances performance significantly, introducing abilities like context learning that smaller models lack. The advancement in Large Language Models, exemplified by the development of ChatGPT, has made significant impacts both academically and industrially, capturing widespread societal interest. This survey provides an overview of the development and prospects from Large Language Models (LLM) to Large Multimodal Models (LMM). It first discusses the contributions and technological advancements of LLMs in the field of natural language processing, especially in text generation and language understanding. Then, it turns to the discussion of LMMs, which integrates various data modalities such as text, images, and sound, demonstrating advanced capabilities in understanding and generating cross-modal content, paving new pathways for the adaptability and flexibility of AI systems. Finally, the survey highlights the prospects of LMMs in terms of technological development and application potential, while also pointing out challenges in data integration, cross-modal understanding accuracy, providing a comprehensive perspective on the latest developments in this field.Keywords

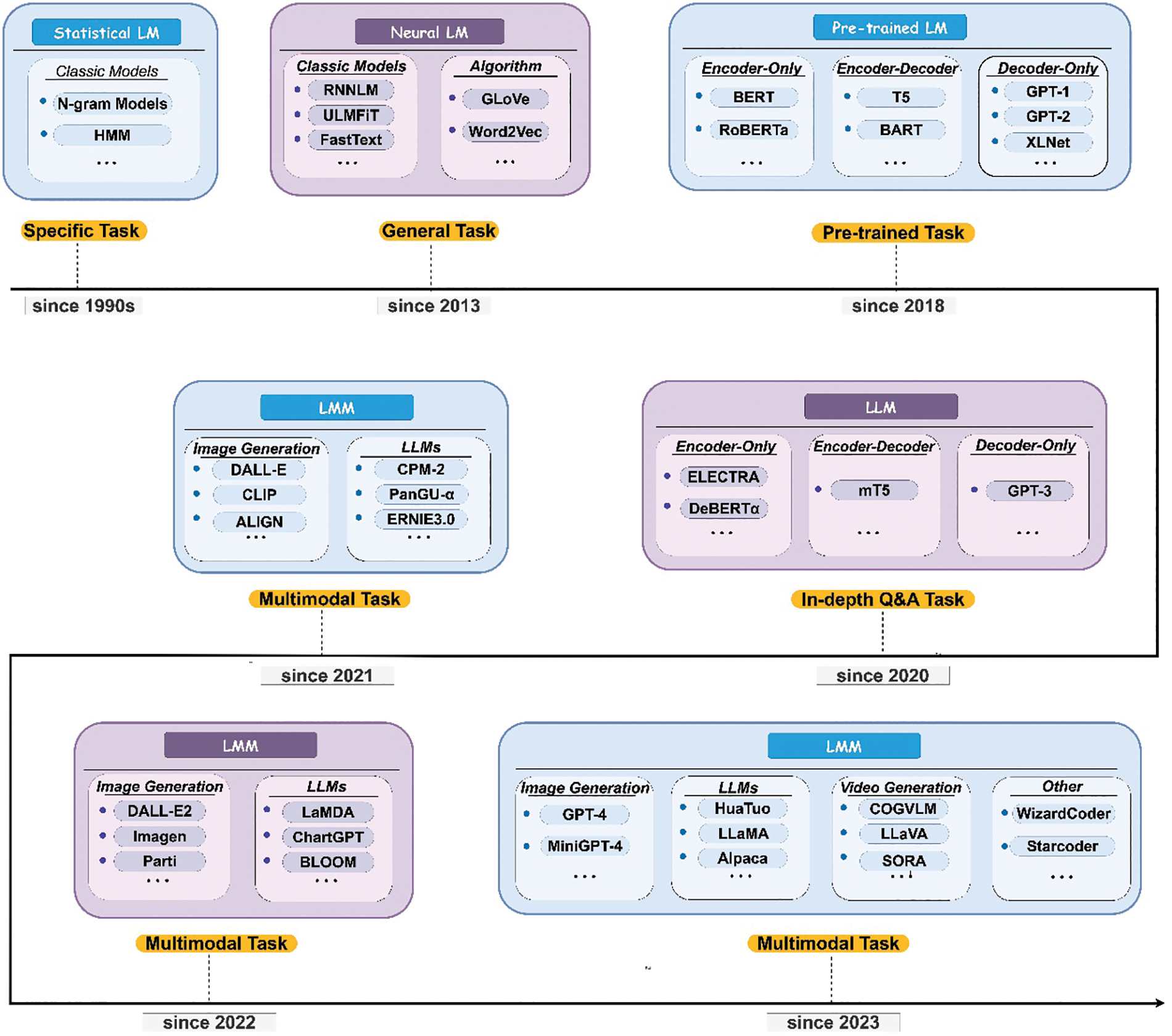

Language serves as a foundational element in human communication and expression, as well as in the interaction between humans and machines, necessitating the development of generalized models to empower machines with the ability to perform complex linguistic tasks. The need for generalized models stems from the growing demand for machines to handle complex language tasks, including translation, summarization, information retrieval, conversational interactions [1], etc. This necessity is rooted in the intrinsic human capability to communicate and express thoughts. Language is a prominent ability in human beings to express and communicate, which develops in early childhood and evolves over a lifetime [2,3]. Unlike humans, machines lack the innate ability to comprehend and generate human language, a gap that can only be bridged through the deployment of sophisticated artificial intelligence (AI) algorithms. It has been a longstanding research challenge to achieve this goal, to enable machines to read, write, and communicate like human [4]. Addressing this challenge, the field of language modeling aims to advance machine language intelligence by focusing on the generative likelihood of sequences of words, thereby enabling the prediction of future or missing tokens [5]. This pursuit has been a focal point of research, evolving through four significant stages, each marking a progressive step towards enabling machines to read, write, and communicate with human-like proficiency.

Building upon these four stages, the development of LMMs emerges as a pivotal fifth stage in the evolution of artificial intelligence.

LMMs mark a significant advancement in AI by integrating multisensory skills like visual understanding and auditory processing with the linguistic capabilities of LLMs. This approach not only leverages the dominant role of vision but also emphasizes the importance of other modalities such as sound, enhancing AI systems to be more adept and versatile. By incorporating a broader range of sensory inputs, LMMs aim to achieve a more powerful form of general intelligence, capable of efficiently performing a wider array of tasks. The five developmental stages are detailed as follows.

1. Statistical Language Models (SLM)

SLMs are a type of language model that uses statistical methods to predict the probability of a sequence of words in a language. These models are based on the assumption that the likelihood of a word occurring in a text depends on the words that precede it. SLMs [6–9] analyze large corpora of text to learn word occurrence patterns and relationships. SLMs have been fundamental in various NLP [10] like speech recognition, text prediction, and machine translation before the rise of more advanced neural network-based models. Therefore, the specially proposed principles and methods [11] are used to alleviate the problems encountered in information retrieval challenges.

2. Neural Language Models (NLM)

NLMs are a type of language model that uses neural networks, especially deep learning techniques, to understand and generate human language. Unlike SLMs that rely on counts and probabilities of sequences of words, neural models use layers of artificial neurons to process and learn from large amounts of text data. These models capture complex patterns and dependencies in language, allowing for more accurate and contextually relevant language generation and understanding. Examples include Recurrent Neural Networks (RNN), Long Short-Term Memory networks (LSTM), and transformer models like Generative Pre-trained Transformer (GPT). They are widely used in applications like machine translation, text generation, and speech recognition.

3. Pre-Trained Language Models (PLM)

PLMs are a category of language model that have been previously trained on large datasets before being used for specific tasks. This pre-training involves learning from vast amounts of text data to understand the structure, nuances, and complexities of a language. Once pre-trained, these models can be fine-tuned with additional data specific to a particular application or task, such as text classification, question answering, or language translation. Examples of PLMs include Bidirectional Encoder Representations from Transformers (BERT) [12], GPT, and Text-to-Text Transfer Transformer (T5) [13]. These models have revolutionized the field of NLP by providing a strong foundational understanding of language, which can be adapted to a wide range of language-related tasks.

4. Large Language Models (LLM)

Artificial Intelligence, particularly generative AI, has garnered widespread attention for its capacity to produce lifelike outputs [14]. LLMs are complex computational systems in the field of artificial intelligence, particularly NLP. They are typically constructed using deep learning techniques, often leveraging Transformer architectures. These models are characterized by their vast number of parameters, often in the billions, which enable them to capture a wide range of linguistic nuances and contextual variations. LLMs are trained on extensive corpora of text, allowing them to generate, comprehend, and interact using human language with a high degree of proficiency. Their capabilities include but are not limited to text generation, language translation, summarization, and question-answering. These models have significantly advanced the frontiers of NLP, offering more sophisticated and context-aware language applications.

5. Large Multimodal Models (LMM)

LMMs are advanced artificial intelligence systems capable of processing and understanding multiple types of data inputs. In addition to standard text-based applications, LLMs are expanding their capabilities to engage with various forms of media, such as images [15,16], videos [17,18] and audio files [19,20] among others. They are multimodal because they can integrate and interpret information from these varied modes simultaneously. These models leverage large-scale datasets and sophisticated neural network architectures to learn complex patterns across different data types. This ability allows them to perform tasks like image captioning, where they generate descriptive text for images, or answer questions based on a combination of text and visual information.

The emergence of LLMs has revolutionized our ability to process and generate human-like text, thereby enhancing applications in numerous fields such as automated customer support, content creation, and language translation, and opening up new possibilities for human-computer interaction. As we advance beyond the realm of pure text-based interactions, the field is witnessing the rise of LMMs. These sophisticated models are pioneering the integration of multisensory data, notably visual and auditory inputs, to better emulate the comprehensive sensory experiences that are central to human cognition. In the field of computer vision, efforts are being made to create vision-language models akin to ChatGPT, aiming to enhance multimodal dialogue capabilities [21–24]. GPT-4 [25] has already taken strides in this direction by accommodating multimodal inputs and incorporating visual data. This progression can be charted through the development of attention mechanisms in LLMs, which have been instrumental in improving contextual understanding. Attention has evolved from a fundamental concept to more complex types and variations, each with its own optimization challenges. Building upon this, the architecture of LMMs incorporates these advanced attention frameworks to process and synthesize information across multiple modalities. Concurrently, the field grapples with open issues such as contextual understanding inherent limitations, the challenges of ambiguity and vagueness in language, and the phenomenon of catastrophic forgetting. Foundational LLMs sometimes misinterpret instructions and “hallucinate” facts, undermining their practical effectiveness [26]. Therefore, there is a focus on correcting hallucinations and enhancing cognitive abilities within these models, which is critical to their reliability and effectiveness. Addressing these challenges requires innovative training data and methods tailored to LLMs and LMMs alike, aiming to refine internal and external reasoning processes. This fine-tuning is essential for achieving accuracy in reasoning, enabling these models to make informed decisions based on a combination of learned knowledge and real-time sensory input. The practical applications of LLMs and LMMs are expansive and transformative, particularly in sectors like healthcare, where they can interpret patient data to inform diagnoses and treatments, and in finance, where they can analyze market trends for more accurate forecasting. In robotics, LMMs facilitate more natural human-robot interactions and enable machines to navigate and interact with external environments more effectively. In sum, the journey from LLMs to LMMs is not merely an incremental step but a significant leap towards creating AI systems that can understand and interact with the world in a manner akin to human intelligence. The eventual integration of these models into real-world applications promises to enhance the efficacy and sophistication of AI technologies across the board.

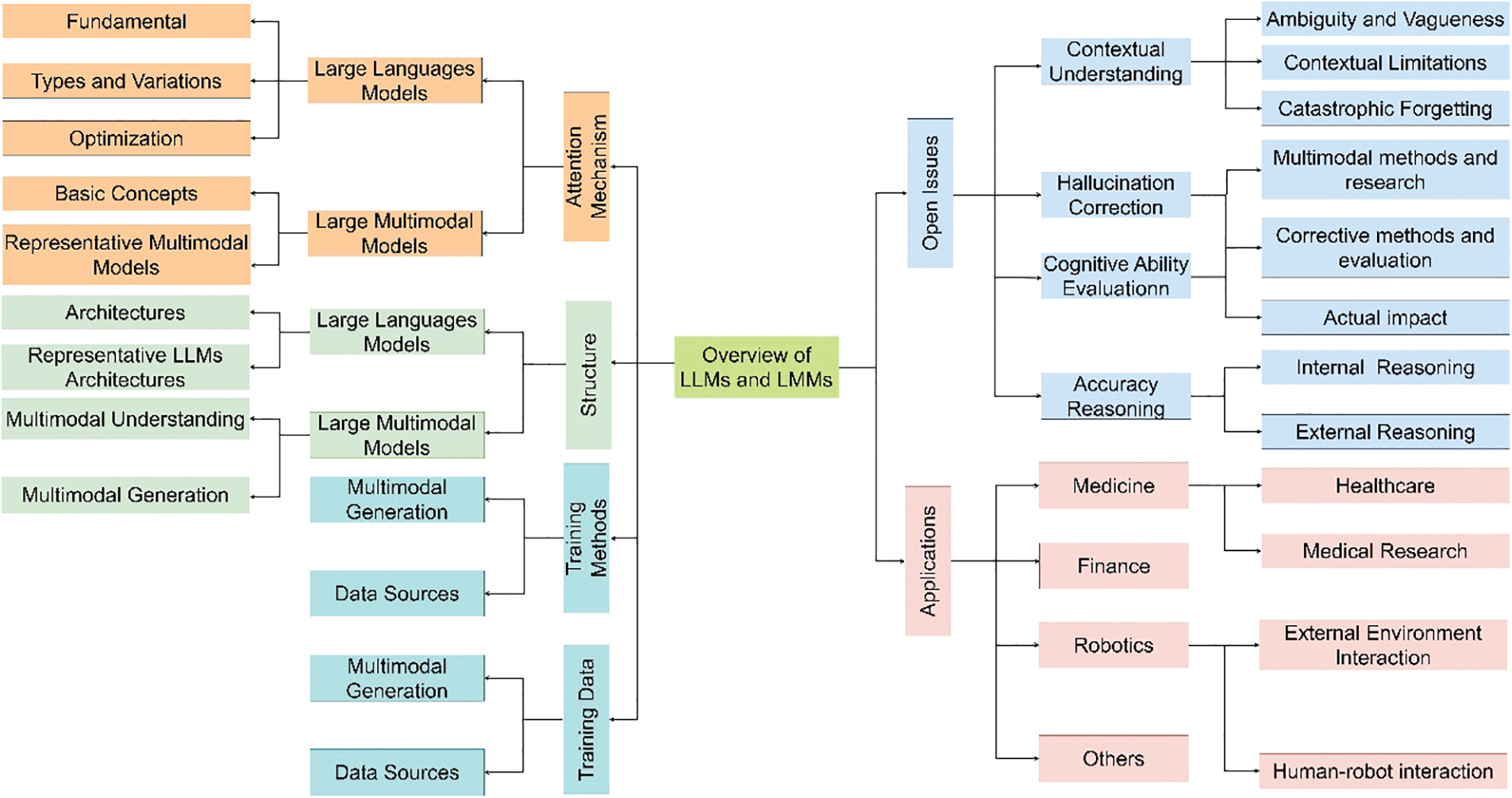

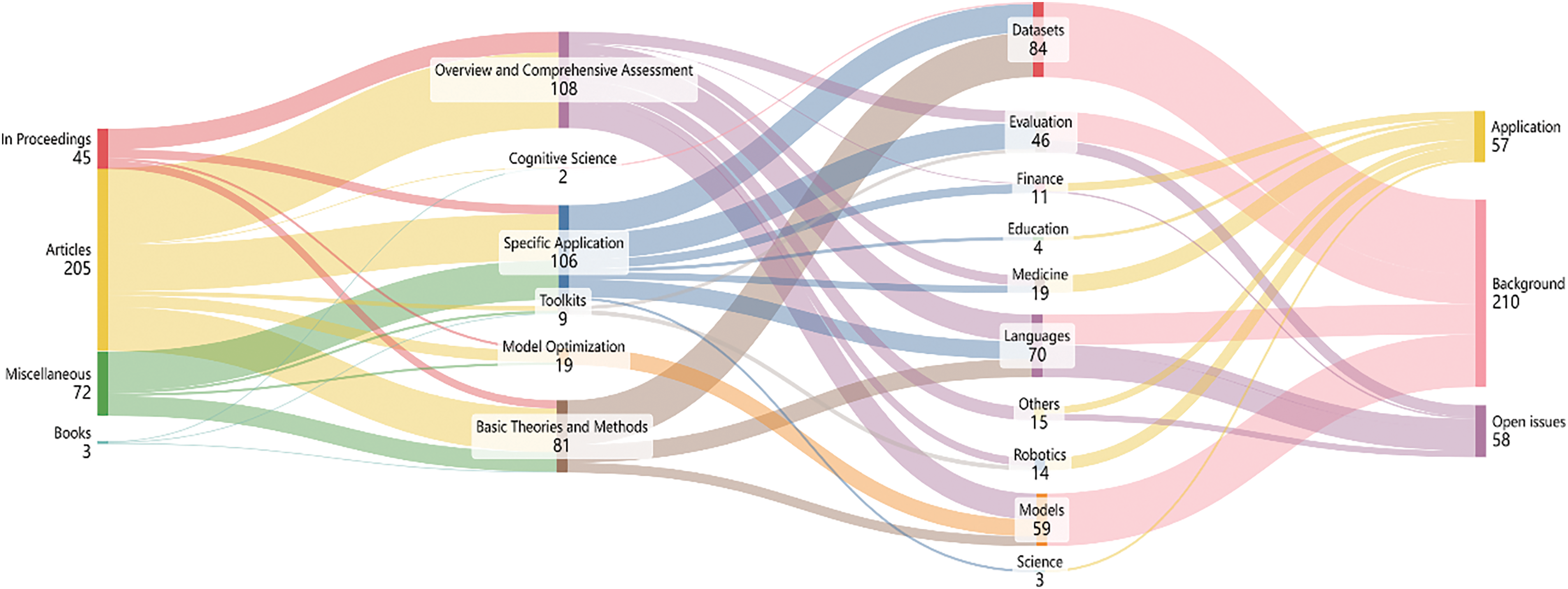

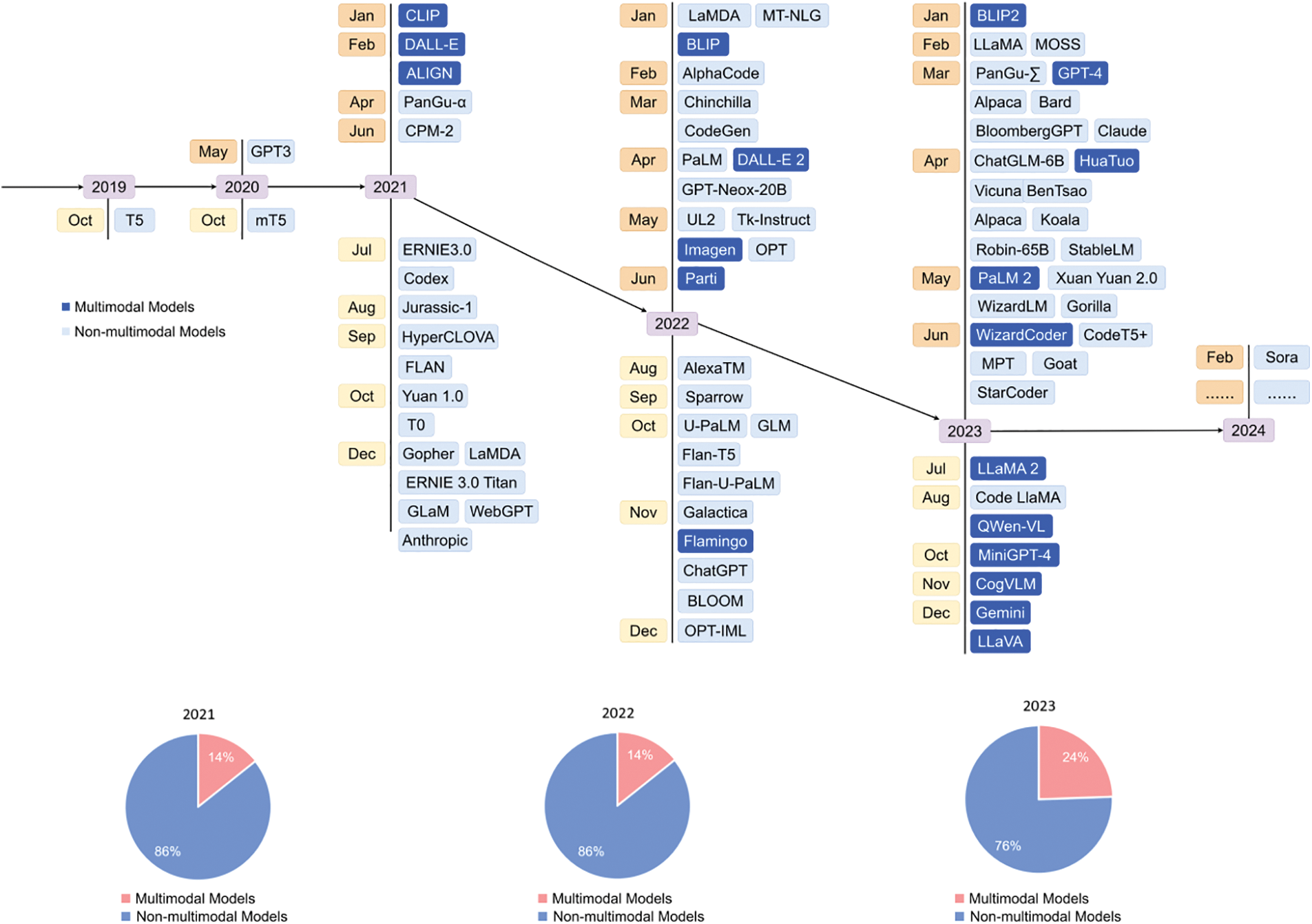

This review and its subsequent exposition aim to detail the current landscape and future direction of LLMs and LMMs, exploring the nuanced details of these models and their transformative potential in multimodal AI. The structure and content of the article are as shown in Figs. 1 and 2. Fig. 1provides a broad overview of LLMs and LMMs in six areas: 1. Attention Mechanism 2. Structure 3. Training Methods 4. Training Data 5. Open Issues 6. Applications. Through the Sankey diagram, Fig. 2 counts 325 documents, including 45 in proceedings, 72 other articles, 205 articles, and 3 books. Fig. 3 illustrates the timeline of model proposals from 2019 to mid-2023, with dark blue indicating multi-modal models. The pie chart depicts the proportion of multimodal and non-multimodal models from 2021 to 2023. It is evident from the picture that the development and application of multimodal models are becoming increasingly recognized and embraced by the public.

Figure 1: Overview of LLMs and LMMs

Figure 2: Literature source sankey diagram

Figure 3: Multimodal model growth from 2019 to 2024

Adhering to the hierarchical structure of the outline, with a focus on large language models and multimodal models as primary keywords, we meticulously curated a collection of representative documents. These documents, numbering approximately 325 in total, span multiple fields and were selected based on criteria such as research background, unresolved issues, and practical applications.

The main contributions of this paper are as follows:

• We offer an examination of the evolution and future potential spanning from LLMs to LMMs. Initially, we delve into the contributions and technological strides of LLMs within the realm of NLP, particularly in the arenas of text generation and linguistic comprehension.

• Subsequently, we transition to an exploration of LMMs, amalgamating diverse data modalities such as textual, visual, and auditory inputs, thereby show-casing sophisticated proficiencies in cross-modal comprehension and content generation. This pioneering integration opens avenues for enhanced adaptability and versatility within AI systems.

• We have elucidated the prospects of LMMs in terms of technological advancement and application potential, while also revealing the main challenges related to data integration, cross-modal understanding accuracy, and the current landscape. Finally, in specific application domains, we not only detailed the differences between LLMs and LMMs but also highlighted the necessity of their transformation in concrete applications.

LLMs, like GPT-3 [27], PaLM [28], Galactica [29], and LLaMA [30], are transformer-based models with hundreds of billions of parameters, trained on vast text datasets [31]. These models are adept at comprehending natural language and executing complex tasks through text generation. This section provides an overview of LLMs, covering attention mechanisms, model architecture, and training data and methods for a concise understanding of their operation.

Attention in LLMs is fundamental for processing and understanding complex language structures. It operates by focusing on specific parts of the input data, thereby discerning relevant context and relationships within the text. Various types and variations of attention exist, such as self-attention and multi-head attention, each offering unique advantages in handling different understanding. Optimization of attention mechanisms, especially in large models, involves techniques like sparse attention to manage computational efficiency and memory usage, crucial for scaling LLMs for more extensive and intricate datasets. These mechanisms collectively enhance the LLMs ability to generate coherent, contextually relevant responses, making them versatile in numerous language processing applications.

We will delve into the concept of Attention in LLMs from three distinct perspectives: the fundamental principles underlying attention mechanisms, the types and variations that exist within these models, and the optimization strategies employed to enhance their efficiency and effectiveness.

The attention mechanism plays a pivotal role within the Transformer framework, facilitating inter-token interactions across sequences and deriving representations for both input and output sequences. When processing sequential data, the attention mechanism simulates the human attention process by endowing the model with varying degrees of attention to different parts. This is akin to how humans allocate attention when processing information, allowing the model to selectively focus on specific parts of the sequence, rather than treating all information equally. In the attention mechanism, the model calculates weights based on different parts of the input data to indicate which parts the model should pay more attention to. These weights determine which positions in the input sequence should be considered when calculating the output, enabling the model to simulate selective attention similar to that of humans when processing sequential data.

In summary, the attention mechanism simulates the human attention process by dynamically adjusting attention to different parts, enabling the model to better comprehend and process sequential data. This contributes to the improvement of performance in models for tasks such as NLP and machine translation.

1. Self-Attention

Self-attention [32] within the Transformer model is characterized as a mechanism that calculates a position response in a sequence by considering all positions, assigning importance through learned patterns. Unlike models that process sequences sequentially, this allows for parallel processing, enhancing training efficiency. It adeptly handles long-range dependencies, crucial in translation where contextual comprehension is key. By evaluating interrelations across an entire input sequence, self-attention significantly improves the model ability to interpret intricate patterns and dependencies, a distinct advantage in complex task handling. Self-attention parallel processing capability and proficiency in capturing long-range dependencies offer a significant advantage over traditional models like RNN and convolutional neural networks (CNN). Particularly in NLP, where understanding long-distance relationships in data is crucial, self-attention proves more effective. This mechanism is extensively utilized in the Transformer model, now a dominant framework in various NLP tasks, including machine translation, text generation, and classification. The transformer reliance on self-attention, avoiding RNN or CNN architectures, markedly enhances its ability to handle extended sequences, driving breakthroughs in performance across multiple applications.

2. Cross Attention

Cross-attention, a pivotal concept in deep learning and NLP, denotes an advanced attention mechanism. This mechanism is instrumental in enabling models to intricately associate and assign weights to elements across two distinct sequences. A prime example is in machine translation, where it bridges the source and target language components. Essentially, cross-attention empowers a model to integrate and process information from one sequence while attentively considering another sequence context. Predominantly utilized in sequence-to-sequence models, which are prevalent in machine translation, text summarization, and question-answering systems, cross-attention plays a crucial role. It facilitates the model ability to decode and interrelate the intricacies between an input sequence (like a segment of text) and its corresponding output sequence (such as a translated phrase or an answer). Delving into specifics, the cross-attention mechanism leverages insights from one sequence (for instance, the output from an encoder) to attentively navigate and emphasize particular segments of another sequence (like input to a decoder). This capability is key to grasping and handling the multifaceted dependencies existing between sequences, thereby significantly boosting precision and efficiency of the model in complex tasks. In the realm of machine translation, for instance, cross-attention enables the model to meticulously concentrate on specific fragments of the source language sentence, ensuring a more accurate and contextual translation into the target language. Overall, cross-attention stands as a cornerstone technique in deep learning, essential for the nuanced understanding and processing of complex inter-sequential relationships.

3. Full Attention

Full attention in the context of neural network architectures, particularly Transformers, refers to a mechanism where each element in a sequence attends to every other element. Unlike sparse attention which selectively focuses on certain parts of the sequence, full attention involves calculating attention scores between all pairs of elements in the input sequence. This approach, while computationally intensive due to its quadratic complexity, provides a comprehensive understanding of the relationships within the data, making it highly effective for tasks requiring deep contextual understanding. However, its computational cost limits its scalability, especially for very long sequences.

4. Sparse Attention

Sparse attention, as detailed in the work [33], revolutionizes the efficiency of Transformer models for extensive sequences. By introducing sparse factorizations into the attention matrix, this approach transforms the computational complexity from quadratic to nearly linear. This reduction is accomplished through a strategic decomposition of the full attention mechanism into more manageable operations that closely emulate dense attention, but with significantly reduced computational demands. Demonstrating its robustness, the sparse Transformer excels in processing a wide range of data types, including text, images, and audio, thereby setting new performance benchmarks in density modeling for complex datasets like Enwik8 and CIFAR-10. Notably, its design allows for the handling of sequences up to a million steps in length, a feat that significantly surpasses the capabilities of standard Transformer models. This advancement not only enhances the efficiency of processing lengthy sequences but also opens new avenues for complex sequence modeling tasks.

5. Multi-Query/Grouped-Query Attention

Multi-query attention is a variant of the traditional attention mechanism commonly used in neural network architectures. In this approach, instead of computing attention with a single query per input element, multiple queries are used simultaneously for each element [34]. This allows the model to capture a wider range of relationships and interactions within the data. Multi-query attention can provide a richer and more nuanced understanding of the input, as it enables the model to attend to different aspects or features of the data in parallel, enhancing its ability to learn complex patterns and dependencies.

6. Flash Attention

Flash attention [35] is a technique in neural network architecture that optimizes the efficiency of attention mechanisms, specifically in Transformers. It focuses on improving the speed and reducing memory usage during the attention calculation process. This is achieved by enhancing the handling of memory read and write operations, particularly in GPUs. As a result, flash attention can operate significantly faster than traditional attention mechanisms, while also being more memory efficient. This makes it particularly advantageous for tasks involving large-scale data processing or when operating under memory constraints. It employs a tiling strategy to minimize memory transactions between different GPU memory levels, leading to faster and more memory-efficient exact attention computation. Notably, flash attention design allows it to perform computations up to several times faster than traditional attention methods, with substantial memory savings, marking a significant advancement in handling large-scale data in neural networks. FlashAttention has been implemented as a fused kernel within CUDA and is now integrated into prominent frameworks including PyTorch [36], DeepSpeed [37], and Megatron-LM [38]. This integration signifies its practical applicability and enhancement of these platforms, providing a more efficient and memory-effective approach to attention computation in large-scale neural network models.

FlashAttention-2 [39], as an evolution of the original FlashAttention, significantly advances the efficiency of attention processing in Transformers. It optimizes GPU utilization by refining the algorithm for more effective work partitioning. The enhancement involves minimizing non-matrix multiplication operations and enabling parallel processing of attention, even for individual heads, across various GPU thread blocks. Moreover, it introduces a more balanced distribution of computational tasks within thread blocks. These strategic improvements result in FlashAttention-2 achieving approximately double the speed of its predecessor, nearing the efficiency of optimized matrix multiplication operations, thereby marking a substantial leap in executing large-scale Transformer models efficiently.

7. PagedAttention

PagedAttention is an advanced technique in neural net-work architecture, specifically designed to address the limitations of conventional attention mechanisms in handling long sequences. It operates by dividing the computation into smaller, more manageable segments or pages, thereby reducing the memory footprint and computational load. This approach allows for efficient processing of long sequences that would otherwise be challenging or infeasible with standard attention models, making it particularly useful in large-scale NLP and other data-intensive applications. The PagedAttention method has been developed to optimize the use of memory and augment the processing capacity of LLMs in operational environments [40].

In the realm of neural network models, particularly in NLP, attention mechanisms have emerged as a pivotal innovation, enhancing model accuracy and contextual understanding. These mechanisms enable models to selectively concentrate on relevant segments of input data, effectively capturing intricate dependencies and relations. This targeted focus significantly bolsters performance in tasks such as language translation and summarization.

However, with the growing scale of models and data, traditional attention mechanisms often grapple with increased computational demands, affecting training speed and efficiency. To address these challenges, optimization techniques like sparse attention have been developed. Sparse attention streamlines the process by selectively focusing on crucial data points, thus reducing the computational burden. This selective approach not only expedites the training process but also scales more adeptly with larger datasets.

The incorporation of sparse attention is crucial in managing the escalating complexities and sizes of datasets in advanced deep learning applications. By optimizing attention mechanisms, we can build models that are not only more accurate but also faster and more scalable, catering to the demanding requirements of modern machine learning tasks. These advancements are integral to pushing the boundaries of what neural network models can achieve, particularly in processing and understanding large-scale, complex data structures.

Multimodal learning in computer science involves integrating data from various modalities, such as text, images, and sound, to create models that understand and process information more holistically. This approach is crucial for tasks requiring an understanding across multiple data types. For instance, in a scenario where both visual cues from images and descriptive cues from text are essential, multi-modal learning enables the model to combine these distinct types of information to generate a more accurate and comprehensive understanding. This is particularly important in complex data interpretation and decision-making tasks, where relying on a single modality might lead to incomplete or biased conclusions. Multimodal learning, therefore, plays a vital role in enhancing the depth and breadth of data analysis and interpretation in AI applications.

In contemporary multimodal research, image-text conversion and matching represent a pivotal area, involving the precise alignment of visual content with textual descriptions. Attention mechanisms play an essential role in this process. By incorporating cross-modal attention mechanisms, LMMs are able to focus on parts of the image that are closely related to the text descriptions, thereby achieving more accurate local alignments. Additionally, these models utilize global attention to integrate the semantic information of the entire image and text, ensuring overall semantic consistency. Xu et al. [41] proposed a novel multimodal model named Cross-modal Attention with Semantic Consistency (CASC). It employs an innovative attention mechanism to integrate both local and global matching strategies. Through finely-tuned cross-modal attention, it achieves granular local alignment, while the use of multi-label prediction ensures the consistency of global semantics. This strategy of combining local and global perspectives through attention not only enhances the accuracy of image-text matching but also significantly improves the model’s ability to handle complex multimodal information.

Cai et al. [42] proposed a graph-attention based multimodal fusion network for enhancing the joint classification of hyperspectral images and LiDAR data. It includes an HSI-LiDAR feature extractor, a graph-attention fusion module, and a classification module. The fusion module constructs an undirected weighted graph with modality-specific tokens to address long-distance dependencies and explore deep semantic relationships, which are then classified by two fully connected layers.

In modern AI research, multimodal models integrate diverse data like text, images, and sound using cross-modal attention mechanisms. These mechanisms allow models to focus on relevant information across modalities. For example, in image-text matching tasks, they enable identification of key text elements and their alignment with corresponding visual details. This enhances data processing accuracy and improves the model’s adaptability and problem-solving capabilities in complex scenarios, proving essential for multimodal tasks.

2.2.2 Representative Multimodal Models

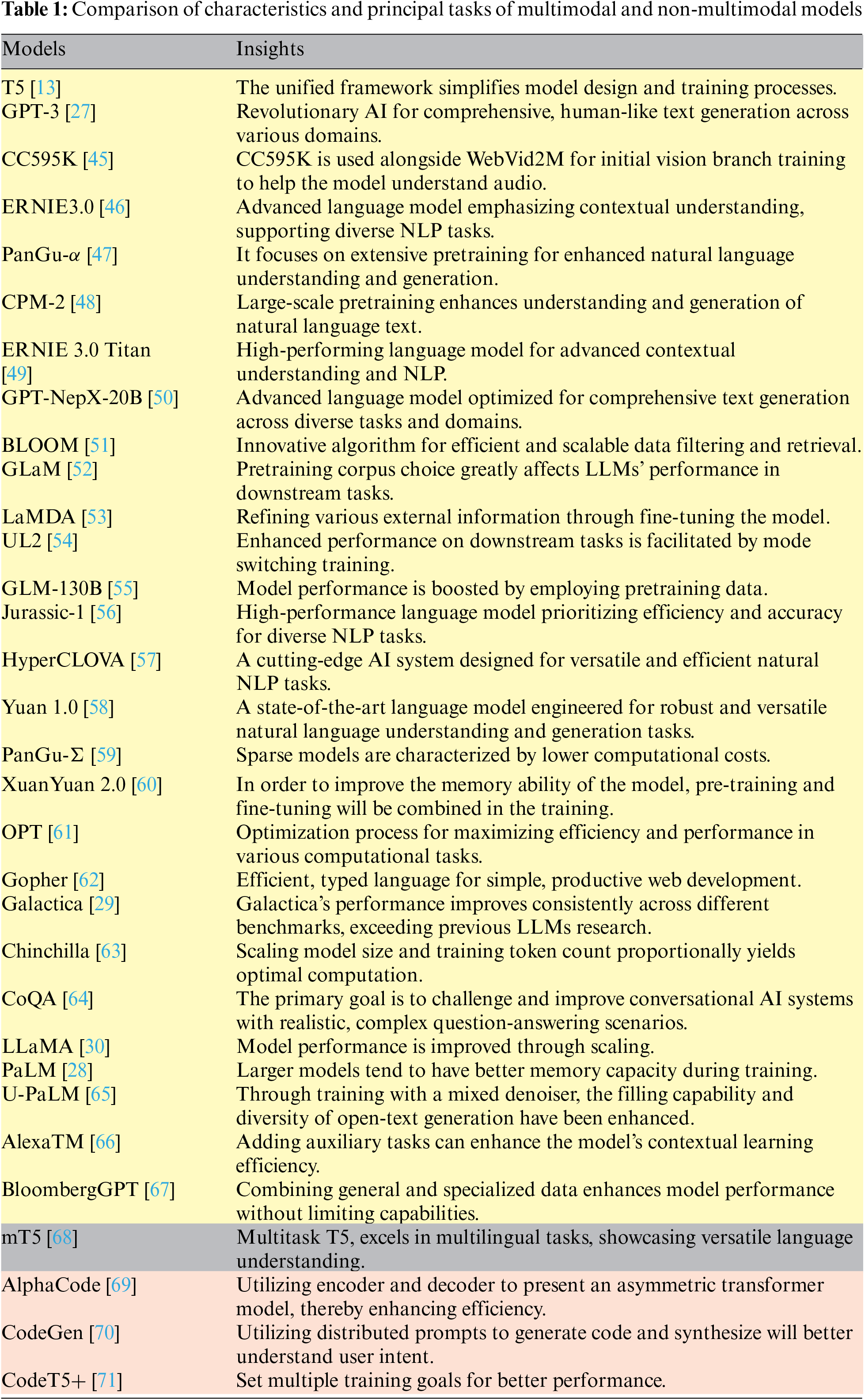

This section offers a comprehensive exploration of how advanced AI models integrate varied data types like text, images, and audio. These multimodal models, adept at processing and interpreting multi-sensory information, facilitate a nuanced understanding of complex data sets. The introduction outlines the architecture of these models, their data fusion techniques, and diverse applications in fields such as NLP, computer vision, and human-computer interaction, setting the groundwork for appreciating their interdisciplinary and technological complexity. It has been shown that CLIP-NAV [43] explores an innovative approach to Vision-and-Language Navigation (VLN) using the CLIP model for zero-shot navigation. The focus is on improving VLN in diverse and previously unseen environments without dataset-specific fine-tuning. The study leverages CLIP strengths in language grounding and object recognition to navigate based on natural language instructions. The results demonstrate that this approach can surpass existing supervised baselines in navigation tasks, highlighting the potential of CLIP in generalizing better across different environments for VLN tasks. This research marks a significant stride in the field of autonomous navigation using language models. In addition, RoboCLIP [44] addresses the challenge of efficiently teaching robots to perform tasks with minimal demonstrations. The method utilizes a single demonstration, which can be a video or textual description, to generate rewards for online reinforcement learning. This approach eliminates the need for labor-intensive reward function designs and allows for the use of demonstrations from different domains, such as human videos. RoboCLIP use of pretrained Video-and-Language Models (VLMs) without fine-tuning represents a significant advancement in enabling robots to learn tasks effectively with limited data. Table 1 provides an extensive overview of frequently used non-multimodal and multimodal models, delineating their distinct characteristics and primary functions. It utilizes a color-coding system where non-multimodal models are differentiated as follows: transformer-based models are highlighted in yellow, code generation models in pink, and multilingual or cross-language models in gray. This color coding helps to clearly understand each model type and its functionalities.

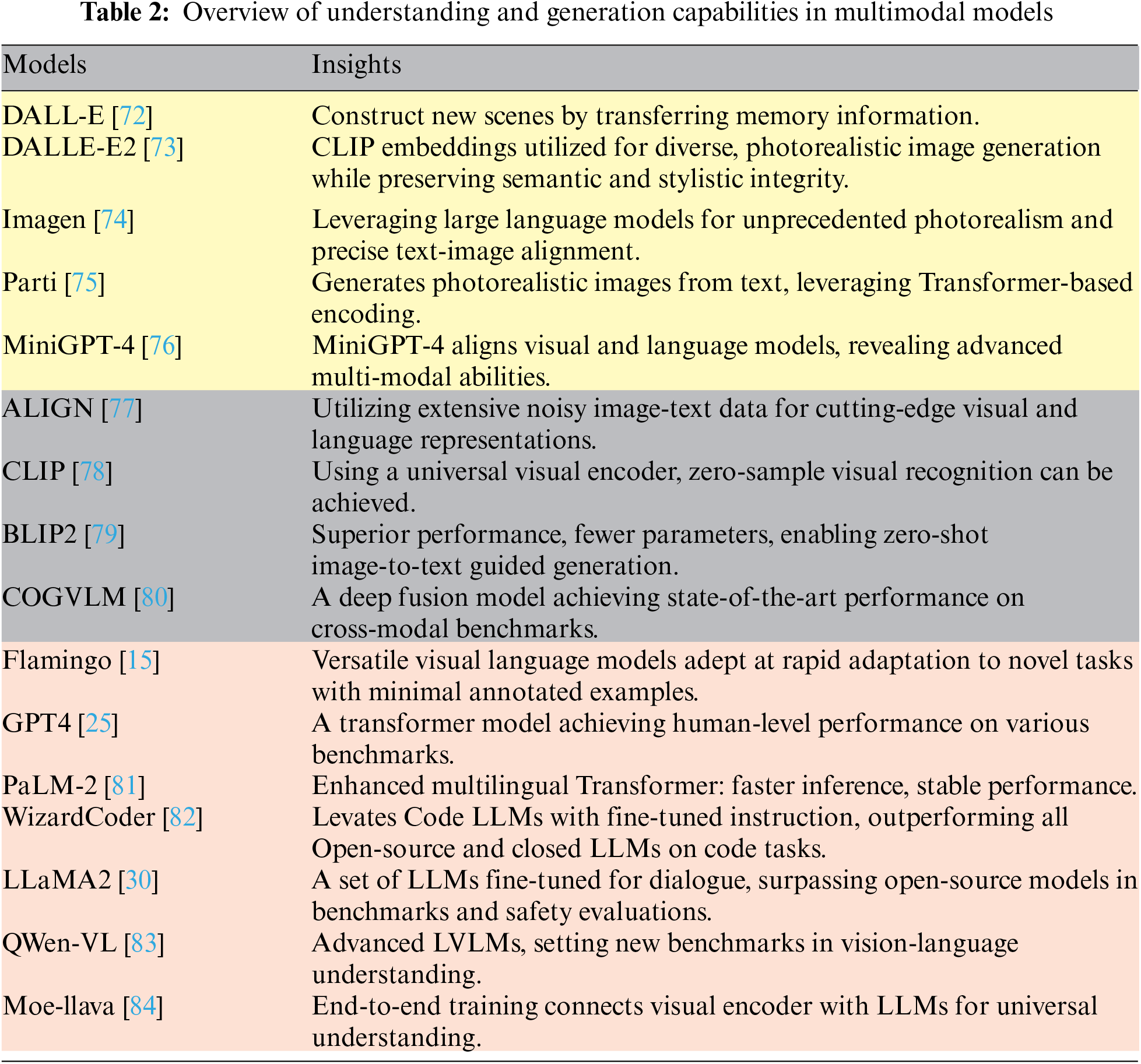

In contrast, Table 2 focuses exclusively on multimodal models. It categorizes these models based on their capabilities with a similar color-coding system: models capable of generating images from textual descriptions and understanding the relationship between images and text are marked with yellow; those that demonstrate a comprehensive grasp of multimodal data are in pink; and models that offer a holistic approach to both multimodal comprehension and generative abilities are designated with gray.

In this discussion, we explore the diverse variants of Transformer architectures, which stem from variations in how attention mechanisms are applied and how transformer blocks are interconnected.

1. Encoder-Only

In the landscape of NLP, the advent of the encoder-only architecture signifies a pivotal development. This architecture departs from traditional sequential processing by employing the Transformer encoder to generate rich, contextual representations of input text. The innovation lies in its ability to grasp the nuances of language through bidirectional context, making it adept across a spectrum of NLP tasks, including but not limited to text classification, entity recognition, and sentiment analysis. The encoder-only model capacity for pre-training on extensive corpora before fine-tuning for specific tasks has set a new standard for understanding and processing human language, thus reshaping the methodologies employed in NLP research and applications.

2. Encoder-Decoder

The encoder-decoder architecture, a cornerstone in the field of NLP, has been instrumental in advancing machine translation, text summarization, and question answering systems. This framework, as detailed by Sutskever et al. [85], employs a dual-component approach where the encoder processes the input sequence to a fixed-length vector representation, which the decoder then uses to generate the target sequence. This separation allows the model to handle variable-length inputs and outputs, enabling a more flexible and accurate translation of complex language structures. The architecture efficacy in capturing long-distance dependencies and its adaptability to various sequential tasks have catalyzed significant innovations in NLP, making it a fundamental model for researchers and practitioners alike.

3. Decoder-Only

The decoder-only architecture, notably pioneered by models such as GPT [86], represents a transformative approach in the realm of NLP, particularly in text generation tasks. This architecture, eschewing the encoder component, focuses solely on the decoder to predict the next token in a sequence based on the preceding ones. It leverages an autoregressive model that processes text in a sequential manner, ensuring that each prediction is contingent upon the tokens that came before it. This design facilitates the generation of coherent and contextually relevant text, making decoder-only models particularly adept at tasks such as story generation, creative writing, and more. The effectiveness of this architecture has been demonstrated across various domains, showcasing its versatility and power in capturing the nuances of human language.

Causal Decoder: The primary goal of a LLMs is to forecast the subsequent token given the preceding sequence of tokens. Although incorporating additional context from an encoder can enhance the relevance of predictions, empirical evidence suggests that LLMs can still excel without an encoder [87], relying solely on a decoder. This approach mirrors the decoder component of the traditional encoder decoder architecture, where the flow of information is unidirectional, meaning the prediction of any token tk is contingent upon the sequence of tokens leading up to and including tk−1. This decoder-only configuration has become the most prevalent variant among cutting-edge LLMs.

Prefix Decoder: In encoder-decoder architectures, causal masked attention allows the encoder to utilize self-attention to consider every token within a sentence, enabling it to access tokens from tk+1 to tn as well as those from t1 to tk−1 when computing the representation for tk. However, omitting the encoder in favor of a decoder-only model removes this comprehensive attention capability. A modification in decoder-only setups involves altering the masking strategy from strictly causal to permitting full visibility for certain segments of the input sequence, thereby adjusting the scope of attention and potentially enhancing model flexibility and understanding [1].

2.3.2 Representative LLMs Architectures

In the evolving landscape of NLP, various model architectures have significantly advanced the field. The T5 [13] and BART [88] models, both embodying the encoder-decoder architecture, have redefined versatility in NLP tasks. T5 converts all NLP problems into a unified text-to-text format, leveraging a comprehensive Transformer architecture for both encoding and decoding phases. Similarly, BART integrates the bidirectional encoding capabilities of BERT with the autoregressive decoding prowess of GPT, enhancing performance across a range of generative and comprehension tasks. On the other hand, the encoder-only architecture is exemplified by models such as RoBERTa [89] and ALBERT [90]. RoBERTa refines the BERT framework through optimized pre-training techniques, achieving superior results on benchmark tasks. ALBERT reduces model size and increases training speed without compromising performance, illustrating the efficiency of architecture optimization. XLNet [91] and TransformerXL [92] explore the realm of Causal Decoder architecture. XLNet integrates the best of Transformer self-attention with autoregressive language modeling, capturing bidirectional context in text sequences through permutation language modeling. Transformer-XL introduces a novel recurrence mechanism to handle longer text sequences, effectively capturing long-range dependencies. Besides, ELECTRA [93] represents a unique take on the encoder-only architecture, introducing a novel pre-training task that distinguishes between real and artificially replaced tokens to train the model more efficiently. These architectures, through their innovative designs and applications, underscore the dynamic and rapidly advancing nature of machine learning research in NLP, each contributing unique insights and capabilities to the domain.

2.4.1 Multimodal Understanding

1. Modality encoder

Modality encoder is tasked with encoding inputs from diverse modalities to obtain corresponding features [94]. Fig. 4 depicts iconic models and notable representatives at various junctures in time, showcasing the evolving landscape of tasks undertaken by these models over different epochs. As science and technology progress, the pervasive adoption of large language models and multi-modal languages across diverse domains is poised to proliferate, facilitating the execution of a myriad of distinct tasks.

Figure 4: Images show evolving model tasks; multimodal models’ adoption grows

Visual Modality: For image processing, four notable encoders are often considered. NFNet-F6 [95] represents a modern take on the traditional ResNet architecture, eliminating the need for normalization layers. It introduces an adaptive gradient clipping technique that enhances training on highly augmented datasets, achieving state-of-the-art (SOTA) results in image recognition. Vision Transformer (ViT) [96] brings the Transformer architecture, originally designed for NLP, to the realm of images. By dividing images into patches and applying linear projections, ViT processes these through multiple Transformer blocks, enabling deep understanding of visual content. CLIP ViT [78] bridges the gap between textual and visual data. It pairs a vision Transformer with a text encoder, leveraging contrastive learning from a vast corpus of text-image pairs. This approach significantly enhances the model’s ability to understand and generate content relevant to both domains. Eva-CLIP ViT [97] focuses on refining the extensive training process of its predecessor, CLIP. It aims to stabilize training and optimize performance, making the development of multimodal models more efficient and effective. For video content [98], a uniform sampling strategy can extract 5 frames from each video, applying similar preprocessing techniques as those used for images to ensure consistency in encoding and analysis across different media types.

Audio Modality: CFormer [99], HuBERT [100], BEATs [101], and Whisper [102] are key models for encoding audio, each with distinct mechanisms. CFormer integrates the CIF alignment method and a Transformer for audio feature extraction. HuBERT, inspired BERT [12], employs self-supervised learning to predict hidden speech units. BEATs focus on learning bidirectional encoder representations from audio via Transformers, showcasing advancements in audio processing.

2. LLMs backbone

LLMs backbone, as the central elements of LLMs, adopts key features such as zero-shot generalization, fewshot ICL (In-Context Learning), chain-of-thought (CoT), and adherence to instructions. The backbone of these LLMs manages to process and interpret representations across different modalities, facilitating semantic comprehension, logical reasoning, and input-based decision-making. In LMMs, frequently utilized LLMs encompass ChatGLM [55], FlanT5 [103], Qwen [83], Chinchilla [63], OPT [61], PaLM [28], LLaMA [30] and Vicuna [104], among others.

The Modality Generator MGX plays a crucial role in generating outputs across different modalities. For this purpose, it often employs readily available Latent Diffusion Models (LDMs) [105], such as Stable Diffusion [106] for crafting images, Zeroscope [107] for creating videos, and AudioLDM-2 [108] for producing audio. The conditional inputs for this denoising process, which facilitates the creation of multimodal content, are provided by the features, as determined by the Output Projector. Hou et al. [109] developed a methodology that integrates image inputs and prompt engineering in LMMs to solve parson’s problems, a kind of visual programming challenge.

2.5 Training Methods, Training Data and Testing

LLMs undergo a comprehensive development process encompassing pre-training, fine-tuning, and alignment to ensure their efficacy and ethical application across diverse tasks. Initially, pre-training equips LLMs with a broad understanding of language by learning from extensive text corpora, enabling them to capture complex linguistic patterns and knowledge. Subsequently, fine-tuning adjusts these pretrained models to specific tasks or domains, enhancing their performance on particular applications through targeted training on smaller, task-specific datasets. Finally, the alignment phase involves refining the models to adhere to human values and ethical standards, often employing techniques like Reinforcement Learning from Human Feedback (RLHF).

LMMs have significantly advanced the field of NLP by integrating text with other data modalities, such as images and videos. Presently, the development of LMMs follows three primary approaches: pretraining, instruction tuning, and prompting. In the forthcoming discussion, we will delve into these key strategies in greater detail.

1. Pre-Training

The pre-training process of LLMs is a pivotal step where models are exposed to vast amounts of textual data, enabling them to learn complex patterns, grammar, and contextual cues. Techniques such as Masked Language Modeling (MLM), prominently featured in BERT [12], involve obscuring parts of the text to challenge the model to infer the missing words using surrounding context. On the other hand, models like GPT leverage an autoregressive approach, predicting the next word in a sequence based on the words that precede it. This extensive pre-training phase allows LLMs to acquire a deep, nuanced understanding of language, facilitating their effectiveness across a broad spectrum of NLP tasks. The acquired knowledge enables these models to excel in applications ranging from text generation and summarization to question answering and translation, significantly advancing the field of AI and its capabilities in understanding and generating human language.

In the realm of LMMs, a significant trend is the integration of multiple modalities through end-to-end unified models. For example, MiniGPT-4 [76] leverages a pretrained and frozen ViT [98] alongside Q-Former and Vicuna LLMs [108], requiring only a linear projection layer for aligning vision and language modalities. Similarly, BLIP2 [79] introduces a dual-phase approach for vision-language modality alignment, starting with representation learning from a static visual encoder and progressing to vision-to-language generative learning facilitated by a static LLMs for zero-shot image-to-text tasks. Flamingo [15] further exemplifies this stream by utilizing gated cross attention mechanisms to merge inputs from a pre-trained visual encoder and an LLMs, effectively bridging the gap between visual and linguistic data.

2. Fine-Tuning

Instruction tuning of LLMs is a process designed to enhance models’ ability to comprehend and execute textual instructions. This method involves training LLMs on datasets comprised of instructional prompts paired with corresponding outputs, thereby teaching the models to follow explicit directives. Such an approach significantly improves the model versatility, enabling it to perform a broad array of tasks as directed by user inputs. A prime example of this is GPT-3 [27], which has undergone instruction tuning to better understand and respond to natural language instructions. This enhancement allows GPT-3 to generate text that is not only relevant and coherent but also aligned with the specific instructions provided, showcasing its improved capacity for tasks ranging from content creation to answering complex queries. The success of instruction tuning in GPT-3 highlights its potential to make LLMs more interactive and adaptable, marking a significant advancement in the field of artificial intelligence and NLP.

Building on the concept of instruction tuning [107] for NLP tasks [110,111] researchers have expanded the scope to include fine-tuning pre-trained LLMs with multimodal instructions. This advancement enables the transformation of LLMs into multimodal chatbots [76,16,112] and task solvers [113–115] with notable examples being MiniGPT-4, BLIP2, and Flamingo for chatbots, and other models such as LaVIN and LLaMA Adapter focusing on task solving. A critical aspect of enhancing these LMMs involves gathering data that follows multimodal instructions for finetuning [116]. To overcome challenges associated with data collection, strategies such as benchmark adaptation [117–119] self-instruction [120–122], and hybrid composition [123,115] have been adopted. Furthermore, to bridge the modality gap, a learnable interface connects different modalities from frozen pre-trained models, aiming for parameter-efficient tuning. For instance, LaVIN [123] and LLaMA Adapter [124] have introduced transformer-based and modality-mixing adapter modules, respectively, for efficient training. In contrast, expert models like VideoChatText [17] leverage specialized models such as Whisper [102] for speech recognition, converting multimodal inputs directly into language, thereby facilitating comprehension by subsequent LLMs.

3. Alignment

The concept of alignment pertains to the process of aligning the model outputs with human values, intentions, and ethical standards. This involves training methodologies and evaluation strategies designed to ensure that LLMs behave in ways that are beneficial and non-harmful. Wei et al. [125] introduce techniques for aligning LLMs through iterative processes involving human feedback, where models are fine-tuned based on evaluations of their outputs against desired ethical and moral criteria. Furthermore, Ouyang et al. [110] explore alignment through RLHF, a method where models are adjusted based on direct human input on the appropriateness and alignment of generated content. These processes aim to mitigate risks associated with LLMs generating biased, misleading, or harmful content, ensuring their utility and safety in real-world applications.

4. Prompting

Prompting techniques, in contrast to fine-tuning, offer a way to guide Mega LMMs using context or instructions without changing their parameters, reducing the need for vast multimodal datasets. This method is particularly useful for multimodal CoT tasks, allowing models to generate reasoning and answers from multimodal inputs. Examples include CoT-PT [126], which uses prompt tuning and visual biases for implicit reasoning, and Multimodal-CoT, employing a two-step process combining rationale generation and answer deduction. This approach also facilitates breaking down complex tasks into simpler sub-tasks through multimodal prompts [24,127], demonstrating the effectiveness and adaptability of prompting in multimodal learning.

2.5.2 Data Sources and Evaluation

1. Datasets for LLMs

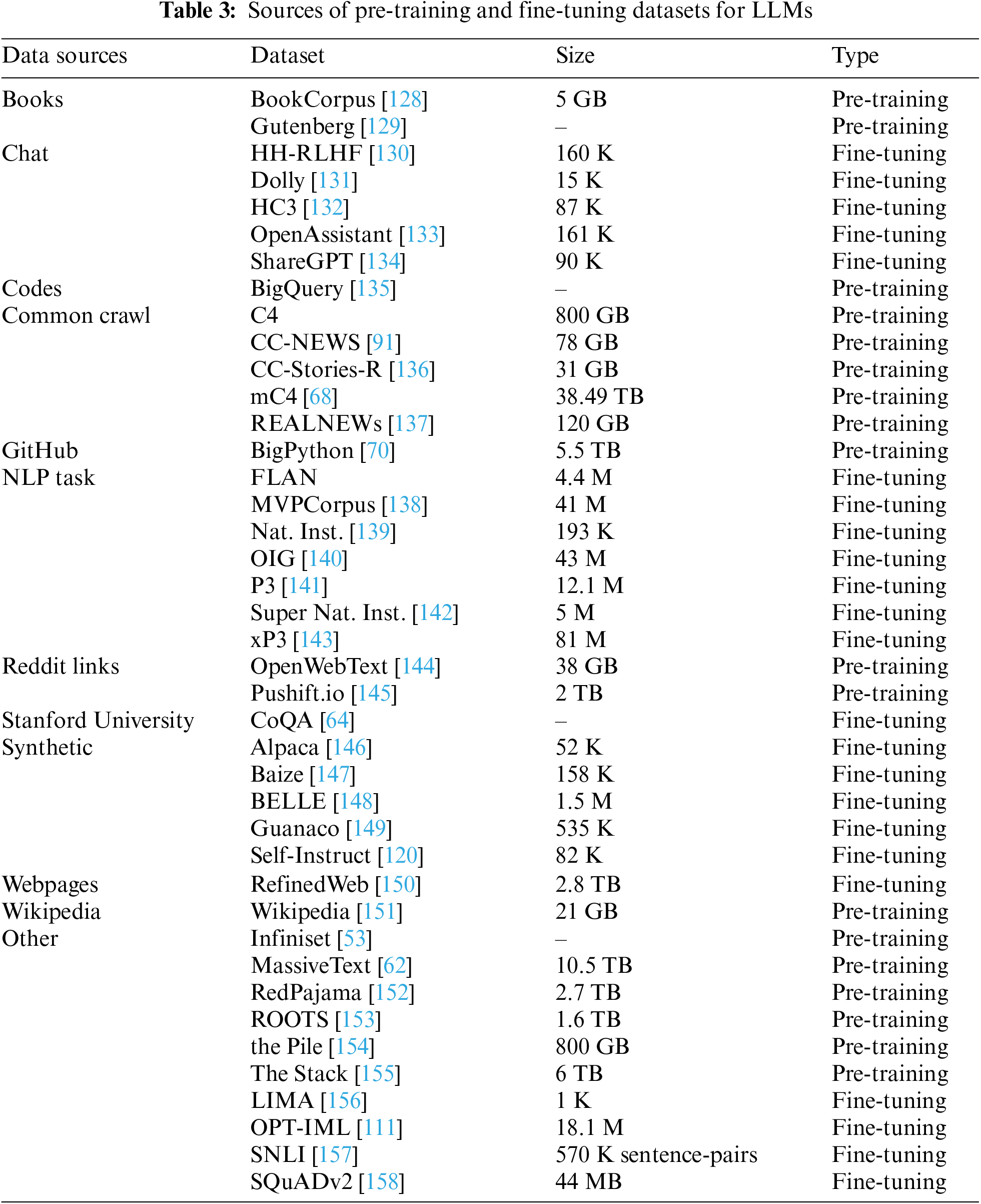

LLMs derive their prowess from meticulously curated datasets, which are essential for their pre-training and finetuning. The creation of such datasets is a demanding task, demanding both breadth and depth of high-quality data. Researchers have identified an array of key data sources integral for LLMs training, as summarized in Table 2. This selection includes literature, dialogues, code from GitHub, comprehensive common crawl data, domain-specific datasets from NLP tasks, academic content from Stanford University, and discussions from Reddit. Additionally, synthetic data and the expansive knowledge from Wikipedia are harnessed, providing LLMs with a rich, varied linguistic and conceptual landscape to learn from, thereby enhancing their applicability across a myriad of tasks. The specific attributes of these datasets are systematically detailed in Table 3.

Pre-Training Datasets: Pre-training datasets serve as the foundation for the development of LLMs, and their diversity is crucial for the comprehensive understanding these models achieve. For instance, the extensive common crawl dataset captures a wide snapshot of the web, enabling models to learn from a myriad of topics and writing styles. Similarly, literary works included in datasets provide nuanced language and complex narrative structures that aid in understanding more sophisticated language use. GitHub repositories contribute technical and programming language data, essential for specialized tasks as discussed by Zhao et al. [105]. Meanwhile, the Reddit dataset, with its conversational and often informal text, offers insights into colloquial language. These datasets, among others, are instrumental in pre-training LLMs, equipping them with the breadth of knowledge necessary to understand and generate humanlike text.

Instruction-Tuning Datasets: Following the pre-training phase, instruction tuning, also known as supervised finetuning, plays a crucial role in amplifying or eliciting particular competencies in LLMs. We delve into a selection of prominent datasets employed for instruction tuning, which we have organized into three principal categories according to how the instruction instances are formatted. These categories encompass datasets oriented towards NLP tasks, datasets derived from everyday conversational interactions, and artificially generated, or synthetic, datasets.

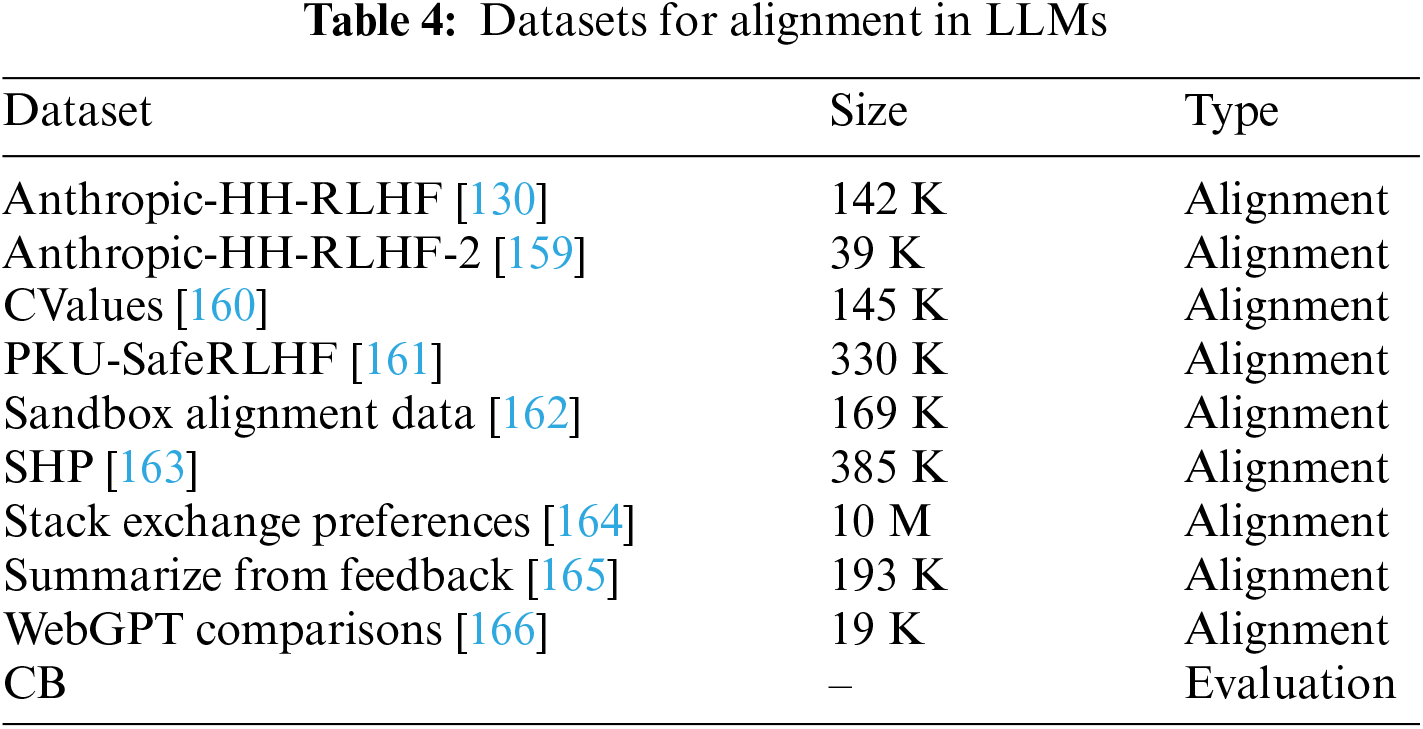

Alignment Datasets: Beyond instruction tuning, crafting datasets that ensure LLMs align with human ethical standards, such as helpfulness, truthfulness, and nonmaleficence, is crucial. This section presents a suite of key datasets employed for alignment tuning. The statistical table for the specific datasets used for pre-training data and instruction tuning data of LLMs can be found in Table 4.

2. Datasets for LMMs

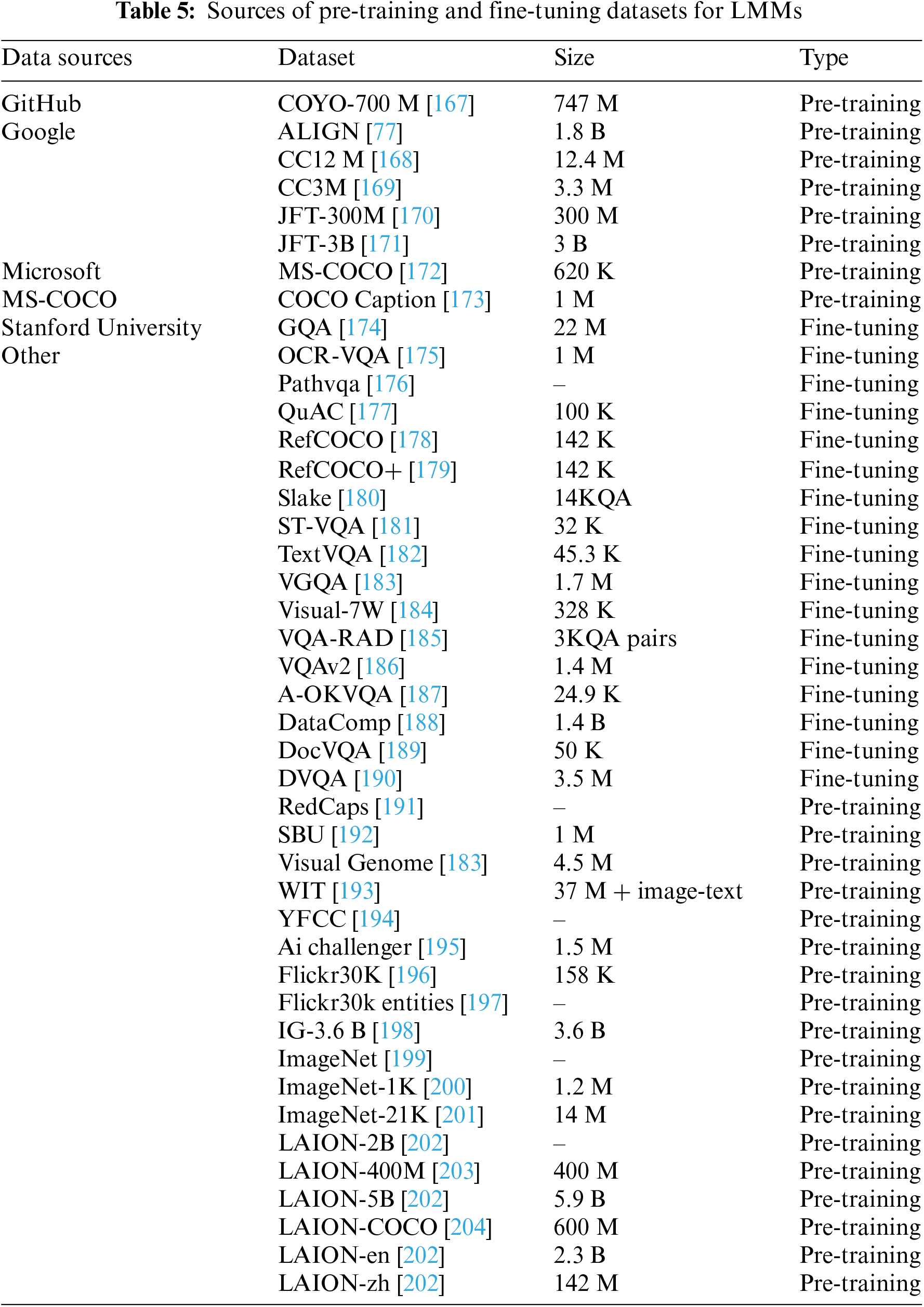

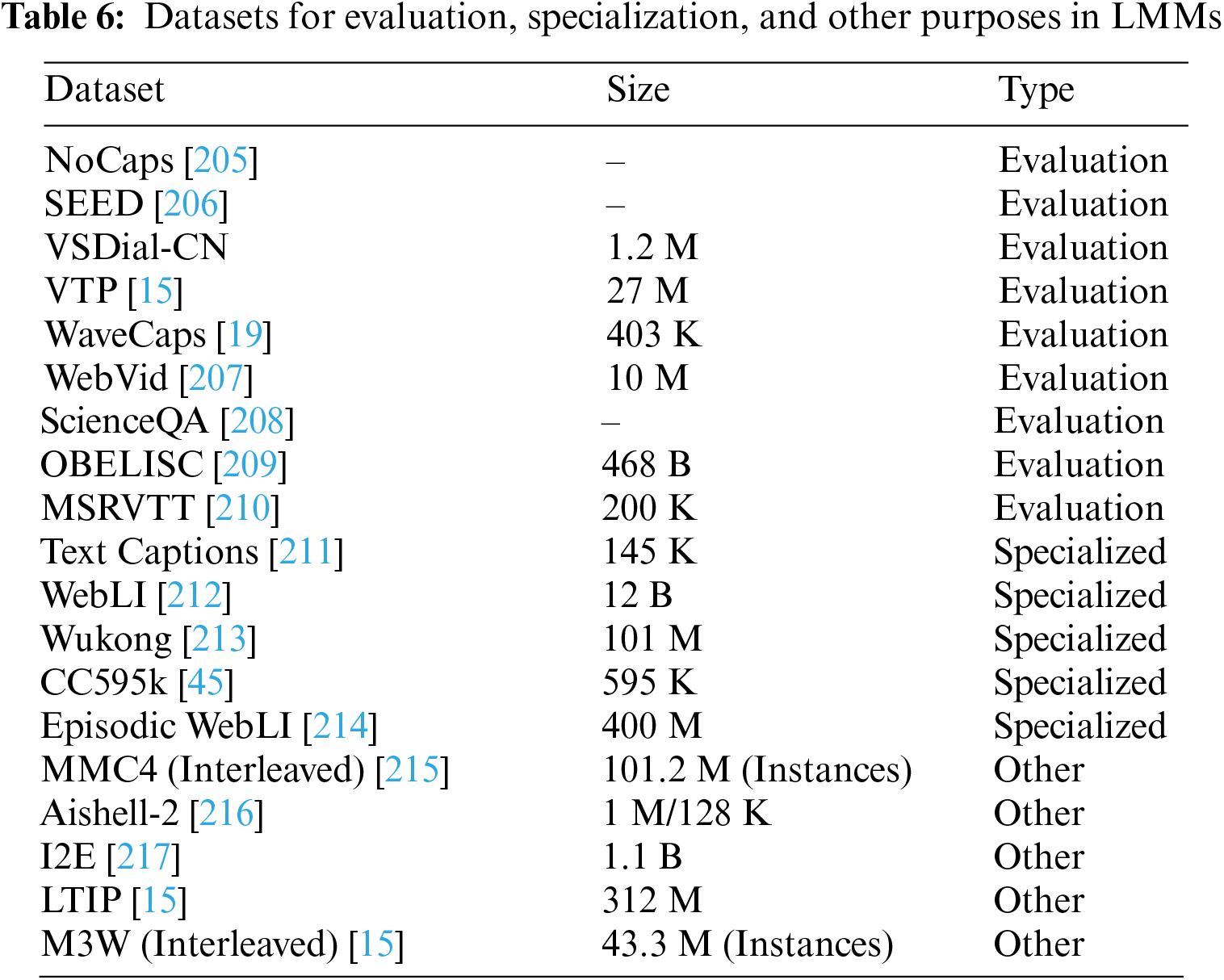

LMMs leverage vast and varied datasets for pre-training, encompassing image, text, and sometimes audio-visual content to understand and generate across modalities. Pretraining on datasets and BooksCorpus [128] for text allows LMMs to acquire foundational knowledge. Instruction tuning datasets then tailor these models for specific tasks; for instance, the visual question answering dataset guides models on how to respond accurately to queries about visual content. Such comprehensive training enables MM-LMs to perform complex tasks like image captioning and visual reasoning, bridging the gap between human and machine perception. Evaluating LMMs encompasses measuring their proficiency in tasks combining text and visual inputs. This involves specialized benchmarks, aiming to quantify the models understanding and generative capabilities across modalities. These evaluations are crucial for gauging how well MMLMs can mimic human-like understanding in diverse scenarios. For a detailed overview of the datasets employed in these evaluations, refer to Table 5 and Table 6.

Addressing diversity and mitigating potential biases in large datasets are essential tasks in the development of AI models. The following strategies are implemented to ensure that the data used does not perpetuate or amplify bias:

Fairness Metrics: Various fairness metrics are used to evaluate AI models to ensure they do not favor one group over another [218]. These metrics like ImageNet [219] for images help in understanding and quantifying any disparities in model performance across different groups defined by attributes like age, gender, ethnicity, etc.

Bias Detection and Mitigation: Specialized tools and methodologies are used to detect and quantify biases in datasets. Once identified, strategies such as re-sampling the data, weighting, or modifying the data processing techniques are employed to mitigate these biases.

Regular Audits: Periodic audits of the AI models and their training data help in identifying and addressing any emergent biases or issues in performance. These audits are crucial for maintaining the integrity and fairness of the model over time.

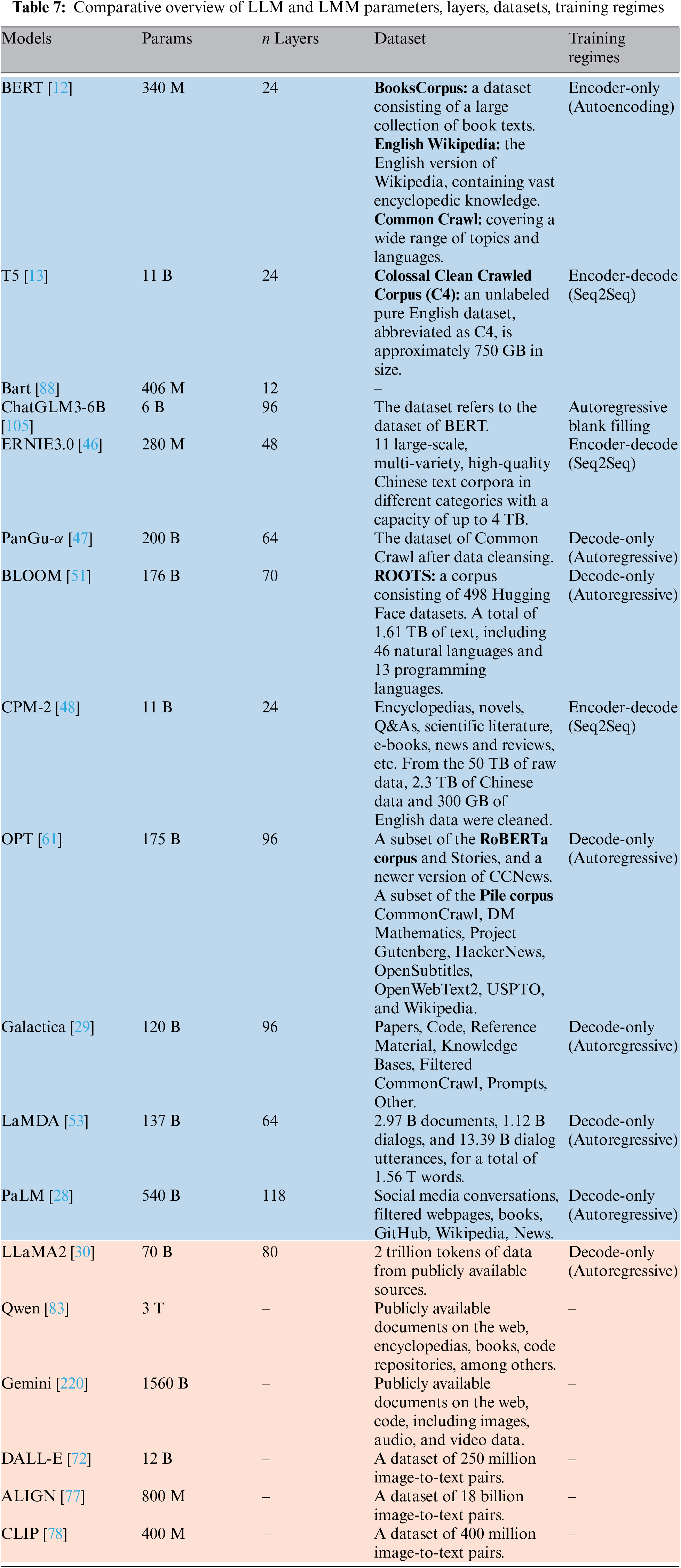

Each row within the Table 7 meticulously outlines the model’s name, parameter count, number of layers, dataset descriptions, and their respective training strategies, including autoencoding methods, autoregressive methods and sequence-to-sequence (Seq2Seq) encoding-decoding methods. A comparative overview of several large models, including parameter sizes, layers, datasets, and training regimes (the “-” indicates that for multimodal models, due to their unique architectures and methods of integrating various types of data, certain details such as the number of layers or training strategies are not readily classifiable or applicable, hence these fields are left blank). The blue bottom represents the LLMs, and the red bottom represents the LMMs. This comprehensive summary facilitates a deeper understanding of the diversity and scale of contemporary language models, as well as the complexities associated with their data processing and learning mechanisms.

In summary, dealing with diversity and potential bias in large datasets is a critical task in AI model development. To ensure that the data used does not convey or amplify these biases, the RD team uses a variety of strategies. First, they conduct a data review to identify and correct biased data. Second, enhance the diversity of the dataset by sourcing data from a variety of origins to guarantee equitable representation across different demographics. In addition, developers will use algorithm review and testing to ensure that the model’s decision-making process is fair and unbiased. These methods work together to help build more unbiased and reliable AI systems.

LLMs typically utilize deep neural network architectures, such as the Transformer architecture, which comprises a multi-layer self-attention mechanism and a feed-forward neural network. Each layer processes input data through encoding and decoding steps. The number of layers directly influences the model’s complexity and performance. For instance, GPT-3 [27], with its 175 billion parameters, features a 96-layer Transformer architecture. In contrast, multimodal models handle not only text data but also integrate diverse types of data like images, audio, and video. These models necessitate specialized layer structures to process and fuse multiple data modalities. For example, the CLIP [78] model combines images and text to learn cross-modal representations through parallel visual and text Transformers.

The parameter scales of both LLMs and LMMs are immense. Increasing parameter size generally enhances performance but also escalates the demand for computational resources and training complexity. Training LLMs typically depends on vast text datasets collected from the internet, including books, articles, and website content. For instance, BERT [12], which possesses 340 million parameters, utilize datasets like the BooksCorpus and English Wikipedia.

Similarly, LMMs often possess large parameter sizes due to the simultaneous processing and fusion of multiple data modalities. To be specific, LLaMA2 [30] boast significantly higher parameters, approximately 70 billion, harnessing an extensive amount of data—2 trillion tokens—from publicly available sources, and are trained using a decode-only approach.

LMMs require datasets that encompass various data types such as images and text. For instance, Gemini [220], which holds 1560 billion parameters and leverages public documents across diverse formats, including images and audio.

3. Evaluation

In the evaluation strategy of LLMs and LMMs, three pivotal methodologies have emerged to assess their performance and capabilities comprehensively: the benchmark-based approach, the human-based approach, and the model-based approach. Each of these methods offers distinct advantages and inherent limitations, often necessitating their combined application for a thorough appraisal of an LLMs’ and LMMs’ proficiency.

Benchmark-based approach: In evaluating LLMs, segmenting benchmarks into knowledge-centric and reasoning-centric categories. Knowledge benchmarks like MMLU [221] and CEval [222] are designed to measure the models’ grasp of factual information, while reasoning benchmarks such as GSM8K [223], BBH [224], and MATH [225] evaluate their ability to engage in complex problem-solving. The evaluation process entails generating responses from LLMs to structured prompts and then employing a set of rules to predict answers from these responses. The accuracy of the models is quantified by comparing these predictions to the correct answers. In the realm of LMMs, there has been a concerted effort to develop benchmarks tailored to their unique capabilities. Notably, Fu et al. [226] developed the MME benchmark, a suite that encompasses 14 distinct perceptual and cognitive tasks, with each instruction-answer pair meticulously crafted to prevent data leakage. Additionally, the LAMM-Benchmark [227] was introduced for the quantitative assessment of LLMs across a spectrum of 2D and 3D visual tasks. Video-ChatGPT [228] has also contributed to this space by presenting a framework for evaluating video-based conversational models, including assessments of video-based generative performance and zero-shot question-answering capabilities. These advancements in benchmarking are crucial for the thorough evaluation and continuous refinement of LLMs and LMMs.

Human-based approach: The human-based approach to evaluating LLMs is pivotal for assessing real-world applicability, including alignment with human values and tool manipulation. This method uses open-ended questions, with human evaluators judging the quality of LLM responses. Employing techniques like pairwise comparison and single-answer grading, evaluations can range from direct answer scoring in HELM [229] for tasks like summarization to comparative feedback in Chatbot Arena’s [230] crowdsourced conversations. This nuanced assessment is essential for tasks requiring humanlike judgment and creativity, providing a comprehensive view of LLMs capabilities. Evaluating LMMs as chatbots involves open-ended interactions, challenging traditional scoring methods. Assessment strategies include manual scoring by humans on specific performance dimensions. While manual scoring is insightful, it is labor-intensive.

Model-based approach: The model-based approach offers a promising solution to labor-intensive problems. GPT scoring, leveraging models such as GPT-4, is utilized to evaluate responses based on criteria like helpfulness and accuracy. However, this method encounters limitations due to the non-public availability of multimodal interfaces, potentially impacting the accuracy of performance benchmarks. Furthermore, case studies are conducted to provide a more detailed analysis, particularly beneficial for complex tasks requiring sophisticated human-like decision-making. In evaluating LLMs, additional models or algorithms are employed to assess their performance, revealing both intrinsic capabilities and shortcomings. To mitigate the high cost associated with human evaluation, surrogate LLMs like ChatGPT and GPT-4 are utilized. Platforms such as AlpacaEval [231] and MT-bench [232] employ these surrogate LLMs for comparative analysis. While these closed-source LLMs exhibit high concordance with human assessments, concerns persist regarding access and data security. Recent endeavors have concentrated on fine-tuning open-source LLMs, such as Vicuna [104], to function as evaluators, thereby narrowing the performance gap with proprietary models.

2.6 Emergent Abilities of LLMs

Wei et al. [233] studied the emergence abilities of large-scale language models, a phenomenon does not present in smaller models. As these models increase in size, they develop new, unpredictable capabilities that surpass the performance of smaller models, akin to phase transitions in physics [234]. While emergent abilities can be task-specific [62], the emphasis here is on versatile abilities that enhance performance across diverse tasks. This part introduces three principal emergent abilities identified in LLMs, alongside models that demonstrate such capabilities [235].

2.6.1 In-Context Learning (ICL)

ICL was notably defined in the context of GPT-3 [27], illustrating that when provided with natural language instructions and/or task demonstrations, the model can generate accurate outputs for test instances by completing input text sequences, without necessitating further training or adjustments. This capability, particularly pronounced in the GPT-3 model with 175 billion parameters, was not as evident in earlier iterations such as GPT-1 and GPT-2. However, the effectiveness of ICL varies with the nature of the task at hand. For instance, GPT-3, 13 billion parameter variant demonstrates proficiency in arithmetic tasks, like 3-digit addition and subtraction, whereas the more extensive 175 billion parameter model struggles with tasks like Persian question answering.

Instruction tuning, which involves fine-tuning LLMs with a diverse set of tasks described through natural language, has proven effective in enhancing the model’s ability to tackle novel tasks also presented in instructional form. This technique allows LLMs to understand and execute instructions for new tasks without relying on explicit examples [116,144,223], thereby broadening their generalization capabilities. Research indicates that the LaMDA-PT model [53], after undergoing instruction tuning, began to markedly surpass its untuned counterpart in performing unseen tasks at a threshold of 68 billion parameters, a benchmark not met by models sized 8 billion parameters or less. Further studies have identified that for PaLM [28] to excel across a range of tasks as measured by four evaluation benchmarks (namely MMLU, BBH, TyDiQA, and MGSM), a minimum model size of 62 billion parameters is necessary, although smaller models may still be adequate for more specific tasks, such as those in MMLU [107].

Zhou et al. [236] proposed a novel NLP technique, “From Simple to Complex Prompting”, which breaks down complex problems into simpler sub-problems, starting with the easiest and progressively tackling to more challenging ones. This method is particularly effective for complex issues, outperforming traditional methods. The CoT prompting strategy allows LLMs to address tasks through a prompting mechanism that incorporates intermediate reasoning steps towards the final solution [31,237].

This section embarks on an exploration of the yet unresolved complexities inherent in LLMs and LMMs. These advanced computational systems, while demonstrating unprecedented abilities in processing and generating human-like text and multimedia content, still grapple with significant challenges. We delve into the intricate nuances of these models, examining the limitations that hinder their full potential. Key areas of focus include the ongoing struggle with understanding and replicating nuanced human context, the management of inherent biases in training data, and the challenges in achieving true semantic understanding.

Contextual understanding is a hallmark capability of both LLMs and LMMs, revolutionizing the way information is processed and interpreted across various domains. LLMs excel in comprehending and generating text within specific contexts, discerning nuances and subtleties to produce coherent and contextually appropriate responses. Similarly, LMMs extend this prowess by incorporating diverse modalities such as images, audio, and text, allowing for a richer understanding of complex scenarios. Whether it’s analyzing textual documents or interpreting visual cues alongside linguistic context, both LLMs and LMMs demonstrate a remarkable ability to grasp and interpret the intricate interplay of contextual factors, thereby advancing research, problem-solving, and decision-making across diverse fields.

In the realm of advanced computational linguistics, both LLMs and LMMs for Matching encounter a critical challenge known as contextual limitations. Chen et al. [238] introduced Position Interpolation (PI) as a solution to the limitations posed by insufficient context window sizes in models. The essence of this approach lies in avoiding extrapolation. Instead, it focuses on reducing position indices by aligning the maximum position index with the context window upper limit, as set during pre-training. This alignment of the position index range and relative distances before and after expansion mitigates the effects of expanding the context window on attention score calculation. Consequently, it enhances the model adaptability while preserving the quality associated with the original context window size. Exploration of methodologies to enrich contextual reasoning capabilities is imperative, facilitating models to deduce implicit information and formulate nuanced predictions grounded in comprehensive contexts. This endeavor may entail delving into sophisticated techniques, such as integrating external knowledge reservoirs or harnessing multi- hop reasoning mechanisms.

This section discusses the often-encountered issues of ambiguity and vagueness in contextual understanding, analyzing relevant research and proposing strategies to address them. As an illustration, Chuang et al. [239] proposed a new decoding method called Decoding by Contrasting Layers (DoLa). This method seeks to enhance the extraction of factual knowledge embedded within LLMs without relying on external information retrieval or additional fine-tuning. Capitalizing on the observation that factual knowledge in LLMs is often confined to specific transformer layers, DoLa derives the next label distribution by comparing the logarithmic differences obtained through projecting the front and back layers into the vocabulary space. Concretely, it involves subtracting the logarithmic probability of the output from the mature layer from the output of the immature layer. This resultant distribution is then employed as the prediction for the next word, with the overarching goal of minimizing ambiguity and addressing other related challenges. As another important aspect, unlike enhancing the extraction of internal knowledge in LLMs, extracting valuable evidence from the external world allows for answering questions based on the gathered evidence [240–243]. Specially, LLM-Augmenter [244] are proposed to enhance the performance of LLMs. In contrast to a standalone LLM, it introduces a set of plug-and-play modules, enabling the LLMs to leverage external knowledge for generating more accurate and information-rich responses. The system continuously optimizes the LLMs prompts based on feedback generated by utility functions, enhancing the quality of the models’ responses. In a range of settings, including task-focused conversations and broad-spectrum query response systems, the LLM-Augmenter efficiently minimizes the generation of spurious outputs by the LLMs, all the while preserving the response coherence and richness of information. Furthermore, based on a multimodal LLMs framework, Qi et al. [245] introduced a systematic approach to probing multimodal LLMs using diverse prompts to understand how prompt content influences model comprehension. It aims to explore the model’s capability through different prompt inputs and assess contextual understanding abilities with a series of probing experiments. Existing research has explored the inconsistency between vision and language. For instance, Khattak et al. [246] proposed a novel method addressing the inconsistency between visual and language representations in pre-trained visual language models like CLIP. It enhances collaborative learning by integrating multimodal prompts into both vision and language branches, thereby aligning their outputs. The method employs cross-entropy loss for training and has been evaluated across 11 recognition datasets, consistently outperforming existing methods.

Future work on ambiguity and vagueness entails several pivotal avenues for advancement in natural language understanding [247]. Primarily, researchers aim to develop robust algorithms capable of effectively disambiguating ambiguous terms and resolving vague expressions within textual contexts. This involves exploring novel techniques such as context-aware word sense disambiguation and probabilistic modeling of vague language.

Additionally, further investigation is warranted to enhance the capacity of models to handle inherent ambiguities and vagueness in human language. This could involve the development of advanced machine learning approaches that integrate contextual information and domain knowledge to make more informed interpretations of ambiguous or vague statements.

Catastrophic forgetting in LLMs and LMMs is a critical challenge. It arises when these models, after being trained or fine-tuned on new data or tasks, tend to forget the knowledge they previously acquired. This issue occurs because the neural network weights, which are adjusted to improve performance on new tasks, might overwrite or weaken the weights essential for earlier tasks. This problem is especially acute in LLMs and LMMs due to their intricate structure and the vast variety of language data they process. It significantly hinders their ability to consistently perform across different tasks, particularly in dynamic settings that demand continuous learning and adaptation.

In particular, Mitra et al. [248] proposed a novel approach for improving the performance of LMMs in vision-language tasks, named Compositional Chain-of-Thought (CCoT) to address the issues of the forgetting of pre-training objectives. CCoT operates in two primary steps. First, a scene graph is generated using an LMM, which involves creating a structured representation of the visual scene. The second step involves using the generated scene graph as part of a prompt in conjunction with the original image and task prompt. Additionally, by incorporating scene graphs, CCoT allows for a more organized and comprehensive processing of visual information. In order to overcome the same problem in the field of image reasoning, BenchLMM [249] is first utilized to assess the performance of LMMs across various visual styles, addressing the issue of performance degradation under non-standard visual effects. After image processing, PixelLM [250] excels in creating detailed object masks, addressing a key shortfall in multimodal systems. Its core includes a novel lightweight pixel decoder and segmentation codebooks, streamlining the transformation of visual features into precise masks. This innovation enhances task efficiency and applicability in areas like image editing and autonomous driving. Additionally, the mechanism for target refinement loss in the model improves discrimination of overlapping objectives, thus refining mask quality.

Besides the above methods, Liu et al. [251] introduced the DEJAVU system to improve the efficiency of LLMs during inference, addressing the high computational cost issue without sacrificing contextual learning abilities. Unlike existing methods that require costly retraining or reduce LLMs contextual capabilities, DEJAVU dynamically forecasts contextual sparsity based on input data for each layer, combined with asynchronous processing hardware implementation. This approach significantly reduces inference latency, outperforming prevalent systems like FasterTransformer and hugging face implementations.

Future research on catastrophic forgetting encompasses several critical areas aimed at mitigating this phenomenon and enhancing the robustness of neural networks in continual learning scenarios. Researchers are exploring methods to design neural architectures that are more resistant to catastrophic forgetting, such as incorporating mechanisms to selectively retain important information from previous tasks while learning new ones.

To be specific, there is a need to develop more effective rehearsal-based learning techniques, where models actively revisit and train on past data to prevent forgetting. This may involve investigating strategies for prioritizing and sampling past experiences in a way that maximally benefits learning on new tasks.

3.2 Hallucination Correction and Cognitive Ability Evaluation

LLMs and LMMs, while being marvels of modern computational linguistics, are not exempt from an intriguing phenomenon known as “hallucinations”—where outputs generated by the model are either factually incorrect or nonsensical. This phenomenon primarily arises from several core issues. First and foremost, the quality and scope of the training data play a pivotal role. Secondly, the model limitations in understanding context led to hallucinations. Additionally, the challenge of reasoning and common sense is also apparent. LLMs, adept at pattern recognition and language generation, sometimes falter in tasks requiring logical reasoning or common-sense knowledge, resulting in responses that seem plausible but are fundamentally flawed. Another contributing factor is the inherent limitations of the model architectures and algorithms.

3.2.1 Corrective Methods and Evaluation

In the evolving landscape of artificial intelligence, the phenomena of “hallucination” in LLMs and LMMs present a unique set of challenges and opportunities. This section of the paper delves into the intricate world of hallucination correction within these advanced AI systems. It explores the mechanisms through which these models occasionally generate misleading or factually incorrect information, often in response to complex or ambiguous prompts. The focus then shifts to the evaluation of cognitive abilities in AI, scrutinizing how these systems understand, process, and respond to diverse information. By dissecting the underpinnings of hallucination and assessing the cognitive competencies of these models, this paper aims to shed light on the path forward in refining AI for more accurate, reliable, and contextually aware responses.

In terms of zero resource illusion recognition, SelfCheckGPT [252] is proposed to achieve zero-resource black-box hallucination identification within generative LLMs. The fundamental principle asserts that a language model, once it comprehends a specific concept, is expected to produce responses through random sampling. These responses should not only resemble each other but also uphold consistent truths.

Conversely, for hallucinated content, randomly selected replies are prone to divergence and contradictions. The research findings indicate that SelfCheckGPT effectively detects both non-factual and factual sentences and ranks the authenticity of the content. Compared to gray-box methods, this approach demonstrates superior performance in sentence-level hallucination detection and paragraph-level authenticity assessment.

Furthermore, Friel et al. [253] proposed the innovative Chain-Poll methodology and the RealHall benchmark suite as powerful tools for evaluating and solving the hallucinogen difficulty in LLMs outcomes, making a comprehensive and impactful contribution to the field of hallucinogen detection in LLM-generated texts. To be specific, the RealHall benchmark suite has been designed to address the limitations of previous hallucination detection efforts. ChainPoll is designed to detect both open and closed domain hallucinations, thus demonstrating its versatility. Performance tests conducted in this thesis show that ChainPoll outperforms a range of published alternatives, including SelfCheckGPT [252], GPTScore [254], G-Eval [255], and TRUE [256]. ChainPoll proves to be not only more accurate, but also faster, more cost-effective, and equally good at detecting both open and closed domain illusions.