Open Access

Open Access

ARTICLE

A Novel Dynamic Residual Self-Attention Transfer Adaptive Learning Fusion Approach for Brain Tumor Diagnosis

1 Electrical Engineering Department, Faculty of Engineering at Rabigh, King Abdulaziz University, Jeddah, 21981, Saudi Arabia

2 Electrical Engineering Department, College of Engineering, Northern Border University, Arar, 91431, Saudi Arabia

* Corresponding Author: Ahmed A. Alsheikhy. Email:

(This article belongs to the Special Issue: Advancements in Machine Learning and Artificial Intelligence for Pattern Detection and Predictive Analytics in Healthcare)

Computers, Materials & Continua 2025, 82(3), 4161-4179. https://doi.org/10.32604/cmc.2025.061497

Received 26 November 2024; Accepted 31 January 2025; Issue published 06 March 2025

Abstract

A healthy brain is vital to every person since the brain controls every movement and emotion. Sometimes, some brain cells grow unexpectedly to be uncontrollable and cancerous. These cancerous cells are called brain tumors. For diagnosed patients, their lives depend mainly on the early diagnosis of these tumors to provide suitable treatment plans. Nowadays, Physicians and radiologists rely on Magnetic Resonance Imaging (MRI) pictures for their clinical evaluations of brain tumors. These evaluations are time-consuming, expensive, and require expertise with high skills to provide an accurate diagnosis. Scholars and industrials have recently partnered to implement automatic solutions to diagnose the disease with high accuracy. Due to their accuracy, some of these solutions depend on deep-learning (DL) methodologies. These techniques have become important due to their roles in the diagnosis process, which includes identification and classification. Therefore, there is a need for a solid and robust approach based on a deep-learning method to diagnose brain tumors. The purpose of this study is to develop an intelligent automatic framework for brain tumor diagnosis. The proposed solution is based on a novel dense dynamic residual self-attention transfer adaptive learning fusion approach (NDDRSATALFA), carried over two implemented deep-learning networks: VGG19 and UNET to identify and classify brain tumors. In addition, this solution applies a transfer learning approach to exchange extracted features and data within the two neural networks. The presented framework is trained, validated, and tested on six public datasets of MRIs to detect brain tumors and categorize these tumors into three suitable classes, which are glioma, meningioma, and pituitary. The proposed framework yielded remarkable findings on variously evaluated performance indicators: 99.32% accuracy, 98.74% sensitivity, 98.89% specificity, 99.01% Dice, 98.93% Area Under the Curve (AUC), and 99.81% F1-score. In addition, a comparative analysis with recent state-of-the-art methods was performed and according to the comparative analysis, NDDRSATALFA shows an admirable level of reliability in simplifying the timely identification of diverse brain tumors. Moreover, this framework can be applied by healthcare providers to assist radiologists, pathologists, and physicians in their evaluations. The attained outcomes open doors for advanced automatic solutions that improve clinical evaluations and provide reasonable treatment plans.Keywords

Various illnesses can threaten or pose dangerous risks to our lives, such as cancer [1]. Physicians call any uncontrolled growth of cells inside any body part cancer [2–5]. In the United States, medical reports expect nearly 2 million cases of cancer will occur in 2024 [1]. In addition, the estimated number of cancer deaths is more than 600 thousand cases [1]. In 2023, the reporters claimed that more than 94,000 new cases of brain tumors would be diagnosed in the United States of America [1]. Physicians and pathologists have classified the identified brain tumors into four main groups, which are Benign, Glioma, Meningioma, and Pituitary [6–10]. The last three types are known as Malignant due to their growth and effect on health [11–14]. The benign type normally grows slower than the other types. In addition, location, size, and stage of development are considered the key factors in determining the identified potential mass of the tumor belongs to which group [14–18].

Brain tumors have been proven to have a substantial impact on an individual’s life since the brain controls nerves, spinal cords, and movements. Pituitary tumors occur within the pituitary gland, which is located at the center of the brain [2–6]. These tumors affect hormone production, cause severe headaches, and disrupt balance [16,18–20]. Glioma tumors occur in the glial cells, and that is why it is called Glioma [2]. Glioma tumors range from low-grade to highly aggressive ones and affect neurons [2]. In addition, the Glioma type includes other subtypes: Astrocytoma, Oligodendrogliomas, and Ependymomas [2]. Meningioma comes from the protective layers around the brain and the spinal cord. Initially, this type is benign and as time goes on, it becomes Malignant when the affected tissues and cells put excessive pressure on the adjacent ones [2]. The typical treatment plans for these types of tumors range are surgery, chemotherapy, and radiation [2]. Fig. 1 illustrates the three main types of Malignant brain tumors, that are found in [2]. Fig. 1a represents the Pituitary tumor, Fig. 1b denotes the Meningioma tumor, and Fig. 1c refers to the Glioma tumor.

Figure 1: The main Malignant types of brain tumors

Physicians have identified various symptoms of patients who were positively diagnosed with brain tumors. However, these symptoms vary from one patient to another. In addition, these symptoms can be escorted by other diseases [2]. The detected symptoms of brain tumors are 1) Headaches, which are the mutual symptom in all diagnosed patients with brain tumors. However, there is no right pattern that ensures the identified disease is a brain tumor [19–22], 2) Seizures, which occur in patients before the positive diagnosis of the disease. However, these seizures occur because of high fever, trauma, stroke, and epilepsy, 3) Nausea and vomiting, 4) Hearing and vision issues, 5) Strange feeling in the head, 6) Weakness in arms and legs, and 7) Neuromuscular problems and cognitive changes in memory or inability to focus or pointing to the right words. Pathologists and physicians use numerous techniques to diagnose brain tumors, and these methods are invasive or non-invasive [2–5,23–26]. MRI scans, positron emission tomography, and computed tomography are the most common methods due to their safety on patients. These methods are non-invasive type, while the biopsy procedure is invasive type. MRI scans provide rich and valuable information regarding the locations, shape, size, and development of brain tumors. However, using manual procedures requires high skills and expertise. In addition, these procedures are time-consuming and prone to errors or mistakes [2–4]. MRI scans are an essential technique since they give valuable insights into the anatomy and pathology of the brain. Unfortunately, interpreting data from MRI scans for brain tumor identification shows substantial challenges since high skills are needed for accurate diagnosis. Due to the advancement in utilizing medical images, a revolution in diagnostic brain tumors has begun, specifically, in the realm of tumor identification. It is critical to identify brain tumors early to combat them and place various treatment plans. Due to the challenges and reasons stated earlier, there is a strong need for new solutions that can provide early detection and improve decision-making operations in the medical field. Artificial intelligence (AI) solutions, specifically deep learning-based ones, have gained special attention and focus recently due to their incredible performance and high accuracy results in diagnosing different diseases. Various methods based on AI have been developed to provide noticeable improvement in detecting and categorizing brain tumors, which makes these approaches suitable for analyzing complex patterns of tumor data that seem hidden to the human eye. Moreover, AI solutions learn from extracted characteristics and patterns to make better predictions [2,3].

Applying AI techniques in brain tumor identification and classification is considered an emerging area of interest despite the ongoing revolution in the medical field. However, due to the natural appearance of tumors in the brain, it has become a significant need to implement a practical approach that provides accuracy and cost-effectiveness as well. Therefore, this study proposes an AI-based solution to identify and classify brain tumors properly and effectively. This solution is a novel dense dynamic residual self-attention transfer adaptive learning fusion approach (NDDRSATALFA). The transfer learning approach is applied to produce tuning extracted characteristics and fining them as well. The proposed method identifies tumor masses and classifies these masses into four types: benign, pituitary, glioma, and meningioma.

1.2 Motivations and Contributions

The Government of Saudi Arabia has launched a government program called Saudi Vision 2030, which is based on three main pillars: the economy, society, and the nation [27]. This program aims to provide diversity in the economy, culture, and society [27]. Saudi Vision 2030 program includes twelve initiatives, among these initiatives, there is a dedicated one for the health sector and it is called the Health Transformation Program [27]. The objectives of this program are to provide digital transformation in the health sector, place digital health solutions in the healthcare providers, and increase the quality of life for all people in the country. This study stems from the need to have accurate digital solutions that provide extremely dependable solutions and to participate in this promising vision. It aims to present an innovative framework that surpasses other state-of-the-art methods in brain tumor detection and classification. This research is mainly recognized and distinguished by its following key contributions:

A. Developing an automatic framework, NDDRSATALFA, for brain tumor detection and classification.

B. Two dedicated deep-learning neural networks, VGG19 and UNET, were implemented and a transfer learning module was applied to these two networks.

C. Implementing a novel dense dynamic residual self-attention network (NDDRSAN) to support both neural networks and achieve high outputs.

D. Improving sensitivity to identify tumor masses in their early stage regarding their locations and shapes.

E. Enhancing the capability to detect masses despite their various appearances using the dedicated NDDRSAN module.

F. Ability to be generalized for masses categorization.

The rest of this paper is structured as follows: the literature review is presented in Section 1.3 and Section 2 provides a deep explanation of the proposed approach. The conducted performance evaluation and discussion are in Section 3. Section 4 concludes the paper.

Recently, deep-learning methods have reached remarkable outcomes and performance in brain tumor segmentation, identification, and classification. In addition, improving accuracy and reducing manual errors or mistakes have been achieved. Various researchers from different disciplines were inspired by those motivations to further explore this area. In this subsection, a related work of some state-of-the-art works to segment, recognize, and categorize different brain tumors using deep-learning approaches is provided.

Pacal et al. in [1] presented a new method to classify brain tumors based on the EfficientNetv2 structure, global attention module (GAM), effective channel attention (ECA), and Gram-CAM visualization tools. The objectives of this solution were to categorize brain tumors, enhance the facility to focus on salient characteristics within complex MRI scans, and increase accuracy. The authors demonstrated their solution on a huge public dataset and achieved a remarkable 99.76% accuracy. This dataset was obtained from Kaggle and had 7023 MRI scans from healthy and patients with brain tumors. These MRI scans included four main types of images, which were Pituitary tumor, Glioma, Meningioma, and no tumor. The developed solution was divided into eight stages of different layers. The first and last stages had 1 layer in each, while the second stage contained two layers. The coming two stages had three layers, and the fifth stage included four layers. The sixth and seventh stages contained six and twelve layers, respectively. The utilized MRI scans were resized from 512 × 512 pixels to 224 × 224 pixels to reduce the calculation of dimension, minimize resource allocation, and optimize the execution times. In addition, various data augmentation methodologies were applied to avoid overfitting and make the model more solid and robust. Four performance indicators were evaluated by the developed model, which were accuracy, precision, sensitivity, and F1-score. The authors trained, validated, and tested their approach on a machine that had a Linux operating system, an Intel Core i9 of series 9900X, 11 GB RAM, 4352 CUDA cores, and 3.50 GHz. PyTorch was the deep-learning platform utilized in [1]. The authors found that their model suffered from two limitations, which were the size and diversity of the applied dataset, and the massive computational resources needed to achieve good results. Readers can refer to [1] for additional information.

In [2], the authors introduced a deep-learning-based model to classify brain tumors using convolutional neural networks (CNNs). This model had a sequential CNN structure of various layers of convolutional, max-pooling, dropout, and dense. A dataset was applied, and the model reached 98% accuracy on the testing set, while the F1-score was between 97% and 98%. This dataset was collected from three sources: Figshare, SARTAJ datasets, and Br35H dataset. All images were MRI scans. As in [1], the used dataset had four types of MRI scans: Pituitary tumor, Glioma, Meningioma, and no tumor. The authors split these MRI scans into three groups, which were training, validation, and testing. Each MRI scan was rescaled from its original dimension to 150 × 150 pixels to ensure all inputs had the same spatial dimension and converted from RGB scale to grayscale. This conversion reduced the applied channel from three to one and minimized the computational complexity and the required resources. In addition, some augmentation methods were deployed to avoid overfitting and increase the robustness and the system’s prediction. The developed approach had some limitations, which were the need for high computational resources as reported by the authors, dependency on the applied dataset, and performance varied when different modalities were utilized.

Musthafa et al. in [3] presented a deep-learning-based approach using ResNet50 and the Grad-CAM tool to identify brain tumors. A dataset of MRI scans was applied for training, validation, and testing purposes. This dataset was downloaded from Kaggle and had 253 MRI scans of different formats. In addition, these images were categorized as tumors or no tumors. Unfortunately, no additional data about specific tumor types was reported in this dataset. The authors achieved 98.52% accuracy. The implemented method used some augmentation methods to avoid overfitting issues. Thus, the number of MRI scans increased from 253 to more than 1500 images. The authors utilized ResNet50 technology, which contains 50 layers, and trained it on a Python platform using 10 epochs with a batch size of 16. In addition, the learning rate was dynamic. Readers can refer to [3] for additional information.

Raza et al. in [4] presented a new method based on neural networks and a stacking algorithm to classify brain tumors. This method worked on T1 MRI scans by drawing various CNNs on these T1 images to extract features and feed them into the stacking algorithm, which was a single layer. This layer stacked different classifiers to produce numerous predictions. Two public datasets were applied to test the approach and evaluate its performance. The authors utilized nine deep-learning topologies in their model, which were VGG16, VGG19, DenseNet121, InceptionV3, MobileNet, NASNetMobile, MobileNetV2, DenseNet169, and InceptionResNetV2, and the applied different classifiers were K-Nearest Neighbors (KNN), NuSVC, AdaBoost, SVM, Random Forest (RF), XGBoost, Light GBM, and Multilayer Perceptron (MLPs). In addition, the principal component analysis (PCA) tool was deployed to reduce the dimensions of the feature maps obtained. The method started by cropping unneeded parts and resizing inputs to 299 × 299 pixels for the InceptionV3 network and to 224 × 224 pixels for the remaining networks. Some data augmentation methods were applied to avoid overfitting issues on one dataset only and this increased the number of MRI scans from 253 to 977. These methods were right and left mirroring, flipping an image around its horizontal axis and vertical axis, rotating the image 15 degrees, and adding some noise. The developed method extracted nearly 2000 characteristics from both datasets and reduced their dimensions by applying the PCA tool. This approach attained 96.69% average accuracy. Unfortunately, the used datasets provided two labels only, which were tumor or no tumor.

The authors in [5] implemented an approach based on an improved fuzzy factor fuzzy local information C means (IFF-FLICM), modified harmony search, and sine cosine algorithm (MHS-SCA), and extreme learning machine (ELM) to identify brain tumors and classify these tumors into their suitable groups using MRI scans. A sole dataset of 255 MRI scans was applied to the developed method to train it and test it as well. It attained 98.78%, 99.23%, and 99.12% for sensitivity, specificity, and accuracy, respectively after the method was run for 23,248.7 s. This approach categorized tumor masses as either Benign or Malignant. Initially, all MRI scans were resized to a suitable size, and features were found using the Mexicanhat Wavelet Feature Extractor. The resultant weights were adjusted and optimized by the developed MHS-SCA method after 1000 iterations. Unfortunately, the method is tested only on a small dataset and there is no guarantee that it can work perfectly as it should be on a larger dataset. In addition, it cannot classify Malignant tumors into their actual types and its execution time is massive as stated earlier. Interested readers are encouraged to read [5] for additional information.

Alsheikhy et al. in [13] presented a deep-learning-based approach using VGG19 and UNET and numerous tools to recognize and label brain tumors into two classes: Benign and Malignant. The applied tools were 1) an image segmentation technique, 2) a Support Vector Machine (SVM), 3) a Discrete Wavelet Transform (DWT), and 4) the Principal Component Analysis (PCA). The authors deployed their method on a single dataset from Kaggle, which is publicly available, and reached 99.2% accuracy. This method was tested on a MATLAB platform. Readers can refer to [13] for additional information.

This study investigates and emphasizes a new knowledge-based framework to diagnose brain tumors. This knowledge-based framework utilizes mainly two newly implemented deep-learning neural networks and other methodologies. In addition, six public datasets are applied to the proposed solution. This section is split into numerous subsections, where the next subsection provides brief background information about the self-attention network and the novel residual self-attention network. Then, an intensive explanation of the presented framework is provided.

The Self-Attention Network Scheme (SANS) has been widely deployed in various fields, such as the medical [28]. This scheme has achieved remarkable performance in image segmentation and classification, and this is the reason for utilizing it in this research. Deploying SANS in this study improves the paying attention to the significant characteristics, which in turn enhances the framework’s performance. SANS works based on three elements, which are Query (Q), Key (K), and Value (V). These three elements are inputs into SANS. The similarities between each pair of Q and K are computed using the Cosine Similarity Method (CSM) [28]. CSM generates values within a range between [−1, 1], where −1 means no similarity and 1 refers to the best similarity. Fig. 2 demonstrates a general block diagram of SANS.

Figure 2: The typical structure of SANS

In this research, a new Residual SANS is implemented and is abbreviated as RSANS. Residual means that two types of learning exist, which are local and global. Global Residual Learning (GRL) refers to learning only the residuals between inputs and outputs, while Local Residual Learning (LRL) represents a learning strategy in a stacked scheme. This scheme aims to learn as much information as possible, so the proposed model learns rich details of the context and texture of the processed data. RSANS encompasses five components, not three as in the typical one. These five elements are 1) Query (Q), 2) Key (K), 3) Value (V), 4) Location (L), and 5) Code (C). The first three elements as in the regular one, where Q represents the detected potentially infected cells, K denotes our findings, and V refers to the numerical representations of these detected cells. The fourth component denotes the potential locations of tumor masses of the segmented images. This element takes a value from 1 to 4, where 1 denotes the upper left, 2 refers to the upper right, 3 is for the right bottom, and 4 presents the remaining part. These values are passed from the segmentation network. The last element (C) holds value(s) of the four subregions, where 1 denotes the upper right, 2 refers to the upper left, 3 is for the left bottom, and 4 is for the right bottom. Fig. 3 depicts an internal architecture of the implemented RSANS module. In addition, it is located between the two U-shaped networks. GAP stands for Global Average Pooling.

Figure 3: The architecture of the designed RSANS module

The proposed framework is implemented in various stages to leverage the deep-learning process. This leveraging operation improves the prediction’s accuracy. These stages are comprehensive, which include the preparation of the applied dataset, data preprocessing, deep learning to extract features, and lastly evaluating the framework’s performance using various performance indicators. These indicators will be mentioned later. These phases are precisely designed to ensure that high performance is achieved and provide insights into its ability to give an accurate decision-making process. Fig. 4 depicts a high overview of the proposed solution.

Figure 4: High-level overview of the proposed framework

In this research, six public datasets are applied. These datasets are divided into three sets, which are the training, validation, and testing. The training set represents 70%, 10% for the validation set, and 20% for the testing set. Section 3 will provide additional details about these public datasets. Performing meticulous preprocessing operations is a fundamental key to achieving exquisite performance in the realm of brain tumor identification, classification, and analysis. These processes aim to optimize the utilization of the applied datasets inside the three successive sets: training, validation, and testing. As demonstrated in Fig. 4, this stage contains six operations. Initially, any noise is removed using some built-in filters in MATLAB. This operation eliminates any corruption or extraneous elements that may lead to extracting misleading characteristics. Then, every MRI scan is resized to a standard dimension: 224 × 224 pixels to ensure all inputs are homogeneous and smooth feature extraction, followed by a conversion tool to transform all inputs from RGB mode to grayscale mode since the RGB images are more prone to noise than the grayscale images. After that, some data augmentation tools are deployed to increase the richness of the applied datasets since the heterogeneity and intricacies of the presence of brain tumors have become more challenging. These tools include numerous transformative techniques to give different variations of real scenarios. The applied augmentation techniques are rotation, flipping horizontally and vertically, and zooming in and out. Lastly, a normalization operation is applied to boost the presented framework’s sensitivity to understand any existing pathological structural distinctions. Therefore, heightening the framework’s capacity for discrimination.

The presented solution turns raw data from the MRI scans into numerical useful information, and this information is referred to as the extracted features. In this research, the total number of extracted characteristics is 24, such as location, shape, size, area, mean, standard deviation, correlation, and variance. The applied filters inside the developed convolutional layers of the VGG19 network are stirred 6 pixels at a time using 2 stride values, which are applied on input. Then, zero padding operation is applied to keep the original image, while the features are extracted, and prevent straying caught details. The initial extracted characteristics are forwarded into the segmentation module to segment each input. In this module, the segmentation is performed by putting boundaries around every potential tumor mass. These masses are called the Regions of Interest (RoIs). The remaining final characteristics are extracted from all RoIs and sent into the developed RSANS network. The RSANS network tunes all features and reduces their dimensions with the help of Principal Component Analysis (PCA). The size of the resultant four vectors is 1 × 24, where 1 denotes the number of rows and the number 24 represents the number of columns. The computed four vectors denote the total number of classified potential masses, which are Pituitary, Glioma, Meningioma, and no tumor. In the presented framework, the total number of extracted features is nearly 1,834,567. In addition, all required features are flattened and normalized in the classification network. The proposed framework utilizes the cross-entropy as its loss function. Choosing this loss function and Adam optimizer plays a significant impact on the attained accuracy. The selected loss function evaluates the presented approach’s performance by analyzing the configurations and results between the actual labels and the achieved probability distributions. Therefore, penalties for deviations are imposed. The 5-fold cross-validation algorithm is applied in this study. The training lasted for nearly 26 h and the testing set lasted nearly 9 h.

In deep-learning applications, choosing performance indicators for evaluation purposes is crucial and dominant to assess the proposed approach’s performance. In this study, a wide range of indicators were chosen. Each metric provides useful insights for recognition and categorization objectives. The considered and computed metrics provide diverse analyses of quantitative and qualitative, which are as follows:

1) True Positive (TP).

2) False Positive (FP).

3) True Negative (TN).

4) False Negative (FN).

5) Precision (Pci): is demonstrated as follows:

6) Sensitivity (Sen): is represented as follows:

7) Accuracy (Acy): is calculated as follows:

8) Specificity (Spe): is computed via Eq. (4):

9) F1-score: is represented as follows:

10) Dice (Dce): this metric is illustrated as follows:

Area Under the Curve (AUC): this quantity measures the effectiveness of the proposed algorithm in distinguishing between TP and TN groups. The higher the AUC value is, the better the model is achieved.

This section provides intensive details of the conducted experiments and meticulous analysis of the obtained outcomes. Complete descriptions of the performed experimental settings, explaining the achieved qualitative and quantitative figures of the proposed framework, and clarifying how the experimental process was conducted. Lastly, a comparative evaluation with some reported state-of-the-art approaches in the literature is provided to assess the presented framework against those benchmarks. The designed deep learning framework was implemented to recognize and classify brain tumors, and it was trained, validated, and tested in a rigorous and robust computational environment.

In this study, we used a hosting machine with the following hardware configuration: its Operating System was Windows 11 Pro. Ver. 23H2, a 64-bit-based processor, Intel(R) Core i7-8550U of 1.99 GHz with 8 cores; an Intel(R) UHD Graphic card 620 card with 16 GB of RAM. All experiments conducted were carried out on MATLAB R2017b, which was used as the programming platform. Additionally, numerous configurations were applied to the presented scheme to achieve its remarkable findings, and these settings were: 1) Leaky ReLU, ReLU, and Sigmoid were the deployed activation functions, 2) every convolutional layer includes 130 neurons, 3) 0.35 was the applied decay value, 4) 10, and 16 batch sizes were deployed, 5) the values of Dropout and Stride parameters were 0.5 and 2, 6) the initial learning rate was 0.001 and this value was updated regularly in the proposed scheme to attain better results, and 7) the number of applied epochs and iterations were 75 and 30,000. Furthermore, early stopping criteria was applied to prevent overfitting.

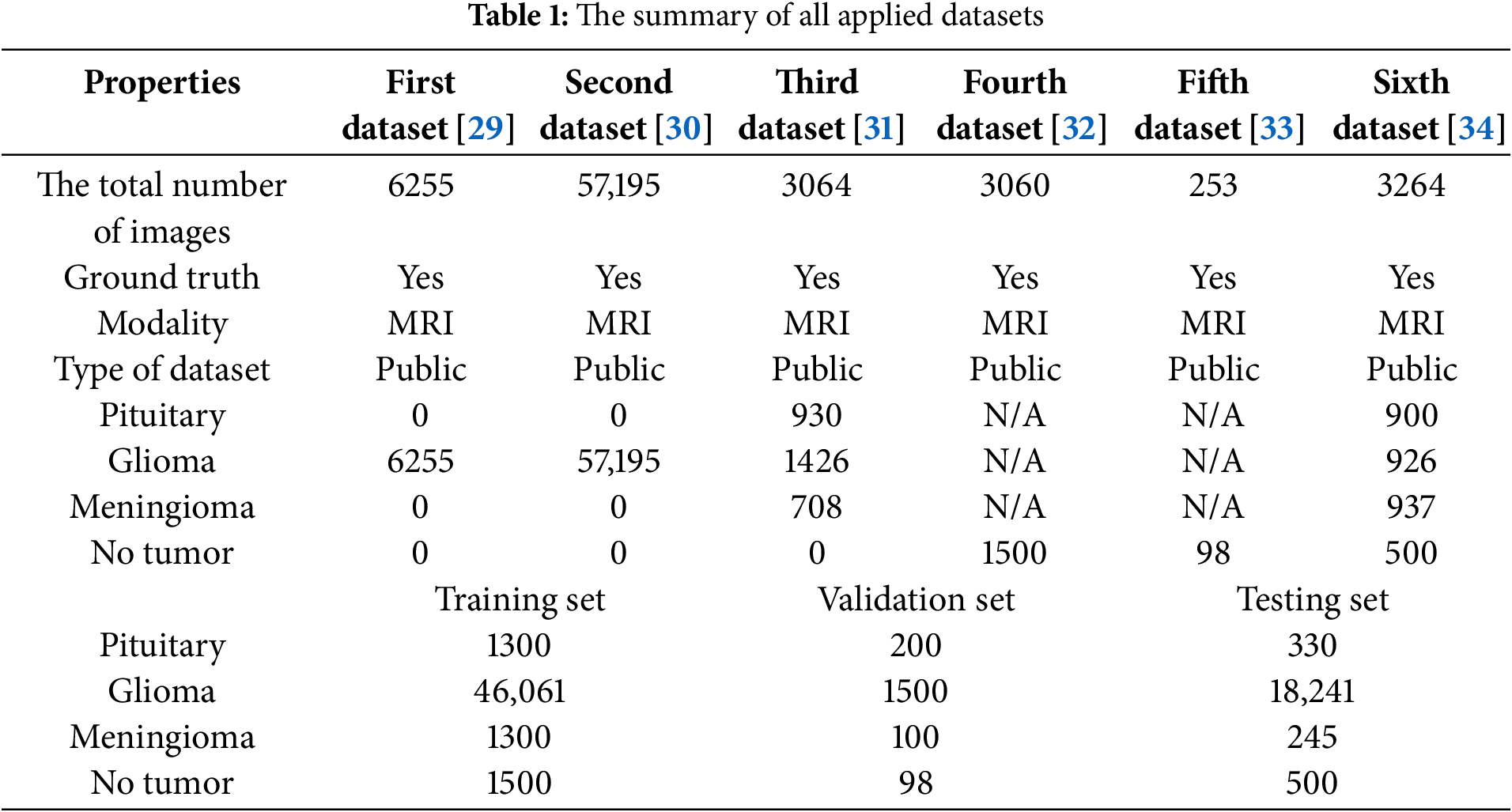

In this study, we applied different BraTS datasets, which refer to the brain tumor segmentation challenge datasets. These datasets are public medical MRI images used for developing brain tumor identification and classification algorithms. This research applies six public benchmark datasets from the Kaggle and Figshare websites. The first dataset [29] was created in the RSNA-ASNR-MICCAI Brain Tumor Segmentation (BraTS) Challenge in 2021, and its approximate size is 12.4 GB. This dataset comprises 6255 MRIs of Glioma type in various formats: T1, post-contrast T1-weighted (T1Gd), T2-weighted (T2), and T2 Fluid Attenuated Inversion Recovery (T2-FLAIR) volumes. The second dataset [30] contains the same formats of MRI scans as in the first dataset, and its size is 7 GB. It contains 57,195 MRI scans of Glioma only. The third dataset [31] encompasses different types of brain tumors with a total size of 900 MB. It contains 3064 T1-weighted contrast-enhanced MRI scans, which are: 1) 708 meningioma, 2) 1426 glioma, and 3) 930 pituitary This set was collected from 233 patients [31]. The fourth dataset [32] includes 3060 MRI scans with tumors and without tumors. The size of this set is 88 MB. Unfortunately, no details about utilized tumors were provided for this set. The fifth dataset [33] contains 253 MRIs of tumors and no tumors and no information regarding tumor types was provided. The size of this set is 16 MB. The last dataset [34] of size 91 MB encompasses various brain tumor types with a total number of MRI scans is 3264. The reported types and number of tumors are 937 Meningioma, 900 Pituitary, and 926 Glioma. In addition, the total number of no-tumor MRI scans is 500. Table 1 shows the breakdown details of all applied datasets. It is worth noting that Table 1 clearly shows that the proposed scheme deals with unbalanced datasets since all three sets contain unbalanced datasets.

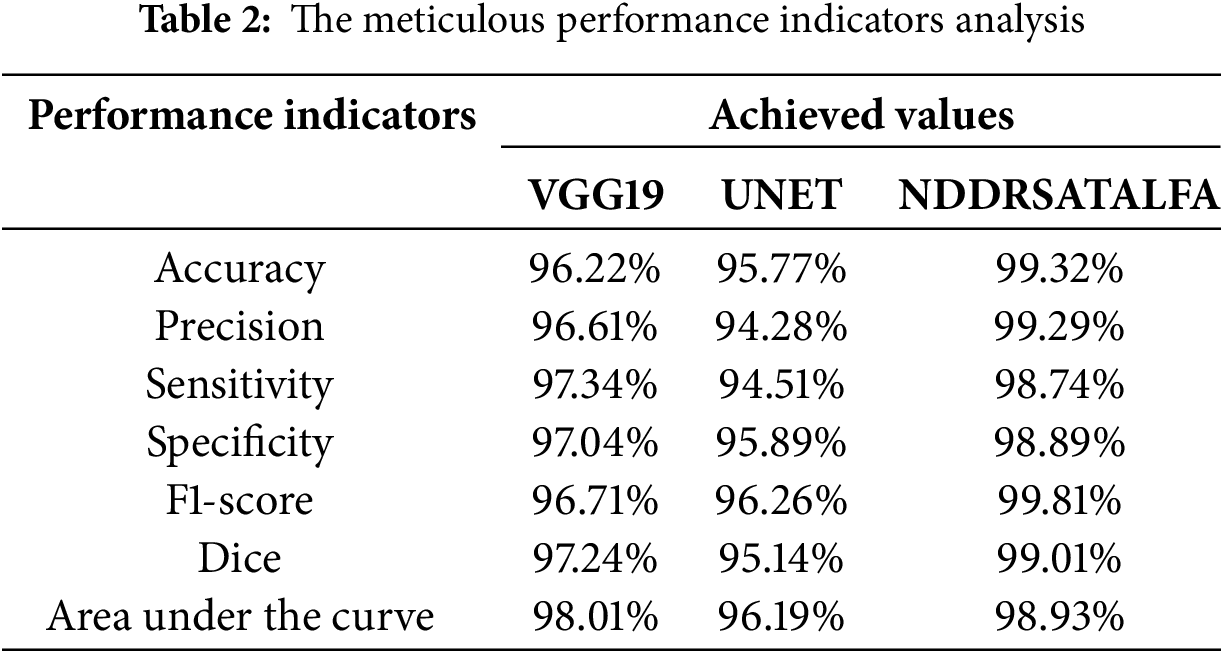

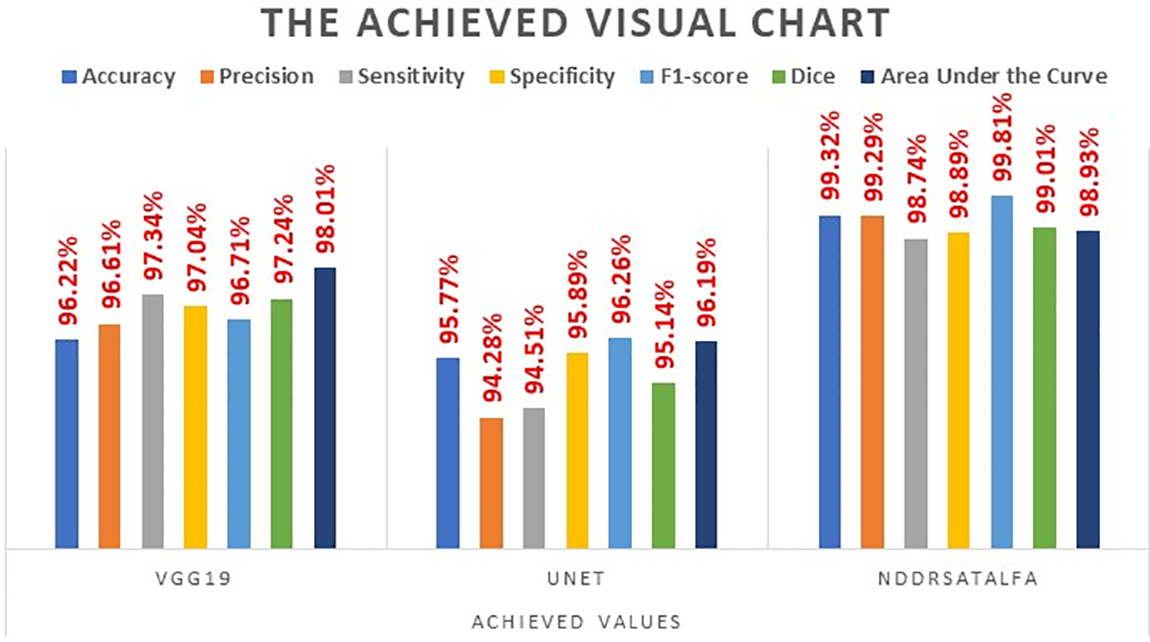

This subsection provides a meticulous evaluation of the proposed solution for brain tumor diagnosis. It discusses the considered performance indicators that were mentioned in Section 2 and the outcomes. This subsection mainly focuses on assessing the presented framework’s usefulness, robustness, and consistency in categorizing all applied MRI scans into four classes as stated earlier. Accuracy, precision, sensitivity, specificity, and F1-score offer a clear vision of the framework’s capability to suitably recognize and categorize brain tumors into their right classes. In addition, the confusion matrix provides a comprehensive view of the performance achieved in all groups. Furthermore, Receiver Operating Characteristic (ROC) charts and Area Under the Curve (AUC) values help in considering the discriminative capabilities of the proposed approach. In this research, the designed and developed two neural networks: VGG19 and UNET were evaluated separately, and their average results were compared against NDDRSATALFA as listed in Table 2. These results were attained after 30,000 iterations for all models. In addition, the learning rate value was the same for all models, and it was 0.01. These results were achieved in the training set. Fig. 5 depicts a visual analysis chart of all achieved outcomes for the examined three networks.

Figure 5: The visualization chart of the evaluation report

Based on the meticulous investigation of the presented framework, it was noticed that the VGG19 network reached an acceptable level of performance. Particularly, the VGG19 network reached a notable 96.22% accuracy, which shows its capability to suitably categorize instances with a high precision that reached 96.61%. Additionally, this network revealed a good rate of sensitivity at 97.34%. This rate indicates its ability to correctly detect all positive instances. Also, achieving a specificity percentage of 97.04% demonstrated the capability of the network to categorize as much as possible of the negative instances properly. Moreover, its F1-score rate was 96.71%. These outcomes emphasize and highlight the usefulness and trustworthiness in the context of the investigated problem. The second developed network, which is UNET, demonstrated slightly lower performance results, reaching 95.77% accuracy. In addition, it showed 94.51% sensitivity, 95.89% specificity, and its F1-score rate was 96.26%.

The outcomes achieved by Dice and AUC were 95.14% and 96.16%, respectively. The proposed framework, NDDRSATALFA, unveiled a commendable higher performance than the other two networks, which depicts its efficiency by applying the transfer learning mode and utilizing the dedicated residual self-attention network. This novel framework achieved commendable findings, resulting in a remarkable 99.32 % accuracy, 98.74% sensitivity, 98.89% specificity, 99.81% F1-score, and 99.01% Dice, with an AUC value of 98.93%. According to the careful intensive examinations of all considered performance metrics, which are illustrated in the graph analysis in Fig. 5, it has been distinguished that NDDRSATALFA has displayed higher and superior performance in all indicators. These outcomes highlight the effectiveness and consistency of the presented framework in assisting pathologists, radiologists, and physicians in precisely identifying brain tumors and providing a helpful diagnosis solution. The proposed framework highlights the potential to significantly increase the accuracy of the diagnostic tool. In addition, it improves the expected outcomes that are related to patients in the neurology field. Furthermore, robust performance results across all performance indicators considered were attained. The accuracy indicator reached its highest value in NDDRSATALFA, signifying the approach’s usefulness and success in recognizing the presence and absence of all types of brain tumors as well as categorizing all detected types of tumor masses. The applied loss function, cross-entropy in this study, which measures the differences between the foretold and actual labels, was diminished efficiently. This minimization result proposes that the framework’s anticipation was carefully closed to the actual labels.

This research utilizes two powerful tools in evaluating the categorization stage of the presented framework, which are the Receiver Operating Characteristic (ROC) curves and Area Under the Curve (AUC). A full explanation and clarification of the achieved ROC curves for the three main types: Glioma, Meningioma, and Pituitary in Fig. 6 is provided. These curves cooperatively imply that NDDRSATALFA achieves extremely well in classifying these tumors into their groups. NDDRSATALFA achieved commendable AUC results between 98.1% and 99.87% in most of the experiments that were performed. These outcomes recommend that the predictions being reached are remarkable, reliable, and dependable in separating all investigated types. Therefore, this separation process is critical in diagnostic systems. The zigzags shown in these curves represent the thresholds in the experiments. Every type has its threshold values according to the extracted features. These thresholds play significant roles in controlling the balance between two performance indicators, which are sensitivity and specificity. The higher sensitivity is reached, the higher classification is performed. Additionally, the confidence interval obtained by the proposed scheme as illustrated in Fig. 6 lies between 94.74% and 99.32%.

Figure 6: The ROC visualization of the testing set

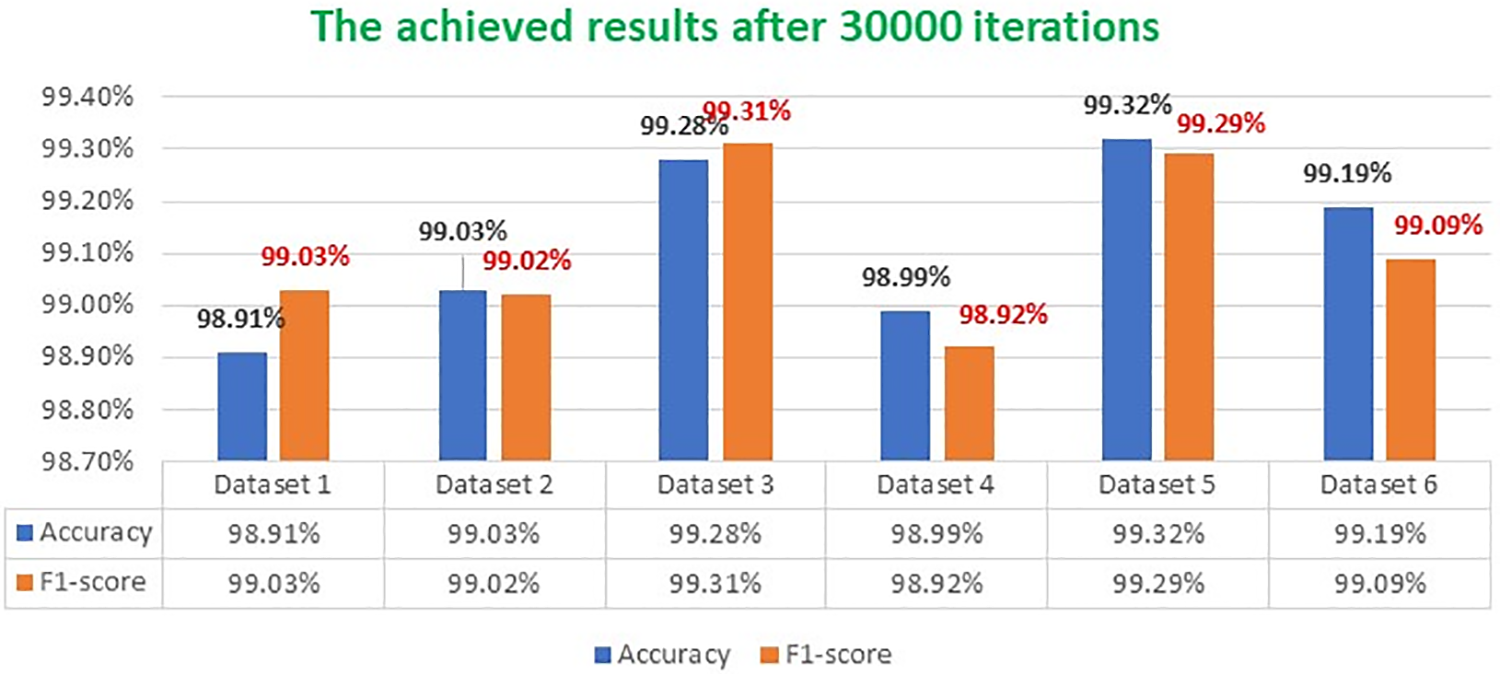

Fig. 7 illustrates three samples of three types of tumors with their segmentation outputs using the proposed framework. Every tumor is segmented with a different color, followed by their outlines. In addition, Fig. 8 depicts the attained accuracy and F1-score rates for every applied dataset during the training stage after 30,000 iterations. Accuracy and F1-score rates ranged between 98.9% and 99.32%. Obtained accuracy in the first and fourth dataset were 98.91% and 98.99%, respectively, while the minimum F1-score rate was attained in the fourth dataset at a score of 98.92%. In the other datasets, both indicators were higher than 99%, which implies that the presented scheme achieved remarkable findings despite the type of tumor or its original dataset. Furthermore, we analyzed NDDRSATALFA under different thresholds to see its outputs of accuracy, F1-score, and dice after 30,000 iterations for each threshold. The results obtained are illustrated in Fig. 9. The deployed threshold values were 0.2, 0.4, 0.6, and 0.8. This chart shows that all performance indicators were improved each time we increased the threshold value.

Figure 7: Segmentation outputs

Figure 8: Attained accuracy and F1-score rates for every dataset

Figure 9: The achieved outputs under different thresholds

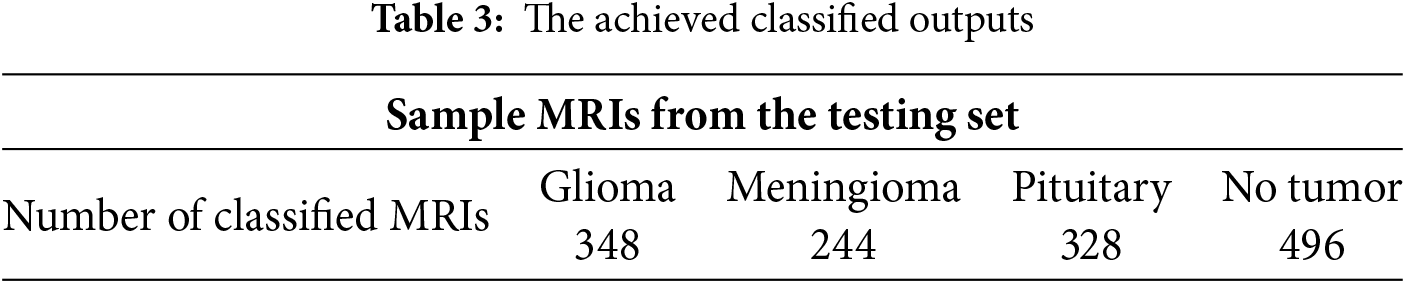

We conducted various experiments to segment and categorize all MRIs in the testing set. Table 3 tabulated a sample taken from the testing set. This sample was 350 Glioma, 245 Meningioma, 330 Pituitary, and 500 no tumor. NDDRSATALFA accurately classified 348 Glioma, 244 Meningioma, 328 Pituitary, and 496 no tumor out of 500. In total, NDDRSATALFA properly categorized 1416 out of 1425 MRIs, which implies that its accuracy rate was 99.37%.

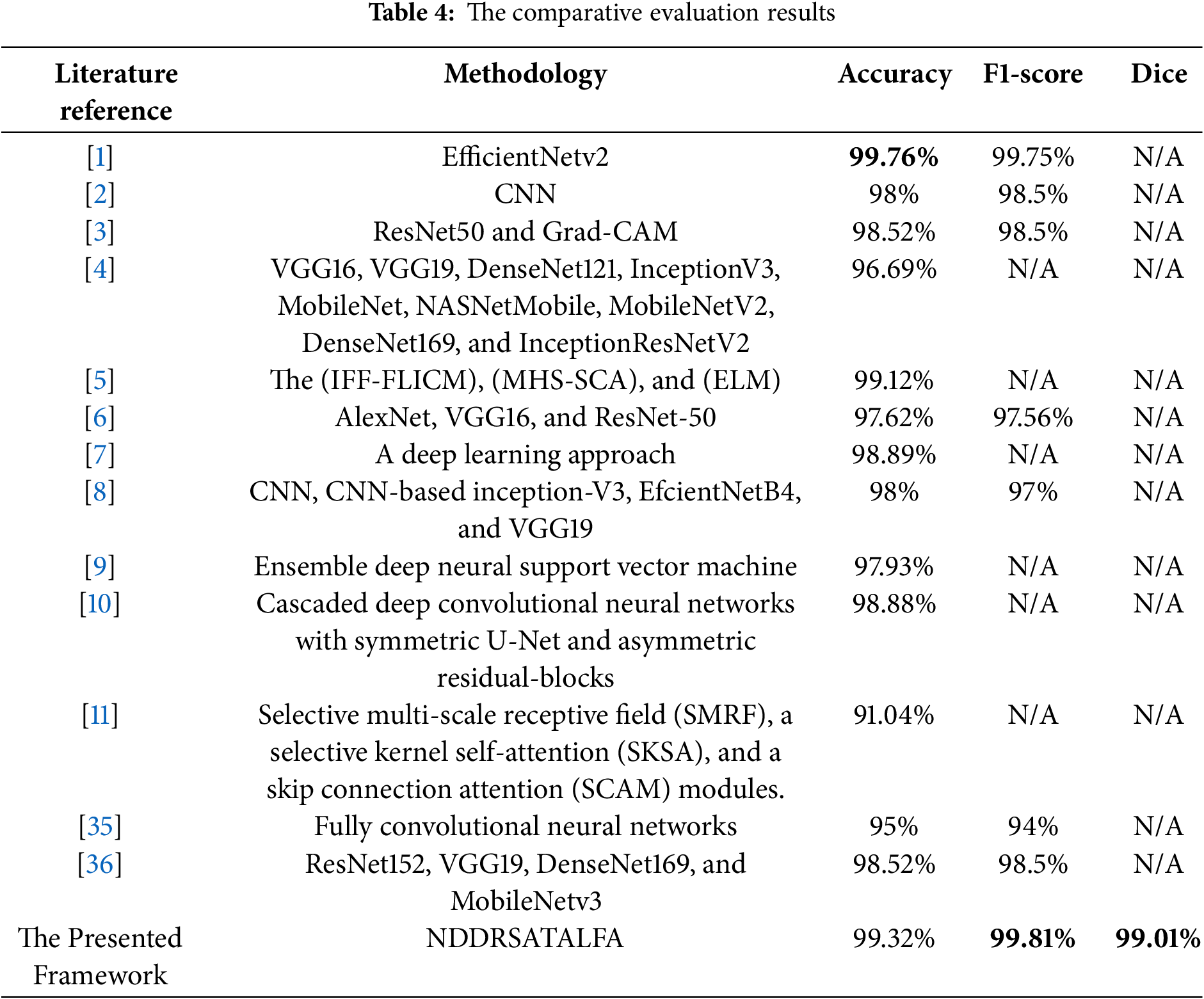

A comprehensive comparative analysis between some state-of-the-art solutions in the literature and NDDRSATALFA was performed on accuracy, F1-score, Dice, and the type of methodology that was applied. Each solution uses its own dataset or datasets. In this study, NDDRSATALFA was compared against the developed methods in [1–11], noting that all these works were implemented in 2024. Thus, we can consider them the latest solutions in the field. The highest results are in bold. The comparative analysis is listed in Table 4. By checking the reported results in Table 4, we noticed that NDDRSATALFA reached scores of 99.31%, 99.81%, and 99.01% for accuracy, F1-score, and Dice, respectively. These scores surpass other results of all compared works except accuracy in [1], which attained higher accuracy at 99.76%. The reported work in [11] obtained the lowest accuracy score of 91%, while other models attained accuracy scores between 97.6% and 99.1%. Table 4 demonstrates that NDDRSATALFA operates well in identifying the potential regions of Interest and categorizes these masses successfully. Additionally, NDDRSATALFA can segment different shapes of masses as depicted in Fig. 7.

This study proposed a novel framework for brain tumor identification and categorization purposes. This framework, NDDRSATALFA, verified its commendable superior performance against the cutting-edge approaches to two distinct tasks, which are segmentation and classification. The proposed framework deploys two developed neural networks and a novel residual self-attention network. We believe this solution is compatible with the best practices for deploying numerous deep-learning technologies to implement various approaches to compete in the field of brain tumor recognition, which allows for a smooth generalization over unknown data. The extraordinary performance of NDDRSATALFA, highlighted by its highest accuracy score, can be considered a new landmark and benchmark in the field. By meticulously evaluating NDDRSATALFA against some of the cutting-edge models, we demonstrated a convincing reason for it to be applied in clinical evaluations to significantly improve the diagnoses procedures, and outcomes. In addition, making the framework light in terms of computational efficiency and resource allocation makes it well-suited for deployment across different environments, such as mobiles and other hand-held devices. The presented approach paves the way for more dependable and consistent diagnostics solutions and treatment plans.

Although the proposed framework achieved commendable outcomes, it is prone to limitations despite its achievements. One limitation is its dependency on high-resolutions and well-marked datasets. This limitation can lead to a clear and noticeable bias if not considered and treated well. Another limitation that occurred during the implementation was the variance of performance when another modality of inputs was applied. The proposed framework works perfectly on MRI scans and generates unneeded behaviors with other modalities. Additionally, NDDRSATALFA requires a massive computational resource and various adjusting and tuning of the applied hyperparameters for optimization purposes. NDDRSATALFA provides incredible potential for applying deep-learning technologies to segment and classify different brain tumors from MRI scans. Furthermore, another limitation is the noticeable constraint in the diversity of the utilized public datasets. This diversity impacts the ability of NDDRSATALFA to recognize and classify all types properly. Moreover, computational requirements and resource allocations with high memory capacity reveal real challenges in environments, where resources are constrained. Nevertheless, due to advancements in technologies, numerous directions in research provide breakthrough chances to resolve these obstacles, such as using machines with high-performance utilization and resource allocation to improve robustness and effectiveness.

This section is not mandatory but can be added to the manuscript if the discussion is unusually long or complex. This research proposes a new innovative framework based on two neural networks and a new residual self-attention network to detect and classify brain tumors using MRI scans. This solution is trained, validated, and tested using six public datasets of MRI scans. By deploying various experiments, NDDRSATALFA achieves remarkable outcomes and can be seen as a new landmark in the field. It achieves a commendable accuracy score of 99.32%. It uses transfer learning and residual self-attention modules to improve its capability in segmentation and classification. Therefore, these modules lead NDDRSATALFA to superior performance against other developed methods. These findings drive and boost applications in the medical field of imaging analysis to go beyond expectations and emphasize how the self-attention network increases accuracy, leading to improved clinical evaluations and setting new treatment plans. Furthermore, our outcomes reduce the burden on healthcare providers, pathologists, radiologists, and physicians when dealing with brain tumors.

In contrast, NDDRSATALFA generates numerous challenges and opens different areas for enhancement, such as the need for a broad diversity of datasets, minimizing the architecture density, and optimizing the computational complexity. Furthermore, applying the proposed model in real clinical evaluations is highly required to test it in real scenarios. This procedure ensures that NDDRSATALFA complies with experts’ clinical evaluations and monitors its impact on patients.

Acknowledgement: The authors would like to thank the Deanship of Scientific Research (DSR) at King Abdulaziz University, Jeddah, Saudi Arabia under Grant No. (GPIP:1055-829-2024). The authors, therefore, acknowledge with thanks DSR for technical and financial support.

Funding Statement: This project was funded by the Deanship of Scientific Research (DSR) at King Abdulaziz University, Jeddah, Saudi Arabia under Grant No. (GPIP:1055-829-2024). The authors, therefore, acknowledge with thanks DSR for technical and financial support.

Author Contributions: Conceptualization: Ahmed A. Alsheikhy; data curation: Tawfeeq Shawly; formal analysis: Ahmed A. Alsheikhy and Tawfeeq Shawly; funding acquisition: Tawfeeq Shawly; investigation: Ahmed A. Alsheikhy; methodology: Tawfeeq Shawly; supervision: Ahmed A. Alsheikhy; validation: Ahmed A. Alsheikhy, and Tawfeeq Shawly; writing—original draft: Ahmed A. Alsheikhy and Tawfeeq Shawly; writing—review and editing: Tawfeeq Shawly. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The authors would like to confirm that the datasets which are utilized in this research are available at different websites and can be found at the following links: Schettler D, 2021, [Online]. Available: https://www.kaggle.com/datasets/dschettler8845/brats-2021-task1 (accessed on 30 January 2025). Awsaf, 2020, [Online]. Available: https://www.kaggle.com/datasets/awsaf49/brats2020-training-data (accessed on 30 January 2025). Cheng J. Brain Tumor Dataset. Figshare: Dataset, 2017, [Online]. Available: https://figshare.com/articles/dataset/brain_tumor_dataset/1512427/5 (accessed on 30 January 2025). Chakrabarty N, 2019, [Online]. Available: https://kaggle.com/datasets/navoneel/brain-mri-images-for-brain-tumor-detection (accessed on 30 January 2025). Hamada A, 2022, [Online]. Available: https://www.kaggle.com/datasets/ahmedhamada0/brain-tumor-detection (accessed on 30 January 2025). Bhuvaji S, 2020, [Online]. Available: https://www.kaggle.com/datasets/sartajbhuvaji/brain-tumor-classification-mri (accessed on 30 January 2025).

Ethics Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

References

1. Pacal I, Celik O, Bayram B, Cunha A. Enhancing EfficientNetv2 with global and efficient channel attention mechanisms for accurate MRI-based brain tumor classification. Cluster Comput. 2024;27:11187–212. [Google Scholar]

2. Alshuhail A, Thajur A, Chandramma R, Mahesh TR, Almusharraf A, Vinoth Kumar V, et al. Refining neural network algorithms for accurate brain tumor classification in MRI imagery. BMC Med Imaging. 2024;24(118):1–20. doi:10.1186/s12880-024-01285-6. [Google Scholar] [PubMed] [CrossRef]

3. Musthafa MM, Mahesh TR, Kumar VV, Guluwadi S. Enhancing brain tumor detection in MRI images through explainable AI using Grad-CAM with Resnet 50. BMC Med Imaging. 2024;24:107. doi:10.1186/s12880-024-01292-7. [Google Scholar] [PubMed] [CrossRef]

4. Raza S, Gul N, Khattak HA, Rehan A, Farid MI, Kamal A, et al. Brain tumor detection and classification using deep feature fusion and stacking concepts. J Popul Ther Clin Pharmacol. 2024;31(1):1339–56. doi:10.53555/jptcp.v31i1.4179. [Google Scholar] [CrossRef]

5. Dash S, Siddique M, Mishra S, Gelmecha DJ, Satapathy S, Rathee DS, et al. Brain tumor detection and classification using IFF-FLICM segmentation and optimized ELM model. J Eng. 2024;2024:8419540. doi:10.1155/2024/8419540. [Google Scholar] [CrossRef]

6. Dhakshnamurthy VK, Govindan M, Sreerangan K, Nagarajan MD, Thomas A. Brain tumor detection and classification using transfer learning models. Eng Proc. 2024;62(1):1. doi:10.3390/engproc2024062001. [Google Scholar] [CrossRef]

7. Agarwal M, Rani G, Kumar A, Kumar PK, Manikandan R, Gandomi AH. Deep learning for enhanced brain tumor detection and classification. Results Eng. 2024;22(3):102117. doi:10.1016/j.rineng.2024.102117. [Google Scholar] [CrossRef]

8. Khalil MZ, Basarslan MS. Brain tumor detection from images and comparison with transfer learning methods and 3-layer CNN. Sci Rep. 2024;14(1):2664. doi:10.1038/s41598-024-52823-9. [Google Scholar] [PubMed] [CrossRef]

9. Anantharajan S, Gunasekaran S, Subramanian T, Venkatesh R. MRI brain tumor detection using deep learning and machine learning approaches. Meas: Sens. 2024;31(3):101026. doi:10.1016/j.measen.2024.101026. [Google Scholar] [CrossRef]

10. Abd-Ellah MK, Awad AI, Ashraf AM, Ibraheem AM. Automatic brain-tumor diagnosis using cascaded deep convolutional neural networks with symmetric U-Net and asymmetric residual-blocks. Sci Rep. 2024;14(1):9501. doi:10.1038/s41598-024-59566-7. [Google Scholar] [PubMed] [CrossRef]

11. Guo B, Cao N, Yang P, Zhang R. SSGNet: selective multi-scale receptive field and kernel self-attention based on group-wise modality for brain tumor segmentation. Electronics. 2024;13(10):1915. doi:10.3390/electronics13101915. [Google Scholar] [CrossRef]

12. Mahmud I, Mamun M, Abdelgawad A. A deep analysis of brain tumor detection from MR images using deep learning networks. Algorithms. 2023;16(4):176. doi:10.3390/a16040176. [Google Scholar] [CrossRef]

13. Alsheikhy AA, Azzahrani AS, Alzahrani AK, Shawly T. An effective diagnosis system for brain tumor detection and classification. Comput Syst Sci Eng. 2023;46(2):2021–37. doi:10.32604/csse.2023.036107. [Google Scholar] [CrossRef]

14. Saeed S, Rezay S, Keshavarz H, Kalhori SRN. MRI-based brain tumor detection using convolutional deep learning methods and chosen machine learning techniques. BMC Med Inform Decis Mak. 2023;23(1):16. doi:10.1186/s12911-023-02114-6. [Google Scholar] [PubMed] [CrossRef]

15. Khan SI, Rahman A, Debnath T, Karim R, Nasir MK, Band SS, et al. Accurate brain tumor detection using deep convolutional neural network. Comput Ind Biotechnol J. 2022 Aug 27;20:4733–45. doi:10.1016/j.csbj.2022.08.039. [Google Scholar] [PubMed] [CrossRef]

16. Patil S, Kirange D. Ensemble of deep learning models for brain tumor detection. Proc Int Conf Mach Learn Data Eng. 2023;218:2468–79. doi:10.1016/j.procs.2023.01.222. [Google Scholar] [CrossRef]

17. Ilhan U, Ilhan A. Brain tumor segmentation based on a new threshold approach. In: Proceeding of the 9th International Conference on Theory and Application of Soft Computing, Computing with Words and Perception, ICSCCW 2017; 2017 Aug 22–23; Budapest, Hungary; 2017. Vol. 120, p. 580–7. [Google Scholar]

18. Aarthilakshmi M, Meenakshi S, Pushkala AP, Rama V, Prakash NB. Brain tumor detection using machine learning. Int J Sci Technol. 2020;9(4):1976–9. [Google Scholar]

19. Asiri AA, Shaf A, Ali T, Shakeel U, Irfan M, Mehdar KM, et al. Exploring the power of deep learning: fine-tuned vision transformer for accurate and efficient brain tumor detection in MRI scans. Diagnostics. 2023;13(12):2094. doi:10.3390/diagnostics13122094. [Google Scholar] [PubMed] [CrossRef]

20. Siddique AB, Sakib S, Khan MMR, Tanzeem AK, Chowdhury M, Yasmin N. Deep convolutional neural networks model-based brain tumor detection in brain MRI images. In: Proceeding of the 2020 Fourth International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC); 2020; Palladam, India. p. 909–14. [Google Scholar]

21. Kot E, Krawczyk Z, Siwek K, Krolocki L, Czwarnowski P. Deep learning-based framework for tumour detection and semantic segmentation. Bull Pol Acad Sci Tech Sci. 2021;63(3):e136750. doi:10.24425/bpasts.2021.136750. [Google Scholar] [CrossRef]

22. Bayoumi ES, Abd-Ellah MK, Khalaf AAM, Gharieb RR. Brain tumor automatic detection from MRI images using transfer learning model with deep convolutional neural network. J Adv Eng Trends. 2022;41(2):19–30. doi:10.21608/jaet.2020.42896.1051. [Google Scholar] [CrossRef]

23. Ramtekhar PK, Pandey A, Pawar MK. Accurate detection of brain tumor using optimized feature selection based on deep learning techniques. Multimed Tools Appl. 2023;82(29):44623–53. doi:10.1007/s11042-023-15239-7. [Google Scholar] [PubMed] [CrossRef]

24. Tam A, Sreeja PP, Jayashankari J, Mohamed A, Iroda S, Vijayan V. Identification of brain tumor on MR images with and without segmentation using Dl techniques. In: Proceedings of the International Conference on Newer Engineering Concepts and Technology (ICONNECT-2023); 2023; Tiruchirappalli, Tamil Nadu, India. p. 1–7. [Google Scholar]

25. Praveena M, Rao MK. Brain tumor detection using integrated learning process detection (ILPD). Int J Adv Comput Sci Appl. 2022;13(10):136–41. doi:10.14569/issn.2156-5570. [Google Scholar] [CrossRef]

26. Devkota B, Alsadoon A, Prasad PWC, Singh AK, Elchouemi A. Image segmentation for early stage brain tumor detection using mathematical morphological reconstruction. In: Proceedings of the 6th International Conference on Smart Computing and Communications, ICSCC 2017; 2018; India. Vol. 125, p. 115–23. doi:10.1016/j.procs.2017.12.017. [Google Scholar] [CrossRef]

27. Vision S. 2030; 2016. [cited 2024 May 29]. Available from: https://www.vision2030.gov.sa/. [Google Scholar]

28. Huang Z, Liang M, Qin J, Zhong S, Lin L. Understanding self-attention mechanism via dynamical system perspective. In: Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV); 2023; Paris, France. p. 1412–22. [Google Scholar]

29. Schettler D. 2021. [cited 2025 Jan 30]. Available from: https://www.kaggle.com/datasets/dschettler8845/brats-2021-task1. [Google Scholar]

30. Awsaf. 2020 [cited 2025 Jan 30]. Available from: https://www.kaggle.com/datasets/awsaf49/brats2020-training-data. [Google Scholar]

31. Cheng J. Brain tumor dataset. Figshare: Dataset; 2017. [cited 2025 Jan 30]. Available from: https://figshare.com/articles/dataset/brain_tumor_dataset/1512427/5. [Google Scholar]

32. Chakrabarty N. 2019. [cited 2025 Jan 30]. Available from: https://kaggle.com/datasets/navoneel/brain-mri-images-for-brain-tumor-detection. [Google Scholar]

33. Hamada A. 2022. [cited 2025 Jan 30]. Available from: https://kaggle.com/datasets/ahmedhamada0/brain-tumor-detection. [Google Scholar]

34. Bhuvaji S. 2020. [cited 2025 Jan 30]. Available from: https://www.kaggle.com/datasets/sartajbhuvaji/brain-tumor-classification-mri. [Google Scholar]

35. Simo AMD, Kouanou AT, Monthe V, Nana MK, Lonla BM. Introducing a deep learning method for brain tumor classification using MRI Data towards better performance. Inform Med Unlocked. 2024;44:101423. doi:10.1016/j.imu.2023.101423. [Google Scholar] [CrossRef]

36. Mathivanan SK, Soniamuthu S, Murugesan S, Rajadurai H, Shivaharu BD, Shah MA. Employing deep learn-ing and transfer learning for accurate brain tumor detection. Sci Rep. 2024;14(1):7232. doi:10.1038/s41598-024-57970-7. [Google Scholar] [PubMed] [CrossRef]

Cite This Article

Copyright © 2025 The Author(s). Published by Tech Science Press.

Copyright © 2025 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF

Downloads

Downloads

Citation Tools

Citation Tools