Open Access

Open Access

REVIEW

Survey on AI-Enabled Resource Management for 6G Heterogeneous Networks: Recent Research, Challenges, and Future Trends

1 Centre of Advanced Communication, Research and Innovation (ACRI), Department of Electrical Engineering, Faculty of Engineering, University of Malaya, Kuala Lumpur, 50603, Malaysia

2 School of Computing Sciences, College of Computing, Informatics and Mathematics, Universiti Teknologi Mara, Shah Alam, 40450, Malaysia

3 Centre for Cyber Security, Faculty of Information Science and Technology (FTSM), Universiti Kebangsaan Malaysia (UKM), Bangi, 43600, Malaysia

4 Faculty of Science and Engineering, Waseda University, Tokyo, 169-8555, Japan

5 Faculty of Telecommunications, Posts and Telecommunications Institute of Technology, Hanoi, 11518, Vietnam

* Corresponding Authors: Kaharudin Dimyati. Email: ; Quang Ngoc Nguyen. Email:

Computers, Materials & Continua 2025, 83(3), 3585-3622. https://doi.org/10.32604/cmc.2025.062867

Received 30 December 2024; Accepted 02 April 2025; Issue published 19 May 2025

Abstract

The forthcoming 6G wireless networks have great potential for establishing AI-based networks that can enhance end-to-end connection and manage massive data of real-time networks. Artificial Intelligence (AI) advancements have contributed to the development of several innovative technologies by providing sophisticated specific AI mathematical models such as machine learning models, deep learning models, and hybrid models. Furthermore, intelligent resource management allows for self-configuration and autonomous decision-making capabilities of AI methods, which in turn improves the performance of 6G networks. Hence, 6G networks rely substantially on AI methods to manage resources. This paper comprehensively surveys the recent work of AI methods-based resource management for 6G networks. Firstly, the AI methods are categorized into Deep Learning (DL), Federated Learning (FL), Reinforcement Learning (RL), and Evolutionary Learning (EL). Then, we analyze the AI approaches according to optimization issues such as user association, channel allocation, power allocation, and mode selection. Thereafter, we provide appropriate solutions to the most significant problems with the existing approaches of AI-based resource management. Finally, various open issues and potential trends related to AI-based resource management applications are presented. In summary, this survey enables researchers to understand these advancements thoroughly and quickly identify remaining challenges that need further investigation.Keywords

The rapid advancement of communication infrastructures and the potential proliferation of wireless applications have encouraged the swift adoption of wireless technology [1]. 5G networks have recently been developed to support ultra-reliable and low-latency communications (URLLC), massive machine-type communication (mMTC), and enhanced mobile broadband (eMBB) [2,3]. Anticipated features of 6G networks include a plethora of new services, such as holographic communications, remote surgery and telemedicine, tactile internet, Artificial Intelligence (AI)-driven connectivity, Brain-Computer Interfaces, high precision positioning, and quantum communication [4,5]. The International Mobile Telecommunications-2030 guidelines set up substantial targets for 6G wireless technology to fulfill the broad and sophisticated needs of future applications such as the Internet of Things (IoT) and smart surfaces [6]. The most important performance indicators that are anticipated for 6G are 1 terabit per second (Tbps) data rate, 0.01 to 0.1 ms latency, 1000 km/h mobility, and 10 million devices/km2 connection density [7]. Compared to 5G, 6G demonstrates the ability to provide terahertz bandwidth and a promising data rate, along with increased reliability and reduced latency [8,9]. In addition, to fulfill the various demands of 6G, AI is anticipated to facilitate fully autonomous systems that incorporate distributed learning models [10].

The purpose of AI-enabled 6G networks is to automate operations, analyze massive data, and create intelligent cloud, edge, and fog nodes [11]. Ultimately, the main goal is to establish a seamless end-to-end connection that is impossible with current 5G standards [12]. In 6G networks, heterogeneous devices must meet diverse Quality of Service (QoS) demands to deploy network resources intelligently. Low-latency and ultra-reliable networks are needed to guarantee real-time data and seamless network connectivity between autonomous vehicles [13]. The physical infrastructure is expected to support various wireless network applications such as extended reality, telemedicine, and video streaming [14]. The development of these applications poses significant issues for resource management in 6G networks, as they require network services with particular performance features, including mobility management, latency reduction, energy efficiency, and spectrum efficiency [15]. The architecture standards for 6G networks must urgently tackle the previously mentioned challenges to maximize the effective utilization of network resources [16]. In addition, effective resource management is essential for facilitating information sharing among Devise to Devise (D2D) communication, map navigation, health alert notifications, etc. [17,18]. The ability to concurrently achieve the requirements of reliability and energy-and-spectrum-efficient communication is going to be particularly challenging. Therefore, it is essential to develop collaborative optimization solutions for resource management issues in 6G network applications.

These challenges include channel allocation, interference management, user association, and power allocation, which are crucial for meeting diverse needs [19]. Heuristic and suboptimal optimization approaches are used to solve traditional resource management challenges in wireless networks [20]. Moreover, global optimization algorithms are unable to resolve the NP-hard joint optimization challenges of spectrum efficiency and energy efficiency in the forthcoming 6G networks due to their complexity [21]. The field of artificial intelligence, which includes machine learning algorithms, has emerged as a potential solution to maintain computationally challenging and NP-hard issues [22,23]. This development encouraged researchers to adopt machine learning algorithms to address joint optimization issues in 6G wireless networks. Additionally, robustness, reliability, and resource efficiency have become more concerning with 6G networks. Hence, intelligent resource management in 6G networks, which machine learning enables, necessitates a radical departure from conventional resource management approaches [24].

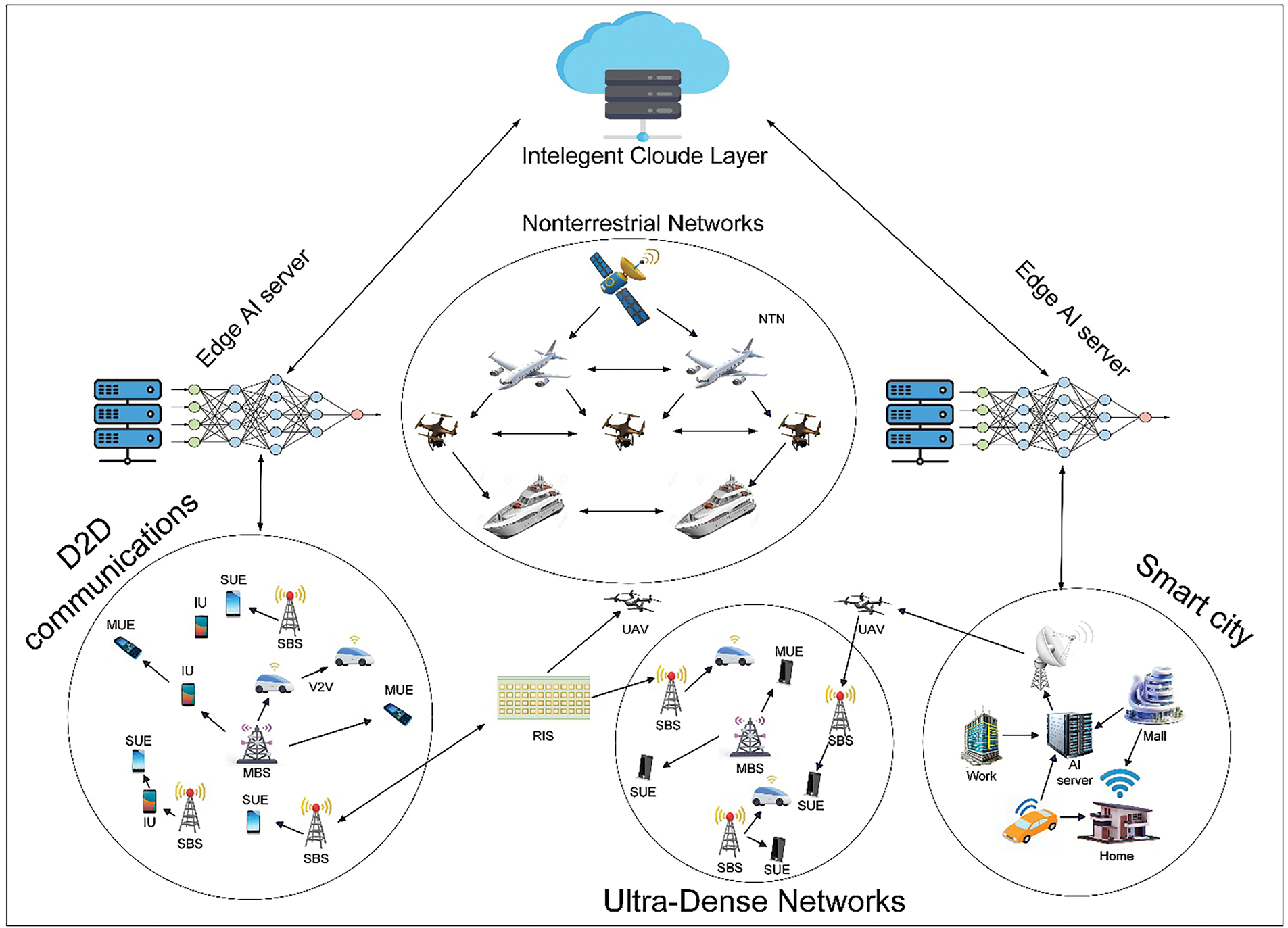

Furthermore, 6G wireless network optimization based on AI methods is influenced by the high data rates offered by the terahertz spectrum [25]. AI approaches are expected to deliver cognitive services, effective spectrum management, and autonomous network installations [26]. Moreover, the integration of 6G networks and machine learning may efficiently enhance resource utilization and enable real-time learning for autonomous systems [27]. Achieving a balance between the anticipated exponential increase in data traffic, the integration of sensor-based services, and the tremendous densification of networks is crucial in the progress toward 6G networks [28]. Thus, the 6G networks enabled by AI will play a crucial role in society and industry, meeting the communication demands of machines and humans [29,30]. Fig. 1 illustrates a vision of a 6G wireless network.

Figure 1: A vision of 6G wireless network

This survey article comprehensively reviews the prospective advantages and challenges of AI approach integration with the latest technologies in 6G networks. Improving the efficiency of 6G wireless networks is addressed, along with the issues of managing resources using AI approaches. This article explains the methods used by current studies to address intelligent resource management in 6G networks using AI techniques. These AI techniques are classified into Deep Learning (DL), Federated Learning (FL), Reinforcement Learning (RL), and Evolutionary Learning (EL). Subsequently, we evaluate the AI methods based on optimization challenges such as user association, channel allocation, power allocation, and mode selection. Furthermore, outstanding issues and possible developments in AI-based resource management are discussed. Fig. 2 shows the survey structure.

Figure 2: Survey structure

2 Motivations and Contributions

Our innovative and comprehensive survey addresses this knowledge gap and encourages more research on 6G network intelligent resource management using AI. We produced recent works on AI types including DL, FL, RL, and EL, and analyzed them according to optimization challenges such as user association, channel allocation, power allocation, and mode selection. Additionally, this review offers an innovative perspective and categorization of recent literature, with a particular emphasis on AI-enabled 6G networks. Consequently, we can define the unresolved challenges and issues that are associated with intelligent resource management. In addition, we suggest many intriguing future research topics in the design principles of 6G wireless applications that AI applications enable.

The main contributions are summarized below:

1. Provide a summary of current AI methods for 6G network resource management applications, including DL, FL, RL, and EL.

2. Investigate the AI methods in the context of optimization problems such as user association, channel allocation, power allocation, and mode selection.

3. Summarize the AI-assisted methods, including the learning types, optimization issues, applied scenarios, advantages, and limitations.

4. Identify the unsolved issues, areas of study that need more investigation, and potential remedies related to the future directions of machine learning applications for the standard design of 6G networks.

In this paper, we discuss different aspects of AI-based 6G wireless networks as shown in Fig. 2. The rest of the paper is organized as follows: In Section 3, we summarize the related research and provide an overview of the current research surveys that use AI in wireless communication networks. Then, we provide an overview of AI-based resource management in Section 4. Section 5 provides an overview of the present AI approaches used for resource management, including DL, FL, RL, and EL. In addition, Section 6 explores the upcoming trends and directions for resource management in future wireless communications that AI powers. Section 7 shows the comparative analysis of several AI Strategies for 6G HetNets Finally, the conclusion of the paper is presented in Section 8.

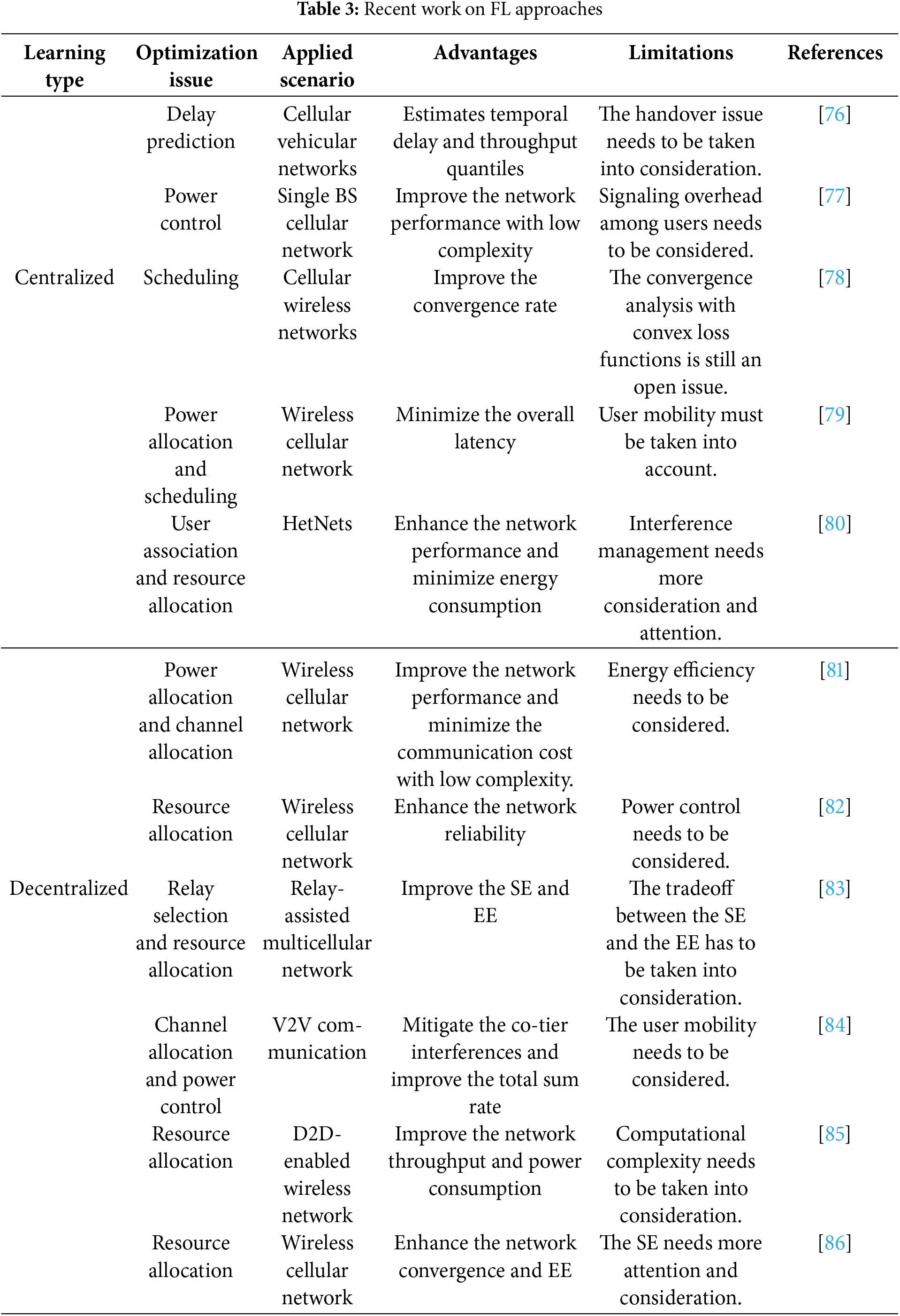

The use of AI for future communications has been the subject of several research studies in the past few years, resulting from the prospective advantages that AI can potentially gain. In [31], the integration of Machine Learning (ML) and Blockchain Technology (BCT) is the main goal of the study. The authors evaluated the implementation of ML in 6G, examining applications and incorporation with conventional and non-conventional media communication, as well as the incorporation of it in the 6G-IoT network. Furthermore, the complicated configuration of wireless communication systems demands an extensive review of privacy and security, which has inspired extensive study of BCT. This review paper analyzed BCT’s characteristics, framework, and utilization in various communication environments. Moreover, they performed a comprehensive examination of AI and BCT, studying their effect on communication networks. Implementing this integrated approach has specific characteristics crucial for wireless communication and networks, including model sharing and decentralized data.

In [32], the authors comprehensively reviewed ML-enabled wireless communication in 6G networks. It investigated current machine learning approaches, including supervised and unsupervised machine learning, federated learning, reinforcement learning, deep learning, and deep reinforcement learning for resource management applications. The authors investigated the performance of ML methods in three different network classifications, specifically D2D, fog-radio access, and vehicle networks. Moreover, they presented a summary of ML based on new algorithms designed to address the challenges regarding resource allocation, task offloading, and handover. Eventually, they emphasized the open issues, challenges, and potential solutions, in addition to future investigation designs, in the framework of 6G wireless applications. In [33], the authors presented an extensive examination and comprehensive evaluation of AI-RAN’s vision and current issues. Firstly, the authors introduced a brief overview of 6G AI-RAN, then discussed the existing 5G RAN methodologies and the challenges that must be addressed to successfully deploy 6G AIRAN. Furthermore, the study analyzed state-of-the-art research areas in AI-RAN, focusing on spectrum allocation, network design, and resource management issues. Moreover, they focused on several approaches to tackle these issues, which include employing modern ML and edge computing techniques to enhance the efficiency of 6G AI-RAN.

In [34], the authors investigated the implementation of AI-enabled applications to handle various aspects of 6G mobile communications, particularly intelligent network management and mobility, channel coding, massive Multi-Input Multi-Output (MIMO), and beamforming. This research has shown that the 6G framework, employing AI approaches, can intelligently manage network configurations and various resources related to slices, computational power, caching, energy, and communications to meet continually changing demands. Following that, the authors have identified many challenges and potential investigation areas for improving AI 6G networks. Based on AI 6G, wireless networks experience multiple challenges, specifically in the management of massive data, complex algorithms, interference control, energy efficacy, and privacy. This paper highlighted several future areas of study which include the implementation of intelligent spectrum management, beamforming based on AI, autonomous networks, the integration of quantum technology and AI, satellite networks enhanced by AI, the emphasis on environmental sustainability, network slicing improved by AI, and the utilize of distributed ML for communications. In [35], the authors extensively reviewed resource management techniques in developed Heterogeneous Networks (HetNets). A comprehensive evaluation of the existing resource management methods for HetNets is provided. Moreover, this article reviewed recent studies in various aspects of resource management regarding user association, power allocation, mode selection, and spectrum efficiency to concentrate on existing research gaps. Approaches, criteria, methods, strategies, and structures have organized the provided resource management aspects in various network scenarios. In addition, this paper presented the efficient approaches utilized by HetNets to address the challenges of intelligent communications.

In [36], the authors extensively investigated the suitability of DRL and RL approaches for resource allocation in 6G network slicing. The authors conducted a comprehensive examination of relevant research studies and examined the feasibility and effectiveness of the suggested methods in tackling issues regarding resource allocation, admission control, resource orchestration, and resource scheduling. Furthermore, they evaluated the methodologies based on the optimization objectives, including the network’s emphasis, the range of potential states and actions, the algorithms employed, the framework of deep neural networks, and the balance between exploration and exploitation. In [37], a comprehensive examination of resource optimization is investigated, including both current and previous investigations on approaches, indicators, and application scenarios. They also demonstrated the significance of resource optimization for the next 6G networks. Moreover, the authors investigated various current methodologies for evaluating the performance of 6G IoT networks, especially concentrating on metrics such as bandwidth, reliability, latency, EE, and throughput. Finally, this paper analyzed the challenges experienced in this area of study and provided promising techniques for future research to enhance the performance of 6G IoT networks.

In [38], the authors provided a resource management framework that utilizes AI in future Beyond Five Generation (B5G)/6G networks to examine the significance of AI in resource allocation for future wireless communications systems. To comprehensively investigate the present innovations in relevant research, this study has reviewed and compared resource management strategies in two classifications: AI-based and model-based methods. Furthermore, they have highlighted the issues that must be overcome to incorporate AI into existing and future wireless networks. These challenges include the requirement of datasets and test cases. Additionally, they have discussed opportunities, such as exploring the theoretical performance constraints based on artificial intelligence resource management, mapping scenarios, goals, and algorithms, utilizing both broad learning and deep learning techniques, and investigating innovative approaches for achieving performance metrics with clarifying artificial intelligence. In [39], the authors concentrated on utilizing AI and ML features to enhance 6G networks and optimize resource management. They demonstrated advanced terahertz approaches, including ML-based terahertz channel estimations and spectrum allocation, that have been regarded as innovative in accomplishing high-speed transmission across a wide range of frequencies. Furthermore, they employed AI and ML applications in power management, specifically for energy-harvesting networks. In addition, this review paper concentrated on AI and ML technologies to improve security in IoT systems, which involves the enhancement of authentication, attack detection processes, and access control. Also, they conducted effective handover management and mobility strategies using Q-learning, DRL, and DL to provide highly reliable and secure connections and meet the requirements of 6G dynamic networks. Intelligent resource allocation methods, such as traffic, storage, and computation offloading techniques, have been recognized as potential approaches for satisfying low latency demands in 6G applications.

In [40], the authors comprehensively reviewed the influential “learning to optimize” methods in various fields of 6G networks. The major optimization issues and specifically designed ML frameworks are identified and examined. Specifically, this paper focused on algorithm unrolling, graph neural networks, DRL, end-to-end learning, and wireless federated learning for distributed optimization. These techniques are designed to tackle challenging problems that arise in various significant wireless applications. The paper also covers issues such as the architecture of neural networks, theoretical tools employed in various ML techniques, implementation obstacles, and future research objectives. These discussions aimed to provide practical guidance for using ML models in 6G wireless networks. In [41], the authors performed a comprehensive analysis of the implementation of AI and ML in 6G networks to improve the perspective of the future IoT. They discussed the development of communication systems and emphasized the importance of incorporating AI/ML algorithms in the structure of the systems. This paper also explored the potential of AI/ML algorithms to improve the IoT services supplied by smart facilities. It particularly concentrated fields of application, such as smart agriculture, smart healthcare, smart transportation, and smart industry, that can perform effectively.

In [42], the authors presented an in-depth investigation of various ML, DL, RL, and DRL algorithms that can be employed to optimize the challenges while developing technologies to satisfy the demands of 6G networks. They demonstrated that the utilization of ML algorithms may efficiently tackle an extensive range of issues associated with SE, EE, throughput, decreasing computation, and developing reliable and secure channels for communications. Nevertheless, while these techniques have been effective in previous research, it is crucial to recognize that additional investigation is required to optimize their efficacy in advancing innovation. In conclusion, they presented potential ML approaches to tackle obstacles that could occur in 6G networks efficiently. These challenges include expanding coverage, connecting terrestrial and non-terrestrial systems, reducing latency, and incorporating sensing and communication techniques. However, the proposed solutions often lack comprehensive validation in real-world scenarios, highlighting the need for more empirical studies and experimental validation to assess their effectiveness and scalability. In addition, none of the aforementioned surveys categorized the machine learning into DL, FL, RL, and EL, and analyzed the AI approaches according to user association, channel allocation, power allocation, and mode selection. In summary, while the present investigations provide useful insights into resource management in 6G networks, further research is required to tackle the practical issues and restrictions faced in real-world implementations. In contrast to prior investigations that provide a broad summary of AI approaches for 6G networks, our survey provides an organized taxonomy of AI approaches, such as DL, FL, RL, and EL, specifically applied to resource management challenges such as user association, channel allocation, power control, and mode selection. We further demonstrate the trade-offs between AI model accuracy, complexity, and real-time application, hence illuminating their viability in realistic 6G environments. Additionally, a comparative analysis section has been included, differentiating our research from existing surveys regarding scope, contributions, and methodology. This study identifies significant resource management challenges in AI-based 6G networks and proposes innovative research directions, such as hybrid AI models. Table 1 presents a concise overview of the current research on the integration of machine learning in 6G wireless communication networks.

4 An Overview of Resource Management in 6G Networks

Efficient allocation and utilization of network resources is a crucial aspect of 6G wireless communication networks. Meeting the increasing demands of emerging services and applications requires efficient use of resources [34]. 6G networks are expected to be available to billions of devices with wide connectivity, low latency, and high transmission rates [43]. Diverse methods of managing resources, including spectrum allocation, power control, user association, and interference management, are required to accomplish these ambitious objectives [44]. The effective distribution of the accessible spectrum is one of the main obstacles to 6G resource management. 6G is expected to use a diverse array of frequency bands, surpassing those of previous generations [45]. These bands include millimeter-wave (mmWave) and THz. Distinctive characteristics and issues are associated with each of these bands [46]. For example, mmWave and THz bands have enormous data rates but significant path loss and need sophisticated beamforming methods for reliable communication. Dynamic spectrum allocation based on network circumstances and user needs is essential for efficient spectrum management [47]. Power control is an additional significant 6G resource management aspect.

Managing interference is becoming more challenging as the number of devices increases and networks are densified by deploying small cells [48]. To minimize interference, maximize energy efficiency, and guarantee QoS for various applications, reliable power management systems are crucial [29]. The network environment and user mobility must be considered while adjusting transmission power. In addition, user association and load balancing are essential aspects of resource management in 6G networks. As 6G encompasses a wide range of technologies, from macro cells to small cells, D2D communication to satellite networks, it is crucial to intelligently pair users with the most suitable network nodes [49]. Signal strength, network congestion, and user mobility are all aspects that must be taken into account. By balancing traffic across networks, effective user association schemes increase network efficiency and user experience. The utilization of high-frequency and dense network deployment makes interference control a more significant issue in 6G networks [50,51]. Interference alignment, Intelligent reflecting surfaces, and coordinated multi-point transmission are some of the advanced interference reduction strategies that are anticipated to be pivotal in interference management [52]. These strategies need advanced coordination and immediate adjustment to the dynamic network environment.

The management of resources in 6G networks is expected to experience a revolutionary change because of the advent of AI and ML [53]. To optimize resource allocation, anticipate traffic patterns, and react to varying network circumstances, ML algorithms can analyze tremendous amounts of data [54]. For instance, consider the applications of DL models in traffic management for predictive analytics and RL in optimizing power regulation and spectrum allocation via environmental learning [55]. FL is appropriate for user association and load-balancing activities since it provides a means to use distributed information across various devices while maintaining privacy [56]. EL optimizes complicated processes including dynamic resource allocation and network setup, improving network efficiency [57]. In general, the management of resources in 6G wireless communication networks requires a comprehensive methodology that deals with many difficulties related to spectrum allocation, power control, user association, and mode selection. AI/ML methods improve the effectiveness and flexibility of these procedures, thereby enabling the full utilization of 6G networks. As advancements in research and development progress in this area, it will be crucial to use inventive methods and techniques to fulfill the challenging demands of upcoming wireless communication.

5 AI-Based Resource Management in 6G Networks

Advancements in AI played an essential role in 6G networks, offering remarkable improvements in connection, efficiency, and speed [44]. Researchers are using AI algorithms to optimize network management, forecast traffic patterns, and enhance re-source allocation [58]. AI models are used in dynamic spectrum management to maximize throughput while minimizing interference in real time to make predictions and assign spectrum resources. Improved reliability is also achieved via the deployment of AI-driven predictive maintenance, which identifies and fixes any network issues before they affect service quality. Consequently, AI will play a crucial role in developing 6G networks, enabling more intelligent, secure, and faster wireless communication [59]. Deep learning, reinforcement learning, federated learning, and evolutionary learning are some of the machine learning techniques used in modern wireless networks. AI approaches are categorized based on their distinct applications in tackling optimization issues of resource management in 6G networks. These approaches offer abilities in terms of scalability, adaptability, and decision-making. However, Deep Reinforcement Learning (DRL) and Federated Reinforcement Learning (FRL) are two examples of hybrid techniques that incorporate attributes from several different models of learning to improve overall performance. The classification of AI types is explained in Fig. 3.

Figure 3: Classification of AI approaches

Modern wireless networks are undergoing a dramatic transformation due to the efficiency and effectiveness achieved by deep learning algorithms [58]. These methods improve modulation and demodulation accuracy and efficiency in advanced signal processing. Additionally, they are vital in enhancing data throughput and reducing congestion via dynamic resource allocation and traffic prediction, all of which contribute to optimal network management. When applied to wireless networks, deep learning makes them more intelligent, flexible, and reliable. Both supervised and unsupervised learning fall under the general category of deep learning [59,60]. Supervised learning uses labeled data to train algorithms, whereas unsupervised learning uses unlabeled data to find patterns and structures.

Supervised learning trains algorithms on a labeled dataset to produce accurate predictions or classifications based on newly acquired information. In [61], the researchers investigate the issue of user association via the perspective of deep learning. They present a deep learning approach to link user equipment to competitive macro and small base stations. To find the asymptotically optimum solution for labeling in supervised learning, the user association issue is formulated as an optimization problem. They analyze the accuracy of the proposed method after training the U-Net model. Based on the simulation results, the proposed scheme outperforms the Genetic Algorithm (GA) scheme in terms of computation time and scalability, and it approaches the GA scheme in terms of cumulative rate gain. However, channel allocation and power control are still an open issue. In [62], the authors investigate the user association and resource allocation problems in HetNets. Their primary objective is to optimize Energy Efficiency (EE) while considering the limitations imposed by QoS, power, and interference. In particular, the Lagrange dual decomposition approach is used to handle the problem of user association. On the other hand, semi-supervised learning and Deep Neural Networks (DNN) are utilized for resource allocation. According to the simulation, the proposed approach has the potential to produce greater EE while simultaneously reducing complexity. However, the cross-tier interferences need to be considered.

In [63], the researchers examined the challenges associated with future TV broadcasting via 5G wireless mobile networks. They present a framework for allocating multimedia resources in TV broadcasting using a network slice-based approach. The system includes a proactive network-slicing architecture and a detailed explanation of its operational mechanism. Then, a prediction technique is specifically developed for resource allocation in the context of TV broadcasting service demand. The suggested architecture prioritizes optimizing energy efficiency by considering the combined transmit power and bandwidth in allocating 5G resources. Deep reinforcement learning is used to effectively handle the system complexity, and a convex problem technique is presented to obtain the best solution and speed up the process of training. Results indicate that the suggested strategy can properly anticipate multicast service needs and increase network energy efficiency under specific QoS criteria and temporal fluctuations. However, more accurate multicast service demand must be considered using a deep learning model with additional cells and layers. In [64], the authors examine the optimization issue of spectral efficiency (SE) in the context of a Massive MIMO network, taking into account different numbers of users. The optimization problem is formulated as joint power and data problem. Given the non-convex nature of the issue, they developed an innovative iterative technique that reaches an equilibrium point in polynomial time. Furthermore, they provide a deep learning solution to facilitate real-time deployment. Data and pilot powers are predicted by the proposed PowerNet neural network, which only makes use of large-scale fading information. The main contribution is to create a neural network that can accommodate a constantly changing number of users, allowing PowerNet to approximate numerous power control functions with varied inputs and outputs. The results demonstrate the superiority of the proposed approach over the iterative algorithm in terms of SE. Nevertheless, the channel allocation problem needs to be considered.

In [65], the researchers examined the issue of handover in 5G networks. The paper aims to transform the handover issue into a classification problem and then solve it using deep learning, as it is a typical technology that may produce very accurate results for classification problems. The proposed method considers two user features: Signal to Interference Noise Ratio (SINR) and SINR change. The simulation results indicate that the proposed method has the lowest rates of radio connection failure and ping-pong compared to the benchmark mechanisms. However, the system throughput needs more consideration and attention. In [66], the authors investigated the power allocation of the Cloud Radio Access Network (C-RAN), optimizing the selection of Remote Radio Heads (RRH), and providing information about the associated connections that correspond to those RRH. A neural network-based optimization model was introduced with the objective of optimizing RRH selection. The Group Sparse Beamforming technique was used to evaluate the model and provide near-optimal solutions for power consumption. The obtained results encourage the use of machine learning methodsto mitigate the power consumption and complexity associated with this rising field. However, user fairness needs to be considered.

In [67], an ML approach is employed to address the cell selection problem in 5G Ultra-Dense Networks (UDNs). A cell selection approach based on a neural network is proposed that employs a trained back-propagation model to execute the problem of small base station selection effectively. The objective is to extend the duration of presence within small cells, thereby reducing the handovers. The trained suggested model is capable of predicting the optimal small base station with a high level of precision and a minimal error rate. The results demonstrate that the proposed approach effectively accomplishes its objectives by reducing the rate of handovers and extending the duration of vehicle presence throughout small cells. Consequently, the occurrence of failed and needless handovers is reduced. Furthermore, compared to non-ML approaches, computational complexity is decreased. However, additional input features must be considered throughout training to ensure the model’s applicability across different scenarios and environments.

Unsupervised learning uses algorithms to find patterns, structures, and connections in unlabeled data. In [68], an iterative channel allocation method and a power allocation method based on DNNs with unsupervised learning are proposed in downlink Non-Orthogonal Multiple Access (NOMA)-based HetNet. The optimization issue aims to maximize the total rate while maintaining the QoS demand. The presented algorithm offers a sum rate and outage probability comparable to those of the interior point method, which can provide the ideal solution but has incomprehensibly greater complexity than the proposed scheme. Furthermore, when contrasted with the traditional two-sided matching technique, the proposed channel allocation approach obtains a higher NOMA gain. However, the interference among users needs to be considered. In [69], the problem of power allocation and user association in dynamic HetNets is investigated. The authors propose a novel approach for reducing computational complexity in dynamic HetNets using unsupervised learning-based user association and power control algorithms. They develop an unsupervised learning approach using a recurrent neural network that can be adjusted to accommodate different numbers of users. Extensive simulations have shown that the suggested approach outperforms existing optimization-based techniques in terms of fairness performance. However, the inter-cluster interference needs to be considered.

In [70], for mmWave massive MIMO HetNets, the authors suggest two hybrid precoding methods that use unsupervised learning with graph attention networks and convolutional neural networks. The proposed methods significantly enhance both the training efficiency and the difficulty of acquiring samples. Graph attention networks and multi-head mechanisms are investigated for their great learning capacity for obtaining correct precoding vectors by analyzing network spatial properties and reducing algorithm computational complexity without anticipating graph structure. Extensive simulations demonstrate that the proposed algorithms exhibit significant advantages in terms of enhancing SE and EE while maintaining low computational complexity. Nevertheless, the proposed algorithm examined a limited number of users. In [71], a DNN allocates resources for a 5G massive MIMO network. The multi-objective sine cosine algorithm is used to optimize the objective functions. Data rate, SINR, power consumption, and EE are the objective functions used in this optimization procedure. Subsequently, the optimized goal functions are assigned to the neural network to distribute resources. Additionally, the fairness index for the resource distribution procedure based on neural networks is also determined. The results of the proposed approach demonstrate that it offers superior performance compared to other existing approaches. However, the tradeoff between SE and EE needs to be considered.

In [72], the authors propose a deep learning algorithm to tackle the power allocation problem in C-RAN. User association is considered in the optimization issue to represent an actual cellular environment accurately. The authors conduct a comprehensive study to examine the trade-offs encountered while using a deep learning-based solution for power allocation. Achieving near-optimal performance with a low computing complexity is shown by the results of the proposed method. Nonetheless, the proposed deep learning method does not always guarantee an acceptable outcome. In [73], the authors investigate the problem of resource allocation for the URLLC network in order to maximize energy efficiency. The Dinkelbach transformation is used to turn the initial fractional optimization issues into linear problems. A DNN is used to formulate the power control function. The proposed approach provides superior results compared to the random power allocation and the QoS demands are effectively ensured. Simulation results confirm that the proposed approach can significantly enhance the system’s EE. Furthermore, the training method exhibits rapid convergence while maintaining a low complexity. However, an appropriate channel allocation strategy needs to be considered.

5.1.3 Open Issues and Proposed Solutions

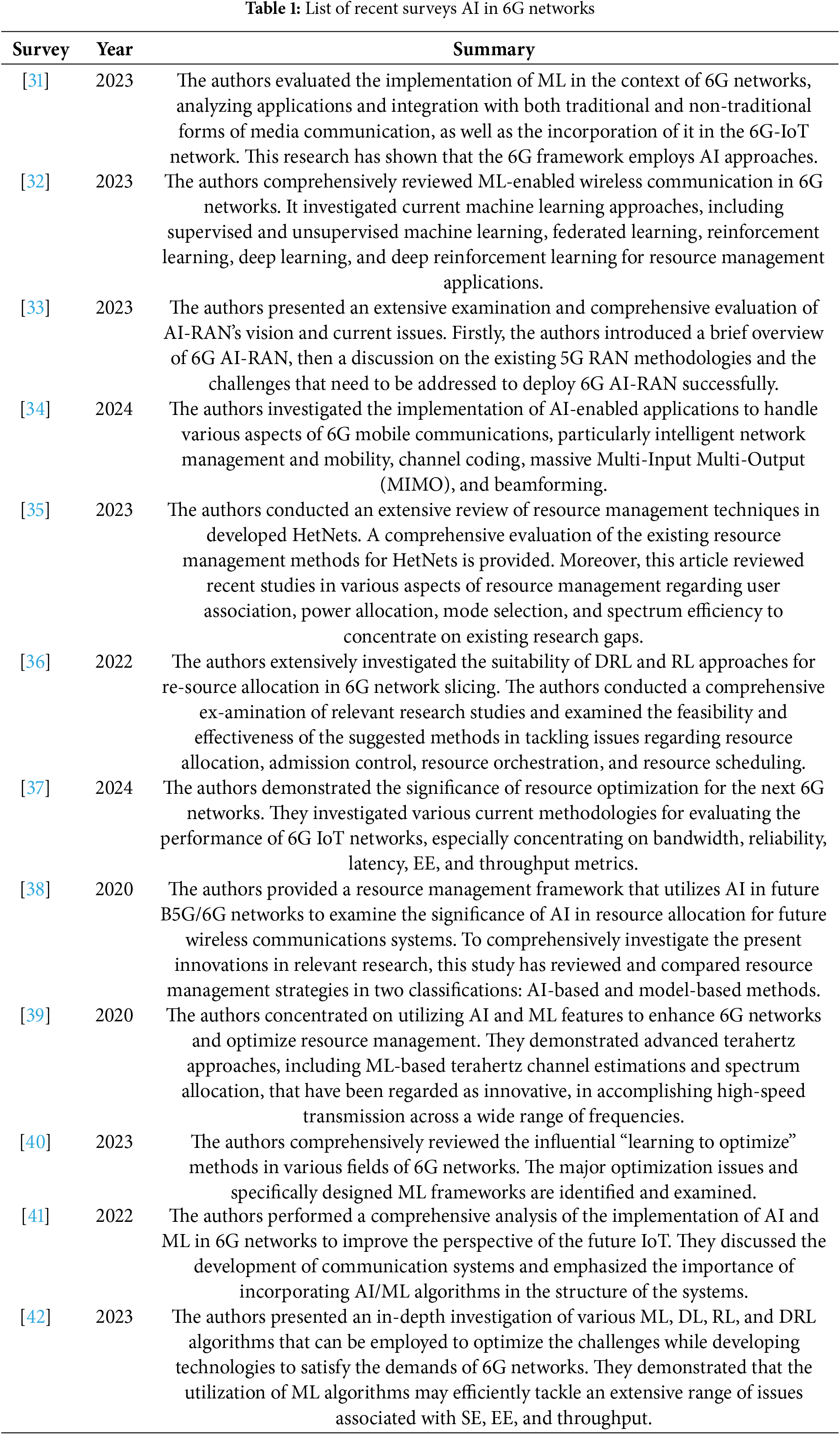

Concerning channel allocation, power allocation, user association, mode selection, and interference control, there are still several unsolved issues concerning deep learning approaches. 6G environments are dynamic and varied, making it difficult to use supervised DL models for channel and power allocation without large amounts of labeled data. To address this, data augmentation and transfer learning could decrease the need for labeled data and enhance the capacity to generalize models. However, unsupervised DL has challenges in effectively capturing the intricate patterns in these tasks because of the lack of labeled data. Advanced clustering and self-supervised learning methods improve the capacity to learn from unlabeled raw data. Regarding user association and mode selection, it’s important to consider the limitations of supervised DL and unsupervised models. Supervised DL models may struggle to adapt to different scenarios, while unsupervised models may not fully capture user behavior and network conditions. These tasks may be improved by hybrid techniques that combine smaller datasets with greater amounts of unlabeled data. Both supervised and unsupervised DL face enormous challenges in interference control because of their high noise and unpredictability. Interference may be dynamically managed via reinforcement learning, where the model optimizes behaviors depending on rewards. Explainable AI methods play a vital role in offering valuable insights into the decision-making process of deep learning models. They contribute to the improvement of trust and transparency in AI systems. The primary objective of these solutions is to use DL to its fullest capacity to tackle the complicated issues with 6G wireless networks. Table 2 demonstrates the recent work of DL approaches.

Federated learning is revolutionizing 6G networks by allowing intelligent application development [74]. Within this framework, edge devices engage in collaborative training of machine learning models by utilizing their local data, hence obviating the need to transmit sensitive information to a centralized server. This method becomes even more important because of the requirement to efficiently analyze the massive data produced by an enormous device. The distributed architecture of 6G networks is perfect for federated learning since it improves data privacy, optimizes network capacity, and decreases latency [75]. There are two main types of federated learning: centralized and decentralized.

Centralized federated learning uses several edge devices to train models independently and submit their updated parameters to a central server to create a global model. In [76], the authors suggested practical techniques for estimating QoS metrics acquired via network data, specifically with the purpose of implementation of in-vehicle applications. The suggested approach depends on the utilization of federated learning to train regression neural networks. The authors demonstrated that this strategy has the advantage of providing predictions that are part of centralized training, without requiring the transmission of raw measurement data from vehicles. In addition, they validate this method by recovering classical closed-form delay quantiles based on analytical models of basic queueing mechanisms. The authors demonstrated that their methodology overcomes the limitations of basic models by offering quantile estimates for the complicated environment of vehicle communications, which is achieved by considering various application traffic patterns. However, the handover needs to be taken into consideration.

In [77], An FL system was considered, which includes the use of a single base station (BS) and several mobile users. The local machine learning model is trained by mobile users using their data. The authors developed an incentive system based on an auction game, that involves the BS and mobile users, assumes the role of an auctioneer, and the mobile users represent sellers. In the suggested game, all mobile users submit their bids based on the lowest energy cost they experience while participating in the FL scenario. The primal-dual greedy auction is proposed as an approach for determining winners in the auction and enhancing societal welfare. Eventually, numerical results demonstrated the enhancement of our suggested approach in terms of performance. Nevertheless, signaling overhead among users needs to be considered. In [78], A federated learning approach designed specifically for cellular wireless networks was suggested. A centralized computing site is responsible for training a learning model over several users. Furthermore, they proposed a scheduling technique that enhances the convergence rate. The authors additionally investigated the impact of local computing stages on algorithm convergence. They demonstrated that federated learning algorithms have the ability to tackle the problems assuming the issue of wireless channel unreliability is ignored. Nonetheless, convergence analysis with convex loss functions is still an open issue.

In [79], the authors examined the utilization of wireless power transfer (WPT) in assisted FL, where the cellular BS handles the responsibility of charging the wireless devices (WDs) via WPT and also receives the locally trained models of the WDs for model aggregation throughout each iteration of FL. The authors proposed a joint optimization approach for improving the processing rate of each WD, the WPT-duration for the BS to charge each WD, and the number of local iterations for each WD. The objective is to reduce the total latency of FL iterations till the convergence condition is achieved. Despite its non-convex nature, the investigators divided the problem into two subproblems and proposed a simulated annealing-based technique as an approach to solving them in a sequence effectively. The numerical results demonstrated the superiority of the suggested strategy against heuristic techniques. Nevertheless, user mobility must be taken into account. In [80], the authors incorporate mobile edge computing and digital twin technologies into a hierarchical framework of FL. In situations where the users are outside the coverage area of the small base stations, the framework facilitates the involvement of macro base stations in supporting the local computation of the users. This collaboration effectively decreases transmission delay. Additionally, it maintains the privacy of users and facilitates increased participation of them in the training process, hence enhancing the accuracy of the FL. Furthermore, they provided a deep reinforcement learning approach to address the joint optimization issue of dynamic user association and resource allocation. Reducing energy consumption within a certain time delay is the main goal of this method. The simulation results showed that the suggested system successfully decreases the rate of task transmission failures and energy consumption compared to the baseline scheme. Additionally, it results in cost savings in communication across the digital twin networks. Nevertheless, Interference management needs more consideration and attention.

Decentralized federated learning allows edge devices to immediately share and aggregate model updates, guaranteeing scalable and robust model training. In [81], an investigation has been examined on a distributed energy-efficient resource allocation mechanism. Federated reinforcement optimization is proposed to solve the issue of channel assignment and transmit power. The suggested framework effectively tackled the non-convex problem, addressing the challenges of computational complexity and transmission cost. The results obtained from quantitative analysis and numerical simulations demonstrated that the suggested approach performs better than previous decentralized benchmarks. It also effectively reduced communication costs and minimized the data processing load on the base station compared to the centralized technique. Furthermore, the efficiency of the proposed framework has been validated by simulations. However, energy efficiency needs to be considered. In [82], the authors presented three techniques, specifically random scheduling, round-robin, and proportional fair, for the objective of resource allocation in cellular networks utilizing FL. An evaluation of resource allocation efficiency is conducted using the MNIST fashion dataset, taking into account each user’s convergence speed and time. The performance results indicated that the comprehension of proportional fairness is greatest in three situations when evaluated via a communication round. The results of the research have significant potential for improving resource allocation and user selection in the field of wireless network architecture. Nonetheless, power control needs to be considered.

In [83], the researchers investigated relay selection and resource allocation approaches based on FL for multicellular configurations. The main objective is to decrease the training time for the ML models by using the mobile edge computing characteristics of the FL technique. Specifically, a DNN model trained on several edge devices has been investigated for predicting the most effective relay node for each user. The main improvement of the technique given in this study is the involvement of both EE and SE as network metrics, as well as training time and accuracy, in the DNN training and model aggregation process. Based on the results provided, it is obvious that EE and SE could be greatly enhanced when relay node edge devices are used to perform a part of the ML training employment, in comparison to centralized learning-based techniques. Additionally, the FL method improved training accuracy while substantially lowering training time. However, the tradeoff between the SE and the EE has to be taken into consideration. In [84], the authors examined federated deep reinforcement learning to optimize channel allocation and power management for vehicle-to-vehicle (V2V) communication in a decentralized manner. The proposed methodology leverages the capabilities of DRL and FL to effectively address the reliability and delay demands of V2V communication, while simultaneously optimizing the data rates of the network. They created an individual V2V agent employing the dueling double deep Q-network and developed a reward function to train V2V agents simultaneously. The suggested federated scheme has been validated via simulations and demonstrates superiority over the state of the arts schemes in terms of sum rate. Nevertheless, the mobility of users must be taken into consideration.

In [85], the authors proposed a decentralized resource allocation method based on FL-aided DRL. The objective of this research is to optimize the overall capacity and decrease energy consumption. The authors have provided a brief description of four technologies that support their proposal. D2D communication enhances network performance, FL protects the privacy of users while facilitating a decentralized model training paradigm, and DRL allows users to develop resource allocation policies based on network states. The efficiency of the suggested techniques can be evaluated using simulations according to the mm-wave and THz scenarios individually. The simulation results demonstrated that the suggested approach enables users to dynamically allocate resources based on limited network states resulting in significant improvements in network performance, specifically in terms of throughput and power consumption. Nonetheless, computational complexity needs to be taken into consideration. In [86], a distributed resource allocation approach is presented to optimize EE while simultaneously guaranteeing high QoS to users. A meta-federated reinforcement learning method is developed to deal with wireless channel issues. Users can optimize their transmit power and channel allocation by employing neural network models. Based on decentralized reinforcement learning, the federated learning approach facilitates cooperation and develops mutually beneficial among users. The results indicated that the meta-federated reinforcement learning framework, as proposed, enhanced the efficiency of the reinforcement learning process and reduced overhead. Furthermore, the proposed framework outperforms the conventional decentralized algorithm in terms of energy efficiency performance throughout different scenarios. However, the SE demands additional attention and investigation.

5.2.3 Open Issues and Proposed Solutions

In the context of centralized and decentralized FL, several open issues regarding resource management are still challenging. Certain issues with centralized FL include data privacy issues and considerable communication costs when a central server aggregates models from distributed nodes. The aggregation process could be ineffective because of the diverse network contexts and the varied levels of quality of local models. Adaptive communication mechanisms and compression are proposed to decrease model updates and data transmission. Additionally, it is critical to keep user data while combining models by using privacy-preserving technologies such as secure multiparty computing and differential privacy. On the other hand, decentralized FL has concerns about coordination and consistency. Blockchain technology and consensus algorithms can synchronize and secure network model updates.

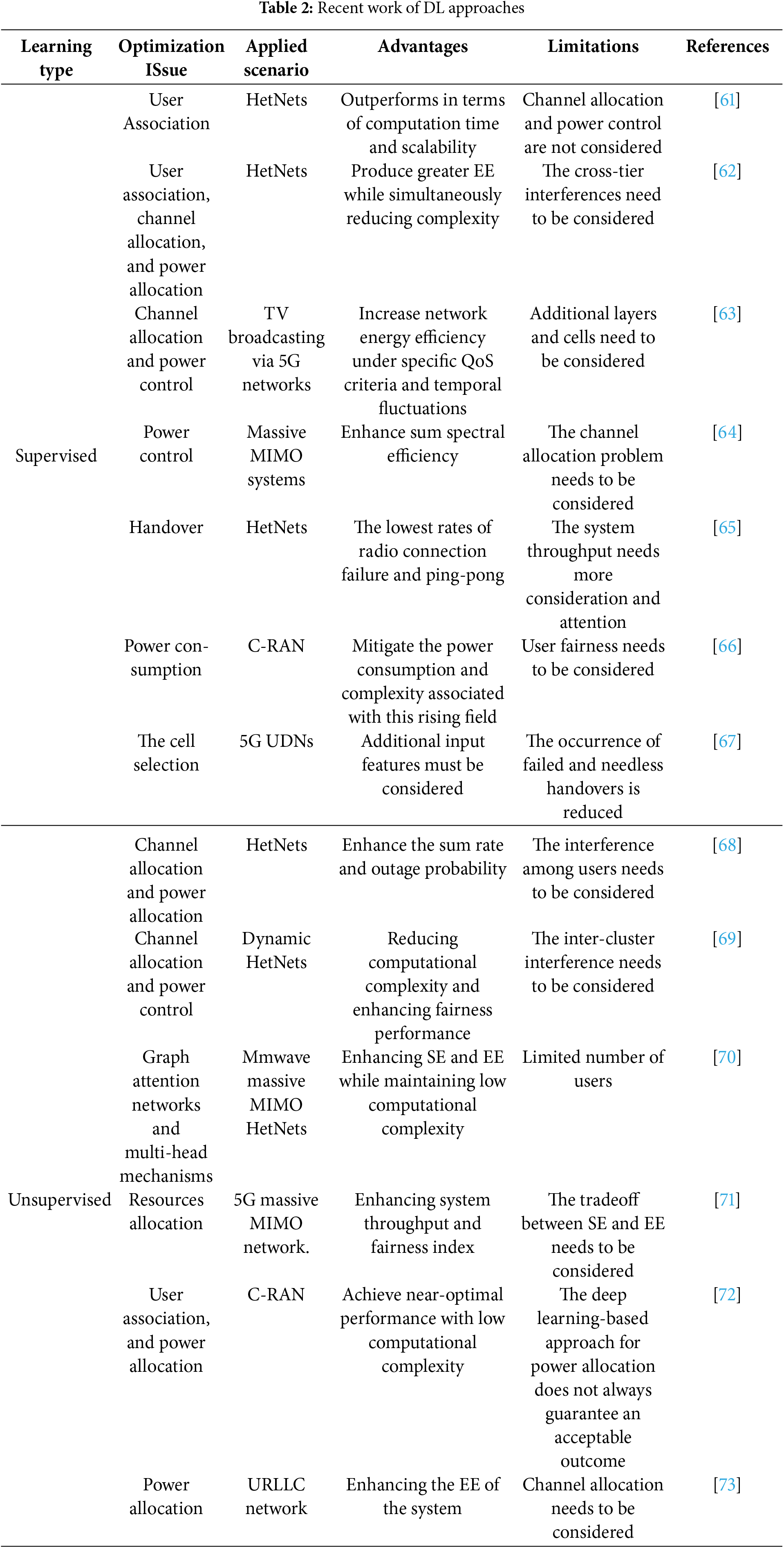

Centralized and decentralized FL face the challenge of channel and power distribution in dynamic network circumstances. Models may be trained to respond dynamically to new data using reinforcement learning methods included in FL frameworks. FL models may capture user behaviors and preferences using user feedback loops and personalized learning paradigms for user association and mode selection. Distributed optimization methods help both FL approaches handle interference by learning interference patterns locally and working globally to discover optimum solutions. Improved 6G wireless network efficiency and scalability are the goals of these FL-centric solutions. Table 3 illustrates the recent work of FL approaches.

5.3 Reinforcement Learning (RL)

Reinforcement learning is expected to significantly impact 6G wireless communication networks by providing novel approaches to enhance network performance and efficiently manage resources [27]. RL involves agents acquiring decision-making skills via their interactions with the environment, where they obtain feedback in the form of penalties or rewards [87]. The trial-and-error process enables the system to adjust and respond to fluctuations in network circumstances, resulting in improved efficiency and reliability [88]. RL has several potential uses, such as in energy management, traffic prediction, spectrum allocation, and other areas where more advanced and autonomous network operations are desired. There are two main subfields within RL: deep learning and Q-learning.

5.3.1 Deep Reinforcement Learning

By integrating deep neural networks with conventional reinforcement learning techniques, deep reinforcement learning improves agents’ ability to manage complicated decision-making tasks and high-dimensional state spaces. In [89], the resource allocation problem in D2D communication within cellular networks is investigated. This paper aims to improve the network throughput of both cellular and D2D pairs by determining the appropriate transmission power and spectrum channel for each D2D link. The authors propose a multi-agent deep reinforcement learning approach to reduce computational complexity. This approach involves sharing information among participating devices, such as their positions and resources. The results demonstrate that the suggested method outperforms previous schemes, particularly with a high density of devices, which causes a significant performance improvement. However, the proposed approach needs to be considered with dense user scenarios to examine its effectiveness.

In [90], a deep reinforcement learning-based approach is proposed to jointly optimize the channel and power allocation in a multi-cell network. The proposed approach aims to improve the average system throughput by mitigating co-channel interference and guaranteeing the QoS constraint. The investigated optimization problem is divided into channel allocation and power allocation sub-problems. Particularly, the double deep Q-network method and the deep deterministic policy gradient method are proposed to solve the channel allocation sub-problem and the power allocation sub-problem, respectively. Simulation results demonstrated that the proposed approach achieved superior performance compared to other alternative approaches. However, for communication computing integration to be effective in multi-cell systems, numerous antennas must be considered. In [91], the authors examine the sum rate maximization problem for a wireless power transfer-enabled IoT network. The authors present a DRL technique to determine the sub-optimal offloading decision. Additionally, they develop an efficient solution using the Lagrangian duality technique to find the optimal time allocation. The simulation results confirmed that the proposed approach, which is based on DRL, achieved over 95 percent of the maximum rate while maintaining minimal complexity. Moreover, the proposed approach outperformed the conventional actor-critic method regarding running time, computational efficiency, and convergence speed. However, more complex scenarios need more attention and consideration to prove the superiority of the proposed approach.

In [92], the authors propose a resource allocation approach based on DRL for NOMA-based D2D communications. The DRL-based approach aims to optimize the allocation of channel spectrum and transmit power of D2D links to maximize EE. The proposed approach allows for efficient resource allocation to D2D links by considering the dynamic nature of the environment. The proposed approach demonstrates superior performance regarding fairness, energy efficiency, and coordination of users. The significance of this performance gain is particularly evident in scenarios with severe interference among users. Nonetheless, mode selection needs to be considered to improve the network performance. In [93], the authors investigate the resource allocation problem of NOMA-based D2D communication underlaying HetNets. The introduction of NOMA technology improves the system performance by reducing the interference generated by D2D transmission. A multi-agent deep reinforcement learning approach is proposed to solve the problem of resource allocation, hence improving the system performance. Optimizing power allocation parameters maximizes the D2D user’s throughput, while channel allocation allows cellular user channels to be reused efficiently. The numerical result of the proposed approach demonstrates remarkable convergence and efficiency, which ultimately leads to beneficial system performance. However, the imperfect channel state information needs to be taken into consideration.

In [94], the authors investigate the problem of resource management under imperfect channel state information within IoT networks. A deep reinforcement learning-based approach is proposed to solve the resource management optimization problem. Assisted by a gated recurrent unit layer, the proposed approach is designed to operate in a distributed cooperative manner to enhance the system’s performance. The proposed approach has the potential to produce faster convergence over the current benchmark frameworks while maintaining the same number of iterations. The results of simulations demonstrate the superiority of the proposed approach in comparison to the other reference approaches in terms of EE. Furthermore, the proposed DRL showed its superiority in complex scenarios. Nevertheless, the proposed approach needs to be considered with different scales of networks. In [95], the authors introduce a distributed multi-agent deep reinforcement learning approach to solve a dense wireless network’s joint user association and power allocation optimization problem. The proposed approach is scalable concerning the size and density of the wireless network. Furthermore, the proposed approach takes into consideration the communication delay and feedback. The results of the simulation show that the recommended approach outperforms decentralized approaches in terms of average rates and obtains performance almost equal to, or even better than, a centralized benchmark approach. Nonetheless, the proposed approach needs to be augmented with expert policies that rely on optimization.

Q-Learning, a model-free reinforcement learning system, optimizes decision-making by updating a Q-value table depending on environmental rewards. In [96], the authors investigate the energy efficiency problems associated with D2D communications in HetNets. The authors propose a methodology combining Q-learning to optimize the association between the user equipment and the BS or access point to reduce the consumed power. The proposed approach demonstrates the capability to perform sufficient exploration and exploitation operations to achieve efficient optimization. According to the results, it can be seen that the proposed approach demonstrates a near-optimal solution in the single-cell scenario. However, user association in multi-cell scenarios must be considered. In [97], the authors propose an intelligent D2D clustering strategy, joint resource allocation, and power control algorithm for the D2D network. A hierarchical clustering technique based on machine learning is presented to construct D2D multicast clusters dynamically, taking into account user preference and reliability amongst D2D multicast users. Then, Q-Learning and Lagrange dual decomposition algorithms are proposed to solve resource allocation and power control issues respectively to optimize the total energy efficiency. Results showed that the proposed approach outperforms conventional techniques in terms of overall energy efficiency and throughput. However, the tradeoff between EE and SE needs to be taken into consideration. In [98], a mode selection approach based on the received signal strength (RSS) threshold is proposed in a dense NOMA-based D2D network. To achieve the highest possible sum rate, they propose a multi-agent reinforcement learning method for adjusting the RSS threshold of each SBS in both downlink and uplink scenarios. Numerical results showed that the proposed multi-agent reinforcement learning method achieved higher data transmission rates and greater coverage in dense NOMA-based D2D communication networks. However, power control must be considered.

In [99], the issue of resource allocation for D2D communications in IoT networks is examined to maximize the system’s overall EE. The Q-learning algorithm is used to address the channel allocation problem, while the power control problem is solved using the Dinkelbach method. Simulation results showed that the proposed approach outperforms prior approaches in the literature and achieves considerable EE improvements via spectrum sharing and D2D and IoT energy harvesting. However, cross-tier interference needs to be considered. In [100], the authors propose a joint power allocation and relay selection approach to enhance energy efficiency in relay-assisted D2D communications networks. Dinkelbach algorithm and Lagrange dual decomposition are presented to solve the power allocation problem and guarantee the QoS for all users. Q-learning algorithm is proposed to solve the relay selection problem. Finally, they provide a comprehensive theoretical examination of the suggested approach in terms of signaling overhead and computational complexity. The simulation results confirm that the suggested approach achieves a total EE for D2D pairs extremely close to the theoretical maximum. Nevertheless, the optimization of channel allocation needs to be considered.

5.3.3 Open Issues and Proposed Solutions

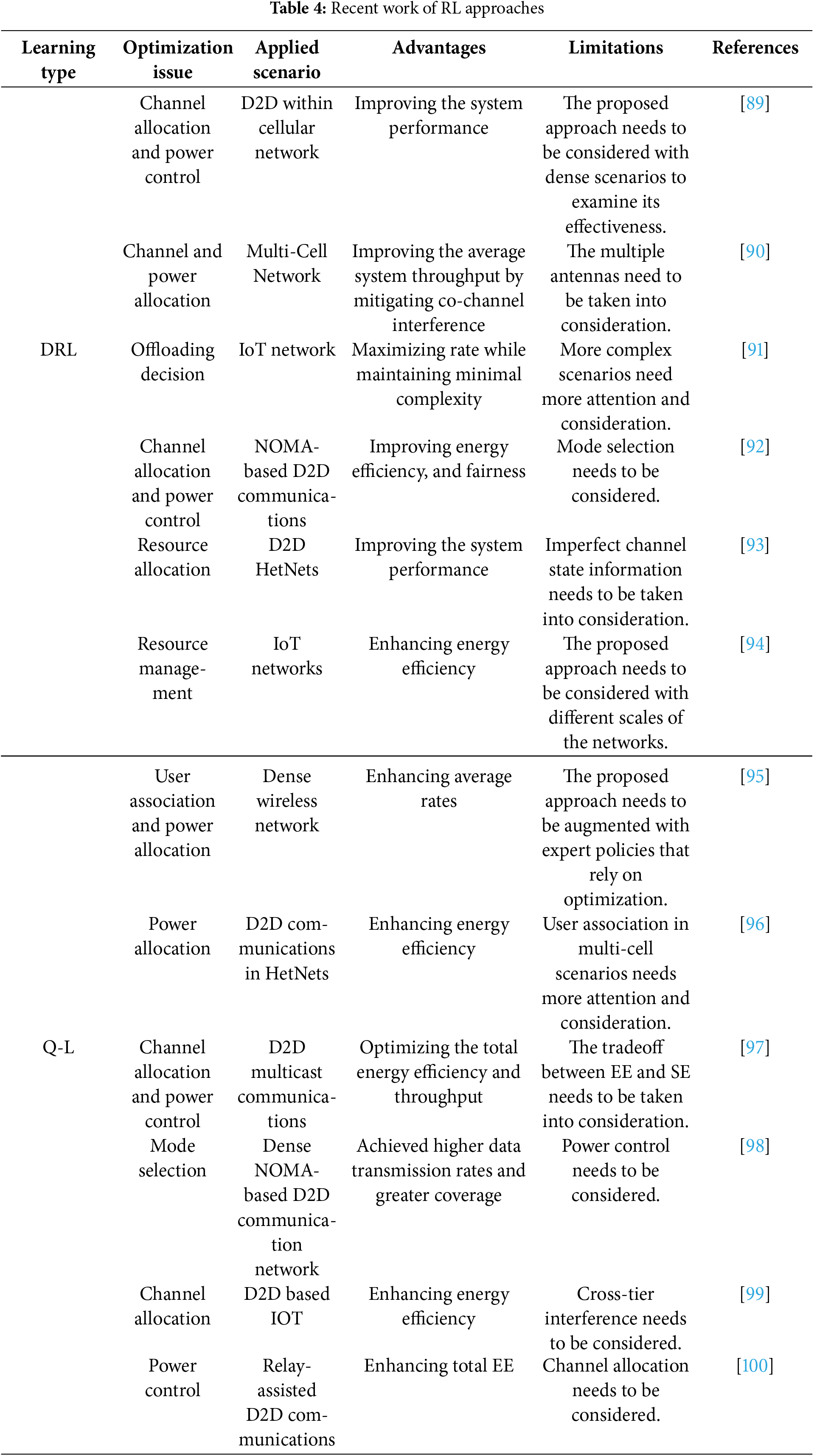

There are still several unsolved issues regarding resource management using Q-learning and DRL. These issues include continuous action spaces, which complicate learning and can result in weak suboptimal policies and convergence. Furthermore, conventional Q-learning has problems adapting to new circumstances since it relies on discrete state-action pairings, which are not applicable to unpredictable and dynamic 6G settings. DRL provides an answer by facilitating more scalable learning and managing continuous spaces via the use of neural networks to estimate the Q-values. Nevertheless, overfitting and instability are issues with DRL models, especially in contexts with significant amounts of variability. Experience replays and target networks are suggested to stabilize training and enhance performance. Transfer learning may improve DRL algorithms for efficient resource management by allowing them to depend on prior knowledge from comparable positions and accelerate convergence. Multi-agent reinforcement learning improves network performance by allowing agents to collaborate on interference control. Practical implementation in 6G networks requires regularization and explainable AI strategies to make these models robust and accessible. Table 4 shows the recent work of RL approaches.

5.4 Evolutionary Learning (EL)

Evolutionary learning is becoming popular in 6G wireless communication networks because it simulates natural evolutionary processes to address complicated optimization challenges [101]. This method is very flexible since it evolves solutions across generations using processes like mutation, crossover, and selection, which are ideal for complex and dynamic 6G configurations. Evolutionary learning performs well in network optimization and resource allocation, where standard approaches fail. 6G networks can maximize efficiency, robustness, and performance by leveraging evolutionary concepts [102]. Swarm intelligence and genetic algorithms are two categories of evolutionary learning.

Genetic algorithms replicate natural selection to optimize challenging issues by repeatedly picking, crossing, and altering candidate solutions. In [103], the authors present a power optimization model employing a genetic algorithm to manage power allocation effectively. Assigning the optimum power to each cellular user is determined by each access point using the updated genetic algorithm until it fulfills the fitness criterion. Furthermore, to improve the network’s effectiveness, a weight-based user scheduling mechanism is developed. This algorithm chooses a user for any given base station based on their distance and the RSS indicator. The power optimization model and user-scheduling method were given equal weights for analyzing power consumption and spectrum efficiency performance indicators. The simulation results indicate that the weight-based user-scheduling algorithm exhibited superior performance, which was further validated by the use of a modified genetic algorithm to optimize weight allocation. Moreover, Optimal transmission power distribution improves spectral efficiency while decreasing power consumption. Nevertheless, the authors considered equal weight in the proposed user scheduling algorithm.

In [104], the researchers examine the problem of joint user association and power distribution in 5G mmWave networks. This research aims to reduce power consumption while ensuring the preservation of user QoS, taking into account the on/off switching strategy of the BSs. The problem was first developed as an integer linear programming with the objective of achieving the optimum solution. A heuristic technique based on the GA is presented as a solution to address the NP-hardness of the issue. In comparison to the benchmark solutions, the suggested GA outperforms them in simulations, especially when dealing with high user loads, and presents a near-optimal solution. Nevertheless, power consumption needs more investigation and consideration. In [105], the authors examine the issue of optimizing the topology and routing in integrated access and backhaul (IAB) networks to ensure high coverage probability. They assess the impact of routing on bypassing temporal obstructions and create effective genetic algorithm-based strategies for placing IAB nodes and distributing non-IAB backhaul links. In addition, the service coverage probability is investigated. This probability is defined as the minimum rate needs of the users. Tree foliage, antenna gain, and blockage parameters are all taken into consideration. The simulation results indicate that IAB is a compelling strategy for facilitating the network densification necessary for 5G and future generations when implemented in a suitable network architecture. Nevertheless, co-tier interference among small base stations needs more attention and consideration.

In [106], the authors proposed a resource allocation scheme based on a Quantum-Inspired Genetic algorithm in D2D-based C-RAN. The proposed resource allocation approach integrates the principles of genetic and quantum computing algorithms to distribute resource blocks across cellular and D2D users. The low complexity allocation strategy is determined by using the predicted D2D throughput. Moreover, the utilization of the Quantum-Inspired Genetic algorithm is implemented within the population matrix to determine the optimal strategy for distributing user resources across several D2D users. Simulation results confirm the superiority of the proposed approach compared to the existing methods. However, power control needs to be considered. In [107], the authors investigate interference mitigation techniques in 5G HetNets. They examine the current subcarrier allocation strategies based on GA and Particle Swarm Optimization (PSO) and the dynamic subchannel allocation approaches based on Fractional Frequency Reuse to determine their limitations. The evolutionary biogeography-based dynamic subcarrier allocation approach is proposed as a solution to address the challenges associated with cross-tier interference by using the evolutionary biogeography-based dynamic subcarrier allocation algorithm. The suggested approach candynamically assign the subchannels to both the macro users and small users. The results indicate that the proposed approach has shown superior SINR for both macro users and small users. Additionally, it has reduced the outage probability and enhanced spectral efficiency compared to the currently used fractional frequency reuse-based dynamic subcarrier allocation technique. However, power consumption needs to be considered.

Swarm algorithms employ several agents to explore and optimize solutions cooperatively. In [108], the authors provide innovative algorithms that use linear increasing inertia weight–binary PSO and Soft Frequency Reuse (SFR) techniques. The objective is to reduce power consumption in small cells. The binary PSO algorithm is used to achieve small cell on/off switching, hence minimizing system power consumption while maintaining the QoS achieved by the users. Furthermore, the binary PSO method utilizes the linearly growing inertia weight to improve its convergence and determine the minimal number of active small cells. In addition, they introduced a classification tree-based SFR approach. In this approach, the small cells are partitioned into center and edge regions, with distinct sub-bands assigned to the edge regions of adjacent small cells. This allocation strategy aims to reduce interference among the small cells. The suggested algorithms outperform existing algorithms by reducing network power consumption and small-cell interference, improving system throughput and energy efficiency. However, the accuracy of the proposed algorithm needs to be considered using dynamic inertia weight.

In [109], the authors propose a channel allocation method for NOMA-based 5G backhaul wireless networks based on power consumption and consider the traffic needs in small cells. The research objective is to improve the assignment of uplink/downlink channels in a mutually beneficial manner to enhance user fairness. The method involves two steps. First, the traveling salesman problem is used to allocate channels initially since it resembles many-to-many user-channel allocation. Second, the modified PSO approach is used with a decreasing coefficient that may be a stochastic estimate methodology for allocation updates. Adding a random velocity improves the exploration behavior and convergence rate of modified PSO. The proposed approach outperforms benchmark schemes in fairness and network capacity. Nevertheless, co-tier interference needs to be considered. In [110], to tackle several objective functions, such as user data rate, spectrum efficiency, and energy efficiency of a 5G wireless network with massive MIMO, the authors propose a self-organizing particle swarm optimizer with multiple objectives. The results of the simulation that was conducted showed that the methodology that was provided is an effective and promising way to solve objective issues. However, user fairness must be considered.

In [111], the authors propose a channel celection method that utilizes the Chicken Swarm Optimization (CSO) algorithm to determine the most efficient channel in a massive MIMO network. This scheme aims to optimize power allocation and beam-forming vectors. The primary goal of the objective function is to generate beam-forming vectors that accurately fulfill SINR requirements. The use of the CSO Algorithm involves the generation of beam-forming vectors and power distribution, which are influenced by the properties of the channel. The channel state information is predicted, and subsequently, a projection matrix is constructed using a channel estimating framework. The comparison study results indicate that the suggested scheme exhibits superior SE and EE performance compared to the benchmark schemes. However, the proposed method is examined with a low number of users.

In [112], the authors investigate the use of an artificial neural network to assess an optimization issue of resource allocation and relay selection, taking into account the mobility of users. The proposed approach aims to achieve maximum spectrum efficiency in cooperative HetNets. In order to accomplish this objective, a novel approach for resource allocation and relay selection based on artificial neural networks is introduced and then compared to a resource allocation and relay selection algorithm based on particle swarm optimization. Numerical results demonstrated that the attainment of maximum spectrum efficiency is accomplished via the consideration of relay mobility and the proper allocation of resources. According to the results, the proposed approach demonstrated superior spectrum efficiency compared to the PSO algorithm. Nevertheless, power consumption needs more consideration and attention. In [113], the authors explore a PSO-based joint channel allocation and power control approach to enhance network performance and effectively reduce interference in D2D-based cellular networks. By precisely creating the fitness values for the two issues, the algorithm is able to keep them from being trapped into solutions that are impossible to implement. The simulations have shown that the proposed algorithm has the potential to enhance the network throughput in D2D-based cellular networks. Furthermore, the method exhibits superior performance compared to the non-joint interference management algorithm considered in the study. However, the user delay requirement needs to be considered.

5.4.3 Open Issues and Proposed Solutions

The optimization of resources in 6G wireless networks poses significant challenges in genetic and swarm evolutionary learning. In dynamic 6G network environments, these evolutionary algorithms often exhibit unsatisfactory convergence rates and experience significant computational costs. Rapid convergence is a problem for genetic algorithms since they depend on mutation and crossover processes, which may make it hard to keep solutions diverse. To identify optimum solutions in extremely dynamic environments, swarm intelligence algorithms like ant colony optimization and particle swarm optimization may have difficulty balancing exploration and exploitation. Some of the suggested solutions are hybrid approaches that combine evolutionary algorithms with other optimization methods. For example, adaptive parameter tuning might be used to dynamically change algorithm parameters depending on the network state, or machine learning models could be integrated to control the search process. It is also possible to increase scalability and decrease processing costs by using distributed and parallel computing approaches. Cooperation techniques throughout swarm algorithms may improve interference management’s adaptability to altering interference patterns. The performance of 6G wireless networks may be greatly improved by using these novel technologies that guarantee capacity and flexibility. Table 5 describes the recent work of EL approaches.

6 AI Strategies Analysis in 6G HetNets

A comparative analysis of several AI strategies such as DL, FL, RL, and EL, applied in 6G HetNets are presented in Fig. 4. The analysis highlights the number of research papers dedicated to each aspect by categorizing these strategies based on their application in user admission, channel utilization, and power control. According to the figure, power control is the most thoroughly examined domain among all AI strategies, with the greatest number of publications reported in DL, FL, RL, and EL. This trend highlights the essential function of AI-driven power optimization in 6G networks, where EE and QoS are crucial.

Figure 4: AI strategies distribution in 6G HetNets via different optimization strategies

User admission and channel utilization reflect different research interests among AI strategies. FL demonstrates an equitable emphasis on all three categories, signifying its importance in the efficient management of distributed resources. Conversely, RL focuses less attention on user admission, indicating a possible research vacuum in this domain. The figure shows the increasing significance of AI in tackling critical challenges in 6G HetNets. Nevertheless, the discrepancy in research concentration among various strategies suggests the necessity of additional investigation, particularly in the integration of AI methods to optimize resources comprehensively.

7 Future Trends and Research Directions

This section proposes future research trends combining advanced resource management techniques employing AI algorithms in 6G wireless networks.

7.1 Advanced Network Automation AI

With the use of AI in 6G, several network management operations may be automated, including configuration, optimization, and planning [114]. This greatly improves operational efficiency by eliminating the need for human interaction. Hence, more effective and efficient network planning techniques may be achievable. Predicting traffic demand and allocating resources to discover and optimize congestion in networks are two examples of how AI can potentially be used. Automation of network service configuration is another area where AI shines. Consequently, this may assist in the reduction of errors and the improvement of network configuration reliability. Improving the effectiveness and efficiency of networks is another area where AI becomes useful. Altering routing tables to reduce latency or optimizing resource allocation to increase throughput are two examples of how AI can be used.

7.2 AI for Green Communication