Open Access

Open Access

REVIEW

A Review of Deep Learning for Biomedical Signals: Current Applications, Advancements, Future Prospects, Interpretation, and Challenges

1 Biomedical Engineering Program, University of Manitoba, Winnipeg, MB R3T 2N2, Canada

2 Department of Electrical and Computer Engineering, University of Manitoba, Winnipeg, MB R3T 2N2, Canada

* Corresponding Author: Zahra Moussavi. Email:

Computers, Materials & Continua 2025, 83(3), 3753-3841. https://doi.org/10.32604/cmc.2025.063643

Received 20 January 2025; Accepted 17 April 2025; Issue published 19 May 2025

Abstract

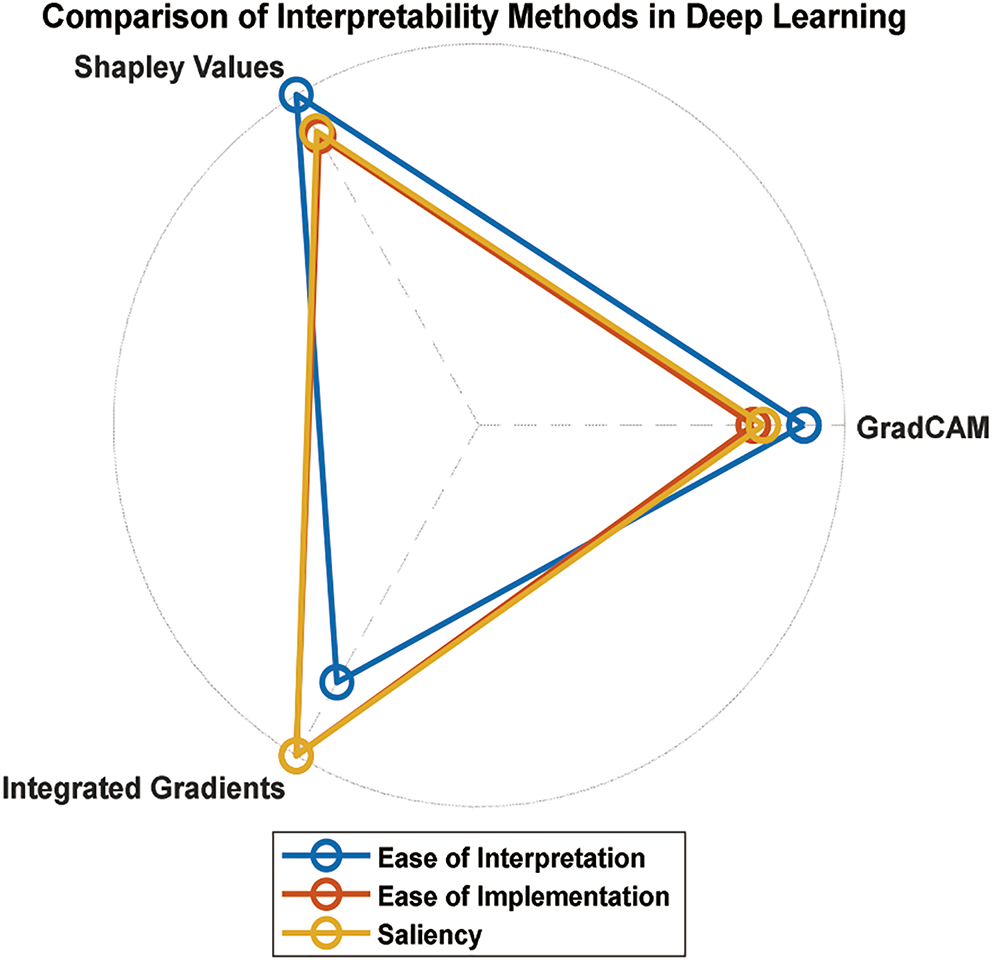

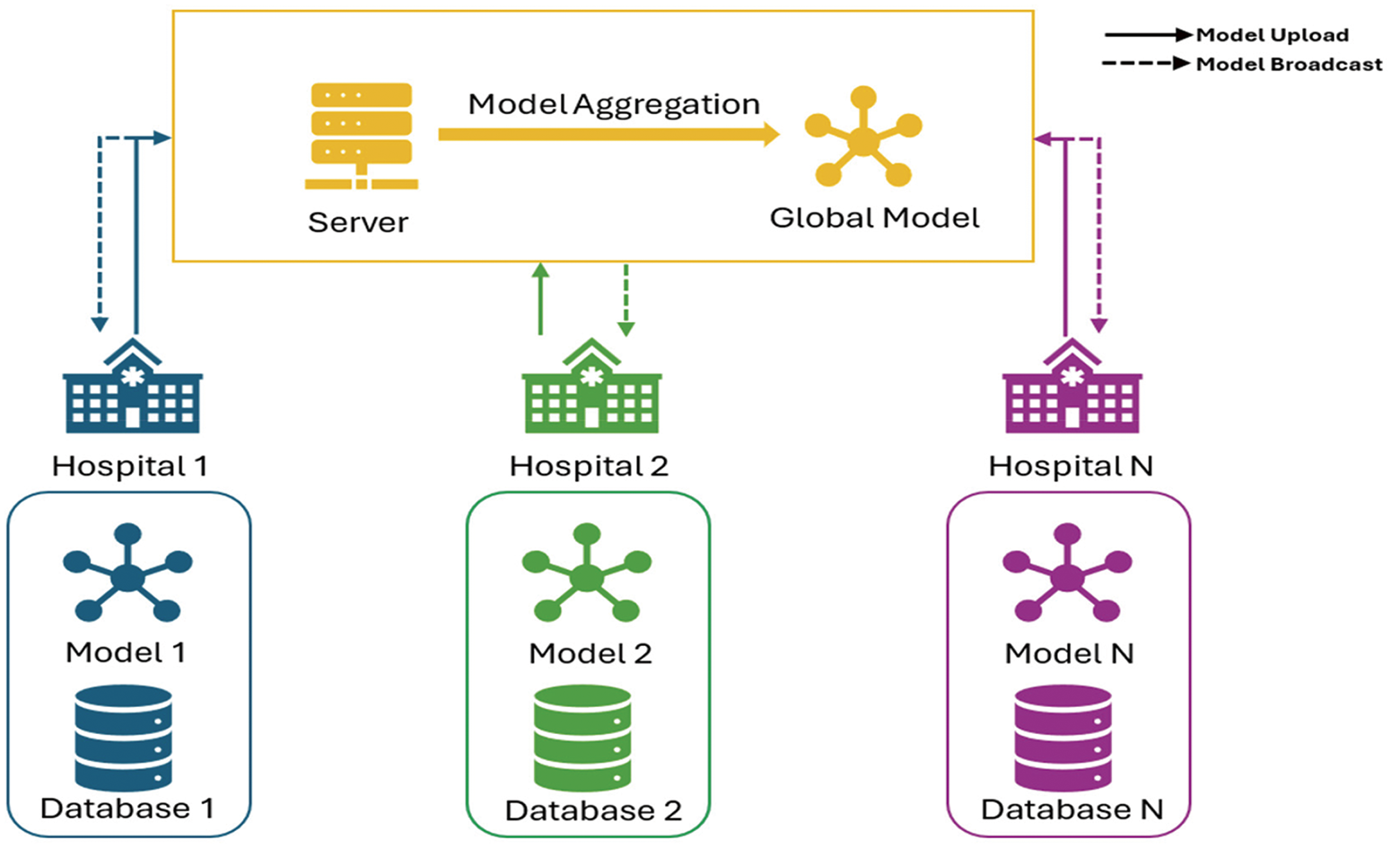

This review presents a comprehensive technical analysis of deep learning (DL) methodologies in biomedical signal processing, focusing on architectural innovations, experimental validation, and evaluation frameworks. We systematically evaluate key deep learning architectures including convolutional neural networks (CNNs), recurrent neural networks (RNNs), transformer-based models, and hybrid systems across critical tasks such as arrhythmia classification, seizure detection, and anomaly segmentation. The study dissects preprocessing techniques (e.g., wavelet denoising, spectral normalization) and feature extraction strategies (time-frequency analysis, attention mechanisms), demonstrating their impact on model accuracy, noise robustness, and computational efficiency. Experimental results underscore the superiority of deep learning over traditional methods, particularly in automated feature extraction, real-time processing, cross-modal generalization, and achieving up to a 15% increase in classification accuracy and enhanced noise resilience across electrocardiogram (ECG), electroencephalogram (EEG), and electromyogram (EMG) signals. Performance is rigorously benchmarked using precision, recall, F1-scores, area under the receiver operating characteristic curve (AUC-ROC), and computational complexity metrics, providing a unified framework for comparing model efficacy. The survey addresses persistent challenges: synthetic data generation mitigates limited training samples, interpretability tools (e.g., Gradient-weighted Class Activation Mapping (Grad-CAM), Shapley values) resolve model opacity, and federated learning ensures privacy-compliant deployments. Distinguished from prior reviews, this work offers a structured taxonomy of deep learning architectures, integrates emerging paradigms like transformers and domain-specific attention mechanisms, and evaluates preprocessing pipelines for spectral-temporal trade-offs. It advances the field by bridging technical advancements with clinical needs, such as scalability in real-world settings (e.g., wearable devices) and regulatory alignment with the Health Insurance Portability and Accountability Act (HIPAA) and General Data Protection Regulation (GDPR). By synthesizing technical rigor, ethical considerations, and actionable guidelines for model selection, this survey establishes a holistic reference for developing robust, interpretable biomedical artificial intelligence (AI) systems, accelerating their translation into personalized and equitable healthcare solutions.Keywords

Abbreviations

| AI | Artificial Intelligence |

| AHAA | American Heart Association |

| BCI | Brain-Computer Interface |

| BNN | Bayesian Neural Networks |

| CBAM | Convolutional Block Attention Module |

| CNN | Convolutional Neural Network |

| CO | Cardiac Output |

| CP | Conformal Prediction |

| DBN | Deep Belief Networks |

| DL | Deep Learning |

| DNN | Deep Neural Networks |

| DWT | Discrete Wavelet Transform |

| ECG | Electrocardiography |

| EEG | Electroencephalography |

| EIG | Enhanced Integrated Gradients |

| EMG | Electromyography |

| EOG | Electrooculography |

| FDA | Food and Drug Administration |

| FSL | Few-Shot Learning |

| FPR | False Positive Rate |

| GAN | Generative Adversarial Network |

| GDPR | General Data Protection Regulation |

| GPU | Graphics Processing Unit |

| Grad-CAM | Gradient-Weighted Class Activation Mapping |

| GRU | Gated Recurrent Unit |

| IG | Integrated Gradients |

| HA | Hierarchical Attention |

| HAN | Hierarchical Attention Networks |

| HFO | High-Frequency Oscillation |

| HIPAA | Health Insurance Portability and Accountability Act |

| HRV | Heart Rate Variability |

| LELE | Locally Explainable Linear Explanations |

| LIME | Local Interpretable Model-Agnostic Explanations |

| LSTM | Long Short-Term Memory |

| MCBAM-GRU | Multistream Convolutional Block Attention Module-Gate Recurrent Unit |

| MEG | Magnetoencephalography |

| ML | Machine Learning |

| MRI | Magnetic Resonance Imaging |

| NIST | National Institute of Standards and Technology |

| NLP | Natural Language Processing |

| PPG | Photoplethysmography |

| PPV | Positive Predictive Value |

| RNN | Recurrent Neural Network |

| ROC | Receiver Operating Characteristic Curve |

| SHAP | Shapley Additive Explanations |

| ShapAAL | Shapley Attributed Ablation with Augmented Learning |

| SR2CNN | Signal Recognition and Reconstruction Convolutional Neural Network |

| STFT | Short-Time Fourier Transform |

| sEMG | Surface Electromyography |

| SVM | Support Vector Machine |

| ZSL | Zero-Shot Learning |

Deep learning (DL) has become a dominant paradigm for processing biomedical signals because it can extract ostensibly unattainable features through conventional methods [1]. In recent years, there have been an increasing number of publications on the application of DL methods to biomedical signals, which has allegedly led to milestone progress in diagnosis, treatment, and personalized healthcare [2–4]. Different DL methods and techniques, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have been applied to analyze numerous types of biomedical signals, such as electroencephalograms (EEGs), electrocardiograms (ECGs), photoplethysmography (PPGs), electrooculography (EOGs), and electromyography (EMG) signals [2,5,6].

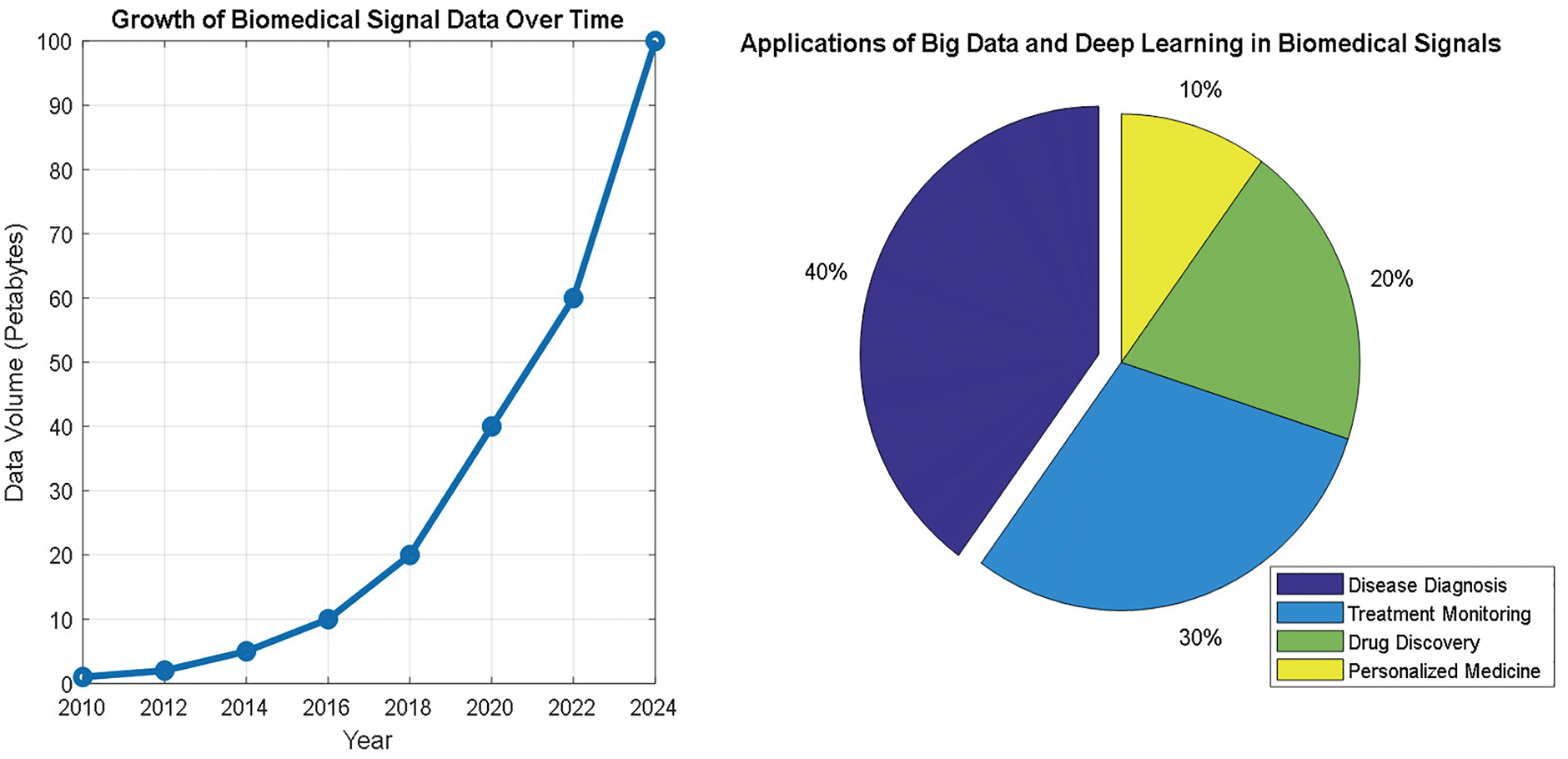

The outcomes of these models are promising, but it is crucial to remember that further validation of their ability to detect all patterns and diseases is needed. This leads to concerns about their reliability in accurate clinical decision-making, and additional evaluations are necessary before they can be widely adopted. However, it has been purportedly used to detect and diagnose several medical conditions, including neuromuscular disorders [7,8], sleep disorders [9,10], epilepsy [11–13], and arrhythmias [2,14–16]. One of the apparent benefits of DL applications in the biomedical signals field is their ability to handle big data. These data became more accessible with the escalation of digital healthcare, and DL models can learn these data to enhance the performance of their outcomes. Moreover, unlabeled data can be used to train DL models, which can be advantageous in scenarios where labeled data are unavailable or costly [17].

The application of DL models for analyzing and interpreting biomedical signals has shown great potential in the medical field. Moreover, these models can provide real-time monitoring of patients’ vital signs and alert clinicians to potential issues. Additionally, these models can analyze large biomedical datasets in depth, identify biomarkers, and predict disease progression, leading to earlier diagnosis and improved treatment outcomes [18]. Thus, these models have the potential to revolutionize healthcare by enabling more personalized and accurate treatment plans. However, further investigations are needed to validate their effectiveness and safety in clinical practice.

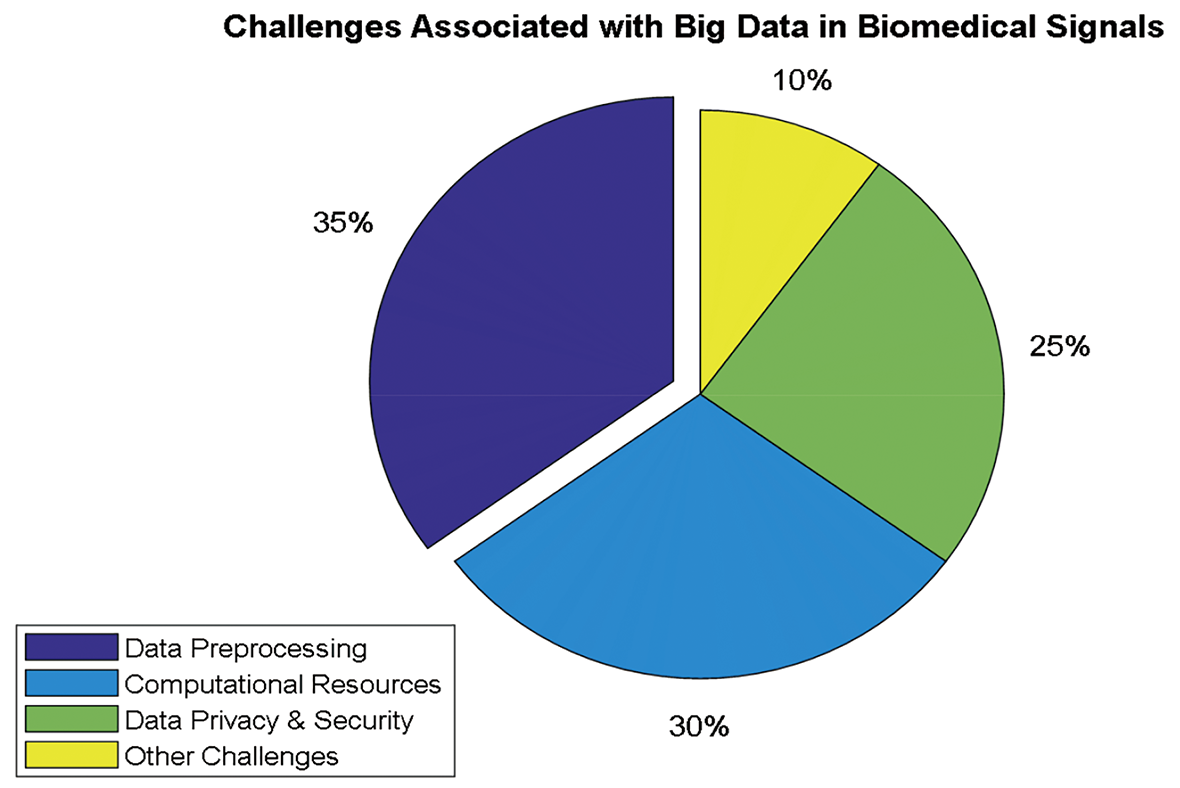

Despite the high-performance results achieved by DL models, several challenges are associated with their deployment in biomedical signal processing. The most challenging part is acquiring and preprocessing data and maintaining their quality. Biomedical signals are often corrupted by noise, artifacts, and interference, making it difficult to extract meaningful features [19,20]. Interpreting DL models can be challenging, as they are frequently analyzed as black boxes, making it difficult to comprehend how a particular verdict was reached [18].

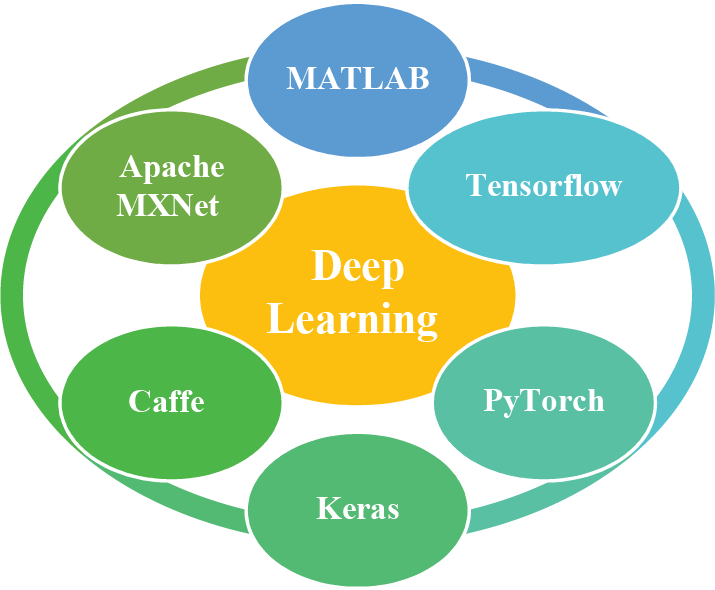

Another formidable challenge in deploying DL to biomedical signals is the necessity for specialized hardware and software. However, DL models require copious computational resources and training them can be computationally exorbitant and protracted [21]. Therefore, specialized hardware is needed to perform the training process. One of the well-known hardware components is the graphics processing unit (GPU) [22]. However, their high power consumption can contribute to climate change if not balanced with renewable energy sources or efficiency measures.

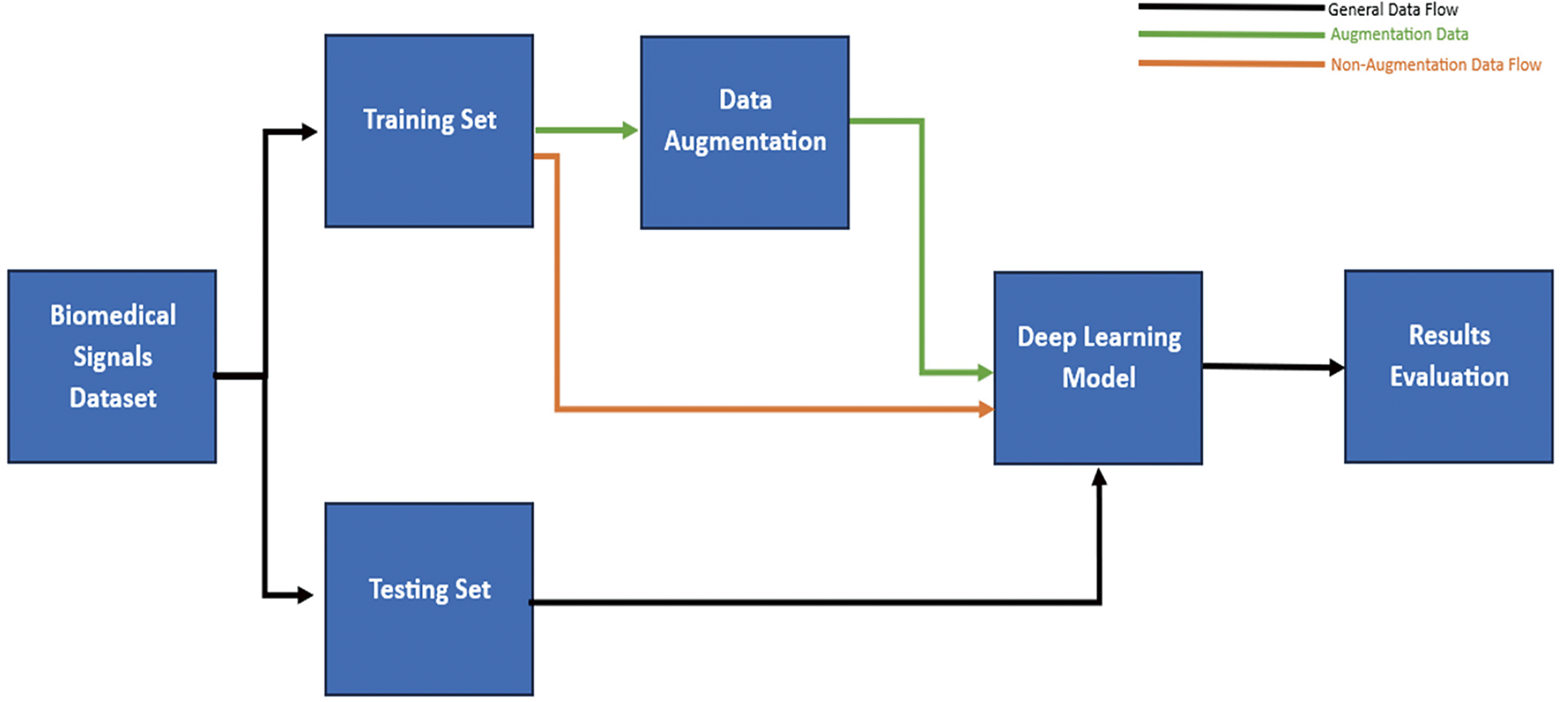

Furthermore, specialized DL software tools are required for the design, evaluation, and execution of models. In addition, these models depend strongly on the quality and quantity of the data used to train them. Therefore, data augmentation techniques, such as noise addition and data balancing, enhance the model’s robustness and diminish the possibility of overfitting. Additionally, developing precise and efficient algorithms for data preprocessing, feature extraction, and normalization is crucial for improving the performance of DL models [19,23].

Furthermore, integrating DL models into clinical systems and practice raises ethical and legal concerns about patient data privacy and ownership. In such integration, ensuring that implementation in healthcare is performed ethically and that the benefits outweigh the risks is essential [21]. However, integrating DL models into clinical systems can change healthcare delivery by connecting clinicians with the most recent insights into patient data. These models can analyze large amounts of biomedical data, learn to find patterns and make previously impossible predictions via conventional machine learning (ML) techniques, enabling more accurate diagnoses and personalized treatment plans [18,20]. In addition, the complexity of these models can make it difficult for healthcare professionals to interpret the results generated. Therefore, providing proper data visualization of the outputs and training of DL models and supporting clinicians in verifying that they can effectively use these models in practice are essential [24]. By fully or partially solving these challenges, DL models can be integrated into clinical workflows to enhance patient outcomes and improve the overall quality of healthcare delivery [17,25].

DL models, especially CNNs, RNNs, and GANs, have recently made substantial advancements in analyzing biomedical signals such as ECGs, EEGs, and PPGs. For example, CNNs have achieved high diagnostic accuracy for ECG-based arrhythmia detection, with studies such as those reporting accuracy rates over 98% on datasets such as the MIT-BIH arrhythmia database [26–28]. Similarly, RNNs have shown effectiveness in capturing sequential dependencies in EEG signals for seizure detection, with sensitivity exceeding 90% in specific applications [13,29]. However, the strengths of these models are often balanced by significant challenges, such as dependency on high-quality data and interpretability limitations. For example, [15] noted that CNNs, despite their high accuracy, struggle with noisy data, whereas [30] reported that the “black-box” nature of RNNs poses a barrier to clinical adoption. These studies illustrate that while DL has transformative potential in biomedical signal analysis, these models must overcome critical limitations to achieve real-world clinical viability.

Objectives

This paper covers various DL models and their diverse applications in biomedical signal processing, thoroughly exploring their current successes and the multifaceted challenges they face. Emphasis is placed on addressing the data used, choosing a suitable model for the application, interpreting the model results, and overcoming challenges, as these are central to effective deployment in clinical settings. The paper also examines opportunities to refine these models for more accurate and efficient predictions, explicitly focusing on facilitating early diagnoses and enabling personalized treatments. Ultimately, this review aims to bridge knowledge gaps by highlighting both the potential and limitations of DL in this rapidly evolving domain.

2 Methodology and Bibliometric Analysis

This paper included mainly journal papers covering general DL applications for biomedical signals. We also included journal and conference papers on DL applications in biomedical signals, except that the conference had no extended documents in a journal.

The search for relevant papers in this review encompassed the period up to the mid of 2024. Relevant manuscripts were identified by searching the following databases and search engines:

• Scopus

• Google Scholar

• PubMed

• IEEE

• ScienceDirect

• Taylor & Francis

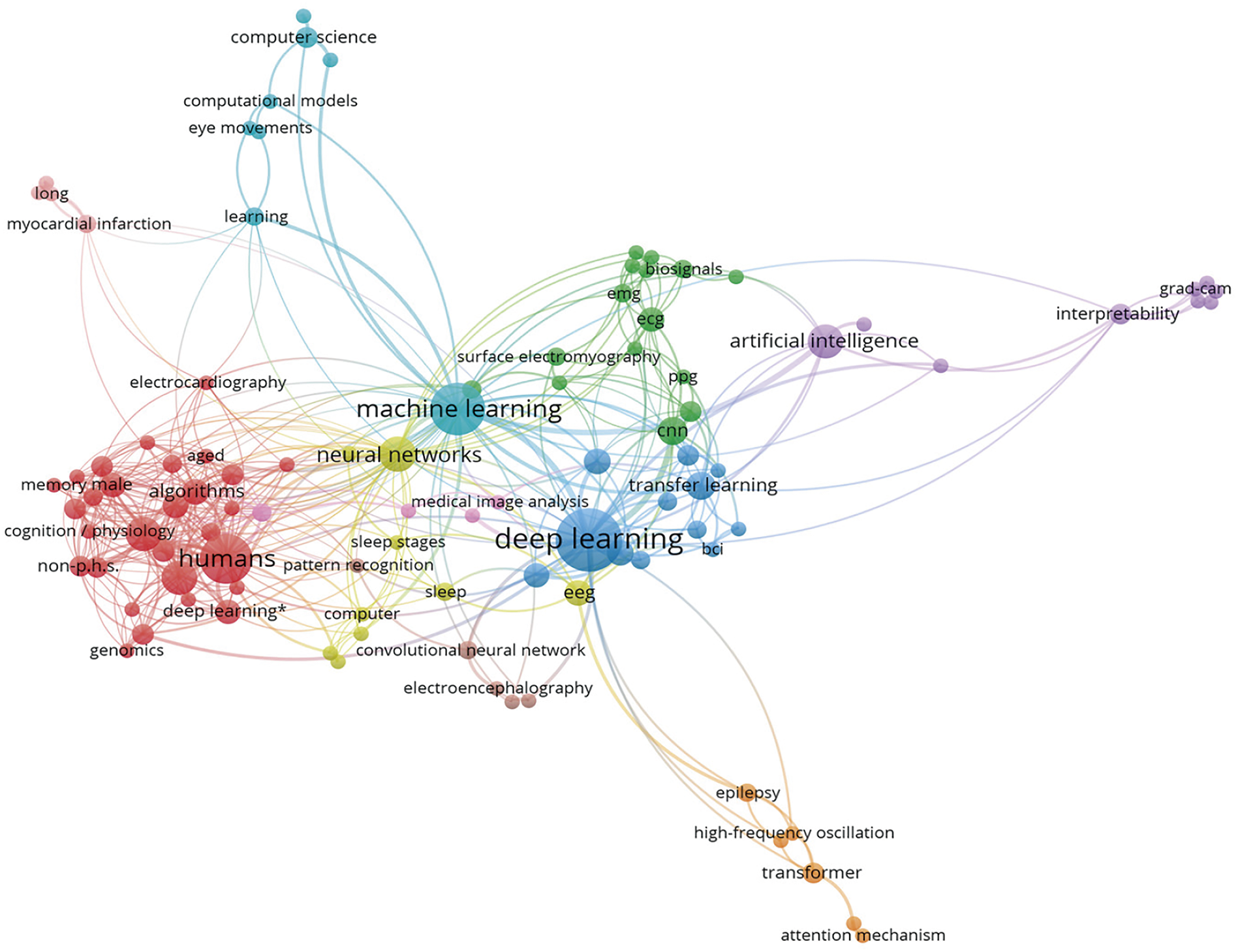

The selection of these databases is essential because they cover a wide range of scholarly articles to ensure comprehensive coverage of this review. Using keywords as a primary search method helps to locate articles that focus on and are relevant to the topic of this review. Keywords are a vital way to navigate between articles and enhance the visibility of published articles. Fig. 1 shows a graphical representation of the keywords used in this review.

Figure 1: Graphical representation of the keywords covered in this review and their relevance to the scope of this paper

The inclusion criteria for this review were as follows:

• This article proposes the application of DL or the analysis of DL in biomedical signals.

• This article addresses one of the predefined research contributions to DL in biomedical signals.

• This article contributes to the theoretical or practical advancements in DL in biomedical signals.

2.3 Search Keywords and Queries

The selected articles were searched via the following keywords: deep learning, biomedical signals, physiological signals, DL, artificial intelligence (AI), diagnosis, ECG, EEG, EMG, CNN, LSTM, and explainable AI. The following is an example of a search query using the keywords DL AND biomedical signals OR physiological signals AND diagnosis.

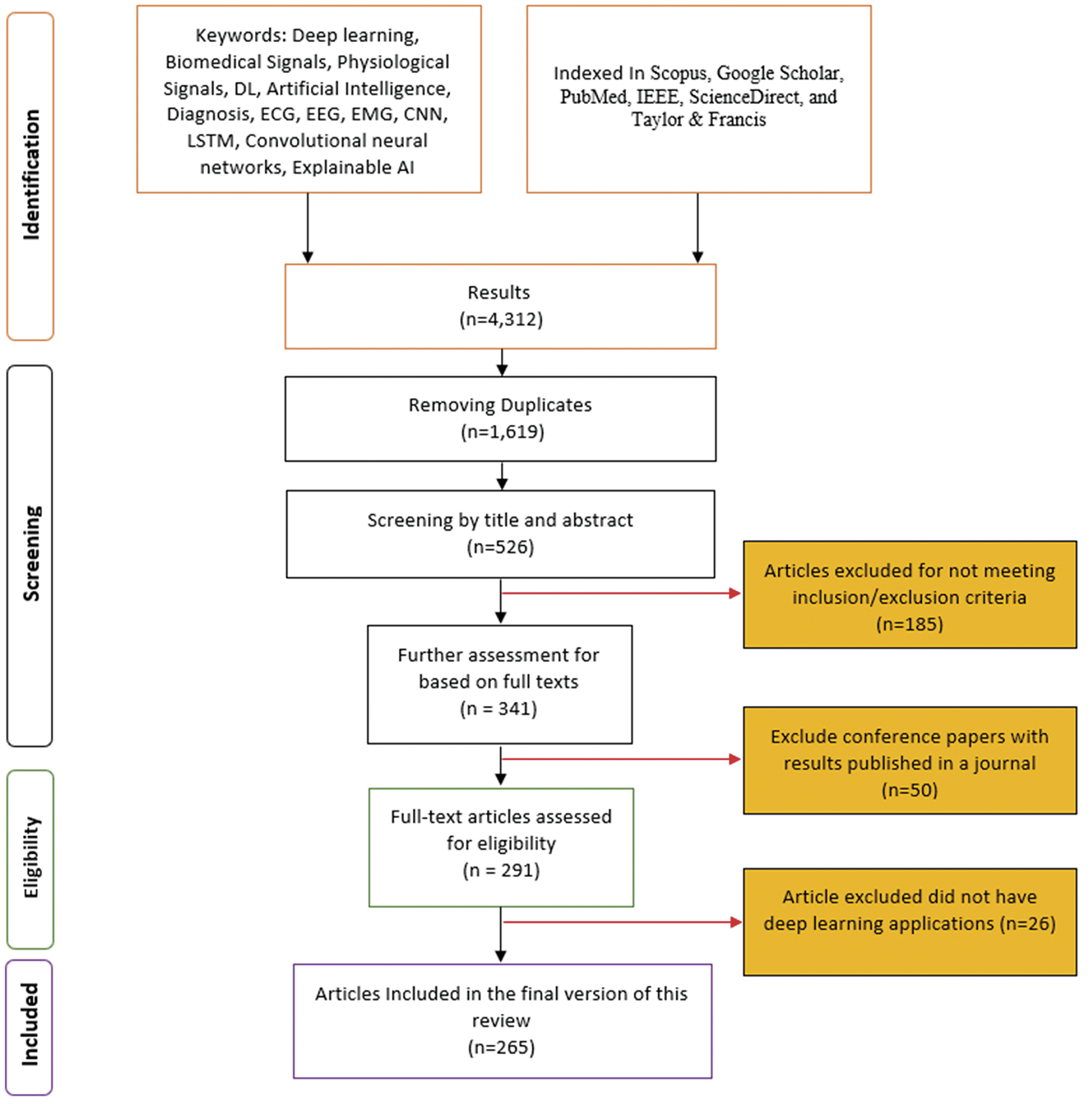

To maintain a focus on the most relevant research, articles that did not directly apply DL techniques to one of the biomedical signals were excluded. Our screening methodology was structured in two stages to ensure thorough screening of articles on DL applications in biomedical signals. In the first stage, we assessed the articles based on their titles and abstracts. The selected articles were searched via the keywords mentioned in Section 2.3. In the second stage, we analyzed the full texts of the selected articles from the first stage. In this stage, the full texts of the articles were used to ensure that they met the inclusion criteria. Fig. 2 shows the flowchart of our screening strategy process.

Figure 2: Flowchart showing the multistage screening process for selecting relevant studies

This section provides an in-depth analysis of the research landscape based on bibliometric data. The analysis is organized into several subsections, each focusing on a different aspect of the analyzed data. The study was performed on all included datasets after duplications were removed.

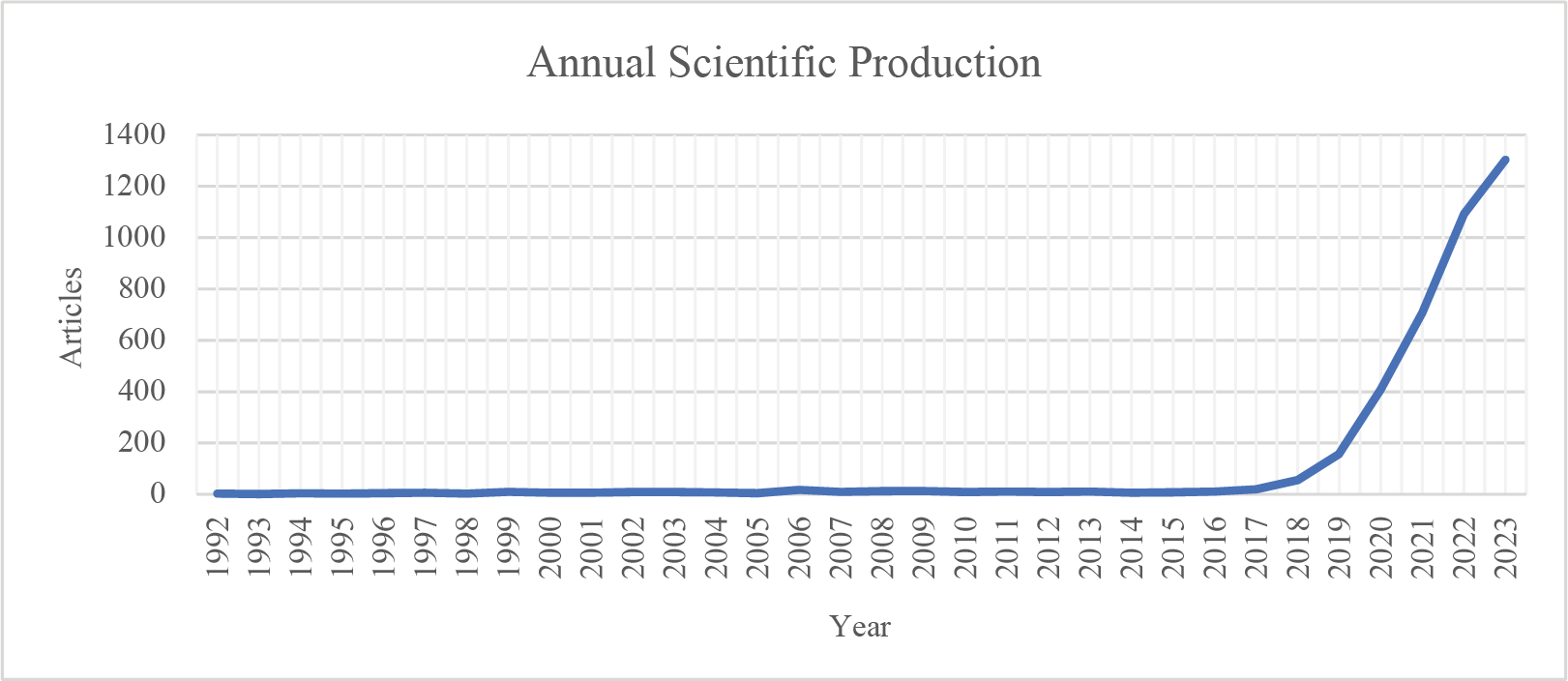

2.5.1 Scientific Production Trends

The analysis of annual publication output reveals a robust increase in scientific production over the past decades. This trend indicates that research interest in this field is growing rapidly, with recent years showing a significant surge in publications. The main findings in this trend analysis are that the dataset shows an increase from approximately 50 publications in the early 2000s to over 400 publications in 2020. A steady growth rate underscores the expanding scope and interest in the field. Fig. 3 shows the number of publications over the years.

Figure 3: Graph depicting the annual publication counts over time

2.5.2 Analysis of Authors’ Research Output over Time

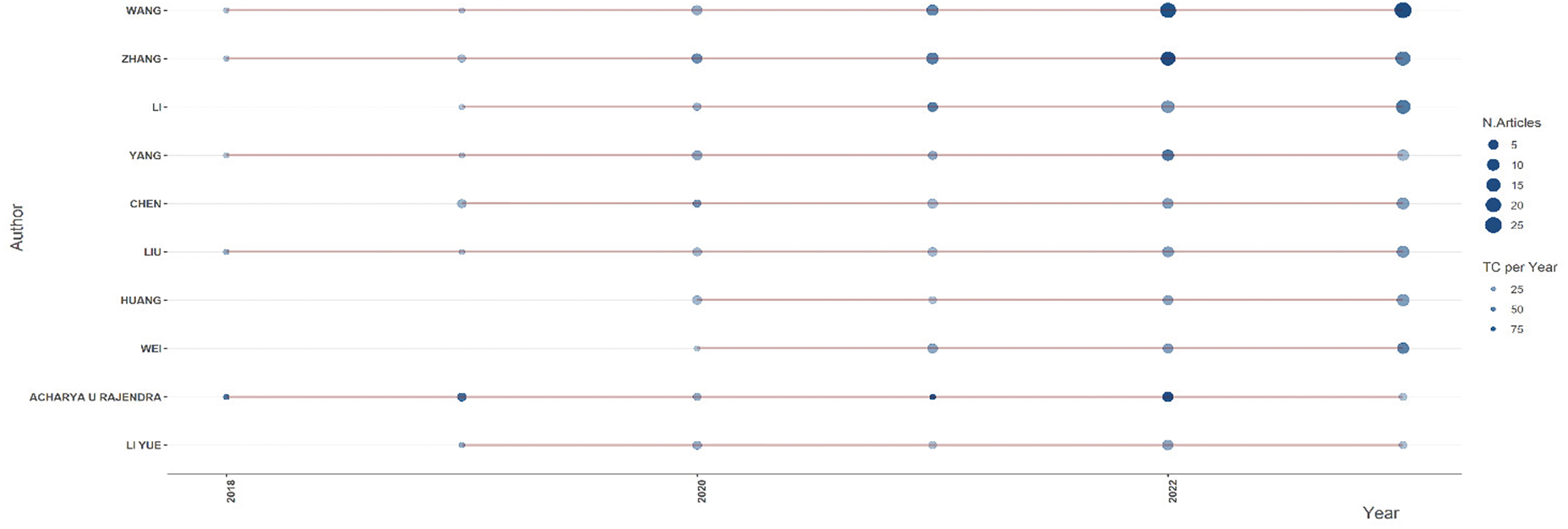

The analysis identifies the most productive authors and evaluates their scholarly impact. Metrics such as publication counts and citations per publication provide insight into the contributions of key researchers. The key findings are the productivity of various authors over time, spanning from 2018 to mid-2024. Fig. 4 shows the authors’ productivity and impact.

Figure 4: Research productivity and citation impact of authors

The analysis reveals several key observations regarding research productivity and scholarly impact. Authors such as Wang, Zhang, Liu, and Li have demonstrated consistent research output over multiple years, with a noticeable peak in 2022. This indicates their sustained contribution to the field and the increasing volume of their work. The most productive years in terms of the number of published articles appear to be 2022 and 2023, as reflected by the more prominent and darker circles in the visualization. This suggests a surge in research activity during these years, possibly driven by advancements in the field or increased research funding and collaboration. Notably, specific authors, such as Acharya U Rajendra, have comparatively fewer publications but have received significant citations in particular years. This highlights the impact of selected high-quality publications garnering considerable scholarly attention. Overall, the general trend suggests increased research productivity and impact over time, with many authors publishing more frequently in recent years. The findings underscore the growing interest in and expansion of research in this domain, as evidenced by the upward trajectory in both publication count and citation impact.

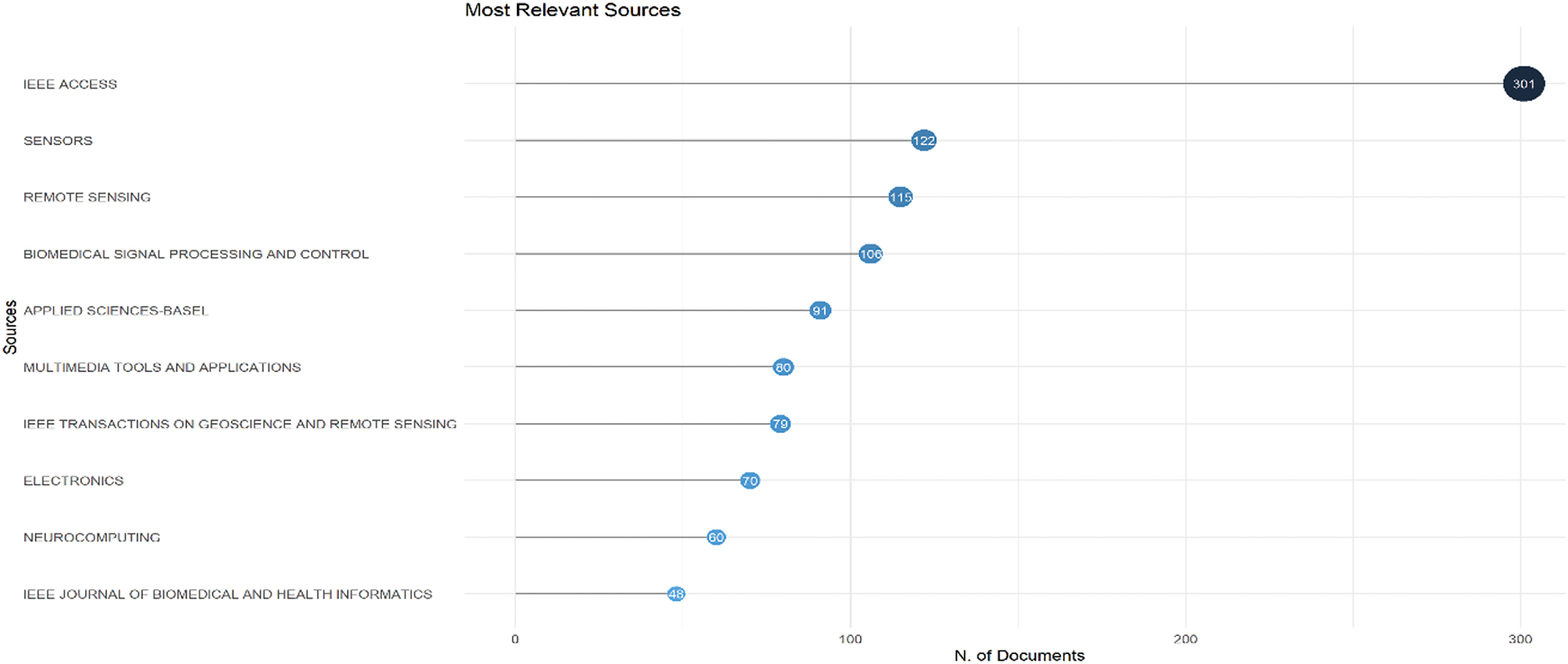

2.5.3 Journal and Source Analysis

Analyzing publication sources provides valuable insight into the dissemination of research within the field. Identifying the most relevant journals and conference proceedings helps us understand the primary venues for publishing influential studies. Fig. 5 shows the most appropriate sources of publications. The dataset highlights the key sources contributing to the body of knowledge in this domain. The most productive journal is IEEE Access, with 301 publications, demonstrating its role as a leading outlet for research dissemination. Sensors and remote sensing have published 122 and 115 articles, respectively, indicating the strong presence of sensor-related applications in the field. Other significant sources include Biomedical Signal Processing and Control (106 articles), Applied Sciences-Basel (91 articles), and Multimedia Tools and Applications (80 articles), emphasizing the interdisciplinary nature of the research. Additionally, journals such as IEEE Transactions on Geoscience and Remote Sensing (79 articles), Electronics (70 articles), Neurocomputing (60 articles), and IEEE Journal of Biomedical and Health Informatics (48 articles) also contributed significantly to the field. The presence of IEEE journals and high-impact computing journals suggest that the research spans multiple domains, integrating biomedical engineering, signal processing, artificial intelligence, and remote sensing. This distribution of publications across various sources reflects the interdisciplinary growth of the field and underscores the importance of computational and sensor-based methodologies in advancing research. The increasing presence of articles in high-impact journals indicates the field’s maturation and expanding recognition in the broader scientific community.

Figure 5: Most relevant sources based on the number of documents published

2.5.4 Country Production Analysis

This section examines countries’ contributions in terms of research citations and publication output, derived from analyzing two datasets: Most Cited Countries and Corresponding Author’s Countries.

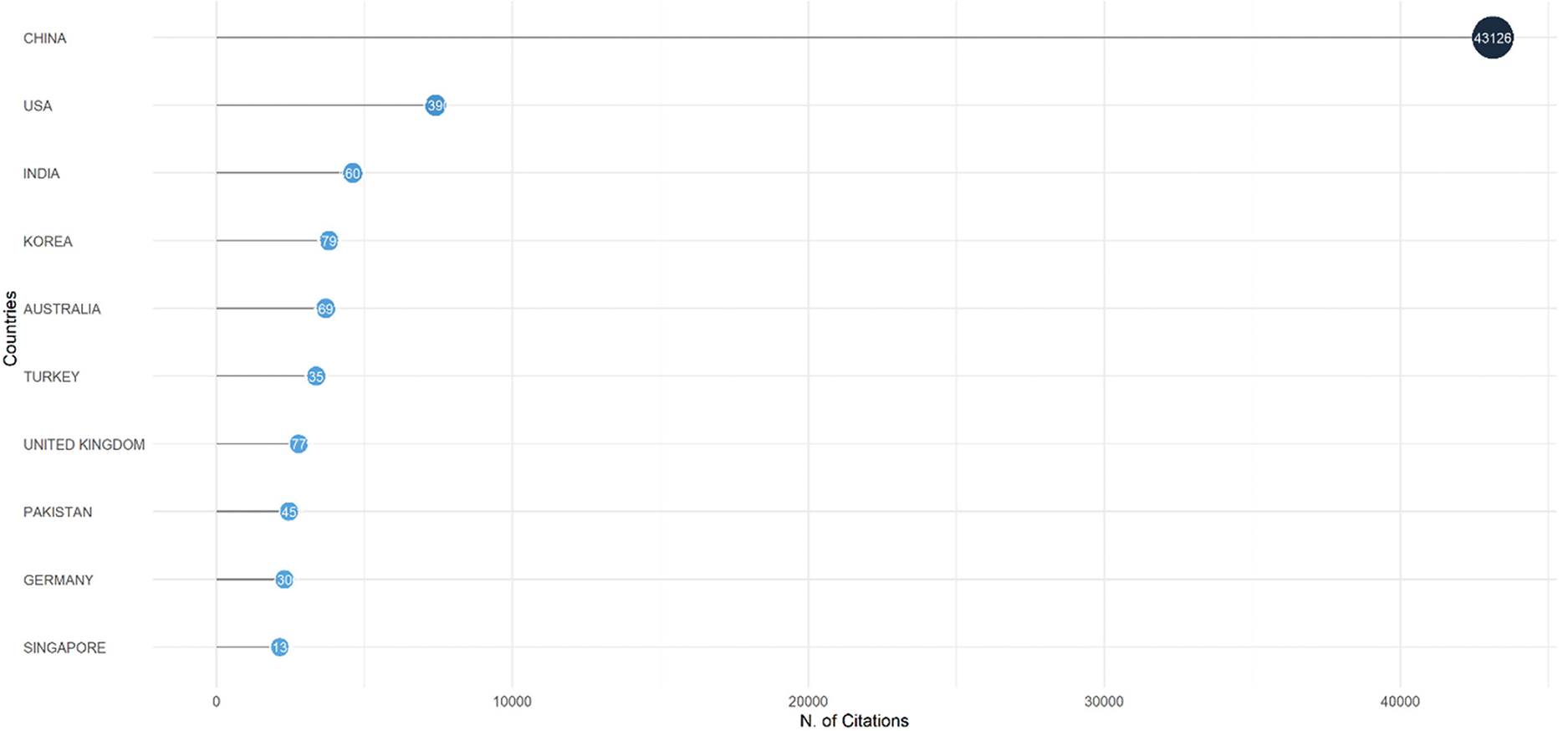

• Citation Analysis

The citation dominance of countries is illustrated in the Most Cited Countries chart (scale: 0–40,000 citations). China leads with the highest citation count, occupying the full scale of the chart (≈40,000 citations). The United States (USA), India, South Korea, and Australia follow in descending order, forming the top five most cited countries. Turkey, the United Kingdom, Pakistan, Germany, and Singapore are also prominent, although their citation volumes are lower than those of China. This trend underscores China’s significant influence on global research. Fig. 6 shows the citation analysis per country for the most cited countries.

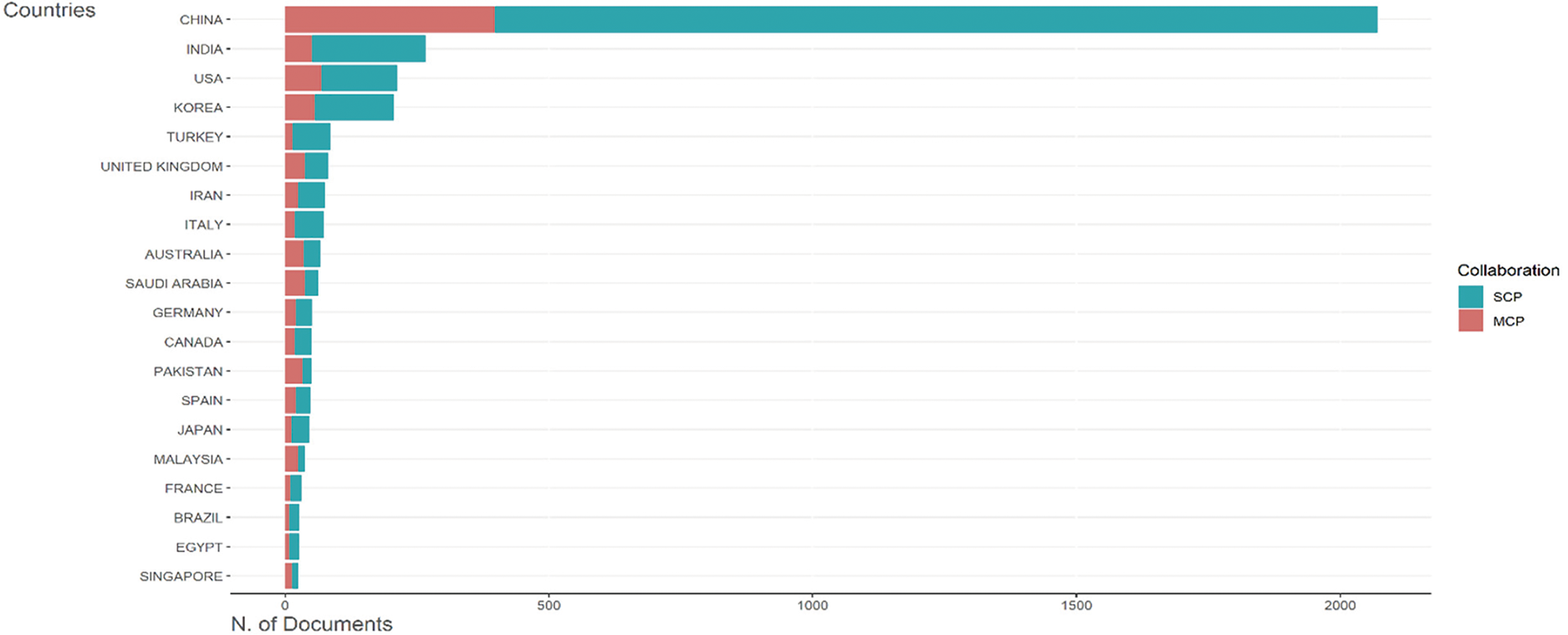

• Publication Output Analysis

Figure 6: Citation analysis per country for the most cited countries

The Corresponding Author’s Countries dataset highlights the number of documents (scale: 0–2000) contributed by countries, categorized into single-country publications (SCPs) and multiple-country publications (MCPs). China again dominates, producing approximately 2000 documents, followed by India, the USA, South Korea, and Turkey. Countries like Iran, Italy, Saudi Arabia, and Japan appear in the publication rankings. Nevertheless, they are absent from the top-cited list, suggesting a disparity between publication volume and citation impact. European nations such as Germany, Spain, France, Canada, and Brazil contribute moderately. Fig. 7 shows the publication analysis per country.

Figure 7: Publication analysis per country

The analysis reveals a stark geographic concentration of research influence. China has emerged as the undisputed leader in citations and publications, reflecting its strategic investments in research and development (R&D), vast academic workforce, and integration into global networks. By contrast, established research hubs such as the USA and Germany maintain strong citation impact despite lower publication volumes relative to China, underscoring their ability to produce high-quality, influential work. Emerging economies, including India, Turkey, and Pakistan, demonstrate growing contributions to global scholarship but face challenges in translating output into citations. This disparity may stem from limited international collaboration, underrepresentation in high-impact journals, or focusing on regionally relevant rather than globally competitive research. Moreover, although their publications are prolific, mid-tier contributors such as Saudi Arabia and Iran lack commensurate citation traction, signaling potential gaps in research visibility or alignment with global priorities. Finally, the dominance of single-country publications (SCPs) across most nations suggests a persistent reliance on domestic expertise and funding. While SCPs strengthen local research ecosystems, the limited prevalence of multiple country publications (MCPs), particularly among emerging economies, highlights untapped opportunities for cross-border partnerships. Such collaboration could enhance the impact of citations, diversify research perspectives, and address global challenges more effectively. These trends underscore the need for policies incentivizing international cooperation while addressing systemic barriers to equitable scholarly recognition.

3 From Traditional Machine Learning to Deep Learning

Machine learning (ML) has become popular in various fields, including biomedical signal processing. Traditional ML methods, such as K-nearest neighbors [31], random forests [32], and gradient boosting [33], have been used for classification [34], regression [35], and clustering tasks [36]. These algorithms require a set of features to be extracted from the input data and then used to train a model. However, the feature engineering process can be time-consuming and requires domain expertise [37,38].

On the other hand, significant attention has been given to DL and its ability to learn features from raw data automatically [39]. DL models, such as CNNs [40], RNNs [41], and deep belief networks (DBNs) [42], have achieved state-of-the-art performance on various tasks, including image and speech recognition, natural language processing (NLP), and biomedical signal processing [39,43].

However, even with the successes achieved by traditional ML methods, the dependency on manual feature engineering has several limitations, particularly in the context of scalability and adaptability [43], and it is also prone to human bias and error [39,43]. This slows the development cycle and limits the model’s generalization ability to new or unforeseen data. In rapidly evolving fields such as biomedical signal processing, where new data types and patterns frequently emerge, the rigidity of traditional feature engineering can hinder the continuous improvement of ML models [3,44,45].

DL addresses these challenges by utilizing neural networks capable of learning directly from raw data, thus automating the feature extraction process. For example, they excel in capturing spatial hierarchies in image data and handling temporal dependencies in sequential data. By removing the need for manual feature engineering, DL models can leverage large datasets to learn more abstract and complex features, leading to improved performance and robustness [46,47].

This shift accelerates the model development process and enhances the potential for discovering novel patterns and insights that human engineers might overlook. Consequently, the transition to DL represents a significant advancement in the field, facilitating more efficient and accurate analysis across diverse applications [43].

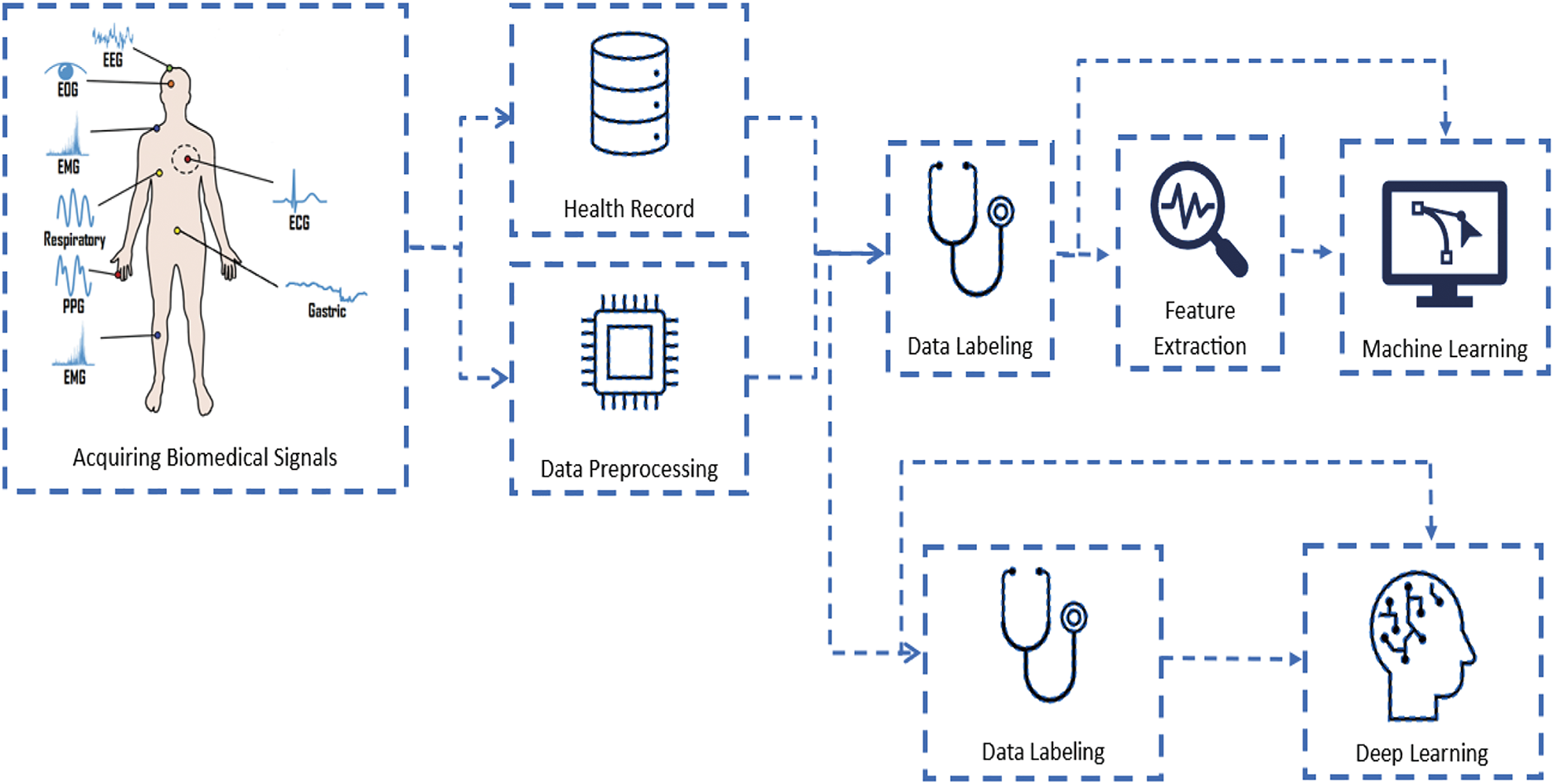

Overall, DL has revolutionized the field of AI and has shown significant promise in biomedical applications. With ongoing advancements in this technology and methods, DL is expected to continue to play an essential role in biomedical research and clinical practice. Fig. 8 shows the difference in data flow between ML and deep learning.

Figure 8: Flow diagram of data from patients to the ML and DL models

ML is a rapidly evolving field that has gained significant attention in recent years because of its ability to learn patterns and make predictions automatically from data. It involves the development of algorithms that can learn from data and improve their performance over time. ML algorithms have been applied in various applications, including image and speech recognition, NLP, and biomedical signal processing [48].

ML has been widely used in biomedical signal processing for classification, regression, and clustering tasks. For example, ML algorithms have been used to classify electroencephalography (EEG) signals into different stages of sleep, predict the development of Alzheimer’s disease via magnetic resonance imaging (MRI) data, and detect abnormal heartbeats in electrocardiography (ECG) signals [49].

Several types of ML algorithms exist, including supervised learning [50], unsupervised learning [51], and reinforcement learning [52]. Supervised learning involves training a model with labeled data, whereas unsupervised learning consists in discovering patterns in unlabeled data. Reinforcement learning involves training a model to make decisions based on a reward system [48,49].

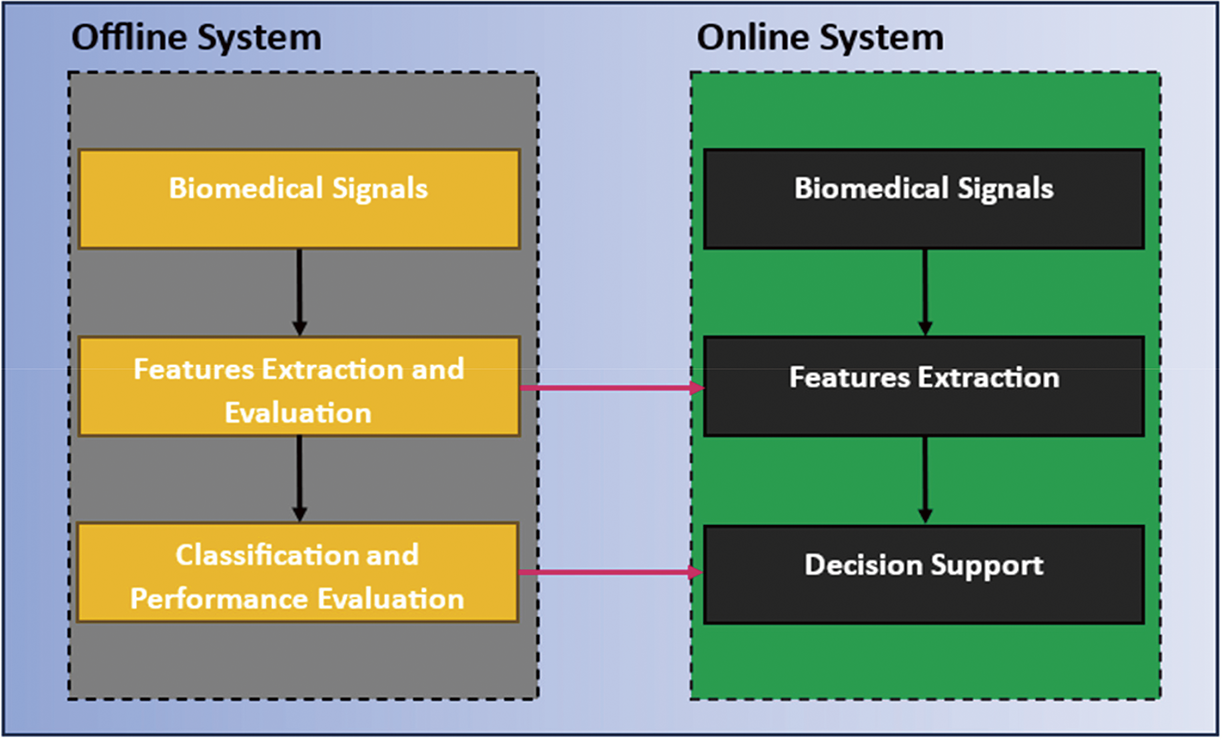

ML algorithms have also been used in biomedical applications, including drug discovery, personalized medicine, and clinical decision support systems. For example, ML algorithms have been used to predict drug interactions and side effects, personalize drug dosages based on patient characteristics, and predict treatment outcomes for various diseases [49]. Fig. 9 shows the block diagram for traditional online and offline detection via ML for biomedical signals.

Figure 9: Block diagram of the traditional prediction system based on biomedical signals

Despite the success of ML, several challenges remain. ML algorithms can suffer from overfitting when the utilized model is too complicated [50]. This leads to the learned parameters of the training data not being generalized to the blind testing data. Moreover, the interpretability of complex models can be challenging, as they usually involve too many computations and layers of abstraction [40,53].

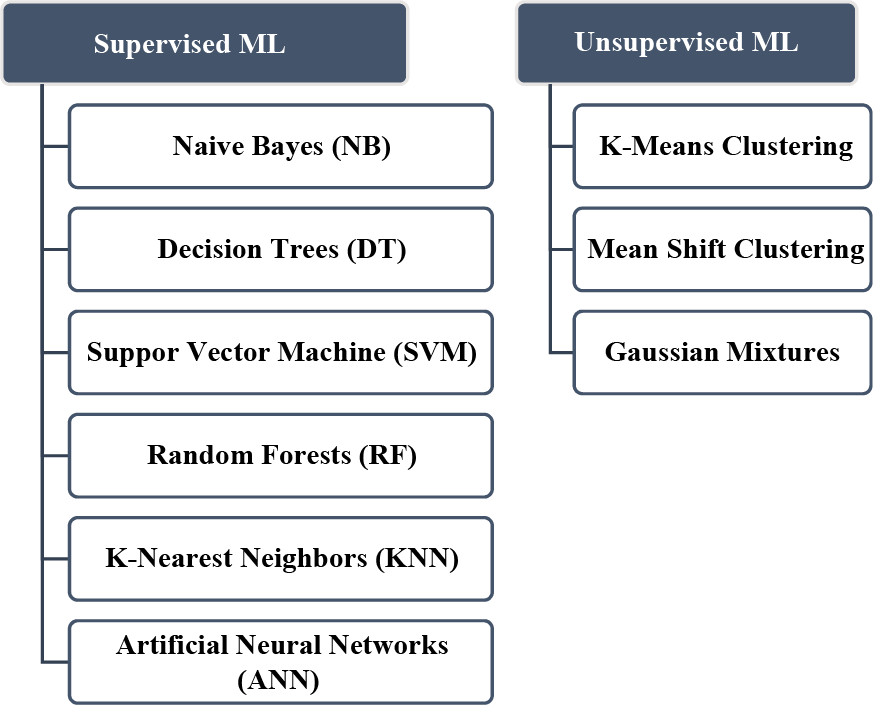

ML has shown significant promise in biomedical signal processing and other biomedical applications. With ongoing advancements in technology and methods, ML is expected to continue to play an essential role in biomedical research and clinical practice [54]. Fig. 10 shows the most popular ML algorithms used for biomedical signals.

Figure 10: Most popular ML algorithms used in biomedical signals

DL has demonstrated superior capabilities in handling complex and unstructured data. The following subsections discuss the most common DL algorithms.

3.2.1 Convolution Neural Networks (CNNs)

CNNs are a type of DL algorithm that has become increasingly popular for image and signal processing applications. CNNs are inspired by the structure and function of the animal visual cortex and are designed to learn hierarchical representations of pictorial and signal data. Unlike traditional ML algorithms, CNNs automatically know features from raw data rather than requiring manual feature extraction [14,55]. CNNs have been applied to various signal-processing tasks, including image classification, object detection, speech recognition, and biomedical signal processing. In the context of biomedical signal processing, CNNs have been used for EEG analysis, ECG analysis, and MRI analysis [44].

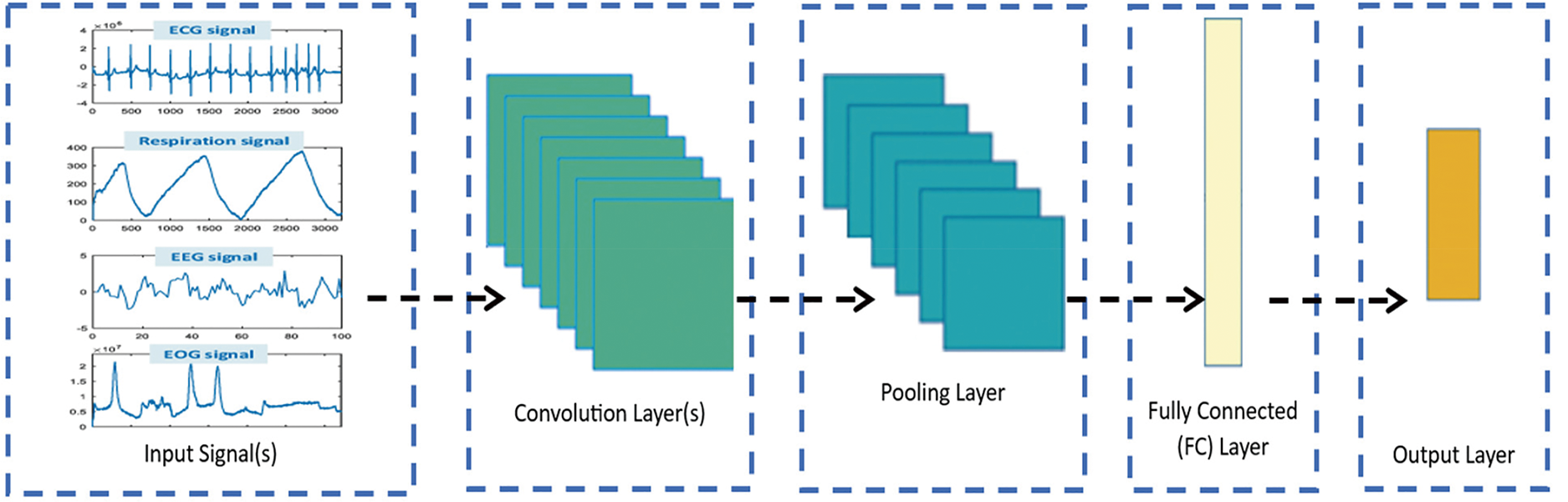

One critical advantage of CNNs is their ability to learn how to extract deep features from data automatically. This can be useful mainly in applications where the signals or features are not well defined or are difficult to detect manually. CNNs are also highly scalable and can be trained on large datasets via parallel processing techniques [1]. Several challenges are associated with training CNNs, including the risk of overfitting and the need for large datasets. Various methods have been developed to address these challenges, such as dropout regularization, data augmentation, and transfer learning [1,40]. In addition to their use in signal processing tasks, CNNs have been applied to various other applications, such as NLP and recommendation systems. DL has achieved state-of-the-art performance in many tasks [40]. DL has emerged as a powerful tool for signal processing tasks, including biomedical signal processing. With ongoing advancements in technology and methods, DL is expected to play an essential role in signal processing and other applications. Fig. 11 shows the general block diagram of CNN.

Figure 11: General block diagram of CNN

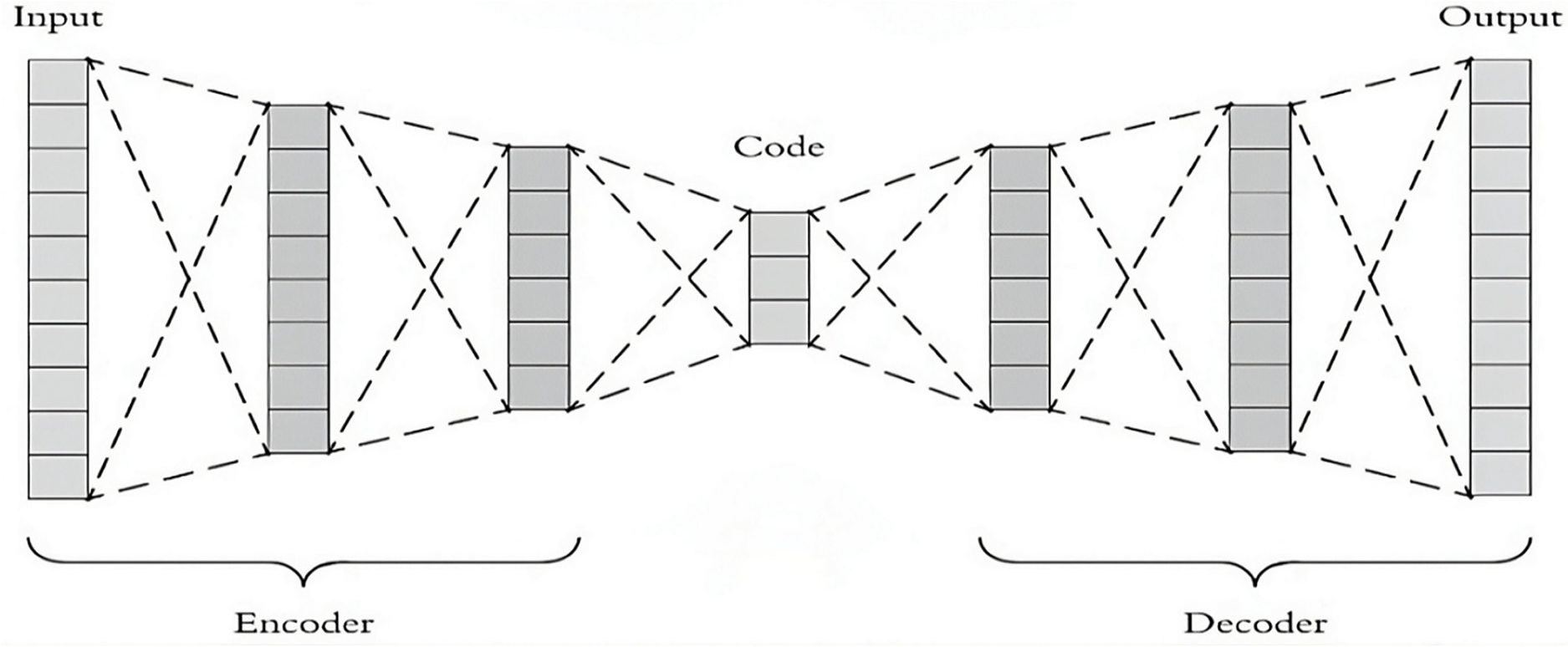

3.2.2 Autoencoder and Stacked Autoencoder

Autoencoders and stacked autoencoders are powerful DL techniques for biomedical signal-processing tasks. An autoencoder is an unsupervised learning algorithm that can learn how to efficiently represent data via the compressed latent space to reconstruct the data [40,56]. It consists of an encoder network that maps the input data to a lower-dimensional latent representation and a decoder network that reconstructs the original input from the latent representation. In biomedical signals, autoencoders have been used for denoising, dimensionality reduction, and feature extraction [57]. By training an autoencoder on a large set of labeled or unlabeled biomedical signals, it can learn to extract meaningful features that capture essential data characteristics. These learned features can subsequently be used for various downstream tasks, such as classification, anomaly detection, and signal synthesis [39].

Stacked autoencoders, or deep autoencoders or DBNs, are extensions of traditional autoencoders with multiple layers of encoding and decoding units. Stacked autoencoders have shown significant promise in biomedical signal analysis because of their ability to learn complex hierarchical representations. Stacked autoencoders can capture higher-level abstractions and intricate patterns in biomedical signals by adding more layers to the autoencoder architecture. This hierarchical representation learning enables more effective feature extraction, improving performance in various biomedical signal processing applications, such as ECG analysis, EEG decoding, and biomedical image analysis [56,58].

In biomedical signal processing, autoencoders and stacked autoencoders have been widely used. For example, autoencoders have been applied in ECG analysis to denoise signals by learning the underlying noise patterns and reconstructing clean ECG signals. Additionally, stacked autoencoders have been employed for feature extraction in EEG decoding tasks, where hierarchical representations of brain signals that capture discriminative information for classifying different cognitive states or detecting anomalies are known [54]. Moreover, in biomedical image analysis, autoencoders and stacked autoencoders have been utilized for tasks such as feature extraction for disease diagnosis from medical images, image denoising, and image quality through superresolution analysis [58]. Fig. 12 shows the general block diagram of the encoders and decoders.

Figure 12: General block diagram of the encoders and decoders

3.2.3 Recurrent Neural Network (RNN)

RNNs have gained significant attention in biomedical signal processing because of their ability to model temporal dependencies and handle sequential data effectively. RNNs are designed to process data sequentially, making them well suited for analyzing biomedical signals, which often exhibit temporal dynamics. RNNs have been successfully applied to various biomedical signal-processing tasks, including ECG, EEG, and speech signal analysis [59,60].

In ECG analysis, RNNs have been used to detect arrhythmias, predict heart rates, and identify abnormalities. By capturing the temporal dependencies in ECG waveforms, RNNs can learn patterns and features crucial for accurately diagnosing and monitoring cardiac conditions. They have shown promising results in detecting various arrhythmias, such as atrial fibrillation, ventricular tachycardia, and heart blocks, contributing to improved clinical decision-making [9,13]. In EEG analysis, RNNs have been applied for brain signal decoding, seizure detection, sleep stage classification, and brain-computer interface (BCI) systems. By modeling the temporal dynamics of EEG signals, RNNs can effectively extract features and patterns that reflect different cognitive states or neurological disorders. They have demonstrated the ability to detect and predict epileptic seizures, classify sleep stages accurately, and enable real-time control in BCI applications [13].

Additionally, RNNs, particularly those with LSTM and GRU components, have been employed for Type-2 diabetes prediction via genomic and tabular data, achieving high performance and showing their effectiveness in predicting chronic diseases from complex datasets [61].

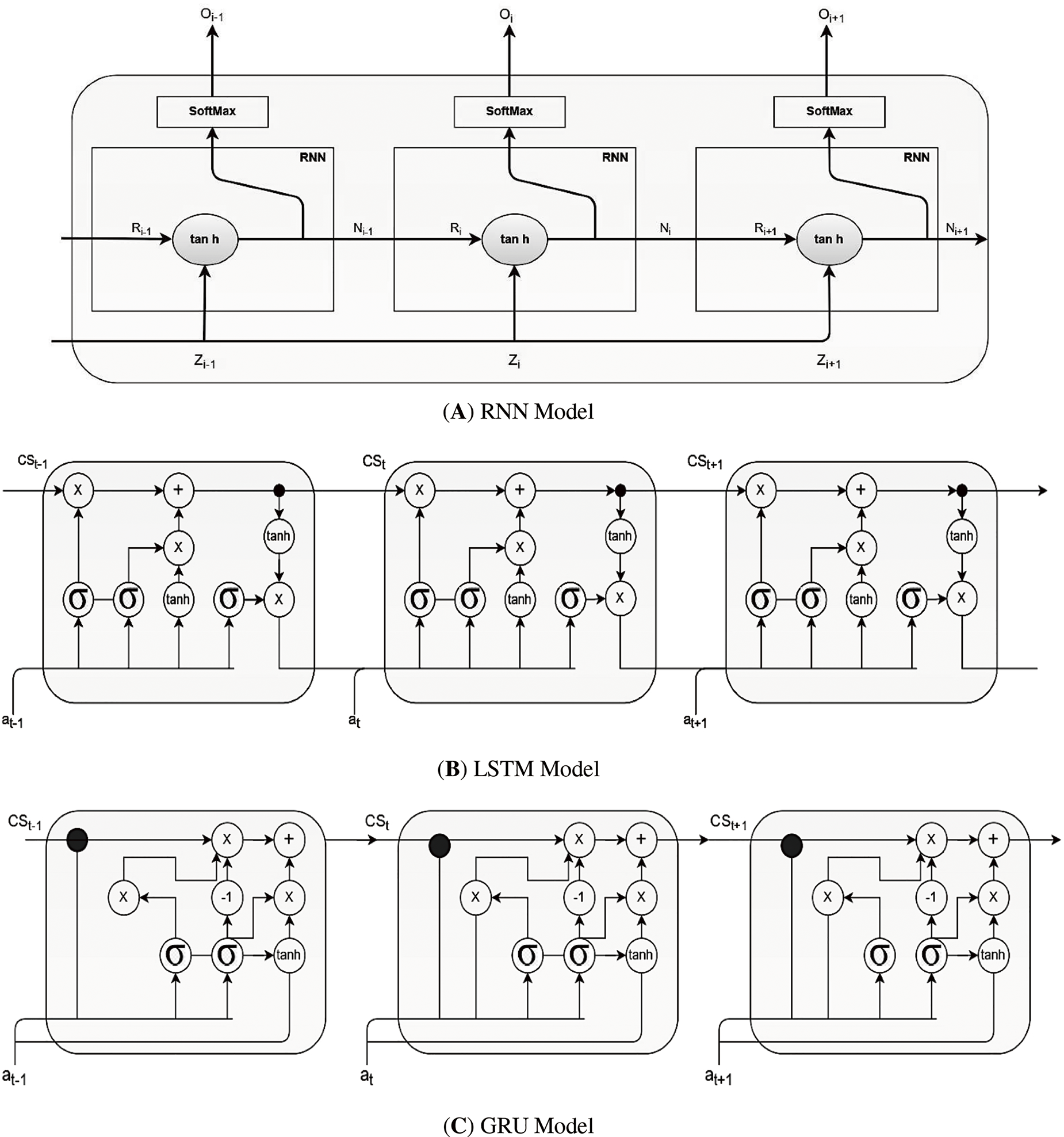

RNNs have also been employed in speech signal analysis for voice recognition, speaker identification, and emotion recognition applications. By considering the temporal context of speech signals, RNNs can capture essential features related to speech dynamics and phonetic patterns [9,62]. They have significantly improved speech recognition accuracy, enabling better speech-based applications in biomedical contexts. Fig. 13 illustrates the distinct architectures of three critical RNN types: the standard RNN, the long short-term memory (LSTM), and the gated recurrent unit (GRU). All three sequential data processes differ in their internal structures and how they handle information flow within the network.

Figure 13: Different types of RNNs

Moreover, when processing sequential biomedical signals such as ECG or EEG data, LSTM networks maintain a cell state that runs through the entire chain of the network, allowing information to persist across time steps. The LSTM cell contains three gates: the forget gate, which determines what data to discard from the cell state; the input gate, which determines what new information to store; and the output gate, which determines what information will be passed to the next step. Fig. 13B illustrates the internal architecture of an LSTM cell, showing how these gates interact to maintain and update the cell state over time. This architecture enables LSTMs to capture long-term dependencies in sequential data, making them particularly effective for arrhythmia detection in ECG signals where temporal patterns are crucial for accurate classification.

The basic RNN unit consists of a single layer, usually an

• Standard RNN

A basic RNN unit consists of a single layer, usually an

where

Despite their simplicity, standard RNNs suffer from the vanishing gradient problem when modeling long-term dependencies. This makes it challenging to retain information over longer sequences.

• Long short-term memory (LSTM)

To mitigate the vanishing gradient problem, LSTMs introduce a cell state and gating mechanisms that regulate the flow of information. An LSTM cell typically has three gates, forget, input, and output gates, which allow the model to retain and utilize information from past inputs effectively [59,60]. The key equations for an LSTM cell are as follows:

where

LSTMs are particularly effective at capturing long-term dependencies in biomedical signals, such as extended ECG waveforms or EEG recordings, by controlling how much past information to keep or forget.

• Gated Recurrent Unit (GRU)

GRUs offer a simplified alternative to LSTMs, using only two gates: update gates and reset gates. These gates regulate the information flow between the previous hidden state and the current candidate state, allowing GRUs to learn long-term dependencies with fewer parameters than LSTMs do [59,60]. The GRU equations are as follows:

where

GRUs often achieve comparable performance to LSTMs on many tasks while being computationally more efficient, making them suitable for real-time biomedical signal analysis and mobile health applications.

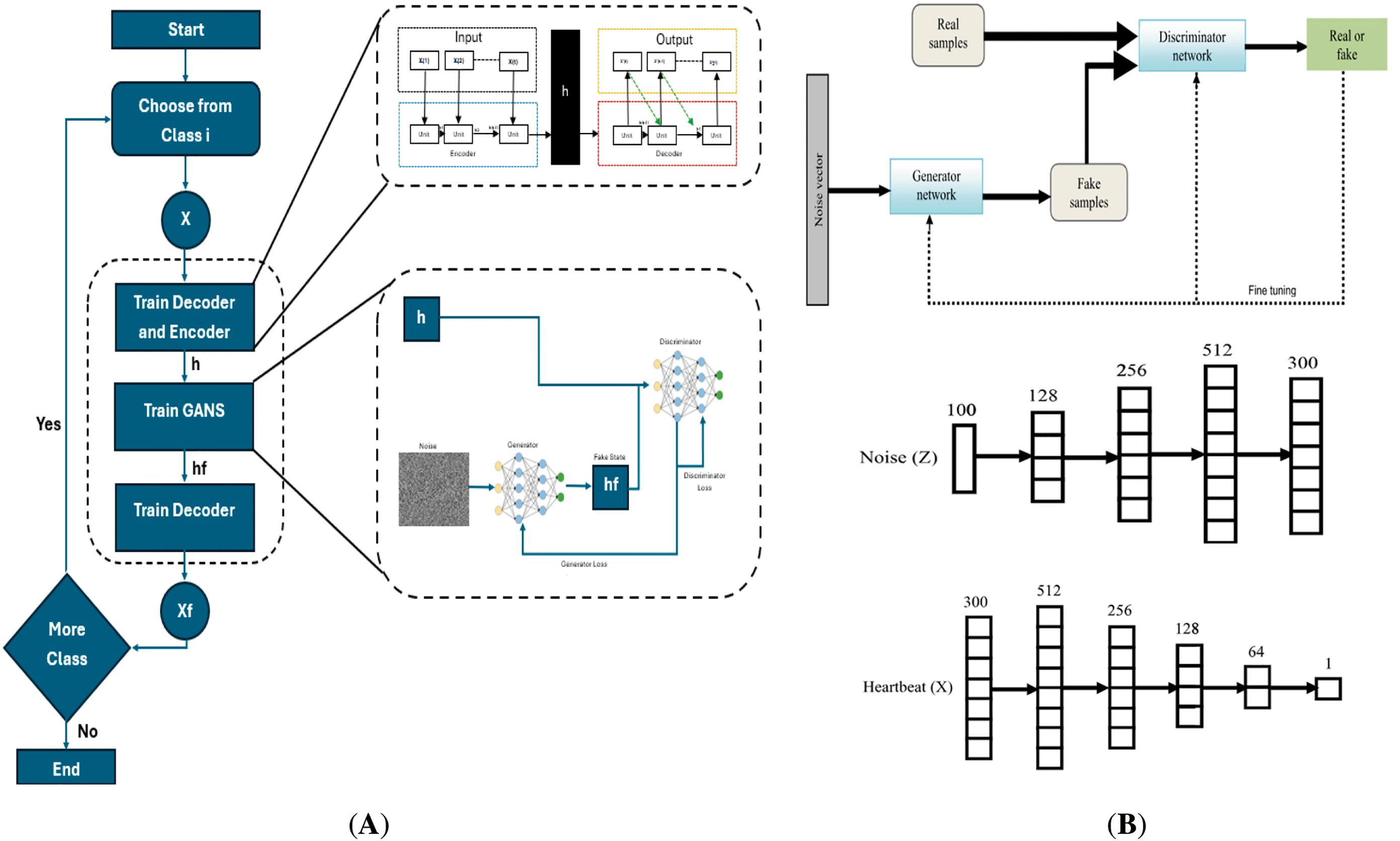

3.2.4 Generative Adversarial Network (GAN)

Generative adversarial networks (GANs) have emerged as robust frameworks for generating synthetic data that resemble accurate biomedical signals. GANs consist of two neural networks, a generator and a discriminator, which are trained adversarially. The generator learns to generate synthetic biomedical signals, whereas the discriminator learns to distinguish between accurate and generated signals. GANs have shown promising applications in various biomedical signal-processing tasks, including data augmentation, anomaly detection, and signal synthesis [63]. A GAN consists of three main components or processes:

• Generator

The generator in a GAN architecture serves as the synthetic data producer. It typically takes random noise as input, often samples from a uniform or normal distribution, and transforms this noise into structured data resembling real-world examples [63]. The architecture generally consists of layers designed to process and refine this noise, which may include recurrent layers for capturing temporal patterns, followed by fully connected layers that apply nonlinear activations to shape the output gradually [63]. Regularization techniques such as dropout are commonly employed to prevent overfitting and ensure diversity in the generated outputs. The final layers produce synthetic data, which aims to mimic the statistical properties and characteristics of accurate biomedical signals such as ECG or EEG waveforms [28,64].

• Discriminator

The discriminator functions as the quality assessor in the GAN framework. Its architecture is designed to analyze input data and determine their authenticity. This typically involves layers that extract relevant features from the input, which may include recurrent or convolutional layers depending on the data type, followed by fully connected layers with appropriate activation functions [28,64]. The final output layer usually produces a probability score indicating whether the input is real (from the actual dataset) or synthetic (generated by the generator). The discriminator’s role is crucial, as it provides the feedback mechanism that guides the generator’s learning process [64,65].

• Adversarial training

The core innovation of GANs lies in their adversarial training process. During training, the generator and discriminator engage in a continuous competition where the generator attempts to produce increasingly realistic synthetic data. In contrast, the discriminator strives to better distinguish real from fake data. This dynamic is formalized as a minimax game where the generator minimizes the probability of detection while the discriminator maximizes its classification accuracy [64,65]. Through multiple iterations of this competition, both networks improve iteratively, ultimately reaching an equilibrium where the generator can produce data that are virtually indistinguishable from real examples, and the discriminator cannot reliably differentiate between them [64,65]. The objective function for GANs is as follows:

where:

•

• where

• where

•

A critical application of GANs in biomedical signals is data augmentation. GANs can generate synthetic signals that augment the limited available data, thereby improving the performance and robustness of ML models. By training the generator to mimic the statistical characteristics of natural signals, GANs can generate realistic synthetic data that expand the training dataset, leading to enhanced model generalizability and performance [28,64].

Another application of GANs in biomedical signals is anomaly detection. GANs can be trained on standard, healthy signals to learn their underlying distribution. The discriminator in the GAN is then used as an anomaly detector to identify deviations from the learned normal distribution. This approach has been successfully applied to detect anomalies in various biomedical signals, such as ECGs, electromyograms (EMGs), and EEGs, enabling early detection of abnormalities and improving patient monitoring [64,65].

Furthermore, GANs have been used for signal synthesis in biomedical applications. For example, GANs have been employed to generate synthetic ECG signals that mimic different cardiac conditions, allowing researchers to study and analyze the effects of specific abnormalities without the need for large, diverse clinical datasets [11,65,66]. Synthetic signals generated by GANs can also be used for training and testing DL models, overcoming the challenges of limited or imbalanced datasets. Fig. 14 shows a general block diagram of the GAN.

Figure 14: (A) Block diagram of a GAN in real-example, (B) Sample architecture for generator and discriminator networks [67] (CC BY 4.0)

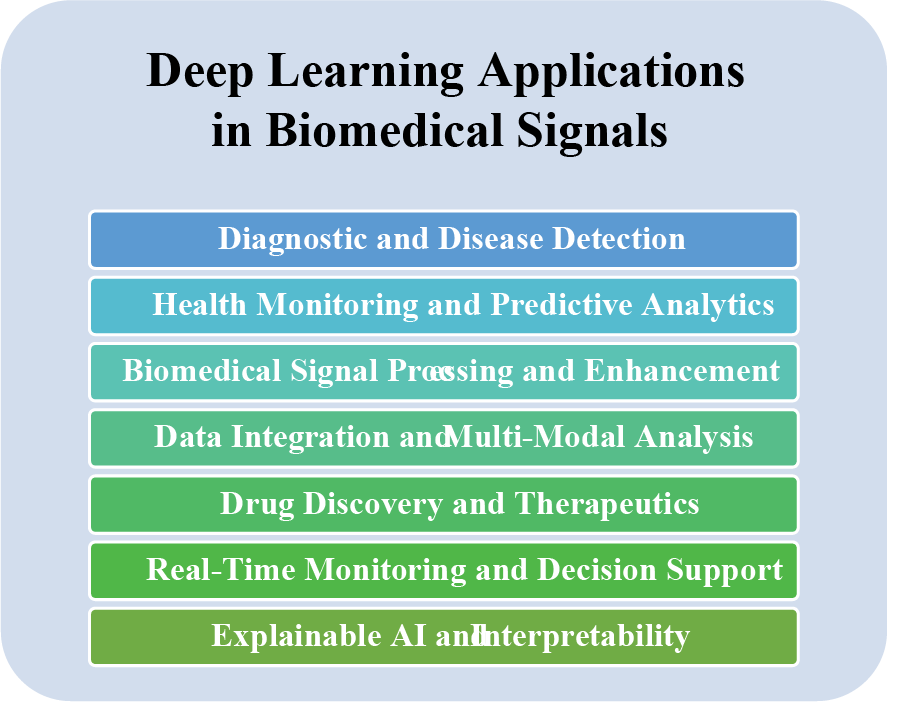

4 DL Applications in Biomedical Signals

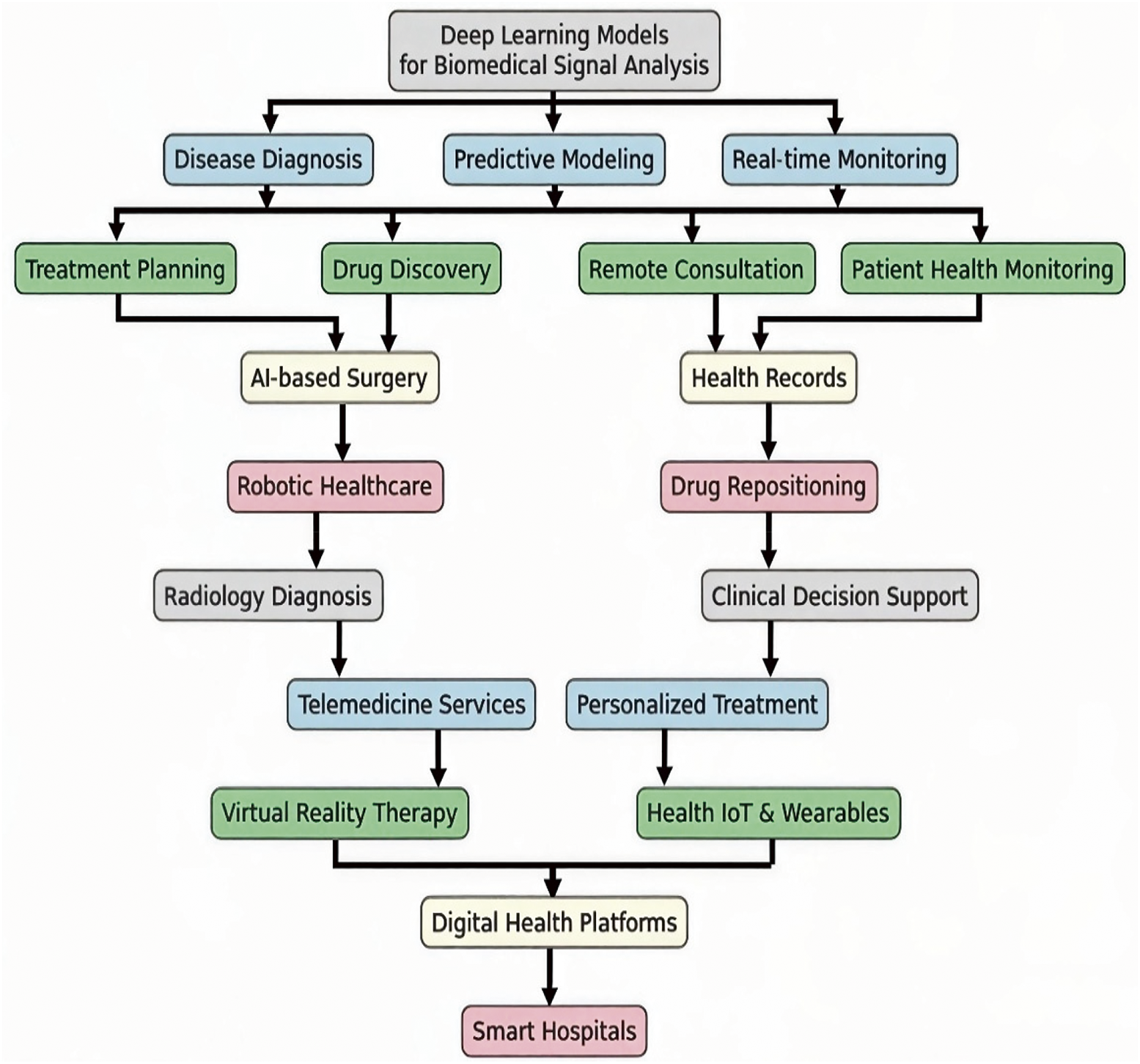

DL has revolutionized the field of biomedical signals, offering powerful tools for analyzing and interpreting complex biomedical data. DL models, such as CNNs, RNNs, and GANs, have been extensively applied in various biomedical signal processing and interpretation tasks, including disease diagnosis, anomaly detection, signal classification, and signal synthesis [9,68]. Owing to its ability to efficiently learn representations from complex and unstructured data, DL has remarkably succeeded in extracting meaningful patterns and features from diverse biomedical signals. This section explores different and recent applications of DL in biomedical signals. The following section discusses these topics in detail. Fig. 15 shows the applications of DL in biomedical signals.

Figure 15: DL applications for biomedical signals

4.1 Diagnostic and Disease Detection

This category uses DL algorithms to analyze biomedical signals and diagnose various medical conditions. It includes applications such as ECG arrhythmia classification [2,69], EEG-based seizure detection [10], and speech and voice analysis for diagnosing disorders.

CNNs have been used to analyze ECGs and accurately identify cardiac arrhythmias. RNNs effectively decoded EEG signals to detect epileptic seizures. These models learn to capture intricate patterns and features from signals, enabling accurate disease identification and aiding in early diagnosis and treatment [2,5,25,28,70].

Anomaly detection is another critical area in which DL has made significant contributions. Training DL models on standard, healthy signals, they can learn and identify deviations from the underlying distribution [10,13,71–74]. This approach has been applied to detect anomalies in various biomedical signals, such as detecting abnormal heart rhythms from ECGs or identifying anomalies in brain signals from EEGs. Deep learning-based anomaly detection can enhance patient monitoring systems and enable early detection of critical events [2,5,12,29,74–76].

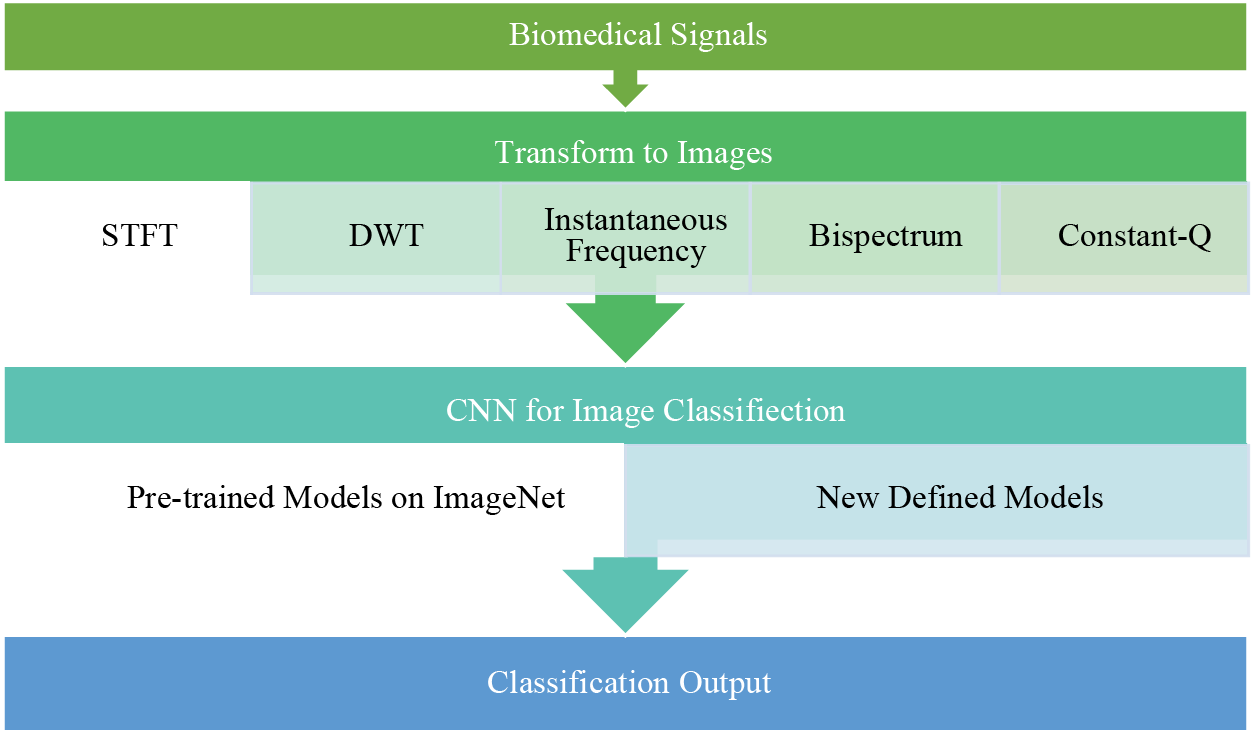

Signal classification is a fundamental task in biomedical signal processing, and DL models have achieved state-of-the-art performance in this area [40]. CNNs, such as short-time Fourier transform (STFT) and discrete wavelet transform (DWT), have been employed to classify images from biomedical signals [77,78]. To detect specific activities or states, RNNs, such as EEGs or EMGs, have been applied to classify time series signals. DL models can automatically learn discriminative features from signals, enabling accurate and efficient classification [56]. Fig. 16 shows how DL uses inputs from biomedical signals as images.

Figure 16: Block diagram using a CNN with image inputs from biomedical signals

4.2 Health Monitoring and Predictive Analytics

DL has shown great potential in using biomedical signals to monitor individuals’ health and predict potential health risks continuously. These signals, including HRV, blood pressure, and glucose levels, offer valuable insights into a person’s physiological state. DL models, such as RNNs and LSTM networks, have been used to analyze temporal patterns in these signals and predict future health events [41,79]. For example, an LSTM-based model effectively predicts hypoglycemic events in patients with type 1 diabetes, providing an early warning system to prevent life-threatening situations [80].

In addition to health monitoring, DL has also been applied to predict disease outcomes and identify potential risk factors based on analyses of longitudinal biomedical data. DL models can identify hidden patterns and complex relationships between signals and patient outcomes by training on large-scale patient data. For example, reference [41] proposed a deep learning-based framework for the early prediction of heart failure-related hospitalization, utilizing electronic health records and physiological signals to achieve high accuracy in forecasting adverse events.

This is a transformative application area for DL in biomedical signals. By harnessing the power of deep neural networks, healthcare professionals can gain valuable insights from continuous health monitoring and make informed decisions for personalized patient care, early disease detection, and risk prediction.

4.3 Biomedical Signal Processing and Enhancement

DL has shown significant potential in enhancing the quality and utility of biomedical signals, which are often corrupted by noise and artifacts that can hinder accurate diagnosis and analysis [81,82]. Deep neural networks have been used to denoise and enhance these signals, improving their fidelity and reliability. For example, a deep learning-based approach using a stacked autoencoder was proposed to effectively denoise electroencephalogram (EEG) signals, reduce artifacts, and improve the accuracy of neurological disorder diagnosis [81]. This represents a critical application area for DL in biomedical signals, where researchers and medical professionals can leverage the capabilities of deep neural networks to effectively denoise, enhance, and augment these signals for improved diagnosis and analysis.

4.4 Data Integration and Multimodal Analysis

To gain comprehensive insights into biomedical signals, DL techniques have shown remarkable potential for combining and analyzing data from multiple sources and modalities. Biomedical research often involves data from various sensors and imaging modalities, and integrating these heterogeneous data can provide a more holistic understanding of complex physiological processes [83,84]. DL models, such as multimodal neural networks and attention mechanisms, have been developed to effectively fuse data from different sources. For example, a multimodal DL framework was proposed that integrated data from ECGs and PPGs to improve the accuracy of heart rate estimation and cardiovascular disease diagnosis [85,86].

DL has also been instrumental in multimodal analysis, combining information from various sources to extract complementary features and facilitate better disease detection and diagnosis. A deep learning-based multimodal analysis approach was developed to diagnose Alzheimer’s disease via structural and functional MRI data, achieving superior performance compared with single-modal analysis [83]. This is an essential application area for DL in biomedical signals, where researchers and clinicians can uncover valuable insights and improve disease detection and diagnosis accuracy and efficiency by integrating data from diverse sources and applying advanced DL techniques.

4.5 Drug Discovery and Therapeutics

This is a rapidly growing field, and DL has been used to accelerate the identification of potential drug candidates and optimize therapeutic strategies. DL techniques have shown great promise in predicting the binding affinity between small molecules and target proteins, which is a crucial step in the design of new drugs [87–89]. CNNs and graph-based DL models have been applied to predict highly accurate protein–ligand interactions. For example, DeepChem, a DL library, was developed to predict the binding affinities of small molecules to protein targets successfully, aiding in virtual screening for potential drug candidates [89].

DL has also significantly optimized drug therapies by analyzing patient data and predicting treatment responses. RNNs and transformer-based models have been applied to electronic health records and genomic data to personalize drug treatment plans and anticipate adverse drug reactions. A DL model was developed that utilized electronic health records to predict patient-specific adverse events, enabling physicians to make informed decisions and reduce the likelihood of harmful drug reactions [90].

This area represents an application for DL in biomedical signals. By using deep neural networks to predict protein–ligand interactions and personalize drug treatments, researchers and clinicians can significantly expedite the drug discovery process and improve patient outcomes in therapeutics.

4.6 Real-Time Monitoring and Decision Support

DL has shown immense potential in providing continuous, real-time analysis of biomedical signals to support clinical decision-making. DL models have been employed to process and analyze streaming data from various sensors and devices, enabling real-time monitoring of patients’ health status and facilitating timely interventions [39,91,92]. For example, deep CNNs have been applied to analyze data from wearable devices, such as smartwatches and fitness trackers, to detect and predict abnormal physiological patterns [92,93].

DL has also provided real-time decision support for critical care settings. By analyzing streaming data from patient monitors and electronic health records, DL models can detect early signs of deterioration and predict adverse events, allowing medical teams to take immediate action. An attention-based DL model was developed for real-time sepsis prediction, achieving high accuracy and sensitivity in identifying patients at risk of septic shock [94].

This topic represents a revolutionary application area for DL in biomedical signals. By leveraging the capabilities of deep neural networks for real-time analysis and prediction, healthcare professionals can receive timely and accurate decision support, leading to improved patient outcomes and more effective healthcare interventions.

4.7 Explainable AI and Interpretability

This is one of the most crucial aspects of applying DL to biomedical signals, where understanding and interpreting model predictions is essential for gaining trust and acceptance from medical professionals. Owing to their complex architecture, DL models are usually considered black boxes and do not understand how decision-making is performed. However, interpretability is vital in biomedical applications to comprehend the rationale behind a model’s predictions and ensure that its recommendations align with medical expertise [95,96].

Recent research has focused on developing explainable DL models that provide insights into the decision-making process. For example, local interpretable model-agnostic explanations (LIMEs) were introduced to explain individual predictions of black-box models such as deep neural networks. Locally explainable linear explanations (LELEs) generate locally interpretable explanations by approximating complex model behavior around a specific instance, allowing medical practitioners to understand why a particular decision was made [97].

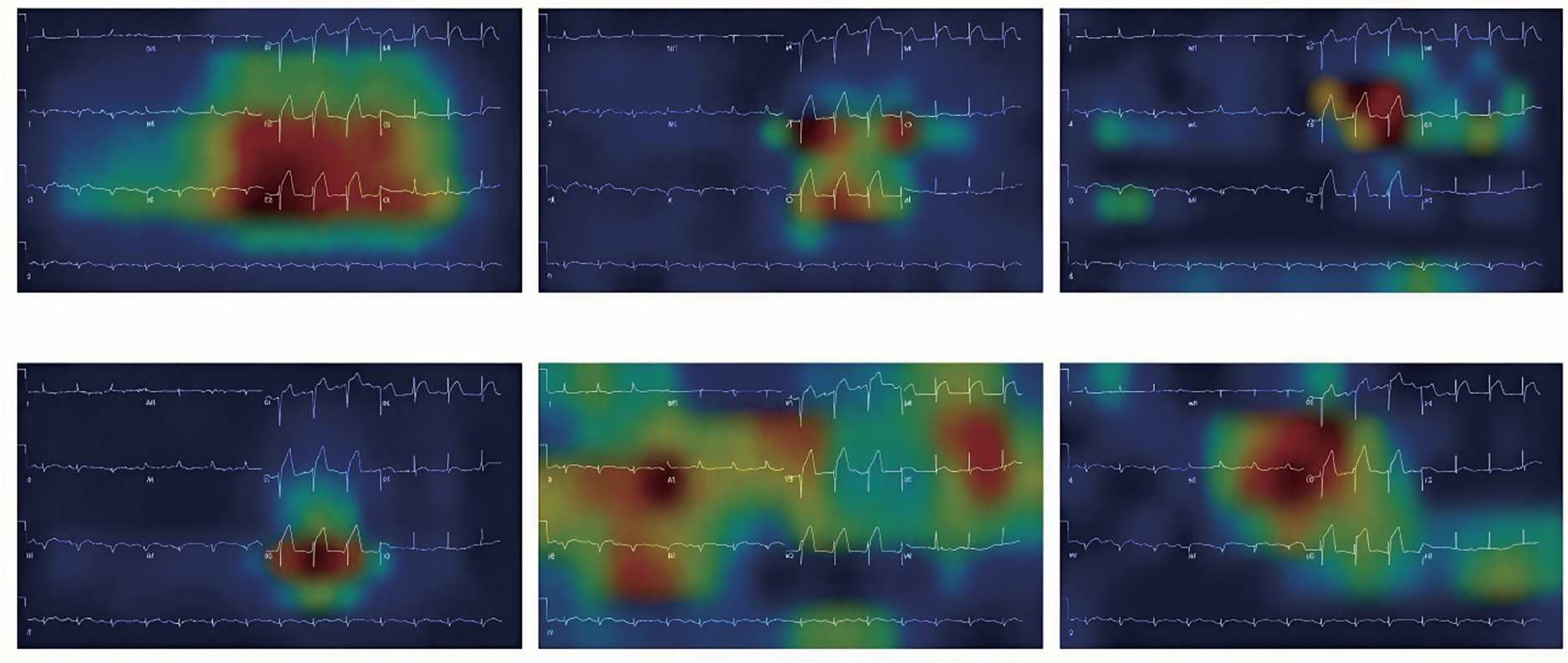

Interpretability is especially crucial when deploying DL models for real-world clinical applications. Medical professionals must trust and validate predictions to ensure patient safety and appropriate treatment plans. Researchers have explored methods to make DL models more interpretable by incorporating attention mechanisms and highlighting specific regions in the input data that influence the model’s output. An attention-based DL model was proposed for arrhythmia detection in ECGs, allowing physicians to understand which parts of the ECG signal were most significant in making the diagnosis [96].

Explainable AI is a crucial area of research when applying DL to biomedical signals. Developing methods and techniques that provide transparent and interpretable insights into DL models’ decision-making processes is essential for fostering trust, facilitating validation, and enabling the safe and effective use of DL in real-world clinical settings.

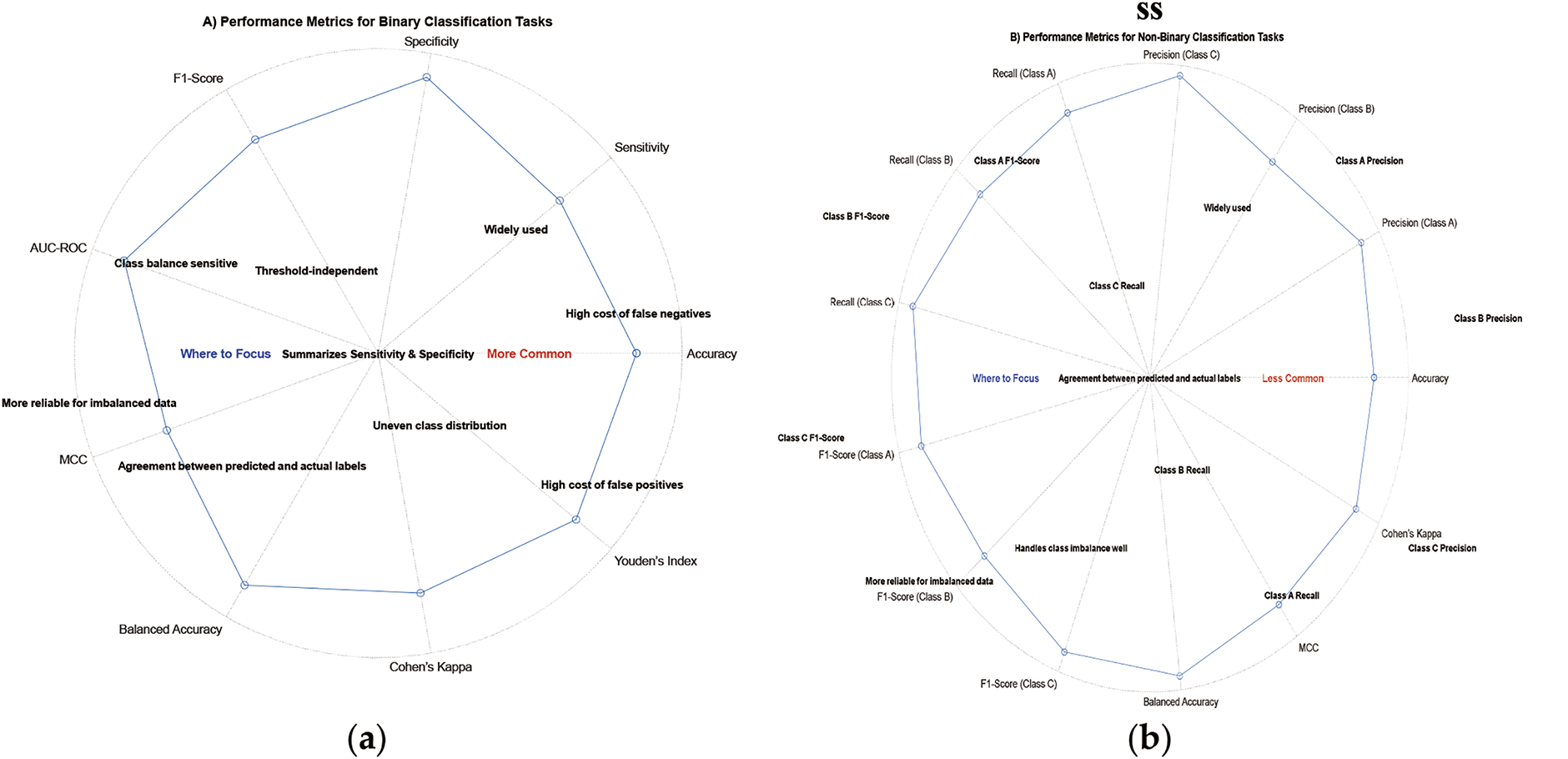

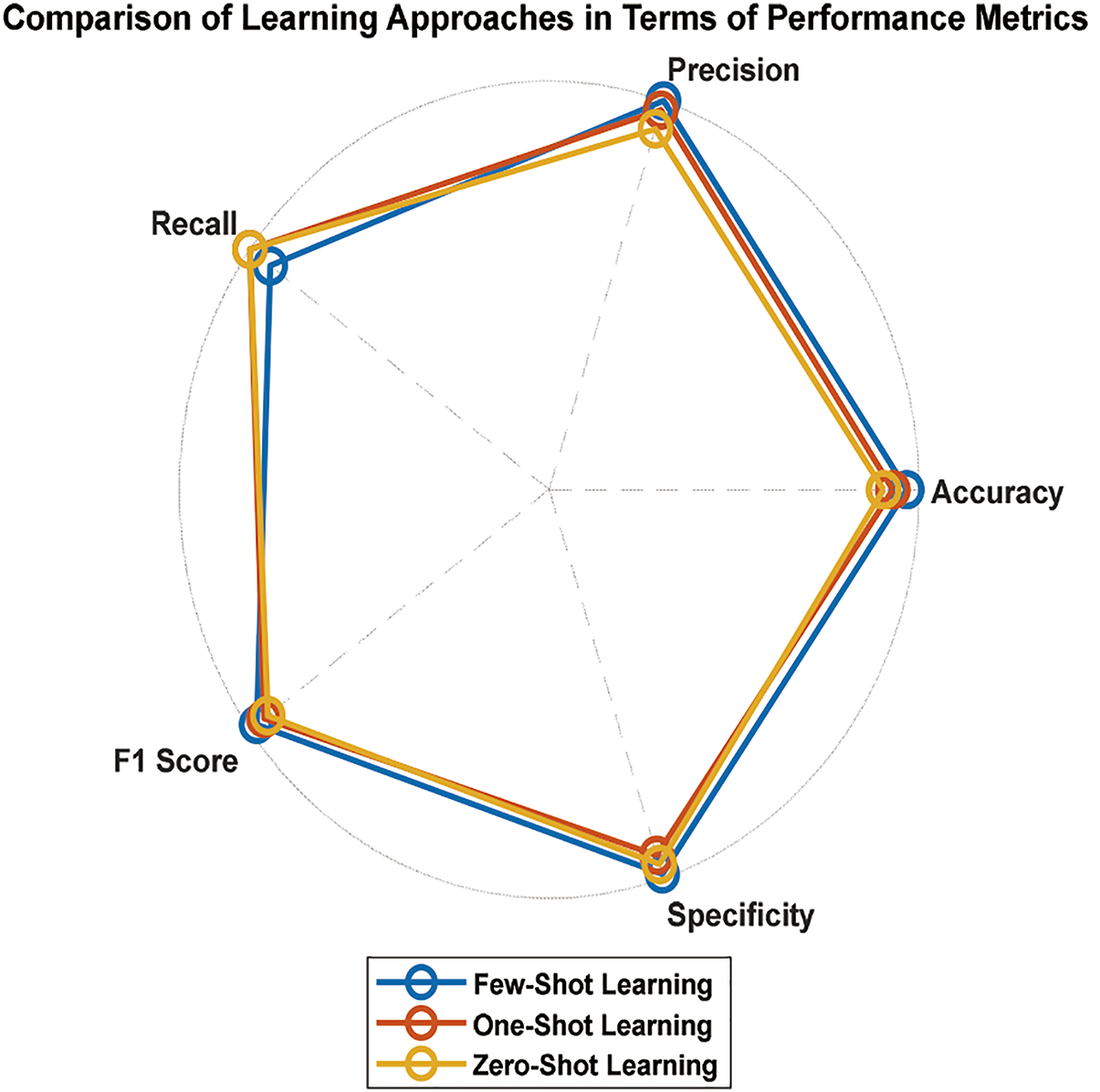

5 Performance Metrics for DL in Biomedical Signals

Assessing the performance of DL models applied to biomedical signals is crucial for evaluating their effectiveness and ensuring their successful application in clinical and research settings [40]. Various performance metrics are commonly used to measure these models’ accuracy, robustness, and generalizability. In this section, we discuss some of the most critical performance metrics and their significance in evaluating the performance of DL models for biomedical signals [38]. These metrics are accuracy, sensitivity (recall), specificity, the F1 score, and the area under the receiver operating characteristic curve (AUC-ROC). The following subsection discusses these metric equations:

Accuracy is one of the most fundamental performance metrics and represents the proportion of correctly classified samples over the total number of samples in the dataset [98]. It is calculated as follows:

where TP (true positives) is the number of correctly classified positive samples, TN (true negatives) is the number of correctly classified negative samples, FP (false positives) is the number of negative samples misclassified as positive, and FN (false negatives) is the number of positive samples misclassified as unfavorable [98].

5.2 Sensitivity (Recall) and Specificity

Sensitivity, also known as recall, measures the ability of a model to identify positive samples correctly. On the other hand, specificity measures the ability to identify negative samples correctly [98]. They are calculated as follows:

High sensitivity is crucial in applications where the cost of false negatives is high, such as in disease diagnosis, whereas high specificity is essential when false positives have severe consequences [98].

The F1 score is the harmonic meaning of precision and recall (sensitivity). It provides a balanced assessment of the model’s performance by considering false positives and negatives [98]. It is calculated as follows:

Precision is the proportion of actual positive samples out of all the predicted positive samples.

5.4 Area Under the Receiver Operating Characteristic Curve (AUC-ROC)

The ROC curve plots the actual positive rate (sensitivity) against the false positive rate (1-specificity) at various classification thresholds. The AUC–ROC metric represents the area under the ROC curve and quantifies the model’s ability to distinguish between positive and negative samples. AUC-ROC values closer to 1 indicate better discriminatory power [98].

5.5 Matthews Correlation Coefficient

The Matthews correlation coefficient (MCC) is a more informative metric for evaluating classification performance, especially in imbalanced datasets. The MCC considers all four confusion matrix components (TP, TN, FP, FN) and produces a value between −1 and 1, where 1 indicates perfect classification, 0 represents random predictions, and −1 signifies complete misclassification. It is calculated as follows:

Unlike accuracy, the MCC remains reliable even when class distributions are skewed, making it a preferred metric for biomedical classification problems.

Balanced accuracy is helpful in imbalanced datasets where standard accuracy may be misleading. It calculates the average sensitivity and specificity, ensuring that both classes contribute equally to the performance measurement. It is defined as:

Balanced accuracy provides a fair assessment when the dataset has a significant class imbalance, making it particularly valuable for biomedical applications such as disease detection.

5.7 Precision (Positive Predictive Value, PPV)

Precision, also known as positive predictive value (PPV), measures the proportion of correctly predicted positive cases among all predicted positive cases. It is defined as:

Positive results are crucial when false positives are costly, such as in cancer screening, where an incorrect positive result can lead to unnecessary biopsies or treatments.

The false positive rate (FPR) measures the proportion of negative samples that were incorrectly classified as positive:

A lower FPR is essential in high-risk applications, such as medical diagnostics, where incorrectly classifying a healthy patient as diseased can lead to unnecessary interventions.

Cohen’s kappa evaluates classification performance by considering agreement beyond what is expected by chance. It is beneficial when working with imbalanced datasets and multiple raters. It is calculated as:

where

A kappa value 1 indicates perfect agreement, whereas 0 indicates random agreement. Cohen’s kappa is especially useful in multiclass classification problems and when comparing multiple models.

5.10 Youden’s Index (J statistic)

Youden’s index provides a single-value measure of a diagnostic test’s performance by considering both sensitivity and specificity:

It ranges from −1 to 1, where 1 indicates perfect classification and 0 suggests no diagnostic ability. Youden’s index is widely used in medical diagnostics to determine the optimal threshold for classification.

The F2 score is a variation of the F1 score that emphasizes recall (sensitivity) more. It is particularly useful in scenarios where false negatives are more costly than false positives, such as critical disease detection.

A higher F2 score is desirable in healthcare applications, where detecting all potential positive cases is more important than minimizing false positives.

5.12 Why Are These Metrics Important?

The choice of performance metrics depends on the specific task and application of deep learning models in biomedical signals. Accuracy is a widely used metric, but more accuracy is needed in imbalanced datasets where the number of positive and negative samples differs significantly. Sensitivity and specificity are essential in scenarios where the cost of false positives or false negatives is asymmetric [40,98]. The F1 score provides a balanced view of the model’s performance and is useful when there is an uneven class distribution. It is essential in applications such as disease diagnosis, where false positives and negatives can have serious consequences, while the F2 score prioritizes recall, which is helpful for disease detection [36,40]. AUC-ROC is particularly valuable in binary classification tasks, as it remains unaffected by the choice of classification thresholds, making it more robust when dealing with imbalanced datasets [40]. The MCC and Cohen’s kappa also provide robust classification performance measures, especially in imbalanced datasets [36,40]. Balanced accuracy ensures that both classes contribute equally, making it more effective than regular accuracy. Youden’s index is particularly valuable in medical diagnostics, as it helps determine the optimal decision threshold [36,40].

5.13 Selecting the Best Metric for Your Problem

The selection of performance metrics depends heavily on the type of task and the application of DL models to biomedical signals. Accuracy is a widely used metric, but greater accuracy is needed for imbalanced datasets where the number of positive and negative samples differs significantly. Sensitivity and specificity are essential in scenarios where the cost of false positives or negatives is asymmetric [40,98].

The F1 score provides a balanced view of the model’s performance and is useful when there is an uneven class distribution. It is essential in applications such as disease diagnosis, where false positives and negatives can have serious consequences [36,40]. AUC-ROC is particularly valuable in binary classification tasks, as it remains unaffected by the choice of classification thresholds, making it more robust when dealing with imbalanced datasets [40].

For imbalanced datasets, metrics such as the MCC, balanced accuracy, Cohen’s kappa, and Youden’s index offer more reliable evaluations than regular accuracy. In medical applications where missing positive cases are costly, the sensitivity, F2 score, and AUC-ROC are more critical than the overall accuracy [36,40]. Conversely, precision and the false positive rate (FPR) should be minimized in applications where false positives carry high consequences. For general classification problems, the F1 score and MCC provide a balanced assessment of model performance [40].

Selecting appropriate performance metrics for evaluating DL models in biomedical signals is crucial for obtaining meaningful insights into their real-world applicability and performance. Researchers and practitioners should carefully consider the specific requirements of their applications and use a combination of metrics to comprehensively assess the model’s capabilities. By doing so, we can advance the field of DL for biomedical signals and promote the adoption of accurate and reliable models in healthcare and related domains [36,99]. Fig. 17 shows the importance of the performance metrics for binary and multiclass scenarios.

Figure 17: Main metrics for two scenarios: (a) Binary; (b) Multiclass

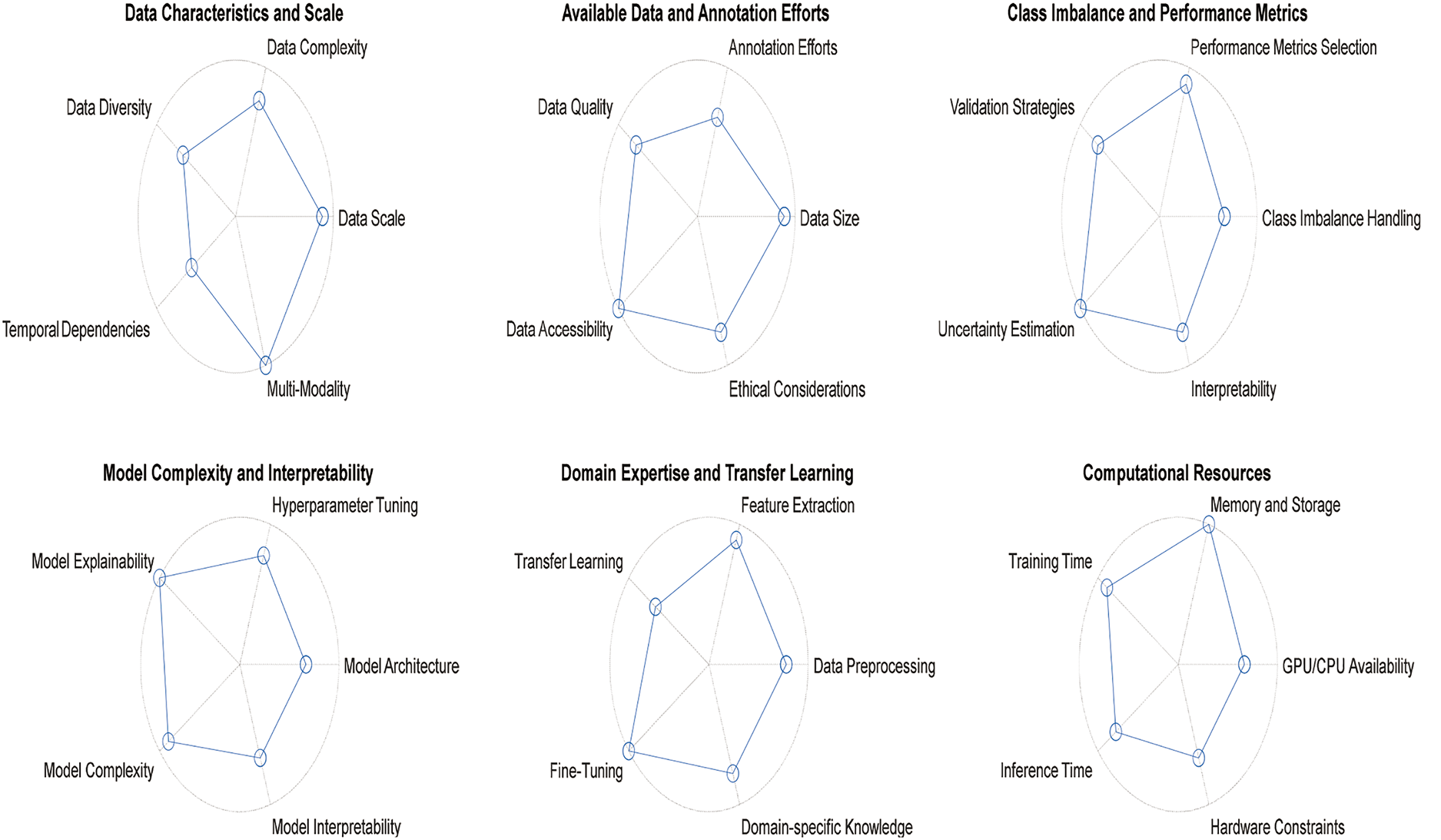

6 Choosing the Perfect Type of DL for Your Data

DL has emerged as a powerful and versatile approach for analyzing biomedical signals because it can automatically learn complex patterns and representations from raw data. However, with the proliferation of different DL architectures and methodologies, selecting the most appropriate type of DL model for a specific biomedical signal dataset has become crucial. This section discusses various considerations to help researchers and practitioners make informed decisions when choosing the perfect type of DL model for their data.

6.1 Data Characteristics and Scale

The first step in choosing a suitable DL model is thoroughly understanding the data characteristics and scaling. Biomedical signals vary widely, including EEGs for brain activity and ECGs for heart activity. Each type of biomedical signal has a unique data format, temporal or spatial resolution, and noise level [100].

For example, CNNs are well suited for image-like data, such as spectrograms and time-frequency representations of signals, as they effectively capture local patterns and spatial dependencies. On the other hand, RNNs or their variants, such as LSTM networks, are adequate for sequential data such as time series or EEG signals, where temporal dependencies and patterns play crucial roles in understanding the underlying physiology [101].

Additionally, the number of classes in classification tasks is another essential consideration. For multiclass or multilabel classification, models such as CNNs with global pooling layers or transformer-based architectures can be adapted to handle multiple classes effectively.

6.2 Available Data and Annotation

The availability of annotated data plays a crucial role in choosing DL models. For supervised tasks, having a substantial amount of labeled data is necessary for training complex models such as deep neural networks. However, obtaining annotated biomedical signal data can be challenging and time-consuming, especially in medical domains with limited expert annotations [102].

Transfer learning can be a practical solution if labeled data are scarce, where pretrained models are fine-tuned on smaller datasets. Pretrained models trained on large-scale datasets such as ImageNet can capture general patterns often useful for related tasks. Fine-tuning these models on specific biomedical signal data can lead to faster convergence and improved performance [103].

6.3 Model Complexity and Interpretability

The complexity of the DL model should align with the available computational resources and interpretability requirements. While deep models such as transformers achieve state-of-the-art performance in various domains, they are computationally demanding. They may require high-end GPUs or specialized hardware for training and inference [104]. On the other hand, simpler models such as logistic regression or decision trees may offer better interpretability but may sacrifice some predictive performance compared with deep neural networks. For applications where interpretability is critical, researchers may opt for models that allow easier visualization and understanding of the learned features [105].

6.4 Class Imbalance and Performance Metrics

In biomedical signal analysis, class imbalance is a common challenge, where one class may dominate the data distribution while others have fewer samples. Accuracy alone may not be a reliable performance metric for imbalanced biomedical signal datasets. Metrics such as sensitivity (recall), specificity, and the F1 score provide a more comprehensive evaluation of the model’s performance [106].

Specialized loss functions such as focal loss or class-weighted approaches can address class imbalance issues and improve the model’s performance on minority classes. Moreover, data augmentation techniques can help balance the class distribution and enhance the model’s generalizability [107].

6.5 Domain Expertise and Transfer Learning

In medical fields, researchers often have domain-specific knowledge that can guide model design, feature selection, and data preprocessing steps. Incorporating domain expertise can lead to better-informed choices in designing the neural network architecture or selecting relevant features for a specific medical task [108]. Transfer learning from models pretrained on similar tasks or domains can also increase performance, especially when labeled data are limited. Fine-tuning pretrained models on target biomedical signal datasets can help the model leverage knowledge from large-scale datasets and improve generalizability [109].

The choice of DL model also depends on the available computational resources, including GPU capabilities, memory, and processing power. While state-of-the-art models may deliver the best performance, they can be computationally expensive and impractical for resource-constrained environments [110]. Researchers can explore model compression techniques, such as model pruning, quantization, or knowledge distillation, to reduce the model’s size and computational requirements without significantly compromising performance [111].

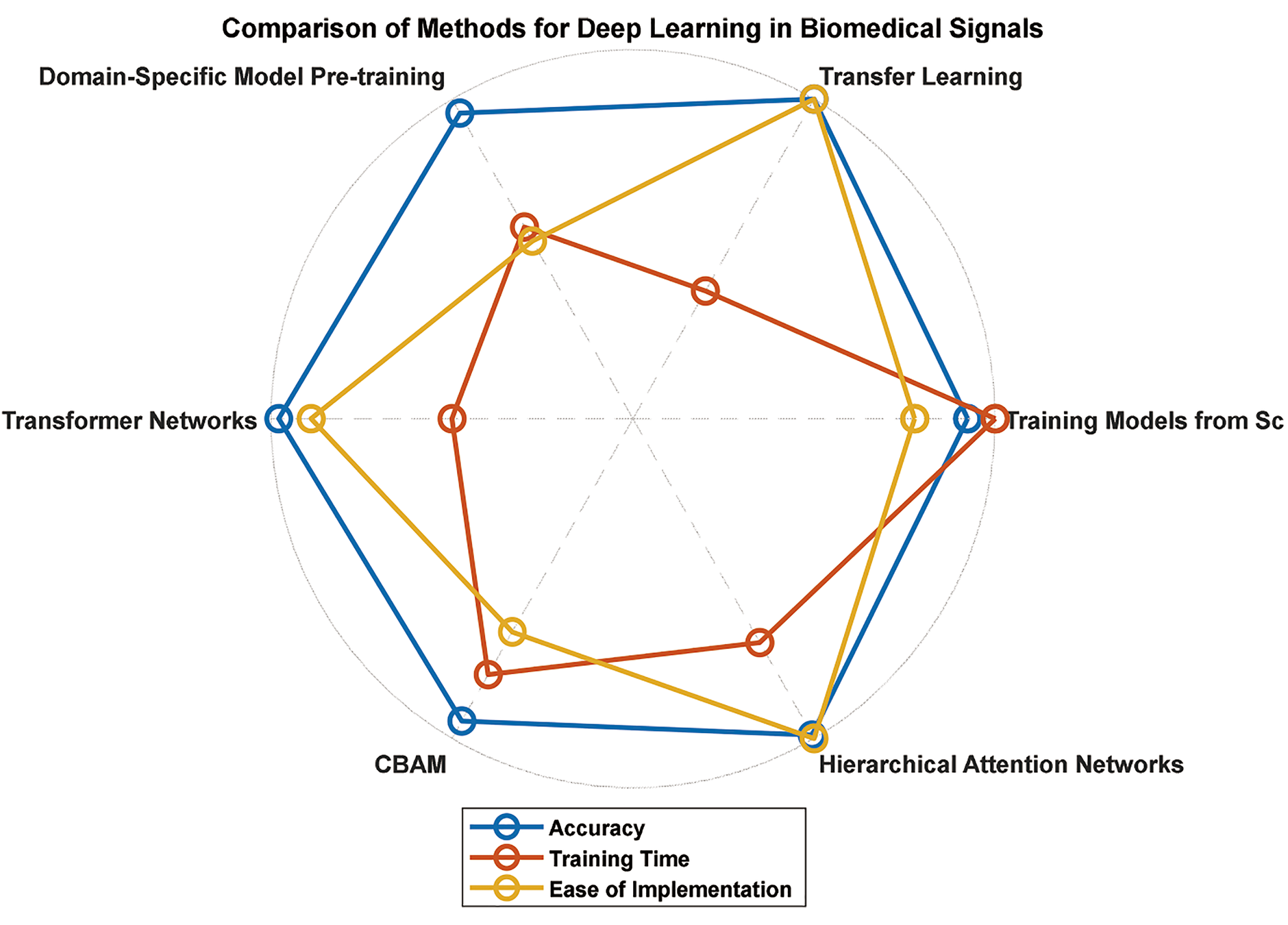

Overall, selecting the perfect type of DL model for biomedical signal analysis requires careful consideration of the data characteristics, available resources, and performance requirements. By understanding the strengths and limitations of different models, researchers can make informed decisions to achieve optimal results in their specific applications. Fig. 18 shows a tradeoff between the parameters of all the abovementioned considerations.

Figure 18: Tradeoff between the parameters of all considerations

While powerful, deep learning models often demand significant computational resources, which can severely restrict their deployment in environments with limited hardware capabilities. In biomedical signal analysis, such constraints are particularly relevant in settings such as mobile health devices, rural clinics with outdated infrastructure, or wearable sensors operating with battery power [110]. These resource-constrained environments face challenges such as insufficient GPU memory, slow processing speeds, and restricted energy budgets, which hinder the use of large-scale models such as transformers or densely connected neural networks. For example, real-time applications such as ECG monitoring on edge devices require models that balance accuracy with low latency. Yet, high-parameter models may fail to meet these requirements even after optimization. This gap between model complexity and practical feasibility underscores the need for lightweight architectures or adaptive frameworks that prioritize efficiency without sacrificing diagnostic reliability [111].

Moreover, the computational burden extends beyond inference, including training and data preprocessing stages. Training state-of-the-art models often requires high-performance computing clusters, which are inaccessible in many research or clinical settings [111]. Even with techniques such as transfer learning or federated learning to mitigate data and resource limitations, the energy consumption and time costs remain prohibitive for continuous operation in low-resource contexts. Consequently, researchers must critically evaluate whether the performance gains of advanced models justify their resource demands or, if more straightforward, specialized architectures such as shallow CNNs or hybrid models offer a more pragmatic solution [110]. Addressing these challenges requires interdisciplinary collaboration to develop hardware-aware algorithms, optimize existing frameworks for deployment, and explore emerging technologies such as neuromorphic computing to bridge the divide between model capability and operational practicality.

7 Methods for Developing and Interpreting DL Models for Biomedical Signals

DL models can be developed via different methodologies, ranging from training models from scratch to leveraging pretrained models through transfer learning. This section discusses several methods for developing DL models, focusing on transfer learning as a practical approach for biomedical signal analysis.

One approach to developing DL models is to train them from scratch. In this method, neural network architecture is designed, initialized with random weights, and trained on the target biomedical signal dataset. While this approach offers complete control over the model architecture and allows for specific customization, it may require a large amount of annotated data and extensive computational resources to achieve competitive performance [112].

Despite their potential challenges, training models from scratch can be suitable when domain-specific expertise suggests that existing pretrained models may not directly apply to the target biomedical signal analysis task [113].

Transfer learning is a powerful technique that leverages knowledge from pretrained models on large-scale datasets and adapts it to new tasks with smaller target datasets. The underlying idea is that lower-level features learned from diverse datasets (e.g., ImageNet) generally apply to various visual recognition tasks [112,114]. By fine-tuning the pretrained model on biomedical signal data, the model can effectively capture relevant patterns and improve performance, even with limited labeled data [115,116].

In transfer learning, there are two main strategies:

Feature Extraction: In this approach, the pretrained model’s convolutional layers are frozen, acting as a feature extractor. The extracted features are fed into a separate classifier for the specific biomedical signal task. This method is effective when the lower-level features learned in the pretrained model are relevant to the target task [40,114–116].

Fine-tuning: Fine-tuning involves using the pretrained model’s lower-level features and adapting the higher-level layers to the target task. During fine-tuning, some or all of the layers in the pretrained model are trainable, allowing the model to adjust its parameters based on the target biomedical signal data [115].

Transfer learning significantly reduces the need for large, annotated datasets, speeds up training, and preserves the knowledge learned from the pretrained model.

7.3 Domain-Specific Model Pretraining

Another method for developing DL models for biomedical signals is domain-specific pretraining. Unlike general-purpose pretraining on large-scale datasets such as ImageNet, domain-specific pretraining focuses on training models on relevant biomedical signal data or related medical datasets [117,118].

Domain-specific pretraining can be helpful when the target biomedical signal dataset differs significantly from generic image datasets. Training the model on more domain-relevant data can be initialized with more task-specific information and may require less fine-tuning on the target task [118].

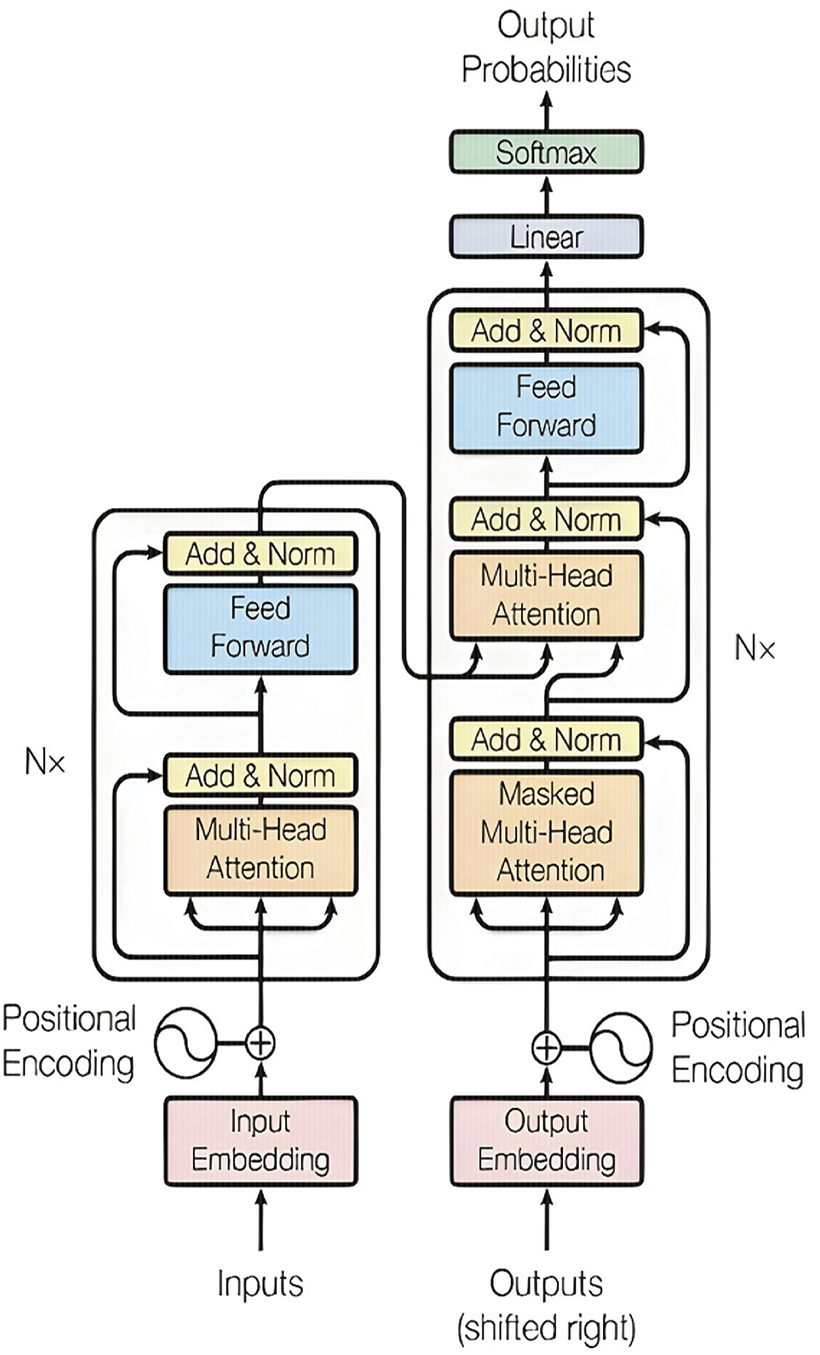

Transformer networks, initially conceived for NLP, have become a cornerstone of modern DL architecture [119]. Their breakthrough lies in the attention mechanism, which enables the model to focus on crucial elements within a sequence, thereby effectively capturing long-range dependencies. This feature has propelled transformers to the forefront of NLP tasks, including machine translation, sentiment analysis, and question answering, outperforming their predecessors and setting new benchmarks in language understanding and generation [120]. Fig. 19 shows the architecture of the transformer.

Figure 19: Transformer network architecture [104] (CC BY 4.0)

However, their impact has not been limited to the realm of NLP. Transformer networks have been successfully repurposed for various other domains, including the analysis of biomedical signals. By leveraging their ability to handle sequential data and discern intricate patterns, transformer-based models have shown potential in tasks such as disease diagnosis, anomaly detection, and medical image analysis [119–121]. This adaptability has paved the way for significant advancements in personalized medicine, offering promising avenues for developing innovative diagnostic tools and more effective healthcare solutions. As researchers continue to explore their potential in diverse fields, the integration of transformer networks is expected to foster breakthroughs in biomedical research, ultimately leading to enhanced healthcare practices and a deeper understanding of complex biological systems [121,122].

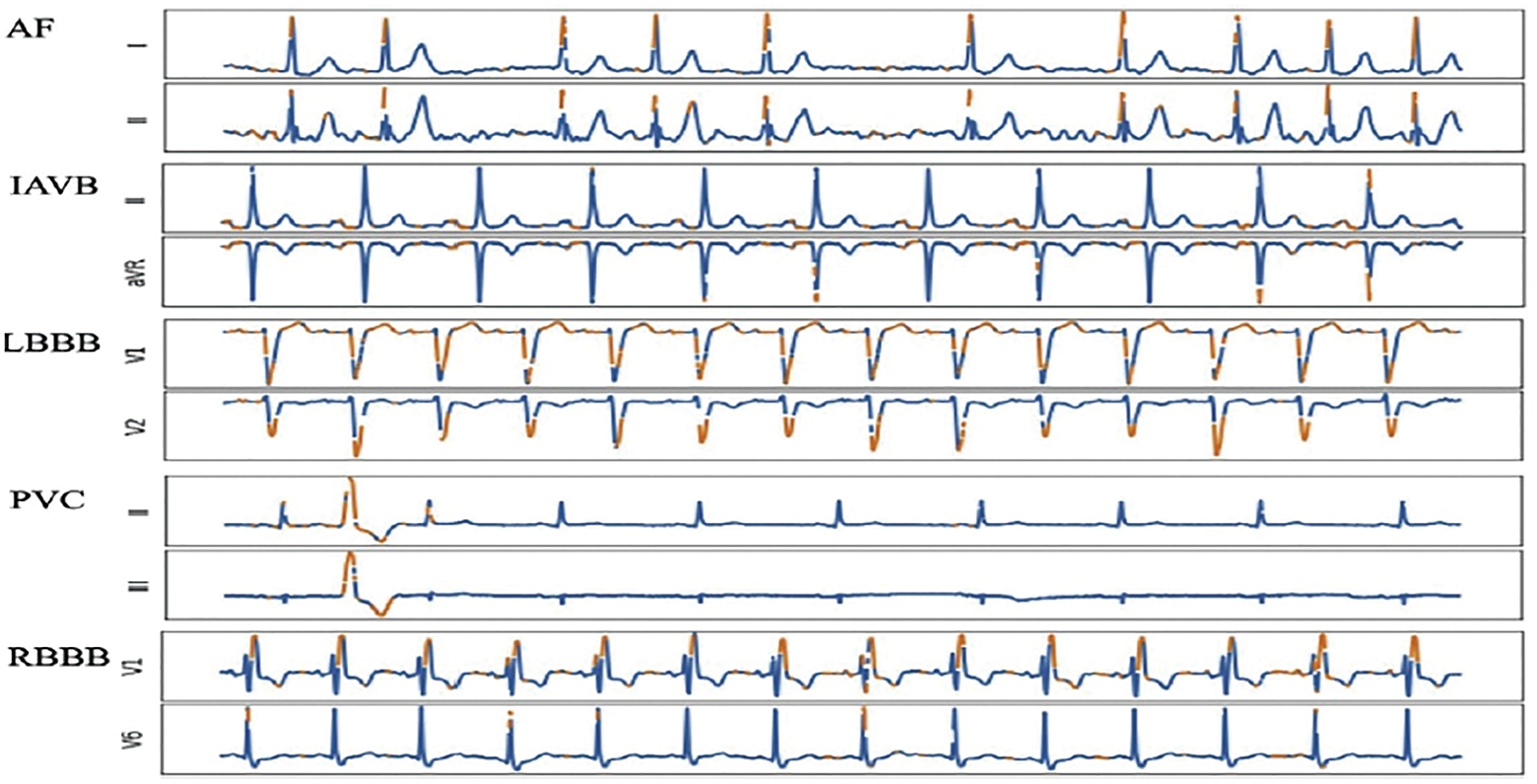

In the field of biomedical signals, transformer networks have shown potential. For example, a study proposed a constrained transformer network for ECG signal processing and arrhythmia classification [123]. The model combines a CNN and a transformer network to extract temporal information from ECG signals and can perform arrhythmia classification with acceptable accuracy [123]. The transformer network pays more attention to the data’s temporal continuity and captures the data’s hidden deep features well1. Another study proposed a transformer-based high-frequency oscillation (HFO) detection framework for biomedical magnetoencephalography (MEG) one-dimensional signal data [122]. The framework included signal segmentation, virtual sample generation, classification, and labeling. The proposed framework outperformed the state-of-the-art HFO classifiers, increasing the classification accuracy by 7% [122]. Recently a transformer mixture model was used for classification of ECG signals [124]. Fig. 20 shows an example of using transformers for biomedical signals classifications.

Figure 20: Schematic representation of the transformer model for biomedical signals classification [124] (CC BY 4.0)

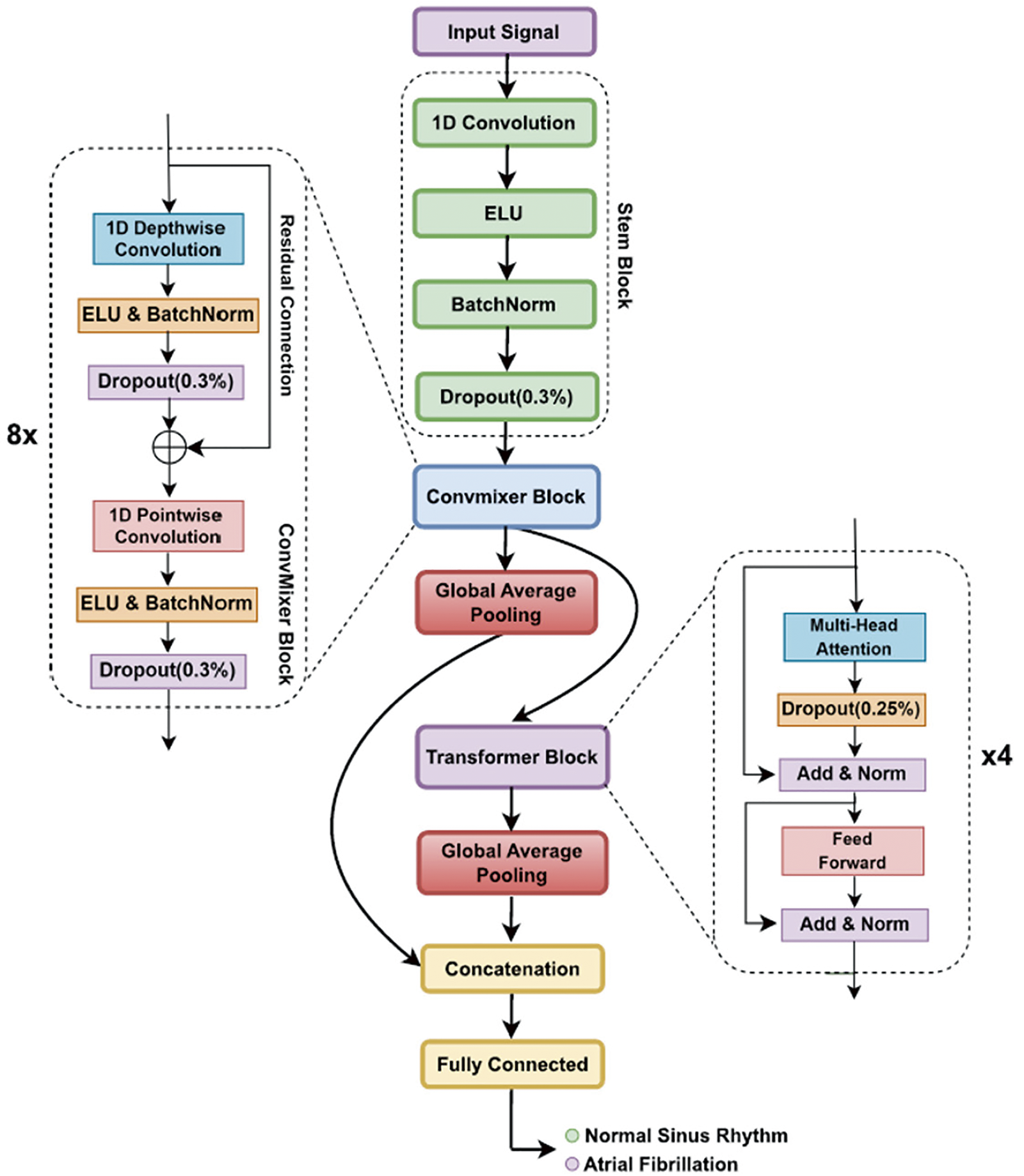

Hybrid DL models combine the power of two types of DL models. These models have shown great promise in analyzing biomedical signals because of the ability of CNNs to perform spatial feature extraction and capture the temporal dependencies of RNNs, such as LSTM networks [125]. By integrating these models, hybrid models can be used to process complex biomedical signals effectively [126]. One of the most popular hybrid models is the CNN-LSTM hybrid model, which first uses CNN layers to extract features from signals and then employs LSTM layers to analyze the sequential nature of these features, enhancing the model’s ability to detect patterns [127]. Such combinations improve overall performance and enable more robust model interpretation, making hybrid models valuable tools in medical diagnostics and personalized healthcare [125–128]. Fig. 21 shows the general block diagram of hybrid DL models using CNN-LSTM.

Figure 21: General block diagram of the hybrid DL model using CNN-LSTM

7.6 Convolutional Block Attention Module (CBAM)