Open Access

Open Access

REVIEW

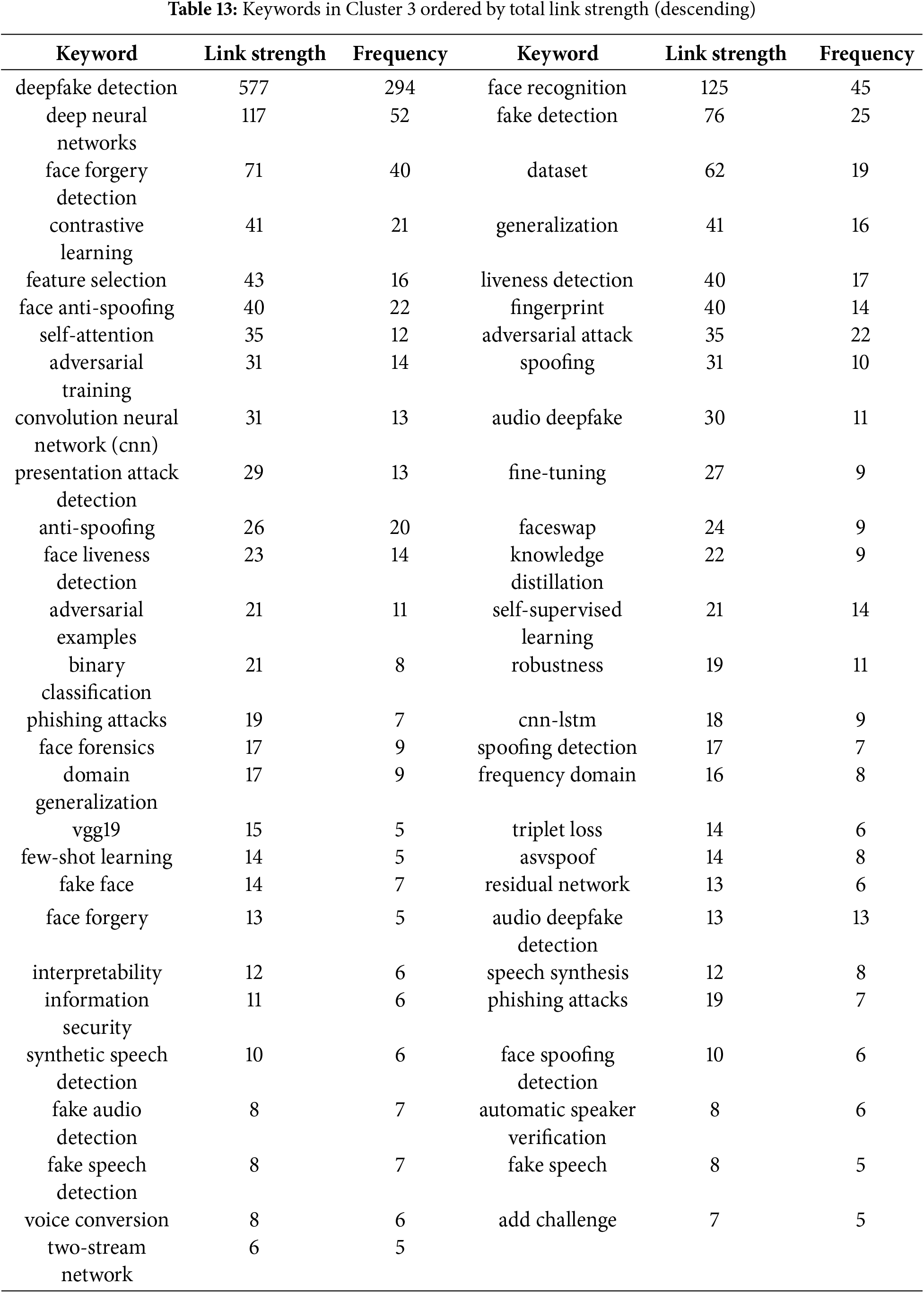

A Contemporary and Comprehensive Bibliometric Exposition on Deepfake Research and Trends

1 Department of Mathematics and Computer Science, Elizade University, Ilara-Mokin, 340271, Nigeria

2 Department of Communication Technology and Network, Universiti Putra Malaysia (UPM), Serdang, 43400, Malaysia

3 Information and Communication Engineering Department, Elizade University, Ilara-Mokin, 340271, Nigeria

4 Department of Computer Science, Faculty of Computing and Artificial Intelligence, Taraba State University, ATC, Jalingo, 660213, Nigeria

5 Department of Engineering Education, Faculty of Engineering and Built Environment, Universiti Kebangsaan Malaysia, Bangi, 43600, Selangor, Malaysia

6 Department of Applied Modelling and Quantitative Methods, Trent University, Peterborough, ON K9L 0G2, Canada

* Corresponding Author: Oluwatosin Ahmed Amodu. Email:

Computers, Materials & Continua 2025, 84(1), 153-236. https://doi.org/10.32604/cmc.2025.061427

Received 24 November 2024; Accepted 07 April 2025; Issue published 09 June 2025

Abstract

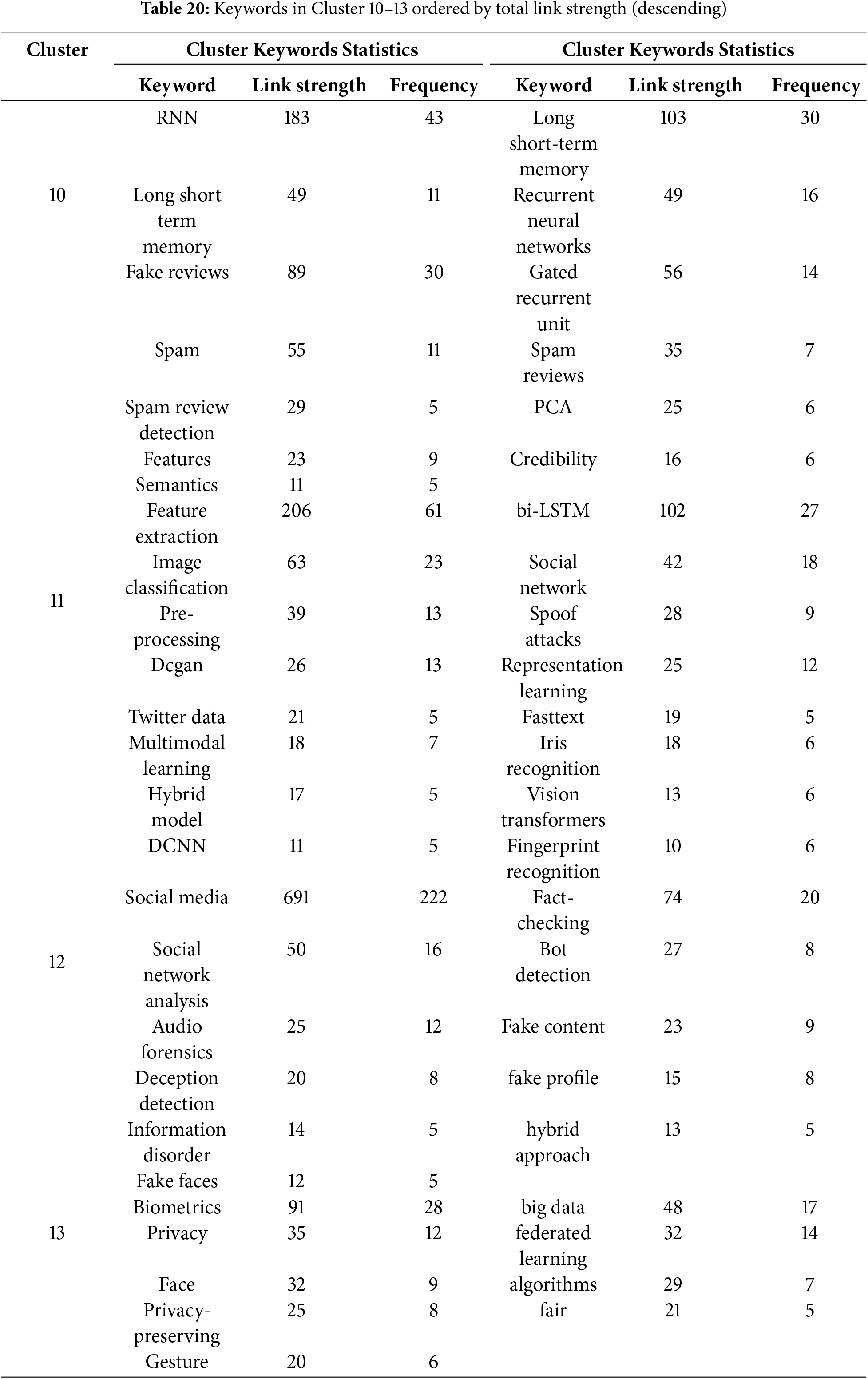

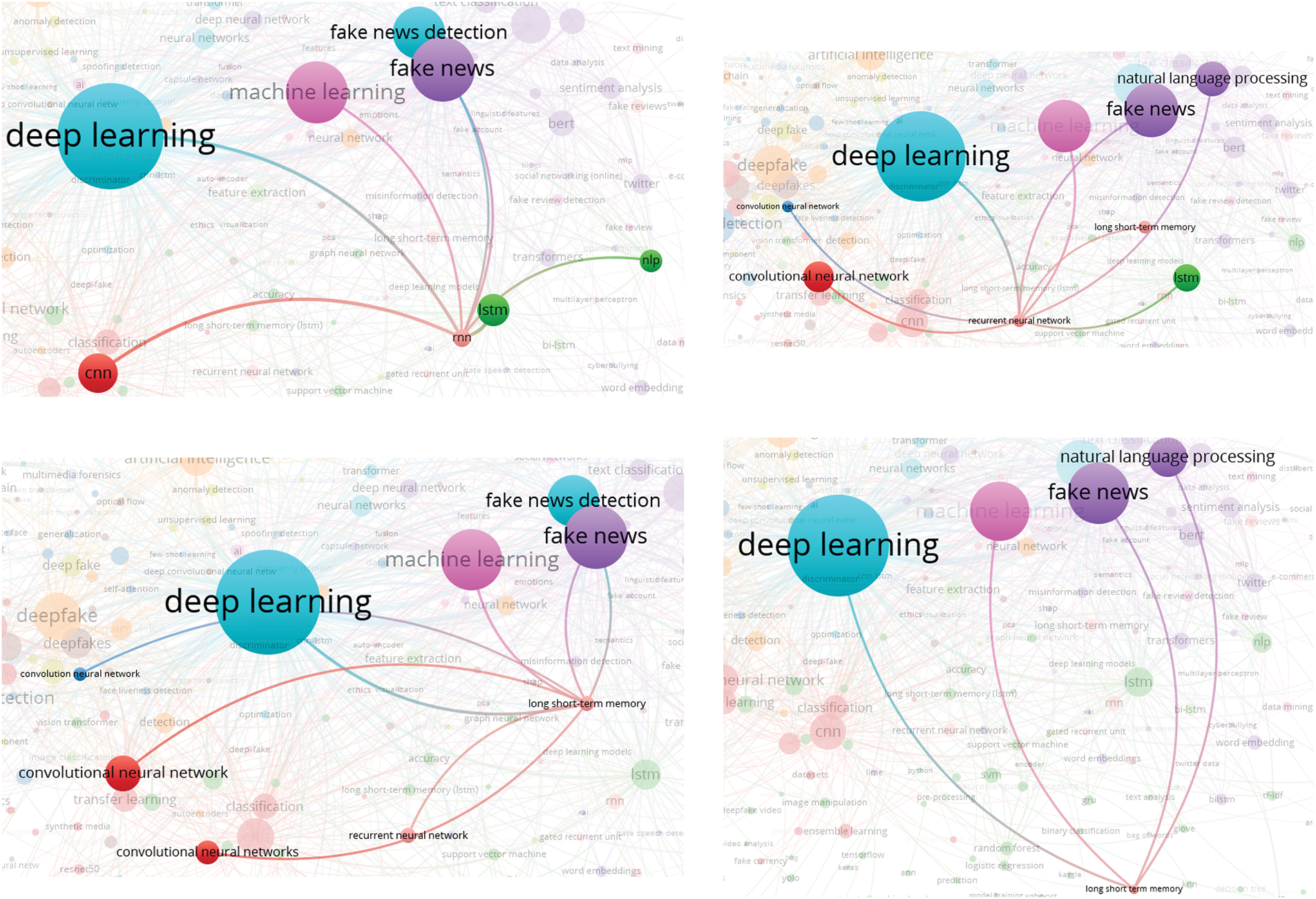

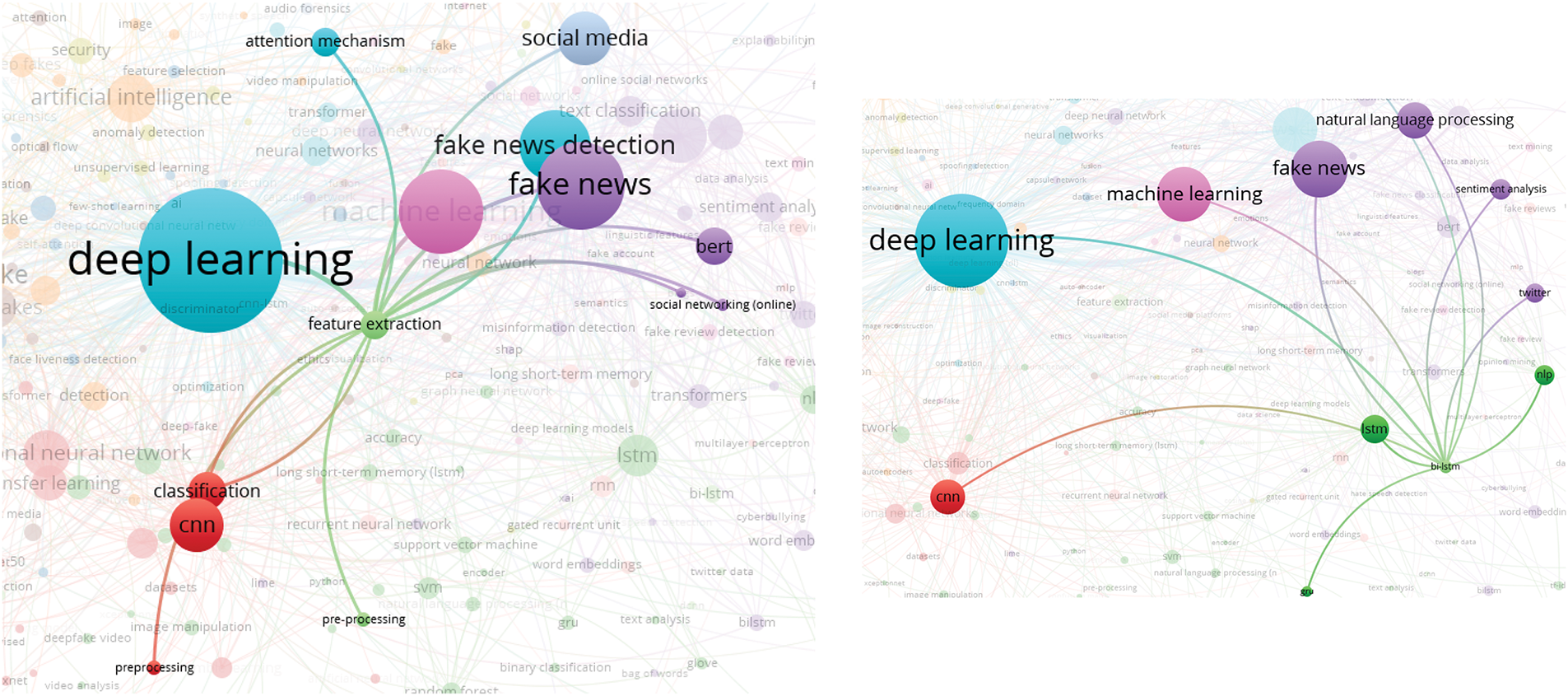

This paper provides a comprehensive bibliometric exposition on deepfake research, exploring the intersection of artificial intelligence and deepfakes as well as international collaborations, prominent researchers, organizations, institutions, publications, and key themes. We performed a search on the Web of Science (WoS) database, focusing on Artificial Intelligence and Deepfakes, and filtered the results across 21 research areas, yielding 1412 articles. Using VOSviewer visualization tool, we analyzed this WoS data through keyword co-occurrence graphs, emphasizing on four prominent research themes. Compared with existing bibliometric papers on deepfakes, this paper proceeds to identify and discuss some of the highly cited papers within these themes: deepfake detection, feature extraction, face recognition, and forensics. The discussion highlights key challenges and advancements in deepfake research. Furthermore, this paper also discusses pressing issues surrounding deepfakes such as security, regulation, and datasets. We also provide an analysis of another exhaustive search on Scopus database focusing solely on Deepfakes (while not excluding AI) revealing deep learning as the predominant keyword, underscoring AI’s central role in deepfake research. This comprehensive analysis, encompassing over 500 keywords from 8790 articles, uncovered a wide range of methods, implications, applications, concerns, requirements, challenges, models, tools, datasets, and modalities related to deepfakes. Finally, a discussion on recommendations for policymakers, researchers, and other stakeholders is also provided.Keywords

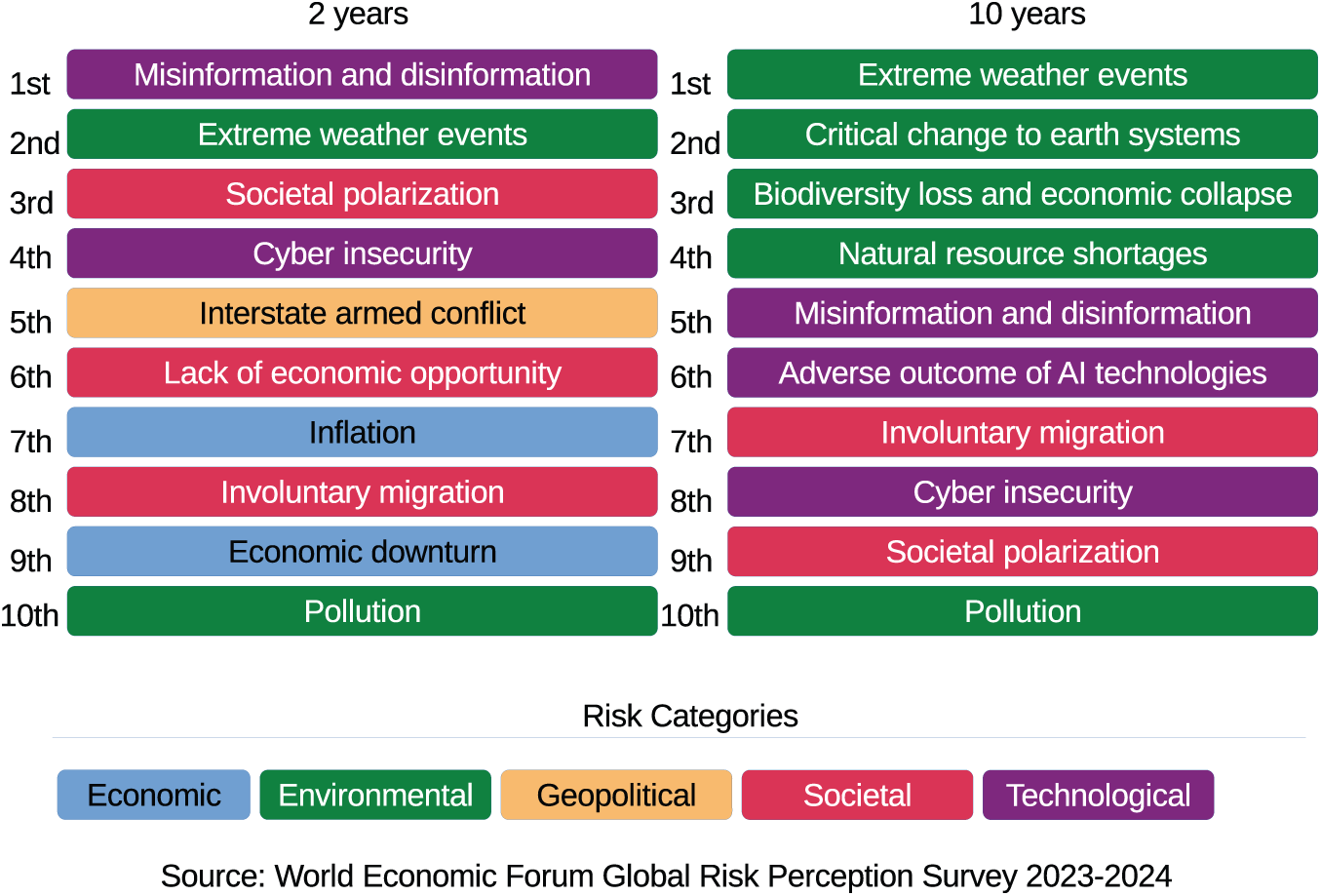

The development of Artificial Intelligence (AI) technology in recent years has raised serious questions and concerns in various sectors, including cybersecurity, politics, and media. Recently, the World Economic Forum’s 2024 Global Risks Report [1] has announced AI-powered misinformation and disinformation as the most pressing short-term global threats (refer to Fig. 1). In particular, AI technology, namely deepfakes, contributes significantly to this phenomenon. Deepfakes enable the creation of highly realistic but fabricated content, such as images, videos, and audio recordings. This technology has been widely exploited to create false information with the intent of deceiving or misleading, such as manipulating public opinion, damaging reputations, or spreading harmful propaganda.

Figure 1: Top 10 risks by global risks report 2024

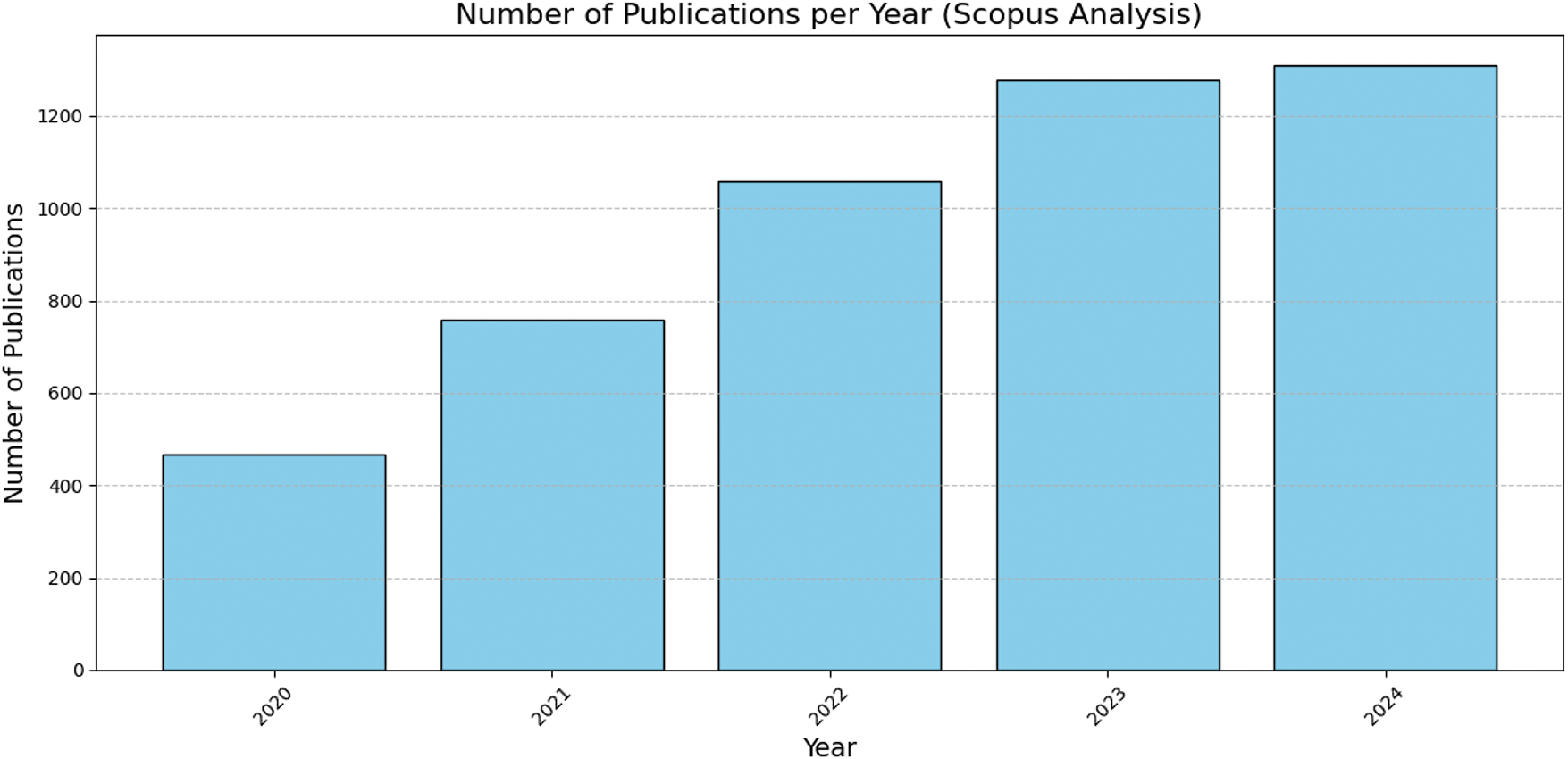

The word “deepfake” first appeared to the general public in 2017 when a member of the Reddit forum “deepfakes” started posting about the use of generative adversarial networks (GANs) [2] to manipulate videos of popular individuals in the society. Such algorithms can produce deceptively lifelike media, and stunning facial swaps [3]. Fig. 2 shows the number of research papers from Scopus within the last five years indicating an increasing trend in the number of publications on deepfakes.

Figure 2: Published papers on deepfake within the last five years

Deepfake poses risks to individuals and organizations especially when it has been used with bad intentions, potentially damaging reputations, and causing societal harm. The potential for malicious use of deepfakes is significant, such as the creation of manipulated representations of public figures or other individuals, often leading to harm or reputation damage [4]. For instance, faces can be superimposed on explicit images, videos, or audio clips, and public figures can be fabricated as making harmful statements [5]. Besides disseminating false information, eroding confidence, and misdiagnosis, deepfakes can be used to perform cyber crimes such as fraud and security threats. They can undermine and destabilize the operations of a company via false claims [6] and are also harmful in their status as evidence which could make justice preservation quite difficult [7]. The prevalence of deepfakes makes it more challenging to filter fake news from real news, thus threatening security via the dissemination of propaganda [8]. Addressing this critical issue is paramount. This is evident from the increasing number of works in the deepfake detection research area. In particular, [9] provides statistical data from 2017 to 2024 demonstrating that research output in deepfake detection significantly exceeds that of deepfake creation. Although machine learning forms the foundation of most deepfake detection methods, challenges remain, such as the scarcity of high-quality datasets and benchmarks [10–13]. Notably, Convolutional neural networks (CNNs) have been identified as the widely used deep learning method for video deepfake detection [14].

Deepfakes can also serve benign purposes, such as enhancing photo quality for magazine covers, and may even be useful in education, fashion, marketing, and healthcare [8]. Other popular applications include interactive digital twins [15], and the deployment of digital avatars or virtual assistants within video conferencing environments [16]. Moreover, smartphone applications such as FaceApp and Facebrity, which leverage deepfake technology, have recently garnered significant public interest [17]. However, as previously discussed, the potential for malicious use of deepfakes appears to outweigh their beneficial applications, raising notable concerns for individuals, organizations, and national security.

Among ways to combat deepfakes include legislation and regulation, corporate policies and voluntary action, education, and training, as well as anti-deepfake technology. Such technologies include deepfake detection, content authentication, and deepfake prevention [8]. Enhanced detection methods help address deepfake threats by providing tools to verify content authenticity. Deepfake detection remains an active area of research, with ongoing developments aimed at improving accuracy and adapting to the evolving nature of deepfake technology [5]. Several deepfake detection strategies have been developed in response to growing concerns and garnered significant attention from specialists and academics in recent years. Deepfake detection involves several steps. The first step, data collection, involves gathering real and deepfake data for analysis. The second step, face detection, involves identifying facial regions to capture characteristics such as emotion, age, and gender. The third step, feature extraction, involves extracting distinguishing features from the face for deepfake identification. The fourth step, feature selection requires choosing the most relevant features for accurate detection. The fifth step, model selection, involves selecting a suitable model from deep learning, machine learning, or statistical approaches. The final step, model evaluation, involves assessing model performance using various metrics [18]. At the heart of these steps is feature extraction, feature selection, and model selection, where artificial intelligence plays a significant role.

Notably, to understand the depth of the literature, a useful technique for comprehending the dynamics of research output and impact across a range of topics is via bibliometric analysis [19]. Researchers can get insights that guide future research, funding choices, and policy creation by using quantitative tools to analyze academic literature. Usually, bibliometric analysis entails obtaining information from scholarly databases such as, Web of Science (WoS), and Scopus. Hence, a useful framework that can comprehend the intricacies of deepfake research can be provided by bibliometric analysis, which is continuously evolving in this area [20,21] and various other fields [22–26]. Accordingly, a bibliometric analysis is applied to the study of scientific literature to quantify and assess research findings, patterns, and the composition of knowledge within particular fields [27].

Using bibliometric analysis, one can get insight into the evolution of research over time, pinpoint research trends, and highlight notable authors, journals, and institutions. It can also reveal the top-cited author contributions, institutions, and keyword co-occurrences. Therefore, this research aims to investigate current trends and developments in deepfake technology by analyzing publication and citation patterns, identifying key players (countries, organizations, and authors), exploring prominent themes and research interests, and identifying emerging trends within the field. This study focuses on addressing the following research questions:

• What are the distributions of publications on AI-based deepfakes geographically?

• What is the bibliographic coupling of researchers in the field of AI-based deepfakes?

• What are the most influential institutions working on AI-based deepfake research?

• What are the dominant trends from the meta-data (titles, abstracts, and keywords) on the research on AI-based deepfakes?

• Which research areas are the most prominent in the field of AI-based deepfakes and what are the top-cited papers in these areas?

• What lessons can be derived from these identified papers?

• What are the limitations, insights, and future prospects of deepfake detection research?

• What research patterns can be observed from the co-occurrence of keywords within the extensive body of deepfake research, based on a comprehensive analysis of these keywords?

• What are the trends, challenges and recommendations based on the review?

• What are the recommendations for addressing deepfakes for policymakers, researchers, and other stakeholders, such as industries and media outlets?

This paper stands out from previous bibliometric studies on deepfakes through:

• Identifying continental contributions to deepfake research and providing insights on data from WoS.

• Identifying the most prominent keywords by leading authors with the highest number of published documents on deepfakes.

• Identifying top research papers by leading authors with respect to citations based on data from WoS.

• Identifying and classifying key research areas based on the most prominent keywords into four themes: deepfake detection, feature extraction, face recognition, and forensics.

• Reviewing top cited papers that fall under these themes and their contributions.

• Discussing latest developments on global AI regulation initiatives.

• Discussing some of the trends, challenges and recommendations based on the review.

• Providing an exhaustive analysis of a more comprehensive search on deepfake based on data from Scopus.

• Providing insights based on an exhaustive keyword analysis from a comprehensive Scopus search.

• Discussing recommendations for policymakers, researchers, and practitioners.

These novel contributions provide unique insights into the rapidly advancing field of deepfakes. Accordingly, the remaining sections of the document are arranged as follows: Section 2 describes related literature on the bibliometric analysis of deepfakes, Section 3 provides details on the methodology, the results are described in Section 4, with insights into some prominent research areas, pressing concerns and key contributions are discussed in Section 5. Section 6 provides the results of the exhaustive search and analysis of research on deepfakes. Section 7 provides recommendations for addressing deepfakes for policymakers, researchers, and practitioners.

The interest in deepfake research is growing. Accordingly, we provide an overview of related works on the bibliometric analysis of deepfake research together with some of their findings.

2.1 Related Bibliometric Papers

In this section, we review the related work on deepfakes that have considered bibliometric analysis approaches to investigate trends in this area.

The work in [28] investigates misinformation in academia via network analysis of author keywords using bibliometric data. The results indicate that topics related to misinformation have increased in recent years. The work in [29] aims to select the most relevant articles on deepfakes based on data collected from Clarivate Analytics’ Web of Science Core Collection. The authors show that within a period of six years (2018 to 2023), an annual growth rate of over 100% has been experienced, indicating the trends in this research area. Furthermore, the authors identify key authors, collaboration among authors, primary topics studied in research, and major keywords. In addition, the work provides potential techniques to stop the proliferation of deepfakes to ensure information trust.

In [20], using VOSviewer, the authors conduct a bibliometric analysis aimed at providing a comprehensive analysis of deepfakes and investigating influential authors and their collaboration, as well as countries and more specific institutions investing annually. Using Web of Science, they analyze top document types, source titles, publication trends, and the productivity of various countries, as well as collaborative efforts among institutions, authors, and regions. The authors also use CiteSpace to identify fundamental focal points, research directions, and shifts in citations for keywords, thus presenting an in-depth analysis.

In [30], the authors conduct a meta-analysis on deepfakes to visualize their evolution and related publications. They identify key authors, research institutions, and published papers using bibliometric data. In addition, the authors conduct a survey to test whether participants can differentiate real photos of people from fake AI-generated images. Although the study contains aspects of a bibliometric paper, it is considered a meta-research, survey, and background study. The findings of the study show that humans are falling short of keeping up with AI and must be conscious of its societal impact.

The study in [21] also aims to provide a bibliometric analysis of deepfake technology based on 217 entries spanning a range of 15 years from Scopus. The authors use VOSviewer and R-programming to perform the analysis, and the results indicate that India has the highest number of publications. There is also an emerging rise in publications on the issue of deepfakes. In addition, the authors provide insights into collaboration patterns, key contributors, and the evolving discourse, serving as a foundation for informed decision-making and further research.

The authors in [31] conduct a bibliometric analysis of articles published on deepfakes, focusing on six research questions related to the main research areas, current topics and their relationships, research trends, changes in research topics over time, contributors to deepfake research, and funders of deepfake research. Based on a study of 331 articles obtained from Scopus and Web of Science, the authors provide answers to these questions. Furthermore, they discuss emerging areas, potential development opportunities, applied methods, relationships among prominent researchers, countries conducting the research, and opportunities for practitioners interested in deepfake research.

Previous bibliometric studies have focused on specific aspects of deepfake research, such as fake news detection by Gunawan et al. [32], image anti-forensics by Lu et al. [33], and the negative effects of deepfake content by Garg and Gill [34]. However, these studies are limited in scope and do not provide a comprehensive overview of the field. For instance, Gunawan et al.’s research focuses on deepfake news detection, while Lu et al.’s study explores image anti-forensics. Garg and Gill’s research, although focused on deepfake, primarily examines the negative effects of deepfake content.

Other studies have investigated related topics, such as disinformation through social media [35] and digital forensics investigation models by Ivanova and Stefanov [36]. However, these studies are restricted to specific keywords and do not provide a thorough analysis of the deepfake field. Gil et al.’s research on deepfake technology evolution and trends is based on bibliometric analysis but differentiates itself by focusing on organizations’ funding deepfake research [31]. Kaushal et al.’s [20] study provides a comprehensive analysis of deepfake research but is limited to influential authors, countries, institutions, and publications.

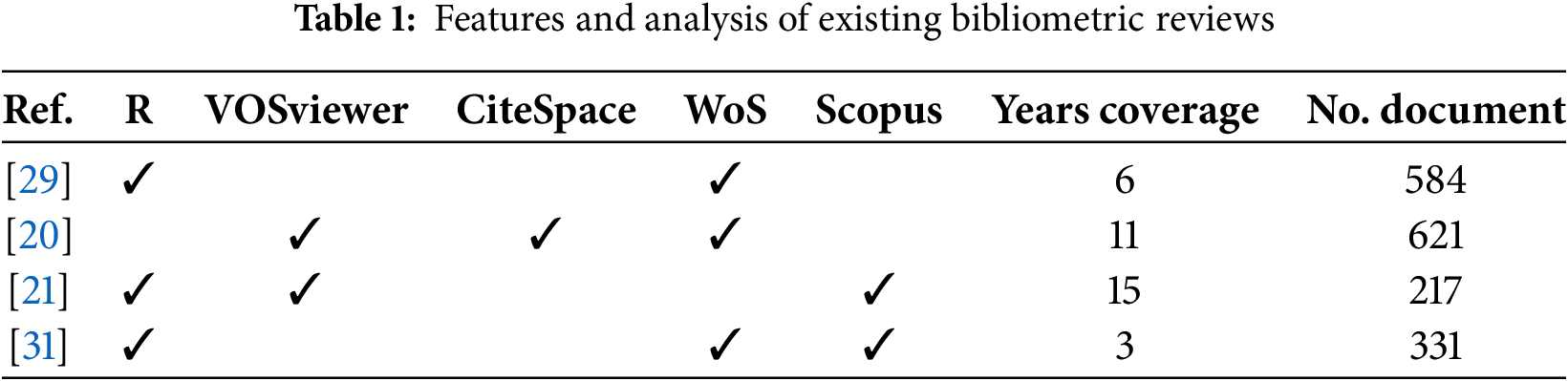

In conducting bibliometric analysis, a dataset must be acquired, typically through sources such as Web of Science (WoS) or Scopus, which have lots of bibliographic information [37], and analyzed using tools like VOSviewer, the R bibliometric package, or CiteSpace. Prior bibliometric analysis of deepfake research, as outlined in Table 1, reveals an expanding interest in the topic, but the current scope remains limited in several respects. Existing bibliometric analyses [20,21,29,31] provide valuable insights into publication trends and scholarly output, covering periods ranging from 3 to 15 years and document counts from 217 to 621. However, these analyses often lack coverage of larger datasets. This study, which analyzes 1412 documents from WoS, highlights the need for more comprehensive exploration due to the rapid development of deepfake technology. This evolution raises pressing ethical concerns, including its use in disinformation campaigns, privacy violations, and potential harm to individuals and organizations. Addressing these issues requires studies that go beyond detection and prevention to consider broader societal implications. Deepfake research remains an engaging field with few comprehensive review studies to provide insights and encourage further research. Conducting more extensive studies will support the development of policies and frameworks to address both technical and ethical challenges. The key features and analysis of existing bibliometric reviews, highlighting one of the gaps addressed by this study (dataset size and years of coverage), are presented in Table 1.

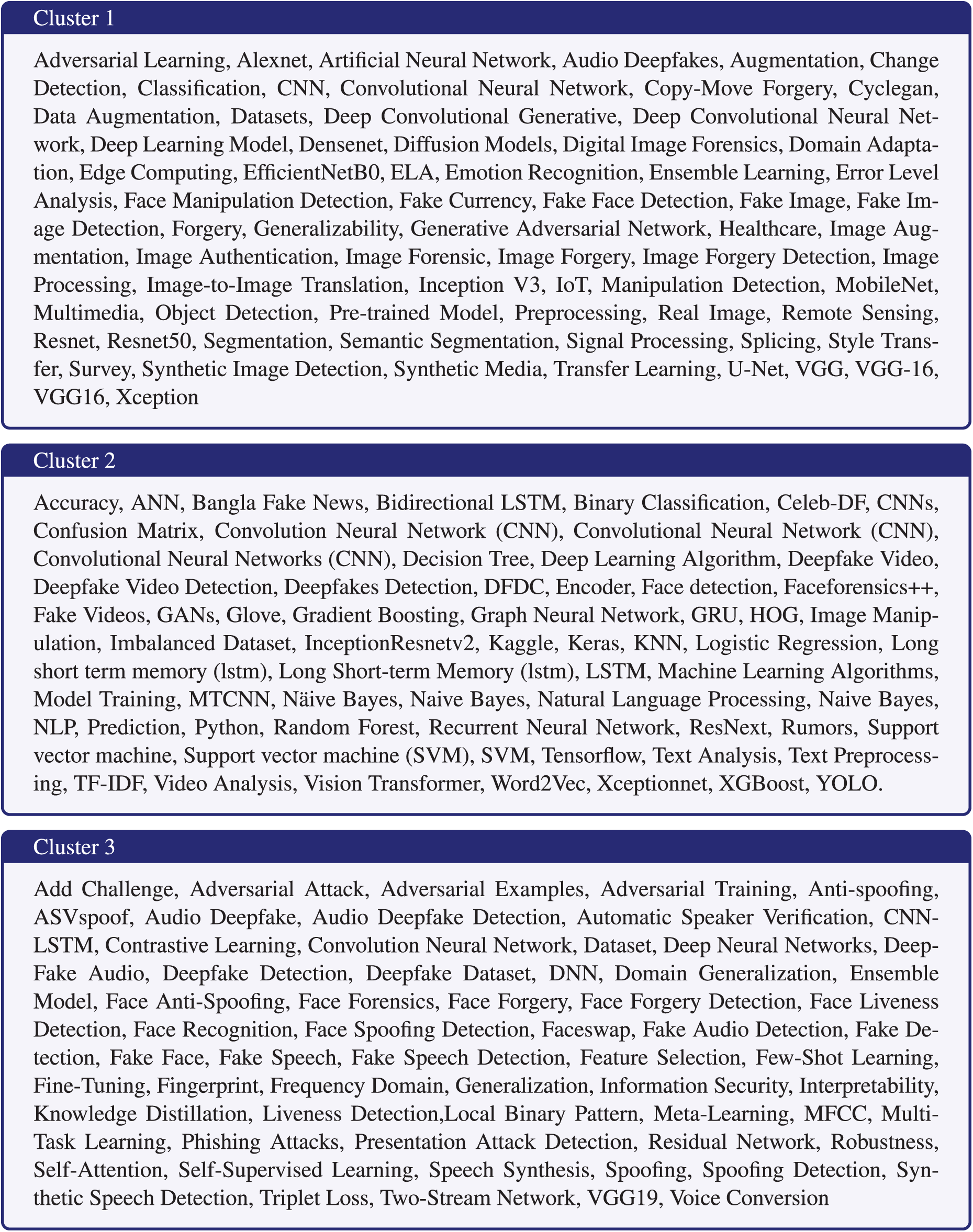

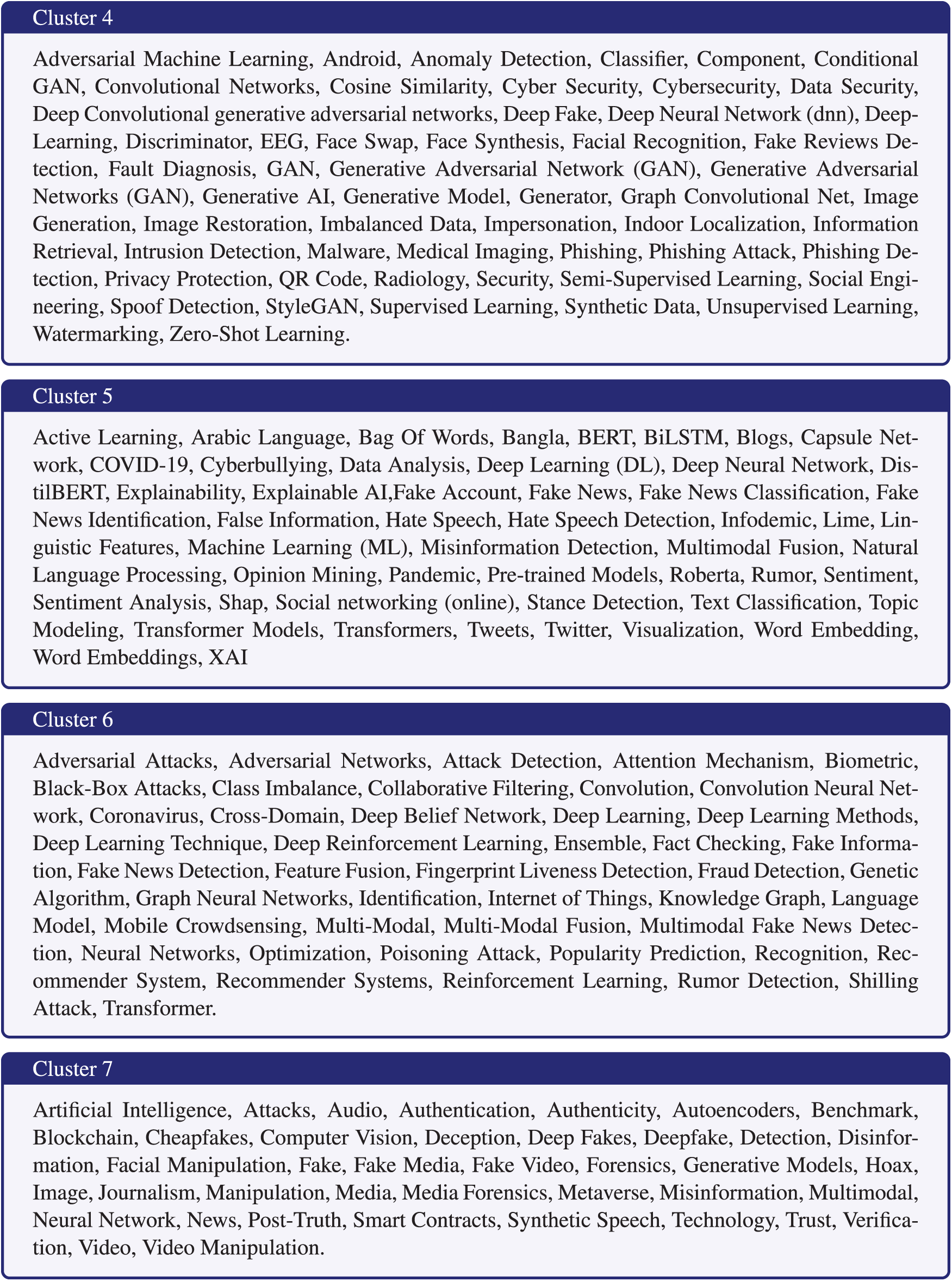

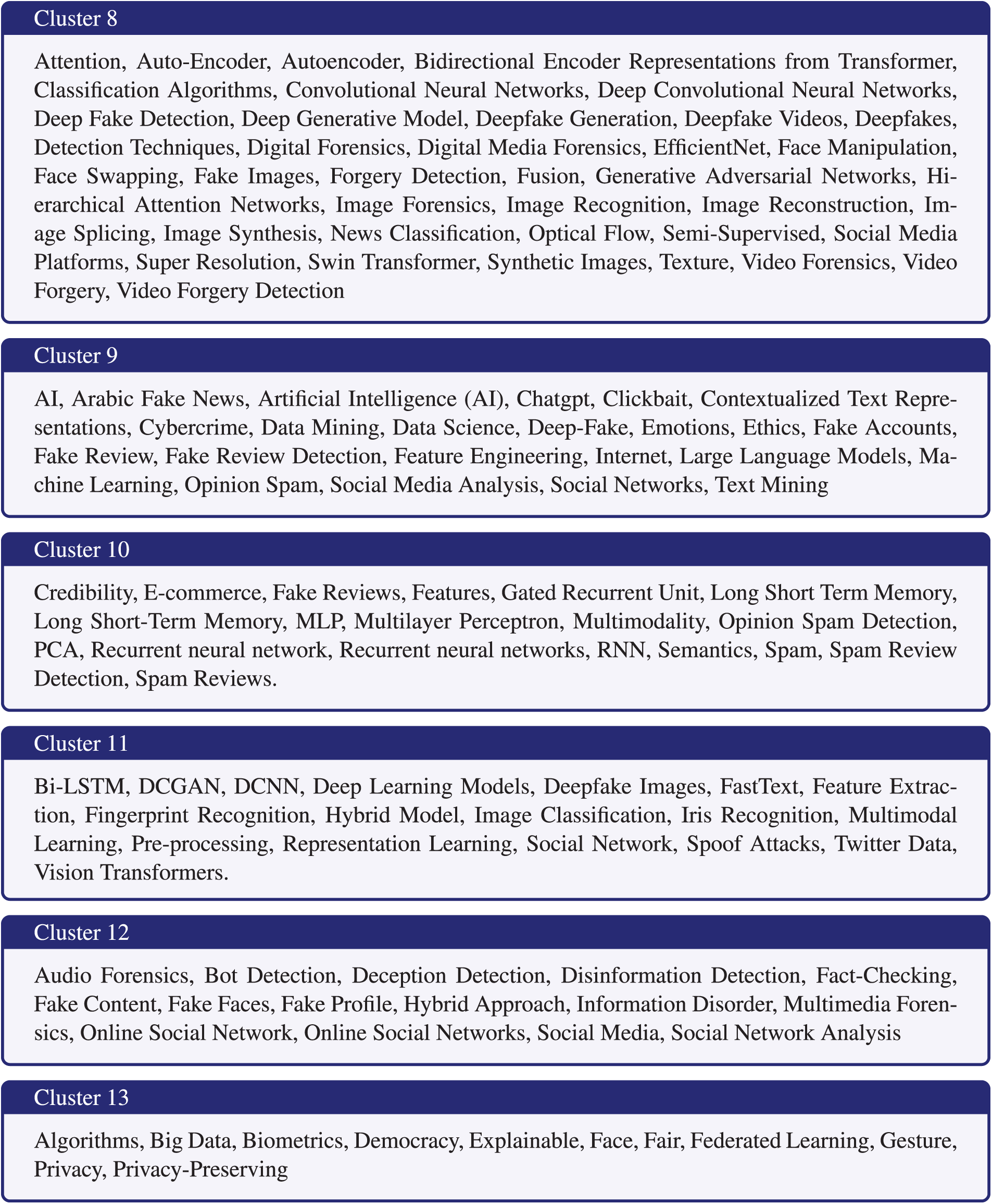

Although prior works have provided different insights into countries, prominent authors, institutions, and keywords, this paper distinguishes itself from prior bibliometric studies in five ways: (1) the size of the dataset; (2) the classification of these areas into themes by grouping related concepts within a single thematic group wherever applicable; (3) the review of top-cited papers under these themes to identify research patterns and some of the most influential research. Reviewing top-cited papers in each domain provides perspectives absent in other bibliometric papers and adds depth from an angle missing in prior works, with summaries indicating lessons learned. In addition to all these, the methodology deployed is also replicable and easy to follow, as papers selected for review are chosen based on well-defined criteria with strong relevance; (4) we provide a comprehensive analysis of a wide spectrum of keywords that were clustered using VOSviewer and we discuss the themes of each cluster. Moreover, insights into the state of deepfake research are provided from a corpus of over 8000 keywords; and (5) recommendations for addressing deepfakes for policymakers, researchers, and practitioners are provided. These contributions are unique to this paper and provide new insights into pivotal areas within the entire deepfake research domain.

This study aims to examine trends in publications and citations, countries’ contributions, prominent authors, influential organizations, recurring themes, thematic elements, research interests, and emerging trends in the field of deepfake research using a distinct approach by conducting an in-depth analysis of prominent selected keywords, including detection, feature extraction, face recognition, and forensics. It then provides a review of the most cited papers in this domain, discussing some of their main contributions, motivations, and relevance. In addition, the dataset from Web of Science used in this paper is much larger than that of many existing works due to rapid advances in research in this area. Thus, many of the findings in this bibliometric analysis differ from those in prior work. Moreover, this study covers 21 research areas, with 1412 results from these areas, showing the large scope covered by the search. Furthermore, details are provided on the contributions of different continents and some of the main funding organizations in prominent countries, keywords associated with researchers with the most documents, top-cited papers by top-cited authors, and deep insights from 8790 keywords obtained from over 5,000 search entries in Scopus. The findings of this research provide valuable insights into the current state of deepfake research, identify research gaps, and offer recommendations for future studies. The study also sheds light on the negative effects of deepfake content and provides a foundation for developing strategies to mitigate these effects.

This study utilizes a bibliometric analysis of research on deepfakes, employing VOSviewer to map and analyze the literature [38–40]. For all analyses conducted in this paper, we used the default VOSviewer settings unless stated otherwise, such as when adjusting the minimum keyword threshold. The VOSviewer provides visualization according to three bibliometric networks; a bibliographic coupling network of co-authorship (countries, researchers, and organization), a co-occurrence network of author keywords, and text analysis of title and abstract. A bibliometric network usually consists of both nodes and edges. The nodes could represent journals, publications, keywords, or researchers while the edges show the relationship between different pairs of nodes. Such relationships could be co-authorship or co-occurrence relations [40]. The primary goal is to elucidate research trends, identify influential contributors and countries, and explore critical themes in deepfake technology. The methodology outlines the data collection and visualization process.

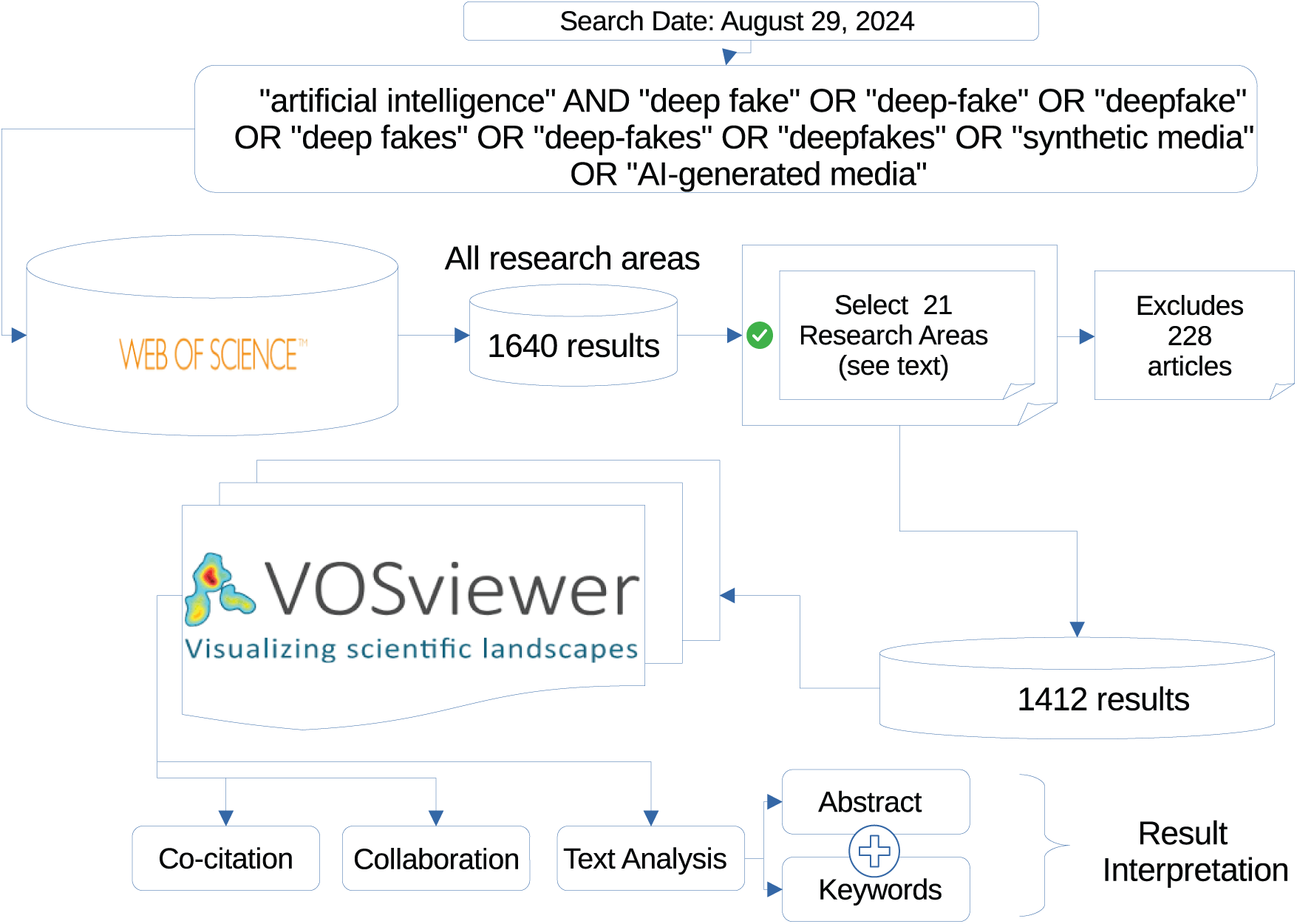

Accordingly, the bibliometric information for this study was collected using the Web of Science, where each article’s data corresponds to the theme. The research relied on comprehensive bibliographic databases, specifically Web of Science (WoS), due to their extensive coverage of peer-reviewed literature and citation information [37,41], as well as a source that favoured Natural Sciences and Engineering related disciplines [42]. As a result, this source is selected to capture a broad spectrum of foundational and recent deepfake technology studies. The search strategy involved querying terms such as “artificial intelligence” AND “deep fake” OR “deep-fake” OR “deepfake” OR “deep fakes” OR “deep-fakes” OR “deepfakes” OR ”synthetic media” OR “AI-generated media”. The search was conducted on August 29, 2024, and included articles, conference papers, reviews, and proceedings, which revealed 1640 documents. The data was downloaded as a Tab delimited file from the Web of Science database. This study partially follows the PRISMA guidelines [43,44].

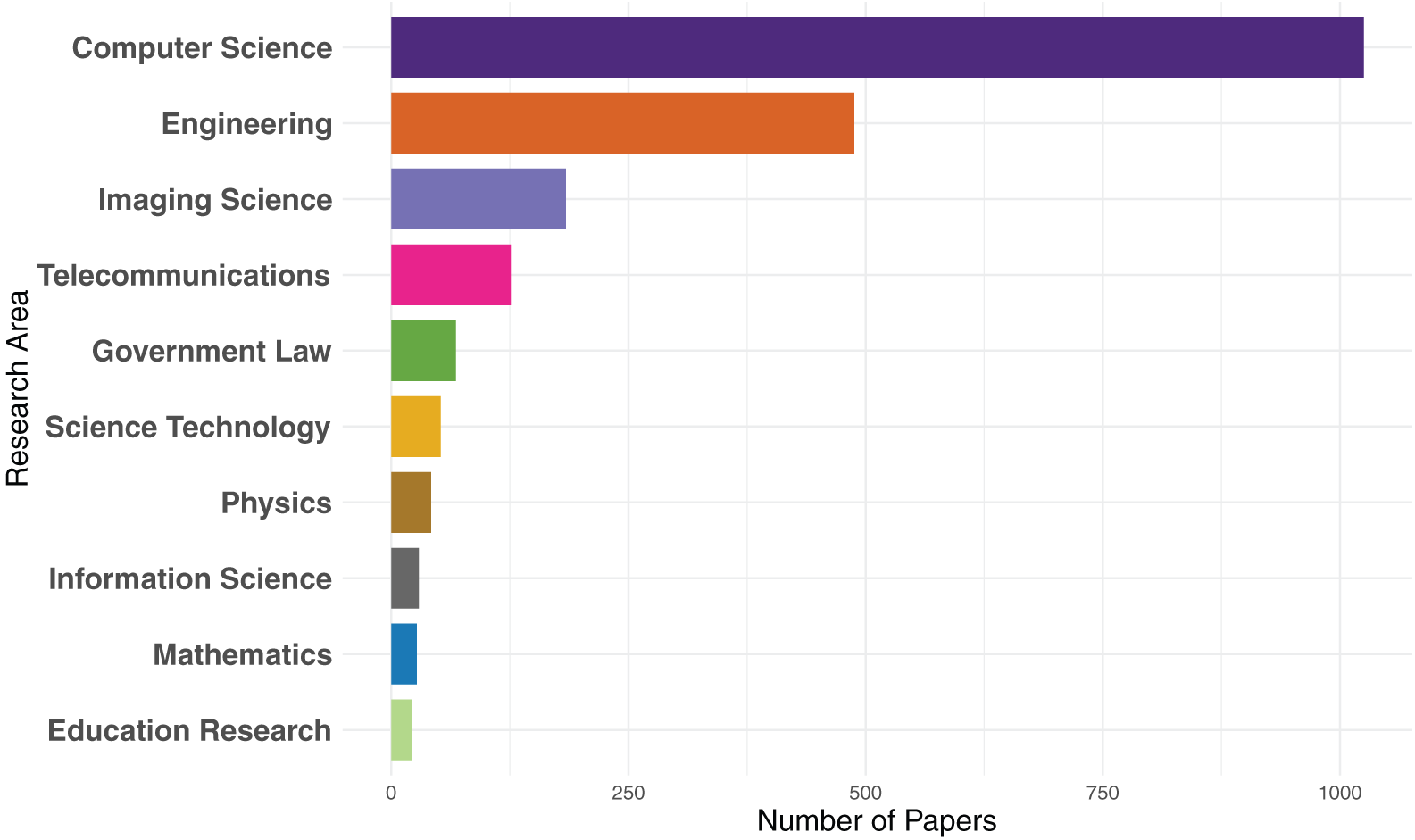

However, due to the limitations of PRISMA, which is a framework specifically designed for systematic and meta-analysis review [43,44], this study carefully selects the papers to meet the criteria of bibliometric review. Hence, the breakdown of the research process is presented in Fig. 3. Accordingly, the search retrieved results from various research areas, of which 21 research areas related to deepfakes were selected, yielding 1412 results, which are Computer Science: 1025; Engineering: 488; Imaging Science: 184; Telecommunications: 126; Government Law: 68; Science Technology: 52; Physics: 42; Information Science: 29; Mathematics: 27; Education Research: 22; Criminology Penology: 15; International Relations: 15; Film, Radio, Television: 13; Art: 12; Surgery: 5; Legal Medicine: 5; Theatre: 4; Medical Ethics: 3; Medical Informatics: 2; Obstetrics Gynecology: 2; Radiology, Nuclear Medicine, Imaging: 2. The choice of database, keywords and research areas as well as the use of VOSviewer for the presentation of data and visualizations helps to filter out outliers in the research on deepfakes, thus no other data cleaning process was required. Accordingly, Fig. 4 lists the top ten deepfake-related research areas in decreasing order and the number of papers from each research area.

Figure 3: Research methodology

Figure 4: Top 10 deepfake-related research areas and their corresponding number of WoS indexed papers

Limiting the scope to the chosen 21 research areas helps to ensure documents not directly related to the technological or societal aspects of deepfakes were excluded. This involves 228 articles and the 21 research areas retrieved 1412 results, which were exported in tab-delimited file format for analysis. Bibliographic data for the 1412 publications was downloaded from the Web of Science database, which supports various file formats. These documents were exported in text format for analysis. The entire record was obtained for each publication.

This section presents and analyzes the bibliographic data, focusing on key metrics such as the most prolific authors, leading countries in publication output, and other relevant trends, as observed in previous bibliometric studies [45–47]. By examining these aspects, this analysis provides a clearer view of the current research landscape, highlighting influential contributors and the regions driving advancements in deepfake research. Note that the influential contributions discussed in this section are based on the number of publications and citations.

4.1 Geographical Distribution of Publications (Citations by Country)

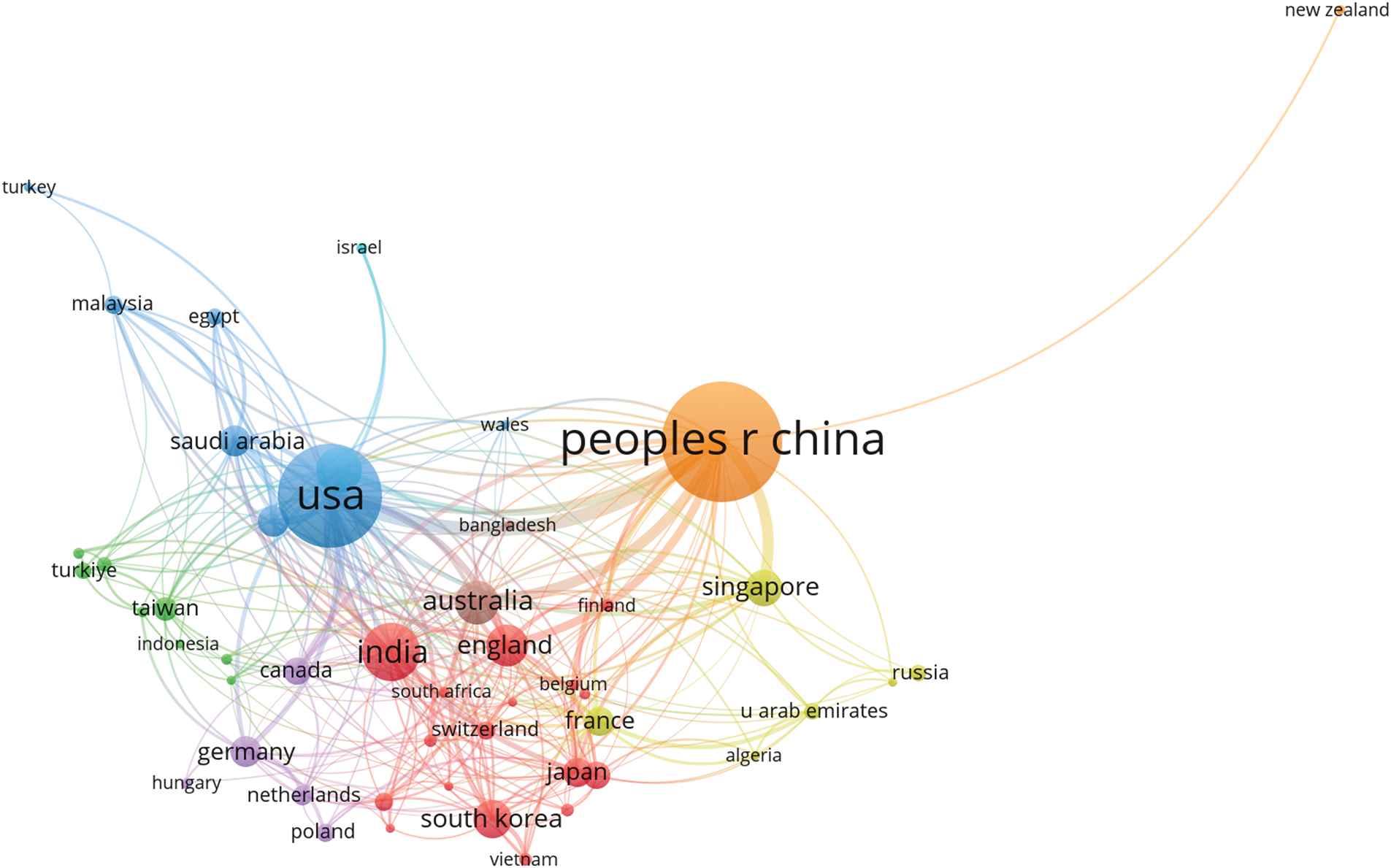

This study examines the geographical distribution of publications by using citations as the unit of analysis. A bibliographic map was generated based on collected data, utilizing bibliographic coupling of country co-authorship with fractional counting. The maximum number of countries per document was set to 25. To ensure a meaningful analysis, the minimum number of documents required for a country to be included in the citation analysis was set to five, which is the default value. Among the 89 countries in the dataset, 49 met this threshold. The final visualization is presented in Fig. 5.

Figure 5: The visualization of the countries and regions with a minimum of a five publication threshold

Fig. 5 highlights regions and countries with at least five publications related to deepfake research. Each circle represents a country, where larger circles indicate higher publication counts, while smaller circles represent countries with fewer publications. In general, the closer two countries appear in the visualization, the stronger their bibliographic coupling relationship.

4.2 Leading Countries Based on the Number of Publications

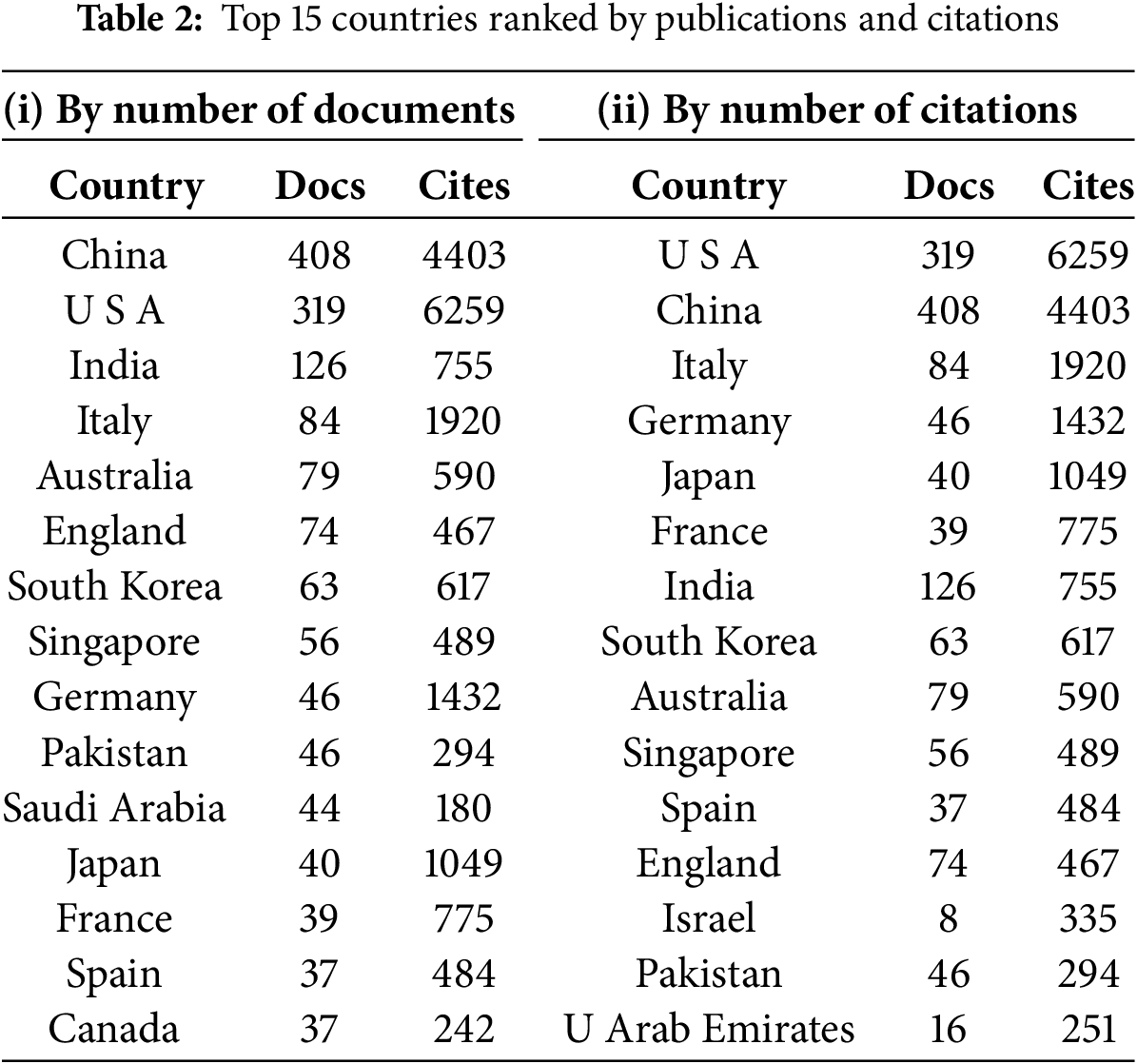

The research contributions of the top 20 countries, ranked by the number of published documents on deepfakes, are presented in Table 2(i). The minimum number of documents required for a country to be included in the visualization was set to 19.

The most prolific country in deepfake research is the People’s Republic of China (PRC) or China, with 408 publications and 4403 citations, followed by the United States with 319 publications and 6259 citations, and India with 126 publications and 755 citations. This indicates that the China is the leading contributor to deepfake research, closely followed by the USA. At the lower end of the top 20 list, Norway and Switzerland each have 20 publications, while Malaysia, ranking 20th, has 19 publications.

The analysis also reveals that certain countries, such as Angola, Argentina, Bahrain, Bosnia & Herzegovina, Chile, Cyprus, Fiji, Kosovo, Lebanon, Libya, Morocco, Nepal, Somalia, Tunisia, Uzbekistan, Yemen, Ghana, and Sri Lanka, have contributed only one publication each. Similarly, Nigeria, Northern Ireland, Belarus, Colombia, Trinidad and Tobago, Estonia, and Iraq each have two publications, indicating relatively lower contributions to deepfake research.

This study finds that deepfake research is prioritized in countries such as China, the USA, India, and Italy, likely due to their strong focus on cybersecurity, emerging technologies, and digital innovation. Consequently, deepfake research is concentrated in industrialized nations with substantial public and private funding dedicated to AI and digital technologies. In contrast, countries with fewer publications in this area may have limited access to funding and tend to focus on research addressing socio-economic priorities, such as public health, agriculture, or other pressing local concerns, rather than deepfake technology.

Table 2 presents the top 15 countries ranked by the number of publications and citations.

4.2.1 Leading Countries Based on Number of Citations

Considering the number of citations, the research contributions on deepfakes from the top 15 countries are presented in Table 2 (ii). The analysis was conducted by selecting a minimum of one document per country and including the top 15 countries out of 83. The USA is the most influential country in terms of citations, with 6259 citations from 319 publications, followed by the China with 4403 citations from 408 publications and Italy with 1920 citations from 84 publications. The data shows that the USA has significantly more citations on deepfakes than any other country. In contrast, countries such as Saudi Arabia, the Netherlands, and Egypt rank at the bottom of the list, with Saudi Arabia having 180 citations, the Netherlands 178 citations, and Egypt 111 citations. Moreover, the overall analysis indicates that countries such as Cyprus, Ghana, Iran, and Sri Lanka have no citations, while Slovenia, Bosnia & Herzegovina, Kosovo, and Luxembourg each have only one citation.

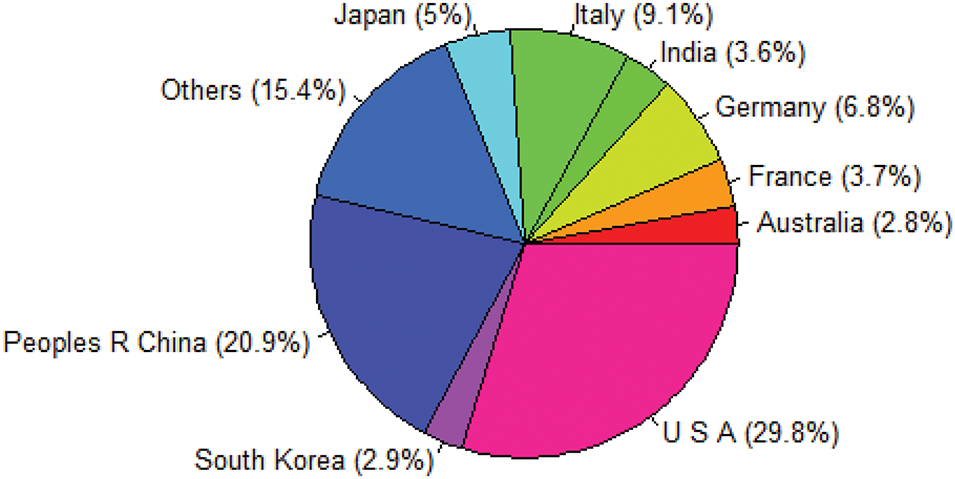

In summary, this section highlights the top countries contributing to deepfake research in terms of both document count and citation ranking. Specifically, China, the USA, and Italy rank in the top three for both categories (refer to Fig. 6), making them the leading contributors to deepfake research. Most countries that appear in the document ranking also appear in the citation ranking, with the exceptions of Israel, the United Arab Emirates, and Egypt. These countries are in the top 20 for document citations but not in the document ranking itself. Similarly, Norway, Switzerland, and Malaysia are in the top 20 for document rankings but not in the citation ranking.

Figure 6: The top 10 countries by citations

4.2.2 Continental Insights and Recommendations

First, Asia leads in terms of the number of documents (783), with China contributing more than 52% of the total. North America follows, primarily represented by the USA (319). Europe ranks third (280), with Italy accounting for only 30% of all documents, indicating a more balanced contribution across multiple European countries. Oceania is represented solely by Australia, which has 79 documents—a significant number relative to some European countries with larger populations and more institutions. Africa’s footprint is not observed in the analyzed data.

In terms of citations, North America, represented by the USA (6259), has the highest impact despite ranking second in the number of publications. Research in the USA is supported by funders such as the National Science Foundation, the Defense Advanced Research Projects Agency, and the U.S. Department of Defense. Asia follows, led by China (4403), though many other Asian countries have a lower citation-to-document ratio compared to Europe, where research, particularly from Italy and Germany, has a higher citation impact. Major funders in Europe include the European Commission and the Horizon 2020 Framework Programme. Oceania, represented by Australia (590), also contributes significantly. Africa and the Middle East do not have a notable presence in terms of citations.

Overall, Asia has the highest research volume with a strong citation count. Research in China benefits from funders such as the National Natural Science Foundation of China, the Ministry of Science and Technology of the People’s Republic of China, and the National Key Research and Development Program of China. Other key funding agencies in Asia include the National Research Foundation of Korea. Meanwhile, North America (primarily the USA) produces the most impactful research overall, while Europe generates well-cited publications. Oceania also makes significant contributions, though its citation impact is lower compared to Europe and North America.

Given the low participation of some continents and countries in deepfake research, intercontinental collaboration should be encouraged. Deepfake technology is a global concern, as the internet is accessible to all. Collaboration between technologically advanced nations and developing regions would enhance the global research landscape on deepfakes, fostering more comprehensive and diverse contributions to this critical field.

4.3 Bibliographic Coupling Network of Researchers

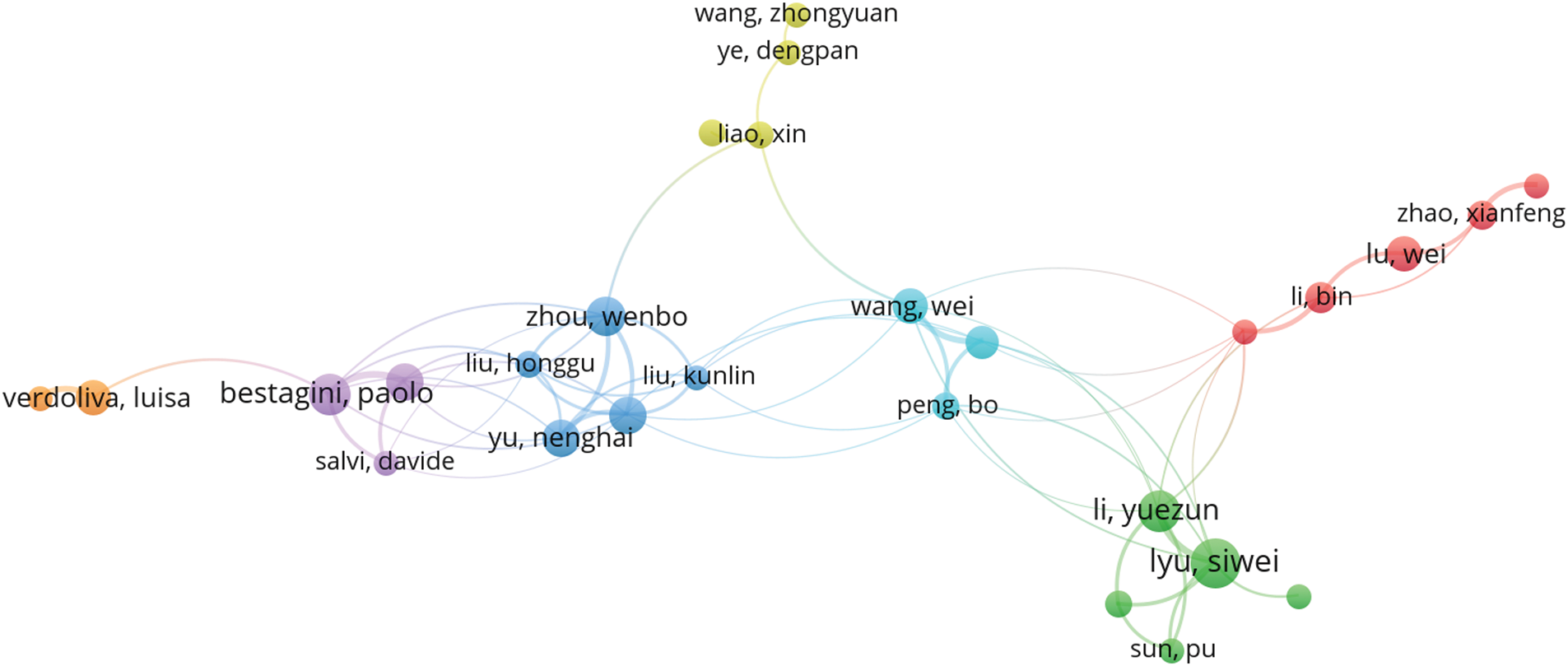

In order to construct the visualization of researcher citations, we used bibliographic coupling based on co-authorship with fractional counting, setting a maximum of 25 countries per document. VOSviewer requires a minimum document count per country for inclusion in the citation visualization; we selected the default threshold of five publications. From our dataset, 105 authors met this criterion out of 3991 authors with at least five publications. In the visualization shown in Fig. 7, each circle represents a researcher, with larger circles indicating researchers with many publications and smaller circles indicating those with fewer. Generally, the closer any two researchers are within the visualization, the more closely they are related in terms of bibliographic coupling. In other words, researchers positioned near each other tend to cite the same publications, whereas those further apart typically do not.

Figure 7: The visualization of the bibliographic coupling network of researchers

4.3.1 Leading Authors Based on Number of Documents

The research contributions on deepfakes by the top 20 authors, based on the number of publications, are presented in Table 3(i). This visualization was created by setting a minimum of one document per author. The most productive author is Lyu Siwei (USA), with 19 publications and 1541 citations, followed by Javed Ali (Pakistan), with 15 publications and 128 citations; and Woo Simon (South Korea), Bestagini Paolo (Italy), and Hu Yongjian (China), each with 14 publications and 209, 184, and 21 citations, respectively. The data shows that Lyu Siwei (USA) is the leading contributor to deepfake research, followed by Javed Ali (Pakistan). Authors such as Farid Hany (USA), Tariq Shahroz (Australia), Irtaza Aun (USA), Chen Yu (USA), Jin Xin (China), Jiang Qian (China), and Dong Jing (China) each have nine publications, placing them at the bottom of the top 20 list.

Additionally, the research indicates that nine of the top 20 authors are from China, four from the USA, four from Italy, and one each from Pakistan, South Korea, and Australia. The significant number of authors from China underscores their substantial contribution to deepfake research. Overall, over 200 authors have only one publication. Table 3 presents the top 20 authors, ranked by the number of publications, their citation counts, and countries.

4.3.2 Related Keywords by Authors with the Highest Number Documents

In this study, we aim to identify the research patterns represented by keywords in the works published by authors with the most documents. These keywords are mainly related to methods and techniques, as well as broader concepts and components on deepfake generation, image analysis, deepfake, and forgery detection. Similarly, text, audio, and video forgery are all evident in these keywords. These keywords include Adversarial Learning, Adversarial Networks, Audio Authenticities, Audio Forgery Detection, Data Hiding, Deepfake Detection, Deep Neural Networks, Detection Methods, Detection Models, Digital Image Forensics, Duplication Detection, Face Images, Face Recognition, Face Synthesis, Face Swapping, Facial Expressions, Facial Landmark, Fake Detection, Forgery Detections, Gait Analysis, Gait Recognition, Generalization Capability, Generative Adversarial Networks, Image Analysis, Image Classification, Image Compression, Image Enhancement, Image Features, Image Forensics, Image Matching, Image Processing, Manipulation Techniques, Media Forensics, Neural Network, Neural Networks, Object Detection, Reversible Data Hiding, Reversible Watermarking, Speech Recognition, Synthetic Data, Video Forgery Detection, Voice Replay Attack, Watermark Embedding.

4.3.3 Leading Authors Based on Number of Citations

Regarding citation count, the research contributions on deepfakes by the top 20 authors are presented in Table 3(ii). The most cited author is Lyu Siwei (USA), with 19 publications and 1541 citations, followed by Li Yuezun (China), with 13 publications and 1460 citations, and Riess Christian (Germany), with three publications and 1153 citations. The data indicates that Lyu Siwei (USA) has the highest citation count in deepfake research, followed by Li Yuezun (China).

Authors such as Li Lingzhi (China), Yang Hao (USA), Zhang Ting (China), and Guo Baining (China) are at the lower end of the top 20 list. Li Lingzhi and Yang Hao each have 531 citations, while Zhang Ting and Guo Baining have 41 citations each across two publications.

Among the top 20 most cited authors, nine are from China, four from Germany, three from the USA, and two each from Italy and Japan. The data further indicates that over 100 authors have no citations. Lyu Siwei’s leading citation count suggests that his work is highly influential and widely recognized. He is also the only author in the top three to rank highly in both publication and citation counts. Additionally, the presence of nine Chinese authors in the top 20 underscores China’s significant contribution to deepfake research. Table 3 presents the top 20 authors ranked by citation count, along with their publication numbers and countries.

4.3.4 Top-Cited Papers by the Most Cited Authors

This section briefly explores the two most cited papers by the five most cited authors in deepfake research, each with over 1000 citations. Notably, these two papers collectively involve contributions from Lyu Siwei, Li Yuezun, Riess Christian, Verdoliva Luisa, and Yang Xin. Both papers highlight the importance of high-quality datasets and benchmarks for deepfake research.

The first paper, titled *“Celeb-DF: A Large-Scale Challenging Dataset for DeepFake Forensics”* [10], co-authored by Li, Xin Yang, and Siwei Lyu, along with Pu Sun and Honggang Qi, identifies a major limitation in existing deepfake datasets—their low visual quality, which makes them unrealistic compared to deepfake videos circulated online. To address this issue, the authors introduced a new large-scale deepfake video dataset containing 5639 high-quality videos featuring celebrities, generated using an improved synthesis process. A comprehensive evaluation of deepfake detection methods using this dataset demonstrates its challenges and potential impact on deepfake forensics.

The second paper, titled *“FaceForensics++: Learning to Detect Manipulated Facial Images”* [48], authored by Rossler and co-authors, including Riess Christian and Verdoliva Luisa, proposes an automated, publicly available benchmark for facial manipulation detection. This benchmark standardizes the evaluation of deepfake detection methods by incorporating prominent manipulation techniques at varying compression levels and sizes. The dataset contains over 1.8 million manipulated images, making it significantly larger than previous datasets. A thorough analysis of data-forgery detection techniques reveals that incorporating domain-specific knowledge significantly improves detection accuracy, even under strong compression, and outperforms human observers.

4.4 Most Influential Institutions

The most influential institutions are analyzed based on two criteria: the highest number of publications and the highest number of citations. The results of this analysis are presented in the following sections.

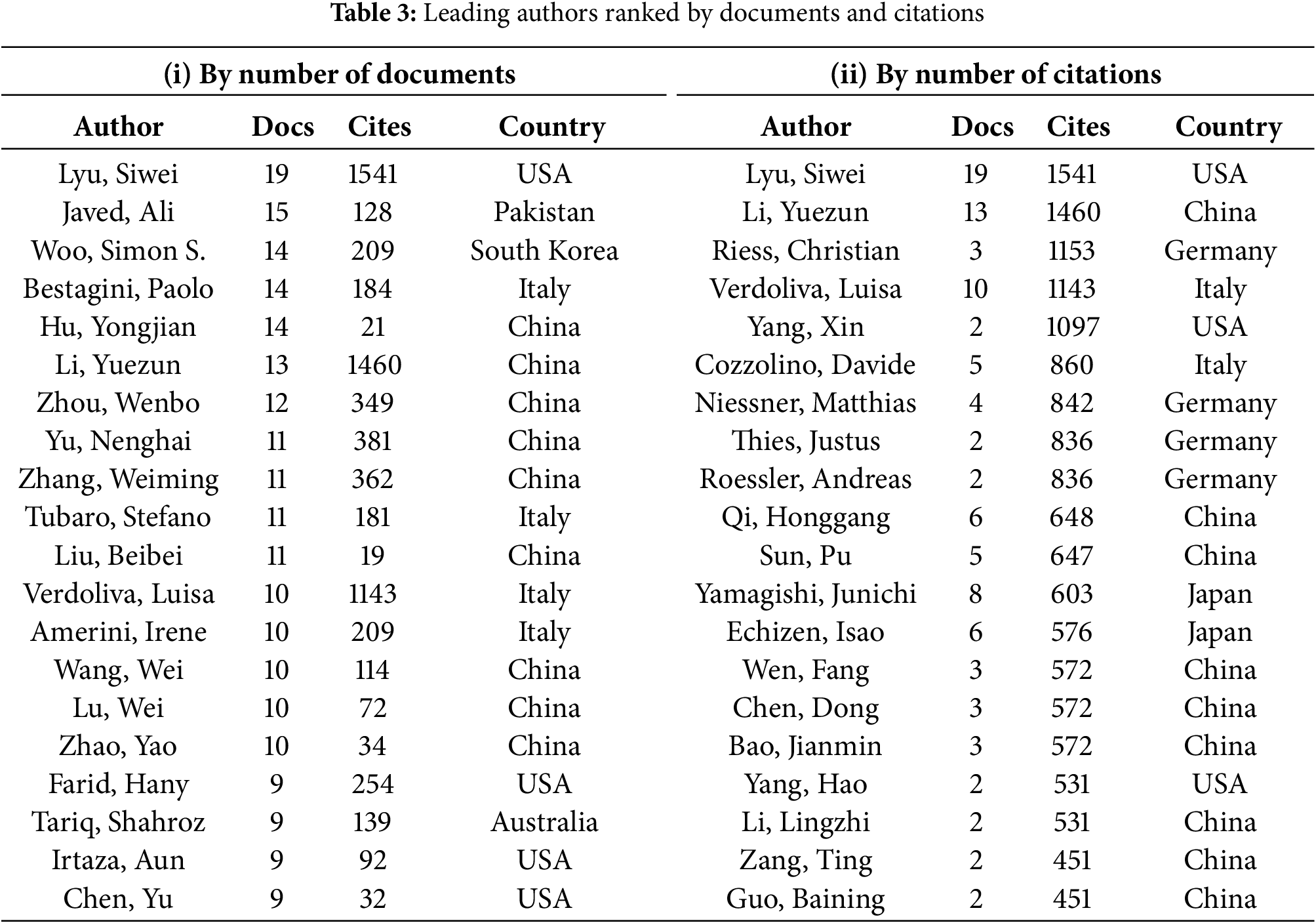

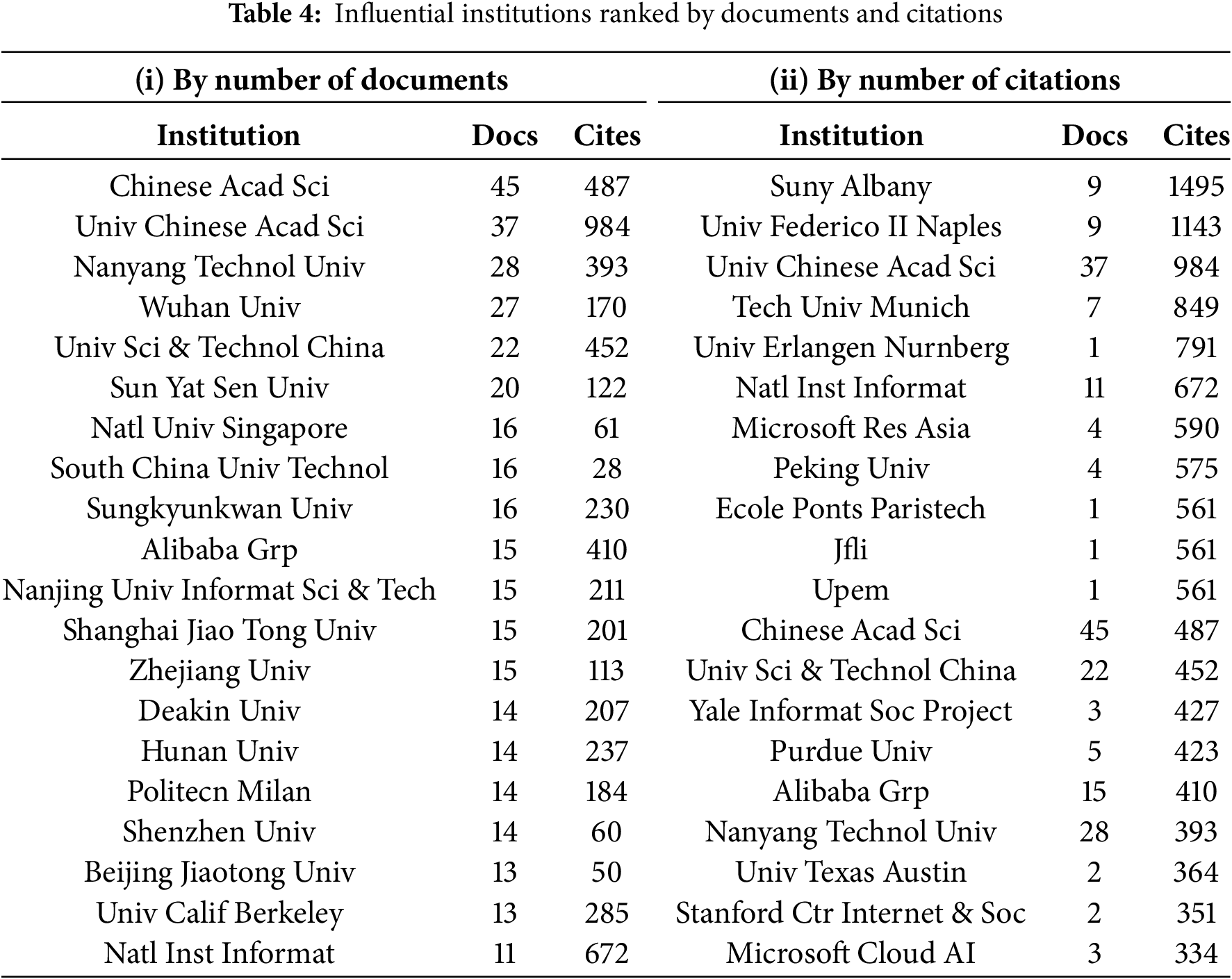

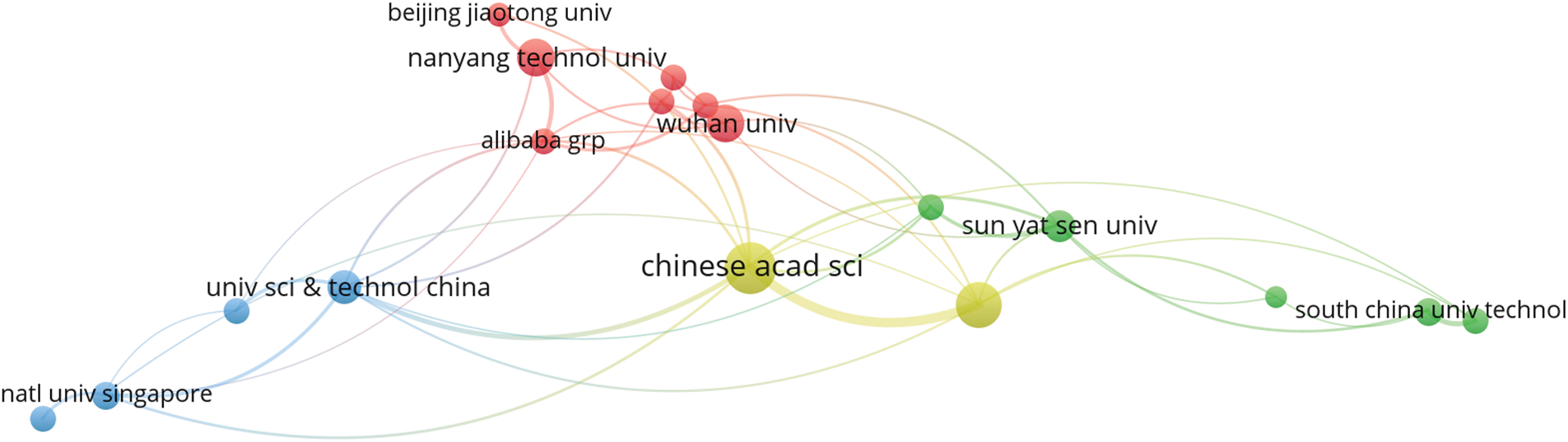

4.4.1 Influential Institutions Based on Number of Documents

An analysis of the most influential institutions reveals that the leading contributor to deepfake research is the Chinese Academy of Sciences, with 45 publications and 487 citations, followed by the University of the Chinese Academy of Sciences, with 37 publications and 984 citations, and Nanyang Technological University, with 28 publications and 393 citations (see Table 4(i)). The institution abbreviations are presented in the table as extracted from VOSviewer.

The data highlights the Chinese Academy of Sciences as the primary contributor to deepfake research, closely followed by the University of the Chinese Academy of Sciences. Among the top 20 institutions, the minimum publication count is 11, with four institutions meeting this threshold: Xi’an University (11 papers, 167 citations), the National Institute of Informatics (11 papers, 672 citations), SUNY Buffalo (11 papers, 93 citations), and Monash University (11 papers, 145 citations). The National Institute of Informatics ranks 20th due to its higher citation count.

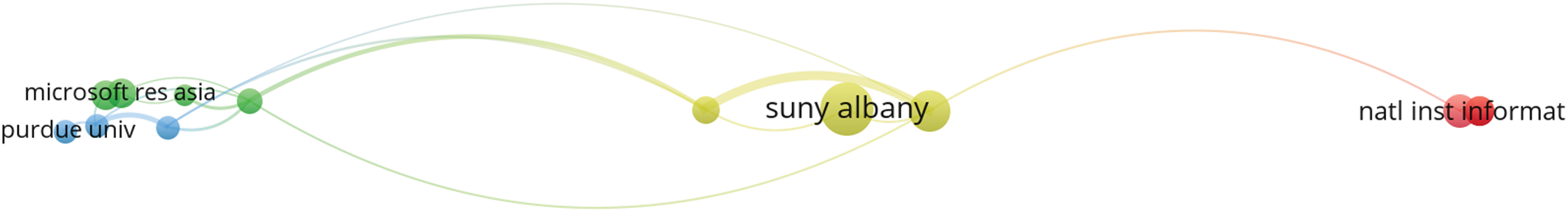

Overall, more than 150 institutions have only one publication. Table 4 presents the top 20 institutions ranked by the number of documents, along with their citation counts. Additionally, some of the top 20 institutions in the network are not directly connected. The largest connected cluster consists of 18 institutions, as shown in Fig. 8.

Figure 8: Visualization of connected organizations using the number of documents to rank (refer to Table 4 for details)

4.4.2 Influential Institutions Based on Number of Citations

This study also examines the most influential institutions based on citation count, complementing the analysis conducted on the number of publications per institution. The results reveal that the most influential institution is SUNY Albany, with nine publications and 1495 citations, followed by the University of Federico II Naples, with nine publications and 1143 citations, and the University of the Chinese Academy of Sciences, with 37 publications and 984 citations, as shown in Table 4 (ii).

The findings indicate that SUNY Albany is the leading contributor to deepfake research in terms of citations, followed by the University of Federico II Naples. Notably, Dr. Siwei Lyu, the most highly cited researcher in this field, is affiliated with SUNY Albany.

Among the top 20 institutions, the lowest publication count is three, which includes Microsoft Cloud AI, with 334 citations. Additionally, the analysis reveals that more than 150 institutions have no citations. Table 4 presents the top 20 institutions ranked by citation count, along with their respective publication numbers.

It is also important to note that some of the top 20 institutions in the network are not directly connected. The largest connected cluster consists of 14 institutions, as shown in Fig. 9.

Figure 9: Visualization of connected organizations using the number of citations to rank (refer to Table 4 for details)

4.4.3 Relevance of Higher Document or Citation: Institutional

The analysis of influential institutions based on the number of publications and citations provides valuable insights into the research landscape of deepfakes. However, it is important to recognize that the number of publications produced by an institution does not necessarily correlate with the number of citations it receives. This discrepancy highlights the distinction between the quantity of research output and its quality or impact within the scientific community.

For instance, while institutions like the University of the Chinese Academy of Sciences have a high number of publications (37), it is institutions such as SUNY Albany that lead in citations (1495 citations from just 9 publications). This suggests that although SUNY Albany has fewer publications, its research has a greater impact, receiving significant attention and citations from other scholars. Conversely, an institution with a higher publication count may not necessarily receive a proportional number of citations, as seen with Microsoft Cloud AI (3 publications, 334 citations), which, despite its smaller output, has a relatively high citation rate.

This comparison underscores the importance of not relying solely on the number of publications as a measure of an institution’s research influence. Citations often provide a more accurate reflection of the quality, relevance, and impact of research, as they indicate how frequently other researchers reference and build upon that work. Therefore, institutions like SUNY Albany, with fewer but highly cited papers, may contribute more significantly to the field than institutions with a larger number of publications but fewer citations.

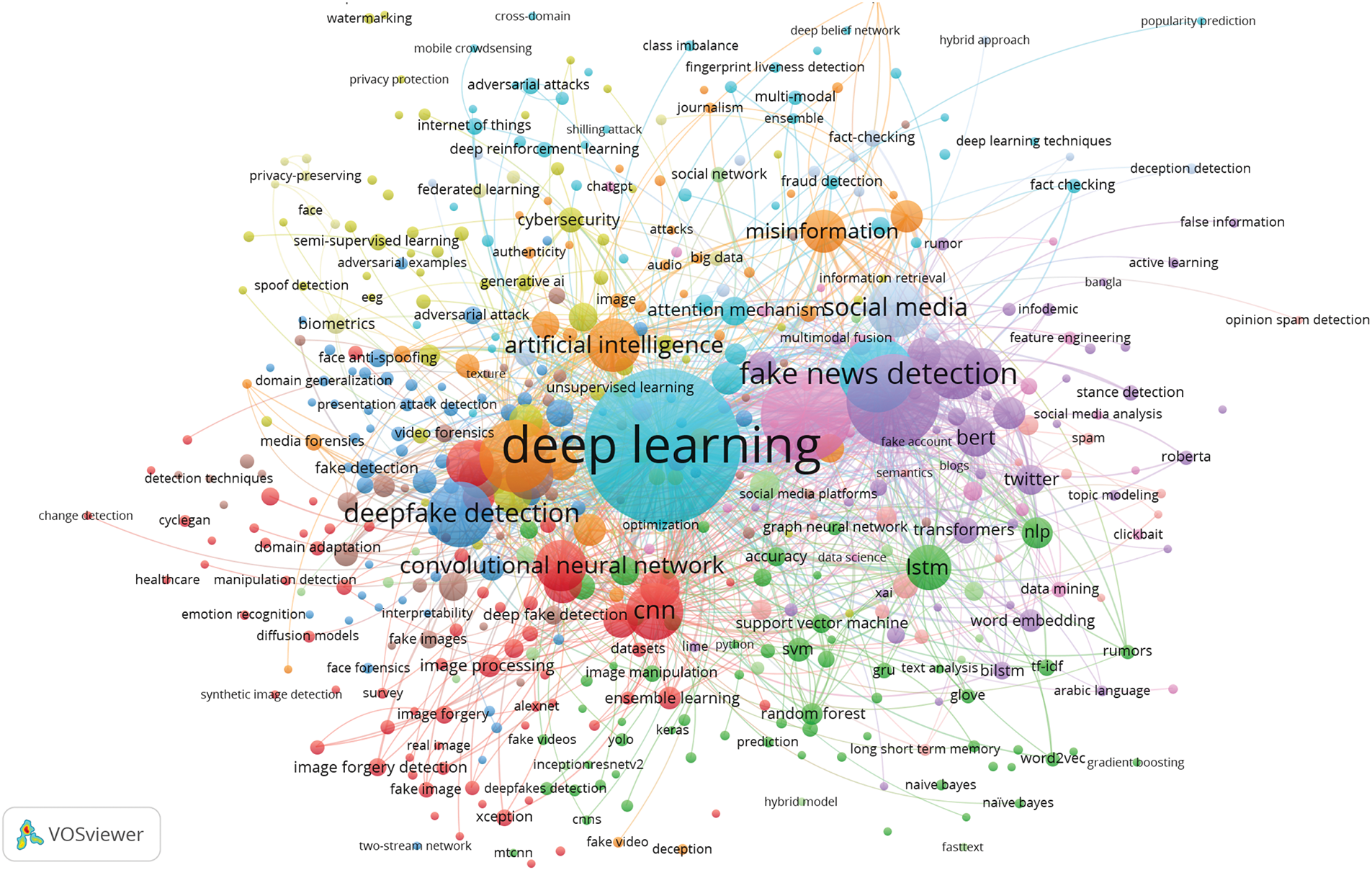

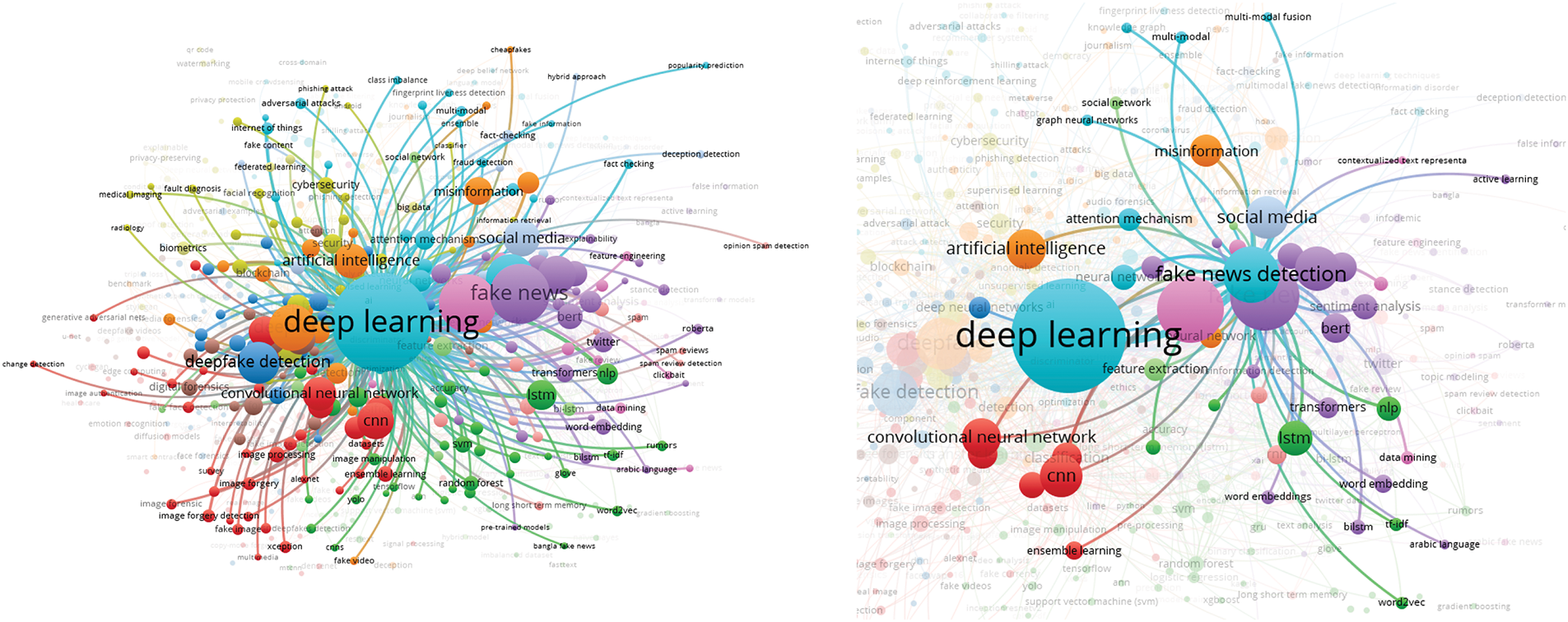

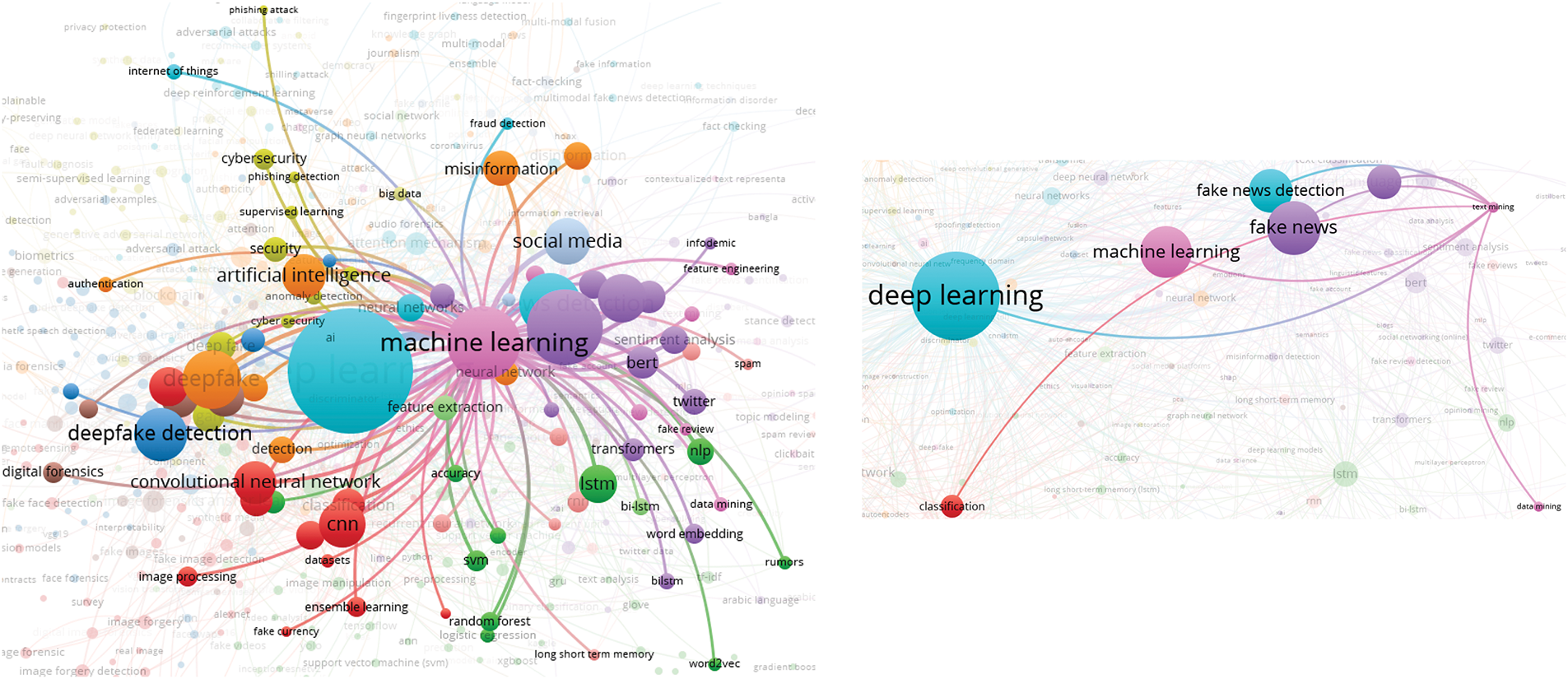

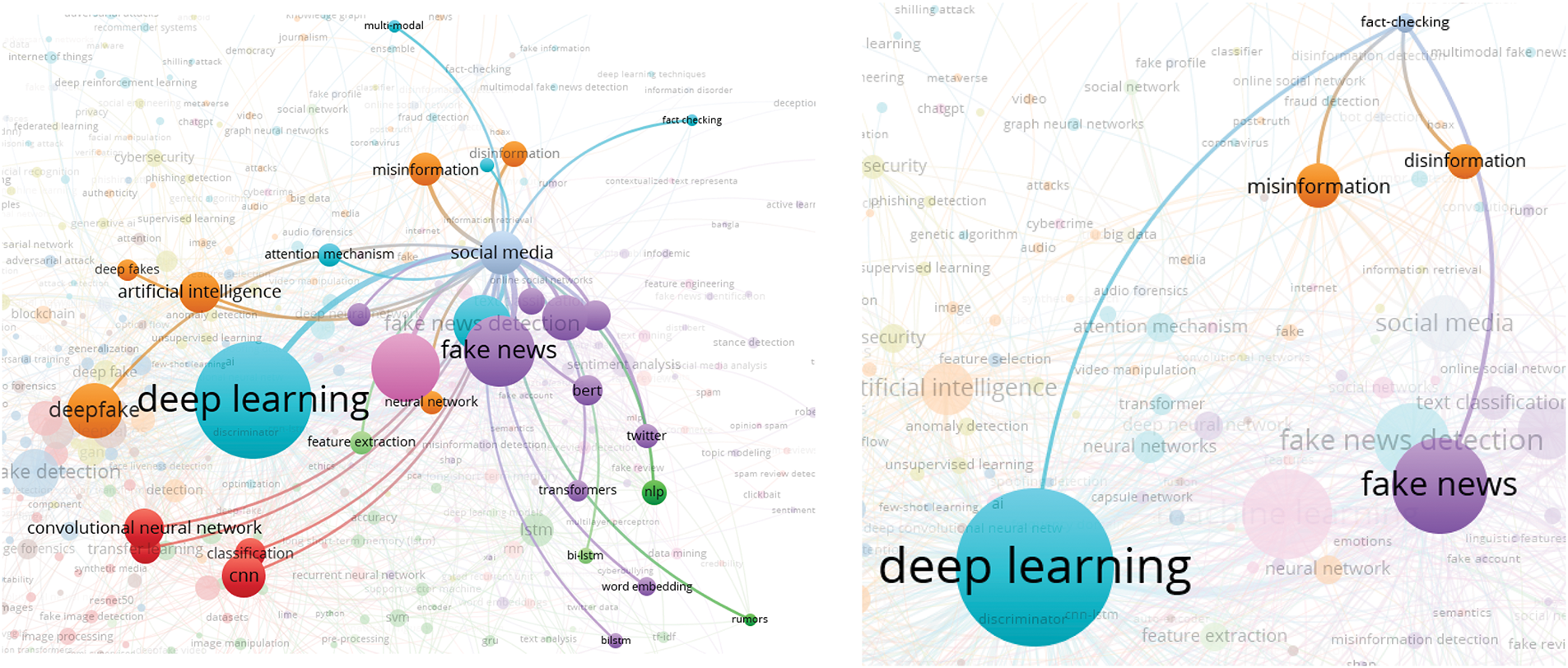

4.5 Co-occurrence Network of Keywords

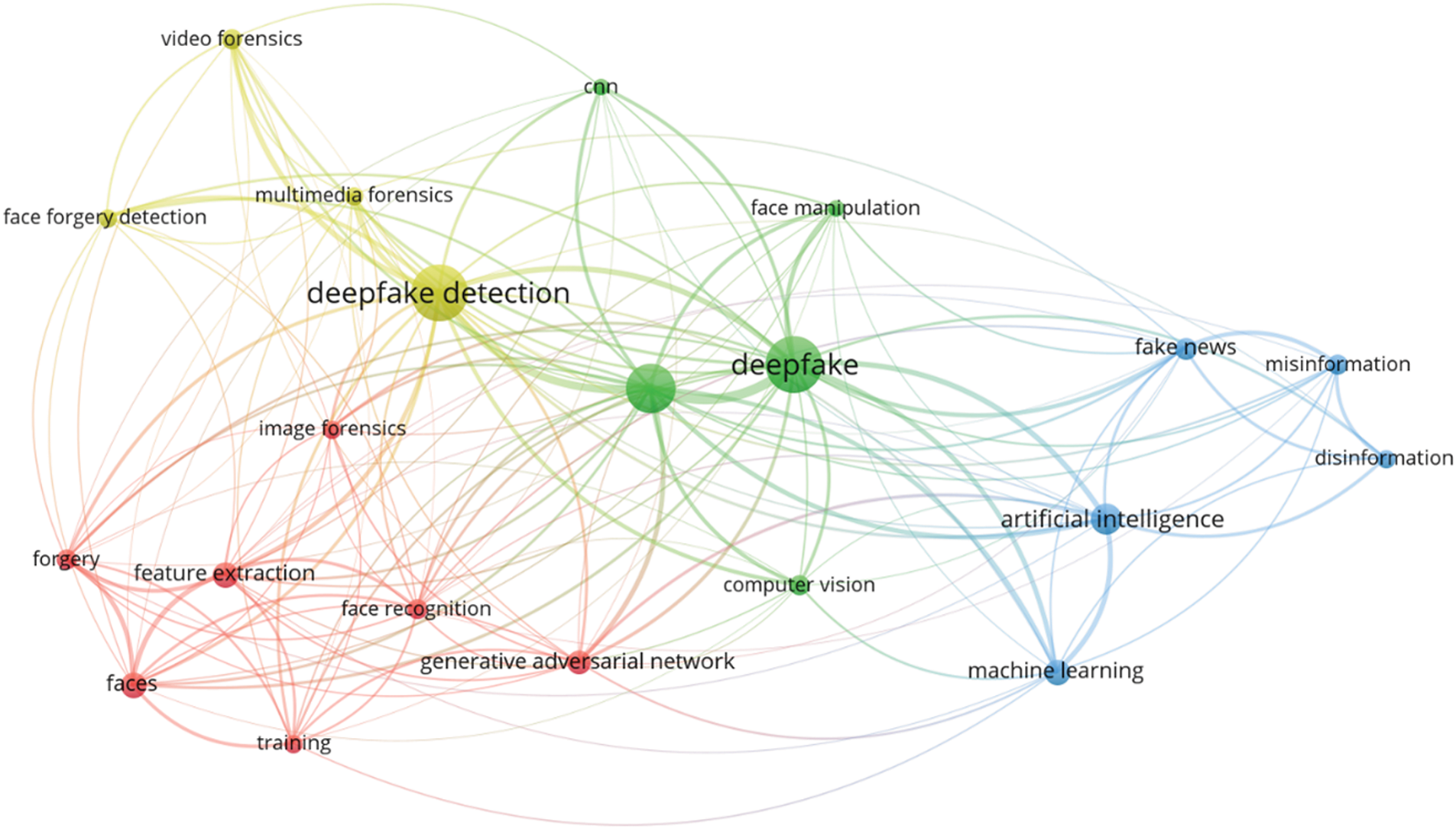

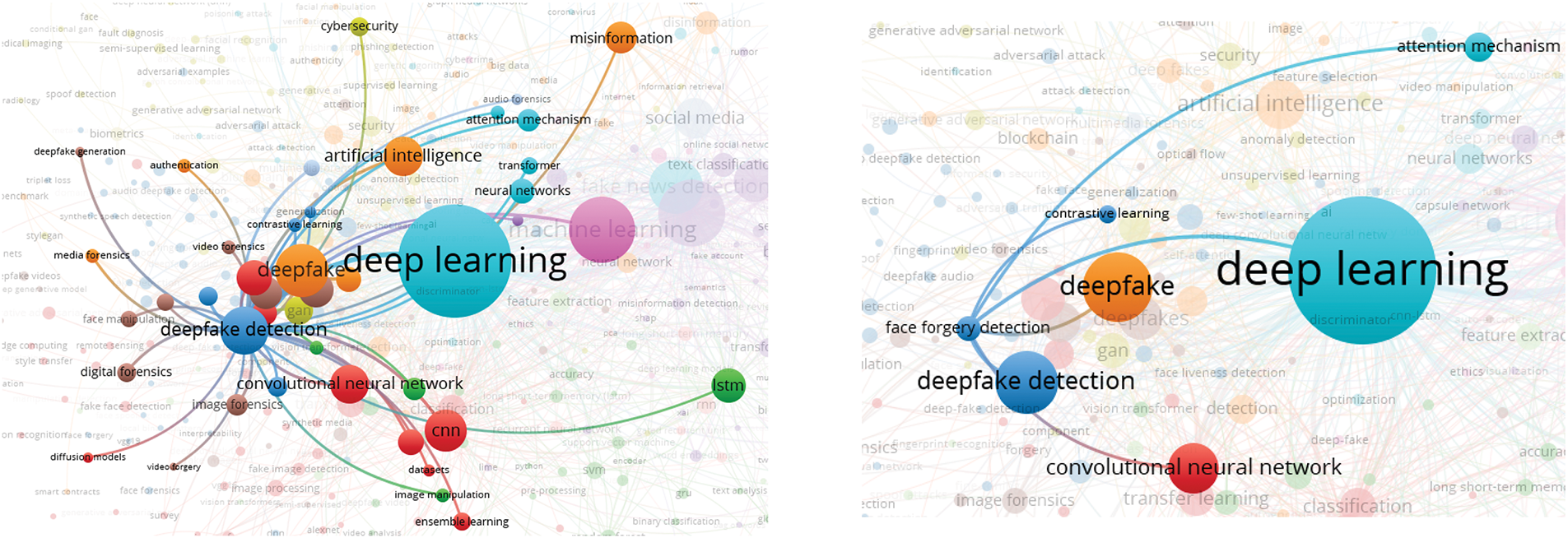

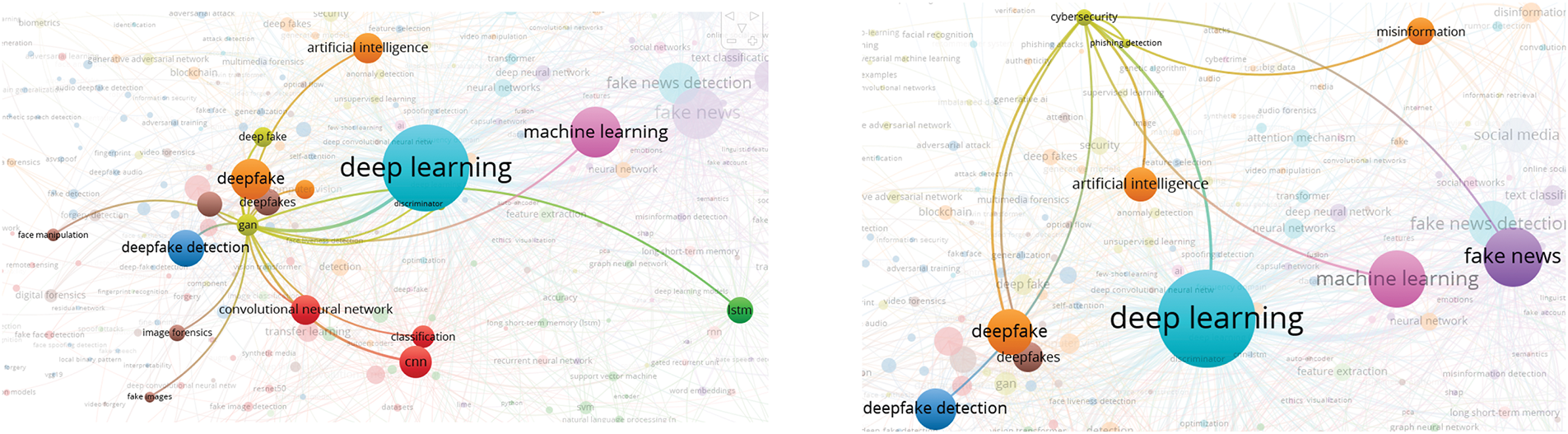

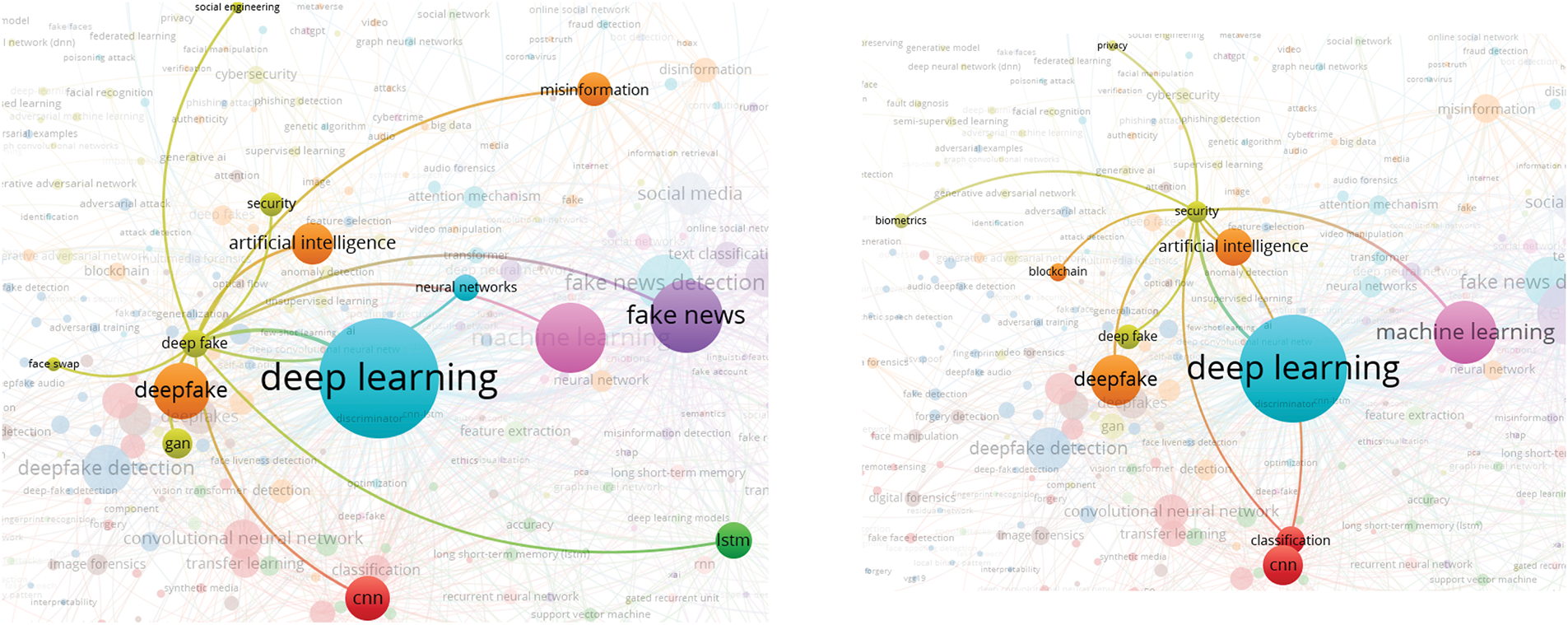

In this analysis, we present a visualization of the keywords used by authors. Specifically, we use bibliographic coupling to analyze the co-occurrence of author keywords, applying the fractional counting option. Out of 2809 keywords, we set the minimum occurrence threshold at 25, resulting in a selection of 23 keywords. In the visualization, each circle represents a keyword, with closer proximity indicating a stronger relationship between keywords. The co-occurrence of keywords in publications was analyzed to determine their interconnectedness. The results show that the primary keyword is “deepfake detection,” with prominent related keywords including deepfake, deep learning, artificial intelligence, feature extraction, machine learning, faces, and generative adversarial networks. Additionally, synonyms such as deepfake, deepfakes, and deepfake are collectively represented as “Deepfake” in the visualization, as shown in Fig. 10.

Figure 10: Visualization of author keywords

In the visualization (see Fig. 10), four clusters are identified. The first cluster, shown in red, focuses on technologies and processes related to deepfake creation and detection. This cluster contains seven keywords, making it the largest, which suggests that researchers in this field prioritize this aspect more than others. Clusters 2 and 3 each contain five keywords. Cluster 2, represented in green, is centered on deepfake generation techniques and technologies, while Cluster 3, in blue, relates to the impact of deepfakes on information integrity and misinformation. Cluster 4, shown in yellow, consists of four keywords and is associated with deepfake detection and forensic analysis, offering opportunities for further research contributions. Cluster 3 has the highest number of connections in the visualization, linking it to most of the other keywords.

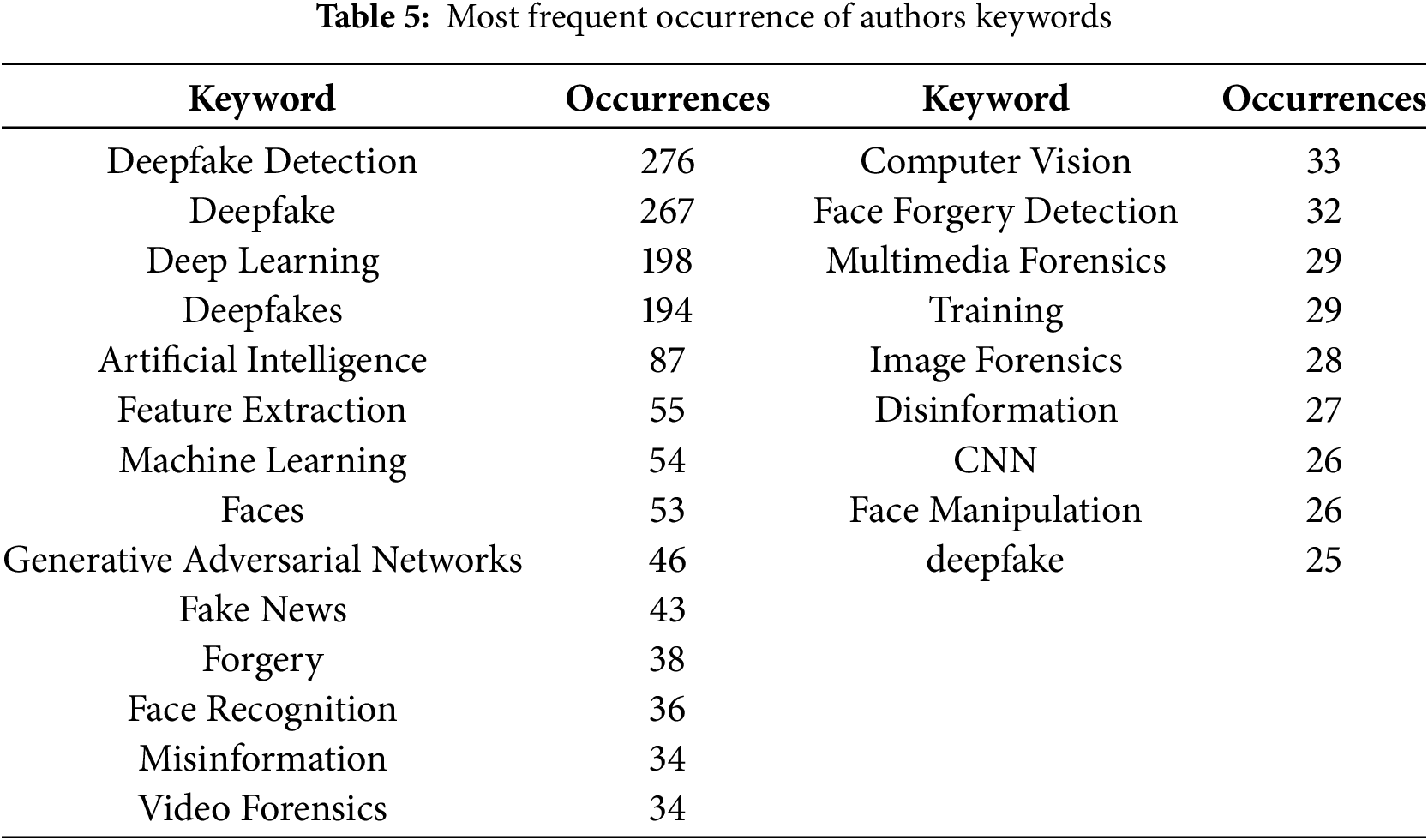

The emphasis on deepfake detection in research suggests that deepfakes are becoming increasingly common, necessitating the development of effective detection systems. Researchers are actively working to improve detection methods capable of accurately identifying deepfake content. Given that deepfakes can be highly realistic, they pose risks such as misinformation, security threats, and reputation damage to individuals and organizations. Consequently, many researchers focus on developing deepfake detection solutions. Table 5 presents the 23 keywords identified (including variant forms such as ‘Deepfake’, ‘Deepfakes’, ‘deepfake’), along with their occurrence counts based on the analysis.

4.6 Text Analysis of Titles and Abstracts

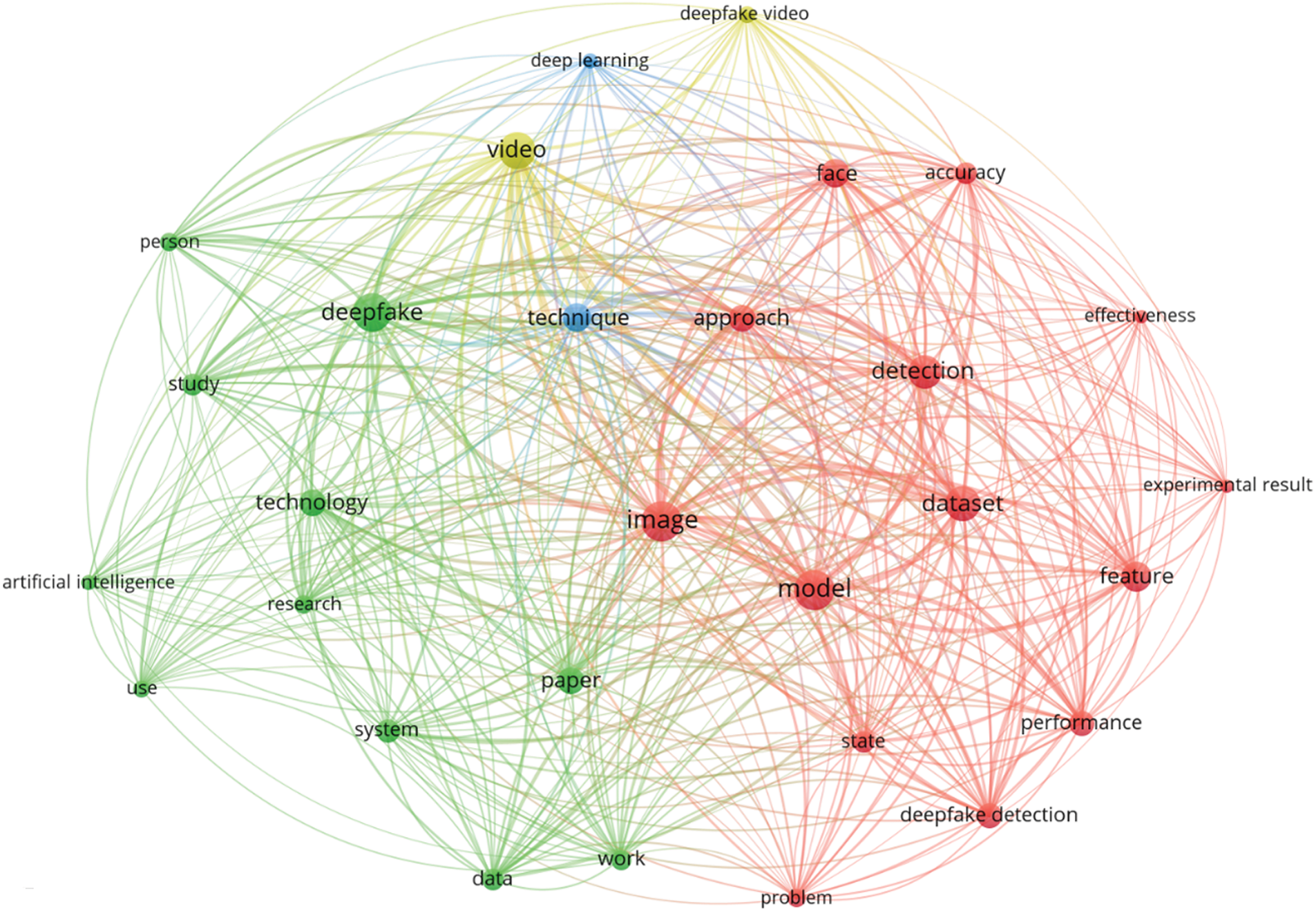

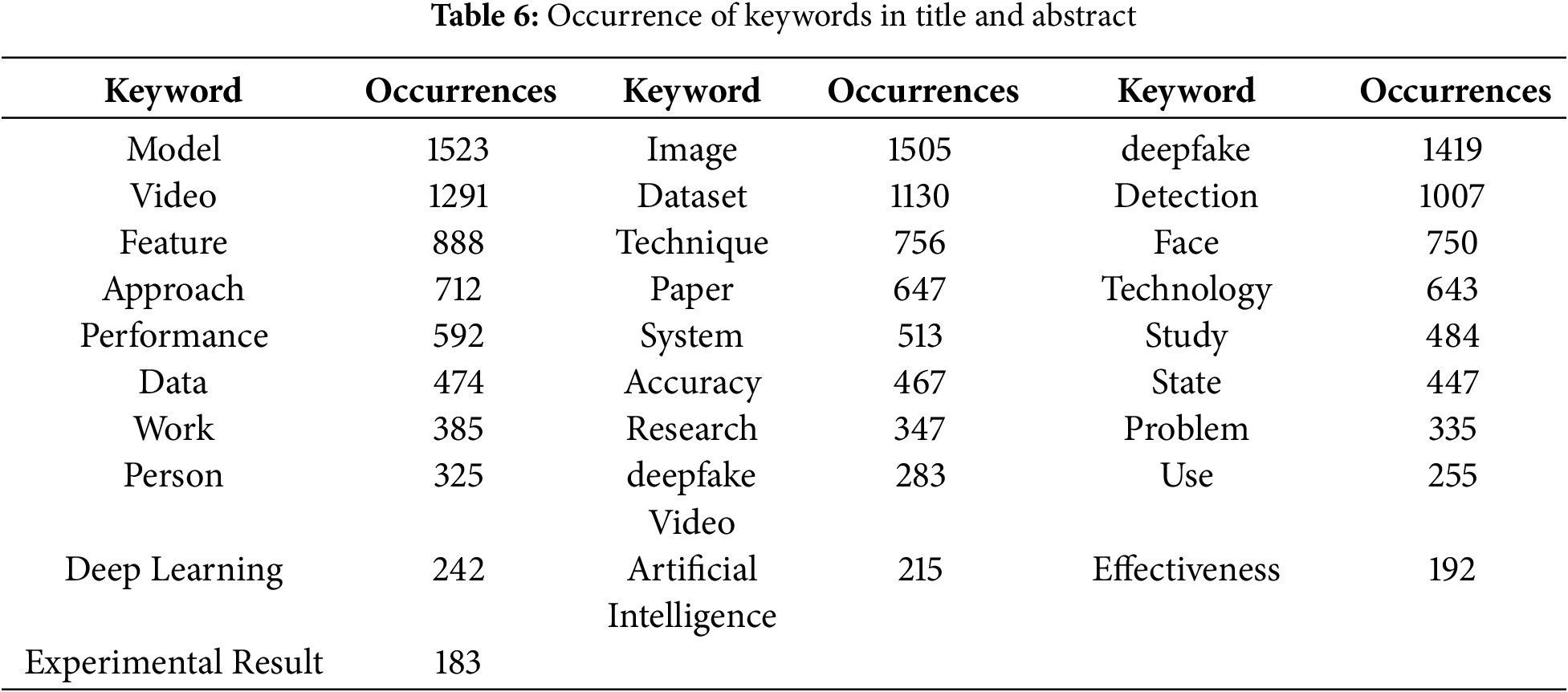

This study also performs a text analysis of titles and abstracts to identify common research themes and focus areas. The minimum number of occurrences for a term is set at 160 out of 25,799 items, with 29 terms meeting this threshold and being considered for visualization. According to the extracted data, model, most likely referring to detection models, is mentioned frequently in the literature, followed by other commonly used terms such as image, deepfake, video, dataset, detection, feature, technique, and face.

The network visualization presented in Fig. 11 reveals four clusters. The first cluster, shown in red, focuses on evaluating the accuracy and effectiveness of deepfake detection. This cluster contains 14 keywords, making it the largest, which suggests that researchers in this field place significant emphasis on assessing detection performance. Cluster 2, shown in green, consists of 11 keywords and is centered on advancements in deepfake technology. Clusters 3 and 4 each contain two keywords. Cluster 3, shown in blue, focuses on techniques and deep learning algorithms used in deepfake research, while Cluster 4, shown in yellow, pertains to deepfake and authentic videos. Notably, Cluster 3 has the most connections in the visualization, as it is closely linked to other clusters, with deepfake serving as a key term connected to most other keywords.

Figure 11: Network visualization of text analysis using the title and abstract considering full counting

According to the data, seven of the 29 identified terms represent different aspects of deepfake research: deepfake detection, models, features, accuracy, effectiveness, fields, and studies. These terms highlight the central focus on deepfake detection and the associated challenges. Based on these findings, several key observations can be made:

• Deepfake detection is applied to manipulated images, audio, or videos, where faces can be easily altered.

• The rapid advancements in GANs have made the creation of deepfakes more accessible.

• Extensive research has been dedicated to improving models capable of detecting deepfake media.

• Researchers are examining deepfake features, such as inconsistencies in facial movements or lighting, to identify fake content.

• Efforts are being made to assess the effectiveness of detection systems in accurately identifying deepfakes.

• Studies explore the challenges and solutions related to deepfake technology, with significant contributions focused on enhancing detection methods.

Table 6 presents the frequency of keyword occurrences based on titles and abstracts.

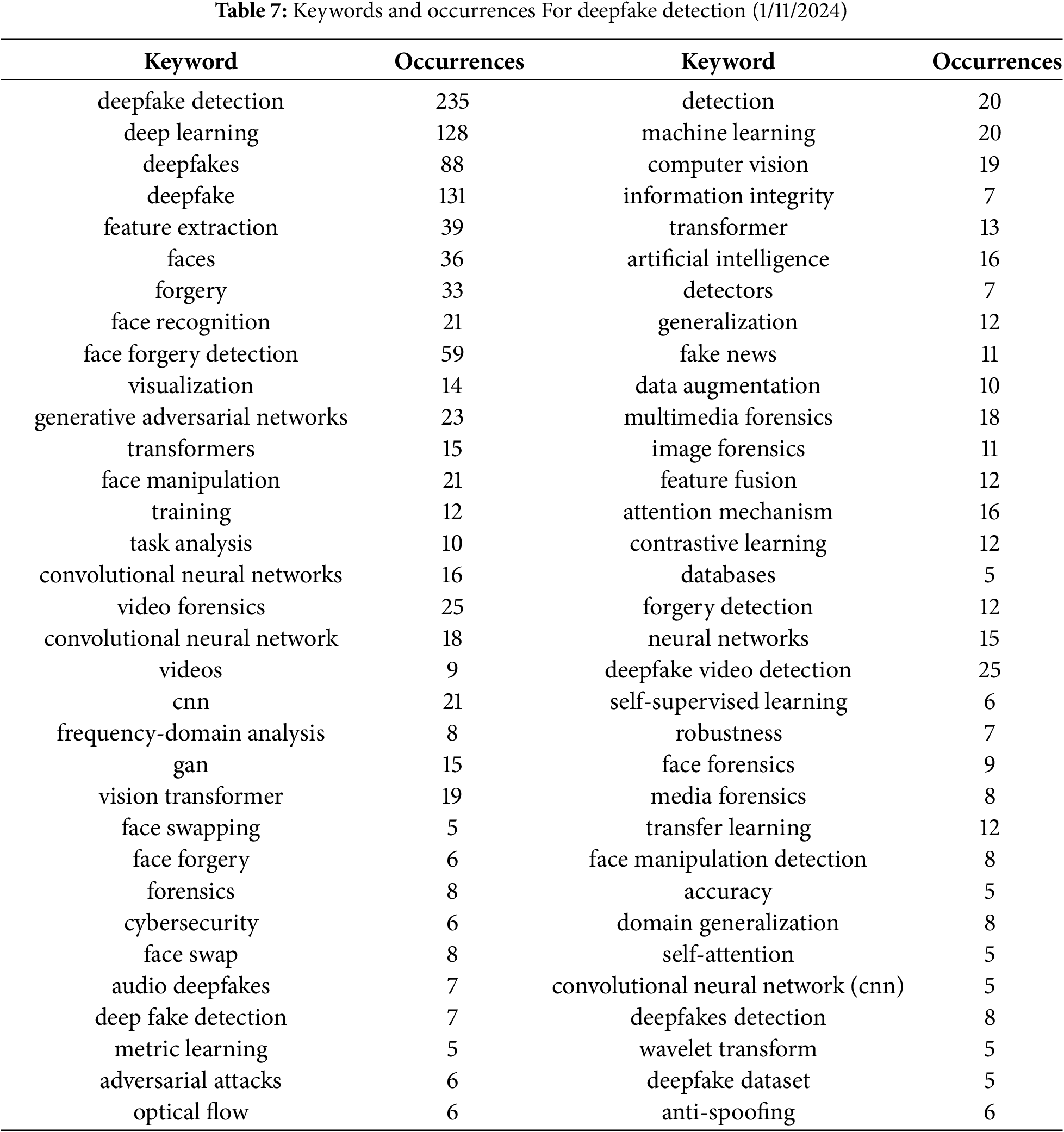

The findings of this study further highlight the importance of deepfake detection. Therefore, we take a closer look at the keywords associated with deepfake detection. In this analysis, we use the following keyword query: (detect OR detection OR detecting (Title) AND “Deep fake” OR deepfake OR deep-fake OR “Deep fakes” OR deepfakes OR deep-fakes OR “face forgery” OR “face-forgery” OR “face manipulation” OR “face-manipulation” (Topic)), retrieved from the Web of Science (WoS) database on 1 November, 2024. The results are summarized in Table 7, which clearly demonstrates that deepfakes, deepfake detection, and related processes and analyses constitute some of the most frequently used keywords. These include deepfake(s), face recognition, face forgery detection, face manipulation, detectors, feature fusion, forgery detection, deepfake video detection, multimedia forensics, image forensics, face forensics, media forensics, forensics, face swapping, face forgery, face manipulation detection, face swap, and audio deepfakes.

Additionally, machine learning models, modeling approaches, and detection techniques are commonly referenced. In this context, generative adversarial networks (GANs) emerge as the most prevalent, followed by transformers, artificial intelligence, neural networks, and convolutional neural networks (CNNs). Other relevant techniques include the attention mechanism, contrastive learning, self-supervised learning, and transfer learning.

Furthermore, several desired features of deepfake detection solutions are evident, including generalization, robustness, and accuracy. The importance of security is also reflected in the presence of keywords such as cybersecurity, adversarial attacks, and anti-spoofing. Ethical concerns surrounding deepfakes are also noticeable, with terms such as forgery, information integrity, and fake news appearing frequently.

Similarly, the significance of databases and deepfake datasets is evident from keywords like database and deepfake dataset. Finally, various modeling techniques and analytical mechanisms are represented by keywords such as task analysis, training, feature extraction, feature fusion, frequency-domain analysis, metric learning, contrastive learning, wavelet transform, and optical flow.

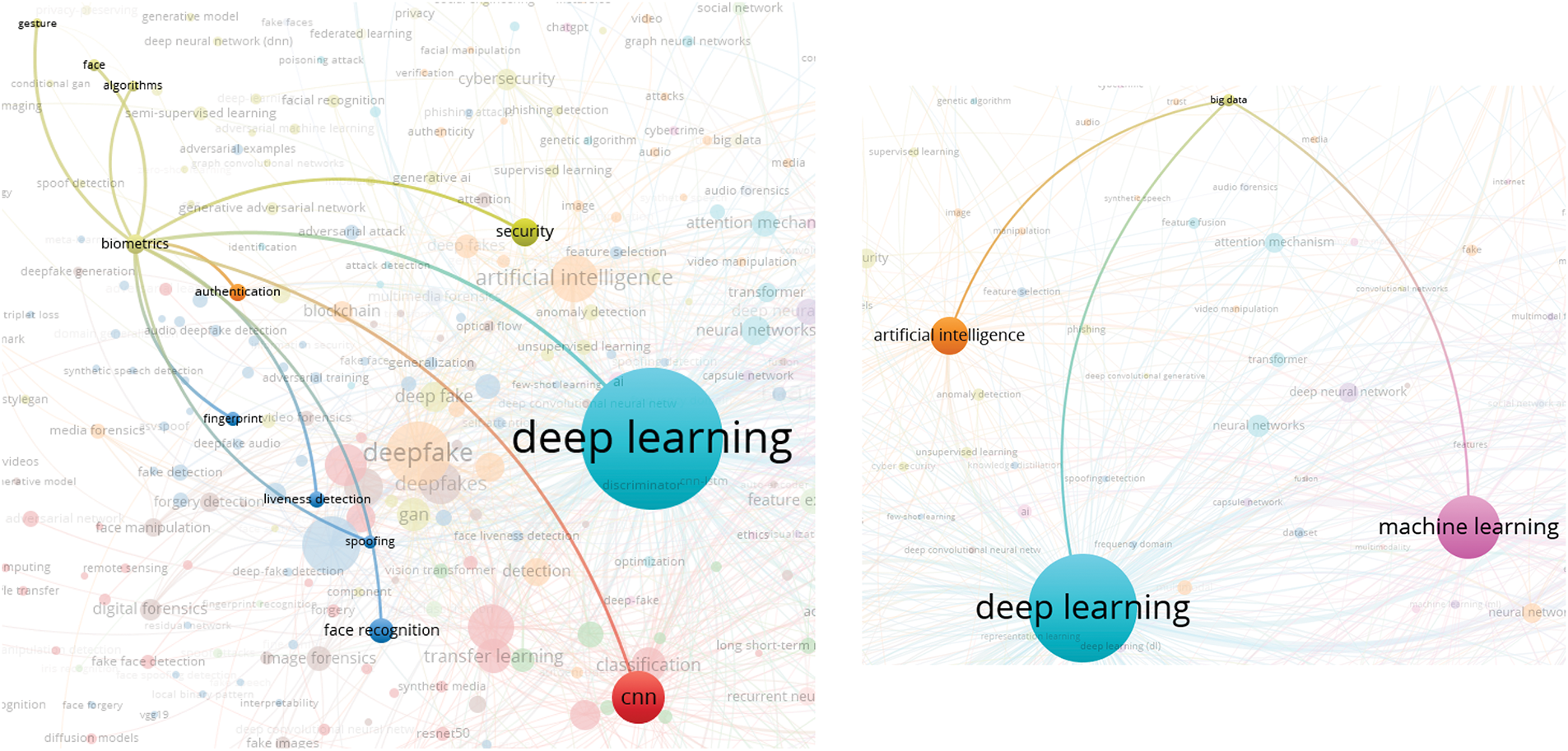

5.1 Prominent Areas and Key Contributions

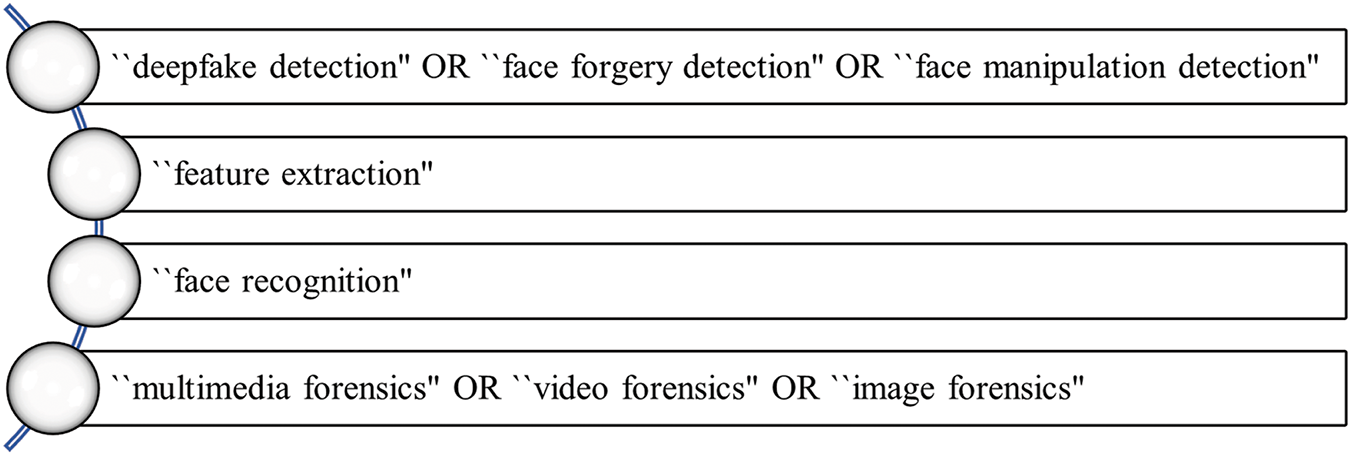

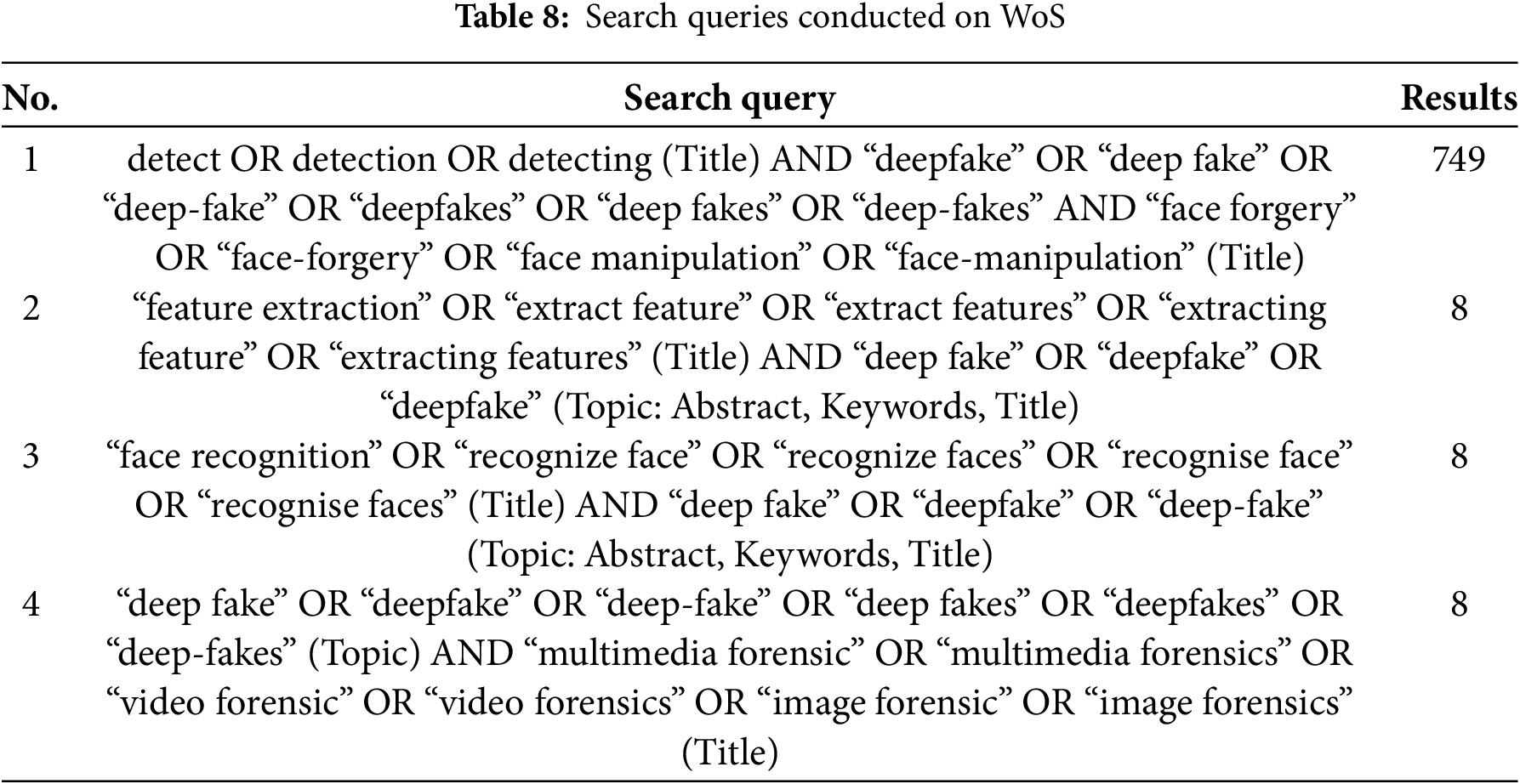

In this section, we discuss some of the prominent areas and key contributions to deepfake research. Prior to that, a background on how these papers were selected is provided. First, we identified four themes based on the visualization produced by VOSviewer of keywords in Fig. 10. This categorization of major themes is presented in Fig. 12. In addition, we conducted a new search on WoS for each of these categories as provided in Table 8. Note that the results in WoS are sensitive to plural, and hence, the plurals for deepfake have also been included. Based on the above finding, we provide a discussion of the top-cited papers and top contributions in this area based on published papers between 2021 and 2024.

Figure 12: Four key themes based on the VOSviewer visualization

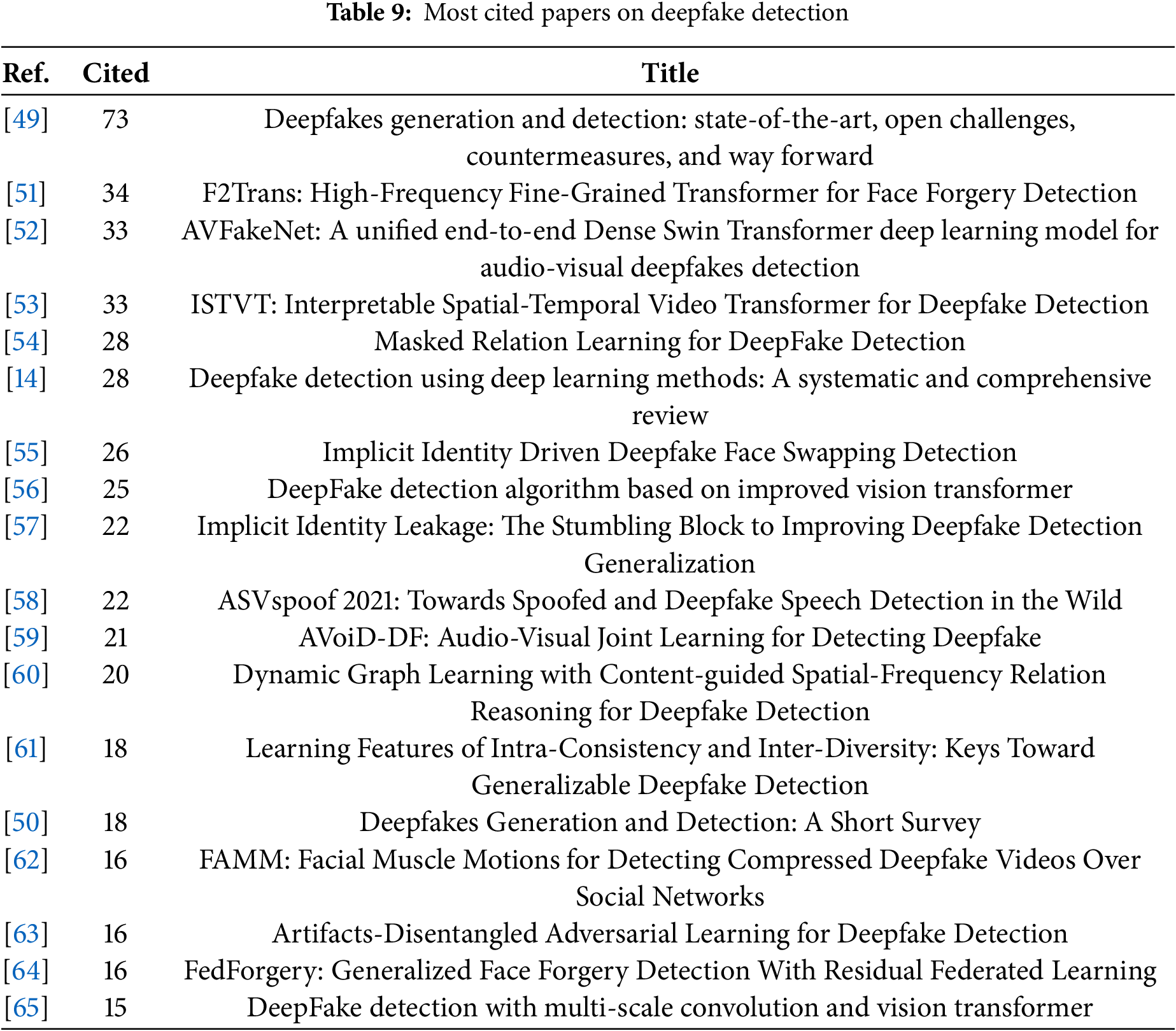

In this section, we provide the top-cited articles on deepfake detection or face forgery detection, refer to Table 9. From the search using the keywords “deepfake detection” OR “face forgery detection” OR “face manipulation detection”, we obtained 49 results, which included review papers and technical articles. The top three reviews in this category are presented below, followed by the top 15 technical papers (most cited) are discussed. For each of the categories discussed, we present the motivation of these works and then provide a summary of the main issues covered.

Deepfakes are of utmost concern due to the threat they pose to modern society [1]. These reviews have highlighted some of the key factors driving the advancement of deepfake technology. The first [49] identifies the technical advancement that led to the availability of deepfakes as the easy access to audio-visual content on social media, the availability of modern machine learning tools and libraries, and open-source trained models, coupled with the rapid development of deep learning. Particularly, the availability of generative adversarial networks (GANs) has led to the proliferation of disinformation. The second [14] emphasizes the threats of deepfakes to national security and confidentiality, and highlights that it is becoming difficult to distinguish real and fake content with the naked eye, which can lead to several societal challenges, such as deceiving public opinion or the use of doctored evidence in court. Similar to the previous study, the third [50] also highlights the advancement of deep learning techniques, and the existence of large multimedia databases makes it much easier to manipulate or generate realistic facial images even by common people with malicious intentions. The following is a summary of the top three reviews on deepfakes.

Considering the ease of access to content on social media, the availability of tools such as Keras or TensorFlow, open-source trained models, and cheaper computing infrastructure, deep learning methods have rapidly evolved. Generative adversarial networks (GANs) now make it possible to generate deepfake media, which can be used to disseminate misinformation and facilitate other social vices, such as financial fraud, hoaxes, and disruptions to government functioning. Thus, the work in [49] provides a comprehensive review of tools and ML approaches for deepfake generation and detection in audio and video, covering manipulation methods, public datasets, performance standards, and results. In addition, it discusses challenges and future directions.

The evolution of deep learning for solving various challenges in academia, industry, and healthcare has been well utilized. However, it has also been used to pose threats to confidentiality, national security, and other areas. Problems such as deepfakes, creating fake images, videos, and speech that are difficult to distinguish from real ones, have become a significant menace. At times, even humans cannot differentiate between false and authentic content, hence posing a serious threat to public opinion and court evidence. This motivates the work in [14], which assesses deepfake detection strategies using deep learning, categorizing methods by application (video, image, audio, and hybrid multimedia detection). It provides insights into deepfake generation, detection developments, weaknesses of existing methods, and areas for further investigation, noting that CNNs are the most widely used approach.

Considering the advancements in deep learning techniques and the availability of large databases that can be freely accessed, the layman can now generate or manipulate facial samples for different purposes some of which are malicious. This motivates the work in [50], which provides an overview of deepfake and face manipulation techniques and discusses identity swap, face reenactment, attribute manipulation, and entire-face synthesis, along with current challenges and future research directions.

Apart from the above highly cited reviews on deepfake and face forgery detection, several top-cited contributions to deepfake detection are provided in this section.

Although face forgery detectors have become popular and performed impressively well, they struggle with the problem of generalization and robustness. To address these issues in face forgery detection, the authors [51] propose a high-frequency fine-grained transformer network with two components: CDA, which captures invariant manipulation patterns, and HWS, which filters out low-frequency components to focus on high-frequency forgery cues. Experiments on benchmarks demonstrate the model’s robustness.

Existing methods for detecting deepfakes often focus on visual or audio modalities alone, with low accuracy in multimodal approaches. To improve this, the authors in [52] propose a unified framework for detecting manipulations in audio-visual streams of deepfake videos. The dense Swin transformer network (AVFakeNet) shows robustness across varied illumination and ethnicity, with experiments confirming its efficiency and generalization.

For robust deepfake detection, researchers explore joint spatial-temporal information, but these models often lack interpretability. Thus, the authors in [53] propose an interpretable spatial-temporal video transformer (ISTVT) to capture spatial artifacts and temporal inconsistencies. Extensive experiments validate its effectiveness and provide visualization-based insights.

Most deepfake detection approaches treat it as a binary classification task, ignoring relationships across regions. This motivates the study in [54], which formulates detection as a graph classification problem, where facial regions are vertices. To reduce redundancy, the authors use masked relation learning, achieving a 2% improvement over state-of-the-art methods.

Face swapping is aimed at replacing the target face with the source face and generating a fake face difficult for humans to tell whether it is fake or genuine. Thus, the authors in [55] aim to look at the problem of face-swapping detection from the perspective of face identity. Thus, they propose an implicit identity-driven framework, utilizing differences between explicit and implicit identities to detect fakes. This method generalizes well against other solutions, as shown by experiments and visualizations.

CNNs can identify deepfakes but often suffer from overfitting and struggle to connect local and global features, leading to misclassification. Thus, the authors in [56] propose an efficient vision transformer model that combines CNN and patch-based positioning, showing improved generalization and performance, accurately detecting 2313 out of 2500 fake videos.

Unexpected learned identity representations on images hinder the generalization of binary classifiers for detection. This is the observation made by the authors in [57] who analyzed binary classifiers’ generalization performance in deepfake detection, finding that implicit identity leakage limits generalization. They propose a method to reduce this effect, outperforming other methods in both in-dataset and cross-dataset evaluations.

Benchmarking is crucial in enabling meaningful comparisons of solutions to popular problems in language and speech processing. Benchmark evaluations can demonstrate the transition from laboratory conditions to scenarios observed in the real world. In this context, ASVspoof is a challenge focused on spoofing and deepfake detection. The paper in [58] summarizes the ASVspoof 2021 challenge, presenting the results of 54 participating teams that concentrated on deepfake and spoofing detection. The results show robustness in countermeasures to logical access tasks and robustness for physical access tasks in real physical spaces. Similarly, it was observed that detection generalization for deepfake target detection solutions for manipulated compressed speech is resilient to compression effects but not generalizable across different source datasets. The paper also reviews top-performing systems and challenges and provides a roadmap for the future of ASVspoof development.

Prior research on deepfake detection mostly captures intra-modal artifacts, but real-world deepfakes involve both audio and visual elements. Thus, the authors in [59] propose a joint audio-visual detection method that leverages inconsistencies between modalities. For evaluation, the authors built a new benchmark that focuses on more than one modality and can cover more forgery methods. The proposed method shows a superior performance over other methods in experiments.

Existing face forgery methods using frequency-aware information combined with CNN lack adequate information interaction with image content, thus limiting the generalizability. Hence, the work in [60] proposes a spatial-frequency dynamic graph method to capture relation-aware features in spatial and temporal domains via dynamic graph learning, achieving performance improvements over state-of-the-art methods.

Several deepfake detection approaches attempt to learn discriminative features between real and fake faces using an end-to-end trained DNN. However, most of those works suffer from poor generalization among different data sources, forgery methods, and post-processing operations. To address these generalization issues, the authors in [61] propose a transformer-based self-supervised learning method and data augmentation strategy, enhancing the model’s ability to distinguish subtle differences in real and fake images. Experiments validate its superior generalization ability on unseen forgery methods and untrained datasets.

Most detection methods do not perform detection sufficiently well on compressed videos, which are common on social media uploads. Thus, the authors in [62] propose a facial muscle-motion framework based on residual federated learning for face forgery detection. The proposed framework detects compressed deepfake videos, demonstrating strong performance and resilience to compression effects. Also, results from theoretical analysis show that compression does not affect facial muscle motion feature construction, and differences in features exist between deepfake and real videos.

Effective extraction of forgery artifacts is crucial for deepfake detection. However, features extracted by a supervised binary classifier often contain irrelevant information. Moreover, existing algorithms experience performance degradation when there is a mismatch between training and testing datasets. Thus, the study in [63] proposes an artifact-disentangled adversarial learning framework to isolate artifact features, outperforming other methods on benchmark datasets.

Existing face forgery detection methods rely on publicly shared or centralized data for training, overlooking privacy and security concerns when personal data cannot be shared in real-world scenarios. Additionally, variations in artifact types can negatively impact detection accuracy due to differences in data distribution. Thus, authors in [64] propose a federated learning model (FedForgery) that enhances detection generalization across decentralized data without compromising privacy. Experiments were conducted on a publicly available face forgery detection dataset, and the result proves the superiority of the performance of the proposed Fedforgery.

The authors in [65] note that while existing methods perform well on high-quality datasets, their performance on low-quality and cross-validation datasets is often unsatisfactory. To address this, the authors propose a new CNN-based method for deepfake detection. The proposed CNN-based model is combined with a vision transformer for improved detection of deepfake artifacts at different scales, achieving better detection performance across datasets of different quality levels and good generalization across cross-datasets.

In summary, one of the primary challenges faced by face forgery detectors is achieving good generalization and robustness, despite their growing popularity. To address this, models capable of effective generalization are essential. Many existing proposals tend to focus exclusively on either visual or audio modalities, often neglecting the comprehensive detection of multimodal deepfakes, a task that presents significant challenges. Another critical consideration is the need for interpretable deepfake detection models, as many current approaches lack interpretability. Additionally, effective detection methods should account for inter-relationships across regions in deepfakes to improve performance. Avoiding overfitting is also a crucial aspect of designing robust deepfake detection algorithms and frameworks. In addition, the use of advanced learning architectures to improve deepfake detection accuracy is another major aspect that needs to be well considered. Given the prevalence of low-quality datasets and compressed videos on social media, detection methods must perform reliably under such conditions. Effective extraction of forgery artifacts is essential, and classification algorithms must demonstrate strong performance across diverse datasets for successful deepfake detection. Moreover, many forgery detection methods rely heavily on publicly shared or centralized data, raising significant security and privacy concerns. Variations in artifact types due to data distribution further complicate detection accuracy. Finally, benchmarking and comparing solutions to address common challenges in language and speech processing, especially those related to deepfakes, is vital. Organizing competitions in this domain can help drive innovation and establish standardized evaluation criteria.

Feature extraction plays a pivotal role in the detection of AI-generated media, especially in the context of deepfakes and other forms of synthetic content. As generative models, such as DeepFake, DALL-E, and various voice synthesis technologies, continue to advance, they produce hyper-realistic images, videos, and audio that challenge traditional authentication and detection systems. Feature extraction techniques help address these challenges by identifying unique patterns, artifacts, and inconsistencies that can distinguish authentic content from manipulated or artificially generated media [66]. Effective feature extraction captures critical details within the data that may not be visually or audibly apparent but are essential for classification and detection. For example, in image-based deepfake detection, methods such as Error Level Analysis (ELA) and Photo Response Non-Uniformity (PRNU) have been employed to highlight compression artifacts or sensor noise patterns that differ between real and synthetic images. In audio deepfake detection, Mel spectrograms and Gammatone spectrograms can reveal subtle frequency anomalies introduced during synthetic generation, while advanced feature extraction through modified neural networks like ResNet enhances the identification of these anomalies.

Furthermore, in the selected articles, we found that various cutting-edge feature extraction techniques were designed to improve the robustness and accuracy of deepfake detection across media types. These techniques leverage deep learning architectures, optimized spectrograms, and innovative neural network structures to enhance the granularity and relevance of the extracted features, thus facilitating more precise differentiation between real and manipulated content. For example, a study that uses Face-Swap Detection with ELA and Convolutional Neural Network (CNN). A novel technique combines deep learning and error level analysis (ELA) to detect these manipulations. By identifying differences in image compression ratios between the fake and original areas, the ELA method exposes counterfeit traces. A Convolutional Neural Network (CNN) is trained to extract these counterfeit features and classify images as real or fake. This approach offers significant advantages in terms of accuracy, efficiency, and computational cost reduction, making it a powerful tool for detecting DeepFake-generated images [67]. The work in [68] introduces a novel deep neural network architecture to extract robust lip features for speaker authentication, particularly in the face of deepfake attacks. To mitigate the impact of static lip information and enhance the representation of dynamic talking habits, the proposed model incorporates two innovative units: Diffblock and DRblock. Experimental results on the GRID dataset demonstrate the effectiveness of the proposed approach, surpassing state-of-the-art methods in both human and CG imposter scenarios. The proposed network incorporates two innovative units: the Feature-level Difference block (Diffblock) and the Pixel-level Dynamic Response block (DRblock). These units effectively mitigate the impact of static lip information and capture dynamic talking habits. Experimental results using the GRID dataset demonstrate the superior performance of the proposed method in accurately distinguishing between genuine and forged lip presentations, outperforming state-of-the-art visual speaker authentication techniques. It is worth noting that recent years have witnessed a surge in audio impersonation attacks, posing a significant threat to voice-based authentication systems and speech recognition applications [66].

To counter the above-mentioned attacks, robust detection methods are imperative. This paper introduces a novel approach to enhance front-end feature extraction for audio impersonation attack detection, specifically focusing on the Hindi language. The proposed model leverages a combination of Gammatone spectrogram, Mel spectrogram, and Ternary Pattern Audio Features (TPAF) spectrogram, followed by an optimized ResNet27 for feature extraction. Subsequently, four different binary classifiers (XGboost, Random Forest, K-Nearest Neighbors, and Naive Bayes) are employed to classify audio samples as genuine or spoofed. The proposed method demonstrates superior performance, achieving a 0.9% Equal Error Rate (EER) for impersonation attacks on the Voice Impersonation Corpus in Hindi Language (VIHL) dataset, outperforming existing techniques [66].

Besides, reference [69] used Gammatone spectrograms and a ResNet27 model; this method detects Hindi-language audio impersonation attacks with high accuracy, surpassing existing techniques in robustness and accuracy. Reference [70] has improved a deep learning approach with multi-phase feature extraction (including Gabor Filter and RN50MHA) that accurately detects deep fake images, achieving high detection rates across various datasets. Another study has leveraged Photo Response Non-Uniformity (PRNU) and Error Level Analysis (ELA), this method trains CNNs to differentiate photorealistic AI images from real photos, achieving over 95% accuracy [71]. In addition, MSFRNet, a multi-scale feature extraction framework, addresses feature omission and redundancy in detecting deep fake images, outperforming standard binary classifiers through a multi-scale prediction network [72]. Another study uses Rotation-Invariant Local Binary Pattern in Fog Computing (VRLBP), a secure fog computing protocol for rotation-invariant local binary pattern (RI-LBP) feature extraction, enhances privacy in outsourced deepfake detection, achieving accuracy close to RI-LBP with reduced computational overhead [73].

The advancement of generative models, including DeepFake, DALL-E, and various voice synthesis technologies, has enabled the production of synthetic content with a level of realism that complicates conventional authentication and detection efforts. Feature extraction techniques are essential for isolating subtle artifacts, inconsistencies, and patterns, such as compression irregularities or sensor-specific noise, that serve as distinguishing markers between authentic and manipulated content. Recent scholarly efforts underscore the significance of developing advanced feature extraction methods tailored to diverse media modalities. In image-based deepfake detection, techniques such as Error Level Analysis (ELA) and Photo Response Non-Uniformity (PRNU) have proven effective in highlighting compression artifacts and sensor noise anomalies. Similarly, in audio-based detection, spectrogram-based approaches, including Mel and Gammatone spectrograms, integrated with advanced neural networks such as ResNet, have demonstrated efficacy in identifying subtle frequency aberrations induced by synthetic generation. Innovative methodologies have further enhanced the robustness and precision of deepfake detection. Notable examples include convolutional neural networks (CNNs) trained with ELA, which effectively classify manipulated images based on compression disparities, and multi-scale feature extraction frameworks like MSFRNet, which address feature omission and redundancy to improve detection performance. Additionally, models employing novel components such as Diffblock and DRblock for dynamic lip feature extraction have achieved superior accuracy in detecting visual manipulations, while optimized spectrogram-based techniques have demonstrated high efficacy in audio impersonation detection. Despite significant advancements, the persistent evolution of deepfake technologies underscores the critical need for continued innovation in feature extraction methodologies. The development of more sophisticated and computationally efficient techniques is imperative to maintain detection accuracy and reliability in the face of increasingly sophisticated synthetic media. Such efforts are vital for ensuring the integrity of authentication systems across diverse applications and domains.

The rapid evolution of deep learning and generative models has significantly impacted fields such as computer vision, natural language processing, and multimedia processing, introducing both groundbreaking opportunities and complex challenges. One of the most contentious applications of these advancements is the creation of deepfakes- highly realistic, AI-generated images, videos, or audio clips that convincingly replicate the likeness of real individuals. Enabled by generative adversarial networks (GANs) and other sophisticated deep learning algorithms, deepfakes are increasingly indistinguishable from authentic content and pose serious implications for privacy, security, and ethical standards. Consequently, the field of deepfake detection has gained immense attention in both academic research and industry applications, particularly as public concerns over misuse and manipulation grow.

While many researchers have developed algorithms to identify deepfake content, current literature reveals several persistent challenges in detection methods. Existing techniques often struggle with generalizability across diverse datasets, maintaining efficiency in computationally constrained environments, and effectively handling nuanced presentation attacks like morphing and impersonation [74]. Additionally, there are growing concerns about ethical implications, such as racial bias in face recognition systems, which may be exacerbated by deepfake manipulations [75]. These issues underscore the need for advanced feature extraction techniques, novel neural network architectures, and robust evaluation methodologies to improve the accuracy, efficiency, and fairness of deepfake detection systems.

From novel applications of the Fisherface algorithm combined with Local Binary Pattern Histogram (FF-LBPH) for image analysis [76] to the use of advanced contrastive learning frameworks for video detection, these studies illustrate the breadth of techniques being developed to tackle the deepfake problem [77]. Furthermore, research into the cognitive and neural responses to deepfake stimuli highlights new frontiers in detection that leverage human perceptual differences [78], while analyses of racial bias in face recognition APIs underscore the importance of ethical considerations in deploying detection systems. By systematically summarizing and analyzing these diverse approaches, this review aims to provide a comprehensive overview of the state of deepfake detection research, identify key trends and challenges, and suggest directions for future investigation.