Open Access

Open Access

REVIEW

A Comprehensive Review of Face Detection/Recognition Algorithms and Competitive Datasets to Optimize Machine Vision

1 Department of Computer System Engineering, University of Engineering and Technology, Peshawar, 25000, Pakistan

2 EIAS: Data Science and Blockchain Laboratory, College of Computer and Information Sciences, Prince Sultan University, Riyadh, 11586, Saudi Arabia

3 College of Computer and Information Science, Prince Sultan University, Riyadh, 11586, Saudi Arabia

4 Department of AI and Software, Gachon University, Seongnam-si, 13120, Republic of Korea

5 Department of Econometrics, Tashkent State University of Economics, Tashkent, 100066, Uzbekistan

* Corresponding Author: Muhammad Shahid Anwar. Email:

Computers, Materials & Continua 2025, 84(1), 1-24. https://doi.org/10.32604/cmc.2025.063341

Received 12 January 2025; Accepted 25 April 2025; Issue published 09 June 2025

Abstract

Face recognition has emerged as one of the most prominent applications of image analysis and understanding, gaining considerable attention in recent years. This growing interest is driven by two key factors: its extensive applications in law enforcement and the commercial domain, and the rapid advancement of practical technologies. Despite the significant advancements, modern recognition algorithms still struggle in real-world conditions such as varying lighting conditions, occlusion, and diverse facial postures. In such scenarios, human perception is still well above the capabilities of present technology. Using the systematic mapping study, this paper presents an in-depth review of face detection algorithms and face recognition algorithms, presenting a detailed survey of advancements made between 2015 and 2024. We analyze key methodologies, highlighting their strengths and restrictions in the application context. Additionally, we examine various datasets used for face detection/recognition datasets focusing on the task-specific applications, size, diversity, and complexity. By analyzing these algorithms and datasets, this survey works as a valuable resource for researchers, identifying the research gap in the field of face detection and recognition and outlining potential directions for future research.Keywords

Face recognition (FR) is a biometric technology that recognizes or confirms a person’s identification based on visual features [1]. To match the identified face to a known identity, this technique requires analyzing facial traits, including the spacing between the eyes, the nose’s shape, and the curves of the face [2]. Face recognition technology is frequently employed for security and authentication purposes, such as unlocking smartphones or entering restricted areas. These activities have grown in significance in fields including surveillance, security, human-computer interaction, and entertainment [3].

The goal of creating biometric applications like facial recognition has gained significance in the context of smart cities. Furthermore, many researchers worldwide have concentrated on developing more reliable and precise techniques and algorithms for these systems and their everyday uses [4]. Every type of security system needs to safeguard personal information. Passwords are the most widely utilized type for recognition. However, many systems are starting to integrate many biometric factors for recognizing tasks due to the advancements in security algorithms and information technologies [5]. Thanks to these biometric elements, people’s identities can be determined by their physiological or behavioral traits. They also offer several benefits. For instance, simply having a person in front of the sensor suffices, and remembering multiple passwords or secret codes is no longer necessary [6]. Recently, many recognition systems based on various biometric characteristics, including face, voice, iris, and fingerprints [7], have been implemented.

Systems that use a person’s biological traits to identify them are particularly appealing because they are simple to use. The human face comprises several structures and traits [8]. For this reason, considering its potential in numerous domains and applications (surveillance, home security, border control, and so forth), it has emerged as one of the most popular biometric authentication systems in recent years [9].

Customers can already use facial recognition technology as an ID (identification) outside of phones, such as at concerts, sports stadiums, and airport check-ins [10]. Furthermore, this system can identify people just using photos taken by the camera because it doesn’t require human participation. Moreover, many face recognition algorithms with high identification accuracy have been created with various search kinds in mind [11]. Designing new face recognition systems would be fascinating to meet real-time restrictions.

Numerous computer vision techniques, including local, subspace, and hybrid approaches, have been presented to solve face detection or recognition applications with good robustness and discrimination [12]. Despite these advancements, face recognition technology faces challenges in real-world applications, particularly under varying occlusion, lighting conditions, and facial expressions. It is also challenging to detect and recognize the face in the future high-demanding immersive technology in a 360-degree environment due to the loss of facial information caused by merging images taken from different angles, leading to facial features’ inconsistencies. The most inventive methods are created to address these difficulties and provide dependable face recognition software. However, they are relatively sophisticated, demand a lot of processing time, and use a lot of memory [13]. Face recognition technology is one of the main technologies because of the quick advancements in digital cameras and portable devices and the growing need for security. The necessity for extensive, diversified datasets that adequately reflect the population is one of the major problems [14]. Concerns about bias and fairness are also raised because many available datasets could only be of some demographic groupings.

Although there is a sizable and constantly expanding number of face detection and identification datasets accessible, it might be difficult for academics and practitioners to discover and select the most suitable dataset for their specific needs.

Although face recognition technology has made significant progress, current algorithms still face difficulties in real-world conditions, such as in lighting changes, facial expressions and poses, and occlusions. In many cases, human perception still outperforms these systems. Additionally, the datasets used for training and evaluating these algorithms differ widely in size, diversity, and applicability. This study provides a comprehensive review of face detection and recognition algorithms developed between 2015 and 2024, explores key datasets, and highlights existing research gaps to support future advancements in the field. This paper’s primary contribution is:

• This review paper takes an in-depth look at the most recent face detection and identification methods.

• This work also the face detection/recognition datasets that are currently accessible, considering their size, diversity, and complexity, as well as the specific tasks to which they have been applied.

• Studying these methods, their advantages and disadvantages, and the dataset allows researchers to develop an accurate and efficient algorithm.

2 Challenges and Limitations Faced by Face Detection and Recognition

An effective face detection and recognition algorithm may encounter several constraints when identifying face images. Among these restrictions are:

Pose Variations: Head movements, stance changes, and changes in camera angle may also compromise the efficiency of the face recognition algorithm [1].

Illumination Variation: The image quality and the effectiveness of the algorithms can be obstructed by several environmental factors [5], such as lighting conditions, reflections, and shadows.

Occlusions: Occlusions [7] can complicate and reduce the accuracy of face recognition/detection systems. Examples include hats, mustaches, glasses, etc.

Computational Complexity: Deep learning-based face recognition algorithms can be computationally expensive due to their high memory and processor requirements [11].

Changes in Look: Several parameters, such as aging and haircuts, can affect a person’s appearance and make it challenging to match against a database [7].

Limited Training Data: Face recognition algorithms require substantial training data to work effectively [11]. For example, deep learning-based FR algorithms require training on over one million face images.

Specific Dataset: Algorithms for facial recognition are typically only trustworthy on datasets. Other datasets are not recognized by these algorithms [15]. For instance, an algorithm trained to identify photographs of people with lighter skin tones does not recognize a dataset of people with darker skin tones.

Fig. 1 depicts some facial appearances that pose issues for face detection/recognition algorithms.

Figure 1: Facial characteristics that cause challenges for algorithms designed for detecting and recognizing faces

The evaluation procedure begins with an initial screening within the scope of the present effort. As previously stated, this review focused on recent and cutting-edge contributions to face detection/recognition performance and their datasets. The flow diagram of the review methodology is presented in Fig. 2. We have organized the entire review procedure into the sections below.

Figure 2: Flow diagram of the review methodology

This work thoroughly evaluates previous investigations using the systematic mapping study provided by Ahmad et al. [16]. The review provides a detailed methodology of previous work that directly or indirectly contributes to face detection/recognition systems and datasets. Furthermore, the study included research questions to highlight the primary aims. These research questions allow users to select an appropriate algorithm/dataset based on their requirements.

After reviewing the existing face detection/recognition literature, we formulate the research questions carefully. We identified the research gaps by examining the advancements and challenges faced in real-world applications. Moreover, our objective was to develop research questions that explore the performance of face detection/recognition algorithms and their limitations.

• How did face detection and recognition evolve between 2015 and 2024, and what improvements have been made?

• What are the open-source gaps and challenges do modern face recognition/detection algorithms still face?

• How do different published face detection/recognition datasets compare in terms of size, features, and complexity.

• What are the future research directions for researchers in the field of face detection/recognition.

With the default settings, the keywords were searched directly on publisher websites and Google Scholar. We reviewed the articles and selected those that included pertinent findings for further review. Furthermore, the following are the important aspects, subjects, and related studies, including journals, conference proceedings, and book chapters.

The following terms and criteria govern the screening of studies:

• The team considered peer-reviewed publications (2014–2024) from reputable journals, book chapters, and conference proceedings in the domain of artificial intelligence and biometrics.

• We concentrated on the related title, which has numerous citations in Google Scholar.

• Fast reviews were conducted for additional evaluation and data extraction. We concentrated on the abstract and introduction during the fast review to understand the difficulties, motivations, and contributions.

• Papers having a lack of experimental validations, non-English papers, and studies not directly related to face recognition have been excluded from the study.

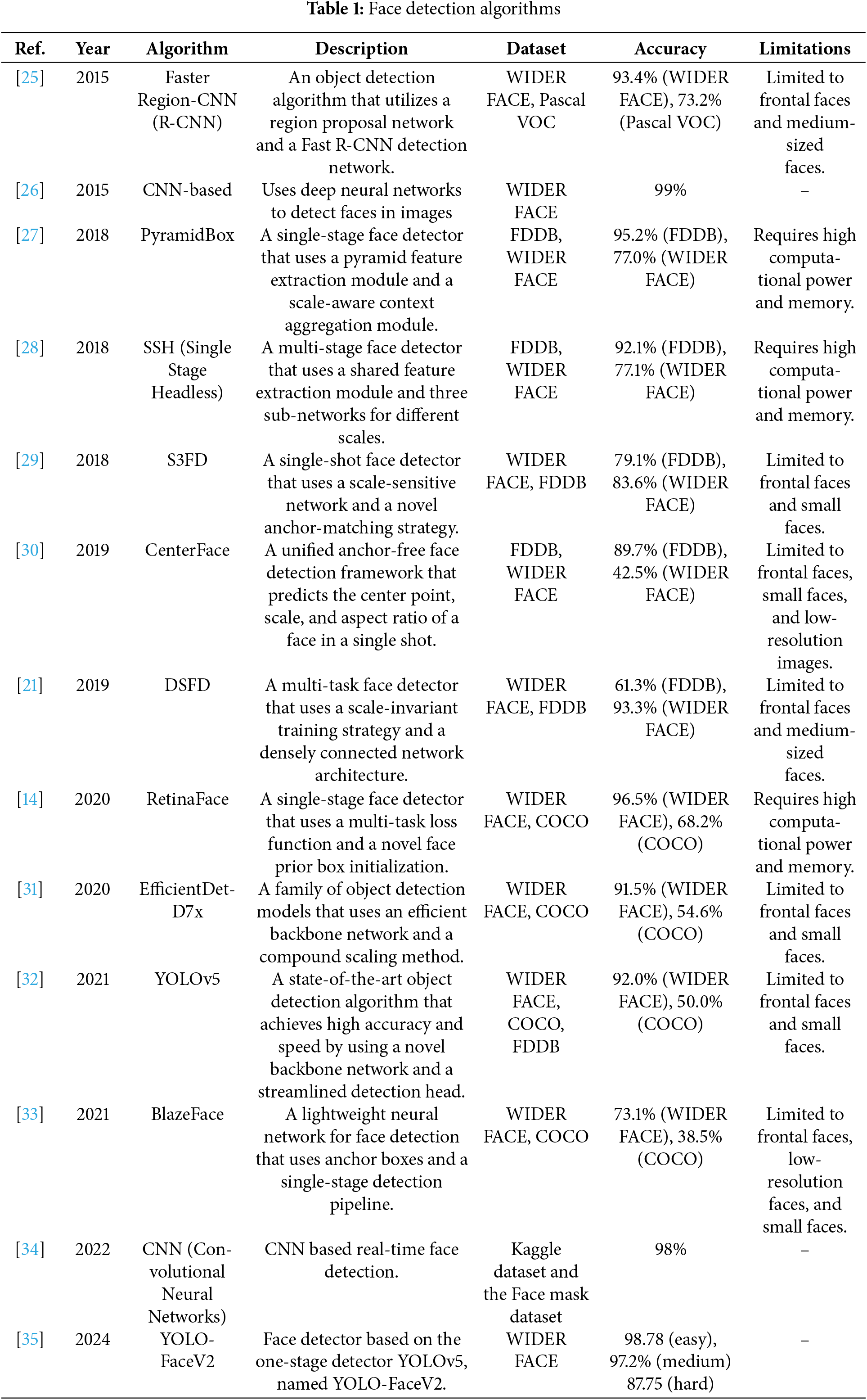

Various data were retrieved from the selected publications during the information-gathering process, as indicated in Tables 1–3. Additionally, a spreadsheet was used to capture the various data and further investigate the study’s issues. As a result, a comprehensive literature evaluation was conducted to identify potential challenges in forecasting student performance. The research also demonstrates the contributions of prior articles that go beyond the boundaries of artificial intelligence.

In the FR recognition pipeline, face detection is one of the most significant stages. To improve the performance of face detection (FD) [17], several research studies have been conducted, spanning from key point annotation [18] to data augmentation approaches [19]. FR is based on the fundamentals of object detection, and it shares that face detection had the same history as generic object detection before deep learning. Handcrafted characteristics and approaches were used for detection, such as Haar-like characteristics [20]. This gradually evolved into more formalized and complex techniques to minimize variations of Pose, expression, lighting, occlusion, and other difficulties, as presented in Fig. 1. The WIDER FACE dataset [21] has been instrumental in advancing face detection techniques, leading to the emergence of innovative approaches such as PyramidAchors [18], Dual Shot Face Detector (DSFD) [22], and the more recent TinaFace [23], which is among the latest face detection models as of 2021.

Authors in [24] suggested an intriguing technique to handle the issue of spotting small faces. This model can detect hundreds of little faces in a single image. They investigated three components of the little face problem: the effect of scale invariance, picture resolution, and contextual reasoning. In contrast to prior works, they trained distinct detectors for different scales.

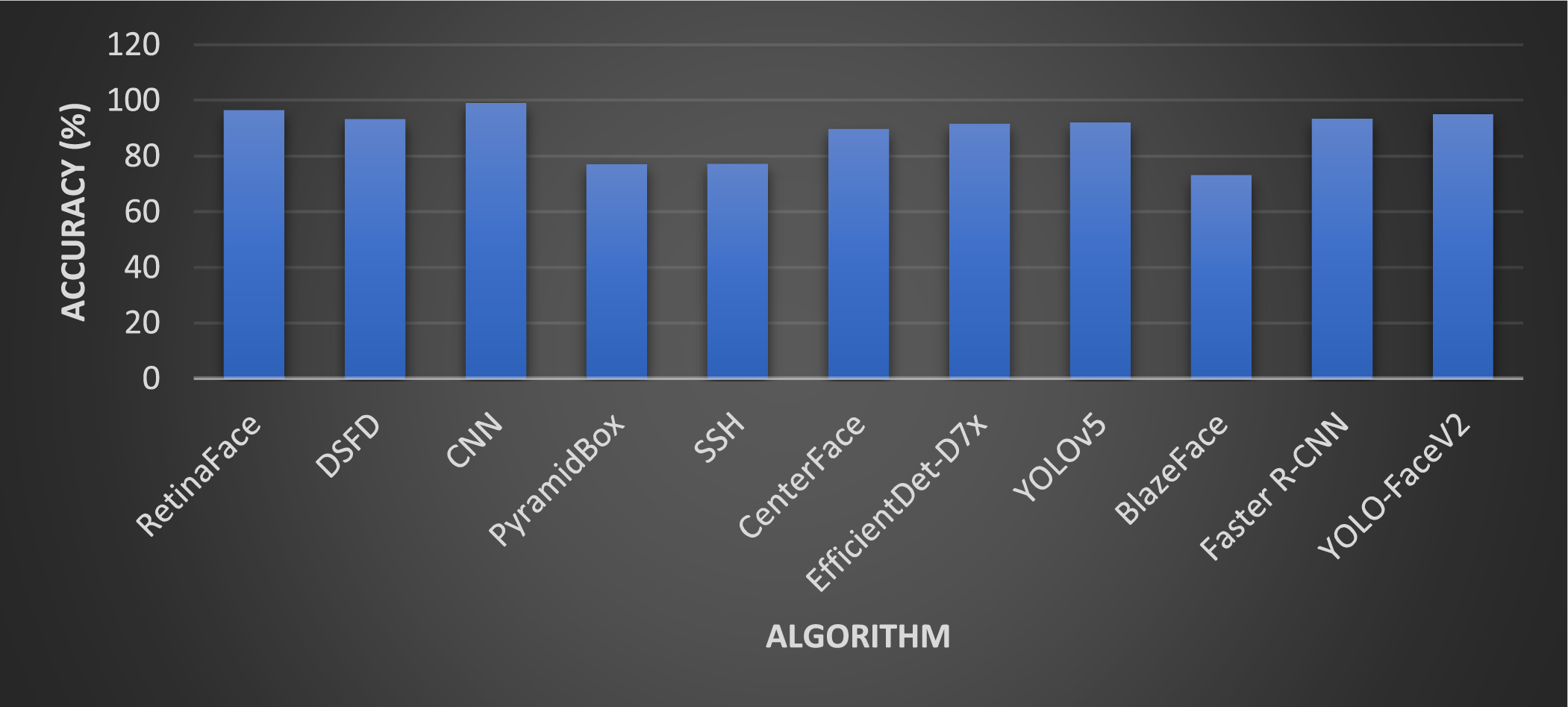

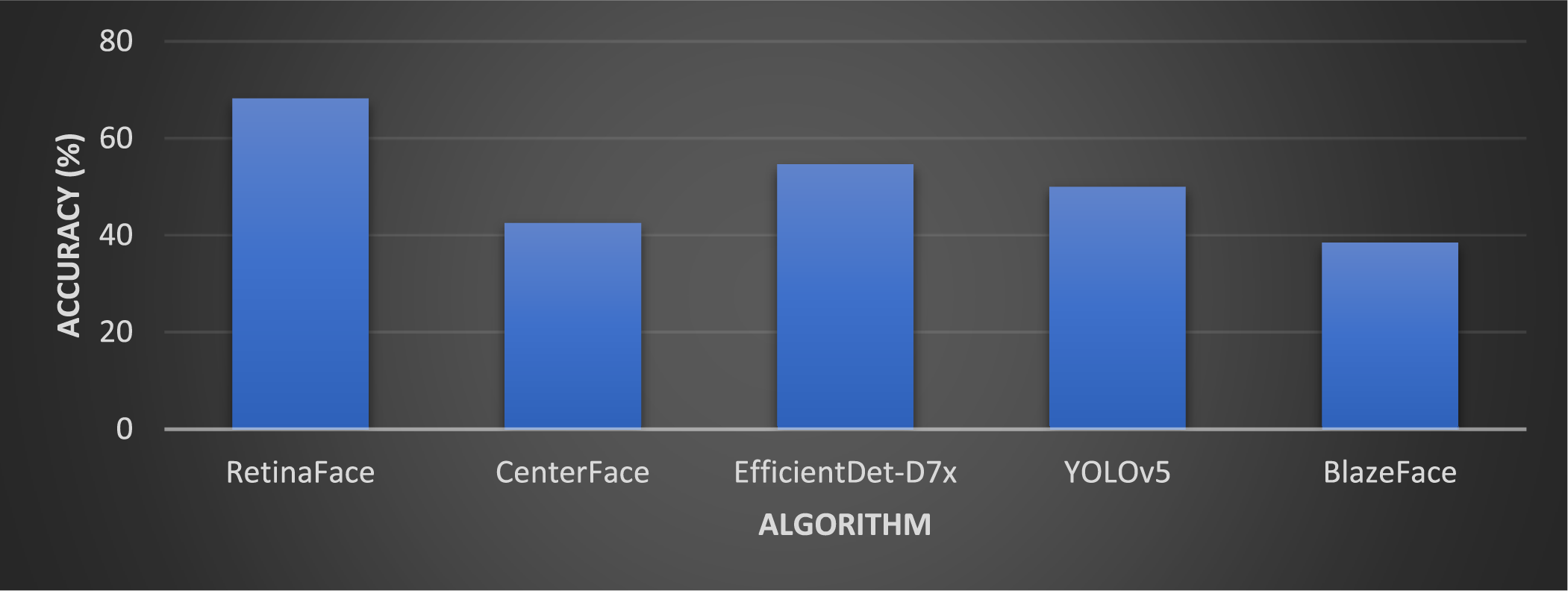

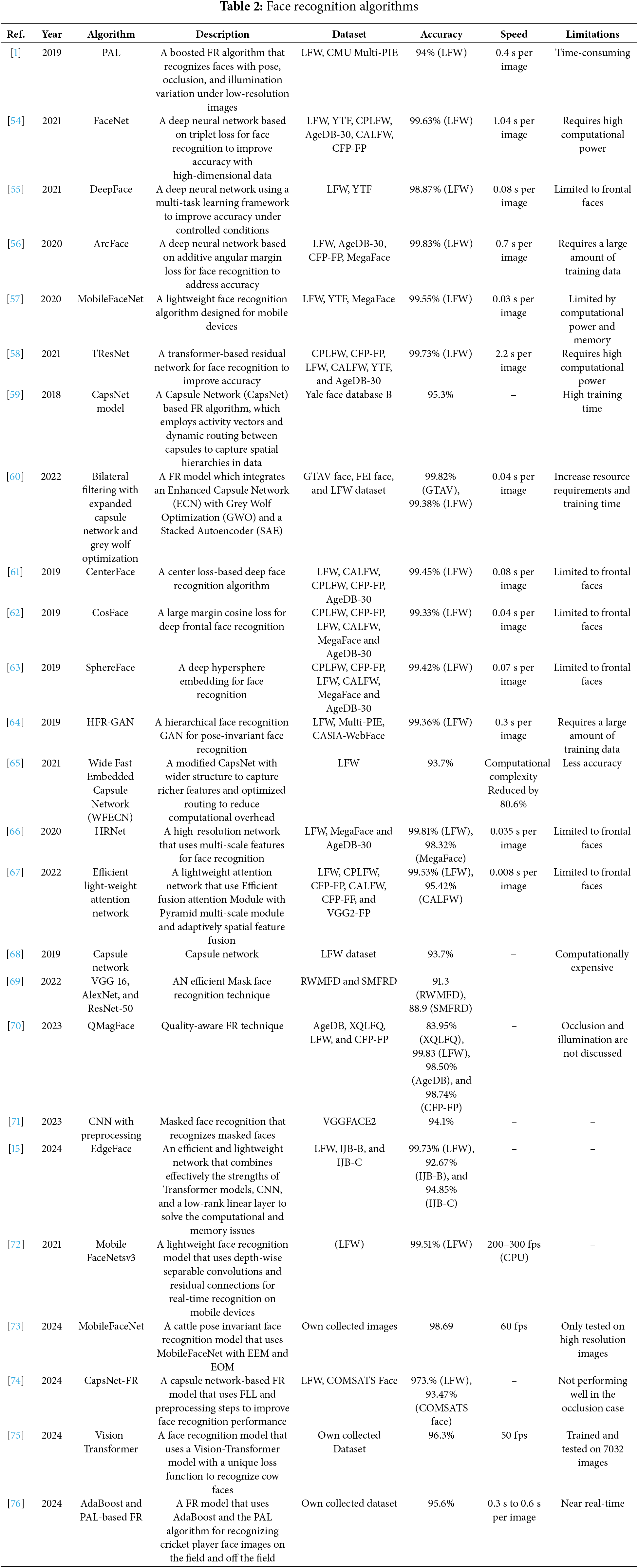

The majority of face identification algorithms employ either a feature-based or image-based approach. Feature-based methods involve extracting and comparing image features with a database of known facial features. On the other hand, image-based methods involve comparing training and testing images to find the most suitable match. Tables 1, 2 show the most recently published state-of-the-art face detection and recognition methods. Figs. 3 and 4 compare different face detection approaches tested on the WIDER FACE, and COCO datasets.

Figure 3: Comparison of different face detection techniques tested on the WIDER FACE dataset

Figure 4: Comparison of different face detection techniques tested based on the COCO dataset

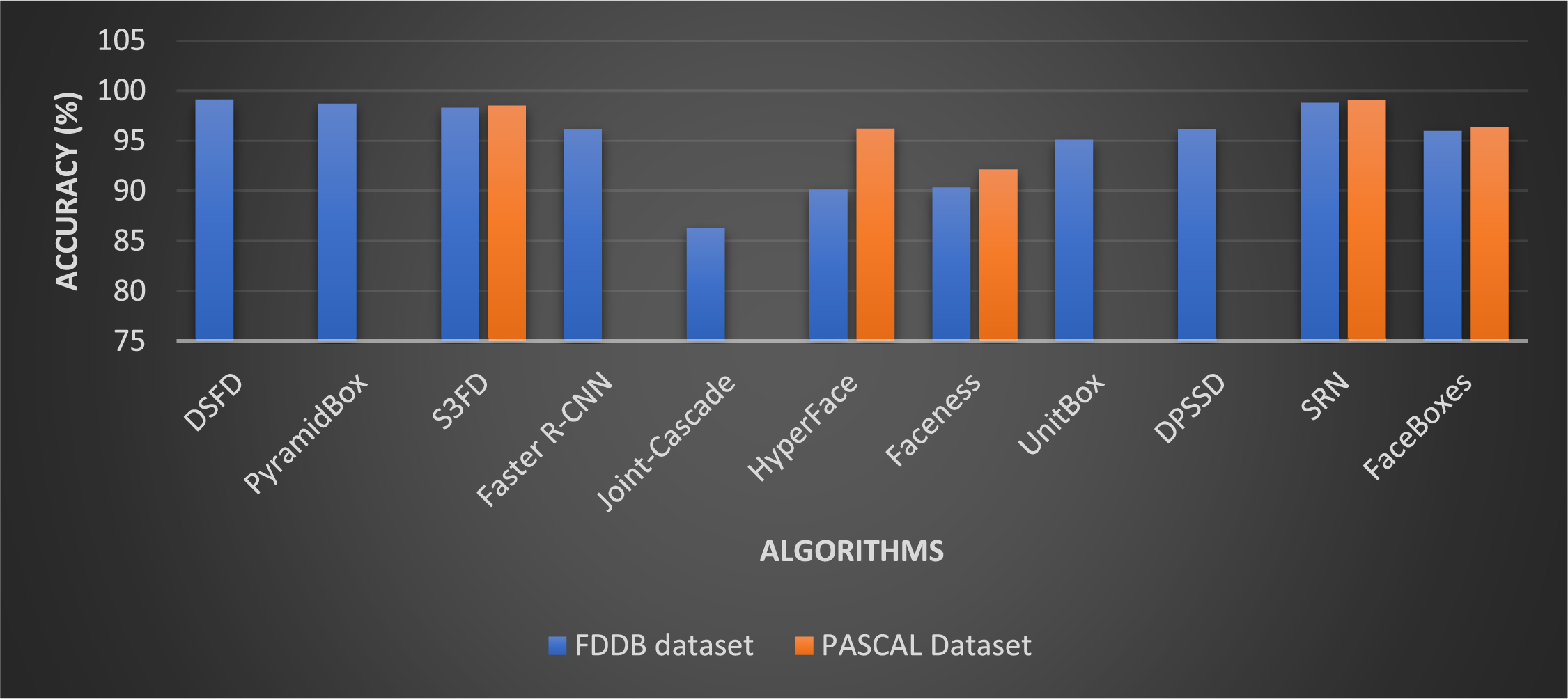

The values for accuracy and speed mentioned here should be considered approximations and subject to change based on the hardware and software setups utilized for testing. Additionally, this list is not complete, and there may be additional cutting-edge face detection algorithms that are not covered. Additionally, the limits indicated are predicated on the information provided in the corresponding study publications. Furthermore, based on the particular use case and requirements, these algorithms may have various strengths and drawbacks [36]. It’s critical to thoroughly consider and choose the best algorithm for a certain task. Furthermore, Fig. 5 presents the comparison of several face detection algorithms on the FDDB and PASCAL datasets. These algorithms include DSFD [22], pyramidbox [27], Single shot scale-invariant face detector (S3FD) [29], joint-cascade CNN [37], HyperFace [38], faceness [39], UnitBox [40], DPSSD [41], Selective Refinement Network (SRN) [42], and Joint face detection and facial motion retargeting [43].

Figure 5: Comparison of different face detection techniques tested based on FDDB and PASCAL datasets

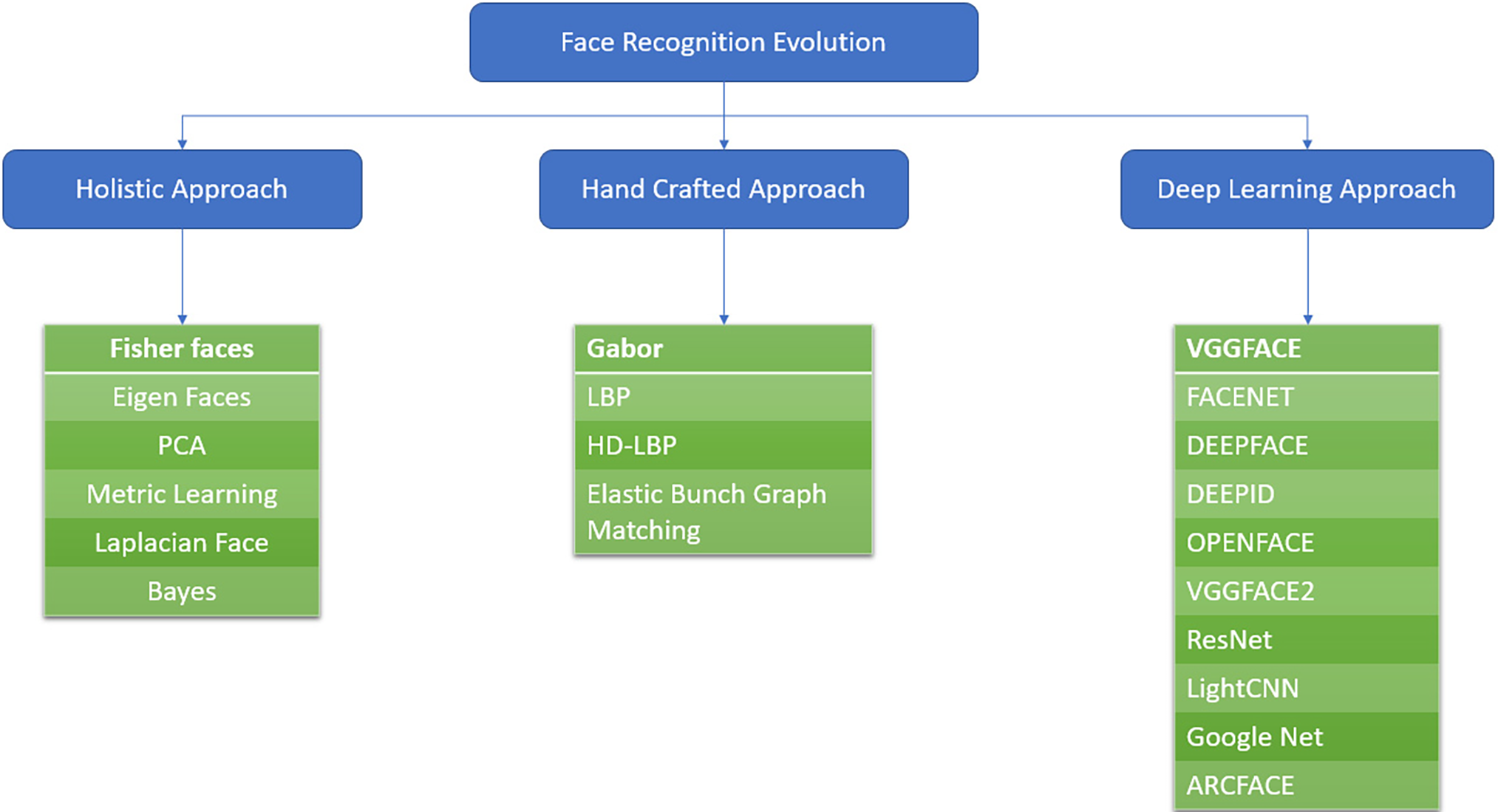

Fig. 6 shows the wide range of FR approaches that have continuously progressed to state-of-the-art DL (Deep Learning) methodologies. Moghaddam et al. [44] released Eigenfaces in the early 90s, one of the most basic approaches utilizing low-dimensional feature-based segmentation, which marked the beginning of significant development in FR. Each face image in the training set is divided into several tiny feature bits known as Eigenfaces using their technique. The variance in the location of each Eigenface for the subject image is calculated by linearly projecting the subject image across the Eigenface feature space. Additional early work that integrates low-dimension feature-based segmentation with holistic techniques is provided by [45,46]. By providing models invariant to both lighting direction and facial expression, Fischerfaces [47] outperformed Eigenfaces. Due to its inability to handle unforeseen facial changes that differ from the variations gathered in the training dataset, scientists looked for novel methods based on manually created local facial feature representations. The early 2000s saw the publication of several important articles, such as a Gabor method for FR based on local features [48], a local binary feature-based approach [49], and a high-dimensional feature-based compression [50]. In terms of FR outcomes, these developments, which mostly concentrated on high-dimensional feature representation, performed better than holistic methods. Their reliance on handcrafted qualities, on the other hand, is partial to their efficiency in practical, diverse, and complex FR scenarios.

Figure 6: Popular face recognition methods

Since the limits of handcrafted features have emerged, learning-based techniques have emerged [51]. These approaches, however, perform poorly when confronted with complicated variations in facial appearance that are not recorded in the training data. In response to these problems, the research community boosted its attempts to overcome FR’s poor performance under non-linear fluctuations in facial look and expression.

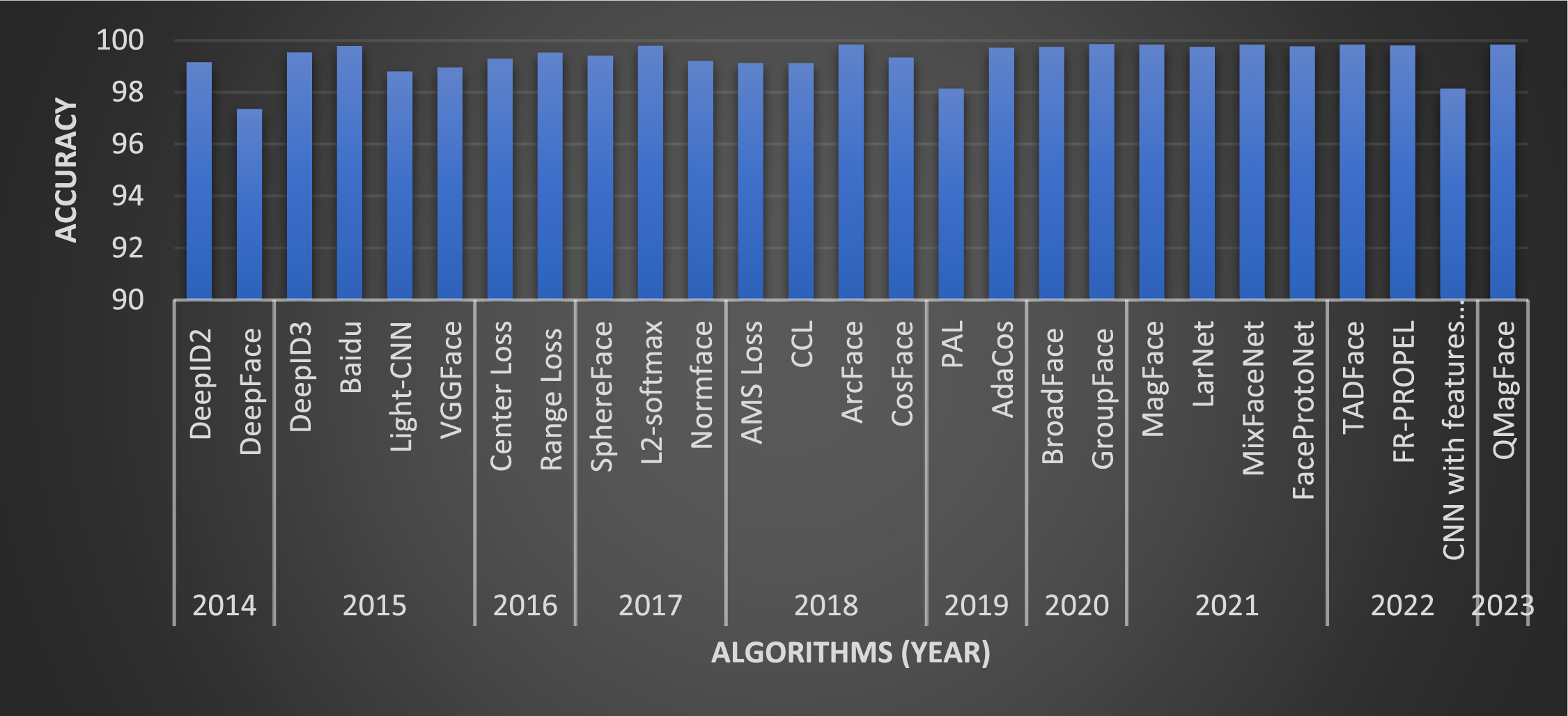

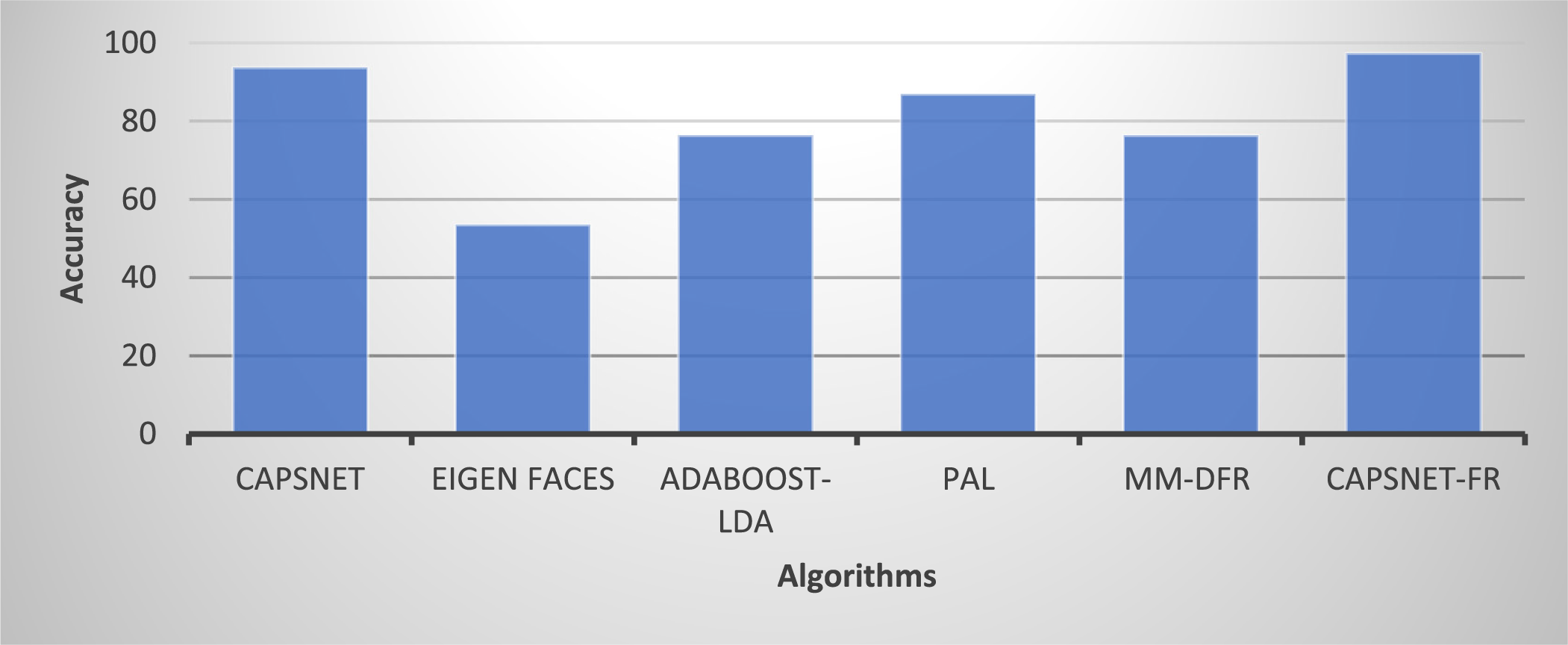

In the last decade, DL has dominated FR research by overcoming the above-mentioned concerns. The functioning of DL imitates the way the human brain processes data and is commonly utilized in CNNs as layered networks. When AlexNet [52] achieved outstanding accuracy in the ImageNet competition 2012, CNNs received attention for image recognition challenges. DeepFace [53] obtained a then-unprecedented FR accuracy of 97.35% on the Labelled Faces in the Wild (LFW) dataset. Table 2 summarizes the evolution chronologically, citing some major works in FR. Fig. 7 presents the accuracy comparison of published FR algorithms tested on the LFW datasets. Fig. 8 concludes the accuracy of the face recognition algorithm on the COMSATS face dataset.

Figure 7: Comparison of published face recognition algorithms based on accuracy (LFW dataset)

Figure 8: Evaluation accuracy of FR algorithms on the COMSATS face dataset

The accuracy and speed values are simply estimates and subject to change depending on the exact implementation and testing conditions. It’s crucial to evaluate the effectiveness of these algorithms in the context of your particular use case, considering factors such as dataset size, processing power, and the desired level of accuracy and speed. Furthermore, this table is not exhaustive, and there might be other cutting-edge methods of facial recognition that are not included. However, the speed and accuracy statistics are estimates and could vary depending on the specific testing conditions. It is critical to evaluate how well various algorithms perform on a particular dataset and hardware configuration before selecting the optimal approach for a given use case.

Facial data augmentation/enhancement is an effective method of compensating for a lack of facial training data [77]. It’s a method for boosting the amount of training or testing data by modifying real-life or simulated virtual face samples. The actual idea behind data augmentation is to generate additional samples of the class under the given category. Data augmentation can be applied in training, testing, or both. In a sense, a larger volume of data seems to improve deep learning performance [78]. Again, small object detection performance improvement can also be guaranteed by adding the types and the number of small object samples within the dataset. Face transformation creates new face samples by altering the geometry, RGB (Red, Green, Blue) channels, and changing the hairstyles, makeup, and facial expressions [79]. Another method used for face transformation is removing or wearing accessories such as Glasses, hats, earrings, etc. [79].

Memory and computation restrictions are the most important advantages governing the data augmentation algorithms. There are two widely recognized methods of data augmentation: online and offline [80]. Online data augmentation occurs dynamically during training, while offline data augmentation generates the data beforehand and then stores it in memory. The online approach saves space in memory, although it may slow the training down. Offline approaches are faster in training but will take up large amounts of memory [80].

Aligning the face is an important part of the facial recognition process. It involves identifying and adjusting the key facial points in a given image to match a standard face template. Face alignment research has advanced in recent years with growing success. A typical face alignment approach seeks to progressively align a standard face shape template to an input facial image by searching the input for predefined facial points. Typically, this begins with a coarse shape refined iteratively through numerous steps and ends when the convergence criteria are met. As the search advances, facial appearance data and the conventional face shape model are combined to discover facial fiducial spots.

Several excellent review studies thoroughly documented the evolution of face alignment algorithms from traditional to modern deep learning-based methods [81]. Heatmap regression is a common method for face localization [82]. Wang et al. [83] presented AdaptiveWingLoss, a Pytorch implementation of a heatmap regression. In 2019, it was posted on GitHub [84]. Based on deep neural network architecture, the Deep Alignment Network (DAN) is a multi-stage face alignment method that was first presented in [82]. DAN evaluates the face roughly at first, then iteratively improves the findings. Zhang et al. [85] used a cascade classifier featuring a deep learning approach. The researchers built a cascade deep model from their work in which four layers of a convolutional cascade were embedded. Each of the cascade layers was trained to refine the facial landmarks from the prior layer. Another work on the deep convolutional cascade model was proposed by [86]. Their model DeCaFa makes use of an end-to-end CNN with a cascade classifier, thereby keeping the image’s spatial resolution intact while passing through the cascade. Between each of the cascade layers, a soft-max-linked multi-chained transfer layer is applied to derive a facial-landmarks-wise output. Authors in [87] proposed a combination of different face alignment techniques, such as a heatmap with coordinate regression network and spatial attention, to increase the stability and accuracy of the model. Their model gives promising results in aligning occluded face images.

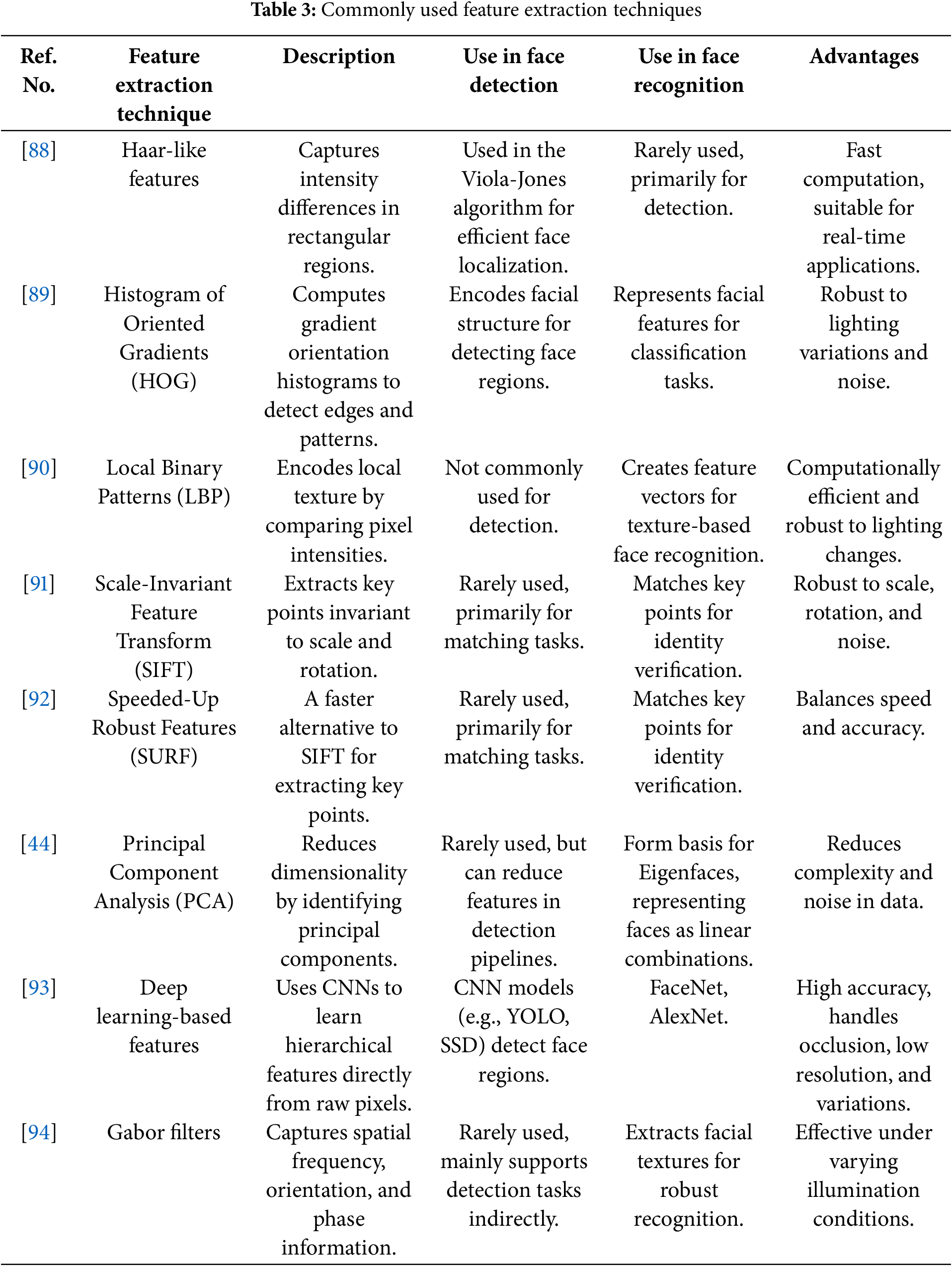

6.3 Feature Extraction Techniques

To the success of face detection and recognition, feature extraction methods are fundamental. To enhance the detection/recognition accuracy, raw images are transformed into meaningful information using these techniques. Table 3 summarizes some commonly used feature extraction methods with applications, and advantages in face recognition and detection.

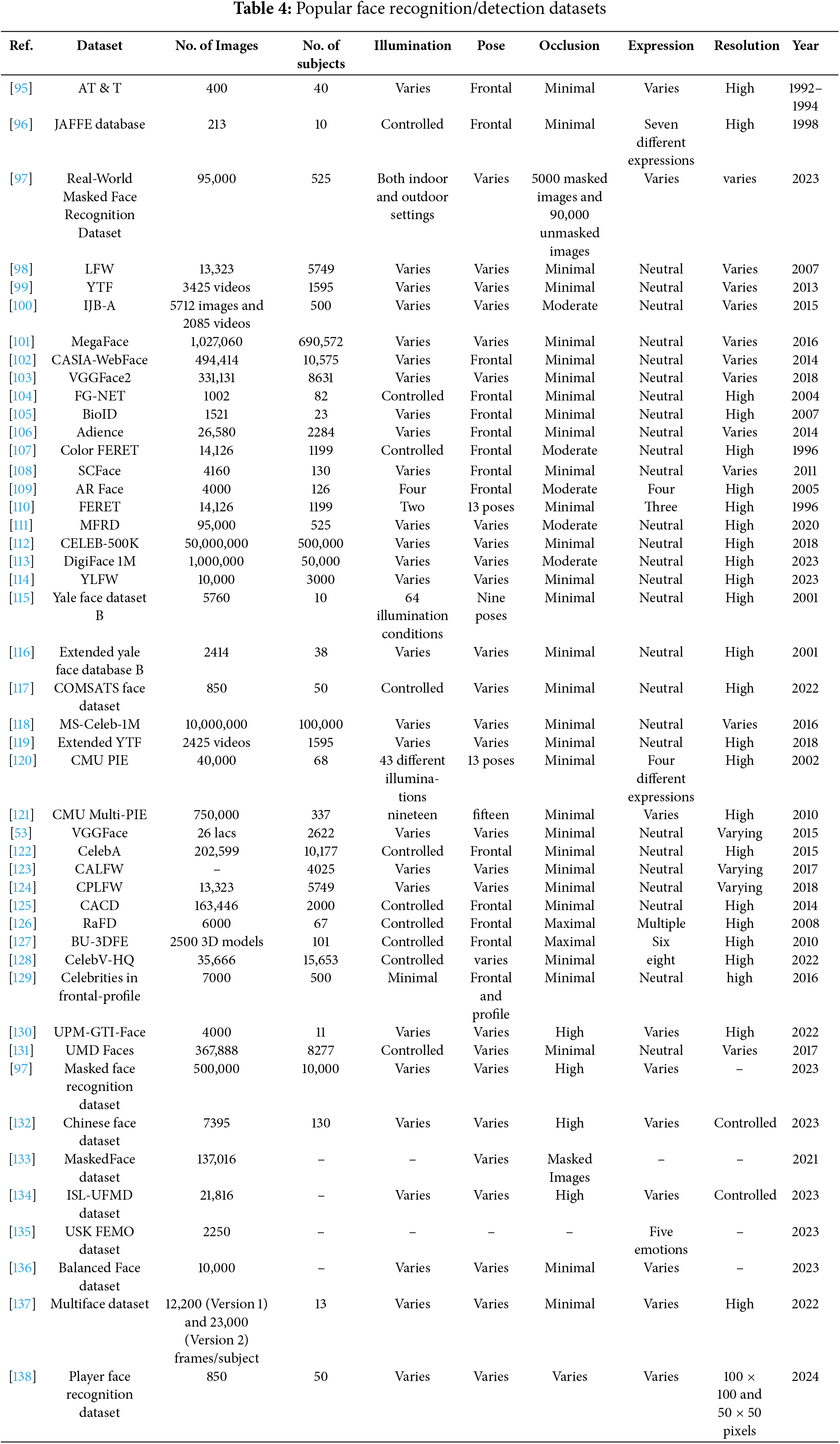

7 Face Detection/Recognition Datasets

The face recognition community has access to a vast array of databases, and the algorithms used for face identification exhibit varying degrees of performance across these datasets. These databases, as presented in Table 4, varied in scope, purpose, and quantity, and were assembled by research teams. Here, we quickly go over the salient characteristics of these publicly accessible face recognition datasets, including occlusion, illumination, image resolution, pose variation, and the number of individuals and images. However, not as much detail is covered on these databases because of the inaccessibility of data.

This table provides only a high-level comparison of these datasets and does not account for specific details such as image quality, the diversity of the subjects, etc. The choice of the dataset should be guided by the precise research question/problem and should include diverse images with various characteristics to address the face recognition algorithms’ limitations.

8 Addressing the Research Questions

In this study, we tried to address and work on some important aspects of face detection and recognition. We restate each research question and summarize the important findings:

1. How did face detection and recognition evolve between 2015 and 2024, and what improvements have been made?

The analysis in Sections 4–6 shows significant improvement, particularly in deep learning approaches, improved feature extraction techniques, and even the increasing accuracy of the systems in regards to changes in illumination, posture, and occlusions.

2. What are the open-source gaps and challenges do modern face recognition/detection algorithms still face?

As suggested and explained in detail in Sections 4–6, some challenges remain, for instance, dataset biases, pose variations (50° to 90°), systematic discrimination, privacy issues, loss of efficacy in uncontrolled settings, and many others.

3. How do different published face detection/recognition datasets compare in terms of size, features, and complexity?

Section 7 presents an in-depth comparison of several seminal datasets based on parameters such as size, features, complexity, and challenges.

4. What are the future research directions for researchers in the field of face detection/recognition?

Key research gaps and potential directions for future research have been addressed in the conclusion and research directions section.

9 Conclusion and Research Directions

The face recognition system holds great theoretical and practical importance, making it a popular study topic in image processing and computer vision. Numerous real-world applications heavily utilize this technology, including human-machine interaction, security, surveillance, homeland security, access control, image search, and entertainment. This study compares several publicly available face detection and recognition algorithms based on the approach, dataset accuracy, limitations, and descriptions. Further, we contrast several publicly available face detection and identification datasets according to several parameters, including size, lighting fluctuation, occlusion, and image resolution. We anticipate that this survey report will motivate scholars working in this area to engage and focus more on facial recognition system methodologies.

Several areas can be investigated in the future to improve face recognition systems’ performance and address existing challenges. A critical challenge is to make the algorithm more resilient to handle pose variation, illumination variation, occlusion, and image resolution variation. Although several face recognition algorithms have promising results while dealing with these conditions in a controlled environment, these challenges remain common in uncontrolled scenarios. Another significant area of growth is creating a technique for an accurate face recognition model on limited-resource devices such as mobile phones. One key area that needs improvement in face recognition is enhancing recognition algorithms to increase their accuracy when dealing with face images taken at extreme angles (<50 degrees and having greater than 50% of occlusions). Current models appear to be ineffective in these aspects, which reduces their usefulness in real-time applications like surveillance, biometric authentication, and security systems. Additionally, addressing dataset biases, improving robustness against adversarial attacks, and developing more efficient real-time face recognition models are crucial areas for future exploration. Addressing these concerns would make it possible to develop more accurate face recognition systems that can be deemed trustworthy and reliable. Lastly, continual research and development efforts will be needed to address potential causes of algorithmic bias or data mistakes and improve algorithms.

Acknowledgement: The authors thank Prince Sultan University for paying the Article Processing Charges of this article.

Funding Statement: The authors received no specific funding for this study.

Author Contributions: The authors confirm contribution to the paper as follows: Conceptualization, Mahmood Ul Haq and Muhammad Athar Javed Sethi; methodology, Mahmood Ul Haq, Muhammad Athar Javed Sethi, Sadique Ahmad and Naveed Ahmad; validation, Mahmood Ul Haq, Muhammad Shahid Anwar and Alpamis Kutlimuratov; formal analysis, Mahmood Ul Haq, Muhammad Athar Javed Sethi, Sadique Ahmad and Alpamis Kutlimuratov; investigation, Sadique Ahmad, Naveed Ahmad, Muhammad Shahid Anwar and Alpamis Kutlimuratov; resources, Sadique Ahmad, Naveed Ahmad, Muhammad Shahid Anwar and Alpamis Kutlimuratov; writing—original draft preparation, Mahmood Ul Haq; writing—review and editing, Mahmood Ul Haq, Muhammad Athar Javed Sethi and Sadique Ahmad; visualization, Muhammad Athar Javed Sethi, Naveed Ahmad and Alpamis Kutlimuratov; supervision, Muhammad Athar Javed Sethi and Sadique Ahmad; project administration, Muhammad Athar Javed Sethi, Sadique Ahmad, Naveed Ahmad, Muhammad Shahid Anwar and Alpamis Kutlimuratov; funding acquisition, Sadique Ahmad. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The article reviews existing studies only, which are available online on different platforms, e.g., Google Scholar, etc.

Ethical Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

References

1. Haq MU, Shahzad A, Mahmood Z, Shah AA, Muhammad N, Akram T. Boosting the face recognition performance of ensemble based LDA for pose, non-uniform illuminations, and low-resolution images. KSII Trans Internet Inf Syst. 2019;13(6):3411–64. doi:10.3837/tiis.2019.06.021. [Google Scholar] [CrossRef]

2. Eltaieb RA, El-Banby GM, El-Shafai W, Abd El-Samie FE, Abbas AM. Efficient implementation of cancelable face recognition based on elliptic curve cryptography. Opt Quantum Electron. 2023;55(9):841. doi:10.1007/s11082-023-04641-y. [Google Scholar] [CrossRef]

3. Farouk AE, Abd-Elnaby M, Ashiba HI, El-Banby GM, El-Shafai W, El-Fishawy AS, et al. Secure cancelable face recognition system based on inverse filter. J Opt. 2024;53(3):1667–88. doi:10.1007/s12596-023-01233-7. [Google Scholar] [CrossRef]

4. Ullah H, Haq MU, Khattak S, Khan GZ, Mahmood Z. A robust face recognition method for occluded and low-resolution images. In: 2019 International Conference on Applied and Engineering Mathematics (ICAEM); 2019 Aug 27–29; Taxila, Pakistan. p. 86–91. doi:10.1109/icaem.2019.8853753. [Google Scholar] [CrossRef]

5. Badr IS, Radwan AG, EL-Rabaie EM, Said LA, El-Shafai W, El-Banby GM, et al. Circuit realization and FPGA-based implementation of a fractional-order chaotic system for cancellable face recognition. Multimed Tools Appl. 2024;83(34):81565–90. doi:10.1007/s11042-023-15867-z. [Google Scholar] [CrossRef]

6. Saleem S, Shiney J, Priestly Shan B, Kumar Mishra V. Face recognition using facial features. Mater Today Proc. 2023;80:3857–62. doi:10.1016/j.matpr.2021.07.402. [Google Scholar] [CrossRef]

7. Munawar F, Khan U, Shahzad A, Haq MU, Mahmood Z, Khattak S, et al. An empirical study of image resolution and pose on automatic face recognition. In: 2019 16th International Bhurban Conference on Applied Sciences and Technology (IBCAST); 2019 Jan 8–12; Islamabad, Pakistan. p. 558–63. doi:10.1109/ibcast.2019.8667233. [Google Scholar] [CrossRef]

8. Rajeshkumar G, Braveen M, Venkatesh R, Josephin Shermila P, Ganesh Prabu B, Veerasamy B, et al. Smart office automation via faster R-CNN based face recognition and Internet of Things. Meas Sens. 2023;27:100719. doi:10.1016/j.measen.2023.100719. [Google Scholar] [CrossRef]

9. Abdulhussain SH, Mahmmod BM, AlGhadhban A, Flusser J. Face recognition algorithm based on fast computation of orthogonal moments. Mathematics. 2022;10(15):2721. doi:10.3390/math10152721. [Google Scholar] [CrossRef]

10. Boutros F, Struc V, Fierrez J, Damer N. Synthetic data for face recognition: current state and future prospects. Image Vis Comput. 2023;135:104688. doi:10.1016/j.imavis.2023.104688. [Google Scholar] [CrossRef]

11. Ahmad S, Haq MU, Sethi MAJ, El Affendi MA, Farid Z, Al Luhaidan AS. Mapping faces from above: exploring face recognition algorithms and datasets for aerial drone images. In: Deep cognitive modelling in remote sensing image processing. Hershey, PA, USA: IGI Global; 2024. p. 55–69. doi:10.4018/979-8-3693-2913-9.ch003. [Google Scholar] [CrossRef]

12. Opanasenko VM, Fazilov SK, Mirzaev ON, Kakharov SSU. An ensemble approach to face recognition in access control systems. J Mob Multimed. 2024:749–68. doi:10.13052/jmm1550-4646.20310. [Google Scholar] [CrossRef]

13. Zhang X, Xuan C, Ma Y, Tang Z, Cui J, Zhang H. High-similarity sheep face recognition method based on a Siamese network with fewer training samples. Comput Electron Agric. 2024;225:109295. doi:10.1016/j.compag.2024.109295. [Google Scholar] [CrossRef]

14. Deng J, Guo J, Zhou Y, Yu J, Kotsia I, Zafeiriou S. RetinaFace: single-stage dense face localisation in the wild. arXiv:1905.00641. 2019. [Google Scholar]

15. George A, Ecabert C, Shahreza HO, Kotwal K, Marcel S. EdgeFace: efficient face recognition model for edge devices. IEEE Trans Biom Behav Identity Sci. 2024;6(2):158–68. doi:10.1109/TBIOM.2024.3352164. [Google Scholar] [CrossRef]

16. Ahmad S, El-Affendi MA, Anwar MS, Iqbal R. Potential future directions in optimization of students’ performance prediction system. Comput Intell Neurosci. 2022;2022:6864955. doi:10.1155/2022/6864955. [Google Scholar] [PubMed] [CrossRef]

17. Hosny KM, AbdElFattah Ibrahim N, Mohamed ER, Hamza HM. Artificial intelligence-based masked face detection: a survey. Intell Syst Appl. 2024;22:200391. doi:10.1016/j.iswa.2024.200391. [Google Scholar] [CrossRef]

18. Yashunin D, Baydasov T, Vlasov R. MaskFace: multi-task face and landmark detector. arXiv:2005.09412. 2020. [Google Scholar]

19. Li Z, Tang X, Han J, Liu J, He R. PyramidBox++: high performance detector for finding tiny face. arXiv:1904.00386. 2019. [Google Scholar]

20. Viola P, Jones MJ. Robust real-time face detection. Int J Comput Vis. 2004;57(2):137–54. doi:10.1023/B:VISI.0000013087.49260.fb. [Google Scholar] [CrossRef]

21. Yang S, Luo P, Loy CC, Tang X. Wider Face: a face detection benchmark. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016 Jun 27–30; Las Vegas, NV, USA. p. 5525–33. doi:10.1109/CVPR.2016.596. [Google Scholar] [CrossRef]

22. Li J, Wang Y, Wang C, Tai Y, Qian J, Yang J, et al. DSFD: dual shot face detector. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2019 Jun 15–20; Long Beach, CA, USA. p. 5055–64. doi:10.1109/cvpr.2019.00520. [Google Scholar] [CrossRef]

23. Zhu Y, Cai H, Zhang S, Wang C, Xiong Y. TinaFace: strong but simple baseline for face detection. arXiv:2011.13183. 2020. [Google Scholar]

24. Hu P, Ramanan D. Finding tiny faces. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2017 Jul 21–26; Honolulu, HI, USA. p. 1522–30. doi:10.1109/CVPR.2017.166. [Google Scholar] [CrossRef]

25. Ren S, He K, Girshick R, Sun J. Faster R-CNN: towards real-time object detection with region proposal networks. Adv Neural Inf Process Syst. 2015;28:1–9. [Google Scholar]

26. Li H, Lin Z, Shen X, Brandt J, Hua G. A convolutional neural network cascade for face detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2015 Jun 7–12; Boston, MA, USA. p. 5325–34. doi:10.1109/CVPR.2015.7299170. [Google Scholar] [CrossRef]

27. Li X, Xiang Y, Li S. Combining convolutional and vision transformer structures for sheep face recognition. Comput Electron Agric. 2023;205:107651. doi:10.1016/j.compag.2023.107651. [Google Scholar] [CrossRef]

28. Najibi M, Samangouei P, Chellappa R, Davis L. SSH: single stage headless face detector. In: Proceedings of the European Conference on Computer Vision (ECCV); 2018 Sep 8–14; Munich, Germany. [Google Scholar]

29. Zhang S, Zhu X, Lei Z, Shi H, Wang X, Li SZ. S3FD: single shot scale-invariant face detector. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV); 2017 Oct 22–29; Venice, Italy. p. 192–201. doi:10.1109/ICCV.2017.30. [Google Scholar] [CrossRef]

30. Yu S, Jiang Y, Lu J, Zhou J. CenterFace: joint face detection and alignment using face as point. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2019 Jun 16–20; Long Beach, CA, USA. doi:10.48550/arXiv.1911.03599. [Google Scholar] [CrossRef]

31. Tan M, Pang R, Le QV. EfficientDet: scalable and efficient object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2020 Jun 13-19; Seattle, WA, USA. p. 10778–87. doi:10.1109/cvpr42600.2020.01079. [Google Scholar] [CrossRef]

32. Thuan D. Evolution of Yolo algorithm and Yolov5: the state-of-the-art object detention algorithm. [cited 2025 Jan 1]. Available from: https://www.theseus.fi/bitstream/handle/10024/452552/Do_Thuan.pdf?sequence=2. [Google Scholar]

33. Bazarevsky V, Kartynnik Y, Vakunov A, Raveendran K, Grundmann M. BlazeFace: Sub-millisecond neural face detection on mobile GPUs; 2019. doi:10.48550/arXiv.1907.05047. [Google Scholar] [CrossRef]

34. Goyal H, Sidana K, Singh C, Jain A, Jindal S. A real time face mask detection system using convolutional neural network. Multimed Tools Appl. 2022;81(11):14999–5015. doi:10.1007/s11042-022-12166-x. [Google Scholar] [PubMed] [CrossRef]

35. Yu Z, Huang H, Chen W, Su Y, Liu Y, Wang X. YOLO-FaceV2: a scale and occlusion aware face detector. Pattern Recognit. 2024;155:110714. doi:10.1016/j.patcog.2024.110714. [Google Scholar] [CrossRef]

36. Kumar A, Kaur A, Kumar M. Face detection techniques: a review. Artif Intell Rev. 2019;52(2):927–48. doi:10.1007/s10462-018-9650-2. [Google Scholar] [CrossRef]

37. Qin H, Yan J, Li X, Hu X. Joint training of cascaded CNN for face detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016 Jun 27–30; Las Vegas, NV, USA. p. 3456–65. doi:10.1109/CVPR.2016.376. [Google Scholar] [CrossRef]

38. Ranjan R, Patel VM, Chellappa R. HyperFace: a deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition. IEEE Trans Pattern Anal Mach Intell. 2019;41(1):121–35. doi:10.1109/TPAMI.2017.2781233. [Google Scholar] [PubMed] [CrossRef]

39. Yang S, Luo P, Loy CC, Tang X. Faceness-net: face detection through deep facial part responses. IEEE Trans Pattern Anal Mach Intell. 2017;40(8):1845–59. doi:10.48550/arXiv.1701.08393. [Google Scholar] [CrossRef]

40. Yu J, Jiang Y, Wang Z, Cao Z, Huang T. UnitBox: an advanced object detection network. In: Proceedings of the 24th ACM International Conference on Multimedia; 2016 Oct 15–19; Amsterdam, The Netherlands. p. 516–20. doi:10.1145/2964284.2967274. [Google Scholar] [CrossRef]

41. Ranjan R, Bansal A, Zheng J, Xu H, Gleason J, Lu B, et al. A fast and accurate system for face detection, identification, and verification. IEEE Trans Biom Behav Identity Sci. 2019;1(2):82–96. doi:10.1109/TBIOM.2019.2908436. [Google Scholar] [CrossRef]

42. Chi C, Zhang S, Xing J, Lei Z, Li SZ, Zou X. Selective refinement network for high performance face detection. Proc AAAI Conf Artif Intell. 2019;33(1):8231–8. doi:10.1609/aaai.v33i01.33018231. [Google Scholar] [CrossRef]

43. Chaudhuri B, Vesdapunt N, Wang B. Joint face detection and facial motion retargeting for multiple faces. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2019 Jun 15–20; Long Beach, CA, USA. p. 9711–20. doi:10.1109/cvpr.2019.00995. [Google Scholar] [CrossRef]

44. Moghaddam B, Wahid W, Pentland A. Beyond eigenfaces: probabilistic matching for face recognition. In: Proceedings Third IEEE International Conference on Automatic Face and Gesture Recognition; 1998 Apr 14–16; Nara, Japan. p. 30–5. doi:10.1109/AFGR.1998.670921. [Google Scholar] [CrossRef]

45. He X, Yan S, Hu Y, Niyogi P, Zhang HJ. Face recognition using laplacianfaces. IEEE Trans Pattern Anal Mach Intell. 2005;27(3):328–40. doi:10.1109/TPAMI.2005.55. [Google Scholar] [PubMed] [CrossRef]

46. Wiskott L, Fellous JM, Krüger N, von der Malsburg C. Face recognition by elastic bunch graph matching. In: Intelligent biometric techniques in fingerprint and face recognition. Boca Raton, FL, USA: Routledge; 2022. p. 355–96. doi:10.1201/9780203750520-11. [Google Scholar] [CrossRef]

47. Belhumeur PN, Hespanha JP, Kriegman DJ. Eigenfaces vs. Fisherfaces: recognition using class specific linear projection. IEEE Trans Pattern Anal Mach Intell. 1997;19(7):711–20. doi:10.1109/34.598228. [Google Scholar] [CrossRef]

48. Liu C, Wechsler H. A Gabor feature classifier for face recognition. In: Proceedings Eighth IEEE International Conference on Computer Vision, ICCV 2001; 2001 Jul 7–14; Vancouver, BC, Canada. p. 270–5. doi:10.1109/ICCV.2001.937635. [Google Scholar] [CrossRef]

49. Ahonen T, Hadid A, Pietikainen M. Face description with local binary patterns: application to face recognition. IEEE Trans Pattern Anal Mach Intell. 2006;28(12):2037–41. doi:10.1109/TPAMI.2006.244. [Google Scholar] [PubMed] [CrossRef]

50. Chen D, Cao X, Wen F, Sun J. Blessing of dimensionality: high-dimensional feature and its efficient compression for face verification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; 2013 Jun 23–28; Portland, OR, USA. p. 3025–32. doi:10.1109/CVPR.2013.389. [Google Scholar] [CrossRef]

51. Lei Z, Pietikäinen M, Li SZ. Learning discriminant face descriptor. IEEE Trans Pattern Anal Mach Intell. 2014;36(2):289–302. doi:10.1109/TPAMI.2013.112. [Google Scholar] [PubMed] [CrossRef]

52. Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. Adv Neural Inf Process Syst. 2012;25:1–9. doi:10.1145/3065386. [Google Scholar] [CrossRef]

53. Parkhi OM, Vedaldi A, Zisserman A. Deep face recognition. In: Proceedings of the British Machine Vision Conference 2015; 2015 Sep 7–10; Swansea, UK. Durham, UK: British Machine Vision Association; 2015. doi:10.5244/c.29.41. [Google Scholar] [CrossRef]

54. Schroff F, Kalenichenko D, Philbin J. FaceNet: a unified embedding for face recognition and clustering. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2015 Jun 7–12; Boston, MA, USA. p. 815–23. doi:10.1109/CVPR.2015.7298682. [Google Scholar] [CrossRef]

55. Taigman Y, Yang M, Ranzato M, Wolf L. DeepFace: closing the gap to human-level performance in face verification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; 2014 Jun 23–28; Columbus, OH, USA. p. 1701–8. doi:10.1109/CVPR.2014.220. [Google Scholar] [CrossRef]

56. Deng J, Guo J, Xue N, Zafeiriou S. ArcFace: additive angular margin loss for deep face recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2019 Jun 15–20; Long Beach, CA, USA. p. 4685–94. doi:10.1109/cvpr.2019.00482. [Google Scholar] [CrossRef]

57. Chen Y, Wen Y, Sun J, Li Z. MobileFaceNets: efficient CNNs for accurate real-time face verification on mobile devices. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; 2018 Jun 18–22; Salt Lake City, UT, USA. p. 3693–701. doi:10.48550/arXiv.1804.07573. [Google Scholar] [CrossRef]

58. Tan W, Guo Y, Wu Z, Zhang Z, Huang R, Li J. TResNet: high-performance GPU-dedicated architecture for deep learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops; 2021 Jun 19–25; Virtual. p. 567–76. doi:10.48550/arXiv.2003.13630. [Google Scholar] [CrossRef]

59. Mukhometzianov R, Carrillo J. CapsNet comparative performance evaluation for image classification. arXiv:1805.11195. 2018. [Google Scholar]

60. Sreekala K, Cyril CPD, Neelakandan S, Chandrasekaran S, Walia R, Martinson EO. Capsule network-based deep transfer learning model for face recognition. Wirel Commun Mob Comput. 2022;2022:2086613. doi:10.1155/2022/2086613. [Google Scholar] [CrossRef]

61. Wen Y, Zhang K, Li Z, Qiao Y. A discriminative feature learning approach for deep face recognition. In: Proceedings of the European Conference on Computer Vision; 2016 Oct 11–14; Amsterdam, The Netherlands. p. 499–515. doi:10.1007/978-3-319-46478-7_31. [Google Scholar] [CrossRef]

62. Wang H, Wang Y, Zhou Z, Ji X, Gong D, Zhou J, et al. CosFace: large margin cosine loss for deep face recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; 2018 Jun 18–22; Salt Lake City, UT, USA. p. 5265–74. doi:10.48550/arXiv.1801.09414. [Google Scholar] [CrossRef]

63. Liu W, Wen Y, Yu Z, Li M, Raj B, Song L. SphereFace: deep hypersphere embedding for face recognition. In: Proceedings of the IEEE International Conference on Computer Vision; 2017 Jul 22–29; Venice, Italy. p. 6738–46. doi:10.1109/CVPR.2017.713. [Google Scholar] [CrossRef]

64. Zhu X, Zhen L, Yan J, Dong Y, Li SZ. High-fidelity Pose and Expression Normalization for face recognition in the wild. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2015 Jun 7-12; Boston, MA, USA. p. 787–96. doi:10.1109/CVPR.2015.7298679. [Google Scholar] [CrossRef]

65. Eldifrawi I, Abo-Zahhad M, Abdelwahab M, El-Malek AHA. New face recognition algorithm adopting wide fast embedded capsule networks with reduced complexity and preserved accuracy. In: 2021 9th International Japan-Africa Conference on Electronics, Communications, and Computations (JAC-ECC); 2021 Dec 13–14; Alexandria, Egypt. p. 20–5. doi:10.1109/JAC-ECC54461.2021.9691419. [Google Scholar] [CrossRef]

66. Sun S, Zhuang S, Zhou Z, Huang Q, Liu Y, Zhang Z. High-resolution representations for labeling pixels and regions. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; 2020 Jun 14–19; Seattle, WA, USA. p. 7132–41. doi:10.48550/arXiv.1904.04514. [Google Scholar] [CrossRef]

67. Zhang P, Zhao F, Liu P, Li M. Efficient lightweight attention network for face recognition. IEEE Access. 2022;10:31740–50. doi:10.1109/ACCESS.2022.3150862. [Google Scholar] [CrossRef]

68. Chui A, Patnaik A, Ramesh K, Wang L. Capsule networks and face recognition. [cited 2025 Jan 1]. Available from: https://lindawangg.github.io. [Google Scholar]

69. Hariri W. Efficient masked face recognition method during the COVID-19 pandemic. Signal Image Video Process. 2022;16(3):605–12. doi:10.1007/s11760-021-02050-w. [Google Scholar] [PubMed] [CrossRef]

70. Terhörst P, Ihlefeld M, Huber M, Damer N, Kirchbuchner F, Raja K, et al. QMagFace: simple and accurate quality-aware face recognition. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV); 2023 Jan 2–7; Waikoloa, HI, USA. p. 3473–83. doi:10.1109/WACV56688.2023.00348. [Google Scholar] [CrossRef]

71. Tsai TH, Lu JX, Chou XY, Wang CY. Joint masked face recognition and temperature measurement system using convolutional neural networks. Sensors. 2023;23(6):2901. doi:10.3390/s23062901. [Google Scholar] [PubMed] [CrossRef]

72. Xiao J, Jiang G, Liu H. A lightweight face recognition model based on MobileFaceNet for limited computation environment. EAI Endorsed Trans IoT. 2022;7(27):1–9. doi:10.4108/eai.28-2-2022.173547. [Google Scholar] [CrossRef]

73. Xu X, Deng H, Wang Y, Zhang S, Song H. Boosting cattle face recognition under uncontrolled scenes by embedding enhancement and optimization. Appl Soft Comput. 2024;164:111951. doi:10.1016/j.asoc.2024.111951. [Google Scholar] [CrossRef]

74. Haq MU, Sethi MAJ, Ben Aoun N, Alluhaidan AS, Ahmad S, Farid Z. CapsNet-FR: capsule networks for improved recognition of facial features. Comput Mater Contin. 2024;79(2):2169–86. doi:10.32604/cmc.2024.049645. [Google Scholar] [CrossRef]

75. Bergman N, Yitzhaky Y, Halachmi I. Biometric identification of dairy cows via real-time facial recognition. Animal. 2024;18(3):101079. doi:10.1016/j.animal.2024.101079. [Google Scholar] [PubMed] [CrossRef]

76. Haq MU, Sethi MAJ, Ahmad S, ELAffendi MA, Asim M. Automatic player face detection and recognition for players in cricket games. IEEE Access. 2024;12:41219–33. doi:10.1109/ACCESS.2024.3377564. [Google Scholar] [CrossRef]

77. Lv JJ, Shao XH, Huang JS, Zhou XD, Zhou X. Data augmentation for face recognition. Neurocomputing. 2017;230:184–96. doi:10.1016/j.neucom.2016.12.025. [Google Scholar] [CrossRef]

78. Masi I, Trân AT, Hassner T, Sahin G, Medioni G. Face-specific data augmentation for unconstrained face recognition. Int J Comput Vis. 2019;127(6):642–67. doi:10.1007/s11263-019-01178-0. [Google Scholar] [CrossRef]

79. Dalvi J, Bafna S, Bagaria D, Virnodkar S. A survey on face recognition systems. arXiv:2201.02991. 2022. [Google Scholar]

80. Nanni L, Paci M, Brahnam S, Lumini A. Comparison of different image data augmentation approaches. J Imaging. 2021;7(12):254. doi:10.3390/jimaging7120254. [Google Scholar] [PubMed] [CrossRef]

81. Wang N, Gao X, Tao D, Yang H, Li X. Facial feature point detection: a comprehensive survey. Neurocomputing. 2018;275:50–65. doi:10.1016/j.neucom.2017.05.013. [Google Scholar] [CrossRef]

82. Bulat A, Tzimiropoulos G. Two-stage convolutional part heatmap regression for the 1st 3D face alignment in the wild (3DFAW) challenge. In: Computer Vision—ECCV 2016 Workshops; 2016 Oct 11–14; Amsterdam, The Netherlands. Cham, Switzerland: Springer International Publishing. p. 616–24. doi:10.1007/978-3-319-48881-3_43. [Google Scholar] [CrossRef]

83. Wang X, Bo L, Li F. Adaptive wing loss for robust face alignment via heatmap regression. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV); 2019 Oct 27–Nov 2; Seoul, Republic of Korea. p. 6970–80. doi:10.1109/iccv.2019.00707. [Google Scholar] [CrossRef]

84. Hou A. Adaptive wing loss for face alignment [GitHub repository]. San Francisco, CA, USA: GitHub. [cited 2025 Jan 15]. Available from: https://github.com/andrewhou1/Adaptive-Wing-Loss-for-Face-Alignment. [Google Scholar]

85. Zhang Z, Luo P, Loy CC, Tang X. Facial landmark detection by deep multi-task learning. In: Computer Vision—ECCV 2014: 13th European Conference; 2014 Sep 6–12; Zurich, Switzerland. Cham, Switzerland: Springer International Publishing. p. 94–108. doi:10.1007/978-3-319-10599-4_7. [Google Scholar] [CrossRef]

86. Dapogny A, Cord M, Bailly K. DeCaFA: deep convolutional cascade for face alignment in the wild. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV); 2019 Oct 27–Nov 2; Seoul, Republic of Korea. p. 6892–900. doi:10.1109/iccv.2019.00699. [Google Scholar] [CrossRef]

87. Park H, Kim D. ACN: occlusion-tolerant face alignment by attentional combination of heterogeneous regression networks. Pattern Recognit. 2021;114:107761. doi:10.1016/j.patcog.2020.107761. [Google Scholar] [CrossRef]

88. Besnassi M, Neggaz N, Benyettou A. Face detection based on evolutionary haar filter. Pattern Anal Appl. 2020;23(1):309–30. doi:10.1007/s10044-019-00784-5. [Google Scholar] [CrossRef]

89. Rahmad C, Asmara RA, Putra DH, Dharma I, Darmono H, Muhiqqin I. Comparison of Viola-Jones haar cascade classifier and histogram of oriented gradients (HOG) for face detection. IOP Conf Ser Mater Sci Eng. 2020;732(1):012038. doi:10.1088/1757-899x/732/1/012038. [Google Scholar] [CrossRef]

90. Karanwal S. Robust local binary pattern for face recognition in different challenges. Multimed Tools Appl. 2022;81(20):29405–21. doi:10.1007/s11042-022-13006-8. [Google Scholar] [CrossRef]

91. Alamri J, Harrabi R, Ben S. Face recognition based on convolution neural network and scale invariant feature transform. Int J Adv Comput Sci Appl. 2021;12(2):644–54. doi:10.14569/ijacsa.2021.0120281. [Google Scholar] [CrossRef]

92. Setta S, Sinha S, Mishra M, Choudhury P. Real-time facial recognition using SURF-FAST. In: Sharma N, Chakrabarti A, Balas VE, Bruckstein AM, editors. Data management, analytics and innovation. Vol. 2. Singapore: Springer; 2021. p. 505–22. doi:10.1007/978-981-16-2937-2_32. [Google Scholar] [CrossRef]

93. Prasad PS, Pathak R, Gunjan VK, Ramana Rao HV. Deep learning based representation for face recognition. In: ICCCE 2019: Proceedings of the 2nd International Conference on Communications and Cyber Physical Engineering. Singapore: Springer; 2020. p. 419–24. doi:10.1007/978-981-13-8715-9_50. [Google Scholar] [CrossRef]

94. Li HA, Fan J, Zhang J, Li Z, He D, Si M, et al. Facial image segmentation based on Gabor filter. Math Probl Eng. 2021;2021:6620742. doi:10.1155/2021/6620742. [Google Scholar] [CrossRef]

95. Kasikrit. ATT database of faces [Internet]. San Francisco, CA, USA; Kaggle. [cited 2024 Apr 16]. Available from: https://www.kaggle.com/datasets/kasikrit/att-database-of-faces. [Google Scholar]

96. Kamachi M, Ullah TM, Akamatsu S. Japanese female facial expression (JAFFE) database. In: Proceedings of the International Conference on Automatic Face and Gesture Recognition; 2023 Jan 5–8; Waikoloa Beach, HI, USA. doi:10.5281/zenodo.3451524. [Google Scholar] [CrossRef]

97. Wang Z, Huang B, Wang G, Yi P, Jiang K. Masked face recognition dataset and application. IEEE Trans Biom Behav Identity Sci. 2023;5(2):298–304. doi:10.1109/TBIOM.2023.3242085. [Google Scholar] [CrossRef]

98. Huang GB, Ramesh M, Berg T, Learned-Miller E. Labeled faces in the wild: a database for studying face recognition in unconstrained environments [Internet]. [cited 2024 Apr 16]. Available from: http://vis-www.cs.umass.edu/lfw/. [Google Scholar]

99. Wolf L, Hassner T, Maoz I. Face recognition in unconstrained videos with matched background similarity. In: CVPR 2011; 2011 Jun 20–25; Colorado Springs, CO, USA. p. 529–34. doi: 10.1109/CVPR.2011.5995566. [Google Scholar] [CrossRef]

100. Klare BF, Klein B, Taborsky E, Blanton A, Cheney J, Allen K, et al. Pushing the frontiers of unconstrained face detection and recognition: iarpa Janus Benchmark A. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2015 Jun 7–12; Boston, MA, USA. p. 1931–9. doi:10.1109/CVPR.2015.7298803. [Google Scholar] [CrossRef]

101. Kemelmacher-Shlizerman I, Seitz SM, Miller D, Brossard E. The MegaFace benchmark: 1 million faces for recognition at scale. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016 Jun 27–30; Las Vegas, NV, USA. p. 4873–82. doi:10.1109/CVPR.2016.527. [Google Scholar] [CrossRef]

102. Zhang J, Shan S, Kan M, Chen X. WebFace: a scalable face image dataset with varying pose and age; 2014 [Internet]. [cited 2025 Apr 24]. Available from: https://paperswithcode.com/dataset/casia-webface. [Google Scholar]

103. Cao Q, Shen L, Xie W, Parkhi OM, Zisserman A. VGGFace2: a dataset for recognising faces across pose and age. In: 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018); 2018 May 15–19; Xi’an, China. p. 67–74. doi:10.1109/FG.2018.00020. [Google Scholar] [CrossRef]

104. Panis G, Lanitis A, Tsapatsoulis N, Cootes TF. Overview of research on facial ageing using the FG-NET ageing database. IET Biom. 2016;5(2):37–46. doi:10.1049/iet-bmt.2014.0053. [Google Scholar] [CrossRef]

105. BioID facial recognition (Version 2.2.2). [Mobile app, 2022] retrieved from Google Play Store [Internet]. [cited 2025 Apr 24]. Available from: https://play.google.com/store/apps/details?id=com.bioid.authenticator. [Google Scholar]

106. Levi G, Hassncer T. Age and gender classification using convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW); 2015 Jun 7–12; Boston, MA, USA. p. 34–42. doi:10.1109/CVPRW.2015.7301352. [Google Scholar] [CrossRef]

107. Color FERET database [Internet]. [cited 2022 May 20]. Available from: https://www.nist.gov/itl/products-and-services/color-feret-database. [Google Scholar]

108. Grgic M, Delac K, Grgic S. SCface-surveillance cameras face database. Multimed Tools Appl. 2011;51(3):863–79. doi:10.1007/s11042-009-0417-2. [Google Scholar] [CrossRef]

109. Martinez A, Benavente R. The AR face database. Barcelona, Spain: CVC; 1998. Report No.: 24. [Google Scholar]

110. Phillips PJ, Wechsler H, Huang J, Rauss PJ. The FERET database and evaluation procedure for face-recognition algorithms. Image Vis Comput. 1998;16(5):295–306. doi:10.1016/S0262-8856(97)00070-X. [Google Scholar] [CrossRef]

111. Wang Z, Wang G, Huang B, Xiong Z, Hong Q, Wu H, et al. Masked face recognition dataset and application. arXiv:2003.09093. 2020. [Google Scholar]

112. Cao J, Li Y, Zhang Z. Celeb-500K: a large training dataset for face recognition. In: 25th IEEE International Conference on Image Processing (ICIP); 2018 Oct 7–10; Athens, Greece. p. 2406–10. doi:10.1109/ICIP.2018.8451704. [Google Scholar] [CrossRef]

113. Bae G, de la Gorce M, Baltrušaitis T, Hewitt C, Chen D, Valentin J, et al. DigiFace-1M: 1 million digital face images for face recognition. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV); 2023 Jan 2–7; Waikoloa, HI, USA. p. 3515–24. doi:10.1109/WACV56688.2023.00352. [Google Scholar] [CrossRef]

114. Medvedev I, Shadmand F, Gonçalves N. Young labeled faces in the wild (YLFWa dataset for children faces recognition. arXiv:2301.05776. 2023. [Google Scholar]

115. Center for computational vision and control. Yale face database B [Internet]. New Haven, CT, USA: Yale University. 2001. [cited 2025 Apr 17]. Available from: https://www.cs.yale.edu/cvc/projects/yalefacesB/yalefacesB.html. [Google Scholar]

116. Georghiades AS, Belhumeur PN, Kriegman DJ. From few to many: illumination cone models for face recognition under variable lighting and pose. IEEE Trans Pattern Anal Mach Intell. 2001;23(6):643–60. doi:10.1109/34.927464. [Google Scholar] [CrossRef]

117. Haq MU, Sethi MAJ, Ullah R, Shazhad A, Hasan L, Karami GM. COMSATS face: a dataset of face images with pose variations, its design, and aspects. Math Probl Eng. 2022;2022:4589057. doi:10.1155/2022/4589057. [Google Scholar] [CrossRef]

118. Guo Y, Zhang L, Hu Y, He X, Gao J. MS-celeb-1M: a dataset and benchmark for large-scale face recognition. In: Computer Vision—ECCV 2016: 14th European Conference; 2016 Oct 11–14; Amsterdam, The Netherlands. Cham, Switzerland: Springer International Publishing; 2016. p. 87–102. doi:10.1007/978-3-319-46487-9_6. [Google Scholar] [CrossRef]

119. Ferrari C, Berretti S, Del Bimbo A. Extended YouTube faces: a dataset for heterogeneous open-set face identification. In: 2018 24th International Conference on Pattern Recognition (ICPR); 2018 Aug 20–24; Beijing, China. p. 3408–13. doi:10.1109/ICPR.2018.8545642. [Google Scholar] [CrossRef]

120. Sim T, Baker S, Bsat M. The CMU pose, illumination, and expression (PIE) database. In: Proceedings of Fifth IEEE International Conference on Automatic Face Gesture Recognition; 2002 May 21; Washington, DC, USA. p. 53–8. doi:10.1109/AFGR.2002.1004130. [Google Scholar] [CrossRef]

121. Gross R, Matthews I, Cohn J, Kanade T, Baker S. Multi-PIE. Image Vis Comput. 2010;28(5):807–13. doi:10.1016/j.imavis.2009.08.002. [Google Scholar] [PubMed] [CrossRef]

122. Liu Z, Luo P, Wang X, Tang X. Deep learning face attributes in the wild. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV); 2015 Dec 7–13; Santiago, Chile. p. 3730–8. doi:10.1109/ICCV.2015.425. [Google Scholar] [CrossRef]

123. Zheng T, Deng W, Hu J. Cross-age LFW: a database for studying cross-age face recognition in unconstrained environments. arXiv:1708.08197. 2017. [Google Scholar]

124. Zheng T, Deng W. Cross-pose LFW: a database for studying cross-pose face recognition in unconstrained environments [Internet]. Beijing, China: Beijing University of Posts and Telecommunications. 2018 [cited 2025 Apr 24]. Available from: https://pan.baidu.com/s/1i6iHztN. [Google Scholar]

125. Papers with code. CACD dataset [Internet]. [cited 2025 Apr 17]. Available from: http://paperswithcode.com/dataset/cacd. [Google Scholar]

126. Langner O, Dotsch R, Bijlstra G, Wigboldus DHJ, Hawk ST, van Knippenberg A. Presentation and validation of the radboud faces database. Cogn Emot. 2010;24(8):1377–88. doi:10.1080/02699930903485076. [Google Scholar] [CrossRef]

127. Yin L, Chen X, Sun Y, Worm T, Reale M. A high-resolution 3D dynamic facial expression database. In: Proceedings of the IEEE International Conference on Automatic Face & Gesture Recognition; 2008 Sep 17–19; Amsterdam, The Netherlands. p. 1–6. doi:10.1109/AFGR.2008.4813324. [Google Scholar] [CrossRef]

128. Zhu H, Wu W, Zhu W, Jiang L, Tang S, Zhang L, et al. CelebV-HQ: a large-scale video facial attributes dataset. In: European Conference on Computer Vision; 2022; Cham, Switzerland: Springer Nature Switzerland; p. 650–67. doi:10.1007/978-3-031-20071-7_38. [Google Scholar] [CrossRef]

129. Sengupta S, Chen JC, Castillo C, Patel VM, Chellappa R, Jacobs DW. Frontal to profile face verification in the wild. In: 2016 IEEE Winter Conference on Applications of Computer Vision (WACV); 2016 Mar 7–10; Lake Placid, NY, USA. p. 1–9. doi:10.1109/WACV.2016.7477558. [Google Scholar] [CrossRef]

130. Rodrigo M, González-Sosa E, Cuevas C, García N. UPM-GTI-face: a dataset for the evaluation of the impact of distance and masks in face detection and recognition systems. In: 2022 18th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS); 2022 Nov 29–Dec 2; Madrid, Spain. p. 1–8. doi:10.1109/AVSS56176.2022.9959558. [Google Scholar] [CrossRef]

131. Bansal A, Nanduri A, Castillo CD, Ranjan R, Chellappa R. UMDFaces: an annotated face dataset for training deep networks. In: 2017 IEEE International Joint Conference on Biometrics (IJCB); 2017 Oct 1–4; Denver, CO, USA. p. 464–73. doi:10.1109/BTAS.2017.8272731. [Google Scholar] [CrossRef]

132. Li N, Shen X, Sun L, Xiao Z, Ding T, Li T, et al. Chinese face dataset for face recognition in an uncontrolled classroom environment. IEEE Access. 2023;11:86963–76. doi:10.1109/ACCESS.2023.3302919. [Google Scholar] [CrossRef]

133. Cabani A, Hammoudi K, Benhabiles H, Melkemi M. MaskedFace-Net—a dataset of correctly/incorrectly masked face images in the context of COVID-19. Smart Health. 2021;19:100144. doi:10.1016/j.smhl.2020.100144. [Google Scholar] [PubMed] [CrossRef]

134. Eyiokur FI, Ekenel HK, Waibel A. Unconstrained face mask and face-hand interaction datasets: building a computer vision system to help prevent the transmission of COVID-19. Signal Image Video Process. 2023;17(4):1027–34. doi:10.1007/s11760-022-02308-x. [Google Scholar] [PubMed] [CrossRef]

135. Muhajir M, Oktiana M, Muchtar K, Fitria M, Akhyar A, Pratama MD, et al. USK-FEMO: a face emotion dataset using deep learning for effective learning. In: 2023 2nd International Conference on Computer System, Information Technology, and Electrical Engineering (COSITE); 2023 Aug 2–3; Banda Aceh, Indonesia. p. 199–203. doi:10.1109/COSITE60233.2023.10249834. [Google Scholar] [CrossRef]

136. Mekonnen KA. Balanced face dataset: guiding StyleGAN to generate labeled synthetic face image dataset for underrepresented group. arXiv:2308.03495. 2023. [Google Scholar]

137. Wuu CH, Zheng N, Ardisson S, Bali R, Belko D, Brockmeyer E, et al. Multiface: a dataset for neural face rendering. arXiv:2207.11243. 2022. [Google Scholar]

138. Haq MU, Sethi MAJ, Ullah S, Ullah A. The development, applications, challenges, and analysis of a cricket player face recognition dataset. In: Deep cognitive modelling in remote sensing image processing. Hershey, PA, USA: IGI Global; 2024. p. 173–97. doi:10.4018/979-8-3693-2913-9.ch008. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2025 The Author(s). Published by Tech Science Press.

Copyright © 2025 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF

Downloads

Downloads

Citation Tools

Citation Tools