Open Access

Open Access

REVIEW

A Systematic Review of Deep Learning-Based Object Detection in Agriculture: Methods, Challenges, and Future Directions

1 Department of Electrical & Instrumentation Engineering, Thapar Institute of Engineering & Technology, Patiala, 147004, Punjab, India

2 Department of Computer Science & Engineering, Thapar Institute of Engineering & Technology, Patiala, 147004, Punjab, India

* Corresponding Authors: Mukesh Dalal. Email: ,

Computers, Materials & Continua 2025, 84(1), 57-91. https://doi.org/10.32604/cmc.2025.066056

Received 28 March 2025; Accepted 30 April 2025; Issue published 09 June 2025

Abstract

Deep learning-based object detection has revolutionized various fields, including agriculture. This paper presents a systematic review based on the PRISMA 2020 approach for object detection techniques in agriculture by exploring the evolution of different methods and applications over the past three years, highlighting the shift from conventional computer vision to deep learning-based methodologies owing to their enhanced efficacy in real time. The review emphasizes the integration of advanced models, such as You Only Look Once (YOLO) v9, v10, EfficientDet, Transformer-based models, and hybrid frameworks that improve the precision, accuracy, and scalability for crop monitoring and disease detection. The review also highlights benchmark datasets and evaluation metrics. It addresses limitations, like domain adaptation challenges, dataset heterogeneity, and occlusion, while offering insights into prospective research avenues, such as multimodal learning, explainable AI, and federated learning. Furthermore, the main aim of this paper is to serve as a thorough resource guide for scientists, researchers, and stakeholders for implementing deep learning-based object detection methods for the development of intelligent, robust, and sustainable agricultural systems.Keywords

Supplementary Material

Supplementary Material FileAgriculture is one of the most important fields that plays an important role in ensuring global food security, rural development, and economic growth. With the technological advancements and modernization of agriculture, certain challenges need to be addressed, such as climate change, pest infections, crop diseases, workforce shortages, and the necessity for sustainable resource management [1]. These challenges can be mitigated by the integration of Artificial Intelligence (AI) and computer vision, which has unveiled new opportunities for improving efficiency, accuracy and production in agricultural processes. Object detection has emerged as a pivotal AI technology, facilitating the autonomous identification, localization, and classification of objects such as leaves, fruits, pests, and diseases from images or video frames [2]. There are different technologies utilized for object detection in agriculture, including IoT sensors with cameras that monitor leaves, fruits, vegetables, and crops in real time. Other technologies include drones and satellites for capturing large-scale crop images for analysis and disease/pest detection, and robotic harvesters are also utilized for the automation of fruit and vegetable seeding and picking. Further, due to the advancements in deep learning, object detection methodologies have transitioned from feature-based models to data-driven approaches such as Convolution Neural Network (CNN) [3], You Only Look Once (YOLO) [4], Region-based CNN (R-CNN) [5], etc., which are utilized for detecting pests, diseases, and stages of growth. Moreover, recent developments in Vision Transformers (ViT) [6], EfficientDet [7], YOLOv9, YOLOv10, and hybrid CNN-transformer architectures have significantly enhanced detection performance in demanding field settings. The agricultural AI business has shown significant expansion in recent years.

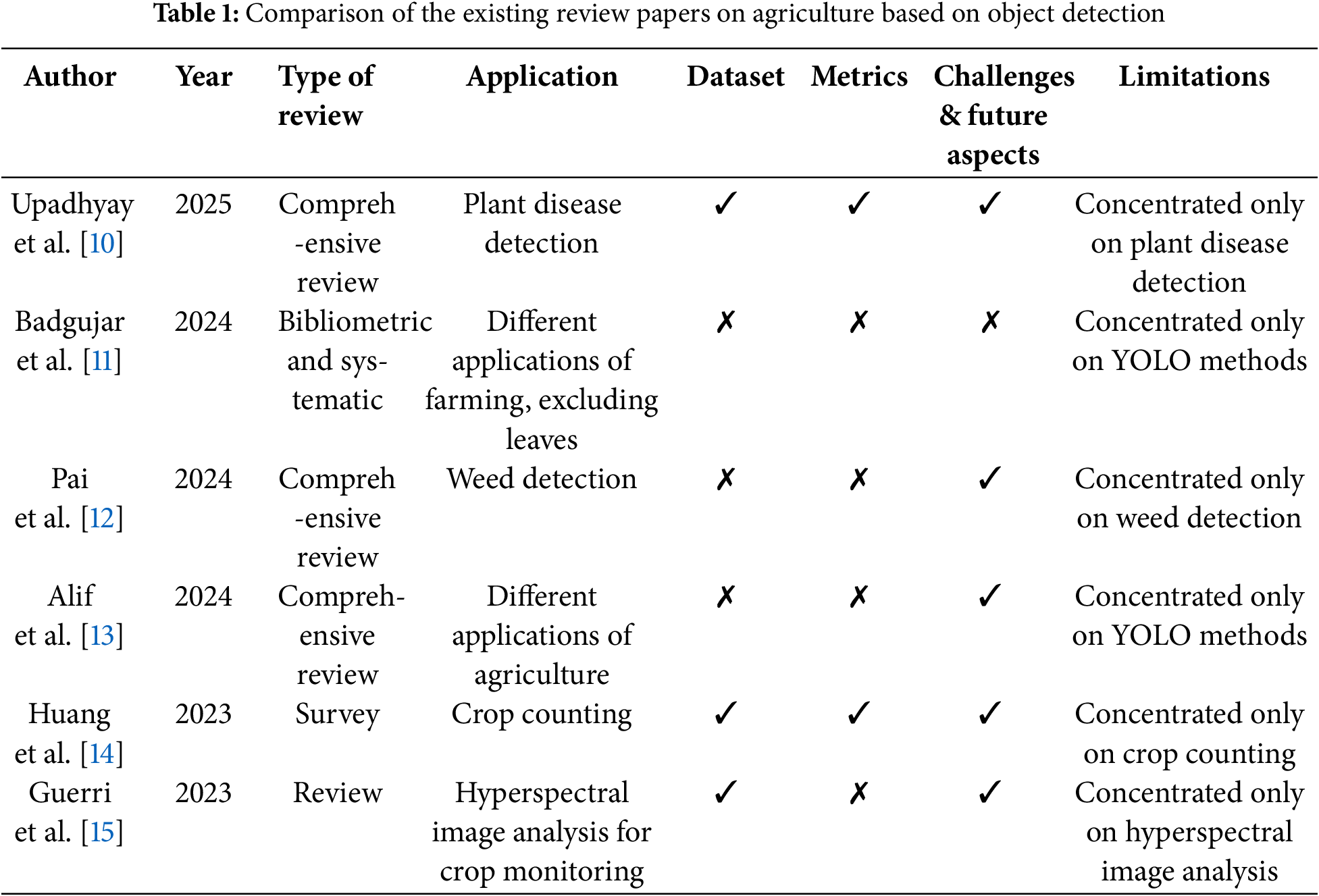

Despite significant expansion, a substantial gap exists in reviewing multimodal object detection approaches, benchmark dataset standardization, and lightweight solutions for field-ready deployment, especially in resource-constrained rural environments. This necessitates a comprehensive and timely systematic review that not only compiles current developments but also identifies research gaps and future directions. This paper is thus motivated by the need to bridge the knowledge gap, provide a structured analysis of deep learning-based object detection techniques in agriculture, and guide future research toward building intelligent, sustainable, and scalable agri-tech solutions. A survey by GlobeNewswire indicates that the market was valued at roughly $1.63 billion in 2022 and is anticipated to attain $7.97 billion by 2030, exhibiting a compound annual growth rate (CAGR) of 21.9% throughout this timeframe [8]. These statistics underscore the growing incorporation of artificial intelligence technology in agriculture, propelled by the demand for improved efficiency and production in farming methods [9]. Table 1 presents a comparative analysis of the existing review papers based on object detection.

The current review papers predominantly concentrate on specific objects, methodologies, or applications, such as plant disease detection or weed identification, resulting in a substantial deficiency of cohesive insights across several agricultural sectors. Based on the comparative analysis, the following gaps have been identified: (i) Limited Scope in Previous Research: Most of the prior reviews concentrate on a single object or application, such as exclusively plant disease identification [10] or solely weed detection [12]. Certain methods are exclusive to particular techniques, notably the YOLO family [11]. (ii) Insufficient Exploration of Wider Agricultural Applications: Few studies explore object detection’s application in monitoring, yield estimation, and disease analysis across multiple agricultural objects like leaves, fruits, vegetables, and crops. (iii) Lack of Dataset and Metric Analysis: Numerous reviews [11–13] fail to address datasets or evaluation metrics, which are essential for replicability and performance assessment in deep learning research.

This paper fills the gap by delivering a comprehensive and current analysis of various object detection methodologies, datasets, evaluation metrics, and challenges, thus providing essential guidance to researchers, practitioners, and policymakers aiming to create scalable and effective AI-driven agricultural solutions. The importance of this study lies in its thorough examination of deep learning-based object detection methods utilized for many agricultural elements, including leaves, fruits, vegetables, and crops. The rising demand for precision agriculture and automation in farming necessitates the integration of AI-driven solutions to enhance productivity, sustainability, and resilience. The systematic review was conducted for the last three years, from 2022 to 2024, and also included recent papers. This paper aims to act as a fundamental reference for researchers, scientists, stakeholders, and policymakers working in AI and agriculture, specifically for leaves, fruits, vegetables, and crops aiming to utilize deep learning-based object detection approaches for the development of intelligent, resilient, and sustainable agriculture. The presented systematic review for agriculture based on object detection using deep learning has the following contributions:

• Scope: The paper concentrated on a broader range of object detection techniques based on deep learning for four types of objects: leaves, fruits, vegetables, and crops used for different applications of agriculture, such as disease detection, monitoring, and analysis.

• Recent developments: The paper included a review of recent contributions (last three years, including the current year) in the field of agriculture based on object detection, ensuring the systematic review incorporates current technologies and trends.

• Comprehensive dataset: The paper presented a detailed overview of publicly accessible datasets utilized for object detection in agriculture, with their attributes and sources.

• Evaluation metrics: The evaluation metrics are one of the most important aspects of any research, therefore, the paper also discusses the commonly used metrics for object detection in agriculture, highlighting their relevance and effectiveness.

• Challenges and future aspects: The paper highlighted current challenges and research deficiencies in the domain, providing insights on prospective research trajectories to researchers and academicians.

2 Method and Research Questions

This section discusses the details of the method used for review based on the PRISMA approach.

2.1 Inclusion and Exclusion Criteria

A systematic search approach was employed in accordance with PRISMA2020 standards to collect pertinent literature on object identification, deep learning, agriculture, and smart farming. The investigation was performed across multiple digital repositories, including Google Scholar, IEEE Xplore, Scopus, Springer, ACM, and ScienceDirect. Keyword combinations and Boolean operators were employed to construct the search queries. The investigation was carried out from January 2022 to January 2025, concentrating on journal papers and conference proceedings in Computer Science, Artificial Intelligence, and Agriculture. The chosen articles were subsequently examined for technical specifications, experimental outcomes, datasets, and assessment criteria. There were four major reasons for excluding the studies such as studies not employ deep learning, studies not based on object detection, studies related to other fields of agriculture, like animals, poultry, etc., and manuscripts that were not in the English language were excluded.

2.2 Search Procedure and Risk of Bias

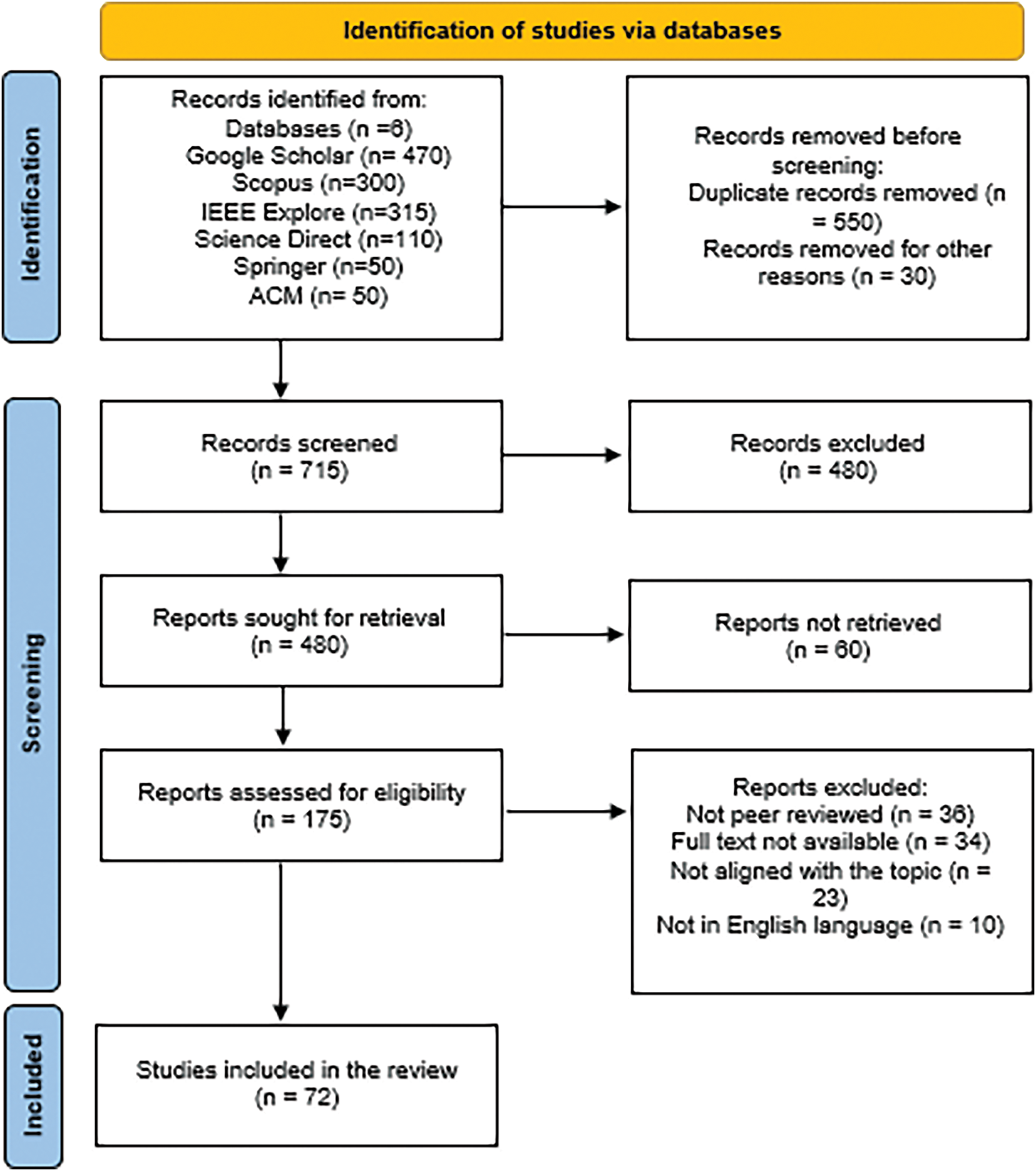

A systematic search approach was employed in accordance with PRISMA2020 standards to collect pertinent literature on object identification, deep learning, agriculture, and smart farming. Keyword combinations and Boolean operators were employed to construct the search queries. Examples, “object detection” AND “deep learning” AND “agriculture”; “CNN” “YOLO” OR “SSD” OR “Faster R-CNN” OR “EfficientDet” AND “crop” OR “fruit” OR “plant” OR “leaf”; “smart farming” AND “computer vision” AND “detection”. The researchers mitigated information bias by conducting an exploratory literature review, using relevant databases and tools, and avoiding data duplication. The chosen articles were subsequently examined for technical specifications, experimental outcomes, datasets, and assessment criteria. The search was completed on January 30, 2025, yielding 1295 studies. The process of inclusion and exclusion with the details of databases is shown using the PRISMA 2020 flow diagram in Fig. 1.

Figure 1: PRISMA 2020 flow diagram

(i) Study Selection

Table 2 shows the results of the search of different databases for the manuscript.

(ii) Synthesis of Results

The synthesis of the selected literature for review which is a total of 72, indicates an increasing inclination for deep learning-based object identification techniques in agriculture, specifically YOLO variants, EfficientDet, and transformer-based frameworks. These models provide rapidity and precision in real-time agricultural monitoring and pathogen identification applications. Hybrid frameworks that combine computer vision techniques with deep learning are increasingly gaining prominence. Nonetheless, performance measurements and scalability deficiencies persist, especially in practical implementations.

The systematic review of object detection in agriculture is done on the basis of the following research questions:

1. What are the most commonly used object detection techniques in the era of deep learning in agriculture, specifically for leaves, fruits, vegetables, and crops?

2. What are the most common datasets available for object detection in agriculture?

3. What are the common evaluation metrics used for evaluating object detection techniques in agriculture?

4. Which models in the literature are utilized most, and which are performing better at the present stage?

5. What are the challenges and future directions for researchers and practitioners working in the field of object detection in agriculture?

The review paper is divided into different sections to answer the above research questions, starting with the introduction of deep learning and object detection techniques. Further, the existing techniques are discussed with their comparative analysis for object detection in agriculture considering four objects, namely, leaves, fruits, vegetables, and crops. The review also highlights the benchmark datasets available for object detection in agriculture and evaluation metrics used for implementing and evaluating techniques, answering the second and third research questions, respectively. Moreover, the systematic review discusses the advantages and limitations of the existing object detection techniques. Additionally, answering the last research question, the applications and future aspects are discussed in the context of object detection in agriculture.

3 Overview of Object Detection in the Era of Deep Learning

Deep learning techniques have revolutionized the field of agriculture by providing accurate and efficient solutions for various tasks, such as disease detection, crop monitoring, and yield prediction. Deep learning models such as CNNs, Recurrent Neural Networks (RNNs), Generative Adversarial Networks (GANs) [16], and transfer learning are extensively employed in agriculture for disease detection, image classification, crop surveillance and weed detection. These methodologies are applicable for sequential data tasks, synthetic data generation, and the fine-tuning of pre-trained models on novel datasets. These methodologies can be utilized on drone or satellite-based images, soil moisture, historical climatic data, and additional variables to enhance crop yields and soil vitality. Among different applications of deep learning in agriculture, object detection has been one of the most prominent areas of computer vision, achieving efficiency and efficacy with the help of deep learning techniques. The timeline of the evolution of prominent object detection techniques is shown in Fig. 2.

Figure 2: Timeline of prominent object detection techniques evolution

In agriculture, the objective of object detection entails identification and localization of certain objects such as sick fruits, leaves, vegetables, pests on crops, etc., using images or videos. The presented review considered four types of objects in agriculture, namely, leaves, fruits, vegetables, and crops. There are different applications of object detection in these four objects as shown in Fig. 3.

Figure 3: Agricultural applications of object detection in leaves, fruits, vegetables and crops

For leaf monitoring there are several applications which have been explored in the literature by researchers such as disease and pest detection, stress detection, nutrient deficiency analysis. In disease detection, researchers identified diseases such as rust, mildew, bacterial infections, etc. [17,18] and by identifying leaf damage patterns to detect pest infections [19]. Similarly, for stress detection, researchers have identified drought stress via withering patterns and observed leaf yellowing and curling resulting from severe temperatures [20]. Furthermore, the leaf discoloration and irregular shapes were identified due to nutrient deficiencies. There is another important application, the Leaf Area Index (LAI) calculation to measure leaf density for the estimation of crop health and growth rates. For fruit and vegetable monitoring, one of the major applications in the literature is ripeness detection, which is done by analyzing the texture and color for optimal harvesting time [21]. Another application is automated harvesting, which includes robotic arms for picking selective fruits and vegetables, ultimately helping in increasing efficiency and reducing labor. Additionally, researchers have worked on yield estimation and grading of size and quality using classification to produce high-quality products [22,23]. The fourth object that has been considered in this review is crop monitoring, in which researchers have done efficient work lately, including weed detection and removal for precise herbicide [24].

Furthermore, Automated robots are also been invented that works in weed detection and removal [25]. The researchers are also working in the application of growth stage analysis where plants are monitored for different growth phases from germination to harvest [26]. The color and size of crops helps in monitoring the stage of the crop whether it is ready to harvest or not or it needs extra care such as more water etc. [27]. The paper focused on the review of the four objects and the related work is mentioned in the next section with their comparative analysis. Fig. 4 shows a typical example of an object detection system for agriculture. A typical object detection system in agriculture uses deep learning techniques like CNN and attention mechanisms like ViT and EfficientDet for different objects and applications. For agriculture, a dataset is curated and pre-processed using techniques like normalization and data augmentation. Post-processing techniques like thresholding, label mapping, and Non-Maximum Suppression improve precision and accuracy. Outputs include qualitative and quantitative results.

Figure 4: Basic flow of object detection in agriculture

4 Object Detection Techniques in Agriculture

This section discusses the work done by researchers and academicians in the field of object detection by utilizing deep learning models for different applications in agriculture. The section is categorized into four sub-sections covering four objects of agriculture: leaves, fruits, vegetables, and crops.

This section describes the techniques used for object detection in leaves for different applications, such as disease detection, classification, etc. Du et al. [28] presented a novel object detection model for Spodoptera frugiperda using the Faster R-CNN framework and high-spatial-resolution RGB ortho-images from an unmanned aerial vehicle. The model, categorized into four classes based on feeding and invasion, achieved a mean Average Precision (mAP) of 43.6% on a test dataset. The model is useful for monitoring pest stress and achieving precise pest control in maize fields. Further, Zhang et al. [29] presented a Tranvolution detection network using GAN modules for plant disease identification. The researchers used a generative model and GAN models in the attention extraction module. The Tranvolution architecture outperformed CNN and ViT, achieving 51.7% precision, 48.1% recall, and 50.3% mean Average Precision. Xu et al. [30] introduced a real-time object detection technique for melon leaf illnesses in greenhouses, improving early disease diagnosis in agriculture. The Pruned-YOLO v5s + Shuffle (PYSS) model was used, achieving 93.2% and 98.2% mAP@0.5 for powdery mildew and genuine leaves, respectively. The model’s size and inference time were reduced by 85% and 7.5%, making it an economical and portable technique. Andrew et al. [31] used CNN-based models like VGG-16, ResNet-50, DenseNet-121, and Inception V4 to detect plant diseases in crops, focusing on optimizing hyperparameters. The study also analyzed transfer learning models to improve recognition and classification accuracy, address labeling and classification challenges, and mitigate overfitting issues. For experiments PlantVillage dataset was used and achieved a classification accuracy of 99.81% for DenseNet-121.

Haque et al. [32] used YOLOv5 to classify and detect four common rice leaf diseases in Bangladesh: bacterial leaf blight, brown spot, leaf blast, and sheath blight. The method used a Roboflow AI notebook and pre-trained COCO weights, achieving high accuracy during training on approximately 1500 images. Cho et al. [33] introduced an intelligent agricultural robot that uses object detection, picture fusion, and data augmentation to accurately quantify plant development data. Further, Li et al. [34] presented a lightweight, high-precision target detection model using YOLOv4 for recognizing tea buds in complex agricultural environments and achieved an 85.15% detection accuracy for one-bud-one-leaf and one-bud-two-leaf teas. Furthermore, Abid et al. [35] used the YOLOv8 model for the automation of detecting and classifying leaf diseases in five principal crops in Bangladesh: rice, corn, wheat, potato, and tomato. The YOLOv8 model surpassed its predecessors, with a mean average precision (mAP) of 98.3% and an F1 score of 97%. Recently, Li et al. [36] presented a “Plant Leaf Detection transformer with Improved De-noising Anchor boxes (PL-DINO)” methodology that integrates a Convolutional Block Attention Module (CBAM) into the ResNet50 backbone to extract features from leaf pictures and uses an Equalisation Loss (EQL) to mitigate class imbalance in relevant statistics.

Saberi [37] presented a new framework for classifying leaf diseases in plants and fruits using a modified deep transfer learning model. The model used model engineering, SVM models, and kernel parameters. The ResNet-50 model achieved 99.95% accuracy in genome classification, leaf enumeration, and PLA estimation. Hemanth et al. [38] presented a hybrid model for precision agriculture disease identification, utilizing VGG16 and DenseNet121 for image classification. The model, trained on the “DiseaseLeafNet” dataset, achieved an impressive validation accuracy of 86.53%. Further, Pulugu et al. [39] presented a multi-phase system using sensors and images for disease detection, including preprocessing, feature extraction, model training, and disease identification. Ensemble Deep Reinforcement Learning was used to develop a plant disease prediction model in Pennsylvania, achieving an accuracy of 89.47%. Similarly, Gokeda et al. [40] presented a hybrid model for pest detection based on an IoT-UAV smart agriculture system. Moreover, Guan et al. [41] provided Insect25, an innovative dataset for agricultural pest detection, aimed at overcoming issues such as the scarcity of comprehensive datasets and the performance constraints of detection methods. They proposed GC-Faster RCNN, a hybrid attention mechanism that achieved 0.970 mAP@0.5 and 0.939 mAP@0.75.

Further, Liu et al. [42] introduced an intelligent agricultural robot that uses object detection, picture fusion, and data augmentation to accurately quantify plant development data. The robot used a real-time deep learning object detector and image fusion of RGB and depth pictures to identify target plants with enhanced accuracy. This solution addressed the limitations of traditional detection models and requires fewer calculations than current 3D point cloud-based approaches. Kaur et al. [43] explored the use of deep learning and object detection techniques to identify tomato leaf diseases, a significant risk to global tomato cultivation. The study introduced a deep convolutional Mask R-CNN framework, modifying the RPN network and restructuring its backbone for better detection accuracy. Additionally, Wang et al. [44] developed a method for detecting sweet potato leaves in natural scenes using a modified Faster R-CNN architecture and a visual attention mechanism. The technique, which uses a convolutional block attention module and DIoU-NMS algorithm, achieved a 95.7% precision, surpassing leading object detection approaches. Giakoumoglou et al. [45] presented the Generate-Paste-Blend-Detect method, which is a synthetic dataset for object detection in agriculture, focusing on insect whiteflies. It used Denoising Diffusion Probabilistic Models to create objects, integrate them with the environment, and use an object detection model. The method achieved a mean average precision of 0.66 using the advanced YOLOv8 object detection model.

Further, Nwaneto et al. [46] utilized the YOLOv8 model to detect Taro Leaf Blight (TLB) early in taro plants, improving detection accuracy. The model, integrated into an Android application, offers real-time diagnostic and disease management capabilities to farmers. Field studies showed the model’s efficacy and intuitive design, with an impressive 98.53% accuracy on an extensive dataset. Mumtaz and Jalal [47] presented a four-phase classification pipeline to identify precision agriculture plant diseases. It used histogram equalization, contrast stretching, segmentation methods, SIFT, Harris corner detection, and genetic algorithms for feature extraction and structure recognition. The presented method achieved an accuracy of 92.0%. Preanto et al. [48] introduced a semantic segmentation approach for diagnosing sweet orange leaf diseases using YOLOv8, which improved precision agriculture by addressing manual inspection constraints. The algorithm achieved an accuracy of 80.4% in training and validation, outperforming VIT’s 99.12%. Mahmoud et al. [49] introduced a new strategy to improve root collar detection in precision agriculture using the YOLOv5 neural network model. The model, trained on blueberry image data, achieves a precision of 0.886 on modified images, and a smooth perturbation training attains a mAP of 0.828, enhancing its robustness and generalizability. Dai et al. [50] presented a deep information feature fusion extraction network (DFN-PSAN) for plant disease classification in natural field environments, using YOLOv5 Backbone and Neck network and pyramidal squeezed attention. The model achieves an average accuracy of 95.27%, saving 26% of parameters.

Lin et al. [51] presented the MobileNetv3-YOLOv4 architecture as a highly efficient framework for object detection in smart agriculture, achieving high accuracy and speed. Experimental results showed that the lightweight MobYOLv4 design reduces parameters by 82.31% and 28.87 FPS, while the enhanced MobYOLv4 architecture outperforms lightweight models in accuracy, detection speed, and computational complexity, making it ideal for real-time or resource-limited applications. Moreover, Aldakheel et al. [52] used the YOLOv4 algorithm to detect and identify plant leaf diseases using the Plant Village dataset. The algorithm used over 40,000 images from 14 species and focused on rust and scab in apple leaves, using real-time object detection. The presented technique achieved an accuracy of 99.99% on the dataset, claiming that the integration could improve disease prediction and management in agriculture. YOLO-Leaf is a novel model for detecting apple leaf diseases, overcoming challenges in illumination, shadows, and perceptual fields [53]. It uses Dynamic Snake Convolution for feature extraction, BiFormer for augmented attention, and IF-CIoU for enhanced bounding box regression. Experimental results show that YOLO-Leaf outperforms current models in detection accuracy with mAP50 scores of 93.88% and 95.69%, respectively, demonstrating the potential of advanced technologies in precision agriculture.

Highlights of the discussed literature

Deep learning models (YOLOv5, YOLOv8, Faster R-CNN, Mask R-CNN, DenseNet, and Transformer-based architectures like PL-DINO) have significantly advanced leaf disease detection. YOLO-based methods dominate in real-time applications, particularly for mobile-friendly implementations. Transformer-based approaches (PL-DINO) improve feature extraction and class imbalance issues. Hybrid models (e.g., Tranvolution with GAN modules, MobileNetv3-YOLOv4) offer lightweight, high-accuracy solutions for resource-limited environments. Ensemble learning and synthetic datasets are emerging trends to improve the generalizability and robustness of agricultural object detection models. Table 3 presents a comparative analysis of the above-mentioned leaf-based reviewed techniques.

For fruits, several researchers utilized object detection for different applications. Liu et al. [54] developed a model for detecting and segmenting obscured green fruits. The model used a fully convolutional one-stage object detection framework and a two-layer convolutional block attention network to restore incomplete edges. The model also introduced a double-layer convolutional block attention network. The model achieved recognition and segmentation accuracies of 81.2% and 85.3% on the Apple dataset and 77.2% and 79.7% on the Apple-ape dataset. Zhang et al. [55] presented a deep learning object detection method for accurately enumerating fruit yield on a holly tree. The method uses UAV imagery to create a detailed map of the tree’s surface, and a YOLOX object identification network is trained using innovative techniques. The YOLOX-based fruit-counting technique is effective for various fruits, including apples and lychee, and has high inference efficiency, making it a viable option for real-time use in orchard and plantation management. The statistical correlation between detected and real figures reaches 96% for a ring shot parameter of the tree at R ≤ 1.2 m, 95% at R ≤ 1.6 m, and exceeds 99% at R ≤ 1.2 m, while surpassing 97% at R ≤ 2.0 m. Zhai et al. [56] presented the TEAVit model as a camouflage object detection network designed for identifying green tomatoes in agricultural settings. The model used a hybrid architecture of CNN and Transformer, combining convolutional neural networks lightweight efficiency with the transformer’s vision and self-attention capabilities. The augmented dataset includes 4944 accurately labeled targets, with the model surpassing existing deep learning methods in object detection.

Liu et al. [23] introduced an MAE-YOLOv8 model for detecting green crisp plums in complex orchard environments. The model, based on YOLOv8s-p2, used an efficient multi-scale attention module, an asymptotic feature pyramid network (AFPN), and minimum point distance intersection over union (MPDIoU) as regression loss functions. The experimental results claimed 92.3% precision, 82% recall, 89.4% average precision, and 68 frames per second. Furthermore, Zheng et al. [57] explored object detection in remote sensing imagery, specifically focusing on identifying strawberries on a central Florida strawberry farm. Researchers used an object-detection model and an enhanced FaceNet model to detect strawberry fruit and blossom items. The method improved the recognition accuracy of strawberry flowers, unripe fruits, and ripe fruits from 76.28% to 96.98%, 71.64% to 99.09%, and 69.81% to 97.17%, respectively. Jrondi et al. [58] compared two object detection models, DETR and YOLOv8, for fruit detection in agriculture. DETR is effective in localizing fruits, while YOLOv8 improves detection efficacy, particularly for orange and sweet_orange categories. Both models offer insights for precision agriculture, aiding farmers in yield forecasting and harvest strategizing. DETR’s ability to identify objects of various sizes and thresholds is beneficial for precise fruit identification, while YOLOv8’s precision and recall rates make it suitable for swift fruit recognition.

Additionally, Zhao et al. [59] aimed to improve the detection accuracy of blueberry fruits by developing a UAV remote sensing target detection dataset for blueberry canopy fruits in an orchard setting. The PAC3 module integrates position data encoding during feature extraction, minimizing the likelihood of overlooking blueberry fruits. The PF-YOLO model outperforms other models, with improvements in mAP of 5.5% to Yolov5s, 6.8% to Yolov5l, 2.5% to Yolov5s-p6, 2.1% to Yolov5l-p6, 5.7% to Tph-Yolov5, 2.9% to Yolov8n, 1.5% to Yolov8s, and Yolov9c 3.4%. Furthermore, the AG-YOLO algorithm proposed by Lin et al. [60] for detecting citrus fruits addressed low detection accuracy and overlooked detections in occluded situations. It used NextViT architecture and a Global Context Fusion Module to assimilate contextual information. The algorithm achieved high precision on over 8000 outdoor images, attaining a precision of 90.6%, a mean average precision of 83.2%, and a mAP@50:95 of 60.3%. Lee et al. [61] introduced a machine vision and learning method for detecting flower clusters on apple trees, enabling the prediction of potential yield. A field robot was used to gather 1500 photos of apple trees, achieving a cluster precision of 0.88 and a percentage error of 14%. Although the model predicted lower yields, it provided insights into tree development and production. The research explores modifications to improve detection, including external illumination, diverse training data conditions, and ground truth boxes for circular items like apples and tomatoes.

Focusing on edge computing, Jiao et al. [62] introduced a real-time litchi detection technique using low-energy edge computing devices. It utilized litchi orchard pictures and a CNN-based detector, YOLOx, to identify litchi fruit locations. The method achieved a 97.1% compression rate and is suitable for practical orchard settings. Similarly, ref. [63] presented edge AI by utilizing lightweight vision models for farmers to identify orange diseases, achieving 96% accuracy in species classification and object detection. Mao et al. [64] presented RTFD, a lightweight method designed for edge CPU devices to identify fruit utilizing the PicoDet-S paradigm. It enhanced real-time detection efficiency by 1.9% and 2.3% for tomato and strawberry datasets, respectively, with no computational loss. The RTFD model possesses significant potential for intelligent picking robots and is anticipated to be effectively implemented in edge computing. Zhou et al. [65] presented a framework for detecting and analyzing rod-like crops (fruits and vegetables) using multi-object-oriented detection techniques. It employed Zizania shots and deep learning models using OBBLabel. The model achieved a precision of 0.903 and an accuracy of 93.4%. Furthermore, the ITF-WPI cross-modal feature fusion model improves the accuracy of wolfberry pest identification and optimizes data consumption [66]. It employs CNN and Object-Based Linear Localization (OBLL) for the concurrent analysis of pictures and text. The approach has pragmatic applications in agriculture, pest management of wolfberries, and yield enhancement. Evaluated over multiple datasets, it attained an average accuracy of 97.91%.

Highlights of the discussed literature

These studies focus on improving fruit detection and segmentation in agricultural settings using deep learning techniques. Methods range from CNN-based models like YOLOX and MAE-YOLOv8 for real-time detection to Transformer-enhanced architectures such as TEAVit and AG-YOLO for improved accuracy in complex environments. UAV and remote sensing imagery are leveraged for large-scale monitoring, while object detection frameworks like DETR and YOLOv8 are compared for citrus fruit recognition. Specialized approaches, such as a fully convolutional framework for obscured green fruits and PAC3-integrated models for blueberry detection, enhance precision in challenging scenarios. These advancements contribute to automated harvesting, yield estimation, and smart agriculture applications. Table 4 highlights the comparative analysis of reviewed techniques.

This section covers literature related to vegetables. Siddiquee et al. [67] developed an IoT-enabled smart agriculture monitoring system that detects, quantifies, assesses ripeness, and identifies diseased produce. It was tested on a tomato field in Chittagong, Bangladesh, and aimed to assist farmers in digitally tracking production in Bangladesh and Malaysia. The system uses a CNN with a 90% accuracy rate. Jin et al. [68] developed a deep learning-based model to detect weeds in vegetable crops, identifying vegetables and categorizing all other green entities as weeds. The system, YOLO-v3, achieved a high F1 score of 0.971, with precision and recall values of 0.971 and 0.970, respectively. The research used ImageNet, a dataset with over 14 million labelled images, and three types of Convolutional Neural Networks (CNNs) for visual identification. Guo et al. [20] developed an automated surveillance system for airborne vegetable insect pests in South China using an RGB camera and YOLO-SIP detector. Yellow sticky traps were used for pest sampling, and a computer vision detector, YOLO-SIP, was used to accurately identify pests. The system demonstrated potential for automated pest control in vegetable fields, surpassing existing detectors in counting efficacy. Roy et al. [69] introduced a high-performance real-time fine-grain object detection model derived from an enhanced version of the YOLOv4 algorithm. The model tackles challenges in plant disease detection, including dense distribution, uneven morphology, multi-scale object classes, and textural similarity. The revised network architecture incorporates DenseNet, residual blocks, Spatial Pyramid Pooling, and Path Aggregation Network, with the Hard-Swish function enhancing accuracy through superior nonlinear feature extraction. The model surpasses current detection algorithms in accuracy and speed, offering an efficient approach for identifying plant diseases in intricate situations. The model additionally incorporates generalised IoU (GIoU) and Distance-IoU (DIoU) loss to enhance performance. The advanced YOLOv4 method is employed to create a precise real-time high-performance image detection model on a single GPU.

Furthermore, Reyes-Hung et al. [70] utilized YOLOv7 and YOLOv8, to categorize stress in potato crops using multispectral pictures captured by drones. The integration of RGB and monochrome photos markedly enhanced accuracy metrics for both healthy and stressed plants, yielding values of 0.917 and 0.914, respectively, alongside F1 scores of 0.902 for healthy plants and 0.881 for stressed plants. Ullao et al. [71] presented Sureveg CORE Organic Cofund ERA-Net that developed a robotic platform for strip cropping using a CNN Vegetables Detection-Characterization Method. The project explores the benefits of strip cropping systems for organic vegetable cultivation, automated equipment management, and biodegradable waste repurposing. The Automatic Robotic Fertilization Process uses ROS algorithms to identify crop varieties and central coordinates, with cabbage achieving the highest recognition rate. The method has a high reliability index and mean accuracy of 90.5% with minimal error rates in vegetable characterization. Li et al. [72] presented a novel YOLOv5-based method for vegetable disease detection that has been developed, improving detection range and efficacy for minor diseases. The algorithm’s CSP, FPN, and NMS modules have been optimized to mitigate external impacts and extend detection range. Experiments show a mean Average Precision of 93.1% for vegetable disease identification, minimizing false negatives and false positives. The model’s size is 17.1 MB and the average detection duration is 0.03 s. The algorithm is effective in vegetable cultivation and agricultural output, offering precise planting and informed decision-making.

Liong et al. [73] explored automated inspection systems in the food sector, using computer vision technology to analyze the geometric characteristics of agricultural goods. The DeepLabv3+ algorithm for semantic segmentation, object detection, and object tracking is employed to precisely categorise and evaluate the geometric characteristics of carrots. The system accurately recognizes features like width, length, area, and volume, with average errors of 1.85%, 2.51%, and 5.35%. Islam et al. [74] used eight machine learning and deep learning-based CNN models to classify and segment green vegetables and diseases in Bangladesh. The paper analyzes seven leafy vegetables utilizing five classification models (YOLOv5, YOLOv8, ResNet50, VGG16, and VGG19,) and three instance segmentation models (YOLOv5, v7, and v8). The combination of these models with sensors and remote sensing technologies aids real-time disease identification. López-Correa et al. [75] utilized RetinaNet to automatically identify and categorize weed species in tomato cultivation. The model, trained on RGB photos, achieved an average precision of 0.900–0.977. The research also evaluated its efficacy on novel data sets, proving RetinaNet an effective method for weed detection in real-world settings.

Rodrigues et al. [76] explored the use of computer vision and deep learning for the dynamic phenological classification of vegetable crops. Four advanced models were evaluated using a dataset containing RGB and grayscale annotations. The YOLO v4 model accurately classified 4123 plants from 5396 ground facts, demonstrating the potential of CNN_DL in agricultural procedures, thereby advancing sustainable agriculture practices. Hu et al. [21] presented a new technique for precision spraying of agricultural robots on vegetable plants using Multiple Object Tracking and Segmentation (MOTS) methodologies. Moreover, Das et al. [77] developed an autonomous agricultural rover using deep learning algorithms for vegetable harvesting and soil analysis. The model yields a precision score of 0.8518, a recall score of 0.7624, and mean average precision scores of 0.8213 and 0.4419. For real-time identification of different vegetables, Shreenithi et al. [78] presented a Mobile Veggie Detector system based on deep learning that used a smartphone application, eliminating the need for spoken communication. Zhao et al. [79] introduced a new framework that uses two CNNs for crop harvesting, YOLO-VGG. Additionally, Wang et al. [80] also used YOLOv8 and presented YOLOv8n-vegetable, a model designed to improve vegetable disease identification in greenhouses. The experimental results achieved a mAP of 92.91% and a speed of 271.07 frames per second, demonstrating its competence in vegetable disease detection tasks within greenhouse planting environments. The model showed a 6.46% increase in mAP and a novel IoU loss function.

Highlights of the discussed literature

This collection of studies explores deep learning and computer vision applications in smart agriculture, focusing on vegetable crop monitoring, disease detection, weed classification, and automated farming. Various models, including YOLO variants, RetinaNet, ResNet, and VGG, have been employed to improve accuracy in detecting pests, plant stress, and ripeness assessment. IoT-based systems, robotic automation, and multispectral imaging enhance efficiency in tasks like fertilization, phenological classification, and precision spraying. Additionally, mobile applications and AI-driven object detection improve real-time crop monitoring. The advancements highlight the potential of AI in optimizing agricultural productivity and sustainability. Table 5 shows the comparative analysis of the reviewed techniques.

In this section literature of different crops have been reviewed, the crops terminology here resembles wheat, rice, maze, etc. The majority of the tasks in crops are for weed detection, yield estimation, disease detection and growth stage detection.

Kong et al. [81] proposed CropDetdiff, a diffusion learning detector designed for identifying small-scale crop diseases in agricultural settings. It uses dynamic convolutional kernels and a graph attention network to improve feature extraction. Researchers used fuzzy label assignment and a novel loss function, HIoU, to manage detection. CropDetDiff achieved an optimal balance between detection efficacy and operating efficiency, reaching a mean Average Precision (mAP) of 73.2% and 40.1% on two datasets. Dang et al. [82] introduced a novel dataset for cotton cultivation consisting of 5648 images of 12 weed categories. The detection accuracy of different YOLO models varied from 88.14% with YOLOv3-tiny to 95.22% with YOLOv4, and from 68.18% with YOLOv3-tiny to 89.72% with Scaled-YOLOv4. Further, Kalezhi et al. [83] explored the early identification and localization of plant diseases in agriculture using YOLO and Generalised Efficient Layer Aggregation Network (GELAN) for cassava plant leaf diseases. The results showed an improvement in evaluation metrics, exceeding 80% for most disorders. Zou et al. [24] introduced a deep learning-based image augmentation technique for agricultural fields, concentrating on synthetic representations of crops, weeds, and soil. The approach is evaluated on image classification, object detection, and semantic segmentation tasks utilizing ResNet, YOLOv5, and DeepLabV3. The obtained results had an overall accuracy of 0.98, with precision, recall, and F1-score all at 0.99. The findings indicate that the suggested strategy significantly enhances crop categorization, object recognition, and semantic segmentation in agricultural fields, although with minor overfitting.

Further, Lee et al. [84] presented a technique that integrates Image Captioning and Object Detection techniques to improve crop disease diagnosis in Korean agriculture. They utilized a transformer to generate precise diagnostic words for disease-infected crops, considering crop types, disease types, damage levels, and symptoms. The mean BLEU score for Image Captioning is 64.96%, but the mAP50 for Object Detection is 0.382. Onler et al. [18] presented a work for wheat powdery mildew, caused by the fungal disease Blumeria graminis tritici, a global concern. They have utilized the YOLOv8m model and achieved the highest precision of 0.79, recall 0.74, F1 score of 0.77, and average precision of 0.35. Moreover, Gomez et al. [85] investigated the effectiveness of precision weed spraying using two datasets, two GPU types, and multiple object detection algorithms. The work used deep learning-based object detectors like YOLOv3 and CenterNet, and a new statistic called weed coverage rate (WCR) to assess the influence of detector precision on spraying accuracy. YoloV5m, Yolov3, and Faster R-CNN, demonstrated optimal performance, achieving a frame rate of 980 or 1024, contingent upon the number of cameras utilized. Rai et al. [86] utilized the YOLO-Spot model to detect weeds in aerial images and videos obtained from small unmanned aerial vehicles. It employed fewer parameters and diminished feature map dimensions, attaining considerable prediction accuracy. The optimized model was advised for incorporation with remote sensing technologies for targeted weed management.

Kumar et al. [87] utilized YOLOv8 for weed detection in agricultural settings. The presented work achieved an accuracy of 86% in real-time weed recognition, with few false positives. However, the algorithm struggled to distinguish between weed and background instances, resulting in an accuracy of 0.0. The YOLO v8-based weed recognition algorithm has the potential for real-time agricultural tasks, particularly in-field herbicide neutralization, due to its rapid object detection and superior alignment with ground truth bounding boxes. Bhat et al. [88] developed an optimal algorithm for weed detection and management using agricultural imaging data. Researchers utilized Faster R-CNN and YOLOv6 for effective weed and crop detection using an extensive dataset of images captured under different lighting and climatic conditions. The models showed exceptional precision in identifying crops and weeds in agricultural fields, providing high accuracy and rapid processing speeds. Additionally, Zhang et al. [89] presented HR-YOLOv8, an object detection model that uses a dual self-attention mechanism to improve crop growth detection performance. The model incorporates InnerShape (IS)-IoU as the bounding box regression loss and alters the feature fusion component by linking convolution streams from high to low resolution in parallel. Experimental results show a reduction in parameters and an average detection accuracy exceeding the baseline model by 5.2% and 0.6%, respectively. The model also mitigates the imbalanced distribution of categories in the dataset.

Focusing on precision agriculture, Andreau et al. [90] utilized YOLOv8 framework for insect detection. The authors analyze and assess YOLOv8 models, highlighting its versatility in detecting insects regardless of crop type. The findings help create a robust dataset for future insect identification technologies. Additionally, Darbyshire et al. [91] evaluated the viability of precision spraying in agriculture by employing two datasets, distinct image resolutions, and multiple object detection algorithms to assess the accuracy of weed detection and spraying. The paper presents measurements such as weed coverage rate and area treated to quantify these elements. It was determined that 93% of weeds could be eradicated by treating only 30% of the land via advanced visual techniques. Furthermore, Thakur et al. [92] also utilized YOLOv8 for the detection of crops and weeds and was trained and assessed on a specialized dataset for crop and weed detection, with an mAP of 89.7%. Naik et al. [93] used RCNNs to identify and categorize weeds in sesame fields with the goal of developing an automated pest and weed management system for sesame cultivation, providing farmers with critical insights about weed composition, enhancing crop health, increasing yield, and reducing herbicide application. The proposed technique was able to achieve a detection accuracy of 96.84% and a weed classification accuracy of 97.79%, reducing reliance on hazardous chemicals.

Moreover, Singh et al. [94] presented an IoT-based real-time object detection system for crop protection and agricultural field security using ESP32-CAM and Raspberry Pi, along with an optimized YOLOv8 model. The Ad-YOLOv8 model integrates IoT and deep learning to tackle issues like scale variation, occlusion, background clutter, light fluctuations, and small item recognition. The presented work delivered real-time notifications to farmers via Firebase Cloud Messaging, achieving a precision of 97%, recall of 96%, and accuracy of 96%. Furthermore, Sharma et al. [95] also utilized YOLOv8 models for pest detection. Suriyage et al. [96] explored a new weed detection method using the DETR model with a ResNet-50 backbone. The model shows promising performance with a training loss of 2.7083 and a validation loss of 1.9104. Focusing on the security of sustainable agriculture, Singh et al. [97] developed E-YOLOv3 to improve crop protection and reduce crop loss in agricultural settings. The model is most effective for real-time applications and is the most effective in mitigating agricultural damage caused by humans or animals. The model outperforms four previous models with 97% precision, 96% recall, 96% F1 score, 80.81% IoU, and 95.86% mAP. Another work presented by Wang et al. [98] introduced Concealed Crop Detection (CCD), a new standard for detecting concealed objects in crowded agricultural environments. The authors proposed a Recurrent Iterative Segmentation Network (RISNet) framework to improve current Change of Direction (COD) methodologies.

Highlights of discussed literature

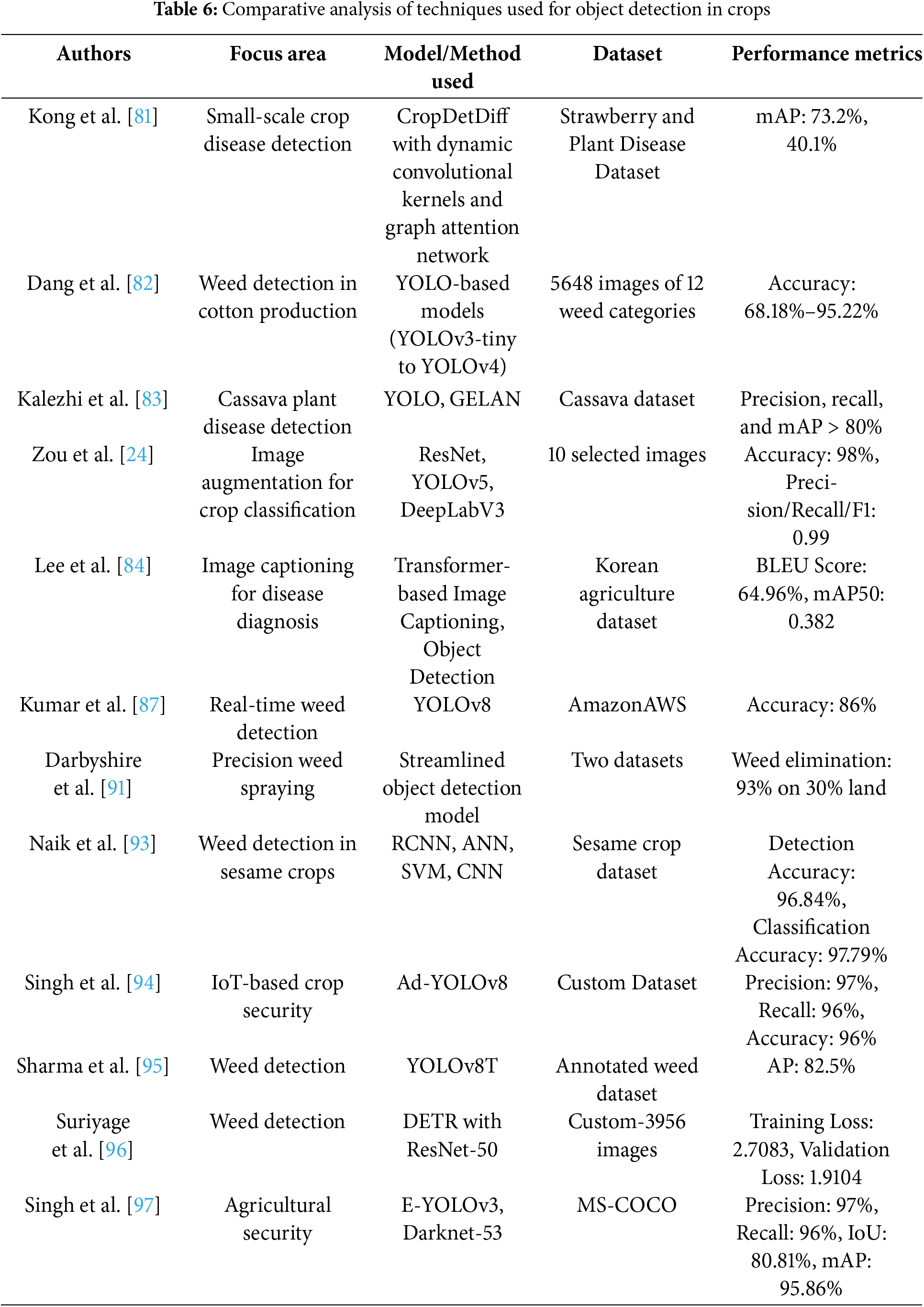

Studies have developed innovative object detection approaches for agricultural applications, focusing on crop disease diagnosis, weed detection, and pest control. These include CropDetdiff, YOLOWeeds, GELAN, and YOLOv8m. These methods have achieved high detection accuracies and improved crop and weed segmentation accuracy. The use of advanced deep learning techniques in these applications demonstrates the potential for real-time agricultural monitoring and decision-making. Table 6 highlights the comparison of different object detection techniques in crops.

This section presents the benchmark dataset for all the four objects considered for this review. There are common datasets present for agriculture such as the PlantDoc dataset that offers authentic image sets in natural environments resulting in improving model resilience under real-world settings. New Plant Diseases Dataset is one of the comprehensive dataset that provides an extensive compilation of images spanning 38 categories, ideal for training deep learning models for disease identification. PlantDoc Dataset: This offers authentic photos set in natural environments, hence improving model resilience under field settings. Recently, another dataset, the Benchmark Dataset for Plant Leaf Disease Detection, which comprises high-resolution images of particular vegetables, suitable for intricate analysis and segmentation tasks. Table 7 summarizes different datasets for leaves, fruits, vegetables and crops (including wheat, rice, etc.), covering the details such as the name of the dataset, type of images, dataset size, source to download the dataset, etc. The dataset covered in this section is mainly focused on diseases, as it is one of the most prominent applications of image datasets that is being utilized for object detection [99], re-identification [100] and classification of different plant species [101].

Evaluation of object detection models based on deep learning in agriculture necessitates specific metrics for evaluating the efficacy in detection, localization, and classification. Object detection can be done for different objects in agriculture, such as leaves, vegetables, fruits, and crops. Some of the commonly used evaluation metrics are as follows:

i. Precision: The ratio of accurately detected objects to the total number of objects detected. A high value of precision results in a diminished occurrence of false positives.

where TP and FP refer to true positive and false positive.

ii. Recall: The ratio of accurately detected objects to the total number of actual objects present in the image. A high recall indicates a few false negatives.

where FN refers to false negatives.

iii. F1-Score: F1-Score is the harmonic mean of precision and recall, offering a balanced assessment.

iv. Frames Per Second (FPS): FPS is used to evaluate the model’s inference velocity, essential for real-time applications such as drone-assisted crop monitoring.

v. Intersection over Union (IoU): IoU is used to measure the precision of object localization by assessing the overlap between the predicted and ground truth bounding boxes. A higher value of IoU (e.g., IoU > 0.5) signifies better localization.

vi. Mean Average Precision (mAP): mAP is a standard metric for object detection that integrates IoU with average precisions.

Common evaluation criteria for AI-driven object detection have limitations due to inconsistent labelling, environmental variability, and class imbalance. The evaluation metrics like mAP, IoU, and F1-score provide benchmarks for object detection models, but their alignment with real-world agricultural needs, particularly for smallholder farmers and resource-limited environments, requires further scrutiny. Metrics should reflect robustness to lighting, partial occlusion, lower-resolution imagery, energy efficiency, and computational load. Aligning metric selection with ground-level use cases, data limitations, and socio-technical constraints is crucial for translating AI-driven object detection into sustainable agricultural tools.

7 Critical Analysis and Discussion

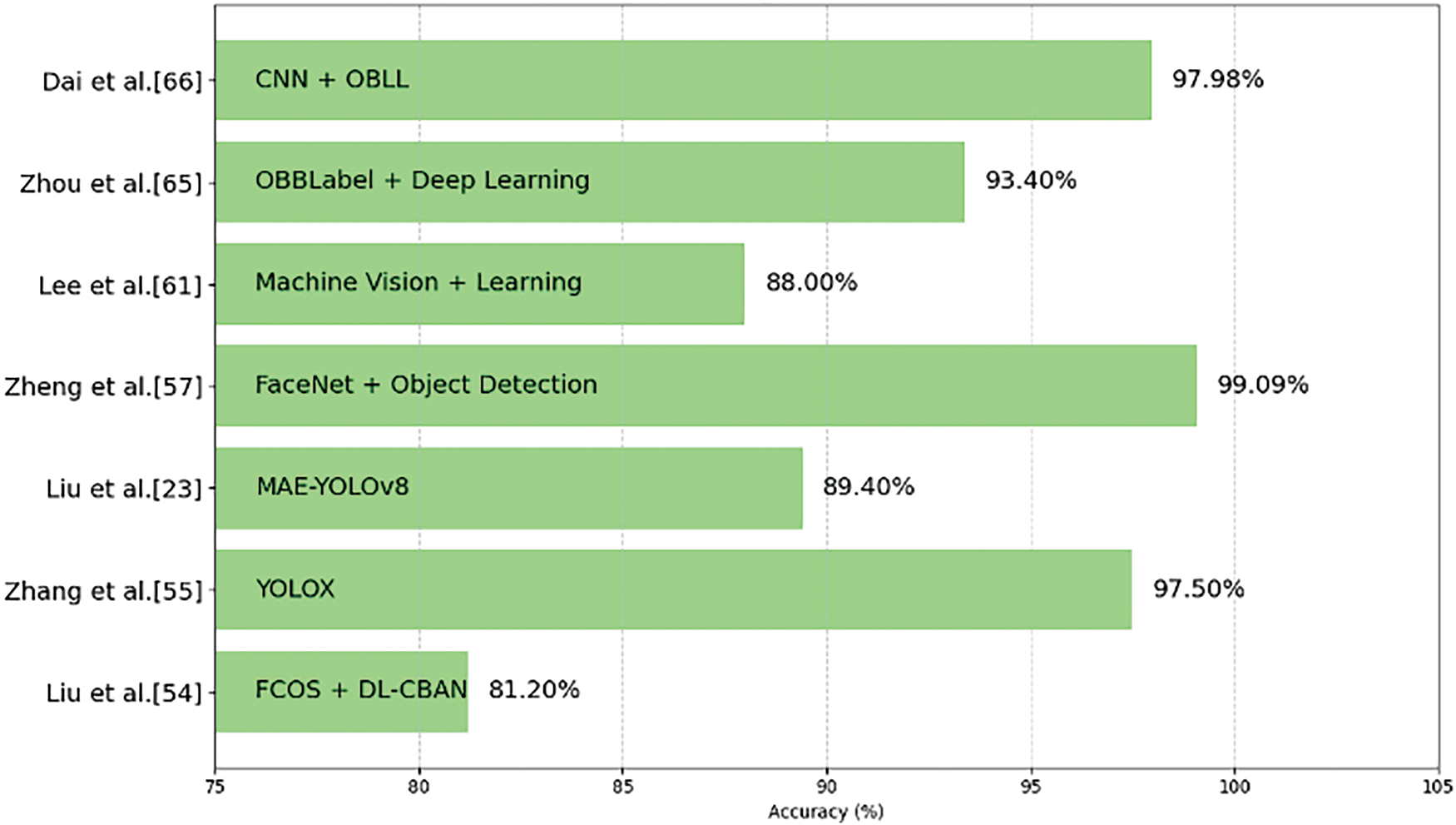

Object detection using deep learning in agriculture provides numerous benefits, including enhanced efficacy, accuracy, adaptability, and scalability. It is utilized for the automation of operations like crop monitoring and disease identification, saving effort and time, and can be used on enormous datasets. Object detection in agriculture can also be applied to a variety of tasks, including image classification and disease detection. In this section, performance analysis of agricultural studies has been done to find the choices for efficient datasets, models, and metrics. In Fig. 5, the bar graph compares the accuracy or mAP values for considered studies for leaves as mentioned in Table 3 for different models such as YOLOv5, Faster R-CNN, DenseNet-121, ResNet-50, YOLOv8, etc.

Figure 5: Comparison of reviewed studies for leaves agricultural applications [28,29,31,32,35–37,39,44–46,48–51]

Fig. 6 shows visualization of the accuracy or mAP (%) performance of various studies and object detection models on different fruit agricultural applications, Zheng et al. with FaceNet model surpasses all other models with accuracy 99.09%.

Figure 6: Comparison of reviewed studies for fruits agricultural applications [23,54,55,57,61,65,66]

The bar graph in Fig. 7 compares the accuracy/mAP of various studies used in vegetable-related agriculture. The highest-performing model is Li et al. [72] with 93.1% accuracy, followed closely by Wang et al. [80] with 92.91% accuracy and Reyes-Hung et al. [70] with 91.7% accuracy. Most models achieved accuracy or mAP values above 90%, demonstrating their effectiveness in tasks like disease detection, pest identification, and crop monitoring. The graph highlights the growing role of deep learning, particularly YOLO-based architectures, in optimizing agricultural automation.

Figure 7: Comparison of reviewed models for vegetable agricultural applications [67,70–72,80]

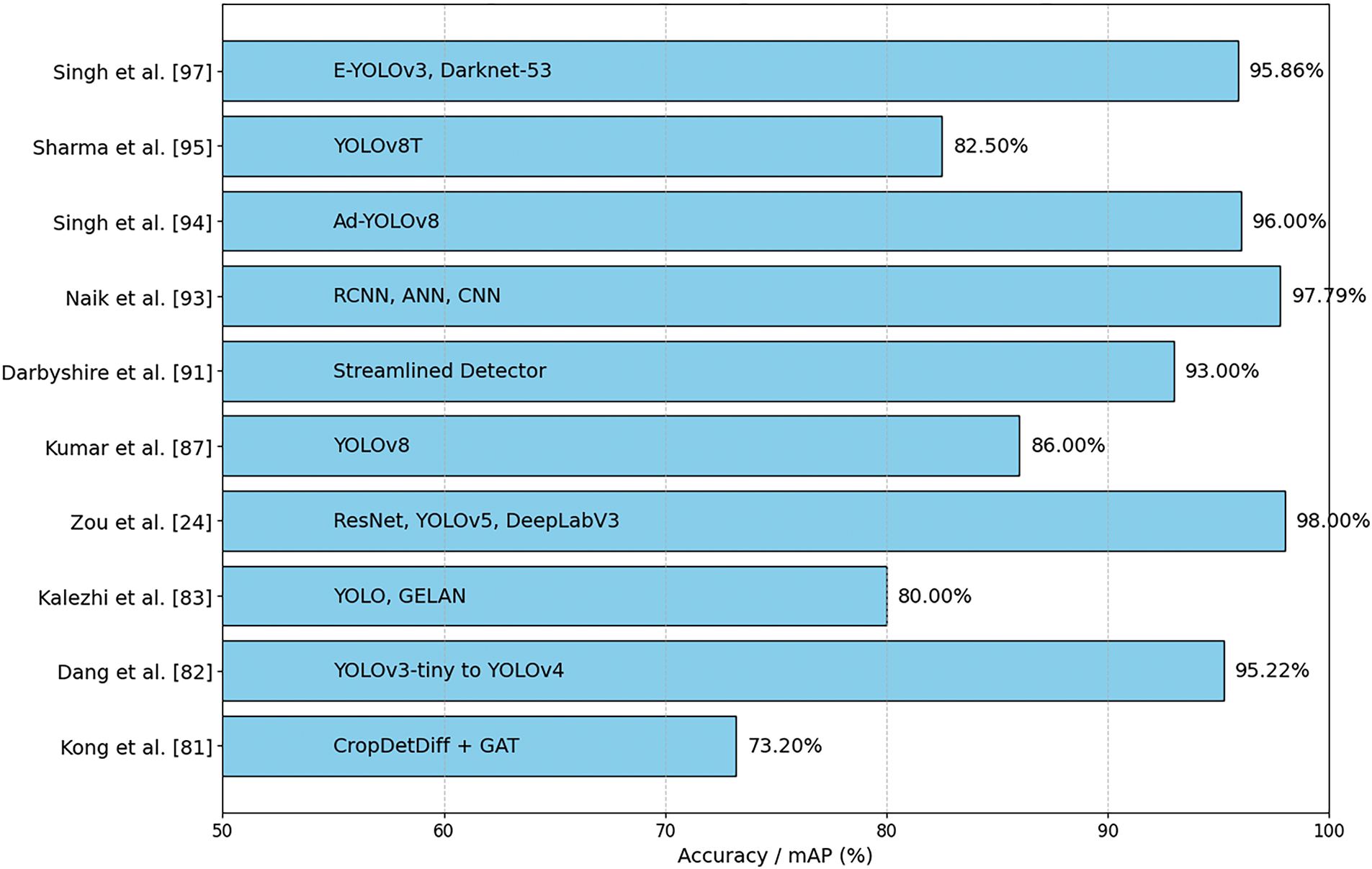

The bar chart in Fig. 8 presents the accuracy (or mAP) of various deep learning models used in recent crop-based studies. Each entry includes the author’s name and the corresponding model on the y-axis, while accuracy is represented on the x-axis. The chart highlights that models like Zou et al. [24] using ResNet with YOLO and DeepLab, Singh et al. [94] YOLOv8 and Naik et al. [93] RCNN-based approaches have demonstrated high accuracy, often exceeding 90%. Some studies focusing on weed and disease detection achieved lower accuracy, indicating potential challenges in complex agricultural environments. Overall, YOLO-based models dominate recent advancements in precision agriculture, offering reliable performance for tasks such as crop classification, disease identification, and weed detection.

Figure 8: Comparison of reviewed models for crops agricultural applications [24,81–83,87,91,93–95,97]

This section also presents a critical analysis by summarizing the answers to the research questions highlighted in Section 2.

1. What are the most commonly used object detection techniques in the era of deep learning in agriculture, specifically for leaves, fruits, vegetables and crops?

Answer: Convolutional Neural Network (CNN) methodologies are extensively employed in agriculture for the detection of crop diseases, identification of weeds, detection of fruits, and enumeration of fruits. The techniques encompass R-CNN, YOLO, SSD, lightweight and mobile-optimized detectors, transformer-based object detectors, hybrid methodologies, and classical approaches. R-CNN is employed for superior accuracy, whereas YOLO is utilized for real-time detection. Lightweight and mobile-optimized detectors are crucial for edge computing and IoT-driven agricultural systems. Transformer-based object detectors are advancing swiftly thanks to their attention processes and enhanced spatial awareness. Hybrid methodologies use convolutional neural networks and transformers to achieve elevated precision and scalability. Classical approaches, such HOG and SIFT/SURF, are infrequently utilized yet still cited in baseline comparisons.

2. What are the most common datasets available for object detection in agriculture?

Answer: There are different datasets present for agriculture covering different applications such as disease detection, classification of different crops etc. One of the most commonly used dataset in the literature is PlantDoc dataset which consists of images from natural environments, enhancing model resilience in real-world settings. The New Plant Diseases Dataset, comprising 38 categories, is a comprehensive collection of images ideal for complex analysis and segmentation tasks, particularly for training deep learning models for disease identification. The Benchmark Dataset for Plant Leaf Disease Detection also offers high-resolution images of specific vegetables. Other dataset examples include the Mendeley Leaf Disease Dataset, PlantVillage, Rice Leaf Disease Dataset, AI Challenger 2018, Fruit-360, etc.

3. What are the common evaluation metrics used for evaluating object detection techniques in agriculture?

Answer: The common evaluation metrics used for evaluating object detection techniques in agriculture includes IoU, mAP, F1-Score, Precision, Recall, Accuracy etc. The right evaluation metrics need to be chosen for a particular problem of agriculture based on object detection. Some recommendations are as follows:

(i) Classification: For classification and counting problems common metrics are F1-score, IoU, mAP, Recall and Precision.

(ii) Disease Detection: For problems related to crops disease detection Dice Coefficient, mAP, IoU, etc.

(iii) Object Detection: Real-time object detection can be evaluated using IoU, mAP, FPS and other common metrics used for classification.

(iv) Estimation: Crop yield estimation can be evaluated using MSE, F1-Score, RMSE, etc.

4. Which models in the literature are utilized most and which are performing better at the present stage?

Answer: Researchers have used various deep learning methodologies for object detection in agriculture, including YOLO, Faster R-CNN, Mask R-CNN, RetinaNet, EfficientDet, and transformer-based methodologies. YOLO uses a single neural network to predict bounding boxes and class probabilities, while Faster R-CNN generates bounding boxes using a region proposal network. Mask R-CNN expands Faster R-CNN by predicting pixel masks. RetinaNet uses Focal Loss for difficult samples, while EfficientDet combines one- and two-stage detectors for precise detection. Transformer-based methodologies, such as DETR and ViT, have also shown high performance in object detection tasks.

Further, edge computing is essential for instantaneous decision-making in precision agriculture, diminishing dependence on the internet, and minimizing latency. Deploying deep learning models on edge hardware poses problems related to memory, computational capacity, and energy efficiency. To tackle these issues, researchers are investigating lightweight models such as MobileNet, EfficientDet-Lite, and Tiny-YOLO, which can deliver near real-time inference with low accuracy loss. Edge AI integrates scholarly research with scalable applications in diverse agricultural settings.

5. What are the challenges and future directions for researchers and practitioners working in the field of object detection in agriculture?

Answer: In agriculture, object detection encounters some technical obstacles such as restricted datasets, inadequate model generalization, etc. In addition to this, some major real-world challenges are difficult to encounter. Examples include weather variability, occlusions, natural lighting conditions, and complicating real-world implementation and scalability.

The findings of this review predominantly align with prior literature highlighting the efficacy of YOLO-based object detection frameworks in agricultural settings, owing to their equilibrium of speed and precision [13,93]. This review recognizes the extensive utilization of YOLO variations (v5 to v10) for real-time crop monitoring, pest detection, and yield calculation, akin to the findings of Badgujar et al. [11]. This study expands beyond previous reviews that concentrated solely on specific applications, such as plant disease detection or crop counting, by encompassing four distinct object types: leaves, fruits, vegetables, and crops, thereby providing a more comprehensive overview of agricultural use cases. Moreover, Alif et al. [13] underscored the preeminence of YOLO models but neglected to investigate contemporary transformer-based architectures such as PL-DINO or TEAVit, which this publication recognizes as advantageous for addressing class imbalance and occlusion issues. Huang et al. [14] illustrated the potential of deep learning for crop counting; however, their analysis did not adequately address the problems of lightweight deployment and the possibility of multimodal learning, which are identified as significant gaps in the present study. This review builds upon previous research by critically examining emergent trends, including synthetic datasets [45], hybrid CNN-transformer models [56], and the necessity for explainable AI and domain adaptability—topics that were scarcely addressed in earlier reviews. This article differs from Pai et al. [12], which concentrated exclusively on weed detection, by including various applications such as disease diagnosis, phenological stage analysis, and robotic integration, thereby serving as a more comprehensive reference for future agricultural AI systems.

This review paper provides both theoretical and practical contributions. Theoretically, it enhances the academic comprehension of the adaptation and evaluation of deep learning-based object recognition models in various agricultural applications, emphasizing trends, performance constraints, and methodological deficiencies. The findings provide a valuable resource for researchers, developers, and policymakers seeking to implement AI-driven solutions in precision agriculture by offering insights into appropriate models, datasets, and evaluation metrics for tasks, including yield estimation, crop monitoring, and disease detection. To address the current challenges, some future aspects are recommended for object detection in agriculture, encompassing multimodal data fusion, real-time edge computing, integration with robotics and drones, and explainable artificial intelligence. Possible uses include automated harvesting, precision spraying, climate-resilient monitoring, and advanced crop management systems for sustainable, high-efficiency agricultural methods.

8 Challenges and Future Aspects

Object detection based on deep learning in agriculture offers both benefits and challenges, such as data scarcity, model generalization, computing limitations, regulatory compliance, and the need for domain-specific AI solutions. Agricultural ecosystems are dynamic, necessitating continual precision across regions. Nonetheless, innovations such as self-supervised learning, few-shot learning, multimodal AI, and Edge AI can surmount these constraints, transforming precision agriculture and facilitating sustainable practices. Deep learning-based object recognition in agriculture faces challenges due to limited information, environmental variability, and computational demands. Strategies like data augmentation, transfer learning, and domain adaptation can improve model resilience and reduce reliance on extensive datasets. Lightweight architectures and edge computing solutions can facilitate real-time execution with limited hardware resources. Addressing these obstacles is crucial for progressing from experimental settings to scalable, field-ready AI applications in agriculture. Using lightweight architectures and edge computing solutions can help overcome these obstacles. Some of the other challenges are summarized in this section, and further, future aspects for naive researchers intending to work in this domain are also discussed. The challenges are as follows:

(i) Dataset: One of the major challenges in agriculture is the agricultural datasets that sometimes lack in diverse, annotated, and extensive imagery, complicating categorization efforts. Variability in morphology (appearance), overlap between fruits and foliage, and class imbalance in detection present significant obstacles. Researchers must focus on domain-specific datasets that are essential to encompass diverse environmental aspects and tackle these data-related difficulties.

(ii) Deep Learning Model & Algorithms: Another major challenge in agriculture involves the selection of a deep learning model due to the generalization across various crops, computational speed, complexity, speed, few-shot and zero-shot learning, small item detection, and the difficulty of achieving real-time detection for drones and robotics. Lightweight AI models are essential for on-device processing, whereas high-resolution images are required for the early diagnosis of diseases.

(iii) Implementation: Challenges in implementing AI-based agriculture encompass cost, integration with robotics and automation, accessibility, absence of standardization, and user acceptance. Affordable mobile solutions are essential, while accurate object localization and intuitive interfaces are vital for confidence and acceptance.

(iv) Environmental & Real-World Challenges: Environmental obstacles in agriculture images encompass lighting, background intricacy, seasonal fluctuations, meteorological conditions, camera and sensor constraints. In the real world, environmental factors such as fog, precipitation, variations in sunshine intensity, and dust can dominate and influence the quality of the image captured. Additionally, other real-world challenges that need to be considered are sensor noise and unstructured fields that can impact precision and accuracy.

(v) Regulatory Compliance: Deep learning in agriculture encounters data protection and regulatory obstacles stemming from GDPR, CCPA, and country AI legislation. Concerns regarding privacy, data ownership, security, and ethical considerations influence AI models for precision agriculture, disease identification, and resource efficiency. Adherence to AI governance policies and resolving compliance concerns is essential for facilitating AI deployment in agriculture.

Future Aspects

Agriculture will keep on evolving with the advancement of technology, and keeping this as a pivot, there are several future aspects that need to be explored by researchers, scientists, practitioners, and stakeholders. Some of the future aspects are discussed below:

(i) Explainable AI (XAI): Researchers and scientists should focus on developing explainable AI methodologies that elucidate the decision-making process. This will help farmers to understand the insights of AI predictions and decisions.

(ii) Cross-Domain Adaptation: Cross-domain adaptation involves training of models for generalizability across diverse areas and environments, hence augmenting their efficacy in training and adapting to numerous situations in agriculture.

(iii) Agricultural Robotics: Agricultural robotics is one of the most prominent fields that is evolving at a rapid rate for the advancement in agriculture. Creating robots for agriculture that utilize deep learning methodologies for the automation of agricultural works, including crop monitoring, crop trimming, and harvesting.

(iv) Multimodal Learning: Multimodal learning is one of the latest and advanced techniques that involves the integration of diverse data types such as text, images, sensor, and satellite data for improving decision-making and automation in agriculture. Creating multimodal learning methodologies that utilize deep learning models for analysis and fuse different information sources for enhanced accuracy and insights.

(v) Neural Architecture Search (NAS): NAS is an AI-based technique for the automatic construction of optimal deep learning architecture, such as AutoML, NASNet, etc. For agriculture-specific problems, NAS can identify the optimal models based on different applications, such as crop monitoring, yield prediction, precision farming, and crop disease detection. The model will be identified to enhance efficiency and accuracy while minimizing the computing complexity.

(vi) Multispectral Analysis: Multispectral analysis uses several light bands such as visible, thermal, near-infrared, etc. and the data could be captured through drones or satellites to assess soil conditions, crop health, and water stress. This method is extensively employed to address real-time problems and helps in precision agriculture, crop surveillance, and disease identification to enhance agricultural decision-making.

(vii) Self-Supervised Learning (SSL): Researchers should utilize SSL for facilitating yield prediction, crop-disease diagnosis and multimodal analysis with unlabeled data, while enhancing domain adaptation, real-time monitoring and Edge AI implementation of crop disease monitoring with limited labeled data.

This paper presented a systematic review of the last three years of research work done in agriculture, focusing on deep learning-based object detection for examining four types of objects: leaves, fruits, vegetables, and crops. The paper discusses the evolution of object detection methods in agriculture, highlighting the shift from traditional computer vision to deep learning strategies. It highlights advancements in models like CNNs, Region-based CNNs, and the YOLO family, enhancing precision and efficiency. The paper evaluates benchmark datasets and measures, highlighting the need for improved object detection algorithms in agriculture. It highlights challenges in creating intelligent and sustainable systems and calls for further research into creative solutions. The main contribution of the paper is that it offers a thorough examination of recent developments and prospective trajectories in deep learning-based object identification for agriculture, facilitating the emergence of intelligent agricultural solutions. It also lays the groundwork for smart agricultural solutions, enhancing productivity, sustainability, and food security.

Acknowledgement: The authors would like to thank Thapar Institute of Engineering & Technology, Patiala, for their support.

Funding Statement: The authors received no specific funding for this study.

Author Contributions: Both authors contributed equally in writing the manuscript. Mukesh Dalal: Initial draft, data curation, comparative analysis, and thorough proofreading. Payal Mittal: Draw figures, equations, critical analysis, and thorough proofreading. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The data will be available on reasonable request.

Ethics Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

Supplementary Materials: The supplementary material is available online at https://www.techscience.com/doi/10.32604/cmc.2025.066056/s1.

References

1. Devlet A. Modern agriculture and challenges. Front Life Sci Relat Technol. 2021;2(1):21–9. doi:10.51753/flsrt.856349. [Google Scholar] [CrossRef]

2. Gupta S, Tripathi AK. Fruit and vegetable disease detection and classification: recent trends, challenges, and future opportunities. Eng Appl Artif Intell. 2024;133:108260. doi:10.1016/j.engappai.2024.108260. [Google Scholar] [CrossRef]

3. O’shea K, Nash R. An introduction to convolutional neural networks. arXiv:1511.08458. 2015. [Google Scholar]

4. Redmon J, Divvala S, Girshick R, Farhadi A. You only look once: unified, real-time object detection. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016 Jun 27–30; Las Vegas, NV, USA. p. 779–88. doi:10.1109/CVPR.2016.91. [Google Scholar] [CrossRef]

5. Girshick R, Donahue J, Darrell T, Malik J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans Pattern Anal Mach Intell. 2016;38(1):142–58. doi:10.1109/TPAMI.2015.2437384. [Google Scholar] [PubMed] [CrossRef]

6. Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, et al. An image is worth 16 × 16 words: transformers for image recognition at scale. arXiv:2010.11929. 2020. [Google Scholar]

7. Tan M, Pang R, Le QV. EfficientDet: scalable and efficient object detection. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2020 Jun 13–19; Seattle, WA, USA. p. 10778–87. doi:10.1109/cvpr42600.2020.01079. [Google Scholar] [CrossRef]

8. Shahbandeh M. AI in agriculture market value worldwide 2023–2028. [cited 2025 Feb 15]. Available from: https://www.statista.com/statistics/1326924/ai-in-agriculture-marketvalue-worldwide/#:~:text=The%20value%20of%20artificial%20intelligence,billion%20U.S.%20dollars%20y%202028. [Google Scholar]

9. Mathur A. Artificial intelligence in agriculture market skyrockets to $7.97 billion by 2030 dominated by Tech Giants—Tule Technologies Inc., Precisionhawk Inc. and Easytosee Agtech Sl. The insight partners. [cited 2025 Feb 15]. Available from: https://www.globenewswire.com/news-release/2024/12/16/2997588/0/en/Artificial-Intelligence-in-Agriculture-Market-Skyrockets-to-7-97-Billion-by-2030-Dominated-by-Tech-Giants-Tule-Technologies-Inc-Precisionhawk-Inc-and-Easytosee-Agtech-Sl-The-Insigh.html?ut. [Google Scholar]

10. Upadhyay A, Chandel NS, Singh KP, Chakraborty SK, Nandede BM, Kumar M, et al. Deep learning and computer vision in plant disease detection: a comprehensive review of techniques, models, and trends in precision agriculture. Artif Intell Rev. 2025;58(3):92. doi:10.1007/s10462-024-11100-x. [Google Scholar] [CrossRef]

11. Badgujar CM, Poulose A, Gan H. Agricultural object detection with you only look once (YOLO) algorithm: a bibliometric and systematic literature review. Comput Electron Agric. 2024;223(4):109090. doi:10.1016/j.compag.2024.109090. [Google Scholar] [CrossRef]

12. Pai DG, Kamath R, Balachandra M. Deep learning techniques for weed detection in agricultural environments: a comprehensive review. IEEE Access. 2024;12(6):113193–214. doi:10.1109/access.2024.3418454. [Google Scholar] [CrossRef]

13. Alif MAR, Hussain M. YOLOv1 to YOLOv10: a comprehensive review of YOLO variants and their application in the agricultural domain. arXiv:2406.10139. 2024. [Google Scholar]

14. Huang Y, Qian Y, Wei H, Lu Y, Ling B, Qin Y. A survey of deep learning-based object detection methods in crop counting. Comput Electron Agric. 2023;215(11):108425. doi:10.1016/j.compag.2023.108425. [Google Scholar] [CrossRef]

15. Guerri MF, Distante C, Spagnolo P, Bougourzi F, Taleb-Ahmed A. Deep learning techniques for hyperspectral image analysis in agriculture: a review. ISPRS Open J Photogramm Remote Sens. 2024;12(7):100062. doi:10.1016/j.ophoto.2024.100062. [Google Scholar] [CrossRef]

16. Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, et al. Generative adversarial networks. Commun ACM. 2020;63(11):139–44. doi:10.1145/3422622. [Google Scholar] [CrossRef]

17. Shafi U, Mumtaz R, Shafaq Z, Zaidi SMH, Kaifi MO, Mahmood Z, et al. Wheat rust disease detection techniques: a technical perspective. J Plant Dis Prot. 2022;129(3):489–504. doi:10.1007/s41348-022-00575-x. [Google Scholar] [CrossRef]

18. Önler E, Köycü ND. Wheat powdery mildew detection with YOLOv8 object detection model. Appl Sci. 2024;14(16):7073. doi:10.3390/app14167073. [Google Scholar] [CrossRef]

19. Cui S, Ling P, Zhu H, Keener HM. Plant pest detection using an artificial nose system: a review. Sensors. 2018;18(2):378. doi:10.3390/s18020378. [Google Scholar] [PubMed] [CrossRef]