Open Access

Open Access

ARTICLE

Towards Efficient Vehicle Recognition: A Unified System for VMMR, ANPR, and Color Classification

1 Department of Software Engineering, Bahria University H-11 Campus, Islamabad, 44000, Pakistan

2 Centre for Smart Systems and Automation, CoE for Robotics and Sensing Technologies,

Faculty of Artificial Intelligence and Engineering, Multimedia University, Persiaran Multimedia, Cyberjaya, 63100, Selangor, Malaysia

3 Department of Computer Science, Bahria University E-8 Campus, Islamabad, 44220, Pakistan

* Corresponding Author: Teong Chee Chuah. Email:

(This article belongs to the Special Issue: Computer Vision and Image Processing: Feature Selection, Image Enhancement and Recognition)

Computers, Materials & Continua 2025, 85(2), 3945-3963. https://doi.org/10.32604/cmc.2025.067538

Received 06 May 2025; Accepted 03 July 2025; Issue published 23 September 2025

Abstract

Vehicle recognition plays a vital role in intelligent transportation systems, law enforcement, access control, and security operations—domains that are becoming increasingly dynamic and complex. Despite advancements, most existing solutions remain siloed, addressing individual tasks such as vehicle make and model recognition (VMMR), automatic number plate recognition (ANPR), and color classification separately. This fragmented approach limits real-world efficiency, leading to slower processing, reduced accuracy, and increased operational costs, particularly in traffic monitoring and surveillance scenarios. To address these limitations, we present a unified framework that consolidates all three recognition tasks into a single, lightweight system. The framework utilizes MobileNetV2 for efficient VMMR, YOLO (You Only Look Once) for accurate license plate detection, and histogram-based clustering in the HSV color space for precise color identification. Rather than optimizing each module in isolation, our approach emphasizes tight integration, enabling improved performance and reliability. The system also features adaptive image calibration and robust algorithmic enhancements to ensure consistent results under varying environmental conditions. Experimental evaluations demonstrate that the proposed model achieves a combined accuracy of 93.3%, outperforming traditional methods and offering practical scalability for deployment in real-world transportation infrastructures.Keywords

Vehicle recognition technologies are essential for effective traffic management and law enforcement, enabling regulated vehicle flow and controlled access to restricted zones. Modern systems typically rely on three core components: Vehicle Make and Model Recognition (VMMR), Automatic Number Plate Recognition (ANPR), and vehicle color detection. VMMR enhances security by assisting in vehicle identification, supporting enforcement and surveillance tasks [1]. ANPR enables real-time tracking and precise license plate recognition, while color detection systems improve traffic monitoring and public safety through automated color extraction.

Recent advances in deep learning have demonstrated promising results by integrating VMMR and ANPR [1]. Building on these developments, our framework employs MobileNetV2 and YOLO for initial vehicle detection, while YOLOv4-tiny, PaddleOCR, and SVTR-tiny enhance ANPR accuracy under varying environmental conditions. Our approach refines existing methods, prioritizing lightweight architecture and operational efficiency for real-world deployment.

Despite progress in each domain, standalone systems face significant limitations in practical scenarios. VMMR systems often struggle with environmental noise, such as poor lighting, adverse weather, and congested traffic [2]. Similarly, ANPR performance degrades under low-light conditions, non-standard camera angles, and regional regulatory variations. Vehicle color detection is also susceptible to lighting fluctuations and weather-related distortions, leading to misclassifications. Without addressing these challenges, systems fail to provide comprehensive, actionable vehicle data.

Advanced deep learning models like EfficientNet [3] and Vision Transformers [4] have improved feature extraction and pattern recognition. EfficientNet achieves high accuracy through compound scaling, while Vision Transformers use attention mechanisms to capture fine-grained details. However, both demand substantial computational resources, limiting their use in resource-constrained environments.

While individual subsystems have matured, a critical gap remains in developing unified, lightweight frameworks that integrate VMMR, ANPR, and color detection. Our work bridges this gap by combining the strengths of each subsystem to compensate for their weaknesses. We anchor our framework in MobileNetV2, chosen for its efficiency and low computational overhead, making it ideal for real-time, edge-level intelligent transportation applications.

Despite extensive research, few holistic systems reliably integrate these capabilities under diverse conditions. This paper contributes the following:

1. We propose an integrated system combining VMMR, ANPR, and color detection in a modular, extensible architecture. By merging adaptive image processing with deep learning, our solution delivers efficient, multifunctional recognition.

2. Our framework incorporates an augmentation strategy that enhances performance across varying conditions (e.g., illumination changes, weather effects). Combined with optimized inference, this ensures accurate real-time recognition in complex environments.

3. To maximize applicability, we combine MobileNetV2, YOLO-based detection, and HSV color clustering. This hybrid approach improves modularity, scalability, and robustness, making the system viable for traffic monitoring, law enforcement, and security applications.

By consolidating these functionalities into a single framework, our solution eliminates the need for separate systems, offering a more efficient and reliable approach to real-world vehicle recognition.

The innovative aspect of our work is that it combines Vehicle Make and Model Recognition (VMMR), Automatic Number Plate Recognition (ANPR) and color detection into a single, lightweight model, instead of suggesting new solutions to each of those tasks. Although tremendous progress has been achieved in VMMR (e.g., CNN-based methodologies [5]), ANPR (e.g., YOLO-based detection [6]) and color identification (e.g., HSV clustering [7]), the techniques are often used alone, resulting in inefficient multi-task vehicle recognition systems. The framework is the integration of these known methods, where MobileNetV2 is used in VMMR, YOLO and EasyOCR in ANPR, and histogram-based HSV clustering in color detection, which results in the concurrent processing of the methods and therefore, leads to a better performance in applications such as traffic surveillance, law enforcement, and security management in Pakistan. The reason behind this combined effort is that a practical and context related vehicle recognition system is needed that best fits the Pakistani traffic conditions, which require more robust and efficient solutions to environmental issues (e.g., poor lighting, occlusions) and specific license plate format in the region (e.g., Pakistani plates). Current approaches, including conventional ANPR schemes [8], or deep learning systems pretrained over cross-geographical datasets, are either prone to differences between local plates or have significant computational costs that are not acceptable in edge devices. We build our framework on a dataset of 4000 Pakistani license plates to train ANPR, and a dataset of approximately 364 additional Pakistani images (Stanford Cars) to train VMMR to maximize performance in the target scenario. Our system is able to achieve a 93.3% overall accuracy (Section 4) and computational efficiency, which provides a scalable solution to intelligent transportation systems, by focusing on integration and not reinvention. The cohesive nature of such a system not only eliminates the redundancy of sequential or separate processing, but also improves reliability because of cross-verification of VMMR, ANPR and color data. Again, as an example, the text of license plates and vehicle make and color can be combined to enhance identification of vehicles in security systems.

This integrated solution does not only solve the inefficient nature of sequential or divisive processing, but also improves reliability due to cross-checking of VMMR, ANPR and color data. To illustrate, integration of license plate text and vehicle make and color enhances the accuracy of identification in the security fields. Our work therefore introduces a novel method of vehicle recognition by coordinating the current approaches into one practical framework that can be applied in real world.

The vehicle recognition systems are important in areas that include traffic monitoring, police work, and security. Nevertheless, it can be said that their efficiency is frequently hindered by the disjointed nature of the most important elements, i.e., Vehicle Make and Model Recognition (VMMR), Automatic Number Plate Recognition (ANPR), and color detection. Such components are usually established and function as independent or sequential processes, resulting in inefficiencies that impedes real time performance. It is especially challenging in region-specific cases, such as Pakistan, where environmental conditions, such as bad lighting, occlusions, or bad weather, and license plate formats that are unique to the country are an added problem. In addition, most of the available recognition algorithms are computationally intensive, and thus they cannot be implemented on resource-limited devices that are often used in practice. The research question that is answered in this work is to come up with a unified theoretical framework to combine the existing methods of VMMR, ANPR and color detection to one integrated system. The idea is to allow parallel computation of these parts to make vehicle detection quick, stable, and resilient. In particular, the framework is meant to integrate the inference of the make and model of a vehicle as well as the reading of its license plate text along with the most dominant color of the vehicle into a simplified procedure. Integrated system aims at avoiding the inefficiencies of isolated systems, providing that all elements operate collaboratively to improve the general performance in real life scenarios.

The goal is to develop a system that would achieve single result from integrated recognition tasks but at the same time be computationally efficient and robust to large variations, especially those that may occur in Pakistani traffic conditions. Instead of devoting attention to the creation of new approaches to each particular task, the given framework is aimed at the combination of already existing methods and techniques in order to develop a new way of vehicle recognition. The proposed system would address the issues of fragmentation and context-specific needs, and thus it would offer a feasible solution towards real-time vehicle identification within intelligent transportation systems.

Vehicle Make and Model Recognition (VMMR), Automatic Number Plate Recognition (ANPR), and car color detection have been the focus of extensive research aimed at enhancing recognition performance across diverse real-world scenarios. While notable advancements have been made in each of these areas, most existing systems have been developed independently, resulting in fragmented solutions that often fail to deliver comprehensive performance in dynamic and varied environments.

VMMR, in particular, poses significant challenges due to high intra-class variance arising from vehicle modifications, inconsistent lighting conditions, and varying viewing angles. For instance, the study in [5] presents a deep learning-based VMMR model that demonstrates high accuracy under controlled settings. By leveraging convolutional neural networks (CNNs) for feature extraction, the system achieved impressive recognition rates. However, its performance declined significantly in real-world scenarios involving inconsistent backgrounds and fluctuating lighting conditions. Another approach in [5] employed a hybrid method combining CNNs and classical image processing techniques. Although effective in structured environments, the model’s reliance on high computational power and large volumes of labeled data limits its scalability and real-time application.

Recent developments in ANPR systems have also garnered attention. Research cited in [8] highlighted the success of traditional image processing techniques, particularly during daytime conditions. However, our analysis reveals a sharp performance drop during nighttime or under adverse weather conditions. Enhanced systems, as described in [8], integrated image preprocessing with deep learning to improve visibility robustness. Despite these improvements, they struggled with unusual license plate fonts and partially obscured characters, indicating room for further refinement.

Color recognition, too, remains sensitive to environmental conditions. The work in [9] introduced a technique that combined histogram equalization with image analysis to compensate for lighting inconsistencies. While this method achieved satisfactory results in stable lighting, it was less effective during rapid illumination changes, such as those caused by natural weather transitions. Further developments, including the machine learning algorithm proposed in [10], improved performance under such dynamic conditions. However, the model faced limitations when differentiating between similar dark shades like navy blue and black, often confusing them.

Integrated vehicle recognition systems combining VMMR, ANPR, and color detection are still relatively rare. Álvarez-Bazo et al. [11] proposed a joint VMMR and ANPR system optimized for well-lit conditions, but it lacked support for color detection. Another notable work by Guerrero-Ibez et al. [12] combined VMMR, ANPR, and facial recognition using SIFT and OCR techniques, achieving 75% accuracy on a toy car dataset. However, the system’s limited scalability and modest accuracy prevent its practical deployment. While several integrated models have demonstrated improved accuracy by using CNNs for VMMR and ANPR, their computational demands render them unsuitable for edge devices or real-time use. Moreover, most existing solutions overlook challenges related to environmental robustness, computational efficiency, and region-specific needs, such as accommodating the variations in Pakistani license plates.

Addressing these gaps, our framework integrates proven methods into a unified architecture that is both efficient and robust. It achieves a significantly improved overall accuracy of 93.3% (as discussed in Section 4), making it a strong candidate for real-world deployment.

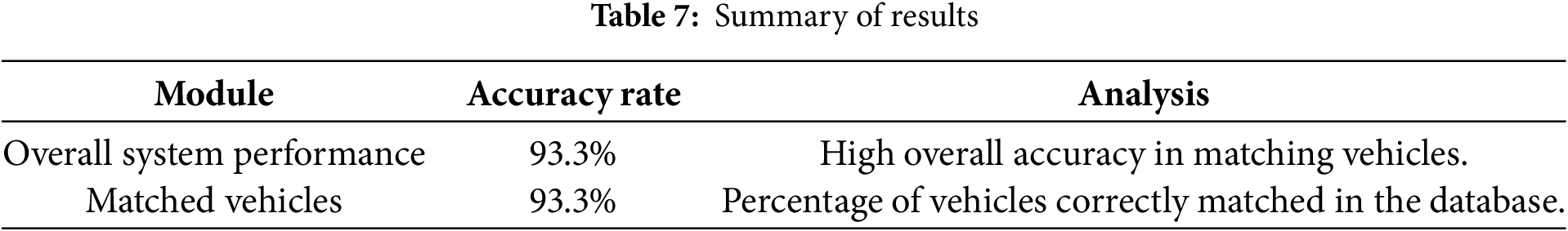

Additionally, scalability to large datasets posed a challenge for the system. The findings of existing work are summarized in Table 1.

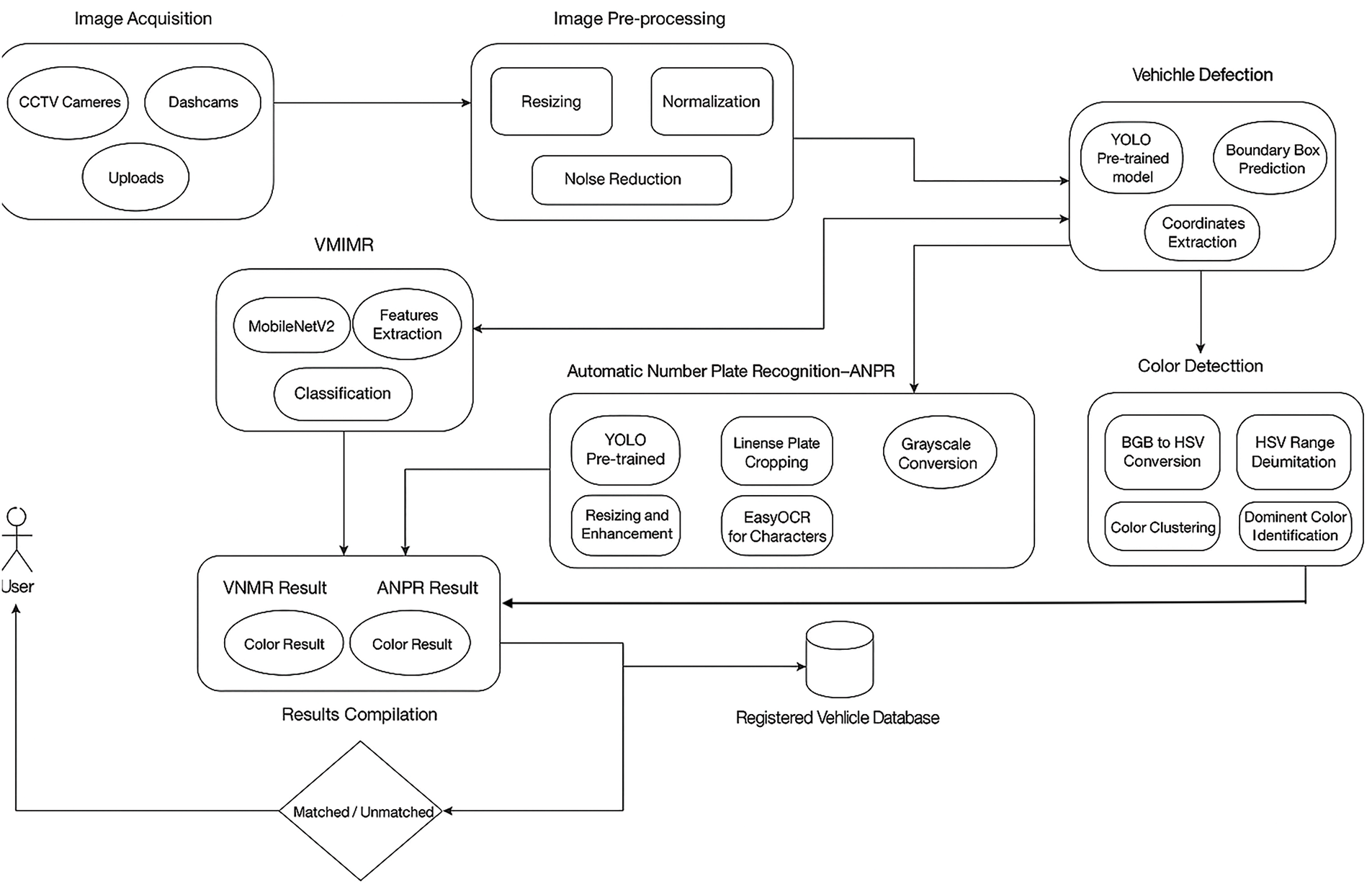

The proposed vehicle recognition and color detection framework employs advanced deep-learning models and image processing techniques to identify cars, license plates, manufacturers, and colors, as shown in Fig. 1. The process begins with image import from CCTV, dashcams, or other sources, followed by checks to ensure images meet acceptable formats, such as .jpg, .jpeg, and .png. Resizing, normalization, noise reduction, and contrast enhancement improve image quality and uniformity.

Figure 1: System architecture modular diagram showing ANPR, color, and VMMR detection modules

Using grid-scanning, YOLO (You Only Look Once) predicts bounding boxes and class probabilities to identify vehicles and output their coordinates [18]. Vehicle parts are trimmed for processing. We have trained the MobileNetV2 algorithm to accurately classify automobile manufacturers and models. The choice of MobileNetV2 for the VMMR task was driven by its ability to balance accuracy and computational efficiency. Unlike heavier architectures, MobileNetV2 is designed to perform well on resource-constrained edge devices, ensuring real-time performance critical to intelligent transportation systems [19]. Additionally, its depth-wise separable convolutions reduce computational cost without compromising feature extraction quality for ANPR, another YOLO model detects license plates, and EasyOCR extracts text. License plate OCR begins with grayscale conversion, scaling, and enhancement.

To detect color, BGR automobile images are converted to HSV color space, where color is clustered by HSV ranges to identify the dominant color. Vehicle make, model, license plate text, and color data are used for applications such as traffic monitoring, automatic toll collection, and smart parking. To handle data volume and scale, containerization and microservices are deployed on edge devices and cloud infrastructure. Integrating these modules with a registered vehicle database allows cross- checking, improving system efficacy. A CSV file backup serves as the test database for real-time verified vehicle information. The proposed framework comprises the following modules: VMMR, ANPR, color detection, as elaborated next.

3.1 Vehicle Make and Model Recognition (VMMR)

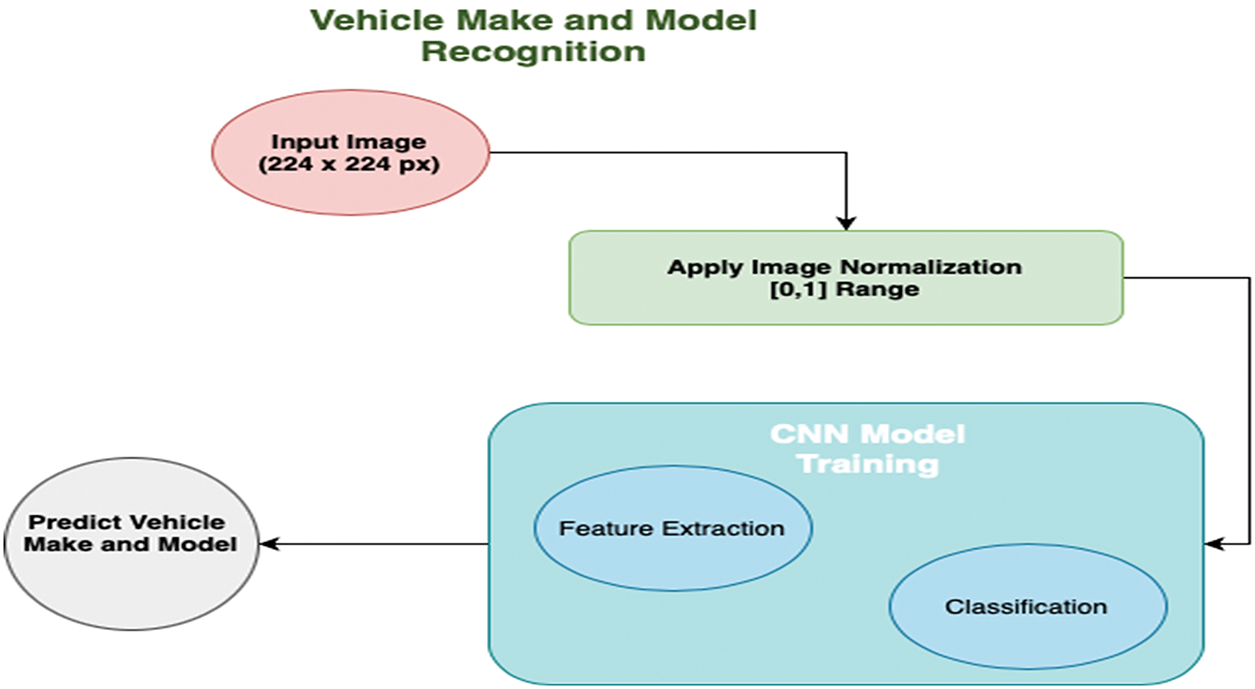

The VMMR module serves as the foundation of our vehicle recognition framework. It leverages Convolutional Neural Networks (CNNs) to accurately identify a vehicle’s make and model from captured images. CNNs are particularly well-suited for this task due to their ability to detect and interpret spatial patterns within visual data.

In this module, the CNN analyzes distinctive design features of vehicles to determine both the manufacturer and model. The process begins with the collection of wide-angle images of various vehicle types, captured from multiple perspectives. This dataset includes images taken under diverse environmental conditions to reflect real-world variability, enabling the CNN to generalize effectively during training.

To enhance robustness, we employ data augmentation techniques such as image rotation and flipping. These transformations expose the model to different visual orientations, allowing it to recognize vehicles even when they appear at unusual angles or are partially obscured. As a result, the model performs more reliably in practical, real-world scenarios. Throughout its layered architecture, the CNN progressively extracts and filters image features such as edges, textures, and shapes, crucial for distinguishing vehicle characteristics. The model is trained using labeled datasets, with optimization algorithms like Adam and SGD applied to fine-tune its parameters for better performance.

We also integrate regularization methods such as dropout and batch normalization to reduce overfitting and improve the model’s adaptability across varying inputs. The final trained model is evaluated using standard performance metrics including accuracy, precision, recall, and F1 score, to ensure it generalizes well to unseen data. Fig. 2 presents a visual overview of the complete workflow, from image input (supporting .JPEG, .JPG, and .PNG formats) to final vehicle identification output.

Figure 2: Conceptual framework of the VMMR module

Popular deep learning frameworks, such as TensorFlow and PyTorch, facilitate the implementation and training the CNN model, streamlining development for researchers. In addition, transfer learning is applied, by utilizing MobileNetV2 pre-trained on the ImageNet dataset. This approach ensures faster convergence during training and enhances generalization to diverse vehicle types and conditions. To adapt the model to real-world scenarios, it was fine-tuned on a curated, domain-specific dataset that reflects variations in lighting, weather, and occlusions. This fine-tuning process enables the model to effectively handle challenging environmental conditions. The integration of transfer learning significantly reduces computational overhead and improves real-time performance, making it suitable for intelligent transportation systems.

3.2 Automatic Number Plate Recognition (ANPR)

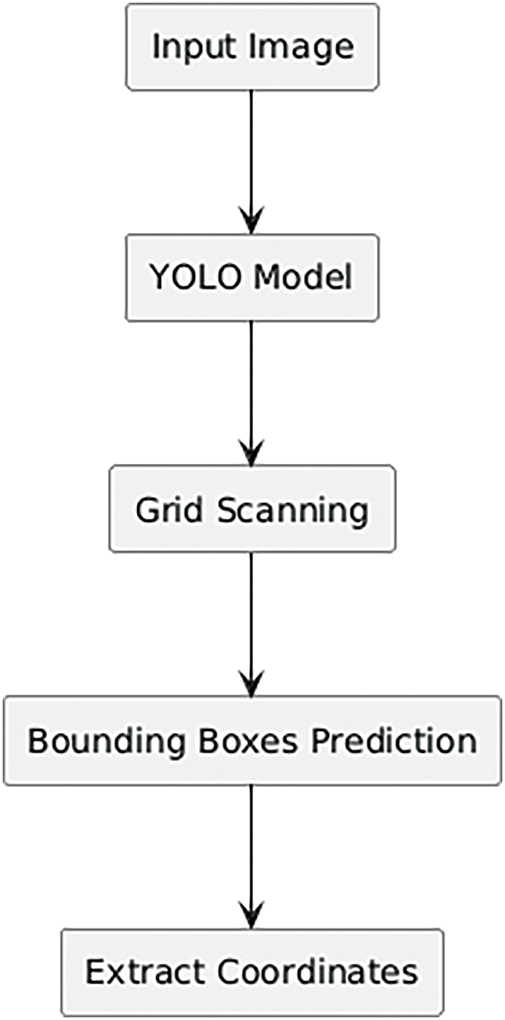

The ANPR module detects and reads license plates from vehicle images, a critical component in vehicle identification systems. This process involves several sub-steps: image preprocessing, license plate detection, and Optical Character Recognition (OCR), each vital to accurately extracting textual information from license plates, as shown in Fig. 3.

Figure 3: Automatic number plate recognition coordinates extraction framework

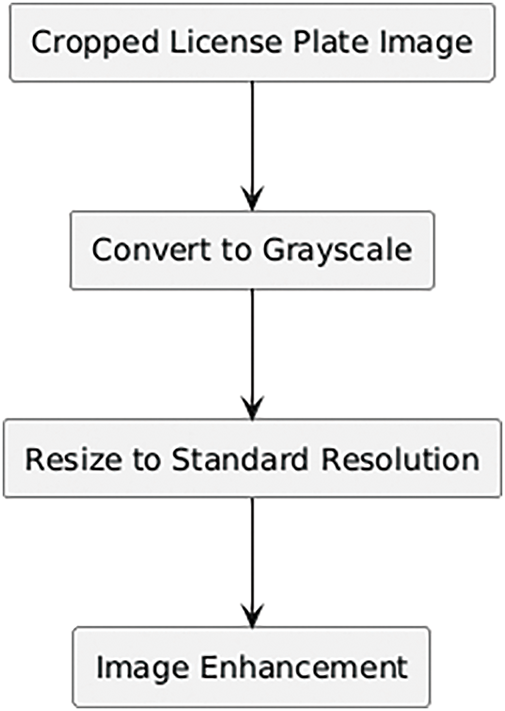

Fig. 4 shows the role of EasyOCR, which receives the grayscale, scaled, and enhanced license plate images. EasyOCR uses CNNs and RNNs to accurately extract text from images by analyzing character patterns and structures, converting images to text. Supporting a variety of license plate fonts and styles, EasyOCR enables applications like toll collection, traffic monitoring, and access control through the automated conversion of license plate images to text.

Figure 4: OCR image processing framework

By registering vehicle number plates, we compare the ANPR results to verify if the plates detected by OCR match the registered plates. When a match is found, we calculate the probability of license plate recognition accuracy. For example, given two plates: ABC123 and ABD123, the algorithm first verifies if the detected text contains the original characters. If true, we use the Sequence-Matcher (Eq. (1)) to find the Longest Common Matching Subsequence (LCMS), in this case is “AB” and “123”.

The similarity ratio

With

In Eq. (2), M represents the number of matching characters, T is the total character count in both sequences, and r is the similarity ratio. The system marks a positive match whenever the result value r hits or beats the required level (for example 0.75).

After grayscale conversion the system prepares license plate images by increasing image contrast and reducing background noise for better detection. Modern object detection systems (YOLO and Faster R-CNN) find license plates by locating possible plate areas through their specific recognition algorithms. These algorithms serve their purpose by finding license plates correctly even in challenging scenes.

OCR automatically gets textual data from detected license plate images. EasyOCR scans characters because it was trained to spot multiple font styles across various plates. Text accuracy remains reliable after OCR processing because post processing tools verify spelling and check character sequences to fix any detection mistakes. EasyOCR achieves good OCR results because it contains machine learning models trained to identify license plate characters from multiple types. For accurate license plate recognition YOLO and Faster R-CNN detection methods assist this process. Texture mapping and visual contrast adjustments happen through the OpenCV package [20] on it. EasyOCR reads the characters, being trained to recognize different fonts and designs for robustness. Common OCR errors are corrected through post processing, such as spell checking and character validation, to maintain text accuracy. EasyOCR’s effectiveness in OCR tasks is supported by its pre-trained models, which recognize characters in different license plate formats. Additionally, object detection algorithms such as YOLO or Faster R-CNN are used for efficient and accurate license plate detection. Image preprocessing and enhancement are performed with OpenCV [21].

Vehicles need their specific colors to help people recognize them. Our Color Detection tool uses the HSV color model to precisely identify and group vehicle color patterns.

Our system transforms BGR color space images into HSV space for processing. HSV processing gives better results because it splits color data from brightness data making the system work well in different lighting conditions. Our system divides the transformed image into color segments using precise HSV parameters. Our system creates separate color-based masks that locate all image sections matching defined color ranges.

The system detects the vehicle color by studying where pixels of each hue cluster in the separated image areas. Our system improves color recognition by using real-world measurements to update threshold values so it detects colors that match real-life scenarios. The repeated improvement steps keep the results accurate in different situations. OpenCV processes color space conversion and segmentation to make image processing run faster. The system identifies vehicle colors by using predefined HSV color ranges for red, blue and gray plus making additional changes from observed results.

The combination of VMMR, ANPR, and Color Detection enables us to develop a strong system that extracts vehicle details from images. Combining these features lets the system see and study vehicles better in different environments. The system takes input images as it moves them through one module after another with the output from each step connecting to the next. The connected workflow helps the system run better while each part helps the other parts work. Through the VMMR module the system gains essential vehicle information which makes both license plate recognition and color detection operate better.

The proposed unified approach is summarized in Algorithm 1. The Algorithm 1 is a theoretical framework that transforms an input image into a decision outcome, enabling vehicle identification for applications such as traffic monitoring and law enforcement in Pakistani contexts. The process begins with an input image I, representing raw data acquired from sources such as CCTV cameras, dashcams, or manual uploads. This image undergoes a series of transformations, including pre-processing, vehicle detection, VMMR, ANPR with a Sequence Matcher, and color detection, culminating in a feature vector F that is compared against a registered vehicle database DB to produce an output decision (matched or unmatched).

Algorithm Formulation

The algorithm is defined by a function

•

•

•

•

The transformation

i) Pre-processing: The input

ii) Vehicle Detection: A detection function

iii) VMMR: A recognition function

iv) ANPR: The ANPR process involves multiple steps:

A plate detection function

• A text extraction function

• A Sequence Matcher function:-

using a reference dataset

v. Color Detection: A color analysis function

The outputs of these sub-functions are combined into

The algorithm concludes with a matching function

•

•

The objective is to maximize the accuracy of

The system uses parallel processing to handle big data efficiently while doing image work at the same time. The system design improves performance for real-time vehicle recognition tasks. The system displays results through an easy-to-understand display that shows users vehicle information including make, model, license plate number, and color. The system uses Python as its main programming language while OpenCV, TensorFlow, and PyTorch support modules to process images and learn with machine and deep learning techniques.

Our system combines Vehicle VMMR, Automatic Number Plate Recognition technology, and color recognition to identify vehicles fully.

To ensure robustness and generalizability, our system was validated on public and curated datasets, reflecting the integration of VMMR, ANPR, and color detection for Pakistani traffic scenarios. Pakistani Vehicles Cars Dataset, with approximately 364 images, was used alongside a curated dataset of 4000 Stanford Car images having 48 classes for training and validation. The Pakistani dataset achieved 90.63% accuracy, reflecting optimization for local vehicle types.

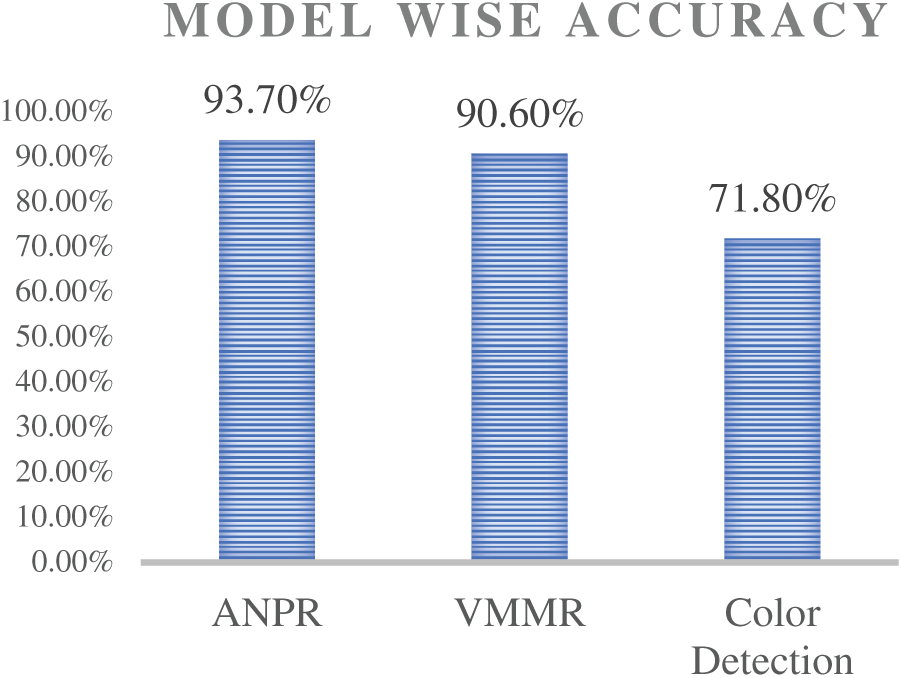

ANPR module was trained and validated on 364 Pakistani license plates along with YOLO, achieving 93.0% detection accuracy and 88.5% OCR accuracy, aligning with the overall 93.75% (Fig. 5). The combined system shows reliable performance in these test results.

Figure 5: Accuracy across modules: VMMR, ANPR and color detection

Fig. 5 shows the accuracy rate for each module. The ANPR module achieved an accuracy of 93.7% along with varying lightening conditions. The VMMR module achieved an accuracy of 90.63% in identifying vehicle makes and models. This high accuracy indicates the module’s ability to process complex visual data, effectively distinguishing different vehicle brands and models.

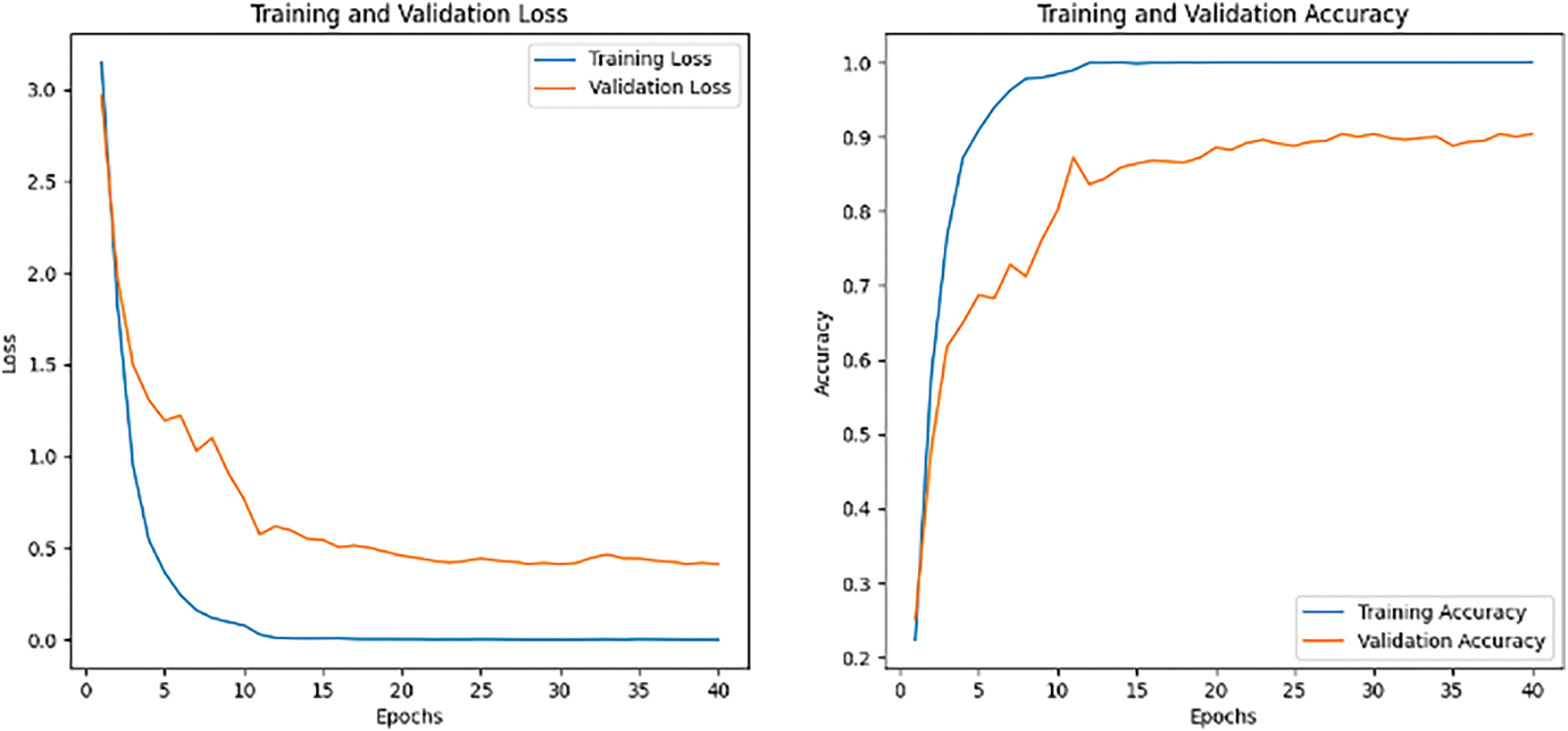

Fig. 6 illustrates the training process of the VMMR system using the CNN architecture, MobileNetV2. The left graph displays the training and validation loss over 40 epochs, while the right graph shows the training and validation accuracy over the same number of epochs. These metrics are crucial for understanding the model’s performance and generalization capabilities.

Figure 6: Training and validation accuracy graph for VMMR model training

The training accuracy plateaus near 0.99, indicating that the model has effectively learned from the training data. However, the validation accuracy levels out around 0.85, with slight fluctuations, suggesting the model’s generalization on new unseen data has reached a peak with the current configuration.

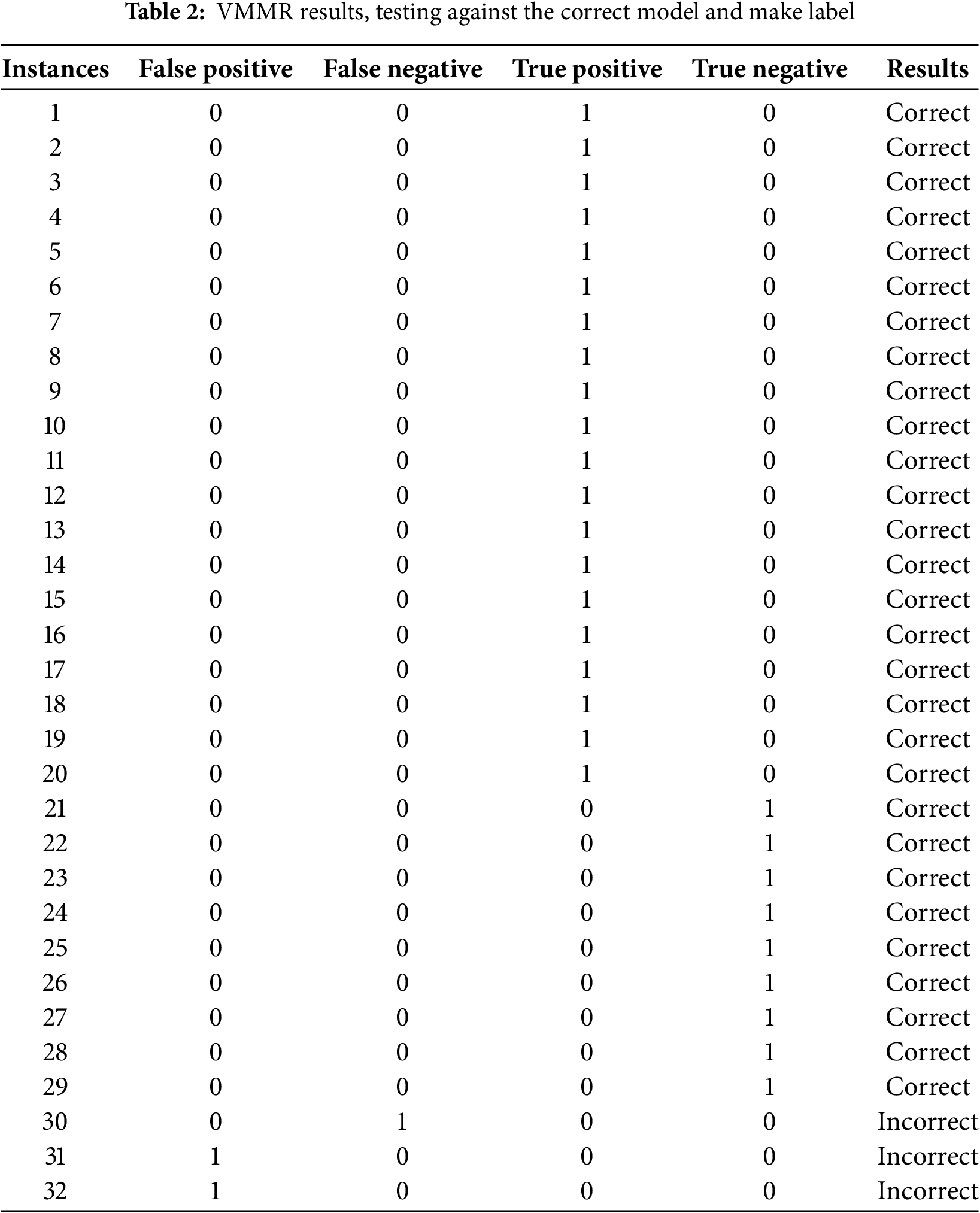

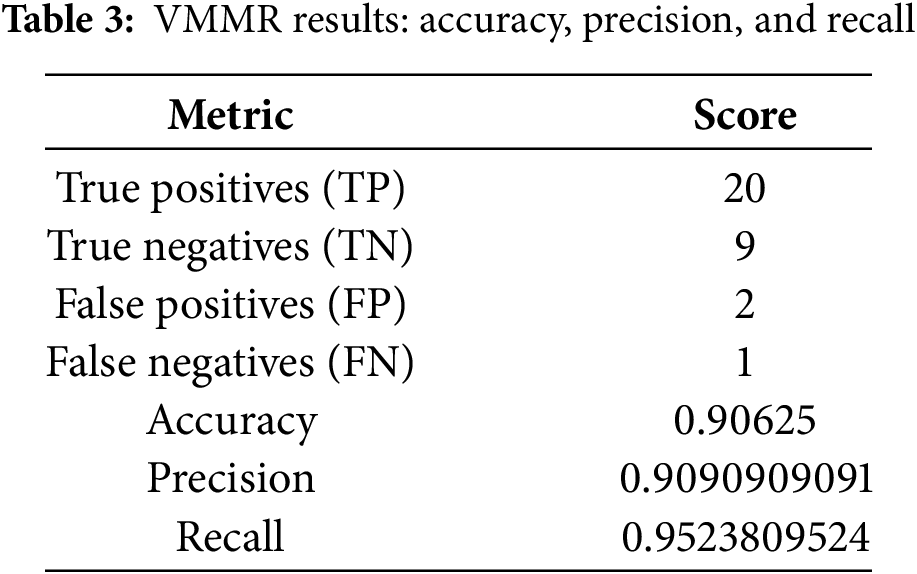

In Table 2, the VMMR outcomes are summarized as follows:

• True Positives (TP): 20 (cases with “TRUE” for Is True Vehicle)

• True Negatives (TN): 9 (cases with “FALSE” for Is True Vehicle)

• False Positives (FP): 2 (cases where “Is True Vehicle” is “FALSE” but the model predicted “TRUE”)

• False Negatives (FN): 1 (cases where “Is True Vehicle” is “TRUE” but the model predicted “FALSE”)

From the results of VMMR shown in Table 2, the accuracy, precision and recall has been calculated and is presented in Table 3.

The ANPR module demonstrated a notable accuracy of 93.75% in reading license plates, as shown in Fig. 5. This result indicates the module’s effectiveness in preprocessing and accurately extracting license plate information. Techniques such as grayscale conversion and contrast enhancement contribute significantly to the module’s ability to accurately recognize and read license plates.

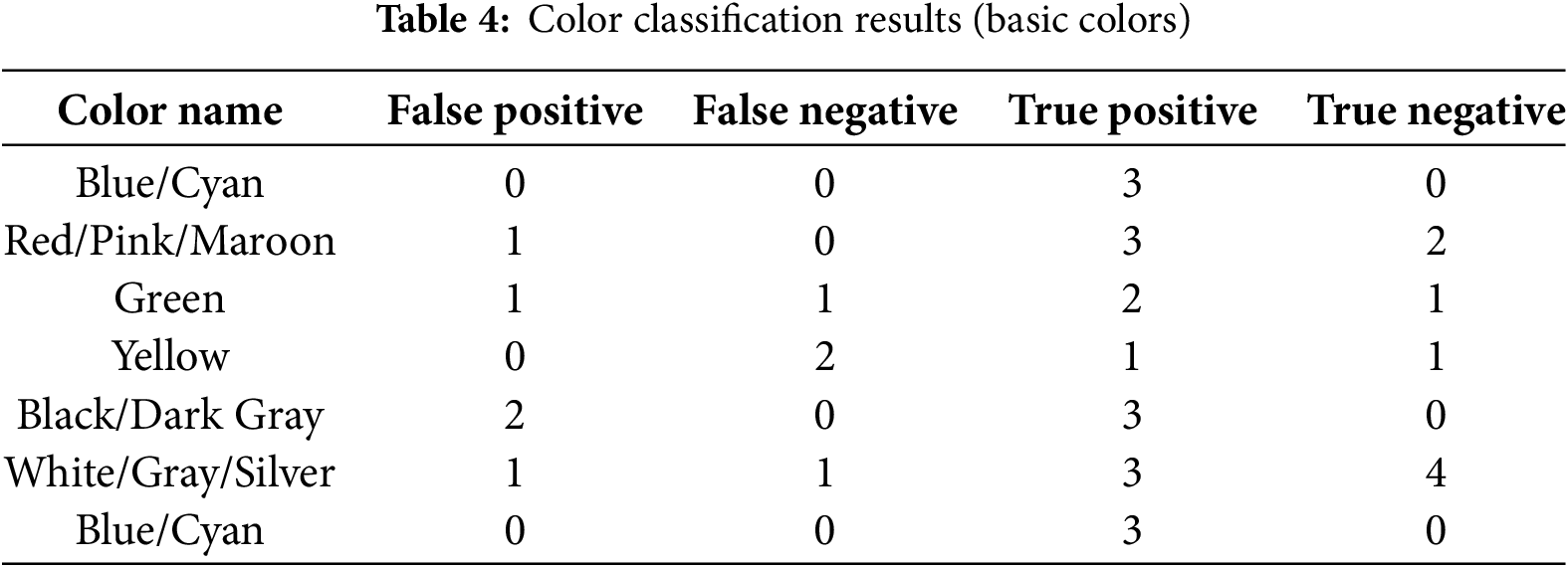

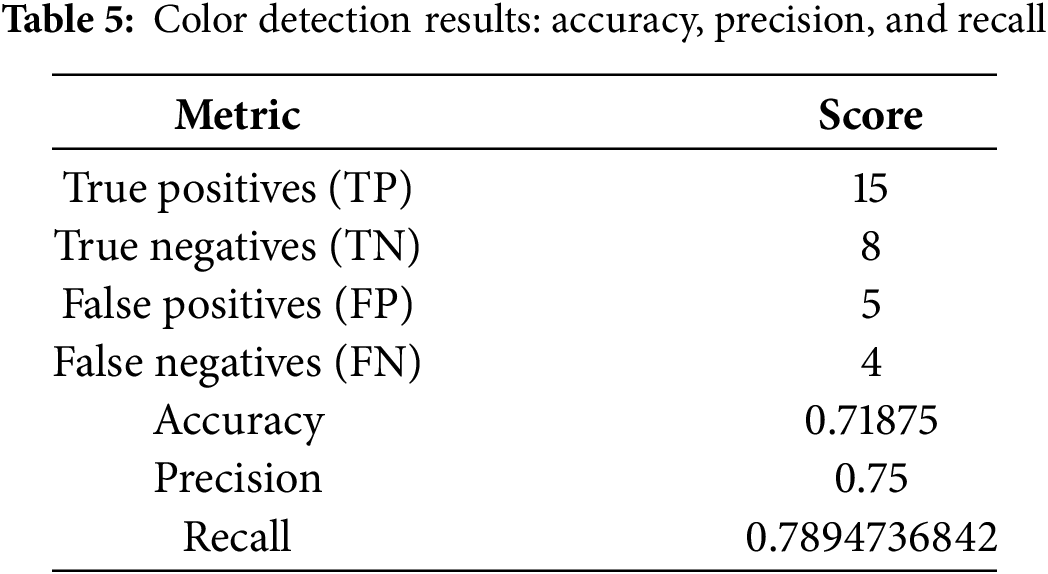

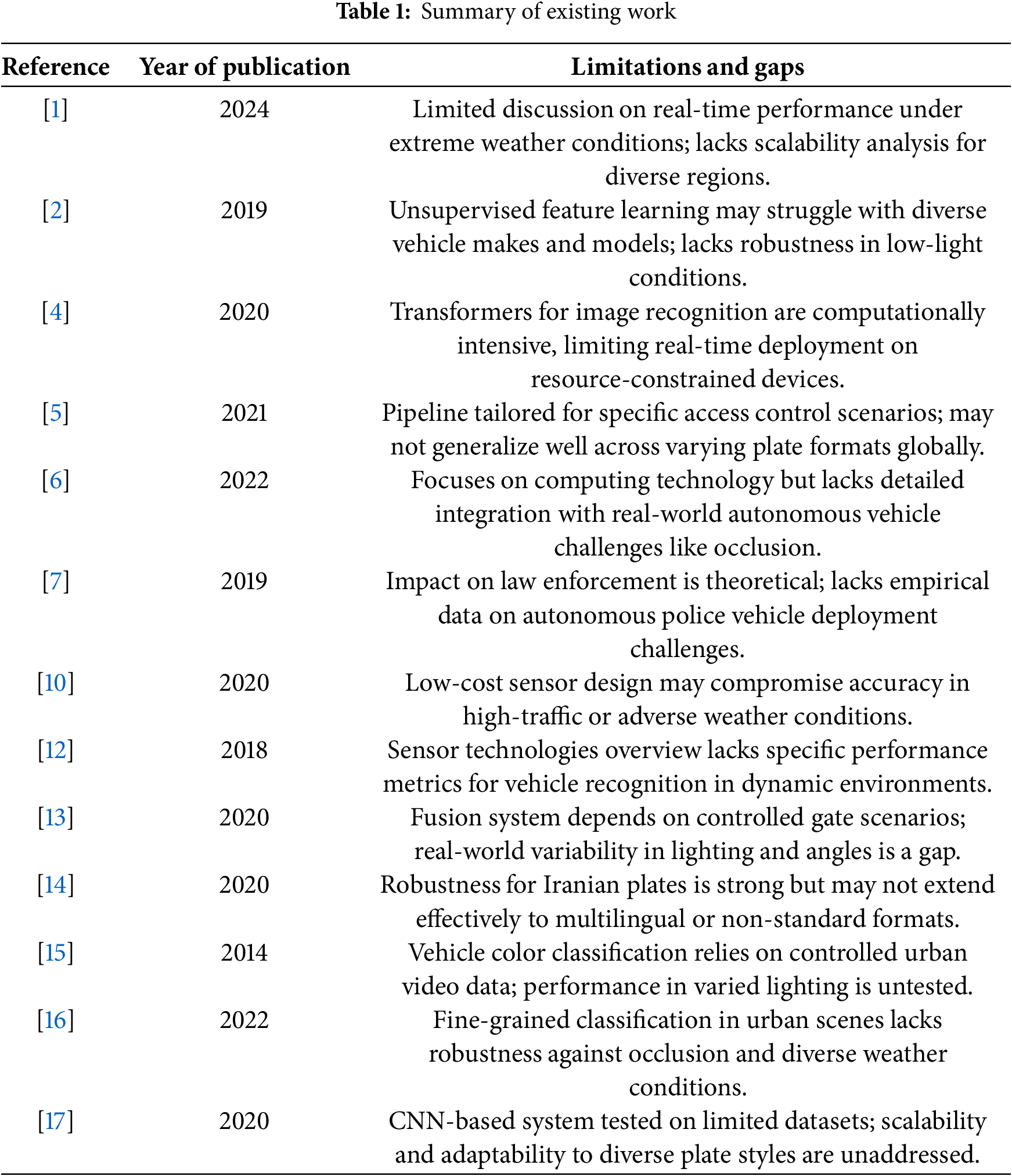

The color detection module based on the HSV color space reached the accuracy of 71.87% in color classification (Fig. 5). Although this accuracy is smaller than that of VMMR (90.63%) and ANPR (93.75%) it is good enough to be useful in practice, since it adds to the rest of the modules in our single framework. The HSV-based clustering algorithm was selected because of its computational simplicity, and therefore, could be used in resource-limited edge devices, and its resistance to illumination changes due to decoupling of hue and brightness (Section 3.3). The issues such as shadows or color fading are overcome thanks to adaptive thresholding and preprocessing methods (e.g., normalization, contrast enhancement), confirmed on 32 examples (Table 4) while color detection showing 15 True Positives out of 32 examples (Table 5) and Suzuki Alto case study (92% accuracy, Table 6). In comparison to deep learning-based classifiers (e.g., CNNs) that are resource-demanding and needs a substantial amount of labelled data, HSV clustering is more efficient and performance-wise, which is why it is part of the overall 93.3% vehicle matching accuracy Table 7.

The integrated performance of the VMMR, ANPR, and Color Detection modules resulted in a vehicle matching accuracy of 93.33% when cross-referenced with the registered database. This signifies that 93.3% of the vehicles were successfully matched with the registration database, with only 6.7% unmatched. The high success rate underscores the effectiveness of combining results from multiple modules for comprehensive vehicle identification. High accuracy across individual modules, coupled with overall vehicle matching success, indicates the system’s strong potential for practical applications in vehicle recognition and management.

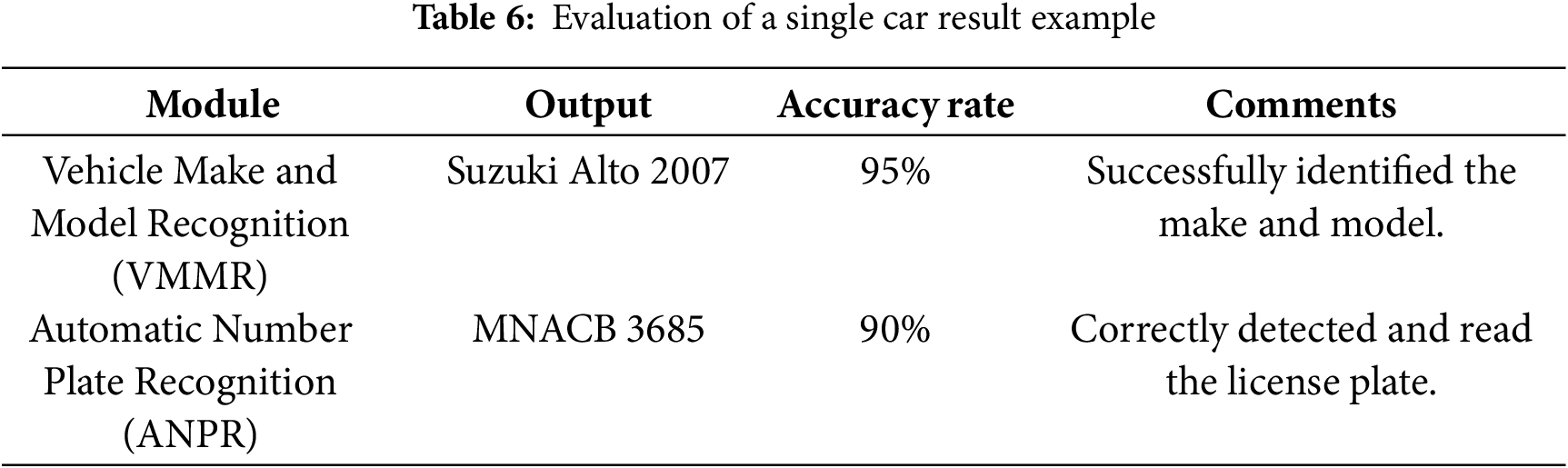

Table 6 presents result for a specific vehicle, the Suzuki Alto 2007, tested for system consistency and robustness. 35 variations of this vehicle’s image were generated with adjustments in factors like angles and lighting conditions. Each image was tested across all three modules—VMMR, ANPR, and Color Detection, with the following consolidated results:

• VMMR: The system correctly identified the Suzuki Alto with overall VMMR accuracy of 95% across the 35 variations.

• ANPR: The license plate was successfully detected in 32 out of 35 cases. A plate recognition accuracy of 90% was achieved with a 0.75 matching threshold, requiring at least a 75% match to determine success.

• Color Detection: In 33 out of 35 cases, the system accurately classified the color as ‘Gray/ Silver’, achieving a color detection accuracy of 92%.

Next, we tested the system with 35 different variations of the same vehicle image to evaluate its robustness under varying conditions. These unified results for the Suzuki Alto show that the system maintains high accuracy in vehicle identification, recognition, and classification even with variations in the vehicle image data.

Table 7 shows the results, confirming that the integrated system effectively performs vehicle make and model recognition, license plate recognition, and color detection, resulting in a comprehensive vehicle identification solution. The system’s high accuracy and reliability across modules make it suitable for implementation in applications like traffic monitoring and law enforcement.

Since the system’s overall system performance relies on the accuracy of matched vehicles, the overall system performance aligns with matched vehicle accuracy. Visual results and statistical data further validate the system, showing its ability to process and analyze vehicle images under diverse conditions. From a holistic perspective, the system combines several vehicles identification features, with each module contributing to a better understanding of each vehicle. This integrated solution leverages advanced technologies to enhance recognition accuracy and offers the practical framework for real-world applications.

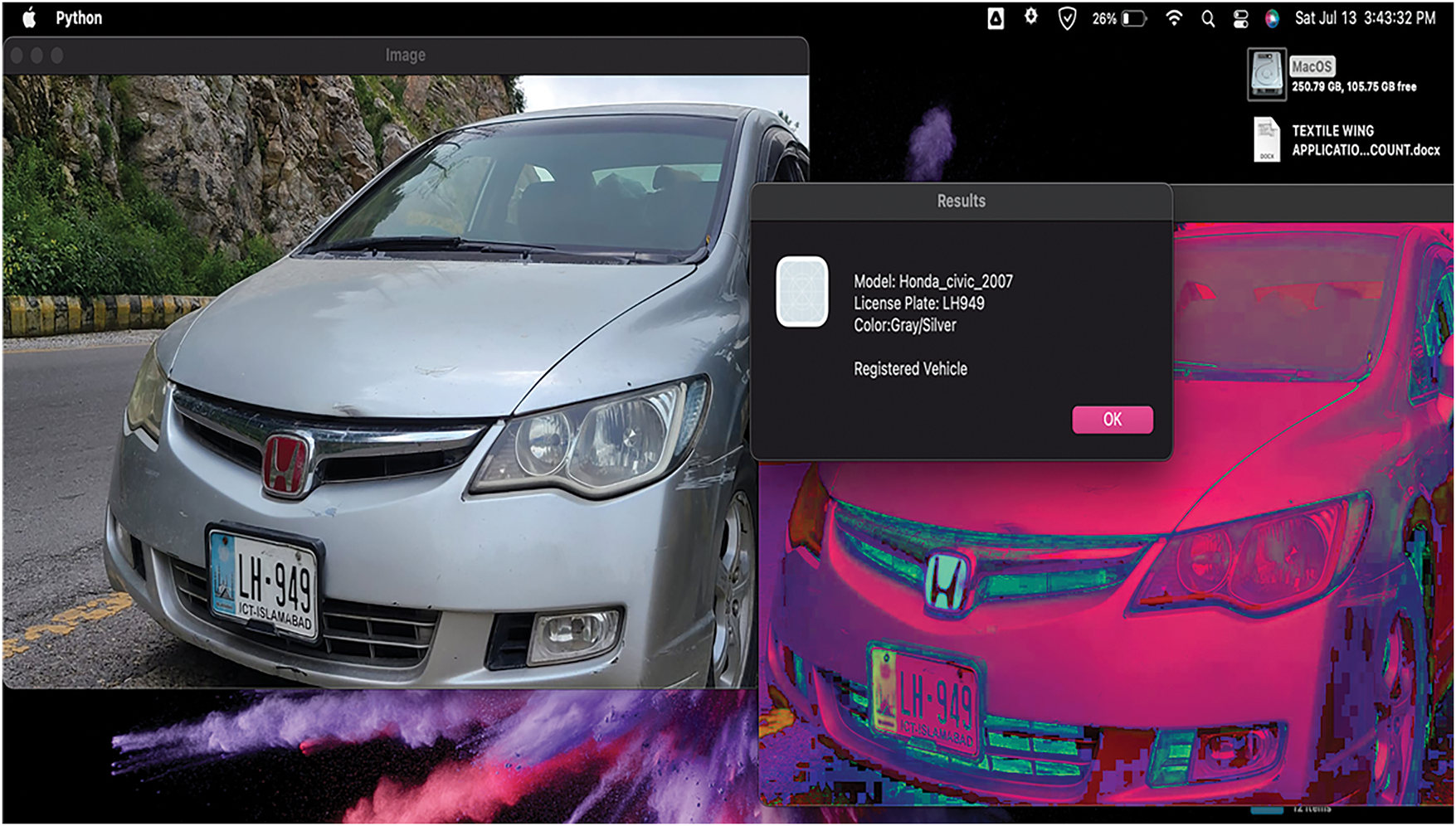

The combined results from the VMMR, ANPR, and Color Detection modules offer a clear view of the system’s capabilities. By merging these components, the vehicle identification system proves to be both robust and effective. As shown in Fig. 7, an example vehicle is detected with key details such as make, model, license plate, and color. This integrated approach highlights the system’s ability to compile data from different modules, forming a complete profile of the vehicle.

Figure 7: Example of detected vehicle showing detected number plate, color, and model

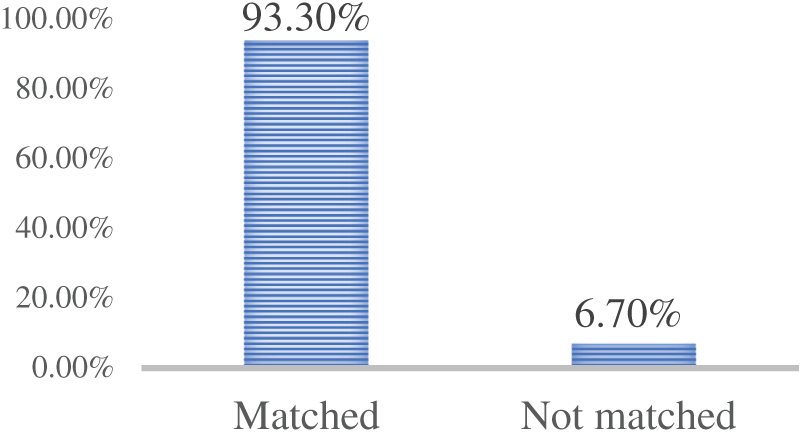

The system’s overall performance in matching vehicles with a registered database is illustrated in Fig. 8. The system successfully matched 93.3% of vehicles, with only 6.7% remaining unmatched. This high matching rate demonstrates the system’s effectiveness in cross-referencing data from each module for accuracy. The success rate emphasizes the system’s reliability and strength in identifying vehicles.

Figure 8: Registered vs. matched vehicles from the database

This study presents a unified approach that integrates Vehicle Make and Model Recognition (VMMR), Automatic Number Plate Recognition (ANPR), and color detection into a cohesive system for advanced vehicle tracking. By leveraging complementary modules, the system enhances accuracy and reliability through cross-verification, minimizing recognition errors. Pixel-level integration further refines the process, enabling more precise and efficient identification—an essential attribute for real-world vehicle recognition and verification systems.

Ghaida Saadouli et al. [13] proposed a security solution combining license plate recognition, car model identification, and facial recognition for access control. Their method utilized SIFT and DoG for vehicle detection, while OCR and the Viola–Jones algorithm were used for number plate and facial recognition. Although their system achieved a 75% success rate in identifying vehicles, the results were based on tests involving toy cars under controlled conditions, limiting the generalizability of their findings.

In contrast, our research introduces a more scalable and contemporary system employing MobileNetV2 for VMMR, YOLO for ANPR, and HSV-based clustering for color detection. Tested in diverse real-time environments, our system consistently achieved a 93.3% accuracy rate—demonstrating strong performance regardless of lighting or environmental variations. Moreover, the use of deep learning techniques, along with containerized deployment, makes our solution suitable for large-scale smart city implementations.

While the HSV clustering method for color detection yielded lower accuracy (71.87%) compared to deep learning-based classifiers, it offered a lightweight and computationally efficient alternative—particularly advantageous for deployment on edge devices. In future work, we aim to explore hybrid models that combine HSV clustering with lightweight convolutional neural networks (CNNs) to improve accuracy under extreme lighting conditions while maintaining operational efficiency.

Overall, the integrated system proved to be more robust than standalone solutions, achieving a 93.3% matching accuracy (Fig. 8). This demonstrates the effectiveness of the combined modules in delivering reliable performance suitable for real-world intelligent transportation applications.

The integration of VMMR, ANPR, and color detection within a unified framework yields a robust and reliable vehicle identification system, well-suited for traffic monitoring, law enforcement, and vehicle management—particularly in the context of Pakistan. By leveraging proven techniques—MobileNetV2 for vehicle make and model recognition, YOLO combined with EasyOCR for license plate recognition, and HSV clustering for color detection—the proposed system achieves a commendable accuracy of 93.3% (as detailed in Section 4), consistently outperforming modular or standalone solutions. Comprehensive testing on diverse datasets—including the Stanford Cars dataset for VMMR and a custom set of 364 Pakistani license plates for ANPR—demonstrates the system’s effectiveness, adaptability, and resilience under various environmental conditions.

In the future, we intend to extend the ANPR module to support multilingual and international license plates, such as those found in Chinese and European regions, using datasets like CCPD and UFPR-ALPR. Enhancing color recognition under challenging lighting scenarios is another focus, with plans to incorporate lightweight CNN-based classifiers. To improve real-time deployment, we will investigate edge computing solutions, including Docker-based containerization, to minimize latency within smart city infrastructure. In parallel, advanced data augmentation strategies will be developed to better handle occlusion and adverse weather conditions such as fog or rain. Finally, adopting a cloud-native architecture with microservices will enable horizontal scaling, making the system capable of managing large-scale urban traffic networks efficiently.These enhancements aim to reinforce the framework’s real-world applicability, enabling high-performance, real-time vehicle recognition while preserving its modularity and computational efficiency.

Acknowledgement: The authors thank the Multimedia University AI Research Lab and Telekom Research and Development Sdn Bhd for support. We are grateful to the contributors of the Pakistani Cars Image Dataset on Kaggle and to our colleagues for valuable discussions.

Funding Statement: This work was supported in part by Multimedia University Research Fellow under Grant MMUI/250008 and in part by Telekom Research and Development Sdn Bhd under Grant RDTC/241149.

Author Contributions: Conceptualization, Kashif Sultan and Saad Sadiq; Methodology, Saad Sadiq; Software, Saad Sadiq; Validation, Muhammad Usman Hashmi and Kashif sultan; Formal analysis, Muhammad Sheraz; Investigation, Muhammad Sheraz and Teong Chee Chuah; Data curation, Muhammad Sheraz and Kashif Sultan; Writing—original draft, Saad Sadiq and Kashif Sultan; Writing—review & editing, Muhammad Usman Hashmi and Teong Chee Chuah; Supervision, Kashif Sultan. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The data that support the findings of this study are openly available in Kaggle at https://www.kaggle.com/datasets/salmanadam1052/pakistani-cars-image-dataset (accessed on 2 July 2025).

Ethics Approval: There is no ethical approval required.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

References

1. Mustafa TT, Karabatak M. Real time car model and plate detection system by using deep learning architectures. IEEE Access. 2024;12:107616–30. doi:10.1109/ACCESS.2024.3413554. [Google Scholar] [CrossRef]

2. Nazemi A, Azimifar Z, Shafiee MJ, Wong A. Real-time vehicle make and model recognition using unsupervised feature learning. IEEE Trans Intell Transp Syst. 2020;21(7):3080–90. doi:10.1109/tits.2019.2924830. [Google Scholar] [CrossRef]

3. Tan M, Le Q. EfficientNet: rethinking model scaling for convolutional neural networks. In: Proceedings of the International Conference on Machine Learning (ICML); 2019; Long Beach, CA, USA. doi:10.48550/arXiv.1905.11946. [Google Scholar] [CrossRef]

4. Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, et al. An image is worth 16 x 16 words: transformers for image recognition at scale. arXiv:2010.11929. 2020. doi:10.48550/arXiv.2010.11929. [Google Scholar] [CrossRef]

5. Shashidhar R, Manjunath AS, Kumar RS, Roopa M, Puneeth SB. Vehicle number plate detection and recognition using YOLO-v3 and OCR method. In: 2021 IEEE International Conference on Mobile Networks and Wireless Communications (ICMNWC). Tumkur, Karnataka, India: IEEE; 2021. p. 1–5. doi:10.1109/ICMNWC52512.2021.9688266. [Google Scholar] [CrossRef]

6. Khan MG, Saeed M, Zulfiqar A, Ghadi YY, Adnan M. A novel deep learning based anpr pipeline for vehicle access control. IEEE Access. 2022;10:64172–84. doi:10.1109/ACCESS.2022.3183017. [Google Scholar] [CrossRef]

7. Gregg A. Autonomous police vehicles: the impact on law enforcement. Homeland Security Affairs; 2019. [cited 2025 Aug 2]. Available from: https://www.hsdl.org/c/view?docid=825205. [Google Scholar]

8. Shrivastava S, Singh SK, Shrivastava K, Sharma V. CNN-based automated vehicle registration number plate recognition system. In: 2020 2nd International Conference on Advances in Computing, Communication Control and Networking (ICACCCN). Greater Noida, India: IEEE. p. 795–802. doi:10.1109/ICACCCN51052.2020.9362705. [Google Scholar] [CrossRef]

9. Siegel JE, Erb DC, Sarma SE. A survey of the connected vehicle landscape—architectures, enabling technologies, applications, and development areas. IEEE Trans Intell Transp Syst. 2018;19(8):2391–406. doi:10.1109/TITS.2017.2750624. [Google Scholar] [CrossRef]

10. Chen F, Zhao D. Computing technology in autonomous vehicle. In: Li Y, Shi H, editors. Advanced driver assistance systems and autonomous vehicles. Singapore: Springer; 2022. doi:10.1007/978-981-19-5053-7_3. [Google Scholar] [CrossRef]

11. Ålvarez-Bazo F, Snchez-Cambronero S, Vallejo D, Glez-Morcillo C, Rivas A, Gallego I. A low-cost automatic vehicle identification sensor for traffic networks analysis. Sensors. 2020;20(19):5589. doi:10.3390/s20195589. [Google Scholar] [PubMed] [CrossRef]

12. Guerrero-Ibez J, Zeadally S, Contreras-Castillo J. Sensor technologies for intelligent. transportation systems. Sensors. 2018;18(4):1212. doi:10.3390/s18041212. [Google Scholar] [PubMed] [CrossRef]

13. Saadouli G, Elburdani MI, Al-Qatouni RM, Kunhoth S, Al-Maadeed S. Automatic and secure electronic gate system using fusion of license plate, car make recognition and face detection. In: 2020 IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT). Doha, Qatar; 2020. p. 79–84. doi:10.1109/ICIoT48696.2020.9089615. [Google Scholar] [CrossRef]

14. Tourani A, Shahbahrami A, Soroori S, Khazaee S, Suen CY. A robust deep learning approach for automatic iranian vehicle license plate detection and recognition for surveillance systems. IEEE Access. 2020;8:201317–30. doi:10.1109/ACCESS.2020.3035613. [Google Scholar] [CrossRef]

15. Wang YC, Han CC, Hsieh CT, Fan KC. Vehicle color classification using manifold learning methods from urban surveillance videos. EURASIP J Image Video Process. 2014;2014(1) :48. [Google Scholar]

16. Najeeb SA, Raza RH, Yusuf A, Sultan Z. Fine-grained vehicle classification in urban traffic scenes using deep learning. In: Proceedings of the 11th International Conference on Robotics, Vision, Signal Processing and Power Applications: Enhancing Research and Innovation through the Fourth Industrial Revolution. Singapore: Springer Singapore; 2022. p. 902–8. doi:10.1007/978-981-19-1589-2_86. [Google Scholar] [CrossRef]

17. Kamoji S, Koshti D, Dmonte A, George SJ, Pereira CS. Image processing based vehicle identification and speed measurement. In: 2020 International Conference on Inventive Computation Technologies (ICICT). Coimbatore, India: IEEE; 2020. p. 523–7. doi:10.1109/ICICT48043.2020.9112482. [Google Scholar] [CrossRef]

18. Sinha H, Soumya GV, Undavalli S, Jeyanthi R. An effective real-time approach to automatic number plate recognition (ANPR) using YOLOv3 and OCR. In: Intelligent Systems, Technologies and Applications: Proceedings of Sixth ISTA 2020, India. Singapore: Springer; 2021. p. 299–314. doi:10.1007/978-981-33-4265-9_21. [Google Scholar] [CrossRef]

19. Tian Y, Han M. Adaptive binarization for vehicle state images based on contrast preserving decolorization and major cluster estimation. IEICE Trans Inf Syst. 2022;105(3):679–88. doi:10.1587/transinf.2021EDP7207. [Google Scholar] [CrossRef]

20. Alamgir RM, Shuvro AA, Al Mushabbir M, Rani NJ, Rahman MM, Ahmed S. Performance analysis of yolo-based architectures for vehicle detection from traffic images in Bangladesh. In: 2022 25th International Conference on Computer and Information Technology (ICCIT). Cox's Bazar, Bangladesh: IEEE; 2022. p. 982–7. doi:10.1109/ICCIT57467.2022.10052737. [Google Scholar] [CrossRef]

21. Lubna N, Mufti N, Shah SAA. Automatic number plate recognition: a detailed survey of relevant algorithms. Sensors. 2021;21(9):3028. doi:10.3390/s21093028. [Google Scholar] [PubMed] [CrossRef]

Cite This Article

Copyright © 2025 The Author(s). Published by Tech Science Press.

Copyright © 2025 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF

Downloads

Downloads

Citation Tools

Citation Tools