Open Access

Open Access

REVIEW

On Privacy-Preserved Machine Learning Using Secure Multi-Party Computing: Techniques and Trends

1 School of Computing, University of Colombo, Colombo, 00700, Sri Lanka

2 Department of Computer Engineering, University of Peradeniya, Peradeniya, 20400, Sri Lanka

3 School of Computer Science and Mathematics, Liverpool John Moores University, Liverpool, L3 3AF, UK

4 Research and Innovations Centers Division, Rabdan Academy, Abu Dhabi, 22401, United Arab Emirates

* Corresponding Author: Gyu Myoung Lee. Email:

# These authors contributed equally to this work

Computers, Materials & Continua 2025, 85(2), 2527-2578. https://doi.org/10.32604/cmc.2025.068875

Received 09 June 2025; Accepted 13 August 2025; Issue published 23 September 2025

Abstract

The rapid adoption of machine learning in sensitive domains, such as healthcare, finance, and government services, has heightened the need for robust, privacy-preserving techniques. Traditional machine learning approaches lack built-in privacy mechanisms, exposing sensitive data to risks, which motivates the development of Privacy-Preserving Machine Learning (PPML) methods. Despite significant advances in PPML, a comprehensive and focused exploration of Secure Multi-Party Computing (SMPC) within this context remains underdeveloped. This review aims to bridge this knowledge gap by systematically analyzing the role of SMPC in PPML, offering a structured overview of current techniques, challenges, and future directions. Using a semi-systematic mapping study methodology, this paper surveys recent literature spanning SMPC protocols, PPML frameworks, implementation approaches, threat models, and performance metrics. Emphasis is placed on identifying trends, technical limitations, and comparative strengths of leading SMPC-based methods. Our findings reveal that while SMPC offers strong cryptographic guarantees for privacy, challenges such as computational overhead, communication costs, and scalability persist. The paper also discusses critical vulnerabilities, practical deployment issues, and variations in protocol efficiency across use cases.Keywords

Utilizing data in training machine learning models while offering transformative opportunities introduces notable privacy challenges. Datasets containing sensitive information [1], such as medical records or financial data, require stringent confidentiality to safeguard individual and organizational privacy. Although access to such data can enhance model performance and deliver significant advantages, the imperative to protect privacy remains a critical constraint. When trained on diverse and extensive datasets, machine learning models achieve better accuracy and generalization. However, when data is distributed across multiple entities or institutions, privacy concerns emerge as a key obstacle, highlighting the need for effective and robust privacy-preserving methodologies.

The literature review on Secure Multi-Party Computing (SMPC) reveals a significant research gap. While extensive work exists on Privacy-Preserving Machine Learning (PPML), a comprehensive exploration of SMPC’s role within the PPML domain is lacking. This research seeks to fill this gap by systematically examining the current state of SMPC in PPML [2]. The study encompasses fundamental concepts, key approaches, challenges faced, and prospective directions for future research, offering a well-rounded perspective on the topic.

This work also aims to provide valuable insights for researchers and practitioners by presenting an up-to-date survey of SMPC techniques. It includes a detailed comparative analysis of various SMPC methods, emphasizing their strengths and limitations. Furthermore, the study investigates potential threats to SMPC, evaluates diverse SMPC protocols, and discusses metrics for performance assessment and considerations for scalability. By synthesizing these aspects, the paper aspires to equip readers with a nuanced understanding of modern SMPC practices, thereby enabling informed decision-making in both academic and industrial applications.

Guided by recent deployments of privacy-preserving machine learning, we address three concrete questions:

• RQ1. Which SMPC protocols have been integrated into PPML systems since 2012, and how do they compare in terms of security guarantees, model fidelity, and resource overhead?

• RQ2. What SMPC-specific attack surfaces emerge when training or serving ML models, and how effective are existing counter-measures?

• RQ3. Where are the open performance and usability gaps that block real-world adoption, and what research directions can close them?

Relative to prior surveys, we make four specific advances:

1. Comprehensive SMPC-PPML corpus (Sections 4 and 5). We catalogue peer-reviewed works from 2012 to 2025, annotate them along five dimensions (protocol family, adversary model, #parties, dataset, task). (RQ1).

2. Unified benchmark table. We normalise accuracy, computation time, communication cost, and scalability for 17 representative protocols, enabling comparisons that were previously scattered. (RQ1).

3. Structured threat taxonomy (Section 6.3). We identify SMPC-specific attack vectors and map published defences to each vector, highlighting residual risks. (RQ2).

4. Actionable research agenda (Section 8). We derive six concrete open problems from “low latency SMPC for edge devices” to “hybrid SMPC + Differential Privacy (DP) for billion-parameter models” and pair each with measurable success criteria for future work. (RQ3).

The organization of the remaining sections of this survey paper is as follows. Section 3 describes the research methods we adopted for this study. Section 4 provides an overview of standard privacy-preserving techniques. Section 5 delves into the application of privacy-preserving techniques in different phases of the machine learning pipeline. The utilization of SMPC in PPML is the focus of Section 6. This section includes a description of attacks and threats to SMPC, the evaluation metrics for measuring the performance of SMPC-based PPML approaches, and the limitations of SMPC for PPML. The challenges, issues, and open problems in SMPC-based PPML approaches are discussed in Section 8. Moreover, the paper provides several directions for future research in this area by highlighting the gaps in existing research. Finally, Section 9 presents the paper’s conclusion.

This section provides an overview of key methods supporting privacy-preserving machine learning. A chronological overview of major advancements in PPML with SMPC is provided in Fig. 1, highlighting key publications that have shaped the field.

Figure 1: Secure computation timeline [3–18]

2.1 Current Landscape of Privacy Preserving Machine Learning

PPML has become a critical focus in machine learning research, driven by the need to balance data utility with privacy protection and secure machine learning systems [19]. Numerous techniques have been developed to extract information from data without compromising privacy [20,21]. PPML addresses the growing demand for secure machine learning systems by enabling model training and deployment while safeguarding sensitive data. As reliance on cloud-based platforms and decentralized data collection increases, PPML has become foundational in designing privacy-compliant frameworks that meet technical and regulatory requirements.

Over the past two decades, PPML has advanced significantly, with research focusing on maintaining data confidentiality while enabling collaborative model training. A key development in this domain has been the integration of SMPC, leading to three distinct research phases:

1. Foundational Phase (2007–2012): This period saw the emergence of theoretical frameworks for secure machine learning, including protocols for secure gradient descent, logistic regression, and general multiparty computation (SPDZ).

2. Mid-Phase (2013–2020): Research shifted toward practical implementations, leading to the development of secure neural network frameworks and privacy-enhanced models such as SecureML, SecureNN, and CryptFlow.

3. Modern Phase (2021–Present): The focus has been on optimizing performance to enable real-world deployment at scale. Techniques such as Cheetah, PUMA, and SecretFlow have been introduced to enhance computational efficiency and scalability.

2.1.1 Attacks on Machine Learning Models

The implementation of PPML plays a critical role in mitigating attacks on machine learning models. As adversaries persistently seek to exploit vulnerabilities and compromise data integrity, deploying robust countermeasures is essential for strengthening model resilience.

2.1.2 Privacy-Preserving Models in the Real World

In the medical sector, collecting patient data by various institutions and hospitals can pose challenges in pooling the data to train machine learning models due to privacy laws [22–24]. To overcome these challenges, PPML can provide a solution by enabling institutions to collaborate on model training without disclosing patient data. The SMPC case study [25] demonstrated the application of SMPC in healthcare through a garbled circuits approach for patient risk stratification, thereby eliminating the need for centralized data. The authors created a large-scale dataset with over two million patients and 141 million healthcare encounters from Chicago. The system performed SMPC over a Wide Area Network (WAN) in just over seven minutes, showcasing impressive efficiency for the data scale. To overcome the performance bottleneck of naive record linkage methods that require quadratic time, they implemented Cuckoo hashing for efficient and privacy-preserving entity resolution between hospital datasets. This hashing step reduced computational overhead while maintaining accuracy and privacy. The authors illustrated the potential of deploying SMPC-based systems in real-world healthcare by simulating a distributed environment, addressing legal and technical barriers to sharing sensitive patient data. This case study illustrates not only the technical soundness of SMPC for real-world applications but also its potential for deployment in regulated domains, such as healthcare.

Similarly, in the financial sector, the use of PPML is crucial for customer segmentation in private banking, as the protection of customer data is essential for realizing the benefits of data insights held by multiple parties. The modern world has seen the benefits of secure machine learning in various other sectors as well, including [26–28]. The growing demand for PPML has led to the emergence of Privacy-Preserving Machine Learning as a Service (PPMLaaS) [29–31]. For instance, the framework proposed in [31] involves a pool of data perturbation methods that selects the most appropriate approach for the input data. PrivEdge [30] is another example, which trains a model on the private data of each party involved and performs private prediction services using SMPC techniques. Additionally, reference [29] highlights the acceleration of Prediction As a Service through encrypted data.

Privacy preservation techniques can be implemented throughout the various stages of the machine learning pipeline [32]. We can classify them into four broad categories: anonymization techniques, cryptographic techniques, DP [33], and Trusted Execution Environment (TEE) [34].

2.2 What Is Secure Multi-Party Computing?

The central concept of SMPC is to enable multiple parties to collaboratively perform a computational task without exposing their private data. SMPC is highly versatile and can be applied across various domains, including machine learning [35]. Unlike traditional approaches, SMPC eliminates the need for anonymization techniques, as data is never fully disclosed to other parties during the computation process. Secure multi-party computation is also referred to as Secure MPC or SMC. This paper will use the abbreviation SMPC throughout for consistency.

2.3 Applications of Secure Multi-Party Computing

In PPML, SMPC can be utilized during the machine learning pipeline’s training and inference stages, depending on the specific use case. In the training phase, SMPC secures datasets contributed by different parties, ensuring the data remains private while training the model. During inference, SMPC prevents the server hosting the model from accessing the user’s input data, maintaining confidentiality.

Federated Learning (FL), a decentralized machine learning approach, complements SMPC in certain scenarios. FL enables model training across multiple devices, where updates or gradients are shared with a central server for aggregation, improving the global model. SMPC, on the other hand, facilitates collaborative computation by multiple parties on encrypted input data without revealing it. While FL preserves privacy through decentralization, SMPC ensures privacy by operating on encrypted data.

Recently, SMPC has been integrated into FL frameworks [36–38] to secure the sharing of model updates [38–40]. This is particularly critical in cases where updates may contain sensitive information, such as those involving personal data [41]. However, the communication overhead associated with SMPC poses a significant challenge, often leading to performance slowdowns in machine learning tasks. Balancing privacy guarantees with computational efficiency remains a key area of research in applying SMPC to PPML.

We conducted a semi-Systematic Mapping Study (semi-SMS) to explore the scientific literature on PPML, with a specific focus on SMPC. Our survey aims to offer a comprehensive introduction to widely used PPML techniques, examine the landscape of SMPC protocols, and address both the training and inference aspects of SMPC. Additionally, we identify existing research gaps and suggest potential future directions for SMPC, providing researchers with a clear overview of the field’s current state.

To gather relevant literature, we used keywords such as “Privacy-Preserving Machine Learning,” “PPML,” “Secure Multi-Party Computing,” and “SMPC,” resulting in an initial set of research papers. We then applied a backward snowballing approach to include vital references cited by these papers, followed by a forward snowballing approach to identify papers citing them. This method ensured we captured the most relevant and widely cited research in the PPML and SMPC domains.

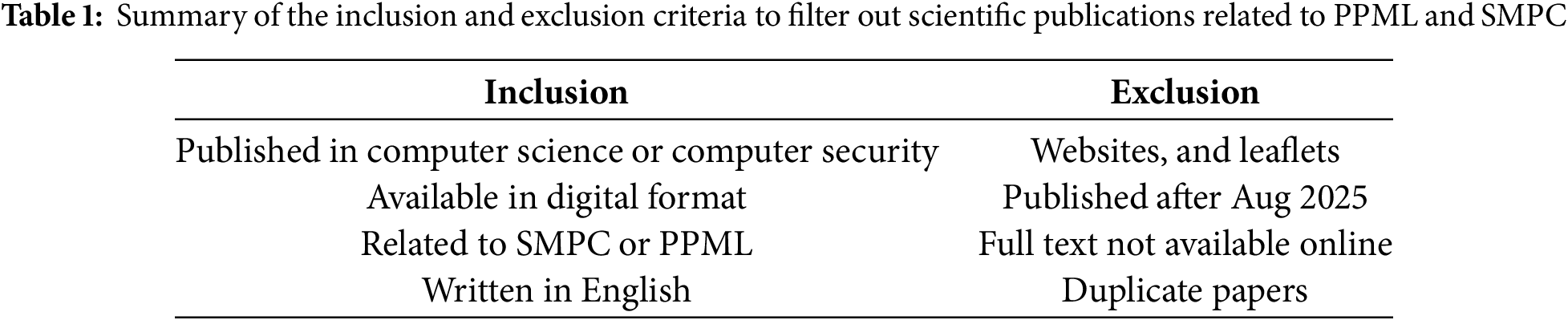

We applied strict inclusion and exclusion criteria to refine the large pool of papers, retaining only the most pertinent studies. Although we did not impose a specific starting date for the publications, we limited the search to works published before 01 August 2025. Table 1 illustrates our adopted inclusion/exclusion criteria.

Finally, we manually reviewed the collected papers to ensure that our analysis included only those directly related to PPML and SMPC. The publishers of the selected papers are depicted in Fig. 2.

Figure 2: Publishers of the selected papers

A semi-SMS offers distinct advantages over methods such as systematic literature reviews (SLRs) for researchers and practitioners seeking to understand emerging and fragmented fields, such as Secure Multi-party Computing (SMPC) for Privacy-Preserving Machine Learning (PPML). While SLRs excel at answering narrow questions, semi-SMS offers a more agile approach to knowledge synthesis, revealing trending research methods, future directions, and gaps. By prioritizing breadth over depth, semi-SMS captures the whole landscape of this rapidly evolving domain of SMPC for PPML, revealing patterns in protocol design, methodological trends in model implementation, and performance gaps that an SLR’s strict inclusion criteria might exclude. This flexibility is vital in a field where new cryptographic techniques and ML model architectures emerge constantly across different security, cryptography, and machine learning venues, and where rigid protocols would miss these innovative papers. For those looking to identify promising research directions in PPML, semi-SMS offers a balanced approach that combines systematic rigor with the flexibility to uncover new insights. In contrast to the exploratory value of mapping studies, an SLR is the superior and ideal method when the research objective is to produce a definitive, trustworthy answer to a specific, well-defined question.

4.1 Privacy Preservation Techniques Overview

In the domain of privacy preservation, several methodologies have been devised to ensure the secure exchange of data across multiple entities. These approaches fall into four primary categories: anonymization, cryptography, data perturbation, and Trusted Execution Environments (TEEs) as depicted in Fig. 3.

Figure 3: A taxonomy of privacy preservation techniques [42–81]

This survey focuses solely on SMPC for machine learning. While techniques such as anonymization, differential privacy, and TEE are important for privacy-preservation, we intentionally excluded them to maintain depth and coherence in our analysis.

Homomorphic Encryption (HE), introduced by Rivest et al. in 1978 [82], revolutionized data privacy by enabling computation directly on encrypted data, thus preserving confidentiality throughout the computational process. The encrypted outputs, when decrypted, are identical to those derived from computations on the plaintext. This intrinsic property obviates reliance on a trusted third party for data handling, enhancing security and privacy integrity.

In contemporary literature, HE is classified into three categories [83] based on the permitted type and the number of operations on the encrypted data:

1. Partially Homomorphic Encryption (PHE): Supports unlimited operations of a single type, such as addition or multiplication, within the encrypted domain.

2. Somewhat Homomorphic Encryption (SWHE): Facilitates a limited number of operations encompassing multiple types.

3. Fully Homomorphic Encryption (FHE): Capable of executing an unrestricted sequence of operations of any type, providing maximum computational flexibility on encrypted data.

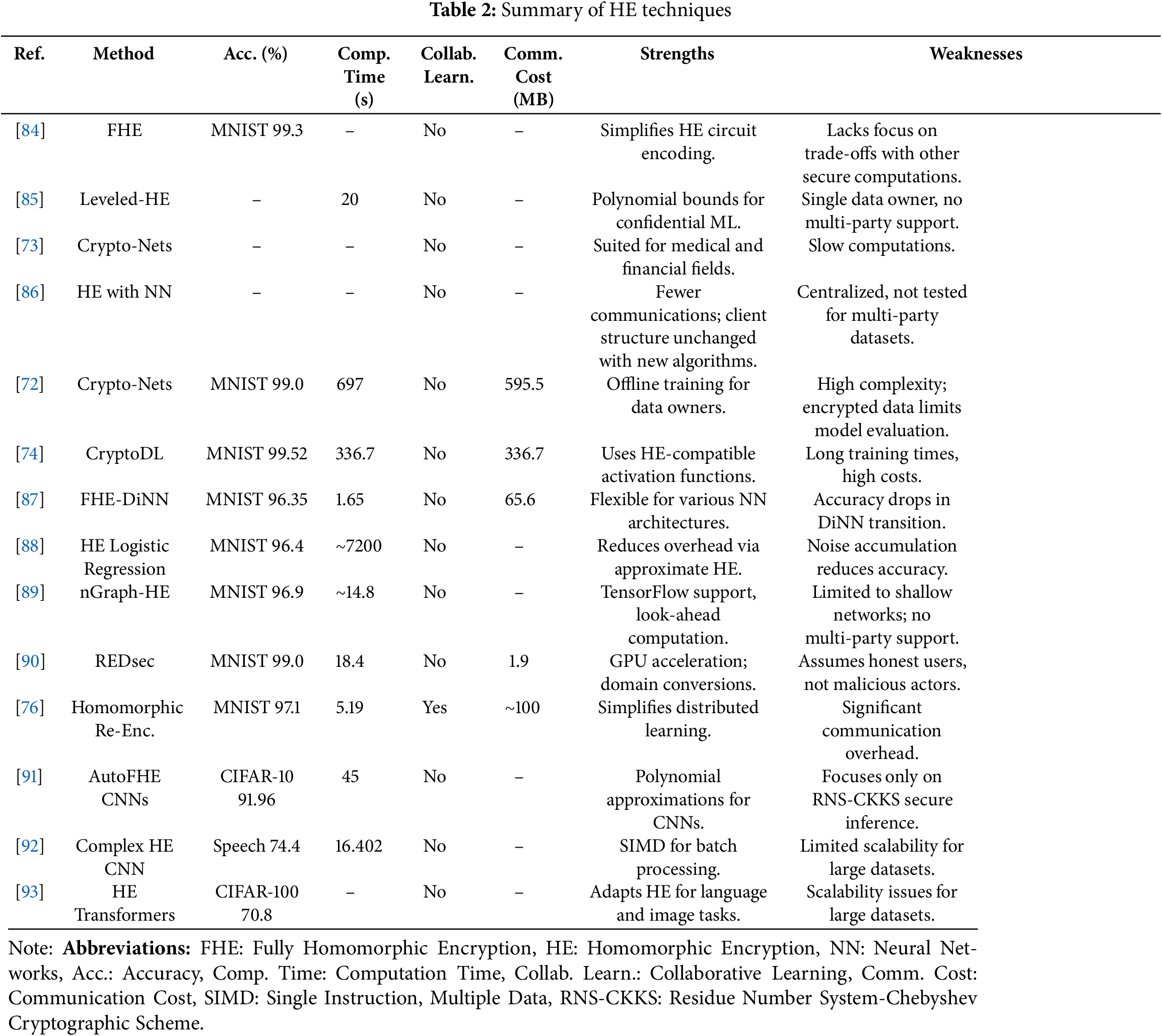

These categories delineate the operational constraints and scope, shaping their respective applications across domains requiring varying levels of computation and privacy guarantees. A summary of HE’s role in privacy-preserving computations across various fields is presented in Table 2. The dashes in the table represent missing values, which are not explicitly reported in the corresponding original papers. We decided not to estimate or impute metrics such as communication cost or runtime to preserve the integrity of the results. Missing values arise from differing experimental setups, complicating comparisons of HE methods. Future work should implement a unified benchmarking approach for more precise comparisons.

HE is widely regarded as a robust cryptographic scheme for enabling privacy-preserving machine learning. It permits computations to be performed directly on encrypted data, maintaining privacy throughout the process. Despite its theoretical advantages, the practical application of HE is often constrained by computational overheads and inefficiencies. Alternative variants such as additive homomorphic encryption [94] and homomorphic re-encryption [76] have been introduced to mitigate these limitations. These alternatives, however, support only a restricted subset of mathematical operations, limiting their utility in complex machine-learning tasks.

Nonetheless, substantial research has been conducted to adapt HE for privacy-preserving machine learning. Notable contributions include studies by Aono et al. [95], and subsequent advancements detailed in works such as [87,96–99]. These studies explore optimization techniques and hybrid approaches to address the inherent challenges associated with HE in machine-learning contexts.

4.2.2 Fully Homomorphic Encryption

Although the concept of HE was first proposed in 1978, it wasn’t until the introduction of FHE by Gentry in 2009 [100] that a practical implementation became feasible. This scheme utilizes lattice-based cryptography, which supports performing addition and multiplication operations on encrypted data. Despite its theoretical feasibility, FHE faced challenges in terms of computational overhead and slowness.

As a result, various variants of HE emerged to overcome these challenges. These include Leveled Homomorphic Encryption (LHE) [101], Homomorphic re-encryption [76,102], Additively Homomorphic Encryption [95], and Multi-key Fully Homomorphic Encryption (Mk-FHE) [71,77].

4.2.3 Functional Encryption (FE)

Functional Encryption (FE), introduced by Sahai and Waters [103] and later formalized by Boneh et al. [104], represents an advanced encryption paradigm designed to enable controlled computation over encrypted data. Unlike traditional public-key encryption, which simply allows a decryption key holder to access the plaintext, FE restricts access to specific outputs of a function computed over the ciphertext. This ensures that sensitive inputs remain confidential while yielding usable outputs for authorized users.

The core challenges in designing FE systems stem from ensuring security and efficiency across all polynomial-time functions. FE systems typically involve significant computational overhead and demand robust, fine-grained access controls [104]. Two critical properties of FE systems are selective disclosure and security against collusion. Selective disclosure ensures that decryption yields only specific functional outputs, while collusion resistance guarantees that even if multiple decryption key holders collaborate, they cannot reconstruct more than the permitted functional outputs.

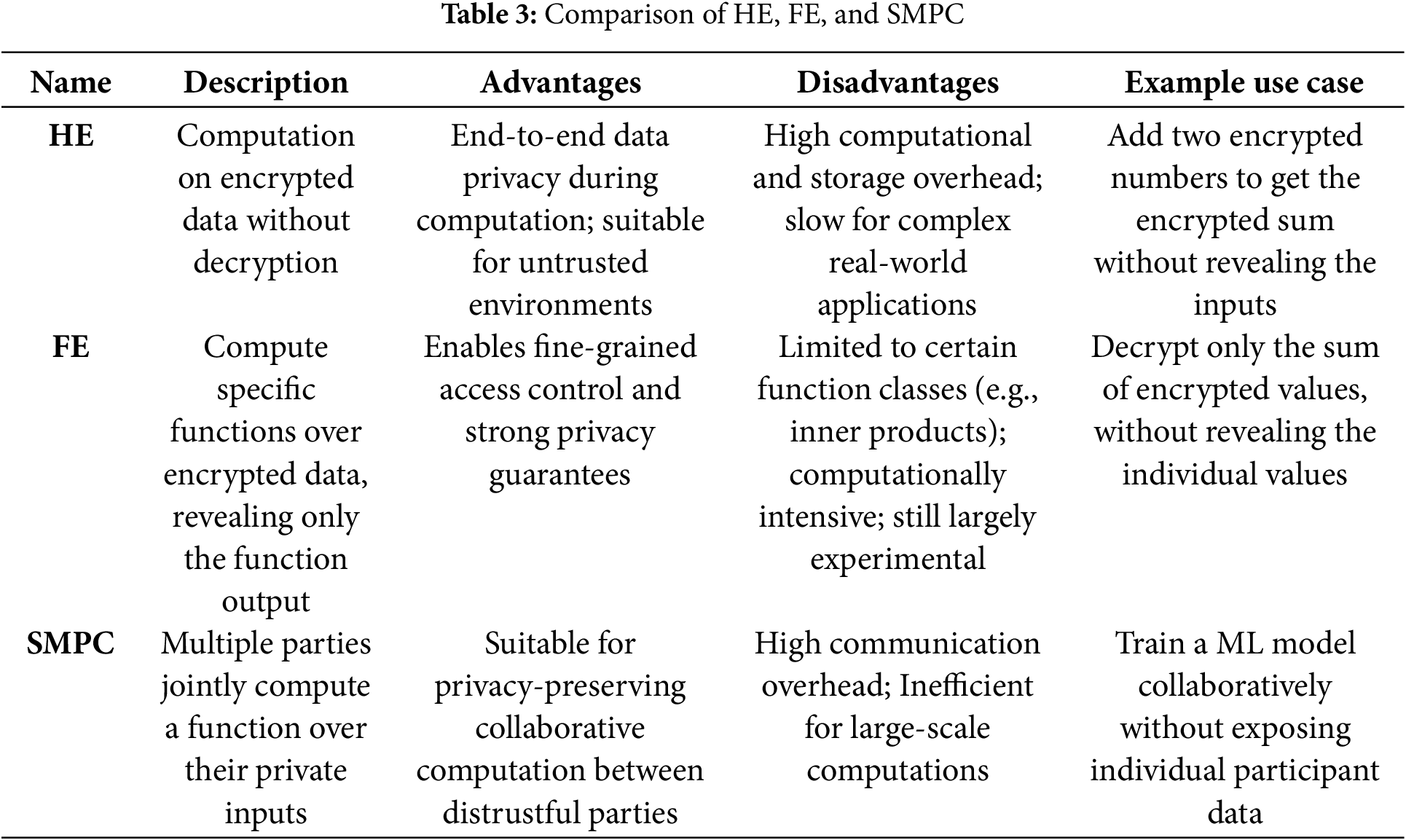

The capabilities of FE make it pivotal for secure data-sharing applications, attribute-based access control, and PPML. By combining stringent access control with advanced cryptographic constructs, FE provides a framework for enabling secure computation in environments requiring high levels of confidentiality and control [105]. Table 3 includes a high-level comparison of cryptographic techniques for PPML.

4.2.4 Secure Multi-Party Computing

The concept of Secure Multi-Party Computing (SMPC) addresses the challenge of maintaining data privacy when multiple parties must pool their data to perform a computation. Originally proposed by [106] and later improved by many, such as [107], SMPC has evolved from a theoretical framework to a practical tool. SMPC can be defined as follows: Consider two or more parties

Figure 4: Overall architecture of SMPC

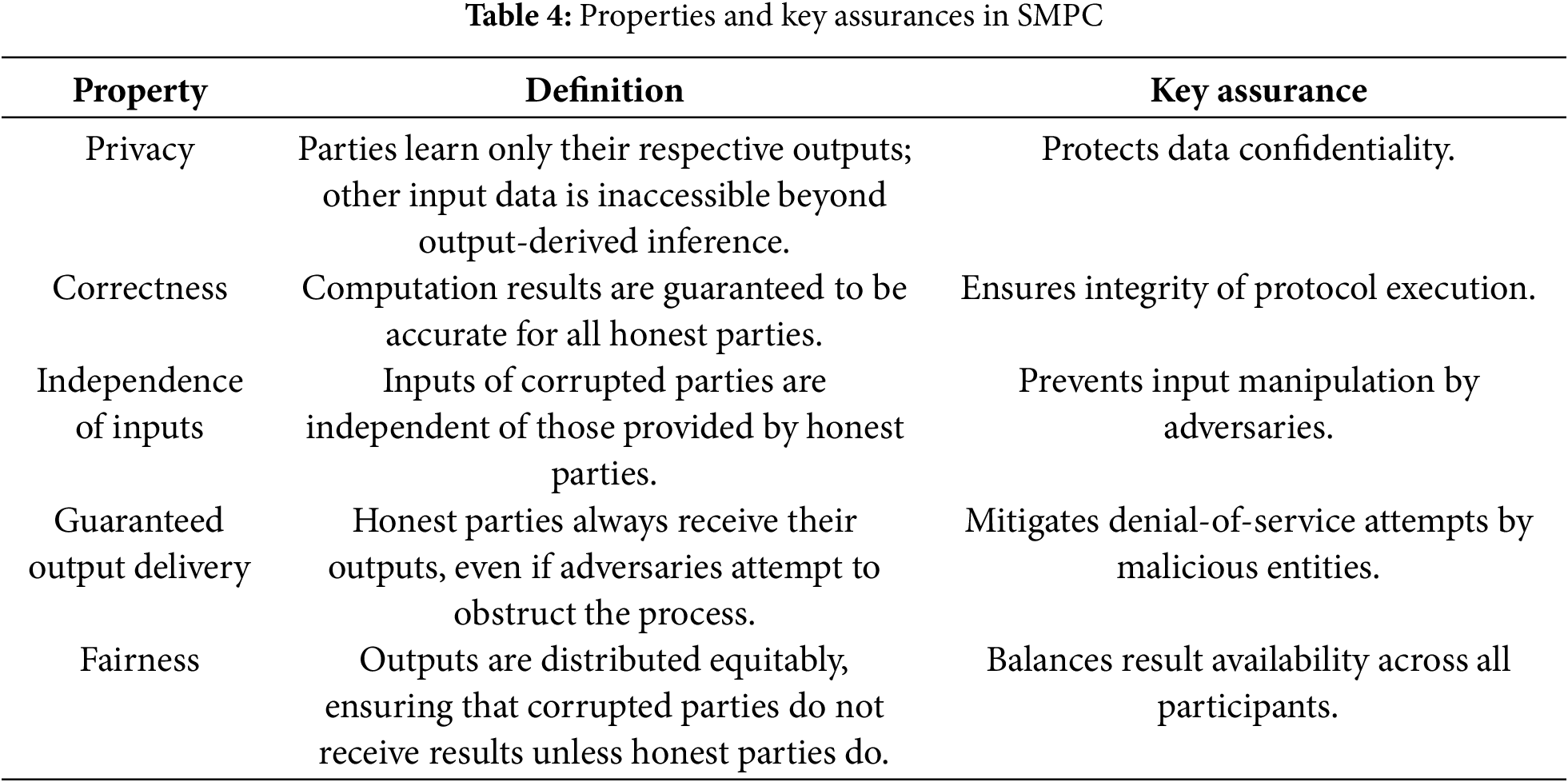

In the context of SMPC, five properties define the security of a protocol. These are illustrated in Table 4. The adversarial models in SMPC protocols are determined by two key factors: the level of adversarial behavior permitted and the corruption strategies employed.

Allowed adversarial behavior:

Adversarial models define the extent of the corrupted parties’ deviation from the protocol. For clarity, consider the scenario where a group of hospitals collaborates to train a machine learning model for early cancer detection using encrypted patient records.

1. Semi-honest adversaries: In this model, all parties, including corrupted ones, faithfully follow the SMPC protocol. However, the adversary (honest-but-curious) may attempt to infer sensitive information by analyzing internal states or intermediate computations [109].

Example: Each hospital adheres to the protocol during model training but records encrypted gradients or intermediate values in an attempt to deduce private data, such as the prevalence of a rare disease at another institution.

2. Malicious adversaries: Malicious, or active, adversaries may arbitrarily deviate from the protocol to disrupt the computation or extract unauthorized information.

Example: A hospital might tamper with its input by substituting real patient outcomes with synthetic data, or it may intentionally send incorrect encrypted gradients to influence the training outcome or leak other hospitals’ inputs.

3. Covert adversaries: Covert adversaries act maliciously only if they believe other parties cannot detect their actions. This model represents rational adversaries discouraged by the risk of discovery and potential consequences.

Example: A hospital might slightly misreport its model update during training if the deviation is subtle enough. However, it avoids significant misbehavior due to concerns about reputational damage and potential penalties from audits.

Corruption strategy:

Their corruption strategy can also determine the categorization of adversaries in SMPC protocols.

1. Static corruption model: In this model, the distinction between honest and corrupted parties is determined before the execution of the protocol.

2. Adaptive corruption model: The adversary can corrupt parties during the computation, and these corrupted parties remain compromised throughout the process.

3. Proactive security model: The adversary can corrupt parties for a specific period.

In addition to adversarial considerations, the design of SMPC protocols also depends on the computed function’s representation. Typically, this representation is either a finite field structure, as demonstrated in works such as [59,110,111], or a ring structure, as seen in studies such as [6,62,112,113]. However, a comprehensive examination of these representations exceeds the scope of the present paper.

Beyond the choice of representation, SMPC implementations must address several critical factors:

• Cryptographic Primitives: Techniques like HE (discussed in sections 4.2.1–4.2.3), secret sharing schemes [114], and zero-knowledge proofs [115] underpin SMPC by enabling computation on encrypted data, secure data reconstruction, and verifiable statements without revealing sensitive information. These primitives are instrumental in maintaining data integrity and confidentiality.

• Communication Security: Establishing secure channels (e.g., TLS protocols) and robust authentication mechanisms is paramount to thwart eavesdropping and man-in-the-middle attacks.

• Computational Complexity: Protocols must optimize the computational overhead associated with cryptographic operations to ensure feasibility for large-scale deployments.

• Scalability: The efficiency of SMPC protocols often degrades with increasing participants. Designing systems capable of maintaining performance under such conditions is vital for practical adoption.

• Participant Dynamics: SMPC protocols sometimes necessitate distinct preprocessing and post-processing phases. Preprocessing includes generating and distributing cryptographic keys and preparatory operations to facilitate secure computation. Post-processing, by contrast, may integrate techniques such as differential privacy to enhance security guarantees further. In real-world scenarios, participants may join or leave computations unpredictably. Addressing this requires robust mechanisms for dynamic membership management during protocol execution.

There are numerous applications of SMPC [28,108,116–119], such as secure key exchange, secure voting, and secure auction, to name a few, which are discussed in Section 7. This paper focuses on the applications of SMPC for PPML. In machine learning, SMPC can be utilized for privacy-preserving training or inference. For privacy-preserving training, SMPC can be implemented in two ways: either multiple parties can pool their private datasets to train a global model on a server, or the user can keep their data private and perform the training on multiple servers such that no single server has access to the original dataset content.

In summary, SMPC provides an essential framework for enabling collaborative computation without compromising data privacy. By addressing complex adversarial behaviors, corruption strategies, and computational requirements, SMPC offers robust solutions to privacy challenges in distributed environments. Its adaptability to cryptographic primitives, communication security measures, and scalability requirements highlight its suitability for high-stakes applications such as PPML. As the demand for secure data processing continues to grow, SMPC stands out as a pivotal approach, ensuring confidentiality and functional integrity in collaborative computations [120].

5 Privacy-Preserved Machine Learning

Machine learning model development typically proceeds through three primary phases: data preparation, training, and inference. Safeguarding the privacy of sensitive data across these stages necessitates the adoption of specialized techniques. These methods must effectively mitigate the risks of exposing sensitive information while maintaining the functionality and performance of machine learning algorithms.

The initial phase, data preparation, involves data collection, cleaning, normalization, and transformation, along with removing extraneous or irrelevant elements. Privacy concerns during this phase stem from potential vulnerabilities to unauthorized access or manipulation of raw data. Privacy-preserving techniques at this stage may include encryption and secure data storage protocols, ensuring that sensitive data remains inaccessible to external threats.

The training phase involves using the processed data to build a machine-learning model. This phase is typically computationally intensive, as the model is iteratively refined until it achieves the desired level of accuracy. Techniques such as differential privacy, secure multiparty computation, or HE can ensure privacy during the training phase. These techniques add random noise to the data to prevent the model from learning about the individual data points while still allowing it to discover patterns in the data.

The final phase is inference, where the trained model makes predictions on new, unseen data. This is typically the phase where data privacy is most at risk, as the predictions made by the model can potentially reveal information about the individual data points. Ensuring privacy during the inference phase can be achieved through techniques such as secure enclaves, HE, or FL. These techniques allow the predictions to be made on encrypted data, preventing any unauthorized access or manipulation of the sensitive information.

In machine learning, ensuring the absence of data leaks during the data preparation phase is critical, as vulnerabilities at this stage may expose sensitive information to malicious actors. Weaknesses in the implementation or insufficient security measures during preprocessing can result in several types of leaks: direct, indirect, and peer-to-peer. For example, utilizing cloud-based platforms for model training introduces risks of direct leakage during data transfer to the cloud. Indirect leakage can occur via parameter updates, where model parameters inadvertently expose sensitive patterns. In distributed frameworks such as FL, peer-to-peer leaks may emerge as models share parameters among nodes.

The academic literature proposes various privacy-preserving techniques addressing these concerns tailored to the preprocessing stage of the machine learning pipeline. Table 5 provides an overview of these methods, highlighting strengths and weaknesses.

DNNs’ vulnerability to adversarial samples has been extensively documented, with adversarial inputs deliberately crafted to mislead these models. To mitigate such vulnerabilities, Wang et al. proposed the RFN method, as described in [121]. This approach focuses on enhancing the resilience of DNNs while preserving classification accuracy. Experimental evaluations demonstrated the efficacy of RFN using the MNIST [128] and CIFAR-10 datasets. Complementary methods, including RFN and FSFN, were introduced by Han et al. [122]. These algorithms, designed to counter gradient-based adversarial attacks, were shown to outperform RFN in terms of effectiveness against such threats.

Guo et al. [123] investigated input transformations as a defensive mechanism for Convolutional Neural Networks (CNNs) against adversarial attacks. Their study incorporated techniques such as bit-depth reduction, JPEG compression, total variance minimization, and image quilting during preprocessing. The findings highlighted the practical effectiveness of total variance minimization and image quilting. Shaham et al. further explored transformation-based defenses in [124], focusing on basis transformation functions, including low-pass filtering, JPEG compression, Principal Component Analysis (PCA), soft-thresholding, and low-resolution wavelet approximations. Among these, JPEG compression was identified as the most effective method under their experimental framework.

Vincent et al. [125] pioneered the use of denoising autoencoders as a training mechanism to enhance resistance against adversarial attacks. However, their implementation failed to eliminate adversarial perturbations entirely. Cho et al. [126] applied denoising autoencoders to generate clean images by removing adversarial noise in the context of semantic segmentation tasks. Despite these advancements, conventional denoising autoencoders remain susceptible to adversarial error amplification, where residual perturbations propagate through network layers. To address this limitation, Liao et al. proposed High-Level Guided Denoiser (High-level Representation Guided Denoiser (HGD)) [127], a flexible and easily trainable method that avoids adversarial error amplification. However, Athalye et al. [129] demonstrated that HGD is ineffective in white-box threat models, underscoring the need for more robust solutions.

Research in privacy-preserving machine learning predominantly emphasizes safeguarding the privacy of data utilized during model training. This entails ensuring that training data remains inaccessible to the party conducting the model training or to multiple collaborating parties responsible for data provision.

Implementing secure protocols for machine learning training processes presents significant benefits in practical applications. For instance, data generated on mobile devices, where computational resources are limited, can be securely transferred to cloud-based infrastructures for model training. This ensures data privacy while leveraging the computational advantages of cloud platforms.

5.2.1 Types of Collaborative Training

The concept of collaborative learning, as elaborated in prior work [130], pertains to the cooperative efforts of multiple entities in training a machine learning model. Such frameworks necessitate stringent privacy safeguards, especially as more parties participate in training. Collaborative machine learning systems can be classified into three primary categories based on the distribution of computational tasks among participants during the training phase.

1. Direct/Central training:

In centralized training, a single server aggregates datasets contributed by one or more entities to train a unified model. The direct training setup is shown in Fig. 5, where a single server aggregates all datasets for model development. This approach allows participants to benefit from a comprehensive model that leverages the combined data while ostensibly maintaining the confidentiality of individual datasets. However, the process often requires local data transmission to the server, potentially compromising privacy.

Figure 5: Direct training

The centralized model also incurs high communication overhead, as all participating entities must transfer their datasets to a central location. This leads to extended model training times and poses scalability challenges, particularly in environments with large or geographically dispersed datasets. The privacy implications of centralized data sharing remain a critical concern in this paradigm, as the central server gains access to the raw data of all contributors.

2. Indirect training:

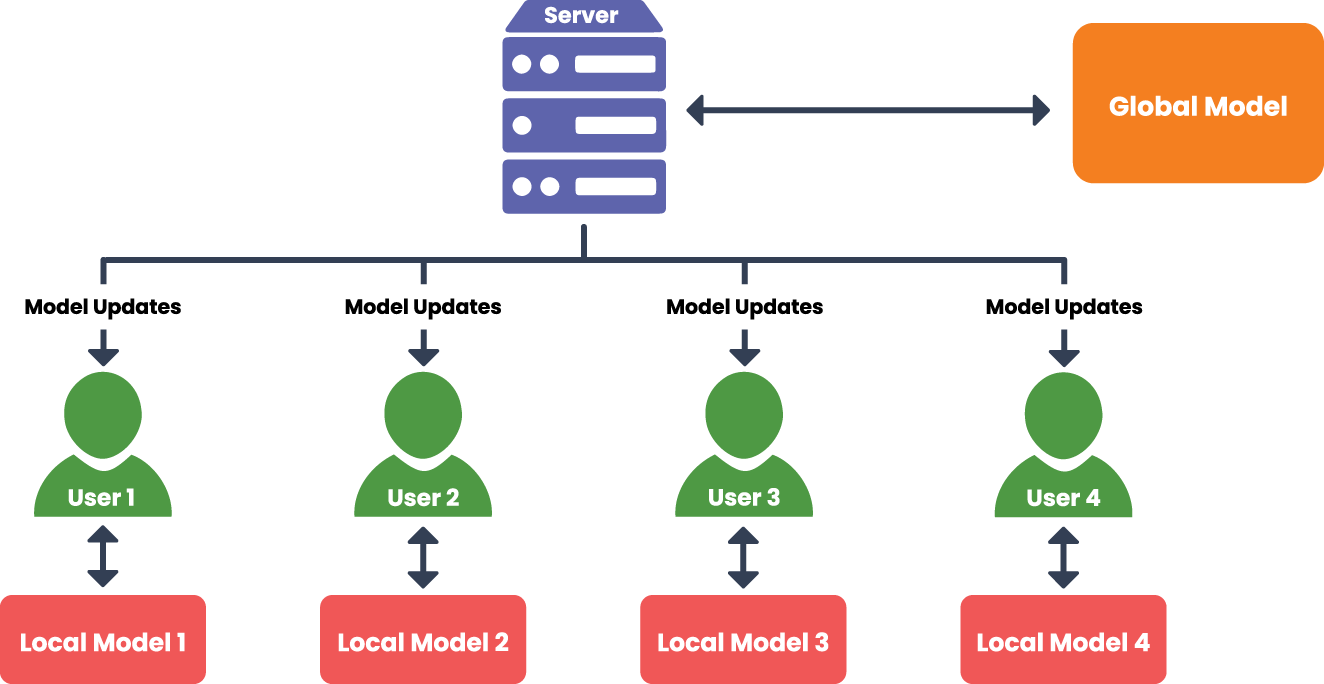

The indirect training paradigm adopts a client-server architecture wherein individual clients are empowered to train models locally. Fig. 6 depicts the indirect training approach, highlighting how clients train locally and share model updates with a central server. The process typically begins with the server disseminating a global model initialized on a designated dataset. Clients download the model parameters, retrain the model locally using their private datasets, and subsequently upload the updated parameters to the server. The server then aggregates the contributions to refine the global model.

Figure 6: Indirect training

This method enhances privacy in several ways. First, sensitive data remains confined to local devices, mitigating risks associated with data sharing and breaches. Second, local processing reduces the potential for interception or unauthorized access during training. Additionally, mathematical techniques such as encryption and randomization can further secure the aggregated parameters, enhancing privacy protection.

Despite these advantages, indirect training is not devoid of privacy risks. Leakage of sensitive information may occur when locally trained parameters are transmitted to the server. Studies have demonstrated that malicious actors could exploit these parameters to infer private data characteristics, highlighting vulnerabilities in this approach (e.g., [38,95,131–134]).

FL exemplifies the indirect training paradigm and has gained significant traction due to its ability to decentralize training. However, the standard FL framework remains susceptible to security challenges. Research addressing these limitations has proposed privacy-preserving enhancements to FL, including mechanisms for secure aggregation, differential privacy, and cryptographic techniques (e.g., [119,135–138]).

3. Peer-to-peer training:

The Peer-to-peer (P2P) training method eliminates the need for a central server, relying instead on a decentralized collaborative framework, as illustrated by Fig. 7. Participants independently train their models on local datasets without sharing the raw data. Instead, model parameters are exchanged among peers according to a pre-established agreement.

Figure 7: Peer-to-peer training

While this approach avoids direct dataset sharing, privacy risks persist. Model parameters exchanged during training may inadvertently reveal sensitive information, potentially leading to data leakage. Several studies have highlighted these vulnerabilities and proposed mitigations to address them (e.g., [23,134,139–142]). These works underscore the importance of privacy-preserving techniques in enhancing the security of P2P training systems.

5.2.2 Privacy-Preserving Techniques Used during the Training Phase

Various techniques are employed to safeguard privacy during the training phase of machine learning models. These methods primarily include HE, FE, and SMPC. Each of these approaches addresses privacy concerns by enabling computations on sensitive data without compromising its confidentiality.

At the inference stage, the central objective is to generate predictions from a pre-trained model utilizing novel input data. These inputs may originate from diverse sources such as mobile devices, cloud servers, or Internet of Things (IoT) devices. A significant concern during this phase revolves around preserving the confidentiality of sensitive data within the inputs to prevent unauthorized access or misuse.

Both HE and SMPC are used to secure machine learning pipelines at the inference stage. HE enables computations on encrypted data without revealing the original data. This technique encrypts the data inputs before sending them to the server for prediction. The encrypted data is then decrypted after the prediction is made. The use of HE can significantly decrease computation efficiency, making it less suitable for real-time applications. However, recent work suggests, this can be achieved with realistic speeds even for dense, Deep Neural Networks (DNNs) [143]. In SMPC, the data inputs are split into multiple shares and distributed among different parties. The computation is then performed on the shares, and the result is combined to obtain the final prediction. This technique can guarantee privacy but requires many communication rounds, making it less efficient for real-time applications.

6 Secure Multi-Party Computing for Privacy Preserving Machine Learning

SMPC enables multiple parties to collaboratively perform computations on their private datasets without revealing sensitive information. In the context of machine learning, SMPC can be utilized to secure various stages of the machine learning pipeline, ensuring data privacy while allowing for collaborative processing. While the theoretical foundations of SMPC are discussed in Section 4.2.4, this section focuses on its practical applications in enhancing the security and privacy of machine learning workflows.

SMPC can be applied across different phases of a machine learning pipeline, from computing loss functions during training to evaluating models during inference. It is one of the two principal methods for protecting data during collaborative machine learning tasks, the other being HE. Although SMPC introduces communication overhead during training, it is generally more cost-efficient compared to FHE.

An essential application of SMPC is its integration with FL, addressing privacy concerns in collaborative learning where model parameters are exchanged without encryption. By combining SMPC with FL, as explored by [144], parties can collaboratively train models while preserving the confidentiality of their individual datasets.

SMPC has a wide range of applications in domains where privacy and security are paramount. It allows parties with private data to jointly perform computations without exposing their underlying data, making it ideal for scenarios constrained by privacy regulations or data sensitivity.

One of the critical application scenarios of PPML using SMPC is FL, where multiple parties with private data collaborate to build a machine learning model without sharing the raw data. This allows the parties to train a shared model on their private data while preserving privacy. It is a helpful solution for building models in scenarios where data is distributed across multiple organizations or devices.

Another application scenario is privacy-preserving data analysis, where SMPC can perform data analysis tasks such as computing aggregate statistics or clustering on sensitive data while preserving privacy. This can be useful in scenarios where data is subject to privacy regulations or is considered sensitive, but insights are still needed to make decisions or drive business outcomes. Consider the following example. Different government institutions hold information about citizens. However, they cannot share the data to collaboratively train a machine learning model due to privacy concerns. SMPC can allow them to pool their data together to train a machine learning model on a cloud service provider that could utilize the insights given by all the data without revealing any of the data. When this model is deployed, SMPC can be used by a citizen who wants to classify their private data according to the trained model, which belongs to the cloud service provider, without revealing the data.

Additionally, SMPC can be used for collaborative model training, enabling multiple parties with private data to jointly train a machine learning model without revealing their data to each other. This can be useful in scenarios where multiple parties have private data they want to use for model training but do not want to share. A prominent example is the provision of Machine Learning as a Service (MLaaS) platforms, such as Tapas [29], PrivEdge [30], and PaaS [31]. SMPC may be utilized both during the training and inference phases.

6.1 Applications of SMPC in Real-World Systems

6.1.1 Healthcare: Federated Cancer Detection

Collaborative studies among European oncology centers have shown that secure multiparty computation (SMPC) can enable federated analysis on MRI radiotherapy data from 48 patients with adrenal metastases. The system maintained patient data locality while preserving diagnostic performance (AUC comparable to centralized baselines), and adhered to GDPR constraints in production settings [145]. In a separate deployment, breast cancer histopathology data across multiple institutions were analyzed using federated learning combined with differential privacy and SMPC-based gradient aggregation. This setup yielded an ROC-AUC of 0.95 under strict privacy guarantees (

6.1.2 Finance: Cross-Institutional Fraud Detection

The SecureFD system demonstrated scalable SMPC-based graph analytics on one billion transaction edges, achieving a 12% improvement in early-stage fraud detection compared to institution-specific models [147]. Similar results have been reported by financial consortia (e.g., VISA and Ant Group), where SMPC was applied to federate transaction features across institutions without any inter-bank data exposure [148].

6.1.3 Genomics: Secure GWAS at Scale

A hybrid protocol combining SMPC and homomorphic encryption enabled secure genome-wide association studies (GWAS) on 23,000 individuals. The method supported correction for population stratification while ensuring raw genotype data remained confidential [149]. Subsequent work has demonstrated the approach can be extended to cohorts of up to one million genomes, with communication complexity scaling sub-linearly with population size [150].

6.1.4 Energy: Household Load Forecasting

In a pilot involving 1600 households, SMPC techniques were used to protect smart-meter data during both model training and inference in a federated short-term load forecasting system. The implementation achieved a 12% reduction in mean absolute error (MAE) compared to single-utility forecasting models [151].

6.2 Advantages and Disadvantages of PPML-SMPC

Incorporating SMPC into machine learning pipelines offers several key advantages:

• Data privacy: SMPC ensures that data remains confidential throughout the computation process, eliminating the need for data sharing among parties.

• Regulatory compliance: Since data does not leave its original location, SMPC helps comply with data privacy regulations like the General Data Protection Regulation (GDPR).

• Security: SMPC provides resistance against adversaries without relying on a central trusted authority and is considered quantum-safe due to data distribution during computation.

• Usability: By preserving data privacy, SMPC allows for the use of raw data without compromising privacy, eliminating the trade-off between data usability and confidentiality.

However, it is important to note that SMPC is not immune to all types of attacks. The potential for malicious behavior by participating parties must be considered, as discussed in Section 6.3.

Despite its advantages, the use of SMPC in privacy-preserving machine learning has certain limitations:

• Communication overhead: SMPC introduces significant communication costs during machine learning tasks, leading to slower computations compared to traditional methods [152–154]. This overhead is less pronounced in smaller models like decision trees but becomes problematic for deep learning models with numerous parameters.

• Trust assumptions: SMPC protocols often assume that the majority of participating parties are honest. If this assumption fails, the privacy of the data may be compromised.

• Complexity: Implementing SMPC can be complex and may require specialized expertise, which could hinder adoption in some settings.

Extensive research has been conducted to address SMPC’s limitations, leading to improvements in its efficiency and practicality. Currently, SMPC has matured to a stage where it can be integrated into practical machine learning workflows, offering a viable alternative to methods like FHE, which significantly increases computational time. By balancing privacy preservation with computational efficiency, SMPC plays a crucial role in advancing privacy-preserving machine learning.

6.3 SMPC-Specific Attacks and Threats

This section examines attack scenarios relevant to the PPML paradigm when employing SMPC. The discussion encompasses various stages of the machine learning lifecycle, emphasizing threat vectors and corresponding mitigation strategies.

Training phase vulnerabilities represent a significant area of concern in SMPC-based privacy-preserving systems [155]. These attacks, which often exploit the collaborative nature of model training, present greater practical risks compared to those targeting inference. Among these, contamination attacks are particularly notable.

Contamination Attacks

Contamination attacks, as characterized in prior research [156], exploit the presence of adversarial actors within a group of parties collaboratively training a machine learning model using SMPC protocols. In such scenarios, adversaries introduce maliciously crafted data into the shared training dataset, effectively poisoning the data pool. The malicious record might be targeted at one attribute, a set of attributes, or even the label of the record. This manipulation results in the model embedding unintended correlations, potentially compromising its reliability or ethical fairness. Data injection and modification attacks fall under contamination attacks, where an adversary modifies the training data to deceive the model. Thus, SMPC models are vulnerable to data injection and modification attacks.

For instance, in a financial consortium involving banks and institutions pooling sensitive client data to train a model for mortgage decisions, a malicious participant could inject data correlating sensitive attributes, such as race or gender, with mortgage outcomes. This would lead to biased and discriminatory outputs when the model is deployed. Variants of this attack include targeted data injection, where specific attributes are manipulated, and broader data modification, which alters multiple elements of the training dataset. Such vulnerabilities highlight the susceptibility of SMPC-based training to integrity violations.

Logic Corruption Attacks

While SMPC protocols inherently resist logic corruption by their cryptographic design, specific attack vectors may arise depending on the underlying encryption schemes or implementation nuances. Adversaries could exploit protocol execution flaws, introduce disruptions in inter-party communications, or leverage weaknesses in SMPC implementations. The resilience of SMPC to such attacks is contingent on robust protocol adherence and secure software engineering practices. Recent laser-based fault-injection work shows full model extraction against garbled-circuit SMPC inference [157]. Practical MAC-key-leakage exploits against SPDZ implementations further illustrate this risk [158].

Ensuring the security of machine learning models during the inference phase is of paramount importance, as models at this stage remain susceptible to a variety of adversarial attacks. This section explores the vulnerabilities inherent to inference pipelines employing SMPC to safeguard user data privacy, regardless of whether SMPC was used during the training process.

Furthermore, inference attacks typically rely on the adversary’s ability to exploit knowledge of the model itself, a factor that remains unaffected by the use of SMPC in the training process. SMPC protocols facilitate secure collaborative computation by leveraging private inputs from multiple parties, thereby ensuring data confidentiality during the computation. This feature provides inherent robustness against certain adversarial techniques, including model extraction, shadow model creation, power side-channel exploitation, membership inference, and linkage attacks.

However, SMPC does not inherently address vulnerabilities to model inversion and memorization attacks. These attack types exploit the ability to reconstruct sensitive input data or extract memorized training data directly from model outputs. To mitigate these risks, additional safeguards must be incorporated. Post-processing techniques, such as the integration of differential privacy mechanisms, output perturbation, rounding, and quantization, can enhance security guarantees and address residual threats effectively.

We assess PPML techniques employing SMPC based on the following criteria: effectiveness, efficiency, privacy, and scalability. Each dimension evaluates a distinct aspect of the integration and performance of SMPC techniques in PPML frameworks.

Effectiveness pertains to how well SMPC models achieve their intended objectives. Evaluative measures include:

• Accuracy: The precision of the model’s outcomes, accounting for trade-offs between accuracy and other metrics such as privacy or efficiency.

• Reconstruction rate [159]: This metric evaluates the system’s ability to recover distributed privacy-preserving components accurately, serving as an indicator of model performance.

Efficiency measures the overhead introduced by SMPC integration within machine learning pipelines, focusing on:

• Inference runtime: The time required to produce predictions. For instance, reference [75] explored runtime optimization in real-world privacy-preserving applications.

• Training time: The duration of the model’s training phase. Research such as [160] emphasizes methods to reduce this cost.

• Communication costs: Significant communication overhead arises from data exchange among participating parties in SMPC systems, increasing the pipeline’s overall execution time.

• Computation costs: Computation-intensive techniques like HE amplify training times due to data encryption overhead.

The privacy assurances of SMPC models are typically underpinned by rigorous theoretical security proofs. These proofs validate the extent to which privacy is preserved within the model. However, maintaining privacy often necessitates a compromise with other performance metrics:

• Privacy-accuracy tradeoff: Increased privacy measures may reduce the model’s predictive accuracy, as observed in studies such as [133].

• Privacy-communication cost tradeoff: Enhanced privacy protections frequently result in higher communication overhead, as noted by [95].

To optimize privacy in SMPC models, it is advisable to deploy a combination of privacy-preserving techniques. Relying solely on a single method is insufficient, as it may not address the full spectrum of potential attack vectors targeting the model or its underlying data. The selection of appropriate techniques should be informed by the specific threat model and operational requirements of the application, ensuring a balanced approach to security and performance.

Scalability in SMPC models refers to the capacity to accommodate an increasing number of participants in the computational process without significant performance degradation. Some SMPC protocols impose inherent limits on the number of parties they can efficiently support. Thus, scalability evaluations must address two critical factors:

• Participant capacity: The ability of the protocol to incorporate a larger number of parties while adhering to its operational constraints.

• Communication overhead: The extent to which communication costs grow as the number of participants increases, with an emphasis on maintaining these costs at a reasonable level to ensure system efficiency.

Assessing and enhancing scalability is essential for the practical application of SMPC models, particularly in scenarios involving large-scale collaborative machine learning.

7.1 Comparison with Existing Surveys

Several surveys have explored SMPC, each offering valuable contributions but with distinct limitations in scope or depth.

Choi and Butler [161] explored integrating Trusted Execution Environments (TEEs) with SMPC in 2019, highlighting hardware security for mobile computation and challenges for constrained devices, but focused solely on SMPC and TEEs. In contrast, our survey offers a broader view of the SMPC landscape, independent of hardware, and includes a wider range of use cases and deployment scenarios. Gamiz et al. [162] conducted a systematic literature review in 2024 on 19 SMPC studies in the context of IoT and Big Data. Their methodology provides insights into SMPC in edge and large-scale computing. However, the limited number of papers hinders the generalizability of their findings. Our approach offers a broader perspective by incorporating SMPC into federated learning, deployment, and real-world use cases, such as medical and financial modeling.

The most closely related work is the recent survey by Zhou et al. [163] in 2024, which focuses on SMPC for machine learning. Our work and theirs both focus on PPML with SMPC, but they only cover SMPC IEEE recommendations, missing important contributions. In contrast, our survey includes diverse publications from ACM, Springer, USENIX, and arXiv, providing a more comprehensive view of SMPC. Earlier surveys on SMPC, such as those by Du et al. (2001) [164], offer foundational insights into its theoretical and practical aspects. However, they fall short in identifying concrete research gaps and future directions, and several of these works are now outdated in light of significant recent advances, particularly in applied PPML settings. Wang et al. (2015) [165] explored SMPC rational adversaries but overlooked modern challenges, such as scalability and deployment.

Our survey offers a high-level overview of SMPC for PPML, highlighting key protocols and applications. We emphasize current research gaps and deployment challenges, aiming to guide future research not only in protocol development but also in real-world implementation.

7.2 Related Work in SMPC for PPML

PPML using SMPC has evolved beyond academic research into practical applications [166,167]. The increasing interest in this field is driven by the need to secure machine learning pipelines in real-world settings facilitated by cloud service providers offering MLaaS. Various algorithms have been proposed, differing in execution speed, privacy guarantees, the number of participating parties, and the accuracy of models compared to non-privacy-preserving counterparts. This section examines the most significant contributions in this area, comparing them based on these characteristics.

SMPC has been effectively applied to basic classification and regression algorithms, where its primary limitation—communication overhead—has minimal impact, allowing for practical deployment. Reference [5] introduced a method for securely aggregating locally trained classifiers. Several studies [168–170] proposed algorithms for secure k-means clustering using SMPC. Reference [171] explored SMPC implementations of fundamental classifiers such as decision trees and Support Vector Machines (SVMs), highlighting SMPC’s adaptability in enhancing privacy without significantly compromising performance.

In the realm of neural networks, SMPC has been proposed for privacy-preserving computation among multiple parties. Reference [9] introduced SecureNN, a three-party protocol supporting operations like matrix multiplication, convolution, ReLU activation, max-pooling, and normalization. Their approach achieved over 99% accuracy on the MNIST dataset while providing security against one semi-honest and one malicious adversary.

However, applying SMPC to deep learning tasks presents significant challenges due to the high communication costs associated with DNNs, which contain millions or billions of parameters. Reference [131] proposed an algorithm for securing deep learning pipelines via SMPC, allowing users to balance communication and computation costs. Reference [172] introduced Trident, a design that improves speed and can be extended to privacy-preserving deep learning, which is particularly beneficial for complex models where computational efficiency and privacy assurance must be carefully balanced.

Hardware-assisted approaches have also been explored. Reference [156] proposed data-oblivious machine learning algorithms supporting SVMs, neural networks, decision trees, and k-means clustering on Intel Skylake processors, demonstrating improved scalability compared to previous SMPC-based solutions.

Researchers have been actively exploring the application of SMPC in FL to enhance security in decentralized communication, particularly in scenarios like IoT platforms. While FL facilitates collaborative model training with some degree of user anonymity, it does not fully safeguard individual data privacy, as model parameters can inadvertently reveal sensitive information. SMPAI [173] proposed an FL technique that integrates SMPC with differential privacy to address these challenges. Simulations in the ABIDES environment evaluated this approach, demonstrating the improved accuracy and communication latency with a growing number of parties. However, these findings are limited to simulations, leaving the SMPAI’s real-world applicability and performance untested. In another effort, reference [174] developed a faster FL solution for vertically partitioned data, incorporating lightweight cryptographic primitives to manage party dropouts effectively. Similarly, reference [144] introduced a two-phase framework for Multi-Party Computing (MPC)-enabled model aggregation using a small committee selected from a larger participant pool. This framework, designed for integration with IoT platforms, outperformed peer-to-peer FL methods in execution and communication efficiency. However, it relies on a trusted environment without adversaries and lacks support for vertical FL and transfer learning. In 2021, reference [175] presented Chain-PPFL, a privacy-preserving FL solution utilizing single-masking and chained communication mechanisms. The approach achieved accuracy and computational complexity comparable to the FedAVG algorithm [38]. Despite its promising results, Chain-PPFL’s privacy-preserving capabilities and performance improvements were validated only through simulations, with no evidence of its effectiveness in decentralized FL applications. These advancements represent significant progress toward practical and robust PPML solutions integrating FL and SMPC, particularly for IoT platforms where data security and computational efficiency are paramount.

In data clustering tasks involving multiple parties, privacy-preserving clustering algorithms are essential. As a result, researchers have extensively explored SMPC-based clustering techniques that ensure privacy, with a particular focus on k-means clustering [168–170,176]. For instance, reference [168] improved computation speed by incorporating parallelism. A concise overview of privacy-preserving clustering methods that leverage SMPC can be found in Table 6.

Beyond classification tasks, SMPC has been applied to other machine-learning problems. Reference [177] demonstrated a protocol for computing item ratings and rankings while preserving accuracy and reducing communication costs by interacting with a mediator rather than multiple vendors. This approach deviates from traditional recommendation systems that require pooling all data together, although it assumes trust in the mediator for intermediate computations on encrypted data.

In recent work, reference [178] proposed a method for feature selection that leverages the anonymity advantages of SMPC. Their technique is independent of the model training phase and can be integrated with any MPC protocol to rank dataset features using a scoring protocol based on Gini impurity.

Furthermore, platforms like Cerebro [179] facilitate collaborative learning by enabling end-to-end computation of machine learning tasks on plaintext data without requiring users to have specialized cryptographic knowledge. This simplification aids in the adoption of privacy-preserving techniques in practical applications.

7.3 Secure Multi-Party Computing Protocols

Table 7 summarizes the main SMPC protocols, comparing key properties and the number of supported parties.

2012–2015: Foundational protocols

SPDZ [6], introduced in 2012 with rigorous security proofs, comprises a secure online phase capable of guarding against active adversaries who can corrupt up to

The authors of the original SPDZ have made significant advancements to address its limitations. For instance, reference [58] resolves the assumption of pre-sharing secret keys by incorporating BGV encryption [180] and delegates numerous computations to the preprocessing phase, thereby reducing costs for the online phase. Additionally, this enhanced version of SPDZ facilitates parallel computations through multithreading capabilities.

ABY [64] introduces a mixed protocol framework for secure Two-Party Computation (2PC) by integrating Arithmetic sharing, Boolean sharing, and Yao’s garbled circuits. Also, the authors infer that oblivious transfer-based multiplication outperforms homomorphic multiplication based on their benchmark observations. Furthermore, they employ standard operations to craft a flexible protocol mixture and leverage the latest optimizations for each protocol utilized. However, it is essential to note that this framework is limited to a passive semi-honest adversary model and lacks support for malicious adversaries, unlike SPDZ. Additionally, ABY lacks scalability beyond 2PC and should accommodate a variety of protocols beyond those used in its initial implementation.

2016–2018: Efficient extensions and hybrid approaches

Reference [181] highlights the contrasting advancement rates between 2PC and MPC, emphasizing the relatively slower progress in MPC. The paper introduces an MPC protocol tailored for the semi-honest adversary setting, achieved through oblivious transfer among parties using multi-party garbled circuits. Key advantages of this approach include constant rounds of communication and support for any number of adversaries. However, additional research is warranted to optimize the efficient utilization of linearly scaling multi-party garbled circuits with varying numbers of parties.

Araki et al. [182] present a Three-Party Computation (3PC) protocol designed for an honest majority, ensuring security in the presence of semi-honest adversaries while maintaining privacy even with malicious parties. However, these guarantees are based on simulation-based definitions and are limited to scenarios with at most one corrupted party. Additionally, the protocol does not accommodate an arbitrary number of parties and necessitates an honest majority. Nevertheless, experimental results indicate the feasibility of secure computation using standard hardware, particularly with fast network speeds and high throughput capabilities.

2019–2021: Deployment-ready frameworks

ASTRA [66] emerged as a highly efficient 3PC protocol operating over a ring of integers modulo

Manticore [186] further refined secure computation frameworks by preventing overflow in machine learning applications, showcasing a unique modular lifting approach that preserves arithmetic operations. Meanwhile, Fantastic Four [187,188] respectively introduced novel four-party and multi-party comparison protocols, each enhancing security features and operational efficiency in scenarios of dishonest majority and complex comparison tasks. For instance, FantasticFour [187] introduces a Four-Party Computation (4PC) protocol while supporting active security against corrupted adversaries in an honest majority setting. While it provides resilience against malicious adversaries, relying on an honest majority remains a limitation when dealing with a compromised party. Lastly, reference [189] demonstrated a secure computation protocol for graph algorithms with an honest majority, efficiently safeguarding graph topology with a 3PC setup that remarkably accelerates computations even for large-scale graphs, proving its practical utility and speed in real-world applications. A notable contribution of this work is the advancement of secure shuffling techniques as a replacement for secure sorting algorithms.

2022–present: Large-scale and cloud focused solutions

Reference [190] presents a cloud-based MPC protocol to ensure security for up to n-1 malicious parties in conjunction with a semi-honest server. Compared to the protocol outlined in [192] for server-aided 2PC protocols, this approach demonstrates a fourfold improvement in execution time and a 2.9-fold reduction in communication costs. It is important to note that the execution time and communication metrics in [192] were not provided initially and were approximated in [190], rendering the above improvements likely estimations. Additionally, the study showcases significant enhancements, such as an estimated 83-fold decrease in execution time, a 1.5-fold reduction in communication among 2 or 4 client parties, and a 42-fold improvement in server communication costs. Moreover, the proposed solution exhibits nearly linear scalability, with communication costs and execution times scaling proportionally with the number of parties involved.

Building upon the foundation of [189], the research discussed in [191] enhances the online efficiency of the secure shuffle protocol outlined in [189] by achieving a twofold reduction in communication costs and the number of online rounds in a 3-party setting. However, it is essential to highlight that while this approach excels in 3-party computation, its communication costs become less favorable than those of [189] when applied to larger values of n. Therefore, while the approach proposed in [191] shows promise for 3PC scenarios, its generalizability is limited.

7.4 Privacy Preserving Machine Learning Techniques That Utilize Secure Multi-Party Computing

A high-level comparison of SMPC-based PPML training approaches is presented in Table 8.

2007–2012: Early foundations and proof of concept

The field of PPML has seen many advances since its inception, including works to extend SMPC techniques to gradient-descent methods. The first work in this area, by [3], proposed a secure two-party protocol for gradient descent, establishing a foundational approach. They proved the proposed protocol is correct and privacy-preserving for the two-party case, with a potential extension to the multiparty case. However, the protocol assumes that the involved two parties are semi-honest. Reference [5] proposed an approach averaging locally trained models with a stochastic component to make the averaged model differentially private. However, the best performance was limited to instances where dataset sizes were equal. Their approach lacks generalizability when data from different parties are from distinct distributions and assumes data are sampled from the same distribution, such as the Laplace distribution. Reference [193] proposed a technique optimizing the overall multiparty objective with a weaker form of differential privacy compared to [5], with performance not dependent on the number of parties or dataset size. They demonstrated that the local model aggregation method proposed by [5] degrades with an increasing number of involved parties, contrary to the original claims.

2013–2020: Scaling up SMPC for neural networks and complex ML tasks

Reference [131] introduced

Reference [195] proposed a work applicable to any DNN without sacrificing accuracy in a cloud computing environment that does not rely on data perturbation or noise addition. Instead, it uses cryptographic tools to preserve privacy in the MPC setting. However, their approach depends on the assumption that involved parties are non-colluding while the cloud server can be malicious, breaking the MPC protocol.

EzPC [196] provides a 2PC framework with formal correctness and security guarantees, outperforming its predecessors by 19

In 2020, reference [197] proposed InvertSqrt, an MPC protocol for efficiently computing the reciprocal of the square root of a value,

2021–present: Robust real-world deployment

In 2021, several advancements were made in SMPC for machine learning. SWIFT [204] is a maliciously secure 3PC over rings that provides guaranteed output delivery. However, it does not extend to multiple semi-honest parties and assumes only one malicious adversary in a 3PC or 4PC setting. CRYPTGPU [205] improves performance by running all operations on a GPU, achieving 2

Similarly, CRYPTEN [12] also offers GPU support and operates under a semi-honest threat model but may be susceptible to side-channel attacks. Falcon [13] supports batch normalization and guarantees security against malicious adversaries with an honest majority. It provides private inference 8

In the realm of SMPC techniques post-2021, various frameworks and platforms have emerged to bolster privacy-preserving computations. Tetrad [209], a 4PC computing framework, improves fairness and robustness over Trident [172] while reducing deployment costs six-fold and achieving speed gains—four times faster in ML training and five times faster in ML inference. However, Tetrad requires function-dependent preprocessing for increased generality. Piranha [210] leverages GPUs to accelerate SMPC computations in 2PC, 3PC, and 4PC settings, enhancing training and inference speeds for PPML techniques by 16–48x compared to respective CPU-based versions. Nevertheless, Piranha assumes the involved parties execute within their trust domains with dedicated GPUs and secure channel communication for secret sharing. NFGen [211] employs piecewise polynomial approximations for nonlinear functions in MPC systems, addressing precision variations caused by fixed-point and floating-point numbers. Regardless, it is limited to two MPC platforms and does not support multi-dimensional nonlinear functions. SecretFlow-SPU [16] features a frontend compiler converting ML programs into MPC-specific representations, with code optimizations and a backend runtime for executing MPC protocols. It achieves 4.1

Spin [17] enables secure computation of attention mechanisms, facilitating privacy-preserving deep learning in MPC settings, including CNN training and transformer inference. It supports Graphics Processing Unit (GPU) acceleration and operates in an n-party dishonest majority setting. While Spin effectively optimizes online computation, it does not address inefficiencies in the precomputation phase. Additionally, its applicability is limited to deep learning models that fit within GPU memory, restricting scalability for larger architectures. EVA-S3PC [18] introduces secure atomic operators for large-scale matrix operations, enabling efficient training and inference of linear regression models in 2PC and 3PC settings under a semi-honest adversary model. It demonstrates superior communication efficiency compared to SecretFlow [16] and CryptGPU [205]. However, EVA-S3PC is evaluated only in a LAN, limiting its scalability to WAN. Furthermore, its design is restricted to a 3PC setting, constraining its applicability to large multi-party scenarios.

Table 9 provides an overview of SMPC-driven secure inference techniques and their associated datasets.

2017–2018: Foundational approaches to secure inference

Before 2019, several studies focused on secure inference in machine learning using SMPC. Reference [215] introduced BaNNeRS, a method for secure inference on Binarized Neural Networks (BNNs) utilizing MP-SPDZ. This approach assumes an honest majority, providing security by aborting execution if a malicious adversary is detected. However, BaNNeRS suffers from high computational overhead, making it relatively slow and unsuitable for real-time applications.

In the same year, reference [212] proposed MiniONN, a technique for transforming existing neural networks into oblivious neural networks. Their method demonstrated significantly lower response latency and reduced message sizes compared to previous work, such as [7], while achieving sub-linear growth in these metrics. Although MiniONN effectively hides neural network parameters such as model weights and bias matrices, it still reveals metadata, including the number of layers, the sizes of weight matrices, and the operations performed in each layer. Furthermore, MiniONN lacks the necessary support for developers and the neural networks commonly used in industrial production environments.

Additionally, reference [213] introduced GAZELLE, which combines HE with 2PC to enable privacy-preserving inference over encrypted data. GAZELLE achieves significant improvements, including a 20–30

2019–2020: Broadning techniques and large datasets

Between 2019 and 2020, significant advancements were made in secure inference techniques. In 2020, CryptFlow [11] was introduced, achieving secure inference with accuracy equivalent to plaintext TensorFlow while outperforming prior methods. Its successor, CryptFlow2 [216], extended this work by enabling secure inference on large-scale datasets, such as those involving ResNet-50 and DenseNet-121 models, with an order-of-magnitude reduction in communication costs and 20

Also in 2020, SwaNN [217] presented a hybrid approach combining PHE and 2PC. Delphi [219] offered a 2PC inference system for neural networks, achieving a 22

2021–2022: Enhanced precision, GPU acceleration, and specialized layers

After 2021, new frameworks continued to enhance the efficiency and practicality of secure inference. Cheetah [14], a 2PC neural network inference system, employed HE protocols to efficiently evaluate convolutional layers, batch normalization, and fully connected layers, while integrating communication-efficient primitives for nonlinear functions like ReLU. Experimental results showed that Cheetah outperformed CryptFlow2 [216], being 5.6

SecFloat [221] introduced a 2PC library specializing in 32-bit single-precision floating-point operations. It achieved six times higher precision and doubled efficiency compared to earlier works like ABY-F circuits [64] and MP-SPDZ [63]. Despite its advancements in precision through floating-point arithmetic, SecFloat does not support double-precision floating points and lacks security against malicious adversaries.