Open Access

Open Access

ARTICLE

Deep Architectural Classification of Dental Pathologies Using Orthopantomogram Imaging

1 Department of Computer Science, Mohammad Ali Jinnah University, Karachi, 75400, Pakistan

2 Faculty of Computing and Informatics, Multimedia University, Cyberjaya, 63100, Malaysia

3 Department of Information Systems, College of Computer and Information Science, Princess Nourah bint Abdulrahman University, P.O. Box 84428, Riyadh, 11671, Saudi Arabia

4 Department of Computer Science and Artificial Intelligence, College of Computing and Information Technology, University of Bisha, P.O. Box 551, Bisha, 61922, Saudi Arabia

* Corresponding Author: Hafiz Muhammad Attaullah. Email:

Computers, Materials & Continua 2025, 85(3), 5073-5091. https://doi.org/10.32604/cmc.2025.068797

Received 06 June 2025; Accepted 30 July 2025; Issue published 23 October 2025

Abstract

Artificial intelligence (AI), particularly deep learning algorithms utilizing convolutional neural networks, plays an increasingly pivotal role in enhancing medical image examination. It demonstrates the potential for improving diagnostic accuracy within dental care. Orthopantomograms (OPGs) are essential in dentistry; however, their manual interpretation is often inconsistent and tedious. To the best of our knowledge, this is the first comprehensive application of YOLOv5m for the simultaneous detection and classification of six distinct dental pathologies using panoramic OPG images. The model was trained and refined on a custom dataset that began with 232 panoramic radiographs and was later expanded to 604 samples. These included annotated subclasses representing Caries, Infection, Impacted Teeth, Fractured Teeth, Broken Crowns, and Healthy conditions. The training was performed using GPU resources alongside tuned hyperparameters of batch size, learning rate schedule, and early stopping tailored for generalization to prevent overfitting. Evaluation on a held-out test set showed strong performance in the detection and localization of various dental pathologies and robust overall accuracy. At an IoU of 0.5, the system obtained a mean precision of 94.22% and recall of 90.42%, with mAP being 93.71%. This research confirms the use of YOLOv5m as a robust, highly efficient AI technology for the analysis of dental pathologies using OPGs, providing a clinically useful solution to enhance workflow efficiency and aid in sustaining consistency in complex multi-dimensional case evaluations.Keywords

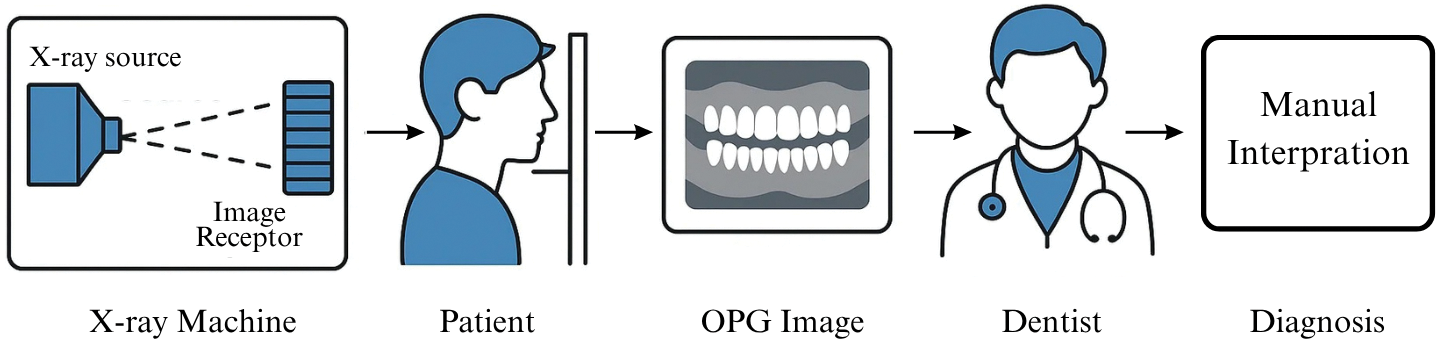

The accurate and timely diagnosis of dental and maxillofacial pathologies is fundamental to effective patient treatment and general health management. OPGs, also called panoramic X-rays, are a cornerstone of dental diagnostics. A panoramic X-ray is a two-dimensional (2-D) dental X-ray examination that captures the entire mouth in a single image, including the teeth, upper and lower jaws, surrounding structures, and tissues [1]. This comprehensive overview is invaluable for the initial screening and detection of various conditions. However, conventional interpretation of these images by dental professionals, while critical, can be time-consuming and subject to variability. The process of interpreting an OPG effectively involves a systematic evaluation of complex images, considering that OPG is a tomographic radiography using a focal trough that approximates the mandible curve [2]. The nuances of effective radiographic interpretation are vital, involving classifying findings and considering various factors [3]. The inherent subjectivity in this process and the challenge of distortion in panoramic images can affect diagnostic outcomes, highlighting a compelling need for innovative solutions to enhance diagnostic capabilities [4]. The limitations inherent in manual OPG analysis have spurred exploration into advanced technological aids. The field of AI is rapidly advancing. The transformative role of AI in dentistry, which utilizes its fundamentals for contemporary applications, is becoming increasingly evident, offering new paradigms for diagnostics and treatment planning [5]. This research, “Deep Architectural Classification of Dental Pathologies Using OPG Imaging,” is motivated by this potential, aiming to develop an automated system that leverages AI to assist dental professionals in the accurate and efficient analysis of OPGs. An automated system for OPG analysis must be capable of recognizing and differentiating various dental structures and pathological conditions. This study focuses on six clinically significant classes: Caries, Infection, Impacted Teeth, Fractured Teeth, Broken Crown (BDC/BDR), and Healthy dental structures. The accurate radiographic distinction between these classes is often complex. For instance, dental caries is the most common dental disease, and its disclosure in the early stage is crucial; however, traditional screening methods can be very subjective, and AI-assisted detection on radiographs can make the examination faster and more precise [6,7]. An effective AI system must learn the distinguishing radiographic features for all such conditions. Orthopantomography is a specialized imaging technique that produces a panoramic view by rotating an X-ray source and detector around the patient’s head, capturing a focal trough that represents the dental arches. The typical workflow, from image acquisition to diagnosis by a dental professional or an AI system, is conceptually illustrated in Fig. 1.

Figure 1: A conceptual illustration of the OPG diagnostic workflow

When an AI model, specifically an object detection framework like YOLOv5, analyzes an OPG, it processes the image through several stages. This involves the CNN backbone extracting hierarchical features from the input OPG. Subsequently, the model’s detection head identifies regions of interest, predicts bounding boxes to localize these regions, and simultaneously classifies the contents of each box into one of the predefined dental condition categories [8]. This integrated approach of localization and classification in a single pass, making models suitable for real-time applications, stems from foundational object detection techniques such as those pioneered in “You Only Look Once: Unified, Real-Time Object Detection” [9]. The application of AI, especially deep learning and Convolutional Neural Networks (CNNs), in dentistry has been an area of growing research. Comprehensive reviews on medical image analysis using Convolutional Neural Networks highlight the widespread success of these models in various image-based diagnostic tasks due to their ability to learn intricate features directly from data [10]. These prior works establish the feasibility of deep learning in this domain and also point to the remaining challenges. This research contributes to the advancing field of AI in dental diagnostics by developing and evaluating a YOLOv5m-based system for multi-class detection and architectural classification of six common dental pathologies from OPGs. Using a publicly available annotated data set that promotes reproducibility, the application provides a comprehensive performance analysis with detailed quantitative and qualitative results. The study demonstrates the practical efficacy of a deep learning model as an assistive tool for dental practitioners and addresses multi-class detection of OPGs, reflecting real-world clinical scenarios. A review of the advancements of AI in dentistry underscores the importance of such comprehensive diagnostic aids for planning more effective therapies and potentially reducing treatment costs [11]. Despite significant advancements in deep learning for dental pathology detection, crucial gaps persist that hinder robust clinical application. Many existing automated diagnostic systems often separate image-level classification from multi-class object detection, thereby failing to provide the precise spatial localization of pathologies within Orthopantomogram (OPG) images. Such simultaneous detection and localization are paramount for accurate diagnosis and targeted treatment planning in clinical settings. Furthermore, current models frequently suffer from limited generalization due to reliance on institution-specific or demographically restricted datasets. To address these critical limitations, particularly the need for a comprehensive and efficient solution for simultaneous detection and classification, our study introduces the first comprehensive application of YOLOv5m for the concurrent detection and classification of six distinct dental pathologies using panoramic OPG images. This approach aims to deliver precise spatial localization and enhance diagnostic consistency, directly addressing the aforementioned challenges in automated dental image analysis.

The remainder of this paper is organized as follows. Section 2 dives deeper into existing literature on dental image analysis techniques, AI applications in dental radiology, common dental pathologies, and a review of relevant object detection methodologies. Section 3 provides a comprehensive description of the Dataset utilized. Section 4 elaborates on the methodology YOLOv5m model architecture, data processing and training. Section 5 presents and analyzes the quantitative and qualitative results, and finally, Section 6 concludes the paper, summarizing findings, discussing limitations, and proposing future work.

Dental caries remains a primary focus for automated detection due to its prevalence. Various imaging modalities and AI techniques have been explored. Bui, Hamamoto, and Paing proposed a computer-aided diagnosis (CAD) method for caries detection on dental panoramic radiographs by fusing deep activated features with geometric features, achieving high accuracy, sensitivity, and specificity all exceeding 90% with classifiers like SVM and Random Forest [11]. Casalegno et al. developed a CNN-based deep learning model for automated detection and localization of dental lesions in near-infrared transillumination (TI) images, achieving a mean IOU of 72.7% for segmentation and promising AUC scores for binary caries presence detection, even with limited training data [12]. Ding et al. explored using the YOLOv3 algorithm for detecting dental caries in oral photographs taken with mobile phones, finding that a model trained on combined augmented and enhanced images achieved the highest mAP (85.48%) [13]. Bayraktar and Ayan investigated a modified YOLO (CNN) model for diagnosing interproximal caries on 1000 digital bitewing radiographs, reporting an overall accuracy of 94.59% and an AUC of 87.19% [14]. Haghanifar, Majdabadi, and Ko proposed PaXNet, an automatic system using ensemble transfer learning and a capsule network for dental caries detection in panoramic images, achieving 86.05% accuracy with higher recall for severe caries [15]. A study available on ResearchGate investigated detecting dental abnormalities using YOLOv8 models on RGB color images from an oral camera, with YOLOv8s achieving 84% precision, 79% recall, and 85% mAP@0.5 for caries detection [16]. De Araujo Faria et al. introduced an ANN-based method using PyRadiomics features from OPGs to predict and detect radiation-related caries (RRC) in cancer patients, showing high sensitivity (98.8%) and AUC (0.9869) for RRC detection [17].

Detecting conditions affecting the supporting structures of teeth has also been a significant area of AI research. Jiang et al. developed a two-stage deep learning architecture (UNet and YOLO-v4) using 640 panoramic images for radiographic staging of periodontal bone loss, achieving an overall classification accuracy of 0.77, generally outperforming general dental practitioners [18]. Khan et al. investigated automated feature detection and segmentation of common periapical findings (caries, alveolar bone recession, interradicular radiolucencies) on 206 periapical radiographs, finding U-Net and its variants performed best. However, segmentation of interradicular radiolucencies proved challenging [19]. Kibcak et al. developed a U-Net-based system for segmenting dental implants and a CNN for detecting peri-implantitis on 7696 OPGs. The segmentation was highly accurate (DSC 0.986), and the classification model for peri-implantitis achieved a recall of 0.903 and an F1-score of 0.835 [20].

Beyond caries and periodontal issues, AI has been applied to other conditions visible on panoramic radiographs. Murata et al. applied a CNN-based deep learning system to diagnose maxillary sinusitis on panoramic radiographs, achieving high diagnostic performance (accuracy 87.5%, AUC 0.875) comparable to experienced radiologists [21].

Several studies have aimed for more comprehensive diagnostic systems capable of identifying multiple conditions simultaneously. Alabd-Aljabar et al. presented a hybrid transfer learning approach using 1262 OPGs for diagnosing four teeth classes (cavity, filling, impacted, implant), with Vision Transformer (ViT) achieving the highest accuracy at 96% [22]. Zhu et al. developed an AI framework using BDU-Net and nnU-Net to diagnose five dental diseases (impacted teeth, full crowns, residual roots, missing teeth, caries) on 1996 panoramic radiographs. The system showed high specificity across most diseases and efficiency comparable to or exceeding mid-level dentists [23]. Laishram and Thongam devised a CNN-based method to classify tooth types and oral anomalies (fixed partial denture, impacted teeth) from 800 training OPGs, reporting 97.92% accuracy [24]. Almalki et al. proposed a YOLOv3 model to detect and classify cavities, root canals, dental crowns, and broken-down roots from 1200 augmented OPG images, achieving 99.33% accuracy on their dataset [25]. Lee et al. built an AI model to detect 17 fine-grained dental anomalies from approximately 23,000 panoramic images, achieving a high sensitivity of about 0.99 [26]. Laishram and Thongam also proposed a Faster R-CNN-based method to detect and classify oral/dental pathologies (teeth types, fixed partial denture, impacted teeth) from OPGs, reporting over 90% accuracy for detection and 99% for classification [27]. Muresan et al. introduced an approach for automatic teeth detection and classification of 14 dental issues on OPGs using CNN-based semantic segmentation (ERFNet) and image processing, with their teeth detection achieving a 0.93 F1-score [28]. A study is accessible on Niscpr.res.in utilized a hyperparameter-optimized Random Forest ensemble (tuned with Genetic Algorithm) for classifying tooth types and anomalies in panoramic radiographs, achieving 98% accuracy [29].

Identifying and isolating individual teeth is often a prerequisite for detailed pathological assessment. Tian et al. introduced a method using sparse voxel octrees and 3D CNNs for automatic tooth segmentation and classification on 3D dental models, achieving high accuracy for both tasks using a hierarchical approach [30]. Ayidh Alqahtani et al. validated a deep CNN tool for automated segmentation and classification of teeth with orthodontic brackets on CBCT images, reporting excellent segmentation (IoU 0.99) and highly accurate classification (precision 99%, recall 99.9%) [31]. Hejazi developed a two-step system using a CNN to classify OPGs as normal/abnormal, followed by Faster R-CNN to detect lesions in abnormal images from the Tufts Dental Database, showing promising classification (84.56% accuracy) and detection (82.35% precision) results [32]. Ong et al. developed a three-step deep learning system (YOLOv5, U-Net, EfficientNet) for automated dental development staging on 5133 OPGs based on Demirjian’s method, achieving high mAP (0.995) for detection and good F1 scores for tooth type classification [33]. Lo Giudice et al. evaluated a deep learning CNN for fully automatic mandible segmentation from CBCT scans, finding it highly accurate (DSC difference of 2.8%–3.1% vs. manual) and significantly faster [34]. Singh and Sehgal proposed a 6-layer DCNN for tooth numbering and classification (canine, incisor, molar, premolar) on panoramic images after segmentation, achieving 95% accuracy on an augmented database [35]. Imak et al. developed ResMIBCU-Net, an advanced encoder-decoder network, for automatic impacted tooth segmentation in OPGs, achieving 99.82% accuracy and a 91.59% F1-score [36].

The field also sees diverse AI methodologies beyond standard CNN classification/detection. Minoo and Ghasemi explored VGG16, VGG19, and ResNet50 for classifying calculus, tooth discolouration, and caries from 3392 JPG images of teeth, with ResNet50 performing best (95.23% accuracy) [37]. Cejudo et al. compared baseline CNN, ResNet-34, and CapsNet for classifying four types of dental radiographs, with ResNet-34 showing superior accuracy (>98%) and Grad-CAM providing interpretability [38]. Sumalatha et al. presented a dual-architecture AI system using an ensemble of ResNet-50, EfficientNet-B1, and InceptionResNetV2 for oral disease classification and the DETR model for detecting abnormalities in OPGs [39]. Hasnain et al. employed a CNN to classify dental X-ray images (126 images, augmented) as ‘Normal’ or ‘Affected’ for automating clinical quality evaluation, achieving 97.87% accuracy [40]. A study referenced via ResearchGate utilized a CNN to classify Quantitative Light-Induced Fluorescence (QLF) images for dental plaque assessment, outperforming shallow models with an F1-score of 0.76 for Red Fluorescent Plaque Percentage labels [41].

While our study focuses on the detection of dental pathologies, the broader utility of AI in dental radiography extends to tasks like dental age estimation, where deep learning models, particularly CNNs, have shown promising results in improving accuracy and efficiency over conventional techniques [40]. Our study utilizes YOLOv5m for the simultaneous detection of a range of dental pathologies, other research focuses on specialized areas such as root disease classification. An innovative approach combining ensemble deep learning architectures with metaheuristic optimization has shown remarkable accuracy in identifying conditions like periapical lesions and progressive periodontitis, thus expanding the scope of AI in comprehensive dental diagnostics.

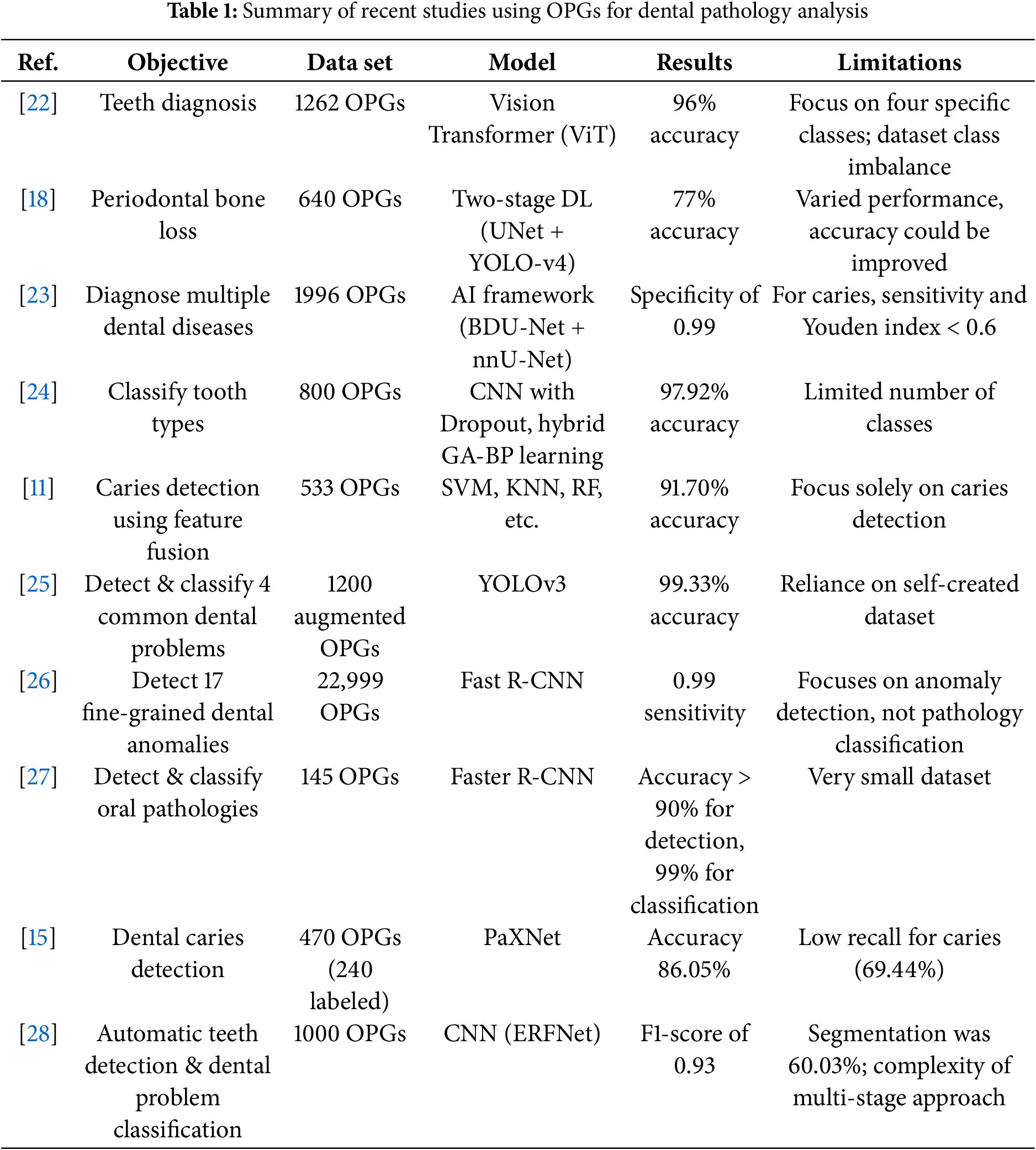

To further contextualize the advancements and remaining challenges in automated dental image analysis, Table 1 provides a comparative summary of several key studies discussed in this review. These studies utilize various AI techniques, primarily on OPGs, to detect and/or classify a range of dental conditions, highlighting diverse methodologies, performance achievements, and inherent limitations.

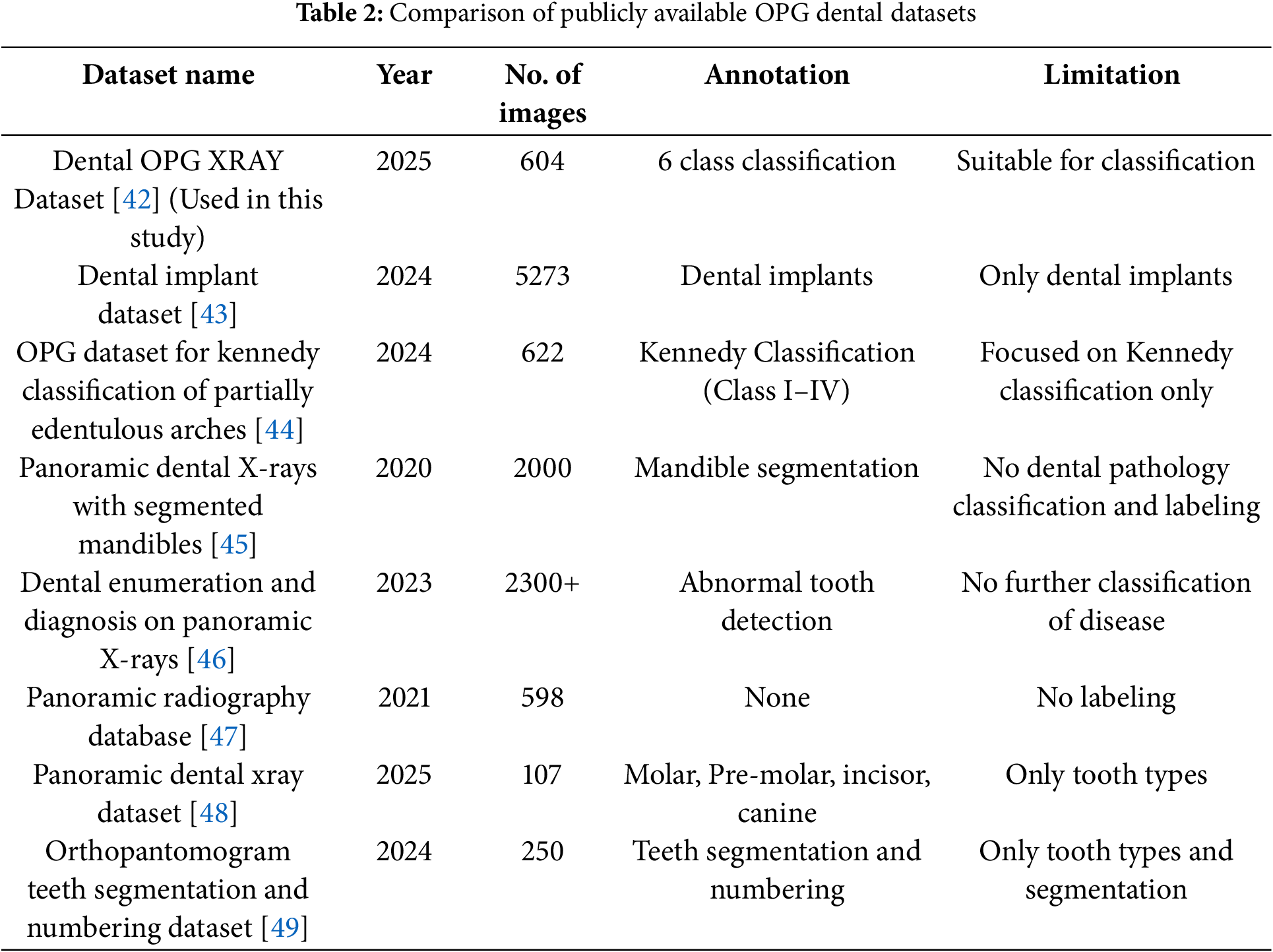

3.1 Dental OPG Dataset Comparison

The “Dental OPG X-RAY Dataset” [42] was selected due to its direct alignment with the research objective of multi-class dental pathology classification using OPG images. There are unique attributes, no other dataset offers annotations, specially 6 classes. As to the best of out knowledge This is the only publicly available dataset that has annotations available so this was selected. Unlike other reviewed datasets that focus on specific tasks such as implant analysis [43], Kennedy classification [44], mandible segmentation [45], general abnormality detection without detailed disease classification [46], lack labelling [47], or are limited to tooth type identification and segmentation [48,49], the chosen dataset provides annotations for six distinct and clinically relevant dental pathologies as shown in Table 2. This specific focus on multi-class pathology classification makes it uniquely suitable for training and evaluating the proposed system.

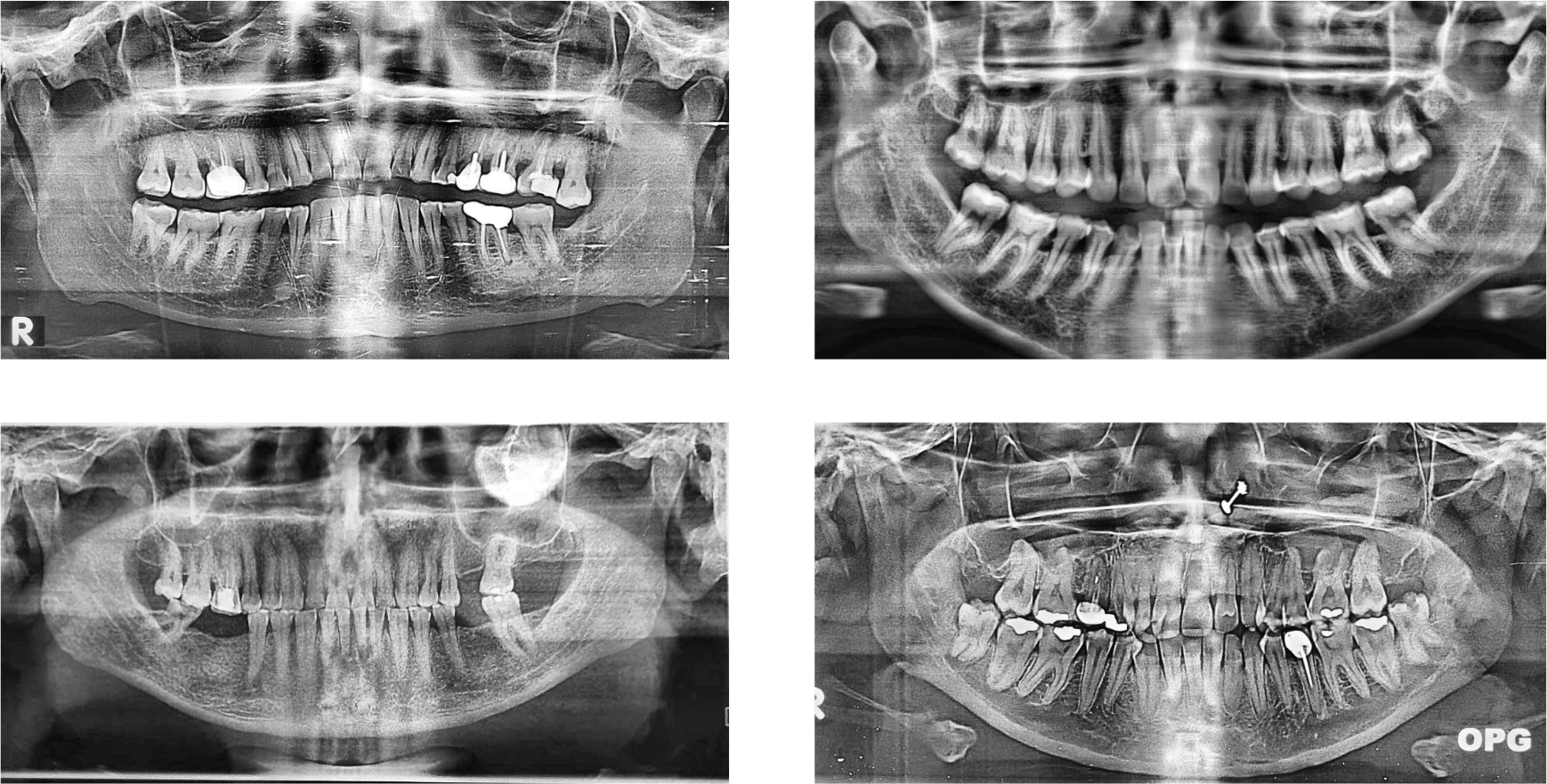

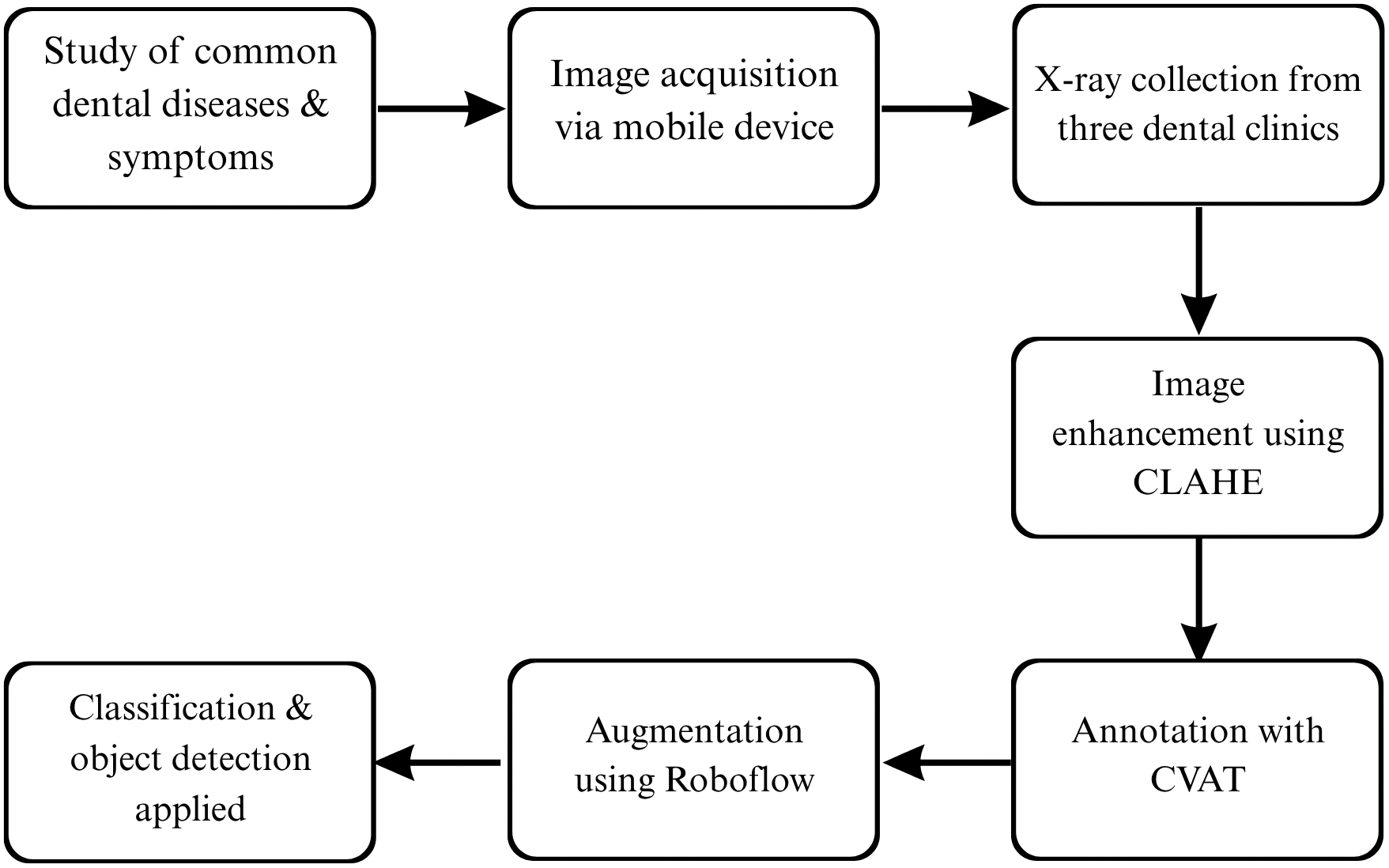

The primary dataset employed in this research is the publicly accessible “Dental OPG X-RAY Dataset” [42]. This resource was specifically curated to support deep-learning research in the detection and classification of dental diseases from panoramic radiographs. The original collection consists of 232 OPGs. The OPG images were initially captured by photographing X-ray films with a 64-megapixel Samsung A52 smartphone camera to ensure clarity and detail, as shown in Fig. 2. Prior to processing for this study, the dataset creators enhanced the images using Contrast-Limited Adaptive Histogram Equalization (CLAHE) to improve the visibility of dental structures.

Figure 2: Examples of panoramic dental radiographs (OPG) from the dataset

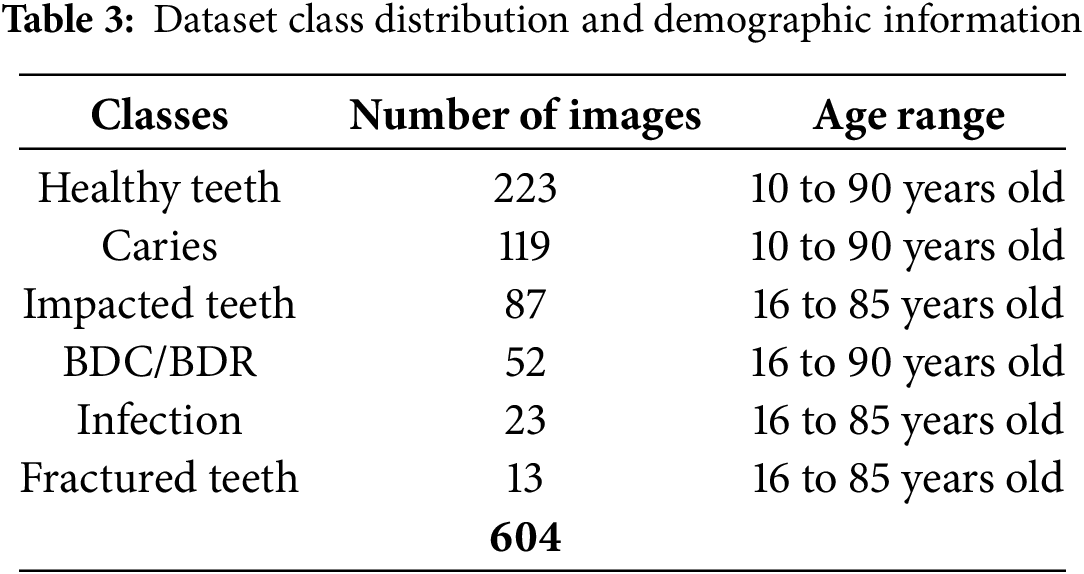

The dataset deliberately excluded individuals under 10 years of age. As stated in the dataset’s description, this targeted selection was made to focus on adult dental structures, which exhibit significant anatomical and pathological variations compared to those of children. The severe class imbalance, particularly for ’Fractured Teeth,’ with only 13 instances. This low count is reflective of the inherently lower prevalence of fractured teeth compared to other dental conditions in typical clinical populations, as captured in this [42] dataset.

3.3 Data Splitting, Classes and Labeling

For this research, an augmented version of the dataset was primarily used, comprising a total of 604 images. These were split into a Training Set (558 images), a Validation Set (23 images) for hyperparameter tuning and performance checks during training, and a Test Set (23 images) for final, unbiased model evaluation. The dataset is expertly annotated for six distinct classes. Healthy teeth, caries (tooth decay), affected teeth, infection, fractured teeth, and broken crown/socket (BDC/BDR). Dental professionals performed annotations using CVAT (Computer Vision Annotation Tool) to ensure precision [50]. Table 3 shows a comprehensive breakdown of the annotations in the dataset.

To enhance the diversity and robustness of our training dataset, thereby significantly improving model generalization and preventing overfitting, a series of carefully selected data augmentation techniques were applied. These transformations were exclusively performed on the training set, after the initial train-test split, to rigorously avoid data leakage and ensure an unbiased evaluation of the model’s performance on unseen data. Specifically, rotations were applied within a range of

The dataset is organized into folders for object detection (with train/validation/test splits) and classification (with images sorted into class-specific folders), totalling 197 MB in size and available as a zip file [42]. Fig. 3 shows step by step how the data set was prepared for a model to be applied.

Figure 3: A detailed overview of how the dataset was developed

3.4 Dataset Format and Storage

The images are stored in JPG format, with labels for object detection available in YOLO-compatible text files. The images are categorized into two main folders:

1. Object Detection Dataset Folder: This folder contains the original and augmented images, as well as their corresponding annotations. The images are split into training, validation, and test sets.

2. Classification Dataset Folder: In this folder, the images are organized into six separate class folders (one for each dental condition). This folder allows researchers to use the dataset for classification tasks without the object detection annotations.

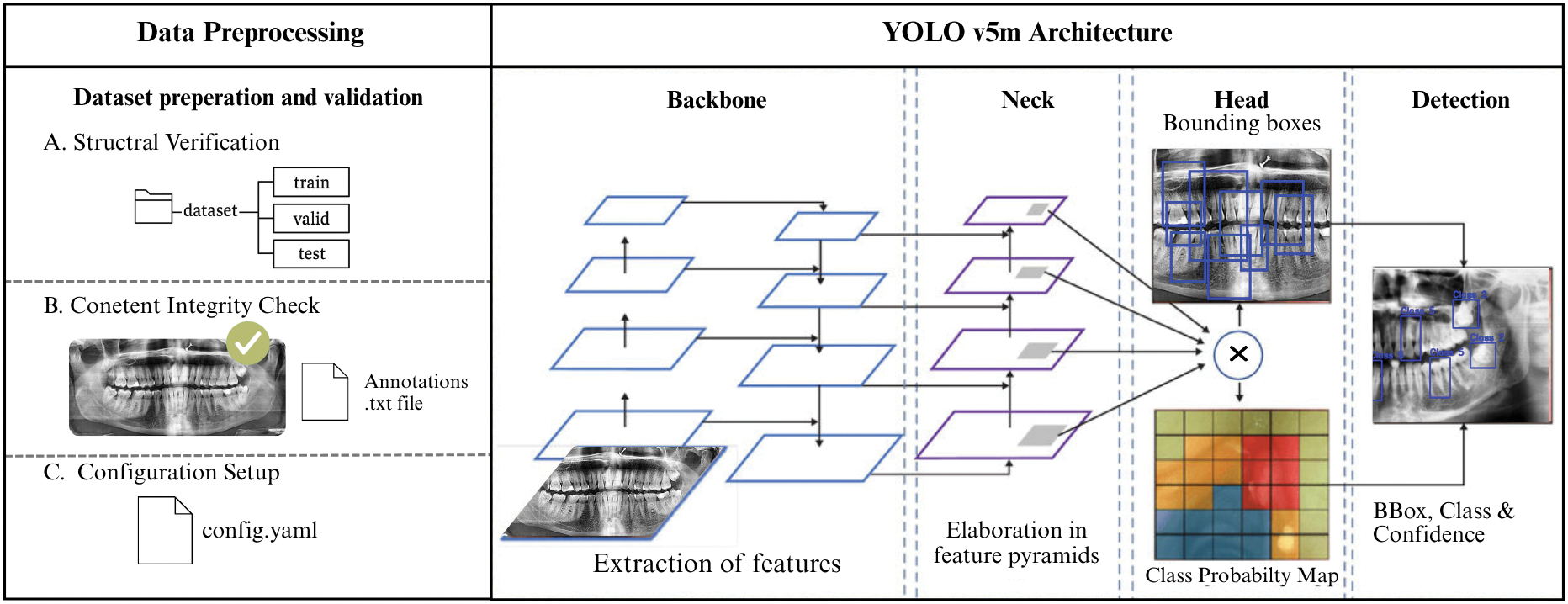

This chapter outlines the approach used to develop an automated system for classifying dental pathologies from OPG images using deep learning. It covers model selection, dataset preparation, and training strategy. The study uses the YOLO object detection model due to its real-time performance and unified architecture, which performs detection and classification in a single pass. YOLOv5m, developed by Ultralytics, was selected for its balanced trade-off between accuracy and efficiency. The ‘medium’ (m) variant offers strong performance with manageable hardware requirements, making it suitable for potential clinical applications.

The YOLOv5m model consists of three core parts as shown in Fig. 4. Backbone (CSPDarknet53): Extracts image features at multiple scales using CSP connections to enhance learning and reduce computation. Neck (PANet): Fuses features across scales to improve detection of varied pathology sizes via top-down and bottom-up pathways. Head: Outputs predictions including bounding boxes, object confidence scores, and class probabilities across six dental pathology categories [51,52], and analysis in [53]. This variant includes 106 layers, 25.05 million parameters, and requires about 64 GFLOPs, offering a robust foundation for high-precision detection. The YOLOv5 architecture is inherently designed for multi-object detection. This means for each image, it can:

• Predict Multiple Bounding Boxes: It generates multiple bounding boxes, each intended to enclose a detected object (in your case, a dental pathology).

• Assign Class Probabilities: For each predicted bounding box, it simultaneously assigns a probability for each possible class (e.g., Caries, Infection, Impacted Teeth, etc.).

• Spatial Localization: By providing distinct bounding box coordinates and class labels for each detected pathology, even if they are overlapping or close to each other, the model offers precise spatial localization. This allows it to differentiate between multiple conditions present in the same area or across the image.

Figure 4: Shows preprocessing along with the main components of YOLOv5’s architecture

4.2 Data Preprocessing and Configuration

Dataset Verification: The Dental OPG X-RAY Dataset was reviewed for completeness. All required folders, including original images, augmented data and training/validation/test splits, were present. Each image file (jpg) had a matching label file (txt), with 558 training samples and 23 each in validation and test sets. Basic statistics revealed an average image size of 640

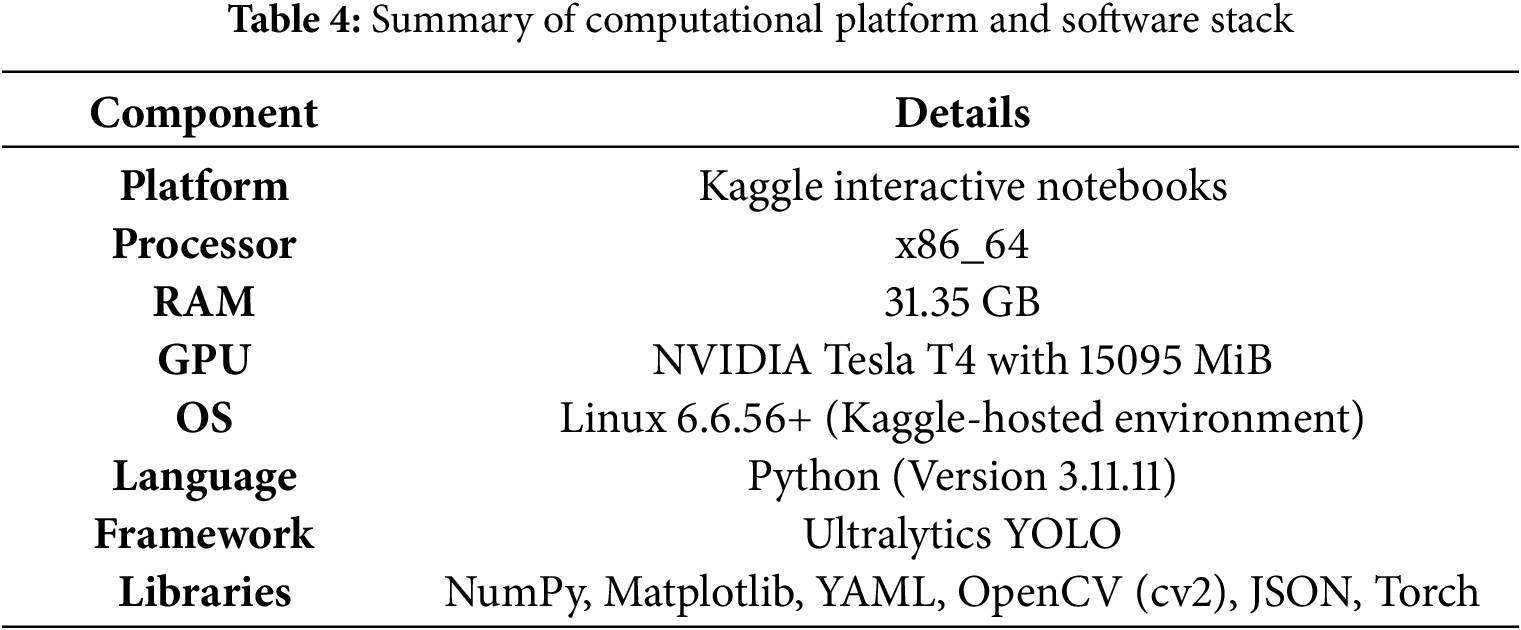

Model development and training were conducted in the environment summarized in Table 4.

4.4 Training Parameters and Strategy

The YOLOv5m model was fine-tuned using pre-trained weights from the COCO dataset, leveraging transfer learning (“Transferred 553/559 items”) for faster convergence. All input OPG images were resized to 640

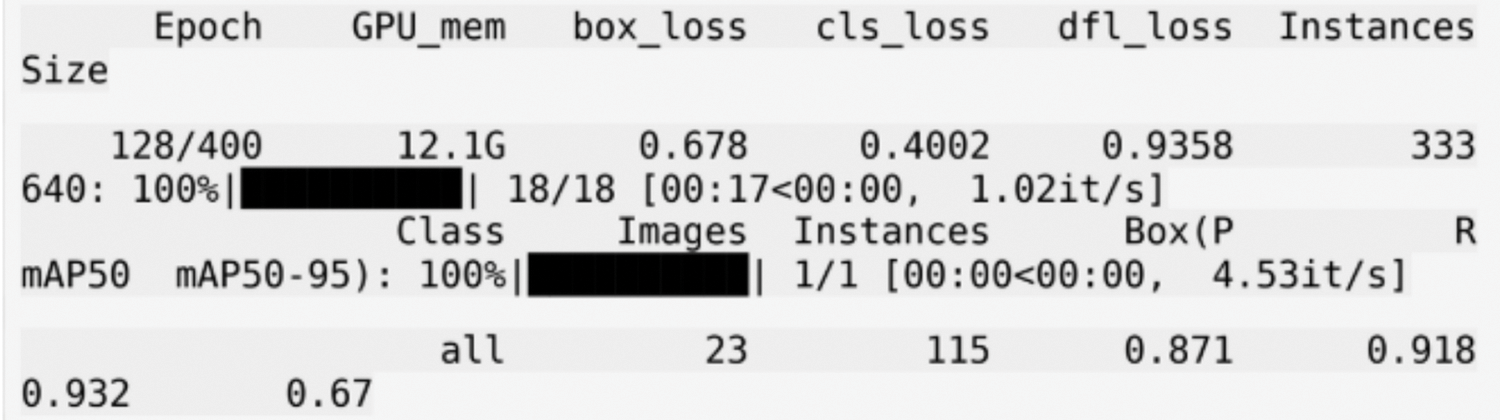

The model was initially set to train for a maximum of 400 epochs. An epoch represents one complete pass through the entire training dataset (558 images). Given a batch size of 32, each epoch consisted of approximately 18 iterations (558 images/32 images per batch

Figure 5: Shows the training logs for the YOLOv5m model

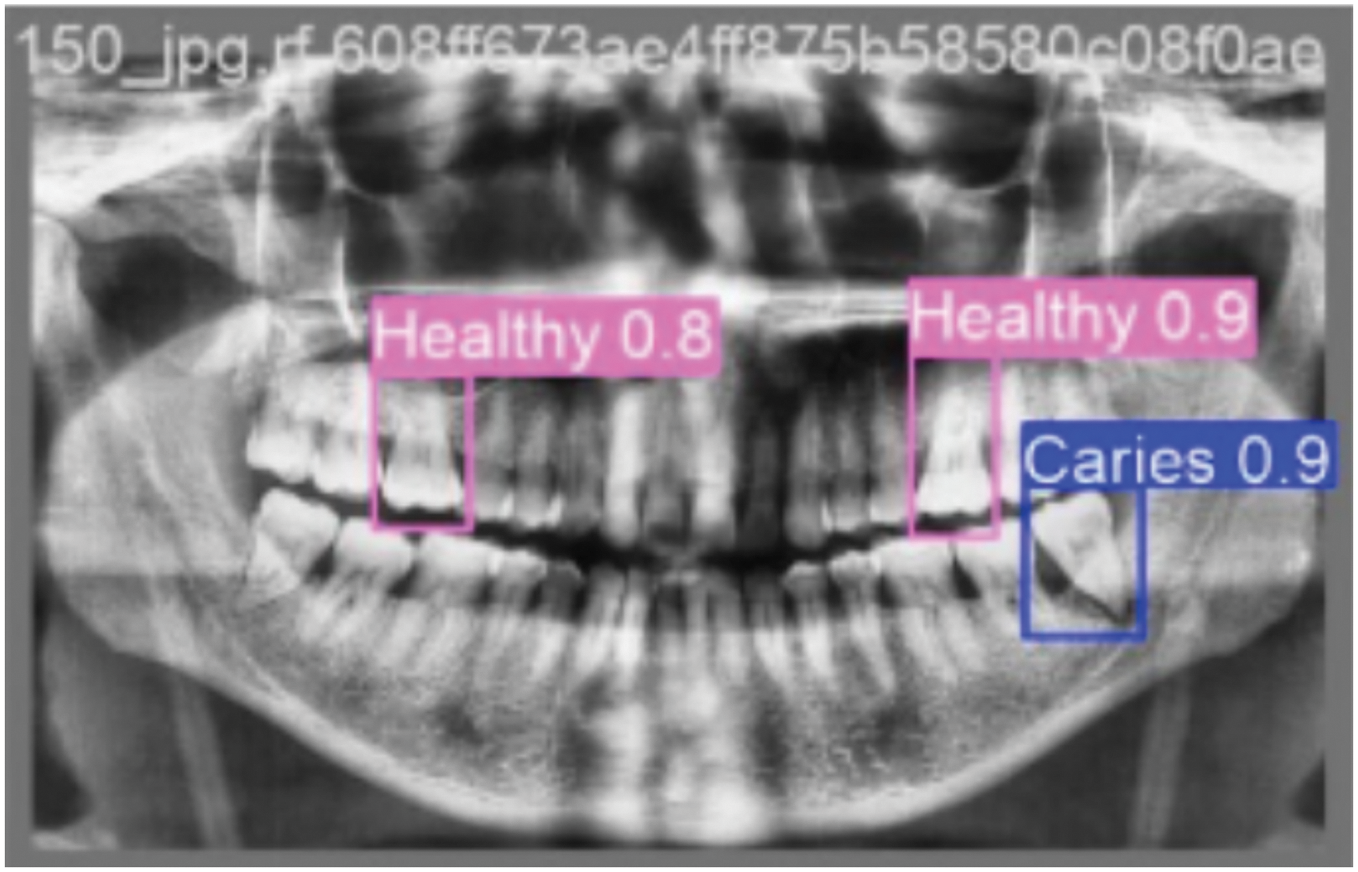

Upon processing an input OPG image during inference, the trained YOLOv5m model generates several key outputs for each detected dental pathology: Bounding Boxes: Coordinates defining a rectangular region, localizing the detected pathology. Class Labels: The predicted type of dental condition for each bounding box (e.g., Caries, Infection, Impacted Teeth, Fractured Teeth, Broken Crown, or Healthy). Confidence Scores: A numerical value (typically between 0 and 1) associated with each detection, indicating the model’s confidence in the presence and classification of the pathology within the predicted bounding box. These outputs can be visualized by overlaying the bounding boxes and labels on the original OPG image or can be provided in a structured format (e.g., JSON) for further analysis or integration into other systems. Post-processing steps like Non-Maximum Suppression (NMS) are applied to refine these raw detections by eliminating redundant or overlapping boxes for the same object instance. The final output typically consists of the original OPG image overlaid with the predicted bounding boxes for each detected pathology. The class label (e.g., “Healthy ”, “Caries”) and the confidence score associated with each bounding box as shown in Fig. 6.

Figure 6: Shows the annotated bounding boxes highlighting different dental conditions

The trained YOLOv5m model demonstrated efficient processing on the Tesla T4 GPU, with an average total time of approximately 28.3 ms per OPG image (2.6 ms preprocess, 24.9 ms inference, 0.8 ms post-processing/NMS). This corresponds to a throughput of roughly 35 frames per second, underscoring its potential for timely clinical application.

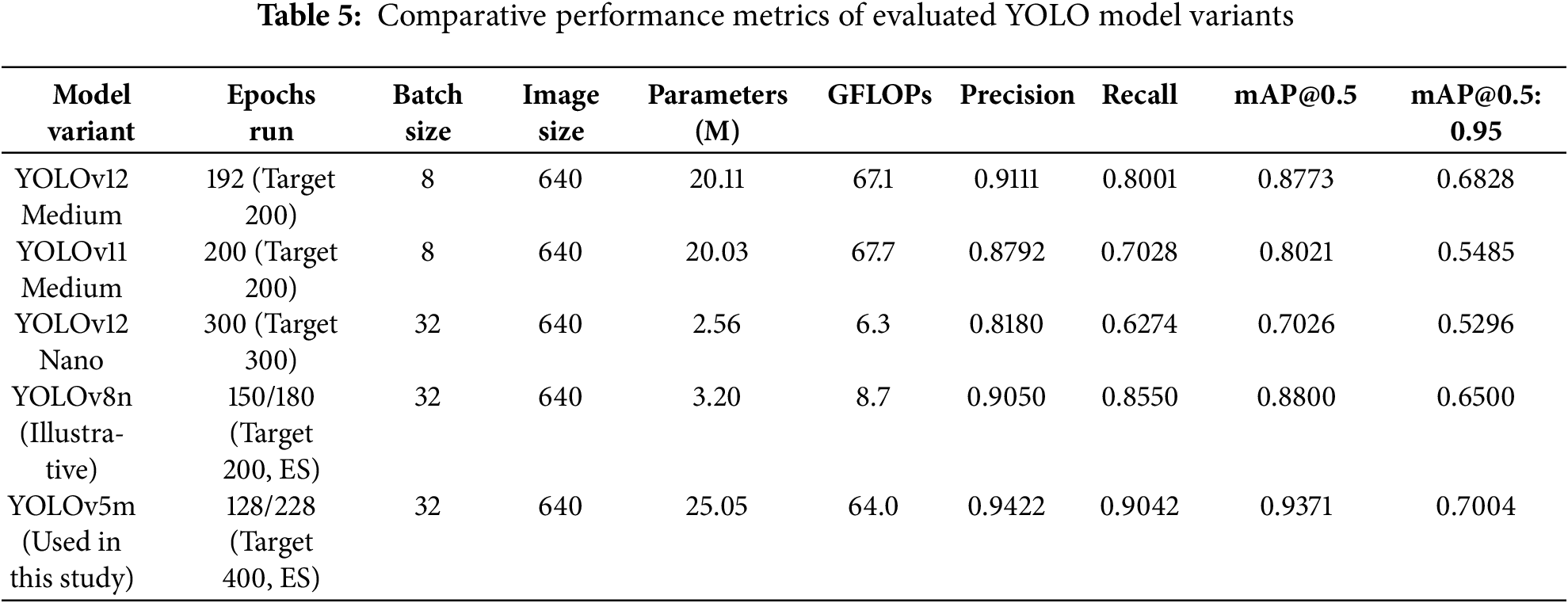

The study evaluated several YOLO variants; their key performance metrics on the test set are summarized in Table 5.

YOLOv5m was selected as the optimal model based on several factors. It demonstrated compelling efficacy in automatically detecting and classifying multiple dental pathologies from OPG images. Highest overall Precision (94.22%), Recall (90.42%), and mAP@0.5 (93.71%) highlight its accuracy and reliability. Per-class analysis confirmed robust performance, particularly for critical conditions like Infection, Broken Crown, and Impacted Teeth. Visual evaluations through performance curves and detection examples further substantiate these quantitative findings.

The high performance of the selected YOLOv5m model underscores the significant potential of advanced object detectors to aid in dental diagnostics. This system can contribute to:

• Improved Diagnostic Efficiency: Facilitating rapid screening of OPGs, allowing practitioners to focus on complex cases.

• Enhanced Diagnostic Consistency: Offering an objective baseline to help reduce inter-observer variability.

• Support for Early Detection: Aiding in the identification of subtle or early-stage pathologies due to its strong recall for critical conditions.

• Increased Accessibility: The efficiency of YOLOv5m suggests that such AI tools can be practical for wider clinical adoption, including in resource-constrained settings.

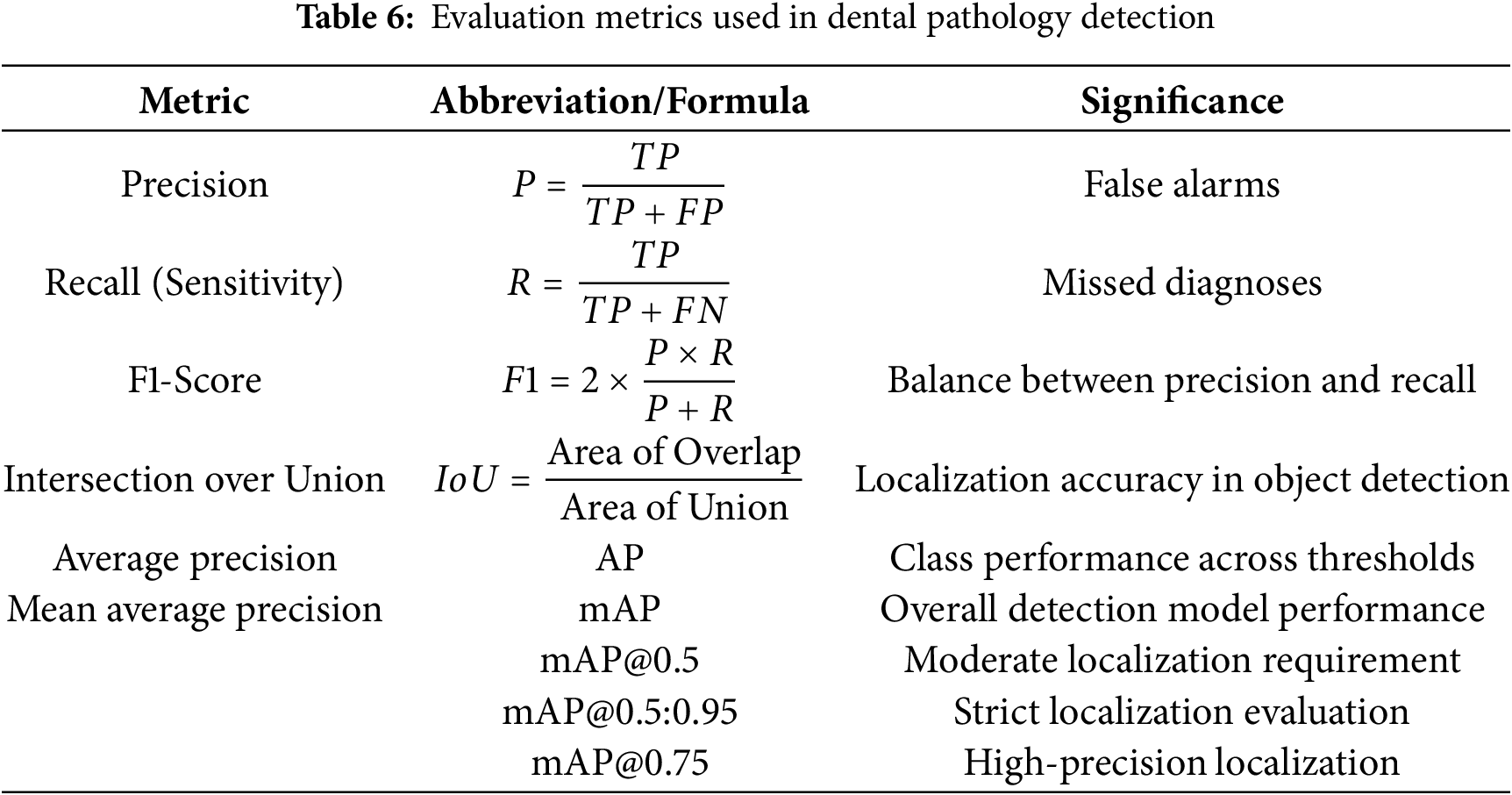

The model’s efficacy was assessed using standard object detection metrics to provide an objective understanding of its accuracy, localization capabilities, and overall reliability in the context of dental diagnostics: Table 6.

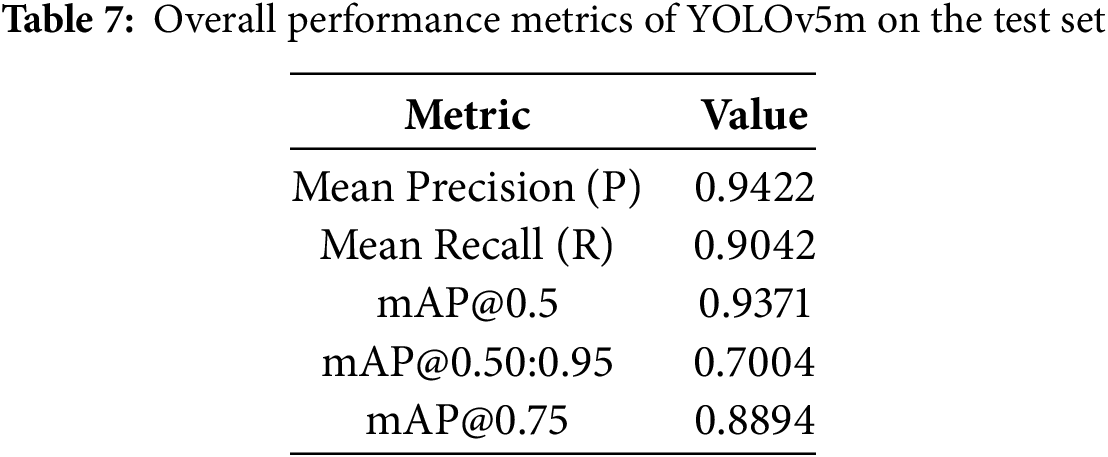

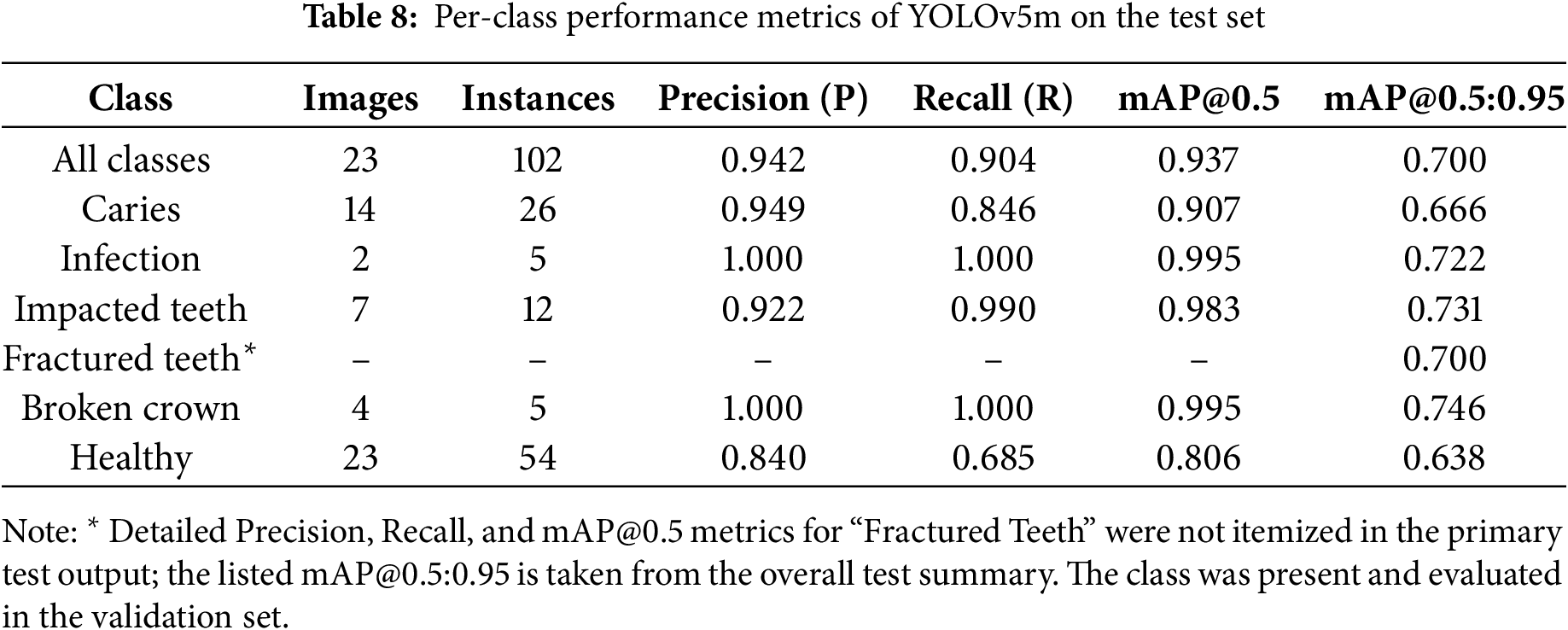

The YOLOv5m model, utilizing weights from the optimal epoch 128 (determined by validation performance and early stopping), was rigorously evaluated on a segregated test set of 23 OPG images containing 102 instances of dental conditions. This ensures an unbiased assessment of its generalization capabilities. The model demonstrated strong overall performance, as summarized in Table 7 and the per-class analysis, detailed in Table 8, reveals the model’s varied strengths across the six defined classes.

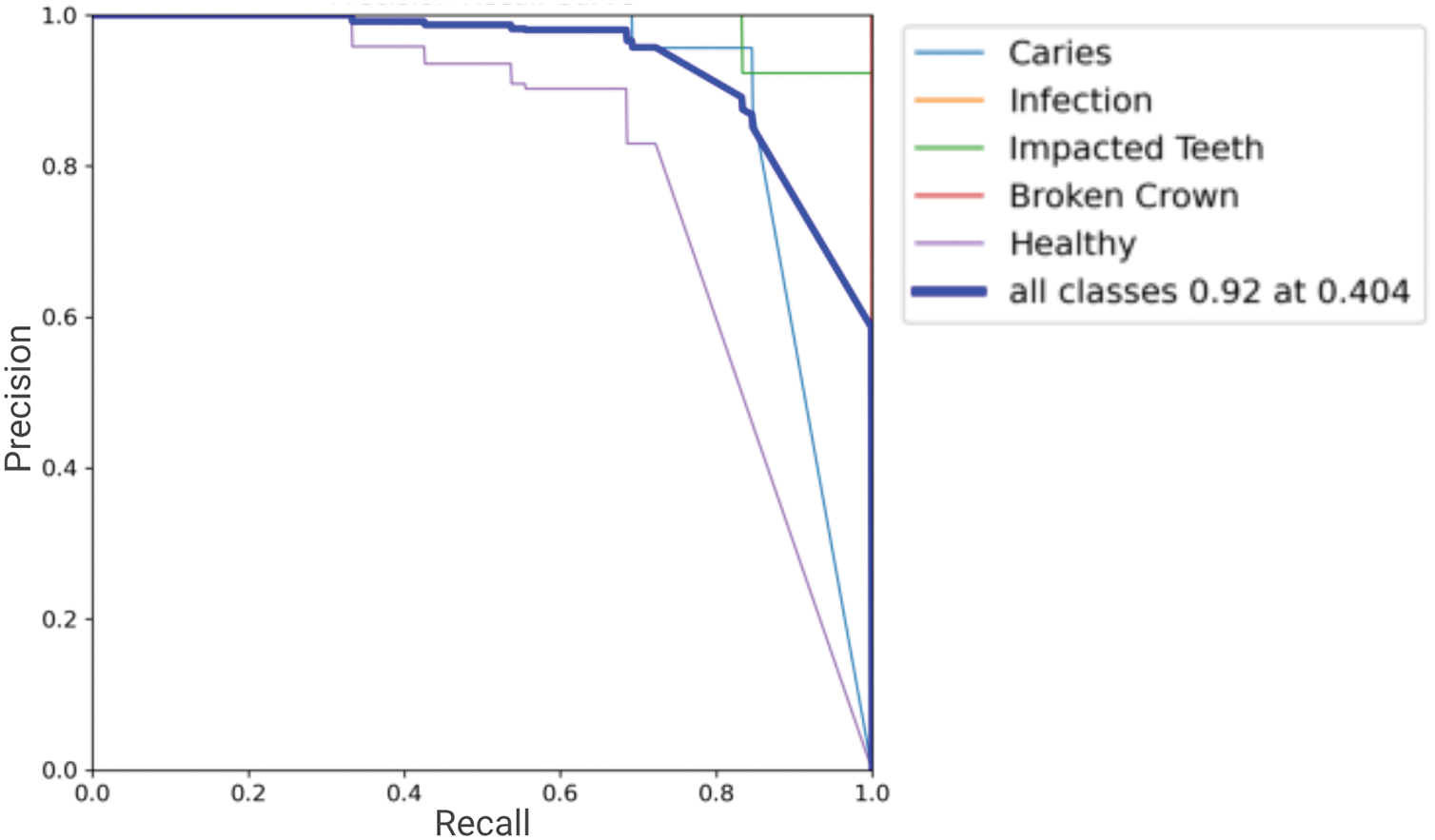

Overall, the class-specific analysis confirms the model’s strong potential as a diagnostic aid, providing reliable detections with a good balance of precision and recall across most pathologies. Areas with relatively lower recall, such as for “Healthy,” indicate opportunities for future refinement. Precision-Recall (PR) Curve in Fig. 7. Its proximity to the top-right (Precision = 1, Recall = 1) visually confirms the high mAP@0.5 of 0.9371, demonstrating robust detection accuracy across varying confidence levels.

Figure 7: The Precision-Recall (PR) curve for all classes

F1-Score, Precision, and Recall curves plotted against confidence thresholds are shown in Fig. 8. These illustrate the model’s performance trade-offs: the P-curve shows precision maintenance at different certainty levels, the R-curve depicts recall variation, and the F1-curve peak indicates the optimal confidence for balanced precision and recall.

Figure 8: Confidence based curves (a) Precision confidence (b) Recall confidence (c) F1 confidence

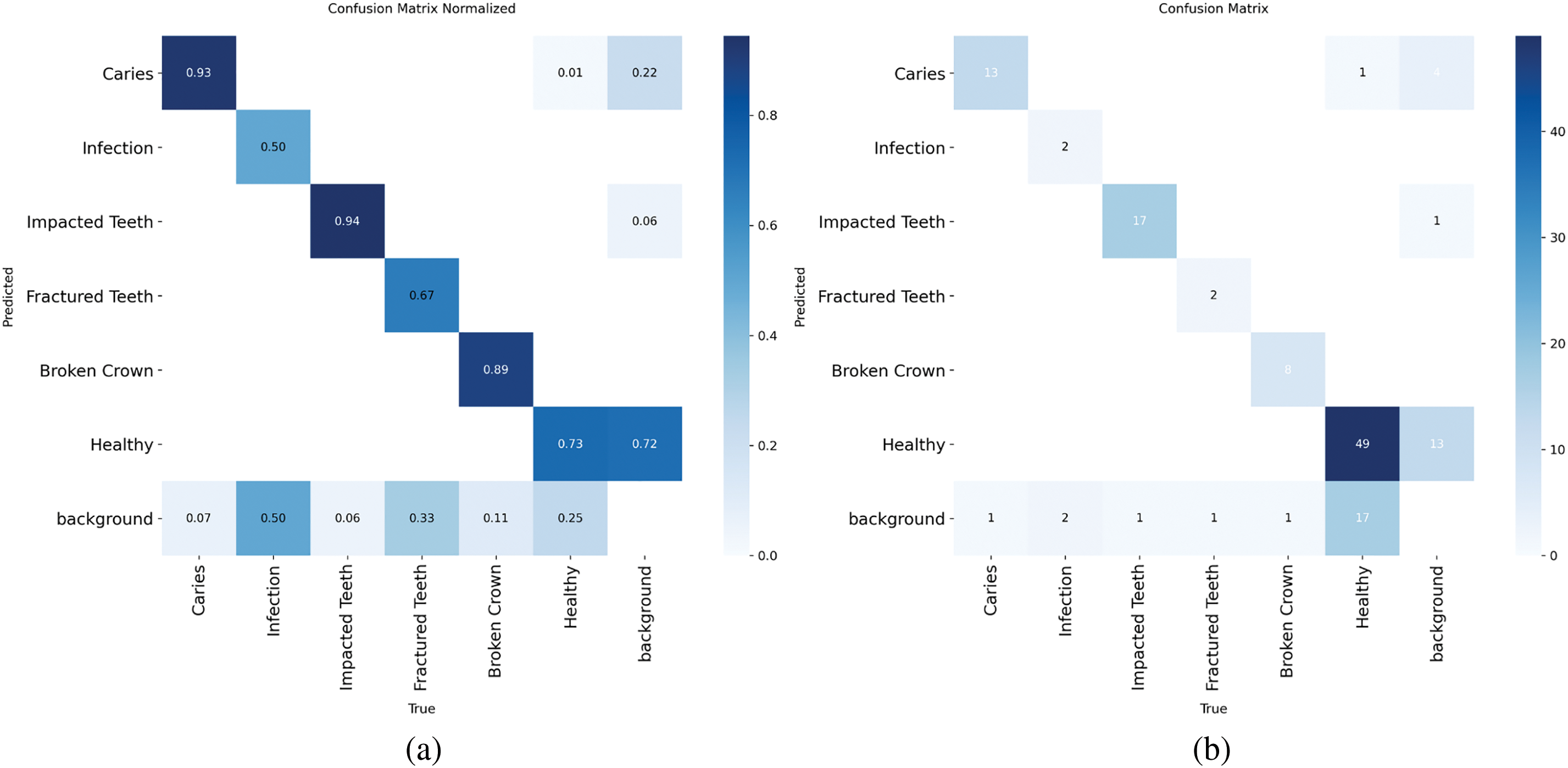

Normalized confusion matrix in Fig. 9 for classification performance on detected objects. Strong diagonal values indicate high correct classification rates per pathology. Off-diagonal elements highlight any inter-class misclassifications or confusion with background, which are minimal given the high overall precision.

Figure 9: Per-class confusion matrix (a) Normalised (b) Standard

This research successfully demonstrated the efficacy of a YOLOv5m-based automated system for the detection and architectural classification of six key dental pathologies from OPG images. The model achieved strong performance on a segregated test set, with a mean precision of 0.9422, recall of 0.9042, and a mean Average Precision (mAP@0.5) of 0.9371, validating its potential as a reliable and precise diagnostic aid. The system showed particularly robust detection for critical conditions like Infection and Broken Crown and confirmed good model generalization without significant overfitting. This study underscores the viability of YOLOv5m in enhancing dental diagnostic workflows, offering a scalable tool to support practitioners and improve patient care. Despite these promising outcomes, certain limitations must be acknowledged. The primary datasets and test set size (23 images) were also small, and specific classes like “Fractured Teeth” had insufficient instances for a detailed per-class metric breakdown in the primary test summary.

Future work should emphasise on the expansion of the dataset to include a greater diversity of multi-center, multi-ethnic, and pediatric OPGs, which will further enhance the model’s generalizability and robustness. We also aim to incorporate additional dental pathologies and disease labels to broaden the scope of detection and classification, while simultaneously addressing class imbalance with advanced techniques. Furthermore, exploring the integration of comparative baselines, such as Faster R-CNN or SSD, will provide a more comprehensive evaluation of our YOLOv5m-based approach. Our research will also investigate adapting the model to other imaging modalities, including intraoral X-rays and cone-beam CT. Our proposed AI-based approach holds significant promise for real-world integration into dental practice. We envision its deployment as a crucial component of a clinical decision support system, potentially through direct integration with OPG X-ray machines to provide immediate diagnostic assistance during patient examinations. Furthermore, the model’s capabilities could be harnessed via mobile applications, enabling rapid and accessible diagnostic insights for dentists. We are also committed to investigating real-time deployment strategies within various clinical environments to ensure seamless and impactful adoption.

In essence, this research affirms the strong potential of AI-driven tools to augment dental diagnostics. While challenges concerning dataset diversity and advanced interpretability remain, the achieved results and outlined, future work offer a clear path toward the broader clinical adoption of such technologies for globally improved dental healthcare.

Acknowledgement: This research is supported by Princess Nourah bint Abdulrahman university Rsearchers supporting project number (PNURSP2025R195), Princess Nourah bint Abdulrahman university, Riyadh, Saudi Arabia. Also, supported by Deanship of Graduate Studies and Scientific Research at University of Bisha for supporting this work through the Fast-Track Research Support Program.

Funding Statement: This study received funding from the Princess Nourah bint Abdulrahman University Researchers Supporting Project (PNURSP2025R195) and the University of Bisha through its Fast-Track Research Support Program.

Author Contributions: Conceptualization was carried out by Arham Adnan and Muhammad Tuaha Rizwan, with Muhammad Tuaha Rizwan leading the methodology and handling software development. Validation was performed by Shakila Basheer. Formal analysis was conducted by Hafiz Muhammad Attaullah. Investigation was led by Arham Adnan and Mohammad Tabrez Quasim. Resources were provided by Shakila Basheer and Hafiz Muhammad Attaullah, who also managed data curation. The original draft was prepared by Arham Adnan and Muhammad Tuaha Rizwan. Review and editing were contributed by Hafiz Muhammad Attaullah. Visualization was overseen by Mohammad Tabrez Quasim, with supervision also provided by him. Project administration and funding acquisition were managed by Hafiz Muhammad Attaullah. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The data that support the findings of this study are openly available at https://data.mendeley.com/datasets/c4hhrkxytw/4 (accessed on 29 July 2025).

Ethics Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

References

1. R. S. of N. A. (RSNAA. C. of Radiology (ACR). Panoramic Dental X-ray, Radiologyinfo.org [Internet]. [cited 2025 May 27]. Available from: https://www.radiologyinfo.org/en/info/panoramic-xray. [Google Scholar]

2. Cosson J. Interpreting an orthopantomogram. Aust J Gen Pract. 2020;49(9):550–5. doi:10.31128/AJGP-07-20-5536. [Google Scholar] [CrossRef]

3. Effective radiographic interpretation. Decisions in dentistry [Internet]. [cited 2025 May 28]. Available from: https://decisionsindentistry.com/article/effective-radiographic-interpretation/. [Google Scholar]

4. Bhat S, Birajdar GK, Patil MD. A comprehensive survey of deep learning algorithms and applications in dental radiograph analysis. Healthcare Analy. 2023;4(7):100282. doi:10.1016/j.health.2023.100282. [Google Scholar] [CrossRef]

5. Samaranayake L, Tuygunov N, Schwendicke F, Osathanon T, Khurshid Z, Boymuradov SA, et al. The transformative role of artificial intelligence in dentistry: a comprehensive overview. Part 1: fundamentals of AI, and its contemporary applications in dentistry. Int Dent J. 2025;75(2):383–96. doi:10.1016/j.identj.2025.02.005. [Google Scholar] [PubMed] [CrossRef]

6. Ossowska A, Kusiak A, Świetlik D. Artificial intelligence in dentistry—narrative review. Int J Environ Res Public Health. 2022;19(6):3449. doi:10.3390/ijerph19063449. [Google Scholar] [PubMed] [CrossRef]

7. Manjunatha VA, Parisarla H, Parasher S. A systematic review on recent advancements in 3D surface imaging and Artificial intelligence for enhanced dental research and clinical practice. Int J Maxillofac Imaging. 2024;10(4):132–9. doi:10.18231/j.ijmi.2024.029. [Google Scholar] [CrossRef]

8. Redmon J, Divvala S, Girshick R, Farhadi A. You only look once: unified, real-time object detection. arXiv:1506.02640. 2016. [Google Scholar]

9. Anwar SM, Majid M, Qayyum A, Awais M, Alnowami M, Khan MK. Medical image analysis using convolutional neural networks: a review. J Med Syst. 2018;42(11):226. doi:10.1007/s10916-018-1088-1. [Google Scholar] [PubMed] [CrossRef]

10. Ghaffari M, Zhu Y, Shrestha A. A review of advancements of Artificial intelligence in dentistry. Dent Rev. 2024;4(2):100081. doi:10.1016/j.dentre.2024.100081. [Google Scholar] [CrossRef]

11. Bui TH, Hamamoto K, Paing MP. Deep fusion feature extraction for caries detection on dental panoramic radiographs. Appl Sci. 2021;11(5):2005. doi:10.3390/app11052005. [Google Scholar] [CrossRef]

12. Casalegno F, Newton T, Daher R, Abdelaziz M, Lodi-Rizzini A, Schürmann F, et al. Caries detection with near-infrared transillumination using deep learning. J Dent Res. 2019;94(11):1578–84. doi:10.1177/0022034519871884. [Google Scholar] [PubMed] [CrossRef]

13. Ding B, Zhang Z, Liang Y, Wang W, Hao S, Meng Z, et al. Detection of dental caries in oral photographs taken by mobile phones based on the YOLOv3 algorithm. Ann Transl Med. 2021;9(21):1622. doi:10.21037/atm-21-4805. [Google Scholar] [PubMed] [CrossRef]

14. Bayraktar Y, Ayan E. Diagnosis of interproximal caries lesions with deep convolutional neural network in digital bitewing radiographs. Clin Oral Investig. 2022;26(1):623–32. doi:10.1007/s00784-021-04040-1. [Google Scholar] [PubMed] [CrossRef]

15. Haghanifar A, Majdabadi MM, Ko S-B. PaXNet: dental caries detection in panoramic x-ray using ensemble transfer learning and capsule classifier. arXiv:2012.13666. 2020. [Google Scholar]

16. Rouhbakhshmeghrazi A, Fazelifar A, Alizadeh G. Detecting dental caries with convolutional neural networks using color images. In: 2nd International Congress on Science, Engineering & New Technologies; 2024 Jul 11; Hamburg, Germany. [Google Scholar]

17. De Araujo Faria V, Azimbagirad M, Viani Arruda G, Fernandes Pavoni J, Cezar Felipe J, Dos Santos EMCMF, et al. Prediction of radiation-related dental caries through pyradiomics features and artificial neural network on panoramic radiography. J Digit Imaging. 2021;34(5):1237–48. doi:10.1007/s10278-021-00487-6. [Google Scholar] [PubMed] [CrossRef]

18. Jiang L, Chen D, Cao Z, Wu F, Zhu H, Zhu F. A two-stage deep learning architecture for radiographic staging of periodontal bone loss. BMC Oral Health. 2022;22(1):106. doi:10.1186/s12903-022-02119-z. [Google Scholar] [PubMed] [CrossRef]

19. Khan HA, Haider MA, Ansari HA, Ishaq H, Kiyani A, Sohail K, et al. Automated feature detection in dental periapical radiographs by using deep learning. Oral Surg Oral Med Oral Pathol Oral Radiol. 2021;131(6):711–20. doi:10.1016/j.oooo.2020.08.024. [Google Scholar] [PubMed] [CrossRef]

20. Kibcak E, Buhara O, Temelci A, Akkaya N, Ünsal G, Minervini G. Deep learning-driven segmentation of dental implants and peri-implantitis detection in orthopantomographs: a novel diagnostic tool. J Evidence-Based Dental Pract. 2025;25(1):102058. doi:10.1016/j.jebdp.2024.102058. [Google Scholar] [PubMed] [CrossRef]

21. Murata M, Ariji Y, Ohashi Y, Kawai T, Fukuda M, Funakoshi T, et al. Deep-learning classification using convolutional neural network for evaluation of maxillary sinusitis on panoramic radiography. Oral Radiol. 2019;35(3):301–7. doi:10.1007/s11282-018-0363-7. [Google Scholar] [PubMed] [CrossRef]

22. Alabd-Aljabar A, Raisan Z, Adnan M, Dhou S. A hybrid transfer learning approach to teeth diagnosis using orthopantomogram radiographs. IEEE Access. 2024;12(8):178142–52. doi:10.1109/ACCESS.2024.3507925. [Google Scholar] [CrossRef]

23. Zhu J, Chen Z, Zhao J, Yu Y, Li X, Shi K, et al. Artificial intelligence in the diagnosis of dental diseases on panoramic radiographs: a preliminary study. BMC Oral Health. 2023;23(1):358. doi:10.1186/s12903-023-03027-6. [Google Scholar] [PubMed] [CrossRef]

24. Laishram A, Thongam K. Automatic classification of oral pathologies using orthopantomogram radiography images based on convolutional neural network. Int J Interact Multimed Artif Intell. 2021. doi:10.9781/ijimai.2021.10.009. [Google Scholar] [PubMed] [CrossRef]

25. Almalki YE, Din AI, Ramzan M, Irfan M, Aamir KM, Almalki A, et al. Deep learning models for classification of dental diseases using orthopantomography X-ray OPG images. Sensors. 2022;22(19):7370. doi:10.3390/s22197370. [Google Scholar] [PubMed] [CrossRef]

26. Lee S, Kim D, Jeong H-G. Detecting 17 fine-grained dental anomalies from panoramic dental radiography using Artificial intelligence. Sci Rep. 2022;12(1):5172. doi:10.1038/s41598-022-09083-2. [Google Scholar] [PubMed] [CrossRef]

27. Laishram A, Thongam K. Detection and classification of dental pathologies using faster-RCNN in orthopantomogram radiography image. In: 2020 7th International Conference on Signal Processing and Integrated Networks (SPIN); 2020 Feb 27–28; Noida, India. p. 423–8. doi:10.1109/SPIN48934.2020.9071242. [Google Scholar] [CrossRef]

28. Muresan MP, Barbura AR, Nedevschi S. Teeth detection and dental problem classification in panoramic X-ray images using deep learning and image processing techniques. In: 2020 IEEE 16th International Conference on Intelligent Computer Communication and Processing (ICCP); 2020 Sep 3–5; Cluj-Napoca, Romania. p. 457–63. doi:10.1109/ICCP51029.2020.9266244. [Google Scholar] [CrossRef]

29. Singh SB, Laishram A, Thongam K, Singh KM. A random forest-based automatic classification of dental types and pathologies using panoramic radiography images. J Scient Indus Res (JSIR). 2024;83(5):531–43. doi:10.56042/jsir.v83i5.3994. [Google Scholar] [CrossRef]

30. Tian S, Dai N, Zhang B, Yuan F, Yu Q, Cheng X. Automatic classification and segmentation of teeth on 3D dental model using hierarchical deep learning networks. IEEE Access. 2019;7:84817–28. doi:10.1109/ACCESS.2019.2924262. [Google Scholar] [CrossRef]

31. Ayidh Alqahtani K, Jacobs R, Smolders A, Van Gerven A, Willems H, Shujaat S, et al. Deep convolutional neural network-based automated segmentation and classification of teeth with orthodontic brackets on cone-beam computed-tomographic images: a validation study. Eur J Orthod. 2023;45(2):169–74. doi:10.1093/ejo/cjac047. [Google Scholar] [PubMed] [CrossRef]

32. Hejazi K. Deep learning-based system for automated classification and detection of lesions in dental panoramic radiography, [master’s thesis]. Damascus, Syria: Syrian Virtual University; 2024. [Google Scholar]

33. Ong SH, Kim H, Song JS, Shin TJ, Hyun HK, Jang KT, et al. Fully automated deep learning approach to dental development assessment in panoramic radiographs. BMC Oral Health. 2024;24(1):426. doi:10.1186/s12903-024-04160-6. [Google Scholar] [PubMed] [CrossRef]

34. Lo Giudice A, Ronsivalle V, Spampinato C, Leonardi R. Fully automatic segmentation of the mandible based on convolutional neural networks (CNNs). Orthodon Craniof Res. 2021;24(S2):100–7. doi:10.1111/ocr.12536. [Google Scholar] [PubMed] [CrossRef]

35. Singh P, Sehgal P. Numbering and classification of panoramic dental images using 6-layer convolutional neural network. Pattern Recognit Image Anal. 2020;30(1):125–33. doi:10.1134/S1054661820010149. [Google Scholar] [CrossRef]

36. Imak A, Çelebi A, Polat O, Türkoğlu M, Şengür A. ResMIBCU-Net: an encoder-decoder network with residual blocks, modified inverted residual block, and bi-directional ConvLSTM for impacted tooth segmentation in panoramic X-ray images. Oral Radiol. 2023;39(4):614–28. doi:10.1007/s11282-023-00677-8. [Google Scholar] [PubMed] [CrossRef]

37. Minoo S, Ghasemi F. Automated teeth disease classification using deep learning models. Int J Appl Data Sci Eng Health. 2024;1(2):1–9. [Google Scholar]

38. Cejudo JE, Chaurasia A, Feldberg B, Krois J, Schwendicke F. Classification of dental radiographs using deep learning. J Clin Med. 2021;10(7):1496. doi:10.3390/jcm10071496. [Google Scholar] [PubMed] [CrossRef]

39. Sumalatha MR, Tejasree MS, Sowmiya S, Parthasarathi D, Sathish Kumar M. A multi-model approach to oral pathology classification and dental X-ray analysis. In: 2025 3rd International Conference on Intelligent Data Communication Technologies and Internet of Things (IDCIoT); 2025 Feb 5–7; Bengaluru, India. p. 1597–603. doi:10.1109/IDCIOT64235.2025.10914936. [Google Scholar] [CrossRef]

40. Hasnain MA, Ali S, Malik H, Irfan M, Maqbool MS. Deep learning-based classification of dental disease using X-rays. J Comput Biomed Inform. 2023;5(01):82–95. doi:10.56979/501/2023. [Google Scholar] [CrossRef]

41. Imangaliyev S, van der Veen MH, Volgenant CMC, Loos BG, Keijser BJF, Crielaard W, et al. Classification of quantitative light-induced fluorescence images using convolutional neural network. arXiv:1705.09193. 2017. [Google Scholar]

42. Rahman RB, Tanim SA, Alfaz N, Shrestha TE, Miah MSU, Mridha MF. A comprehensive dental dataset of six classes for deep learning based object detection study. Data Brief. 2024;57(1):110970. doi:10.1016/j.dib.2024.110970. [Google Scholar] [PubMed] [CrossRef]

43. Khairkar, Ashwini. Dental implant dataset. Mendeley Data, V1; 2024. doi:10.17632/x4gr6mmwy4.1. [Google Scholar] [CrossRef]

44. Waqas M, Hasan S, Khurshid Z, Kazmi S. OPG dataset for kennedy classification of partially edentulous arches. Mendeley Data; 2024. doi:10.17632/ccw5mvg69r.1. [Google Scholar] [CrossRef]

45. Abdi A, Kasaei S. Panoramic dental X-rays with segmented mandibles. Mendeley Data; 2020. doi:10.17632/hxt48yk462.2. [Google Scholar] [CrossRef]

46. DENTEX - MICCAI23 - Grand Challenge [Internet]. [cited 2025 May 29]. Available from: https://dentex.grand-challenge.org/data/. [Google Scholar]

47. Fretes López VR, Carlos GA, Mello Román JC, Vázquez Noguera JL, Gariba Silva R, Legal-Ayala H, et al. Panoramic radiography database. Zenodo. 2021. doi:10.5281/zenodo.4457648. [Google Scholar] [CrossRef]

48. Brahmi W, Jdey I, Drira F. Panoramic dental Xray dataset. Kaggle. 2025. doi:10.17632/73n3kz2k4k.3. [Google Scholar] [CrossRef]

49. Adnan N, Umer F. Orthopantomogram teeth segmentation and numbering dataset. Data Brief. 2024;57(1):111152. doi:10.1016/j.dib.2024.111152. [Google Scholar] [PubMed] [CrossRef]

50. Aljabri M, AlAmir M, AlGhamdi M, Abdel-Mottaleb M, Collado-Mesa F. Towards a better understanding of annotation tools for medical imaging: a survey. Multimed Tools Appl. 2022;81(18):25877–911. doi:10.1007/s11042-022-12100-1. [Google Scholar] [PubMed] [CrossRef]

51. Jocher G, Stoken A, Borovec J, NanoCode012, Christopher STAN, Changyu L, et al. ultralytics/yolov5: v3.0. Zenodo. 2020. doi:10.5281/zenodo.3983579. [Google Scholar] [CrossRef]

52. Sivri MB, Taheri S, Kırzıoğlu Ercan RG, Yağcı Ü, Golrizkhatami Z. Dental age estimation: a comparative study of convolutional neural network and Demirjian’s method. J Forensic Leg Med. 2024;103(4):102679. doi:10.1016/j.jflm.2024.102679. [Google Scholar] [PubMed] [CrossRef]

53. Enkvetchakul P, Khonjun S, Pitakaso R, Srichok T, Luesak P, Kaewta C, et al. Advanced AI techniques for root disease classification in dental X-rays using deep learning and metaheuristic approach. Intell Syst Applicat. 2025;26(4):200526. doi:10.1016/j.iswa.2025.200526. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2025 The Author(s). Published by Tech Science Press.

Copyright © 2025 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools