Open Access

Open Access

ARTICLE

Aerial Images for Intelligent Vehicle Detection and Classification via YOLOv11 and Deep Learner

1 Guodian Nanjing Automation Co., Ltd., Nanjing, 210032, China

2 Faculty of Computing and AI, Air University, Islamabad, 44000, Pakistan

3 College of Computer Science, King Khalid University, Abha, 61421, Saudi Arabia

4 Department of Informatics and Computer Systems, King Khalid University, Abha, 61421, Saudi Arabia

5 Department of Information Systems, College of Computer Engineering and Sciences, Prince Sattam bin Abdulaziz University, Al-Kharj, 16273, Saudi Arabia

6 Jiangsu Key Laboratory of Intelligent Medical Image Computing, School of Artificial Intelligence (School of Future Technology), Nanjing University of Information Science and Technology, Nanjing, 210044, China

7 Cognitive Systems Lab, University of Bremen, Bremen, 28359, Germany

* Corresponding Author: Hui Liu. Email:

# These authors contributed equally to this work

Computers, Materials & Continua 2026, 86(1), 1-19. https://doi.org/10.32604/cmc.2025.067895

Received 15 May 2025; Accepted 02 September 2025; Issue published 10 November 2025

Abstract

As urban landscapes evolve and vehicular volumes soar, traditional traffic monitoring systems struggle to scale, often failing under the complexities of dense, dynamic, and occluded environments. This paper introduces a novel, unified deep learning framework for vehicle detection, tracking, counting, and classification in aerial imagery designed explicitly for modern smart city infrastructure demands. Our approach begins with adaptive histogram equalization to optimize aerial image clarity, followed by a cutting-edge scene parsing technique using Mask2Former, enabling robust segmentation even in visually congested settings. Vehicle detection leverages the latest YOLOv11 architecture, delivering superior accuracy in aerial contexts by addressing occlusion, scale variance, and fine-grained object differentiation. We incorporate the highly efficient ByteTrack algorithm for tracking, enabling seamless identity preservation across frames. Vehicle counting is achieved through an unsupervised DBSCAN-based method, ensuring adaptability to varying traffic densities. We further introduce a hybrid feature extraction module combining Convolutional Neural Networks (CNNs) with Zernike Moments, capturing both deep semantic and geometric signatures of vehicles. The final classification is powered by NASNet, a neural architecture search-optimized model, ensuring high accuracy across diverse vehicle types and orientations. Extensive evaluations of the VAID benchmark dataset demonstrate the system’s outstanding performance, achieving 96% detection, 94% tracking, and 96.4% classification accuracy. On the UAVDT dataset, the system attains 95% detection, 93% tracking, and 95% classification accuracy, confirming its robustness across diverse aerial traffic scenarios. These results establish new benchmarks in aerial traffic analysis and validate the framework’s scalability, making it a powerful and adaptable solution for next-generation intelligent transportation systems and urban surveillance.Keywords

The World Population Prospects from the United Nations reveal that urban areas will house 68% of the global population by the year 2050 [1]. The rapid increase in urban population density created overwhelming increases in vehicle traffic that trouble current traffic monitoring methods. Traffic congestion within the US leads to annual losses in productivity that surpass $87 billion. The rising complexity in cities drives smart cities to implement automated traffic surveillance systems that remain scalable while achieving intelligent management of their systems. The traditional fixed surveillance cameras and inductive loop detectors, which have performed well up to this point, exhibit multiple drawbacks because they provide restricted monitoring zones and require high installation expenses in addition to sensitivity to blocked views and limited scalability for changing conditions. In contrast, aerial imagery obtained via unmanned aerial vehicles (UAVs) and satellites provides a high-altitude, unobstructed view of urban landscapes, making it a promising solution for large-scale traffic monitoring. However, this modality introduces its own set of challenges: high object density, scale variation, viewpoint distortion, and significant background clutter.

Despite the rapid advances in deep learning, few existing frameworks offer a comprehensive, end-to-end solution for vehicle detection, tracking, counting, and classification in aerial imagery. Most approaches focus narrowly on one or two tasks and are often optimized for ground-view datasets, limiting their generalizability in overhead views. This research addresses that gap by introducing a novel, unified deep-learning framework tailored specifically for aerial vehicle analytics within smart city infrastructure.

The key contributions of this paper are as follows:

1. Enhancement of aerial image quality using Adaptive Histogram Equalization (AHE), optimizing contrast and visibility in diverse environmental conditions.

2. Robust scene parsing through the use of Mask2Former, enabling precise semantic segmentation in densely populated, visually complex environments.

3. State-of-the-art object detection via YOLOv11, which improves handling of occlusion, fine-grained object differentiation, and multi-scale detection in aerial contexts.

4. High-performance object tracking using ByteTrack, maintaining consistent vehicle identities across video frames even under occlusion or motion blur.

5. Unsupervised vehicle counting based on a DBSCAN clustering approach, adaptable to variable traffic densities without requiring labeled counting data.

6. A novel hybrid feature extraction module that fuses deep semantic features (via CNNs) with geometric descriptors (via Zernike Moments) for improved vehicle representation.

7. Accurate vehicle classification using NASNet, a neural architecture search-optimized model that delivers superior accuracy across diverse vehicle categories and orientations.

The proposed system performs remarkably well on challenging VAID benchmark datasets by reaching a detection accuracy of 96%, and tracking accuracy of 94%, and a classification accuracy of 96.4%. In UAVDT tests, the system shows detection performance at 95% and tracking operations at 93%, and classification results at 95%. This data confirms that the system performs well across various aerial traffic situations. The system has proven its effectiveness in diverse traffic situations while handling obscure and dynamic messes, which demonstrates its worth as a scalable solution for smart city transportation systems.

The acceleration of smart city development has created strong motivation for extensive research about automated traffic surveillance systems through aerial surveillance approaches. Artificial vision algorithms and deep learning developments have enabled researchers to develop multiple models that achieve fundamental vehicle monitoring functions, including detection, tracking, counting, and categorization. Standardized research methodologies are now being used for detailed analysis of technical elements and main research contributions.

2.1 Vehicle Detection and Tracking Systems

The development of sophisticated vehicle detection and tracking systems is crucial for enhancing urban mobility, traffic management, and safety. In recent years, aerial surveillance using Unmanned Aerial Vehicles (UAVs) has gained significant traction, offering a unique perspective for real-time traffic monitoring. While various methods have been explored to optimize vehicle detection, these systems still face challenges in terms of accuracy, speed, and adaptability to diverse traffic conditions. in a recent research Bhaskar and Yong [2] proposed an approach utilizing Gaussian Mixture Models (GMM) and Blob Detection to effectively segment foreground from background, applying morphological operations to reduce noise and enhance object tracking accuracy. While achieving promising results, their method is constrained by limited adaptability to aerial viewpoints and varying vehicle sizes. Chen and Meng [3] proposed a self-learning system using FAST and HoG features with Forward–Backward Tracking (FBT), where feedback from tracking enhances detection in dynamic aerial scenes. These methods underscore the need for consistently robust detection models across varying traffic conditions. Building on this, Yusuf et al. [4] introduced a pipeline integrating pixel labeling, particle filtering, geo-referencing, and HoG to detect, classify, and track multiple vehicles with high accuracy. Similarly, Wang et al. [5] developed a UAV-based system with image registration, feature extraction, shape detection, and motion tracking to estimate traffic speed and trajectories across diverse altitudes and angles. Our work extends these efforts by developing an adaptive system for vehicle detection and characterization from UAV imagery.

2.2 Vehicle Detection and Classification Systems

Vehicle detection and classification are critical functions in the realm of intelligent traffic surveillance, forming the analytical backbone of modern transportation systems. These support predictive modeling and automated control systems that enhance roadway efficiency and safety. However, the complexities introduced by aerial platforms, such as high altitudes, varied perspectives, and fluctuating lighting, pose significant challenges that traditional ground-based systems are ill-equipped to manage. In an early contribution to this field, Won [6] conducted a thorough review of traffic monitoring systems with a particular emphasis on vehicle classification. His study explores the design and deployment of diverse classification technologies, highlighting the evolution of intelligent systems through MEMS, machine learning, and wireless communication advancements. While the review lays a strong foundation for understanding the technological landscape, it underscores the persistent technical gaps in deploying highly accurate, real-time vehicle classification systems under the dynamic conditions of aerial surveillance In contrast, Hamzenejadi and Mohseni [7] addressed the core challenge of detecting small vehicles in high-resolution UAV imagery by enhancing the YOLOv5 architecture. Through tailored modifications to the network’s depth and width, they significantly improved both accuracy and inference speed critical factors in real-time UAV operations. Their model outperformed baseline YOLOv5 configurations, marking a step forward in developing aerial-ready, deep learning-based solutions. Building on this momentum, Mustafa and Alizadeh [8] introduced a customized YOLOv4 system augmented with Convolutional Block Attention Modules (CBAM) to increase sensitivity to critical features in UAV-captured imagery. Their approach, tested on a regional dataset, achieved a strong mAP of 88.25% at 35 FPS and demonstrated superior performance against several established models, including Faster RCNN and YOLOv3. Recently, a comprehensive evaluation of YOLOv8 variants was conducted in the context of UAV-based aerial traffic monitoring, offering valuable insights into model performance under diverse environmental and flight conditions [9]. Building on these advancements, our research incorporates YOLOv11 and aims to deliver an adaptable, high-performance vehicle detection and classification system that meets the operational and environmental demands of modern aerial surveillance platforms.

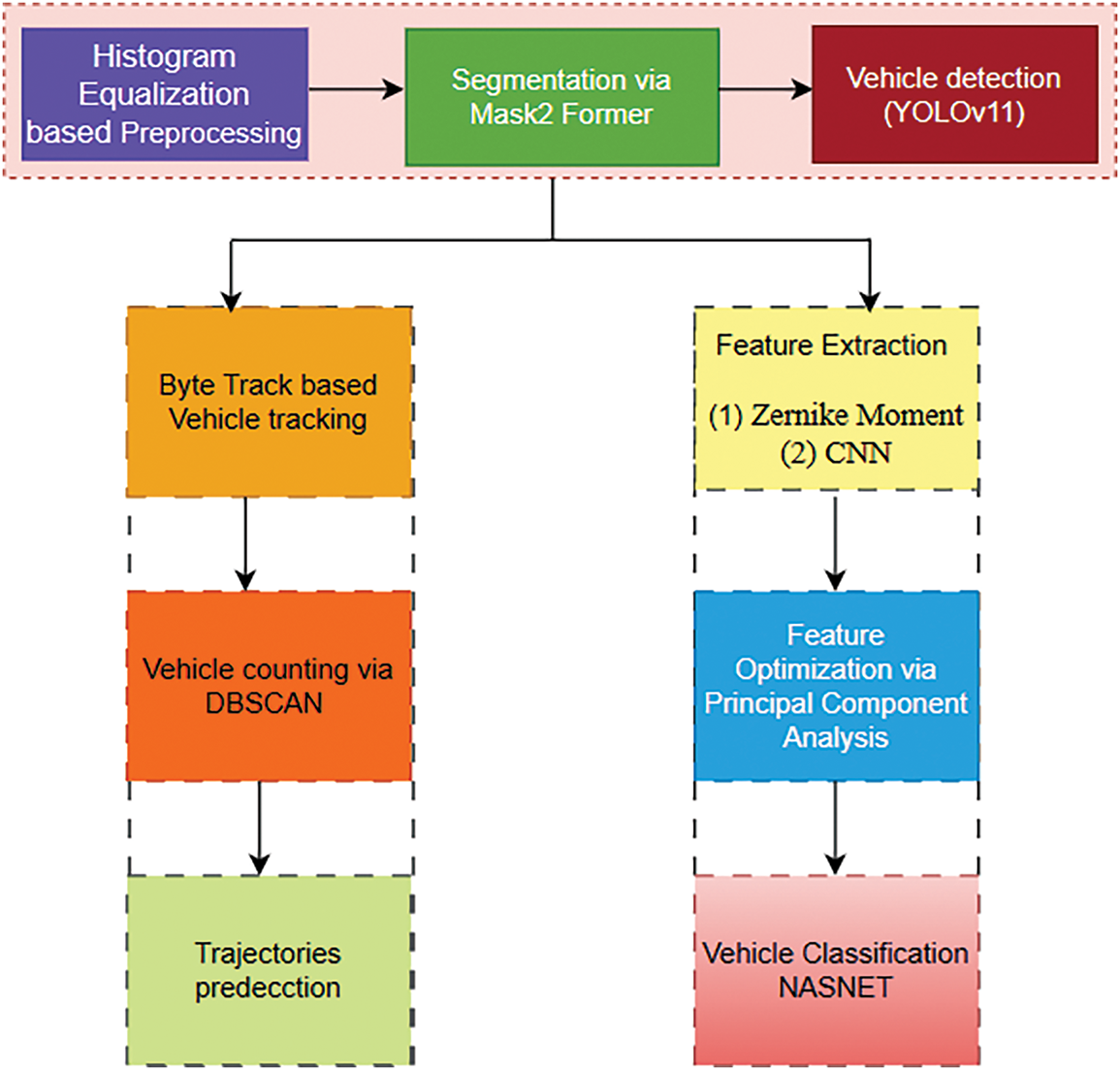

This research proposes a comprehensive deep learning framework for aerial traffic surveillance, integrating detection, tracking, counting, and classification. Traditional methods designed for ground-level imagery often fail in aerial views due to occlusion, perspective distortion, and background complexity. With drones and edge AI becoming central to smart cities, our system addresses the need for scalable, intelligent traffic management. As shown in Fig. 1, it starts with Adaptive Histogram Equalization (AHE) to enhance image clarity under varying lighting, followed by Mask2Former for efficient and accurate scene segmentation. YOLOv11 is used for vehicle detection, handling scale variance, and occlusion effectively. ByteTrack ensures robust multi-object tracking by maintaining consistent vehicle IDs. Traffic flow is analyzed using DBSCAN-based counting and trajectory prediction. For classification, we use a hybrid CNN-Zernike and CNN architecture, combining semantic and geometric features. PCA optimizes these features, which are then classified using NASNet for high accuracy and efficiency.

Figure 1: Architecture flow for a smart traffic surveillance system

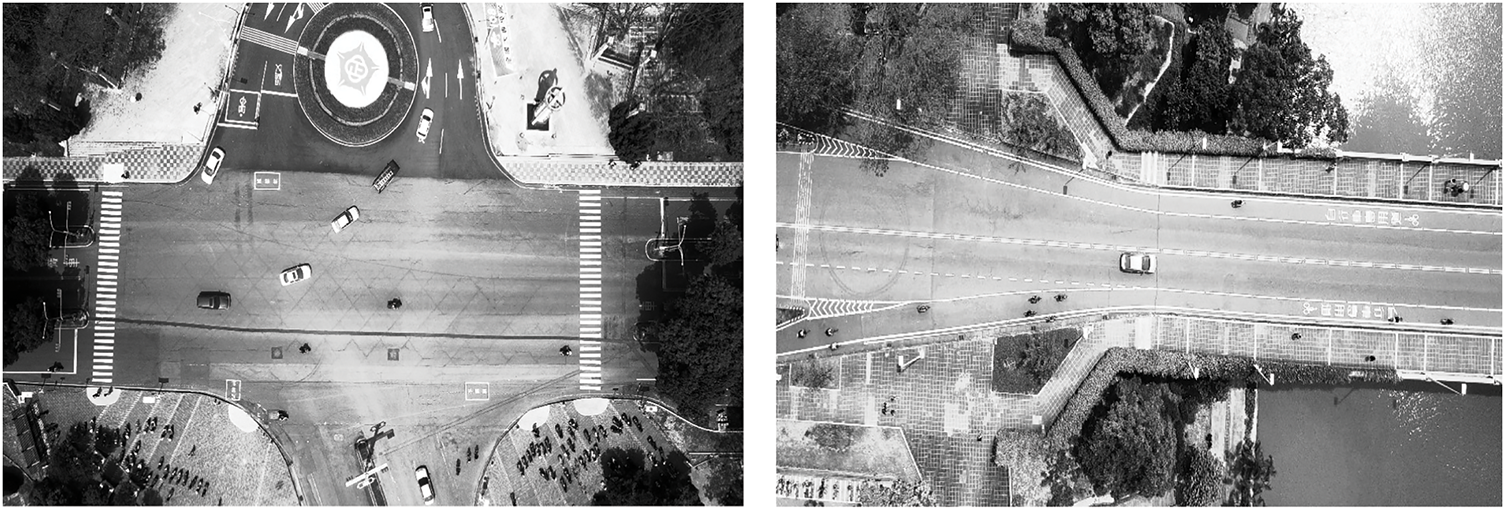

The proposed framework includes adaptive histogram equalization preprocessing techniques for aerial imagery improvement through adaptive histogram equalization. The image contrast enhancement through pixel intensity distribution achieves better results in aerial images by using Adaptive Histogram Equalization (AHE) technology [10]. The local implementation of AHE over different image zones maintains image details throughout high and low-contrast areas as shown in Fig. 2. The intensity values in each local region are adjusted based on the distribution of pixel intensities in the neighborhood, thereby enhancing the overall image clarity. The equation for adaptive histogram equalization in a local neighborhood N can be defined as:

Figure 2: Preprocessed images via histogram equalization

I(x,y) is the original pixel intensity at position (x, y) whereas µN and σT are the mean and standard deviation of intensities within the local neighborhood N, µN and σT are the global mean and standard deviation of the image,

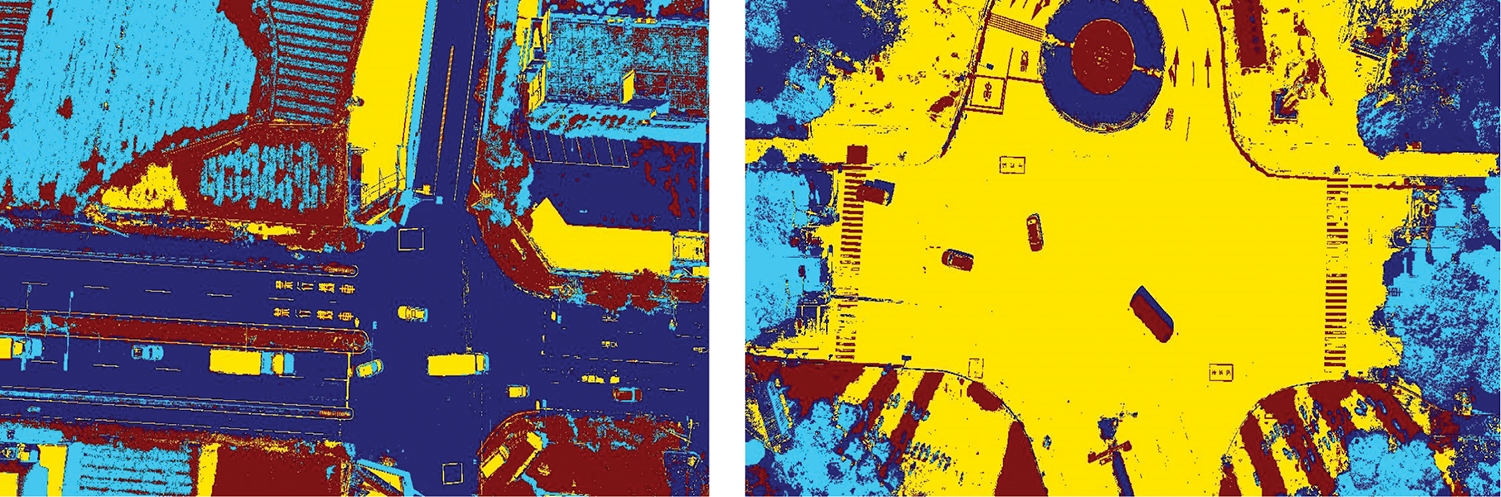

3.2 Mask2Former Based Segmentation

After preprocessing, we utilize the advanced Mask2Former model for scene segmentation because it was created specifically for the accurate segmentation of objects in complex visual environments. The challenges present in aerial imaging, such as dense objects and scale variations and hidden features, prevent standard segmentation procedures from being effective [11]. The transformer-based architecture employed by Mask2Former enables the model to extract both close-range and broad-spectrum relationships in pictures and this capability helps it identify vehicles against other urban scene components. The accurate object masks produced through semantic segmentation are crucial for cutting cars out of their background, including structures and vegetation. The segmentation output is mathematically defined as:

Figure 3: Precise vehicle segmentation in complex UAV Urban Scenes using Mask2Former

3.3 Vehicle Detection via YOLOv11

Following segmentation, the system advances to the critical vehicle detection task, employing the state-of-the-art YOLOv11 (You Only Look Once, version 11) model. YOLOv11 introduces several key architectural and algorithmic enhancements over its predecessors, making it exceptionally well-suited for aerial imagery, where objects are often small, partially occluded, and densely clustered [12]. In contrast to conventional two-stage detectors, YOLOv11 performs detection in a single forward pass, ensuring low latency and high throughput attributes essential for real-time intelligent traffic systems. At the core of YOLOv11’s architecture is a dense prediction strategy that divides the input image into an S × S grid, with each cell predicting multiple bounding boxes, objectness scores, and class probabilities. The detection confidence score

whereas

whereas

Figure 4: Vehicle detection result on aerial imagery using YOLOv11 demonstrating accurate detection of small and occluded vehicles

3.4 Vehicle Tracking via Byte Track

After detecting objects, the system proceeds to multi-object vehicle tracking, which forms a vital part for sustained tracking of individual vehicle identities throughout successive aerial frames. The system uses ByteTrack as its tracking mechanism. A sophisticated tracking system achieves top performance in complex situations through its processing of confident and unreliable sensor outputs [13]. The system provides specific value to aerial imagery because it handles common issues of occlusions and scale problems and detection errors. The traditional tracking detection systems eliminate weak identifications that can split fragmented identity continuity. ByteTrack overcomes this limitation by introducing a dual-stage data association strategy, wherein high-confidence detections are matched first, followed by re-evaluation and integration of lower-confidence predictions, enabling robust target continuity even in visually ambiguous scenes. The cost of association between a tracked object

Figure 5: Vehicle tracking via byte track

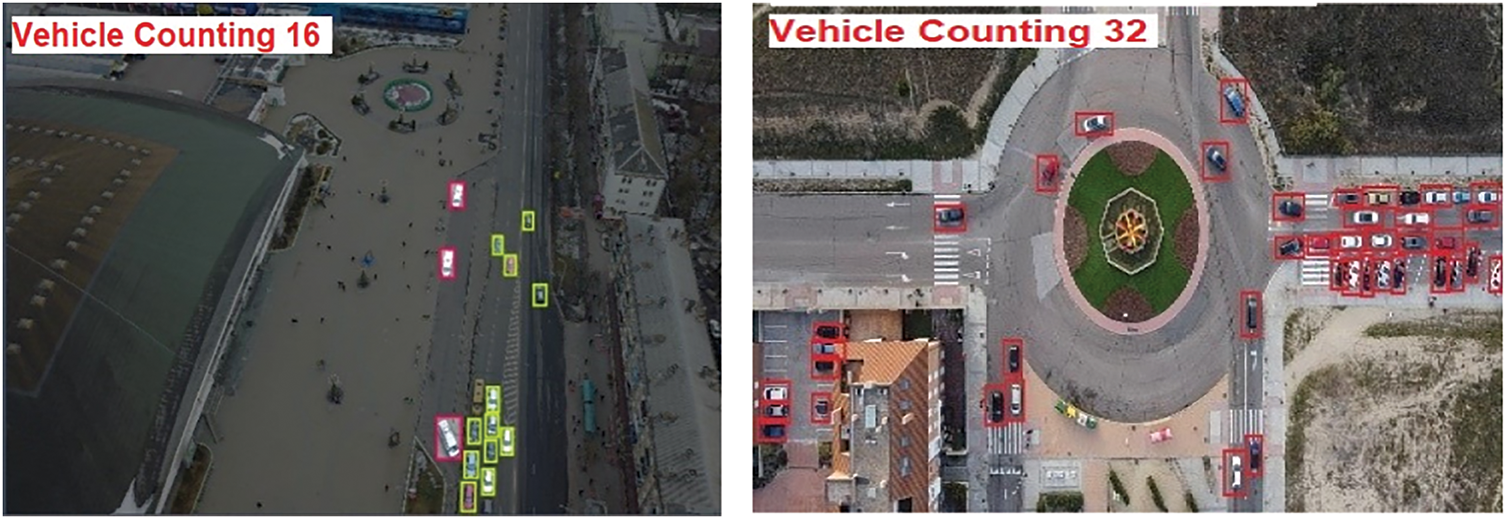

3.5 Vehicle Counting via DBSCAN

To estimate traffic density and vehicle flow accurately, the system employs an unsupervised vehicle counting module based on DBSCAN. This method excels in aerial views where object density, distortion, and occlusion challenge traditional approaches. DBSCAN dynamically adapts to traffic variations by clustering dense regions of tracked centroids while ignoring outliers. Vehicles are modeled as spatiotemporal points [14], with clusters formed based on proximity and density using two hyperparameters: neighborhood radius (ε) and minimum neighbors (minPts). A point p is a core point (vehicle) if it meets these criteria.

here, ∥p − q∥ represents the Euclidean distance between vehicle centroids p and q in the tracked set D. Vehicles are counted only when spatial density exceeds a threshold, reducing noise detection and overlaps. A temporal smoothing function with a sliding-window mechanism prevents duplicate counts by consolidating identity-preserved tracks. Fig. 6 shows the clustering of tracked vehicle centroids using DBSCAN for accurate counting in aerial scenes, effectively handling occlusions and closely grouped vehicles. The method is adaptable, scalable, and well-suited for smart city applications with minimal maintenance requirements.

Figure 6: DBSCAN based vehicle counting on aerial image

3.6 Trajectories Approximation

Vehicle trajectory detection involves tracking the central coordinates from all bounding boxes that appear in image frames. The developed system creates dependable trajectory tracking procedures that help identify important traffic-related data. The centroid-based trajectory estimation method needs improvement through its extension to perform better analysis, combining trajectory conflict detection with sudden changes in vehicular motion for accident prediction. Mathematical formulas in Eqs. (10) and (11) allow computation of centroid points to precisely locate vehicles in each an illustration in Fig. 7 shows vehicle movement trajectories using arrows, highlighting directional flow across the scene. These paths are generated by tracking the centroids of vehicles over time. The visualized trajectories help identify traffic patterns, detect motion anomalies, and support proactive traffic safety measures

where i and j are the center coordinates of the bounded rectangle.

Figure 7: Vehicle trajectories visualized using arrows to indicate direction and movement across the scene

Feature extraction is essential for vehicle classification, enabling the system to capture both deep semantic and geometric characteristics of vehicles from aerial imagery. Fundamental diagnostic elements derived from extraction enable vehicles to distinguish separate from other objects while simultaneously increasing system performance accuracy in diverse environmental conditions. The system performs dual extraction at the same time, due to Convolutional Neural Networks analyzing semantic elements and Zernike Moments deciphering geometric shapes to understand vehicle specifications.

The second technique employed in our feature extraction process is the use of Zernike Moments, which provide a robust method for capturing the geometric features of vehicles, particularly their shape and spatial distribution [15]. Zernike Moments are a set of orthogonal polynomials defined on the unit disk, and their ability to describe shape invariance makes them an ideal choice for aerial vehicle detection tasks where vehicle shapes can vary significantly due to perspective distortions, occlusions, or partial visibility. These moments are computed as a series of radial and angular components, allowing the system to capture both local and global shape characteristics of the vehicles as shown in Fig. 8. The Zernike Moments for a given vehicle image I(x, y) are calculated using the following equation:

whereas

Figure 8: Zernike moments feature extraction of vehicles

3.7.2 Convolutional Neural Networks (CNN)

The data contains various environmental conditions that feature multiple lighting scenarios together with different traffic volumes. Ten different sites in southern Taiwan were chosen for collecting data that encompassed urban settings along with suburban areas and university campuses to provide essential evaluation conditions for the models [16]. The method includes successive convolutional operations together with non-linear activation components (ReLU) alongside pooling filters, which reduce spatial volume but maintain critical data points. The governing principle governing CNN operations follows this mathematical formula:

where y is the output feature map, σ represents the activation function (such as ReLU),

Figure 9: CNN-based feature extraction of vehicles

3.8 Feature Optimization via Principal Component Analysis (PCA)

A dedicated feature optimization phase enhances the discriminative power and efficiency of features extracted by the hybrid CNN-Zernike module. Principal Component Analysis (PCA) reduces redundancy by projecting features onto a lower-dimensional space that preserves key variance, while L2 normalization scales them uniformly to improve model stability [17]. This combination accelerates training, mitigates overfitting, and ensures compact, class-discriminative features for classification. As shown in Fig. 10, the PCA-processed CNN and Zernike features form tight clusters, demonstrating effective optimization.

Figure 10: PCA-reduced feature clustering of CNN and Zernike

3.9 Vehicle Classification through Neural Architecture Search Network (NASNet)

After feature extraction and optimization, the refined features are fed into the NASNet architecture for final vehicle classification. NASNet, developed through neural architecture search, balances high accuracy with computational efficiency, making it ideal for large-scale visual tasks. It autonomously builds optimal configurations for accurate and cost-effective vehicle classification [18]. NASNet categorizes vehicles such as cars, trucks, buses, and motorcycles using the extracted features. Its adaptability ensures consistent identification across different vehicle types, sizes, and directions in complex urban aerial scenes. The deep architecture of NASNet captures fine-grained features, improving classification precision. According to experimental results, NASNet delivers reliable aerial vehicle recognition, making it suitable for smart city surveillance. Fig. 11 illustrates its modular design with 3 × 3 convolutions, reduction cells for downsampling, and normal cells for feature refinement, enabling accurate analysis across diverse vehicle shapes and positions.

Figure 11: NASNet architecture for vehicle classification

4 Experimental Settings and Analysis

This study used a system with an AMD Ryzen 7 5800H processor (3.20 GHz), 32 GB RAM, and an AMD Radeon RX 6700M GPU with 8 GB VRAM. Experiments were conducted on two aerial datasets, VAID and UAVDT, chosen for their diverse traffic densities, urban landscapes, and varying illumination and weather conditions, ensuring a comprehensive performance evaluation. The proposed framework was compared to state-of-the-art methods, with performance assessed using various evaluation metrics. The discussion covers datasets, experimental methods, and detailed quantitative and qualitative results.

VAID dataset which contains 6000 aerial images of vehicles that are sorted into eight vehicle types including sedan, pickup, bus, trailer, minibus, truck, cement truck and car. Monitoring vehicles required professionally equipped drones mounted with cameras at between 90 to 95 meters’ altitude to capture high-definition frames of 2720 × 1530 pixels during 23.98 frame-per-second operations. The data contains various environmental conditions that feature multiple lighting scenarios together with different traffic volumes. Ten different sites in southern Taiwan were chosen for collecting data that encompassed urban settings along with suburban areas and university campuses to provide essential evaluation conditions for the models.

The UAVDT dataset serves as a valuable benchmark for evaluating aerial image-based methods related to detection, classification, and tracking. It compares 100 video segments, totaling approximately 80,000 frames, collected through UAV-based data acquisition. The Dataset reflects a wide range of challenging conditions, including varying weather, illumination levels, and occlusion scenarios. Video is recorded at a resolution of 1080 × 540 pixels and a frame rate of 30 frames per second, with annotation provided for multiple object categories, including vehicles and pedestrians. The recorded scenes span several roadway types such as arterial roads, highways, intersections, and urban squares.

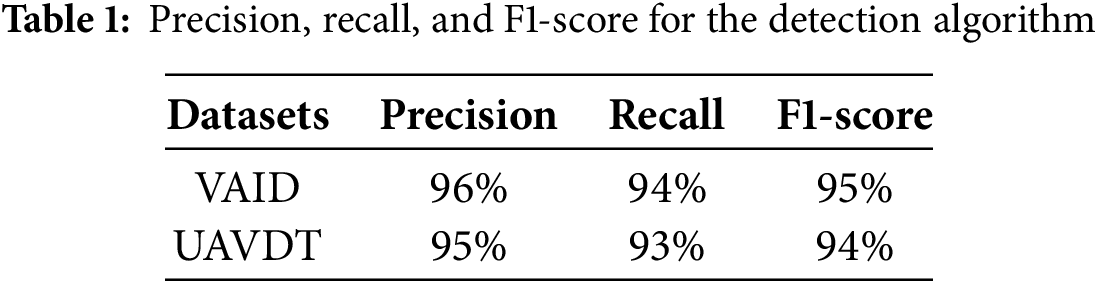

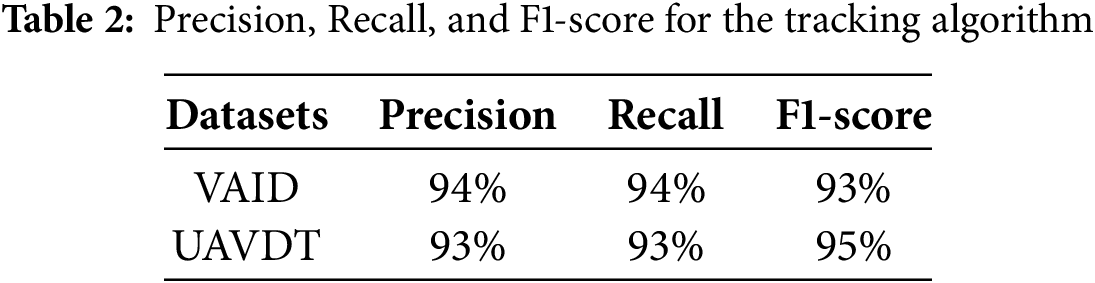

The proposed system was rigorously evaluated on two challenging benchmark datasets, VAID and UAVDT, to comprehensively assess its performance across diverse aerial traffic scenarios. To ensure statistical reliability and minimize random variation effects, the experiments were conducted independently five times. The recorded mean data serve to enhance research stability. Table 1, along with Table 2, presents precise evaluation statistics about precision, recall, and F1-score measurements for the vehicle detection module to show the system maintains steady high performance under different scenario conditions.

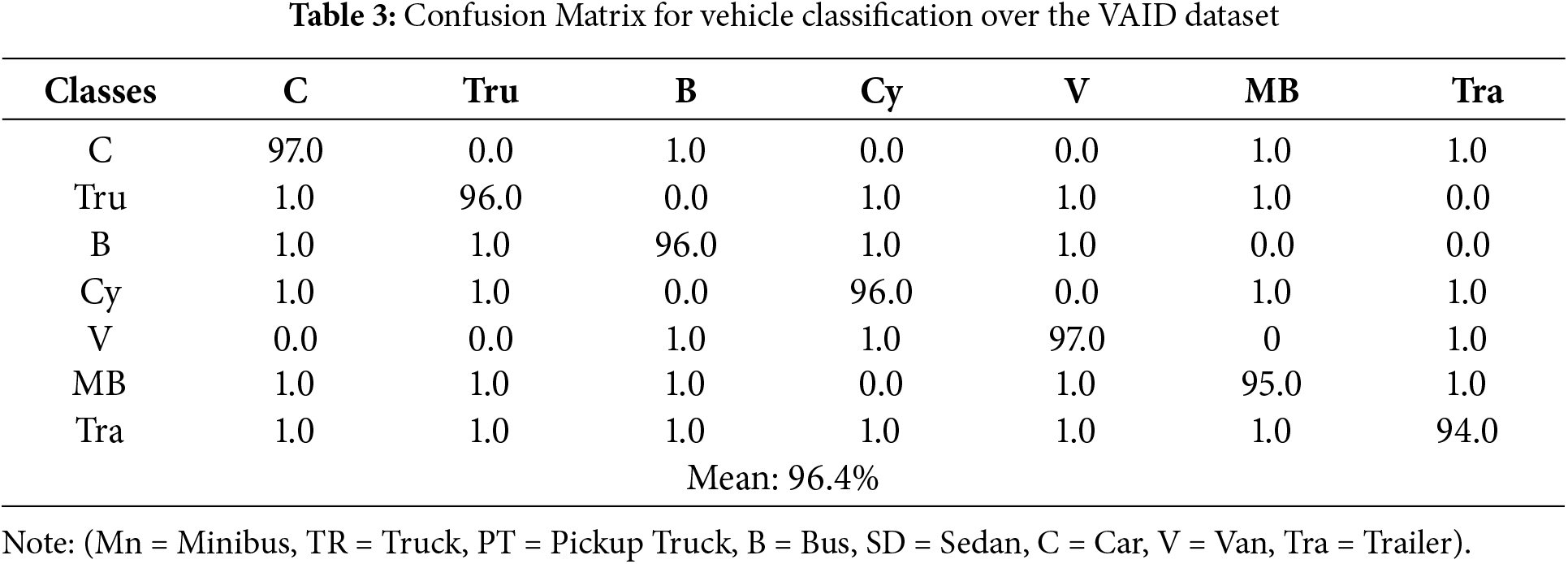

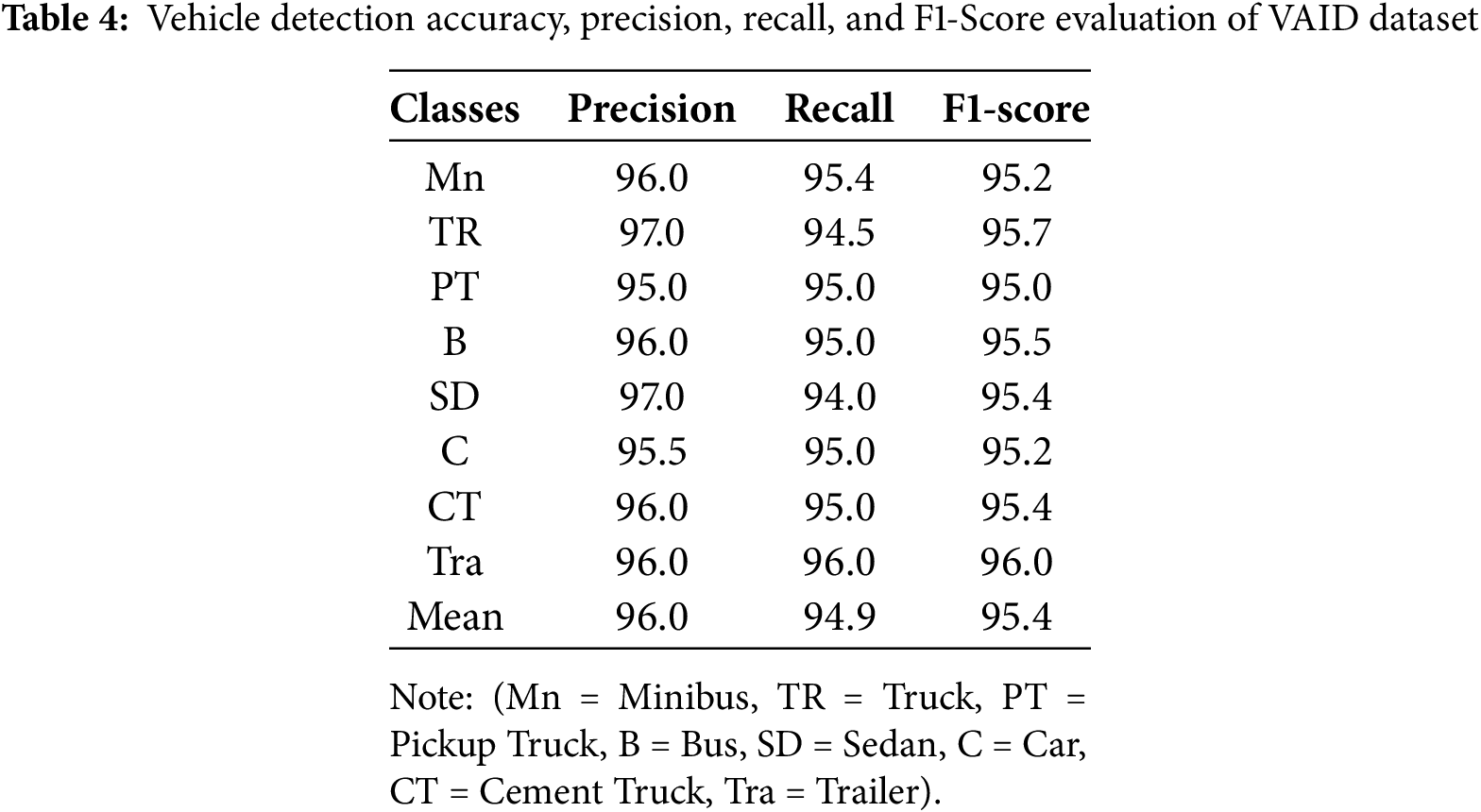

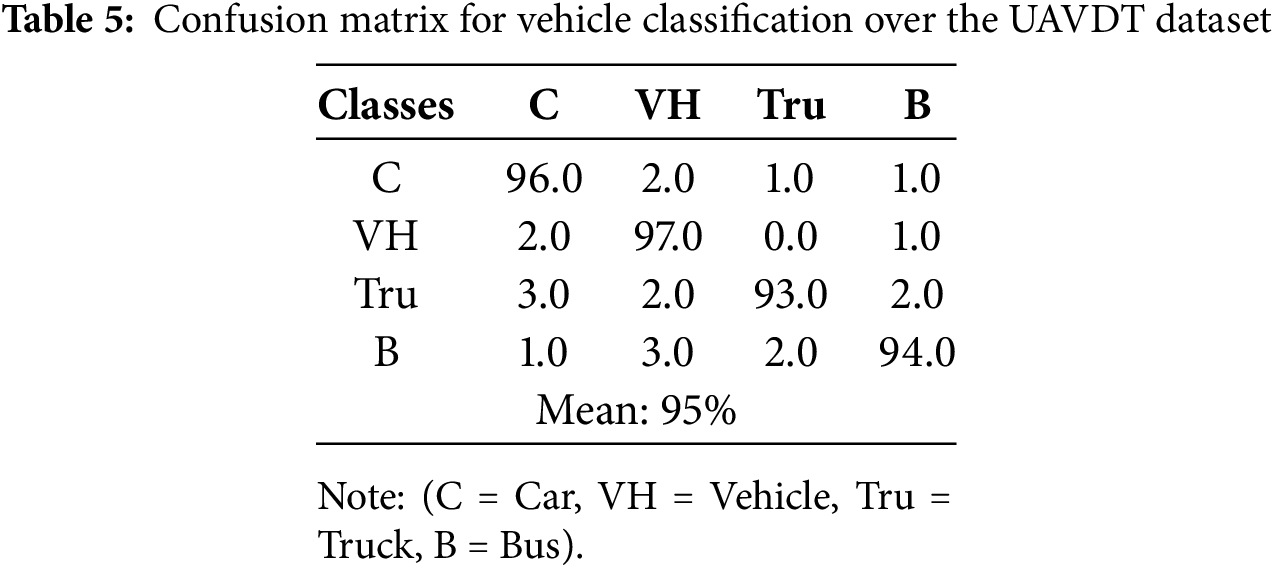

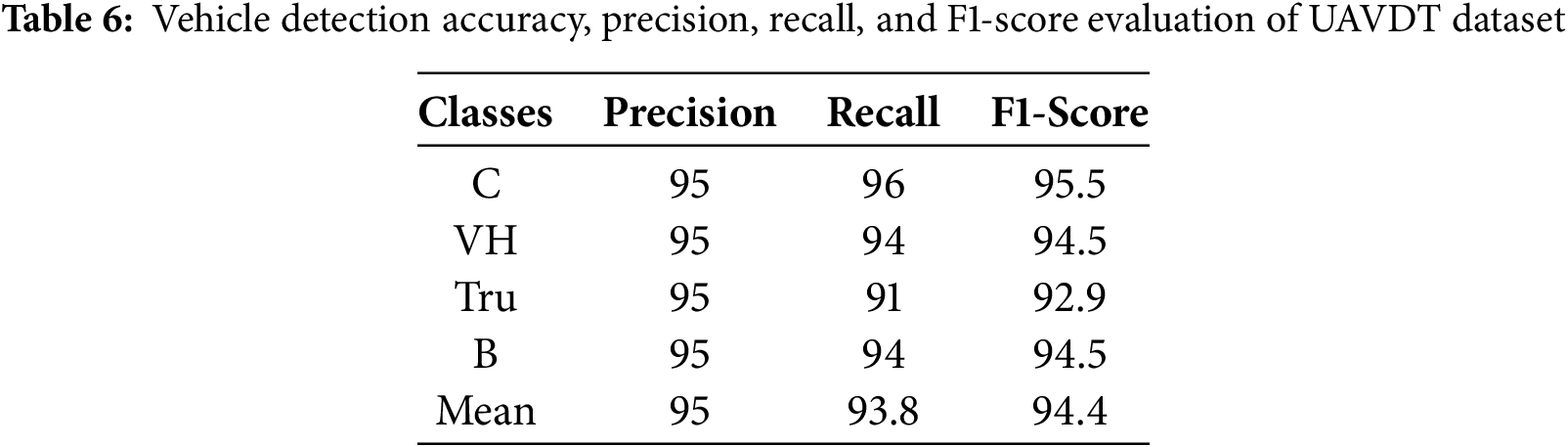

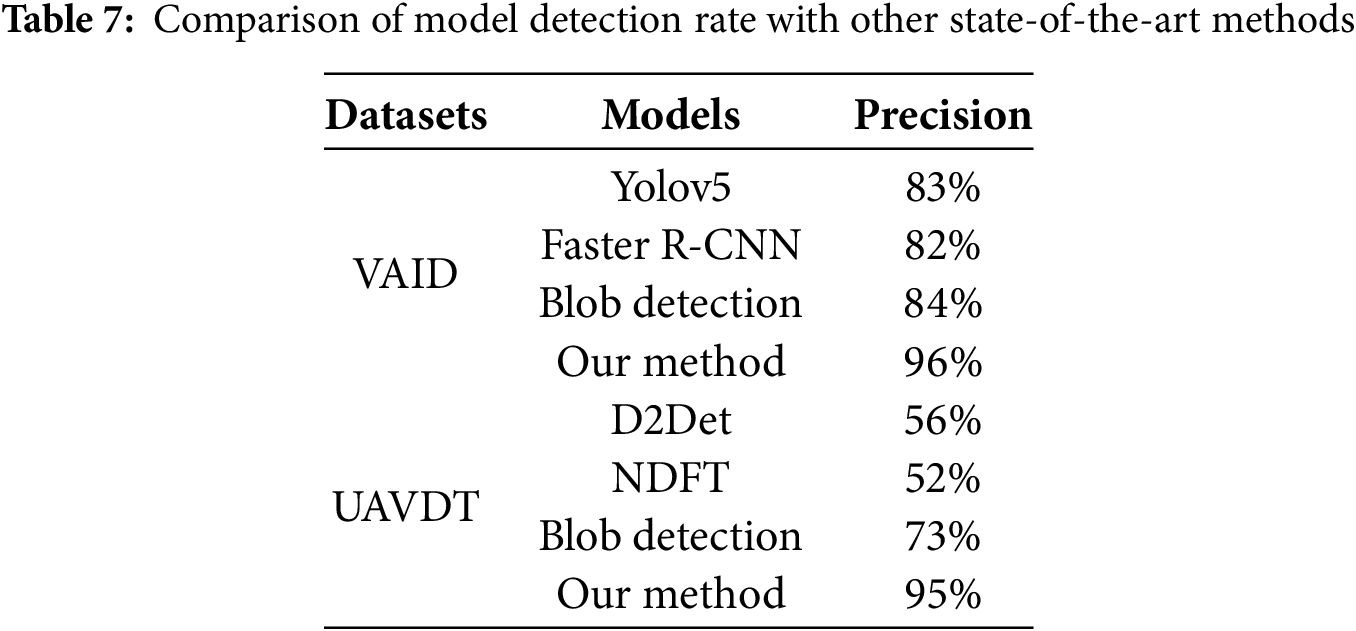

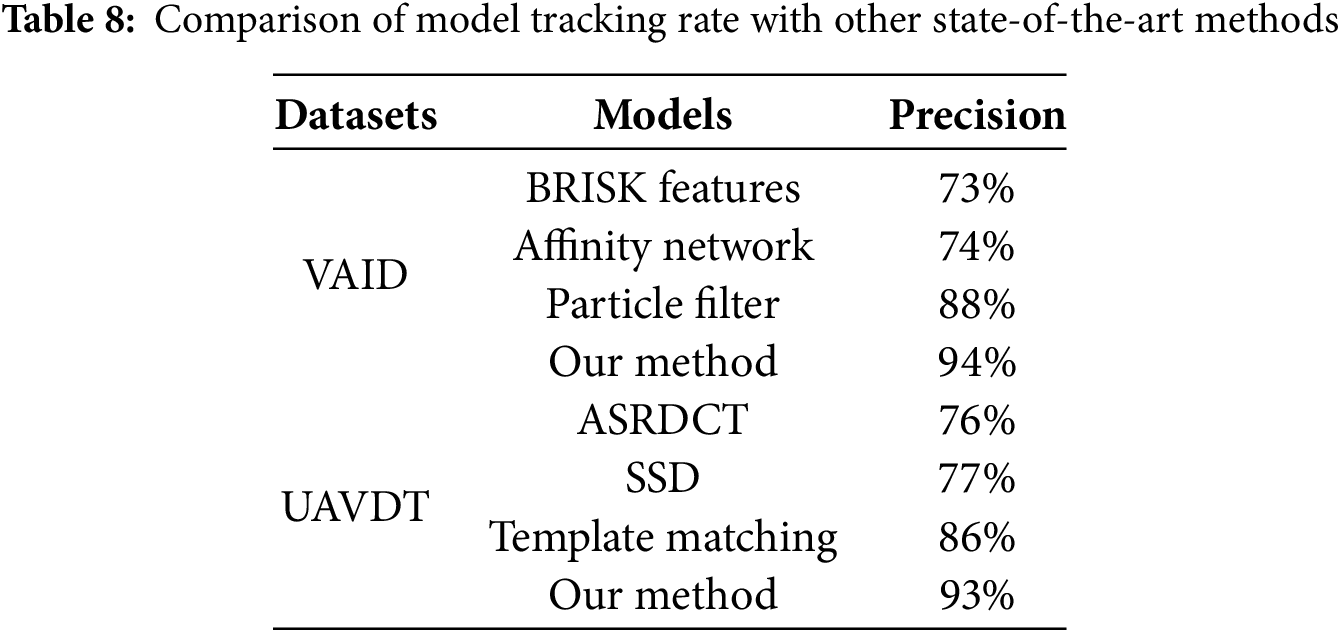

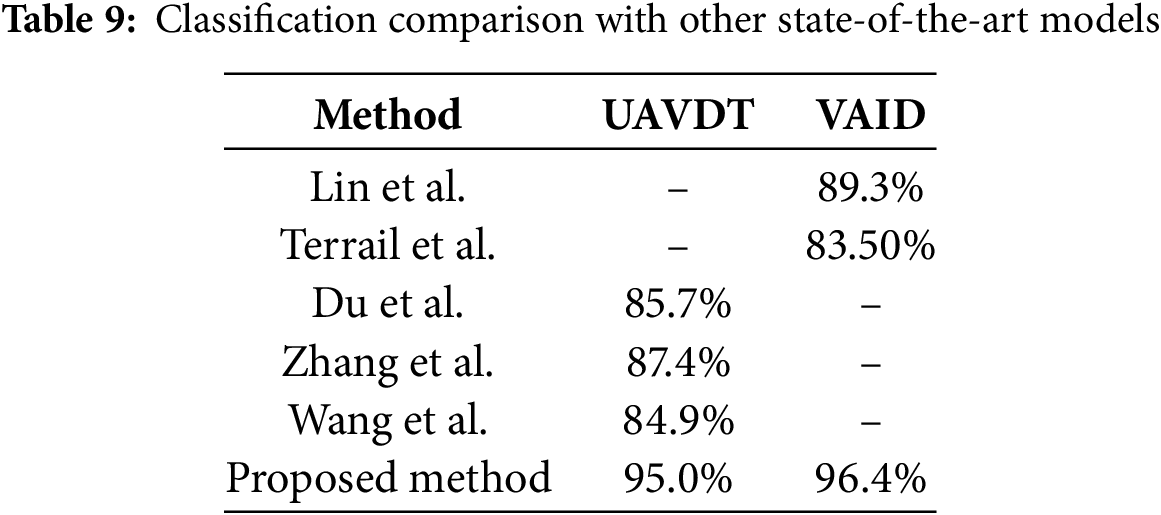

Table 3 shows the confusion matrix for vehicle classification on the VAID dataset, while Table 4 reports detection metrics including accuracy, precision, recall, and F1-score. Similarly, Table 5 presents the classification matrix for UAVDT, and Table 6 provides corresponding detection metrics, demonstrating the model’s adaptability. Table 7 compares classification performance with state-of-the-art methods, and Table 8 evaluates tracking performance across models. Finally, Table 9 contrasts classification results on VAID and UAVDT, highlighting the method’s generalizability and effectiveness in traffic surveillance.

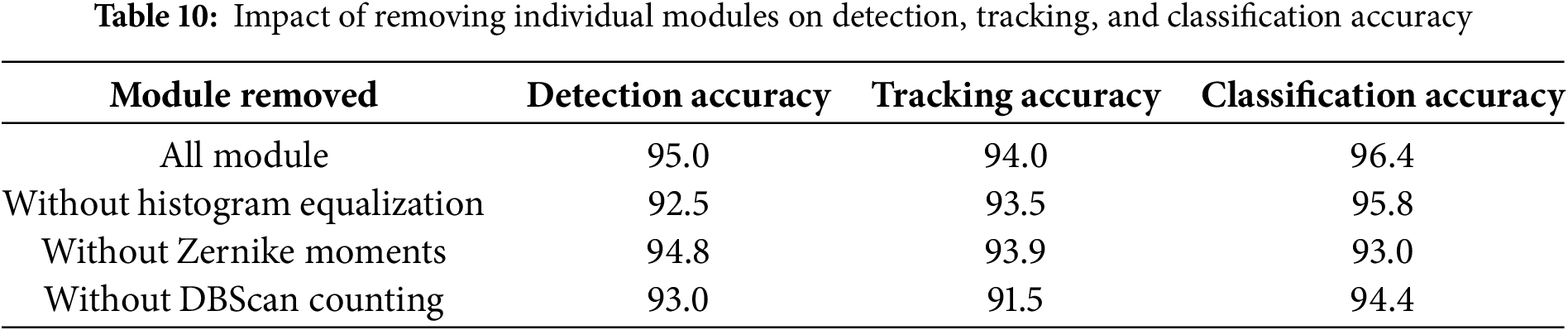

The ablation study clearly shows that removing any of the modules results in a decrease in performance. Specifically, the absence of histogram equalization reduces detection and classification accuracy, indicating its role in improving image quality and feature extraction. Excluding Zernike moments causes a noticeable drop in classification accuracy, confirming their effectiveness in capturing important shape features. Lastly, removing DBSCAN counting affects tracking accuracy, especially in scenarios with dense traffic, as the clustering method aids in precise vehicle counting and tracking.

To evaluate the contribution of each key module in the proposed framework, we performed ablation experiments by removing individual components and measuring the impact on detection, tracking, and classification accuracy. The results are summarized in Table 10.

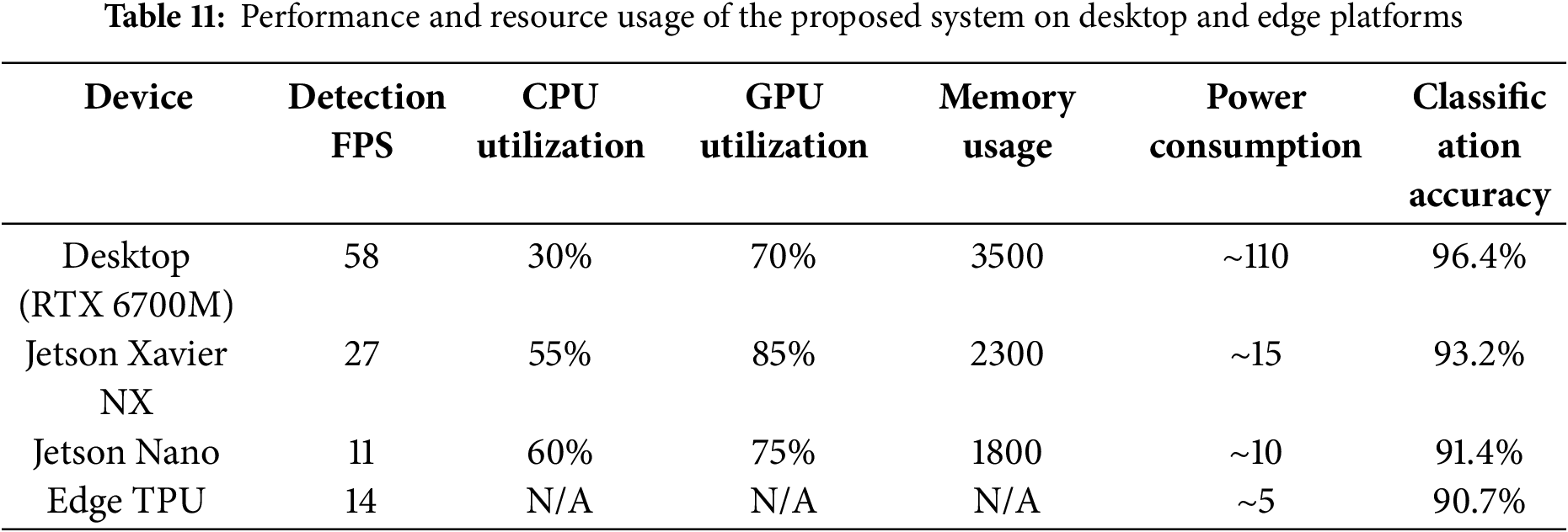

4.4 Edge Deployment Feasibility and Real-World UAV Integration

To demonstrate the practical deployment potential of our vehicle analysis framework for UAV-based smart city applications, we performed detailed evaluations on representative edge computing platforms commonly used in UAV systems, including the NVIDIA Jetson Nano, Jetson Xavier NX, and Google Coral Edge TPU. Table 11: Summarize the performance and resource utilization metrics of our system on both desktop and embedded platform.

The slight accuracy reduction observed on edge devices (3%–5%) results primarily from model quantization and input resolution reduction aimed at maintaining acceptable real-time processing speeds. Nevertheless, the framework sustains robust detection, tracking, and classification performance, suitable for UAV-based traffic monitoring and smart city applications. These results validate the practical potential of our system to operate in real-time on power-constrained embedded platforms typical for UAV deployment. An inference speed of 11–27 FPS on Jetson devices supports continuous monitoring while balancing power consumption and hardware limitations.

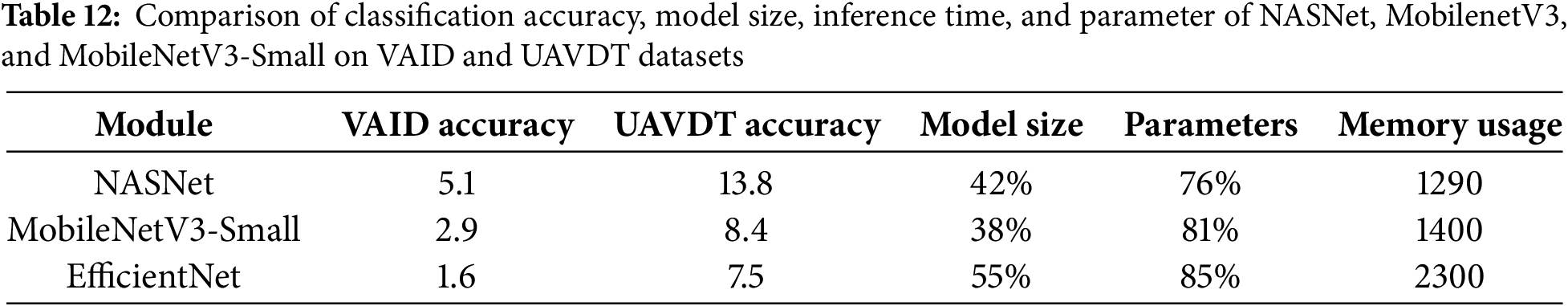

4.5 Lightweight Classifier Comparison

To evaluate the trade-off between classification accuracy and computational efficiency, we compared the proposed NASNet-based classifier with lightweight alternatives: MobileNetV3-Small and EfficientNet-Lite0. These models are widely adopted for deployment on edge and mobile devices due to their efficient architecture and reduced parameter count. All models were trained and tested under identical conditions using the same feature extraction pipeline on the VAID and UAVDT datasets. Table 12 presents the results, including classification accuracy, model size, inference time per image and a number of parameters.

These results indicate that while NASNet yields the highest classification accuracy, MobileNetV3-Small offers 70% faster inference with ~2.3% lower accuracy, making it suitable for scenarios where speed and hardware constraints outweigh marginal accuracy differences. EfficientNet-Lite0 offers a middle ground with better accuracy than MobileNetV3 and reasonable latency.

The proposed aerial traffic surveillance system demonstrates strong performance on the VAID and UAVDT datasets, yet several limitations remain. The framework currently focuses solely on vehicle detection, classification, tracking, and counting, omitting critical urban elements such as pedestrians, cyclists, and infrastructure. Additionally, the limited field of view restricts its suitability for fully automated smart city systems requiring comprehensive situational awareness. In highly congested scenes, overlapping vehicles can cause minor declines in tracking accuracy, though ByteTrack and Mask2Former maintain overall robustness. Furthermore, standard aerial image resolutions limit accurate detection of small or distant vehicles, a challenge that could be addressed by using ultra-high-resolution drone imagery. Another important consideration is potential dataset bias. The VAID dataset may over-represent certain vehicle classes and lacks diverse weather conditions, which could impact the system’s generalizability to extreme or varied environments. Annotation quality and occlusion challenges inherent in aerial datasets may also affect detection and tracking results. Addressing these issues will require future research focusing on multi-class detection frameworks, dynamic occlusion handling, and adaptive processing capable of managing diverse image qualities. Such advances will improve the system’s real-world applicability and performance.

This study presents an integrated deep learning framework for aerial traffic surveillance, combining adaptive histogram equalization, Mask2Former segmentation, YOLOv11 detection, ByteTrack tracking, DBSCAN counting, and hybrid CNN-Zernike moment feature extraction. Extensive evaluation on VAID and UAVDT datasets demonstrates the system’s strong accuracy, with detection rates around 96%, tracking accuracy near 94%, and classification accuracy exceeding 96% on VAID, with comparable results on UAVDT. Despite this, challenges remain in detecting smaller objects, handling occlusions, and expanding to other urban classes like pedestrians and cyclists. Future work will focus on enhancing detection capabilities across multiple classes, incorporating dynamic occlusion management, and leveraging ultra-high-resolution imagery to improve fine-grained detection. Real-time edge deployment optimization and UAV operational challenges, including payload constraints, limited battery life, communication bandwidth, and environmental factors will also be addressed. Ongoing efforts include model compression, adaptive frame rate control, and efficient communication protocols. Planned UAV flight tests will validate system robustness under real-world conditions, supported by hardware-software co-design for seamless integration. These steps will help advance scalable, adaptable, and resilient smart city traffic monitoring solutions.

Acknowledgement: Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2025R410), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Funding Statement: The APC was funded by the Open Access Initiative of the University of Bremen and the DFG via SuUB Bremen. The authors extend their appreciation to the Deanship of Research and Graduate Studies at King Khalid University for funding this work through Large Group Project under grant number (RGP2/367/46). This research is supported and funded by Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2025R410), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Author Contributions: study conception and design: Ghulam Mujtaba, Wenbiao Liu, data collection: Mohammed Alshehri, and Yahya AlQahtani; analysis and interpretation of results: Nouf Abdullah Almujally, and Hui Liu; draft manuscript preparation Ghulam Mujtaba, and Hui Liu. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: All publicly available datasets are used in the study.

Ethics Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

References

1. Gu D, Andreev K, Dupre ME. Major trends in population growth around the world. China CDC Wkly. 2021;3(28):604–13. doi:10.46234/ccdcw2021.160. [Google Scholar] [PubMed] [CrossRef]

2. Bhaskar PK, Yong SP. Image processing based vehicle detection and tracking method. In: Proceedings of the 2014 International Conference on Computer and Information Sciences (ICCOINS); 2014 Jun 3–5; Kuala Lumpur, Malaysia. p. 1–5. doi:10.1109/ICCOINS.2014.6868357. [Google Scholar] [CrossRef]

3. Chen X, Meng Q. Robust vehicle tracking and detection from UAVs. In: Proceedings of the 2015 7th International Conference of Soft Computing and Pattern Recognition; 2015 Nov 13–15; Fukuoka, Japan. p. 241–6. doi:10.1109/SOCPAR.2015.7492814. [Google Scholar] [CrossRef]

4. Yusuf MO, Hanzla M, Rahman H, Sadiq T, Mudawi NA, Almujally NA, et al. Enhancing vehicle detection and tracking in UAV imagery: a pixel labeling and particle filter approach. IEEE Access. 2024;12(11):72896–911. doi:10.1109/ACCESS.2024.3401253. [Google Scholar] [CrossRef]

5. Wang L, Chen F, Yin H. Detecting and tracking vehicles in traffic by unmanned aerial vehicles. Autom Constr. 2016;72(11):294–308. doi:10.1016/j.autcon.2016.05.008. [Google Scholar] [CrossRef]

6. Won M. Intelligent traffic monitoring systems for vehicle classification: a survey. IEEE Access. 2020;8:73340–58. doi:10.1109/ACCESS.2020.2987634. [Google Scholar] [CrossRef]

7. Hamzenejadi MH, Mohseni H. Real-time vehicle detection and classification in UAV imagery using improved YOLOv5. In: Proceedings of the 2022 12th International Conference on Computer and Knowledge Engineering (ICCKE); 2022 Nov 17–18; Mashhad, Iran. p. 231–6. doi:10.1109/ICCKE57176.2022.9960099. [Google Scholar] [CrossRef]

8. Mustafa NE, Alizadeh F. YOLO-based approach for multiple vehicle detection and classification using UAVs in the Kurdistan Region of Iraq. Int J ITS Res. 2025;23(2):747–60. doi:10.1007/s13177-025-00479-8. [Google Scholar] [CrossRef]

9. Bakirci M. Advanced aerial monitoring and vehicle classification for intelligent transportation systems with YOLOv8 variants. J Netw Comput. 2025;237(B):104134. doi:10.1016/j.jnca.2025.104134. [Google Scholar] [CrossRef]

10. Ramamoorthy M, Qamar S, Manikandan R, Jhanjhi NZ, Masud M, AlZain MA. Earlier detection of brain tumor by pre-processing based on histogram equalization with neural network. Healthcare. 2022;10(7):1218. doi:10.3390/healthcare10071218. [Google Scholar] [PubMed] [CrossRef]

11. Cheng B, Choudhuri A, Misra I, Kirillov A, Girdhar R, Schwing AG. Mask2Former for video instance segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Los Angeles, California. arXiv:2112.10764. 2021. [Google Scholar]

12. Alif MAR. YOLOv11 for vehicle detection: advancements, performance, and applications in intelligent transportation systems. arXiv:2410.22898. 2024. [Google Scholar]

13. Cong R, Wang Z, Wang Z. Enhanced ByteTrack vehicle tracking algorithm for addressing occlusion challenges. In: Proceedings of the 2024 International Conference on Computational Vision and Robotics (ICCVR); 2025 Apr 1; Cham, Switzerland. p. 110–21. doi:10.1007/978-3-031-85952-6_11. [Google Scholar] [CrossRef]

14. Sun D, Li B, Qian Z. Research of vehicle counting based on DBSCAN in video analysis. In: Proceedings of the 2013 2013 IEEE International Conference on Green Computing and Communications and IEEE Internet of Things and IEEE Cyber; 2013 Aug 20–23; Beijing, China. p. 1523–7. doi:10.1109/GreenCom-iThings-CPSCom.2013.270. [Google Scholar] [CrossRef]

15. Rao PB, Prasad DV, Kumar CP. Feature extraction using Zernike moments. Int J Latest Trends Eng Technol. 2013;2(2):228–34. [Google Scholar]

16. Jogin M, Madhulika MS, Divya GD, Meghana RK, Apoorva S. Feature extraction using convolution neural networks (CNN) and deep learning. In: Proceedings of the 2018 3rd IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT); 2018 May 18–19; Bangalore, India. p. 2319–23. doi:10.1109/RTEICT42901.2018.9012507. [Google Scholar] [CrossRef]

17. Song FF, Guo Z, Mei D. Feature selection using principal component analysis. In: Proceedings of the 2010 International Conference on System Science, Engineering Design and Manufacturing Informatization; 2010 Aug 12–14; Yichang, China. p. 27–30. doi:10.1109/ICSEM.2010.14. [Google Scholar] [CrossRef]

18. Seo Y, Shin KS. Image classification for vehicle type dataset using state-of-the-art convolutional neural network architecture. In: Proceedings of the 2018 Artificial Intelligence and Cloud Computing Conference; 2018 Dec 21; Tokyo, Japan. p. 139–44. doi:10.1145/3299819.3299822. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2026 The Author(s). Published by Tech Science Press.

Copyright © 2026 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools