Open Access

Open Access

REVIEW

Advanced Signal Processing and Modeling Techniques for Automotive Radar: Challenges and Innovations in ADAS Applications

1 Department of Electronics and Communication Engineering, Sikkim Manipal Institute of Technology, Majhitar, Sikkim Manipal University, Gangtok, 737136, Sikkim, India

2 Department of Computer Science and Engineering, Sikkim Manipal Institute of Technology, Majhitar, Sikkim Manipal University, Gangtok, 737136, Sikkim, India

3 Department of Electronics and Communication Engineering, Indian Institute of Information Technology, Kalyani, 741235, West Bengal, India

4 Department of Electrical and Electronics Engineering, Faculty of Engineering, University of Lagos, Akoka, Lagos, 100213, Nigeria

5 Bachelor’s Program of Artificial Intelligence and Information Security, Fu Jen Catholic University, 510 Zhongzheng Road, New Taipei City, 242062, Taiwan

* Corresponding Authors: Samarendra Nath Sur. Email: ; Chun-Ta Li. Email:

# These authors contributed equally to this work

Computer Modeling in Engineering & Sciences 2025, 144(1), 83-146. https://doi.org/10.32604/cmes.2025.067724

Received 10 May 2025; Accepted 02 July 2025; Issue published 31 July 2025

Abstract

Automotive radar has emerged as a critical component in Advanced Driver Assistance Systems (ADAS) and autonomous driving, enabling robust environmental perception through precise range-Doppler and angular measurements. It plays a pivotal role in enhancing road safety by supporting accurate detection and localization of surrounding objects. However, real-world deployment of automotive radar faces significant challenges, including mutual interference among radar units and dense clutter due to multiple dynamic targets, which demand advanced signal processing solutions beyond conventional methodologies. This paper presents a comprehensive review of traditional signal processing techniques and recent advancements specifically designed to address contemporary operational challenges in automotive radar. Emphasis is placed on direction-of-arrival (DoA) estimation algorithms such as Bartlett beamforming, Minimum Variance Distortionless Response (MVDR), Multiple Signal Classification (MUSIC), and Estimation of Signal Parameters via Rotational Invariance Techniques (ESPRIT). Among these, ESPRIT offers superior resolution for multi-target scenarios with reduced computational complexity compared to MUSIC, making it particularly advantageous for real-time applications. Furthermore, the study evaluates state-of-the-art tracking algorithms, including the Kalman Filter (KF), Extended KF (EKF), Unscented KF, and Bayesian filter. EKF is especially suitable for radar systems due to its capability to linearize nonlinear measurement models. The integration of machine learning approaches for target detection and classification is also discussed, highlighting the trade-off between the simplicity of implementation in K-Nearest Neighbors (KNN) and the enhanced accuracy provided by Support Vector Machines (SVM). A brief overview of benchmark radar datasets, performance metrics, and relevant standards is included to support future research. The paper concludes by outlining ongoing challenges and identifying promising research directions in automotive radar signal processing, particularly in the context of increasingly complex traffic scenarios and autonomous navigation systems.Graphic Abstract

Keywords

Next-generation vehicles are equipped with Advanced Driver Assistance Systems (ADAS) designed to enhance driving safety while ensuring a safe and stress-free journey [1]. According to the report provided by the World Health Organization (WHO), road traffic accidents resulted in approximately 1.19 million fatalities in 2023 [2]. The high rate of casualties, significant financial losses, and the growing demand for intelligent safety systems have driven manufacturers to advance autonomous driving technologies [3].

In fully automated vehicles, human drivers are replaced by intelligent systems responsible for both sensing and decision-making. The ADAS framework integrates multiple sensors, including radar, LiDAR, and cameras, to ensure reliable vehicle performance and improve driver assistance. Among these, radar is particularly effective for detecting the range and velocity of objects, processing data efficiently, and operating under challenging weather conditions. LiDAR offers high-range accuracy and superior angular resolution but is susceptible to adverse weather conditions and interference [4]. Cameras provide color distinction, high angular resolution, and accurate target classification but cannot measure velocity and range, and their performance is compromised in low-light and adverse weather conditions [5]. Given these limitations, automotive radar is the primary sensing modality for automated vehicles [6].

Radars were developed as military tools during and after World War II [7]. Over time, the applications expanded to include air traffic control, weather Radars, ground-penetrating radars, guided missile target locating systems, and more. Automotive Radar applications were first developed in the early 1970s as part of a German research program (NTO 49) aimed at reducing road accidents [8]. In recent times, the Euro New Car Assessment Program (NCAP) for European road safety requires Adaptive Cruise Control (ACC), Automotive Emergency Braking (AEB), Lane Change Assist (LCA), etc. In [9], a semi-physical Radar modeling technique has been adopted to observe the accuracy of the probability density function of Radar data and the Radar Cross Section (RCS) values obtained are similar to the values for global vehicle target validation of NCAP.

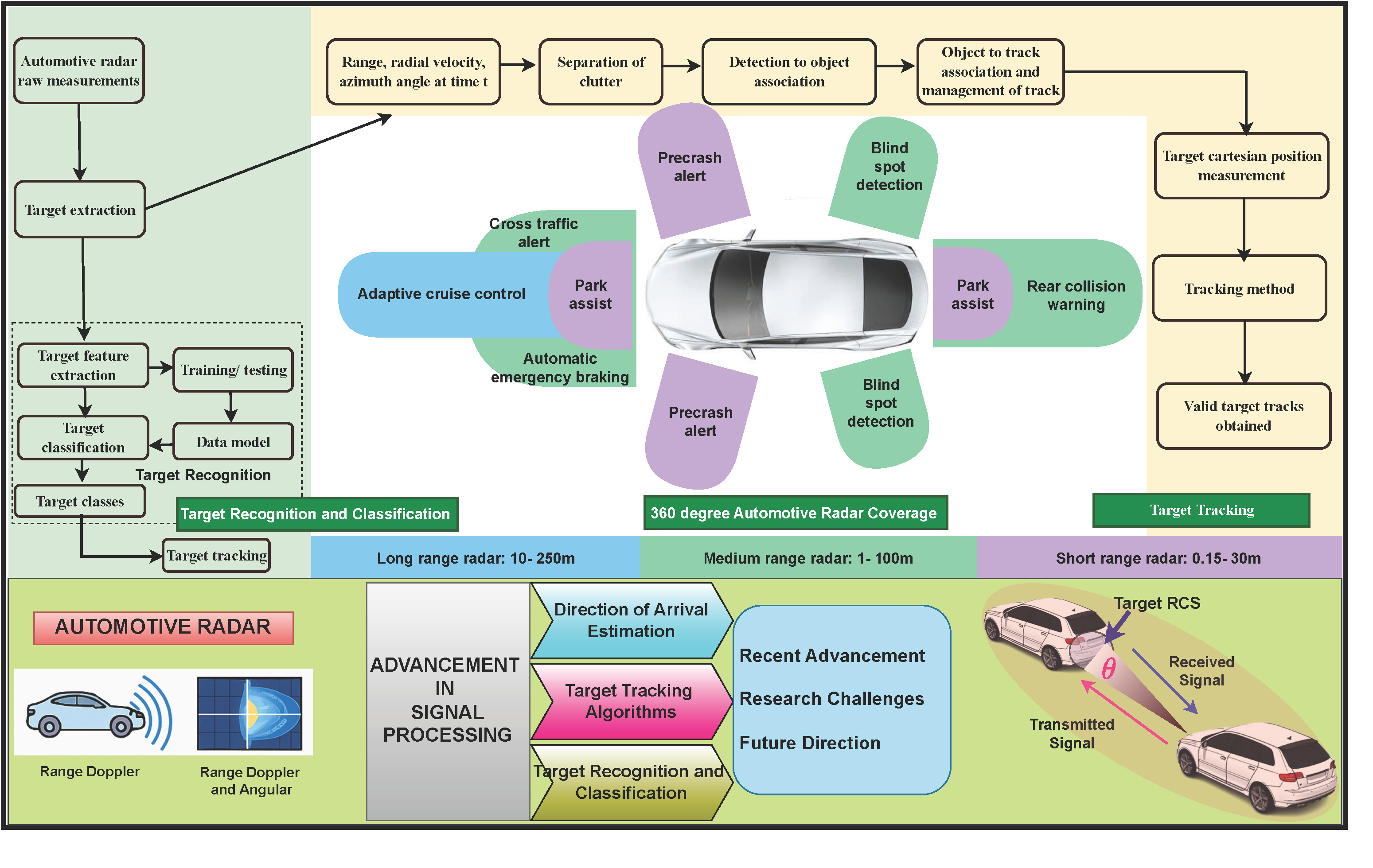

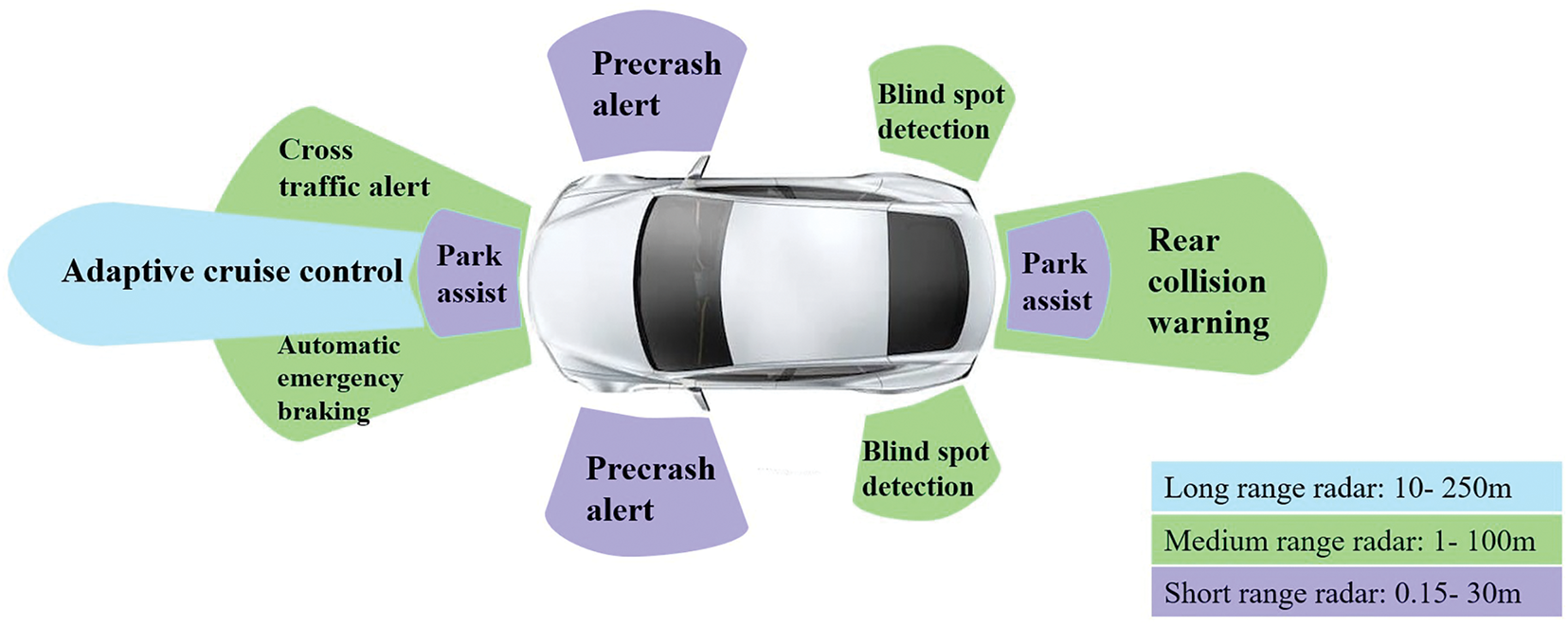

Automotive Radars are mostly used in the 24 and 77 GHz ranges of the frequency spectrum. A 4 GHz bandwidth, improved range resolution, proper Doppler sensitivity that leads to velocity resolution, and a reduced antenna aperture, which is useful for fitting on vehicles, are the important advantages of using the 77–81 GHz frequency band. Automotive Radars operating in the 24 GHz frequency band are used for ultra-wideband applications. An arrangement of planar grid antenna array for this Radar improves the antenna gain and impedance bandwidth [10]. Performance criteria of automotive Radar include target resolution, range resolution, dynamic range in terms of velocity, and direction of arrival of the received signal. Fig. 1 represents a 360 degree surround sensing by Radar scenario of an autonomous car [11]. A Long Range Radar (LRR) having a range of 10–250 m is mounted in front of a vehicle and is suitable for ACC [12]. Medium Range Radars (MRR) with a range of 1–100 m are fitted on the front and rear sides and are applicable for Lane Change Assistance and warning of rear collisions. Short-range radars (SRRs) with a range of 0.15–30 m are fitted at the four corners of a car and are applicable for parking assist, obstacle detection, etc. [13]. The various radars, along with their respective functions, are depicted in Fig. 1.

Figure 1: Vehicle in 360° Automotive radar coverage for collision avoidance

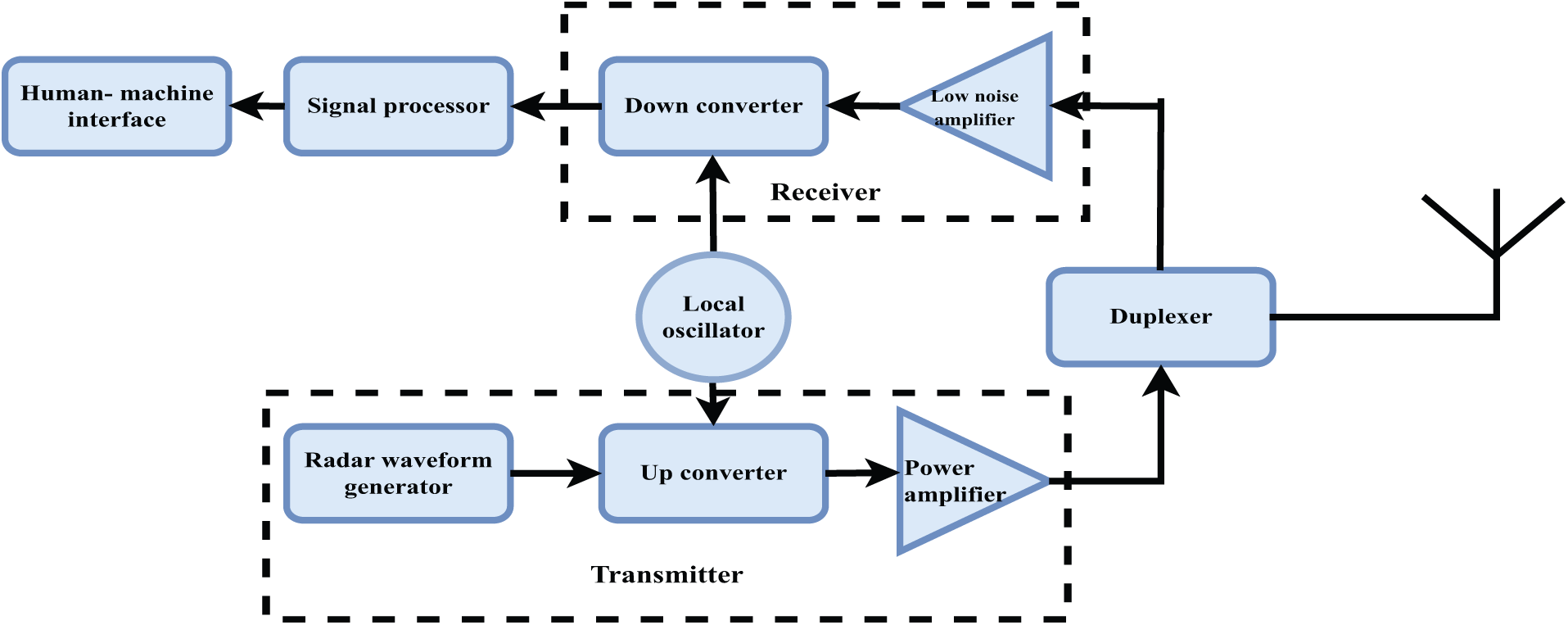

Automotive radar systems are generally composed of three main components: the transmitter, the receiver, and the signal processing subsystem. On the transmitter side, the antenna operates using a frequency-modulated continuous wave (FMCW) chirp waveform [14]. Signal processing at this stage involves generating a series of up-and-down chirps using a frequency-generating circuit, which are then transmitted via the antenna. The antenna radiates power that is regulated by design constraints, thereby influencing the transmitter architecture. Notably, the maximum detectable range is proportional to the square root of the transmitted power. The transmitter typically consists of a waveform generator, an upconverter, and a power amplifier. The waveform generator produces a predefined signal, either continuous or pulsed, at an intermediate frequency (IF). This signal is then converted to a higher radio frequency (RF) via the up-converter and subsequently amplified using a power amplifier with adjustable gain. The transmitted signal reflects off-targets and returns to the radar system, where it is received and mixed with a copy of the transmitted signal, resulting in a beat frequency. The receiver must maximize the signal-to-noise ratio (SNR) to suppress or eliminate unwanted signals and clutter. To achieve this, the receiver includes a low-noise amplifier (LNA) and a down-converter, which utilizes a local oscillator to convert the RF signal back to IF.

The signal processing subsystem plays a crucial role in extracting range and velocity information. It involves applying a Fourier Transform to the beat frequencies to perform range estimation and analyzing the Doppler-induced phase shifts across multiple chirps to measure target velocity. This is typically accomplished through a two-stage Fast Fourier Transform (FFT): a fast-time FFT for range estimation and a slow-time FFT for Doppler estimation, followed by beamforming techniques [15,16]. Direction of arrival (DOA) estimation is performed using array processing techniques, such as digital beamforming. Based on the extracted information, a target list is generated, enabling detection and analysis of target parameters. This is followed by stationary and dynamic target processing, wherein stationary targets undergo classification while moving targets are subject to tracking and classification. A high-level block diagram of the automotive radar signal processing chain is presented in Fig. 2.

Figure 2: Automotive radar processing system

Automotive Radar operational hurdles [17]:

1. In urban environments, automotive radar systems are significantly affected by multipath propagation, which arises from reflections of various surrounding objects such as pedestrians, vehicles, road infrastructure, and animals. These objects exhibit varying Radar Cross Section (RCS), velocities, and movement patterns, necessitating high precision in target detection, localization, tracking, and classification. Multipath interference can introduce false target detections, which can adversely impact overall radar performance. Mitigating these effects requires applying advanced signal processing techniques, which, in turn, increases the computational complexity of the system.

2. In automotive radar systems, any object above the road surface that interferes with signal reception is classified as clutter. Clutter originating from nearby obstacles significantly influences the required suppression levels of antenna sidelobes, particularly in the elevation plane. Echoes received through sidelobes can, in some cases, exhibit greater power than returns from weaker targets captured by the main lobe, potentially causing signal interference. Consequently, the design of radar detectors capable of accurately identifying weak targets in the presence of strong clutter is essential to ensure robust and reliable system performance.

3. Automotive radar systems also encounter significant challenges due to interference, which can be broadly classified into three categories: self-interference, intra-vehicle cross-interference, and inter-vehicle cross-interference. Self-interference arises from reflections of the radar signal off the vehicle’s structure, such as the frame or radome, which can hinder the operation of SRR systems. Intra-vehicle cross-interference occurs when multiple radar units installed on the same vehicle have overlapping fields of view, leading to mutual signal disruption. Inter-vehicle cross-interference is induced by radar systems mounted on other vehicles in close proximity, with the severity of interference determined by the relative distance between vehicles and the characteristics of their transmitted waveforms. Addressing these interference sources is critical for maintaining the integrity and reliability of radar-based perception systems in automotive environments.

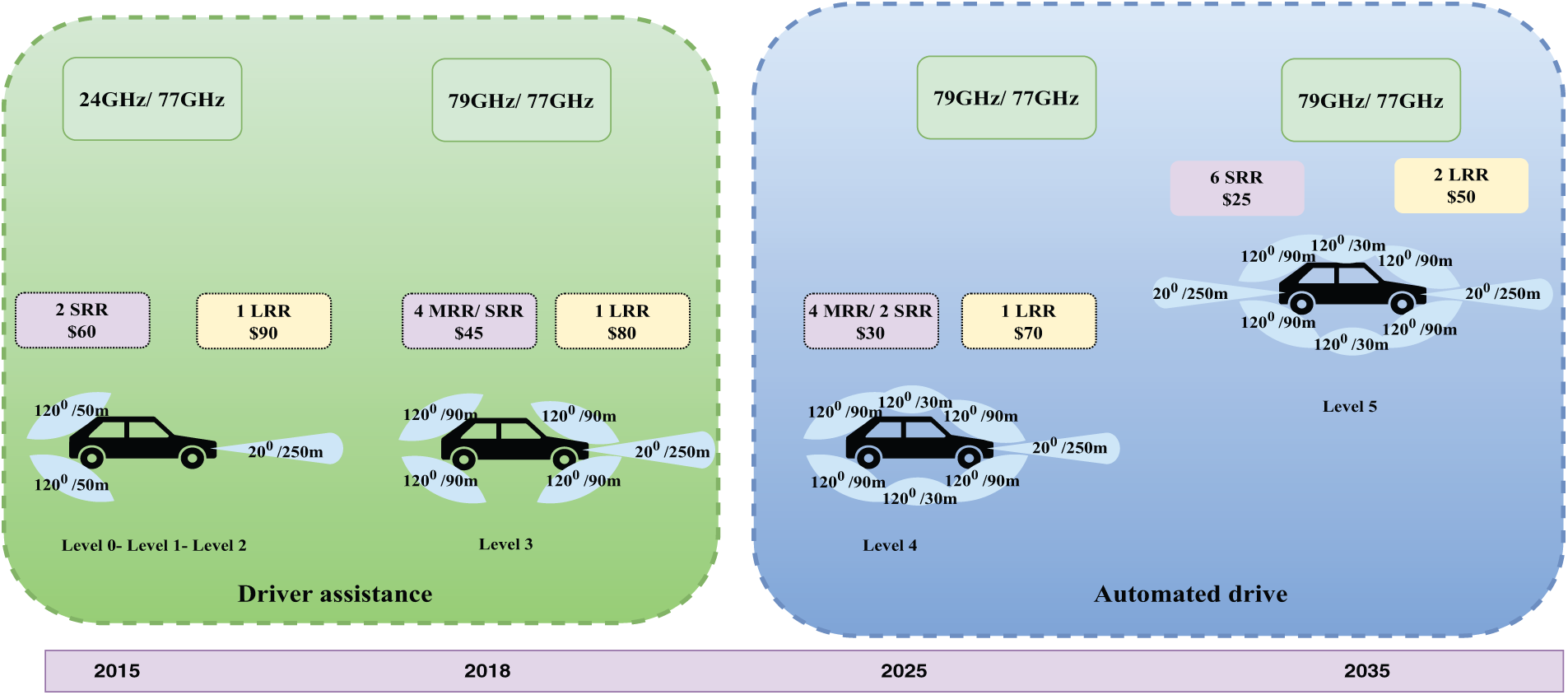

As stated before, the application areas of automotive radars include ACC, AEB, etc., which further help in the process of vehicle automation. With this ADAS system, the Society of Automotive Engineers (S.A.E.) and the National Highway Traffic Safety Administration have thus standardized six levels of autonomous driving [18]:

1. Level 0: The driver undertakes driving tasks without any automation

2. Level 1: Automation system takes over either steering or acceleration, but the driver monitors, like the Cross Traffic Assist function

3. Level 2: The system takes over functions like adaptive cruise control and brake assist, but the driver still monitors.

4. Level 3: Most tasks are automated, and the system informs the driver when necessary.

5. Level 4: The whole driving task is to be automated and the human driver is to be notified only in undefined cases.

6. Level 5: Fully automated with no driver intervention.

Fig. 3 presents the evolution of automotive Radar for ADAS applications and economic development [19].

Figure 3: Evolution of automotive radar for ADAS [19]

The contributions of this paper include:

1. A comprehensive overview of automotive radar signal processing techniques, including range and velocity estimation, has been presented. Additionally, a comparative analysis of various waveform types has been conducted, highlighting their respective advantages and limitations in the context of automotive applications. The study also examines different forms of interference encountered in radar systems. Furthermore, a comparative summary of existing review articles on automotive radar offers insight into the current state of research and emerging trends in the field.

2. A detailed analysis of target detection methods and various DOA estimation algorithms has been presented. Comparative evaluations of these algorithms are provided in tabular form, highlighting their respective advantages and limitations. This analysis facilitates a clearer understanding of the trade-offs involved in selecting appropriate DOA estimation techniques for automotive radar applications.

3. Various target tracking algorithms, as proposed in key contributions from existing literature, have been discussed. A comparative table is also included to illustrate the respective advantages and disadvantages of each algorithm, providing insight into their applicability and performance in automotive radar systems.

4. Target recognition and classification using Machine Learning (ML) algorithms has emerged as a significant area of research in automotive radar systems. Various algorithms currently under investigation have been discussed in detail, along with an analysis of their respective strengths and limitations.

5. Key research challenges in the field of automotive radar have also been outlined to support future efforts aimed at addressing these issues and advancing the state of the art.

6. To obtain the training data for the ML algorithms, a large Radar dataset is used that contains a detailed description of the surrounding environment. In this work, the publicly available important datasets are described concisely. Additionally, automotive Radar evaluation metrics and global standards are also provided.

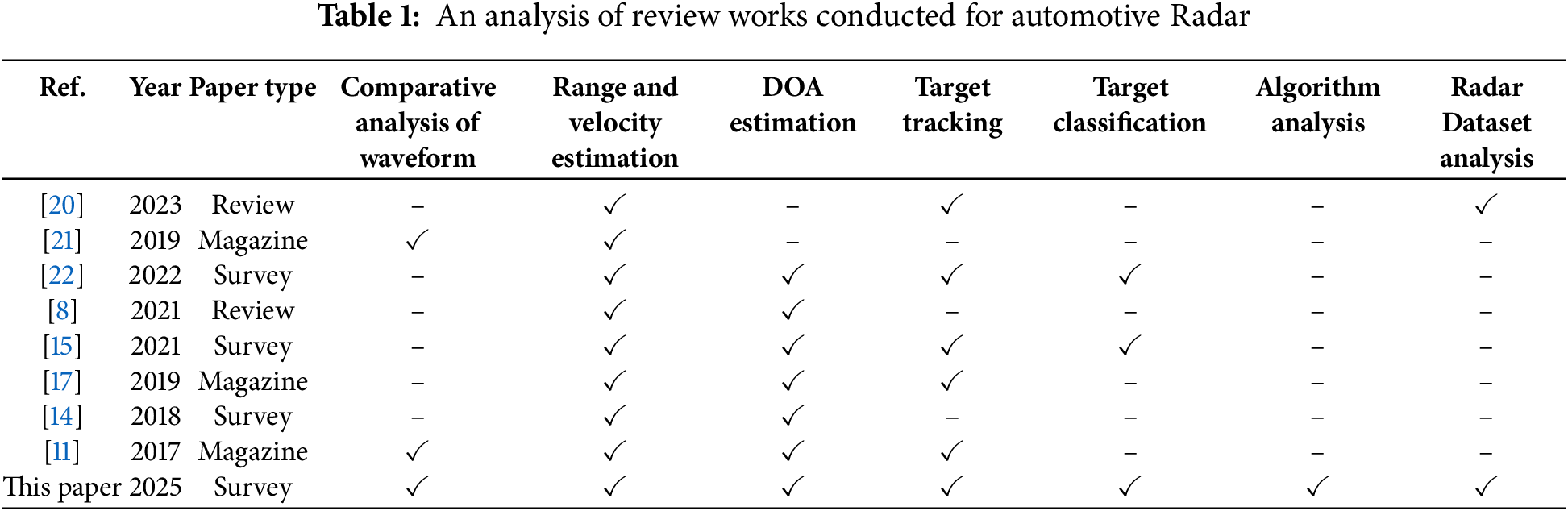

Table 1 presents a comparison between earlier review works on signal processing techniques for automotive radars and this work.

The remainder of this paper is organized as follows: Section 2 provides an overview of automotive radar systems, including fundamental mathematical formulations and commonly used radar waveforms. Section 3 presents a detailed analysis of existing research on target detection and Direction-of-Arrival (DOA) estimation techniques. Section 4 discusses various target-tracking methods explored in the literature. Section 5 reviews recent advances in target recognition and classification approaches. Section 6 outlines the key research challenges and potential future directions in the field of automotive radar. Section 7 presents an overview of the various automotive Radar databases available publicly for further research in this field, as well as the standards and parameter evaluation metrics for automotive Radar. Finally, the paper concludes with a summary of key findings.

2 Overview of Automotive Radar

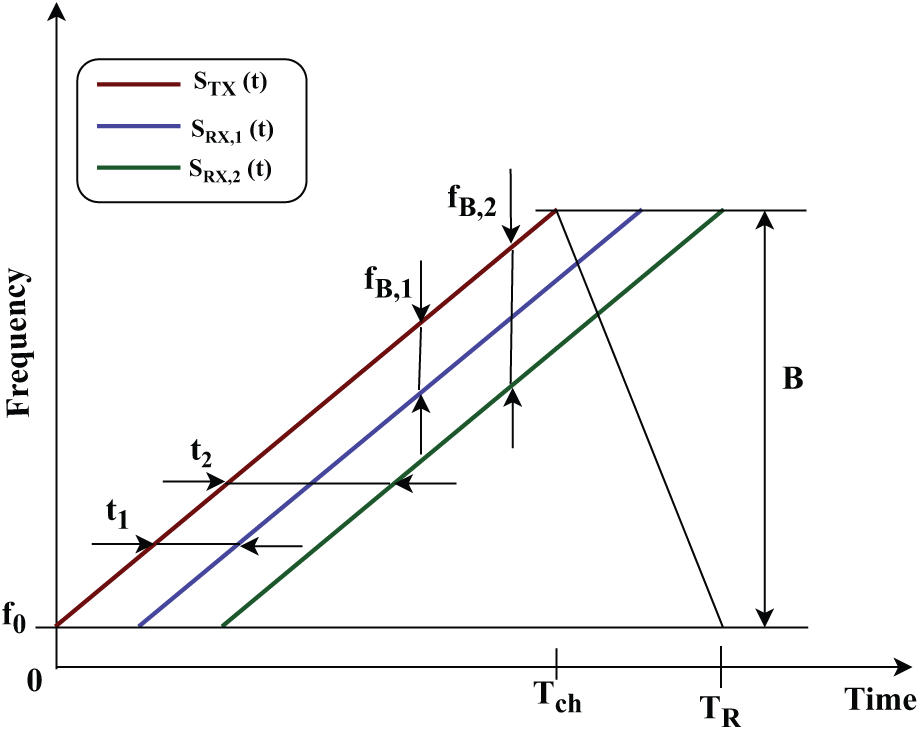

Modern automotive Radar generally applies a frequency-modulated continuous chirp waveform (FMCW), working in frequency ranges of 24 and 76–81 GHz. To detect targets, a series of signals with up chirp and down chirp is generated by a frequency-generating circuit like a phase locked loop (PLL) and transmitted using a transmit antenna [20]. This chirp waveform in the time-frequency domain is presented in Fig. 4, where the transmitted wave, received wave, and beat frequency are identified.

Figure 4: Time vs. frequency domain representation of FMCW radar

The transmitted signal

for,

A corresponding echo is reflected from the surroundings for each chirp incident on the targets. For simplicity, a target can be considered as a point target. The received signal is a time-delayed and attenuated form of the transmitted signal. The received signal is presented as,

where

At the receiving end, the received signal is multiplied by the transmitted signal and then filtered using a low-pass filter to get a signal having IF. The basic mathematical model to estimate the velocity and range of a desired mobile target can be derived from processing this IF frequency signal, which is shown as follows:

in which,

Using this

Whether the received signal is coming from a recent chirp or a previous one leads to ambiguity. The maximal unambiguous range can be shown as,

where

This proves that range resolution improves when bandwidth is increased.

Velocity measurement of a particular target using Radar depends on the Doppler effect. Here, two targets at equal distances but in motion in reverse directions with corresponding velocities

where

where

For the two targets considered above, the velocities are given as,

where

where

The position of a particular target is shown in a spherical coordinate system presented as (R,

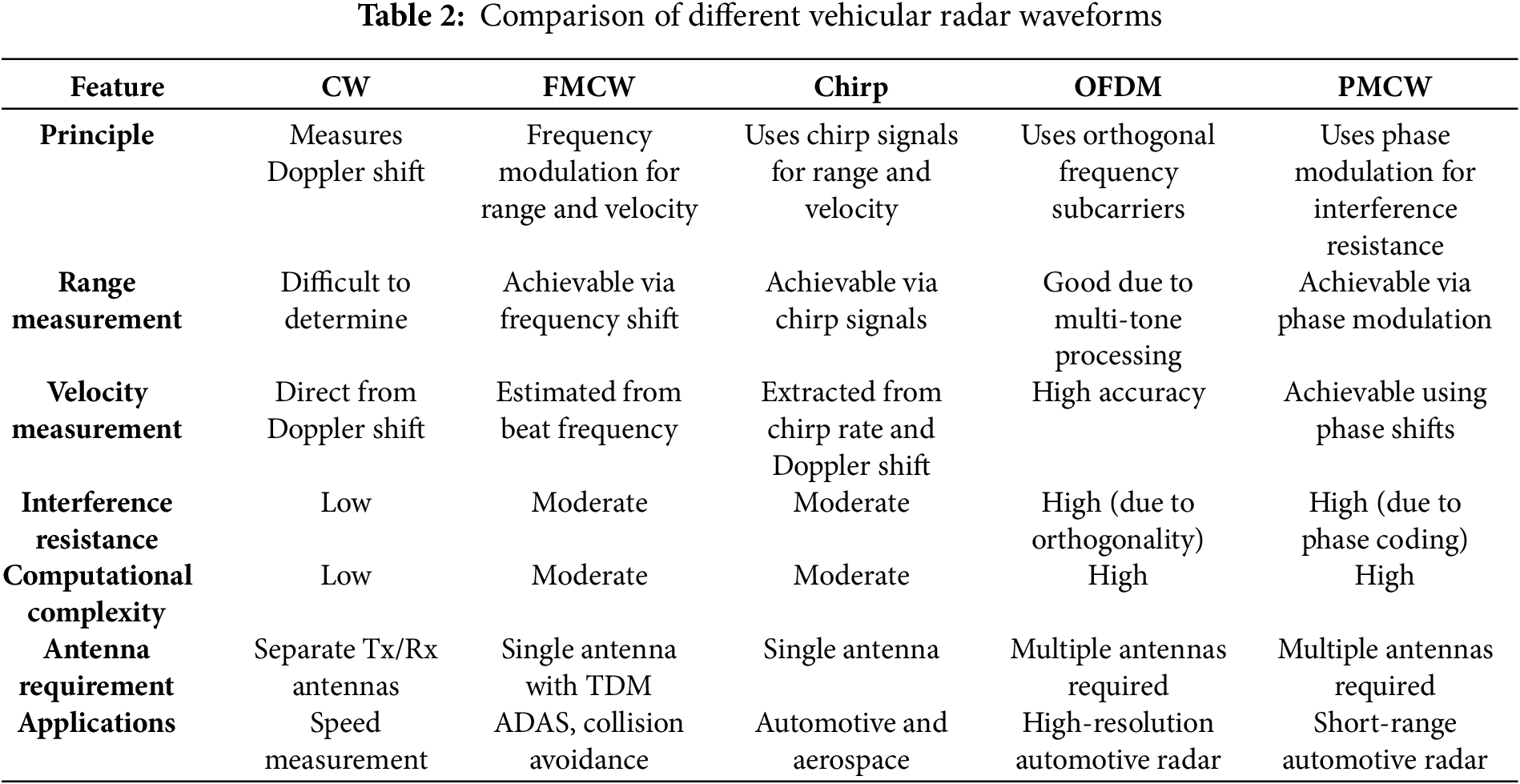

Automotive radar performance is evaluated based on several metrics, including velocity resolution, range resolution, angular resolution, and target detection probability. The choice of waveform has a significant impact on these performance parameters. Radar waveforms are generally categorized into continuous wave (CW), pulsed, and modulated types. Modulated waveforms comprise FMCW, Orthogonal Frequency Division Multiplexing (OFDM), and Phase Modulated Continuous Wave (PMCW) [11]. A detailed discussion of these waveform types is provided in the following.

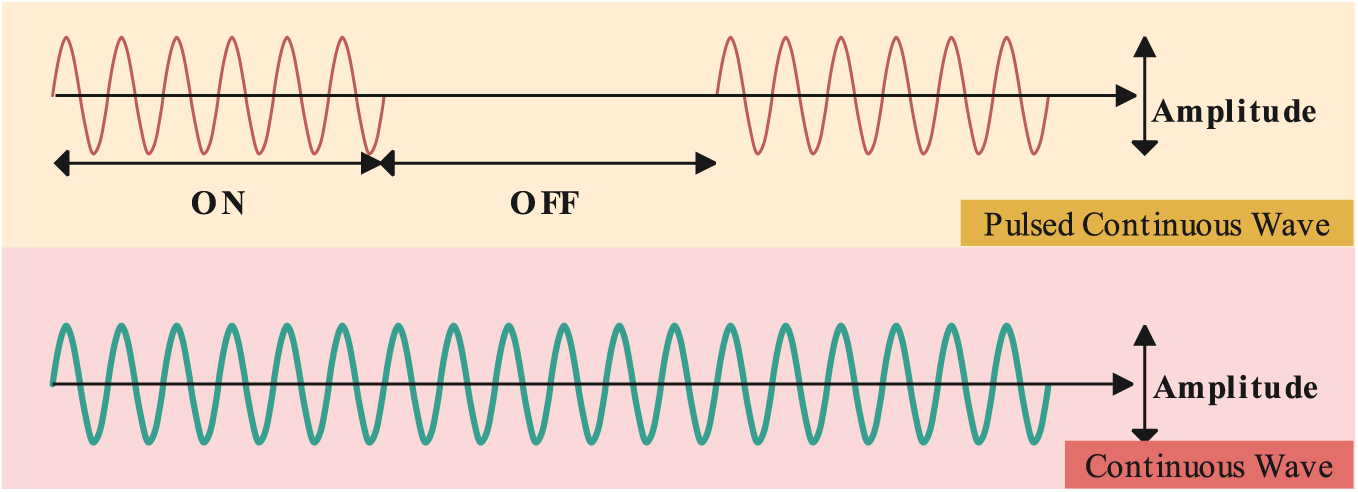

In a CW waveform, the transmitted and received signals are processed using a conjugate product, generating a signal corresponding to the specific target’s Doppler frequency. However, due to the continuous behavior of this waveform, measuring the delay due to the round-trip is challenging, making range resolution difficult to achieve. Typically, CW radar systems require separate antennas for transmission and reception.

The duration of a pulse and pulse repetition frequency (PRF) are used for designing this waveform with the required range and velocity estimation. For a pulsed waveform, one antenna system can be used for both transmission and reception processes. Fig. 5 presents a comparative representation of a continuous wave and a pulsed continuous wave.

Figure 5: Representation of continuous wave and pulsed continuous wave

2.4.3 Frequency Modulated Continuous Wave

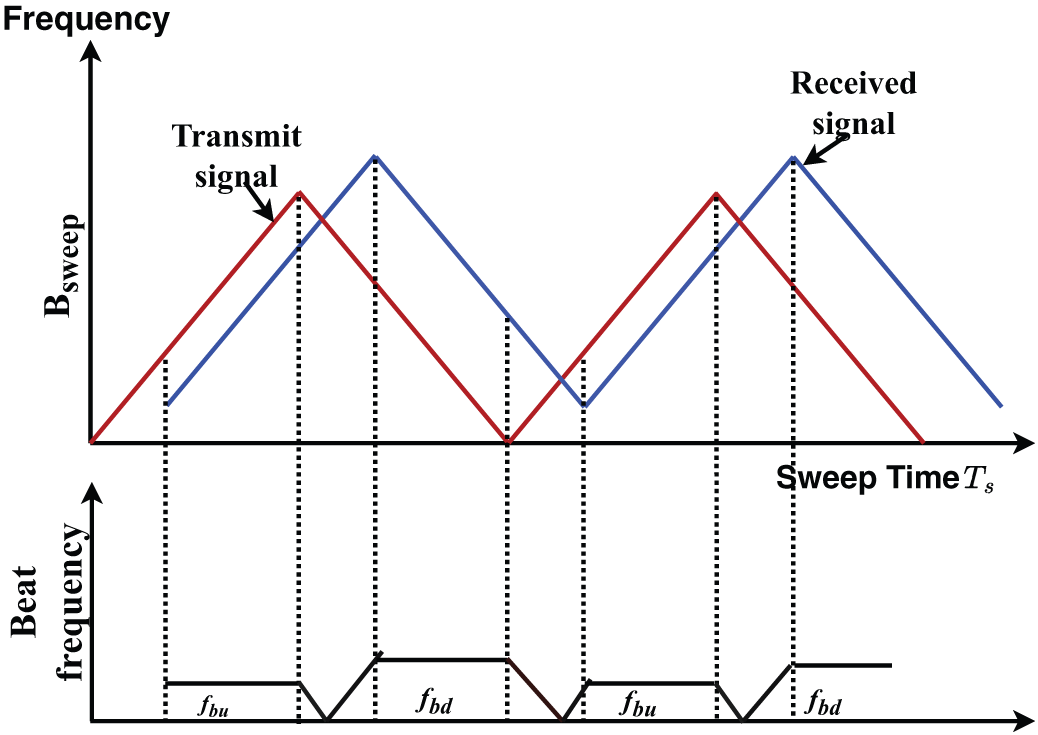

For the FMCW [23] automotive Radar, the carrier signal is modulated by the transmitter by a linear increase of the frequency over time for a predefined interval called a chirp. The main characteristic of FMCW is that the velocity and range of the target can be simultaneously estimated using the 2-dimensional (2D) FFT process. A wide sweep bandwidth improves range resolution as these factors are inversely proportional. The Doppler resolution is determined by the pulse width and the total number of pulses required for this measurement. In the Linear FMCW waveform, the beat frequency for a single mobile target can be derived after the received echo signal is combined with the signal transmitted. Thus, it is composed of a Doppler frequency shift

Here,

Figure 6: Beat frequency generation using chirp signal to estimate range and velocity

Two beat frequencies, one each for the upward slope

By applying the FFT on each reflected chirp, the target’s range is measured as:

After Range-FFT, another “Fourier transform”, “Doppler-FFT” is applied to obtain the velocities of multiple targets

Stepped FMCW—For this waveform, a sequence of sinusoidal signals is transmitted at distinct frequencies, and the phase shift and steady-state amplitude caused by the Radar channel at each distinct frequency are measured. The inverse discrete Fourier transformation (IDFT) measures the target range. Sparse stepped frequency waveform [24] provides lower levels of range sidelobe for the detection of weak targets. Using a sparse array interpolation method, the sidelobes are reduced, resulting in a mitigation of the likelihood of a “false alarm” during the evaluation of the target angle. Interrupted FMCW—In Interrupted FMCW, reception of target echo is allowed only when the timing signal is off. For short-range targets, the total reception time is reduced, making it difficult to detect those targets. But the effect is reversed for long-range targets. So, an arrangement needs to be made between SRR and LRR. An online learning approach based on the Thompson sampling technique can be applied to identify which FMCW waveform will be beneficial for target classification [25].

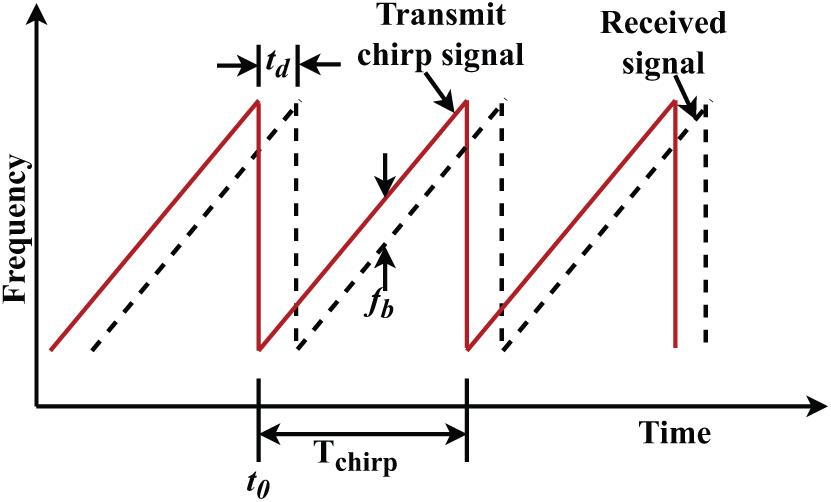

2.4.4 Fast Chirp Ramp Sequence Waveform

The advantage of a fast chirp waveform [26] over a usual FMCW waveform is that a 2D-FFT processing enables range and velocity estimation of a target accurately. To collect range information, this 2D-FFT is applied first for each chirp and then across chirps to obtain velocity information. Additionally, the beat frequency signals from targets are greater than the noise corner frequency, providing an improved SNR for detecting weak targets. An example of a fast chirp ramp sequence in the time-frequency domain is indicated in Fig. 7.

Figure 7: Fast chirp ramp sequence

OFDM [27] is a digitally modulated waveform comprising a set of orthogonal complex subcarriers. In vehicular radar applications, modulation symbols are mapped onto the complex amplitudes of these subcarriers. The orthogonality among subcarriers is ensured by designing each subcarrier to complete an integer number of cycles within the duration of an OFDM symbol, also referred to as the evaluation interval. To mitigate inter-carrier interference (ICI), the subcarrier spacing must exceed the maximum expected Doppler shift.

At the receiver, the radar modulation symbols can be efficiently demodulated using the FFT, making OFDM a suitable choice for digital vehicular radar systems. The range profile is extracted through frequency-domain channel estimation. Range and velocity estimations are performed along two distinct dimensions. Specifically, target velocity estimation can be viewed as a decomposition of the conventional two-dimensional matched filtering process into two one-dimensional matched filters, each is applied independently in its respective measurement domain.

2.4.6 Phase Modulated Continuous Wave

The PMCW waveform [28] consists of a sequence of periodically transmitted symbols that phase modulate a carrier frequency. The estimation of the target range is performed through the correlation between the received and transmitted signals. PMCW radar systems require sampling across the full bandwidth of the transmitted signal, necessitating high-speed sampling and high-resolution analog-to-digital converters (ADCs). Binary PMCW waveforms are commonly employed for automotive applications due to their simplicity and robustness. Binary PMCW is usually used for automotive applications. A PMCW waveform consists of a few symbols of binary nature

where

where

2.4.7 Combined Frequency Shift Keying (FSK) Modulated Waveform and FMCW Waveform

This waveform helps to remove ghost targets and accurately detect multiple targets with high-range resolution for short ranges. Here two-stepped Linear Frequency Modulated Continuous Waveforms (LFMCW), (designated as X and Y) are used, having the same sweep bandwidth and center frequencies, but split by a specific frequency,

Orthogonal waveform using TDM: In TDM MIMO automotive Radar, one transmitting antenna transmits a signal at each time slot. A specific antenna transmits N chirps at each time slot with a switching delay of

Orthogonal waveform using FDM: In this method, different carrier frequencies modulate the transmitter signals, and then these are separated from each other in a way that the nth FMCW chirp is shifted by frequency

Orthogonal waveform using DDM: In this technique, a total N chirps are transmitted in a sequence with

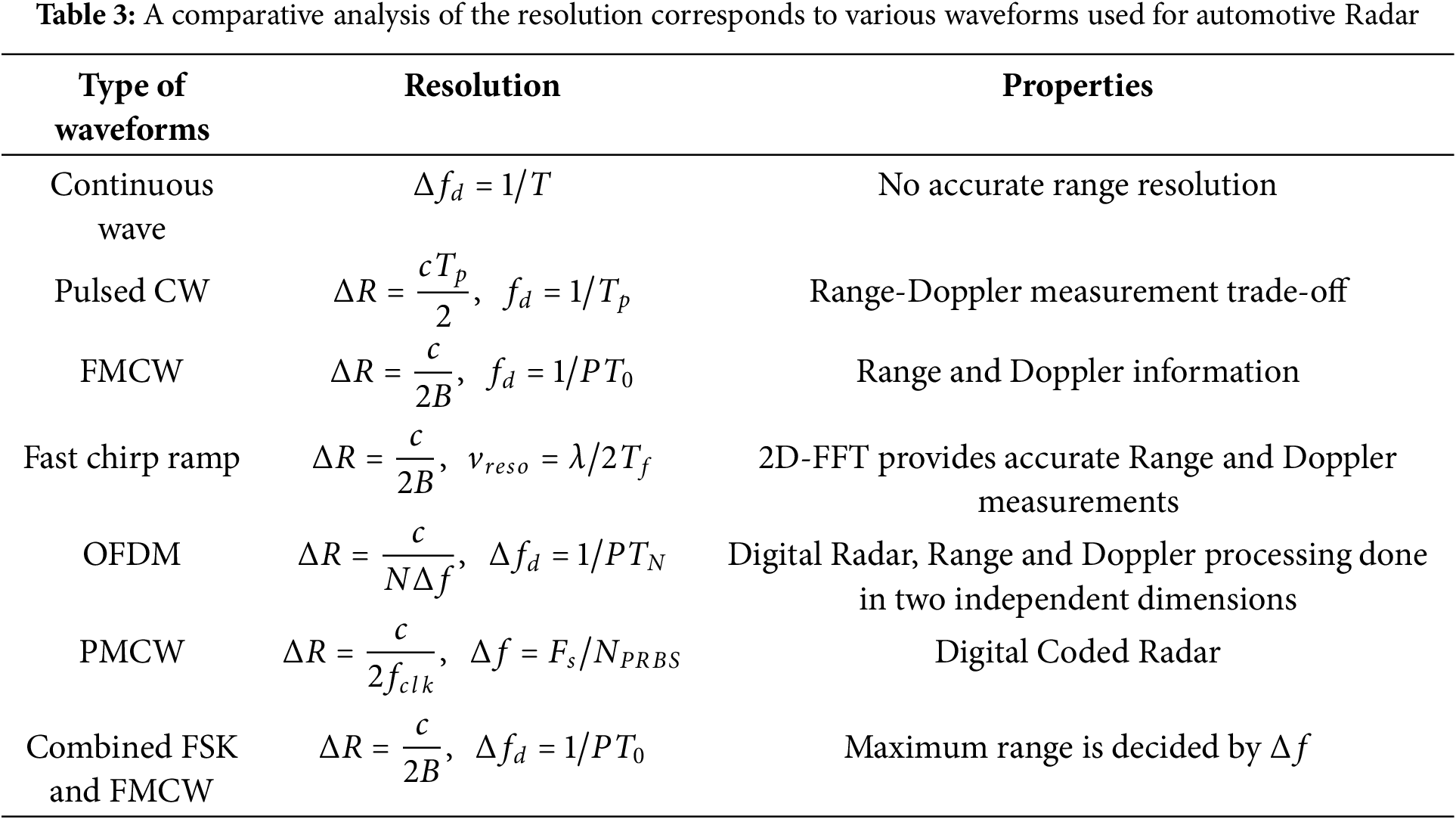

Table 3 presents an analysis of the different types of waveforms, generally used for automotive Radar, based on range and Doppler resolution values.

B = Radar bandwidth, T = time duration when data is obtained, N = samples used in CW and carriers used in OFDM,

For automotive Radar, conventionally, the FMCW chirp waveform is used due to the advantage of 2D-FFT processing for accurate range and velocity estimation. An RF sweep bandwidth increases the range resolution, and a fast ramp slope helps to achieve maximum unambiguous relative velocity. The fast ramp slope and wide IF bandwidth facilitate the separation of targets in the beat frequency domain, ensuring that the noise from a strong target produces less interference during the detection of a weak target. Recently, however, PMCW Radar has been preferred for automotive applications due to its capability to separate weak RCS targets from those of strong RCS targets. Binary PMCW Radars also provide no range-Doppler coupling and integration of Radar and communication waveforms. Polyphase-coded spread spectrum Radar system can be used for estimation of RCS over ultra-high-frequency radio channels [1,16,33–35].

2.5 Waveform Interference in Automotive Radar Systems

Interference due to Radars occurs when multiple Radars are in proximity, and the interference level depends on the in-between distance and the waveform pattern [36]. A particular vehicle fitted with an interfering Radar present at a distance R, is considered to create interference for a victim Radar. Here, the interfering radar acts as a target for the radar, which is assumed to be a victim. The interference-to-noise ratio (INR) measures the sensitivity of a victim Radar to interference. It depends on the variables of the interfering Radar and the victim Radar, the interfering Radar’s signal modulation pattern, and the demodulation process of the victim Radar. The interfering Radar is considered to have a bandwidth B, and at the victim, Radar, the power spectral density (PSD) of the interference is written as [6],

where

where

When the victim FMCW signal overlaps with the interfering FMCW signal, this results in a specific type of interference. After down-conversion at the radar receiver, the interference appears as a linear chirp signal that sweeps across the radar’s passband, occupying a wide bandwidth. After bandpass filtering, the interference signal becomes an “impulse-like signal” in the time domain. The slope and relative timing of the frequency modulation of both the victim and interfering radars determine the position and width of this interference signal. The difference in frequency modulation (FM) sweep rates between the interfering radar and the victim radar, along with their timing and frequency alignments, determines the bandwidth of the interference observed after down-conversion in the victim radar. Type A: interfering Radar and Radar assumed to be the victim, sweep with similar time duration

Type B: interfering Radar and victim Radar sweeps with similar time duration

where

This kind of interference is noticed when the “interfering PMCW” signal overlaps the victim PMCW signal. A PMCW interference is assumed with arbitrary, noise-type biphase coding with chirp rate

In both cases of FMCW victim and PMCW interferer or PMCW victim and FMCW interferer, the interference appears like noise in time and frequency domains. The INR is the same.

The techniques for interference mitigation in automotive Radar can be classified into two categories: techniques at the transmitter (such as frequency hopping and timing jitter) and methods at the receiver (such as time domain excision). Transmission techniques are usually designed to ensure that separate Radars transmit in a way where the signals are nearly perpendicular to each other in domains like time, frequency, or polarization. Mostly, interference mitigation is done at the receiver side. For FMCW-FMCW interference, a matched filtering is usually adopted to obtain integration gain for a constant frequency signal considered as a target and the interference spreads as noise [6]. For PMCW on PMCW interference, Code Division Multiple Access (CDMA) ensures that every Radar has a unique spreading code, and the interference becomes a wide-band noise signal. An FMCW interference is similar to a jammer in a spread-spectrum system for a PMCW victim Radar, and adaptive filtering can be used for mitigation. A PMCW interference on an FMCW victim can be reduced by separation in the polarization or frequency domain. Additionally, Neural network (NN) methods can be used for mitigating multi-channel interference [37]. The signal separation neural network can separate the interference from the beat signal, making it interference-free, and reconstruct the signal.

3 Target Detection and DOA Estimation

3.1 Signal Processing for Target Detection

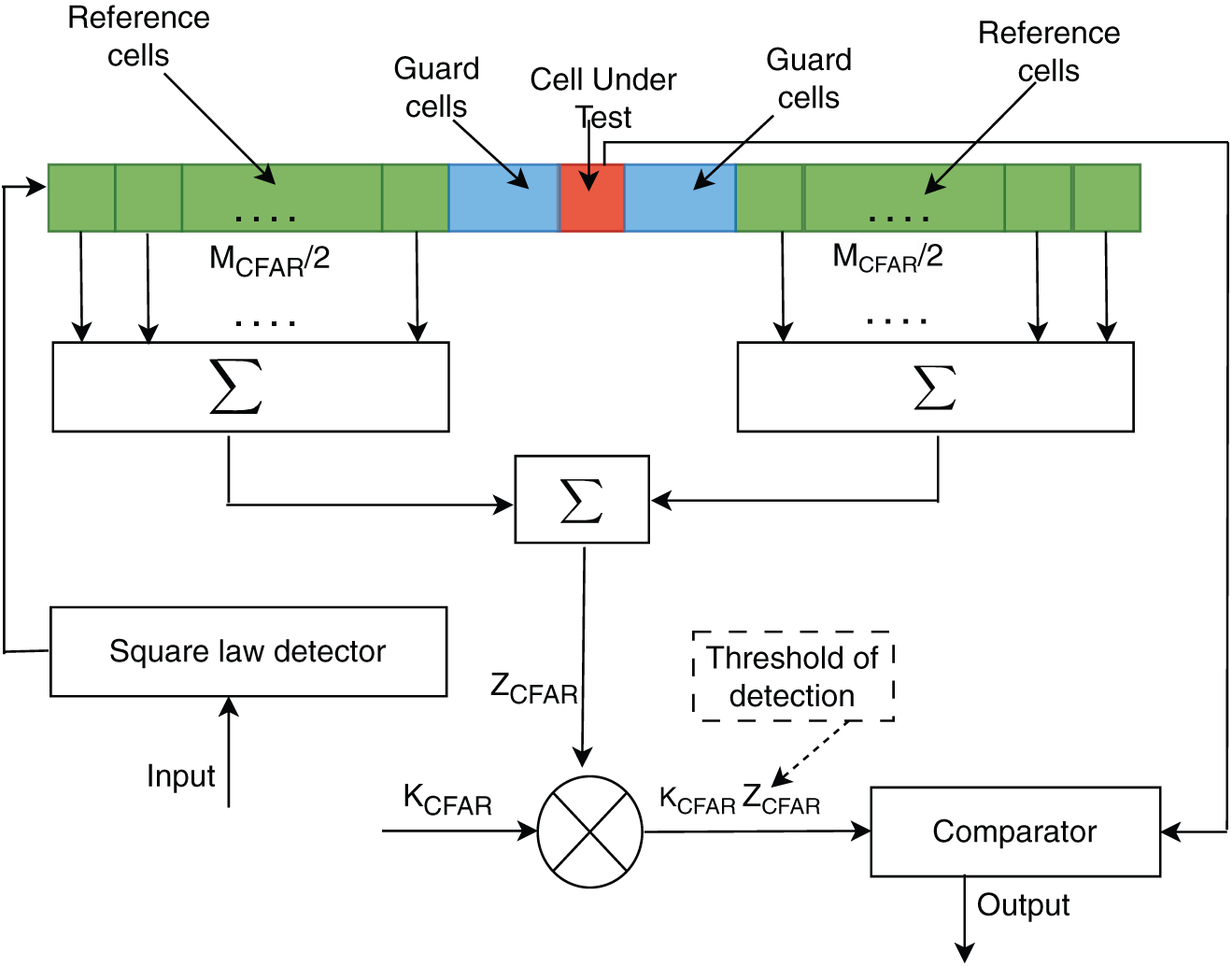

A signal processing framework [17] is required for target detection with automotive Radar. An automotive Radar is considered to transmit a series of identical waveforms (like FMCW chirps, PMCW symbols, or OFDM). These transmitted waveforms are reflected from the targets and clutter and received at the receiver end, where they are down-converted as a combination of various Radar signal echoes along with additional noise from the receiver. The work aims to reduce additive noise and detect and then classify echoes obtained from various objects that are separable in the spectral domains of Doppler, range, and Direction of Arrival (DOA). For i =

where

where

where

where

Figure 8: Working principle of the CA-CFAR method

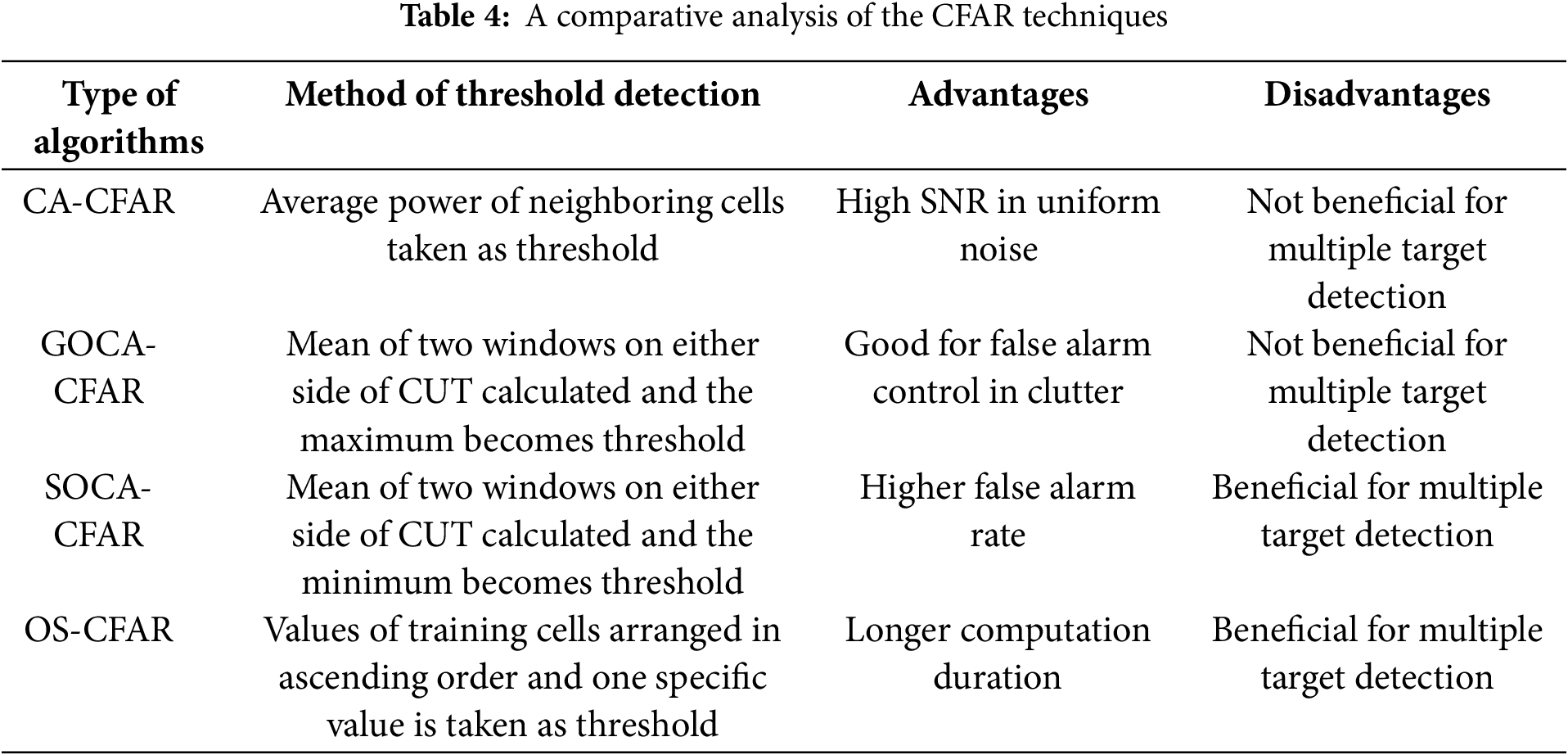

In Greatest-of-cell-averaging (GOCA-CFAR), two windows are considered on either side of the CUT, each having the same number of training/neighbouring cells. The mean of these two windows is calculated, and the maximum value of these mean values is taken as the threshold value. On the other hand, in Smallest-of-cell-averaging (SOCA-CFAR), the mean of the two windows is calculated, and the minimum value of these is taken as the threshold. In Order static CFAR (OS-CFAR), the values of the training cells are organised in ascending order, and one value is selected. This OS-CFAR detector is used in [39] for targets in micro-motion, and to cluster these targets, the image dilation algorithm is applied. Inclusion of deep learning techniques such as Convolutional Neural Network (CNN) [40] provides an increased rate of detection of targets compared to CFAR. A comparative analysis of these CFAR methods is presented in Table 4.

Clustering and tracking methods are adopted for additional detection required for automotive radar. Tracking of the target mainly includes prediction, association, and update procedures, as observed in the case of the Kalman filter. Tracking helps to improve target localization, deduce accurate velocity and trajectory, and create a picture of the target’s surroundings. The final task is called classification, where knowledge of the detected and then tracked target is obtained from echoes received from the target. This is achieved using selected micro-Doppler features, spatial spread, and other parameters.

3.2 Direction of Arrival (DOA) Estimation

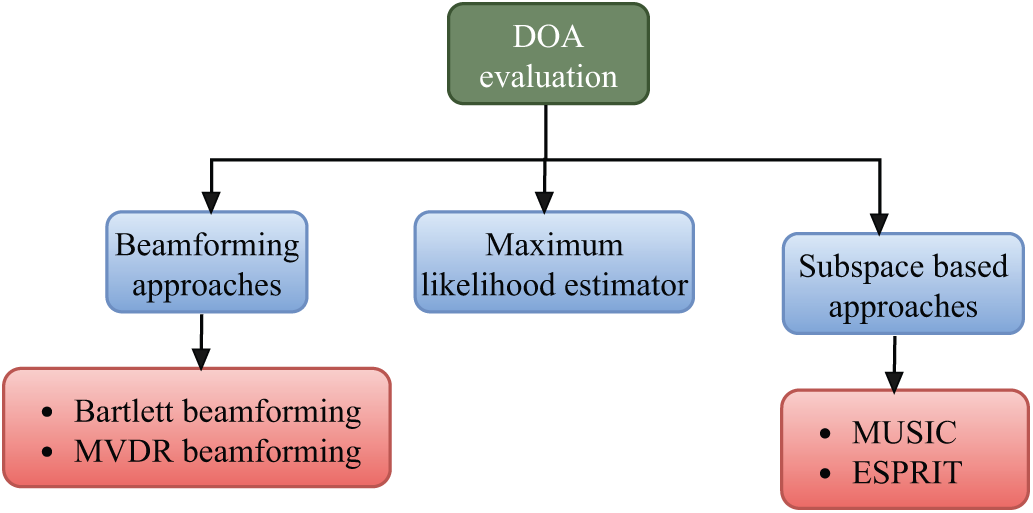

Under real-world road conditions, an unknown number of signal echoes from targets in various directions may arrive at the receiver antenna. These target echoes, combined with noise and interference, pose significant challenges for reliable target detection and tracking. To enable accurate beamforming and to place nulls in the direction of interfering signals, precise estimation of the DOA of the desired target signal is essential. Various DOA estimation techniques for target echoes are illustrated in Fig. 9.

Figure 9: DOA evaluation techniques

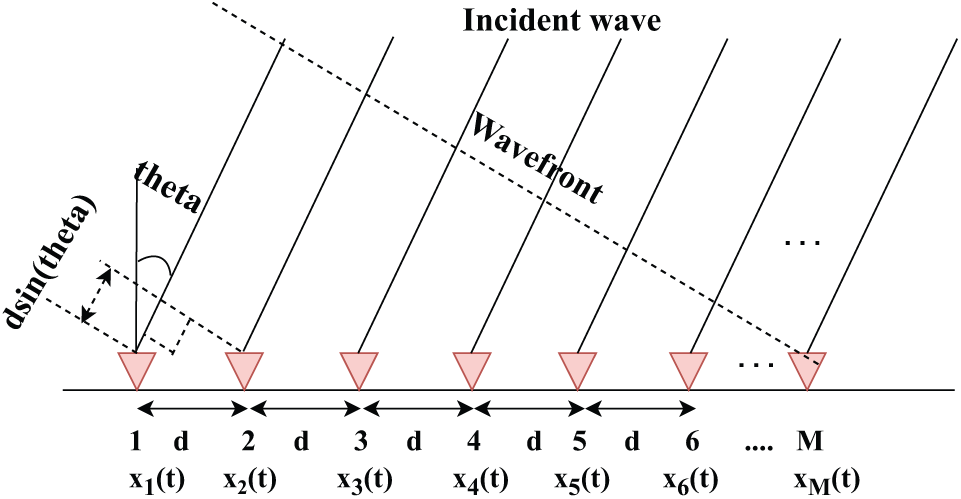

A radar signal model is presented in Fig. 10 to estimate the DOA of the received signal.

Figure 10: Signal model for DOA estimation

An automotive radar system is considered to contain a series of M antenna elements on which signals from

where

where

With H denoting the Hermitian response. Then the power at the output of the array of sensors is given as,

where E[.] is the expectation operation and

Beamforming is a technique used to create a desired radiation pattern by coherently combining signals from multiple antennas, each weighted according to its appropriate value. This process enhances signals arriving from a specific direction while suppressing interference and noise from undesired directions. The Bartlett algorithm, also known as conventional beamforming, is used to enhance the signal from a specific direction by compensating for the phase shifts of the incoming wavefront. The optimal weight vector in the Bartlett method ca be written as,

The limitations of Bartlett beamforming include: i) Applicable only for a single source of signal, ii) In the presence of multiple sources, it provides low resolution, resulting in ambiguity.

3.2.2 Minimum Variance Distortionless Response (MVDR)

In this algorithm, a persistent gain is maintained for the signal from a desired direction, and a lesser weight is added to the direction of the interfering signal and noise. The weight vector is given as

The weight vector for beamforming angle

The power spectrum at angle

This method provides improved resolution than Bartlett, but not as much as subspace methods. To enhance the angular resolution of beamforming algorithms, a method can be employed to determine the transformation vector that represents the relationship between received signals and create extrapolated elements outside the region of the array’s actual antenna elements [42]. With both original and extrapolated signals, the direction of the target echo is estimated with higher resolution.

3.2.3 Multiple Signal Classification (MUSIC)

This method is based on the subspace approach, where the covariance matrix is decomposed into a signal subspace and a noise subspace. The steering vectors are observed to be orthogonal to the noise. In the spatial power spectrum, a peak provides the required direction. Considering the equation of the received signal as shown in (29) and (30), the covariance matrix is presented as,

where

where the eigenvalues are sorted as,

The parameters can be defined as,

The basic principle of the MUSIC algorithm is that for a real source direction

Therefore,

The MUSIC algorithm observes the angles

The main disadvantages of the MUSIC algorithm are that it requires prior knowledge of the signal sources and its complex computation.

The MUSIC algorithm can be summarised as follows:

1. The sample covariance matrix is computed, using the source covariance matrix

2. The Eigenvalue decomposition of

3. The noise subspace

4. For each angle

5. The DoAs are obtained from the peaks of the pseudo-spectrum.

3.2.4 Estimation of Signal Parameters via Rotational Invariance Techniques (ESPRIT)

This method, ESPRIT, is computationally less complicated than MUSIC as it does not consider all direction vectors. The subspace of incident signals is lengthened by two responses displaced by a recognized vector, using which the DOA can be obtained. The equation of the received signal is presented in (29) and (30). The assumptions for the ESPRIT algorithm can be stated as,

• The array needs to have a structure of translational invariance (e.g., two identical subarrays).

• Prior information is present for the number of sources

• The source signals are considered uncorrelated.

• Likewise, the noise is uncorrelated with the signals and spatially white.

Now two overlapping subarrays of size

where

Hence, the subarray outputs can be written as:

Here,

For the estimation of Subspace, the equation form the data matrix:

The sample covariance matrix is computed as follows:

The eigen-decomposition of

The matrix

For the estimation of Rotational Invariance and Eigenvalue,

It is assumed that,

for an unknown matrix

For the estimation of

Then the he eigenvalues of

Every eigenvalue needs to satisfy the following condition:

Finally, the angle of arrival can be estimated by using:

The ESPRIT algorithm can be summarised as follows:

1.

2. The covariance matrix

3. The signal subspace

4.

5. Estimation of

6. The eigenvalues

7. Finally, estimation of the DoAs is done:

Here, the eigenvalues of

A MUSIC algorithm with enhanced beamspace can lessen the computational complexity and storage space, which is beneficial for automotive Radar [45]. The parameter space can be reduced by utilizing prior information to improve beamformer design. To mitigate the consequences of a lower SNR value and incorrect sample covariance in a single snapshot, a modified estimator can be employed, which considers the relationship between a signal with an interference model of sample covariance and the subspace model. Furthermore, to enhance direction estimation for two closely spaced targets, the averaging of sub-matrices of sample covariance evaluation and the utilization of the Toeplitz structure are employed. This new algorithm offers a higher resolution probability than conventional MUSIC at the same signal-to-noise ratio (SNR). For the processing of range and angle, a single-snapshot MUSIC is used in [46], which reduces the computational complexity. Another process involves obtaining DOA with single-snapshot MUSIC and evaluation of the performance with analog-to-digital allocations [47]. A new way for better resolution of angles is the application of a two-stage MUSIC algorithm [48]. Here, a crude estimation is initially performed using MUSIC. However, this estimation won’t be accurate if the targets are closely placed and have low SNR. Based on these values, each antenna element is directed to specific directions using a calibration technique that focuses on signals coming from particular directions, as presented in the first stage. The Root Mean Squared Error (RMSE) values of this new method with Root-MUSIC are less than those of standard Root-MUSIC in the low SNR region. In [49], a compressive sensing alternating descent conditional gradient (CS-ADCG) algorithm has been used. Using a gridless process and minimization of the atomic norm, the observation scene has been discretized to prepare an atomic set. The signal sources’ angles are obtained by measuring the inner product of this atomic set with fragments from every iteration and are used as primary values for searching. Finally, a function for mapping is made of the sources of the signal, and gradient descent is applied for iterative optimization. This step is conducted in a continuous domain to reduce the off-grid effect. To determine DOA in the presence of interference, the variational mode decomposition method is used. Then, with the signal-to-interference ratio obtained from this algorithm, a weighted MUSIC algorithm is applied for obtaining DOA [50].

The time complexity of MUSIC or MVDR is obtained as

A different method of lowering the computational complexity is by application of digital beam-forming (DBF), which is suitable for dynamic environments as seen in road scenarios of automotive Radar [58]. A 77 GHz automotive Radar uses an improved angular resolution DOA algorithm, which is formed by a bigger virtual array using the relative motion observed between the automotive Radar and targets. The proposed DBF-based method can obtain a crude evaluation of the target angle. The radial velocity produced by the relative motion observed between the radar and targets is taken as if only produced by radar, with the targets motionless. Thus, alongside the vehicle’s moving direction, a velocity that differs from its actual velocity can be measured. Lastly, the positions of the array for

Sparse matrix-based representation of Radar signals can be used for DOA estimation in MIMO Radar [60]. For sparse uniform linear array (ULA) structure, FMCW radar is preferred as it can provide highly accurate range information even in high SNR situations [61]. A sparse matrix depiction is developed for a bistatic model of Radar for 2D-location, i.e., range, DOA, and Doppler estimation [62]. Here, the road is characterized by a Cartesian map using which the targets’ coordinates and total multi-path Doppler for target velocity are estimated. In a sparse version of the raw Radar data, after controlling the bistatic formation’s geometry, the source vectors have a familiar support set, which helps in the application of group-sparsity (GS) based optimization. This algorithm for estimating 2D location and Doppler performs better compared to MUSIC. A further addition to this algorithm is the application of a 3-dimensional (3D) multi-static FMCW signal model, followed by an evaluation of the multi-target location and Doppler method using the GS methodology [63]. Furthermore, an association of multi-target parameters via cross-correlation and an ESPRIT algorithm, as well as based on Least Squares, is demonstrated. The GS joint shows better results than MUSIC at every level of SNR in the evaluation of Doppler and location. Additionally, the GS method can be used to determine the Doppler frequency first, and then the Doppler parameters are used for obtaining range parameters, and finally, the DOA is evaluated with these target parameters [64]. A signal processing method for DOA measurement based on Compressive Sensing (CS) theory is presented, which provides good resolution and accuracy while allowing an improved degree of design [65]. This algorithm enables the utilization of configurations with sparse antennas, featuring a reduced number of transmitter and receiver channels, while maintaining a larger effective antenna aperture. The authors have provided four sparse reconstruction algorithms along with the MUSIC algorithm. Orthogonal Matching Pursuit (OMP) is better suited for automotive Radar applications, as it offers improved detection efficiency and is faster than MUSIC. A method for ghost target detection is shown in [66] where the CS method is used for angle estimation of direct paths and multipaths.

Deterministic maximum likelihood (DML) is a parametric-based DOA estimation approach that estimates DOA by a projection of vectors of the received signal to the steering matrix’s null space. In [67], different transmit signals having orthogonal properties are generated with space-time block codes, and depending on the number of transmit antennas, the transmit signals have their phases shifted at orderly intervals. Each of the signals transmitted is matched to its respective transmitting antenna by applying the DML algorithm to find the proper array for DOA estimation. Upon identifying the signal transmitted from the initial transmitter antenna, the highest velocity to be detected is not compromised, and the accuracy of DOA analysis is improved. When the transmitted signals are not matched, the correlation value of the received echo signal and the steering matrix is degraded, and DOA estimation is worsened, even if the number of targets is appropriately detected. Based on ML assessment [68,69] MARS-a super-resolution real-time DOA used for automotive Radar. Here, the evaluation results from earlier timestamps are used to create an adequate and reduced search space. To decrease computation time, problems at every step are decomposed into separate sub-problems, and the GPU is utilized for parallel computing. Through simulation-type experiments, it has been demonstrated that only MARS can handle up to one hundred bins consisting of reflection points with a resolution of 1∘ within 1 ms. A DOA estimation based on Fast Variational Bayesian method helps to lessen the high sidelobes in sparse arrays and improve resolution for closely placed reflectors [70]. The implementation of sparse Bayesian algorithms for DOA evaluation, which provides improved accuracy and lower hardware costs, is demonstrated in [71].

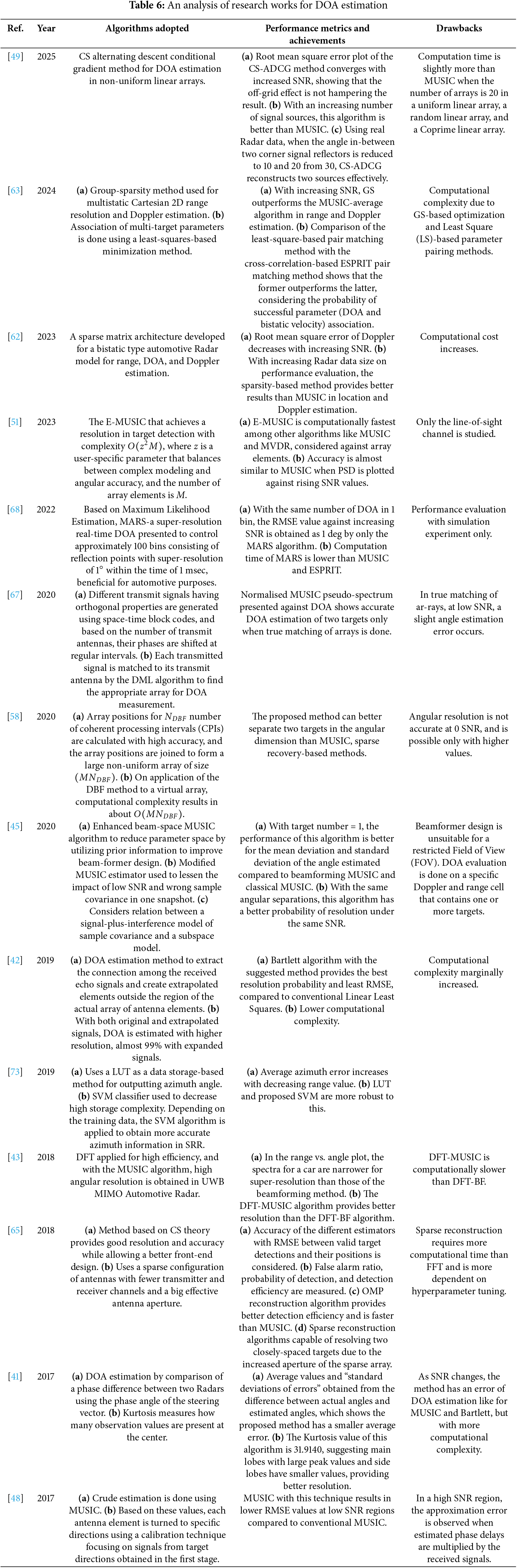

Machine learning algorithms for DOA measurement can be classified based on Regression, model order methods, and spectrum [72]. To improve DOA estimation and reduce computational complexity, in [73], the authors utilize a look-up table (LUT) based on data storage, which can mitigate measurement errors. Then, the authors propose Support Vector Machine (SVM), an ML classifier, to decrease the high storage complexity. The ideal azimuth selection issue is considered a multi-classification problem, in which a considerable quantity of training samples is obtained from the ultra-SRR and used to improve the classifier. Depending on these data for training, the SVM algorithm is applied to receive more precise azimuth information in SRR. Table 6 contains a detailed analysis of research works available regarding DOA estimation of targets using automotive Radar.

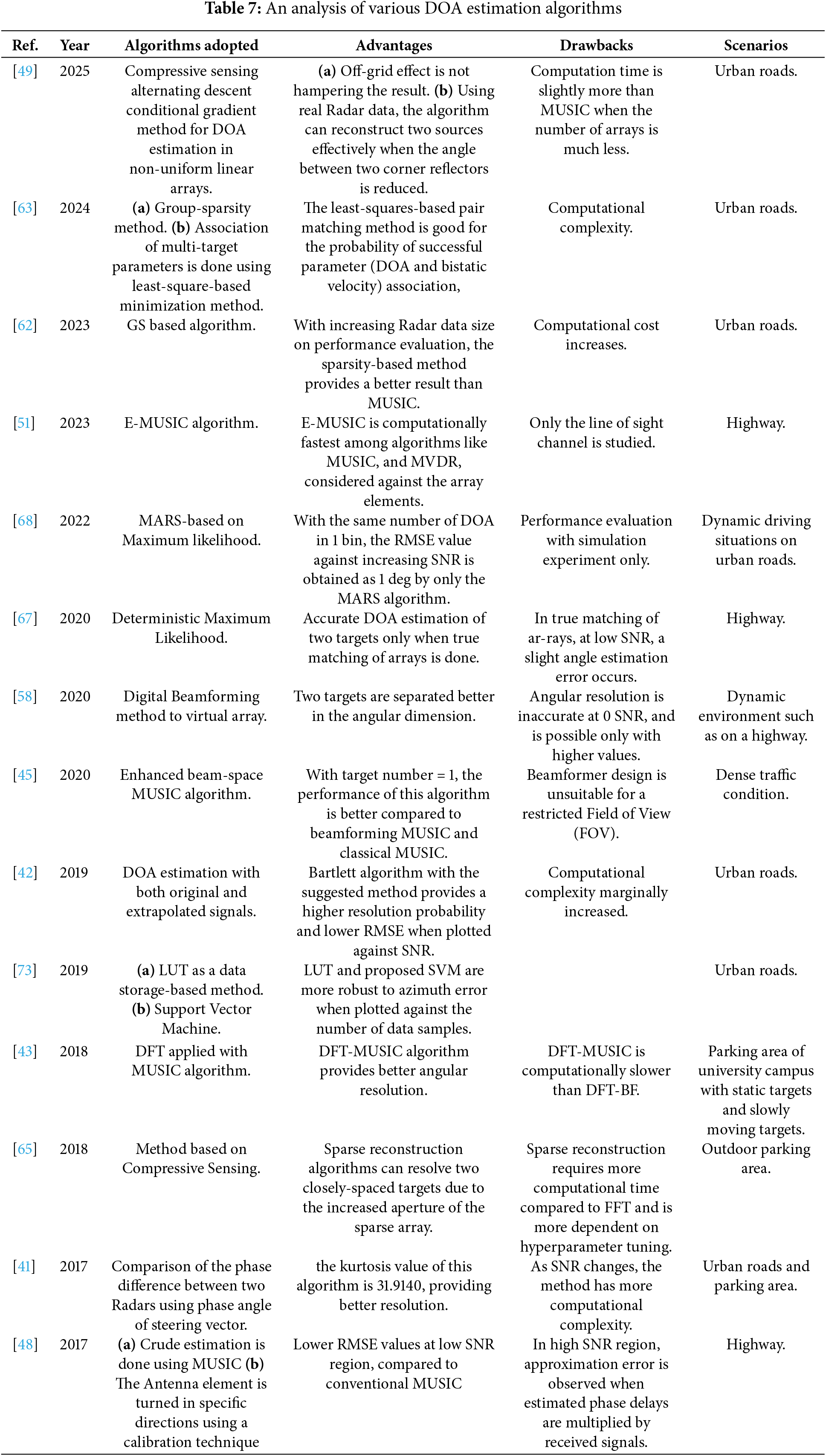

Table 7 contains an analysis of various DOA estimation algorithms.

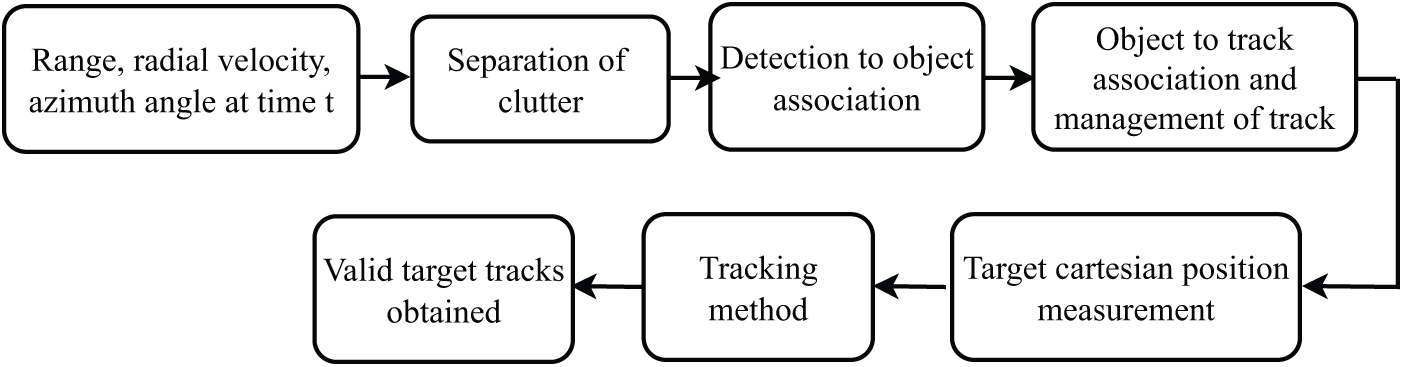

After target detection by Radar, filtering and tracking techniques for obtaining target motion dynamics are required to stay informed about the target’s position and avoid collision [74,75] as in the ACC application. Different target modeling models are present, like dynamic target modeling and static target modeling, which are further categorized into occupancy grip mapping, amplitude grid mapping, and free space mapping [76]. Fig. 11 draws a flowchart of the target tracking signal processing method.

Figure 11: Signal processing for target tracking

The target parameters measured are range, velocity, and azimuth angle obtained at time instant t. The target is separated from clutter by discriminating between moving and stationary targets, where stationary targets are not considered. Object association is achieved by grouping multiple detections from the same target into a single object. When an object does not match any existing track, a new track is initiated, resulting in valid and false tracks. Only valid tracks of real targets are input to the tracking filter. The target position represented in Cartesian coordinates obtained from range and angle measurements is fed to tracking filters. The output from the tracking filter is a valid track for a particular target.

The main characteristics of multi-target tracking are [77,78]:

1. Motion model for the target.

2. Prediction and update of the target state.

3. Data association of measurements of tracks.

4. Target Track Management applied for track initiation, confirmation of track, and termination of track.

To detect a target vehicle, motion models are utilized, where the parameters of the target are assembled from sensor data. These models are designed according to the movement of vehicles and classified as constant velocity (CV), constant acceleration (CA), constant turn rate (CTR), and constant turn rate and acceleration (CTRA).

4.2 Prediction and Update of Target State

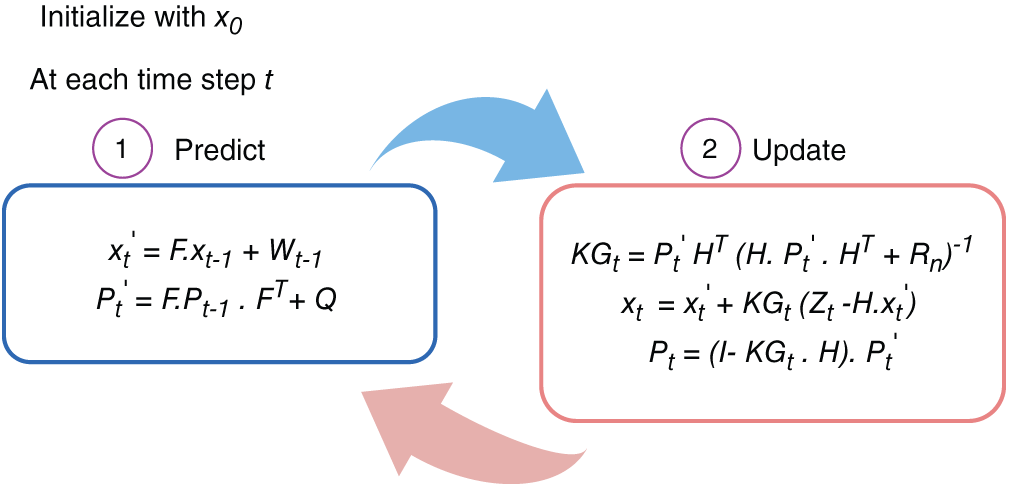

Track initiation establishes a sufficiently accurate track in terms of position, velocity, and direction within the shortest time possible. The Kalman filter (KF) is typically used to estimate the location of actual targets at the current instant in time, utilizing prediction and update processes. The Bayesian method is also being introduced for this purpose.

The KF is a recursive filter used to estimate the state of a discrete-time linear type dynamic system from noise-filled measurements. It consists of a Prediction step and an Update step. Prediction step: A new value called the predicted value is assumed based on the initial value, and then the error present in the prediction is obtained according to various noises in the Radar system. Predicted value,

where F is the state transition matrix,

where Q is noise and T stands for transpose. Update step: The actual measurement coming from the Radar is obtained and named as the measured value. The difference between the measured and predicted values is evaluated, and then it is decided which value to keep based on the Kalman gain. Based on the Kalman gain, these new values and new errors are calculated, which will be the predictions done by the KF in the first iteration. The Kalman gain is the parameter that determines the weight assigned to predicted and measured values. It determines whether the actual value is closer to the expected value or the measured value. The output of this Update step is fed back to the predicted state, and this cycle continues until the error between predicted and real values conve rges to zero.

where H is state transition matrix containing no unwanted information,

where

These new

Figure 12: Kalman filter algorithm

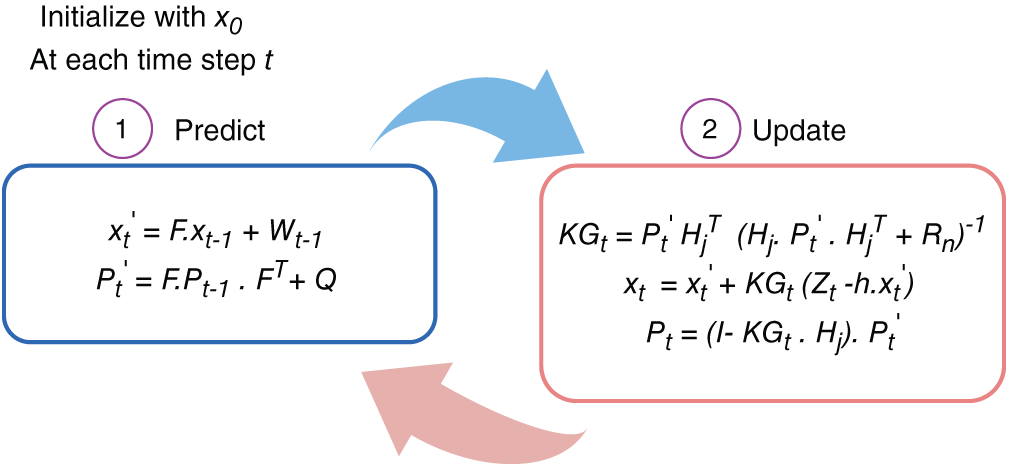

4.2.2 Extended Kalman Filter (EKF)

The limitation of the “Kalman filter” is that it works with a Gaussian distribution and linear functions. Radar data involves non-linear functions, which must be approximated to make them linear. This approximation is typically performed using the Taylor series, and the EKF can be applied afterward. Prediction step: The prediction step is similar to the Kalman filter.

where F is a matrix of state transition,

where Q is noise and T stands for transpose. Update step: the difference in-between the measured value and the actual value is given as,

where

where

Fig. 13 shows a pictorial presentation of the Extended Kalman filter method.

Figure 13: Extended Kalman filter algorithm

4.2.3 Unscented Kalman Filter (UKF)

The UKF is similar to the EKF and tries to address its problems. Here, the transformation is a nonlinear unscented transformation and is considered a replacement for the linearization process in EKF. In this method, a precise nonlinear function is employed to approximate the probability distribution of the state.

To work with non-Gaussian Radar systems, Bayesian filtering and the particle filter (PF) are sometimes employed. With the help of random samples, this method estimates the state Probability Density Function (PDF). The model for the system can be shown as,

where F is a transition matrix and

where H is taken as the transition function and W is taken as zero-mean white noise. The PF algorithm can approximate the posterior PDF P(x(t)|Z(1:t)) by particles, which are a set of weighted random samples. The first prior distribution of the state P(x(0)) and PDF P(x(t−1)| Z(1: t−1)) at time (t−1) are assumed to be known. The PDF is written as,

The prediction is then updated with the help of the current measurement y(t) based on Bayes’ theorem,

in which, P(y(Z)|Z(1:t−1)) is a normalizing constant. The optimal state can be derived as

A limitation of this method is that the unknown integrals are hard to compute, so approximations are needed.

A single target localization method by applying a collocated MIMO-monopulse approach to FFT processing is adopted in a real-life experiment [79]. To improve the velocity uncertainty of a moving target, a cascaded KF as described in [80], can be applied, where KF is first applied on polar coordinates to derive velocity and predict the acceleration. Next, an EKF is used to improve velocity measurements and minimize measurement error when the motion state is in Cartesian coordinates, and measurements are provided in polar coordinates. An improved adaptive EKF is used in [81] to enhance the robustness and accuracy of the tracking process. A cubature Kalman filter (CKF) applies the cubature rules to approximate recursive Bayesian estimation integrals with a Gaussian assumption. The square-root CKF (SRCKF) algorithm distributes the factors, which are the square roots of the predicted and posterior error covariance matrices, to prevent the square rooting of the matrix. The iterative SRCKF algorithm in [82], iteratively optimizes the SRCKF measurement and update processes by the Gauss-Newton method, leading to a lower error component. In [83], a threshold method is first used to filter out ghost targets and empty targets. This is followed by application of the Adaptive Interactive Multiple Model Kalman Filter and the Hungarian algorithm for association and tracking of multiple targets, which reduces the error as compared to conventional UKF algorithms. In [84], a multi-target tracking algorithm based on a 4D Radar point cloud has been proposed for obtaining the intensity, location, velocity, and structure of the targets. The method provides compensation for point cloud clustering, velocity, static state, and dynamic state updates, as well as 3D border generation of the dynamic target using the Kalman filter, contour updates of the static target, and a target trajectory control procedure. Tracking of targets in the presence of velocity ambiguity requires a tracking algorithm with TDM where disambiguation of Doppler is done before angle estimation [85].

A reweighted robust PF (RR-PF) is proven to improve state values in a nonlinear model and is more robust to outliers [86]. The method utilizes inputs from the particle weights of true particles and filters out inputs from unreliable particles through the discriminative treatment of detected Radar data. A track-before-detect (TBD) algorithm uses the targets’ kinematic constraints on road and graph theory algorithms to define every plot as a potential target or clutter [87]. The algorithm involves a discriminant metric, which refers to mathematical calculation rules of a plot and its trajectory, followed by the state transition of the plot, and requires transition conditions. The post-processing is done for motion state estimation of confirmed targets, after which significant results in target detection and effective clutter removal are observed. A multi-frame TBD can adjust the threshold value of detection depending on the existence of mobile targets present within the Radar field-of-view and also considers the self-positioning errors of the ego vehicle [88]. Another application of TBD is the motion compensation technique on the dynamic programming-based TBD [89], which works for ground Radars to decrease the error of the conventional algorithm. In [90], the Cramer-Rao lower bound method is applied to detect the location and velocity of a mobile target, and an active sensing application is further used to improve tracking accuracy.

Linear Regression with KF: A machine learning algorithm like Linear regression can be applied with KF for more accurate estimation of target parameters. Linear regression helps identify a statistical relationship between an independent variable and a dependent variable. Here, time (t) can be assumed as independent and ‘

The motion in both ‘

4.3 Data Association and Measurement of Tracks

Data association is used to combine multiple detections from the same target into a single object. If an object does not match the current track, a new track has to be initialized. Thus, valid and false tracks are produced. The valid tracks are considered for updating the states [91]. In the global nearest neighbor (GNN) algorithm, the association depends on the minimum Euclidean distances between measured and predicted values. However, this algorithm performs poorly in high-clutter regions.

The Joint Probabilistic Data Association Filter (JPDAF) algorithm is more efficient for tracking targets, where the probability of

A micro-Doppler-based leg tracking framework for pedestrian detection to enable behavioral signs within one measurement cycle has been presented in [92]. A model is designed to estimate the spatial movement of the feet, segment the body in a vertical format, and extract the reflection points resulting from leg movement. An elevation-resolving antenna is used. Then, EKF is used for target tracking. After data association is completed with Joint Probabilistic Data Association (JPDA), the reflection points can be assigned to a particular leg. Then the location, kinematic data, and velocity of each foot can be filtered. In [93], an Interacting Multiple Model (IMM) algorithm with the JPDA algorithm is shown to achieve tracking of multiple maneuvering targets. Since the effect of this algorithm is less pronounced in the nonlinear case, UKF with Doppler measurement is applied to achieve better position and velocity accuracy. In [94], the spatial distribution of the measurement model produced by a target vehicle is presented using a variational Gaussian mixture (VGM) model. For mapping of the extended target tracking problem, the Probability Generating Functional formulation has been used.

An adaptive strong tracking extended KF (ASTEKF) helps to lessen the impact of state transitions and parameter changes on the measurement process and for better resilience to interferences [95]. The adaptive attenuation factor is updated whenever the time fading factor changes, helping to mitigate the divergence problem in a tracking process. This algorithm offers enhanced capacity to track abrupt changes in the target’s motion states. A number of clustering algorithms are used for identification, investigation, and tracking of targets [96]. The Density Based Spatial Clustering of Applications with Noise (DBSCAN) clustering algorithm can be applied to range-angle data to obtain the centroids of the cluster points, which then provides the target position [97]. An imaging method for a target moving at high speed utilizes the Doppler Range Processing (DRP) method to achieve velocity and range resolutions, thereby obtaining coherent integration gains through range-Doppler processing [98]. Initially, Doppler processing is performed using the FFT on slow-time samples. A velocity bin interpolation method, and lastly, processing of the range is done via FFT over Doppler migration lines. The complexity of computation of this algorithm is calculated as

A hybrid smooth variable structure filter (SVSF) is presented in [99] by combining generalized time-varying smoothing boundary layer (GVBL) and Tanh-SVSF to prevent parameter sensitivity and control the unwanted chattering matter. A non-linear generalized variable smoothing boundary layer (NGVBL) parameter is used to create a hybrid switching scheme that leads to an ideal Kalman filter (KF) in cases of low model uncertainty. For solving data association and clustering problems, a Deep Neural Network (DNN), called Radar Tracking Network or TrackNet, can be used, which applies point clouds of Radar data from several time stamps to get desired objects on the road and provide the information on tracking [100]. In this architecture, features are extracted independently for each cell and timestamp using a PointNet++- based method that incorporates long-distance point sampling and multi-scale grouping. This is followed by processes of convolution and max-pooling applied to smaller point clouds within each cell. For extended object tracking, a measurement modeling and estimation method, known as the data-region association process, partitions an object into several regions. A simple measurement distribution is performed over each area, and a complex method is applied to the target [101]. Also, a new gating method is used for data association.

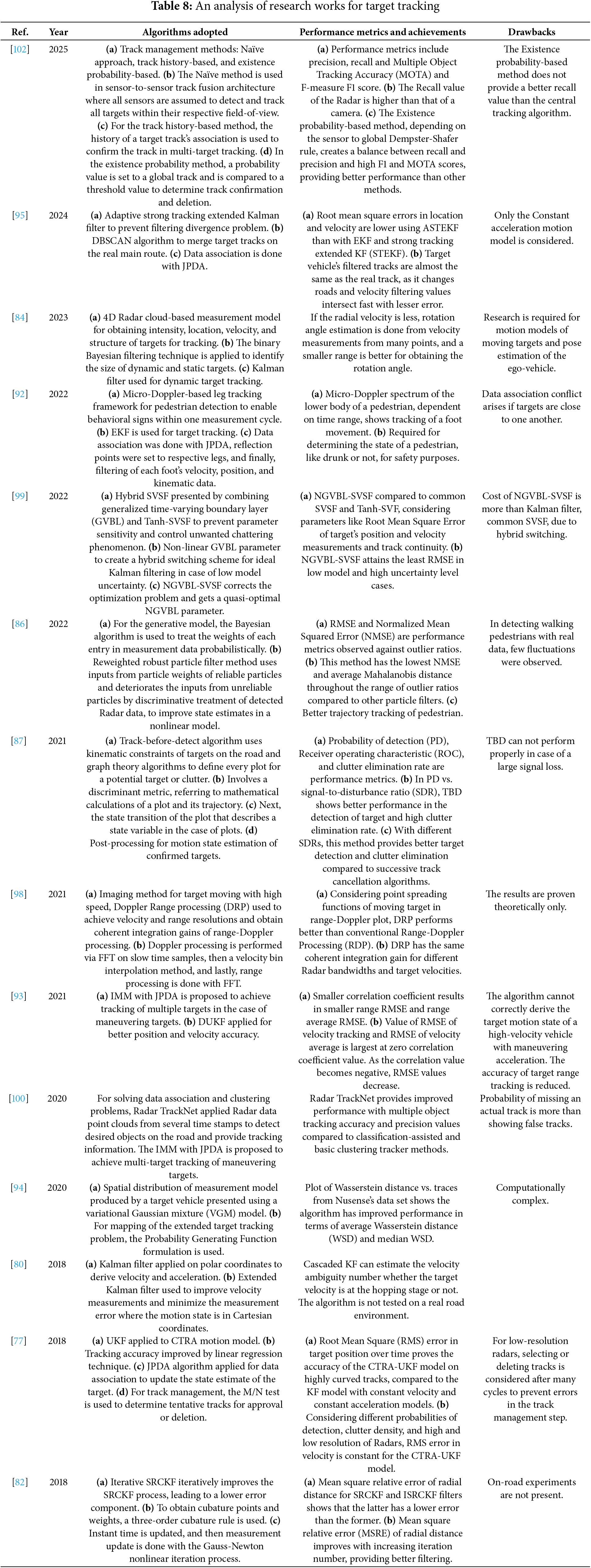

For multiple targets, a management process is required to filter false alarms and efficiently track them under changing detectability scenarios [102]. Tracks can be classified into two categories: provisional and verified tracks. After each measurement round, the values are updated and verified in the first phase. The remaining measurements are tested for association with provisional tracks in the second phase. If these measurements do not correlate with known tracks, they initialize new provisional tracks. After further examination, these tracks become either confirmed or deleted. For this purpose, the M/N test can be applied, where a provisional track is confirmed if a minimum M number of detections is obtained for N scans of data. If K or fewer detections are obtained for N scans, the provisional track is rejected. A composite method can be formed by combining two or more M/N tests by a logical OR operation. This will provide more accurate results with little increase in computational difficulty. For target tracking, a medium access control (MAC) technique has been adapted so that automotive Radars can have a common channel and suggest the best MAC parameter for a particular vehicle and corresponding road traffic [103]. Table 8 contains a detailed analysis of research works available regarding target tracking using automotive Radar.

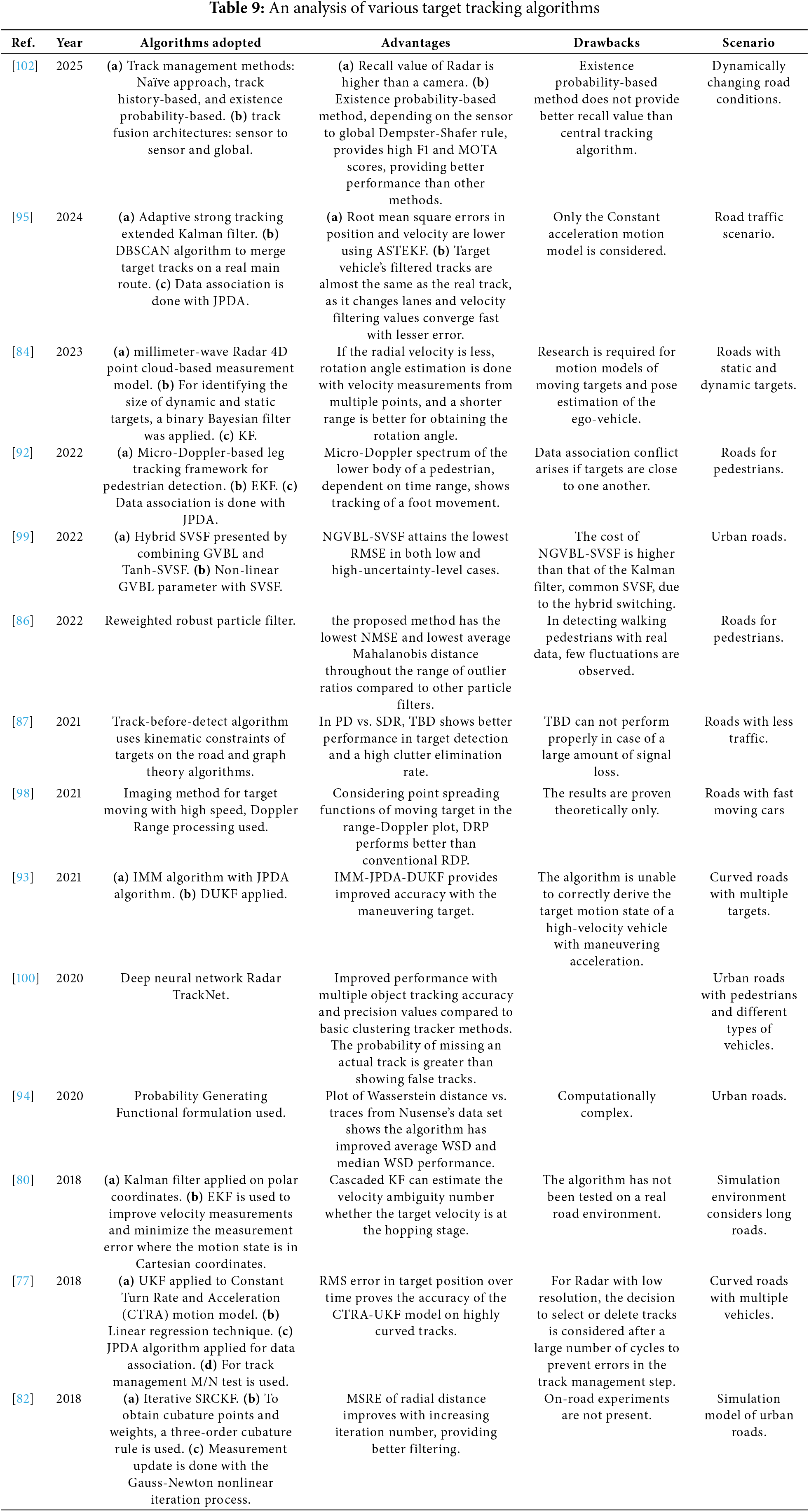

Table 9 contains an analysis of various algorithms for target tracking using automotive Radar.

5 Target Recognition and Classification

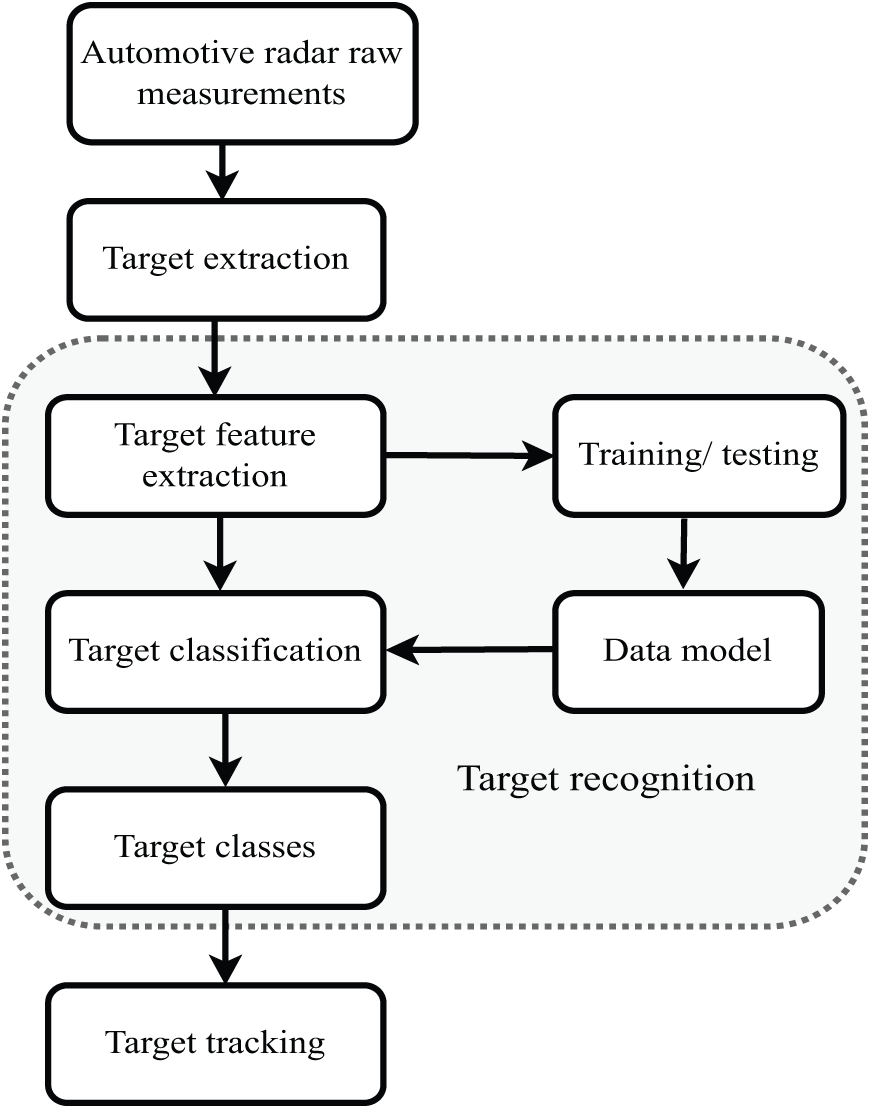

The road scenario in which automotive Radar operates is very cluttered, so the classification of targets with high accuracy is essential. A flowchart for the target recognition and classification process is depicted in Fig. 14. Using the raw Radar data, a potential target is observed and its features like RCS, range, and Doppler are extracted.

Figure 14: Signal flowchart of automotive radar for target recognition and classification

A training data set containing representative Radar data examples is required. Now, the data measured from the target is considered along with the training data. The result is classifying the new data, i.e., the new target, into different classes or categories. The target recognition problem in Radars can be addressed from an ML algorithm perspective. The principle of an ML is to find the direction from a group of unknown data and then utilize this to predict the next step in advance or classify the remaining data. ML algorithms are divided into three types: Supervised learning algorithms, Semi-supervised learning, and Unsupervised learning algorithms. The supervised methods are applied when training datasets are available to predict the output of the algorithm, and the well-known examples include K-Nearest Neighbor (KNN) algorithm, Support Vector Machine (SVM) algorithm, and Artificial Neural Networks (ANN). Semi-supervised methods are used when labeled data is insufficient and unlabeled data is used for training the algorithm; certain Convolutional Neural Networks (CNNs) fall into this category. Unsupervised algorithms such as K-means clustering and Principal Component Analysis (PCA) are applied when labeled data is not available for training purposes.

5.1 K-Nearest Neighbor (KNN) Algorithm

In the scenario of Automotive Radar data, the supervised learning algorithm, KNN algorithm [104], can be used to classify Radar signals into different categories based on their similarity to other signals. To derive this classifier, a training set containing representative examples of the Radar data is required. Data obtained from the Range-Doppler map is considered. The main steps for the KNN algorithm can be written as:

1. Calculation of distance between the fresh data point, known as query, and every data point in the training dataset with the help of a distance metric, e.g., Euclidean distance as used here:

where

2. This equation is conducted on each existing data point with the new data;

3. Once all distances are obtained, these are sorted in ascending order to find the k-nearest neighbors;

4. The k-nearest neighbors with the smallest distances are selected with

5. Lastly, the class of the query point is obtained based on the majority class among the k-nearest neighbors.

5.2 Support Vector Machine (SVM) Algorithm

The SVM is a set of supervised learning algorithms used to classify targets, regression, and outlier detection [23]. The SVM algorithm chooses the decision threshold from an indefinite quantity of probable ones, leaving the biggest margin between the nearest data point and the hyperplane, called support vectors. A classifier of linear type is of the form,

where

The issue of optimization is stated as,

This problem is expressed by defining the Lagrangian

where

After substituting

The initial problem of optimization can be finally given as,

So, if

A classifier based on bidirectional long short-term memory (LSTM) applies the feature of relative velocity, range, and signal amplitude to classify targets at ground level and targets at overhead on real roads, useful for collision avoidance [105]. FFT and cell-averaging CFAR (CA-CFAR) have been used for the implementation of this LSTM, which provides a precision of around

A hybrid method of SVM and CNN techniques for target classification is proposed in [112]. At first, the range-Doppler image is obtained by 2D DFT, followed by the extraction of features of targets by CA-CFAR and a DBSCAN algorithm. Then, SVM is applied for the first stage classification, and finally, the remaining image samples with no identified category are used as input into the CNN to retrain. Based on conventional RCS, Root Radar Cross Section (RRCS) is defined in [113], for real-time target classification using 77 GHz FMCW Radar. The RRCS is determined by using the amplitudes of the received and transmitted signals from the frequency domain. Hence, the reflection characteristics of targets can be extracted from the amplitude of the transmitted signal. The SVM can be applied to pedestrian and vehicle classification based on the proposed characteristics obtained from RRCS. Another method of using SVM in conjunction with a Deep Learning (DL) model, specifically You Only Look Once (YOLO), for classifying vehicles and humans is presented in [114]. The range-angle Cartesian plot is transformed into an image and used for training and classification with the YOLOv3 model. The SVM utilizes target boundary boxes from the YOLO V3 model to enhance classification, and by combining each result, the classification performance is further improved. The YOLO V3 is also presented in [115] for classifying humans, vehicles, and aerial vehicles, such as drones. This is applied after detecting range and angle using a rotating millimeter-wave (mmWave) FMCW Radar, where the range is calculated from Analog-to-Digital converter (ADC) samples, and the angle axis is calculated from the rotational frames. Another application of YOLO trained using a transformed range-angle domain is presented in [116]. In a Radar image, YOLO studies the bounding box and probability of class as a regression problem, assuming the location and type of the target by only looking at the image once, hence the name. These images are partitioned into grid cells, where each cell consists of bounding boxes and a confidence score, representing the likelihood of the target’s presence based on the intersection over union (IoU). The performance of this model is presented in mean average precision (mAP). YOLO V4 is used in [117] also for obtaining IoU values. The YOLO V5 model has been utilized for human classification to achieve improved accuracy [118]. The DL can be applied to data of imaging Radar to classify vehicles, pedestrians, and cyclists and estimate their direction [119].

In [120], the classification of pedestrians’ speed rate and movement of hands is done by applying unsupervised Principal Component Analysis (PCA) for the extraction of features. Then supervised classification algorithms like SVMs and KNN are used to classify between fast walk, slow walk, and slow walk while keeping hands in pockets of pedestrians. A high fidelity physics-based simulation method has been used in [121] for obtaining several spectrograms from the micro-Doppler parameters of the vulnerable road users like pedestrians. This is the training data for a 5-layer convolutional neural network, which achieves nearly

Considering the information obtained from a target’s statistical RCS, the classification accuracy of around

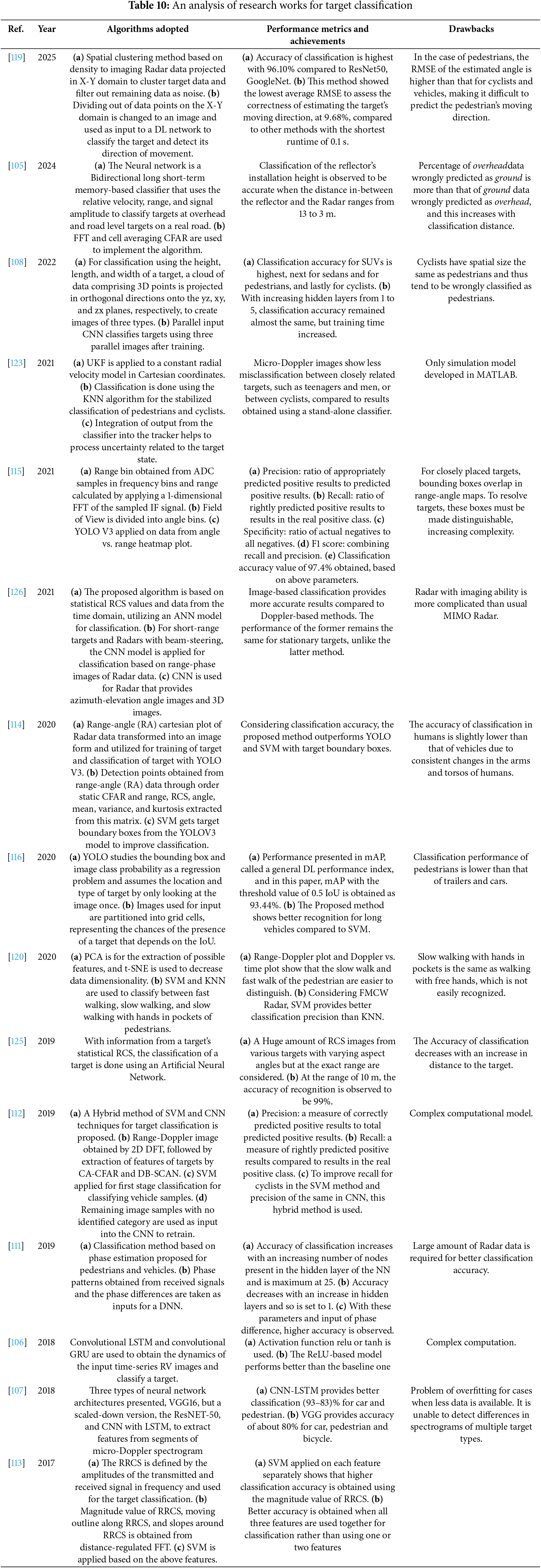

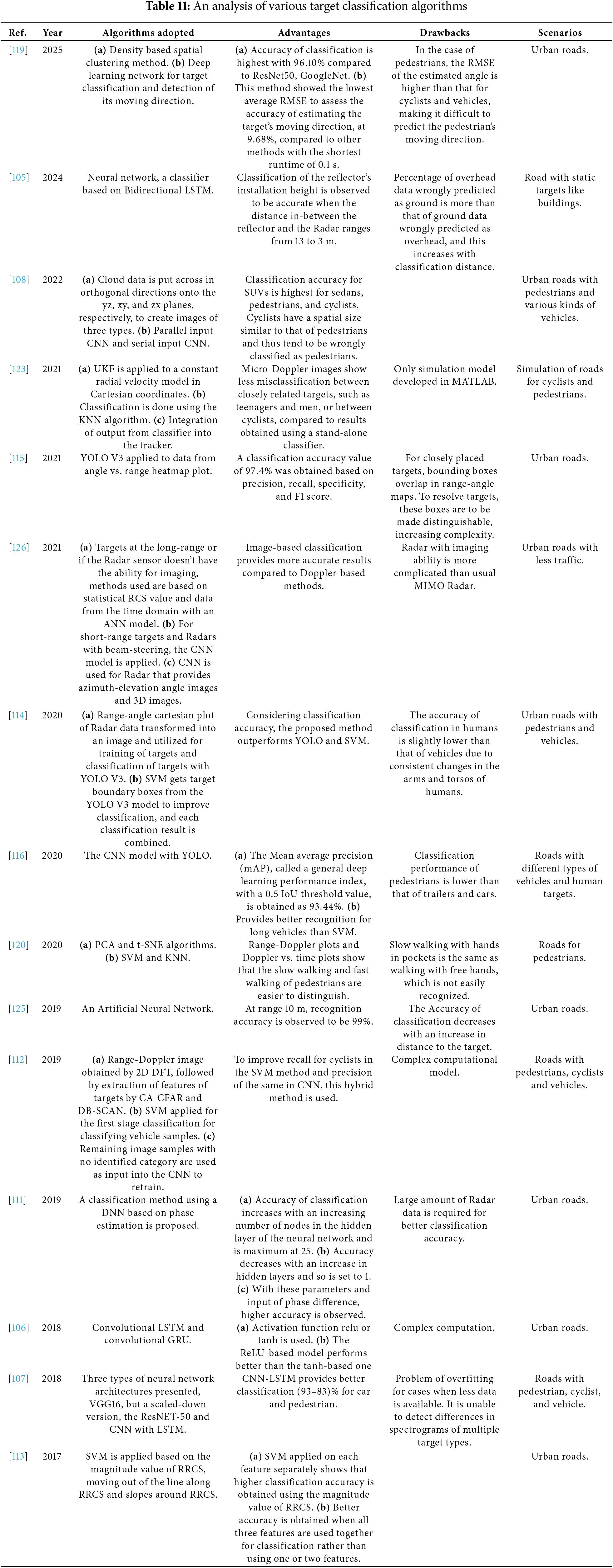

Table 11 contains an analysis of various algorithms used for target classification by automotive Radar.

6 Research Challenges and Future Scope

6.1 Challenges in Automotive Radar Signal Processing

The challenges faced in the signal processing of automotive Radar are explained in brief.

1. Interference—The introduction of more radar-fitted vehicles on the road leads to interference, such as self-interference originating from Radar signals reflected by the vehicle and radome, cross-interference from separate radars on the same vehicle, and cross-interference from Radars on another vehicle. Based on the waveforms, these are primarily FMCW-FMCW and FMCW-PMCW interference, and the interference level depends on the separation between Radars, beam pattern, and signal processing method. Interferences increase the likelihood of false alarms and obscure actual targets.

Solution: Methods like matched filtering for reducing FMCW waveform interference, and CDMA for reducing PMCW waveform interference are used. Recently, neural network-based mitigation methods have been studied.

2. High resolution—Automotive Radar is required to obtain information on the surrounding targets and classify them. For this purpose, high resolution is required in range, Doppler, elevation, and azimuth angles. High resolution is obtained using 2D-FFT in the range-Doppler domain, increasing the processor cost.

Solution: To maintain a balance between angular resolution and unambiguous field of view, in uniform rectangular arrays (URA), the resolution and field of view are monitored by horizontal and vertical antenna spacings. More research is underway to properly calibrate the array after vehicle integration and throughout Radar’s lifespan.

3. Estimation of parameters in Multipath and clutter scenarios—The operation scenario includes various targets like pedestrians, vehicles, animals, bridges, road structures, etc. Automotive Radar has to operate accurately to detect, track, and classify every target even in the presence of multipath propagation in urban road scenarios. The multi-path effect increases false alarms. The tracking of low-altitude targets, such as vehicles, is affected mainly by ground clutter.

Solution: Radar detection methods, based on the Convolutional Neural Network, can be used for complex clutter conditions.

4. Multi-target detection and ghost object removal—The detection of multiple targets is a difficult task as it involves proper clustering of Radar data associated with a specific target and tracking the position of every target in motion. Multi-target tracking algorithms can be applied to track the positions of multiple targets continuously. Sometimes, multiple radar reflections of the Radar signal produce ghost targets, i.e., targets which are not present.

Solution: To eliminate such an ambiguity, neural network-based classifiers can be used.

5. Detection in low SNR environments-Radars can usually operate under low SNR environments, like weather conditions with fog or snow. But in very adverse conditions, especially for automotive purposes, detection problems may occur.

Solution: During extreme bad weather conditions, continuous wave Radars with longer observation time are required for high range detection and range resolution.

6. Real-time constraints in embedded processing. The Operation of automotive Radar is a time-critical process. It is designed to detect and track targets, update target positions, and monitor the surrounding environment, all within a strict time duration, failing which can lead to accidents.

Solution: Adaptive signal processing and efficient algorithms with reduced computational complexities help to provide real-time, accurate Radar data.

7. Dataset scarcity and lack of standardization—Authorities such as EURO NCAP and the New Car Assessment Program for Southeast Asia (ASEAN NCAP) are present for automotive Radar standardization. However, a global standardization for regulations is still unavailable commercially. Additionally, the scarcity of real-time Radar data is another challenge to carry out further research in this field.

Solution: Country-specific Radar datasets are available for public use, which resolves this issue to some extent.

6.2 Innovations and Future Trends

The aim of research in automotive Radar has changed from hardware to millimeter-wave systems and RF signal processing methods. So, recent research has focused on digital modulation techniques, Cognitive Radar, Radar imaging, integrated sensing and communication, machine learning, and Quantum Radar. The research and evolution in this sector are outlined here in a brief [15]:

1. AI-driven Radar and Cognitive Radar-Target classification is required for risk assessment, sensing the resources, and finally, automated control. For target recognition and classification, a machine learning algorithm is usually adopted. In this aspect, a large, real or synthetic Radar dataset is required to be available for further work. Artificial Intelligence and Machine learning algorithms can be further utilized for localization, interference reduction, waveform design, and other specialized technologies. Like, Neural network such as CNN can be applied for the processing of non-clustered target detections [129]. In [130], a Deep Learning and Image Processing-based Height Estimator is applied to create a real time system that uses image data to obtain heights of buildings in the paths of unmanned aerial vehicles. Here, Google Street view images are used, which can be replaced with Radar images. An automated multi-path annotation method converts a conventional large Radar dataset into multi-path labeled data, on which deep learning-based signal processing is applied to challenges present in such scenarios [131]. A Cognitive Radar [132] can sense the environment, reason, and learn with the help of supervised techniques, and finally adapt its parameters to meet the changes in the scenario.

2. Radar imaging and 4D Radar—Imaging Radar is an innovative application, beneficial for measuring target RCS. Here, the echoes from the target are converted into digital form, sent to the data recorder for processing, and finally shown as an image. Range-azimuth imaging can be obtained by application of Super-Resolution Angular Spectra Estimation Network [133]. Another such technique is the 4D Radar [134,135] used to measure elevation and azimuth angles, range, and Doppler of the target, while providing high resolution and wider field-of-view. In the case of 4-dimensional imaging Radar, a waveform for MIMO-PMCW is better suited than the MIMO-FMCW model [136]. Coherent Radar networks can be applied to create a Radar image with better SNR and azimuth resolution and to obtain vectorial velocities of targets [137].

3. Integrated sensing and communication (ISAC)—In case of ISAC, the Radar and communication systems can coexist by either sharing the frequency spectrum, or by sharing the same hardware, or by using waveforms from the communication system for Radar functioning [138]. For understanding ISAC, in-depth knowledge of communication is also required. Like in [139], the structure of cell-free massive MIMO has been described for wireless communication networks and green communication methods.

4. Radar simulation and synthetic data for training—To compensate for the scarcity of real-time Radar data, synthetic data is artificially produced. This is very beneficial for the training process of the ML algorithms used for target tracking and classification.

5. Quantum Radar and metamaterial-based Radars—Recent innovations involve Quantum radar [140], which generates quantum entangled signals related to a reference signal present at the receiver. This Radar works better than conventional ones in low signal echoes and high noise, and is quite resilient to deception and electronic jamming.

6.3 Utility of Autonomous Driving

The main application of automotive Radar is to prevent accidents by using warning signals and automated safety functions, and thus achieve the Vision Zero objectives of zero deaths in traffic accidents. The main utilities of autonomous driving can be listed as

1. Safer Roads—As stated in the introduction, reducing road accidents is one of the most important motivations for autonomous vehicles. Automotive Radar sensors can perceive the environment better than human drivers; thus, driving errors like drunk driving and sleepy driving will be significantly reduced.

2. Improved traffic management and fuel efficiency—Automated vehicles will lead to better traffic management and reduced accident rates. Additionally, as vehicles are designed to enhance efficiency in acceleration and braking, fuel efficiency is expected to improve, resulting in reduced carbon emissions. Thus, helping the environment as a whole.

3. Free time for drivers—In levels 3, 4, and 5 of automation, most of the driving tasks will be done by automation, so drivers will have more time to spend on themselves. Accidents caused by drowsy drivers are a widespread incident, with automation, and drivers can rest on long rides.

4. Improved way of living—Even disabled persons and older citizens would be able to experience driving instead of relying on others.

5. Providing newer job opportunities—Job opportunities will be made in automobile, electronics, and software engineering, among others. With the mass production of automated cars, their price will eventually decrease and become more affordable for the general public.

7 Benchmark Datasets and Standards for Automotive Radar

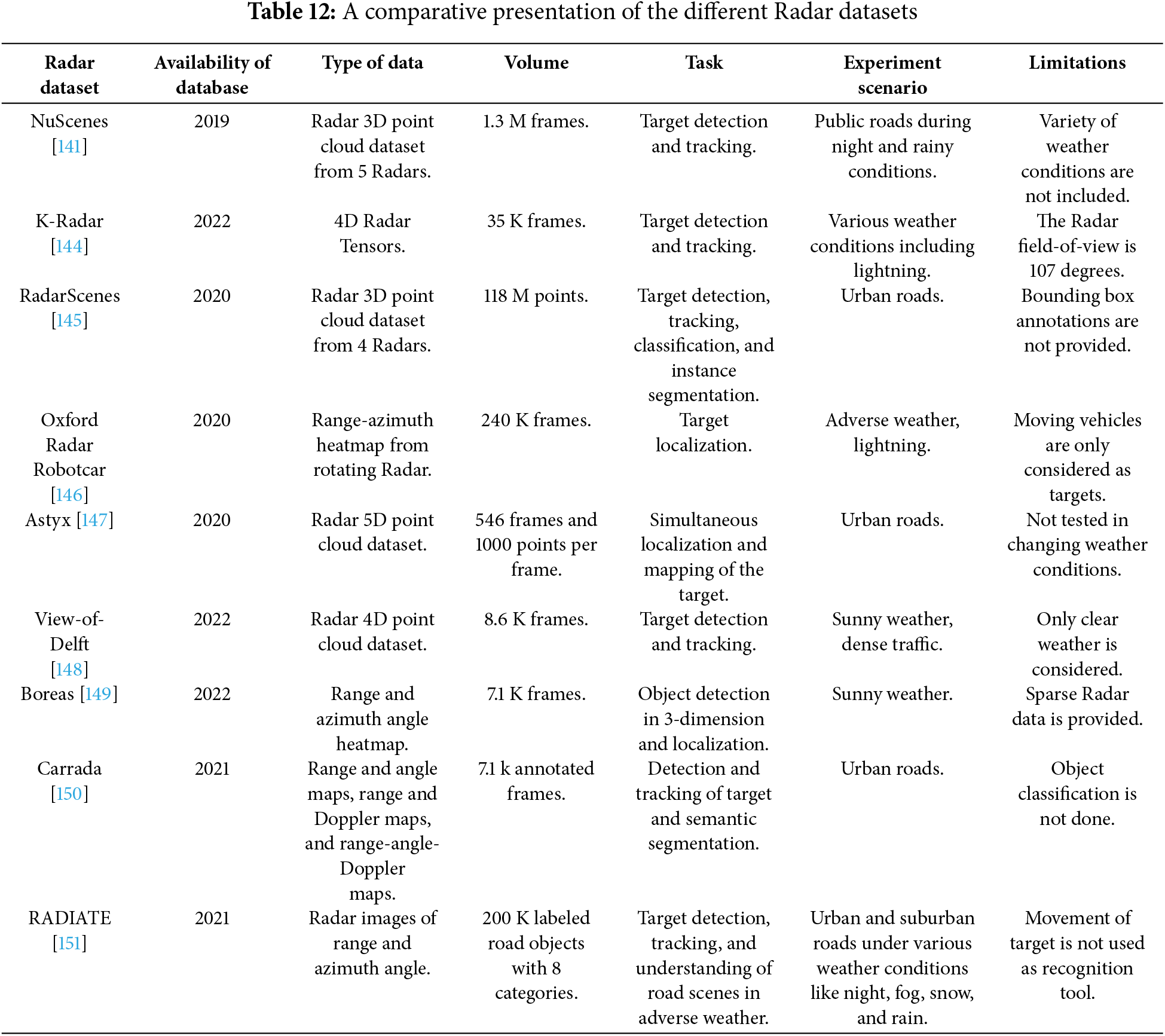

Recent innovations in automotive Radar for target detection, tracking, and classification are being achieved by using ML algorithms. For the training data of the ML algorithms, a large Radar dataset is required, which contains an authentic and detailed description of the surrounding environment. Several Radar datasets are available publicly, and the important datasets are described concisely in this work. These datasets are presented in a comparative table in the following Table 12.