Open Access

Open Access

REVIEW

A Survey of Large-Scale Deep Learning Models in Medicine and Healthcare

School of Computer Science, Nanjing University of Information Science and Technology, Nanjing, 210044, China

* Corresponding Author: Yangyang Guo. Email:

# These authors contribute equally to this work and share first authorship

Computer Modeling in Engineering & Sciences 2025, 144(1), 37-81. https://doi.org/10.32604/cmes.2025.067809

Received 13 May 2025; Accepted 15 July 2025; Issue published 31 July 2025

Abstract

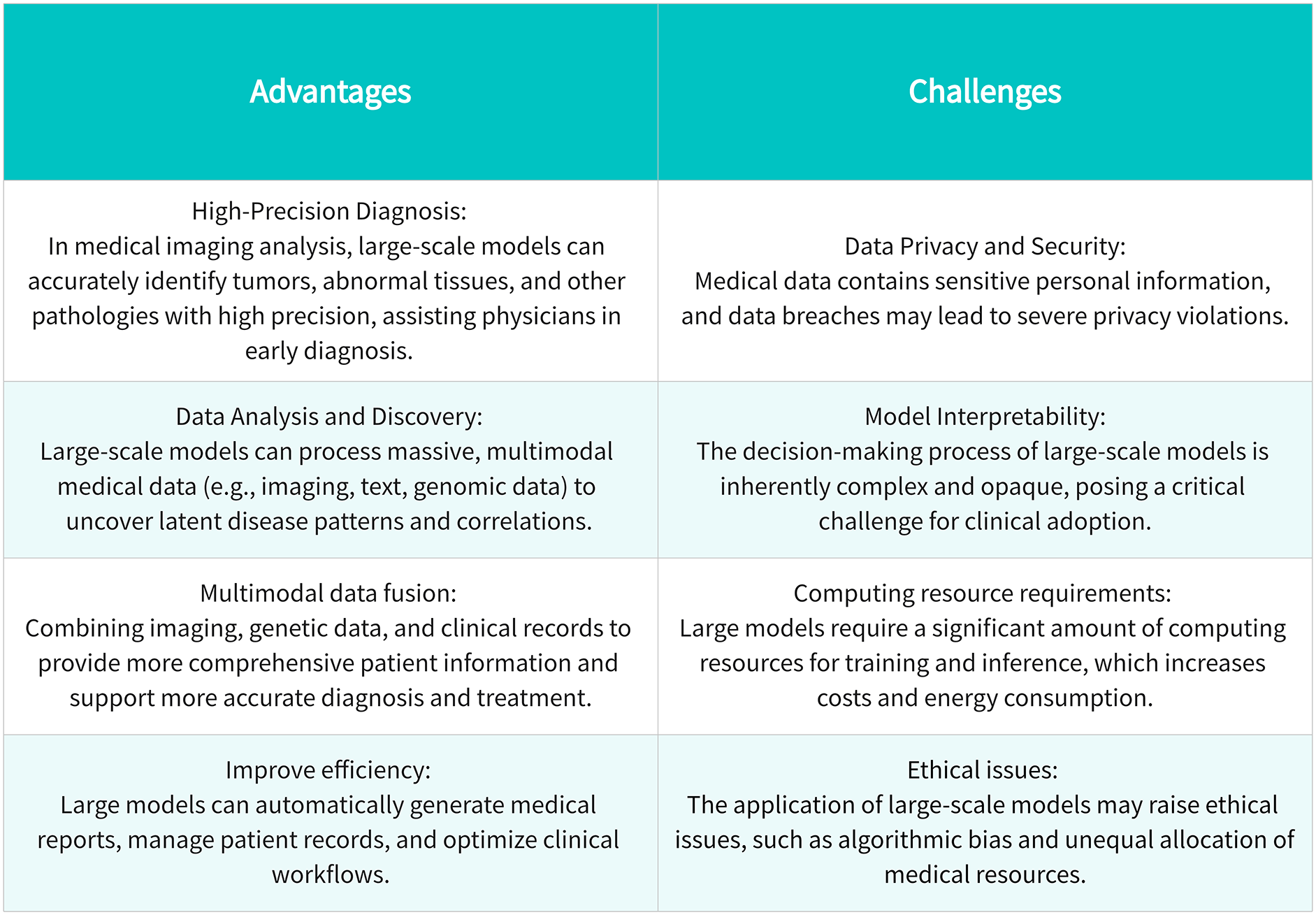

The rapid advancement of artificial intelligence technology is driving transformative changes in medical diagnosis, treatment, and management systems through large-scale deep learning models—a process that brings both groundbreaking opportunities and multifaceted challenges. This study focuses on the medical and healthcare applications of large-scale deep learning architectures, conducting a comprehensive survey to categorize and analyze their diverse uses. The survey results reveal that current applications of large models in healthcare encompass medical data management, healthcare services, medical devices, and preventive medicine, among others. Concurrently, large models demonstrate significant advantages in the medical domain, especially in high-precision diagnosis and prediction, data analysis and knowledge discovery, and enhancing operational efficiency. Nevertheless, we identify several challenges that need urgent attention, including improving the interpretability of large models, strengthening privacy protection, and addressing issues related to handling incomplete data. This research is dedicated to systematically elucidating the deep collaborative mechanisms between artificial intelligence and the healthcare field, providing theoretical references and practical guidance for both academia and industry.Keywords

The convergence of AI and medicine, particularly the incorporation of large-scale deep learning models, introduces unparalleled opportunities and challenges in the domains of medical diagnosis, treatment, and management [1,2]. Recent reviews, such as Nazi and Peng, further consolidate the growing body of research on the potential and constraints of large language models in medical applications, underscoring their transformative potential [3]. In this article, ‘large models’ refer to a class of deep learning neural networks that are characterized by large parameter sizes (usually ranging from billions to trillions) and have demonstrated excellent performance in various tasks such as natural language processing (NLP) and computer vision (CV). The training of these models usually includes two main stages: pretraining and fine-tuning. In the pretraining stage, the model learns general data representations and patterns on large unlabeled datasets through self-supervised learning methods; the fine-tuning stage adapts the model to specific tasks.

Large models obtain rich and general data representations through pretraining, which enables them to effectively adapt to different tasks in the fine-tuning stage, achieving multitask learning and reducing the need for task-specific models. These models demonstrate versatility across domains, executing natural language processing (text generation, machine translation, sentiment analysis), visual computing (image categorization, object recognition, pixel-wise segmentation), and multimodal applications spanning healthcare and finance. Although LLMs have shown promising prospects in healthcare, they also face challenges, including data privacy, model interpretability, and ethical issues. As a cutting-edge representative of AI, large models are swiftly penetrating the healthcare landscape, furnishing more precise, expeditious, and dependable support for clinical decision-making. On November 30, 2022, OpenAI, the American AI company, launched a notable Large Language Model (LLM) named ChatGPT, distinguished by its outstanding natural language processing (NLP) capabilities. The advent of ChatGPT marks a major breakthrough in the application of artificial intelligence in the field of healthcare, providing new possibilities for patient consultation, case analysis, and health education [4]. Currently, research efforts have explored the utilization of authentic clinical texts to develop a generative LLM termed Ga-torTronGPT, assessing its efficacy in biomedical NLP and healthcare text generation [5]. Models trained with synthetic medical text generated by GatorTronGPT outperformed models trained with real clinical text on some tasks. Yet, concurrent with this technological progression, a gamut of contemplative issues has surfaced, scrutinizing not only the prowess of technology but also the quality and enduring nature of healthcare services [6].

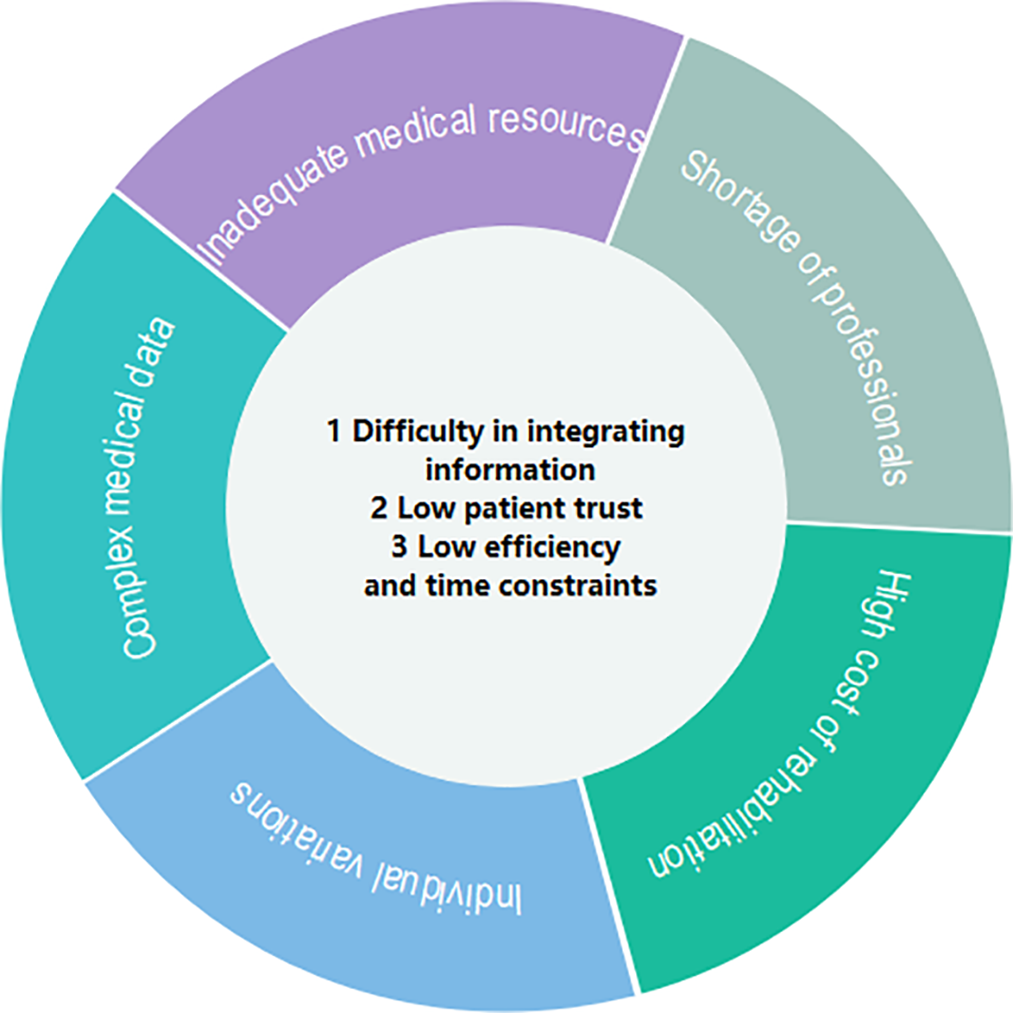

The healthcare sector processes a large amount of data, including patient records, medical images, and laboratory tests. However, traditional manual analysis methods can no longer meet the needs of fast processing and accurate acquisition of key information [7]. Although large-scale deep learning models have achieved remarkable success in natural language processing and image recognition, their complexity and specificity still bring challenges to healthcare applications [8]. The intricacy of medical data is not only evident in its massive volume but also in its heterogeneity and dynamism, presenting unprecedented complexity in the design and application of models [9]. In this context, there is an urgent need to explore how to better leverage large models to serve patients, support medical decision-making, and propel the development of medical research. In practical applications, the complexity of large models and their computational resource requirements pose a series of challenges [10]. For many healthcare institutions and research teams, achieving efficient applications of large models entails overcoming not only technical challenges but also addressing limitations in hardware and resources. Consequently, the pressing issue at hand is how to better apply large models in resource-constrained environments within medicine and healthcare.

Large-scale deep learning models have become a pivotal catalyst for transformative advancements in medical and healthcare domains [11,12]. From the intelligent processing of medical images to the analysis of patient records, these models play an increasingly vital role in clinical decision-making, disease diagnosis, and the formulation of treatment plans [2]. Our work focuses on various applications of large-scale deep learning models in medicine and healthcare, systematically exploring and summarizing the advantages of large models in healthcare. Through a comprehensive analysis of existing research and practical cases, our aim is to provide a thorough understanding of the potential value of large models for healthcare practitioners, researchers, and decision-makers in medicine and healthcare. To ensure comprehensive coverage of relevant literature, we systematically searched multiple databases, notably PubMed and Google Scholar. In the case of Google Scholar, only the first 50 most pertinent studies were included, as further results exhibited diminishing relevance and deviated from the study’s core objectives. Additionally, the technical search was based on abstracts and titles, with our search scope limited to English articles published between 2019 and 2023.

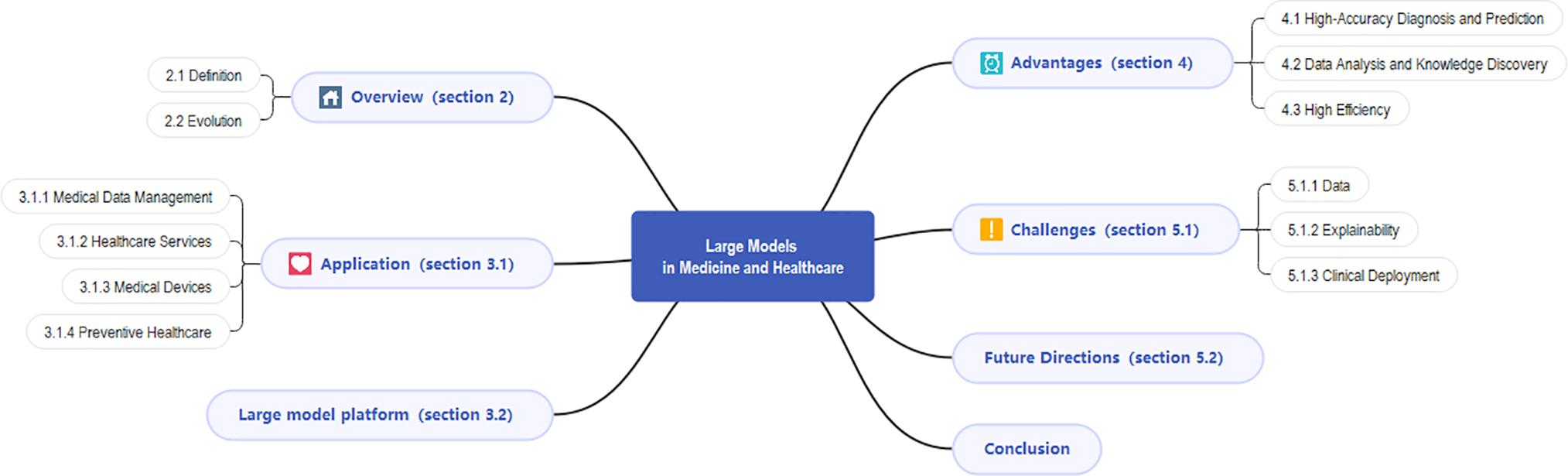

This review will systematically discuss the applications of large models in medicine and healthcare and their unique potential in the realm of medicine. As shown in Fig. 1 below, in Section 2, we will present a comprehensive examination of large-scale models, including their fundamental principles and developmental history. In Section 3, we will primarily analyze the overall application of large models in medicine and healthcare, with Table 1 summarizing existing studies for comparison with our paper. Subsequently, in Section 4, we will concentrate on the advantages of LLMs in healthcare, exploring their specific performances in high-precision diagnosis, data analysis, and knowledge discovery, and enhancing work efficiency. Finally, in Section 5, we will review the current challenges faced by the healthcare sector to gain a more comprehensive understanding of the application prospects of large models in healthcare. This paper will conclude with a conclusion section serving as an overall summary of the research, emphasizing the potential value of large models in the healthcare domain, and proposing directions for further investigation.

Figure 1: Diagram of the content structure

2.1 Definition of the Large Model

Large models, as characterized by their extensive parameter scale ranging from billions to trillions, have become a defining feature of modern deep learning neural networks [13]. Such models require domain-specific adaptations in high-stakes fields like emergency medicine, where real-time interpretation of complex data (e.g., ECG waveforms or trauma imaging) is critical for clinical decision-making [14,15]. To align with the current consensus within the AI community, we recognize models such as SAM for computer vision and Contrastive Language-Image Pre-training (CLIP) for vision-language tasks as more appropriate examples of large models. SAM, a recent advancement in CV, demonstrates the capabilities of large models in handling complex image data, while CLIP showcases the potential of large models in understanding and correlating visual and textual information. Large models have demonstrated versatility beyond NLP and CV, such as Spectrum-BERT (Wang et al.) which adapts bidirectional transformers for spectral data classification, suggesting potential extensions to medical spectral analysis (e.g., spectroscopy-based diagnostics) [16].

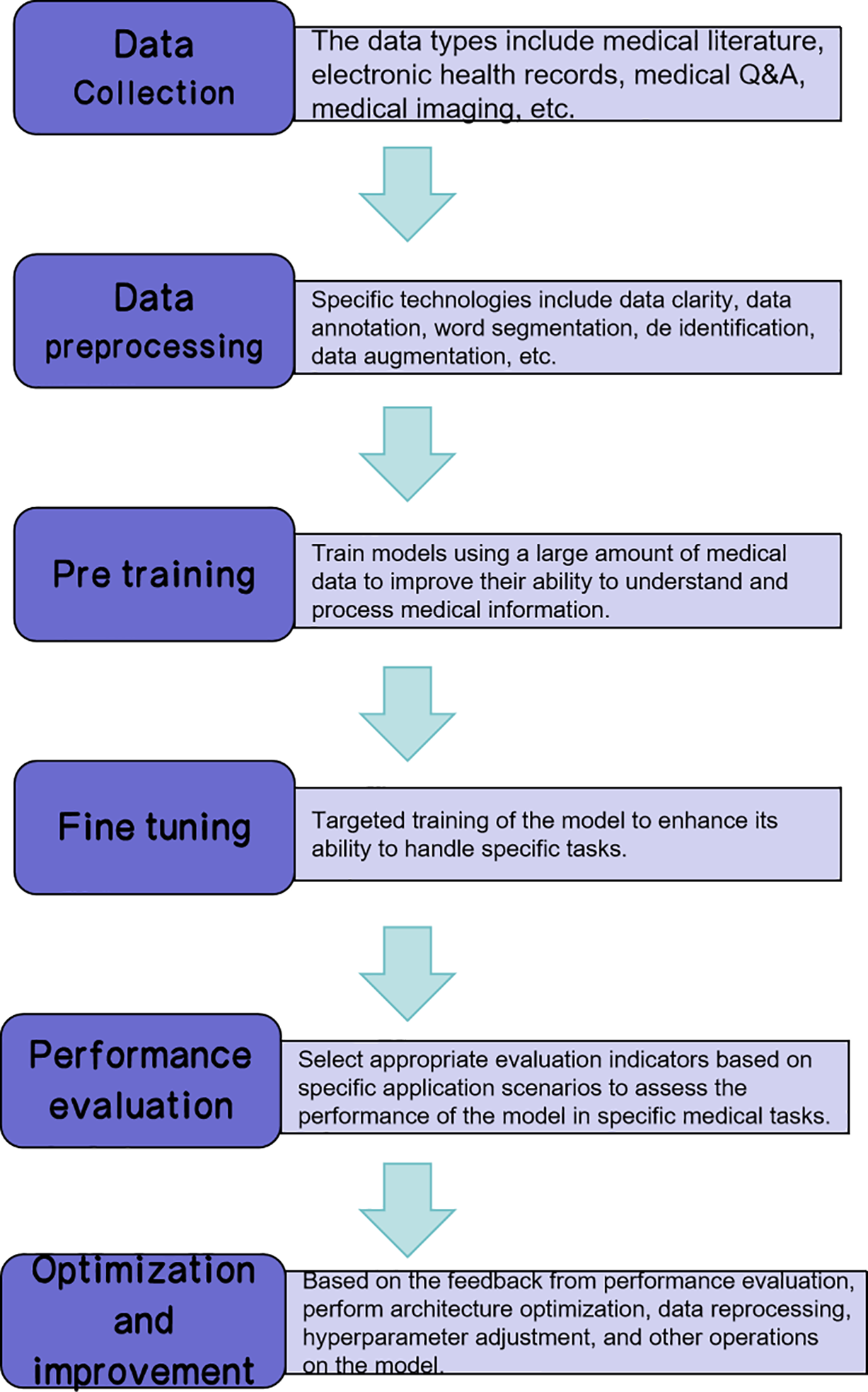

Large models have revolutionized the way we approach multimodal tasks, such as medical diagnosis and financial analysis, by providing a more comprehensive and nuanced understanding of data. Their ability to learn rich and universal representations through pretraining on massive datasets enables them to adapt to a wide array of tasks during the fine-tuning stage, reducing the need for task-specific models and enhancing multitask learning. As shown in Fig. 2, the development of medical big models covers a complete technical chain from multisource data collection to iterative optimization. Among them, the pretraining stage extracts general representations through self-supervised learning, while the fine-tuning stage adapts to specific clinical tasks (such as image classification), and ultimately drives model optimization through performance evaluation.

Figure 2: Technical pipeline of medical large model development

2.2 Evolution of the Large Model

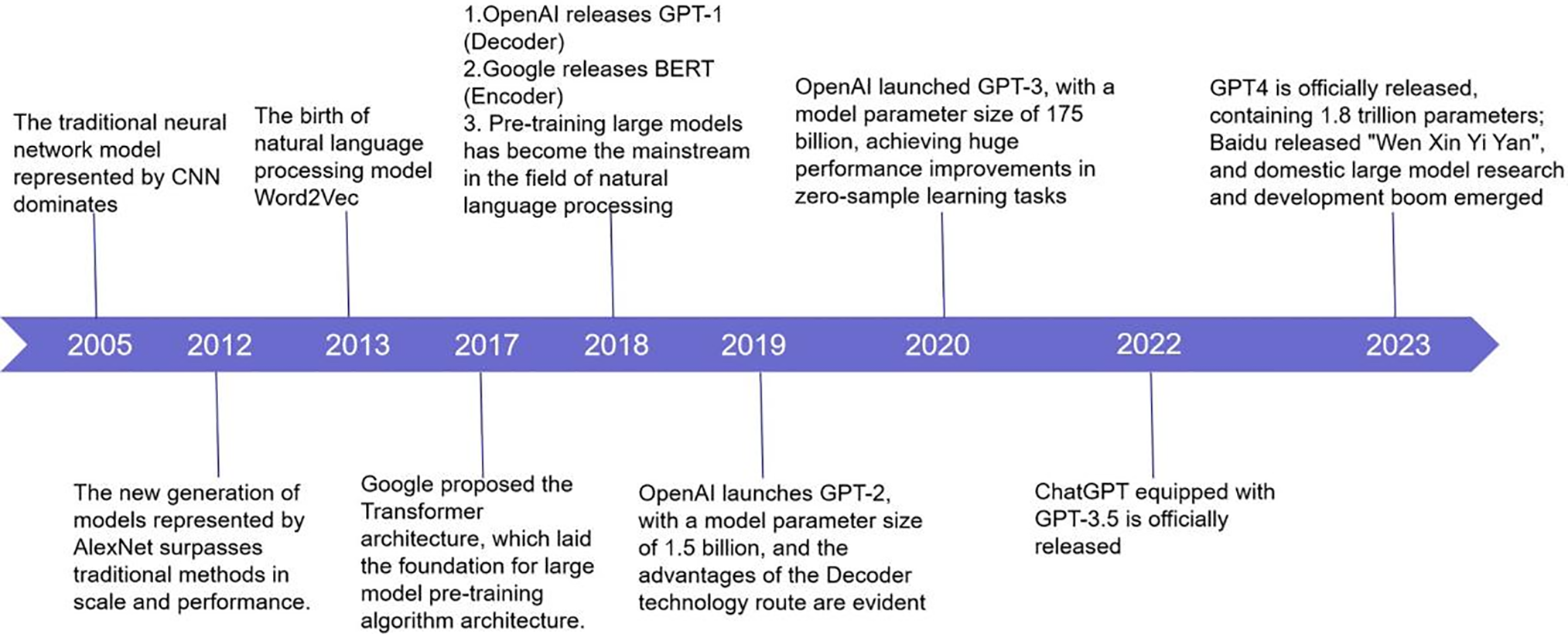

Fig. 3 shows the key developments of large-scale AI models from 2005 to 2024. Each marked event represents a technological breakthrough or the release of an important model. The advancements in these models and technologies have not only accelerated the rapid development of AI but also laid the foundation for its application in the healthcare field. The following timeline outlines the influential models and architectures, particularly significant breakthroughs in the fields of natural language processing (NLP) and computer vision (CV).

Figure 3: The evolution of large models

In Section 2.2.1, we will discuss in detail how the Transformer architecture lays the foundation for a large model pre-training algorithm architecture. Section 2.2.2 will explore the development of the GPT series models, especially the release of GPT-1, GPT-2, and GPT-3, and their performance in natural language processing tasks. Finally, in Section 2.2.3, we will examine current challenges and future directions, while referring to the timeline in Fig. 2 to highlight where these discussion points fit into the evolution of large-scale models.

2.2.1 From Transformer to Large Model

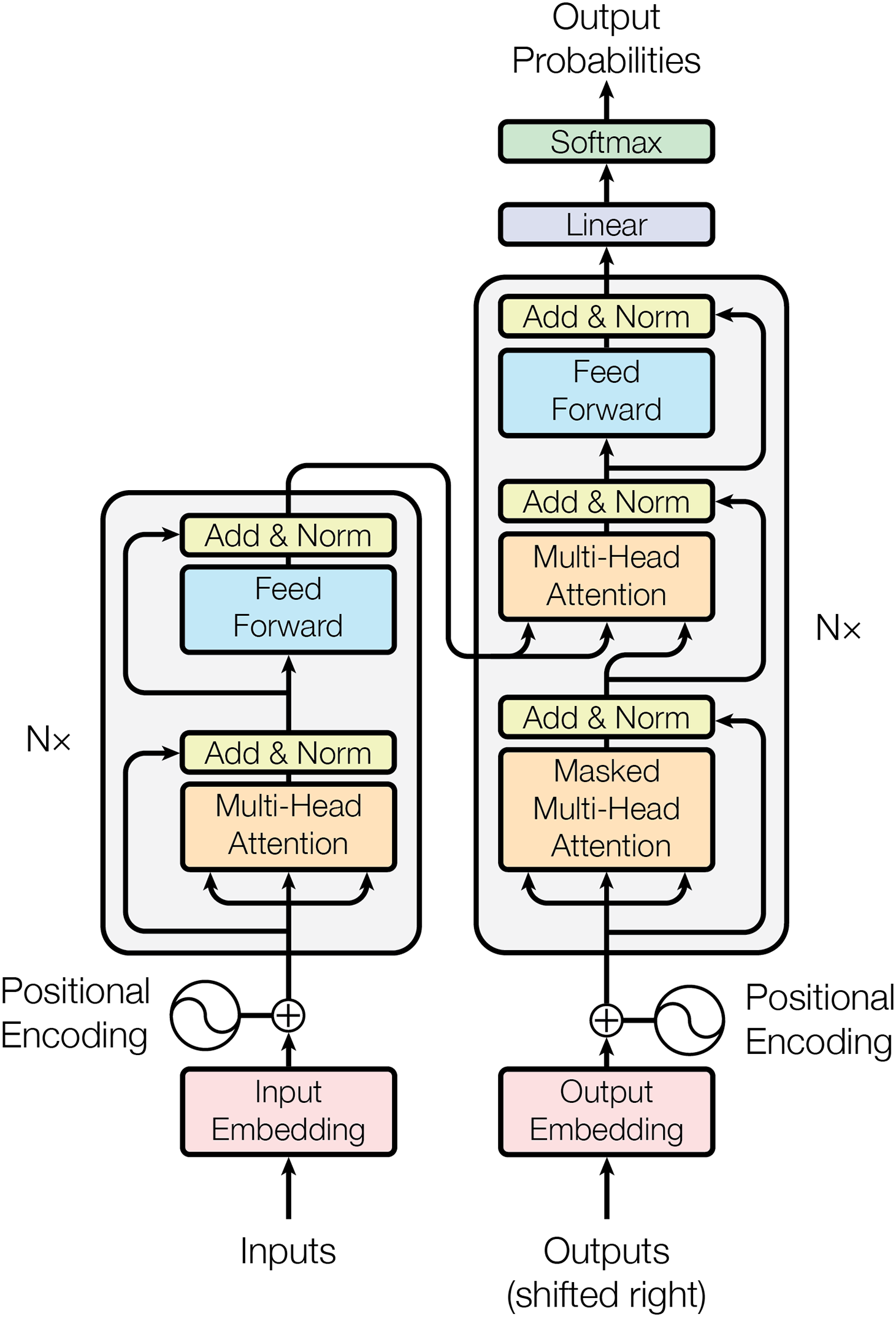

The Transformer architecture represents a paradigm shift in large model development. Departing from traditional Bidirectional Recurrent Neural Networks(RNNs)/Convolutional Neural Networks(CNNs), its purely attention-based design enables parallel sequence processing and superior training efficiency. The architecture’s core innovation-the self-attention mechanism-establishes direct dependencies between arbitrary sequence positions (see Fig. 4). Transformer achieves global modeling of long texts through the attention mechanism and is widely used in medical NLP tasks such as case understanding and symptom analysis [17]. Compared with traditional models such as RNN, its parallel processing capability and modeling efficiency are more suitable for building high-performance medical large models (such as GPT series, BERT series, etc.) [18].

Figure 4: The Transformer’s model architecture

The introduction of the Transformer model provides a theoretical basis and architectural support for building large models [19]. Since Transformers can effectively handle a large number of parameters without being affected by the gradient vanishing or exploding problems in traditional models, researchers began to explore the possibility of improving performance by increasing the size of the model [20].

The Transformer architecture has achieved remarkable success through implementations like GPT (Generative Pre-trained Transformer) [21]. The initial GPT-1 model established the effectiveness of Transformer-based pre-training across diverse NLP applications. This breakthrough was followed by progressively scaled versions—GPT-2 and GPT-3—with the latter’s unprecedented 175 billion parameters demonstrating exceptional few-shot learning and natural language comprehension capabilities [22,23].

In 2018, the research community introduced BERT (Bidirectional Encoder Representations from Transformers), which revolutionized language model pre-training through its innovative Masked Language Modeling (MLM) and Next Sentence Prediction (NSP) objectives. These training mechanisms enable the model to learn deep bidirectional language representations. BERT’s success not only advanced large model development but also established foundational techniques that influenced nearly all subsequent language model architectures in NLP research [24].

With the success of BERT and GPT series models, large models have begun to expand their applications in various fields, including healthcare, financial analysis, etc. These models can adapt to and solve problems in specific fields by fine-tuning on data in specific fields [25]. At the same time, this has also brought about discussions on model generalization ability, data privacy, and ethical issues.

The introduction of the Transformer architecture and the progress of BERT and GPT have greatly promoted the development of the field of artificial intelligence, especially in the construction and application of large-scale models. However, with the increase in model size, new challenges have also been brought about in terms of computing resources, model interpretability, and ethical issues. Future progress in large models needs to not only boost performance but also resolve these accompanying problems. This includes developing more efficient training algorithms, reducing the carbon footprint of the model, improving the interpretability of the model, and ensuring that the decision-making process of the model meets ethical standards. Through these efforts, large models are expected to contribute to the sustainable development of society while promoting the advancement of artificial intelligence technology.

2.2.2 Development of GPT Series Models

The GPT series of models is a large-scale pre-trained language model based on the Transformer architecture launched by OpenAI. Since 2018, the GPT series of models has undergone several important iterations, and each iteration has achieved significant improvements in model scale and performance [26,27].

GPT-1: The first model in the GPT series, which introduced a Transformer-based pre-trained language model and demonstrated its potential in various NLP tasks. GPT-1 is trained by predicting the next word in a text sequence, using a large amount of book and web page data as training material [28].

GPT-2: Based on GPT-1, GPT-2 significantly expanded the model size, with the number of parameters reaching 1.5 billion. GPT-2 uses a more diverse data source during training, including Wikipedia, books, and web pages, which further improves its ability to understand and generate text [29–31].

GPT-3: As a significant advancement in the GPT lineage, GPT-3 marked a breakthrough with its unprecedented scale, boasting 175 billion parameters, ranking it among the world’s largest language models upon release [32]. Beyond sheer size, GPT-3 demonstrated substantial performance gains, particularly in few-shot learning and natural language comprehension. Its versatility enabled zero-shot applications like translation, text summarization, and Question Answering(QA) systems, achieving competitive results without task-specific fine-tuning.

GPT-4o: GPT-4o is OpenAI’s latest model in the GPT series, released on May 13, 2024, where “o” stands for “omni,” highlighting its omnipotent multimodal capabilities. GPT-4o not only processes text, but also can understand and generate voice and visual content, providing a more natural interactive experience. It has a fast response speed, averaging 320 milliseconds, close to the speed of human conversation, and reduces costs by 50% and increases speed by 2 times, significantly improving efficiency [33,34]. In terms of security, GPT-4o addresses the risks of new modalities by filtering training data and adjusting model behavior. The launch of this model heralds an important step forward in artificial intelligence’s simulation of human interaction and demonstrates a new trend in the future development of AI technology. Hurst et al. provided a detailed system card for GPT-4o, highlighting its advanced capabilities and potential applications in various fields, including healthcare.

The progression of the GPT model series has not only accelerated breakthroughs in natural language processing but also unlocked novel potentials for diverse AI applications [35]. As the model size increases, the GPT series models have demonstrated outstanding capabilities in handling complex language understanding and generation tasks [36]. However, as the size of models grows, new challenges also arise, such as the demand for computing resources, model interpretability, and ethical issues. Future research needs to address these accompanying problems while improving model performance to ensure the sustainable development of the technology.

2.2.3 Existing Limitations and Prospective Developments

The application of large-scale models in healthcare presents significant opportunities but is also accompanied by some challenges that need to be addressed through future research and practice [37]. Although large models have great potential in the medical field, they still face challenges such as computing resource consumption, data privacy, and model interpretability. Professor Shen pointed out that efficient training strategies and algorithm optimization are the key to improving training efficiency [38]. Since medical data is highly sensitive, ensuring its privacy and security has become a prerequisite for the actual deployment of the model. For instance, Demelius et al. provide a systematic review of differential privacy in centralized deep learning, outlining improvements in noise calibration and training efficiency [39]. A critical challenge lies in ensuring algorithmic interpretability, particularly for clinical applications where decision transparency is paramount. The medical community requires comprehensible rationales behind model outputs to establish trust and facilitate appropriate adoption in healthcare settings. At the same time, data quality and diversity are also important factors affecting model performance. Data bias may lead to errors in model output, affecting fairness and accuracy. Training data regional bias is a significant limitation of current large-scale models. Most models are trained on large public datasets from North America or Europe, which may result in poor performance in other regions (such as Africa, Asia, etc.). For example, language differences, varying disease spectra, and diverse medical practices can reduce the model’s generalization ability, thus limiting its fair application worldwide. Additionally, training and deploying large-scale models require substantial computational resources, leading to significant carbon emissions. Studies have shown that the energy consumed to train a model similar in scale to GPT-3 is equivalent to the lifetime carbon emissions of a car. To achieve sustainable development, future research should explore green AI strategies, including model compression, knowledge distillation, and more efficient hardware. Finally, regulatory compliance and technical integration issues are also challenges that need to be overcome in large-scale model applications [40]. The strict regulatory requirements in the medical field and the technical integration issues of existing systems need to be fully considered during the development and deployment of the model.

Faced with these challenges, future research needs to explore in multiple directions [41,42]. First of all, enhancing model interpretability is the key to improving clinical deployment of AI models. Researchers need to develop new techniques to improve the interpretability of LLMs and make their decision-making process more transparent. Secondly, interdisciplinary collaboration is also an important way to promote the role of advanced deep learning systems in healthcare and medical applications. Through the collaboration of experts in computer science, data science, medicine, and other fields, we can jointly solve the problems of applying large models in the field of healthcare. In addition, technological innovations, including algorithm optimization, computing efficiency improvement, and the development of new hardware, will promote the application of large models in the healthcare field. At the same time, updating and improving relevant ethical standards and laws, and regulations to ensure that the application of large-scale models complies with ethical standards and legal requirements is also an important direction for future research.

As large-scale models become more widely used in healthcare, ethical and regulatory compliance becomes increasingly important. This requires that patient privacy, data security and compliance must be taken into consideration during the development and application of the model. Global cooperation is also an important direction for the future, especially when facing global public health challenges such as epidemics, which require global cooperation and sharing of data and models to improve response capabilities. In addition, considering the environmental impact of large models and developing more energy-efficient training and operation methods to achieve sustainable development is also a key issue in the future. Overcoming these limitations could enable large-scale models to enhance healthcare delivery significantly, ultimately improving patient outcomes.

To address the challenges of medical data privacy and model interpretability, researchers have proposed a number of specific technical solutions and achieved initial results in the medical field [43].

In terms of privacy protection, Federated Learning is widely used in medical artificial intelligence systems. This method allows models to be trained on local devices without the need to centrally store sensitive data, thereby effectively reducing the risk of data leakage. For example, in a diabetic retinopathy screening project carried out by Google in cooperation with medical institutions, federated learning was successfully used to improve model performance without collecting user data [44]. In addition, Differential Privacy, as a mechanism for adding random noise, is also used in medical text modeling to prevent the reverse inference of individual data [45].

In terms of model interpretability, explainable artificial intelligence (XAI) methods such as LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (SHapley Additive Explanations) are integrated into medical decision support systems to help doctors understand model outputs. For example, SHAP is used in breast cancer prediction and critical care scoring models to demonstrate how the model makes risk judgments based on specific physiological indicators [46]. In addition, models that combine attention mechanisms with medical ontology structures (such as Clinical Attention Networks) are emerging, which can highlight key features and cross-validate with medical knowledge graphs, thereby enhancing the medical rationality of explanations.

As technology evolves, future research should continue to promote the deep integration of privacy-enhancing technologies (PETs) and interpretable frameworks to enhance the security and trust of large models in actual medical scenarios.

2.3 Literature Selection Methodology

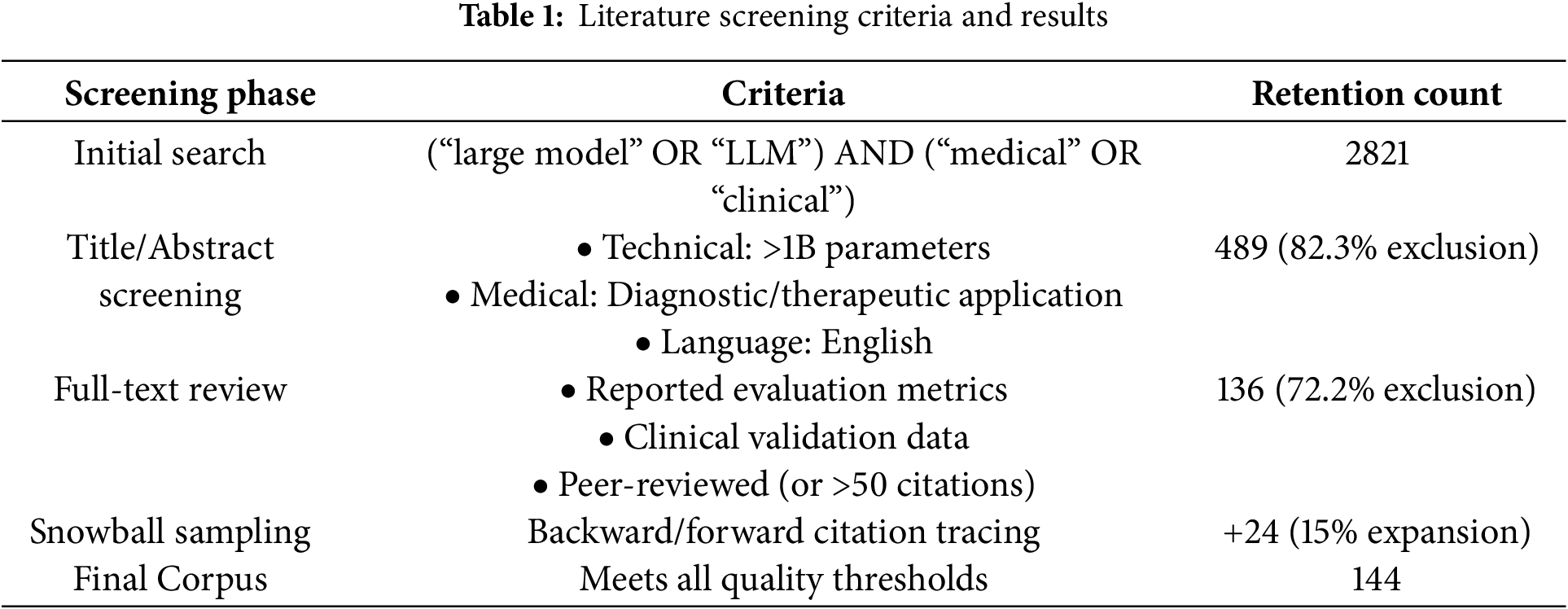

To ensure the rigor of the research method, this paper adopts the systematic literature screening process recommended by the PRISMA guidelines (see Table 1). The literature published between 2005 and 2023 was searched in five major databases (PubMed, IEEE Xplore, Web of Science, China National Knowledge Infrastructure, and arXiv) using a combined search formula ((“big model” OR “LLM”) AND (“medical” OR “clinical”)), which is consistent with the big model development timeline shown in Fig. 3.

3 Integration of Medicine and Large Models

3.1 Application of Large Models in Medicine and Healthcare

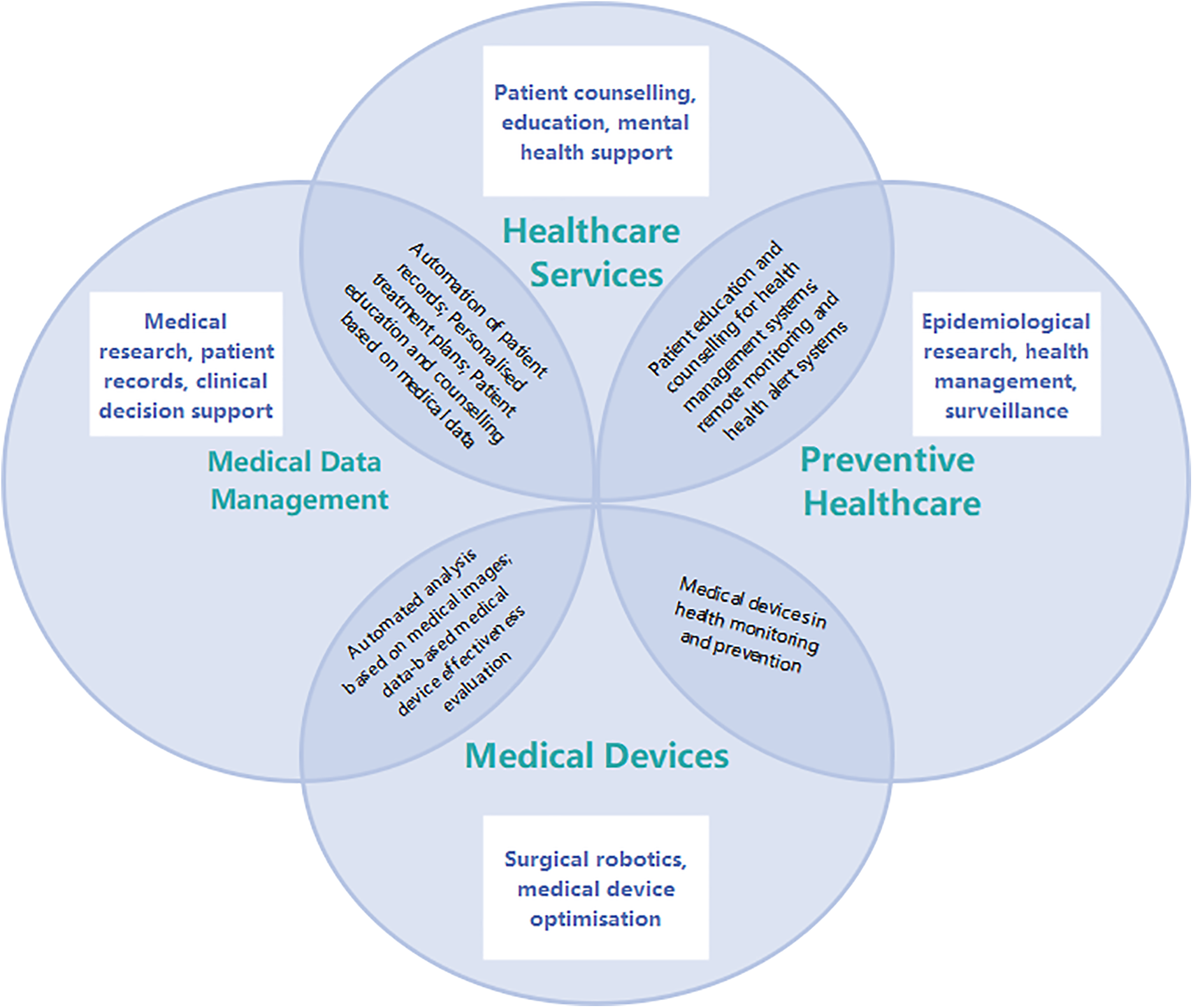

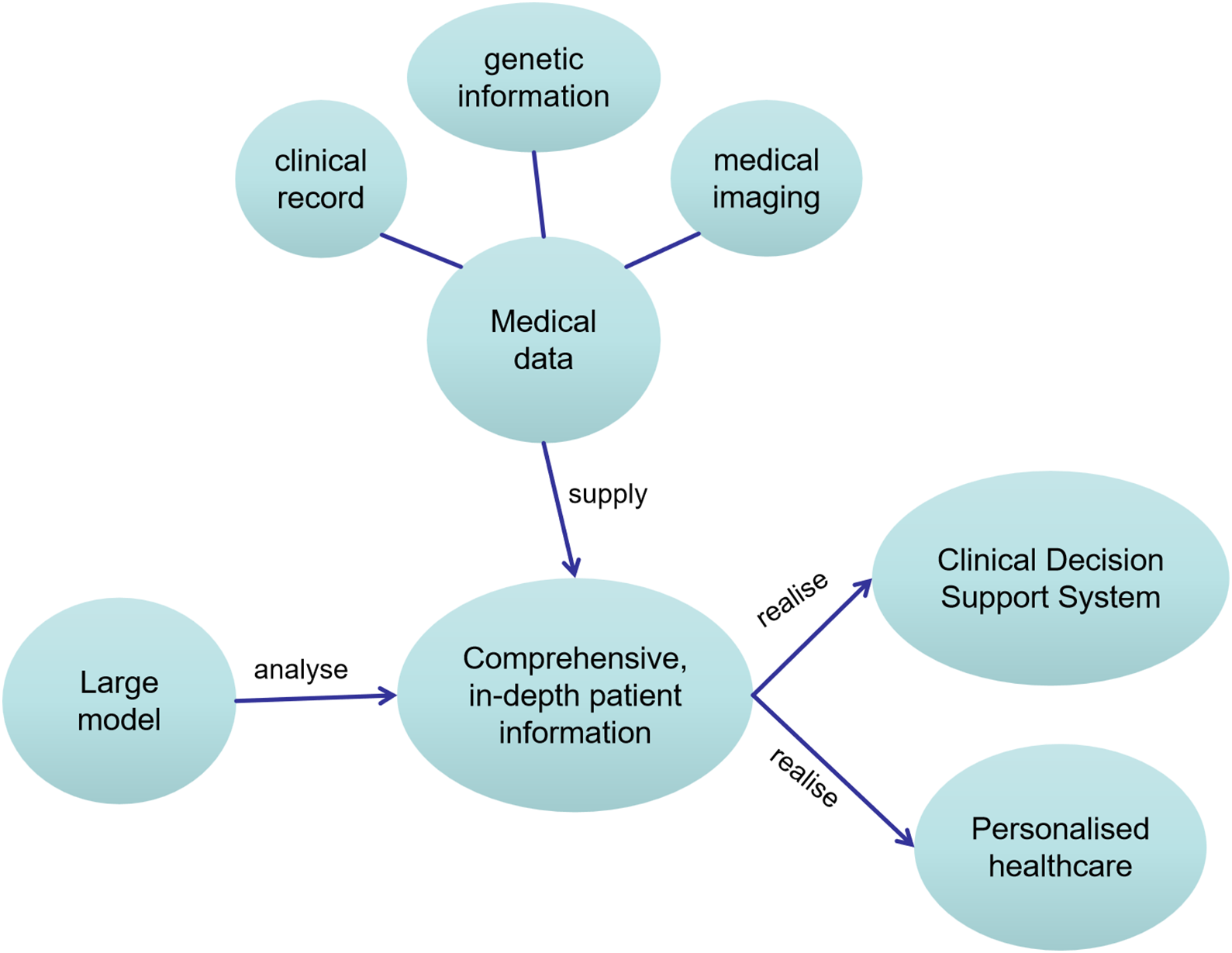

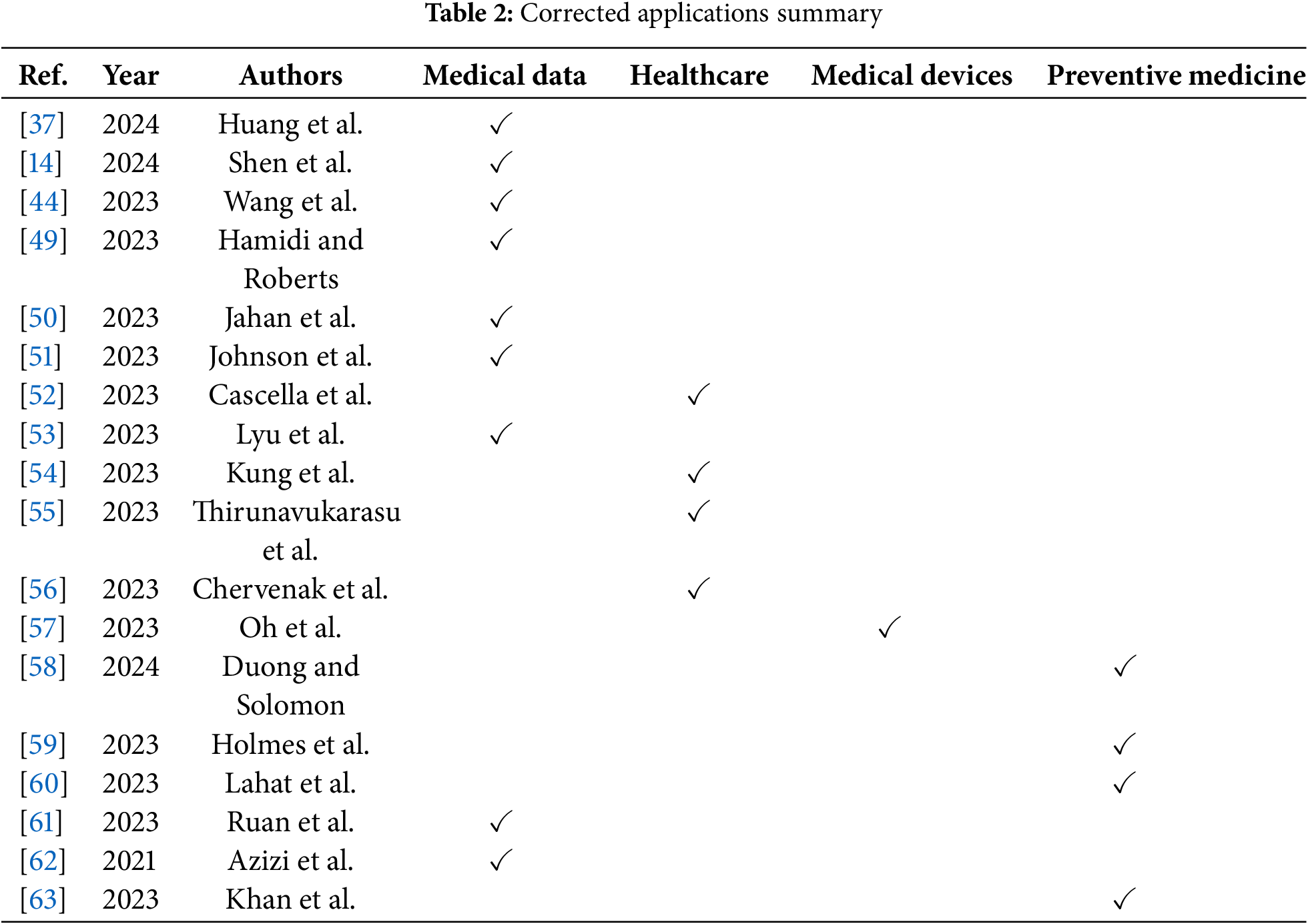

The applications of large models in medicine and healthcare span across various domains, including medical data management, healthcare services, preventive medicine, and medical device innovation, providing novel possibilities for medical research, clinical practices, and patient care [47]. These application areas can intersect, forming comprehensive medical solutions, enhancing healthcare services for patients, expediting medical research, and improving the efficiency of healthcare, as illustrated in Fig. 5. Based on this, Table 2 sums up the existing papers, with four areas of application highlighted below.

Figure 5: Framework of the application of the large model in medicine and healthcare

In order to improve the systematicness and comparability of the literature review, we classified the existing research according to its core tasks. The classification criteria are as follows: (1) If the research focuses on clinical text, image or multimodal data processing, it is classified as “medical data management”; (2) If the research focuses on improving patient service experience or supporting clinical processes, it is classified as “health care services”; (3) If the purpose of the research is disease prediction, epidemic monitoring or health risk intervention, it is classified as “preventive medicine”; (4) Research involving physical tools or systems such as medical equipment, surgical assistance, and remote monitoring is classified as “medical devices”. Some literature involves multiple application directions and may be checked in multiple categories [48]. Table 2 shows the distribution of representative studies under the above classification framework.

The progress of artificial intelligence heavily depends on high-quality data. However, in clinical AI research, a major challenge is the scarcity of large-scale, accurately annotated training datasets, which hinders further development [64]. The application of large models in handling healthcare data is increasingly gaining attention [49–51]. Large models can govern, analyze, and apply healthcare data using cutting-edge methods and technologies, forming multimodal databases that include various data types such as scales, text, images, waveforms, and omics. Recent advances in clinical concept annotation, such as the active transfer learning approach proposed by Abbas et al., demonstrate how contextual word embeddings can improve the efficiency of extracting structured information from unstructured medical records [65]. Here are some ways in which large models are constructed and utilized for multimodal healthcare databases [2]:

• Association of Text and Image Data: Large models can analyze textual medical records, such as case histories and reports, along with medical images like CT scans or X-ray images [66]. This allows doctors and researchers to understand the patient’s condition more easily, enabling accurate diagnosis and treatment.

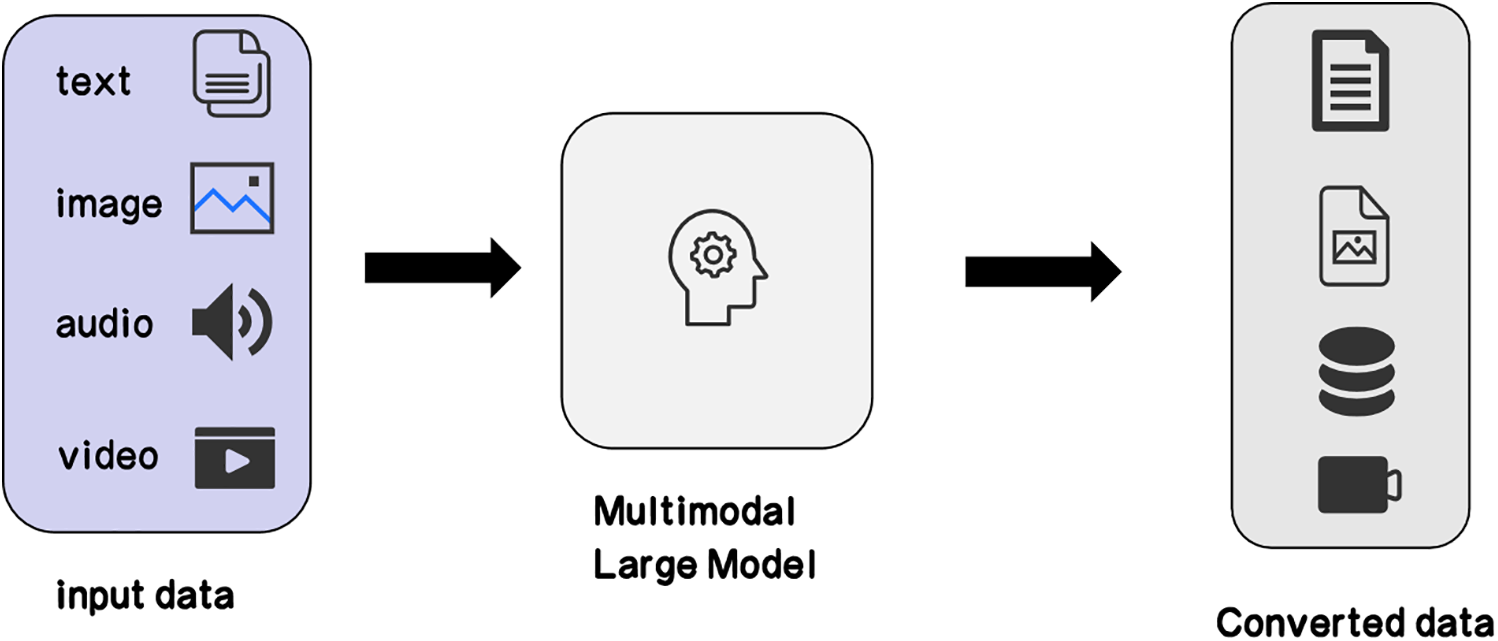

• Annotation of Images and Text: Large models can be used to add textual descriptions to medical images, making them more understandable and manageable. This is useful for building image databases and research, enhancing the accessibility of medical images [67]. As shown in Fig. 6, the integration of multimodal data through large models enables comprehensive annotation and analysis, which is critical for improving medical image interpretability.

• Multimodal Analysis: Large models can correlate and analyze information from different data types, assisting doctors in identifying potential health issues in patients [68]. For example, combining genetic information, clinical data, and medical images can provide more comprehensive diagnostic and treatment recommendations.

• Data Integration and Interaction: Large models can integrate multiple data sources to construct comprehensive patient profiles. This helps healthcare professionals better understand a patient’s medical history and condition, enabling wiser decision-making.

Figure 6: Multimodal large language model

In addition, the exponential growth of medical publications poses significant challenges for healthcare professionals to stay current with cutting-edge research and evolving clinical standards. Large models can automatically read, understand, and summarize medical literature, providing timely information to assist doctors in making wiser diagnostic and treatment decisions [69]. Furthermore, by analyzing patients’ clinical data, genetic information, and lifestyles, large models can help doctors formulate personalized treatment plans. This can improve the effectiveness of treatment and reduce unnecessary medications or therapies. They can also analyze large-scale health data to identify potential epidemic risks and provide early warnings. This is crucial for healthcare institutions and government decision-makers to better prepare for and respond to epidemic outbreaks.

The use of large models in the healthcare sector provides new opportunities to enhance efficiency, accuracy, and accessibility of healthcare [52]. Large models can be utilized to build intelligent healthcare assistants, answering patient queries, providing diagnostic reports, improving recommendations, and even explaining medical reports [53]. Recent clinical trials have demonstrated that AI-powered diagnostic tools, such as mobile phone-based skin cancer analysis, can achieve comparable or even superior accuracy to human specialists in certain scenarios [70–72]. This enhances the accessibility and efficiency of healthcare. The potential for utilizing large language models in assisting medical education and employing large language models (such as ChatGPT) in general practice knowledge testing falls within the healthcare domain [54,55]. These studies explore how advanced language model technology can elevate medical education and its practical applications, introducing new possibilities to the healthcare [73]. Huang highlighted the transformative potential of multi-modal LLMs (e.g., ChatGPT) in dental practice, demonstrating their capacity to improve diagnostic accuracy and therapeutic efficacy [74]. Then Alqahtani et al. highlighted the emergent role of AI and LLMs in higher education and research, demonstrating their potential to transform medical education and research practices [75]. These applications aim to improve medical professional training and enhance the efficiency of healthcare.

In everyday healthcare, large models can be employed to monitor individuals’ health, providing early warnings to prevent health risks or complications that may arise during the recovery process [76]. Large models can contribute to the development of intelligent health assistants or virtual doctors to answer personal health-related queries and provide medical information and advice [77,78]. Recent studies, such as Hua and Eastwood, highlight the growing role of LLMs in mental health care, demonstrating their ability to assist in psychological counseling, symptom screening, and personalized therapy recommendations [79,80]. This aids in enhancing public health knowledge, helping individuals better understand diseases, symptoms, and preventive measures. Through smartphone apps or wearable devices, large models can assist individuals in recording and monitoring their health data, such as step count, heart rate, and sleep quality. This helps people better understand their health status and identify potential problems immediately. By analyzing personal health data, large models can assist individuals in tracking health trends, such as weight management, blood sugar control, and blood pressure management. This facilitates the creation of suitable health plans. Moreover, large models can provide personalized dietary advice based on individuals’ dietary preferences, health goals, and nutritional needs, promoting healthy lifestyles [81]. For patients in the recovery phase, large models can be used to monitor their recovery progress. By analyzing lifestyle data, exercise data, and biological indicators, the model can offer customized feedback and suggestions to help individuals achieve their recovery goals and predict potential outcomes of the recovery process. This aids healthcare professionals and patients in understanding long-term recovery trends and adjusting treatment plans based on these predictions. During the recovery process, large models can assist patients in managing medications, providing medication reminders, monitoring medication adherence, and addressing questions related to medication therapy. Additionally, large models can offer psychological support to patients in recovery. They can answer questions about emotional health, coping with stress and anxiety, or provide resources and advice to help patients address emotional and mental health issues.

Large models analyze CT, Magnetic Resonance Imaging (MRI) and other medical images to identify anatomical structures and provide navigation and path planning support for intelligent surgical robots. They can assist in developing personalized surgical plans, optimizing incision locations and instrument trajectories, and thus improve surgical accuracy and safety [56]. They can identify and segment a patient’s anatomical structures, including vessels, nerves, and tissues, providing accurate navigation and target localization for surgical robots. Based on personalized anatomical structures, large models can assist in planning surgical paths and operational steps. This involves determining optimal incision locations, organ positioning, and the best trajectories for tool movement. This helps predict potential difficulties and risks in advance, optimizing surgical plans. Surgical robots equipped with sensors and cameras provide real-time feedback of the surgical scene. Large models can analyze this data, assisting surgical teams in understanding the surgery’s progress in real-time and making adjustments based on patient physiological features [82,83]. This real-time intelligent feedback helps avoid potential risks and enhances surgical safety [84]. Utilizing the computational capabilities of large models, surgical robots can more accurately locate a patient’s anatomical structures. This is crucial for minimally invasive surgeries, precise cutting, and suturing. Through real-time navigation, surgical teams can execute surgical operations more accurately. Large models can optimize the control systems of surgical robots. Using deep learning algorithms, the model can learn and adapt to the physiological changes of different patients, enabling more precise and intelligent control of surgical tools, ensuring the best treatment outcomes [85]. Virtual reality scenarios created using large models allow doctors and surgical teams to undergo high-quality, realistic training [57]. This helps improve surgeons’ skills, reduce operation risks, and provides a platform for the application of new technologies and instruments. Large models can analyze extensive surgical data, extract lessons learned, and help improve surgical techniques and processes. This data-driven approach contributes to establishing safer and more efficient surgical standards.

Large models play a key role in optimizing medical devices, covering aspects such as design, performance optimization, and user experience enhancement. Large models can be used to simulate and optimize the design process of medical devices [86]. Recent advancements in AI-driven training tools have shown significant potential in the education of emergency medicine doctors, where realistic simulations and virtual environments can improve diagnostic accuracy and clinical skills [87]. For example, in the design of imaging diagnostic devices, the model can consider factors such as patient anatomy and organ structure, optimizing the geometric construction of X-ray or magnetic resonance imaging devices to improve image quality and diagnostic accuracy. Large models can simulate the performance of medical devices, including signal processing, image reconstruction, sensor response, and more. By simulating different working conditions and parameter settings, the performance of the device can be optimized to ensure outstanding performance in various practical scenarios. Large models can analyze the user interface of medical devices, providing improvement suggestions to optimize the user experience. This includes simplifying operation procedures, optimizing the layout of control buttons, improving alarm systems, and reducing the workload and risks for healthcare personnel. For imaging diagnostic devices, large models can be used to optimize image processing algorithms, enhancing image clarity, contrast, and even assisting in automatic anomaly detection. This helps improve the diagnostic accuracy and speed of doctors. Large models can evaluate the energy consumption of medical devices, propose energy-saving solutions, and design more environmentally friendly devices. This is particularly important for devices that operate for extended periods, such as those in operating rooms, as it reduces energy expenses and complies with environmental standards. Large models can simulate the performance changes of medical devices after prolonged use and provide optimization suggestions to extend the device’s lifespan. Additionally, the model can analyze the maintainability of the device, offering designs that are easier to repair and maintain.

Overall, large models provide powerful computational and learning capabilities for the development of intelligent surgical robots, improving surgical precision and safety. This not only helps enhance patient treatment outcomes but also drives technological innovation of surgical medicine. Furthermore, the application of large models in optimizing medical devices not only improves device performance and reliability but also drives innovation in medical technology, providing a better medical experience for patients and healthcare professionals. As technology advances, it is essential to ensure the interpretability of models and their compliance with medical regulations.

The application of large models in epidemiology and genetics covers a wide range of scenarios, with the primary advantage lying in the handling and analysis of large-scale medical data to better understand disease spread, predict disease trends, and formulate effective public health strategies [58]. Here are detailed applications of large models in areas like epidemiological research:

• Disease Spread Prediction: Large models can analyze extensive medical data, including patient records, symptoms, and treatment plans, to predict the spread trends of diseases. This is crucial for early detection and response to emerging viruses or disease pandemics [88].

• Infectious Disease Modeling: Large models can be used to construct models simulating the spread of viruses or bacteria within populations. This helps understand the transmission pathways, speed, and scale of diseases, providing a scientific basis for public health decisions.

• Real-time Epidemic Monitoring: By monitoring medical data, social media information, and other relevant data in real-time, large models provide timely epidemic monitoring. This continuous surveillance enables prompt intervention and timely execution of containment strategies to mitigate disease transmission.

• Optimizing Epidemiological Surveys: Large models assist in designing and optimizing epidemiological surveys, improving data collection efficiency. Through deep learning, models can identify potential risk factors and correlations from multiple data sources, offering a more comprehensive perspective for disease research.

• Assessing Treatment Efficacy: During outbreaks, large models can assess the effectiveness of different treatment options, including drug treatments and vaccinations. By analyzing patient responses and disease progression, models can provide better treatment recommendations for clinical doctors.

• Risk Assessment Modeling: Large models can integrate multidimensional data to build risk assessment models, providing early warnings of potential public health risks [89]. This helps decision-makers take necessary preventive and control measures before an outbreak.

• Genomic Data Analysis: Large models can combine genomics data to study the correlation between individual genes and infectious diseases. This enhances the understanding of why certain individuals may be more susceptible or recover more easily.

The use of large models in epidemiological research provides the medical community with deeper and more comprehensive insights, aiding in the better understanding of diseases, optimizing medical decisions, and providing a scientific basis for public health work [59,60]. Recent advances in AI-driven drug repurposing (e.g., Singh) demonstrate the potential of large models to accelerate therapeutic discovery for infectious diseases by screening existing drug libraries against pathogen targets [90].

With the in-depth application of AI technology in the healthcare field, large model platforms have become key drivers of progress. Through comprehensive medical data synthesis and analytical processing, these platforms enable enhanced clinical decision support, facilitating accurate diagnostics and optimized treatment strategies. In the literature screening of platform applications, studies on publicly available large-scale model-based healthcare platforms were included, focusing on precision diagnosis and treatment optimization. Exclusion criteria included: 1) studies where the platform did not report clinical effects; 2) studies that did not involve specific medical tasks or data applications. This section aims to explore the specific applications of large model platforms in medicine and healthcare, and how they improve the quality of medical services by offering precise diagnostic support, optimizing treatment plans, and enhancing patient care.

Although large general models such as GPT-3 possess powerful language understanding and generation capabilities through pre-training on massive datasets [91], their performance and credibility in the medical domain are still subject to limitations due to the highly specialized and sensitive nature of medical data. To better address the needs of medicine, models specifically designed for healthcare have emerged. These models leverage extensive medical data, including clinical records, medical literature, and medical imaging, during the pretraining phase to gain a better understanding of medical terminologies, complex disease relationships, and medical practices [92]. In the fine-tuning phase, models designed for medicine collaborate with healthcare professionals to learn more accurate clinical judgments and decision-making processes.

One such model is GatorTronGPT, developed for clinical applications using the GPT-3 architecture with 200 billion parameters [5]. Evaluating its performance in medical research and healthcare, with a focus on key functionalities in text generation, revealed that GatorTronGPT achieved state-of-the-art performance on four biomedical NLP benchmark datasets (out of six evaluated). This demonstrates the benefits of GatorTronGPT in biomedical research, generating synthetic clinical text for the development of synthetic clinical NLP models (GatorTronS). Evaluations by medical professionals indicate that GatorTronGPT can generate clinically relevant content with language readability comparable to real-world clinical records. Recent studies propose hybrid evaluation frameworks combining human expertise and automated metrics (e.g., Sblendorio et al.) to dynamically assess LLMs’ clinical feasibility, which aligns with the validation approaches of domain-specific models like GatorTronGPT [93].

This development brings about more precise and reliable applications of artificial intelligence in medicine and healthcare, supporting doctors in making more accurate choices in diagnosis, treatment, and decision-making [61]. Models designed specifically for medicine can not only handle the complexity of medical knowledge but also better adapt to the uniqueness of medical practices [94,95]. This customized approach is expected to enhance the efficiency of healthcare, reduce error rates, and provide patients with more personalized and precise medical services. In the future, as concerns about medical data privacy and security continue to rise, the development of models specifically designed for medicine will likely yield more significant results driven by the advancement of medical artificial intelligence. Fig. 7 provides a brief summary illustration, showcasing the diverse applications and potential impact of large models in medicine and healthcare.

Figure 7: Large model platform designed for medicine

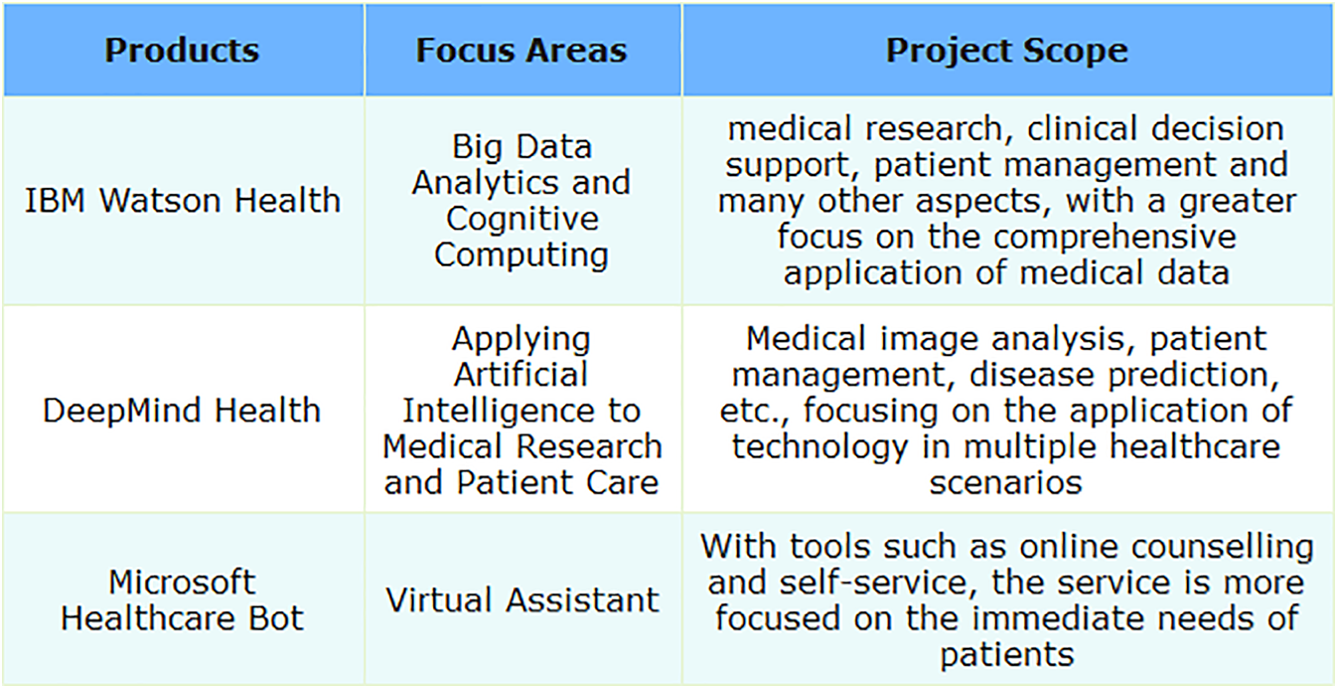

IBM’s healthcare-focused division, Watson Health, utilizes cutting-edge AI and cognitive computing to foster advancements in medical services and enhance industry standards. The platform integrates technologies such as big data analytics, machine learning, and deep learning to process and analyze extensive medical datasets, including clinical data, genetic information, and medical images. IBM Watson Health aims to provide comprehensive solutions to empower healthcare professionals in making more accurate diagnoses, devising personalized treatment plans, and supporting innovation in medical research and health management. By introducing advanced computing capabilities into medicine, IBM Watson Health strives to enhance patients’ medical experiences, reduce healthcare costs, and foster the collaborative development of the entire healthcare ecosystem. It includes multiple tools and solutions such as Watson for Oncology and Watson for Drug Discovery.

DeepMind Health is a department specifically dedicated to applying artificial intelligence to healthcare, covering various aspects including medical image analysis and patient data management. DeepMind Health is a branch of DeepMind, an AI company that is now a subsidiary of Alphabet, focusing on exploring and applying AI technologies to improve medicine. Established in 2016, the mission of DeepMind Health is to use advanced technologies, including deep learning and machine learning, to address complex issues in medicine and healthcare. The department’s research and projects cover areas such as medical image analysis, optimization of patient management systems, disease prediction, and treatment. Notably, DeepMind Health has achieved significant milestones in medical image recognition and processing and has collaborated closely with healthcare professionals in various medical projects, aiming to drive innovative applications of AI in healthcare. What’s more, DeepMind Health’s projects have sparked some controversy regarding medical data privacy, raising concerns about technology and medical ethics.

Microsoft Healthcare Bot is an intelligent robot platform designed specifically for healthcare, launched by Microsoft. The platform combines AI and NLP technologies to provide powerful virtual assistants for healthcare institutions and service providers, aiming to improve the interaction experience between patients and the healthcare system. Microsoft Healthcare Bot can be integrated into healthcare institutions websites, applications, and other digital channels. Through automated responses to common questions, providing health information, and facilitating functionalities like appointment scheduling, it effectively supports patients’ self-service and management. Its flexibility and customizability enable healthcare institutions to tailor the robot’s features according to their needs, better meeting patients’ personalized requirements, and enhancing the efficiency and accessibility of healthcare services. Microsoft Healthcare Bot is a comprehensive virtual assistant platform, offering a wide range of healthcare services with high customizability, allowing institutions to tailor features based on their specific needs and achieve integration across multiple digital channels.

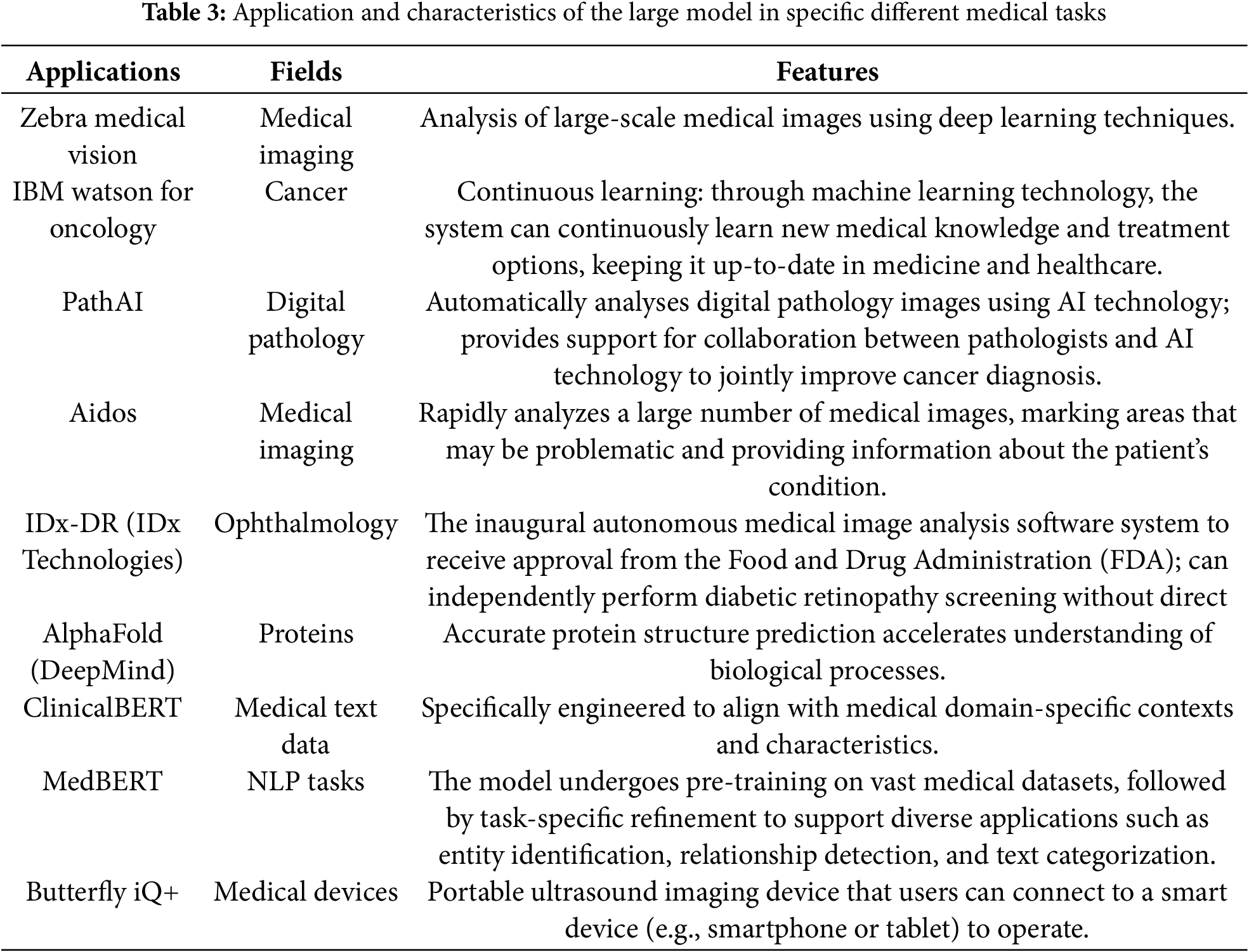

This section introduces several large-scale platforms that have had a significant impact in the medical field. They mainly rely on advanced deep learning and artificial intelligence technologies and are applied to medical data analysis, disease prediction, patient management, and other fields. The following Table 3 summarizes the core technologies and practical applications of these platforms.

ClinicalBERT and MedBERT are pre-trained language models focused on the medical domain to enhance the efficiency of processing medical texts. Both ClinicalBERT and MedBERT are built on the BERT architecture, incorporating pre-training capabilities. ClinicalBERT is an extension of BioBERT, specialized in the biomedical domain, with a primary focus on clinical medical texts. In contrast, MedBERT aims to adapt more broadly to medicine, encompassing biomedical and clinical medicine. ClinicalBERT is primarily used for processing clinical medical texts such as electronic health records and clinical reports, while MedBERT aims for a broader adaptation, covering biomedical literature and clinical medical texts. Due to differences in their design objectives, their performance may vary across specific tasks, and performance comparisons typically require evaluations based on specific tasks and datasets. In summary, ClinicalBERT and MedBERT exhibit differences in design objectives, foundational datasets, and application scope, and the choice between them may depend on researchers’ needs and application scenarios.

Zebra Medical Vision employs deep learning technology to analyze medical images, providing automated diagnostic and screening tools for tasks such as breast cancer screening and lung nodule detection. Caption Health utilizes deep learning and artificial intelligence to offer intelligent ultrasound imaging, enabling physicians to conduct ultrasound examinations without the direct intervention of ultrasound specialists. The emergence of these platforms signifies advancements in large model technology within the healthcare domain, providing more advanced tools and methods for medical diagnosis and research.

Watson for Oncology is a specific application under the IBM Watson Health department. IBM Watson Health is dedicated to leveraging artificial intelligence and cognitive computing technologies to improve healthcare and life sciences. Watson for Oncology, focusing on cancer treatment, aims to provide personalized treatment recommendations to doctors based on patient medical records and extensive medical literature knowledge.

AlphaFold is a model designed for predicting protein structures and is a project by DeepMind, specializing in the biological domain. Its goal is to address significant biological challenges, such as the protein folding problem, using machine learning methods. AlphaFold aims to advance the scientific understanding of protein structures and provide more accurate predictions, which is crucial for drug discovery and disease understanding. AlphaFold has achieved notable success in the Critical Assessment of Structure Prediction (CASP) competition, gaining global attention and being considered a milestone on protein structure prediction.

4 Advantages of Large Models in Healthcare

4.1 High-Accuracy Diagnosis and Prediction

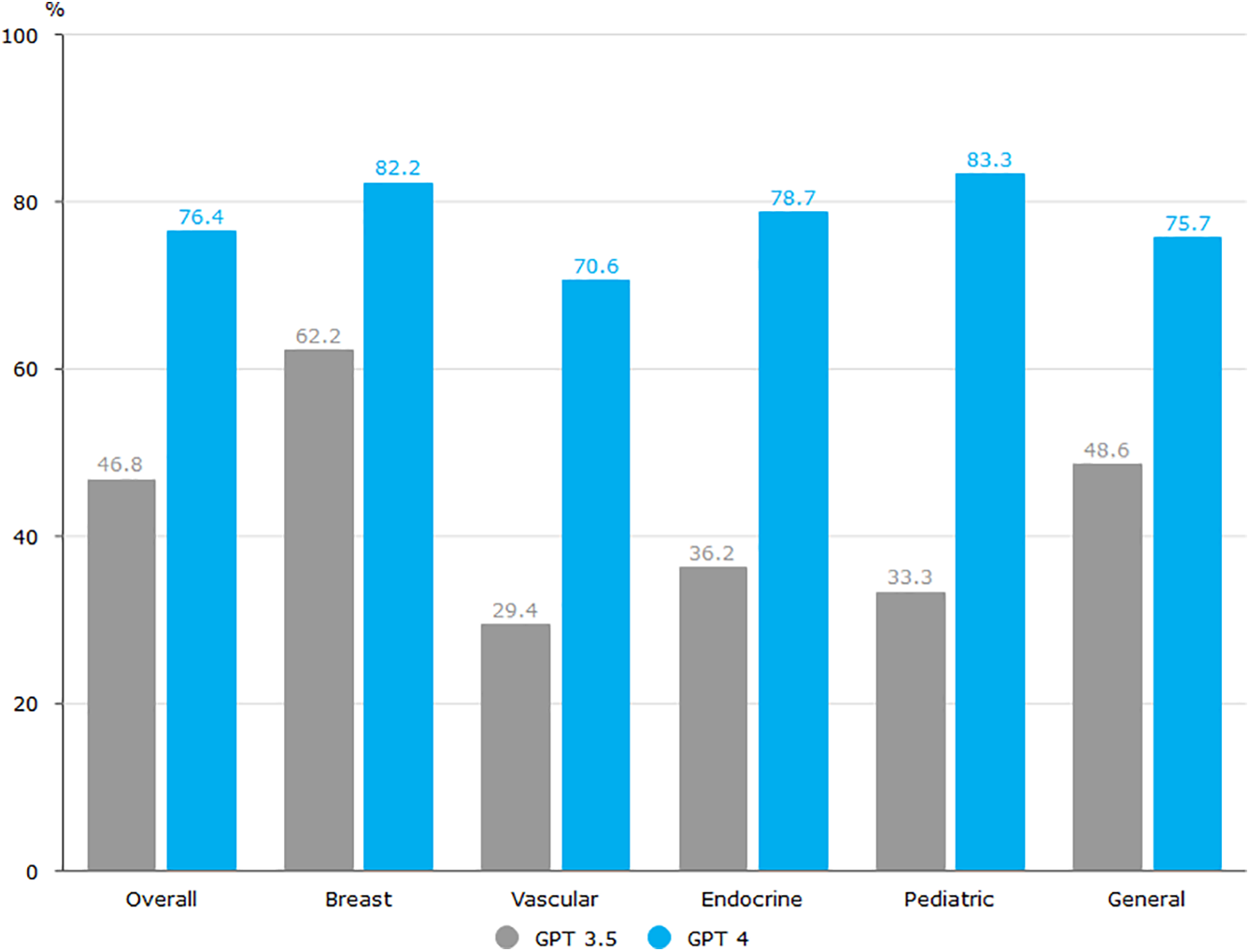

In the healthcare domain, achieving high-accuracy diagnosis and prediction is a crucial advantage of applying large models. This advantage has profound implications for elevating the standards of medical diagnosis and improving patient treatment outcomes. ChatGPT, particularly GPT-4, demonstrated significant comprehension of complex surgical clinical information, achieving an accuracy rate of 76.4% on the Korean Board of General Surgery exam [44]. As shown in Fig. 8 below, the overall accuracy of the GPT-3.5 was 46.8%, while the GPT-4 showed significant improvement with an overall accuracy of 76.4%. Through deep learning and extensive data analysis, large models exhibit a high degree of sensitivity and accuracy in handling medical images, physiological data, and clinical information. Large models, when analyzing patient data, can identify potential risk factors, assisting healthcare professionals in achieving early disease diagnosis and prediction. Consequently, this provides doctors with more reliable auxiliary information, enhancing the precision of medical diagnoses and the accuracy of predictions. Although large language models perform well in text generation, their “hallucination” phenomenon raises serious concerns in the medical context. Models may generate information that is inconsistent with actual medical knowledge, and if doctors are unaware of it, it may lead to misdiagnosis or treatment errors. This risk is particularly critical in clinical decision support systems. Therefore, rigorous validation should be carried out before deployment, supplemented by a manual review mechanism to ensure the accuracy and reliability of the information.

Figure 8: Comparative performance evaluation of CPT-3.5 and CPT-4 across surgical subspecialties

4.1.1 High Precision in Medical Data Analysis

Medical imaging, such as CT scans, MRI, and X-ray images, constitutes a crucial component of clinical diagnosis. Large models, leveraging deep learning techniques, can learn complex image features and patterns, thereby achieving high-precision diagnosis in medical image analysis [96]. Esteva demonstrated that deep learning-enabled computer vision systems can achieve expert-level performance in tasks such as radiology and pathology image interpretation, highlighting the transformative potential of AI in medical imaging [97]. For instance, in tumor detection, large models can accurately label and classify abnormal cells, assisting doctors in early detection of patient lesions and improving the early diagnosis rates of diseases like cancer [98]. Large, accurately annotated datasets are crucial for successful deep learning applications in medical imaging [99]. However, collecting such high-quality labels is particularly difficult for histopathology images due to their unique characteristics, including gigapixel resolutions, diverse cancer subtypes, and significant staining variations. Self-supervised learning (SSL) offers a viable alternative by learning meaningful representations directly from unlabeled data, which can then be adapted to various downstream tasks. Self-supervised learning has shown promise in medical image analysis, particularly in scenarios with limited labeled data. For instance, Ouyang et al. demonstrated that SSL can significantly improve few-shot medical image segmentation by leveraging unlabeled data to learn robust representations [100]. Additionally, data augmentation techniques play a crucial role in enhancing the performance of deep learning models by artificially increasing the size and diversity of training datasets. Visual representations based on Self-Representational Contrastive Learning (SRCL) not only achieved state-of-the-art performance on each dataset but also demonstrated robustness and transferability compared to other SSL methods and ImageNet pretraining (both supervised and self-supervised methods) [101].

While self-supervised pre-training with supervised fine-tuning has demonstrated success in image recognition with limited labeled data, its adoption in medical imaging remains underexplored. Azizi et al. [62] investigated this approach for medical image classification, evaluating performance on two distinct tasks: dermatological condition identification from photographs and multi-label chest X-ray interpretation [102]. Their findings revealed that domain-specific self-supervised pre-training following ImageNet initialization substantially enhanced classifier performance [103]. The researchers further proposed Multi-Instance Contrastive Learning (MICLe), which leverages multiple patient images to create enriched positive pairs for contrastive learning. Notably, the study also demonstrated the resilience of large self-supervised models to distributional variations.

Beyond medical images, large models can also process and analyze clinical text data, such as health records and doctors’ diagnostic reports. Through deep learning, large models can understand and extract key information from textual data, providing comprehensive patient information to healthcare professionals. This textual data analysis aids in accurately identifying a patient’s medical history, symptoms, and treatment feedback, thereby enhancing the overall assessment accuracy of patients.

Pathology reports serve as critical sources of clinical and research data, yet their unstructured narrative format poses significant challenges for qualitative data extraction. Automated keyword extraction offers an efficient solution for summarizing these complex documents and improving processing efficiency. Kim developed a supervised deep learning model incorporating natural language processing to identify three key categories of pathological information: specimen details, procedures, and pathology types [104]. Their study evaluated the model’s performance against conventional extraction methods using 3115 expert-annotated reports, then applied it to analyze 36,014 unlabeled reports with validation through biomedical terminology standards. The findings confirmed the model’s effectiveness in practical clinical data extraction scenarios.

4.1.2 Enhanced Predictive Capability through Multimodal Data Fusion

Large models possess the capability to handle multimodal data, combining medical images with patients’ genetic information and clinical records, among others. The fusion of such data provides a more comprehensive reflection of a patient’s condition, offering more accurate information for diagnosis and prediction [105]. Recent studies further demonstrate the effectiveness of deep learning-based multi-modal fusion in neurodegenerative disease classification, achieving high accuracy in Alzheimer’s disease diagnosis by integrating longitudinal MRI, PET, and clinical data [106]. Leveraging the capabilities of large models, they demonstrate unique advantages in early diagnosis and disease prediction [107]. By learning from extensive disease data, large models can identify potential disease signs and risk factors. For instance, combining imaging and genetic data, large models can predict the risk of certain genetic diseases, providing personalized health management recommendations for patients. Fu provided a comprehensive survey on the evaluation of multimodal large language models (MLLMs), highlighting the importance of multimodal data fusion in enhancing model performance and predictive capabilities [70]. In predicting cardiovascular diseases, large models can analyze various data aspects, including physiological indicators, lifestyle, and genetic information, helping physicians predict the probability that a patient develops a disease and take early intervention measures to reduce the risks of the disease.

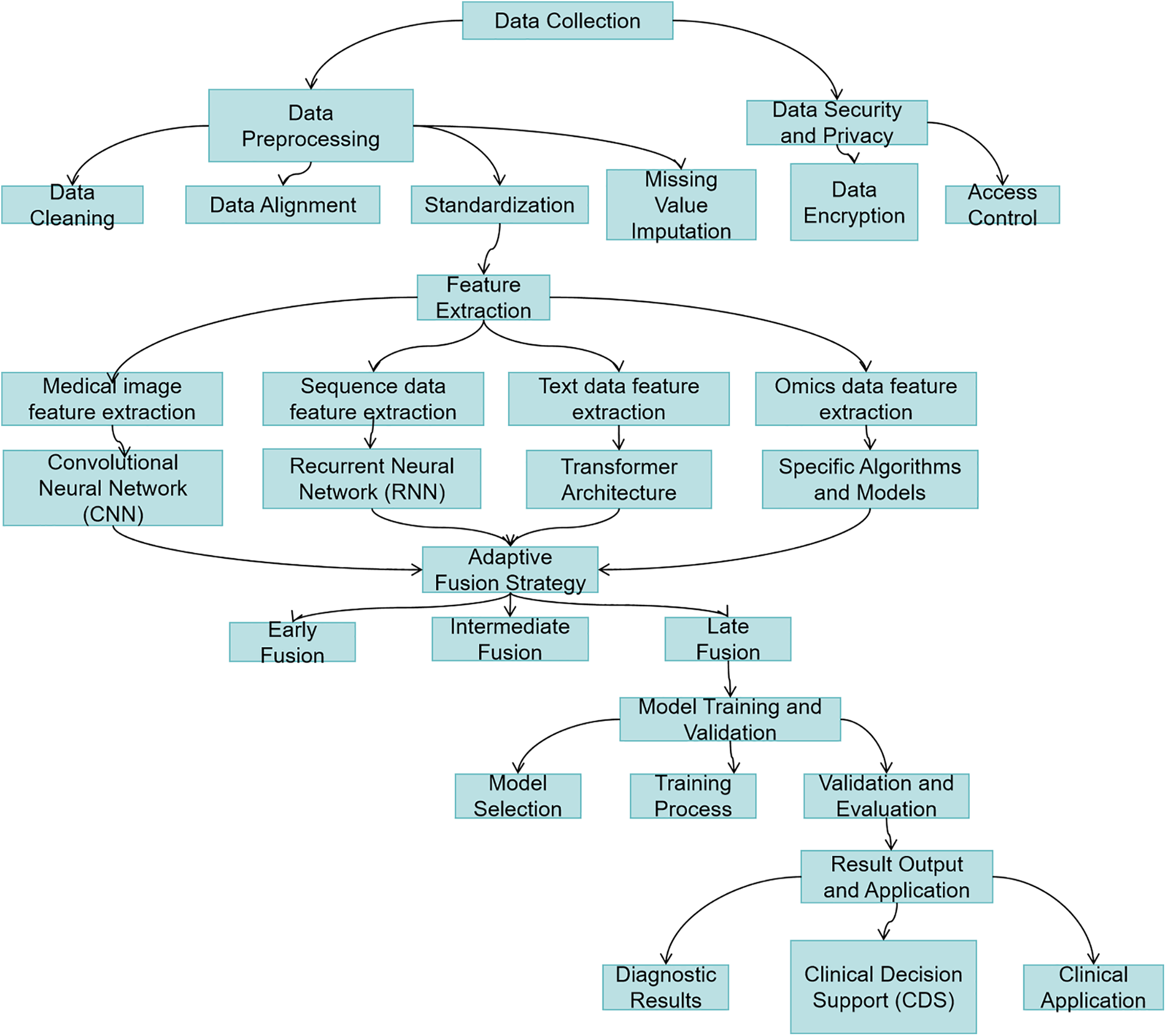

Modern precision medicine increasingly relies on multimodal data integration to enhance clinical decision-making across diagnosis, treatment planning, and outcome prediction [108]. Common quantitative evaluation methods for multimodal large language models (MLLMs) have their limitations, making it difficult to comprehensively evaluate performance. To address these challenges, Fig. 9 illustrates a comprehensive multimodal data processing workflow, which systematically integrates medical data from heterogeneous sources (e.g., imaging, genomics, and clinical records) through critical stages including secure data collection, standardized preprocessing, domain-specific feature extraction, and adaptive fusion strategies. This framework not only highlights the synergy between data diversity and model robustness but also provides a scalable approach to enhance predictive accuracy in real-world clinical settings.

Figure 9: Multimodal healthcare data processing workflow

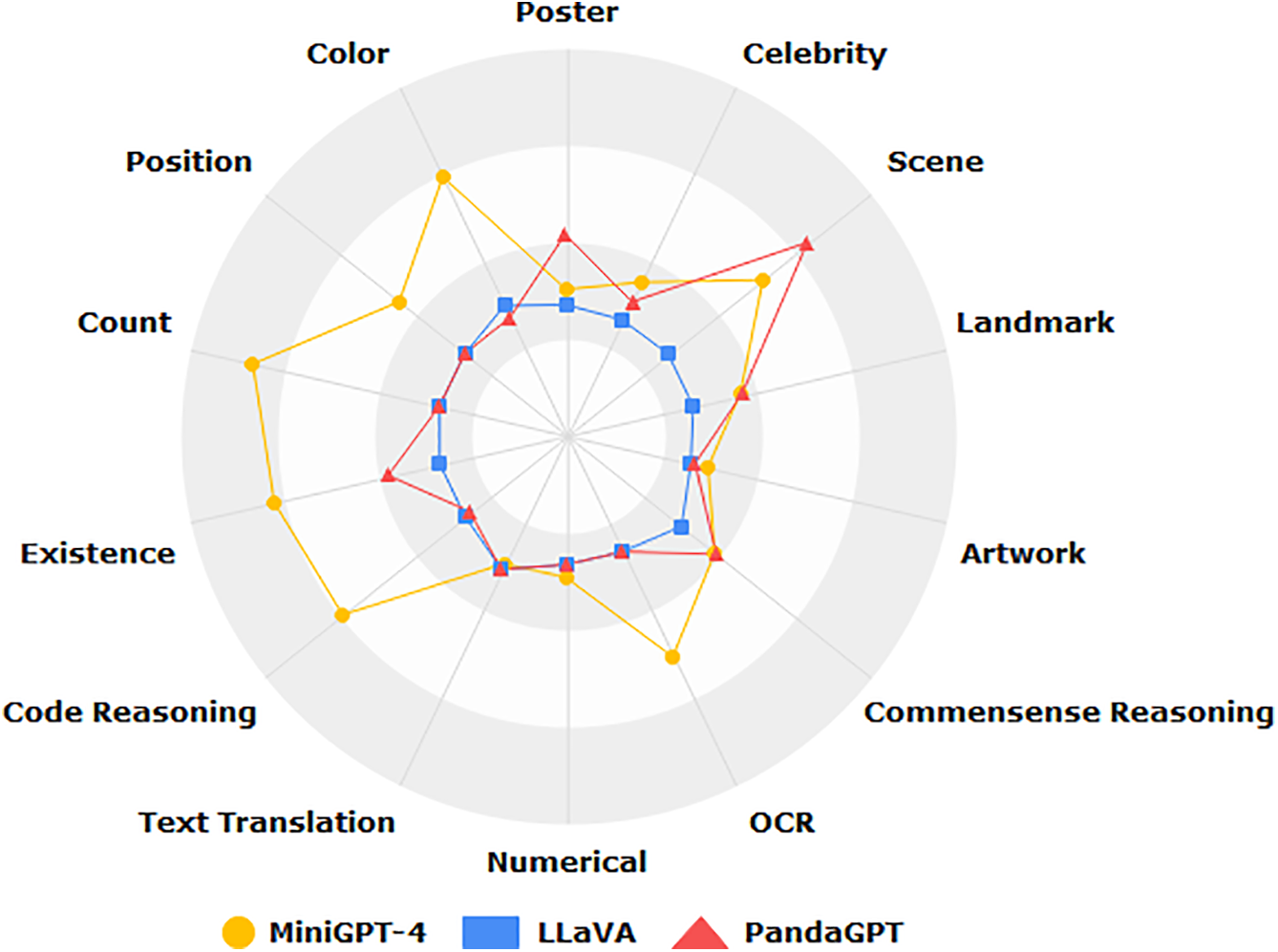

Multimodal Evaluation Benchmark (MME) is the first comprehensive Multimodal Large Language Model(MLLM) assessment benchmark with rich experiments on 14 subtasks to comprehensively assess MLLM, and Fig. 10 shows the results of three MLLM assessments [20]. Mohsen conducted a systematic review of AI-based multimodal data fusion in clinical settings, emphasizing the integration of electronic health records (EHR) with medical imaging [109]. Their work evaluated diverse fusion strategies, disease-specific applications, and machine learning algorithms, while also cataloging available multimodal datasets. The findings revealed that combined EHR and imaging models consistently surpassed unimodal approaches in diagnostic and predictive performance [110]. In a related study, the author developed an end-to-end deep learning model to predict neurodevelopmental impairments (cognitive, language, and motor) in 2-year-olds using multimodal MRI (T2-weighted, DTI, resting-state fMRI) and clinical data [111]. The model achieved prediction accuracies of 88.4%, 87.2%, and 86.7% for each domain, respectively, significantly outperforming single-modality baselines.

Figure 10: Benchmarking multimodal LLMs: a comprehensive evaluation across 14 vision-language tasks

Although multimodal data fusion technology has shown great potential in improving diagnostic accuracy and predictive ability, it still faces many challenges in actual clinical deployment and urgently needs in-depth discussion.

First, the interoperability bottleneck is a major obstacle to achieving multimodal data integration. Data generated by different hospitals or devices often have inconsistent formats and different collection frequencies, and lack common data standards and interfaces, which seriously limit the deployability of the model in a cross-institutional environment. In addition, the time alignment and semantic consistency issues between different modalities often make information fusion difficult, affecting model performance and stability.

Secondly, the annotation cost of high-quality multimodal data is extremely high. Clinical images, texts, genetic data, etc. require a large number of experts to manually annotate, but the resources of medical professionals are extremely limited. This not only increases the cost of research but also limits the scalability of the model in real clinical environments.

Thirdly, deployment in clinical environments is difficult. Large multimodal models usually require high-performance computing resources and complex integrated deployment solutions, and many primary care institutions are limited by technical infrastructure and find it difficult to support the operation of such models. At the same time, clinical staff lack understanding of the internal mechanisms of the model, which also exacerbates its distrust and usage barriers in actual applications.

In addition, real-time and data privacy issues also limit the implementation of multimodal fusion technology. Clinical decisions are usually highly time-sensitive, and the processing of multimodal models is relatively complex and may not meet the requirements of real-time feedback. At the same time, integrating multi-source data may increase the risk of privacy leakage, especially in a medical environment that lacks a sound data governance mechanism.

In summary, the future development of multimodal data fusion systems needs to focus on solving practical obstacles such as standardization, annotation automation, resource optimization, and compliance while improving algorithm performance, so as to achieve large-scale promotion and implementation in clinical practice.

4.1.3 Individualized Treatment Feedback

AI and machine learning are revolutionizing healthcare by sifting through massive amounts of patient data, from medical histories to genetic profiles. These smart systems spot hidden trends and connections, helping doctors predict how different patients might respond to particular therapies. Large models play a crucial role in analyzing treatment feedback and formulating personalized plans. By monitoring patients’ physiological data, treatment history, and genetic information, large models can more accurately predict patient responses to specific medications, providing support for doctors to formulate individualized treatment plans and thereby improving treatment efficacy [84]. Andrews suggested that ML and AI are being used to analyze large datasets containing patient information, medical records, genetic data, and treatment outcomes [112]. And Landi proposed a deep learning-based unsupervised framework for processing heterogeneous EHR and deriving patient representations, efficiently and effectively achieving large-scale patient stratification [113]. This study analyzed electronic health records (EHRs) from 1,608,741 patients across multiple hospital cohorts, encompassing 57,464 clinical concepts. We developed ConvAE, an innovative representation learning model that integrates word embeddings, convolutional neural networks (CNNs), and autoencoders to encode patient trajectories into low-dimensional latent vectors. Hierarchical clustering analysis validated that the model demonstrates effective patient stratification capabilities for both general and disease-specific populations. Notably, when applied to conditions like type 2 diabetes, Parkinson’s disease, and Alzheimer’s disease, ConvAE identified clinically meaningful subtypes that correlated strongly with disease progression patterns, symptom severity, and comorbidity profiles. The findings show that ConvAE effectively creates patient profiles that reveal clinically useful information. This adaptable approach enables deeper analysis of diverse patient subgroups with varying disease causes, while uncovering new opportunities for personalized treatment research using electronic health records.

While large models hold great potential in medicine, they come with important ethical risks. One major concern is algorithmic bias, especially when the training data underrepresents the global population. Data bias can lead to differences in diagnostic accuracy and treatment recommendations, especially for marginalized groups. For example, if the training data comes primarily from North America and Europe, the model may not be able to effectively address different disease prevalence, medical practices, or language differences in other regions when applied to those regions.

For example, a model trained primarily on male and European data may overlook or misunderstand health problems that are more common in women or other racial groups, leading to misdiagnosis or inappropriate treatment. This bias can exacerbate health inequalities and prevent marginalized groups from receiving the same accurate medical services as mainstream groups in terms of diagnosis and treatment. Therefore, ensuring the diversity and inclusiveness of training data is critical to the responsible application of AI in healthcare.

4.2 Data Analysis and Knowledge Discovery

4.2.1 Diversity and Complexity of Data

In healthcare, we are confronted with an ever-expanding and diverse ocean of data, encompassing various types of information across multiple layers, from clinical records to medical images and genomic data [109]. This diversity provides doctors with more comprehensive and in-depth patient information, offering rich material for the development of personalized medical care and treatment plans. However, accompanying this diversity is the complexity of the data, rendering traditional manual analysis methods inadequate when dealing with such vast and complex medical datasets.

Clinical records stand as one of the fundamental and extensively collected data types in the medical domain, incorporating patients’ medical histories, symptom descriptions, diagnostic information, and more [114]. These records are typically unstructured text, covering a wide range of medical knowledge. Yet, their unstructured nature makes traditional manual organization and analysis exceptionally cumbersome. Simultaneously, medical imaging data provides rich visual information, such as X-rays, MRIs, CT scans, etc., playing a crucial role in disease diagnosis and treatment processes. Nevertheless, large-scale medical imaging data requires not only highly specialized interpretation but also effective tools to extract key information. Genomic data has rapidly emerged in recent years, presenting unprecedented opportunities for medical research and treatment. Individual genetic information can reveal the genetic basis of many diseases and provide a theoretical foundation for devising personalized treatment plans [115–117]. AI-driven approaches, as demonstrated by Ozaybi et al., enable efficient analysis of complex biochemical interactions, facilitating novel drug target identification [118]. However, the complexity and high-dimensional features of genomic data make traditional analysis methods insufficient, requiring the assistance of advanced computer technology and algorithms for precise interpretation and analysis.

The emergence of large-scale models opens up brand new possibilities for processing and analyzing medical data, as shown in Fig. 11. Traditional manual analysis methods prove inadequate when faced with these multi-source, high-dimensional, and unstructured data. Firstly, manually processing medical data consumes a significant amount of time and effort, resources that should ideally be allocated more towards patient diagnosis and care. Secondly, manual processing is susceptible to subjective factors, leading to inconsistency and inaccuracy in results. In this era of information, we need more efficient, accurate, and intelligent methods to address the challenges posed by medical data. Deep learning provides a wide array of tools, techniques, and frameworks to tackle these challenges [119]. Deep learning technologies, especially models like CNNs (Convolutional Neural Networks) and NLP (Natural Language Processing), excel in the processing of medical images and text data. These models can learn complex patterns and associations within the data, providing doctors with more comprehensive diagnostic information.

Figure 11: Schematic of knowledge graph for healthcare data analysis and knowledge discovery

4.2.2 Analytical Capabilities of Large Models

Medical data is often multimodal and complex, including medical images, genetic data, and biomarkers. Large models can capture complex relationships and patterns within such data [120]. Through deep learning algorithms, large models can extract valuable information from large-scale, multimodal medical data. For medical images, large models can perform image segmentation, feature extraction, and even automatically annotate abnormal areas. In clinical text, large models can understand context, extract entity information, aiding doctors in better comprehending patient medical histories and symptoms [121]. One core idea of deep learning is to learn abstract representations of data through hierarchical non-linear transformations. Each layer performs a series of complex non-linear operations on the input, gradually extracting high-level features from the data [122]. This layered non-linear transformation allows large models to adapt to complex data distributions and relationships, thereby better modeling latent patterns in the data. Large models, by learning representations of data during the training process, automatically discover key features within input data. This parameter learning approach enables large models to extract useful information from massive datasets, including complex relationships and patterns hidden in the data. Adjusting model weights, large models can optimize the loss function, improving their fit to the training data [123].

4.2.3 Advantages of Large Models in Knowledge Discovery

Large AI models have become powerful tools for medical knowledge discovery, leveraging advanced deep learning and NLP methods to uncover valuable insights from complex healthcare datasets. These models excel at identifying hidden patterns, novel correlations, and emerging trends across diverse medical data sources, significantly enhancing both research capabilities and clinical decision-making. Their ability to process massive datasets and detect intricate relationships makes them particularly valuable for:

• Text Mining and Knowledge Extraction: Large models can deeply understand and analyze text by learning from vast amounts of medical literature and clinical records. Through natural language processing techniques, they can extract keywords, entities, relationships, and events, establishing connections between concepts and forming medical knowledge graphs. These graphs not only assist doctors in better understanding literature but also uncover medical relationships that might exist in literature but have not been explicitly expressed.

• Data Associations and Pattern Recognition: Large models can process various types of medical data, including images, biomarkers, clinical records, etc. Through deep learning techniques, they can identify complex relationships and patterns within this data. This aids in discovering common features in patient populations, potential risk factors for diseases, and the effectiveness of drugs on different patients (e.g., uncovering hidden drug-disease relationships through AI, as in Islam et al.) [124].

• Automated Experiment Design and Research Planning: When analyzing large-scale biomedical data, large models can automatically identify potential research directions and experiment designs [125]. They can guide researchers in choosing appropriate biomarkers, sample sizes, and research methods, thus enhancing research efficiency. This automated research planning helps accelerate progress in medical research, enabling scientists to explore new treatment methods and disease mechanisms more rapidly.

• Large Models and Knowledge Graphs: Knowledge graphs are structured representations of medical knowledge, depicting different entities and their relationships in a graph format. When large models understand and analyze medical text, they can generate meaningful embedding vectors, contributing to the construction of richer knowledge graphs [123,126]. Recent reviews (e.g., Perdomo-Quinteiro & Belmonte-Hernández) systematically analyze knowledge graph-based approaches for drug repurposing, demonstrating their potential in accelerating biomedical discovery by integrating heterogeneous data sources [127].

• Large-Scale Literature Mining: Traditional literature mining methods often face challenges related to the complexity of text and the vast volume of data. Large models, through deep learning, can better capture semantic information in text, discovering correlations and trends in medical literature. This provides researchers with a more extensive and in-depth understanding of literature, expediting the progress of medical research.

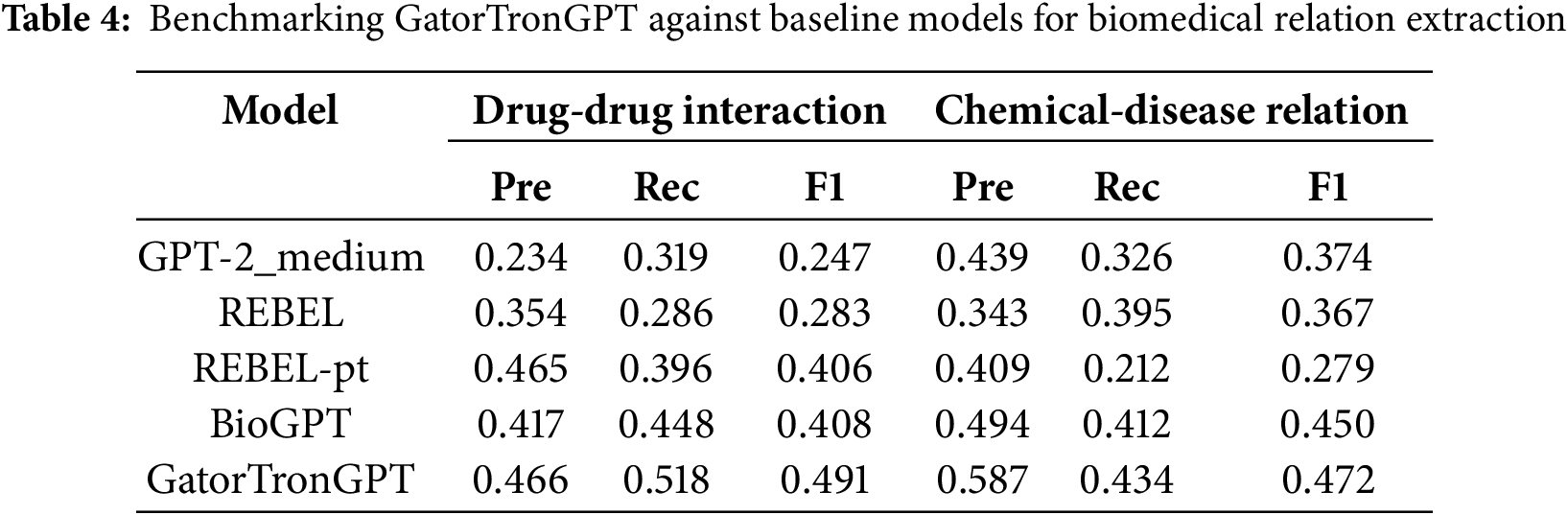

Improving efficiency is a key advantage of applying large models in medicine and healthcare. Through various technological advantages such as automation, data processing, and decision support, large models significantly enhance medical research, clinical diagnosis, treatment decision-making, and more. In this process, large models play crucial roles in automating medical research, analyzing image data, processing and integrating data, optimizing clinical workflows, managing and monitoring patients, and decision support systems. Table 4 presents a performance comparison between GatorTronGPT and four other biomedical transformer models for end-to-end relation extraction tasks, including drug-drug interactions and chemical-disease relations. The results indicate that GatorTronGPT achieved superior performance across all three benchmark datasets when compared to existing models [5].

To enhance the understanding of the performance advantages of GatorTronGPT, this article supplements the task details and indicator settings involved in the evaluation in Table 3. The experiment covers multiple biomedical natural language processing (NLP) tasks, including drug-drug interaction identification (DDI), chemical-disease relations, etc. The evaluation datasets used include BioCreative V Chemical-Disease Relations(CDR), DDIExtraction 2013 and NCBI Disease datasets. The main evaluation indicators include accuracy, precision, recall and F1 score.

GatorTronGPT outperforms existing models such as BioBERT, ClinicalBERT, and PubMedBERT on all datasets. Its performance advantage can be attributed to the following key factors:

• Model architecture advantage: GatorTronGPT is based on the GPT-3 architecture and has 200 billion parameters, which gives it a significant advantage in processing long texts and modeling complex language structures.

• Pre-training data quality: The model is pre-trained using high-quality medical corpus from real clinical records, medical literature, and standardized terminology libraries, thereby enhancing the ability to understand medical semantics.

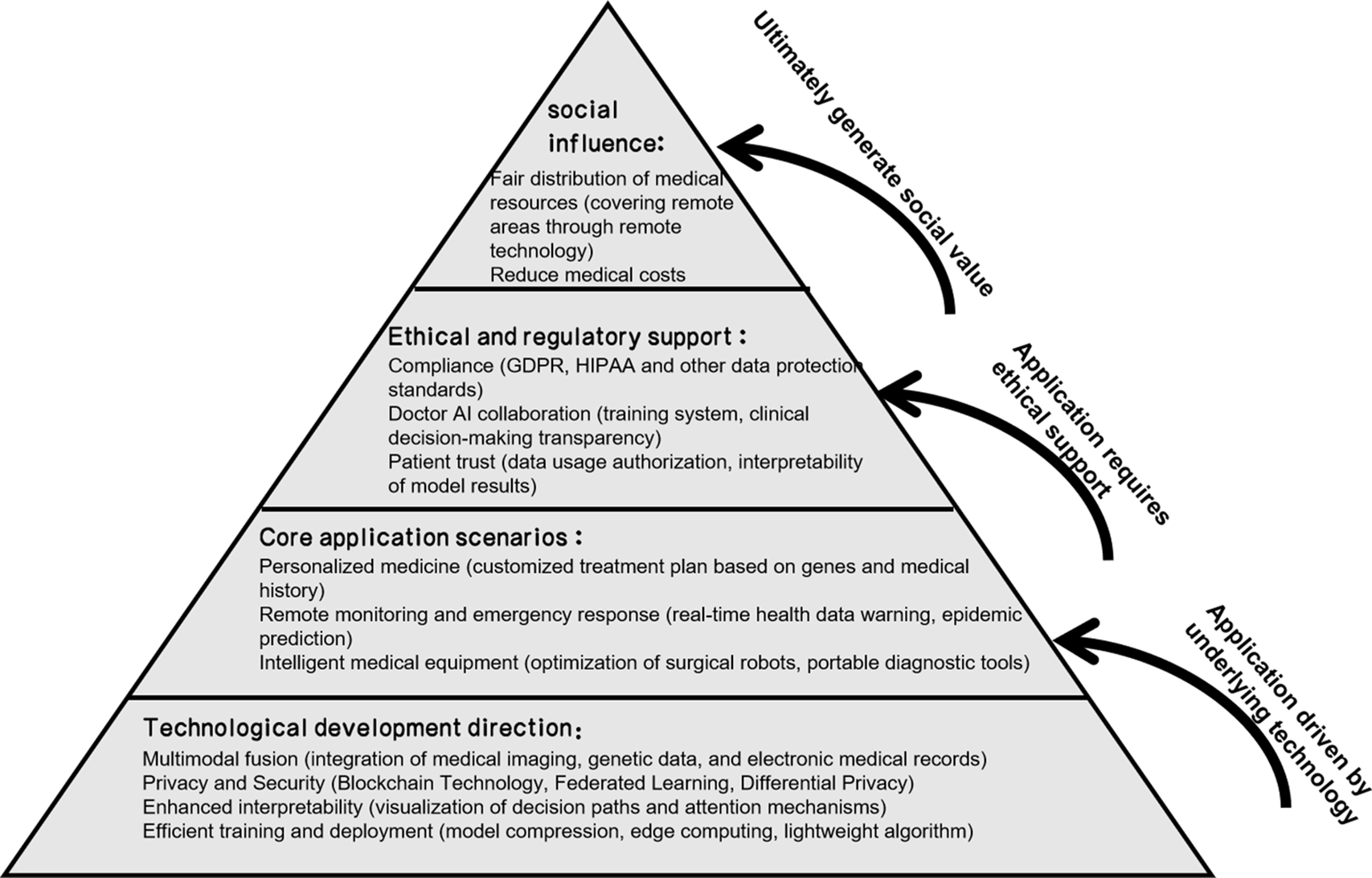

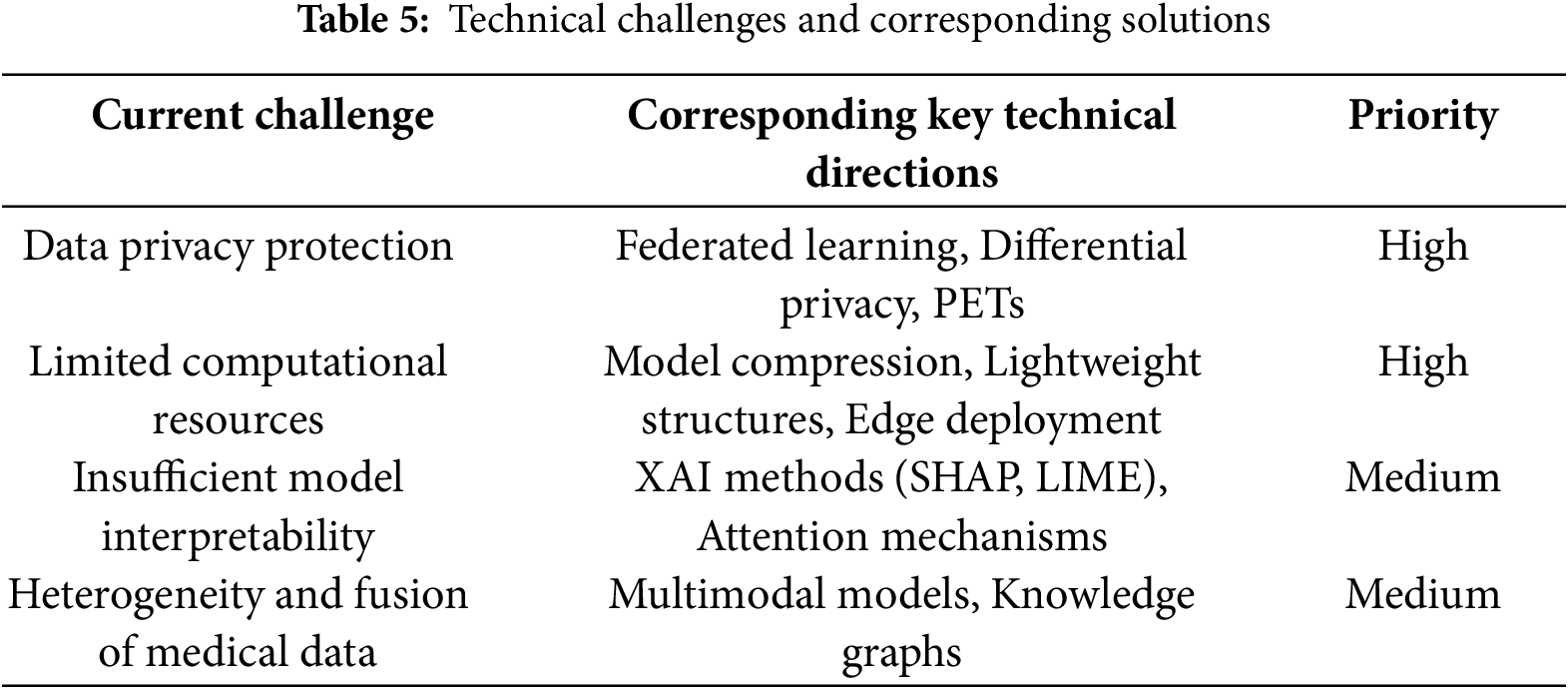

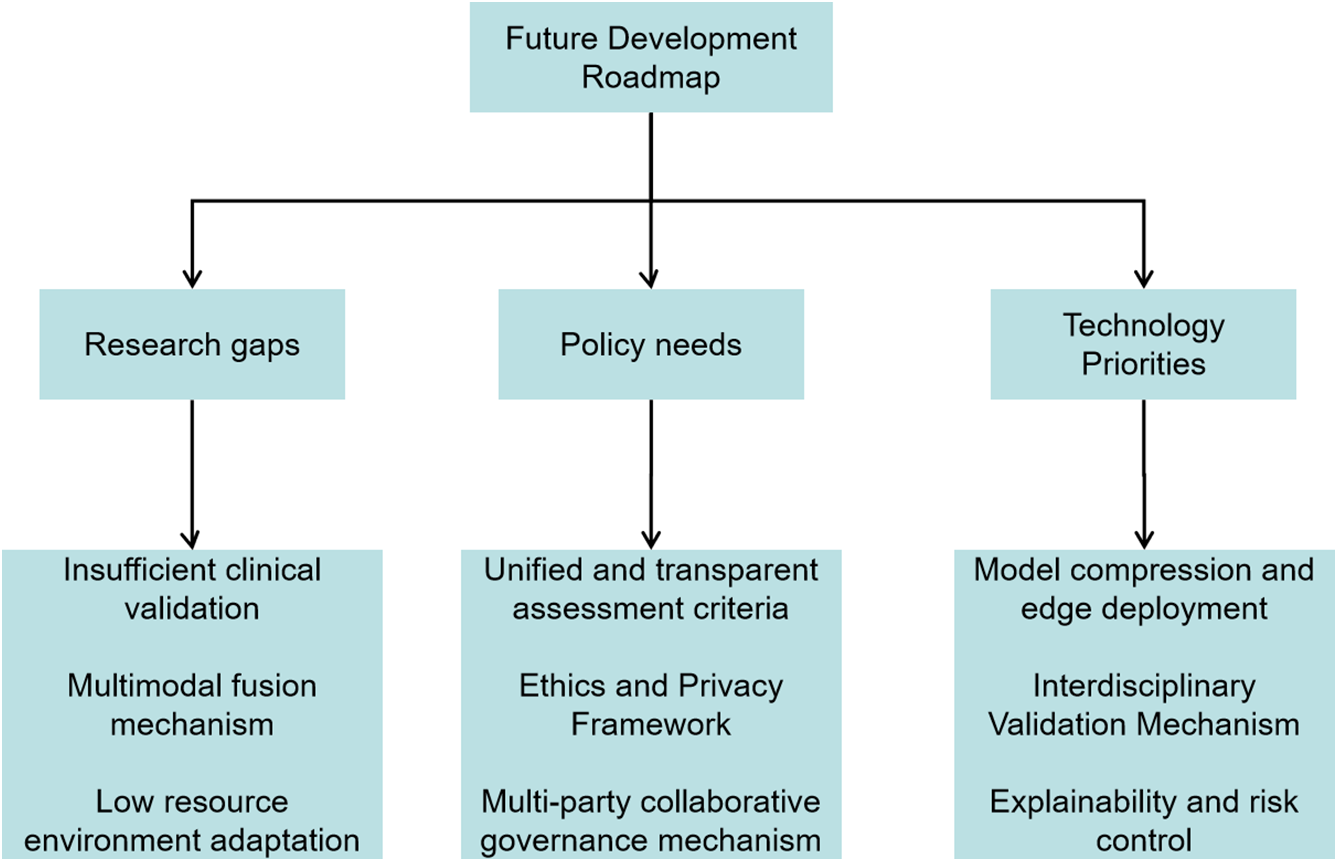

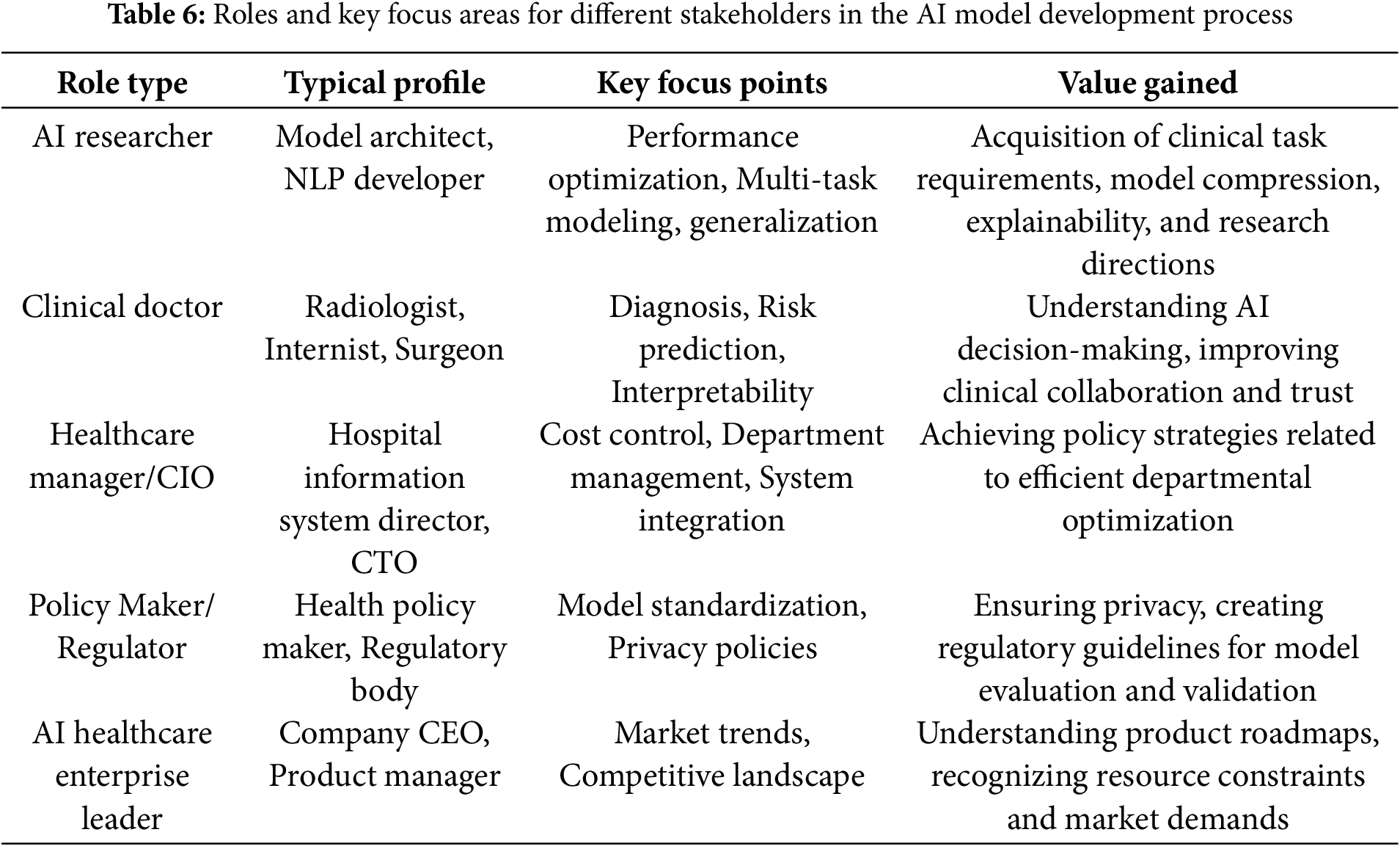

• Task fine-tuning strategy: During the fine-tuning stage, GatorTronGPT was fine-tuned for different NLP tasks, so that it can effectively adapt to various information extraction and relationship recognition tasks.