Open Access

Open Access

ARTICLE

Displacement Feature Mapping for Vehicle License Plate Recognition Influenced by Haze Weather

1 Department of Electrical Engineering, College of Engineering, Jouf University, Sakakah, 72388, Saudi Arabia

2 College of Computer and Information Sciences, Jouf University, Sakakah, 72388, Saudi Arabia

3 Department of Computer sciences, Applied College, Princess Nourah bint Abdulrahman University, P.O. Box 84428, Riyadh, 11671, Saudi Arabia

4 Department of Computers and Information Technologies, College of Sciences and Arts Turaif, Northern Border University, Arar, 91431, Saudi Arabia

5 School of Electrical Engineering, Southeast University, Nanjing, 210096, China

6 Department of Electrical Engineering, University of Business and Technology, Jeddah, 21448, Saudi Arabia

7 Engineering Mathematics Department, Alexandria University, Lotfy El-Sied st. off Gamal Abd El-Naser, Alexandria, 11432, Egypt

* Corresponding Authors: Radhia Khdhir. Email: ; Somia Asklany. Email:

(This article belongs to the Special Issue: Machine Learning and Deep Learning-Based Pattern Recognition)

Computer Modeling in Engineering & Sciences 2025, 144(3), 3607-3644. https://doi.org/10.32604/cmes.2025.069681

Received 28 June 2025; Accepted 20 August 2025; Issue published 30 September 2025

Abstract

License plate recognition in haze-affected images is challenging due to feature distortions such as blurring and elongation, which lead to pixel displacements. This article introduces a Displacement Region Recognition Method (DR2M) to address such a problem. This method operates on displaced features compared to the training input observed throughout definite time frames. The technique focuses on detecting features that remain relatively stable under haze, using a frame-based analysis to isolate edges minimally affected by visual noise. The edge detection failures are identified using a bilateral neural network through displaced feature training. The training converges bilaterally towards the minimum edges from the maximum region. Thus, the training input and detected edges are used to identify the displacement between observed image frames to extract and differentiate the license plate region from the other vehicle regions. The proposed method maps the similarity feature between the detected and identified vehicle regions. This aids in leveraging the plate recognition precision with a high F1 score. Thus, this technique achieves a 10.27% improvement in identification precision, a 10.57% increase in F1 score, and a 9.73% reduction in false positive rate compared to baseline methods under maximum displacement conditions caused by haze. The technique attains an identification precision of 95.68%, an F1 score of 94.68%, and a false positive rate of 4.32%, indicating robust performance under haze-affected settings.Keywords

License plate detection in haze weather is a challenging task to perform in transportation systems. To overcome the complexity range, the automatic license plate detection (ALPD) model is used [1]. The ALPD model collects hazardous images captured by smart devices on the road. The model produces real driving vehicle plates in foggy or hazy weather conditions [2]. The ALPD model analyzes the image’s background, eliminating foggy content and features to detect the number of plates accurately. It also tilted the plates to reduce the foggy weather effects from the images. The ALPD model maximizes the precision in the detection process [3,4]. A License Plate Recognition (LPR) method is used in fog and haze environments. The LPR method estimates the atmospheric lights and surrounding effects of the hazed and blurred images [5]. The estimating process de-hazes the features and patterns of the images for detection. The LPR method detects the exact character and features of the number plates. It enhances the performance level in the license plate recognition process [6].

Feature extraction and analysis methods are used for license plate detection in haze weather. It is used to ease the detection process by reducing the energy computation in further processes [7]. A feature extraction technique extracts the optimal features from the given video footage and improves vehicle detection. It addresses issues like background detection-subtraction and cast shadow removal. The extraction technique analyzes the vehicle images and produces data for the specific detection process like plates [8]. The extraction technique optimizes the issues that cause latency in detecting license plates. It is also used to process the data and remove the fogged edges of the images [9]. A feature analysis approach is also used for license plate detection in haze weather. The analysis approach recognises the license plate in complex haze conditions [10]. The approach analyzes the colour, edge, corner, and information presented in the fogged images. The image produces relevant data, which minimizes the computational cost of detection. Both horizontal and vertical histograms are used in the approach to remove the unnecessary edges in the images. The analysis approach enlarges the effectiveness level of the detection systems [11,12].

Machine learning (ML) algorithms are used for license plate detection in haze weather. The ML methods are used here to improve the precision of the plate detection process [13]. A generative adversarial network model developed for improving picture quality under difficult settings is known as the Multi-Phase Generative Adversarial Network (MPGAN). Its primary goal is to improve object identification and recognition, such as license plate recognition. This includes repairing damaged photos caused by haze and other visual degradations. The method also uses a visual processing algorithm to improve the quality of the haze pictures and images [14]. The MPGAN is used here to dehaze the images as needed. The algorithm locates the license plate’s exact location and produces the vehicles’ features in haze weather conditions [15,16]. The MPGAN algorithm increases the accuracy and feasibility ratio of the license plate detection. A deep learning (DL) algorithm-based detection method is also used in detection systems [17]. Deep Learning uses complex neural network topologies to learn characteristics from raw input, such as photos, automatically. These algorithms can decipher intricate visual patterns, allowing for accurate identification and classification tasks, such as separating license plate areas from backgrounds with little human intervention. The DL algorithm uses a descriptor classifier to classify the exact features extracted from the images. The DL algorithm detects the license plates with minimized requirements, enhancing the system’s quality range [18].

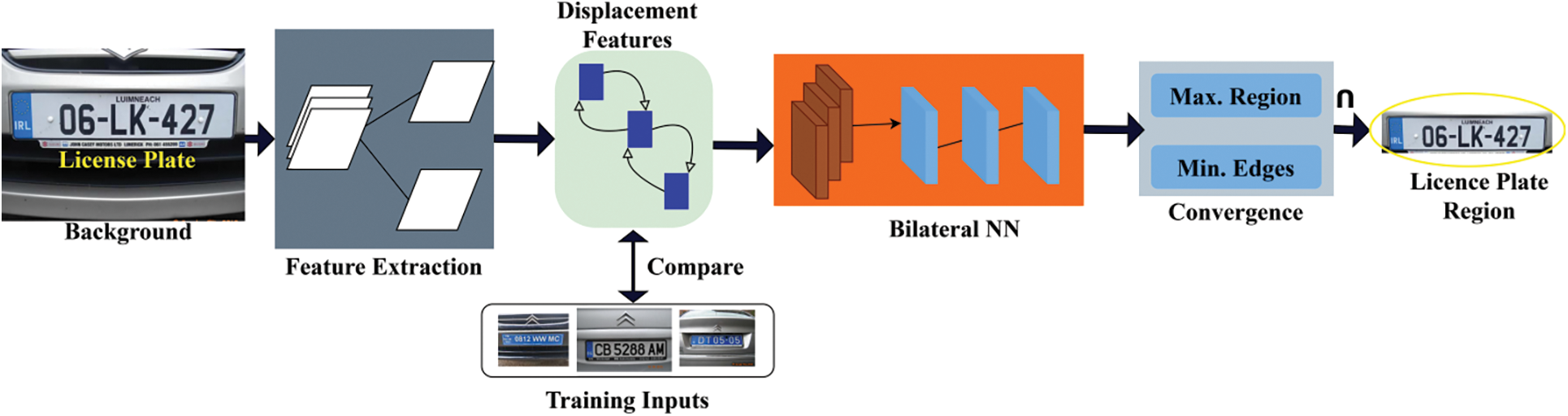

The above abstract, which encompasses the components and functions, is graphically exhibited in Fig. 1. Fig. 1 illustrates the Displacement Region Recognition Method (DR2M) feature extraction. These versions detect missing license plate characteristics, especially in low light. An accurate detection process relies on size and color. Before anything else, snap images in dim light to see license plates better. Photography will be crisper after dehazing to reduce noise and boost contrast. In addition to size, colour intensity and license plate isolation are crucial visual aspects. Fill a bidirectional neural network with these variables and use displacement mapping to smooth road bumps. Maximum region extraction layers capture the full license plate area, while minimum region extraction layers concentrate on finer details like character edges and boundary lines essential for precise detection. Two tiers make up this network design. The network calculates displacement using hazy equations by analysing area and edge properties over several frames. Hazy equations in the DR2M model, both region-level and edge-level feature displacement under haze, using maximization for structural stability and minimization for detail preservation. To increase feature identification, convergence estimation refines bidirectional neural network feature mappings to convergence. Convergence estimation tracks residual displacement over iterations, halting updates once stability is reached. This ensures consistent, noise-resistant mappings, improving accuracy in degraded visibility conditions. Use maximisation and minimisation algorithms to compare relocated features to their picture positions. Use Adam or Stochastic Gradient Descent (SGD) as a gradient descent optimiser and train the dataset at the right learning rate and batch size. Cross-entropy or MSE might be the loss function depending on the classification goal. License plate placements are common in fuzzy training photos.

Figure 1: Graphical abstract of the article

The recommended method for recognising license plates in foggy conditions employs a bidirectional neural network and DR2M for accurate recognition. First, capture photos in low light, when ambient noise and poor vision make license plates difficult to see. Clearer images start with dehazing to remove noise and boost contrast. Apart from size, colour intensity (RGB values or greyscale) and license plate isolation are the most crucial photo attributes. Input these variables into a bidirectional neural network and map them using displacement to account for hazy variations. Minimum region extraction layers focus on boundaries and finer features, whereas maximum region extraction layers prioritise the full plate. Two layers form this network architecture. The network examines edge and area properties over successive frames to calculate displacement and employs equations characterising haze-induced feature changes.

In DR2M, the similarity feature quantifies the correspondence between current and historical plate region–edge mappings. By comparing geometric alignment and feature consistency, it filters out mismatched or unstable detections. This selective acceptance of only high-similarity mappings reduces false positives, thereby enhancing precision and improving the overall recognition reliability.

Up to convergence, the convergence estimation technique refines feature mappings in a bidirectional neural network to enhance feature detection. As mentioned, the maximisation and minimisation equations compare the relocated features to their original places in the picture. Employ Adam or SGD gradient descent optimisers with dataset-optimal learning rate and batch size for training. Fuzzy training photos comprise license plate positions, and the loss function is cross-entropy or mean squared error (MSE), depending on the classification task. After numerous training cycles, the model is evaluated using recall, accuracy, F1 score, and false positive rate to reduce errors. To minimize background attention on crucial regions like the plate’s edges, the network uses Edge-Guided Sparse Attention (EGSA). The technology accurately detects license plates in haze, shifting lighting, and ambient noise.

Beyond haze degradation, existing methods often ignore spatial displacement of features, lose fine character details, and lack temporal stability in detection. Many prioritize speed over robustness, struggle under occlusion or illumination changes, and fail to integrate multi-scale features. DR2M directly addresses these issues through displacement mapping, bilateral processing, and convergence estimation.

The main contributions to the article are:

• This paper presents the Displacement Region Identification Method (DR2M) to deal with license plate identification in cars affected by haze. The frame-based analysis uses the bare minimum of observed edges to derive the haze’s effect. A bidirectional neural network can identify edge detection errors using misplaced feature training.

• To isolate the license plate area from the rest of the vehicle’s image, employ the training input and the identified edges to determine the displacement between the observed picture frames.

• At the highest displacement factor, this method achieves an impressive 10.27% identification precision, an F1 score of 10.57%, and a false positive rate of 9.73% lower than the standard method.

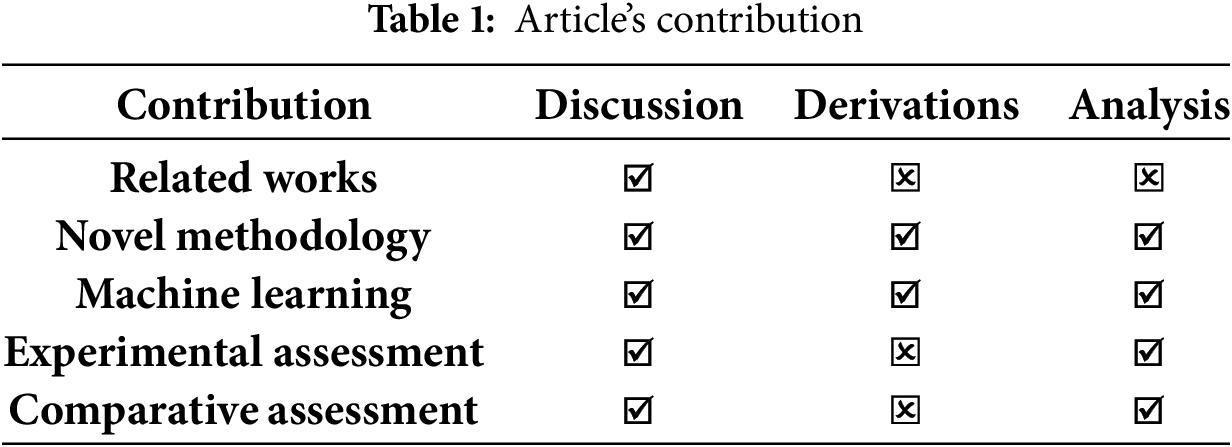

The contributions of the article are also presented in Table 1.

The proposed method presents numerous innovative enhancements compared to current dehazing and License Plate Recognition (LPR) techniques:

• Displacement Feature Mapping implements a dynamic system that monitors and rectifies haze-induced pixel displacements over successive frames. In contrast to conventional dehazing techniques that primarily emphasize picture improvement, DR2M precisely simulates the spatial displacement of license plate features—such as edges and characters—caused by haze, facilitating reliable localization even under significant visual distortion.

• The Bilateral Neural Network is distinguished by its dual-layer architecture: one layer analyzes region-based features (e.g., plate dimensions, morphology), while the other addresses edge-based features (e.g., text margins, outlines). This concurrent feature processing enhances the model’s capacity to distinguish plate regions from background noise, especially in hazy conditions.

• DR2M’s architecture incorporates convergence estimation that realigns displaced features with their original placements over time, a process lacking in the majority of current LPR or dehazing pipelines.

• These advances enable DR2M to enhance image clarity and structurally adjust to haze-induced distortions, resulting in an improved F1 score, heightened detection precision, and diminished false positives relative to state-of-the-art approaches.

DR2M is novel as it integrates displacement feature mapping, bilateral region–edge pathways, and convergence estimation to handle haze-induced spatial drift, edge degradation, and instability. Unlike existing methods focused solely on enhancement or detection, DR2M jointly ensures spatial consistency, detail preservation, and temporal stability, directly addressing these persistent shortcomings.

The rest of the paper is followed by Section 2, describing the recent literature review on the proposed topic. Section 3 introduces the Proposed Displacement Region Recognition Method. Section 4 gives details about the results obtained and the discussions. Section 5 gives the conclusion of the paper.

2.1 Advancements in License Plate Recognition

Silva and Jung [19] introduce IWPOD-NET, a strong network for recognizing license plates in difficult conditions. IWPOD-NET accurately detects license plate corners in diverse conditions, allowing effective perspective rectification. IWPOD-NET performs well against the top methods in Advancements in License Plate Recognition (ALPR), scoring highly across different datasets. IWPOD-NET was evaluated across various conditions and vehicle types, including motorcycles, showcasing its robustness.

Jiang et al. [20] propose an ALPR method optimized for real-time performance and accuracy in various conditions. License Plate Detection Network (LPDNet) uses an anchor-free approach with a Rotating Gaussian Kernel and centrality loss for precise license plate detection. CRNet, a full-convolution network, improves character recognition speed and accuracy across different license plate types. The method outperforms existing approaches on diverse public datasets.

Lin et al. [21] implement a method using the SOLOv2 model for better license plate detection and segmentation in wide-angle images. The approach improves recognition rates by 12% in challenging environments like indoor parking lots. The method effectively addresses issues such as low lighting and complex backgrounds. Employing instance segmentation accurately handles varying license plate positions and orientations, advancing multiple license plate recognition in diverse conditions.

2.2 Dataset Contributions for ALPR

Wang et al. [22] introduce LSV-LP, a large-scale video dataset with over 1402 videos and 364,607 annotated license plates. LSV-LP improves upon single-image datasets by reflecting greater diversity and complexity in natural scenes. The MFLPR-Net framework leverages information from adjacent frames to enhance license plate detection and recognition (LPDR) performance. Experimental results demonstrate that MFLPR-Net outperforms mainstream methods in accuracy and robustness.

Ramajo-Ballester et al. [23] developed dual license plate recognition and visual features encoding. The proposed method uses license plate recognition and visual encoding for vehicle identification, enhancing robustness and accuracy. Two new open-source datasets, UC3M-LP for license plate detection and UC3M-VRI for vehicle re-identification, have been created and published. The dual identification system shows excellent performance on public and proposed datasets.

2.3 Optimization for Real-Time Performance

Ke et al. [24] present an ultra-fast ALPR approach using an improved Yolov3-tiny model for detecting multiple license plates in diverse environments. Multi-scale Residual Network (MRNet) efficiently recognizes license plates using multi-scale features without needing complex image adjustments or costly Recurrent Neural Networks (RNNs). The method includes a novel data augmentation technique to improve the network’s generalisation ability. The approach enhances efficiency and accuracy in automatic license plate recognition systems.

Cao [25] investigated a Convolutional Neural Network (CNN)-based approach for license plate detection using a Yolo-based algorithm. The Yolo algorithm detects and extracts license plates in a single pass, optimizing efficiency. The model’s accuracy is evaluated on a validation set to identify areas for further optimization. The method improves efficiency and accuracy in license plate detection using convolutional neural networks.

Qin and Liu [26] propose an end-to-end deep neural network for license plate detection and recognition in various conditions. The unified framework avoids the drawbacks of separate detection and recognition methods, making it more practical in real-world applications. The technique accurately localizes license plates, contributing to the accuracy of subsequent tasks. The process enhances performance across diverse scenarios, improving overall system effectiveness.

Wang et al. [27] proposed a GPU-free method for license plate detection using color-edge fusion and a Retina model. The technique combines colour filtering, Sobel detector, Slime Mould Algorithm (SMA) search, Support Vector Machine (SVM), and a trained Retina model for efficient detection without GPUs. It achieves a speedup of 312.37% compared to the original Retina model on CPU devices, with a precision of 97.95%. Evaluated on the CCPD, the method excels in detecting Chinese blue license plates on CPU devices.

Kim et al. [28] introduced AFA-Net to better recognize license plates in low-resolution dash cam footage. AFA-Net uses modules for restoring images, combining features, and reconstructing images to improve recognition accuracy. The method targets challenging conditions typical of dynamic driving environments, focusing on optimizing recognition performance. AFA-Net’s experiments show that accuracy in these conditions is improved compared to other methods.

Zhang et al. [29] presented TOCNet, a network for detecting waterway license plates. Similarity Fusion Module (SFM) enhances the detection of Small License Plate (SLP) targets by using similarity weights to increase model sensitivity. Task-oriented Decoupling Head (TDH) improves localization accuracy through selective fusion, enhancing SLP detection precision in waterway supervision. The method aims to improve the performance of license plate detection in intelligent waterway scenarios.

Seo and Kang [30] proposed a robust model using lightweight anchor-free networks. The method enhances recognition accuracy outdoors by employing attention-based networks with a residual deformable block. The method surpasses traditional approaches on benchmark datasets like CCPD, AOLP, and VBLPD and performs well on Korean handicapped parking card data. The method detects and recognizes license plates in diverse, challenging scenarios.

Shahidi Zandi and Rajabi [31] developed a deep-learning framework for Iranian license plate detection. The method uses YOLOv3 to detect Iranian license plates and Faster R-CNN to recognize and classify the characters. YOLOv3 achieved 99.6% mAP and 98.08% accuracy with an average detection speed of 23 ms, while Faster R-CNN achieved 98.8%. The system works effectively in challenging conditions and outperforms other Iranian license plate recognition systems.

2.5 Environmental Challenges in ALPR

Rao et al. [32] used Yolov5l to detect license plates, adapting it to transfer learning for diverse environments. Adaptive Fusion Feature Segmentation Network (AFF-Net) is introduced to correct large-angle deflections in license plate images by combining segmentation results with original areas. The method enhances recognition accuracy using a Convolutional Recurrent Neural Network (CRNN) with a channel attention mechanism to utilize spatial information effectively. The approach aims to improve license plate recognition in complex and varied scenes.

Using deep learning, Vizcarra et al. [33] study adversarial attacks and defenses in License Plate Recognition (LPR) systems. Deep neural networks have advanced Optical Character Recognition (OCR) in LPR systems, which is crucial for accurate plate recognition. The method suggests enhancing the LPR system security with image Denoising and inpainting techniques. The approach enhances the reliability of LPR systems against adversarial threats.

2.6 Innovations in Feature Extraction and Classification

Liang et al. [34] introduce EGSANet, which uses an Edge-Guided Sparse Attention (EGSA) mechanism to improve license plate detection. EGSA is evaluated on CCPD and AOLP datasets, consistently showing high precision and strong generalization. The method outperforms existing attention methods and models in detecting license plates. EGSANet significantly enhances accuracy across various challenging real-world scenarios. EGSA improves license plate identification by focusing on edge information in the input picture. This strategy is helpful in haze-affected places where license plate detection is difficult due to low visibility, noise, or intricate backgrounds. EGSA focuses on object edges, notably license plate borders. Edges help identify spots like license plates by defining their structure and shape. Edge-guided attention helps the model focus on the picture’s edges containing the most essential information. The EGSA method enhances license plate identification while optimizing computational resources by sparsely focusing on picture edges. Haze, fog, and intricate backgrounds are challenging, but our strategy improves feature localisation, false positives, and noise robustness. This method revolutionises practical license plate recognition.

Ramasamy et al. [35] present a new automated number plate recognition approach to reliably and efficiently identify number plates. The first step involves utilizing a pretrained Location-Dependent Ultra-Convolutional Neural Network (LUCNN), a novel ultra-convolutional neural network, to identify key characteristics in the input images. The gathered characteristics are inputted into hybrid single-shot fully convolutional detectors that incorporate a Support Vector Machine (SVM) classifier to distinguish between the city, model, and number of the car and its registration location. The suggested LUCNN+SSVM model successfully extracts the number plate areas from images captured behind vehicles at varying distances. The performance results show that compared to the existing recognition models, the suggested LUCNN+SSVM model achieves a higher accuracy of 98.75% and a lower error range of 1.25%.

Yan et al. [36] created a multilayer memristive neural network circuit for license plate detection. The neural network automatically adjusts outputs to match set targets based on inputs, which is suitable for tasks like pattern recognition. Simulations demonstrate the circuit achieves 93% accuracy in license plate detection and handles memristor variations, enhancing training speed and performance. Using novel memristive technology, the method shows promise for efficient and robust license plate detection.

Subramanian and Aruchamy [37] present a novel speech-emotion recognition (SER) framework to classify spoken emotions. The recommended method pre-processes voice signals to eliminate artefacts and background noise. We merged state-of-the-art speech emotion traits with the novel energy and phase attributes. The threshold-based feature selection (TFS) technique uses statistics to discover the best features. The Tamil Emotional dataset, a regional language of India, tests the proposed architecture using classical ML and deep learning classifiers. The recommended TFS approach has 97.96% accuracy, making it better for Indian regional languages than Indian English and Malayalam datasets.

Multi-scale fusion defogging (MsF) is a part of the multi-task learning framework to prepare pictures for processing by the re-identification branch, which successfully reduces image blur [38]. The adaptive preservation of critical features is further enhanced by using a phase attention mechanism. Compared to the semi-supervised joint defogging learning (SJDL) model, the method’s improved performance is especially noticeable in challenging foggy conditions.

Secure the privacy of the Internet of Things via a blockchain-based method that involves LPR [39]. Bypassing the gateway, a system registers a user’s license plate straight on the blockchain. As the system’s car count increases, the database controller can potentially crash. Using blockchain technology, this article suggests a privacy protection mechanism for the Internet of Vehicles (IoV) that uses license plate recognition. The license plate reader system sends a picture of a license plate to the central gateway, which handles all incoming and outgoing data. Whenever a user needs a license plate, a system linked to the blockchain registers it directly, bypassing the gateway.

Based on the survey conducted in [40], current license plate recognition systems encounter significant problems, including suboptimal performance in low visibility, fluctuating illumination, occlusion, and severe weather conditions such as haze or fog. Numerous conventional methods depend significantly on image quality and encounter difficulties with warped or low-resolution plates, leading to erroneous detection and recognition. Moreover, several approaches exhibit insufficient adaptation to various plate formats and environmental fluctuations, constraining their generalizability. These constraints necessitate the development of advanced models such as DR2M, which mitigate haze-induced feature displacements through the utilization of bilateral neural networks for enhanced and precise recognition.

Alongside conventional methods, new research has explored real-time and lightweight license plate recognition systems designed for resource-limited settings. Shashirangana et al. [41] introduced a framework based on neural architecture search (NAS) tailored for edge devices. The model effectively accommodates hardware constraints and ensures fast deployment; nevertheless, it lacks mechanisms to address environmental distortions like haze, which considerably affects its accuracy in outdoor settings characterized by feature displacement and blurred edges. Sayedelahl [42] concentrated on deep learning-driven Arabic license plate identification for intelligent traffic systems, exhibiting great precision for region-specific plate varieties. Nonetheless, the system’s resilience to haze or low visibility conditions is not expressly considered. The method presupposes largely untainted inputs and excludes any haze-aware modules or feature alignment techniques. Tang et al. [43] created an ultra-lightweight ALPR system suitable for microcontrollers, prioritizing cost-effectiveness and energy efficiency. This technique performs well in low-resource scenarios but demonstrates inadequate performance in degraded visual conditions, such as haze, due to its absence of feature rectification or adaptive augmentation processes essential for successful recognition in these environments.

Agarwal and Bansal [44] introduced a YOLO World-based system that accomplishes real-time license plate detection and recognition with excellent accuracy in controlled settings. Nonetheless, as indicated in their findings, the model’s efficacy declines under adverse visibility conditions, such as poor illumination or environmental impediments. under contrast to YOLO World, our suggested DR2M explicitly targets haze-induced distortions and feature displacement, providing enhanced robustness under harsh situations. This method’s contrast further emphasizes the innovation and practical importance of DR2M in uncontrolled, real-world contexts.

These results highlight a significant deficiency in the LPR literature: the majority of lightweight or real-time models emphasize speed and economy while frequently neglecting the issues associated with haze-induced feature displacement and edge degradation. This immediately inspires the proposed DR2M, which amalgamates displacement feature mapping with a bilateral neural network to rectify haze-induced distortions. DR2M enhances recognition accuracy in low visibility conditions while maintaining adaptability for real-time implementation, effectively reconciling resilience and efficiency in LPR systems.

Various technological deficiencies in earlier variety Plate Recognition (LPR) systems led to the creation of DR2M. Perspective correction, real-time performance, and other technologies can remedy haze and environmental distortions. These strategies function best with clean settings or organised data; therefore, they are ineffective at resolving ecological distortions. Although Adaptive Feature Attention Network (AFA-Net) and Multilayer Perceptron Network (MFLPR-Net) improve dynamic accuracy, haze-induced pixel displacements and feature extensions remain under-researched. SOLOv2 and Yolov5l can recognise and segment complicated circumstances, but they don’t know how to handle haze-displaced properties. Most systems ignore environmental factors like haze, which change pixel positions and affect detection and recognition accuracy. This is noteworthy compared to TOCNet and EGSANet, which focus on specific settings or extremely tiny targets. Haze distorts characteristics; therefore, this area needs further attention. The handling of pixel displacements between frames is poor. Edge detection for haze is rare. Displaced feature training fails to enhance recognition accuracy. It was required to build DR2M to solve these issues. Bidirectional neural networks and misdirected feature training allow it to distinguish license plates in a haze.

According to published research, conventional license plate recognition methods have difficulty dealing with various environmental factors that can drastically affect detection accuracy, such as haze, occlusion, or poor illumination. Classical approaches, including simple Support Vector Machines (SVMs) and Convolutional Neural Networks (CNNs), have problems generalising to new datasets, which causes them to perform inconsistently when used in the real world. When faced with complicated backdrops or low-quality photographs, these methods aren’t very resilient and usually require extensive pre-processing. These shortcomings have prompted several efforts to improve the precision and speed of number plate detection. Innovative approaches such as DR2M and EGSANet combine deep learning architectures with attention mechanisms to enhance the accuracy of feature extraction and detection under challenging environments. Hybrid models like LUCNN+SSVM merge CNN-based feature extraction with SVM classification to improve accuracy and decrease false positives. In addition to fixing the problems with older methods, these additions make real-time processing easier and increase the models’ generalizability to other datasets.

New ALPR systems handle challenges including intricate scenarios, environmental distortions, and real-time performance. AFA-Net and EGSANet are great; they can only handle specific issues like low resolution or haze, not occlusion, shifting lighting, or missing pixels. YOLO-based frameworks and other existing recognition methods favour speed above accuracy, but they can’t handle feature loss in harsh conditions.

A bidirectional neural network with displacement mapping manages haze-induced feature changes in the proposed Displacement Region Recognition Method (DR2M). With dual-layer processing for the region and edge-based extraction, DR2M ensures reliable identification under challenging scenarios. Improved detection accuracy with fewer false positives is achieved via convergence estimates and robust feature mapping. DR2M provides a scalable, reliable solution to ALPR system restrictions that will enhance identification accuracy, F1 score, and processing latency.

3 Proposed Displacement Region Recognition Method

This paper involves identifying the license plate number from the input image, and this pre-processing is carried out efficiently. This step uses image representation to extract the image as input and provide the necessary processing for this work. This work aims to improve the precision and F1 score for this observation and proposes a bilateral neural network. The state of the art involves determining whether the license plate capture is affected by errors due to haze conditions. If the capture occurs during the haze condition, detecting the number on the plate is difficult. Based on this analysis step, the background image is acquired as the input, i.e., the license plate and from this approach, the examination is followed up accurately. This strategy illustrates the features of the input image. In this process, colour and size are taken into consideration. Examining these license plate features, the mapping is followed up, and the vehicle is provided with a transparent license plate. This approach determines the displacement of the feature occurrences due to the feature extraction method. In this phase, the bilateral neural network is introduced under the displacement of features. In this context, “bilateral” denotes the dual-path architecture of the proposed neural network, which concurrently analyzes both region-based characteristics (e.g., plate size, shape) and edge-based features (e.g., contours, borders). This dual-layer architecture allows the model to identify both coarse and fine-grained details proficiently, hence augmenting its capacity to manage haze-induced distortions and promoting convergence in feature mapping and recognition.

The DR2M model feature extraction procedure is designed to detect misplaced characteristics in license plate images, especially when the lighting is poor. Colour and size are the process’s primary properties for accurate detection. Because of its colour, the license plate can be easily distinguished from its surroundings, especially under difficult lighting conditions like haze. Using a suitable colour space is important to help the model differentiate between the vehicle’s backdrop and the plate, as haze frequently reduces contrast. Colour extraction employs the distribution and intensity of RGB data to help the model identify the area of the license plate. The computational effort can be reduced by converting to greyscale in some circumstances while critical colour-based boundaries are retained.

The model’s ability to recognise plates with various backdrop colours remains resilient, even in a haze, since it leverages colour. Even when haze makes it harder to see details, white or yellow plates may be identified by their darker backgrounds. The model compares the sizes of the identified areas to distinguish the license plate from other vehicle parts. This comes in handy, especially when there are several frames of the vehicle’s movement or when part of the image is obscured.

• Haze displacement handling manages pixel shifts induced by blurring. The model starts by identifying haze-induced pixel displacement. This displacement shifts license plate features, including characters, making identification harder.

• Maximum and minimum region extraction let the DR2M bilateral neural network locate and measure displacement. The model analyzes feature similarity across image frames or time intervals to correct for license plate relocation.

• Following displacement, the model realigns pixels using convergence estimation, as previously discussed. After displacement, the bilateral network validates the proper detection of large objects (like the plate) and minute details (like edges and letters).

• A comparison of displaced features with training data maps presents features to projected license plate locations to fix the problem. Example: The model can predict when the plate’s location will change due to haze and alter its detection border.

Robustness in handling Hazy conditions

1. Blurred or mismatched license plate borders can be created by haze-induced pixel displacement. By considering region-based (based on size) and edge-based (based on colour) characteristics, the bilateral network can manage misalignments and adjust for these changes.

2. Over time, the network fine-tunes its displacement feature recognition algorithm, making it more accurate for shifted plates and less prone to false positives.

The method’s effectiveness in real-world circumstances will be supported by visual and numerical proof, thanks to the thorough treatment of displaced features.

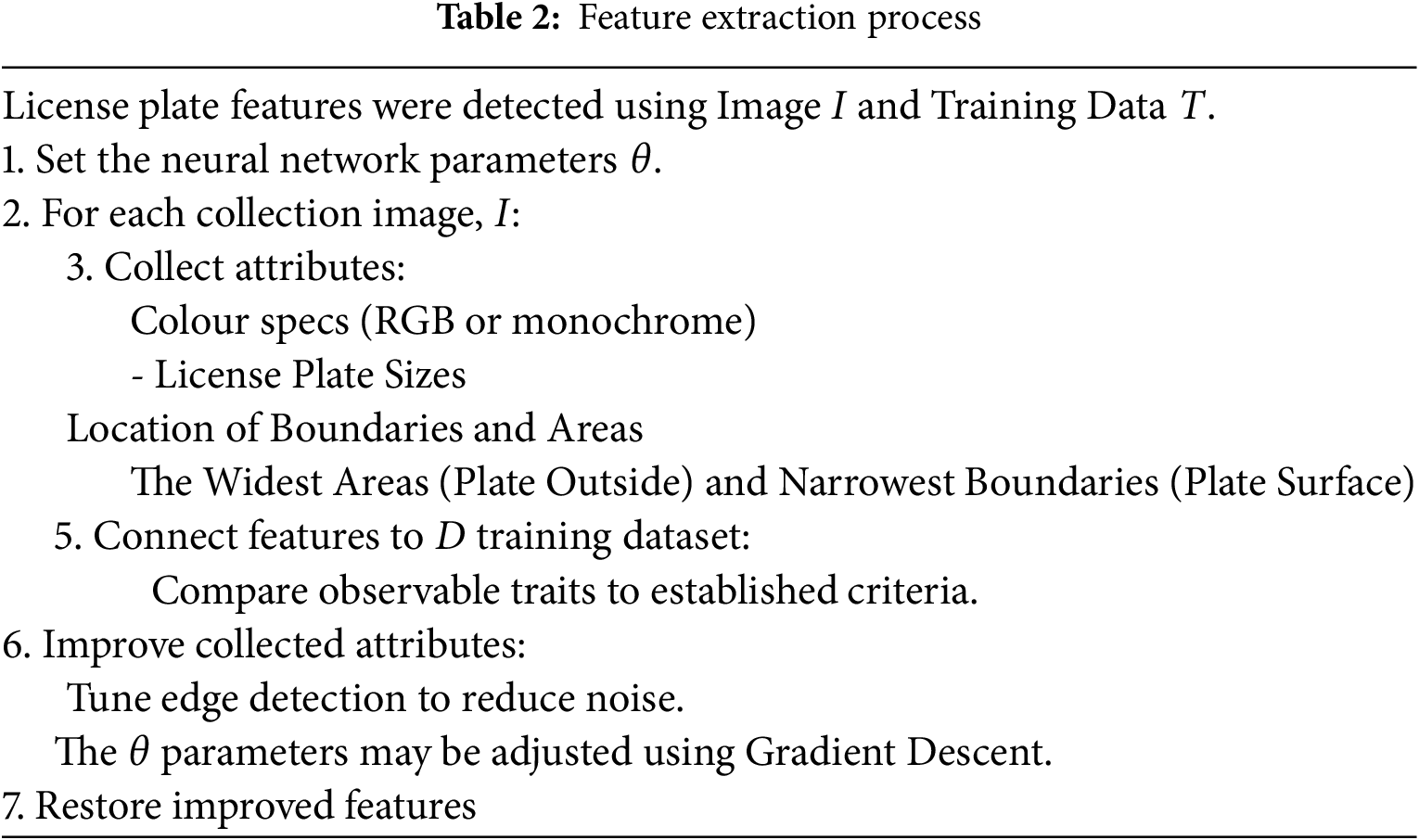

This technique is classified into two forms: maximum region and minimum region. The convergence rate is observed due to the feature extraction and computation methodology. In this case, it considers the background image and provides a better analysis by performing the mapping. The bilateral works in these works are in two layers, including the maximum and minimum region extraction, to establish the convergence rate. This methodology illustrates license plate recognition and provides reliable computation with the necessary feature extraction mechanism. This step determines the feature mapping for the vehicle license plate recognition with two varying layers. The varying layers are associated with the region and edges. The preliminary step is to perform with the region and then with the edges. In this section, mapping is carried out for the training inputs observed under the bilateral neural network, and the displaced features from the extraction process are compared. The first derivation is the image representation formulated in the equation below.

The image representation is equated to the above equation for license plate detection from haze conditions. The region and edges are represented as

Due to the haze, the noisy pixels captured from these necessary features are extracted and show improved image resolution. If the particular image is somewhat noisy, then detection is difficult to ensure the resolution in the higher range, so the extraction of necessary features is very important to identify in haze conditions. The license plate detection in haze conditions is evaluated based on size and colour. Based on these factors, the extraction is followed up accurately in this proposed work for the input image. This stage defines the displacement of features and derives the mapping associated with the vehicle license plate recognition. This strategy provides the identification of license plates at the required time interval. Thus, it derives a reliable image representation from the background image and achieves the detection of the number. The formulation below extracts features from the image representation and improves the image quality.

The feature extraction is observed in the above equation, further categorized by colour and size. Based on these two factors, the font and design of the plate are analyzed, and it is represented as

A background image is taken from this processing step, and the extraction with the mentioned features is performed. On top of this analysis, the background image is acquired to extract the desired features. This mechanism determines the input image’s color and size; from this approach, monitoring is observed periodically. In this examination stage, image identification plays an important role in this extraction process and produces the output. The time requirement is discussed in this topic to extract the necessary features in the required time interval. By progression of this method, feature extraction provides the background image for the efficient region and edge-based computation. The colour indicates the RGB value with some greyscale images; the size describes the font size of the number displayed on the plate. Based on this strategy, the extraction is followed up for the displacement identification of features, including the license plate’s font and design. From this extraction method, the segmentation characteristic is performed, classifying the region and edges.

The characteristic segmentation is equated for the region and edges of the input image. The first derivation is for the region, and the second is for the edges. Based on this approach, the font and design of the input image are considered and work accordingly to extract the features. The feature extraction is carried out by identifying the colour and size of the acquired license plate. In this observation method, the font and design are considered and examined by monitoring the extraction over the mentioned time interval. The feature extraction process is defined by providing this process, and the region and edge-based computation is determined. Region-based execution is used to run the process efficiently. In this mechanism, the characteristic of the license plate is used to illustrate the extraction of color and size from the plate, making the task easy to define the region. From the region, the pixel is blurred due to the haze condition; to address this, both the region and edge detection are followed up in this bilateral layer.

This segmentation plays a vital role in the image’s region and edge; the feature extraction is carried out efficiently from this observation. The process defines the maximum and minimum regions and is identified in this extraction method from these edges. The edges are associated with the feature mapping for the vehicle, which provides license plate detection for the feature position. Based on this strategy, it derives the edge that differs from boundary detection, where it analyzes the edges of the image. From the box format, the edges are distinguished and provide the necessary computation for the segmentation. The segmentation is carried out for the varying computation layers and relies on recognising the vehicle license plate. This approach derives the feature extraction process with the convergence rate for reliable analysis. Based on this evaluation step, recognition is carried out for the different processing sets and giving the characteristics of the region and edges from the image for segmentation. From this method, the analysis for displaced features is progressed to find the feature position changes, which are equated below.

The analysis for the displaced feature is identified in the above equation and represented as

Figure 2:

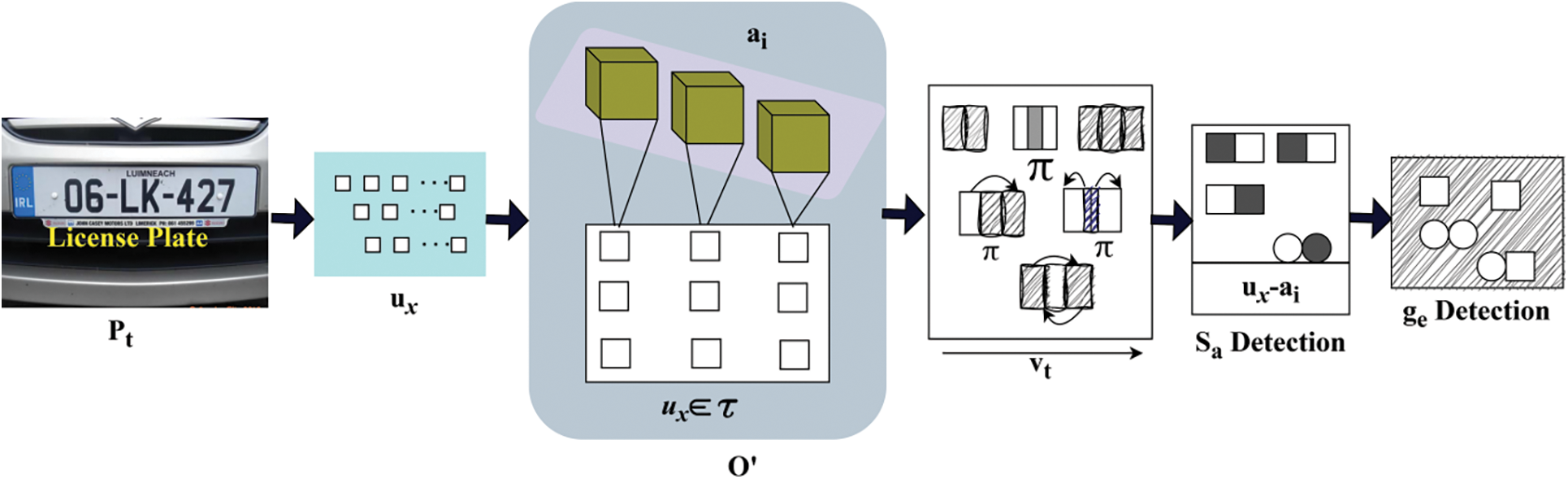

In Fig. 2, the

The periodic monitoring is performed for the displaced features, and from this observation strategy, the time duration is calculated. The comparison is represented ass

In this study, the “widest areas” denote the bounding boxes exhibiting the most excellent height-width product that comply with the initial color and aspect ratio criteria. The calculation involves segmenting the image into contiguous sections and determining the area of each region as follows:

The “narrowest boundaries” are established using Sobel edge detection, followed by morphological thinning to improve edge definition. Among all identified edges, those possessing the minimal enclosing bounding box around character-level gradients are selected. These denote intricate elements such as letter outlines and plate margins. The metrics of the most significant area and narrowest boundary serve as inputs for the bilateral neural network to facilitate efficient region and edge-based license plate detection.

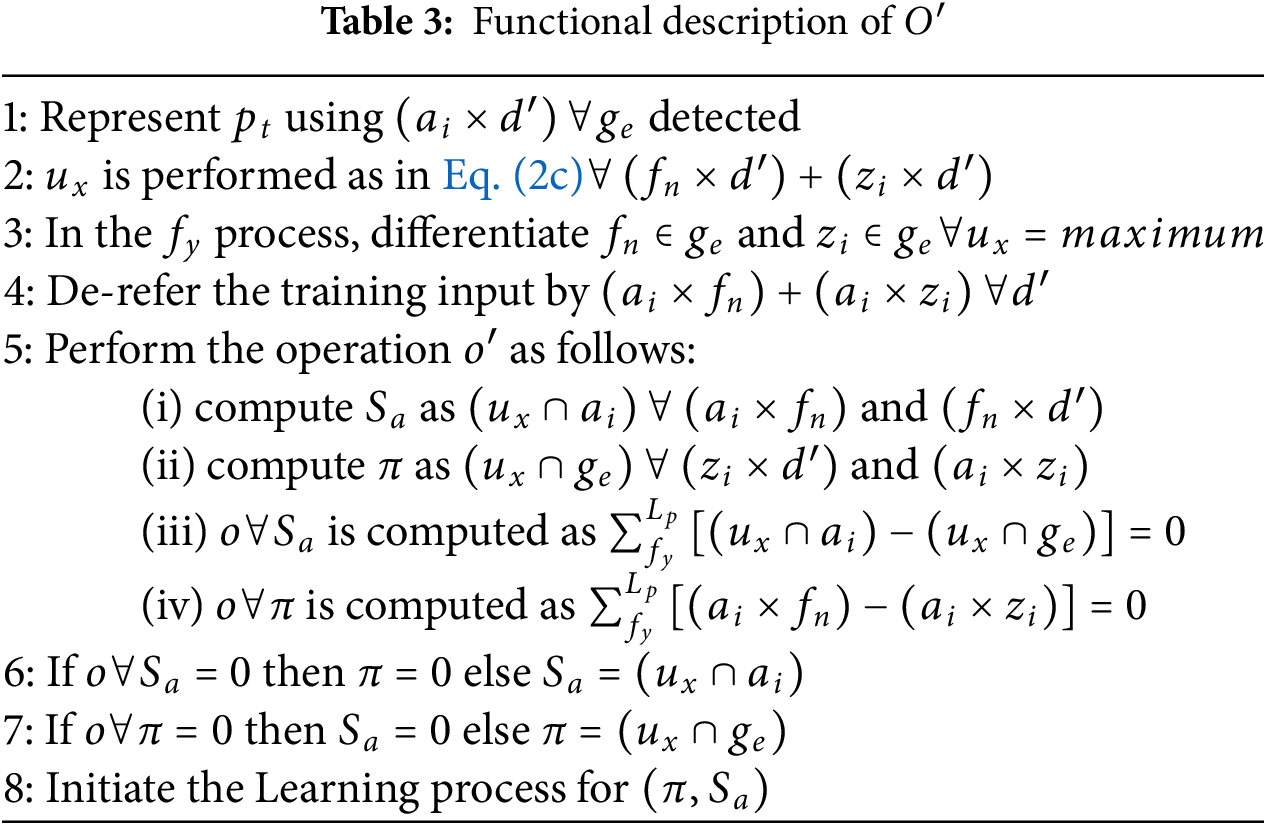

The primary goal of the first method is to recognize the license plate by extracting pertinent information from the input photos, such as the size and color. It uses a neural network to find the largest areas (plates) and the smallest boundaries (plates). Iterative optimization methods, such as gradient descent, enhance the findings by decreasing noise and increasing accuracy once these features have been mapped to training data for validation. The functional description of

This evaluation step maximises the region for vehicle license plate detection. The comparison is made with the existing techniques and derived from reliable processing. Here, it illustrates the feature position and variation and resolves for further evaluation. In this technique, the bilateral neural network is proposed to acquire the input from the displaced features and progress, resulting in the maximum and minimum regions. Based on this structural feature, the comparison is followed up in this technique, the pixel’s position is found, and the fix is used for the analysis. The fixed pixel frame is further computed to recognize the number plate. These steps are processed individually by introducing a bilateral neural network in the following section.

The suggested technique adjusts for light and background noise changes in hazy license plate identification by integrating a bidirectional neural network for displaced feature mapping with colour and size-based feature extraction. The model can see it in weak or changing lighting because it employs colour intensity to focus on the license plate’s continuous colour contrasts. While focusing on license plate size, the algorithm filters out extraneous background noise. The bidirectional neural network enhances detection by integrating edge- and region-based feature extraction. This lowers background noise and lets the model react to illumination changes. The model eliminates background clutter and glares by focusing on the highest and lowest areas, and edge-guided recognition helps identify the plate’s borders even with low-contrast illumination. These capabilities make license plate identification reliable even in noisy and dynamic environments.

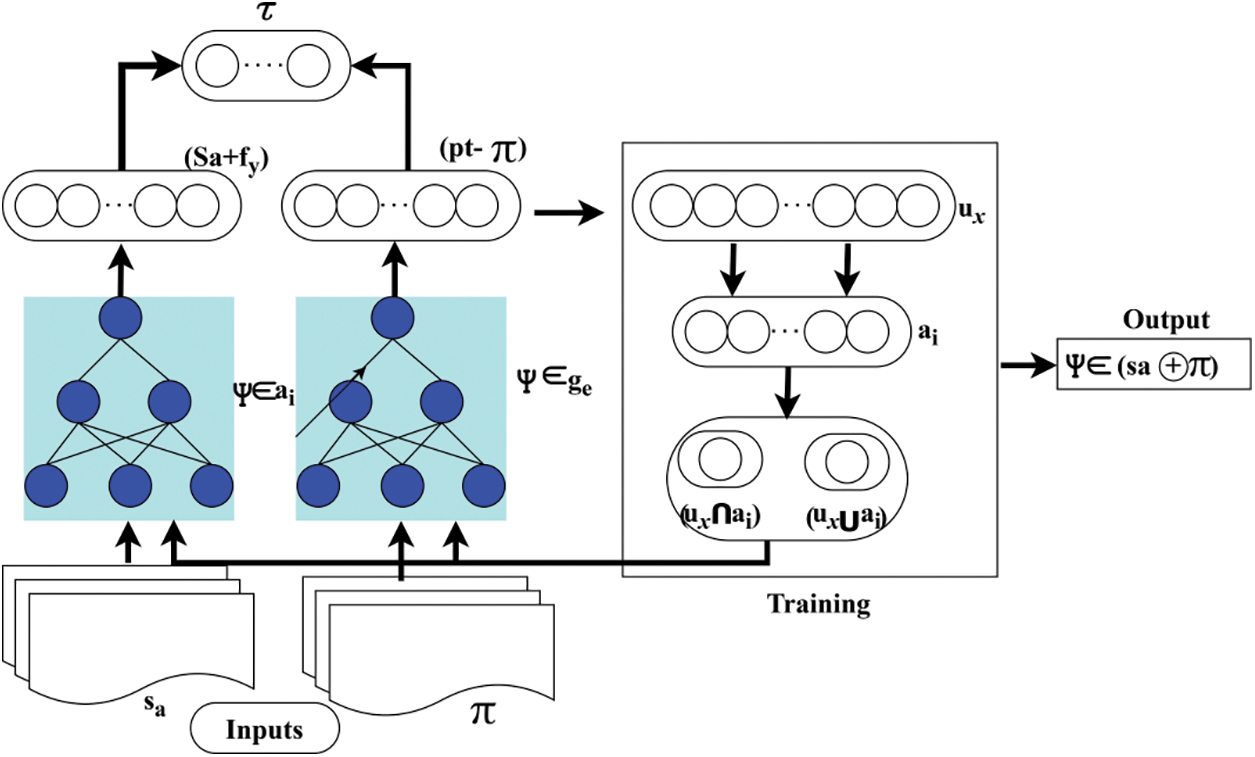

3.1 Bi-Lateral Neural Network for Convergence Estimation

The suggested Displacement Region Identification Method (DR2M) uses a bidirectional neural network architecture to address displaced features in haze-related license plate recognition. A thorough description of layer connections, activation functions, training techniques, architectural rationale, and computing complexity.

1. Layer connectivity:

The algorithm analyses haze-impacted photos in the Input Layer to extract license plate properties, including size, colour, and text. The network uses maximum and minimum region extraction layers to reduce haze-related feature displacement.

The bidirectional neural network extracts areas and edges concurrently using two hidden layers. The network analyzes haze-induced visual properties using hidden layers to handle misplaced features.

The network’s final hidden layers generate intermediate outputs representing maximum (region-level) and minimum (edge-level) features, which are then fused and passed to the output layer to produce the final license plate prediction. This enables the network to accurately recognise license plates by mapping misplaced characteristics to their correct positions.

2. Activation function:

The DR2M may use the popular Rectified Linear Unit (ReLU) in its hidden layers as an activation function. ReLU excels at non-linearity and sparse gradients, making it ideal for image-processing neural networks. The output layer can employ softmax or sigmoid activation functions to distinguish between license plate detection and non-detection.

3. Training process:

• The model extracts several parameters to find missing characteristics. These include colour, size, and typeface. The bilateral network uses extracted features to determine maximum and minimum regions and estimate convergence rates.

• The repeated comparison of displaced characteristics with seen image parts shows the repetitiveness of this operation. The network adjusts its weights to decrease the mismatch between observed and displaced features. It also compares training inputs with displaced characteristics to decrease early training mistakes.

• Use a cross-entropy loss function or mean squared error (MSE) to calculate the difference between the features’ supposed and actual displacement. Training will employ gradient descent optimisers like Adam or SGD to update weights.

• This architecture concurrently extracts characteristics from regions (size, location) and edges (licence plate borders and edges).

• The Maximisation Layer handles areas with more characteristics to simplify extracting the most critical regions. The minimisation layer emphasises edges to improve identification in haze-covered areas.

The bilateral design’s characteristic displacement detection improves license plate identification, especially in haze. This method reduces false positives by identifying and distinguishing important from irrelevant traits. Here, the Convergence estimation refers to the changes that describe the license plate acquisition of font and design that do not affect the different layers in a neural network. If the first layer works on segmentation, then the second layer also works under the same segmentation mechanism. It indicates two layers under the same computation process. This method is used in our work to find the region and edges of the number plate. To illustrate these two regions, maximum and minimum are detected, and based on this approach, the bilateral neural network works. The neural network is the interconnection of layers, including the hidden and training layers. From this observation, the proposal model analyzes the displaced feature that is equated in Eq. (4) and provides an examination of the region from the input image.

Step 1-Region Assessment: In this proposal, the region examination is carried out to find the required features displaced in the image. This working principle involves the license plate detection under which position it has a deviation. The position deviation is used to determine the displacement of the feature, and the mapping is performed below. The bilateral works to examine the region with the displaced region involve the background image for the analysis, which is given as the input for the initial layer. Eq. (3a) is useful for determining the variance and location of haze-displaced features. In the context of the feature extraction phase, the expression

The examination is

Figure 3: Bilateral network representation

The general representation for

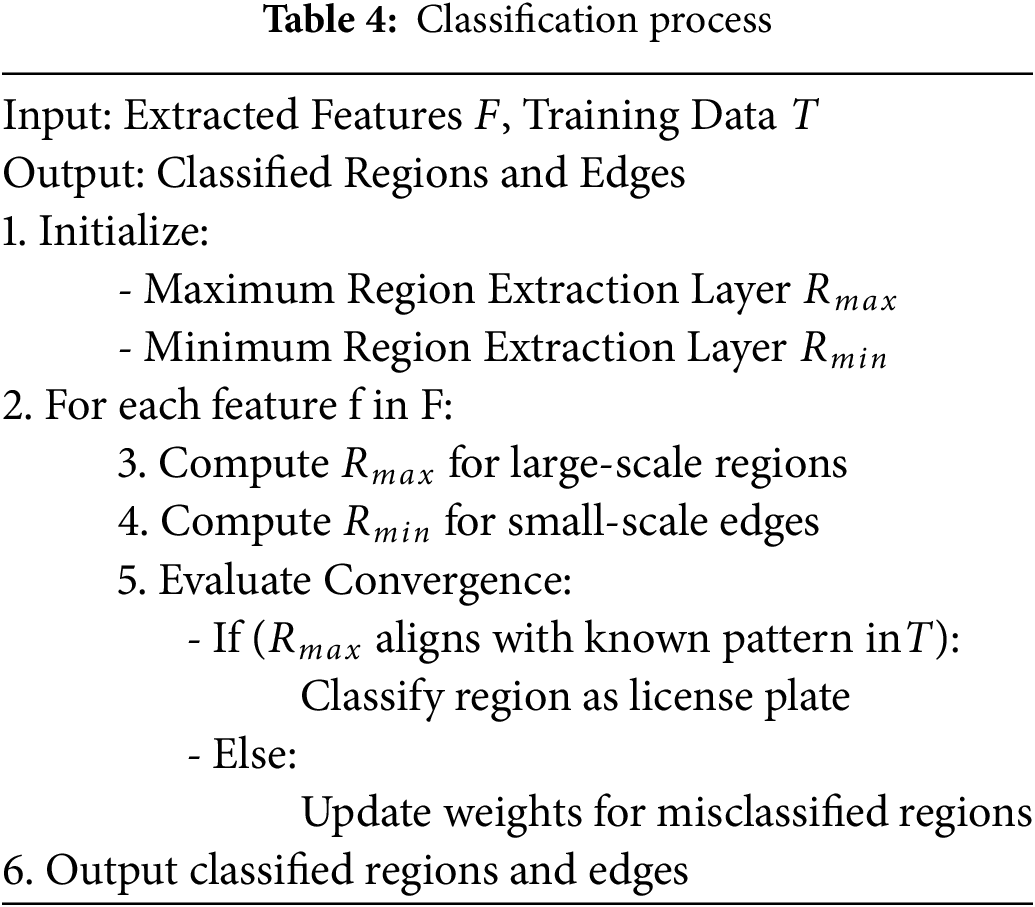

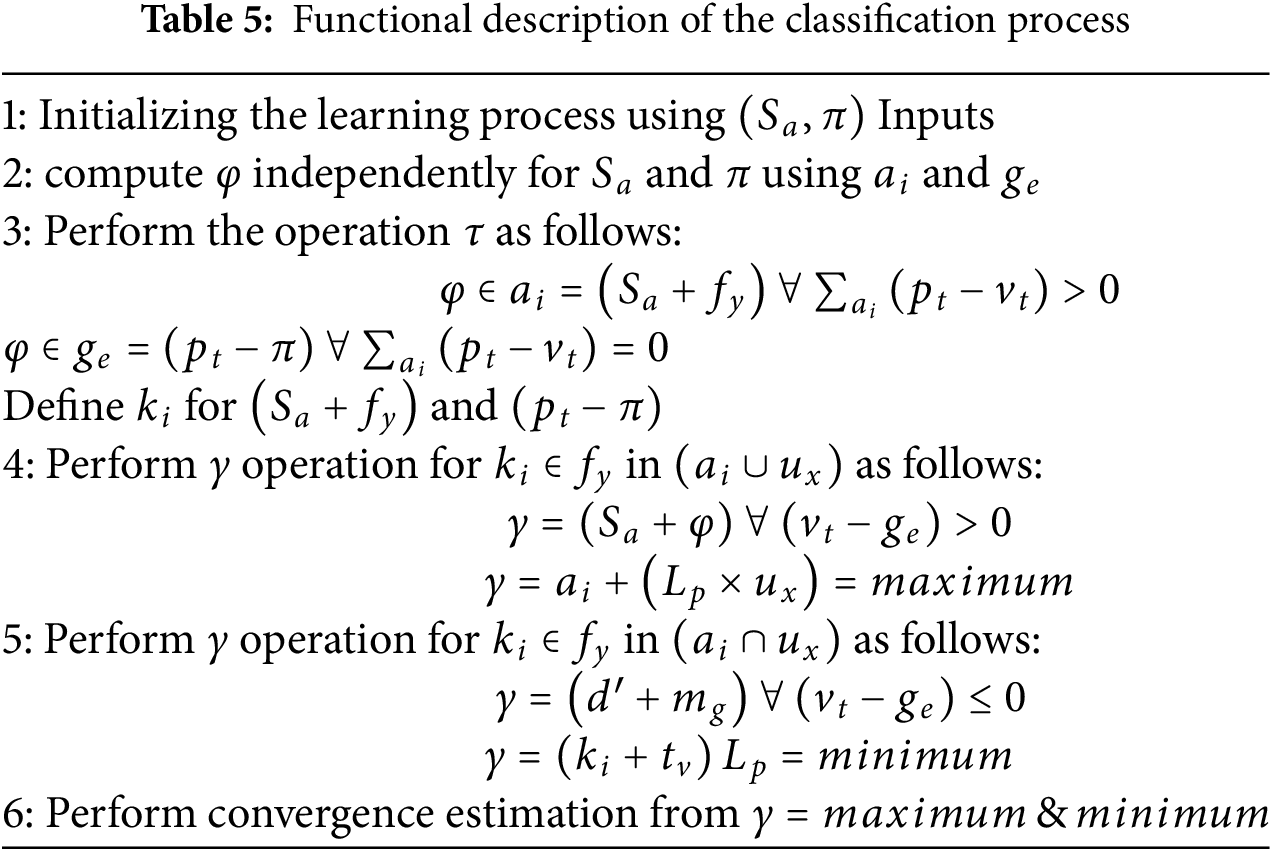

Step 2-Classification: Here, from the input layer, it forwards to the next interlinked layer

The classification performed in the above equation is equated as

The second algorithm determines whether detected areas and edges are license plates. The method employs maximum region extraction for features with a wide scale, like the plate area, and minimum region extraction for details with a small scale, such as edges. A convergence check verifies the classification by checking if the model’s parameters have been updated to fix misaligned features. The functional description of the classification process is given in Table 5.

Step 3-Convergence Estimation: The convergence rate for the proposed work is estimated in this step. This extracts the output from the classification model that ensures maximum region detection for convergence estimation. By fine-tuning the learning process indefinitely until it reaches an optimum state, convergence estimation ensures that the neural network reliably recognises license plate displacements. Convergence occurs when the neural network successfully detects the proper license plate region and edges and minimizes the discrepancy between future predictions of displaced features. The bidirectional network stabilises outputs now. The approach uses minimum and maximum region extraction due to the network’s bidirectional topology. This layer may separate region-based and edge-based feature sets and determine how they converge.

Eq. (3c) shows the DR2M model’s convergence rate calculation, incorporating many critical factors to provide precise convergence for haze-related license plate identification.

The convergence is represented as

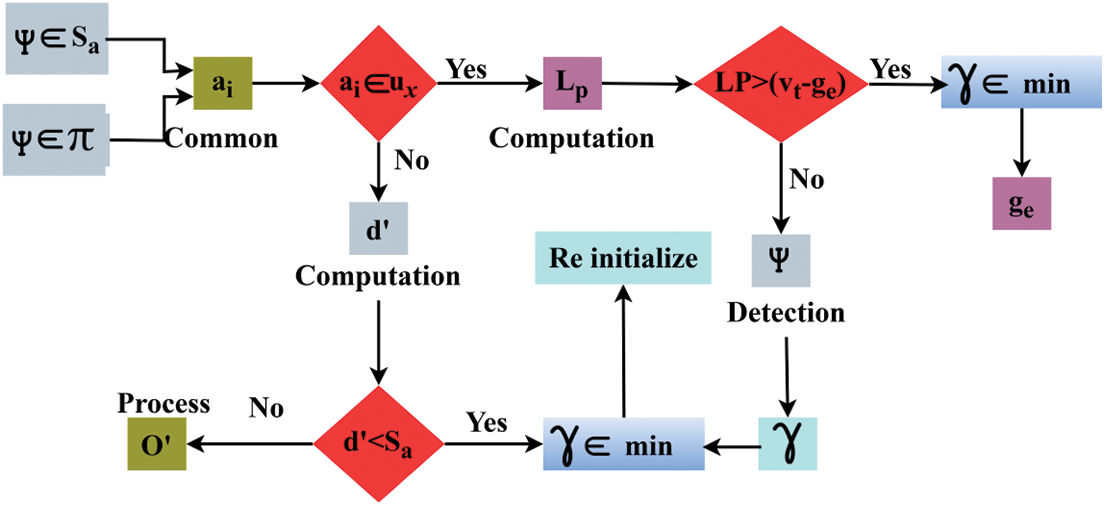

Figure 4: Convergence estimation decision

Fig. 4 depicts the decision-making process for the bilateral network’s convergence. It shows the process flow from extracting input features to identifying maximum and minimum regions, and finally to the output of convergence. The important nodes like ϕ, γ, and vr stand for the steps when the network looks at displaced characteristics like the region and edge and converges via bilateral processing. It is the process by which the neural network determines the largest area of interest by identifying and mapping out the displaced characteristics in broader portions of the image, such as the license plate. The minimal region extraction route shows that the neural network considers larger and smaller features, including edges. When the network stabilizes—shown by the figure’s feedback loop—and the model ends training and makes a correct number plate prediction, the convergence decision-making process is complete. The convergence analysis is performed for maximum

Step 4-Training: The DR2M model was trained utilizing the Adam optimizer with an initial learning rate of 0.001, implementing a step decay schedule that diminished the learning rate by 50% every 10 epochs. A batch size of 32 was employed, and the training occurred across 50 epochs, with early stopping predicated on validation loss to mitigate overfitting. The loss function is integrated cross-entropy for classification precision and mean squared error (MSE) for displacement assessment. This setup facilitated consistent convergence and efficient learning of both regional and edge characteristics in images influenced by haze.

The training phase gives the previous processing feature detection as the input to enhance the extraction model.

The training phase performs the minimum range of region extraction, including the training input comparison techniques. The training is described as

The distinguishment of license plate variation is processed in the above equation, and it is equated as

Learning rate, batch size, convergence time, and displacement thresholds are hyperparameters that influence the model’s convergence accuracy and speed. Convergence can be accelerated or slowed down depending on the value of the learning rate; a greater value will result in faster convergence, but could lead to instability. While a bigger batch size does make convergence smoother, it may also lead to higher memory use. Convergence time specifies the amount of time allotted for the process to finish. Convergence may be delayed with a larger batch size and accelerated with a smaller time. Detecting more edges is possible with high sensitivity, but if it’s too sensitive, it could produce false positives. Minimisation allows for detecting thin lines and edges, which are smaller and more subtle feature changes necessary for license plate recognition.

The first and second layers run on the same computation steps from these layers to avoid errors and false rates. From this distinguishment model, the displacement of feature mapping is allowed by comparing training inputs.

The mapping is used to observe the displacement position changes from the input region. In this case, training input is associated with the feature that identifies segmentation phases. The processing stage determines the mapping of current and previous detection of number plates and provides the maximum region extraction. This approach uses a background image to illustrate the mapping process and provides reliable results. It uses the bilateral format as a two-layer computation and provides the mapping with the previous detection phase. Further, the regions and edges are examined below.

The examination is followed up for the mapping with the feature displacement, and from this region, edges are defined by the neural network computation. In this methodology, recognition of the number plate is detected and formulated below. In this phase, the first step is finding how much region is extracted, and the second is determining how many edges are extracted. Based on these techniques, the mapping is followed up for the region- and edge-based examination. From this approach, the identification of vehicle region is recognized below.

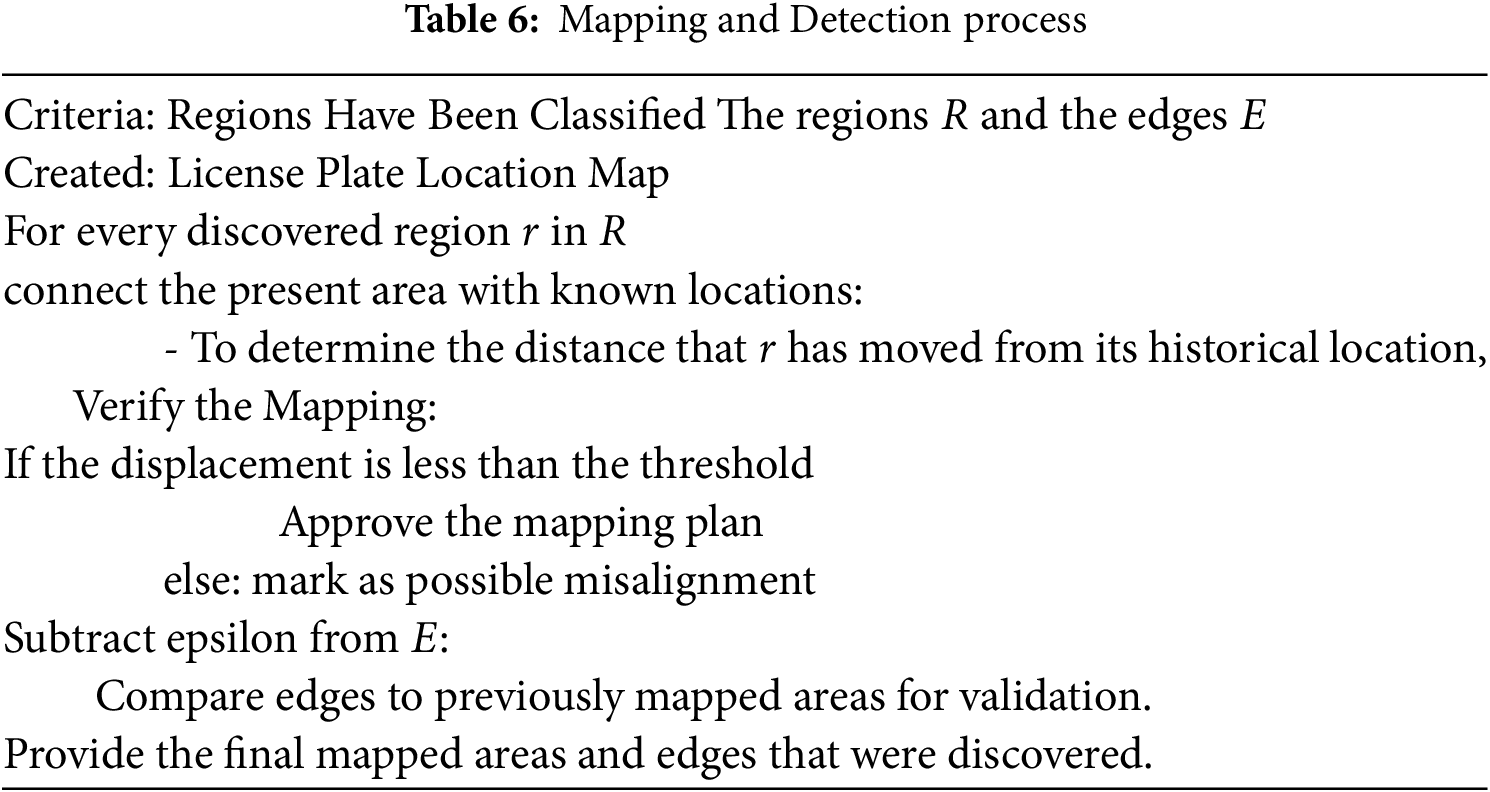

The vehicle region identification is performed in the above equation, and the training section for the interlinked layer is employed, where the classification is followed up. This strategy defines the displacement of feature extraction by mapping with the training input and identifies the vehicle number plate. This methodology illustrates the region and edge detection based on the training inputs and the two layers. Thus, the vehicle detection is satisfied using a bi-lateral neural network and its convergence rate. Table 6 examines the Mapping and Detection Process.

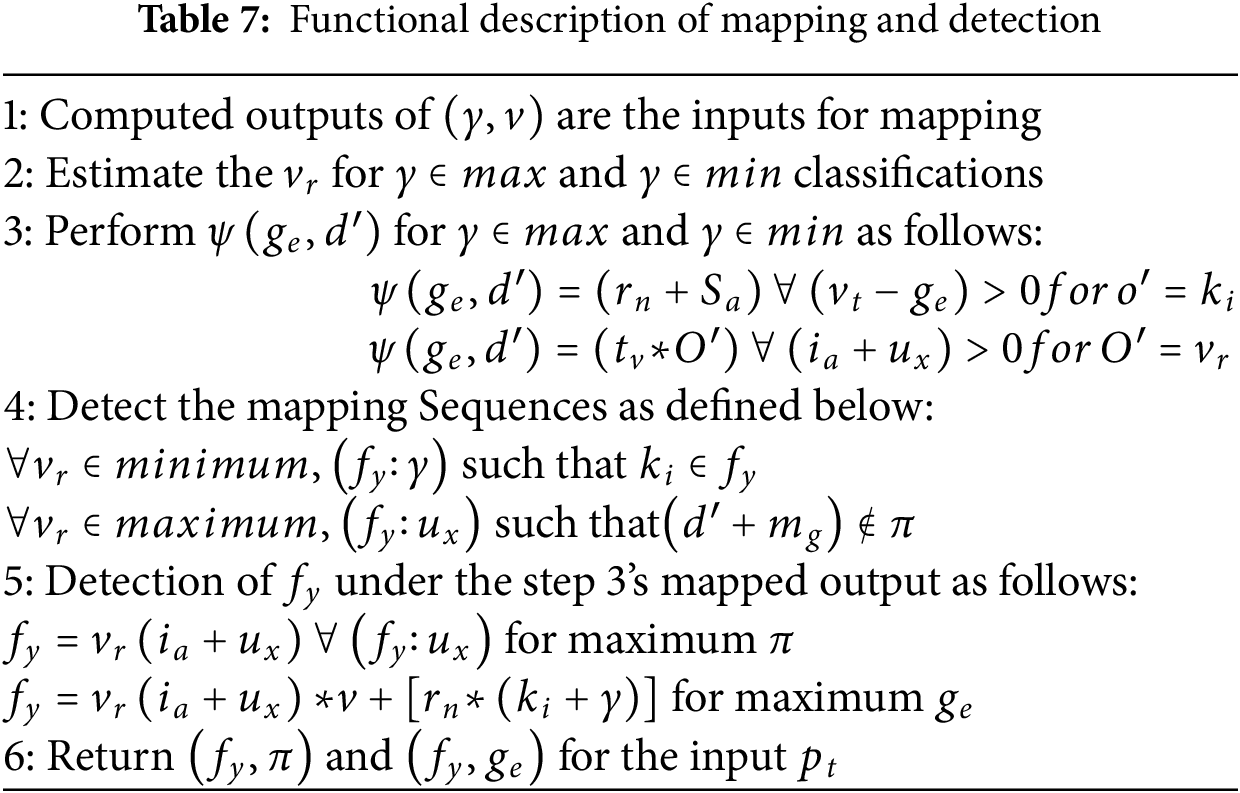

The last technique checks feature alignment by mapping found areas and edges to known locations and computing displacements. It checks for proper detection by comparing present and past traits and highlighting inconsistencies. The license plate’s localization is fine-tuned, and the mapped areas and verified edges are outputted for identification at this stage. The functional description of the mapping and detection is presented in Table 7.

The proposed method maps the similarity feature between the detected and identified vehicle regions. This aids in leveraging the plate recognition precision with a high F1 score.

This method enhances precision by improving the F1 score in this work, which uses the interlinked layer and input layer. This format relies on maximum region extraction and a better convergence rate. The precision is represented as

3.3.1 Preprocessing (Dehazing/Enhancement)

Preprocessing in DR2M includes steps such as resizing, haze removal, and normalization. These operations work across all image pixels, so the processing time grows in direct proportion to image size. The memory requirement is also proportional to the size of the image, as the entire frame must be stored temporarily. This stage prepares input images for reliable feature extraction by enhancing visibility and contrast. The workload is relatively modest and can be reduced further using GPU-accelerated filters. Since each frame is processed independently, the time needed increases in a linear manner with the dataset size, making it suitable for real-time use when optimized.

3.3.2 Feature Extraction (Region and Edge Features)

Feature extraction identifies both large-scale plate regions and fine-scale character edges. Techniques such as edge detection and region segmentation examine all pixels, so processing time increases in direct proportion to image size. Memory usage is also proportional to image size, as intermediate results like color channels, gradients, and edge maps must be stored. These extracted features are vital for later displacement mapping and classification. Because the operations can be performed in parallel, using a GPU can greatly speed them up. Parameter tuning, such as adaptive threshold settings, helps reduce unnecessary processing while preserving important details even under hazy conditions.

3.3.3 Displacement Feature Mapping

Displacement mapping connects features between the current frame and previous frames to capture haze-induced spatial shifts. In the most demanding case, every detected region may be compared with every other region, which can make this step the most time-consuming in DR2M. Memory usage depends on storing both current and historical feature data, which can be large for high-resolution inputs. This step is the main contributor to overall computational cost, but efficiency is improved by limiting comparisons to likely matches based on geometric constraints and similarity checks. This targeted approach strengthens temporal consistency without overwhelming processing resources.

3.3.4 Similarity Feature Computation

The similarity feature calculation measures how closely matched features from different frames resemble each other in both shape and appearance. This step is relatively lightweight compared to displacement mapping, requiring only simple comparisons between the stored descriptors of regions and edges. Memory requirements are also low, since only key descriptors need to be stored. By acting as a filtering stage before classification it helps remove mismatches and reduces false detections. This directly boosts precision and ensures only stable, consistent matches are kept. Because it uses straightforward comparison operations, it has very little impact on overall processing time.

3.3.5 Bilateral Pathway Inference (Max/Min Processing)

The bilateral pathway is made up of two parallel neural network streams: one that focuses on the largest, most prominent features (such as the plate’s outer shape) and another that focuses on the smallest details (such as character edges). Both pathways run simultaneously, which can increase processing time and memory usage compared to single-path networks. However, this design ensures that both coarse shapes and fine details are preserved under hazy conditions, leading to more robust detection. Using GPU parallelization allows these two streams to run in tandem without significant delays, although architectural optimization is important for speed in real-time applications.

Convergence estimation checks whether the displacement mapping results have stabilized over time. It monitors the changes in detected features across successive frames and stops updating when the results become steady. This helps prevent wasted processing and ensures consistent output. The memory and processing demands are relatively low, but repeated checks over large datasets can add some overhead. Optimizations such as early stopping rules, checking in batches, and reducing the number of frames considered can further improve speed. This stage plays an important role in producing stable, noise-resistant recognition results in video sequences or changing environmental conditions.

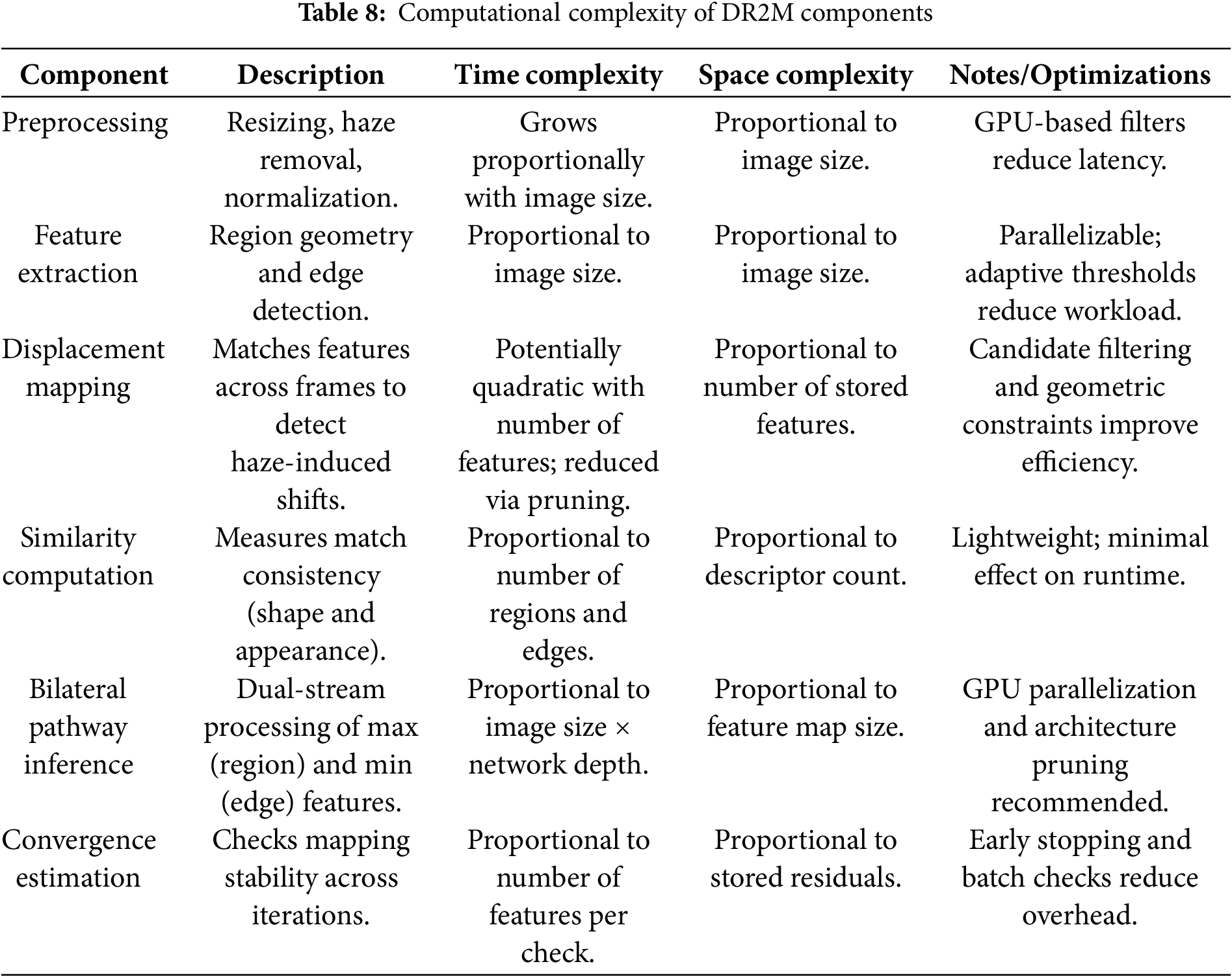

Section 3.3 provides a detailed computational complexity analysis of DR2M, weighing the trade-off between processing overhead and the precision improvements it delivers. The twin-layer architecture of the bilateral neural network inherently increases both time and space requirements, as it must simultaneously evaluate maximum (region-level) and minimum (edge-level) feature pathways and perform displacement mapping between current and previous frames. Displacement mapping, in particular, can become the most resource-intensive stage, especially when processing high-resolution images or large-scale video datasets in real time. While this design is more demanding than single-path approaches, it enables superior detection accuracy under haze by preserving both coarse geometry and fine details while ensuring temporal stability. To keep the approach practical for real-world deployment, computational optimizations are essential. These include parallel processing on GPUs, pruning of candidate matches to reduce mapping overhead, architectural simplification to eliminate redundant computations, and faster convergence criteria to minimize unnecessary iterations. Together, these measures maintain DR2M’s recognition accuracy while allowing it to operate efficiently in challenging, real-time environments. Computational Complexity of DR2M Components are futher given in Table 8.

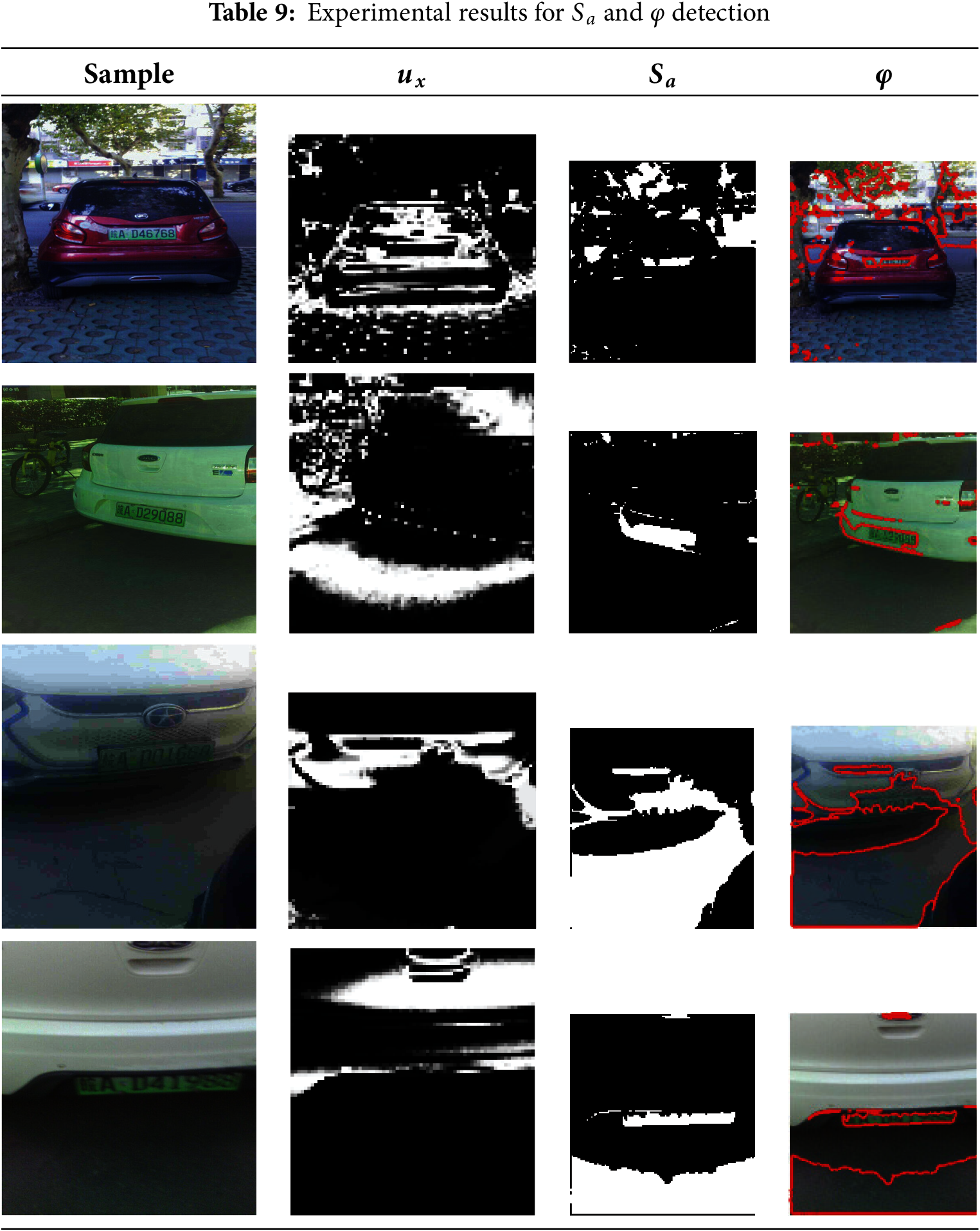

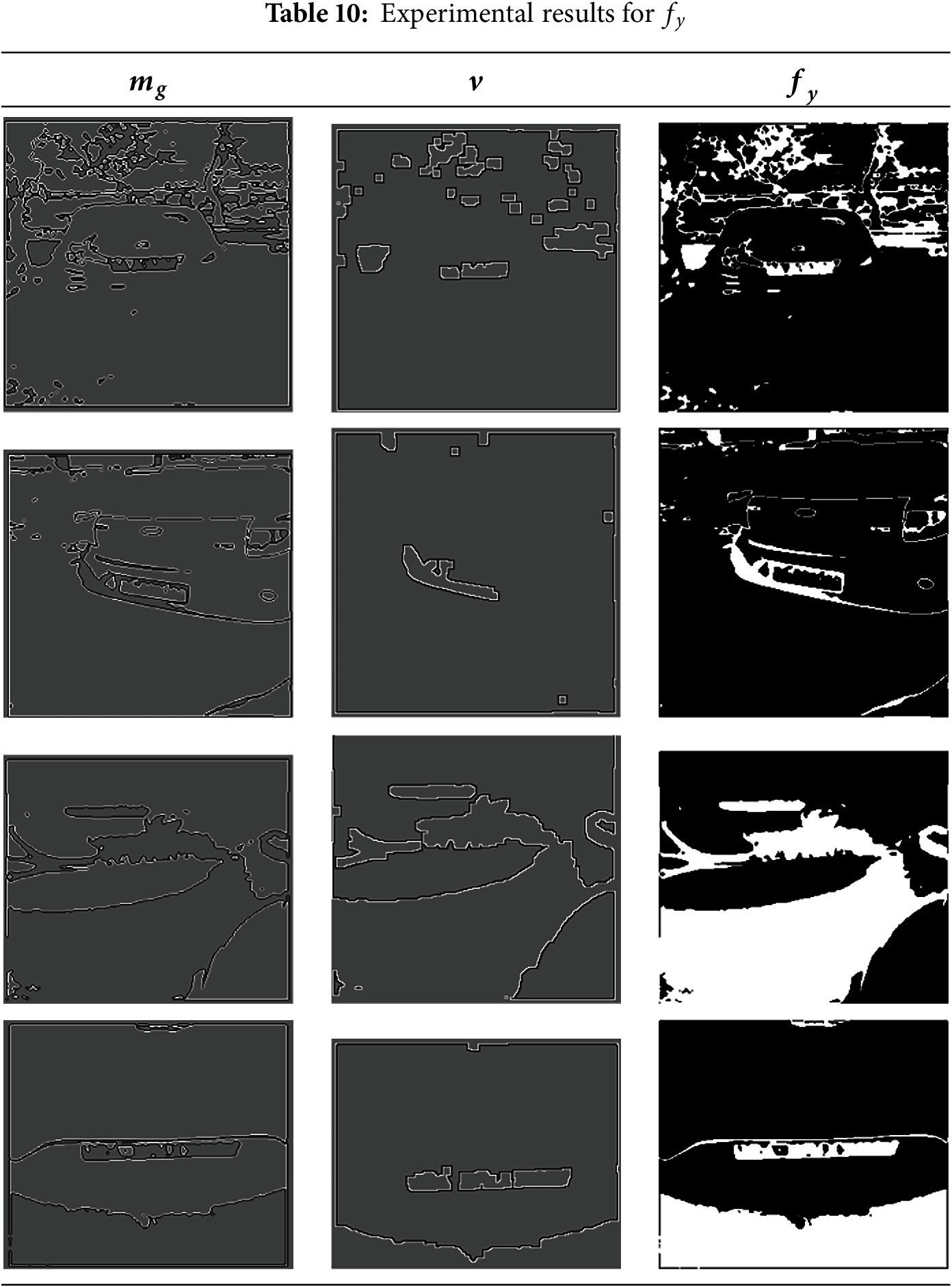

The experimental assessment is performed using the CCPD dataset (https://github.com/detectRecog/CCPD, accessed on 10 May 2025). This dataset provides 30 thousand vehicle images observed at 720 × 1160-pixel concentrations recorded at 64FPS. From this dataset, 10,000 images are used to train the bilateral network, and 5700 images are used to test the proposed method’s efficacy in detecting number plates. The experimental results are tabulated in Tables 9 and 10, corresponding to the intermediate functions of the methods. Due to its wide library ecosystem, Python 3.9 is suggested for the proposed system’s software setup. TensorFlow 2.8 was used to train and build the bidirectional neural network, with PyTorch allowing custom layers and benchmarks. We utilized OpenCV 4.5 for image preprocessing and feature extraction. We used Scikit-Image for complicated transforms. CUDA Toolkit 11.6 and NumPy simplify numerical operations and data processing. Due to its speed and stability, Ubuntu 20.04 LTS fits the development environment. Matplotlib and Seaborn provide visual inspection. Hardware included an Intel Core i9-10900K, a strong multithreaded CPU with ten cores and twenty threads. An NVIDIA GeForce RTX 3080 GPU with 10 GB VRAM enabled fast neural network training and high-resolution picture processing. Due to its 64 GB DDR4 RAM and 1 TB NVMe SSD, this PC can easily manage large files. Using two 27-inch 4K displays for debugging and a 2 TB external hard drive for dataset backup improved visibility. It ensured real-time license plate recognition was efficient, scalable, and reliable under demand.

The suggested DR2M model was assessed utilizing two datasets: the Chinese City Parking Dataset (CCPD) and OpenALPR. Given that CCPD predominantly comprises clear images, synthetic haze was applied to 40% of the data utilizing an atmospheric scattering model, wherein the scattering coefficient β was adjusted between 0.5 and 2.5 to replicate varying haze strengths. Likewise, 30% of the OpenALPR dataset was enhanced with haze to assess the model’s generalizability in challenging visual situations. The samples from both datasets were allocated into 70% training, 15% validation, and 15% testing sets to guarantee a rigorous examination.

The DR2M model utilized the Adam optimizer with a starting learning rate of 0.001. A step decay schedule was utilized, halving the learning rate every 10 epochs. The training procedure employed a batch size of 32 and was conducted for a maximum of 50 epochs, incorporating early stopping predicated on validation loss to avert overfitting. The loss function integrated cross-entropy for classification and mean squared error (MSE) for displacement mapping. L2 regularization with a weight decay of 0.0001 and a dropout rate of 0.3 was implemented in the fully connected layers to improve generalization.

A synthetic haze augmentation technique was employed on a portion of the CCPD dataset to replicate hazy conditions, as the original dataset predominantly features clear images. The atmospheric scattering model, grounded on the Koschmieder law, was employed to replicate real-world haze by modifying the image’s contrast and color saturation by the following equation:

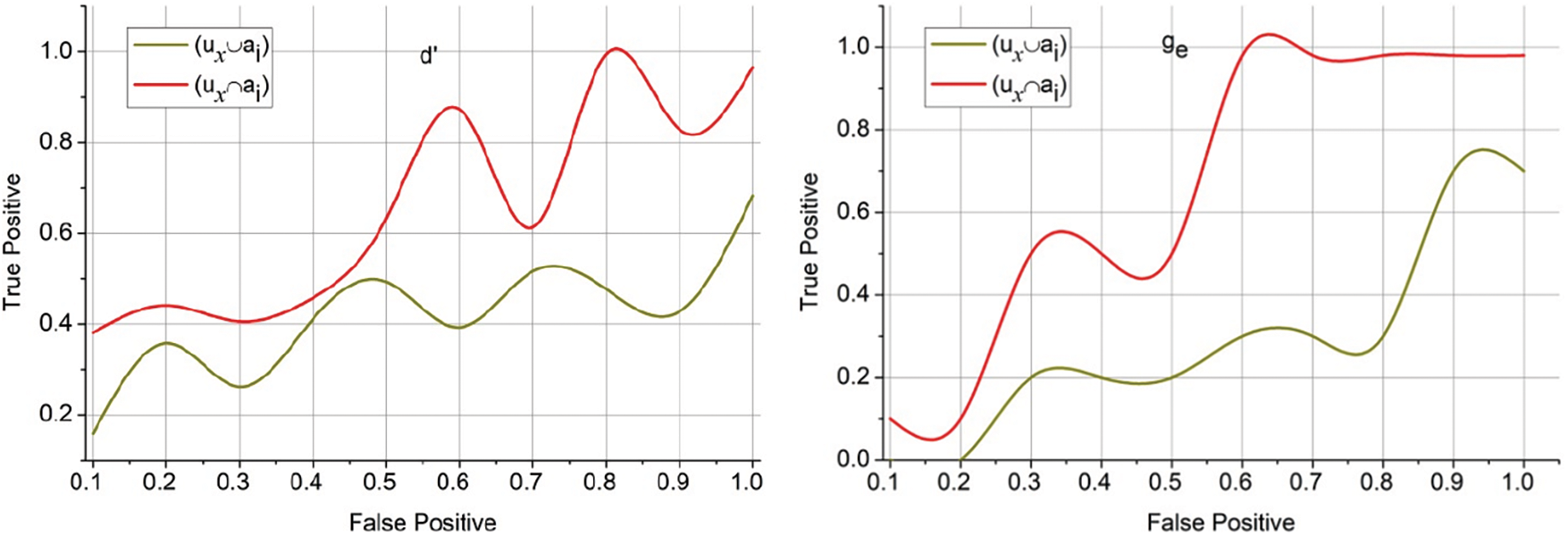

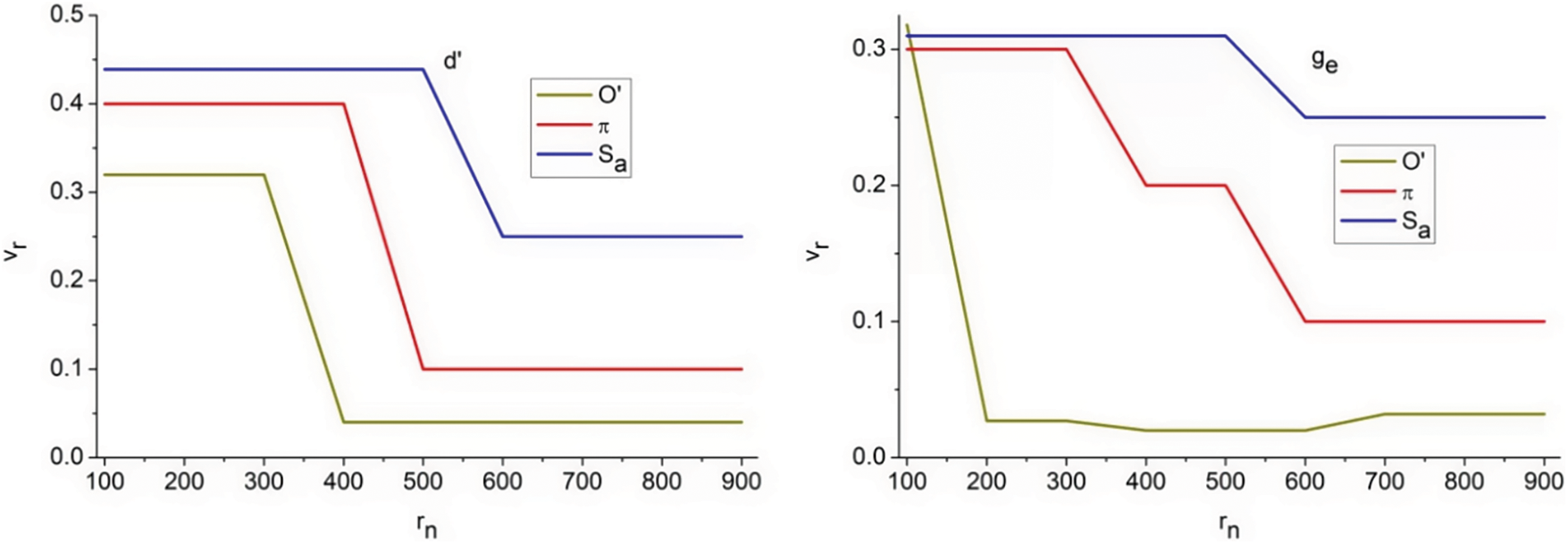

True and false positives will likely maximize detection precision in the self-experimental factors considered. Therefore the varying false rate the true positive rate for

Figure 5: True positive rate assessment for

The constraints for

Figure 6:

The

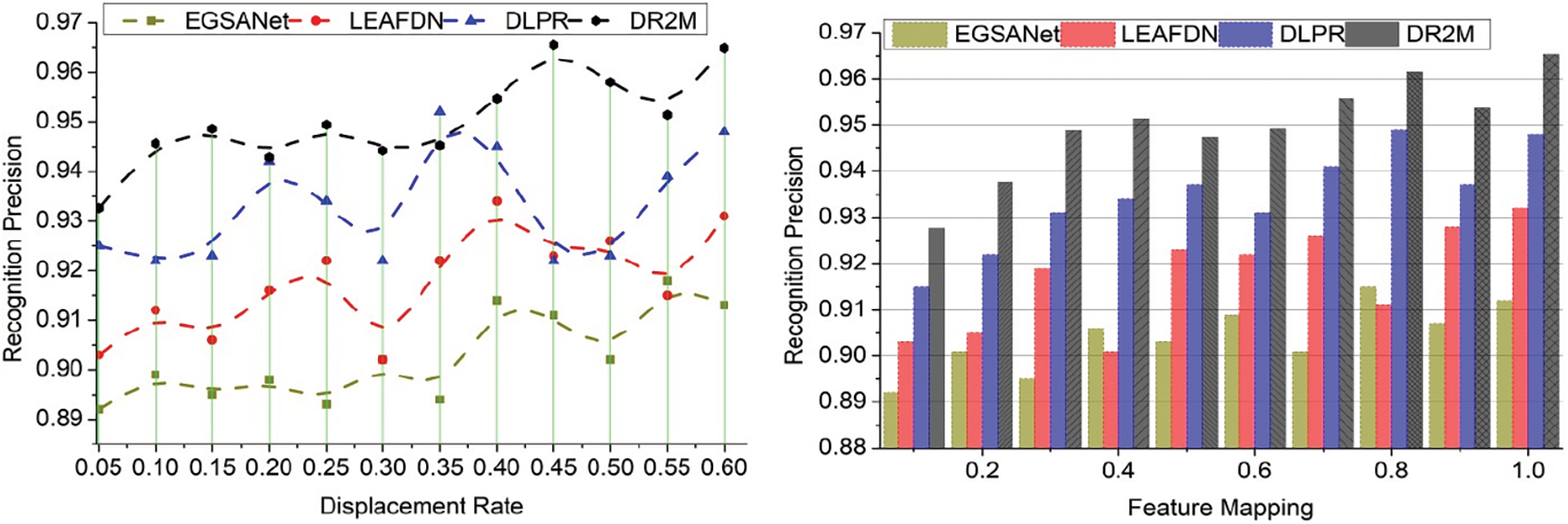

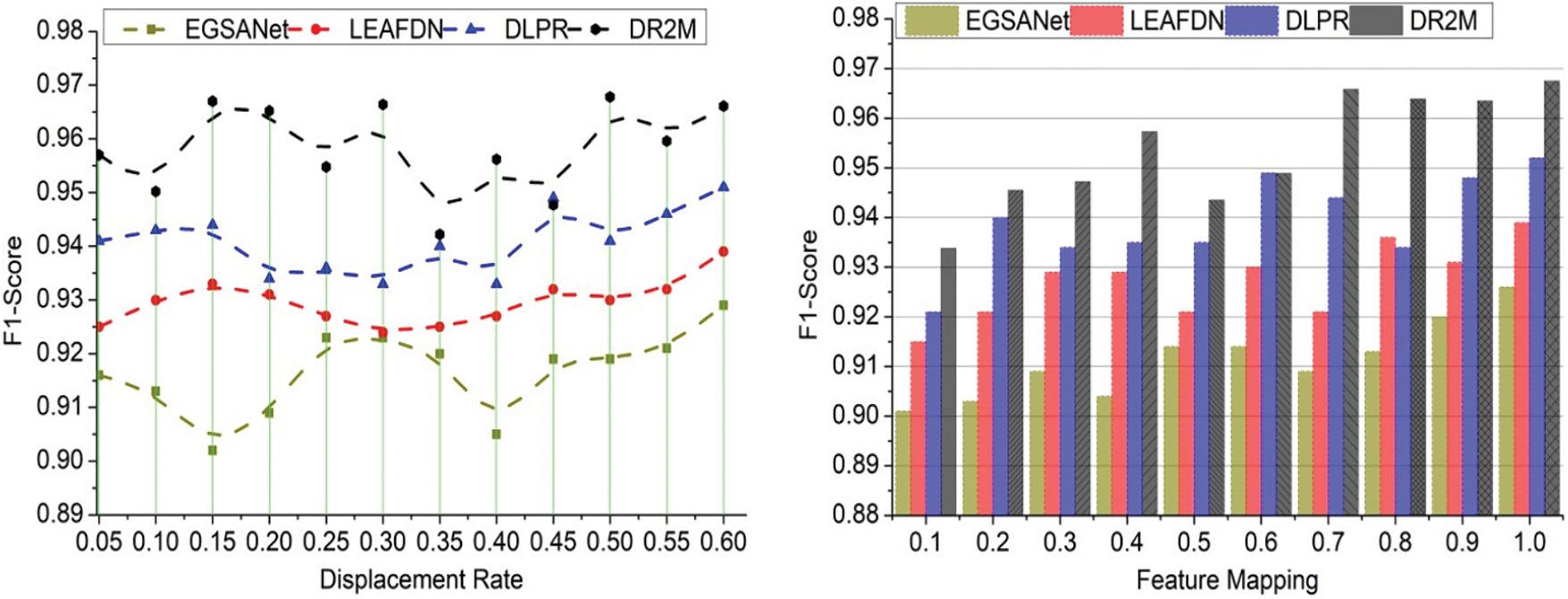

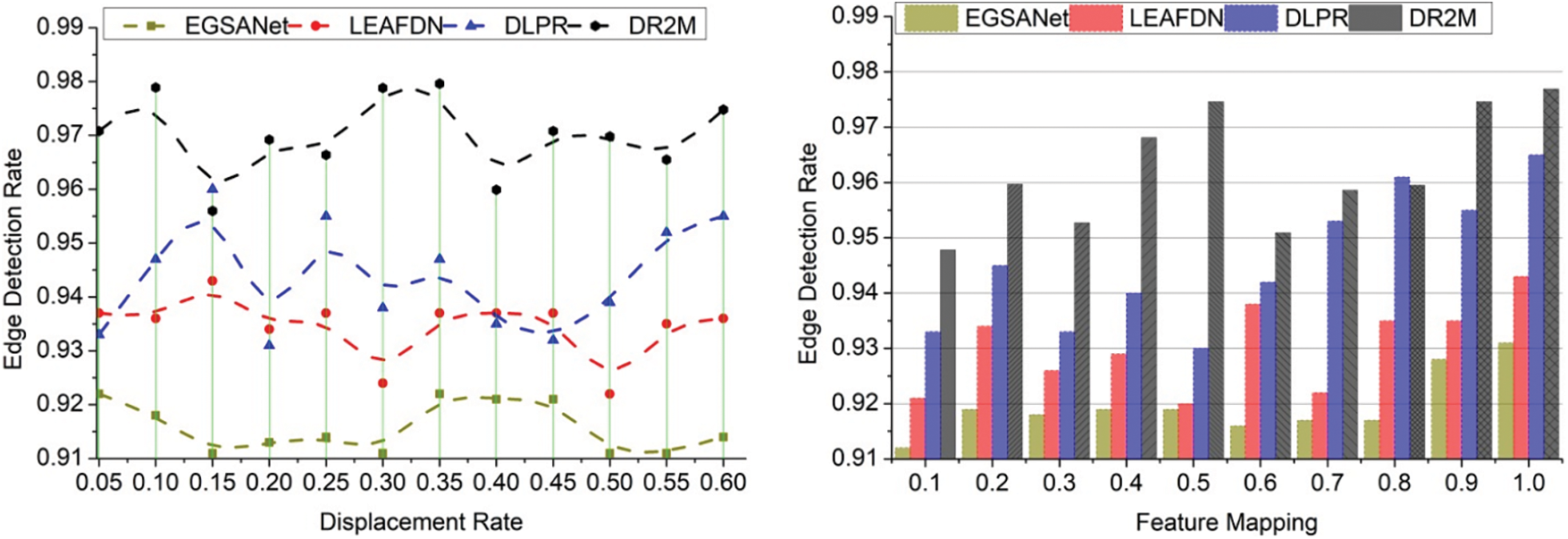

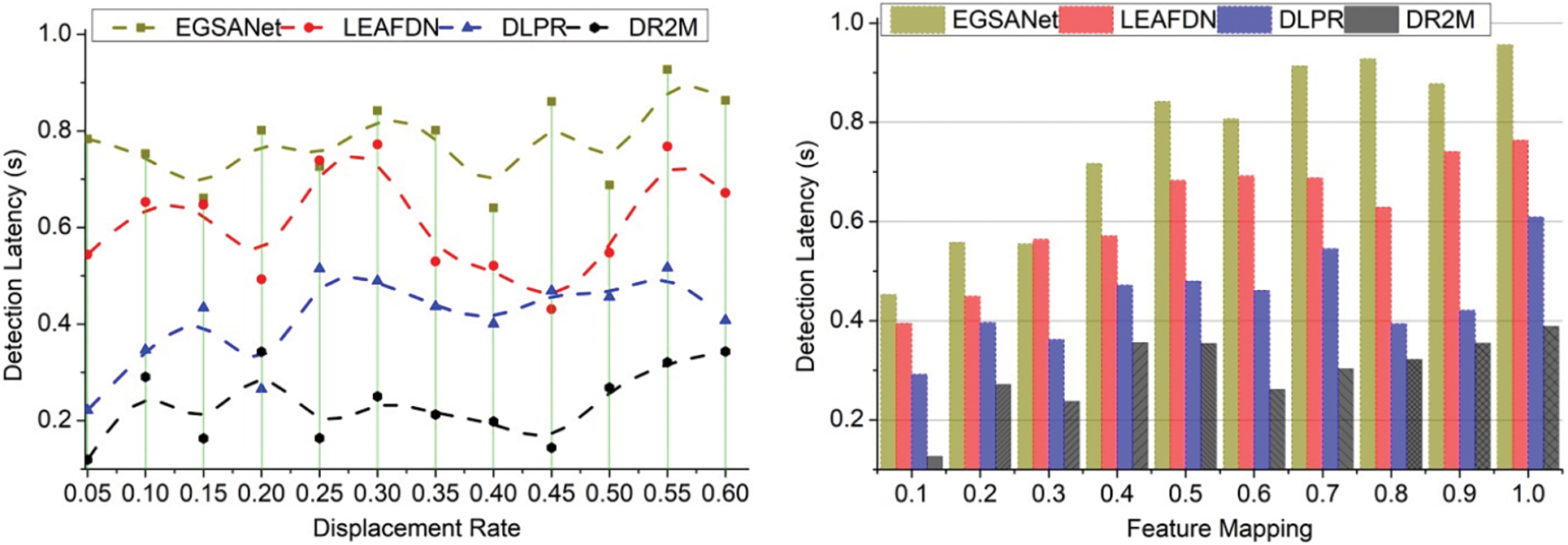

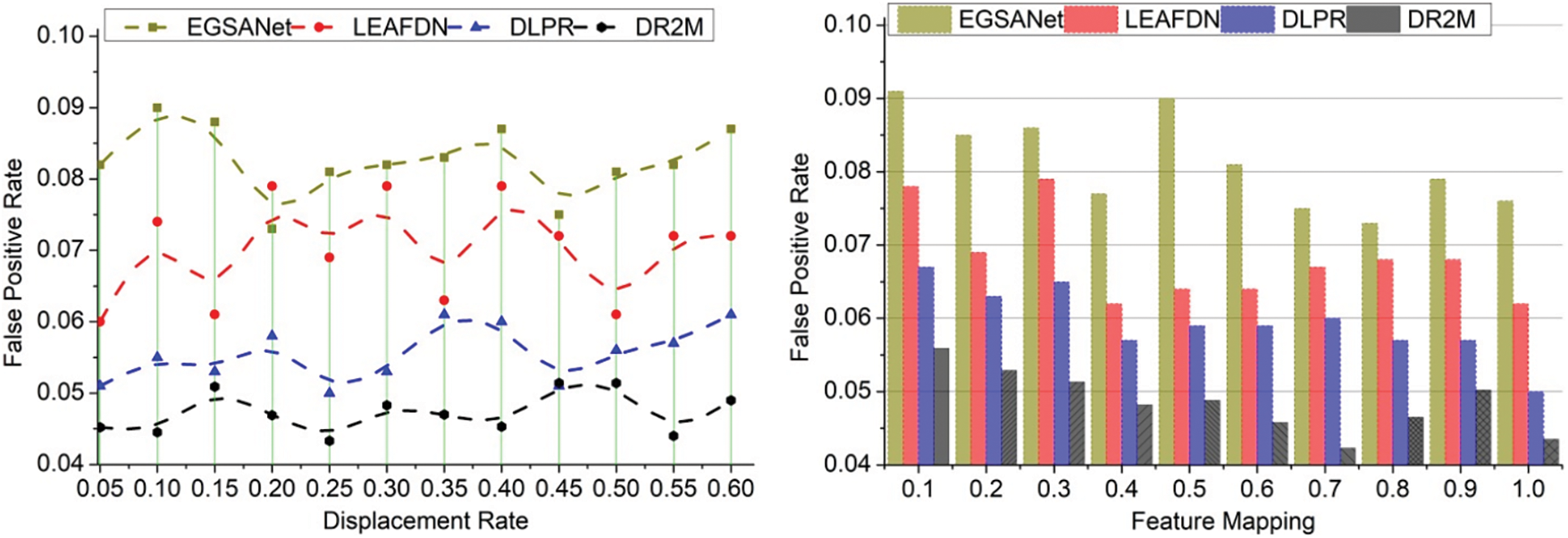

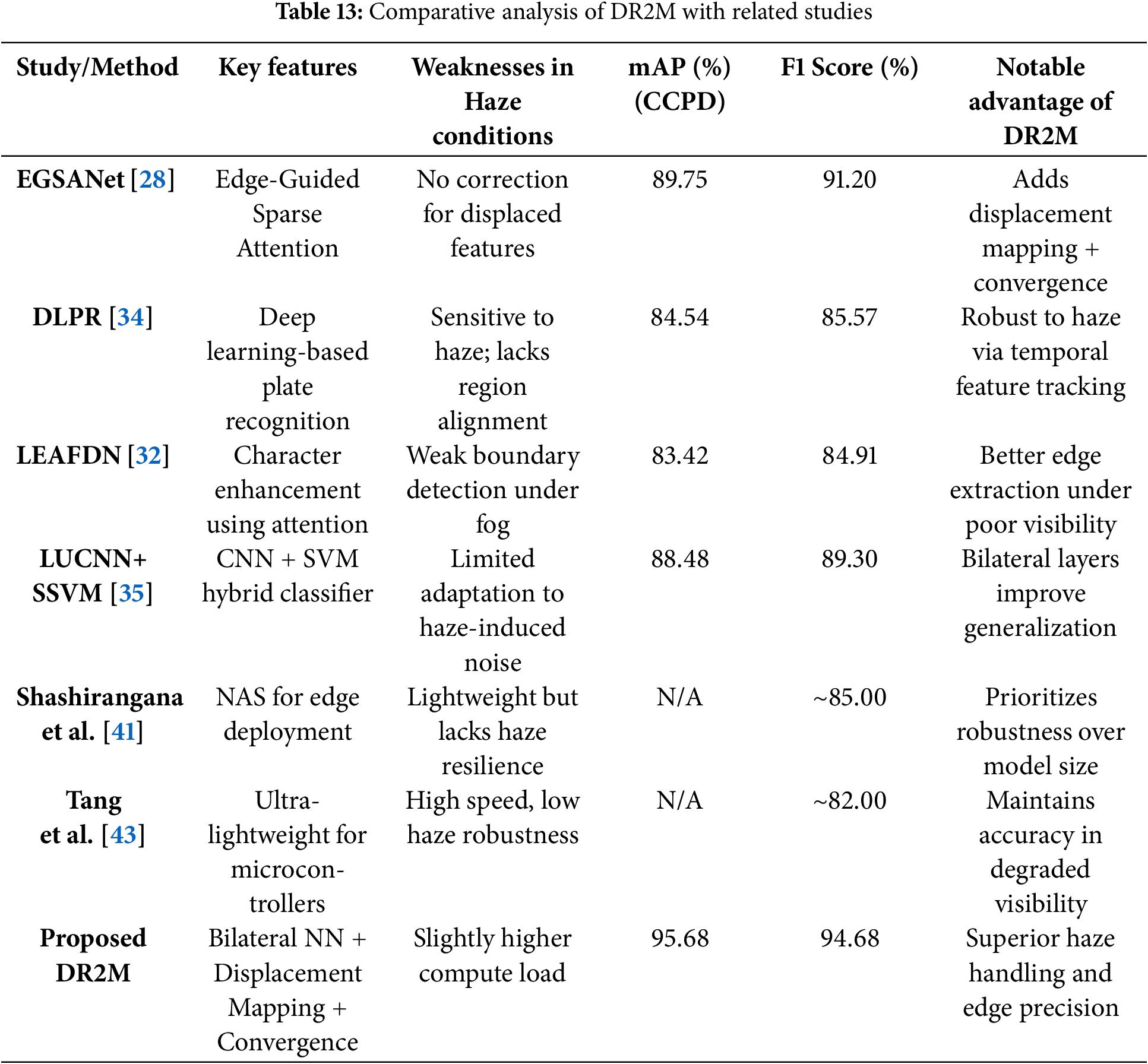

The experimental assessment is validated using recognition precision, F1 score, edge detection rate, detection latency, and false positive rate. The X-axis considers the variations in displacement rate [0.05, 0.6] and feature mapping [0.1, 1] to assess their impact on the above metrics. The assessment encompasses EGSANet [28], LEAFDN [32], DLPR [34], and LUCNN+SSVM [35] methods from the related works for a comparative presentation.

The recognition precision for the proposed work is high for varying displacement rates and feature mapping. This approach relates to the detection of license plates and extracts the necessary features from the background image. The evaluation step is used to indicate the feature position and identify the changes in the displaced features. The processing step indicates the region and edge-based detection, and finds a better license plate recognition. In this categorization method, two different layers are involved: the first is for the region, and the second is for the edges. This evaluation step observes mapping the current and previous stages of image features. The computation is carried out to analyze the vehicle number plate region and provides the necessary process. In this section, precision is improved along with the F1 score, which provides better recognition. The computation is carried out for both the region and edges and involves interlinking the first and second layers. In this computation process, recognition precision is formulated as

Figure 7: Recognition precision assessment for displacement rate and feature mapping

The F1 score (Fig. 8) shows better achievement under displacement rate and feature mapping in this work. This approach identifies a maximum and minimum range of values and employs feature mapping. The feature mapping is carried forward to improve the F1 score in this proposal. This computation step evaluates the license plate recognition from the background image and produces a reliable output. This case involves a bilateral neural network for the two layers associated with the region and edges. Considering this, it provides better license plate recognition from the captured image. In this section, the F1 score is improved by employing the image representation and giving appropriate results. The evaluation is done for the displacement rate and feature mapping, and the desired vehicle recognition is provided. From this processing step, the F1 score is enhanced in this work by equating

Figure 8: F1 score assessment for displacement rate and feature mapping

Fig. 9 presents the edge detection rate comparisons for varying displacement rates and feature mapping. This shows better computation for feature mapping than the previous detection stage. The existing stage involves the displaced position changes acquired from the feature extraction process. This classification model involves the feature position where the displacement occurs due to pixel variations. The processing step involves feature mapping of the vehicle, where the recognition is carried out at the mentioned time interval. This is associated with the mapping of features that indicate the color and size of the input image. It extracts the necessary features from the input image and provides the appropriate result, such as license plate recognition. In this section, the edge detection is carried forward to achieve the maximum region extraction from the displaced features. The edge detection is done post to the region examination that is equated in Eq. (6) and it is represented as

Figure 9: Edge detection rate assessment for displacement rate and feature mapping

The proposed work’s detection latency is less than the other methods and is processed under two metrics, displacement rate and feature mapping (Fig. 10). Based on these factors, it is associated with the reliable detection of license plates and involves the feature extraction process. The extracted features are associated with the latency, which shows the improvement in precision. The precision rate is enhanced in this proposal, which involves a bilateral neural network. It is associated with the license plate recognition and performs the image representation. From the image representation model, the feature extraction is performed, and, in this format, it involves the color and size as the features in this work. These factors indicate the region- and edge-based detection from the image representation. By considering this process, latency is addressed better for reliable processing. In this examination phase, the detection latency decreases to enhance the precision rate and it is represented as

Figure 10: Detection latency assessment for displacement rate and feature mapping

Fig. 11 presents the false positive rate comparisons for varying displacement rates and feature mapping. Reducing this achieves better vehicle license plate recognition and estimates the convergence rate associated with the maximum and minimum region. This approach defines the recognition of the number plate and is associated with the training phase of the bilateral neural network. This format determines the segmentation characteristics involving the regions and edges. The evaluation step is used to indicate the two different layers, and it involves the detection of the displacement of the feature position and its changes. It is associated with the varying position changes that occur during the acquisition of the input image. The image representation is used to indicate reliable processing and provide better recognition. In this approach, it shows that the false rate positive is reduced and it is formulated as

Figure 11: False positive rate assessment for displacement rate and feature mapping

The DR2M model’s exceptional performance is due to its bilateral neural network architecture, which concurrently processes region-level and edge-level data. This dual-path architecture enables the model to maintain coarse structures such as plate form and size while simultaneously preserving intricate character edges, even within haze-induced visual distortion. In ambiguous images, when pixel values fluctuate due to scattering, the edge path aids in preserving discernible features, while the region path guarantees contextual coherence. The displacement feature mapping process aligns features across frames or image variants, compensating for pixel-level drift caused by haze. These solutions diminish false positives and improve localization accuracy. Nonetheless, the trade-off involves heightened computing complexity resulting from dual-path inference and convergence estimates. Nevertheless, DR2M preserves real-time functionality and provides reliable accuracy, particularly in areas with limited visibility, rendering it very appropriate for intelligent transportation applications.

The suggested approach considers several important factors to improve accuracy and decrease false positives in number plate identification, especially in difficult weather situations like haze. To solve the problem of false positives in license plate identification, which is particularly prevalent in difficult weather situations like haze, the suggested approach employs a bidirectional neural network that prioritizes maximum region and minimum edge extraction to guarantee correct feature detection. The technique differentiates between background objects that may be mistaken for license plates and the real location of the plate using displacement feature mapping. The system employs a mix of size- and colour-based feature extraction to reduce the likelihood of mistaking the license plate for other, similarly sized items or ambient noise in foggy environments. This improves accuracy in low-light or otherwise challenging environments by directing the model’s processing capacity to the area most likely to contain the license plate, decreasing the possibility of false positives.

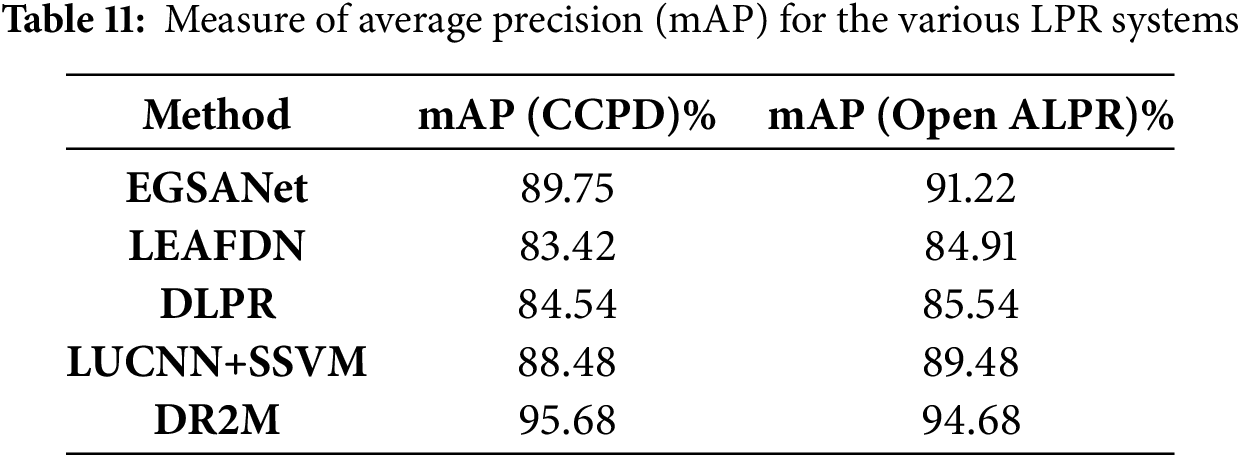

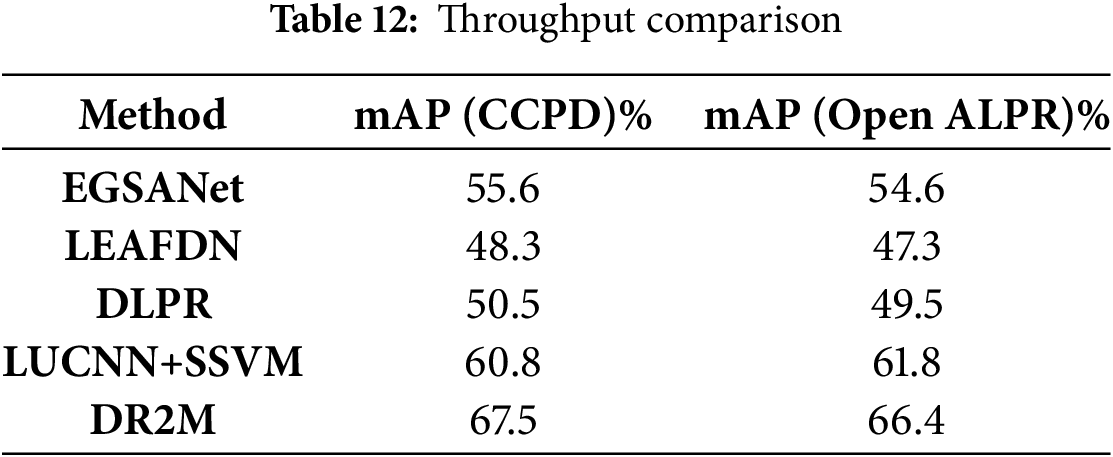

4.3 Mean Average Precision (mAP)

The proposed model (DR2M) Mean Average Precision (mAP), as shown in Table 11 was compared to other state-of-the-art approaches, such as EGSANet, LEAFDN, DLPR, and LUCNN+SSVM. Including mAP in haze-related investigations is essential for proving the method’s dependability and generalizability. When the model’s mAP stays high in clear and foggy situations, the approach can handle real-world differences in weather and atmospheric conditions, which is a big deal. It shows how successful the method is in practice. To guarantee that mAP represents performance across many difficulties, datasets used to evaluate it, such as CCPD or OpenALPR, must incorporate various situations. For a more accurate and representative mAP computation, it is helpful to have a dataset that includes photographs taken in various lighting situations, including bright, dim, and foggy. This will give us a better idea of how the model would do in real-world scenarios. Using Mean Average Precision (mAP) as the statistic, the comparison table showcases the efficacy of several license plate identification systems across two datasets—CCPD and OpenALPR. With an impressive mAP of 95.68% on CCPD and 94.68% on OpenALPR, DR2M has the best performance, showing that it is the most accurate and resilient in detecting license plates in various situations. Although it lags slightly behind DR2M, EGSANet demonstrates good generalisation with mAP values of 91.22% on OpenALPR and 89.75% on CCPD. LEAFDN and DLPR demonstrate reasonable accuracy, particularly in more difficult real-world scenarios (CCPD), with comparatively lower mAP values. LEAFDN scored 83.42% and 84.91%, while DLPR achieved 84.54% and 85.54%. Despite DR2M’s superior performance, LUCNN+SSVM adapts to different contexts and achieves outstanding results, especially on the OpenALPR dataset (mAP = 89.48%). Other approaches demonstrate inconsistent performance when faced with real-world problems, but DR2M remains dominant throughout both datasets.